30.8 Modern Estimators for Staggered Adoption

30.8.1 Group-Time Average Treatment Effects (Callaway and Sant’Anna 2021)

Notation Recap

\(Y_{it}(0)\): Potential outcome for unit \(i\) at time \(t\) in the absence of treatment.

\(Y_{it}(g)\): Potential outcome for unit \(i\) at time \(t\) if first treated in period \(g\).

\(Y_{it}\): Observed outcome for unit \(i\) at time \(t\).

\[ Y_{it} = \begin{cases} Y_{it}(0), & \text{if unit } i \text{ never treated ( } C_i = 1 \text{)} \\ 1\{G_i > t\} Y_{it}(0) + 1\{G_i \le t\} Y_{it}(G_i), & \text{otherwise} \end{cases} \]

\(G_i\): Group assignment, i.e., the time period when unit \(i\) first receives treatment.

\(C_i = 1\): Indicator that unit \(i\) never receives treatment (the never-treated group).

\(D_{it} = 1\{G_i \le t\}\): Indicator that unit \(i\) has been treated by time \(t\).

Assumptions

The following assumptions are typically imposed to identify treatment effects in staggered adoption settings.

Staggered Treatment Adoption

Once treated, a unit remains treated in all subsequent periods.

Formally, \(D_{it}\) is non-decreasing in \(t\).Parallel Trends Assumptions (Conditional or Unconditional on Covariates)

Two common variants:

- Parallel trends based on never-treated units: \[

\mathbb{E}[Y_t(0) - Y_{t-1}(0) | G_i = g] = \mathbb{E}[Y_t(0) - Y_{t-1}(0) | C_i = 1]

\] Interpretation:

- The average potential outcome trends of the treated group (\(G_i = g\)) are the same as the never-treated group, absent treatment.

- Parallel trends based on not-yet-treated units: \[

\mathbb{E}[Y_t(0) - Y_{t-1}(0) | G_i = g] = \mathbb{E}[Y_t(0) - Y_{t-1}(0) | D_{is} = 0, G_i \ne g]

\] Interpretation:

- Units not yet treated by time \(s\) (\(D_{is} = 0\)) can serve as controls for units first treated at \(g\).

These assumptions can also be conditional on covariates \(X\), as:

\[ \mathbb{E}[Y_t(0) - Y_{t-1}(0) | X_i, G_i = g] = \mathbb{E}[Y_t(0) - Y_{t-1}(0) | X_i, C_i = 1] \]

- Parallel trends based on never-treated units: \[

\mathbb{E}[Y_t(0) - Y_{t-1}(0) | G_i = g] = \mathbb{E}[Y_t(0) - Y_{t-1}(0) | C_i = 1]

\] Interpretation:

Random Sampling

Units are sampled independently and identically from the population.Irreversibility of Treatment

Once treated, units do not revert to untreated status.Overlap (Positivity)

For each group \(g\), the propensity of receiving treatment at \(g\) lies strictly within \((0, 1)\): \[ 0 < \mathbb{P}(G_i = g | X_i) < 1 \]

The Group-Time ATT, \(ATT(g, t)\), measures the average treatment effect for units first treated in period \(g\), evaluated at time \(t\).

\[ ATT(g, t) = \mathbb{E}[Y_t(g) - Y_t(0) | G_i = g] \]

Interpretation:

\(g\) indexes when the group first receives treatment.

\(t\) is the time period when the effect is evaluated.

\(ATT(g, t)\) captures how treatment effects evolve over time, following adoption at time \(g\).

Identification of \(ATT(g, t)\)

Using Never-Treated Units as Controls: \[ ATT(g, t) = \mathbb{E}[Y_t - Y_{g-1} | G_i = g] - \mathbb{E}[Y_t - Y_{g-1} | C_i = 1], \quad \forall t \ge g \]

Using Not-Yet-Treated Units as Controls: \[ ATT(g, t) = \mathbb{E}[Y_t - Y_{g-1} | G_i = g] - \mathbb{E}[Y_t - Y_{g-1} | D_{it} = 0, G_i \ne g], \quad \forall t \ge g \]

Conditional Parallel Trends (with Covariates):

If treatment assignment depends on covariates \(X_i\), adjust the parallel trends assumption:- Never-treated controls: \[ ATT(g, t) = \mathbb{E}[Y_t - Y_{g-1} | X_i, G_i = g] - \mathbb{E}[Y_t - Y_{g-1} | X_i, C_i = 1], \quad \forall t \ge g \]

- Not-yet-treated controls: \[ ATT(g, t) = \mathbb{E}[Y_t - Y_{g-1} | X_i, G_i = g] - \mathbb{E}[Y_t - Y_{g-1} | X_i, D_{it} = 0, G_i \ne g], \quad \forall t \ge g \]

Aggregating \(ATT(g, t)\): Common Parameters of Interest

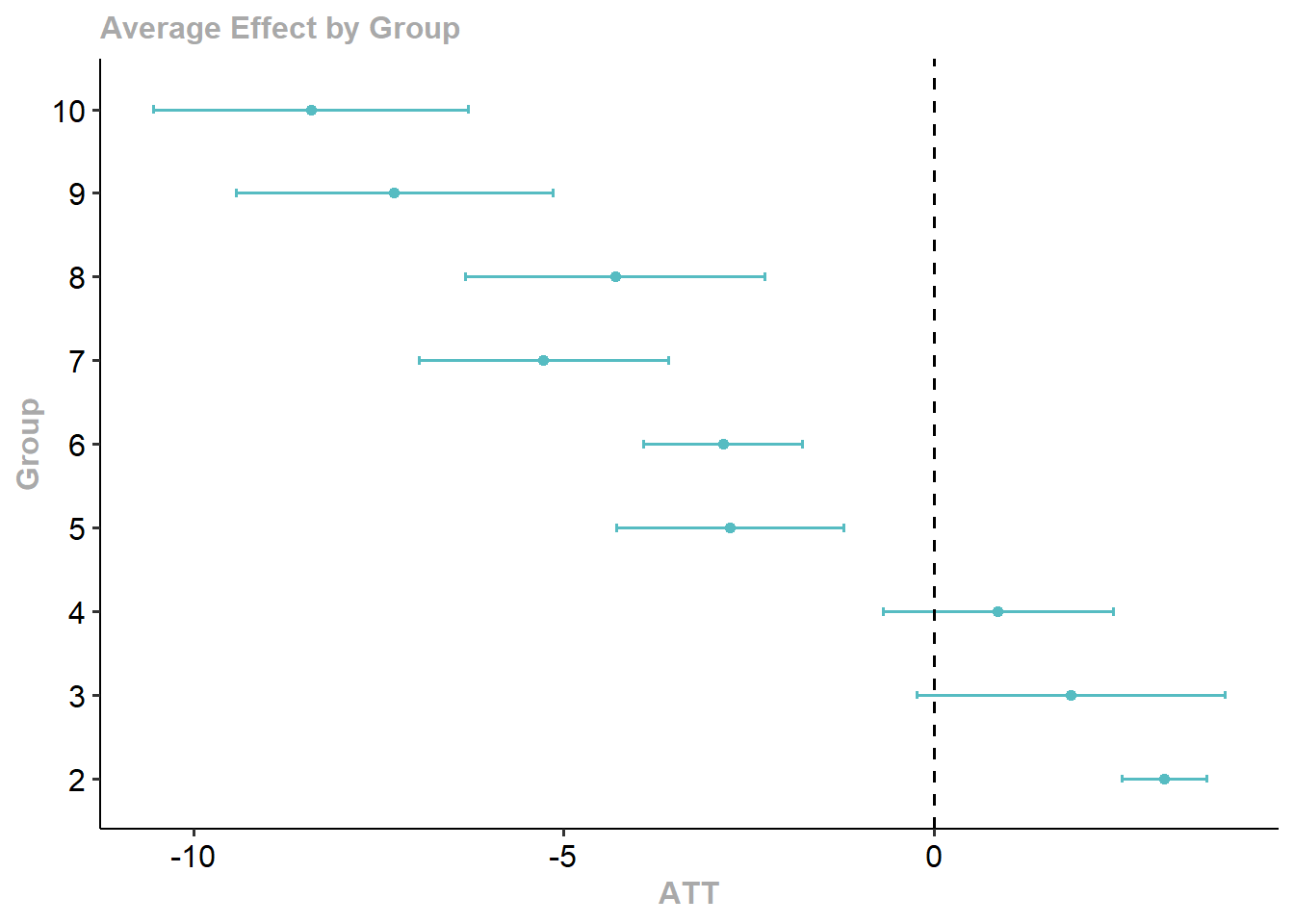

Average Treatment Effect per Group (\(\theta_S(g)\)):

Average effect over all periods after treatment for group \(g\): \[ \theta_S(g) = \frac{1}{\tau - g + 1} \sum_{t = g}^{\tau} ATT(g, t) \]- \(\tau\): Last time period in the panel.

Overall Average Treatment Effect on the Treated (ATT) (\(\theta_S^O\)):

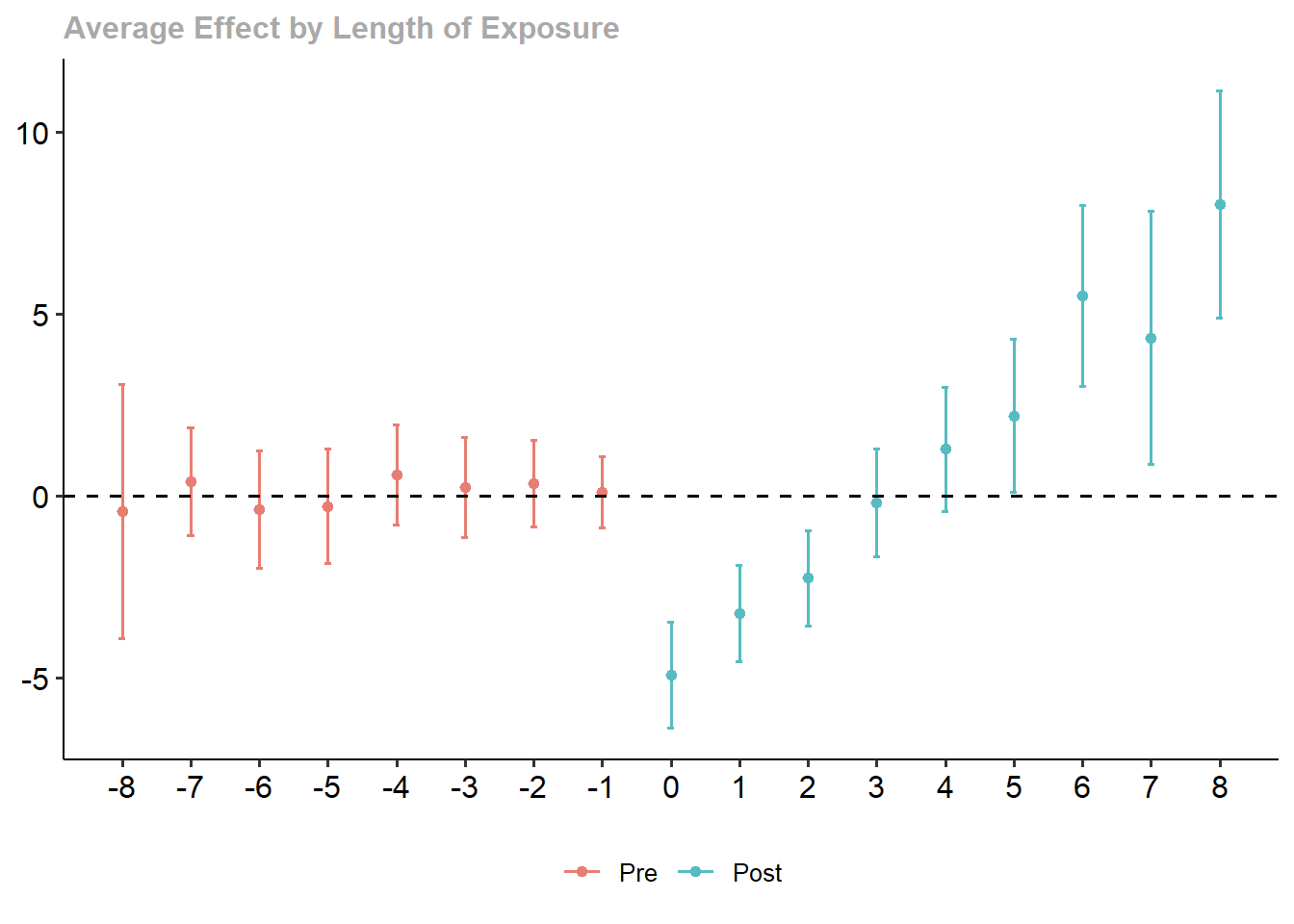

Weighted average of \(\theta_S(g)\) across groups \(g\), weighted by their group size: \[ \theta_S^O = \sum_{g=2}^{\tau} \theta_S(g) \cdot \mathbb{P}(G_i = g) \]Dynamic Treatment Effects (\(\theta_D(e)\)):

Average effect after \(e\) periods of treatment exposure: \[ \theta_D(e) = \sum_{g=2}^{\tau} \mathbb{1}\{g + e \le \tau\} \cdot ATT(g, g + e) \cdot \mathbb{P}(G_i = g | g + e \le \tau) \]Calendar Time Treatment Effects (\(\theta_C(t)\)):

Average treatment effect at time \(t\) across all groups treated by \(t\): \[ \theta_C(t) = \sum_{g=2}^{\tau} \mathbb{1}\{g \le t\} \cdot ATT(g, t) \cdot \mathbb{P}(G_i = g | g \le t) \]Average Calendar Time Treatment Effect (\(\theta_C\)):

Average of \(\theta_C(t)\) across all post-treatment periods: \[ \theta_C = \frac{1}{\tau - 1} \sum_{t=2}^{\tau} \theta_C(t) \]

The staggered() function offers several estimands, each defining a different way of aggregating group-time average treatment effects into a single overall treatment effect:

Simple: Equally weighted across all groups.

Cohort: Weighted by group sizes (i.e., treated cohorts).

Calendar: Weighted by the number of observations in each calendar time.

library(staggered)

library(fixest)

data("base_stagg")

# Simple weighted average ATT

staggered(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "simple"

)

#> estimate se se_neyman

#> 1 -0.7110941 0.2211943 0.2214245

# Cohort weighted ATT (i.e., by treatment cohort size)

staggered(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "cohort"

)

#> estimate se se_neyman

#> 1 -2.724242 0.2701093 0.2701745

# Calendar weighted ATT (i.e., by year)

staggered(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "calendar"

)

#> estimate se se_neyman

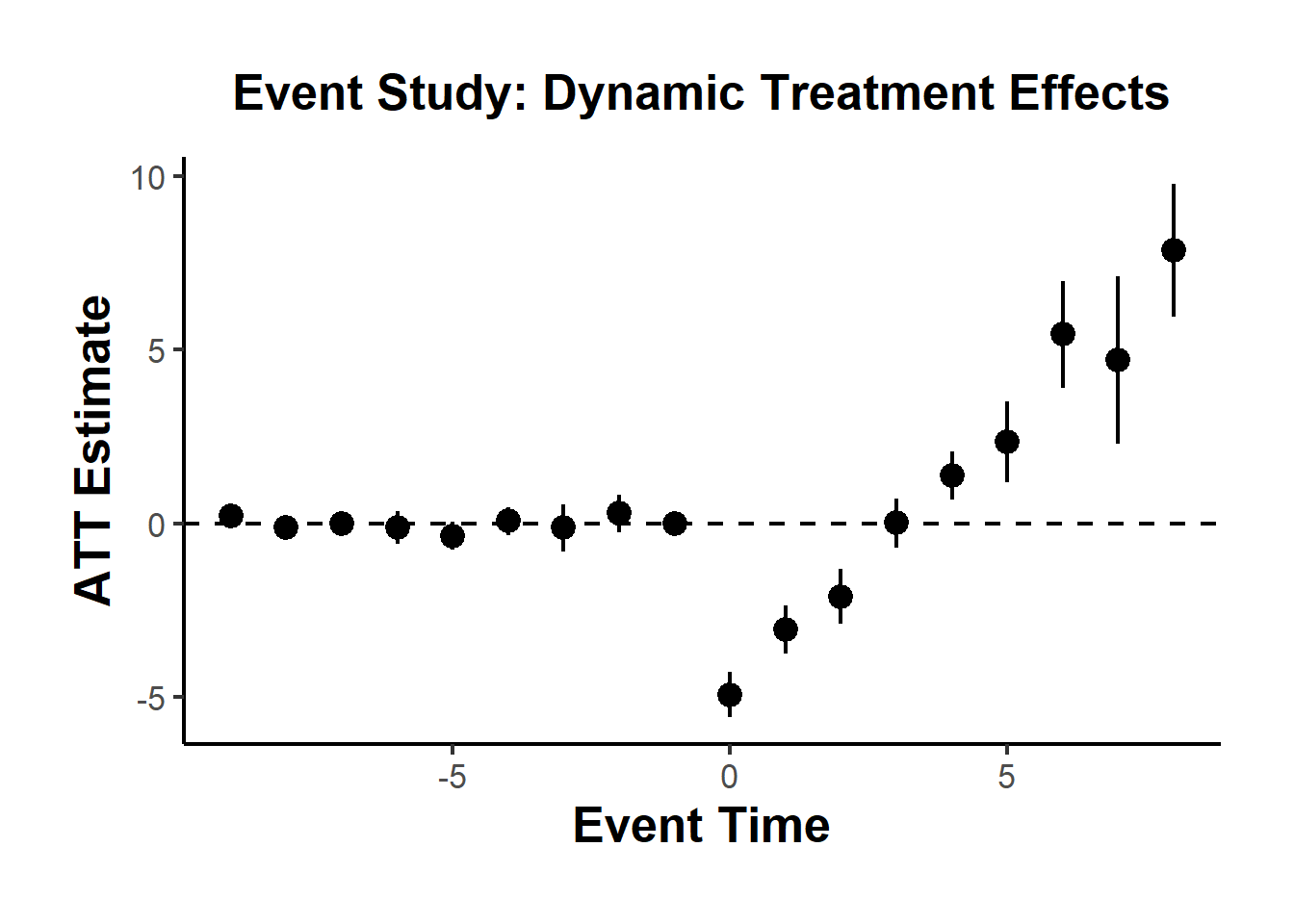

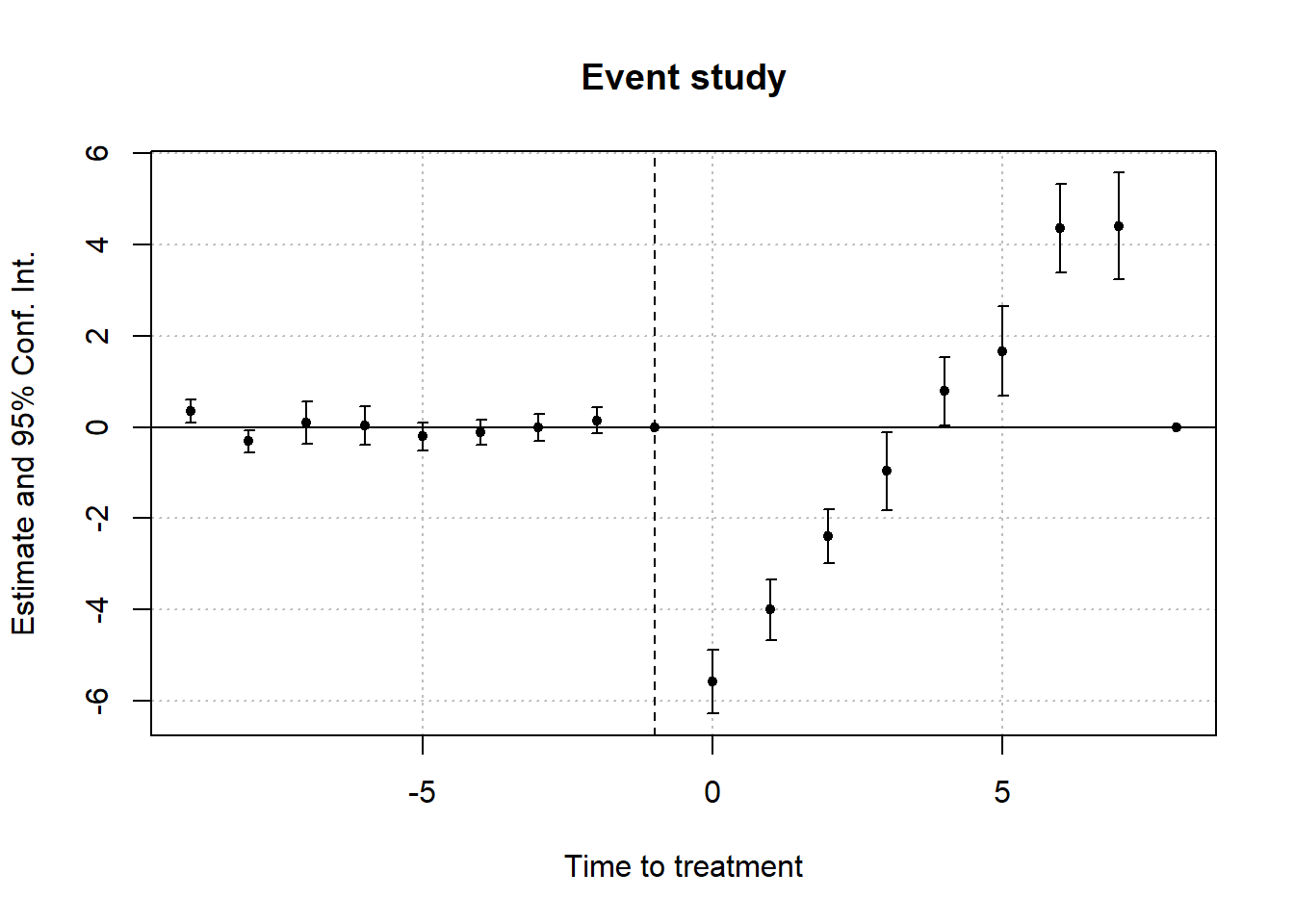

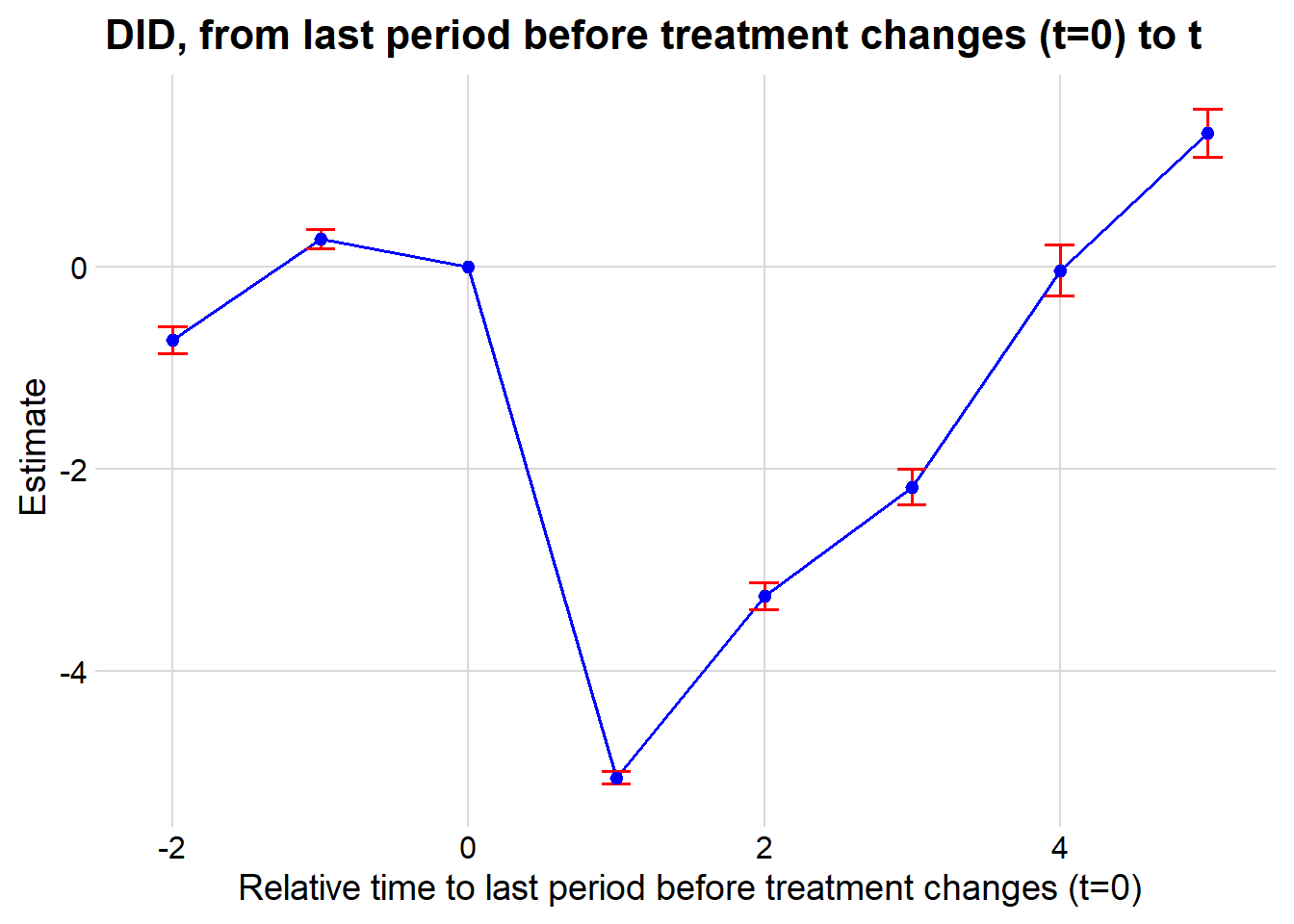

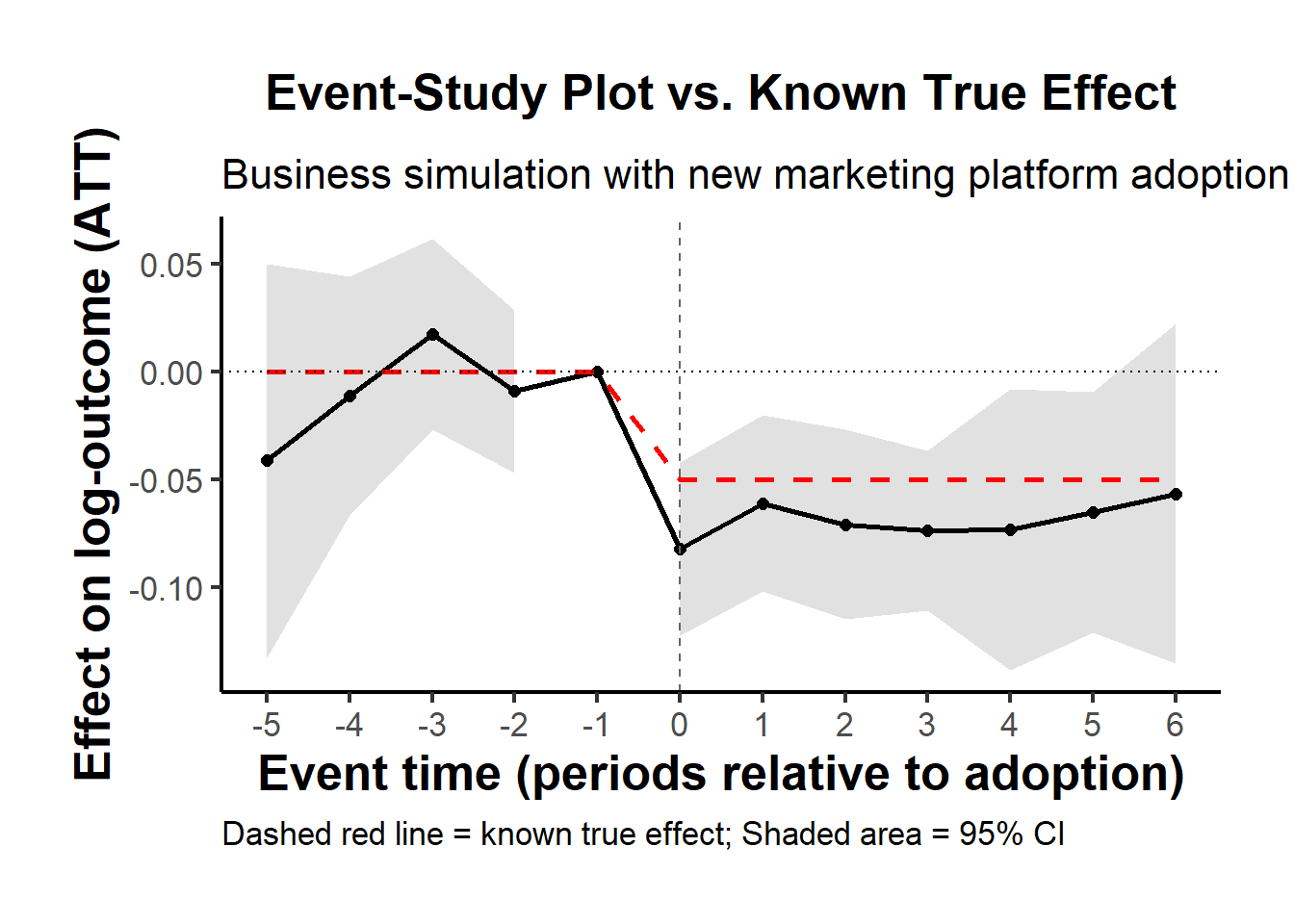

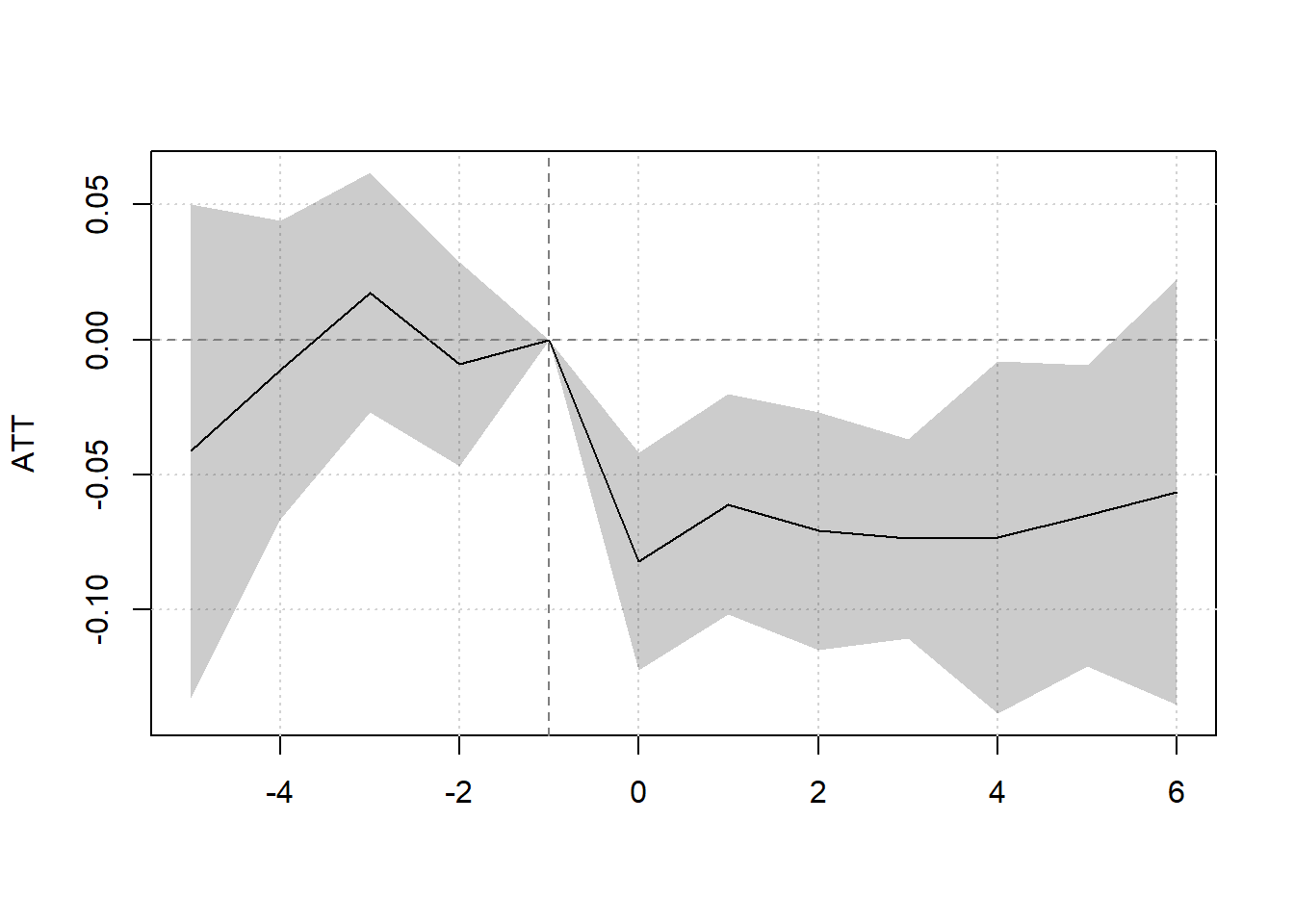

#> 1 -0.5861831 0.1768297 0.1770729To visualize treatment dynamics around the time of adoption, the event study specification estimates dynamic treatment effects relative to the time of treatment (Figure 30.9).

res <- staggered(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "eventstudy",

eventTime = -9:8

)# Plotting the event study with pointwise confidence intervals

library(ggplot2)

library(dplyr)

ggplot(

res |> mutate(

ymin_ptwise = estimate - 1.96 * se,

ymax_ptwise = estimate + 1.96 * se

),

aes(x = eventTime, y = estimate)

) +

geom_pointrange(aes(ymin = ymin_ptwise, ymax = ymax_ptwise)) +

geom_hline(yintercept = 0, linetype = "dashed") +

xlab("Event Time") +

ylab("ATT Estimate") +

ggtitle("Event Study: Dynamic Treatment Effects") +

causalverse::ama_theme()

Figure 30.9: Event Study Dynamic Treatment Effects

The staggered package also includes direct implementations of alternative estimators:

staggered_cs()implements the Callaway and Sant’Anna (2021) estimator.staggered_sa()implements the L. Sun and Abraham (2021) estimator, which adjusts for bias from comparisons involving already-treated units.

# Callaway and Sant’Anna estimator

staggered_cs(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "simple"

)

#> estimate se se_neyman

#> 1 -0.7994889 0.4484987 0.4486122

# Sun and Abraham estimator

staggered_sa(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "simple"

)

#> estimate se se_neyman

#> 1 -0.7551901 0.4407818 0.4409525To assess statistical significance under the sharp null hypothesis \(H_0: \text{TE} = 0\), the staggered package includes an option for Fisher’s randomization (permutation) test. This approach tests whether the observed estimate could plausibly occur under a random reallocation of treatment timings.

# Fisher Randomization Test

staggered(

df = base_stagg,

i = "id",

t = "year",

g = "year_treated",

y = "y",

estimand = "simple",

compute_fisher = TRUE,

num_fisher_permutations = 100

)

#> estimate se se_neyman fisher_pval fisher_pval_se_neyman

#> 1 -0.7110941 0.2211943 0.2214245 0 0

#> num_fisher_permutations

#> 1 100This test provides a non-parametric method for inference and is particularly useful when the number of groups is small or standard errors are unreliable due to clustering or heteroskedasticity.

30.8.2 Cohort Average Treatment Effects (L. Sun and Abraham 2021)

L. Sun and Abraham (2021) propose a solution to the TWFE problem in staggered adoption settings by introducing an interaction-weighted estimator for dynamic treatment effects. This estimator is based on the concept of Cohort Average Treatment Effects on the Treated (CATT), which accounts for variation in treatment timing and dynamic treatment responses.

Traditional TWFE estimators implicitly assume homogeneous treatment effects and often rely on treated units serving as controls for later-treated units. When treatment effects vary over time or across groups, this leads to contaminated comparisons, especially in event-study specifications.

L. Sun and Abraham (2021) address this issue by:

Estimating cohort-specific treatment effects relative to time since treatment.

Using never-treated units as controls, or in their absence, the last-treated cohort.

30.8.2.1 Defining the Parameter of Interest: CATT

Let \(E_i = e\) denote the period when unit \(i\) first receives treatment. The cohort-specific average treatment effect on the treated (CATT) is defined as: \[ CATT_{e, l} = \mathbb{E}[Y_{i, e + l} - Y_{i, e + l}^\infty \mid E_i = e] \] Where:

\(l\) is the relative period (e.g., \(l = -1\) is one year before treatment, \(l = 0\) is the treatment year).

\(Y_{i, e + l}^\infty\) is the potential outcome without treatment.

\(Y_{i, e + l}\) is the observed outcome.

This formulation allows one to trace out the dynamic effect of treatment for each cohort, relative to their treatment start time.

L. Sun and Abraham (2021) extend the interaction-weighted idea to panel settings, originally introduced by Gibbons, Suárez Serrato, and Urbancic (2018) in a cross-sectional context.

They propose regressing the outcome on:

Relative time indicators constructed by interacting treatment cohort (\(E_i\)) with time (\(t\)).

Unit and time fixed effects.

This method explicitly estimates \(CATT_{e, l}\) terms, avoiding the contaminating influence of already-treated units that TWFE models often suffer from.

Relative Period Bin Indicator

\[ D_{it}^l = \mathbb{1}(t - E_i = l) \]

- \(E_i\): The time period when unit \(i\) first receives treatment.

- \(l\): The relative time period: how many periods have passed since treatment began.

- Static Specification

\[ Y_{it} = \alpha_i + \lambda_t + \mu_g \sum_{l \ge 0} D_{it}^l + \epsilon_{it} \]

- \(\alpha_i\): Unit fixed effects.

- \(\lambda_t\): Time fixed effects.

- \(\mu_g\): Effect for group \(g\).

- Excludes periods prior to treatment.

- Dynamic Specification

\[ Y_{it} = \alpha_i + \lambda_t + \sum_{\substack{l = -K \\ l \neq -1}}^{L} \mu_l D_{it}^l + \epsilon_{it} \]

- Includes leads and lags of treatment indicators \(D_{it}^l\).

- Excludes one period (typically \(l = -1\)) to avoid perfect collinearity.

- Tests for pre-treatment parallel trends rely on the leads (\(l < 0\)).

30.8.2.2 Identifying Assumptions

- Parallel Trends

For identification, it is assumed that untreated potential outcomes follow parallel trends across cohorts in the absence of treatment: \[ \mathbb{E}[Y_{it}^\infty - Y_{i, t-1}^\infty \mid E_i = e] = \text{constant across } e \] This allows us to use never-treated or not-yet-treated units as valid counterfactuals.

- No Anticipatory Effects

Treatment should not influence outcomes before it is implemented. That is: \[ CATT_{e, l} = 0 \quad \text{for all } l < 0 \] This ensures that any pre-trends are not driven by behavioral changes in anticipation of treatment.

- Treatment Effect Homogeneity (Optional)

The treatment effect is consistent across cohorts for each relative period. Each adoption cohort should have the same path of treatment effects. In other words, the trajectory of each treatment cohort is similar.

Although L. Sun and Abraham (2021) allow treatment effect heterogeneity, some settings may assume homogeneous effects within cohorts and periods:

Each cohort has the same pattern of response over time.

This is relaxed in their design but assumed in simpler TWFE settings.

30.8.2.3 Comparison to Other Designs

Different DiD designs make distinct assumptions about how treatment effects vary (Table 30.7)

| Study | Vary Over Time | Vary Across Cohorts | Notes |

|---|---|---|---|

| L. Sun and Abraham (2021) | ✓ | ✓ | Allows full heterogeneity |

| Callaway and Sant’Anna (2021) | ✓ | ✓ | Estimates group × time ATTs |

| Borusyak, Jaravel, and Spiess (2024) | ✓ | ✗ | Homogeneous across cohorts Heterogeneity over time |

| Athey and Imbens (2022) | ✗ | ✓ | Heterogeneity only across adoption cohorts |

| Clement De Chaisemartin and D’haultfœuille (2023) | ✓ | ✓ | Complete heterogeneity |

| Goodman-Bacon (2021) | ✓ or ✗ | ✗ or ✓ | Restricts one dimension Heterogeneity either “vary across units but not over time” or “vary over time but not across units”. |

30.8.2.4 Sources of Treatment Effect Heterogeneity

Several forces can generate heterogeneous treatment effects:

Calendar Time Effects: Macro events (e.g., recessions, policy changes) affect cohorts differently.

Selection into Timing: Units self-select into early/late treatment based on anticipated effects.

Composition Differences: Adoption cohorts may differ in observed or unobserved ways.

Such heterogeneity can bias TWFE estimates, which often average effects across incomparable groups.

30.8.2.5 Technical Issues

When using an event-study TWFE regression to estimate dynamic treatment effects in staggered adoption settings, one must exclude certain relative time indicators to avoid perfect multicollinearity. This arises because relative period indicators are linearly dependent due to the presence of unit and time fixed effects.

Specifically, the following two terms must be addressed:

The period immediately before treatment (\(l = -1\)): This period is typically omitted and serves as the baseline for comparison. This normalization has been standard practice in event study regressions prior to L. Sun and Abraham (2021) .

A distant post-treatment period (e.g., \(l = +5\) or \(l = +10\)): L. Sun and Abraham (2021) clarified that in addition to the baseline period, at least one other relative time indicator, typically from the far tail of the post-treatment distribution, must be dropped, binned, or trimmed to avoid multicollinearity among the relative time dummies. This issue emerges because fixed effects absorb much of the within-unit and within-time variation, reducing the effective rank of the design matrix.

Dropping certain relative periods (especially pre-treatment periods) introduces an implicit normalization: the estimates for included periods are now interpreted relative to the omitted periods. If treatment effects are present in these omitted periods (e.g., due to anticipation or early effects), this will contaminate the estimates of included relative periods.

To avoid this contamination, researchers often assume that all pre-treatment periods have zero treatment effect, i.e.,

\[ CATT_{e, l} = 0 \quad \text{for all } l < 0 \]

This assumption ensures that excluded pre-treatment periods form a valid counterfactual, and estimates for \(l \geq 0\) are not biased due to normalization.

L. Sun and Abraham (2021) resolve the issues of weighting and aggregation by using a clean weighting scheme that avoids contamination from excluded periods. Their method produces a weighted average of cohort- and time-specific treatment effects (\(CATT_{e, l}\)), where the weights are:

- Non-negative

- Sum to one

- Interpretable as the fraction of treated units who are observed \(l\) periods after treatment, normalized over the number of available periods \(g\)

This interaction-weighted estimator ensures that the estimated average treatment effect reflects a convex combination of dynamic treatment effects from different cohorts and times.

In this way, their aggregation logic closely mirrors that of Callaway and Sant’Anna (2021), who also construct average treatment effects from group-time ATTs using interpretable weights that align with the sampling structure.

library(fixest)

data("base_stagg")

# Estimate Sun & Abraham interaction-weighted model

res_sa20 <- feols(

y ~ x1 + sunab(year_treated, year) | id + year,

data = base_stagg

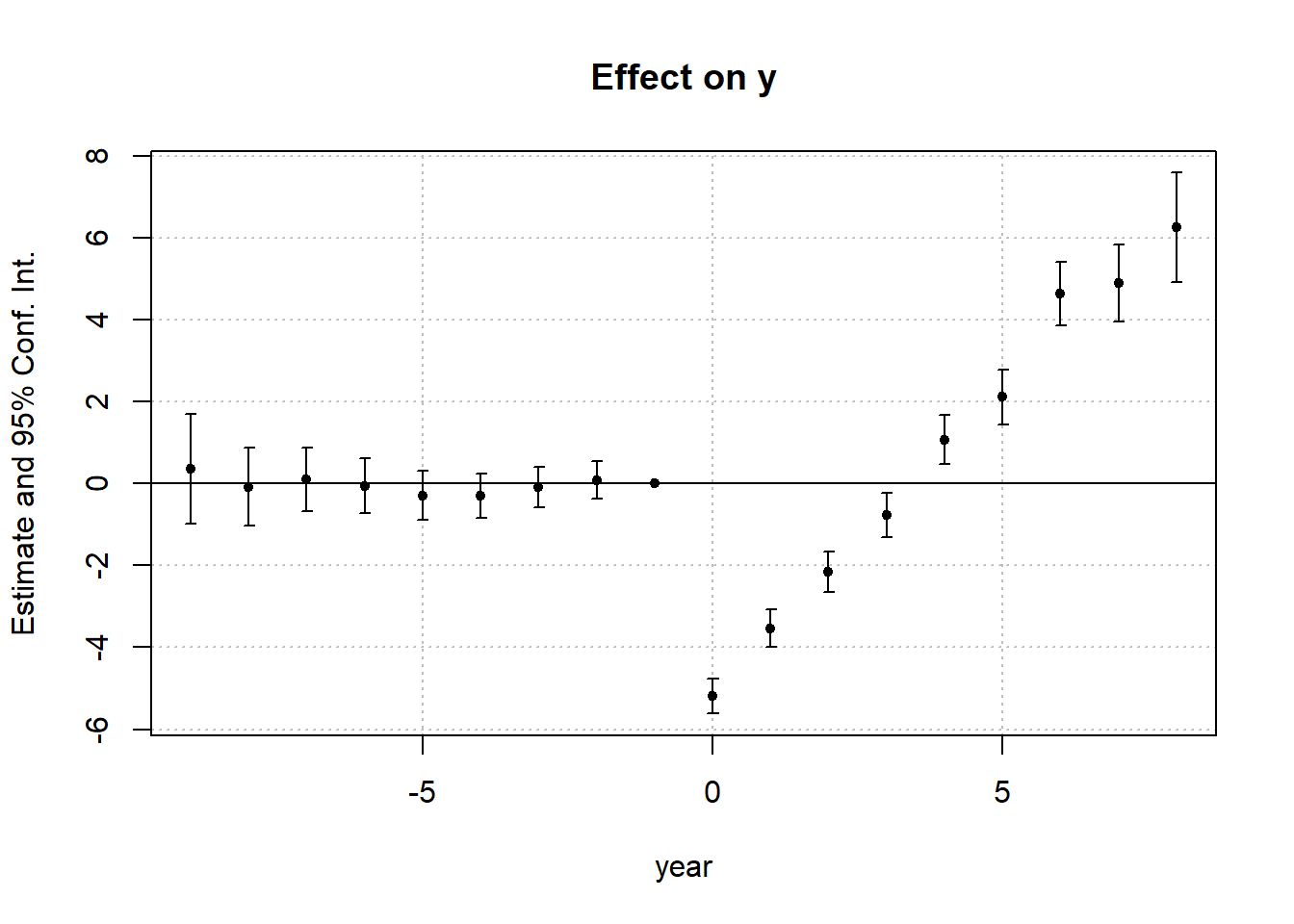

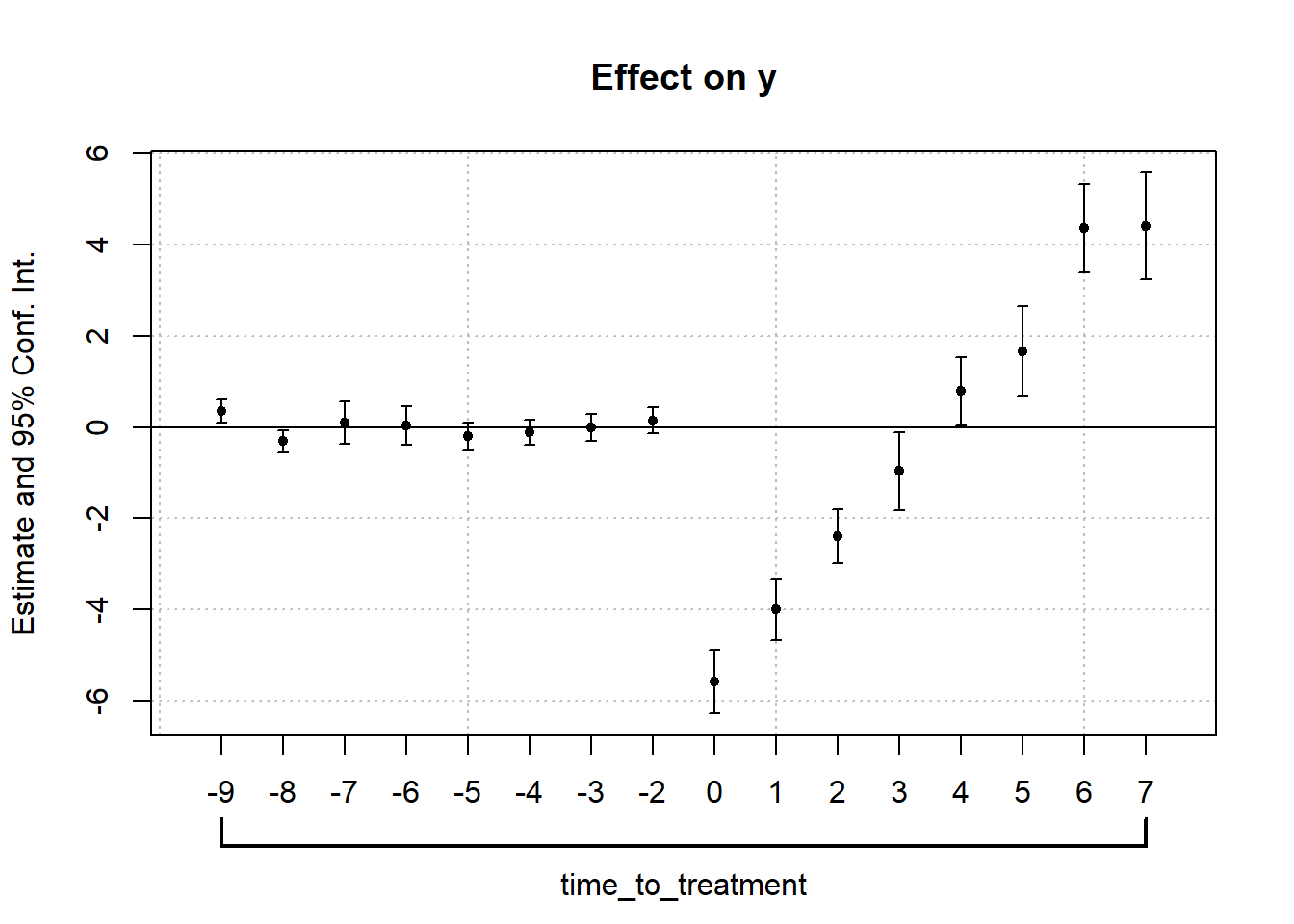

)Use iplot() to visualize the estimated dynamic treatment effects across relative time (Figure 30.10)

Figure 30.10: Effect on y

You can summarize the results using different aggregation options:

# Overall average ATT

summary(res_sa20, agg = "att")

#> OLS estimation, Dep. Var.: y

#> Observations: 950

#> Fixed-effects: id: 95, year: 10

#> Standard-errors: IID

#> Estimate Std. Error t value Pr(>|t|)

#> x1 0.994678 0.018823 52.84242 < 2.2e-16 ***

#> ATT -1.133749 0.194764 -5.82113 8.6022e-09 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#> RMSE: 0.921817 Adj. R2: 0.887984

#> Within R2: 0.876406

# Aggregation across post-treatment periods (excluding leads)

summary(res_sa20, agg = c("att" = "year::[^-]"))

#> OLS estimation, Dep. Var.: y

#> Observations: 950

#> Fixed-effects: id: 95, year: 10

#> Standard-errors: IID

#> Estimate Std. Error t value Pr(>|t|)

#> x1 0.994678 0.018823 52.842416 < 2.2e-16 ***

#> year::-9:cohort::10 0.351766 0.682887 0.515116 6.0662e-01

#> year::-8:cohort::9 0.033914 0.682561 0.049686 9.6039e-01

#> year::-8:cohort::10 -0.191932 0.681848 -0.281488 7.7841e-01

#> year::-7:cohort::8 -0.589387 0.681850 -0.864394 3.8764e-01

#> year::-7:cohort::9 0.872995 0.683597 1.277061 2.0197e-01

#> year::-7:cohort::10 0.019512 0.682179 0.028603 9.7719e-01

#> year::-6:cohort::7 -0.042147 0.681865 -0.061811 9.5073e-01

#> year::-6:cohort::8 -0.657571 0.682009 -0.964167 3.3527e-01

#> year::-6:cohort::9 0.877743 0.682578 1.285923 1.9886e-01

#> year::-6:cohort::10 -0.403635 0.681921 -0.591909 5.5409e-01

#> year::-5:cohort::6 -0.658034 0.682137 -0.964666 3.3502e-01

#> year::-5:cohort::7 -0.316974 0.683243 -0.463926 6.4283e-01

#> year::-5:cohort::8 -0.238213 0.682050 -0.349261 7.2699e-01

#> year::-5:cohort::9 0.301477 0.684068 0.440712 6.5955e-01

#> year::-5:cohort::10 -0.564801 0.681846 -0.828340 4.0774e-01

#> year::-4:cohort::5 -0.983453 0.681867 -1.442295 1.4963e-01

#> year::-4:cohort::6 0.360407 0.682386 0.528156 5.9754e-01

#> year::-4:cohort::7 -0.430610 0.681846 -0.631535 5.2788e-01

#> year::-4:cohort::8 -0.895195 0.681846 -1.312898 1.8961e-01

#> year::-4:cohort::9 -0.392478 0.682433 -0.575116 5.6538e-01

#> year::-4:cohort::10 0.519001 0.681901 0.761110 4.4683e-01

#> year::-3:cohort::4 0.591288 0.681879 0.867144 3.8614e-01

#> year::-3:cohort::5 -1.000650 0.682072 -1.467074 1.4277e-01

#> year::-3:cohort::6 0.072188 0.681865 0.105868 9.1571e-01

#> year::-3:cohort::7 -0.836820 0.681850 -1.227279 2.2010e-01

#> year::-3:cohort::8 -0.783148 0.681958 -1.148382 2.5117e-01

#> year::-3:cohort::9 0.811285 0.682635 1.188461 2.3502e-01

#> year::-3:cohort::10 0.527203 0.682593 0.772354 4.4014e-01

#> year::-2:cohort::3 0.036941 0.682296 0.054143 9.5684e-01

#> year::-2:cohort::4 0.832250 0.681851 1.220575 2.2262e-01

#> year::-2:cohort::5 -1.574086 0.681930 -2.308281 2.1250e-02 *

#> year::-2:cohort::6 0.311758 0.681848 0.457225 6.4764e-01

#> year::-2:cohort::7 -0.558631 0.681891 -0.819239 4.1291e-01

#> year::-2:cohort::8 0.429591 0.681861 0.630028 5.2886e-01

#> year::-2:cohort::9 1.201899 0.681871 1.762649 7.8359e-02 .

#> year::-2:cohort::10 -0.002429 0.682085 -0.003562 9.9716e-01

#> att -1.133749 0.194764 -5.821130 8.6022e-09 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#> RMSE: 0.921817 Adj. R2: 0.887984

#> Within R2: 0.876406

# Aggregate post-treatment effects from l = 0 to 8

summary(res_sa20, agg = c("att" = "year::[012345678]")) |>

etable(digits = 2)

#> summary(res_..

#> Dependent Var.: y

#>

#> x1 0.99*** (0.02)

#> year = -9 x cohort = 10 0.35 (0.68)

#> year = -8 x cohort = 9 0.03 (0.68)

#> year = -8 x cohort = 10 -0.19 (0.68)

#> year = -7 x cohort = 8 -0.59 (0.68)

#> year = -7 x cohort = 9 0.87 (0.68)

#> year = -7 x cohort = 10 0.02 (0.68)

#> year = -6 x cohort = 7 -0.04 (0.68)

#> year = -6 x cohort = 8 -0.66 (0.68)

#> year = -6 x cohort = 9 0.88 (0.68)

#> year = -6 x cohort = 10 -0.40 (0.68)

#> year = -5 x cohort = 6 -0.66 (0.68)

#> year = -5 x cohort = 7 -0.32 (0.68)

#> year = -5 x cohort = 8 -0.24 (0.68)

#> year = -5 x cohort = 9 0.30 (0.68)

#> year = -5 x cohort = 10 -0.56 (0.68)

#> year = -4 x cohort = 5 -0.98 (0.68)

#> year = -4 x cohort = 6 0.36 (0.68)

#> year = -4 x cohort = 7 -0.43 (0.68)

#> year = -4 x cohort = 8 -0.90 (0.68)

#> year = -4 x cohort = 9 -0.39 (0.68)

#> year = -4 x cohort = 10 0.52 (0.68)

#> year = -3 x cohort = 4 0.59 (0.68)

#> year = -3 x cohort = 5 -1.0 (0.68)

#> year = -3 x cohort = 6 0.07 (0.68)

#> year = -3 x cohort = 7 -0.84 (0.68)

#> year = -3 x cohort = 8 -0.78 (0.68)

#> year = -3 x cohort = 9 0.81 (0.68)

#> year = -3 x cohort = 10 0.53 (0.68)

#> year = -2 x cohort = 3 0.04 (0.68)

#> year = -2 x cohort = 4 0.83 (0.68)

#> year = -2 x cohort = 5 -1.6* (0.68)

#> year = -2 x cohort = 6 0.31 (0.68)

#> year = -2 x cohort = 7 -0.56 (0.68)

#> year = -2 x cohort = 8 0.43 (0.68)

#> year = -2 x cohort = 9 1.2. (0.68)

#> year = -2 x cohort = 10 -0.002 (0.68)

#> att -1.1*** (0.19)

#> Fixed-Effects: --------------

#> id Yes

#> year Yes

#> _______________________ ______________

#> S.E. type IID

#> Observations 950

#> R2 0.90982

#> Within R2 0.87641

#> ---

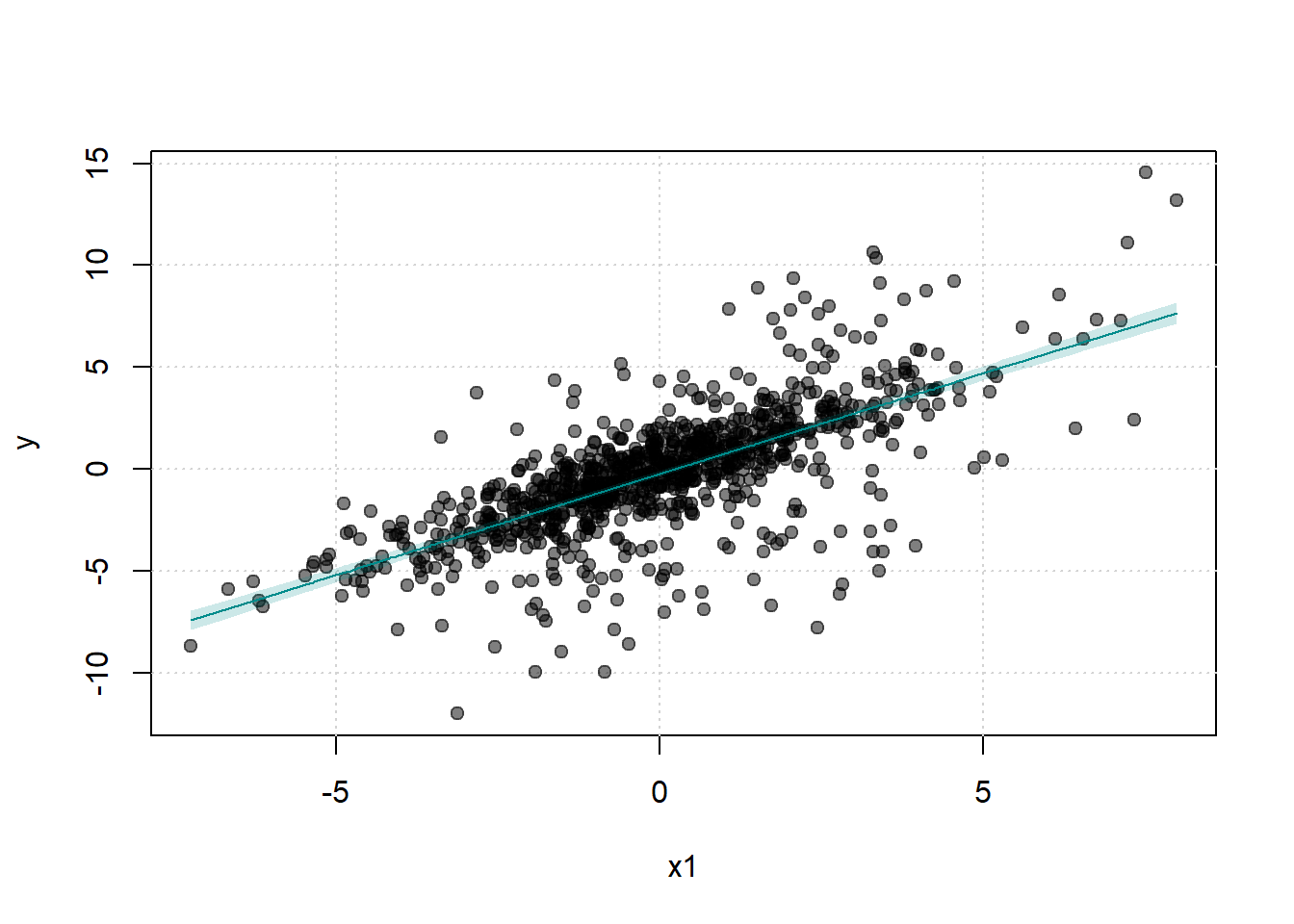

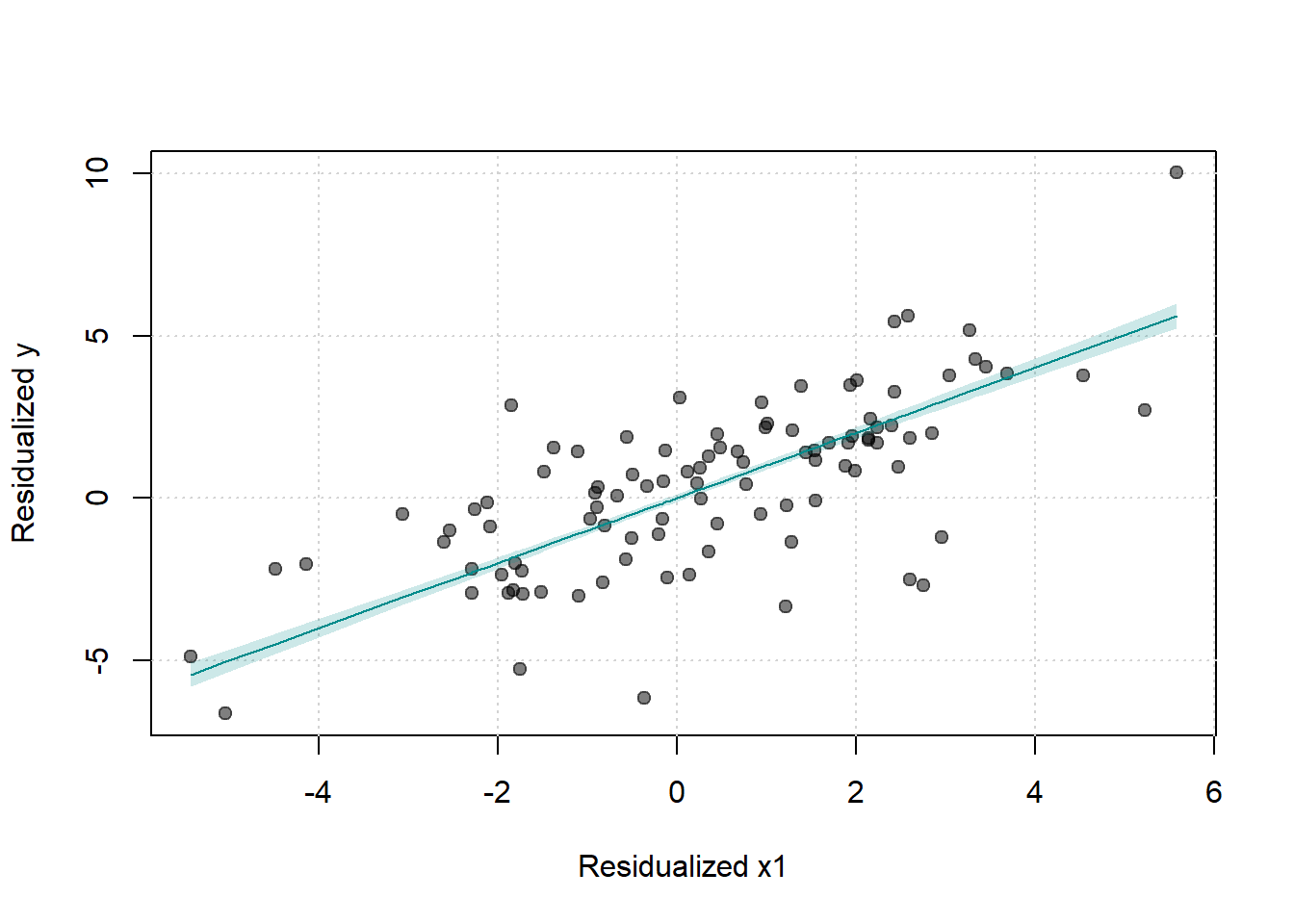

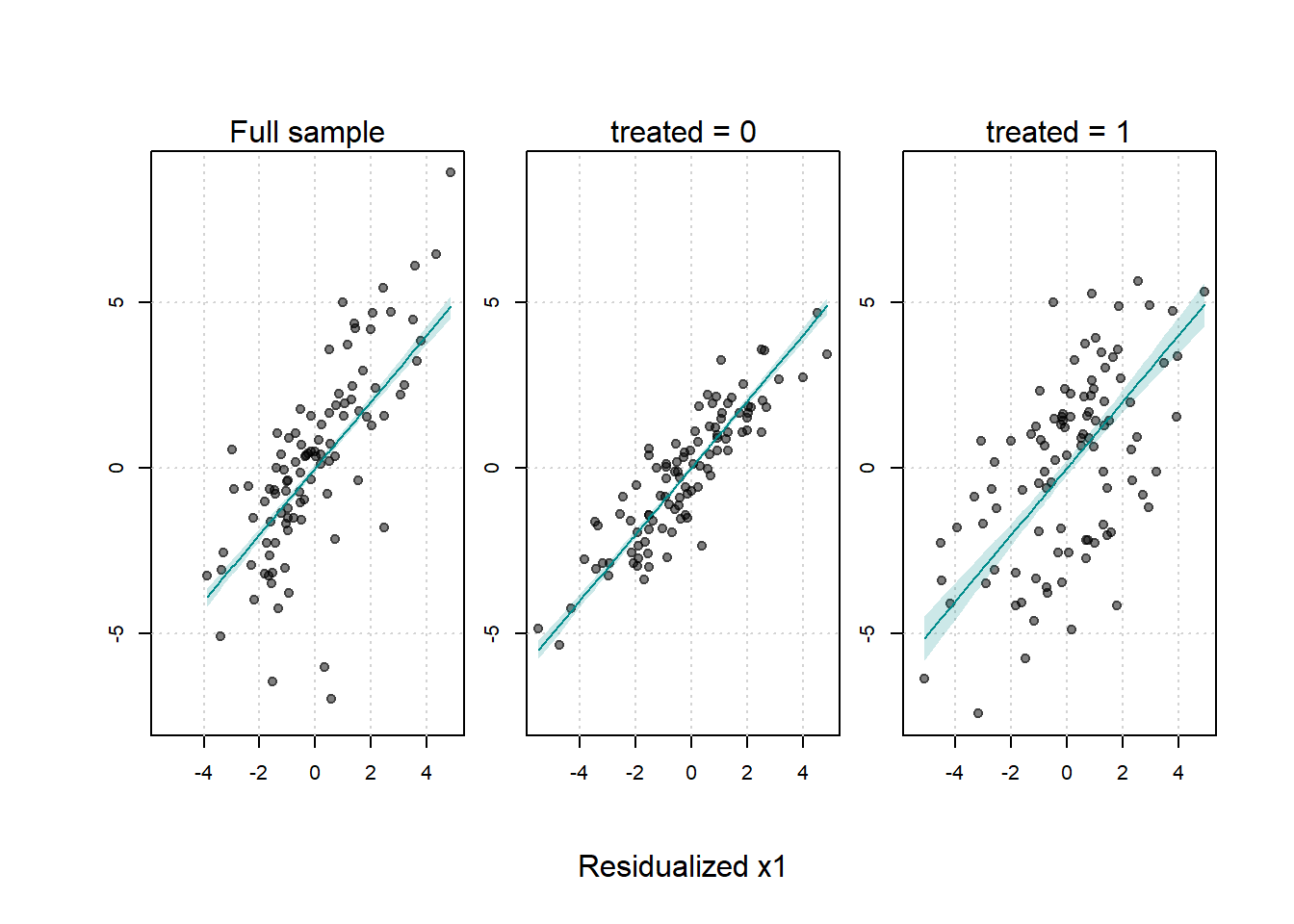

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1The fwlplot package provides diagnostics for how much variation is explained by fixed effects or covariates (Figures 30.11, (ref?)(fig:residualized-scatter-x1-y) and 30.13).

Figure 30.11: Scatter Plot of Y agaisnt X1

Figure 30.12: Scatter Plot of Residualized Y against Residualized X1

# Splitting by treatment status

fwl_plot(

y ~ x1 |

id + year,

data = base_stagg,

n_sample = 100,

fsplit = ~ treated

)

Figure 30.13: Scatter Plot of Residualized Y against Residualized X1 by Treatment Status

30.8.3 Stacked Difference-in-Differences

The Stacked DiD approach addresses key limitations of standard TWFE models in staggered adoption designs, particularly treatment effect heterogeneity and timing variations. By constructing sub-experiments around each treatment event, researchers can isolate cleaner comparisons and reduce contamination from improperly specified control groups.

Basic TWFE Specification

\[ Y_{it} = \beta_{FE} D_{it} + A_i + B_t + \epsilon_{it} \]

- \(Y_{it}\): Outcome for unit \(i\) at time \(t\).

- \(D_{it}\): Treatment indicator (1 if treated, 0 otherwise).

- \(A_i\): Unit (group) fixed effects.

- \(B_t\): Time period fixed effects.

- \(\epsilon_{it}\): Idiosyncratic error term.

Steps in the Stacked DiD Procedure

30.8.3.1 Choose an Event Window

Define:

- \(\kappa_a\): Number of pre-treatment periods to include in the event window (lead periods).

- \(\kappa_b\): Number of post-treatment periods to include in the event window (lag periods).

Implication:

Only events where sufficient pre- and post-treatment periods exist will be included (i.e., excluding those events that do not meet this criteria).

30.8.3.2 Enumerate Sub-Experiments

Define:

- \(T_1\): First period in the panel.

- \(T_T\): Last period in the panel.

- \(\Omega_A\): The set of treatment adoption periods that fit within the event window:

\[ \Omega_A = \left\{ A_i \middle| T_1 + \kappa_a \le A_i \le T_T - \kappa_b \right\} \]

- Each \(A_i\) represents an adoption period for unit \(i\) that has enough time on both sides of the event.

Let \(d = 1, \dots, D\) index the sub-experiments in \(\Omega_A\).

- \(\omega_d\): The event (adoption) date of the \(d\)-th sub-experiment.

30.8.3.3 Define Inclusion Criteria

Valid Treated Units

- In sub-experiment \(d\), treated units have adoption date exactly equal to \(\omega_d\).

- A unit may only be treated in one sub-experiment to avoid duplication.

Clean Control Units

- Controls are units where \(A_i > \omega_d + \kappa_b\), i.e.,

- They are never treated, or

- They are treated in the far future (beyond the post-event window).

- A control unit can appear in multiple sub-experiments, but this requires correcting standard errors (see below).

Valid Time Periods

- Only observations where

\[ \omega_d - \kappa_a \le t \le \omega_d + \kappa_b \]

are included. - This ensures the analysis is centered on the event window.

30.8.3.4 Specify Estimating Equation

Basic DiD Specification in the Stacked Dataset

\[ Y_{itd} = \beta_0 + \beta_1 T_{id} + \beta_2 P_{td} + \beta_3 (T_{id} \times P_{td}) + \epsilon_{itd} \]

Where:

\(i\): Unit index

\(t\): Time index

\(d\): Sub-experiment index

\(T_{id}\): Indicator for treated units in sub-experiment \(d\)

\(P_{td}\): Indicator for post-treatment periods in sub-experiment \(d\)

\(\beta_3\): Captures the DiD estimate of the treatment effect.

Equivalent Form with Fixed Effects

\[ Y_{itd} = \beta_3 (T_{id} \times P_{td}) + \theta_{id} + \gamma_{td} + \epsilon_{itd} \]

where

\(\theta_{id}\): Unit-by-sub-experiment fixed effect.

\(\gamma_{td}\): Time-by-sub-experiment fixed effect.

Note:

- \(\beta_3\) summarizes the average treatment effect across all sub-experiments but does not allow for dynamic effects by time since treatment.

30.8.3.5 Stacked Event Study Specification

Define Time Since Event (\(YSE_{td}\)):

\[ YSE_{td} = t- \omega_d \]

where

Measures time since the event (relative time) in sub-experiment \(d\).

\(YSE_{td} \in [-\kappa_a, \dots, 0, \dots, \kappa_b]\) in every sub-experiment.

Event-Study Regression (Sub-Experiment Level)

\[ Y_{it}^d = \sum_{j = -\kappa_a}^{\kappa_b} \beta_j^d . 1 (YSE_{td} = j) + \sum_{j = -\kappa_a}^{\kappa_b} \delta_j^d (T_{id} . 1 (YSE_{td} = j)) + \theta_i^d + \epsilon_{it}^d \]

where

Separate coefficients for each sub-experiment \(d\).

\(\delta_j^d\): Captures treatment effects at relative time \(j\) within sub-experiment \(d\).

Pooled Stacked Event-Study Regression

\[ Y_{itd} = \sum_{j = -\kappa_a}^{\kappa_b} \beta_j \cdot \mathbb{1}(YSE_{td} = j) + \sum_{j = -\kappa_a}^{\kappa_b} \delta_j \left( T_{id} \cdot \mathbb{1}(YSE_{td} = j) \right) + \theta_{id} + \epsilon_{itd} \]

- Pooled coefficients \(\delta_j\) reflect average treatment effects by event time \(j\) across sub-experiments.

30.8.3.6 Clustering in Stacked DID

Cluster at Unit × Sub-Experiment Level (Cengiz et al. 2019): Accounts for units appearing multiple times across sub-experiments.

Cluster at Unit Level (Deshpande and Li 2019): Appropriate when units are uniquely identified and do not appear in multiple sub-experiments.

library(did)

library(tidyverse)

library(fixest)

# Load example data

data(base_stagg)

# Get treated cohorts (exclude never-treated units coded as 10000)

cohorts <- base_stagg %>%

filter(year_treated != 10000) %>%

distinct(year_treated) %>%

pull()

# Function to generate data for each sub-experiment

getdata <- function(j, window) {

base_stagg %>%

filter(

year_treated == j | # treated units in cohort j

year_treated > j + window # controls not treated soon after

) %>%

filter(

year >= j - window &

year <= j + window # event window bounds

) %>%

mutate(df = j) # sub-experiment indicator

}

# Generate the stacked dataset

stacked_data <- map_df(cohorts, ~ getdata(., window = 5)) %>%

mutate(

rel_year = if_else(df == year_treated, time_to_treatment, NA_real_)

) %>%

fastDummies::dummy_cols("rel_year", ignore_na = TRUE) %>%

mutate(across(starts_with("rel_year_"), ~ replace_na(., 0)))

# Estimate fixed effects regression on the stacked data

stacked_result <- feols(

y ~ `rel_year_-5` + `rel_year_-4` + `rel_year_-3` + `rel_year_-2` +

rel_year_0 + rel_year_1 + rel_year_2 + rel_year_3 +

rel_year_4 + rel_year_5 |

id ^ df + year ^ df,

data = stacked_data

)

# Extract coefficients and standard errors

stacked_coeffs <- stacked_result$coefficients

stacked_se <- stacked_result$se

# Insert zero for the omitted period (usually -1)

stacked_coeffs <- c(stacked_coeffs[1:4], 0, stacked_coeffs[5:10])

stacked_se <- c(stacked_se[1:4], 0, stacked_se[5:10])# Plotting estimates from three methods: Callaway & Sant'Anna, Sun & Abraham, and Stacked DiD

cs_out <- att_gt(

yname = "y",

data = base_stagg,

gname = "year_treated",

idname = "id",

# xformla = "~x1",

tname = "year"

)

cs <-

aggte(

cs_out,

type = "dynamic",

min_e = -5,

max_e = 5,

bstrap = FALSE,

cband = FALSE

)

res_sa20 = feols(y ~ sunab(year_treated, year) |

id + year, base_stagg)

sa = tidy(res_sa20)[5:14, ] %>% pull(estimate)

sa = c(sa[1:4], 0, sa[5:10])

sa_se = tidy(res_sa20)[6:15, ] %>% pull(std.error)

sa_se = c(sa_se[1:4], 0, sa_se[5:10])

compare_df_est = data.frame(

period = -5:5,

cs = cs$att.egt,

sa = sa,

stacked = stacked_coeffs

)

compare_df_se = data.frame(

period = -5:5,

cs = cs$se.egt,

sa = sa_se,

stacked = stacked_se

)

compare_df_longer <- compare_df_est %>%

pivot_longer(!period, names_to = "estimator", values_to = "est") %>%

full_join(compare_df_se %>%

pivot_longer(!period, names_to = "estimator", values_to = "se")) %>%

mutate(upper = est + 1.96 * se,

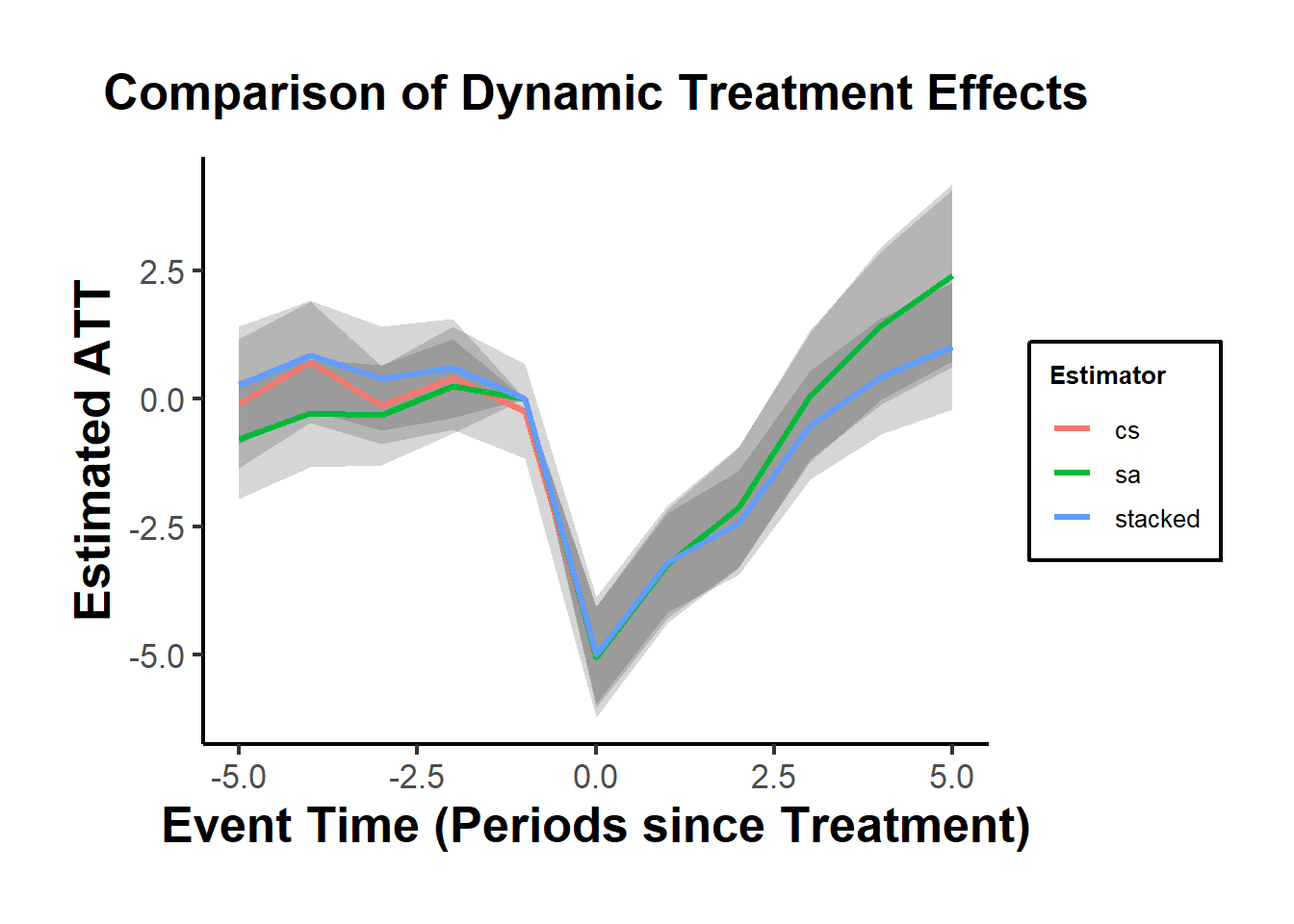

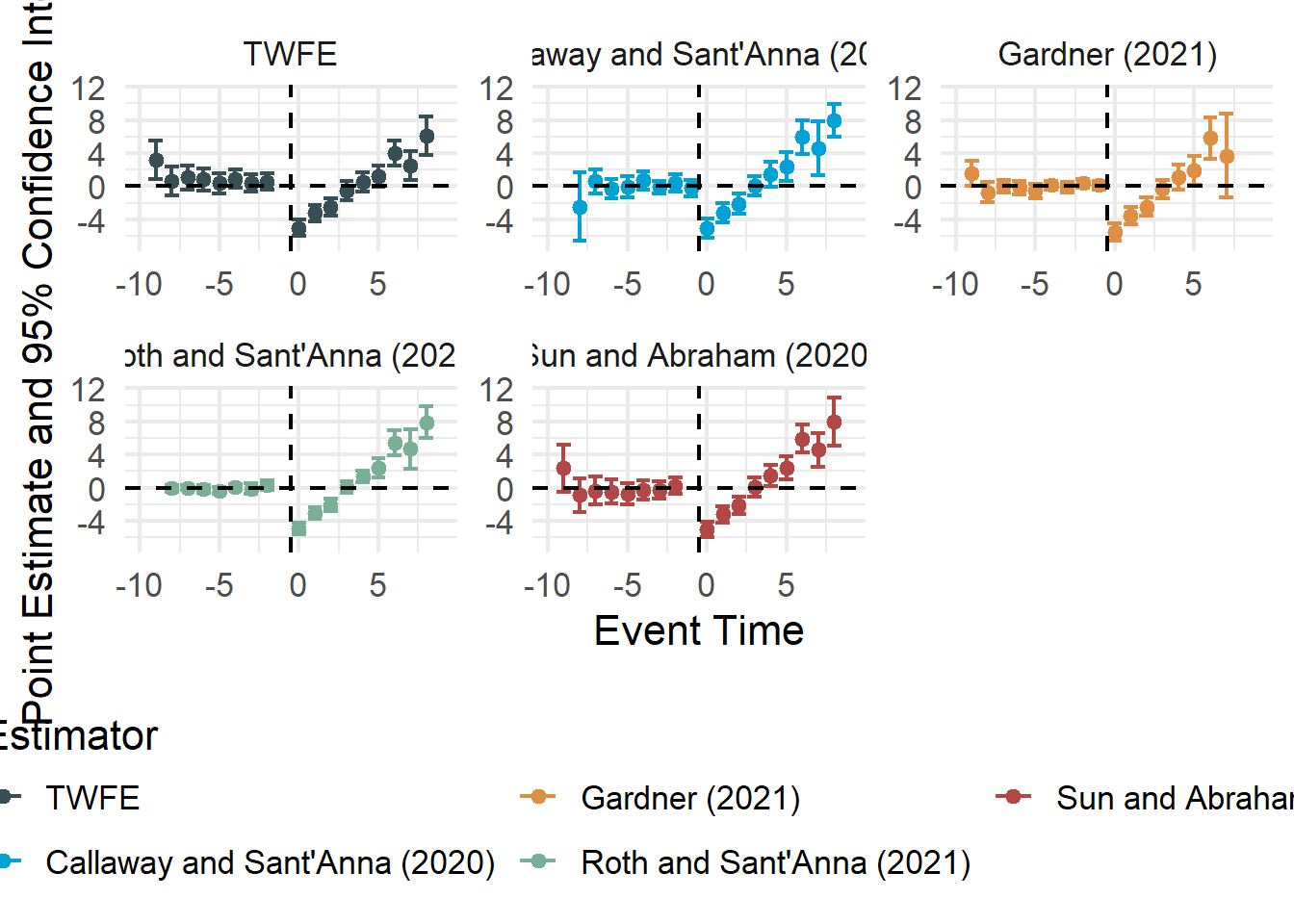

lower = est - 1.96 * se)Figure 30.14 shows a comparison between three estimators (i.e., CS, SA, and stacked)

ggplot(compare_df_longer) +

geom_ribbon(aes(

x = period,

ymin = lower,

ymax = upper,

group = estimator

), alpha = 0.2) +

geom_line(aes(

x = period,

y = est,

group = estimator,

color = estimator

),

linewidth = 1.2) +

labs(

title = "Comparison of Dynamic Treatment Effects",

x = "Event Time (Periods since Treatment)",

y = "Estimated ATT",

color = "Estimator"

) +

causalverse::ama_theme()

Figure 30.14: Comparison of Dynamic Average Treatment Effects

30.8.4 Panel Match DiD Estimator with In-and-Out Treatment Conditions

As noted in Imai and Kim (2021), the TWFE regression model is widely used but fundamentally relies on strong modeling assumptions, particularly linearity and additivity. It does not constitute a fully nonparametric estimation method and may yield biased results under model misspecification.

Researchers often prefer TWFE due to its ability to control for both unit- and time-specific unobserved confounders:

- \(\alpha_i = h(\mathbf{U}_i)\) accounts for unit-level confounders.

- \(\gamma_t = f(\mathbf{V}_t)\) adjusts for time-level confounders.

The functional forms \(h(\cdot)\) and \(f(\cdot)\) are left unspecified, but additivity and separability are assumed. TWFE is based on the model:

\[ Y_{it} = \alpha_i + \gamma_t + \beta X_{it} + \epsilon_{it} \]

for \(i = 1, \dots, N\), and \(t = 1, \dots, T\). However, this formulation requires a linear specification for the treatment effect \(\beta\). Contrary to popular belief, the model does require functional form assumptions for validity (Imai and Kim 2021, 406; 2019).

30.8.4.1 Matching and the Panel Match DiD Estimator

To mitigate model dependence and improve causal inference validity, Imai and Kim (2021) propose a matching-based framework for panel data. This method is implemented via the wfe and PanelMatch R packages and offers design-based identification under relaxed assumptions.

This setting generalizes staggered adoption, allowing units to transition in and out of treatment. The core idea is to construct matched control groups that share the same treatment history as treated units and then apply a Difference-in-Differences logic. This is better than synthetic controls (e.g., Xu (2017)) because it requires less data to achieve good performance and can adapt to contexts where units switch treatment status multiple times.

Key Properties of PM-DiD (Imai, Kim, and Wang 2021)

- Designed for multiple treatment switches over time.

- Addresses issues of carryover, reversal, and attenuation bias.

- Allows estimation of short-term and long-term causal effects, accounting for time dynamics.

Key Findings (Imai, Kim, and Wang 2021)

Even under favorable conditions for OLS, PM-DiD is more robust to model misspecification and omitted lags.

This robustness comes with a cost: reduced efficiency (larger variance).

Reflects the classic bias-variance tradeoff between flexible and parametric estimators.

Data and Software Requirements

Treatment variable: binary (0 = control, 1 = treated).

Unit and time variables: integer/numeric and ordered.

Input data must be in

data.frameformat.

Examples of settings that can utilize this method:

30.8.4.2 Two-Way Matching Interpretation of TWFE

The least squares estimate of \(\beta\) in the TWFE model can be re-expressed as a matching estimator that compares each treated unit to observations within:

- The same unit (within-unit match),

- The same time period (within-time match),

- Adjusted by a third set of observations in neither group.

This leads to mismatches: treated observations compared to units with the same treatment status, which causes attenuation bias.

The adjustment factor \(K\) corrects for this by weighting matches appropriately. However, even the weighted TWFE estimator contains some mismatches and relies on comparisons across units that differ in key characteristics.

In the simple two-period, two-group DiD setting, the TWFE and DiD estimators coincide. However, in multi-period DiD with treatment reversals, this equivalence breaks down (Imai and Kim 2021).

- The unweighted TWFE is not equivalent to multi-period DiD.

- The multi-period DiD is equivalent to a weighted TWFE, but some weights are negative, which is a problematic feature from a design-based perspective.

This means that justifying TWFE via DiD logic is incorrect unless the linearity assumption is satisfied.

30.8.4.3 Estimation Using Panel Match DiD

Core Estimation Steps (Imai, Kim, and Wang 2021):

- Match treated observations with control observations from the same time period and with identical treatment histories over the past \(L\) periods.

- Use standard matching or weighting methods to refine control sets (e.g., Mahalanobis distance, propensity score).

- Apply a DiD estimator to compute treatment effects at time \(t + F\).

- Evaluate match quality using covariate balance diagnostics (Ho et al. 2007).

Causal Estimand

Let \(F\) be the number of leads (future periods) and \(L\) be the number of lags (past treatment periods). Define the average treatment effect as:

\[ \delta(F, L) = \mathbb{E}\left[Y_{i, t+F}(1) - Y_{i, t+F}(0) \mid \text{treatment history from } t-L \text{ to } t\right] \]

- \(F = 0\): contemporaneous effect (short-run ATT)

- \(F > 0\): future outcomes (long-run ATT)

- \(L\): adjusts for potential carryover effects

The estimator also allows for estimation of the Average Reversal Treatment Effect (ART) when treatment status switches from 1 to 0.

30.8.4.4 Model Assumptions

No spillover effects across units (i.e., SUTVA holds)

Carryover effects allowed up to \(L\) periods.

After \(L\) lags, prior treatments are assumed to have no effect on \(Y_{i,t+F}\).

The potential outcome at \(t + F\) is independent of treatment assignments beyond \(t - L\).

The key identifying assumption is a conditional parallel trends assumption. Outcome trends are assumed parallel across treated and matched control units, conditional on:

Past treatment,

Covariate histories,

Lagged outcomes (excluding the most recent).

Unlike standard TWFE, strong ignorability is not required.

30.8.4.5 Covariate Balance Assessment

Assessing balance before estimating ATT is critical:

- Compute the mean standardized difference between treated and matched control units.

- Check balance across covariates and lagged outcomes for all \(L\) pretreatment periods.

- Imbalanced covariates may indicate violations of the parallel trends assumption.

30.8.4.6 Implementing the Panel Match DiD Estimator

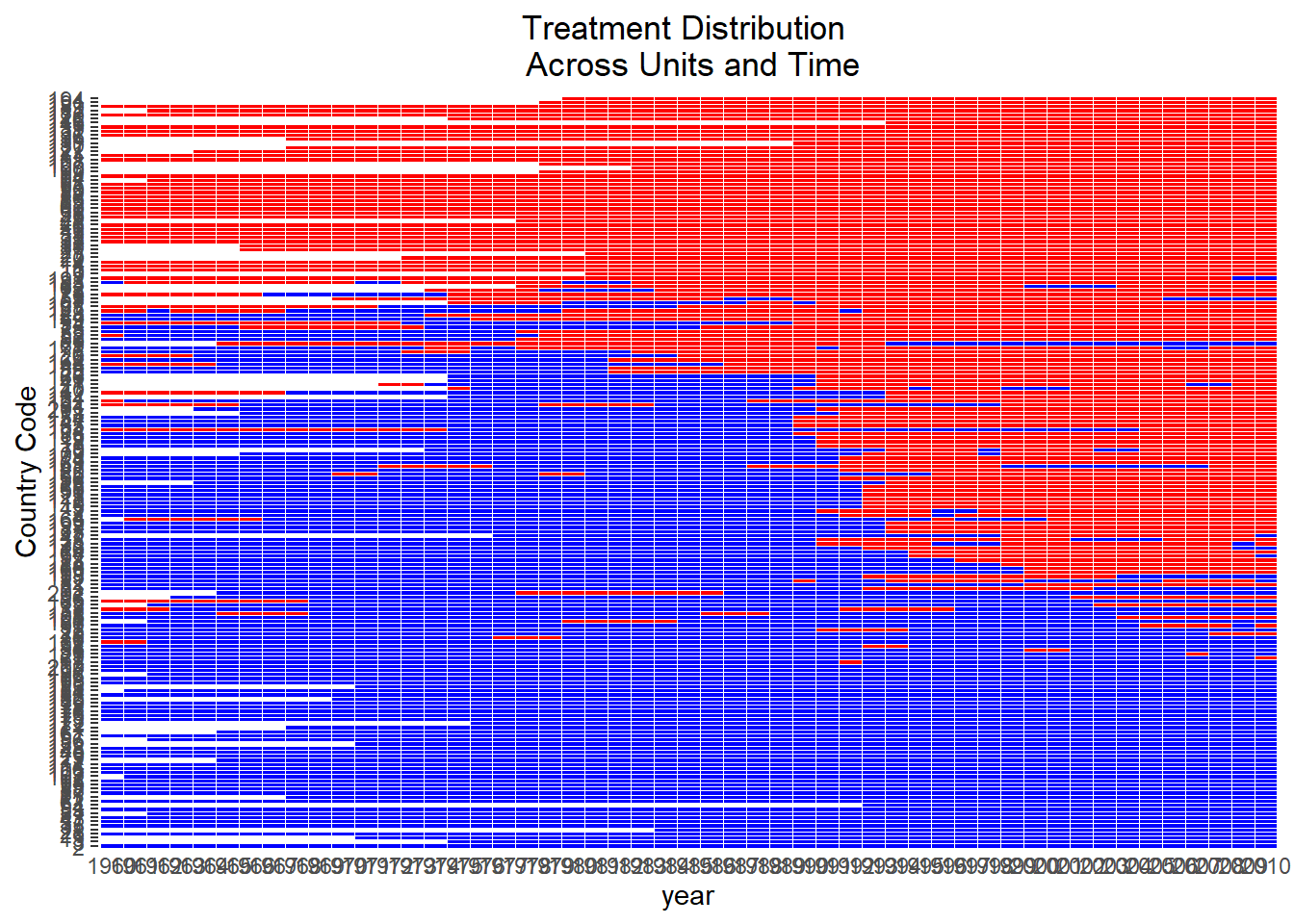

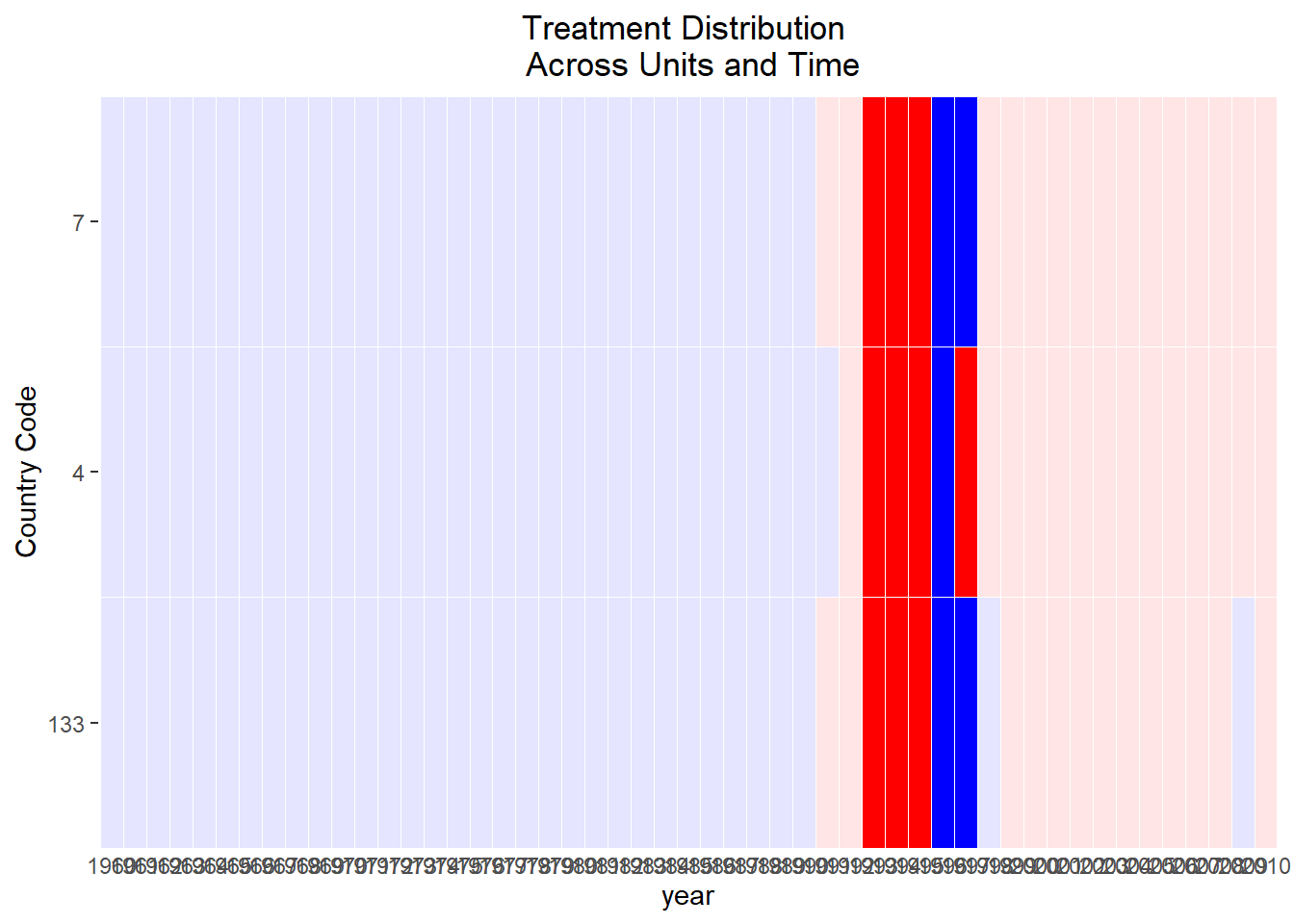

Treatment Variation Plot

Visualizing the variation of treatment across space and time is essential to assess whether the treatment has sufficient heterogeneity to support credible causal identification.

DisplayTreatment(

panel.data = PanelData(

panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"

),

legend.position = "none",

xlab = "year",

ylab = "Country Code"

)

Figure 30.15: Treatment Distribution Across Units and Time

This plot aids in identifying whether the treatment is broadly distributed or concentrated among a few units or time periods.

Insufficient treatment variation may weaken identification or reduce the precision of estimated effects.

30.8.4.6.1 Setting Parameters \(F\) and \(L\)

- Select \(F\): the number of leads, or time periods after treatment, for which the effect is measured.

\(F = 0\): contemporaneous (short-term) treatment effect.

\(F > 0\): long-term or cumulative effects.

- Select \(L\): the number of lags (prior treatment periods) used in matching to adjust for carryover effects.

Increasing \(L\) enhances credibility but reduces match quality and sample size.

This selection reflects the bias-variance tradeoff.

30.8.4.6.2 Causal Quantity of Interest

The ATT is defined as:

\[ \delta(F, L) = \mathbb{E} \left[ Y_{i,t+F}(1) - Y_{i,t+F}(0) \mid X_{i,t} = 1, \text{History}_{i,t-L:t-1} \right] \]

This estimator accounts for carryover history (via \(L\)) and post-treatment dynamics (via \(F\)).

It is also robust to treatment reversals (i.e., treatment switching back to control).

A related estimand, the Average Reversal Treatment Effect (ART), measures the causal effect of switching from treatment to control.

30.8.4.6.3 Choosing \(F\) and \(L\)

Large \(L\):

Improves identification of causal effect by accounting for long-term treatment confounding.

Reduces sample size due to stricter matching requirements.

Large \(F\):

Enables analysis of delayed effects.

Complicates interpretation if units switch treatment again before \(t + F\).

Researchers should select \(F\) and \(L\) based on substantive context, theoretical considerations, and sensitivity analysis.

30.8.4.6.4 Constructing and Refining Matched Sets

- Initial Matching

Each treated observation is matched to control units from other units in the same time period.

Matching is based on exact treatment histories from \(t - L\) to \(t - 1\).

Purpose

Controls for carryover effects.

Ensures matched units have similar latent propensities for treatment.

- Refinement Process

Refined matched sets additionally adjust for pre-treatment covariates and lagged outcomes.

Matching strategies:

Mahalanobis distance.

Propensity score.

Up to \(J\) best matches per treated unit may be used.

- Weighting

Assigns weights to matched controls to emphasize similarity.

Weighting is often done via inverse propensity scores, or other balance-enhancing metrics.

Can be considered a generalization of traditional matching.

30.8.4.6.5 Difference-in-Differences Estimation

Once matched sets are constructed:

The counterfactual for each treated unit is a weighted average of outcomes from its matched control set.

The DiD estimate of ATT is:

\[ \widehat{\delta}_{\text{ATT}} = \frac{1}{|T_1|} \sum_{(i,t) \in T_1} \left[ Y_{i,t+F} - \sum_{j \in \mathcal{C}_{it}} w_{ijt} Y_{j,t+F} \right] \]

where \(T_1\) is the set of treated observations, \(\mathcal{C}_{it}\) is the matched control set, and \(w_{ijt}\) are normalized weights.

Considerations when \(F > 0\):

Matched controls may themselves switch into treatment before \(t + F\).

Some treated units may revert to control.

30.8.4.6.6 Checking Covariate Balance

One of the main advantages of matching-based estimators is the ability to diagnose balance:

- For each covariate and each lag, compute:

\[ \text{Standardized Difference} = \frac{\bar{X}_{\text{treated}} - \bar{X}_{\text{control}}}{\text{SD}_{\text{treated}}} \]

Aggregate these over all treated observations and time periods.

Examine balance on:

Time-varying covariates,

Lagged outcomes,

Baseline covariates

Balance checks provide indirect validation of the parallel trends assumption.

30.8.4.6.7 Standard Error Estimation

Analogous to the conditional variance seen in regression models.

Standard errors are calculated conditional on the matching weights (G. W. Imbens and Rubin 2015).

SE here is a measure of sampling uncertainty given the matched design.

Note: They do not incorporate uncertainty from the matching procedure itself (Ho et al. 2007).

30.8.4.6.8 Matching on Treatment History

The goal is to compare treated units transitioning into treatment to control units with comparable treatment histories.

Set

qoi =:"att": Average Treatment on the Treated,"atc": Average Treatment on the Controls,"art": Average Reversal Treatment Effect,"ate": Average Treatment Effect.

library(PanelMatch)

# All examples follow the package's vignette

# Create the matched sets

PM.results.none <-

PanelMatch(

lag = 4,

refinement.method = "none",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

# visualize the treated unit and matched controls

DisplayTreatment(

legend.position = "none",

xlab = "year",

ylab = "Country Code",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

matched.set = PM.results.none$att[1],

# highlight the particular set

show.set.only = TRUE

)

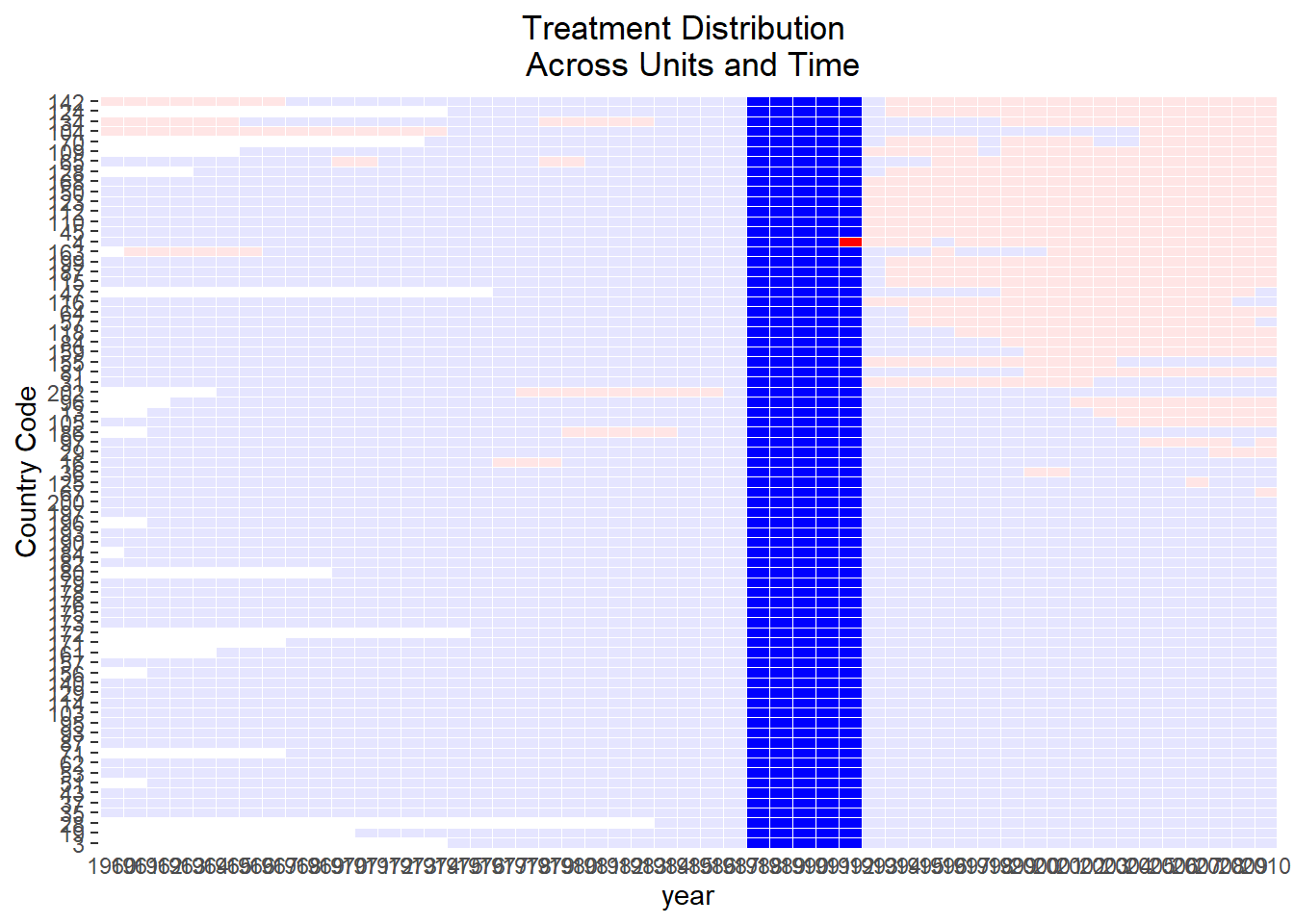

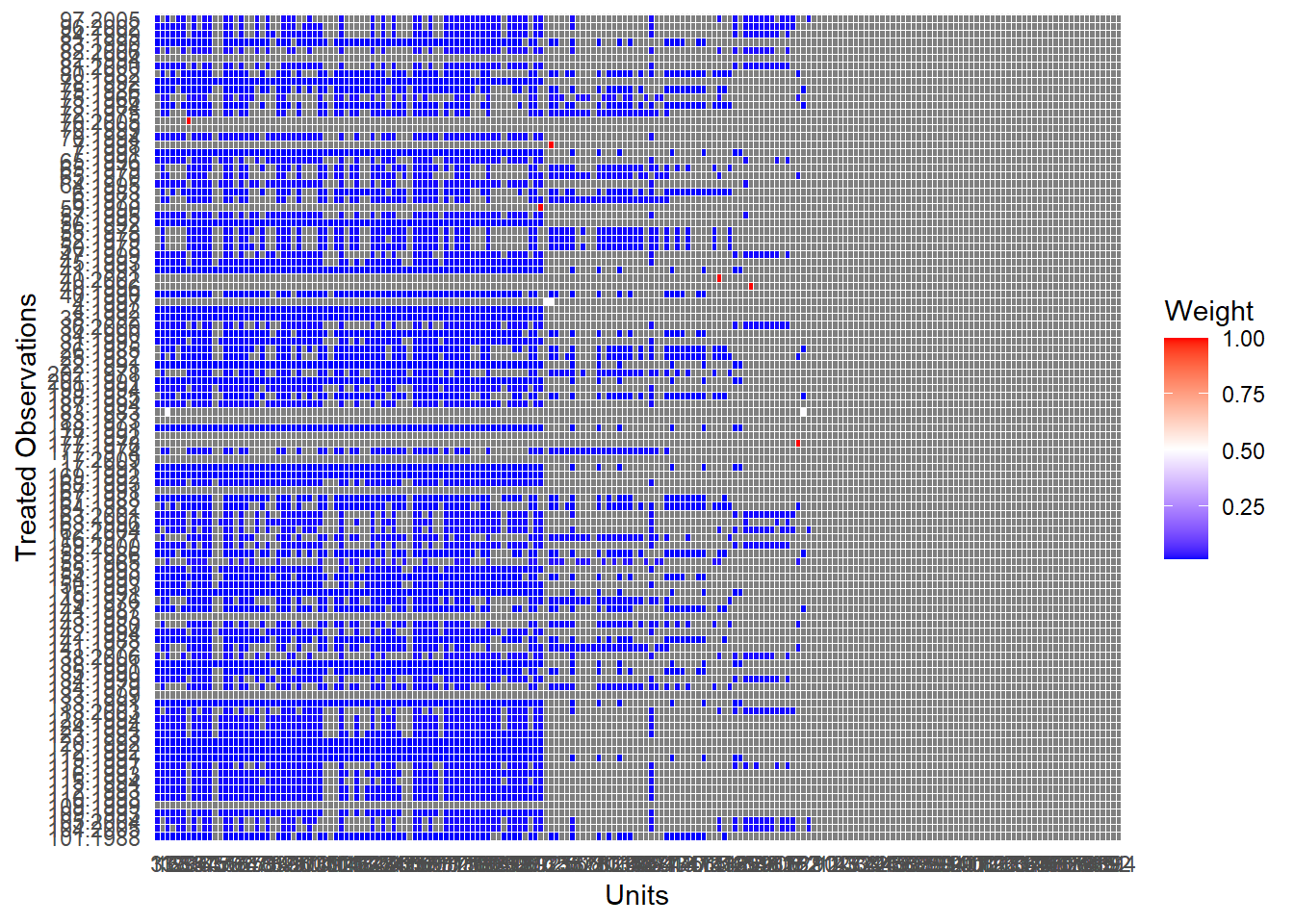

Figure 30.16: Treatment by Country and Year

Control units and the treated unit have identical treatment histories over the lag window (1988-1991)

DisplayTreatment(

legend.position = "none",

xlab = "year",

ylab = "Country Code",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

matched.set = PM.results.none$att[2],

# highlight the particular set

show.set.only = TRUE

)

Figure 30.17: Focused Treatment Visualization

This set is more limited than the first one, but we can still see that we have exact past histories.

Refining Matched Sets

Refinement involves assigning weights to control units.

Users must:

Specify a method for calculating unit similarity/distance.

Choose variables for similarity/distance calculations.

Select a Refinement Method

Users determine the refinement method via the

refinement.methodargument.Options include:

mahalanobisps.matchCBPS.matchps.weightCBPS.weightps.msm.weightCBPS.msm.weightnone

Methods with “match” in the name and Mahalanobis will assign equal weights to similar control units.

“Weighting” methods give higher weights to control units more similar to treated units.

Variable Selection

Users need to define which covariates will be used through the

covs.formulaargument, a one-sided formula object.Variables on the right side of the formula are used for calculations.

“Lagged” versions of variables can be included using the format:

I(lag(name.of.var, 0:n)).

Understanding

PanelMatchandmatched.setobjectsThe

PanelMatchfunction returns aPanelMatchobject.The most crucial element within the

PanelMatchobject is the matched.set object.Within the

PanelMatchobject, the matched.set object will have names like att, art, or atc.If

qoi = ate, there will be two matched.set objects: att and atc.

Matched.set Object Details

matched.set is a named list with added attributes.

Attributes include:

Lag

Names of treatment

Unit and time variables

Each list entry represents a matched set of treated and control units.

Naming follows a structure:

[id variable].[time variable].Each list element is a vector of control unit ids that match the treated unit mentioned in the element name.

Since it’s a matching method, weights are only given to the

size.matchmost similar control units based on distance calculations.

# PanelMatch without any refinement

PM.results.none <-

PanelMatch(

lag = 4,

refinement.method = "none",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

# Extract the matched.set object

msets.none <- PM.results.none$att

# PanelMatch with refinement

PM.results.maha <-

PanelMatch(

lag = 4,

refinement.method = "mahalanobis", # use Mahalanobis distance

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

covs.formula = ~ tradewb,

size.match = 5,

qoi = "att" ,

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

msets.maha <- PM.results.maha$att# these 2 should be identical because weights are not shown

msets.none |> head()

#> wbcode2 year matched.set.size

#> 1 4 1992 74

#> 2 4 1997 2

#> 3 6 1973 63

#> 4 6 1983 73

#> 5 7 1991 81

#> ... [1 more matched set(s) not printed]

msets.maha |> head()

#> wbcode2 year matched.set.size

#> 1 4 1992 74

#> 2 4 1997 2

#> 3 6 1973 63

#> 4 6 1983 73

#> 5 7 1991 81

#> ... [1 more matched set(s) not printed]

# summary(msets.none)

# summary(msets.maha)Visualizing Matched Sets with the plot method

Users can visualize the distribution of the matched set sizes.

A red line, by default, indicates the count of matched sets where treated units had no matching control units (i.e., empty matched sets).

Plot adjustments can be made using

graphics::plot.

plot(

msets.none,

panel.data = PanelData(

panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"

)

)

Figure 30.18: Weight Distribution

Comparing Methods of Refinement

Users are encouraged to:

Use substantive knowledge for experimentation and evaluation.

Consider the following when configuring

PanelMatch:The number of matched sets.

The number of controls matched to each treated unit.

Achieving covariate balance.

Note: Large numbers of small matched sets can lead to larger standard errors during the estimation stage.

Covariates that aren’t well balanced can lead to undesirable comparisons between treated and control units.

Aspects to consider include:

Refinement method.

Variables for weight calculation.

Size of the lag window.

Procedures for addressing missing data (refer to

match.missingandlistwise.deletearguments).Maximum size of matched sets (for matching methods).

Supportive Features:

print,plot, andsummarymethods assist in understanding matched sets and their sizes.get_covariate_balancehelps evaluate covariate balance:- Lower values in the covariate balance calculations are preferred.

PM.results.none <-

PanelMatch(

lag = 4,

refinement.method = "none",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

PM.results.maha <-

PanelMatch(

lag = 4,

refinement.method = "mahalanobis",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

# listwise deletion used for missing data

PM.results.listwise <-

PanelMatch(

lag = 4,

refinement.method = "mahalanobis",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = FALSE,

listwise.delete = TRUE,

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

# propensity score based weighting method

PM.results.ps.weight <-

PanelMatch(

lag = 4,

refinement.method = "ps.weight",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = FALSE,

listwise.delete = TRUE,

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

size.match = 5,

qoi = "att",

lead = 0:4,

forbid.treatment.reversal = FALSE

)

get_covariate_balance(

PM.results.none,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

covariates = c("tradewb", "y")

)

#>

#> ==============================

#> PM.results.none

#> ==============================

#>

#> --- QOI: att ---

#> tradewb y

#> t_4 -0.07245466 0.291871990

#> t_3 -0.20930129 0.208654876

#> t_2 -0.24425207 0.107736647

#> t_1 -0.10806125 -0.004950238

#> t_0 -0.09493854 -0.015198483# Compare covariate balance to refined sets

# See large improvement in balance

get_covariate_balance(

PM.results.ps.weight,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

covariates = c("tradewb", "y")

)

#>

#> ==============================

#> PM.results.ps.weight

#> ==============================

#>

#> --- QOI: att ---

#> tradewb y

#> t_4 0.014362590 0.04035905

#> t_3 0.005529734 0.04188731

#> t_2 0.009410044 0.04195008

#> t_1 0.027907540 0.03975173

#> t_0 0.040272235 0.04167921PanelEstimate

Standard Error Calculation Methods

There are different methods available:

Bootstrap (default method with 1000 iterations).

Conditional: Assumes independence across units, but not time.

Unconditional: Doesn’t make assumptions of independence across units or time.

For

qoivalues set toatt,art, oratc(Imai, Kim, and Wang 2021):- You can use analytical methods for calculating standard errors, which include both “conditional” and “unconditional” methods.

PE.results <- PanelEstimate(

sets = PM.results.ps.weight,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

se.method = "bootstrap",

number.iterations = 1000,

confidence.level = .95

)

# point estimates

PE.results[["estimates"]]

#> NULL

# standard errors

PE.results[["standard.error"]]

#> t+0 t+1 t+2 t+3 t+4

#> 0.6378008 1.0580194 1.4415817 1.8023301 2.2215475

# use conditional method

PE.results <- PanelEstimate(

sets = PM.results.ps.weight,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

se.method = "conditional",

confidence.level = .95

)

# point estimates

PE.results[["estimates"]]

#> NULL

# standard errors

PE.results[["standard.error"]]

#> t+0 t+1 t+2 t+3 t+4

#> 0.4844805 0.8170604 1.1171942 1.4116879 1.7172143

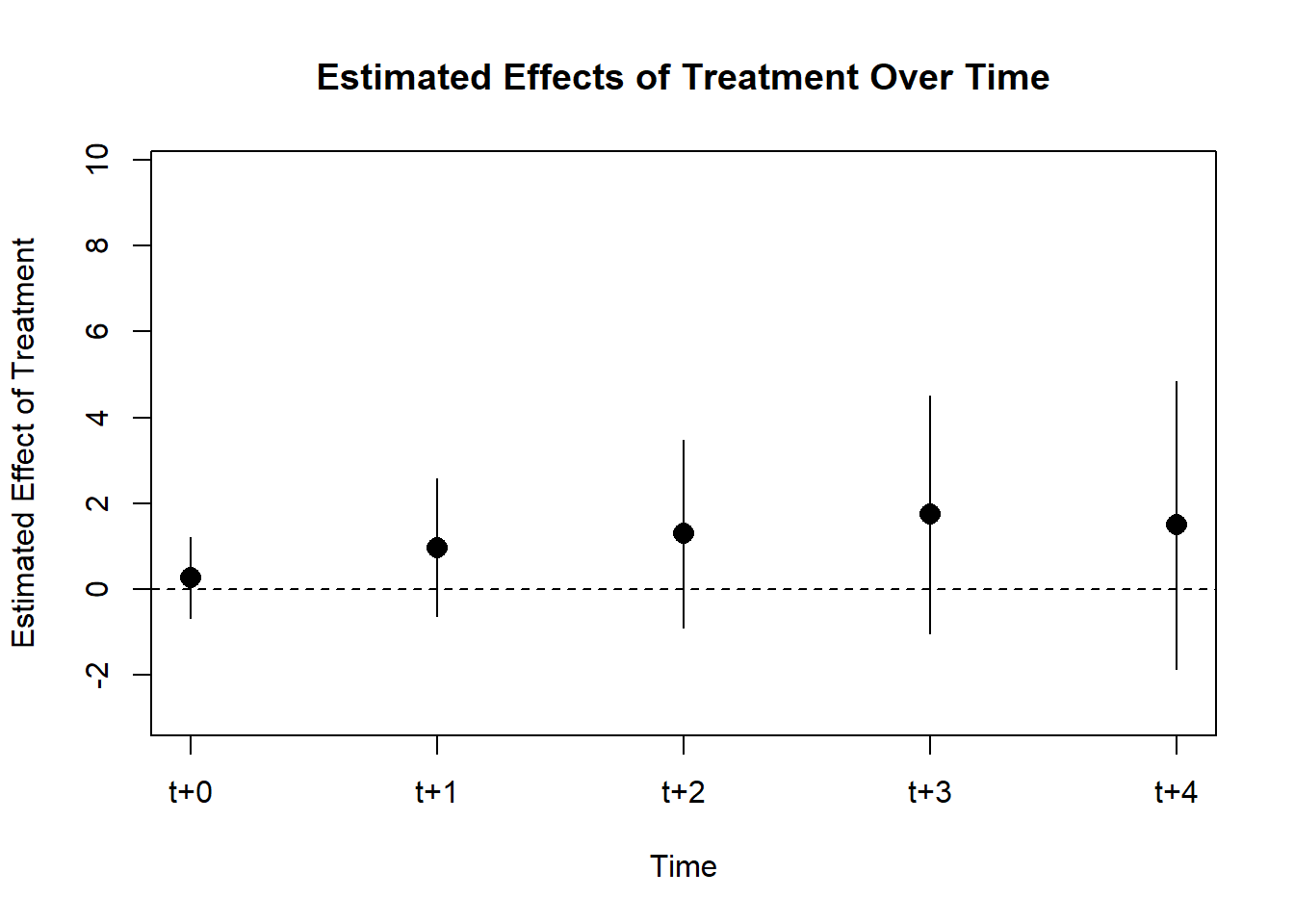

summary(PE.results)

#> estimate std.error 2.5% 97.5%

#> t+0 0.2609565 0.4844805 -0.6886078 1.210521

#> t+1 0.9630847 0.8170604 -0.6383243 2.564494

#> t+2 1.2851017 1.1171942 -0.9045586 3.474762

#> t+3 1.7370930 1.4116879 -1.0297644 4.503950

#> t+4 1.4871846 1.7172143 -1.8784937 4.852863

Figure 30.19: Post-treatment Effects Over Time

Moderating Variables

# moderating variable

dem$moderator <- 0

dem$moderator <- ifelse(dem$wbcode2 > 100, 1, 2)

PM.results <-

PanelMatch(

lag = 4,

# time.id = "year",

# unit.id = "wbcode2",

# treatment = "dem",

refinement.method = "mahalanobis",

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = TRUE,

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

size.match = 5,

qoi = "att",

# outcome.var = "y",

lead = 0:4,

forbid.treatment.reversal = FALSE,

use.diagonal.variance.matrix = TRUE

)

PE.results <-

PanelEstimate(

sets = PM.results,

panel.data = PanelData(

panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"

),

moderator = "moderator"

)

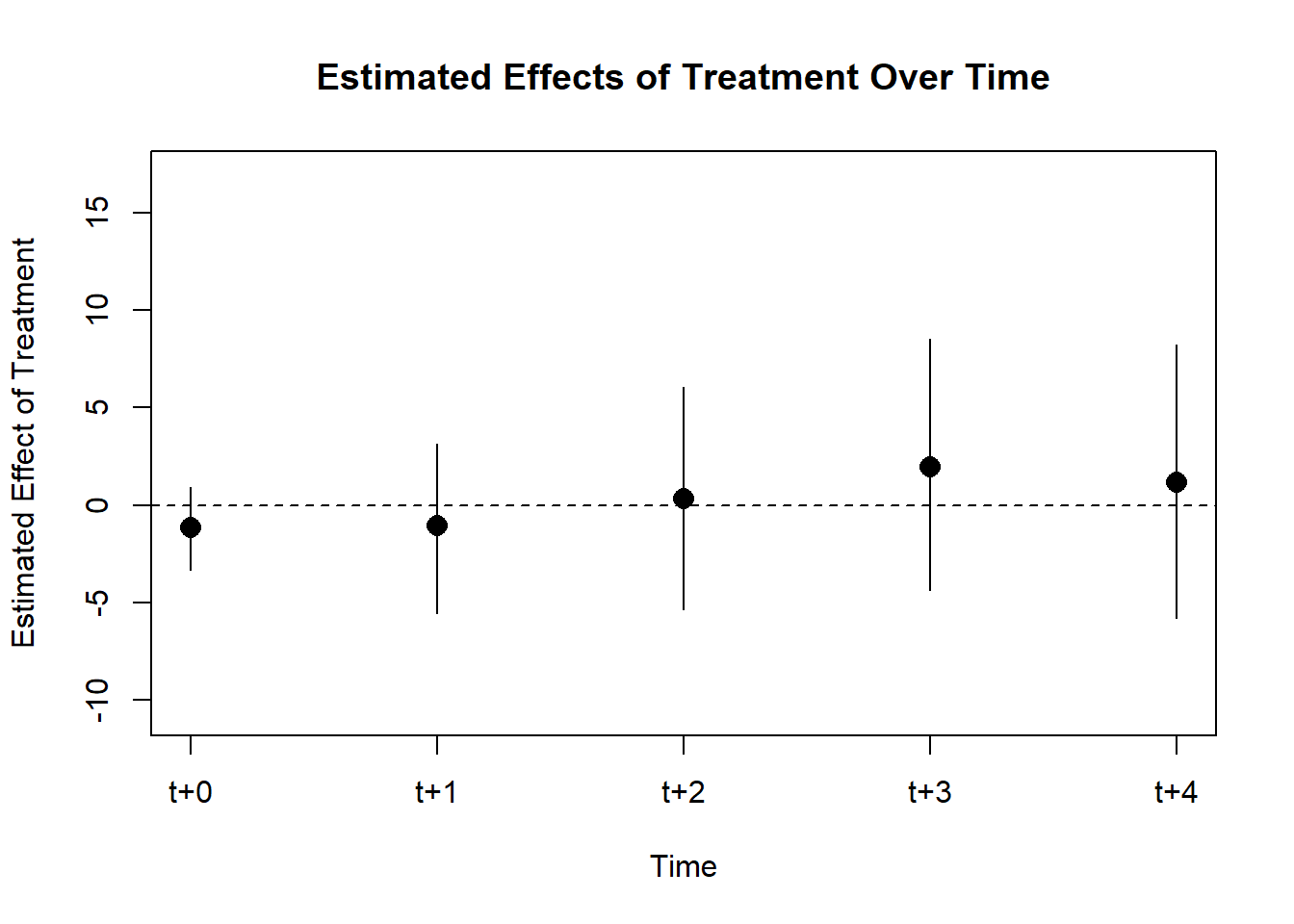

Figure 30.20: Estimated Effects of Treatment Over Time

In this study, aligned with the research by Acemoglu et al. (2019), two key effects of democracy on economic growth are estimated: the impact of democratization and that of authoritarian reversal. The treatment variable, \(X_{it}\), is defined to be one if country \(i\) is democratic in year \(t\), and zero otherwise.

The Average Treatment Effect for the Treated (ATT) under democratization is formulated as follows:

\[ \begin{aligned} \delta(F, L) &= \mathbb{E} \left\{ Y_{i, t + F} (X_{it} = 1, X_{i, t - 1} = 0, \{X_{i,t-l}\}_{l=2}^L) \right. \\ &\left. - Y_{i, t + F} (X_{it} = 0, X_{i, t - 1} = 0, \{X_{i,t-l}\}_{l=2}^L) | X_{it} = 1, X_{i, t - 1} = 0 \right\} \end{aligned} \]

In this framework, the treated observations are countries that transition from an authoritarian regime \(X_{it-1} = 0\) to a democratic one \(X_{it} = 1\). The variable \(F\) represents the number of leads, denoting the time periods following the treatment, and \(L\) signifies the number of lags, indicating the time periods preceding the treatment.

The ATT under authoritarian reversal is given by:

\[ \begin{aligned} &\mathbb{E} \left[ Y_{i, t + F} (X_{it} = 0, X_{i, t - 1} = 1, \{ X_{i, t - l}\}_{l=2}^L ) \right. \\ &\left. - Y_{i, t + F} (X_{it} = 1, X_{it-1} = 1, \{X_{i, t - l} \}_{l=2}^L ) | X_{it} = 0, X_{i, t - 1} = 1 \right] \end{aligned} \]

The ATT is calculated conditioning on 4 years of lags (\(L = 4\)) and up to 4 years following the policy change \(F = 1, 2, 3, 4\). Matched sets for each treated observation are constructed based on its treatment history, with the number of matched control units generally decreasing when considering a 4-year treatment history as compared to a 1-year history.

To enhance the quality of matched sets, methods such as Mahalanobis distance matching, propensity score matching, and propensity score weighting are utilized. These approaches enable us to evaluate the effectiveness of each refinement method. In the process of matching, we employ both up-to-five and up-to-ten matching to investigate how sensitive our empirical results are to the maximum number of allowed matches. For more information on the refinement process, please see the Web Appendix

The Mahalanobis distance is expressed through a specific formula. We aim to pair each treated unit with a maximum of \(J\) control units, permitting replacement, denoted as \(| \mathcal{M}_{it} \le J|\). The average Mahalanobis distance between a treated and each control unit over time is computed as:

\[ S_{it} (i') = \frac{1}{L} \sum_{l = 1}^L \sqrt{(\mathbf{V}_{i, t - l} - \mathbf{V}_{i', t -l})^T \mathbf{\Sigma}_{i, t - l}^{-1} (\mathbf{V}_{i, t - l} - \mathbf{V}_{i', t -l})} \]

For a matched control unit \(i' \in \mathcal{M}_{it}\), \(\mathbf{V}_{it'}\) represents the time-varying covariates to adjust for, and \(\mathbf{\Sigma}_{it'}\) is the sample covariance matrix for \(\mathbf{V}_{it'}\). Essentially, we calculate a standardized distance using time-varying covariates and average this across different time intervals.

In the context of propensity score matching, we employ a logistic regression model with balanced covariates to derive the propensity score. Defined as the conditional likelihood of treatment given pre-treatment covariates (Rosenbaum and Rubin 1983), the propensity score is estimated by first creating a data subset comprised of all treated and their matched control units from the same year. This logistic regression model is then fitted as follows:

\[ \begin{aligned} & e_{it} (\{\mathbf{U}_{i, t - l} \}^L_{l = 1}) \\ &= Pr(X_{it} = 1| \mathbf{U}_{i, t -1}, \ldots, \mathbf{U}_{i, t - L}) \\ &= \frac{1}{1 = \exp(- \sum_{l = 1}^L \beta_l^T \mathbf{U}_{i, t - l})} \end{aligned} \]

where \(\mathbf{U}_{it'} = (X_{it'}, \mathbf{V}_{it'}^T)^T\). Given this model, the estimated propensity score for all treated and matched control units is then computed. This enables the adjustment for lagged covariates via matching on the calculated propensity score, resulting in the following distance measure:

\[ S_{it} (i') = | \text{logit} \{ \hat{e}_{it} (\{ \mathbf{U}_{i, t - l}\}^L_{l = 1})\} - \text{logit} \{ \hat{e}_{i't}( \{ \mathbf{U}_{i', t - l} \}^L_{l = 1})\} | \]

Here, \(\hat{e}_{i't} (\{ \mathbf{U}_{i, t - l}\}^L_{l = 1})\) represents the estimated propensity score for each matched control unit \(i' \in \mathcal{M}_{it}\).

Once the distance measure \(S_{it} (i')\) has been determined for all control units in the original matched set, we fine-tune this set by selecting up to \(J\) closest control units, which meet a researcher-defined caliper constraint \(C\). All other control units receive zero weight. This results in a refined matched set for each treated unit \((i, t)\):

\[ \mathcal{M}_{it}^* = \{i' : i' \in \mathcal{M}_{it}, S_{it} (i') < C, S_{it} \le S_{it}^{(J)}\} \]

\(S_{it}^{(J)}\) is the \(J\)th smallest distance among the control units in the original set \(\mathcal{M}_{it}\).

For further refinement using weighting, a weight is assigned to each control unit \(i'\) in a matched set corresponding to a treated unit \((i, t)\), with greater weight accorded to more similar units. We utilize inverse propensity score weighting, based on the propensity score model mentioned earlier:

\[ w_{it}^{i'} \propto \frac{\hat{e}_{i't} (\{ \mathbf{U}_{i, t-l} \}^L_{l = 1} )}{1 - \hat{e}_{i't} (\{ \mathbf{U}_{i, t-l} \}^L_{l = 1} )} \]

In this model, \(\sum_{i' \in \mathcal{M}_{it}} w_{it}^{i'} = 1\) and \(w_{it}^{i'} = 0\) for \(i' \notin \mathcal{M}_{it}\). The model is fitted to the complete sample of treated and matched control units.

\[\begin{equation} \bar{B}(j, l) = \frac{1}{N_1} \sum_{i=1}^N \sum_{t = L+ 1}^{T-F}D_{it} B_{it}(j,l) \label{eq:aggbalance} \end{equation}\]Checking Covariate Balance A distinct advantage of the proposed methodology over regression methods is the ability it offers researchers to inspect the covariate balance between treated and matched control observations. This facilitates the evaluation of whether treated and matched control observations are comparable regarding observed confounders. To investigate the mean difference of each covariate (e.g., \(V_{it'j}\), representing the \(j\)-th variable in \(\mathbf{V}_{it'}\)) between the treated observation and its matched control observation at each pre-treatment time period (i.e., \(t' < t\)), we further standardize this difference. For any given pretreatment time period, we adjust by the standard deviation of each covariate across all treated observations in the dataset. Thus, the mean difference is quantified in terms of standard deviation units. Formally, for each treated observation \((i,t)\) where \(D_{it} = 1\), we define the covariate balance for variable \(j\) at the pretreatment time period \(t - l\) as: \[\begin{equation} B_{it}(j, l) = \frac{V_{i, t- l,j}- \sum_{i' \in \mathcal{M}_{it}}w_{it}^{i'}V_{i', t-l,j}}{\sqrt{\frac{1}{N_1 - 1} \sum_{i'=1}^N \sum_{t' = L+1}^{T-F}D_{i't'}(V_{i', t'-l, j} - \bar{V}_{t' - l, j})^2}} \label{eq:covbalance} \end{equation}\] where \(N_1 = \sum_{i'= 1}^N \sum_{t' = L+1}^{T-F} D_{i't'}\) denotes the total number of treated observations and \(\bar{V}_{t-l,j} = \sum_{i=1}^N D_{i,t-l,j}/N\). We then aggregate this covariate balance measure across all treated observations for each covariate and pre-treatment time period:

Lastly, we evaluate the balance of lagged outcome variables over several pre-treatment periods and that of time-varying covariates. This examination aids in assessing the validity of the parallel trend assumption integral to the DiD estimator justification.

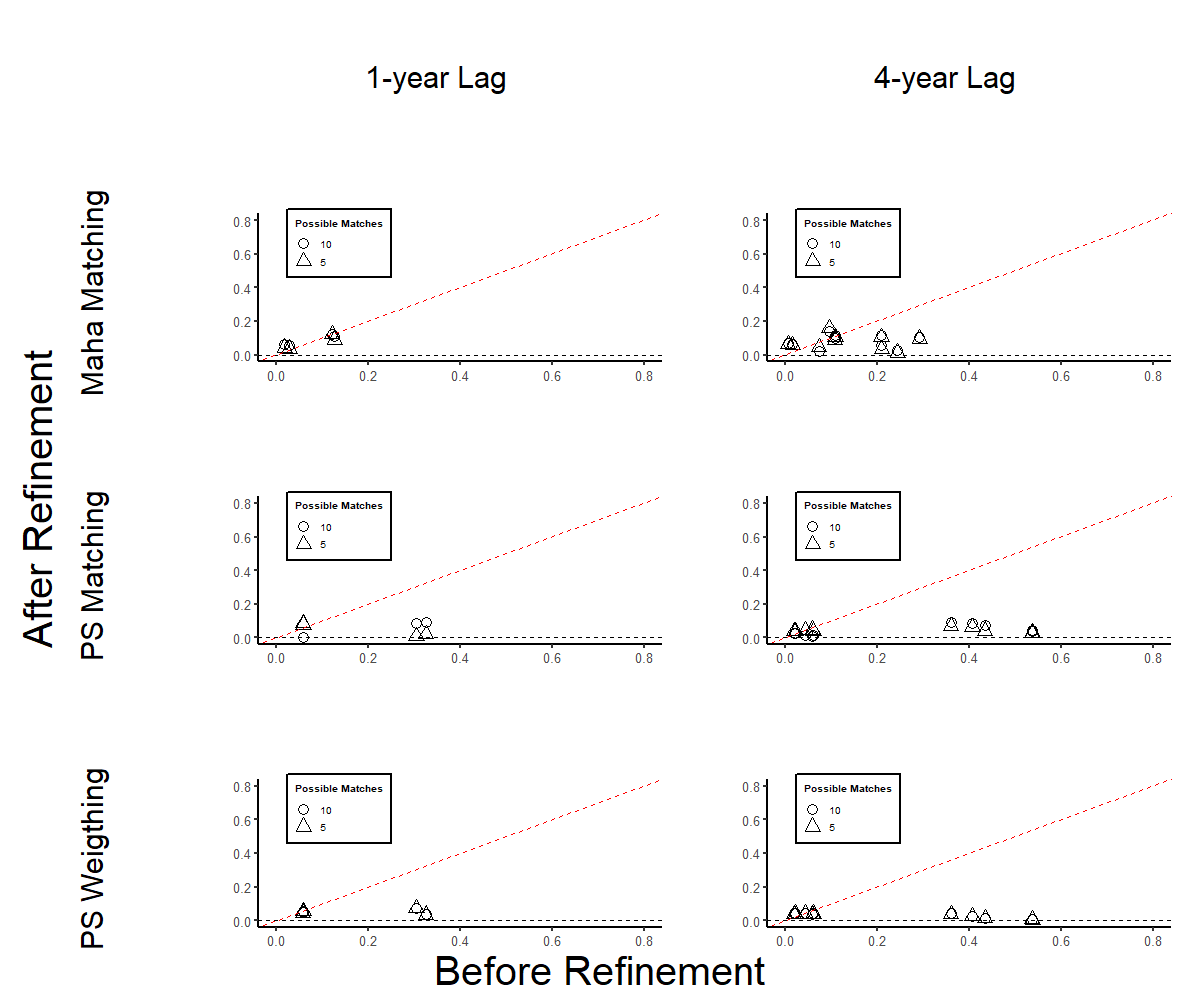

In the balance scatter figure, we demonstrate the enhancement of covariate balance thank to the refinement of matched sets. Each scatter plot contrasts the absolute standardized mean difference between before (horizontal axis) and after (vertical axis) this refinement. Points below the 45-degree line indicate an improved standardized mean balance for certain time-varying covariates post-refinement. The majority of variables benefit from this refinement process. Notably, the propensity score weighting (bottom panel) shows the most significant improvement, whereas Mahalanobis matching (top panel) yields a more modest improvement.

library(PanelMatch)

library(causalverse)

runPanelMatch <- function(method, lag, size.match=NULL, qoi="att") {

# Default parameters for PanelMatch

common.args <- list(

lag = lag,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

qoi = qoi,

lead = 0:4,

forbid.treatment.reversal = FALSE,

size.match = size.match # setting size.match here for all methods

)

if(method == "mahalanobis") {

common.args$refinement.method <- "mahalanobis"

common.args$match.missing <- TRUE

common.args$use.diagonal.variance.matrix <- TRUE

} else if(method == "ps.match") {

common.args$refinement.method <- "ps.match"

common.args$match.missing <- FALSE

common.args$listwise.delete <- TRUE

} else if(method == "ps.weight") {

common.args$refinement.method <- "ps.weight"

common.args$match.missing <- FALSE

common.args$listwise.delete <- TRUE

}

return(do.call(PanelMatch, common.args))

}

methods <- c("mahalanobis", "ps.match", "ps.weight")

lags <- c(1, 4)

sizes <- c(5, 10)You can either do it sequentially

res_pm <- list()

for(method in methods) {

for(lag in lags) {

for(size in sizes) {

name <- paste0(method, ".", lag, "lag.", size, "m")

res_pm[[name]] <- runPanelMatch(method, lag, size)

}

}

}

# Now, you can access res_pm using res_pm[["mahalanobis.1lag.5m"]] etc.

# for treatment reversal

res_pm_rev <- list()

for(method in methods) {

for(lag in lags) {

for(size in sizes) {

name <- paste0(method, ".", lag, "lag.", size, "m")

res_pm_rev[[name]] <- runPanelMatch(method, lag, size, qoi = "art")

}

}

}or in parallel

library(foreach)

library(doParallel)

registerDoParallel(cores = 4)

# Initialize an empty list to store results

res_pm <- list()

# Replace nested for-loops with foreach

results <-

foreach(

method = methods,

.combine = 'c',

.multicombine = TRUE,

.packages = c("PanelMatch", "causalverse")

) %dopar% {

tmp <- list()

for (lag in lags) {

for (size in sizes) {

name <- paste0(method, ".", lag, "lag.", size, "m")

tmp[[name]] <- runPanelMatch(method, lag, size)

}

}

tmp

}

# Collate results

for (name in names(results)) {

res_pm[[name]] <- results[[name]]

}

# Treatment reversal

# Initialize an empty list to store results

res_pm_rev <- list()

# Replace nested for-loops with foreach

results_rev <-

foreach(

method = methods,

.combine = 'c',

.multicombine = TRUE,

.packages = c("PanelMatch", "causalverse")

) %dopar% {

tmp <- list()

for (lag in lags) {

for (size in sizes) {

name <- paste0(method, ".", lag, "lag.", size, "m")

tmp[[name]] <-

runPanelMatch(method, lag, size, qoi = "art")

}

}

tmp

}

# Collate results

for (name in names(results_rev)) {

res_pm_rev[[name]] <- results_rev[[name]]

}

stopImplicitCluster()library(gridExtra)

# Updated plotting function

create_balance_plot <- function(method, lag, sizes, res_pm, dem) {

matched_set_lists <- lapply(sizes, function(size) {

res_pm[[paste0(method, ".", lag, "lag.", size, "m")]]$att

})

return(

balance_scatter_custom(

matched_set_list = matched_set_lists,

legend.title = "Possible Matches",

set.names = as.character(sizes),

legend.position = c(0.2, 0.8),

# for compiled plot, you don't need x,y, or main labs

x.axis.label = "",

y.axis.label = "",

main = "",

data = dem,

dot.size = 5,

# show.legend = F,

them_use = causalverse::ama_theme(base_size = 32),

covariates = c("y", "tradewb")

)

)

}

plots <- list()

for (method in methods) {

for (lag in lags) {

plots[[paste0(method, ".", lag, "lag")]] <-

create_balance_plot(method, lag, sizes, res_pm, dem)

}

}

# # Arranging plots in a 3x2 grid

# grid.arrange(plots[["mahalanobis.1lag"]],

# plots[["mahalanobis.4lag"]],

# plots[["ps.match.1lag"]],

# plots[["ps.match.4lag"]],

# plots[["ps.weight.1lag"]],

# plots[["ps.weight.4lag"]],

# ncol=2, nrow=3)

# Standardized Mean Difference of Covariates

library(gridExtra)

library(grid)

# Create column and row labels using textGrob

col_labels <- c("1-year Lag", "4-year Lag")

row_labels <- c("Maha Matching", "PS Matching", "PS Weigthing")

major.axes.fontsize = 40

minor.axes.fontsize = 30

png(

file.path(getwd(), "images", "did_balance_scatter.png"),

width = 1200,

height = 1000

)

# Create a list-of-lists, where each inner list represents a row

grid_list <- list(

list(

nullGrob(),

textGrob(col_labels[1], gp = gpar(fontsize = minor.axes.fontsize)),

textGrob(col_labels[2], gp = gpar(fontsize = minor.axes.fontsize))

),

list(textGrob(

row_labels[1],

gp = gpar(fontsize = minor.axes.fontsize),

rot = 90

), plots[["mahalanobis.1lag"]], plots[["mahalanobis.4lag"]]),

list(textGrob(

row_labels[2],

gp = gpar(fontsize = minor.axes.fontsize),

rot = 90

), plots[["ps.match.1lag"]], plots[["ps.match.4lag"]]),

list(textGrob(

row_labels[3],

gp = gpar(fontsize = minor.axes.fontsize),

rot = 90

), plots[["ps.weight.1lag"]], plots[["ps.weight.4lag"]])

)

# "Flatten" the list-of-lists into a single list of grobs

grobs <- do.call(c, grid_list)

grid.arrange(

grobs = grobs,

ncol = 3,

nrow = 4,

widths = c(0.15, 0.42, 0.42),

heights = c(0.15, 0.28, 0.28, 0.28)

)

grid.text(

"Before Refinement",

x = 0.5,

y = 0.03,

gp = gpar(fontsize = major.axes.fontsize)

)

grid.text(

"After Refinement",

x = 0.03,

y = 0.5,

rot = 90,

gp = gpar(fontsize = major.axes.fontsize)

)

dev.off()

Figure 30.21: Variable Balance After Matched Set Refinement

Note: Scatter plots display the standardized mean difference of each covariate \(j\) and lag year \(l\) before (x-axis) and after (y-axis) matched set refinement. Each plot includes varying numbers of possible matches for each matching method. Rows represent different matching/weighting methods, while columns indicate adjustments for various lag lengths.

# Step 1: Define configurations

configurations <- list(

list(refinement.method = "none", qoi = "att"),

list(refinement.method = "none", qoi = "art"),

list(refinement.method = "mahalanobis", qoi = "att"),

list(refinement.method = "mahalanobis", qoi = "art"),

list(refinement.method = "ps.match", qoi = "att"),

list(refinement.method = "ps.match", qoi = "art"),

list(refinement.method = "ps.weight", qoi = "att"),

list(refinement.method = "ps.weight", qoi = "art")

)

# Step 2: Use lapply or loop to generate results

results <- lapply(configurations, function(config) {

PanelMatch(

lag = 4,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

match.missing = FALSE,

listwise.delete = TRUE,

size.match = 5,

lead = 0:4,

forbid.treatment.reversal = FALSE,

refinement.method = config$refinement.method,

covs.formula = ~ I(lag(tradewb, 1:4)) + I(lag(y, 1:4)),

qoi = config$qoi

)

})

# Step 3: Get covariate balance and plot

plots <- mapply(function(result, config) {

df <- get_covariate_balance(

if (config$qoi == "att")

result$att

else

result$art,

panel.data = PanelData(panel.data = dem,

unit.id = "wbcode2",

time.id = "year",

treatment = "dem",

outcome = "y"),

covariates = c("tradewb", "y"),

plot = F

)

causalverse::plot_covariate_balance_pretrend(df, main = "", show_legend = F)

}, results, configurations, SIMPLIFY = FALSE)

# Set names for plots

names(plots) <- sapply(configurations, function(config) {

paste(config$qoi, config$refinement.method, sep = ".")

})To export

library(gridExtra)

library(grid)

# Column and row labels

col_labels <-

c("None",

"Mahalanobis",

"Propensity Score Matching",

"Propensity Score Weighting")

row_labels <- c("ATT", "ART")

# Specify your desired fontsize for labels

minor.axes.fontsize <- 16

major.axes.fontsize <- 20

png(file.path(getwd(), "images", "p_covariate_balance.png"), width=1200, height=1000)

# Create a list-of-lists, where each inner list represents a row

grid_list <- list(

list(

nullGrob(),

textGrob(col_labels[1], gp = gpar(fontsize = minor.axes.fontsize)),

textGrob(col_labels[2], gp = gpar(fontsize = minor.axes.fontsize)),

textGrob(col_labels[3], gp = gpar(fontsize = minor.axes.fontsize)),

textGrob(col_labels[4], gp = gpar(fontsize = minor.axes.fontsize))

),

list(

textGrob(

row_labels[1],

gp = gpar(fontsize = minor.axes.fontsize),

rot = 90

),

plots$att.none,

plots$att.mahalanobis,

plots$att.ps.match,

plots$att.ps.weight

),

list(

textGrob(

row_labels[2],

gp = gpar(fontsize = minor.axes.fontsize),

rot = 90

),

plots$art.none,

plots$art.mahalanobis,

plots$art.ps.match,

plots$art.ps.weight

)

)

# "Flatten" the list-of-lists into a single list of grobs

grobs <- do.call(c, grid_list)

# Arrange your plots with text labels

grid.arrange(

grobs = grobs,

ncol = 5,

nrow = 3,

widths = c(0.1, 0.225, 0.225, 0.225, 0.225),

heights = c(0.1, 0.45, 0.45)

)

# Add main x and y axis titles

grid.text(

"Refinement Methods",

x = 0.5,

y = 0.01,

gp = gpar(fontsize = major.axes.fontsize)

)

grid.text(

"Quantities of Interest",

x = 0.02,

y = 0.5,

rot = 90,

gp = gpar(fontsize = major.axes.fontsize)

)

dev.off()

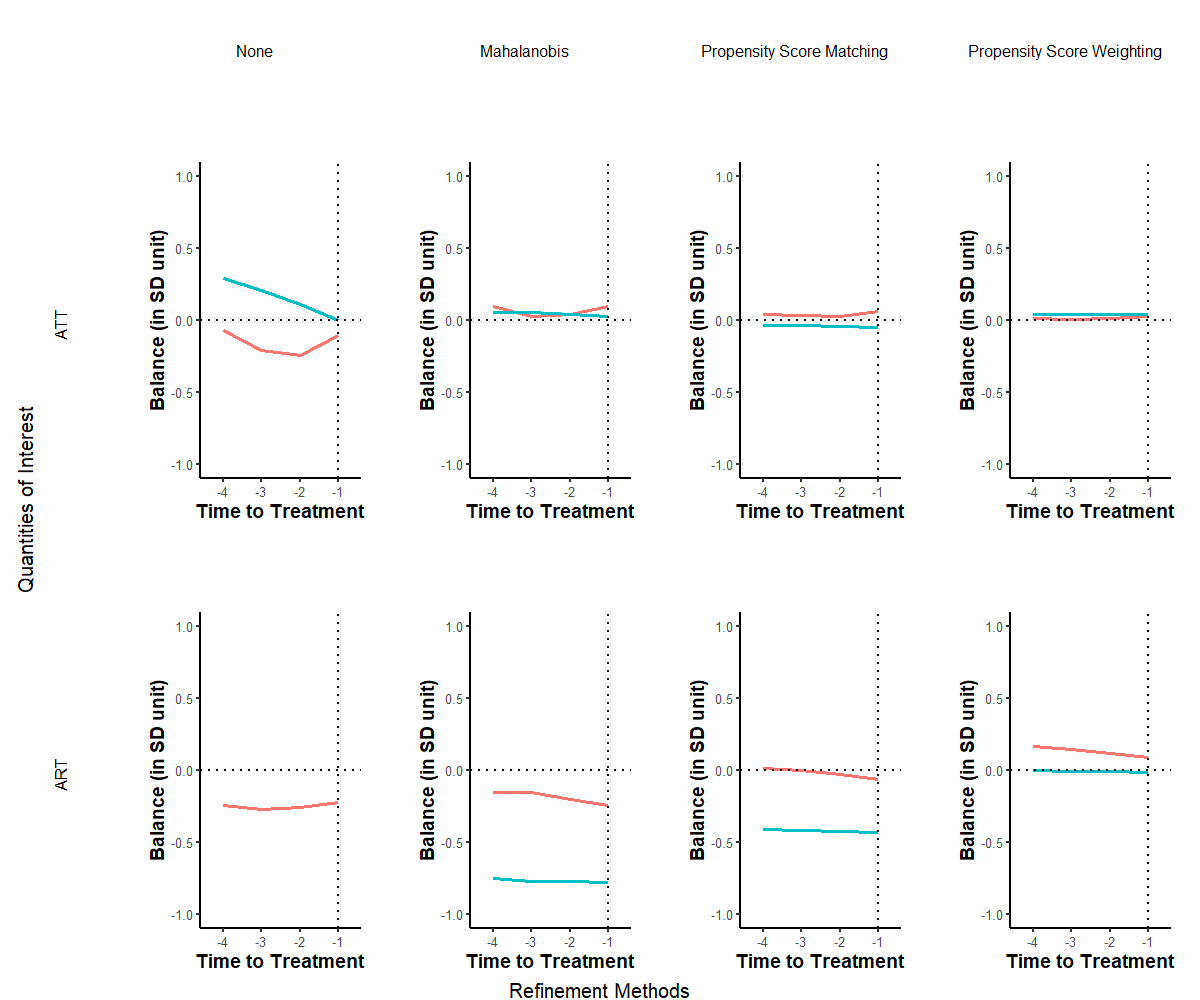

Figure 30.22: Covariate Balance Over Time by Refinement Method and Estimand

Note: Each graph displays the standardized mean difference plotted on the vertical axis across a pre-treatment duration of four years represented on the horizontal axis. The leftmost column illustrates the balance prior to refinement, while the subsequent three columns depict the covariate balance post the application of distinct refinement techniques. Each individual line signifies the balance of a specific variable during the pre-treatment phase. The red line is tradewb and blue line is the lagged outcome variable.

In Figure 30.22, we observe a marked improvement in covariate balance due to the implemented matching procedures during the pre-treatment period. Our analysis prioritizes methods that adjust for time-varying covariates over a span of four years preceding the treatment initiation. The two rows delineate the standardized mean balance for both treatment modalities, with individual lines representing the balance for each covariate.

Across all scenarios, the refinement attributed to matched sets significantly enhances balance. Notably, using propensity score weighting considerably mitigates imbalances in confounders. While some degree of imbalance remains evident in the Mahalanobis distance and propensity score matching techniques, the standardized mean difference for the lagged outcome remains stable throughout the pre-treatment phase. This consistency lends credence to the validity of the proposed DiD estimator.

Estimation Results

We now detail the estimated ATTs derived from the matching techniques. Figure below offers visual representations of the impacts of treatment initiation (upper panel) and treatment reversal (lower panel) on the outcome variable for a duration of 5 years post-transition, specifically, (\(F = 0, 1, …, 4\)). Across the five methods (columns), it becomes evident that the point estimates of effects associated with treatment initiation consistently approximate zero over the 5-year window. In contrast, the estimated outcomes of treatment reversal are notably negative and maintain statistical significance through all refinement techniques during the initial year of transition and the 1 to 4 years that follow, provided treatment reversal is permissible. These effects are notably pronounced, pointing to an estimated reduction of roughly X% in the outcome variable.

Collectively, these findings indicate that the transition into the treated state from its absence doesn’t invariably lead to a heightened outcome. Instead, the transition from the treated state back to its absence exerts a considerable negative effect on the outcome variable in both the short and intermediate terms. Hence, the positive effect of the treatment (if we were to use traditional DiD) is actually driven by the negative effect of treatment reversal.

# sequential

# Step 1: Apply PanelEstimate function