13 Imputation (Missing Data)

Missing data is a pervasive issue in applied statistics, and this chapter offers a comprehensive treatment of its diagnosis and resolution. Beginning with a conceptual introduction, we discuss the mechanisms underlying missingness—MCAR, MAR, and MNAR—and their consequences for unbiased estimation. Later, the chapter provides practical tools for identifying patterns of missingness, including graphical and statistical diagnostics. It then reviews a spectrum of imputation methods, from simple techniques (mean substitution, regression imputation) to advanced algorithms (multiple imputation using chained equations and random forest imputation). Each method is evaluated in terms of bias, efficiency, and robustness, with detailed comparisons. Practical criteria for choosing an appropriate imputation strategy are also provided, followed by an in-depth discussion on ethical issues and transparency in reporting. Emerging trends in handling high-dimensional and MNAR data are also covered. Finally, the last section walks through a complete imputation example, ensuring readers can apply these concepts immediately.

13.1 Introduction to Missing Data

Missing data is a common problem in statistical analyses and data science, impacting the quality and reliability of insights derived from datasets. One widely used approach to address this issue is imputation, where missing data is replaced with reasonable estimates.

13.1.1 Types of Imputation

Imputation can be categorized into:

- Unit Imputation: Replacing an entire missing observation (i.e., all features for a single data point are missing).

- Item Imputation: Replacing missing values for specific variables (features) within a dataset.

While imputation offers a means to make use of incomplete datasets, it has historically been viewed skeptically. This skepticism arises from:

- Frequent misapplication of imputation techniques, which can introduce significant bias to estimates.

- Limited applicability, as imputation works well only under certain assumptions about the missing data mechanism and research objectives.

Biases in imputation can arise from various factors, including:

Imputation method: The chosen method can influence the results and introduce biases.

Missing data mechanism: The nature of the missing data—whether it is Missing Completely at Random (MCAR) or Missing at Random (MAR)—affects the accuracy of imputation.

Proportion of missing data: The amount of missing data significantly impacts the reliability of the imputation.

Available information in the dataset: Limited information reduces the robustness of the imputed values.

13.1.2 When and Why to Use Imputation

The appropriateness of imputation depends on the nature of the missing data and the research goal:

Missing Data in the Outcome Variable (\(y\)): Imputation in such cases is generally problematic, as it can distort statistical models and lead to misleading conclusions. For example, imputing outcomes in regression or classification problems can alter the underlying relationship between the dependent and independent variables.

Missing Data in Predictive Variables (\(x\)): Imputation is more commonly applied here, especially for non-random missing data. Properly handled, imputation can enable the use of incomplete datasets while minimizing bias.

13.1.2.1 Objectives of Imputation

The utility of imputation methods differs substantially depending on whether the goal of the analysis is inference/explanation or prediction. Each goal has distinct priorities and tolerances for bias, variance, and assumptions about the missing data mechanism:

13.1.2.1.1 Inference/Explanation

In causal inference or explanatory analyses, the primary objective is to ensure valid statistical inference, emphasizing unbiased estimation of parameters and accurate representation of uncertainty. The treatment of missing data must align closely with the assumptions about the mechanism behind the missing data—whether it is Missing Completely at Random (MCAR), Missing at Random (MAR), or Missing Not at Random (MNAR):

Bias Sensitivity: Inference analyses require that imputed data preserve the integrity of the relationships among variables. Poorly executed imputation can introduce bias, even when it addresses missingness superficially.

Variance and Confidence Intervals: For inference, the quality of the standard errors, confidence intervals, and test statistics is critical. Naive imputation methods (e.g., mean imputation) often fail to appropriately reflect the uncertainty due to missingness, leading to overconfidence in parameter estimates.

Mechanism Considerations: Imputation methods, such as multiple imputation (MI), attempt to generate values consistent with the observed data distribution while accounting for missing data uncertainty. However, MI’s performance depends heavily on the validity of the MAR assumption. If the missingness mechanism is MNAR and not addressed adequately, the imputed data could yield biased parameter estimates, undermining the purpose of inference.

13.1.2.1.2 Prediction

In predictive modeling, the primary goal is to maximize model accuracy (e.g., minimizing mean squared error for continuous outcomes or maximizing classification accuracy). Here, the focus shifts to optimizing predictive performance rather than ensuring unbiased parameter estimates:

Loss of Information: Missing data reduces the amount of usable information in a dataset. Imputation allows the model to leverage all available data, rather than excluding incomplete cases via listwise deletion, which can significantly reduce sample size and model performance.

Impact on Model Fit: In predictive contexts, imputation can reduce standard errors of the predictions and stabilize model coefficients by incorporating plausible estimates for missing values.

Flexibility with Mechanism: Predictive models are less sensitive to the missing data mechanism than inferential models, as long as the imputed values help reduce variability and align with patterns in the observed data. Methods like K-Nearest Neighbors (KNN), iterative imputation, or even machine learning models (e.g., random forests for imputation) can be valuable, regardless of strict adherence to MAR or MCAR assumptions.

Trade-offs: Overimputation, where too much noise or complexity is introduced in the imputation process, can harm prediction by introducing artifacts that degrade model generalizability.

13.1.2.1.3 Key Takeaways

The usefulness of imputation depends on whether the goal of the analysis is inference or prediction:

Inference/Explanation: The primary concern is valid statistical inference, where biased estimates are unacceptable. Imputation is often of limited value for this purpose, as it may not address the underlying missing data mechanism appropriately (Rubin 1996).

Prediction: Imputation can be more useful in predictive modeling, as it reduces the loss of information from incomplete cases. By leveraging observed data, imputation can lower standard errors and improve model accuracy.

13.1.3 Importance of Missing Data Treatment in Statistical Modeling

Proper handling of missing data ensures:

- Unbiased Estimates: Avoiding distortions in parameter estimates.

- Accurate Standard Errors: Ensuring valid hypothesis testing and confidence intervals.

- Adequate Statistical Power: Maximizing the use of available data.

Ignoring or mishandling missing data can lead to:

- Bias: Systematic errors in parameter estimates, especially under MAR or MNAR mechanisms.

- Loss of Power: Reduced sample size leads to larger standard errors and weaker statistical significance.

- Misleading Conclusions: Over-simplistic imputation methods (e.g., mean substitution) can distort relationships among variables.

13.1.4 Prevalence of Missing Data Across Domains

Missing data affects virtually all fields:

- Business: Non-responses in customer surveys, incomplete sales records, and transactional errors.

- Healthcare: Missing data in electronic health records (EHRs) due to incomplete patient histories or inconsistent data entry.

- Social Sciences: Non-responses or partial responses in large-scale surveys, leading to biased conclusions.

13.1.5 Practical Considerations for Imputation

- Diagnostic Checks: Always examine the patterns and mechanisms of missing data before applying imputation (Diagnosing the Missing Data Mechanism).

- Model Selection: Align the imputation method with the missing data mechanism and research goal.

- Validation: Assess the impact of imputation on results through sensitivity analyses or cross-validation.

13.2 Theoretical Foundations of Missing Data

13.2.1 Definition and Classification of Missing Data

Missing data refers to the absence of values for some variables in a dataset. The mechanisms underlying missingness significantly impact the validity of statistical analyses and the choice of handling methods. These mechanisms are classified into three categories:

Missing Completely at Random (MCAR): The probability of missingness is independent of both observed and unobserved data. In other words, the missing data occur entirely at random and are unrelated to any values in the dataset.

Missing at Random (MAR): The probability of missingness is related to the observed data but not to the missing data itself. This means that, after controlling for observed variables, the missingness is random.

Missing Not at Random (MNAR): The probability of missingness depends on unobserved data or the missing values themselves. In this case, the missingness is related to the very information that is missing, making it the most challenging type to handle in analysis.

13.2.1.1 Missing Completely at Random (MCAR)

MCAR occurs when the probability of missingness is entirely random and unrelated to either observed or unobserved variables. Under this mechanism, missing data do not introduce bias in parameter estimates when ignored, although statistical efficiency is reduced due to the smaller sample size.

Mathematical Definition: The missingness is independent of all data, both observed and unobserved:

\[ P(Y_{\text{missing}} | Y, X) = P(Y_{\text{missing}}) \]

Characteristics of MCAR:

- Missingness is completely unrelated to both observed and unobserved data.

- Analyses remain unbiased even if missing data are ignored, though they may lack efficiency due to reduced sample size.

- The missing data points represent a random subset of the overall data.

Examples:

- A sensor randomly fails at specific time points, unrelated to environmental or operational conditions.

- Survey participants randomly omit responses to certain questions without any systematic pattern.

Methods for Testing MCAR:

Little’s MCAR Test: A formal statistical test to assess whether data are MCAR. A significant result suggests deviation from MCAR.

-

Mean Comparison Tests:

- T-tests or similar approaches compare observed and missing data groups on other variables. Significant differences indicate potential bias.

- Failure to reject the null hypothesis of no difference does not confirm MCAR but suggests consistency with the MCAR assumption.

Handling MCAR:

Since MCAR data introduce no bias, they can be handled using the following techniques:

-

Complete Case Analysis (Listwise Deletion):

- Analyses are performed only on cases with complete data. While unbiased under MCAR, this method reduces sample size and efficiency.

-

Universal Singular Value Thresholding (USVT):

- This technique is effective for MCAR data recovery but can only recover the mean structure, not the entire true distribution (Chatterjee 2015).

-

SoftImpute:

- A matrix completion method useful for some missing data problems but less effective when missingness is not MCAR (Hastie et al. 2015).

-

Synthetic Nearest Neighbor Imputation:

- A robust method for imputing missing data. While primarily designed for MCAR, it can also handle certain cases of missing not at random (MNAR) (Agarwal et al. 2023). Available on GitHub: syntheticNN.

Notes:

- The “missingness” on one variable can be correlated with the “missingness” on another variable without violating the MCAR assumption.

- Absence of evidence for bias (e.g., failing to reject a t-test) does not confirm that the data are MCAR.

13.2.1.2 Missing at Random (MAR)

Missing at Random (MAR) occurs when missingness depends on observed variables but not the missing values themselves. This mechanism assumes that observed data provide sufficient information to explain the missingness. In other words, there is a systematic relationship between the propensity of missing values and the observed data, but not the missing data.

Mathematical Definition:

The probability of missingness is conditional only on observed data:

\[ P(Y_{\text{missing}} | Y, X) = P(Y_{\text{missing}} | X) \]

This implies that whether an observation is missing is unrelated to the missing values themselves but is related to the observed values of other variables.

Characteristics of MAR:

- Missingness is systematically related to observed variables.

- The propensity for a data point to be missing is not related to the missing data but is related to some of the observed data.

- Analyses must account for observed data to mitigate bias.

Examples:

- Women are less likely to disclose their weight, but their gender is recorded. In this case, weight is MAR.

- Missing income data is correlated with education, which is observed. For example, individuals with higher education levels might be less likely to reveal their income.

Challenges in MAR:

- MAR is weaker than Missing Completely at Random (MCAR).

- It is impossible to directly test for MAR. Evidence for MAR relies on domain expertise and indirect statistical checks rather than direct tests.

Handling MAR:

Common methods for handling MAR include:

Multiple Imputation by Chained Equations (MICE): Iteratively imputes missing values based on observed data.

Maximum Likelihood Estimation: Estimates model parameters directly while accounting for MAR assumptions.

Regression-Based Imputation: Predicts missing values using observed covariates.

These methods assume that observed variables fully explain the missingness. Effective handling of MAR requires careful modeling and often domain-specific knowledge to validate the assumptions underlying the analysis.

13.2.1.3 Missing Not at Random (MNAR)

Missing Not at Random (MNAR) is the most complex missing data mechanism. Here, missingness depends on unobserved variables or the values of the missing data themselves. This makes MNAR particularly challenging, as ignoring this dependency introduces significant bias in analyses.

Mathematical Definition:

The probability of missingness depends on the missing values:

\[ P(Y_{\text{missing}} | Y, X) \neq P(Y_{\text{missing}} | X) \]

Characteristics of MNAR:

- Missingness cannot be fully explained by observed data.

- The cause of missingness is directly related to the unobserved values.

- Ignoring MNAR introduces significant bias in parameter estimates, often leading to invalid conclusions.

Examples:

- High-income individuals are less likely to disclose their income, and income itself is unobserved.

- Patients with severe symptoms drop out of a clinical study, leaving their health outcomes unrecorded.

Challenges in MNAR:

- MNAR is the most difficult missingness mechanism to address because the missing data mechanism must be explicitly modeled.

- Identifying MNAR often requires domain knowledge and auxiliary information beyond the observed dataset.

Handling MNAR:

MNAR requires explicit modeling of the missingness mechanism. Common approaches include:

Heckman Selection Models: These models explicitly account for the selection process leading to missing data, adjusting for potential bias (J. J. Heckman 1976a).

Instrumental Variables: Variables predictive of missingness but unrelated to the outcome can be used to mitigate bias (B. Sun et al. 2018; E. J. Tchetgen Tchetgen and Wirth 2017).

Pattern-Mixture Models: These models separate the data into groups (patterns) based on missingness and model each group separately. They are particularly useful when the relationship between missingness and missing values is complex.

Sensitivity Analysis: Examines how conclusions change under different assumptions about the missing data mechanism.

-

Use of Auxiliary Data

Auxiliary data refers to external data sources or variables that can help explain the missingness mechanism.

Surrogate Variables: Adding variables that correlate with missing data can improve imputation accuracy and mitigate the MNAR challenge.

Linking External Datasets: Merging datasets from different sources can provide additional context or predictors for missingness.

Applications in Business: In marketing, customer demographics or transaction histories often serve as auxiliary data to predict missing responses in surveys.

Additionally, data collection strategies, such as follow-up surveys or targeted sampling, can help mitigate MNAR effects by collecting information that directly addresses the missingness mechanism. However, such approaches can be resource-intensive and require careful planning.

13.2.2 Missing Data Mechanisms

| Mechanism | Missingness Depends On | Implications | Examples |

|---|---|---|---|

| MCAR | Neither observed nor missing data | No bias; simplest to handle; decreases efficiency due to data loss. | Random sensor failure. |

| MAR | Observed data only | Requires observed data to explain missingness; common assumption in imputation methods. | Gender-based missingness of weight. |

| MNAR | Missing data itself or unobserved variables | Requires explicit modeling of the missingness mechanism; significant bias if ignored. | High-income individuals not disclosing income. |

13.2.3 Relationship Between Mechanisms and Ignorability

The concept of ignorability is central to determining whether the missingness process must be explicitly modeled. Ignorability impacts the choice of methods for handling missing data and whether the missing data mechanism can be safely disregarded or must be explicitly accounted for.

13.2.3.1 Ignorable Missing Data

Missing data is ignorable under the following conditions:

- The missing data mechanism is MAR or MCAR.

- The parameters governing the missing data process are unrelated to the parameters of interest in the analysis.

In cases of ignorable missing data, there is no need to model the missingness mechanism explicitly unless you aim to improve the efficiency or precision of parameter estimates. Common imputation techniques, such as multiple imputation or maximum likelihood estimation, rely on the assumption of ignorability to produce unbiased parameter estimates.

Practical Considerations for Ignorable Missingness

Even though ignorable mechanisms simplify analysis, researchers must rigorously assess whether the missingness mechanism meets the MAR or MCAR criteria. Violations can lead to biased results, even if unintentionally overlooked.

For example: A survey on income may assume MAR if missingness is associated with respondent age (observed variable) but not income itself (unobserved variable). However, if income directly influences nonresponse, the assumption of MAR is violated.

13.2.3.2 Non-Ignorable Missing Data

Missing data is non-ignorable when:

- The missingness mechanism depends on the values of the missing data themselves or on unobserved variables.

- The missing data mechanism is related to the parameters of interest, resulting in bias if the mechanism is not modeled explicitly.

This type of missingness (i.e., Missing Not at Random (MNAR) requires modeling the missing data mechanism directly to produce unbiased estimates.

Characteristics of Non-Ignorable Missingness

-

Dependence on Missing Values: The likelihood of missingness is associated with the missing values themselves.

- Example: In a study on health, individuals with more severe conditions are more likely to drop out, leading to an underrepresentation of the sickest individuals in the data.

-

Bias in Complete Case Analysis: Analyses based solely on complete cases can lead to substantial bias.

- Example: In income surveys, if wealthier individuals are less likely to report their income, the estimated mean income will be systematically lower than the true population mean.

- Need for Explicit Modeling: To address MNAR, the analyst must model the missing data mechanism. This often involves specifying relationships between observed data, missing data, and the missingness process itself.

13.2.3.3 Implications of Non-Ignorable Missingness

Non-ignorable mechanisms are often associated with sensitive or personal data:

-

Examples:

Individuals with lower education levels may omit their education information.

Participants with controversial or stigmatized health conditions might opt out of surveys entirely.

-

Impact on Policy and Decision-Making:

- Biases introduced by MNAR can have serious consequences for policymaking, such as underestimating the prevalence of poverty or mischaracterizing population health needs.

By explicitly addressing non-ignorable missingness, researchers can mitigate biases and ensure that findings accurately reflect the underlying population.

13.3 Diagnosing the Missing Data Mechanism

Understanding the mechanism behind missing data is critical to choosing the appropriate methods for handling it. The three main mechanisms for missing data are MCAR (Missing Completely at Random), MAR (Missing at Random), and MNAR (Missing Not at Random). This section discusses methods for diagnosing these mechanisms, including descriptive and inferential approaches.

13.3.1 Descriptive Methods

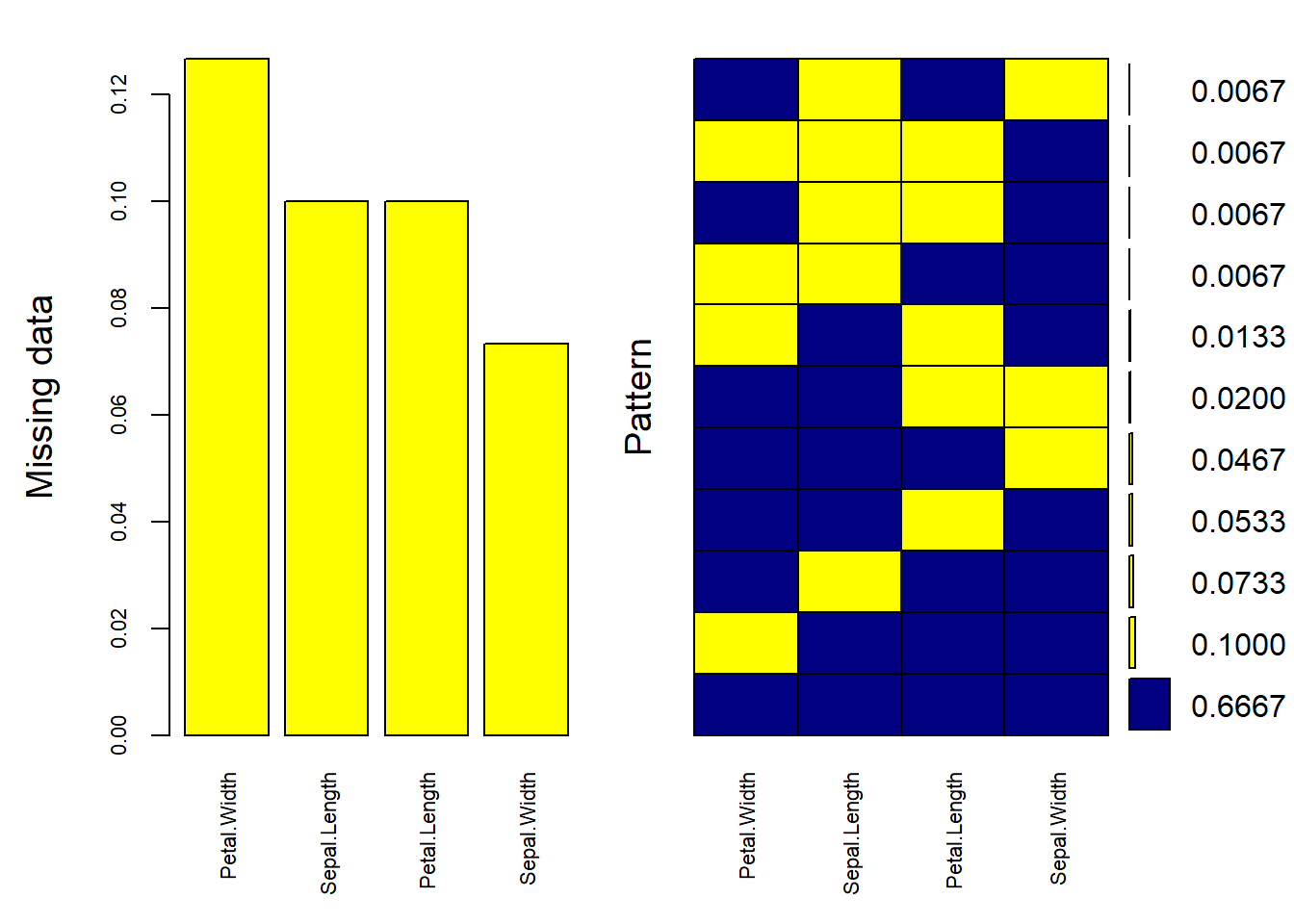

13.3.1.1 Visualizing Missing Data Patterns

Visualization tools are essential for detecting patterns in missing data. Heatmaps and correlation plots can help identify systematic missingness and provide insights into the underlying mechanism.

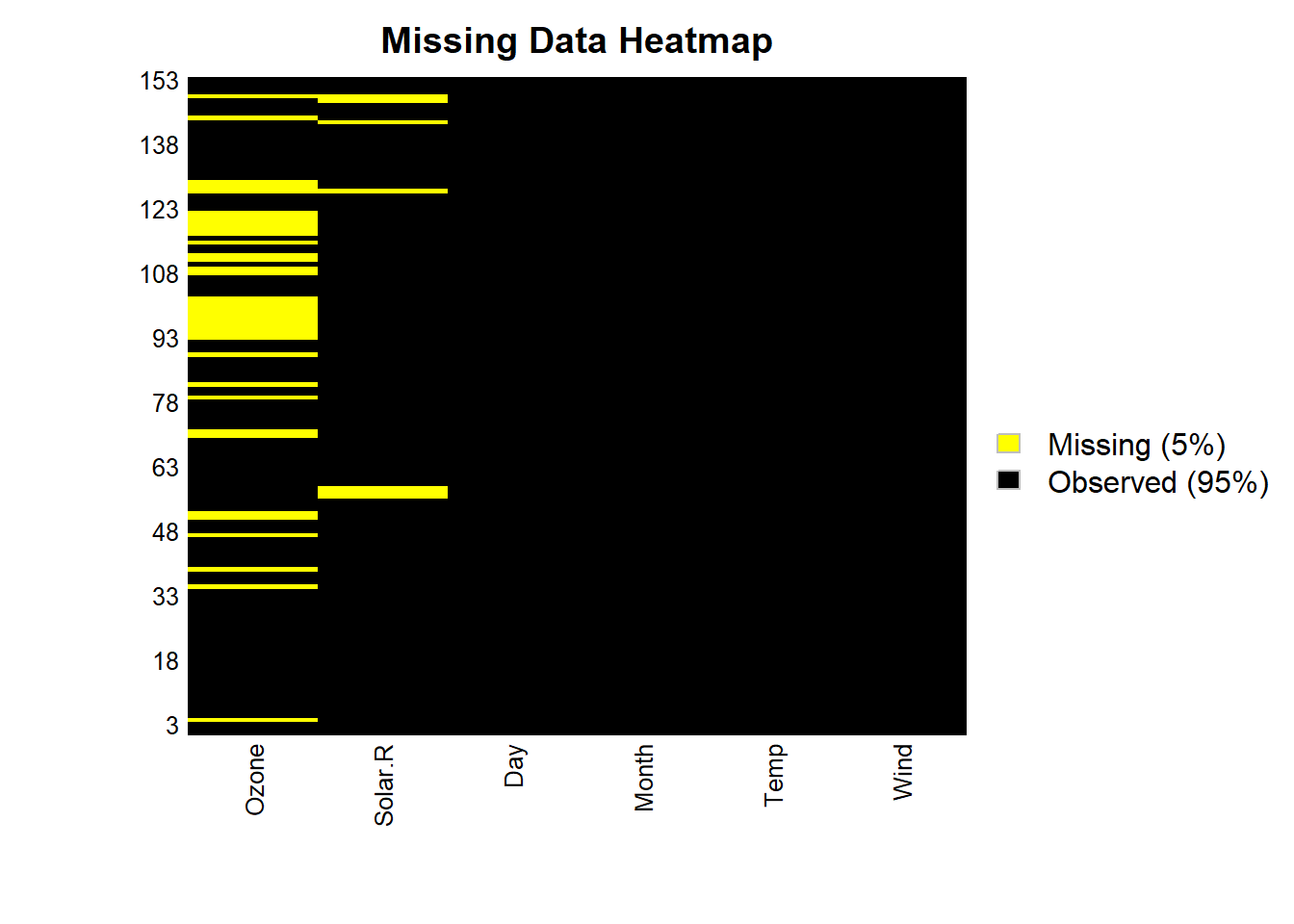

# Example: Visualizing missing data

library(Amelia)

missmap(

airquality,

main = "Missing Data Heatmap",

col = c("yellow", "black"),

legend = TRUE

)

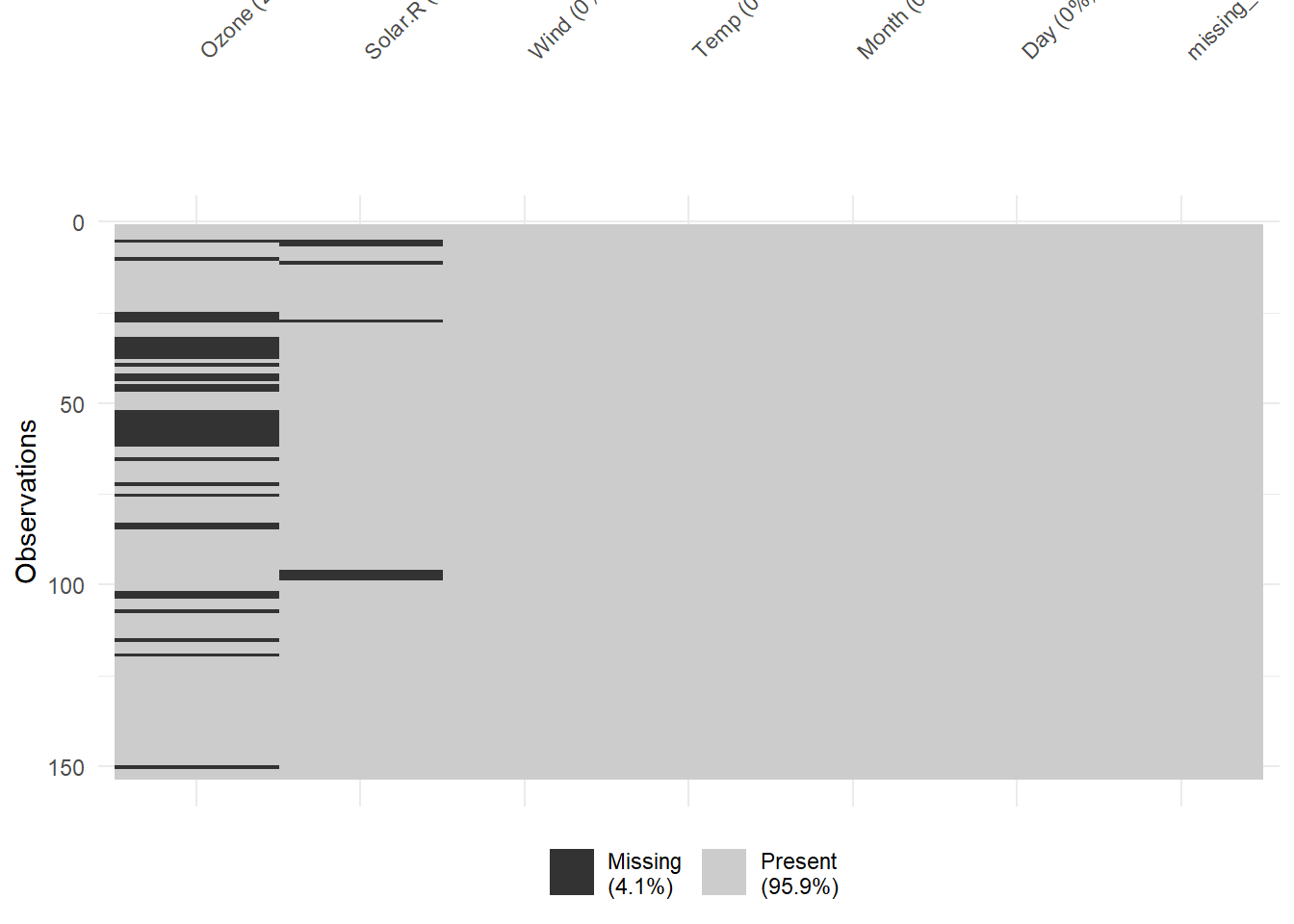

Figure 13.1: Missing Data Heatmap

Heatmaps: Highlight where missingness occurs in a dataset.

Correlation Plots: Show relationships between missingness indicators of different variables.

Exploring Univariate and Multivariate Missingness

- Univariate Analysis: Calculate the proportion of missing data for each variable.

# Example: Proportion of missing values

missing_proportions <- colSums(is.na(airquality)) / nrow(airquality)

print(missing_proportions)

#> Ozone Solar.R Wind Temp Month Day

#> 0.24183007 0.04575163 0.00000000 0.00000000 0.00000000 0.00000000- Multivariate Analysis: Examine whether missingness in one variable is related to others. This can be visualized using scatterplots of observed vs. missing values.

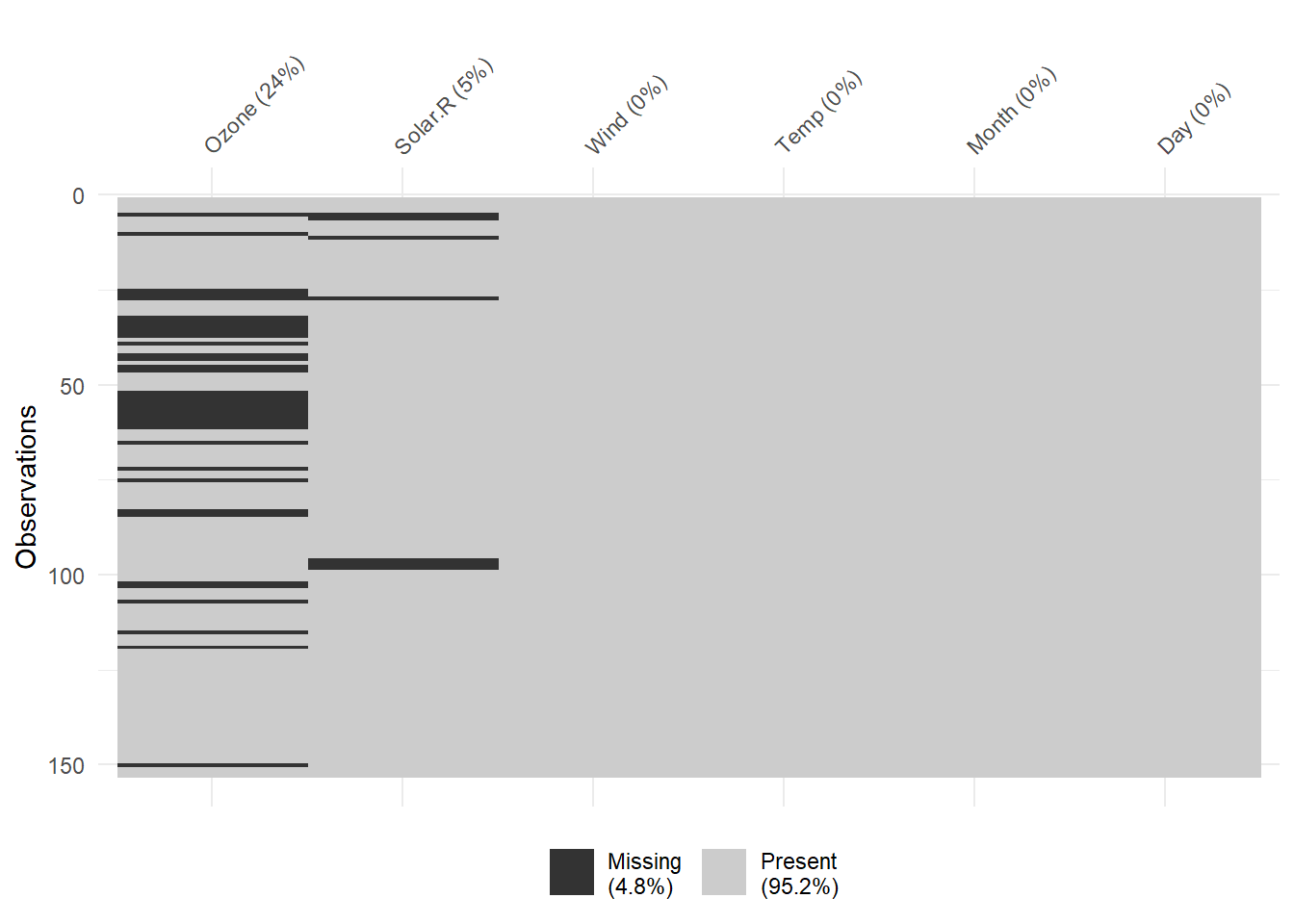

vis_miss(airquality)

Figure 13.2: Missing Data Heatmap

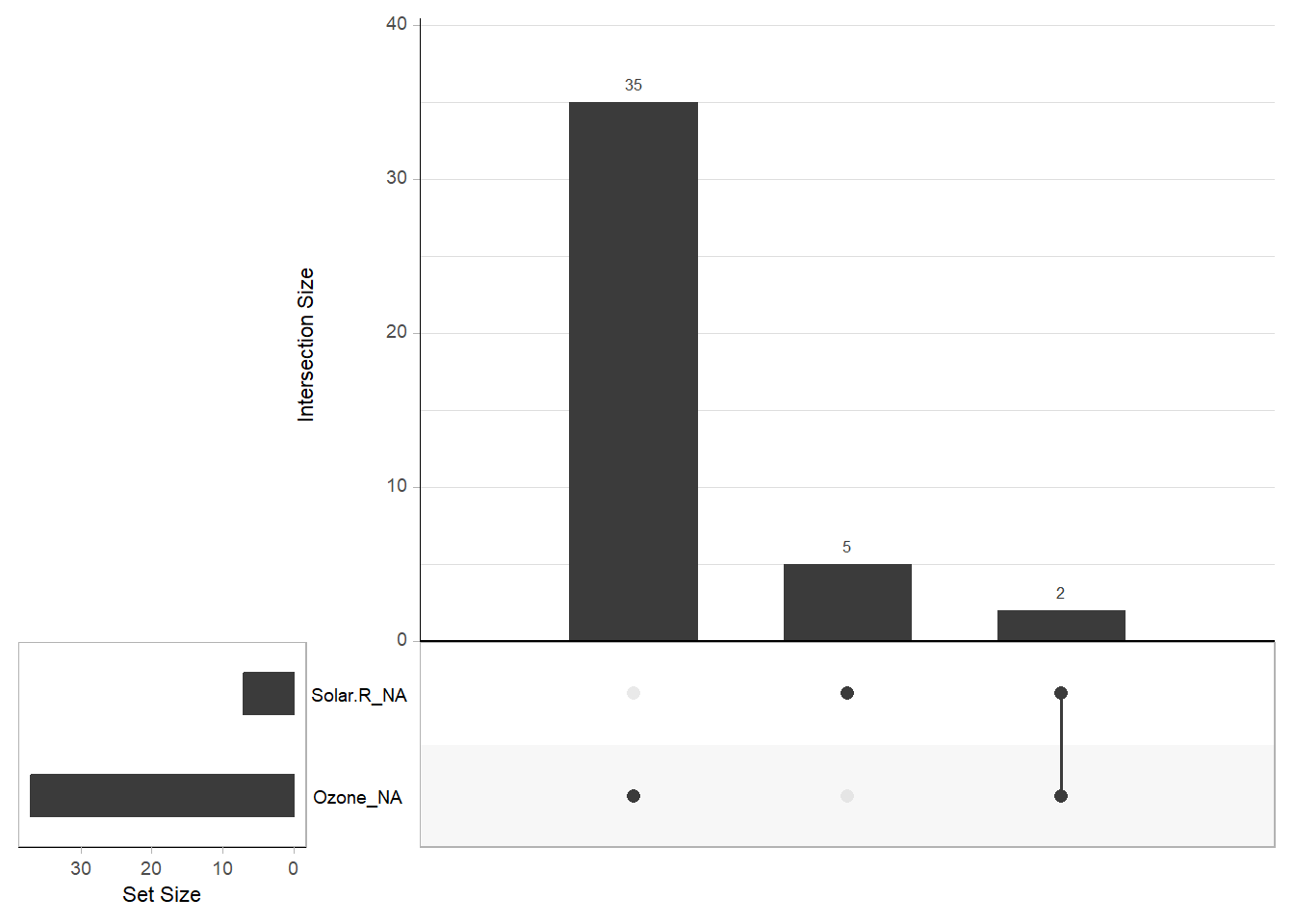

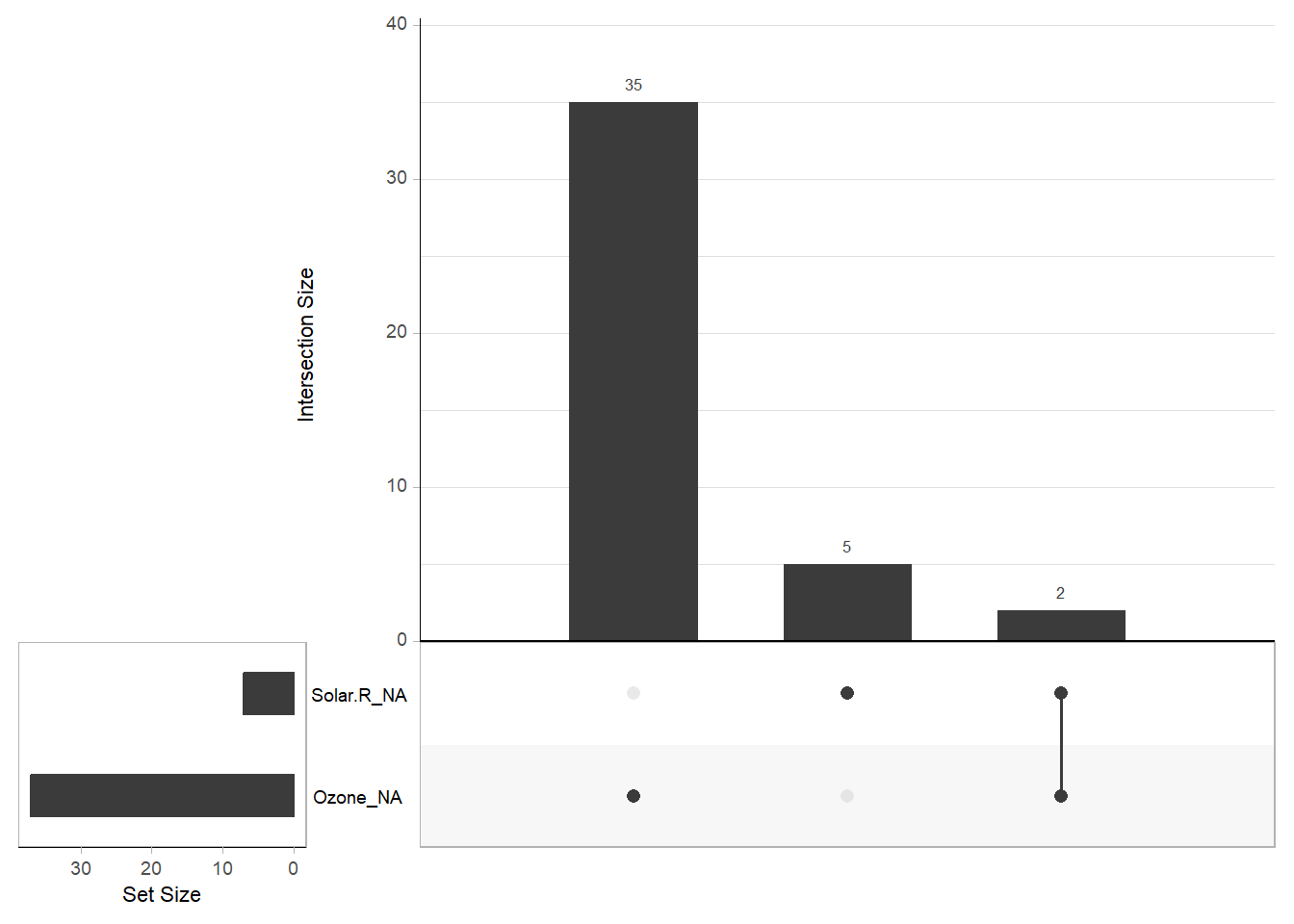

gg_miss_upset(airquality) # Displays a missingness upset plot

Figure 13.3: Missing Data Barchart

13.3.2 Statistical Tests for Missing Data Mechanisms

13.3.2.1 Diagnosing MCAR: Little’s Test

Little’s test is a hypothesis test to determine if the missing data mechanism is MCAR. It tests whether the means of observed and missing data are significantly different. The null hypothesis is that the data are MCAR.

\[ \chi^2 = \sum_{i=1}^n \frac{(O_i - E_i)^2}{E_i} \]

Where:

\(O_i\)= Observed frequency

\(E_i\)= Expected frequency under MCAR

13.3.2.2 Diagnosing MCAR via Dummy Variables

Creating a binary indicator for missingness allows you to test whether the presence of missing data is related to observed data. For instance:

-

Create a dummy variable:

1 = Missing

0 = Observed

-

Conduct a chi-square test or t-test:

Chi-square: Compare proportions of missingness across groups.

T-test: Compare means of (other) observed variables with missingness indicators.

# Example: Chi-square test

airquality$missing_var <- as.factor(ifelse(is.na(airquality$Ozone), 1, 0))

# Across groups of months

table(airquality$missing_var, airquality$Month)

#>

#> 5 6 7 8 9

#> 0 26 9 26 26 29

#> 1 5 21 5 5 1

chisq.test(table(airquality$missing_var, airquality$Month))

#>

#> Pearson's Chi-squared test

#>

#> data: table(airquality$missing_var, airquality$Month)

#> X-squared = 44.751, df = 4, p-value = 4.48e-09

# Example: T-test (of other variable)

t.test(Wind ~ missing_var, data = airquality)

#>

#> Welch Two Sample t-test

#>

#> data: Wind by missing_var

#> t = -0.60911, df = 63.646, p-value = 0.5446

#> alternative hypothesis: true difference in means between group 0 and group 1 is not equal to 0

#> 95 percent confidence interval:

#> -1.6893132 0.8999377

#> sample estimates:

#> mean in group 0 mean in group 1

#> 9.862069 10.25675713.3.3 Assessing MAR and MNAR

13.3.3.1 Sensitivity Analysis

Sensitivity analysis involves simulating different scenarios of missing data and assessing how the results change. For example, imputing missing values under different assumptions can provide insight into whether the data are MAR or MNAR.

13.3.3.2 Proxy Variables and External Data

Using proxy variables or external data sources can help assess whether missingness depends on unobserved variables (MNAR). For example, in surveys, follow-ups with non-respondents can reveal systematic differences.

13.3.3.3 Practical Challenges in Distinguishing MAR from MNAR

Distinguishing between Missing at Random (MAR) and Missing Not at Random (MNAR) is a critical and challenging task in data analysis. Properly identifying the nature of the missing data has significant implications for the choice of imputation strategies, model robustness, and the validity of conclusions. While statistical tests can sometimes aid in this determination, the process often relies heavily on domain knowledge, intuition, and exploratory analysis. Below, we discuss key considerations and examples that highlight these challenges:

Sensitive Topics: Missing data related to sensitive or stigmatized topics, such as income, drug use, or health conditions, are often MNAR. For example, individuals with higher incomes might deliberately choose not to report their earnings due to privacy concerns. Similarly, participants in a health survey may avoid answering questions about smoking if they perceive social disapproval. In such cases, the probability of missingness is directly related to the unobserved value itself, making MNAR likely.

Field-Specific Norms: Understanding norms and typical data collection practices in a specific field can provide insights into missingness patterns. For instance, in marketing surveys, respondents may skip questions about spending habits if they consider the questions intrusive. Prior research or historical data from the same domain can help infer whether missingness is more likely MAR (e.g., random skipping due to survey fatigue) or MNAR (e.g., deliberate omission by higher spenders).

Analyzing Auxiliary Variables: Leveraging auxiliary variables—those correlated with the missing variable—can help infer the missingness mechanism. For example, if missing income data strongly correlates with employment status, this suggests a MAR mechanism, as the missingness depends on observed variables. However, if missingness persists even after accounting for observable predictors, MNAR might be at play.

Experimental Design and Follow-Up: In longitudinal studies, dropout rates can signal MAR or MNAR patterns. For example, if dropouts occur disproportionately among participants reporting lower satisfaction in early surveys, this indicates an MNAR mechanism. Designing follow-up surveys to specifically investigate dropout reasons can clarify missingness patterns.

Sensitivity Analysis: To account for uncertainty in the missingness mechanism, researchers can conduct sensitivity analyses by comparing results under different assumptions (e.g., imputing data using both MAR and MNAR approaches). This process helps to quantify the potential impact of misclassifying the missingness mechanism on study conclusions.

-

Real-World Examples:

- In customer feedback surveys, higher ratings might be overrepresented due to non-response bias. Customers with negative experiences might be less likely to complete surveys, leading to an MNAR scenario.

- In financial reporting, missing audit data might correlate with companies in financial distress, a classic MNAR case where the missingness depends on unobserved financial health metrics.

Summary

MCAR: No pattern in missingness; use Little’s test or dummy variable analysis.

MAR: Missingness related to observed data; requires modeling assumptions or proxy analysis.

MNAR: Missingness depends on unobserved data; requires external validation or sensitivity analysis.

13.4 Methods for Handling Missing Data

13.4.1 Basic Methods

13.4.1.1 Complete Case Analysis (Listwise Deletion)

Listwise deletion retains only cases with complete data for all features, discarding rows with any missing values.

Advantages:

- Universally applicable to various statistical tests (e.g., SEM, multilevel regression).

- When data are Missing Completely at Random (MCAR), parameter estimates and standard errors are unbiased.

- Under specific Missing at Random (MAR) conditions, such as when the probability of missing data depends only on independent variables, listwise deletion can still yield unbiased estimates. For instance, in the model \(y = \beta_{0} + \beta_1X_1 + \beta_2X_2 + \epsilon\), if missingness in \(X_1\) is independent of \(y\) but depends on \(X_1\) and \(X_2\), the estimates remain unbiased (Little 1992).

- This aligns with principles of stratified sampling, which does not bias estimates.

- In logistic regression, if missing data depend only on the dependent variable but not on independent variables, listwise deletion produces consistent slope estimates, though the intercept may be biased (Vach and Vach 1994).

- For regression analysis, listwise deletion is more robust than Maximum Likelihood (ML) or Multiple Imputation (MI) when the MAR assumption is violated.

Disadvantages:

- Results in larger standard errors compared to advanced methods.

- If data are MAR but not MCAR, biased estimates can occur.

- In non-regression contexts, more sophisticated methods often outperform listwise deletion.

13.4.1.2 Available Case Analysis (Pairwise Deletion)

Pairwise deletion calculates estimates using all available data for each pair of variables, without requiring complete cases. It is particularly suitable for methods like linear regression, factor analysis, and SEM, which rely on correlation or covariance matrices.

Advantages:

- Under MCAR, pairwise deletion produces consistent and unbiased estimates in large samples.

- Compared to listwise deletion (Glasser 1964):

- When variable correlations are low, pairwise deletion provides more efficient estimates.

- When correlations are high, listwise deletion becomes more efficient.

Disadvantages:

- Yields biased estimates under MAR conditions.

- In small samples, covariance matrices might not be positive definite, rendering coefficient estimation infeasible.

- Software implementation varies in how sample size is handled, potentially affecting standard errors.

Note: Carefully review software documentation to understand how sample size is treated, as this influences standard error calculations.

13.4.1.3 Indicator Method (Dummy Variable Adjustment)

Also known as the Missing Indicator Method, this approach introduces an additional variable to indicate missingness in the dataset.

Implementation:

- Create an indicator variable:

\[ D = \begin{cases} 1 & \text{if data on } X \text{ are missing} \\ 0 & \text{otherwise} \end{cases} \]

- Modify the original variable to accommodate missingness:

\[ X^* = \begin{cases} X & \text{if data are available} \\ c & \text{if data are missing} \end{cases} \]

Note: A common choice for \(c\) is the mean of \(X\).

Interpretation:

- The coefficient of \(D\) represents the difference in the expected value of \(Y\) between cases with missing data and those without.

- The coefficient of \(X^*\) reflects the effect of \(X\) on \(Y\) for cases with observed data.

Disadvantages:

- Produces biased estimates of coefficients, even under MCAR conditions (Jones 1996).

- May lead to overinterpretation of the “missingness effect,” complicating model interpretation.

13.4.1.4 Advantages and Limitations of Basic Methods

| Method | Advantages | Disadvantages |

|---|---|---|

| Listwise Deletion | Simple and universally applicable; unbiased under MCAR; robust in certain MAR scenarios. | Inefficient (larger standard errors); biased under MAR in many cases; discards potentially useful data. |

| Pairwise Deletion | Utilizes all available data; efficient under MCAR with low correlations; avoids discarding all cases. | Biased under MAR; prone to non-positive-definite covariance matrices in small samples. |

| Indicator Method | Simple implementation; explicitly models missingness effect. | Biased even under MCAR; complicates interpretation; may not reflect true underlying relationships. |

13.4.2 Single Imputation Techniques

Single imputation methods replace missing data with a single value, generating a complete dataset that can be analyzed using standard techniques. While convenient, single imputation generally underestimates variability and risks biasing results.

13.4.2.1 Deterministic Methods

13.4.2.1.1 Mean, Median, Mode Imputation

This method replaces missing values with the mean, median, or mode of the observed data.

Advantages:

- Simplicity and ease of implementation.

- Useful for quick exploratory data analysis.

Disadvantages:

- Bias in Variances and Relationships: Mean imputation reduces variance and disrupts relationships among variables, leading to biased estimates of variances and covariances (Haitovsky 1968).

- Underestimated Standard Errors: Results in overly optimistic conclusions and increased risk of Type I errors.

- Dependency Structure Ignored: Particularly problematic in high-dimensional data, as it fails to capture dependencies among features.

13.4.2.1.2 Forward and Backward Filling (Time Series Contexts)

Used in time series analysis, this method replaces missing values using the preceding (forward filling) or succeeding (backward filling) values.

Advantages:

- Simple and preserves temporal ordering.

- Suitable for datasets where adjacent values are strongly correlated.

Disadvantages:

- Biased if missingness spans long gaps or occurs systematically.

- Cannot capture trends or changes in the underlying process.

13.4.2.2 Statistical Prediction Models

13.4.2.2.1 Linear Regression Imputation

Missing values in a variable are imputed based on a linear regression model using observed values of other variables.

Advantages:

- Preserves relationships between variables.

- More sophisticated than mean or median imputation.

Disadvantages:

- Assumes linear relationships, which may not hold in all datasets.

- Fails to capture variability, leading to downwardly biased standard errors.

13.4.2.2.2 Logistic Regression for Categorical Variables

Similar to linear regression imputation but used for categorical variables. The missing category is predicted using a logistic regression model.

Advantages:

- Useful for binary or multinomial categorical data.

- Preserves relationships with other variables.

Disadvantages:

- Assumes the underlying logistic model is appropriate.

- Does not account for uncertainty in the imputed values.

13.4.2.3 Non-Parametric Methods

13.4.2.3.1 Hot Deck Imputation

Hot Deck Imputation is a method of handling missing data where missing values are replaced with observed values from “donor” cases that are similar in other characteristics. This technique has been widely used in survey data, including by organizations like the U.S. Census Bureau, due to its flexibility and ability to maintain observed data distributions.

Advantages of Hot Deck Imputation

- Retains observed data distributions: Since missing values are imputed using actual observed data, the overall distribution remains realistic.

- Flexible: This method is applicable to both categorical and continuous variables.

- Constrained imputations: Imputed values are always feasible, as they come from observed cases.

- Adds variability: By randomly selecting donors, this method introduces variability, which can aid in robust standard error estimation.

Disadvantages of Hot Deck Imputation

- Sensitivity to similarity definitions: The quality of imputed values depends on the criteria used to define similarity between cases.

- Computational intensity: Identifying similar cases and randomly selecting donors can be computationally expensive, especially for large datasets.

- Subjectivity: Deciding how to define “similar” can introduce subjectivity or bias.

Algorithm for Hot Deck Imputation

Let \(n_1\) represent the number of cases with complete data on the variable \(Y\), and \(n_0\) represent the number of cases with missing data on \(Y\). The steps are as follows:

- From the \(n_1\) cases with complete data, take a random sample (with replacement) of \(n_1\) cases.

- From this sampled pool, take another random sample (with replacement) of size \(n_0\).

- Assign the values from the sampled \(n_0\) cases to the cases with missing data in \(Y\).

- Repeat this process for every variable in the dataset.

- For multiple imputation, repeat the above four steps multiple times to create multiple imputed datasets.

Variations and Considerations

- Skipping Step 1: If Step 1 is skipped, the variability of imputed values is reduced. This approach might not fully account for the uncertainty in missing data, which can underestimate standard errors.

- Defining similarity: A major challenge in this method is deciding what constitutes “similarity” between cases. Common approaches include matching based on distance metrics (e.g., Euclidean distance) or grouping cases by strata or clusters.

Practical Example

The U.S. Census Bureau employs an approximate Bayesian bootstrap variation of Hot Deck Imputation. In this approach:

Similar cases are identified based on shared characteristics or grouping variables.

A randomly chosen value from a similar individual in the sample is used to replace the missing value.

This method ensures imputed values are plausible while incorporating variability.

Key Notes

-

Good aspects:

- Imputed values are constrained to observed possibilities.

- Random selection introduces variability, helpful for multiple imputation scenarios.

-

Challenges:

- Defining and operationalizing “similarity” remains a critical step in applying this method effectively.

Below is an example code snippet illustrating Hot Deck Imputation in R:

library(Hmisc)

# Example dataset with missing values

data <- data.frame(

ID = 1:10,

Age = c(25, 30, NA, 40, NA, 50, 60, NA, 70, 80),

Gender = c("M", "F", "F", "M", "M", "F", "M", "F", "M", "F")

)

# Perform Hot Deck Imputation using Hmisc::impute

data$Age_imputed <- impute(data$Age, "random")

# Display the imputed dataset

print(data)

#> ID Age Gender Age_imputed

#> 1 1 25 M 25

#> 2 2 30 F 30

#> 3 3 NA F 40

#> 4 4 40 M 40

#> 5 5 NA M 40

#> 6 6 50 F 50

#> 7 7 60 M 60

#> 8 8 NA F 80

#> 9 9 70 M 70

#> 10 10 80 F 80This code randomly imputes missing values in the Age column based on observed data using the Hmisc package’s impute function.

13.4.2.3.2 Cold Deck Imputation

Cold Deck Imputation is a systematic variant of Hot Deck Imputation where the donor pool is predefined. Instead of selecting donors dynamically from within the same dataset, Cold Deck Imputation relies on an external reference dataset, such as historical data or other high-quality external sources.

Advantages of Cold Deck Imputation

- Utilizes high-quality external data: This method is particularly useful when reliable external reference datasets are available, allowing for accurate and consistent imputations.

- Consistency: If the same donor pool is used across multiple datasets, imputations remain consistent, which can be advantageous in longitudinal studies or standardized processes.

Disadvantages of Cold Deck Imputation

- Lack of adaptability: External data may not adequately reflect the unique characteristics or variability of the current dataset.

- Potential for systematic bias: If the donor pool is significantly different from the target dataset, imputations may introduce bias.

- Reduces variability: Unlike Hot Deck Imputation, Cold Deck Imputation systematically selects values, which removes random variation. This can affect the estimation of standard errors and other inferential statistics.

Key Characteristics

- Systematic Selection: Cold Deck Imputation selects donor values systematically based on predefined rules or matching criteria, rather than using random sampling.

- External Donor Pool: Donors are typically drawn from a separate dataset or historical records.

Algorithm for Cold Deck Imputation

- Identify an external reference dataset or predefined donor pool.

- Define the matching criteria to find “similar” cases between the donor pool and the current dataset (e.g., based on covariates or stratification).

- Systematically assign values from the donor pool to missing values in the current dataset based on the matching criteria.

- Repeat the process for each variable with missing data.

Practical Considerations

- Cold Deck Imputation works well when external data closely resemble the target dataset. However, when there are significant differences in distributions or relationships between variables, imputations may be biased or unrealistic.

- This method is less useful for datasets without access to reliable external reference data.

Suppose we have a current dataset with missing values and a historical dataset with similar variables. The following example demonstrates how Cold Deck Imputation can be implemented:

# Current dataset with missing values

current_data <- data.frame(

ID = 1:5,

Age = c(25, 30, NA, 45, NA),

Gender = c("M", "F", "F", "M", "M")

)

# External reference dataset (donor pool)

reference_data <- data.frame(

Age = c(28, 35, 42, 50),

Gender = c("M", "F", "F", "M")

)

# Perform Cold Deck Imputation

library(dplyr)

# Define a matching function to find closest donor

impute_cold_deck <- function(missing_row, reference_data) {

# Filter donors with the same gender

possible_donors <- reference_data %>%

filter(Gender == missing_row$Gender)

# Return the mean age of matching donors as an example of systematic imputation

return(mean(possible_donors$Age, na.rm = TRUE))

}

# Apply Cold Deck Imputation to the missing rows

current_data <- current_data %>%

rowwise() %>%

mutate(

Age_imputed = ifelse(

is.na(Age),

impute_cold_deck(cur_data(), reference_data),

Age

)

)

# Display the imputed dataset

print(current_data)

#> # A tibble: 5 × 4

#> # Rowwise:

#> ID Age Gender Age_imputed

#> <int> <dbl> <chr> <dbl>

#> 1 1 25 M 25

#> 2 2 30 F 30

#> 3 3 NA F 38.8

#> 4 4 45 M 45

#> 5 5 NA M 38.8Comparison to Hot Deck Imputation

| Feature | Hot Deck Imputation | Cold Deck Imputation |

|---|---|---|

| Donor Pool | Internal (within the dataset) | External (predefined dataset) |

| Selection | Random | Systematic |

| Variability | Retained | Reduced |

| Bias Potential | Lower | Higher (if donor pool differs) |

This method suits situations where external reference datasets are trusted and representative. However, careful consideration is required to ensure alignment between the donor pool and the target dataset to avoid systematic biases.

13.4.2.3.3 Random Draw from Observed Distribution

This imputation method replaces missing values by randomly sampling from the observed distribution of the variable with missing data. It is a simple, non-parametric approach that retains the variability of the original data.

Advantages

-

Preserves variability:

- By randomly drawing values from the observed data, this method ensures that the imputed values reflect the inherent variability of the variable.

-

Computational simplicity:

- The process is straightforward and does not require model fitting or complex calculations.

Disadvantages

-

Ignores relationships among variables:

- Since the imputation is based solely on the observed distribution of the variable, it does not consider relationships or dependencies with other variables.

-

May not align with trends:

- Imputed values are random and may fail to align with patterns or trends present in the data, such as time series structures or interactions.

Steps in Random Draw Imputation

- Identify the observed (non-missing) values of the variable.

- For each missing value, randomly sample one value from the observed distribution with or without replacement.

- Replace the missing value with the randomly sampled value.

The following example demonstrates how to use random draw imputation to fill in missing values:

# Example dataset with missing values

set.seed(123)

data <- data.frame(

ID = 1:10,

Value = c(10, 20, NA, 30, 40, NA, 50, 60, NA, 70)

)

# Perform random draw imputation

random_draw_impute <- function(data, variable) {

observed_values <- data[[variable]][!is.na(data[[variable]])] # Observed values

data[[variable]][is.na(data[[variable]])] <- sample(observed_values,

sum(is.na(data[[variable]])),

replace = TRUE)

return(data)

}

# Apply the imputation

imputed_data <- random_draw_impute(data, variable = "Value")

# Display the imputed dataset

print(imputed_data)

#> ID Value

#> 1 1 10

#> 2 2 20

#> 3 3 70

#> 4 4 30

#> 5 5 40

#> 6 6 70

#> 7 7 50

#> 8 8 60

#> 9 9 30

#> 10 10 70Considerations

-

When to Use:

- This method is suitable for exploratory analysis or as a quick way to handle missing data in univariate contexts.

-

Limitations:

- Random draws may result in values that do not fit well in the broader context of the dataset, especially in cases where the variable has strong relationships with others.

| Feature | Random Draw from Observed Distribution | Regression-Based Imputation |

|---|---|---|

| Complexity | Simple | Moderate to High |

| Preserves Variability | Yes | Limited in deterministic forms |

| Considers Relationships | No | Yes |

| Risk of Implausible Values | Low (if observed values are plausible) | Moderate to High |

This method is a quick and computationally efficient way to address missing data but is best complemented by more sophisticated methods when relationships between variables are important.

13.4.2.4 Semi-Parametric Methods

13.4.2.4.1 Predictive Mean Matching (PMM)

Predictive Mean Matching (PMM) imputes missing values by finding observed values closest in predicted value (based on a regression model) to the missing data. The donor values are then used to fill in the gaps.

Advantages:

Maintains observed variability in the data.

Ensures imputed values are realistic since they are drawn from observed data.

Disadvantages:

Requires a suitable predictive model.

Computationally intensive for large datasets.

Steps for PMM:

- Regress \(Y\) on \(X\) (matrix of covariates) for the \(n_1\) (non-missing cases) to estimate coefficients \(\hat{b}\) and residual variance \(s^2\).

- Draw from the posterior predictive distribution of residual variance: \[s^2_{[1]} = \frac{(n_1-k)s^2}{\chi^2},\] where \(\chi^2\) is a random draw from \(\chi^2_{n_1-k}\).

- Randomly sample from the posterior distribution of \(\hat{b}\): \[b_{[1]} \sim MVN(\hat{b}, s^2_{[1]}(X'X)^{-1}).\]

- Standardize residuals for \(n_1\) cases: \[e_i = \frac{y_i - \hat{b}x_i}{\sqrt{s^2(1-k/n_1)}}.\]

- Randomly draw a sample (with replacement) of \(n_0\) residuals from Step 4.

- Calculate imputed values for \(n_0\) missing cases: \[y_i = b_{[1]}x_i + s_{[1]}e_i.\]

- Repeat Steps 2–6 (except Step 4) to create multiple imputations.

Notes:

PMM can handle heteroskedasticity

works for multiple variables, imputing each using all others as predictors.

Example:

Example from Statistics Globe

set.seed(1) # Seed

N <- 100 # Sample size

y <- round(runif(N,-10, 10)) # Target variable Y

x1 <- y + round(runif(N, 0, 50)) # Auxiliary variable 1

x2 <- round(y + 0.25 * x1 + rnorm(N,-3, 15)) # Auxiliary variable 2

x3 <- round(0.1 * x1 + rpois(N, 2)) # Auxiliary variable 3

# (categorical variable)

x4 <- as.factor(round(0.02 * y + runif(N))) # Auxiliary variable 4

# Insert 20% missing data in Y

y[rbinom(N, 1, 0.2) == 1] <- NA

data <- data.frame(y, x1, x2, x3, x4) # Store data in dataset

head(data) # First 6 rows of our data

#> y x1 x2 x3 x4

#> 1 NA 28 -10 5 0

#> 2 NA 15 -2 2 1

#> 3 1 15 -12 6 1

#> 4 8 58 22 10 1

#> 5 NA 26 -12 7 0

#> 6 NA 19 36 5 1

library("mice") # Load mice package

##### Impute data via predictive mean matching (single imputation)#####

imp_single <- mice::mice(data, m = 1, method = "pmm") # Impute missing values

#>

#> iter imp variable

#> 1 1 y

#> 2 1 y

#> 3 1 y

#> 4 1 y

#> 5 1 y

data_imp_single <- mice::complete(imp_single) # Store imputed data

# head(data_imp_single)

# Since single imputation underestiamtes stnadard errors,

# we use multiple imputaiton

##### Predictive mean matching (multiple imputation) #####

# Impute missing values multiple times

imp_multi <- mice(data, m = 5, method = "pmm")

#>

#> iter imp variable

#> 1 1 y

#> 1 2 y

#> 1 3 y

#> 1 4 y

#> 1 5 y

#> 2 1 y

#> 2 2 y

#> 2 3 y

#> 2 4 y

#> 2 5 y

#> 3 1 y

#> 3 2 y

#> 3 3 y

#> 3 4 y

#> 3 5 y

#> 4 1 y

#> 4 2 y

#> 4 3 y

#> 4 4 y

#> 4 5 y

#> 5 1 y

#> 5 2 y

#> 5 3 y

#> 5 4 y

#> 5 5 y

data_imp_multi_all <-

# Store multiply imputed data

mice::complete(imp_multi,

"repeated",

include = TRUE)

data_imp_multi <-

# Combine imputed Y and X1-X4 (for convenience)

data.frame(data_imp_multi_all[, 1:6], data[, 2:5])

head(data_imp_multi)

#> y.0 y.1 y.2 y.3 y.4 y.5 x1 x2 x3 x4

#> 1 NA -1 6 -1 -3 3 28 -10 5 0

#> 2 NA -10 10 4 0 2 15 -2 2 1

#> 3 1 1 1 1 1 1 15 -12 6 1

#> 4 8 8 8 8 8 8 58 22 10 1

#> 5 NA 0 -1 -6 2 0 26 -12 7 0

#> 6 NA 4 0 3 3 3 19 36 5 1Example from UCLA Statistical Consulting

library(mice)

library(VIM)

library(lattice)

library(ggplot2)

## set observations to NA

anscombe <- within(anscombe, {

y1[1:3] <- NA

y4[3:5] <- NA

})

## view

head(anscombe)

#> x1 x2 x3 x4 y1 y2 y3 y4

#> 1 10 10 10 8 NA 9.14 7.46 6.58

#> 2 8 8 8 8 NA 8.14 6.77 5.76

#> 3 13 13 13 8 NA 8.74 12.74 NA

#> 4 9 9 9 8 8.81 8.77 7.11 NA

#> 5 11 11 11 8 8.33 9.26 7.81 NA

#> 6 14 14 14 8 9.96 8.10 8.84 7.04

## check missing data patterns

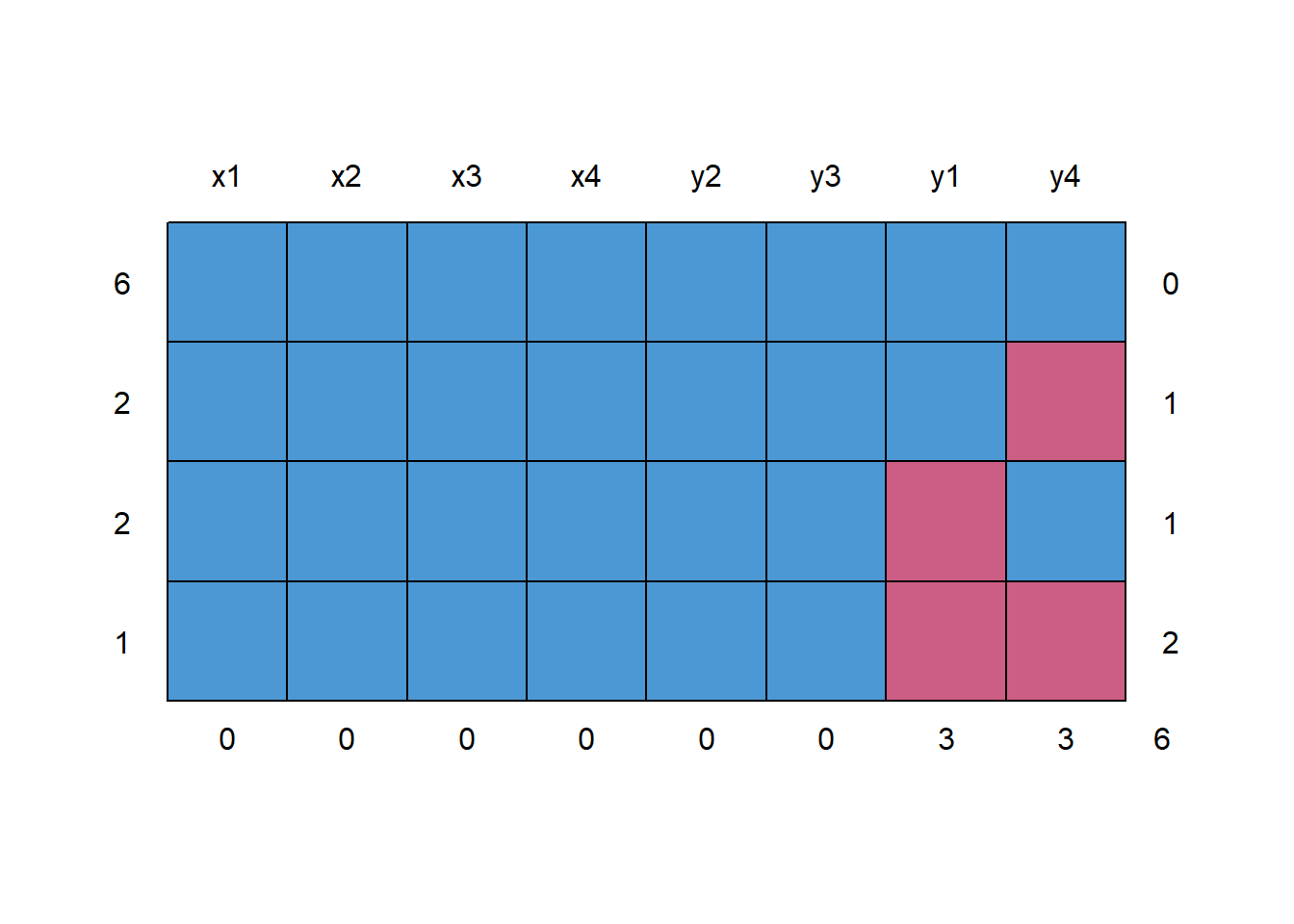

md.pattern(anscombe)

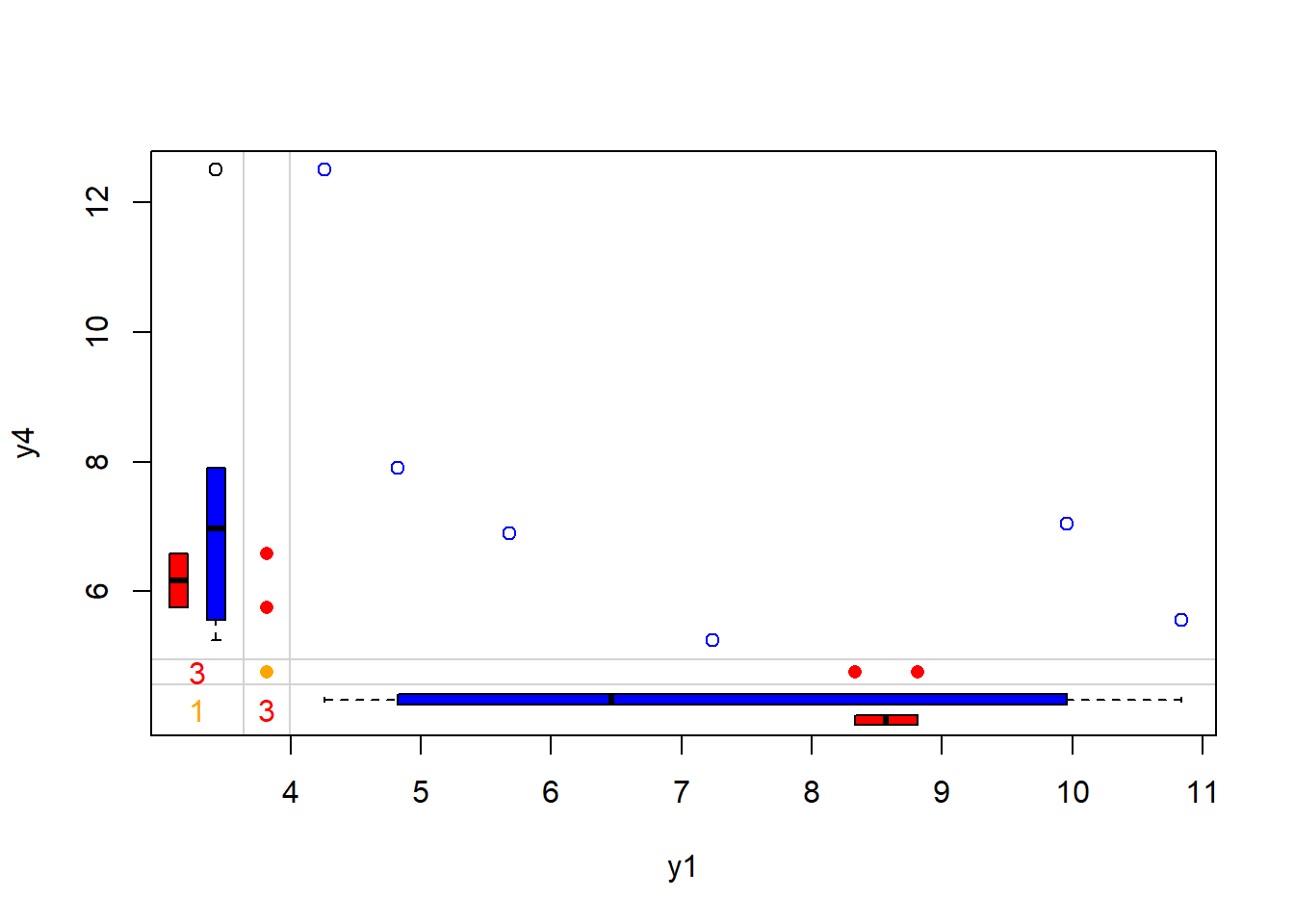

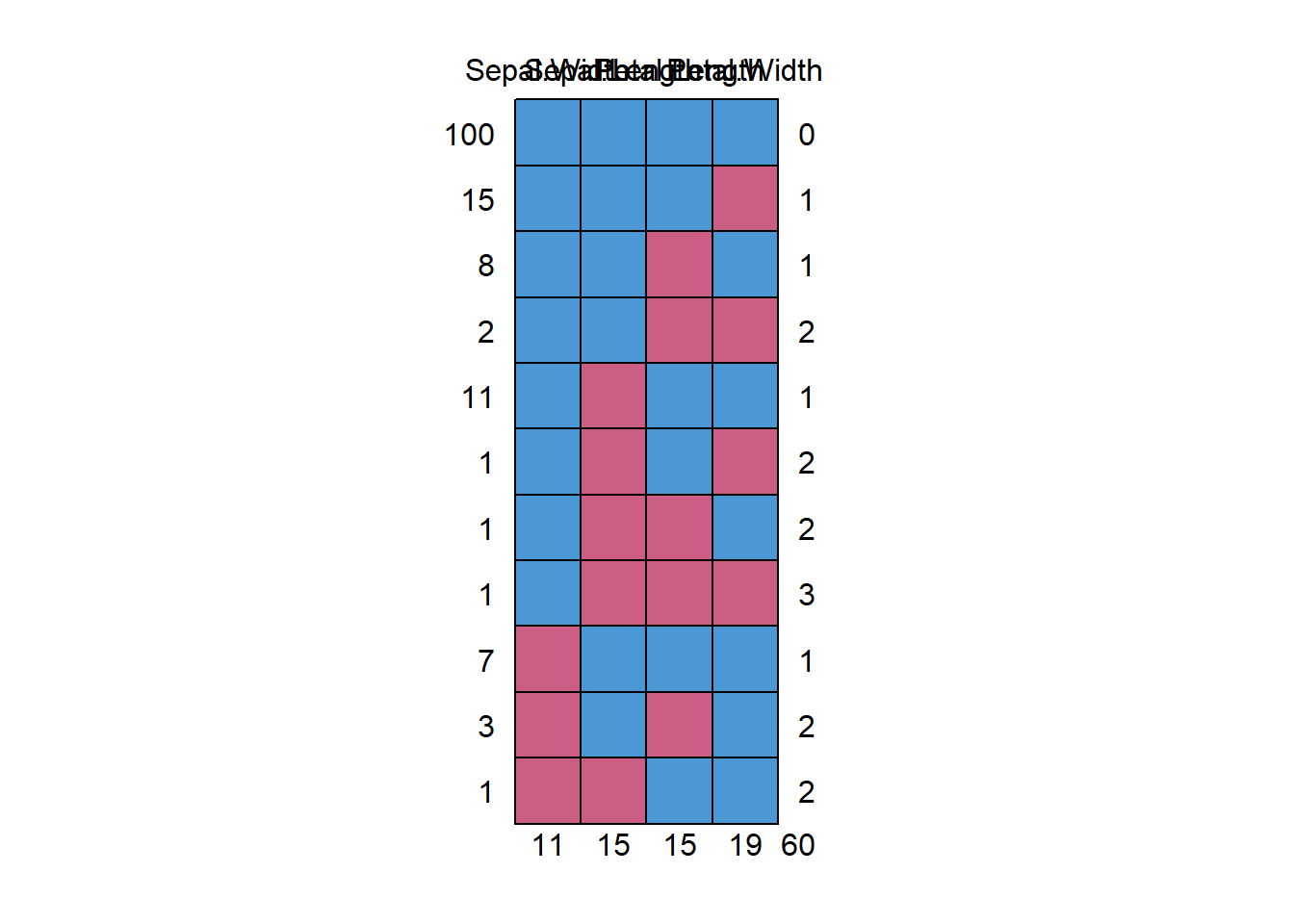

Figure 13.4: Missing Data Pattern

#> x1 x2 x3 x4 y2 y3 y1 y4

#> 6 1 1 1 1 1 1 1 1 0

#> 2 1 1 1 1 1 1 1 0 1

#> 2 1 1 1 1 1 1 0 1 1

#> 1 1 1 1 1 1 1 0 0 2

#> 0 0 0 0 0 0 3 3 6

## Number of observations per patterns for all pairs of variables

p <- md.pairs(anscombe)

p

#> $rr

#> x1 x2 x3 x4 y1 y2 y3 y4

#> x1 11 11 11 11 8 11 11 8

#> x2 11 11 11 11 8 11 11 8

#> x3 11 11 11 11 8 11 11 8

#> x4 11 11 11 11 8 11 11 8

#> y1 8 8 8 8 8 8 8 6

#> y2 11 11 11 11 8 11 11 8

#> y3 11 11 11 11 8 11 11 8

#> y4 8 8 8 8 6 8 8 8

#>

#> $rm

#> x1 x2 x3 x4 y1 y2 y3 y4

#> x1 0 0 0 0 3 0 0 3

#> x2 0 0 0 0 3 0 0 3

#> x3 0 0 0 0 3 0 0 3

#> x4 0 0 0 0 3 0 0 3

#> y1 0 0 0 0 0 0 0 2

#> y2 0 0 0 0 3 0 0 3

#> y3 0 0 0 0 3 0 0 3

#> y4 0 0 0 0 2 0 0 0

#>

#> $mr

#> x1 x2 x3 x4 y1 y2 y3 y4

#> x1 0 0 0 0 0 0 0 0

#> x2 0 0 0 0 0 0 0 0

#> x3 0 0 0 0 0 0 0 0

#> x4 0 0 0 0 0 0 0 0

#> y1 3 3 3 3 0 3 3 2

#> y2 0 0 0 0 0 0 0 0

#> y3 0 0 0 0 0 0 0 0

#> y4 3 3 3 3 2 3 3 0

#>

#> $mm

#> x1 x2 x3 x4 y1 y2 y3 y4

#> x1 0 0 0 0 0 0 0 0

#> x2 0 0 0 0 0 0 0 0

#> x3 0 0 0 0 0 0 0 0

#> x4 0 0 0 0 0 0 0 0

#> y1 0 0 0 0 3 0 0 1

#> y2 0 0 0 0 0 0 0 0

#> y3 0 0 0 0 0 0 0 0

#> y4 0 0 0 0 1 0 0 3-

rr= number of observations where both pairs of values are observed -

rm= the number of observations where both variables are missing values -

mr= the number of observations where the first variable’s value (e.g. the row variable) is observed and second (or column) variable is missing -

mm= the number of observations where the second variable’s value (e.g. the col variable) is observed and first (or row) variable is missing

## Margin plot of y1 and y4

marginplot(anscombe[c(5, 8)], col = c("blue", "red", "orange"))

Figure 13.5: Scatterplot of y4 versus y1 with Marginal Boxplots

## 5 imputations for all missing values

imp1 <- mice(anscombe, m = 5)

#>

#> iter imp variable

#> 1 1 y1 y4

#> 1 2 y1 y4

#> 1 3 y1 y4

#> 1 4 y1 y4

#> 1 5 y1 y4

#> 2 1 y1 y4

#> 2 2 y1 y4

#> 2 3 y1 y4

#> 2 4 y1 y4

#> 2 5 y1 y4

#> 3 1 y1 y4

#> 3 2 y1 y4

#> 3 3 y1 y4

#> 3 4 y1 y4

#> 3 5 y1 y4

#> 4 1 y1 y4

#> 4 2 y1 y4

#> 4 3 y1 y4

#> 4 4 y1 y4

#> 4 5 y1 y4

#> 5 1 y1 y4

#> 5 2 y1 y4

#> 5 3 y1 y4

#> 5 4 y1 y4

#> 5 5 y1 y4

## linear regression for each imputed data set - 5 regression are run

fitm <- with(imp1, lm(y1 ~ y4 + x1))

summary(fitm)

#> # A tibble: 15 × 7

#> term estimate std.error statistic p.value nobs df.residual

#> <chr> <dbl> <dbl> <dbl> <dbl> <int> <dbl>

#> 1 (Intercept) 7.33 2.44 3.01 0.0169 11 8

#> 2 y4 -0.416 0.223 -1.86 0.0996 11 8

#> 3 x1 0.371 0.141 2.63 0.0302 11 8

#> 4 (Intercept) 7.27 2.90 2.51 0.0365 11 8

#> 5 y4 -0.435 0.273 -1.59 0.150 11 8

#> 6 x1 0.387 0.160 2.41 0.0422 11 8

#> 7 (Intercept) 6.54 2.80 2.33 0.0479 11 8

#> 8 y4 -0.322 0.255 -1.26 0.243 11 8

#> 9 x1 0.362 0.156 2.32 0.0491 11 8

#> 10 (Intercept) 5.93 3.08 1.92 0.0907 11 8

#> 11 y4 -0.286 0.282 -1.02 0.339 11 8

#> 12 x1 0.418 0.176 2.37 0.0451 11 8

#> 13 (Intercept) 8.16 2.67 3.05 0.0158 11 8

#> 14 y4 -0.489 0.251 -1.95 0.0867 11 8

#> 15 x1 0.326 0.151 2.17 0.0622 11 8

## pool coefficients and standard errors across all 5 regression models

mice::pool(fitm)

#> Class: mipo m = 5

#> term m estimate ubar b t dfcom df

#> 1 (Intercept) 5 7.0445966 7.76794670 0.719350800 8.63116766 8 5.805314

#> 2 y4 5 -0.3896685 0.06634920 0.006991497 0.07473900 8 5.706243

#> 3 x1 5 0.3727865 0.02473847 0.001134293 0.02609962 8 6.178032

#> riv lambda fmi

#> 1 0.11112601 0.10001207 0.3044313

#> 2 0.12644909 0.11225460 0.3161877

#> 3 0.05502168 0.05215218 0.2586992

## output parameter estimates

summary(mice::pool(fitm))

#> term estimate std.error statistic df p.value

#> 1 (Intercept) 7.0445966 2.9378849 2.397846 5.805314 0.05483678

#> 2 y4 -0.3896685 0.2733843 -1.425350 5.706243 0.20638512

#> 3 x1 0.3727865 0.1615538 2.307508 6.178032 0.0592399913.4.2.4.2 Stochastic Imputation

Stochastic Imputation is an enhancement of regression imputation that introduces randomness into the imputation process by adding a random residual to the predicted values from a regression model. This approach aims to retain the variability of the original data while reducing the bias introduced by deterministic regression imputation.

Stochastic Imputation can be described as:

\[ \text{Imputed Value} = \text{Predicted Value (from regression)} + \text{Random Residual} \]

This method is commonly used as a foundation for multiple imputation techniques.

Advantages of Stochastic Imputation

-

Retains all the benefits of regression imputation:

- Preserves relationships between variables in the dataset.

- Utilizes information from observed data to inform imputations.

-

Introduces randomness:

- Adds variability by including a random residual term, making imputed values more realistic and better representing the uncertainty of missing data.

-

Supports multiple imputation:

- By generating different random residuals for each iteration, it facilitates the creation of multiple plausible datasets for robust statistical analysis.

Disadvantages of Stochastic Imputation

-

Implausible values:

- Depending on the random residuals, imputed values may fall outside the plausible range (e.g., negative values for variables like age or income).

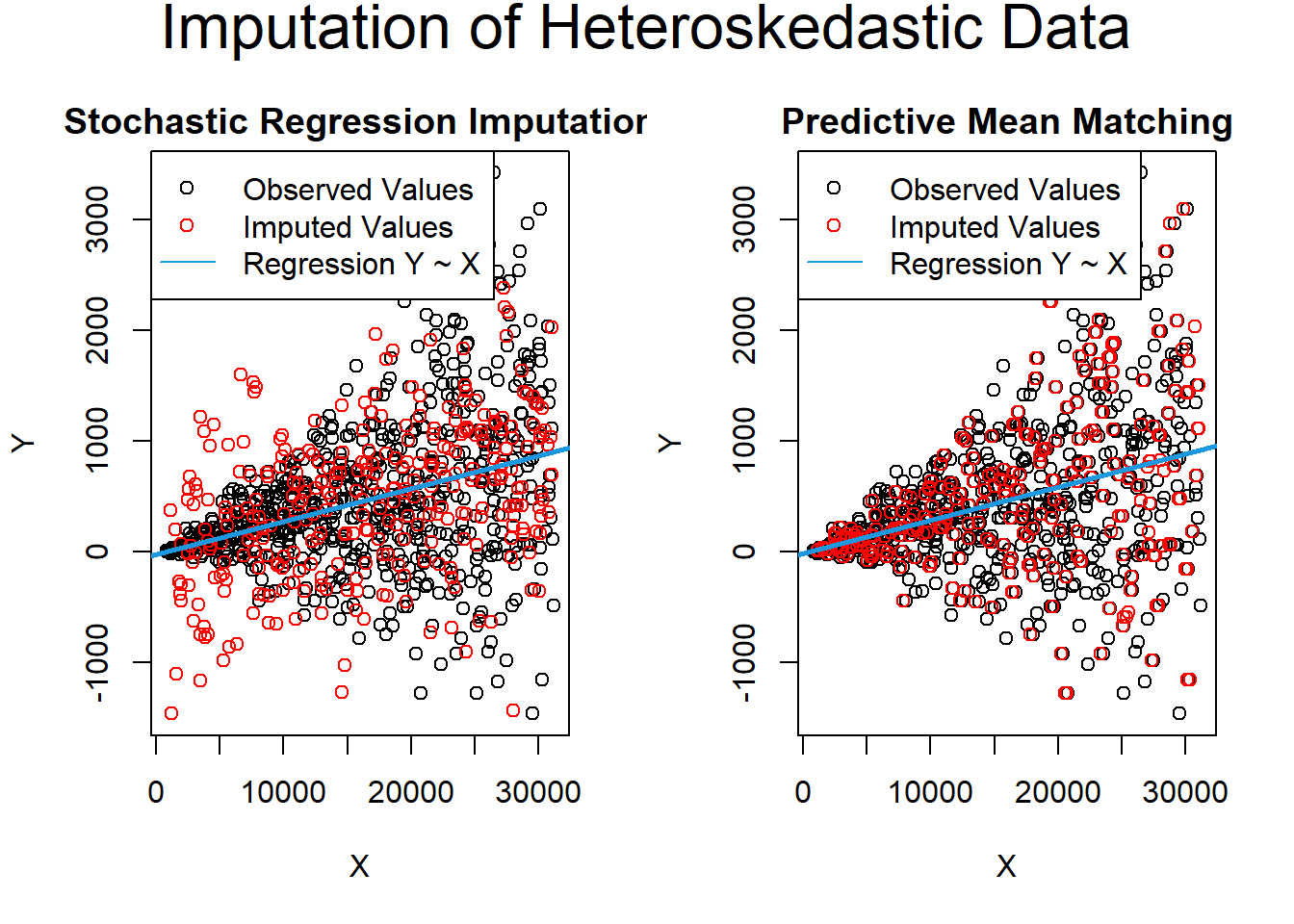

-

Cannot handle heteroskedasticity:

- If the data exhibit heteroskedasticity (i.e., non-constant variance of residuals), the randomness added by stochastic imputation may not accurately reflect the underlying variability.

Steps in Stochastic Imputation

- Fit a regression model using cases with complete data for the variable with missing values.

- Predict missing values using the fitted model.

- Generate random residuals based on the distribution of residuals from the regression model.

- Add the random residuals to the predicted values to impute missing values.

# Example dataset with missing values

set.seed(123)

data <- data.frame(

X = rnorm(10, mean = 50, sd = 10),

Y = c(100, 105, 110, NA, 120, NA, 130, 135, 140, NA)

)

# Perform stochastic imputation

stochastic_impute <- function(data, predictor, target) {

# Subset data with complete cases

complete_data <- data[!is.na(data[[target]]), ]

# Fit a regression model

model <- lm(as.formula(paste(target, "~", predictor)), data = complete_data)

# Predict missing values

missing_data <- data[is.na(data[[target]]), ]

predictions <- predict(model, newdata = missing_data)

# Add random residuals

residual_sd <- sd(model$residuals, na.rm = TRUE)

stochastic_values <- predictions + rnorm(length(predictions), mean = 0, sd = residual_sd)

# Impute missing values

data[is.na(data[[target]]), target] <- stochastic_values

return(data)

}

# Apply stochastic imputation

imputed_data <- stochastic_impute(data, predictor = "X", target = "Y")

# Display the imputed dataset

print(imputed_data)

#> X Y

#> 1 44.39524 100.0000

#> 2 47.69823 105.0000

#> 3 65.58708 110.0000

#> 4 50.70508 137.0070

#> 5 51.29288 120.0000

#> 6 67.15065 114.9107

#> 7 54.60916 130.0000

#> 8 37.34939 135.0000

#> 9 43.13147 140.0000

#> 10 45.54338 127.9359Notes

Multiple Imputation: Most multiple imputation methods are extensions of stochastic regression imputation. By repeating the imputation process with different random seeds, multiple datasets can be generated to account for uncertainty in the imputed values.

Dealing with Implausible Values: Additional constraints or transformations (e.g., truncating imputed values to a plausible range) may be necessary to address the issue of implausible values.

Comparison to Deterministic Regression Imputation

| Feature | Deterministic Regression Imputation | Stochastic Imputation |

|---|---|---|

| Randomness | None | Adds random residuals |

| Preserves Variability | No | Yes |

| Use in Multiple Imputation | Limited | Well-suited |

| Bias Potential | Higher | Lower |

# Income data

set.seed(1) # Set seed

N <- 1000 # Sample size

income <-

round(rnorm(N, 0, 500)) # Create some synthetic income data

income[income < 0] <- income[income < 0] * (-1)

x1 <- income + rnorm(N, 1000, 1500) # Auxiliary variables

x2 <- income + rnorm(N,-5000, 2000)

# Create 10% missingness in income

income[rbinom(N, 1, 0.1) == 1] <- NA

data_inc_miss <- data.frame(income, x1, x2)Single stochastic regression imputation

imp_inc_sri <- mice(data_inc_miss, method = "norm.nob", m = 1)

#>

#> iter imp variable

#> 1 1 income

#> 2 1 income

#> 3 1 income

#> 4 1 income

#> 5 1 income

data_inc_sri <- mice::complete(imp_inc_sri)Single predictive mean matching

imp_inc_pmm <- mice(data_inc_miss, method = "pmm", m = 1)

#>

#> iter imp variable

#> 1 1 income

#> 2 1 income

#> 3 1 income

#> 4 1 income

#> 5 1 income

data_inc_pmm <- mice::complete(imp_inc_pmm)Stochastic regression imputation contains negative values

data_inc_sri$income[data_inc_sri$income < 0]

#> [1] -23.85404 -58.37790 -61.86396 -57.47909 -21.29221 -73.26549

#> [7] -61.76194 -42.45942 -351.02991 -317.69090

# No values below 0

data_inc_pmm$income[data_inc_pmm$income < 0]

#> numeric(0)Evidence for heteroskadastic data

# Heteroscedastic data

set.seed(1) # Set seed

N <- 1:1000 # Sample size

a <- 0

b <- 1

sigma2 <- N^2

eps <- rnorm(N, mean = 0, sd = sqrt(sigma2))

y <- a + b * N + eps # Heteroscedastic variable

x <- 30 * N + rnorm(N[length(N)], 1000, 200) # Correlated variable

y[rbinom(N[length(N)], 1, 0.3) == 1] <- NA # 30% missing

data_het_miss <- data.frame(y, x)Single stochastic regression imputation

imp_het_sri <- mice(data_het_miss, method = "norm.nob", m = 1)

#>

#> iter imp variable

#> 1 1 y

#> 2 1 y

#> 3 1 y

#> 4 1 y

#> 5 1 y

data_het_sri <- mice::complete(imp_het_sri)Single predictive mean matching

imp_het_pmm <- mice(data_het_miss, method = "pmm", m = 1)

#>

#> iter imp variable

#> 1 1 y

#> 2 1 y

#> 3 1 y

#> 4 1 y

#> 5 1 y

data_het_pmm <- mice::complete(imp_het_pmm)Comparison between predictive mean matching and stochastic regression imputation

# Both plots in one graphic

par(mfrow = c(1, 2))

# Plot of observed values

plot(x[!is.na(data_het_sri$y)],

data_het_sri$y[!is.na(data_het_sri$y)],

main = "",

xlab = "X",

ylab = "Y")

# Plot of missing values

points(x[is.na(y)], data_het_sri$y[is.na(y)],

col = "red")

# Title of plot

title("Stochastic Regression Imputation",

line = 0.5)

# Regression line

abline(lm(y ~ x, data_het_sri),

col = "#1b98e0", lwd = 2.5)

# Legend

legend(

"topleft",

c("Observed Values", "Imputed Values", "Regression Y ~ X"),

pch = c(1, 1, NA),

lty = c(NA, NA, 1),

col = c("black", "red", "#1b98e0")

)

# Plot of observed values

plot(x[!is.na(data_het_pmm$y)],

data_het_pmm$y[!is.na(data_het_pmm$y)],

main = "",

xlab = "X",

ylab = "Y")

# Plot of missing values

points(x[is.na(y)], data_het_pmm$y[is.na(y)],

col = "red")

# Title of plot

title("Predictive Mean Matching",

line = 0.5)

abline(lm(y ~ x, data_het_pmm),

col = "#1b98e0", lwd = 2.5)

# Legend

legend(

"topleft",

c("Observed Values", "Imputed Values", "Regression Y ~ X"),

pch = c(1, 1, NA),

lty = c(NA, NA, 1),

col = c("black", "red", "#1b98e0")

)

mtext(

"Imputation of Heteroskedastic Data",

# Main title of plot

side = 3,

line = -1.5,

outer = TRUE,

cex = 2

)

Figure 13.6: Imputation of Heteroskedastic Data

13.4.2.5 Matrix Completion

Matrix completion is a method used to impute missing data in a feature matrix while accounting for dependence between features. This approach leverages principal components to approximate the data matrix, a process referred to as matrix completion (James et al. 2013, Sec 12.3).

Problem Setup

Consider an \(n \times p\) feature matrix \(\mathbf{X}\), where the element \(x_{ij}\) represents the value for the \(i\)th observation and \(j\)th feature. Some elements of \(\mathbf{X}\) are missing, and we aim to impute these missing values.

The matrix \(\mathbf{X}\) can be approximated using its leading principal components. Specifically, we consider \(M\) principal components that minimize the following objective:

\[ \underset{\mathbf{A} \in \mathbb{R}^{n \times M}, \mathbf{B} \in \mathbb{R}^{p \times M}}{\operatorname{min}} \left\{ \sum_{(i,j) \in \mathcal{O}} (x_{ij} - \sum_{m=1}^M a_{im}b_{jm})^2 \right\} \]

where \(\mathcal{O}\) is the set of observed indices \((i,j)\), which is a subset of the total \(n \times p\) pairs. Here: - \(\mathbf{A}\) is an \(n \times M\) matrix of principal component scores. - \(\mathbf{B}\) is a \(p \times M\) matrix of principal component loadings.

Imputation of Missing Values

After solving the minimization problem:

- Missing observations \(x_{ij}\) can be imputed using the formula: \[ \hat{x}_{ij} = \sum_{m=1}^M \hat{a}_{im}\hat{b}_{jm} \] where \(\hat{a}_{im}\) and \(\hat{b}_{jm}\) are the estimated elements of \(\mathbf{A}\) and \(\mathbf{B}\), respectively.

- The leading \(M\) principal component scores and loadings can be approximately recovered, as is done in complete data scenarios.

Iterative Algorithm

The eigen-decomposition used in standard principal component analysis is not applicable here because of missing values. Instead, an iterative algorithm, as described in (James et al. 2013, Alg 12.1), is employed:

Initialize the Complete Matrix: Construct an initial complete matrix \(\tilde{\mathbf{X}}\) of dimension \(n \times p\) where: \[ \tilde{x}_{ij} = \begin{cases} x_{ij} & \text{if } (i,j) \in \mathcal{O} \\ \bar{x}_j & \text{if } (i,j) \notin \mathcal{O} \end{cases} \] Here, \(\bar{x}_j\) is the mean of the observed values for the \(j\)th variable in the incomplete data matrix \(\mathbf{X}\). \(\mathcal{O}\) indexes the observed elements of \(\mathbf{X}\).

-

Iterative Steps: Repeat the following steps until convergence:

Minimize the Objective: Solve the problem: \[ \underset{\mathbf{A} \in R^{n \times M}, \mathbf{B} \in R^{p \times M}}{\operatorname{min}} \left\{ \sum_{(i,j) \in \mathcal{O}} (x_{ij} - \sum_{m=1}^M a_{im}b_{jm})^2 \right\} \] by computing the principal components of the current \(\tilde{\mathbf{X}}\).

Update Missing Values: For each missing element \((i,j) \notin \mathcal{O}\), set: \[ \tilde{x}_{ij} \leftarrow \sum_{m=1}^M \hat{a}_{im}\hat{b}_{jm} \]

Recalculate the Objective: Compute the objective: \[ \sum_{(i,j) \in \mathcal{O}} (x_{ij} - \sum_{m=1}^M \hat{a}_{im} \hat{b}_{jm})^2 \]

Return Imputed Values: Once the algorithm converges, return the estimated missing entries \(\tilde{x}_{ij}\) for \((i,j) \notin \mathcal{O}\).

Key Considerations

- This approach assumes that the missing data are missing at random (MAR).

- Convergence criteria for the iterative algorithm often involve achieving a threshold for the change in the objective function or limiting the number of iterations.

- The choice of \(M\), the number of principal components, can be guided by cross-validation or other model selection techniques.

13.4.2.6 Comparison of Single Imputation Techniques

| Method | Advantages | Disadvantages |

|---|---|---|

| Mean, Median, Mode Imputation | Simple, quick implementation. | Biased variances and covariances; ignores relationships among variables. |

| Forward/Backward Filling | Preserves temporal ordering. | Biased for systematic gaps or long missing sequences. |

| Linear Regression Imputation | Preserves relationships among variables. | Fails to capture variability; assumes linearity. |

| Logistic Regression Imputation | Handles categorical variables well. | Requires appropriate model assumptions; ignores variability. |

| PMM | Maintains variability; imputes realistic values. | Computationally intensive; requires a good predictive model. |

| Hot Deck Imputation | Flexible; maintains data distribution. | Sensitive to donor selection; computationally demanding. |

| Cold Deck Imputation | Consistent across datasets with predefined donor pools. | Risk of bias if donor data are not representative. |

| Random Draw from Observed | Simple; retains variability in data. | Does not preserve relationships among variables; random imputation may distort trends. |

| Matrix Completion | Captures dependencies; imputes structurally consistent values. | Computationally intensive; assumes principal components capture data relationships. |

Single imputation techniques are straightforward and accessible, but they often underestimate uncertainty and fail to fully leverage relationships among variables. These limitations make them less ideal for rigorous analyses compared to multiple imputation or model-based approaches.

13.4.3 Machine Learning and Modern Approaches

13.4.3.1 Tree-Based Methods

13.4.3.1.1 Random Forest Imputation (missForest)

Random Forest Imputation uses an iterative process where a random forest model predicts missing values for one variable at a time, treating other variables as predictors. This process continues until convergence.

-

Mathematical Framework:

- For a variable \(X_j\) with missing values, treat \(X_j\) as the response variable.

- Fit a random forest model \(f(X_{-j})\) using the other variables \(X_{-j}\) as predictors.

- Predict missing values \(\hat{X}_j = f(X_{-j})\).

- Repeat for all variables with missing data until imputed values stabilize.

-

Advantages:

- Captures complex interactions and non-linearities.

- Handles mixed data types seamlessly.

-

Limitations:

- Computationally intensive for large datasets.

- Sensitive to the quality of data relationships.

13.4.3.1.2 Gradient Boosting Machines (GBM)

Gradient Boosting Machines iteratively build models to minimize loss functions. For imputation, missing values are treated as a target variable to be predicted.

Mathematical Framework: The GBM algorithm minimizes the loss function: \[ L = \sum_{i=1}^n \ell(y_i, f(x_i)), \] where \(\ell\) is the loss function (e.g., mean squared error), \(y_i\) are observed values, and \(f(x_i)\) are predictions.

Missing values are treated as the \(y_i\) and predicted iteratively.

-

Advantages:

- Highly accurate predictions.

- Captures variable importance.

-

Limitations:

- Overfitting risks.

- Requires careful parameter tuning.

13.4.3.2 Neural Network-Based Imputation

13.4.3.2.1 Autoencoders

Autoencoders are unsupervised neural networks that compress and reconstruct data. Missing values are estimated during reconstruction.

-

Mathematical Framework: An autoencoder consists of:

- An encoder function: \(h = g(Wx + b)\), which compresses the input \(x\).

- A decoder function: \(\hat{x} = g'(W'h + b')\), which reconstructs the data.

The network minimizes the reconstruction loss: \[ L = \sum_{i=1}^n (x_i - \hat{x}_i)^2. \]

-

Advantages:

- Handles high-dimensional and non-linear data.

- Unsupervised learning.

-

Limitations:

- Computationally demanding.

- Requires large datasets for effective training.

13.4.3.2.2 Generative Adversarial Networks (GANs) for Data Imputation

GANs consist of a generator and a discriminator. For imputation, the generator fills in missing values, and the discriminator evaluates the quality of the imputations.

-

Mathematical Framework: GAN training involves optimizing: \[

\min_G \max_D \mathbb{E}[\log D(x)] + \mathbb{E}[\log(1 - D(G(z)))].

\]

- \(D(x)\): Discriminator’s probability that \(x\) is real.

- \(G(z)\): Generator’s output for latent input \(z\).

-

Advantages:

- Realistic imputations that reflect underlying distributions.

- Handles complex data types.

-

Limitations:

- Difficult to train and tune.

- Computationally intensive.

13.4.3.3 Matrix Factorization and Matrix Completion

13.4.3.3.1 Singular Value Decomposition (SVD)

SVD decomposes a matrix \(A\) into three matrices: \[ A = U\Sigma V^T, \] where \(U\) and \(V\) are orthogonal matrices, and \(\Sigma\) contains singular values. Missing values are estimated by reconstructing \(A\) using a low-rank approximation: \[ \hat{A} = U_k \Sigma_k V_k^T. \]

-

Advantages:

- Captures global patterns.

- Efficient for structured data.

-

Limitations:

- Assumes linear relationships.

- Sensitive to sparsity.

13.4.3.3.2 Collaborative Filtering Approaches

Collaborative filtering uses similarities between rows (users) or columns (items) to impute missing data. For instance, the value of \(X_{ij}\) is predicted as: \[ \hat{X}_{ij} = \frac{\sum_{k \in N(i)} w_{ik} X_{kj}}{\sum_{k \in N(i)} w_{ik}}, \] where \(w_{ik}\) represents similarity weights and \(N(i)\) is the set of neighbors.

13.4.3.4 K-Nearest Neighbor (KNN) Imputation

KNN identifies the \(k\) nearest observations based on a distance metric and imputes missing values using a weighted average (continuous variables) or mode (categorical variables).

Mathematical Framework: For a missing value \(x\), its imputed value is: \[ \hat{x} = \frac{\sum_{i=1}^k w_i x_i}{\sum_{i=1}^k w_i}, \] where \(w_i = \frac{1}{d(x, x_i)}\) and \(d(x, x_i)\) is a distance metric (e.g., Euclidean or Manhattan).

-

Advantages:

- Simple and interpretable.

- Non-parametric.

-

Limitations:

- Computationally expensive for large datasets.

13.4.3.5 Hybrid Methods

Hybrid methods combine statistical and machine learning approaches. For example, mean imputation followed by fine-tuning with machine learning models. These methods aim to leverage the strengths of multiple techniques.

13.4.3.6 Summary Table

| Method | Advantages | Limitations | Applications |

|---|---|---|---|

| Random Forest (missForest) | Handles mixed data types, captures interactions | Computationally intensive | Mixed data types |

| Gradient Boosting Machines | High accuracy, feature importance | Sensitive to parameters | Predictive tasks |

| Autoencoders | Handles high-dimensional, non-linear data | Computationally expensive | Complex datasets |

| GANs | Realistic imputations, complex distributions | Difficult to train, resource-intensive | Healthcare, finance |

| SVD | Captures global patterns, efficient | Assumes linear relationships | Recommendation systems |

| Collaborative Filtering | Intuitive for user-item data | Struggles with sparse or new data | Recommender systems |

| KNN Imputation | Simple, interpretable | Computationally intensive, sensitive to k | General-purpose |

| Hybrid Methods | Combines multiple strengths | Complexity in design | Flexible |

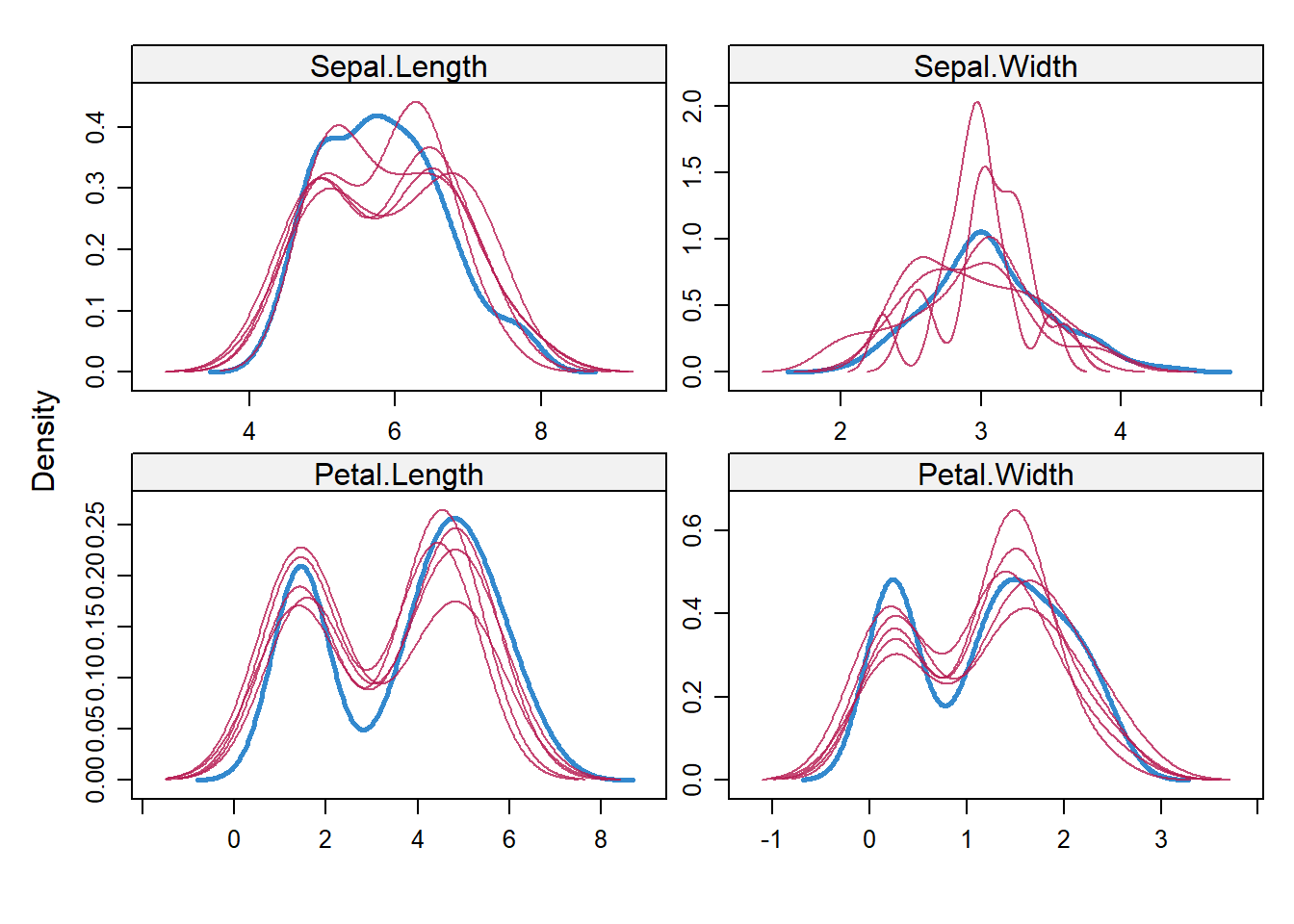

13.4.4 Multiple Imputation

Multiple Imputation (MI) is a statistical technique for handling missing data by creating several plausible datasets through imputation, analyzing each dataset separately, and then combining the results to account for uncertainty in the imputations. MI operates under the assumption that missing data is either Missing Completely at Random (MCAR) or Missing at Random (MAR).

Unlike Single Imputation Techniques, MI reflects the uncertainty inherent in the missing data by introducing variability in the imputed values. It avoids biases introduced by ad hoc methods and produces more reliable statistical inferences.

The three fundamental steps in MI are:

- Imputation: Replace missing values with a set of plausible values to create multiple “completed” datasets.

- Analysis: Perform the desired statistical analysis on each imputed dataset.

- Combination: Combine the results using rules to account for within- and between-imputation variability.

13.4.4.1 Why Multiple Imputation is Important

Imputed values are estimates and inherently include random error. However, when these estimates are treated as exact values in subsequent analysis, the software may overlook this additional error. This oversight results in underestimated standard errors and overly small p-values, leading to misleading conclusions.