39 Controls

Traditionally, adding more control variables in regression models is often considered harmless or even beneficial. The idea is simple: controlling for potential confounders (variables that influence both the treatment and the outcome) should help isolate the causal effect of the variable of interest. However, this intuition can be misleading, especially when it comes to overcontrolling—an issue often overlooked in both academic research and the review process.

In many fields, the peer review process frequently encourages the addition of more control variables. Reviewers often ask authors to add variables to “control” for potential confounding. While this seems reasonable on the surface, it’s important to note that the practice of adding controls without a solid theoretical justification can lead to erroneous conclusions.

-

Question: Why are reviewers often quick to suggest adding more controls?

- Answer: It is often assumed that more control variables lead to more accurate models, but this assumption does not hold in many situations. The true causal relationship between the treatment (e.g., marketing campaign) and outcome (e.g., sales) may become obscured if irrelevant or inappropriate variables are controlled for.

Counterpoint: Rarely will a reviewer suggest removing variables that could distort the analysis, even though doing so may be more appropriate in some cases. This is a subtle issue that is often connected to the concept of Coefficient Stability.

Overcontrolling occurs when we include control variables that do not truly affect the causal relationship between the exposure (e.g., treatment) and the outcome. This practice can introduce bias into the estimated causal effect. There are two main issues with overcontrolling:

- Overcontrol Bias: When a control variable is a collider (as described in Directed Acyclic Graphs), conditioning on it can induce a spurious correlation between the exposure and the outcome.

- Loss of Variability: Including too many control variables can lead to multicollinearity, which reduces the variability available to estimate the relationship between the treatment and outcome.

39.1 Bad Controls

39.1.1 M-bias

A common intuition in causal inference is to control for any variable that precedes the treatment. This logic underpins much of the guidance in traditional econometric texts (G. W. Imbens and Rubin 2015; J. D. Angrist and Pischke 2009), where pre-treatment variables like \(Z\) are often recommended as controls if they correlate with both the treatment \(X\) and the outcome \(Y\).

This perspective is especially prevalent in Matching Methods, where all observed pre-treatment covariates are typically included in the matching process. However, controlling for every pre-treatment variable can lead to bad control bias.

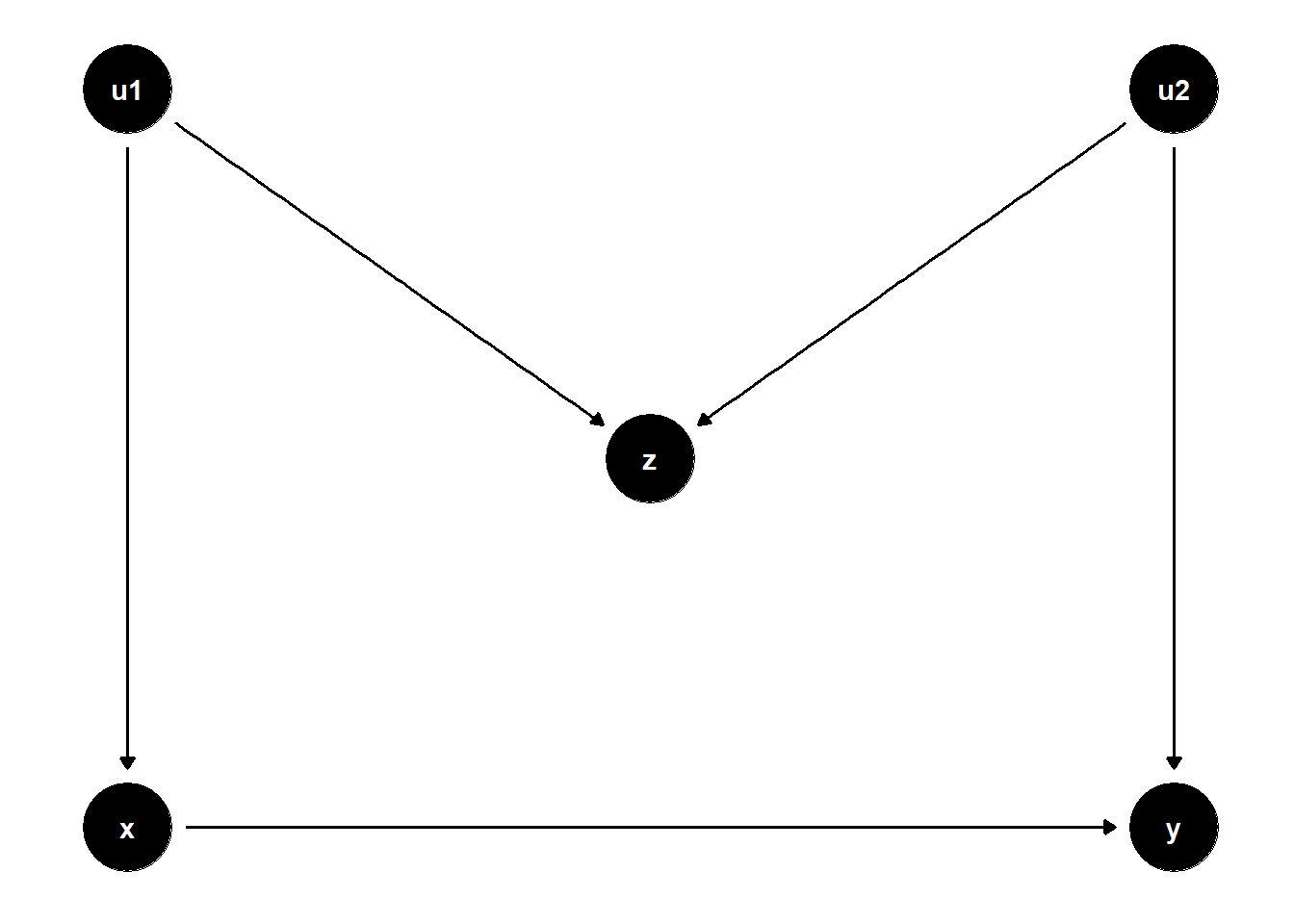

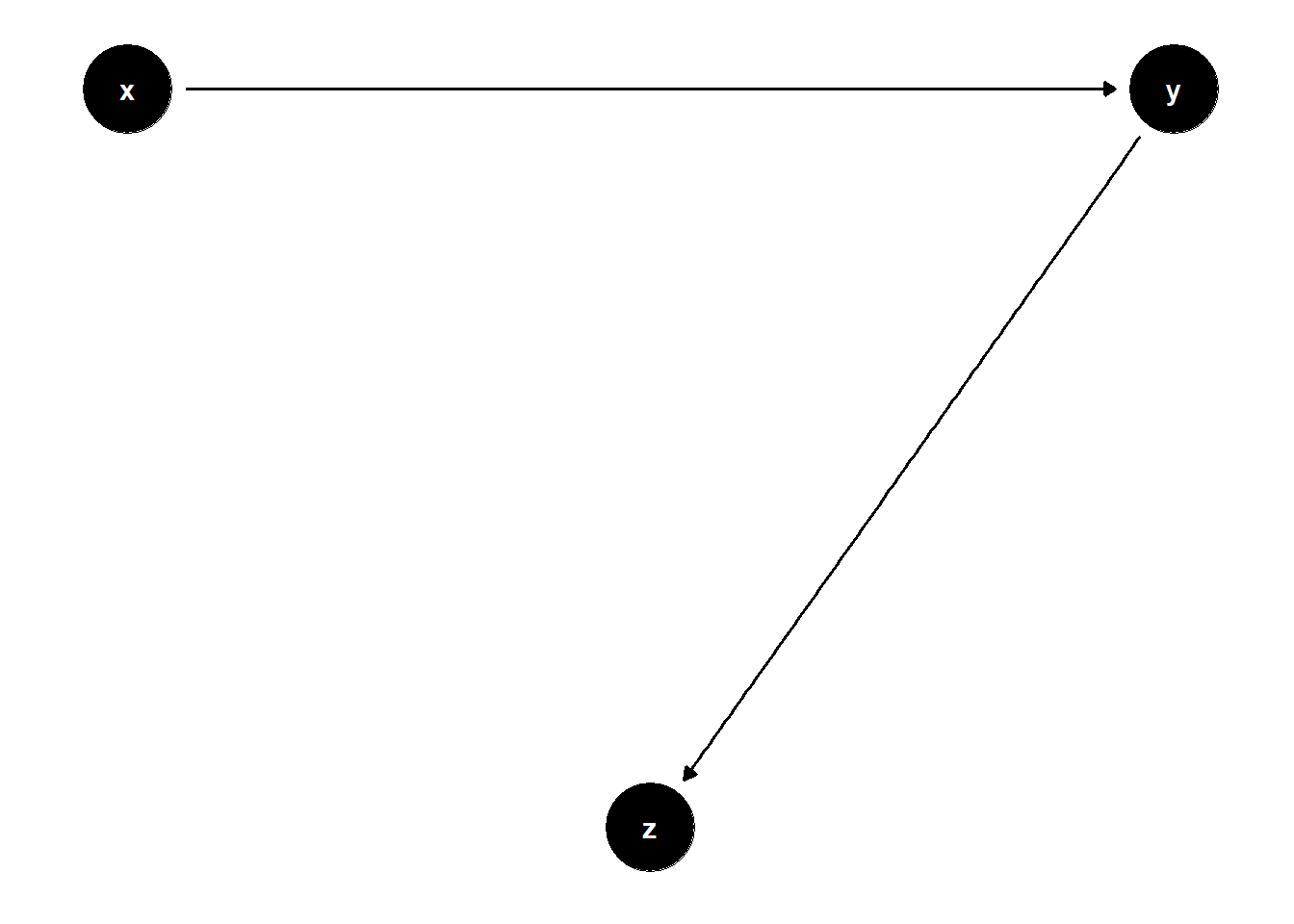

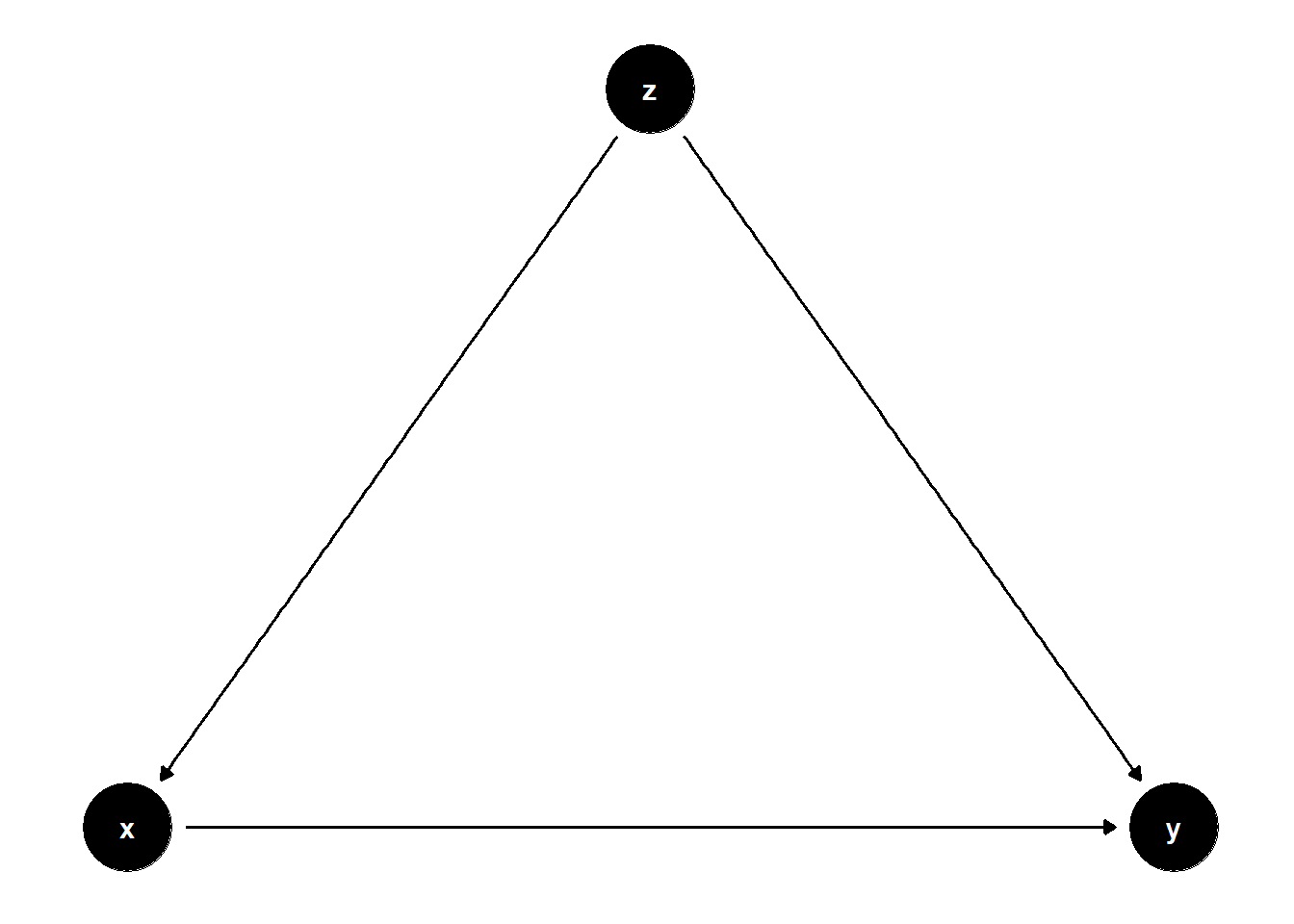

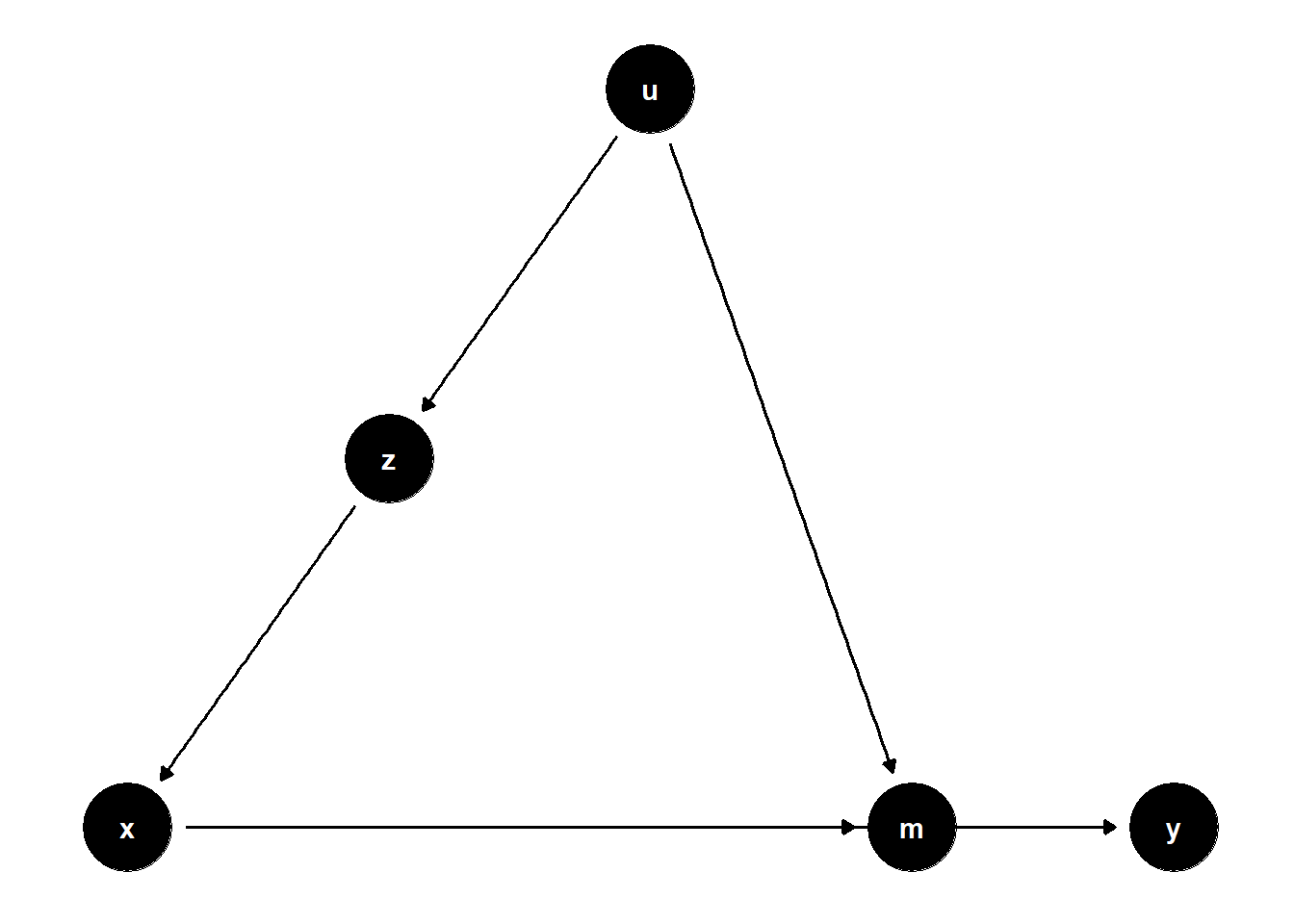

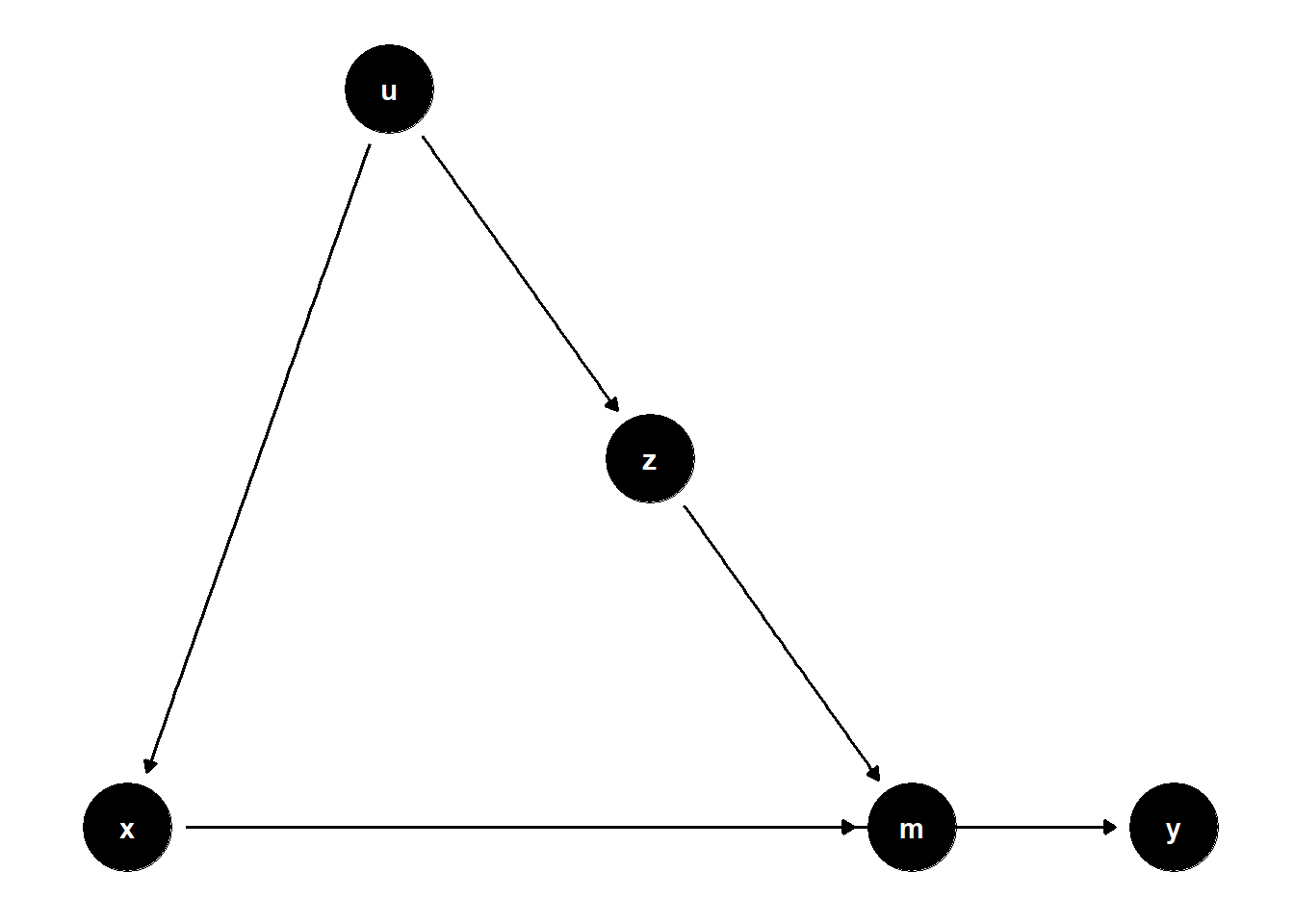

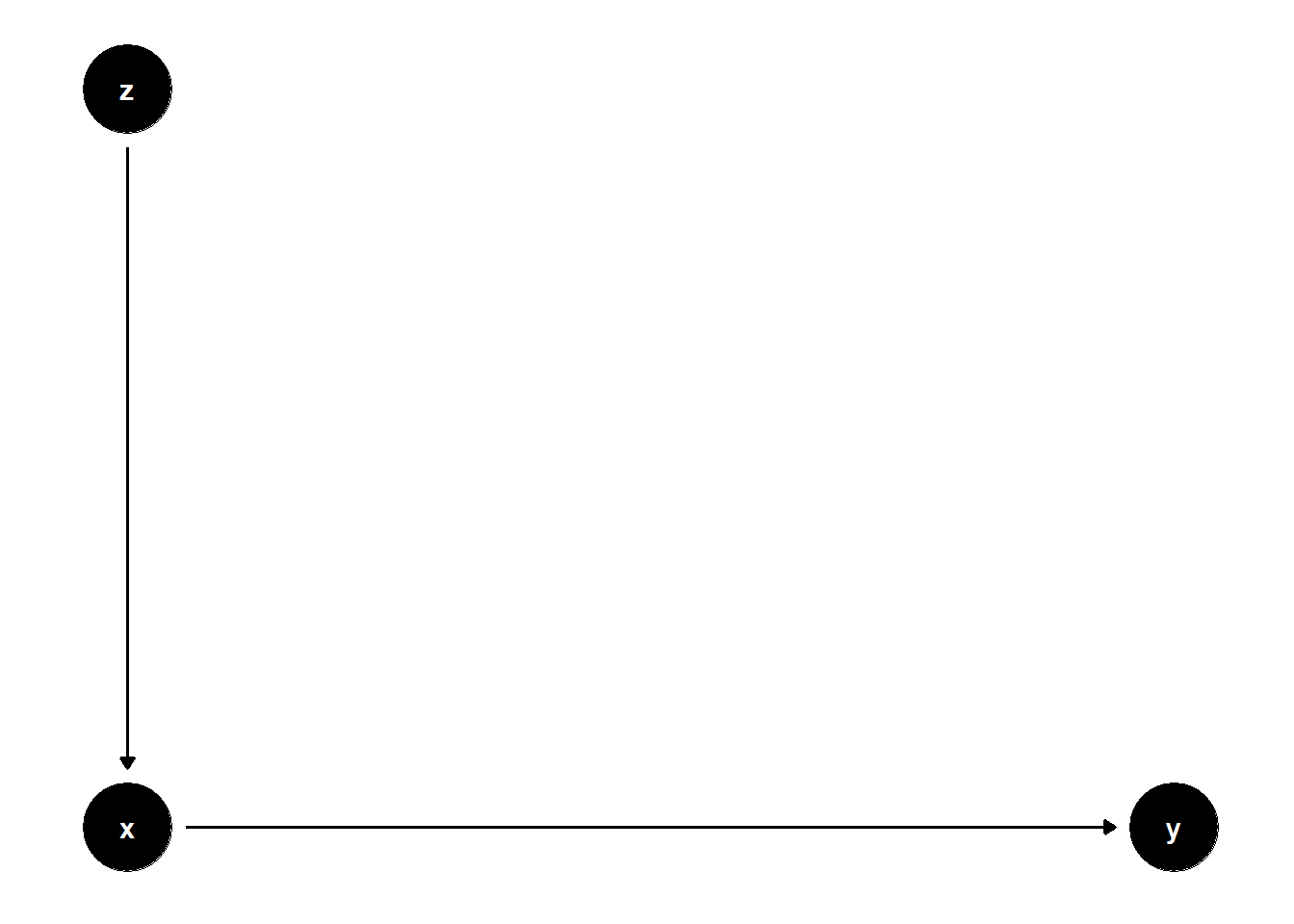

One such example is M-bias, which arises when conditioning on a collider — a variable that is influenced by two unobserved causes. The DAG below illustrates a case where \(Z\) appears to be a good control but actually opens a biasing path:

# Clean workspace

rm(list = ls())

# DAG specification

model <- dagitty("dag{

x -> y

u1 -> x

u1 -> z

u2 -> z

u2 -> y

}")

# Set latent variables

latents(model) <- c("u1", "u2")

# Coordinates for plotting

coordinates(model) <- list(

x = c(x = 1, u1 = 1, z = 2, u2 = 3, y = 3),

y = c(x = 1, u1 = 2, z = 1.5, u2 = 2, y = 1)

)

# Plot the DAG

ggdag(model) + theme_dag()

In this structure, \(Z\) is a collider on the path \(X \leftarrow U_1 \rightarrow Z \leftarrow U_2 \rightarrow Y\). Controlling for \(Z\) opens this path, introducing a spurious association between \(X\) and \(Y\) even if none existed originally.

Even though \(Z\) is statistically correlated with both \(X\) and \(Y\), it is not a confounder, because it does not lie on a back-door path that needs to be blocked. Instead, adjusting for \(Z\) biases the estimate of the causal effect of \(X \to Y\).

Let’s illustrate this with a simulation:

set.seed(123)

n <- 1e4

u1 <- rnorm(n)

u2 <- rnorm(n)

z <- u1 + u2 + rnorm(n)

x <- u1 + rnorm(n)

causal_coef <- 2

y <- causal_coef * x - 4 * u2 + rnorm(n)

# Compare unadjusted and adjusted models

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Adjusted")

)| Unadjusted | Adjusted | |

|---|---|---|

| (Intercept) | 0.05 | 0.03 |

| (0.04) | (0.03) | |

| x | 2.01 *** | 2.80 *** |

| (0.03) | (0.03) | |

| z | -1.57 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.32 | 0.57 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Notice how adjusting for \(Z\) changes the estimate of the effect of \(X\) on \(Y\), even though \(Z\) is not a true confounder. This is a textbook example of M-bias in practice.

39.1.1.1 Worse: M-bias with Direct Effect from Z to Y

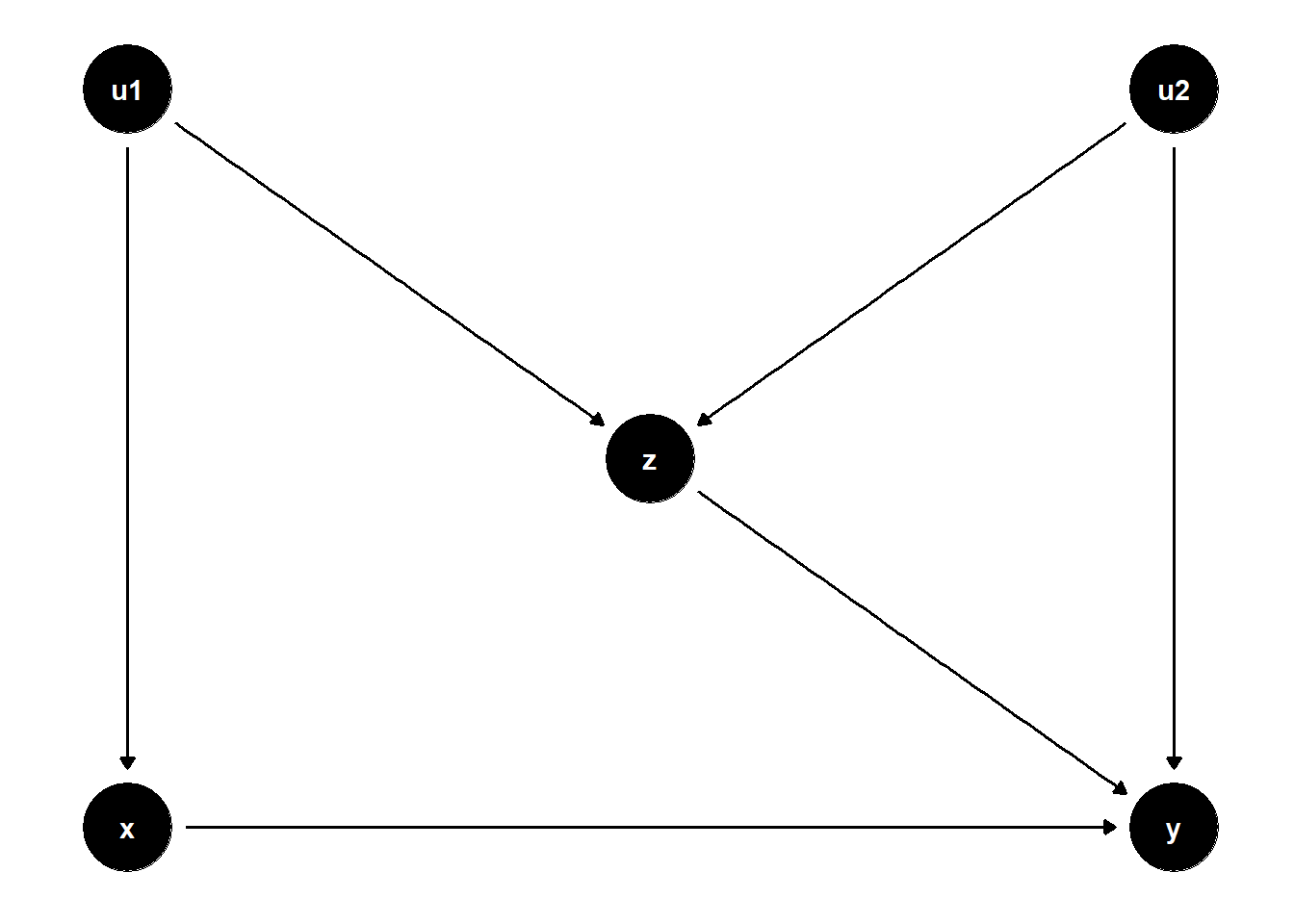

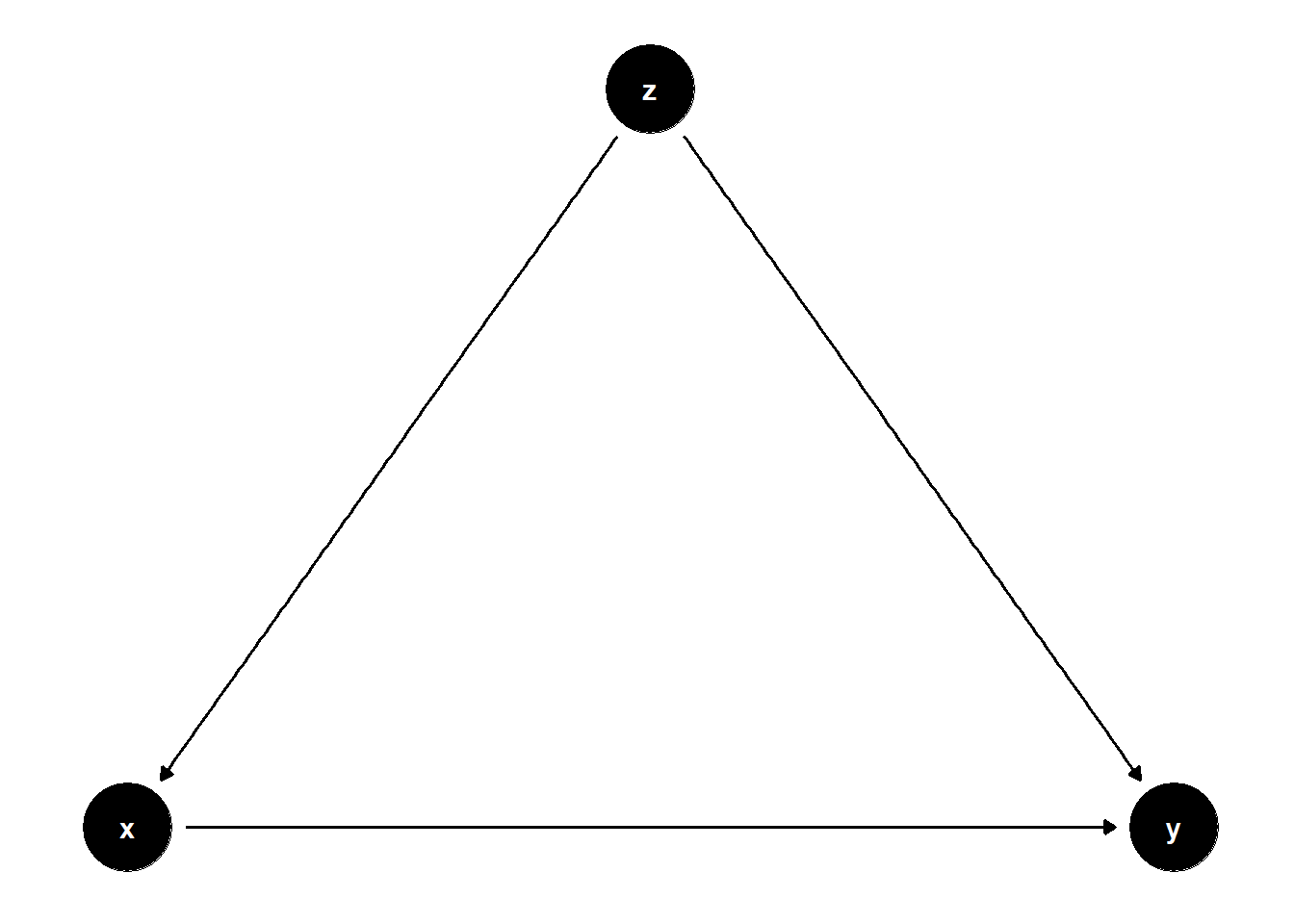

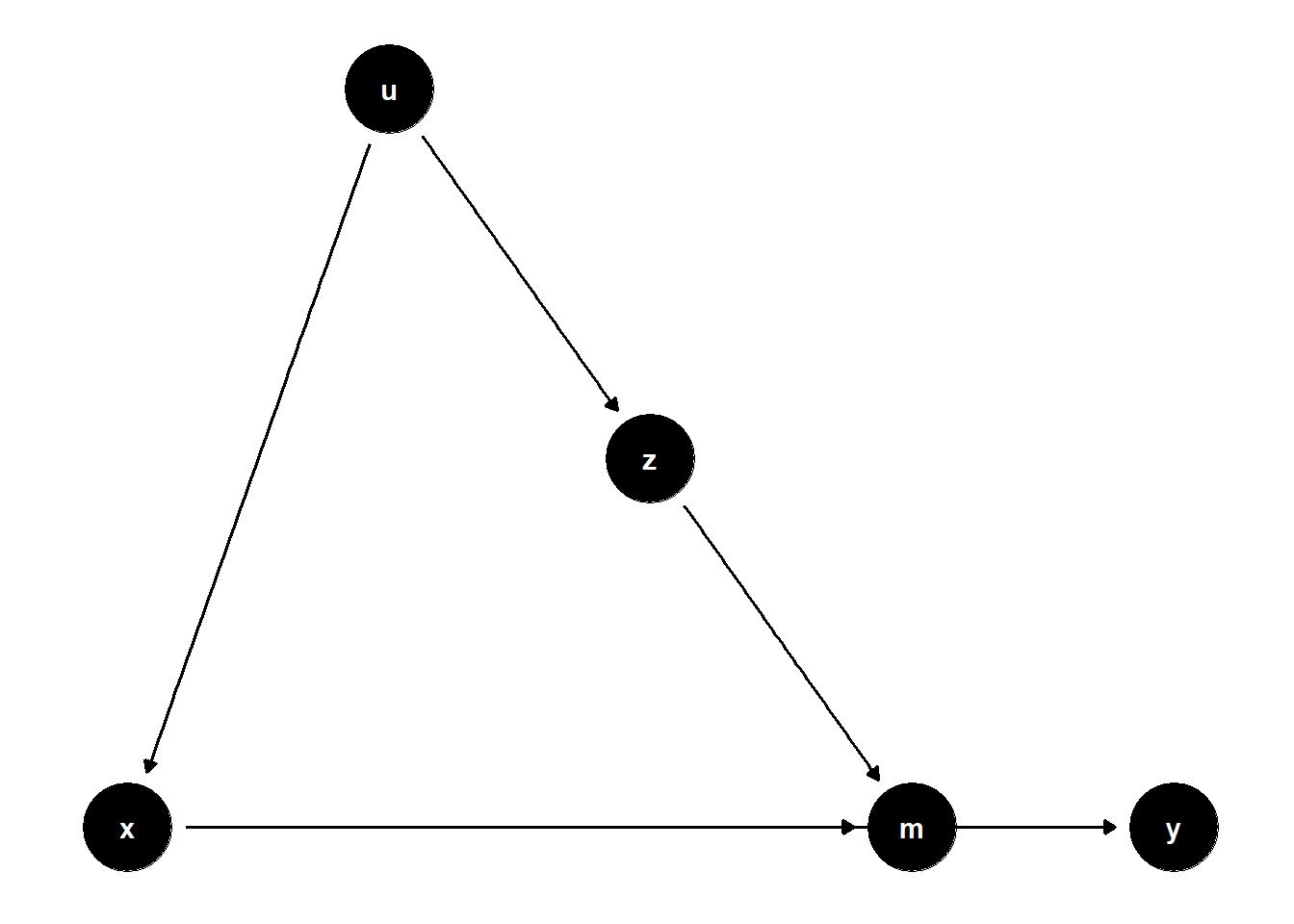

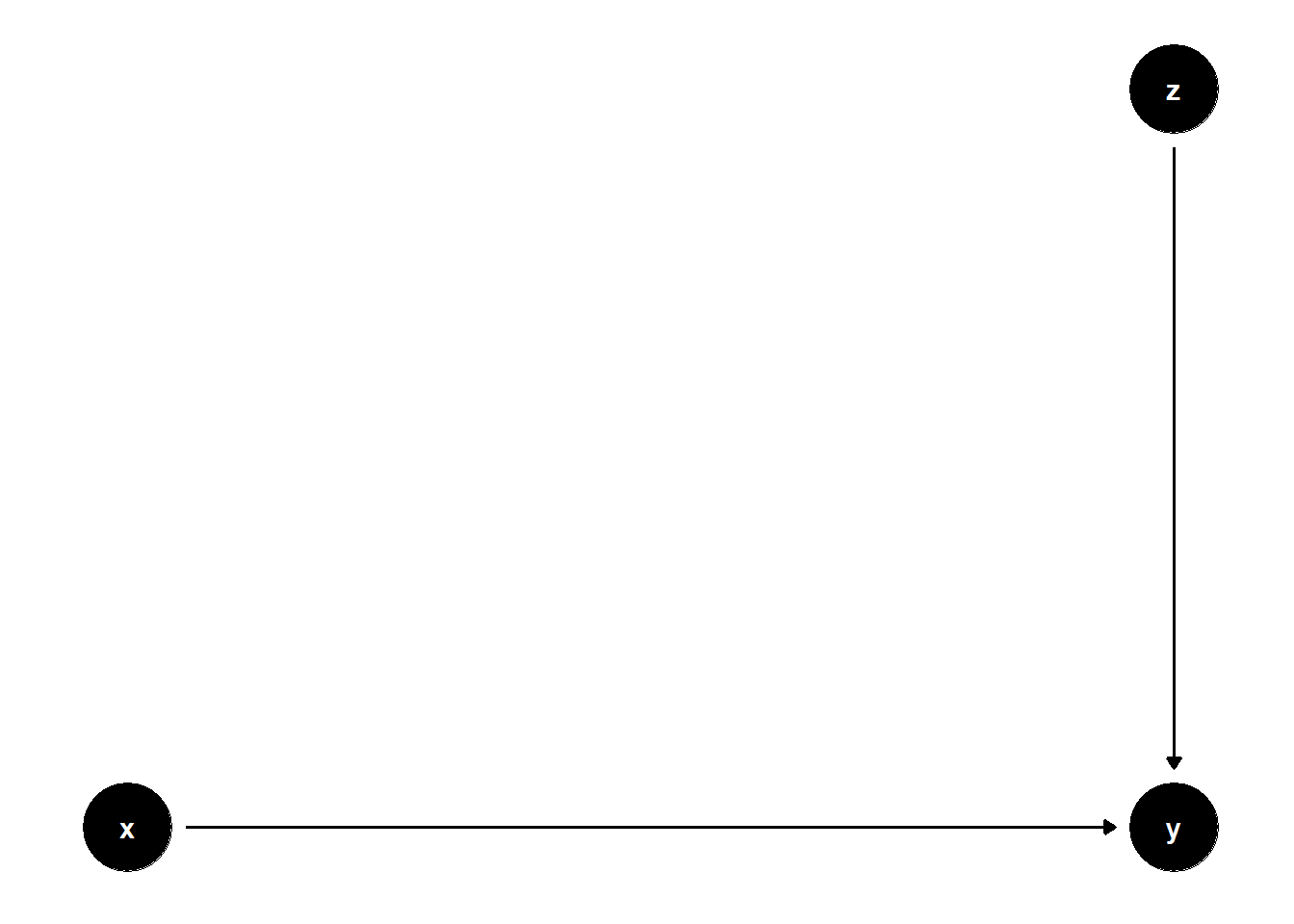

A more difficult case arises when \(Z\) also has a direct effect on \(Y\). Consider the DAG below:

# Clean workspace

rm(list = ls())

# DAG specification

model <- dagitty("dag{

x -> y

u1 -> x

u1 -> z

u2 -> z

u2 -> y

z -> y

}")

# Set latent variables

latents(model) <- c("u1", "u2")

# Coordinates for plotting

coordinates(model) <- list(

x = c(x = 1, u1 = 1, z = 2, u2 = 3, y = 3),

y = c(x = 1, u1 = 2, z = 1.5, u2 = 2, y = 1)

)

# Plot the DAG

ggdag(model) + theme_dag()

This situation presents a dilemma:

Not controlling for \(Z\) leaves the back-door path \(X \leftarrow U_1 \to Z \to Y\) open, introducing confounding bias.

Controlling for \(Z\) opens the collider path \(X \leftarrow U_1 \to Z \leftarrow U_2 \to Y\), which also biases the estimate.

In short, no adjustment strategy can fully remove bias from the estimate of \(X \to Y\) using observed data alone.

What Can Be Done?

When facing such situations, we often turn to sensitivity analysis to assess how robust our causal conclusions are to unmeasured confounding. Specifically, recent advances (Cinelli et al. 2019; Cinelli and Hazlett 2020) allow us to quantify:

Plausible bounds on the strength of the direct effect \(Z \to Y\)

Sensitivity parameters reflecting the possible influence of the latent variables \(U_1\) and \(U_2\)

These tools help us understand how large the unmeasured biases would have to be in order to overturn our conclusions — a pragmatic approach when perfect control is impossible.

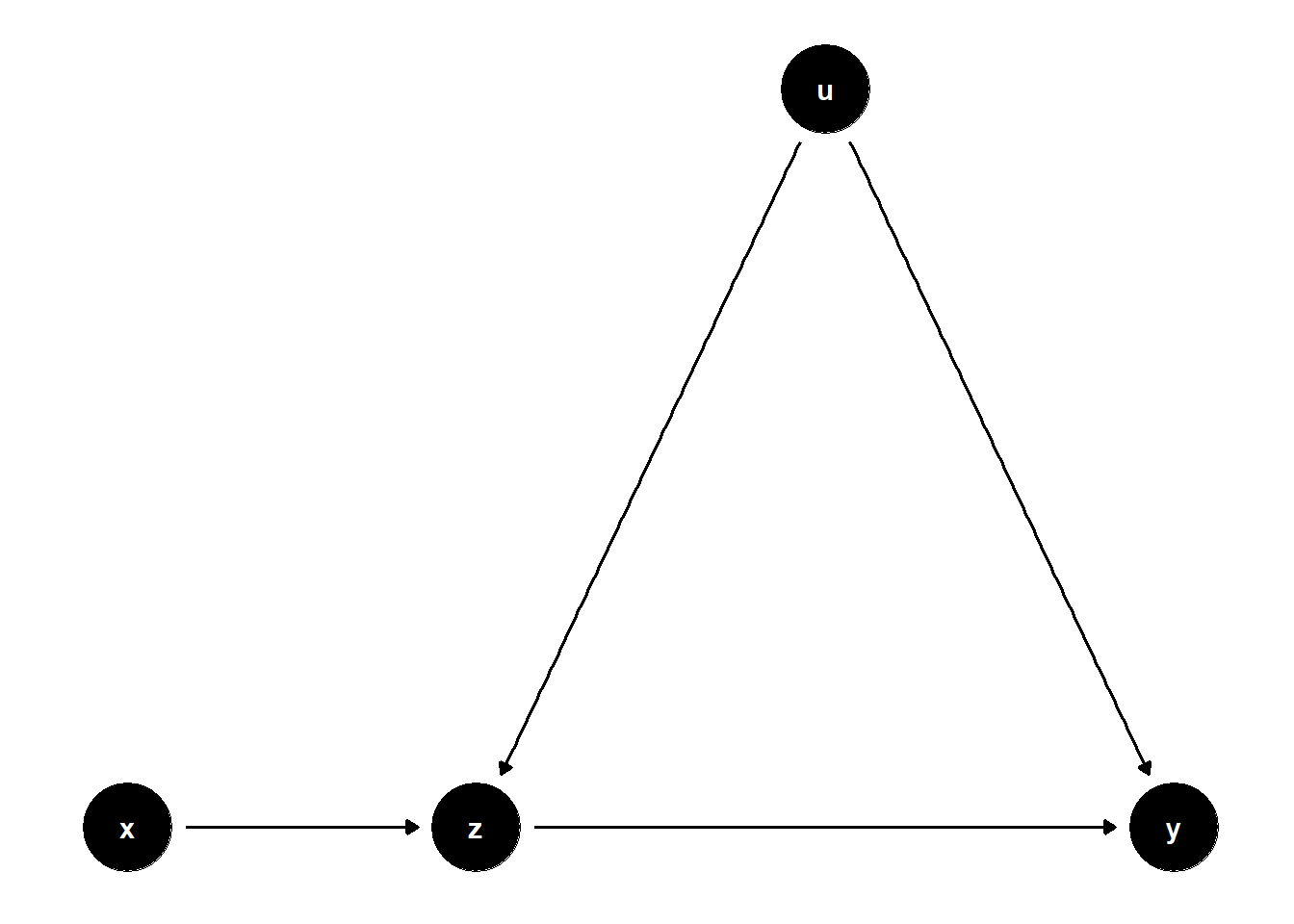

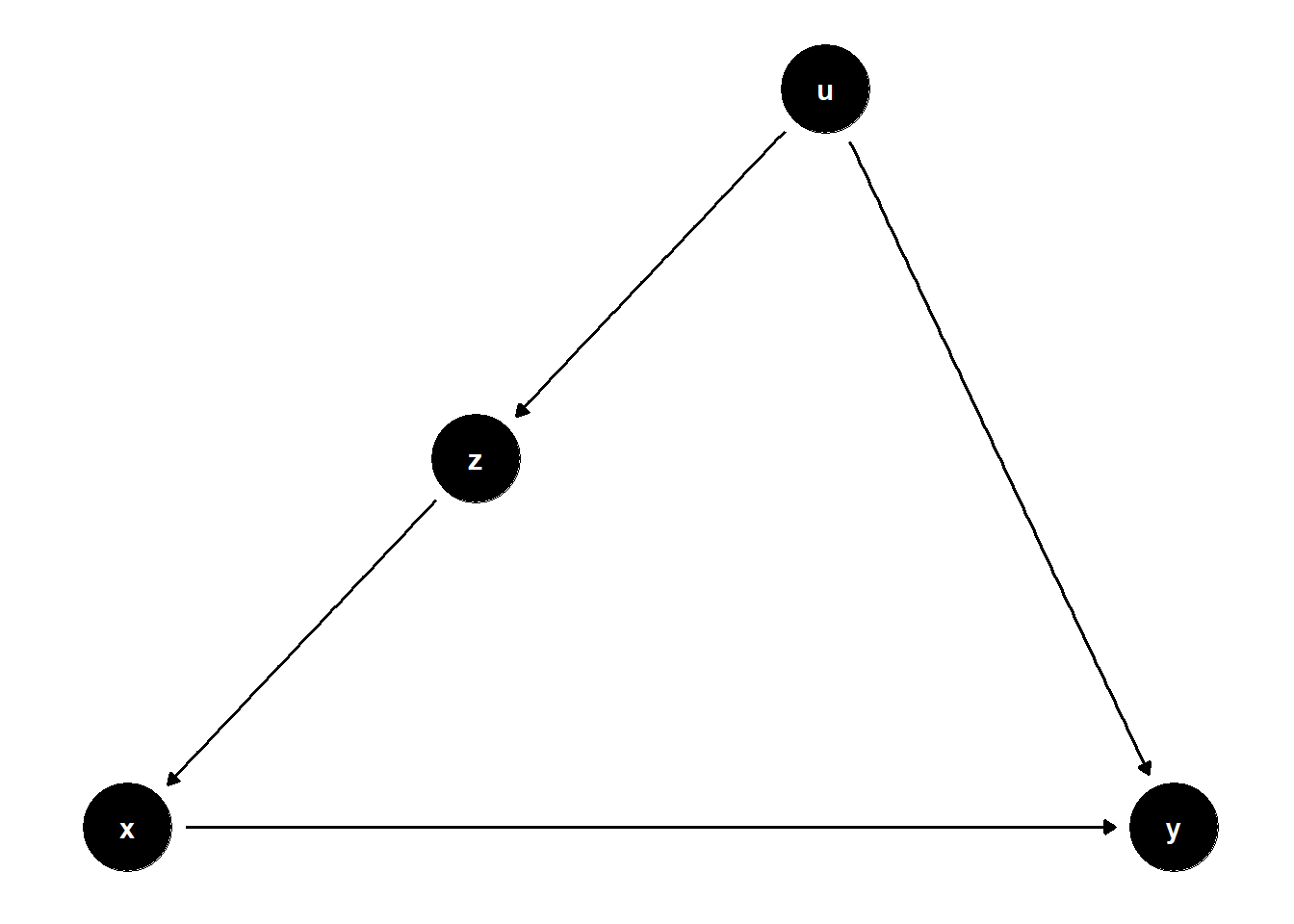

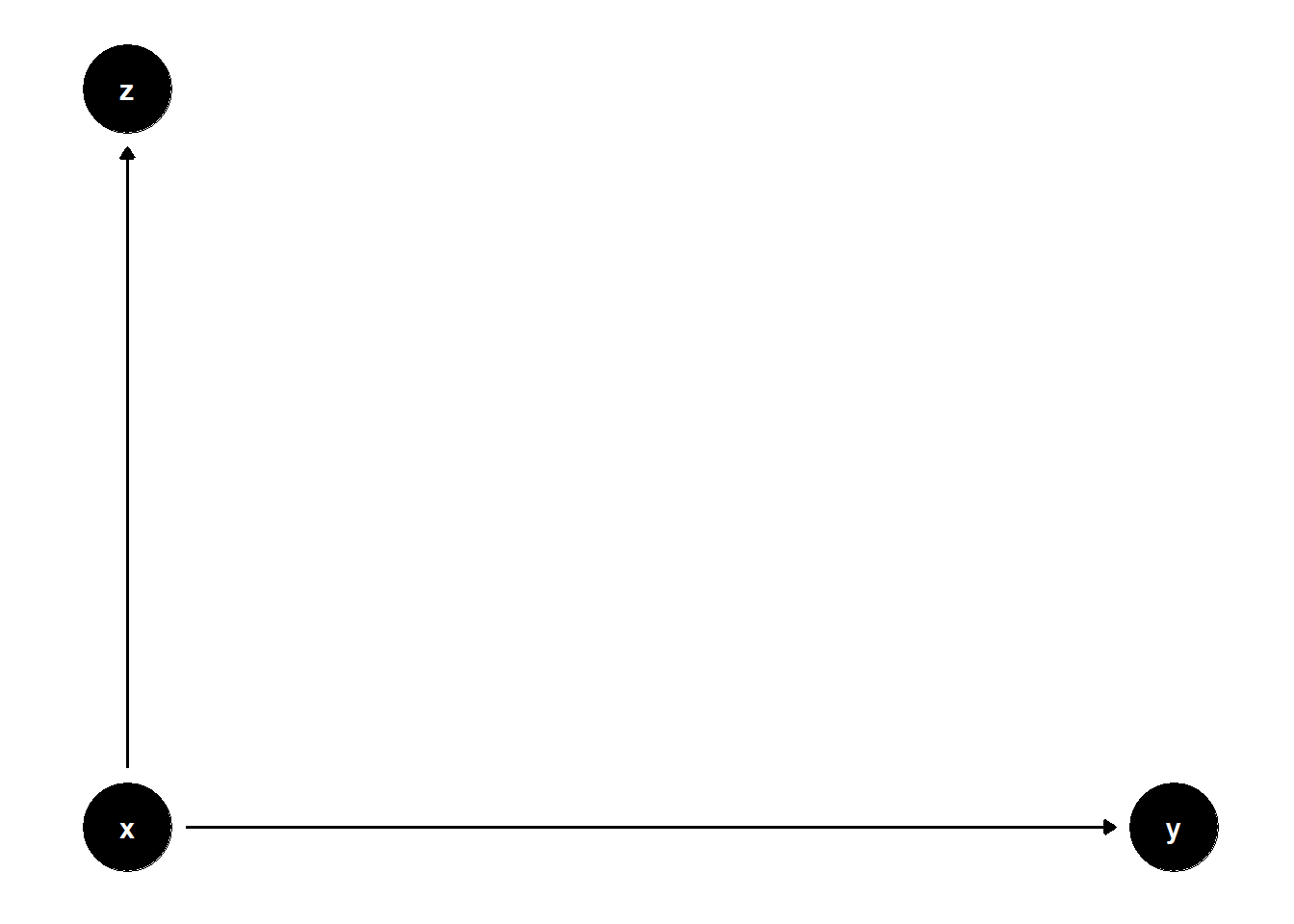

39.1.2 Bias Amplification

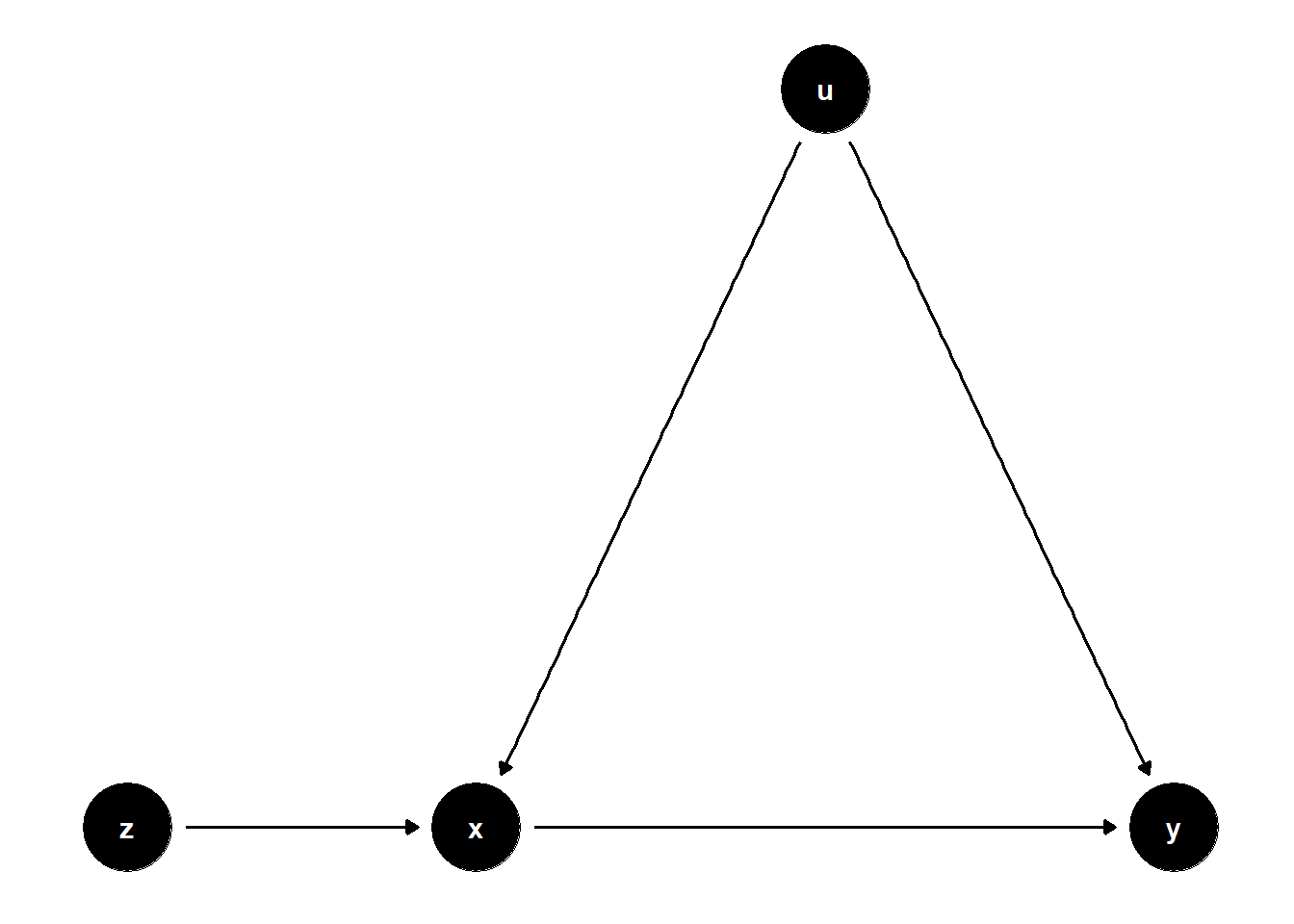

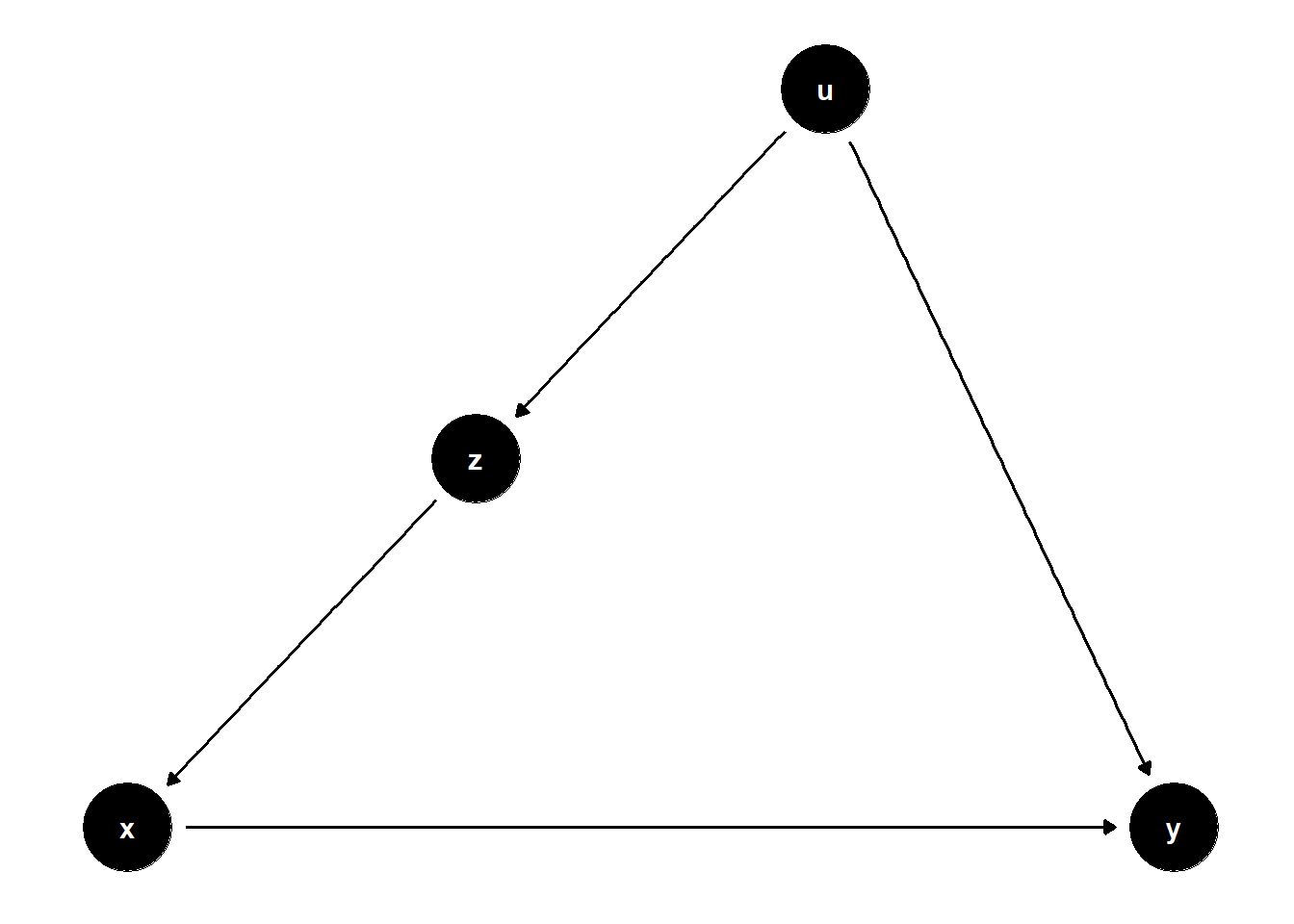

Bias amplification occurs when controlling for a variable that is not a confounder — in fact, controlling for it increases bias due to an unobserved confounder.

In the DAG below, \(U\) is an unobserved common cause of both \(X\) and \(Y\). \(Z\) influences \(X\) but has no causal relationship with \(Y\). Including \(Z\) in the model does not block any back-door path but instead increases the bias from \(U\) by amplifying its association with \(X\).

# Clean workspace

rm(list = ls())

# DAG specification

model <- dagitty("dag{

x -> y

u -> x

u -> y

z -> x

}")

# Set latent variable

latents(model) <- c("u")

# Coordinates for plotting

coordinates(model) <- list(

x = c(z = 1, x = 2, u = 3, y = 4),

y = c(z = 1, x = 1, u = 2, y = 1)

)

# Plot the DAG

ggdag(model) + theme_dag()

Even though \(Z\) is a strong predictor of \(X\), it is not a confounder, because it is not a common cause of \(X\) and \(Y\). Controlling for \(Z\) increases the portion of \(X\)’s variation explained by \(U\), thus amplifying bias in estimating the effect of \(X\) on \(Y\).

Simulation:

set.seed(123)

n <- 1e4

z <- rnorm(n)

u <- rnorm(n)

x <- 2*z + u + rnorm(n)

y <- x + 2*u + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Adjusted")

)| Unadjusted | Adjusted | |

|---|---|---|

| (Intercept) | -0.02 | -0.01 |

| (0.02) | (0.02) | |

| x | 1.32 *** | 1.99 *** |

| (0.01) | (0.01) | |

| z | -2.01 *** | |

| (0.03) | ||

| N | 10000 | 10000 |

| R2 | 0.71 | 0.80 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Observe that the adjusted model is more biased than the unadjusted one. This illustrates how controlling for a variable like \(Z\) can amplify omitted variable bias.

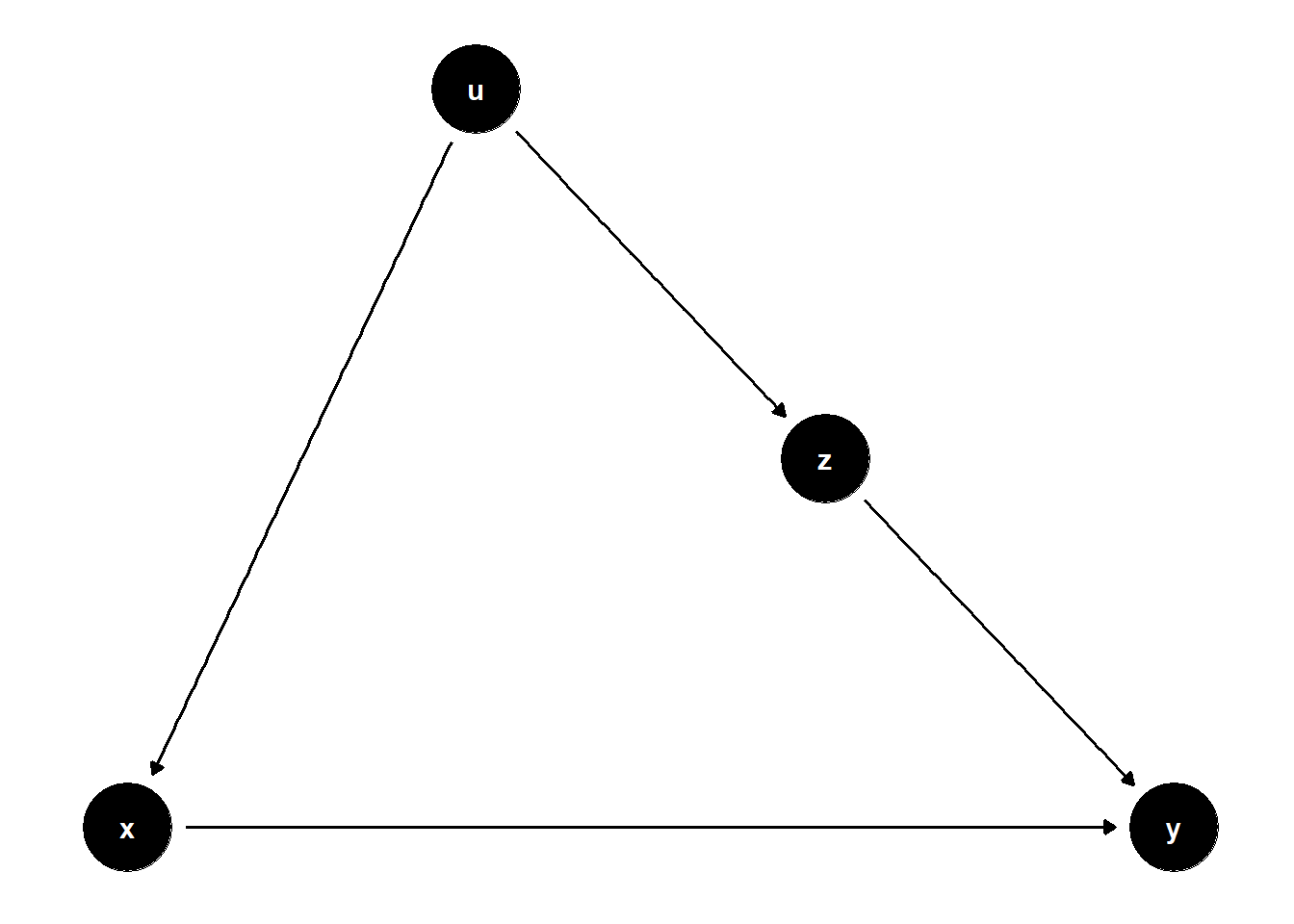

39.1.3 Overcontrol Bias

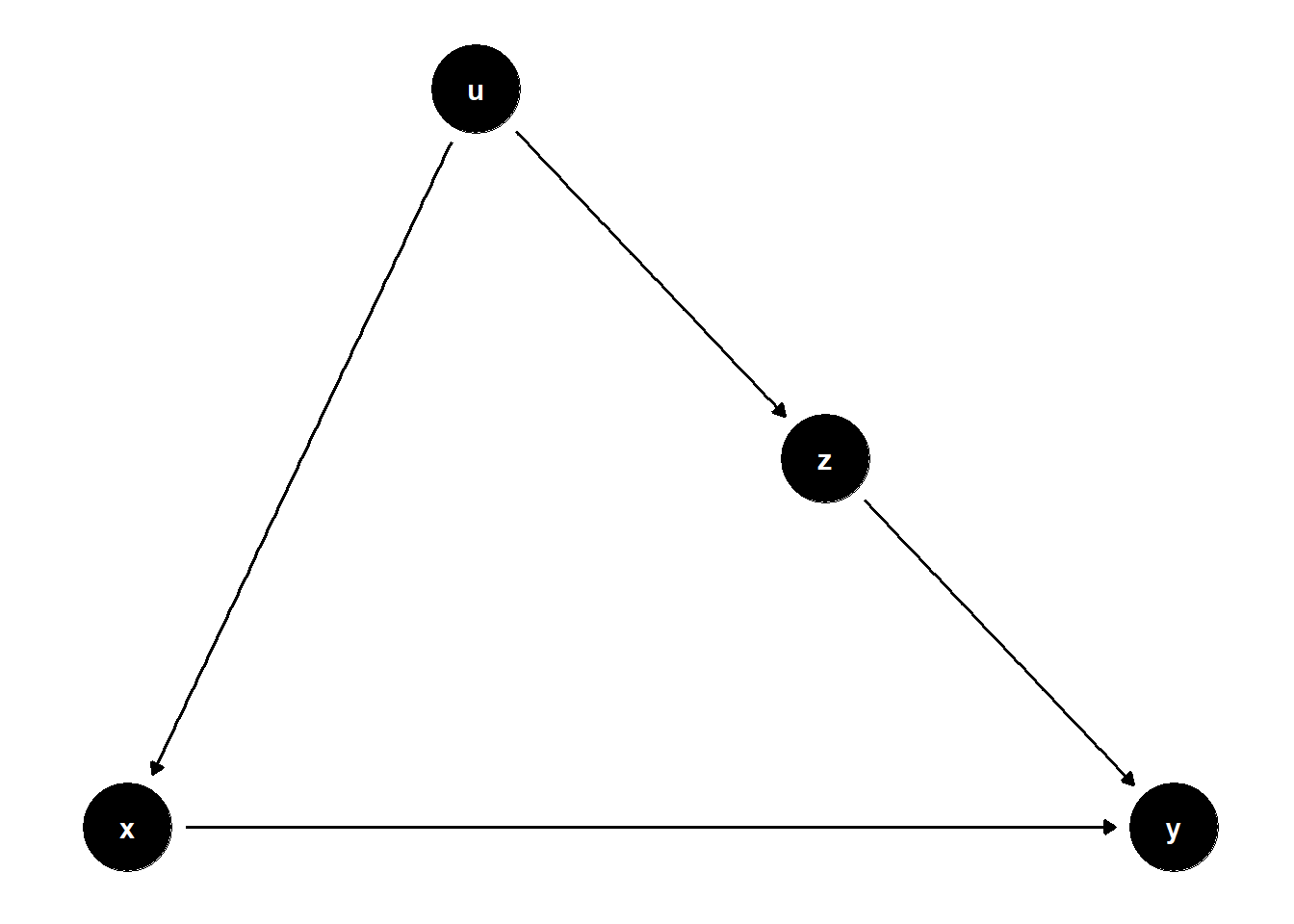

Overcontrol bias arises when we adjust for variables that lie on the causal path from treatment to outcome, or that serve as proxies for the outcome.

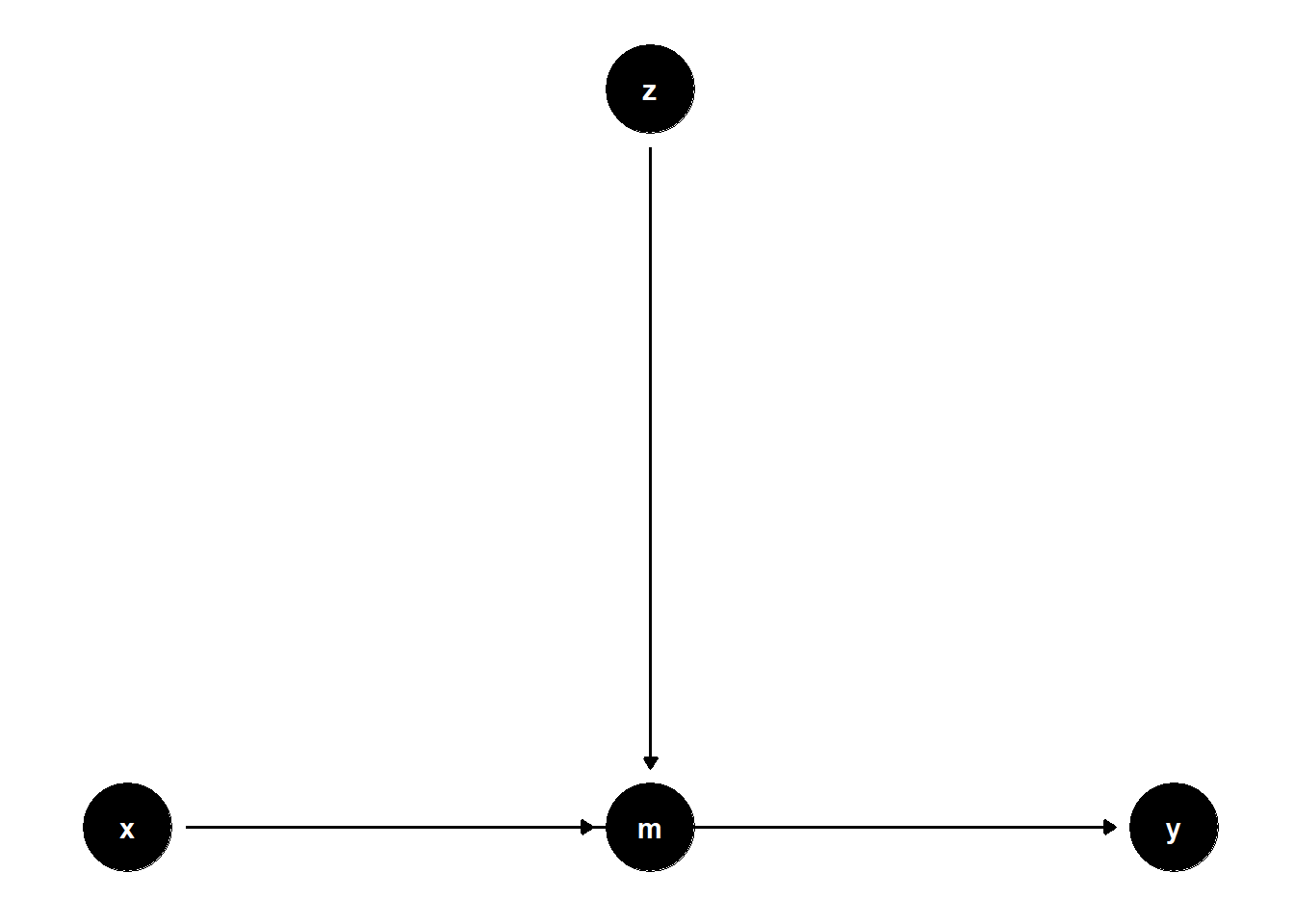

39.1.3.1 Mediator Control

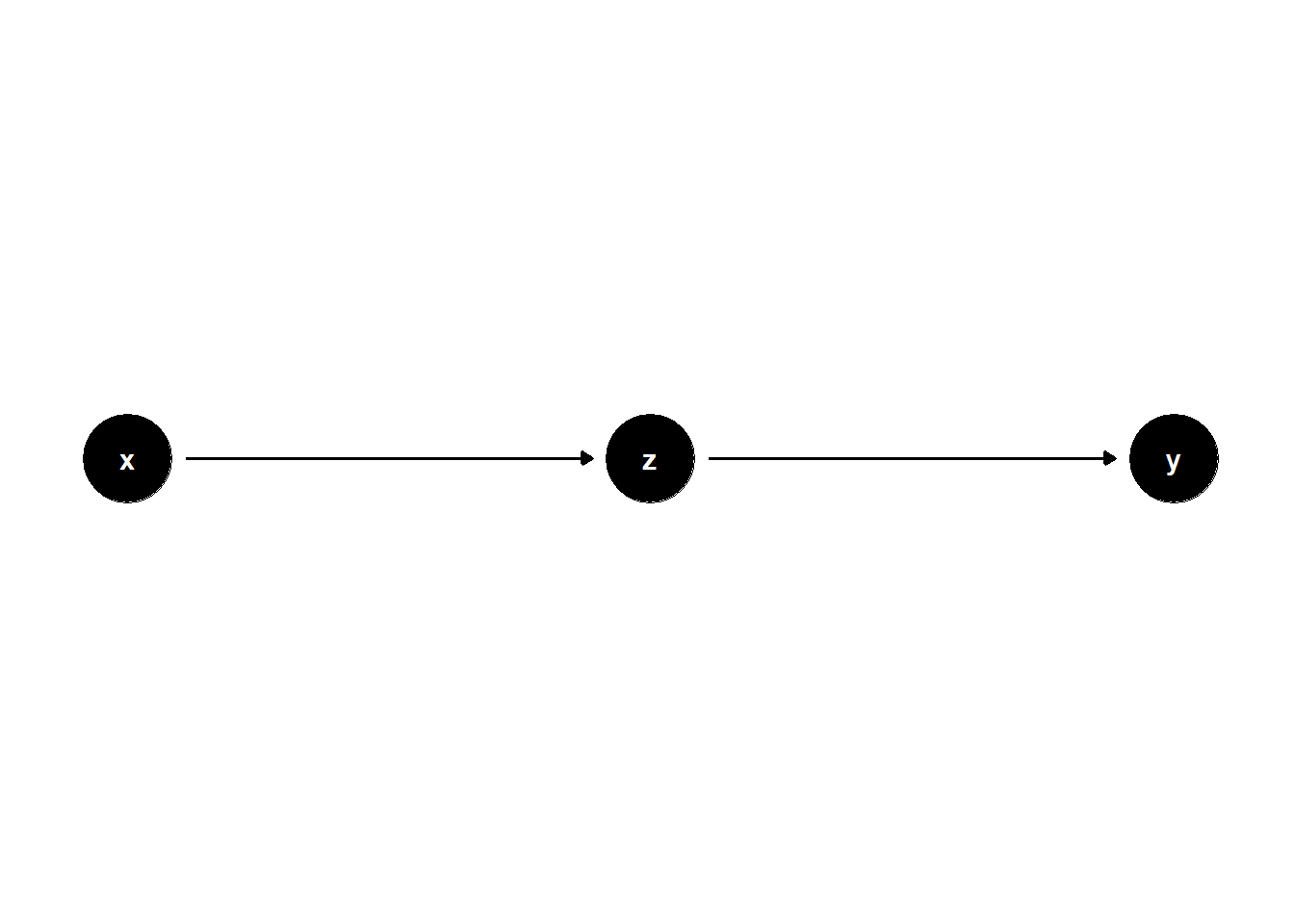

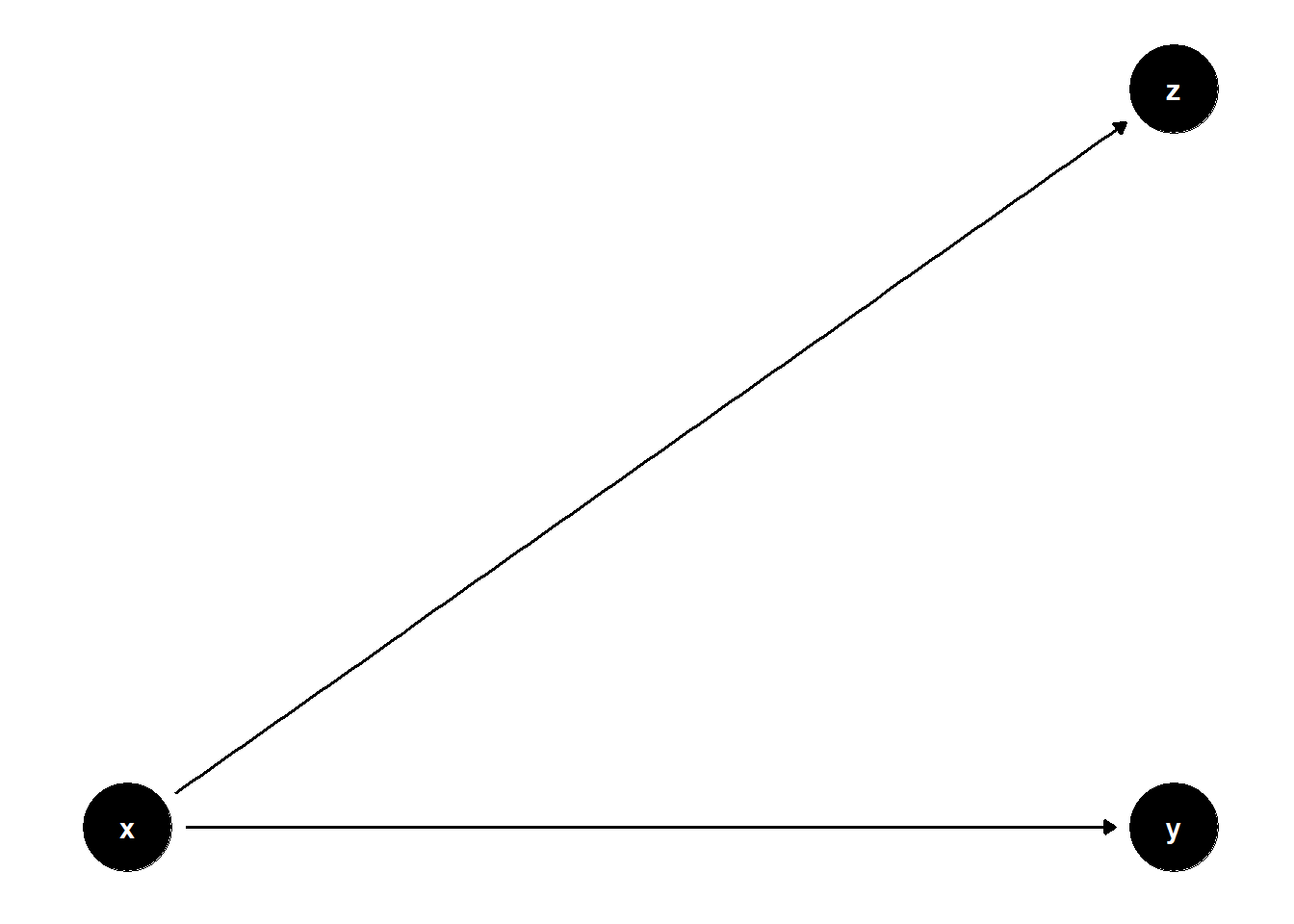

Controlling for a mediator — a variable that lies on the causal path between treatment and outcome — removes part of the effect we are trying to estimate.

# Clean workspace

rm(list = ls())

# DAG: X → Z → Y

model <- dagitty("dag{

x -> z

z -> y

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 3),

y = c(x = 1, z = 1, y = 1)

)

ggdag(model) + theme_dag()

If we want to estimate the total effect of \(X\) on \(Y\), controlling for \(Z\) (a mediator) leads to overcontrol bias.

set.seed(123)

n <- 1e4

x <- rnorm(n)

z <- x + rnorm(n)

y <- z + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Total Effect", "Controlled for Mediator")

)| Total Effect | Controlled for Mediator | |

|---|---|---|

| (Intercept) | -0.02 | -0.01 |

| (0.01) | (0.01) | |

| x | 1.03 *** | 0.02 |

| (0.01) | (0.01) | |

| z | 1.00 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.34 | 0.67 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Here, \(Z\) will appear significant, but including it blocks the causal path from \(X\) to \(Y\). This is misleading when the goal is to estimate the total effect of \(X\).

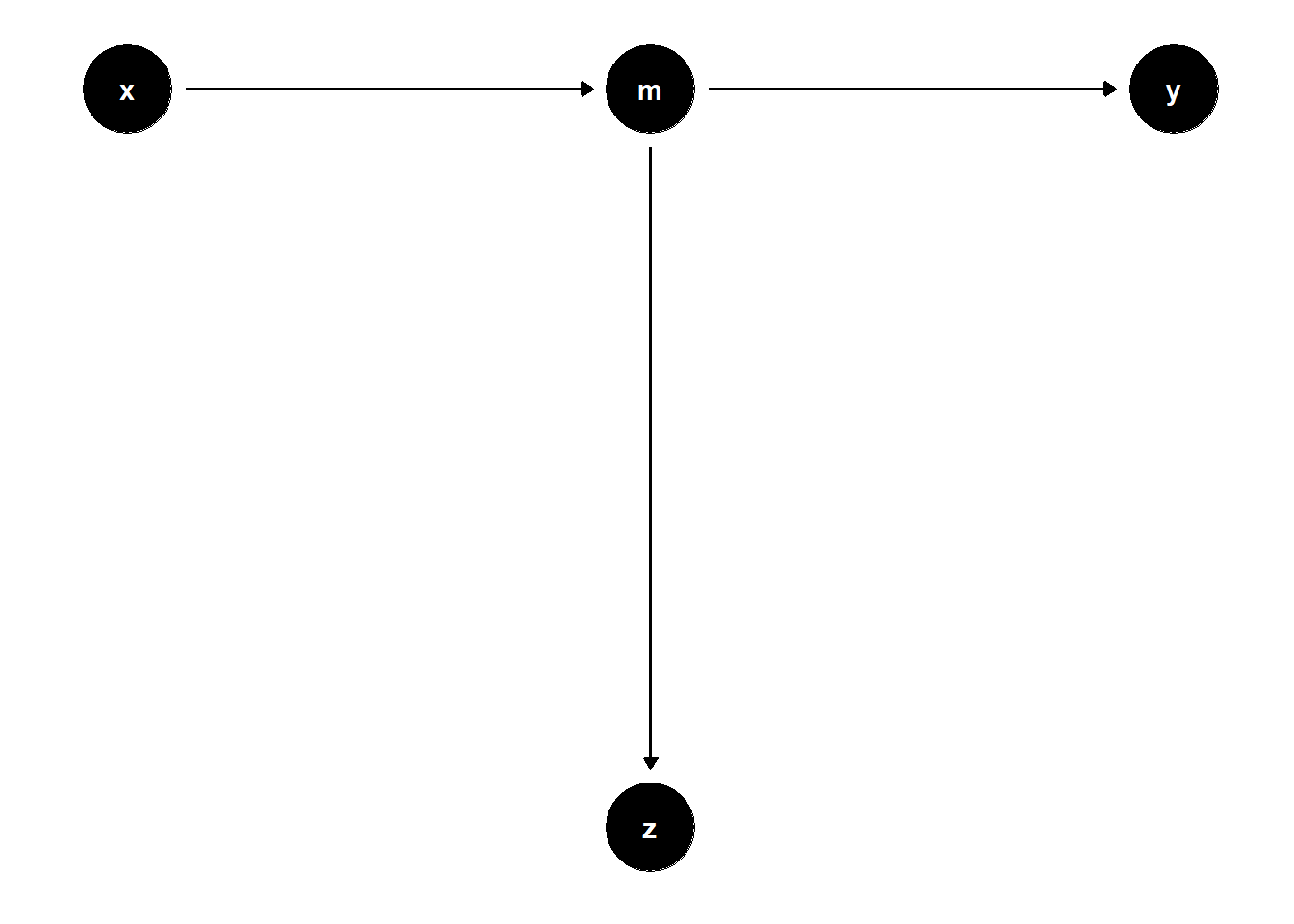

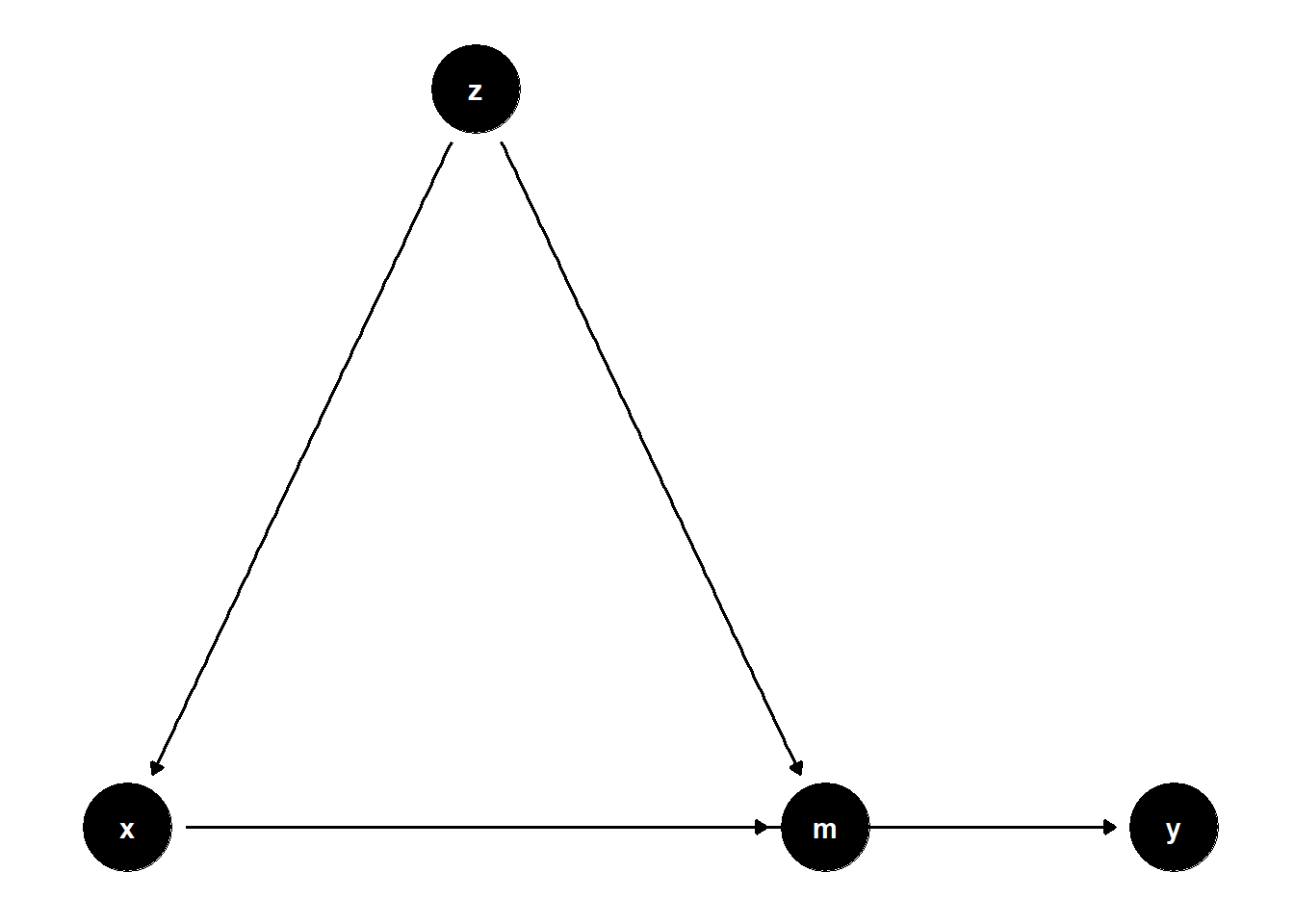

39.1.3.2 Proxy for Mediator

In more complex scenarios, controlling for variables that proxy for mediators can introduce similar distortions.

# Clean workspace

rm(list = ls())

# DAG: X → M → Z, M → Y

model <- dagitty("dag{

x -> m

m -> z

m -> y

}")

coordinates(model) <- list(

x = c(x = 1, m = 2, z = 2, y = 3),

y = c(x = 2, m = 2, z = 1, y = 2)

)

ggdag(model) + theme_dag()

set.seed(123)

n <- 1e4

x <- rnorm(n)

m <- x + rnorm(n)

z <- m + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x),

lm(y ~ x + z),

model.names = c("Total Effect", "Controlled for Proxy Z"))| Total Effect | Controlled for Proxy Z | |

|---|---|---|

| (Intercept) | -0.01 | -0.01 |

| (0.01) | (0.01) | |

| x | 0.99 *** | 0.49 *** |

| (0.01) | (0.02) | |

| z | 0.49 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.33 | 0.49 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though \(Z\) is not on the path from \(X\) to \(Y\), controlling for it removes part of the causal variation coming through \(M\).

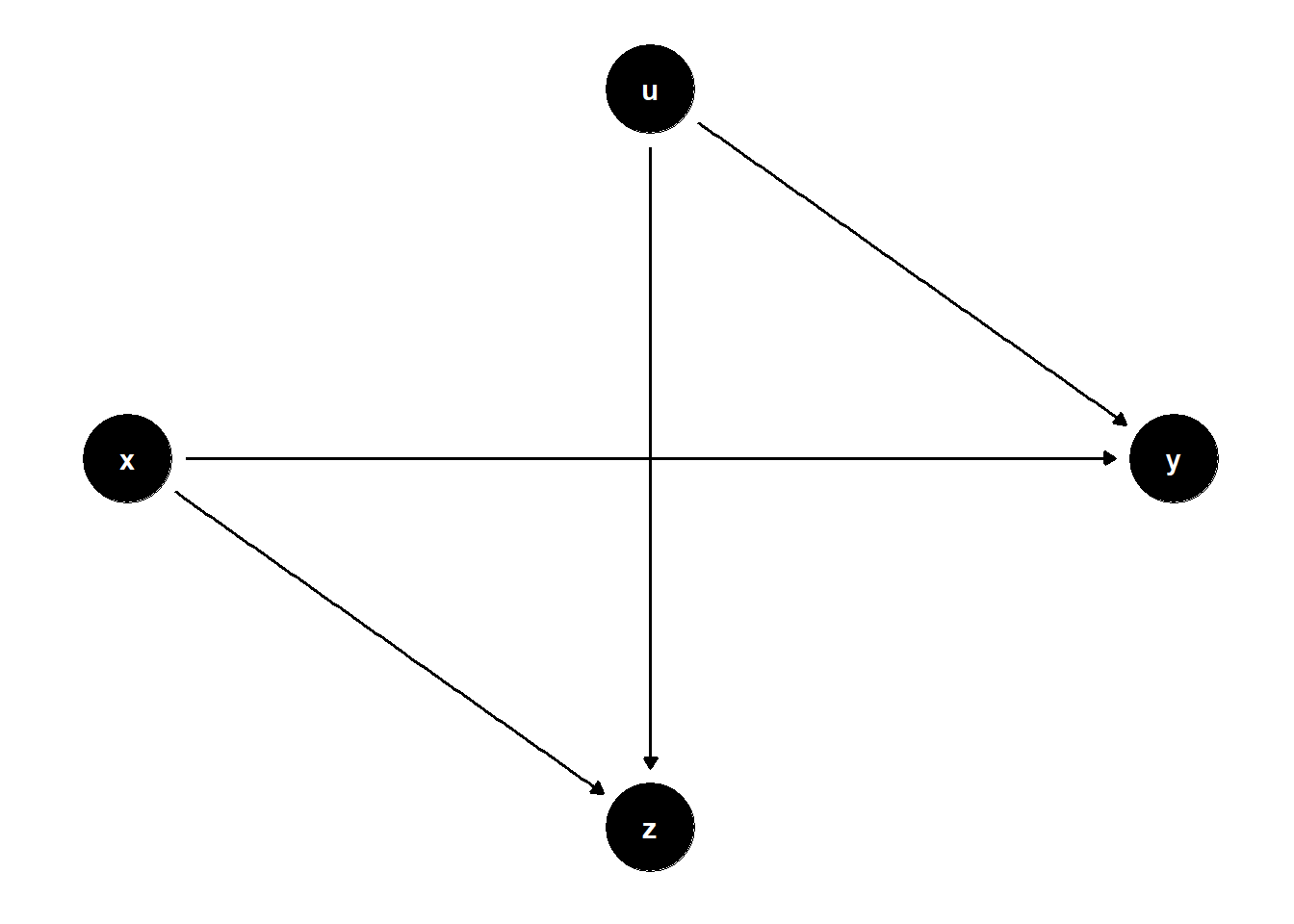

39.1.3.3 Overcontrol with Unobserved Confounding

When \(Z\) is influenced by both \(X\) and a latent confounder \(U\) that also affects \(Y\), controlling for \(Z\) again biases the estimate.

# Clean workspace

rm(list = ls())

# DAG: X → Z → Y; U → Z, U → Y

model <- dagitty("dag{

x -> z

z -> y

u -> z

u -> y

}")

latents(model) <- "u"

coordinates(model) <- list(

x = c(x = 1, z = 2, u = 3, y = 4),

y = c(x = 1, z = 1, u = 2, y = 1)

)

ggdag(model) + theme_dag()

set.seed(1)

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + u + rnorm(n)

y <- z + u + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Controlled for Z")

)| Unadjusted | Controlled for Z | |

|---|---|---|

| (Intercept) | -0.01 | -0.01 |

| (0.02) | (0.01) | |

| x | 1.01 *** | -0.47 *** |

| (0.02) | (0.01) | |

| z | 1.48 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.15 | 0.78 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Although the total effect of \(X\) on \(Y\) is correctly captured in the unadjusted model, adjusting for \(Z\) introduces bias via the collider path \(X \to Z \leftarrow U \to Y\).

Insight: Controlling for \(Z\) inadvertently blocks the direct effect of \(X\) and opens a biasing path through \(U\). This makes the adjusted model unreliable for causal inference.

These examples highlight the importance of conceptual clarity and causal reasoning in model specification. Not all covariates should be controlled for — especially not those that are:

Mediators (on the causal path)

Proxies for mediators or outcomes

Colliders or descendants of colliders

In business contexts, this often arises when analysts include intermediate variables like sales leads, customer engagement scores, or operational metrics without understanding whether these mediate the effect of a treatment (e.g., ad spend) or confound it.

39.1.4 Selection Bias

Selection bias — also known as collider stratification bias — occurs when conditioning on a variable that is a collider (a common effect of two or more variables). This inadvertently opens non-causal paths, inducing spurious associations between variables that are otherwise independent or unconfounded.

39.1.4.1 Classic Collider Bias

In the DAG below, \(Z\) is a collider between \(X\) and a latent variable \(U\). Controlling for \(Z\) opens a back-door path from \(X\) to \(Y\) through \(U\), introducing bias.

rm(list = ls())

# DAG

model <- dagitty("dag{

x -> y

x -> z

u -> z

u -> y

}")

latents(model) <- "u"

coordinates(model) <- list(

x = c(x = 1, z = 2, u = 2, y = 3),

y = c(x = 3, z = 2, u = 4, y = 3)

)

ggdag(model) + theme_dag()

Simulation:

set.seed(123)

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + u + rnorm(n)

y <- x + 2*u + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Adjusted for Z (collider)")

)| Unadjusted | Adjusted for Z (collider) | |

|---|---|---|

| (Intercept) | -0.02 | -0.01 |

| (0.02) | (0.02) | |

| x | 0.99 *** | -0.02 |

| (0.02) | (0.02) | |

| z | 0.99 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.17 | 0.49 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Controlling for \(Z\) opens the non-causal path \(X \to Z \leftarrow U \to Y\), resulting in biased estimates of the effect of \(X\) on \(Y\).

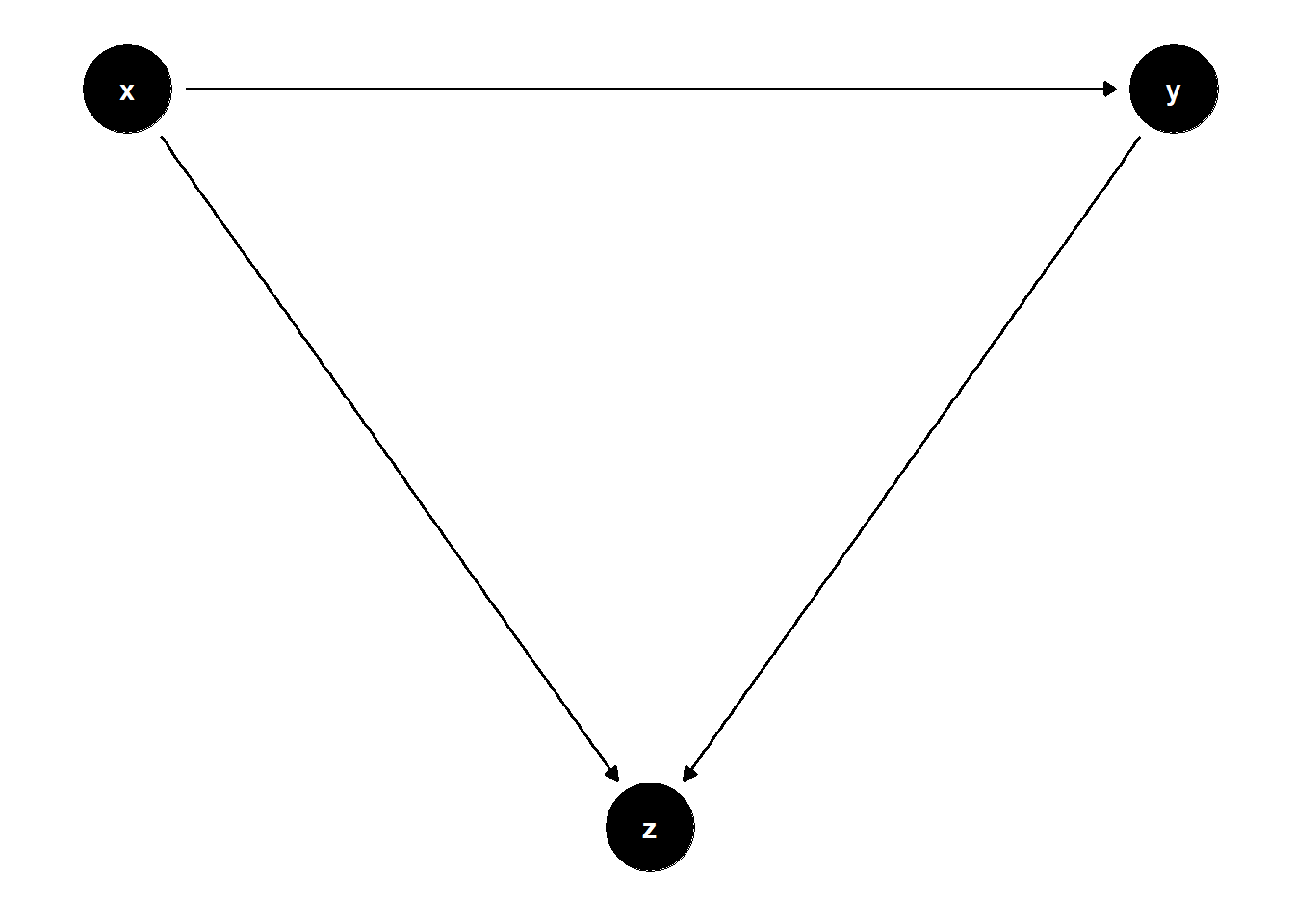

39.1.4.2 Collider Between Treatment and Outcome

In some cases, the collider is influenced directly by both the treatment and the outcome. This setting is also highly relevant in observational designs, particularly in retrospective or convenience sampling scenarios.

rm(list = ls())

# DAG: X → Z ← Y

model <- dagitty("dag{

x -> y

x -> z

y -> z

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 3),

y = c(x = 2, z = 1, y = 2)

)

ggdag(model) + theme_dag()

Simulation:

set.seed(123)

n <- 1e4

x <- rnorm(n)

y <- x + rnorm(n)

z <- x + y + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Adjusted for Collider Z")

)| Unadjusted | Adjusted for Collider Z | |

|---|---|---|

| (Intercept) | -0.01 | -0.00 |

| (0.01) | (0.01) | |

| x | 1.01 *** | -0.01 |

| (0.01) | (0.01) | |

| z | 0.50 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.50 | 0.75 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though \(Z\) is associated with both \(X\) and $Y$, it should not be controlled for, because doing so opens the collider path \(X \to Z \leftarrow Y\), generating spurious dependence.

39.1.5 Case-Control Bias

Case-control studies often condition on the outcome (or its descendants), which can lead to collider bias if not properly accounted for.

In the DAG below, \(Z\) is a descendant of a collider. Controlling for it can again induce spurious correlations by opening non-causal paths.

rm(list = ls())

# DAG: X → Y → Z

model <- dagitty("dag{

x -> y

y -> z

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 3),

y = c(x = 2, z = 1, y = 2)

)

ggdag(model) + theme_dag()

Simulation:

set.seed(123)

n <- 1e4

x <- rnorm(n)

y <- x + rnorm(n)

z <- y + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Unadjusted", "Adjusted for Descendant Z")

)| Unadjusted | Adjusted for Descendant Z | |

|---|---|---|

| (Intercept) | -0.01 | -0.00 |

| (0.01) | (0.01) | |

| x | 1.01 *** | 0.49 *** |

| (0.01) | (0.01) | |

| z | 0.50 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.50 | 0.75 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Note the subtlety: if \(X\) has a true causal effect on \(Y\), then controlling for \(Z\) biases the estimate. However, if \(X\) has no causal effect on \(Y\), then \(X\) is d-separated from \(Y\), even when adjusting for \(Z\). In that special case, controlling for \(Z\) will not falsely suggest an effect.

Key Insight: Whether or not adjustment induces bias depends on the presence or absence of a true causal path. This highlights the importance of DAGs in clarifying assumptions and guiding valid statistical inference.

39.1.6 Summary

| Bias Type | Key Mistake | Path Opened | Consequence |

|---|---|---|---|

| M-Bias | Controlling for a collider | \(X \leftarrow U_1 \to Z \leftarrow U_2 \to Y\) | Spurious association |

| Bias Amplification | Controlling for a non-confounder | Amplifies unobserved confounding | Larger bias than before |

| Overcontrol Bias | Controlling for a mediator or proxy | Blocks part of causal effect | Underestimates total effect |

| Selection Bias | Conditioning on a collider or its descendant | \(X \to Z \leftarrow Y\) or similar | Induced non-causal correlation |

| Case-Control Bias | Conditioning on a variable affected by outcome | \(X \to Y \to Z\) | Collider-induced associatio |

39.2 Good Controls

39.2.1 Omitted Variable Bias Correction

A variable \(Z\) is a good control when it blocks all back-door paths from the treatment \(X\) to the outcome \(Y\). This is the fundamental criterion from the back-door adjustment theorem in causal inference.

39.2.1.1 Simple Confounder

In this DAG, \(Z\) is a common cause of both \(X\) and \(Y\), i.e., a confounder.

rm(list = ls())

model <- dagitty("dag{

x -> y

z -> x

z -> y

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 3),

y = c(x = 1, z = 2, y = 1)

)

ggdag(model) + theme_dag()

Controlling for \(Z\) removes the bias from the back-door path \(X \leftarrow Z \rightarrow Y\).

n <- 1e4

z <- rnorm(n)

causal_coef <- 2

beta2 <- 3

x <- z + rnorm(n)

y <- causal_coef * x + beta2 * z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.02 | 0.01 |

| (0.02) | (0.01) | |

| x | 3.49 *** | 2.00 *** |

| (0.02) | (0.01) | |

| z | 2.98 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.82 | 0.97 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

39.2.1.2 Confounding via a Latent Variable

In this structure, \(U\) is unobserved but causes both \(Z\) and \(Y\), and \(Z\) affects \(X\). Even though \(U\) is not observed, adjusting for \(Z\) helps block the back-door path from \(X\) to \(Y\) that goes through \(U\).

rm(list = ls())

model <- dagitty("dag{

x -> y

u -> z

z -> x

u -> y

}")

latents(model) <- "u"

coordinates(model) <- list(

x = c(x = 1, z = 2, u = 3, y = 4),

y = c(x = 1, z = 2, u = 3, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- z + rnorm(n)

y <- 2 * x + u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.02 | -0.01 |

| (0.01) | (0.01) | |

| x | 2.33 *** | 1.99 *** |

| (0.01) | (0.01) | |

| z | 0.51 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.91 | 0.92 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though $Z$ appears significant, its inclusion serves to reduce omitted variable bias rather than having a causal interpretation itself.

39.2.1.3 \(Z\) is caused by \(U\), but also causes \(Y\)

This DAG illustrates a subtle case where \(Z\) is on a non-causal path from \(X\) to \(Y\) and helps block bias through a shared cause \(U\).

rm(list = ls())

model <- dagitty("dag{

x -> y

u -> z

u -> x

z -> y

}")

latents(model) <- "u"

coordinates(model) <- list(

x = c(x = 1, z = 3, u = 2, y = 4),

y = c(x = 1, z = 2, u = 3, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- u + rnorm(n)

y <- 2 * x + z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.02 | 0.01 |

| (0.02) | (0.01) | |

| x | 2.49 *** | 1.98 *** |

| (0.01) | (0.01) | |

| z | 1.02 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.83 | 0.93 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Again, we cannot interpret the coefficient on \(Z\) causally, but including \(Z\) helps reduce omitted variable bias from the unobserved confounder \(U\).

39.2.1.4 Summary of Omitted Variable Correction

# Model 1: Z is a confounder

model1 <- dagitty("dag{

x -> y

z -> x

z -> y

}")

coordinates(model1) <-

list(x = c(x = 1, z = 2, y = 3), y = c(x = 1, z = 2, y = 1))

# Model 2: Z is on path from U to X

model2 <- dagitty("dag{

x -> y

u -> z

z -> x

u -> y

}")

latents(model2) <- "u"

coordinates(model2) <-

list(x = c(

x = 1,

z = 2,

u = 3,

y = 4

),

y = c(

x = 1,

z = 2,

u = 3,

y = 1

))

# Model 3: Z influenced by U, affects Y

model3 <- dagitty("dag{

x -> y

u -> z

u -> x

z -> y

}")

latents(model3) <- "u"

coordinates(model3) <-

list(x = c(

x = 1,

z = 3,

u = 2,

y = 4

),

y = c(

x = 1,

z = 2,

u = 3,

y = 1

))

par(mfrow = c(1, 3))

ggdag(model1) + theme_dag()

39.2.2 Omitted Variable Bias in Mediation Correction

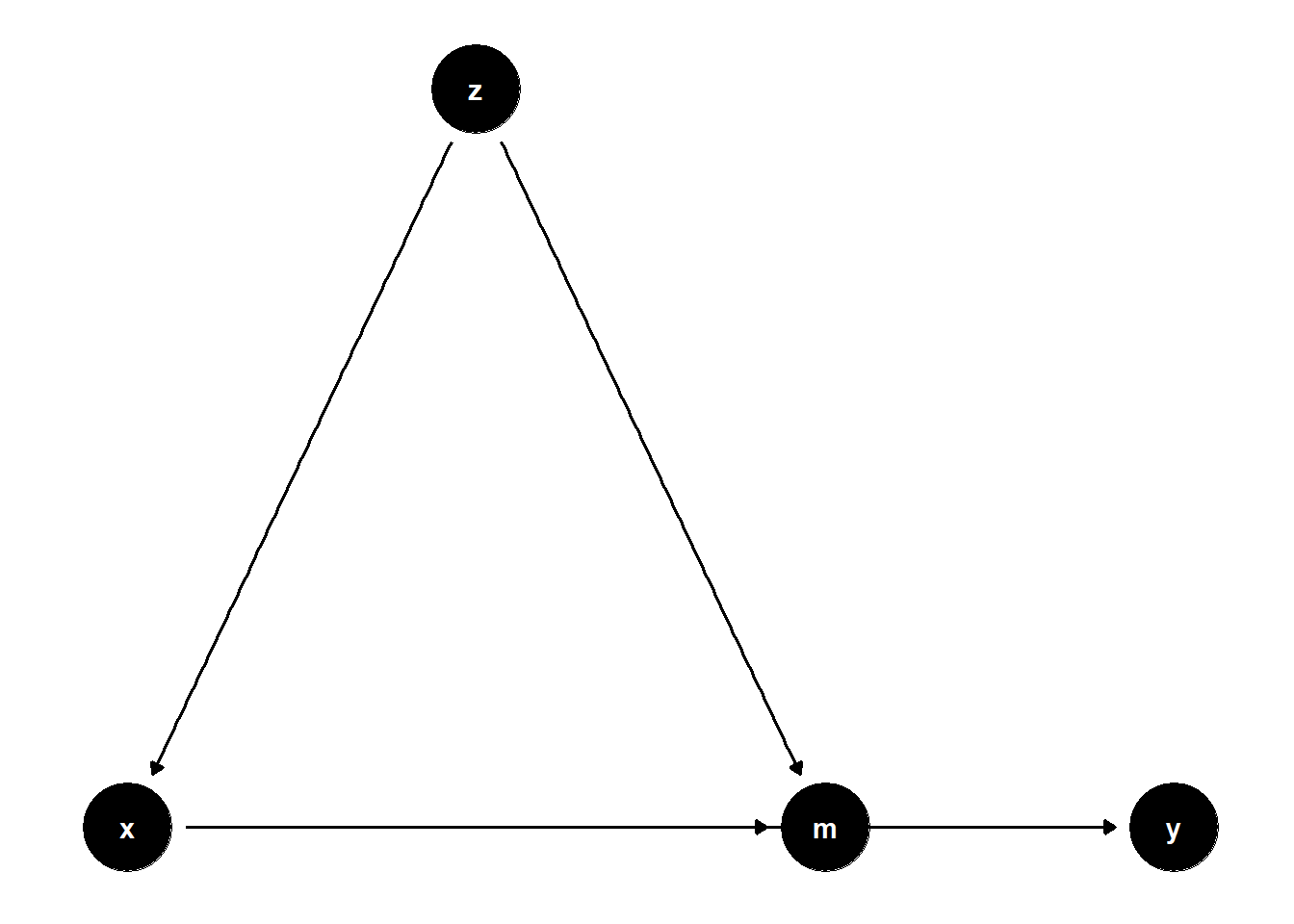

When a variable \(Z\) is a confounder of both the treatment \(X\) and a mediator \(M\), controlling for \(Z\) helps isolate the indirect and direct effects more accurately.

39.2.2.1 Observed Confounder of Mediator and Treatment

rm(list = ls())

model <- dagitty("dag{

x -> y

z -> x

x -> m

z -> m

m -> y

}")

coordinates(model) <-

list(x = c(

x = 1,

z = 2,

m = 3,

y = 4

),

y = c(

x = 1,

z = 2,

m = 1,

y = 1

))

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- z + rnorm(n)

m <- 2 * x + z + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.01 | 0.01 |

| (0.02) | (0.01) | |

| x | 2.50 *** | 1.99 *** |

| (0.01) | (0.01) | |

| z | 1.01 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.84 | 0.87 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

39.2.2.2 Latent Common Cause of Mediator and Treatment

rm(list = ls())

model <- dagitty("dag{

x -> y

u -> z

z -> x

x -> m

u -> m

m -> y

}")

latents(model) <- "u"

coordinates(model) <-

list(x = c(

x = 1,

z = 2,

u = 3,

m = 4,

y = 5

),

y = c(

x = 1,

z = 2,

u = 3,

m = 1,

y = 1

))

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- z + rnorm(n)

m <- 2 * x + u + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.01 | 0.01 |

| (0.02) | (0.02) | |

| x | 2.34 *** | 2.01 *** |

| (0.01) | (0.02) | |

| z | 0.50 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.86 | 0.87 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

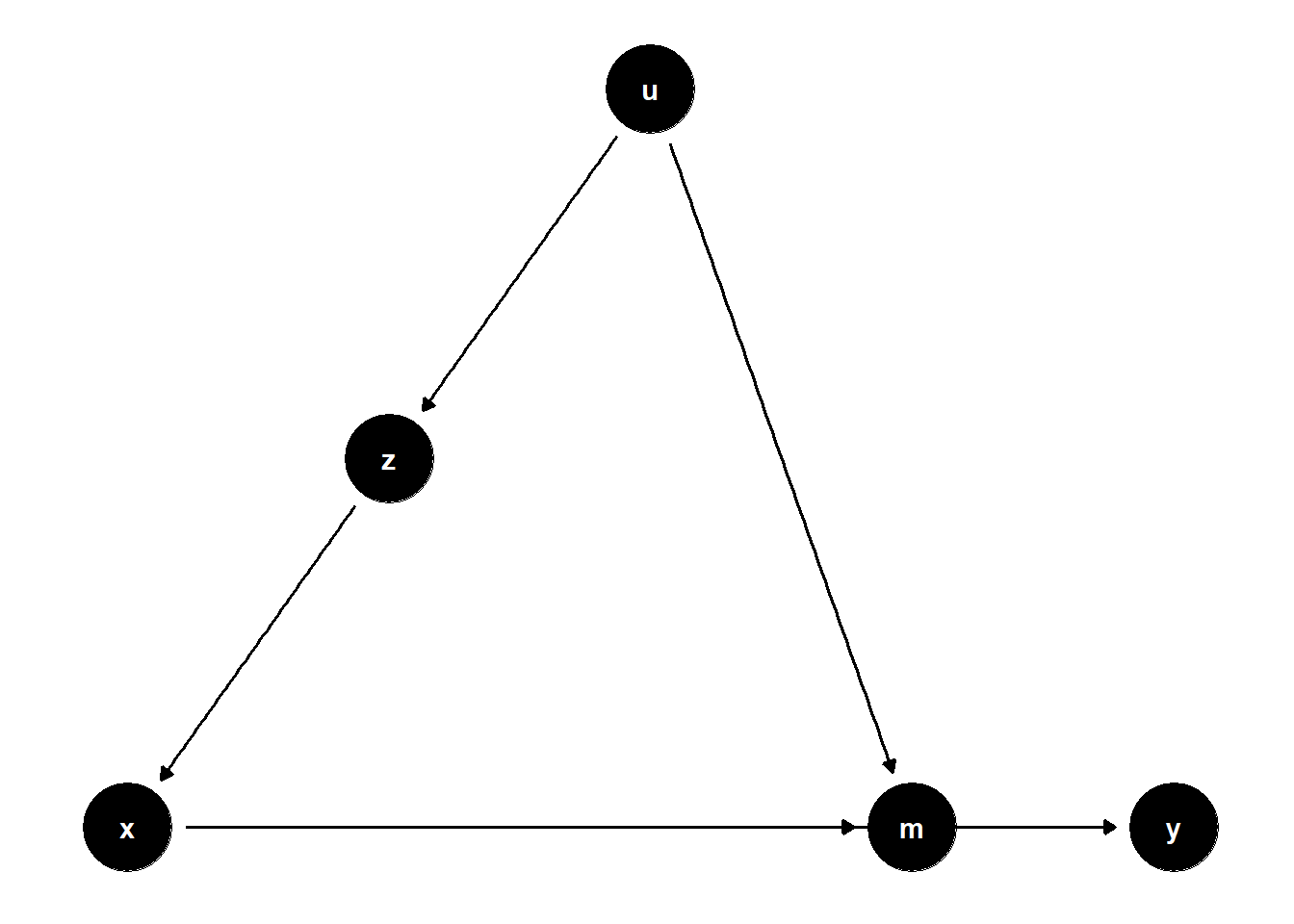

39.2.2.3 Z Affects Mediator, U Affects Both X and Z

rm(list = ls())

model <- dagitty("dag{

x -> y

u -> z

z -> m

x -> m

u -> x

m -> y

}")

latents(model) <- "u"

coordinates(model) <-

list(x = c(

x = 1,

z = 3,

u = 2,

m = 4,

y = 5

),

y = c(

x = 1,

z = 2,

u = 3,

m = 1,

y = 1

))

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- u + rnorm(n)

m <- 2 * x + z + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.00 | 0.00 |

| (0.02) | (0.01) | |

| x | 2.49 *** | 1.99 *** |

| (0.01) | (0.01) | |

| z | 1.00 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.78 | 0.87 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

39.2.2.4 Summary of Mediation Correction

# Model 4

model4 <- dagitty("dag{

x -> y

z -> x

x -> m

z -> m

m -> y

}")

coordinates(model4) <-

list(x = c(

x = 1,

z = 2,

m = 3,

y = 4

),

y = c(

x = 1,

z = 2,

m = 1,

y = 1

))

# Model 5

model5 <- dagitty("dag{

x -> y

u -> z

z -> x

x -> m

u -> m

m -> y

}")

latents(model5) <- "u"

coordinates(model5) <-

list(x = c(

x = 1,

z = 2,

u = 3,

m = 4,

y = 5

),

y = c(

x = 1,

z = 2,

u = 3,

m = 1,

y = 1

))

# Model 6

model6 <- dagitty("dag{

x -> y

u -> z

z -> m

x -> m

u -> x

m -> y

}")

latents(model6) <- "u"

coordinates(model6) <-

list(x = c(

x = 1,

z = 3,

u = 2,

m = 4,

y = 5

),

y = c(

x = 1,

z = 2,

u = 3,

m = 1,

y = 1

))

par(mfrow = c(1, 3))

ggdag(model4) + theme_dag()

While \(Z\) may be statistically significant, this does not imply a causal effect unless \(Z\) is directly on the causal path from \(X\) to \(Y\). In many valid control scenarios, \(Z\) simply serves to isolate the causal effect of \(X\), not to be interpreted as a cause itself.

39.3 Neutral Controls

Not all covariates used in regression adjustment are necessary for identification. Neutral controls do not help with causal identification but may affect estimation precision. Including them:

- Does not introduce bias, because they do not lie on back-door or collider paths.

- May reduce standard errors, by explaining additional variation in the outcome.

39.3.1 Good Predictive Controls

When a variable is correlated with the outcome \(Y\), but not a cause of the treatment \(X\), controlling for it is optional for identification but may increase precision.

39.3.1.1 \(Z\) predicts \(Y\), not \(X\)

# Clean workspace

rm(list = ls())

model <- dagitty("dag{

x -> y

z -> y

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 2),

y = c(x = 1, z = 2, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- rnorm(n)

y <- x + 2 * z + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Without Z", "With Predictive Z")

)| Without Z | With Predictive Z | |

|---|---|---|

| (Intercept) | -0.01 | -0.01 |

| (0.02) | (0.01) | |

| x | 1.01 *** | 1.01 *** |

| (0.02) | (0.01) | |

| z | 2.01 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.17 | 0.83 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

The coefficient on \(X\) remains unbiased in both models, but standard errors are smaller in the model with \(Z\).

39.3.1.2 \(Z\) predicts a mediator \(M\)

rm(list = ls())

model <- dagitty("dag{

x -> y

x -> m

z -> m

m -> y

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, m = 2, y = 3),

y = c(x = 1, z = 2, m = 1, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- rnorm(n)

m <- 2 * z + rnorm(n)

y <- x + 2 * m + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Without Z", "With Predictive Z")

)| Without Z | With Predictive Z | |

|---|---|---|

| (Intercept) | 0.02 | 0.02 |

| (0.05) | (0.02) | |

| x | 1.04 *** | 1.00 *** |

| (0.05) | (0.02) | |

| z | 3.98 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.05 | 0.77 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though \(Z\) is not on any causal path from \(X\) to \(Y\), controlling for it may reduce residual variance in \(Y\), hence increasing precision.

39.3.2 Good Selection Bias

In more complex selection structures, adjusting for selection variables can improve identification, but only in the presence of additional post-selection information.

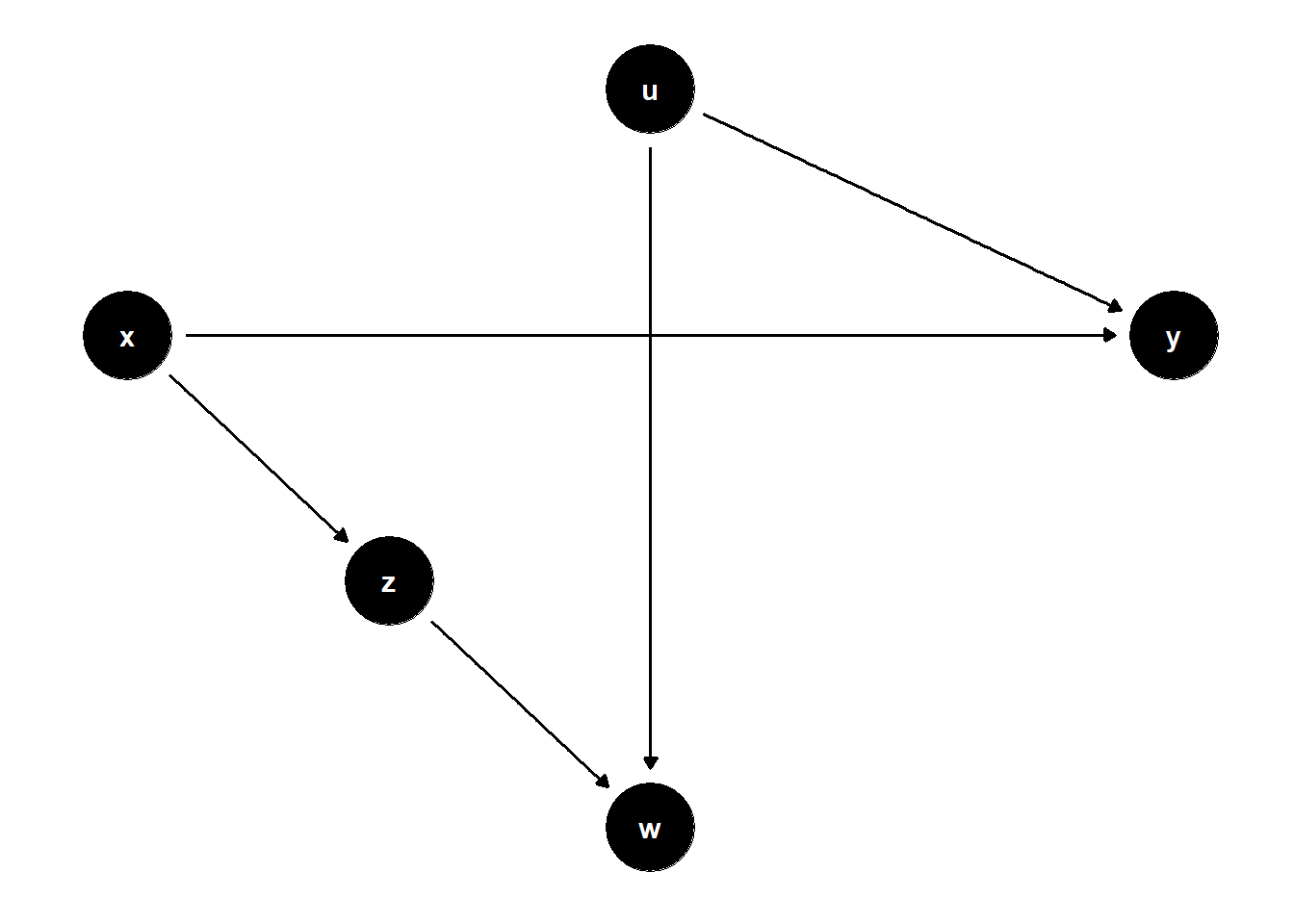

39.3.2.1 \(W\) is a collider; \(Z\) helps condition on selection

rm(list = ls())

model <- dagitty("dag{

x -> y

x -> z

z -> w

u -> w

u -> y

}")

latents(model) <- "u"

coordinates(model) <- list(

x = c(x = 1, z = 2, w = 3, u = 3, y = 5),

y = c(x = 3, z = 2, w = 1, u = 4, y = 3)

)

ggdag(model) + theme_dag()

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + rnorm(n)

w <- z + u + rnorm(n)

y <- x - 2 * u + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + w),

lm(y ~ x + z + w),

model.names = c("Unadjusted", "Control W", "Control W + Z")

)| Unadjusted | Control W | Control W + Z | |

|---|---|---|---|

| (Intercept) | -0.00 | 0.00 | -0.02 |

| (0.02) | (0.02) | (0.02) | |

| x | 1.00 *** | 1.69 *** | 1.01 *** |

| (0.02) | (0.02) | (0.02) | |

| w | -0.67 *** | -1.00 *** | |

| (0.01) | (0.01) | ||

| z | 1.01 *** | ||

| (0.02) | |||

| N | 10000 | 10000 | 10000 |

| R2 | 0.18 | 0.40 | 0.51 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | |||

Unadjusted model is unbiased.

Controlling only for \(W\) is biased due to collider path \(X \to Z \to W \leftarrow U \to Y\).

Adding \(Z\) restores identification by blocking that path.

39.3.3 Bad Predictive Controls

Not all predictive variables are useful — some may reduce precision by soaking up degrees of freedom or increasing multicollinearity.

39.3.3.1 \(Z\) predicts \(X\), not \(Y\)

rm(list = ls())

model <- dagitty("dag{

x -> y

z -> x

}")

coordinates(model) <- list(

x = c(x = 1, z = 1, y = 2),

y = c(x = 1, z = 2, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- 2 * z + rnorm(n)

y <- x + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Without Z", "With Z (predicts X)")

)| Without Z | With Z (predicts X) | |

|---|---|---|

| (Intercept) | 0.01 | 0.01 |

| (0.01) | (0.01) | |

| x | 1.00 *** | 1.00 *** |

| (0.00) | (0.01) | |

| z | -0.00 | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.84 | 0.84 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

\(Z\) adds no explanatory power for \(Y\), and thus increases SE for the estimate of \(X\)’s effect.

39.3.3.2 \(Z\) is a child of \(X\)

rm(list = ls())

model <- dagitty("dag{

x -> y

x -> z

}")

coordinates(model) <- list(

x = c(x = 1, z = 1, y = 2),

y = c(x = 1, z = 2, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

x <- rnorm(n)

z <- 2 * x + rnorm(n)

y <- x + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Without Z", "With Child Z")

)| Without Z | With Child Z | |

|---|---|---|

| (Intercept) | 0.00 | 0.00 |

| (0.01) | (0.01) | |

| x | 1.02 *** | 1.06 *** |

| (0.01) | (0.02) | |

| z | -0.02 * | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.49 | 0.49 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Here, \(Z\) is a post-treatment variable. Though it does not bias the estimate of \(X\), it adds noise and increases the SE.

39.3.4 Bad Selection Bias

Controlling for some post-treatment variables can hurt precision without affecting bias.

rm(list = ls())

model <- dagitty("dag{

x -> y

x -> z

}")

coordinates(model) <- list(

x = c(x = 1, z = 2, y = 2),

y = c(x = 1, z = 2, y = 1)

)

ggdag(model) + theme_dag()

n <- 1e4

x <- rnorm(n)

z <- 2 * x + rnorm(n)

y <- x + rnorm(n)

jtools::export_summs(

lm(y ~ x),

lm(y ~ x + z),

model.names = c("Without Z", "With Post-treatment Z")

)| Without Z | With Post-treatment Z | |

|---|---|---|

| (Intercept) | -0.00 | -0.00 |

| (0.01) | (0.01) | |

| x | 1.00 *** | 0.99 *** |

| (0.01) | (0.02) | |

| z | 0.01 | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.50 | 0.50 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Although \(Z\) lies on a causal path from \(X\) to \(Z\) (and not from \(Z\) to \(Y\)), including \(Z\) adds redundant information and may inflate standard errors. In this case, it’s not a “bad control” in the M-bias sense, but still suboptimal.

39.3.5 Summary Table: Predictive vs. Causal Utility of Controls

| Control Type | Bias Impact | SE Impact | Causal Use? |

|---|---|---|---|

| Predictive of \(Y\), not \(X\) | None | Improves SE | Optional |

| Predictive of \(X\), not \(Y\) | None | Hurts SE | Avoid if possible |

| On causal path (\(X \to Z\)) | None (if not a mediator) | SE or undercontrol | Avoid unless estimating direct effect |

| Post-treatment collider | May induce bias | Hurts SE | Avoid |

| Aids blocking collider paths | May help | Mixed | Conditional |

39.4 Choosing Controls

Identifying which variables to control for is one of the most important — and difficult — steps in causal inference. The goal is to block all back-door paths between the treatment \(X\) and the outcome \(Y\), without introducing bias from colliders or mediators.

When done correctly, adjustment removes confounding bias. When done incorrectly, it can introduce bias, increase variance, or obscure the true causal relationship.

39.4.1 Step 1: Use a Causal Diagram (DAG)

Causal diagrams provide a graphical representation of assumptions about the data-generating process. With a DAG, we can:

- Identify all back-door paths from \(X\) to \(Y\)

- Determine which paths are blocked or opened by conditioning

- Use software to identify minimal sufficient adjustment sets

For example, using dagitty:

library(dagitty)

dag <- dagitty("dag {

X -> Y

Z -> X

Z -> Y

U -> X

U -> Y

}")

adjustmentSets(dag, exposure = "X", outcome = "Y")This will return the set(s) of covariates that must be controlled for to estimate the causal effect of \(X\) on \(Y\) under the back-door criterion.

39.4.2 Step 2: Use Algorithmic Tools

Several tools can automate the process of selecting appropriate controls given a DAG:

DAGitty provides an intuitive browser-based interface to:

Draw causal diagrams

Identify minimal sufficient adjustment sets

Simulate interventions (do-calculus)

Diagnose overcontrol or collider bias

It supports direct integration with R and allows reproducible workflows.

Fusion is a powerful tool for:

Computing identification formulas using do-calculus

Handling complex longitudinal data and selection bias

Formalizing queries for total, direct, and mediated effects

Fusion implements algorithms that go beyond standard adjustment and allow for nonparametric identification when latent confounders are present.

39.4.3 Step 3: Theoretical Principles

Key guidelines include:

Do not control for mediators if estimating the total effect

Control for pre-treatment confounders (common causes of treatment and outcome)

Avoid colliders and their descendants

Consider the use of instrumental variables when no suitable adjustment set exists

39.4.4 Step 4: Consider Sensitivity Analysis

Even with well-reasoned DAGs, our control set may be imperfect, especially if some variables are unobserved or measured with error. In these cases, sensitivity analysis tools help quantify how robust our causal conclusions are.

The sensemakr package (Cinelli et al. 2019; Cinelli and Hazlett 2020) allows for:

Quantifying how strong unmeasured confounding would have to be to change conclusions

Reporting robustness values: the minimal strength of confounding needed to explain away the effect

Graphical summaries of confounding thresholds

This allows researchers to report assumptions transparently, even in the presence of unmeasured bias.

39.4.5 Step 5: Know When Not to Control

Remember, not every variable should be adjusted for. The table below summarizes when to control and when to avoid:

| Variable Type | Control? | Why |

|---|---|---|

| Confounder (\(Z \to X\) and \(Z \to Y\)) | Yes | Blocks back-door path |

| Mediator (\(X \to Z \to Y\)) | No | Blocks part of the effect (unless estimating direct effect) |

| Collider (\(X \to Z \leftarrow Y\)) | No | Opens non-causal paths |

| Instrument (\(Z \to X\), \(Z \not\to Y\)) | No | Used differently, not for adjustment |

| Pre-treatment proxy for outcome | Caution | May amplify bias or introduce overcontrol |

| Predictor of outcome, not \(X\) | Optional | Improves precision, does not affect identification |

39.4.6 Summary: Control Selection Pipeline

Define your causal question clearly (total effect, direct effect, etc.)

Draw a DAG that reflects substantive knowledge

Use DAGitty/Fusion to identify minimal sufficient control sets

Double-check for bad controls (colliders, mediators)

If in doubt, conduct sensitivity analysis using

sensemakrReport assumptions transparently — causal conclusions are only as valid as the assumptions they rely on

The most important confounders are often unmeasured. But recognizing which ones should have been measured is already half the battle.