22.6 Emerging Research

Recent research highlights significant challenges in measuring the causal effects of ad content in online advertising experiments. Digital advertising platforms employ algorithmic targeting and dynamic ad delivery mechanisms, which can introduce systematic biases in A/B testing (Braun and Schwartz 2025). Key concerns include:

Nonrandom Exposure: Online advertising platforms optimize ad delivery dynamically, meaning users are not randomly assigned to different ad variants. Instead, platforms use proprietary algorithms to serve ads to different, often highly heterogeneous, user groups.

Divergent Delivery: The optimization process can lead to “divergent delivery,” where differences in user engagement patterns and platform objectives result in non-comparable treatment groups. This confounds the true effect of ad content with algorithmic biases in exposure.

Bias in Measured Effects: Algorithmic targeting, user heterogeneity, and data aggregation can distort both the magnitude and direction of estimated ad effects. This means traditional A/B test results may not accurately reflect the true impact of ad creatives.

Limited Transparency: Platforms have little incentive to assist advertisers or researchers in disentangling ad content effects from proprietary targeting mechanisms. As a result, experimenters must design careful identification strategies to mitigate these biases.

22.6.1 Covariate Balancing in Online A/B Testing: The Pigeonhole Design

J. Zhao and Zhou (2024) address the challenge of covariate balancing in online A/B testing when experimental subjects arrive sequentially. Traditional experimental designs struggle to maintain balance in real-time settings where treatment assignments must be made immediately. This work introduces the online blocking problem, in which subjects with heterogeneous covariates must be assigned to treatment or control groups dynamically, with the goal of minimizing total discrepancy—quantified as the minimum weight perfect matching between groups.

To improve covariate balance, the authors propose the pigeonhole design, a randomized experimental design that operates as follows:

- The covariate space is partitioned into smaller subspaces called “pigeonholes.”

- Within each pigeonhole, the design balances the number of treated and control subjects, reducing systematic imbalances.

Theoretical analysis demonstrates the effectiveness of this design in reducing discrepancy compared to existing approaches. Specifically, the pigeonhole design is benchmarked against:

- Matched-pair design

- Completely Randomized Design

The results highlight scenarios where the pigeonhole design outperforms these alternatives in maintaining covariate balance.

Using Yahoo! data, the authors validate the pigeonhole design in practice, demonstrating a 10.2% reduction in variance when estimating the Average Treatment Effect. This improvement underscores the design’s practical utility in reducing noise and improving the precision of experimental results.

The findings of J. Zhao and Zhou (2024) provide valuable insights for practitioners conducting online A/B tests:

- Ensuring real-time covariate balance improves the reliability of causal estimates.

- The pigeonhole design offers a structured yet flexible approach to balancing covariates dynamically.

- By reducing variance in treatment effect estimation, it enhances statistical efficiency in large-scale online experiments.

This study contributes to the broader discussion of randomized experimental design in dynamic settings, providing a compelling alternative to traditional approaches.

22.6.2 Handling Zero-Valued Outcomes

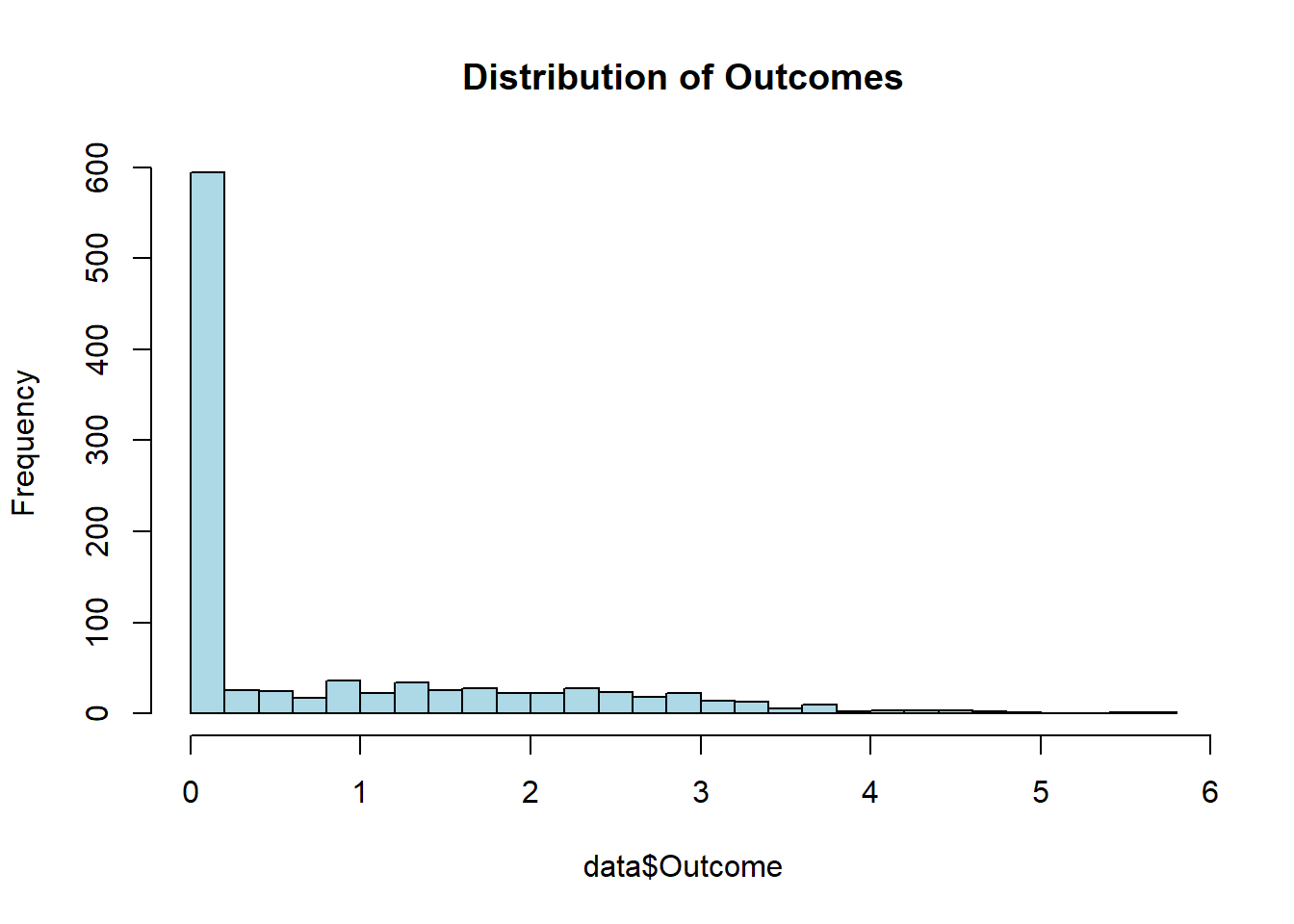

When analyzing treatment effects, a common issue arises when the outcome variable includes zero values.

For example, in business applications, a marketing intervention may have no effect on some customers (resulting in zero sales). If we were to apply a log transformation to the outcome variable, this would be problematic because:

- \(\log(0)\) is undefined.

- Log transformation is sensitive to outcome units, making interpretation difficult (J. Chen and Roth 2024).

Instead of applying a log transformation, we should use methods that are robust to zero values:

- Percentage changes in the Average

- By using Poisson Quasi-Maximum Likelihood Estimation (QMLE), we can interpret coefficients as percentage changes in the mean outcome of the treatment group relative to the control group.

- Extensive vs. Intensive Margins

- This approach distinguishes between:

- Extensive margin: The likelihood of moving from zero to a positive outcome (e.g., increasing the probability of making a sale).

- Intensive margin: The increase in the outcome given that it is already positive (e.g., increasing the sales amount).

- To estimate the intensive-margin bounds, we could use Lee (2009), assuming that treatment has a monotonic effect on the outcome.

- This approach distinguishes between:

We first generate a dataset to simulate a scenario where an outcome variable (e.g., sales, website clicks) has many zeros, and treatment affects both the extensive and intensive margins.

set.seed(123) # For reproducibility

library(tidyverse)

n <- 1000 # Number of observations

p_treatment <- 0.5 # Probability of being treated

# Step 1: Generate the treatment variable D

D <- rbinom(n, 1, p_treatment)

# Step 2: Generate potential outcomes

# Untreated potential outcome (mostly zeroes)

Y0 <- rnorm(n, mean = 0, sd = 1) * (runif(n) < 0.3)

# Treated potential outcome (affecting both extensive and intensive margins)

Y1 <- Y0 + rnorm(n, mean = 2, sd = 1) * (runif(n) < 0.7)

# Step 3: Combine effects based on treatment assignment

Y_observed <- (1 - D) * Y0 + D * Y1

# Ensure non-negative outcomes (modeling real-world situations)

Y_observed[Y_observed < 0] <- 0

# Create a dataset with an additional control variable

data <- data.frame(

ID = 1:n,

Treatment = D,

Outcome = Y_observed,

X = rnorm(n) # Control variable

) |>

dplyr::mutate(positive = Outcome > 0) # Indicator for extensive margin

# View first few rows

head(data)

#> ID Treatment Outcome X positive

#> 1 1 0 0.0000000 1.4783345 FALSE

#> 2 2 1 2.2369379 -1.4067867 TRUE

#> 3 3 0 0.0000000 -1.8839721 FALSE

#> 4 4 1 3.2192276 -0.2773662 TRUE

#> 5 5 1 0.6649693 0.4304278 TRUE

#> 6 6 0 0.0000000 -0.1287867 FALSE# Plot distribution of outcomes

hist(

data$Outcome,

breaks = 30,

main = "Distribution of Outcomes",

col = "lightblue"

)

Figure 2.15: Distribution of Outcomes

22.6.2.1 Estimating Percentage Changes in the Average

Since we cannot use a log transformation, we estimate percentage changes using Poisson QMLE, which is robust to zero-valued outcomes.

library(fixest)

# Poisson Quasi-Maximum Likelihood Estimation (QMLE)

res_pois <- fepois(

fml = Outcome ~ Treatment + X,

data = data,

vcov = "hetero"

)

# Display results

etable(res_pois)

#> res_pois

#> Dependent Var.: Outcome

#>

#> Constant -2.223*** (0.1440)

#> Treatment 2.579*** (0.1494)

#> X 0.0235 (0.0406)

#> _______________ __________________

#> S.E. type Heteroskedas.-rob.

#> Observations 1,000

#> Squared Cor. 0.33857

#> Pseudo R2 0.26145

#> BIC 1,927.9

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1To interpret the results:

The coefficient on

Treatmentrepresents the log-percentage change in the mean outcome of the treatment group relative to the control group.To compute the proportional effect, we exponentiate the coefficient:

# Compute the proportional effect of treatment

treatment_effect <- exp(coefficients(res_pois)["Treatment"]) - 1

treatment_effect

#> Treatment

#> 12.17757Compute the standard error:

# Compute standard error

se_treatment <- exp(coefficients(res_pois)["Treatment"]) *

sqrt(res_pois$cov.scaled["Treatment", "Treatment"])

se_treatment

#> Treatment

#> 1.968684Thus, we conclude that the treatment effect increases the outcome by 1215% for the treated group compared to the control group.

22.6.2.2 Estimating Extensive vs. Intensive Margins

We now estimate treatment effects separately for the extensive margin (probability of a nonzero outcome) and the intensive margin (magnitude of effect among those with a nonzero outcome).

First, we use Lee Bounds to estimate the intensive-margin effect for individuals who always have a nonzero outcome.

library(causalverse)

res <- causalverse::lee_bounds(

df = data,

d = "Treatment",

m = "positive",

y = "Outcome",

numdraws = 10

) |>

causalverse::nice_tab(2)

print(res)

#> term estimate std.error

#> 1 Lower bound -0.22 0.09

#> 2 Upper bound 2.77 0.14Since the confidence interval includes zero, we cannot conclude that the treatment has a significant intensive-margin effect.

22.6.2.3 Sensitivity Analysis: Varying the Effect for Compliers

To further investigate the intensive-margin effect, we consider how sensitive our results are to different assumptions about compliers.

We assume that the expected outcome for compliers is \(100 \times c%\) lower or higher than for always-takers: \[ E(Y(1) \| \text{Complier}) = (1 - c) E(Y(1) \| \text{Always-taker}) \] We compute Lee Bounds for different values of \(c\):

set.seed(1)

c_values = c(0.1, 0.5, 0.7)

combined_res <- bind_rows(lapply(c_values, function(c) {

res <- causalverse::lee_bounds(

df = data,

d = "Treatment",

m = "positive",

y = "Outcome",

numdraws = 10,

c_at_ratio = c

)

res$c_value <- as.character(c)

return(res)

}))

combined_res |>

dplyr::select(c_value, everything()) |>

causalverse::nice_tab()

#> c_value term estimate std.error

#> 1 0.1 Point estimate 6.60 0.71

#> 2 0.5 Point estimate 2.54 0.13

#> 3 0.7 Point estimate 1.82 0.08- If we assume \(c = 0.1\), meaning compliers’ outcomes are 10% of always-takers’, then the intensive-margin effect is 6.6 units higher for always-takers.

- If \(c = 0.5\), meaning compliers’ outcomes are 50% of always-takers’, then the intensive-margin effect is 2.54 units higher.

These results highlight how assumptions about compliers affect conclusions about the intensive margin.

When dealing with zero-valued outcomes, log transformations are not appropriate. Instead:

Poisson QMLE provides a robust way to estimate percentage changes in the outcome.

Extensive vs. Intensive Margins allow us to distinguish between:

The probability of a nonzero outcome (extensive margin).

The magnitude of change among those with a nonzero outcome (intensive margin).

Lee Bounds provide a method to estimate the intensive-margin effect, though results can be sensitive to assumptions about always-takers and compliers.