5.2 “Law of the unconscious statistician” (LOTUS)

A distribution is the complete picture of the long run pattern of variability of random variable. An expected value is just one particular characteristic of a distribution, namely, the long run average value. We can often compute expected values without first finding the entire distribution.

Example 5.7 Flip a coin 3 times and let \(X\) be the number of flips that result in H, and let \(Y=(X-1.5)^2\). (We will see later why we might be interested in such a transformation.)

- Find the distribution of \(Y\).

- Compute \(\textrm{E}(Y)\).

- How could we have computed \(\textrm{E}(Y)\) without first finding the distribution of \(Y\)?

- Is \(\textrm{E}((X-1.5)^2)\) equal to \((\textrm{E}(X)-1.5)^2\)?

Solution. to Example 5.7

Show/hide solution

The possible values of \(X\) are 0, 1, 2, 3, so the possible values of \(Y\) are \((0 - 1.5)^2= 2.25\), \((1 - 1.5)^2= 0.25\), \((2 - 1.5)^2=0.25\), \((3 - 1.5)^2=2.25\). That is, the possible values of \(Y\) are 0.25 and 2.25. \(\textrm{P}(Y = 2.25) = \textrm{P}(X = 0)+\textrm{P}(X = 3) = 2/8\). The following table provides the distribution of \(Y\).

\(y\) \(p_Y(y)\) 0.25 6/8 2.25 2/8 \(\textrm{E}(Y) = 0.25(6/8) + 2.25(2/8) = 0.75\).

Since \(X\) takes the value 0 with probability 1/8, 1 with probability 3/8, 2 with probability 3/8, 3 with probability 1/8, then the random variable \((X - 1.5)^2\) takes value \((0 - 1.5)^2\) with probability 1/8, \((1 - 1.5)^2\) with probability 3/8, \((2 - 1.5)^2\) with probability 3/8, and \((3 - 1.5)^2\) with probability 1/8. Therefore \[ \textrm{E}((X-1.5)^2) = (0 - 1.5)^2(1/8)+ (1 - 1.5)^2(3/8) + (2 - 1.5)^2(3/8) + (3 - 1.5)^2(1/8) = 0.75. \] Finding the distribution of \(Y\) and then using it to compute the expected value of \(Y\) basically just groups some of the terms in the calculation in the previous sentence together.

NO! \(\textrm{E}(X) = 1.5\) so \((\textrm{E}(X)-1.5)^2=0\).

The calculation of \(\textrm{E}((X-1.5)^2)\) in part 3 of the previous example probably seemed pretty natural, and only required working with the distribution of \(X\) rather than first finding the distribution of a transformed random variable. It’s so natural, we could probably do it without thinking; this is the idea behind the following.

Definition 5.2 (Law of the unconscious statistician ( LOTUS)) The “law of the unconscious statistician” (LOTUS) says that the expected value of a transformed random variable can be found without finding the distribution of the transformed random variable, simply by applying the probability weights of the original random variable to the transformed values.

\[\begin{align*} & \text{Discrete $X$ with pmf $p_X$:} & \textrm{E}[g(X)] & = \sum_x g(x) p_X(x)\\ & \text{Continuous $X$ with pdf $f_X$:} & \textrm{E}[g(X)] & =\int_{-\infty}^\infty g(x) f_X(x) dx \end{align*}\]

The left-hand side of LOTUS, \(\textrm{E}[g(X)]\), represents finding the expected value the “long way”: define \(Y=g(X)\), find the distribution of \(Y\) (e.g., using the cdf method in Section 4.6), then use the definition of expected value to compute \(\textrm{E}(Y)\). LOTUS says we don’t have to first find the distribution of \(Y=g(X)\) to find \(\textrm{E}[g(X)]\); rather, we just simply apply the transformation \(g\) to each possible value \(x\) of \(X\) and then apply the corresponding weight for \(x\) to \(g(x)\).

From a simulation perspective, the left-hand side of LOTUS, \(\textrm{E}[g(X)]\), represents first constructing a spinner according to the distribution of \(Y=g(X)\) (e.g., using the cdf method in Section 4.6), then spinning it to simulate many \(Y\) values and averaging the simulated values. LOTUS says we don’t have to construct the \(Y\) spinner; we can simply spin the \(X\) spinner to simulate many \(X\) values, apply the transformation \(g\) to each simulated \(X\) value, and then average the transformed values.

LOTUS is much more useful for continuous random variables.

Example 5.8 Let \(X\) be a random variable with a Uniform(-1, 1) distribution and let \(Y=X^2\). Recall that in Example 4.30 we found the pdf of \(Y\): \(f_Y(y) = \frac{1}{2\sqrt{y}}, 0<y<1\).

- Find \(\textrm{E}(X^2)\) using the distribution of \(Y\) and the definition of expected value. Remember: if we did not have the distribution of \(Y\), we would first have to derive it as in Example 4.30.

- Describe how to use simulation to approximate \(\textrm{E}(Y)\), in a way that is analogous to the method in the previous part.

- Find \(\textrm{E}(X^2)\) using LOTUS.

- Describe how to use simulation to approximate \(\textrm{E}(X^2)\), in a way that is analogous to the method in the previous part.

- Is \(\textrm{E}(X^2)\) equal to \((\textrm{E}(X))^2\)?

Solution. to Example 5.8

Show/hide solution

- If we have the distribution of \(Y\), we compute expected value according to the definition, weighting \(y\) values by the pdf of \(Y\), \(f_Y(y)\), and then integrating over possible \(y\) values. \[ \textrm{E}(X^2) = \textrm{E}(Y) = \int_{-\infty}^\infty y f_Y(y) dy = \int_0^1 y\left(\frac{1}{2\sqrt{y}}\right)dy = 1/3 \]

- Construct a spinner corresponding to the distribution of \(Y\). Remember: this would involve first finding the cdf of \(Y\) as we did in Example 4.30, and then the corresponding quantile function to determine how to stretch/shrink the values on the spinner. Spin the \(Y\) spinner many times to simulate many values of \(Y\) and then average the simulated \(Y\) values to approximate \(\textrm{E}(Y)\).

- The pdf of \(X\) is \(f_X(x) = 1/2, -1<x<1\). Now we just work with the distribution of \(X\), but average the squared values \(x^2\), instead of the \(x\) values. Notice that the integral below is a \(dx\) integral. Using LOTUS \[ \textrm{E}(X^2) = \int_{-\infty}^\infty x^2 f_X(x) dx = \int_{-1}^1 x^2 (1/2)dx = 1/3 \] We hope you recognize that this calculation is much easier than first calculating the pdf as in Example 4.30.

- Spin the Uniform(-1, 1) spinner to simulate many values of \(X\). For each simulated value \(x\) compute \(x^2\), then average the \(x^2\) values. (We can construct a Uniform(-1, 1) spinner by a linear rescaling of the Uniform(0, 1) spinner: \(u\mapsto 2u-1\).)

- NO!!! \(\textrm{E}(X)= 0\), but \(\textrm{E}(X^2)=1/3\).

Recall Section 2.9. Whether in the short run or the long run, in general \[\begin{align*} \text{Average of $g(X)$} & \neq g(\text{Average of $X$}) \end{align*}\]

In terms of expected values, in general \[\begin{align*} \textrm{E}(g(X)) & \neq g(\textrm{E}(X)) \end{align*}\] The left side \(\textrm{E}(g(X))\) represents first transforming the \(X\) values and then averaging the transformed values. The right side \(g(\textrm{E}(X))\) represents first averaging the \(X\) values and then plugging the average (a single number) into the transformation formula.

X = RV(Uniform(-1, 1))

Y = X ** 2

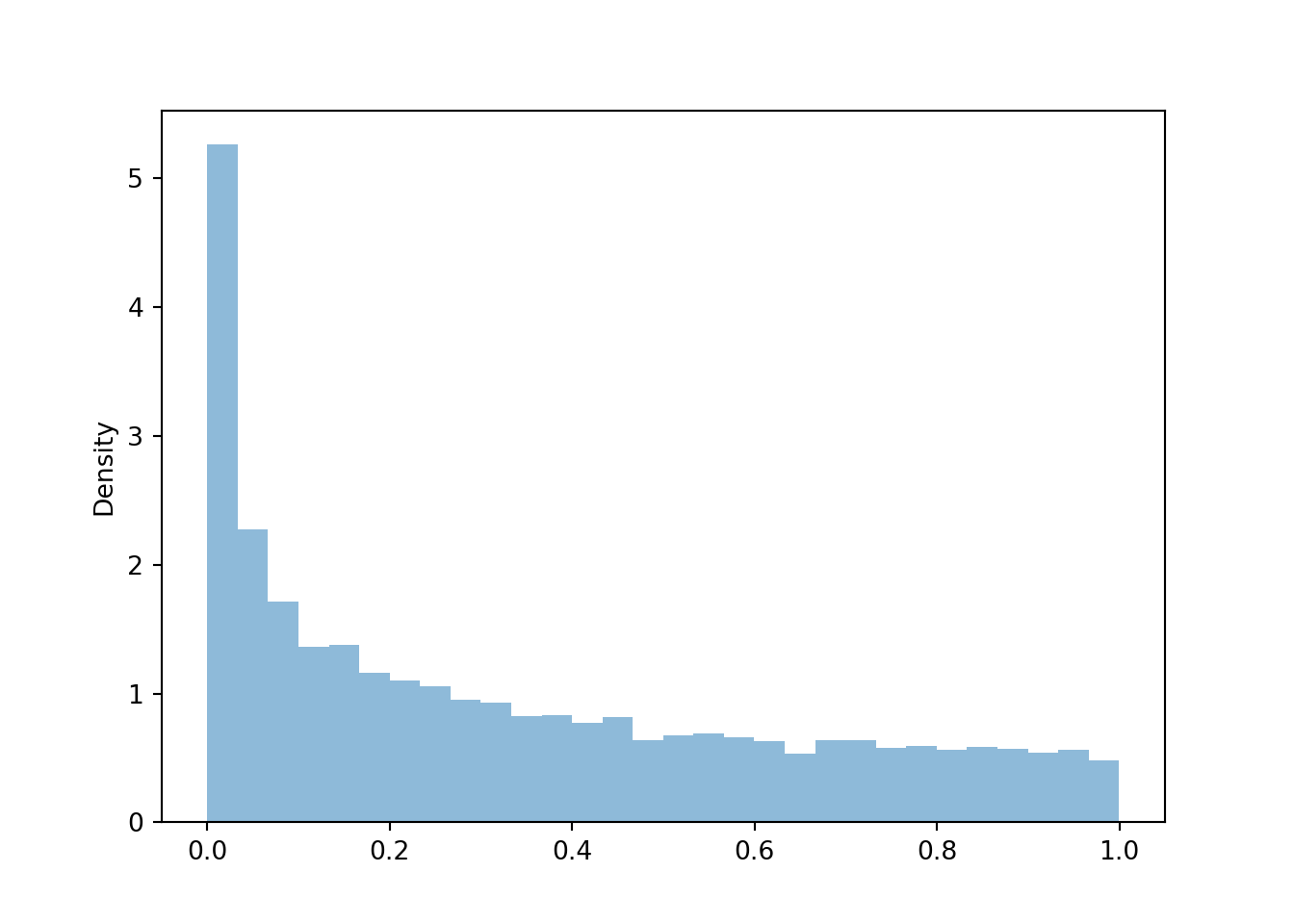

y = Y.sim(10000)

y.mean()## 0.3387249830110265y.plot()

Example 5.9 We want to find \(\textrm{E}(X^2)\) if \(X\) has an Exponential(1) distribution. Donny Dont says: “I can just use LOTUS and replace \(x\) with \(x^2\), so \(\textrm{E}(X^2)\) is \(\int_{-\infty}^{\infty} x^2 e^{-x^2} dx\)”. Do you agree?

Solution. to Example 5.9

Show/hide solution

No, \(x^2\) is multiplied by the density at \(x\), \(f_X(x)=e^{-x}\), not at \(x^2\). Think of the discrete random variable in Example 5.7. There we had \((x-1.5)^2 p_X(x)\), e.g., \((3-1.5)^2(1/8)\) because \(p_X(3)= 1/8\). If we had squared the \(x\) values inside the pmf, we would have \(p_X(3^2)\) which is 0. The correct use of LOTUS is

\[ \textrm{E}(X^2) = \int_0^\infty x^2 e^{-x} dx = 2 \]

The whole point of LOTUS is that you can work with the distribution of the original random variable \(X\). Keep the pdf of \(X\), \(f_X(x)\), as is, and only transform the values being averaged. Keeping the pdf \(f(x)\) as is is like sticking with the \(X\) spinner rather than constructing a \(Y\) spinner. The \(X\) spinner itself doesn’t change; rather, you transform the values that it generates before averaging.

Example 5.10 Roll a fair four-sided die twice. Let \(X\) be the sum of the two rolls, and let \(W\) be the number of rolls that are equal to 4.

- Find the joint distribution of \(X\) and \(W\).

- Let \(Z = XW\) be the product of \(X\) and \(W\). Find the distribution of \(Z\).

- Find \(\textrm{E}(Z)\).

- How could you find \(\textrm{E}(XW)\) without first finding the distribution of \(Z = XW\)?

- Is \(\textrm{E}(XW)\) equal to the product of \(\textrm{E}(X)\) and \(\textrm{E}(W)\)?

Solution. to Example 5.10

Show/hide solution

The table displays the joint pmf \(p_{X, W}(x, w)\).

\(x\) \ \(w\) 0 1 2 \(p_X(x)\) 2 1/16 0 0 1/16 3 2/16 0 0 2/16 4 3/16 0 0 3/16 5 2/16 2/16 0 4/16 6 1/16 2/16 0 3/16 7 0 2/16 0 2/16 8 0 0 1/16 1/16 \(p_{W}(w)\) 9/16 6/16 1/16 For each \((x, w)\) pair find the product \(xw\), and then collect like values to compute probabilities. For example, since \(X>0\), \(\textrm{P}(Z = 0) = \textrm{P}(XW = 0) = \textrm{P}(W=0)=9/16\). The table displays the pmf of \(Z\).

\(z\) \(p_Z(z)\) 0 9/16 5 2/16 6 2/16 7 2/16 16 1/16 Since we have the distribution of \(Z\) we can just use the definition of expected value. \[ 0(9/16) + 5(2/16)+6(2/16) + 7(2/16) + 16(1/16) = 3.25 \] So \(\textrm{E}(XW) = 3.25\).

We can use the joint pmf of \(X\) and \(W\), find the product for each possible pair, and multiply by the probability of that pair. \[\begin{align*} & \quad (2)(0)(1/16) + (3)(0)(2/16) + (4)(0)(3/16) + (5)(0)(2/16) + (6)(0)(1/16) \\ & + (5)(1)(2/16)+(6)(1)(2/16) + (7)(1)(2/16) + (8)(2)(1/16) \end{align*}\] Finding the distribution of \(Z\) and then using it to compute the expected value of \(Z\) basically just groups some of the terms in the calculation in the previous sentence together.

No!. \(\textrm{E}(X)= 5\) and \(\textrm{E}(W) = 0.5\), so the expected value of the product is not equal to the product of the expected values.

Recall Section 2.9. Whether in the short run or the long run, in general \[\begin{align*} \text{Average of $g(X, Y)$} & \neq g(\text{Average of $X$}, \text{Average of $Y$}) \end{align*}\]

In terms of expected values, in general

\[\begin{align*} \textrm{E}\left(g(X, Y)\right) & \neq g\left(\textrm{E}(X), \textrm{E}(Y))\right) \end{align*}\]

There is also LOTUS for two random variables. \[\begin{align*} & \text{Discrete $X, Y$ with joint pmf $p_{X, Y}$:} & \textrm{E}[g(X, Y)] & = \sum_{x}\sum_{y} g(x, y) p_{X, Y}(x, y)\\ & \text{Continuous $X, Y$ with joint pdf $f_{X, Y}$:} & \textrm{E}[g(X, Y)] & = \int_{-\infty}^\infty\int_{-\infty}^\infty g(x, y) f_{X, Y}(x, y)\,dxdy \end{align*}\]

LOTUS for two continuous random variables requires double integration. However, we will later see other tools for computing expected values, and using such tools it is often possible to avoid double integration.

Of course, LOTUS only gives a shortcut to computing expected values of transformations. Remember that expected values are only summary characteristics of a distribution. If we want more information about a distribution, e.g., its pdf or cdf, then LOTUS will not be enough.