9.8 Resources

This section provides some pointers to resources on character data and text processing in R.

9.8.1 Character strings

Read Chapter 14: Strings of the r4ds textbook (Wickham & Grolemund, 2017) and corresponding examples and exercises.

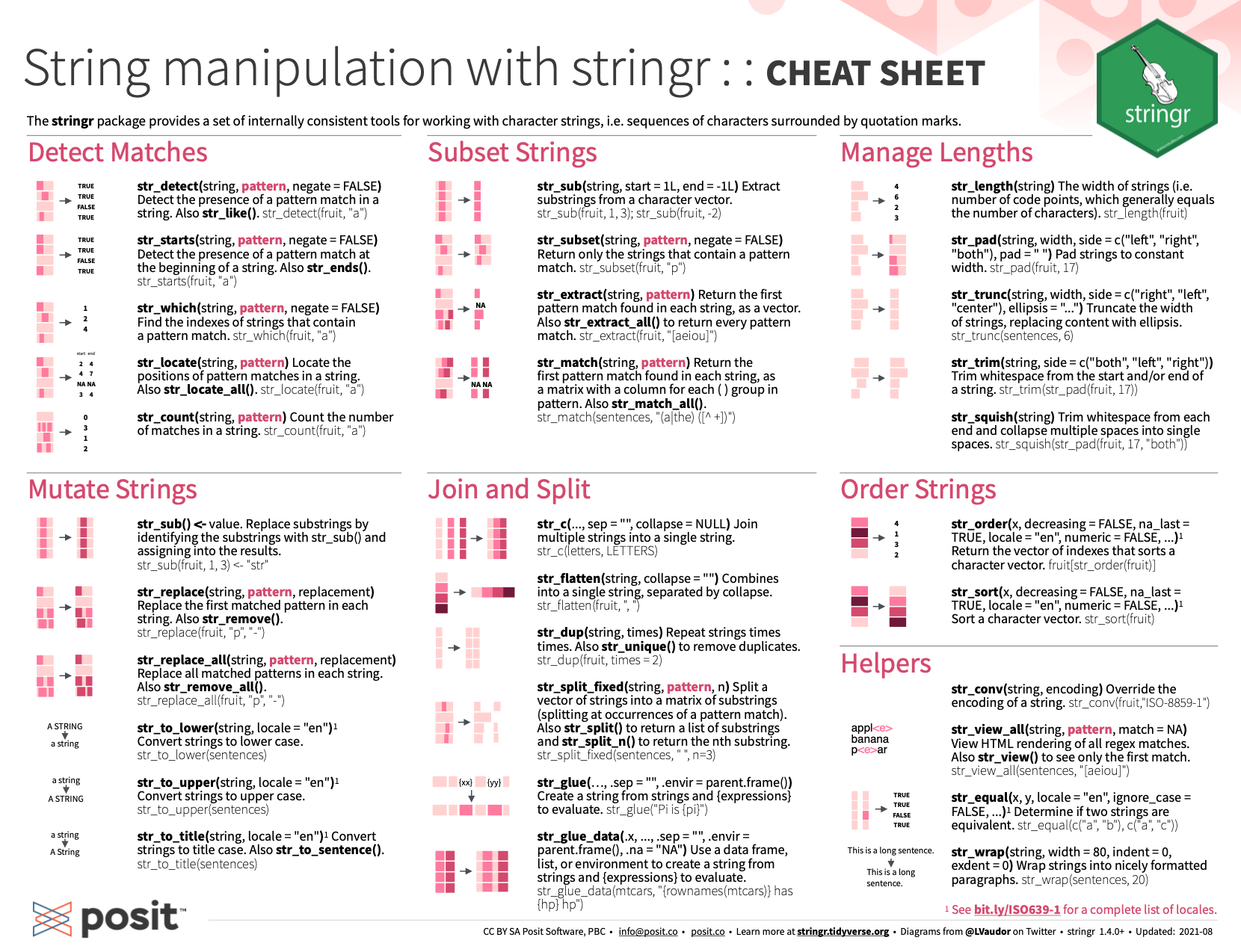

Figure 9.8: Text and string manipulation with stringr and regular expressions from RStudio cheatsheets.

9.8.2 Unicode characters and encodings

An inquisitive mind can easily spend a few weeks on Unicode characters and encodings:

For Unicode references, see

The lists of Unicode characters at unicode.org and Wikipedia

For additional details on character encodings, see the article What every programmer absolutely, positively needs to know about encodings and character sets to work with text at Kunststube.net.

Text rendering hates you (by Alexis Beingessner, 2019-09-28) provides a humorous perspective on the difficulties of rendering character symbols.

For details on German Umlaut letters (aka. diaeresis/diacritic), see Wikipedia: Diaeresis_diacritic and Wikipedia: Germanic Umlaut.

9.8.3 Regular expressions

Regular expressions are yet another topic to waste more time on than anyone must know:

Wikipedia: Regular_expression provides background information.

Regular-expressions.info provides regex information on many platforms, including R.

See the cheatsheet on Basic Regular Expressions in R (contributed by Ian Kopacka):

.](images/cheat/regex.png)

Figure 9.9: Basic regular expressions in R (by Ian Kopacka)

available at RStudio cheatsheets.

See Section E.4 of Appendix E for additional resources on regular expressions.

9.8.4 Books on text data

The topic of handling text data in R is big enough to receive book-length treatments:

The book Handling Strings with R (by Gaston Sanchez) provides a comprehensive overview of string manipulation in R.

The book Text Mining with R (by Julia Silge and David Robinson) provides a guide to text analysis within a tidy data framework. It uses the tidytext package to format text into tables with one token of text per row and manipulate them to perform advanced tasks.

Not a book on processing text, but a wonderful source of public-domain texts to process is https://www.gutenberg.org. The R package gutenbergr (Robinson, 2020) allows to search, download, and process books from this collection.

9.8.5 Natural language processing

Venturing from manipulating character symbols to the challenges of organizing text corpora and analyzing its semantics and pragmatics involves many additional techniques and transformation steps.

The CRAN Task View: Natural Language Processing provides an overview of many related R packages.

Here are some pointers to R packages that may be helpful along the way:

The lsa package provides routines for performing latent semantic analysis (LSA, see Wikipedia) in R.

The quanteda package provides a framework for quantitative text analysis in R.

The tokenizers package provides a consistent interface for converting natural language text into tokens (e.g., paragraphs, sentences, words).