10.4 Probing the interaction

10.4.1 The pick-a-point approach.

10.4.1.1 Omnibus inference.

Hayes used the omnibus testing framework to assess how important coefficients \(b_{1}\) and \(b_{2}\) were to our interaction model, model1. Before fitting the models, he discussed why he preferred to fit models after centering sexism (i.e., \(W\)) to 4.25. Here we’ll call our centered variable sexism_p, where _p stands in for “prime”.

protest <-

protest %>%

mutate(sexism_p = sexism - 4.25)From here on, model3 is the moderation model without the lower-order D1 and D2 terms; model4 is the full moderation model.

# The model without D1 + D2

model3 <-

update(model2,

newdata = protest,

liking ~ 1 + sexism_p + D1:sexism_p + D2:sexism_p,

chains = 4, cores = 4)

# The full model with D1 + D2

model4 <-

update(model2,

newdata = protest,

liking ~ 1 + D1 + D2 + sexism_p + D1:sexism_p + D2:sexism_p,

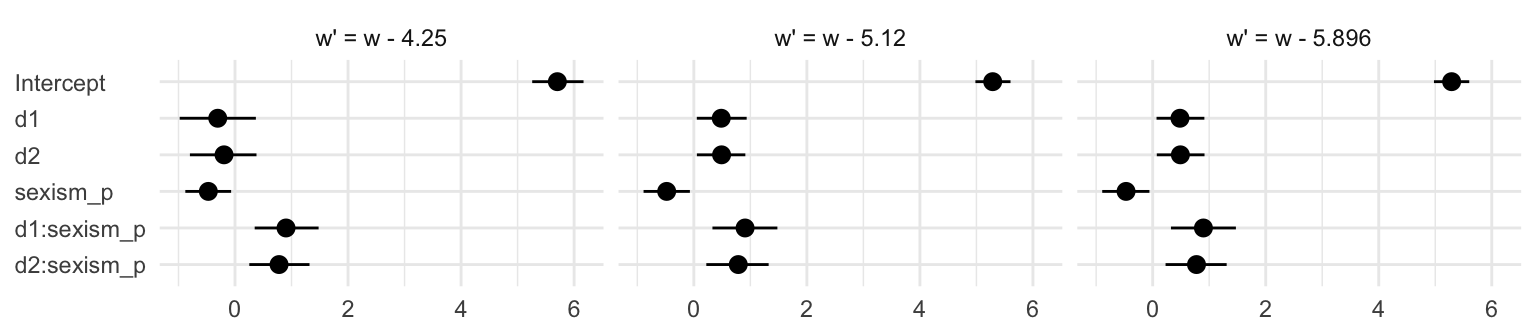

chains = 4, cores = 4)The coefficient summaries for model4 correspond to the top section of Table 10.3 (p. 373).

fixef(model4) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 5.688 0.225 5.238 6.124

## D1 -0.285 0.337 -0.936 0.399

## D2 -0.170 0.306 -0.744 0.445

## sexism_p -0.464 0.204 -0.846 -0.062

## D1:sexism_p 0.894 0.286 0.320 1.445

## D2:sexism_p 0.767 0.276 0.222 1.305We can compare their Bayesian \(R^2\) distributions like we usually do.

r2s <-

bayes_R2(model3, summary = F) %>%

as_tibble() %>%

rename(`Model without D1 + D2` = R2) %>%

bind_cols(

bayes_R2(model4, summary = F) %>%

as_tibble() %>%

rename(`Model with D1 + D2` = R2)

) %>%

mutate(`The R2 difference` = `Model with D1 + D2` - `Model without D1 + D2`)

r2s %>%

gather() %>%

mutate(key = factor(key, levels = c("Model without D1 + D2", "Model with D1 + D2", "The R2 difference"))) %>%

group_by(key) %>%

summarize(median = median(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 x 4

## key median ll ul

## <fct> <dbl> <dbl> <dbl>

## 1 Model without D1 + D2 0.139 0.05 0.245

## 2 Model with D1 + D2 0.155 0.066 0.254

## 3 The R2 difference 0.016 -0.118 0.151Our results differ a bit from those in the text, but the substantive interpretation is the same. The D1 and D2 parameters added little predictive power to the model in terms of \(R^2\). We can also use information criteria to compare the models. Here are the results from using the LOO-CV.

loo(model3, model4,

reloo = T)## LOOIC SE

## model3 371.97 22.10

## model4 374.77 21.75

## model3 - model4 -2.81 1.57[When I ran the loo() without the reloo argument, I got a warning message about an observation with an overly-large pareto \(k\) value. Setting reloo = T fixed the problem.]

The LOO-CV difference between the two models was pretty small and its standard error was of about the same magnitude of its difference. Thus, the LOO-CV gives the same general message as the \(R^2\). The D1 and D2 parameters were sufficiently small and uncertain enough that constraining them to zero did little in terms of reducing the explanatory power of the statistical model.

Here’s the same thing all over again, but this time after centering sexism on 5.120.

protest <-

protest %>%

mutate(sexism_p = sexism - 5.120)

# The model without D1 + D2

model3 <-

update(model2,

newdata = protest,

liking ~ 1 + sexism_p + D1:sexism_p + D2:sexism_p,

chains = 4, cores = 4)

# The full model with D1 + D2

model4 <-

update(model2,

newdata = protest,

liking ~ 1 + D1 + D2 + sexism_p + D1:sexism_p + D2:sexism_p,

chains = 4, cores = 4)These coefficient summaries correspond to the middle section of Table 10.3 (p. 373).

fixef(model4) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 5.288 0.159 4.971 5.600

## D1 0.483 0.226 0.041 0.914

## D2 0.491 0.220 0.049 0.914

## sexism_p -0.467 0.210 -0.871 -0.050

## D1:sexism_p 0.898 0.293 0.323 1.482

## D2:sexism_p 0.777 0.281 0.205 1.334Here are the Bayesian \(R^2\) summaries and the summary for their difference.

r2s <-

bayes_R2(model3, summary = F) %>%

as_tibble() %>%

rename(`Model without D1 + D2` = R2) %>%

bind_cols(

bayes_R2(model4, summary = F) %>%

as_tibble() %>%

rename(`Model with D1 + D2` = R2)

) %>%

mutate(`The R2 difference` = `Model with D1 + D2` - `Model without D1 + D2`)

r2s %>%

gather() %>%

mutate(key = factor(key, levels = c("Model without D1 + D2", "Model with D1 + D2", "The R2 difference"))) %>%

group_by(key) %>%

summarize(median = median(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 x 4

## key median ll ul

## <fct> <dbl> <dbl> <dbl>

## 1 Model without D1 + D2 0.098 0.027 0.191

## 2 Model with D1 + D2 0.155 0.063 0.259

## 3 The R2 difference 0.055 -0.072 0.185And the LOO-CV:

loo(model3, model4)## LOOIC SE

## model3 377.34 23.81

## model4 375.02 21.83

## model3 - model4 2.32 6.02Here again our Bayesian \(R^2\) and loo() results cohere, both suggesting the D1 and D2 parameters were of little predictive utility. Note how this differs a little from the second \(F\)-test on page 370.

Here’s what happens when we center sexism on 5.896.

protest <-

protest %>%

mutate(sexism_p = sexism - 5.896)

# The model without D1 + D2

model3 <-

update(model2,

newdata = protest,

liking ~ 1 + sexism_p + D1:sexism_p + D2:sexism_p,

chains = 4, cores = 4)

# The full model with D1 + D2

model4 <-

update(model2,

newdata = protest,

liking ~ 1 + D1 + D2 + sexism_p + D1:sexism_p + D2:sexism_p,

chains = 4, cores = 4)These coefficient summaries correspond to the lower section of Table 10.3 (p. 373).

fixef(model4) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 4.915 0.235 4.449 5.360

## D1 1.190 0.317 0.572 1.828

## D2 1.104 0.325 0.467 1.741

## sexism_p -0.476 0.213 -0.889 -0.068

## D1:sexism_p 0.902 0.300 0.298 1.484

## D2:sexism_p 0.783 0.284 0.249 1.346Again, the \(R^2\) distributions and their difference-score distribution.

r2s <-

bayes_R2(model3, summary = F) %>%

as_tibble() %>%

rename(`Model without D1 + D2` = R2) %>%

bind_cols(

bayes_R2(model4, summary = F) %>%

as_tibble() %>%

rename(`Model with D1 + D2` = R2)

) %>%

mutate(`The R2 difference` = `Model with D1 + D2` - `Model without D1 + D2`)

r2s %>%

gather() %>%

mutate(key = factor(key, levels = c("Model without D1 + D2", "Model with D1 + D2", "The R2 difference"))) %>%

group_by(key) %>%

summarize(median = median(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 x 4

## key median ll ul

## <fct> <dbl> <dbl> <dbl>

## 1 Model without D1 + D2 0.028 0.003 0.092

## 2 Model with D1 + D2 0.155 0.062 0.265

## 3 The R2 difference 0.123 0.018 0.237loo(model3, model4)## LOOIC SE

## model3 387.78 26.72

## model4 375.15 21.86

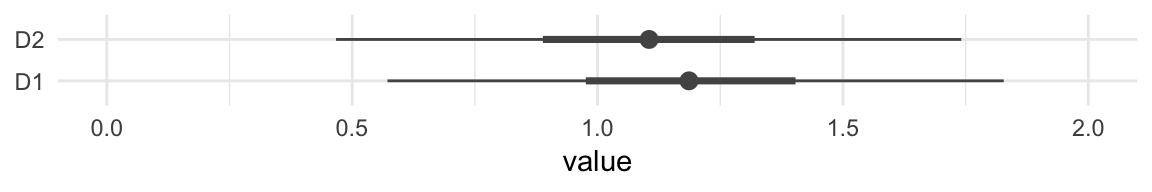

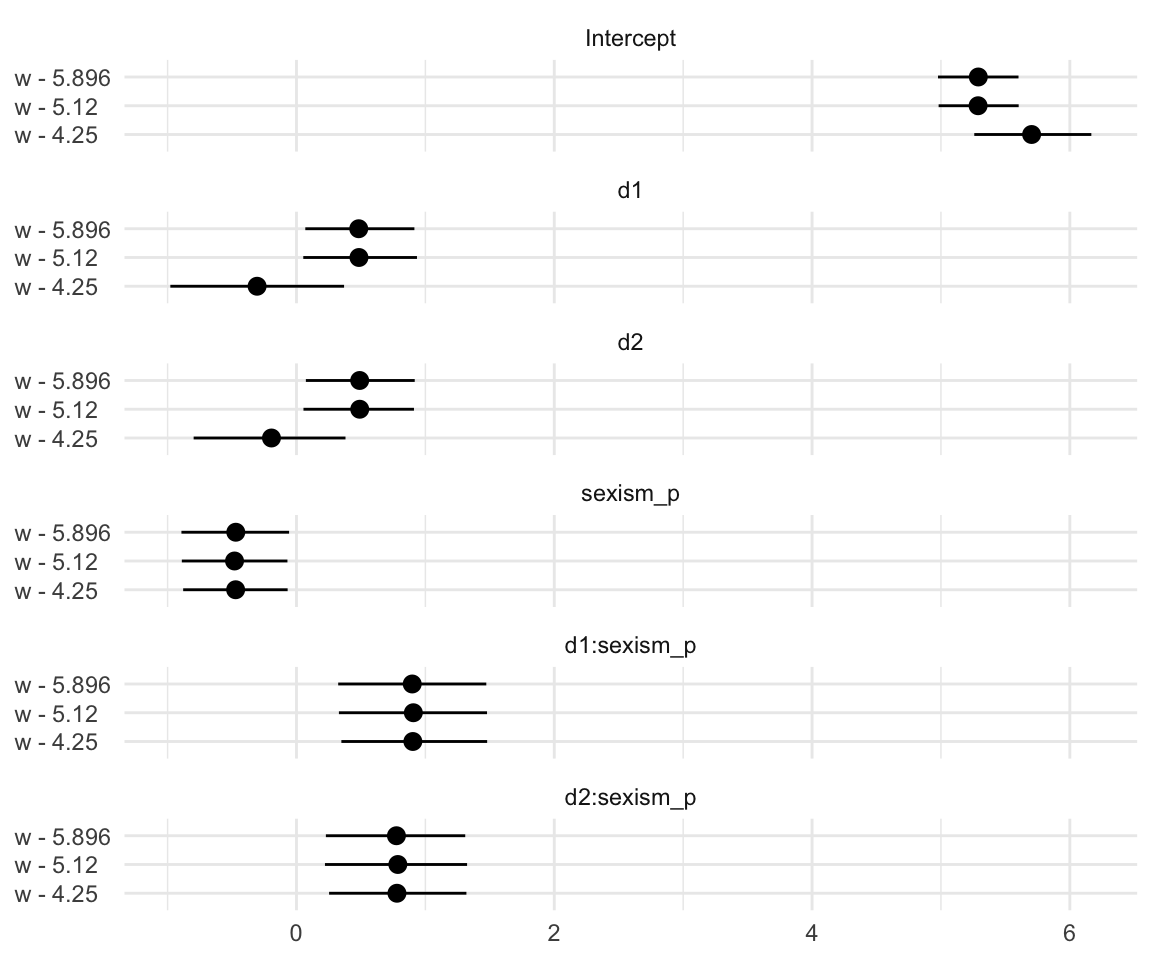

## model3 - model4 12.64 11.12Although our Bayesian \(R^2\) difference is now predominantly positive, the LOO-CV difference for the two models remains uncertain. Here’s a look at the two parameters in question using a handmade coefficient plot.

posterior_samples(model4) %>%

select(b_D1:b_D2) %>%

gather() %>%

mutate(key = str_remove(key, "b_")) %>%

ggplot(aes(key, y = value)) +

stat_summary(fun.y = median,

fun.ymin = function(i){quantile(i, probs = .025)},

fun.ymax = function(i){quantile(i, probs = .975)},

color = "grey33") +

stat_summary(geom = "linerange",

fun.ymin = function(i){quantile(i, probs = .25)},

fun.ymax = function(i){quantile(i, probs = .75)},

color = "grey33",

size = 1.25) +

xlab(NULL) +

coord_flip(ylim = 0:2) +

theme_minimal()

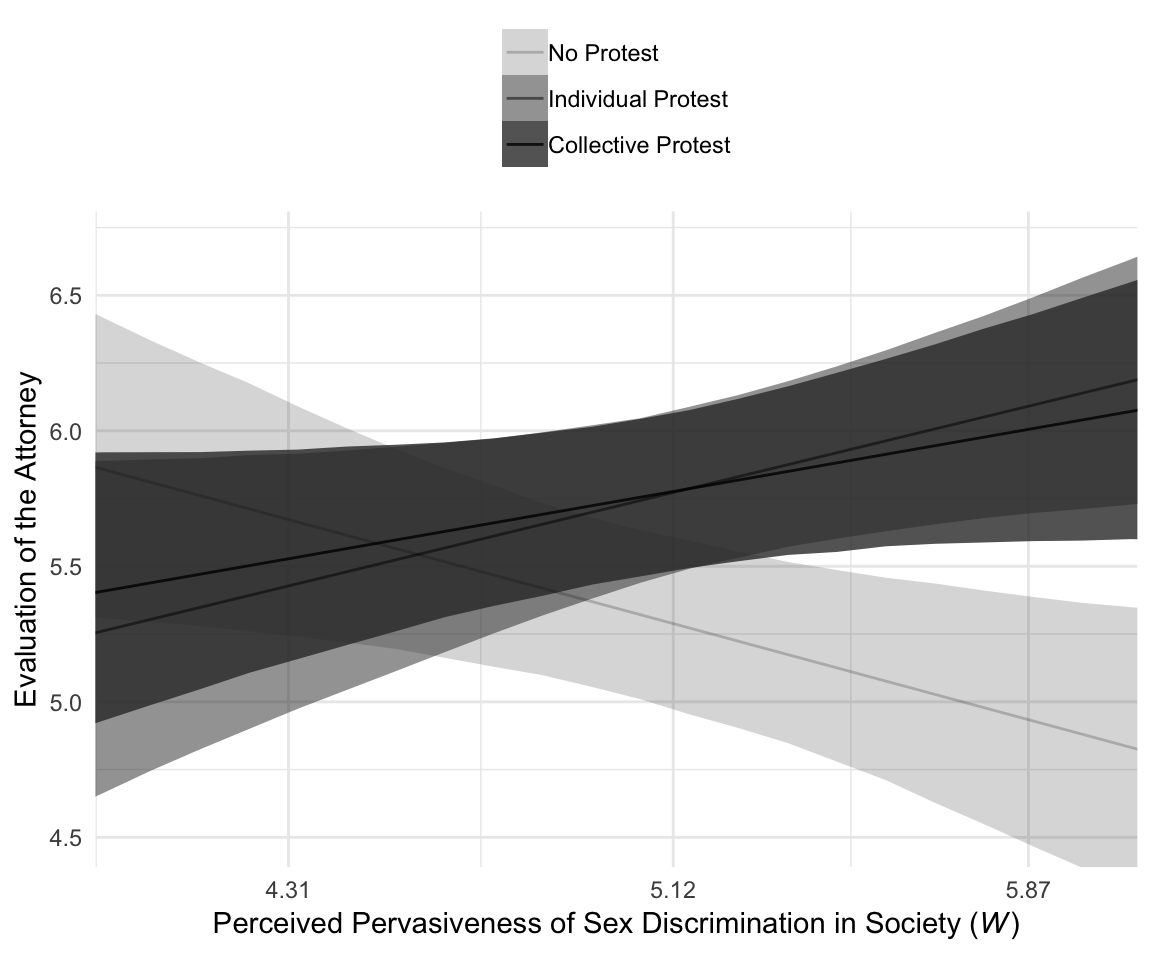

For Figure 10.4, we’ll drop our faceting approach and just make one big plot. Heads up: I’m going to drop the 50% intervals from this plot. They’d just make it too busy.

model2_fitted %>%

ggplot(aes(x = sexism, alpha = condition)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

size = 0) +

geom_line(aes(y = Estimate)) +

scale_alpha_manual(values = c(.2, .5, .8)) +

scale_x_continuous(breaks = breaks$values,

labels = breaks$labels) +

coord_cartesian(xlim = 4:6,

ylim = c(4.5, 6.7)) +

labs(x = expression(paste("Perceived Pervasiveness of Sex Discrimination in Society (", italic(W), ")")),

y = "Evaluation of the Attorney") +

theme_minimal() +

theme(legend.title = element_blank(),

legend.position = "top",

legend.direction = "vertical")

10.4.1.2 Pairwise inference.

To “consider the effect of Catherine’s behavior on how she is perceived among people who are relatively high in their perceptions of the pervasiveness of sex discrimination in society (p. 372)”, we’ll use fitted(). Since the number of unique predictor values is small for this example, we’ll just plug them directly into the newdata argument rather than first saving them as a nd object.

fitted(model2,

newdata = tibble(D1 = c(0, 1, 0),

D2 = c(0, 0, 1),

sexism = 5.896)) %>%

round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## [1,] 4.922 0.235 4.455 5.383

## [2,] 6.102 0.202 5.698 6.506

## [3,] 6.013 0.216 5.596 6.440Note that for these analyses, we just used model2, the model based on the un-centered sexism variable. We can also continue using fitted() in conjunction with the original model2 to get the group comparisons for when \(W\) = 4.250. Since these involve computing difference scores, we’ll have to use summary = F and do some wrangling.

fitted(model2,

newdata = tibble(D1 = c(0, 1, 0),

D2 = c(0, 0, 1),

sexism = 4.25),

summary = F) %>%

as_tibble() %>%

rename(`No Protest` = V1,

`Individual Protest` = V2,

`Collective Protest` = V3) %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

gather() %>%

mutate(key = factor(key, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(key) %>%

summarize(mean = mean(value),

sd = sd(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 x 5

## key mean sd ll ul

## <fct> <dbl> <dbl> <dbl> <dbl>

## 1 No Protest 5.7 0.229 5.26 6.15

## 2 Individual Protest 5.40 0.255 4.92 5.91

## 3 Collective Protest 5.51 0.205 5.12 5.93

## 4 difference_a -0.297 0.349 -0.974 0.391

## 5 difference_b -0.19 0.314 -0.784 0.427Here’s the same thing for when \(W\) = 5.120.

fitted(model2,

newdata = tibble(D1 = c(0, 1, 0),

D2 = c(0, 0, 1),

sexism = 5.120),

summary = F) %>%

as_tibble() %>%

rename(`No Protest` = V1,

`Individual Protest` = V2,

`Collective Protest` = V3) %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

gather() %>%

mutate(key = factor(key, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(key) %>%

summarize(mean = mean(value),

sd = sd(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 x 5

## key mean sd ll ul

## <fct> <dbl> <dbl> <dbl> <dbl>

## 1 No Protest 5.29 0.159 4.97 5.61

## 2 Individual Protest 5.77 0.155 5.47 6.07

## 3 Collective Protest 5.78 0.149 5.48 6.06

## 4 difference_a 0.484 0.224 0.047 0.92

## 5 difference_b 0.488 0.22 0.065 0.936Finally, here it is for when \(W\) = 5.986.

fitted(model2,

newdata = tibble(D1 = c(0, 1, 0),

D2 = c(0, 0, 1),

sexism = 5.986),

summary = F) %>%

as_tibble() %>%

rename(`No Protest` = V1,

`Individual Protest` = V2,

`Collective Protest` = V3) %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

gather() %>%

mutate(key = factor(key, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(key) %>%

summarize(mean = mean(value),

sd = sd(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 x 5

## key mean sd ll ul

## <fct> <dbl> <dbl> <dbl> <dbl>

## 1 No Protest 4.88 0.249 4.38 5.36

## 2 Individual Protest 6.14 0.214 5.71 6.57

## 3 Collective Protest 6.04 0.228 5.60 6.49

## 4 difference_a 1.26 0.326 0.62 1.92

## 5 difference_b 1.16 0.341 0.499 1.8410.4.2 The Johnson-Neyman technique.

10.4.2.1 Omnibus inference.

Consider the first sentence of the section:

Applied to probing an interaction between a multicategorical \(X\) and a continuous \(W\), an omnibus version of the JM technique involves finding the value or values of \(W\) where their \(F\)-ratio comparing the \(g\) estimated values of \(Y\) is just statistically significant.

Since we’re not using \(F\)-tests with our approach to Bayesian modeling, the closest we might have is a series of \(R^2\) difference tests, which would require refitting the model multiple times over many ways of centering the \(W\)-variable, sexism. I suppose you could do this if you wanted, but it just seems silly, to me. I’ll leave this one up to the interested reader.

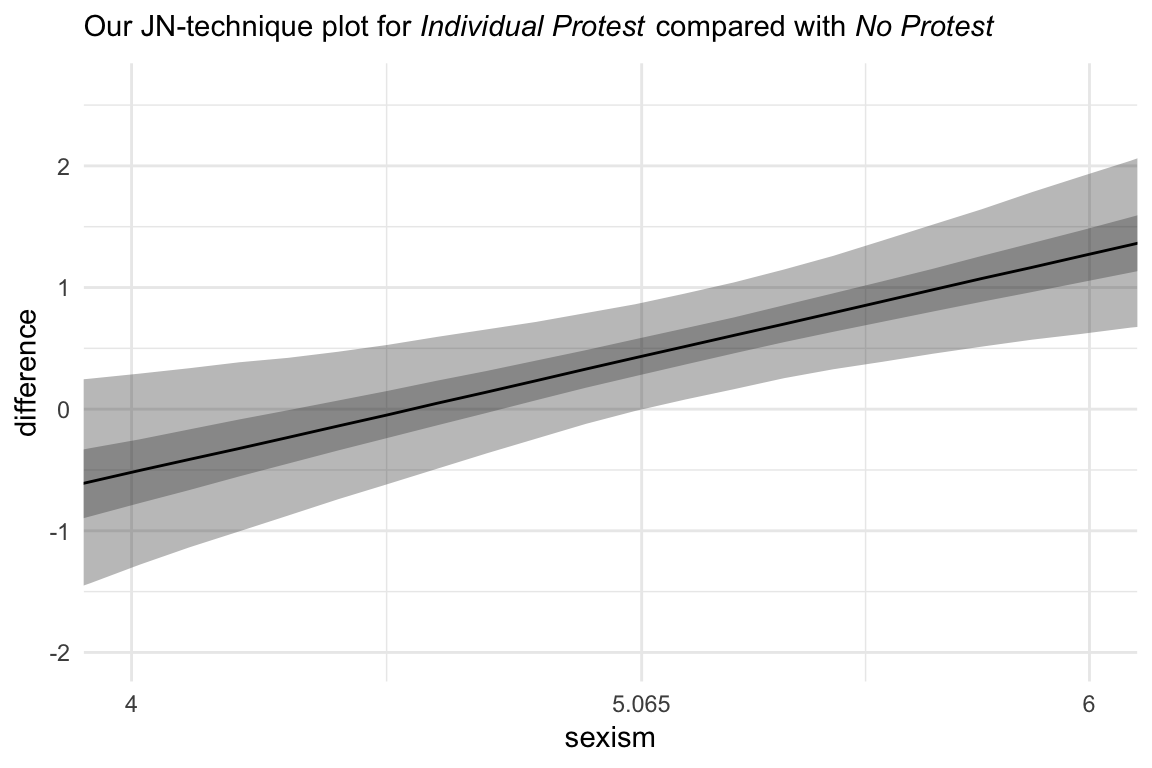

10.4.2.2 Pairwise inference.

Hayes didn’t make plots for this section, but if you’re careful constructing your nd and with the subsequent wrangling, you can make the usual plots. Since we have two conditions we’d like to compare with No Protest, we’ll make two plots. Here’s the comparison using Individual Protest, first.

# the transition value Hayes identified in the text

Hayes_value <- 5.065

nd <-

tibble(D1 = rep(0:1, each = 30),

D2 = rep(0, times = 30*2),

sexism = rep(seq(from = 3.5, to = 6.5, length.out = 30),

times = 2))

# we need some new data

fitted(model2,

newdata = nd,

summary = F) %>%

as_tibble() %>%

gather() %>%

mutate(sexism = rep(rep(seq(from = 3.5, to = 6.5, length.out = 30),

each = 4000),

times = 2)) %>%

mutate(condition = rep(c("No Protest", "Individual Protest"),

each = 4000*30)) %>%

mutate(iter = rep(1:4000, times = 30*2)) %>%

select(-key) %>%

rename(estimate = value) %>%

spread(key = condition, value = estimate) %>%

mutate(difference = `Individual Protest` - `No Protest`) %>%

# the plot

ggplot(aes(x = sexism, y = difference)) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .025)},

fun.ymax = function(i){quantile(i, probs = .975)},

alpha = 1/3) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .25)},

fun.ymax = function(i){quantile(i, probs = .75)},

alpha = 1/3) +

stat_summary(geom = "line",

fun.y = median) +

scale_x_continuous(breaks = c(4, Hayes_value, 6),

labels = c("4", Hayes_value, "6")) +

coord_cartesian(xlim = 4:6) +

labs(subtitle = expression(paste("Our JN-technique plot for ", italic("Individual Protest"), " compared with ", italic("No Protest")))) +

theme_minimal()

Now we’re ready to compare No Protest to Collective Protest. The main difference is with the rep() code in the D1 and D2 columns in nd. Other than that, we just switched out a few “Individual” labels with “Collective”.

# the transition value Hayes identified in the text

Hayes_value <- 5.036

nd <-

tibble(D1 = rep(0, times = 30*2),

D2 = rep(0:1, each = 30),

sexism = rep(seq(from = 3.5, to = 6.5, length.out = 30),

times = 2))

fitted(model2,

newdata = nd,

summary = F) %>%

as_tibble() %>%

gather() %>%

mutate(sexism = rep(rep(seq(from = 3.5, to = 6.5, length.out = 30),

each = 4000),

times = 2)) %>%

mutate(condition = rep(c("No Protest", "Collective Protest"),

each = 4000*30)) %>%

mutate(iter = rep(1:4000, times = 30*2)) %>%

select(-key) %>%

rename(estimate = value) %>%

spread(key = condition, value = estimate) %>%

mutate(difference = `Collective Protest` - `No Protest`) %>%

ggplot(aes(x = sexism, y = difference)) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .025)},

fun.ymax = function(i){quantile(i, probs = .975)},

alpha = 1/3) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .25)},

fun.ymax = function(i){quantile(i, probs = .75)},

alpha = 1/3) +

stat_summary(geom = "line",

fun.y = median) +

scale_x_continuous(breaks = c(4, Hayes_value, 6),

labels = c("4", Hayes_value, "6")) +

coord_cartesian(xlim = 4:6) +

labs(subtitle = expression(paste("Our JN-technique plot for ", italic("Collective Protest"), " compared with ", italic("No Protest")))) +

theme_minimal()

And here we do it one last time between the two active protest conditions.

nd <-

tibble(D1 = rep(1:0, each = 30),

D2 = rep(0:1, each = 30),

sexism = rep(seq(from = 3.5, to = 6.5, length.out = 30),

times = 2))

fitted(model2,

newdata = nd,

summary = F) %>%

as_tibble() %>%

gather() %>%

mutate(sexism = rep(rep(seq(from = 3.5, to = 6.5, length.out = 30),

each = 4000),

times = 2)) %>%

mutate(condition = rep(c("Individual Protest", "Collective Protest"),

each = 4000*30)) %>%

mutate(iter = rep(1:4000, times = 30*2)) %>%

select(-key) %>%

rename(estimate = value) %>%

spread(key = condition, value = estimate) %>%

mutate(difference = `Collective Protest` - `Individual Protest`) %>%

ggplot(aes(x = sexism, y = difference)) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .025)},

fun.ymax = function(i){quantile(i, probs = .975)},

alpha = 1/3) +

stat_summary(geom = "ribbon",

fun.ymin = function(i){quantile(i, probs = .25)},

fun.ymax = function(i){quantile(i, probs = .75)},

alpha = 1/3) +

stat_summary(geom = "line",

fun.y = median) +

coord_cartesian(xlim = 4:6) +

labs(subtitle = expression(paste("Our JN-technique plot for ", italic("Collective Protest"), " compared with ", italic("Individual Protest")))) +

theme_minimal()

Not much difference there.