Chapter 18: statistics

18.1 Hung Hung

https://www.youtube.com/playlist?list=PLTpF-A8hKVUOqfNyA6mOD6lo2cc6clZZP

https://www.youtube.com/watch?v=3S2r4XBzKts

population

普查 vs. 統計

random variable

\[ X \]

sample has randomness

probability function

\[ \mathrm{P}_{{\scriptscriptstyle X}}\left(E\right)\in\left[0,1\right] \]

event = subset of sample space

\[ E \]

input event with output probability in 0 to 1

\[ \mathrm{P}_{{\scriptscriptstyle X}}:\left\{ E_{{\scriptscriptstyle i}}\right\} _{{\scriptscriptstyle i\in I}}\rightarrow\left[0,1\right] \]

target of interest

- probability function

but events are hard to be listed or enumerated

\[ X:\left\{ \omega_{{\scriptscriptstyle i}}\right\} _{{\scriptscriptstyle i}\in I}\rightarrow\mathbb{R} \]

CDF = cumulative distribution function

\[ F_{{\scriptscriptstyle X}}\left(x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right) \]

real function is much easier to be operable, there is differentiation or difference operation

target of interest

- CDF = cumulative distribution function

- probability function

target of interest

- \(\mathrm{P}_{{\scriptscriptstyle X}}\left(\cdot\right)\) PF = probability function

- \(f_{{\scriptscriptstyle X}}\left(x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(X=x\right)\) PMF = probability mass function

- \(f_{{\scriptscriptstyle X}}\left(x\right)=\dfrac{\mathrm{d}}{\mathrm{d}x}\mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right)\) PDF = probability density function

- \(F_{{\scriptscriptstyle X}}\left(x\right)\) CDF = cumulative distribution function[18.1.2.1]

- \(M_{{\scriptscriptstyle X}}\left(\xi\right)\) MGF = moment generating function[18.1.2.7.1]

- \(\varphi_{{\scriptscriptstyle X}}\left(\xi\right)\) CF = characteristic function[18.1.2.7.2]

\[ \begin{array}{ccccccc} X & \sim & F_{{\scriptscriptstyle X}}\left(x\right) & \overset{\text{FToC}}{\longleftrightarrow} & f_{{\scriptscriptstyle X}}\left(x\right) & \leftrightarrow & \mathrm{P}_{{\scriptscriptstyle X}}\\ & \text{inversion formula}\ : & \updownarrow & \forall\xi\approx0\left[M_{{\scriptscriptstyle X}}\left(\xi\right)\in\mathbb{R}\right]\ \wedge & \uparrow\downarrow & \searrow\nwarrow & \looparrowleft\wedge\ \mathrm{supp}\left(f_{{\scriptscriptstyle X}}\right)\text{ is bounded}\\ & & \varphi_{{\scriptscriptstyle X}}\left(\xi\right) & \leftrightarrows & M_{{\scriptscriptstyle X}}\left(\xi\right) & \rightarrow & \left\{ \mu_{{\scriptscriptstyle n}}\middle|n\in\mathbb{N}\right\} \end{array} \]

In population,

\[ X\sim F_{{\scriptscriptstyle X}}\left(x\right) \]

by sampling,

\[ X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle i}},\cdots,X_{{\scriptscriptstyle n}}=X_{{\scriptscriptstyle i}}\sim F_{{\scriptscriptstyle X}}\left(x\right) \]

or

\[ X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle i}},\cdots,X_{{\scriptscriptstyle n}}=X_{{\scriptscriptstyle i}}\overset{\text{i.i.d.}}{\sim}F_{{\scriptscriptstyle X}}\left(x\right) \]

\(\text{i.i.d.}\) = independently identically distributed

and inference back

parametrically

\[ \widehat{X}\sim\widehat{F}_{{\scriptscriptstyle X}}\left(x\right)=\widehat{F}_{{\scriptscriptstyle X}}\left(x|\theta\right) \]

or nonparametrically

\[ \hat{X}\sim\hat{F}_{{\scriptscriptstyle X}}\left(x\right) \]

inference is function of samples, or called random function, to estimate unknown parameters

\[ \widehat{\Theta}\leftarrow\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle i}},\cdots,X_{{\scriptscriptstyle n}}\right)=T\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle n}}\right)=T\left(\cdots,X_{{\scriptscriptstyle i}},\cdots\right)=T\left(X_{{\scriptscriptstyle i}}\right) \]

correspondng CDF for inference or estimation function of sampling random variables

\[ T\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle n}}\right)=T\sim F_{{\scriptscriptstyle T}}\left(t\right) \]

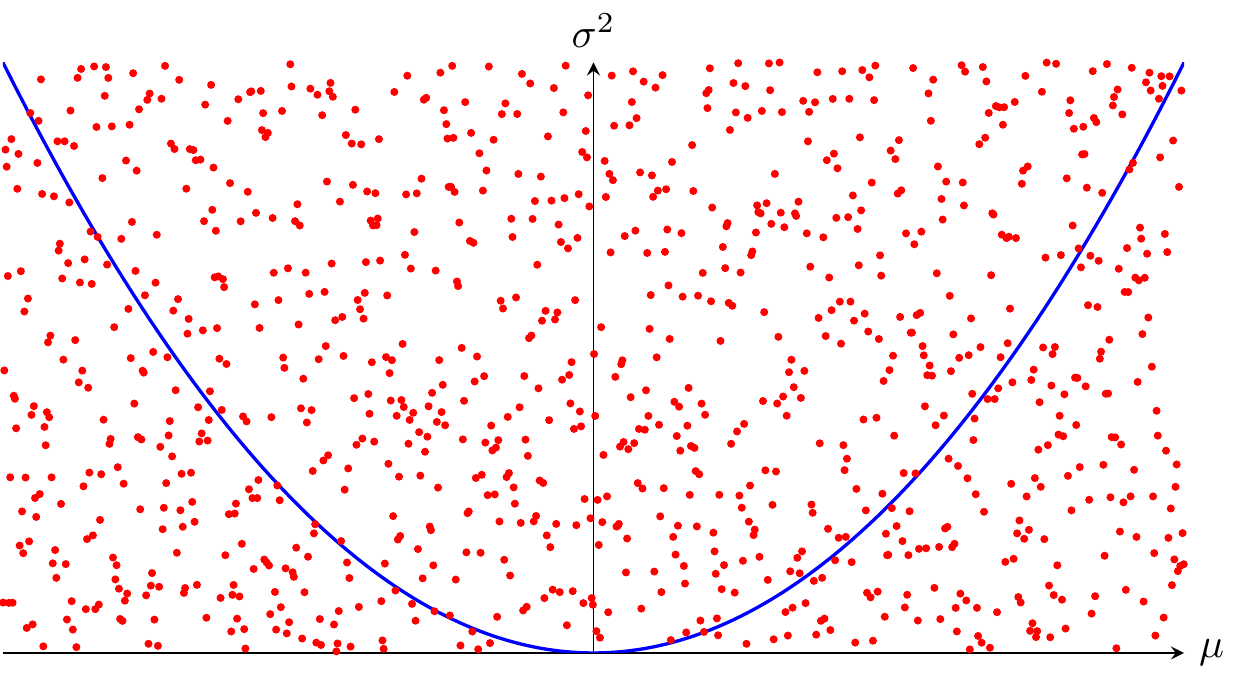

wish to be unbiased and consistent

\[ \begin{cases} \mathrm{E}\left(\widehat{\Theta}\right)=\theta\Leftrightarrow\mathrm{E}\left(\widehat{\Theta}\right)-\theta=0 & \text{unbiasedness}\\ \mathrm{V}\left(\widehat{\Theta}\right)=0 & \text{consistency} \end{cases} \]

unbiasedness usually harder than consistency, thus usually first considered consistency.

modeling or parameterizing with unknown parameter \(\theta\)

\[ F_{{\scriptscriptstyle X}}\left(x\right)\overset{M}{=}F_{{\scriptscriptstyle X}}\left(x|\theta\right)=F_{{\scriptscriptstyle X}}\left(x;\theta\right) \]

parameterization is to reduce unknown parameters from infinite ones to finite ones

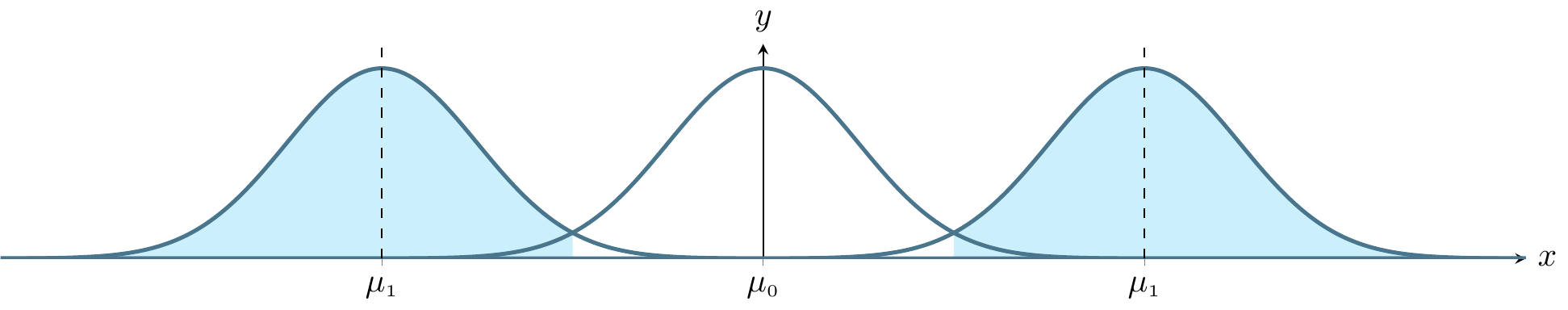

e.g. for normally distributed data

\[ f_{{\scriptscriptstyle X}}\left(x\right)\overset{M}{=}f_{{\scriptscriptstyle X}}\left(x|\theta\right)=\dfrac{\mathrm{e}^{{\scriptscriptstyle \frac{-1}{2}\left(\frac{x-\mu}{\sigma}\right)^{2}}}}{\sqrt{2\pi\sigma^{2}}}=f_{{\scriptscriptstyle X}}\left(x|\mu,\sigma^{2}\right)=f_{{\scriptscriptstyle X}}\left(x|\mu,\sigma\right) \]

the price of parameterization is guess wrong model.

For some non-negative data, instead of normal distribution, consider distributions skewed to the right

topics

- \(\mathrm{P}_{{\scriptscriptstyle X}}\) probability theory

- \(f_{{\scriptscriptstyle X}}\left(x\right)\overset{M}{=}f_{{\scriptscriptstyle X}}\left(x|\theta\right)=f_{{\scriptscriptstyle X}}\left(x;\theta\right)\) various univariable distribution

- \(f_{\boldsymbol{{\scriptscriptstyle X}}}\left(\boldsymbol{x}\right)\overset{M}{=}f_{\boldsymbol{{\scriptscriptstyle X}}}\left(\boldsymbol{x}|\boldsymbol{\theta}\right)=f_{\boldsymbol{{\scriptscriptstyle X}}}\left(\boldsymbol{x};\boldsymbol{\theta}\right)\) multivariable distribution

- \(T\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle n}}\right)\) inference

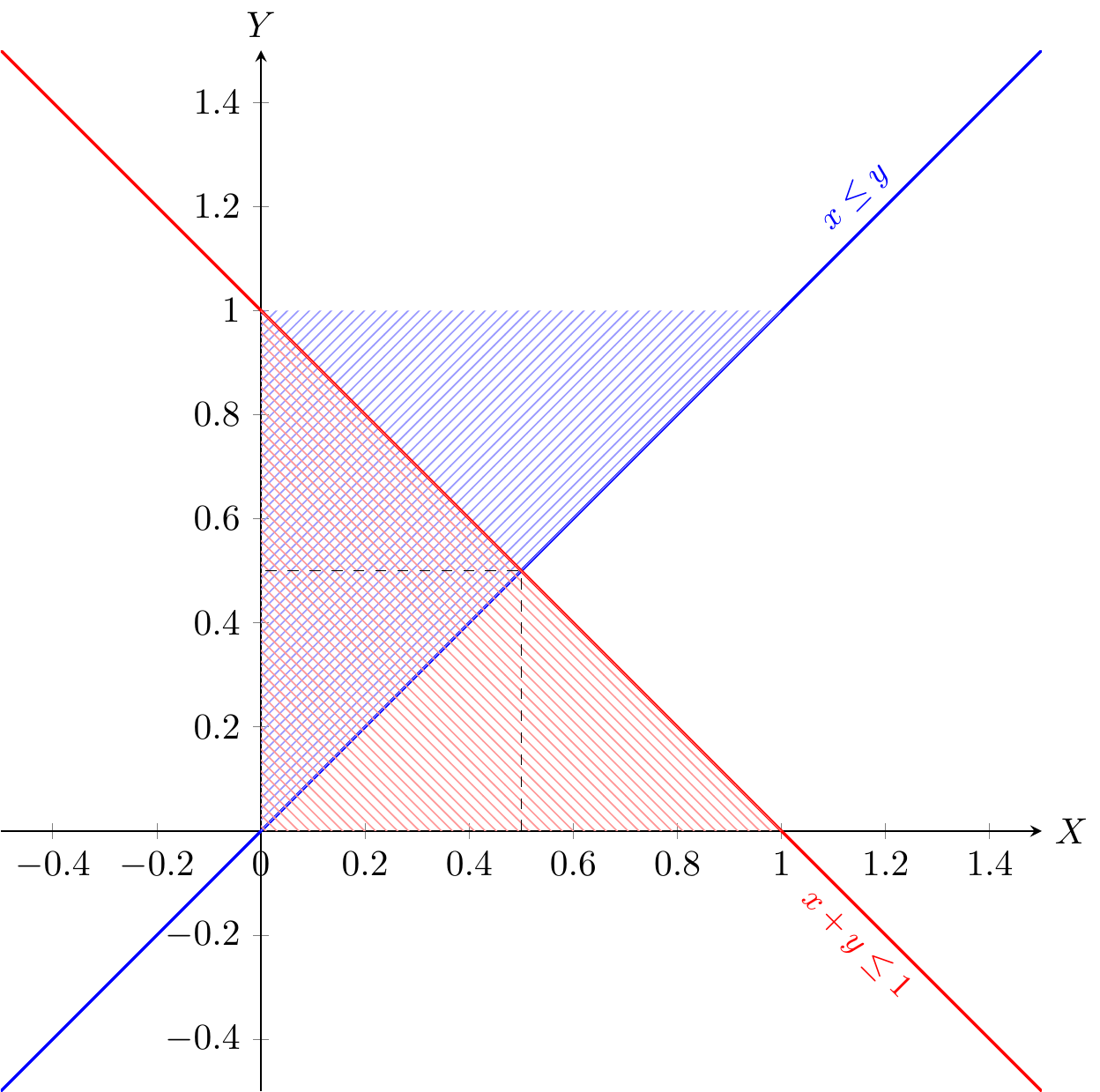

- point estimation \[ \widehat{\mu}=\begin{cases} \overline{X} & \rightarrow\mu\\ \mathrm{median}\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle n}}\right) & \rightarrow\mu\\ \vdots \end{cases} \]

- interval estimation = hypothesis testing \[ \begin{cases} H_{{\scriptscriptstyle 0}}:\theta=\theta_{{\scriptscriptstyle 0}}\\ H_{{\scriptscriptstyle 1}}:\theta\ne\theta_{{\scriptscriptstyle 0}} \end{cases}\leftarrow T\in\left\{ 0,1\right\} \]

- how to find \(T\)

- behavior of random function \(T\left(X_{{\scriptscriptstyle 1}},\cdots,X_{{\scriptscriptstyle n}}\right)=T\sim F_{{\scriptscriptstyle T}}\left(t\right)\)

- statistical properties of \(T\)

- asymptotic properties \[ n\rightarrow\infty\begin{cases} \text{CLT}=\text{central limit theorem}\\ \text{LLN}=\text{law of large number} \end{cases} \]

18.1.1 probability theory

https://www.youtube.com/watch?v=HBmTDtMBr3c

Definition 18.1 sample space: The set \(S\) of all possible outcomes of an experiment is called the sample space

\[ S=\left\{ \omega_{{\scriptscriptstyle i}}\right\} _{{\scriptscriptstyle i}\in I} \]

Definition 18.2 event: An event \(E\) is any collection of possible outcomes of an experiment, i.e. any subset of \(S\)

\[ E \subseteq S \] set operation

commutativity, associativity, distributivity

De Morgan law

pairwise disjoint = mutually exclusive

partition

18.1.1.1 probability function

probability function axioms = probability function definition

Kolmogorov axioms of probability6 p.72

Definition 18.3 probability function: Given a sample space \(S\) and its event \(E\), a probability function is a function \(\mathrm{P}\) satisfying

\[ \begin{cases} \mathrm{P}\left(S\right)=1\\ \forall E\subseteq S\left(\mathrm{P}\left(E\right)\ge0\right)\\ E_{{\scriptscriptstyle 1}},\cdots,E_{{\scriptscriptstyle i}},\cdots\text{ are pairwise disjoint}\Rightarrow\mathrm{P}\left(\bigcup\limits _{i\in I}E_{{\scriptscriptstyle i}}\right)=\sum\limits _{i\in I}E_{{\scriptscriptstyle i}} \end{cases} \]

tossing a dice

theorems

\[ \mathrm{P}\left(\emptyset\right)=0 \]

\[ \mathrm{P}\left(E\right)\le1 \]

\[ \mathrm{P}\left(E^{\mathrm{C}}\right)=\mathrm{P}\left(\overline{E}\right)=1-\mathrm{P}\left(E\right) \]

\[ \mathrm{P}\left(E_{{\scriptscriptstyle 2}}\cap\overline{E}_{{\scriptscriptstyle 1}}\right)=\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\cap E_{{\scriptscriptstyle 1}}\right) \]

\[ E_{{\scriptscriptstyle 1}}\subseteq E_{{\scriptscriptstyle 2}}\Rightarrow\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)\le\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right) \]

addition rule6 p.75 and extended addition rule6 p.76

inclusion-exclusion principle = sieve principle

\[ \mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)=\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right) \]

\[ \mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\cup E_{{\scriptscriptstyle 3}}\right)=\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 3}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\cap E_{{\scriptscriptstyle 3}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 3}}\cap E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\cap E_{{\scriptscriptstyle 3}}\right) \]

\[ \mathrm{P}\left(\bigcup\limits _{i=1}^{n}E_{{\scriptscriptstyle i}}\right)=\sum\limits _{k=1}^{n}\left(\left(-1\right)^{k-1}\sum\limits _{1\le i_{1}<\cdots<i_{k}\le n}\mathrm{P}\left(\bigcap\limits _{i\in\left\{ i_{1},\dots,i_{k}\right\} }E_{{\scriptscriptstyle i}}\right)\right) \]

symmetric difference6 p.75

union probability upper-bounded by sum of indivisual probability

\[ \mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)=\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right)-\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right)\le\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right) \]

\[ E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}=\emptyset\Leftrightarrow\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)=\mathrm{P}\left(E_{{\scriptscriptstyle 1}}\right)+\mathrm{P}\left(E_{{\scriptscriptstyle 2}}\right) \]

Boole inequality

\[ \mathrm{P}\left(\bigcup\limits _{i\in I}E_{{\scriptscriptstyle i}}\right)\le\sum\limits _{i\in I}E_{{\scriptscriptstyle i}} \]

\[ \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}}\middle|H_{{\scriptscriptstyle 0}}\right)=\mathrm{P}\left(\text{reject }H_{{\scriptscriptstyle 0}}\middle|H_{{\scriptscriptstyle 0}}\text{ is true}\right)=\alpha=\text{type 1 error} \]

multiple hypothesis testing

How to control the family-wise error rate?

Ideally,

FWER = family-wise error rate

\[ \begin{aligned} \alpha= & \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle 1}}}\cup\cdots\cup\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle m}}}\middle|H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle 1}}\cap\cdots\cap H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle m}}\right)=\mathrm{P}\left(\text{reject any }H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}\middle|\text{any }H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\text{ is true}\right)\\ = & \mathrm{P}\left(\bigcup\limits _{i=1}^{m}\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=1-\mathrm{P}\left(\overbrace{\bigcup\limits _{i=1}^{m}\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\\ = & 1-\mathrm{P}\left(\bigcap\limits _{i=1}^{m}\underbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=1-\mathrm{P}\left(\text{not to reject any }H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\\ & \overset{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\text{ pairwise independent}}{=}1-\prod\limits _{i=1}^{m}\mathrm{P}\left(\underbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=1-\prod\limits _{i=1}^{m}\left(1-\mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\right)\\ & \overset{\forall i,j\left[\mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\alpha_{{\scriptscriptstyle 0}}\right]}{=}1-\prod\limits _{i=1}^{m}\left(1-\alpha_{{\scriptscriptstyle 0}}\right)=1-\left(1-\alpha_{{\scriptscriptstyle 0}}\right)^{m}\\ \alpha= & 1-\left(1-\alpha_{{\scriptscriptstyle 0}}\right)^{m}\\ \alpha_{{\scriptscriptstyle 0}}= & 1-\left(1-\alpha\right)^{\frac{1}{m}}=1-\sqrt[m]{1-\alpha}\\ \Downarrow\\ \text{set } & \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\alpha_{{\scriptscriptstyle 0}}=1-\sqrt[m]{1-\alpha} \end{aligned} \]

But condition \(H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\text{ pairwise independent}\) is too strong.

Practically,

\[ \begin{aligned} \alpha= & \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle 1}}}\cup\cdots\cup\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle m}}}\middle|H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle 1}}\cap\cdots\cap H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle m}}\right)=\mathrm{P}\left(\text{reject any}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}\middle|\text{any }H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\text{ is true}\right)\\ = & \mathrm{P}\left(\bigcup\limits _{i=1}^{m}\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\overset{\mathrm{P}\left(\bigcup\limits _{i\in I}E_{{\scriptscriptstyle i}}\right)\le\sum\limits _{i\in I}E_{{\scriptscriptstyle i}}}{\underset{\text{Boole inequality}}{\le}}\sum\limits _{i=1}^{m}\mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\underset{\Uparrow}{=}\sum\limits _{i=1}^{m}\alpha_{{\scriptscriptstyle 0}}=m\alpha_{{\scriptscriptstyle 0}}\underset{\Downarrow}{=}\alpha\\ \text{let }\forall i,j & \left[\mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\alpha_{{\scriptscriptstyle 0}}\right]\Rightarrow\sum\limits _{i=1}^{m}\mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\sum\limits _{i=1}^{m}\alpha_{{\scriptscriptstyle 0}}=m\alpha_{{\scriptscriptstyle 0}}\Rightarrow\alpha_{{\scriptscriptstyle 0}}=\dfrac{\alpha}{m}\\ \Downarrow\\ \text{set } & \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\alpha_{{\scriptscriptstyle 0}}=\dfrac{\alpha}{m} \end{aligned} \]

Bonferroni correction

\[ \mathrm{P}\left(\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)=\dfrac{\alpha}{m}\Rightarrow\mathrm{P}\left(\bigcup\limits _{i=1}^{m}\overbrace{H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle i}}}\middle|\bigcap\limits _{j=1}^{m}H_{{\scriptscriptstyle 0}}^{{\scriptscriptstyle j}}\right)\le\alpha \]

Bonferroni inequality6 p.77

Bonferroni inequality and Boole inequality are equivalent inequalities

birthday problem6 p.78

18.1.2 univariable distribution

\[ \begin{cases} \mathrm{P}_{{\scriptscriptstyle X}}\left(X\in E\right) & \forall E\subseteq S\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right) & \forall x\in\mathbb{R} \end{cases} \]

\[ \begin{cases} \mathrm{P}_{{\scriptscriptstyle X}}\left(X\in E\right) & \forall E\subseteq S\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)=F_{{\scriptscriptstyle X}}\left(x\right) & \forall x\in\mathbb{R} \end{cases} \]

\[ \begin{aligned} & \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(\bigcup\limits _{\epsilon>0}\left(-\infty,x-\epsilon\right]\right)=\lim_{\epsilon\rightarrow0}\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x-\epsilon\right]\right)\\ \leftrightarrow & \mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right)\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(E\right),E=\left(-\infty,x\right) \end{aligned} \]

18.1.2.1 cumulative distribution function

CDF = cumulative distribution function

\[ F_{{\scriptscriptstyle X}}\left(x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right) \] \[ X\sim\mathrm{P}_{{\scriptscriptstyle X}}\leftrightarrow F_{{\scriptscriptstyle X}}\left(x\right) \]

\[ X\sim F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow\mathrm{P}_{{\scriptscriptstyle X}} \]

Definition 18.4 CDF = cumulative distribution function: A cumulative distribution function is a function \(F:\mathbb{R} \rightarrow \left[0,1\right]\) satisfying

\[ F_{{\scriptscriptstyle X}}\left(x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right) \]

Theorem 18.1 CDF = cumulative distribution function: \(F\left(x\right)\) is a cumulative distribution function iff

\[ \begin{cases} \begin{cases} \lim\limits _{x\rightarrow-\infty}F\left(x\right)=0 & \lim\limits _{x\rightarrow+\infty}F\left(x\right)=1\end{cases} & \left(01\right)\left[0,1\right]\\ \forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[F\left(x_{{\scriptscriptstyle 1}}\right)\le F\left(x_{{\scriptscriptstyle 2}}\right)\right] & \left(nd\right)\text{non-decreasing}\\ \lim\limits _{x\rightarrow x_{{\scriptscriptstyle 0}}^{+}}F\left(x\right)=F\left(x_{{\scriptscriptstyle 0}}\right) & \left(rc\right)\text{right-continuous} \end{cases} \]

Definition 18.5 RV = r.v. = random variable

\[ \begin{cases} X\text{ is a continuous RV} & \lim\limits _{x\rightarrow x_{{\scriptscriptstyle 0}}}F_{{\scriptscriptstyle X}}\left(x\right)=F_{{\scriptscriptstyle X}}\left(x_{{\scriptscriptstyle 0}}\right)\\ X\text{ is a discrete RV} & F_{{\scriptscriptstyle X}}\text{ is a step function of }x \end{cases} \]

6 p.103

Definition 18.6 RV = r.v. = random variable

6 p.104

Definition 18.7 range of r.v. = range of RV = the range of a random variable

\[ \begin{aligned} \mathcal{R}_{{\scriptscriptstyle X}}=&\left\{ x\middle|\begin{cases} \omega\in S\\ x\in X\left(\omega\right) \end{cases}\right\} \\=&\left\{ x\middle|\forall\omega\in S\left[x\in X\left(\omega\right)\right]\right\} \\=&\left\{ x\middle|x\in X\left(\Omega\right)\right\} =X\left(\Omega\right) \end{aligned} \]

18.1.2.2 probability density function

\[ \begin{cases} \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(\left(-\infty,x\right]\right)=F_{{\scriptscriptstyle X}}\left(x\right)\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(X=x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(x\right)=? \end{cases} \]

Definition 18.8 PDF = probability density function

PMF = probability mass function

\[ \begin{cases} f_{{\scriptscriptstyle X}}\left(x\right)=\dfrac{\mathrm{d}}{\mathrm{d}x}F_{{\scriptscriptstyle X}}\left(x\right) & X\text{ continuous RV}\\ f_{{\scriptscriptstyle X}}\left(x\right)=F_{{\scriptscriptstyle X}}\left(x\right)-F_{{\scriptscriptstyle X}}\left(x^{-}\right) & X\text{ discrete RV} \end{cases} \]

\[ \begin{cases} f_{{\scriptscriptstyle X}}\left(x\right)=\text{derivative of }F_{{\scriptscriptstyle X}}\left(x\right) & X\text{ continuous}\\ f_{{\scriptscriptstyle X}}\left(x\right)=\text{difference of }F_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

\[ \begin{cases} f_{{\scriptscriptstyle X}}\left(x\right)=\dfrac{\mathrm{d}}{\mathrm{d}x}F_{{\scriptscriptstyle X}}\left(x\right) & \Leftrightarrow F_{{\scriptscriptstyle X}}\left(x\right)=\intop\limits _{-\infty}^{x}f_{{\scriptscriptstyle X}}\left(t\right)\mathrm{d}t\\ f_{{\scriptscriptstyle X}}\left(x\right)=F_{{\scriptscriptstyle X}}\left(x\right)-F_{{\scriptscriptstyle X}}\left(x^{-}\right) & \Leftrightarrow F_{{\scriptscriptstyle X}}\left(x\right)=\sum\limits _{t\le x}f_{{\scriptscriptstyle X}}\left(t\right) \end{cases} \]

\[ \begin{cases} X\sim\mathrm{P}_{{\scriptscriptstyle X}}\leftrightarrow F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow f_{{\scriptscriptstyle X}}\left(x\right) & \text{ e.g. probability theory}\\ X\sim F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow\mathrm{P}_{{\scriptscriptstyle X}} & \Rightarrow F_{{\scriptscriptstyle X}}\left(x\right)\overset{M}{=}F_{{\scriptscriptstyle X}}\left(x|\theta\right)\text{ e.g. survial analysis}\\ X\sim f_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow\mathrm{P}_{{\scriptscriptstyle X}} & \Rightarrow f_{{\scriptscriptstyle X}}\left(x\right)\overset{M}{=}f_{{\scriptscriptstyle X}}\left(x|\theta\right)\text{ e.g. general statistics} \end{cases} \]

Theorem 18.2 PDF = probability density function or PMF = probability mass function: \(f\left(x\right)\) is a probability density function or probability mass function iff

\[ \begin{cases} \forall x\in\mathbb{R}\left[f\left(x\right)\ge0\right]\\ \begin{cases} \intop\limits _{-\infty}^{+\infty}f\left(x\right)\mathrm{d}x=1 & t\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}f\left(x\right)=1 & t\text{ discrete} \end{cases} \end{cases} \]

\[ \forall E\subseteq S\left[\mathrm{P}_{{\scriptscriptstyle X}}\left(X\in E\right)=\begin{cases} \int_{x\in E}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in E}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}\right] \]

https://www.youtube.com/watch?v=KIXBlj-3M2k

\[ \begin{aligned} \mathrm{P}_{{\scriptscriptstyle X}}\left(X=x\right)= & \lim_{\epsilon\rightarrow0}\mathrm{P}_{{\scriptscriptstyle X}}\left(\left[x-\epsilon,x+\epsilon\right]\right)\\ = & \lim_{\epsilon\rightarrow0}\mathrm{P}_{{\scriptscriptstyle X}}\left(x-\epsilon\le X\le x+\epsilon\right)\\ = & \lim_{\epsilon\rightarrow0}\left[F_{{\scriptscriptstyle X}}\left(x+\epsilon\right)-F_{{\scriptscriptstyle X}}\left(x-\epsilon\right)\right]\\ = & \begin{cases} F_{{\scriptscriptstyle X}}\left(x\right)-F_{{\scriptscriptstyle X}}\left(x\right)=0 & X\text{ continuous}\\ F_{{\scriptscriptstyle X}}\left(x\right)-F_{{\scriptscriptstyle X}}\left(x^{-}\right)=f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \end{aligned} \]

\[ X\sim F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow\mathrm{P}_{{\scriptscriptstyle X}} \]

\[ Y=g\left(X\right) \]

\[ \begin{cases} Y\sim F_{{\scriptscriptstyle Y}}\left(y\right)\leftrightarrow f_{{\scriptscriptstyle Y}}\left(y\right) & \Rightarrow F_{{\scriptscriptstyle Y}}\left(y\right)\overset{M}{=}F_{{\scriptscriptstyle Y}}\left(y|\theta\right)\\ Y\sim f_{{\scriptscriptstyle Y}}\left(y\right)\leftrightarrow F_{{\scriptscriptstyle Y}}\left(y\right)\leftrightarrow\mathrm{P}_{{\scriptscriptstyle Y}} & \Rightarrow f_{{\scriptscriptstyle Y}}\left(y\right)\overset{M}{=}f_{{\scriptscriptstyle Y}}\left(y|\theta\right) \end{cases} \]

18.1.2.3 range vs. support

Definition 18.9 range of r.v. = range of RV = the range of a random variable

\[ \begin{aligned} \mathcal{R}_{{\scriptscriptstyle X}}=&\left\{ x\middle|\begin{cases} \omega\in S\\ x\in X\left(\omega\right) \end{cases}\right\} \\=&\left\{ x\middle|\forall\omega\in S\left[x\in X\left(\omega\right)\right]\right\} \\=&\left\{ x\middle|x\in X\left(\Omega\right)\right\} =X\left(\Omega\right) \end{aligned} \]

Definition 18.10 support

\[ \mathrm{supp}\left(f\right)=\left\{ x\middle|\begin{cases} f:D\rightarrow\mathcal{R}\\ x\in D\\ f_{{\scriptscriptstyle X}}\left(x\right)\ne0 \end{cases}\right\} \]

Definition 18.11 support of r.v. = support of RV = the support of a random variable

\[ \mathrm{supp}\left(f_{{\scriptscriptstyle X}}\right)=\left\{ x\middle|\begin{cases} x\in X\left(\Omega\right)\\ f_{{\scriptscriptstyle X}}\left(x\right)\ne0 \end{cases}\right\} \overset{f_{{\scriptscriptstyle X}}\left(x\right)\ge0}{=}\left\{ x\middle|\begin{cases} x\in X\left(\Omega\right)\\ f_{{\scriptscriptstyle X}}\left(x\right)>0 \end{cases}\right\} \]

18.1.2.4 continuous monotone transformation

Theorem 18.3 Random variable \(Y\) is monotone transformation of random variable \(X\), i.e. \(\begin{cases} X\sim F_{{\scriptscriptstyle X}}\left(x\right)\leftrightarrow f_{{\scriptscriptstyle X}}\left(x\right)\\ Y=g\left(X\right)\begin{cases} \forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[g\left(x_{{\scriptscriptstyle 1}}\right)<g\left(x_{{\scriptscriptstyle 2}}\right)\right]\\ \forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[g\left(x_{{\scriptscriptstyle 1}}\right)>g\left(x_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\Rightarrow & \exists g^{-1}:Y\rightarrow X \end{cases}\), then

\[ f_{{\scriptscriptstyle Y}}\left(y\right)=f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\left|\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\right| \]

Proof:

\[ \begin{aligned} F_{{\scriptscriptstyle Y}}\left(y\right)= & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y\le y\right)\\ = & \mathrm{P}\left(g\left(X\right)\le y\right)\begin{cases} \forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[g\left(x_{{\scriptscriptstyle 1}}\right)<g\left(x_{{\scriptscriptstyle 2}}\right)\right] & \Leftrightarrow\forall g\left(x_{{\scriptscriptstyle 1}}\right)<g\left(x_{{\scriptscriptstyle 2}}\right)\left[x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\right]\\ \forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[g\left(x_{{\scriptscriptstyle 1}}\right)>g\left(x_{{\scriptscriptstyle 2}}\right)\right] & \Leftrightarrow\forall g\left(x_{{\scriptscriptstyle 1}}\right)>g\left(x_{{\scriptscriptstyle 2}}\right)\left[x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\right] \end{cases}\\ = & \begin{cases} \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le g^{-1}\left(y\right)=x\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(X\ge g^{-1}\left(y\right)=x\right) & \forall y_{{\scriptscriptstyle 1}}>y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \begin{cases} \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le g^{-1}\left(y\right)=x\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(X\ge g^{-1}\left(y\right)=x\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \begin{cases} F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ 1-F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ F_{{\scriptscriptstyle Y}}\left(y\right)= & \begin{cases} F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ 1-F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases} \end{aligned} \]

\[ \begin{aligned} f_{{\scriptscriptstyle Y}}\left(y\right)=\dfrac{\mathrm{d}}{\mathrm{d}y}F_{{\scriptscriptstyle Y}}\left(y\right)= & \begin{cases} \dfrac{\mathrm{d}}{\mathrm{d}y}F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right) & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ \dfrac{\mathrm{d}}{\mathrm{d}y}\left[1-F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\right] & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \begin{cases} \dfrac{\mathrm{d}F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)}{\mathrm{d}g^{-1}\left(y\right)}\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ \dfrac{-\mathrm{d}F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)}{\mathrm{d}g^{-1}\left(y\right)}\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \begin{cases} \dfrac{\mathrm{d}F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)}{\mathrm{d}g^{-1}\left(y\right)}\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right]\\ \dfrac{\mathrm{d}F_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)}{\mathrm{d}g^{-1}\left(y\right)}\dfrac{-\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \begin{cases} f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \begin{cases} \dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\ge0\\ f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\ge0 \end{cases}\Rightarrow f_{{\scriptscriptstyle Y}}\left(y\right)\ge0\\ f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\dfrac{-\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \begin{cases} \dfrac{-\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\ge0\\ f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\ge0 \end{cases}\Rightarrow f_{{\scriptscriptstyle Y}}\left(y\right)\ge0 \end{cases}\\ f_{{\scriptscriptstyle Y}}\left(y\right)= & \begin{cases} f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\ge0\\ f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\dfrac{-\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y} & \dfrac{-\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\ge0 \end{cases}\\ f_{{\scriptscriptstyle Y}}\left(y\right)= & f_{{\scriptscriptstyle X}}\left(g^{-1}\left(y\right)\right)\left|\dfrac{\mathrm{d}g^{-1}\left(y\right)}{\mathrm{d}y}\right| \end{aligned} \]

\[ \tag*{$\Box$} \]

segment \(g\left(X\right)\) into monotone functions

For example, \(\begin{cases} g\left(x\right)=x^{2}\\ Y=g\left(X\right) \end{cases}\Rightarrow Y=g\left(X\right)=X^{2}\),

\[ \begin{cases} Y=g\left(X\right)=X^{2}\\ X\in\left(-\infty,+\infty\right) \end{cases} \]

\[ \begin{aligned} & Y=g\left(X\right)=X^{2}\\ \Rightarrow & Y=\begin{cases} X^{2}=g\left(X\right) & X\ge0\Leftrightarrow X\in\left[0,+\infty\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\right]\\ X^{2}=g\left(X\right) & X<0\Leftrightarrow X\in\left(-\infty,0\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}^{2}>X_{{\scriptscriptstyle 2}}^{2}\right] \end{cases}\\ \Rightarrow & X=\begin{cases} \sqrt{Y}=g^{-1}\left(Y\right) & X\ge0\Rightarrow\forall X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\left[X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\right]\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\right]\\ -\sqrt{Y}=g^{-1}\left(Y\right) & X<0\Rightarrow\forall X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\left[X_{{\scriptscriptstyle 1}}>X_{{\scriptscriptstyle 2}}\right]\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}>X_{{\scriptscriptstyle 2}}\right] \end{cases}\\ \Rightarrow & X=\begin{cases} \sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left[0,\infty\right)\Rightarrow X\ge0\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right]\\ -\sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left[0,\infty\right)\Rightarrow X<0\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right] \end{cases} \end{aligned} \]

\[ \begin{aligned} F_{{\scriptscriptstyle Y}}\left(y\right)= & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y\le y\right)=\mathrm{P}\left(X^{2}\le y\right)\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left(\left\{ X<0\right\} \cup\left\{ X\ge0\right\} \right)\right)\\ = & \mathrm{P}\left(\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)\cup\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)\right)\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)\\ & -\mathrm{P}\left(\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)\cap\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)\right)\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)-\mathrm{P}\left(\emptyset\right)\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)-0\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)\\ = & \mathrm{P}\left(\left\{ -X\le\sqrt{y}\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X\le\sqrt{y}\right\} \cap\left\{ X\ge0\right\} \right)\\ = & \mathrm{P}\left(\left\{ X\ge-\sqrt{y}\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X\le\sqrt{y}\right\} \cap\left\{ X\ge0\right\} \right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(-\sqrt{y}\le X<0\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(0\le X\le\sqrt{y}\right)\\ = & \left[F_{{\scriptscriptstyle X}}\left(0\right)-F_{{\scriptscriptstyle X}}\left(-\sqrt{y}\right)\right]+\left[F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(0\right)\right]\\ = & F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(-\sqrt{y}\right) \end{aligned} \]

\[ \tag*{$\Box$} \]

Another example, \(\begin{cases} Y=g\left(X\right)=X^{2}\\ X\in\left[-1,\infty\right) \end{cases}\),

\[ \begin{aligned} & Y=g\left(X\right)=X^{2}\\ \Rightarrow & Y=\begin{cases} X^{2}=g\left(X\right) & X\ge0\Leftrightarrow X\in\left[0,+\infty\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\right]\\ X^{2}=g\left(X\right) & -1\le X<0\Leftrightarrow X\in\left[-1,0\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}^{2}>X_{{\scriptscriptstyle 2}}^{2}\right] \end{cases}\\ \Rightarrow & X=\begin{cases} \sqrt{Y}=g^{-1}\left(Y\right) & X\in\left[0,\infty\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\left[X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\right]\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}<X_{{\scriptscriptstyle 2}}\right]\\ -\sqrt{Y}=g^{-1}\left(Y\right) & X\in\left[-1,0\right)\Rightarrow\forall X_{{\scriptscriptstyle 1}}^{2}<X_{{\scriptscriptstyle 2}}^{2}\left[X_{{\scriptscriptstyle 1}}>X_{{\scriptscriptstyle 2}}\right]\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[X_{{\scriptscriptstyle 1}}>X_{{\scriptscriptstyle 2}}\right] \end{cases}\\ \Rightarrow & X=\begin{cases} \sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left[0,\infty\right)\Rightarrow X\in\left[0,\infty\right)\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right]\\ -\sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left(0,1\right]\Rightarrow X\in\left[-1,0\right)\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ \Rightarrow & X=\begin{cases} \sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left(1,\infty\right)\Rightarrow X\in\left(1,\infty\right)\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right]\\ \sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left[0,1\right]\Rightarrow X\in\left[0,1\right]\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)<g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right]\\ -\sqrt{Y}=g^{-1}\left(Y\right) & Y\in\left(0,1\right]\Rightarrow X\in\left[-1,0\right)\Rightarrow\forall Y_{{\scriptscriptstyle 1}}<Y_{{\scriptscriptstyle 2}}\left[g^{-1}\left(Y_{{\scriptscriptstyle 1}}\right)>g^{-1}\left(Y_{{\scriptscriptstyle 2}}\right)\right] \end{cases} \end{aligned} \]

\[ \begin{aligned} & F_{{\scriptscriptstyle Y}}\left(y\right)=\mathrm{P}_{{\scriptscriptstyle Y}}\left(Y\le y\right)=\mathrm{P}\left(X^{2}\le y\right)\begin{cases} Y=g\left(X\right)=X^{2}\\ X\in\left[-1,\infty\right) \end{cases}\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left(\left\{ X<0\right\} \cup\left\{ X\ge0\right\} \right)\right)=\cdots\text{ as }\begin{cases} Y=g\left(X\right)=X^{2}\\ X\in\left(-\infty,+\infty\right) \end{cases}\\ = & \mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X^{2}\le y\right\} \cap\left\{ X\ge0\right\} \right)\\ = & \mathrm{P}\left(\left\{ -X\le\sqrt{y}\right\} \cap\left\{ X<0\right\} \right)+\mathrm{P}\left(\left\{ X\le\sqrt{y}\right\} \cap\left\{ X\ge0\right\} \right)\\ = & \begin{cases} \mathrm{P}\left(\left\{ -X\le\sqrt{y}\right\} \cap\left\{ X<0\right\} \cap\left\{ X>1\right\} \right)+\mathrm{P}\left(\left\{ X\le\sqrt{y}\right\} \cap\left\{ X\ge0\right\} \cap\left\{ X>1\right\} \right) & Y\in\left(1,\infty\right)\Rightarrow X\in\left(1,\infty\right)\\ \mathrm{P}\left(\left\{ -X\le\sqrt{y}\right\} \cap\left\{ X<0\right\} \cap\left\{ X\ge-1\right\} \right)+\mathrm{P}\left(\left\{ X\le\sqrt{y}\right\} \cap\left\{ X\ge0\right\} \cap\left\{ X\le1\right\} \right) & Y\in\left[0,1\right]\Rightarrow\begin{cases} X\in\left[0,1\right]\\ X\in\left[-1,0\right) \end{cases} \end{cases}\\ = & \begin{cases} \mathrm{P}\left(\emptyset\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(1<X\le\sqrt{y}\right) & Y\in\left(1,\infty\right)\Rightarrow X\in\left(1,\infty\right)\\ \mathrm{P}\left(\left\{ X\ge-\sqrt{y}\right\} \cap\left\{ X<0\right\} \cap\left\{ X\ge-1\right\} \right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(0\le X\le\min\left\{ \sqrt{y},1\right\} \right) & Y\in\left[0,1\right]\Rightarrow\begin{cases} X\in\left[0,1\right]\\ X\in\left[-1,0\right) \end{cases} \end{cases}\\ = & \begin{cases} 0+\left[F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(1\right)\right] & Y\in\left(1,\infty\right)\Rightarrow X\in\left(1,\infty\right)\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(\max\left\{ -1,-\sqrt{y}\right\} \le X<0\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(0\le X\le\sqrt{y}\right) & Y\in\left[0,1\right]\Rightarrow\begin{cases} X\in\left[0,1\right]\\ X\in\left[-1,0\right) \end{cases} \end{cases}\\ = & \begin{cases} F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(1\right) & Y\in\left(1,\infty\right)\Rightarrow X\in\left(1,\infty\right)\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(-\sqrt{y}\le X<0\right)+\left[F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(0\right)\right] & Y\in\left[0,1\right]\Rightarrow\begin{cases} X\in\left[0,1\right]\\ X\in\left[-1,0\right) \end{cases} \end{cases}\\ = & \begin{cases} F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(1\right) & y>1\\ F_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)-F_{{\scriptscriptstyle X}}\left(-\sqrt{y}\right) & -1\le y\le1 \end{cases} \end{aligned} \]

\[ \tag*{$\Box$} \]

18.1.2.5 discrete monotone transformation

\[ \begin{cases} Y=g\left(X\right)=X^{2}\\ X\text{ discrete}\Rightarrow & Y\text{ discrete} \end{cases} \]

\[ \begin{aligned} f_{{\scriptscriptstyle Y}}\left(y\right)= & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y=y\right)\\ = & \mathrm{P}\left(X^{2}=y\right)\\ = & \mathrm{P}\left(\left\{ X=\sqrt{y}\right\} \cup\left\{ X=-\sqrt{y}\right\} \right)\\ = & \mathrm{P}\left(\left\{ X=\sqrt{y}\right\} \right)+\mathrm{P}\left(\left\{ X=-\sqrt{y}\right\} \right)-\mathrm{P}\left(\left\{ X=\sqrt{y}\right\} \cap\left\{ X=-\sqrt{y}\right\} \right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X=\sqrt{y}\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(X=-\sqrt{y}\right)-\mathrm{P}\left(\emptyset\right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X=\sqrt{y}\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(X=-\sqrt{y}\right)-0\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X=\sqrt{y}\right)+\mathrm{P}_{{\scriptscriptstyle X}}\left(X=-\sqrt{y}\right)\\ = & f_{{\scriptscriptstyle X}}\left(\sqrt{y}\right)+f_{{\scriptscriptstyle X}}\left(-\sqrt{y}\right) \end{aligned} \]

\[ \tag*{$\Box$} \]

Theorem 18.4 discrete monotone transformation

\[ \begin{array}{c} \begin{cases} Y=g\left(X\right)\\ X\text{ discrete}\Rightarrow & Y\text{ discrete} \end{cases}\\ \Downarrow\\ f_{{\scriptscriptstyle Y}}\left(y\right)=\sum\limits _{\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(x\right)=\sum\limits _{\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(x\right) \end{array} \]

Proof:

\[ \begin{aligned} f_{{\scriptscriptstyle Y}}\left(y\right)= & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y=y\right)\\ = & \mathrm{P}\left(g\left(X\right)=y\right)=\sum_{t\in\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(t\right)=\sum_{x\in\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(x\right)=\sum\limits _{\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(x\right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X=g^{-1}\left(y\right)\right)=\sum_{t\in\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(t\right)=\sum_{x\in\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(x\right)=\sum\limits _{\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(x\right) \end{aligned} \]

\[ \begin{array}{ccccccccc} f_{{\scriptscriptstyle Y}}\left(y\right) & = & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y=y\right)\\ & = & \mathrm{P}\left(g\left(X\right)=y\right) & = & \sum\limits _{t\in\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(t\right) & = & \sum\limits _{x\in\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(x\right) & = & \sum\limits _{\left\{ x\middle|g\left(x\right)=y\right\} }f_{{\scriptscriptstyle X}}\left(x\right)\\ & = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X=g^{-1}\left(y\right)\right) & = & \sum\limits _{t\in\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(t\right) & = & \sum\limits _{x\in\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(x\right) & = & \sum\limits _{\left\{ x\middle|x=g^{-1}\left(y\right)\right\} }f_{{\scriptscriptstyle X}}\left(x\right) \end{array} \]

\[ \tag*{$\Box$} \]

Theorem 18.5 probability integral transformation

\[ \begin{array}{c} \begin{cases} \begin{cases} X\text{ continuous} & \left(c\right)\\ X\sim F_{{\scriptscriptstyle X}}\left(x\right) & \left(d\right) \end{cases}\\ Y=F_{{\scriptscriptstyle X}}\left(X\right) & \left(t\right) \end{cases}\\ \Downarrow\\ F_{{\scriptscriptstyle Y}}\left(y\right)=y,\forall y\in\left[0,1\right]\\ \Updownarrow\text{def.}\\ Y\sim U=U\left(y\right)\Leftrightarrow Y\sim U\left(y\right)\Leftrightarrow Y\text{ is uniformly distributed on }\left[0,1\right] \end{array} \]

Proof:

\[ \begin{aligned} F_{{\scriptscriptstyle Y}}\left(y\right)= & \mathrm{P}_{{\scriptscriptstyle Y}}\left(Y\le y\right)\overset{\left(t\right)}{=}\mathrm{P}\left(F_{{\scriptscriptstyle X}}\left(X\right)\le y\right),\forall x_{{\scriptscriptstyle 1}}<x_{{\scriptscriptstyle 2}}\left[F_{{\scriptscriptstyle X}}\left(x_{{\scriptscriptstyle 1}}\right)<F_{{\scriptscriptstyle X}}\left(x_{{\scriptscriptstyle 2}}\right)\right]\Rightarrow\begin{cases} \exists F_{{\scriptscriptstyle X}}^{-1}:Y\rightarrow X\\ \forall y_{{\scriptscriptstyle 1}}<y_{{\scriptscriptstyle 2}}\left[F_{{\scriptscriptstyle X}}^{-1}\left(y_{{\scriptscriptstyle 1}}\right)<F_{{\scriptscriptstyle X}}^{-1}\left(y_{{\scriptscriptstyle 2}}\right)\right] \end{cases}\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le F_{{\scriptscriptstyle X}}^{-1}\left(y\right)=x\right)=\mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right),x=F_{{\scriptscriptstyle X}}^{-1}\left(y\right)\\ = & \mathrm{P}_{{\scriptscriptstyle X}}\left(X\le x\right)=F_{{\scriptscriptstyle X}}\left(x\right)\overset{x=F_{{\scriptscriptstyle X}}^{-1}\left(y\right)}{=}F_{{\scriptscriptstyle X}}\left(F_{{\scriptscriptstyle X}}^{-1}\left(y\right)\right)=y\\ F_{{\scriptscriptstyle Y}}\left(y\right)= & y \end{aligned} \]

\[ \tag*{$\Box$} \]

Note:

According to Theorem 18.5,

\[ \begin{aligned} & U=F_{{\scriptscriptstyle X}}\left(X\right)\overset{\ref{thm:probability-integral-transformation}}{\sim}U\left(u\right)\text{ on }\left[0,1\right]\\ \Rightarrow & X=F_{{\scriptscriptstyle X}}^{-1}\left(U\right)\wedge X\sim F_{{\scriptscriptstyle X}}\left(x\right)\Rightarrow F_{{\scriptscriptstyle X}}^{-1}\left(U\right)=X\sim F_{{\scriptscriptstyle X}}\left(x\right)\Rightarrow F_{{\scriptscriptstyle X}}^{-1}\left(U\right)\sim F_{{\scriptscriptstyle X}}\left(x\right)\\ \Rightarrow & X=F_{{\scriptscriptstyle X}}^{-1}\left(U\right)\sim F_{{\scriptscriptstyle X}}\left(x\right)\\ \text{i.e. } & \text{uniform random variables subsituted into the inverse of }F_{{\scriptscriptstyle X}}\text{,}\\ & \text{ we can get random variables follow }F_{{\scriptscriptstyle X}}\left(x\right) \end{aligned} \]

18.1.2.6 expected value

\[ \mathrm{E}\left(g\left(X\right)\right)=\mathrm{E}\left[g\left(X\right)\right]=\mathrm{E}g\left(X\right)=\mathbb{E}\left[g\left(X\right)\right]=\mathbb{E}g\left(X\right) \]

Definition 18.12 expected value: The expected value of a random variable \(g\left(X\right)\) is

\[ \mathrm{E}\left(g\left(X\right)\right)=\mathrm{E}\left[g\left(X\right)\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

6 p.126

Definition 18.13 expected value or expectation function: The expected value of a random variable \(X\) is

\[ \mathrm{E}\left(X\right)=\mathrm{E}\left[X\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}xf_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x=1 & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}xf_{{\scriptscriptstyle X}}\left(x\right)=1 & X\text{ discrete} \end{cases} \]

Theorem 18.6 the rule of the lazy statistician

the law of the unconscious statistician = the LOTUS

\[ \mathrm{E}\left(g\left(X\right)\right)=\mathrm{E}\left[g\left(X\right)\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

Proof:7 p.162 for p.119

Discrete case:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

Continuous case:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

\[ \tag*{$\Box$} \]

By linearity of \(\intop\) and \(\sum\), expected values have the following properties or theorems,

- \(\mathrm{E}\left[a_{{\scriptscriptstyle 1}}g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)+a_{{\scriptscriptstyle 2}}g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)+c\right]=a_{{\scriptscriptstyle 1}}\mathrm{E}\left[g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}\left[g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)\right]+c\)

- \(\forall x\in\mathbb{R}\left[g\left(x\right)\ge0\right]\Rightarrow\mathrm{E}\left[g\left(X\right)\right]\ge0\)

- \(\forall x\in\mathbb{R}\left[g_{{\scriptscriptstyle 1}}\left(x\right)\ge g_{{\scriptscriptstyle 2}}\left(x\right)\right]\Rightarrow\mathrm{E}\left[g_{{\scriptscriptstyle 1}}\left(X\right)\right]\ge\mathrm{E}\left[g_{{\scriptscriptstyle 2}}\left(X\right)\right]\)

- \(\forall x\in\mathbb{R}\left[a\le g\left(x\right)\le b\right]\Rightarrow a\le\mathrm{E}\left[g\left(X\right)\right]\le b\)

Theorem 18.7 \(\mathrm{E}\left[X\right]\) minimizes Euclidean distance \(\mathrm{E}\left[\left(X-b\right)^{2}\right]\) over \(b\), i.e.

\[ \mathrm{E}\left[X\right]=\underset{b}{\arg\min}\thinspace\mathrm{E}\left[\left(X-b\right)^{2}\right] \]

Proof:

\[ \begin{aligned} \mathrm{E}\left[\left(X-b\right)^{2}\right]= & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]+\mathrm{E}\left[X\right]-b\right)^{2}\right]\\ = & \mathrm{E}\left[\left\{ \left(X-\mathrm{E}\left[X\right]\right)+\left(\mathrm{E}\left[X\right]-b\right)\right\} ^{2}\right]\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}+2\left(X-\mathrm{E}\left[X\right]\right)\left(\mathrm{E}\left[X\right]-b\right)+\left(\mathrm{E}\left[X\right]-b\right)^{2}\right]\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+2\left(\mathrm{E}\left[X\right]-b\right)\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)\right]+\mathrm{E}\left[\left(\mathrm{E}\left[X\right]-b\right)^{2}\right]\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+2\left(\mathrm{E}\left[X\right]-b\right)\mathrm{E}\left[X-\mathrm{E}\left[X\right]\right]+\left(\mathrm{E}\left[X\right]-b\right)^{2}\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+2\left(\mathrm{E}\left[X\right]-b\right)\left(\mathrm{E}\left[X\right]-\mathrm{E}\left[X\right]\right)+\left(\mathrm{E}\left[X\right]-b\right)^{2}\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+2\left(\mathrm{E}\left[X\right]-b\right)0+\left(\mathrm{E}\left[X\right]-b\right)^{2}\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+0+\left(\mathrm{E}\left[X\right]-b\right)^{2}\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]+\left(\mathrm{E}\left[X\right]-b\right)^{2}\overset{\left(\mathrm{E}\left[X\right]-b\right)^{2}\ge0}{\ge}\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]\\ \mathrm{E}\left[\left(X-b\right)^{2}\right]\ge & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]\\ \Downarrow\\ \mathrm{E}\left[\left(X-b\right)^{2}\right]= & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]\text{ holds if }\left(\mathrm{E}\left[X\right]-b\right)^{2}=0\Rightarrow b=\mathrm{E}\left[X\right]\Rightarrow\mathrm{E}\left[X\right]=\underset{b}{\arg\min}\thinspace\mathrm{E}\left[\left(X-b\right)^{2}\right] \end{aligned} \]

\[ \tag*{$\Box$} \]

Note:

When \(b=\mathrm{E}\left[X\right]\), \(\mathrm{E}\left[\left(X-b\right)^{2}\right]\) has minimum loss \(\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]=\mathrm{V}\left[X\right]=\mathrm{V}\left(X\right)\), i.e. defintion of variance appears.

Theorem 18.8 \(\mathrm{median}\left[X\right]\) minimizes \(\mathrm{E}\left[\left|X-b\right|\right]\) over \(b\), i.e.

\[ \mathrm{median}\left[X\right]=\underset{b}{\arg\min}\thinspace\mathrm{E}\left[\left|X-b\right|\right] \]

Proof:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

\[ \tag*{$\Box$} \]

Note:

When \(b=\mathrm{median}\left[X\right]\), \(\mathrm{E}\left[\left(X-b\right)^{2}\right]\) has minimum loss \(\mathrm{E}\left[\left|X-\mathrm{median}\left[X\right]\right|\right]\), i.e. defintion of MAD(mean absolute deviation) in robust statistics appears.

Definition 18.14 indicator function

\[ \begin{aligned} 1\left(E\right)=1\left(x\in E\right)=1\left(\left\{ x\in E\right\} \right)=1\left(\left\{ x\middle|x\in E\right\} \right)= & \begin{cases} 1 & E\\ 0 & \overline{E} \end{cases}=\begin{cases} 1 & \text{if }E\\ 0 & \text{if }\overline{E}=E^{\mathrm{C}} \end{cases}\\ = & \begin{cases} 1 & \text{if event }E\text{ occurs}\\ 0 & \text{if event }E\text{ does not occur} \end{cases} \end{aligned} \]

Note:

Theorem 18.9 probability as expected value

\[ \mathrm{P}_{{\scriptscriptstyle X}}\left(E\right)=\mathrm{P}\left(x\in E\right)=\mathrm{E}\left[1\left(X\in E\right)\right] \]

Proof:

\[ \begin{aligned} \mathrm{P}_{{\scriptscriptstyle X}}\left(E\right)=\mathrm{P}\left(x\in E\right)= & \int_{x\in E}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x=\int_{E}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x\\ = & \int1\left(x\in E\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x\\ = & \int g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x,g\left(x\right)=1\left(x\in E\right)\\ = & \mathrm{E}\left[g\left(X\right)\right],g\left(X\right)=1\left(X\in E\right)\\ = & \mathrm{E}\left[1\left(X\in E\right)\right]\\ \mathrm{P}_{{\scriptscriptstyle X}}\left(E\right)=\mathrm{P}\left(x\in E\right)= & \mathrm{E}\left[1\left(X\in E\right)\right] \end{aligned} \]

\[ \tag*{$\Box$} \]

Iverson bracket https://en.wikipedia.org/wiki/Iverson_bracket

\[ \begin{cases} v\left(p\left(x\right)\right)=\mathrm{T} & \Leftrightarrow\left[p\left(x\right)\right]=1\\ v\left(p\left(x\right)\right)=\mathrm{F} & \Leftrightarrow\left[p\left(x\right)\right]=0 \end{cases} \]

\[ \left[p\left(x\right)\right]=\begin{cases} 1 & v\left(p\left(x\right)\right)=\mathrm{T}\\ 0 & v\left(\neg p\left(x\right)\right)=\mathrm{T} \end{cases}=\begin{cases} 1 & p\left(x\right)\\ 0 & \neg p\left(x\right) \end{cases} \]

negation = NOT

\[ \left[\neg p\right]=1-\left[p\right] \]

in set theory or domain of events,

\[ 1\left(\overline{E}\right)=1-1\left(E\right) \]

conjunction = AND

\[ \left[p\wedge q\right]=\left[p\right]\left[q\right] \]

in set theory or domain of events,

\[ 1\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right) \]

disjunction = OR

\[ \left[p\vee q\right]=\left[p\right]+\left[q\right]-\left[p\right]\left[q\right]=\left[p\right]+\left[q\right]-\left[p\wedge q\right] \]

Proof:

in set theory or domain of events,

\[ \begin{aligned} 1\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)\overset{\text{de Moivre}}{=} & 1\left(\overline{\overline{E}_{{\scriptscriptstyle 1}}\cap\overline{E}_{{\scriptscriptstyle 2}}}\right)\\ = & 1-1\left(\overline{E}_{{\scriptscriptstyle 1}}\cap\overline{E}_{{\scriptscriptstyle 2}}\right)=1-1\left(\overline{E}_{{\scriptscriptstyle 1}}\right)1\left(\overline{E}_{{\scriptscriptstyle 2}}\right)\\ = & 1-\left[1-1\left(E_{{\scriptscriptstyle 1}}\right)\right]\left[1-1\left(E_{{\scriptscriptstyle 2}}\right)\right]\\ = & 1-\left[1-1\left(E_{{\scriptscriptstyle 1}}\right)\right]\left[1-1\left(E_{{\scriptscriptstyle 2}}\right)\right]\\ = & 1-\left[1-1\left(E_{{\scriptscriptstyle 1}}\right)-1\left(E_{{\scriptscriptstyle 2}}\right)+1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right)\right]\\ = & 1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right)\\ = & 1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right) \end{aligned} \]

\[ 1\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right) \]

\[ \tag*{$\Box$} \]

implication = conditional

\[ \begin{aligned} \left[p\rightarrow q\right]= & \left[\neg p\vee q\right]\\ = & \left[\neg p\right]+\left[q\right]-\left[\neg p\right]\left[q\right]\\ = & 1-\left[p\right]+\left[q\right]-\left(1-\left[p\right]\right)\left[q\right]\\ = & 1-\left[p\right]+\left[p\right]\left[q\right] \end{aligned} \]

exclusive disjunction = XOR

\[ \begin{aligned} \left[p\veebar q\right]=\left[p\oplus q\right]= & \left|\left[p\right]-\left[q\right]\right|=\left(\left[p\right]-\left[q\right]\right)^{2}\\ = & \left[p\right]\left(1-\left[q\right]\right)+\left(1-\left[p\right]\right)\left[q\right] \end{aligned} \]

biconditional = XNOR

\[ \left[p\leftrightarrow q\right]=\left[p\odot q\right]=\left[\neg\left(p\oplus q\right)\right]=\left[\neg\left(p\veebar q\right)\right]=\left(\left[p\right]+\left(1-\left[q\right]\right)\right)\left(\left(1-\left[p\right]\right)+\left[q\right]\right) \]

Kronecker delta

\[ \delta_{ij}=\left[i=j\right] \]

single-argument notation

\[ \delta_{i}=\delta_{i0}=\begin{cases} 1 & i=j=0\\ 0 & i\neq j=0 \end{cases} \]

sign function

\[ \mathrm{sgn}\left(x\right)=\begin{cases} 1 & x>0\\ 0 & x=0=\left[x>0\right]-\left[x<0\right]\\ -1 & x<0 \end{cases} \]

absolute function

\[ \begin{aligned} \left|x\right|= & \begin{cases} x & x\ge0\\ -x & x<0 \end{cases}=\begin{cases} x & x>0\\ -x & x\le0 \end{cases}=\begin{cases} x & x>0\\ 0 & x=0\\ -x & x<0 \end{cases}\\ = & \begin{cases} x\cdot1 & x>0\\ x\cdot0 & x=0\\ x\cdot\left(-1\right) & x<0 \end{cases}=\begin{cases} x\cdot\mathrm{sgn}\left(x\right) & x>0\\ x\cdot\mathrm{sgn}\left(x\right) & x=0\\ x\cdot\mathrm{sgn}\left(x\right) & x<0 \end{cases}\\ = & x\cdot\mathrm{sgn}\left(x\right)=x\left(\left[x>0\right]-\left[x<0\right]\right)=x\left[x>0\right]-x\left[x<0\right] \end{aligned} \]

binary min and max function

\[ \max\left(x,y\right)=x\left[x>y\right]+y\left[x\le y\right] \]

\[ \min\left(x,y\right)=x\left[x\le y\right]+y\left[x>y\right] \]

binary max function

\[ \max\left(x,y\right)=\dfrac{x+y+\left|x-y\right|}{2} \]

floor and ceiling functions

floor function

\[ \begin{aligned} \left\lfloor x\right\rfloor = & n,\,n\le x<n+1\\ = & \sum_{n\in\mathbb{N}}n\left[n\le x<n+1\right] \end{aligned} \]

ceiling function

\[ \begin{aligned} \left\lceil x\right\rceil = & n,\,n-1<x\le n\\ = & \sum_{n\in\mathbb{N}}n\left[n-1<x\le n\right] \end{aligned} \]

Heaviside step function

\[ \mathrm{H}\left(x\right)=\begin{cases} 1 & x>0\\ 0 & x\le0 \end{cases}=\left[x>0\right]=1_{\left(0,\infty\right)}\left(x\right) \]

or conveniently define “unit step function”

\[ u\left(x\right)=\begin{cases} 1 & x\ge0\\ 0 & x<0 \end{cases}=\left[x\ge0\right]=1_{\left[0,\infty\right)}\left(x\right) \]

ramp function = rectified linear unit activation function = ReLU

\[ \mathrm{ReLU}\left(x\right)=\begin{cases} x & x\ge0\\ 0 & x<0 \end{cases}=x\left[x\ge0\right] \]

indicator function

\[ A\subseteq X\Rightarrow\begin{cases} 1_{A}:X\rightarrow\left\{ 0,1\right\} & \Leftrightarrow x\in X\overset{1_{A}}{\rightarrow}\left\{ 0,1\right\} \\ 1_{A}\left(x\right)=\begin{cases} 1 & x\in A\\ 0 & x\notin A \end{cases} & =\left[x\in A\right]=\begin{cases} 1 & v\left(x\in A\right)=\mathrm{T}\\ 0 & v\left(\neg\left(x\in A\right)\right)=\mathrm{T} \end{cases} \end{cases} \]

\(A,B\subseteq\Omega\),

\[ A=B\Leftrightarrow1_{A}=1_{B} \]

\[ A=\Omega\Leftrightarrow1_{A}\left(x\right)=1 \]

\[ A=\emptyset\Leftrightarrow1_{A}\left(x\right)=0 \]

Theorem 18.10 subset indicator order

\[ A\subset B\Rightarrow1_{A}\left(x\right)\le1_{B}\left(x\right) \]

Proof:

\[ \begin{aligned} & \forall x\left(1_{A}\left(x\right)=1\Rightarrow1_{B}\left(x\right)=1\right)\\ \Leftrightarrow & \forall x\left(\neg1_{A}\left(x\right)=1\vee1_{B}\left(x\right)=1\right)\\ \Leftrightarrow & \forall x\neg\left(1_{A}\left(x\right)=1\wedge\neg1_{B}\left(x\right)=1\right)\\ \Rightarrow & \neg\exists x\left(1_{A}\left(x\right)=1\wedge1_{B}\left(x\right)=0\right)\\ \Rightarrow & \neg\exists x\left(1_{B}\left(x\right)=0<1=1_{A}\left(x\right)\right)\\ \Rightarrow & \neg\exists x\left(1_{B}\left(x\right)<1_{A}\left(x\right)\right)\\ \Rightarrow & \forall x\left(1_{B}\left(x\right)\ge1_{A}\left(x\right)\right) \end{aligned} \]

\[ \tag*{$\Box$} \]

in set theory or domain of events,

\[ 1\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right) \]

\[ 1\left(\overline{E}\right)=1-1\left(E\right) \]

\[ 1\left(E_{{\scriptscriptstyle 1}}\cup E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\right)1\left(E_{{\scriptscriptstyle 2}}\right)=1\left(E_{{\scriptscriptstyle 1}}\right)+1\left(E_{{\scriptscriptstyle 2}}\right)-1\left(E_{{\scriptscriptstyle 1}}\cap E_{{\scriptscriptstyle 2}}\right) \]

expectation in many perspectives

\[ Y=g\left(X\right) \]

\[ \intop\limits _{-\infty}^{+\infty}y\thinspace f_{{\scriptscriptstyle Y}}\left(y\right)\mathrm{d}y=\mathrm{E}\left[Y\right]=\mathrm{E}\left[g\left(X\right)\right]=\intop\limits _{-\infty}^{+\infty}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x \]

\[ \mathrm{E}_{{\scriptscriptstyle Y}}\left[Y\right]=\intop\limits _{-\infty}^{+\infty}y\thinspace f_{{\scriptscriptstyle Y}}\left(y\right)\mathrm{d}y=\mathrm{E}\left[Y\right]=\mathrm{E}\left[g\left(X\right)\right]=\intop\limits _{-\infty}^{+\infty}g\left(x\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x=\mathrm{E}_{{\scriptscriptstyle X}}\left[g\left(X\right)\right] \]

\[ \mathrm{E}_{{\scriptscriptstyle Y}}\left[Y\right]=\mathrm{E}_{{\scriptscriptstyle X}}\left[g\left(X\right)\right] \]

\[ \begin{aligned} & \mathrm{E}\left[a_{{\scriptscriptstyle 1}}g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)+a_{{\scriptscriptstyle 2}}g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)+c\right],\begin{cases} Y_{{\scriptscriptstyle 1}}=g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\\ Y_{{\scriptscriptstyle 2}}=g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right) \end{cases}\\ = & \mathrm{E}\left[a_{{\scriptscriptstyle 1}}Y_{{\scriptscriptstyle 1}}+a_{{\scriptscriptstyle 2}}Y_{{\scriptscriptstyle 2}}+c\right] \end{aligned} \]

\[ \begin{aligned} & \mathrm{E}\left[a_{{\scriptscriptstyle 1}}g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)+a_{{\scriptscriptstyle 2}}g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)+c\right]=a_{{\scriptscriptstyle 1}}\mathrm{E}\left[g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}\left[g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)\right]+c\\ = & \mathrm{E}\left[a_{{\scriptscriptstyle 1}}Y_{{\scriptscriptstyle 1}}+a_{{\scriptscriptstyle 2}}Y_{{\scriptscriptstyle 2}}+c\right]=a_{{\scriptscriptstyle 1}}\mathrm{E}\left[Y_{{\scriptscriptstyle 1}}\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}\left[Y_{{\scriptscriptstyle 2}}\right]+c \end{aligned} \]

\[ a_{{\scriptscriptstyle 1}}\mathrm{E}\left[g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}\left[g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)\right]+c=a_{{\scriptscriptstyle 1}}\mathrm{E}_{{\scriptscriptstyle X_{{\scriptscriptstyle 1}}}}\left[g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}_{{\scriptscriptstyle X_{{\scriptscriptstyle 2}}}}\left[g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)\right]+c \]

\[ a_{{\scriptscriptstyle 1}}\mathrm{E}\left[Y_{{\scriptscriptstyle 1}}\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}\left[Y_{{\scriptscriptstyle 2}}\right]+c=a_{{\scriptscriptstyle 1}}\mathrm{E}_{{\scriptscriptstyle Y_{{\scriptscriptstyle 1}}}}\left[Y_{{\scriptscriptstyle 1}}\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}_{{\scriptscriptstyle Y_{{\scriptscriptstyle 1}}}}\left[Y_{{\scriptscriptstyle 2}}\right]+c \]

\[ a_{{\scriptscriptstyle 1}}\mathrm{E}_{{\scriptscriptstyle X_{{\scriptscriptstyle 1}}}}\left[g_{{\scriptscriptstyle 1}}\left(X_{{\scriptscriptstyle 1}}\right)\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}_{{\scriptscriptstyle X_{{\scriptscriptstyle 2}}}}\left[g_{{\scriptscriptstyle 2}}\left(X_{{\scriptscriptstyle 2}}\right)\right]+c=a_{{\scriptscriptstyle 1}}\mathrm{E}_{{\scriptscriptstyle Y_{{\scriptscriptstyle 1}}}}\left[Y_{{\scriptscriptstyle 1}}\right]+a_{{\scriptscriptstyle 2}}\mathrm{E}_{{\scriptscriptstyle Y_{{\scriptscriptstyle 1}}}}\left[Y_{{\scriptscriptstyle 2}}\right]+c \]

18.1.2.7 moment

Definition 18.15 \(n^\text{th}\) moment: For each integer \(n\), the \(n^\text{th}\) moment of \(X\) is \(\mathrm{E}\left[X^{n}\right]\).

The \(n^\text{th}\) central moment of \(X\) is \(\mu_{{\scriptscriptstyle n}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{n}\right]\).

\[ \mathrm{E}\left[X^{n}\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}x^{n}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x^{n}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

\[ \mu=\mathrm{E}\left[X^{1}\right]=\mathrm{E}\left[X\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}x^{1}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x^{1}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}=\begin{cases} \intop\limits _{-\infty}^{+\infty}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

\[ \begin{aligned} \mu_{{\scriptscriptstyle n}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{n}\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{n}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{n}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \end{aligned} \]

\(1^\text{st}\) moment of \(X\) = mean

\[ \mu=\mathrm{E}\left[X^{1}\right]=\mathrm{E}\left[X\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}x^{1}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x^{1}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}=\begin{cases} \intop\limits _{-\infty}^{+\infty}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

\(1^\text{st}\) central moment of \(X\) = \(0\)

\[ \begin{aligned} \mu_{{\scriptscriptstyle 1}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{1}\right]= & \begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{1}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{1}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}=\begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}\\ = & \begin{cases} \intop\limits _{-\infty}^{+\infty}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x-\intop\limits _{-\infty}^{+\infty}\mu\thinspace f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}x\thinspace f_{{\scriptscriptstyle X}}\left(x\right)-\sum\limits _{x\in X\left(\Omega\right)}\mu\thinspace f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}\\ = & \begin{cases} \mathrm{E}\left[X\right]-\mu\intop\limits _{-\infty}^{+\infty}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \mathrm{E}\left[X\right]-\mu\sum\limits _{x\in X\left(\Omega\right)}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}=\begin{cases} \mathrm{E}\left[X\right]-\mu\cdot1 & X\text{ continuous}\\ \mathrm{E}\left[X\right]-\mu\cdot1 & X\text{ discrete} \end{cases}\\ = & \begin{cases} \mathrm{E}\left[X\right]-\mu & X\text{ continuous}\\ \mathrm{E}\left[X\right]-\mu & X\text{ discrete} \end{cases}=\begin{cases} \mathrm{E}\left[X\right]-\mathrm{E}\left[X\right] & X\text{ continuous}\\ \mathrm{E}\left[X\right]-\mathrm{E}\left[X\right] & X\text{ discrete} \end{cases}\\ = & \begin{cases} 0 & X\text{ continuous}\\ 0 & X\text{ discrete} \end{cases}=0\\ \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)\right]= & 0\\ \mathrm{E}\left[X-\mathrm{E}\left[X\right]\right]= & 0 \end{aligned} \]

\[ \forall X\left(\mathrm{E}\left[X-\mathrm{E}\left[X\right]\right]=0\right) \]

For normal distribution, actually for any distribution,

\[ \begin{array}{c} X\sim\mathrm{n}\left(0,1\right)=\mathcal{N}\left(0,1^{2}\right)\\ \Downarrow\\ \mathrm{E}\left[X-\mathrm{E}\left[X\right]\right]=0 \end{array} \]

\(2^\text{nd}\) central moment of \(X\) = variance

\[ \begin{aligned} \mu_{{\scriptscriptstyle 2}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]= & \begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases}\\ = & \mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]=\mathrm{V}\left[X\right]=\mathrm{V}\left(X\right) \end{aligned} \]

For normal distribution,

\[ \begin{array}{c} X\sim\mathrm{n}\left(0,1\right)=\mathcal{N}\left(0,1^{2}\right)=\mathcal{N}\left(\mu=0,\mathrm{V}^{2}\left[X\right]=1^{2}\right)\\ \Downarrow\\ \mathrm{V}\left[X\right]=\mathrm{V}\left(X\right)=1 \end{array} \]

variance properties

\[ \begin{aligned} \mathrm{V}\left[aX+b\right]=a^{2}\mathrm{V}\left[X\right] \end{aligned} \]

Proof:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

\[ \tag*{$\Box$} \]

\(3^\text{rd}\) central moment of \(X\)

\[ \mu_{{\scriptscriptstyle 3}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{3}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{3}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

skewness

偏度

\[ \begin{aligned} \mathrm{skewness}\left[X\right]= & \dfrac{\mu_{{\scriptscriptstyle 3}}}{\mu_{{\scriptscriptstyle 2}}^{\frac{3}{2}}}=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]}{\left(\mathrm{V}\left[X\right]\right)^{\frac{3}{2}}}=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]}{\left(\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]\right)^{\frac{3}{2}}}\\ = & \begin{cases} \dfrac{\intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{3}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x}{\left(\intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x\right)^{\frac{3}{2}}} & X\text{ continuous}\\ \dfrac{\sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{3}f_{{\scriptscriptstyle X}}\left(x\right)}{\left(\sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right)\right)^{\frac{3}{2}}} & X\text{ discrete} \end{cases} \end{aligned} \]

For normal distribution,

\[ \begin{array}{c} X\sim\mathrm{n}\left(0,1\right)=\mathcal{N}\left(0,1^{2}\right)=\mathcal{N}\left(\mu=0,\mathrm{V}^{2}\left[X\right]=1^{2}\right)\\ \Downarrow\\ \mathrm{skewness}\left[X\right]=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]}{\left(\mathrm{V}\left[X\right]\right)^{\frac{3}{2}}}=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]}{1^{\frac{3}{2}}}=0 \end{array} \]

Proof:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

\[ \tag*{$\Box$} \]

\(4^\text{th}\) central moment of \(X\)

\[ \mu_{{\scriptscriptstyle 4}}=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]=\begin{cases} \intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{4}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x & X\text{ continuous}\\ \sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{4}f_{{\scriptscriptstyle X}}\left(x\right) & X\text{ discrete} \end{cases} \]

kurtosis

峰度

\[ \begin{aligned} \mathrm{kurtosis}\left[X\right]= & \dfrac{\mu_{{\scriptscriptstyle 4}}}{\mu_{{\scriptscriptstyle 2}}^{2}}=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]}{\left(\mathrm{V}\left[X\right]\right)^{2}}=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]}{\left(\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right]\right)^{2}}\\ = & \begin{cases} \dfrac{\intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{4}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x}{\left(\intop\limits _{-\infty}^{+\infty}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right)\mathrm{d}x\right)^{2}} & X\text{ continuous}\\ \dfrac{\sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{4}f_{{\scriptscriptstyle X}}\left(x\right)}{\left(\sum\limits _{x\in X\left(\Omega\right)}\left(x-\mu\right)^{2}f_{{\scriptscriptstyle X}}\left(x\right)\right)^{2}} & X\text{ discrete} \end{cases} \end{aligned} \]

For normal distribution,

\[ \begin{array}{c} X\sim\mathrm{n}\left(0,1\right)=\mathcal{N}\left(0,1^{2}\right)=\mathcal{N}\left(\mu=0,\mathrm{V}^{2}\left[X\right]=1^{2}\right)\\ \Downarrow\\ \mathrm{kurtosis}\left[X\right]=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]}{\left(\mathrm{V}\left[X\right]\right)^{2}}=\dfrac{\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]}{1^{2}}=3 \end{array} \]

Proof:

\[ \begin{aligned} \text{to be proved} \end{aligned} \]

\[ \tag*{$\Box$} \]

For normal distribution,

\[ \begin{array}{c} X\sim\mathrm{n}\left(0,1\right)=\mathcal{N}\left(0,1^{2}\right)=\mathcal{N}\left(\mu=0,\mathrm{V}^{2}\left[X\right]=1^{2}\right)\\ \Downarrow\\ \end{array} \]

\[ \begin{cases} \mu=\mathrm{E}\left[X\right] & =0\\ \mu_{{\scriptscriptstyle 1}}=\mathrm{E}\left[X-\mathrm{E}\left[X\right]\right] & =0\\ \mathrm{variance}\left[X\right]=\mathrm{V}\left[X\right]=\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{2}\right] & =1\\ \mathrm{skewness}\left[X\right]=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{3}\right]}{\left(\mathrm{V}\left[X\right]\right)^{\frac{3}{2}}} & =0\\ \mathrm{kurtosis}\left[X\right]=\dfrac{\mathrm{E}\left[\left(X-\mathrm{E}\left[X\right]\right)^{4}\right]}{\left(\mathrm{V}\left[X\right]\right)^{2}} & =3 \end{cases} \]