38 Controls

This section follows (Cinelli, Forney, and Pearl 2022) and code

Traditional literature usually considers adding additional control variables is harmless to analysis.

More specifically, this problem is most prevalent in the review process. Reviewers only ask authors to add more variables to “control” for such variable, which can be asked with only limited rationale. Rarely ever you will see a reviewer asks an author to remove some variables to see the behavior of the variable of interest (This is also related to Coefficient stability).

However, adding more controls is only good in limited cases.

38.1 Bad Controls

38.1.1 M-bias

Traditional textbooks (G. W. Imbens and Rubin 2015; J. D. Angrist and Pischke 2009) consider Z as a good control because it’s a pre-treatment variable, where it correlates with the treatment and the outcome.

This is most prevalent in Matching Methods, where we are recommended to include all “pre-treatment” variables.

However, it is a bad control because it opens the back-door path Z←U1→Z←U2→Y

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; u1->x; u1->z; u2->z; u2->y}")

# set u as latent

latents(model) <- c("u1", "u2")

## coordinates for plotting

coordinates(model) <- list(x = c(

x = 1,

u1 = 1,

z = 2,

u2 = 3,

y = 3

),

y = c(

x = 1,

u1 = 2,

z = 1.5,

u2 = 2,

y = 1

))

## ggplot

ggdag(model) + theme_dag()

Even though Z can correlate with both X and Y very well, it’s not a confounder.

Controlling for Z can bias the X→Y estimate, because it opens the colliding path X←U1→Z←U2←Y

n <- 1e4

u1 <- rnorm(n)

u2 <- rnorm(n)

z <- u1 + u2 + rnorm(n)

x <- u1 + rnorm(n)

causal_coef <- 2

y <- causal_coef * x - 4*u2 + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.05 | -0.06 |

| (0.04) | (0.03) | |

| x | 1.94 *** | 2.76 *** |

| (0.03) | (0.03) | |

| z | -1.60 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.30 | 0.56 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Another worse variation is

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; u1->x; u1->z; u2->z; u2->y; z->y}")

# set u as latent

latents(model) <- c("u1", "u2")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, u1=1, z=2, u2=3, y=3),

y = c(x=1, u1=2, z=1.5, u2=2, y=1))

## ggplot

ggdag(model) + theme_dag()

You can’t do much in this case.

If you don’t control for Z, then you have an open back-door path X←U1→Z→Y, and the unadjusted estimate is biased

If you control for Z, then you open backdoor path X←U1→Z←U2→Y, and the adjusted estimate is also biased

Hence, we cannot identify the causal effect in this case.

We can do sensitivity analyses to examine (Cinelli et al. 2019; Cinelli and Hazlett 2020)

- the plausible bounds on the strength of the direct effect of Z→Y

- the strength of the effects of the latent variables

38.1.2 Bias Amplification

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; u->x; u->y; z->x}")

# set u as latent

latents(model) <- c("u")

## coordinates for plotting

coordinates(model) <- list(

x = c(z=1, x=2, u=3, y=4),

y = c(z=1, x=1, u=2, y=1))

## ggplot

ggdag(model) + theme_dag()

Controlling for Z amplifies the omitted variable bias

n <- 1e4

z <- rnorm(n)

u <- rnorm(n)

x <- 2*z + u + rnorm(n)

y <- x + 2*u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.03 | -0.02 |

| (0.02) | (0.02) | |

| x | 1.34 *** | 2.01 *** |

| (0.01) | (0.01) | |

| z | -2.02 *** | |

| (0.03) | ||

| N | 10000 | 10000 |

| R2 | 0.71 | 0.80 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

38.1.3 Overcontrol bias

Sometimes, this is similar to controlling for variables that are proxy of the dependent variable.

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->z; z->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, y=3),

y = c(x=1, z=1, y=1))

## ggplot

ggdag(model) + theme_dag()

If X is a proxy for Z (i.e., a mediator between Z and Y), controlling for Z is bad

n <- 1e4

x <- rnorm(n)

z <- x + rnorm(n)

y <- z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.04 ** | 0.01 |

| (0.01) | (0.01) | |

| x | 0.99 *** | 0.00 |

| (0.01) | (0.01) | |

| z | 0.99 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.33 | 0.66 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Now you see that Z is significant, which is technically true, but we are interested in the causal coefficient of X on Y.

Another setting for overcontrol bias is

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->m; m->z; m->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, m=2, z=2, y=3),

y = c(x=2, m=2, z=1, y=2))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

x <- rnorm(n)

m <- x + rnorm(n)

z <- m + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.02 | -0.02 |

| (0.01) | (0.01) | |

| x | 0.97 *** | 0.48 *** |

| (0.01) | (0.01) | |

| z | 0.50 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.32 | 0.49 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Another setting for this bias is

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->z; z->y; u->z; u->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, u=3, y=4),

y = c(x=1, z=1, u=2, y=1))

## ggplot

ggdag(model) + theme_dag()

set.seed(1)

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + u + rnorm(n)

y <- z + u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.01 | -0.01 |

| (0.02) | (0.01) | |

| x | 1.01 *** | -0.47 *** |

| (0.02) | (0.01) | |

| z | 1.48 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.15 | 0.78 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

The total effect of X on Y is not biased (i.e., 1.01≈1.48−0.47).

Controlling for Z will fail to identify the direct effect of X on Y and opens the biasing path X→Z←U→Y

38.1.4 Selection Bias

Also known as “collider stratification bias”

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->z; u->z;u->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, u=2, y=3),

y = c(x=3, z=2, u=4, y=3))

## ggplot

ggdag(model) + theme_dag()

Adjusting Z opens the colliding path X→Z←U→Y

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + u + rnorm(n)

y <- x + 2*u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.01 | 0.01 |

| (0.02) | (0.02) | |

| x | 0.97 *** | -0.03 |

| (0.02) | (0.02) | |

| z | 1.00 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.16 | 0.49 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Another setting is

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->z; y->z}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, y=3),

y = c(x=2, z=1, y=2))

## ggplot

ggdag(model) + theme_dag()

Controlling Z opens the colliding path X→Z←Y

n <- 1e4

x <- rnorm(n)

y <- x + rnorm(n)

z <- x + y + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.00 | 0.00 |

| (0.01) | (0.01) | |

| x | 1.03 *** | -0.00 |

| (0.01) | (0.01) | |

| z | 0.51 *** | |

| (0.00) | ||

| N | 10000 | 10000 |

| R2 | 0.51 | 0.76 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

38.1.5 Case-control Bias

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; y->z}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, y=3),

y = c(x=2, z=1, y=2))

## ggplot

ggdag(model) + theme_dag()

Controlling Z opens a virtual collider (a descendant of a collider).

However, if X truly has no causal effect on Y. Then, controlling for Z is valid for testing whether the effect of X on Y is 0 because X is d-separated from Y regardless of adjusting for Z

n <- 1e4

x <- rnorm(n)

y <- x + rnorm(n)

z <- y + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.00 | -0.00 |

| (0.01) | (0.01) | |

| x | 1.00 *** | 0.50 *** |

| (0.01) | (0.01) | |

| z | 0.50 *** | |

| (0.00) | ||

| N | 10000 | 10000 |

| R2 | 0.50 | 0.75 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

38.2 Good Controls

38.2.1 Omitted Variable Bias Correction

This is when Z can block all back-door paths.

rm(list = ls())

model <- dagitty("dag{x->y; z->x; z->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, y=3, z=2),

y = c(x=1, y=1, z=2))

## ggplot

ggdag(model) + theme_dag()

Unadjusted estimate is biased

adjusting for Z blocks the backdoor path

n <- 1e4

z <- rnorm(n)

causal_coef = 2

beta2 = 3

x <- z + rnorm(n)

y <- causal_coef * x + beta2 * z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.01 | -0.00 |

| (0.02) | (0.01) | |

| x | 3.51 *** | 2.00 *** |

| (0.02) | (0.01) | |

| z | 3.02 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.82 | 0.97 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

# cleans workspace

rm(list = ls())

# Draw DAG

# specify edges

model <- dagitty("dag{x->y; u->z; z->x; u->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, u=3, y = 4),

y = c(x=1, y=1, z=2, u = 3))

## ggplot

ggdag(model) + theme_dag()

Unadjusted estimate is biased

adjusting for Z blocks the backdoor door path due to U

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

causal_coef = 2

x <- z + rnorm(n)

y <- causal_coef * x + u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.03 * | 0.03 * |

| (0.01) | (0.01) | |

| x | 2.34 *** | 2.01 *** |

| (0.01) | (0.01) | |

| z | 0.49 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.91 | 0.92 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though Z is significant, we cannot give it a causal interpretation.

# cleans workspace

rm(list = ls())

# Draw DAG

# specify edges

model <- dagitty("dag{x->y; u->z; u->x; z->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=3, u=2, y = 4),

y = c(x=1, y=1, z=2, u = 3))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- u + rnorm(n)

causal_coef <- 2

y <- causal_coef * x + z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.03 | -0.01 |

| (0.02) | (0.01) | |

| x | 2.51 *** | 2.01 *** |

| (0.01) | (0.01) | |

| z | 1.01 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.84 | 0.93 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Even though Z is significant, we cannot give it a causal interpretation.

Summary

# cleans workspace

rm(list = ls())

# Model 1

model1 <- dagitty("dag{x->y; z->x; z->y}")

## coordinates for plotting

coordinates(model1) <- list(

x = c(x=1, y=3, z=2),

y = c(x=1, y=1, z=2))

# Model 2

# specify edges

model2 <- dagitty("dag{x->y; u->z; z->x; u->y}")

# set u as latent

latents(model2) <- "u"

## coordinates for plotting

coordinates(model2) <- list(

x = c(x=1, z=2, u=3, y = 4),

y = c(x=1, y=1, z=2, u = 3))

# Model 3

# specify edges

model3 <- dagitty("dag{x->y; u->z; u->x; z->y}")

# set u as latent

latents(model3) <- "u"

## coordinates for plotting

coordinates(model3) <- list(

x = c(x=1, z=3, u=2, y = 4),

y = c(x=1, y=1, z=2, u = 3))

par(mfrow=c(1,3))

## ggplot

ggdag(model1) + theme_dag()

38.2.2 Omitted Variable Bias in Mediation Correction

Common causes of X and any mediator (between X and Y) confound the effect of X on Y

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; z->x; x->m; z->m; m->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, m=3, y=4),

y = c(x=1, z=2, m=1, y=1))

## ggplot

ggdag(model) + theme_dag()

Z is a confounder of both the mediator M and X

n <- 1e4

z <- rnorm(n)

x <- z + rnorm(n)

causal_coef <- 2

m <- causal_coef * x + z + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.02 | -0.01 |

| (0.02) | (0.01) | |

| x | 2.49 *** | 1.97 *** |

| (0.01) | (0.01) | |

| z | 1.02 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.83 | 0.86 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; u->z; z->x; x->m; u->m; m->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, u=3, m=4, y=5),

y = c(x=1, z=2, u=3, m=1, y=1))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- z + rnorm(n)

causal_coef <- 2

m <- causal_coef * x + u + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.01 | -0.01 |

| (0.02) | (0.02) | |

| x | 2.31 *** | 2.00 *** |

| (0.01) | (0.02) | |

| z | 0.49 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.86 | 0.86 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; u->z; z->m; x->m; u->x; m->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=3, u=2, m=4, y=5),

y = c(x=1, z=2, u=3, m=1, y=1))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

u <- rnorm(n)

z <- u + rnorm(n)

x <- u + rnorm(n)

causal_coef <- 2

m <- causal_coef * x + z + rnorm(n)

y <- m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.01 | -0.00 |

| (0.02) | (0.01) | |

| x | 2.50 *** | 1.99 *** |

| (0.01) | (0.01) | |

| z | 1.02 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.78 | 0.87 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Summary

# model 4

model4 <- dagitty("dag{x->y; z->x; x->m; z->m; m->y}")

## coordinates for plotting

coordinates(model4) <- list(

x = c(x=1, z=2, m=3, y=4),

y = c(x=1, z=2, m=1, y=1))

# model 5

model5 <- dagitty("dag{x->y; u->z; z->x; x->m; u->m; m->y}")

# set u as latent

latents(model5) <- "u"

## coordinates for plotting

coordinates(model5) <- list(

x = c(x=1, z=2, u=3, m=4, y=5),

y = c(x=1, z=2, u=3, m=1, y=1))

# model 6

model6 <- dagitty("dag{x->y; u->z; z->m; x->m; u->x; m->y}")

# set u as latent

latents(model6) <- "u"

## coordinates for plotting

coordinates(model6) <- list(

x = c(x=1, z=3, u=2, m=4, y=5),

y = c(x=1, z=2, u=3, m=1, y=1))

par(mfrow=c(1,3))

## ggplot

ggdag(model4) + theme_dag()

38.3 Neutral Controls

38.3.1 Good Predictive Controls

Good for precision

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; z->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, y=2),

y = c(x=1, z=2, y=1))

## ggplot

ggdag(model) + theme_dag()

Controlling for Z does not help or hurt identification, but it can increase precision (i.e., reducing SE)

n <- 1e4

z <- rnorm(n)

x <- rnorm(n)

y <- x + 2 * z + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.01 | 0.01 |

| (0.02) | (0.01) | |

| x | 1.00 *** | 1.01 *** |

| (0.02) | (0.01) | |

| z | 2.00 *** | |

| (0.01) | ||

| N | 10000 | 10000 |

| R2 | 0.17 | 0.83 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Similar coefficients, but smaller SE when controlling for Z

Another variation is

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->m; z->m; m->y}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, m=2, y=3),

y = c(x=1, z=2, m=1, y=1))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- rnorm(n)

m <- 2 * z + rnorm(n)

y <- x + 2 * m + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.00 | -0.00 |

| (0.05) | (0.02) | |

| x | 0.97 *** | 0.99 *** |

| (0.05) | (0.02) | |

| z | 4.02 *** | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.04 | 0.77 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Controlling for Z can reduce SE

38.3.2 Good Selection Bias

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->z; z->w; u->w;u->y}")

# set u as latent

latents(model) <- "u"

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, w=3, u=3, y=5),

y = c(x=3, z=2, w=1, u=4, y=3))

## ggplot

ggdag(model) + theme_dag()

- Unadjusted estimate is unbiased

- Controlling for Z can increase SE

- Controlling for Z while having on W can help identify X

n <- 1e4

x <- rnorm(n)

u <- rnorm(n)

z <- x + rnorm(n)

w <- z + u + rnorm(n)

y <- x - 2*u + rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + w), lm(y ~ x + z + w))| Model 1 | Model 2 | Model 3 | |

|---|---|---|---|

| (Intercept) | 0.01 | 0.01 | 0.03 |

| (0.02) | (0.02) | (0.02) | |

| x | 0.99 *** | 1.65 *** | 0.99 *** |

| (0.02) | (0.02) | (0.02) | |

| w | -0.67 *** | -1.01 *** | |

| (0.01) | (0.01) | ||

| z | 1.02 *** | ||

| (0.02) | |||

| N | 10000 | 10000 | 10000 |

| R2 | 0.16 | 0.39 | 0.50 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | |||

38.3.3 Bad Predictive Controls

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; z->x}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=1, y=2),

y = c(x=1, z=2, y=1))

## ggplot

ggdag(model) + theme_dag()

n <- 1e4

z <- rnorm(n)

x <- 2 * z + rnorm(n)

y <- x + 2 * rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | -0.02 | -0.02 |

| (0.02) | (0.02) | |

| x | 0.99 *** | 1.00 *** |

| (0.01) | (0.02) | |

| z | -0.00 | |

| (0.04) | ||

| N | 10000 | 10000 |

| R2 | 0.55 | 0.55 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Similar coefficients, but greater SE when controlling for Z

Another variation is

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->z}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=1, y=2),

y = c(x=1, z=2, y=1))

## ggplot

ggdag(model) + theme_dag()

set.seed(1)

n <- 1e4

x <- rnorm(n)

z <- 2 * x + rnorm(n)

y <- x + 2 * rnorm(n)

jtools::export_summs(lm(y ~ x), lm(y ~ x + z))| Model 1 | Model 2 | |

|---|---|---|

| (Intercept) | 0.02 | 0.02 |

| (0.02) | (0.02) | |

| x | 1.00 *** | 0.99 *** |

| (0.02) | (0.05) | |

| z | 0.00 | |

| (0.02) | ||

| N | 10000 | 10000 |

| R2 | 0.20 | 0.20 |

| *** p < 0.001; ** p < 0.01; * p < 0.05. | ||

Worse SE when controlling for Z (0.02<0.05)

38.3.4 Bad Selection Bias

# cleans workspace

rm(list = ls())

# DAG

## specify edges

model <- dagitty("dag{x->y; x->z}")

## coordinates for plotting

coordinates(model) <- list(

x = c(x=1, z=2, y=2),

y = c(x=1, z=2, y=1))

## ggplot

ggdag(model) + theme_dag()

Not all post-treatment variables are bad.

Controlling for Z is neutral, but it might hurt the precision of the causal effect.

38.4 Choosing Controls

By providing a causal diagram, deciding the appropriateness of controls are automated.

Guide on how to choose confounders: T. J. VanderWeele (2019)

In cases where it’s hard to determine the plausibility of controls, we might need to further analysis.

sensemakr provides such tools.

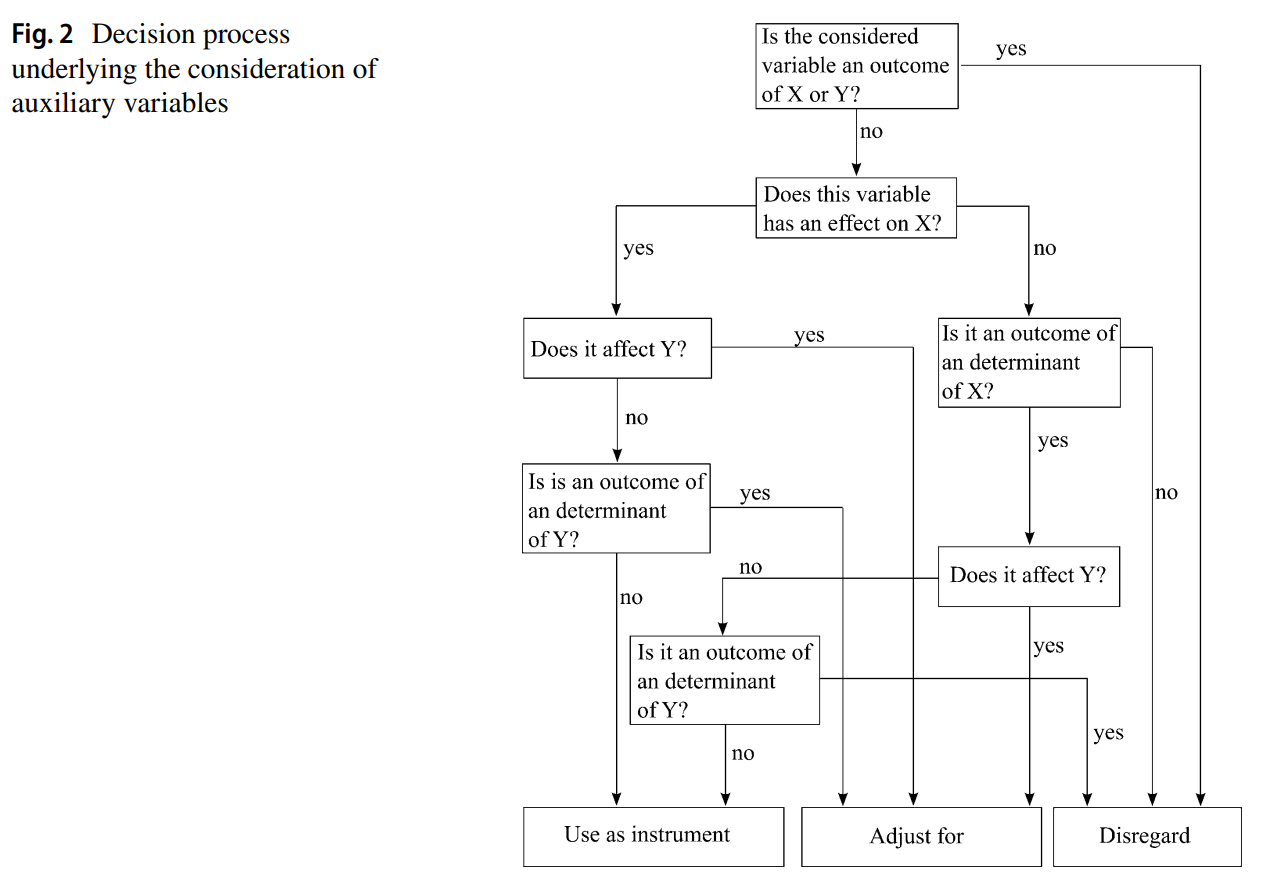

In simple cases, we can follow the simple rules of thumb provided by Steinmetz and Block (2022) (p. 614, Fig 2)