hidden_states = c("hot", "cold")

N = length(hidden_states)

# transition matrix of hidden states

A = rbind(

c(0.7, 0.3),

c(0.4, 0.6)

)

# initial distribution of hidden state

pi_0 = c(0.571, 0.429)

observed_states = c("small", "medium", "large")

M = length(observed_states)

# emission matrix: hidden -> observed

B = rbind(

c(0.1, 0.4, 0.5),

c(0.7, 0.2, 0.1)

)Hidden Markov Models

Simulating HMM

T = 100

# Note: +1's below because first entries represent initial time 0

X = rep(NA, T + 1)

O = rep(NA, T + 1)

# initial state

X[1] = sample(1:N, 1, prob = pi_0)

O[1] = sample(1:M, 1, prob = B[X[1], ])

for (t in 1:T) {

# simulate current hidden state, given previous hidden state

X[t + 1] = sample(1:N, 1, prob = A[X[t], ])

# simulate current observed state, given current hidden state

O[t + 1] = sample(1:M, 1, prob = B[X[t + 1], ])

}

data.frame(t = 0:T, X_t = hidden_states[X], O_t = observed_states[O]) |>

head(10) |> kbl() |> kable_styling()| t | X_t | O_t |

|---|---|---|

| 0 | hot | large |

| 1 | hot | large |

| 2 | hot | large |

| 3 | hot | large |

| 4 | hot | large |

| 5 | cold | large |

| 6 | cold | medium |

| 7 | hot | medium |

| 8 | hot | medium |

| 9 | hot | small |

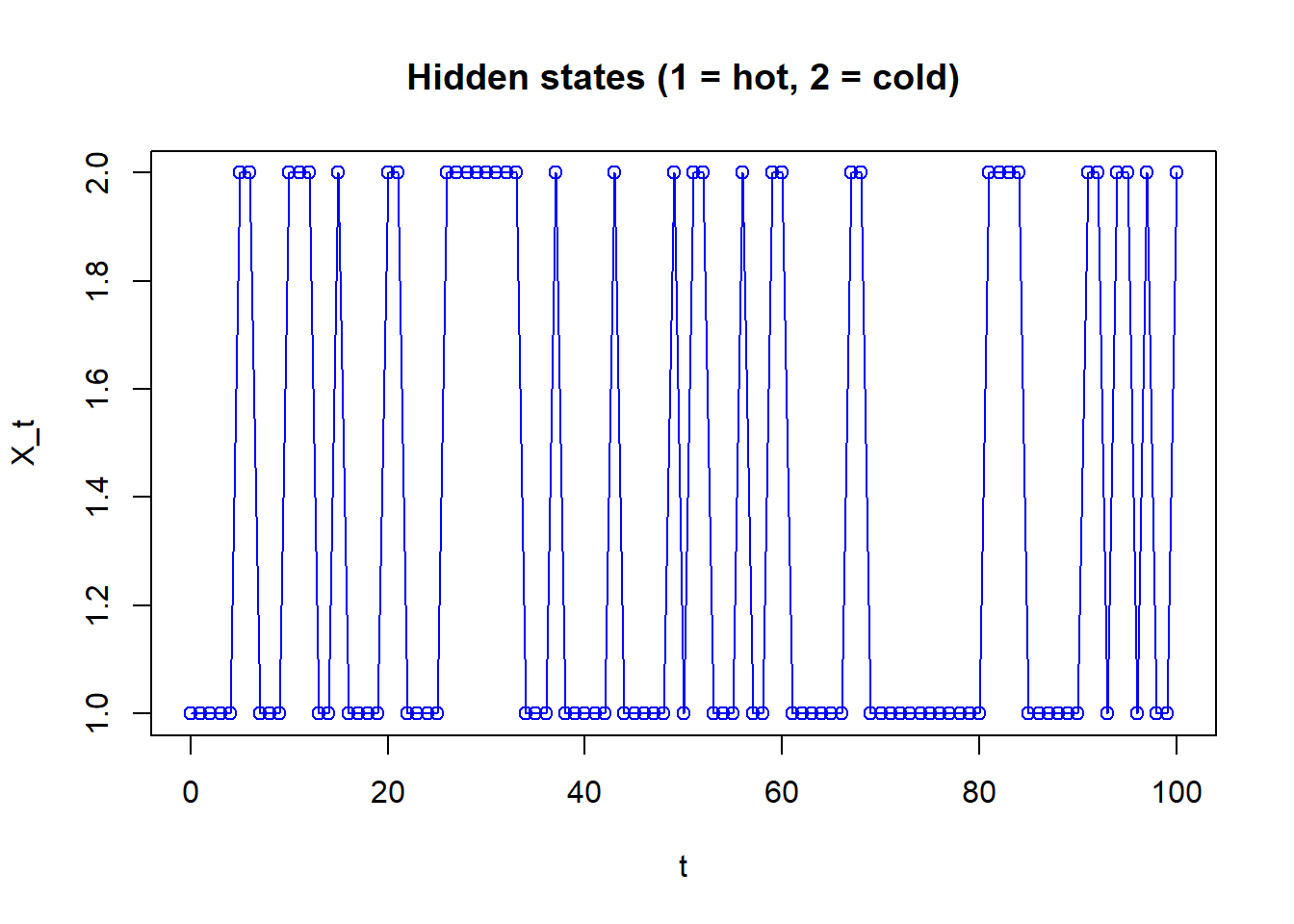

plot(0:T, X, type = "o", xlab = "t", ylab = "X_t", col = "blue",

main = "Hidden states (1 = hot, 2 = cold)")

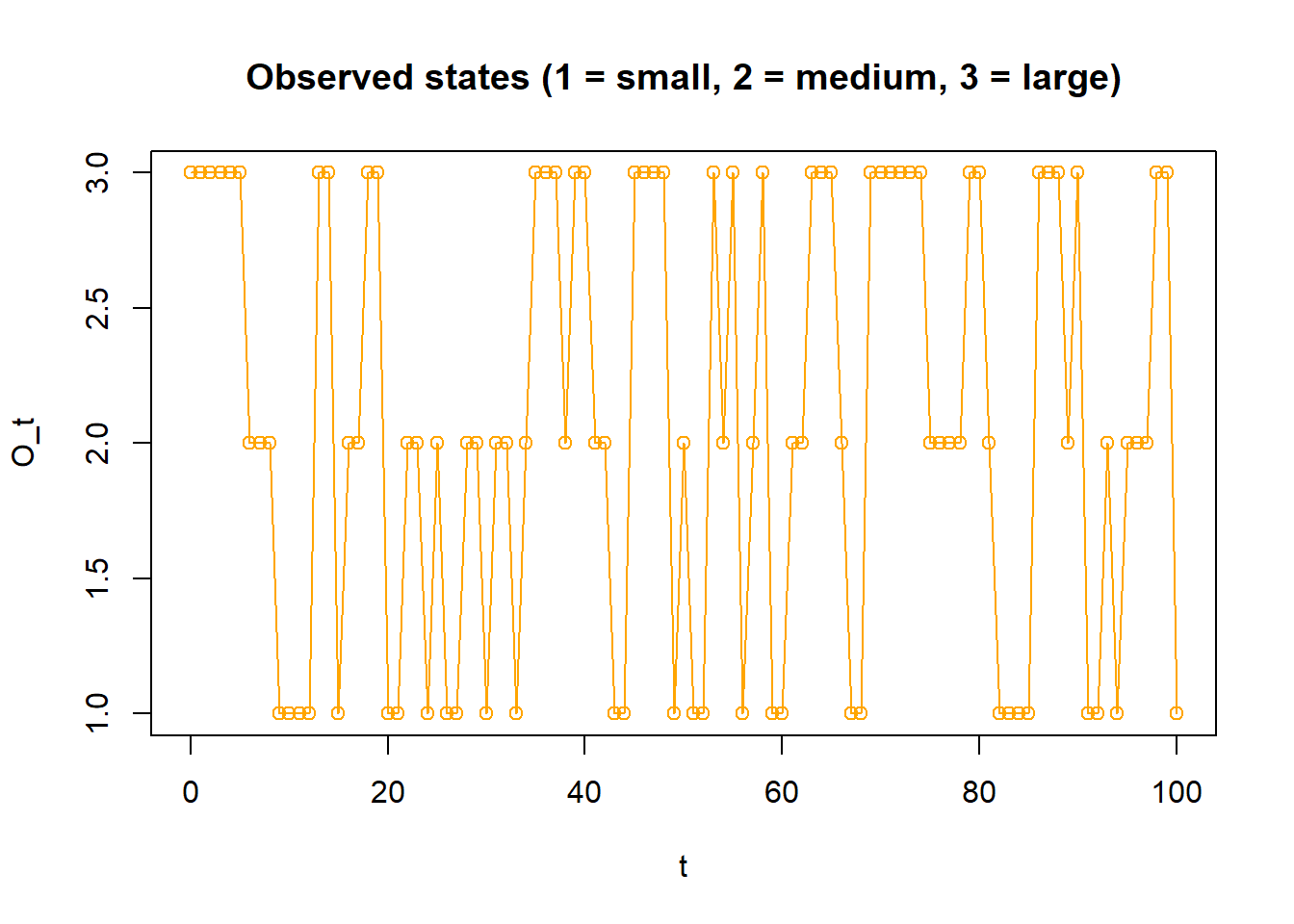

plot(0:T, O, type = "o", xlab = "t", ylab = "O_t", col = "orange",

main = "Observed states (1 = small, 2 = medium, 3 = large)")

Stationary/Marginal at single time

# hidden states

pi_sd = compute_stationary_distribution(A)

pi_sd [,1] [,2]

[1,] 0.5714286 0.4285714# observed states

pi_sd %*% B [,1] [,2] [,3]

[1,] 0.3571429 0.3142857 0.3285714HMM package

library(HMM)

# Initialise HMM

hmm = initHMM(States = hidden_states,

Symbols = observed_states,

transProbs = A,

emissionProbs = B,

startProbs = pi_sd)

hmm$States

[1] "hot" "cold"

$Symbols

[1] "small" "medium" "large"

$startProbs

hot cold

0.5714286 0.4285714

$transProbs

to

from hot cold

hot 0.7 0.3

cold 0.4 0.6

$emissionProbs

symbols

states small medium large

hot 0.1 0.4 0.5

cold 0.7 0.2 0.1HMM package simulation

simHMM(hmm, 10)$states

[1] "hot" "cold" "hot" "cold" "hot" "cold" "cold" "cold" "cold" "hot"

$observation

[1] "medium" "small" "large" "small" "medium" "small" "small" "small"

[9] "small" "medium"Likelihood of observations

# Sequence of observations

observations = c("small", "medium")

log_forward_probability = forward(hmm, observations)

forward_probability = exp(log_forward_probability)

forward_probability index

states 1 2

hot 0.05714286 0.06400000

cold 0.30000000 0.03942857colSums(forward_probability) 1 2

0.3571429 0.1034286 # Sequence of observations

observations = simHMM(hmm, 10)$observation

observations [1] "large" "large" "medium" "small" "medium" "small" "large" "medium"

[9] "small" "medium"log_forward_probability = forward(hmm, observations)

forward_probability = exp(log_forward_probability)

forward_probability index

states 1 2 3 4 5 6

hot 0.28571429 0.10857143 0.032182857 0.002566857 0.002327680 0.0002173669

cold 0.04285714 0.01114286 0.007851429 0.010056000 0.001360731 0.0010603200

index

states 7 8 9 10

hot 2.881424e-04 9.190230e-05 7.461376e-06 6.904504e-06

cold 7.014021e-05 2.570537e-05 3.009574e-05 4.059171e-06colSums(forward_probability) 1 2 3 4 5 6

3.285714e-01 1.197143e-01 4.003429e-02 1.262286e-02 3.688411e-03 1.277687e-03

7 8 9 10

3.582826e-04 1.176077e-04 3.755711e-05 1.096367e-05 Decoding

observations = c("small", "medium")

viterbi(hmm, observations)[1] "cold" "hot" # Sequence of observation

observations = simHMM(hmm, 100)$observation

observations [1] "large" "medium" "large" "medium" "small" "large" "large" "small"

[9] "small" "small" "medium" "medium" "large" "large" "small" "medium"

[17] "medium" "large" "medium" "small" "large" "medium" "medium" "small"

[25] "large" "small" "medium" "medium" "large" "large" "large" "small"

[33] "large" "small" "small" "small" "medium" "large" "large" "large"

[41] "large" "large" "medium" "large" "medium" "medium" "small" "small"

[49] "large" "small" "small" "medium" "large" "small" "medium" "small"

[57] "large" "medium" "medium" "large" "small" "medium" "medium" "large"

[65] "large" "medium" "large" "small" "small" "large" "small" "medium"

[73] "large" "small" "medium" "small" "medium" "medium" "medium" "medium"

[81] "small" "small" "small" "medium" "medium" "small" "small" "large"

[89] "large" "large" "medium" "small" "large" "small" "small" "medium"

[97] "small" "large" "medium" "large" viterbi(hmm, observations) [1] "hot" "hot" "hot" "hot" "cold" "hot" "hot" "cold" "cold" "cold"

[11] "hot" "hot" "hot" "hot" "cold" "hot" "hot" "hot" "hot" "cold"

[21] "hot" "hot" "hot" "cold" "hot" "cold" "hot" "hot" "hot" "hot"

[31] "hot" "cold" "hot" "cold" "cold" "cold" "hot" "hot" "hot" "hot"

[41] "hot" "hot" "hot" "hot" "hot" "hot" "cold" "cold" "hot" "cold"

[51] "cold" "hot" "hot" "cold" "cold" "cold" "hot" "hot" "hot" "hot"

[61] "cold" "hot" "hot" "hot" "hot" "hot" "hot" "cold" "cold" "hot"

[71] "cold" "hot" "hot" "cold" "cold" "cold" "hot" "hot" "hot" "hot"

[81] "cold" "cold" "cold" "hot" "hot" "cold" "cold" "hot" "hot" "hot"

[91] "hot" "cold" "hot" "cold" "cold" "cold" "cold" "hot" "hot" "hot" Estimation

# Sequence of observation

observation = simHMM(hmm, 10000)$observation

table(observation) / 10000observation

large medium small

0.3205 0.3211 0.3584 # Baum-Welch

baumWelch(hmm, observation, maxIterations = 10)$hmm

$hmm$States

[1] "hot" "cold"

$hmm$Symbols

[1] "small" "medium" "large"

$hmm$startProbs

hot cold

0.5714286 0.4285714

$hmm$transProbs

to

from hot cold

hot 0.6990122 0.3009878

cold 0.4018597 0.5981403

$hmm$emissionProbs

symbols

states small medium large

hot 0.09936791 0.4157953 0.4848368

cold 0.70413347 0.1947090 0.1011575

$difference

[1] 0.018781129 0.002290127 0.002154031 0.002030550 0.001917701 0.001813952

[7] 0.001718117 0.001629262 0.001546632 0.001469609All or nothing

library(HMM)

hidden_states = c("learning", "mastery")

observed_states = c("incorrect", "correct")

A = rbind(

c(0.8, 0.2),

c(0.001, 0.999)

)

B = rbind(

c(0.5, 0.5),

c(0.1, 0.9)

)

pi_0 = c(0.999, 0.001)

# Initialise HMM

hmm = initHMM(States = hidden_states,

Symbols = observed_states,

transProbs = A,

emissionProbs = B,

startProbs = pi_0)

hmm$States

[1] "learning" "mastery"

$Symbols

[1] "incorrect" "correct"

$startProbs

learning mastery

0.999 0.001

$transProbs

to

from learning mastery

learning 0.800 0.200

mastery 0.001 0.999

$emissionProbs

symbols

states incorrect correct

learning 0.5 0.5

mastery 0.1 0.9simHMM(hmm, 10)$states

[1] "learning" "learning" "mastery" "mastery" "mastery" "mastery"

[7] "mastery" "mastery" "mastery" "mastery"

$observation

[1] "correct" "correct" "correct" "correct" "correct" "correct" "correct"

[8] "correct" "correct" "correct"# Sequence of observation

observations = simHMM(hmm, 100)$observation

observations [1] "incorrect" "correct" "correct" "correct" "correct" "correct"

[7] "correct" "correct" "correct" "correct" "correct" "correct"

[13] "correct" "correct" "correct" "correct" "correct" "correct"

[19] "correct" "incorrect" "correct" "correct" "correct" "correct"

[25] "correct" "correct" "correct" "incorrect" "correct" "correct"

[31] "correct" "correct" "correct" "correct" "correct" "correct"

[37] "correct" "correct" "correct" "correct" "correct" "correct"

[43] "correct" "correct" "correct" "incorrect" "correct" "correct"

[49] "correct" "correct" "correct" "correct" "correct" "correct"

[55] "correct" "correct" "correct" "correct" "correct" "correct"

[61] "correct" "correct" "correct" "correct" "correct" "correct"

[67] "correct" "correct" "correct" "correct" "correct" "incorrect"

[73] "correct" "incorrect" "correct" "correct" "incorrect" "correct"

[79] "incorrect" "correct" "correct" "correct" "correct" "incorrect"

[85] "incorrect" "correct" "correct" "incorrect" "incorrect" "incorrect"

[91] "correct" "correct" "correct" "correct" "correct" "correct"

[97] "correct" "correct" "correct" "correct" viterbi(hmm, observations) [1] "learning" "mastery" "mastery" "mastery" "mastery" "mastery"

[7] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[13] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[19] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[25] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[31] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[37] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[43] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[49] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[55] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[61] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[67] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[73] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[79] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[85] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[91] "mastery" "mastery" "mastery" "mastery" "mastery" "mastery"

[97] "mastery" "mastery" "mastery" "mastery" # Sequence of observation

observation = simHMM(hmm, 10000)$observation

table(observation) / 10000observation

correct incorrect

0.8988 0.1012 # Baum-Welch

baumWelch(hmm, observation, maxIterations = 10)$hmm

$hmm$States

[1] "learning" "mastery"

$hmm$Symbols

[1] "incorrect" "correct"

$hmm$startProbs

learning mastery

0.999 0.001

$hmm$transProbs

to

from learning mastery

learning 0.895489932 0.1045101

mastery 0.001329809 0.9986702

$hmm$emissionProbs

symbols

states incorrect correct

learning 0.45947571 0.5405243

mastery 0.09629494 0.9037051

$difference

[1] 0.112359065 0.040630020 0.032319113 0.024353848 0.018073108 0.013406443

[7] 0.010007670 0.007541187 0.005744390 0.004425991