8.5 Gradient Boosting

Note: I learned gradient boosting from explained.ai.

Gradient boosting machine (GBM) is an additive modeling algorithm that gradually builds a composite model by iteratively adding M weak sub-models based on the performance of the prior iteration’s composite,

\[F_M(x) = \sum_m^M f_m(x).\]

The idea is to fit a weak model, then replace the response values with the residuals from that model, and fit another model. Adding the residual prediction model to the original response prediction model produces a more accurate model. GBM repeats this process over and over, running new models to predict the residuals of the previous composite models, and adding the results to produce new composites. With each iteration, the model becomes stronger and stronger. The successive trees are usually weighted to slow down the learning rate. “Shrinkage” reduces the influence of each individual tree and leaves space for future trees to improve the model.

\[F_M(x) = f_0 + \eta\sum_{m = 1}^M f_m(x).\]

The smaller the learning rate, \(\eta\), the larger the number of trees, \(M\). \(\eta\) and \(M\) are hyperparameters. Other constraints to the trees are usually applied as additional hyperparameters, including, tree depth, number of nodes, minimum observations per split, and minimum improvement to loss.

The name “gradient boosting” refers to the boosting of a model with a gradient. Each round of training builds a weak learner and uses the residuals to calculate a gradient, the partial derivative of the loss function. Gradient boosting “descends the gradient” to adjust the model parameters to reduce the error in the next round of training.

In the case of classification problems, the loss function is the log-loss; for regression problems, the loss function is mean squared error. GBM continues until it reaches maximum number of trees or an acceptable error level.

8.5.0.1 Gradient Boosting Classification Tree

In addition to the gradient boosting machine algorithm, implemented in caret with method = gbm, there is a variable called Extreme Gradient Boosting, XGBoost, which frankly I don’t know anything about other than it is supposed to work extremely well. Let’s try them both!

8.5.0.1.1 GBM

I’ll predict Purchase from the OJ data set again, this time using the GBM method by specifying method = "gbm". gbm has the following tuneable hyperparameters (see modelLookup("gbm")).

n.trees: number of boosting iterations, \(M\)interaction.depth: maximum tree depthshrinkage: shrinkage, \(\eta\)n.minobsinnode: minimum terminal node size

I’ll use tuneLength = 5.

set.seed(1234)

garbage <- capture.output(

oj_mdl_gbm <- train(

Purchase ~ .,

data = oj_train,

method = "gbm",

metric = "ROC",

tuneLength = 5,

trControl = oj_trControl

))

oj_mdl_gbm## Stochastic Gradient Boosting

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## interaction.depth n.trees ROC Sens Spec

## 1 50 0.88 0.87 0.72

## 1 100 0.89 0.87 0.74

## 1 150 0.88 0.87 0.75

## 1 200 0.88 0.87 0.75

## 1 250 0.88 0.87 0.74

## 2 50 0.88 0.87 0.75

## 2 100 0.89 0.87 0.75

## 2 150 0.88 0.86 0.75

## 2 200 0.88 0.86 0.75

## 2 250 0.88 0.85 0.75

## 3 50 0.89 0.87 0.76

## 3 100 0.88 0.86 0.77

## 3 150 0.88 0.86 0.76

## 3 200 0.87 0.87 0.77

## 3 250 0.87 0.86 0.76

## 4 50 0.88 0.85 0.75

## 4 100 0.88 0.86 0.76

## 4 150 0.87 0.85 0.77

## 4 200 0.87 0.85 0.76

## 4 250 0.87 0.85 0.75

## 5 50 0.89 0.86 0.76

## 5 100 0.88 0.85 0.74

## 5 150 0.88 0.85 0.76

## 5 200 0.87 0.85 0.74

## 5 250 0.87 0.84 0.75

##

## Tuning parameter 'shrinkage' was held constant at a value of 0.1

##

## Tuning parameter 'n.minobsinnode' was held constant at a value of 10

## ROC was used to select the optimal model using the largest value.

## The final values used for the model were n.trees = 50, interaction.depth =

## 5, shrinkage = 0.1 and n.minobsinnode = 10.train() tuned n.trees ($M) and interaction.depth, holding shrinkage = 0.1 (), and n.minobsinnode = 10. The optimal hyperparameter values were n.trees = 50, and interaction.depth = 5.

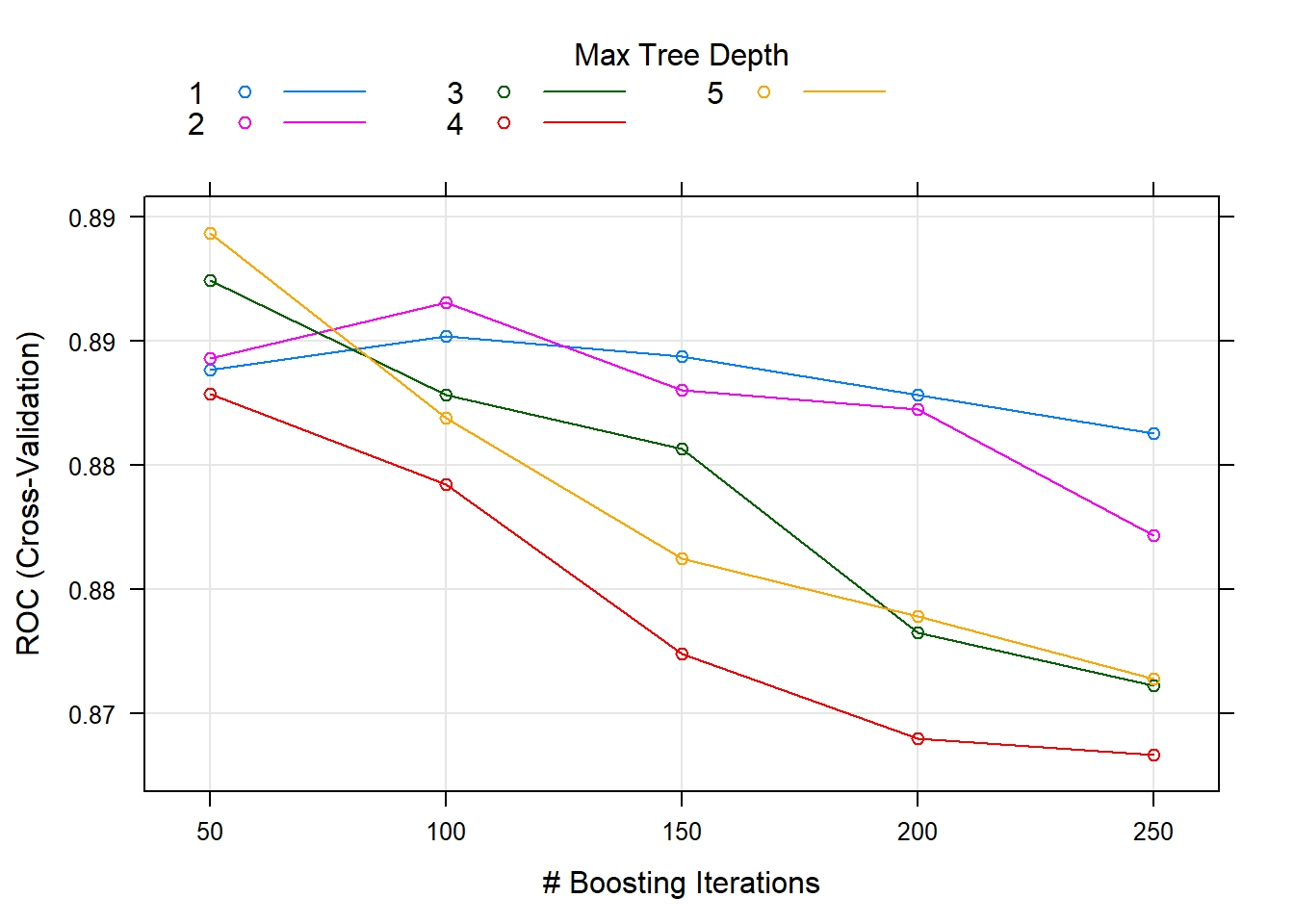

You can see from the tuning plot that accuracy is maximized at \(M=50\) for tree depth of 5, but \(M=50\) with tree depth of 3 worked nearly as well.

Let’s see how the model performed on the holdout set. The accuracy was 0.8451.

oj_preds_gbm <- bind_cols(

predict(oj_mdl_gbm, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_gbm, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_gbm <- confusionMatrix(oj_preds_gbm$Predicted, reference = oj_preds_gbm$Actual)

oj_cm_gbm## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 113 16

## MM 17 67

##

## Accuracy : 0.845

## 95% CI : (0.789, 0.891)

## No Information Rate : 0.61

## P-Value [Acc > NIR] : 0.0000000000000631

##

## Kappa : 0.675

##

## Mcnemar's Test P-Value : 1

##

## Sensitivity : 0.869

## Specificity : 0.807

## Pos Pred Value : 0.876

## Neg Pred Value : 0.798

## Prevalence : 0.610

## Detection Rate : 0.531

## Detection Prevalence : 0.606

## Balanced Accuracy : 0.838

##

## 'Positive' Class : CH

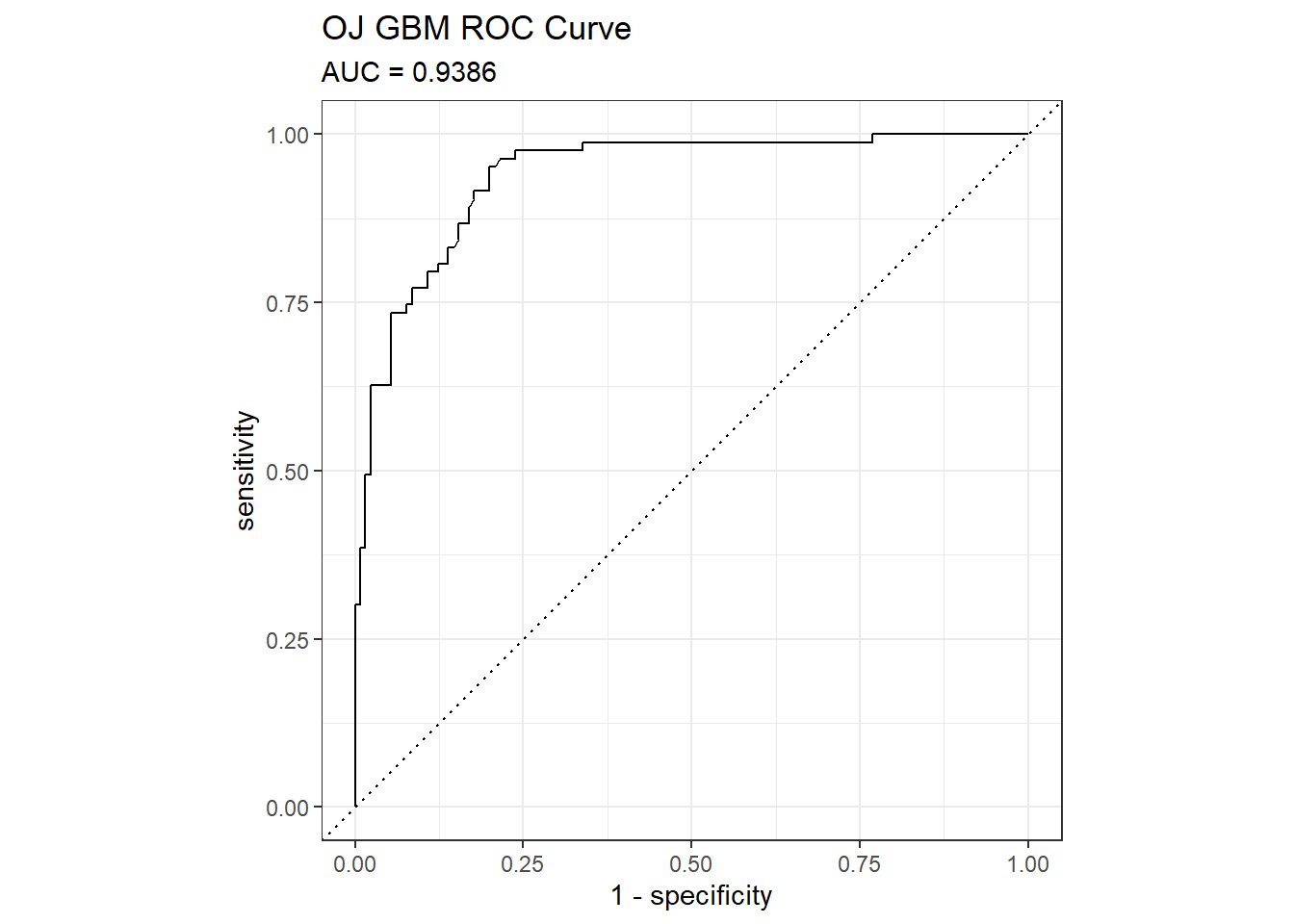

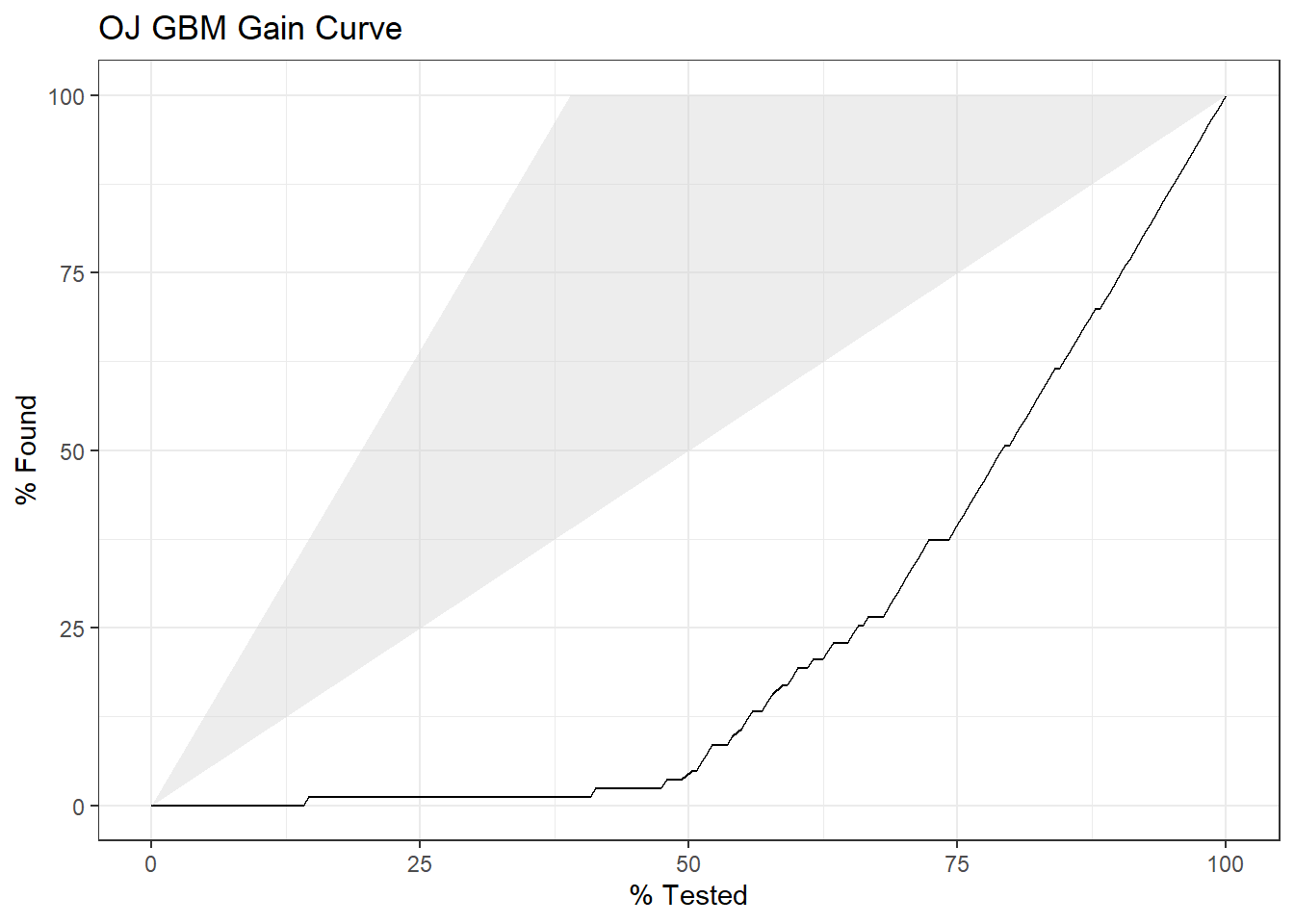

## AUC was 0.9386. Here are the ROC and gain curves.

mdl_auc <- Metrics::auc(actual = oj_preds_gbm$Actual == "CH", oj_preds_gbm$CH)

yardstick::roc_curve(oj_preds_gbm, Actual, CH) %>%

autoplot() +

labs(

title = "OJ GBM ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

Now the variable importance. Just a few variables. LoyalCH is at the top again.

8.5.0.1.2 XGBoost

I’ll predict Purchase from the OJ data set again, this time using the XGBoost method by specifying method = "xgbTree". xgbTree has the following tuneable hyperparameters (see modelLookup("xgbTree")). The first three are the same as xgb.

nrounds: number of boosting iterations, \(M\)max_depth: maximum tree deptheta: shrinkage, \(\eta\)gamma: minimum loss reductioncolsamle_bytree: subsample ratio of columnsmin_child_weight: minimum size of instance weightsubstample: subsample percentage

I’ll use tuneLength = 5 again.

set.seed(1234)

garbage <- capture.output(

oj_mdl_xgb <- train(

Purchase ~ .,

data = oj_train,

method = "xgbTree",

metric = "ROC",

tuneLength = 5,

trControl = oj_trControl

))

oj_mdl_xgb## eXtreme Gradient Boosting

##

## 857 samples

## 17 predictor

## 2 classes: 'CH', 'MM'

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 772, 772, 771, 770, 771, 771, ...

## Resampling results across tuning parameters:

##

## eta max_depth colsample_bytree subsample nrounds ROC Sens Spec

## 0.3 1 0.6 0.50 50 0.88 0.88 0.75

## 0.3 1 0.6 0.50 100 0.88 0.87 0.75

## 0.3 1 0.6 0.50 150 0.88 0.87 0.73

## 0.3 1 0.6 0.50 200 0.88 0.86 0.73

## 0.3 1 0.6 0.50 250 0.88 0.86 0.74

## 0.3 1 0.6 0.62 50 0.89 0.88 0.75

## 0.3 1 0.6 0.62 100 0.88 0.87 0.76

## 0.3 1 0.6 0.62 150 0.88 0.86 0.75

## 0.3 1 0.6 0.62 200 0.88 0.86 0.74

## 0.3 1 0.6 0.62 250 0.88 0.86 0.73

## 0.3 1 0.6 0.75 50 0.89 0.88 0.75

## 0.3 1 0.6 0.75 100 0.88 0.87 0.75

## 0.3 1 0.6 0.75 150 0.88 0.88 0.72

## 0.3 1 0.6 0.75 200 0.88 0.87 0.73

## 0.3 1 0.6 0.75 250 0.88 0.86 0.74

## 0.3 1 0.6 0.88 50 0.89 0.87 0.75

## 0.3 1 0.6 0.88 100 0.88 0.87 0.74

## 0.3 1 0.6 0.88 150 0.88 0.86 0.75

## 0.3 1 0.6 0.88 200 0.88 0.86 0.74

## 0.3 1 0.6 0.88 250 0.88 0.86 0.75

## 0.3 1 0.6 1.00 50 0.89 0.88 0.75

## 0.3 1 0.6 1.00 100 0.89 0.88 0.74

## 0.3 1 0.6 1.00 150 0.89 0.88 0.74

## 0.3 1 0.6 1.00 200 0.88 0.88 0.75

## 0.3 1 0.6 1.00 250 0.88 0.87 0.75

## 0.3 1 0.8 0.50 50 0.88 0.87 0.75

## 0.3 1 0.8 0.50 100 0.88 0.86 0.74

## 0.3 1 0.8 0.50 150 0.88 0.87 0.74

## 0.3 1 0.8 0.50 200 0.87 0.85 0.74

## 0.3 1 0.8 0.50 250 0.87 0.86 0.74

## 0.3 1 0.8 0.62 50 0.88 0.87 0.74

## 0.3 1 0.8 0.62 100 0.88 0.86 0.76

## 0.3 1 0.8 0.62 150 0.88 0.87 0.74

## 0.3 1 0.8 0.62 200 0.88 0.86 0.74

## 0.3 1 0.8 0.62 250 0.88 0.87 0.73

## 0.3 1 0.8 0.75 50 0.89 0.88 0.74

## 0.3 1 0.8 0.75 100 0.88 0.87 0.74

## 0.3 1 0.8 0.75 150 0.88 0.88 0.74

## 0.3 1 0.8 0.75 200 0.88 0.87 0.75

## 0.3 1 0.8 0.75 250 0.88 0.86 0.74

## 0.3 1 0.8 0.88 50 0.89 0.87 0.74

## 0.3 1 0.8 0.88 100 0.88 0.88 0.74

## 0.3 1 0.8 0.88 150 0.88 0.87 0.74

## 0.3 1 0.8 0.88 200 0.88 0.86 0.75

## 0.3 1 0.8 0.88 250 0.88 0.86 0.73

## 0.3 1 0.8 1.00 50 0.89 0.87 0.74

## 0.3 1 0.8 1.00 100 0.89 0.87 0.74

## 0.3 1 0.8 1.00 150 0.88 0.87 0.75

## 0.3 1 0.8 1.00 200 0.88 0.88 0.75

## 0.3 1 0.8 1.00 250 0.88 0.87 0.75

## 0.3 2 0.6 0.50 50 0.88 0.86 0.76

## 0.3 2 0.6 0.50 100 0.87 0.86 0.75

## 0.3 2 0.6 0.50 150 0.87 0.85 0.75

## 0.3 2 0.6 0.50 200 0.86 0.85 0.74

## 0.3 2 0.6 0.50 250 0.86 0.84 0.73

## 0.3 2 0.6 0.62 50 0.89 0.86 0.74

## 0.3 2 0.6 0.62 100 0.88 0.84 0.76

## 0.3 2 0.6 0.62 150 0.87 0.84 0.76

## 0.3 2 0.6 0.62 200 0.87 0.83 0.76

## 0.3 2 0.6 0.62 250 0.86 0.84 0.74

## 0.3 2 0.6 0.75 50 0.88 0.87 0.77

## 0.3 2 0.6 0.75 100 0.88 0.86 0.75

## 0.3 2 0.6 0.75 150 0.87 0.86 0.74

## 0.3 2 0.6 0.75 200 0.87 0.86 0.73

## 0.3 2 0.6 0.75 250 0.86 0.85 0.74

## 0.3 2 0.6 0.88 50 0.89 0.86 0.77

## 0.3 2 0.6 0.88 100 0.88 0.85 0.74

## 0.3 2 0.6 0.88 150 0.87 0.85 0.76

## 0.3 2 0.6 0.88 200 0.87 0.85 0.75

## 0.3 2 0.6 0.88 250 0.87 0.84 0.75

## 0.3 2 0.6 1.00 50 0.89 0.87 0.76

## 0.3 2 0.6 1.00 100 0.88 0.85 0.76

## 0.3 2 0.6 1.00 150 0.88 0.85 0.75

## 0.3 2 0.6 1.00 200 0.87 0.86 0.75

## 0.3 2 0.6 1.00 250 0.87 0.85 0.75

## 0.3 2 0.8 0.50 50 0.88 0.85 0.74

## 0.3 2 0.8 0.50 100 0.87 0.86 0.75

## 0.3 2 0.8 0.50 150 0.87 0.85 0.74

## 0.3 2 0.8 0.50 200 0.86 0.83 0.74

## 0.3 2 0.8 0.50 250 0.86 0.83 0.75

## 0.3 2 0.8 0.62 50 0.88 0.86 0.76

## 0.3 2 0.8 0.62 100 0.87 0.85 0.76

## 0.3 2 0.8 0.62 150 0.87 0.85 0.76

## 0.3 2 0.8 0.62 200 0.87 0.85 0.76

## 0.3 2 0.8 0.62 250 0.86 0.84 0.74

## 0.3 2 0.8 0.75 50 0.88 0.85 0.76

## 0.3 2 0.8 0.75 100 0.88 0.84 0.74

## 0.3 2 0.8 0.75 150 0.87 0.85 0.75

## 0.3 2 0.8 0.75 200 0.87 0.85 0.75

## 0.3 2 0.8 0.75 250 0.87 0.84 0.74

## 0.3 2 0.8 0.88 50 0.88 0.86 0.75

## 0.3 2 0.8 0.88 100 0.88 0.85 0.75

## 0.3 2 0.8 0.88 150 0.87 0.86 0.75

## 0.3 2 0.8 0.88 200 0.87 0.85 0.75

## 0.3 2 0.8 0.88 250 0.86 0.85 0.74

## 0.3 2 0.8 1.00 50 0.88 0.86 0.76

## 0.3 2 0.8 1.00 100 0.88 0.85 0.75

## 0.3 2 0.8 1.00 150 0.87 0.85 0.75

## 0.3 2 0.8 1.00 200 0.87 0.85 0.75

## 0.3 2 0.8 1.00 250 0.87 0.85 0.74

## 0.3 3 0.6 0.50 50 0.88 0.86 0.76

## 0.3 3 0.6 0.50 100 0.87 0.84 0.73

## 0.3 3 0.6 0.50 150 0.85 0.84 0.72

## 0.3 3 0.6 0.50 200 0.85 0.84 0.72

## 0.3 3 0.6 0.50 250 0.85 0.84 0.73

## 0.3 3 0.6 0.62 50 0.88 0.86 0.76

## 0.3 3 0.6 0.62 100 0.87 0.84 0.74

## 0.3 3 0.6 0.62 150 0.86 0.84 0.73

## 0.3 3 0.6 0.62 200 0.86 0.85 0.73

## 0.3 3 0.6 0.62 250 0.85 0.85 0.74

## 0.3 3 0.6 0.75 50 0.88 0.85 0.75

## 0.3 3 0.6 0.75 100 0.86 0.84 0.74

## 0.3 3 0.6 0.75 150 0.86 0.84 0.73

## 0.3 3 0.6 0.75 200 0.86 0.84 0.74

## 0.3 3 0.6 0.75 250 0.85 0.84 0.74

## 0.3 3 0.6 0.88 50 0.87 0.86 0.77

## 0.3 3 0.6 0.88 100 0.87 0.85 0.75

## 0.3 3 0.6 0.88 150 0.86 0.85 0.75

## 0.3 3 0.6 0.88 200 0.86 0.84 0.75

## 0.3 3 0.6 0.88 250 0.86 0.85 0.75

## 0.3 3 0.6 1.00 50 0.88 0.86 0.76

## 0.3 3 0.6 1.00 100 0.87 0.86 0.75

## 0.3 3 0.6 1.00 150 0.87 0.86 0.74

## 0.3 3 0.6 1.00 200 0.87 0.84 0.75

## 0.3 3 0.6 1.00 250 0.86 0.85 0.73

## 0.3 3 0.8 0.50 50 0.87 0.84 0.75

## 0.3 3 0.8 0.50 100 0.86 0.85 0.73

## 0.3 3 0.8 0.50 150 0.86 0.84 0.71

## 0.3 3 0.8 0.50 200 0.85 0.83 0.74

## 0.3 3 0.8 0.50 250 0.84 0.83 0.71

## 0.3 3 0.8 0.62 50 0.88 0.87 0.75

## 0.3 3 0.8 0.62 100 0.87 0.85 0.73

## 0.3 3 0.8 0.62 150 0.87 0.85 0.74

## 0.3 3 0.8 0.62 200 0.86 0.84 0.74

## 0.3 3 0.8 0.62 250 0.85 0.84 0.72

## 0.3 3 0.8 0.75 50 0.88 0.86 0.75

## 0.3 3 0.8 0.75 100 0.87 0.85 0.72

## 0.3 3 0.8 0.75 150 0.86 0.84 0.73

## 0.3 3 0.8 0.75 200 0.86 0.84 0.73

## 0.3 3 0.8 0.75 250 0.86 0.84 0.72

## 0.3 3 0.8 0.88 50 0.88 0.86 0.74

## 0.3 3 0.8 0.88 100 0.87 0.85 0.74

## 0.3 3 0.8 0.88 150 0.86 0.85 0.75

## 0.3 3 0.8 0.88 200 0.86 0.84 0.75

## 0.3 3 0.8 0.88 250 0.86 0.84 0.74

## 0.3 3 0.8 1.00 50 0.88 0.85 0.75

## 0.3 3 0.8 1.00 100 0.87 0.86 0.75

## 0.3 3 0.8 1.00 150 0.87 0.85 0.74

## 0.3 3 0.8 1.00 200 0.86 0.85 0.75

## 0.3 3 0.8 1.00 250 0.86 0.84 0.75

## 0.3 4 0.6 0.50 50 0.87 0.85 0.74

## 0.3 4 0.6 0.50 100 0.85 0.84 0.74

## 0.3 4 0.6 0.50 150 0.86 0.84 0.73

## 0.3 4 0.6 0.50 200 0.85 0.84 0.72

## 0.3 4 0.6 0.50 250 0.85 0.85 0.72

## 0.3 4 0.6 0.62 50 0.87 0.85 0.75

## 0.3 4 0.6 0.62 100 0.87 0.85 0.74

## 0.3 4 0.6 0.62 150 0.86 0.83 0.75

## 0.3 4 0.6 0.62 200 0.86 0.83 0.75

## 0.3 4 0.6 0.62 250 0.86 0.84 0.74

## 0.3 4 0.6 0.75 50 0.87 0.85 0.74

## 0.3 4 0.6 0.75 100 0.86 0.85 0.72

## 0.3 4 0.6 0.75 150 0.86 0.84 0.75

## 0.3 4 0.6 0.75 200 0.86 0.83 0.73

## 0.3 4 0.6 0.75 250 0.85 0.83 0.72

## 0.3 4 0.6 0.88 50 0.87 0.86 0.75

## 0.3 4 0.6 0.88 100 0.86 0.85 0.74

## 0.3 4 0.6 0.88 150 0.85 0.85 0.73

## 0.3 4 0.6 0.88 200 0.85 0.84 0.73

## 0.3 4 0.6 0.88 250 0.85 0.84 0.72

## 0.3 4 0.6 1.00 50 0.88 0.86 0.76

## 0.3 4 0.6 1.00 100 0.86 0.85 0.73

## 0.3 4 0.6 1.00 150 0.86 0.85 0.73

## 0.3 4 0.6 1.00 200 0.86 0.83 0.73

## 0.3 4 0.6 1.00 250 0.85 0.82 0.72

## 0.3 4 0.8 0.50 50 0.86 0.85 0.72

## 0.3 4 0.8 0.50 100 0.85 0.85 0.72

## 0.3 4 0.8 0.50 150 0.85 0.85 0.73

## 0.3 4 0.8 0.50 200 0.85 0.84 0.72

## 0.3 4 0.8 0.50 250 0.84 0.83 0.73

## 0.3 4 0.8 0.62 50 0.87 0.85 0.76

## 0.3 4 0.8 0.62 100 0.86 0.85 0.74

## 0.3 4 0.8 0.62 150 0.86 0.84 0.71

## 0.3 4 0.8 0.62 200 0.85 0.83 0.72

## 0.3 4 0.8 0.62 250 0.85 0.83 0.71

## 0.3 4 0.8 0.75 50 0.87 0.84 0.75

## 0.3 4 0.8 0.75 100 0.86 0.84 0.74

## 0.3 4 0.8 0.75 150 0.86 0.84 0.73

## 0.3 4 0.8 0.75 200 0.85 0.84 0.72

## 0.3 4 0.8 0.75 250 0.85 0.83 0.72

## 0.3 4 0.8 0.88 50 0.87 0.84 0.75

## 0.3 4 0.8 0.88 100 0.86 0.84 0.74

## 0.3 4 0.8 0.88 150 0.86 0.83 0.73

## 0.3 4 0.8 0.88 200 0.85 0.83 0.75

## 0.3 4 0.8 0.88 250 0.85 0.83 0.74

## 0.3 4 0.8 1.00 50 0.87 0.85 0.74

## 0.3 4 0.8 1.00 100 0.86 0.86 0.73

## 0.3 4 0.8 1.00 150 0.86 0.85 0.73

## 0.3 4 0.8 1.00 200 0.86 0.84 0.73

## 0.3 4 0.8 1.00 250 0.86 0.84 0.72

## 0.3 5 0.6 0.50 50 0.86 0.85 0.73

## 0.3 5 0.6 0.50 100 0.85 0.84 0.71

## 0.3 5 0.6 0.50 150 0.85 0.83 0.72

## 0.3 5 0.6 0.50 200 0.85 0.82 0.73

## 0.3 5 0.6 0.50 250 0.85 0.81 0.72

## 0.3 5 0.6 0.62 50 0.86 0.84 0.73

## 0.3 5 0.6 0.62 100 0.86 0.84 0.72

## 0.3 5 0.6 0.62 150 0.85 0.84 0.73

## 0.3 5 0.6 0.62 200 0.85 0.85 0.72

## 0.3 5 0.6 0.62 250 0.85 0.84 0.70

## 0.3 5 0.6 0.75 50 0.86 0.84 0.75

## 0.3 5 0.6 0.75 100 0.86 0.83 0.73

## 0.3 5 0.6 0.75 150 0.85 0.82 0.73

## 0.3 5 0.6 0.75 200 0.85 0.82 0.71

## 0.3 5 0.6 0.75 250 0.85 0.83 0.71

## 0.3 5 0.6 0.88 50 0.86 0.85 0.75

## 0.3 5 0.6 0.88 100 0.86 0.84 0.74

## 0.3 5 0.6 0.88 150 0.85 0.84 0.73

## 0.3 5 0.6 0.88 200 0.85 0.83 0.72

## 0.3 5 0.6 0.88 250 0.85 0.82 0.73

## 0.3 5 0.6 1.00 50 0.87 0.85 0.74

## 0.3 5 0.6 1.00 100 0.86 0.84 0.72

## 0.3 5 0.6 1.00 150 0.86 0.84 0.73

## 0.3 5 0.6 1.00 200 0.85 0.83 0.73

## 0.3 5 0.6 1.00 250 0.85 0.83 0.73

## 0.3 5 0.8 0.50 50 0.86 0.84 0.74

## 0.3 5 0.8 0.50 100 0.85 0.84 0.73

## 0.3 5 0.8 0.50 150 0.85 0.84 0.73

## 0.3 5 0.8 0.50 200 0.85 0.84 0.72

## 0.3 5 0.8 0.50 250 0.85 0.83 0.71

## 0.3 5 0.8 0.62 50 0.86 0.85 0.72

## 0.3 5 0.8 0.62 100 0.86 0.84 0.74

## 0.3 5 0.8 0.62 150 0.86 0.83 0.72

## 0.3 5 0.8 0.62 200 0.85 0.83 0.73

## 0.3 5 0.8 0.62 250 0.85 0.83 0.72

## 0.3 5 0.8 0.75 50 0.86 0.85 0.75

## 0.3 5 0.8 0.75 100 0.85 0.84 0.73

## 0.3 5 0.8 0.75 150 0.85 0.82 0.72

## 0.3 5 0.8 0.75 200 0.84 0.83 0.72

## 0.3 5 0.8 0.75 250 0.84 0.82 0.71

## 0.3 5 0.8 0.88 50 0.87 0.85 0.75

## 0.3 5 0.8 0.88 100 0.86 0.84 0.74

## 0.3 5 0.8 0.88 150 0.85 0.84 0.72

## 0.3 5 0.8 0.88 200 0.85 0.82 0.72

## 0.3 5 0.8 0.88 250 0.85 0.83 0.73

## 0.3 5 0.8 1.00 50 0.87 0.85 0.73

## 0.3 5 0.8 1.00 100 0.86 0.85 0.75

## 0.3 5 0.8 1.00 150 0.86 0.84 0.74

## 0.3 5 0.8 1.00 200 0.85 0.84 0.72

## 0.3 5 0.8 1.00 250 0.85 0.84 0.72

## 0.4 1 0.6 0.50 50 0.88 0.86 0.74

## 0.4 1 0.6 0.50 100 0.88 0.86 0.72

## 0.4 1 0.6 0.50 150 0.87 0.85 0.74

## 0.4 1 0.6 0.50 200 0.87 0.86 0.73

## 0.4 1 0.6 0.50 250 0.87 0.85 0.74

## 0.4 1 0.6 0.62 50 0.89 0.87 0.73

## 0.4 1 0.6 0.62 100 0.88 0.86 0.75

## 0.4 1 0.6 0.62 150 0.88 0.85 0.75

## 0.4 1 0.6 0.62 200 0.87 0.85 0.75

## 0.4 1 0.6 0.62 250 0.88 0.86 0.74

## 0.4 1 0.6 0.75 50 0.88 0.87 0.74

## 0.4 1 0.6 0.75 100 0.88 0.87 0.75

## 0.4 1 0.6 0.75 150 0.88 0.87 0.76

## 0.4 1 0.6 0.75 200 0.88 0.87 0.73

## 0.4 1 0.6 0.75 250 0.88 0.86 0.74

## 0.4 1 0.6 0.88 50 0.89 0.88 0.74

## 0.4 1 0.6 0.88 100 0.88 0.88 0.75

## 0.4 1 0.6 0.88 150 0.88 0.87 0.74

## 0.4 1 0.6 0.88 200 0.88 0.87 0.73

## 0.4 1 0.6 0.88 250 0.87 0.86 0.74

## 0.4 1 0.6 1.00 50 0.89 0.87 0.74

## 0.4 1 0.6 1.00 100 0.89 0.87 0.74

## 0.4 1 0.6 1.00 150 0.88 0.87 0.75

## 0.4 1 0.6 1.00 200 0.88 0.87 0.75

## 0.4 1 0.6 1.00 250 0.88 0.87 0.74

## 0.4 1 0.8 0.50 50 0.88 0.88 0.75

## 0.4 1 0.8 0.50 100 0.88 0.87 0.74

## 0.4 1 0.8 0.50 150 0.87 0.86 0.72

## 0.4 1 0.8 0.50 200 0.87 0.86 0.73

## 0.4 1 0.8 0.50 250 0.87 0.85 0.73

## 0.4 1 0.8 0.62 50 0.88 0.88 0.75

## 0.4 1 0.8 0.62 100 0.88 0.87 0.74

## 0.4 1 0.8 0.62 150 0.88 0.86 0.77

## 0.4 1 0.8 0.62 200 0.87 0.85 0.73

## 0.4 1 0.8 0.62 250 0.87 0.85 0.75

## 0.4 1 0.8 0.75 50 0.89 0.87 0.75

## 0.4 1 0.8 0.75 100 0.88 0.86 0.74

## 0.4 1 0.8 0.75 150 0.88 0.86 0.73

## 0.4 1 0.8 0.75 200 0.88 0.86 0.73

## 0.4 1 0.8 0.75 250 0.87 0.85 0.73

## 0.4 1 0.8 0.88 50 0.89 0.88 0.74

## 0.4 1 0.8 0.88 100 0.88 0.87 0.75

## 0.4 1 0.8 0.88 150 0.88 0.86 0.74

## 0.4 1 0.8 0.88 200 0.88 0.87 0.75

## 0.4 1 0.8 0.88 250 0.87 0.86 0.74

## 0.4 1 0.8 1.00 50 0.89 0.87 0.75

## 0.4 1 0.8 1.00 100 0.88 0.88 0.75

## 0.4 1 0.8 1.00 150 0.88 0.88 0.74

## 0.4 1 0.8 1.00 200 0.88 0.87 0.74

## 0.4 1 0.8 1.00 250 0.88 0.87 0.75

## 0.4 2 0.6 0.50 50 0.88 0.86 0.75

## 0.4 2 0.6 0.50 100 0.87 0.85 0.76

## 0.4 2 0.6 0.50 150 0.87 0.85 0.75

## 0.4 2 0.6 0.50 200 0.86 0.85 0.71

## 0.4 2 0.6 0.50 250 0.86 0.85 0.72

## 0.4 2 0.6 0.62 50 0.88 0.85 0.76

## 0.4 2 0.6 0.62 100 0.87 0.85 0.75

## 0.4 2 0.6 0.62 150 0.87 0.85 0.76

## 0.4 2 0.6 0.62 200 0.86 0.84 0.74

## 0.4 2 0.6 0.62 250 0.86 0.83 0.73

## 0.4 2 0.6 0.75 50 0.87 0.86 0.77

## 0.4 2 0.6 0.75 100 0.87 0.86 0.73

## 0.4 2 0.6 0.75 150 0.87 0.84 0.76

## 0.4 2 0.6 0.75 200 0.86 0.85 0.75

## 0.4 2 0.6 0.75 250 0.86 0.85 0.73

## 0.4 2 0.6 0.88 50 0.88 0.85 0.75

## 0.4 2 0.6 0.88 100 0.87 0.85 0.77

## 0.4 2 0.6 0.88 150 0.87 0.85 0.74

## 0.4 2 0.6 0.88 200 0.87 0.84 0.74

## 0.4 2 0.6 0.88 250 0.86 0.85 0.74

## 0.4 2 0.6 1.00 50 0.88 0.86 0.77

## 0.4 2 0.6 1.00 100 0.87 0.85 0.74

## 0.4 2 0.6 1.00 150 0.87 0.84 0.73

## 0.4 2 0.6 1.00 200 0.87 0.84 0.74

## 0.4 2 0.6 1.00 250 0.86 0.85 0.74

## 0.4 2 0.8 0.50 50 0.87 0.85 0.74

## 0.4 2 0.8 0.50 100 0.87 0.85 0.72

## 0.4 2 0.8 0.50 150 0.86 0.86 0.73

## 0.4 2 0.8 0.50 200 0.85 0.84 0.71

## 0.4 2 0.8 0.50 250 0.86 0.84 0.70

## 0.4 2 0.8 0.62 50 0.87 0.85 0.75

## 0.4 2 0.8 0.62 100 0.87 0.85 0.75

## 0.4 2 0.8 0.62 150 0.86 0.84 0.75

## 0.4 2 0.8 0.62 200 0.85 0.84 0.73

## 0.4 2 0.8 0.62 250 0.85 0.84 0.73

## 0.4 2 0.8 0.75 50 0.87 0.85 0.75

## 0.4 2 0.8 0.75 100 0.87 0.85 0.75

## 0.4 2 0.8 0.75 150 0.86 0.84 0.75

## 0.4 2 0.8 0.75 200 0.86 0.85 0.73

## 0.4 2 0.8 0.75 250 0.86 0.83 0.74

## 0.4 2 0.8 0.88 50 0.88 0.87 0.75

## 0.4 2 0.8 0.88 100 0.87 0.85 0.74

## 0.4 2 0.8 0.88 150 0.87 0.85 0.73

## 0.4 2 0.8 0.88 200 0.87 0.85 0.74

## 0.4 2 0.8 0.88 250 0.86 0.85 0.74

## 0.4 2 0.8 1.00 50 0.88 0.85 0.75

## 0.4 2 0.8 1.00 100 0.87 0.85 0.74

## 0.4 2 0.8 1.00 150 0.87 0.85 0.74

## 0.4 2 0.8 1.00 200 0.87 0.85 0.75

## 0.4 2 0.8 1.00 250 0.86 0.85 0.75

## 0.4 3 0.6 0.50 50 0.86 0.85 0.74

## 0.4 3 0.6 0.50 100 0.86 0.84 0.75

## 0.4 3 0.6 0.50 150 0.86 0.85 0.73

## 0.4 3 0.6 0.50 200 0.85 0.83 0.74

## 0.4 3 0.6 0.50 250 0.85 0.84 0.72

## 0.4 3 0.6 0.62 50 0.88 0.86 0.76

## 0.4 3 0.6 0.62 100 0.86 0.85 0.73

## 0.4 3 0.6 0.62 150 0.86 0.83 0.72

## 0.4 3 0.6 0.62 200 0.86 0.84 0.72

## 0.4 3 0.6 0.62 250 0.85 0.84 0.71

## 0.4 3 0.6 0.75 50 0.87 0.85 0.74

## 0.4 3 0.6 0.75 100 0.87 0.85 0.73

## 0.4 3 0.6 0.75 150 0.86 0.83 0.72

## 0.4 3 0.6 0.75 200 0.86 0.85 0.71

## 0.4 3 0.6 0.75 250 0.86 0.84 0.72

## 0.4 3 0.6 0.88 50 0.87 0.84 0.75

## 0.4 3 0.6 0.88 100 0.87 0.86 0.75

## 0.4 3 0.6 0.88 150 0.86 0.85 0.73

## 0.4 3 0.6 0.88 200 0.86 0.84 0.73

## 0.4 3 0.6 0.88 250 0.85 0.84 0.72

## 0.4 3 0.6 1.00 50 0.88 0.86 0.76

## 0.4 3 0.6 1.00 100 0.87 0.85 0.73

## 0.4 3 0.6 1.00 150 0.86 0.85 0.74

## 0.4 3 0.6 1.00 200 0.86 0.84 0.74

## 0.4 3 0.6 1.00 250 0.86 0.84 0.72

## 0.4 3 0.8 0.50 50 0.87 0.84 0.74

## 0.4 3 0.8 0.50 100 0.86 0.85 0.74

## 0.4 3 0.8 0.50 150 0.85 0.84 0.73

## 0.4 3 0.8 0.50 200 0.85 0.83 0.72

## 0.4 3 0.8 0.50 250 0.84 0.83 0.72

## 0.4 3 0.8 0.62 50 0.87 0.85 0.74

## 0.4 3 0.8 0.62 100 0.86 0.83 0.73

## 0.4 3 0.8 0.62 150 0.85 0.83 0.72

## 0.4 3 0.8 0.62 200 0.85 0.82 0.72

## 0.4 3 0.8 0.62 250 0.85 0.83 0.72

## 0.4 3 0.8 0.75 50 0.87 0.86 0.73

## 0.4 3 0.8 0.75 100 0.86 0.84 0.73

## 0.4 3 0.8 0.75 150 0.85 0.85 0.73

## 0.4 3 0.8 0.75 200 0.86 0.84 0.72

## 0.4 3 0.8 0.75 250 0.85 0.85 0.72

## 0.4 3 0.8 0.88 50 0.87 0.86 0.74

## 0.4 3 0.8 0.88 100 0.86 0.85 0.75

## 0.4 3 0.8 0.88 150 0.85 0.83 0.74

## 0.4 3 0.8 0.88 200 0.85 0.84 0.75

## 0.4 3 0.8 0.88 250 0.85 0.83 0.73

## 0.4 3 0.8 1.00 50 0.87 0.85 0.73

## 0.4 3 0.8 1.00 100 0.87 0.84 0.74

## 0.4 3 0.8 1.00 150 0.86 0.83 0.74

## 0.4 3 0.8 1.00 200 0.86 0.84 0.74

## 0.4 3 0.8 1.00 250 0.86 0.83 0.73

## 0.4 4 0.6 0.50 50 0.86 0.84 0.75

## 0.4 4 0.6 0.50 100 0.85 0.83 0.75

## 0.4 4 0.6 0.50 150 0.85 0.83 0.73

## 0.4 4 0.6 0.50 200 0.84 0.84 0.73

## 0.4 4 0.6 0.50 250 0.84 0.84 0.74

## 0.4 4 0.6 0.62 50 0.86 0.84 0.72

## 0.4 4 0.6 0.62 100 0.85 0.84 0.73

## 0.4 4 0.6 0.62 150 0.85 0.83 0.73

## 0.4 4 0.6 0.62 200 0.84 0.82 0.72

## 0.4 4 0.6 0.62 250 0.84 0.83 0.71

## 0.4 4 0.6 0.75 50 0.86 0.84 0.75

## 0.4 4 0.6 0.75 100 0.86 0.84 0.74

## 0.4 4 0.6 0.75 150 0.85 0.83 0.74

## 0.4 4 0.6 0.75 200 0.85 0.83 0.73

## 0.4 4 0.6 0.75 250 0.85 0.83 0.72

## 0.4 4 0.6 0.88 50 0.87 0.85 0.73

## 0.4 4 0.6 0.88 100 0.86 0.84 0.75

## 0.4 4 0.6 0.88 150 0.86 0.84 0.72

## 0.4 4 0.6 0.88 200 0.85 0.83 0.73

## 0.4 4 0.6 0.88 250 0.85 0.83 0.72

## 0.4 4 0.6 1.00 50 0.87 0.84 0.73

## 0.4 4 0.6 1.00 100 0.86 0.83 0.73

## 0.4 4 0.6 1.00 150 0.86 0.83 0.73

## 0.4 4 0.6 1.00 200 0.85 0.82 0.72

## 0.4 4 0.6 1.00 250 0.85 0.81 0.72

## 0.4 4 0.8 0.50 50 0.86 0.85 0.73

## 0.4 4 0.8 0.50 100 0.86 0.84 0.72

## 0.4 4 0.8 0.50 150 0.85 0.84 0.71

## 0.4 4 0.8 0.50 200 0.85 0.83 0.71

## 0.4 4 0.8 0.50 250 0.85 0.83 0.71

## 0.4 4 0.8 0.62 50 0.86 0.84 0.77

## 0.4 4 0.8 0.62 100 0.85 0.83 0.73

## 0.4 4 0.8 0.62 150 0.85 0.83 0.72

## 0.4 4 0.8 0.62 200 0.84 0.82 0.73

## 0.4 4 0.8 0.62 250 0.85 0.82 0.73

## 0.4 4 0.8 0.75 50 0.87 0.85 0.72

## 0.4 4 0.8 0.75 100 0.85 0.85 0.72

## 0.4 4 0.8 0.75 150 0.85 0.83 0.72

## 0.4 4 0.8 0.75 200 0.85 0.83 0.71

## 0.4 4 0.8 0.75 250 0.85 0.82 0.72

## 0.4 4 0.8 0.88 50 0.86 0.85 0.74

## 0.4 4 0.8 0.88 100 0.85 0.84 0.74

## 0.4 4 0.8 0.88 150 0.85 0.84 0.74

## 0.4 4 0.8 0.88 200 0.85 0.82 0.73

## 0.4 4 0.8 0.88 250 0.85 0.83 0.73

## 0.4 4 0.8 1.00 50 0.87 0.85 0.75

## 0.4 4 0.8 1.00 100 0.86 0.85 0.73

## 0.4 4 0.8 1.00 150 0.86 0.85 0.71

## 0.4 4 0.8 1.00 200 0.86 0.84 0.72

## 0.4 4 0.8 1.00 250 0.85 0.84 0.70

## 0.4 5 0.6 0.50 50 0.86 0.84 0.75

## 0.4 5 0.6 0.50 100 0.85 0.84 0.72

## 0.4 5 0.6 0.50 150 0.84 0.83 0.72

## 0.4 5 0.6 0.50 200 0.84 0.84 0.71

## 0.4 5 0.6 0.50 250 0.84 0.84 0.72

## 0.4 5 0.6 0.62 50 0.86 0.83 0.72

## 0.4 5 0.6 0.62 100 0.85 0.83 0.73

## 0.4 5 0.6 0.62 150 0.85 0.84 0.74

## 0.4 5 0.6 0.62 200 0.85 0.84 0.72

## 0.4 5 0.6 0.62 250 0.84 0.84 0.72

## 0.4 5 0.6 0.75 50 0.86 0.84 0.72

## 0.4 5 0.6 0.75 100 0.85 0.85 0.71

## 0.4 5 0.6 0.75 150 0.84 0.83 0.70

## 0.4 5 0.6 0.75 200 0.84 0.84 0.71

## 0.4 5 0.6 0.75 250 0.84 0.83 0.71

## 0.4 5 0.6 0.88 50 0.86 0.85 0.73

## 0.4 5 0.6 0.88 100 0.86 0.84 0.73

## 0.4 5 0.6 0.88 150 0.85 0.83 0.73

## 0.4 5 0.6 0.88 200 0.85 0.84 0.73

## 0.4 5 0.6 0.88 250 0.85 0.82 0.72

## 0.4 5 0.6 1.00 50 0.86 0.84 0.74

## 0.4 5 0.6 1.00 100 0.85 0.83 0.74

## 0.4 5 0.6 1.00 150 0.85 0.82 0.73

## 0.4 5 0.6 1.00 200 0.85 0.82 0.72

## 0.4 5 0.6 1.00 250 0.84 0.82 0.72

## 0.4 5 0.8 0.50 50 0.87 0.85 0.71

## 0.4 5 0.8 0.50 100 0.86 0.85 0.71

## 0.4 5 0.8 0.50 150 0.85 0.84 0.72

## 0.4 5 0.8 0.50 200 0.85 0.84 0.73

## 0.4 5 0.8 0.50 250 0.84 0.83 0.70

## 0.4 5 0.8 0.62 50 0.86 0.85 0.73

## 0.4 5 0.8 0.62 100 0.86 0.84 0.73

## 0.4 5 0.8 0.62 150 0.85 0.84 0.74

## 0.4 5 0.8 0.62 200 0.85 0.83 0.73

## 0.4 5 0.8 0.62 250 0.85 0.83 0.73

## 0.4 5 0.8 0.75 50 0.86 0.85 0.74

## 0.4 5 0.8 0.75 100 0.85 0.84 0.72

## 0.4 5 0.8 0.75 150 0.85 0.83 0.72

## 0.4 5 0.8 0.75 200 0.84 0.82 0.72

## 0.4 5 0.8 0.75 250 0.84 0.82 0.72

## 0.4 5 0.8 0.88 50 0.86 0.85 0.73

## 0.4 5 0.8 0.88 100 0.85 0.83 0.74

## 0.4 5 0.8 0.88 150 0.85 0.83 0.73

## 0.4 5 0.8 0.88 200 0.85 0.83 0.73

## 0.4 5 0.8 0.88 250 0.85 0.83 0.73

## 0.4 5 0.8 1.00 50 0.87 0.85 0.72

## 0.4 5 0.8 1.00 100 0.86 0.85 0.71

## 0.4 5 0.8 1.00 150 0.86 0.84 0.72

## 0.4 5 0.8 1.00 200 0.85 0.84 0.72

## 0.4 5 0.8 1.00 250 0.85 0.84 0.72

##

## Tuning parameter 'gamma' was held constant at a value of 0

## Tuning

## parameter 'min_child_weight' was held constant at a value of 1

## ROC was used to select the optimal model using the largest value.

## The final values used for the model were nrounds = 50, max_depth = 1, eta

## = 0.3, gamma = 0, colsample_bytree = 0.6, min_child_weight = 1 and subsample

## = 1.train() tuned eta (\(\eta\)), max_depth, colsample_bytree, subsample, and nrounds, holding gamma = 0, and min_child_weight = 1. The optimal hyperparameter values were eta = 0.3, max_depth - 1, colsample_bytree = 0.6, subsample = 1, and nrounds = 50.

With so many hyperparameters, the tuning plot is nearly unreadable.

Let’s see how the model performed on the holdout set. The accuracy was 0.8732 - much better than the 0.8451 from regular gradient boosting.

oj_preds_xgb <- bind_cols(

predict(oj_mdl_xgb, newdata = oj_test, type = "prob"),

Predicted = predict(oj_mdl_xgb, newdata = oj_test, type = "raw"),

Actual = oj_test$Purchase

)

oj_cm_xgb <- confusionMatrix(oj_preds_xgb$Predicted, reference = oj_preds_xgb$Actual)

oj_cm_xgb## Confusion Matrix and Statistics

##

## Reference

## Prediction CH MM

## CH 120 17

## MM 10 66

##

## Accuracy : 0.873

## 95% CI : (0.821, 0.915)

## No Information Rate : 0.61

## P-Value [Acc > NIR] : <0.0000000000000002

##

## Kappa : 0.729

##

## Mcnemar's Test P-Value : 0.248

##

## Sensitivity : 0.923

## Specificity : 0.795

## Pos Pred Value : 0.876

## Neg Pred Value : 0.868

## Prevalence : 0.610

## Detection Rate : 0.563

## Detection Prevalence : 0.643

## Balanced Accuracy : 0.859

##

## 'Positive' Class : CH

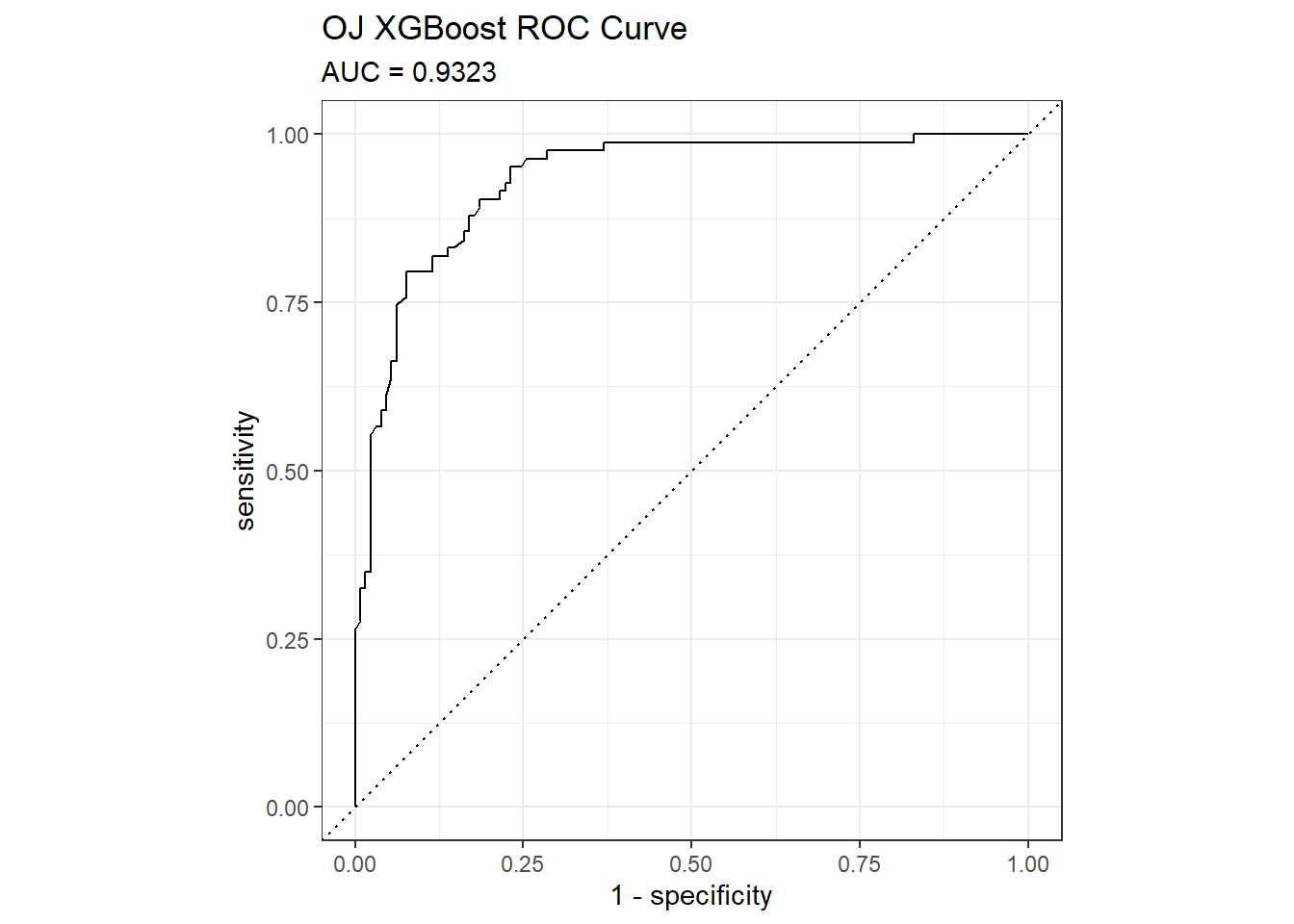

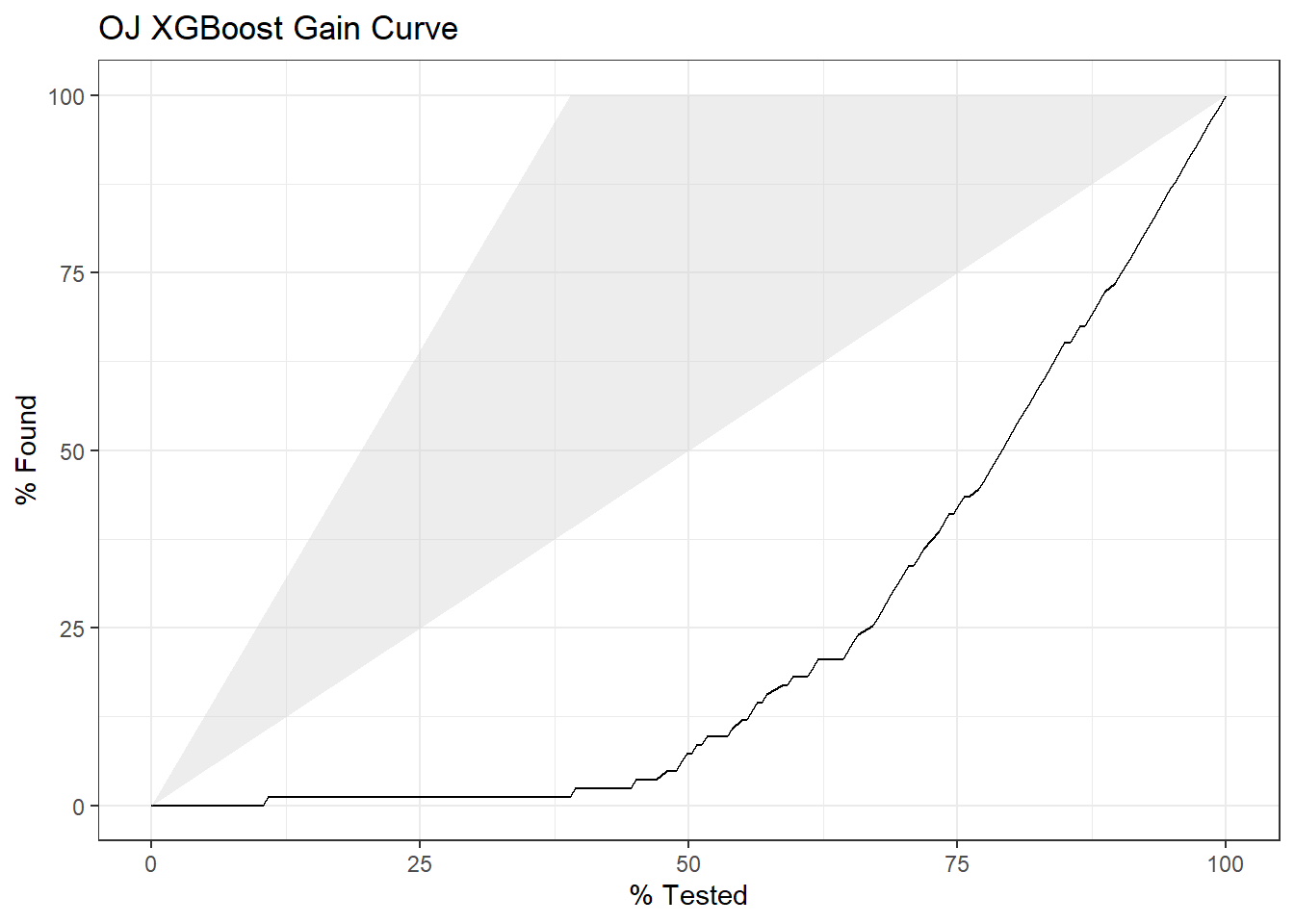

## AUC was 0.9386 for gradient boosting, and here it is 0.9323. Here are the ROC and gain curves.

mdl_auc <- Metrics::auc(actual = oj_preds_xgb$Actual == "CH", oj_preds_xgb$CH)

yardstick::roc_curve(oj_preds_xgb, Actual, CH) %>%

autoplot() +

labs(

title = "OJ XGBoost ROC Curve",

subtitle = paste0("AUC = ", round(mdl_auc, 4))

)

yardstick::gain_curve(oj_preds_xgb, Actual, CH) %>%

autoplot() +

labs(title = "OJ XGBoost Gain Curve")

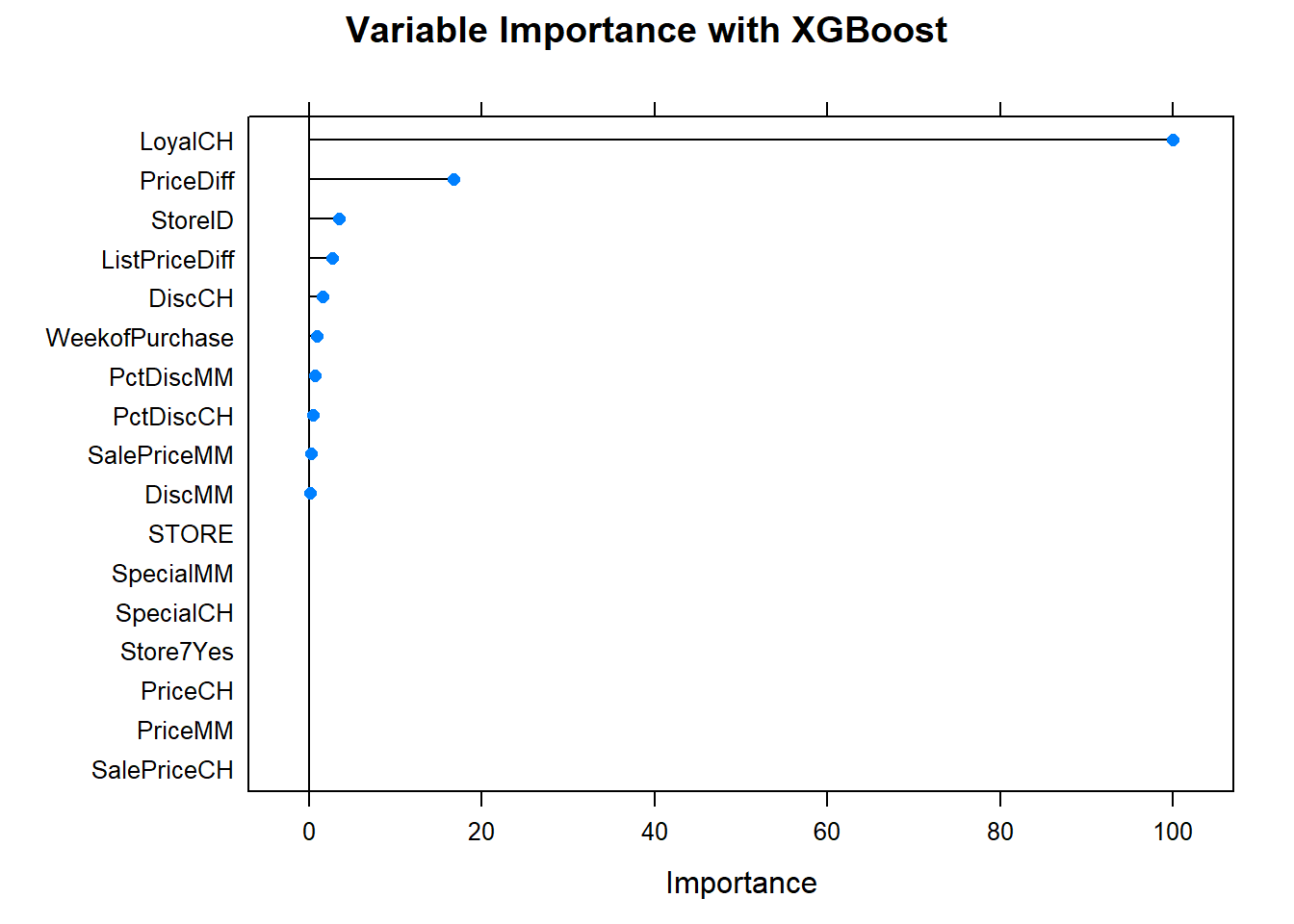

Now the variable importance. Nothing jumps out at me here. It’s the same top variables as regular gradient boosting.

Okay, let’s check in with the leader board. Wow, XGBoost is extreme.

oj_scoreboard <- rbind(oj_scoreboard,

data.frame(Model = "Gradient Boosting", Accuracy = oj_cm_gbm$overall["Accuracy"])) %>%

rbind(data.frame(Model = "XGBoost", Accuracy = oj_cm_xgb$overall["Accuracy"])) %>%

arrange(desc(Accuracy))

scoreboard(oj_scoreboard)Model | Accuracy |

XGBoost | 0.8732 |

Single Tree | 0.8592 |

Single Tree (caret) | 0.8545 |

Bagging | 0.8451 |

Gradient Boosting | 0.8451 |

Random Forest | 0.8310 |

8.5.0.2 Gradient Boosting Regression Tree

8.5.0.2.1 GBM

I’ll predict Sales from the Carseats data set again, this time using the bagging method by specifying method = "gbm"

set.seed(1234)

garbage <- capture.output(

cs_mdl_gbm <- train(

Sales ~ .,

data = cs_train,

method = "gbm",

tuneLength = 5,

trControl = cs_trControl

))

cs_mdl_gbm## Stochastic Gradient Boosting

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results across tuning parameters:

##

## interaction.depth n.trees RMSE Rsquared MAE

## 1 50 1.8 0.67 1.50

## 1 100 1.5 0.78 1.24

## 1 150 1.3 0.83 1.06

## 1 200 1.2 0.84 0.99

## 1 250 1.2 0.85 0.94

## 2 50 1.5 0.78 1.22

## 2 100 1.2 0.84 1.01

## 2 150 1.2 0.84 0.96

## 2 200 1.2 0.84 0.95

## 2 250 1.2 0.84 0.95

## 3 50 1.4 0.81 1.13

## 3 100 1.2 0.83 0.99

## 3 150 1.2 0.83 0.97

## 3 200 1.2 0.83 0.98

## 3 250 1.2 0.82 0.99

## 4 50 1.3 0.81 1.09

## 4 100 1.3 0.82 0.99

## 4 150 1.2 0.82 0.99

## 4 200 1.3 0.82 0.99

## 4 250 1.3 0.81 1.01

## 5 50 1.3 0.81 1.06

## 5 100 1.3 0.82 1.00

## 5 150 1.2 0.82 0.99

## 5 200 1.3 0.82 1.01

## 5 250 1.3 0.81 1.02

##

## Tuning parameter 'shrinkage' was held constant at a value of 0.1

##

## Tuning parameter 'n.minobsinnode' was held constant at a value of 10

## RMSE was used to select the optimal model using the smallest value.

## The final values used for the model were n.trees = 250, interaction.depth =

## 1, shrinkage = 0.1 and n.minobsinnode = 10.The optimal tuning parameters were at \(M = 250\) and interation.depth = 1.

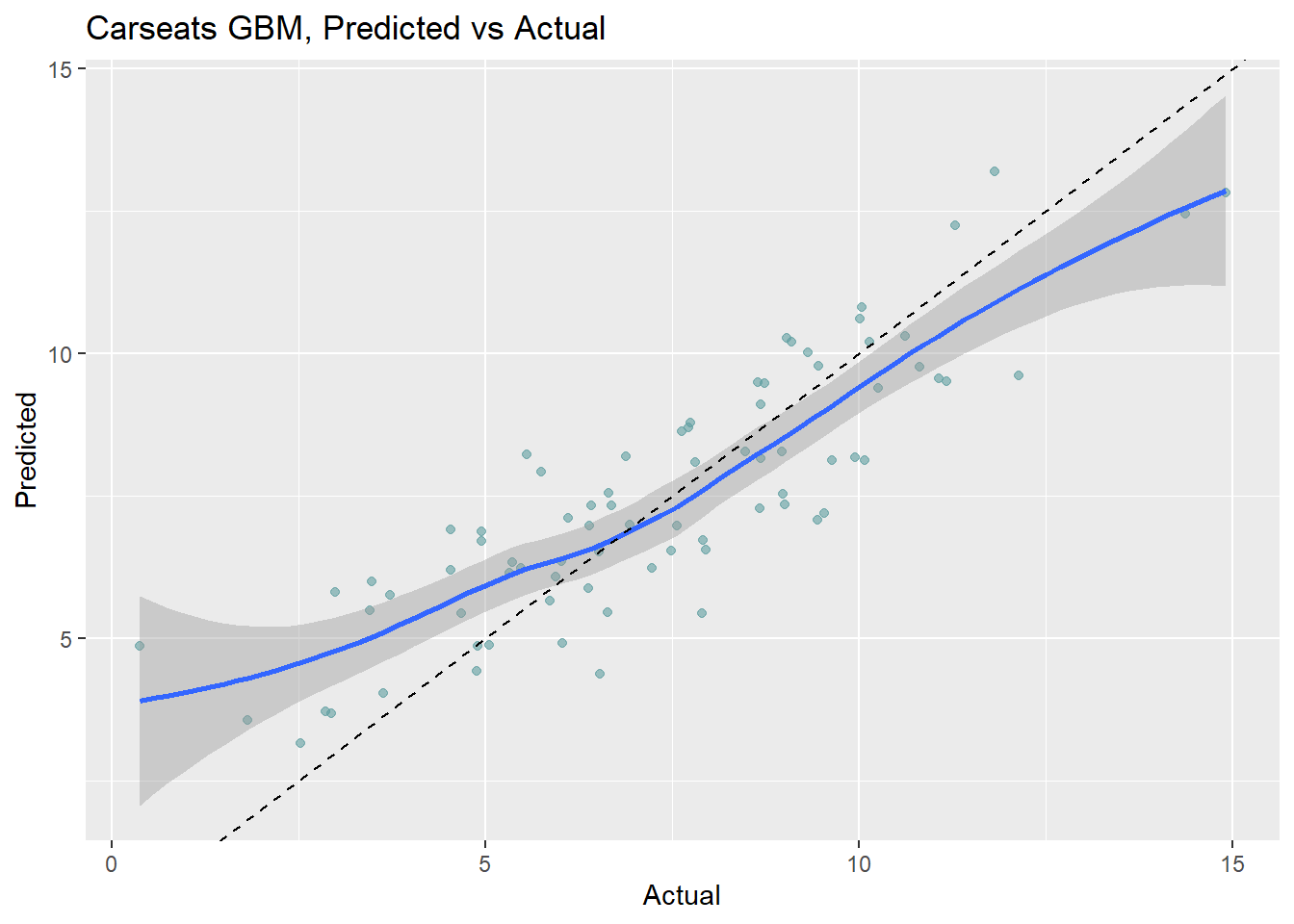

Here is the holdout set performance.

cs_preds_gbm <- bind_cols(

Predicted = predict(cs_mdl_gbm, newdata = cs_test),

Actual = cs_test$Sales

)

# Model over-predicts at low end of Sales and under-predicts at high end

cs_preds_gbm %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth(method = "loess", formula = "y ~ x") +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

labs(title = "Carseats GBM, Predicted vs Actual")

The RMSE is 1.438 - the best of the bunch.

cs_rmse_gbm <- RMSE(pred = cs_preds_gbm$Predicted, obs = cs_preds_gbm$Actual)

cs_scoreboard <- rbind(cs_scoreboard,

data.frame(Model = "GBM", RMSE = cs_rmse_gbm)

) %>% arrange(RMSE)

scoreboard(cs_scoreboard)Model | RMSE |

GBM | 1.4381 |

Random Forest | 1.7184 |

Bagging | 1.9185 |

Single Tree (caret) | 2.2983 |

Single Tree | 2.3632 |

8.5.0.2.2 XGBoost

I’ll predict Sales from the Carseats data set again, this time using the bagging method by specifying method = "xgb"

set.seed(1234)

garbage <- capture.output(

cs_mdl_xgb <- train(

Sales ~ .,

data = cs_train,

method = "xgbTree",

tuneLength = 5,

trControl = cs_trControl

))

cs_mdl_xgb## eXtreme Gradient Boosting

##

## 321 samples

## 10 predictor

##

## No pre-processing

## Resampling: Cross-Validated (10 fold)

## Summary of sample sizes: 289, 289, 289, 289, 289, 289, ...

## Resampling results across tuning parameters:

##

## eta max_depth colsample_bytree subsample nrounds RMSE Rsquared MAE

## 0.3 1 0.6 0.50 50 1.4 0.80 1.11

## 0.3 1 0.6 0.50 100 1.2 0.84 0.92

## 0.3 1 0.6 0.50 150 1.1 0.85 0.91

## 0.3 1 0.6 0.50 200 1.1 0.85 0.90

## 0.3 1 0.6 0.50 250 1.1 0.85 0.90

## 0.3 1 0.6 0.62 50 1.4 0.81 1.13

## 0.3 1 0.6 0.62 100 1.2 0.85 0.96

## 0.3 1 0.6 0.62 150 1.1 0.85 0.91

## 0.3 1 0.6 0.62 200 1.1 0.85 0.92

## 0.3 1 0.6 0.62 250 1.1 0.85 0.91

## 0.3 1 0.6 0.75 50 1.4 0.81 1.14

## 0.3 1 0.6 0.75 100 1.2 0.84 0.97

## 0.3 1 0.6 0.75 150 1.1 0.85 0.92

## 0.3 1 0.6 0.75 200 1.1 0.85 0.92

## 0.3 1 0.6 0.75 250 1.1 0.85 0.92

## 0.3 1 0.6 0.88 50 1.4 0.81 1.13

## 0.3 1 0.6 0.88 100 1.2 0.85 0.95

## 0.3 1 0.6 0.88 150 1.1 0.86 0.89

## 0.3 1 0.6 0.88 200 1.1 0.86 0.89

## 0.3 1 0.6 0.88 250 1.1 0.86 0.89

## 0.3 1 0.6 1.00 50 1.4 0.81 1.15

## 0.3 1 0.6 1.00 100 1.2 0.85 0.96

## 0.3 1 0.6 1.00 150 1.1 0.86 0.90

## 0.3 1 0.6 1.00 200 1.1 0.86 0.88

## 0.3 1 0.6 1.00 250 1.1 0.86 0.88

## 0.3 1 0.8 0.50 50 1.4 0.80 1.14

## 0.3 1 0.8 0.50 100 1.2 0.84 0.96

## 0.3 1 0.8 0.50 150 1.2 0.84 0.95

## 0.3 1 0.8 0.50 200 1.2 0.84 0.94

## 0.3 1 0.8 0.50 250 1.2 0.84 0.93

## 0.3 1 0.8 0.62 50 1.4 0.81 1.11

## 0.3 1 0.8 0.62 100 1.2 0.85 0.95

## 0.3 1 0.8 0.62 150 1.1 0.85 0.91

## 0.3 1 0.8 0.62 200 1.1 0.85 0.90

## 0.3 1 0.8 0.62 250 1.2 0.84 0.92

## 0.3 1 0.8 0.75 50 1.4 0.81 1.12

## 0.3 1 0.8 0.75 100 1.2 0.85 0.95

## 0.3 1 0.8 0.75 150 1.1 0.86 0.91

## 0.3 1 0.8 0.75 200 1.1 0.85 0.90

## 0.3 1 0.8 0.75 250 1.1 0.85 0.90

## 0.3 1 0.8 0.88 50 1.4 0.80 1.15

## 0.3 1 0.8 0.88 100 1.2 0.84 0.96

## 0.3 1 0.8 0.88 150 1.1 0.85 0.91

## 0.3 1 0.8 0.88 200 1.1 0.85 0.91

## 0.3 1 0.8 0.88 250 1.1 0.85 0.90

## 0.3 1 0.8 1.00 50 1.4 0.81 1.15

## 0.3 1 0.8 1.00 100 1.2 0.85 0.96

## 0.3 1 0.8 1.00 150 1.1 0.86 0.91

## 0.3 1 0.8 1.00 200 1.1 0.86 0.89

## 0.3 1 0.8 1.00 250 1.1 0.86 0.89

## 0.3 2 0.6 0.50 50 1.3 0.81 1.04

## 0.3 2 0.6 0.50 100 1.3 0.80 1.04

## 0.3 2 0.6 0.50 150 1.3 0.79 1.06

## 0.3 2 0.6 0.50 200 1.4 0.79 1.05

## 0.3 2 0.6 0.50 250 1.4 0.77 1.09

## 0.3 2 0.6 0.62 50 1.3 0.81 1.02

## 0.3 2 0.6 0.62 100 1.3 0.82 0.99

## 0.3 2 0.6 0.62 150 1.3 0.81 1.02

## 0.3 2 0.6 0.62 200 1.3 0.80 1.04

## 0.3 2 0.6 0.62 250 1.3 0.79 1.05

## 0.3 2 0.6 0.75 50 1.2 0.83 0.98

## 0.3 2 0.6 0.75 100 1.2 0.83 0.96

## 0.3 2 0.6 0.75 150 1.3 0.82 0.98

## 0.3 2 0.6 0.75 200 1.3 0.81 1.00

## 0.3 2 0.6 0.75 250 1.3 0.80 1.02

## 0.3 2 0.6 0.88 50 1.3 0.83 1.02

## 0.3 2 0.6 0.88 100 1.2 0.82 1.00

## 0.3 2 0.6 0.88 150 1.3 0.82 0.99

## 0.3 2 0.6 0.88 200 1.3 0.81 1.00

## 0.3 2 0.6 0.88 250 1.3 0.80 1.03

## 0.3 2 0.6 1.00 50 1.3 0.83 1.02

## 0.3 2 0.6 1.00 100 1.2 0.83 1.01

## 0.3 2 0.6 1.00 150 1.3 0.82 1.01

## 0.3 2 0.6 1.00 200 1.3 0.80 1.04

## 0.3 2 0.6 1.00 250 1.3 0.80 1.04

## 0.3 2 0.8 0.50 50 1.3 0.82 1.00

## 0.3 2 0.8 0.50 100 1.3 0.81 1.03

## 0.3 2 0.8 0.50 150 1.3 0.80 1.06

## 0.3 2 0.8 0.50 200 1.3 0.80 1.05

## 0.3 2 0.8 0.50 250 1.4 0.79 1.08

## 0.3 2 0.8 0.62 50 1.3 0.81 1.02

## 0.3 2 0.8 0.62 100 1.3 0.80 1.02

## 0.3 2 0.8 0.62 150 1.3 0.79 1.05

## 0.3 2 0.8 0.62 200 1.3 0.79 1.06

## 0.3 2 0.8 0.62 250 1.4 0.78 1.08

## 0.3 2 0.8 0.75 50 1.3 0.81 1.00

## 0.3 2 0.8 0.75 100 1.3 0.80 1.02

## 0.3 2 0.8 0.75 150 1.4 0.78 1.06

## 0.3 2 0.8 0.75 200 1.4 0.77 1.08

## 0.3 2 0.8 0.75 250 1.4 0.77 1.11

## 0.3 2 0.8 0.88 50 1.2 0.83 1.00

## 0.3 2 0.8 0.88 100 1.2 0.82 0.99

## 0.3 2 0.8 0.88 150 1.3 0.81 1.01

## 0.3 2 0.8 0.88 200 1.3 0.80 1.03

## 0.3 2 0.8 0.88 250 1.3 0.79 1.04

## 0.3 2 0.8 1.00 50 1.2 0.82 1.01

## 0.3 2 0.8 1.00 100 1.3 0.82 1.01

## 0.3 2 0.8 1.00 150 1.3 0.81 1.02

## 0.3 2 0.8 1.00 200 1.3 0.80 1.04

## 0.3 2 0.8 1.00 250 1.3 0.79 1.05

## 0.3 3 0.6 0.50 50 1.5 0.75 1.16

## 0.3 3 0.6 0.50 100 1.4 0.75 1.16

## 0.3 3 0.6 0.50 150 1.5 0.74 1.16

## 0.3 3 0.6 0.50 200 1.5 0.75 1.16

## 0.3 3 0.6 0.50 250 1.5 0.74 1.17

## 0.3 3 0.6 0.62 50 1.4 0.75 1.12

## 0.3 3 0.6 0.62 100 1.5 0.74 1.15

## 0.3 3 0.6 0.62 150 1.5 0.74 1.17

## 0.3 3 0.6 0.62 200 1.5 0.73 1.18

## 0.3 3 0.6 0.62 250 1.5 0.73 1.18

## 0.3 3 0.6 0.75 50 1.4 0.76 1.13

## 0.3 3 0.6 0.75 100 1.4 0.76 1.13

## 0.3 3 0.6 0.75 150 1.5 0.75 1.15

## 0.3 3 0.6 0.75 200 1.5 0.74 1.16

## 0.3 3 0.6 0.75 250 1.5 0.74 1.16

## 0.3 3 0.6 0.88 50 1.4 0.79 1.09

## 0.3 3 0.6 0.88 100 1.4 0.78 1.09

## 0.3 3 0.6 0.88 150 1.4 0.77 1.09

## 0.3 3 0.6 0.88 200 1.4 0.77 1.10

## 0.3 3 0.6 0.88 250 1.4 0.77 1.10

## 0.3 3 0.6 1.00 50 1.4 0.78 1.06

## 0.3 3 0.6 1.00 100 1.4 0.78 1.05

## 0.3 3 0.6 1.00 150 1.4 0.77 1.06

## 0.3 3 0.6 1.00 200 1.4 0.77 1.07

## 0.3 3 0.6 1.00 250 1.4 0.77 1.08

## 0.3 3 0.8 0.50 50 1.5 0.74 1.15

## 0.3 3 0.8 0.50 100 1.5 0.75 1.18

## 0.3 3 0.8 0.50 150 1.5 0.74 1.19

## 0.3 3 0.8 0.50 200 1.5 0.74 1.19

## 0.3 3 0.8 0.50 250 1.5 0.73 1.19

## 0.3 3 0.8 0.62 50 1.4 0.77 1.08

## 0.3 3 0.8 0.62 100 1.4 0.76 1.12

## 0.3 3 0.8 0.62 150 1.5 0.75 1.14

## 0.3 3 0.8 0.62 200 1.5 0.74 1.15

## 0.3 3 0.8 0.62 250 1.5 0.74 1.15

## 0.3 3 0.8 0.75 50 1.3 0.80 1.05

## 0.3 3 0.8 0.75 100 1.4 0.78 1.09

## 0.3 3 0.8 0.75 150 1.4 0.78 1.11

## 0.3 3 0.8 0.75 200 1.4 0.77 1.12

## 0.3 3 0.8 0.75 250 1.4 0.77 1.13

## 0.3 3 0.8 0.88 50 1.4 0.78 1.08

## 0.3 3 0.8 0.88 100 1.4 0.77 1.10

## 0.3 3 0.8 0.88 150 1.4 0.77 1.12

## 0.3 3 0.8 0.88 200 1.4 0.77 1.13

## 0.3 3 0.8 0.88 250 1.4 0.76 1.13

## 0.3 3 0.8 1.00 50 1.4 0.77 1.12

## 0.3 3 0.8 1.00 100 1.4 0.76 1.13

## 0.3 3 0.8 1.00 150 1.4 0.76 1.14

## 0.3 3 0.8 1.00 200 1.5 0.75 1.15

## 0.3 3 0.8 1.00 250 1.5 0.75 1.16

## 0.3 4 0.6 0.50 50 1.6 0.71 1.23

## 0.3 4 0.6 0.50 100 1.6 0.70 1.25

## 0.3 4 0.6 0.50 150 1.6 0.70 1.27

## 0.3 4 0.6 0.50 200 1.6 0.70 1.27

## 0.3 4 0.6 0.50 250 1.6 0.70 1.27

## 0.3 4 0.6 0.62 50 1.5 0.73 1.19

## 0.3 4 0.6 0.62 100 1.5 0.73 1.19

## 0.3 4 0.6 0.62 150 1.5 0.72 1.19

## 0.3 4 0.6 0.62 200 1.5 0.72 1.19

## 0.3 4 0.6 0.62 250 1.5 0.72 1.19

## 0.3 4 0.6 0.75 50 1.5 0.72 1.22

## 0.3 4 0.6 0.75 100 1.6 0.71 1.23

## 0.3 4 0.6 0.75 150 1.6 0.71 1.23

## 0.3 4 0.6 0.75 200 1.6 0.71 1.23

## 0.3 4 0.6 0.75 250 1.6 0.71 1.23

## 0.3 4 0.6 0.88 50 1.5 0.75 1.16

## 0.3 4 0.6 0.88 100 1.5 0.75 1.17

## 0.3 4 0.6 0.88 150 1.5 0.74 1.17

## 0.3 4 0.6 0.88 200 1.5 0.74 1.17

## 0.3 4 0.6 0.88 250 1.5 0.74 1.17

## 0.3 4 0.6 1.00 50 1.5 0.73 1.20

## 0.3 4 0.6 1.00 100 1.5 0.73 1.20

## 0.3 4 0.6 1.00 150 1.5 0.73 1.21

## 0.3 4 0.6 1.00 200 1.5 0.73 1.21

## 0.3 4 0.6 1.00 250 1.5 0.73 1.21

## 0.3 4 0.8 0.50 50 1.6 0.70 1.23

## 0.3 4 0.8 0.50 100 1.6 0.70 1.24

## 0.3 4 0.8 0.50 150 1.6 0.70 1.25

## 0.3 4 0.8 0.50 200 1.6 0.70 1.25

## 0.3 4 0.8 0.50 250 1.6 0.70 1.25

## 0.3 4 0.8 0.62 50 1.5 0.74 1.19

## 0.3 4 0.8 0.62 100 1.5 0.74 1.20

## 0.3 4 0.8 0.62 150 1.5 0.74 1.20

## 0.3 4 0.8 0.62 200 1.5 0.74 1.20

## 0.3 4 0.8 0.62 250 1.5 0.74 1.20

## 0.3 4 0.8 0.75 50 1.5 0.74 1.20

## 0.3 4 0.8 0.75 100 1.5 0.74 1.21

## 0.3 4 0.8 0.75 150 1.5 0.74 1.21

## 0.3 4 0.8 0.75 200 1.5 0.74 1.21

## 0.3 4 0.8 0.75 250 1.5 0.74 1.21

## 0.3 4 0.8 0.88 50 1.5 0.75 1.13

## 0.3 4 0.8 0.88 100 1.5 0.75 1.15

## 0.3 4 0.8 0.88 150 1.5 0.75 1.15

## 0.3 4 0.8 0.88 200 1.5 0.75 1.15

## 0.3 4 0.8 0.88 250 1.5 0.75 1.15

## 0.3 4 0.8 1.00 50 1.5 0.75 1.16

## 0.3 4 0.8 1.00 100 1.5 0.75 1.16

## 0.3 4 0.8 1.00 150 1.5 0.75 1.17

## 0.3 4 0.8 1.00 200 1.5 0.75 1.17

## 0.3 4 0.8 1.00 250 1.5 0.75 1.17

## 0.3 5 0.6 0.50 50 1.7 0.66 1.32

## 0.3 5 0.6 0.50 100 1.7 0.66 1.32

## 0.3 5 0.6 0.50 150 1.7 0.66 1.32

## 0.3 5 0.6 0.50 200 1.7 0.66 1.32

## 0.3 5 0.6 0.50 250 1.7 0.66 1.32

## 0.3 5 0.6 0.62 50 1.6 0.71 1.25

## 0.3 5 0.6 0.62 100 1.6 0.70 1.25

## 0.3 5 0.6 0.62 150 1.6 0.70 1.25

## 0.3 5 0.6 0.62 200 1.6 0.70 1.25

## 0.3 5 0.6 0.62 250 1.6 0.70 1.25

## 0.3 5 0.6 0.75 50 1.6 0.69 1.27

## 0.3 5 0.6 0.75 100 1.6 0.69 1.27

## 0.3 5 0.6 0.75 150 1.6 0.69 1.27

## 0.3 5 0.6 0.75 200 1.6 0.69 1.27

## 0.3 5 0.6 0.75 250 1.6 0.69 1.27

## 0.3 5 0.6 0.88 50 1.6 0.71 1.23

## 0.3 5 0.6 0.88 100 1.6 0.71 1.23

## 0.3 5 0.6 0.88 150 1.6 0.71 1.23

## 0.3 5 0.6 0.88 200 1.6 0.71 1.23

## 0.3 5 0.6 0.88 250 1.6 0.71 1.23

## 0.3 5 0.6 1.00 50 1.6 0.71 1.24

## 0.3 5 0.6 1.00 100 1.6 0.71 1.24

## 0.3 5 0.6 1.00 150 1.6 0.71 1.24

## 0.3 5 0.6 1.00 200 1.6 0.71 1.24

## 0.3 5 0.6 1.00 250 1.6 0.71 1.24

## 0.3 5 0.8 0.50 50 1.5 0.75 1.18

## 0.3 5 0.8 0.50 100 1.5 0.74 1.19

## 0.3 5 0.8 0.50 150 1.5 0.74 1.19

## 0.3 5 0.8 0.50 200 1.5 0.74 1.19

## 0.3 5 0.8 0.50 250 1.5 0.74 1.19

## 0.3 5 0.8 0.62 50 1.5 0.74 1.15

## 0.3 5 0.8 0.62 100 1.5 0.74 1.16

## 0.3 5 0.8 0.62 150 1.5 0.74 1.16

## 0.3 5 0.8 0.62 200 1.5 0.74 1.16

## 0.3 5 0.8 0.62 250 1.5 0.74 1.16

## 0.3 5 0.8 0.75 50 1.5 0.74 1.20

## 0.3 5 0.8 0.75 100 1.5 0.74 1.20

## 0.3 5 0.8 0.75 150 1.5 0.74 1.21

## 0.3 5 0.8 0.75 200 1.5 0.74 1.21

## 0.3 5 0.8 0.75 250 1.5 0.74 1.21

## 0.3 5 0.8 0.88 50 1.6 0.72 1.24

## 0.3 5 0.8 0.88 100 1.6 0.72 1.24

## 0.3 5 0.8 0.88 150 1.6 0.72 1.24

## 0.3 5 0.8 0.88 200 1.6 0.72 1.24

## 0.3 5 0.8 0.88 250 1.6 0.72 1.24

## 0.3 5 0.8 1.00 50 1.5 0.73 1.17

## 0.3 5 0.8 1.00 100 1.5 0.73 1.18

## 0.3 5 0.8 1.00 150 1.5 0.73 1.18

## 0.3 5 0.8 1.00 200 1.5 0.73 1.18

## 0.3 5 0.8 1.00 250 1.5 0.73 1.18

## 0.4 1 0.6 0.50 50 1.3 0.81 1.04

## 0.4 1 0.6 0.50 100 1.2 0.84 0.95

## 0.4 1 0.6 0.50 150 1.2 0.83 0.95

## 0.4 1 0.6 0.50 200 1.2 0.83 0.96

## 0.4 1 0.6 0.50 250 1.2 0.83 0.96

## 0.4 1 0.6 0.62 50 1.3 0.82 1.02

## 0.4 1 0.6 0.62 100 1.1 0.85 0.91

## 0.4 1 0.6 0.62 150 1.1 0.85 0.91

## 0.4 1 0.6 0.62 200 1.2 0.84 0.93

## 0.4 1 0.6 0.62 250 1.2 0.84 0.94

## 0.4 1 0.6 0.75 50 1.3 0.83 1.04

## 0.4 1 0.6 0.75 100 1.1 0.85 0.92

## 0.4 1 0.6 0.75 150 1.1 0.85 0.90

## 0.4 1 0.6 0.75 200 1.1 0.85 0.90

## 0.4 1 0.6 0.75 250 1.1 0.85 0.90

## 0.4 1 0.6 0.88 50 1.3 0.82 1.09

## 0.4 1 0.6 0.88 100 1.2 0.84 0.95

## 0.4 1 0.6 0.88 150 1.1 0.85 0.92

## 0.4 1 0.6 0.88 200 1.1 0.85 0.93

## 0.4 1 0.6 0.88 250 1.1 0.85 0.93

## 0.4 1 0.6 1.00 50 1.3 0.83 1.04

## 0.4 1 0.6 1.00 100 1.1 0.86 0.92

## 0.4 1 0.6 1.00 150 1.1 0.86 0.89

## 0.4 1 0.6 1.00 200 1.1 0.86 0.89

## 0.4 1 0.6 1.00 250 1.1 0.86 0.89

## 0.4 1 0.8 0.50 50 1.3 0.83 1.01

## 0.4 1 0.8 0.50 100 1.2 0.84 0.96

## 0.4 1 0.8 0.50 150 1.2 0.84 0.94

## 0.4 1 0.8 0.50 200 1.2 0.83 0.96

## 0.4 1 0.8 0.50 250 1.2 0.83 0.95

## 0.4 1 0.8 0.62 50 1.3 0.83 1.02

## 0.4 1 0.8 0.62 100 1.2 0.83 0.97

## 0.4 1 0.8 0.62 150 1.2 0.83 0.96

## 0.4 1 0.8 0.62 200 1.2 0.83 0.97

## 0.4 1 0.8 0.62 250 1.2 0.83 0.97

## 0.4 1 0.8 0.75 50 1.3 0.83 1.03

## 0.4 1 0.8 0.75 100 1.1 0.85 0.92

## 0.4 1 0.8 0.75 150 1.2 0.84 0.92

## 0.4 1 0.8 0.75 200 1.2 0.84 0.93

## 0.4 1 0.8 0.75 250 1.2 0.84 0.94

## 0.4 1 0.8 0.88 50 1.3 0.83 1.04

## 0.4 1 0.8 0.88 100 1.2 0.85 0.92

## 0.4 1 0.8 0.88 150 1.1 0.85 0.92

## 0.4 1 0.8 0.88 200 1.1 0.85 0.92

## 0.4 1 0.8 0.88 250 1.2 0.84 0.93

## 0.4 1 0.8 1.00 50 1.3 0.84 1.03

## 0.4 1 0.8 1.00 100 1.1 0.86 0.91

## 0.4 1 0.8 1.00 150 1.1 0.86 0.88

## 0.4 1 0.8 1.00 200 1.1 0.86 0.88

## 0.4 1 0.8 1.00 250 1.1 0.86 0.88

## 0.4 2 0.6 0.50 50 1.4 0.77 1.12

## 0.4 2 0.6 0.50 100 1.4 0.77 1.12

## 0.4 2 0.6 0.50 150 1.4 0.77 1.16

## 0.4 2 0.6 0.50 200 1.5 0.75 1.19

## 0.4 2 0.6 0.50 250 1.5 0.75 1.20

## 0.4 2 0.6 0.62 50 1.3 0.80 1.03

## 0.4 2 0.6 0.62 100 1.3 0.79 1.06

## 0.4 2 0.6 0.62 150 1.3 0.79 1.06

## 0.4 2 0.6 0.62 200 1.4 0.79 1.07

## 0.4 2 0.6 0.62 250 1.4 0.77 1.10

## 0.4 2 0.6 0.75 50 1.3 0.80 1.03

## 0.4 2 0.6 0.75 100 1.3 0.78 1.06

## 0.4 2 0.6 0.75 150 1.4 0.77 1.08

## 0.4 2 0.6 0.75 200 1.4 0.76 1.12

## 0.4 2 0.6 0.75 250 1.4 0.76 1.12

## 0.4 2 0.6 0.88 50 1.3 0.81 1.02

## 0.4 2 0.6 0.88 100 1.3 0.81 1.01

## 0.4 2 0.6 0.88 150 1.3 0.80 1.03

## 0.4 2 0.6 0.88 200 1.3 0.79 1.05

## 0.4 2 0.6 0.88 250 1.3 0.78 1.06

## 0.4 2 0.6 1.00 50 1.2 0.82 1.01

## 0.4 2 0.6 1.00 100 1.3 0.81 1.03

## 0.4 2 0.6 1.00 150 1.3 0.81 1.05

## 0.4 2 0.6 1.00 200 1.3 0.80 1.07

## 0.4 2 0.6 1.00 250 1.3 0.79 1.08

## 0.4 2 0.8 0.50 50 1.4 0.78 1.09

## 0.4 2 0.8 0.50 100 1.4 0.76 1.13

## 0.4 2 0.8 0.50 150 1.5 0.75 1.16

## 0.4 2 0.8 0.50 200 1.5 0.73 1.18

## 0.4 2 0.8 0.50 250 1.6 0.72 1.22

## 0.4 2 0.8 0.62 50 1.3 0.80 1.06

## 0.4 2 0.8 0.62 100 1.3 0.79 1.05

## 0.4 2 0.8 0.62 150 1.3 0.78 1.08

## 0.4 2 0.8 0.62 200 1.4 0.78 1.08

## 0.4 2 0.8 0.62 250 1.4 0.78 1.09

## 0.4 2 0.8 0.75 50 1.3 0.79 1.04

## 0.4 2 0.8 0.75 100 1.4 0.78 1.07

## 0.4 2 0.8 0.75 150 1.4 0.77 1.10

## 0.4 2 0.8 0.75 200 1.5 0.75 1.13

## 0.4 2 0.8 0.75 250 1.4 0.76 1.13

## 0.4 2 0.8 0.88 50 1.3 0.80 1.05

## 0.4 2 0.8 0.88 100 1.3 0.78 1.08

## 0.4 2 0.8 0.88 150 1.4 0.77 1.09

## 0.4 2 0.8 0.88 200 1.4 0.76 1.12

## 0.4 2 0.8 0.88 250 1.4 0.75 1.14

## 0.4 2 0.8 1.00 50 1.3 0.81 1.02

## 0.4 2 0.8 1.00 100 1.3 0.80 1.03

## 0.4 2 0.8 1.00 150 1.3 0.79 1.07

## 0.4 2 0.8 1.00 200 1.4 0.78 1.10

## 0.4 2 0.8 1.00 250 1.4 0.77 1.12

## 0.4 3 0.6 0.50 50 1.5 0.75 1.18

## 0.4 3 0.6 0.50 100 1.5 0.74 1.20

## 0.4 3 0.6 0.50 150 1.5 0.73 1.21

## 0.4 3 0.6 0.50 200 1.5 0.73 1.22

## 0.4 3 0.6 0.50 250 1.5 0.73 1.22

## 0.4 3 0.6 0.62 50 1.4 0.75 1.14

## 0.4 3 0.6 0.62 100 1.5 0.73 1.18

## 0.4 3 0.6 0.62 150 1.5 0.73 1.19

## 0.4 3 0.6 0.62 200 1.5 0.73 1.19

## 0.4 3 0.6 0.62 250 1.5 0.73 1.19

## 0.4 3 0.6 0.75 50 1.4 0.77 1.12

## 0.4 3 0.6 0.75 100 1.5 0.75 1.16

## 0.4 3 0.6 0.75 150 1.5 0.75 1.17

## 0.4 3 0.6 0.75 200 1.5 0.74 1.18

## 0.4 3 0.6 0.75 250 1.5 0.74 1.18

## 0.4 3 0.6 0.88 50 1.4 0.77 1.07

## 0.4 3 0.6 0.88 100 1.4 0.75 1.11

## 0.4 3 0.6 0.88 150 1.5 0.74 1.12

## 0.4 3 0.6 0.88 200 1.5 0.74 1.13

## 0.4 3 0.6 0.88 250 1.5 0.74 1.13

## 0.4 3 0.6 1.00 50 1.4 0.78 1.11

## 0.4 3 0.6 1.00 100 1.4 0.77 1.11

## 0.4 3 0.6 1.00 150 1.4 0.77 1.12

## 0.4 3 0.6 1.00 200 1.4 0.76 1.12

## 0.4 3 0.6 1.00 250 1.4 0.76 1.13

## 0.4 3 0.8 0.50 50 1.6 0.71 1.25

## 0.4 3 0.8 0.50 100 1.6 0.69 1.29

## 0.4 3 0.8 0.50 150 1.6 0.69 1.30

## 0.4 3 0.8 0.50 200 1.6 0.69 1.31

## 0.4 3 0.8 0.50 250 1.6 0.69 1.31

## 0.4 3 0.8 0.62 50 1.4 0.76 1.13

## 0.4 3 0.8 0.62 100 1.5 0.75 1.16

## 0.4 3 0.8 0.62 150 1.5 0.74 1.18

## 0.4 3 0.8 0.62 200 1.5 0.74 1.18

## 0.4 3 0.8 0.62 250 1.5 0.74 1.18

## 0.4 3 0.8 0.75 50 1.5 0.75 1.18

## 0.4 3 0.8 0.75 100 1.5 0.72 1.22

## 0.4 3 0.8 0.75 150 1.6 0.71 1.24

## 0.4 3 0.8 0.75 200 1.6 0.71 1.25

## 0.4 3 0.8 0.75 250 1.6 0.71 1.25

## 0.4 3 0.8 0.88 50 1.4 0.78 1.10

## 0.4 3 0.8 0.88 100 1.4 0.78 1.10

## 0.4 3 0.8 0.88 150 1.4 0.77 1.12

## 0.4 3 0.8 0.88 200 1.4 0.77 1.12

## 0.4 3 0.8 0.88 250 1.4 0.77 1.12

## 0.4 3 0.8 1.00 50 1.3 0.79 1.07

## 0.4 3 0.8 1.00 100 1.4 0.78 1.10

## 0.4 3 0.8 1.00 150 1.4 0.78 1.11

## 0.4 3 0.8 1.00 200 1.4 0.77 1.12

## 0.4 3 0.8 1.00 250 1.4 0.77 1.12

## 0.4 4 0.6 0.50 50 1.6 0.71 1.25

## 0.4 4 0.6 0.50 100 1.6 0.70 1.26

## 0.4 4 0.6 0.50 150 1.6 0.70 1.27

## 0.4 4 0.6 0.50 200 1.6 0.70 1.27

## 0.4 4 0.6 0.50 250 1.6 0.70 1.27

## 0.4 4 0.6 0.62 50 1.6 0.70 1.26

## 0.4 4 0.6 0.62 100 1.6 0.70 1.27

## 0.4 4 0.6 0.62 150 1.6 0.69 1.27

## 0.4 4 0.6 0.62 200 1.6 0.69 1.27

## 0.4 4 0.6 0.62 250 1.6 0.69 1.27

## 0.4 4 0.6 0.75 50 1.6 0.70 1.27

## 0.4 4 0.6 0.75 100 1.6 0.70 1.28

## 0.4 4 0.6 0.75 150 1.6 0.70 1.28

## 0.4 4 0.6 0.75 200 1.6 0.70 1.28

## 0.4 4 0.6 0.75 250 1.6 0.70 1.28

## 0.4 4 0.6 0.88 50 1.6 0.72 1.25

## 0.4 4 0.6 0.88 100 1.6 0.72 1.27

## 0.4 4 0.6 0.88 150 1.6 0.72 1.27

## 0.4 4 0.6 0.88 200 1.6 0.71 1.27

## 0.4 4 0.6 0.88 250 1.6 0.71 1.28

## 0.4 4 0.6 1.00 50 1.6 0.71 1.23

## 0.4 4 0.6 1.00 100 1.6 0.70 1.24

## 0.4 4 0.6 1.00 150 1.6 0.70 1.24

## 0.4 4 0.6 1.00 200 1.6 0.70 1.24

## 0.4 4 0.6 1.00 250 1.6 0.70 1.24

## 0.4 4 0.8 0.50 50 1.7 0.66 1.37

## 0.4 4 0.8 0.50 100 1.7 0.64 1.42

## 0.4 4 0.8 0.50 150 1.7 0.64 1.42

## 0.4 4 0.8 0.50 200 1.8 0.64 1.43

## 0.4 4 0.8 0.50 250 1.8 0.64 1.43

## 0.4 4 0.8 0.62 50 1.6 0.69 1.24

## 0.4 4 0.8 0.62 100 1.6 0.68 1.25

## 0.4 4 0.8 0.62 150 1.6 0.68 1.25

## 0.4 4 0.8 0.62 200 1.6 0.68 1.25

## 0.4 4 0.8 0.62 250 1.6 0.68 1.25

## 0.4 4 0.8 0.75 50 1.6 0.72 1.21

## 0.4 4 0.8 0.75 100 1.6 0.72 1.22

## 0.4 4 0.8 0.75 150 1.6 0.71 1.22

## 0.4 4 0.8 0.75 200 1.6 0.71 1.22

## 0.4 4 0.8 0.75 250 1.6 0.71 1.22

## 0.4 4 0.8 0.88 50 1.6 0.71 1.22

## 0.4 4 0.8 0.88 100 1.6 0.71 1.23

## 0.4 4 0.8 0.88 150 1.6 0.71 1.23

## 0.4 4 0.8 0.88 200 1.6 0.71 1.23

## 0.4 4 0.8 0.88 250 1.6 0.71 1.23

## 0.4 4 0.8 1.00 50 1.6 0.71 1.24

## 0.4 4 0.8 1.00 100 1.6 0.71 1.25

## 0.4 4 0.8 1.00 150 1.6 0.70 1.25

## 0.4 4 0.8 1.00 200 1.6 0.70 1.25

## 0.4 4 0.8 1.00 250 1.6 0.70 1.25

## 0.4 5 0.6 0.50 50 1.9 0.59 1.52

## 0.4 5 0.6 0.50 100 1.9 0.59 1.53

## 0.4 5 0.6 0.50 150 1.9 0.59 1.53

## 0.4 5 0.6 0.50 200 1.9 0.59 1.53

## 0.4 5 0.6 0.50 250 1.9 0.59 1.53

## 0.4 5 0.6 0.62 50 1.7 0.67 1.35

## 0.4 5 0.6 0.62 100 1.7 0.67 1.35

## 0.4 5 0.6 0.62 150 1.7 0.67 1.36

## 0.4 5 0.6 0.62 200 1.7 0.67 1.36

## 0.4 5 0.6 0.62 250 1.7 0.67 1.36

## 0.4 5 0.6 0.75 50 1.7 0.66 1.30

## 0.4 5 0.6 0.75 100 1.7 0.66 1.30

## 0.4 5 0.6 0.75 150 1.7 0.66 1.30

## 0.4 5 0.6 0.75 200 1.7 0.66 1.30

## 0.4 5 0.6 0.75 250 1.7 0.66 1.30

## 0.4 5 0.6 0.88 50 1.7 0.65 1.37

## 0.4 5 0.6 0.88 100 1.7 0.65 1.37

## 0.4 5 0.6 0.88 150 1.7 0.65 1.37

## 0.4 5 0.6 0.88 200 1.7 0.65 1.37

## 0.4 5 0.6 0.88 250 1.7 0.65 1.37

## 0.4 5 0.6 1.00 50 1.6 0.68 1.30

## 0.4 5 0.6 1.00 100 1.6 0.68 1.30

## 0.4 5 0.6 1.00 150 1.6 0.68 1.30

## 0.4 5 0.6 1.00 200 1.6 0.68 1.30

## 0.4 5 0.6 1.00 250 1.6 0.68 1.30

## 0.4 5 0.8 0.50 50 1.6 0.69 1.27

## 0.4 5 0.8 0.50 100 1.6 0.69 1.27

## 0.4 5 0.8 0.50 150 1.6 0.69 1.27

## 0.4 5 0.8 0.50 200 1.6 0.69 1.27

## 0.4 5 0.8 0.50 250 1.6 0.69 1.27

## 0.4 5 0.8 0.62 50 1.6 0.69 1.26

## 0.4 5 0.8 0.62 100 1.6 0.69 1.27

## 0.4 5 0.8 0.62 150 1.6 0.69 1.27

## 0.4 5 0.8 0.62 200 1.6 0.69 1.27

## 0.4 5 0.8 0.62 250 1.6 0.69 1.27

## 0.4 5 0.8 0.75 50 1.7 0.69 1.29

## 0.4 5 0.8 0.75 100 1.7 0.69 1.30

## 0.4 5 0.8 0.75 150 1.7 0.69 1.30

## 0.4 5 0.8 0.75 200 1.7 0.69 1.30

## 0.4 5 0.8 0.75 250 1.7 0.69 1.30

## 0.4 5 0.8 0.88 50 1.6 0.71 1.24

## 0.4 5 0.8 0.88 100 1.6 0.71 1.24

## 0.4 5 0.8 0.88 150 1.6 0.71 1.24

## 0.4 5 0.8 0.88 200 1.6 0.71 1.24

## 0.4 5 0.8 0.88 250 1.6 0.71 1.24

## 0.4 5 0.8 1.00 50 1.6 0.69 1.27

## 0.4 5 0.8 1.00 100 1.6 0.69 1.27

## 0.4 5 0.8 1.00 150 1.6 0.69 1.27

## 0.4 5 0.8 1.00 200 1.6 0.69 1.27

## 0.4 5 0.8 1.00 250 1.6 0.69 1.27

##

## Tuning parameter 'gamma' was held constant at a value of 0

## Tuning

## parameter 'min_child_weight' was held constant at a value of 1

## RMSE was used to select the optimal model using the smallest value.

## The final values used for the model were nrounds = 150, max_depth = 1, eta

## = 0.4, gamma = 0, colsample_bytree = 0.8, min_child_weight = 1 and subsample

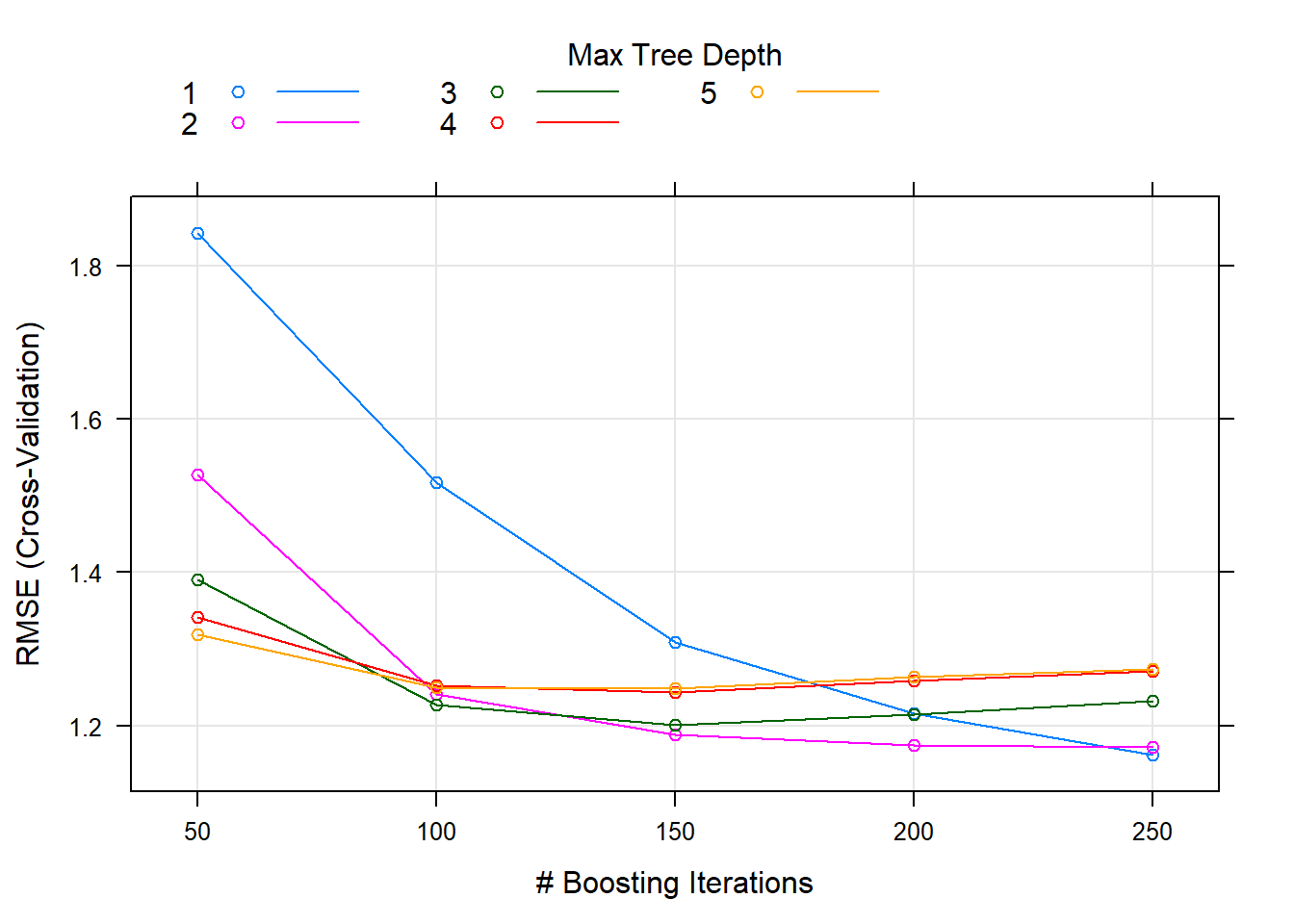

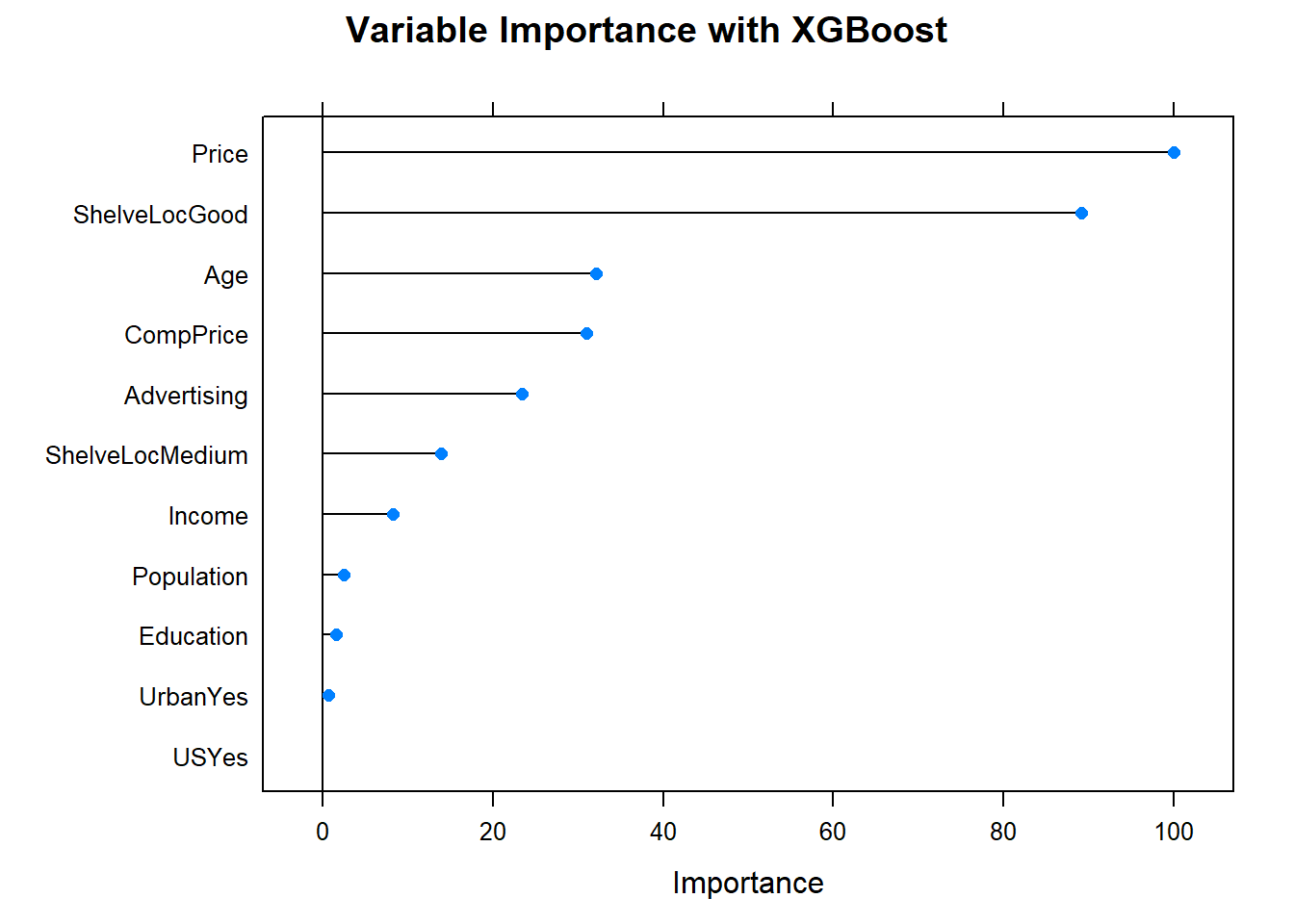

## = 1.The optimal tuning parameters were at \(M = 250\) and interation.depth = 1.

train() tuned eta (\(\eta\)), max_depth, gamma, colsample_bytree, subsample, and nrounds, holding gamma = 0, and min_child_weight = 1. The optimal hyperparameter values were eta = 0.4, max_depth = 1, gamma = 0, colsample_bytree = 0.8, subsample = 1, and nrounds = 150.

With so many hyperparameters, the tuning plot is nearly unreadable.

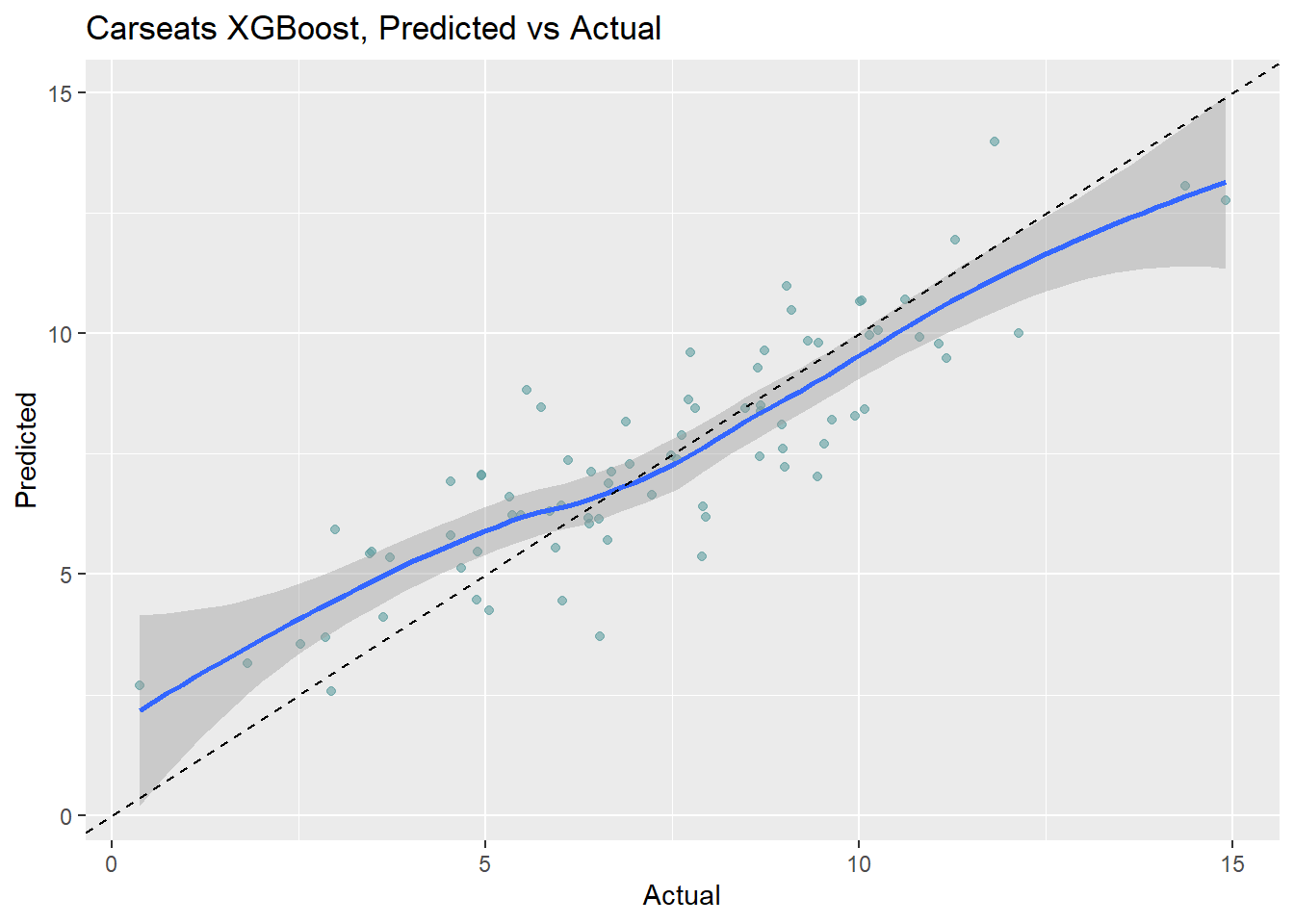

Here is the holdout set performance.

cs_preds_xgb <- bind_cols(

Predicted = predict(cs_mdl_xgb, newdata = cs_test),

Actual = cs_test$Sales

)

# Model over-predicts at low end of Sales and under-predicts at high end

cs_preds_xgb %>%

ggplot(aes(x = Actual, y = Predicted)) +

geom_point(alpha = 0.6, color = "cadetblue") +

geom_smooth(method = "loess", formula = "y ~ x") +

geom_abline(intercept = 0, slope = 1, linetype = 2) +

labs(title = "Carseats XGBoost, Predicted vs Actual")

The RMSE is 1.438 - the best of the bunch.

cs_rmse_xgb <- RMSE(pred = cs_preds_xgb$Predicted, obs = cs_preds_xgb$Actual)

cs_scoreboard <- rbind(cs_scoreboard,

data.frame(Model = "XGBoost", RMSE = cs_rmse_xgb)

) %>% arrange(RMSE)

scoreboard(cs_scoreboard)Model | RMSE |

XGBoost | 1.3952 |

GBM | 1.4381 |

Random Forest | 1.7184 |

Bagging | 1.9185 |

Single Tree (caret) | 2.2983 |

Single Tree | 2.3632 |