Chapter 21 Time series

21.1 Introduction

Time Series Analysis and Time Series Modeling are powerful forecasting tools

A prior knowledge of the statistical theory behind Time Series is useful before Time series Modeling

ARMA and ARIMA are important models for performing Time Series Analysis

Time is the most valuable resource we have.

It measures and tracks our mere existence. Many people have searched out sages to tell them about their future.

In statistics, we use special methods to predict & forecast how certain variables change over time.

One such method, which deals with time based data is Time Series Modeling.

As the name suggests, it involves working on time (years, days, hours, minutes) based data, to derive hidden insights to make informed decision making.

Time series models are very useful models when you have serially correlated data.

Most of business houses work on time series data to analyze sales number for the next year, website traffic, competition position and much more.

However, it is also one of the areas, which many analysts do not understand.

21.2 Business Application

Business Application: Sales Forecasting

Time series analysis is commonly used in business for sales forecasting. Accurate sales forecasting is crucial for businesses to make informed decisions regarding inventory management, production planning, resource allocation, and financial projections. By analyzing historical sales data, businesses can identify patterns, trends, and seasonality to make predictions about future sales.

Numerical Example:

Let’s consider a fictional retail company, “Loui-Mart,” that sells electronic gadgets. They want to forecast their monthly sales for the next six months based on the past 24 months of sales data. Here is a simplified example of their historical sales data:

| Month | Sales (in thousands) |

|---|---|

| Jan-2021 | $100 |

| Feb-2021 | $120 |

| Mar-2021 | $130 |

| Apr-2021 | $110 |

| May-2021 | $140 |

| Jun-2021 | $160 |

| Jul-2021 | $170 |

| Aug-2021 | $180 |

| Sep-2021 | $150 |

| Oct-2021 | $190 |

| Nov-2021 | $210 |

| Dec-2021 | $230 |

| Jan-2022 | $110 |

| Feb-2022 | $130 |

| Mar-2022 | $140 |

| Apr-2022 | $120 |

| May-2022 | $150 |

| Jun-2022 | $170 |

| Jul-2022 | $180 |

| Aug-2022 | $190 |

| Sep-2022 | $160 |

| Oct-2022 | $200 |

| Nov-2022 | $220 |

| Dec-2022 | $240 |

Now, Loui-Mart can use time series analysis techniques, such as moving averages, exponential smoothing, or ARIMA (AutoRegressive Integrated Moving Average), to forecast sales for the next six months (Jan 2023 to Jun 2023).

After applying a suitable forecasting method, the projected sales for the next six months might look like this:

| Forecasted Month | Forecasted Sales |

|---|---|

| Jan-2023 | $160 |

| Feb-2023 | $180 |

| Mar-2023 | $190 |

| Apr-2023 | $170 |

| May-2023 | $200 |

| Jun-2023 | $220 |

Based on this forecast, Loui-Mart can plan its inventory, production schedules, and allocate resources accordingly. For instance, they might need to increase their stock in anticipation of higher sales in May and June, or they could adjust their marketing strategies to boost sales in months with lower projected revenue.

By consistently updating and refining their time series forecasting models with new data, Loui-Mart can make more accurate predictions, leading to better decision-making and ultimately improved business performance.

21.3 What we will cover in these notes

- Basics – Time Series Modeling

- Exploration of Time Series Data in R

- Introduction to ARMA Time Series Modeling

- Framework and Application of ARIMA Time Series Modeling

21.4 Basics – Time Series Modeling

Let’s begin from basics. This includes

- stationary series

- random walks - \(\rho\) Coefficient

- Dickey Fuller Test of Stationarity.

If these terms are already scaring you, don’t worry – they will become clear in a bit and I bet you will start enjoying the subject as I explain it.

21.4.1 Stationary Series

There are three basic criterion for a series to be classified as stationary series :

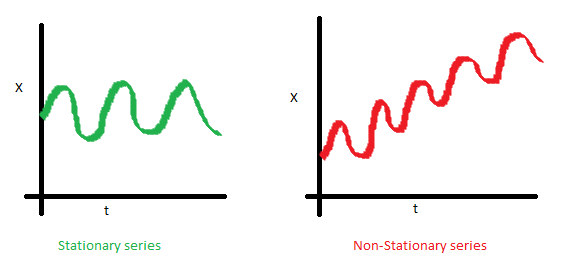

- The mean of the series should not be a function of time rather should be a constant. The image below has the left hand graph satisfying the condition whereas the graph in red has a time dependent mean.

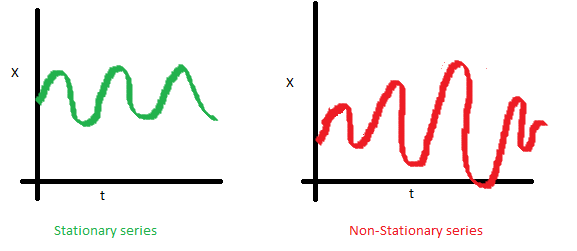

- The variance of the series should not a be a function of time. This property is known as homoscedasticity. Following graph depicts what is and what is not a stationary series. (Notice the varying spread of distribution in the right hand graph)

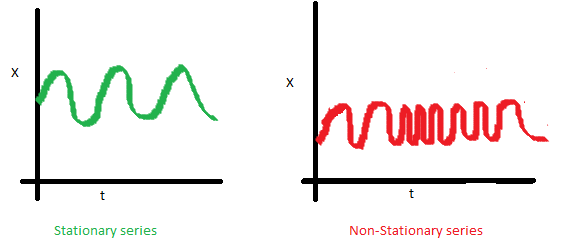

- The covariance of the \(i\) th term and the \((i + m)\) th term should not be a function of time. In the following graph, you will notice the spread becomes closer as the time increases. Hence, the covariance is not constant with time for the ‘red series’.

21.4.1.1 Why do I care about stationarity of a time series?

You cannot build a statistical time series model if it isn’t stationary.

In cases where the stationary criterion are violated, the first requisite becomes to stationarize the time series and then try stochastic models to predict this time series.

We will learn a few methods to make a time series stationary

21.5 Random Walk

- This is the most basic concept of the time series.

- You might know the concept well.

- But, I found many people in the industry who interprets random walk as a stationary process.

- In this section with the help of some mathematics, I will make this concept crystal clear for ever. Let’s take an example.

21.5.1 Example:

Imagine a person moving randomly on a giant chess board.

In this case, the next position of the person is only dependent on the last position.

Now imagine, you are sitting in another room and are not able to see the person.

You want to predict the position of the person with time. How accurate will you be? - Of course you will become more and more inaccurate as the position of the person changes. - At t=0 you know exactly where the person is. - At t=1, she can move to 8 different squares with one step. Your probability dips to 1/8 instead of 1 and it keeps on going down with each additional step.

21.6 Time Series Function

Now let’s try to formulate this series :

\[X(t) = X(t-1) + Er(t)\] where \(Er(t)\) is the error at time point \(t\). This is the randomness the girl brings at every point in time.

We can then adjust the equation for the time period \(t-1\), which gives us \(X(t-1) = X(t-2) + Er(t-1)\). Let’s plug these in to the first equation.

\[X(t)=X(t-1)+Er(t)=X(t-2)+Er(t)+Er(t-1)\] If we recursively fit in all the \(Xs\), we end up with the following equation :

\[X(t) = X(0) + Sum(Er(1),Er(2),Er(3).....Er(t))\] The equation tells us that our guess of where you are today at time \(t\) is dependent on where you started \(X(0)\) and some random steps.

21.6.1 Is the Mean constant ?

Describing the equation as a series of errors makes it easier for us to calculate the expected mean and variance.

The Expected Value of the series is

\[E[X(t)] = E[X(0)] + Sum(E[Er(1)],E[Er(2)],E[Er(3)].....E[Er(t)])\] We know that Expectation of any Error will be zero as it is random.

Hence we get \(E[X(t)] = E[X(0)] = Constant\).

21.6.2 Is the Variance constant?

Remember that if \(a\) and \(b\) are random variables, then \(VAR(a+b)=VAR(a)+VAR(b)-COV(a,b)\).

If \(a\) and \(b\) are independent, then \(COV(a,b)=0\).

We know the regression errors are independent so we can write,

\[Var[X(t)] = Var[X(0)] + Sum(Var[Er(1)],Var[Er(2)],Var[Er(3)].....Var[Er(t)])\] \[Var[X(t)] = t * Var(Error) = \text{Time dependent}.\]

Hence, we infer that the random walk is not a stationary process as it has a time variant variance.

Also, if we check the covariance, we see that too is dependent on time.

Here is some simple intuition \[Cov[X(t),X(t-1)]=Cov[X(t-1)+Er(t),X(t-l)]=Var[X(t-1)]\]

21.6.3 \(\huge{\rho}\)

Let’s spice up things a bit, We already know that a random walk is a non-stationary process.

Let us introduce a new coefficient in the equation to see if we can make the formulation stationary.

Introduced coefficient : \(\rho\)

This coefficient \(\rho\) tell us how important the past is to the present.

\[X(t) = \rho * X(t-1) + Er(t)\] Now, we will vary the value of \(\rho\) to see if we can make the series stationary.

21.6.4 \(\huge{\rho = 0}\)

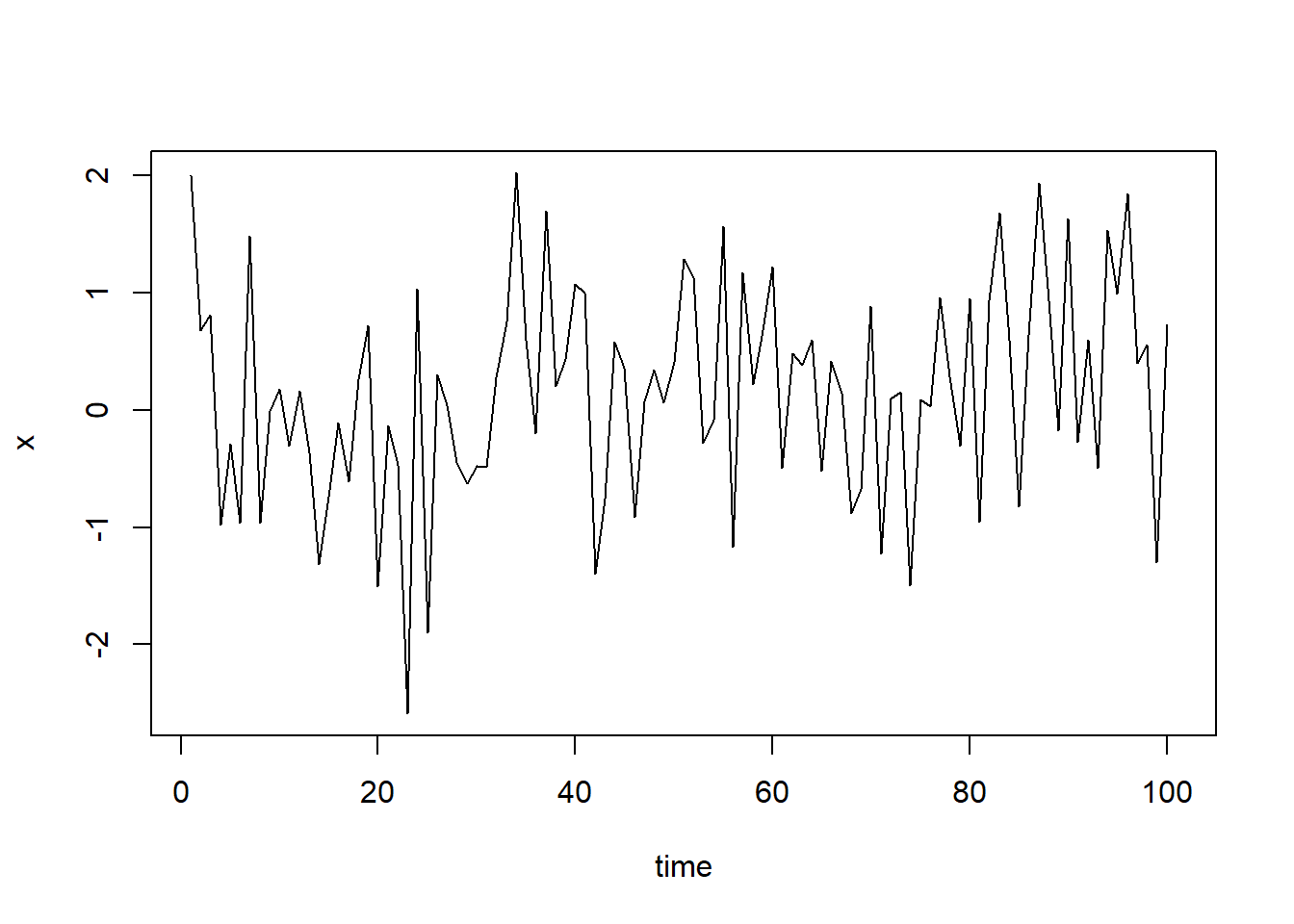

Let’s start with a perfectly stationary series with \(\rho = 0\). Here is the plot for the time series :

21.6.5 \(\huge{\rho = 0.5}\)

Increase the value of \(\rho\) to 0.5 gives us following graph :

You might notice that our cycles have become broader but essentially there does not seem to be a serious violation of stationary assumptions.

21.6.6 \(\huge{\rho = 0.9}\)

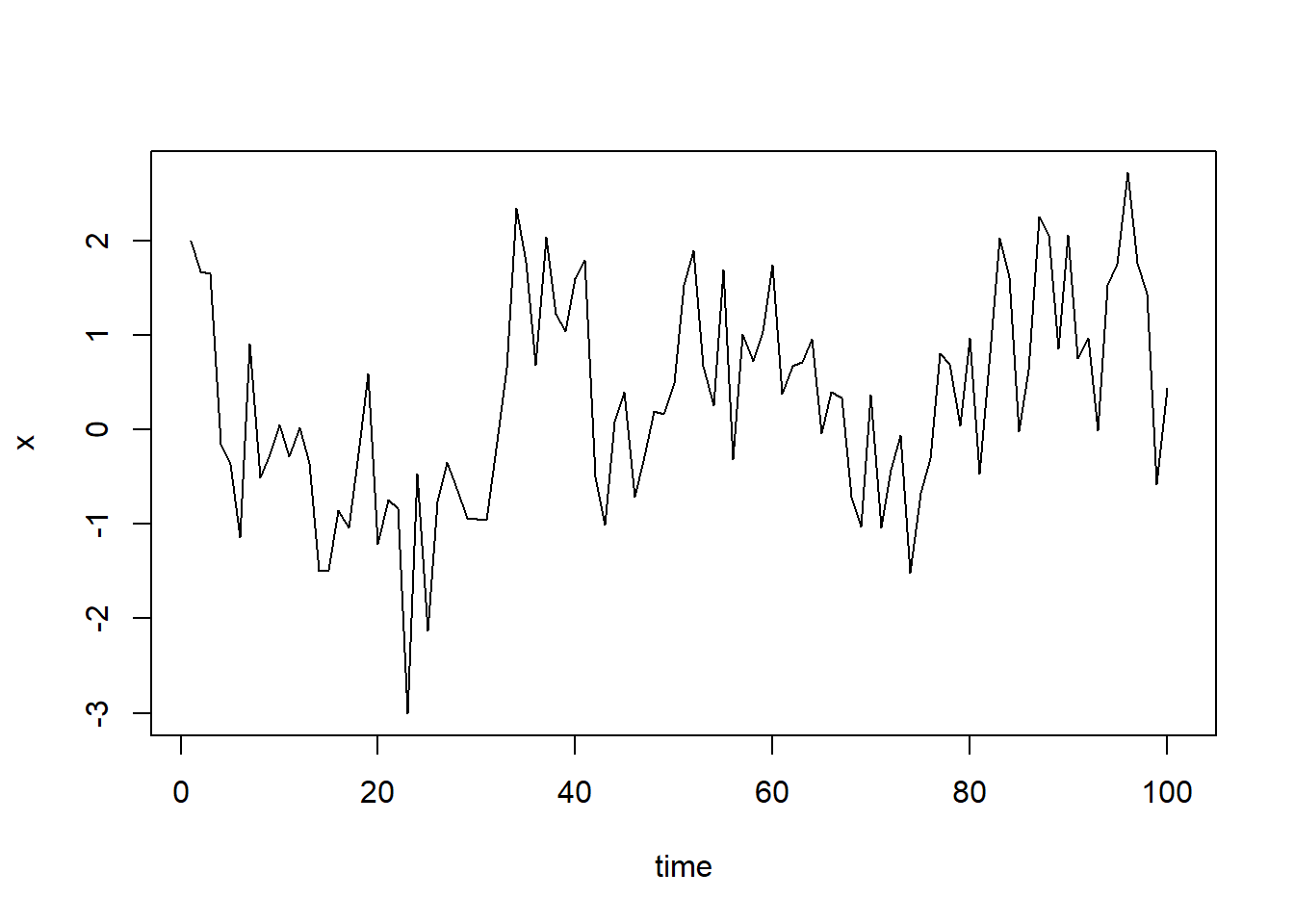

Let’s now take a more extreme case of \(\rho\) = 0.9

We still see that the X returns back from extreme values to zero after some intervals. This series also is not violating non-stationarity significantly.

21.6.7 \(\huge{\rho = 1}\)

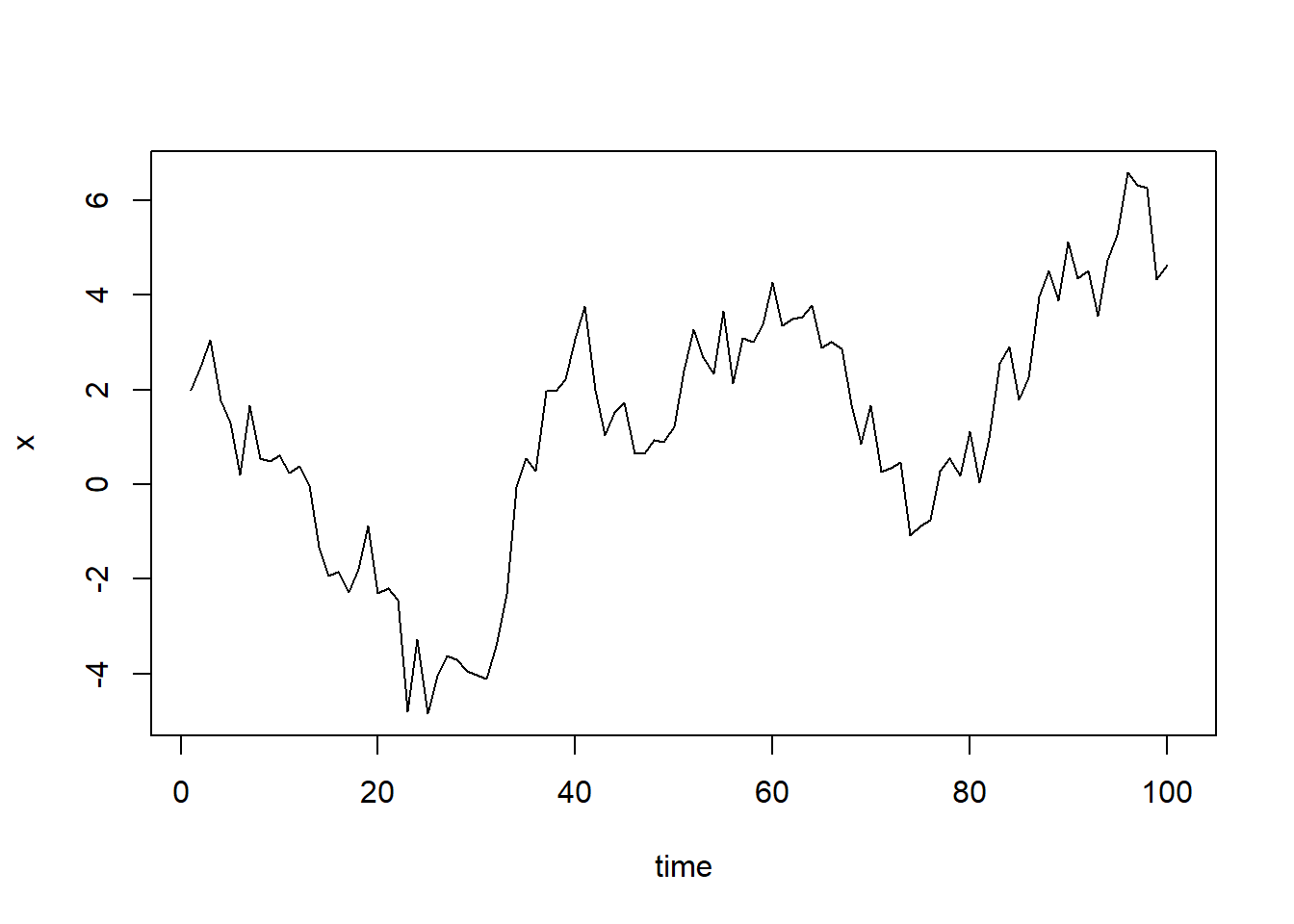

Now, let’s take a look at the random walk with \(\rho = 1\).

This obviously is an violation to stationary conditions.

21.6.8 Not Stationairy

What makes \(\rho = 1\) a special case which comes out badly in stationary test? We will find the mathematical reason to this.

Let’s take expectation on each side of the equation \[X(t) = \rho * X(t-1) + Er(t)\]

\[E[X(t)] = \rho *E[ X(t-1)]\] This equation is very insightful. The next X (or at time point t) is being pulled down to \(\rho\) * Last value of X.

For instance, if \(X(t – 1 ) = 1\), \(E[X(t)] = 0.5\) ( for \(\rho = 0.5\)) . Now, if X moves to any direction from zero, it is pulled back to zero in next step.

What happens when the \(\rho\) becomes 1? No force can pull the X down in the next step.

21.6.9 Dickey Fuller Test of Stationarity

What you just learnt in the last section is formally known as Dickey Fuller test. Here is a small tweak which is made for our equation to convert it to a Dickey Fuller test:

\[X(t) = \rho * X(t-1) + Er(t) => X(t) - X(t-1) = (\rho - 1) X(t - 1) + Er(t)\] We have to test if \(\rho\) – 1 is significantly different than zero or not. If the null hypothesis gets rejected, we’ll get a stationary time series.

Stationary testing and converting a series into a stationary series are the most critical processes in a time series modelling. You need to memorize each and every detail of this concept to move on to the next step of time series modelling.

Let’s now consider an example to show you what a time series looks like.

21.7 Exploration of Time Series Data in R

Here we’ll learn to handle time series data on R. Our scope will be restricted to data exploring in a time series type of data set and not go to building time series models.

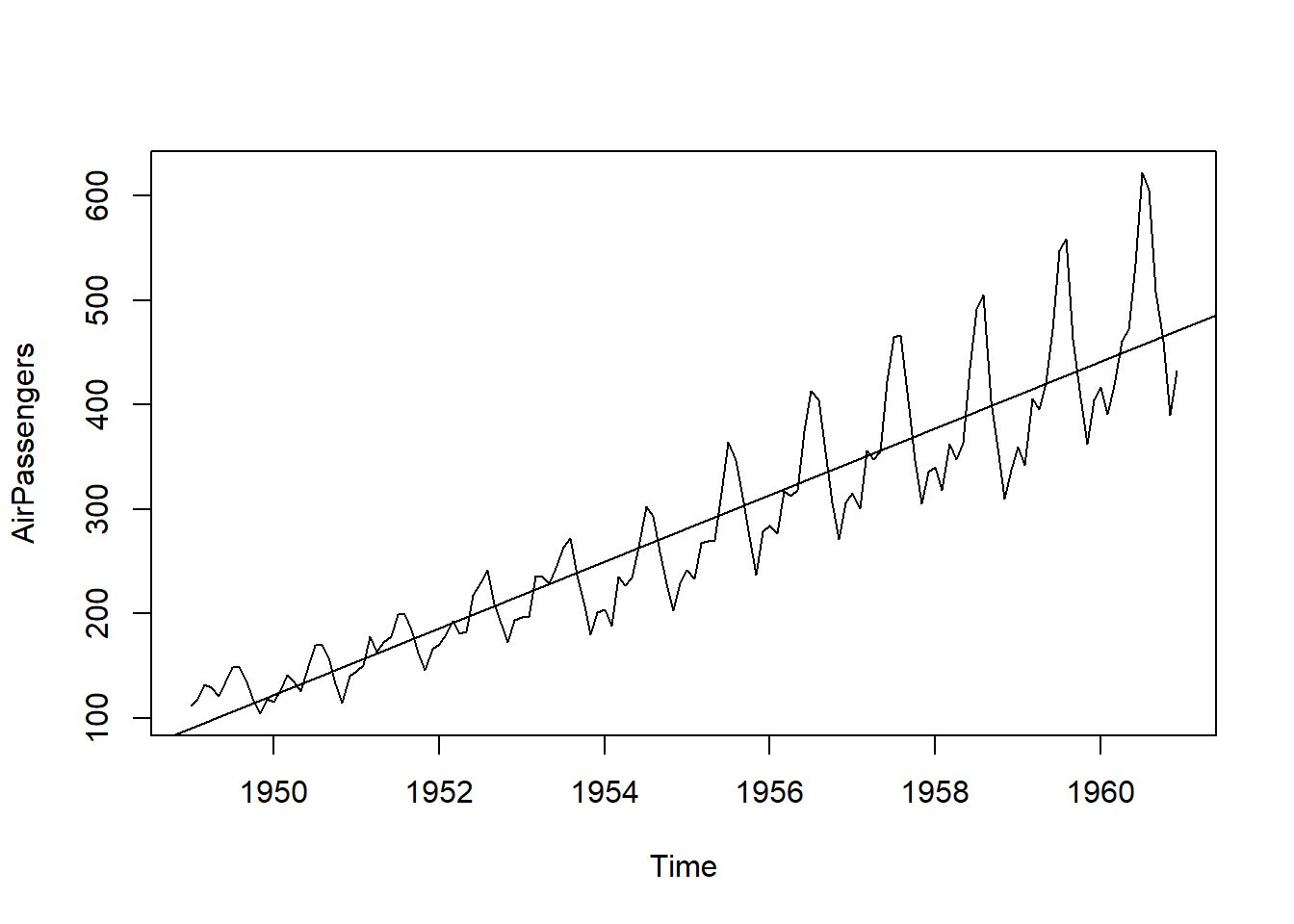

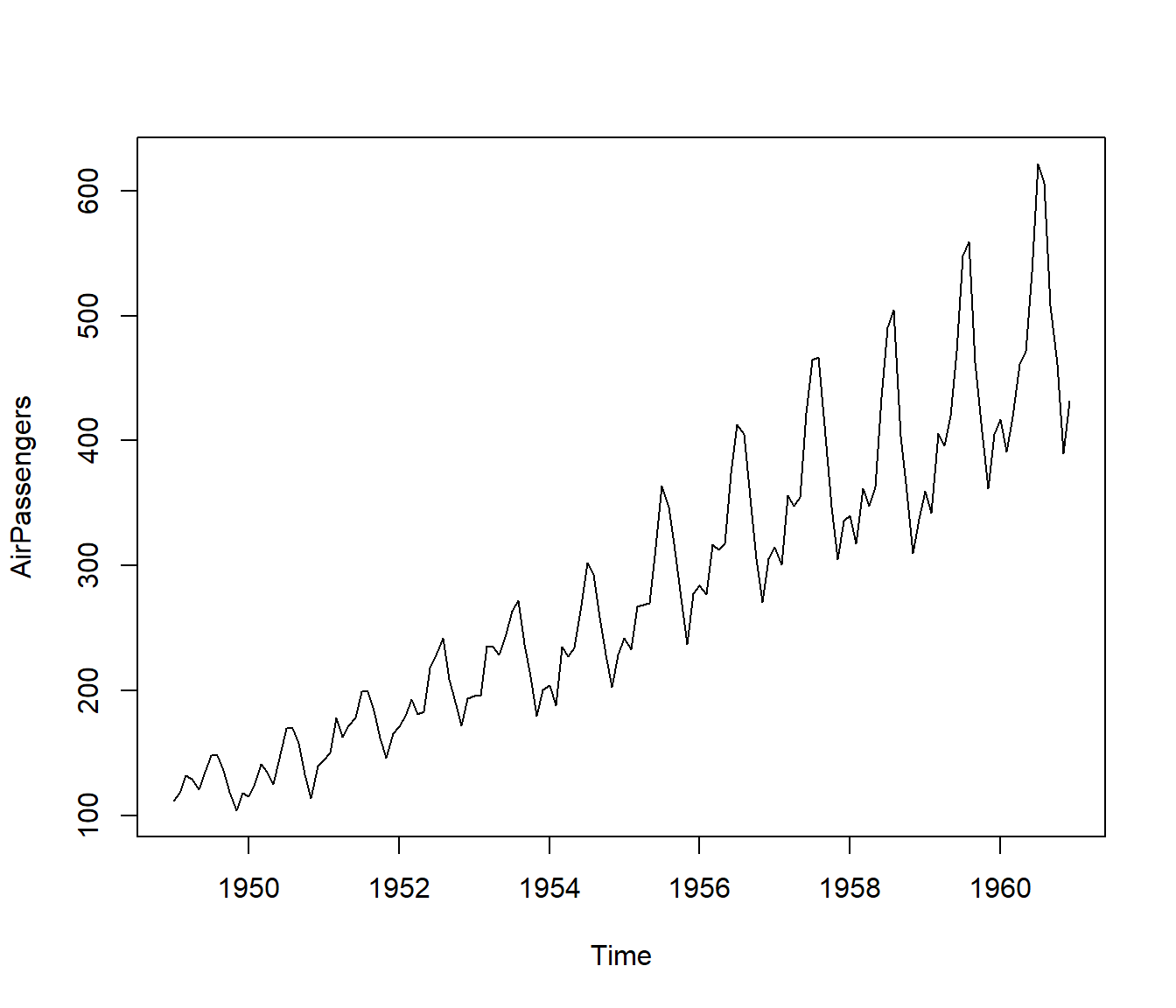

I have used a built-in data set of R called AirPassengers.

The dataset consists of monthly totals of international airline passengers, 1949 to 1960.

Loading the Data Set Following is the code which will help you load the data set and spill out a few top level metrics.

## [1] "ts"## [1] 1949 1## [1] 1960 12Summary Values

## [1] 12## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 104.0 180.0 265.5 280.3 360.5 622.0Detailed Metrics

#The number of passengers are distributed across the spectrum

plot(AirPassengers)

#This will plot the time series

abline(reg=lm(AirPassengers~time(AirPassengers)))

More operations

## Jan Feb Mar Apr May Jun Jul Aug Sep Oct Nov Dec

## 1949 1 2 3 4 5 6 7 8 9 10 11 12

## 1950 1 2 3 4 5 6 7 8 9 10 11 12

## 1951 1 2 3 4 5 6 7 8 9 10 11 12

## 1952 1 2 3 4 5 6 7 8 9 10 11 12

## 1953 1 2 3 4 5 6 7 8 9 10 11 12

## 1954 1 2 3 4 5 6 7 8 9 10 11 12

## 1955 1 2 3 4 5 6 7 8 9 10 11 12

## 1956 1 2 3 4 5 6 7 8 9 10 11 12

## 1957 1 2 3 4 5 6 7 8 9 10 11 12

## 1958 1 2 3 4 5 6 7 8 9 10 11 12

## 1959 1 2 3 4 5 6 7 8 9 10 11 12

## 1960 1 2 3 4 5 6 7 8 9 10 11 12

Important Inferences

- The year on year trend clearly shows that the #passengers have been increasing without fail.

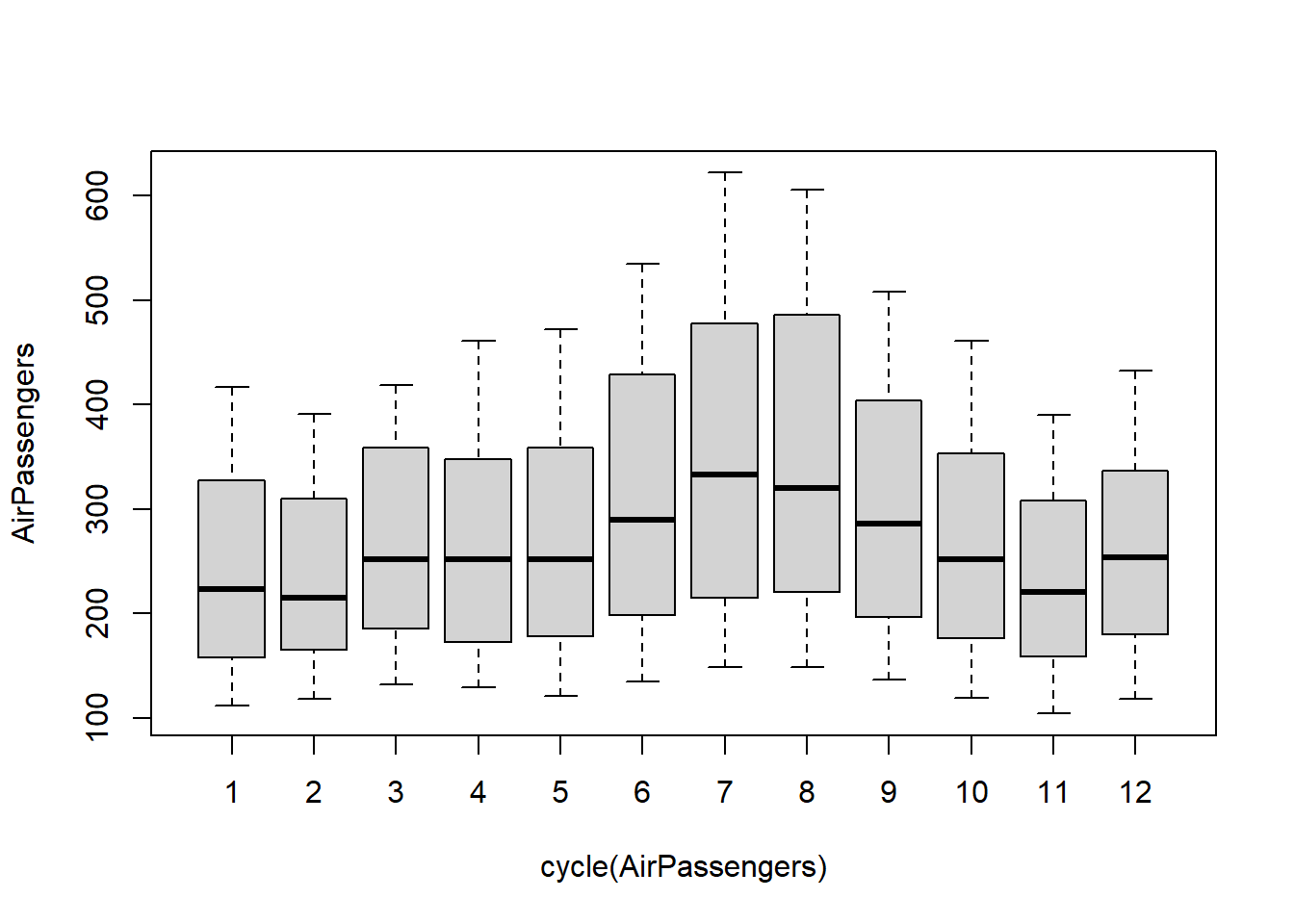

- The variance and the mean value in July and August is much higher than rest of the months.

- Even though the mean value of each month is quite different their variance is small. Hence, we have strong seasonal effect with a cycle of 12 months or less.

- Exploring data becomes most important in a time series model – without this exploration, you will not know whether a series is stationary or not. As in this case we already know many details about the kind of model we are looking out for.

- Let’s now take up a few time series models and their characteristics. We will also take this problem forward and make a few predictions.

21.8 Introduction to ARMA Time Series Modeling

ARMA models are commonly used in time series modeling. In ARMA model, AR stands for auto-regression and MA stands for moving average. If these words sound intimidating to you, worry not – I’ll simplify these concepts in next few minutes for you!

We will now develop a knack for these terms and understand the characteristics associated with these models. But before we start, you should remember, AR or MA are not applicable on non-stationary series.

In case you get a non stationary series, you first need to stationarize the series (by taking difference / transformation) and then choose from the available time series models.

First, I’ll explain each of these two models (AR & MA) individually. Next, we will look at the characteristics of these models.

21.8.1 Auto-Regressive Time Series Model

Let’s understanding AR models using the case below:

The current GDP of a country say x(t) is dependent on the last year’s GDP i.e. x(t – 1).

The hypothesis being that the total cost of production of products & services in a country in a fiscal year (known as GDP) is dependent on the set up of manufacturing plants / services in the previous year and the newly set up industries / plants / services in the current year.

But the primary component of the GDP is the former one.

GDP as an AR Time Series

Hence, we can formally write the equation of GDP as:

\[x(t) = alpha * x(t – 1) + error (t)\]

This equation is known as AR(1) formulation. The numeral one (1) denotes that the next instance is solely dependent on the previous instance. The alpha is a coefficient which we seek so as to minimize the error function. Notice that \(x(t- 1)\) is indeed linked to \(x(t-2)\) in the same fashion. Hence, any shock to \(x(t)\) will gradually fade off in future.

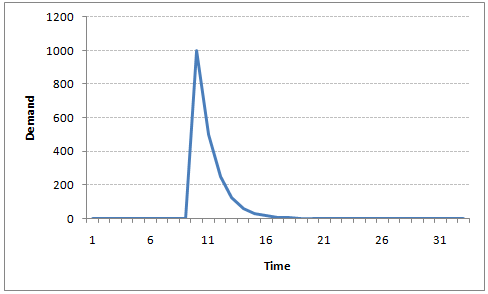

Juice Bottles Time Series

For instance, let’s say \(x(t)\) is the number of juice bottles sold in a city on a particular day.

During winters, very few vendors purchased juice bottles.

Suddenly, on a particular day, the temperature rose and the demand of juice bottles soared to 1000.

However, after a few days, the climate became cold again.

But, knowing that the people got used to drinking juice during the hot days, there were 50% of the people still drinking juice during the cold days.

The Inertia Property In following days, the proportion went down to 25% (50% of 50%) and then gradually to a small number after significant number of days.

The following graph explains the inertia property of AR series:

21.9 Moving Average Time Series Model

Let’s take another case to understand Moving average time series model.

- A manufacturer produces a certain type of bag, which was readily available in the market.

- Being a competitive market, the sale of the bag stood at zero for many days.

- The manufacture innovates and produces a new type of bag.

- This type of bag was not available anywhere in the market.

- He was able to sell the entire stock of 1000 bags (lets call this as \(x(t)\) ). The demand got so high that the bag ran out of stock.

- As a result, some 100 odd customers couldn’t purchase this bag. Lets call this gap as the error at that time point.

- With time, the bag had lost its WOW factor. But still few customers were left who went empty handed the previous day.

Apply the senario to time series Following is a simple formulation to depict the scenario :

\[x(t) = beta * error(t-1) + error (t)\]

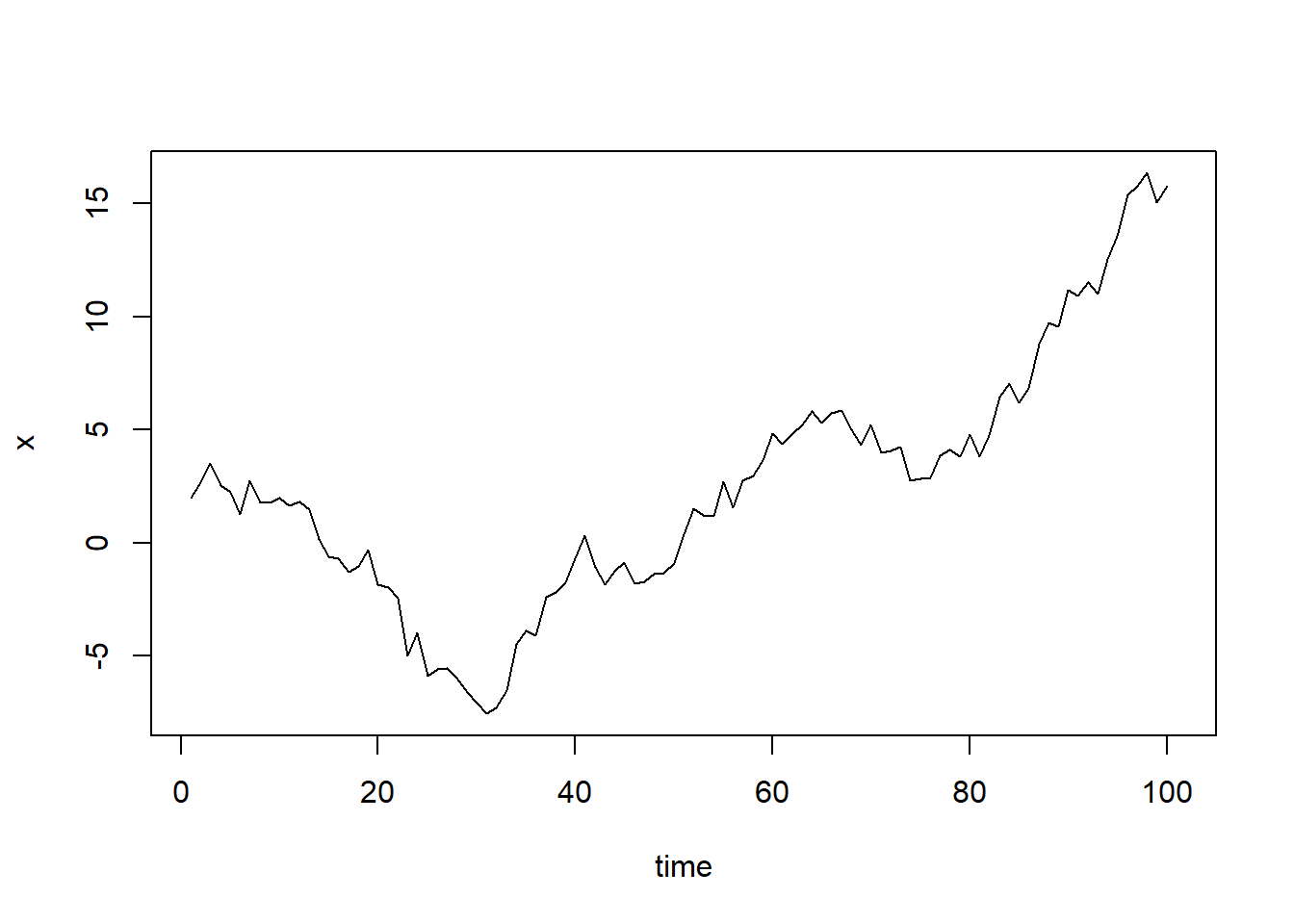

If we try plotting this graph, it will look something like this :

Did you notice the difference between MA and AR model?

In MA model, noise / shock quickly vanishes with time. The AR model has a much lasting effect of the shock.

Difference between AR and MA models The primary difference between an AR and MA model is based on the correlation between time series objects at different time points.

- The correlation between \(x(t)\) and \(x(t-n)\) for \(n >\) order of MA is always zero.

- This directly flows from the fact that covariance between \(x(t)\) and \(x(t-n)\) is zero for MA models

- The correlation of \(x(t)\) and \(x(t-n)\) gradually declines with n becoming larger in the AR model.

- This difference gets exploited irrespective of having the AR model or MA model. The correlation plot can give us the order of MA model.

21.10 Exploiting ACF and PACF plots

Once we have got the stationary time series, we must answer two primary questions:

Q1. Is it an AR or MA process?

Q2. What order of AR or MA process do we need to use?

The trick to solve these questions is available in the previous section. Didn’t you notice?

Exploiting ACF and PACF plots

The first question can be answered using Total Correlation Chart (also known as Auto – correlation Function / ACF).

- ACF is a plot of total correlation between different lag functions. For instance, in GDP problem, the GDP at time point t is \(x(t)\).

- We are interested in the correlation of \(x(t)\) with \(x(t-1)\) , \(x(t-2)\) and so on.

Moving Average Determination In a moving average series of lag n, we will not get any correlation between \(x(t)\) and \(x(t – n -1)\) . Hence, the total correlation chart cuts off at nth lag.

- For example, an MA(2) is a moving average where the current value is uncorrelated with the third value, (i.e. \(COV(x(t),x(t-3))=0\)).

- For an AR series this correlation will gradually go down without any cut off value. So what do we do if it is an AR series?

Determining the AR lag

We need the partial correlation graph to determine the optimal AR length. The partial correlation lags will become insignificant after the degree of the AR series.

For instance, if we have a AR(1) series, if we exclude the effect of 1st lag \((x(t-1))\), our 2nd lag \((x(t-2))\) is independent of \(x(t)\).

Hence, the partial correlation function (PACF) will drop sharply after the 1st lag.

Here is an AR(2)

The blue line above shows significantly different values than zero. Clearly, the graph above has a cut off on PACF curve after 2nd lag which means this is mostly an AR(2) process.

Clearly, the graph above has a cut off on ACF curve after 2nd lag which means this is mostly a MA(2) process.

21.11 Framework and Application of ARIMA Time Series Modeling

A quick revision, Till here we’ve learnt basics of time series modeling, time series in R and ARMA modeling. Now is the time to join these pieces and make an interesting story.

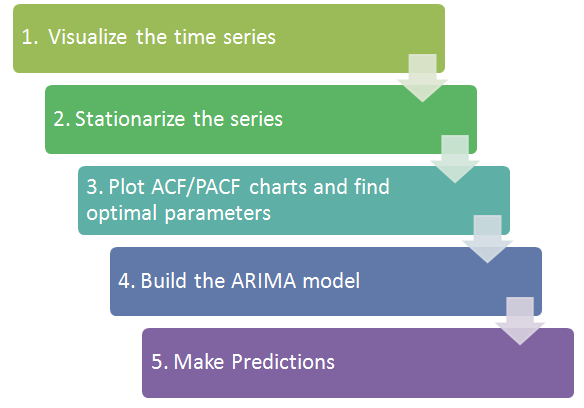

Overview of the Framework

Step 1: Visualize the Time Series It is essential to analyze the trends prior to building any kind of time series model.

The details we are interested in pertains to any kind of trend, seasonality or random behavior in the series.

We have covered this part in the second part of this series.

Understanding your Time Series Each data point \(X(t)\) at time t in a Time Series can be expressed as either a sum or a product of 3 components, namely, Seasonality \([S(t)]\), Trend \([T(t)]\) and Error \([Er(t)]\) (a.k.a White Noise).

For Additive Time Series, \[X(t) = S(t)+T(t)+Er(t)\]

For Multiplicative Time Series, \[X(t) = S(t)*T(t)*Er(t)\]

but taking the natural log of the time series returns it back to the additive model. \[\log X(t) = \log S(t)+\log T(t)+\log Er(t)\]

Step 2: Stationarize the Series Once we know the patterns, trends, cycles and seasonality , we can check if the series is stationary or not.

Dickey – Fuller is one of the popular test to check the same.

We have covered this test in the first part of this article series.

This doesn’t ends here!

What if the series is found to be non-stationary?

Detrending There are three commonly used technique to make a time series stationary:

- Detrending : Here, we simply remove the trend component from the time series. For instance, the equation of my time series is:

\[x(t) = (mean + trend * t) + error\]

We’ll simply remove the part in the parentheses and build model for the rest.

Differencing 2. Differencing : This is the commonly used technique to remove non-stationarity. Here we try to model the differences of the terms and not the actual term. For instance,

\[x(t) – x(t-1) = ARMA (p , q)\]

This differencing is called as the Integration part in AR(I)MA. Now, we have three parameters

\[p : AR\] \[d : I\] \[q : MA\]

Seasonality 3. Seasonality : Seasonality can easily be incorporated in the ARIMA model directly. More on this has been discussed in the applications part below.

Step 3: Find Optimal Parameters The parameters p,d,q can be found using ACF and PACF plots.

If both ACF and PACF decreases gradually, it indicates that we need to make the time series stationary and introduce a value to “d”.

Step 4: Build ARIMA Model The value found in the previous section might be an approximate estimate and we need to explore more (p,d,q) combinations.

- Select the one with the lowest BIC and AIC should be our choice.

- We can also try some models with a seasonal component.

Step 5: Make Predictions Once we have the final ARIMA model, we are now ready to make predictions on the future time points.

We can also visualize the trends to cross validate if the model works fine.

21.12 Applications of Time Series Model

Now, we’ll use the same example that we have used above. Then, using time series, we’ll make future predictions. We recommend you to check out the example before proceeding further.

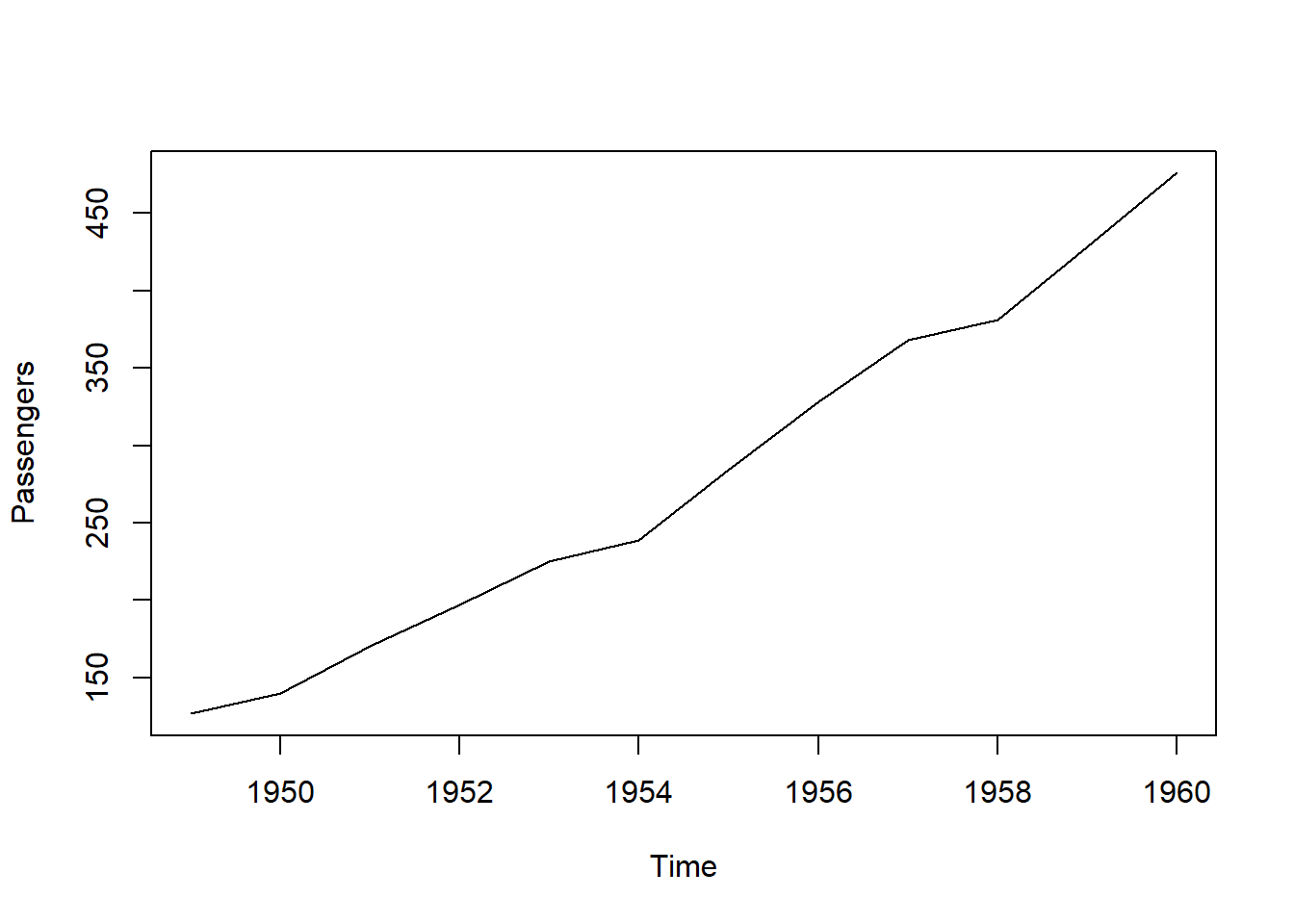

Where did we start? Below we plot the number of passengers by years. Make some observation.

Here are my observations:

There is a trend component which grows the passenger year by year.

There looks to be a seasonal component which has a cycle less than 12 months.

The variance in the data keeps on increasing with time.

We know that we need to address two issues before we test stationary series. One, we need to remove unequal variances. We do this using log of the series. Two, we need to address the trend component. We do this by taking difference of the series.

Dickey - Fuller Test

##

## Augmented Dickey-Fuller Test

##

## data: diff(log(AirPassengers))

## Dickey-Fuller = -9.6003, Lag order = 0, p-value = 0.01

## alternative hypothesis: stationaryWe see that the series is stationary enough to do any kind of time series modelling.

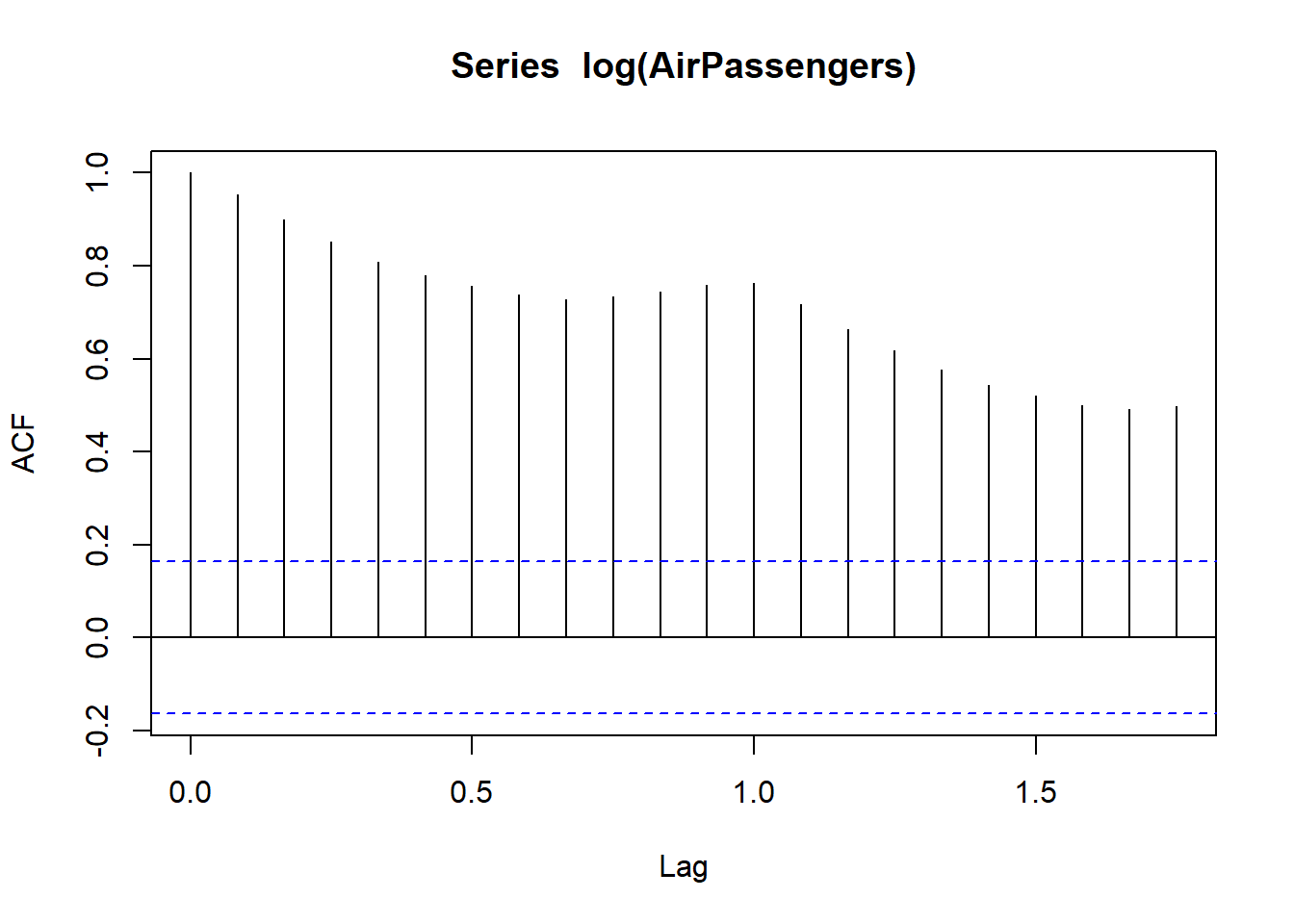

Next step is to find the right parameters to be used in the ARIMA model. We already know that the ‘d’ component is 1 as we need 1 difference to make the series stationary. We do this using the Correlation plots. Following are the ACF plots for the series :

ACF Plots

What do you see in the chart shown above? Clearly, the decay of ACF chart is very slow, which means that the population is not stationary. We have already discussed above that we now intend to regress on the difference of logs rather than log directly. Let’s see how ACF and PACF curve come out after regressing on the difference.

Clearly, ACF plot cuts off after the first lag. Hence, we understood that value of p should be 0 as the ACF is the curve getting a cut off. While value of q should be 1 or 2. After a few iterations, we found that (0,1,1) as (p,d,q) comes out to be the combination with least AIC and BIC.

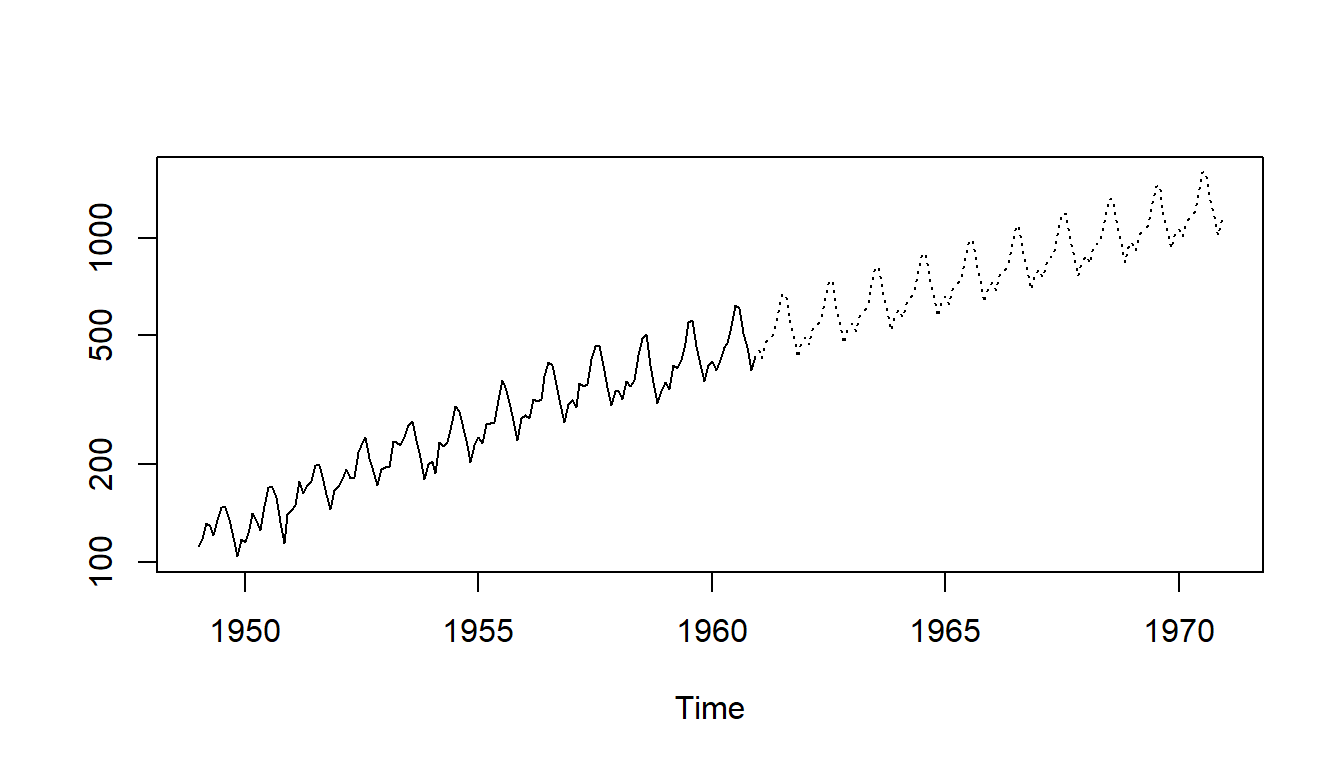

Fitting an ARIMA Model Let’s fit an ARIMA model - predict the future 10 years. - add a seasonal component in the ARIMA formulation. - visualize the model and predictions

ARIMA code You can use the following code to do the same :

fit <- arima(log(AirPassengers), c(0, 1, 1),seasonal = list(order = c(0, 1, 1), period = 12))

pred <- predict(fit, n.ahead = 10*12)

ts.plot(AirPassengers,2.718^pred$pred, log = "y", lty = c(1,3))

Projects Now, its time to take the plunge and actually play with some other real datasets.

So are you ready to take on the challenge?

Test the techniques discussed in this post and accelerate your learning in Time Series Analysis with the following Practice Problems:

COVID-19 Time Series in Class Assignments.