28 Day 28 (July 15)

28.1 Announcements

Please email me and request a 20 min time period during the final week of class (July 22 - 26) to give your final presentation. When you email me please suggest three times/dates that work for you.

Read Ch. 7 and 8 in linear models with R

28.3 Collinearity

When two or more of the predictor variables (i.e., columns of \(\mathbf{X}\)) are correlated, the predictors are said to be collinear. Also called

- Collinearity

- Multicollinearity

- Correlation among predictors/covariates

- Partial parameter identifiability

Collinearity cause coefficient estimates (i.e., \(\hat{\boldsymbol{\beta}}\) to change when a collinear predictor is removed (or added) to the model.

- Extremely common problem with observational data and data collected from poorly designed experiments.

Example

- The data

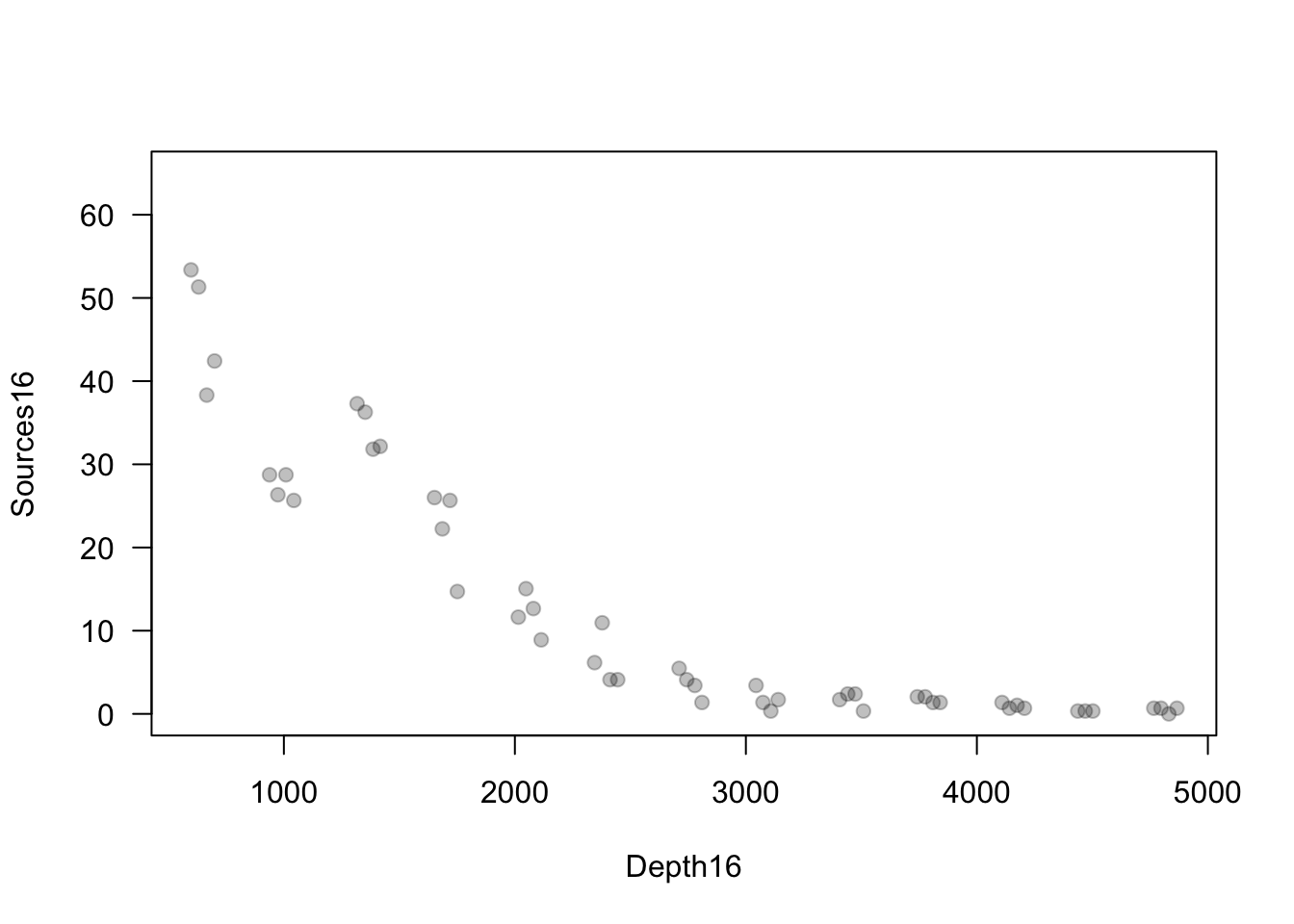

library(httr) library(readxl) url <- "https://doi.org/10.1371/journal.pone.0148743.s005" GET(url, write_disk(path <- tempfile(fileext = ".xls")))df1 <- read_excel(path=path,sheet = "Data", col_names=TRUE,col_types="numeric") notes <- read_excel(path=path,sheet = "Notes", col_names=FALSE)## # A tibble: 6 × 9 ## States Year CDEP WKIL WPOP BRPA NCAT SDEP NSHP ## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> ## 1 1 1987 6 4 10 2 9736 10 1478 ## 2 1 1988 0 0 14 1 9324 0 1554 ## 3 1 1989 3 1 12 1 9190 0 1572 ## 4 1 1990 5 1 33 3 8345 0 1666 ## 5 1 1991 2 0 29 2 8733 2 1639 ## 6 1 1992 1 0 41 4 9428 0 1608## # A tibble: 9 × 2 ## ...1 ...2 ## <chr> <chr> ## 1 Year <NA> ## 2 State 1=Montana,2=Wyoming,3=Idaho ## 3 Cattle depredated CDEP ## 4 Sheep depredated SDEP ## 5 Wolf population WPOP ## 6 Wolves killed WKIL ## 7 Breeding pairs BRPA ## 8 Number of cattleA* NCAT ## 9 Number of SheepB* NSHPFit a linear model

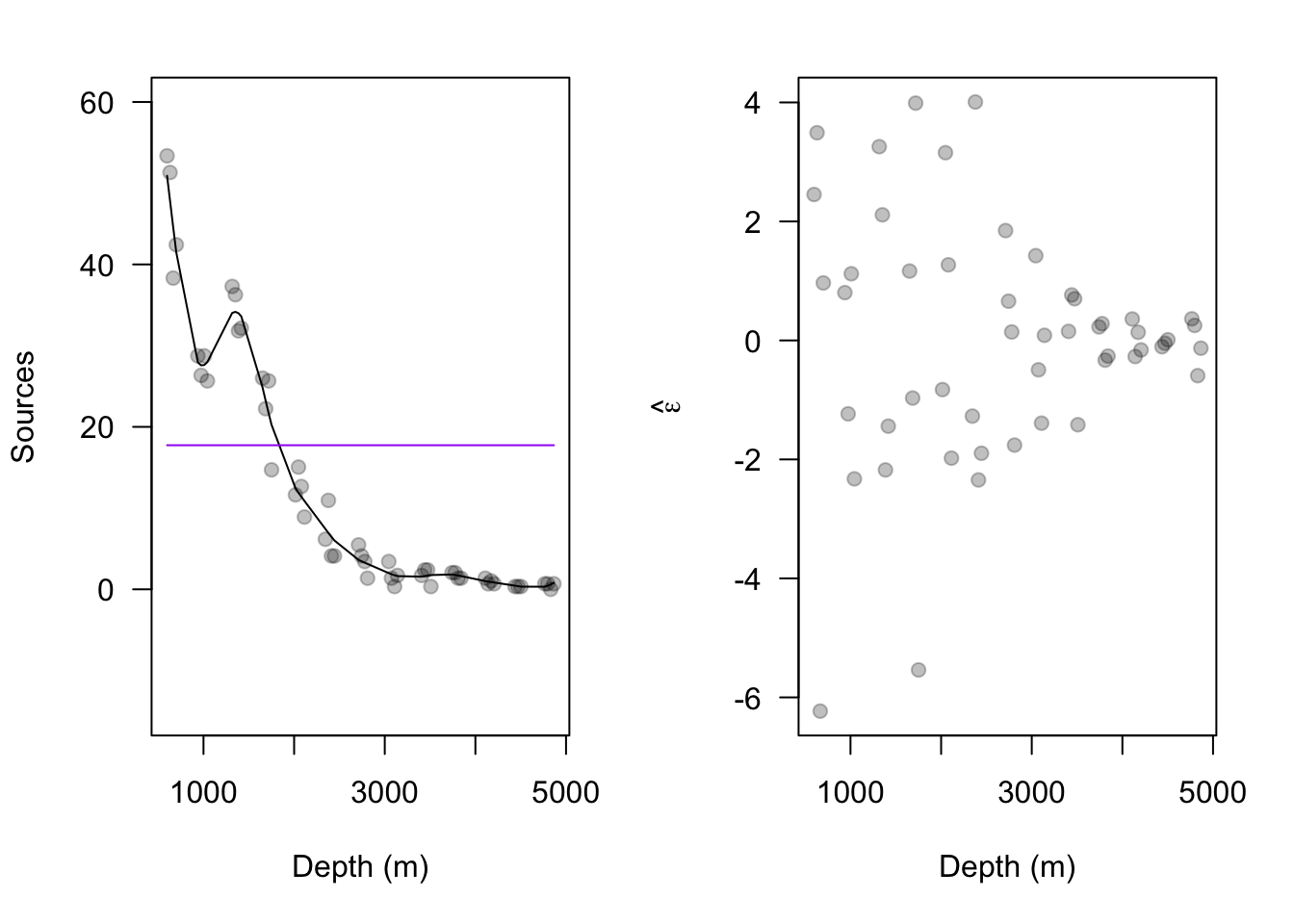

## ## Call: ## lm(formula = CDEP ~ NCAT + WPOP, data = df1) ## ## Residuals: ## Min 1Q Median 3Q Max ## -40.289 -8.147 -3.475 2.678 92.257 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -3.8455597 8.1697216 -0.471 0.64 ## NCAT 0.0009372 0.0010363 0.904 0.37 ## WPOP 0.1031119 0.0119644 8.618 7.51e-12 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 20.23 on 56 degrees of freedom ## (3 observations deleted due to missingness) ## Multiple R-squared: 0.5855, Adjusted R-squared: 0.5707 ## F-statistic: 39.55 on 2 and 56 DF, p-value: 1.951e-11- Killing wolves should reduce the number of cattle depredated, right? Let’s add the number of wolves killed to our model.

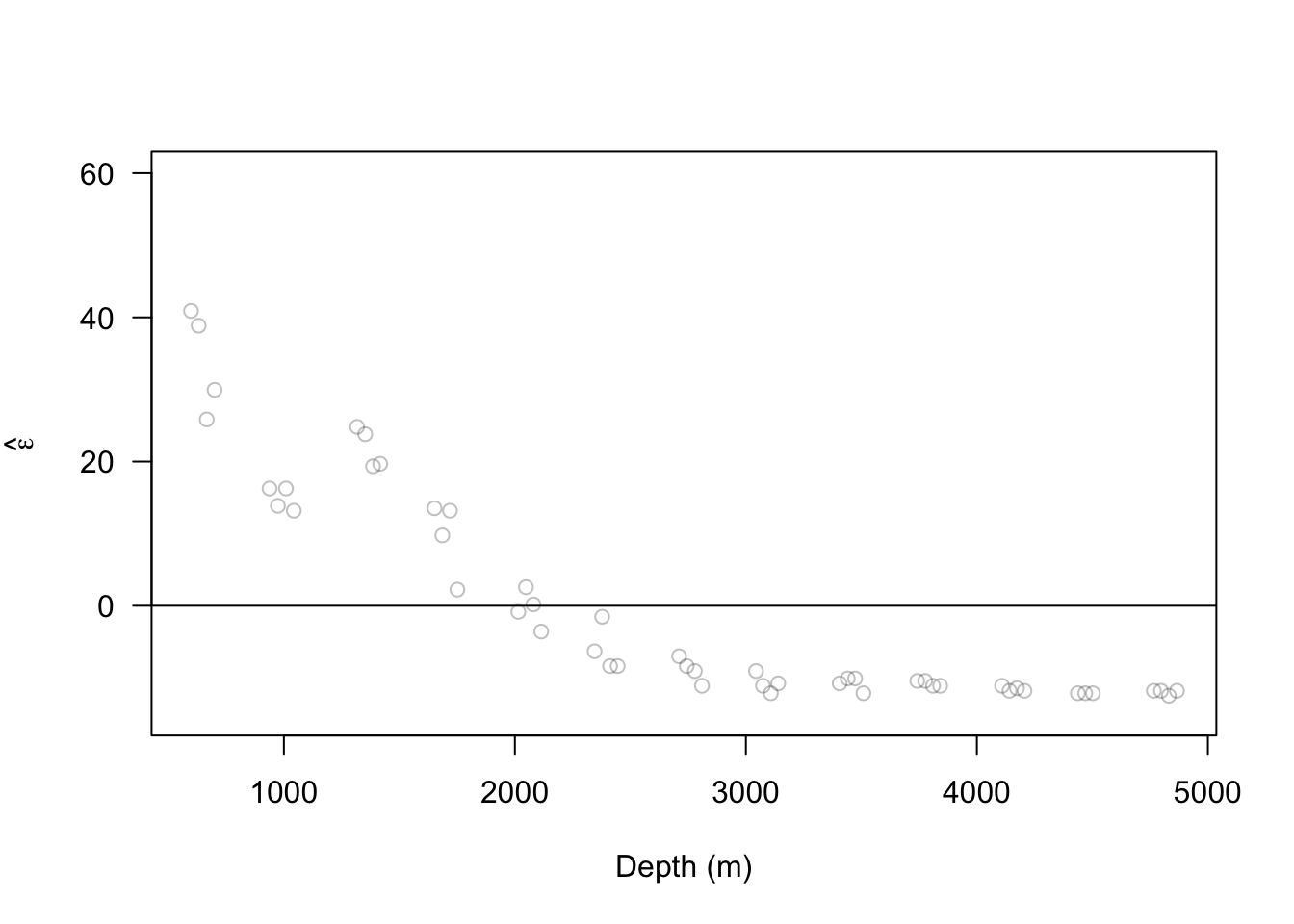

## ## Call: ## lm(formula = CDEP ~ NCAT + WPOP + WKIL, data = df1) ## ## Residuals: ## Min 1Q Median 3Q Max ## -18.519 -8.549 -1.588 4.049 79.041 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) 13.901373 6.511267 2.135 0.0372 * ## NCAT -0.001385 0.000830 -1.669 0.1008 ## WPOP 0.011868 0.015724 0.755 0.4536 ## WKIL 0.683946 0.097769 6.996 3.84e-09 *** ## --- ## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1 ## ## Residual standard error: 14.85 on 55 degrees of freedom ## (3 observations deleted due to missingness) ## Multiple R-squared: 0.7807, Adjusted R-squared: 0.7687 ## F-statistic: 65.25 on 3 and 55 DF, p-value: < 2.2e-16- What happened?!

## WKIL WPOP NCAT ## WKIL 1.00000000 0.7933354 -0.04460099 ## WPOP 0.79333543 1.0000000 -0.34440797 ## NCAT -0.04460099 -0.3444080 1.00000000Some analytical results

- Assume the linear model \[\mathbf{y}=\beta_{1}\mathbf{x}+\beta_{2}\mathbf{z}+\boldsymbol{\varepsilon}\] where \(\boldsymbol{\varepsilon}\sim\text{N}(\mathbf{0},\sigma^{2}\mathbf{I})\)

- Assume that the predictors \(\mathbf{x}\) and \(\mathbf{z}\) are correlated (i.e., are not orthogonal \(\mathbf{x}^{\prime}\mathbf{z}\neq 0\) and \(\mathbf{z}^{\prime}\mathbf{x}\neq 0\)).

- Then \[\hat{\beta}_1 = (\mathbf{x}^{\prime}\mathbf{x}\times\mathbf{z}^{\prime}\mathbf{z} - \mathbf{x}^{\prime}\mathbf{z}\times\mathbf{z}^{\prime}\mathbf{x})^{-1}((\mathbf{z}^{\prime}\mathbf{z})\mathbf{x}^{\prime} - (\mathbf{x}^{\prime}\mathbf{z})\mathbf{z}^{\prime})\mathbf{y}\] and \[\hat{\beta}_2 = (\mathbf{z}^{\prime}\mathbf{z}\times\mathbf{x}^{\prime}\mathbf{x} - \mathbf{z}^{\prime}\mathbf{x}\times\mathbf{x}^{\prime}\mathbf{z})^{-1}((\mathbf{x}^{\prime}\mathbf{x})\mathbf{z}^{\prime} - (\mathbf{z}^{\prime}\mathbf{x})\mathbf{x}^{\prime})\mathbf{y}.\] The variance is \[\text{Var}(\hat{\beta}_{1})=\sigma^{2}\bigg{(}\frac{1}{1-R^{2}_{xz}}\bigg{)}\frac{1}{\sum_{i=1}^{n}(x_{i}-\bar{x})^{2}}\] \[\text{Var}(\hat{\beta}_{2})=\sigma^{2}\bigg{(}\frac{1}{1-R^{2}_{xz}}\bigg{)}\frac{1}{\sum_{i=1}^{n}(z_{i}-\bar{z})^{2}}\]where \(R^{2}_{xz}\) is the correlation between \(\mathbf{x}\) and \(\mathbf{z}\) (see pg. 107 in Faraway (2014)).

Take away message

- \(\hat{\beta}_i\) is the unbiased and minimum variance estimator assuming all predictors for \(\beta_i \neq 0\) are in the model.

- \(\hat{\beta}_i\) depends on the other predictors in the model.

- Correlation among predictors increases the variance of \(\hat{\beta}_i\).

- Known as the variance inflation factor

- Collinearity is ubiquitous in any model with correlated predictors

## NCAT WPOP WKIL ## 1.350723 3.637257 3.212207

Literature cited

Faraway, J. J. 2014. Linear Models with r. CRC Press.