11 Day 11 (June 17)

11.1 Announcements

- Questions about assignment 3

- Update on assignment 2

- Read Ch. 2 in linear models with R (estimation)

- Read Ch. 3 in linear models with R (inference)

11.2 Maximum Likelihood Estimation

- Maximum likelihood estimation for the linear model

- Assume that \(\mathbf{y}\sim \text{N}(\mathbf{X}\boldsymbol{\beta},\sigma^2\mathbf{I})\)

- Write out the likelihood function \[\mathcal{L}(\boldsymbol{\beta},\sigma^2)=\prod_{i=1}^n\frac{1}{\sqrt{2\pi\sigma^2}}\textit{e}^{-\frac{1}{2\sigma^2}(y_{i} - \mathbf{x}_{i}^{\prime}\boldsymbol{\beta})^2}.\]

- Maximize the likelihood function

- First take the natural log of \(\mathcal{L}(\boldsymbol{\beta},\sigma^2)\), which gives \[\mathcal{l}(\boldsymbol{\beta},\sigma^2)=-\frac{n}{2}\text{ log}(2\pi\sigma^2) - \frac{1}{2\sigma^2}\sum_{i=1}^{n}(y_{i} - \mathbf{x}_{i}^{\prime}\boldsymbol{\beta})^2.\]

- Recall that \(\sum_{i=1}^{n}(y_{i} - \mathbf{x}_{i}^{\prime}\boldsymbol{\beta})^2 = (\mathbf{y} - \mathbf{X}\boldsymbol{\beta})^{\prime}(\mathbf{y} - \mathbf{X}\boldsymbol{\beta})\)

- Maximize the log-likelihood function \[\underset{\boldsymbol{\beta}, \sigma^2}{\operatorname{argmax}} -\frac{n}{2}\text{ log}(2\pi\sigma^2) - \frac{1}{2\sigma^2}(\mathbf{y} - \mathbf{X}\boldsymbol{\beta})^{\prime}(\mathbf{y} - \mathbf{X}\boldsymbol{\beta})\]

- f- Three ways to do it in program R

- Using matrix calculus and algebra

# Data y <- c(0.16,2.82,2.24) X <- matrix(c(1,1,1,1,2,3),nrow=3,ncol=2,byrow=FALSE) n <- length(y) # Maximum likelihood estimation using closed from estimators beta <- solve(t(X)%*%X)%*%t(X)%*%y sigma2 <- (1/n)*t(y-X%*%beta)%*%(y-X%*%beta) beta## [,1] ## [1,] -0.34 ## [2,] 1.04## [,1] ## [1,] 0.5832- Using modern (circa 1970’s) optimization techniques

# Maximum likelihood estimation estimation using the Nelder-Mead algorithm y <- c(0.16,2.82,2.24) X <- matrix(c(1,1,1,1,2,3),nrow=3,ncol=2,byrow=FALSE) negloglik <- function(par){ beta <- par[1:2] sigma2 <- par[3] -sum(dnorm(y,X%*%beta,sqrt(sigma2),log=TRUE)) } optim(par=c(0,0,1),fn=negloglik,method = "Nelder-Mead")## $par ## [1] -0.3397162 1.0398552 0.5831210 ## ## $value ## [1] 3.447978 ## ## $counts ## function gradient ## 150 NA ## ## $convergence ## [1] 0 ## ## $message ## NULL- Using modern and user friendly statistical computing software

# Maximum likelihood estimation using lm function # Note: estimate of sigma2 is not the MLE! df <- data.frame(y = c(0.16,2.82,2.24),x = c(1,2,3)) m1 <- lm(y~x,data=df) coef(m1)## (Intercept) x ## -0.34 1.04## [1] 1.7496## ## Call: ## lm(formula = y ~ x, data = df) ## ## Residuals: ## 1 2 3 ## -0.54 1.08 -0.54 ## ## Coefficients: ## Estimate Std. Error t value Pr(>|t|) ## (Intercept) -0.3400 2.0205 -0.168 0.894 ## x 1.0400 0.9353 1.112 0.466 ## ## Residual standard error: 1.323 on 1 degrees of freedom ## Multiple R-squared: 0.5529, Adjusted R-squared: 0.1057 ## F-statistic: 1.236 on 1 and 1 DF, p-value: 0.4663 - Live example

11.3 Confidence intervals for paramters

- Example

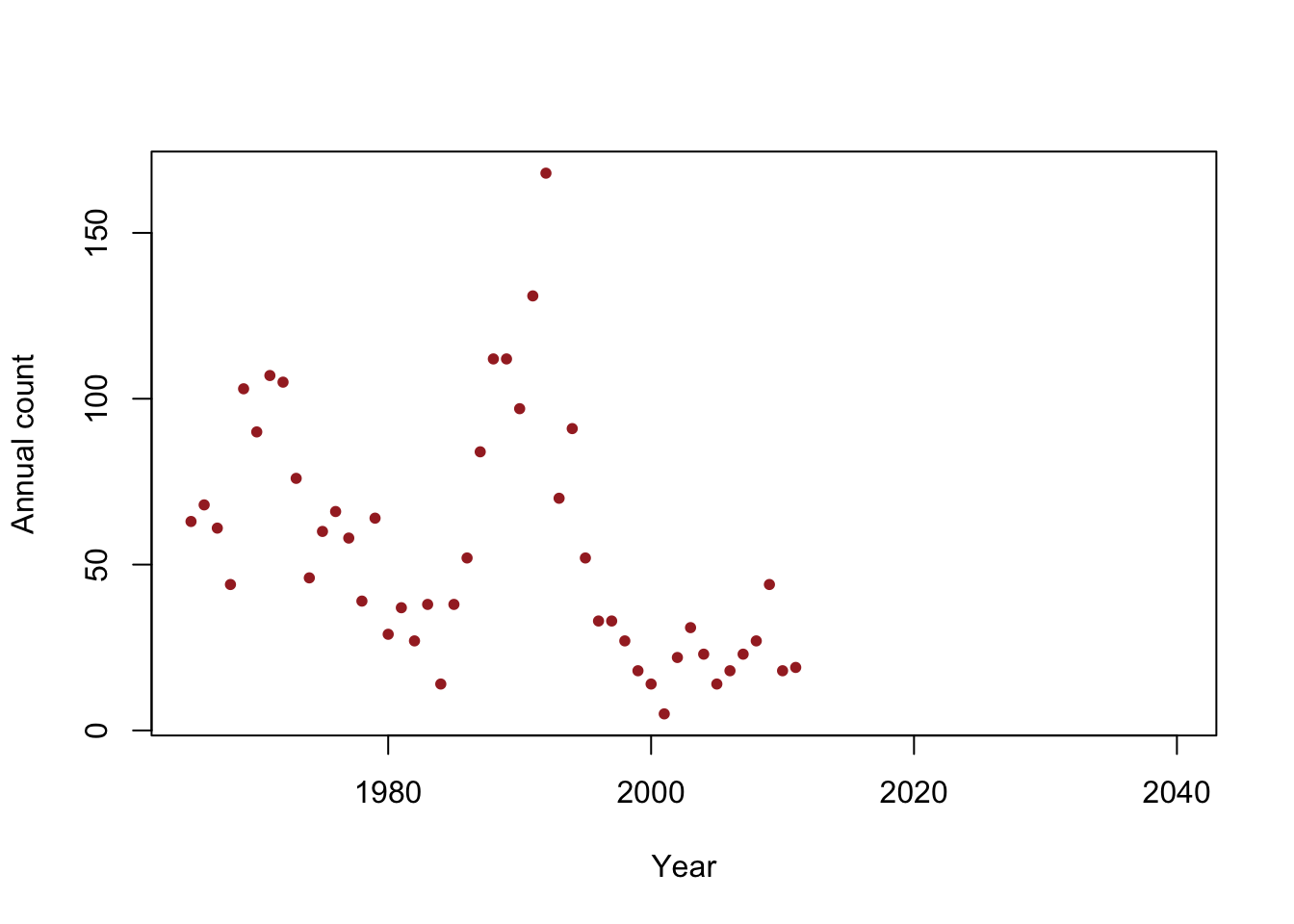

y <- c(63, 68, 61, 44, 103, 90, 107, 105, 76, 46, 60, 66, 58, 39, 64, 29, 37, 27, 38, 14, 38, 52, 84, 112, 112, 97, 131, 168, 70, 91, 52, 33, 33, 27, 18, 14, 5, 22, 31, 23, 14, 18, 23, 27, 44, 18, 19) year <- 1965:2011 df <- data.frame(y = y, year = year) plot(x = df$year, y = df$y, xlab = "Year", ylab = "Annual count", main = "", col = "brown", pch = 20, xlim = c(1965, 2040))

- Is the population size really decreasing?

- Write out the model we should use answer this question

- How can we assess the uncertainty in our estimate of \(\beta_1\)?