Chapter 2 Jan 25–31: Sampling Distribution, Hypothesis Tests, Independent Sample T-Tests

As you can see in the course calendar, this is the only chapter you need to look at for January 25–31 2021. You can ignore all subsequent chapters in this e-book, for now.

Please read this entire chapter and then complete the assignment at the end.

This week, our goals are to…

Review the process of inference, including the relationship between sample and population.

Review the basic logic of hypothesis testing.

Explore how samples and populations are related to each other.

Conduct an independent samples t-test on the type of data used in randomized controlled trials (RCTs).

Announcements and reminders

I recommend that you quickly skim through the assignment at the end of the chapter before going through this chapter’s content, so that you know in advance the tasks that you will be expected to do.

If you have data of your own that you are interested in analyzing, you can often use your own data instead of the provided data for the weekly assignments. Please discuss this with the instructors as desired. As long as you are adequately practicing the new skills each week, it doesn’t matter to us which data you use!

Please let all instructors know any time you will be turning in an assignment later than the deadline.

Contact both Anshul and Nicole any time with questions or to set up meetings. Additionally, you should have already received calendar invitations by e-mail with already-scheduled group work / office hour sessions for this week.

2.1 Inference, Sample, and Population

2.1.1 Key concepts

One of the most basic goals of quantitative analysis is to be able to use numbers to answer questions about a group of people. These questions often involve measuring a construct or examining a potential relationship.

Here are some examples of quantitative questions we might ask:

How many households in Karachi, Pakistan have a motorcycle?

Do physician assistant students in Massachusetts score higher on the Physician Assistant Clinical Knowledge Rating and Assessment Tool (PACKRAT) after their second year of training compared to after their first year of training?

In the United States of America, what is the relationship between the number of words a person knows and a person’s age?

To answer each of the questions above, we would need to first define and identify the following:

Question – What we are trying to answer using collected data, numbers, and quantitative analysis methods.

Population (also called population of interest) – The group about which we are trying to answer our question. This can be a group of people, objects, or anything else about which we can collect information.

Sample – A subgroup of the population of interest. Typically, it is not possible for us to collect data on all members of our entire population of interest, so we have to select a subgroup of the population of interest to analyze and collect data on. This subgroup is called our sample.

Inference – The process of analyzing the data in our sample to learn about our population. This process often involves making guesses or assumptions about the relationship between our sample and our population of interest. We want to be responsible practitioners of quantitative analysis when we conduct inference. Quite often, we will consider the assumptions we have made and we might realize that it would not be reasonable for us to make an inference to draw a conclusion about our population of interest using the sample that we have.

Inference procedure – A specific quantitative method that we will select which will allow us to make an inference, meaning use information about our sample to draw a conclusion about our population of interest. You will learn about many inference procedures during this course, including t-test, linear regression, and logistic regression. The inference procedure has to be selected carefully, such that it will allow us to answer our question and such that it is well-suited for the type of data we have. All limitations of our selected inference procedure need to be transparently communicated when we share our results. Frequently, we will try out multiple inference procedures to help us answer a single question. We will then compare the results from these multiple procedures and draw our conclusion carefully.22 It is nice when multiple analytic approaches confirm the same result, but that doesn’t always happen.

Unit of observation – The level within your sample at which you collect your data. This is often the person or object. A unit of observation can also be thought of as an individual row in your data spreadsheet. What does each row of your dataset represent? The answer to this question is usually your unit of observation.

Please watch the following video to help reinforce the concepts of sample and population:23

The video above can be viewed externally at https://www.youtube.com/watch?v=eIZD1BFfw8E.

Please watch the following video to learn more about the process of inference:24

The video above can be viewed externally at https://www.youtube.com/watch?v=tFRXsngz4UQ.

2.1.2 Examples

The concepts above may be confusing before you have some experience using them. Let’s start to build that experience using some examples. In the table below, we revisit the quantitative questions that were introduced earlier.

Selected concepts for each example quantitative research question:

| Concept | Question 1 | Question 2 | Question 3 |

|---|---|---|---|

| Question | How many households in Karachi, Pakistan have a motorcycle? | Do physician assistant students in Massachusetts score higher on the Physician Assistant Clinical Knowledge Rating and Assessment Tool (PACKRAT) after their second year of training compared to after their first year of training? | In the United States of America (USA), what is the relationship between the number of words a person knows and a person’s age? |

| Population | All households in Karachi. | All first and second year physician assistant students in Massachusetts. | All residents of the USA. |

| Sample | 100 households in Karachi that we were able to survey. | First and second year physician assistant students at MGH Institute of Health Professions (MGHIHP) | 28,867 residents of the USA surveyed and given a vocabulary test between 1978 and 2016. |

| Inference | Use data collected on motorcycle ownership in our sample (100 households in Karachi) to make guesses about motorcycle ownership in our entire population (all households in Karachi). | Use data in our sample (PACKRAT results of first and second year students at MGHIHP) to make a guess about our population of interest (PACKRAT performance of all physician assistant students in Massachusetts). | Determine the relationship between vocabulary test score and age in our sample (28,867 residents) and use this to guess about the nature of this relationship in our population of interest (all USA residents). |

| Inference procedure | Calculate percentage of households in our sample that have a motorcycle. Check if our sample is appropriately representative of our population; if so, assume that the percentage of households with motorcycles is the same in the population as in the sample. Calculate and report range of error on our estimate. | Compare PACKRAT performance of first year physician assistant students to that of second year students at MGHIHP, using a t-test. If the assumptions for a t-test result to be trustworthy for inference are met, t-test result can be reported as result for the entire population. | In sampled survey participants’ data, calculate the slope defining the relationship between vocabulary test score and age, using linear regression. If the assumptions for a linear regression result to be trustworthy for inference are met, linear regression result can be reported as result for the entire population. |

| Unit of observation | Household | Physician assistant student | Person |

Most analytic methods presented in this textbook allow us to conduct the process of inference. In most cases, we will have a question of interest, a population in which we are interested in answering that question, and a sample of data that is drawn from that population. Most of the time, we will first answer our question of interest only within our sample. Then, we will do some diagnostic tests of assumptions. If our analysis passes all of these tests, then we can often use our results from our sample alone to answer our question of interest in our population. The process below illustrates this procedure.

Process of inferential quantitative analysis:

- Ask a question

- Identify population of interest

- Gather data on a sample (subgroup) from the population

- Answer question on sample using appropriate quantitative method

- Run diagnostic tests of assumptions to see if results from sample can apply to entire population (and be used to infer the answer to the question for the population)

- Report results about population, to the extent possible.

When we answer quantitative questions using the process described in this section, we often have to test hypotheses using hypothesis tests. Hypothesis tests allow us to weigh the quantitative evidence that is available to us and then responsibly draw a conclusion (or not) to our question of interest. Keep reading to learn about hypothesis tests!

2.2 Hypothesis Tests

Hypothesis tests are one of the most basic tools we use in inferential statistics as we try to use quantitative methods to answer a question. They help us weigh the quantitative evidence that we have in favor or against a particular conclusion. More specifically, they tell us the extent to which we can be confident in a particular conclusion.

In this section, we will go through the basic logic and formulation of hypothesis tests and then introduce some more formal language and terms that we will be using frequently going forward.

2.2.1 Basic logic and formulation

Before we formalize how a hypothesis test works, we will look at a few examples to become familiar with the logic of hypothesis testing. Keep in mind that what you are about to read is a logic-based framework for problem-solving. It is not exclusively a process related to quantitative analysis.

For our first example, let’s pretend that there is a high jumper25 named Weeda. We want to answer the following question: Can Weeda jump over a 7-foot bar?

One thing we could do is we could just guess and give an answer based on what we know about Weeda from before. However, as responsible quantitative researchers, we’re not going to do that. We’re going to be more strict. We are going to use the following analytic strategy: We will assume that Weeda can NOT jump over a 7-foot bar UNLESS we see convincing evidence that Weeda CAN do it.

This can be rewritten as a hypothesis test:

Null hypothesis – Weeda can not jump over a 7-foot bar. This is our default assumption if we don’t see any evidence to the contrary.

Alternate hypothesis – Weeda can jump over a 7-foot bar.

Next, we go to a sports facility to collect some data and we watch Weeda.

There are three possible outcomes:

Weeda tries to jump over the 7-foot bar and is able to do it. In this case, we reject the null hypothesis because we have evidence to support the alternate hypothesis.

Weeda tries to jump over the 7-foot bar and is not able to do it. In this case, we fail to reject the null hypothesis because we do not have evidence to support the alternate hypothesis.

Weeda never attempts to jump. In this case, we fail to reject the null hypothesis because we do not have evidence to support the alternate hypothesis.

So, what is the truth? Can Weeda jump over the 7-foot bar or not? Let’s unpack the situation:

Basically, if we have evidence that Weeda can jump over the 7-foot bar, then we conclude that the truth is that Weeda can jump over the 7-foot bar. And we reject the null hypothesis, which says that Weeda can’t accomplish the jump. We found convincing evidence against the null hypothesis.

If we do not have evidence that Weeda can jump over the 7-foot bar, then we don’t know the truth.26 Maybe Weeda can do it but didn’t do it when we collected data. Or maybe Weeda can’t do it. We just don’t know. For this reason, when we do not have evidence to support the alternate hypothesis, we DO NOT say that we “accept” the null hypothesis. We NEVER EVER EVER “accept” the null hypothesis. Instead, we just fail to find evidence against the null hypothesis, leaving the truth unknown. So we say that we fail to reject the null hypothesis.

You might be wondering: What if our goal is to prove that Weeda cannot jump over the 7-foot bar? Well, we can’t do that. All we can do is say that we failed to find evidence that Weeda can jump over the 7-foot bar.

To review, here is what we did above:

- Identify a question we want to answer.

- Write null and alternate hypotheses.

- Gather data/evidence.

- Evaluate strength of evidence in favor of alternate hypothesis.

- If evidence in favor of alternate hypothesis is strong enough, reject null hypothesis.

- If evidence in favor of alternate hypothesis is not strong—or doesn’t exist at all—then we fail to reject the null hypothesis.

Please watch the following video to reinforce the basic framework of hypothesis tests:27

The video above can be viewed externally at https://www.youtube.com/watch?v=ZzeXCKd5a18.

So we know now that in order to reject the null hypothesis in favor of the alternate hypothesis, we need the requisite evidence. This suggests the following question:

How strong does evidence have to be such that is strong enough to reject the null hypothesis?

Now that we have covered the possible scenarios that can occur as we a) use evidence to b) conduct a hypothesis test to c) answer a question, we can start to consider how this process relates to quantitative questions specifically and how we will use numbers to evaluate the extent or strength of our evidence in favor of the alternate hypothesis. This will be addressed in the next section!

2.2.2 Formal language and terms

In the previous section, you read an example of the use of evidence and a hypothesis test to answer a question. Now, we will build on that framework and consider how it can help us specifically with quantitative analysis. To do this, we will use another example.

Imagine that we want to determine if a medication tablet is effective in lowering patients’ blood pressure over the course of 30 days. So, we enroll 100 patients as subjects into a study. 50 are in the treatment group and receive the medication. The other 50 are in the control group and do not receive the medication. At the end of the 30-day experiment, we see if the blood pressure of the 50 subjects in the treatment group decreased more than the blood pressure of the 50 subjects in the control group.

The diagram below summarizes this quantitative research design:

Let’s specify some now-familiar characteristics of this research design:

Question: Does the blood pressure medication reduce our patients’ blood pressure?

Population of interest: All patients with high blood pressure.

Sample: 100 patients with high blood pressure.

Data/evidence: Collected during randomized controlled trial.

Dependent variable: change in blood pressure over 30 days.

Independent variable: experimental group (meaning membership in treatment or control group).

Unit of observation: Patient

Hypotheses

- Null hypothesis: The blood pressure medication does not work, meaning that there will be no difference between the improvement in the treatment and control groups on Day 30.

- Alternate hypothesis: The blood pressure medication does work, meaning that there will be a significant difference between the improvement in the treatment and control groups on Day 30.

Now we will re-write the hypotheses above in a slightly more technical way:

Null hypothesis (\(H_0\)): The change in blood pressure in the treatment group will be equal to the change in blood pressure in the control group.

Alternate hypothesis (\(H_A\)): The change in blood pressure in the treatment group will not be equal to the change in blood pressure in the control group.

Basically, the null hypothesis is saying that the two groups—treatment and control—have the same outcome. The alternate hypothesis is saying that these two groups have different outcomes. Note that \(H_0\) is a common way to write null hypothesis and \(H_A\) is a common way to write alternate hypothesis.

To conduct a hypothesis test like the one we have set up above, we will usually choose a particular inference procedure to run in R and then we will receive results which might include an effect size, confidence interval for the effect size, and a p-value. We can answer our question of interest and reach a conclusion for our hypothesis test by interpreting these results.

Below, we will define effect size, confidence interval, and p-value. It is natural for these concepts to feel unclear even after reading the descriptions below. You will gradually start to understand them better as you encounter more examples and run analyses of your own.

2.2.2.1 Effect size

Effect size means how large or small an outcome of interest is. In the context of our blood pressure medication example, if systolic blood pressure in the treatment group goes down by 10 mmHg on average, while systolic blood pressure in the control group goes down by 1 mmHg on average, the effect size of the medication in our sample of 100 subjects is \(10-1=9 \text{ mmHg}\). It is then up to the researcher to decide if an effect size of 9 mmHg within the 100 sampled subjects is a meaningful effect size or not.

Imagine if the treatment group’s systolic blood pressure decreased by 1.5 mmHg while the control group’s systolic blood pressure decreased by 1 mmHg. Then the effect size of the medication in our sample of 100 subjects is \(1.5-1=0.5 \text{ mmHg}\). Such a small effect size is probably not clinically meaningful, although it would still be up to the researcher to decide.

In both of the scenarios above—effect sizes of 9 or 0.5 mmHg—if we had strong enough evidence, we would reject the null hypothesis, because the treatment and control group results were not equal. This means that even if we do reject the null hypothesis, it still doesn’t necessarily mean that we got a meaningful result. A quantitative researcher has to also consider the size of the difference—in this case between treatment and control groups—that caused us to reject the null hypothesis and whether that size means anything or not.

In most quantitative analyses, we are not only interested in the effect size in an outcome of interest within our sample. Rather, we want to use data about our sample to determine the effect size in our outcome of interest in the entire population from which our sample was drawn (selected). In the context of our example, this means that we not only want to see if the medication is associated with a decrease in blood pressure in our sample of 100 subjects. Rather, we want to use our results from our sample of 100 subjects to determine if the medication is associated with a decrease in blood pressure in the entire population of patients who have high blood pressure.

While we are able to use subtraction to calculate an exact average effect size—either 9 or 0.5 mmHg in our scenarios above—for just our sample of 100 subjects, we cannot calculate an exact effect size for the entire population of patients with high blood pressure. Instead, all we can do is use statistical theory—which we are relying on the computer to implement for us—to generate a range of possible effect sizes in the entire population. We refer to this range of possibilities as a confidence interval.

2.2.2.2 Confidence interval

A confidence interval is a range of numbers that tells us plausible values of an outcome of interest in the population that we are trying to infer about. In our blood pressure example, perhaps we would find that while the effect size (of taking the medication) in our sample of 100 subjects is 9 mmHg, we are 95% certain that the effect size (of taking the medication) in the entire population is between 2 and 16 mmHg. This is called the 95% confidence interval. This means that we are 95% certain that the true effect of the medication on systolic blood pressure in the entire population of interest would have been no lower than 2 mmHg and no higher than 16 mmHg.

Now imagine that we were less certain about the association between taking the medication and blood pressure reduction. Imagine that the 95% confidence interval had been from -2 to 20 mmHg. In this case, the direction of the association is also in question. When the 95% confidence interval crosses 0, it means that we don’t even know the direction of the effect size in the population. If we gave this medication to the entire population, it is possible that their blood pressure would get better and also possible that it would get worse.

Confidence intervals are directly related to p-values, which are discussed below.

2.2.2.3 p-value

A p-value—sometimes just called p—is a number that is often reported as a result of a hypothesis test. More specifically, the p-value is a probability that can be between 0 and 1.

All p-values are the result of a hypothesis test in which we have a null hypothesis and are trying to determine if we have strong enough evidence to reject that null hypothesis in favor of an alternate hypothesis. The p-value tells us how strong or weak this evidence is.

1-p =

- our level of confidence in the alternate hypothesis being true.

- the probability that the alternate hypothesis is true

- the strength on a scale from 0 to 1 of evidence in favor of the alternate hypothesis.

p =

- the probability that, if we reject the null hypothesis, doing so (rejecting the null hypothesis) was a mistake.

- the probability of committing a Type I error (see below).

- the probability that the scenario in the alternate hypothesis occurred due to random chance, rather than the alternative hypothesis being the truth.28

Here are some examples:

If \(p=.03\) and \(1-p=0.97\), this means that we are 97% confident in the alternate hypothesis. And there is a 3% chance that if we reject the null hypothesis, doing so (rejecting the null hypothesis) was a mistake. There is a 3% chance of Type I error (see below).

If \(p=.43\) and \(1-p=.57\), this means that we are 57% confident in the alternate hypothesis. And there is a 43% chance that if we reject the null hypothesis, doing so (rejecting the null hypothesis) was a mistake. There is a 43% chance of Type I error (see below).

And here are some guidelines to keep in mind about p-values:

The threshold for your p-value at which we will reject the null hypothesis is called an \(\alpha\text{-level}\), pronounced “alpha level.” If \(p < \alpha\)—meaning if the p-value is below the alpha level—we reject the null hypothesis.

An alpha level is the threshold for what is commonly called statistical significance. This term can be misleading and we should try to avoid using it without providing additional context. If results of a hypothesis test are “statistically significant,” it does not necessarily mean that they are meaningful in a tangible or “real world” sense. Effect sizes, confidence intervals, and p-values all need to be considered together before answering a quantitative question. Diagnostic metrics and tests that are specific to each inference procedure also need to be taken into consideration before a conclusion can be drawn.

A commonly used alpha level is 0.05. In this common scenario, the null hypothesis is rejected when \(p \le 0.05\). This is equal to 95% certainty (or more) and 5% probability (or less) of rejecting the null hypothesis being a mistake.

Higher p-values mean more uncertainty, meaning lower confidence in the alternate hypothesis. Higher p-values correspond to larger—and often inconclusive—confidence intervals.

Lower p-values mean more certainty, meaning higher confidence in the alternate hypothesis. Lower p-values correspond to smaller—and more definitive—confidence intervals.

2.2.3 Type I and Type II Errors in Hypothesis Testing

When we formulate a hypothesis test and reach a conclusion to that test, there are two types of errors we can make. These can be referred to as Type I and Type II errors, summarized below. I personally find these terms difficult to remember and not as useful as simply thinking through the concepts myself. It is optional (not required) for you to memorize these terms for the two types of error.

Possible errors in hypothesis testing:

Type I error: Reject the null hypothesis when it is really true.

Type II error: Fail to reject the null hypothesis when it is really false.

Remember: The p-value is the calculated probability that we made a mistake by rejecting the null hypothesis. In more technical terms, the p-value is the probability that we are making a Type I error. But you don’t need to think about it that way or memorize this. The key is to just remember that the p-value is the probability that if we reject the null hypothesis, doing so (rejecting the null hypothesis) is a mistake.

The following video also describes Type I and Type II error:29

The video above can be viewed externally at https://www.youtube.com/watch?v=a_l991xUAOU.

2.3 Sampling Distributions

Now that you have learned about the concepts of inference, sample, population, and hypothesis testing, we will turn to one of the most foundational concepts related to samples and population. We know that we have to use a sample to try to answer questions about a population. So, obviously, samples are going to be extremely important to everything we do in quantitative analysis. We rely on samples a lot. However, we have not yet discussed any more specific details about sampling.

Here are some questions about that we will start to explore in the content below:

What are some actual qualitative and quantitative characteristics of the sample?

What are the sample’s capabilities and limitations when we try to use it to make inferences about the population?

Remember: A sample is just a group of observations (like people, cars, cells, any unit you want to study) that you have selected from a bigger group (the bigger group is called the population and is defined as all possible observations/entities that you want to study).

2.3.1 Central Limit Theorem

One of the most important concepts not only in statistics but also—in my opinion—in nature is the central limit theorem.

Please watch the following video which introduces to the central limit theorem:30

The video above can be viewed externally at https://www.youtube.com/watch?v=YAlJCEDH2uY.

Next, have a look at this video that also introduces the central limit theorem and contains an illustrative example (you can follow along on a piece of paper, if you wish):31

The video above can be viewed externally at https://www.youtube.com/watch?v=JNm3M9cqWyc.

The Wikipedia article on the central limit theorem also contains a good explanation and example, if you would like to read about it (this is optional, not required):

- Central Limit Theorem. Wikipedia. https://en.wikipedia.org/wiki/Central_limit_theorem.

2.3.2 Sample Mean

Another important foundational concept is the sampling distribution of the sample mean. Please watch the following video, which will introduce you to this concept:32

The video above can be viewed externally at https://www.youtube.com/watch?v=FXZ2O1Lv-KE.

The following resources are optional (not required) for you to read. They can also serve as useful references where you can look up concepts later, if you would like:

- Selecting Subjects for Survey Research. Educational Research Basics by Del Siegle. June 20 2019. Neag School of Education – University of Connecticut. https://researchbasics.education.uconn.edu/sampling/.

- PowerPoint slides on Sampling Distribution of the Mean

- Sections A–H in Online Stat Book: Sampling Distributions. David M. Lane. http://onlinestatbook.com/2/sampling_distributions/sampling_distributions.html.

2.3.3 Standard Deviation and Standard Error

As you may have noticed, we are developing a unique vocabulary related to quantitative methods. Sometimes these terms can get confusing, especially when it comes to remembering whether a concept is referring to a sample or a population. One such situation that can often be confusing is the terms standard deviation and standard error.

Two key terms are standard deviation and standard error.

This video explains the difference:33

The video above can be viewed externally at https://www.youtube.com/watch?v=A82brFpdr9g.

2.4 Introduction to t-tests

A t-test is one of the most basic ways to determine if there is a difference between two groups in an outcome of interest. T-tests can be used to compare independent samples of data, where the observations in each comparison group are separate; and they can also be used for paired data, where the goal is to determine if there is a difference in an outcome of interest measured twice on the same observations. In this week of the course, we will learn some basic details about t-tests and you will do an independent samples t-test practice example in your homework. Then, in a future week, you will run multiple types of t-tests on your own computer. To conduct both types of t-tests and also examine the conditions under which we can or cannot trust our t-test results.

Below, you will find videos that introduce you to t-tests as well as a written example of an independent samples t-test using the blood pressure example from earlier in this chapter. Currently, the videos come before the written example. However, depending on your learning style, it would also be reasonable to read the example first and then watch the videos.

2.4.1 t-test Videos – Required

We will begin by watching the following video, which explains the relationship between hypothesis testing and t-tests:34

The video above can be viewed externally at https://www.youtube.com/watch?v=0zZYBALbZgg.

Next, have a look at this video that uses a single example to show the purpose of a t-test:35

The video above can be viewed externally at https://www.youtube.com/watch?v=N2dYGnZ70X0.

2.4.2 t-test Videos – Optional

This section contains a few more videos that are optional (not required).

In the video below, you can see an example of a t-test being conducted, including how it can be done in a spreadsheet software and calculated by hand. You are not required to learn how to conduct a t-test in a spreadsheet software or by hand. Here is the video:36

The video above can be viewed externally at https://www.youtube.com/watch?v=pTmLQvMM-1M.

And this video goes through two examples in careful detail (much more than you are required to learn for our course):37

The video above can be viewed externally at https://youtu.be/UcZwyzwWU7o.

2.4.3 Independent Samples t-test Example

Below, we will go through a t-test example. This example has been generated using R code. You will not be using R this week but you will soon.

An independent samples t-test is used when you have an outcome from two separate groups that you want to compare. More specifically, a t-test compares means from two different groups. The blood pressure medication example from earlier in this chapter is again applicable here. We want to compare two separate groups—treatment and control—to see if their mean change in blood pressure over 30 days is the same or different.38

Let’s examine this data ourselves in the table below (T means treatment and C means control):

## group SysBPChange

## 1 T -8.0

## 2 T -14.8

## 3 T -6.8

## 4 T -11.6

## 5 T -10.2

## 6 T -11.6

## 7 T -4.8

## 8 T -6.5

## 9 T -9.9

## 10 T -17.2

## 11 T -10.7

## 12 T -11.9

## 13 T -8.7

## 14 T -11.6

## 15 T -8.6

## 16 T -8.3

## 17 T -10.9

## 18 T -14.1

## 19 T -8.8

## 20 T -12.4

## 21 T -14.1

## 22 T -16.8

## 23 T -1.8

## 24 T -10.8

## 25 T -15.1

## 26 T -8.5

## 27 T -11.2

## 28 T -9.8

## 29 T -10.9

## 30 T -12.8

## 31 T -9.2

## 32 T -10.1

## 33 T -12.2

## 34 T -8.8

## 35 T -8.5

## 36 T -7.5

## 37 T -10.2

## 38 T -6.2

## 39 T -11.4

## 40 T -8.1

## 41 T -15.3

## 42 T -6.3

## 43 T -7.2

## 44 T -5.9

## 45 T -13.4

## 46 T -16.8

## 47 T -10.7

## 48 T -10.9

## 49 T -12.9

## 50 T -13.1

## 51 C 0.0

## 52 C -3.5

## 53 C -2.6

## 54 C 3.7

## 55 C -1.1

## 56 C -0.6

## 57 C -0.3

## 58 C -1.5

## 59 C 4.9

## 60 C -1.8

## 61 C -0.3

## 62 C 0.2

## 63 C -0.6

## 64 C -6.0

## 65 C -4.1

## 66 C -0.5

## 67 C -2.6

## 68 C 0.4

## 69 C 0.5

## 70 C 1.9

## 71 C -1.0

## 72 C -0.1

## 73 C 1.6

## 74 C -3.2

## 75 C -1.0

## 76 C -3.7

## 77 C 1.8

## 78 C -4.2

## 79 C 1.0

## 80 C -2.2

## 81 C 2.0

## 82 C 5.5

## 83 C 1.5

## 84 C 1.0

## 85 C 3.7

## 86 C -1.5

## 87 C -3.3

## 88 C -2.7

## 89 C -4.3

## 90 C -7.0

## 91 C -1.4

## 92 C 5.2

## 93 C 3.8

## 94 C 4.6

## 95 C -1.5

## 96 C 2.1

## 97 C -5.1

## 98 C 0.5

## 99 C 3.5

## 100 C -0.7I know that was a really long table above and probably not that useful to look at. It’s there in case you want to copy and paste it into a spreadsheet or see an example of how this type of data can be organized.

We can also summarize the data above in a way that is easier to understand. Let’s look at the mean and standard deviation of our control and treatment groups separately:

## # A tibble: 2 x 4

## group `Group Count` `Mean of SysBPChange` `SD of SysBPChange`

## * <fct> <int> <dbl> <dbl>

## 1 C 50 -0.38 2.95

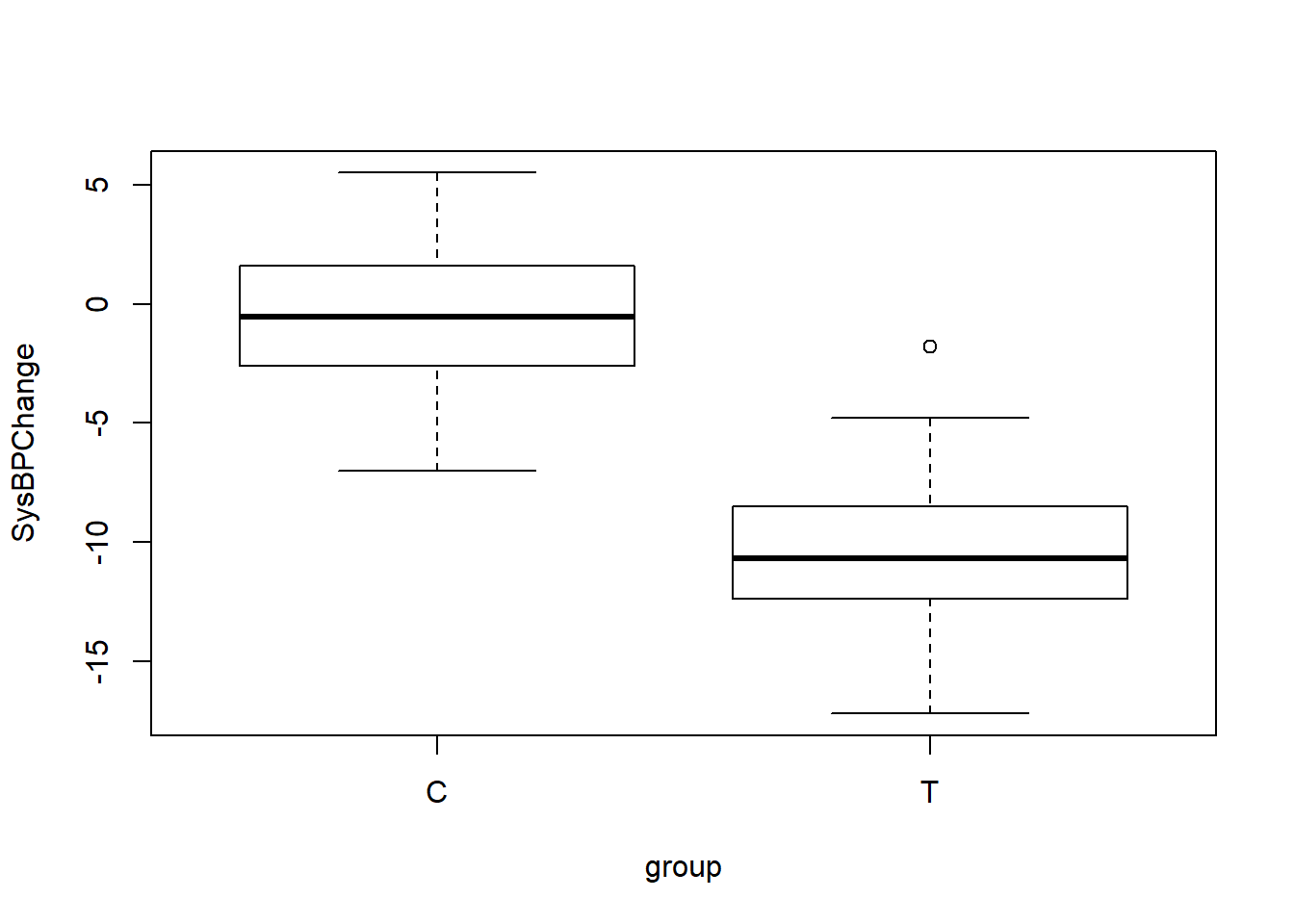

## 2 T 50 -10.5 3.22Above, we see that…

- There are 50 observations in the treatment group—identified as

T—and their mean change in systolic blood pressure was -10.5 mmHg, with a standard deviation of 3.2 mmHg. - There are 50 observations in the control group—identified as

C—and their mean change in systolic blood pressure was -0.4 mmHg, with a standard deviation of 2.9 mmHg.

We can also visualize this blood pressure data using a boxplot:

We are now ready to run an independent samples t-test.

The null hypothesis for this t-test is that the means of SysBPChange are equal in the treatment and control groups. We can write the null hypothesis like this:

\[H_0\text{: } \mu_T = \mu_C\]

In the notation above, \(\mu\) represents the population mean (not sample mean) of our outcome of interest. \(\mu_T\) is the population mean (not sample mean) of SysBPChange in our treatment group and \(\mu_C\) is the population mean (not sample mean) of SysBPChange in our control group.

Another way to write this very same null hypothesis is like this:

\[H_0\text{: } \mu_T - \mu_C = 0\]

The alternate hypothesis is as follows:

\[H_A\text{: } \mu_T \ne \mu_C\]

or

\[H_A\text{: } \mu_T - \mu_C \ne 0\]

Note that for this independent samples t-test, our hypotheses relate to the population means of our treatment and control groups, not our sample means. It is important to solidify this concept before we continue. There are two populations that we are interested in learning about:

Those patients who receive the blood pressure medication. We have a sample of only 50 such patients and we know that their systolic blood pressure dropped by 10.5 mmHg on average. 10.5 is the sample mean. We do not know what happened to the blood pressure of the entire population of people who took the medication. The population mean is unknown.

Those patients who do not receive the blood pressure medication. We have a sample of only 50 such patients and we know that their systolic blood pressure dropped by 0.4 mmHg on average. 0.4 is the sample mean. We do not know what happened to the blood pressure of the entire population of people who did not take the medication. The population mean is unknown.

We already know that there is a difference of \(10.5-0.4=10.1 \text{ mmHg}\) in our sample means. That is not what we are trying to learn from our t-test. Instead, we are trying to learn about the difference in the population means of these two types of people (those who received the medication and those who didn’t in the entire populations from which our 50-patient samples were selected).

We want to answer the question: If we were to hypothetically do an enormous experiment in which ALL patients with high blood pressure were subjects, how much of a difference (if at all) would we see in systolic blood pressure change between those who did and didn’t receive the medication?

We are trying to answer this question using our tiny study of 50 treatment subjects, 50 control subjects, and a t-test.

Now that we have clarified what exactly we are doing, let’s review the hypotheses in plain words. We start with the assumption—null hypothesis—that there is no difference in systolic blood pressure change between those who did and did not receive the medication in the entire population. If we find evidence that there is a difference, we will reject the null hypothesis in favor of the alternate hypothesis, which states that there is a difference in systolic blood pressure change between those who did and did not receive the medication in the population. If we do not find any evidence that the systolic blood pressures of those who did and did not take the medication in the population are different, we fail to reject the null hypothesis.

Now it is time to run our t-test. You can see the result of this t-test below:39

##

## Welch Two Sample t-test

##

## data: SysBPChange by group

## t = 16.354, df = 97.233, p-value < 2.2e-16

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## 8.872546 11.323454

## sample estimates:

## mean in group C mean in group T

## -0.380 -10.478Let’s interpret the results of the t-test from the computer output above:

The 95% confidence interval of the effect size in the population is 8.9–11.3 mmHg. This means that there is a 95% chance that in the entire population (not our sample of 100 subjects), people who take the medication improve (decrease) their systolic blood pressure by at least 8.9 mmHg and at most 11.3 mmHg on average, relative to those who don’t take the medication.

The p-value is extremely small, lower than 0.001.40

We have sufficient evidence—pending diagnostic tests that we will learn in a later week—to reject the null hypothesis and conclude that in the population, the systolic blood pressures of those who take the medication reduce more than the systolic blood pressures of those who do not take the medication, on average.

Before we can consider the results of the t-test above to be trustworthy, we must first make sure that our data passes a few diagnostic tests of assumptions that must be met. These assumptions are discussed later in the course (not this week).

2.4.3.1 Example with uncertain confidence interval and high p-value

Below, the t-test procedure from above is repeated with an example in which we fail to reject the null hypothesis. All details are the same except for the outcome data for the treatment group, which have been modified for illustrative purposes.41

Let’s see the data in a table again:

## group SysBPChange

## 1 T 1.5

## 2 T -5.3

## 3 T 2.7

## 4 T -2.1

## 5 T -0.7

## 6 T -2.1

## 7 T 4.7

## 8 T 3.0

## 9 T -0.4

## 10 T -7.7

## 11 T -1.2

## 12 T -2.4

## 13 T 0.8

## 14 T -2.1

## 15 T 0.9

## 16 T 1.2

## 17 T -1.4

## 18 T -4.6

## 19 T 0.7

## 20 T -2.9

## 21 T -4.6

## 22 T -7.3

## 23 T 7.7

## 24 T -1.3

## 25 T -5.6

## 26 T 1.0

## 27 T -1.7

## 28 T -0.3

## 29 T -1.4

## 30 T -3.3

## 31 T 0.3

## 32 T -0.6

## 33 T -2.7

## 34 T 0.7

## 35 T 1.0

## 36 T 2.0

## 37 T -0.7

## 38 T 3.3

## 39 T -1.9

## 40 T 1.4

## 41 T -5.8

## 42 T 3.2

## 43 T 2.3

## 44 T 3.6

## 45 T -3.9

## 46 T -7.3

## 47 T -1.2

## 48 T -1.4

## 49 T -3.4

## 50 T -3.6

## 51 C 0.0

## 52 C -3.5

## 53 C -2.6

## 54 C 3.7

## 55 C -1.1

## 56 C -0.6

## 57 C -0.3

## 58 C -1.5

## 59 C 4.9

## 60 C -1.8

## 61 C -0.3

## 62 C 0.2

## 63 C -0.6

## 64 C -6.0

## 65 C -4.1

## 66 C -0.5

## 67 C -2.6

## 68 C 0.4

## 69 C 0.5

## 70 C 1.9

## 71 C -1.0

## 72 C -0.1

## 73 C 1.6

## 74 C -3.2

## 75 C -1.0

## 76 C -3.7

## 77 C 1.8

## 78 C -4.2

## 79 C 1.0

## 80 C -2.2

## 81 C 2.0

## 82 C 5.5

## 83 C 1.5

## 84 C 1.0

## 85 C 3.7

## 86 C -1.5

## 87 C -3.3

## 88 C -2.7

## 89 C -4.3

## 90 C -7.0

## 91 C -1.4

## 92 C 5.2

## 93 C 3.8

## 94 C 4.6

## 95 C -1.5

## 96 C 2.1

## 97 C -5.1

## 98 C 0.5

## 99 C 3.5

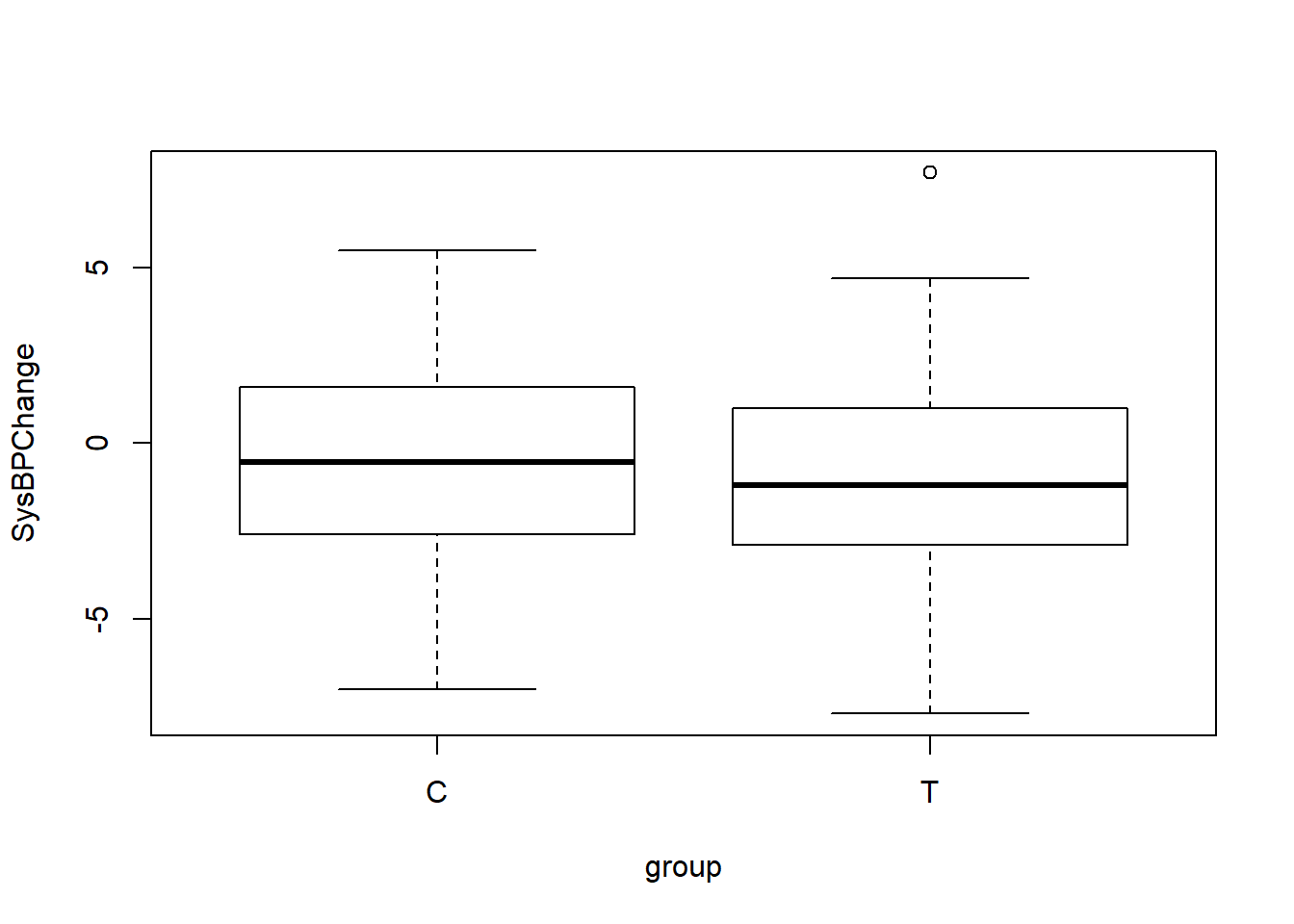

## 100 C -0.7The following boxplot helps us quickly understand this new data:

In this new version of the data, the medication did not make a difference in the treatment group, as the boxplot above shows.

Our hypotheses are the same as before:

- \(H_0\text{: } \mu_T = \mu_C\)

- \(H_A\text{: } \mu_T \ne \mu_C\)

And now we again run the t-test and generate an output:

##

## Welch Two Sample t-test

##

## data: SysBPChange by group

## t = 0.96848, df = 97.233, p-value = 0.3352

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -0.6274544 1.8234544

## sample estimates:

## mean in group C mean in group T

## -0.380 -0.978We can interpret the results of the t-test from the output we received (pending the diagnostic tests that you will learn in a later week):

The 95% confidence interval of the effect size in the population is -0.6–1.8 mmHg. This means that there is a 95% chance that in the entire population (not our sample of 100 subjects), people who take the medication worsen their systolic blood pressure by at most 0.6 mmHg and improve it by at most 1.8 mmHg on average, relative to those who don’t take the medication. In this situation, the 95% confidence interval includes the number 0, meaning that we do not know if this is a negative (meaning that the medication makes the blood pressure of patients who take it worse than the blood pressure of patients who do not), zero (meaning that those who do and do not take the medication have the same blood pressure outcomes), or positive (meaning that the medication makes the blood pressure of patients who take it better than the blood pressure of patients who do not) trend. This is an inconclusive result.

The p-value is 0.34, which is quite large. This means that we have very low confidence in the alternate hypothesis.

We do not have sufficient evidence to reject the null hypothesis and conclude that in the population, the systolic blood pressures of those who take the medication reduce more than the systolic blood pressures of those who do not take the medication, on average.

We fail to reject the null hypothesis.

Note once again that before we can consider the results of the t-test above to be trustworthy, we must first make sure that our data passes a few diagnostic tests of assumptions that must be met. These assumptions are discussed later in the course (not this week).

You have now reached the end of this week’s content. Please proceed to the assignment below.

2.5 Assignment

2.5.1 Dance of the Means

For the first part of the homework this week, you will complete the Dance of the Means activity. Please download the following three files to your computer:

- ESCI Dance of the Means NITOP.xlsm Excel file. Note that some students have reported trouble when clicking on the link to download this file. Try right-clicking on the file and opening it in a new tab, if you also experience difficulty.42

- Guided Activity Word file.43

- This PowerPoint file may also help you get the activity up and running.

You may have to click on Enable Content or Enable Macros in the Excel file to get it to work.

Please follow along in the activity guide and then answer the following questions:

Task 1: What is the sampling distribution of the mean?

Task 2: Are sample means from random samples always normally distributed around the population mean? Why or why not?

Task 3: What factors influence the MoE (margin of error) and why?

Task 4: When will the sampling distribution of means be “normal?”

Task 5: Why is the central limit theorem important?

2.5.2 Comparing Distributions With an Independent Samples T-Test

For this part of the assignment, we will use the 2 Sample T-Test tool (click here to open it!), which I will call the tool throughout this section of the assignment.

Imagine that we, some researchers, are trying to answer the following research question: How does fertilizer affect plant growth?

We conduct a randomized controlled trial in which some plants are given a fixed amount of fertilizer (treatment group) and other plants are given no fertilizer (control group). Then we measure how much each plant grows over the course of a month. Let’s say we have ten plants in each group and we find the following amounts of growth.

The 10 plants in the control group each grew this much (each number corresponds to one plant’s growth):

3.8641111

4.1185322

2.6598828

0.3559656

2.8639095

0.9020122

5.0527020

2.3293899

3.5117162

4.3417785

The 10 plants in the treatment group each grew this much:

7.532292

1.445972

6.875600

6.518691

1.193905

4.659153

3.512655

4.578366

8.791810

4.891557

Delete the numbers that are pre-populated in the tool. Copy and paste our control data in as Sample 1 and our treatment data in as Sample 2.

Task 6: What is the mean and standard deviation of the control data? What is the mean and standard deviation of the treatment data? Do not calculate these by hand. The tool will tell these to you in the sample summary section.

You’ll see that the tool has drawn the distributions of the data for our treatment and control groups. That’s how you can visualize the effect size (impact) of an RCT. It has also given us a verdict at the bottom that the “Sample 2 mean is greater.” This means that this particular statistical test (a t-test) concludes that we are more than 95% certain that sample 1 (the control group) and sample 2 (the treatment group) are drawn from separate populations. In this case, the control group is sampled from the “population” of plants that didn’t get fertilizer and the treatment group is sampled from the “population” of those that did.

This process is called inference. We are making the inference, based on our 20-plant study, that in the broader population of plants, fertilizer is associated with more growth. The typical statistical threshold for inference is 95% certainty. In the difference of means section of the tool, you’ll see p = 0.0468 written. This is called a p-value. The following formula gives us the percentage of certainty we have in a statistical estimate, based on the p-value (which is written as p): \(\text{Level of Certainty} = (1-p)*100\). To be 95% certain or higher, the p-value must be equal to 0.05 or lower. That’s why you will often see p<0.05 written in studies and/or results tables.

With these particular results, our experiment found statistically significant evidence that fertilizer is associated with plant growth.

Task 7: What was the null hypothesis in this t-test that we conducted?

Task 8: What was the alternate hypothesis in this t-test that we conducted?

Now, click on the radio buttons next to ‘Sample 1 summary’ and ‘Sample 2 summary.’ This will allow you to compare different distributions to each other quickly, without having to change the numbers (raw data) above. Let’s imagine that the control group had not had as much growth as it did. Change the Sample 2 mean from 5 to 4.5.

Task 9: What is the new p-value of this t-test, with the new mean for Sample 2? What is the conclusion of our experiment, with these new numbers? Use the proper statistical language to write your answer.

Task 10: Gradually reduce the standard deviation of Sample 2 until the results are statistically significant at the 95% certainty level. What is the relationship between the standard deviation of your samples and our ability to distinguish them from each other statistically?44

2.5.3 Follow up and Submission

You have now reached the end of this week’s assignment. The tasks below will guide you through submission of the assignment and allow us to gather questions and/or feedback from you.

Task 11: Please write any questions you have for the course instructors (optional).

Task 12: Please write any feedback you have about the instructional materials (optional).

Task 13: Please submit your assignment (multiple files are fine) to the D2L assignment drop-box corresponding to this chapter and week of the course. Please e-mail all instructors if you experience any difficulty with this process.

As you continue the course, you will learn a variety of methods for comparing the results of different inference procedures and deciding which ones to trust and not trust.↩︎

Population vs sample. 365 Data Science. Aug 11, 2017. YouTube. https://www.youtube.com/watch?v=eIZD1BFfw8E.↩︎

Understanding Statistical Inference - statistics help. Dr Nic’s Maths and Stats. Nov 8, 2015. YouTube. https://www.youtube.com/watch?v=tFRXsngz4UQ.↩︎

An athlete who tries to jump over bars to see how high of a bar they can jump over.↩︎

The reason for which we don’t have evidence doesn’t matter. It could be because Weeda attempted to jump and could not do it or because Weeda never attempted to jump. We just don’t have evidence that Weeda can do it.↩︎

Hypothesis testing. Null vs alternative. 365 Data Science. Aug 11, 2017. YouTube. https://www.youtube.com/watch?v=ZzeXCKd5a18.↩︎

This particular bullet is a little oversimplified. In a two-sample (whether independent or paired) t-test for example, the t-test is helping us determine if the two samples come from different populations. Occasionally, maybe they don’t come from separate populations, but the p-value is still low and we reject the null hypothesis. But in this scenario, maybe the individuals we sampled just happened to make it seem like there is a difference in the two samples. That sampling was due to random chance. So the p-value in this example also tells us the probability that an outcome occurred due to random chance rather than the two samples actually being different from one another. It is not necessary to understand this explanation yet, especially since you won’t learn about t-tests until later.↩︎

Type I error vs Type II error. 365 Data Science. Aug 11, 2017. YouTube. https://www.youtube.com/watch?v=a_l991xUAOU.↩︎

The Central Limit Theorem. StatQuest with Josh Starmer. Sep 3, 2018. YouTube. https://www.youtube.com/watch?v=YAlJCEDH2uY.↩︎

Central limit theorem | Inferential statistics | Probability and Statistics | Khan Academy. Khan Academy. Jan 26, 2010. YouTube. https://www.youtube.com/watch?v=JNm3M9cqWyc.↩︎

Sampling distribution of the sample mean | Probability and Statistics | Khan Academy. Khan Academy. Jan 26, 2010. YouTube. https://www.youtube.com/watch?v=FXZ2O1Lv-KE.↩︎

StatQuickie: Standard Deviation vs Standard Error. StatQuest with Josh Starmer. Mar 20, 2017. YouTube. https://www.youtube.com/watch?v=A82brFpdr9g.↩︎

Hypothesis testing: step-by-step, p-value, t-test for difference of two means - Statistics Help. Dr Nic’s Maths and Stats. Dec 5, 2011. YouTube. https://www.youtube.com/watch?v=0zZYBALbZgg.↩︎

What is a t-test?. U of G Library. Oct 23, 2017. YouTube. https://www.youtube.com/watch?v=N2dYGnZ70X0.↩︎

Student’s t-test. Bozeman Science. Apr 13, 2016. YouTube. https://www.youtube.com/watch?v=pTmLQvMM-1M.↩︎

Hypothesis Testing - Difference of Two Means - Student’s -Distribution & Normal Distribution. The Organic Chemistry Tutor. Nov 14, 2019. YouTube. https://youtu.be/UcZwyzwWU7o.↩︎

This is a variety of between-subjects research design.↩︎

Soon, in a future week of the course, you will learn how to run this yourself in R. For now, we’ll just focus on interpreting the results.↩︎

The test output reports:

p-value < 2.2e-16.2.2e-16means \(2.2*10^{-16} = 0.00000000000000022\), which is a very small number! This is telling us that there is an extremely small chance that rejecting the null hypothesis would be a mistake.↩︎9.5 has been added to all treatment observations, to make the difference in sample means 0.6 mmHg now instead of 10.1 mmHg as it was initially. This mimics a situation in which the blood pressure medication did not help much.↩︎

This note about an alternate way to access the Excel file was added on January 31 2021.↩︎

The source of both files is: https://thenewstatistics.com/itns/esci/dance-of-the-means/.↩︎

Remember, when we are analyzing the data in an RCT, we are trying to figure out if the treatment and control groups had different or similar results. We are seeing if we can distinguish the two groups from each other in any way. The mean and standard deviation of the data in the two groups are the key parameters that help us tell the treatment and control groups apart, which is why you need to play around with the t-test tool to understand these relationships.↩︎