Chapter 13 Measures of Association

13.1 Getting Ready

This chapter examines multiple ways to measure the strength of relationships between two variables in a contingency table. This material forms a basis for the remaining chapters, all of which focus on different methods for assessing the strength and direction of relationships between numeric variables. To follow along in R, you should load the anes20 data set, as well as the libraries for the DescTools and descr packages. You might also want to keep a calculator handy, or be ready to use R as a calculator.

13.2 Going Beyond Chi-squared

As discussed at the end of the previous chapter, chi-squared is a good measure of statistical significance, but it is not appropriate to use it to judge the strength of a relationship, or effect size. By “strength of the relationship” in crosstabs, we generally mean the extent to which values of the dependent variable are conditioned by (or depend upon) values of the independent variable. As a general rule, when chi-squared is not significant the outcomes of the dependent variable vary only slightly and randomly around their expected (null hypothesis) outcomes. If chi-squared is statistically significant, we know there is a relationship between the two variables but we don’t know the degree to which the outcomes on the dependent variable are conditioned by the values of the independent variables—only that they differ enough from the expected outcomes under the null hypothesis that we can conclude there is a real relationship between the two variables.

Of course, we can use the column percentages to try to get a handle on how strong the relationship is, but it can be difficult to do so with any precision, in part because there is not a uniform standard for interpreting the patterns column percentages. For instance, if we consider the relationship between education and religious importance from the previous chapter (shown below), we might note that in the first row, the percent assigning low importance to religion ranges from 25.5% among those with less than a high school degree to 41% among those with a graduate degree, an increase of 15.5 percentage points. If we look at those who assign a moderate level of importance to religion (second row), there is about a 7 percentage point decrease between those whose highest level of education is less than high school and those with an advanced degree, and there is about a 7-point drop off in the third row (moderate importance) across levels of education, though not a steady decrease.

#Crosstab for religious importance by education

crosstab(anes20$relig_imp, anes20$educ,

plot=F,

prop.c=T,

chisq=T) Cell Contents

|-------------------------|

| Count |

| Column Percent |

|-------------------------|

============================================================================

anes20$educ

anes20$relig_imp LT HS HS Some Coll 4yr degr Grad degr Total

----------------------------------------------------------------------------

Low 96 365 860 789 650 2760

25.5% 27.4% 30.9% 38.4% 41.0%

----------------------------------------------------------------------------

Moderate 93 284 535 389 275 1576

24.7% 21.3% 19.2% 18.9% 17.4%

----------------------------------------------------------------------------

High 187 684 1387 875 660 3793

49.7% 51.3% 49.9% 42.6% 41.6%

----------------------------------------------------------------------------

Total 376 1333 2782 2053 1585 8129

4.6% 16.4% 34.2% 25.3% 19.5%

============================================================================

Statistics for All Table Factors

Pearson's Chi-squared test

------------------------------------------------------------

Chi^2 = 108.23 d.f. = 8 p <2e-16

Minimum expected frequency: 72.897 Describing the differences in column percentages like this is an important part of telling the story of the relationship between two variables, so we should always give them a close look and be comfortable discussing them. However, we should not rely on them alone, as they do not provide a clear, consistent, and standard basis for judging the strength of the relationship in the table. There are fifteen cells in the table shown above and focusing on a few cells with relatively high or low percentages does not take into account all of the information in the table. Also, as you learned in Chapter 12, discussions of findings like the one presented above imply equal weight assignment for each column. For instance, the first column of data represents only 4.6% of the sample, but we discuss the 25.5 percent of that column’s observations in the first row as if they carry the same weight as the other column percentages in that row, even though each of the other columns contributes much more to the overall pattern in the table. What we need is to be able to complement the discussion of column percentages with statistics that take into account the outcomes across the entire table and weight those outcomes according to their share of the overall sample.

13.3 Measures of Association for Crosstabs

13.3.1 Cramer’s V

Measures of association are statistics that summarize the strength of the relationship between two variables. The measures of effect size examined in previous chapters– Cohen’s D (for t-scores) and eta2 (for F-ratios)–are essentially measures of association. One useful measure of association for crosstabs, especially if one of the variables is a nominal-level variable, is Cramer’s V. We begin with this measure because it is based on chi-squared. One of the many virtues of chi-squared is that it is based on information from every cell of the table and uses cell frequencies instead of percentages, so each cell is weighted appropriately. Recall, though, that the “problem” with chi-square is that its size reflects not just the strength of the relationship but also the sample size and the size of the table. Cramer’s V discounts the value of chi-squared for both the sample size and the size of the table by incorporating them in the denominator of the formula:

\[\text{Cramer's V}=\sqrt{\frac{\chi^2}{N*min(r-1,c-1)}}\]

Here we take the square root of chi-squared divided by the sample size times either the number of rows minus 1 or the number of columns minus 1, whichever is smaller.38 In the education and religiosity crosstab, rows minus 1 is smaller than columns minus 1, so plugging in the values of chi-squared (108.2) and the sample size:

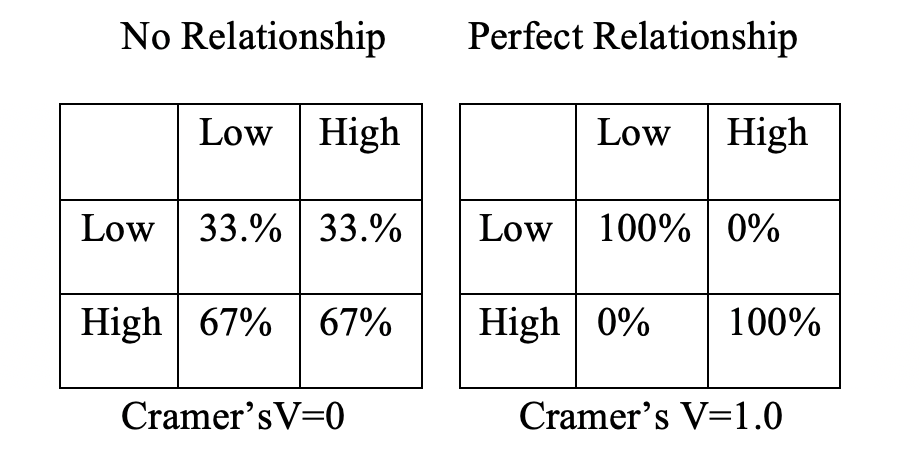

\[V=\sqrt{\frac{108.2}{8129*2}}=.082\] Interpreting Cramer’s V is relatively straightforward, as long as you understand that it is bounded by 0 and 1, where a value of zero means there is absolutely no relationship between the two variables, and a value of one means there is a perfect relationship between the two variables. The Figure below illustrate these two extremes:

Figure 13.1: Hypothetical Outcomes for Cramer’s V

In the table on the left, there is no change in the column percentages as you move across columns. We know from the discussion of chi-square in the last chapter, that this is exactly what statistical independence looks like–the row outcome does not depend upon column outcomes. In the table on the right, you can perfectly predict the outcome of the dependent variable based on levels of the independent variable. Given these bounds, the Cramer’s V value for the relationship between education and the religious importance (V=.08) suggests that this is a fairly weak relationship.

Let’s calculate Cramer’s V for the other tables we used in Chapter 12, regional and age-based differences in religious importance. First up, regional differences:

#Crosstab for religious importance by region

crosstab(anes20$relig_imp, anes20$V203003,

plot=F,

prop.c=T,

chisq=T) Cell Contents

|-------------------------|

| Count |

| Column Percent |

|-------------------------|

==========================================================================

SAMPLE: Census region

anes20$relig_imp 1. Northeast 2. Midwest 3. South 4. West Total

--------------------------------------------------------------------------

Low 549 649 811 782 2791

39.4% 32.6% 26.4% 43.5%

--------------------------------------------------------------------------

Moderate 320 385 548 343 1596

23.0% 19.3% 17.9% 19.1%

--------------------------------------------------------------------------

High 525 956 1710 671 3862

37.7% 48.0% 55.7% 37.4%

--------------------------------------------------------------------------

Total 1394 1990 3069 1796 8249

16.9% 24.1% 37.2% 21.8%

==========================================================================

Statistics for All Table Factors

Pearson's Chi-squared test

------------------------------------------------------------

Chi^2 = 238.17 d.f. = 6 p <2e-16

Minimum expected frequency: 269.71 On its face, based on differences in the column percentages within rows, this looks like a stronger relationship than in the first table. Let’s check out the value of Cramer’s V for this table to see if we are correct.

\[V=\sqrt{\frac{238.2}{8249*2}}=.12\] Although this result shows a somewhat stronger impact on religious importance from region than from education, Cramer’s V still points to a weak relationship.

Finally, let’s take another look at the relationship between age and religious importance.

#Crosstab got religious importance by age

crosstab(anes20$relig_imp, anes20$age5,

plot=F,

prop.c=T,

chisq=T) Cell Contents

|-------------------------|

| Count |

| Column Percent |

|-------------------------|

=================================================================

PRE: SUMMARY: Respondent age

anes20$relig_imp 18-29 30-44 45-60 61-75 76+ Total

-----------------------------------------------------------------

Low 505 877 666 522 135 2705

50.4% 43.7% 32.3% 24.4% 19.4%

-----------------------------------------------------------------

Moderate 189 357 396 470 122 1534

18.9% 17.8% 19.2% 22.0% 17.5%

-----------------------------------------------------------------

High 308 774 1003 1149 440 3674

30.7% 38.5% 48.6% 53.7% 63.1%

-----------------------------------------------------------------

Total 1002 2008 2065 2141 697 7913

12.7% 25.4% 26.1% 27.1% 8.8%

=================================================================

Statistics for All Table Factors

Pearson's Chi-squared test

------------------------------------------------------------

Chi^2 = 396.66 d.f. = 8 p <2e-16

Minimum expected frequency: 135.12 Of the three tables, this one shows the greatest differences in column percentages within rows, around thirty-point differences in both the top and bottom rows, so we might expect Cramer’s V to show a stronger relationship as well.

\[V=\sqrt{\frac{396.7}{8249*2}}=.16\]

Hmmm. That’s interesting. Cramer’s V does show that the age has a stronger impact on religious importance than either region or education, but not by much. Are you surprised by the rather meager readings on Cramer’s V for this table? After all, we saw a pretty dramatic differences in the column percentages, so we might have expected that Cramer’s V would come in at somewhat higher values. What this reveals is an inherent limitation of focusing on a few of the differences in column percentages rather than taking in information from the entire table. For instance, by focusing on the large differences in the Low and High row, we ignored the tiny differences in the Moderate row. Another problem is that we tend to treat the column percentages as if they carry equal weight. Again, column percentages are important–they tell us what it happening between the two variables–but focusing on them exclusively invariably provides an incomplete accounting of the relationship. Good measures of association such as Cramer’s V take into account information from all cells in a table and provide a more complete assessment of the relationship.

Getting Cramer’s V in R. The CramerV function is found in the DescTools package and can be used to get the values of Cramer’s V in R.

#V for education and religious importance:

CramerV(anes20$relig_imp, anes20$educ)[1] 0.081592#V for region and religious importance:

CramerV(anes20$relig_imp, anes20$V203003)[1] 0.12015#V for age and religious importance:

CramerV(anes20$relig_imp, anes20$age5)[1] 0.15831These results confirm the earlier calculations.

13.3.2 Lambda

Another sometimes useful statistic for judging the strength of a relationship is lambda (\(\lambda\)). Lambda is referred to as a proportional reduction in error (PRE) statistic because it summarizes how much we can reduce the level of error from guessing, or predicting the outcome of the dependent variable by using information from an independent variable. The concept of proportional reduction in error plays an important role in many of the topics included in the last several chapters of this book.

The formula for lambda is:

\[Lambda (\lambda)=\frac{E_1-E_2}{E_1}\]

Where:

- E1 =error by guessing with no information on the independent variable.

- E2 =error by guessing with information about an independent variable.

Okay, so what does this mean? Let’s think about it in terms of the relationship between region and religious importance. The table below includes just the raw frequencies for these two variables:

#Cell frequencies for religious importance by region

crosstab(anes20$relig_imp, anes20$V203003,plot=F) Cell Contents

|-------------------------|

| Count |

|-------------------------|

==========================================================================

SAMPLE: Census region

anes20$relig_imp 1. Northeast 2. Midwest 3. South 4. West Total

--------------------------------------------------------------------------

Low 549 649 811 782 2791

--------------------------------------------------------------------------

Moderate 320 385 548 343 1596

--------------------------------------------------------------------------

High 525 956 1710 671 3862

--------------------------------------------------------------------------

Total 1394 1990 3069 1796 8249

==========================================================================Now, suppose that you had to “guess,” or “predict” the value of the dependent variable for each of the 8249 respondents from this table. What would be your best guess? By “best guess” I mean which category would give the least overall error in guessing? As a rule, with ordinal or nominal dependent variables, it is best to guess the modal outcome (you may recall this from Chapter 4) if you want to minimize error in guessing. In this case, that outcome is the “High” row, which has 3862 respondents. If you guess this, you will be correct 3862 times and wrong 4387 times. This seems like a lot of error, but no other guess can give you an error rate this low (go ahead, try).

From this, we get \(E_1=4387\).

E2 is the error we get when we are able to guess the value of the dependent variable based on the value of independent variable. Here’s how we get E2: we look within each column of the independent variable and choose the category of the dependent variable that will give us the least overall error for that column (the modal outcome within each column). For instance, for “Northeast” we would guess “Low” and we would be correct 549 times and wrong 845 times (make sure you understand how I got these numbers); for “Midwest” we would guess “High” and would be wrong 1034 times; for “South” we would guess “High” and be wrong 1359 times; and for “West” we could guess “Low” and be wrong 1014 times. Now that we have given our best guess within each category of the independent variable we can estimate E2, which is the sum of all of the errors from guessing when we have information from the independent variable:

\[E_2= 845+1034+1359+1014= 4252\]

Note that E2 is less than E1. This means that the independent variable reduced the error in guessing the outcome of the dependent variable (\(E_1 - E_2=135\)). On its own, it is difficult to tell if reducing error by 135 represents a lot or a little reduction in error. Because of this, we express reduction in error as a proportion of the original error:

\[Lambda (\lambda)=\frac{4387-4252}{4387}=\frac{135}{4387}=.031\] What this tells us is that knowing the region of residence for respondents in this table reduces the error in predicting the dependent variable by 3.1%. Lambda ranges from 0 to 1, where 0 means that the independent variable does not account for any error in predicting the dependent variable, and 1 means the independent variable accounts for all of the error in predicting the dependent variable. You might notice that this interpretation is very similar to the interpretation of eta2 in Chapter 11. That’s because both statistics are measuring the amount of error in the dependent variable that is explained by the independent variable.

One of the nice things about lambda, compared to Cramer’s V, is that it has a very intuitive meaning. Think about how we interpret Cramer’s V for the table above (.12). We know that .12 on a scale from 0 to 1 (a weak relationship) is rather low, but it is hard to ascribe more meaning to it than that (.12 of what?). Now, contrast that with Lambda (.031), which also indicates a weak relationship but at the same time conveys an additional piece of information, that knowing the outcome of the independent variable leads to a 3.1% decrease in error when predicting the dependent variable.

Getting Lambda from R. The Lambda function in R can be used to calculate lambda for a pair of variables. along with variable names, we need to specify two additional pieces of information in the Lambda function: direction="row" means that we are predicting row outcomes, and conf.level =.95 adds a 95% confidence interval around lambda.

#For Region and Religious importance

Lambda(anes20$relig_imp, anes20$V203003, direction="row", conf.level =.95) lambda lwr.ci upr.ci

0.0307727 0.0086624 0.0528831 Here, we get confirmation that the calculations for the region/religious importance table were correct (lambda = .03). Also, since the 95% confidence interval does not include 0 (barely!), we can reject the null hypothesis and conclude that there is a statistically significant but quite small reduction in error predicting religious importance with region.

We can also see the values of lambda for the other two tables: lambda is 0 for education and religious importance and .07 for age and religious importance.

#For Education and Religious importance

Lambda(anes20$relig_imp, anes20$educ, direction="row", conf.level =.95)lambda lwr.ci upr.ci

0 0 0 #For Age and Religious importance

Lambda(anes20$relig_imp, anes20$age5, direction="row", conf.level =.95) lambda lwr.ci upr.ci

0.070771 0.048647 0.092896 The finding for education and religious importance points to one limitation of lambda: it can equal 0 even if there is clearly a relationship in the table. The problem is that the relationship might not be a proportional reduction in error relationship–that is the independent variable does not improve over guessing, even if there is a significant pattern (according to chi-square) in the data. This usually happens when the distribution of the dependent variable is skewed enough toward the modal value that the errors from guessing in E2 are the same as the error in E1. Looking at the table for education and religious importance (reproduced below), about 47% of all responses are in the “High” row. This is not what I would call heavily skewed toward the modal category (after all, the modal category will always have more outcomes than the other categories), but it does tilt that way enough that a weak relationship like the one between education and religious importance is not going to result in any reduction in error from guessing.

If you start to work through calculating lambda for this table, you will see that the best guess within each category of the independent variable is always in the “High” row, which is also the best guess without information about the independent variable. Hence, lambda=0.

#Cell frequencies for religious importance by education

crosstab(anes20$relig_imp, anes20$educ,

plot=F) Cell Contents

|-------------------------|

| Count |

|-------------------------|

===========================================================================

anes20$educ

anes20$relig_imp LT HS HS Some Coll 4yr degr Grad degr Total

---------------------------------------------------------------------------

Low 96 365 860 789 650 2760

---------------------------------------------------------------------------

Moderate 93 284 535 389 275 1576

---------------------------------------------------------------------------

High 187 684 1387 875 660 3793

---------------------------------------------------------------------------

Total 376 1333 2782 2053 1585 8129

===========================================================================Lambda and Cramer’s V are using different standards to evaluate the strength of relationship. Lambda focuses on whether using the independent variable leads to less error in guessing outcomes of the dependent variable. Cramer’s V, on the other hand, focuses on how different the pattern in the table is from what you would expect if there were no relationship.

13.4 Ordinal Measures of Association

Cramer’s V and Lambda measure the association between two variables by focusing on deviation from expected outcomes or reduction in error, respectively, two useful pieces of information. One drawback to these measures though is that they do not express information about the strength of a relationship from a directional perspective. Consider, for example, the impact of education and age on religious importance, as discussed above. In both cases, there are clear directional expectations based on the ordinal nature of both variables: religious importance should decrease as levels of education increase (negative relationship) and it should increase as age increases (positive relationship). The findings in the crosstabs supported these expectations. One problem, however, is that the measures of association we’ve looked at so far are not designed to capture the extent to which the pattern in a crosstab follows a particular directional pattern. What we need, then, are measures of association that incorporate the idea of directionality into their calculations.

To demonstrate some of these ordinal measures of association, I use a somewhat simpler 3x3 crosstab that still focuses on religious importance as the dependent variable (the smaller table works better for demonstration). Here, I use anes20$ideol3, a three-category version of anes20$V201200, to look at how political ideology is related to religious importance.

anes20$ideol3<-anes20$V201200

levels(anes20$ideol3)<-c("Liberal","Liberal","Liberal","Moderate",

"Conservative","Conservative",

"Conservative")#Crosstab of religious importance by ideology

crosstab(anes20$relig_imp, anes20$ideol3,

prop.c=T, chisq=T,

plot=F) Cell Contents

|-------------------------|

| Count |

| Column Percent |

|-------------------------|

=============================================================

PRE: 7pt scale liberal-conservative self-placement

anes20$relig_imp Liberal Moderate Conservative Total

-------------------------------------------------------------

Low 1410 570 489 2469

56.6% 31.5% 17.9%

-------------------------------------------------------------

Moderate 428 427 498 1353

17.2% 23.6% 18.2%

-------------------------------------------------------------

High 654 815 1750 3219

26.2% 45.0% 63.9%

-------------------------------------------------------------

Total 2492 1812 2737 7041

35.4% 25.7% 38.9%

=============================================================

Statistics for All Table Factors

Pearson's Chi-squared test

------------------------------------------------------------

Chi^2 = 997.01 d.f. = 4 p <2e-16

Minimum expected frequency: 348.19 CramerV(anes20$relig_imp, anes20$ideol3, conf.level = .95)Cramer V lwr.ci upr.ci

0.26608 0.24915 0.28221 Lambda(anes20$relig_imp, anes20$ideol3, direction="row", conf.level = .95) lambda lwr.ci upr.ci

0.19780 0.17694 0.21867 The observant among you might question whether political ideology is an ordinal variable. After all, none of the category labels carry the obvious hints, such as “Low” and “High”, or “Less than” and “Greater than.” Instead, as pointed out in Chapter 1, you can think of the categories of political ideology as growing more conservative (and less liberal) as you move from “Liberal” to “Moderate” to “Conservative”.

Here’s what I would say about this relationship based on what we’ve learned thus far. There is a positive relationship between political ideology and the importance people attach to religion. Looking from the Liberal column to the Conservative column, we see a steady decrease in the percent who attach low importance to religion and a steady increase in the percent who attach high importance to religion. Almost 57% of liberals are in the Low row, compared to only about 18% among conservatives; and only 26% of liberals are in the High row, compared to about 64% of conservatives. The p-value for chi-square is near 0, so we can reject the null hypothesis, the value of Cramer’s V (.27) suggests a weak to moderate relationship, and the value of lambda shows that ideology reduces the error in guessing religious importance by about 20%.

There is nothing wrong with using lambda and Cramer’s V for this table, as long as you understand that they are not addressing the directionality in the data. For that, we need to use an ordinal measure of association, one that assesses the degree to which high values of the independent variable are related to high or low values of the dependent variable. In essence, these statistics focus on the ranking of categories and summarize how well we can predict the ordinal ranking of the dependent variable based on values of the independent variable. Ordinal-level measures of association range in value form -1 to +1, with 0 indicating no relationship, -1 indicating a perfect negative relationship, and + 1 indicating a perfect positive relationship.

13.4.1 Gamma

One example of an ordinal measure of association is gamma (\(\gamma\)). Very generally, what gamma does is calculate how much of the table follows a positive pattern and how much it follows a negative pattern, but using different terminology. More formally, Gamma is based on the number of similarly ranked and differently ranked pairs of observations in the contingency table. Similarly ranked pairs can be thought of as pairs that follow a positive pattern, and differently ranked pairs are those that follow a negative pattern.

\[Gamma=\frac{N_{similar} - N_{different}}{N_{similar} + N_{different}}\] If you look at the parts of this equation, it is easy to see that gamma is a measure of the degree to which positive or negative pairs dominate the table. When positive (similarly ranked) pairs dominate, gamma will be positive; when negative (differently ranked) pairs dominate, gamma will be negative; and when there is no clear trend, gamma will be zero or near zero.

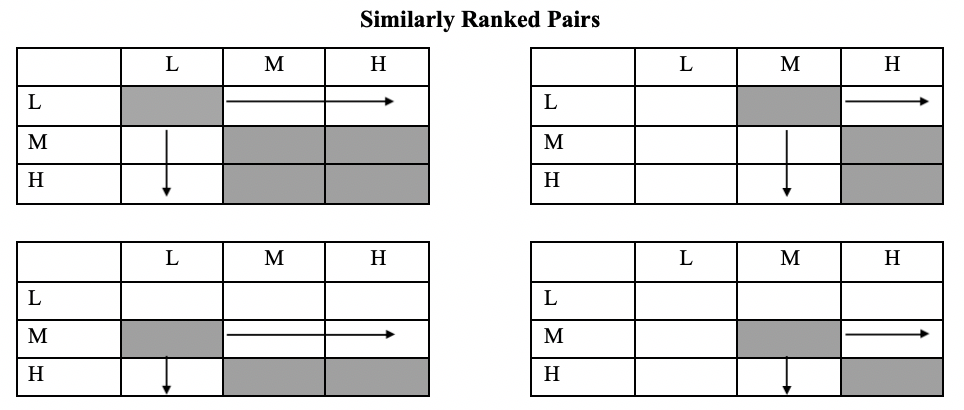

But how do we find these pairs? Let’s look at similarly ranked pairs in a generic 3x3 table first.

Figure 13.2: Similarly Ranked Pairs

For each cell in the table in Figure 13.2, we multiply that cell’s number of observations times the sum of the observations that are found in all cells ranked higher in value on both the independent and dependent variables (below and to the right). We refer to these cells as “similarly ranked” because they have the same ranking on both the independent and dependent variables, relative to the cell of interest. These pairs of cells are highlighted in figure 13.2.

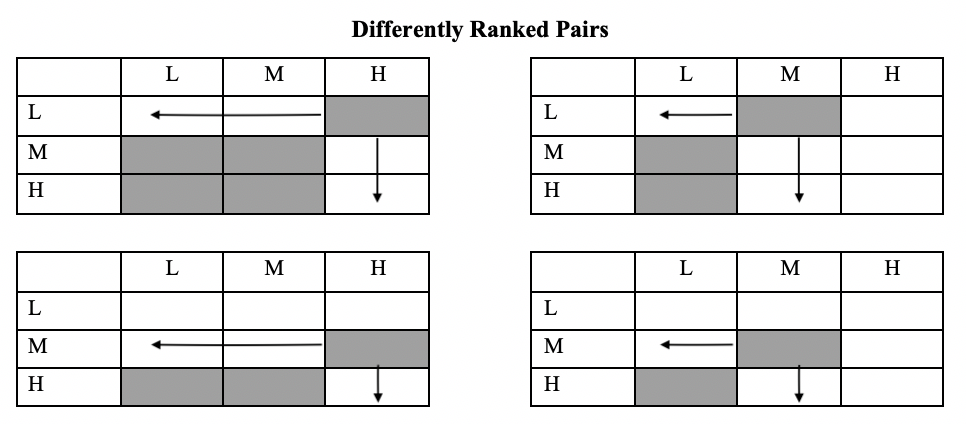

For differently ranked pairs, we need to match each cell with cells that are inconsistently ranked on the independent and dependent variables (higher in value on one and lower in value on the other) . Because of this inconsistent ranking, we call these differently ranked pairs. These cells are below and to the left of the reference cell, as highlighted in Figure 13.3

Figure 13.3: Differently Ranked Pairs

Using this information to calculate gamma may seem a bit cumbersome—and it is—but it becomes clearer after working through it a few times. We’ll work through this once (I promise, just once) using the crosstab for ideology and religious importance. That crosstab is reproduced below, using just the raw frequencies.

Let’s start with the similarly ranked pairs. In the calculation below, I begin in the upper-left corner (Liberal/Low) and pair those 1410 respondents with all respondents in cells below and to the right (1410 x (427+498+815+1750)). Note that the four cells below and to the right are all higher in value on both the independent and dependent variables than the reference cell. Then I go to the Liberal/Moderate cell and match those 428 respondents with the two cells below and to the right (428 x (815+1750)). Next, I go to the Moderate/Low cell and match those 570 respondents with the two cells below and to the right (570 x (498+1750)), and finally, I go to the Moderate/Moderate cell and match those 427 respondents with respondents in the one cell below and to the right (427 X 1750).

#Calculate similarly ordered pairs

similar<-1410*(427+498+815+1750)+428*(815+1750)+570*(498+1750)+(427*1750)

similar[1] 8047330This gives us 8,047,330 similarly ranked pairs. That seems like a big number, and it is, but we are really interested in how big it is relative to the number of differently ranked pairs.

Cell Contents

|-------------------------|

| Count |

|-------------------------|

=============================================================

PRE: 7pt scale liberal-conservative self-placement

anes20$relig_imp Liberal Moderate Conservative Total

-------------------------------------------------------------

Low 1410 570 489 2469

-------------------------------------------------------------

Moderate 428 427 498 1353

-------------------------------------------------------------

High 654 815 1750 3219

-------------------------------------------------------------

Total 2492 1812 2737 7041

=============================================================To calculate differently ranked pairs, I begin in the upper-right corner (Conservative/Low) and pair those 489 respondents with respondents in all cells below and to the left (489 x (427+428+654+815)). Note that the four cells below and to the left are all lower in value on the independent variable and higher in value on the dependent variable than the reference cell. Then, I match the 570 respondents in the Moderate/Low cell with all respondents in cells below and to the left (570 x (428+654)). Next, I match the 498 respondents in the Conservative/Moderate cell with all respondents in cells below and to the left (498 x (654+815)). And, finally, I match the 427 respondents in the Moderate/Moderate cell with all respondents in the one cell below and to the left (427x654).

#Calculate differently ordered pairs

different=489*(427+428+654+815)+570*(428+654)+498*(654+815)+427*654

different[1] 2763996This gives us 2,763,996 differently ranked pairs. Again, this is a large number, but we now have a basis of comparison, the 8,047,330 similarly ranked pairs. Since there are more similarly ranked pairs, we know that there is a positive relationship in this table. Let’s plug these values into the gamma formula to get a more definitive sense of this relationship.

For this table,

\[\text{gamma}=\frac{8047330-2763996}{8047330+2763996}=\frac{5283334}{10811326}=.4887\] Now, let’s check this with the R function for gamma.

#Get Gamma from R

GoodmanKruskalGamma(anes20$relig_imp, anes20$ideol3, conf.level = .95) gamma lwr.ci upr.ci

0.48869 0.46254 0.51483 Two things here: the value of gamma is the same as what we calculated on our own, and the 95% confidence interval around the point estimate for gamma does not include 0, so we can reject the null hypothesis (\(H_0: gamma=0\)). Further, we can interpret the value of gamma (.49) as meaning that we there is a moderate-to-strong, positive relationship between ideology and religious importance.

13.4.1.1 A Problem with Gamma

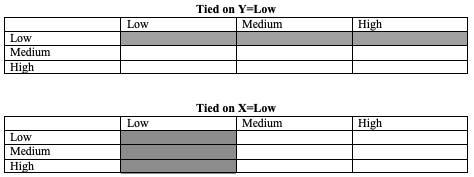

Gamma is a useful statistic from an instructional perspective in part because the logic of taking the balance of similar and different rankings to determine directionality is so intuitively clear. However, in practice, gamma does come with a bit of baggage. The main problem with gamma is that it tends to overstate the strength of relationships. For instance, in the example of the relationship between ideology and religious importance, the value of gamma far outstrips the values of Cramer’s V (.266) and lambda (.198). We don’t expect to get the same results, but when two measures of association suggest a somewhat modest relationship and another suggests a much stronger relationship, it is a bit hard to reconcile the difference. The reason gamma tends to produce stronger effects is because it focuses only the diagonal pairs. In other words, gamma is calculated only on the basis of pairs of observations that follow clearly positive (similarly ranked) or negative (differently ranked) patterns. But we know that not all pairs of observations in a table follow these directional patterns. Many of the pairs of observations in the table are “tied” in value on one variable but have different values on the other variable, so they don’t follow a positive or negative pattern. If tied pairs were also taken into account, the denominator would be much larger and Gamma would be somewhat smaller.

The two tables below illustrate examples of tied pairs. In the first table, the shaded area represents the cells that are tied in their value of Y (Low) but have different values of x (low, medium, and high). In the second table, the highlighted cells are tied in their value of X (low) but differ in their value of Y (low, medium, high). And there are many more tied pairs throughout the tables. None of these pairs are included in the calculation of gamma, but they can constitute a substantial part of the table, especially if the directional pattern is weak. To the extent there are a lot of tied pairs in a given table, Gamma is likely to significantly overstate the magnitude of the relationship between X and Y because the denominator for Gamma is smaller than it should be if it is intended to represent the entire table.

Figure 13.4: Tied Pairs in a Crosstab

If you are using gamma, you should always take into account the fact that it tends to overstate relationships and there are some alternative measures of association that do account for the presence of tied pairs. Still, as a general rule, gamma will not report a significant relationship when the other statistics do not. In other words, gamma does not increase the likelihood of concluding there is a relationship when there actually isn’t one.

13.4.2 Tau-b and Tau-c

There are many useful alternatives to Gamma. The ones I like to use are tau-b and tau-c, both of which maintain the focus on the number of similar and differently ranked pairs in the numerator but also take into account the number of tied pairs in the denominator, albeit in somewhat different ways.39 In practice, the primary difference between the two is that tau-b is appropriate for square tables (e.g., 3x3 or 4x4) while tau-c is appropriate for rectangular tables (e.g., 2x3 or 3x4). Like Gamma, Tau-b and Tau-c are bounded by -1 (perfect negative relationship) and +1 (perfect positive relationship), with 0 indicating no relationship.

The R functions for tau-b and tau-c are similar to the function for gamma. Although tau-b is the most appropriate measure of association for the relationship between ideology and religion (a square table), both statistics are reported below in order to demonstrate the commands:

#Get tau-b and tau-c for religious important by ideology

KendallTauB(anes20$relig_imp, anes20$ideol3, conf.level=0.95) tau_b lwr.ci upr.ci

0.33091 0.31151 0.35030 StuartTauC(anes20$relig_imp, anes20$ideol3, conf.level=0.95) tauc lwr.ci upr.ci

0.31971 0.30104 0.33839 The results for tau-b and tau-c are very close in magnitude (they usually are), both pointing to a moderate, positive relationship between ideology and religion. You should also note that the confidence interval does not include 0, so we can reject the null hypothesis (H0:tau-b/tau-c=0). You probably noticed that these values are somewhat stronger than those for Cramer’s V and lambda but somewhat weaker than the result obtained from gamma. In my experience, this is usually the case.

With the exception of gamma, because it tends to overstate the magnitude of relationships, you can use the table below as a rough guide to how the measures of association discussed here are connected to judgments of effect size. Using this information in conjunction with the contents of a crosstab should enable you to provide a fair and substantive assessment of the relationship in the table.

| Absolute Value | Effect Size |

|---|---|

| .05 | |

| .15 | Weak |

| .25 | |

| .35 | Moderate |

| .45 | |

| .55 | Strong |

| .65 | |

| .75 | Very Strong |

| .85 |

Always remember that the column percentages tell the story of how the variables are connected, but you still need a measure of association to summarize the strength and direction of the relationship. At the same time, the measure of association is not very useful on its own without referencing the contents of the table.

13.5 Revisiting the Gender Gap in Abortion Attitudes

Back in Chapter 10, we looked at the gender gap in abortion attitudes, using t-tests for the sex-based differences in two different outcomes, the proportion who thought abortion should always be illegal and the proportion who think abortion should be available as a matter of choice. Those tests found no significant gender-based difference for preferring that abortion be illegal and a significant but small difference in the proportion preferring that abortion be available as a matter of choice. With crosstabs, we can do better than just testing these two polar opposite positions by utilizing a wider range of preferences, as shown below.

#Create abortion attitude variable

anes20$abortion<-factor(anes20$V201336)

#Change levels to create four-category variable

levels(anes20$abortion)<-c("Illegal","Rape/Incest/Life",

"Other Conditions","Choice","Other Conditions")

#Create numeric indicator for sex

anes20$Rsex<-factor(anes20$V201600)

levels(anes20$Rsex)<-c("Male", "Female")

crosstab(anes20$abortion, anes20$Rsex, prop.c=T,

chisq=T, plot=F) Cell Contents

|-------------------------|

| Count |

| Column Percent |

|-------------------------|

==========================================

anes20$Rsex

anes20$abortion Male Female Total

------------------------------------------

Illegal 388 475 863

10.4% 10.7%

------------------------------------------

Rape/Incest/Life 929 978 1907

24.9% 22.1%

------------------------------------------

Other Conditions 688 706 1394

18.5% 16.0%

------------------------------------------

Choice 1723 2263 3986

46.2% 51.2%

------------------------------------------

Total 3728 4422 8150

45.7% 54.3%

==========================================

Statistics for All Table Factors

Pearson's Chi-squared test

------------------------------------------------------------

Chi^2 = 24.499 d.f. = 3 p = 0.0000196

Minimum expected frequency: 394.76 Here, the dependent variable ranges from wanting abortion to be illegal in all circumstances, to allowing it only in cases of rape, incest, or threat t0 the life of the mother, to allowing it in some other circumstances, to allowing it generally as a matter of choice. These categories can be seen as ranging from most to least restrictive views on abortion. As expected, based on the analysis in Chapter 10, the differences between men and women on this issues are not very great. In fact, the greatest difference in column percentages is in the “Choice” row, where women are about five percentage points more likely than men to favor this position. This weak effect is also reflected in the measures of association reported below.

#Get tau-b and tau-c for religious important by ideology

CramerV(anes20$abortion, anes20$Rsex)[1] 0.054827StuartTauC(anes20$abortion, anes20$Rsex, conf.level=0.95) tauc lwr.ci upr.ci

0.041292 0.018106 0.064479 This relationship is another demonstration of the need to go beyond just reporting on statistically significance. Had we simply reported that chi-square is statistically significant, with a p-value of .0000196, we might have concluded that sex plays an important role in in shaping attitudes on abortion. However, by focusing some attention on the column percentages and measures of association, we can more accurately report that sex is related to how people answered this question but its impact is quite small.

13.5.1 When to Use Which Measure

A number of measures of association are available for describing the relationship between two variables in a contingency table. Not all measures of association are appropriate for all tables, however. To determine the appropriate measure of association, you need to know the level of measurement for your table.

For tables in which at least one variable is nominal, such as the table featuring region and religious importance, you must use non-directional measures of association. For these tables, Cramer’s V and lambda serve as excellent measures of association. You can certainly use both, or you may decide that you are more comfortable with one over the other and use it on its own; just make sure that if you are using just one of these two statistics, you are not doing so because it makes the relationship look stronger.

For tables in which both variables are ordinal, you can choose between gamma, tau-b, and tau-c. My own preference is to rely on tau-b and tau-c, since they incorporate more information from the table than gamma does and, hence, provide a more realistic impression of the relationship in the table. If you use gamma, you should remember and acknowledge that it tends to overstate the magnitude of relationships because it focuses just on the positive and negative patterns in the table. You should also remember that tau-b is appropriate for square tables, and tau-c is for rectangular tables.

13.6 Next Steps

The next several chapters build on this and earlier chapters and turn to assessing relationships between numeric variables. In Chapter 14, we examine how use scatter plots and correlations coefficients to assess relationships between numeric variables. The correlation coefficient is an interval-ratio version of the same type of measures of association you learned about in this chapter. Like other directional measures of association, it measures the extend to which the relationship between the independent and dependent variable follows a positive versus negative pattern, and provides a sense of both the direction and strength of the relationship. The remaining chapters focus on regression analysis, first examining how single variables influence a dependent variable, then how we can assess the impact of multiple independent variables on a single dependent variable. If you’ve been able to follow along so far, the remaining chapters won’t pose a problem for you.

13.7 Exercises

13.7.1 Concepts and Calculations

The table below provides the raw frequencies for the relationship between respondent sex and political ideology in the 2020 ANES survey.

| Male | Female | Total | |

|---|---|---|---|

| Liberal | 1043 | 1443 | 2486 |

| Moderate | 812 | 984 | 1796 |

| Conservative | 1443 | 1283 | 2726 |

| Total | 3298 | 3710 | 7008 |

Chi-square=66.23

What percent of female respondents identify as Liberal? What percent of male respondents identify as Liberal? What about Conservatives? What percent of male and female respondents identify as Conservative? Do the differences between male and female respondents seem substantial?

Calculate and interpret Cramer’s V, Lambda, and Gamma for this table. Show your work.

If you were using tau-b or tau-c for this table, which would be most appropriate? Why?

13.7.2 R Problems

In the wake of the Dobbs v. Jackson Supreme Court decision, which overturned the longstanding precedent from Roe v. Wade, it is interesting to consider who is most likely to be upset by the Court’s decision. You should examine how three different independent variables influence anes20$upset_court, a slightly transformed version of anes20$V201340. This variable measures responses to a question that asked respondents if they would be pleased or upset if the Supreme Court reduced abortion rights. The three independent variables you should use are anes20$V201600 (sex of respondent), anes20$ptyID3 (party identification), and anes20$age5 (age of the respondent).

Copy and run the two code chunks below to create anes20$upset_court and anes20$age5.

#Create anes20$upset_court

anes20$upset_court<-anes20$V201340

levels(anes20$upset_court)<- c("Pleased", "Upset", "Neither")

anes20$upset_court<-ordered(anes20$upset_court,

levels=c("Upset", "Neither", "Pleased"))#Collapse age into fewer categories

anes20$age5<-cut2(anes20$V201507x, c(30, 45, 61, 76))

#Assign label to levels

levels(anes20$age5)<-c("18-29", "30-44", "45-60","61-75"," 76+")Create three crosstabs using the dependent and independent variables described above. Do not include the mosaic plots. For each crosstab, discuss the contents of the table focusing on strength and direction of the relationship (if direction is appropriate). This discussion should rely primarily on the column percentages. Think about this as telling whatever story there is to tell about how the variables are related.

Decide which measures of association are most appropriate for summarizing the relationship in each of the tables, run the commands for those measures of association, and discuss the results. What do these measures of association tell you about the strength, direction, and statistical significance of the relationships?

Another popular measure of association for crosstabs based on chi-square is phi, which takes into account sample size but not table size. \[phi=\sqrt{\frac{\chi^2}{N}}\] I’m not adding phi to the discussion here because it is most appropriate for 2x2 tables, in which case it is equivalent to Cramer’s V.↩︎

If you are itching for another formula, this one shows how tau-b integrates tied pairs: \[\text{tau-b}=\frac{N_{similar} - N_{different}}{\sqrt{(N_{s}+N_{d}+N_{ty})((N_{s}+N_{d}+N_{tx}))}}\] where \(N_{ty}\) and \(N_{tx}\) are the number of tied pairs on y and x, respectively.↩︎