data = read_csv("sixers.csv") |>

rename(FTpct = "FT%") |>

arrange(desc(FTA)) 24 Introduction to Bayesian Hierarchical Models

In a Bayesian hierarchical1 model, the model for the data depends on certain parameters, and those parameters in turn depend on other parameters. Hierarchical modeling provides a framework for building complex and high-dimensional models from simple and low-dimensional building blocks.

Suppose we want to estimate the true, long run free throw percentage for each basketball player currently on the Philadelphia 76ers2. That is, we want to estimate each player’s true probability of successfully scoring on any free throw attempt. We’ll consider data from games in the 2022-2023 NBA season obtained from basketball-reference as of Feb 27, summarized in Section 24.1.1.

Example 24.1 Let \(\theta_j\) denote player \(j\)’s true (long run) FT percentage; for example, \(\theta_{\text{Embiid}}\), \(\theta_{\text{Harden}}\), etc. Let \(n_j\) denote the number of free throw attempts and \(y_j\) the number of free throws made for player \(j\).

One way to estimate \(\theta_j\) is to pool all the data together. Over the 19 players in the data set, there are 1482 attempts, of which 1229 were successful, for an overall free throw percentage of 82.9%. The sample mean free throw percentage, among players with nonzero attempts, is 77.7%. So we could just estimate each \(\theta_j\) with the overall free throw percentage (or the sample mean free throw percentage). What are some issues with this approach?

At the other extreme, we could estimate \(\theta_j\) using just the attempts for player \(j\). For example, use only the attempts for Embiid to estimate \(\theta_{\text{Embiid}}\), only the attempts for Harden to estimate \(\theta_{\text{Harden}}\), etc. What are some issues with this approach? (Hint: consider the players with no attempts, or few attempts.)

In a Bayesian model, the \(\theta_j\)’s would have a prior distribution. We could assume a Bayesian model with a prior in which all \(\theta_j\) are independent. What are some issues with this approach?

Example 24.2 Let’s first consider just a single player with true (long run) free throw percentage \(\theta\). Given \(n\) independent attempts, conditional on \(\theta\), we might assume that \(y\) follows a Binomial(\(n, \theta\)) distribution.

If we imagine the player is randomly selected from among current NBA players, how might we interpret the prior distribution for \(\theta\)?

The prior distribution for \(\theta\) has a prior mean; let’s denote it \(\mu\). How might we interpret \(\mu\)?

The prior mean \(\mu\) is also a parameter, so we could place a prior distribution on it. What would the prior distribution on \(\mu\) represent?

In this model \(\mu\) and \(\theta\) are dependent. Why is this dependence useful?

The prior distribution for \(\theta\) has a prior standard deviation; let’s denote it \(\sigma\). How might we interpret \(\sigma\)?

The prior standard deviation \(\sigma\) is also a parameter, so we could place a prior distribution on it. What would the prior distribution on \(\sigma\) represent?

- A Bayesian hierarchical model consists of the following layers in the hierarchy.

- A model for the data \(y\) which depends on model parameters \(\theta\) and is represented by the likelihood \(f(y|\theta)\).

- The model usually involves multiple parameters. Generically, \(\theta\) represents a vector of parameters that show up in the likelihood function.

- Remember: While the likelihood for a particular \(y\) sample is computed by evaulating the conditional density of \(y\) given \(\theta\), the likelihood function is a function of \(\theta\), treating the data \(y\) as fixed.

- A (joint) prior distribution for the model parameter \(\theta\), denoted \(\pi(\theta|\phi)\), which depends on hyperparameters \(\phi\).

- A hyperprior distribution, i.e., a prior distribution for the hyperparameters, denoted \(\pi(\phi)\). (Again, \(\phi\) generically represents a vector of hyperparameters.)

- A model for the data \(y\) which depends on model parameters \(\theta\) and is represented by the likelihood \(f(y|\theta)\).

- The above specification implicitly assumes that the data distribution and likelihood function only depend on \(\theta\); the hyperparameters only affect \(y\) through \(\theta\). That is, the general hierarchical model above assumes that the data \(y\) and hyperparameters \(\phi\) are conditionally independent given the model parameters \(\theta\), so the likelihood satisfies \[ f(y|\theta, \phi) = f(y|\theta) \] The joint prior distribution on parameters and hyperparameters is \[ \pi(\theta, \phi) = \pi(\theta|\phi)\pi(\phi) \]

- Given the observed data \(y\), we find the joint posterior density of the parameters and hyperparameters, denoted \(\pi(\theta, \phi|y)\), using Bayes rule: posterior \(\propto\) likelihood \(\times\) prior. \[\begin{align*} \pi(\theta, \phi|y) & \propto f(y|\theta, \phi)\pi(\theta, \phi)\\ & \propto f(y|\theta)\pi(\theta|\phi)\pi(\phi) \end{align*}\]

- In Bayesian hierarchical models, there will generally be dependence between parameters. But dependence among parameters is actually useful for several reasons:

- The dependence is natural and meaningful in the problem context.

- If parameters are dependent, then all data can inform parameter estimates.

Example 24.3 Now suppose want to estimate the free throw percentages for \(J\) NBA players, given data consisting of the number of free throws attempted \(n_j\) and made \(y_j\) by player \(j\). Specify a hierarchical model, including your choice of prior distribution. How many parameters are there in the model?

Example 24.4 Use software to fit the Bayesian model using the 76ers data. Summarize the posterior distribution of \(\mu\).

Example 24.5 For each player \(j\), consider the posterior mean and posterior SD of \(\theta_j\).

What is the posterior mean of \(\theta_j\) for players with no attempts? Why does this make sense?

Why is the posterior mean of \(\theta_j\) not the same for players with no attempts?

For which players is the posterior mean closest to the observed free throw percentage? Why?

Which players have the smallest posterior SDs? Why?

Find and interpret 50%, 80%, and 98% posterior credible intervals for Joel Embiid.

Find and interpret 50%, 80%, and 98% posterior credible intervals for Kevin Ross.

24.1 Notes

24.1.1 Sample data

data |>

select(Player, FTA, FT, FTpct) |>

kbl() |> kable_styling()| Player | FTA | FT | FTpct |

|---|---|---|---|

| Joel Embiid | 556 | 477 | 0.858 |

| James Harden | 279 | 241 | 0.864 |

| Tyrese Maxey | 155 | 126 | 0.813 |

| Tobias Harris | 90 | 77 | 0.856 |

| Shake Milton | 89 | 76 | 0.854 |

| Montrezl Harrell | 85 | 59 | 0.694 |

| De'Anthony Melton | 59 | 46 | 0.780 |

| Danuel House Jr. | 40 | 28 | 0.700 |

| Georges Niang | 32 | 28 | 0.875 |

| Furkan Korkmaz | 29 | 19 | 0.655 |

| Paul Reed | 22 | 16 | 0.727 |

| P.J. Tucker | 20 | 17 | 0.850 |

| Matisse Thybulle | 12 | 9 | 0.750 |

| Jalen McDaniels | 5 | 2 | 0.400 |

| Kevin Ross | 5 | 5 | 1.000 |

| Jaden Springer | 4 | 3 | 0.750 |

| Saben Lee | 0 | 0 | NA |

| Julian Champagnie | 0 | 0 | NA |

| Michael Foster Jr. | 0 | 0 | NA |

24.1.2 Approximating the posterior distribution

brms is structured around linear models. In the situation of this example, brms wants to fit a logistic regression model. Rather than doing that, I used another software program, JAGS, to fit our current model. Don’t worry about the syntax. Just notice that the inputs are: (1) the data, (2) the model for the data which determines the likelihood (dbinom), and (3) the prior (dbeta) and hyperprior (dnorm). And the output is an approximation of the posterior distribution of the parameters.

library(rjags)Loading required package: codaLinked to JAGS 4.3.1Loaded modules: basemod,bugsplayer = data$Player

y = data$FT

n = data$FTA

J = length(y)

# specify the model: likelihood, prior, hyperprior

model_string <- "model{

# Likelihood

for (j in 1:J){

y[j] ~ dbinom(theta[j], n[j])

}

# Prior Beta(alpha, beta)

# We put hyperpriors on the mean mu and the SD sigma,

# So we need to convert to shape parameters alpha and beta

for (j in 1:J){

theta[j] ~ dbeta(mu * (mu * (1 - mu) / sigma ^ 2 - 1), (1 - mu) * (mu * (1 - mu) / sigma ^ 2 - 1))

}

# Hyperprior, phi=(mu, sigma)

# In JAGS the second parameter of dnorm is precision = 1 / SD ^ 2

mu ~ dnorm(0.75, 1 / 0.05 ^ 2)

sigma ~ dnorm(0.08, 1 / 0.02 ^ 2)

}"

dataList = list(y = y, n = n, J = J)

model <- jags.model(file = textConnection(model_string),

data = dataList)Compiling model graph

Resolving undeclared variables

Allocating nodes

Graph information:

Observed stochastic nodes: 19

Unobserved stochastic nodes: 21

Total graph size: 77

Initializing modelupdate(model, n.iter = 1000)

posterior_sample <- coda.samples(model,

variable.names = c("theta", "mu", "sigma"),

n.iter = 10000,

n.chains = 5)head(posterior_sample)[[1]]

Markov Chain Monte Carlo (MCMC) output:

Start = 2001

End = 2007

Thinning interval = 1

mu sigma theta[1] theta[2] theta[3] theta[4] theta[5]

[1,] 0.7324699 0.07280416 0.8263356 0.7838183 0.7522553 0.7925202 0.8312793

[2,] 0.7296958 0.09717432 0.8141839 0.8862601 0.8249744 0.8156394 0.8328928

[3,] 0.7534373 0.05886149 0.8110601 0.8514074 0.8309432 0.7985060 0.8187285

[4,] 0.7629686 0.07312367 0.8180578 0.7921141 0.8082493 0.7919141 0.8548020

[5,] 0.7870372 0.06456467 0.8835235 0.8170800 0.8524726 0.7964469 0.8361199

[6,] 0.8223509 0.07197705 0.8675150 0.8211591 0.8601450 0.8308076 0.8118094

[7,] 0.8140601 0.05526624 0.8583838 0.8854689 0.8308901 0.8721061 0.8384305

theta[6] theta[7] theta[8] theta[9] theta[10] theta[11] theta[12]

[1,] 0.7846246 0.6916464 0.6939036 0.7983741 0.7050658 0.7155877 0.6774993

[2,] 0.7901079 0.8378239 0.7379439 0.8160219 0.7703497 0.6920512 0.6844990

[3,] 0.6379728 0.7846579 0.7741689 0.7738598 0.6385470 0.6963456 0.6589052

[4,] 0.6623805 0.8124167 0.7870318 0.8195600 0.7737182 0.6999984 0.6556901

[5,] 0.7569271 0.8260051 0.8013455 0.7371848 0.8072837 0.6953802 0.8782308

[6,] 0.7726494 0.7482264 0.7235362 0.9043854 0.7518377 0.7711486 0.8039431

[7,] 0.6970380 0.7127409 0.7793467 0.8513418 0.6763837 0.7747981 0.8146501

theta[13] theta[14] theta[15] theta[16] theta[17] theta[18] theta[19]

[1,] 0.6350453 0.6921780 0.7309112 0.7886032 0.6774284 0.6919365 0.7830328

[2,] 0.7725379 0.7102491 0.6557252 0.7879546 0.8156375 0.6958028 0.7808721

[3,] 0.7666308 0.6869822 0.6875977 0.7752541 0.8532899 0.7412464 0.7684919

[4,] 0.8021445 0.7648994 0.7053264 0.6848467 0.8487722 0.7219288 0.7456395

[5,] 0.7270379 0.8763493 0.7174345 0.8468590 0.9170156 0.8161639 0.7850196

[6,] 0.7158149 0.8372383 0.9061728 0.7975963 0.9153025 0.8361973 0.7211391

[7,] 0.8104942 0.6915508 0.8848918 0.8361592 0.7239111 0.8180481 0.7721977

attr(,"class")

[1] "mcmc.list"summary(posterior_sample)

Iterations = 2001:12000

Thinning interval = 1

Number of chains = 1

Sample size per chain = 10000

1. Empirical mean and standard deviation for each variable,

plus standard error of the mean:

Mean SD Naive SE Time-series SE

mu 0.78291 0.02181 0.0002181 0.0005265

sigma 0.07238 0.01555 0.0001555 0.0003948

theta[1] 0.85365 0.01444 0.0001444 0.0001945

theta[2] 0.85498 0.02008 0.0002008 0.0002667

theta[3] 0.80739 0.02928 0.0002928 0.0003891

theta[4] 0.83614 0.03404 0.0003404 0.0004654

theta[5] 0.83419 0.03479 0.0003479 0.0004822

theta[6] 0.71958 0.04249 0.0004249 0.0006357

theta[7] 0.78051 0.04287 0.0004287 0.0005661

theta[8] 0.73819 0.05282 0.0005282 0.0007801

theta[9] 0.82846 0.04741 0.0004741 0.0006556

theta[10] 0.72301 0.05898 0.0005898 0.0009410

theta[11] 0.76063 0.05882 0.0005882 0.0008344

theta[12] 0.80944 0.05468 0.0005468 0.0007363

theta[13] 0.77353 0.06525 0.0006525 0.0009496

theta[14] 0.73072 0.07864 0.0007864 0.0013104

theta[15] 0.81267 0.06626 0.0006626 0.0009952

theta[16] 0.77947 0.07125 0.0007125 0.0010301

theta[17] 0.78342 0.07710 0.0007710 0.0011776

theta[18] 0.78427 0.07603 0.0007603 0.0011103

theta[19] 0.78360 0.07639 0.0007639 0.0011818

2. Quantiles for each variable:

2.5% 25% 50% 75% 97.5%

mu 0.73847 0.76863 0.78363 0.79781 0.8244

sigma 0.04388 0.06152 0.07183 0.08249 0.1045

theta[1] 0.82420 0.84419 0.85379 0.86384 0.8809

theta[2] 0.81375 0.84161 0.85562 0.86918 0.8917

theta[3] 0.74677 0.78804 0.80870 0.82787 0.8610

theta[4] 0.76550 0.81355 0.83788 0.85966 0.8980

theta[5] 0.76165 0.81191 0.83551 0.85849 0.8973

theta[6] 0.63319 0.69172 0.72092 0.74920 0.7974

theta[7] 0.68946 0.75317 0.78295 0.81046 0.8590

theta[8] 0.62594 0.70380 0.74154 0.77584 0.8326

theta[9] 0.72829 0.79817 0.83021 0.86200 0.9152

theta[10] 0.59761 0.68492 0.72691 0.76474 0.8278

theta[11] 0.63342 0.72369 0.76482 0.80197 0.8646

theta[12] 0.69251 0.77489 0.81288 0.84832 0.9075

theta[13] 0.62958 0.73301 0.77802 0.81971 0.8879

theta[14] 0.55554 0.68251 0.73859 0.78698 0.8605

theta[15] 0.66385 0.77161 0.81700 0.85939 0.9280

theta[16] 0.62482 0.73499 0.78464 0.83042 0.9028

theta[17] 0.61326 0.73640 0.79018 0.83717 0.9170

theta[18] 0.62112 0.73601 0.79064 0.83797 0.9169

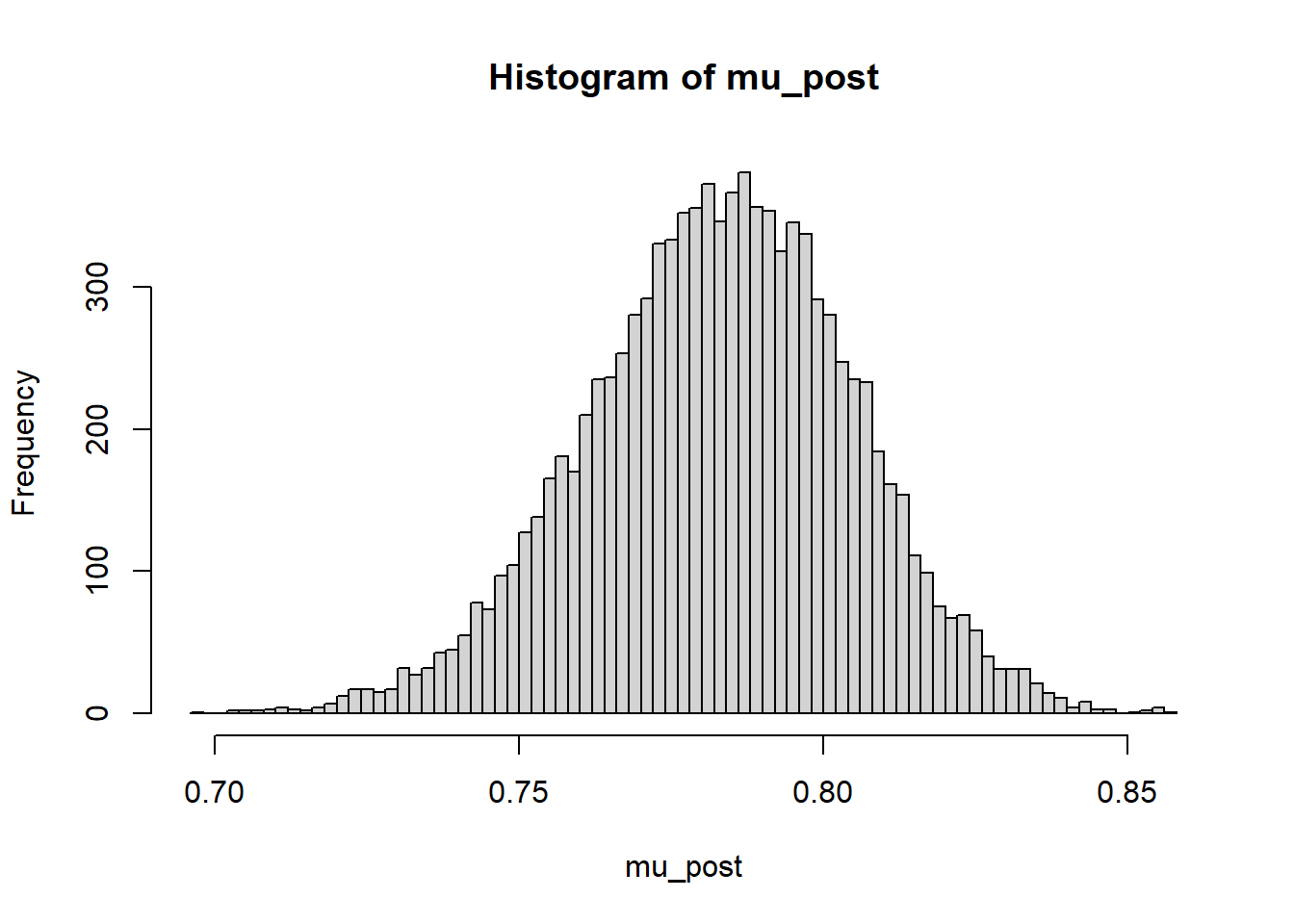

theta[19] 0.61229 0.73840 0.78999 0.83634 0.914624.1.3 Posterior distribution of \(\mu\)

mu_post = as.matrix(posterior_sample)[, "mu"]

hist(mu_post, breaks = 100)

quantile(mu_post, c(0.01, 0.10, 0.25, 0.5, 0.75, 0.90, 0.99)) 1% 10% 25% 50% 75% 90% 99%

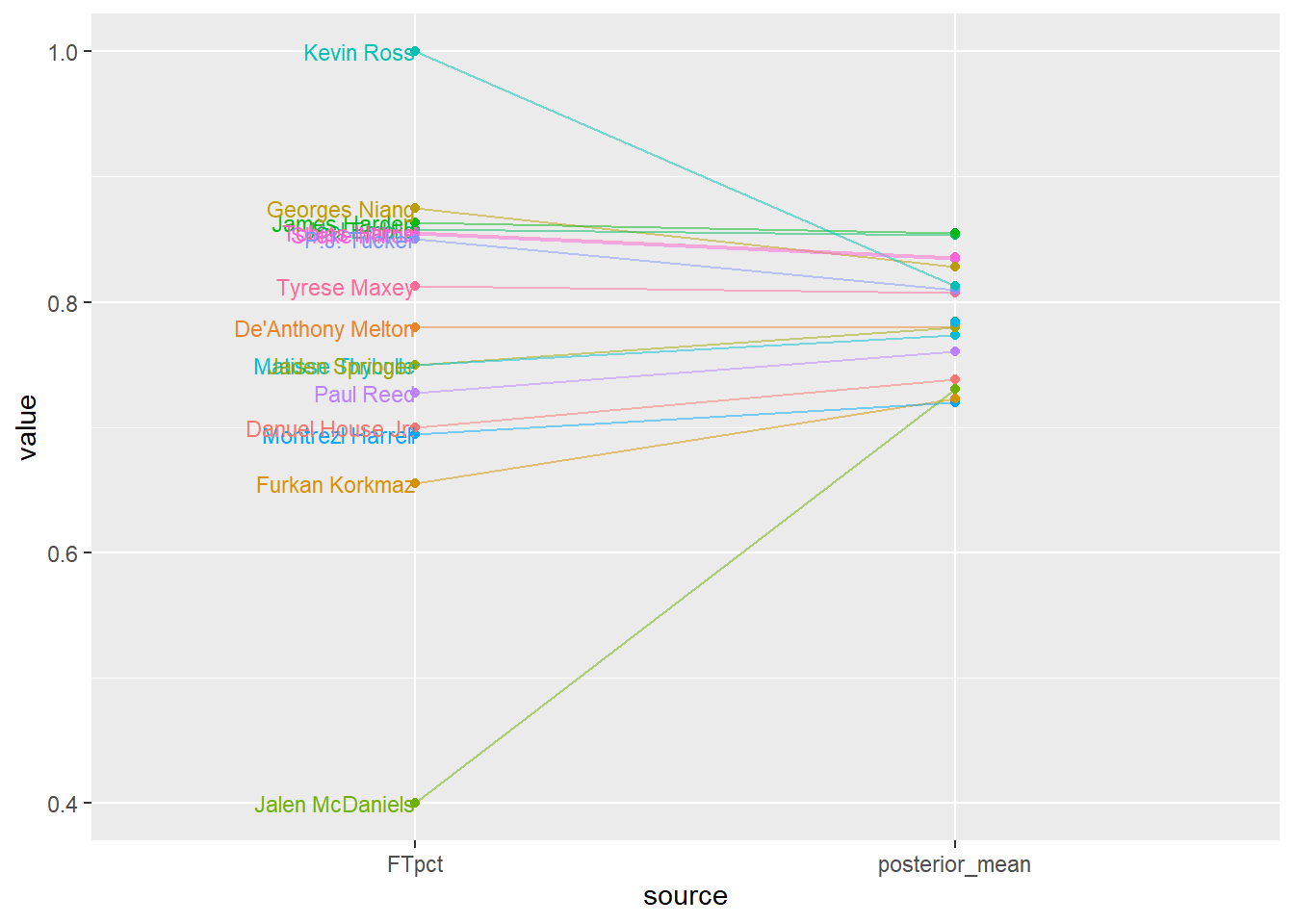

0.7290810 0.7544493 0.7686335 0.7836262 0.7978124 0.8099958 0.8321037 24.1.4 Posterior mean and SD of \(\theta_j\)s

player_summary = data.frame(player, n, y, y / n)

names(player_summary) = c("player", "FTA", "FT", "FTpct")

# In posterior_sample list, the first two entries correspond to mu and sigma,

# so we remove them.

player_summary[c("posterior_mean", "posterior_SD")] =

summary(posterior_sample)$statistics[-(1:2), c("Mean", "SD")]

player_summary |> kbl(digits = 4) |> kable_styling()| player | FTA | FT | FTpct | posterior_mean | posterior_SD |

|---|---|---|---|---|---|

| Joel Embiid | 556 | 477 | 0.8579 | 0.8536 | 0.0144 |

| James Harden | 279 | 241 | 0.8638 | 0.8550 | 0.0201 |

| Tyrese Maxey | 155 | 126 | 0.8129 | 0.8074 | 0.0293 |

| Tobias Harris | 90 | 77 | 0.8556 | 0.8361 | 0.0340 |

| Shake Milton | 89 | 76 | 0.8539 | 0.8342 | 0.0348 |

| Montrezl Harrell | 85 | 59 | 0.6941 | 0.7196 | 0.0425 |

| De'Anthony Melton | 59 | 46 | 0.7797 | 0.7805 | 0.0429 |

| Danuel House Jr. | 40 | 28 | 0.7000 | 0.7382 | 0.0528 |

| Georges Niang | 32 | 28 | 0.8750 | 0.8285 | 0.0474 |

| Furkan Korkmaz | 29 | 19 | 0.6552 | 0.7230 | 0.0590 |

| Paul Reed | 22 | 16 | 0.7273 | 0.7606 | 0.0588 |

| P.J. Tucker | 20 | 17 | 0.8500 | 0.8094 | 0.0547 |

| Matisse Thybulle | 12 | 9 | 0.7500 | 0.7735 | 0.0652 |

| Jalen McDaniels | 5 | 2 | 0.4000 | 0.7307 | 0.0786 |

| Kevin Ross | 5 | 5 | 1.0000 | 0.8127 | 0.0663 |

| Jaden Springer | 4 | 3 | 0.7500 | 0.7795 | 0.0713 |

| Saben Lee | 0 | 0 | NaN | 0.7834 | 0.0771 |

| Julian Champagnie | 0 | 0 | NaN | 0.7843 | 0.0760 |

| Michael Foster Jr. | 0 | 0 | NaN | 0.7836 | 0.0764 |

player_summary |>

select(player, FTpct, posterior_mean) |>

pivot_longer(!player, names_to = "source", values_to = "value") |>

mutate(label = if_else(source == "FTpct", player, "")) |>

ggplot(aes(x = source,

y = value,

group = player,

col = player,

label = label)) +

scale_color_discrete() +

geom_point() +

geom_text(hjust = 1, size = 3) +

geom_line(alpha = 0.5) +

theme(legend.position = "none")

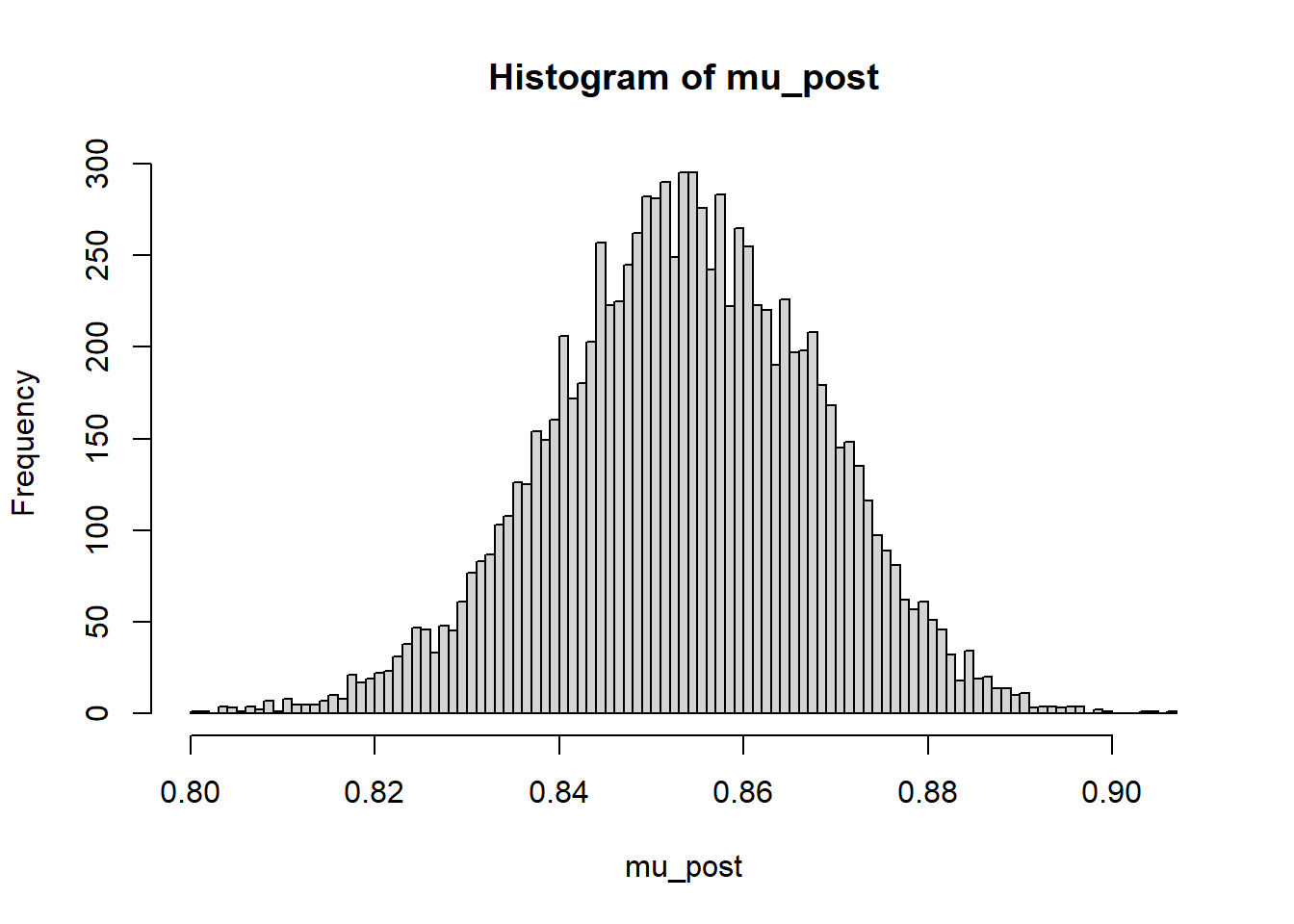

24.1.5 Posterior distribution of \(\theta_{\text{Embiid}}\)

mu_post = as.matrix(posterior_sample)[, "theta[1]"]

hist(mu_post, breaks = 100)

quantile(mu_post, c(0.01, 0.10, 0.25, 0.5, 0.75, 0.90, 0.99)) 1% 10% 25% 50% 75% 90% 99%

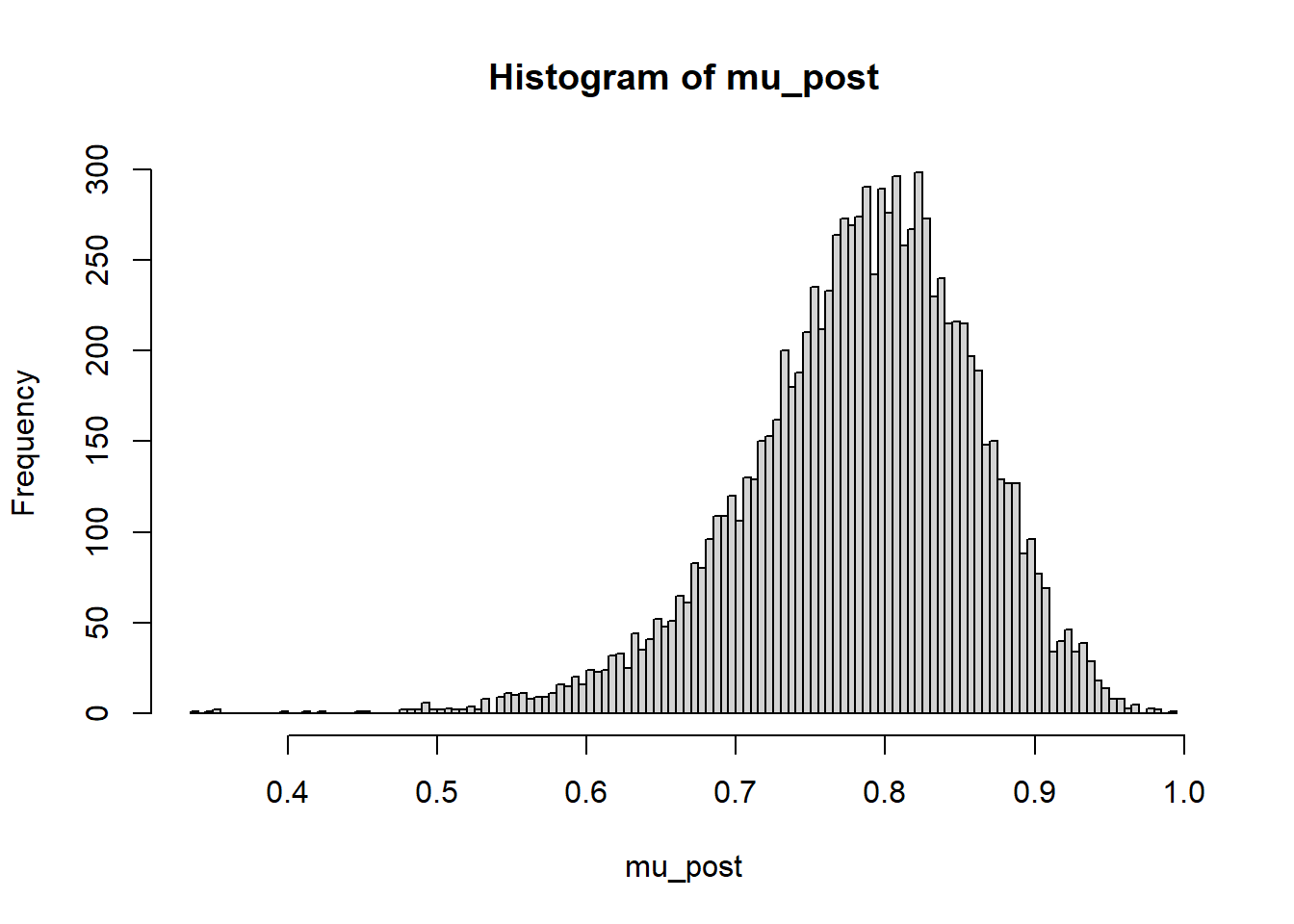

0.8184544 0.8352037 0.8441910 0.8537942 0.8638389 0.8719677 0.8858650 24.1.6 Posterior distribution of \(\theta_{\text{Ross}}\)

mu_post = as.matrix(posterior_sample)[, "theta[19]"]

hist(mu_post, breaks = 100)

quantile(mu_post, c(0.01, 0.10, 0.25, 0.5, 0.75, 0.90, 0.99)) 1% 10% 25% 50% 75% 90% 99%

0.5676829 0.6844631 0.7383997 0.7899897 0.8363422 0.8748987 0.9339276 ShinyStan provides a nice interface to interactively investigate the posterior distribution.

library(shinystan)

sixers_sso <- as.shinystan(posterior_sample, model_name = "76ers Free Throws")

sixers_sso <- drop_parameters(sixers_sso, pars = c("sigma"))

launch_shinystan(sixers_sso)