12 Independence

- Recall that events \(A\) and \(B\) are independent if the knowing whether or not one occurs does not change the probability of the other.

- For events \(A\) and \(B\) (with \(0<\text{P}(A)<1\) and \(0<\text{P}(B)<1\)) the following are equivalent. That is, if one is true then they all are true; if one is false, then they all are false.

\[\begin{align*} \text{$A$ and $B$} & \text{ are independent}\\ \text{P}(A \cap B) & = \text{P}(A)\text{P}(B)\\ \text{P}(A^c \cap B) & = \text{P}(A^c)\text{P}(B)\\ \text{P}(A \cap B^c) & = \text{P}(A)\text{P}(B^c)\\ \text{P}(A^c \cap B^c) & = \text{P}(A^c)\text{P}(B^c)\\ \text{P}(A|B) & = \text{P}(A)\\ \text{P}(A|B) & = \text{P}(A|B^c)\\ \text{P}(B|A) & = \text{P}(B)\\ \text{P}(B|A) & = \text{P}(B|A^c) \end{align*}\]

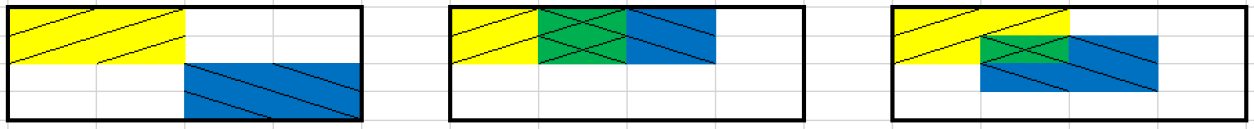

Example 12.1 Each of the three Venn diagrams below represents a sample space with 16 equally likely outcomes. Let \(A\) be the yellow / event, \(B\) the blue \ event, and their intersection \(A\cap B\) the green \(\times\) event. Suppose that areas represent probabilities, so that for example \(\text{P}(A) = 4/16\).

In which of the scenarios are events \(A\) and \(B\) independent?

- Do not confuse “disjoint” with “independent”.

- Disjoint means two events do not “overlap”. Independence means two events “overlap in just the right way”.

- You can pretty much forget “disjoint” exists; you will naturally apply the addition rule for disjoint events correctly without even thinking about it.

- Independence is much more important and useful, but also requires more care.

Example 12.2 Roll two fair four-sided dice, one green and one gold. There are 16 total possible outcomes (roll on green, roll on gold), all equally likely. Consider the event \(E=\{\text{the green die lands on 1}\}\). Answer the following questions by computing and comparing appropriate probabilities.

Consider \(A=\{\text{the gold die lands on 4}\}\). Are \(A\) and \(E\) independent?

Consider \(B=\{\text{the sum of the dice is 3}\}\). Are \(B\) and \(E\) independent?

Consider \(C=\{\text{the sum of the dice is 5}\}\). Are \(C\) and \(E\) independent?

- Independence concerns whether or not the occurrence of one event affects the probability of the other.

- Given two events it is not always obvious whether or not they are independent.

- Independence depends on the underlying probability measure. Events that are independent under one probability measure might not be independent under another.

- Independence is often assumed. Whether or not independence is a valid assumption depends on the underlying random phenomenon.

Example 12.3 Flip a fair coin twice. Let

- \(A\) be the event that the first flip lands on heads

- \(B\) be the event that the second flip lands on heads,

- \(C\) be the event that both flips land on the same side.

- Are the two events \(A\) and \(B\) independent?

- Are the two events \(A\) and \(C\) independent?

- Are the two events \(B\) and \(C\) independent?

- Are the three events \(A\), \(B\), and \(C\) independent?

- Events \(A_1, A_2, A_3, \ldots\) are independent if:

- any pair of events \(A_i, A_j, (i \neq j)\) satisfies \(\text{P}(A_i\cap A_j)=\text{P}(A_i)\text{P}(A_j)\),

- and any triple of events \(A_i, A_j, A_k\) (distinct \(i,j,k\)) satisfies \(\text{P}(A_i\cap A_j\cap A_k)=\text{P}(A_i)\text{P}(A_j)\text{P}(A_k)\),

- and any quadruple of events satisfies \(\text{P}(A_i\cap A_j\cap A_k \cap A_m)=\text{P}(A_i)\text{P}(A_j)\text{P}(A_k)\text{P}(A_m)\),

- and so on.

- Intuitively, a collection of events is independent if knowing whether or not any combination of the events in the collection occur does not change the probability of any other event in the collection.

Example 12.4 A certain system consists of three components made by three different manufacturers. Past experience indicates that component A will fail within 100 hours with probability 0.01, component B will fail within 100 hours with probability 0.02, and component C will fail within 100 hours with probability 0.03. Assume the components fail independently. Find the probability that the system fails (within 100 hours) if:

- The components are connected in parallel: the system fails only if all of the components fail.

- The components are connected in series: the system fails whenever at least one of the components fails.

- When events are independent, the multiplication rule simplifies greatly. \[ \text{P}(A_1 \cap A_2 \cap A_3 \cap \cdots \cap A_n) = \text{P}(A_1)\text{P}(A_2)\text{P}(A_3)\cdots\text{P}(A_n) \quad \text{if $A_1, A_2, A_3, \ldots, A_n$ are independent} \]

- When a problem involves independence, you will want to take advantage of it. Work with “and” events whenever possible in order to use the multiplication rule. For example, for problems involving “at least one” (an “or” event) take the complement to obtain “none” (an “and” event).

Example 12.5 In the meeting problem, assume Regina’s arrival time \(R\) follows a Uniform(0, 60) distribution and Cady’s arrival time \(Y\) follows a Normal(30, 10) distribution, independently of each other. (Remember, arrival times are measured in minutes after noon.) Let \(T=\min(R, Y)\). Compute and interpret \(\text{P}(T < 10)\).

Example 12.6 A very large petri dish starts with a single microorganism. After one minute, the microorganism either splits into two with probability 0.75, or dies. All subsequent microorganisms behave in the same way — splitting into two with probability 0.75 or dying after each minute — independently of each other. What is the probability that the population eventually goes extinct? (Hint: condition on the first step.)