Chapter 4 Data analysis

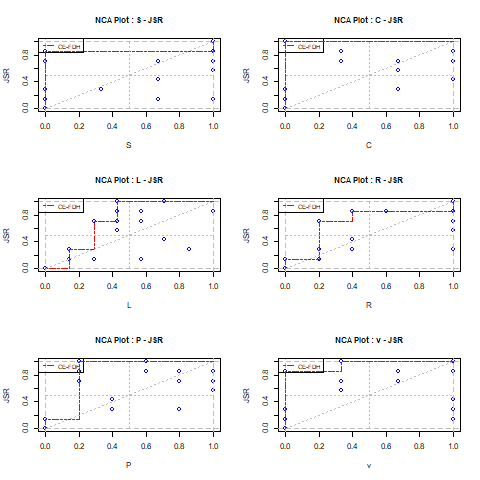

4.1 Visual inspection of the scatter plot

The starting point for NCA’s data analysis is a visual inspection of the \(XY\) scatter plot. This is a qualitative examination of the data pattern. By visual inspection the following questions can be answered:

- Is the expected corner of the \(XY\) plot empty?

- What is an appropriate ceiling line?

- What are potential outliers?

- What is the data pattern in the rest of plot?

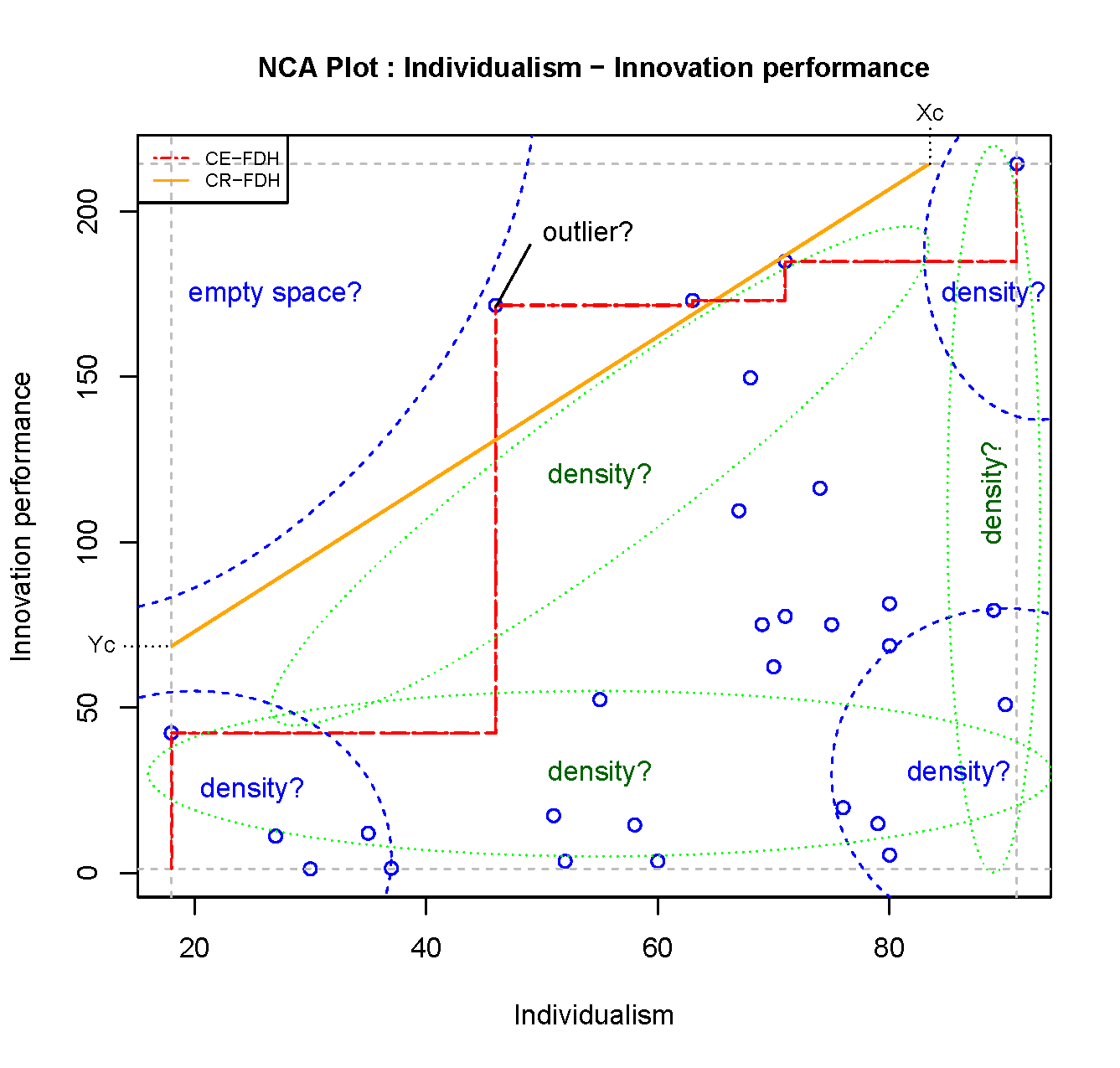

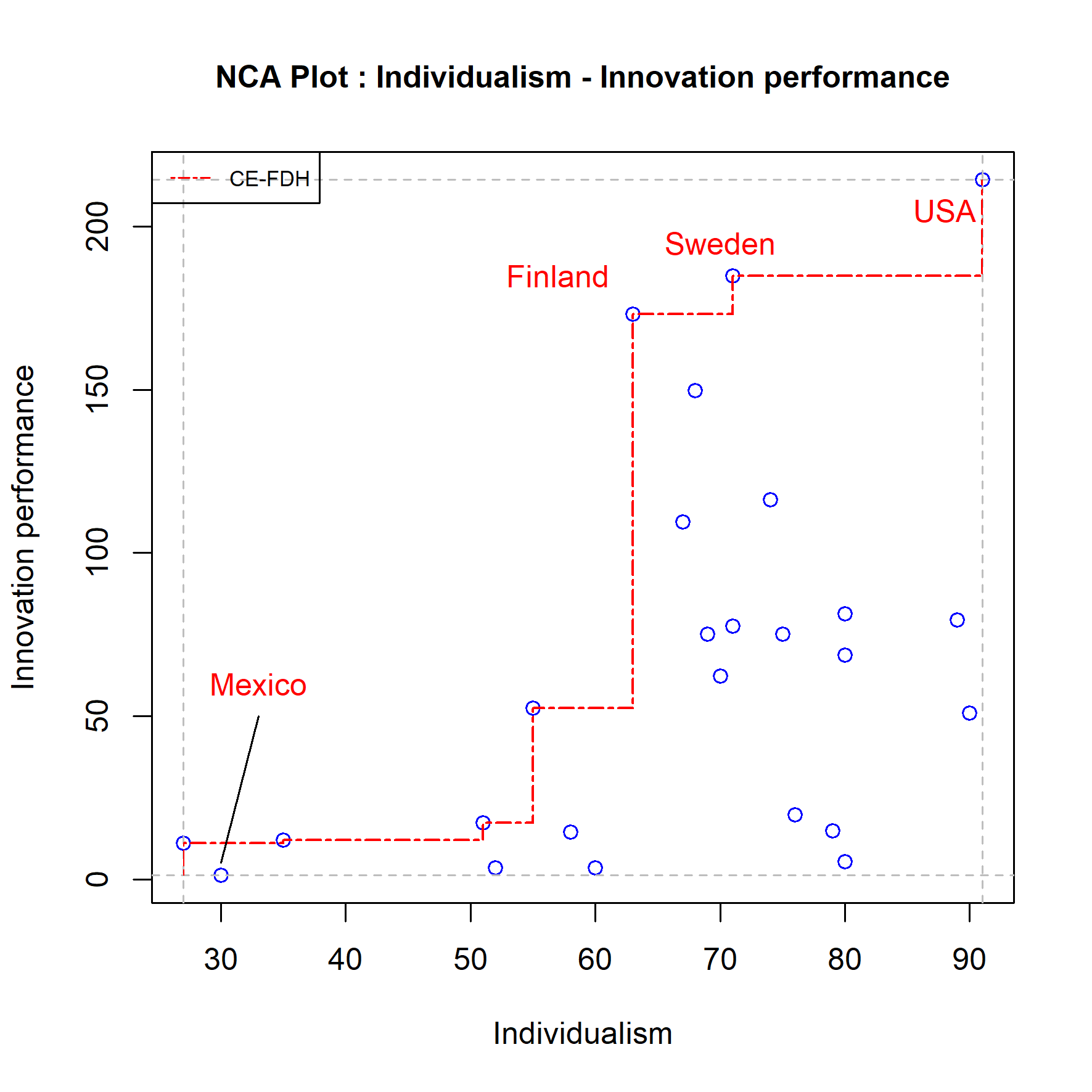

Visual inspection of the scatter plot is illustrated with nca.example, in particular for testing of the hypothesis that Individualism is necessary for Innovation performance. Figure 4.1 shows the scatter plot of Individualism versus Innovation performance with all 28 cases as black dots.

Figure 4.1: Scatter plot of Individualism and Innovation performance for evaluating the empty space, the appropriateness of the ceiling line, potential outliers, and the density of the cases in relevant areas.

4.1.1 Is the expected corner empty?

According to the hypothesis a high level of \(X\) is necessary for a high level of \(Y\) such that the upper left corner is expected to be empty. Figure 4.1 shows that cases with a low value of \(X\) have a low value of \(Y\), and that cases with a high value of \(Y\) have a high value of \(X\). The upper left corner is indeed empty. NCA’s effect size quantifies the size of the empty area in the scatter plot. After visual inspection the size of the empty space can be obtained with the NCA software by using the summaries argument of the nca_output function.

4.1.2 What is an appropriate ceiling line?

The ceiling line represents the border between the area with cases and the area without cases. Figure 4.1 shows the two default ceiling lines. The CR-FDH ceiling is often selected when the border is theoretically assumed to be linear, or when the condition and outcome have many levels (e.g., are continuous). The CE-FDH ceiling is often selected when the condition or outcome have few levels (e.g., are discrete). When the decision about the ceiling line is not made a priori the ceiling line can be selected by visual inspection of the scatter plot. When de border looks linear the CR-FDH line may be selected, and when it looks non-linear the CE-FDH line may be the best choice. After visual inspection the appropriateness of the ceiling line may be verified with the NCA’s ceiling accuracty and fit measures. Ceiling accuracy is the percentage of cases on or below the ceiling line. The ceiling accuracy of an appropriate ceiling line is close to 100%. NCA’s fit measure is the effect size of a selected ceiling as a percentage of the effect size of the CE-FDH line. The selected ceiling line may be inappropriate when fit deviates considerably from 100%. Ceiling accuracy and fit can be obtained with the NCA software by using the summaries argument of nca_output function.

4.1.3 What are potential outliers?

Outliers are cases that are relatively ‘far away’ from other cases. These cases can be identified by visual inspection of the scatter plot. In NCA potential outliers are the cases that construct the ceiling line (‘ceiling outliers’) and the cases that construct the scope (‘scope outliers’). NCA defines an outlier as a case that has a large influence on the effect size when removed (see Section 3.8). After visual inspection, potential outliers can be verified with the NCA software by using the nca_outlier function.

4.1.4 What is the data pattern in the rest of plot?

Although NCA focuses on the empty area in the scatter plot, the full space can also be informative for necessity. In particular the density of cases in other corners, the density of cases near the scope limits, and the density of cases near the ceiling line contain relevant information for necessity.

4.1.4.1 Density of cases in other corners

The presence of many cases in the lower left corner, thus cases with a low value of \(X\) that do not show a high value of \(Y\), is supportive for the necessary condition hypothesis. Similarly, the presence of many cases in the upper right corner, thus cases with a high value of \(X\) that show a high value of \(Y\), is also supportive for the necessary condition hypothesis. If only a few cases were present in these corners the emptiness of the upper left corner could be a random result. This also applies when the majority of cases are in the lower right corner. After visual inspection, the randomness of the empty space (when the variables are unrelated) is identified with NCA’s statistical test that is part of the NCA software. The NCA’s p value can be obtained by using the test.rep argument in the nca_analysis function and by subsequently by using the summaries argument in the nca_output function.

Although NCA focuses on the emptiness of the corner that is expected to be empty according to the hypothesis, it is also possible to explore the emptiness of other corners. When theoretical support is available, the emptiness of another corner could be formulated as an additional necessary condition hypothesis. Such hypothesis is formulated in terms of the absence or a low value of \(X\) or \(Y\) (see Section 2.6). After visual inspection, any corner in the scatter plot can be evaluated with the NCA software by using the corner argument in the nca_analysis function.

4.1.4.2 Density of cases near the scope limits

Cases near the scope limit of \(X = X_{max}\) have met the necessary condition of a high level of \(X\) being necessary for a high level of \(Y\). For certain levels of \(X > X_c\), where \(X_c\) is the intersection between the ceiling line and the line \(Y = Y_{max}\), \(X\) is not necessary for \(Y\) (‘condition inefficiency’). When most cases are in the condition inefficiency area \(X_c < X < X_{max}\), the necessary condition could be considered as ‘trivial’. Most cases have met the necessary condition, whereas only a few cases have not.

Cases near the scope limit of \(Y = Y_{min}\) have a low level of the outcome. Up to a level \(Y = Y_c\), where \(Y_c\) is the intersection between the ceiling line and the line \(X = X_{min}\), \(Y\) is not constrained by \(X\) (‘outcome inefficiency’). When most cases are in the outcome inefficiency area \(Y_{min} < Y < Y_c\), the necessary condition could be considered ‘irrelevant’; the condition is not constraining the outcome in that area. The condition and outcome inefficiencies can be obstained with the NCA software by using the summaries argument of nca_output function.

4.1.4.3 Density of cases near the ceiling line

Cases near the ceiling line will be able to achieve a higher outcome unless the necessary condition increases. These cases can be considered as “best cases” (assuming that the outcome is desirable, and the condition is an effort). For a given level of the condition the maximum possible outcome for that level of condition is achieved by other unknown variables). Thus, for a certain level of the condition, cases near the ceiling have achieved a relatively high level of the outcome compared to cases with similar level of the condition. Furthermore, cases near the outcome ‘support’ the estimation of the ceiling line. With many cases near the ceiling line, the support of the ceiling line is high.

4.2 The ‘empty space: Necessary condition ’in kind’

Necessary condition hypotheses are qualitative statements like ‘\(X\) is necessary for \(Y\)’. The statement indicates that it is impossible to have \(Y\) without \(X\). Consequently, if the statement holds, the space corresponding to having \(Y\) but not \(X\) in an \(XY\) plot or contingency table does not have observations, thus is empty. To calculate the size of the empty space, NCA draws a border line (ceiling line) between the empty space without observation and the full space with observations. The size of the empty space relative to the full space is the effect size and indicates the constraint that \(X\) poses on \(Y\). When the effect size is relevant (e.g., \(d > 0.1\)), and when the empty space is unlikely to be a random result of unrelated \(X\) and \(Y\) (e.g., \(p < 0.05\)), the researcher may conclude that there is empirical evidence for the hypothesis. Thus, after having evaluated theoretical support, the effect size and the p value, the hypothesis ‘\(X\) is necessary for \(Y\)’ may be supported. This hypothesis is formulated in a qualitative way and describes the necessary condition in kind. The effect size and its p value can be produced with the NCA software using the nca_analysis function.

Even for small samples, NCA can estimate an effect size an a relevant p value. An effect size can be calculate for a sample size \(N > 1\) (at least two cases must be available) and a relevant p value can be calculated when for a sample size \(N > 3\). The reasons for these thresholds are as follows. NCA’s effect size can be calculated as long as is a ceiling line can be drawn in the \(XY\) plot. NCA estimates the ceiling line from empirical data and for drawing a line at least two points are needed (\(N = 2\)). NCA assumes that the ceiling line is not pure horizontal and not pure vertical (although the CE-FDH step function has horizontal and vertical parts). When the ceiling line represents the situation that the presence or high value of \(X\) is necessary for the presence or high value of Y, the empty space is located in the upper left corner of the scatter plot. For \(N = 2\) the first case is in the lower left corner (\(x = 0, y = 0\)), and the second case is in the upper right corner (\(x = 1, y = 1\)). Then the effect size is 1 (CE-FDH). For all other situations with \(N = 2\), the effect size is 0 or not defined (pure horizontal or pure vertical line). For calculating the p value, NCA’s statistical test (see Section 6.2) compares the effect size of the observed sample (with \(N\) observed cases) with the effect sizes of created samples with \(N\) fictive cases when \(X\) and \(Y\) are unrelated. Fictive cases that represent non-relatedness between \(X\) and \(Y\) are obtained by combining observed \(X\) values with observed \(Y\) values into new samples. The total number of created samples with a unique combination (permutations) of fictive cases is \(N!\) (\(N\) factorial). Only one of the permutations corresponds to the observed sample. For each sample the effect size is calculated. The p value is then defined as the probability (p) that the effect size of the observed sample is equal to or larger than the effect size of all samples. If this probability is small (e.g., \(p < .05\)), a ‘significant’ result is obtained. This means that the observed sample is unlikely the result of a random process of unrelated variables (the null hypothesis is rejected), suggesting support for an alternative hypothesis.

For example, for a sample size of \(N = 2\) the total number of unique samples or permutations (including the observed sample) is \(1 * 2 = 2\). When the observed sample consists of case 1 \((x_1,y_1)\) and case 2 \((x_2,y_2)\) then the observed \(x\)-values \((x_1,x_2)\) and the observed \(y\)-values \((y_1,y_2)\) can be combined into two unique samples of size \(N = 2\): the observed sample with observed case 1 \((x_1,y_1)\) and observed case 2 \((x_2, y_2)\), and the alternative created sample of \(N = 2\) with two fictive cases \((x_1,y_2)\) and \((x_2, y_1)\). For example, when the observed sample has case 1 is \((0,0)\) and case 2 is \((1,1)\) the observed effect size is 1. The effect size of the alternative sample has the cases \((1,0)\) and \((0,1)\) with effect size is 0. The probability that the effect size of the observed sample is equal to or larger than the effect sizes of the two sample is 1 in 2, thus \(p = 0.5\). This means that the effect size of 1 is a ‘non-significant’ result because the probability that the observed effect size is caused by unrelated variables is not small enough (not below 0.05).

Similarly, for sample size \(N = 3\) the total number of unique samples is \(1 * 2 * 3 = 6\), and the smallest possible p value is \(1/6 = 0.167\), for \(N = 4\) the total number of unique samples is \(1 * 2 * 3 * 4 = 24\), and the smallest possible p value is \(1/24 = 0.042\), and for \(N = 5\) the total number of unique samples is \(1 * 2 * 3 * 4 * 5 = 120\), and the smallest possible p value is \(1/120 = 0.008\), etc. Thus, only from \(N = 4\) it is possible to obtain a p value that is small enough for making conclusions about the ‘statistical significance’ of an effect size.

When sample size increases, the total number of unique samples increases rapidly, and the possible p value decreases rapidly. For \(N = 10\) the total number of samples (permutations) is 3628800 and the corresponding possible p value is smaller than 0.0000003. For \(N = 20\) is the total number of samples is a number of 19 digits with a corresponding possible p value that has 18 zero’s after the decimal point. When N is large the computation of the effect sizes of all samples (permutations) requires unrealistic computation times. Therefore, NCA uses a random sample from all possible samples and estimates the p value with this selection of samples. Therefore, NCA’s statistical test is an ‘approximate permutation test’. In the NCA software this test is implemented with the test.rep argument in the nca_analysis function to provide the number of samples to be analyzed, for example 10000 (the larger the more precision but also more computation time). When the test.rep value is larger than \(N!\) (which is relevant for small N), the software selects all \(N!\) samples for the p value calculation.

The NCA software can also be used for estimating the effect size and p value for small N.

4.3 The bottleneck table: Necessary conditions ‘in degree’

For additional insights, a necessity relationship can be formulated in a quantitative way: the necessary condition in degree: “level of \(X\) is necessary for level of \(Y\)”. The bottleneck table is a helpful tool for evaluating necessary conditions in degree. The bottleneck table is the tabular representation of the ceiling line. The first column is the outcome \(Y\) and the next columns are the necessary conditions. The values in the table are levels of \(X\) and \(Y\) corresponding to the ceiling line.

By reading the bottleneck table row by row from left to right it can be evaluated for which particular level of \(Y\), which particular threshold levels of the conditions \(X\) are necessary. The bottleneck table includes only conditions that are supposed to be necessary conditions in kind. Conditions that are not supposed to be necessary (e.g., because the effect size is too small or the p value too large) are usually excluded from the table ((see Section 4.8). The bottleneck table can be produced with the NCA software using the argument bottlenecks = 'TRUE' in the nca_output function.

Figure 4.2 shows the software output of a particular bottleneck table (Dul, Hauff, et al., 2021) showing two personality traits of sales persons that were identified as necessary conditions for Sales performance (\(Y\)): Ambition (\(X_1\)) and Sociability (\(X_2\)) because there is theoretical support for it, the effect sizes are relatively large (d > 0.10), and the p values are relatively low (p < 0.05).

![Bottleneck table with two necessary conditions for sales performance [Adapted from @dul2021marketing]. NN = not necessary.](sales%20output.png)

Figure 4.2: Bottleneck table with two necessary conditions for sales performance (Adapted from Dul, Hauff, et al., 2021). NN = not necessary.

The example shows that up to level 40 of Sales performance, Ambition and Sociability are not necessary (NN). For level 50 and 60 of Sales performance only Ambition is necessary and for higher levels of Sales performance both personality traits are necessary. For example, for a level of 70 of Sales performance, Ambition must be at least 29.4 and Sociability at least 23.2. If a case (a sales person) has a level of a condition that is lower than the threshold value, this person cannot achieve the corresponding level of Sale performance. The condition is a bottleneck. For the highest level of Sales performance, the required threshold levels of Ambition and Sociability are 66.8 and 98.4 respectively.

4.3.1 Levels expressed as percentage of range

Figure 4.2 is an example of a default bottleneck table produced by the NCA software. This table has 11 rows with levels of \(X\) and \(Y\) expressed as ‘percentage.range’. The range is the maximum level minus the minimal level. A level of 0% of the range corresponds to the minimum level, 100% the maximum level, and 50% the middle level between these extremes. In the first row, the \(Y\) level is 0% of the \(Y\) range, and in the eleventh row it is 100%. In the default bottleneck table the levels of \(Y\) and \(X\)’s are expressed as percentages of their respective ranges.

4.3.2 Levels expressed as actual values

The levels of \(X\) and \(Y\) in the bottleneck table can also be expressed as actual values. Actual values are the values of \(X\) and \(Y\) as they are in the original dataset (and in the scatter plot). This way of expressing the levels can help to compare the bottleneck table results with the original data and with the scatter plot results. Expressing the levels of \(X\) with actual values can be done with the argument bottleneck.x = 'actual' in the nca_analysis function of the NCA software, and the actual values of \(Y\) can be shown in the bottleneck table with the argument bottleneck.y = 'actual'.

One practical use of a bottleneck table with actual values is illustrated with the example of sales performance. Figure 4.3 combines the bottleneck table with the scatter plots.

![Left: Bottleneck table with two necessary conditions for sales performance. Right: The red dot is a specific sales person with sales performance = 4, Ambition = 65 and Sociability = 59 [Adapted from @dul2021marketing]. NN = not necessary.](sales.png)

Figure 4.3: Left: Bottleneck table with two necessary conditions for sales performance. Right: The red dot is a specific sales person with sales performance = 4, Ambition = 65 and Sociability = 59 (Adapted from Dul, Hauff, et al., 2021). NN = not necessary.

The actual values of Sales performance range from 0 to 5.5, and for both conditions from 0 to 100. The left side of Figure 4.3 shows that up to level of 2 of Sales performance, none of the conditions are necessary. The scatter plots shows that cases with very low level of Ambition or Sociability still can achieve a level of 2 of Sales performance. However, for level 4 of Sales performance Ambition must be at least 33 and Sociability at least 29. The scatter plot shows that several cases have not reached these threshold levels of the conditions. This means that these cases do not reach level 4 of Sales performance. The scatter plots also show that cases exist with (much) higher levels of the conditions than these threshold levels and yet do not reach level of 4 of Sales performance: Ambition and Sociability are necessary, but not sufficient for Sales performance.

The right side of Figure 4.3 shows a particular case with Sales performance level 4, Ambition level 65 and Sociability level 59. For this person Sociability is the bottleneck for moving from level 4 to level 5 of Sales performance. Level 5 of Sales performance requires level 55 of Ambition and this is already achieved by this person. However, the person’s level of Sociability is 59 and this is below the threshold level that is required for level 5 of Sales performance. For reaching this level of Sales performance, this person must increase the level of Sociability (e.g., by training). Improving the Ambition without improving Sociability has no effect and is a waste of effort. For other persons, the individual situation might be different (e.g., Ambition but not Sociability is the bottleneck, both are bottlenecks, or none is a bottleneck). This type of bottleneck analysis allows the researcher to better understand the bottlenecks of individual cases and how to act on individual cases.

4.3.3 Levels expressed as percentiles

The levels of \(X\) and \(Y\) can also be expressed as percentiles. This can be helpful for selecting ‘important’ necessary conditions when acting on a group of cases. The percentile is a score where a certain percentage of scores fall below that score. For example, 90 percentile of \(X\) is a score of \(X\) that is so high that 90% of the observed \(X\)-scores fall below that \(X\)-score. Similarly, 5 percentile of \(X\) is a score of \(X\) that is so low that 5% of the observed \(X\)-scores fall below that \(X\)-score.

The bottleneck can be expressed with percentiles by using the argument bottleneck.x = 'percentile' and/or bottleneck.y = 'percentile' in the nca_analysis function of the NCA software. Figure 4.4 shows the example bottleneck table with the level of \(Y\) expressed as actual values, and the level of the \(X\)s as percentiles.

![Bottleneck table with levels of $Y$ expressed as actual and levels of $X$ as percentiles. Between brackets are the cumulative number of cases that have not reached the threshold levels. The total number of cases is 108. [Adapted from @dul2021marketing].](salespercentiles.png)

Figure 4.4: Bottleneck table with levels of \(Y\) expressed as actual and levels of \(X\) as percentiles. Between brackets are the cumulative number of cases that have not reached the threshold levels. The total number of cases is 108. (Adapted from Dul, Hauff, et al., 2021).

A percentile level of \(X\) corresponds to the percentage of cases that are unable to reach the threshold level of \(X\) and thus the corresponding level of \(Y\) in the same row. If in a certain row (e.g., row with \(Y\) = 3) the percentile of \(X\) is small (e.g., 4% for Ambition), only a few cases (4 of 108 = 4%) where not able to reach the required level of \(X\) for the corresponding level of \(Y\). If the percentile of \(X\) is large (e.g., row with \(Y\) = 5.5), many cases where not able to reach the required level of \(X\) for the corresponding level of \(Y\): 68 of 108 = 63% for Ambition and 106 of 108 = 98% for Sociability. Therefore, the percentile for \(X\) is an indication of the ‘importance’ of the necessary conditions: how many cases were unable to reach the required level of the necessary condition for a particular level of the outcome. When several necessary conditions exist, this information may be relevant for prioritizing a collective action on a group of cases by focusing on bottleneck conditions with high percentile values of \(X\).

Further details of using and interpreting the bottleneck table in this specific example can be seen in this video.

4.3.4 Levels expressed as percentage of maximum

The final way of expressing the levels of \(X\) and \(Y\) is less commonly used. It is possible to express levels of \(X\) and \(Y\) as percentage of their maximum levels. The bottleneck table can be expressed with percentage of maximum by using the argument bottleneck.x = 'percentage.max' or bottleneck.y = 'percentage.max' in the nca_analysis function of the NCA software.

4.3.5 Interpretation of the bottleneck table with other corners

The above sections refer to analyzing the upper left corner in the \(XY\) plot, corresponding to the situation that the presence or a high value of \(X\) is necessary for the presence or a high value of \(Y\). Then, when \(X\) is expressed as ‘percentage range’, ‘actual value’ or ‘percentage maximum’ the bottleneck table shows the minimum required level of \(X\) for a given value of \(Y\). When \(X\) is expressed as ‘percentiles’, the bottleneck table shows the percentage of cases that are unable to reach the required level of \(X\) for a given value of \(Y\).

The interpretation of the bottleneck table is different when other corners than the upper left corner are analysed (see Section 1.3).

4.3.5.1 Interpretation of the bottleneck table with corner = 2

For corner = 2, the upper right corner is empty suggesting that the absence or low value of \(X\) is necessary for the presence or high value of \(Y\). This means that for a given value of \(Y\), the value of \(X\) must be equal to or lower than the threshold value according to the ceiling line. When \(X\) is expressed as ‘percentage range’, ‘actual value’ or ‘percentage maximum’ the bottleneck table shows the maximum required level of \(X\) for a given value of \(Y\). In other words, for each row in the bottleneck table (representing the target level of \(Y\)), the corresponding level of \(X\) must be at most the level mentioned in the bottleneck table. When \(X\) is expressed as ‘percentiles’, the bottleneck table still shows the percentage and number of cases that are unable to reach the required level of \(X\) for a given value of \(Y\).

4.3.5.2 Interpretation of the bottleneck table with corner = 3

For corner = 3, the lower left corner is empty suggesting that the presence or high value of \(X\) is necessary for the absence or low value of \(Y\). This means that for a given value of \(Y\), the value of \(X\) must be equal to or higher than the threshold value according to the ceiling line. When \(X\) is expressed as ‘percentage range’, ‘actual value’ or ‘percentage maximum’ the bottleneck table shows the minimum required level of \(X\) for a given value of \(Y\). In other words, for each row in the bottleneck table (representing the target level of \(Y\)), the corresponding level of \(X\) must be at least the level mentioned in the bottleneck table. However, the first column in the bottleneck table (representing \(Y\)) is now reversed. The first row has a high \(Y\) value and the last row has the low values as the target outcome is low. When percentages are used for \(Y\) (percentile or percentage of maximum) then 0% corresponds to a highest level of \(Y\) and 100% to the lowest level of \(Y\). When \(X\) is expressed as ‘percentiles’, the bottleneck table still shows the percentage and number of cases that are unable to reach the required level of \(X\) for a given value of \(Y\).

4.3.5.3 Interpretation of the bottleneck table with corner = 4

For corner = 4, the lower right corner is empty suggesting that the absence or low value of \(X\) is necessary for the absence or low value of \(Y\). This means that for a given value of \(Y\), the value of \(X\) must be equal to or lower than the threshold value according to the ceiling line. When \(X\) is expressed as ‘percentage range’, ‘actual value’ or ‘percentage maximum’ the bottleneck table shows the maximum required level of \(X\) for a given value of \(Y\). In other words, for each row in the bottleneck table (representing the target level of \(Y\)), the corresponding level of \(X\) must be at most the level mentioned in the bottleneck table. However, the first column in the bottleneck table (representing \(Y\)) is now reversed. The first row has a high \(Y\) value and the last row has the low values as the target outcome is low. When percentages are used for \(Y\) (percentile or percentage of maximum) then 0% corresponds to a highest level of \(Y\) and 100% to the lowest level of \(Y\). When \(X\) is expressed as ‘percentiles’, the bottleneck table still shows the percentage and number of cases that are unable to reach the required level of \(X\) for a given value of \(Y\).

4.3.6 NN and NA in the bottleneck table

A NN (Not Necessary) in the bottleneck table means that \(X\) is not necessary for \(Y\) for the particular level of \(Y\). With any value of \(X\) it is possible to achieve the particular level of \(Y\).

An NA (Not Applicable) in the bottleneck table is a warning that it is not possible to compute a value for the \(X\). There are two possible reasons for it, the first more often than the second:

The maximum possible value of the condition for the particular level of \(Y\) according to the ceiling line is lower than the actually observed maximum value. This can happen for example when the CR ceiling (which is a trend line) runs at \(X\) = \(X_{max}\) (which is the right vertical line of the scope in the scatter plot) under the line \(Y\) = \(Y_{max}\) (which is the upper horizontal line of the scope). If this happens the researcher can either explain why this NA appears in the bottleneck table, or can change NA into the highest observed level of \(X\). The latter can be done with the argument

cutoff = 1in thenca_analysisfunction.In a bottleneck table with multiple conditions, one case determines the \(Y_{max}\) value and that case has a missing value for the condition with the NA (but not for another condition). When all cases are complete (have no missing values) or when at least one case exist that has a complete observation (\(X\), \(Y_{max}\)) for the given condition, the NA will not appear. The action to be taken is either to explain why this NA appears, or to delete the incomplete case from the bottleneck table analysis (and accept that the \(Y_{max}\) in the bottleneck table does not correspond to the actually observed \(Y_{max}\)).

4.4 Robustness checks

When conducting empirical research, a researcher must make a variety of theoretical and methodological choices to obtain results. Often, also other plausible choices could have been made. In a robustness check the sensitivity of the results of other plausible choices is evaluated. The check focuses on the sensitivity of the main estimates and the researcher’s main conclusion (e.g., about a hypothesis). Evaluating the robustness of a result is therefore a good practice for any empirical research method. For example, in regression analysis the regression coefficient is a main estimate that can be sensitive for inclusion or exclusion of potential confounders in the regression model (model specification) (X. Lu & White, 2014) or for the selection of the functional form of the regression equation (linear or non-linear). Another example of a researcher’s choice is the threshold p value (e.g., \(p = 0.05\) or \(p = 0.01\)) for (non)rejection of the hypothesis (Benjamin et al., 2018). In NCA, the effect size and the p value are two main estimates for concluding whether a necessity relationship is credible. These estimates can be sensitive for several choices. Some choices are outside the realm of NCA, for example choices related to the data that are used as input to NCA (research design, sampling, measurement, pre-processing of data) or the threshold level of statistical significance. Other choices are NCA-specific, such as the choice of the ceiling line (e.g., CE-FDH or CR-FDH), the choice of the scope (empirical or theoretical), the threshold level of the necessity effect size (e.g., 0.1 or 0.2), and the handling of NCA-relevant outliers (keep or remove). All these choices have an effect on the necessity in kind conclusion whether or not a condition is necessary. A robustness check for necessity in degree evaluates the effect on the number of bottleneck cases (cases that are unable to achieve the required level of the condition for the target level of the outcome.

This section discuss these researcher’s choices that are candidates for a robustness check. The selected candidates are coices regarding ceiling line, threshold levels of the d value and the p value, scope and outliers. The section introduces the NCA robustness table. This table shows the influence of researcher’s choices on the main NCA results.

4.4.1 Choice of ceiling line

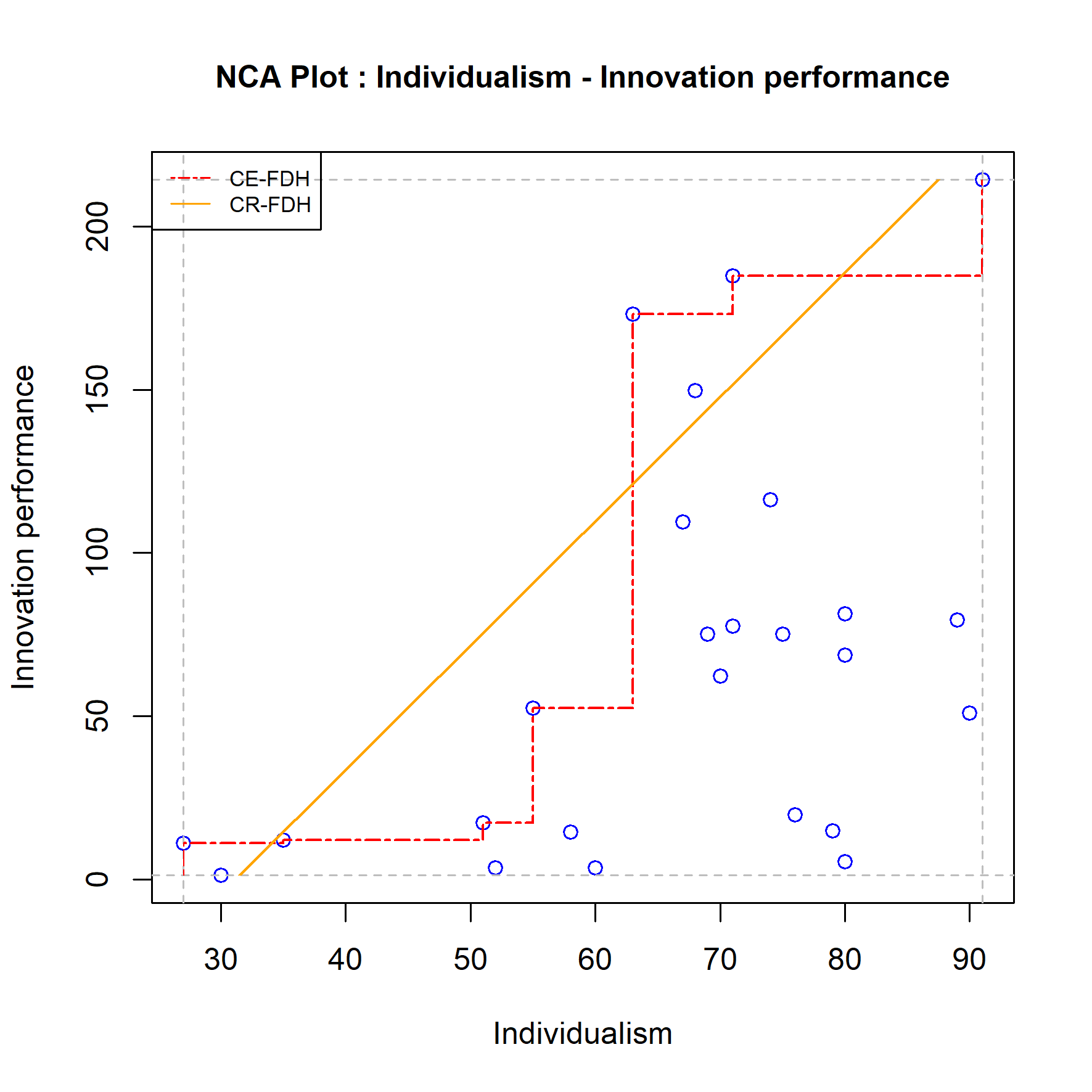

In NCA model specification refers to the variables that are selected as potential necessary conditions. Functional form refers to the form of the ceiling line. NCA is a (multiple) bivariate analysis where the inclusion or exclusion of other variables does not affect the results. However, the functional form of the necessity model may affect the results. The two default ceiling lines are linear (straight line CR-FDH) and piece-wise linear (step function CE-FDH), and the NCA results depend on the choice of the ceiling line. The researcher usually selects the ceiling line based on the type of data (discrete or continuous) or on whether the expected or observed pattern of the border between the area with and without data is linear or non-linear. For example, when the data has a small number of discrete levels with a jumpy border, the researcher may decide to select the CE-FDH ceiling line. When the researcher assumes a linear ceiling line in the population, the straight ceiling line CR-FDH may be selected. The choice of the ceiling line is partly subjective because there are no hard criteria for the best ceiling form (see also Section 6.3.3). Therefore, a robustness check with another plausible ceiling line is warranted. When the selected ceiling line is obviously the best choice (e.g., CE-FDH when the condition or outcome is dichotomous), it makes no sense to mechanistically select another line (e.g., CR-FDH) as this is not a reasonable alternative. If other ceiling line choices are also plausible, it makes sense to check the effect of this other choice. For example, Figure 4.5 shows the scatter plot with the two default ceiling lines for testing the hypothesis that in Western countries a culture of individualism is necessary for innovation performance (Dul, 2020).

Figure 4.5: Example of a ceiling line robustness check: Comparing the results of two ceiling lines.

In this example the choice of the ceiling line is not obvious. As suggested in Dul (2020) a robustness check could consist of performing the analysis with both ceiling lines and comparing the results. The results for the CE-FDH ceiling line are \(d = 0.58\) and \(p = 0.002\), and for the CR-FDH ceiling line are \(d = 0.51\) and \(p = 0.003\). Given the researcher’s other choices (e.g., effect size threshold = 0.1; p value threshold = 0.05) the ceiling line robustness check consists of evaluating whether the researcher’s conclusion about the necessity hypothesis remains the same (robust result) or differs (fragile result). In this case both ceiling lines produce the same conclusion regarding necessity in kind: the necessity hypothesis is supported, which suggests that this is a robust result.

In the situation of a dichotomous necessary condition where the condition or the outcome (or both) have only two values, the only feasible ceiling line is the CE_FDH ceiling line. The CR_FDH ceiling line is not a plausible alternative as it does not fit the step-wise data pattern, and the results are already known: the CR_FDH effect size is half of the CE_FDH effect size, whereas the p values are the same. In this situation, the robustness check should exclude selecting another ceiling line.

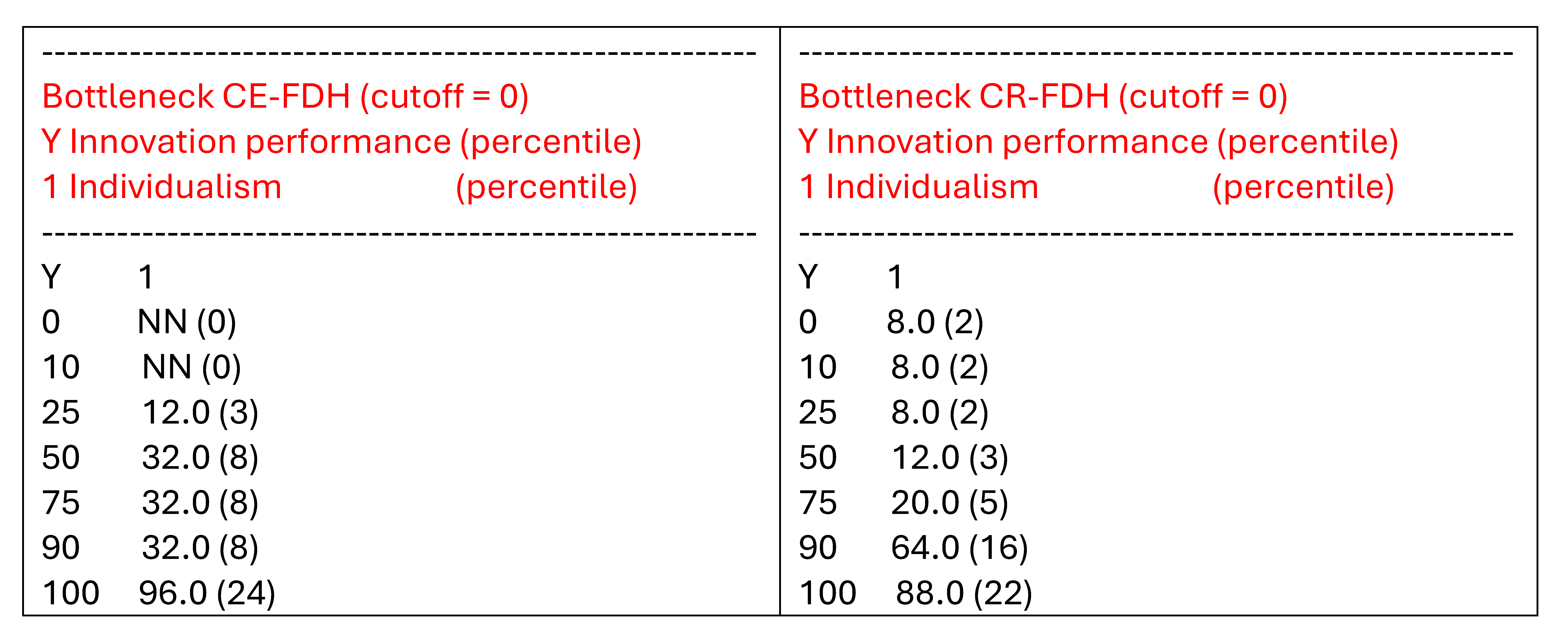

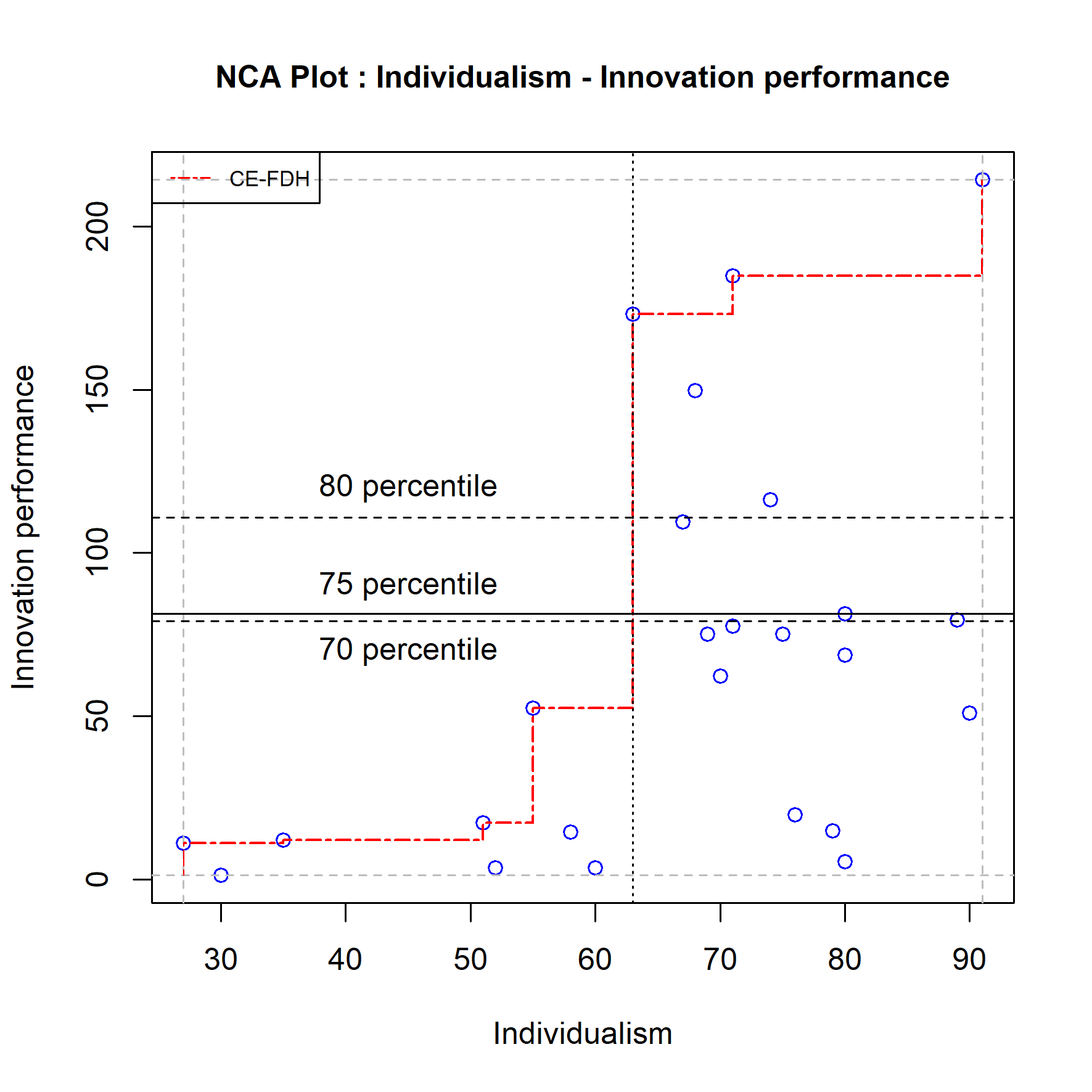

Regarding necessity in degree the bottleneck tables (with outcome and conditions expressed as percentiles) shows that the results are more variable. For example, Figure ?? shows that for a “high” level of the outcome that can only be achieved by 25% of the cases (75th percentile), 32% of the cases (8 cases) cannot achieve the required level for the condition individualism when the CE-FDH line is used, whereas 20% of the cases (5 cases) cannot achieve when the CR-FDH line is used.

Figure 4.6: Bottleneck tables of for two ceiling lines.

4.4.2 Choice of the threshold level of the effect size

The researcher could also have made another choice for the effect size threshold level. If the threshold level is changed from the common 0.1 value (“small effect”) to a larger value of 0.2 (“medium effect”), the conclusion about necessity would not change. Since the observed effect size is verly large, the robustness check of effect size threshold suggests a robust result.

4.4.3 Choice of the threshold level of the p value

The researcher could also have made another choice for the p value threshold level. If the threshold level is changed from the common 0.05 value to a smaller value of 0.01, the conclusion about necessity would not change. Singe the estimated p values is very small, the robustness check of effect size threshold suggests a robust result.

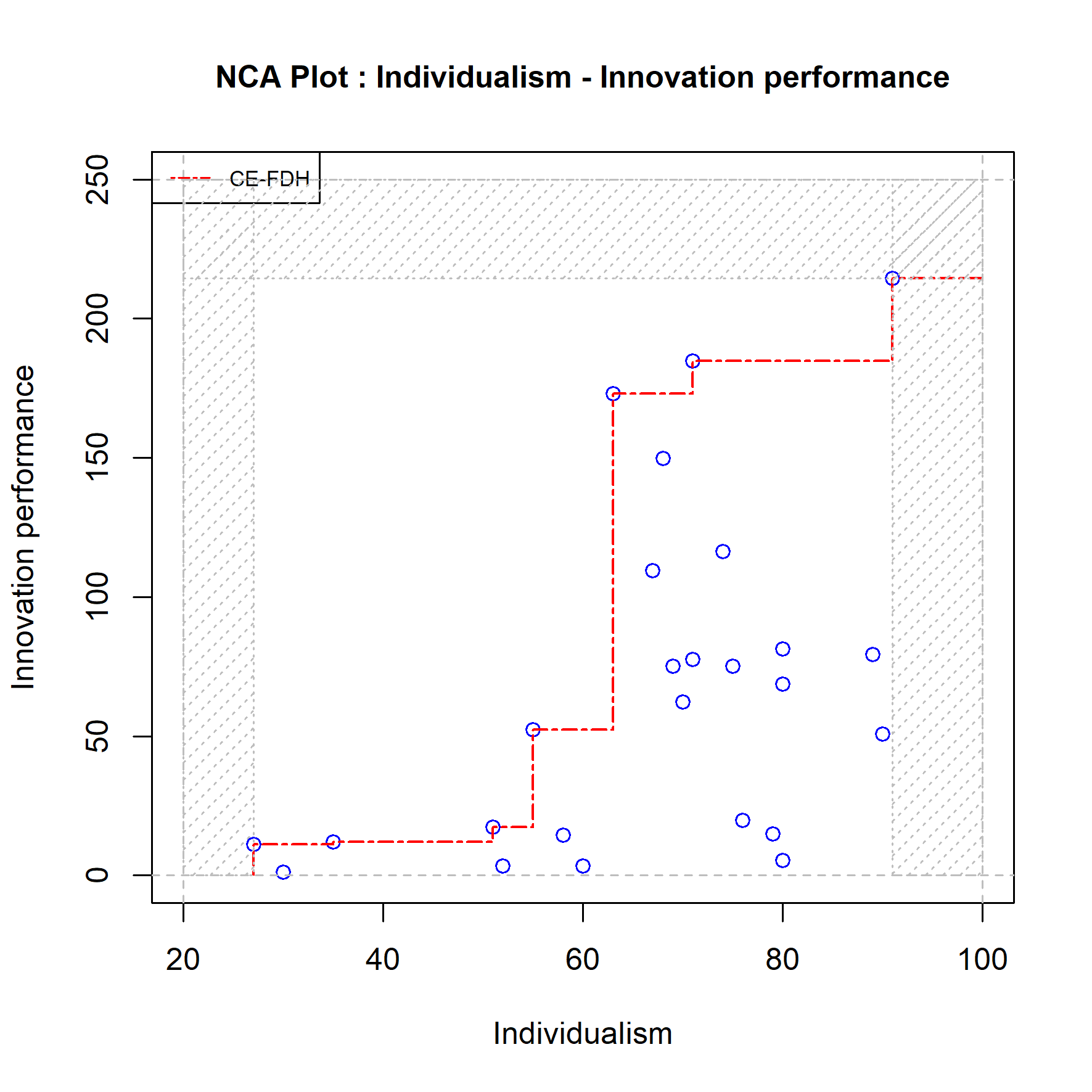

4.4.4 Choice of the scope

Whereas regression analysis assumes that the variables of the regression model are unbounded (can have values between minus and plus infinity, see Section 4.5), NCA assumes that the variables in the necessity model are bounded (have minimum and maximum values). As a default the NCA selects the bounds based on the observed minimum and maximum values of the condition and the outcome to define the ‘empirical scope’. However, it is also possible to conduct NCA with a theoretically defined scope (‘theoretical scope’). The robustness of the result could be checked by selecting another plausible scope (‘theoretical scope’) than the default empirical scope, or when the original choice was a theoretical scope to select the empirical scope or a different theoretical scope. For example, the researcher may have selected the empirical scope for an analysis with variables measured with a Likert scale, but the scale’s minimum and maximum values are not used. Then the researcher can conduct a robustness check with a theoretical scope defined with the extreme values of the scale. Changing the scope for a larger theoretical scope generally results in a larger effect size. Therefore, such change may affect the researcher’s original conclusion that the hypothesis is rejected, but will not change an original conclusion that the hypothesis is supported. The latter is shown in Figure 4.7. The scatter plot has a theoretical scope that is larger than the empirical scope. The condition ranges from 20 to 100 and the outcome from 0 to 120. The effect size for the CE-FDH ceiling line has increased from \(d = 0.58\) (\(p = 0.002\)) to \(d = 0.61\) (\(p = 0.002\)). Changing a theoretical scope for a smaller scope generally results in a smaller effect size. Such change may affect the researcher’s original conclusion that the hypothesis is supported, but will not change an original conclusion that the hypothesis is rejected.

Figure 4.7: Example of a scope robustness check: Comparing the results with the empirical and the theoretical scope (difference is shaded).

When the researcher would have selected a theoretical scope for the analysis and changes that for a smaller theoretical scope, the effect size will be smaller and may also affect the researcher’s conclusion about the hypothesis from ‘rejected’ to ‘not rejected’.

4.4.5 Choice of outlier removal

A removal of outliers potentially has a large influence on the results as NCA results can be sensitive for outliers (see Section 3.8). Ceiling outliers (outliers that define the ceiling line) and scope outliers (outliers that define the scope) usually increase the effect size when removed. For example, in Figure 4.10 Finland is a potential ceiling outlier that increases the CE-FDH effect size from 0.58 (\(p = 0.002\)) to 0.62 (\(p = 0.001\)) and Mexico is a potential scope outlier that increases the effect size slightly. However, when an outlier is both a ceiling outlier and a scope outlier the effect may decrease. This applies to the potential outlier USA, where the effect size decreases from 0.58 (\(p = 0.002\)) to 0.52 (\(p = 0.012\)) when this potential outlier is removed.

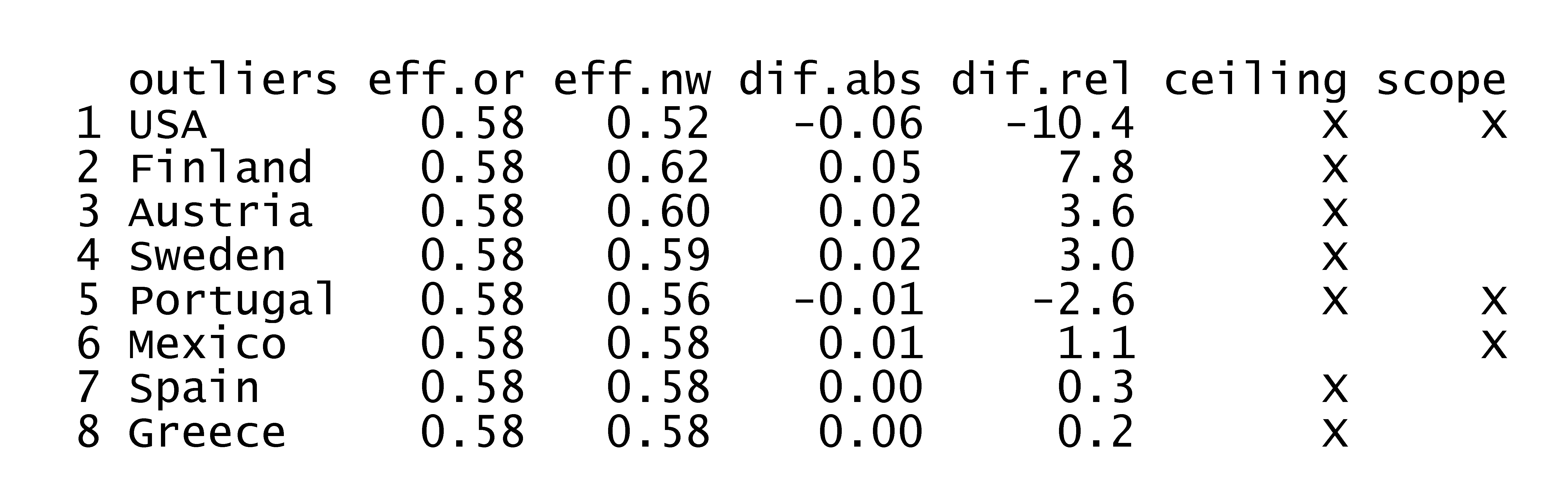

For getting a first impression about the role of removing outliers two robustness checks can be done. In the first check the most influential single outlier (largest effect on the effect size if removed is selected, and this potential outlier is removed from the dataset. The potential outliers can be identified with the NCA software as follows:

The results are shown in Figure 4.8, where eff.or is the original effect size, eff.nw is the new effect size after removing the potential outlier, dif.abs and dif.rel are the absolute and relative differences between the new and original effect size and ‘ceiling’ and ‘scope’ indicate whether the potential outliers is a ceiling outlier or a scope outlier (or both). It turns out the USA is the largest outlier. When this case is removed from the dataset the NCA results are changed as indicated above.

Figure 4.8: The influence of removing a single potential outlier on the effect size.

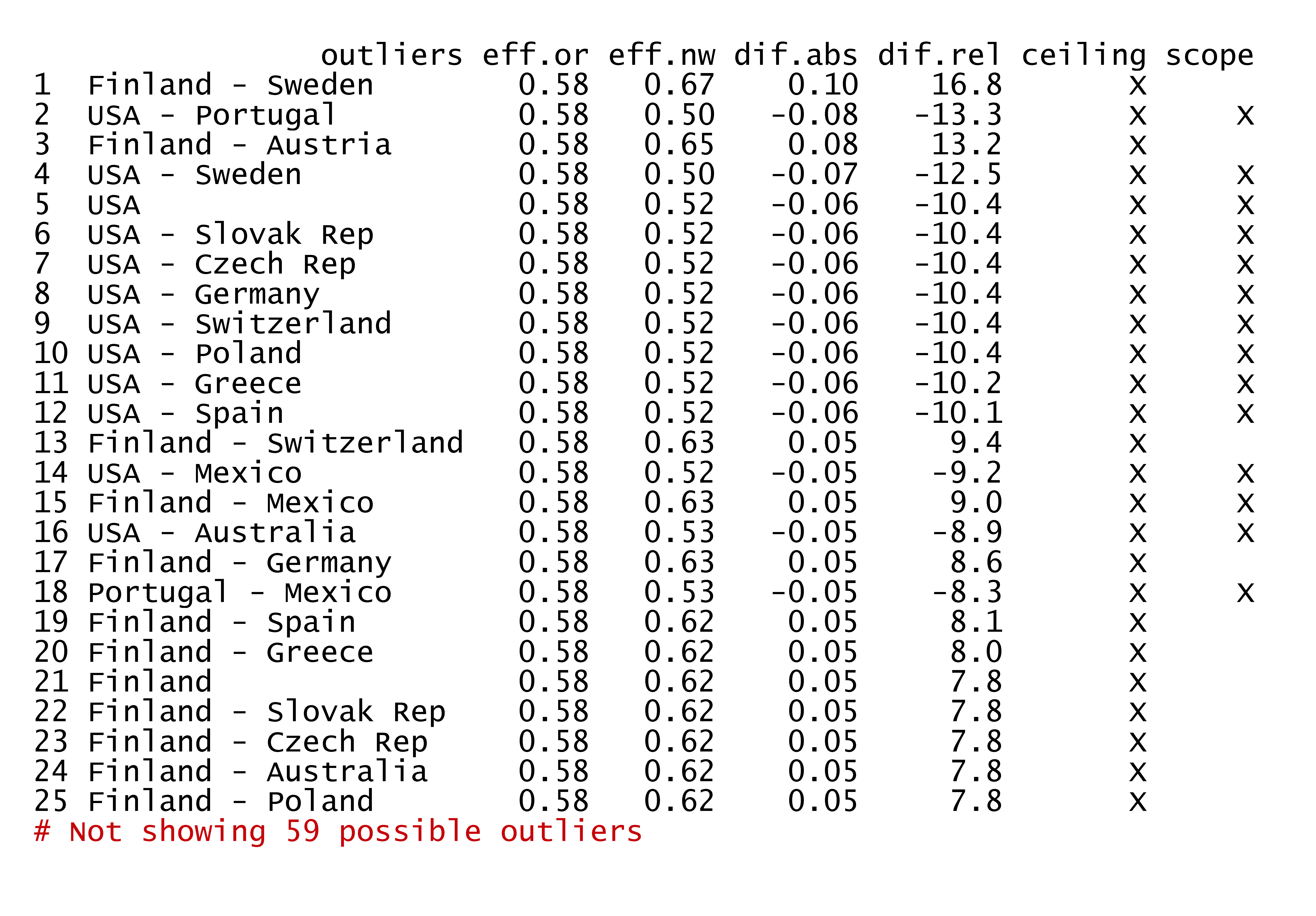

In the second check, multiple outliers (for example a set of two) are selected that have the largest influence on the effect size when removed as a set. This outlier analysis can be initiated as follows:

Figure 4.9: The influence of removing two potential outliers on the effect size.

The results show that the set ‘Finland-Sweden’ is the most influential outlier duo. If this duo is removed from the dataset, the effect size increases and the p value reduces.

Figure 4.10: Potential outliers. Mexico = potential scope outlier; Finland, Sweden = potential ceiling outliers; USA = potential scope and ceiling outlier. USA is the most influential single outlier. Finland - Sweden is the most influential combination of multiple (two) outliers.

If this crude outlier approach with removing a single most influential outlier and aset of multiple most influential outliers does not change the conclusions, the results can be considered robust. Note, however, that a careful consideration is needed about an outlier decision: What are potential outliers, what are reasons of removing or not removing them (see decision tree about outliers in Section 3.8), and what is their influence on the effect size. Outliers are usually only removed when they are erroneous (measurement error or sampling error) or when they are exceptional (very large influence on the effect size when removed). In the current example, the influence of removing potential outliers is relatively modest; keeping them seems to be the most appropriate approach.

4.4.6 Choice of target outcome

The last robustness check explores the stability of the bottleneck analysis in terms of the number of bottleneck cases (that cannot achieve the target outcome because the condition is not satisfied) when the target outcome is mildly changed. For example, when the original target outcome is 75% (percentile) what is the influence on the percentage and number of bottleneck cases when the target outcome is somewhat lower (70%) or higher (80%).

Figure 4.11: The effect of changing the target outcome from 75 percentile to 70 and 80 percentile.

Figure 4.11 shows that for each of these levels of the outcome the required minimum level of the condition is 63. Eight cases (32%) have not reached that level of the condition, which makes them bottleneck cases. The number of bottleneck cases does not change with minor changes of the target outcome.

4.4.7 Robustness table

The results the robustness checks can be summarized in a NCA robustness table (4.1) where the original NCA results are compared with the results when different, also feasible choices are made. The first row shows the original results, and the next rows give the results after changes are explored. The nine changes: ceiling line change, d threshold change, p threshold change, scope change, single outlier removal, multiple outlier removal, target lower, and target higher. For checking the robustness of necessity in kind, effect size, p value, and conclusion about necessity (assuming theoretical support) are displayed. For checking the robustness of necessity in degree the percentage (%) and number (#) of bottleneck cases that are unable to achieve the required level of the condition for a selected target level of the outcome are shown. The NCA robustness table shows that the original results of this example are robust.

| Robustness check | Effect size | p value | Necessity | Bottlenecks(%) | Bottlenecks(#) |

|---|---|---|---|---|---|

| Individualism | |||||

| Original | 0.58 | 0.100 | no | 32 | 8 |

| Ceiling change | 0.51 | 0.100 | no | 20 | 5 |

| d threshold change | 0.58 | 0.100 | no | 32 | 8 |

| p threshold change | 0.58 | 0.100 | no | 32 | 8 |

| Scope change | 0.61 | 0.100 | no | 32 | 8 |

| Single outlier removal | 0.52 | 0.100 | no | 33.3 | 8 |

| Multiple outlier removal | 0.67 | 0.100 | no | 34.8 | 8 |

| Target lower | 0.58 | 0.100 | no | 32 | 8 |

| Target higher | 0.58 | 0.100 | no | 32 | 8 |

The NCA robustness table for a specific necessity relationship with one condition and one outcome can be produced with the following code that makes use of three functions: nca_robustness_table_general, nca_robustness_checks_general, and get_outliers. The R code for these functions can be found in Sections 7.4.1, 7.4.2, and 7.4.3, respectively.

# General robustness table for a single necessity relationship.

library(NCA)

source("nca_robustness_table_general.R")

source("nca_robustness_checks_general.R")

source("get_outliers.R")

# Example (nca.example with Western countries)

data(nca.example)

data <- nca.example

data <- data[-c(14,22,26),] #exclude non-Western countries

conditions <- "Individualism"

outcome <- "Innovation performance"

bottleneck.y <- "percentile"

plots <- FALSE # do not show scatter plots

# Define original analysis

define_check <- function(

name,

ceiling = "ce_fdh", # original

d_threshold = 0.1, # original

p_threshold = 0.05, # original

scope = NULL, # original

outliers = 0, # original

target_outcome = 75 # original

) {

list(

name = name,

ceiling = ceiling,

d_threshold = d_threshold,

p_threshold = p_threshold,

scope = scope,

outliers = outliers,

target_outcome = target_outcome

)

}

# Define robustness checks (differences with Original)

checks <- list(

define_check("Original"),

define_check("Ceiling change", ceiling = "cr_fdh"),

define_check("d threshold change", d_threshold = 0.2),

define_check("p threshold change", p_threshold = 0.01),

define_check("Scope change", scope = list(c(20, 100, 0, 250))),

define_check("Single outlier removal", outliers = 1),

define_check("Multiple outlier removal", outliers = 2),

define_check("Target lower", target_outcome = 80),

define_check("Target higher", target_outcome = 90)

)

# Conduct robustness checks and produce robustness table

results <- nca_robustness_table_general(

data = data,

conditions = conditions,

outcome = outcome,

ceiling = ceiling,

scope = scope,

d_threshold = d_threshold,

p_threshold = p_threshold,

outliers = outliers,

bottleneck.y = bottleneck.y,

target_outcome = target_outcome,

plots = plots,

checks = checks

)

# View results

print(results)4.5 Combining NCA with regression analysis

4.5.1 Introduction

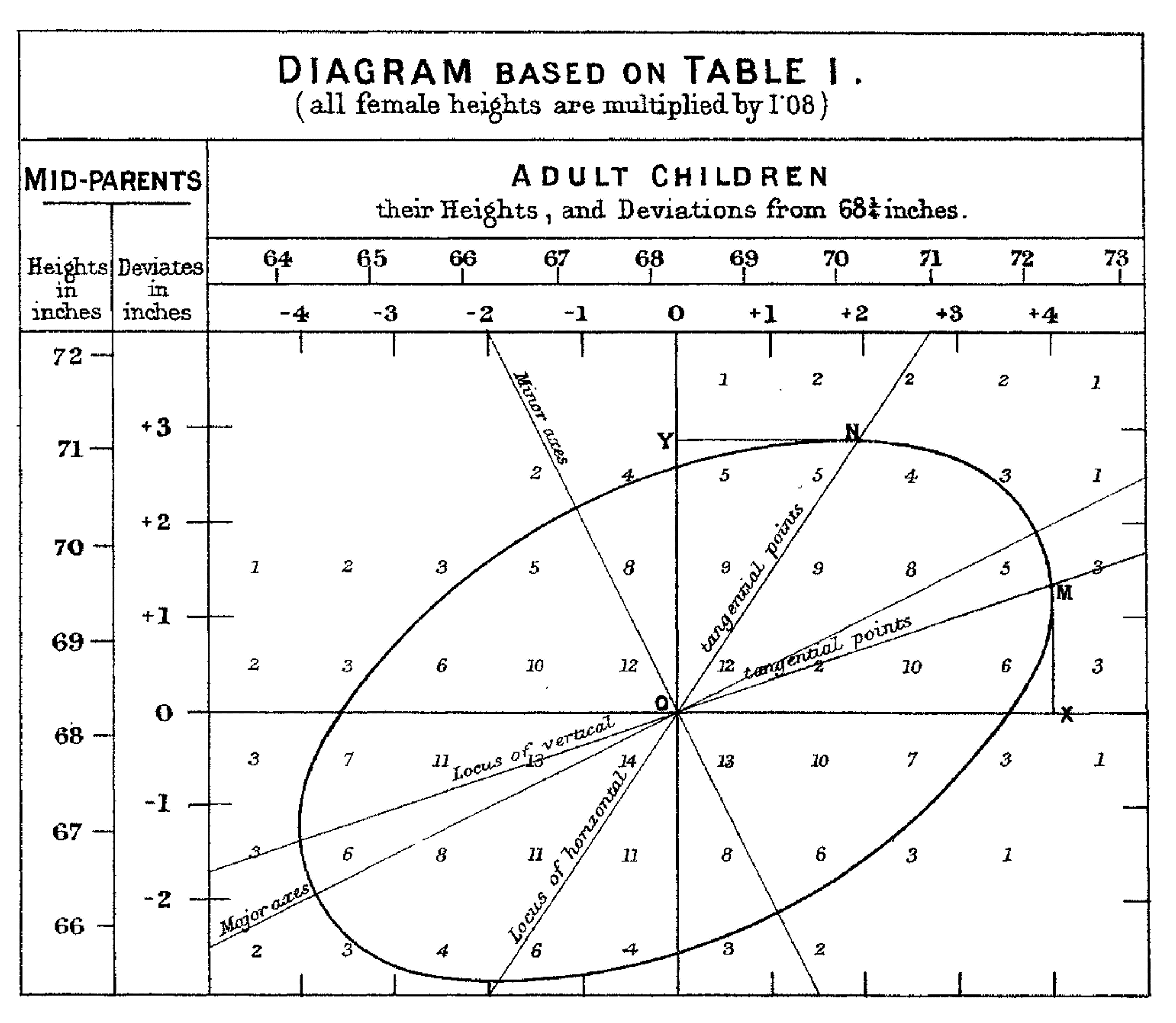

Regression is the mother of all data analyses in the social sciences. It was invented more than 100 years ago when Francis Galton (Galton, 1886) quantified the pattern in the scores of parental height and child height (see Figure 4.12 showing the original graph).

Figure 4.12: Francis Galton’s (1886) graph with data on Parent height (‘mid-parents height’) and Child height (‘Adult children height’).

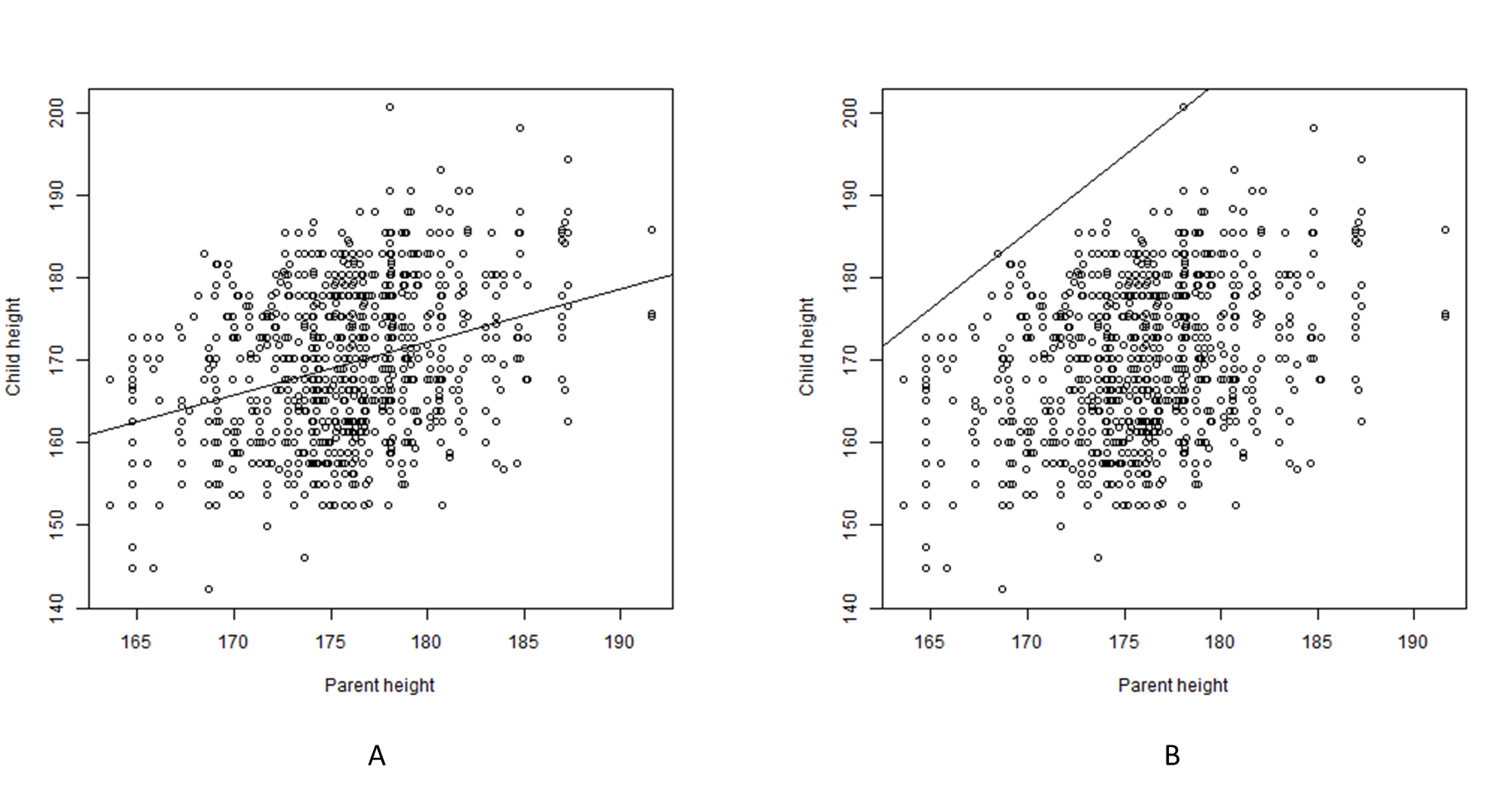

In Figure 4.13 Galton’s data are shown in two \(XY\) scatter plots.

Figure 4.13: Scatter plots of the relationship between Parent height (\(X\)) and Child height (\(Y\)) (after Galton (1886). A. With a regression line. B. With a ceiling line.

Galton drew lines though the middle of the data for describing the average trend between Parental height and Child height: the regression line (Figure 4.13 A). For example, with a Parent height of 175 cm, the estimated average Child height is about 170 cm. Galton could also have drawn a line on top of the data for describing the necessity of Parent height for Child height: the ceiling line (Figure ?? B). For example, with a Parent height of 175 cm, the estimated maximum possible Child height is about 195 cm. But Galton did not draw a ceiling line, and the social sciences have adopted the average trend line as the basis for many data analysis approaches. Regression analysis has developed over the years and many variants exist. The main variant is Ordinary Least Squares (OLS) regression. It is used for example in Simple Linear Regression (SLR), Multiple Linear Regression (MLR), path analysis, variance-based Structural Equation Modeling (SEM) and Partial Least Squares Structural Equation Modeling (PLS-SEM). In this section I compare OLS regression with NCA.

4.5.2 Logic and theory

OLS regression uses additive, average effect logic. The regression line (Figure 4.13 A) predicts the average \(Y\) for a given \(X\). Because the cases are scattered, for a given \(X\) also higher and lower values of \(Y\) than the average value of \(Y\) are possible. With one \(X\) (Simple Linear Regression), \(Y\) is predicted by the regression equation is:

\[\begin{equation} \tag{4.1} Y = β_0 + β_1 X + ɛ(X) \end{equation}\]

where \(β_0\) is the intercept of the regression line, \(β_1\) is the slope of the regression line, and \(ɛ(X)\) is the error term representing the scatter around the regression line for a given \(X\). The slope of the regression line (regression coefficient) is estimated by minimizing the squared vertical distances between the observed \(Y\)-values and the regression line (‘least squares’). The error term includes the effect of all other factors that can contribute to the outcome \(Y\).

For the parent-child data, the regression equation is \(Y = 57.5 + 0.64 X + ɛ(X)\). OLS regression assumes that on average \(ɛ(X) = 0\). Thus, when \(X\) (Parent height) is 175 cm, the estimated average Child height is about 170 cm. In contrast NCA’s C-LP ceiling line is defined by \(Y_c = -129 + 1.85 X\). Thus, when \(X\) (Parent height) is 175 cm, the estimated maximum possible Child height is about 195 cm. Normally, in NCA the ceiling line is interpreted inversely (e.g., in the bottleneck table): \(X_c = (Y_c + 129)/1.85\) indicating, while assuming a non-decreasing ceiling line, that a minimum level of \(X = X_c\) is necessary (but not sufficient) for a target level of \(Y =Y_c\). When parents wish to have a child of 200 cm it is necessary (but not sufficient) that their Parent height is at least about 177 cm.

To allow for doing statistical tests with OLS, it is usually assumed that the error term for a given \(X\) is normally distributed (with average value 0): cases close to the regression line for the given \(X\) are more likely than cases far from the regression line. The normal distribution is unbounded, hence very high or very low values of \(Y\) are possible, though not likely. This implies that any high value of \(Y\) is possible. Even without the assumption of the normal distribution of the error term, a fundamental assumption of OLS is that the \(Y\) value is unbounded (Berry, 1993). Thus, very large child heights (e.g., 300 cm) are theoretically possible in OLS, but unlikely. This assumption contradicts NCA’s logic in which \(X\) and \(Y\) are presumed bounded. \(X\) puts a limit on \(Y\) and thus there is a border represented by the ceiling line. The limits can be empirically observed in the sample (e.g., the height of the observed tallest person in the sample is 205 cm) for defining NCA’s empirical scope or can be theoretically defined (e.g., the height of the ever-observed tallest person of 272 cm) for defining the NCA’s theoretical scope.

Additivity is another part of regression logic. It is assumed that the terms of the regression equation are added. Next to \(X\), the error term is always added in the regression equation. Possibly also other \(X\)’s or combination of \(X\)’s are added in the regression equation (multiple regression, see below). This means that the terms that make up the equation can compensate for each other. For example, when \(X\) is low, \(Y\) can still be achieved when other terms (error term or other \(X\)’s) give a higher contribution to \(Y\). The additive logic implies that for achieving a certain level of \(Y\), no \(X\) is necessary. This additive logic contradicts NCA’s logic that \(X\) is necessary: \(Y\) cannot be achieved when the necessary factor does not have the right level, and this absence of \(X\) cannot be compensated by other factors.

Results of a regression analysis are usually interpreted in terms of probabilistic sufficiency. A common probabilistic sufficiency-type of hypotheses is ‘\(X\) likely increases \(Y\)’ or ‘\(X\) has an average positive effect on \(Y\)’. Such hypothesis can be tested with regression analysis. The hypothesis is considered to be supported if the regression coefficient is positive. Often, it is then suggested that \(X\) is sufficient to produce an increase the likelyhood of the outcome \(Y\). The results also suggest that a given \(X\) is not necessary for producing the outcome \(Y\) because other factors in the regression model (other \(X\)’s and the error term) can compensate for the absence of a low level of \(X\).

4.5.3 Data analysis

Most regression models include more than one \(X\). The black box of the error term is opened and other \(X\)’s are added to the regression equation (Multiple Linear Regression - MLR), for example:

\[\begin{equation} \tag{4.2} Y = β_0 + β_1 X_1 + β_2 X_2 + \epsilon_X \end{equation}\]

where \(β_1\) and \(β_2\) are the regression coefficients. By adding more factors that contribute to \(Y\) into the equation, \(Y\) is predicted for given combinations of \(X\)’s,and a larger part of the scatter is explained. R\(^2\) is the amount of explained variance of a regression model and can have values between 0 and 1. By adding more factors, usually more variance is explained resulting in higher values of R\(^2\).

Another reason to add more factors is to avoid ‘omitted variable bias’. This bias is the result of not including factors that correlate with \(X\) and \(Y\), which causes in biased estimations of the regression coefficients. Hence, the common standard of regression is not the simple OLS regression with one factor, but multiple regression with many factors including control variables to reduce omitted variable bias. By adding more relevant factors, the prediction of \(Y\) becomes better and the risk of omitted variable bias is reduced. Adding factors in the equation is not just adding new factors (\(X\)). Some factors may be combined such as squaring a factor (\(X^2\)) to represent a non-linear effect of \(X\) on \(Y\), or taking the product of two factors \((X_2 * X_2)\) to represent the interaction between these factors. Such combination of factors are added as a separate terms into the regression equation. Also, other regression-based approaches such as SEM and PLS-SEM include many factors. In SEM models factors are ‘latent variables’ of the measurement model of the SEM approach.

A study by Bouquet & Birkinshaw (2008) is an example of the prediction of an average outcome using MLR by adding many terms including combined factors in the regression equation. This highly cited article in the Academy of Management Journal studies multinational enterprises (MNE’s) to predict how subsidiary companies gain attention from their headquarters (\(Y\)). They use a multiple regression model with 25 terms (\(X\)’s and combination of \(X\)’s) and an ‘error’ term \(ɛ\). With the regression model the average outcome (average attention) for a group of cases (or for the theoretical ‘the average case’) for given values of the terms can be estimated. The error term represents all unknown factors that have a positive or negative effect on the outcome but are not included in the model, assuming that the average effect of the error term is zero. \(β_0\) is a constant and the other \(β_i\)’s are the regression coefficients of the terms, indicating how strong the term is related to the outcome (when all other terms are constant). The regression model is:

\(Attention = \\ β_0 \\ + β_1\ Subsidiary\ size \\ + β_2\ Subsidiary\ age \\ + β_3\ (Subsidiary\ age)^2 \\ + β_4\ Subsidiary\ autonomy \\ + β_5\ Subsidiary\ performance \\ + β_6\ (Subsidiary\ performance)^2 \\ + β_7\ Subsidiary\ functional\ scope \\ + β_8\ Subsidiary\ market\ scope \\ + β_9\ Geographic\ area\ structure \\ + β_{10}\ Matrix\ structure \\ + β_{11}\ Geographic\ scope \\ + β_{12}\ Headquarter\ AsiaPacific\ parentage \\ + β_{13}\ Headquarter\ NorthAmerican\ parentage \\ + β_{14}\ Headquarter\ subsidiary\ cultural\ distance \\ + β_{15}\ Presence\ of\ MNEs\ in\ local\ market \\ + β_{16}\ Local\ market\ size \\ + β_{17}\ Subsidiary\ strength\ within\ MNE\ Network \\ + β_{18}\ Subsidiary\ initiative\ taking \\ + β_{19}\ Subsidiary\ profile\ building \\ + β_{20}\ Headquarter\ subsidiary\ geographic\ distance \\ + β_{21}\ (Initiative\ taking * Geographic\ distance) \\ + β_{22}\ (Profile\ building * Geographic\ distance) \\ + β_{23}\ Subsidiary\ downstream\ competence \\ + β_{24}\ (Initiative\ taking * Downstream\ competence) \\ + β_{25}\ (Profile\ building * Downstream\ competence) \\ + \epsilon\)

The 25 terms of single and combined factors in the regression equation explain 27% of the variance (R\(^2\) = 0.27). Thus, the error term (representing the not included factors) represent the other 73% percent (unexplained variance). Single terms predict only a small part of the outcome. For example, ‘subsidiary initiative taking’ (term 18) is responsible for 2% to the explained variance.

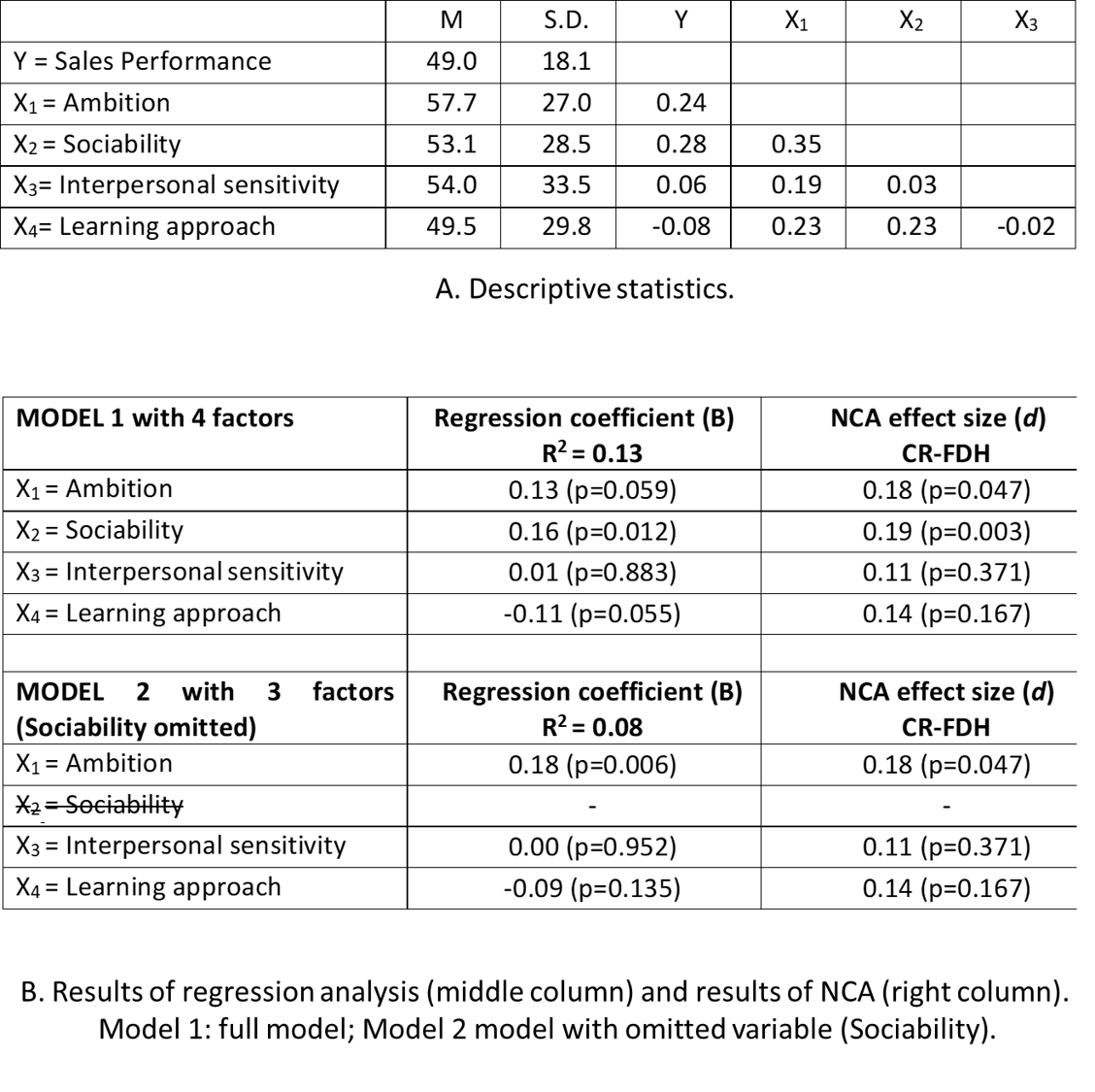

The example shows that adding more factors makes the model more complex and less understandable and therefore less useful in practice. The contrast with NCA is large. NCA can have a model with only one factor that perfectly explains the absence of a certain level of an outcome when the factor is not present at the right level for that outcome. Whereas regression models must include factors that correlate with other factors and with the outcome to avoid biased estimation of the regression coefficient, NCA’s effect size for a necessary factor is not influenced by the absence or presence of other factors in the model. This is illustrated with and example about the effect of a sales person’s personality on sales performance using data of 108 cases (sales representatives from a large USA food manufacturer) obtained with The Hogan Personality Inventory (HPI) personality assessment tool for predicting organizational performance (Hogan & Hogan, 2007). Details of the example are in Dul, Hauff, et al. (2021). The statistical descriptives of the data (mean, standard deviation, correlation) are shown in Figure 4.14 A. Ambition and Sociability are correlated with \(Y\) as well as each other. Hence, if one of them is omitted from the model the regression results may be biased.

Figure 4.14: Example of the results of a regression analysis and NCA. Effect of four personality traits of sales persons on sales performance. A. Descriptive statistics. B. Results of regression analysis and NCA for two different models. Data from Hogan & Hogan (2007)

The omission of one variable is shown in Figure 4.14 B, middle column. The full model (Model 1) includes all four personality factors. The regression results show that Ambition and Sociability have positive average effects on Sales performance (regression coefficients 0.13 and 0.16 respectively, and Learning approach has a negative average effect on Sales performance (regression coefficient -0.11). Interpersonal sensitivity has virtually no average effect on Sales performance (regression coefficient 0.01). The p values for Ambition and Sociability are relatively small. Model 2 has only three factors because Sociability is omitted. The regression results show that the regression coefficients of all three remaining factors have changed. The regression coefficient for Ambition has increased to 0.18, and the regression coefficients of the other two factors have minor differences (because these factors are less correlated with the omitted variable). Hence, in a regression model that is not correctly specified because factors that correlate with factors that are included in the model and with the outcome are not included, the regression coefficients of the included factors may be biased (omitted variable bias). However, the results of the NCA analysis does not change when a variable is omitted (Figure 4.14 B, right column). This means that an NCA model does not suffer from omitted variable bias.

4.5.4 How to combine NCA and regression

Regression and NCA are fundamentally different and complementary. A regression analysis can be added to a NCA study to evaluate the average effect of the identified necessary condition for the outcome. However, the researcher must then include all relevant factors, also those that are not expected to be necessary, to avoid omitted variable bias, and must obtain measurement scores for these factors. When NCA is added to a regression study, not much extra effort is required. Such regression study can be a multiple regression analysis, or the analysis of the structural model of variance based structural equation modeling (SEM), Partial Least Squares structural equation modeling (PLS-SEM) or another regression technique. If a theoretical argument is available for a factor (indicator or latent construct) being necessary for an outcome, any factor that is included in the regression model (independent variables, moderators, mediators) can also be treated as a potential necessary condition that can be tested with NCA. This could be systematically done:

For all factors in the regression model that are potential necessary conditions.

For those factors that provide a surprising result in the regression analysis (e.g., in terms of direction of the regression coefficient, see below the situation of disparate duality) to better understand the result.

For those factors that show no or a limited effect in the regression analysis (small regression coefficient) to check whether such ‘unimportant’ factors on average still may be necessary for a certain outcome.

For those factors that have a large effect in the regression analysis (large regression coefficient) to check whether an ‘important’ factor on average may also be necessary.

When adding NCA to a regression analysis more insight about the effect of \(X\) on \(Y\) can be obtained.

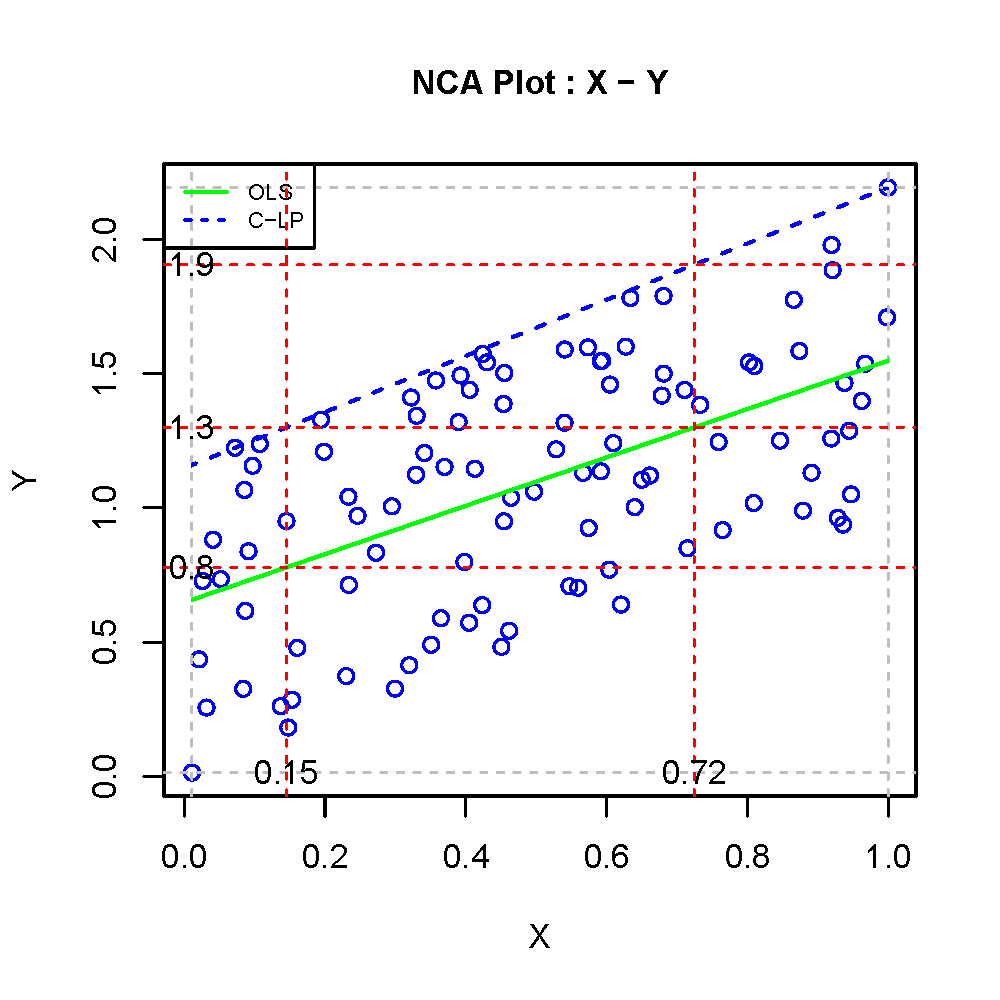

Figure 4.15 shows a example of a combined NCA and simple OLS regression analysis.

Figure 4.15: Example of interpretation of findings from regression analysis and NCA

\(X\) and \(Y\) are related with correlation coefficient 0.58. The data may be interpreted as an indication of a necessity relationship, an indication of an average effect relationship, or both. The average relationship is illustrated with a green OLS regression line with intercept 0.66 and slope 0.9 (p < 0.001). The ceiling line (C-LP) is illustrated with the dashed blue with intercept 1.1 and slope 1.0 (effect size d = 0.24; p < 0.001). According the ceiling line, for an outcome value \(Y = 1.3\), a minimum level of the condition of \(X = 0.15\) is necessary. According to the vertical line \(X = 0.15\), the average value of the outcome for this value the condition is \(Y = 0.8\). In other words, for a given value of \(X\) (e.g., \(X =0.15\)), NCA predicts the maximum possible value of \(Y\) (\(Y = 1.3\)), whereas OLS regression predicts the average value of \(Y\) (\(Y = 0.8\)). OLS regression does not predict a maximum value of \(Y\). Regression analysis assumes that any value of \(Y\) is possible. Also very high values of \(Y\), up to infinity, are possible, but unlikely. The horizontal line \(Y = 1.3\) not only intersects the ceiling line, showing the required level of \(X = 0.15\) that all cases must have satisfied to be able to reach \(Y = 1.3\), but also the OLS regression line, showing that the average outcome \(Y = 1.3\) is obtained when \(X = 0.72\). The vertical line \(X = 0.72\) intersects the ceiling line at \(Y = 1.9\), showing that this outcome level is maximally possible when the condition level \(X = 0.72\).

In this example the regression line has the same direction as the ceiling line. There is a positive average effect and a ‘positive’ necessity effect (high level of \(X\) is necessary for high level of \(Y\)). I call this situation: ‘commensurate duality’. Commensurate duality also applies when both lines have negative slopes. It is also possible, but less common that the slope of the ceiling line is positive and the slope of the regression line is negative. In this situation there is a negative average effect and a ‘positive’ necessity effect. A high level of \(X\) is necessary for high level of \(Y\), but increasing \(X\) on average reduces \(Y\). This can occur when a relatively high number of cases are in the lower right corner of the scatter plot (cases with high \(X\) that do not reach high \(Y\)). The situation when both lines have opposite slopes is called ‘disparate duality’. A third situation is possible that there is no significant average effect and yet a significant necessity effect, or the other way around. I call this situation ‘impact duality’. The word ‘duality’ refers to a data interpretation battle. Should the observed data pattern be describe with regression analysis, NCA or both? The answer depends on the researcher’s theory. When a conventional theory with additive/average effect logic is used, regression analysis ensures theory-method fit. When a necessity theory is used, NCA ensures theory-method fit. When a conventional theory and a necessity theory are both used (e.g., in an ‘embedded necessity theory,’ see Bokrantz & Dul, 2023 and see Chapter 2) both methods should be used to ensure theory-method fit. Hence, it depends on the underlying theory what method(s) should be selected to analyse the data.

4.5.5 What is the same in NCA and regression?

I showed that regression has several characteristics that are fundamentally different from the characteristics of NCA. Regression is about average trends, uses additive logic, assumes unbounded \(Y\) values, is prone to omitted variable bias, needs control variables, and is used for testing sufficiency-type of hypotheses, whereas NCA is about necessity logic, assumes limited \(X\) and \(Y\), is immune for omitted variable bias, does not need control variables, and is used for testing necessity hypotheses.

However, NCA and regression also share several characteristics. Both NCA and regression are variance-based approaches and use linear algebra (although NCA can also be applied with the set theory approach with Boolean algebra; see Section 4.7 on NCA and QCA). Both methods need good (reliable and valid) data without measurement error, although NCA may be more prone to measurement error. For statistical generalization from sample to population both methods need to have a probability sample that is representative for the population, and having larger samples usually give more reliable estimations of the population parameters, although NCA can handle small sample sizes. Additionally, for generalization of the findings of a study both methods need replications with different samples; a one-shot study is not conclusive. Both methods cannot make strong causal interpretations when observational data are used; then at least also theoretical support is needed. When null hypothesis testing is used in both methods, such tests and the corresponding p values have strong limitations and are prone to misinterpretations; a low p value only indicates a potential randomness of the data and is not a prove of the specific alternative hypothesis of interest (average effect, or necessity effect).

When a researcher uses NCA or OLS, these common fundamental limitations should be acknowledged. When NCA and OLS are used in combination the fundamental differences between the methods should be acknowledged. It is important to stress that one method is not better than the other. NCA and OLS are different and address different research questions. To ensure theory-method fit, OLS is the preferred method when the researcher is interested in an average effect of \(X\) on \(Y\), and NCA is the preferred method when the researcher is interested in the necessity effect of \(X\) on \(Y\).

4.6 Combining NCA with (PLS-)SEM

One of the most common applications of combining NCA with a regression-based method is the use of NCA in the context of Partial Least Squares - Structural Equation Modeling (PLS-SEM). The reason for this popularity may be two-fold.

Leading PLS-SEM proponents introduced NCA as a methodological enrichment of PLS-SEM, and provided specific recommendations (Richter, Schubring, et al., 2020; Richter et al., 2022; Richter, Hauff, Ringle, et al., 2023) and extensions (Hauff et al., 2024; Sarstedt et al., 2024) on how to use NCA in combination with PLS-SEM.

A basic version of the NCA software became part of a popular software package for conducting PLS-SEM (SmartPLS, see Section 7.1.2).

NCA has also been applied in combination with other types of SEM such as the classical covariance-based SEM (CB-SEM).

A SEM model consists of two parts. The measurement model defines how latent variables are represented by measured indicators. A latent variable is an unobserved construct that is derived from observed indicators. The structural model specifies how the latent variables are related to each other.

In both PLS-SEM and CB-SEM, latent variable scores can be obtained and used for further analysis. However, the estimation approach differs. PLS-SEM uses partial least squares to estimate latent variable scores as weighted composites of indicators and to maximize the explained variance in the dependent variables. CB-SEM estimates model parameters based on the covariance matrix, using techniques such as maximum likelihood or generalized least squares. Latent variable scores are not directly estimated in the model fitting process, but can be computed afterward if needed (e.g., as factor scores).

Combining NCA with SEM involves using the estimated scores of the latent variables as input for NCA. This allows researchers to examine whether a relationship between two latent variables are not only related in terms of probabilistic sufficiency (as modeled in SEM) but also in terms of necessity, assuming a theoretical rationale exists. In NCA, latent variables from the structural model can take the role of condition or outcome to study necessity relationships.

All guidelines for applying NCA in general (see Sections 1.7 and 1.8) also apply to combining NCA with SEM.

4.6.1 Steps for conducting NCA with SEM

Figure 4.16 shows a flowchart for conducting NCA in combination with SEM.

Figure 4.16: Flowchart for conducting NCA in combination with Structural Equation Modeling (SEM).

Below the steps are discussed in detail and an example is given about how to use NCA with PLS-SEM. Since SEM terminology about ‘indicators’, ‘weights’, ‘latent variables’ and ‘rescaling’ varies across the SEM literature, the following terminology is used in this book:

Terminology

General:

Rescaling: normalization or standardization of original scores.

Normalization (or min-max normalization): rescaling of scales with new minimum and maximum values (e.g., 0-1 or 0-100).

Standardization: Rescaling of observed scale values based on the their probability distribution. (z-score)

Indicator:

Indicator (variable). A manifest/measured variable linked to a latent variable.

Indicator score. The value of an indicator.

Raw indicator score. The value of the indicator measured on a scale. The scale usually has minimum and maximum values, e.g., Likert scale ranging from 1-5 or 1-7.

Normalized indicator score. The value of an indicator after min-max normalization of the raw indicator score (e.g., 0-1 scale or percentage scale 0-100).

Standardized indicator score. The value of an indicator after standardization of the raw indicator (z-score: rescaled such that the mean = 0 and standard deviation = 1).

Original indicator score. The indicator that is used as input for the SEM model (raw score or standardized score), which can be raw or standardized depending on the estimation settings.

Weight and loading:

(Indicator) weight. In PLS-SEM, the extent to which an indicator contributes to the latent variable score (as part of a weighted composite).

Standardized indicator weight. Indicator weight using standardized indicators.

Estimated indicator weight. In PLS-SEM, the estimated coefficient that multiplies an indicator to construct the latent variable score.

Factor loading. In CB-SEM, the strength of indicator–latent variable relationships.

Latent variable:

- Latent variable. An unobserved construct linked to multiple indicators.

Latent variable score. The value of a latent variable, calculated as a linear combination (weighted sum) of indicator scores.

Standardized latent variable score. The value of a latent variable obtained by linear combination (weighted sum) of standardized indicator scores (z -score).

Unstandardized latent variable score. The value of a latent variable expressed in the scale of the original indicators (commonly raw original scores).

Normalized unstandardized latent variable score. Unstandardized latent variable score after normalization.

4.6.1.1 Step 1: Theorize probabilistic and necessity relationships

The first step is a fundamental step for any combined NCA and SEM study. An NCA-SEM study implies that the relationships between the variables are considered from two different causal perspectives: probabilistic sufficiency (SEM) and necessity (NCA). Both SEM and NCA require that the study starts with formulating theoretical expectations about the relationship (e.g., hypotheses). According to SEM, all relationships between latent variables in the structural model are considered probabilistic sufficiency relationships. According to NCA all, a few, or none of the relationships can be hypothesized as necessity relationships as well. Each necessity relationship between latent variables should be theoretically justified (see Section 2.2). The theoretical justification of applying these different causal lenses to the structural model is essential (Dul, 2024a). A theory that includes one or more necessity relationships in addition to probabilistic sufficiency relationships is called an ‘embedded necessity theory’ (Bokrantz & Dul, 2023). The latent variables of a structural model can have two different roles. A ‘predictor’ variable (independent variable; ‘condition’ in NCA) explains or predicts a ‘predicted’ variable (dependent variable; outcome in NCA). For example, when in a structural model a latent variable \(M\) mediates the relationship between \(X\) and \(Y\), then \(M\) has two different roles. For the \(X\)-\(M\) relationship \(X\) is the predictor and \(M\) is the predicted variable, and for the \(M\)-\(Y\) relationship \(M\) is the predictor and \(Y\) the predicted variable. When a relationship is (also) considered a necessity relationship, NCA calls the predictor variable the ‘condition’ and the predicted variable the ‘outcome’.

4.6.1.2 Step 2: Conduct SEM

Conducting SEM assumes that data are available on observed indicators that are used to represent latent variables. SEM involves specifying a measurement model, which defines the relationships between indicators and latent variables, and a structural model, which specifies the relationships between latent variables. The complete model is then estimated using a chosen algorithm (e.g., PLS-SEM or CB-SEM). NCA is not part of the SEM estimation process itself, but it uses latent variable scores obtained from SEM as input for its own analysis. Therefore, it is important that the analyst ensures that the latent variable scores extracted from the SEM model are valid, reliable, and interpretable. Recommendations for good SEM practices are available in the literature and are not discussed here. Both commercial and open-source software tools are available to conduct SEM.

4.6.1.3 Step 3: Extract latent variable scores

The latent variable scores obtained from the SEM model serve as input for NCA. During SEM estimation, latent variable scores are often standardized (z-scores), resulting in scores with a mean of 0 and standard deviation of 1. Although NCA results are invariant to affine transformations (such as standardization or linear rescaling), it is recommended for purposes of interpretation to first unstandardize the latent variable scores, and then, if desired, apply normalization (e.g., min-max scaling). Unstandardized latent variable scores (see Step 3a, if needed) are expressed on a scale that is directly related to the original indicator scale, provided that the same measurement scale was used for all indicators of the latent construct. This allows for more meaningful interpretation of the NCA results, especially when discussing real-world implications and when results are discussed in terms of necessity ‘in degree’ with specified levels of \(X\) and \(Y\). Note that not all SEM software packages offer the option to extract unstandardized latent variable scores, particularly in CB-SEM, where latent scores are not estimated by default. Below is an example showing how to convert standardized scores back to unstandardized scores when needed. When all unstandardized latent variable scores are derived from indicators measured on the same scale (i.e., with identical minimum and maximum values), normalization is not required. However, if latent variables have different scales, min-max normalization is recommended (see Step 3b). This ensures that the latent variable scores are comparable, which is particularly important in later steps (e.g., Step 5: producing a BIPMA).

A common approach to min-max normalization is to rescale scores to a 0–100 range, allowing latent variable scores to be interpreted as percentages of their original scale range. Alternatively, rescaling to a 0–1 range can be used, which is often more convenient for NCA, as it yields a scope of 1. However, this is optional and not required for the validity of NCA results.

4.6.1.4 Step 4: Conduct NCA

The unstandardized and possibly normalized latent variable scores are input to NCA. In the first part of the analysis the hypothesized relationships are tested for ‘necessity in kind’, which is the qualitative statement that \(X\) is necessary for \(Y\) without specifying levels of \(X\) and \(Y\) other than absence/presence or low/high. The observed effect size \(d\) and p value are compared with their threshold values (e.g., \(d\) = 0.10; p = 0.05) that are set by the researcher to decide if a predictor variable is necessary for a predicted variable, as suggested by the hypothesis.