16.1 Worked Example

Melanie A. Murphy & Jeffrey S. Evans

1. Overview of Worked Example

a. Background

There are many ways graphs can be implemented to understand population structure and relate that structure to landscape characteristics (see Dyer and Nason 2004). In this exercise, we will calculate various graph metrics and apply graphs to fit a gravity model.

Gravity models are a type of inferential model that exploit graph characteristics. Gravity models include both at-site and between-site landscape data. They are a type of graph consisting of nodes and edges. These nodes and edges represent landscape characteristics associated with these graph elements.

2. Setup

Add required packages

This code checks of all required packages are installed, and if so, loads them. If any are missing, it will return a message that identifies the packages that need to be installed. If that happens, install the packages and run the code again.

The package then checks if your version of the GeNetIt package is up-to-date (at least 0.1-5), and if not, installs the latest version.

p <- c("raster", "igraph", "sp", "GeNetIt", "spatialEco", "leaflet",

"sf", "terra", "sfnetworks", "spdep", "dplyr", "tmap", "devtools")

if(any(!unlist(lapply(p, requireNamespace, quietly=TRUE)))) {

m = which(!unlist(lapply(p, requireNamespace, quietly=TRUE)))

suppressMessages(invisible(lapply(p[-m], require,

character.only=TRUE)))

stop("Missing library, please install ", paste(p[m], collapse = " "))

} else {

if(packageVersion("GeNetIt") < "0.1-5") {

remotes::install_github("jeffreyevans/GeNetIt")

}

suppressMessages(invisible(lapply(p, require, character.only=TRUE)))

}3. Wetland complex data preparation

In this sections, we will read in all wetland locations in the study area and calculate a few graph-based metrics to assign to wetland sites that data was collected at. This allows us to put our samples into the context of the larger wetland system thus, accounting for proximity and juxtaposition.

a. Read in wetlands data

Import file “Wetlands.csv”.

wetlands <- read.csv(system.file("extdata/Wetlands.csv", package="LandGenCourse"),

header = TRUE)

head(wetlands)## ID X Y RALU SiteName

## 1 1 688835 5002939 y AirplaneLake

## 2 2 687460 4994400 n AlpineInletCreek

## 3 3 687507 4994314 n AlpineInletMeadow

## 4 4 687637 4994117 n AlpineLake

## 5 5 688850 4997750 n AxeHandleMeadow

## 6 6 688500 4998900 y BachelorMeadowMake it a spatial object.

## Classes 'sf' and 'data.frame': 121 obs. of 4 variables:

## $ ID : int 1 2 3 4 5 6 7 8 9 10 ...

## $ RALU : chr "y" "n" "n" "n" ...

## $ SiteName: chr "AirplaneLake" "AlpineInletCreek" "AlpineInletMeadow" "AlpineLake" ...

## $ geometry:sfc_POINT of length 121; first list element: 'XY' num 688835 5002939

## - attr(*, "sf_column")= chr "geometry"

## - attr(*, "agr")= Factor w/ 3 levels "constant","aggregate",..: 1 1 1

## ..- attr(*, "names")= chr [1:3] "ID" "RALU" "SiteName"b. Create wetlands graph

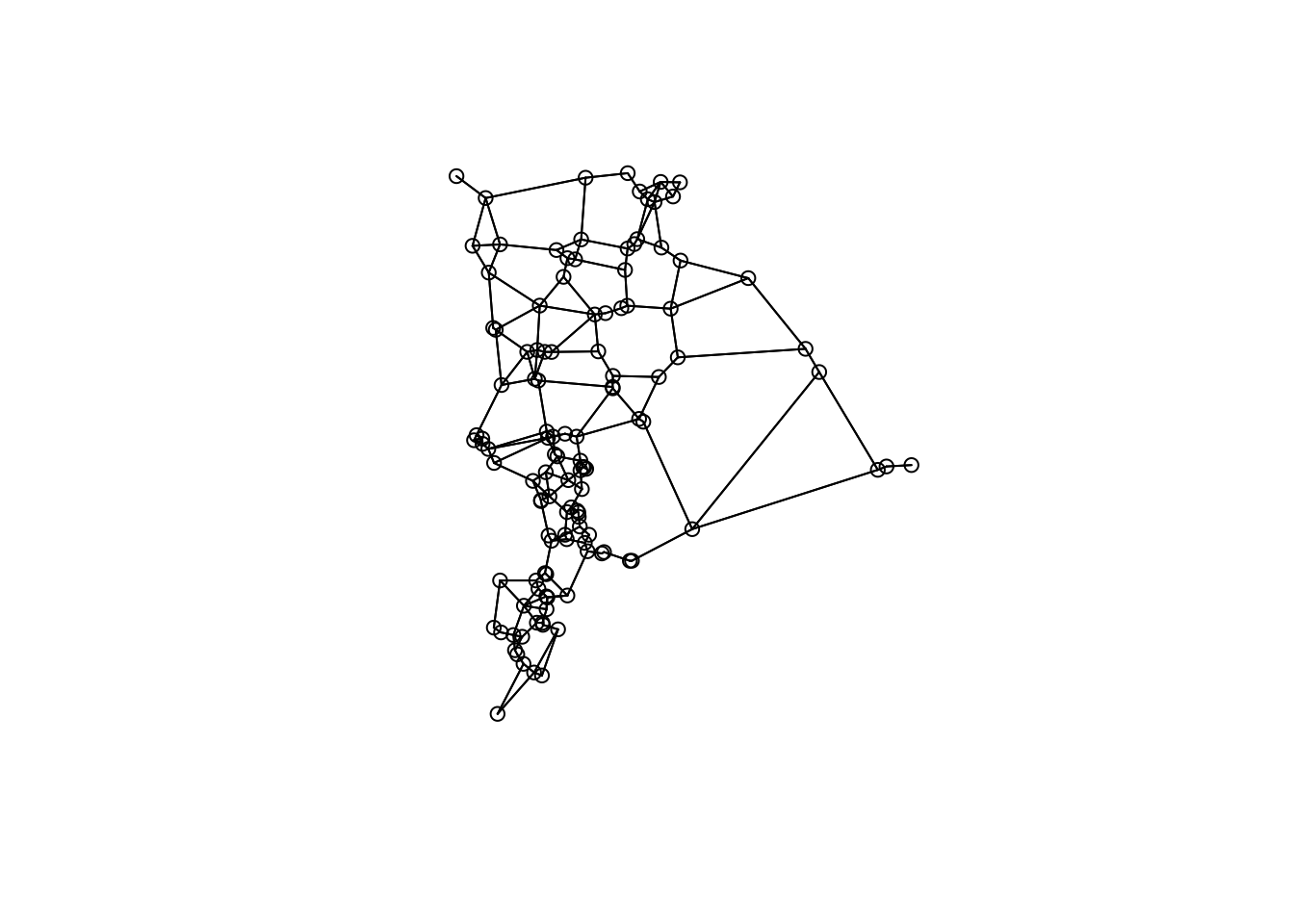

Create Gabriel graph from the wetlands to represent a “realization” of connectivity and spatial arrangement.

Derive Gabriel graph

gg <- graph2nb(gabrielneigh(st_coordinates(wetlands)),sym=TRUE)

plot(gg, coords=st_coordinates(wetlands))

Questions:

- This graph may or may not be the best representation of wetland connectivity. What other types of graphs could you build?

- What wetlands are connected to each other based on the graph?

c. Graph metrics

To calculate graph metrics, we need to do a few steps:

Coerce to sf line object (will be used to create igraph object)

Coerce to a sfnetwork, which is an igraph object:

wg <- as_sfnetwork(gg, edges=gg, nodes=wetlands, directed = FALSE,

node_key = "SiteName", length_as_weight = TRUE,

edges_as_lines = TRUE)Calculate weights

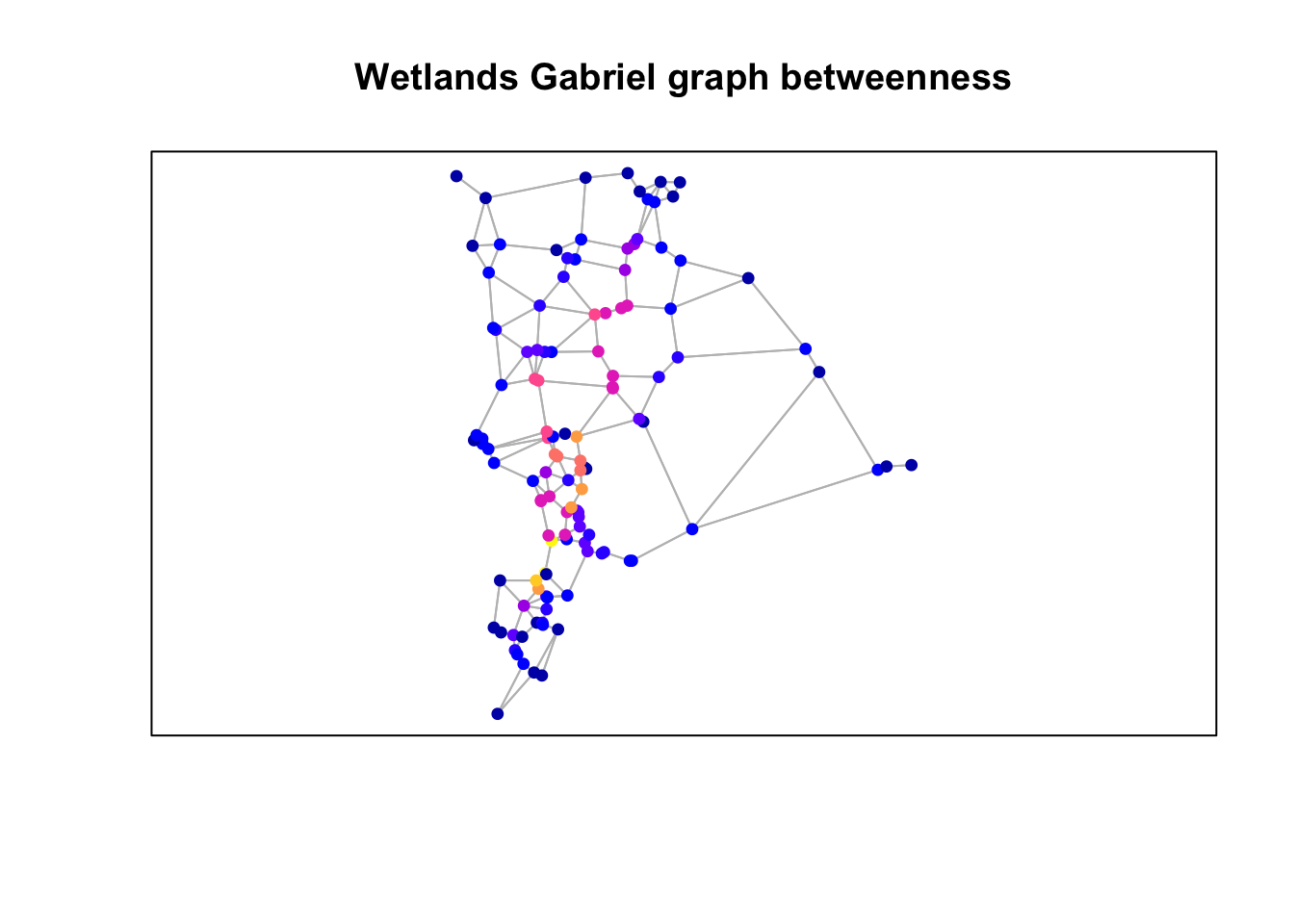

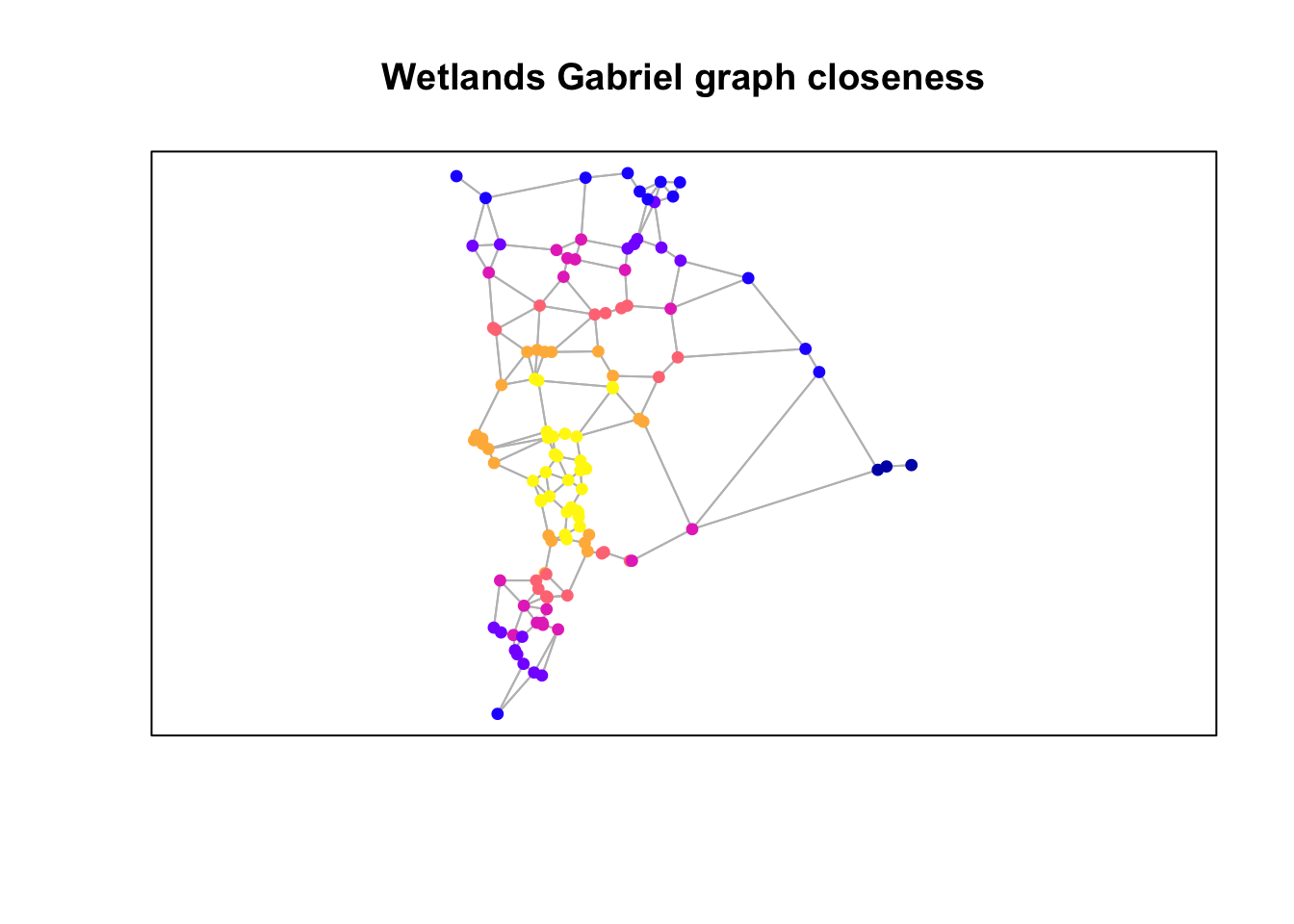

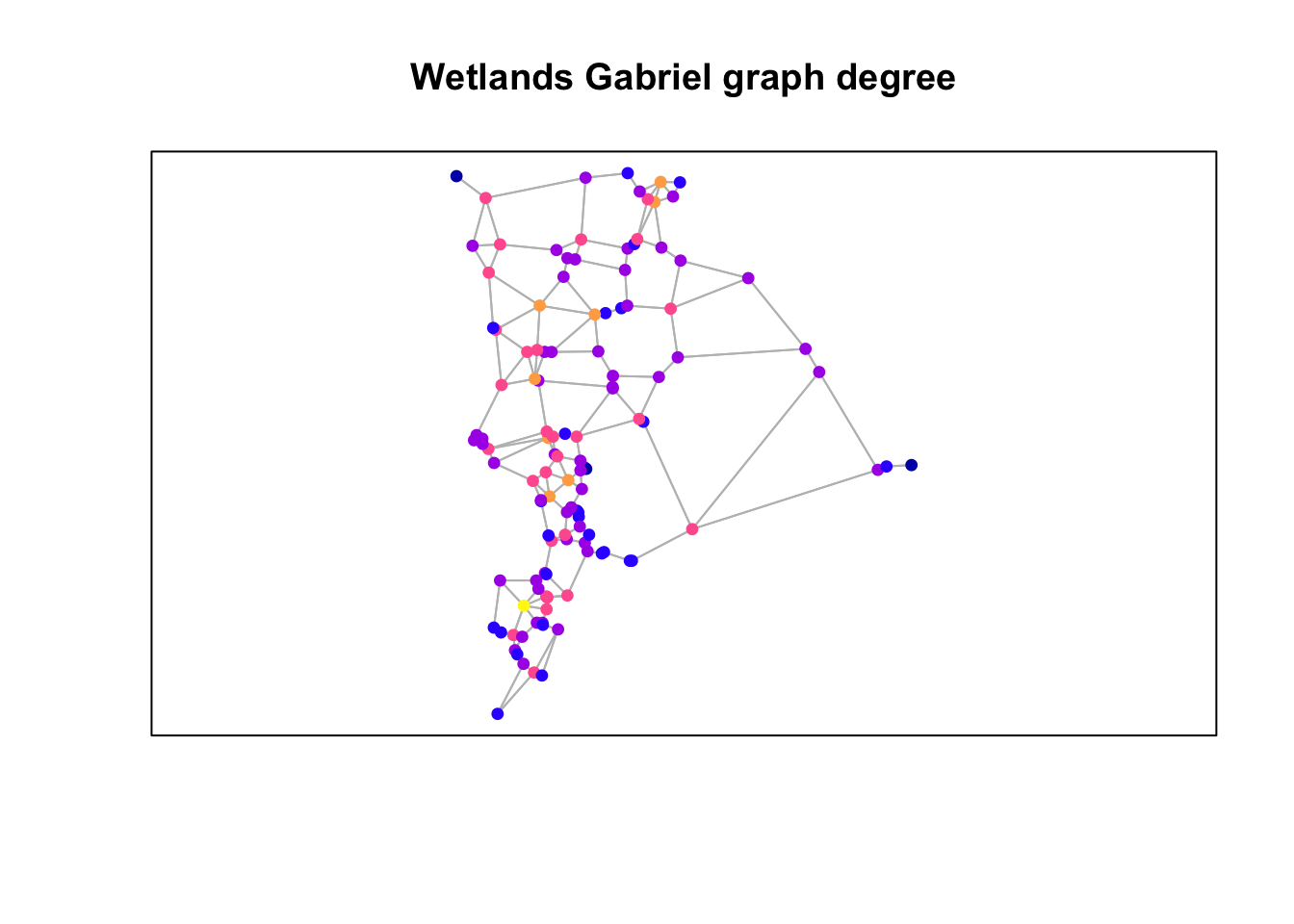

Calculate graph metrics of betweenness and closeness with weights and degree. We’ll add these as attributes (columns) to the wetlands sf object.

- degree - the number of connections a node has

- betweenness - the number of shortest paths going through a node

- closensess - the average of the shortest path length from the node to every other node in the network

wetlands$betweenness <- igraph::betweenness(wg, directed=FALSE, weights=w)

wetlands$degree <- igraph::degree(wg)

wetlands$closeness <- igraph::closeness(wg, weights=w)

wetlands## Simple feature collection with 121 features and 6 fields

## Attribute-geometry relationships: constant (3), NA's (3)

## Geometry type: POINT

## Dimension: XY

## Bounding box: xmin: 686244 ymin: 4993077 xmax: 695699 ymax: 5004317

## Projected CRS: WGS 84 / UTM zone 11N

## First 10 features:

## ID RALU SiteName geometry betweenness degree

## 1 1 y AirplaneLake POINT (688835 5002939) 288 8

## 2 2 n AlpineInletCreek POINT (687460 4994400) 443 6

## 3 3 n AlpineInletMeadow POINT (687507 4994314) 332 4

## 4 4 n AlpineLake POINT (687637 4994117) 220 6

## 5 5 n AxeHandleMeadow POINT (688850 4997750) 1690 6

## 6 6 y BachelorMeadow POINT (688500 4998900) 195 4

## 7 7 y BarkingFoxLake POINT (687944 5000006) 1323 6

## 8 8 n BarkingFoxWetland POINT (687872 5000041) 1291 10

## 9 9 n BartPond POINT (687150 4995850) 0 6

## 10 10 n BigClearLake POINT (690888 5004126) 0 4

## closeness

## 1 0.3538381

## 2 0.3407043

## 3 0.3352415

## 4 0.3226762

## 5 0.5426490

## 6 0.5334932

## 7 0.5094841

## 8 0.5066511

## 9 0.3783181

## 10 0.2706522d. Plot graph metric

Plot results using the graph edges and wetlands points with the attributes “betweenness”, “closeness” and “degree”.

Plot betweenness

plot(st_geometry(gg), col="grey")

plot(wetlands["betweenness"], pch=19,

cex=0.75, add=TRUE)

box()

title("Wetlands Gabriel graph betweenness")

Plot closeness

plot(st_geometry(gg), col="grey")

plot(wetlands["closeness"], pch=19,

cex=0.75, add=TRUE)

box()

title("Wetlands Gabriel graph closeness")

Plot degree

plot(st_geometry(gg), col="grey")

plot(wetlands["degree"], pch=19,

cex=0.75, add=TRUE)

box()

title("Wetlands Gabriel graph degree")

Questions: Consider the three figures above and how the graph was constructed.

- In what way(s) is the resulting graph potentially ecologically meaningful?

- How might it not be ecologically or biologically meaningful?

4. Wetland field-data preparation

In this section we will read the field data and add the node metrics we just calculated.

Using RALU_Site.csv, we read in the data, add the node data (betweenness and degree), create a spatial object that includes the node data.

Read in site data

sites <- read.csv(system.file("extdata/RALU_Site.csv", package="LandGenCourse"),

header = TRUE)

sites$SiteID <- as.character(sites$SiteID)Add the node data.

Note: using names is dangerous as, small changes in names can result in non-matches. In this case, the ID fields are not consistent (data were collected at different times for different purposes originally). However, names are standardized in a drop-down list of a database. So they are a matching field. My recommendation to you all is to do to this type of operation on a numeric field.

nodestats <- st_drop_geometry(wetlands[,c(3,5:7)])

nodestats <- nodestats[which(nodestats$SiteName %in% sites$SiteName),]

sites <- merge(nodestats, sites, by="SiteName")Convert data frame to sf object.

## Simple feature collection with 6 features and 14 fields

## Attribute-geometry relationships: constant (14)

## Geometry type: POINT

## Dimension: XY

## Bounding box: xmin: 687944 ymin: 4996458 xmax: 690127 ymax: 5002939

## Projected CRS: WGS 84 / UTM zone 11N

## SiteName betweenness degree closeness SiteID Elev Length Area

## 1 AirplaneLake 288 8 0.3538381 27 2564.381 390 62582.2

## 2 BachelorMeadow 195 4 0.5334932 15 2591.781 0 225.0

## 3 BarkingFoxLake 1323 6 0.5094841 19 2545.275 160 12000.0

## 4 BobLake 116 4 0.4652124 16 2649.125 143 4600.0

## 5 CacheLake 673 4 0.5189996 10 2475.829 75 2268.8

## 6 DoeLake 758 6 0.4710215 8 2463.006 170 13034.9

## Perim Depth pH Dforest Drock Dshrub geometry

## 1 1142.8 21.64 6.5 0.398 0.051 0.00 POINT (688835 5002939)

## 2 60.0 0.40 6.1 0.000 0.000 0.20 POINT (688500 4998900)

## 3 435.0 5.00 6.5 0.400 0.250 0.05 POINT (687944 5000006)

## 4 321.4 2.00 7.0 0.550 0.000 0.05 POINT (690127 4999150)

## 5 192.0 1.86 6.5 0.508 0.000 0.00 POINT (688777 4997264)

## 6 463.2 6.03 7.6 0.254 0.000 0.00 POINT (688968 4996458)Question:

- What are the fields here? What data are included in

sites?

5. Saturated Graph

a. Create graph from site locations

To assess connectivity using a gravity model, we need to build a graph from the occupied frog sites create a graph. This could be any type of graph, but I generally use saturated (essentially a full genetic distance matrix) or pruned by some maximum distance.

dist.graph <- knn.graph(sites, row.names = sites$SiteName)

dist.graph <- merge(dist.graph, st_drop_geometry(sites),

by.y="SiteName", by.x="from_ID")

dist.graph <- dist.graph[,-c(11:19)] ## d.op extra columnsNote: Can create a distance-constrained graph with max.dist arg (not run)

b. Merge the graph with genetic distance

Read in the genetic distance data and make a matrix, gdist, then unfold data

gdist <- read.csv(system.file("extdata/RALU_Dps.csv", package="LandGenCourse"), header=TRUE)

rownames(gdist) <- t(names(gdist))

gdist <- dmatrix.df(as.matrix(gdist))

names(gdist) <- c("FROM", "TO", "GDIST") #unfold the file

gdist <- gdist[!gdist$FROM == gdist$TO ,]

gdist[,1] <-sub("X", "", gdist[,1])

gdist[,2] <-sub("X", "", gdist[,2])

gdist <- cbind(from.to=paste(gdist[,1], gdist[,2], sep="."), gdist)Transform genetic distance to genetic flow (1-distance)

Merge graph with genetic distances, based on from node - to node combination

dist.graph$from.to <- paste(dist.graph$i, dist.graph$j, sep=".")

dist.graph <- merge(dist.graph, gdist, by = "from.to")Question:

- What is in the resulting object?

6. Spatial model data prepration

b. Reclassify wetlands

NLCD is land cover data. The wetland classes are 11 and 90-95 (for this system and vintage NLCD data)

c. Calculate the proportion of the landscape around sites

Assign proportion of landcover that is wetland to sites as pwetland. You could create a binary raster for any cover type of interest and calculate this parameter.

Question:

- You want to know if areas of dense wetlands produce more frogs. What buffer distance will you use?

Create function to extract the proportion of wetland.

## method 1 (can result in Inf if all zero)

# prop.land <- function(x) {

# length(x[x==1]) / length(x)

# }

## method 2 (no divide by zero error)

prop.land <- function(x) {

prop.table(table(factor(x, levels=c(0,1))))[2]

}Apply the function to extract the proportion of wetland within a buffer (here: 300 m).

## Warning: [extract] transforming vector data to the CRS of the rasterAdd the proportion of wetland back to the dataframe.

## [1] 0.205047319 0.018987342 0.047923323 0.000000000 0.003174603 0.115755627Challenge:

- What happens if you change this radius?

- What radius do you think makes the most sense ecologically?

Alternatively, you can use the landscapemetrics package (see Week 2) to calculate a broader set of landscape metrics. Here is an example for calculating pland.

Note: as per help file, the argument y of the function sample_lsm can accept an sf points object (e.g., sites). This returned an error though, hence we first convert sites to an sp object, using the function as_Spatial.

nlcd_sampled <- landscapemetrics::sample_lsm(landscape = xvars[["wetlnd"]],

what = "lsm_c_pland",

shape = "circle",

y = sf::st_coordinates(sites),

size = 300,

return_raster = FALSE,

plot_id=sites$SiteID)

pwetland <- dplyr::select(dplyr::filter(nlcd_sampled, class == 1,

metric == "pland"), plot_id, value)

names(pwetland) <- c("SiteID", "pwetland")

pwetland$pwetland <- pwetland$pwetland/100

head(pwetland)Note: these values are sorted by SiteID and are not in the same order as the sf object sites, that’s why the first six values may not be the same.

d. Add values of rasters to sample sites

This adds potential “at site” variables, keep as sf POINT class object. We are removing raster 6 as knowing the cover class from NLCD that intersects the same points is not very useful information.

## Warning: [extract] transforming vector data to the CRS of the rastere. Add raster covariates to graph edges (lines)

Remove nlcd and wetlnd rasters before calculating statistics, as calculating statistics on categorical data is nonsensical.

Calculating stats - this will take a while!

idx <- which(names(xvars) %in% c("nlcd","wetlnd"))

suppressWarnings(

stats <- graph.statistics(dist.graph, r = xvars[[-idx]],

buffer= NULL, stats = c("min",

"mean","max", "var", "median")))Add statistics to graph.

f. What about categorical variables?

Statistical moments (mean, variance, etc.) are nonsensical for categorical variables. Here we create a function for returning the percent wetland between sites. Then we use it to calculate an additional statistic and add the result to the graph.

Question:

- Are there other categorical variables that you think may be ecologically important?

Function to calculate percent wetland between pairs of sites:

wet.pct <- function(x) {

x <- ifelse(x == 11 | x == 12 | x == 90 | x == 95, 1, 0)

prop.table(table(factor(x, levels=c(0,1))))[2]

} Calculate statistic and add it to graph.

g. Evaluate node and edge correlations

We need to evaluate correlations in the data to avoid overdispersion in our models (remember lab from Week 12, where we calculated the variance inflation factor, VIF).

Note, we are not going to actually remove the correlated variables but, just go through a few methods of evaluating them. The code to remove colinear variables is commented out for reference. We do have to log transform the data as to evaluate the actual model structure.

Create data frame with the node variables to be evaluated.

node.var <- c("degree", "betweenness", "Elev", "Length", "Area", "Perim",

"Depth", "pH","Dforest","Drock", "Dshrub", "pwetland", "cti",

"dd5", "ffp","gsp","pratio","hli","rough27","srr")

s <- st_drop_geometry(sites) %>% select("degree", "betweenness", "Elev", "Length", "Area", "Perim",

"Depth", "pH","Dforest","Drock", "Dshrub", "pwetland", "cti", "ffp","gsp")Log-transform values, set log of negative values or zero (where log is not defined) to 0.00001.

Site correlations:

p = 0.8 # Set upper limit of for collinearity

site.cor <- cor(s, y = NULL,

use = "complete.obs",

method = "pearson")

diag(site.cor) <- 0

cor.idx <- which(site.cor > p | site.cor < -p, arr.ind = TRUE)

cor.names <- vector()

cor.p <- vector()

for(i in 1:nrow(cor.idx))

{

cor.p[i] <- site.cor[cor.idx[i,][1], cor.idx[i,][2]]

cor.names [i] <- paste(rownames(site.cor)[cor.idx[i,][1]],

colnames(site.cor)[cor.idx[i,][2]], sep="_")

}

data.frame(parm=cor.names, p=cor.p)## parm p

## 1 ffp_Elev -0.9016287

## 2 gsp_Elev 0.8934291

## 3 Area_Length 0.8715535

## 4 Perim_Length 0.8392532

## 5 Length_Area 0.8715535

## 6 Perim_Area 0.9912114

## 7 Length_Perim 0.8392532

## 8 Area_Perim 0.9912114

## 9 Elev_ffp -0.9016287

## 10 gsp_ffp -0.9656032

## 11 Elev_gsp 0.8934291

## 12 ffp_gsp -0.9656032This returns a list of pairwise correlations that are than the threshold p. Instead of doing this manually, we can use the function collinear to check this. It will make a suggestions which variable to drop.

## Collinearity between Area and Perim correlation = 0.9912## Correlation means: 0.446 vs 0.276## recommend dropping Area## Collinearity between Area and Length correlation = 0.8716## Correlation means: 0.446 vs 0.278## recommend dropping Area## Collinearity between Perim and Length correlation = 0.8393## Correlation means: 0.441 vs 0.278## recommend dropping Perim## Collinearity between ffp and Elev correlation = 0.9016## Correlation means: 0.341 vs 0.283## recommend dropping ffp## Collinearity between ffp and gsp correlation = 0.9656## Correlation means: 0.341 vs 0.284## recommend dropping ffp## Collinearity between Elev and gsp correlation = 0.8934## Correlation means: 0.336 vs 0.284## recommend dropping ElevIt also returns a vector of correlated variables:

## [1] "Elev" "Area" "Perim" "ffp"h. Add node data

Build and add node (at-site) level data to graph, then merge edge (distance graph) and edge (between-site) data.

Merge node and edges for model data.frame

gdata <- merge(st_drop_geometry(dist.graph)[c(1,2,5,11,14,7)], node,

by.x="SiteID", by.y="SiteID")

gdata <- merge(gdata, st_drop_geometry(dist.graph)[c(11, 8:10, 15:40)],

by.x="SiteID", by.y="SiteID")

# log transform matrix

for(i in 5:ncol(gdata))

{

gdata[,i] <- ifelse(gdata[,i] <= 0, 0.00001, log(gdata[,i]))

}7. Gravity model

a. Develop hypothesis

What type of gravity model do you wish to run (production or attraction constraint)? Think about what hypotheses that you want to test. Write out model statements that group parameters into hypotheses and run models. Remember to run a NULL that is just distance.

At-site (node) potential parameters. These are all at-site variables. Remember that we pulled all raster variables. We want to critically think about hypotheses and not use all of these parameters.

degree- graph degreebetweenness- graph betweenessElev- elevation (see comments below)Length- geographic distanceArea- wetland area (field)Perim- wetland perimeter (field)Depth- wetland depth (field)- highly correlated with predatory fish presence/abundancepH- wetland pH (field)Dforest- distance to forest (field)Drock- distance to rock (field)Dshrub- distance to shrub (field)pwetland- proportion of wetland in X buffer (calculated above)cti- compound topographic wetness index - steady-state measure of wetness based on topography (raster data)dd5- degree days >5 C (sum of temp) - (raster data)ffp- frost free period (raster data)gsp- growing season precipitation (raster data)pratio- ratio of growing season precip to annual precip (raster data) - can indicate amount of snow to rainhli- heat load index - topographic measure of exposure, related to productivity (ice-off and primary productivity) in this system (raster data)rough27- unscale topographic variation at a 27 X 27 (cells) window size (raster data)ssr- measure of topographic variation at a 27X27 (cells) windo size - for this system pulling out ridgelines (raster data)

NOTE: we are adding elevation here as a covariate. HOWEVER - elevation does not represent ecological processes in and of itself. I strongly encourage using the components (temp, moisture, rainfall, vegetation, accessibility, etc.) directly and not elevation as a surrogate parameter.

Between site (edge) potential parameters include:

cti,dd5,ffp,gsp,pratio,hli,rough27,ssr(min, mean, max, var, median for each)wet.pct.nlcd- percent of cells that are wetland class

# null model (under Maximum Likelihood)

( null <- gravity(y = "GDIST", x = c("length"), d = "length", group = "from_ID",

data = gdata, fit.method = "ML") )## [1] "Running singly-constrained gravity model"## Gravity model

##

## Linear mixed-effects model fit by maximum likelihood

## Data: gdata

## AIC BIC logLik

## 20554.37 20585.62 -10273.18

##

## Random effects:

## Formula: GDIST ~ 1 | from_ID

## (Intercept) Residual

## StdDev: 0.2009152 0.4232432

##

## Fixed effects: stats::as.formula(paste(paste(y, "~", sep = ""), paste(x, collapse = "+")))

## Value Std.Error DF t-value p-value

## (Intercept) -1.2019984 0.07467247 18224 -16.096941 0

## length 0.1418157 0.03059252 18224 4.635633 0

## Correlation:

## (Intr)

## length -0.854

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -3.2285925 -0.5168847 0.1184083 0.6182159 2.4848654

##

## Number of Observations: 18252

## Number of Groups: 27# Fish hypothesis (under Maximum Likelihood)

( depth <- gravity(y = "GDIST", x = c("length","from.Depth"), d = "length",

group = "from_ID", data = gdata, fit.method = "ML", ln = FALSE) )## [1] "Running singly-constrained gravity model"## Gravity model

##

## Linear mixed-effects model fit by maximum likelihood

## Data: gdata

## AIC BIC logLik

## -13067.22 -13028.16 6538.61

##

## Random effects:

## Formula: GDIST ~ 1 | from_ID

## (Intercept) Residual

## StdDev: 0.08102556 0.168483

##

## Fixed effects: stats::as.formula(paste(paste(y, "~", sep = ""), paste(x, collapse = "+")))

## Value Std.Error DF t-value p-value

## (Intercept) -0.4101360 0.028357541 18224 -14.463032 0.0000

## length -0.0002202 0.001624886 18224 -0.135530 0.8922

## from.Depth -0.0200540 0.014527936 25 -1.380377 0.1797

## Correlation:

## (Intr) length

## length -0.462

## from.Depth -0.693 -0.002

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -5.07683320 -0.58959052 0.02162005 0.65913788 3.21875035

##

## Number of Observations: 18252

## Number of Groups: 27# Productivity hypothesis (under Maximum Likelihood)

#( production <- gravity(y = "GDIST", x = c("length", "from.ffp", "from.hli"),

# d = "length", group = "from_ID", data = gdata,

# fit.method = "ML", ln = FALSE) )

# Climate hypothesis (under Maximum Likelihood)

#( climate <- gravity(y = "GDIST", x = c("length", "median.ffp", "median.pratio"),

# d = "length", group = "from_ID", data = gdata,

# fit.method = "ML", ln = FALSE) )

# Wetlands hypothesis (under Maximum Likelihood)

( wetlands <- gravity(y = "GDIST", x = c("length", "from.degree", "from.betweenness", "from.pwetland"),

d = "length", group = "from_ID", data = gdata, fit.method = "ML",

ln = FALSE) )## [1] "Running singly-constrained gravity model"## Gravity model

##

## Linear mixed-effects model fit by maximum likelihood

## Data: gdata

## AIC BIC logLik

## -13061.9 -13007.22 6537.952

##

## Random effects:

## Formula: GDIST ~ 1 | from_ID

## (Intercept) Residual

## StdDev: 0.08303671 0.168483

##

## Fixed effects: stats::as.formula(paste(paste(y, "~", sep = ""), paste(x, collapse = "+")))

## Value Std.Error DF t-value p-value

## (Intercept) -0.3844698 0.10679706 18224 -3.600004 0.0003

## length -0.0002183 0.00162517 18224 -0.134338 0.8931

## from.degree 0.0035277 0.01270448 23 0.277677 0.7837

## from.betweenness -0.0318164 0.05937320 23 -0.535872 0.5972

## from.pwetland 0.0069227 0.01136835 23 0.608941 0.5485

## Correlation:

## (Intr) length frm.dg frm.bt

## length -0.126

## from.degree -0.163 0.014

## from.betweenness -0.782 -0.007 -0.429

## from.pwetland 0.218 0.004 0.443 -0.286

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -5.07691616 -0.58884894 0.02146133 0.65939630 3.21854105

##

## Number of Observations: 18252

## Number of Groups: 27# Topography hypothesis (under Maximum Likelihood)

#( topo <- gravity(y = "GDIST", x = c("length", "median.srr", "median.rough27"), d = "length",

# group = "from_ID", data = gdata, fit.method = "ML",

# ln = FALSE) )

# Habitat hypothesis (under Maximum Likelihood)

( habitat <- gravity(y = "GDIST", x = c("length", "wet.pct.nlcd", "median.gsp"),

d = "length", group = "from_ID", data = gdata, fit.method = "ML",

ln = FALSE, method="ML") )## [1] "Running singly-constrained gravity model"## Gravity model

##

## Linear mixed-effects model fit by maximum likelihood

## Data: gdata

## AIC BIC logLik

## -13063.38 -13016.51 6537.689

##

## Random effects:

## Formula: GDIST ~ 1 | from_ID

## (Intercept) Residual

## StdDev: 0.083851 0.168483

##

## Fixed effects: stats::as.formula(paste(paste(y, "~", sep = ""), paste(x, collapse = "+")))

## Value Std.Error DF t-value p-value

## (Intercept) -0.4356243 0.3357628 18222 -1.2974167 0.1945

## length -0.0002220 0.0016250 18222 -0.1365924 0.8914

## wet.pct.nlcd 0.0000254 0.0008624 18222 0.0294094 0.9765

## median.gsp -0.0002840 0.0592239 18222 -0.0047961 0.9962

## Correlation:

## (Intr) length wt.pc.

## length -0.039

## wet.pct.nlcd -0.129 0.000

## median.gsp -0.998 0.000 0.135

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -5.07738165 -0.58923728 0.02085918 0.65955597 3.21896871

##

## Number of Observations: 18252

## Number of Groups: 27# Global model (under Maximum Likelihood)

#( global <- gravity(y = "GDIST", x = c("length", "wet.pct.nlcd", "median.gsp",

# "from.Depth", "from.ffp", "from.hli", "from.pratio", "from.degree",

# "from.betweenness", "from.pwetland", "median.srr", "median.rough27"),

# d = "length", group = "from_ID", data = gdata, fit.method = "ML",

# ln = FALSE) )

( global <- gravity(y = "GDIST", x = c("length", "wet.pct.nlcd", "median.gsp",

"from.Depth", "from.ffp",

"from.betweenness", "from.pwetland"),

d = "length", group = "from_ID", data = gdata, fit.method = "ML",

ln = FALSE) )## [1] "Running singly-constrained gravity model"## Gravity model

##

## Linear mixed-effects model fit by maximum likelihood

## Data: gdata

## AIC BIC logLik

## -13058.71 -12980.59 6539.355

##

## Random effects:

## Formula: GDIST ~ 1 | from_ID

## (Intercept) Residual

## StdDev: 0.0788026 0.168483

##

## Fixed effects: stats::as.formula(paste(paste(y, "~", sep = ""), paste(x, collapse = "+")))

## Value Std.Error DF t-value p-value

## (Intercept) -0.5297034 0.3719807 18222 -1.4240077 0.1545

## length -0.0002215 0.0016251 18222 -0.1363155 0.8916

## wet.pct.nlcd 0.0000281 0.0008625 18222 0.0325393 0.9740

## median.gsp 0.0013770 0.0592685 18222 0.0232332 0.9815

## from.Depth -0.0307357 0.0179440 22 -1.7128674 0.1008

## from.ffp 0.0442308 0.0548190 22 0.8068514 0.4284

## from.betweenness 0.0291035 0.0606375 22 0.4799595 0.6360

## from.pwetland 0.0071061 0.0099232 22 0.7161115 0.4815

## Correlation:

## (Intr) length wt.pc. mdn.gs frm.Dp frm.ff frm.bt

## length -0.036

## wet.pct.nlcd -0.117 0.000

## median.gsp -0.909 0.000 0.135

## from.Depth 0.176 -0.002 0.001 -0.004

## from.ffp -0.340 0.004 0.003 0.038 -0.288

## from.betweenness -0.273 0.000 0.001 -0.012 -0.536 0.068

## from.pwetland 0.112 -0.004 0.001 0.001 -0.128 -0.137 -0.012

##

## Standardized Within-Group Residuals:

## Min Q1 Med Q3 Max

## -5.07768386 -0.58928064 0.02237781 0.65817829 3.21958216

##

## Number of Observations: 18252

## Number of Groups: 27b. Compare competing models

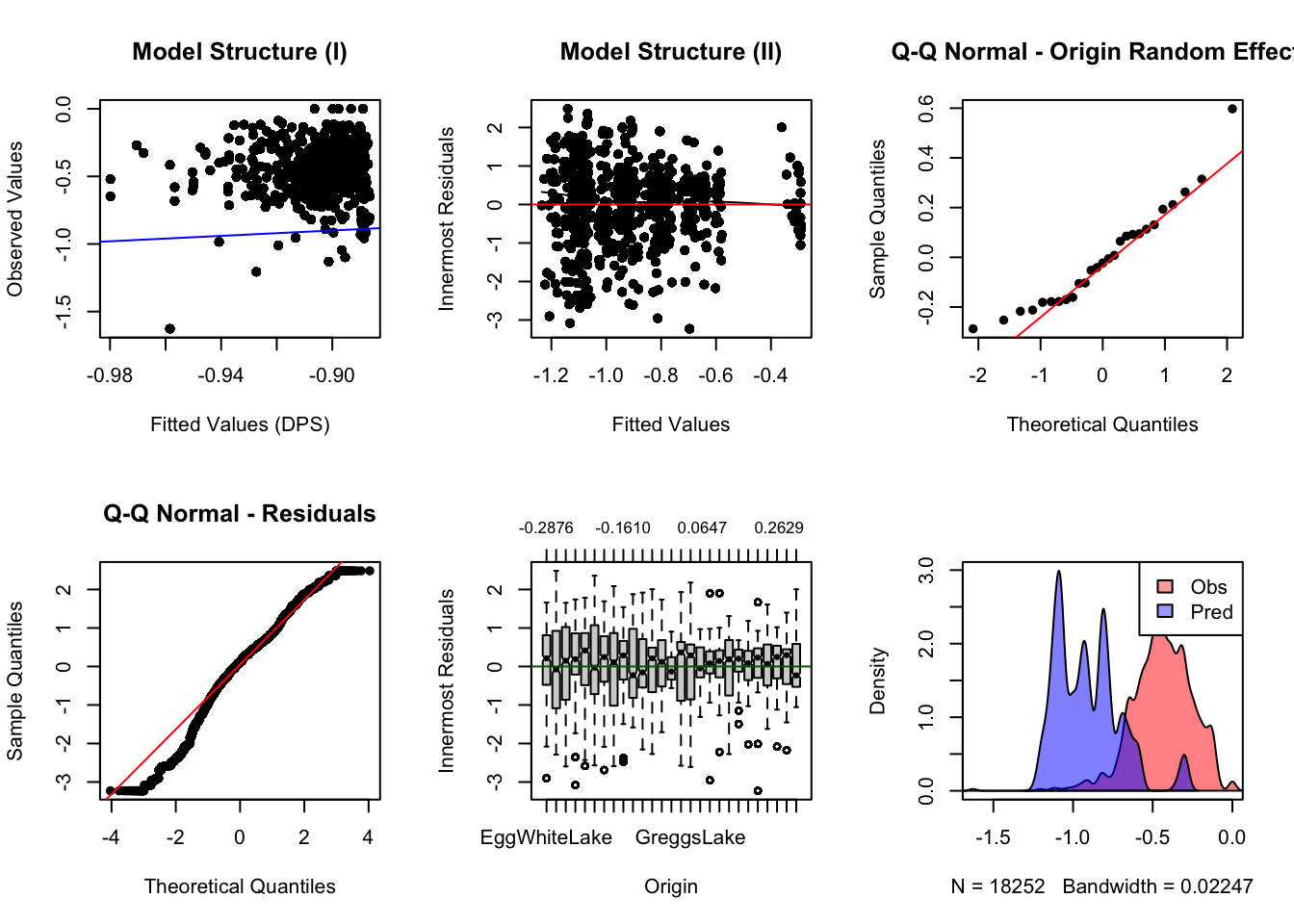

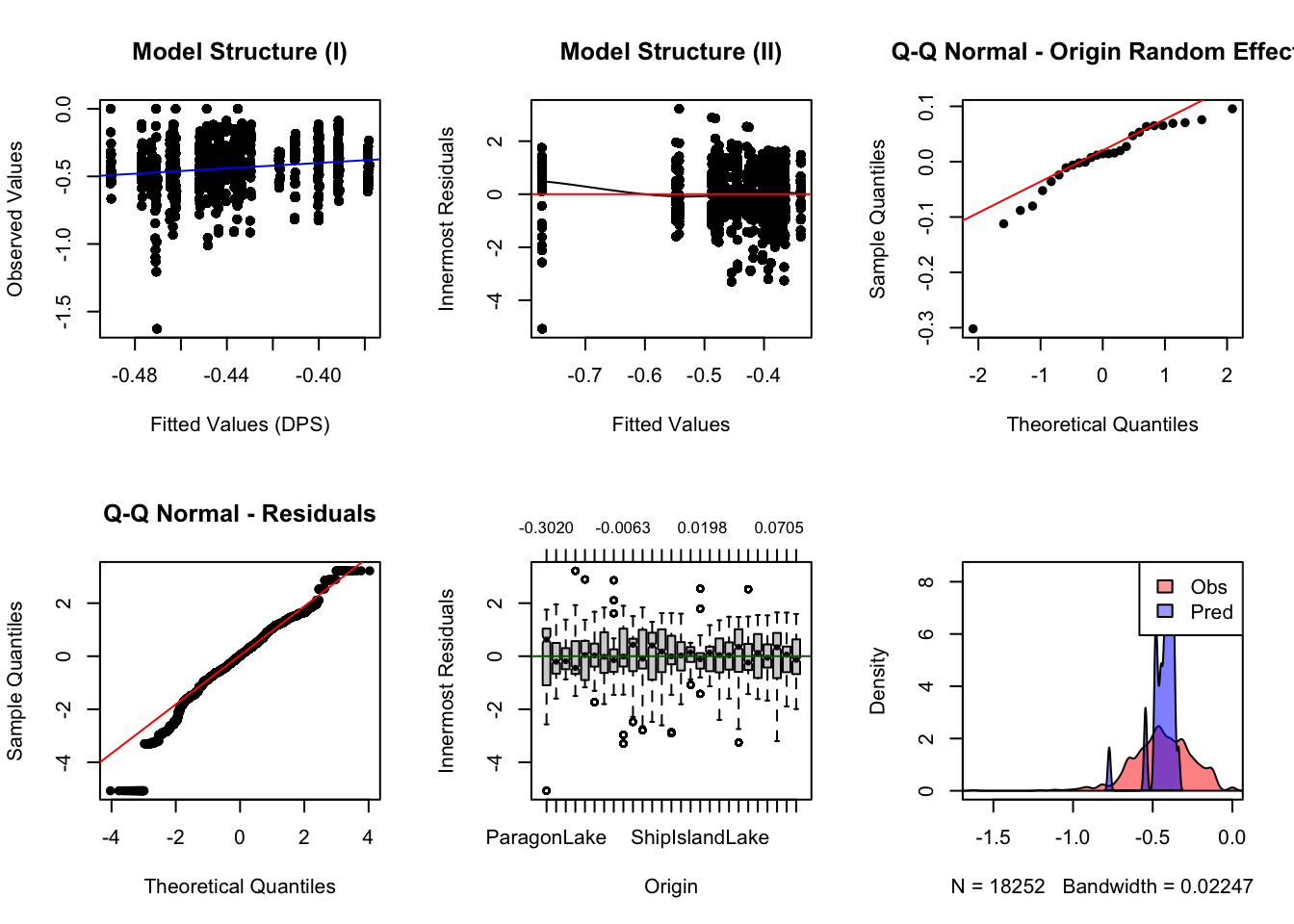

Should you use ML or REML? Create diagnostic plots.

Can you directly compare ML and REML? Why not?

#compare.models(null, depth, production, climate, wetlands,

# topo, habitat, global)

compare.models(null, depth, wetlands,

habitat, global)## model AIC BIC log.likelihood RMSE nparms fit.method

## 1 null 20554.37 20585.62 -10273.185 0.2894 2 ML

## 2 depth -13067.22 -13028.16 6538.610 0.1213 3 ML

## 3 wetlands -13061.90 -13007.22 6537.952 0.1217 5 ML

## 4 habitat -13063.38 -13016.51 6537.689 0.1220 4 ML

## 5 global -13058.71 -12980.59 6539.355 0.1207 8 ML

## deltaAIC deltaBIC

## 1 33621.588595 33613.77657

## 2 0.000000 0.00000

## 3 5.315719 20.93978

## 4 3.840564 11.65259

## 5 8.509063 47.56921

c. Fit final model(s) and calculate effect size

To calculate effect size, we refit the models with REML.

# productivity fit (under REML)

#h <- c("length", "from.ffp", "from.hli")

#production_fit <- gravity(y = "GDIST", x = h, d = "length", group = "from_ID",

# data = gdata, ln=FALSE)

## g.obal fit (under REML)

#g <- c("length", "wet.pct.nlcd", "median.gsp", "from.Depth", "from.ffp",

# "from.hli", "from.pratio", "from.degree", "from.betweenness",

# "from.pwetland", "median.srr", "median.rough27")

g <- c("length", "wet.pct.nlcd", "median.gsp", "from.Depth", "from.ffp",

"from.betweenness", "from.pwetland")

global_fit <- gravity(y = "GDIST", x = g, d = "length",

group = "from_ID", data = gdata, ln=FALSE)## [1] "Running singly-constrained gravity model"Effect size calculation: global model

## t.value df cohen.d p.value low.ci

## length -0.13233104 18222 -0.0019606212 0.894724 -0.02249547

## wet.pct.nlcd 0.02652092 18222 0.0003929348 0.978842 -0.02014191

## median.gsp 0.01893690 18222 0.0002805698 0.984892 -0.02025427

## from.Depth -1.54640025 22 -0.6593872813 0.136273 -1.29966538

## from.ffp 0.72841095 22 0.3105954724 0.474045 -0.31611957

## from.betweenness 0.43335537 22 0.1847833506 0.668976 -0.43940100

## from.pwetland 0.64649121 22 0.2756647800 0.524649 -0.35021986

## up.ci

## length 0.01857423

## wet.pct.nlcd 0.02092778

## median.gsp 0.02081541

## from.Depth -0.01910919

## from.ffp 0.93731051

## from.betweenness 0.80896770

## from.pwetland 0.90154942

Production model:

d. Back predict global_fit model

Feel free to add other top models.

gd <- back.transform(gdata$GDIST)

# Make individual-level (group) predictions (per slope) and show RMSE

global.p <- predict(global_fit, y = "GDIST", x = g,

newdata=gdata, groups = gdata$from_ID,

back.transform = "simple")## Making individual-level (per-slope group)

## constrained predictions## Back-transforming exp(y-hat)*0.5*variance(y-hat),

## assumes normally distributed errors#production.p <- predict(production_fit, y = "GDIST", x = h,

# newdata=gdata, groups = gdata$from_ID,

# back.transform = "simple")

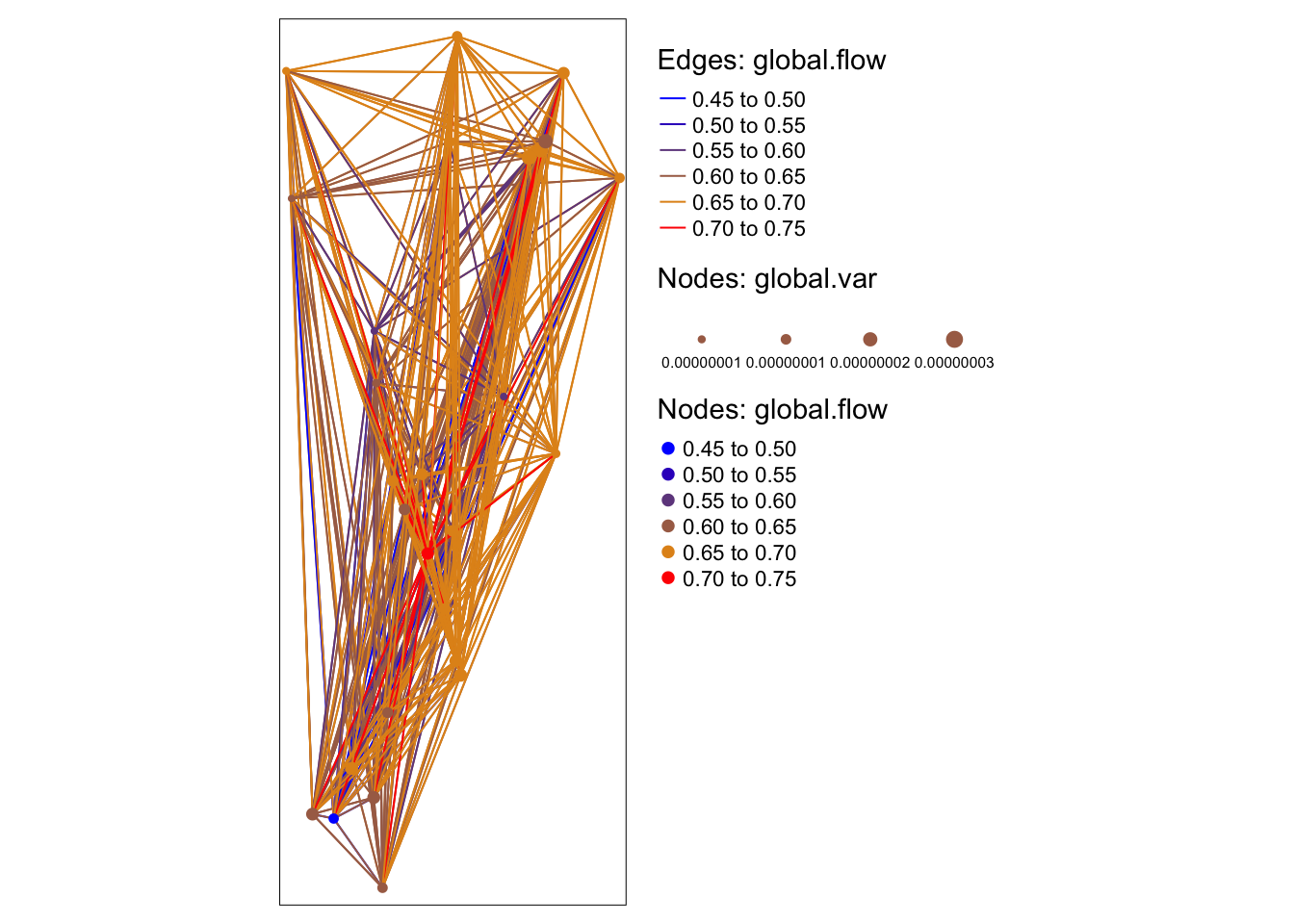

cat("RMSE of global", rmse(global.p, gd), "\n")## RMSE of global 0.1104107e. Aggregrate estimates and plot

We can aggregrate estimates back to the edges and nodes. An interactive map can be created using the tmap package (see Week 2 Bonus vignette).

Aggregate estimates to graph and node.

global.p <- data.frame(EID = gdata$from.to,

NID = gdata$from_ID,

p=global.p)

edge.p <- tapply(global.p$p, global.p$EID, mean)

dist.graph$global.flow <- edge.p

node.p <- tapply(global.p$p, global.p$NID, mean)

node.var <- tapply(global.p$p, global.p$NID, var)

idx <- which(sites$SiteName %in% names(node.p))

sites$global.flow[idx] <- node.p

sites$global.var[idx] <- node.varDefine the map and store it in object Map.

pal <- colorRampPalette(rev(c("red","orange","blue")), bias=0.15)

Map <-

tm_shape(dist.graph) +

tm_lines("global.flow", palette=pal(10), title.col="Edges: global.flow") +

tm_shape(sites) +

tm_symbols(col = "global.flow", size = "global.var",

shape = 20, scale = 0.75, palette=pal(10),

title.col="Nodes: global.flow", title.size="Nodes: global.var") Plot static map: here we tell R to plot the legend outside of the map.

## tmap mode set to plotting## Legend labels were too wide. Therefore, legend.text.size has been set to 0.49. Increase legend.width (argument of tm_layout) to make the legend wider and therefore the labels larger.

Plot interactive map: this may not render e.g. in Bookdown or when you knit your notebook to output formats other than .html.