Chapter 21 Speeding up R

library(tidyverse) # dplyr, ggplot2, etc...

library(microbenchmark) # for measuring how long stuff takes

# library(doMC) # do multi-core stuff

library(foreach) # parallelizable for loops

library(faraway) # some examples

library(boot)

library(caret)

library(glmnet)CHAPTER IS STILL BEING EDITED

The package doMC is no longer supported. doParallel will replace.

Extreme caution is encouraged running code chunks of this chapter. Parallel computing is a useful and exciting topic, but can have very high demands on personal computers. The author and NAU are not responsible for computer issues related to running code in this chapter.

Dr. Sonderegger’s Video Companion: Video Lecture.

Eventually if you have large enough data sets, an R user eventually writes code that is slow to execute and needs to be sped up. This chapter tries to lay out common problems and bad habits and shows how to correct them. However, the correctness and maintainability of code should take precedence over speed. Too often, misguided attempts to obtain efficient code results in an un-maintainable mess that is no faster that the initial code.

Hadley Wickham has a book aimed at advanced R user that describes many of the finer details about R. One section in the book describes his process for building fast, maintainable software projects and if you have the time, I highly suggest reading the on-line version, Advanced R.

First we need some way of measuring how long our code took to run. For this we will use the package microbenchmark. The idea is that we want to evaluate two or three expressions that solve a problem.

# The expressions can be as many lines as you'd like, enclosed by { }.

# For a single line, you can skip the enclosing { }.

#

# For evaluating large chunks of code, I like to

# just wrap the code up in a function.

x <- runif(1000) # x vector of 1000 numbers between 0 and 1.

microbenchmark(

sqrt(x), # First expression to compare

x^(0.5) # second expression to compare

) %>% print(digits=3)## Unit: microseconds

## expr min lq mean median uq max neval

## sqrt(x) 3.2 3.3 3.55 3.4 3.45 6.9 100

## x^(0.5) 21.9 22.1 23.31 22.2 22.55 63.4 100What microbenchmark does is run the two expressions a number of times and then

produces the 5-number summary of those times. By running it multiple times (by

default, 100 times), we

account for the randomness associated with a operating system that is also running

at the same time. Being good statisticians, the cld column stands for compact

letter display and if the letters are different, there is a statistically

significant difference in the timing. If we cause the microbenchmark() function to

run more times (1000s or 100,000s times), we could eventually end up with the smallest

difference to be statistically significant, but I think we shouldn’t complain about

speed differences if a sample of 100 runs can’t detect the difference.

21.1 Faster for loops?

Often we need to perform some simple action repeatedly. It is natural to write

a for loop to do the action and we wish to speed the up. In this first case,

we will consider having to do the action millions of times and each chunk of

computation within the for takes very little time.

Consider frame of 4 columns, and for each of \(n\) rows, we wish to know which column has the largest value.

make.data <- function(n){

data <- data.frame(

Norm = rnorm(n, mean=5, sd=2),

Pois = rpois(n, lambda = 5),

Gamma = rgamma(n, shape = 2, scale = 3),

Exp = rexp(n, rate = 1/5))

return(data)

}

data <- make.data(6)

data## Norm Pois Gamma Exp

## 1 6.080710 4 10.455435 3.961338

## 2 7.571859 8 6.439578 7.347794

## 3 4.117350 5 8.870075 1.692957

## 4 2.927725 3 1.712223 13.276074

## 5 4.248720 4 5.151792 2.451040

## 6 4.407426 5 14.849483 6.216384The way that you might first think about solving this problem is to write a for loop and, for each row, figure it out.

f1 <- function( input ){

output <- NULL

for( i in 1:nrow(input) ){

output[i] <- which.max( input[i,] )

}

return(output)

}We might consider that there are two ways to return a value from a function

(using the return function and just printing it). In fact, I’ve always heard

that using the return statement is a touch slower.

f2.noReturn <- function( input ){

output <- NULL

for( i in 1:nrow(input) ){

output[i] <- which.max( input[i,] )

}

output

}## Unit: milliseconds

## expr min lq mean median uq max neval

## f1(data) 31.4 32.3 37.0 32.9 33.9 291.8 100

## f2.noReturn(data) 31.4 32.5 35.2 33.0 34.6 47.6 100In fact, it looks like it is a touch slower, but not massively compared to the run-to-run variability. I prefer to use the return statement for readability, but if we agree have the last line of code in the function be whatever needs to be returned, readability isn’t strongly effected.

We next consider whether it would be faster to allocate the output vector once we figure out the number of rows needed, or just build it on the fly?

f3.AllocOutput <- function( input ){

n <- nrow(input)

output <- rep(NULL, n)

for( i in 1:nrow(input) ){

output[i] <- which.max( input[i,] )

}

return(output)

}data <- make.data(10000) # Moderately large sample size

microbenchmark(

f1(data),

f3.AllocOutput(data)

) %>% print(digits=3)## Unit: milliseconds

## expr min lq mean median uq max neval

## f1(data) 313 325 342 337 350 428 100

## f3.AllocOutput(data) 318 327 344 335 351 576 100There isn’t a significant improvement allocating the size of output first. So given this, we shouldn’t feel to bad being lazy and using output <- NULL to initialize things.

21.2 Vectorizing loops

In general, for loops in R are very slow and we want to avoid them as much as possible. The apply family of functions can be quite helpful for applying a function to each row or column of a matrix or data.frame or to each element of a list.

To test this, instead of a for loop, we will use apply.

f4.apply <- function( input ){

output <- apply(input, 1, which.max) # 1 = apply to rows

return(output)

}## Unit: milliseconds

## expr min lq mean median uq max neval

## f1(data) 312.2 329 351.3 338.0 361.2 563.8 100

## f4.apply(data) 20.3 21 23.6 22.3 24.1 37.3 100This is the type of speed up that matters. We have a 10-fold speed up in execution time and particularly the maximum time has dropped impressively.

Unfortunately, I have always found the apply functions a little cumbersome and I prefer to use dplyr functions for readability. For this example, we’ll compare summarizing each column by calculating the mean.

First for a small sample sized data:

data <- make.data(1000)

microbenchmark(

data %>% apply(2, mean),

data %>% summarize_all(mean)

) %>% print(digits=3)## Unit: microseconds

## expr min lq mean median uq max neval

## data %>% apply(2, mean) 126 153 164 160 175 448 100

## data %>% summarize_all(mean) 1688 1839 1858 1862 1871 2636 100Unfortunately dplyr is a lot slower than apply in this case. I wonder if the dynamics would change with a larger n?

data <- make.data(1000000)

microbenchmark(

data %>% apply(2, mean),

data %>% summarize_all(mean)

) %>% print(digits=3)## Unit: milliseconds

## expr min lq mean median uq max neval

## data %>% apply(2, mean) 50.2 54.34 74.06 56.66 61.16 291.7 100

## data %>% summarize_all(mean) 7.5 7.73 8.17 7.91 8.11 13.7 100What just happened? The package dplyr is designed to work well for large data sets, and utilizes a modified structure, called a tibble, which provides massive benefits for large tables, but at the small scale, the overhead of converting the data.frame to a tibble overwhelms any speed up. But because the small sample case is already fast enough to not be noticeable, we don’t really care about the small n case.

21.3 Parallel Processing

Most modern computers have multiple computing cores, and can run muliple processes at the same time. Sometimes this means that you can run multiple programs and switch back and forth easily without lag, but we are now interested in using as many cores as possible to get our statistical calculations completed by using muliple processing cores at the same time. This is referred to as running the process “in parallel” and there are many tasks in modern statistical computing that are “embarrassingly easily parallelized”. In particular bootstrapping and cross validation techniques are extremely easy to implement in a parallel fashion.

However, running commands in parallel incurs some overhead cost in set up computation, as well as all the message passing from core to core. For example, to have 5 cores all perform an analysis on a set of data, all 5 cores must have access to the data, and not overwrite any of it. So parallelizing code only makes sense if the individual steps that we pass to each core is of sufficient size that the overhead incurred is substantially less than the time to run the job.

We should think of executing code in parallel as having three major steps: 1. Tell R that there are multiple computing cores available and to set up a useable cluster to which we can pass jobs to. 2. Decide what ‘computational chunk’ should be sent to each core and distribute all necessary data, libraries, etc to each core. 3. Combine the results of each core back into a unified object.

21.4 Parallel Aware Functions

There are many packages that address problems that are “embarrassingly easily parallelized” and they will happily work with multiple cores. Methods that rely on re-sampling certainly fit into this category.

The first step is letting R know it has access to multiple cores. This is quite simple using the doMC package (aka the do Multi-Core package).

The registration of multiple cores is actually pretty easy.

21.4.1 boot::boot

Bootstrapping relies on re-sampling the dataset and calculating test statistics from each re-sample. In R, the most common way to do this is using the package boot and we just need to tell the boot function, to use the multiple cores available. (Note, we have to have registered the cores first!)

# make the trees dataset a bit larger,

# just so the overhead cost isn't as large

# compared to the computation of the regression

# coefficients.

for(i in 1:4){

trees <- rbind(trees, trees)

}

# define the model using the original sample data

model <- lm( Volume ~ Girth, data=trees)

# define a function that calculates the model parameters

# given an index of which data points to use.

my.fun <- function(df, index){

model.star <- lm( Volume ~ Girth, data= trees[index,] )

model.star$coefficients

}

# the boot::boot() function has a parallel option we just need

# to switch on

microbenchmark(

serial = boot::boot( trees, my.fun, R=1000 ),

parallel = boot::boot( trees, my.fun, R=1000,

parallel='multicore', ncpus=2 )

) %>% print(digits=3)## Unit: milliseconds

## expr min lq mean median uq max neval

## serial 623 653 707 679 719 1067 100

## parallel 625 656 701 677 714 1026 100In this case, we had a bit of a speed up, but not a factor of 2. This is due to the overhead of splitting the job across both cores.

21.4.2 caret::train

The statistical learning package caret also handles all the work to do cross validation in a parallel computing environment. The functions in caret have an option allowParallel which by default is TRUE, which controls if we should use all the cores. Assuming we have already registered the number of cores, then by default caret will use them all.

library(caret)

# Remember to register how many cores to use!

# doMC::registerDoMC(cores = 2) # my laptop only has two cores.

caret.model <- function(parallel){

grid <- data.frame(

alpha = 1, # 1 => Lasso Regression

lambda = exp(seq(-6, 1, length=50)))

ctrl <- trainControl(

method='repeatedcv', number=5, repeats=4,

preProcOptions = c('center','scale'),

allowParallel = parallel)

model <- train(

lpsa ~ . -svi, data=prostate, method='glmnet',

trControl=ctrl,

tuneGrid=grid,

lambda = grid$lambda )

return(model)

}

# make the dataset a bit larger, just so the overhead

# of moving to multi-core doesn't overwhelm the benefits

# of using two processors. You should never do this

# in a real analysis!

data('prostate', package='faraway')

for( i in 1:7 ){

prostate <- rbind(prostate,prostate)

}

microbenchmark(

caret.model(parallel = FALSE),

caret.model(parallel = TRUE )

) %>% print(digits=3)## Unit: seconds

## expr min lq mean median uq max neval

## caret.model(parallel = FALSE) 1.20 1.26 1.34 1.29 1.41 1.73 100

## caret.model(parallel = TRUE) 1.21 1.27 1.33 1.30 1.39 1.67 100Again, we saw only moderate gains by using both cores, however it didn’t really cost us anything. Because the caret package by default allows parallel processing, it doesn’t hurt to just load the doMC package and register the number of cores. Even in just the two core case, it is a good habit to get into so that when you port your code to a huge computer with many cores, the only thing to change is how many cores you have access to.

21.5 Parallelizing for loops

One of the easiest ways to parallelize a for loop is using a package called foreach. This package was created by R-Revolutions company that was subsequently bought by Microsoft. They have a nice introduction to the package here.

We will consider an example that is common in modern statistics. We will examine parallel computing utilizing a bootstrap example where we create bootstrap samples for calculating confidence intervals for regression coefficients.

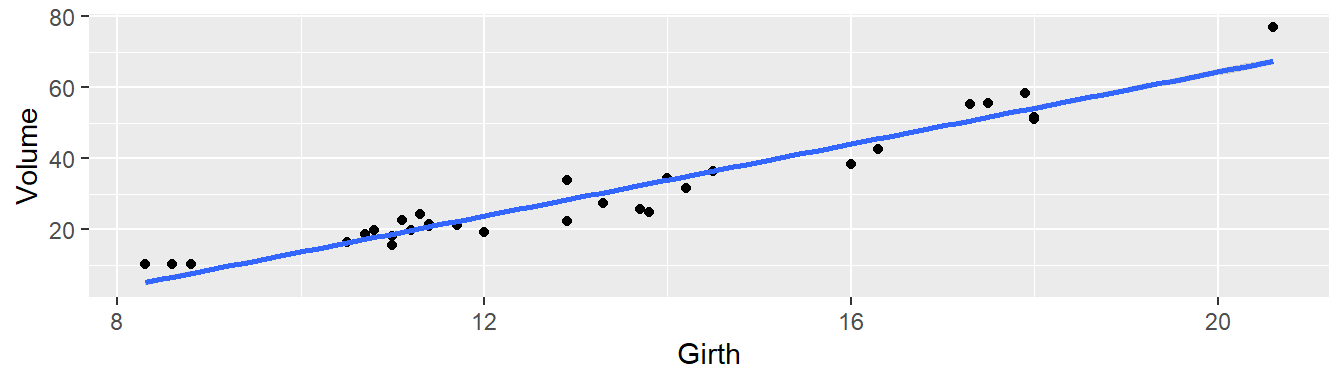

## `geom_smooth()` using formula = 'y ~ x'

This is how we would do this previously.

# f is a formula

# df is the input data frame

# M is the number of bootstrap iterations

boot.for <- function( f, df, M=999){

output <- list()

for( i in 1:100 ){

# Do stuff

model.star <- lm( f, data=df %>% sample_frac(1, replace=TRUE) )

output[[i]] <- model.star$coefficients

}

# use rbind to put the list of results together into a data.frame

output <- sapply(output, rbind) %>% t() %>% data.frame()

return(output)

}We will first ask about how to do the same thing using the function foreach

# f is a formula

# df is the input data frame

# M is the number of bootstrap iterations

boot.foreach <- function(f, df, M=999){

output <- foreach( i=1:100 ) %dopar% {

# Do stuff

model.star <- lm( f, data=df %>% sample_frac(1, replace=TRUE) )

model.star$coefficients

}

# use rbind to put the list of results together into a data.frame

output <- sapply(output, rbind) %>% t() %>% data.frame()

return(output)

}Not much has changed in our code. Lets see which is faster.

microbenchmark(

boot.for( Volume~Girth, trees ),

boot.foreach( Volume~Girth, trees )

) %>% print(digits=3)## Warning: executing %dopar% sequentially: no parallel backend registered## Unit: milliseconds

## expr min lq mean median uq max neval

## boot.for(Volume ~ Girth, trees) 147 149 158 161 164 184 100

## boot.foreach(Volume ~ Girth, trees) 164 167 177 179 182 207 100In this case, the overhead associated with splitting the job across two cores, copying the data over, and then combining the results back together was more than we saved by using both cores. If the nugget of computation within each pass of the for loop was larger, then it would pay to use both cores.

# massiveTrees has 31000 observations

massiveTrees <- NULL

for( i in 1:1000 ){

massiveTrees <- rbind(massiveTrees, trees)

}

microbenchmark(

boot.for( Volume~Girth, massiveTrees ) ,

boot.foreach( Volume~Girth, massiveTrees )

) %>% print(digits=3)## Unit: seconds

## expr min lq mean median uq max

## boot.for(Volume ~ Girth, massiveTrees) 11.4 11.5 11.9 11.8 12.1 15.4

## boot.foreach(Volume ~ Girth, massiveTrees) 11.4 11.5 11.9 11.8 12.1 16.0

## neval

## 100

## 100Because we often generate a bunch of results that we want to see as a data.frame, the foreach function includes a .combine option which allows us to specify a function to do the combining.

output <- foreach( i=1:1000, .combine=rbind ) %dopar% {

# Do stuff

model.star <- lm( Volume ~ Girth, data= trees %>% sample_frac(1, replace=TRUE) )

model.star$coefficients %>% t() %>% as.data.frame()

}It is important to recognize that the data.frame trees was utilized inside the foreach loop. So when we called the foreach loop and distributed the workload across the cores, it was smart enough to distribute the data to each core. However, if there were functions that we utilized inside the for loop that came from a package, we need to tell each core to load the function.