Chapter 4 Probability

4.1 Overview

In this Chapter we introduce probability as a measure associated with a random experiment. After providing a short motivation for probability (Section 4.2), we begin in Section 4.3 with the notion of a Sample space (Section 4.3), the set of possible outcomes of a random experiment and Events (Section 4.4), the outcome(s) which occur. This enables us in Section 4.5 to define probability as a finite measure which uses the scale 0 (impossible) to 1 (certain) to define the likelihood of an event. We conclude the chapter by introducing the concept of conditional probability (Section 4.6), the probability of one event given (conditional upon) another event (or events) having occurred. We present the key results of the Theorem of total probability and Bayes’ formula. The discussion of conditional probability leads us naturally to consider the dependence between two (or more) events and the notion of independence, where the probability of an event occurring is not affected by whether or not another event has occurred and we explore this further in Section 4.7.

4.2 Motivation

There are many situations where we have uncertainty and want to quantify that uncertainty.

- Manchester United will win the Premier League this season.

- The Labour Party will win the next general election.

- The £ will rise against the $ today.

- Coin tossed repeatedly - a head will turn up eventually.

- In 5 throws of a dart, I will hit the Bull’s eye once.

- If I play the lottery every week I will win a prize next year.

(a)-(c) are subjective probabilities, whereas (d)-(f) are objective/statistical/physical probabilities.

The general idea is:

- A conceptual random experiment \(\mathcal{E}\).

- List all possible outcomes \(\Omega\) for the experiment \(\mathcal{E}\) but don’t know which occurs, has occurred or will occur.

- Assign to each possible outcome \(\omega\) a real number which is the probability of that outcome.

4.3 Sample Space

We begin by defining a set.

Set.

A set is a collection of objects. The notation for a set is to simply list every object separated by commas, and to surround this list by curly braces \(\{\) and \(\}\). The objects in a set are referred to as the elements of the set.

There is no restrictions on what constitutes as an object in set. A set can have a finite or infinite number of elements. The ordering of the elements in a list is irrelevant. Two sets are equal if and only if they have the same collection of elements.

Sample space.

The sample space \(\Omega\) for a random experiment \(\mathcal{E}\) is the set of all possible outcomes of the random experiment.

Rolling a die.

The sample space for the roll of a die is

that is, the set of the six possible outcomes.

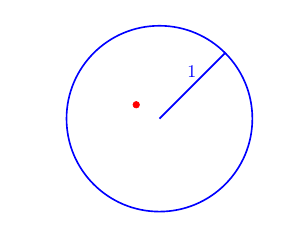

Dart in a target.

Dart into a circular target, radius 1:

Figure 4.1: Example: \((x,y) =(-0.25,0.15)\).

that is, the set of pairs of real numbers that are less than a distance 1 from the origin \((0,0)\).

Note that the examples illustrate how \(\Omega\) may be discrete or continuous.

4.4 Events

Event.

An event relating to an experiment is a subset of \(\Omega\).

Toss two coins.

The sample space for the toss of two fair coins is

Let \(A\) be the event that at least one head occurs, then

Note that the events \(HT\) (Head on coin 1 and Tail on coin 2) and \(TH\) (Tail on coin 1 and Head on coin 2) are distinct events.

Volcano eruption.

The sample space of time in years until a volcano next erupts is \[ \Omega = \{t: t >0 \} =(0,\infty),\] that is, all positive real numbers. Let event \(L\) be the volcano erupting in the next \(10\) years, then \[L = \{t: 0 < t \leq 10\} =(0,10].\]

We summarise key set notation, involving sets \(E\) and \(F\), below:

- We use \(\omega \in E\) to denote that \(\omega\) is an element of the set \(E\). Likewise, \(\omega \notin E\) denotes that \(\omega\) is not an element of \(E\);

- The notation \(E \subseteq F\) means that if \(\omega \in E\), then \(\omega \in F\). In this case, we say \(E\) is a subset of \(F\);

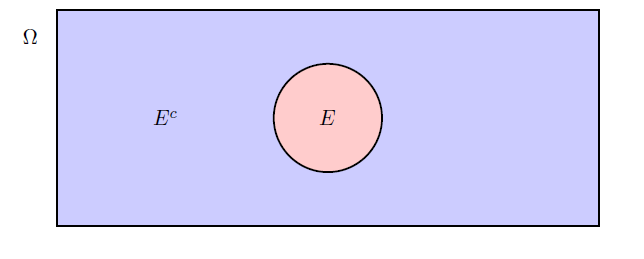

- \(E^{c}\) - complement of \(E\), sometimes written \(\bar{E}\).

\(E^{c}\) consists of all points in \(\Omega\) that are not in \(E\). Thus, \(E^{c}\) occurs if and only if \(E\) does not occur, see Figure 4.2.

Figure 4.2: Complement example.

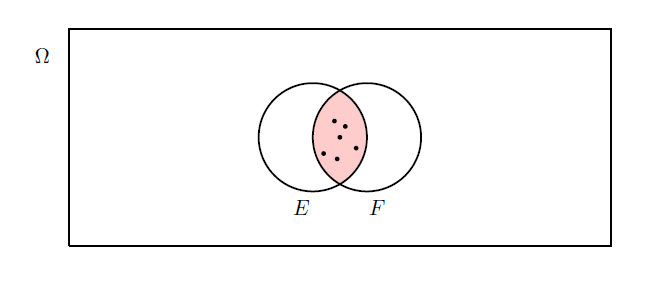

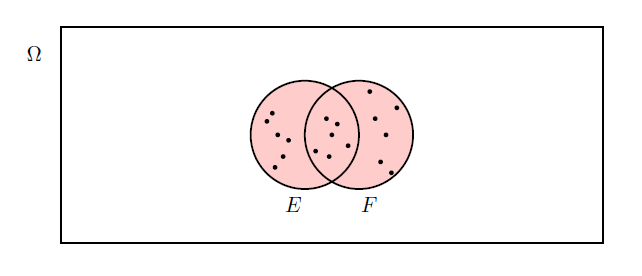

- The intersection of two sets \(E\) and \(F\), denoted \(E \cap F\), is the set of all elements that belong to both \(E\) and \(F\), see Figure 4.3.

Figure 4.3: Intersection example.

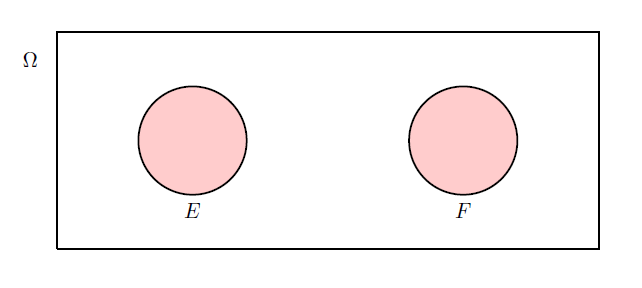

- If \(E \cap F = \emptyset\) then \(E\) and \(F\) cannot both occur, i.e. \(E\) and \(F\) are disjoint (or exclusive) sets, see Figure 4.4.

Figure 4.4: Disjoint (exclusive) example.

- The union of two sets \(E\) and \(F\), denoted \(E \cup F\), is the set of all elements that belong to either \(E\) or to \(F\), see Figure 4.5.

Figure 4.5: Union example.

- The set \(\{\}\) with no elements in it is called the empty set and is denoted \(\emptyset\).

Note: \(\Omega^c = \emptyset\) and \(\emptyset^c = \Omega\).

A summary of sets notation using the outcomes from a six sided die are presented in the Video 6.

Video 6: Set notation

4.5 Probability

There are different possible interpretations of the meaning of a probability:

Classical interpretation. Assuming that all outcomes of an experiment are equally likely, then the probability of an event \(A = \frac{n(A)}{n(\Omega)}\), where \(n(A)\) is the number of outcomes satisfying \(A\) and \(n(\Omega)\) is the number of outcomes in \(\Omega\) (total number of possible outcomes).

Frequency interpretation. The probability of an event is the relative frequency of observing a particular outcome when an experiment is repeated a large number of times under similar circumstances.

Subjective interpretation. The probability of an event is an individual’s perception as to the likelihood of an event’s occurrence.

Probability.

A probability (measure) is a real-valued set function \(P\) defined on the events (subsets) of a sample space \(\Omega\) satisfying the following three axioms (see Kolmogorov, 1933):

- \(P(E)\geq0\) for any event \(E\);

- \(P(\Omega)=1\);

- If \(E_1,E_2,\dots, E_n\) are disjoint events (i.e. \(E_i \cap E_j = \emptyset\) for all \(i \neq j\)), then \[P \left( \bigcup_{i=1}^n E_i \right) = \sum_{i=1}^n P(E_i).\]

If \(\Omega\) is infinite then 3. can be extended to:

3’. If \(E_1,E_2,\dots\) is any infinite sequence of disjoint events (i.e. \(E_i \cap E_j = \emptyset\) for all \(i\not=j\)), then

\[P \left( \bigcup_{i=1}^\infty E_i \right) = \sum_{i=1}^\infty P(E_i).\]

Note that all of the other standard properties of probability (measures) that we use are derived from these three axioms.

Using only the axioms above, prove:

\(0 \leq P(E) \leq 1\) for any event \(E\);

\(P(E^C) = 1 - P(E)\) where \(E^C\) is the complement of \(E\);

\(P(\emptyset) = 0\);

\(P(A \cup B) = P(A) + P(B) - P(A \cap B)\).

A summary of probability along with proofs of the results in Example 4.5.2 are provided in Video 7.

Video 7: Probability

Solution to Example 4.5.2.

which rearranges to give \(P(E^c) = 1-P(E)\).

Special cases

If \(E = \emptyset\), then \(E^c = \Omega\) giving \(1 = P(\emptyset) +1\) and it follows that \(P(\emptyset)=0\).

Since \(P(E^c) \geq 0\), we have that \(P(E) \leq 1\) and hence \(0\leq P(E) \leq 1\).

In many cases, \(\Omega\) consists of \(N (=n(\Omega))\) equally likely elements, i.e. \[ \Omega = \left\{ \omega_{1}, \omega_{2}, \ldots, \omega_{N} \right\}, \] with \(P(\omega_i) = \frac{1}{N}\).

Then, for any event \(E\) (i.e. subset of \(\Omega\)), \[ P(E) = \frac{n(E)}{n(\Omega)} = \frac{n(E)}{N} \] coinciding with the Classical interpretation of probability.

1. Throw a die. \(\Omega = \left\{ 1,2,3,4,5,6 \right\}\).

\[ P(\{1\})=P(\{2\})= \ldots = P(\{6\})= \frac{1}{6}. \]

The probability of throwing an odd number is

\[P(\mbox{Odd}) = P( \left\{ 1,3,5 \right\}) = \frac{3}{6} = \frac{1}{2}. \]

2. Draw a card at random from a standard pack of 52.

\[\Omega = \left\{ A\clubsuit, 2\clubsuit, 3\clubsuit, \ldots, K\diamondsuit \right\}. \]

\(P(\omega) = 1/52\) for all \(\omega \in \Omega\).

If \(E = \left\{ \mbox{Black} \right\}\) and \(F = \left\{ \mbox{King} \right\}\),

then there are 26 black cards, \(n(E) =26\), 4 kings, \(n(F)=4\) and 2 black kings (\(K\clubsuit\) and \(K\spadesuit\)), \(n(E\cap F)=2\),

\[\begin{eqnarray*}

P(E \cup F) & = & P(E) + P(F) - P(E \cap F) \\

& = & \frac{26}{52} + \frac{4}{52} - \frac{2}{52} \\

& = & \frac{7}{13}.

\end{eqnarray*}\]

4.6 Conditional probability

Conditional Probability.

The conditional probability of an event \(E\) given an event \(F\) is

Note if \(P(F)>0\), then \(P(E \cap F) = P(E|F) P(F)\).

Moreover, since \(E \cap F = F \cap E\), we have that \[ P(E|F) P(F) = P(E \cap F) = P (F \cap E) = P(F|E) P(E). \] In other words, to compute the probability of both events \(E\) and \(F\) occurring, we can either:

- Consider first whether \(E\) occurs, \(P(E)\), and then whether \(F\) occurs given that \(E\) has occurred, \(P(F|E)\),

- Or consider first whether \(F\) occurs, \(P(F)\), and then whether \(E\) occurs given that \(F\) has occurred, \(P(E|F)\).

Rolling a die.

Consider the experiment of tossing a fair 6-sided die. What is the probability of observing a 2 if the outcome was even?

Let event \(T\) be observing a 2 and let event \(E\) be the outcome is even. Find \(P(T|E)\):

Independence.

Two events \(E\) and \(F\) are independent if

If \(P(F)>0\), two events, \(E\) and \(F\), are independent if and only if \(P(E|F)=P(E)\).

Observations on Independence

- If \(E\) and \(F\) are NOT independent then

\[

P( E \cap F) \neq P(E)P (F).

\]

- \(E\) and \(F\) being independent is NOT the same as \(E\) and \(F\) being disjoint.

Independence: \(P( E \cap F) = P(E)P(F)\).

Disjoint (exclusive): \(P( E \cap F) = P (\emptyset) = 0\).

Rolling a die.

Consider the experiment of tossing a fair 6-sided die.

Let \(E= \{2,4,6\}\), an even number is rolled on the die and \(F= \{3,6\}\), a multiple of 3 is rolled on the die.

Are \(E\) and \(F\) independent?

\(E \cap F = \{6 \}\), so \(P(E \cap F) = \frac{1}{6}\).Therefore \(E\) and \(F\) are independent.

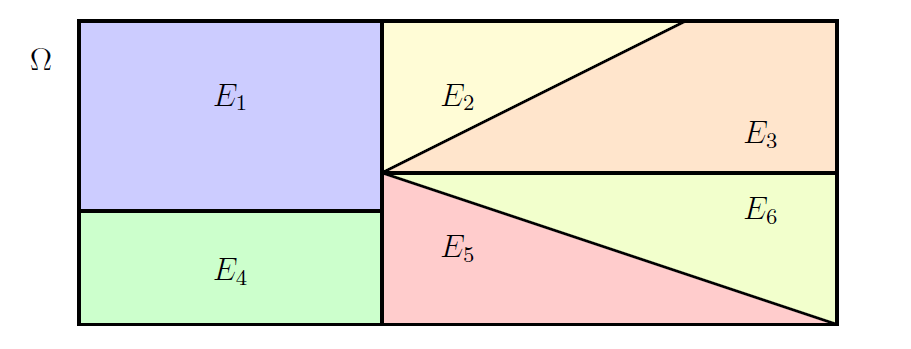

Partition.

A partition of a sample space \(\Omega\) is a collection of events \(E_{1}, E_{2}, \ldots,E_n\) in \(\Omega\) such that:

- \(E_{i} \cap E_{j} = \emptyset\) for all \(i \neq j\) (disjoint sets)

- \(E_{1} \cup E_{2} \cup \ldots \cup E_n \; = \bigcup_{i=1}^n E_i = \Omega\).

We can set \(n=\infty\) in Definition 4.6.6 and have infinitely many events constructed the partition.

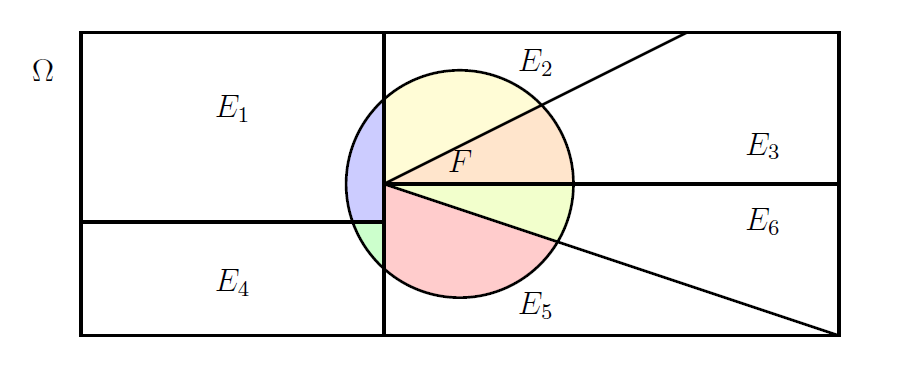

Figure 4.6 presents an example of a partition of \(\Omega\) using six events \(E_1,E_2, \ldots, E_6\).

Figure 4.6: Example of a partition of a sample space using six events.

This is illustrated in Figure 4.7 using the partition given in Figure 4.6.

Figure 4.7: The event F expressed in terms of the union of events.

Theorem of Total Probability.

Let \(E_1,E_2,\dots, E_n\) be a partition of \(\Omega\) (i.e. \(E_i\cap E_j=\emptyset\) for all \(i\not=j\) and \(\bigcup\limits_{i=1}^n E_i=\Omega\)) and let \(F\subseteq \Omega\) be any event. Then,Since the \(E_i\)’s form a partition:

- \(F = F \cap \Omega = \bigcup_{i=1}^n [ F \cap E_i ]\).

- For each \(i \neq j\), \([F \cap E_i] \cap [F \cap E_j] = \emptyset\).

Tin can factory.

Suppose that a factory uses three different machines to produce tin cans. Machine I produces 50% of all cans, machine II produces 30% of all cans and machine III produces the rest of the cans. It is known that 4% of cans produced on machine I are defective, 2% of the cans produced on machine II are defective and 5% of the cans produced on machine III are defective. If a can is selected at random, what is the probability that it is defective?

Job interview problem.

A manager interviews 4 candidates for a job. The manager MUST make a decision offer/reject after each interview. Suppose that candidates are ranked \(1,2,3,4\) (1 best) and are interviewed in random order.

The manager interviews and rejects the first candidate. They then offer the job to the first candidate that is better than the rejected candidate. If all are worse then they offer the job to the last candidate.

What is the probability that the job is offered to the best candidate?

Attempt Example 4.6.9 (Job interview problem) and then watch Video 8 for the solution.

Video 8: Job Interview

Solution to Example 4.6.9 (Job interview problem).

Let \(F\) be the event that the best candidate is offered the job.

For \(k=1,2,3,4\), let \(E_k\) be the event that candidate \(k\) (the \(k^{th}\) best candidate) is interviewed first. Note that \(E_k\)s form a partition of the sample space and by randomness,We have that:

1. \(P(F|E_1) =0\). If the \(1^{st}\) ranked candidate is interviewed first they will be rejected and cannot be offered the job.

2. \(P(F|E_2) =1\). If the \(2^{nd}\) ranked candidate is interviewed first then all candidates will be rejected until the best (\(1^{st}\) ranked) candidate is interviewed and offered the job.

3. \(P(F|E_3) =\frac{1}{2}\). If the \(3^{rd}\) ranked candidate is interviewed first then whoever is interviewed first out of the \(1^{st}\) ranked and \(2^{nd}\) ranked candidates will be offered the job. Each of these possibilities is equally likely.

4. \(P(F|E_4) =\frac{1}{3}\). If the \(4^{th}\) ranked (worst) candidate is interviewed first then the \(1^{st}\) ranked candidate will only be offered the job if they are interviewed second.

Bayes Formula.

Let \(E_1,E_2,\dots,E_n\) be a partition of \(\Omega\), i.e. \(E_i \cap E_j = \emptyset\) for all \(i \not= j\) and \(\bigcup\limits_{i=1}^n E_i=\Omega\), such that \(P(E_i)>0\) for all \(i=1,\dots,n\), and let \(F\subseteq\Omega\) be any event such that \(P(F)>0\). ThenTin can factory (continued).

Consider Example 4.6.8. Suppose now that we randomly select a can and find that it is defective.

What is the probability that it was produced by machine I?Guilty?

At a certain stage of a jury trial a jury member gauges that the probability that the defendant is guilty is \(7/10\).

The prosecution then produces evidence that fibres of the victim’s clothing were found on the defendant.

If the probability of such fibres being found is 1 if the defendant is guilty and \(1/4\) if the defendant is not guilty, what now should be the jury member’s probability that the defendant is guilty?

Attempt Example 4.6.12 (Guilty?) and then watch Video 9 for the solution.

Video 9: Guilty?

Solution to Example 4.6.12 (Guilty?)

Let \(G\) be the event that the defendant is guilty and let \(F\) be the event that fibres are found on the victim’s clothing.

We want \(P(G|F)\), the probability of being guilty given fibres are found on the victim’s clothing.

We have that \(P(G)=0.7\), \(P(G^c) = 1- P(G)=0.3\), \(P(F|G)=1\) and \(P(F|G^c)=0.25\).

Therefore, by Bayes’ Theorem,

4.7 Mutual Independence

We can extend the concept of independence from two events to \(N\) events.

Mutual independence.

Events \(E_1,E_2,\dots,E_N\) are (mutually) independent if for any finite subset \(\{i_1,i_2,\dots,i_n\} \subseteq \{1,\dots,N\}\),

Aircraft safety.

An aircraft safety system contains \(n\) independent components. The aircraft can fly provided at least one of the components is working. The probability that the \(i\)th component works is \(p_{i}\). Then

Task: Session 3

Attempt the R Markdown file for Session 3:

Session 3: Probability in R

Student Exercises

Attempt the exercises below.

A card is drawn from a standard pack of 52. Let \(B\) be the event ‘the card is black’, \(NA\) the event ‘the card is not an Ace’ and \(H\) the event ‘the card is a Heart’. Calculate the following probabilities:

- \(P(B|NA)\);

- \(P(NA|B^c)\);

- \(P(B^c \cap H | NA)\);

- \(P(NA \cup B | H^c)\);

- \(P(NA \cup H | NA \cap B)\).

Solution to Exercise 4.1.

- \(P(B|NA) = \frac{P(B \cap NA)}{P(NA)} = \frac{24/52}{48/52} = \frac{1}{2}\);

- \(P(NA|B^c) = \frac{P(NA \cap B^c)}{P(B^c)} = \frac{24/52}{26/52} = \frac{12}{13}\);

- \(P(B^c \cap H|NA) = \frac{P(B^c \cap H \cap NA)}{P(NA)} = \frac{12/52}{48/52} = \frac{1}{4}\);

- \(P(NA \cup B|H^c) = \frac{P([NA \cup B] \cap H^c)}{P(H^c)} = \frac{38/52}{39/52} = \frac{38}{39}\);

- Since \([NA \cup H] \cap [NA \cap B] = NA \cap B\), we have that

\(P(NA \cup H | NA \cap B) = \frac{P([NA \cup H] \cap [NA \cap B])}{P(NA \cap B)} = \frac{P(NA \cap B)}{P(NA \cap B)} \left(= \frac{24/52}{24/52} \right) = 1\).

A diagnostic test has a probability \(0.95\) of giving a positive result when

applied to a person suffering from a certain disease, and a probability \(0.10\) of giving

a (false) positive when applied to a non-sufferer. It is estimated that \(0.5\%\) of the population

are sufferers. Suppose that the test is applied to a person chosen at random from

the population. Find the probabilities of the following events.

- the test result will be positive.

- the person is a sufferer, given a positive result.

- the person is a non-sufferer, given a negative result.

- the person is missclassified.

Solution to Exercise 4.2.

Let \(T\) denote ‘test positive’ and \(S\) denote ‘person is a sufferer’. Then question tells us that \(P(T | S) = 0.95\), \(P(T | S^c) = 0.1\), and \(P(S) = 0.005\).

- \(P(T) = P(T|S)P(S) + P(T|S^c)P(S^c) = (0.95)(0.005) + (0.1)(0.995) = 0.10425.\)

- \(P(S | T) = \frac{P(T | S) P(S)}{P(T)} = \frac{(0.95)(0.005)}{0.10425} = 0.04556.\)

- \(P(S^c | T^c) = \frac{P(T^c | S^c) P(S^c)}{P(T^c)} = \frac{[1 - P(T | S^c)] P(S^c)}{1-P(T)} = \frac{(0.9)(0.995)}{0.89575} =0.9997\).

- Missclassification means either the person is a sufferer and the test is negative, or the person is not

a sufferer and the test is positive. These are disjoint events, so we require

\[\begin{eqnarray*} P(S \cap T^c) + P( S^c \cap T) & = & P(T^c | S)P(S) + P(T | S^c) P(S^c)\\ & = & [1 - P(T | S)]P(S) + P(T | S^c) P(S^c)\\ & = & (0.05)(0.005) + (0.1)(0.995) = 0.09975. \end{eqnarray*}\]

In areas such as market research, medical research, etc, it is often hard to get people to

answer embarrassing questions. One way around this is the following. Suppose that \(N\) people

are interviewed, where \(N\) is even. Each person is given a card, chosen at random from \(N\)

cards, containing a single question. Half of the cards contain the embarrassing question, to

which the answer is either ‘Yes’ or ‘No’. The other half of the cards contain the question ‘Is your

birthday between January and June inclusive?’

Suppose that of the \(N\) people interviewed, \(R\) answer ‘Yes’ to the question that they received. Let \(Y\) be the event that a person gives a ‘Yes’ answer, \(E\) the event that they received a card asking the embarrassing question. Assuming that half the population have birthdays between January and June inclusive, write down:

- \(P(Y)\);

- \(P(E)\);

- \(P(Y|E^c)\).

Hence calculate the proportion of people who answered `Yes’ to the embarrassing question.

Hint: Try writing down an expression for \(P(Y)\) using the Theorem of Total Probability.

Comment on your answer.

Solution to Exercise 4.3.

We have that:

- \(P(Y) = \frac{R}{N}\);

- \(P(E) = \frac{1}{2}\);

- \(P(Y|E^c) = \frac{1}{2}\).

A little thought should help you see why.