Chapter 7 Central Limit Theorem and law of large numbers

7.1 Introduction

In this Section we will show why the Normal distribution, introduced in Section 5.7, is so important in probability and statistics. The central limit theorem states that under very weak conditions (almost all probability distributions you will see will satisfy them) the sum of \(n\) i.i.d. random variables, \(S_n\), will converge, appropriately normalised to a standard Normal distribution as \(n \to \infty\). For finite, but large \(n (\geq 50)\), we can approximate \(S_n\) by a normal distribution and the normal distribution approximation can be used to answer questions concerning \(S_n\). In Section 7.2 we present the Central Limit Theorem and apply it to an example using exponential random variables. In Section 7.3 we explore how a continuous distribution (the Normal distribution) can be used to approximate sums of discrete distributions. Finally, in Section 7.4, we present the Law of Large Numbers which states that the uncertainty in the sample mean of \(n\) observations, \(S_n/n\), decreases as \(n\) increases and converges to the population mean \(\mu\). Both the Central Limit Theorem and the Law of Large Numbers will be important moving forward when considering statistical questions.

7.2 Statement of Central Limit Theorem

Before stating the Central Limit Theorem, we introduce some notation.

Convergence in distribution

A sequence of random variables \(Y_1, Y_2, \ldots\) are said to converge in distribution to a random variable \(Y\), if for all \(y \in \mathbb{R}\),We write \(Y_n \xrightarrow{\quad D \quad} Y\) as \(n \to \infty\).

Let \(X_1,X_2,\dots,X_n\) be independent and identically distributed random variables (i.e. a random sample) with finite mean \(\mu\) and variance \(\sigma^2\). Let \(S_n = X_1 + \cdots + X_n\). Then

where \(\bar{X} = \frac{1}{n} \sum_{i=1}^n X_i\) is the mean of the distributions \(X_1, X_2, \ldots , X_n\).

Therefore, we have that for large \(n\),Suppose \(X_1,X_2,\dots,X_{100}\) are i.i.d. exponential random variables with parameter \(\lambda=4\).

- Find \(P(S_{100} > 30)\).

- Find limits within which \(\bar{X}\) will lie with probability \(0.95\).

Since \(X_1,X_2,\dots,X_{100}\) are i.i.d. exponential random variables with parameter \(\lambda=4\), \(E\left[X_i\right]=\frac{1}{4}\) and \(var(X_i) = \frac{1}{16}\). Hence,

\[\begin{align*} E \left[ S_{100} \right] &= 100 \cdot \frac{1}{4} = 25; \\ var(S_{100}) &= 100 \cdot \frac{1}{16} = \frac{25}{4}. \end{align*}\]

Since \(n=100\) is sufficiently big, \(S_{100}\) is approximately normally distributed by the central limit theorem (CLT). Therefore,

\[\begin{align*} P(S_{100} > 30) &= P \left( \frac{S_{100}-25}{\sqrt{\frac{25}{4}}} > \frac{30-25}{\sqrt{\frac{25}{4}}} \right) \\ &\approx P( N(0,1) >2) \\ &= 1 - P( N(0,1) \leq 2) \\ &= 0.0228. \end{align*}\]

Given that \(S_{100} = \sum_{i=1}^{100} X_i \sim {\rm Gamma} (100,4)\), see Section 5.6.2, we can compute exactly \(P(S_{100} >30) = 0.0279\), which shows that the central limit theorem gives a reasonable approximation.

Since \(X_1,X_2,\dots,X_{100}\) are i.i.d. exponential random variables with parameter \(\lambda=4\), \(E\left[X_i\right]=\frac{1}{4}\) and \(var (X_i) = \frac{1}{16}\). Therefore, \(E\left[\bar{X}\right] = \frac{1}{4}\) and \(var(\bar{X}) = \frac{1/16}{100}\).

7.3 Central limit theorem for discrete random variables

The central limit theorem can be applied to sums of discrete random variables as well as continuous random variables. Let \(X_1, X_2, \ldots\) be i.i.d. copies of a discrete random variable \(X\) with \(E[X] =\mu\) and \(var(X) = \sigma^2\). Further suppose that the support of \(X\) is in the non-negative integers \(\{0,1, \ldots \}\). (This covers all the discrete distributions, we have seen, binomial, negative binomial, Poisson and discrete uniform.)

Let \(Y_n \sim N(n \mu, n \sigma^2)\). Then the central limit theorem states that for large \(n\), \(S_n \approx Y_n\). However, there will exist \(x \in \{0,1,\ldots \}\) such thatThis is known as the continuity correction.

Suppose that \(X\) is a Bernoulli random variable with \(P(X=1)=0.6 (=p)\), so \(E[X]=0.6\) and \(var(X) =0.6 \times (1-0.6) =0.24\). Then \[S_n = \sum_{i=1}^n X_i \sim {\rm Bin} (n, 0.6). \]

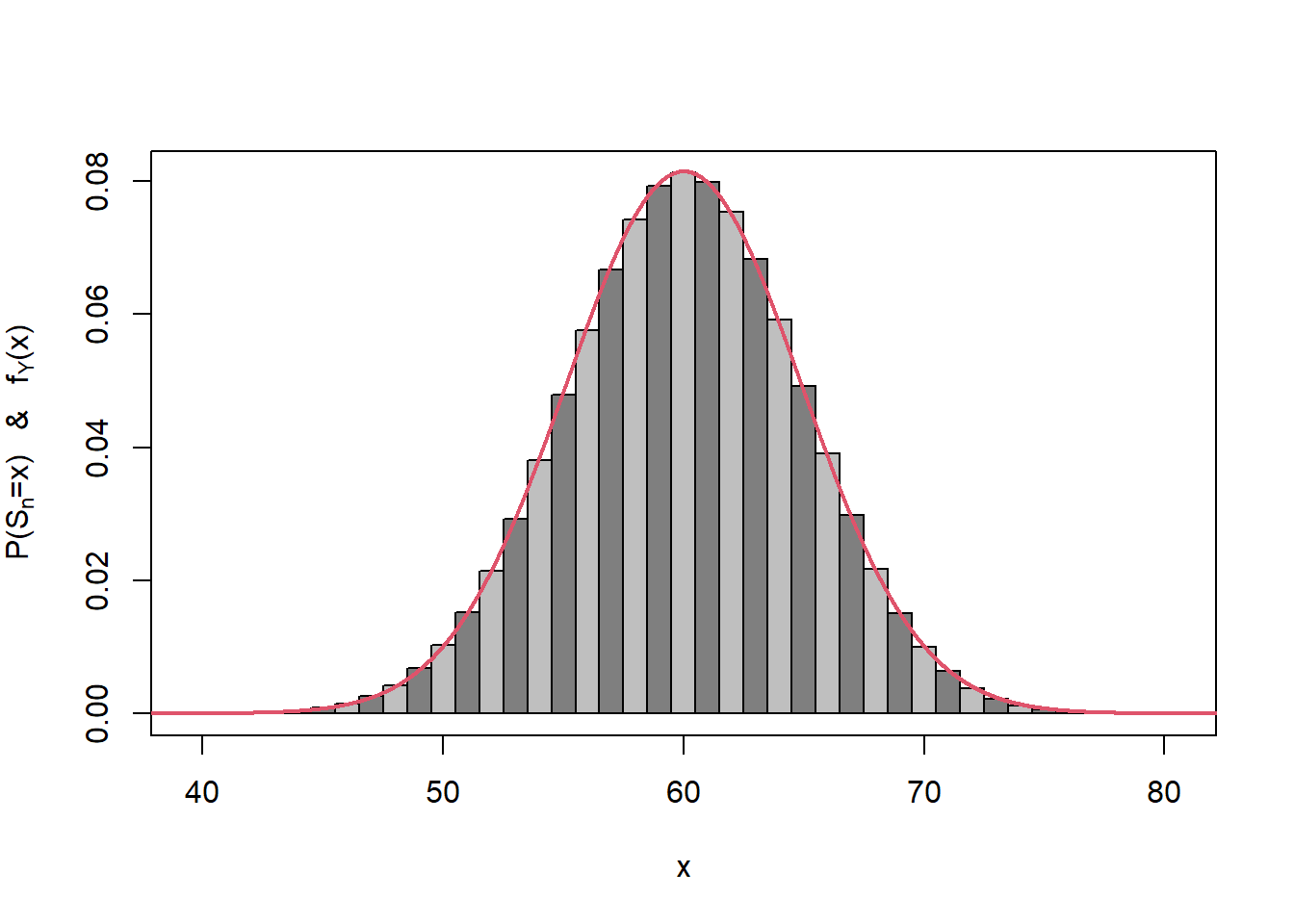

For \(n=100\), \(S_{100} \sim {\rm Bin} (100, 0.6)\) can be approximated by \(Y\sim N(60,24) (=N(np,np(1-p)))\), see Figure 7.1.

Figure 7.1: Central limit theorem approximation for the binomial.

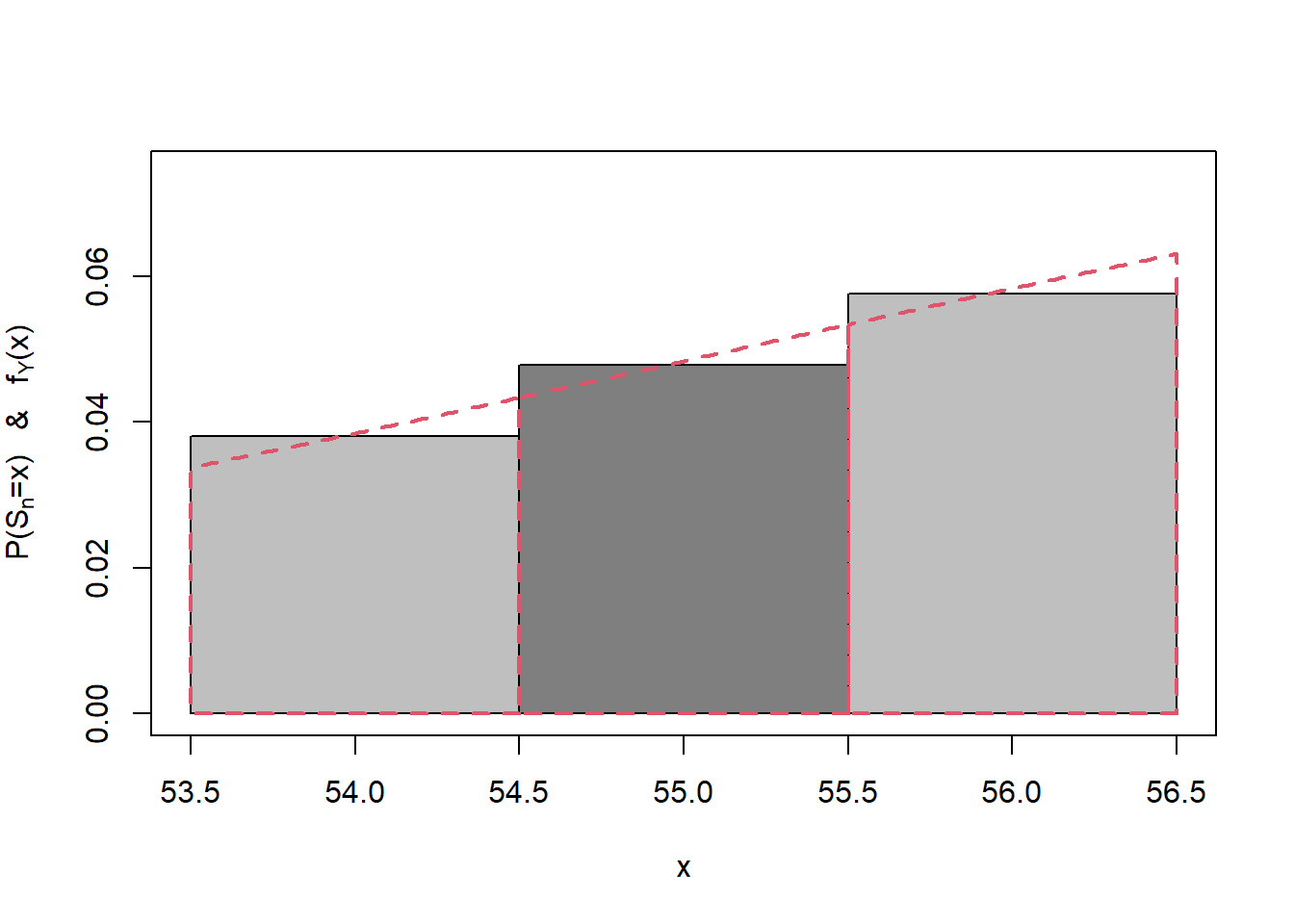

We can see the approximation in Figure 7.1 in close-up for \(x=54\) to \(56\) in Figure 7.2. The areas marked out by the red lines (normal approximation) are approximately equal to the areas of the bars in the histogram (binomial probabilities).

Figure 7.2: Central limit theorem approximation for the binomial for x=54 to 56.

7.4 Law of Large Numbers

We observed that \[ \bar{X} \approx N\left(\mu, \frac{\sigma^2}{n} \right), \] and the variance is decreasing as \(n\) increases.

Given that \[var (S_n) = var \left(\sum_{i=1}^n X_i \right) = \sum_{i=1}^n var \left( X_i \right) = n \sigma^2,\] we have in general that \[var (\bar{X}) = var \left(\frac{S_n}{n} \right) = \frac{1}{n^2} var (S_n) = \frac{\sigma^2}{n}.\]

A random variable \(Y\) which has \(E[Y]=\mu\) and \(var(Y)=0\) is the constant, \(Y \equiv \mu\), that is, \(P(Y=\mu) =1\). This suggests that as \(n \to \infty\), \(\bar{X}\) converges in some sense to \(\mu\). We can make this convergence rigorous.

Convergence in probability

A sequence of random variables \(Y_1, Y_2, \ldots\) are said to converge in probability to a random variable \(Y\), if for any \(\epsilon >0\), \[ P(|Y_n - Y|> \epsilon) \to 0 \qquad \qquad \mbox{ as } \; n \to \infty. \] We write \(Y_n \xrightarrow{\quad p \quad} Y\) as \(n \to \infty\).

We will often be interested in convergence in probability where \(Y\) is a constant.

A useful result for proving convergence in probability to a constant \(\mu\) is Chebychev’s inequality. Chebychev’s inequality is a special case of the Markov inequality which is helpful in bounding probabilities in terms of expectations.

Chebychev’s inequality.

Let \(X\) be a random variable with \(E[X] =\mu\) and \(var(X)=\sigma^2\). Then for any \(\epsilon >0\),

\[ P(|X - \mu| > \epsilon) \leq \frac{\sigma^2}{\epsilon^2}. \]

Law of Large Numbers.

Let \(X_1, X_2, \ldots\) be i.i.d. according to a random variable \(X\) with \(E[X] = \mu\) and \(var(X) =\sigma^2\). Then

\[\bar{X} = \frac{1}{n} \sum_{i=1}^n X_i \xrightarrow{\quad p \quad} \mu \qquad \qquad \mbox{ as } \; n \to \infty. \]

and the Theorem follows.

Central limit theorem for dice

Figure 7.3: Dice picture.

Let \(D_1, D_2, \ldots\) denote the outcomes of successive rolls of a fair six-sided dice.

Let \(S_n = \sum_{i=1}^n D_i\) denote the total score from \(n\) rolls of the dice and let \(M_n = \frac{1}{n} S_n\) denote the mean score from \(n\) rolls of the dice.

- What is the approximate distribution of \(S_{100}\)?

- What is the approximate probability that \(S_{100}\) lies between \(330\) and \(380\), inclusive?

- How large does \(n\) need to be such that \(P(|M_n - E[D]|>0.1) \leq 0.01\)?

Attempt Example 7.4.4 and then watch Video 15 for the solutions.

Video 15: Central limit theorem for dice

Solution to Example 7.4.4.

Then \(E[D_1] = \frac{7}{2} =3.5\) and \(Var (D_1) = \frac{35}{12}\).

Since the rolls of the dice are independent,

\[\begin{eqnarray*} E[S_{100}] &=& E \left[ \sum_{i=1}^{100} D_i \right] = \sum_{i=1}^{100}E \left[ D_i \right] \\ &=& 100 E[D_1] = 350. \end{eqnarray*}\]

and

\[\begin{eqnarray*} var(S_{100}) &=& var \left( \sum_{i=1}^{100} D_i \right) = \sum_{i=1}^{100}var \left( D_i \right) \\ &=& 100 var(D_1)= \frac{875}{3}. \end{eqnarray*}\]

Thus by the central limit theorem, \(S_{100} \approx Y \sim N \left(350, \frac{875}{3} \right)\).

Using the CLT approximation above, and the continuity correction

\[\begin{eqnarray*}P(330 \leq S_{100} \leq 380) &\approx& P(329.5 \leq Y \leq 380.5) \\ &=& P(Y \leq 380.5) - P(Y \leq 329.5) \\ &=& 0.9629 - 0.115 = 0.8479. \end{eqnarray*}\]

If using Normal tables, we have that

\[ P\left( Y \leq 380.5 \right) = P \left( Z = \frac{Y-350}{\sqrt{875/3}} \leq \frac{380.5-350}{\sqrt{875/3}} \right) = \Phi (1.786)\]

and

\[ P\left( Y \leq 329.5 \right) = P \left( Z = \frac{Y-350}{\sqrt{875/3}} \leq \frac{329.5-350}{\sqrt{875/3}} \right) = \Phi (-1.200).\]

Using the Central Limit Theorem, \(M_n \approx W_n \sim N \left(\frac{7}{2}, \frac{35}{12 n} \right)\).

We know by the law of large numbers that \(M_n \xrightarrow{\quad p \quad} \frac{7}{2}\) as \(n \to \infty\), but how large does \(n\) need to be such that there is a \(99\%\) (or greater) chance of \(M_n\) being within \(0.1\) of \(3.5\)?

Using the approximation \(W_n\), we want:Note that

qnorm function in R qnorm(0.995) gives \(c =\) 2.5758293.Therefore

Given that we require \(n \geq 1935.2\), we have that \(n=1936\).

Task: Session 4

Attempt the R Markdown file for Session 4:

Session 4: Convergence and the Central Limit Theorem

Student Exercises

Attempt the exercises below.

Let \(X_1, X_2, \dots, X_{25}\) be independent Poisson random variables each having mean 1. Use the central limit theorem to approximate

Solution to Exercise 7.1.

Note that the final step comes from the symmetry of the normal distribution.

For comparison, since \(X \sim {\rm Po} (25)\), we have that \(P(X >20) = 0.8145\).

The lifetime of a Brand X TV (in years) is an exponential random variable with

mean 10. By using the central limit theorem, find the approximate probability

that the average lifetime of a random sample of 36 TVs is at least 10.5.

Solution to Exercise 7.2.

Let \(X_i\) denote the lifetime of the \(i^{th}\) TV in the sample, \(i=1, 2 \ldots, 36\). Then (from the lecture notes) we know that \(E[X_i] = 10\), \(var(X_i) = 100\).

The sample mean is \(\bar{X} = (X_1 + \ldots X_{36})/36\).

Using the central limit theorem, \(X_1 + \ldots + X_{36} \approx N(360, 3600)\), sowhere \(Z \sim N(0,1)\). Thus the required probability is approximately \(P(Z > 0.3) = 1 - \Phi(0.3) = 0.3821\).

Prior to October 2015,

in the UK National Lottery gamblers bought a ticket on which they mark six different numbers from

\(\{ 1,2,\ldots,49 \}\). Six balls were drawn uniformly at random without replacement from a set of \(49\) similarly numbered balls. A ticket won the jackpot if the six numbers marked are the same as the six numbers drawn.

- Show that the probability a given ticket won the jackpot is \(1/13983816\).

- In Week 9 of the UK National Lottery \(69,846,979\) tickets were sold and there were \(133\) jackpot winners. If all gamblers chose their numbers independently and uniformly at random, use the central limit theorem to determine the approximate distribution of the number of jackpot winners that week. Comment on this in the light of the actual number of jackpot winners.

Solution to Exercise 7.3.

- There are \(\binom{49}{6}=13,983,816\) different ways of choosing 6 distinct numbers \(1,2,\ldots,49\), so the probability a ticket wins the jackpot if \(1/13983816\).

- Let \(X\) be the number of jackpot winners in Week 9 if gamblers chose their numbers independently and uniformly at random. Then

\[ X \sim{\rm Bin} \left( 69,846,979, \frac{1}{13,989,816} \right) = {\rm Bin} (n,p), \text{ say}. \] Then to 4 decimal places \(E[X] =n p = 4.9927\) and \(var(X)=np(1-p)=4.9927\).

Hence, by the central limit theorem,\[ X \approx N(4.9927,4.9927). \]

where \(Z \sim N(0,1)\). This probability is small, so there is very strong evidence that the gamblers did not choose their numbers independently and uniformly at random.