Chapter 19 Hypothesis Testing

19.1 Introduction to hypothesis testing

In estimation, we are interested in asking ourselves the question what is the value of some particular parameter of interest in the population. For example, what is the average annual income of residents in the UK?

Often there are times in statistics when we are not interested in the specific value of the parameter, but rather are interested in asserting some statement regarding the parameter of interest. Some examples:

- We want to claim that the average annual income of UK residents is more than or equal to \(£35,000\).

- We want to assess whether the average annual income of men in academia in the UK is the same as that of women in similar ranks.

- We want to determine whether the number of cars crossing a certain intersection follows a Poisson distribution or whether it is more likely to come from a geometric distribution.

To perform a statistical hypothesis test, one needs to specify two disjoint hypotheses in terms of the parameters of the distribution that are of interest. They are

- \(H_0:\) Null Hypothesis,

- \(H_1:\) Alternative Hypothesis.

Traditionally, we choose \(H_0\) to be the claim that we would like to assert.

Returning to our examples:

- We want to claim that the average annual income of UK residents is more than or equal to \(£35,000\). We test

\[ H_0: \mu \geq 35,000 \quad \text{vs.} \quad H_1: \mu < 35,000. \] - We want to assess whether the average annual income of men in academia in the UK is the same as that of women at similar ranks. We test

\[ H_0: \mu_{\text{men}} = \mu_{\text{women}} \quad \text{vs.} \quad H_1: \mu_{\text{men}} \neq \mu_{\text{women}}.\] - We want to determine whether the number of cars crossing a certain intersection follows a Poisson distribution or whether it is more likely to come from a geometric distribution. We test

\[ H_0: X \sim {\rm Po} (2) \quad \text{vs.} \quad H_1: X \sim {\rm Geom} (0.5).\]

Hypotheses where the distribution is completely specified are called simple hypotheses. For example, \(H_0\) and \(H_1\) in the car example and \(H_0\) in the gender wage example are all simple hypotheses.

Hypotheses where the distribution is not completely specified are called composite hypotheses. For example, \(H_0\) and \(H_1\) in the average annual income example and \(H_1\) in the gender wage example are all composite hypotheses.

Note that in the average annual income and gender wage examples, the null and alternative hypotheses cover all possibilities, whereas for the car example there are many other choices of distributions which could be hypothesized.

The conclusion of a hypothesis test

We will reject \(H_0\) if there is sufficient information from our sample that indicates that the null hypothesis cannot be true thereby concluding the alternative hypothesis is true.

We will not reject \(H_0\) if there is not sufficient information in the sample to refute our claim.

The remainder of this section is structured as follows. We define Type I and Type II errors, which are the probability of making the wrong decision in a hypothesis test. In Section 19.3 we show how to construct hypothesis tests starting with hypothesis tests for the mean of a normal distribution with known variance. This is extended to the case where the variance is unknown and where we have two samples we want to compare. We introduce \(p\)-values which give a measure of how likely (unlikely) the observed data are if the null hypothesis is true.We then consider hypothesis testing in a wide range of scenarios:-

19.2 Type I and Type II errors

Type I error

A Type I error occurs when one chooses to incorrectly reject a true null hypothesis.

A Type I error is also commonly referred to as a false positive.

Type II error

A Type II error occurs when one fails to reject a false null hypothesis.

A Type II error is also commonly referred to as a false negative.

Type I error and Type II error are summarised in the following decision table.

| One accepts the Null | One rejects the Null | |

|---|---|---|

| Null hypothesis is true | Correct Conclusion | Type I Error |

| Null hypothesis is false | Type II Error | Correct Conclusion |

Significance level

The significance level or size of the test is

Typical choices for \(\alpha\) are 0.01, 0.05 and 0.10.

Probability of Type II error

The probability of a Type II error is

Consider the following properties of \(\alpha\) and \(\beta\):

- It can be shown that there is an inverse relationship between \(\alpha\) and \(\beta\), that is as \(\alpha\) increases, \(\beta\) decreases and vice versa. Therefore for a fixed sample size one can only choose to control one of the types of error. In hypothesis testing we choose to control Type I error and select our hypotheses initially so the “worse” error is the Type II error.

- The value of both \(\alpha\) and \(\beta\) depend on the value of the underlying parameters. Consequently, we can control \(\alpha\) by first choosing \(H_0\) to include an equality of the parameter, and then showing that the largest the Type I error can be is at this point of equality. Therefore we may as well choose the parameter to be the size. To illustrate in the average annual income example above

\[\begin{align*}

\alpha &= P (\text{rejecting } H_0 | \mu = 35,000) \\

&\geq P(\text{rejecting } H_0 | \mu \geq 35,000).

\end{align*}\]

Therefore \(H_0: \mu \geq 35,000\) is often just written as \(H_0: \mu = 35,000\).

- Because \(H_0\) describes an equality, \(H_1\) is therefore a composite hypothesis. Therefore \(\beta = P(\text{Type II error})\) is a function of the parameter within the alternative parameter space.

Power of a Test

The power of the test is

The power of a test can be thought of as the probability of making a correct decision.

19.3 Tests for normal means, \(\sigma\) known

In this section we study a number of standard hypothesis tests that one might perform on a random sample.

We assume throughout this section that \(x_1, x_2, \ldots, x_n\) are i.i.d. samples from \(X\) with \(E[X]=\mu\), where \(\mu\) is unknown and \(var(X) = \sigma^2\) is known.

Test 1: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu < \mu_0\); \(\sigma^2\) known.

Watch Video 28 for the construction Hypothesis Test 1.

Video 28: Hypothesis Test 1

A summary of the construction of Hypothesis Test 1 is given below.

Data assumptions. We assume either

- \(X_1,X_2,\dots,X_n\) are a random sample from a normal distribution with known variance \(\sigma^2\);

- The sample size \(n\) is sufficiently large so that we can assume \(\bar{X}\) is approximately normally distributed by the Central Limit Theorem, and that either the variance is known or that the sample variance \(s^2 \approx \sigma^2\).

Step 1: Choose a test statistic based upon the random sample for the parameter we want to base our claim on. For example, we are interested in \(\mu\) so we want to choose a good estimator of \(\mu\) as our test statistic. That is, \(\hat{\mu} = \bar{X}\).

Step 2: Specify a decision rule. The smaller \(\bar{X}\) is, the more the evidence points towards the alternative hypothesis \(\mu < \mu_0\). Therefore our decision rule is to reject \(H_0\) if \(\bar{X} < c\), where \(c\) is called the cut-off value for the test.

Step 3: Based upon the sampling distribution of the test statistic and the specified significance level of the test, solve for the specific value of the cut-off value \(c\). To find \(c\),Since \(P(Z < -z_\alpha )=\alpha\), where \(z_\alpha\) can be found using qnorm(1-alpha) (\(P(Z < z_\alpha) =1 - \alpha\)) or statistical tables, then

\[ -z_\alpha = \frac{c-\mu_0}{\sigma/\sqrt{n}} \]

and \(c = \mu_0 -z_\alpha \cdot \frac{\sigma}{\sqrt{n}}\).

So, the decision rule is to reject \(H_0\) if \(\bar{X} < \mu_0 -z_\alpha \cdot \frac{\sigma}{\sqrt{n}}\) or, equivalently, \[Z = \frac{\bar{X} - \mu_0}{\sigma\sqrt{n}} < -z_\alpha.\]

Test 2: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu < \mu_0\); \(\sigma^2\) known.

This is similar to the previous test, except the decision rule is to reject \(H_0\) if \(\bar{X} > \mu_0 + z_\alpha \frac{\sigma}{\sqrt{n}}\) or, equivalently, \[Z = \frac{\bar{X} - \mu_0}{\sigma/\sqrt{n}} > z_\alpha.\]

Note that both these tests are called one-sided tests, since the rejection region falls on only one side of the outcome space.

Test 3: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu \neq \mu_0\); \(\sigma^2\) known.

The test statistic \(\bar{X}\) does not change but the decision rule will. The decision rule is to reject \(H_0\) if \(\bar{X}\) is sufficiently far (above or below) from \(\mu_0\). Specifically, reject \(H_0\) if \(\bar{X} < \mu_0 -z_{\alpha/2} \cdot \frac{\sigma}{\sqrt{n}}\) or \(\bar{X} > \mu_0 + z_{\alpha/2} \cdot \frac{\sigma}{\sqrt{n}}\). Equivalent to both of these is \[|Z| = \left| \frac{\bar{X}-\mu_0}{\sigma/\sqrt{n}} \right| > z_{\alpha/2}.\]

This is called a two-sided test because the decision rule partitions the outcome space into two disjoint intervals.

Coffee machine.

Suppose that a coffee machine is designed to dispense 6 ounces of coffee per cup with a standard deviation \(\sigma=0.2\), where we assume the amount of coffee dispensed is normally distributed. A random sample of \(n=20\) cups gives \(\bar{x}=5.94\).Test whether the machine is correctly filling the cups.

We test \(H_0: \mu = 6.0\) vs. \(H_1: \mu \neq 6.0\) at significance level \(\alpha = 0.05\).

Using a two-sided test with known variance, the decision rule is to reject \(H_0\) if \(|Z| = \left| \frac{\bar{x}-6.0}{0.2/\sqrt{20}} \right| > z_{0.05/2}= z_{0.025} = 1.96\). NowTherefore, we conclude that there is not enough statistical evidence to reject \(H_0\) at \(\alpha=0.05\).

19.4 \(p\) values

When our sample information determines a particular conclusion to our hypothesis test, we only report that we either reject or do not reject \(H_0\) at a particular significance level \(\alpha\). Hence when we report our conclusion the reader doesn’t know how sensitive our decision is to the choice of \(\alpha\).

To illustrate, in Example 19.3.1 (Coffee Machine) we would have reached the same conclusion that there is not enough statistical evidence to reject \(H_0\) at \(\alpha=0.05\) if \(|Z| = 1.95\) rather than \(|Z| = 1.34\). Whereas, if the significance level was \(\alpha = 0.10\), we would have rejected \(H_0\) if \(|Z| = 1.95 > z_{0.10/2} = 1.6449\), but we would not reject \(H_0\) if \(|Z| = 1.34 < z_{0.10/2} = 1.6449\).

Note that the choice of \(\alpha\) should be made before the test is performed; otherwise, we run the risk of inducing experimenter bias!

\(p\)-value

The \(p\)-value of a test is the probability of obtaining a test statistic at least as extreme as the observed data, given \(H_0\) is true.

So the p-value is the probability of rejecting \(H_0\) with the value of the test statistic obtained from the data given \(H_0\) is true. That is, it is the critical value of \(\alpha\) with regards to the hypothesis test decision.

If we report the conclusion of the test, as well as the \(p\) value then the reader can decide how sensitive our result was to our choice of \(\alpha\).

Coffee machine (continued).

Compute the \(p\) value for the test in Example 19.3.1.

In Example 19.3.1 (Coffee machine), we were given \(\bar{x}=5.94\), \(n=20\) and \(\sigma=0.2\). Our decision rule was to reject \(H_0\) if \(|Z| = \left| \frac{\bar{x}-6.0}{0.2/\sqrt{20}} \right| > z_{0.025}\).

To compute the \(p\)-value for the test assume \(H_0\) is true, that is, \(\mu=6.0\). We want to find,Consider the following remarks on Example 19.4.2.

- The multiplication factor of 2 has arisen since we are computing the \(p\) value for a two-sided test, so there is an equal-sized rejection region at both tails of the distribution. For a one-tailed test we only need to compute the probability of rejecting in one direction.

- The \(p\) value implies that if we had chosen an \(\alpha\) of at least \(0.1802\) then we would have been able to reject \(H_0\).

- In applied statistics, the \(p\) value is interpreted as the sample providing:

\[ \begin{cases} \text{strong evidence against $H_0$}, & \text{if } p \leq 0.01, \\ \text{evidence against $H_0$}, & \text{if } p \leq 0.05, \\ \text{slight evidence against $H_0$}, & \text{if } p \leq 0.10, \\ \text{no evidence against $H_0$}, & \text{if } p > 0.10. \end{cases}\]

19.5 Tests for normal means, \(\sigma\) unknown

Assume \(X_1,X_2,\dots,X_n\) is a random sample from a normal distribution with unknown variance \(\sigma^2\).

Test 4: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu < \mu_0\); \(\sigma^2\) unknown.

where \(s^2\) is the sample variance.

Hence,qt function in R with \(t_{n-1,\alpha} =\) qt(alpha,n-1) or using statistical tables similar to those of the normal tables in Section 5.7. Therefore

Test 5: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu > \mu_0\); \(\sigma^2\) unknown.

Test 6: \(H_0: \mu = \mu_0\) vs. \(H_1: \mu \neq \mu_0\); \(\sigma^2\) unknown.

Coffee machine (continued).

Suppose that \(\sigma\) is unknown in Example 19.3.1, though we still assume the amount of coffee dispensed is normally distributed. A random sample of \(n=20\) cups gives mean \(\bar{x} = 5.94\) and sample standard deviation \(s = 0.1501\).

Test whether the machine is correctly filling the cups.

We test \(H_0: \mu = 6.0\) vs. \(H_1: \mu \neq 6.0\) at significance level \(\alpha = 0.05\).

The decision rule is to reject \(H_0\) if \(|T| = \left| \frac{\bar{x}-6.0}{0.1501/\sqrt{20}} \right| > t_{20-1,0.05/2}= t_{19,0.025} = 2.093\).

Now

19.6 Confidence intervals and two-sided tests

Consider the two-sided \(t\)-test of size \(\alpha\). We reject \(H_0\) if \(|T| = \left| \frac{\bar{X} - \mu_0}{s/\sqrt{n}} \right| > t_{n-1,\alpha/2}\). This implies we do not reject \(H_0\) ifis a \(100(1-\alpha)\%\) confidence interval for \(\mu\). Consequently, if \(\mu_0\), the value of \(\mu\) under \(H_0\), falls within the \(100(1-\alpha)\%\) confidence interval for \(\mu\), then we will not reject \(H_0\) at significance level \(\alpha\).

In general, therefore, there is a correspondence between the “acceptance region” of a statistical test of size \(\alpha\) and the related \(100(1-\alpha)\%\) confidence interval. Therefore, we will not reject \(H_0 : \theta = \theta_0\) vs. \(H_1: \theta \neq \theta_0\) at level \(\alpha\) if and only if \(\theta_0\) lies within the \(100(1-\alpha)\%\) confidence interval for \(\theta\).

Coffee machine (continued).

For the coffee machine in Example 19.5.1 (Coffee machine - continued) we wanted to test \(H_0 : \mu = 6.0\) vs. \(H_1: \mu \neq 6.0\) at significance level \(\alpha = 0.05\). We were given a random sample of \(n=20\) cups with \(\bar{x} = 5.94\) and \(s^2 = 0.1501^2\).

Construct a \(95\%\) confidence interval for \(\mu\).

The limits of a 95% confidence interval for \(\mu\) are

so the 95% confidence interval for \(\mu\) is

If we use the confidence interval to perform our test, we see that

so we will not reject \(H_0\) at \(\alpha =0.05\).

19.7 Distribution of the variance

Thus far we have considered hypothesis testing for the mean but we can also perform hypothesis tests for the variance of a normal distribution. However, first we need to consider the distribution of the sample variance.

Suppose that \(Z_1, Z_2, \ldots, Z_n \sim N(0,1)\). Then we have shown thatin Section 14.2.

This can be extended to show thatNote that the degrees of freedom of \(\chi^2\) is \(n-1\), the number of observations \(n\) minus 1 for the estimation of \(\mu\) by \(\bar{X}\).

It follows that19.8 Other types of tests

Test 7: \(H_0: \sigma_1^2 = \sigma_2^2\) vs. \(H_1: \sigma_1^2 \neq \sigma_2^2\).

Let \(X_1,X_2,\dots,X_m \sim N(\mu_1, \sigma^2_1)\) and \(Y_1,Y_2,\dots,Y_n \sim N(\mu_2, \sigma^2_2)\) be two independent random samples from normal populations.

The test statistic is \(F=\frac{s_1^2}{s_2^2}\), whereqf(alpha/2,m-1,n-1) and qf(1-alpha/2,m-1,n-1). Alternatively, Statistical Tables can be used. For the latter you may need to use the identity

to obtain the required values from the table.

Test 8: \(H_0: \mu_1 = \mu_2\) vs. \(H_1: \mu_1 \neq \mu_2\); \(\sigma^2\) unknown.

Assume \(X_1,X_2,\dots,X_m \sim N(\mu_1, \sigma^2)\) and \(Y_1,Y_2,\dots,Y_n \sim N(\mu_2, \sigma^2)\) are two independent random samples with unknown but equal variance \(\sigma^2\).

Note that

- \((\bar{X} - \bar{Y}) \sim N \Big( (\mu_1 -\mu_2), \sigma^2 \left( \frac{1}{m} + \frac{1}{n} \right) \Big)\) which implies

\[\frac{(\bar{X} - \bar{Y}) - (\mu_1 - \mu_2) }{ \sqrt{ \sigma^2 \left( \frac{1}{m} + \frac{1}{n} \right) }} \sim N(0,1);\] - \((m+n-2) \frac{s_p^2}{\sigma^2} \sim \chi_{m+n-2}^2\);

- \(s_p^2\) is independent of \(\bar{X} - \bar{Y}\).

where \(s_p^2 = \frac{(m-1)s_X^2 + (n-1)s_Y^2}{m+n-2}\) is the pooled sample variance.

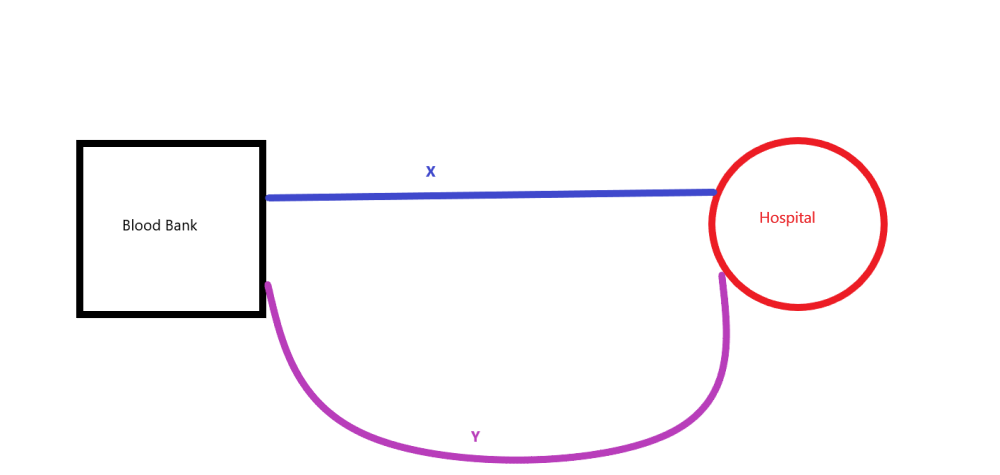

Blood bank.

Suppose that one wants to test whether the time it takes to get from a blood bank to a hospital via two different routes is the same on average. Independent random samples are selected from each of the different routes and we obtain the following information:

| Route X | \(m=10\) | \(\bar{x}=34\) | \(s_X^2 = 17.111\) |

| Route Y | \(n=12\) | \(\bar{y}=30\) | \(s_Y^2 = 9.454\) |

Figure 19.1: Routes from blood bank to hospital.

Test \(H_0: \mu_X = \mu_Y\) vs. \(H_1: \mu_X \neq \mu_Y\) at significance level \(\alpha=0.05\), where \(\mu_1\) and \(\mu_2\) denote the mean travel times on routes X and Y, respectively.

Attempt Example 19.8.1: Blood bank and then watch Video 29 for the solutions.

Video 29: Blood bank

Solution to Example 19.8.1: Blood bank

Compute

- \(F = \frac{s_X^2}{s_Y^2} = \frac{17.111}{9.454} = 1.81\);

- \(F_{9,11,0.975} = \frac{1}{F_{11,9,0.025}} = \frac{1}{3.915} = 0.256\);

- \(F_{9,11,0.025} = 3.588\).

Hence \(F_{9,11,0.975} < F < F_{9,11,0.025}\), so we do not reject \(H_0\) at \(\alpha = 0.05\). Therefore we can assume the variances from the two samples are the same.

Now we test \(H_0: \mu_X = \mu_Y\) vs. \(H_1: \mu_X \neq \mu_Y\) at significance level \(\alpha = 0.05\)

The decision rule is to reject \(H_0\) if

Test 9: \(H_0: \mu_1 = \mu_2\) vs. \(H_1: \mu_1 \neq \mu_2\); non-independent samples.

Suppose that we have two groups of observations \(X_1,X_2,\dots,X_n\) and \(Y_1,Y_2,\dots,Y_n\) where there is an obvious pairing between the observations. For example consider before and after studies or comparing different measuring devices. This means the samples are no longer independent.

An equivalent hypothesis test to the one stated is \(H_0: \mu_d = \mu_1 - \mu_2 = 0\) vs. \(H_1: \mu_d = \mu_1 - \mu_2 \neq 0\). With this in mind define \(D_i = X_i - Y_i\) for \(i=1,\dots,n\), and assume \(D_1,D_2,\dots,D_n \sim N(\mu_d, \sigma_d^2)\) and are i.i.d.

The decision rule is to reject \(H_0\) ifDrug Trial.

In a medical study of patients given a drug and a placebo, sixteen patients were paired up with members of each pair having a similar age and being the same sex. One of each pair received the drug and the other recieved the placebo. The response score for each patient was found.

| Pair Number | \(1\) | \(2\) | \(3\) | \(4\) | \(5\) | \(6\) | \(7\) | \(8\) |

| Given Drug | \(0.16\) | \(0.97\) | \(1.57\) | \(0.55\) | \(0.62\) | \(1.12\) | \(0.68\) | \(1.69\) |

| Given Placebo | \(0.11\) | \(0.13\) | \(0.77\) | \(1.19\) | \(0.46\) | \(0.41\) | \(0.40\) | \(1.28\) |

Are the responses for the drug and placebo significantly different?

This is a “matched-pair” problem, since we expect a relation between the values of each pair. The difference within each pair is

| Pair Number | \(1\) | \(2\) | \(3\) | \(4\) | \(5\) | \(6\) | \(7\) | \(8\) |

| \(\mathbf{D_i=y_i-x_i}\) | \(0.05\) | \(0.84\) | \(0.80\) | \(-0.64\) | \(0.16\) | \(0.71\) | \(0.28\) | \(0.41\) |

We consider the \(D_i\)’s to be a random sample from \(N(\mu_D, \sigma_D^2)\). We can calculate that \(\bar{D} = 0.326\), \(s_D^2 = 0.24\) so \(s_D = 0.49\).

To test \(H_0: \mu_D = 0\) vs \(H_1: \mu_D \neq 0\), the decision rule is to reject \(H_0\) if

Now \(t_{7,0.05} = 1.895\), so we would not reject \(H_0\) at the \(10\%\) level (just).

19.9 Sample size calculation

We have noted that for a given sample \(x_1, x_2,\ldots, x_n\), if we decrease the Type \(I\) error \(\alpha\) then we increase the Type \(II\) error \(\beta\), and visa-versa.

To control for both Type \(I\) and Type \(II\) error, ensure that \(\alpha\) and \(\beta\) are both sufficiently small, we need to choose an appropriate sample size \(n\).

Sample size calculations are appropriate when we have two simple hypotheses to compare. For example, we have a random variable \(X\) with unknown mean \(\mu = E[X]\) and known variance \(\sigma^2 = \text{Var} (X)\). We compare the hypotheses:

- \(H_0: \mu = \mu_0\),

- \(H_1: \mu = \mu_1\).

Without loss of generality we will assume that \(\mu_0 < \mu_1\).

Suppose that \(x_1, x_2, \ldots, x_n\) represent i.i.d. samples from \(X\). Then by the central limit theoremNote that as \(n\) increases, the cut-off for rejecting \(H_0\) decreases towards \(\mu_0\).

We now consider the choice of \(n\) to ensure that the Type \(II\) error is at most \(\beta\), or equivalently, that the power of the test is at least \(1-\beta\).

The Power of the test is:Lemma 19.9.1 (Sample size calculation) gives the smallest sample size \(n\) to bound Type \(I\) and \(II\) errors by \(\alpha\) and \(\beta\) in the case where the variance, \(\sigma^2\) is known.

Sample size calculation.

Suppose that \(X\) is a random variable with unknown mean \(\mu\) and known variance \(\sigma^2\).

The required sample size, \(n\), to ensure significance level \(\alpha\) and power \(1-\beta\) for comparing hypotheses:

- \(H_0: \mu = \mu_0\)

- \(H_1: \mu = \mu_1\)

is: \[ n = \left( \frac{\sigma}{\mu_1 - \mu_0} (z_\alpha - z_{1-\beta}) \right)^2. \]

The details of the proof of Lemma 19.9.1 (Sample size calculation) are provided but can be omitted.

Proof of Sample Size calculations.

Note:

- We need larger \(n\) as \(\sigma\) increases. (More variability in the observations.)

- We need larger \(n\) as \(\mu_1 - \mu_0\) gets closer to 0. (Harder to detect a small difference in mean.)

- We have that \(\alpha, \beta <0.5\), so \(z_{\alpha} >0\) and \(z_{1-\beta} <0\). Hence, \(z_{\alpha} - z_{1-\beta}\) becomes larger as \(\alpha\) and \(\beta\) decrease. (Smaller errors requires larger \(n\).)

The following R Shiny App lets you explore the effect of \(\mu_1 - \mu_0\), \(\sigma\) and \(\alpha\) on the sample size \(n\) or power \(1-\beta\).

R Shiny app: Sample size calculation app

Task: Session 10

Attempt the R Markdown file for Session 10:

Session 10: Confidence intervals and hypothesis testing

Student Exercises

Attempt the exercises below.

Note that throughout the exercises, for a random variable \(X\) and \(0 <\beta <1\), \(c_\beta\) satisfies \(\mathrm{P} (X > c_\beta) = \beta\).

Eleven bags of sugar, each nominally containing 1 kg, were randomly selected from a large batch. The weights of sugar were:

You may assume these values are from a normal distribution.

- Calculate a \(95\%\) confidence interval for the mean weight for the batch.

- Test the hypothesis \(H_0: \mu=1\) vs \(H_1: \mu \neq 1\). Give your answer in terms of a p-value.

Note that

Solution to Exercise 19.1.

Hence, the sample standard deviation is \(s = \sqrt{0.00092} = 0.03033\).

- The \(95\%\) confidence interval for the mean is given by \(\bar{x} \pm t_{n-1,0.025}s/\sqrt{n}\). Now \(t_{10,0.025} = 2.2281\). Hence the confidence interval is

\[\begin{eqnarray*} 1.04 \pm 2.2281 \left(\frac{0.03033}{\sqrt{11}}\right) = 1.04 \pm 0.0204 = (1.0196, 1.0604) \end{eqnarray*}\] - The population variance is unknown so we apply a \(t\) test with test statistic

\[ t= \frac{ \bar{x} - \mu_0 }{ s/\sqrt{n}} = \frac{1.04 - 1}{0.03033/\sqrt{11}} = 4.3741\] and \(n-1\) degrees of freedom. The \(p\) value is \(P(|t|> 4.3741)\). From the critical values given, \(P(t_{10}> 4.1437)=0.001\) and \(P(t_{10}>4.5869)=0.0005\), so \(0.0005 < P(t_{10}> 4.3741) < 0.001\). Hence \(0.001 < p < 0.002\). Therefore, there is strong evidence that \(\mu \neq 1\).

Random samples of 13 and 11 chicks, respectively, were given from birth a protein supplement, either oil meal or meat meal. The weights of the chicks when six weeks old are recorded and the following sample statistics obtained:

- Carry out an \(F\)-test to examine whether or not the groups have significantly different variances or not.

- Calculate a \(95\%\) confidence interval for the difference between weights of 6-week-old chicks on the two diet supplements.

- Do you consider that the supplements have a significantly different effect? Justify your answer.

Note that

\(F_{10,12}\):Solution to Exercise 19.2.

We regard the data as being from two independent normal distributions with unknown variances.

- F-test: \(H_0: \sigma_1^2 = \sigma_2^2\) vs. \(H_1: \sigma_1^2 \ne \sigma_2^2\).

We reject \(H_0\) if

\[F = \frac{s_1^2}{s_2^2} > F_{n_1-1,n_2-1,1-\alpha/2} = \frac{1}{F_{n_2-1,n_1-1,\alpha/2}} \text{ or } F = \frac{s_1^2}{s_2^2} < F_{n_1-1,n_2-1,\alpha/2}. \] Now, \(F_{12,10,0.025} = 3.6209\) and \(F_{12,10,0.975} = \frac{1}{3.3736} = 0.2964\). From the data \(F = s_1^2/s_2^2 = 0.7158\) so we do not reject \(H_0\). There is no evidence against equal population variances.

- Assume \(\sigma_1^2 = \sigma_2^2 = \sigma^2\) (unknown). The pooled estimate of the common variance \(\sigma^2\) is

\[s_p^2 = \frac{(n_1 -1)s_1^2 + (n_2-1)s_2^2}{n_1+n_2-2} = 3453.75,\] so \(s_p = 58.77\). The 95% confidence limits for \(\mu_1-\mu_2\) are

\[\begin{eqnarray*} \bar{x}_1 - \bar{x}_2 \pm t_{22, 0.025} s_p \sqrt{ \frac{1}{n_1}+\frac{1}{n_2} } &=& (247.9`- 275.5) \pm (2.0739 \times 0.4097 \times 58.77) \\ &=& -27.6 \pm 49.9355. \end{eqnarray*}\] So the interval is \((-77.5355,22.3355)\).

- Since the confidence interval in (b) includes zero (where \(\mu_1 -\mu_2 = 0\), \(\mu_1 =\mu_2\)) we conclude that the diet supplements do not have a significantly different effect (at \(5\%\) level).

A random sample of 12 car drivers took part in an experiment to find out if alcohol increases the average reaction time. Each driver’s reaction time was measured in a laboratory before and after drinking a specified amount of alcoholic beverage. The reaction times were as follows:

Let \(\mu_B\) and \(\mu_A\) be the population mean reaction time, before and after drinking alcohol.

- Test \(H_0: \mu_B = \mu_A\) vs. \(H_1: \mu_B \neq \mu_A\) assuming the two samples are independent.

- Test \(H_0: \mu_B = \mu_A\) vs. \(H_1: \mu_B \neq \mu_A\) assuming the two samples contain `matched pairs’.

- Which of the tests in (a) and (b) is more appropriate for these data, and why?

Note that

and the critical values for \(t_{22}\) are given above in Exercise 19.2.

Solution to Exercise 19.3.

- The summary statistics of the reaction times before alcohol are \(\bar{x} = 0.7275\) and \(s_x^2 = 0.0103\). Similarly the summary statistics after alcohol are \(\bar{y} = 0.775\) and \(s_y^2 = 0.0088\). Assuming both samples are from normal distributions with the same variance, the pooled variance estimator is

\[ s_p^2 = \frac{(n-1)s_x^2 + (n-1)s_y^2}{2(n-1)} = 0.0096. \] The null hypothesis is rejected at \(\alpha=0.05\) if

\[ t = \left| \frac{\bar{x}-\bar{y}}{s_p\sqrt{\frac{1}{n}+\frac{1}{n}}} \right| > t_{22,0.025} = 2.0739.\] From the data,

\[ t = \left| \frac{0.7275 - 0.775}{ 0.098 \sqrt{ \frac{2}{12}} } \right| = 1.1873 \] Hence, the null hypothesis is not rejected. There is no significant difference between the reaction times.

- The difference in reaction time for each driver is

\[ \mbox{after}-\mbox{before}=(0.05,-0.02,0.10,0.07,0.05,0.15,0.04,0.00,0.09,0.06,0.00,-0.02) \] The sample mean and variance of the differences are \(\bar{d} = 0.0475\) and \(s_d = 0.0517\). Assuming the differences are samples from a normal distribution, the null hypothesis is rejected at \(\alpha = 0.05\) if

\[ t = \left| \frac{\bar{d}}{s_d/\sqrt{11}} \right| > t_{11,0.025} = 2.201.\] From the data,

\[ t = \left| \frac{0.0475}{ 0.0517/\sqrt{11}} \right| = 3.1827. \] Hence, the null hypothesis is rejected. There is a significant difference between the reaction times.

- The matched pair test in (b) is more appropriate. By recording each driver’s reaction time before and after, and looking at the difference for each driver we are removing the driver effect. The driver effect says that some people are naturally slow both before and after alcohol, others are naturally quick. By working with the difference we have removed this factor.