Note 9.1: Different chapter structure, especially in EDA (Section 9.4)

As I have already read some books on linear regression I will not follow exactly the text in this chapter. I will leave out those passages that are not new for me and where I feel confident. Other passages I will only summarize to have content to consult whenever I would need it.

9.1 Achievements to unlock

Objectives for chapter 09

SwR Achievements

Achievement 1: Using exploratory data analysis to learn about the data before developing a linear regression model (Section 9.4)

Achievement 2: Exploring the statistical model for a line (Section 9.5)

Achievement 3: Computing the slope and intercept in a simple linear regression (Section 9.6)

Achievement 5: Model significance and model fit (Section 9.8)

Achievement 6: Checking assumptions and conducting diagnostics (Section 9.9)

Achievement 7: Adding variables to the model and using transformation (Section 9.10)

Objectives 9.1: Achievements for chapter 09

9.2 The needle exchange examination

Some infectious diseases like HIV and Hepatitis C are on the rise again with young people in non-urban areas having the highest increases and needle-sharing being a major factor. Clean needles are distributed by syringe services programs (SSPs), which can also provide a number of other related services including overdose prevention, referrals for substance use treatment, and infectious disease testing. But even there are programs in place — which is not allowed legally in some US states! — some people have to travel long distances for health services, especially for services that are specialized, such as needle exchanges.

In discussing the possible quenstion one could analyse it turned out that for some questions critical data are missing:

There is a distance-to-syringe-services-program variable among the health services data sources of amfAR (https://opioid.amfar.org/).

Many of the interesting variables were not available for much of the nation, and many of them were only at the state level.

Given these limitations, the book focuses whether the distance to a syringe program could be explained by

whether a county is urban or rural,

what percentage of the county residents have insurance (as a measure of both access to health care and socioeconomic status [SES]),

HIV prevalence,

and the number of people with opioid prescriptions.

As there is no variable for rural or urban status in the amfAR database, the book will tale a variable from the U.S. Department of Agriculture Economic Research Services website (https://www.ers.usda.gov/data-products/county-typology-codes/) that classifies all counties as metro or non-metro.

9.3 Resources & Chapter Outline

9.3.1 Data, codebook, and R packages

Resource 9.1 : Data, codebook, and R packages for learning about descriptive statistics

Data

Two options for accessing the data:

Download the clean data set dist_ssp_amfar_ch9.csv from https://edge.sagepub.com/harris1e.

Follow the instructions in Box 9.1 to import, merge, and clean the data from multiple files or from the original online source

Codebook

Two options for accessing the codebook:

Download the codebook file opioid_county_codebook.xlsx from https://edge.sagepub.com/harris1e.

Use the online codebook from the amfAR Opioid & Health Indicators Database website (https://opioid.amfar.org/)

Packages

Packages used with the book (sorted alphabetically)

{broom}, Section A.3 (David Robinson and Alex Hayes)

I will use the data file provided by the book because I am feeling quite confident with reading and recoding the original data. But I will change the columns names so that the variable names conform to the tidyverse style guide.

## run only once (manually)distance_ssp<-readr::read_csv("data/chap09/dist_ssp_amfar_ch9.csv", show_col_types =FALSE)distance_ssp_clean<-distance_ssp|>dplyr::rename( state ="STATEABBREVIATION", dist_ssp ="dist_SSP", hiv_prevalence ="HIVprevalence", opioid_rate ="opioid_RxRate", no_insurance ="pctunins")|>dplyr::mutate( state =forcats::as_factor(state), metro =forcats::as_factor(metro))|>dplyr::mutate( hiv_prevalence =dplyr::na_if( x =hiv_prevalence, y =-1))save_data_file("chap09", distance_ssp_clean, "distance_ssp_clean.rds")

For the exploratory data analysis I need more details about the association between the distance to the next SSP separated for people living in metro and non-metro areas. See

WATCH OUT! Do missing values have a pattern?

We know from Table 9.1 that the variable hiv_prevalence has many missing values. In all the forthcoming analyses we will remove those 70 NAs and work with complete cases. 70 NA’s in a sample of 500 is with 14% a big proportion from the available data. The question arises: Is there a reason why there are so many missing values? Could it be that this reason is distorting our analysis?

Most of the time I have provided code that suppresses these warnings. This is a dangerous enterprise as it could bias results and conclusions without knowledge of the researcher. I think that a more prudent approach would need an analysis of the missing values. I do not know how to do this yet, but with {naniar} (Section A.52) there is a package for exploring missing data structures. Its website and package has several vignettes to learn its functions and there is also an scientific article about the package (Tierney and Cook 2023).

Exploring missing data structures is in the book no planned achievement, therefore it is here enough to to get rid of the NA’s and to follow the books outline. But I am planning coming back to this issue and learn how to address missing data structures appropriately.

9.4 Achievement 1: Explorative Data Analysis

9.4.1 Introduction

Instead following linearly the chapter I will try to compute my own EDA. I will try three different method:

Manufacturing the data and graphs myself. Writing own functions and using {tidyverse} packages to provide summary plots and statistics.

Experimenting with {GGally}, an extension package to {ggplot2} where one part (GGally::ggpairs()) is the equivalent to the base R graphics::pairs() function.

9.4.2 Steps for EDA

I will apply the following steps:

Procedure 9.1 : Some useful steps to explore data for regression analysis

Order and completeness of the following tasks is not mandatory.

Browse the data:

RStudio Data Explorer: I am always using the data explorer in RStudio to get my first impression of the data. Although this step is not reproducible it forms my frame of mind what EDA steps I should follow and if there are issues I need especially to care about.

Skim data: Look at the data with skimr::skim() to get a holistic view of the data: names, data types, missing values, ordered (categorical) minimum, maximum, mean, sd, distribution (numerical).

Read the codebook: It is important to understand what the different variables mean.

Check structure: Examine with utils::str() if the dataset has special structures, e.g. labelled data, attributes etc.

Glimpse actual data: To get a feeling about data types and actual values use dplyr::glimpse().

Glance at example rows: As an alternative of utils::head() / utils::tails() get random row examples including first and last row of the dataset with my own function my_glance_data().

Check normality assumption:

Draw histograms of numeric variables: To get a better idea I have these histograms overlaid with the theoretical normal distributions and the density plots of the current data. The difference between these two curves gives a better impression if normality is met or not.

Draw Q-Q plots of numeric variables: Q-Q plots gives even a more detailed picture if normality is met.

Check homogeneity assumption: If the normality assumption is not met, then test if the homogeneity of variance assumption between groups is met with Levene’s test or with the more robust Fligner-Killeen’s test. In the following steps use always median instead of mean and do not compute the Pearson’s r but the Spearman’s rho coefficient.

Compute correlation coefficient: Apply either Pearson’s r or the Spearman’s rho coefficient.

Explore categorical data with box plots or violin plots: Box plots work well between a numerical and categorical variable. You could also overlay the data and violin plots to maximize the information in one single graph (see Graph 9.4).

There are packages like {GGally} and {ggfortify} (see Section A.25 and Section A.26) that provide a graphical and statistical representation of all combinations of bivariate relationships. They can be used as convenient shortcuts to many of the task listed here above.

9.4.3 Executing EDA for chapter 9

Example 9.2 : Explorative Data Analysis for chapter 9

skimr::skim() with Table 9.1 is a much better alternative! The second version of {tableone} in the book with the median instead of the mean is not necessary because it is in skimr::skim() integrated.

R Code 9.5 : Histograms of numeric variables

Code

hist_distance<-my_hist_dnorm( df =distance_ssp_clean, v =distance_ssp_clean$dist_ssp, n_bins =30, x_label ="Nearest syringe services program in miles")hist_hiv<-my_hist_dnorm( df =distance_ssp_clean, v =distance_ssp_clean$hiv_prevalence, n_bins =30, x_label ="People with diagnosed HIV per 100,000")hist_opioid<-my_hist_dnorm( df =distance_ssp_clean, v =distance_ssp_clean$opioid_rate, n_bins =30, x_label ="Opioid prescriptions per 100 people")hist_insurance<-my_hist_dnorm( df =distance_ssp_clean, v =distance_ssp_clean$no_insurance, n_bins =30, x_label ="Percentage with no health insurance coverage")gridExtra::grid.arrange(hist_distance, hist_hiv, hist_opioid, hist_insurance, nrow =2)

Graph 9.1: Histograms for numeric variables of chapter 9

I developed a function where I can overlay the theoretical normal distribution and the density of the current data. The difference between the two curves gives an indication if we have a normal distribution.

From our data we see that the biggest difference is between SPP distance and HIV prevalence. This right skewed distribution could also be detected from other indicator already present in the skimr::skim()view of Table 9.1: - The small histogram on the right is the most right skewed distribution. - The standard deviation of hiv_prevalence is the only one, that is bigger than the mean of the variable. - There is a huge difference between mean and the median (p50) where the mean is much bigger than the median (= right skewed distribution), e.g. there is a long tail to the right as can also be seen in the tiny histogram.

Aside from hiv_prevalence the variable distance_ssp is almost equally right skewed. The situation seems better for the rest of the numeric variables. But let’s manufacture Q-Q plots for all of them to see more in detail if they are normally distributed or not.

R Code 9.6 : Q-Q plots of numeric variables

Code

qq_distance<-my_qq_plot( df =distance_ssp_clean, v =distance_ssp_clean$dist_ssp, col_qq ="Distance to SSP")qq_hiv<-my_qq_plot( df =distance_ssp_clean, v =distance_ssp_clean$hiv_prevalence, col_qq ="HIV diagnosed")qq_opioid<-my_qq_plot( df =distance_ssp_clean, v =distance_ssp_clean$opioid_rate, col_qq ="Opioid prescriptions")qq_insurance<-my_qq_plot( df =distance_ssp_clean, v =distance_ssp_clean$no_insurance, col_qq ="Health insurance")gridExtra::grid.arrange(qq_distance, qq_hiv, qq_opioid, qq_insurance, nrow =2)

Graph 9.2: Q-Q plots for numeric variables of chapter 9

It turned out that all four numeric variables are not normally distributed. Some of them looked in the histograms quite OK, because the differences to the normal distribution on the lower and upper end of the data compensate each other.

Testing normality with Shapiro-Wilk or Anderson-Darling test will show that they are definitely not normally distributed.

R Code 9.7 : Normality checking with Shapiro-Wilk & Anderson-Darling tests

Table 9.3: Testing normality with Shapiro-Wilk & Anderson-Darling tests

#> # A tibble: 8 × 4

#> variable statistic p.value method

#> <chr> <dbl> <dbl> <chr>

#> 1 dist_ssp 0.874 1.10e-19 Shapiro-Wilk normality test

#> 2 hiv_prevalence 0.649 8.72e-29 Shapiro-Wilk normality test

#> 3 opioid_rate 0.938 1.55e-13 Shapiro-Wilk normality test

#> 4 no_insurance 0.946 1.85e-12 Shapiro-Wilk normality test

#> 5 dist_ssp 18.0 3.7 e-24 Anderson-Darling normality test

#> 6 hiv_prevalence 37.0 3.7 e-24 Anderson-Darling normality test

#> 7 opioid_rate 2.68 9.14e- 7 Anderson-Darling normality test

#> 8 no_insurance 3.43 1.39e- 8 Anderson-Darling normality test

The p-values from both tests are for all four variables very small, e.g. statistically significant. Therefore we have to reject the Null that they are normally distributed.

Report

It turned out that all four variable are not normally distributed. We can’t therefore not use Pearson’s r coefficient.

scatter_dist_hiv<-my_scatter( df =distance_ssp_clean, v =distance_ssp_clean$hiv_prevalence, w =distance_ssp_clean$dist_ssp, x_label ="HIV prevalence", y_label ="Distance to SSP")scatter_dist_opioid<-my_scatter( df =distance_ssp_clean, v =distance_ssp_clean$opioid_rate, w =distance_ssp_clean$dist_ssp, x_label ="Opioid rate", y_label ="Distance to SSP")scatter_dist_insurance<-my_scatter( df =distance_ssp_clean, v =distance_ssp_clean$no_insurance, w =distance_ssp_clean$dist_ssp, x_label ="No insurance", y_label ="Distance to SSP")gridExtra::grid.arrange(scatter_dist_hiv, scatter_dist_opioid, scatter_dist_insurance, nrow =3)

Graph 9.3: Scatterplots of numeric variables

R Code 9.9 : Testing homogeneity of variances with Levene’s and Fligner-Killeen’s test

Code

hiv_test<-stats::fligner.test(distance_ssp_clean$dist_ssp,distance_ssp_clean$hiv_prevalence)opioid_test<-stats::fligner.test(distance_ssp_clean$dist_ssp,distance_ssp_clean$opioid_rate)insurance_test<-stats::fligner.test(distance_ssp_clean$dist_ssp,distance_ssp_clean$no_insurance)hiv_test2<-car::leveneTest(distance_ssp_clean$dist_ssp,forcats::as_factor(distance_ssp_clean$hiv_prevalence))opioid_test2<-car::leveneTest(distance_ssp_clean$dist_ssp,forcats::as_factor(distance_ssp_clean$opioid_rate))insurance_test2<-car::leveneTest(distance_ssp_clean$dist_ssp,forcats::as_factor(distance_ssp_clean$no_insurance))homogeneity_test<-dplyr::bind_rows(broom::tidy(hiv_test2),broom::tidy(opioid_test2),broom::tidy(insurance_test2))|>dplyr::mutate(method ="Levene's Test for Homogeneity of Variance")|>dplyr::bind_rows(broom:::glance.htest(hiv_test),broom:::glance.htest(opioid_test),broom:::glance.htest(insurance_test),)|>dplyr::bind_cols( variable =c("dist_hiv","dist_opioid", "dist_insurance","dist_hiv","dist_opioid", "dist_insurance"))|>dplyr::relocate(variable)homogeneity_test

Table 9.4: Homogeneity of variances tested with Levene’s and Fligner-Killeen’s test

#> # A tibble: 6 × 7

#> variable statistic p.value df df.residual method parameter

#> <chr> <dbl> <dbl> <int> <int> <chr> <dbl>

#> 1 dist_hiv 0.493 0.999 395 34 Levene's Test fo… NA

#> 2 dist_opioid 0.893 0.770 406 93 Levene's Test fo… NA

#> 3 dist_insurance 0.750 0.983 171 328 Levene's Test fo… NA

#> 4 dist_hiv 408. 0.312 NA NA Fligner-Killeen … 395

#> 5 dist_opioid 451. 0.0609 NA NA Fligner-Killeen … 406

#> 6 dist_insurance 170. 0.502 NA NA Fligner-Killeen … 171

All p-values are higher than the threshold of .05 and are therefore not statistically significant. The Null must not rejected, the homogeneity of variance assumption for all variables is met.

R Code 9.10 : Correlations for numeric variables of chapter 9

Code

cor_pearson<-distance_ssp_clean|>dplyr::summarize( hiv_cor =stats::cor( x =dist_ssp, y =hiv_prevalence, use ="complete.obs", method ="pearson"), opioid_cor =stats::cor( x =dist_ssp, y =opioid_rate, use ="complete.obs", method ="pearson"), insurance_cor =stats::cor( x =dist_ssp, y =no_insurance, use ="complete.obs", method ="pearson"), `n (sample)` =dplyr::n())cor_spearman<-distance_ssp_clean|>dplyr::summarize( hiv_cor =stats::cor( x =dist_ssp, y =hiv_prevalence, use ="complete.obs", method ="spearman"), opioid_cor =stats::cor( x =dist_ssp, y =opioid_rate, use ="complete.obs", method ="spearman"), insurance_cor =stats::cor( x =dist_ssp, y =no_insurance, use ="complete.obs", method ="spearman"), `n (sample)` =dplyr::n())cor_kendall<-distance_ssp_clean|>dplyr::summarize( hiv_cor =stats::cor( x =dist_ssp, y =hiv_prevalence, use ="complete.obs", method ="kendall"), opioid_cor =stats::cor( x =dist_ssp, y =opioid_rate, use ="complete.obs", method ="kendall"), insurance_cor =stats::cor( x =dist_ssp, y =no_insurance, use ="complete.obs", method ="kendall"), `n (sample)` =dplyr::n())cor_chap09<-dplyr::bind_rows(cor_pearson, cor_spearman, cor_kendall)cor_chap09<-dplyr::bind_cols( method =c("Pearson", "Spearman", "Kendall"), cor_chap09)cor_chap09

Table 9.5: Correlations for numeric variables of chapter 9

Here I have computed for a comparison all three correlation coefficients of the nearest distance to the next SSP with the numeric variabeles of the dataset.

Pearson’s \(r\) is not allowed for all of the three variables, because our data didn’t meet the normality assumption.

Using Spearman’s \(\rho\) or Kendall’s \(\tau\) instead of Pearson’s \(r\) results in big differences. For instance: the correlation of distance to the next SSP and HIV prevalence reverses it direction.

Kendall’s tau \(\tau\) is more conservative (smaller) than Spearman’s rho and it is also preferred in most scenarios. (Kendall’s tau is not mentioned in the book. Maybe the reason is — as far as I understand – that Spearman’s is the most widely used correlation coefficient?)

I want to confirm my internet research with the following quotes:

First quote

In the normal case, Kendall correlation is more robust and efficient than Spearman correlation. It means that Kendall correlation is preferred when there are small samples or some outliers. (Pearson vs Spearman vs Kendall) (Pluviophile 2019).

Second quote

Kendall’s Tau: usually smaller values than Spearman’s rho correlation. Calculations based on concordant and discordant pairs. Insensitive to error. P values are more accurate with smaller sample sizes.

Spearman’s rho: usually have larger values than Kendall’s Tau. Calculations based on deviations. Much more sensitive to error and discrepancies in data.

The main advantages of using Kendall’s tau are as follows:

The distribution of Kendall’s tau has better statistical properties.

The interpretation of Kendall’s tau in terms of the probabilities of observing the agreeable (concordant) and non-agreeable (discordant) pairs is very direct.

The big difference between mean and median reflects a right skewed distribution. There are some people living extremely far from the next SSP both in non-metro and metro areas.

It is no surprise that the distance for people living in a non-metro area is much longer than for people in big city. But what certainly surprised me, is that even in big cities half of people live more than 50 miles form the next SSP.

R Code 9.12 : Distance in miles to nearest syringe programs by metro or non-metro status for a sample of 500 counties

Code

distance_ssp_clean|>ggplot2::ggplot(ggplot2::aes( x =metro, y =dist_ssp, fill =metro))+ggplot2::geom_violin(ggplot2::aes( color =metro), fill ="white", alpha =.8)+ggplot2::geom_boxplot(ggplot2::aes( fill =metro, color =metro), width =.2, alpha =.3)+ggplot2::geom_jitter(ggplot2::aes( color =metro), alpha =.4)+ggplot2::labs( x ="Type of county", y ="Miles to syringe program")+ggplot2::scale_fill_manual( values =c("#78A678", "#7463AC"), guide ="none")+ggplot2::scale_color_manual( values =c("#78A678", "#7463AC"), guide ="none")+ggplot2::coord_flip()

Graph 9.4: Distance in miles to nearest syringe programs by metro or non-metro status for a sample of 500 counties

TODO: Exploring several variables together with {**GGally}

During working the material of SwR I had often to look for more details in the internet. During one of this (re)searches I learned about about the possibility to explore multiple variables together with graphics::pairs and the {tidyverse} pendant {GGally}.

After exploring {GGally} I noticed that there is with the ggnostic() function, working as a wrapper around GGally::ggduo(), also a tool that displays full model diagnostics for each given explanatory variable. There are even many other tools where “GGally extends ggplot2 by adding several functions to reduce the complexity of combining geoms with transformed data” GGally: Extension to ggplot2.

I plotted some examples in Section 9.11 but I need to learn these very practical tools much more in detail. Admittedly I have to understand all these different diagnostic tests before I am going to read the extensive documentation (currently 14 articles) and trying to apply shortcuts with the {GGally} functions.

TODO 9.1: Learn to explore several variables together with {GGally}

9.5 Achivement 2: Exploring line model

9.5.1 Introduction

Formula 9.1 : Equation for linear model

\[

\begin{align*}

y = &m_{x}+b \\

y = &b_{0}+b_{1}x \\

y = &c+b_{1}x

\end{align*}

\tag{9.1}\]

\(b, b_{0}, c\): y-intercept of the line, or the value of y when x = 0

\(x, y\): the coordinates of each point along the line

Sometimes \(b^*\) is used. This means that the variable had been standardized, or transformed into z-scores, before the regression model was estimated.

An example of a linear equation would be \(y = 3 + 2x\).

Important 9.1: Variable names and the difference between deterministic and stochastic

The y variable on the left-hand side of the equation is called the dependent or outcome variable.

The x variable(s) on the right-hand side of the equation is/are called the independent or predictor variable(s).

A deterministic equation, or model, has one precise value for y for each value of x. Some equation in physics are deterministic, e.g., \(e = mc^2\).

In a stochastic equation, or model, you cannot predict or explain something exactly. Most of the time, there is some variability that cannot be fully explained or predicted. This unexplained variability is represented by an error term that is added to the equation. Relationships measured in social science are typically stochastic.

R Code 9.13 : Example of a deterministic linear model with gallons of water needed as an outcome and weeks as a predictor

Code

# make a vector called weeks that has the values 1 through 12 in itweeks<-1:12# use the regression model to make a vector called gallons with# weeks as the valuesgallons<-3+2*weeks# make a data frame of weeks and gallonswater<-data.frame(weeks, gallons)# Make a plot (Figure 9.9)water|>ggplot2::ggplot(ggplot2::aes( x =weeks, y =gallons))+ggplot2::geom_line(ggplot2::aes( linetype ="Linear model\ngallons=3+2*weeks"), color ="gray60", linewidth =1)+ggplot2::geom_point(ggplot2::aes( color ="Observation"), size =4, alpha =.6)+ggplot2::labs( x ="Weeks", y ="Gallons of water needed")+ggplot2::scale_linetype_manual(values =2, name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")

Graph 9.5: Example of a linear model with gallons of water needed as an outcome and weeks as a predictor

There is nothing new in this code chunk, therefore I have just taken the code from the book only adapted with changes resulting from newer versions of {ggplot2} (e.g., linewidth instead of size).

It is important to know that the graph does not use the calculation of a linear model with ggplot2::geom_smooth() but merely uses ggplot2::geom_line to connect the points. We are using #eq-chap09-linear-model, e.g. a deterministic formula.

R Code 9.14 : Example of a stochastic linear model with gallons of water needed as an outcome and weeks as a predictor

Code

# make a vector called weeks that has the values 1 through 12 in itweeks<-1:12# use the regression model to make a vector called gallons with# weeks as the values # but this time with simulated residualsset.seed(1001)# for reproducibilitygallons<-3+(2*weeks)+rnorm(12, 0, 2.5)# make a data frame of weeks and gallonswater<-data.frame(weeks, gallons)# calculate the residuals from the linear modelres<-base::summary(stats::lm(gallons~weeks, data =water))$residualswater|>ggplot2::ggplot(ggplot2::aes( x =weeks, y =gallons))+ggplot2::geom_point(ggplot2::aes( color ="Observation"), size =4, alpha =.6)+ggplot2::geom_smooth( formula =y~x, method ="lm", se =FALSE,ggplot2::aes( linetype ="Linear model\ngallons=3+2*weeks"), color ="gray60", linewidth =1)+ggplot2::geom_segment(ggplot2::aes( x =weeks, y =gallons, xend =weeks, yend =gallons-res))+ggplot2::labs( x ="Weeks", y ="Gallons of water needed")+ggplot2::scale_linetype_manual(values =2, name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")

Graph 9.6: Example of a linear model with gallons of water needed as an outcome and weeks as a predictor with deviations (errors)

This is my replication of book’s Figure 9.10, where no R example code is available. I am proud to state that I did this graph without looking ahead or to read the tutorial by Jackson (2016) that is recommended later in the book. To draw this graph I had to take three new actions:

Without these three code addition, I wouldn’t have been able to draw the vertical lines from the observations to the line of the linear model.

Although I managed to create Graph 9.6 myself I mixed up in the explaining text the concepts of errors and residuals.

Important 9.2: Errors vs. Residuals

Errors and residuals are two closely related and easily confused measures:

The error of an observation is the deviation of the observed value from the true value of a quantity of interest (for example, a population mean).

The residual is the difference between the observed value and the estimated value of the quantity of interest (for example, a sample mean).

9.6 Achievement 3: Slope and Intercept

9.6.1 Introduction

A simple linear- regression model could be used to examine the relationship between the percentage of people without health insurance and the distance to a syringe program for a county.

Formula 9.3 : Regression of people without health insurance and the distance to SSP

The slope formula in Equation 9.4 is adding up the product of differences between the observed values and mean value of percentage uninsured (no_insurance) and the observed values and mean value of distance to syringe program (dist_ssp) for each of the 500 counties. This value is divided by the summed squared differences between the observed and mean values of no_insurance for each county.

\(i\): individual observation, in this case a county

\(n\): sample size, in this case 500

\(x_{i}\): mean value of no_insurance for the sample

\(y_{i}\): value of dist_ssp for \(i\)

\(m_{y}\): mean value of dist_ssp for the sample

\(\sum\): symbol for the sum

\(b_{i}\): slope

9.6.3 Computing the intercept

Once the slope is computed, the intercept can be computed by putting the slope and the values of \(m_{x}\) and \(m_{y}\) into the equation for the line with x and y replaced by \(m_{x}\) and \(m_{y}\), \(m_{y} = b_{0} + b_{1} times m_{x}\), and solving it for \(b_{0}\), which is the y-intercept. Because this method of computing the slope and intercept relies on the squared differences and works to minimize the residuals overall, it is often called ordinary least squares or OLS regression.

9.6.4 Estimating the linear regression model with R

R Code 9.15 : Linear regression of distance to syringe program by percent uninsured

Code

## linear regression of distance to syringe program by percent uninsuredlm9.1<-stats::lm( formula =dist_ssp~no_insurance, data =distance_ssp_clean, na.action =na.exclude)save_data_file("chap09", lm9.1, "lm9.1.rds")lm9.1

Listing / Output 9.2: Linear regression of distance to syringe program by percent uninsured

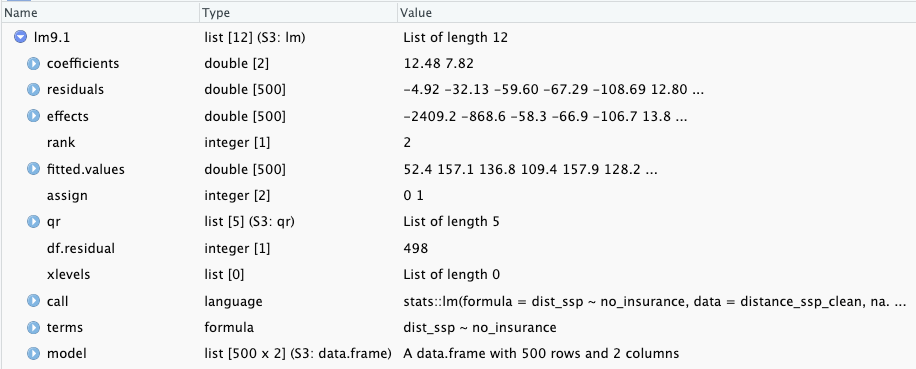

The books does not go into details of the results from stats::lm() but recommends immediately to use base::summary(stats::lm()) to get the best results. The reason is that the summary output of the linear model has much more details (See lst-chap09-summary-lm-distance-uninsured). But I think that it is important to know that there are many different aspects of the result incorporated in the lm object that are not reported.

Graph 9.7: Screenshot of the lm9.1 object

Hidden in the object are important results:

residuals: In Section 9.6.5 I have used the computed residuals from the lm9.1 object to draw the vertical lines from the observation points (dist_ssp) to the regression line in Listing / Output 9.4.

fitted.values: These values build the regression line and are identical with the results from stats::predict(lm9.1) used in Listing / Output 9.7.

effects: There is also a vector the size of the sample (500) called effects. I have looked up what this mean and came to the following definition which I do not (up to now) understand:

For a linear model fitted by lm or aov, the effects are the uncorrelated single-degree-of-freedom values obtained by projecting the data onto the successive orthogonal subspaces generated by the QR decomposition during the fitting process. The first r (the rank of the model) are associated with coefficients and the remainder span the space of residuals (but are not associated with particular residuals). (effects: Effects from Fitted Model)

Some help what effects are why they are important is the explanation of orthogonal in a statistical context. After reading (Orthogonal: Models, Definition & Finding) I got some first vague ideas about the meaning of effects. Hopefully I will learn later was they mean in details and how they are computed.

R Code 9.16 : Estimating the linear regression model of people without health insurance and the distance to SSP using R

Code

# linear regression of distance to syringe program by percent uninsuredbase::summary(lm9.1)

#>

#> Call:

#> stats::lm(formula = dist_ssp ~ no_insurance, data = distance_ssp_clean,

#> na.action = na.exclude)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -217.71 -60.86 -21.61 47.73 290.77

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 12.4798 10.1757 1.226 0.221

#> no_insurance 7.8190 0.7734 10.110 <2e-16 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 85.91 on 498 degrees of freedom

#> Multiple R-squared: 0.1703, Adjusted R-squared: 0.1686

#> F-statistic: 102.2 on 1 and 498 DF, p-value: < 2.2e-16

Listing / Output 9.3: Summary of linear regression of distance to syringe program by percent uninsured

Intercept: The \(y\)-intercept of 12.48 is the \(y\)-value when \(x\) is zero. The model predicts that a county with 0% of people being uninsured would have a distance to the nearest syringe program of 12.48 miles.

Slope: The slope of 7.82 is the change in \(y\) for every one-unit change in \(x\). If the percent uninsured goes up by 1% in a county, the distance in miles to a syringe program would change by 7.82 miles.

\[

\begin{align*}

distance = 12.48 + 7.82 \times \text{no\_insurance} \\

distance = 12.48 + 7.82 \times 10 = 90.68

\end{align*}

\] Based on the linear regression model, a county with 10% of people uninsured would be 90.68 miles from the nearest syringe program.

9.6.5 Understanding residuals

In Graph 9.6 I have already graphed a demonstration how the residuals relate to the regression line. The regression line minimizes the residual differences between the values predicted by the regression line and the observed values.

This is how OLS works. OLS minimizes those distances captured in Graph 9.6 by the solid vertical lines: It minimizes the residuals.

R Code 9.17 : Regression with residuals between percentage without health insurance and distance to nearest SSP

Code

## for a later calculationdist_mean<-base::mean(distance_ssp_clean$dist_ssp)## provide all data into one data frame## to check which column to subtract from what columndf_lm<-distance_ssp_clean|>dplyr::mutate( lm_residuals =lm9.1$residuals, lm_fitted_values =lm9.1$fitted.values, lm_mean =dist_ssp-dist_mean)save_data_file("chap09", df_lm, "df_lm.rds")gg_residuals<-df_lm|>ggplot2::ggplot(ggplot2::aes( y =dist_ssp, x =no_insurance))+ggplot2::geom_smooth( formula =y~x, method ="lm", se =FALSE,ggplot2::aes( linetype ="Linear model"), color ="gray60", linewidth =1)+ggplot2::geom_point(ggplot2::aes( color ="Observation"), size =1, alpha =.6)+ggplot2::geom_segment(ggplot2::aes( x =no_insurance, y =dist_ssp, xend =no_insurance, yend =dist_ssp-lm_residuals, linewidth ="Residuals"), linetype ="solid", color ="grey", alpha =.6)+ggplot2::labs( y ="Distance to nearest SSP facility", x ="Percentage of people without health insurance ")+ggplot2::scale_linetype_manual(values =2, name ="")+ggplot2::scale_linewidth_manual(values =.5, name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")gg_residuals

Listing / Output 9.4: Relationship between percentage without health insurance and distance to nearest syringe program in 500 counties with residuals (vertical lines)

This is the replication of Figure 9.12, where no example code is available. After I had calculated the linear model I needed either the position on the regression line (lm_fitted_values in my case) or the values of the residuals (lm_residuals). I couldn’t use base::summary(stats::lm()) because fitted.values are calculated only for the lm object (in my case lm_dist), which also has the residuals computed and included.

In the end I decided to subtract the residuals from the distance to get the position of the regression line (and the end of the vertical line from the observation).

9.7 Achievement 4: Slope interpretation and significance

9.7.1 Interpreting statistical significance of the slope

The output of Listing / Output 9.2 for the linear model included a p-value for the slope (<2e16) and a p-value for the intercept (0.221). The statistical significance of the slope in linear regression is tested using a Wald test, which is like a one-sample t-test where the hypothesized value of the slope is zero. To get the p-value from the regression model of distance to syringe program, the slope of 12.48 was compared to a hypothesized value of zero using the Wald test.

9.7.1.1 NHST Step 1

Write the null and alternate hypotheses:

Wording for H0 and HA

H0: The slope of the line is equal to zero.

HA: The slope of the line is not equal to zero.

9.7.1.2 NHST Step 2

Compute the test statistic.

The test statistic for the Wald test in OLS regression is the t-statistic.

Formula 9.5 : Formula for the for the Wald test in OLS regression

\[

\begin{align*}

t = &\frac{b_{1}-0}{se_{b_{1}}} \\

t = &\frac{7.8190-0}{0.7734} = 10.11

\end{align*}

\tag{9.5}\]

Note that the formula is the same as the formula for the one-sample t-test from Equation 6.1, but with the slope of the regression model instead of the mean. The t-statistic, that was computed manually in Equation 9.5 can also be found in the model output of Listing / Output 9.2.

9.7.1.3 NHST Step 3

Review and interpret the test statistics: Calculate the probability that your test statistic is at least as big as it is if there is no relationship (i.e., the null is true).

The p-value is < 0.01 and therefore the null hypothesis is rejected in favor of the alternate hypothesis that the slope is not equal to zero.

Report 9.1: Interpretation of the linear regression model lm9.1 after NHST (first draft)

The percentage of uninsured residents in a county is a statistically significant predictor of the distance to the nearest syringe program (b = 7.82; p < .05) in our sample. For every 1% increase in uninsured residents in a county, the predicted distance to the nearest syringe program increases by 7.82 miles.

9.7.2 Computing confidence intervals

stats::confint() computes the confidence interval for the intercept and the slope.

R Code 9.18 : Confidence interval for regression parameters

Listing / Output 9.5: Confidence interval for regression parameters

The intercept is often reported but not interpreted because it does not usually contribute much to answering the research question.

Report 9.2: Interpretation of the linear regression model lm9.1 after statistical significance and confidence intervals (second draft)

The percentage of uninsured residents in a county is a statistically significant predictor of the distance to the nearest syringe program (b = 7.82; p < .05). For every 1% increase in uninsured residents in a county, the predicted distance to the nearest syringe program increases by 7.82 miles. The value of the slope in the sample is 7.82, and the value of the slope is likely between 6.30 and 9.34 in the population that the sample came from (95% CI: 6.30–9.34). With every 1% increase in uninsured residents in a county, the nearest syringe program is between 6.30 and 9.34 more miles away. These results suggest that counties with a larger percentage of uninsured are farther from this resource, which may exacerbate existing health disparities.

9.7.3 Making predcitions

Predicted values of \(y\) are called y-hat and denoted \(\hat{y}\). The stats::predict() function can be used to find the predicted values for all observations, or for a specific value of the independent variable.

stats::predict() is a generic function for predictions from the results of various model fitting functions. The function invokes particular methods which depend on the class of the first argument.

In our case we have to consult the help page of stats::predict.lm(). R knows which method to apply so just using stats::predict() is enough to invoke the correct computation. But for us users to know which arguments to apply we need the specified help page and not the explanation of the generic command.

Most prediction methods which are similar to those for linear models have an argument newdata specifying the first place to look for explanatory variables to be used for prediction.

Listing / Output 9.6: Predicted distance for a county where 10% of people are uninsured

The predicted distance to a syringe program from a county with 10% of people uninsured is 90.67 miles with a confidence interval for the prediction (sometimes called a prediction interval) of 82.42 to 98.92 miles.

R Code 9.20 : Find predictions for all observed values

There is another p-value toward the bottom of Listing / Output 9.3. This p-value was from a test statistic that measures how much better the regression line is at getting close to the data points compared to the mean value of the outcome. Essentially, the question asked to produce this p-value is: Are the predicted values shown by the regression line in Figure 9.13 better than the mean value of the distance to the syringe program at capturing the relationship between no_insurance and dist_ssp?

Important 9.4: F-statistic for linear regression

Like the t-statistic is the test statistic for a t-test comparing two means, the F-statistic is the test statistic for linear regression comparing the regression line to the mean.

It is the same F-statistic that we have seen working with ANOVA in Chapter 7. ANOVA is actually a special type of linear model where all the predictors are categorical.

R Code 9.21 : Distance to mean versus regression line

Code

gg_means<-df_lm|>ggplot2::ggplot(ggplot2::aes( y =dist_ssp, x =no_insurance))+ggplot2::geom_point(ggplot2::aes( color ="County"), size =1, alpha =.6)+ggplot2::geom_hline(ggplot2::aes( linewidth ="Mean observed\ndistance to SSP", yintercept =dist_mean,), color ="grey60", alpha =.6)+ggplot2::geom_segment(ggplot2::aes( x =no_insurance, y =dist_ssp, xend =no_insurance, yend =dist_ssp-lm_mean, linetype ="Difference from mean\nto observed"), color ="grey", alpha =.6)+ggplot2::labs( y ="Miles to syringe program", x ="Percentage of people without health insurance ")+ggplot2::scale_linewidth_manual(values =1, name ="")+ggplot2::scale_linetype_manual(values =c(2, 2), name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")gg_residuals2<-df_lm|>ggplot2::ggplot(ggplot2::aes( y =dist_ssp, x =no_insurance))+ggplot2::geom_smooth( formula =y~x, method ="lm", se =FALSE,ggplot2::aes( linetype ="Predicted distance to\nSSP (regression line)"), color ="gray60", linewidth =1)+ggplot2::geom_point(ggplot2::aes( color ="County"), size =1, alpha =.6)+ggplot2::geom_segment(ggplot2::aes( x =no_insurance, y =dist_ssp, xend =no_insurance, yend =dist_ssp-lm_residuals, linewidth ="Residuals (diff from\npredicted to observe)"), linetype ="dashed", color ="grey", alpha =.6)+ggplot2::labs( y ="Miles to syringe program", x ="Percentage of people without health insurance ")+ggplot2::scale_linetype_manual(values =1, name ="")+ggplot2::scale_linewidth_manual(values =.5, name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")patchwork:::"/.ggplot"(gg_residuals2,gg_means)

Listing / Output 9.8: What is smaller? Sum of distances to regression line or distances to mean?

9.8.2 Understanding F-statistic

The F-statistic is a ratio of explained information (in the numerator) to unexplained information (in the denominator). If a model explains more than it leaves unexplained, the numerator is larger and the F-statistic is greater than 1. F-statistics that are much greater than 1 are explaining much more of the variation in the outcome than they leave unexplained. Large F-statistics are more likely to be statistically significant.

Formula 9.6 : F-statistic for the linear regression

\[

\begin{align*}

F = \frac{\frac{\sum_{i=1}^{n}(\hat{y}-m_{y})^2}{p-1}}{\frac{\sum_{i=1}^{n}(y_{i}-\hat{y_{i}})^2}{n-p}}

\end{align*}

\tag{9.6}\]

\(i\): individual observation, in this case a county

\(n\): sample size, or total number of counties, in this case 500

\(p\): number of parameters in the model; slope and intercept are parameters

\(y_{i}\): observed outcome of distance to syringe program for county \(i\)

\(\hat{y_{i}}\): predicted value of distance to syringe program for county \(i\)

\(m_{y}\): mean of the observed outcomes of distance to syringe program

Numerator: How much differ the predicted values from the observed mean on average. (\(MS_{regression}\)) Denominator: How much differ the predicted values from the actual observed values on average. (\(MS_{residual}\))

The F-statistic is how much a predicted value differs from the mean value on average — which is explained variance, or how much better (or worse) the prediction is than the mean at explaining the outcome — divided by how much an observed value differs from the predicted value on average, which is the residual information or unexplained variance. Or: Explained variance divided by residual information resp. unexplained variance,

Sometimes, these relationships are referred to in similar terminology to ANOVA: the numerator is the \(MS_{regression}\) (where MS stands for mean square) divided by the \(MS_{residual}\).

Bullet List 9.2

: Features of the F-statistic

The F-statistic is always positive, due to the squaring of the terms in the numerator and denominator.

The F-statistic starts at 0 where the regression line is exactly the same as the mean.

The larger the F-statistic gets the more the model explains the variation in the outcome.

F-statistics with large values are less likely to occur when there is no relationship between the variables.

The shape of the F-distribution depends on the number of parameters in the statistical model and the sample size, which determine two degrees of freedom (df) values.

For instance in the last line of Listing / Output 9.3 we see that the value of the F-statistic is 102.2 with 1 (p - 1 = 2 - 1 = 1) and 498 (n - p = 500 - 2 = 498) df.

\(R^2\) is the amount of variation in the outcome that the model explains and is reported as a measure of model fit.

When the model predicts values that are close to the observed values, the correlation is high and the \(R^2\) is high.

To get the percentage of variance explained by the model, multiply \(R^2\) by 100.

Subtracting \(R^2\) from 1 (1 – \(R^2\)) and multiplying by 100 for a percent will give the percent of variance not explained by the model.

The value of \(R^2\) tends to increase with each additional variable added to the model, whether the variable actually improves the model or not.

Adjusted \(R^2\) (\(R^2_{adj}\)) penalizes the value of \(R^2\) a small amount for each additional variable added to the model to ensure that the only increases when the additional predictors explain a notable amount of the variation in the outcome.

\(R^2\) is computed by squaring the value of the correlation between the observed distance to syringe programs in the 500 counties and the values of distance to syringe programs predicted for the 500 counties by the model.

For the relationship between uninsured percentage and distance to syringe program in Listing / Output 9.3, the \(R^2\) is 0.1703. To get the percentage of variance explained by the model, multiply by 100, so 17.03% of the variation in distance to syringe programs is explained by the percentage of uninsured people living in a county.

Important 9.5: \(R^2_{adj}\) is more commonly reported than \(R^2\)

9.8.4 Reproting linear regression results

Bullet List 9.4

: Simple linear regression analysis results to report

Interpretation of the value of the slope (b)

Significance of the slope (t and p, confidence intervals)

Significance of the model (F and p)

Model fit (\(R^2\) or better \(R_{adj}^2\))

The following report is for our linear model lm9.1 example takein into account information from the

Report 9.3: Interpretation of the linear regression model lm9.1 after model fit (third draft)

A simple linear regression analysis found that the percentage of uninsured residents in a county is a statistically significant predictor of the distance to the nearest syringe program (b = 7.82; p < .001). For every 1% increase in uninsured residents, the predicted distance to the nearest syringe program increases by 7.82 miles. The value of the slope is likely between 6.30 and 9.34 in the population that the sample came from (95% CI: 6.30–9.34). With every 1% increase in uninsured residents in a county, there is likely a 6.30- to 9.34-mile increase to the nearest syringe program. The model was statistically significantly better than the baseline model (the mean of the distance to syringe program) at explaining distance to syringe program [F(1, 498) = 102.2; p < .001] and explained 16.86% of the variance in the outcome (\(R_{adj}^2\) = .1686). These results suggest that counties with lower insurance rates are farther from this resource, which may exacerbate existing health disparities.

9.9 Achievement 6: Conducting diagnostics

9.9.1 Introduction

Bullet List 9.5

: Assumptions of simple linear regression

Observations are independent.

The outcome is continuous.

The relationship between the two variables is linear (linearity).

The variance is constant with the points distributed equally around the line (homoscedasticity).

There is no perfect multicollinearity. (Only valid for multiple regression for continuous variables, see Section 9.10.5.)

9.9.2 Linearity

R Code 9.24 : Check linearity with loess curve

Code

distance_ssp_clean|>ggplot2::ggplot(ggplot2::aes( x =no_insurance, y =dist_ssp))+ggplot2::geom_point(ggplot2::aes( size ="County"), color ="#7463AC", alpha =.6)+ggplot2::geom_smooth( formula =y~x,ggplot2::aes( color ="Linear fit line"), method ="lm", se =FALSE)+ggplot2::geom_smooth( formula =y~x,ggplot2::aes( color ="Loess curve"), method ="loess", se =FALSE)+ggplot2::labs( y ="Miles to syringe program", x ="Percent uninsured")+ggplot2::scale_color_manual( values =c("gray60", "deeppink"), name ="")+ggplot2::scale_size_manual(values =2, name ="")

Listing / Output 9.11: Checking the linearity assumption

The linearity assumption is met if a scatterplot of the two variables shows a relationship that falls along a line. An example where this assumption is met in the author’s opinion is Graph 8.3. The route of the loess curve follows not exact the regression line, but there are only small deviations from the regression line.

The situation in Listing / Output 9.11 is a little different: Here the loess line is curved and shows deviation from the linear relationship especially at the lower level of the predictor but also at the zeniths of the two arcs. Harris therefore decided that in Listing / Output 9.11 the linearity assumption has failed.

Conclusion: The linearity assumption is not met.

TODO: How to test the linearity assumption more objectively?

Diagnostics are very important for linear regression models. I have not (yet) much experience but I am pretty sure there exist some tests for the linearity assumption of linear models. I just have to look and find them!

TODO 9.2: Look for packages with linearity test functions

#>

#> studentized Breusch-Pagan test

#>

#> data: lm9.1

#> BP = 46.18, df = 1, p-value = 1.078e-11

Listing / Output 9.12: Checking the homoscedasticity with the Breusch-Pagan test

The assumption of homoscedasticity requires the data points are evenly distributed around the regression line. One way would be to check again Listing / Output 9.11: The points seem closer to the line on the far left and then are more spread out around the line at the higher values of percentage uninsured. Zhis is an indicator that the assumption has failed.

But the Breusch-Pagan test is another – more objective – way to test the homoscedasticity assumption. The tiny p-value confirm our first impression: The Null assumption, that the data points are evenly distributed around the regression line has to be rejected.

Conclusion: The homoscedasticity assumption is not met.

R Code 9.26 : Check homoscedasticity assumption plotting predicted values vs. residuals

Code

## scatterplot with mean as reference linegg_scatter_mean<-df_lm|>ggplot2::ggplot(ggplot2::aes( x =lm_fitted_values, y =lm_residuals))+ggplot2::geom_point(ggplot2::aes( color ="County"), size =1, alpha =.6)+ggplot2::geom_hline(ggplot2::aes( yintercept =base::mean(lm_residuals), linetype ="Perfect prediction\npredicted = observed"))+ggplot2::labs( x ="Predicted values of miles to syringe program", y ="Residuals (distance between observed\nand predicted value)")+ggplot2::scale_linetype_manual(values =2, name ="")+ggplot2::scale_color_manual(values ="#7463AC", name ="")gg_scatter_mean

Listing / Output 9.13: Predicted values and residuals from linear regression model of distance to syringe program by percentage uninsured in a county

Another (third) way to examine the constant error variance assumption is plotting the predicted values versus the residuals. In Listing / Output 9.13 a dashed line is shown to indicate no relationship between the fitted (or predicted) values and the residuals, which would be the ideal situation to meet the assumption. This line is a helpful reference point for looking at these types of graphs.

For the homoscedasticity assumption to be met, the points should be roughly evenly distributed around the dashed line with no clear patterns. It should look like a cloud or random points on the graph with no distinguishable patterns.

In Listing / Output 9.13, there is a clear pattern. Under the dashed line the distribution of the points suggest a negative correlation whereas above the line the points are distributed more randomly. This finding confirms the Breusch-Pagan test result.

Conclusion: The homoscedasticity assumption of the residuals is not met.

9.9.4 Independent residuals

R Code 9.27 : Testing the independence of residuals with the Durbin-Watson test

#>

#> Durbin-Watson test

#>

#> data: lm9.1

#> DW = 2.0103, p-value = 0.5449

#> alternative hypothesis: true autocorrelation is greater than 0

Listing / Output 9.14: Testing the independence of residuals with the Durbin-Watson test

Residuals have to be independent or unrelated to each other. Residuals that are independent do not follow a pattern. Conceptually, residuals are the variation in the outcome that the regression line does not explain. If the residuals form a pattern then the regression model is doing better for certain types of observations and worse for others.

The independence assumption of the residuals can be checked with the Durbin-Watson test. A Durbin-Watson (DW, or D-W) statistic of 2 indicates perfectly independent residuals, meaning that the null hypothesis of the test (that the residuals are independet) cannot be rejected. An this in fact the case in Listing / Output 9.14, as the DW-value is very near to 2.

Conclusion: The independence of residuals assumption is met.

9.9.5 Normality of residuals

Example 9.8 : Testing the normality of residuals assumption

my_hist_dnorm( df =df_lm, v =df_lm$lm_residuals, n_bins =30, x_label ="Residuals (distance between observed\nand predicted value)")

Listing / Output 9.15: Testing the normality of residuals assumption with a histogram of the residuals

The histogram with the overlaid density curve of the theoretical normal distribution in Listing / Output 9.15 shows a right skewed distribution. This is an indicator that the normality assumption is not met.

Conclusion: The normality assumption of the residuals is not met

R Code 9.29 : Checking normality assumption of the residuals with a Q-Q plot

Code

my_qq_plot( df =df_lm, v =df_lm$lm_residuals, x_label ="Theoretical normal distribution", y_label ="Observed residuals (distance between\nobserved and prdicted miles\nto syringe program)")

Listing / Output 9.16: Testing the normality of residuals assumption with a q-q plot

The q-q plot in Listing / Output 9.16 shows some deviation form the normal distribution. Although it is again a quite subjective decision (See TODO 9.2) it seems that the assumption of normality has failed.

Conclusion: The normality assumption of the residuals is not met.

R Code 9.30 : Checking normality assumption of the residuals with the Shapiro-Wilk normality test

#>

#> Shapiro-Wilk normality test

#>

#> data: df_lm$lm_residuals

#> W = 0.94357, p-value = 7.437e-13

Listing / Output 9.17: Checking normality assumption of the residuals of linear model lm9.1 with the Shapiro-Wilk normality test

This method is not mentioned, but I believe that you can also use the Shapiro-Wilk test for testing the normal distribution of residuals. At least the very tiny p-value rejects the Null (that the residuals have a normal distribution) and suggest the same conclusion as the other two (visually decided) tests.

Conclusion: The normality assumption of the residuals is not met.

9.9.6 Interpreting the assumption tests

Bullet List 9.6

: Checking simple linear regression assumptions for model lm9.1m

Observations are independent. No: Counties in the same state are not really independent. (This is a general problem for geographical data analysis.)

The outcome is continuous. Yes: The distance to a syringe program is measured in miles and can take any value of zero or higher.

The relationship between the two variables is linear (linearity). No: See: Section 9.9.2

Because linear model lm9.1 does not meet all the assumptions, the model has to be considered biased and should be interpreted with caution. Specifically, the results of a biased model are not usually considered generalizable to the population.

9.9.7 Using models diagnostics

We are not quite done checking model quality using diagnostics. In addition to testing assumptions, we need to identify problematic observations: outliers or other influential observations.

There are several measures:

standardized residuals,

df-betas,

Cook’s distance, and

leverage.

One good strategy for identifying the truly problematic observations is to identify those observations that are outliers or influential observations on two or more of these four measures.

Example 9.9 : Using models diagnostics to find outliers and influential values

Caution 9.1: Filter counties with absolute values of the residuals greater than 1.96

In my first trial, I forgot to filter using the absolute standardized residual values. I got a list of 24 counties – the two counties at the top of the list with negative values were missing.

That there are only two counties with negative values means that most counties had 2018 distances to syringe programs that were farther away than predicted. Another observation: Most of the outlier counties are situated in Texas, including one that is nearer than predicted.

There are 26 counties with outlier values, e.g., standardized residuals with an absolute value greater than 1.96.

R Code 9.32 : Standardize residuals manually to find outliers

With Listing / Output 9.19 I have standardized residuals manually through (lm_residuals - base::mean(lm_residuals)) / stats::sd(lm_residuals). There are small rounding differences in some counties but the list of outlier counties is identical.

R Code 9.33 : Using dfbeta to find influential values

Listing / Output 9.20: Computing dfbeta to find influential values with dfbeta > 2

Computing dfbeta is a method to find influential values. df_beta removes each observation from the data frame, conducts the analysis again, and compares the intercept and slope for the model with and without the observation. Observations with high dfbeta values may be influencing the model. The book recommends a cutoff of 2.

Warning 9.1: Difference between dfbeta and dfbetas

Reading other resources (Zach 2020; Goldstein-Greenwood 2022) I noticed that they used another threshold: Instead of using 2 they recommended \(2 / \sqrt{n}\), where n is the sample size. But this would result in a cutoff value of \(2 / \sqrt{500}\) = 0.0894427 with the absurd high number of 343 counties as influential values.

But later I became aware that these resources are talking of dfbetas (plural) and not of dfbeta (singular). Between these two measure there is a big difference: dfbetas are the standardized values, whereas dfbeta values depend on the scale of the data.

The result is a list of seven counties, six situated in Texas and one in Florida. All of them are listed because of higher absolute dfbeta intercept values.

R Code 9.34 : Using dfbetas to find influential values

#> # A tibble: 32 × 6

#> county state dist_ssp no_insurance predicted cooks_d

#> <chr> <fct> <dbl> <dbl> <dbl> <dbl>

#> 1 gillespie county TX 313 18.2 155. 0.00845

#> 2 webb county TX 436 30.2 249. 0.0713

#> 3 garfield county NE 300 8.8 81.3 0.00954

#> 4 starr county TX 510 35.1 287. 0.165

#> 5 hendry county FL 84.3 29.8 245. 0.0505

#> 6 scott county KS 210 4.1 44.5 0.0137

#> 7 presidio county TX 171. 35.9 293. 0.0533

#> 8 wyandotte county KS 7.25 21 177. 0.0164

#> 9 concordia parish LA 71.3 26.4 219. 0.0281

#> 10 coryell county TX 341. 10.2 92.2 0.00977

#> 11 hill county TX 326. 19.7 167. 0.0115

#> 12 essex county MA 191. 3.5 39.8 0.0127

#> 13 pawnee county KS 262 7.9 74.2 0.00838

#> 14 dewitt county TX 388. 14.8 128. 0.0118

#> 15 jim wells county TX 456 21 177. 0.0446

#> 16 llano county TX 318 17.8 152. 0.00862

#> 17 somervell county TX 329. 19.5 165. 0.0117

#> 18 kennebec county ME 316. 8.5 78.9 0.0118

#> 19 dallam county TX 106. 24.9 207. 0.0107

#> 20 gonzales county TX 386. 22.6 189. 0.0291

#> 21 guadalupe county TX 387. 14.5 126. 0.0113

#> 22 lamb county TX 54 21 177. 0.00860

#> 23 brooks county TX 487 23.5 196. 0.0727

#> 24 comal county TX 367. 13.9 121. 0.00918

#> 25 burnet county TX 342 15.8 136. 0.00885

#> 26 gaines county TX 49.7 32.6 267. 0.124

#> 27 el paso county TX 33.5 23.8 199. 0.0245

#> 28 maverick county TX 330 31.1 256. 0.0124

#> 29 bosque county TX 339 20.7 174. 0.0147

#> 30 falls county TX 343 18.9 160. 0.0129

#> 31 duval county TX 461. 27.7 229. 0.0816

#> 32 caledonia county VT 224. 5.3 53.9 0.0116

Listing / Output 9.22: Using Cook’s distance to find influential values

Cook’s D is computed in a very similar way as dfbeta(s). That is, each observation is removed and the model is re-estimated without it. Cook’s D then combines the differences between the models with and without an observation for all the parameters together instead of one at a time like the dfbeta(s).

The cutoff for a high Cook’s D value is usually \(4/n\).

Thirty-two counties had a high Cook’s D. Most were in Texas (TX), but a few were in Maine (ME), Massachusetts (MA), and Vermont (VT).

R Code 9.36 : Using leverage to find influential values

#> # A tibble: 28 × 6

#> county state dist_ssp no_insurance predicted leverage

#> <chr> <fct> <dbl> <dbl> <dbl> <dbl>

#> 1 berkshire county MA 104. 3 35.9 0.00883

#> 2 webb county TX 436 30.2 249. 0.0283

#> 3 starr county TX 510 35.1 287. 0.0446

#> 4 hendry county FL 84.3 29.8 245. 0.0271

#> 5 val verde county TX 248. 22.8 191. 0.0111

#> 6 presidio county TX 171. 35.9 293. 0.0476

#> 7 wyandotte county KS 7.25 21 177. 0.00830

#> 8 caldwell parish LA 116. 21.2 178. 0.00859

#> 9 concordia parish LA 71.3 26.4 219. 0.0184

#> 10 essex county MA 191. 3.5 39.8 0.00811

#> 11 carroll county IA 152. 3.3 38.3 0.00839

#> 12 jim wells county TX 456 21 177. 0.00830

#> 13 kinney county TX 291 22.8 191. 0.0111

#> 14 nicollet county MN 17.8 3.5 39.8 0.00811

#> 15 sabine county TX 168. 22.1 185. 0.00997

#> 16 bremer county IA 86.7 3.4 39.1 0.00825

#> 17 kodiak island borough AK 150 22.7 190. 0.0110

#> 18 dallam county TX 106. 24.9 207. 0.0151

#> 19 gonzales county TX 386. 22.6 189. 0.0108

#> 20 lamb county TX 54 21 177. 0.00830

#> 21 brooks county TX 487 23.5 196. 0.0124

#> 22 jefferson county TX 177. 21.5 181. 0.00903

#> 23 gaines county TX 49.7 32.6 267. 0.0358

#> 24 dawson county TX 77 20.8 175. 0.00802

#> 25 el paso county TX 33.5 23.8 199. 0.0129

#> 26 maverick county TX 330 31.1 256. 0.0310

#> 27 brantley county GA 226. 24.4 203. 0.0141

#> 28 duval county TX 461. 27.7 229. 0.0215

Listing / Output 9.23: Using leverage to find influential values

Leverage is determined by the difference between the value of an observation for a predictor and the mean value of the predictor. The farther away an observed value is from the mean for a predictor, the more likely the observation will be influential. Leverage values range between 0 and 1. A threshold of \(2p / n\) is often used with p being the number of parameters and n being the sample size.

The leverage values to find influential states are computed by using the stats::hatvalues() function, because the predicted value of \(y\) is often depicted as \(\hat{y}\).

This time we got a list of 28 counties.

9.9.8 Summarizing outliers and influential values

It would be useful to have all the counties identified by at least two of these four measures in a single list or table to more easily see all the counties that seemed problematic.

Example 9.10 : Summarizing outliers and influential values

Listing / Output 9.24: Listing of all counties with influential values at least in two tests (using dfbeta like in the book)

Here I have use the threshold for the non-standardized dfbeta values, which in my opinion is the wrong option.

I have used the R datatable package {DT} (see: Section A.19), so you can experiment with the listed counties, for example sorting after certain columns or filtering certain states.

R Code 9.38 : Summarizing diagnostics for outliers and influential values with dfbetas

Listing / Output 9.25: Listing of all counties with influential values at least in two tests (using dfbetas, different from the book)

Here I have use the threshold for the standardized dfbetas values, which in my opinion is the correct option. Although there is a big difference in the number of counties with cutoff dfbeta (7 counties) to dfbetas(40 counties) in the end the difference is only 11 counties. The listing shows some counties exceeding the threshold 5 times. The reason is that counties can have outliers corresponding to dfbetas intercept and dfbetas slope.

I have used the R datatable package {DT} (see: Section A.19), so you can experiment with the listed counties, for example sorting after certain columns or filtering certain states. If you sort for instance the distances to the next syringe program you will see that there are some counties with similar values that are not included in Listing / Output 9.24, like kiowa county with similar values as garfied county.

R Code 9.39 : Difference between dfbeta and dfbetas results

Code

county_join<-dplyr::full_join(df_lm_diag1, df_lm_diag2, by =c("county", "state"))county_diff<-county_join|>dplyr::filter(is.na(dist_ssp.x))|>dplyr::select(-(3:6))|>dplyr::arrange(desc(dist_ssp.y))print(county_diff, n =20)

Listing / Output 9.26: Additional counties calculated with the standardized dfbetas (and not dfbeta)

These 11 counties have not been included in the book’s version because of a different method (using unstandardized dfbeta with an undocumented threshold of 2 versus standardized dfbetas with the in several resource documented cutoff \(2 / \sqrt{n = samplesize}\).

When an observation is identified as an outlier or influential value, it is worth looking at the data to see if there is anything that seems unusual. Sometimes, the observations are just different from the rest of the data, and other times, there is a clear data entry or coding error.

Report 9.4: Interpretation of the linear regression model lm9.1 after diagnostics (final version)

A simple linear regression analysis found that the percentage of uninsured residents in a county is a statistically significant predictor of the distance to the nearest syringe program (b = 7.82; p < .001). For every 1% increase in uninsured residents, the distance to the nearest syringe program is expected to increase by 7.82 miles. The value of the slope is likely between 6.30 and 9.34 in the population that the sample came from (95% CI: 6.30–9.34), so with every 1% increase in uninsured residents, there is likely a 6.30- to 9.34-mile increase in distance to the nearest syringe program. The model was statistically significantly better than the baseline at explaining distance to syringe program (F[1, 498] = 102.2; p < .001) and explained 16.86% of the variance in the outcome (\(R_{adj}^2\) = .1686). These results suggest that counties with lower insurance rates are farther from this type of resource, which may exacerbate existing health disparities.

An examination of the underlying assumptions found that the data fail several of the assumptions for linear regression, and so the model should be interpreted with caution; the results do not necessarily generalize to other counties beyond the sample. In addition, regression diagnostics found a number of counties that were outliers or influential observations. Many of these counties were in Texas, which may suggest that counties in Texas are unlike the rest of the sample.

9.10 Achievement 7: Adding variables and transformation

9.10.1 Adding a binary variable metro to the model

Example 9.11 : Adding binary variable metro to the model

R Code 9.40 : Linear regression: Distance to syringe program by uninsured percent and metro status

Code

lm9.2<-stats::lm(formula =dist_ssp~no_insurance+metro, data =distance_ssp_clean)save_data_file("chap09", lm9.2, "lm9.2.rds")base::summary(lm9.2)

#>

#> Call:

#> stats::lm(formula = dist_ssp ~ no_insurance + metro, data = distance_ssp_clean)

#>

#> Residuals:

#> Min 1Q Median 3Q Max

#> -219.80 -60.07 -18.76 48.33 283.96

#>

#> Coefficients:

#> Estimate Std. Error t value Pr(>|t|)

#> (Intercept) 3.4240 10.3621 0.330 0.741212

#> no_insurance 7.3005 0.7775 9.389 < 2e-16 ***

#> metronon-metro 28.0525 7.7615 3.614 0.000332 ***

#> ---

#> Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

#>

#> Residual standard error: 84.89 on 497 degrees of freedom

#> Multiple R-squared: 0.1915, Adjusted R-squared: 0.1883

#> F-statistic: 58.88 on 2 and 497 DF, p-value: < 2.2e-16

Listing / Output 9.27: Linear regression: Distance to syringe program by uninsured percent and metro status

Small p-values indicate that percentage uninsured and metro status both statistically significantly help to explain the distance to a syringe program.

The model is statistically significant, with an F-statistic of F(2, 497) = 58.88 and a p-value of < .001.

\(R_{adj}^2\) indicates that 18.83% of the variation in distance to syringe program is accounted for by this model with no_insurance and metro in it. This is somewhat higher than the \(R_{adj}^2\) of 16.86 from the simple linear model with just no_insurance in it.

The coefficient for no_insurance of 7.30 means that for a county with 1% more uninsured people the distance to SSP grows by 7.30 miles.

metronon-metro is confusing because it combines two aspects: The variable name (here metro) and the category to which the coefficient refers (here non-metro). The other group name metro is the reference group for the metro variable2. The non-metro counties are 28.05 miles farther away from the nearest syringe program than the metro counties.

R Code 9.41 : Graphing the regression model lm9.2 with percent uninsured and metro

Code

distance_ssp_clean|>ggplot2::ggplot(ggplot2::aes( x =no_insurance, y =dist_ssp, group =metro))+ggplot2::geom_line( data =broom::augment(x =lm9.2),ggplot2::aes( y =.fitted, linetype =metro))+ggplot2::geom_point(ggplot2::aes( text =county, color =metro), size =2)+ggplot2::labs( y ="Miles to nearest syringe program", x ="County percent uninsured")+ggokabeito::scale_color_okabe_ito( order =c(1,3), alpha =.4, name ="County")+ggplot2::scale_linetype_manual( values =c(1, 2), name ="Regression line\n(predicted values)")

#> Warning in ggplot2::geom_point(ggplot2::aes(text = county, color = metro), :

#> Ignoring unknown aesthetics: text

Listing / Output 9.28: Regression model lm9.2 with percent uninsured and metro

This is my reproduction of Figure 9.21. There are two differences noteworthy:

I used the colorblind friendly palette scale_color_okabe_ito() from the {ggokabeito} package (See: Barrett (2021)). Therefore my colors are different from Figure 9.21. scale_color_okabe_ito() has 9 colors (default = order = 1:9). With the order argument you determine which colors you want to use. In my case the first and third color. order = c(1, 3).

Listing / Output 9.28 is the first time that I have used the augment() function from the {broom} package (See: Section A.3). I have already used broom::glance() and broom::tidy() in this chapter but I have not understood why and when to use one of these three functions. Therefore I am exploring how to apply these {broom} function in Section 9.11.2.

9.10.2 Using the multiple regression model

Formula 9.7 : Applying multiple regression model lm9.2

I am going to use as an example Miami county (Indiana) with exactly 10% of their residents not insured. This conforms to the book’s example. For a metro county I am using Sonoma county (California) with a slight different uninsured population of 10.1%.

#> county state dist_ssp no_insurance metro .fitted .lower .upper

#> 1 miami county IN 48.88 10.0 non-metro 104.4813 -62.6779 271.640

#> 2 sonoma county CA 13.11 10.1 metro 77.1589 -90.0093 244.327

#> .resid

#> 1 -55.6013

#> 2 -64.0489

Listing / Output 9.29: Compute lm9.2 model values for Miami county (IN) and Sonoma county (CA)

Our values calculated manually (104.48 and 77.15) match the values in .fitted column! We also see that the real values (48.88 and 13.11) are very far from our predicted values but inside the insanely huge 95% predicted intervals.

If we add the values in the column .resid to our predicted values in .fitted we will get the real distances to the nearest Syringe Services Program (SSP). For example: 104.4813 - 55.50131 = 48.97999.

9.10.3 Adding more variables

Before adding (more) variables one has to check the assumptions. For instance: Before we are going to add another continuous variable we should check for normality. If they are not normally distributed then it doesn’t pay the effort to regress with these variables without an appropriate transformation.

Checking the distribution of the continuous variables brings nothing new to my understanding. It is the already usual plotting of histograms and q-q plots. So I will not go into every details.

But what is interesting is the book’s conclusion of the no_insurance distribution (the percentage of uninsured residents): It claims that the normality assumption is met. But I will show that this is not the case and that a logit transformation improves the situation essentially.

Example 9.12 : Exploring no_insurance distribution

#>

#> Shapiro-Wilk normality test

#>

#> data: dist_ssp2$no_insurance_logit

#> W = 0.9921, p-value = 0.009428

Listing / Output 9.31: Checking normality assumption of residents without insurance after logit transformation. Left: Histogram with overlaid density curve. Right: Q-Q plot. Bottom: Shapiro-Wilk test.

The Shapiro-Wilk test is still statistically significant p < .05, and therefore the Null (that the no_insurance is normally distributed) has to be rejected. But the situation has drastically improved as one can seen in both graphs. In contrast to the book I would the percentage of uninsured residents logit transform.

Note 9.2

In contrast to Listing / Output 8.1 where I computed the logit transformation manually I used here the logit() function from the {car} package (see Section A.4).

9.10.4 Identifying transformation procedures

All of the continuous variables are right skewed. To find an appropriate transformation algorithm the book proposes to check with several procedures and to compare the results: Square, cube, inverse and log transformation are used. (For percent or proportions literature recommend the logit or arcsine transformation. — I have also tried arcsine and square but logit was by far the best.)

It is easy to find out with visual inspection the best transformation by comparing their histograms. Even if the situations with all of them has improved no histogram or q-q plot was so normally shaped as my no_insurance variable. But even my logit() transformation was not good enough that the Shapiro-Wilk test could confirm that the variable is normally distributed!

Remark 9.1. : What should I do if the assumptions are not met?

To choose the most normally distributed of the options does not guarantee that the variable finally really meet the assumption. What does this mean and how has one to proceed?

The only option as far as I have learned in SwR is to interpret the results cautiously and to prevent inferences from the sample to the population. But then I do not understand the purpose of the whole inference procedure when — in the end — one has to abstain from inferences.

As I am not going to reproduce all the details of the section about finding the appropriate transformation, I will at least report two other noteworthy things I learned from the book:

It is not necessary to generate a new column in the data.frame of the transformed variable. You could add the transformation directly into the ggplot2::aes() or stats:lm() code.

A disadvantages of transformed variables is the model interpretation. We cannot take the values from the printed output to interpret the model as I have demonstrated it in Equation 9.8. We have to take into account the transformation and to reverse the transformation for the interpretation. For instance if we have log-transformed one variable then we have to inverse this transformation by the appropriate exponential function. Or if we have applied a cube transformation then we need to raise the printed output by the power of 3.

9.10.5 No multicollinearity assumption

For multiple regression. e.g., when there are multiple predictor variables in the model, there is another assumption to met: Multicollinearity. (I have added this assumption to the Bullet List 9.5.)

Multicollinearity occurs when predictors are linearly related, or strongly correlated, to each other. When two or more variables are linearly related to one another, the standard errors for their coefficients will be large and statistical significance will be impacted. This can be a problem for model estimation, so variables that are linearly related to each other should not be in a model together.

There are several ways to check for multicollinearity. Examining correlation or — with more complex models, where one variable is linearly related to a combination of other variables — the use of variance inflation factor (VIF) statistics.

The VIF statistics are calculated by running a separate regression model for each of the predictors where the predictor is the outcome and everything else stays in the model as a predictor. With this model, for example, the VIF for the no_insurance variable would be computed by running the model \(no_insurance = log(x = hiv_prevalence) + metro\).

The \(R^2\) from this linear regression model would be used to determine the VIF by substituting it into Equation 9.9:

Formula 9.8 : Computing the variance inflation factor (VIF)