Achievement 1: (Understanding variable types and data types) (Section 2.4). As I already know this information I will skip this achievement. This empty section is only mentioned to get the same section numbering as in the book.

Achievement 2: Choosing and conducting descriptive analyses for categorical (factor) variables (Section 2.5)

Achievement 3: Choosing and conducting descriptive analyses for continuous (numeric) variables (Section 2.6)

Subject of this chapter is the transgender health care problem.

Transgender people are people whose biological sex is not consistent with their gender.

Cisgender people are people whose gender identity matches their biological sex.

In this chapter we are going to use data from the BRFSS website. The Behavioral Risk Factor Surveillance System (BRFSS) is a system of health-related telephone surveys that collect state data about U.S. residents regarding their health-related risk behaviors, chronic health conditions, and use of preventive services. Established in 1984 with 15 states, BRFSS now collects data in all 50 states as well as the District of Columbia and three U.S. territories. BRFSS completes more than 400,000 adult interviews each year, making it the largest continuously conducted health survey system in the world. (BRFSS)

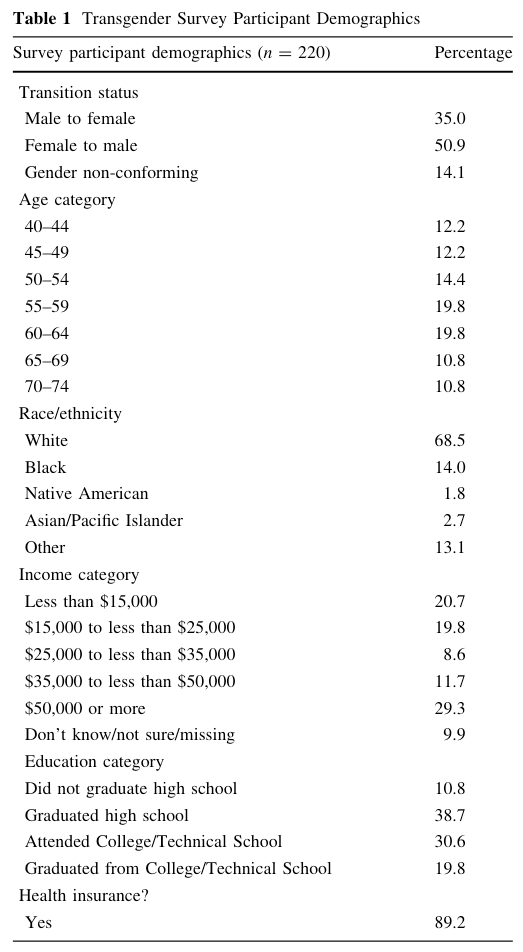

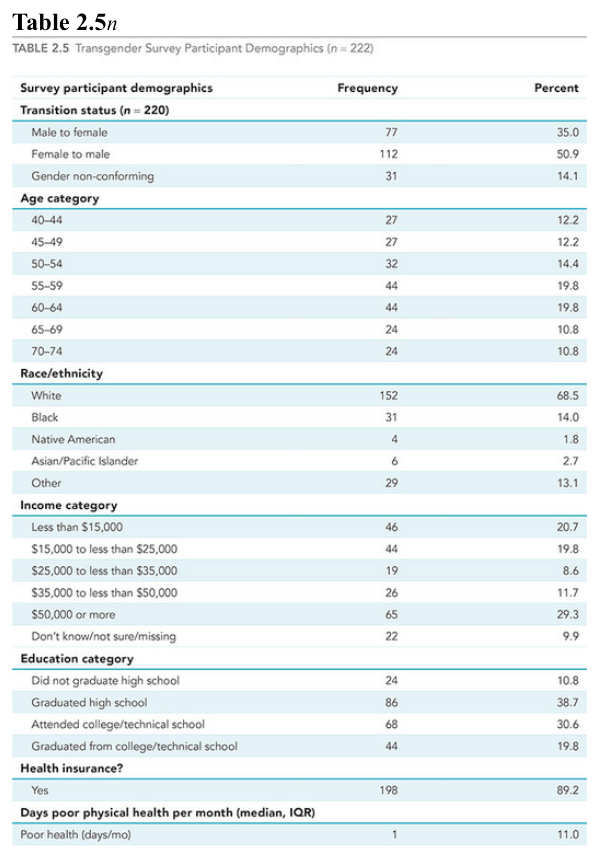

The rationale of this chapter is the fact, that prior to examining statistical relationships among variables, published studies in academic journals usually present descriptive statistics. What kind of measures exist and how to describe the data set is an important task before one can start to analyse statistical relations. The following screenshot from (Narayan, Lebron-Zapata, and Morris 2017, 876) demonstrates how such descriptive statistics can be summarized to create a so-called Table 1 as an introduction to the subject:

Graph 2.1: Screenshot of Table 1, “Transgender Survey Participants Demographics” from Narayan et. al. (2017)

In this chapter we are going to reproduce Graph 2.1 and add some additional information.

2.3 Data, codebook, and R packages

Resource 2.1 : Data, codebook, and R packages for learning about descriptive statistics

To know about variable and data types is essential as different types require different approaches for the analysis.

The following outline of the next sections is slightly adapted from the book. Harris has some measures of categorical variables (mode and index of qualitative variation) explained in the section on numeric variables.

Categorical variables:

Central tendency: The two most commonly used and reported descriptive statistics for categorical (or factor) data types are frequencies and percentages. Sometimes also the mode is used to identify the most common (or typical) category of a factor variable.

Spread / Variety: is for for categorical variables not often reported. Harris mentions the index of qualitative variation, which quantifies how much the observations are spread across the categories of a categorical variable.

Numerical variables:

Central tendency: The most important measures are the mean, median and mode.

For the decision if one should report mean or median in numerical variables the measures for skewness and kurtosis are helpful. Another issue with numerical variables is usage of scientific notation.

I will explain explicitly only to those subjects where I am not firm. This is

Bullet List 2.1: Subjects reviewed in this chapter

But I will address all the other measures in the examples resp. exercises.

2.4.2 Get the data

But before we are going to work with the data we have do download it form the BRFSS website.

Watch out!

Downloading the huge file (69 MB as ZIP and 1 GB unzipped) will take some minutes. So be patient!

R Code 2.1 : Download the SAS transport file data from the BRFSS website and save dataframe with selected variables as R object

Code

## run this code chunk only once (manually)url<-"http://www.cdc.gov/brfss/annual_data/2014/files/LLCP2014XPT.zip"utils::download.file( url =url, destfile =tf<-base::tempfile(), mode ="wb")brfss_2014<-haven::read_xpt(tf)brfss_tg_2014<-brfss_2014|>dplyr::select(TRNSGNDR, `_AGEG5YR`, `_RACE`, `_INCOMG`, `_EDUCAG`, HLTHPLN1, HADMAM, `_AGE80`, PHYSHLTH)data_folder<-base::paste0(here::here(), "/data/")if(!base::file.exists(data_folder)){base::dir.create(data_folder)}chap02_folder<-base::paste0(here::here(), "/data/chap02/")if(!base::file.exists(chap02_folder)){base::dir.create(chap02_folder)}base::saveRDS(object =brfss_tg_2014, file =paste0(chap02_folder, "/brfss_tg_2014_raw.rds"))

Listing / Output 2.1: Download and save the transgender data 2014 from the BRFSS website

The original file has 279 variables and 464664 observations. After selecting only 9 variable the file is with 31.9 MB (memory usage) and stored compressed (2.2 MB) much smaller.

Four observations about the code

It is not possible to download the file with haven::read_xpt(<URL>) directly. At first one has to create a temporary file to store the zipped file.

Whenever you meet a variable / row name with a forbidden R syntax surround the name with backticks (grave accents).

Instead of exporting the R object into a .csv file I save the data as a compressed R object that can loaded easily again into the computer memory with the base::readRDS() function.

With regard to the base::saveRDS() function I have to remind myself that the first argument is the R object without quotes. I committed this error several times.

2.5 Descriptive analyses for categorical (factor) variables

The goal of this section is to summarize and interpret the categorical variable TRNSGNDR. In contrast to the book I will work with a dataframe consisting only of the variable which I will recode to transgender.

2.5.1 Summarize categorical variables without recoding

Example 2.1 : Summarize categorical variables without recoding

Listing / Output 2.2: Show structure of the transgender data with utils::str()

#> tibble [464,664 × 1] (S3: tbl_df/tbl/data.frame)

#> $ TRNSGNDR: num [1:464664] NA NA NA NA NA NA NA NA NA NA ...

#> ..- attr(*, "label")= chr "DO YOU CONSIDER YOURSELF TO BE TRANSGEND"

{haven} has imported labelled data for the variables. We can use these labels to find the appropriate passages in the 126 pages of the codebook. Just copy the variable label and search this string in the PDF of the codebook.

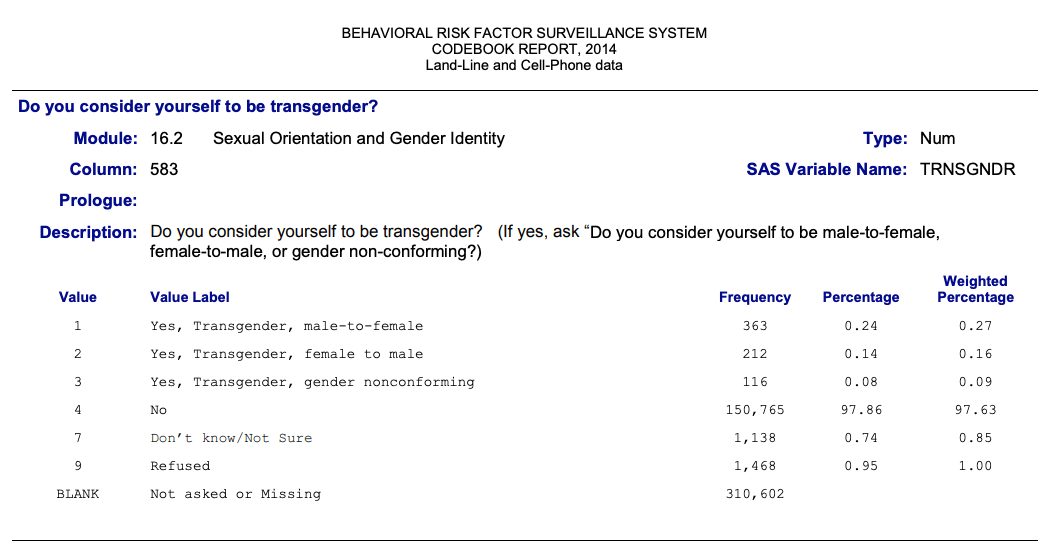

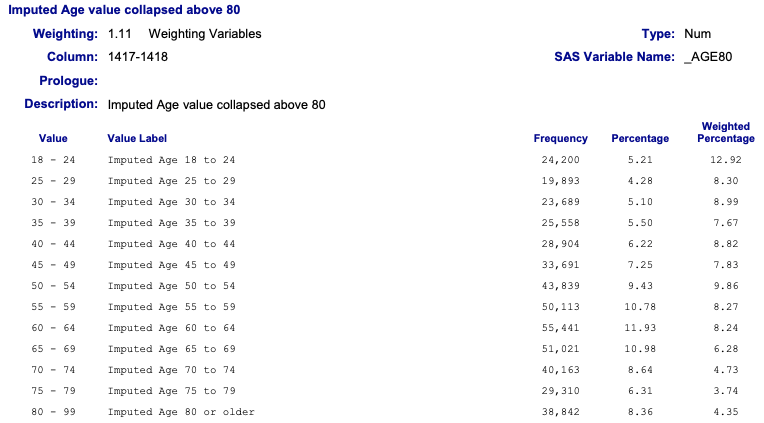

Graph 2.2: Behavior Risk Factor Surveillance Systems (BRFSS) Codebook Report, 2014 Land-Line and Cell-Phone data

Note that variable labels are restricted to 40 characters. I think this is an import limitation of {haven} because there is no such restriction using haven::labelled(). But we need to recode the data anyway, especially as there are no value labels available.

R Code 2.3 : Summarize transgender data with base::summary()

Table 2.1: A firt approach to produce a ‘Table 1’ statistics with {gtsummary} using labelled data

Characteristic

N = 464,6641

DO YOU CONSIDER YOURSELF TO BE TRANSGEND

1

363 (0.2%)

2

212 (0.1%)

3

116 (<0.1%)

4

150,765 (98%)

7

1,138 (0.7%)

9

1,468 (1.0%)

Unknown

310,602

1 n (%)

{gtsummary} uses here the labelled data imported with {haven}. Not bad, isn’t it? Just a one-liner produces this first trial for a pbulic ready Table 1 statistics. This first attempt of table would be even better if the data had also value labels.

For more information how to work with with labelled data see the full Section 1.8 with all its sub-sections. You will find some functions how to adapt Table 2.1 to get a better descriptive in Section 1.9.2. But follow also this chapter along!

To summarize a categorical variable without recoding is generally not purposeful. An exception is utils::str() as it display the internal structure including attributes. With utils::str() one can detect for example if the data are labelled. If this is the case a quick glance at the data with gtsummary::tbl_summary() could be sensible.

2.5.2 Convert numerical variable to factor and recode its levels

Categorical variable: Four steps to get sensible values for reporting data

To print a basic table() is always a useful first try.

Check the class() of the variable. For a categorical variable is has to be factor.

If this not the case then you have to recode the variable forcats::as_factor.

Compare the values with the screenshot from the codebook in Graph 2.2.

Normally table() is used for cross-classifying factors to build a contingency table of the counts at each combination of factor levels. But here I have only one variable.

R Code 2.7 : Check class of categorical variable `TRNSGNDR```

forcats::fct-count() gives you all the information you need to recode a categorical variable:

You will see the number of NAs.

And even more important: If there are (currently) unused levels then forcats::fct_count() will also list them as \(0\) observations.

You will see if some levels are not used much so you can decide if you should probably merge them to a category of “other”. {forcats} has several function to support you in this task.

You can now recode the levels according to the information in the codebook (see Graph 2.2).

R Code 2.10 : Recode the TRNSGNDR variable to match the codebook levels

Code

## create transgender_clean #########transgender_clean<-transgender_pb|>dplyr::mutate(TRNSGNDR =forcats::fct_recode(TRNSGNDR,"Male to female"='1',"Female to male"='2',"Gender nonconforming"='3',"Not transgender"='4',"Don’t know/Not sure"='7',"Refused"='9'))base::summary(transgender_clean)## saving the variable is useful in the developing stage## it helps to work with individual code chunks separatelychap02_folder<-base::paste0(here::here(), "/data/chap02/")base::saveRDS(object =transgender_clean, file =base::paste0(chap02_folder, "transgender_clean.rds"))

#> TRNSGNDR

#> Male to female : 363

#> Female to male : 212

#> Gender nonconforming: 116

#> Not transgender :150765

#> Don’t know/Not sure : 1138

#> Refused : 1468

#> NA's :310602

Listing / Output 2.3: Recoded TRNSGNDR variable to match the codebook levels

After recoding TRNSGNDR we have in Listing / Output 2.3 printed the resulted tibble. The output summarizes already understandable the essence of the variable. But we are still missing some information:

Besides the frequencies we need also the percentages to better understand the data.

As there is a huge amount of missing data, we need to calculate these percentages with and without NA’s.

In the following example we discuss functions of different packages to get the desired result.

Example 2.3 : Data management to display categorical variable for reports

The result of skimr::skim() for categorical variable is somewhat disappointing. It does not report the levels of the variable. The abbreviations of the top counts (4 levels of 6) are not understandable.

R Code 2.12 : Display summary of the TRNSGNDR variable with Hmisc::describe()

#> transgender_clean

#>

#> 1 Variables 464664 Observations

#> --------------------------------------------------------------------------------

#> TRNSGNDR

#> n missing distinct

#> 154062 310602 6

#>

#> Value Male to female Female to male Gender nonconforming

#> Frequency 363 212 116

#> Proportion 0.002 0.001 0.001

#>

#> Value Not transgender Don’t know/Not sure Refused

#> Frequency 150765 1138 1468

#> Proportion 0.979 0.007 0.010

#> --------------------------------------------------------------------------------

Hmisc::describe() is a completely new function for me. Sometimes I met in my reading the {Hmisc} package, but I have never applied functions independently. (Fore more information on {Hmisc} see Section A.39.)

In contrast to other methods it does not list the levels vertically but horizontally. This is unfortunately not super readable and one needs — at least in this case — to use the horizontal scroll bar.

But is has the advantage to display not only the frequencies but also the percentages.

I just learned about the existence of {report}. It is specialized to facilitate reporting of results and statistical models (See: Section A.74). The one-liner shows the levels of the variable, frequencies (n_obs) and percentages. Not bad!

In this example I have used the report::report_table() function because the verbal result of the standard report::report() function is rather underwhelming as you can see:

The data contains 464664 observations of the following 1 variables:

TRNSGNDR: 6 levels, namely Male to female (n = 363, 0.08%), Female to male (n = 212, 0.05%), Gender nonconforming (n = 116, 0.02%), Not transgender (n = 150765, 32.45%), Don’t know/Not sure (n = 1138, 0.24%), Refused (n = 1468, 0.32%) and missing (n = 310602, 66.84%)

Compare this to the example of the book:

Report

The 2014 BRFSS had a total of 464,664 participants. Of these, 310,602 (66.8%) were not asked or were otherwise missing a response to the transgender status question. A few participants refused to answer (n = 1,468; 0.32%), and a small number were unsure of their status (n = 1,138; 0.24%). Most reported being not transgender (n = 150,765; 32.4%), 116 were gender non-conforming (0.03%), 212 were female to male (0.05%), and 363 were male to female (0.08%).

Report 2.1: Do you consider yourself to be transgender? (Figures with missing values)

(There is another report of the TRNSGNDR without the many NAs: See Report 2.2 for a comparison.)

But it seems to me that with more complex results (e.g., reports from models) {report} is quite useful. In the course of this book I will try it out and compare with the reports of other functions.

Another thought: I have filed an issue because I think that it would be a great idea to provide a markdown compatible table so that Quarto resp. pandoc could interpret as a table and visualizing it accordingly. The table above would then appear as the following (copied and slightly edited) example:

Slightly modified {report} table to get a Markdown compatible table

Variable

Level

n_Obs

percentage_Obs

TRNSGNDR

Male to female

363

0.08

TRNSGNDR

Female to male

212

0.05

TRNSGNDR

Gender nonconforming

116

0.02

TRNSGNDR

Not transgender

150765

32.45

TRNSGNDR

Don’t know/Not sure

1138

0.24

TRNSGNDR

Refused

1468

0.32

TRNSGNDR

missing

310602

66.84

R Code 2.14 : Display summary of the TRNSGNDR variable with the recoding method from the book

#> # A tibble: 7 × 4

#> TRNSGNDR n perc_all perc_valid

#> <fct> <int> <dbl> <dbl>

#> 1 Male to female 363 0.0781 0.236

#> 2 Female to male 212 0.0456 0.138

#> 3 Gender nonconforming 116 0.0250 0.0753

#> 4 Not transgender 150765 32.4 97.9

#> 5 Don’t know/Not sure 1138 0.245 0.739

#> 6 Refused 1468 0.316 0.953

#> 7 <NA> 310602 66.8 202.

WATCH OUT! Wrong perc_valid cell value in NA row

I have the same logic used as the author in the book and got the same result too. But the result of one cell is wrong! The perc_valid cell value in the NA row should be empty, but it shows the value 202 (rounded).

R Code 2.15 : Display summary of the TRNSGNDR variable with descr::freq()

#> transgender_clean$TRNSGNDR

#> Frequency Percent Valid Percent

#> Male to female 363 0.07812 0.23562

#> Female to male 212 0.04562 0.13761

#> Gender nonconforming 116 0.02496 0.07529

#> Not transgender 150765 32.44603 97.85995

#> Don’t know/Not sure 1138 0.24491 0.73866

#> Refused 1468 0.31593 0.95286

#> NA's 310602 66.84443

#> Total 464664 100.00000 100.00000

Listing / Output 2.4: Using descr::freq() to calculate values with and without NA’s

This is the first time I used the {descr} package and it shows so far the best result! (See Section A.14.) The one-liner shows levels, frequencies, percentage with and without missing values. It even plots a bar graph, but this is not useful here, so I omitted it with plot = FALSE.

The only disadvantage: One has to learn a new package. And — searching about packages about descriptive statistics I learned that there are at least 10 other packages.

R Code 2.16 : Display summary of the TRNSGNDR variable with my recoding method using {dplyr} and {tidyr} from the {tidyverse}

Code

tg1<-transgender_clean|>dplyr::group_by(TRNSGNDR)|>dplyr::summarize(n =dplyr::n())|>dplyr::mutate(perc_all =100*(n/sum(n)))tg2<-transgender_clean|>dplyr::group_by(TRNSGNDR)|>tidyr::drop_na()|>dplyr::summarize(n =dplyr::n())|>dplyr::mutate(perc_valid =100*(n/sum(n)))dplyr::full_join(tg1, tg2, by =dplyr::join_by(TRNSGNDR, n))

#> # A tibble: 7 × 4

#> TRNSGNDR n perc_all perc_valid

#> <fct> <int> <dbl> <dbl>

#> 1 Male to female 363 0.0781 0.236

#> 2 Female to male 212 0.0456 0.138

#> 3 Gender nonconforming 116 0.0250 0.0753

#> 4 Not transgender 150765 32.4 97.9

#> 5 Don’t know/Not sure 1138 0.245 0.739

#> 6 Refused 1468 0.316 0.953

#> 7 <NA> 310602 66.8 NA

Here I created a tibble with frequencies and percentages with and another one without missing data. Then I join these two tibbles together.

Although this code with packages from the {tidyverse} collection is more complex and verbose than the one-liner from Listing / Output 2.4 using {descr} it has the advantage that one does not need to install and learn a new package. I believe that sometimes it is more efficient to be proficient with some packages than to know many packages superficially.

Report

The 2014 BRFSS had a total of 464,664 participants. Of these, 310,602 (66.8%) were not asked or were otherwise missing a response to the transgender status question. Of the 33.2% who responded, some refused to answer (n = 1,468; 0.95%), and a small number were unsure of their status (n = 1,138, 0.74%). Most reported being not transgender (n = 150,765; 97.9%), 116 were gender non-conforming (0.08%), 212 were female to male (0.14%), and 363 were male to female (0.24%).

Report 2.2: Do you consider yourself to be transgender? (Figures without missing values)

The index of qualitative variation (IQV) quantifies how much the observations are spread across categories of a categorical variable. While these indexes are computed in different ways, they all range from 0 to 1. The resulting values are high when observations are spread out among categories and low when they are not.

If, for instance, a variable has the same amount of data in each of its levels, e.g. the data are perfectly spread across groups, then the index value would be 1. If all variable levels are empty but one, then there is no spread at all and the index value would be 0.

Assessment 2.1 : Assessment of spread in categorical variables with the index of qualitative variation

The index ranges from 0 to 1:

0: No spread at all: All observations are in only one group.

1: Data perfectly spread over all levels of the categorical variable: all levels have the same amount of observations.

The qualitative assessment would be “low” or “high” depending if the index is (far) below or (far) above 0.5. But the most important use is the comparison of the proportions of levels in different observation. For a practical application see Table 2.5.

Harris recommends the qualvar::B() function. But the B index relies on the geometric mean and is therefore not usable if one of the proportions equals to \(0\), e.g., if one of the category levels has no observation the result is wrong. It returns always \(0\) independently of the frequency of categories in other levels.

The {qualvar} package has six indices of qualitative variations: The value of these standardized indices does not depend on the number of categories or number of observations:

ADA: Average Deviation Analog

B: modified geometric mean

DM: Deviation from the mode (DM)

HREL: Shannon Index for computing the “surprise” or uncertainty.

MDA: Mean Difference Analog

VA: Variance Analog

With the exception of two (B and HREL) these indices do not have problems with proportions that equals \(0\) (HREL returns NaN).

Resource 2.2 Resources about indices of variation (IQVs)

A vignette by Joël Gombin explains the origins of the several indices in the {qualvar} package.

The explication of the three indices calculated with the iqv() function of the {statpsych} package can be found in Introduction to Categorical Analysis by Douglas G. Bonett (n.d., 82f.).

In Experiment 2.1 I am going to apply several indices for qualitative variations using frequencies of the HADMAMvariable. Tab “IQV 1” the calculation uses the original frequencies of the categorical variable but without missing values. In tab “IQV 2” I added a new level with no observations (frequency = 0).

Experiment 2.1 : Computing the index of qualitative variation (IQV) for the HADMAM variable.

R Code 2.17 : Using different IQVs with the HADMAM variable

Code

hadmam_clean<-readRDS("data/chap02/hadmam_clean.rds")x<-hadmam_clean$n[1:4]glue::glue("The frequencies of the `HADMAM` variable (without missing values) are:")xglue::glue("Applying `statpsych::iqv(x)` to a vector of these frequenecies:")statpsych::iqv(x)

#> The frequencies of the `HADMAM` variable (without missing values) are:

#> [1] 204705 51067 253 317

#> Applying `statpsych::iqv(x)` to a vector of these frequenecies:

#> Simpson Berger Shannon

#> 0.4301463 0.2685839 0.3723222

Listing / Output 2.5: Computing the index of qualitative variation (IQV) with statpsych::iqv(x)

With {statpsych} I have found another package that computes three indices of qualitative variations (see: Section A.85). They have different names (Simpson, Berger and Shannon) but correspond to one of the Wilcox indices:

Simpson = VA

Berger = DM

Shannon = HREL

Compare these three values with Table 2.2. Again I have used the frequencies of the HADMAM variable:

Table 2.2: Comparison of different Indices of Qualitative Variation (IQV)

Function

Result

qualvar::ADA(x)

0.2685839

qualvar::B(x)

0.0035314

qualvar::DM(x)

0.2685839

qualvar::HREL(x)

0.3723222

qualvar::MDA(x)

0.1364323

qualvar::VA(x)

0.4301463

Normally the function glue::glue() is used to enclose expression by curly braces that will be evaluated as R code. Started with Listing / Output 2.5 I will use this function of the {{glue}} package to print comments as output of the R code chunk. (For more information about {glue }see Section A.34.)

R Code 2.18 : Using different IQVs with the HADMAM variable where one proportion is \(0\)

Code

hadmam_clean<-readRDS("data/chap02/hadmam_clean.rds")y<-c(hadmam_clean$n[1:4], 0)glue::glue("The frequencies of the `HADMAM` variable, \nwithout missing values but one level has no observations:")yglue::glue("Applying `statpsych::iqv(y)` to a vector of these frequenecies:")statpsych::iqv(y)

#> The frequencies of the `HADMAM` variable,

#> without missing values but one level has no observations:

#> [1] 204705 51067 253 317 0

#> Applying `statpsych::iqv(y)` to a vector of these frequenecies:

#> Simpson Berger Shannon

#> 0.4032622 0.2517974 NaN

Table 2.3: Comparison of different Indices of Qualitative Variation (IQV)

Function

Result

qualvar::ADA(y)

0.2517974

qualvar::B(y)

0

qualvar::DM(y)

0.2517974

qualvar::HREL(y)

NaN

qualvar::MDA(y)

0.1023242

qualvar::VA(y)

0.4032622

As you can see: B and HREL (Shannon) cannot be used if one level has no observations. The \(0\) result of B is especially dangerous as one could believe that there is no spread at all, e.g. assuming wrongly that all observations are in one level.

R Code 2.19 : Using different IQVs with the HADMAM variable where one value is NA

Code

hadmam_clean<-readRDS("data/chap02/hadmam_clean.rds")z<-c(hadmam_clean$n[1:4], NA)glue::glue("The frequencies of the `HADMAM` variable, \nwith NA for one level:")zglue::glue("Applying `statpsych::iqv(z)` to a vector of these frequenecies:")statpsych::iqv(z)glue::glue("Now with `statpsych::iqv(stats::na.omit(z))` to eliminate NA's:")statpsych::iqv(stats::na.omit(z))

#> The frequencies of the `HADMAM` variable,

#> with NA for one level:

#> [1] 204705 51067 253 317 NA

#> Applying `statpsych::iqv(z)` to a vector of these frequenecies:

#> Simpson Berger Shannon

#> NA NA NA

#> Now with `statpsych::iqv(stats::na.omit(z))` to eliminate NA's:

#> Simpson Berger Shannon

#> 0.4301463 0.2685839 0.3723222

Table 2.4: Comparison of different Indices of Qualitative Variation (IQV)

Function

Result

qualvar::ADA(z)

0.2685839

qualvar::B(z)

0.0035314

qualvar::DM(z)

0.2685839

qualvar::HREL(z)

0.3723222

qualvar::MDA(z)

0.1364323

qualvar::VA(z)

0.4301463

Missing values destroys completely the statpsych::iqv() function. The reason: The function lacks a rm.na = TRUE argument, a feature all the other indices have.

Bottom line: I recommend only to use the {qualvar} packages, because it covers not only all indices of statpsych::iqv(), but has other indices as well and is better adaptable to data.frames wit missing data.

2.5.3.2.3 Practical usage of IQV

The few values of the HADMAM variable are not very instructive for the usage of the different indices. I am going to apply the functions to another, much more complex dataset from the 1968 US presidential election (Table 1 of (Wilcox 1973, 332)).

R Code 2.20 Comparing different IQVs using data from the 1968 US presidential election

Code

data(package ="qualvar", list ="wilcox1973")### taken from vignette of {qualvar}### https://cran.r-project.org/web/packages/qualvar/vignettes/wilcox1973.htmlwilcox1973$MDA<-apply(wilcox1973[,2:4], 1, qualvar::MDA)wilcox1973$DM<-apply(wilcox1973[,2:4], 1, qualvar::DM)wilcox1973$ADA<-apply(wilcox1973[,2:4], 1, qualvar::ADA)wilcox1973$VA<-apply(wilcox1973[,2:4], 1, qualvar::VA)wilcox1973$HREL<-apply(wilcox1973[,2:4], 1, qualvar::HREL)wilcox1973$B<-apply(wilcox1973[,2:4], 1, qualvar::B)qv_indices<-apply(wilcox1973[, 2:4], 1, statpsych::iqv)|>base::t()|>tibble::as_tibble( .name_repair =~c("Simpson", "Berger", "Shannon"))qv_compared<-dplyr::bind_cols(wilcox1973, qv_indices)|>dplyr::mutate(dplyr::across(dplyr::where(~is.numeric(.)), ~round(., 3)))## the last line is equivalent to## dplyr::where(\(x) is.numeric(x)), \(y) round(y, 3))) or## dplyr::where(function(x) is.numeric(x)), function(y) round(y, 3))) DT::datatable(qv_compared, options =list(pageLength =10))

Table 2.5: Comparing different IQVs using data from the 1968 US presidential election

This is a rather complex code chunk. I combined the indices from {qualvar} and {statpsych} with the dataset qualvar::wilcox1973 and got a very wide table. You have to use the horizontal scroll bar to see all columns of the table. I tried with Quarto layout a different layout with more space for this part of the page. But this was not optimal: Moving the sidebars in reverse destroys the table view and one has to scroll outside of the table and start scrolling to the table again. So I stick with the standard layout.

The idea of IQV indices is to have a measure of spread for categorical variables. For the presidential election dataset a high IQV points out, that the three candidates are almost dead even. A good example is South Carolina (first row): With the exception of B are all indices well beyond 0.9.

As an contrasting example take Texas (eighth row): In Texas Wallace is well behind the other to candidates. The indices (with the notable exceptions of VA and HREL) reflect this difference with a lower index value.

Which index of qualitative variation (IQV) to choose?

From my (cursory) inspection I would recommend to use mostly MDA, DM and ADA for computing an index of qualitative variation (IQV).

B is not stable against levels without observation and HREL is not immune against missing values. Together with VA is HREL — in comparison to the other indices — not so responsive to (smaller) changes in the proportional distribution of the categorical variable.

2.5.4 Achievement 2: Report frequencies for a factorial variable

Use one of the methods shown to create a table of the frequencies for the HADMAM variable, which indicates whether or not each survey participant had a mammogram. Review the question and response options in the codebook and recode to ensure that the correct category labels show up in the table before you begin.

2.5.4.1 Details of the procedure

The following box outlines the different steps and methods I have used. The bold first part of every bullet point corresponds to the tab name of Exercise 2.1.

Procedure 2.1 Report frequencies and proportions for the HADMAM variable

Codebook: Get the appropriate information from the codebook.

Select variable

Look with utils::str() into the attributes to see the labelled variable value

Find with this information the passage in the codebook

Recode the variable

Convert the numeric to a categorical variable

Recode levels (include levels for missing values)

Prepare recoded variable for table output

Summarize absolute and percentage values with missing data

Summarize absolute and percentage values with missing data

Join both information into a data frame

In addition to check my understand for achievement 2 I will try to show the descriptive statistics as a Table 1, produced with {kable} but also with {gtsummary}. The table should contain all the features mentioned in Bullet List 2.2. I know that this looking ahead of achievement 4, but just let’s try it for fun.

Report 1: A manual description with copy & paste, always changing between table and document. A method that is very error prone!

Report 2: R inline reporting as an R markdown report with the gtsummary::inline_text() function. This method results into reproducible reports, an important part of good practices.

2.5.4.2 My solution

Exercise 2.1 Achievement 2: Frequencies and proportions of the categorical HADMAM variable

## read brfss_tg_2014 data #######brfss_tg_2014<-base::readRDS("data/chap02/brfss_tg_2014_raw.rds")## create hadmam_lab ######hadmam_lab<-brfss_tg_2014|>dplyr::select(HADMAM)str(hadmam_lab)

#> tibble [464,664 × 1] (S3: tbl_df/tbl/data.frame)

#> $ HADMAM: num [1:464664] 1 NA NA 1 1 NA NA 1 1 NA ...

#> ..- attr(*, "label")= chr "HAVE YOU EVER HAD A MAMMOGRAM"

In this case the name of the variable HADMAM would have been enough to find the correct passage in the codebook. But this is not always the case. Often the variable name is used many times and it is not often — like it was the case here — that the first appearance is the sought one.

Table 2.6: Have you ever had a mammogram? N = 464664

Response

n

% with NA’s

% without NA’s

Yes

204705

44.05

79.86

No

51067

10.99

19.92

Don’t know/Not sure

253

0.05

0.10

Refused

317

0.07

0.12

NA

208322

44.83

NA

To get a table with knitr::kable() it by far the easiest way: Just put the R dataframe object into the kable() function and — Voilà. And it doesn’t look bad!

R Code 2.25 Display HADMAM variable as descriptive statistics with {kableExtra}

Table 2.7: Have you ever had a mammogram? N = 464664

Response

n

% with NA's

% without NA's

Yes

204705

44.05

79.86

No

51067

10.99

19.92

Don't know/Not sure

253

0.05

0.10

Refused

317

0.07

0.12

NA

208322

44.83

NA

{kableExtra} need more lines to get a similar result as Table 2.6. But {kableExtra} has many more styling options for HTML but also for \(\LaTeX\) as you can see in the extensive documentation. For instance scroll down the HTML options page to get an impression about available features.

I don’t know how to reduce the size of this HTML table and to get rid of the horizontal scroll bar.

R Code 2.26 Display HADMAM variable as descriptive statistics with {gt}

Table 2.8: Have you ever had a mammogram? N = 464664

Response

n

% with NA's

% without NA's

Yes

204705

44.05

79.86

No

51067

10.99

19.92

Don't know/Not sure

253

0.05

0.10

Refused

317

0.07

0.12

NA

208322

44.83

NA

This is the first time that I used the {gt} package. The table looks nice but the construction was super complex: Every change had to be defined in several steps:

what type of change (e.g., table style)

what type of style (e.g., text of cells)

what kind of change (e.g., bold)

where in the table (location e.g., cell body, column labels)

what element (e.g., everything, a few or just a specific one).

R Code 2.27 Display HADMAM variable as descriptive statistics with {gtsummary}

Table 2.9: Have you ever had a mammogram? N = 464664

Characteristic

All values

Without missing values

N = 464,6641

N = 256,3421

HADMAM

Yes

204,705 (44.05%)

204,705 (79.86%)

No

51,067 (10.99%)

51,067 (19.92%)

Don't know/Not sure

253 (0.05%)

253 (0.10%)

Refused

317 (0.07%)

317 (0.12%)

(Missing)

208,322 (44.83%)

1 n (%)

Tables with {gtsummary} play in another league. They are not constructed for data tables but for display tables, especially for generating a so-called Table 1. I could therefore not apply my prepared dataset hadmam_tbl2 but had to use as input the labelled raw data in hadmam_lab.

Report

The 2014 phone survey of the BRFSS (Behavioral Risk Factor Surveillance System) was taken via land-line and cell-phone data. From 464,664 participants were 208,322 (44.83%) people not asked or failed otherwise to respond to the question “Have You Ever Had a Mammogram?”. Of the 256,342 participants that answered affirmed the vast majority (n = 204,705; 79.86%) the question but still almost one fifth (n = 51,067; 19.92%) never had a mammogram. Some women (n = 253; 0.10%) were not sure or refused an answer (n = 317; 0.12%).

Report 2.3: Have You Ever Had a Mammogram? (Written with copy & paste)

Compare my report with the description of a similar structured categorical variable of the same survey: Report 2.2.

Report

The 2014 phone survey of the BRFSS (Behavioral Risk Factor Surveillance System) was taken via land-line and cell-phone data. From NULL participants were 208,322 (44.83%) people not asked or failed otherwise to respond to the question “Have You Ever Had a Mammogram?”. The vast majority of the 256,342 participants affirmed the question 204,705 (79.86%), but still 51,067 (19.92%) — that is almost one fifth — never had a mammogram. A small amount of women 253 (0.10%) were not sure and some women 317 (0.12%) refused an answer.

I had to reformulate some text part, because the replacement was always <absolute number> (<percent number>%). But that was not a big deal. The advantage of gtsummary::inline_text() summaries are reproducible reports that prevents errors in copy & paste and are adapted automatically if the figures of the table changes!

Compare this report with the copy & paste description from Report 2.3.

2.6 Descriptive analyses for continuous (numeric) variables

I will not review explicitly all measures for numeric variables. Instead I will focus on the subjects mentioned in Bullet List 2.1.

2.6.1 Data for experiments with skewness and kurtosis

2.6.1.1 Body measures

To experiment with realistic data I will use the bdims dataset of {openintro} (see Section A.59). It is a body measurement of 507 physically active individuals (247 men and 260 women). To get the following code to run you need to install the {openintro} via CRAN.

I have chosen the bdims data because it has lots of numeric variable to experiment with skewness and kurtosis. As you can read in an article of the Journal of Statistics Education “these data can be used to provide statistics students practice in the art of data analysis” (Heinz et al. 2003). The dataset contains body girth measurements and skeletal diameter measurements, as well as age, weight, height and gender, are given for 507 physically active individuals – 247 men and 260 women. You can read more about the educational use of the data and you will find an explanation of the many variables in the online help of {openintro}.

I will mostly concentrate on the following measures (all values are in centimeter):

biacromial diameter (shoulder breadth)

biiliac diameter (pelvic breadth)

bitrochanteric diameter (hip bone breadth) and

waist girth

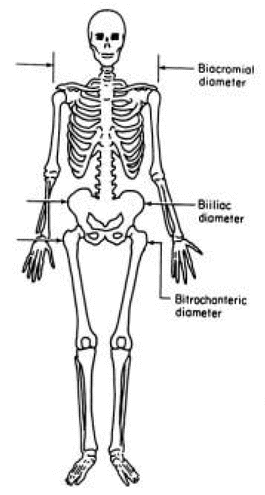

To give you a better understanding where some of these measure are located on the human body I replicate Graph 2.4 one figure of the article.

Graph 2.4: Biacromial, Biiliac, and Bitrochanteric Diameters

To facilitate the following experiment I have written a function to generate a histogram with an overlaid density curve. The code is loaded with other functions whenever this chapter is rendered.

R Code 2.28 : Function to create histogram with overlaid dnorm curve

Code

# df = data.frame or tibble# v = numerical column of data.frame: syntax for call df$v# x_label = title for x-axis# nbins = number of bins# col_fill = fill color# col_color = border color of bins# col = color of dnorm curve# return value is "ggplot" object to customize it further (set labels etc.)my_hist_dnorm<-function(df, v, n_bins=20,col_fill="gray90",col_color="black",col_dnorm="tomato"){p<-df|>ggplot2::ggplot(ggplot2::aes())+ggplot2::geom_histogram(ggplot2::aes(y =ggplot2::after_stat(density)), bins =n_bins, fill =col_fill, color =col_color, stat ="histogram")+ggplot2::stat_function(fun =dnorm, args =c(mean =mean(v), sd =sd(v)), col =col_dnorm)+ggplot2::theme_bw()p}

(This code chunk is not evaluated and has therefore no visible output.)

2.6.1.2 Data

R Code 2.29 : Load the bdims dataset of the {openintro} package and inspect the data

Code

data(package ="openintro", list ="bdims")skimr::skim(bdims)

Data summary

Name

bdims

Number of rows

507

Number of columns

25

_______________________

Column type frequency:

numeric

25

________________________

Group variables

None

Variable type: numeric

skim_variable

n_missing

complete_rate

mean

sd

p0

p25

p50

p75

p100

hist

bia_di

0

1

38.81

3.06

32.4

36.20

38.7

41.15

47.4

▃▇▇▅▁

bii_di

0

1

27.83

2.21

18.7

26.50

28.0

29.25

34.7

▁▂▇▆▁

bit_di

0

1

31.98

2.03

24.7

30.60

32.0

33.35

38.0

▁▃▇▆▁

che_de

0

1

19.23

2.52

14.3

17.30

19.0

20.90

27.5

▃▇▆▂▁

che_di

0

1

27.97

2.74

22.2

25.65

27.8

29.95

35.6

▂▇▆▅▁

elb_di

0

1

13.39

1.35

9.9

12.40

13.3

14.40

16.7

▂▇▇▇▂

wri_di

0

1

10.54

0.94

8.1

9.80

10.5

11.20

13.3

▁▆▇▅▁

kne_di

0

1

18.81

1.35

15.7

17.90

18.7

19.60

24.3

▂▇▅▁▁

ank_di

0

1

13.86

1.25

9.9

13.00

13.8

14.80

17.2

▁▅▇▆▂

sho_gi

0

1

108.20

10.37

85.9

99.45

108.2

116.55

134.8

▃▇▆▆▁

che_gi

0

1

93.33

10.03

72.6

85.30

91.6

101.15

118.7

▃▇▆▅▂

wai_gi

0

1

76.98

11.01

57.9

68.00

75.8

84.50

113.2

▆▇▆▂▁

nav_gi

0

1

85.65

9.42

64.0

78.85

84.6

91.60

121.1

▂▇▆▂▁

hip_gi

0

1

96.68

6.68

78.8

92.00

96.0

101.00

128.3

▂▇▅▁▁

thi_gi

0

1

56.86

4.46

46.3

53.70

56.3

59.50

75.7

▂▇▃▁▁

bic_gi

0

1

31.17

4.25

22.4

27.60

31.0

34.45

42.4

▃▇▇▅▁

for_gi

0

1

25.94

2.83

19.6

23.60

25.8

28.40

32.5

▂▇▆▇▂

kne_gi

0

1

36.20

2.62

29.0

34.40

36.0

37.95

49.0

▂▇▅▁▁

cal_gi

0

1

36.08

2.85

28.4

34.10

36.0

38.00

47.7

▁▇▇▂▁

ank_gi

0

1

22.16

1.86

16.4

21.00

22.0

23.30

29.3

▁▆▇▂▁

wri_gi

0

1

16.10

1.38

13.0

15.00

16.1

17.10

19.6

▂▇▆▆▁

age

0

1

30.18

9.61

18.0

23.00

27.0

36.00

67.0

▇▅▂▁▁

wgt

0

1

69.15

13.35

42.0

58.40

68.2

78.85

116.4

▅▇▇▂▁

hgt

0

1

171.14

9.41

147.2

163.80

170.3

177.80

198.1

▁▆▇▅▁

sex

0

1

0.49

0.50

0.0

0.00

0.0

1.00

1.0

▇▁▁▁▇

We can see from the tiny histograms which variable seems to be normally distributed and which are skewed — and a little less obvious — which variable has excess kurtosis.

2.6.2 Skewness

2.6.2.1 What is skewness?

Skewness is a measure of the extent to which a distribution is skewed.

The mean of \(x\), \(m_x\), is subtracted from each observation \(x_i\). These differences between the mean and each observed value are called deviation scores. Each deviation score is divided by the standard deviation, \(s\), and the result is cubed. Finally, sum the cubed values and divide by the number of cubed values (which is taking the mean) to get the skewness of \(x\). The resulting value is usually negative when the skew is to the left and usually positive when the skew is toward the right. Skewness is strongly impacted by the sample size, therefore the value of skew that is considered too skewed differs depending on sample size.

Assessment 2.2 : Assessment of skewness

Table 2.10: Skewness assessment

Sample Size

Z-values Range

Comment

n < 50

under -2 or over +2

Problem!

n > 50 & < 300

under -3.29 or over +3.29

Problem!

n > 300

under -7 or over 7

Problem, but visual inspection recommended!

Right skewed = tail to the right = skew index is positive

Left skewed = tail to the left = skew index is negative

There are many packages to compute the skewness of a distribution. (Type ??skewness into the console to get a list. Harris recommends the skew() function from the {semTools} package (See Section A.80).

R Code 2.30 : Checking skewness of a shoulder breadth distribution of 247 men

Code

## select men from the samplebdims_male<-bdims|>dplyr::filter(sex==1)## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims_male, bdims_male$bia_di)p+ggplot2::labs(x ="biacromial diameter in centimeters")## commented test outputglue::glue("##################################################")glue::glue("Using the `semTools::skew()` function")semTools::skew(bdims_male$bia_di)glue::glue(" ")glue::glue("##################################################")glue::glue("Using the `statpsych::test.skew()` function")statpsych::test.skew(bdims$bia_di)

#> ##################################################

#> Using the `semTools::skew()` function

#> skew (g1) se z p

#> -0.2157434 0.1558573 -1.3842370 0.1662859

#>

#> ##################################################

#> Using the `statpsych::test.skew()` function

#> Skewness p

#> 0.1563 0.1464

(a) Shoulder breadth distribution in centimeters of 247 physically active men

Listing / Output 2.6: Checking skewness of a shoulder breadth distribution of 247 men

The interpretation follows Table 2.10: The \(z\) result of Graph 2.6 (a) from the biacromial diameter of 247 men is only 1.44, e.g. well situated in the range of -3.29 and +3.29 and therefore we have no skewness problem. The p-value is with 0.17 big and therefore the null (there is no skewness) can’t be rejected.

[statpsych::test.skew() c]omputes a Monte Carlo p-value (250,000 replications) for the null hypothesis that the sample data come from a normal distribution. If the p-value is small (e.g., less than .05) and the skewness estimate is positive, then the normality assumption can be rejected due to positive skewness. If the p-value is small (e.g., less than .05) and the skewness estimate is negative, then the normality assumption can be rejected due to negative skewness. (R Documentation of statpsych::test.skew())

Resource 2.3 Articles on identifying non-normal distributions

Harris referenced (Kim 2013) for the assessment table above. I noticed that there are many short articles about different types of test.. Just put the search string “Statistical[ALL] AND notes[ALL] AND clinical[ALL] AND researchers[ALL]” into the Kamje search engine.

R Code 2.31 : Pelvic breadth distribution of 507 physically active individuals

Code

## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims, bdims$bii_di)p+ggplot2::labs(x ="respondent's biiliac diameter (pelvic breadth) in centimeters.")## commented test outputglue::glue("##################################################")glue::glue("Using the `semTools::skew()` function")semTools::skew(bdims$bii_di)glue::glue(" ")glue::glue("##################################################")glue::glue("Using the `statpsych::test.skew()` function")statpsych::test.skew(bdims$bii_di)

#> ##################################################

#> Using the `semTools::skew()` function

#> skew (g1) se z p

#> -0.4187365342 0.1087856586 -3.8491887572 0.0001185097

#>

#> ##################################################

#> Using the `statpsych::test.skew()` function

#> Skewness p

#> -0.4175 1e-04

Graph 2.5: Pelvic breadth distribution in centimeters of 507 physically active indidviduals

Both tests have a very low p-value and attest us excessive skewness. This is even true if the z-value of semTools::skew() is only -3.85, e.g. in the appropriate boundaries of -7 and + 7 for a sample of 507 individuals (compare Table 2.10). The recommended visual inspection of the histogram shows us a left skewed distribution: There are too much individuals with a smaller pelvic breadth than it would appropriate for a normal distribution. It is very unlikely that the sample comes from a normal distribution. We have to reject the null.

R Code 2.32 : Waist girth distribution in centimeters of 507 physically active individuals

Code

## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims, bdims$wai_gi)p+ggplot2::labs(x ="Respondent's waist girth in centimeters, \nmeasured at the narrowest part of torso below the rib cage as average of contracted and relaxed position")## commented test outputglue::glue("##################################################")glue::glue("Using the `semTools::skew()` function")semTools::skew(bdims$wai_gi)glue::glue(" ")glue::glue("##################################################")glue::glue("Using the `statpsych::test.skew()` function")statpsych::test.skew(bdims$wai_gi)

#> ##################################################

#> Using the `semTools::skew()` function

#> skew (g1) se z p

#> 5.422301e-01 1.087857e-01 4.984390e+00 6.215764e-07

#>

#> ##################################################

#> Using the `statpsych::test.skew()` function

#> Skewness p

#> 0.5406 0

Graph 2.6: Waist girth distribution in centimeters of 507 physically active individuals

Both tests have a very low p-value and attest us excessive skewness. Again is the corresponding the z-value of semTools::skew() in the appropriate boundaries of -7 and + 7 for a sample of 507 individuals (compare Table 2.10). But the recommended visual inspection of the histogram shows us a right skewed distribution very clearly: There are too much individuals with a bigger waist girth than it would appropriate for a normal distribution. It is very unlikely that the sample comes from a normal distribution. We have to reject the null.

2.6.3 Mode

The book claims: “… unfortunately, perhaps because it is rarely used, there is no mode function.” This is not correct. There exists {modeest}, a package specialized for mode estimation (see Section A.49).

Harris therefore explains how to sort the variable to get the value most used as first item. This methods works always out of the box and does not need a specialized package.

R Code 2.33 : Most frequent value(s) in a given numerical vector

Code

tr_f<-forcats::fct_count(transgender_pb$TRNSGNDR)tr_recoded<-forcats::fct_count(transgender_clean$TRNSGNDR)|>dplyr::rename(Recoded ="f")tr<-dplyr::full_join(tr_f, tr_recoded, by =dplyr::join_by(n))|>dplyr::relocate(n, .after =dplyr::last_col())glue::glue("Let's remember the frequencies of the TRNSGNDR variable")trglue::glue(" ")glue::glue("#############################################")glue::glue("TRNSGDR variable not recoded and with NA's")transgender_pb|>dplyr::pull(TRNSGNDR)|>modeest::mfv()glue::glue(" ")glue::glue("#############################################")glue::glue("TRNSGDR variable not recoded with NA's")transgender_pb|>dplyr::pull(TRNSGNDR)|>modeest::mfv(na_rm =TRUE)glue::glue(" ")glue::glue("#############################################")glue::glue("TRNSGDR variable recoded without NA's")transgender_clean|>dplyr::pull(TRNSGNDR)|>modeest::mfv(na_rm =TRUE)

#> Let's remember the frequencies of the TRNSGNDR variable

#> # A tibble: 7 × 3

#> f Recoded n

#> <fct> <fct> <int>

#> 1 1 Male to female 363

#> 2 2 Female to male 212

#> 3 3 Gender nonconforming 116

#> 4 4 Not transgender 150765

#> 5 7 Don’t know/Not sure 1138

#> 6 9 Refused 1468

#> 7 <NA> <NA> 310602

#>

#> #############################################

#> TRNSGDR variable not recoded and with NA's

#> [1] <NA>

#> Levels: 1 2 3 4 7 9

#>

#> #############################################

#> TRNSGDR variable not recoded with NA's

#> [1] 4

#> Levels: 1 2 3 4 7 9

#>

#> #############################################

#> TRNSGDR variable recoded without NA's

#> [1] Not transgender

#> 6 Levels: Male to female Female to male ... Refused

The function mfv() stands for Most Frequent Value(s) and is reexported by {modeest} from {statip}. It returns all modes, e.g. highest values from a vector. For instance: modeest::mfv(c("a", "a", "b", "c", "c")) returns “a, c”. If you want just the first mode value use modeest::mfv1().

R Code 2.34 Arrange data that most frequent value(s) are first row

Code

glue::glue("Let's remember the frequencies of the TRNSGNDR variable")trglue::glue(" ")glue::glue("#############################################. ")glue::glue("TRNSGDR variable with NA's")tr|>dplyr::arrange(desc(n))|>dplyr::dplyr_row_slice(1)glue::glue(" ")glue::glue("#############################################. ")glue::glue("TRNSGDR variable without NA's")tr|>tidyr::drop_na()|>dplyr::arrange(desc(n))|>dplyr::first()glue::glue(" ")glue::glue("#############################################. ")glue::glue("TRNSGDR variable without NA's: Just the mode value")tr|>tidyr::drop_na()|>dplyr::arrange(desc(n))|>dplyr::first()|>dplyr::pull(n)

#> Let's remember the frequencies of the TRNSGNDR variable

#> # A tibble: 7 × 3

#> f Recoded n

#> <fct> <fct> <int>

#> 1 1 Male to female 363

#> 2 2 Female to male 212

#> 3 3 Gender nonconforming 116

#> 4 4 Not transgender 150765

#> 5 7 Don’t know/Not sure 1138

#> 6 9 Refused 1468

#> 7 <NA> <NA> 310602

#>

#> #############################################.

#> TRNSGDR variable with NA's

#> # A tibble: 1 × 3

#> f Recoded n

#> <fct> <fct> <int>

#> 1 <NA> <NA> 310602

#>

#> #############################################.

#> TRNSGDR variable without NA's

#> # A tibble: 1 × 3

#> f Recoded n

#> <fct> <fct> <int>

#> 1 4 Not transgender 150765

#>

#> #############################################.

#> TRNSGDR variable without NA's: Just the mode value

#> [1] 150765

2.6.4 Kurtosis

2.6.4.1 What is kurtosis?

Kurtosis measures how many observations are in the tails of the distribution. If a distribution that looks bell-shaped has many observations in the tails it is more flat than a normal distribution and called platykurtic. On the other hand if a distribution has that looks bell-shaped has only a few observations in the tails it is more pointy than a normal distribution and called leptokurtic. A normal distribution is mesokurtic, e.g., it is neither platykurtic nor leptokurtic.

Platykurtic and leptokurtic deviations from normality do not necessarily influence the mean, since it will still be a good representation of the middle of the data if the distribution is symmetrical and not skewed. However, platykurtic and leptokurtic distributions will have smaller and larger values of variance and standard deviation, respectively, compared to a normal distribution. And this is a problem, as variance and standard deviation are not only used to quantify spread, but are also used in many of the common statistical tests.

R Code 2.35 : Checking kurtosis of hip girth distribution of 507 individuals

Code

## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims, bdims$bit_di)p+ggplot2::labs(x ="respondent's bitrochanteric diameter (hip girth) in centimeters of 507 individuals")glue::glue("###################################################################")glue::glue("Kurtosis of pelvic breadth using `semTools::kurtosis()`")semTools::kurtosis(bdims$bit_di)glue::glue(" ")glue::glue("###################################################################")glue::glue("Kurtosis of pelvic breadth using `statpsych::test.kurtosis()`")statpsych::test.kurtosis(bdims$bit_di)

#> ###################################################################

#> Kurtosis of pelvic breadth using `semTools::kurtosis()`

#> Excess Kur (g2) se z p

#> 0.04872448 0.21757132 0.22394715 0.82279843

#>

#> ###################################################################

#> Kurtosis of pelvic breadth using `statpsych::test.kurtosis()`

#> Kurtosis Excess p

#> 3.0364 0.0364 0.7486

Graph 2.7: Respondent’s bitrochanteric diameter (hip girth) in centimeters of 507 individuals

The excessive kurtosis computed by default is \(g_{2}\), the fourth standardized moment of the empirical distribution.

Kurtosis test of the pelvic breadth distribution

Both functions semTools::skew() and statpsych::test.skew() claim that there is no excess kurtosis. The z-value is small and the p-value is high, so we don’t have to reject the null: The probability is very high, that the data come from a normal distribution.

R Code 2.36 : Checking kurtosis of shoulder breadth distribution of 507 individuals

Code

## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims, bdims$bia_di)p+ggplot2::labs(x ="biachromial diameter distribution of 507 individuals")glue::glue("###################################################################")glue::glue("Kurtosis of biachromial diameter using `semTools::kurtosis()`")semTools::kurtosis(bdims$bia_di)glue::glue(" ")glue::glue("###################################################################")glue::glue("Kurtosis of biachromial diameter using `statpsych::test.kurtosis()`")statpsych::test.kurtosis(bdims$bia_di)

#> ###################################################################

#> Kurtosis of biachromial diameter using `semTools::kurtosis()`

#> Excess Kur (g2) se z p

#> -0.8258614973 0.2175713173 -3.7958197229 0.0001471564

#>

#> ###################################################################

#> Kurtosis of biachromial diameter using `statpsych::test.kurtosis()`

#> Kurtosis Excess p

#> 2.1704 -0.8296 0

Graph 2.8: Biachromial diameter (shoulder breadth) distribution of 507 individuals

Kurtosis test of the shoulder breadth distribution

Both functions semTools::skew() and statpsych::test.skew() assert that there is excess kurtosis. The p-value is very low, so we have to reject the null and assuming the alternative: The probability is very high, that the data didn’t come from a normal distribution.

As the z-value is below 3 and the excess value is negative we have a platykurtic (= flat) distribution. Looking at the histogram shows that this a reasonable interpretation.

R Code 2.37 : Checking kurtosis of pelvis breadth distribution of 507 individuals

Code

## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(bdims, bdims$bii_di)p+ggplot2::labs(x ="Respondent's biiliac diameter (pelvic breadth) in centimeters (men and women, N = 507)")glue::glue("###################################################################")glue::glue("Kurtosis of pelvis breadth distribution using `semTools::kurtosis()`")semTools::kurtosis(bdims$bii_di)glue::glue(" ")glue::glue("###################################################################")glue::glue("Kurtosis of pelvis breadth distribution using `statpsych::test.kurtosis()`")statpsych::test.kurtosis(bdims$bii_di)

#> ###################################################################

#> Kurtosis of pelvis breadth distribution using `semTools::kurtosis()`

#> Excess Kur (g2) se z p

#> 1.112973e+00 2.175713e-01 5.115442e+00 3.130078e-07

#>

#> ###################################################################

#> Kurtosis of pelvis breadth distribution using `statpsych::test.kurtosis()`

#> Kurtosis Excess p

#> 4.0902 1.0902 5e-04

Graph 2.9: Respondent’s biiliac diameter (pelvic breadth) in centimeters of 507 individuals

Kurtosis test of the height distribution

Both functions semTools::skew() and statpsych::test.skew() assert that there is excess kurtosis. The p-value is very low, so we have to reject the null and assuming the alternative: The probability is very high, that the data didn’t come from a normal distribution.

As the z-value is above 3 and the excess value is positive we have a leptokurtic (= pointy) distribution. Looking at the histogram shows that this a reasonable interpretation.

Using only {semTools} for testing skewness and kurtosis

I haven’t found any difference between the results of {semTools} and {statpsych} in the computation of skewness and kurtosis. As the {statpsych} is more cumbersome, because of its 250,000 replications, I will stick with the {semTools} functions.

2.6.5 Scientifc notation

I have not troubles to read figures in scientific notation. But I often forget what command I must use to turn it off/on.

To turn off this option in R, type and run options(scipen = 999).

To turn it back on, type and run options(scipen = 000).

For graphs you could change the scale of the axis:

ggplot(aes(x = Year, y = num.guns/100000)) +

geom_line(aes(color = gun.type)) +

labs(y = "Number of firearms (in 100,000s)")

To turn off just for one variable: format(x, scientific = FALSE). It returns a character vector.

2.6.6 Data management for numerical variables

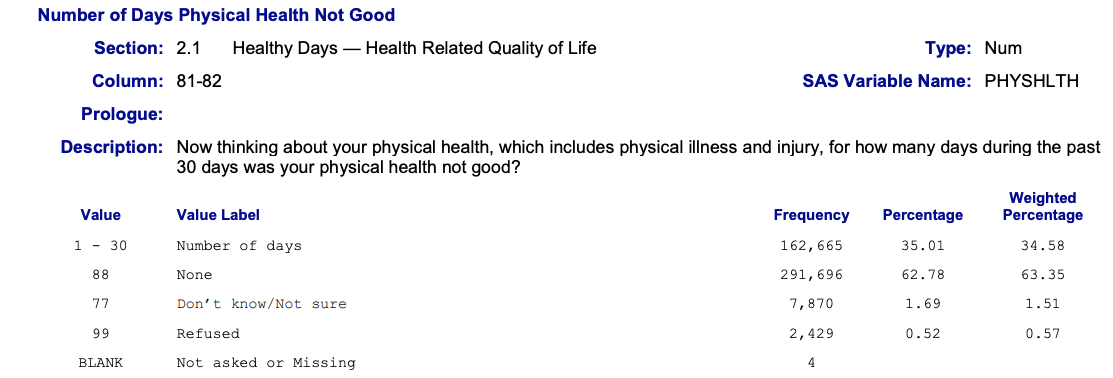

2.6.6.1 The book’s PHYSHLTH variable

Example 2.4 : Data management for numerical PHYSHLTH variable

Concerning the first warning: bins = 30 wouldn’t be ideal, because we have 31 values (0-30 days where the physical health was not good). So bins = 31 would also be a good choice.

I have to confess that I didn’t know that one can use the argument na.rm = TRUE inside a {ggplot2} function.

2. Removing the scientific notation in the y-axis

At first I used used outside the {ggplot2}-block options(scipen = 999)`. But then I decided not to turn off generally the scientific notation but to find a solution inside of the {ggplot2} code chunk.

I came up with ggplot2::scale_y_continuous(labels = scales::label_number()). Using the {scales} package is a common way to change scales and labels of {ggplot2} graphics (see Section A.78). My command changed the scientific notation to numbers, but the numbers still had many zeros and are not handy.

Finally I found a general solution, without using the {scales} package: Writing a local function for the trans argument of the ggplot2::scale_y_continuous() directive. I didn’t know about this method before and found this solution on StackOverflow.

2.6.6.1.0.1 Central tendency

R Code 2.41 : Computing mean, median and mode of the PHYSHLTH variable

Code

physical_health_clean|>tidyr::drop_na()|>dplyr::summarize(mean =base::mean(PHYSHLTH), median =stats::median(PHYSHLTH), mode =modeest::mfv(PHYSHLTH))

#> # A tibble: 1 × 3

#> mean median mode

#> <dbl> <dbl> <dbl>

#> 1 4.22 0 0

2.6.6.1.0.2 Spread

R Code 2.42 : Computing standard deviation, variance, the IQR and the qunatiles of the PHYSHLTH variable

Code

physical_health_clean|>tidyr::drop_na()|>dplyr::summarize(sd =stats::sd(PHYSHLTH), var =stats::var(PHYSHLTH), IQR =stats::IQR(PHYSHLTH))glue::glue(" quantiles corresponding to the given probabilities")stats::quantile(physical_health_clean$PHYSHLTH, na.rm =TRUE)

#> # A tibble: 1 × 3

#> sd var IQR

#> <dbl> <dbl> <dbl>

#> 1 8.78 77.0 3

#> quantiles corresponding to the given probabilities

#> 0% 25% 50% 75% 100%

#> 0 0 0 3 30

:::::

2.6.6.2 Achievement 3: The _AGE80 variable

Exercise 2.2 : Data management with the _AGE80 variable

#> _AGE80

#> Min. :18.00

#> 1st Qu.:44.00

#> Median :58.00

#> Mean :55.49

#> 3rd Qu.:69.00

#> Max. :80.00

Graph 2.12: Imputed Age value collapsed above 80

Remember: I have used as column name _AGE80 (and not X_AGE80) when I imported with {haven} the data in Listing / Output 2.1.

The age of the respondents range from 18 to 80.

The good news: There are no missing values or other codes that we need to recode.

The bad news: The age value of 80 includes all people 80 and above. Therefore age 80 will have more observations as it would be appropriate for this age. As a consequence we can expect that the age distribution would be biased to the right (= left skewed). This is confirmed in two ways: (1) The median is higher than the mean and (2) we see the left skewed distribution also in the tiny histogram from the skimr::skim() summary.

R Code 2.44 : Central tendency, spread, skewness & kurtosis of _AGE80

Code

glue::glue("###################################################################")glue::glue("Central tendency and spread measures of `_AGE80` variable")age80|>dplyr::summarize( mean =base::mean(`_AGE80`), median =stats::median(`_AGE80`), mode =modeest::mfv(`_AGE80`), min =base::min(`_AGE80`), max =base::max(`_AGE80`), sd =stats::sd(`_AGE80`), var =stats::var(`_AGE80`), IQR =stats::IQR(`_AGE80`))## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(age80, age80$`_AGE80`, n_bins =62)p+ggplot2::labs(x ="Age distribution of 464664 individuals (age value collapsed above 80)")+ggplot2::scale_x_continuous(breaks =seq(20,80,10))glue::glue(" ")glue::glue("###################################################################")glue::glue("Skewness of `_AGE80` variable")semTools::skew(age80$`_AGE80`)glue::glue(" ")glue::glue("###################################################################")glue::glue("Kurtosis of `_AGE80` variable")semTools::kurtosis(age80$`_AGE80`)

#> ###################################################################

#> Central tendency and spread measures of `_AGE80` variable

#> # A tibble: 1 × 8

#> mean median mode min max sd var IQR

#> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 55.5 58 80 18 80 16.9 284. 25

#>

#> ###################################################################

#> Skewness of `_AGE80` variable

#> skew (g1) se z p

#> -4.002808e-01 3.593405e-03 -1.113932e+02 0.000000e+00

#>

#> ###################################################################

#> Kurtosis of `_AGE80` variable

#> Excess Kur (g2) se z p

#> -7.661943e-01 7.186809e-03 -1.066112e+02 0.000000e+00

Table 2.12: Age distribution of 464664 individuals (age value collapsed above 80)

As we already have assumed from the over sized 80 year value we have a left skewed distribution. But we have also excess kurtosis, because we have to much observation in the tails, e.g., the distribution is too flat (platykurtic) to come from a normal distribution.

Now lets see the values after we have removed the obvious bias with the 80 year value. We will generate a new variable _AGE79 that range only from 18 to 79 years.

R Code 2.45 : Central tendency, spread, skewness & kurtosis of _AGE79

Code

glue::glue("###################################################################")glue::glue("Central tendency and spread measures of `_AGE79` variable")age79<-age80|>dplyr::filter(`_AGE80`<=79)|>dplyr::rename(`_AGE79` =`_AGE80`)age79|>dplyr::summarize( mean =base::mean(`_AGE79`), median =stats::median(`_AGE79`), mode =modeest::mfv(`_AGE79`), min =base::min(`_AGE79`), max =base::max(`_AGE79`), sd =stats::sd(`_AGE79`), var =stats::var(`_AGE79`), IQR =stats::IQR(`_AGE79`))## construct graph using my private function my_hist_dnorm()p<-my_hist_dnorm(age79, age79$`_AGE79`, n_bins =61)p+ggplot2::labs(x ="Age distribution of 425822 individuals from age 18 to 79")+ggplot2::scale_x_continuous(breaks =seq(20,80,10))glue::glue(" ")glue::glue("###################################################################")glue::glue("Skewness of `_AGE79` variable")semTools::skew(age79$`_AGE79`)glue::glue(" ")glue::glue("###################################################################")glue::glue("Kurtosis of `_AGE79` variable")semTools::kurtosis(age79$`_AGE79`)

#> ###################################################################

#> Central tendency and spread measures of `_AGE79` variable

#> # A tibble: 1 × 8

#> mean median mode min max sd var IQR

#> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

#> 1 53.3 56 62 18 79 15.8 250. 24

#>

#> ###################################################################

#> Skewness of `_AGE79` variable

#> skew (g1) se z p

#> -4.407561e-01 3.753717e-03 -1.174186e+02 0.000000e+00

#>

#> ###################################################################

#> Kurtosis of `_AGE79` variable

#> Excess Kur (g2) se z p

#> -7.528034e-01 7.507435e-03 -1.002744e+02 0.000000e+00

Table 2.13: Age distribution of 425822 individuals from age 18 to 79

Filtering the data to get only values between 18-79 doesn’t normalize the distribution. We can clearly see that there are too many people between 58 to 74 years respectively there is a gap in the cohorts from 35 to 55 years.

2.7 Creating tables for publishing

2.7.1 Necessary features

We need to manipulate data to provide a table with all its required features as they are:

Bullet List

A main title indicating what is in the table, including

the overall sample size

key pieces of information that describe the data such as the year of data collection and the data source

the units of measurement (people, organizations, etc.)

Correct formatting, including

consistent use of the same number of decimal places throughout the table

numbers aligned to the right so that the decimal points line up

words aligned to the left

indentation and shading to differentiate rows or sections

Clear column and row labels that have

logical row and column names

a clear indication of what the data are, such as means or frequencies

row and column sample sizes when they are different from overall sample sizes (Harris 2020).

I will use Table 2.5 (here it is Graph 2.13) from the book as my goal model that I want to replicate. In my first approach I will use {gtsummary} and document every step of the development process.

As first step I have reduced the dataset to those categories that are outlined in Graph 2.13. We will work with 222 records, 2 more as in the model table data are mentioned. But in the header it says “(n = 222)”.

R Code 2.47 Recode

Code

brfss_2014_recoded<-brfss_2014_raw|>dplyr::mutate(TRNSGNDR =forcats::as_factor(TRNSGNDR))|>dplyr::mutate(TRNSGNDR =forcats::fct_recode(TRNSGNDR, "Male to female"="1"))|>dplyr::mutate(TRNSGNDR =forcats::fct_recode(TRNSGNDR, "Female to male"="2"))|>dplyr::mutate(TRNSGNDR =forcats::fct_recode(TRNSGNDR, "Gender non-conforming"="3"))|>dplyr::mutate(`_AGEG5YR` =forcats::as_factor(`_AGEG5YR`))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "40-44"="5"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "45-49"="6"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "50-54"="7"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "55-59"="8"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "60-64"="9"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "65-69"="10"))|>dplyr::mutate(`_AGEG5YR` =forcats::fct_recode(`_AGEG5YR`, "70-74"="11"))|>dplyr::mutate(`_RACE` =forcats::as_factor(`_RACE`))|>dplyr::mutate(`_RACE` =forcats::fct_recode(`_RACE`, "White"="1"))|>dplyr::mutate(`_RACE` =forcats::fct_recode(`_RACE`, "Black"="2"))|>dplyr::mutate(`_RACE` =forcats::fct_recode(`_RACE`, "Native American"="3"))|>dplyr::mutate(`_RACE` =forcats::fct_recode(`_RACE`, "Asian/Pacific Islander"="4"))|>dplyr::mutate(`_RACE` =forcats::fct_collapse(`_RACE`, "Other"=c("5", "7", "8", "9")))|>dplyr::mutate(`_INCOMG` =forcats::as_factor(`_INCOMG`))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "Less than $15,000"="1"))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "$15,000 to less than $25,000"="2"))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "$25,000 to less than $35,000"="3"))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "$35,000 to less than $50,000"="4"))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "$50,000 or more"="5"))|>dplyr::mutate(`_INCOMG` =forcats::fct_recode(`_INCOMG`, "Don't know/not sure/missing"="9"))|>dplyr::mutate(`_EDUCAG` =forcats::as_factor(`_EDUCAG`))|>dplyr::mutate(`_EDUCAG` =forcats::fct_recode(`_EDUCAG`, "Did not graduate highschool"="1"))|>dplyr::mutate(`_EDUCAG` =forcats::fct_recode(`_EDUCAG`, "Graduated highschool"="2"))|>dplyr::mutate(`_EDUCAG` =forcats::fct_recode(`_EDUCAG`, "Attended college/technical school"="3"))|>dplyr::mutate(`_EDUCAG` =forcats::fct_recode(`_EDUCAG`, "Graduated from college/technical school"="4"))|>dplyr::mutate(HLTHPLN1 =forcats::as_factor(HLTHPLN1))|>dplyr::mutate(HLTHPLN1 =forcats::fct_recode(HLTHPLN1, "Yes"="1"))|>dplyr::mutate(HLTHPLN1 =forcats::fct_recode(HLTHPLN1, "No"="2"))|>dplyr::mutate(PHYSHLTH =dplyr::case_match(PHYSHLTH, 77~NA,99~NA,88~0, .default =PHYSHLTH))|>labelled::set_variable_labels( TRNSGNDR ="Transition status", `_AGEG5YR` ="Age category", `_RACE` ="Race/ethnicity", `_INCOMG` ="Income category", `_EDUCAG` ="Education category", HLTHPLN1 ="Health insurance?", PHYSHLTH ="Days poor physical health per month")

WATCH OUT! Warning because of missing level 6 in _RACE variable

In my first run of this long R code I got the following warning:

My first try for a table 1 was just a one-liner with gtsummary::tbl_summary(). It looks already pretty good. But let’s compare with Graph 2.13 in detail:

My version is different in the header. It has “Characteristics” instead of “Survey participants demographics” and “N = 222” instead of “Frequency” and “Percentage” as separated columns.

My percentage values do not have one decimal place.

It is not obvious to which variable the “median, (IQR)” footnote belongs.

My labels are not bold.

I am not sure if I should account also for another difference: My “Health insurance” does not show the value “Yes” in a separate line. The same happens with “Days of poor physical health per month”. But I believe that my version is better as it prevents to show redundant information. My separate last line “Unknown” is in any case better, because it is an important information.

The first difference may be challenged for the same reason: Including “Frequency” and (braced) “Percentage” in one column and explained by a footnote is practically the same information just more compressed. It would provide more space for further information for more complex tables. So I will concentrate on number (2) to (4) and change in (1) only “Characteristic” to “Survey participants demographics”.

R Code 2.49 : Transgender Survey Participants Demographics (2nd try)

This would be the final result adapted to the features and possibilities of {gtsummary}. But just for the sake of learn more about {gtsummary} let’s separate frequencies and percent values into two columns. The advice in StackOverflow is to generate two table objects with the standard gtsummary::tbl_summary() and then to merge them with gtsummary::tbl:merge().

R Code 2.50 : Transgender Survey Participants Demographics (3rd try)

Code

tbl1_gtsummary<-brfss_2014_recoded|>gtsummary::tbl_summary( statistic =list(gtsummary::all_continuous()~"{median}",gtsummary::all_categorical()~"{n}"), label =PHYSHLTH~"Days poor physical health per month (median, IQR)")tbl2_gtsummary<-brfss_2014_recoded|>gtsummary::tbl_summary( statistic =list(gtsummary::all_continuous()~"({p25}, {p75})",gtsummary::all_categorical()~"{p}%"), digits =list(gtsummary::all_categorical()~1), label =PHYSHLTH~"Days poor physical health per month (median, IQR)")tbl_merge_gtsummary<-gtsummary::tbl_merge(list(tbl1_gtsummary, tbl2_gtsummary))|>gtsummary::bold_labels()|>gtsummary::modify_header(list(label~"**Survey participants demographics (N = {n})**",stat_0_1~"**Frequency**", stat_0_2~"**Percent**"))|>gtsummary::modify_spanning_header(gtsummary::everything()~NA)|>gtsummary::modify_footnote(gtsummary::everything()~NA)

#> Warning: The `update` argument of `modify_header()` is deprecated as of gtsummary 2.0.0.

#> ℹ Use `modify_header(...)` input instead. Dynamic dots allow for syntax like

#> `modify_header(!!!list(...))`.

#> ℹ The deprecated feature was likely used in the gtsummary package.

#> Please report the issue at <https://github.com/ddsjoberg/gtsummary/issues>.

The separation of the frequencies and percentages into two columns worked. But now we have an unfavorable line break in the first column with the variable labels. The problem is that the space between the columns are to wide, especially between second and third column.

I couldn’t find a way to reduce the space between columns. Maybe I would need to generate a new theme. But I have chosen to other possibilities:

The trick to get these details is to convert the {gtsummary} object with gtsummary::as_gt() into a {gt} object and then change the relevant characteristic. {gt} has much more control over the table display then {gtsummary} which has its strength to calculate and display values in a predefined table 1 style.

Here I have used the predefined compact theme with reduced font size and smaller cell paddings.

2.8 Exercises (empty)

I have skipped the chapter exercises: They bring nothing new for me. I have already done the recoding and table construction extensively and feel secure about these data management tasks.

2.9 Glossary

term

definition

Arithmetic Mean

The arithmetic mean, also known as "arithmetic average", is the sum of the values divided by the number of values. If the data set were based on a series of observations obtained by sampling from a statistical population, the arithmetic mean is the sample mean $\overline{x}$ (pronounced x-bar) to distinguish it from the mean, or expected value, of the underlying distribution, the population mean $\mu$ (pronounced /'mjuː/). (<a href="https://en.wikipedia.org/wiki/Mean">Wikipedia</a>)

Backtick

The backtick ` is a typographical mark used mainly in computing. It is also known as backquote, grave, or grave accent. (<a href="https://en.wikipedia.org/wiki/Backtick">Wikipedia</a>)

BRFSS

The Behavioral Risk Factor Surveillance System (BRFSS) is a system of health-related telephone surveys that collect state data about U.S. residents regarding their health-related risk behaviors, chronic health conditions, and use of preventive services. Established in 1984 with 15 states, BRFSS now collects data in all 50 states as well as the District of Columbia and three U.S. territories. BRFSS completes more than 400,000 adult interviews each year, making it the largest continuously conducted health survey system in the world. (<a href="https://www.cdc.gov/brfss/index.html">BRFSS</a>)

Cisgender

People whose gender identity matches their biological sex. Contrast: Transgender (SwR, Chapter 2)

CSV