3.6 A Gentler Introduction

This section is my notes from DataCamp course Fundamentals of Bayesian Data Analysis in R. It is an intuitive approach to Bayesian inference.

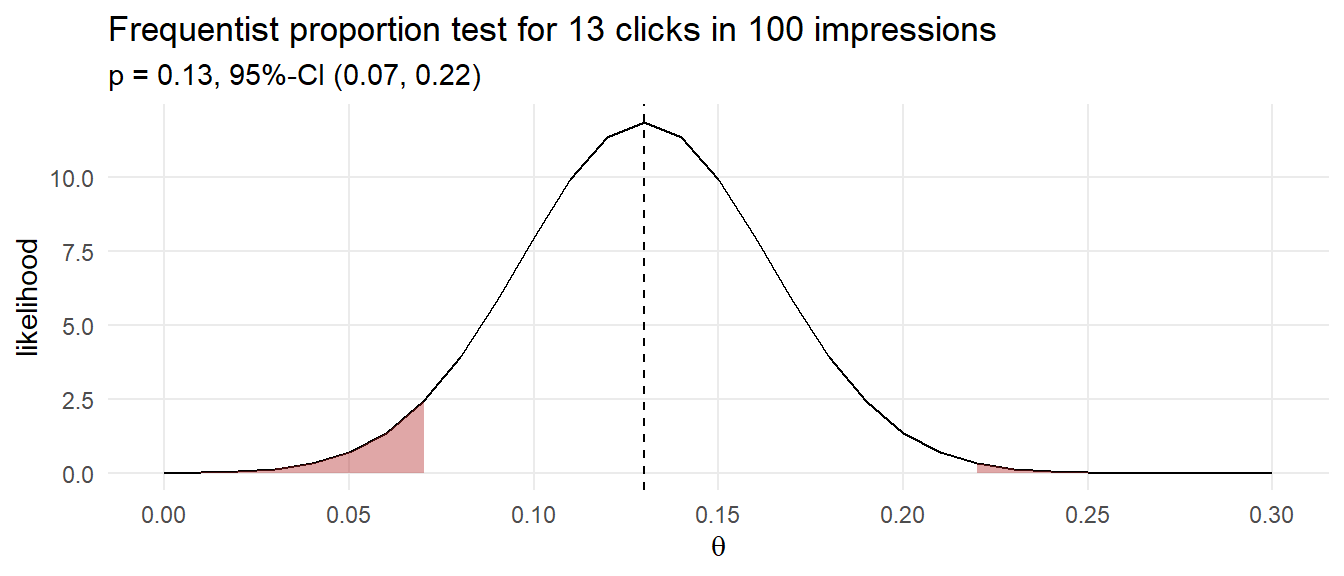

Suppose you purchase 100 ad impressions on a web site and receive 13 clicks. How would you describe the click rate? The Frequentist approach would be to construct a 95% CI around the click proportion.

(ad_prop_test <- prop.test(13, 100))##

## 1-sample proportions test with continuity correction

##

## data: 13 out of 100, null probability 0.5

## X-squared = 53.29, df = 1, p-value = 2.878e-13

## alternative hypothesis: true p is not equal to 0.5

## 95 percent confidence interval:

## 0.07376794 0.21560134

## sample estimates:

## p

## 0.13

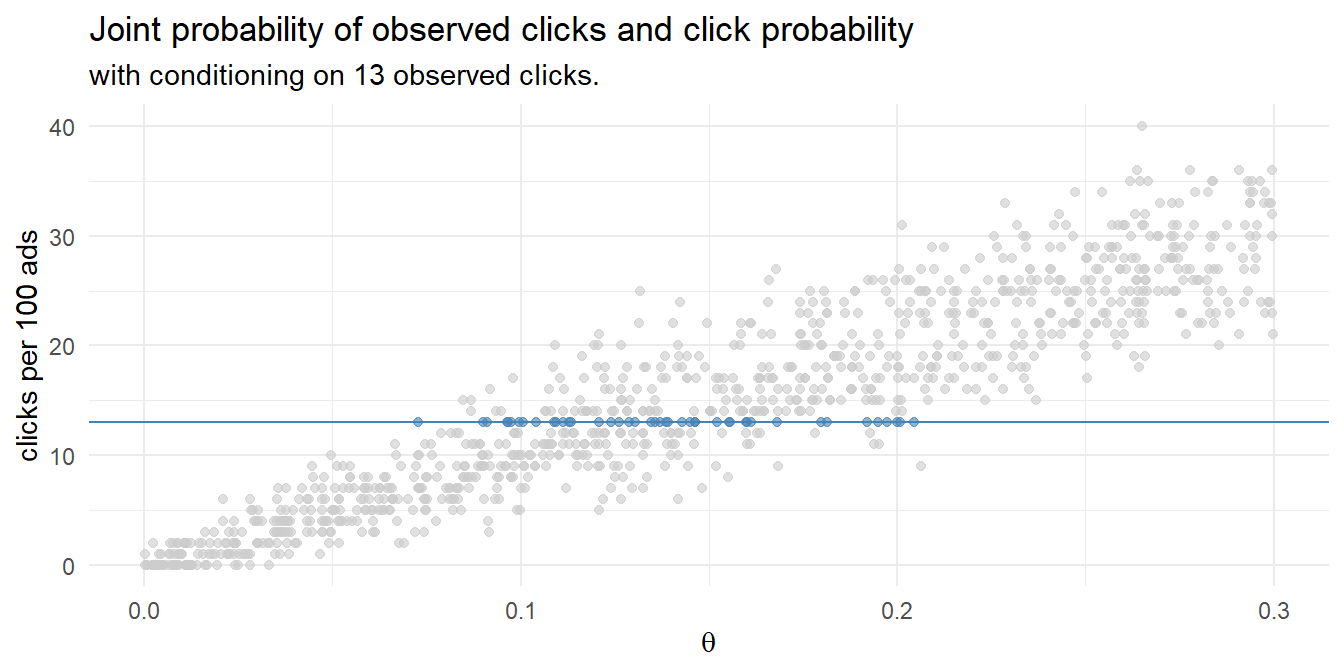

How might you model this using Bayesian reasoning? One way is to run 1,000 experiments that sample 100 ad impression events from an rbinom() generative model using a uniform prior distribution of 0-30% click probability. The resulting 1,000 row data set of click probabilities and sampled click counts forms a joint probability distribution. This method of Bayesian analysis is called rejection sampling because you sample across the whole parameter space, then condition on the observed evidence.

df_sim <- data.frame(click_prob = runif(1000, 0.0, 0.3))

df_sim$click_n <- rbinom(1000, 100, df_sim$click_prob)

Condition the joint probability distribution on the 13 observed clicks to update your prior. The quantile() function returns the median and the .025 and .975 percentile values - the credible interval.

# median and credible interval

(sim_ci <- df_sim %>% filter(click_n == 13) %>% pull(click_prob) %>%

quantile(c(.025, .5, .975)))## 2.5% 50% 97.5%

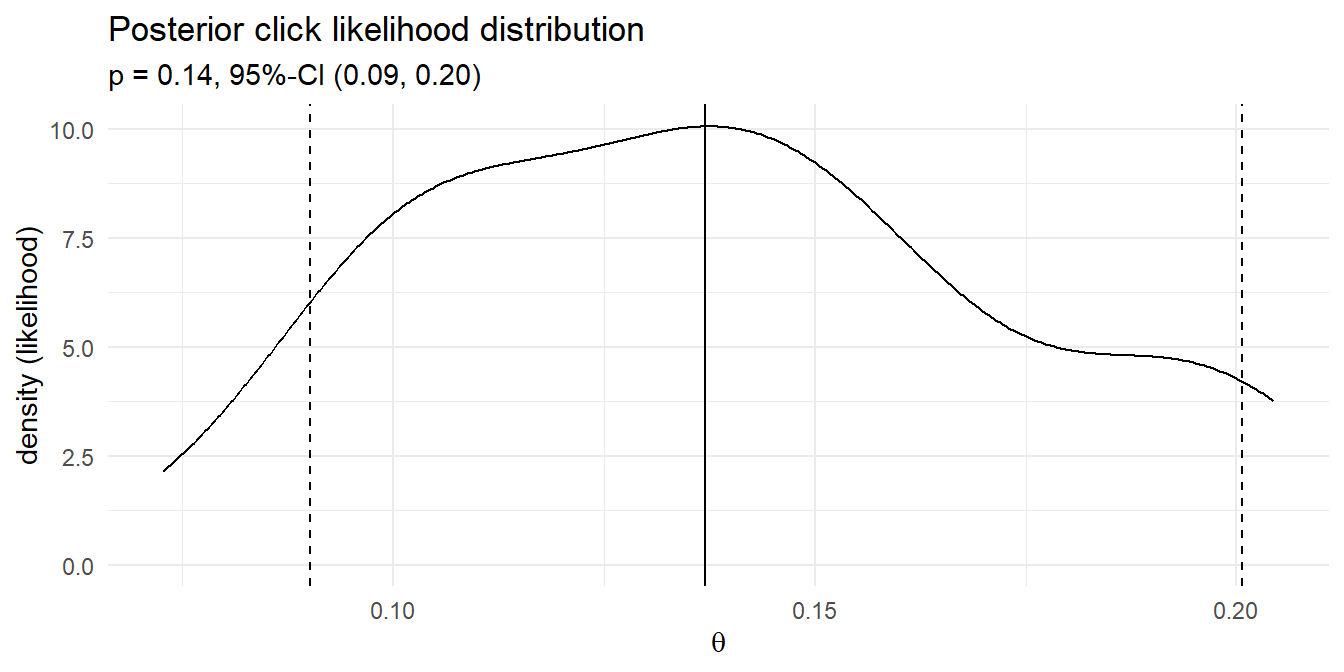

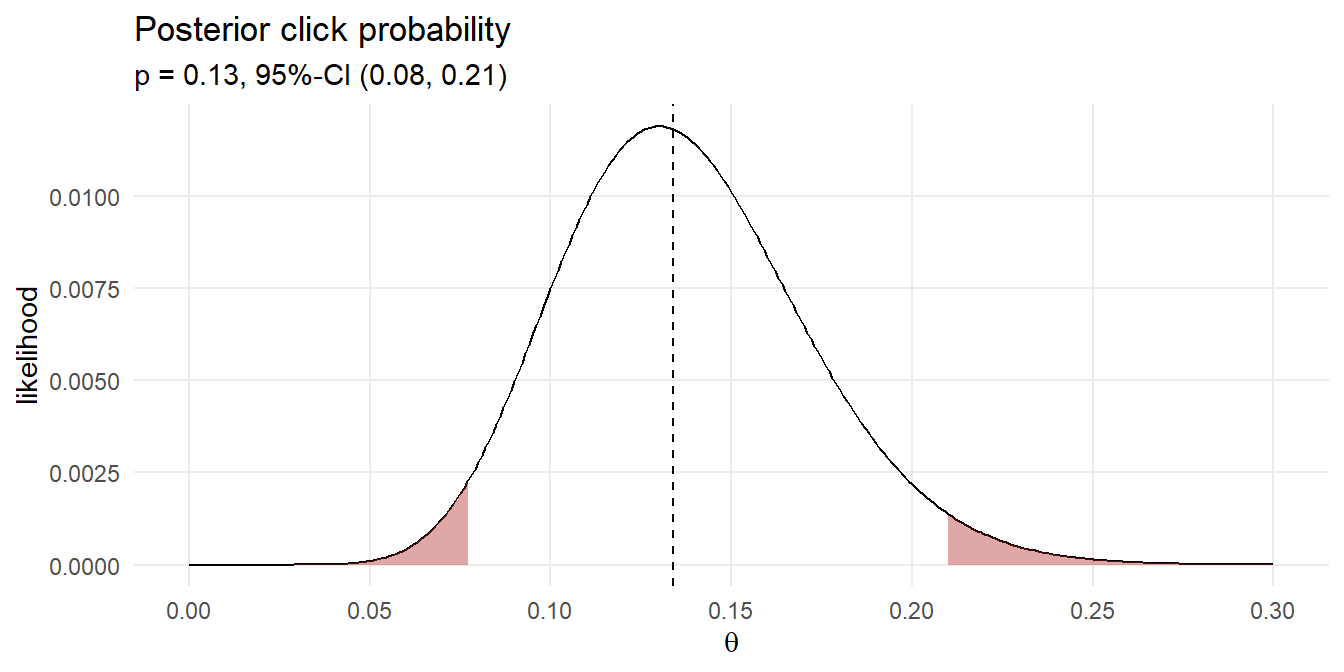

## 0.0901092 0.1370293 0.2007142Your posterior click rate likelihood is 13.7% with 95 credible interval [9.0%, 20.1%]. Here is the density plot of the 43 simulations that produced the 13 clicks. The median and 95% credible interval are marked.

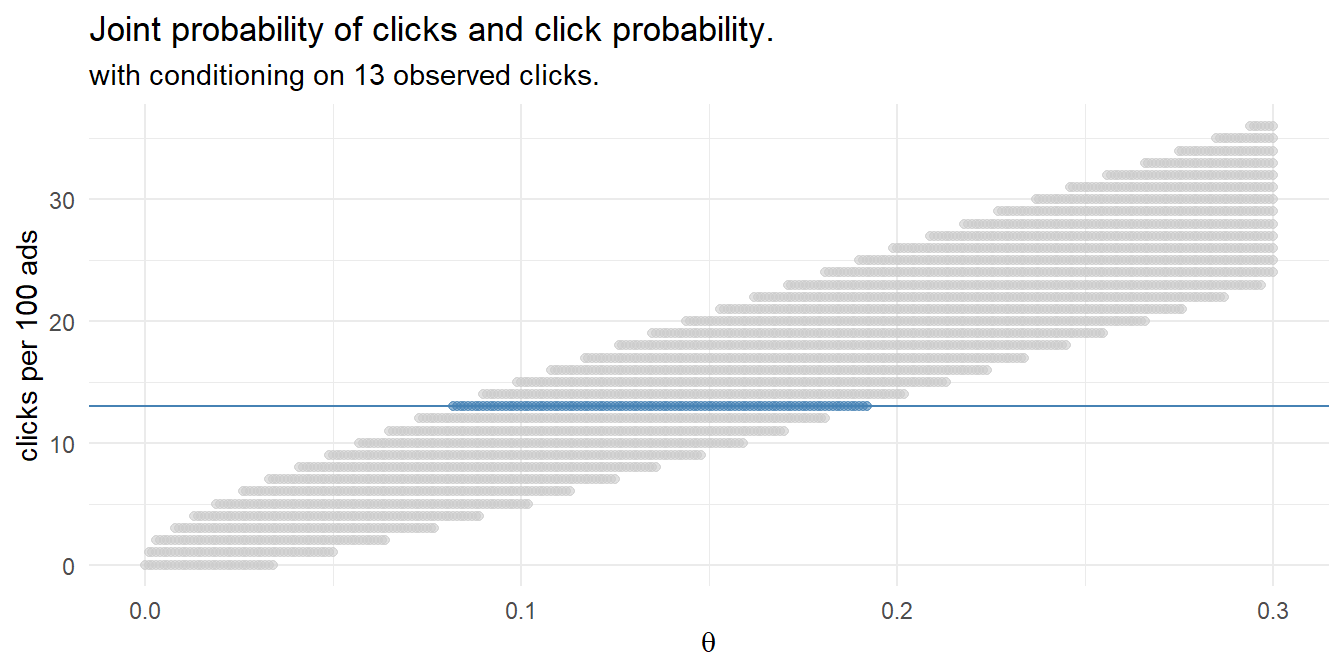

That’s pretty close to the frequentist result! Instead of running 1,000 experiments with randomly selected click probabilities and randomly selected click counts based on those probabilities, you could define a discrete set of candidate click probabilities, e.g. values between 0 and 0.3 incremented by .01, and calculate the click probability density for the 100 ad impressions. This method of Bayesian analysis is called grid approximation.

df_bayes <- expand.grid(

click_prob = seq(0, .3, by = .001),

click_n = 0:100

) %>%

mutate(

prior = dunif(click_prob, min = 0, max = 0.3),

likelihood = dbinom(click_n, 100, click_prob),

probability = likelihood * prior / sum(likelihood * prior)

)

Condition the joint probability distribution on the 13 observed clicks to update your prior.

df_bayes_13 <- df_bayes %>% filter(click_n == 13) %>%

mutate(posterior = probability / sum(probability))Instead of using the quantile() function on these values to measure the median and credible interval, resample the posterior probability to create a distribution.

sampling_idx <- sample(

1:nrow(df_bayes_13),

size = 10000,

replace = TRUE,

prob = df_bayes_13$posterior

)

sampling_vals <- df_bayes_13[sampling_idx, ]

(df_bayes_ci <- quantile(sampling_vals$click_prob, c(.025, .5, .975)))## 2.5% 50% 97.5%

## 0.078 0.134 0.209

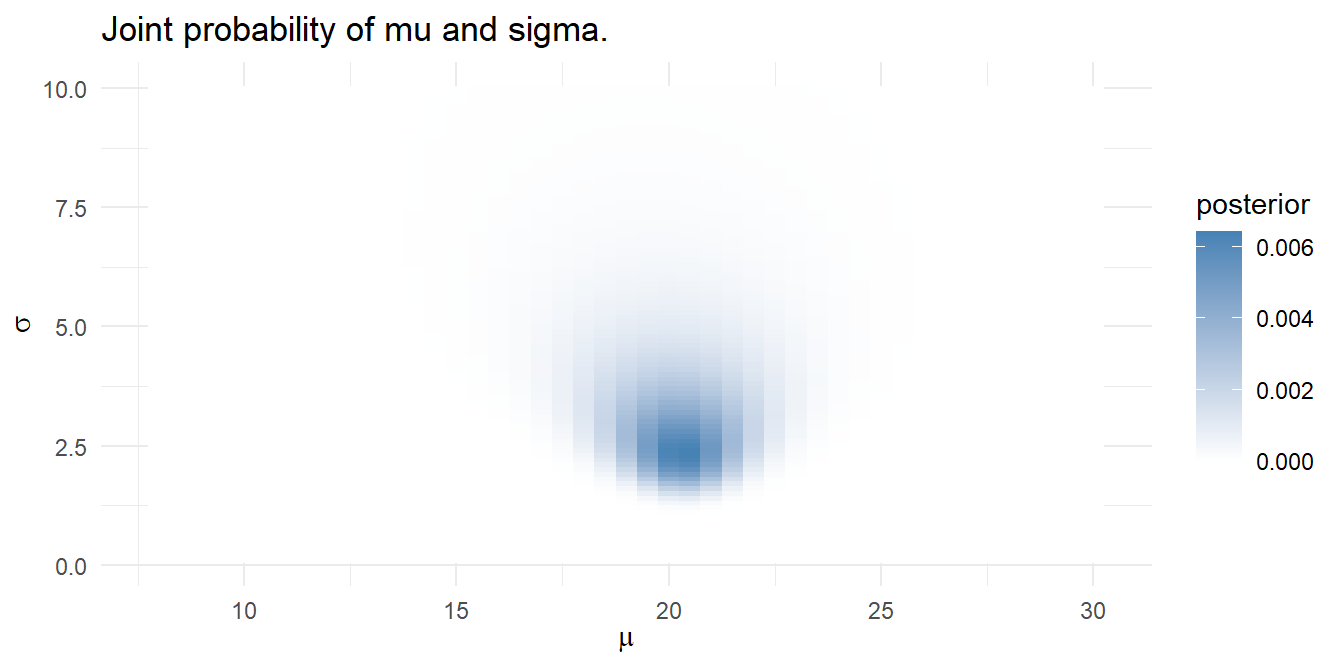

You can use a Bayesian model to estimate multiple parameters. Suppose you want to predict the water temperature in a lake on Jun 1 based on 5 years of prior water temperatures.

temp <- c(19, 23, 20, 17, 23)You model the water temperature as a normal distribution, \(\mathrm{N}(\mu, \sigma^2)\) with a prior distribution \(\mu = \mathrm{N}(18, 5^2)\) and \(\sigma = \mathrm{unif}(0, 10)\) based on past experience.

Using the grid approximation approach, construct a grid of candidate \(\mu\) values from 8 to 30 degrees incremented by .5 degrees, and candidate \(\sigma\) values from .1 to 10 incremented by .1 - a 4,500 row data frame.

mdl_grid <- expand_grid(mu = seq(8, 30, by = 0.5),

sigma = seq(.1, 10, by = 0.1))For each combination of \(\mu\) and \(\sigma\), the prior probabilities are the densities from \(\mu = \mathrm{N}(18, 5^2)\) and \(\sigma = \mathrm{unif}(0, 10)\). The combined prior is their product. The likelihoods are the products of the probabilities of observing each temp given the candidate \(\mu\) and \(\sigma\) values.

mdl_grid_2 <- mdl_grid %>%

mutate(

mu_prior = map_dbl(mu, ~dnorm(., mean = 18, sd = 5)),

sigma_prior = map_dbl(sigma, ~dunif(., 0, 10)),

prior = mu_prior * sigma_prior, # combined prior,

likelihood = map2_dbl(mu, sigma, ~dnorm(temp, .x, .y) %>% prod()),

posterior = likelihood * prior / sum(likelihood * prior)

)

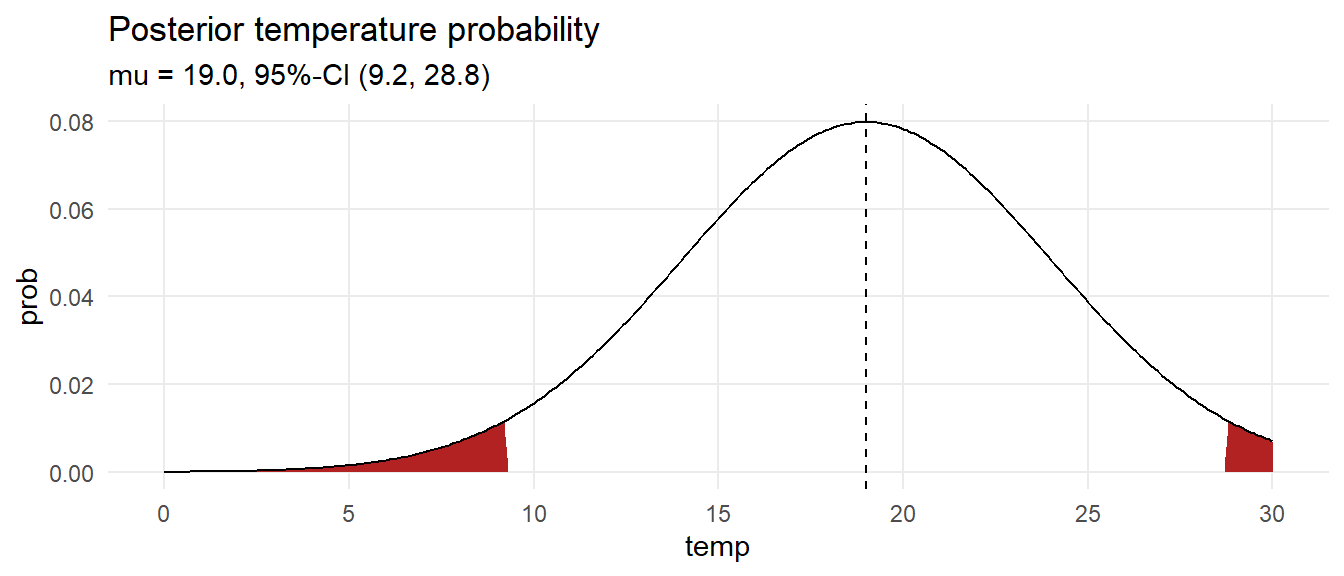

Calculate a credible interval by drawing 10,000 samples from the grid with sampling probability equal to the calculated posterior probabilities. Use the quantile() function to estimate the median and .025 and .975 quantile values.

sampling_idx <- sample(1:nrow(mdl_grid), size = 10000, replace = TRUE, prob = mdl_grid$posterior)## Warning: Unknown or uninitialised column: `posterior`.sampling_vals <- mdl_grid[sampling_idx, c("mu", "sigma")]

mu_ci <- quantile(sampling_vals$mu, c(.025, .5, .975))

sigma_ci <- quantile(sampling_vals$sigma, c(.025, .5, .975))

ci <- qnorm(c(.025, .5, .975), mean = mu_ci[2], sd = sigma_ci[2])

data.frame(temp = seq(0, 30, by = .1)) %>%

mutate(prob = map_dbl(temp, ~dnorm(., mean = ci[2], sd = sigma_ci[2])),

ci = if_else(temp >= ci[1] & temp <= ci[3], "Y", "N")) %>%

ggplot(aes(x = temp, y = prob)) +

geom_area(aes(y = if_else(ci == "N", prob, 0)),

fill = "firebrick", show.legend = FALSE) +

geom_line() +

geom_vline(xintercept = ci[2], linetype = 2) +

theme_minimal() +

scale_x_continuous(breaks = seq(0, 30, 5)) +

theme(panel.grid.minor = element_blank()) +

labs(title = "Posterior temperature probability",

subtitle = glue("mu = {ci[2] %>% scales::number(accuracy = .1)}, 95%-CI (",

"{ci[1] %>% scales::number(accuracy = .1)}, ",

"{ci[3] %>% scales::number(accuracy = .1)})"))

What is the probability the temperature is at least 18?

pred_temp <- rnorm(1000, mean = sampling_vals$mu, sampling_vals$sigma)

scales::percent(sum(pred_temp >= 18) / length(pred_temp))## [1] "54%"