Chapter 7 Optimization

In this chapter, the goal is to demonstrate how Gaussian process (GP) surrogate modeling can assist in optimizing a blackbox objective function. That is, a function about which one knows little – one opaque to the optimizer – and that can only be probed through expensive evaluation. We view optimization as an example of sequential design or active learning (§6.2). Many names have been given to this enterprise, and some will be explained alongside their origins below. Recently Bayesian Optimization (BO) has caught on, especially in the machine learning (ML) community, and likely that’ll stick in part because it’s punchier than the alternatives. BO terminology goes back to a paper predating use of GPs toward this end, and refers primarily to decision criteria for sequential selection and model updating. Modern ML vernacular prefers acquisition functions. BO’s recent gravitas is primarily due to the prevailing view of GP learning as marginalization over a latent random field (§5.3.2).

The role of modeling in optimization, more generically, has a rich history, and we’ll barely scratch the surface here. Models deployed to assist in optimization can be both statistical and non-statistical, however the latter often have strikingly similar statistical analogs. Potential for modern nonparametric statistical surrogate modeling in this context is just recently being recognized by communities for which optimization is bread-and-butter: mathematical programming, statistics, ML, and more. Optimization has played a vital role in stats and ML for decades. All those "L-BFGS-B" searches (Byrd et al. 1995) from optim in earlier chapters, say to find MLEs or optimal designs, are cases in point. It’s intriguing to wonder whether statistical thinking might have something to give back to the optimization world, as it were.

Whereas many communities have long settled for local refinement, statistical methods based on nonparametric surrogate models offer promise for greater scope. True global optimization, whatever that means, may always remain elusive and enterprises motivated by such lofty goals may be folly. But anyone suggesting that wider perspective is undesirable, and that systematic frameworks developed toward that end aren’t worth exploring, is ignoring practitioners and clients who are optimistic that the grass is greener just over the horizon.

Statistical decision criteria can leverage globally scoped surrogates to balance exploration (uncertainty reduction; Chapter 6) and exploitation (steepest ascent; Chapter 3) in order to more reliably find global optima. But that’s just the tip of the iceberg. Statistical models cope handily with noisy blackbox evaluations – a situation where probabilistic reasoning ought to have a monopoly – and thus lend a sense of robustness to solutions and to a notion of convergence. They offer the means of uncertainty quantification (UQ) about many aspects, including the chance that local or global optima were missed. Extension to related, optimization-like criteria such as level-set/contour finding (e.g., Ranjan, Bingham, and Michailidis 2008) is relatively straightforward, although these topics aren’t directly addressed in this chapter. See §10.3.4 for pointers along these lines.

It’s important to disclaim that thinking statistically need not preclude application of more classical approaches. Surrogate modeling naturally lends itself to hybridization. Ideas from mathematical programming not only have merit, but their software is exceedingly well engineered. Let’s not throw the baby out with the bath water. It makes sense to borrow strengths from multiple toolkits and leverage solid implementation and decades of stress testing.

Although the main ideas on statistical surrogate modeling for optimization originate in the statistics literature, it’s again machine learners who are making the most noise in this area today. This is, in part, because they’re more keen to adopt the rich language of mathematical programming, and therefore better able to connect with the wider optimization community. Statisticians tend to get caught up in modeling details and forget that optimization is as much about execution as it’s about methodology. Practitioners want to use these tools, but rarely have the in-house expertise required to code them up on their own. General purpose software leveraging surrogates has been slow to come online. An important goal of this chapter is to show how that might work, and to expose substantial inroads along those lines.

We’ll see how modern nonparametric surrogate modeling and clever (yet arguably heuristic) criteria, that often can be solved in closed form, may be combined to effectively balance exploration and exploitation. Emphasis here is on GPs, but many methods are agnostic to choices of surrogate. We begin by targeting globally scoped numerical optimization, leveraging only blackbox evaluations of an objective function supplied by the user. Subsequently, we shall embellish that setup with methods for handling constraints, known and unknown (i.e., also blackbox); hybridize with modern methods from mathematical programming; and talk about applications from toy to real data.

7.1 Surrogate-assisted optimization

Statistical methods in optimization, in particular of noisy blackbox functions, probably goes back to Box and Draper (1987), a precursor to a canonical response surface methods text by the same authors (Box and Draper 2007). Modern Bayesian optimization (BO) is closest in spirit to methods described by Mockus, Tiesis, and Zilinskas (1978) in a paper entitled “The application of Bayesian methods for seeking the extremum”. Yet many strategies suggested therein didn’t come to the fore until the late 1990’s, perhaps because they emphasized rather crude (linear) modeling. As GPs became established for modeling computer simulations, and subsequently in ML in the 2000s, new life was breathed in.

In the computer experiments literature, folks have been using GPs to optimize functions for some time. One of the best references for the core idea might be Booker et al. (1999), with many ingredients predating that paper. They called it surrogate-assisted optimization, and it involved a nice collaboration between optimization and computer modeling researchers. Non-statistical surrogates had been in play in optimization for some time. Nonparametric statistical ones, with more global scope, offered fresh perspective.

The methodology is simple: train a GP on function evaluations obtained so far; minimize the fitted surrogate predictive mean surface of the GP to select the next location for evaluation; repeat. This is an instance of Algorithm 6.1 in §6.2 where the criterion \(J(x)\) in Step 3 is based on GP predictive mean \(\mu_n(x) = \mathbb{E}\{Y(x) \mid D_n\}\) provided in Eq. (5.2). Although Step 3 deploys its own inner-optimization, minimizing \(\mu_n(x)\) is comparatively easy since it doesn’t involve evaluating a computationally intensive, and potentially noisy, blackbox. It’s something that can easily be solved with conventional methods. As a shorthand, and to connect with other acronyms like EI and PI below, I shall refer to this “mean criterion” as the EY heuristic for surrogate-assisted (Bayesian) optimization (BO).

Before we continue, let’s be clear about the problem. Whereas the RSM literature (Chapter 3) orients toward maxima, BO and math programming favor minimization, which I shall adopt for our discussions here. Specifically, we wish to find

\[ x^\star = \mathrm{argmin}_{x \in \mathcal{X}} \; f(x) \]

where \(\mathcal{X}\) is usually a hyperrectangle, a bounding box, or another simply constrained region. We don’t have access to derivative evaluations for \(f(x)\), nor do we necessarily want them (or want to approximate them) because that could represent additional substantial computational expense. As such, methods described here fall under the class of derivative-free optimization. See, e.g., Conn, Scheinberg, and Vicente (2009), for which many innovative algorithms have been proposed, and many solid implementations are widely available. For a somewhat more recent review, including several of the surrogate-assisted/BO methods introduced here, see Larson, Menickelly, and Wild (2019).

All we get to do is evaluate \(f(x)\), which for now is presumed to be deterministic. Generalizations will come after introducing main concepts, including simple extensions for the noisy case. The literature targets scenarios where \(f(x)\) is expensive to evaluate (in terms of computing time, say), but otherwise is well-behaved: continuous, relatively smooth, only real-valued inputs, etc. Again, these are relaxable modulo suitable surrogate and/or kernel structure. Several appropriate choices are introduced in later chapters.

Implicit in the computational expense of \(f(x)\) evaluations is a tacit “constraint” on the solver, namely that it minimize the number of such evaluations. Although it’s not uncommon to study aspects of convergence in these settings, often the goal is simply to find the best input, \(x^\star\) minimizing \(f\), in the fewest number of evaluations. So in empirical comparisons we typically track the best objective value (BOV) found as a function of the number of blackbox evaluations. In many applied contexts it’s more common to have a fixed evaluation budget than it is to enjoy the luxury of running to convergence. Nevertheless, monitoring progress plays a key role when deciding if further expensive evaluations may be required.

7.1.1 A running example

Consider an implementation of Booker et al.’s method on a re-scaled/coded version of the Goldstein–Price function. See §1.4 homework exercises for more on this challenging benchmark problem.

f <- function(X)

{

if(is.null(nrow(X))) X <- matrix(X, nrow=1)

m <- 8.6928

s <- 2.4269

x1 <- 4*X[,1] - 2

x2 <- 4*X[,2] - 2

a <- 1 + (x1 + x2 + 1)^2 *

(19 - 14*x1 + 3*x1^2 - 14*x2 + 6*x1*x2 + 3*x2^2)

b <- 30 + (2*x1 - 3*x2)^2 *

(18 - 32*x1 + 12*x1^2 + 48*x2 - 36*x1*x2 + 27*x2^2)

f <- log(a*b)

f <- (f - m)/s

return(f)

}Although this \(f(x)\) isn’t opaque to us, and not expensive to evaluate, we shall treat it as such for purposes of illustration. Not much insight can be gained by looking at its form in any case, except to convince the reader that it furnishes a suitably complicated surface despite residing in modest input dimension (\(m=2\)).

Begin with a small space-filling Latin hypercube sample (LHS; §4.1) seed design in 2d.

Next fit a separable GP to those data, with a small nugget for jitter. All of the same caveats about initial lengthscales in GP-based active learning – see §6.2.1 – apply here. To help create a prior on \(\theta\) that’s more stable, darg below utilizes a large auxiliary pseudo-design in lieu of X, which at early stages of design/optimization (\(n_0 = 12\) runs) may not yet possess a sufficient diversity of pairwise distances.

library(laGP)

da <- darg(list(mle=TRUE, max=0.5), randomLHS(1000, 2))

gpi <- newGPsep(X, y, d=da$start, g=1e-6, dK=TRUE)

mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)$msg## [1] "CONVERGENCE: REL_REDUCTION_OF_F <= FACTR*EPSMCH"Just like our ALM/C searches from §6.2, consider an objective based on GP predictive equations. This represents an implementation of Step 3 in Algorithm 6.1 for sequential design/active learning, setting EY as sequential design criterion \(J(x)\), or defining the acquisition function in ML jargon.

Now the predictive mean surface (like \(f\), through the evaluations it’s trained on) may have many local minima, but let’s punt for now on the ideal of global optimization of EY – of the so-called “inner loop” – and see where we get with a search initialized at the current best value. R code below extracts that value: m indexing the best y-value obtained so far, and uses its \(x\) coordinates to initialize a "L-BFGS-B" solver on obj.mean.

m <- which.min(y)

opt <- optim(X[m,], obj.mean, lower=0, upper=1, method="L-BFGS-B", gpi=gpi)

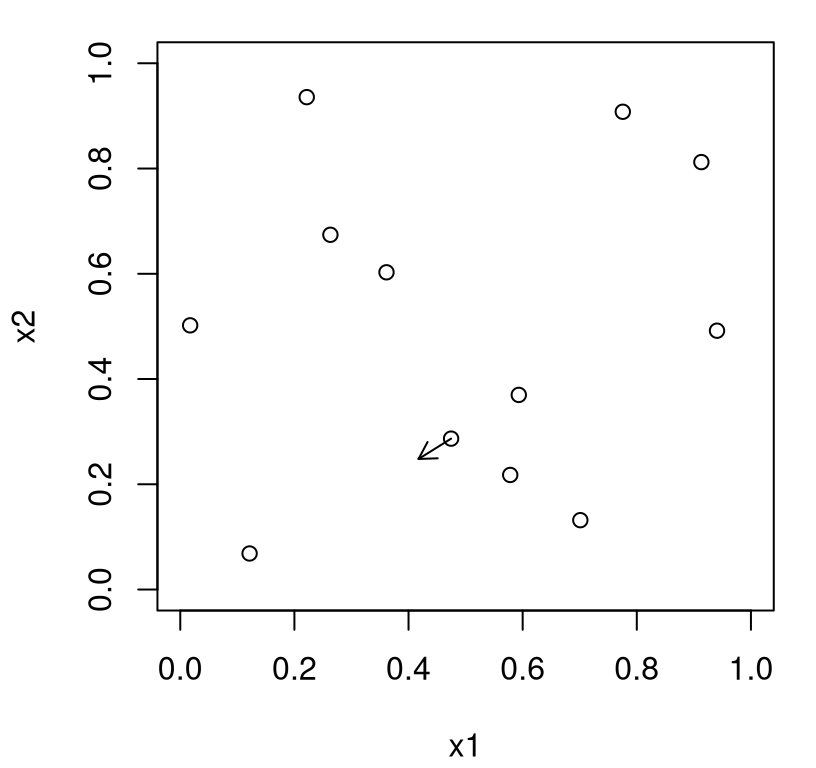

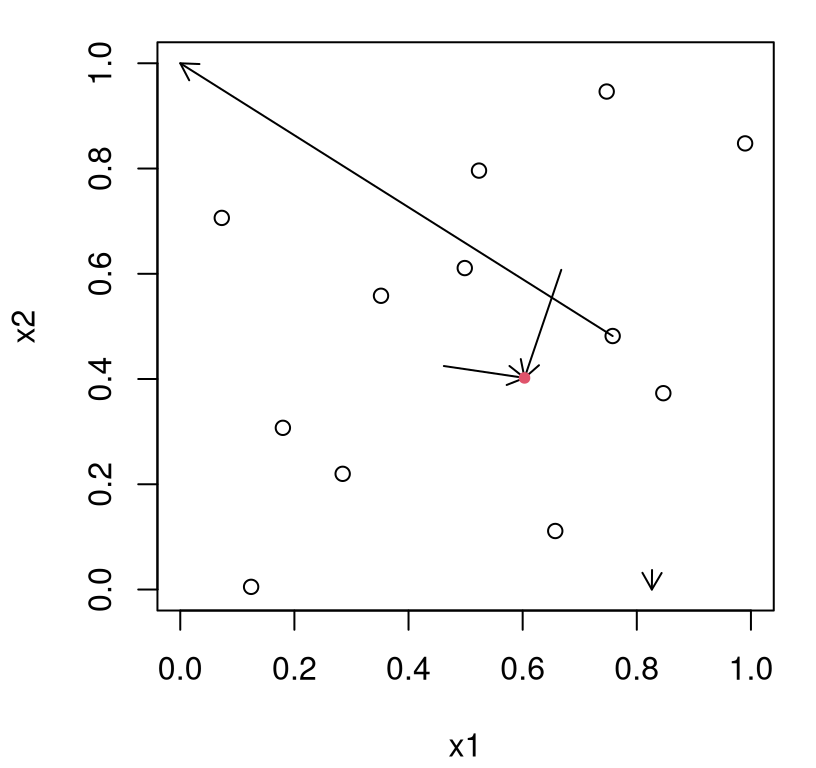

opt$par## [1] 0.4175 0.2481So this is the next point to try. Surrogate optima represent a sensible choice for the next evaluation of the expensive blackbox, or so the thinking goes. Before moving to the next acquisition, Figure 7.1 provides a visualization. Open circles indicate locations of the size-\(n_0\) LHS seed design. The origin of the arrow indicates X[m,]: the location whose y-value is lowest based on those initial evaluations. Its terminus shows the outcome of the optim call: the next point to try.

plot(X[1:ninit,], xlab="x1", ylab="x2", xlim=c(0,1), ylim=c(0,1))

arrows(X[m,1], X[m,2], opt$par[1], opt$par[2], length=0.1)

FIGURE 7.1: First iteration of EY-based search initialized from the value of the objective obtained so far.

Ok, now evaluate \(f\) at opt$par, update the GP and its hyperparameters …

ynew <- f(opt$par)

updateGPsep(gpi, matrix(opt$par, nrow=1), ynew)

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

X <- rbind(X, opt$par)

y <- c(y, ynew)… and solve for the next point.

m <- which.min(y)

opt <- optim(X[m,], obj.mean, lower=0, upper=1, method="L-BFGS-B", gpi=gpi)

opt$par## [1] 0.4930 0.2661Figure 7.2 shows what that looks like in the input domain, as an update on Figure 7.1. In particular, the terminus of the arrow in Figure 7.1 has become an open circle in Figure 7.2, as that input–output pair has been promoted into the training data with GP fit revised accordingly.

plot(X, xlab="x1", ylab="x2", xlim=c(0,1), ylim=c(0,1))

arrows(X[m,1], X[m,2], opt$par[1], opt$par[2], length=0.1)

FIGURE 7.2: Second iteration of EY search following Figure 7.1.

If the origin of the new arrow resides at the newly minted open circle, then we have progress: the predictive mean surface was accurate and indeed helpful in finding a new best point, minimizing \(f\). If not, then the origin is back at the same open circle it originated from before.

Now incorporate the new point into our dataset and update the GP predictor.

ynew <- f(opt$par)

updateGPsep(gpi, matrix(opt$par, nrow=1), ynew)

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

X <- rbind(X, opt$par)

y <- c(y, ynew)Let’s fast-forward a little bit. Code below wraps what we’ve been doing above into a while loop with a simple check on convergence in order to “break out”. If two outputs in a row are sufficiently close, within a tolerance 1e-4, then stop. That’s quite crude, but sufficient for illustrative purposes.

while(1) {

m <- which.min(y)

opt <- optim(X[m,], obj.mean, lower=0, upper=1,

method="L-BFGS-B", gpi=gpi)

ynew <- f(opt$par)

if(abs(ynew - y[length(y)]) < 1e-4) break

updateGPsep(gpi, matrix(opt$par, nrow=1), ynew)

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

X <- rbind(X, opt$par)

y <- c(y, ynew)

}

deleteGPsep(gpi)To help measure progress, code below implements some post-processing to track the best y-value (bov: best objective value) over those iterations. The function is written in some generality in order to accommodate application in several distinct settings, coming later.

bov <- function(y, end=length(y))

{

prog <- rep(min(y), end)

prog[1:min(end, length(y))] <- y[1:min(end, length(y))]

for(i in 2:end)

if(is.na(prog[i]) || prog[i] > prog[i-1]) prog[i] <- prog[i-1]

return(prog)

}In our application momentarily, note that we treat the \(n_0 = 12\) seed design the same as later acquisitions, even though they were not derived from the surrogate/criterion.

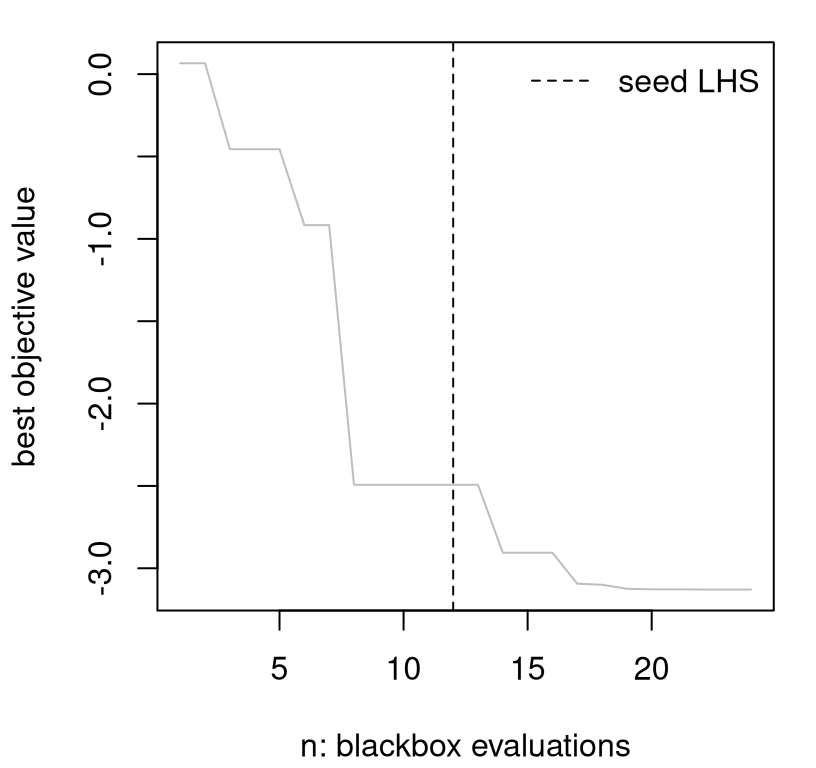

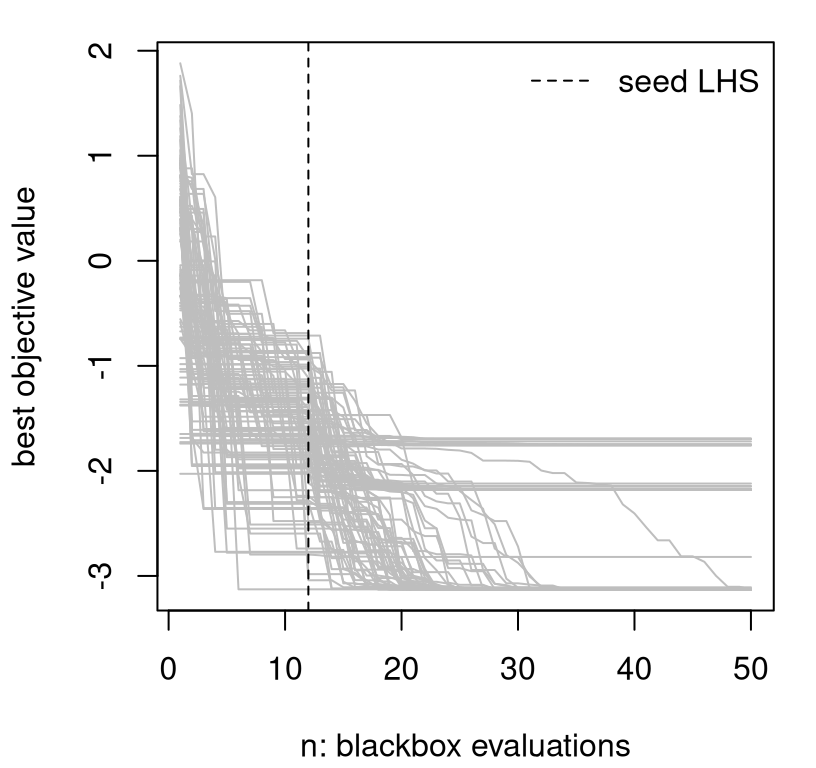

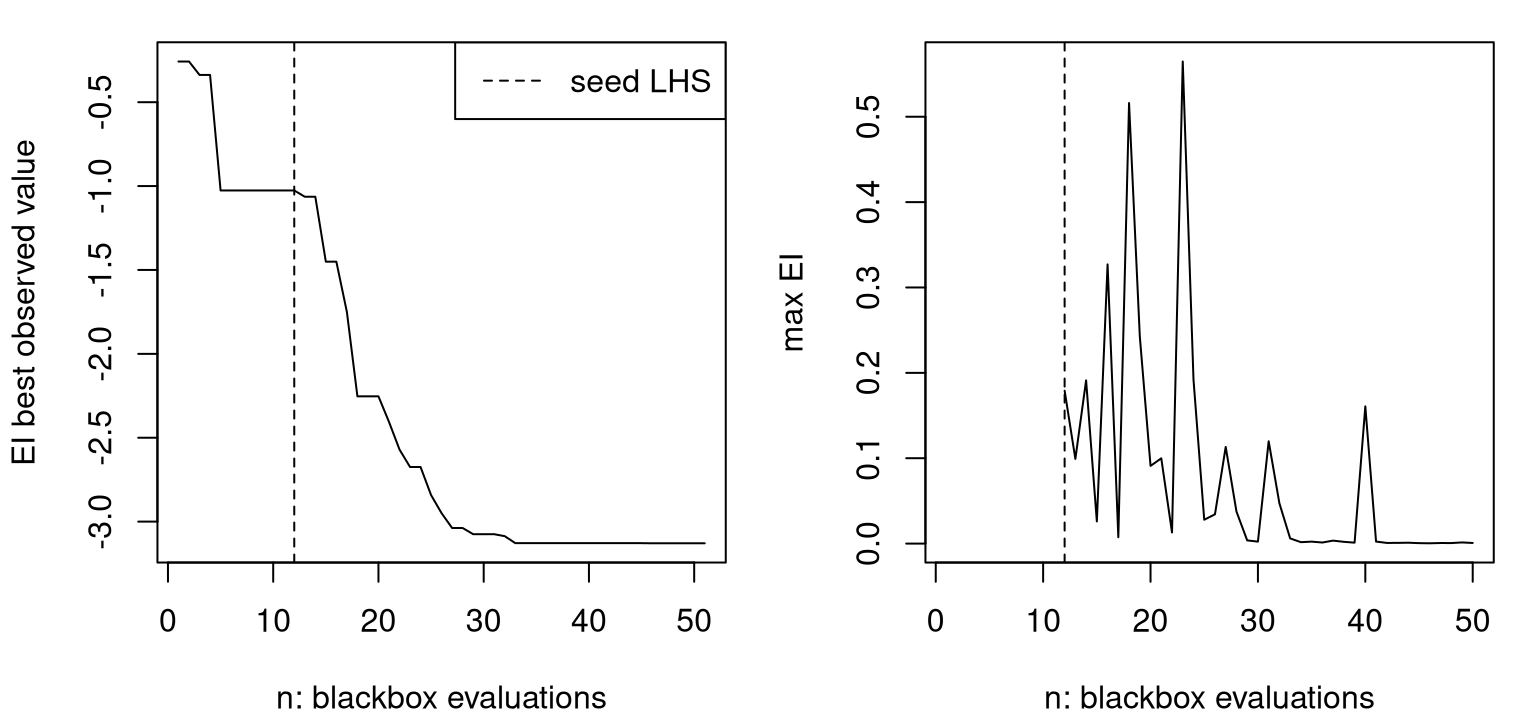

That progress meter is shown visually in Figure 7.3. A vertical dashed line indicates \(n_0\), the size of seed LHS design.

plot(prog, type="l", col="gray", xlab="n: blackbox evaluations",

ylab="best objective value")

abline(v=ninit, lty=2)

legend("topright", "seed LHS", lty=2, bty="n")

FIGURE 7.3: EY progress in terms of BOV over sequential design iterations.

Although it’s difficult to comment on particulars due to random initialization, in most variations substantial progress is apparent over the latter 12 iterations of active learning. There may be plateaus where consecutive iterations show no progress, but these are usually interspersed with modest “drops” and even large “plunges” in BOV. When comparing optimization algorithms based on such progress metrics, the goal is to have BOV curves hug the lower-left-hand corner of the plot to the greatest extent possible: to have the best value of the objective in the fewest number of iterations/evaluations of the expensive blackbox.

To better explore diversity in progress over repeated trials with different random seed designs, an R function below encapsulates our code from above. In addition to a tolerance on successive y-values, an end argument enforces a maximum number of iterations. The full dataset of inputs X and evaluations y is returned.

optim.surr <- function(f, m, ninit, end, tol=1e-4)

{

## initialization

X <- randomLHS(ninit, m)

y <- f(X)

da <- darg(list(mle=TRUE, max=0.5), randomLHS(1000, m))

gpi <- newGPsep(X, y, d=da$start, g=1e-6, dK=TRUE)

mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

## optimization loop

for(i in (ninit+1):end) {

m <- which.min(y)

opt <- optim(X[m,], obj.mean, lower=0, upper=1,

method="L-BFGS-B", gpi=gpi)

ynew <- f(opt$par)

if(abs(ynew - y[length(y)]) < tol) break

updateGPsep(gpi, matrix(opt$par, nrow=1), ynew)

mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

X <- rbind(X, opt$par)

y <- c(y, ynew)

}

## clean up and return

deleteGPsep(gpi)

return(list(X=X, y=y))

}Consider re-seeding and re-solving the optimization problem, minimizing f, in this way over 100 Monte Carlo (MC) repetitions. The loop below combines calls to optim.surr with prog post-processing. A maximum number of end=50 iterations is allowed, but often convergence is signaled after many fewer acquisitions.

reps <- 100

end <- 50

prog <- matrix(NA, nrow=reps, ncol=end)

for(r in 1:reps) {

os <- optim.surr(f, 2, ninit, end)

prog[r,] <- bov(os$y, end)

}It’s important to note that these are random initializations, not random searches. Searches (after initialization) are completely deterministic. Surrogate-assisted/Bayesian optimization is not a stochastic optimization, like simulated annealing, although sometimes deterministic optimizers are randomly initialized as we have done here. Stochastic optimizations, where each sequential decision involves a degree of randomness, don’t make good optimizers for expensive blackbox functions because random evaluations of \(f\) are considered too wasteful in computational terms.

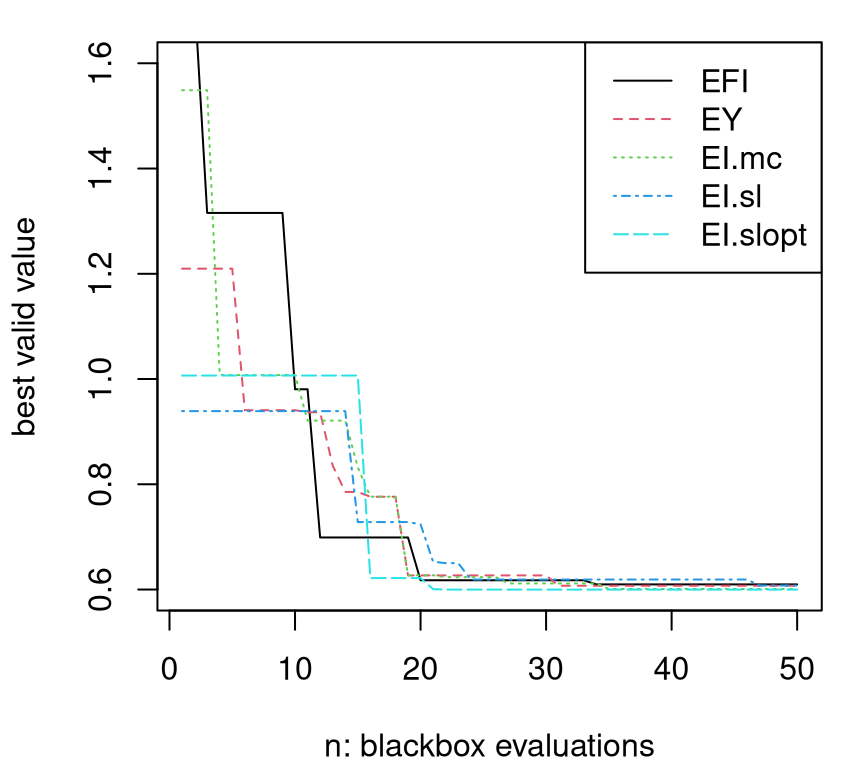

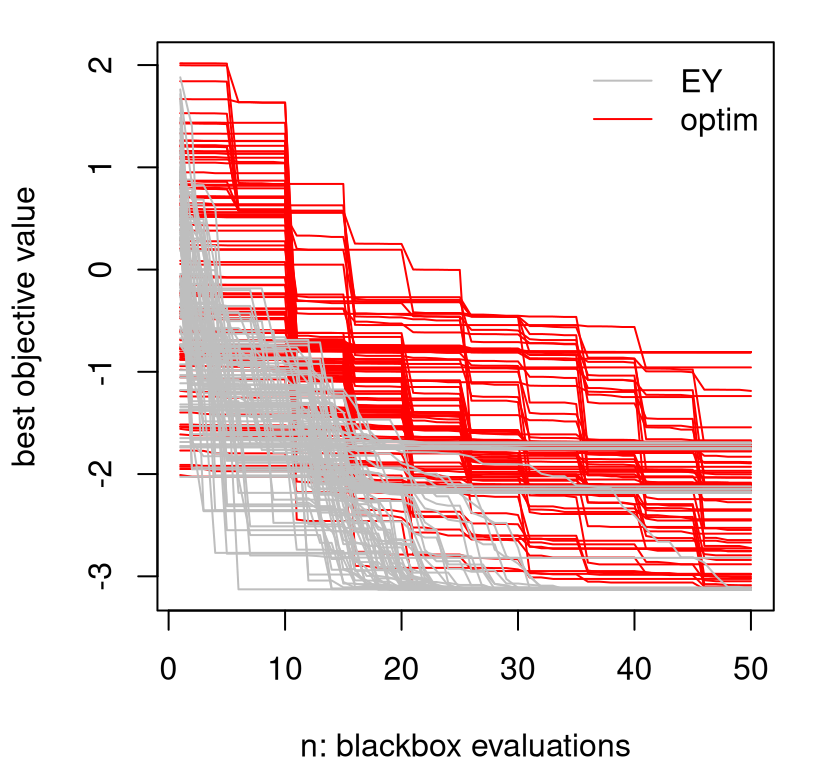

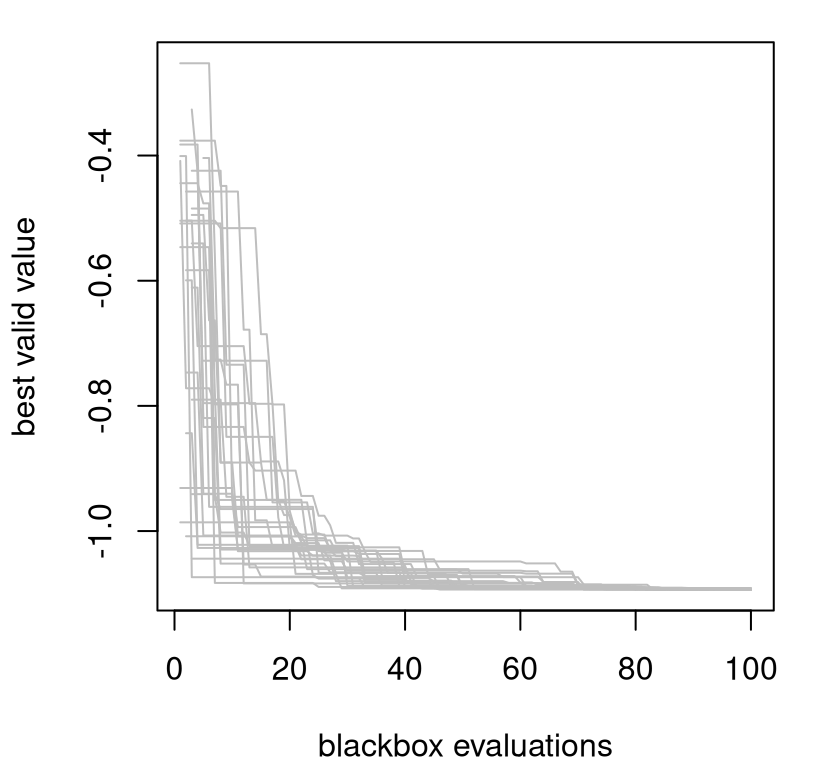

Figure 7.4 shows the 100 trajectories stored in prog.

matplot(t(prog), type="l", col="gray", lty=1,

xlab="n: blackbox evaluations", ylab="best objective value")

abline(v=ninit, lty=2)

legend("topright", "seed LHS", lty=2, bty="n")

FIGURE 7.4: Multiple BOV progress paths following Figure 7.3 under random reinitialization.

Clearly this is not a global optimization tool. It looks like there are three or four local optima, or at least the optimizer is being pulled toward three or four domains of attraction. Before commenting further, it’ll be helpful to have something to compare to.

7.1.2 A classical comparator

How about our favorite optimization library: optim using "L-BFGS-B"? Working optim in as a comparator requires a slight tweak.

Code below modifies our objective function to help keep track of the full set of y-values gathered over optimization iterations, as these are not saved by optim in a way that’s useful for backing out a progress meter (e.g., prog) for comparison. (The optim method works just fine, but it was not designed with my illustrative purpose in mind. It wants to iterate to convergence and give the final result rather than bother you with the details of each evaluation. A trace argument prints partial evaluation information to the screen, but doesn’t return those values for later use.) So the code below updates a y object stored in the calling environment.

Below is the same for loop we did for EY-based surrogate-assisted optimization, but with a direct optim instead. A single random coordinate is used to initialize search, which means that optim enjoys ninit - 1 extra decision-based acquisitions compared to the surrogate method. (No handicap is applied. All expensive function evaluations count equally.) Although optim may utilize more than fifty iterations, our extraction of prog implements a cap of fifty.

prog.optim <- matrix(NA, nrow=reps, ncol=end)

for(r in 1:reps) {

y <- c()

os <- optim(runif(2), fprime, lower=0, upper=1, method="L-BFGS-B")

prog.optim[r,] <- bov(y, end)

}How does optim compare to surrogate-assisted optimization with EY? Figure 7.5 shows that surrogates are much better on a fixed budget. New prog measures for optim are shown in red.

matplot(t(prog.optim), type="l", col="red", lty=1,

xlab="n: blackbox evaluations", ylab="best objective value")

matlines(t(prog), type="l", col="gray", lty=1)

legend("topright", c("EY", "optim"), col=c("gray", "red"), lty=1, bty="n")

FIGURE 7.5: Augmenting Figure 7.4 to include a "L-BFGS-B" comparator under random restarts.

What makes EY so much better; or optim so much worse? Several things. First, "L-BFGS-B" spends precious blackbox evaluations on approximating derivatives. That explains the regular plateaus every five or so iterations. Each step spends roughly \(2m\) calls to \(f\) on calculating a tangent plane, alongside one further call at each newly chosen location, which is often just a short distance away. By comparison, surrogates provide a sense of derivative for “free”, not just locally but everywhere. The curious reader may wish to try method="Nelder-Mead" instead (Nelder and Mead 1965), which is the default in optim. Nelder-Mead doesn’t support search bounds, so the objective must be modified slightly to prevent search wandering well outside our domain of interest. Nelder-Mead doesn’t approximate derivatives, so its progress is a little “smoother” and also a little faster on this 2d problem (when constrained to the box), but is ultimately not as good as the surrogate method. I find that "L-BFGS-B" is more robust, especially in higher dimension, and I like that you can specify a bounding box.

Second, whereas optim, using "L-BFGS-B" or "Nelder-Mead", emphasizes local refinements – leveraging some limited memory, which is like using a model of sorts but not a statistical one – surrogates hold potential for large steps because their view of the response surface is more broad. On occasion, that helps EY escape from local minima, which is more likely in early rather than later iterations.

By and large, surrogate-assisted optimization with EY is still a local affair. Its advantages stem primarily from an enhanced sense of perspective. It exploits, by moving to the next best spot from where it left off, descending with its own optim subroutine on \(\mu_n(x)\). As implemented, it doesn’t explore places that cannot easily be reached from the current best value. It could help to initialize optim subroutines elsewhere, but the success of such variations is highly problem dependent. Sometimes it helps a little, sometimes it hurts.

What’s missing is some way to balance exploration and exploitation. By the way, notice that we’re not actually doing statistics, because at no point is uncertainty being taken into account. Only predictive means are used; predictive variance doesn’t factor in. One way to incorporate uncertainty is through the lower confidence bound (LCB) heuristic (Srinivas et al. 2009). LCB is a simple linear combination between mean and standard deviation:

\[\begin{equation} \alpha_{\mathrm{LCB}}(x) = - \mu_n(x) + \beta_n \sigma_n(x), \quad \mbox{ and } \quad x_{n+1} = \mathrm{argmax}_x \; \alpha_{\mathrm{LCB}}(x). \tag{7.1} \end{equation}\]

LCB introduces a sequence of tuning parameters \(\beta_n\), targeting a balance between exploration and exploitation as iterations of optimization progress. Larger \(\beta_n\) lead to more conservative searches, until in the limit \(\mu_n(x)\) is ignored and acquisitions reduce to ALM (§6.2.1). A family of optimal choices \(\hat{\beta}_n\) may be derived by minimizing regret in a multi-armed bandit setting, however in practice such theoretically automatic selections remain unwieldy for practitioners.

There’s a twist on EY, called Thompson sampling (Thompson 1933), that gracefully incorporates predictive uncertainty. Rather than optimize the predictive mean directly, take a draw from the (full covariance) posterior predictive distribution (5.3), and optimize that instead. Each iteration of search would involve a different, independent surrogate function draw. Therefore, a collection of many optimization steps would feel the effect of a sense of relative uncertainty through a diversity of such draws. Sparsely sampled parts of the input space could see large swings in surrogate optima, which would lead to exploratory behavior. No setting of tuning parameters required.

There are several disadvantages to Thompson sampling however. One is that it’s a stochastic optimization. Each iteration of optimization is based on an inherently random process. Another is that you must commit to a predictive grid in advance, in order to draw from posterior predictive equations, which rules out (continuous) library-based optimization in the inner loop, say by "L-BFGS-B". Actually it’s possible to get around a predictive grid with a substantially more elaborate implementation involving iterative applications of MVN conditionals (5.1). But there’s a better, easier, and more deterministic way to accomplish the same feat by deliberately acknowledging a role for predictive variability in acquisition criteria.

7.2 Expected improvement

In the mid 1990s, Matthias Schonlau (1997) was working on his dissertation, which basically revisited Mockus’ Bayesian optimization idea from a GP and computer experiments perspective. He came up with a heuristic called expected improvement (EI), which is the basis of the so-called efficient global optimization (EGO) algorithm. This distinction is subtle: one is the sequential design criterion (EI), and the other is its repeated application toward minimizing a blackbox function (EGO). In the literature, you’ll see the overall method referred to by both names/acronyms.

Schonlau’s key insight was that predictive uncertainty is underutilized by surrogate frameworks for optimization, which is especially a shame when GPs are involved because they provide such a beautiful predictive variance function. The basic tenets, however, are not limited to GP surrogates. The key is to link prediction to potential for optimization via a measure of improvement. Let \(f_{\min}^n = \min \{y_1, \dots, y_n\}\) be the smallest, best blackbox objective evaluation obtained so far, i.e., the BOV quantity we tracked with prog in our earlier illustrations. Schonlau defined potential for improvement over \(f_{\min}^n\) at an input location \(x\) as

\[ I(x) = \max\{0, f^{\mathrm{min}}_n - Y(x)\}. \]

\(I(x)\) is a random variable. It measures the amount by which a response \(Y(x)\) could be below the BOV obtained so far. Here \(Y(x)\) is shorthand for \(Y(x) \mid D_n\), the predictive distribution obtained from a fitted model. If \(Y(x) \mid D_n\) has nonzero probability of taking on any value on the real line, as it does under a Gaussian predictive distribution, then \(I(x)\) has nonzero probability of being positive for any \(x\).

Now there are lots of things you could imagine doing with \(I(x)\). (Something must be done because in its raw form, as a random variable, it’s of little practical value.) One option is to convert it into a probability. The probability of improvement (PI) criterion is \(\mathrm{PI}(x) = P(I(x) > 0 \mid D_n)\), which is equivalent to \(P(Y(x) < f_{\min}^n \mid D_n)\). Maximizing PI is sensible, but could result in very small steps. The most probable input \(x^\star = \max_x \mathrm{PI}(x)\) may not hold the greatest potential for large improvement, which is important when considering the tacit goal of minimizing the number of expensive blackbox evaluations. Instead, maximizing expected improvement (EI), \(\mathrm{EI}(x) = \mathbb{E}\{I(x) \mid D_n \}\), more squarely targets potential for large improvement.

The easiest way to calculate PI or EI, where “easy” means agnostic to the form of \(Y(x) \mid D_n\), is through MC approximation. Draw \(y^{(t)} \sim Y(x) \mid D_n\), for \(t=1,\dots, T\), from their posterior predictive distribution, and average

\[ \begin{aligned} \mathrm{PI}(x) &\approx \frac{1}{T} \sum_{t=1}^T \mathbb{I}_{\{y^{(t)} > 0\}} & & \mbox{or} & \mathrm{EI}(x) &\approx \frac{1}{T} \sum_{t=1}^T \max \{0, f^n_{\min} - y^{(t)} \}. \end{aligned} \]

In the limit as \(T \rightarrow \infty\) these approximations become exact. This approach works no matter what the distribution of \(Y(x)\) is, so long as you can simulate from it. With fully Bayesian response surface methods leveraging Markov chain Monte Carlo (MCMC) posterior sampling, say, such approximation may represent the only viable option.

However if \(Y(x) \mid D_n\) is Gaussian, as it’s under the predictive equations of a GP surrogate conditional on a particular set of hyperparameters, both have a convenient closed form. PI involves a standard Gaussian CDF (\(\Phi\)) evaluation, as readily calculated with built-in functions in R.

\[\begin{equation} \mathrm{PI}(x) = \Phi\left(\frac{f^n_{\min} - \mu_n(x)}{\sigma_n(x)}\right) \tag{7.2} \end{equation}\]

Transparent in the formula above is that both predictive mean and uncertainty factor into the calculation.

Deriving EI takes a little more work, but nothing an A+ student of calculus couldn’t do using substitution and integration by parts. Details are in an appendix of Schonlau’s thesis. I shall simply quote the final result here.

\[\begin{equation} \mathrm{EI}(x) = (f^n_{\min} - \mu_n(x))\,\Phi\!\left( \frac{f^n_{\min} - \mu_n(x)}{\sigma_n(x)}\right) + \sigma_n(x)\, \phi\!\left( \frac{f^n_{\min} - \mu_n(x)}{\sigma_n(x)}\right), \tag{7.3} \end{equation}\]

where \(\phi\) is the standard Gaussian PDF. Notice how EI contains PI as a component in a larger expression. “One half” of EI is PI multiplied (or weighted) by the amount by which the predictive mean is below \(f^n_{\min}\). The other “half” is predictive variance weighted by a Gaussian density evaluation. In this way, maximizing EI organically and transparently balances competing goals of exploitation (\(\mu_n(x)\) below \(f_{\min}^n\)) and exploration (large predictive uncertainty \(\sigma_n(x)\)).

In what follows the discussion drops PI and focuses exclusively on EI. An exercise in §7.4 encourages the curious reader to rework examples below by swapping in a simple PI implementation, in addition to other alternatives.

7.2.1 Classic EI illustration

As a first illustration of EI, code chunks below recreate an example and visuals presented in the first published/journal manuscript describing EI/EGO (Jones, Schonlau, and Welch 1998). I like to introduce Schonlau’s thesis first to give him proper credit, whereas the Journal of Global Optimization article linked in the previous sentence is often cited as Jones, et al. (1998). Consider data \(D_n = (X_n, Y_n)\) hand-coded in R below, which was taken by eyeball from Figure 10 in that paper, and re-centered to have a mean near zero. Observe the deliberate gap in the input space between fourth and fifth inputs, scanning from the left.

Code below initializes a GP fit on these data with hyperparameterization chosen to match that figure, again by eyeball. (It’s such a great example from a pedagogical perspective, I didn’t want to blow it. We’ll do a more dynamic and novel illustration shortly.) Predictions may then be obtained on a dense grid in the input space.

gpi <- newGP(matrix(x, ncol=1), y, d=10, g=1e-8)

xx <- seq(0, 13, length=1000)

p <- predGP(gpi, matrix(xx, ncol=1), lite=TRUE)Ok, we have everything we need to calculate EI (7.3). R code below evaluates that equation using predictive quantities stored in p after calculating \(f^n_{\min}\) from the small initial set of y values.

m <- which.min(y)

fmin <- y[m]

d <- fmin - p$mean

s <- sqrt(p$s2)

dn <- d/s

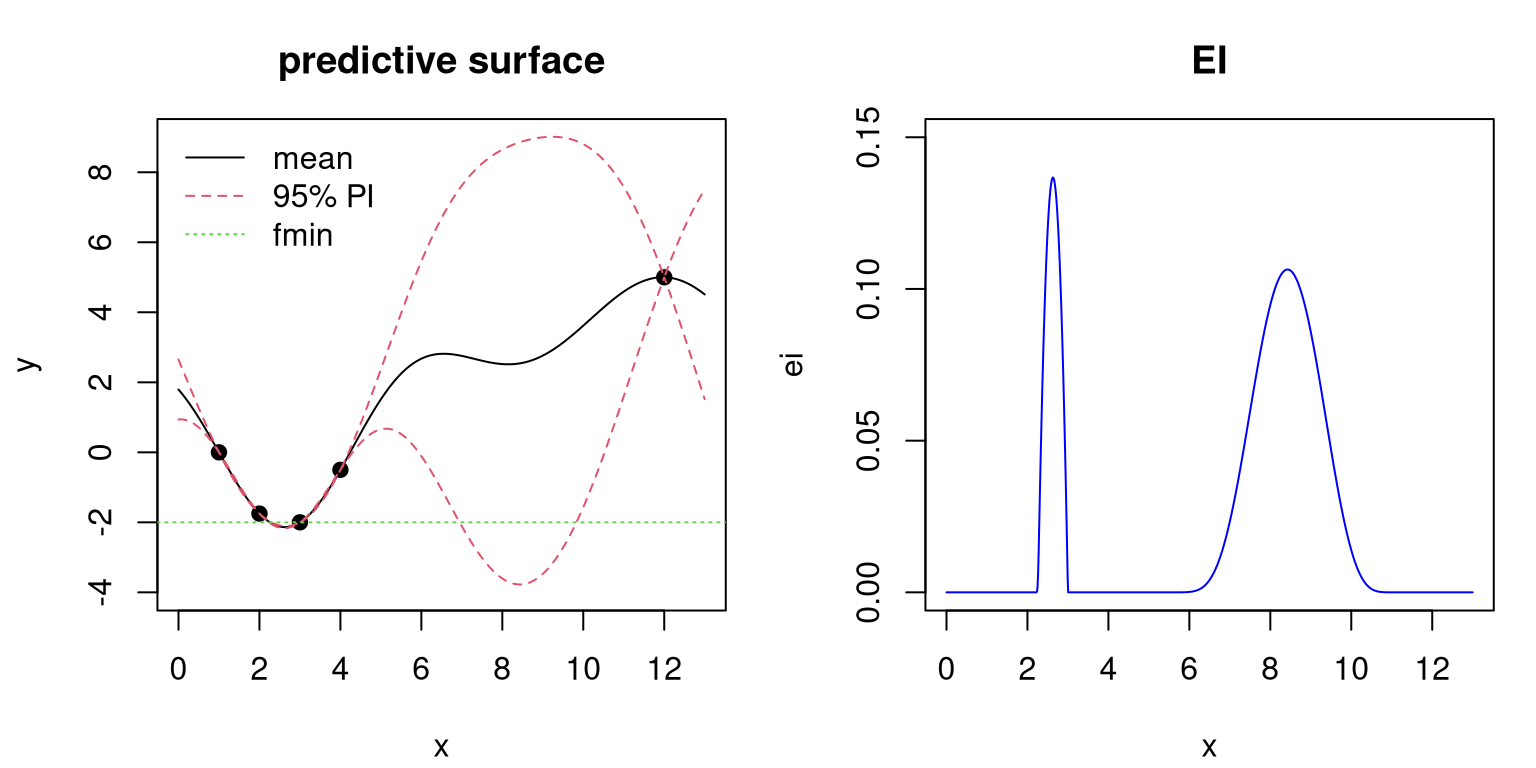

ei <- d*pnorm(dn) + s*dnorm(dn)The left panel in Figure 7.6 shows the predictive surface in terms of mean and approximate 95% error-bars. The predictive mean clearly indicates potential for a solution in the left half of the input space. Our simple surrogate-assisted EY optimizer (§7.1.2) would exploit that low mean and acquire the next point there. However, error-bars suggest great potential for minima in the right half of the input space. Although means are high there, error-bars fall well below the current best value \(f^n_{\min}\), suggesting exploration might be warranted. EI, shown in the right panel of the figure, synthesizes this information to strike a balance between exploration and exploitation.

par(mfrow=c(1,2))

plot(x, y, pch=19, xlim=c(0,13), ylim=c(-4,9), main="predictive surface")

lines(xx, p$mean)

lines(xx, p$mean + 2*sqrt(p$s2), col=2, lty=2)

lines(xx, p$mean - 2*sqrt(p$s2), col=2, lty=2)

abline(h=fmin, col=3, lty=3)

legend("topleft", c("mean", "95% PI", "fmin"), lty=1:3,

col=1:3, bty="n")

plot(xx, ei, type="l", col="blue", main="EI", xlab="x", ylim=c(0,0.15))

FIGURE 7.6: Classic EI illustration showing predictive surface (left) and corresponding EI surface (right).

That synthesis creates a multimodal EI surface. It’s a close call between the left and right options in the input space. Outside of those regions EI is essentially zero, although theoretically positive. The left mode is higher but narrower. Maximizing EI results in choosing the input for the next blackbox evaluation, \(x_{n+1}\), somewhere between 2 and 3. Options other than maximization, towards perhaps a better accounting for breadth of expected improvement, are discussed in §7.2.3. R code below makes a more precise selection and incorporates the new pair \((x_{n+1}, y_{n+1})\).

Notice that the predictive mean is being used for \(y_{n+1}\) in lieu of a “real” evaluation. For a clean illustration we’re supposing that our predictive mean was accurate, and that therefore we have a new global optima. A more realistic example follows soon. Code below updates the GP fit based on the new acquisition and re-evaluates predictive equations on our grid.

updateGP(gpi, matrix(xx[mm], ncol=1), p$mean[mm])

p <- predGP(gpi, matrix(xx, ncol=1), lite=TRUE)

deleteGP(gpi)Cutting-and-pasting from above, next convert those predictions into EIs based on new \(f^n_{\min}\).

m <- which.min(y)

fmin <- y[m]

d <- fmin - p$mean

s <- sqrt(p$s2)

dn <- d/s

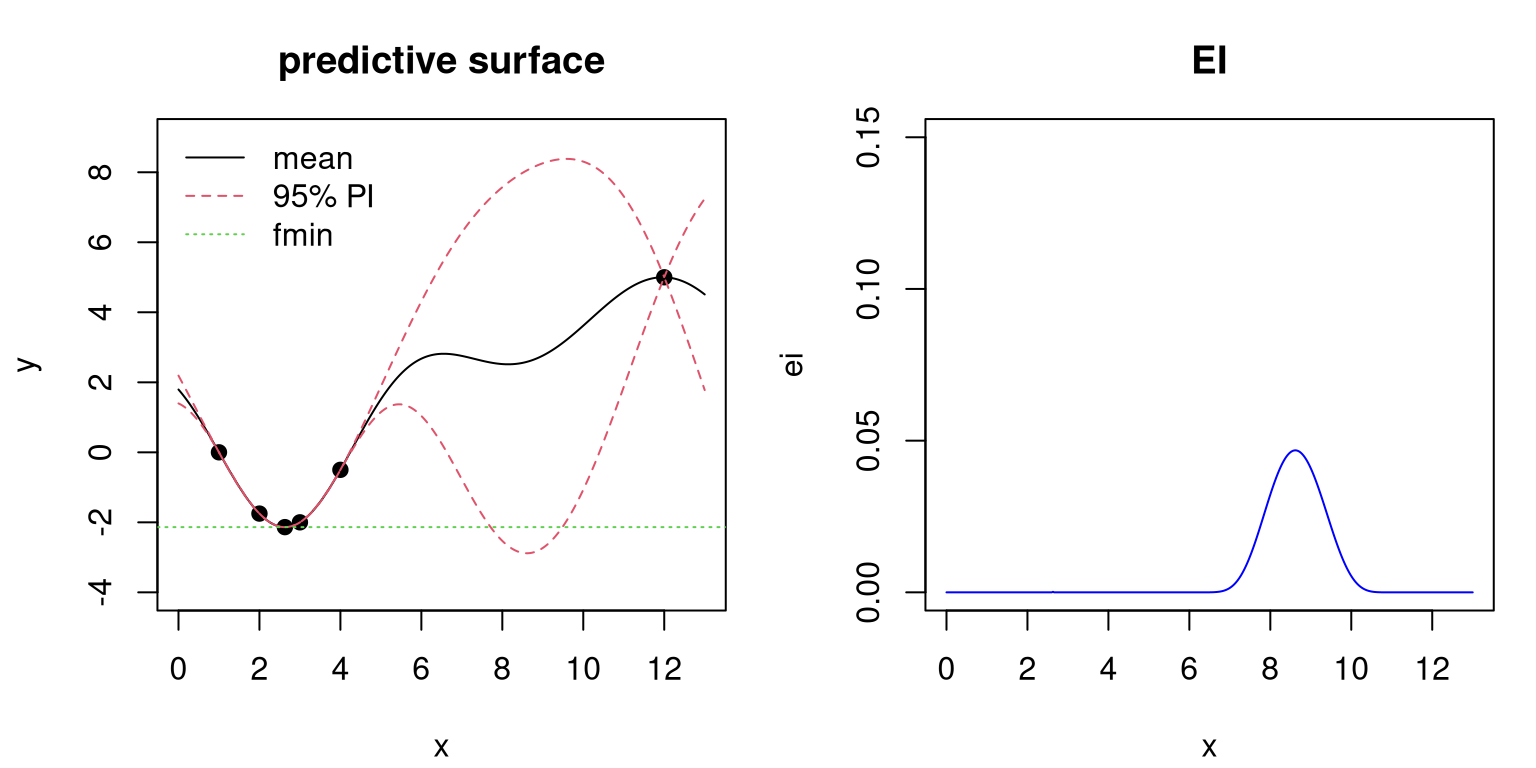

ei <- d*pnorm(dn) + s*dnorm(dn)Updated predictive surface (left) and EI criterion (right) are provided in Figure 7.7.

par(mfrow=c(1,2))

plot(x, y, pch=19, xlim=c(0,13), ylim=c(-4,9), main="predictive surface")

lines(xx, p$mean)

lines(xx, p$mean + 2*sqrt(p$s2), col=2, lty=2)

lines(xx, p$mean - 2*sqrt(p$s2), col=2, lty=2)

abline(h=fmin, col=3, lty=3)

legend("topleft", c("mean", "95% PI", "fmin"), lty=1:3,

col=1:3, bty="n")

plot(xx, ei, type="l", col="blue", main="EI", xlab="x", ylim=c(0,0.15))

FIGURE 7.7: Predictive surface (left) and EI (right) after the first acquisition; see Figure 7.6.

There are several things to notice from the plots in the figure. Perhaps the most striking feature lies in the updated EI surface, which now contains just one bump. Elsewhere, EI is essentially zero. Choosing max EI will result in exploring the high variance region. The plot is careful to keep the same \(y\)-axis compared to Figure 7.6 in order to make transparent that EI tends to decrease as data are added. It need not always decrease, especially when hyperparameters are re-estimated when new data arrive. Since \(\hat{\tau}^2\) is analytic, laGP-based subroutines always keep this hyperparameter up to date. The implementation above doesn’t update lengthscale and nugget hyperparameters, but it could. Comparing left panels between Figures 7.6 and 7.7, notice that predictive variability has been reduced globally even though a point was added in an already densely-sampled area. Data affect GP fits globally under this stationary, default specification, although influence does diminish exponentially as gaps between training and testing locations grow in the input space.

We’re at the limits of this illustration because it doesn’t really involve a function \(f\), but rather some data on evaluations gleaned by eyeball from a figure in a research paper. A more interactive demo is provided as gp_ei_sin.R in supplementary material on the book web page. The demo extends our basic sinusoid example from §5.1.1, but is quite similar in flavor to the illustration above. Specifically, it combines an EI function from the plgp package (Gramacy 2014) with GP fitting from laGP (Gramacy and Sun 2018) – somewhat of a hodgepodge but it keeps the code short and sweet. For enhanced transparency on our running Goldstein–Price example (§7.1.1), we’ll code up our own EI function here, basically cut-and-paste from above, for use with laGP below.

EI <- function(gpi, x, fmin, pred=predGPsep)

{

if(is.null(nrow(x))) x <- matrix(x, nrow=1)

p <- pred(gpi, x, lite=TRUE)

d <- fmin - p$mean

sigma <- sqrt(p$s2)

dn <- d/sigma

ei <- d*pnorm(dn) + sigma*dnorm(dn)

return(ei)

}Observe how this implementation combines prediction with EI calculation. That’s a little inefficient in situations where we wish to record both predictive and EI quantities, as we did in the example above, especially when evaluating on a dense grid. Rather, it’s designed with implementation in Step 3 of Algorithm 6.1 in mind. The final argument, pred, enhances flexibility in specification of fitted model and associated prediction routine.

7.2.2 EI on our running example

To set up EI as an objective for minimization, the function below acts as a wrapper around - EI.

We’ve seen that EI can be multimodal, but it’s not pathologically so like ALM and, to a lesser extent, ALC. ALM/C inherit multimodality from the sausage-shaped GP predictive distribution. Although EI is composed of a measure of predictive uncertainty, its hybrid nature with the predictive mean has a calming effect. Consequently, EI is only high when both mean is low and variance is high, each contributing to potential for low function realization. The number of modes in EI may fluctuate throughout acquisition iterations, but in the long run should resemble the number of troughs in \(f\), assuming minimization. By contrast, the number of ALM/C modes would increase with \(n\). Like with ALM/C, a multi-start scheme for EI searches is sensible, but need not include \(\mathcal{O}(n)\) locations and these need not be as carefully placed, e.g., at the widest parts of the sausages. A fixed number, perhaps composed of the input location of the BOV (that corresponding to \(f^n_{\min}\), where we know the function is low) and a few other points spread around the input space, is often sufficient for decent performance. If not for numerical issues that sometimes arise when EI evaluations are numerically zero for large swaths of inputs, having just two multi-start locations (one at \(f_{\min}^n\) and one elsewhere) often suffices. Working with \(\log \mathrm{EI}(x)\) sometimes helps in such situations, but I won’t bother in our implementation here.

With those considerations in mind, the function below completes our solution to Algorithm 6.1, specifying \(J(x)\) in Step 3 for EI-based optimization. EI.search is similar in flavor to xnp1.search from §6.2.1, but tailored to EI and the multi-start scheme described above.

eps <- sqrt(.Machine$double.eps) ## used lots below

EI.search <- function(X, y, gpi, pred=predGPsep, multi.start=5, tol=eps)

{

m <- which.min(y)

fmin <- y[m]

start <- matrix(X[m,], nrow=1)

if(multi.start > 1)

start <- rbind(start, randomLHS(multi.start - 1, ncol(X)))

xnew <- matrix(NA, nrow=nrow(start), ncol=ncol(X)+1)

for(i in 1:nrow(start)) {

if(EI(gpi, start[i,], fmin) <= tol) { out <- list(value=-Inf); next }

out <- optim(start[i,], obj.EI, method="L-BFGS-B",

lower=0, upper=1, gpi=gpi, pred=pred, fmin=fmin)

xnew[i,] <- c(out$par, -out$value)

}

solns <- data.frame(cbind(start, xnew))

names(solns) <- c("s1", "s2", "x1", "x2", "val")

solns <- solns[solns$val > tol,]

return(solns)

}Although a degree of stochasticity is being injected through the multi-start scheme, this is simply to help the inner loop (maximizing EI), where evaluations are cheap. From the perspective of the outer loop – iterations of sequential design, with details coming momentarily – the intention is still to perform a deliberate and deterministic search. Actually more multi-start locations translate into more careful acquisitions \(x_{n+1}\) and subsequent expensive blackbox evaluation. The data.frame returned on output has one row for each multi-start location, although rows yielding effectively zero EI are culled. Multi-start locations whose first EI evaluation is effectively zero are immediately aborted. There are many further ways to “robustify” EI.search, but it’s surprising how well things work without much fuss. For example, Jones, Schonlau, and Welch (1998) suggested branch and bound search rather than multi-start derivative-based numerical optimization (optim in our implementation above). Providing gradients to optim can help too. But the improvements that those enhancements offer are at best slight in my experience.

All right, let’s initialize an EI-based optimization – same as for the two earlier comparators.

X <- randomLHS(ninit, 2)

y <- f(X)

gpi <- newGPsep(X, y, d=0.1, g=1e-6, dK=TRUE)

da <- darg(list(mle=TRUE, max=0.5), randomLHS(1000, 2))Code below solves for the next input to try, extracting the best row from the output data.frame.

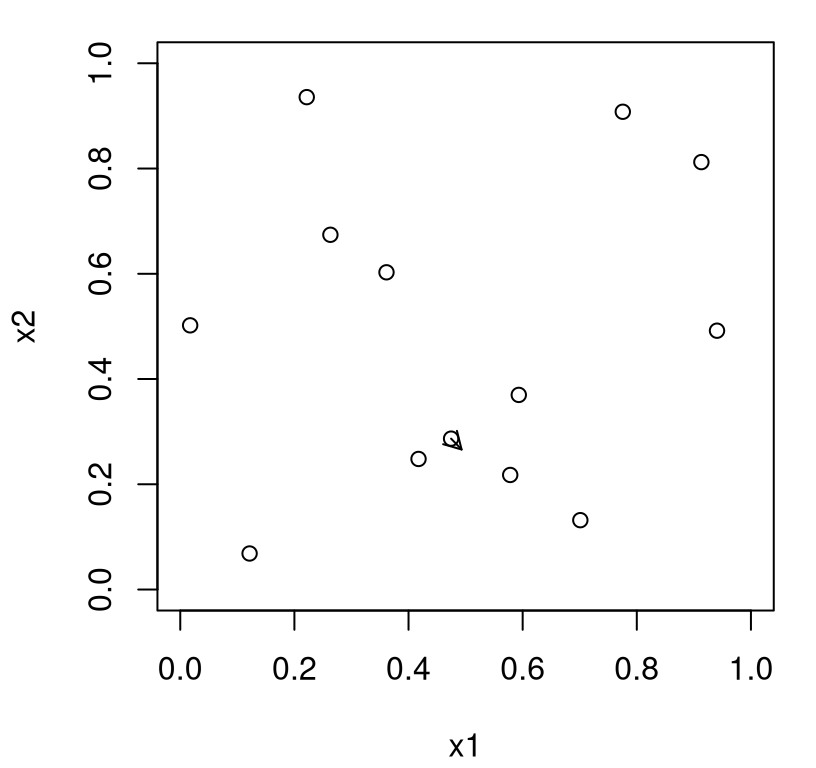

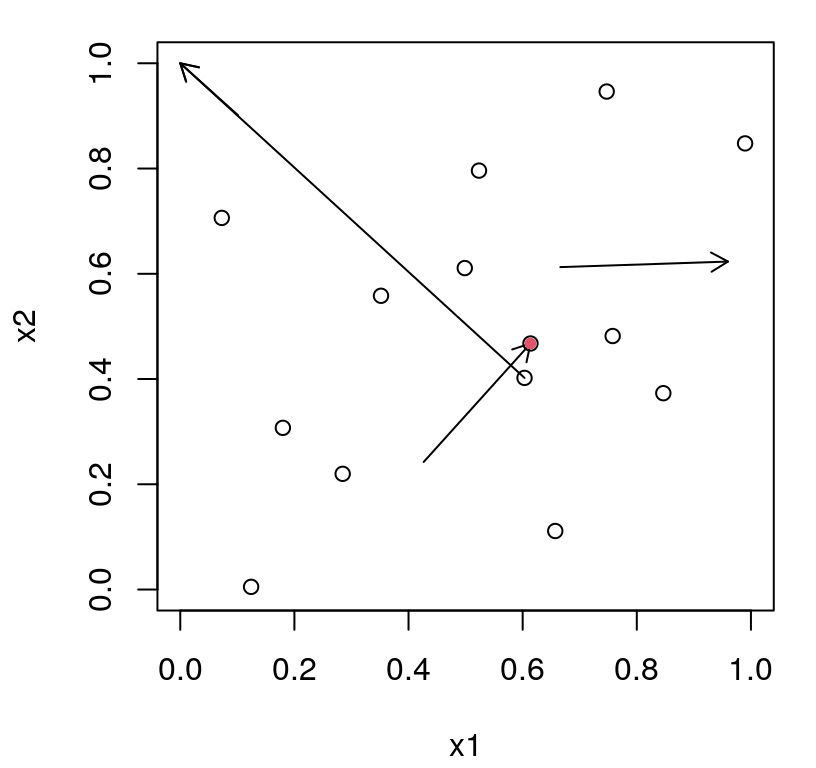

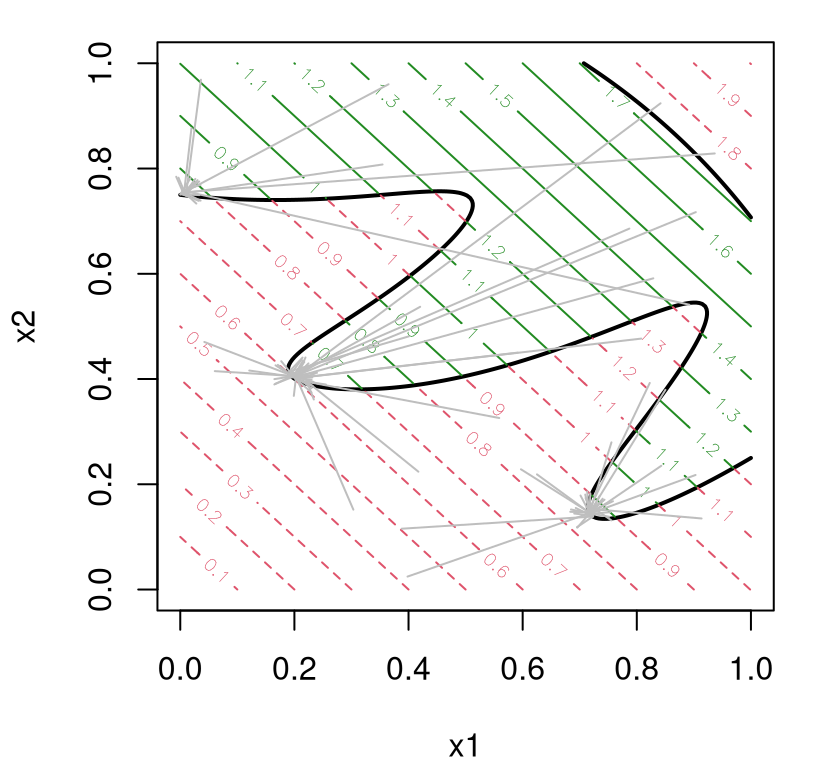

Before acting on that solution, Figure 7.8 summarizes the outcome of search with arrows indicating starting and ending locations of each multi-start EI optimization. Open circles mark the original/existing design. The red dot corresponds to the best row.

plot(X, xlab="x1", ylab="x2", xlim=c(0,1), ylim=c(0,1))

arrows(solns$s1, solns$s2, solns$x1, solns$x2, length=0.1)

points(solns$x1[m], solns$x2[m], col=2, pch=20)

FIGURE 7.8: First iteration of EI search on Goldstein–Price objective in the style of Figure 7.1.

One of the arrows originates from an open circle. This is the multi-start location corresponding to \(f_{\min}^n\). Up to four other arrows come from an LHS. If the total number of arrows is fewer than 5, the default multi.start in EI.search, that’s because some were initialized in numerically-zero EI locations, and consequently that search was voided at the outset. Usually two or more arrows appear, sometimes with distinct terminus indicating a multi-modal criterion.

Moving on now, code below incorporates the new data at the chosen input location (red dot) and updates the GP fit.

xnew <- as.matrix(solns[m,3:4])

X <- rbind(X, xnew)

y <- c(y, f(xnew))

updateGPsep(gpi, xnew, y[length(y)])

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)We’re ready for the next acquisition. Below the search is combined with a second GP update, after incorporating the newly selected data pair.

solns <- EI.search(X, y, gpi)

m <- which.max(solns$val)

maxei <- c(maxei, solns$val[m])

xnew <- as.matrix(solns[m,3:4])

X <- rbind(X, xnew)

y <- c(y, f(xnew))

updateGPsep(gpi, xnew, y[length(y)])

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)Figure 7.9 offers a visual, accompanied by a story that’s pretty similar to Figure 7.8.

plot(X, xlab="x1", ylab="x2", xlim=c(0,1), ylim=c(0,1))

arrows(solns$s1, solns$s2, solns$x1, solns$x2, length=0.1)

points(solns$x1[m], solns$x2[m], col=2, pch=20)

FIGURE 7.9: Second iteration of EI search after Figure 7.8.

You get the idea. Rather than continue with pedantic visuals, a for loop below repeats in this way until fifty samples of \(f\) have been collected. Hopefully one of them will offer a good solution to the optimization problem, an \(x^\star\) with a minimal objective value \(f(x^\star)\).

for(i in nrow(X):end) {

solns <- EI.search(X, y, gpi)

m <- which.max(solns$val)

maxei <- c(maxei, solns$val[m])

xnew <- as.matrix(solns[m,3:4])

ynew <- f(xnew)

X <- rbind(X, xnew)

y <- c(y, ynew)

updateGPsep(gpi, xnew, y[length(y)])

mle <- mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

}

deleteGPsep(gpi)Notice that no stopping criteria are implemented in the loop. Our previous y-value-based criterion would not be appropriate here because progress isn’t measured by the value of the objective, but instead by potential for (expected) improvement. A relevant such quantity is recorded by maxei above. Still, y-progress is essential to drawing comparison to earlier results.

Figure 7.10 presents these two measures of progress side-by-side, with y on the left and maxei on the right.

par(mfrow=c(1,2))

plot(prog.ei, type="l", xlab="n: blackbox evaluations",

ylab="EI best observed value")

abline(v=ninit, lty=2)

legend("topright", "seed LHS", lty=2)

plot(ninit:end, maxei, type="l", xlim=c(1,end),

xlab="n: blackbox evaluations", ylab="max EI")

abline(v=ninit, lty=2)

FIGURE 7.10: EI progress in terms of BOV (left) and maximal EI used for acquisition (right).

First, notice how BOV (on the left) is eventually in the vicinity of \(-3\), which is as good or better than anything we obtained in previous optimizations. Even in this random Rmarkdown build I can be pretty confident about that outcome for reasons that’ll become more apparent after we complete a full MC study, shortly. The right panel, showing EI progress, is usually spiky. As the GP learns about the response surface, globally, it revises which areas it believes are high variance, and which give low response values, culminating in dramatic shifts in potential for improvement. Eventually maximal EI settles down, but there’s no guarantee that it won’t “pop back up” if a GP update is surprised by an acquisition. Sometimes those “pops” are big, sometimes small. Therefore maxei is more useful as a visual confirmation of convergence than it is an operational one, e.g., one that can be engineered into a library function. Hence it’s common to run out an EI search to exhaust a budget of evaluations. The function below, designed to encapsulate code above for repeated calls in an MC setting, demands an end argument in lieu of more automatic convergence criteria.

optim.EI <- function(f, ninit, end)

{

## initialization

X <- randomLHS(ninit, 2)

y <- f(X)

gpi <- newGPsep(X, y, d=0.1, g=1e-6, dK=TRUE)

da <- darg(list(mle=TRUE, max=0.5), randomLHS(1000, 2))

mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

## optimization loop of sequential acquisitions

maxei <- c()

for(i in (ninit+1):end) {

solns <- EI.search(X, y, gpi)

m <- which.max(solns$val)

maxei <- c(maxei, solns$val[m])

xnew <- as.matrix(solns[m,3:4])

ynew <- f(xnew)

updateGPsep(gpi, xnew, ynew)

mleGPsep(gpi, param="d", tmin=da$min, tmax=da$max, ab=da$ab)

X <- rbind(X, xnew)

y <- c(y, ynew)

}

## clean up and return

deleteGPsep(gpi)

return(list(X=X, y=y, maxei=maxei))

}This optim.EI function hard-codes a separable laGP-based GP formulation, but is easily modified for other settings or an isotropic analog. Using that function, let’s repeatedly solve the problem in this way (and track progress) with 100 random initializations, duplicating work similar to our EY and "L-BFGS-B" optimizations from §7.1.1–7.1.2.

reps <- 100

prog.ei <- matrix(NA, nrow=reps, ncol=end)

for(r in 1:reps) {

os <- optim.EI(f, ninit, end)

prog.ei[r,] <- bov(os$y)

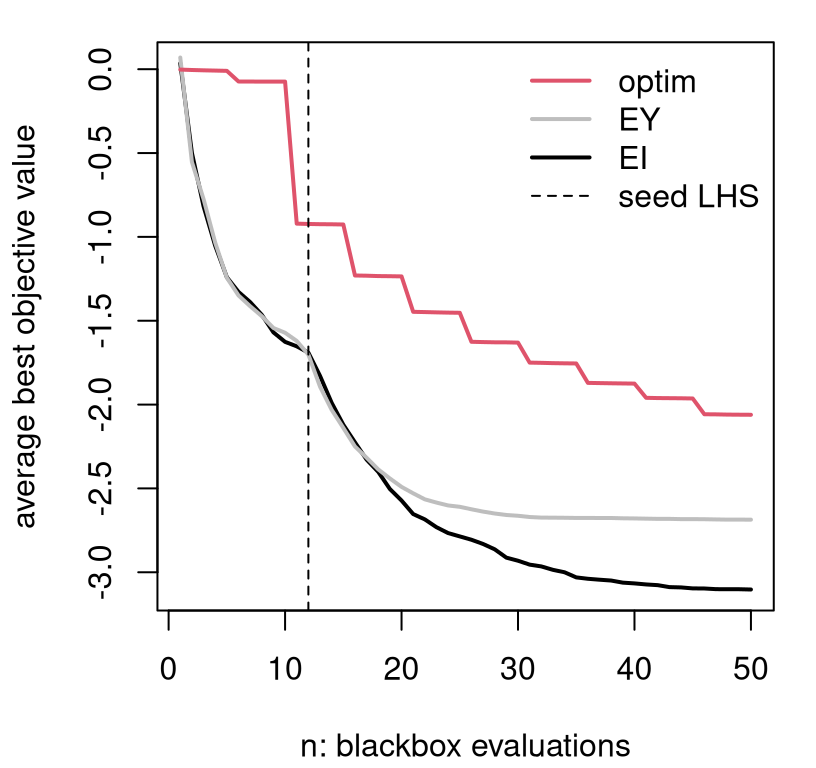

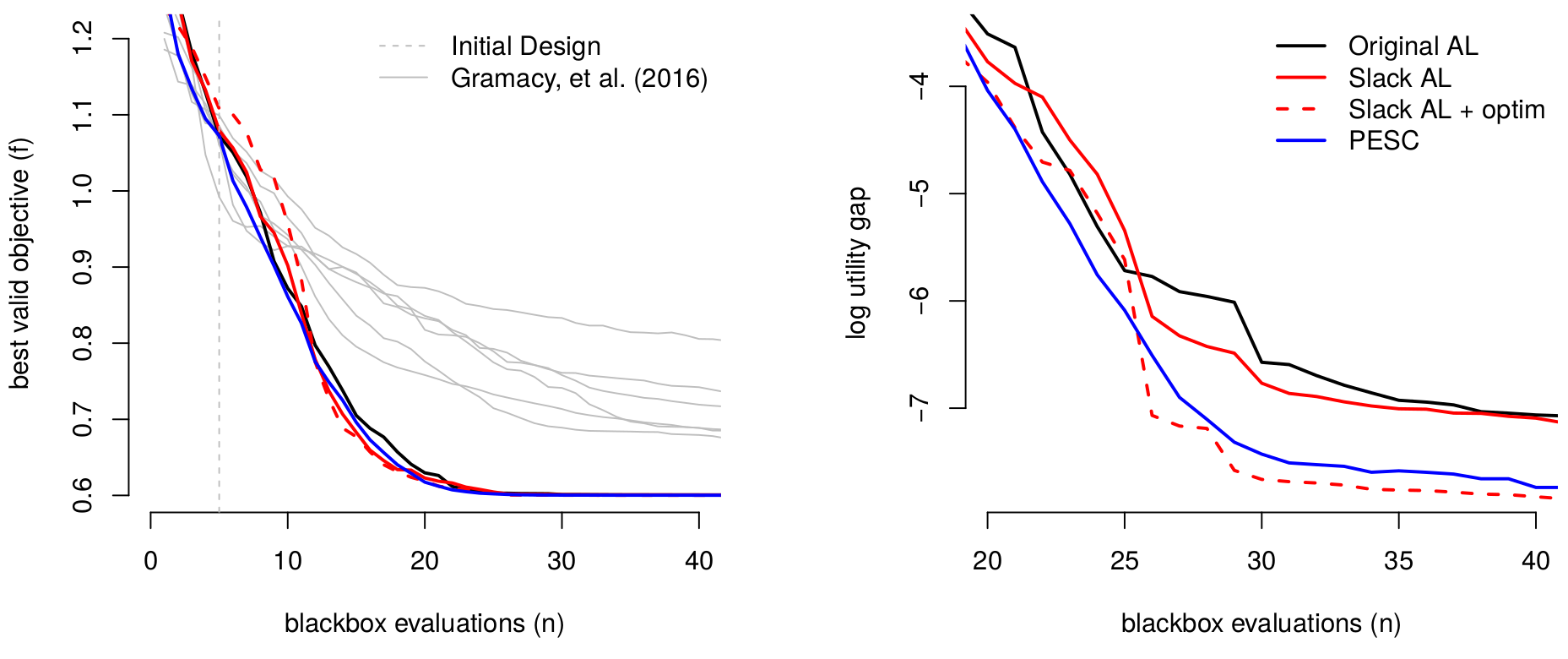

}Because showing three sets of 100 paths (300 total) would be a hot spaghetti mess, Figure 7.11 shows averages of those sets of 100 for our three comparators. Variability in those paths, which is mostly of interest for latter iterations/near the budget limit, is shown in a separate figure momentarily.

plot(colMeans(prog.ei), col=1, lwd=2, type="l",

xlab="n: blackbox evaluations", ylab="average best objective value")

lines(colMeans(prog), col="gray", lwd=2)

lines(colMeans(prog.optim, na.rm=TRUE), col=2, lwd=2)

abline(v=ninit, lty=2)

legend("topright", c("optim", "EY", "EI", "seed LHS"),

col=c(2, "gray", 1, 1), lwd=c(2,2,2,1), lty=c(1,1,1,2),

bty="n")

FIGURE 7.11: Average BOV progress for the three comparators entertained so far.

Although EI and EY perform similarly for the first few acquisitions after initialization, EI systematically outperforms in subsequent iterations. Whereas the classical surrogate-assisted EY heuristic gets stuck in inferior local optima, EI is better able to pop out and explore other alternatives. Both are clearly better than derivative-based methods like "L-BFGS-B", labeled as optim in Figure 7.11.

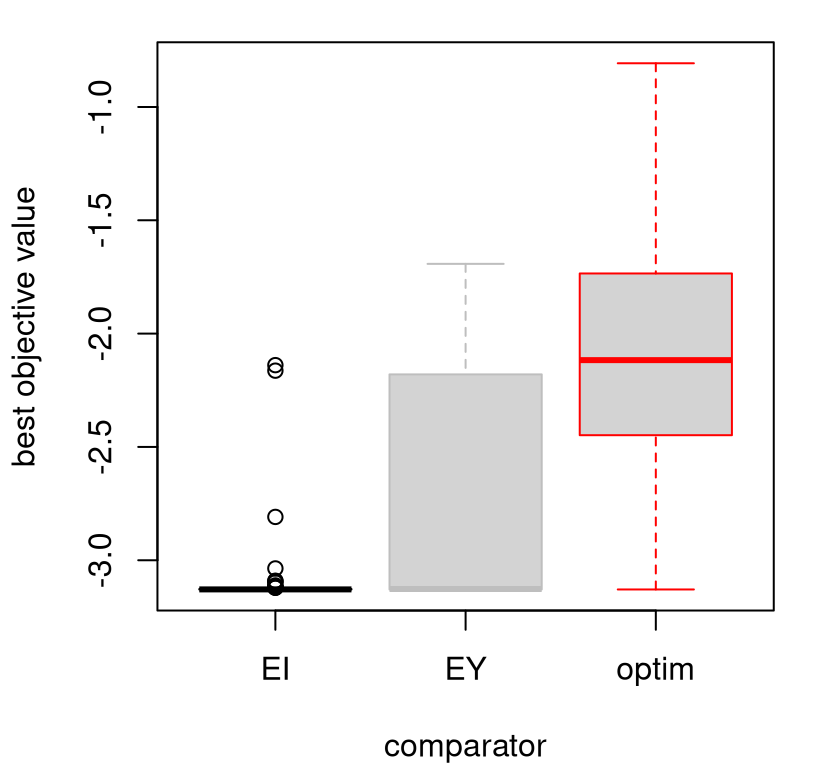

Figure 7.12 shows the diversity of solutions in the final, fiftieth iteration. Only in a small handful of 100 repeats does EI not find the global min after 50 iterations. Classical surrogate-assisted EY optimization fails to find the global optima about half of the time. Numerical "L-BFGS-B" optimization fails more than 75% of the time.

boxplot(prog.ei[,end], prog[,end], prog.optim[,end],

names=c("EI", "EY", "optim"), border=c("black", "gray", "red"),

xlab="comparator", ylab="best objective value")

FIGURE 7.12: Boxplots summarizing the distribution of progress after the final acquisition.

All of the methods would benefit from more iterations, but only marginally unless random restarts are implemented for EY and optim comparators. Once those become stuck in a local minima they lack the ability to pop out on their own. EI, on the other hand, is in a certain sense guaranteed to become unstuck. Under certain regularity conditions, like that hyperparameters are fixed and known and the objective function isn’t completely at odds with typical GP modeling assumptions, the EGO algorithm (i.e., repeated EI searches) will eventually converge to a global optimum. As usual with such theorems, the devil is in the details of the assumptions hidden in those regularity conditions. When are those satisfied, or easily verified, in practice? The real sleight-of-hand here is in the notion of convergence, not in the assumptions. EGO really only converges in the sense that eventually it’ll explore everywhere. Since completely random search also has that property, the accomplishment isn’t all that extraordinary. But the fact that its searches do not look random, but work as well as random in the limit, offers some comfort.

Perhaps a more important, or more relevant, theoretical result is that you can show that each EI-based acquisition is best in a certain sense: selection of \(x_{n+1}\) by EI is optimal if \(n+1 = N\), where \(N\) is the total budget available for evaluations of \(f\). That is, EI is best if the next sample is the last one you plan to take, and your intention is to predict that \(\min_x \; \mu_{n+1}(x)\) is the global minimum. For a sample of technical details relating to convergence of EI-like methods, see Bull (2011). Another perspective views EI as a greedy heuristic approximating a fuller “look-ahead” scheme. In the burgeoning BO literature there are several alternative acquisition functions (Snoek, Larochelle, and Adams 2012), i.e., sequential design heuristics, with similar behavior and theory. Bect, Bachoc, and Ginsbourger (2016) provide a compelling framework for studying properties and variations of EGO/EI-like methods.

7.2.3 Conditional improvement

As an example of a scheme intimately related to EI, but which is in principle forward thinking and targets even broader scope, consider integrated expected conditional improvement [IECI; Gramacy and Lee (2011)]. IECI was designed for optimization under constraints, our next topic, and that transition motivates its discussion here amid myriad similarly motivated extensions (see, e.g., Snoek, Larochelle, and Adams 2012; Bect, Bachoc, and Ginsbourger 2016) and methods based on the knowledge gradient (KG, as described by J. Wu et al. 2017 and references therein). I make no claims that IECI is superior to these alternatives, or superior to EI or KG. Rather it’s a well-motivated choice I know well, adding some breadth to a discussion of acquisition functions for optimization, paired with convenient library support.

In essence, IECI is to EI what ALC is to ALM. Instead of optimizing directly, first integrate then optimize. Like with ALM to ALC, developing intermediate conditional criteria represents a crucial first step. Recall that ALC (§6.2.2) entails measuring variance at a reference location \(x\) after a new location \(x_{n+1}\) is added into the design. Then that gets integrated, or more approximately summed, over a wider set of \(x \in \mathcal{X}\).

Similarly, conditional improvement measures improvement at reference location \(x\), after another location \(x_{n+1}\) is added into the design.

\[ I(x \mid x_{n+1}) = \max \{0, f_{\min}^n - Y(x \mid x_{n+1}) \} \]

“Deduce” moments of the distribution of \(Y(x \mid x_{n+1}) \mid D_n\) as follows:

- \(\mathbb{E}\{Y(x \mid x_{n+1}) \mid D_n\} = \mu_n(x)\) since \(y_{n+1}\) has not come yet.

- \(\mathbb{V}\mathrm{ar}[Y(x \mid x_{n+1}) \mid D_n] = \sigma_{n+1}^2(x)\) follows the ordinary GP predictive equations (5.2) with \(X_{n+1} = (X_n^\top ; x_{n+1}^\top)^\top\) and hyperparameters learned at iteration \(n\). See Eq. (6.6) in §6.2.2. In practice, this quantity is most efficiently calculated with partitioned inverse equations (6.8), just like with ALC.

Integrating \(I(x \mid x_{n+1})\) with respect to \(Y(x \mid x_{n+1})\) yields the expected conditional improvement (ECI), i.e., the analog of EI for conditional improvement.

\[ \mathbb{E}\{I(x \mid x_{n+1}) \mid D_n \} = (f^n_{\min} - \mu_n(x))\,\Phi\!\left( \frac{f^n_{\min}\!-\!\mu_n(x)}{\sigma_{n+1}(x)}\right) + \sigma_{n+1}(x)\, \phi\!\left( \frac{f^n_{\min}\!-\!\mu_n(x)}{\sigma_{n+1}(x)}\right) \]

To obtain a function of \(x_{n+1}\) only, i.e., a criterion for sequential design, integrate over the reference set \(x \in \mathcal{X}\)

\[\begin{equation} \mathrm{IECI}(x_{n+1}) = - \int_{x\in\mathcal{X}} \mathbb{E}\{I(x \mid x_{n+1}) \mid D_n \} w(x) \; dx. \tag{7.4} \end{equation}\]

Such is the integrated expected conditional improvement (IECI), for some (possibly uniform) weights \(w(x)\). As with ALC, in practice that integral is approximated with a sum.

\[ \mathrm{IECI}(x_{n+1}) \approx - \frac{1}{T} \sum_{t=1}^T \mathbb{E}\{I(x^{(t)} \mid x_{n+1}) \} w(x^{(t)}) \quad \mbox{ where } \; x^{(t)} \sim p(\mathcal{X}), \; \mbox{ for } \; t=1,\dots,T, \]

and \(p(\mathcal{X})\) is a measure on the input space \(\mathcal{X}\) which may be uniform. Alternatively, one may combine weights and measures on \(\mathcal{X}\) into a single measure. For now, take both to be uniform in \([0,1]^m\).

The minus in IECI may look peculiar at first glance. Since \(I(x \mid x_{x+1})\) is, in some sense, a two-step measure, small values are preferred over larger ones. ALC similarly prefers smaller future variances. Instead of measuring improvement directly at \(x_{n+1}\), as EI does, IECI measures improvement in a roundabout way, assessing it at a reference point \(x\) under the hypothetical scenario that \(x_{n+1}\) is added into the design. If \(x\) still has high improvement after \(x_{n+1}\) has been added in, then \(x_{n+1}\) must not have had much influence on potential for improvement at \(x\). If \(x_{n+1}\) is influential at \(x\), then improvement at \(x\) should be small after \(x_{n+1}\) is added in, not large. Observe that the above argument makes tacit use of the assumption that \(\mathbb{E}\{I(x \mid x_{n+1})\} \leq \mathbb{E}\{I(x)\}\), for all \(x\in \mathcal{X}\), a kind of monotonicity condition.

Alternately, consider instead expected reduction in improvement (RI), analogous to reduction in variance from ALC.

\[ \mathrm{RI}(x_{n+1}) = \int_{x\in\mathcal{X}} (\mathbb{E}\{I(x) \mid D_n \} - \mathbb{E}\{I(x \mid x_{n+1}) \mid D_n \}) w(x) \; dx \]

Clearly we wish to maximize RI, to reduce the potential for future improvement as much as possible. And observe that \(\mathbb{E}\{I(x)\}\) doesn’t depend on \(x_{n+1}\), so it doesn’t contribute substantively to the criterion. What’s left is exactly IECI. In order for RI to be positive, the very same monotonicity condition must be satisfied. For ALC we don’t need to worry about monotonicity since, conditional on hyperparameters, future (\(n+1\)) variance is always lower than past (\(n\)) variance. It turns out that the definition of \(f_{\min}\) is crucial to determining whether or not monotonicity holds.

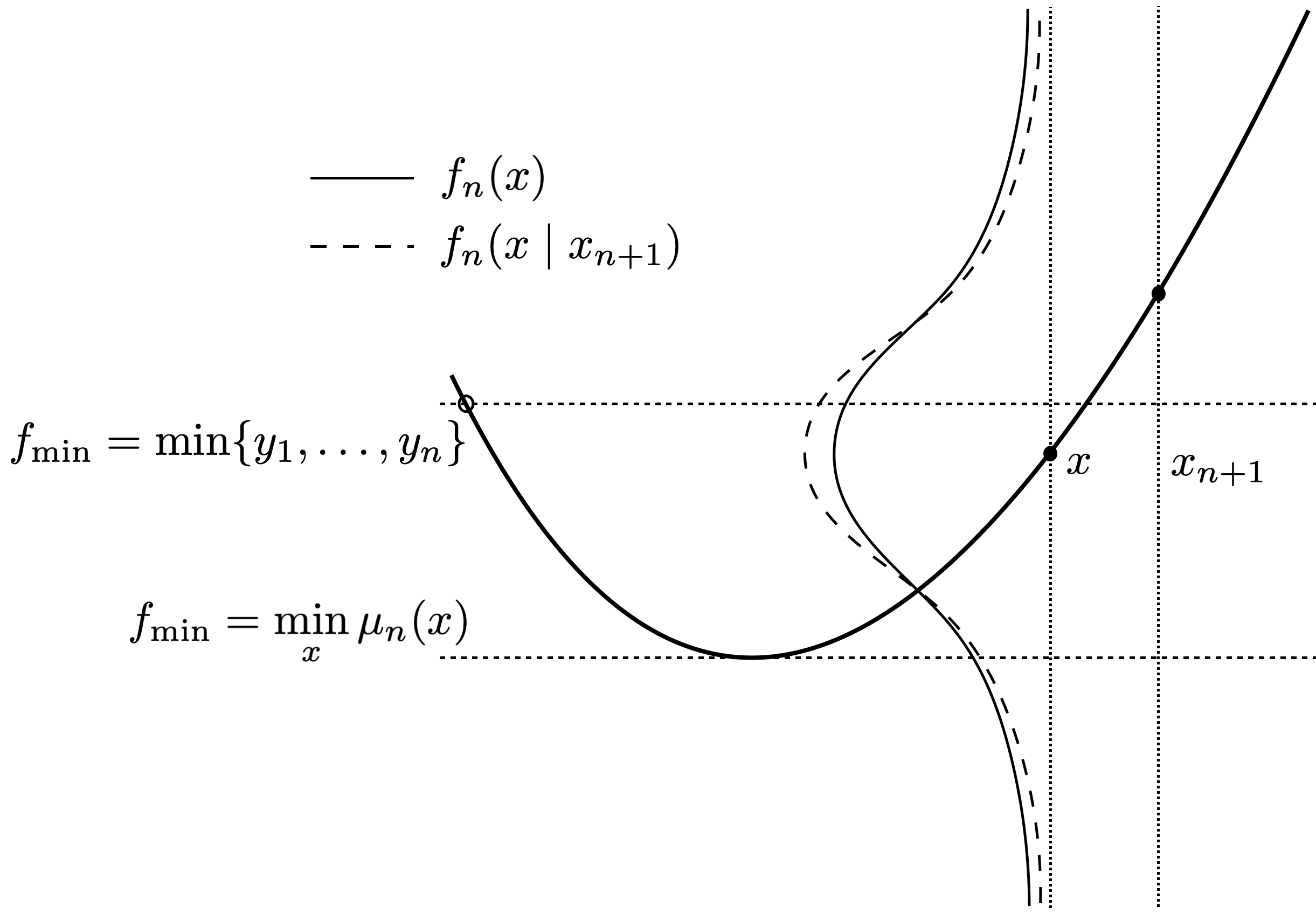

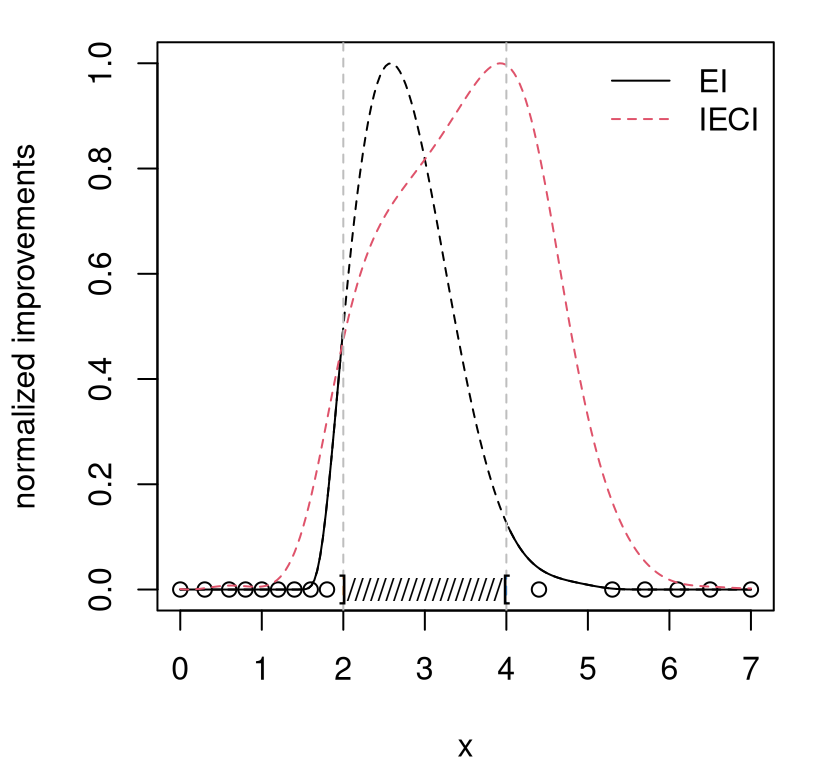

A drawing in Figure 7.13 illustrates how \(f_{\min}\) can influence ECI. Two choices of \(f_{\min}\) are entertained, drawn as horizontal lines. One uses only observed \(y\)-values, following exactly our definition above for \(f_{\min}\). The other takes \(f_{\min}\) from the extremum of the GP predictive mean, drawn as a solid parabolic curve: \(f_{\min} = \min_x \; \mu_n(x)\). EI is related to the area of the predictive density drawn as a solid line, plotted vertically and centered at \(\mu_n(x)\), which lies underneath the horizontal \(f_{\min}\) line(s). ECI is likewise derived from the area of the predictive density drawn as a dashed line lying below the horizontal \(f_{\min}\) line(s). This dashed density has the same mean/mode as the solid one, but is more sharply peaked by influence from \(x_{n+1}\).

FIGURE 7.13: Cartoon illustrating how choice of \(f_{\min}\) affects ECI. Adapted from Gramacy and Lee (2011).

If we suppose that these densities, drawn as bell-shaped curves in the figure, are symmetric (as they are for GPs), then it’s clear that the relationship between ECI and EI depends upon \(f_{\min}\). As the dashed line is more peaked, the left-tail cumulative distributions have the property that \(F_n(f_{\min}\mid x_{n+1}) \geq F_n(f_{\min})\) for all \(f_{\min} \geq \mathbb{E}\{Y(x \mid x_{n+1})\} = \mathbb{E}\{Y(x)\}\). Since \(f_{\min} = \min \{y_1,\dots, y_n\}\) is one such example, we could observe \(\mathbb{E}\{I(x \mid x_{n+1})\} \geq \mathbb{E}\{I(x)\}\), violating the monotonicity condition. Only \(f_{\min} = \min_x \mu_n(x)\) guarantees that ECI represents a reduction compared to EI. That said, in practice the choice of \(f_{\min}\) matters little. But while we’re on the topic of what constitutes improvement – i.e., improvement upon what? – let’s take a short segue and talk about noisy blackbox objectives.

7.2.4 Noisy objectives

The BO literature over-accentuates discord between surrogate-assisted (EI or EY) optimization algorithms for deterministic and noisy blackboxes. The first paper on BO of stochastic simulations is by D. Huang et al. (2006). Since then the gulf between noisy and deterministic methods for BO has seemed to widen despite a surrogate modeling literature which has, if anything, downplayed the state(s) of affairs: Need a GP for noisy simulations? Just estimate a nugget. (With laGP, use jmleGP for isotropic formulations, or mleGPsep with param="both" for separable ones.) EI relies on the same underlying surrogates, so no additional changes are required there either, at least not to modeling aspects. Whenever noise is present, a modest degree of replication – especially in seed designs – can be helpful as a means of separating signal from noise. More details on that front are deferred to Chapter 10.

The notion of improvement requires a subtle change. Actually, nothing is wrong with the form of \(I(x)\), but how its components \(f_{\min}^n\) and \(Y(x) \mid D_n\) are defined. If there’s noise, then \(Y(x_i) \ne y_i\). Responses \(Y_1, \dots, Y_n\) at \(X_n\) are random variables. So \(f_{\min}^n\) is also a random variable. At face value, this substantially complicates matters. MC evaluations of EI, extending sampling of \(Y(x)\) to \(\min \{Y_1, \dots, Y_n\}\), may be the only faithful means of taking expectation over all random quantities. Fully analytic EI in such cases seems out of reach. Unfortunately, MC thwarts library-based optimization of EI. For example, simple optim methods wouldn’t work because the EI approximation would lack smoothness, except in the case of prohibitively large MC sampling efforts.

A common alternative is to use \(f_{\min} = \min_x \; \mu_n(x)\), exactly as suggested above to ensure the monotonicity condition, and proceed as usual. Picheny et al. (2013) call this the “plug-in” method. Plugging in deterministic \(\min_x \; \mu_n(x)\) for random \(f_{\min}\) works well despite under-accounting for a degree of uncertainty. It’s a refreshing coincidence that this choice of \(f_{\min}\) addresses both issues (monotonicity and noise), and that this choice makes sense intuitively. The quantity \(\min_x \; \mu_n(x)\) is our model’s best guess about the global function minimum, and \(\min_x \; \mu_{n+1}(x)\) is the one we intend to report when measuring progress. It stands to reason, then, that that value is sensible as a means of assessing potential to improve upon said predictions in subsequent acquisition iterations.

A second consideration for noisy cases involves the distribution of \(Y(x) \mid D_n\), via \(\mu_n(x)\) and \(\sigma_n^2(x)\) from the GP predictive equations (5.2). In the deterministic case, and when GP modeling without a nugget, \(\sigma_n^2(x) \rightarrow 0\) for all \(x\) as \(n \rightarrow \infty\). Here we’re assuming that \(X_n\) becomes dense in the input space as \(n\rightarrow \infty\), as improvement-based heuristics essentially guarantee. With enough data there’s no predictive uncertainty, so there’s eventually no value to any location by improvement \(I(x)\), either through EI or IECI. This makes sense: if you sample everywhere you’ll know all of the optima in the study region, both global and local. But in the stochastic case, and when GP modeling with a non-zero nugget, \(\sigma_n^2(x) \nrightarrow 0\) as \(n \rightarrow \infty\). No matter how much data is collected, there will always be predictive uncertainty in \(Y(x) \mid D_n\), so there will always be nonzero improvement \(I(x)\), through EI, IECI or otherwise. Our algorithm will never converge.

A simple fix is to redefine improvement on the latent random field \(f(x)\), rather than directly on \(Y(x)\). See §5.3.2 for details. Eventually, with enough data, there’s no uncertainty about the function – no epistemic uncertainty – even though there would be some aleatoric uncertainty about its noisy measurements. What that means, operationally speaking, is that when calculating EI one should use a predictive standard deviation without a nugget, as opposed to the full version (5.2). Specifically, and at slight risk of redundancy (5.16), use

\[\begin{equation} \breve{\sigma}_n^2(x) = \hat{\tau}^2 (1 - C(x, X_n) K_n^{-1} C(x, X_n)^\top) \tag{7.5} \end{equation}\]

rather than the usual \(\sigma_n^2(x) = \hat{\tau}^2 (1 + \hat{g}_n - C(x, X_n) K_n^{-1} C(x, X_n)^\top)\) in EI acquisitions. IECI is similar via \(\breve{\tilde{\sigma}}_{n+1}^2(x)\). Recall that \(\hat{g}_n\) is still involved in \(K_n^{-1}\), so the nugget is still being “felt” by predictions. Crucially, \(\breve{\sigma}_n^2(x) \rightarrow 0\) as \(n \rightarrow \infty\) as long as the design eventually fills the space. Library functions predGP and predGPsep provide \(\breve{\sigma}_n^2(x)\), or its multivariate analog, when supplied with argument nonug=TRUE.

Finally, Thompson sampling, which was dismissed earlier in §7.1.2 as a stochastic optimization, is worth reconsidering in noisy contexts. Noisy evaluation of the blackbox introduces a degree of stochasticity which can’t be avoided. A little extra randomness in acquisition criteria doesn’t hurt much and can sometimes be advantageous, especially when ambiguity between signal and noise regimes is present. See exercises in §7.4 for a specific example. Hybrids of LCB with EI have been successful in noisy optimization contexts. For example, quantile EI [QEI; Picheny et al. (2013)] works well when noise level can be linked to simulation fidelity; more on this and similar methods when we get to optimizing heterskedastic processes in Chapter 10.3.4.

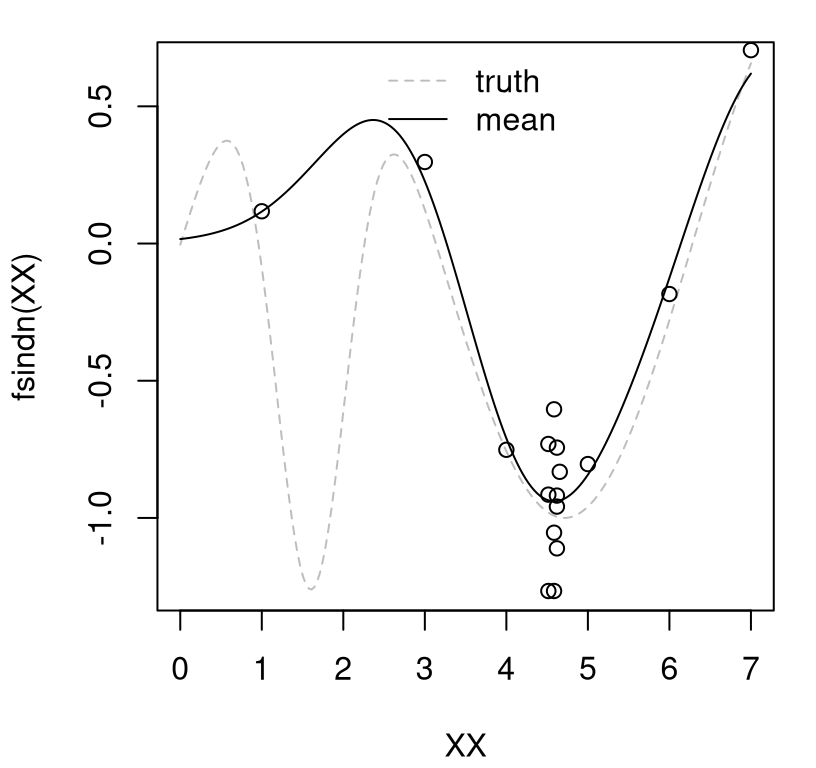

7.2.5 Illustrating conditional improvement and noise

Let’s illustrate both IECI and optimization of a noisy blackbox at the same time. Consider the following data, which is in 1d to ease visualization.

fsindn <- function(x)

sin(x) - 2.55*dnorm(x,1.6,0.45)

X <- matrix(c(0, 0.3, 0.6, 0.8, 1.0, 1.2, 1.4, 1.6, 1.8, 2.0, 2.2, 2.5,

2.8, 3.1, 3.4, 3.7, 4.4, 5.3, 5.7, 6.1, 6.5, 7), ncol=1)

y <- fsindn(X) + rnorm(length(X), sd=0.15)This seed design deliberately omits one of the two local minima of fsindn, although it’s otherwise uniformly spaced in the domain of interest, \(\mathcal{X} = [0,7]\). The code below initializes a GP fit, and performs inference for scale, lengthscale and nugget hyperparameters.

Before calculating EI and IECI, let’s peek at the predictive distribution. Code below extracts predictive quantities for both \(Y(x)\) and latent \(f(x)\), i.e., using \(\sigma_n^2(x)\) or \(\breve{\sigma}^2_n(x)\) from Eq. (7.5), respectively.

XX <- matrix(seq(0, 7, length=201), ncol=1)

pY <- predGP(gpi, XX, lite=TRUE)

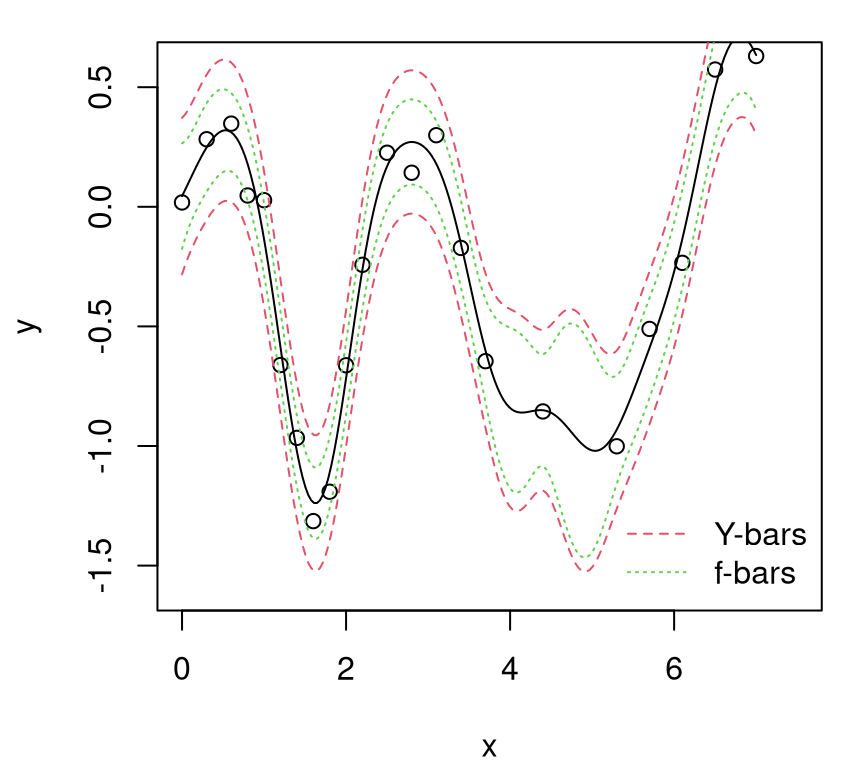

pf <- predGP(gpi, XX, lite=TRUE, nonug=TRUE)Figure 7.14 shows the mean surface, which is the same under both predictors, and two sets of error-bars. Red-dashed lines correspond to the usual error-bars, based on the full distribution of \(Y(x) \mid D_n\), using \(\sigma_n^2(x)\) above. Green-dotted ones use \(\breve{\sigma}^2_n(x)\) instead.

plot(X, y, xlab="x", ylab="y", ylim=c(-1.6,0.6), xlim=c(0,7.5))

lines(XX, pY$mean)

lines(XX, pY$mean + 1.96*sqrt(pY$s2), col=2, lty=2)

lines(XX, pY$mean - 1.96*sqrt(pY$s2), col=2, lty=2)

lines(XX, pf$mean + 1.96*sqrt(pf$s2), col=3, lty=3)

lines(XX, pf$mean - 1.96*sqrt(pf$s2), col=3, lty=3)

legend("bottomright", c("Y-bars", "f-bars"), col=2:4, lty=2:3, bty="n")

FIGURE 7.14: Comparing predictive quantiles under \(Y(x)\) and \(f(x)\).

Observe how “f-bars” quantiles are uniformly narrower than their “Y-bars” counterpart. With enough data \(D_n\), as \(n \rightarrow \infty\) and \(X_n\) filling the space, “f-bars” would collapse in on the mean surface, shown as the solid black line.

Now we’re ready to calculate EI and IECI under those two predictive distributions. EI with nonug=TRUE can be achieved by passing in a predictor pred with that option pre-set, e.g., by copying the function and modifying its default arguments (its formals). The ieciGP function provided by laGP has its own nonug argument. By default, ieciGP takes candidate locations, XX in the code below, identical to reference locations Xref, similar to alcGP.

fmin <- min(predGP(gpi, X, lite=TRUE)$mean)

ei <- EI(gpi, XX, fmin, pred=predGP)

ieci <- ieciGP(gpi, XX, fmin)

predGPnonug <- predGP

formals(predGPnonug)$nonug <- TRUE

ei.f <- EI(gpi, XX, fmin, pred=predGPnonug)

ieci.f <- ieciGP(gpi, XX, fmin, nonug=TRUE)To ease visualization, it helps to normalize acquisition function evaluations so that they span the same range.

ei <- scale(ei, min(ei), max(ei) - min(ei))

ei.f <- scale(ei.f, min(ei.f), max(ei.f) - min(ei.f))

ieci <- scale(ieci, min(ieci), max(ieci) - min(ieci))

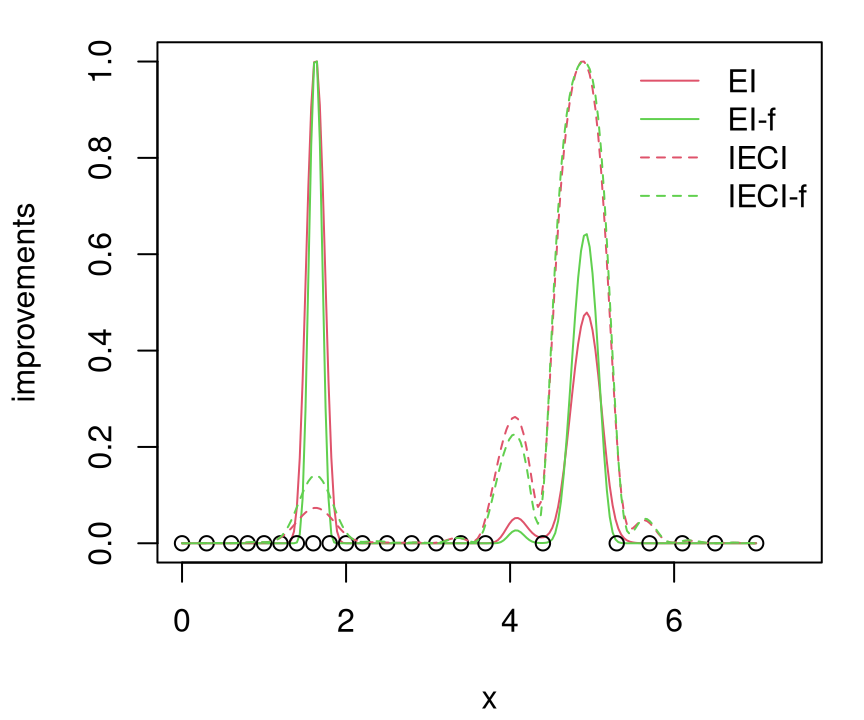

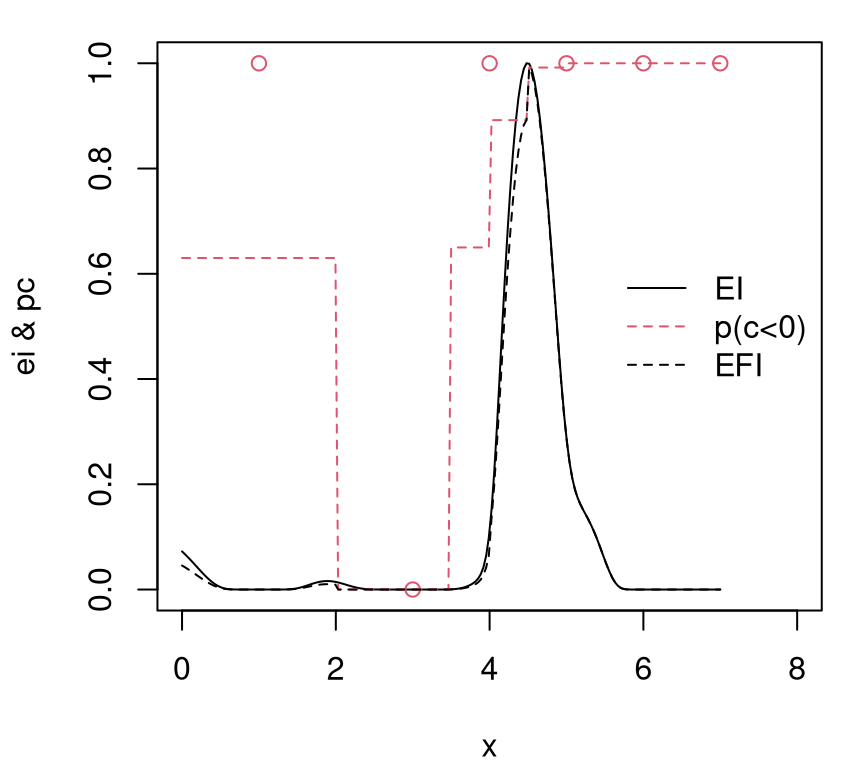

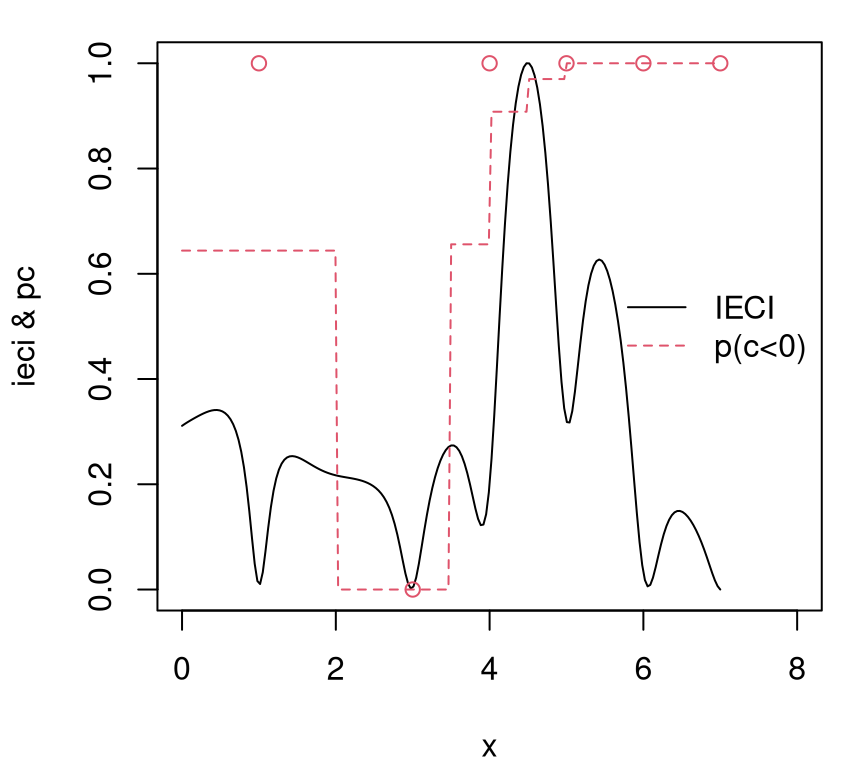

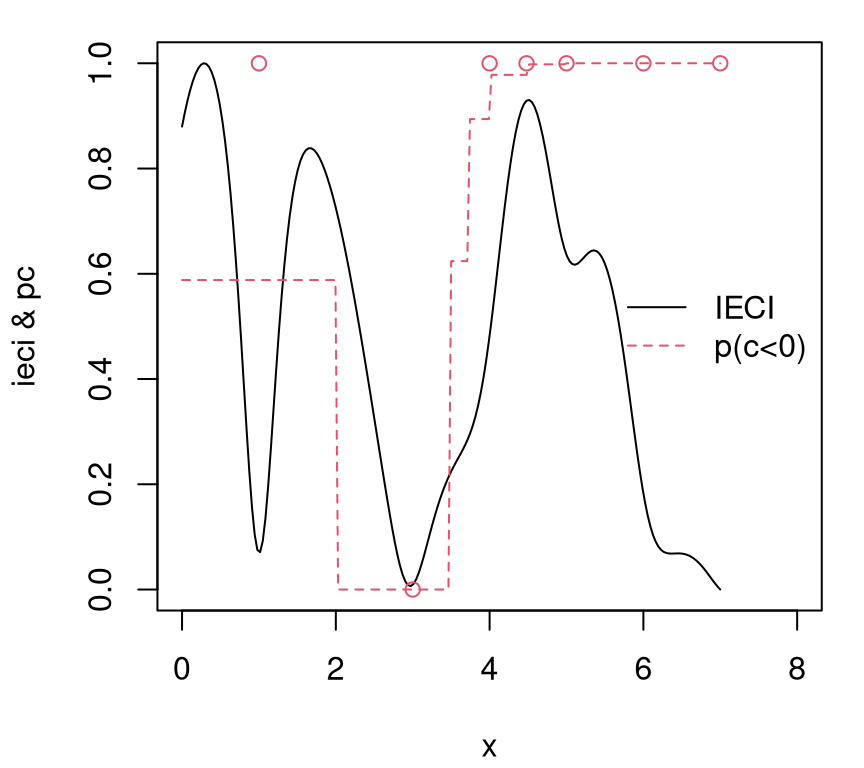

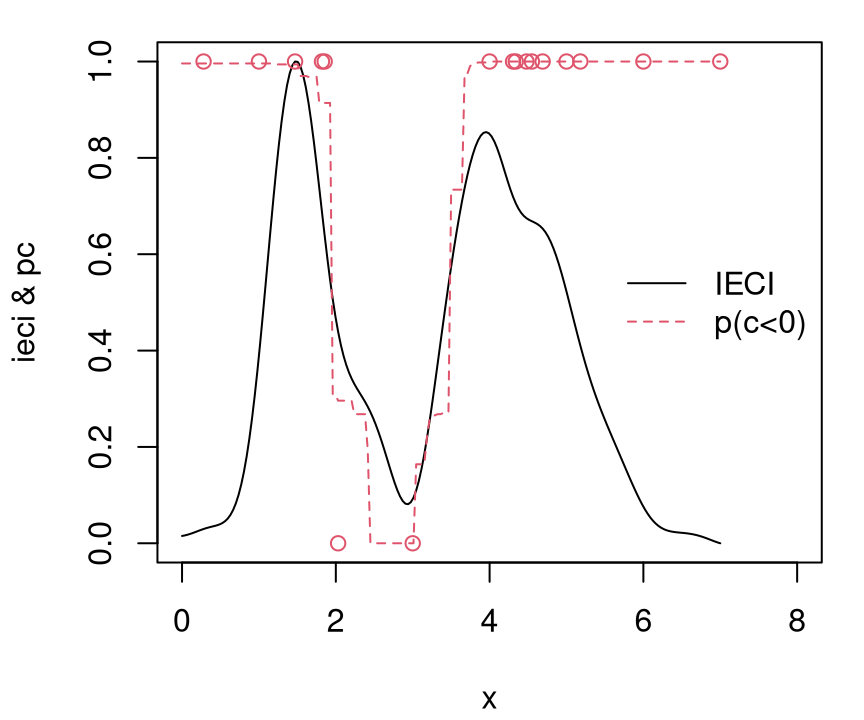

ieci.f <- scale(ieci.f, min(ieci.f), max(ieci.f) - min(ieci.f))Figure 7.15 shows these four acquisition comparators. Solid lines are for EI, and dashed for IECI; the color scheme matches up with predictive surfaces above, where “-f” is the nonug=TRUE version, i.e., based on the latent random field \(f\). So that all are maximizable criteria, negative IECIs are plotted.

plot(XX, ei, type="l", ylim=c(0, 1), xlim=c(0,7.5), col=2, lty=1,

xlab="x", ylab="improvements")

lines(XX, ei.f, col=3, lty=1)

points(X, rep(0, nrow(X)))

lines(XX, 1-ieci, col=2, lty=2)

lines(XX, 1-ieci.f, col=3, lty=2)

legend("topright", c("EI", "EI-f", "IECI", "IECI-f"), lty=c(1,1,2,2),

col=c(2,3,2,3), bty="n")

FIGURE 7.15: EI versus IECI in \(Y(x)\) and \(f(x)\) alternatives.

Since training data were randomly generated for this Rmarkdown build, it’s hard to pinpoint exactly the state of affairs illustrated in Figure 7.15. Usually EI prefers (in both variants) to choose \(x_{n+1}\) from the left half of the space, whereas IECI (both variants) weighs both minima more equally. Occasionally, however, EI prefers the right mode instead, and occasionally both prefer the left, all depending on the random data. Both represent local minima, but the one on the right experiences higher aggregate uncertainty due both to lower sampling and to a wider domain of attraction. The left minima has a narrow trough. As a more aggregate measure, IECI usually up-weights \(x_{n+1}\) from the right half, pooling together large ECI in a bigger geographical region, and consequently putting greater value on their potential to offer improvement globally in the input space.

Both EI and IECI cope with noise just fine. Variations with and without nugget are subtle, at least as exemplified by Figure 7.15. A careful inspection of the code behind that figure reveals that we didn’t actually minimize \(\mu_n(x)\) to choose \(f_{\min}^n\). Rather it was sufficient to use \(\min_i \mu_n(x_i)\), which is less work computationally because no auxiliary numerical optimization is required.

Rather than exhaust the reader with more for loops, say to incorporate IECI and noise into a bakeoff or to augment our running example (§7.1.1) on the (possibly noise-augmented) Goldstein–Price function, these are left to exercises in §7.4. IECI, while enjoying a certain conservative edge by “looking ahead”, rarely outperforms the simpler but more myopic ordinary EI in practice. This is perhaps in part because it’s challenging to engineer a test problem, like the one in our illustration above, exploiting just the scenario IECI was designed to hedge against. Both EI and IECI can be recast into the stepwise uncertainty reduction (SUR) framework (Bect, Bachoc, and Ginsbourger 2016) where one can show that they enjoy a supermartingale property, similar to a submodularity property common to many active learning techniques. But that doesn’t address the extent to which IECI’s limited degree of lookahead may or may not be of benefit, by comparison to EI say, along an arc of future iterations in the steps of a sequential design. Advantages or otherwise are problem dependent. In a context where blackbox objective functions \(f(x)\) almost never satisfy technical assumptions imposed by theory – the Goldstein–Price function isn’t a stationary GP – intuition may be the best guide to relative merits in practice.

Where IECI really shines is in a constrained optimization context, as this is what it was originally designed for – making for a nice segue into our next topic.

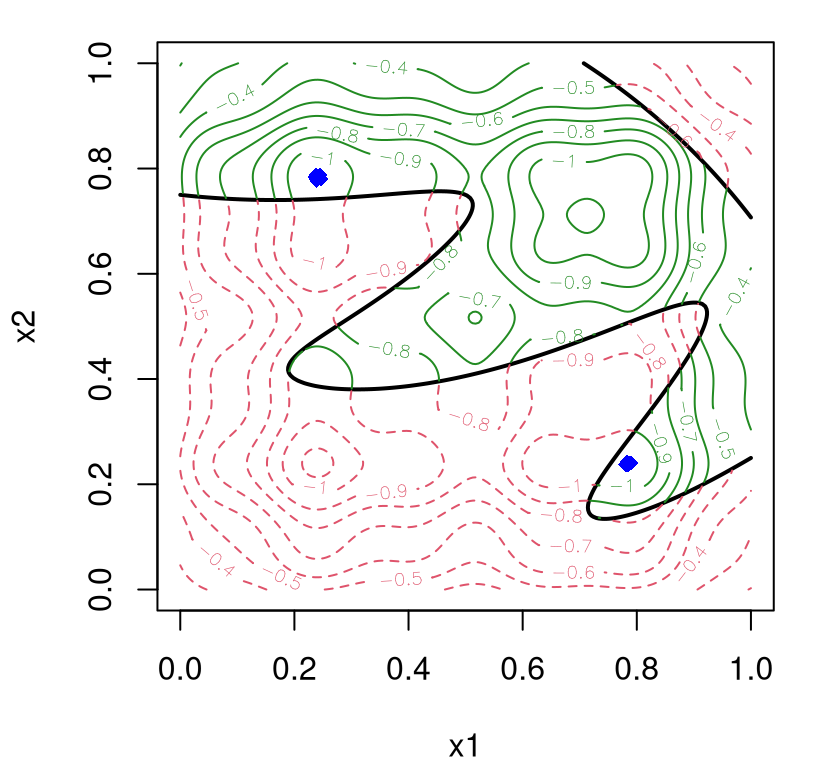

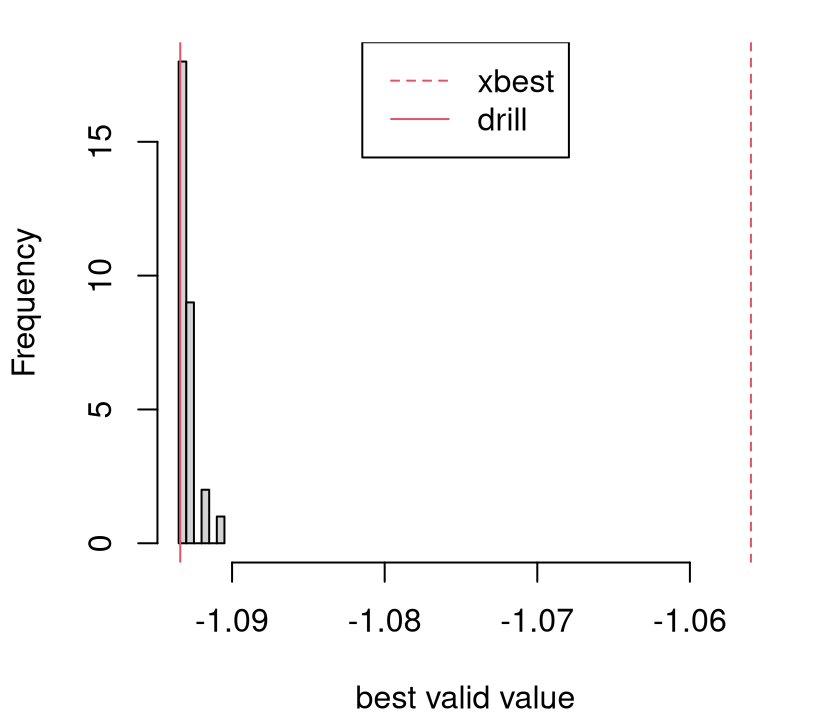

7.3 Optimization under constraints

To start off, we’ll keep it super simple and assume constraints are known, but that they take on non-trivial form. That is, not box/bound constraints (too easy), but something tracing out non-convex or even unconnected regions. Then we’ll move on to unknown, or blackbox constraints which require expensive evaluations to probe. First we’ll treat those as binary, valid or invalid, and then as real-valued. That distinction, binary or real-valued, impacts how constraints are modeled and how they fold into an effective sequential design criteria for optimization. Throughout, the goal is to find the best valid value (BVV) of the objective in the fewest number of blackbox evaluations. More details on the problem formulation(s) are provided below.

7.3.1 Known constraints

For now, assume constraints are known. Specifically, presume that we have access to a function, \(c(x): \mathcal{X} \rightarrow \{0,1\}\), returning zero (or equivalently a negative number) if the constraint is satisfied, or one (or a positive number) if the constraint is violated, and that we can evaluate it for “free”, i.e., as much as we want. Evaluation expense accrues on blackbox objective \(f(x)\) only.

The problem is given formally by the mathematical program

\[\begin{equation} x^\star = \mathrm{argmin}_{x \in \mathcal{\mathcal{X}}} \; f(x) \quad \mbox{ subject to } \quad c(x) \leq 0. \tag{7.6} \end{equation}\]

One simple surrogate-assisted solver entails extending EI to what’s called expected feasible improvement (EFI), which was described in a companion paper to the EI one by the same three authors, but with names of the first two authors swapped (Schonlau, Welch, and Jones 1998).

\[ \mbox{EFI}(x) = \mathbb{E}\{I(x)\} \mathbb{I}(c(x) \leq 0), \]

with \(I(x)\) using an \(f_{\min}^n\) defined over the valid region only. In deterministic settings, that means \(f_{\min}^n = \min_{i=1,\dots,n} \, \{y_i : c(x_i) \leq 0 \}\). When noise is present \(f_{\min}^n = \min_{x \in \mathcal{X}} \{ \mu_n(x) : c(x) \leq 0\}\). The former may be the empty set, in which case the latter is a good backup except in the pathological case that none of the study region \(\mathcal{X}\) is valid.

A verbal description of EFI might be: do EI but don’t bother evaluating, nor modeling, outside the valid region. Seems sensible: invalid evaluations can’t be solutions. We know in advance which inputs are in which set, valid or invalid, so don’t bother wasting precious blackbox evaluations that can’t improve upon the best solution so far.

Yet that may be an overly simplistic view. Mathematical programmers long ago found that approaching local solutions from outside of valid sets can sometimes be more effective than the other way around, especially if the valid region is comprised of disjoint or highly non-convex sets. One example is the augmented Lagrangian method, which we shall review in more detail in §7.3.4.

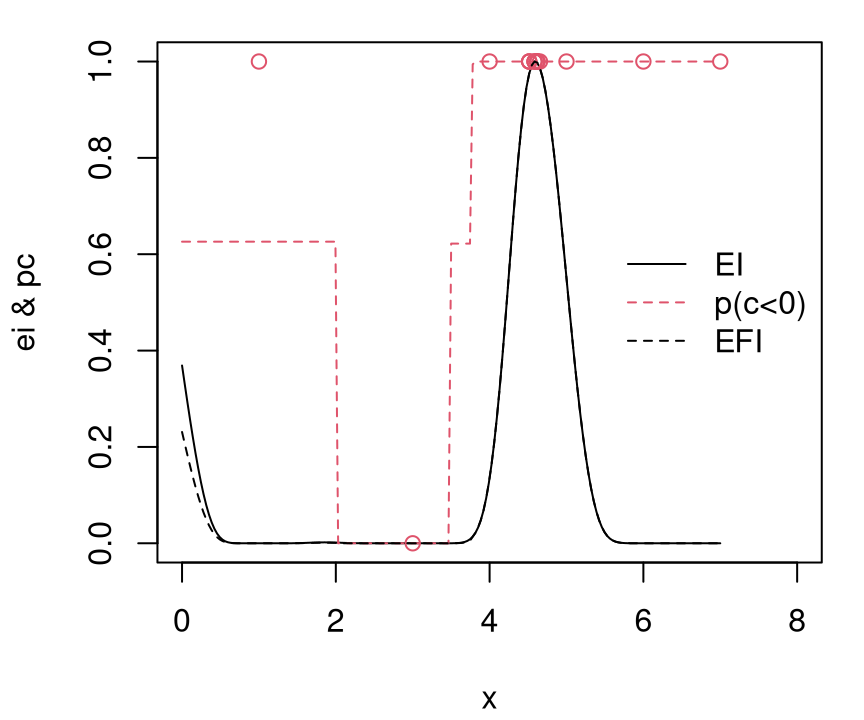

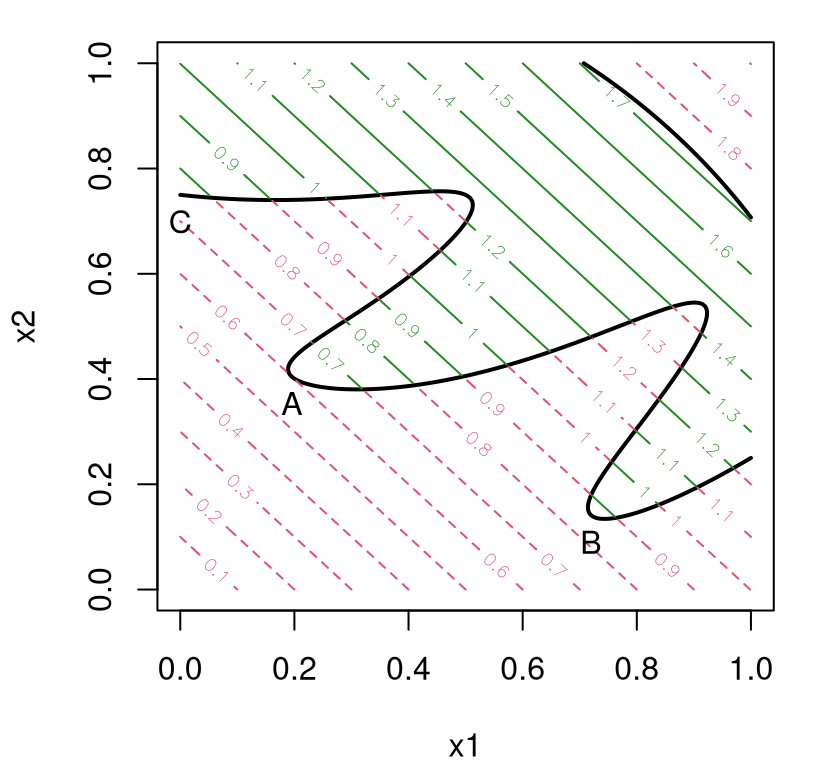

Surrogate modeling considerations also play a role here. Data acquisitions under GPs have a global effect on the updated predictive surface. Information from observations of the blackbox objective outside of the valid region might provide more insight into potential for improvement (inside the region) than any point inside the region could. Think of an invalid region sandwiched between two valid ones (see Figures 7.16–7.17) and how predictive uncertainty might look, and in particular its curvature, at the boundaries. One evaluation splitting the difference between the two boundaries may be nearly as effective as two right on each boundary. EFI would rule out that potential economy. Or, in situations where one really doesn’t want, or can’t perform an “invalid run”, but is still faced with a choice between high EI on either side of the invalid-region boundary, it might make sense to weigh potential for improvement by the amount of the valid region a potential acquisition would cover. That’s exactly what IECI was designed to do.

Adapting IECI to respect a constraint, but to still allow selection of evaluations “wherever they’re most effective”, is a matter of choosing indicator \(\mathbb{I}(c(x) \leq 0)\) as weight \(w(x)\):

\[ \mathrm{IECI}(x_{n+1}) = - \int_{x\in\mathcal{X}} \mathbb{E}\{I(x \mid x_{n+1})\} \mathbb{I}(c(x) \leq 0) \; dx \]

This downweights reference \(x\)-values not satisfying the constraint, and thus also \(x_{n+1}\)-values similarly, however it doesn’t preclude \(x_{n+1}\)-values from being chosen in the invalid region. Rather, the value of \(x_{n+1}\) is judged by its ability to impact improvement within the valid region. An alternative implementation which may be computationally more advantageous, especially when approximating the integral with a sum over a potentially dense collection Xref \(\subseteq \mathcal{X}\), is to exclude from Xref any input locations which don’t satisfy the constraint. More precisely,

\[ \mathrm{IECI}(x_{n+1}) \approx - \frac{1}{T} \sum_{t=1}^T \mathbb{E}\{I(x^{(t)} \mid x_{n+1}) \} \quad \mbox{ where } \; x^{(t)} \sim p_c(\mathcal{X}), \; \mbox{ for } \; t=1,\dots,T, \]

and \(p_c(\mathcal{X})\) is uniform on the valid set \(\{x\in \mathcal{X} : c(x) \leq 0\}\).

To illustrate, consider the same 1d data-generating mechanism as in §7.2.5 except with an invalid region \([2,4]\) sandwiched between two valid regions occupying the first and last third of the input space, respectively.

X <- matrix(c(0, 0.3, 0.6, 0.8, 1.0, 1.2, 1.4, 1.6, 1.8, 4.4, 5.3, 5.7,

6.1, 6.5, 7), ncol=1)

y <- fsindn(X) + rnorm(length(X), sd=0.15)No observations lie in the invalid region, yet. For dramatic effect in this illustration, it makes sense to hard-code a longer lengthscale when fitting the GP.

Readers are strongly advised to tinker with this (also with jmleGP) to see how it effects the results. Next, establish a dense grid in the input space \(\mathcal{X} \equiv\) XX and take evaluations of GP predictive equations thereupon.

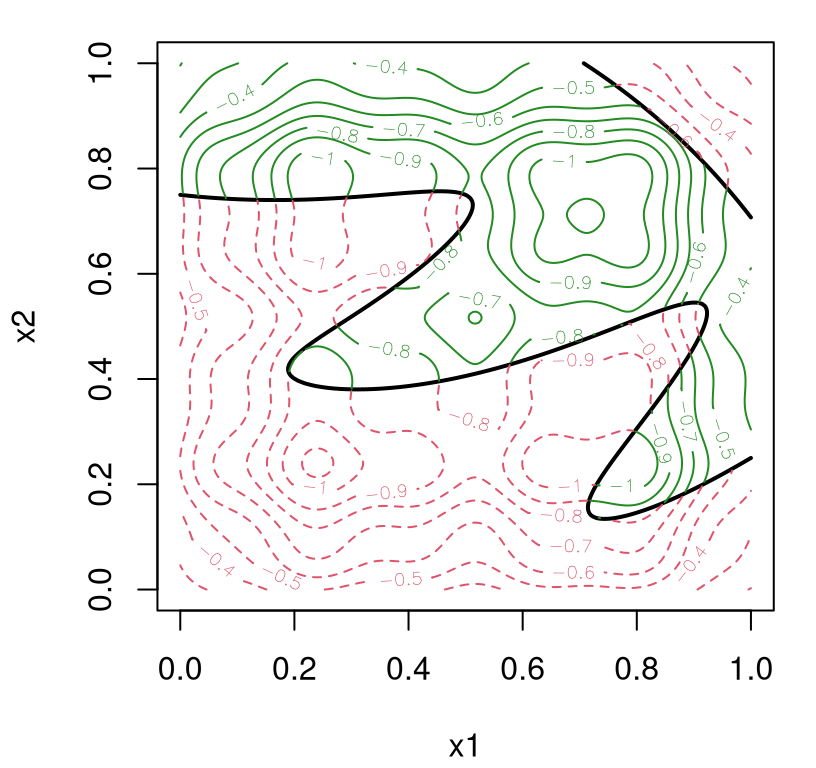

Figure 7.16 shows the resulting predictive surface.

plot(X, y, xlab="x", ylab="y", ylim=c(-3.25, 0.7))

lines(XX, p$mean)

lines(XX, p$mean + 1.96*sqrt(p$s2), col=2, lty=2)

lines(XX, p$mean - 1.96*sqrt(p$s2), col=2, lty=2)![Predictive surface for a 1d problem with invalid region $[2,4]$ sandwiched between two valid ones.](surrogates_files/figure-html/sandwich-1.png)

FIGURE 7.16: Predictive surface for a 1d problem with invalid region \([2,4]\) sandwiched between two valid ones.