Chapter 5 Gaussian Process Regression

Here the goal is humble on theoretical fronts, but fundamental in application. Our aim is to understand the Gaussian process (GP) as a prior over random functions, a posterior over functions given observed data, as a tool for spatial data modeling and surrogate modeling for computer experiments, and simply as a flexible nonparametric regression. We’ll see that, almost in spite of a technical over-analysis of its properties, and sometimes strange vocabulary used to describe its features, GP regression is a simple extension of linear modeling. Knowing that is all it takes to make use of it as a nearly unbeatable regression tool when input–output relationships are relatively smooth, and signal-to-noise ratios relatively high. And even sometimes when they’re not.

The subject of this chapter goes by many names and acronyms. Some call it kriging, which is a term that comes from geostatistics (Matheron 1963); some call it Gaussian spatial modeling or a Gaussian stochastic process. Both, if you squint at them the right way, have the acronym GaSP. Machine learning (ML) researchers like Gaussian process regression (GPR). All of these instances are about regression: training on inputs and outputs with the ultimate goal of prediction and uncertainty quantification (UQ), and ancillary goals that are either tantamount to, or at least crucially depend upon, qualities and quantities derived from a predictive distribution. Although the chapter is titled “Gaussian process regression”, and we’ll talk lots about Gaussian process surrogate modeling throughout this book, we’ll typically shorten that mouthful to Gaussian process (GP), or use “GP surrogate” for short. GPS would be confusing and GPSM is too scary. I’ll try to make this as painless as possible.

After understanding how it all works, we’ll see how GPs excel in several common response surface tasks: as a sequential design tool in Chapter 6; as the workhorse in modern (Bayesian) optimization of blackbox functions in Chapter 7; and all that with a “hands off” approach. Classical RSMs of Chapter 3 have many attractive features, but most of that technology was designed specifically, and creatively, to cope with limitations arising in first- and second-order linear modeling. Once in the more flexible framework that GPs provide, one can think big without compromising finer detail on smaller things.

Of course GPs are no panacea. Specialized tools can work better in less generic contexts. And GPs have their limitations. We’ll have the opportunity to explore just what they are, through practical examples. And down the line in Chapter 9 we’ll see that most of those are easy to sweep away with a bit of cunning. These days it’s hard to make the case that a GP shouldn’t be involved as a component in a larger analysis, or at least attempted as such, where ultimately limited knowledge of the modeling context can be met by a touch of flexibility, taking us that much closer to human-free statistical “thinking” – a fundamental desire in ML and thus, increasingly, in tools developed for modern analytics.

5.1 Gaussian process prior

Gaussian process is a generic term that pops up, taking on disparate but quite specific meanings, in various statistical and probabilistic modeling enterprises. As a generic term, all it means is that any finite collection of realizations (i.e., \(n\) observations) is modeled as having a multivariate normal (MVN) distribution. That, in turn, means that characteristics of those realizations are completely described by their mean \(n\)-vector \(\mu\) and \(n \times n\) covariance matrix \(\Sigma\). With interest in modeling functions, we’ll sometimes use the term mean function, thinking of \(\mu(x)\), and covariance function, thinking of \(\Sigma(x, x')\). But ultimately we’ll end up with vectors \(\mu\) and matrices \(\Sigma\) after evaluating those functions at specific input locations \(x_1, \dots, x_n\).

You’ll hear people talk about function spaces, reproducing kernel Hilbert spaces, and so on, in the context of GP modeling of functions. Sometimes thinking about those aspects/properties is important, depending on context. For most purposes that makes things seem fancier than they really need to be.

The action, at least the part that’s interesting, in a GP treatment of functions is all in the covariance. Consider a covariance function defined by inverse exponentiated squared Euclidean distance:

\[ \Sigma(x, x') = \exp\{ - || x - x '||^2 \}. \]

Here covariance decays exponentially fast as \(x\) and \(x'\) become farther apart in the input, or \(x\)-space. In this specification, observe that \(\Sigma(x,x) = 1\) and \(\Sigma(x, x') < 1\) for \(x' \ne x\). The function \(\Sigma(x, x')\) must be positive definite. For us this means that if we define a covariance matrix \(\Sigma_n\), based on evaluating \(\Sigma(x_i, x_j\)) at pairs of \(n\) \(x\)-values \(x_1, \dots, x_n\), we must have that

\[ x^\top \Sigma_n x > 0 \quad \mbox{ for all } x \ne 0. \]

We intend to use \(\Sigma_n\) as a covariance matrix in an MVN, and a positive (semi-) definite covariance matrix is required for MVN analysis. In that context, positive definiteness is the multivariate extension of requiring that a univariate Gaussian have positive variance parameter, \(\sigma^2\).

To ultimately see how a GP with that simple choice of covariance \(\Sigma_n\) can be used to perform regression, let’s first see how GPs can be used to generate random data following a smooth functional relationship. Suppose we take a bunch of \(x\)-values: \(x_1,\dots, x_n\), define \(\Sigma_n\) via \(\Sigma_n^{ij} = \Sigma(x_i, x_j)\), for \(i,j=1,\dots,n\), then draw an \(n\)-variate realization

\[ Y \sim \mathcal{N}_n(0, \Sigma_n), \]

and plot the result in the \(x\)-\(y\) plane. That was a mouthful, but don’t worry: we’ll see it in code momentarily. First note that the mean of this MVN is zero; this need not be but it’s quite surprising how well things work even in this special case. Location invariant zero-mean GP modeling, sometimes after subtracting off a middle value of the response (e.g., \(\bar{y}\)), is the default in computer surrogate modeling and (ML) literatures. We’ll talk about generalizing this later.

Here’s a version of that verbal description with \(x\)-values in 1d. First create an input grid with 100 elements.

Next calculate pairwise squared Euclidean distances between those inputs. I like the distance function from the plgp package (Gramacy 2014) in R because it was designed exactly for this purpose (i.e., for use with GPs), however dist in base R provides similar functionality.

Then build up covariance matrix \(\Sigma_n\) as inverse exponentiated squared Euclidean distances. Notice that the code below augments the diagonal with a small number eps \(\equiv \epsilon\). Although inverse exponentiated distances guarantee a positive definite matrix in theory, sometimes in practice the matrix is numerically ill-conditioned. Augmenting the diagonal a tiny bit prevents that. Neal (1998), a GP vanguard in the statistical/ML literature, calls \(\epsilon\) the jitter in this context.

Finally, plug that covariance matrix into an MVN random generator; below I use one from the mvtnorm package (Genz et al. 2018) on CRAN.

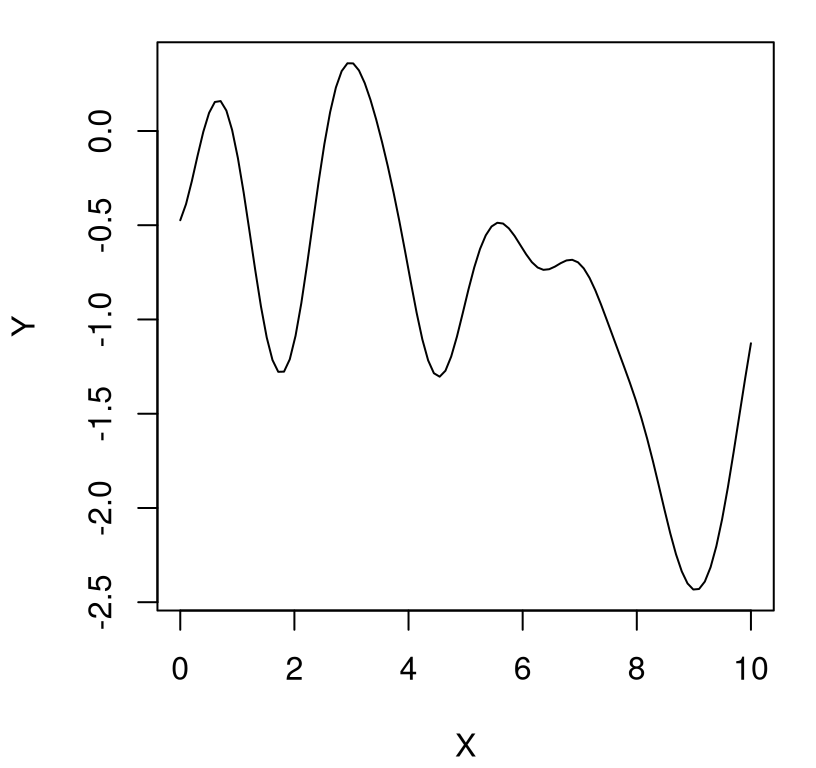

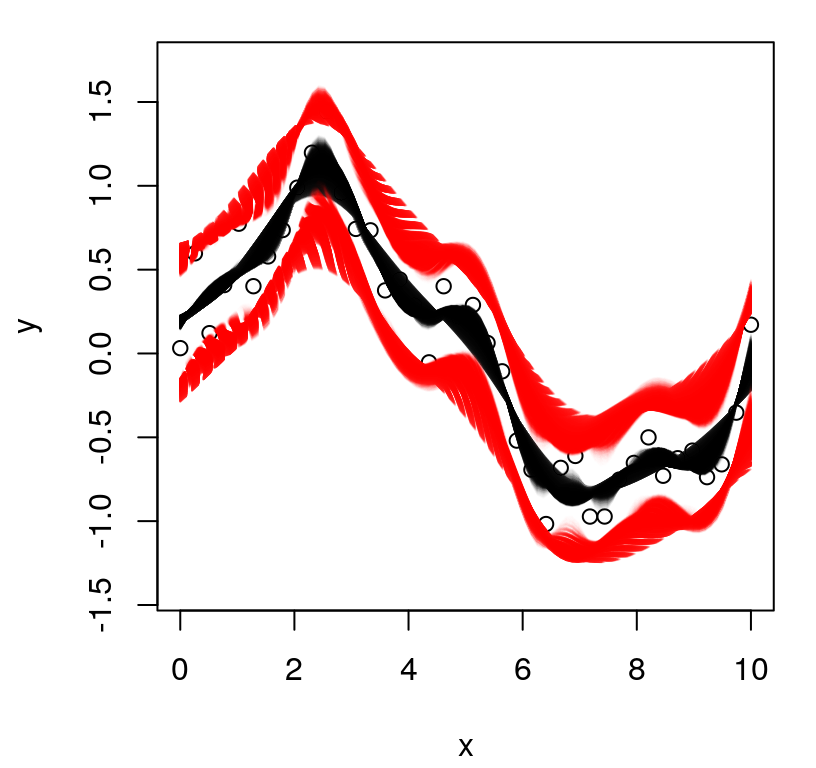

That’s it! We’ve generated a finite realization of a random function under a GP prior with a particular covariance structure. Now all that’s left is visualization. Figure 5.1 plots those X and Y pairs as tiny connected line segments on an \(x\)-\(y\) plane.

FIGURE 5.1: A random function under a GP prior.

Because the \(Y\)-values are random, you’ll get a different curve when you try this on your own. We’ll generate some more below in a moment. But first, what are the properties of this function, or more precisely of a random function generated in this way? Several are easy to deduce from the form of the covariance structure. We’ll get a range of about \([-2,2]\), with 95% probability, because the scale of the covariance is 1, ignoring the jitter \(\epsilon\) added to the diagonal. We’ll get several bumps in the \(x\)-range of \([0,10]\) because short distances are highly correlated (about 37%) and long distances are essentially uncorrelated (\(1e^{-7}\)).

## [1] 3.679e-01 1.125e-07Now the function plotted above is only a finite realization, meaning that we really only have 100 pairs of points. Those points look smooth, in a tactile sense, because they’re close together and because the plot function is “connecting the dots” with lines. The full surface, which you might conceptually extend to an infinite realization over a compact domain, is extremely smooth in a calculus sense because the covariance function is infinitely differentiable, a discussion we’ll table for a little bit later.

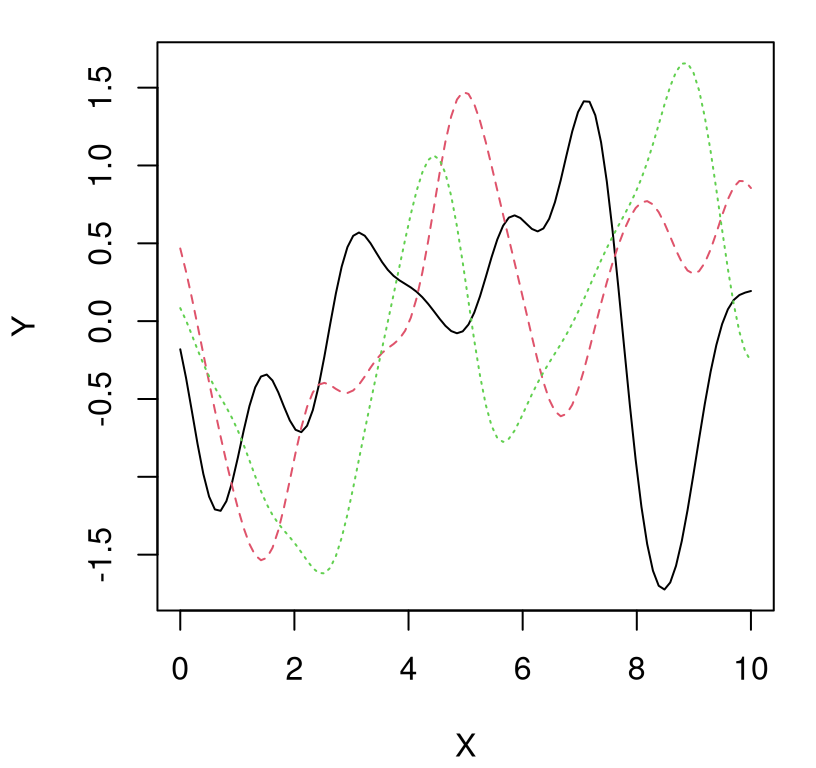

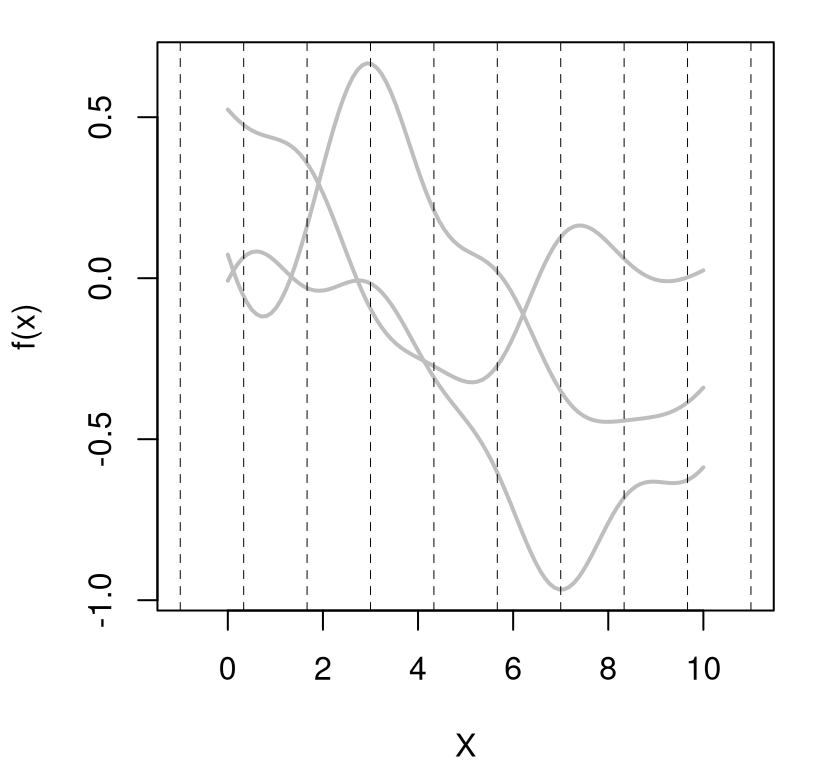

Besides those three things – scale of two, several bumps, smooth look – we won’t be able to anticipate much else about the nature of a particular realization. Figure 5.2 shows three new random draws obtained in a similar way, which will again look different when you run the code on your own.

FIGURE 5.2: Three more random functions under a GP prior.

Each random finite collection is different than the next. They all have similar range, about the same number of bumps, and are smooth. That’s what it means to have function realizations under a GP prior: \(Y(x) \sim \mathcal{GP}\).

5.1.1 Gaussian process posterior

Of course, we’re not in the business of generating random functions. I’m not sure what that would be useful for. Instead, we ask: given examples of a function in pairs \((x_1, y_1), \dots, (x_n, y_n)\), comprising data \(D_n = (X_n, Y_n)\), what random function realizations could explain – could have generated – those observed values? That is, we want to know about the conditional distribution of \(Y(x) \mid D_n\). If we call \(Y(x) \sim \mathcal{GP}\) the prior, then \(Y(x) \mid D_n\) must be the posterior.

Fortunately, you don’t need to be a card-carrying Bayesian to appreciate what’s going on, although that perspective has really taken hold in ML. That conditional distribution, \(Y(x) \mid D_n\), which one might more simply call a predictive distribution, is a familiar quantity in regression analysis. Forget for the moment that when regressing one is often interested in other aspects, like relevance of predictors through estimates of parameter standard errors, etc., and that so far our random functions look like they have no noise. The somewhat strange, and certainly most noteworthy, thing is that so far there are no parameters!

Let’s shelve interpretation (Bayesian updating or a twist on simple regression) for a moment and focus on conditional distributions, because that’s what it’s really all about. Deriving that predictive distribution is a simple application of deducing a conditional from a (joint) MVN. From Wikipedia, if an \(N\)-dimensional random vector \(X\) is partitioned as

\[ X = \left( \begin{array}{c} X_1 \\ X_2 \end{array} \right) \quad \mbox{ with sizes } \quad \left( \begin{array}{c} q \times 1 \\ (N-q) \times 1 \end{array} \right), \]

and accordingly \(\mu\) and \(\Sigma\) are partitioned as,

\[ \mu = \left( \begin{array}{c} \mu_1 \\ \mu_2 \end{array} \right) \quad \mbox{ with sizes } \quad \left( \begin{array}{c} q \times 1 \\ (N-q) \times 1 \end{array} \right) \]

and

\[ \Sigma = \left(\begin{array}{cc} \Sigma_{11} & \Sigma_{12} \\ \Sigma_{21} & \Sigma_{22} \end{array} \right) \ \mbox{ with sizes } \ \left(\begin{array}{cc} q \times q & q \times (N-q) \\ (N-q)\times q & (N - q)\times (N-q) \end{array} \right), \]

then the distribution of \(X_1\) conditional on \(X_2 = x_2\) is MVN \(X_1 \mid x_2 \sim \mathcal{N}_q (\bar{\mu}, \bar{\Sigma})\), where

\[\begin{align} \bar{\mu} &= \mu_1 + \Sigma_{12} \Sigma_{22}^{-1}(x_2 - \mu_2) \tag{5.1} \\ \mbox{and } \quad \bar{\Sigma} &= \Sigma_{11} - \Sigma_{12} \Sigma_{22}^{-1} \Sigma_{21}. \notag \end{align}\]

An interesting feature of this result is that conditioning upon \(x_2\) alters the variance of \(X_1\). Observe that \(\bar{\Sigma}\) above is reduced compared to its marginal analog \(\Sigma_{11}\). Reduction in variance when conditioning on data is a hallmark of statistical learning. We know more – have less uncertainty – after incorporating data. Curiously, the amount by which variance is decreased doesn’t depend on the value of \(x_2\). Observe that the mean is also altered, comparing \(\mu_1\) to \(\bar{\mu}\). In fact, the equation for \(\bar{\mu}\) is a linear mapping, i.e., of the form \(ax + b\) for vectors \(a\) and \(b\). Finally, note that \(\Sigma_{12} = \Sigma_{21}^\top\) so that \(\bar{\Sigma}\) is symmetric.

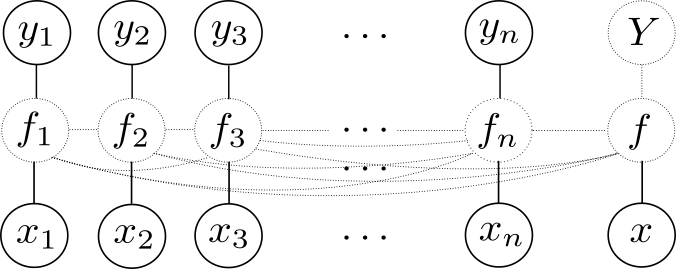

Ok, how do we deploy that fundamental MVN result towards deriving the GP predictive distribution \(Y(x) \mid D_n\)? Consider an \(n+1^{\mathrm{st}}\) observation \(Y(x)\). Allow \(Y(x)\) and \(Y_n\) to have a joint MVN distribution with mean zero and covariance function \(\Sigma(x,x')\). That is, stack

\[ \left( \begin{array}{c} Y(x) \\ Y_n \end{array} \right) \quad \mbox{ with sizes } \quad \left( \begin{array}{c} 1 \times 1 \\ n \times 1 \end{array} \right), \]

and if \(\Sigma(X_n,x)\) is the \(n \times 1\) matrix comprised of \(\Sigma(x_1, x), \dots, \Sigma(x_n, x)\), its covariance structure can be partitioned as follows:

\[ \left(\begin{array}{cc} \Sigma(x,x) & \Sigma(x,X_n) \\ \Sigma(X_n,x) & \Sigma_n \end{array} \right) \ \mbox{ with sizes } \ \left(\begin{array}{cc} 1 \times 1 & 1 \times n \\ n\times 1 & n \times n \end{array} \right). \]

Recall that \(\Sigma(x,x) = 1\) with our simple choice of covariance function, and that symmetry provides \(\Sigma(x,X_n) = \Sigma(X_n,x)^\top\).

Applying Eq. (5.1) yields the following predictive distribution

\[ Y(x) \mid D_n \sim \mathcal{N}(\mu(x), \sigma^2(x)) \]

with

\[\begin{align} \mbox{mean } \quad \mu(x) &= \Sigma(x, X_n) \Sigma_n^{-1} Y_n \tag{5.2} \\ \mbox{and variance } \quad \sigma^2(x) &= \Sigma(x,x) - \Sigma(x, X_n) \Sigma_n^{-1} \Sigma(X_n, x). \notag \end{align}\]

Observe that \(\mu(x)\) is linear in observations \(Y_n\), so we have a linear predictor! In fact it’s the best linear unbiased predictor (BLUP), an argument we’ll leave to other texts (e.g., Santner, Williams, and Notz 2018). Also notice that \(\sigma^2(x)\) is lower than the marginal variance. So we learn something from data \(Y_n\); in fact the amount that variance goes down is a quadratic function of distance between \(x\) and \(X_n\). Learning is most efficient for \(x\) that are close to training data locations \(X_n\). However the amount learned doesn’t depend upon \(Y_n\). We’ll return to that later.

The derivation above is for “pointwise” GP predictive calculations. These are sometimes called the kriging equations, especially in geospatial contexts. We can apply them, separately, for many predictive/testing locations \(x\), one \(x\) at a time, but that would ignore the obvious correlation they’d experience in a big MVN analysis. Alternatively, we may consider a bunch of \(x\) locations jointly, in a testing design \(\mathcal{X}\) of \(n'\) rows, say, all at once:

\[ Y(\mathcal{X}) \mid D_n \sim \mathcal{N}_{n'}(\mu(\mathcal{X}), \Sigma(\mathcal{X})) \]

with

\[\begin{align} \mbox{mean } \quad \mu(\mathcal{X}) &= \Sigma(\mathcal{X}, X_n) \Sigma_n^{-1} Y_n \tag{5.3}\\ \mbox{and variance } \quad \Sigma(\mathcal{X}) &= \Sigma(\mathcal{X},\mathcal{X}) - \Sigma(\mathcal{X}, X_n) \Sigma_n^{-1} \Sigma(\mathcal{X}, X_n)^\top, \notag \end{align}\]

where \(\Sigma(\mathcal{X}, X_n)\) is an \(n' \times n\) matrix. Having a full covariance structure offers a more complete picture of the random functions which explain data under a GP posterior, but also more computation. The \(n' \times n'\) matrix \(\Sigma(\mathcal{X})\) could be enormous even for seemingly moderate \(n'\).

Simple 1d GP prediction example

Consider a toy example in 1d where the response is a simple sinusoid measured at eight equally spaced \(x\)-locations in the span of a single period of oscillation. R code below provides relevant data quantities, including pairwise squared distances between the input locations collected in the matrix D, and its inverse exponentiation in Sigma.

n <- 8

X <- matrix(seq(0, 2*pi, length=n), ncol=1)

y <- sin(X)

D <- distance(X)

Sigma <- exp(-D) + diag(eps, ncol(D))Now this is where the example diverges from our earlier one, where we used such quantities to generate data from a GP prior. Applying MVN conditioning equations requires similar calculations on a testing design \(\mathcal{X}\), coded as XX below. We need inverse exponentiated squared distances between those XX locations …

XX <- matrix(seq(-0.5, 2*pi + 0.5, length=100), ncol=1)

DXX <- distance(XX)

SXX <- exp(-DXX) + diag(eps, ncol(DXX))… as well as between testing locations \(\mathcal{X}\) and training data locations \(X_n\).

Note that an \(\epsilon\) jitter adjustment is not required for SX because it need not be decomposed in the conditioning calculations (and SX is anyways not square). We do need jitter on the diagonal of SXX though, because this matrix is directly involved in calculation of the predictive covariance which we shall feed into an MVN generator below.

Now simply follow Eq. (5.3) to derive joint predictive equations for XX \(\equiv \mathcal{X}\): invert \(\Sigma_n\), apply the linear predictor, and calculate reduction in covariance.

Above mup maps to \(\mu(\mathcal{X})\) evaluated at our testing grid \(\mathcal{X} \equiv\) XX, and Sigmap similarly for \(\Sigma(\mathcal{X})\) via pairs in XX. As a computational note, observe that Siy <- Si %*% y may be pre-computed in time quadratic in \(n=\) length(y) so that mup may subsequently be calculated for any XX in time linear in \(n\), without redoing Siy; for example, as solve(Sigma, y). There are two reasons we’re not doing that here. One is to establish a clean link between code and mathematical formulae. The other is a presumption that the variance calculation, which remains quadratic in \(n\) no matter what, is at least as important as the mean.

Mean vector and covariance matrix in hand, we may generate \(Y\)-values from the posterior/predictive distribution \(Y(\mathcal{X}) \mid D_n\) in the same manner as we did from the prior.

Those \(Y(\mathcal{X}) \equiv\) YY samples may then be plotted as a function of predictive input \(\mathcal{X} \equiv\) XX locations. Before doing that, extract some pointwise quantile-based error-bars from the diagonal of \(\Sigma(\mathcal{X})\) to aid in visualization.

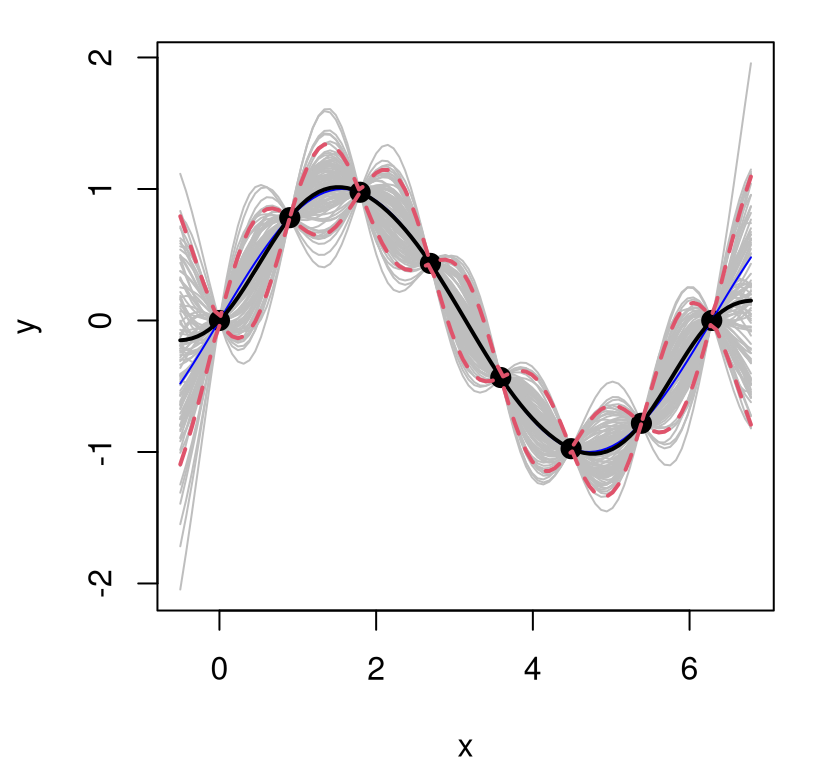

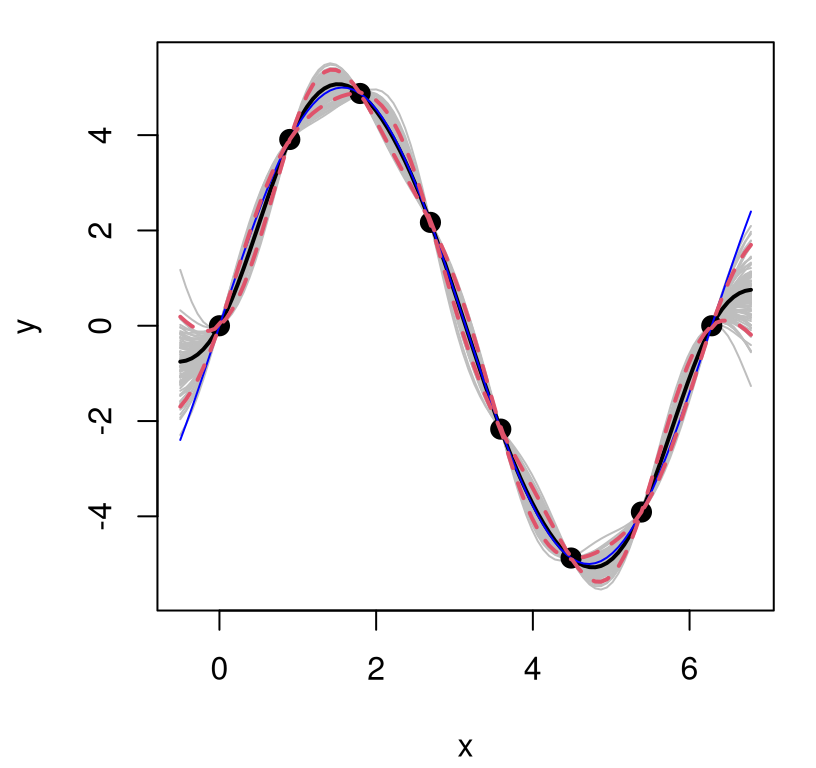

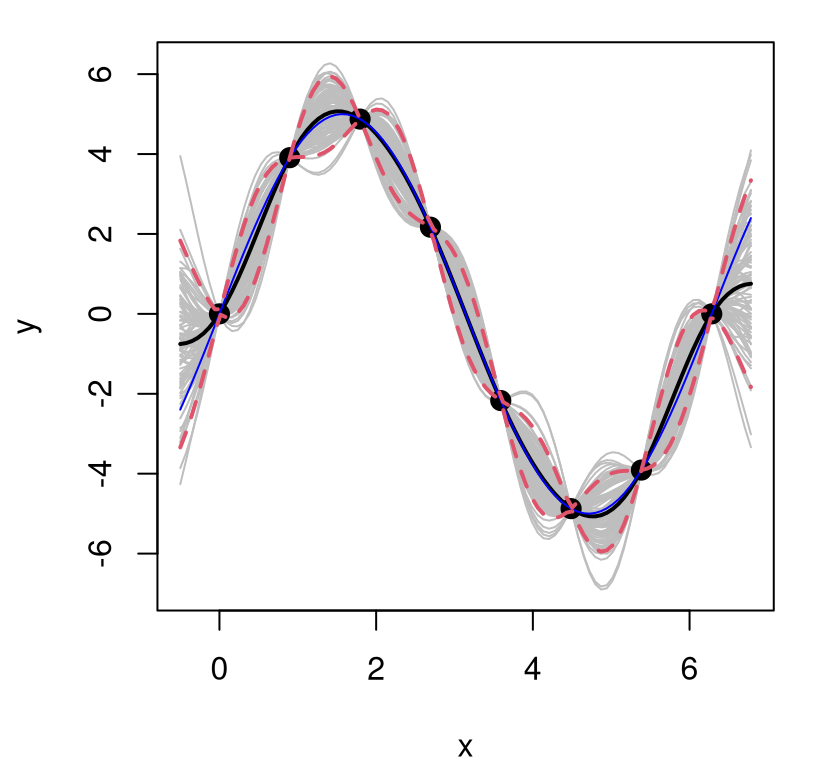

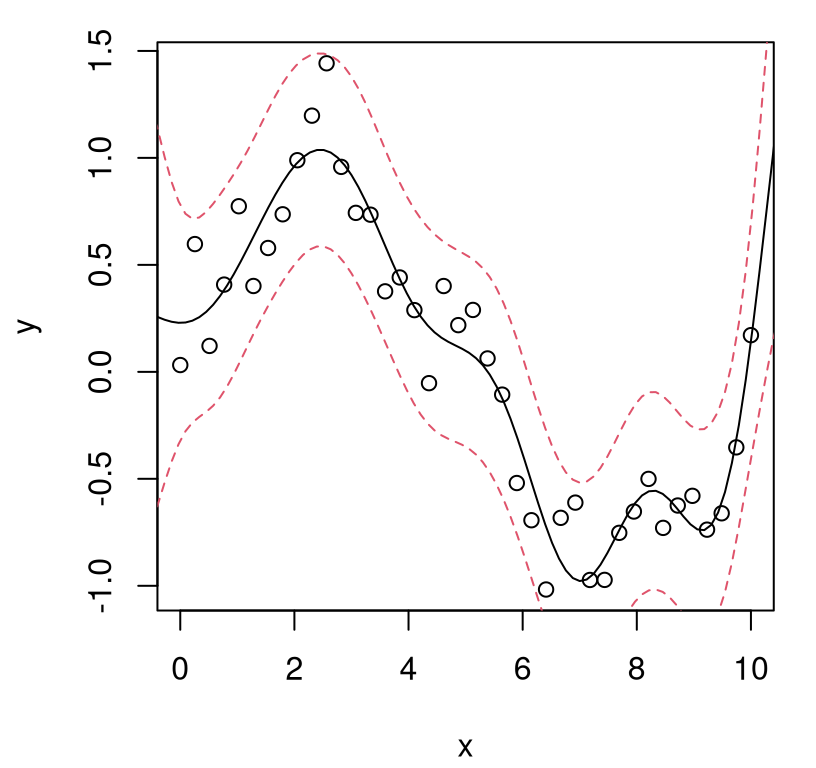

Figure 5.3 plots each of the random predictive, finite realizations as gray curves. Training data points are overlayed, along with true response at the \(\mathcal{X}\) locations as a thin blue line. Predictive mean \(\mu(\mathcal{X})\) in black, and 90% quantiles in dashed-red, are added as thicker lines.

matplot(XX, t(YY), type="l", col="gray", lty=1, xlab="x", ylab="y")

points(X, y, pch=20, cex=2)

lines(XX, sin(XX), col="blue")

lines(XX, mup, lwd=2)

lines(XX, q1, lwd=2, lty=2, col=2)

lines(XX, q2, lwd=2, lty=2, col=2)

FIGURE 5.3: Posterior predictive distribution in terms of means (solid black), quantiles (dashed-red), and draws (gray). The truth is shown as a thin blue line.

What do we observe in the figure? Notice how the predictive surface interpolates the data. That’s because \(\Sigma(x, x) = 1\) and \(\Sigma(x, x') \rightarrow 1^-\) as \(x' \rightarrow x\). Error-bars take on a “football” shape, or some say a “sausage” shape, being widest at locations farthest from \(x_i\)-values in the data. Error-bars get really big outside the range of the data, a typical feature in ordinary linear regression settings. But the predictive mean behaves rather differently than under an ordinary linear model. For GPs it’s mean-reverting, eventually leveling off to zero as \(x \in \mathcal{X}\) gets far away from \(X_n\). Predictive variance, as exemplified by those error-bars, is also reverting to something: a prior variance of 1. In particular, variance won’t continue to increase as \(x\) gets farther and farther from \(X_n\). Together those two “reversions” imply that although we can’t trust extrapolations too far outside of the data range, at least their behavior isn’t unpredictable, as can sometimes happen in linear regression contexts, for example when based upon feature-expanded (e.g., polynomial basis) covariates.

These characteristics, especially the football/sausage shape, is what makes GPs popular as surrogates for computer simulation experiments. That literature, which historically emphasized study of deterministic computer simulators, drew comfort from interpolation-plus-expansion of variance away from training simulations. Perhaps more importantly, they liked that out-of-sample prediction was highly accurate. Come to think of it, that’s why spatial statisticians and machine learners like them too. But hold that thought; there are a few more things to do before we get to predictive comparisons.

5.1.2 Higher dimension?

There’s nothing particularly special about the presentation above that would preclude application in higher input dimension. Except perhaps that visualization is a lot simpler in 1d or 2d. We’ll get to even higher dimensions with some of our later examples. For now, consider a random function in 2d sampled from a GP prior. The plan is to go back through the process above: first prior, then (posterior) predictive, etc.

Begin by creating an input set, \(X_n\), in two dimensions. Here we’ll use a regular \(20 \times 20\) grid.

Then calculate pairwise distances and evaluate covariances under inverse exponentiated squared Euclidean distances, plus jitter.

Finally make random MVN draws in exactly the same way as before. Below we save two such draws.

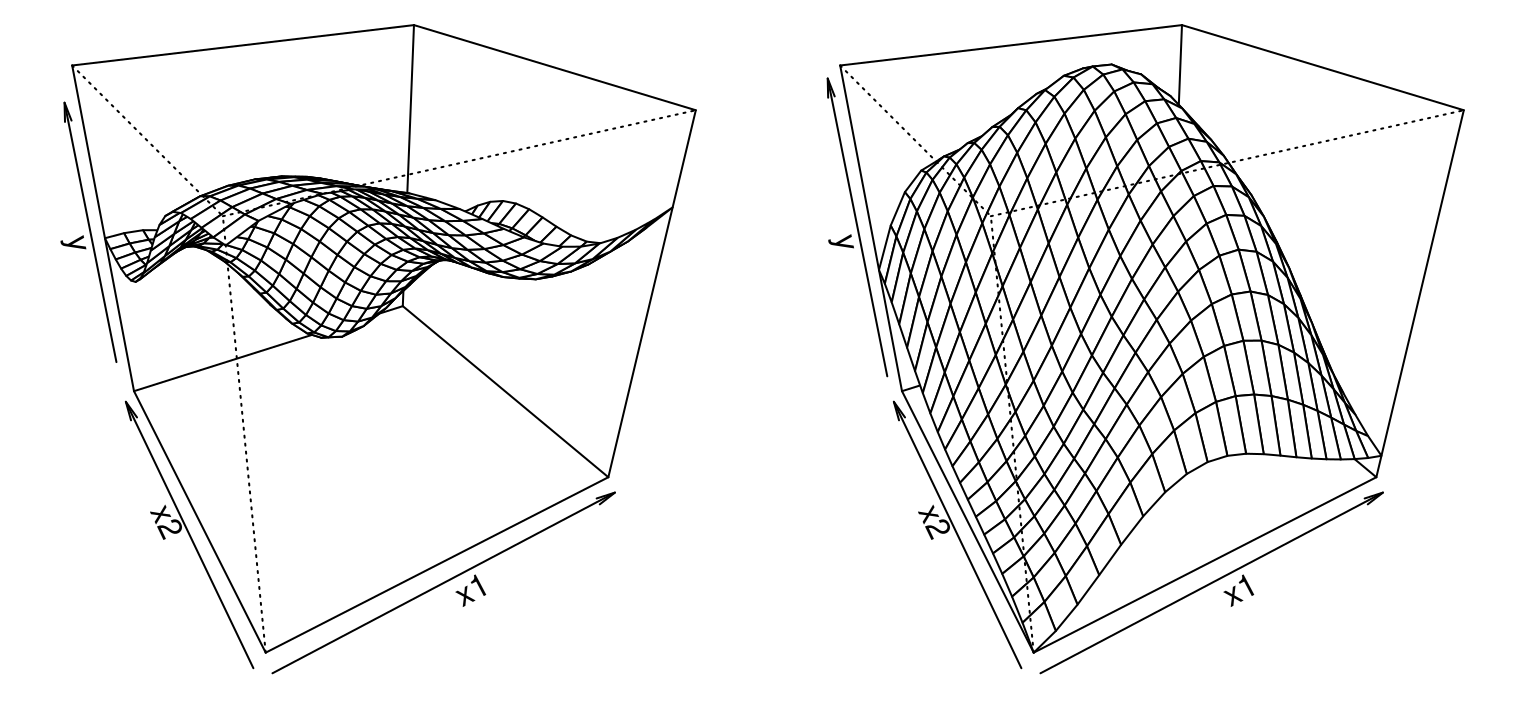

For visualization in Figure 5.4, persp is used to stretch each \(20 \times 20\) = 400-variate draw over a mesh with a fortuitously chosen viewing angle.

par(mfrow=c(1,2))

persp(x, x, matrix(Y[1,], ncol=nx), theta=-30, phi=30, xlab="x1",

ylab="x2", zlab="y")

persp(x, x, matrix(Y[2,], ncol=nx), theta=-30, phi=30, xlab="x1",

ylab="x2", zlab="y")

FIGURE 5.4: Two random functions under a GP prior in 2d.

So drawing from a GP prior in 2d is identical to the 1d case, except with a 2d input grid. All other code is “cut-and-paste”. Visualization is more cumbersome, but that’s a cosmetic detail. Learning from training data, i.e., calculating the predictive distribution for observed \((x_i, y_i)\) pairs, is no different: more cut-and-paste.

To try it out we need to cook up some toy data from which to learn. Consider the 2d function \(y(x) = x_1 \exp\{-x_1^2 - x_2^2\}\) which is highly nonlinear near the origin, but flat (zero) as inputs get large. This function has become a benchmark 2d problem in the literature for reasons that we’ll get more into in Chapter 9. Suffice it to say that thinking up simple-yet-challenging toy problems is a great way to get noticed in the community, even when you borrow a common example in vector calculus textbooks or one used to demonstrate 3d plotting features in MATLAB®.

library(lhs)

X <- randomLHS(40, 2)

X[,1] <- (X[,1] - 0.5)*6 + 1

X[,2] <- (X[,2] - 0.5)*6 + 1

y <- X[,1]*exp(-X[,1]^2 - X[,2]^2)Above, a Latin hypercube sample (LHS; §4.1) is used to generate forty (coded) input locations in lieu of a regular grid in order to create a space-filling input design. A regular grid with 400 elements would have been overkill, but a uniform random design of size forty or so would have worked equally well. Coded inputs are mapped onto a scale of \([-2,4]^2\) in order to include both bumpy and flat regions.

Let’s suppose that we wish to interpolate those forty points onto a regular \(40 \times 40\) grid, say for stretching over a mesh. Here’s code that creates such testing locations XX \(\equiv\mathcal{X}\) in natural units.

Now that we have inputs and outputs, X and y, and predictive locations XX we can start cutting-and-pasting. Start with the relevant training data quantities …

… and follow with similar calculations between input sets X and XX.

Then apply Eq. (5.3). Code wise, these lines are identical to what we did in the 1d case.

It’s hard to visualize a multitude of sample paths in 2d – two was plenty when generating from the prior – but if desired, we may obtain them with the same rmvnorm commands as in §5.1.1. Instead focus on plotting pointwise summaries, namely predictive mean \(\mu(x) \equiv\) mup and predictive standard deviation \(\sigma(x)\):

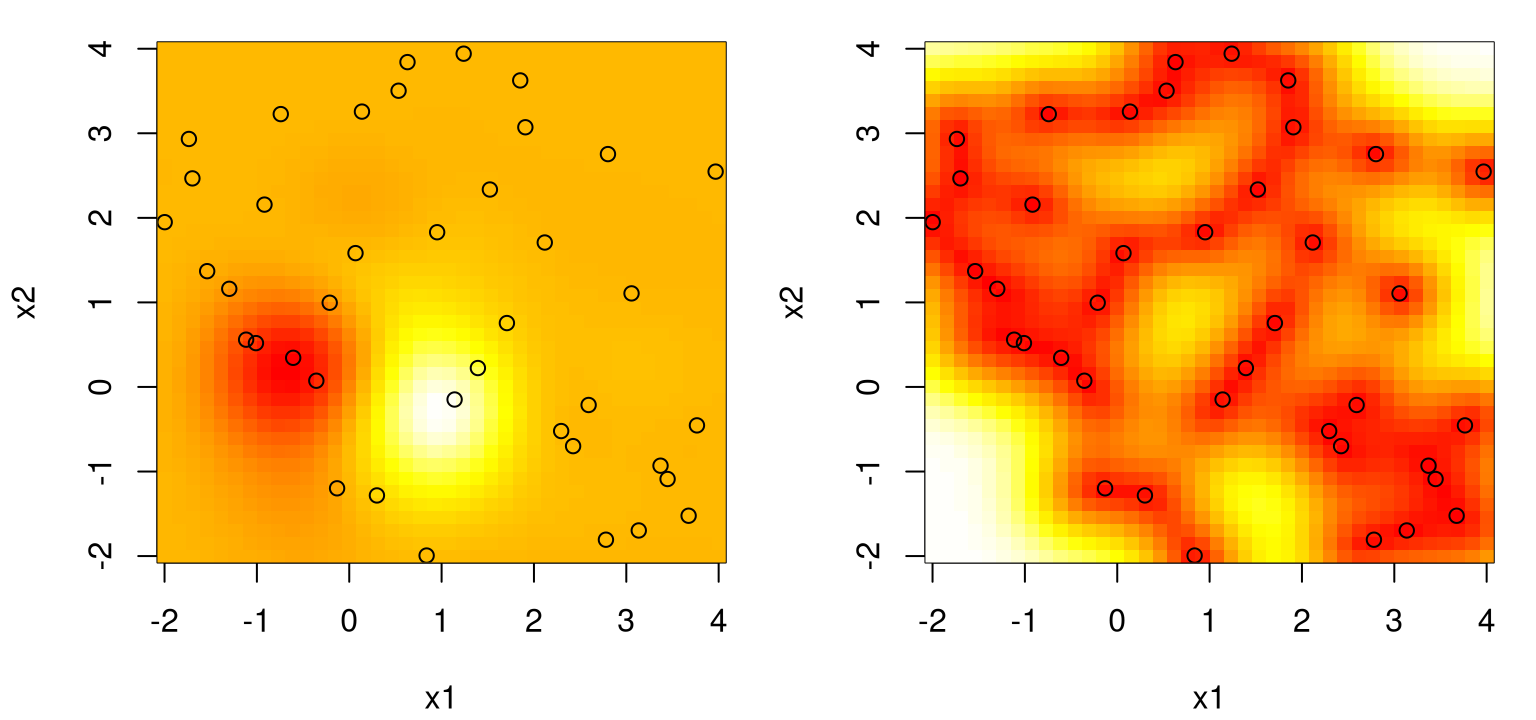

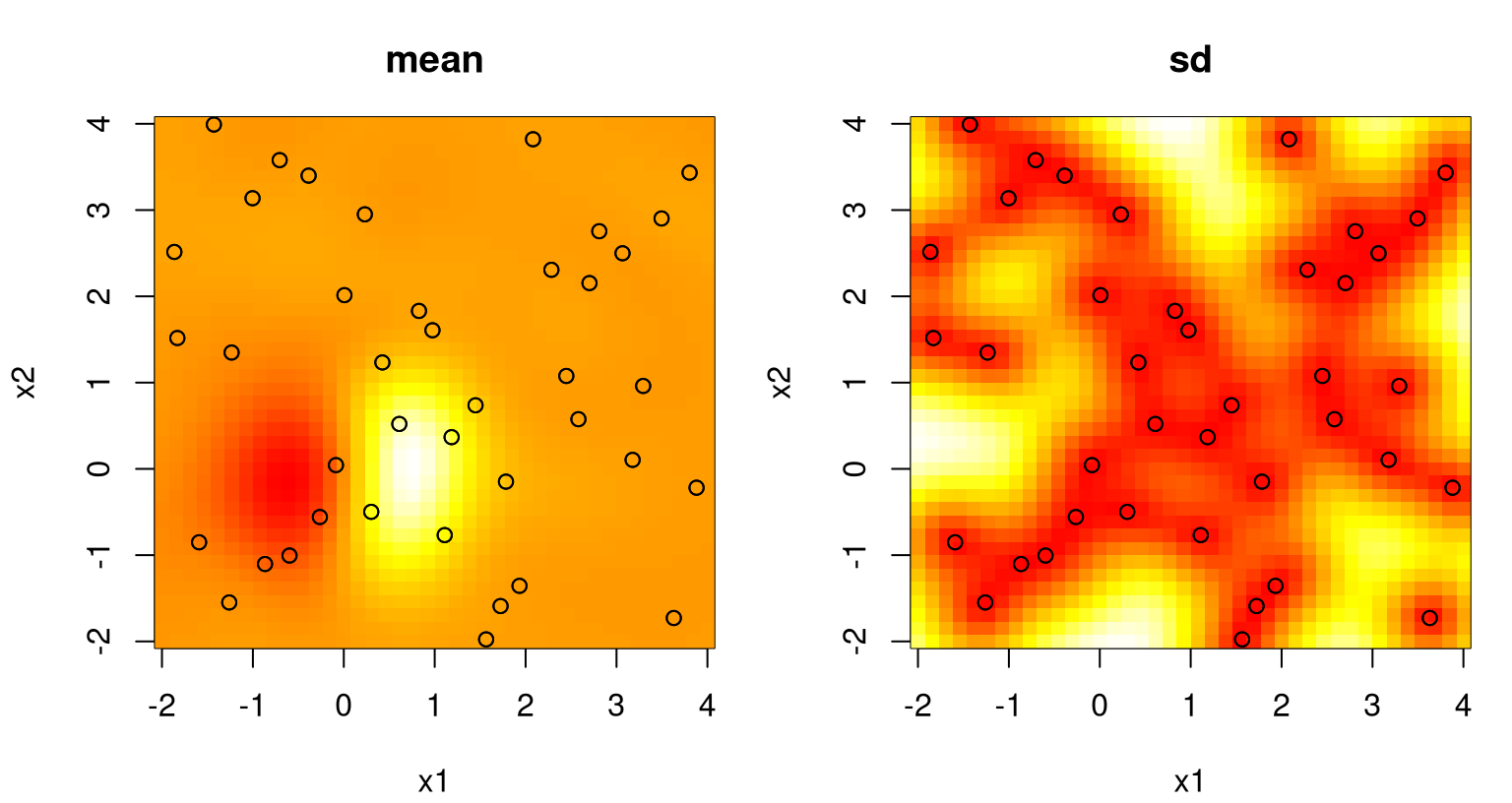

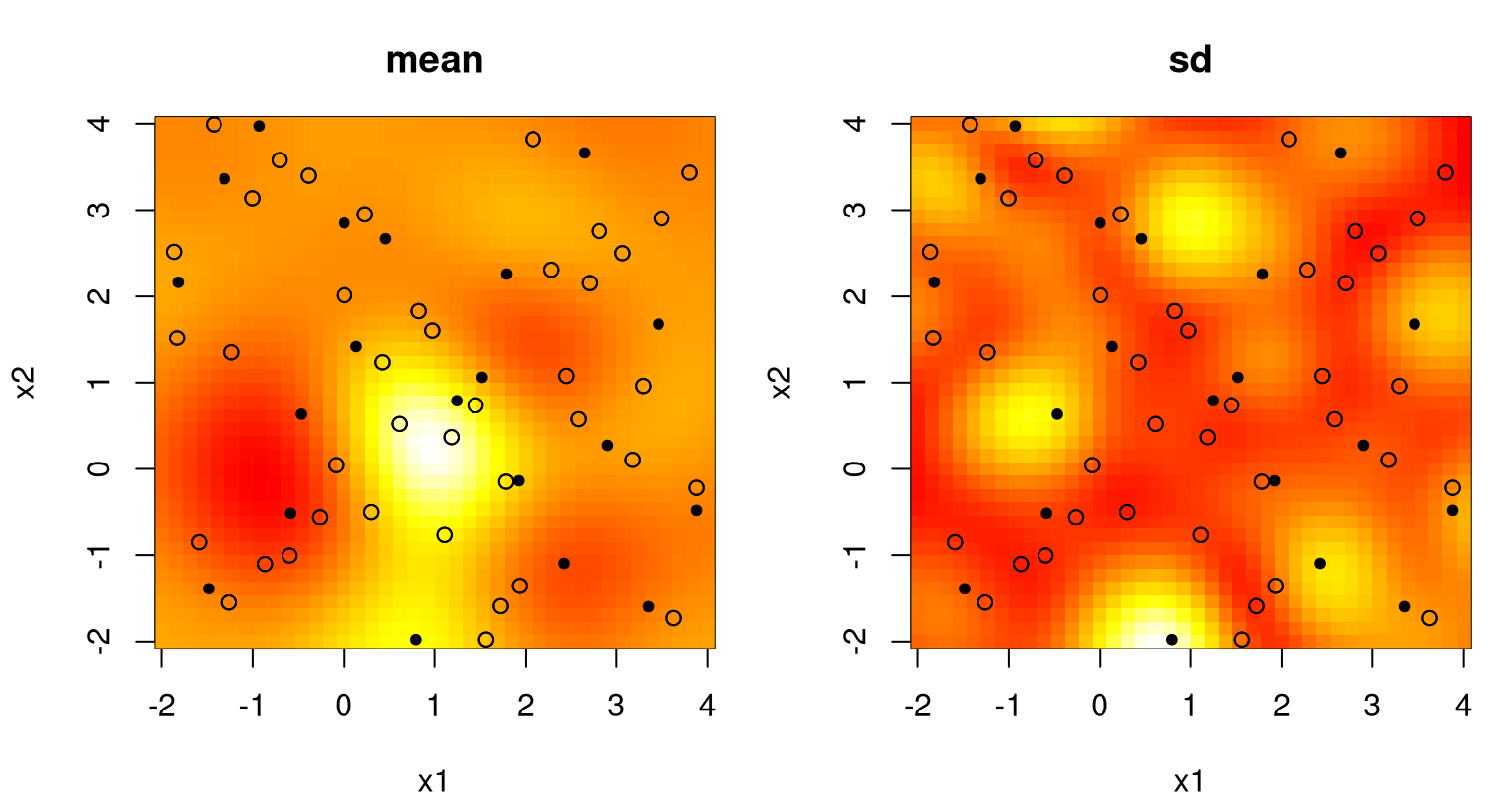

The left panel in Figure 5.5 provides an image plot of the mean over our regularly-gridded inputs XX; the right panel shows standard deviation.

par(mfrow=c(1,2))

cols <- heat.colors(128)

image(xx, xx, matrix(mup, ncol=length(xx)), xlab="x1", ylab="x2", col=cols)

points(X[,1], X[,2])

image(xx, xx, matrix(sdp, ncol=length(xx)), xlab="x1", ylab="x2", col=cols)

points(X[,1], X[,2])

FIGURE 5.5: Posterior predictive for a two-dimensional example, via mean (left) and standard deviation (right) surfaces. Training data input locations are indicated by open circles.

What do we observe? Pretty much the same thing as in the 1d case. We can’t see it, but the predictive surface interpolates. Predictive uncertainty, here as standard deviation \(\sigma(x)\), is highest away from \(x_i\)-values in the training data. Predictive intervals don’t look as much like footballs or sausages, yet somehow that analogy still works. Training data locations act as anchors to smooth variation between points with an organic rise in uncertainty as we imagine predictive inputs moving away from one toward the next.

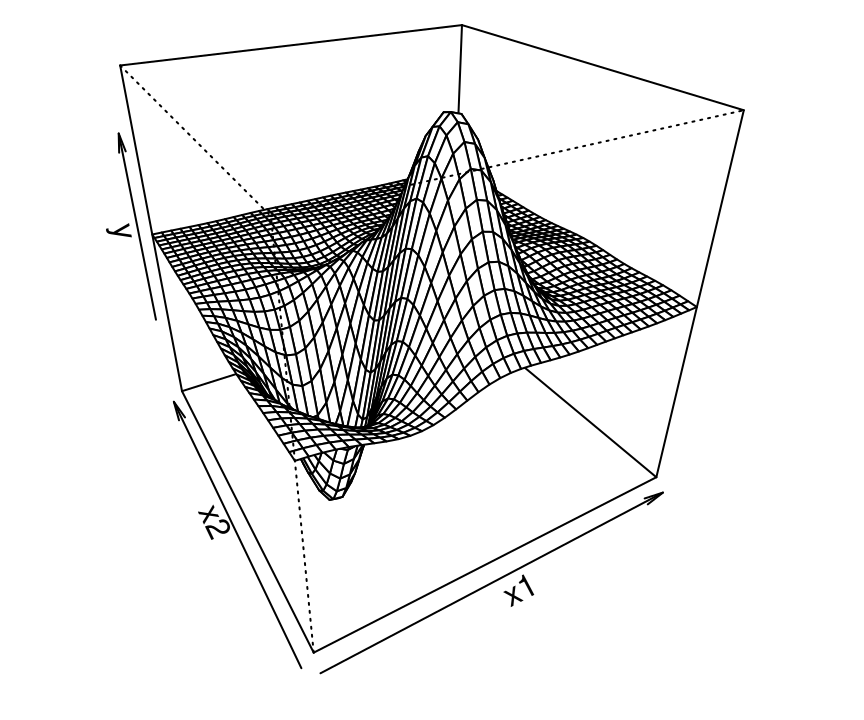

Figure 5.6 provides another look, obtained by stretching the predictive mean over a mesh. Bumps near the origin are clearly visible, with a flat region emerging for larger \(x_1\) and \(x_2\) settings.

FIGURE 5.6: Perspective view on the posterior mean surface from the left panel of Figure 5.5.

Well that’s basically it! Now you know GP regression. Where to go from here? Hopefully I’ve convinced you that GPs hold great potential as a nonlinear regression tool. It’s kinda-cool that they perform so well – that they “learn” – without having to tune anything. In statistics, we’re so used to seeking out optimal settings of parameters that a GP predictive surface might seem like voodoo. Simple MVN conditioning is able to capture input–output dynamics without having to “fit” anything, or without trying to minimize a loss criteria. That flexibility, without any tuning knobs, is what people think of when they call GPs a nonparametric regression tool. All we did was define covariance in terms of (inverse exponentiated squared Euclidean) distance, condition, and voilà.

But when you think about it a little bit, there are lots of (hidden) assumptions which are going to be violated by most real-data contexts. Data is noisy. The amplitude of all functions we might hope to learn will not be 2. Correlation won’t decay uniformly in all directions, i.e., radially. Even the most ideally smooth physical relationships are rarely infinitely smooth.

Yet we’ll see that even gross violations of those assumptions are easy to address, or “fix up”. At the same time GPs are relatively robust to transgressions between assumptions and reality. In other words, sometimes it works well even when it ought not. As I see it – once we clean things up – there are really only two serious problems that GPs face in practice: stationarity of covariance (§5.3.3), and computational burden, which in most contexts go hand-in-hand. Remedies for both will have to wait for Chapter 9. For now, let’s keep the message upbeat. There’s lots that can be accomplished with the canonical setup, whose description continues below.

5.2 GP hyperparameters

All this business about nonparametric regression and here we are introducing parameters, passive–aggressively you might say: refusing to call them parameters. How can one have hyperparameters without parameters to start with, or at least to somehow distinguish from? To make things even more confusing, we go about learning those hyperparameters in the usual way, by optimizing something, just like parameters. I guess it’s all to remind you that the real power – the real flexibility – comes from MVN conditioning. These hyperparameters are more of a fine tuning. There’s something to that mindset, as we shall see. Below we revisit the drawbacks alluded to above – scale, noise, and decay of correlation – with a (fitted) hyperparameter targeting each one.

5.2.1 Scale

Suppose you want your GP prior to generate random functions with an amplitude larger than two. You could introduce a scale parameter \(\tau^2\) and then take \(\Sigma_n = \tau^2 C_n\). Here \(C\) is basically the same as our \(\Sigma\) from before: a correlation function for which \(C(x,x) = 1\) and \(C(x,x') < 1\) for \(x \ne x'\), and positive definite; for example

\[ C(x, x') = \exp \{- || x - x' ||^2 \}. \]

But we need a more nuanced notion of covariance to allow more flexibility on scale, so we’re re-parameterizing a bit. Now our MVN generator looks like

\[ Y \sim \mathcal{N}_n(0, \tau^2 C_n). \]

Let’s check that that does the trick. First rebuild \(X_n\)-locations, e.g., a sequence of one hundred from zero to ten, and then calculate pairwise distances. Nothing different yet compared to our earlier illustration in §5.1.

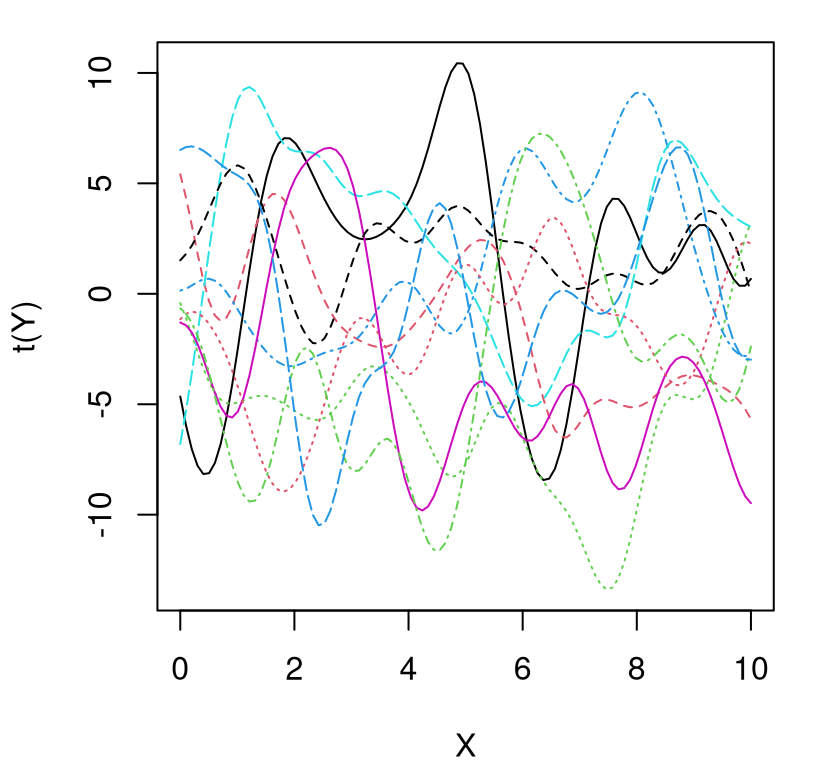

Now amplitude, via 95% of the range of function realizations, is approximately \(2\sigma(x)\) where \(\sigma^2 \equiv \mathrm{diag}(\Sigma_n)\). So for an amplitude of 10, say, choose \(\tau^2 = 5^2 = 25\). The code below calculates inverse exponentiated squared Euclidean distances in \(C_n\) and makes ten draws from an MVN whose covariance is obtained by pre-multiplying \(C_n\) by \(\tau^2\).

As Figure 5.7 shows, amplitude has increased. Not all draws completely lie between \(-10\) and \(10\), but most are in the ballpark.

FIGURE 5.7: Higher amplitude draws from a GP prior.

But again, who cares about generating random functions? We want to be able to learn about functions on any scale from training data. What would happen if we had some data with an amplitude of 5, say, but we used a GP with a built-in scale of 1 (amplitude of 2). In other words, what would happen if we did things the “old-fashioned way”, with code cut-and-pasted directly from §5.1.1?

First generate some data with that property. Here we’re revisiting sinusoidal data from §5.1.1, but multiplying by 5 on the way out of the sin call.

Next cut-and-paste code from earlier, including our predictive grid of 100 equally spaced locations.

D <- distance(X)

Sigma <- exp(-D)

XX <- matrix(seq(-0.5, 2*pi + 0.5, length=100), ncol=1)

DXX <- distance(XX)

SXX <- exp(-DXX) + diag(eps, ncol(DXX))

DX <- distance(XX, X)

SX <- exp(-DX)

Si <- solve(Sigma);

mup <- SX %*% Si %*% y

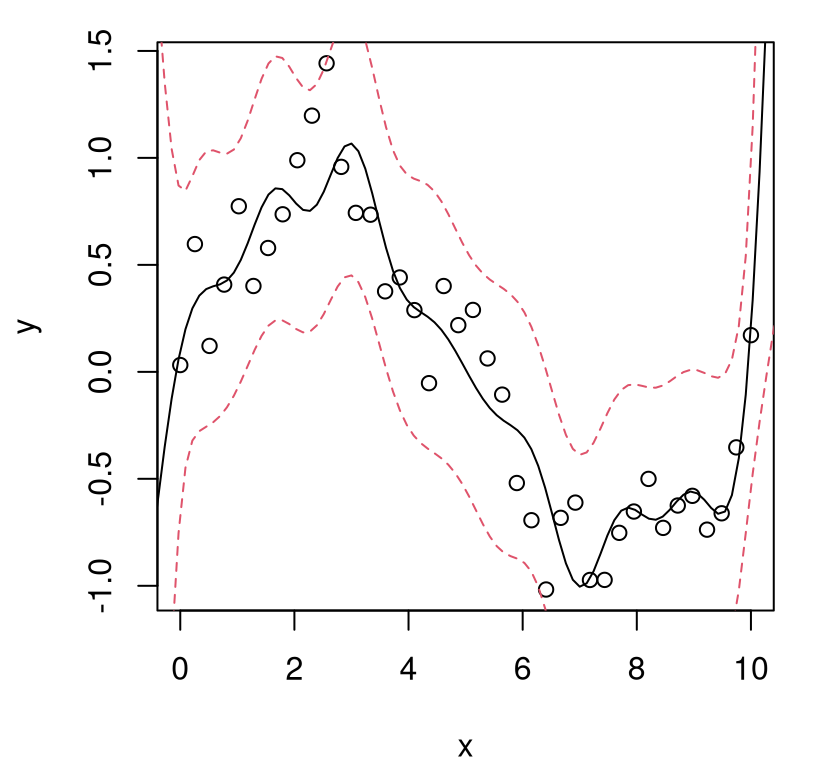

Sigmap <- SXX - SX %*% Si %*% t(SX)Now we have everything we need to visualize the resulting predictive surface, which is shown in Figure 5.8 using plotting code identical to that behind Figure 5.3.

YY <- rmvnorm(100, mup, Sigmap)

q1 <- mup + qnorm(0.05, 0, sqrt(diag(Sigmap)))

q2 <- mup + qnorm(0.95, 0, sqrt(diag(Sigmap)))

matplot(XX, t(YY), type="l", col="gray", lty=1, xlab="x", ylab="y")

points(X, y, pch=20, cex=2)

lines(XX, mup, lwd=2)

lines(XX, 5*sin(XX), col="blue")

lines(XX, q1, lwd=2, lty=2, col=2)

lines(XX, q2, lwd=2, lty=2, col=2)

FIGURE 5.8: GP fit to higher amplitude sinusoid.

What happened? In fact the “scale 1” GP is pretty robust. It gets the predictive mean almost perfectly, despite using the “wrong prior” relative to the actual data generating mechanism, at least as regards scale. But it’s over-confident. Besides a change of scale, the new training data exhibit no change in relative error, nor any other changes for that matter, compared to the example we did above where the scale was actually 1. So we must now be under-estimating predictive uncertainty, which is obvious by visually comparing the error-bars to those obtained from our earlier fit (Figure 5.3). Looking closely, notice that the true function goes well outside of our predictive interval at the edges of the input space. That didn’t happen before.

How to estimate the right scale? Well for starters, admit that scale may be captured by a parameter, \(\tau^2\), even though we’re going to call it a hyperparameter to remind ourselves that its impact on the overall estimation procedure is really more of a fine-tuning. The analysis above lends some credence to that perspective, since our results weren’t too bad even though we assumed an amplitude that was off by a factor of five. Whether benevolently gifted the right scale or not, GPs clearly retain a great deal of flexibility to adapt to the dynamics at play in data. Decent predictive surfaces often materialize, as we have seen, in spite of less than ideal parametric specifications.

As with any “parameter”, there are many choices when it comes to estimation: method of moments (MoM), likelihood (maximum likelihood, Bayesian inference), cross validation (CV), the “eyeball norm”. Some, such as those based on (semi-) variograms, are preferred in the spatial statistics literature. All of those are legitimate, except maybe the eyeball norm which isn’t very easily automated and challenges reproducibility. I’m not aware of any MoM approaches to GP inference for hyperparameters. Stochastic kriging (Ankenman, Nelson, and Staum 2010) utilizes MoM in a slightly more ambitious, latent variable setting which is the subject of Chapter 10. Whereas CV is common in some circles, such frameworks generalize rather less well to higher dimensional hyperparameter spaces, which we’re going to get to momentarily. I prefer likelihood-based inferential schemes for GPs, partly because they’re the most common and, especially in the case of maximizing (MLE/MAP) solutions, they’re also relatively hands-off (easy automation), and nicely generalize to higher dimensional hyperparameter spaces.

But wait a minute, what’s the likelihood in this context? It’s a bit bizarre that we’ve been talking about priors and posteriors without ever talking about likelihood. Both prior and likelihood are needed to form a posterior. We’ll get into finer detail later. For now, recognize that our data-generating process is \(Y \sim \mathcal{N}_n(0, \tau^2 C_n)\), so the relevant quantity, which we’ll call the likelihood now (but was our prior earlier), comes from an MVN PDF:

\[ L \equiv L(\tau^2, C_n) = (2\pi \tau^2)^{-\frac{n}{2}} | C_n |^{-\frac{1}{2}} \exp\left\{- \frac{1}{2\tau^2} Y_n^\top C_n^{-1} Y_n \right\}. \]

Taking the log of that is easy, and we get

\[\begin{equation} \ell = \log L = -\frac{n}{2} \log 2\pi - \frac{n}{2} \log \tau^2 - \frac{1}{2} \log |C_n| - \frac{1}{2\tau^2} Y_n^\top C_n^{-1} Y_n. \tag{5.4} \end{equation}\]

To maximize that (log) likelihood with respect to \(\tau^2\), just differentiate and solve:

\[\begin{align} 0 \stackrel{\mathrm{set}}{=} \ell' &= - \frac{n}{2 \tau^2} + \frac{1}{2 (\tau^2)^2} Y_n^\top C_n^{-1} Y_n, \notag \\ \mbox{so } \hat{\tau}^2 &= \frac{Y_n^\top C_n^{-1} Y_n}{n}. \tag{5.5} \end{align}\]

In other words, we get that the MLE for scale \(\tau^2\) is a mean residual sum of squares under the quadratic form obtained from an MVN PDF with a mean of \(\mu(x) = 0\): \((Y_n - 0)^\top C_n^{-1} (Y_n - 0)\).

How would this analysis change if we were to take a Bayesian approach? A homework exercise (§5.5) invites the curious reader to investigate the form of the posterior under prior \(\tau^2 \sim \mathrm{IG}\left(a/2, b/2\right)\). For example, what happens when \(a=b=0\) which is equivalent to \(p(\tau^2) \propto 1/\tau^2\), a so-called reference prior in this context (Berger, De Oliveira, and Sansó 2001; Berger, Bernardo, and Sun 2009)?

Estimate of scale \(\hat{\tau}^2\) in hand, we may simply “plug it in” to the predictive equations (5.2)–(5.3). Now technically, when you estimate a variance and plug it into a (multivariate) Gaussian, you’re turning that Gaussian into a (multivariate) Student-\(t\), in this case with \(n\) degrees of freedom (DoF). (There’s no loss of DoF when the mean is assumed to be zero.) For details, see for example Gramacy and Polson (2011). For now, presume that \(n\) is large enough so that this distinction doesn’t matter. As we generalize to more hyperparameters, DoF correction could indeed matter but we still obtain a decent approximation, which is so common in practice that the word “approximation” is often dropped from the description – a transgression I shall be guilty of as well.

So to summarize, we have the following scale-adjusted (approximately) MVN predictive equations:

\[ \begin{aligned} Y(\mathcal{X}) \mid D_n & \sim \mathcal{N}_{n'}(\mu(\mathcal{X}), \Sigma(\mathcal{X})) \\ \mbox{with mean } \quad \mu(\mathcal{X}) &= C(\mathcal{X}, X_n) C_n^{-1} Y_n \\ \mbox{and variance } \quad \Sigma(\mathcal{X}) &= \hat{\tau}^2[C(\mathcal{X},\mathcal{X}) - C(\mathcal{X}, X_n) C_n^{-1} C(\mathcal{X}, X_n)^\top]. \end{aligned} \]

Notice how \(\hat{\tau}^2\) doesn’t factor into the predictive mean, but it does figure into predictive variance. That’s important because it means that \(Y_n\)-values are finally involved in assessment of predictive uncertainty, whereas previously (5.2)–(5.3) only \(X_n\)-values were involved.

To see it all in action, let’s return to our simple 1d sinusoidal example, continuing from Figure 5.8. Start by performing calculations for \(\hat{\tau}^2\).

Checking that we get something reasonable, consider …

## [1] 5.487… which is quite close to what we know to be the true value of five in this case. Next plug \(\hat{\tau}^2\) into the MVN conditioning equations to obtain a predictive mean vector and covariance matrix.

Finally gather some sample paths using MVN draws and summarize predictive quantiles by cutting-and-pasting from above.

YY <- rmvnorm(100, mup2, Sigmap2)

q1 <- mup + qnorm(0.05, 0, sqrt(diag(Sigmap2)))

q2 <- mup + qnorm(0.95, 0, sqrt(diag(Sigmap2)))Figure 5.9 shows a much better surface compared to Figure 5.8.

matplot(XX, t(YY), type="l", col="gray", lty=1, xlab="x", ylab="y")

points(X, y, pch=20, cex=2)

lines(XX, mup, lwd=2)

lines(XX, 5*sin(XX), col="blue")

lines(XX, q1, lwd=2, lty=2, col=2); lines(XX, q2, lwd=2, lty=2, col=2)

FIGURE 5.9: Sinusoidal GP predictive surface with estimated scale \(\hat{\tau}^2\). Compare to Figure 5.8.

Excepting the appropriately expanded scale of the \(y\)-axis, the view in Figure 5.9 looks nearly identical to Figure 5.3 with data back on the two-unit scale. Besides that this last fit (with \(\hat{\tau}^2\)) looks better (particularly the variance) than the one before it (with implicit \(\tau^2=1\) when the observed scale was really much bigger), how can one be more objective about which is best out-of-sample?

A great paper by Gneiting and Raftery (2007) offers proper scoring rules that facilitate comparisons between predictors in a number of different situations, basically depending on what common distribution characterizes predictors being compared. These are a great resource when comparing apples and oranges, even though we’re about to use them to compare apples to apples: two GPs under different scales.

We have the first two moments, so Eq. (25) from Gneiting and Raftery (2007) may be used. Given \(Y(\mathcal{X})\)-values observed out of sample, the proper scoring rule is given by

\[\begin{equation} \mathrm{score}(Y, \mu, \Sigma; \mathcal{X}) = - \log | \Sigma(\mathcal{X}) | - (Y(\mathcal{X}) - \mu(\mathcal{X}))^\top (\Sigma(\mathcal{X}))^{-1} (Y(\mathcal{X}) - \mu(\mathcal{X})). \tag{5.6} \end{equation}\]

In the case where predictors are actually MVN, which they aren’t quite in our case (they’re Student-\(t\)), this is within an additive constant of what’s called predictive log likelihood. Higher scores, or higher predictive log likelihoods, are better. The first term \(-\log | \Sigma(\mathcal{X})|\) measures magnitude of uncertainty. Smaller uncertainty is better, all things considered, so larger is better here. The second term \((Y(\mathcal{X}) - \mu(\mathcal{X}))^\top (\Sigma(\mathcal{X}))^{-1} (Y(\mathcal{X}) - \mu(\mathcal{X}))\) is mean-squared error (MSE) adjusted for covariance. Smaller MSE is better, but when predictions are inaccurate it’s also important to capture that uncertainty through \(\Sigma(\mathcal{X})\). Score compensates for that second-order consideration: it’s ok to mispredict as long as you know you’re mispredicting.

A more recent paper by Bastos and O’Hagan (2009) tailors the scoring discussion to deterministic computer experiments, which better suits our current setting: interpolating function observations without noise. They recommend using Mahalanobis distance, which for the multivariate Gaussian is the same as the (negative of the) formula above, except without the determinant of \(\Sigma(\mathcal{X})\), and square-rooted.

\[\begin{equation} \mathrm{mah}(y, \mu, \Sigma; \mathcal{X}) = \sqrt{(y(\mathcal{X}) - \mu(\mathcal{X}))^\top (\Sigma(\mathcal{X}))^{-1} (y(\mathcal{X}) - \mu(\mathcal{X}))} \tag{5.7} \end{equation}\]

Smaller distances are otherwise equivalent to higher scores. Here’s code that calculates both in one function.

score <- function(Y, mu, Sigma, mah=FALSE)

{

Ymmu <- Y - mu

Sigmai <- solve(Sigma)

mahdist <- t(Ymmu) %*% Sigmai %*% Ymmu

if(mah) return(sqrt(mahdist))

return (- determinant(Sigma, logarithm=TRUE)$modulus - mahdist)

}How about using Mahalanobis distance (Mah for short) to make a comparison between the quality of predictions from our two most recent fits \((\tau^2=1\) versus \(\hat{\tau}^2)\)?

Ytrue <- 5*sin(XX)

df <- data.frame(score(Ytrue, mup, Sigmap, mah=TRUE),

score(Ytrue, mup2, Sigmap2, mah=TRUE))

colnames(df) <- c("tau2=1", "tau2hat")

df## tau2=1 tau2hat

## 1 6.259 2.282Estimated scale wins! Actually if you do score without mah=TRUE you come to the opposite conclusion, as Bastos and O’Hagan (2009) caution. Knowledge that the true response is deterministic is important to coming to the correct conclusion about estimates of accuracy as regards variations in scale, in this case, with signal and (lack of) noise contributing to the range of observed measurements. Now what about when there’s noise?

5.2.2 Noise and nuggets

We’ve been saying “regression” for a while, but actually interpolation is a more apt description. Regression is about extracting signal from noise, or about smoothing over noisy data, and so far our example training data have no noise. By inspecting a GP prior, in particular its correlation structure \(C(x, x')\), it’s clear that the current setup precludes idiosyncratic behavior because correlation decays smoothly as a function of distance. Observe that \(C(x,x') \rightarrow 1^-\) as \(x\rightarrow x'\), implying that the closer \(x\) is to \(x'\) the higher the correlation, until correlation is perfect, which is what “connects the dots” when conditioning on data and deriving the predictive distribution.

Moving from GP interpolation to smoothing over noise is all about breaking interpolation, or about breaking continuity in \(C(x,x')\) as \(x\rightarrow x'\). Said another way, we must introduce a discontinuity between diagonal and off-diagonal entries in the correlation matrix \(C_n\) to smooth over noise. There are a lot of ways to skin this cat, and a lot of storytelling that goes with it, but the simplest way to “break it” is with something like

\[ K(x, x') = C(x, x') + g \delta_{x, x'}. \]

Above, \(g > 0\) is a new hyperparameter called the nugget (or sometimes nugget effect), which determines the size of the discontinuity as \(x' \rightarrow x\). The function \(\delta\) is more like the Kronecker delta, although the way it’s written above makes it look like the Dirac delta. Observe that \(g\) generalizes Neal’s \(\epsilon\) jitter.

Neither delta is perfect in terms of describing what to do in practice. The simplest, correct description, of how to break continuity is to only add \(g\) on a diagonal – when indices of \(x\) are the same, not simply for identical values – and nowhere else. Never add \(g\) to an off-diagonal correlation even if that correlation is based on zero distances: i.e., identical \(x\) and \(x'\)-values. Specifically,

- \(K(x_i, x_j) = C(x_i, x_j)\) when \(i \ne j\), even if \(x_i = x_j\);

- only \(K(x_i, x_i) = C(x_i, x_i) + g\).

This leads to the following representation of the data-generating mechanism.

\[ Y \sim \mathcal{N}_n(0, \tau^2 K_n) \]

Unfolding terms, covariance matrix \(\Sigma_n\) contains entries

\[ \Sigma_n^{ij} = \tau^2 (C(x_i, x_j) + g \delta_{ij}), \]

or in other words \(\Sigma_n = \tau^2 K_n = \tau^2(C_n + g \mathbb{I}_n)\). This all looks like a hack, but it’s operationally equivalent to positing the following model.

\[ Y(x) = w(x) + \varepsilon, \]

where \(w(x) \sim \mathcal{GP}\) with scale \(\tau^2\), i.e., \(W \sim \mathcal{N}_n(0, \tau^2 C_n)\), and \(\varepsilon\) is independent Gaussian noise with variance \(\tau^2 g\), i.e., \(\varepsilon \stackrel{\mathrm{iid}}{\sim} \mathcal{N}(0, \tau^2 g)\).

A more aesthetically pleasing model might instead use \(w(x) \sim \mathcal{GP}\) with scale \(\tau^2\), i.e., \(W \sim \mathcal{N}_n(0, \tau^2 C_n)\), and where \(\varepsilon(x)\) is iid Gaussian noise with variance \(\sigma^2\), i.e., \(\varepsilon(x) \stackrel{\mathrm{iid}}{\sim} \mathcal{N}(0, \sigma^2)\). An advantage of this representation is two totally “separate” hyperparameters, with one acting to scale noiseless spatial correlations, and another determining the magnitude of white noise. Those two formulations are actually equivalent. There’s a 1:1 mapping between the two. Many researchers prefer the latter to the former on intuition grounds. But inference in the latter is harder. Conditional on \(g\), \(\hat{\tau}^2\) is available in closed form, which we’ll show momentarily. Conditional on \(\sigma^2\), numerical methods are required for \(\hat{\tau}^2\).

Ok, so back to plan-A with \(Y \sim \mathcal{N}(0, \Sigma_n)\), where \(\Sigma_n = \tau^2 K_n = \tau^2(C_n + g \mathbb{I}_n)\). Recall that \(C_n\) is an \(n \times n\) matrix of inverse exponentiated pairwise squared Euclidean distances. How, then, to estimate two hyperparameters: scale \(\tau^2\) and nugget \(g\)? Again, we have all the usual suspects (MoM, likelihood, CV, variogram) but likelihood-based methods are by far most common. First, suppose that \(g\) is known.

MLE \(\hat{\tau}^2\) given a fixed \(g\) is

\[ \hat{\tau}^2 = \frac{Y_n^\top K_n^{-1} Y_n}{n} = \frac{Y_n^\top (C_n + g \mathbb{I}_n)^{-1} Y_n}{n}. \]

The derivation involves an identical application of Eq. (5.5), except with \(K_n\) instead of \(C_n\).

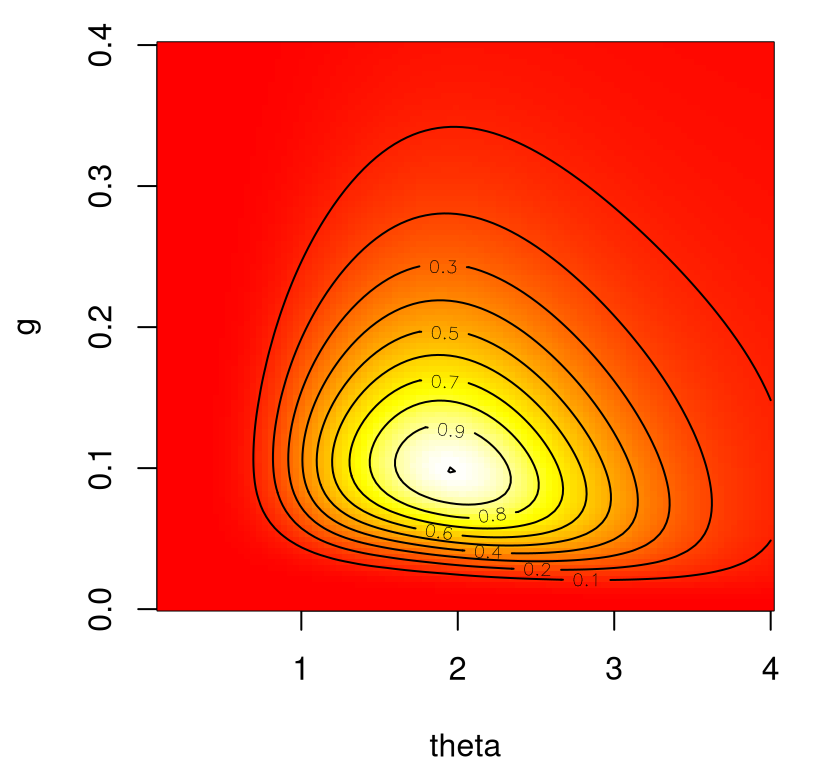

Plug \(\hat{\tau}^2\) back into our log likelihood to get a concentrated (or profile) log likelihood involving just the remaining parameter \(g\).

\[\begin{align} \ell(g) &= -\frac{n}{2} \log 2\pi - \frac{n}{2} \log \hat{\tau}^2 - \frac{1}{2} \log |K_n| - \frac{1}{2\hat{\tau}^2} Y_n^\top K_n^{-1} Y_n \notag \\ &= c - \frac{n}{2} \log Y_n^\top K_n^{-1} Y_n - \frac{1}{2} \log |K_n| \tag{5.8} \end{align}\]

Unfortunately taking a derivative and setting to zero doesn’t lead to a closed form solution. Calculating the derivative is analytic, which we show below momentarily, but solving is not. Maximizing \(\ell(g)\) requires numerical methods. The simplest thing to do is throw it into optimize and let a polished library do all the work. Since most optimization libraries prefer to minimize, we’ll code up \(-\ell(g)\) in R. The nlg function below doesn’t directly work on X inputs, rather through distances D. This is slightly more efficient since distances can be pre-calculated, rather than re-calculated in each evaluation for new g.

nlg <- function(g, D, Y)

{

n <- length(Y)

K <- exp(-D) + diag(g, n)

Ki <- solve(K)

ldetK <- determinant(K, logarithm=TRUE)$modulus

ll <- - (n/2)*log(t(Y) %*% Ki %*% Y) - (1/2)*ldetK

counter <<- counter + 1

return(-ll)

}Observe a direct correspondence between nlg and \(-\ell(g)\) with the exception of a counter increment (accessing a global variable). This variable is not required, but we’ll find it handy later when comparing alternatives on efficiency grounds in numerical optimization, via the number of times our likelihood objective function is evaluated. Although optimization libraries often provide iteration counts on output, sometimes that report can misrepresent the actual number of objective function calls. So I’ve jerry-rigged my own counter here to fill in.

Example: noisy 1d sinusoid

Before illustrating numerical nugget (and scale) optimization towards the MLE, we need some example data. Let’s return to our running sinusoid example from §5.1.1, picking up where we left off but augmented with standard Gaussian noise. Code below utilizes the same uniform Xs from earlier, but doubles them up. Adding replication into a design is recommended in noisy data contexts, as discussed in more detail in Chapter 10. Replication is not essential for this example, but it helps guarantee predictable outcomes which is important for a randomly seeded, fully reproducible Rmarkdown build.

Everything is in place to estimate the optimal nugget. The optimize function in R is ideal in 1d derivative-free contexts. It doesn’t require an initial value for g, but it does demand a search interval. A sensible yet conservative range for \(g\)-values is from eps to var(y). The former corresponds to the noise-free/jitter-only case we entertained earlier. The latter is the observed marginal variance of \(Y\), or in other words about as big as variance could be if these data were all noise and no signal.

## [1] 0.2878Now the value of that estimate isn’t directly useful to us, at least on an intuitive level. We need \(\hat{\tau}^2\) to understand the full decomposition of variance. But backing out those quantities is relatively straightforward.

K <- exp(-D) + diag(g, n)

Ki <- solve(K)

tau2hat <- drop(t(y) %*% Ki %*% y / n)

c(tau=sqrt(tau2hat), sigma=sqrt(tau2hat*g))## tau sigma

## 2.304 1.236Both are close to their true values of \(5/2 = 2.5\) and 1, respectively. Estimated hyperparameters in hand, prediction is a straightforward application of MVN conditionals. First calculate quantities involved in covariance between testing and training locations, and between testing locations and themselves.

Notice that only KXX is augmented with g on the diagonal. KX is not a square symmetric matrix calculated from identically indexed \(x\)-values. Even if it were coincidentally square, or if DX contained zero distances because elements of XX and X coincide, still no nugget augmentation is deployed. Only with KXX, which is identically indexed with respect to itself, does a nugget augment the diagonal.

Covariance matrices in hand, we may then calculate the predictive mean vector and covariance matrix.

mup <- KX %*% Ki %*% y

Sigmap <- tau2hat*(KXX - KX %*% Ki %*% t(KX))

q1 <- mup + qnorm(0.05, 0, sqrt(diag(Sigmap)))

q2 <- mup + qnorm(0.95, 0, sqrt(diag(Sigmap)))Showing sample predictive realizations that look pretty requires “subtracting” out idiosyncratic noise, i.e., the part due to nugget \(g\). Otherwise sample paths will be “jagged” and hard to interpret.

Sigma.int <- tau2hat*(exp(-DXX) + diag(eps, nrow(DXX))

- KX %*% Ki %*% t(KX))

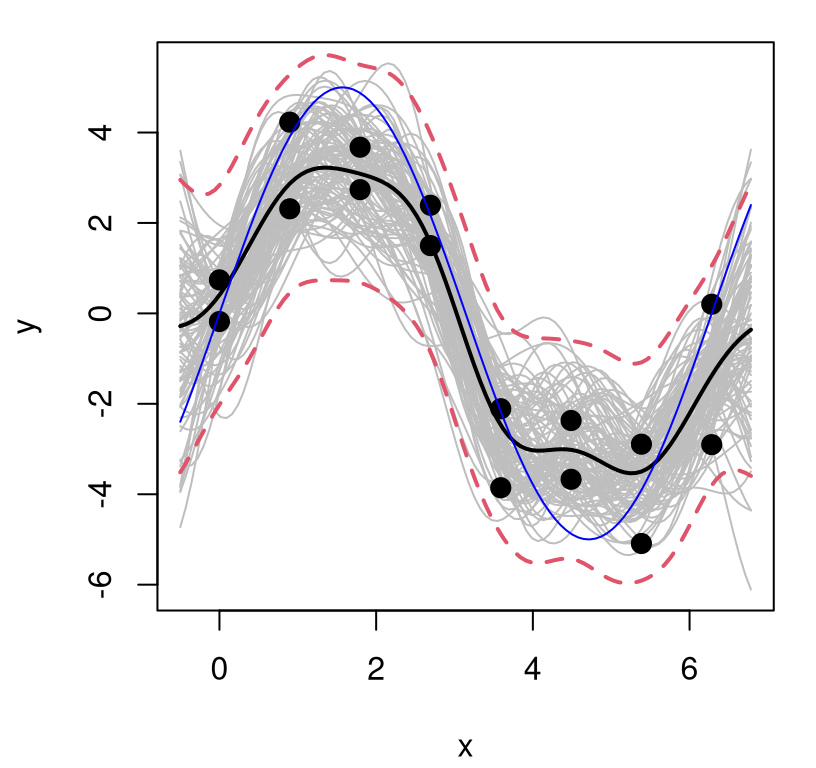

YY <- rmvnorm(100, mup, Sigma.int)§5.3.2 explains how this maneuver makes sense in a latent function-space view of GP posterior updating, and again when we delve into a deeper signal-to-noise discussion in Chapter 10. For now this is just a trick to get a prettier picture, only affecting gray lines plotted in Figure 5.10.

matplot(XX, t(YY), type="l", lty=1, col="gray", xlab="x", ylab="y")

points(X, y, pch=20, cex=2)

lines(XX, mup, lwd=2)

lines(XX, 5*sin(XX), col="blue")

lines(XX, q1, lwd=2, lty=2, col=2)

lines(XX, q2, lwd=2, lty=2, col=2)

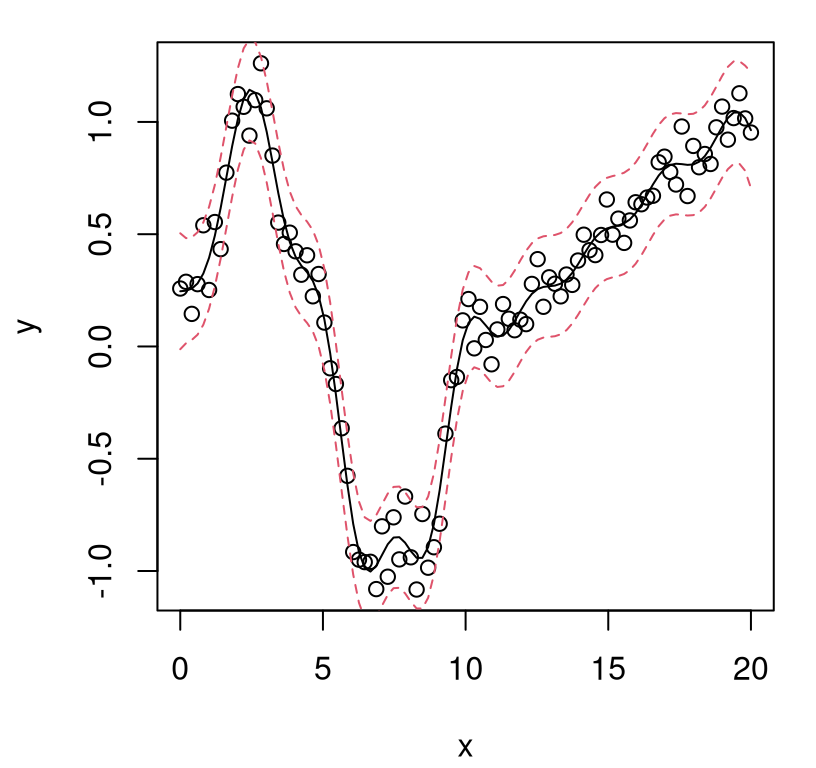

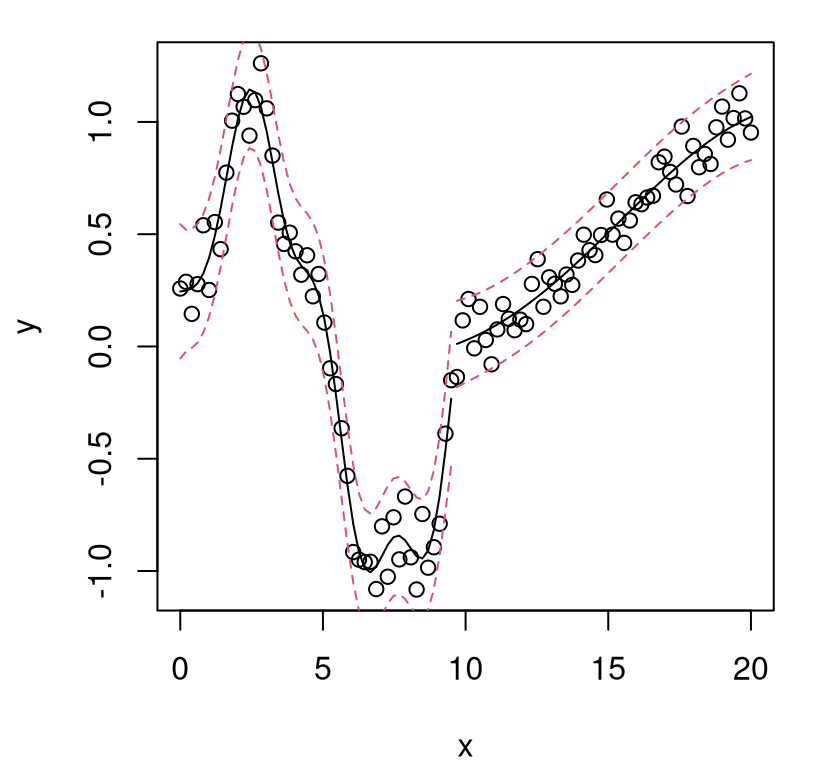

FIGURE 5.10: GP fit to sinusoidal data with estimated nugget.

Notice how the error-bars, which do provide a full accounting of predictive uncertainty, lie mostly outside of the gray lines and appropriately capture variability in the training data observations, shown as filled black dots. That’s it: now we can fit noisy data with GPs using a simple library-based numerical optimizer and about twenty lines of code.

5.2.3 Derivative-based hyperparameter optimization

It can be unsatisfying to brute-force an optimization for a hyperparameter like \(g\), even though 1d solving with optimize is often superior to cleverer methods. Can we improve upon the number of evaluations?

## [1] 16Actually, that’s pretty good. If you can already optimize numerically in fewer than twenty or so evaluations there isn’t much scope for improvement. Yet we’re leaving information on the table: closed-form derivatives. Differentiating \(\ell(g)\) involves pushing the chain rule through the inverse of covariance matrix \(K_n\) and its determinant, which is where hyperparameter \(g\) is involved. The following identities, which are framed for an arbitrary parameter \(\phi\), will come in handy.

\[\begin{equation} \frac{\partial K_n^{-1}}{\partial \phi} = - K_n^{-1} \frac{\partial K_n}{\partial \phi} K_n^{-1} \quad \mbox{ and } \quad \frac{\partial \log | K_n | }{\partial \phi} = \mathrm{tr} \left \{ K_n^{-1} \frac{\partial K_n}{\partial \phi} \right\} \tag{5.9} \end{equation}\]

The chain rule, and a single application of each of the identities above, gives

\[\begin{align} \ell'(g) &= - \frac{n}{2} \frac{Y_n^\top \frac{\partial K_n^{-1}}{\partial g} Y_n}{Y_n^\top K_n^{-1} Y_n} - \frac{1}{2} \frac{\partial \log |K_n|}{\partial g} \tag{5.10} \\ &= \frac{n}{2} \frac{Y_n^\top K_n^{-1} \frac{\partial K_n}{\partial g} K_n^{-1} Y_n}{Y_n^\top K_n^{-1} Y_n} - \frac{1}{2} \mathrm{tr} \left \{ K_n^{-1} \frac{\partial K_n}{\partial g} \right\}. \notag \end{align}\]

Off-diagonal elements of \(K_n\) don’t depend on \(g\). The diagonal is simply \(1 + g\). Therefore \(\frac{\partial K_n}{\partial g}\) is an \(n\)-dimensional identity matrix. Putting it all together:

\[ \ell'(g) = \frac{n}{2} \frac{Y_n^\top (K_n^{-1})^{2} Y_n}{Y_n^\top K_n^{-1} Y_n} - \frac{1}{2} \mathrm{tr} \left \{ K_n^{-1} \right\}. \]

Here’s an implementation of the negative of that derivative for the purpose of minimization. The letter “g” for gradient in the function name is overkill in this scalar context, but I’m thinking ahead to where yet more hyperparameters will be optimized.

gnlg <- function(g, D, Y)

{

n <- length(Y)

K <- exp(-D) + diag(g, n)

Ki <- solve(K)

KiY <- Ki %*% Y

dll <- (n/2) * t(KiY) %*% KiY / (t(Y) %*% KiY) - (1/2)*sum(diag(Ki))

return(-dll)

}Objective (negative concentrated log likelihood, nlg) and gradient (gnlg) in hand, we’re ready to numerically optimize using derivative information. The optimize function doesn’t support derivatives, so we’ll use optim instead. The optim function supports many optimization methods, and not all accommodate derivatives. I’ve chosen to illustrate method="L-BFGS-B" here because it supports derivatives and allows bound constraints (Byrd et al. 1995). As above, we know we don’t want a nugget lower than eps for numerical reasons, and it seems unlikely that \(g\) will be bigger than the marginal variance.

Here we go … first reinitializing the evaluation counter and choosing 10% of marginal variance as a starting value.

counter <- 0

out <- optim(0.1*var(y), nlg, gnlg, method="L-BFGS-B", lower=eps,

upper=var(y), D=D, Y=y)

c(g, out$par)## [1] 0.2878 0.2879Output is similar to what we obtained from optimize, which is reassuring. How many iterations?

## function gradient actual

## 8 8 8Notice that in this scalar case our internal, manual counter agrees with optim’s. Just 8 evaluations to optimize something is pretty excellent,

but possibly not noteworthy compared to optimize’s 16, especially when you consider that an extra 8 gradient evaluations (with similar computational complexity) are also required. When you put it that way, our new derivative-based version is potentially no better, requiring 16 combined evaluations of commensurate computational complexity. Hold that thought. We shall return to counting iterations after introducing more hyperparameters.

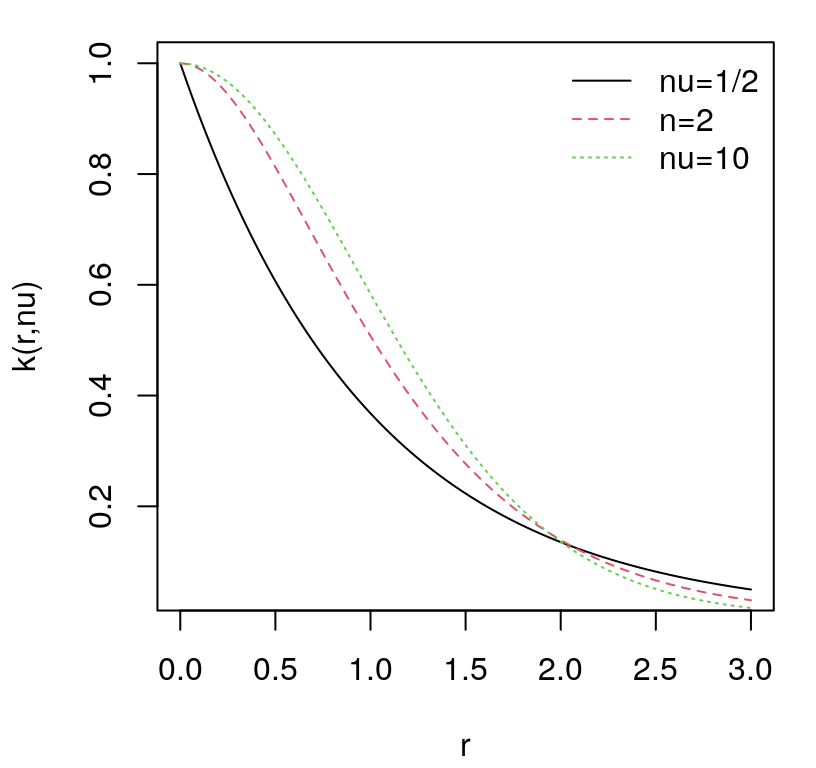

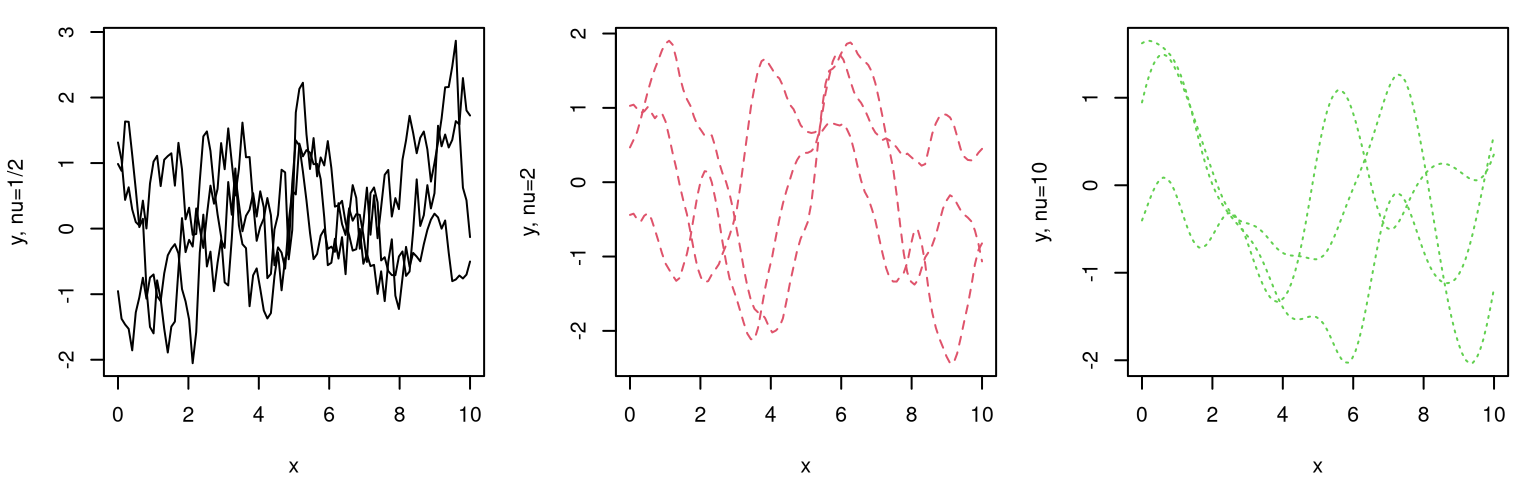

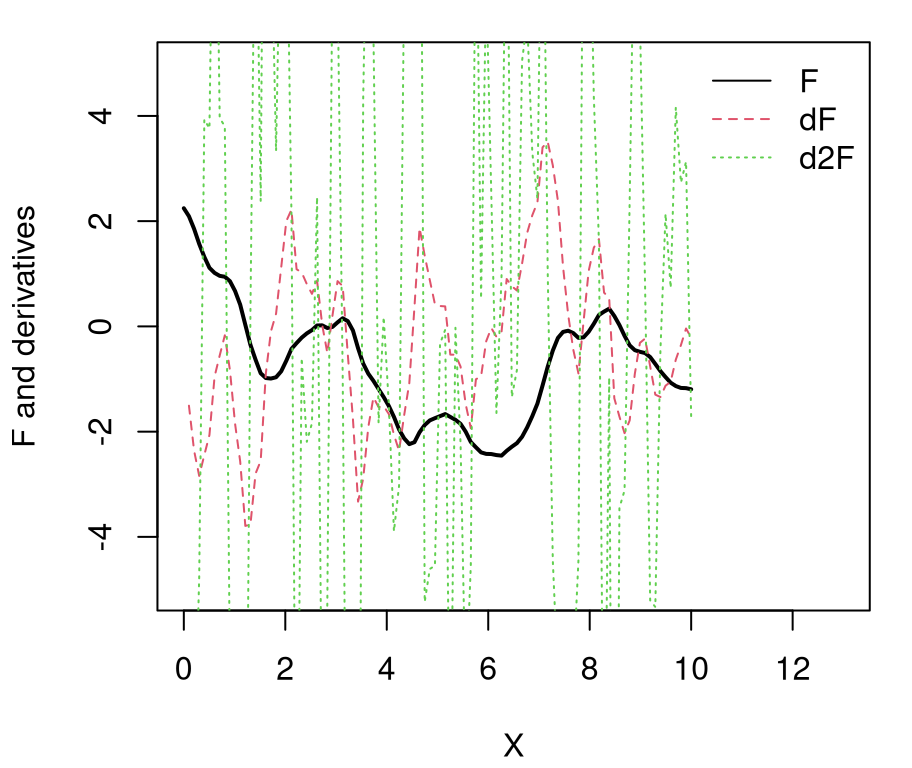

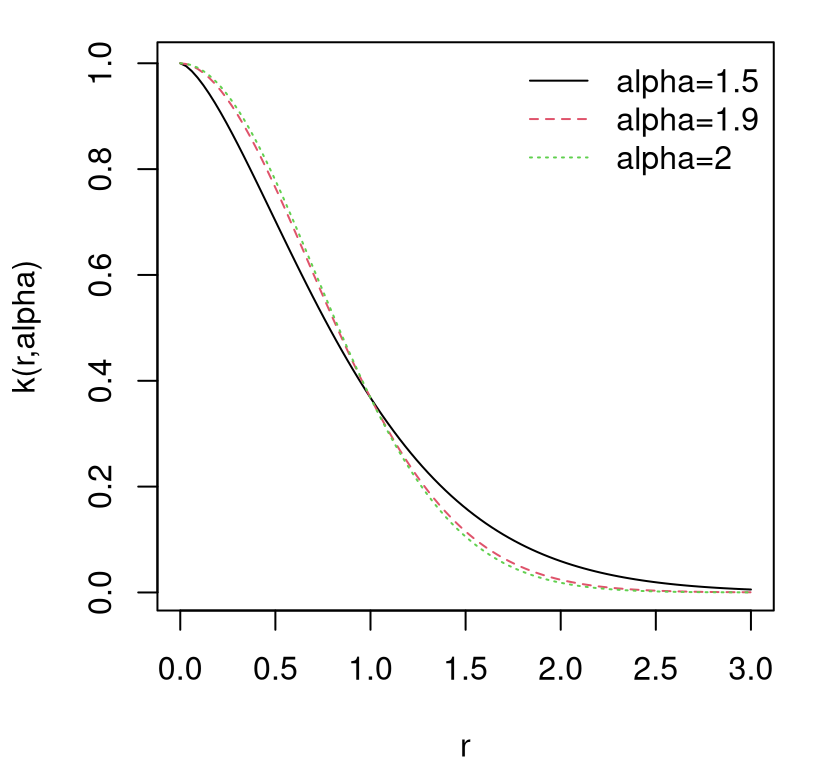

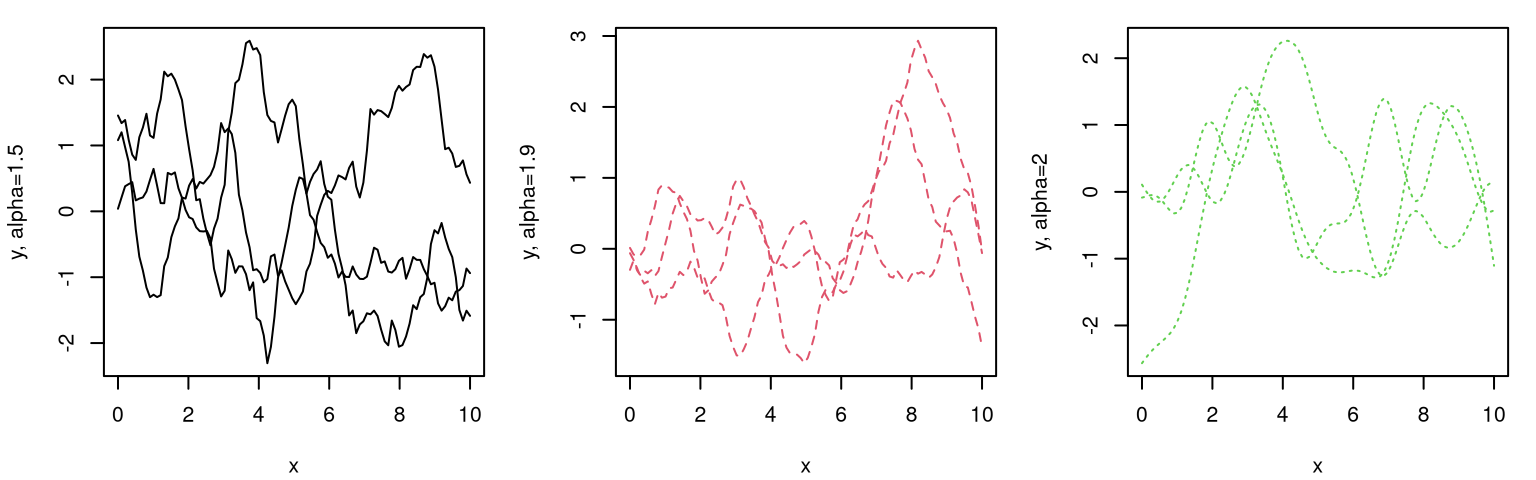

5.2.4 Lengthscale: rate of decay of correlation

How about modulating the rate of decay of spatial correlation in terms of distance? Surely unadulterated Euclidean distance isn’t equally suited to all data. Consider the following generalization, known as the isotropic Gaussian family.

\[ C_\theta(x, x') = \exp\left\{ - \frac{||x - x'||^2}{\theta} \right\} \]

Isotropic Gaussian correlation functions are indexed by a scalar hyperparameter \(\theta\), called the characteristic lengthscale. Sometimes this is shortened to lengthscale, or \(\theta\) may be referred to as a range parameter, especially in geostatistics. When \(\theta = 1\) we get back our inverse exponentiated squared Euclidean distance-based correlation as a special case. Isotropy means that correlation decays radially; Gaussian suggests inverse exponentiated squared Euclidean distance. Gaussian processes should not be confused with Gaussian-family correlation or kernel functions, which appear in many contexts. GPs get their name from their connection with the MVN, not because they often feature Gaussian kernels as a component of the covariance structure. Further discussion of kernel variations and properties is deferred until later in §5.3.3.

How to perform inference for \(\theta\)? Should our GP have a slow decay of correlation in space, leading to visually smooth/slowly changing surfaces, or a fast one looking more wiggly? Like with nugget \(g\), embedding \(\theta\) deep within coordinates of a covariance matrix thwarts analytic maximization of log likelihood. Yet again like \(g\), numerical methods are rather straightforward. In fact the setup is identical except now we have two unknown hyperparameters.

Consider brute-force optimization without derivatives. The R function nl is identical to nlg except argument par takes in a two-vector whose first coordinate is \(\theta\) and second is \(g\). Only two lines differ, and those are indicated by comments in the code below.

nl <- function(par, D, Y)

{

theta <- par[1] ## change 1

g <- par[2]

n <- length(Y)

K <- exp(-D/theta) + diag(g, n) ## change 2

Ki <- solve(K)

ldetK <- determinant(K, logarithm=TRUE)$modulus

ll <- - (n/2)*log(t(Y) %*% Ki %*% Y) - (1/2)*ldetK

counter <<- counter + 1

return(-ll)

}That’s it: just shove it into optim. Note that optimize isn’t an option here as that routine only optimizes in 1d. But first we’ll need an example. For variety, consider again our 2d exponential data from §5.1.2 and Figure 5.5, this time observed with noise and entertaining non-unit lengthscales.

library(lhs)

X2 <- randomLHS(40, 2)

X2 <- rbind(X2, X2)

X2[,1] <- (X2[,1] - 0.5)*6 + 1

X2[,2] <- (X2[,2] - 0.5)*6 + 1

y2 <- X2[,1]*exp(-X2[,1]^2 - X2[,2]^2) + rnorm(nrow(X2), sd=0.01)Again, replication is helpful for stability in reproduction, but is not absolutely necessary. Estimating lengthscale and nugget simultaneously represents an attempt to strike balance between signal and noise (Chapter 10). Once we get more experience, we’ll see that long lengthscales are more common when noise/nugget is high, whereas short lengthscales offer the potential to explain away noise as quickly changing dynamics in the data. Sometimes choosing between those two can be a difficult enterprise.

With optim it helps to think a little about starting values and search ranges. The nugget is rather straightforward, and we’ll copy ranges and starting values from our earlier example: from \(\epsilon\) to \(\mathbb{V}\mathrm{ar}\{Y\}\). The lengthscale is a little harder. Sensible choices for \(\theta\) follow the following rationale, leveraging \(x\)-values in coded units (\(\in [0,1]^2\)). A lengthscale of 0.1, which is about \(\sqrt{0.1} = 0.32\) in units of \(x\), biases towards surfaces three times more wiggly than in our earlier setup, with implicit \(\theta = 1\), in a certain loose sense. More precise assessments are quoted later after learning more about kernel properties (§5.3.3) and upcrossings (5.17). Initializing in a more signal, less noise regime seems prudent. If we thought the response was “really straight”, perhaps an ordinary linear model would suffice. A lower bound of eps allows the optimizer to find even wigglier surfaces, however it might be sensible to view solutions close to eps as suspect. A value of \(\theta=10\), or \(\sqrt{10} = 3.16\) is commensurately (3x) less wiggly than our earlier analysis. If we find a \(\hat{\theta}\) on this upper boundary we can always re-run with a new, bigger upper bound. For a more in-depth discussion of suitable lengthscale and nugget ranges, and even priors for regularization, see Appendix A of the tutorial (Gramacy 2016) for the laGP library (Gramacy and Sun 2018) introduced in more detail in §5.2.6.

Ok, here we go. (With new X we must first refresh D.)

D <- distance(X2)

counter <- 0

out <- optim(c(0.1, 0.1*var(y2)), nl, method="L-BFGS-B", lower=eps,

upper=c(10, var(y2)), D=D, Y=y2)

out$par## [1] 0.920257 0.009167Actually the outcome, as regards the first coordinate \(\hat{\theta}\), is pretty close to our initial version with implied \(\theta = 1\). Since "L-BFGS-B" is calculating a gradient numerically through finite differences, the reported count of evaluations in the output doesn’t match the number of actual evaluations.

## function gradient actual

## 13 13 65We’re searching in two input dimensions, and a rule of thumb is that it takes two evaluations in each dimension to build a tangent plane to approximate a derivative. So if 13 function evaluations are reported, it’d take about \(2\times 2 \rightarrow 4 \times 13 = 52\) additional runs to approximate derivatives, which agrees with our “by-hand” counter.

How can we improve upon those counts? Reducing the number of evaluations should speed up computation time. It might not be a big deal now, but as \(n\) gets bigger the repeated cubic cost of matrix inverses and determinants really adds up. What if we take derivatives with respect to \(\theta\) and combine with those for \(g\) to form a gradient? That requires \(\dot{K}_n \equiv \frac{\partial K_n}{\partial \theta}\), to plug into inverse and determinant derivative identities (5.9). The diagonal is zero because the exponent is zero no matter what \(\theta\) is. Off-diagonal entries of \(\dot{K}_n\) work out as follows. Since

\[ \begin{aligned} K_\theta(x, x') &= \exp\left\{ - \frac{||x - x'||^2}{\theta} \right\}, & \mbox{we have} && \frac{\partial K_\theta(x_i, x_j)}{\partial \theta} &= K_\theta(x_i, x_j) \frac{||x_i - x_j||^2}{\theta^2}. \\ \end{aligned} \]

A slightly more compact way to write the same thing would be \(\dot{K}_n = K_n \circ \mathrm{Dist}_n/\theta^2\) where \(\circ\) is a component-wise, Hadamard product, and \(\mathrm{Dist}_n\) contains a matrix of squared Euclidean distances – our D in the code. An identical application of the chain rule for the nugget (5.10), but this time for \(\theta\), gives

\[\begin{equation} \ell'(\theta) \equiv \frac{\partial}{\partial \theta} \ell(\theta, g) = \frac{n}{2} \frac{Y_n^\top K_n^{-1} \dot{K}_n K_n^{-1} Y_n}{Y_n^\top K_n^{-1} Y_n} - \frac{1}{2} \mathrm{tr} \left \{ K_n^{-1} \dot{K}_n \right\}. \tag{5.11} \end{equation}\]

A vector collecting the two sets of derivatives forms the gradient of \(\ell(\theta, g)\), a joint log likelihood with \(\tau^2\) concentrated out. R code below implements the negative of that gradient for the purposes of MLE calculation with optim minimization. Comments therein help explain the steps involved.

gradnl <- function(par, D, Y)

{

## extract parameters

theta <- par[1]

g <- par[2]

## calculate covariance quantities from data and parameters

n <- length(Y)

K <- exp(-D/theta) + diag(g, n)

Ki <- solve(K)

dotK <- K*D/theta^2

KiY <- Ki %*% Y

## theta component

dlltheta <- (n/2) * t(KiY) %*% dotK %*% KiY / (t(Y) %*% KiY) -

(1/2)*sum(diag(Ki %*% dotK))

## g component

dllg <- (n/2) * t(KiY) %*% KiY / (t(Y) %*% KiY) - (1/2)*sum(diag(Ki))

## combine the components into a gradient vector

return(-c(dlltheta, dllg))

}How well does optim work when it has access to actual gradient evaluations? Observe here that we’re otherwise using exactly the same calls as earlier.

counter <- 0

outg <- optim(c(0.1, 0.1*var(y2)), nl, gradnl, method="L-BFGS-B",

lower=eps, upper=c(10, var(y2)), D=D, Y=y2)

rbind(grad=outg$par, brute=out$par)## [,1] [,2]

## grad 0.9203 0.009167

## brute 0.9203 0.009167Parameter estimates are nearly identical. Availability of a true gradient evaluation changes the steps of the algorithm slightly, often leading to a different end-result even when identical convergence criteria are applied. What about the number of evaluations?

## function gradient actual

## grad 10 10 10

## brute 13 13 65Woah! That’s way better. No only does our actual “by-hand” count of evaluations match what’s reported on output from optim, but it can be an order of magnitude lower, roughly, compared to what we had before. (Variations depend on the random data used to generate this Rmarkdown document.) A factor of five-to-ten savings is definitely worth the extra effort to derive and code up a gradient. As you can imagine, and we’ll show shortly, gradients are commensurately more valuable when there are even more hyperparameters. “But what other hyperparameters?”, you ask. Hold that thought.

Optimized hyperparameters in hand, we can go about rebuilding quantities required for prediction. Begin with training quantities …

K <- exp(- D/outg$par[1]) + diag(outg$par[2], nrow(X2))

Ki <- solve(K)

tau2hat <- drop(t(y2) %*% Ki %*% y2 / nrow(X2))… then predictive/testing ones …

gn <- 40

xx <- seq(-2, 4, length=gn)

XX <- expand.grid(xx, xx)

DXX <- distance(XX)

KXX <- exp(-DXX/outg$par[1]) + diag(outg$par[2], ncol(DXX))

DX <- distance(XX, X2)

KX <- exp(-DX/outg$par[1])… and finally kriging equations.

The resulting predictive surfaces look pretty much the same as before, as shown in Figure 5.11.

par(mfrow=c(1,2))

image(xx, xx, matrix(mup, ncol=gn), main="mean", xlab="x1",

ylab="x2", col=cols)

points(X2)

image(xx, xx, matrix(sdp, ncol=gn), main="sd", xlab="x1",

ylab="x2", col=cols)

points(X2)

FIGURE 5.11: Predictive mean (left) and standard deviation (right) after estimating a lengthscale \(\hat{\theta}\).

This is perhaps not an exciting way to end the example, but it serves to illustrate the basic idea of estimating unknown quantities and plugging them into predictive equations. I’ve only illustrated 1d and 2d so far, but the principle is no different in higher dimensions.

5.2.5 Anisotropic modeling

It’s time to expand input dimension a bit, and get ambitious. Visualization will be challenging, but there are other metrics of success. Consider the Friedman function, a popular toy problem from the seminal multivariate adaptive regression splines [MARS; Friedman (1991)] paper. Splines are a popular alternative to GPs in low input dimension. The idea is to “stitch” together low-order polynomials. The “stitching boundary” becomes exponentially huge as dimension increases, which challenges computation. For more details, see the splines supplement linked here which is based on Hastie, Tibshirani, and Friedman (2009), Chapters 5, 7 and 8. MARS circumvents many of those computational challenges by simplifying basis elements (to piecewise linear) on main effects and limiting (to two-way) interactions. Over-fitting is mitigated by aggressively pruning useless basis elements with a generalized CV scheme.

fried <- function(n=50, m=6)

{

if(m < 5) stop("must have at least 5 cols")

X <- randomLHS(n, m)

Ytrue <- 10*sin(pi*X[,1]*X[,2]) + 20*(X[,3] - 0.5)^2 + 10*X[,4] + 5*X[,5]

Y <- Ytrue + rnorm(n, 0, 1)

return(data.frame(X, Y, Ytrue))

}The surface is nonlinear in five input coordinates,

\[\begin{equation} \mathbb{E}\{Y(x)\} = 10 \sin(\pi x_1 x_2) + 20(x_3 - 0.5)^2 + 10x_4 - 5x_5, \tag{5.12} \end{equation}\]

combining periodic, quadratic and linear effects. Notice that you can ask for more (useless) coordinates if you want: inputs \(x_6, x_7, \dots\) The fried function, as written above, generates both the \(X\)-values, via LHS (§4.1) in \([0,1]^m\), and \(Y\)-values. Let’s create training and testing sets in seven input dimensions, i.e., with two irrelevant inputs \(x_6\) and \(x_7\). Code below uses fried to generate an LHS training–testing partition (see, e.g., Figure 4.9) with \(n=200\) and \(n' = 1000\) observations, respectively. Such a partition could represent one instance in the “bakeoff” described by Algorithm 4.1. See §5.2.7 for iteration on that theme.

m <- 7

n <- 200

nprime <- 1000

data <- fried(n + nprime, m)

X <- as.matrix(data[1:n,1:m])

y <- drop(data$Y[1:n])

XX <- as.matrix(data[(n + 1):(n + nprime),1:m])

yy <- drop(data$Y[(n + 1):(n + nprime)])

yytrue <- drop(data$Ytrue[(n + 1):(n + nprime)])The code above extracts two types of \(Y\)-values for use in out-of-sample testing. De-noised yytrue values facilitate comparison with root mean-squared error (RMSE),

\[\begin{equation} \sqrt{\frac{1}{n'} \sum_{i=1}^{n'} (y_i - \mu(x_i))^2}. \tag{5.13} \end{equation}\]

Notice that RMSE is square-root Mahalanobis distance (5.7) calculated with an identity covariance matrix. Noisy out-of-sample evaluations yy can be used for comparison by proper score (5.6), combining both mean accuracy and estimates of covariance.

First learning. Inputs X and outputs y are re-defined, overwriting those from earlier examples. After re-calculating pairwise distances D, we may cut-and-paste gradient-based optim on objective nl and gradient gnl.

D <- distance(X)

out <- optim(c(0.1, 0.1*var(y)), nl, gradnl, method="L-BFGS-B", lower=eps,

upper=c(10, var(y)), D=D, Y=y)

out## $par

## [1] 2.533216 0.005201

##

## $value

## [1] 683.5

##

## $counts

## function gradient

## 33 33

##

## $convergence

## [1] 0

##

## $message

## [1] "CONVERGENCE: REL_REDUCTION_OF_F <= FACTR*EPSMCH"Output indicates convergence has been achieved. Based on estimated \(\hat{\theta} = 2.533\) and \(\hat{g} = 0.0052\), we may rebuild the data covariance quantities …

K <- exp(- D/out$par[1]) + diag(out$par[2], nrow(D))

Ki <- solve(K)

tau2hat <- drop(t(y) %*% Ki %*% y / nrow(D))… as well as those involved in predicting at XX testing locations.

DXX <- distance(XX)

KXX <- exp(-DXX/out$par[1]) + diag(out$par[2], ncol(DXX))

DX <- distance(XX, X)

KX <- exp(-DX/out$par[1])Kriging equations are then derived as follows.

Notice how not a single line in the code above, pasted directly from identical lines used in earlier examples, requires tweaking to accommodate the novel 7d setting. Our previous examples were in 1d and 2d, but the code works verbatim in 7d. However the number of evaluations required to maximize is greater now than in previous examples. Here we have 33 compared to 10 previously in 2d.

How accurate are predictions? RMSE on the testing set is calculated below, but we don’t yet have a benchmark to compare this to.

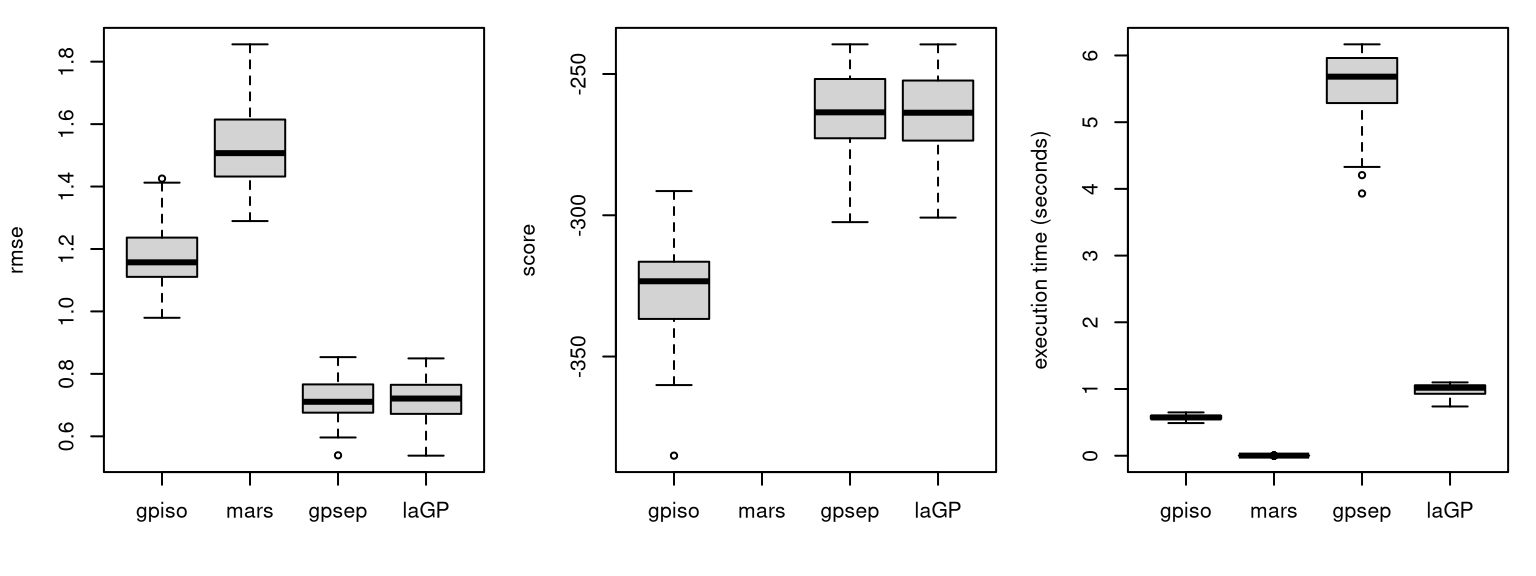

## gpiso

## 1.107How about comparing to MARS? That seems natural considering these data were created as a showcase for that very method. MARS implementations can be found in the mda (Leisch, Hornik, and Ripley 2017) and earth (Milborrow 2019) packages on CRAN.

Which wins between the isotropic GP and MARS based on RMSE to the truth?

## gpiso mars

## 1.107 1.518Usually the GP wins in this comparison. In about one time out of twenty random Rmarkdown rebuilds MARS wins. Unfortunately MARS doesn’t natively provide a notion of predictive variance. That is, not without an extra bootstrap layer or a Bayesian treatment; e.g., see BASS (Francom 2017) on CRAN. So a comparison to MARS by proper score isn’t readily available. Some may argue that this comparison isn’t fair. MARS software has lots of tuning parameters that we aren’t exploring. Results from mars improve with argument degree=2 and, for reasons that aren’t immediately clear to me at this time, they’re even better with earth after the same degree=2 modification. I’ve deliberately put up a relatively “vanilla” straw man in this comparison. This is in part because our GP setup is itself relatively vanilla. An exercise in §5.5 invites the reader to explore a wider range of alternatives on both fronts.

How can we add more flavor? If that was vanilla GP regression, what does rocky road look like? To help motivate, recall that the Friedman function involved a diverse combination of effects on the input variables: trigonometric, quadratic and linear. Although we wouldn’t generally know that much detail in a new application – and GPs excel in settings where little is known about input–output relationships, except perhaps that it might be worth trying methods beyond the familiar linear model – it’s worth wondering if our modeling apparatus is not at odds with typically encountered dynamics. More to the point, GP modeling flexibility comes from the MVN covariance structure which is based on scaled (by \(\theta\)) inverse exponentiated squared Euclidean distance. That structure implies uniform decay in correlation in each input direction. Is such radial symmetry reasonable? Probably not in general, and definitely not in the case of the Friedman function.

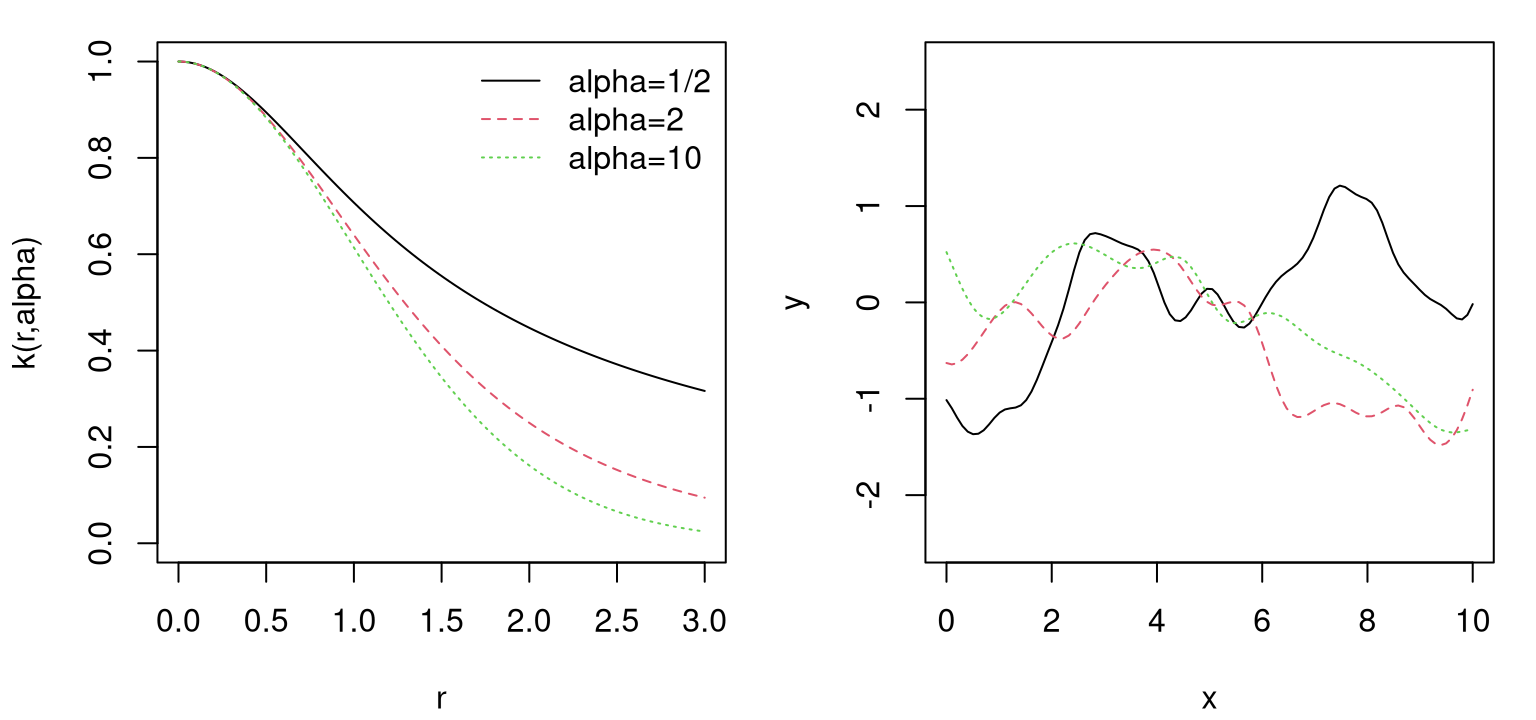

How about the following generalization?

\[ C_\theta(x, x') = \exp\left\{ - \sum_{k=1}^m \frac{(x_k - x'_k)^2}{\theta_k} \right\} \]

Here we’re using a vectorized lengthscale parameter \(\theta = (\theta_1,\dots,\theta_m)\), allowing strength of correlation to be modulated separately by distance in each input coordinate. This family of correlation functions is called the separable or anisotropic Gaussian. Separable because the sum is a product when taken outside the exponent, implying independence in each coordinate direction. Anisotopic because, except in the special case where all \(\theta_k\) are equal, decay of correlation is not radial.

How does one perform inference for such a vectorized parameter? Simple; just expand log likelihood and derivative functions to work with vectorized \(\theta\). Thinking about implementation: a for loop in the gradient function can iterate over coordinates, wherein each iteration we plug

\[\begin{equation} \frac{\partial K_n^{ij}}{\partial \theta_k} = K_n^{ij} \frac{(x_{ik} - x_{jk})^2}{\theta_k^2} \tag{5.14} \end{equation}\]

into our formula for \(\ell'(\theta_k)\) in Eq. (5.11), which is otherwise unchanged.

Each coordinate has a different \(\theta_k\), so pre-computing a distance matrix isn’t helpful. Instead we’ll use the covar.sep function from the plgp package which takes vectorized d \(\equiv \theta\) and scalar g arguments, combing distance and inverse-scaling into one step. Rather than going derivative crazy immediately, let’s focus on the likelihood first, which we’ll need anyways before going “whole hog”. The function below is nearly identical to nl from §5.2.4 except the first ncol(X) components of argument par are sectioned off for theta, and covar.sep is used directly on X inputs rather than operating on pre-calculated D.

nlsep <- function(par, X, Y)

{

theta <- par[1:ncol(X)]

g <- par[ncol(X)+1]

n <- length(Y)

K <- covar.sep(X, d=theta, g=g)

Ki <- solve(K)

ldetK <- determinant(K, logarithm=TRUE)$modulus

ll <- - (n/2)*log(t(Y) %*% Ki %*% Y) - (1/2)*ldetK

counter <<- counter + 1

return(-ll)

}As a testament to how easy it is to optimize that likelihood, at least in terms of coding, below we port our optim on nl above to nlsep below with the only change being to repeat upper and lower arguments, and supply X instead of D. (Extra commands for timing will be discussed momentarily.)

tic <- proc.time()[3]

counter <- 0

out <- optim(c(rep(0.1, ncol(X)), 0.1*var(y)), nlsep, method="L-BFGS-B",

X=X, Y=y, lower=eps, upper=c(rep(10, ncol(X)), var(y)))

toc <- proc.time()[3]

out$par## [1] 1.120385 1.075619 1.775699 9.047709 10.000000 10.000000

## [7] 9.256984 0.008255What can be seen on output? Notice how \(\hat{\theta}_k\)-values track what we know about the Friedman function. The first three inputs have relatively shorter lengthscales compared to inputs four and five. Recall that shorter lengthscale means “more wiggly”, which is appropriate for those nonlinear terms; longer lengthscale corresponds to linearly contributing inputs. Finally, the last two (save \(g\) in the final position of out$par) also have long lengthscales, which is similarly reasonable for inputs which aren’t contributing.

But how about the number of evaluations?

## function gradient actual

## 66 66 1122Woah, lots! Although only 66 optimization steps were required, in 8d (including nugget g in par) that amounts to evaluating the objective function more than one-thousand-odd times, plus-or-minus depending on the random Rmarkdown build. When \(n = 200\), and with cubic matrix decompositions, that can be quite a slog time-wise: about 9 seconds.

## elapsed

## 8.716To attempt to improve on that slow state of affairs, code below implements a gradient (5.14) for vectorized \(\theta\).

gradnlsep <- function(par, X, Y)

{

theta <- par[1:ncol(X)]

g <- par[ncol(X)+1]

n <- length(Y)

K <- covar.sep(X, d=theta, g=g)

Ki <- solve(K)

KiY <- Ki %*% Y

## loop over theta components

dlltheta <- rep(NA, length(theta))

for(k in 1:length(dlltheta)) {

dotK <- K * distance(X[,k])/(theta[k]^2)

dlltheta[k] <- (n/2) * t(KiY) %*% dotK %*% KiY / (t(Y) %*% KiY) -

(1/2)*sum(diag(Ki %*% dotK))

}

## for g

dllg <- (n/2) * t(KiY) %*% KiY / (t(Y) %*% KiY) - (1/2)*sum(diag(Ki))