Chapter 11 Differences Between Two Things (the \(t\)-test chapter)

11.1 Classical Parametric Tests of the Differences Between Two Things: \(t\)-tests

In the simplest terms I can think of, the \(t\)-test helps us analyze the difference between two things that are measured with numbers. There are three main types of \(t\)-test –

The One-sample \(t\)-test: differences between a sample mean and a single numeric value

The Repeated-measures \(t\)-test: differences between two measurements of the same (or similar) entities

The Independent-groups \(t\)-test: differences between two sample means

– but they are all based on the same idea:

What is the difference between two things in terms of sampling error?

The first part of that question – what is the difference – is relatively straightforward. When we are comparing two things, the most natural question to ask is how different they are. The numeric difference between two things is half of the \(t\)-test.

The other half – in terms of sampling error – is a little trickier. Please think briefly about a point made on the page on categorizing and summarizing information: if could measure the entire population, we would hardly need statistical testing at all. Because that is – for all intents and purposes – impossible, we instead base analyses on subsets of the population – samples – and contextualize differences based on a combination of the variation in the measurements and the size of the samples.

That combination of variation in measurements and sample size is captured in the sampling error or, equivalently, the standard error (the terms are interchangeable). The standard error is the standard deviation of sample means – it’s how we expect the means of our samples drawn from the same population-level distribution to differ from each other – more on that to come below. The \(t\)-statistic – regardless of which of the three types of \(t\)-test is being applied to the data – is the ratio of the difference between two things and the expected differences between them:

\[t=\frac{a~difference}{sampling~error}\]

The distinctions between the three main types of \(t\)-test come down to how we calculate the difference and _how we calculate the sampling error.

The difference in the numerator of the \(t\) formula is always a matter of subtraction. To understand the sampling error, we must go to the central limit theorem, first introduced in the page on probability distributions.

11.1.1 The Central Limit Theorem and the \(t\)-distribution

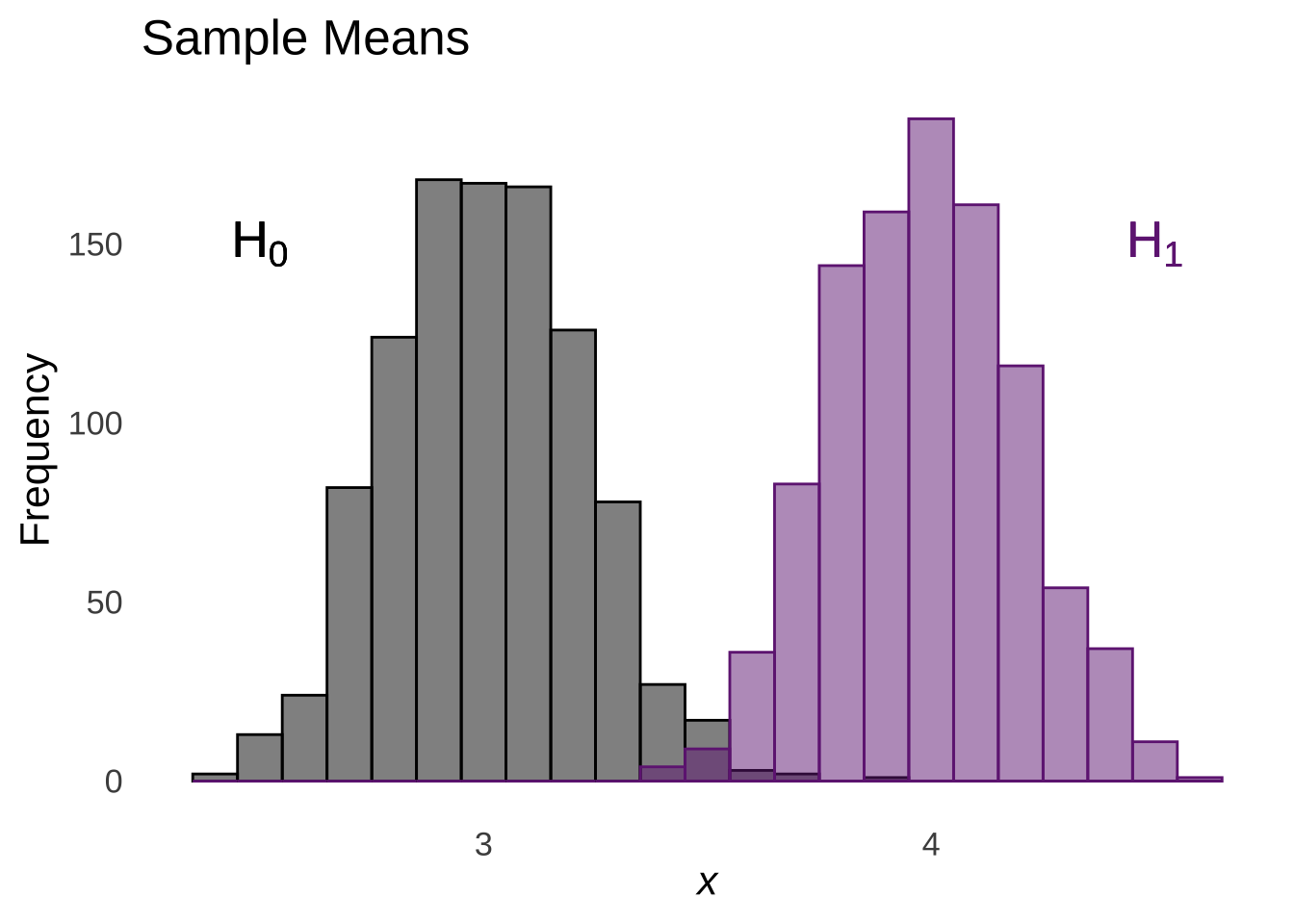

The central limit theorem (CLT) describes the distribution of means taken from a distribution (any distribution, although we will be focusing on normal distributions with regard to \(t\)-tests). It tells us that sample means (\(\bar{x}\)) are distributed as (\(\sim\)) a normal distribution \(N\) with a mean equal to the mean of the distribution from which they were sampled (\(\mu\)) and a variance equal to the variance of the population distribution (\(\sigma^2\)) divided by the number of observations in each sample \(n\):

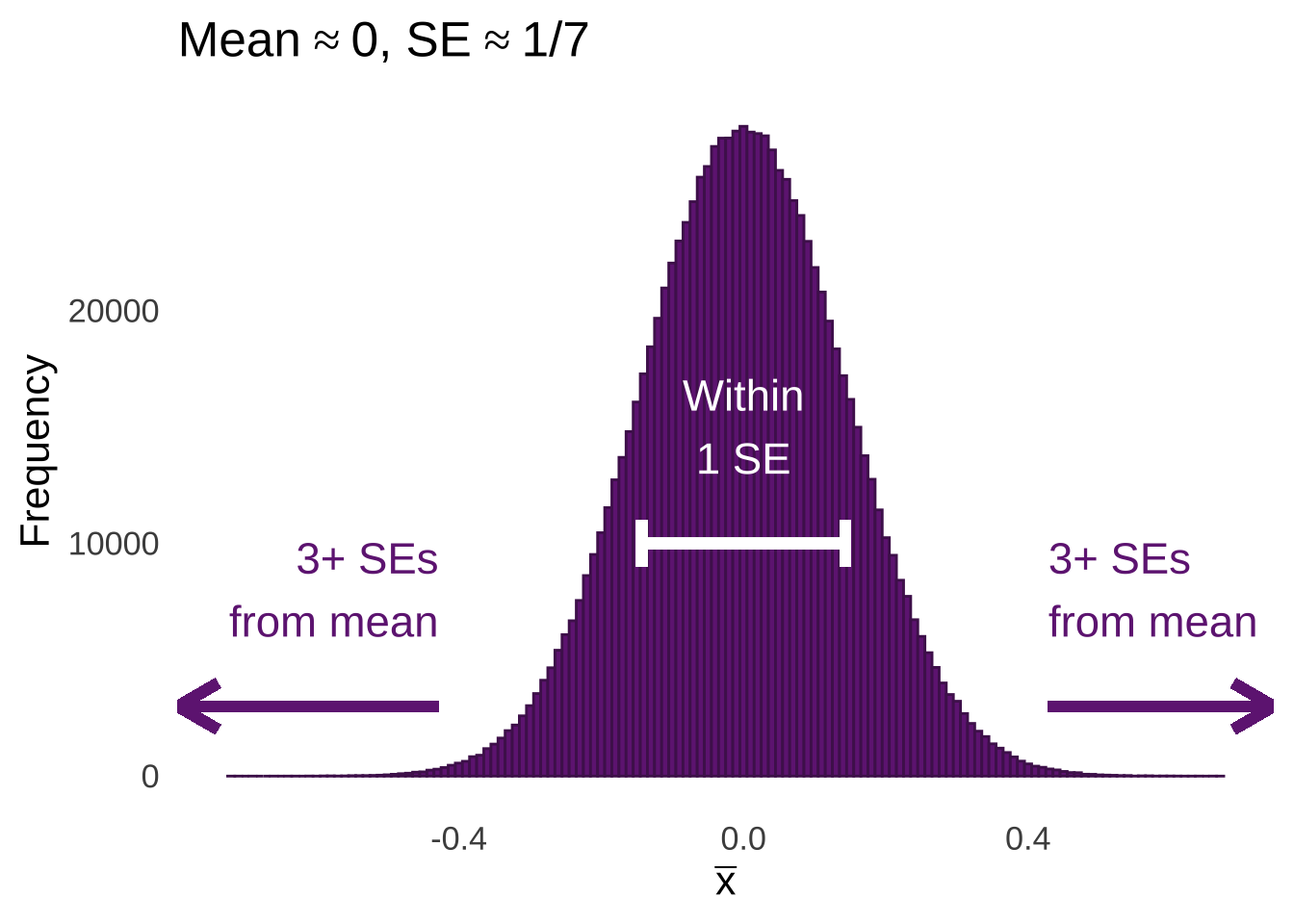

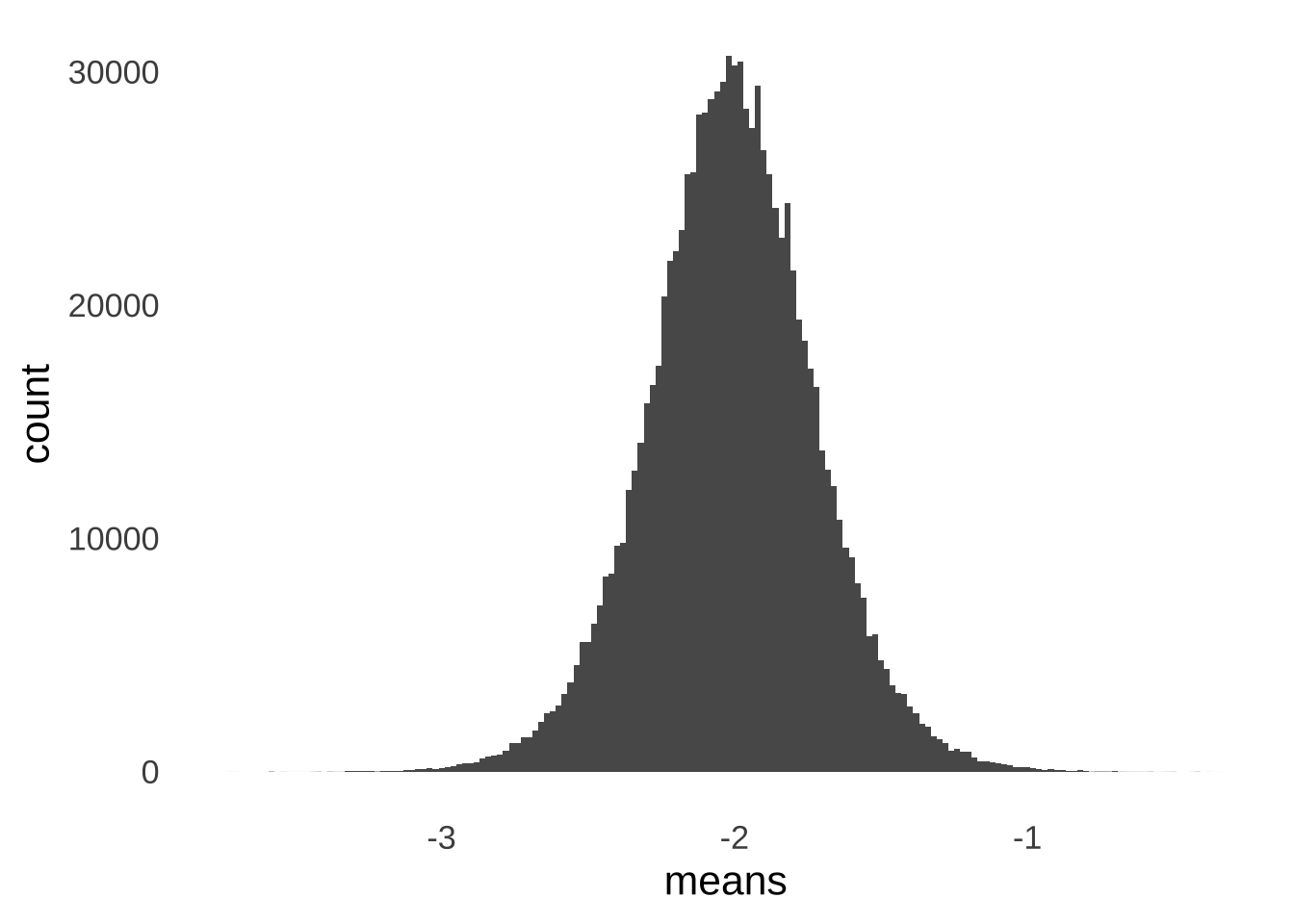

\[\bar{x}\sim N \left( \mu, \frac{\sigma^2}{n} \right)\] Figure 11.1 illustrates an example of the CLT in action: it’s a histogram of the means of one million samples of \(n=49\) each taken from a standard normal distribution. The mean of the sample means is approximately 0 – matching the mean of the standard normal distribution. The standard deviation of the sample means is approximately \(1/7\) – the ratio of the standard deviation of the standard normal distribution (1) and the square root of the size of each sample (\(\sqrt{49}=7\)).

Figure 11.1: Histogram of the Means of 1,000,000 Samples of \(n=49\) from a Standard Normal Distribution

The standard deviation of the sample means is the standard error (SE), which can be confusing since the it means that the standard error is a standard deviation with a standard deviation in the numerator of the formula to calculate it: \(SE = SD/\sqrt{n}\). As difficult as it might be to get a handle on that tricky concept, it’s worth the work, as it helps us understand how the \(t\)-distribution and \(t\)-tests work.

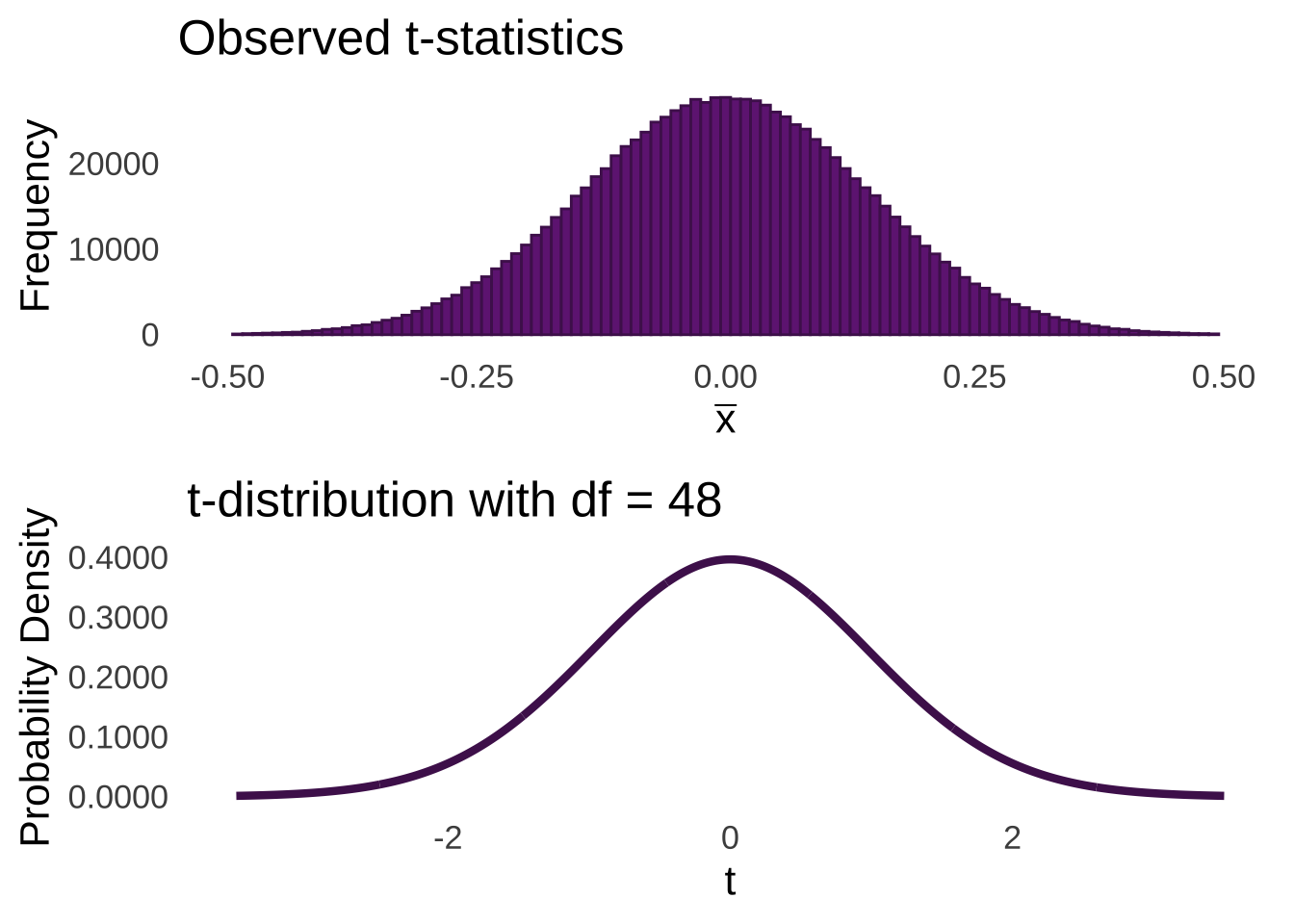

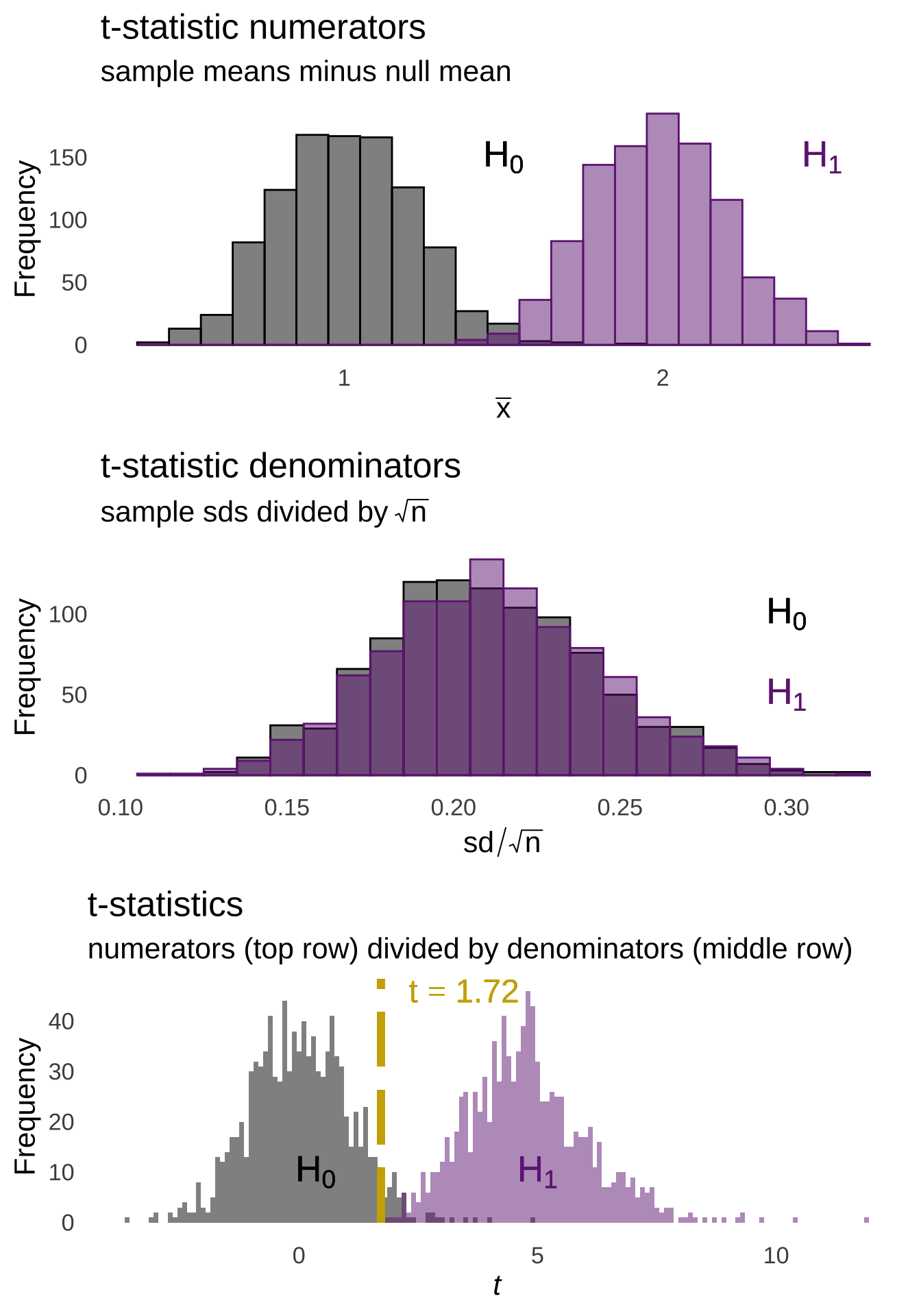

As annotated in Figure 11.1, the majority of sample means are going to fall within 1 standard error of the mean of the sample means. Relatively few sample means are going to fall 3 or more standard errors from the mean. We can model the expectations of the distribution of sampling means using the \(t\)-distribution. For example, if we take all of the million sample means shown in Figure 11.1, and we divide each of them by their respective standard errors – that is, we take the standard deviation of the observations in the sample and divide by the square root of the number of observations \((sd/\sqrt{n})\), we will get the histogram of observed \(t\)-statistics on the top of Figure 11.2.

Figure 11.2: Histogram of \(t\)-statistics (top) and \(t\)-distribution with \(df = 48\) (bottom)

Just as we can find areas under the curve of a normal distribution using \(z\)-scores given the mean and standard deviation of that normal distribution, we can use the number of standard errors from the mean of a \(t\)-distribution – which is zero – to determine areas under \(t\) curves. And, like how \(z\)-scores represent the number of standard deviations a number is from the mean of a normal, the \(t\)-statistic is the number of \(t\)-distribution standard deviations – those are standard errors – an observed \(t\) is from the mean.

To help describe what happens when sample means are drawn, please consider the two following examples that describe the process of using the smallest possible sample size – 1 – and the process of using the largest possible sample size – the size of the entire population, represented by approximately infinity – respectively.

If \(n=1\), then the distribution of the sample means – which, since \(n=1\), wouldn’t really be means so much as they would be the value of the samples themselves – would have a mean \(\mu_{\bar{x}}=\mu\) and a standard deviation of \(\frac{s^2_{\bar{x}}}{1}=\sigma^2\). Thus, each observation would be a sample from a normal distribution. As covered in the page on probability distributions, the probability of any one of those observations falling into a range is determined by the area under the normal curve. We know that the probability that a value sampled from a normal distribution is greater than the mean of the distribution is 50%. We know that the probability that a value sampled from a normal distribution is within one standard deviation of the mean is approximately 68%. And – this is important and we will come back to it several times – we know that the probability of sampling a value that is more than 1.645 standard deviations greater than the mean is approximately 5%:

## [1] 0.04998491the probability of sampling a value that is more than 1.645 standard deviations less than the mean is also approximately 5%:

## [1] 0.04998491and that the probability that sampling a value that is either 1.96 standard deviations greater than or less than the mean is also approximately 5%:

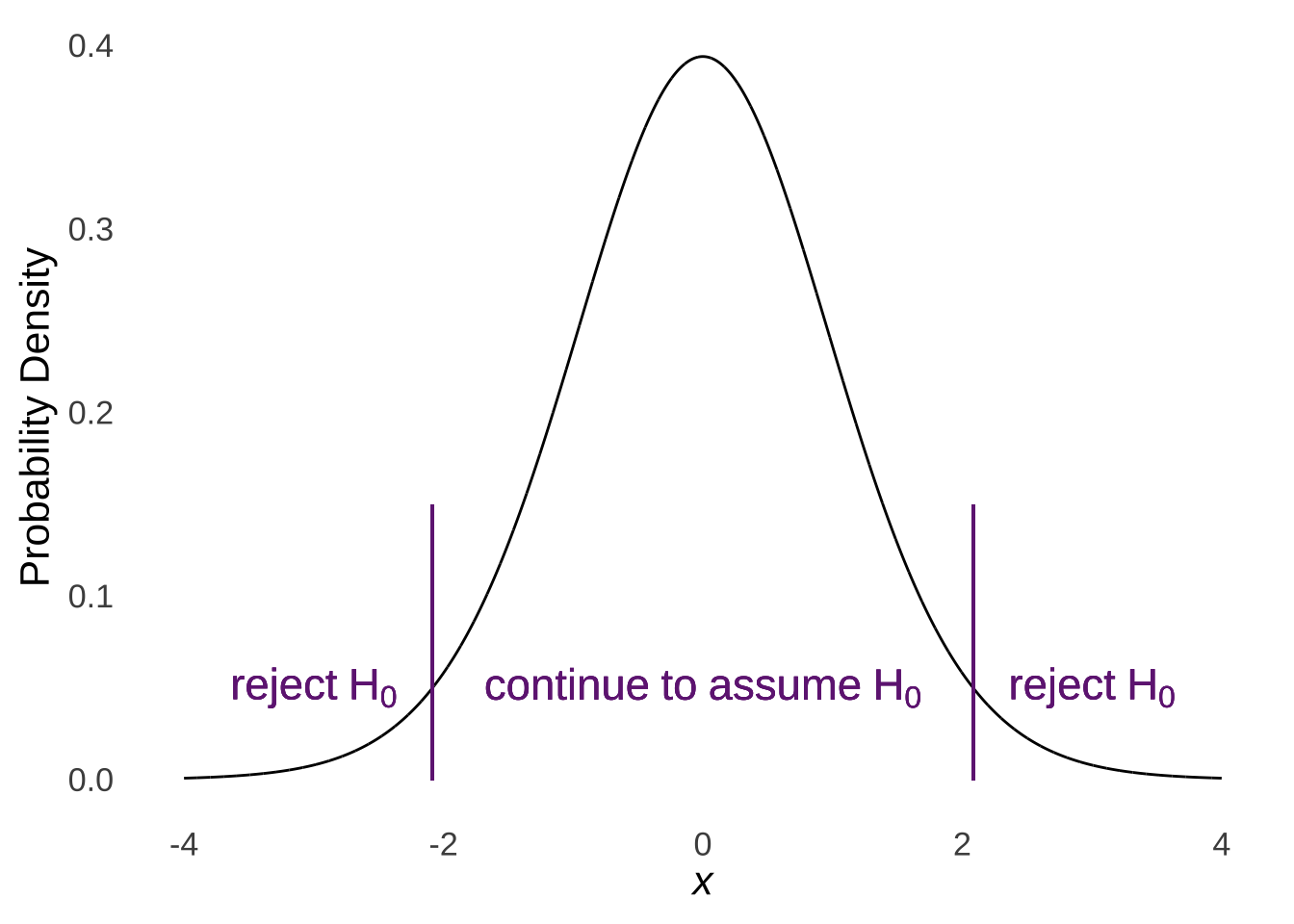

## [1] 0.04999579In classical statistical tests, the null hypothesis implies that the sample being studied is an ordinary sample from a given distribution defined by certain parameters. The alternative hypothesis is that the sample being studied was taken from different distribution, such as a distribution of the same type but defined by different parameters (e.g., if the null hypothesis implies sampling from a standard normal distribution, the alternative hypothesis may be that the sample comes from another normal distribution with a different mean). We reject the null hypothesis if the cumulative likelihood of observing the observed data given that the null hypothesis is true is extraordinarily small. So, if we knew the mean and variance of a population and happened to observe a single value (or the mean of a single value, which is the value itself) that was several standard deviations away from the mean of the population that we assume under the null that we are sampling from, then we may conclude that we should reject the null hypothesis that that observation came from the null-hypothesis distribution in favor of the alternative hypothesis that the observation came from some other distribution.

Figure 11.3: Buddy (Will Ferrell) learning to reject the null hypothesis that he is sampled from a population of elves is a major plot point in the 2003 holiday classic Elf

If \(n=\infty\), then the distribution of the sample means would have a mean equal to \(\mu\) and a variance of \(\sigma^2/\infty=0\)186. That means that if you could sample the entire population and take the mean, the mean you take would always be exactly the population mean. Thus, there would be no possible difference between the mean of the sample you take and the population mean – because the mean you calculated would necessarily be the population mean – and thus there would be no sampling error. In statistical terms, the end result would be a sampling error or standard error of 0.

With a standard error of 0, each mean we calculate would be expected to be the population mean. If we sampled an entire population and we did not calculate the expected population mean, then one of two things are true: either the expectation about the mean is wrong, or, more interestingly, we have sampled a different population than the one we expected.

11.1.2 One-sample \(t\)-test

In theory, the one-sample \(t\)-test assesses the difference between a sample mean and a hypothesized population mean. The idea is that a sample is taken, and the mean is measured. The variance of the sample data is used as an estimate of the population variance, so that the sample mean is considered to be – in the null hypothesis – one of the many possible means sampled from a normal distribution with the hypothesized population mean \(\mu\) and a variance estimated from the sample variance (\(\sigma^2 \approx s^2_{obs}\)). The central limit theorem tells us that the distribution of the sample means – of which the mean of our observed data is assumed to be one in the null hypothesis – has a mean equal to the population mean (\(\bar{x}=\mu\)) and a variance equal to the population variance divided by the size of the sample (\(\sigma^2_{\bar{x}}=\sigma^2/n\)) and therefore a standard deviation equal to the population standard deviation divided by the square root of the size of the sample (\(\sigma_{\bar{x}}=\sqrt{\sigma^2_{\bar{x}}}=\sigma/\sqrt{n}\), which is the standard error).

The \(t\)-statistic (or just \(t\), if you’re into the whole brevity thing) measures how unusual the observed sample mean is from the hypothesized sample mean. It does so by measuring how far away the observed sample mean is from the hypothesized population mean in terms of standard errors (see the illustration in Figure 11.1).

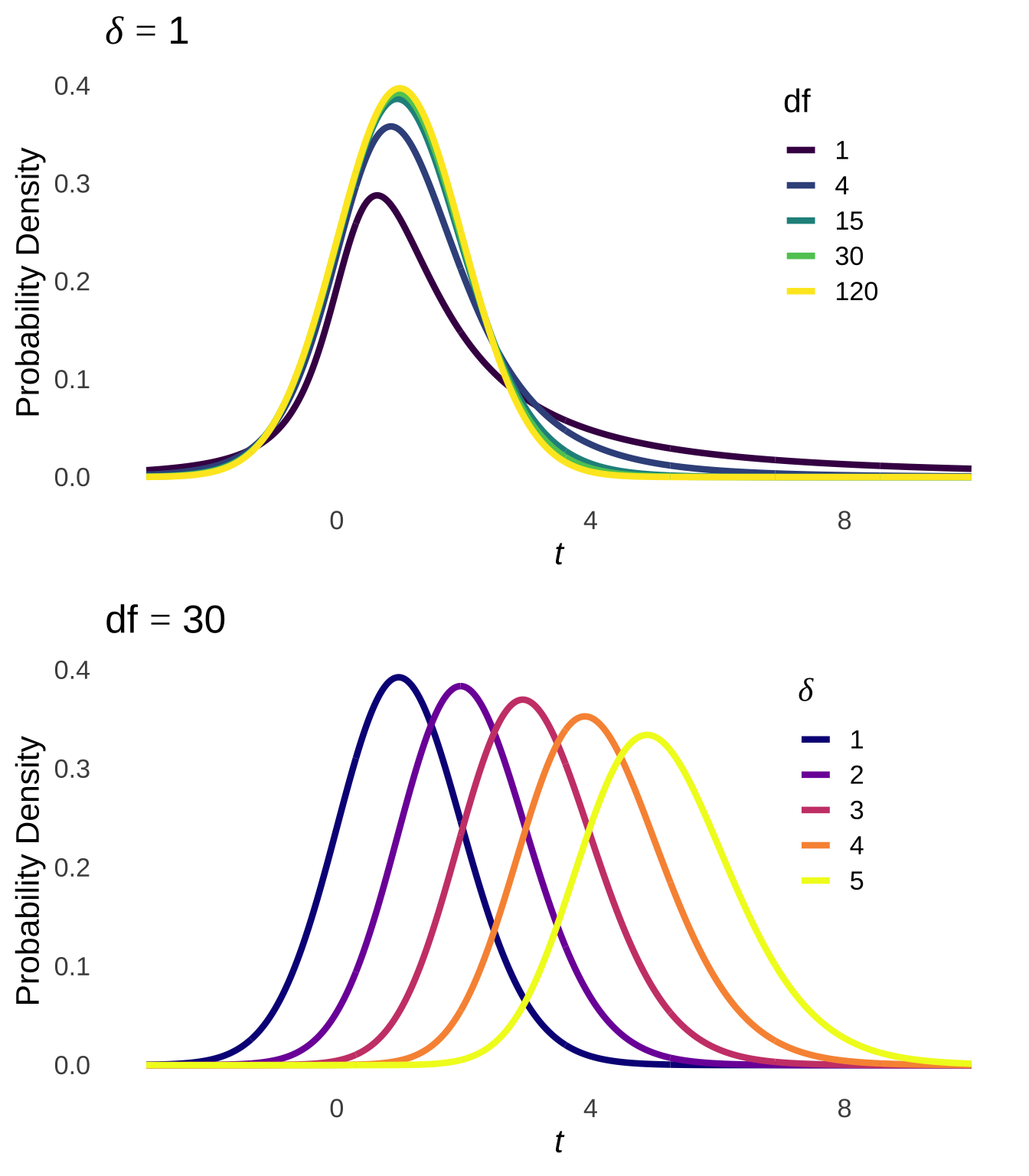

The \(t\)-distribution models the distances of sample means from the hypothesized population mean in terms of standard errors as a function of the degrees of freedom (\(df\)) used in the calculation of those sample means (i.e., \(n-1\)). Smaller (in terms of \(n\)) samples from normal distributions are naturally more likely to be far away from the mean – there is greater sampling error for smaller samples – and the \(t\)-distribution reflects that by being more kurtotic when \(df\) is small. That is, relative to \(t\)-distributions with large \(df\), the tails of \(t\)-distributions for small \(df\) are thicker and thus there are greater areas under the curve associated with extreme values of \(t\) (see Figure 11.4, which is reproduced from probability distributions ).

Figure 11.4: The \(t\) Distribution for Selected Values of \(df\)

Of course, it is possible to observe any mean value for a finite sample 187 taken from a normal distribution. But, at some point, an observed sample mean can be so far from the mean and therefore the probability of observing a sample mean at least that far away is so unlikely that we reject the null hypothesis that the observed sample mean came from the population described in the null hypothesis. We reject the null hypothesis in favor of the alternative hypothesis that the sample came from some other population. We use the \(t\)-statistic to help us make that decision.

The observed \(t\) that will help us make our decision is given by the formula:

\[t_{obs}=\frac{\bar{x}-\mu}{se}\]

where \(\bar{x}\) is the observed sample mean, \(\mu\) is the population mean posited in the null hypothesis, and \(se\) is the standard error of the sample mean \(s_{obs}/\sqrt{n}\), which approximates the standard deviation of all sample means of size \(n\) taken from the hypothesized normal distribution \(\sigma/\sqrt{n}\) (because we don’t really know what \(\sigma\) is in the population).

As noted above, \(\mu\) is the mean of a hypothetical population, but in practice it can be any number of interest. For example, if we were interested in whether the mean of a sample were significantly not equal to zero (\(> 0,~< 0\), or \(\ne 0\)), we could put some variation of \(\mu=0\) (\(\le 0,~\ge 0\), or \(=0\)) in the null hypothesis to simulate what would happen if the population mean were 0? even if we aren’t really thinking about 0 in terms of a population mean.

11.1.2.0.1 One-sample \(t\) Example

For our examples, let’s pretend that we are makers of large kitchen appliances. Let’s start by making freezers. First, we will have to learn how freezers work and how to build them.

Looks easy enough! Now we need to test our freezers. Let’s say we have built 10 freezers and we need to know that our sample of freezers produces temperatures that are significantly less than \(0^{\circ} C\) 188. Here, in degrees Celsius, are our observed data:

| Freezer | Temperature (\(^{\circ}C\)) |

|---|---|

| 1 | -2.14 |

| 2 | -0.80 |

| 3 | -2.75 |

| 4 | -2.58 |

| 5 | -2.26 |

| 6 | -2.46 |

| 7 | -1.33 |

| 8 | -2.85 |

| 9 | -0.93 |

| 10 | -2.01 |

Now we will use the six-step hypothesis testing procedure to test the scientific hypothesis that the mean of the internal temperatures of the freezers we built is significantly less than 0.

Oh, sorry, Nick Miller. First we must do the responsible thing and check the assumptions of the \(t\)-test. Since we have only one set of data (meaning we don’t have to worry about homoscedasticity), the only check we have to do is about normality:

##

## Shapiro-Wilk normality test

##

## data: one.sample.data

## W = 0.89341, p-value = 0.1852The Shapiro-Wilk test says we can continue to assume normality.

11.1.2.0.1.1 Six-Step Hypothesis Testing

- Define null and alternative hypotheses.

For this freezer-temperature-measuring experiment, we are going to start by assuming that the mean of our observed temperatures is sampled from a normal distribution with a mean of 0. We don’t hypothesize a variance in this step: for now, the population variance is unknown (it will be estimated by the sample variance in the process of doing the \(t\)-test calculations in step 5 of the procedure).

Now, it will not do us any good – as freezer-makers – if the mean internal temperatures of our freezers is greater than \(0^{\circ} C\). In this case, a point (or two-tailed) hypothesis will not do, because that would compel us to reject the null hypothesis if the mean were significantly less than or greater than \(0^{\circ} C\). Instead we will use a directional (or one-tailed) hypothesis, where our null hypothesis is that we have sampled our mean freezer temperatures from a normal distribution of freezer temperatures with a population mean that is greater than or equal to 0 and our alternative hypothesis is that we have sampled our mean freezer temperatures from a normal distribution of freezer temperatures with a population mean that is less than 0.:

\[H_0:\mu \ge 0\] \[H_1: \mu <0\] 2. Define the type-I error (false-alarm) rate \(\alpha\)

Let’s say \(\alpha=0.05\). Whatever.

- Identify the statistical test to be used.

Since this is the one-sample \(t\)-test section of the page, let’s go with “one-sample \(t\)-test.”

- Identify a rule for deciding between the null and alternative hypotheses

If the observed \(t\) indicates that the cumulative likelihood of the observed \(t\) or more extreme unobserved \(t\) values is less than the type-I error rate (that is, if \(p\le \alpha\)), we will reject \(H_0\) in favor of \(H_1\).

We can determine whether \(p \le \alpha\) in two different ways. First, we can directly compare the area under the \(t\) curve for \(t \le t_{obs}\) – which is \(p\) because the null hypothesis is one-tailed and we will reject \(H_0\) if \(t\) is significantly less than the hypothesized population parameter \(\mu\) – to \(\alpha\). We can accomplish that fairly easily using software and that is what we will do (sorry for the spoiler).

The second way we can determine whether \(p \le \alpha\) is by using critical values of \(t\). A critical value table such as this one lists the values of \(t\) for which \(p\) is exactly \(\alpha\) given the \(df\) and whether the test is one-tailed or two-tailed. Any value of \(t\) with an absolute value greater than the \(t\) listed in the table for the given \(\alpha\), \(df\), and type of test necessarily has an associated \(p\)-value that is less than \(\alpha\). The critical-value method is helpful if you (a) are in a stats class that doesn’t let you use R on quizzes and tests, (b) are stranded on a desert island with nothing but old stats books and for some reason need to conduct \(t\)-tests, and/or (c) live in 1955.

- Obtain data and make calculations

The mean of the observed sample data is -2.01, and the standard deviation is 0.74. We incorporate the assumption that the observed standard deviation is our best guess for the population standard deviation in the equation to find the standard error:

\[se=\frac{sd_{obs}}{\sqrt{n}}\] although, honestly, if you don’t care much for the theoretical underpinning of the \(t\) formula, it suffices to say the se is the sd divided by the square root of n.

Our null hypothesis indicated that the \(\mu\) of the population was 0, so that’s what goes in the numerator of the \(t_{obs}\) formula. Please note that it makes no difference at this point whether \(H_0\) is \(\mu \le 0\), \(\mu =0\), or \(\mu \ge 0\): the number associated with \(\mu\) goes in the \(t_{obs}\) formula; the equals sign or inequality sign comes into play in the interpretation of \(t_{obs}\).

Thus, the observed \(t\) is:

\[t=\frac{\bar{x}-\mu}{se}=\frac{-2.01-0}{0.23}=-8.59 \]

- Make a decision

Now that we have an observed \(t\), we must evaluate the cumulative likelihood of observing at least that \(t\) in the direction(s) indicated by the type of test (one-tailed or two-tailed). Because the alternative hypothesis was that the population from which the sample was drawn had a mean less than 0, the relevant \(p\)-value is the cumulative likelihood of observing \(t_{obs}\) or a lesser (more negative) \(t\).

Because \(n=10\) (there were 10 freezers for which we measured the internal temperature), \(df=9\). We thus are looking for the lower-tail cumulative probability of \(t \le t_{obs}\) given that \(df=9\):

## [1] 6.241159e-06That is a tiny \(p\)-value! It is much smaller than the \(\alpha\) rate that we stipulated (\(\alpha=0.05\)). We reject \(H_0.\)

OR: the critical \(t\) for \(\alpha=0.05\) and \(df=9\) for a one-tailed test is 1.833. The absolute value of \(t_{obs}\) is \(|-8.59|=8.59\), which is greater than the critical \(t\). We reject \(H_0\).

OR: we could have skipped all of this and just used R from the jump:

##

## One Sample t-test

##

## data: one.sample.data

## t = -8.5851, df = 9, p-value = 6.27e-06

## alternative hypothesis: true mean is less than 0

## 95 percent confidence interval:

## -Inf -1.580833

## sample estimates:

## mean of x

## -2.010017but then we wouldn’t have learned as much.

11.1.3 Repeated-Measures \(t\)-test

The repeated-measures \(t\)-test is used when we have two measurements of the same things and we want to see if the mean of the differences for each thing is statistically significant. Mathematically, the repeated-measures \(t\)-test is the exact same thing as the one-sample \(t\)-test! The only special thing about it is that we get the sample of data by subtracting, for each observation, one measure from the other measure to get difference scores.

The repeated-measures \(t\)-test is just a one-sample \(t\)-test of difference scores.

Repeated measures compare individuals against themselves (or against matched individuals).

This reduces the effect of individual differences, for example:

In a memory experiment with different conditions, a repeated-measures design accounts for the fact that people naturally have different mnemonic capabilities

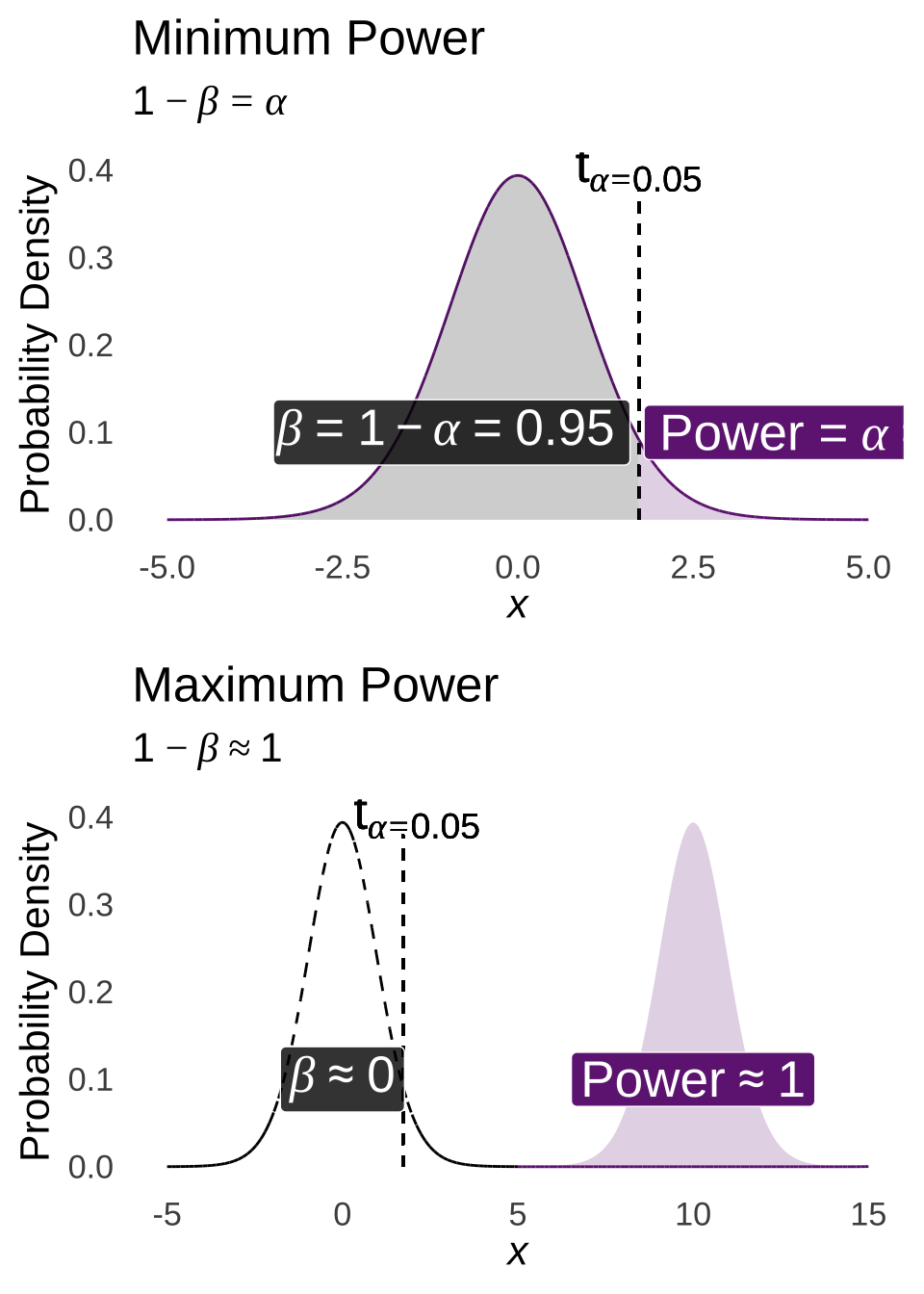

Differences related to different conditions are generally easier to detect, increasing power (more on that topic to come)

Another advantage of repeated-measures designs is that data can be collected under different conditions for the same participants, which saves the researcher from recruiting different participants for different conditions. The combination of reduced noise due to individual variation and collecting data from the same participants for different conditions means that repeated-measures designs are generally more efficient relative to similar independent-groups designs. And that’s not nothing: participant payment, animal subjects, and research time can be costly, and it’s generally good to do the best science one can given the resources at hand.

There are certain situations in which a repeated-measure design should be used with care. For example, some conditions may have order effects like fatigue or learning unrelated to the topic of the study. This is an example of an experimental design issue known as a confound: confounds tend to make it more difficult to interpret the results of a scientific study.

Also, there are certain situations in which a repeated-measure design cannot be used. Some research designs require making comparisons between completely different groups of people by the very nature of the research. Cognitive aging research, to name one field where that is frequently the case, often involves using younger adults as a comparison group for measuring cognition in older adults. Using a repeated-measures design for that kind of research would require recruiting young adults to participate, waiting several decades, and then asking those same, now-older adults to participate again. It’s highly impractical to do that in reality (unless you were working with a criminally unethical pharmaceutical company with access to a mystical beach that ages people a lifetime in 24 hours, which 1. you, personally, would not do and 2. was literally the plot to the 2021 movie Old), so that kind of research must use independent-groups designs.

11.1.3.0.1 Repeated-measures \(t\) Example

To generate another example, let’s go back to our imaginary careers as makers of large kitchen appliances. This time, let’s make an oven. To test our oven, we will make 10 cakes, measuring the temperature each tin of batter in \(^{\circ}C\) before they go into the oven and then measuring the temperature of each of the (hopefully) baked sponges after 45 minutes in the oven.

Here are the (made-up) data:

| Cake | Pre-bake (\(^{\circ} C\)) | Post-bake (\(^{\circ} C\)) | Difference (Post \(-\) Pre) |

|---|---|---|---|

| 1 | 20.83 | 100.87 | 80.04 |

| 2 | 19.72 | 98.58 | 78.86 |

| 3 | 19.64 | 109.09 | 89.44 |

| 4 | 20.09 | 121.83 | 101.74 |

| 5 | 22.25 | 122.78 | 100.53 |

| 6 | 20.83 | 111.41 | 90.58 |

| 7 | 21.31 | 103.96 | 82.65 |

| 8 | 22.50 | 121.81 | 99.31 |

| 9 | 21.17 | 127.85 | 106.68 |

| 10 | 19.57 | 115.17 | 95.60 |

Once we have calculated the difference scores – which for convenience we will abbreviate with \(d\) – we have no more use for the original paired data189. The calculations proceed using \(d\) precisely as they do for the observed data in the one-sample \(t\)-test.

11.1.3.1 Repeated Measures and Paired Samples

This is as good a time as any to pause and note that what I have been calling the repeated-measures \(t\)-test is often referred to as the paired-samples \(t\)-test. Either name is fine! What is important to note is that the measures in this test do not have to refer to the same individual. A paired sample could be identical twins. It could be pairs of animals that have been bred to be exactly the same with regard to some variable of interest (e.g., murine models). It could also be individuals that are matched on some demographic characteristic like age or level of education attained. From a statistical methods point of view, paired samples from different individuals are treated mathematically the same as are paired samples from the same individuals. Whether individuals are appropriately matched is a research methods issue.

Back to the math: the symbols and abbreviations we use are going to be specific to the repeated-measures \(t\)-test, but the formulas are going to be exactly the same. We calculate the observed \(t\) for the repeated-measures test the same way as we calculated the observed \(t\) for the one-samples test, but with assorted \(d\)’s in the formulas to remind us that we’re dealing with difference scores.

\[t_{obs}=\frac{\bar{d}-\mu_d}{se_d}\]

The null assumption is that we are drawing the sample of difference scores from a population of difference scores with a mean equal to \(\mu_d\). The alternative hypothesis is that we are are sampling from a distribution with a different \(\mu_d\).

So, now we are completely prepared to apply the six-step procedure to …

Oh, thanks, Han Solo! We have to test the normality of the differences:

##

## Shapiro-Wilk normality test

##

## data: difference

## W = 0.93616, p-value = 0.5111We can continue to assume that the differences are sampled from a normal distribution.

11.1.3.1.0.1 Six-step Hypothesis Testing

- Identify the null and alternative hypotheses.

Let’s start with the scientific hypothesis that the oven will make the cakes warmer. Thus, we are going to assume a null that the oven makes the cakes no warmer or possibly less warm, with the alternative being that the \(mu_d\) is any positive non-zero difference in the temperature of the cakes.

\[H_0: \mu_d \le 0\] \[H_1: \mu_d>0\] 2. Identify the type-I error (false alarm) rate.

Again, \(\alpha=0.05\), a type-I error rate that might be described as…

So, this time, let’s instead use \(\alpha=0.01\)

- Identify the statistical test to be used.

Repeated-measures \(t\)-test.

- Identify a rule for deciding between the null and alternative hypotheses

If \(p<\alpha\), which as noted for the one-sample \(t\)-test we can calculate with software or by consulting a critical-values table but really we’re just going to use software, reject \(H_0\) in favor of \(H_1\).

- Obtain data and make calculations

The mean of the observed sample data is 92.54, and the standard deviation is 9.77. As for the one-sample test, we incorporate the assumption that the observed standard deviation of the differences is our best guess for the population standard deviation of the differences in the equation to find the standard error:

\[se_d=\frac{sd_{d(obs)}}{\sqrt{n}}\]

The observed \(t\) for these data is:

\[t_{obs}=\frac{\bar{d}-\mu_d}{se_d}=\frac{92.54-0}{3.09}=29.97\]

Because the null hypothesis indicates that \(\mu_d \le 0\), the \(p\)-value is the cumulative likelihood that of \(t \ge t_{obs}\): an upper-tail probability. Thus, the observed \(p\)-value is:

## [1] 1.252983e-10The observed \(p\)-value is less than \(\alpha=0.01\), so we reject the null hypothesis in favor of the alternative hypothesis: the population-level mean difference between pre-bake batter and post-bake sponge warmth is greater than 0.

Of course, we can save ourselves some time by using R. Please note that when using the t.test() command with two arrays (as we do with the repeated-measures test and will again with the independent-samples test), we need to note whether the samples are paired or not using the paired = TRUE/FALSE option.

##

## Paired t-test

##

## data: postbake and prebake

## t = 29.966, df = 9, p-value = 1.255e-10

## alternative hypothesis: true mean difference is greater than 0

## 95 percent confidence interval:

## 86.88161 Inf

## sample estimates:

## mean difference

## 92.54283Based on this analysis, our ovens are in good working order.

But that result only tells us that the mean increase in temperature was significantly greater than 0. That is probably not good enough, even for imaginary makers of large kitchen appliances.

Suppose, then, that instead of using \(\mu_d=0\) as our \(H_0\), we used \(\mu_d=90\) as our \(H_0\). That would mean that we would be testing whether our ovens, on average, raise the temperature of our cakes by more than \(90^{\circ}C\). We’ll skip all of the usual steps and just alter our R commands by changing the value of mu:

##

## Paired t-test

##

## data: postbake and prebake

## t = 0.82337, df = 9, p-value = 0.2158

## alternative hypothesis: true mean difference is greater than 90

## 95 percent confidence interval:

## 86.88161 Inf

## sample estimates:

## mean difference

## 92.54283Thus, while the mean difference was greater than \(90^{\circ}C\), it was not significantly so.

A couple of things to note for that. The first is the \(p\)-value changes because we changed the null hypothesis – more evidence that the \(p\)-value is not the probability of the data themselves. The other is that altering \(\mu_d\) can be a helpful tool when assuming \(\mu_d=0\) – which is the norm (and also the default in R if you leave out the mu= part of the t.test() command) – is not scientifically interesting.

11.1.4 Independent-Groups \(t\)-test

The last type of \(t\)-test is the independent-groups (or independent-samples) \(t\)-test. The independent-groups test is used when we have completely different samples. They don’t even have to be the same size.190.

When groups are independent, the assumption is that they are drawn from different populations that have the same variance. The independent-samples \(t\) is a measure of the difference of the means of the two groups. The distribution that the difference of the means is sampled from is not one of a single population but of the combination of the two populations.

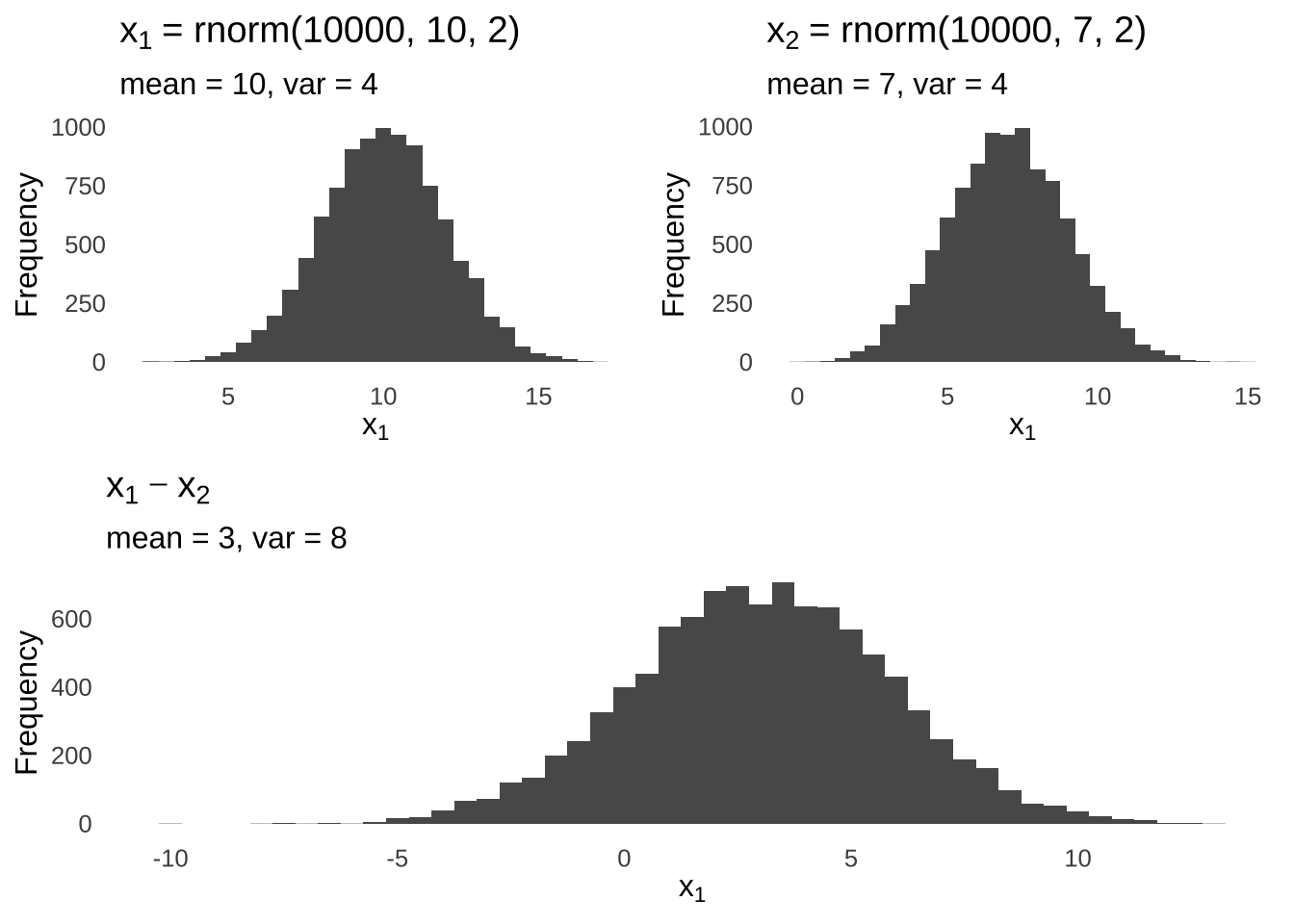

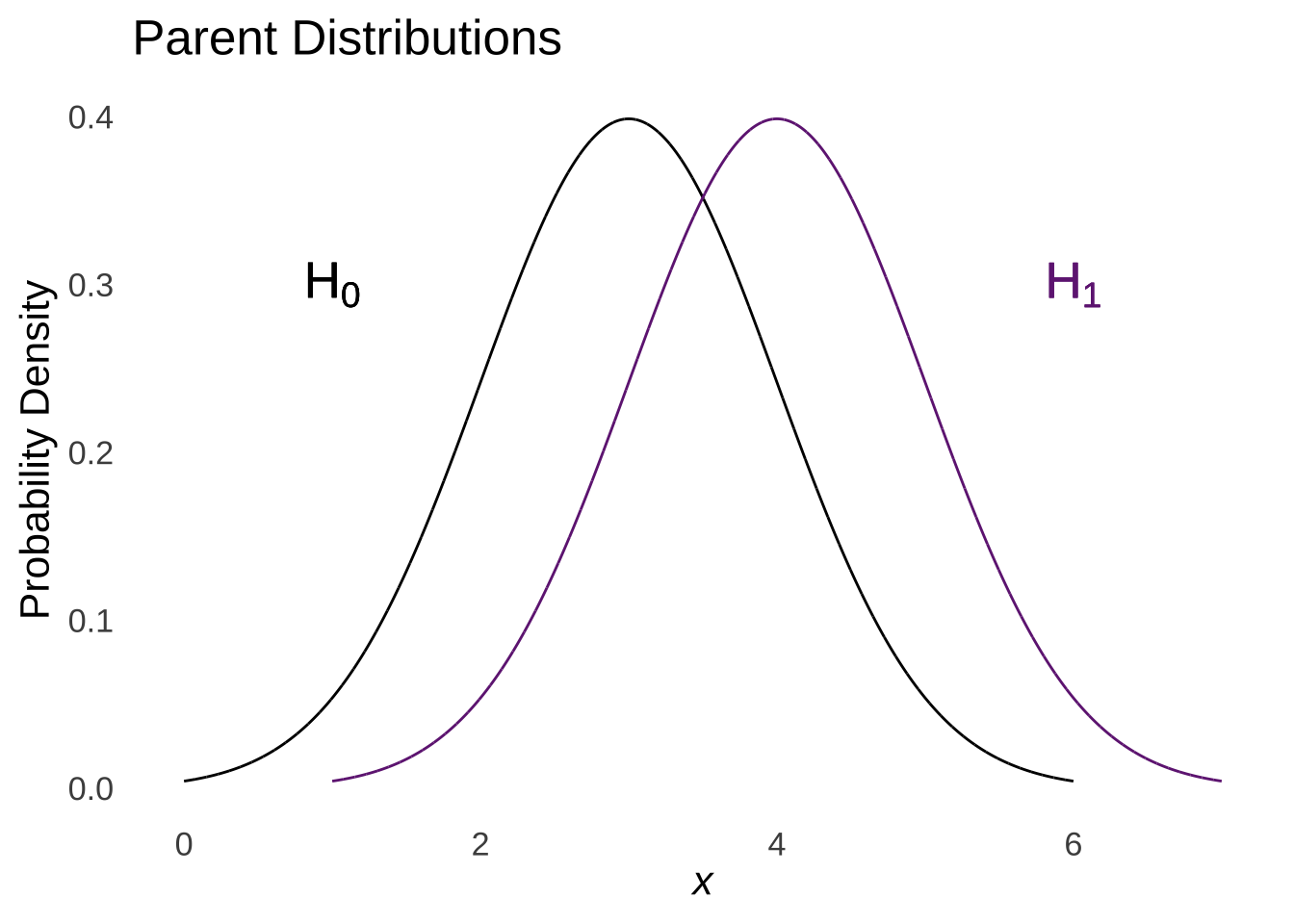

The rules of linear combinations of random variables tell us that the difference of two normal distributions is a normal with a mean equal to the difference of the means of the two distributions and a variance equal to the sum of the means of the two distributions:

Figure 11.5: Linear (Subtractive) Combination of two Normal Distributions

And so the difference of the means is hypothesized to come from the combined distribution of the differences of the means of the two populations.

11.1.4.1 Pooled Variance

The variance of the population distributions that each sample comes from are assumed to be the same: that’s the homescedasticity assumption. When performing the one-sample and repeated-measures \(t\)-tests, we used the sample variances of the single sample and the difference scores, respectively, to estimate the populations from which those numbers came. But, with two groups, we will most likely have two sample variances (we could have the exact same sample variance in both groups, but that would be quite improbable). We can’t say that one sample comes from a population with a variance approximated by the variance of the that sample and that the other sample comes from a population with a variance approximated by the variance of the other sample: that would imply that the populations have different variances, and we can’t have that, now, can we?

Instead, we treat the two sample variances together as estimators of the common variance value of the two populations by calculating what is known as the pooled variance:

\[s^2_{pooled}=\frac{s^2_1(n_1-1)+s^2_2(n_2-1)}{n_1+n_2-2}\] The pooled variance, in practice, acts like a weighted average of the two sample variances: if the samples are of uneven size (\(n1 \ne n_2\)), by multiplying each sample variance by \(n-1\), the variance of the larger sample is weighted more heavily than the variance of the smaller sample. In theory (in practice, too, I guess, but it’s a little harder to see), by multiplying the sample variances by their respective \(n -1\), the pooled variance takes the numerator of the sample variances – the sums of squares (SS) for each observation – adds them together, and creates a new variance.

\[s^2_{pooled}=\frac{\sum(x_1-\bar{x}_1)^2+\sum(x_2-\bar{x}_2)^2}{n_1+n_2-2}\] The denominator of the pooled variance is the total degrees of freedom of the estimate: because there are two means involved in the calculation of the numerator – \(\bar{x}_1\) to calculate the \(SS\) of sample 1 and \(\bar{x}_2\) to calculate the \(SS\) of sample 2, the total \(df\) is the total \(n=n_1+n_2\) minus 2.

Now, our null assumption is that the difference of the means comes from a distribution generated by the combination of the two distributions from which the two means were respectively sampled with a hypothesized mean of the difference of means. The variance of that distribution is unknown at the time that the null and alternative hypotheses are determined191. When we do estimate that variance, it will be the pooled variance. And, when we apply the central limit theorem, we will use a standard error that – based on the rules of linear combinations – is a sum of the standard errors of each sampling procedure:

\[\bar{x1}-\bar{x2} \sim N \left( \mu_1 - \mu_2,~ \frac{\sigma^2}{n_1}+\frac{\sigma^2}{n_2}\right)\]

And therefore our formula for the observed \(t\) for the independent-samples \(t\)-test is:

\[t_{obs}=\frac{\bar{x}_1-\bar{x}_2-\Delta}{\sqrt{\frac{s^2_{pooled}}{n_1}+\frac{s^2_{pooled}}{n_2}}}\] where \(\Delta\) is the hypothesized mean of the null-distribution of the difference of means.

Please note: in practice, as with the repeated-measures \(t\)-test, researchers rarely use a non-zero value for the mean of the null distribution (\(\mu_d\) in the case of the repeated-measures test; \(\Delta\) in the case of the independent-samples test). Still, it’s there if we need it.

The \(df\) that defines the \(t\)-distribution that our difference of sample means comes from is the sum of the degrees of freedom for each sample:

\[df=(n_1-1)+(n_2-1)=n_1+n_2-2\]

11.1.4.1.1 Independent-groups \(t\) Example

Now, let’s think up another example to work through the math. In this case, let’s say we have expanded our kitchen-appliance-making operation to include small kitchen appliances, and we have made two models of toaster: the Mark I and the Mark II. Imagine, please, that we want to test if there is any difference in the time it takes each model to properly toast pieces of bread. We test 10 toasters of each model (here \(n_1-n_2\) that’s doesn’t necessarily have to be true) of toaster and record the time it takes to finish the job:

| Mark I | Mark II |

|---|---|

| 4.72 | 9.19 |

| 7.40 | 11.44 |

| 3.50 | 9.64 |

| 3.85 | 12.09 |

| 4.47 | 10.80 |

| 4.09 | 12.71 |

| 6.34 | 10.04 |

| 3.30 | 9.06 |

| 7.13 | 6.31 |

| 4.99 | 9.44 |

We are now ready to test the hypothesis that there is any difference in the mean toasting time between the two models.

Oh, I almost forgot again! Thanks, Diana Ross! We have to test two assumptions for the independent-samples \(t\)-test: normality and homoscedasticity.

Mark.I.residuals<-Mark.I-mean(Mark.I)

Mark.II.residuals<-Mark.II-mean(Mark.II)

shapiro.test(c(Mark.I.residuals, Mark.II.residuals))##

## Shapiro-Wilk normality test

##

## data: c(Mark.I.residuals, Mark.II.residuals)

## W = 0.94817, p-value = 0.3402Good on normality!

model<-c(rep("Mark I", length(Mark.I)), rep("Mark II", length(Mark.II)))

times<-c(Mark.I, Mark.II)

independent.samples.df<-data.frame(model, times)

leveneTest(times~model, data=independent.samples.df)## Levene's Test for Homogeneity of Variance (center = median)

## Df F value Pr(>F)

## group 1 0.186 0.6714

## 18And good on homoscedasticity, too!

Now, we may proceed with the six-step procedure.

- Identify the null and alternative hypotheses.

We are looking in this case for evidence that either the Mark I toaster or the Mark II toaster is faster than the other. Logically, that means that we are interested in whether the difference between the mean toasting times is significantly different from 0. Thus, we are going to assume a null that indicates no difference between the population mean of Mark I toaster times and the population mean of Mark II toaster times.

\[H_0: \bar{x}_1- \bar{x}_2 = 0\] \[H_1: \bar{x}_1 - \bar{x}_2 \ne 0\] 2. Identify the type-I error (false alarm) rate.

The type-I error rate we used for the repeated-measures example – \(\alpha=0.01\) – felt good. Let’s use that again.

- Identify the statistical test to be used.

Independent-groups \(t\)-test.

- Identify a rule for deciding between the null and alternative hypotheses

If \(p<\alpha\), which again we can calculate with software or by consulting a critical-values table but there’s no need to get tables involved here in the year 2020, reject \(H_0\) in favor of \(H_1\).

- Obtain data and make calculations

The mean of the Mark I sample is 4.98, and the variance is 2.19. The mean of the Mark II sample is 10.07, and the variance is 3.33.

The pooled variance for the two samples is:

\[s^2_{pooled}=\frac{s^2_1(n_1-1)+s^2_2(n_2-1)}{n_1+n_2-2}=\frac{2.19(10-1)+3.33(10-1)}{10+10-2}=2.76\]

The observed \(t\) for these data is:

\[t_{obs}=\frac{\bar{x}_1-\bar{x}_2-\Delta}{\sqrt{\frac{s^2_{pooled}}{n_1}+\frac{s^2_{pooled}}{n_2}}}=\frac{4.98-10.07-0}{\sqrt{\frac{2.76}{10}+\frac{2.76}{10}}}=-6.86\]

Because this is a two-tailed test, the \(p\)-value is the sum of the cumulative likelihood of \(t\le-|t_{obs}|\) or \(t\ge|t_{obs}|\) – that is, the sum of the lower-tail probability that a \(t\) could be less than or equal to the negative version of \(t_{obs}\) and the upper-tail probability that a \(t\) could be greater than or equal to the positive version of \(t_{obs}\):

## [1] 2.033668e-06And all that matches what we could have done much more quickly and easily with the t.test() command. Note in the following code that paired=FALSE – otherwise, R would run a repeated-measures \(t\)-test – and that we have included the option var.equal=TRUE, which indicates that homoscedasticity is assumed. Assuming homoscedasticity is not the default option in R: more on that below.

##

## Two Sample t-test

##

## data: Mark.I and Mark.II

## t = -6.8552, df = 18, p-value = 2.053e-06

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -6.654528 -3.532506

## sample estimates:

## mean of x mean of y

## 4.979966 10.07348211.1.4.2 Welch’s \(t\)-test

The default option for the t.test() command when paired=FALSE is indicated is var.equal=FALSE. That means that without instructing the software to assume homoscedasticity, the default is to assume different variances. This default test is known as Welch’s \(t\)-test. Welch’s test differs from the traditional, homoscedasticity-assuming \(t\)-test in two ways:

- The pooled variance is replaced by separate population variance estimates based on the sample variances. The denominator for Welch’s \(t\) is therefore:

\[\sqrt{\frac{s^2_1}{n^1}+\frac{s^2_2}{n^2}}\] 2. The degrees of freedom of the \(t\)-distribution are adjusted to compensate for the differences in variance. The degrees of freedom for the Welch’s test are not \(n_1+n_2-2\), but rather:

\[df\approx\frac{\left( \frac{s^2_1}{n_1}+\frac{s^2_2}{n_2} \right)^2}{\frac{s^4_1}{n_1^2(n_1-1)}+\frac{s^4_2}{n_2^2(n_2-1)}}\]

Really, what you need to know there is that the Welch’s test uses a different $ than the traditional independent-samples test.

Repeating, then, the analysis of the toasters with var.equal=TRUE removed, the result of the Welch test is:

##

## Welch Two Sample t-test

##

## data: Mark.I and Mark.II

## t = -6.8552, df = 17.27, p-value = 2.566e-06

## alternative hypothesis: true difference in means is not equal to 0

## 95 percent confidence interval:

## -6.659274 -3.527760

## sample estimates:

## mean of x mean of y

## 4.979966 10.073482Note that for these data where homoscedasticity was observed, the \(t_obs\) is the same. The \(df\) is a non-integer value, but close to the value of \(df=18\) for the traditional independent-samples test, and the \(p\)-value is essentially the same.

The advantage of Welch’s test is that it accounts for possible violations of homoscedasticity. I don’t really see a downside except that you may have to explain it a tiny bit in a write-up of your results.

11.1.4.2.1 Notes on the \(t\)-test

Although I chose one or the other for each of the above examples, any type of \(t\)-test can be either a one-tailed test or a two-tailed test. The direction of the hypothesis depends only on the nature of the question one is trying to answer, not on the structure of the data.

Some advice for one-tailed tests: always be sure to keep track of your signs. For the one-tailed test, that is relatively easy: keep in mind whether you are testing whether the sample mean is supposed to be greater than the null value or less than the null value. For the repeated-measures and independent-groups tests, be careful which values you are subtracting from which. Which measurement you subtract from which in the repeated-measures test and which mean you subtract from which in the independent-groups test is arbitrary. However, it can be shocking if, for example, you expect scores to increase from one measurement to another and they appear to decrease, but only because you subtracted what was supposed to be bigger from what was supposed to be smaller (or vice versa).

Related to note 2: assuming that the proper subtractions have been made, if you have a directional hypothesis and the result is in the wrong direction, it cannot be statistically significant. If, for example, the null hypothesis is \(\mu \le 0\) and the \(t\) value is negative, then one cannot reject the null hypothesis no matter how big the magnitude of \(t\). An experiment testing a new drug with a directional hypothesis that the drug will make things better is not successful if the drug makes things waaaaaaaay worse.

11.1.5 \(t\)-tests and Regression

Check this out:

Let’s run a linear regression on our toaster data where the predicted variable (\(y\)) is toasting time and the predictor variable (\(x\)) is toaster model. Note the \(t\)-value on the “modelMark.II” line:

toaster.long<-data.frame(model=c(rep("Mark.I", 10), rep("Mark.II", 10)), times=c(Mark.I, Mark.II))

summary(lm(times~model, data=toaster.long))##

## Call:

## lm(formula = times ~ model, data = toaster.long)

##

## Residuals:

## Min 1Q Median 3Q Max

## -3.7588 -0.9213 -0.3457 1.3621 2.6393

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.9800 0.5254 9.479 2.02e-08 ***

## modelMark.II 5.0935 0.7430 6.855 2.05e-06 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.661 on 18 degrees of freedom

## Multiple R-squared: 0.7231, Adjusted R-squared: 0.7077

## F-statistic: 46.99 on 1 and 18 DF, p-value: 2.053e-06That \(t\)-value is the same as the \(t\) that we got from the \(t\)-test (but with the sign reversed), and the \(p\)-value is the same as well. The \(t\)-test is a special case of regression: we’ll come back to that later.

11.2 Nonparametric Tests of the Differences Between Two Things

Nonparametric Tests evaluate the pattern of observed results rather than the numeric descriptors (e.g., summary statistics like the mean and the standard deviation) of the results. Where a parametric test might evaluate the cumulative likelihood (given the null hypothesis, of course) that people in a study improved on average on a measure following treatment, a nonparametric test might take the same data and evaluate the cumulative likelihood that \(x\) out of \(n\) people improved on the same measure following treatment.

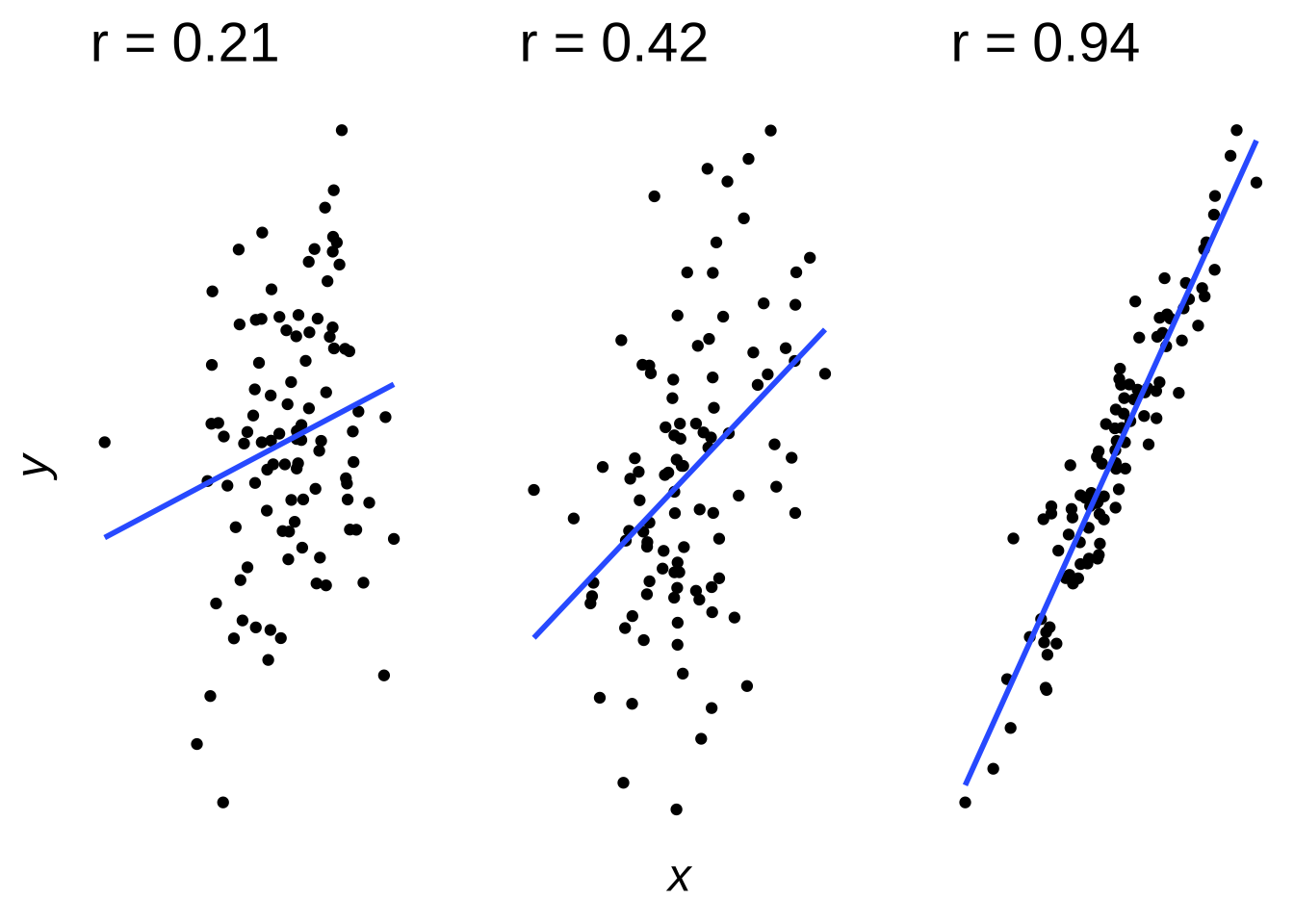

Three examples of nonparametric tests were covered in correlation and regression: the correlation of ranks \(\rho\), the concordance-based correlation \(\tau\), and the categorical correlation \(\gamma\). In each of those tests, it is not the relative values of paired observations with regard to the mean and standard deviation of the variables but the relative patterns of paired observations.

11.2.1 Nonparametric Tests for 2 Independent Groups

11.2.1.1 The \(\chi^2\) Test of Statistical Independence

Statistical Independence refers to a state where the patterns of the observed data do not depend on the number of possibilities for the data to be arranged. That means that there is no relationship between the number of categories that a set of datapoints can be classified into and the probability that the datapoints will be classified in a particular way. By contrast, statistical dependence is a state where there is a relationship between the possible data structure and the observed data structure.

The \(\chi^2\) test of statistical independence takes statistical dependence as its null hypothesis and statistical independence as its alternative hypothesis. It is essentially the same test as the \(\chi^2\) goodness-of-fit test, but used for a wider variety of applied statistical inference (that is, beyond evaluating goodness-of-fit). \(\chi^2\) tests of statistical independence can be categorized by the number of factors being analyzed in a given test: in this section, we will talk about the one factor case – one-way \(\chi^2\) tests – and the two factor case – two-way \(\chi^2\) tests.

11.2.1.1.1 One-way \(\chi^2\) Test of Statistical Independence

In the one-way \(\chi^2\) test, statistical independence is determined on the basis of the number of possible categories for the data.192

To help illustrate, please imagine that 100 people who are interested in ordering food open up a major third-party delivery service website and see the following:

There are two choices, neither of which is accompanied by any type of description. We would expect an approximately equal number of the 100 hungry people to pick each option. Given that there is no compelling reason to choose one over the other, the choice responses of the people are likely to be statistically dependent on the number of choices. We would expect approximately \(\frac{n}{2}=50\) people to order from Restaurant A and approximately \(\frac{n}{2}=50\) people to order from Restaurant B: statistical dependence means we can guess based solely on the possible options.

Now, let’s say our 100 hypothetical hungry people open the same third-party food-delivery website and instead see these options:

Given that the titles of each restaurant are properly descriptive and not ironic, this set of options would suggest that people’s choices will not depend solely on the number of options. The choice responses of the 100 Grubhub customers would likely follow a non-random pattern. Their choices would likely be statistically independent of the number of possible options.

As in the goodness-of-fit test, the \(\chi^2\) test of statistical independence uses an observed \(\chi^2\) test statistic that is calculated based on observed frequencies (\(f_o\), although it’s sometimes abbreviated \(O_f\)) and on _expected frequencies (\(f_e\), sometimes \(E_f\)):

\[\chi^2_{obs}=\sum_1^k \frac{(f_o-f_e)^2}{f_e}\] where \(k\) is the number of categories or cells that the data can fall into. For the one-way test, the expected frequencies for each cell are the total number of observations divided by the number of cells:

\[f_e=\frac{n}{k}.\] The expected frequencies do not have to be integers! In fact, unless the number of observations is a perfect multiple of the number of cells, they will not be.

11.2.1.1.2 Degrees of Freedom

The degrees of freedom (\(df\), sometimes abbreviated with the Greek letter \(\nu\) [“nu”]) for the one-way \(\chi^2\) test is the number of cells \(k\) minus 1:

\[df=k-1\]

Generally speaking, the degrees of freedom for a set of frequencies (as we have in the data that can be analyzed with the \(\chi^2\) test) are the number of cells whose count can change while maintaining the same marginal frequency. For a one-way, two-cell table of data with \(A\) observations in the first cell and \(B\) observations in the second cell, the marginal frequency is \(A+B\):

| cell | cell | margin |

|---|---|---|

| A | B | A+B |

thus, we can think of the marginal frequencies as totals on the margins of tables comprised of cells. If we know \(A\), and the marginal frequency \(A+B\) is fixed, then we know \(B\) by subtraction. The observed frequency of \(A\) can change, and then (given fixed \(A+B\)) we would know \(B\); \(B\) could change, and then we would know \(A\). We cannot freely change both \(A\) and \(B\) while keeping \(A+B\) constant; thus, there is 1 degree of freedom for the two-cell case.

As covered in probability distributions, \(df\) is the sufficient statistic for the \(\chi^2\) distribution: it determines both the mean (\(df\)) and the variance (\(2df\)). Thus, the \(df\) are all we need to know to calculate the area under the \(\chi^2\) curve at or above the observed \(\chi^2\) statistic: the cumulative likelihood of the observed or more extreme unobserved \(\chi^2\) values. The \(\chi^2\) distribution is a one-tailed distribution and the \(\chi^2\) test is a one-tailed test (the alternative hypothesis of statistical independence is a binary thing – there is no such thing as either negative or positive statistical independence), so that upper-tail probability is all we need to know.

As the \(\chi^2\) test is a classical inferential procedure (albeit one that makes an inference on the pattern of observed data and no inference on any given population-level parameters), it observes the traditional six-step procedure. The null hypothesis of the \(\chi^2\) test – as noted above – is always that there is statistical dependence and the alternative hypothesis is always that there is statistical independence. Whatever specific form dependence and independence take, respectively, depends (no pun intended) on the situation being analyzed. The \(\alpha\)-rate is set a priori, the test statistic is a \(\chi^2\) value with \(k-1\) degrees of freedom, and the null hypothesis will be rejected if \(p \le \alpha\).

To make the calculations, it is often convenient to keep track of the expected and observed frequencies in a table resembling this one:

| \(f_e\) | \(f_e\) | ||

| \(f_0\) | \(f_0\) |

We then determine whether the cumulative likelihood of the observed \(\chi^2\) value or more extreme \(\chi^2\) values given the null hypothesis of statistical dependence – mathematically, statistical dependence looks like \(\chi^2\approx 0\) – is less than or equal to the predetermined \(\alpha\) rate either by using a table of \(\chi^2\) quantiles.

Let’s work through an example using the fictional choices of 100 hypothetical people between the made-up restaurants Dan’s Delicious Dishes and Homicidal Harry’s House of Literal Poison. Suppose 77 people choose to order from Dan’s and 23 people choose to order from Homicidal Harry’s. Under the null hypothesis of statistical dependence, we would expect an equal frequency in each cell: 50 for Dan’s and 50 for Homicidal Harry’s:

fe1<-c("$f_e=50$", "")

fo1<-c("", "$f_0=77$")

fe2<-c("$f_e=50$", "")

fo2<-c("", "$f_0=23$")

kable(data.frame(fe1, fo1, fe2, fo2), "html", booktabs=TRUE, escape=FALSE, col.names = c("", "", "", "")) %>%

kable_styling() %>%

row_spec(1, italic=TRUE, font_size="small")%>%

row_spec(2, font_size = "large") %>%

add_header_above(c("Dan's"=2, "Harry's"=2)) %>%

column_spec(2, border_right = TRUE)| \(f_e=50\) | \(f_e=50\) | ||

| \(f_0=77\) | \(f_0=23\) |

The observed \(\chi^2\) statistic is:

\[\chi^2_{obs}=\sum_i^k \frac{(f_o-f_e)^2}{f_e}=\frac{(77-50)^2}{50}+\frac{(23-50)^2}{50}=14.58+14.58=29.16\] The cumulative probability of \(\chi^2\ge 29.16\) for a \(\chi^2\) distribution with \(df=1\) is:

## [1] 6.66409e-08which is smaller than an \(\alpha\) of 0.05, or an \(\alpha\) of 0.01, or really any \(\alpha\) that we would choose. Thus, we reject the null hypothesis of statistical dependence in favor of the alternative of statistical independence. In terms of the example: we reject the null hypothesis that people are equally likely to order from Dan’s Delicious Dishes or from Homicidal Harry’s House of Literal Poison in favor of the alternative that there is a statistically significant difference in people’s choices.

To perform the \(\chi^2\) test in R is blessedly more simple:

##

## Chi-squared test for given probabilities

##

## data: c(77, 23)

## X-squared = 29.16, df = 1, p-value = 6.664e-0811.2.1.1.3 Relationship with the Binomial

Now, you might be thinking:

Wait a minute … the two-cell one-way \(\chi^2\) test seems an awful lot like a binomial probability problem. What if we treated these data as binomial with \(s\) as the frequency of one cell and \(f\) as the frequency of the other with a null hypothesis of \(\pi=0.5\)?

And it is very impressive that you might be thinking that, because the answer is:

A one-way two-cell \(\chi^2\) test will result in approximately the same \(p\)-value as \(p(s \ge s_{obs} |\pi=0.5, N)\), where \(s\) is the greater of the two observed frequencies (or \(p(s \le s_{obs}| \pi=0.5, N)\) where \(s\) is the lesser of the two observed frequencies). In fact, the binomial test is the more accurate of the two methods – the \(\chi^2\) is more of an approximation – but the difference is largely negligible.

To demonstrate, we can use the data from the above example regarding fine dining choices:

## [1] 2.75679e-08The difference between the \(p\)-value from the binomial and from the \(\chi^2\) is about \(4 \times 10^8\). That’s real small!

11.2.1.1.4 Using Continuous Data with the \(\chi^2\) Test

The \(\chi^2\) test assesses categories of data, but that doesn’t necessarily mean that the data themselves have to be categorical. In the goodness-of-fit version, for example, numbers in a dataset were categorized by their values: we can do the same for the test of statistical independence.

Suppose a statistics class of 12 students received the following grades – \(\{85, 85, 92, 92, 93, 95, 95, 96, 97, 98, 99, 100 \}\) – and we wanted to know if their grade breakdown was significantly different from a 50-50 split between A’s and B’s. We could do a one-sample \(t\)-test based on the null hypothesis that \(\mu=90\). Or, we could use a \(\chi^2\) test where the expected frequencies reflect an equal number of students scoring above and below 90:

fe1<-c("$f_e=6$", "")

fo1<-c("", "$f_0=2$")

fe2<-c("$f_e=6$", "")

fo2<-c("", "$f_0=12$")

kable(data.frame(fe1, fo1, fe2, fo2), "html", booktabs=TRUE, escape=FALSE, col.names = c("", "", "", "")) %>%

kable_styling() %>%

row_spec(1, italic=TRUE, font_size="small")%>%

row_spec(2, font_size = "large") %>%

add_header_above(c("B's"=2, "A's"=2))| \(f_e=6\) | \(f_e=6\) | ||

| \(f_0=2\) | \(f_0=12\) |

The observed \(\chi^2\) statistic would be:

\[\chi^2_{obs}=\frac{(2-6)^2}{6}+\frac{(10-6)^2}{6}=5.33\] The \(p\)-value – given that \(df=1\) – would be 0.021, which would be considered significant at the \(\alpha=0.05\) level.

11.2.1.1.5 The Two-Way \(\chi^2\) Test

Often in statistical analysis we are interested in examining multiple factors to see if there is a relationship between things, like exposure and mutation, attitudes and actions, study and recall. The two-way version of the \(\chi^2\) test of statistical independence analyzes patterns in category membership for two different factors. To illustrate: imagine we have a survey comprising two binary-choice responses: people can answer \(0\) or \(1\) to Question 1 and they can answer \(2\) or \(3\) to Question 2. We can organize their responses into a \(2 \times 2\) (\(rows \times columns\)) table known as a contingency table, where the responses to each question – the marginal frequencies of responses – are broken down contingent upon their answers to the other question.193

| 2 | 3 | Margins | ||

|---|---|---|---|---|

| Question 1 | 0 | \(A\) | \(B\) | \(A+B\) |

| 1 | \(C\) | \(D\) | \(C+D\) | |

| Margins | \(A+C\) | \(B+D\) | \(n\) |

Statistical independence in the one-way \(\chi^2\) test was determined as a function of the number of possible options. Statistical independence in the two-way \(\chi^2\) test suggests that the two factors are independent of each other, that is, that the number of possibilities for one factor are unrelated to the categorization of another factor.

11.2.1.1.6 Degrees of Freedom for the 2-way \(\chi^2\) Test

Just as the degrees of freedom for the one-way \(\chi^2\) test were the number of cells in which the frequency was allowed to vary while keeping the margin total the same, the degrees of freedom for the two-way \(\chi^2\) test are the number of cells that are free to vary in frequency while keeping both sets of margins – the margins for each factor – the same. Thus, we have a set of \(df\) for the rows of a contingency table and a set of \(df\) for the columns of a contingency table, and the total \(df\) is the product of the two:

\[df=(k_{rows}-1)\times (k_{columns}-1))\]

11.2.1.1.7 Expected Frequencies for the 2-way \(\chi^2\) Test

Unlike in the one-way test, the expected frequencies across cells in the two-way \(\chi^2\) test do not need to be equal: in most cases, they aren’t. It is possible – and not at all uncommon – for the different response levels of each factor to have different frequencies, which the two-way test accounts for. The expected frequencies instead are proportionally equal given the marginal frequencies. In the arrangment in the table above, for example, we do not expect \(A\), \(B\), \(C\), and \(D\) to be equal to each other but we do expect \(A\) and \(B\) to be _proportional to \(A+C\) and \(A+D\), respectively; for \(A\) and \(C\) to be proportional to \(A+B\) and \(C+D\), respectively; etc.

Thus, the expected frequency for each cell in a 2-way contingency table is:

\[f_e=n \times row~proportion \times column~proportion\] For example, let’s say that we asked 140 people a 2-question survey:

- Is a hot dog a sandwich?

- Does a straw have one hole or two?

and that these are the observed frequencies:

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 40 | 10 | 50 |

| No | 20 | 70 | 90 | |

| Margins | 60 | 80 | 140 |

The expected frequencies, generated by the formula \(f_e=n \times row~proportion \times column~proportion\) for each cell, are presented in the following table above and to the left of their respective observed frequencies:

| 1 | 2 | Margins | ||||

|---|---|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 21.43 | 28.57 | |||

| 40 | 10 | 50 | ||||

| No | 38.57 | 51.43 | ||||

| 20 | 70 | 90 | ||||

| Margins | 60 | 80 | 140 |

Note, for example, that the expected number of people to say “no” to the hot dog question and “1” to the straw question is greater than the expected number of people to say “yes” to the hot dog question and “1” to the straw question, even though the opposite was observed. That is because more people overall said “no” to the hot dog question, so proportionally we expect greater number of responses associated with either answer to the other question among those people who (correctly) said that a hot dog is not a sandwich.

The observed \(\chi^2\) value for the above data is:

\[\chi^2_{obs} (1)=\frac{(40-21.43)^2}{21.43}+\frac{(10-28.57)^2}{28.57}+\frac{(20-38.57)^2}{38.57}+\frac{(70-51.43)^2}{51.43}=43.81\]

The associated \(p\)-value is:

## [1] 6.530801e-16which is smaller than any reasonable \(\alpha\)-rate, so we reject the null hypothesis that there is no relationship between people’s responses to the hot dog question and the straw question.

To perform the one-way \(\chi^2\) test in R, we used the command chisq.test() with a vector of values inside the parentheses. The two-way test has an added dimension, so instead of a vector, we enter a matrix:

## [,1] [,2]

## [1,] 40 10

## [2,] 20 70When calculating the \(\chi^2\) test on a \(2 \times 2\) matrix, R defaults to applying Yates’s Continuity Correction. Personally, I think you can skip it, although it doesn’t seem to do too much harm. We can turn off that default with the option correct=FALSE.

##

## Pearson's Chi-squared test

##

## data: matrix(c(40, 20, 10, 70), nrow = 2)

## X-squared = 43.815, df = 1, p-value = 3.61e-1111.2.1.1.8 Beyond the 2-way \(\chi^2\) Test

Having had a blast with the one-way \(\chi^2\) test and the time of one’s life with the two-way \(\chi^2\) test, one might be tempted to add increasing dimensions to the \(\chi^2\) test…

Such things are mathematically doable, but not terribly advisable for two main reasons:

Adding dimensions increases the number of cells exponentially: a three-way test involves \(x \times y \times z\) cells, a four-way test involved \(x \times y \times z \times q\) cells, etc. In turn, that means that an exponentially rising number of observations is needed for reasonable analysis of the data. In a world of limited resources, that can be a dealbreaker.

The larger problem is one of scientific interpretation. It is relatively straightforward to explain a scientific hypothesis involving the statistical independence of two factors. It is far more difficult to generate a meaningful scientific hypothesis involving the statistical independence of three or more factors, especially if it turns out – as it can – that the \(\chi^2\) test indicates statistical independence of all of the factors but not some subsets of the factors or vice versa.

So, the official recommendation here is to use factorial analyses other than the \(\chi^2\) test should more than two factors apply to a scientific hypothesis.

11.2.1.1.8.1 Dealing with small \(f_e\)

As noted in our previous encounter with the \(\chi^2\) statistic, the only requirement for the \(\chi^2\) test is that \(f_e\ge 5\). If the structure of observed data are such that you would use a 1-way \(\chi^2\) test except for the problem of \(f_e < 5\), we can instead treat the data as binomial, testing the cumulative likelihood of \(s \ge s_{obs}\) given that \(\pi=0.5\). If the structure indicate a 2-way \(\chi^2\) test but \(f_e\) is too small, then we can use the Exact Test.

11.2.1.2 Exact Test

The Exact Test – technically known as Fisher’s Exact Test but since he was a historically shitty person I see no problem dropping his name from the title – is an alternative to the \(\chi^2\) test of statisical independence to use when there is:

- a \(2 \times 2\) data structure and

- inadequate \(f_e\) per cell to use the \(\chi^2\) test.

The exact test returns the cumulative likelihood of a given pattern of data given that the marginal totals remain constant. To illustrate how the exact test returns probabilities, please consider the following labels for a \(2 \times 2\) contingency table:

| Factor 1 | \(A\) | \(B\) | \(A+B\) |

| \(C\) | \(D\) | \(C+D\) | |

| Margins | \(A+C\) | \(B+D\) | \(n\) |

First, let’s consider the number of possible combinations for the row margins. Given that there are \(n\) observations, the number of combinations of observations that could put \(A+B\) of those observations in the top row – and therefore \(C+D\) observations in the second row – is given by:

\[nCr=\frac{n!}{(A+B)!(n-(A+B))!}=\frac{n!}{(A+B)!(C+D)!}\]

Next, let’s consider the arrangement of the row observations into column observations. The number of combinations of observations that lead to \(A\) observations in the first column is \(A+C\) things combined \(A\) at a time – \(_{A+C}C_A\) – and the number of combinations of observations that lead to \(B\) observations in the second column is \(B+D\) things combined \(B\) at a time – \(_{B+D}C_B\). Therefore, there are a total of \(_{A+C}C_A \times _{B+D}C_B\) possible combinations that lead to the observed \(A\) (and thus \(C\) because \(A+C\) is constant) and the observed \(B\) (and thus \(D\) because \(B+D\) is constant), and the formula:

\[\frac{(A+C)!}{A!C!} \times \frac{(B+D)!}{B!D!}=\frac{(A+C)!(B+D)!}{A!B!C!D!}\] describes the number of possible ways to get the observed arrangement of \(A\), \(B\), \(C\), and \(D\). Because, as noted above, there are \(\frac{n!}{(A+B)!(C+D)!}\) total possible arrangements of the data, probability of the observed arrangement is the number of ways to get the observed arrangement divided by the total possible number of arrangements:

\[p(configuration)=\frac{\frac{(A+C)!(B+D)!}{A!B!C!D!}}{\frac{n!}{(A+B)!(C+D)!}}=\frac{(A+B)!(C+D)!(A+C)!(B+D)!}{n!A!B!C!D!}\]

The \(p\)-value for the Exact Test is the cumulative likelihood of all configurations that are as extreme or more extreme than the observed configuration.194

The extremity of configurations is determined by the magnitude of the difference between each pair of observations that constitute a marginal frequency: that is: the difference between \(A\) and \(B\), the difference between \(C\) and \(D\), the difference between \(A\) and \(C\), and the difference between \(B\) and \(D\). The most extreme cases occur when the members of one group are split entirely into one of the cross-tabulated groups, for example: if \(A+B=A\) because all members of the \(A+B\) group are in \(A\) and 0 are in \(B\). Less extreme cases occur when the members of one group are indifferent to the cross-tabulated groups, for example: if \(A+B\approx 2A \approx 2B\) because \(A \approx B\).

Consider the following three examples: two with patterns of data suggesting no relationship between the two factors and another with a pattern of data suggesting a significant relationship between the two factors.

Example 1: An ordinary pattern of data

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 4 | 3 | 7 |

| No | 4 | 4 | 8 | |

| Margins | 8 | 7 | 15 |

In this example, an approximately equal number of responses are given to both questions: 7 people say a hot dog is a sandwich, 8 say it is not; 8 people say a straw has 1 hole, 7 say it has 2. Further, the cross-tabulation of the two answers shows that people who give either answer to one question have no apparent tendencies to give a particular answer to another question: the people who say a hot dog is a sandwich are about even-odds to say a straw has 1 hole or 2; people who say a hot dog is not a sandwich are exactly even-odds to say a straw has 1 or 2 holes (and vice versa).

The probability of the observed pattern of responses is:

\[p=\frac{(A+B)!(C+D)!(A+C)!(B+D)!}{n!A!B!C!D!}=\frac{7!8!8!7!}{15!4!3!4!4!}=0.381\] Given that \(p=0.381\) is greater than any reasonable \(\alpha\)-rate, calculating just the probability of the observed pattern is enough to know that the null hypothesis will not be rejected and we will continue to assume no relationship between the responses to the two questions. However, since this is more-or-less a textbook, and because there is no better place than a textbook than to do things by the book, we will examine the patterns that are more extreme than the observed data given that the margins remain constant.

Here is one such more extreme pattern:

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 5 | 2 | 7 |

| No | 3 | 5 | 8 | |

| Margins | 8 | 7 | 15 |

Note that the margins in the above table are the same as in the first table: \(A+B=7\), \(C+D=8\), \(A+C=8\), and \(B+D\)=7. However, the cell counts that make up those margins are a little more lopsided: \(A\) and \(D\) are a bit larger than \(B\) and \(C\). The probability of this pattern is:

\[p=\frac{(A+B)!(C+D)!(A+C)!(B+D)!}{n!A!B!C!D!}=\frac{7!8!8!7!}{15!5!2!3!5!}=0.183\] The next most extreme pattern is:

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 6 | 1 | 7 |

| No | 2 | 6 | 8 | |

| Margins | 8 | 7 | 15 |

The probability of this pattern is:

\[p=\frac{(A+B)!(C+D)!(A+C)!(B+D)!}{n!A!B!C!D!}=\frac{7!8!8!7!}{15!6!1!2!6!}=0.03\] Finally, the most extreme pattern possible given that the margins are constant is:

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 6 | 1 | 7 |

| No | 2 | 6 | 8 | |

| Margins | 8 | 7 | 15 |

(A good sign that you have the most extreme pattern is that there is a 0 in at least one of the cells.)

The probability of this pattern is:

\[p=\frac{(A+B)!(C+D)!(A+C)!(B+D)!}{n!A!B!C!D!}=\frac{7!8!8!7!}{15!6!1!2!6!}=0.0012\] The sum of the probabilities for the observed pattern and all more extreme unobserved patterns:

\[p=0.381+0.183+0.030+0.001=0.596\] is the \(p\)-value for a directional (one-tailed) hypothesis that there will be \(A\) or more observations in the \(A\) cell. That sort of test is helpful if we have a hypothesis about the odds ratio associated with observations being in the \(A\) cell, but isn’t super-relevant to the kinds of problems we are investigating here. More pertinent is the two-tailed test (of a point hypothesis) that there is any relationship between the two factors. To get that, we would repeat the above procedure the other way (switching values such that the \(B\) and \(C\) cells get bigger) and add all of the probabilities.

But, that’s a little too much work, even going by the book. Modern technology provides us with an easier solution. Using R, we can arrange the observed the data into a matrix:

## [,1] [,2]

## [1,] 4 3

## [2,] 4 4and then run an exact test with the base command fisher.test(). To check our math from above, we can use the option alternative = "greater":

##

## Fisher's Exact Test for Count Data

##

## data: exact.example.1

## p-value = 0.5952

## alternative hypothesis: true odds ratio is greater than 1

## 95 percent confidence interval:

## 0.1602859 Inf

## sample estimates:

## odds ratio

## 1.307924To test the two-tailed hypothesis, we can either use the option alternative = "two.sided" or – since two.sided is the default, just leave it out:

##

## Fisher's Exact Test for Count Data

##

## data: exact.example.1

## p-value = 1

## alternative hypothesis: true odds ratio is not equal to 1

## 95 percent confidence interval:

## 0.1164956 15.9072636

## sample estimates:

## odds ratio

## 1.307924Either way, the \(p\)-value is greater than any \(\alpha\)-rate we might want to use, so we continue to assume the null hypothesis that there is no relationship between the factors, in this case: that responses on the two questions are independent.

Example 2: A Pattern Indicating a Significant Relationship

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 7 | 0 | 7 |

| No | 0 | 8 | 8 | |

| Margins | 7 | 8 | 15 |

This table represents the most extreme possible patterns of responses: everybody who said a hot dog was a sandwich also said that a straw has one hole, and nobody who said a hot dog was a sandwich said that a straw has two holes. There is no more possible extreme on the other side of the responses: if \(A\) equalled 0 and \(B\) equalled 7, then \(C\) would have to be 7 and \(D\) would have to be 1 to keep the margins the same.

Thus, for this pattern, the one-tailed \(p\)-value for the observed data and the two-tailed \(p\)-value are both the probability of the observed pattern:

## [,1] [,2]

## [1,] 7 0

## [2,] 0 8##

## Fisher's Exact Test for Count Data

##

## data: exact.example.2

## p-value = 0.0001554

## alternative hypothesis: true odds ratio is not equal to 1

## 95 percent confidence interval:

## 5.83681 Inf

## sample estimates:

## odds ratio

## InfExample 3: A Pattern That Looks Extreme But Does Not Represent a Significant Relationship

Finally, let’s look at a pattern that seems to be as extreme as it possibly can be:

| 1 | 2 | Margins | ||

|---|---|---|---|---|

| Is a hot dog a sandwich? | Yes | 7 | 8 | 15 |

| No | 0 | 0 | 0 | |

| Margins | 7 | 8 | 15 |

In this observed pattern of responses, there is a big difference between \(A\) and \(C\) and between \(B\) and \(D\). Crucially, though, there is hardly any difference between \(A\) and \(B\) or between \(C\) and \(D\). Even though there are no moves we can make that maintain the same marginal numbers, the pattern here is one where the responses for one question don’t depend at all on the responses for the other question: in this set of data, people are split on the straw question but nobody regardless of their answer to the straw question thinks that a hot dog is a sandwich195. The \(p\)-value for the Exact Test accounts for the lack of dependency between the responses:

##

## Fisher's Exact Test for Count Data

##

## data: exact.example.3

## p-value = 1

## alternative hypothesis: true odds ratio is not equal to 1

## 95 percent confidence interval:

## 0 Inf

## sample estimates:

## odds ratio

## 011.2.1.2.1 Median Test

The core concept of the median test is: are there more values that are greater than the overall median of the data than there are from one sample than from another sample? The idea is that if one sample of data has values that tend to be bigger than values in another sample – with the overall median observation as the guide to what is bigger and what is smaller – then there is a difference between the two samples.

The median test arranges the observed data into two categories: less than the median and greater than the median. Values that are equal to the median can be part of either category – it’s largely a matter of preference – so those category designations, more precisely, can either be less than or equal to the median/greater than the median or less than the median/greater than or equal to the median (the difference will only be noticeable in rare, borderline situations). When those binary categories are crossed with the membership of values in one of two samples, the result is a \(2 \times 2\) contingency table: precisely the kind of thing we analyze with a \(\chi^2\) test of statistical independence or – if \(f_e\) is too small for the \(\chi^2\) test – the Exact Test.

To illustrate the median test, we will use two examples: one an example of data that do not differ with respect to the median value between groups and another where the values do differ between groups with respect to the overall median.

Example 1: an Ordinary Configuration

Imagine, if you will, the following data are observed for the dependent variable in an experiment with a control condition and an experimental condition:

| Control Condition | Experimental Condition | |

|---|---|---|

| Below Median (\(<57\) | 2, 12, 18, 23, 31, 35 | 4, 15, 16, 28, 44, 49 |

| At or Above Median (\(\ge 57\)) | 57, 63, 63, 66, 66, 69, 75 | 64, 67, 77, 84, 84, 85, 98, 100, 102 |

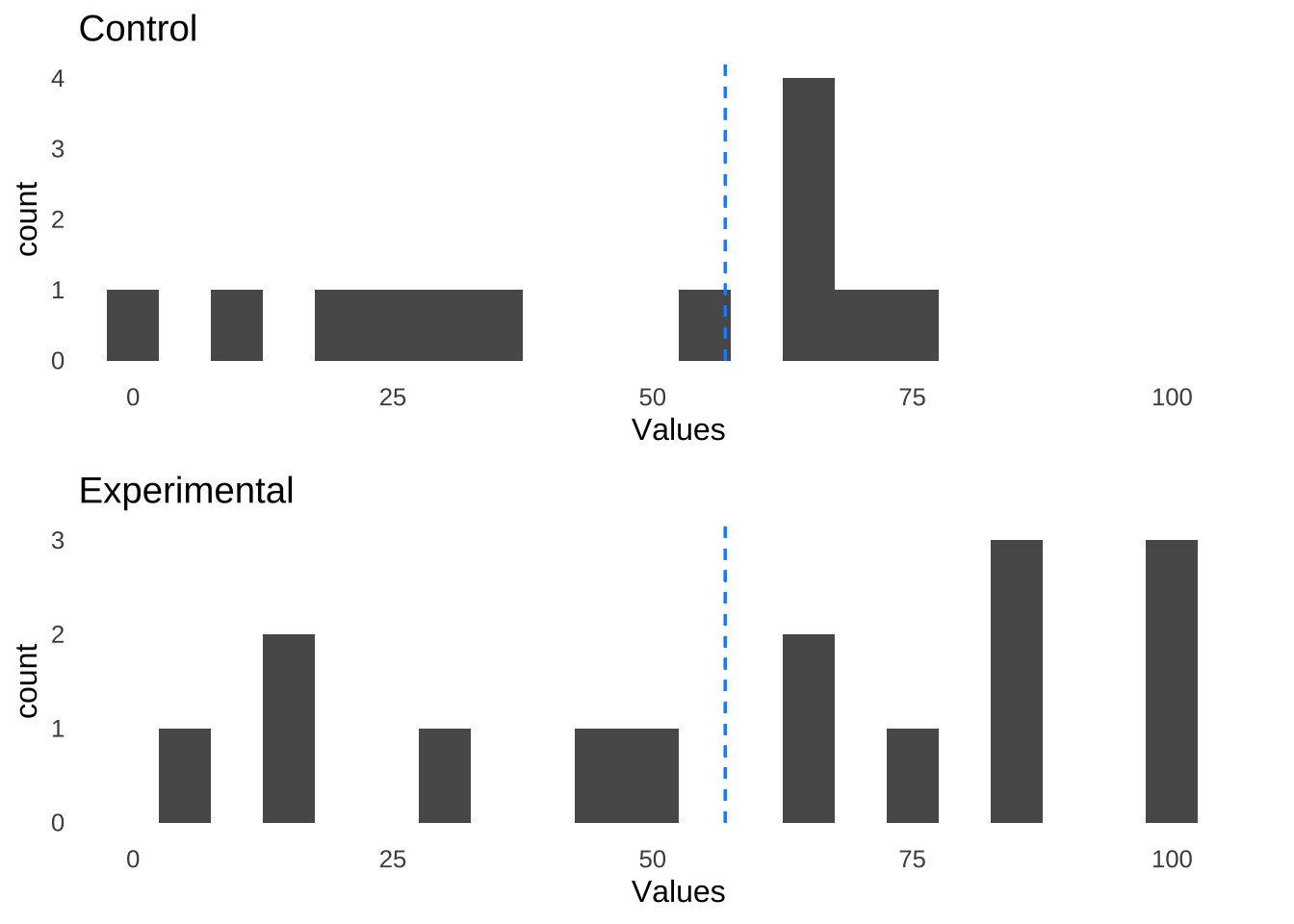

A plot of the above data with annotations for the overall median value (57, in this case), illustrates the similarity of the two groups:

Figure 11.6: Control Group and Experimental Group Data Similar to Each Other With Respect to the Overall Median of 57

Tallying up how many values are below the median and how many values are greater than or equal to the median in each group results in the following contingency table:

| Control | Experimental | |

|---|---|---|

| \(<57\) | 7 | 9 |

| \(\ge 57\) | 6 | 6 |

This arrangement lends itself nicely to a \(\chi^2\) test of statistical independence with \(df=1\):

\[\chi^2_{obs}(1)=\frac{(7-7.43)^2}{7.43}+\frac{(9-8.57)^2}{8.57}+\frac{(6-5.57)^2}{5.57}+\frac{(6-6.43)^2}{6.43}=0.1077, p = 0.743\]

The results of the \(\chi^2\) test lead to the continued assumption of independence between between category (less than/greater than or equal to the median) and condition (control/experimental), which we would interpret as there being no effect of being in the control vs. the experimental group with regard to the dependent variable.

Example 2: A Special Configuration

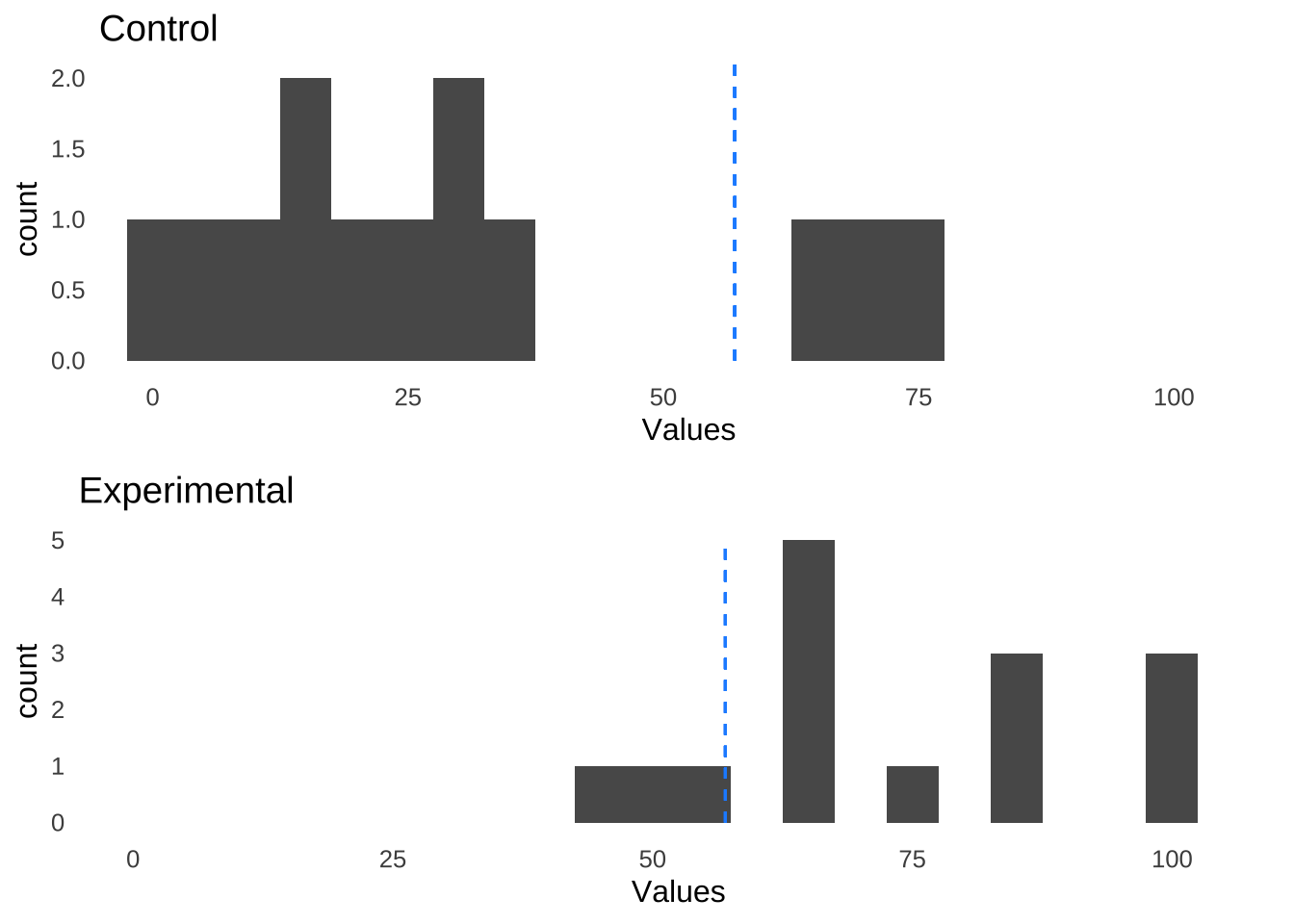

In the second example, there are more observed values in the control condition that are less than the median than there are values greater than or equal to the median and more observed values in the experimental condition that are greater than or equal to the median than there are values less than the median:

| Control Condition | Experimental Condition | |

|---|---|---|

| Below Median (\(<57\)) | 2, 4, 12, 15, 16, 18, 23, 28, 31, 35 | 44, 49 |

| At or Above Median (\(\ge 57\)) | 66, 69, 75 | 57, 63, 63, 64, 66, 67, 77, 84, 84, 85, 98, 100, 102 |

Counting up how many values in each group are either less than or greater than or equal to the overall median gives the following contingency table:

| Control | Experimental | |

|---|---|---|

| \(<57\) | 10 | 2 |

| \(\ge 57\) | 3 | 13 |

which in turn leads to the following \(\chi^2\) test result: