23 Ordinal Predicted Variable

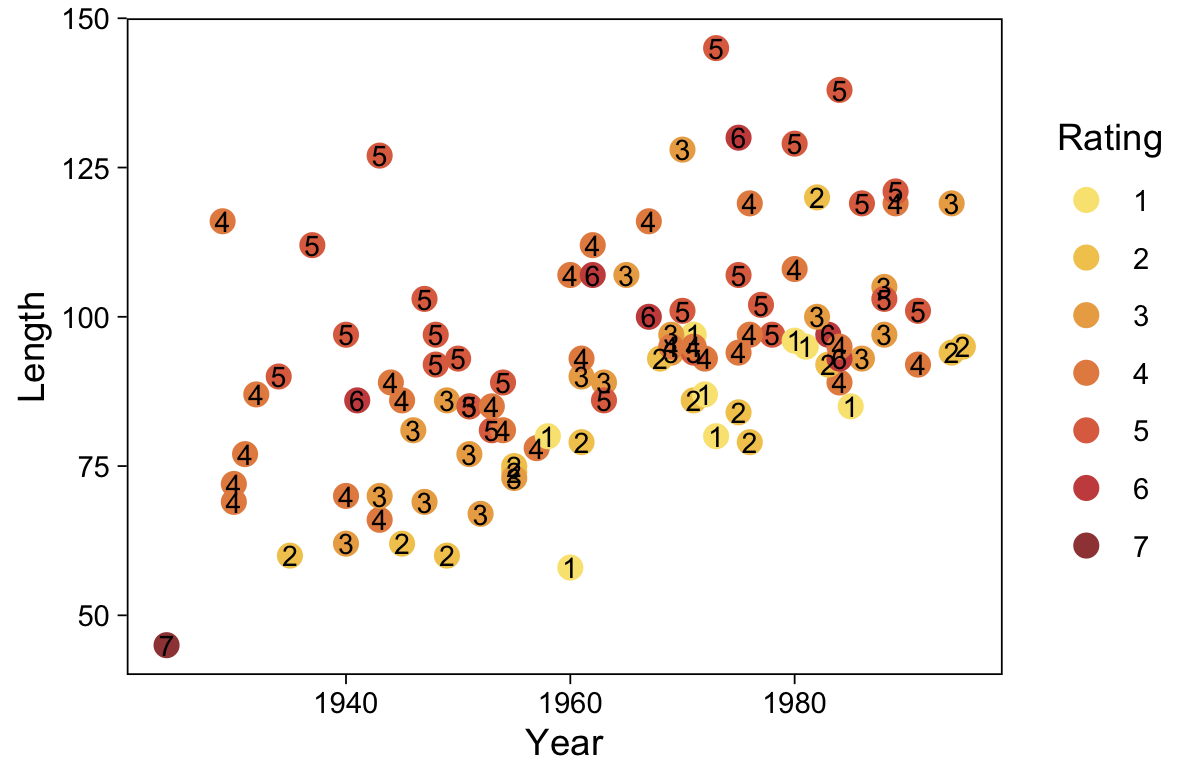

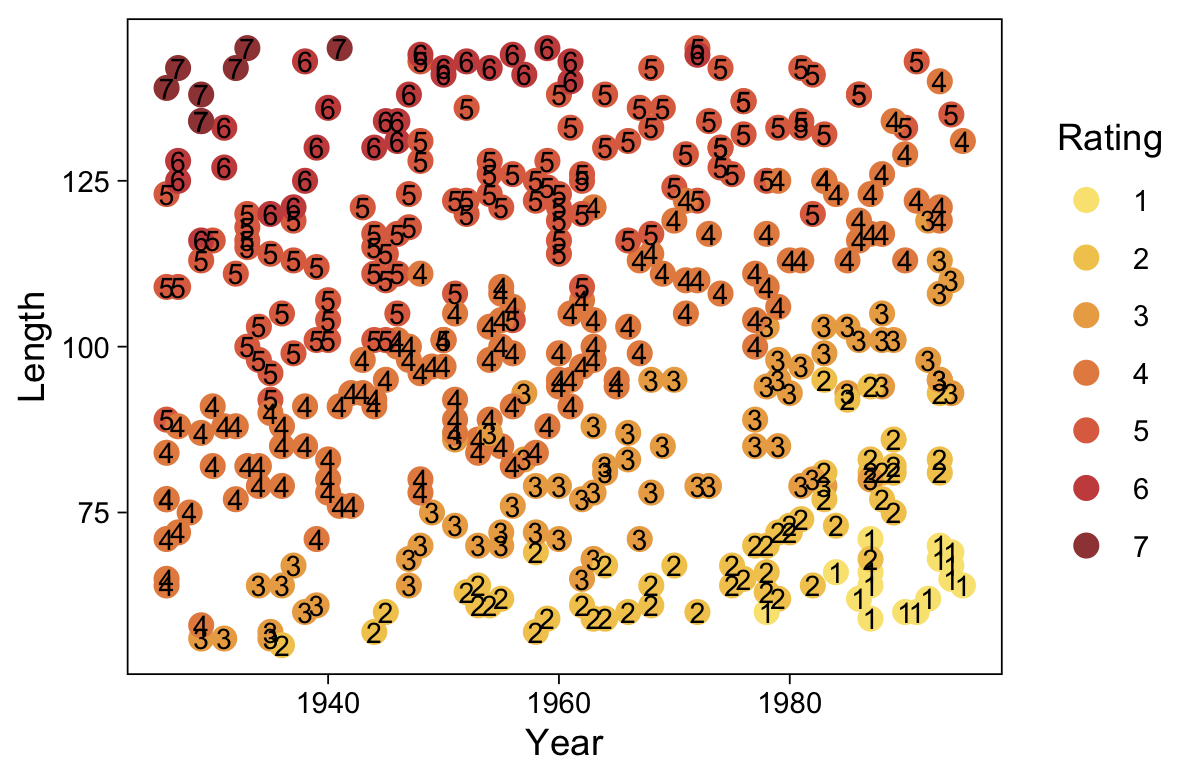

This chapter considers data that have an ordinal predicted variable. For example, we might want to predict people’s happiness ratings on a 1-to-7 scale as a function of their total financial assets. Or we might want to predict ratings of movies as a function of the year they were made.

One traditional treatment of this sort of data structure is called ordinal or ordered probit regression. We will consider a Bayesian approach to this model. As usual, in Bayesian software, it is easy to generalize the traditional model so it is robust to outliers, allows different variances within levels of a nominal predictor, or has hierarchical structure to share information across levels or factors as appropriate.

In the context of the generalized linear model (GLM) introduced in Chapter 15, this chapter’s situation involves an inverse-link function that is a thresholded cumulative normal with a categorical distribution for describing noise in the data, as indicated in the fourth row of Table 15.2 (p. 443). For a reminder of how this chapter’s combination of predicted and predictor variables relates to other combinations, see Table 15.3 (p. 444). (Kruschke, 2015, p. 671, emphasis in the original)

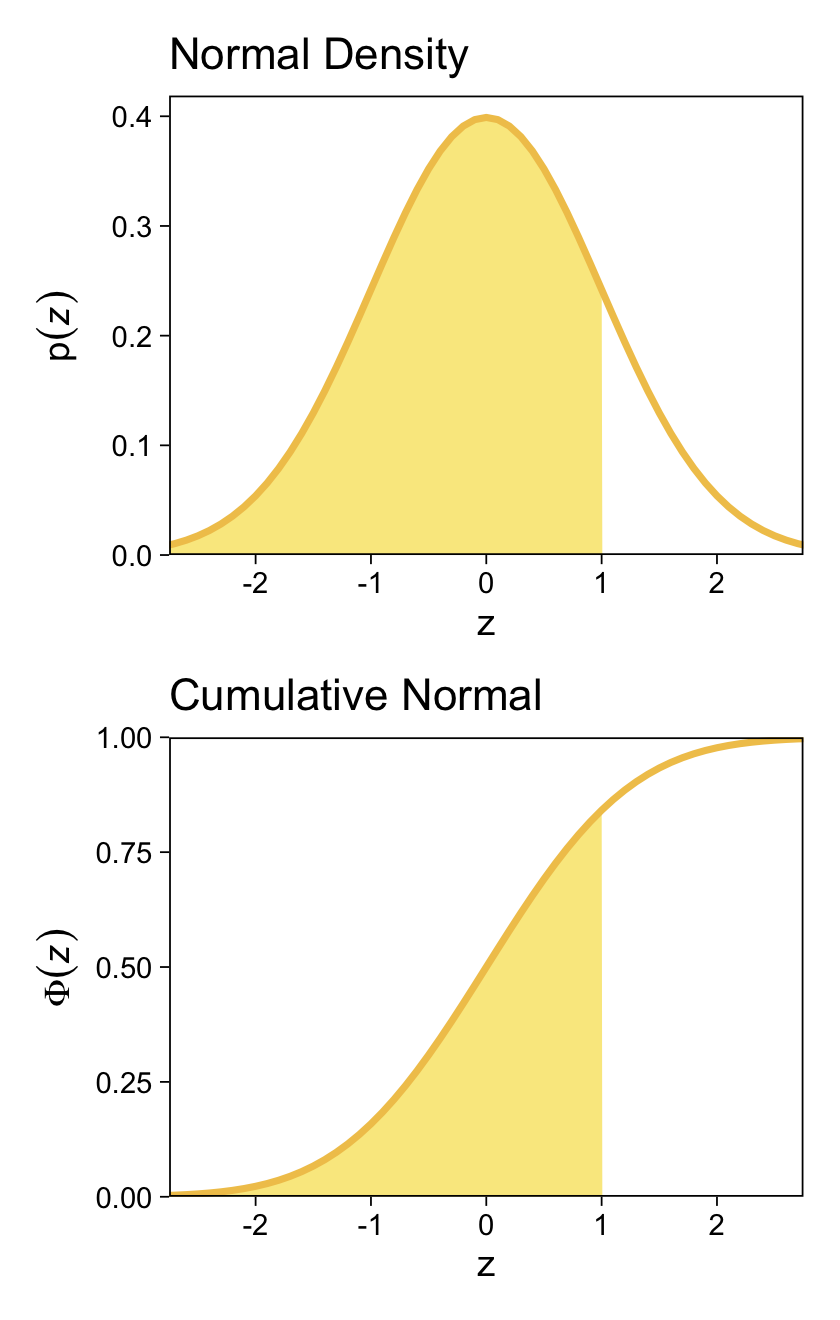

We might follow Kruschke’s advice and rewind a little before tackling this chapter. The cumulative normal function is denoted Φ(x;μ,σ), where x is some real number and μ and σ have their typical meaning. The function is cumulative in that it produces values ranging from zero to 1. Figure 15.8 showed an example of the normal distribution atop of the cumulative normal. Here it is, again.

library(tidyverse)

d <-

tibble(z = seq(from = -3, to = 3, by = .1)) %>%

# add the density values

mutate(`p(z)` = dnorm(z, mean = 0, sd = 1),

# add the CDF values

`Phi(z)` = pnorm(z, mean = 0, sd = 1))

head(d)## # A tibble: 6 × 3

## z `p(z)` `Phi(z)`

## <dbl> <dbl> <dbl>

## 1 -3 0.00443 0.00135

## 2 -2.9 0.00595 0.00187

## 3 -2.8 0.00792 0.00256

## 4 -2.7 0.0104 0.00347

## 5 -2.6 0.0136 0.00466

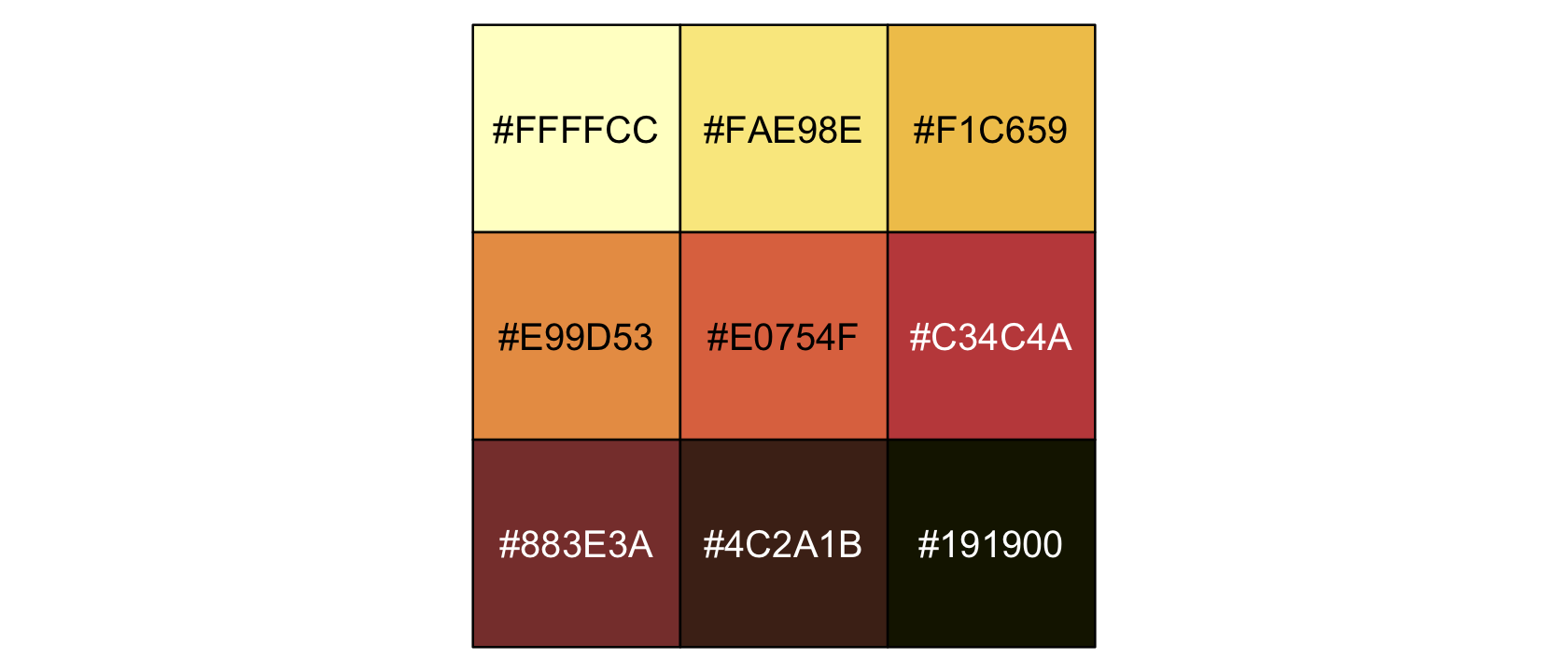

## 6 -2.5 0.0175 0.00621It’s time to talk color and theme. For this chapter, we’ll take our color palette from the scico package (Thomas Lin Pedersen & Crameri, 2021), which provides 17 perceptually-uniform and colorblind-safe palettes based on the work of Fabio Crameri. Our palette of interest will be "lajolla".

library(scico)

sl <- scico(palette = "lajolla", n = 9)

scales::show_col(sl)

Our overall plot theme will be based on ggplot2::theme_linedraw() with just a few adjustments.

theme_set(

theme_linedraw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(color = sl[9], fill = sl[9]),

strip.text = element_text(color = sl[1]))

)Now plot!

p1 <-

d %>%

ggplot(aes(x = z, y = `p(z)`)) +

geom_area(aes(fill = z <= 1),

show.legend = F) +

geom_line(linewidth = 1, color = sl[3]) +

scale_fill_manual(values = c("transparent", sl[2])) +

scale_y_continuous(expand = expansion(mult = c(0, 0.05))) +

labs(title = "Normal Density",

y = expression(p(italic(z))))

p2 <-

d %>%

ggplot(aes(x = z, y = `Phi(z)`)) +

geom_area(aes(fill = z <= 1),

show.legend = F) +

geom_line(linewidth = 1, color = sl[3]) +

scale_fill_manual(values = c("transparent", sl[2])) +

scale_y_continuous(expand = expansion(mult = c(0, 0)), limits = 0:1) +

labs(title = "Cumulative Normal",

y = expression(Phi(italic(z))))

# combine and adjust with patchwork

library(patchwork)

p1 / p2 &

scale_x_continuous(breaks = -2:2) &

coord_cartesian(xlim = c(-2.5, 2.5))

For both plots, z is in a standardized metric (i.e., z-score). With the cumulative normal function, the cumulative probability Φ(z) increases nonlinearly with the z-scores such that, much like with the logistic curve, the greatest change occurs around z=0 and tapers off in the tails.

The inverse of Φ(x) is the probit function. As indicated in the above block quote, we’ll be making extensive use of the probit function in this chapter for our Bayesian models.

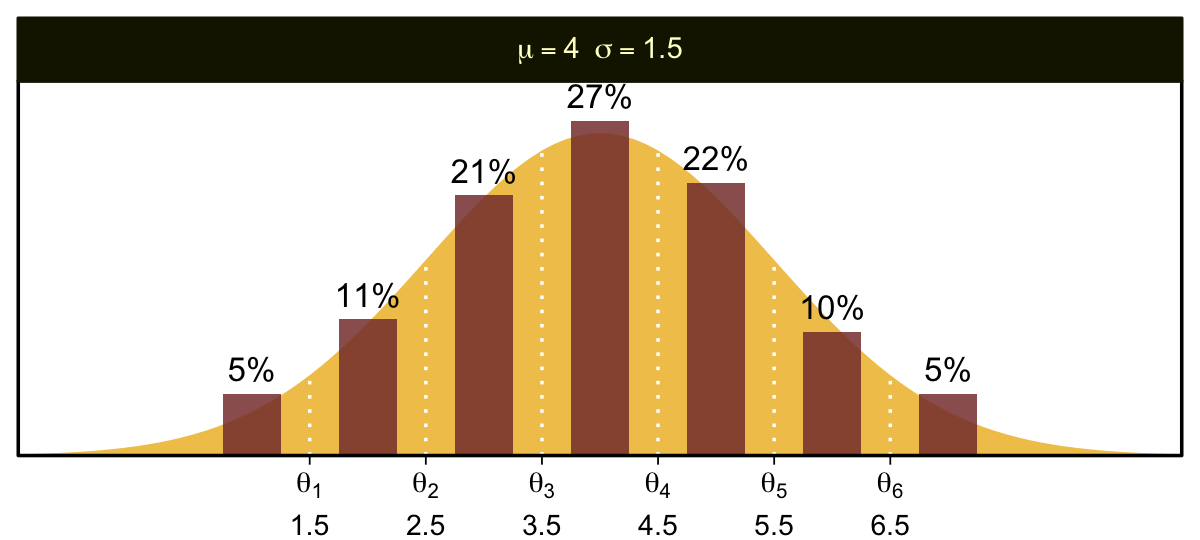

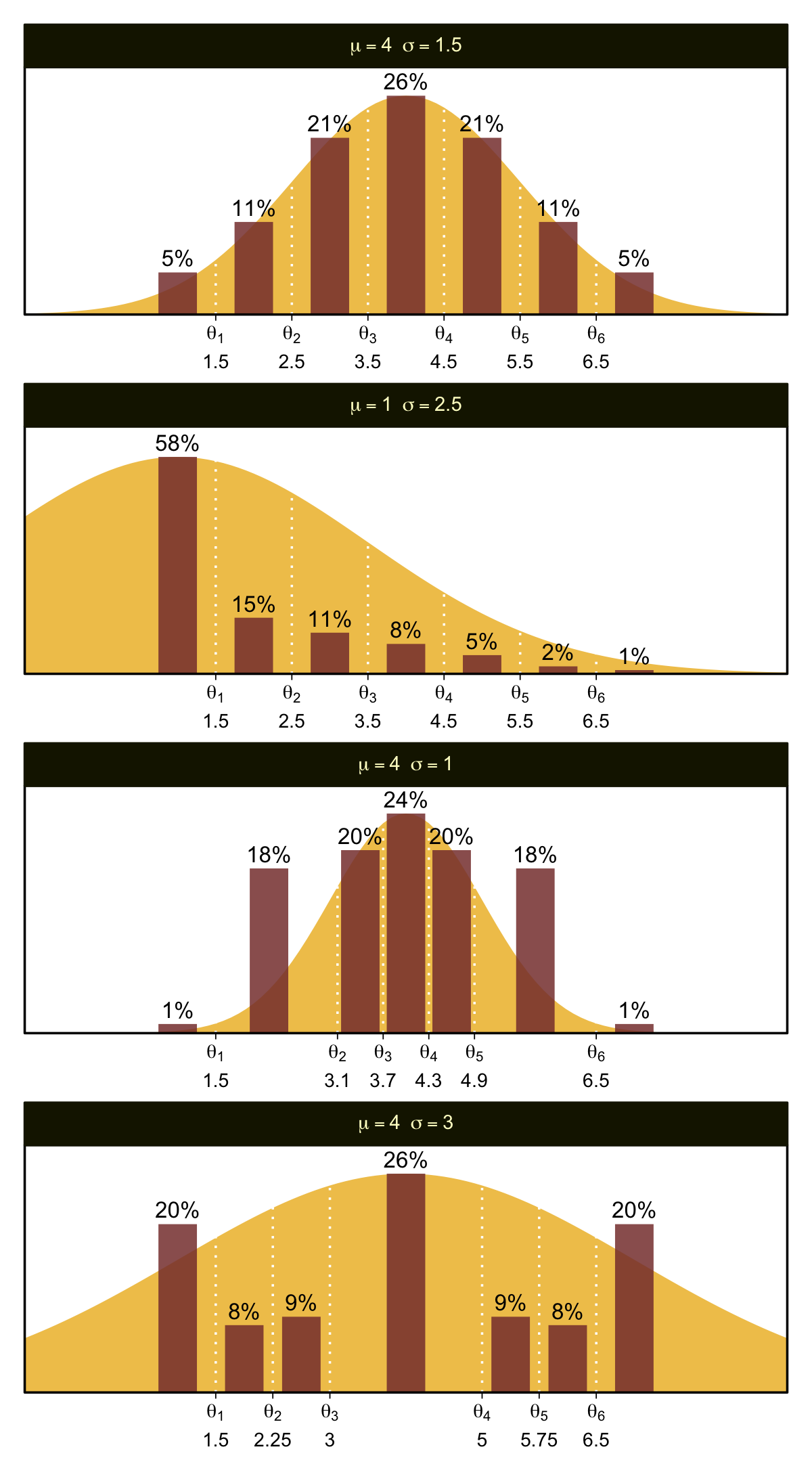

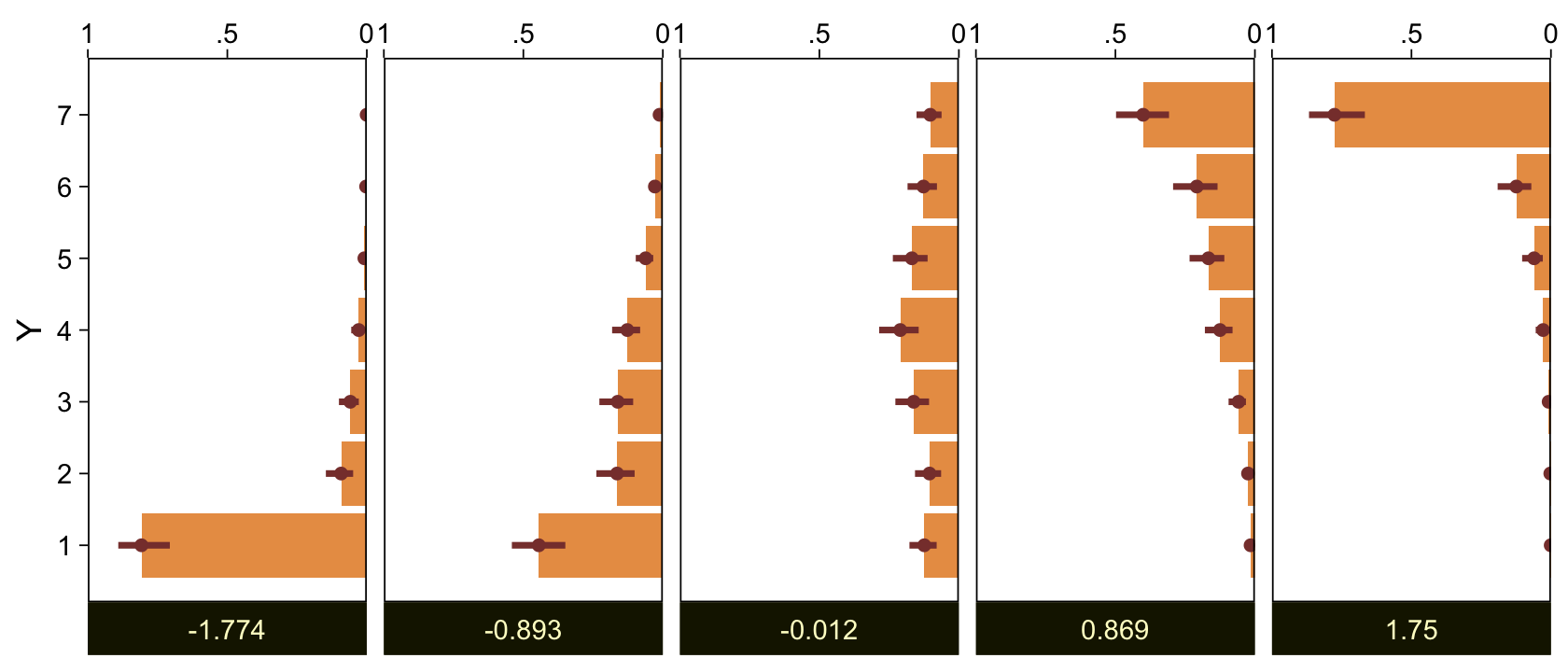

23.1 Modeling ordinal data with an underlying metric variable

You can imagine that the distribution of ordinal values might not resemble a normal distribution, even though the underlying metric values are normally distributed. Figure 23.1 shows some examples of ordinal outcome probabilities generated from an underlying normal distribution. The horizontal axis is the underlying continuous metric value. Thresholds are plotted as vertical dashed lines, labeled θ. In all examples, the ordinal scale has 7 levels, and hence, there are 6 thresholds. The lowest threshold is set at θ1=1.5 (to separate outcomes 1 and 2), and the highest threshold is set at θ1=6.5 (to separate outcomes 6 and 7). The normal curve in each panel shows the distribution of underlying continuous values. What differs across panels are the settings of means, standard deviations, and remaining thresholds. (p. 672)

The various Figure 23.1 subplots require a lot of ins and outs. We’ll start with the top panel and build from there. Here is how we might make the values necessary for the density curve.

den <-

# define the parameters for the underlying normal distribution

tibble(mu = 4,

sigma = 1.5) %>%

mutate(strip = str_c("mu==", mu, "~~sigma==", sigma)) %>%

# this will allow us to rescale the density in terms of the bar plot

mutate(multiplier = 26 / dnorm(mu, mu, sigma)) %>%

# we need values for the x-axis

expand_grid(y = seq(from = -1, to = 9, by = .1)) %>%

# compute the density values

mutate(density = dnorm(y, mu, sigma)) %>%

# use that multiplier value from above to rescale the density values

mutate(percent = density * multiplier)

head(den)## # A tibble: 6 × 7

## mu sigma strip multiplier y density percent

## <dbl> <dbl> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 4 1.5 mu==4~~sigma==1.5 97.8 -1 0.00103 0.101

## 2 4 1.5 mu==4~~sigma==1.5 97.8 -0.9 0.00128 0.125

## 3 4 1.5 mu==4~~sigma==1.5 97.8 -0.8 0.00159 0.155

## 4 4 1.5 mu==4~~sigma==1.5 97.8 -0.7 0.00196 0.192

## 5 4 1.5 mu==4~~sigma==1.5 97.8 -0.6 0.00241 0.236

## 6 4 1.5 mu==4~~sigma==1.5 97.8 -0.5 0.00295 0.289Before making the data for the bar portion of the plot, we’ll need to define the θ-values they’ll be placed between. We also need to define the exact points on the x-axis from which we’d like those bars to originate. Those points, which we’ll call label_1, will double as names for the individual bars.

(theta_1 <- seq(from = 1.5, to = 6.5, by = 1))## [1] 1.5 2.5 3.5 4.5 5.5 6.5(label_1 <- 1:7)## [1] 1 2 3 4 5 6 7Now we can define the data for the bars.

bar <-

# define the parameters for the underlying normal distribution

tibble(mu = 4,

sigma = 1.5) %>%

mutate(strip = str_c("mu==", mu, "~~sigma==", sigma)) %>%

# take random draws from the underlying normal distribution

mutate(draw = map2(mu, sigma, ~rnorm(1e4, mean = .x, sd = .y))) %>%

unnest(draw) %>%

# bin those draws into ordinal categories defined by `theta_1`

# and named by `label_1`

mutate(y = case_when(

draw < theta_1[1] ~ label_1[1],

draw < theta_1[2] ~ label_1[2],

draw < theta_1[3] ~ label_1[3],

draw < theta_1[4] ~ label_1[4],

draw < theta_1[5] ~ label_1[5],

draw < theta_1[6] ~ label_1[6],

draw >= theta_1[6] ~ label_1[7]

)) %>%

# summarize

count(y) %>%

mutate(percent = (100 * n / sum(n)) %>% round(0)) %>%

mutate(percent_label = str_c(percent, "%"),

percent_max = max(percent))

head(bar)## # A tibble: 6 × 5

## y n percent percent_label percent_max

## <int> <int> <dbl> <chr> <dbl>

## 1 1 470 5 5% 27

## 2 2 1104 11 11% 27

## 3 3 2080 21 21% 27

## 4 4 2671 27 27% 27

## 5 5 2160 22 22% 27

## 6 6 1047 10 10% 27Make the top subplot.

bar %>%

ggplot(aes(x = y, y = percent)) +

geom_area(data = den,

fill = sl[3]) +

geom_vline(xintercept = theta_1, color = "white", linetype = 3) +

geom_col(width = .5, alpha = .85, fill = sl[7]) +

geom_text(aes(y = percent + 2, label = percent_label),

size = 3.5) +

annotate(geom = "text",

x = theta_1, y = -5.55, label = theta_1,

size = 3) +

scale_x_continuous(NULL, expand = c(0, 0),

breaks = theta_1,

labels = parse(text = str_c("theta[", 1:6, "]"))) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.06))) +

coord_cartesian(ylim = c(0, 28.5), clip = F) +

theme(plot.margin = margin(5.5, 5.5, 11, 5.5)) +

facet_wrap(~ strip, labeller = label_parsed)

This method works okay for plotting one or two panels. The sheer number of code lines and moving parts seem unwieldy for plotting four. It’d be convenient if we could save the density information for all four panels in one data object. Here’s one way how.

den <-

tibble(panel = 1:4,

mu = c(4, 1, 4, 4),

sigma = c(1.5, 2.5, 1, 3)) %>%

mutate(strip = factor(panel,

labels = str_c("mu==", mu, "~~sigma==", sigma),

ordered = T)) %>%

mutate(multiplier = c(26, 58, 24, 26) / dnorm(mu, mu, sigma)) %>%

expand_grid(y = seq(from = -1, to = 9, by = .1)) %>%

mutate(density = dnorm(y, mu, sigma)) %>%

mutate(percent = density * multiplier)

head(den)## # A tibble: 6 × 8

## panel mu sigma strip multiplier y density percent

## <int> <dbl> <dbl> <ord> <dbl> <dbl> <dbl> <dbl>

## 1 1 4 1.5 mu==4~~sigma==1.5 97.8 -1 0.00103 0.101

## 2 1 4 1.5 mu==4~~sigma==1.5 97.8 -0.9 0.00128 0.125

## 3 1 4 1.5 mu==4~~sigma==1.5 97.8 -0.8 0.00159 0.155

## 4 1 4 1.5 mu==4~~sigma==1.5 97.8 -0.7 0.00196 0.192

## 5 1 4 1.5 mu==4~~sigma==1.5 97.8 -0.6 0.00241 0.236

## 6 1 4 1.5 mu==4~~sigma==1.5 97.8 -0.5 0.00295 0.289Notice we added a panel column for indexing the subplots. Next we’ll need to define theta_[i] and label_[i] values for the remaining plots.

theta_3 <- c(1.5, 3.1, 3.7, 4.3, 4.9, 6.5)

theta_4 <- c(1.5, 2.25, 3, 5, 5.75, 6.5)

label_3 <- c(1, 2.2, 3.4, 4, 4.6, 5.7, 7)

label_4 <- c(1, 1.875, 2.625, 4, 5.375, 6.125, 7)Since the values are the same for the top two panels, we didn’t bother defining a theta_2 or label_2. Now we have all the theta_[i] and label_[i] values, we’ll want to make a function that can use them within case_when() for any of the four panels. Here’s one way to make such a function, which we’ll call make_ordinal().

make_ordinal <- function(x, panel) {

if (panel < 3) {

case_when(

x < theta_1[1] ~ label_1[1],

x < theta_1[2] ~ label_1[2],

x < theta_1[3] ~ label_1[3],

x < theta_1[4] ~ label_1[4],

x < theta_1[5] ~ label_1[5],

x < theta_1[6] ~ label_1[6],

x >= theta_1[6] ~ label_1[7]

)

} else if (panel == 3) {

case_when(

x < theta_3[1] ~ label_3[1],

x < theta_3[2] ~ label_3[2],

x < theta_3[3] ~ label_3[3],

x < theta_3[4] ~ label_3[4],

x < theta_3[5] ~ label_3[5],

x < theta_3[6] ~ label_3[6],

x >= theta_3[6] ~ label_3[7]

)

} else {

case_when(

x < theta_4[1] ~ label_4[1],

x < theta_4[2] ~ label_4[2],

x < theta_4[3] ~ label_4[3],

x < theta_4[4] ~ label_4[4],

x < theta_4[5] ~ label_4[5],

x < theta_4[6] ~ label_4[6],

x >= theta_4[6] ~ label_4[7]

)

}

}Now put those values and our make_ordinal() function to work to make the data for the bar plots.

set.seed(23)

bar <-

tibble(panel = 1:4,

mu = c(4, 1, 4, 4),

sigma = c(1.5, 2.5, 1, 3)) %>%

mutate(strip = factor(panel,

labels = str_c("mu==", mu, "~~sigma==", sigma),

ordered = T)) %>%

mutate(draw = map2(mu, sigma, ~rnorm(1e5, mean = .x, sd = .y))) %>%

unnest(draw) %>%

mutate(y = map2_dbl(draw, panel, make_ordinal)) %>%

group_by(panel, strip) %>%

count(y) %>%

mutate(percent = (100 * n / sum(n)) %>% round(0)) %>%

mutate(percent_label = str_c(percent, "%"),

percent_max = max(percent))

head(bar)## # A tibble: 6 × 7

## # Groups: panel, strip [1]

## panel strip y n percent percent_label percent_max

## <int> <ord> <dbl> <int> <dbl> <chr> <dbl>

## 1 1 mu==4~~sigma==1.5 1 4763 5 5% 26

## 2 1 mu==4~~sigma==1.5 2 10844 11 11% 26

## 3 1 mu==4~~sigma==1.5 3 21174 21 21% 26

## 4 1 mu==4~~sigma==1.5 4 26256 26 26% 26

## 5 1 mu==4~~sigma==1.5 5 21233 21 21% 26

## 6 1 mu==4~~sigma==1.5 6 10951 11 11% 26Like before, we added a panel index. As our final preparatory step, we will make something of a super function with which we’ll plug the desired information into ggplot2, which will then make each subplot. Much of the plotting and data wrangling code will be the same across subplots. As far as I can tell, we only need to vary four parameters. First, we’ll want to be able to subset the data by panel index. We’ll do that with the panel_n argument. Second, we’ll want to select which of the theta_[i] values we’d like to use in geom_vline(), annotate(), and scale_x_continuous(). We’ll do that with the theta argument. We’ll make a y_second_x to pin down exactly where below the x-axis we’d like to put those secondary axis values defined by the theta_[i] values. Finally, we’ll want an ylim_ub parameter to set the upper limit of the y-axis with. The name of our four-parameter super function will be plot_bar_den().

plot_bar_den <- function(panel_n, theta, y_second_x, ylim_ub) {

bar %>%

filter(panel == panel_n) %>%

ggplot(aes(x = y)) +

geom_area(data = den %>% filter(panel == panel_n),

aes(y = percent),

fill = sl[3]) +

geom_vline(xintercept = theta, color = "white", linetype = 3) +

geom_linerange(aes(ymin = 0, ymax = percent),

color = sl[7], alpha = .85, linewidth = 8) +

geom_text(aes(y = percent + (percent_max / 15), label = percent_label),

size = 3.5) +

annotate(geom = "text", x = theta, y = y_second_x,

label = theta, size = 3) +

scale_x_continuous(NULL,

breaks = theta,

labels = parse(text = str_c("theta[", 1:6, "]")),

expand = c(0, 0)) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

coord_cartesian(ylim = c(0, ylim_ub),

clip = F) +

theme(plot.margin = margin(5.5, 5.5, 11, 5.5)) +

facet_wrap(~ strip, labeller = label_parsed)

}Finally, make all four subplots and combine them with patchwork syntax!

p1 <- plot_bar_den(panel_n = 1,

theta = theta_1,

# y_second_x = -6.75,

y_second_x = -5.55,

ylim_ub = 28)

p2 <- plot_bar_den(panel_n = 2,

theta = theta_1,

# y_second_x = -15.5 * 3/4,

y_second_x = -12.37,

ylim_ub = 63)

p3 <- plot_bar_den(panel_n = 3,

theta = theta_3,

# y_second_x = -6.25 * 3/4,

y_second_x = -5.12,

ylim_ub = 25.75)

p4 <- plot_bar_den(panel_n = 4,

theta = theta_4,

# y_second_x = -6.75 * 3/4,

y_second_x = -5.55,

ylim_ub = 28)

p1 / p2 / p3 / p4

Oh mamma. “The crucial concept in Figure 23.1 is that the probability of a particular ordinal outcome is the area under the normal curve between the thresholds of that outcome” (p. 672, emphasis in the original). In each of the subplots, we used six thresholds to discretize the continuous data into seven categories. More generally, we need K thresholds to make K+1 ordinal categories. To make this work,

the idea is that we consider the cumulative area under the normal up the high-side threshold, and subtract away the cumulative area under the normal up to the low-side threshold. Recall that the cumulative area under the standardized normal is denoted Φ(z), as was illustrated in Figure 15.8 [which we remade at the top of this chapter]. Thus, the area under the normal to the left of θk is Φ((θk−μ)/σ), and the area under the normal to the left of θk−1 is Φ((θk−1−μ)/σ). Therefore, the area under the normal curve between the two thresholds, which is the probability of outcome k, is

p(y=k|μ,σ,{θj})=Φ((θk−μ)/σ)−Φ((θk−1−μ)/σ)

[This equation] applies even to the least and greatest ordinal values if we append two “virtual” thresholds at −∞ and +∞…

Thus, a normally distributed underlying metric value can yield a clearly non-normal distribution of discrete ordinal values. This result does not imply that the ordinal values can be treated as if they were themselves metric and normally distributed; in fact it implies the opposite: We might be able to model a distribution of ordinal values as consecutive intervals of a normal distribution on an underlying metric scale with appropriately positioned thresholds. (pp. 674–675)

23.2 The case of a single group

Given a model with no predictors, “if there are K ordinal values, the model has K+1 parameters: θ1,…,θK−1,μ, and σ. If you think about it a moment, you’ll realize that the parameter values trade-off and are undetermined” (p. 675). The solution Kruschke took throughout this chapter was to fix the two thresholds at the ends, θ1 and θK−1, to the constants

θ1≡1+0.5andθK−1≡K−0.5.

For example, all four subplots from Figure 23.1 had K=7 categories, ranging from 1 to 7. Following Kruschke’s convention would mean setting the endmost thresholds to

θ1≡1.5andθ6≡6.5.

As we’ll see, there are other ways to parameterize these models.

23.2.1 Implementation in JAGS brms.

The syntax to fit a basic ordered probit model with brms::brm() is pretty simple.

fit <-

brm(data = my_data,

family = cumulative(probit),

y ~ 1,

prior(normal(0, 4), class = Intercept))The family = cumulative(probit) tells brms you’d like to use the probit link for the ordered-categorical data. It’s important to specify probit because the brms default is to use the logit link, instead. We’ll talk more about that approach at the end of this chapter.

Remember how, at the end of the last section, we said there are other ways to parameterize the ordered probit model? As it turns out, brms does not follow Kruschke’s approach for fixing the thresholds on the ends. Rather, brms freely estimates all thresholds, θ1,...,θK−1, by fixing μ=0 and σ=1. That is, instead of estimating μ and σ from the normal cumulative density function Φ(x), brms::brm() uses the standard normal cumulative density function Φ(z).

This all probably seems abstract. We’ll get a lot of practice comparing the two approaches as we go along. Each has its strengths and weaknesses. At this point, the thing to get is that when fitting a single-group ordered-probit model with the brm() function, there will be no priors for μ and σ. We only have to worry about setting the priors for all K−1 thresholds. And because those thresholds are conditional on Φ(z), we should think about their priors with respect to the scale of standard normal distribution. Thus, to continue on with Kruschke’s minimally-informative prior approach, something like prior(normal(0, 4), class = Intercept) might be a good starting place. Do feel free to experiment with different settings.

23.2.2 Examples: Bayesian estimation recovers true parameter values.

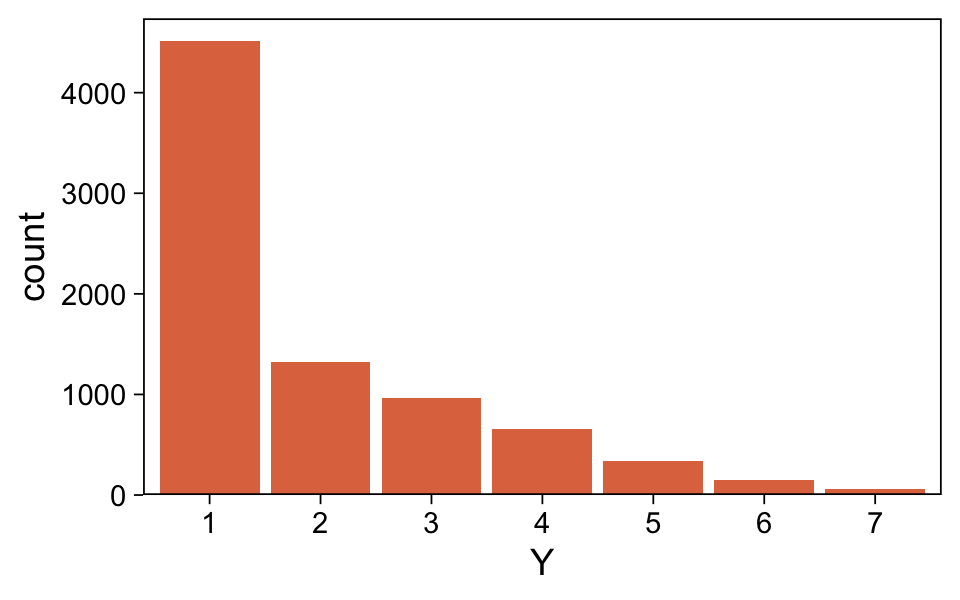

The data for Kruschke’s first example come from his OrdinalProbitData-1grp-1.csv file. Load the data.

my_data_1 <- read_csv("data.R/OrdinalProbitData-1grp-1.csv")

glimpse(my_data_1)## Rows: 100

## Columns: 1

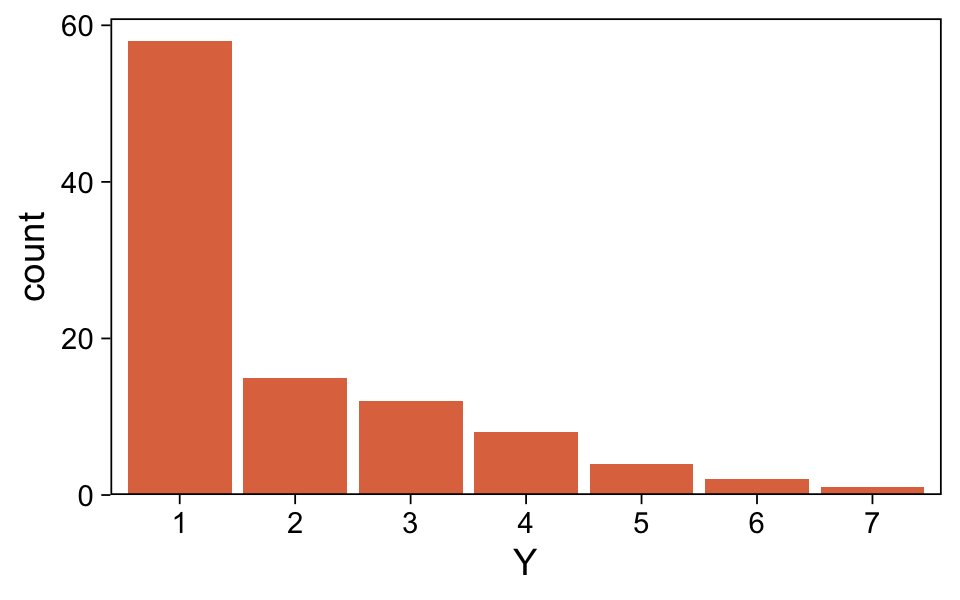

## $ Y <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…Plot the distribution for Y.

my_data_1 %>%

mutate(Y = factor(Y)) %>%

ggplot(aes(x = Y)) +

geom_bar(fill = sl[5]) +

scale_y_continuous(expand = expansion(mult = c(0, 0.05)))

It looks a lot like the distribution of the data from one of the panels from Figure 23.1. Load brms.

library(brms)Fit the first cumulative-probit model.

fit23.1 <-

brm(data = my_data_1,

family = cumulative(probit),

Y ~ 1,

prior(normal(0, 4), class = Intercept),

iter = 3000, warmup = 1000, chains = 4, cores = 4,

seed = 23,

file = "fits/fit23.01")Examine the model summary.

print(fit23.1)## Family: cumulative

## Links: mu = probit; disc = identity

## Formula: Y ~ 1

## Data: my_data_1 (Number of observations: 100)

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept[1] 0.18 0.12 -0.06 0.42 1.00 9787 6646

## Intercept[2] 0.60 0.13 0.35 0.87 1.00 11397 6343

## Intercept[3] 1.04 0.15 0.75 1.34 1.00 11713 6088

## Intercept[4] 1.50 0.19 1.14 1.88 1.00 12416 6754

## Intercept[5] 1.97 0.25 1.51 2.50 1.00 12059 6627

## Intercept[6] 2.57 0.40 1.90 3.44 1.00 11463 6870

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## disc 1.00 0.00 1.00 1.00 NA NA NA

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The brms output for these kinds of models names the thresholds θ[i] as Intercept[i]. Again, whereas Kruschke identified his model by fixing θ1=1.5 (i.e., 1+0.5) and θ6=5.5 (i.e., 6−0.5), we freely estimated all six thresholds by using the cumulative density function for the standard normal. As a result, our thresholds are in a different metric from Kruschke’s.

Let’s extract the posterior draws.

draws <- as_draws_df(fit23.1)

glimpse(draws)## Rows: 8,000

## Columns: 12

## $ `b_Intercept[1]` <dbl> 0.44703738, 0.22443431, 0.30708260, -0.01127637, -0.18681541, 0.37826981, 0.0733989…

## $ `b_Intercept[2]` <dbl> 0.8253956, 0.5598467, 0.6366477, 0.3522155, 0.3877171, 0.7765147, 0.6104502, 0.5962…

## $ `b_Intercept[3]` <dbl> 1.0714275, 1.2367075, 0.9268432, 0.9791826, 1.0762356, 1.1229631, 0.8663489, 1.0003…

## $ `b_Intercept[4]` <dbl> 1.675261, 1.479992, 1.508809, 1.611100, 1.629725, 1.572215, 1.466856, 1.368460, 1.8…

## $ `b_Intercept[5]` <dbl> 2.439194, 1.817869, 2.224741, 2.034097, 1.955867, 1.749030, 2.591565, 1.605434, 2.2…

## $ `b_Intercept[6]` <dbl> 3.195665, 2.412770, 2.777146, 2.669718, 3.094114, 2.040137, 3.561389, 2.170847, 2.5…

## $ disc <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ lprior <dbl> -14.48757, -14.24420, -14.34068, -14.29838, -14.37510, -14.19703, -14.54015, -14.16…

## $ lp__ <dbl> -154.6614, -152.9830, -151.9284, -151.9778, -155.8266, -153.3221, -155.7108, -150.8…

## $ .chain <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ .iteration <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, …

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, …Wrangle the draws a bit.

draws <-

draws %>%

select(.draw, `b_Intercept[1]`:`b_Intercept[6]`)Here’s our brms version of the bottom plot of Figure 23.2.

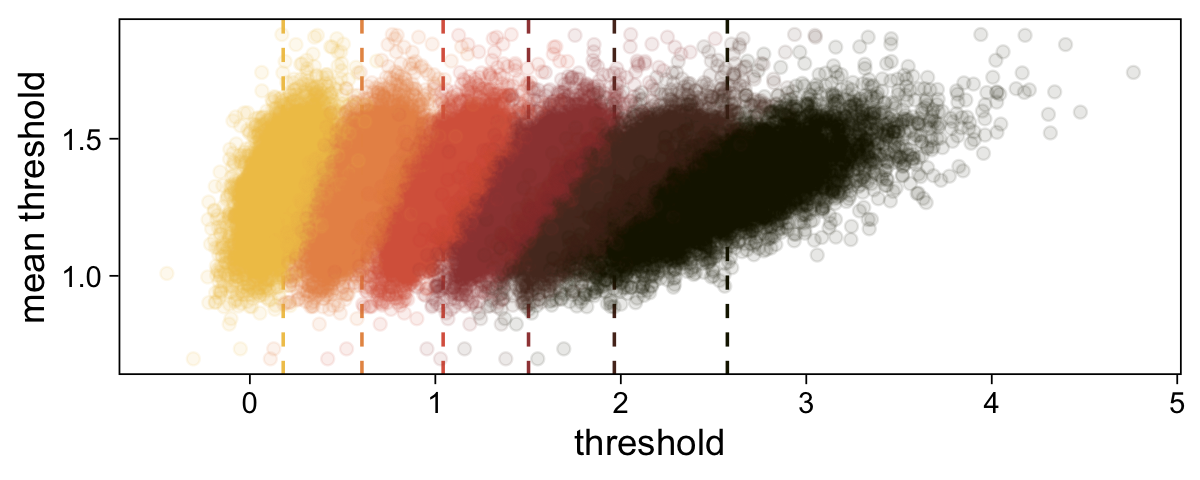

# compute the posterior means for each threshold

means <-

draws %>%

summarise_at(vars(`b_Intercept[1]`:`b_Intercept[6]`), mean) %>%

pivot_longer(everything(),

values_to = "mean")

# wrangle

draws %>%

pivot_longer(-.draw, values_to = "threshold") %>%

group_by(.draw) %>%

mutate(theta_bar = mean(threshold)) %>%

# finally we plot

ggplot(aes(x = threshold, y = theta_bar, color = name)) +

geom_vline(data = means,

aes(xintercept = mean, color = name),

linetype = 2) +

geom_point(alpha = 1/10) +

scale_color_scico_d(palette = "lajolla", begin = .25) +

ylab("mean threshold") +

theme(legend.position = "none")

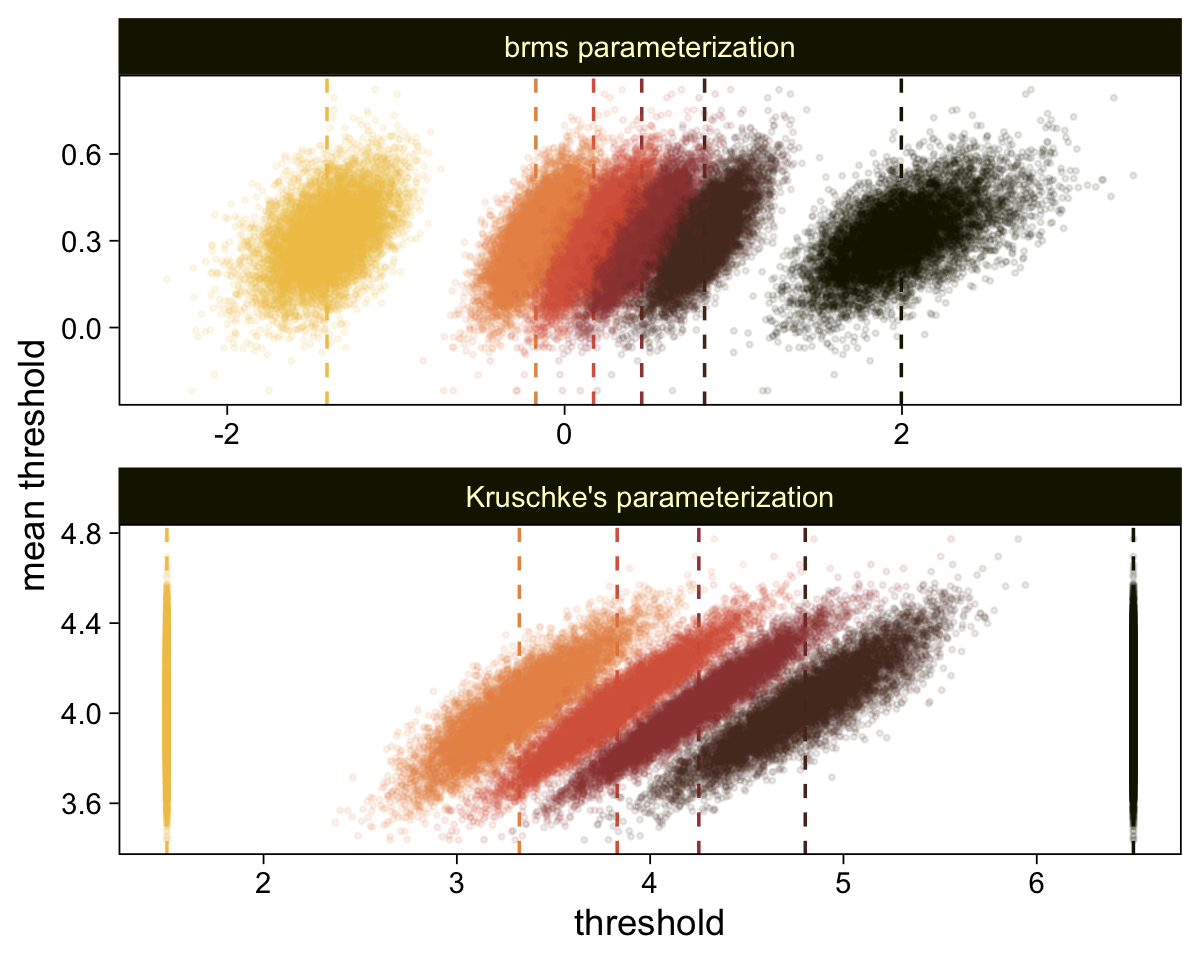

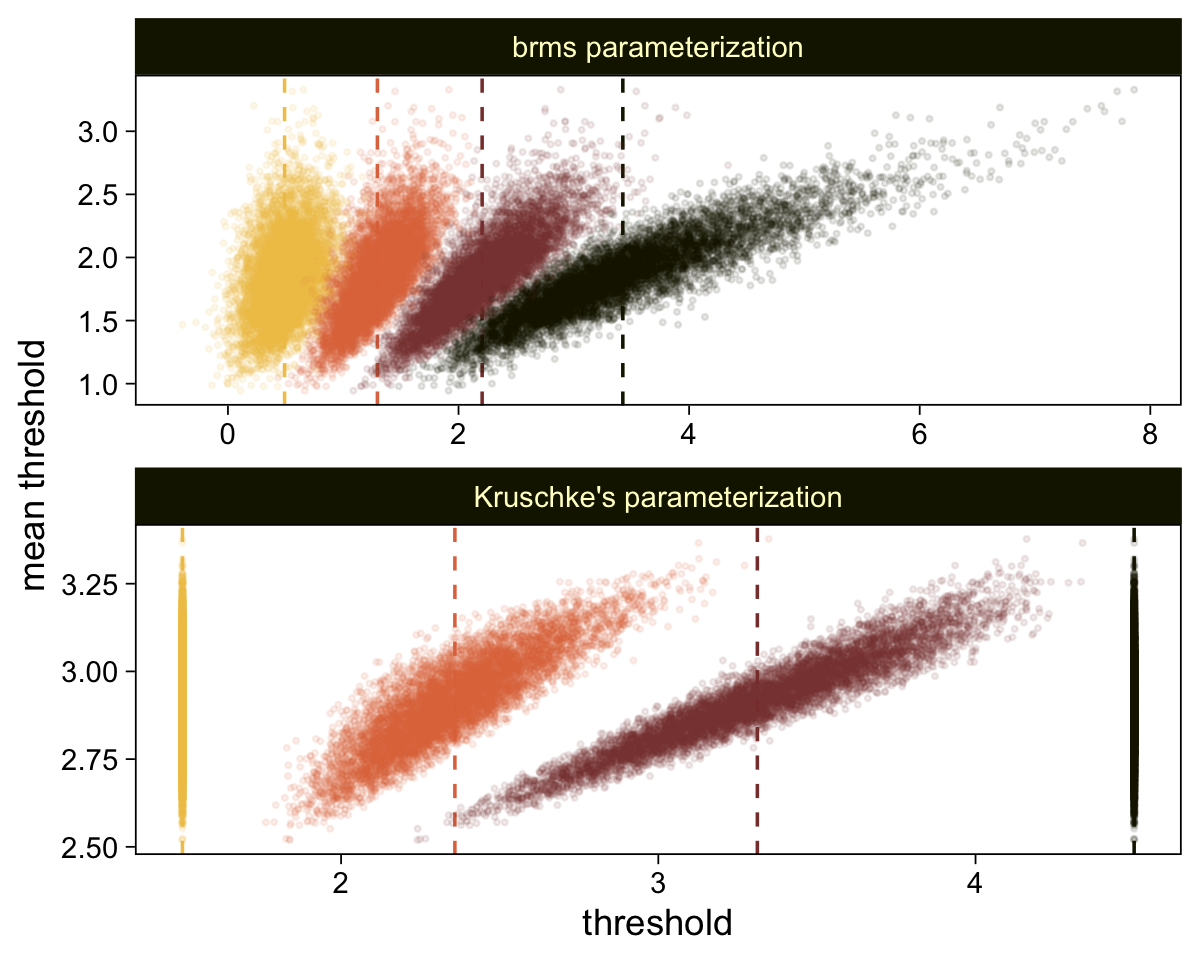

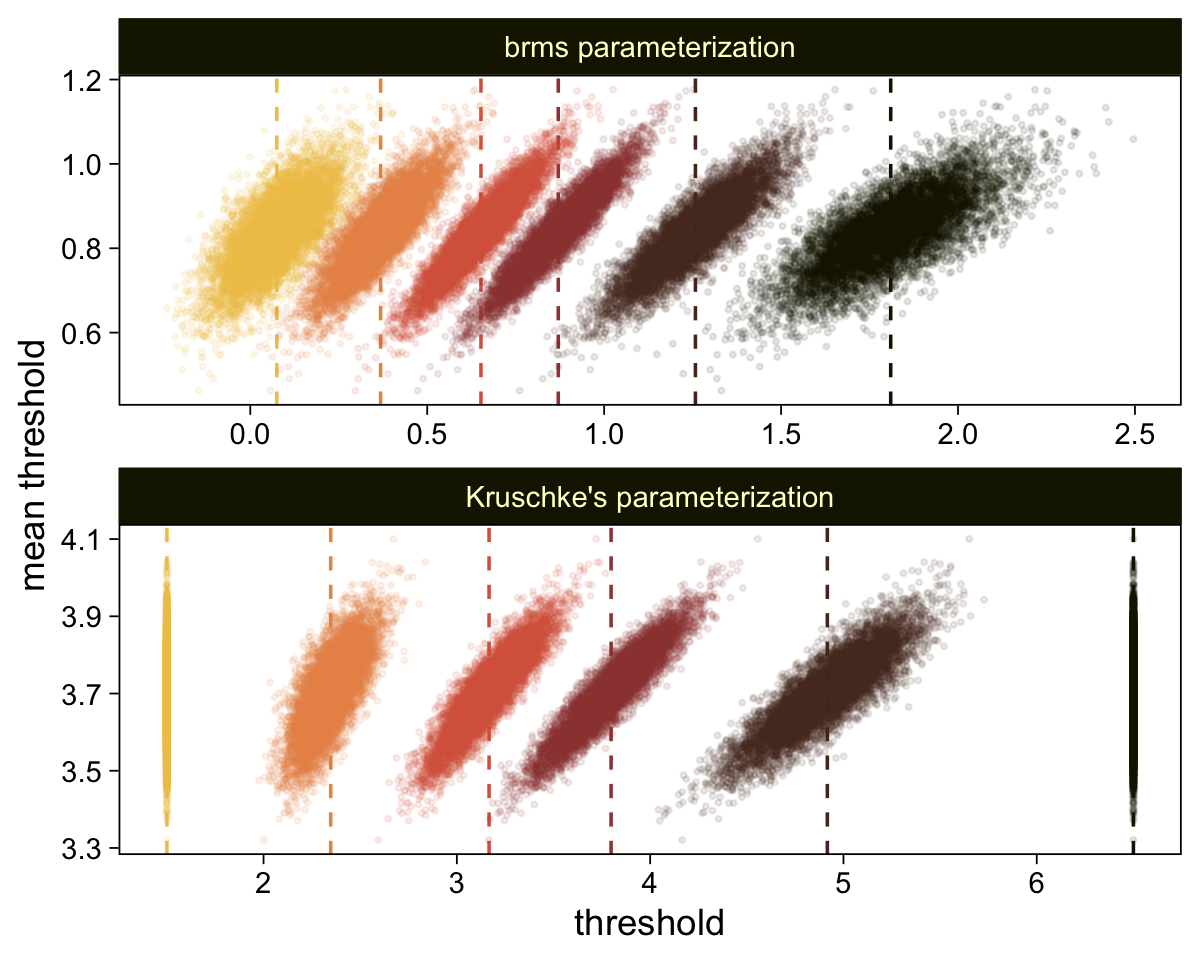

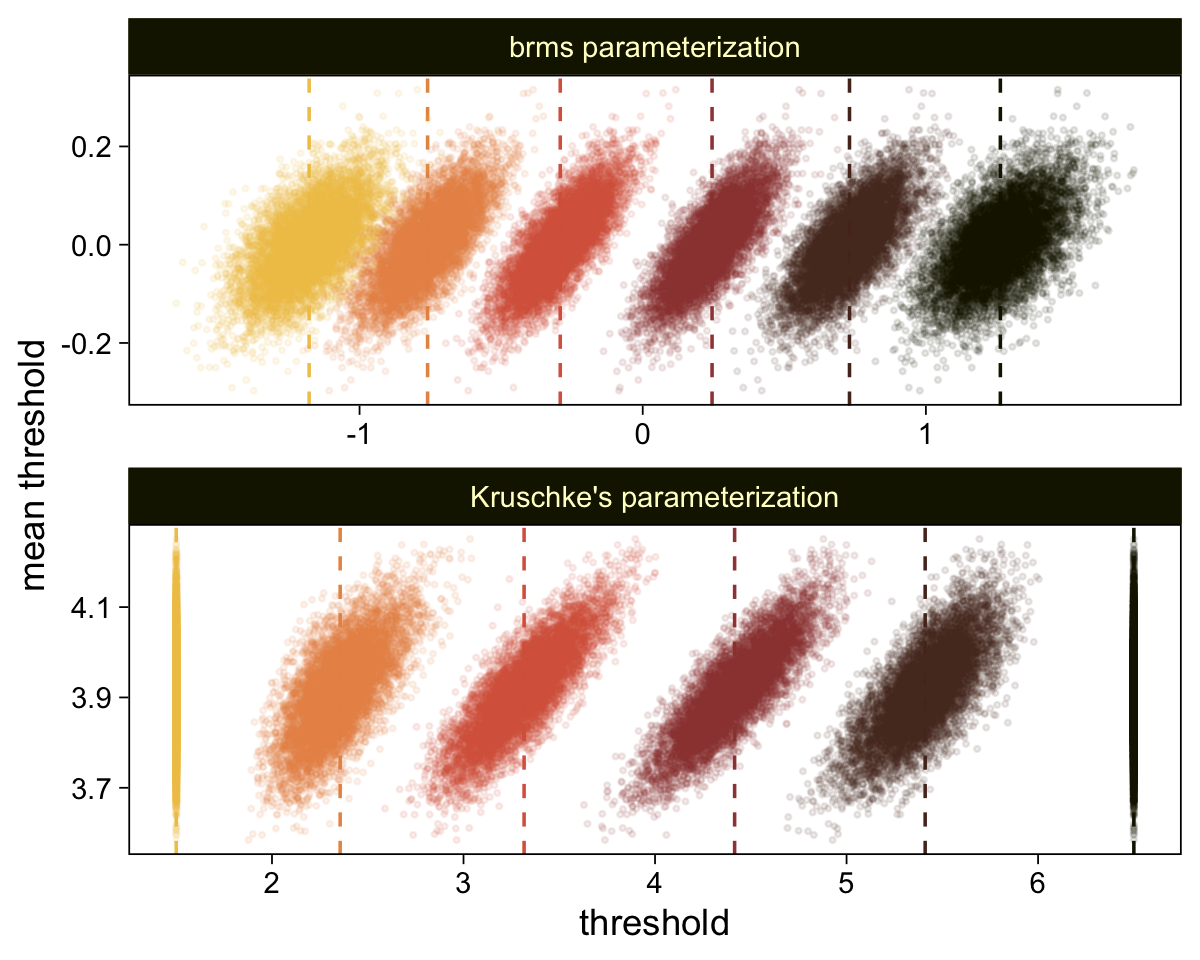

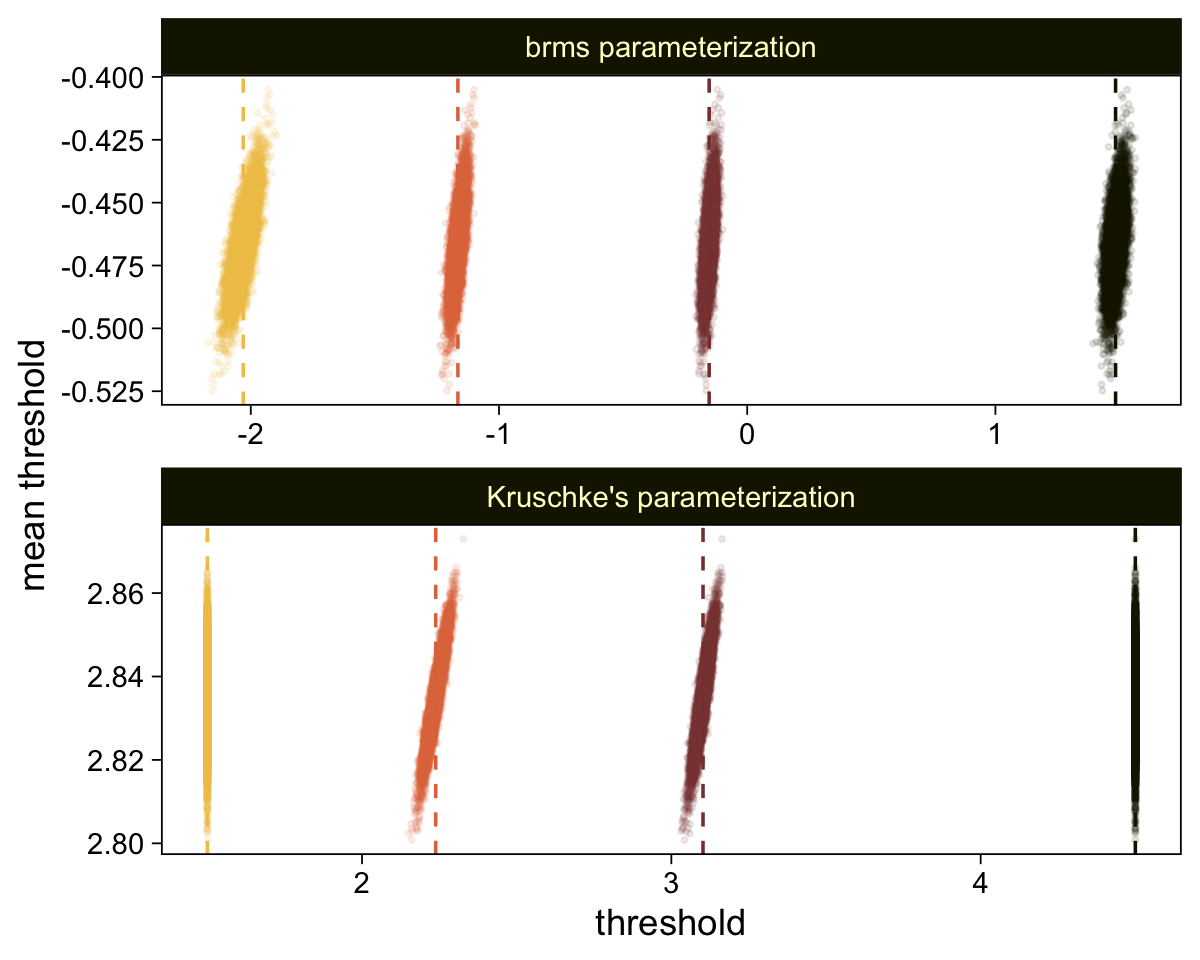

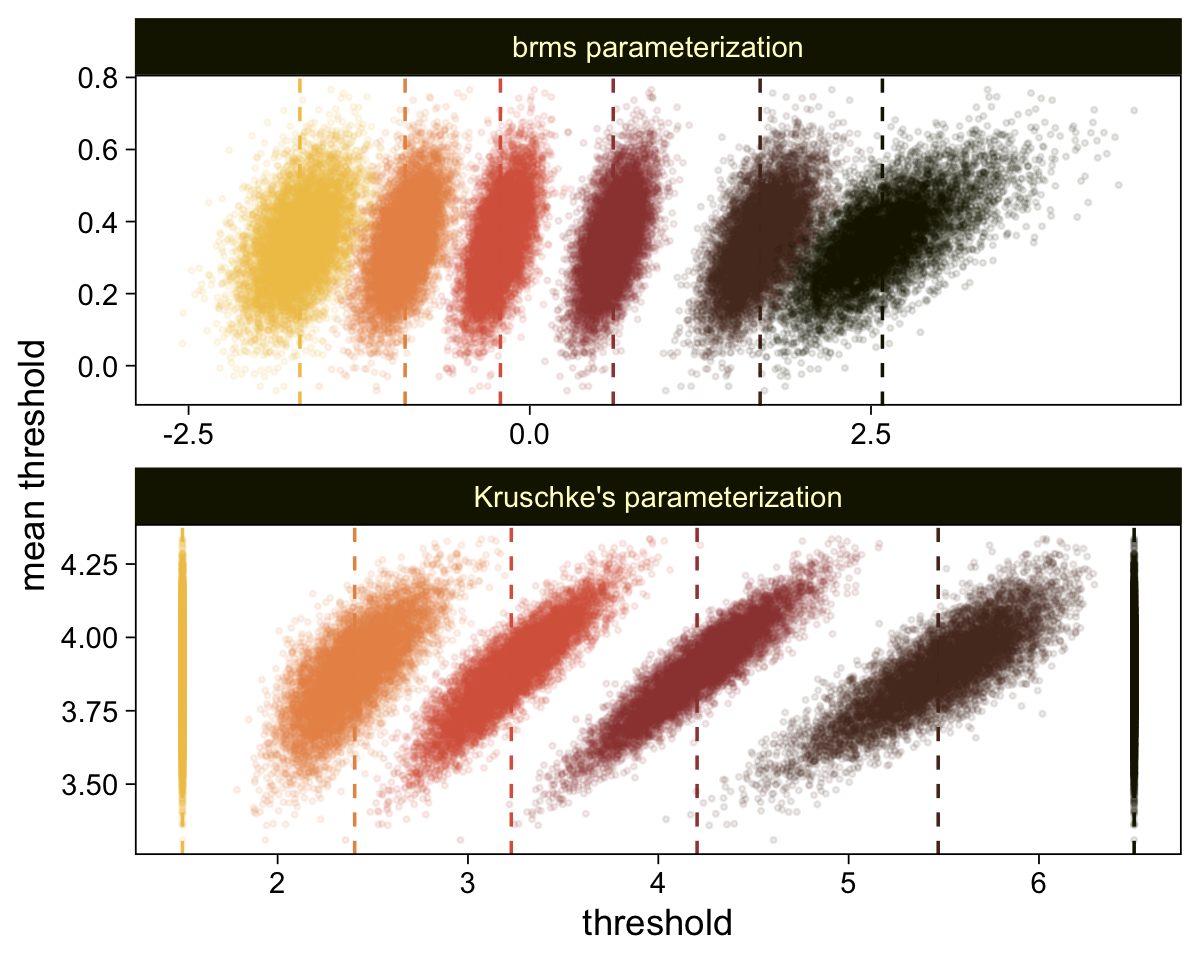

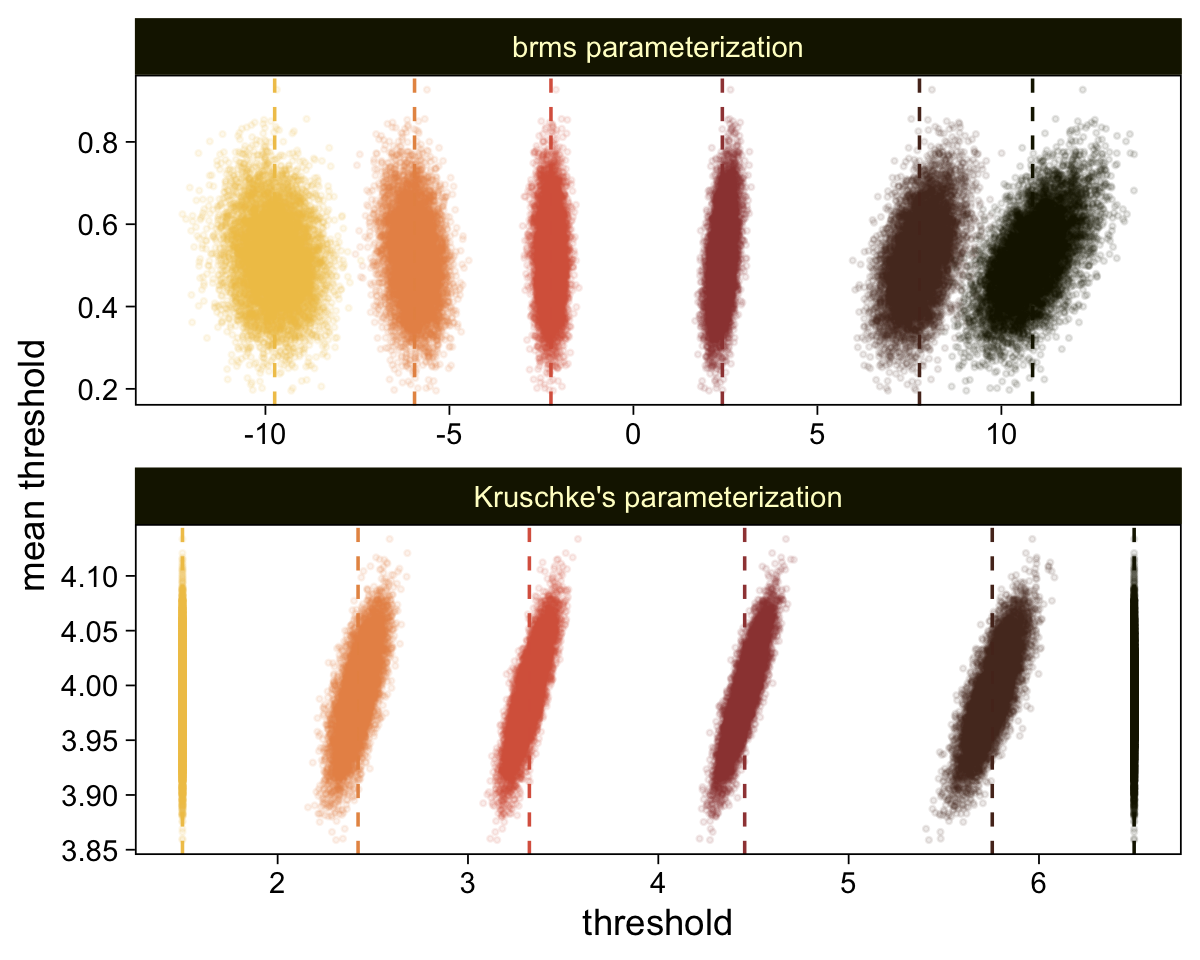

The initial means data at the top contains the θi-specific means, which we used to make the dashed vertical lines with geom_vline(). Did you see what we did there with those group_by() and mutate() lines? That’s how we computed the mean threshold within each step of the HMC chain, what Kruschke (p. 680) denoted as ˉθ(s)=∑K−1kθk(s)/(K−1), where s refers to particular steps in the HMC chain.

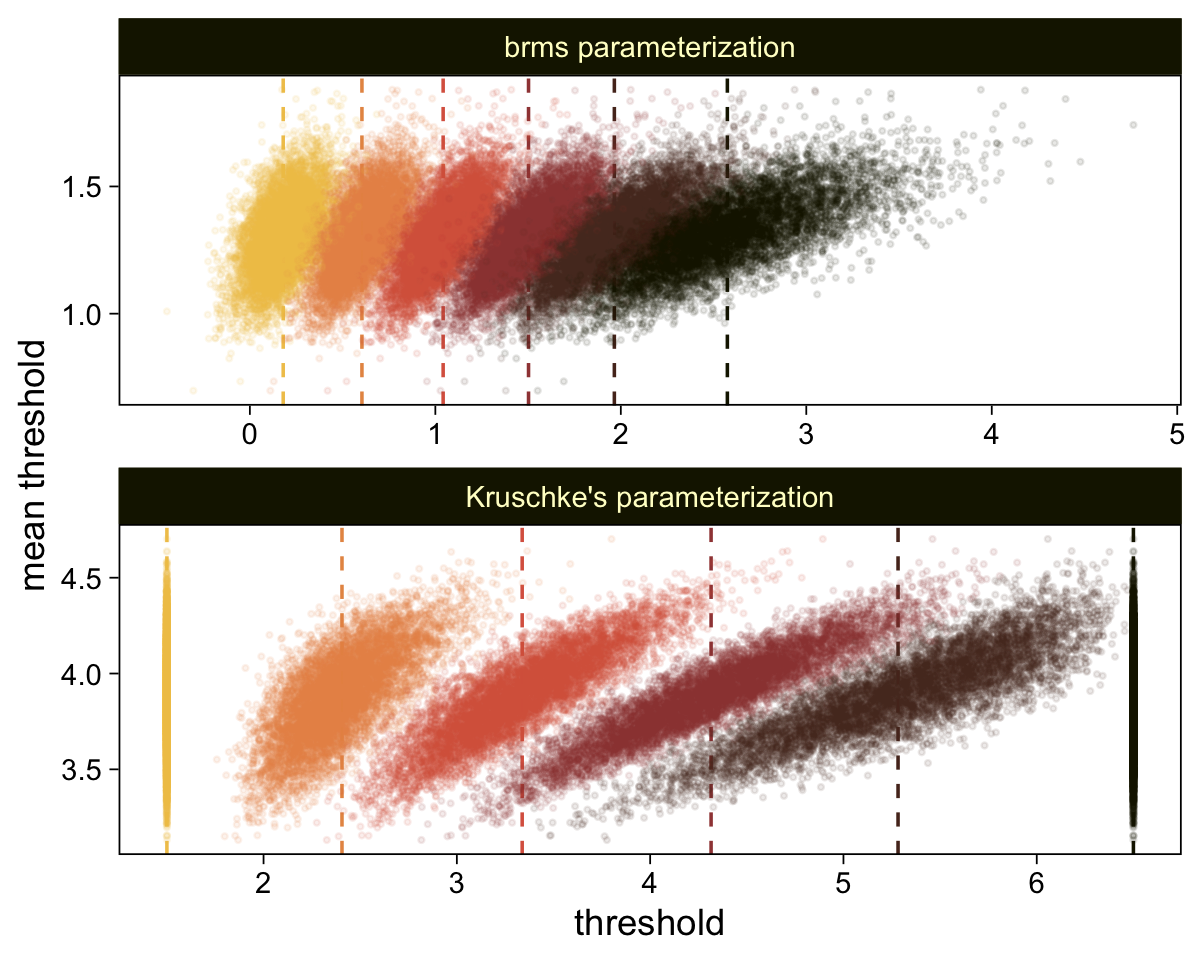

Perhaps of greater interest, you might have noticed how different our plot is from the one in the text. We might should compare the results of our brms parameterization of θ[i] with one based on the parameterization in the text in an expanded version of the bottom plot of Figure 23.2. To convert our brms output to match Kruschke’s, we’ll rescale our θ[i] draws with help from the scales::rescale() function, about which you might learn more here.

# primary data wrangling

p <-

bind_rows(

# brms parameterization

draws %>%

pivot_longer(-.draw, values_to = "threshold") %>%

group_by(.draw) %>%

mutate(theta_bar = mean(threshold)),

# Kruschke's parameterization

draws %>%

pivot_longer(-.draw, values_to = "threshold") %>%

group_by(.draw) %>%

mutate(threshold = scales::rescale(threshold, to = c(1.5, 6.5))) %>%

mutate(theta_bar = mean(threshold))

) %>%

# add an index

mutate(model = rep(c("brms parameterization", "Kruschke's parameterization"), each = n() / 2))

# compute the means by model and threshold for the vertical lines

means <-

p %>%

ungroup() %>%

group_by(model, name) %>%

summarise(mean = mean(threshold))

# plot!

p %>%

ggplot(aes(x = threshold, y = theta_bar)) +

geom_vline(data = means,

aes(xintercept = mean, color = name),

linetype = 2) +

geom_point(aes(color = name),

alpha = 1/10, size = 1/2) +

scale_color_scico_d(palette = "lajolla", begin = .25) +

ylab("mean threshold") +

theme(legend.position = "none") +

facet_wrap(~ model, ncol = 1, scales = "free")

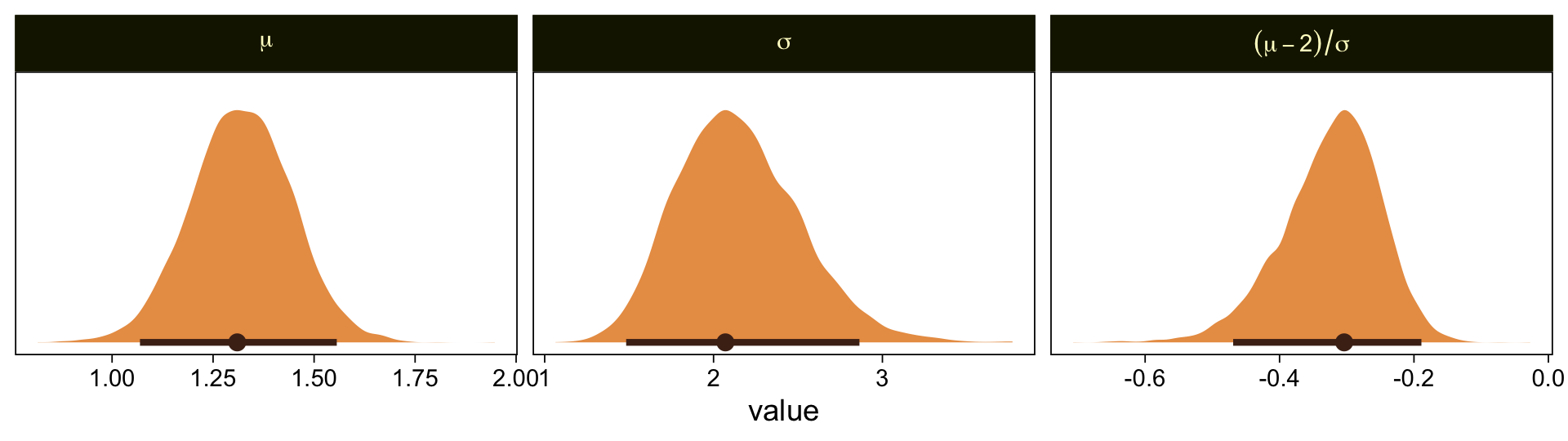

We can take our rescaling approach further to convert the posterior distributions for μ and σ from the brms Normal(0,1) constants to the metric from Kruschke’s approach. Say y1 and y2 are two draws from some Gaussian and z1 and z2 are their corresponding z-scores. Here’s how to solve for σ.

\begin{align*} z_1 - z_2 & = \frac{(y_1 - \mu)}{\sigma} - \frac{(y_2 - \mu)}{\sigma} \\ & = \frac{(y_1 - \mu) - (y_2 - \mu)}{\sigma} \\ & = \frac{y_1 - \mu - y_2 + \mu}{\sigma} \\ & = \frac{y_1 - y_2}{\sigma}, \;\; \text{therefore} \\ \sigma & = \frac{y_1 - y_2}{z_1 – z_2}. \end{align*}

If you’d like to compute \mu, it’s even simpler.

\begin{align*} z_1 & = \frac{y_1 - \mu}{\sigma} \\ z_1 \sigma & = y_1 - \mu \\ z_1 \sigma + \mu & = y_1, \;\; \text{therefore} \\ \mu & = y_1 - z_1 \sigma \end{align*}

Big shout out to my math-stats savvy friends academic twitter for the formulas, especially Ph.Demetri, Lukas Neugebauer, and Brenton Wiernik for walking out the formulas (see this twitter thread). For our application, Intercept[1] and Intercept[6] will be our two z-scores and Kruschke’s 1.5 and 6.5 will be their corresponding y-values.

library(tidybayes)

draws %>%

select(.draw, `b_Intercept[1]`, `b_Intercept[6]`) %>%

mutate(`y[1]` = 1.5,

`y[6]` = 6.5) %>%

mutate(mu = `y[1]` - `b_Intercept[1]` * 1,

sigma = (`y[1]` - `y[6]`) / (`b_Intercept[1]` - `b_Intercept[6]`)) %>%

mutate(`(mu-2)/sigma` = (mu - 2) / sigma) %>%

pivot_longer(mu:`(mu-2)/sigma`) %>%

mutate(name = factor(name, levels = c("mu", "sigma", "(mu-2)/sigma"))) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8],

normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

Our results are similar to Kruschke’s. Given we used a different algorithm, a different parameterization, and different priors, I’m not terribly surprised they’re a little different. If you have more insight on the matter or have spotted a flaw in this method, please share with the rest of us.

It’s unclear, to me, how we’d interpret the effect size. The difficulty isn’t that Kruschke’s comparison of C = 2.0 is arbitrary, but that we can only interpret the comparison given the model assumption of \theta_1 = 1.5 and \theta_6 = 6.5. If your theory doesn’t allow you to understand the meaning of those constants and why you’d prefer them to slightly different ones, you’d be fooling yourself if you attempted to interpret any effect sizes conditional on those values. Proceed with caution, friends.

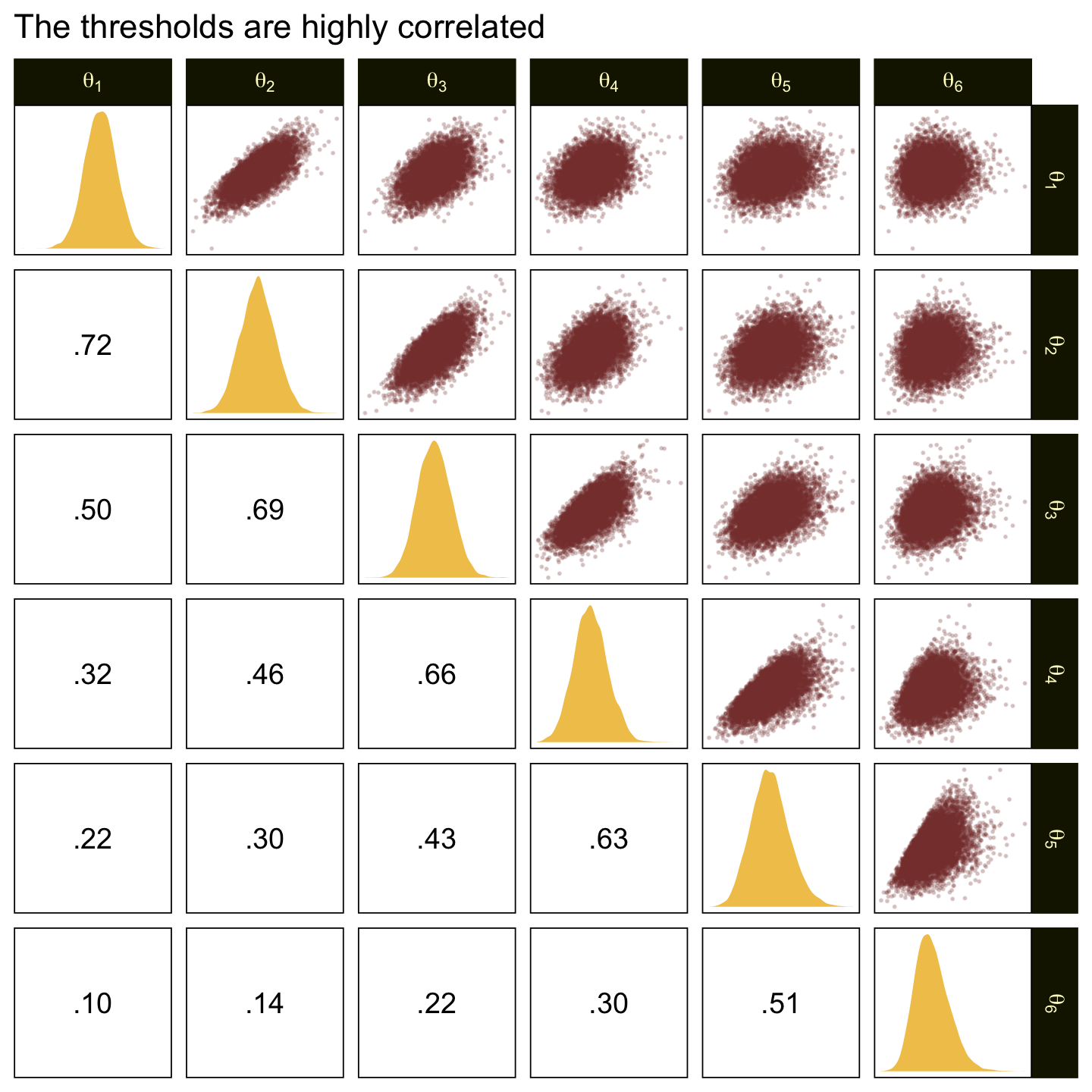

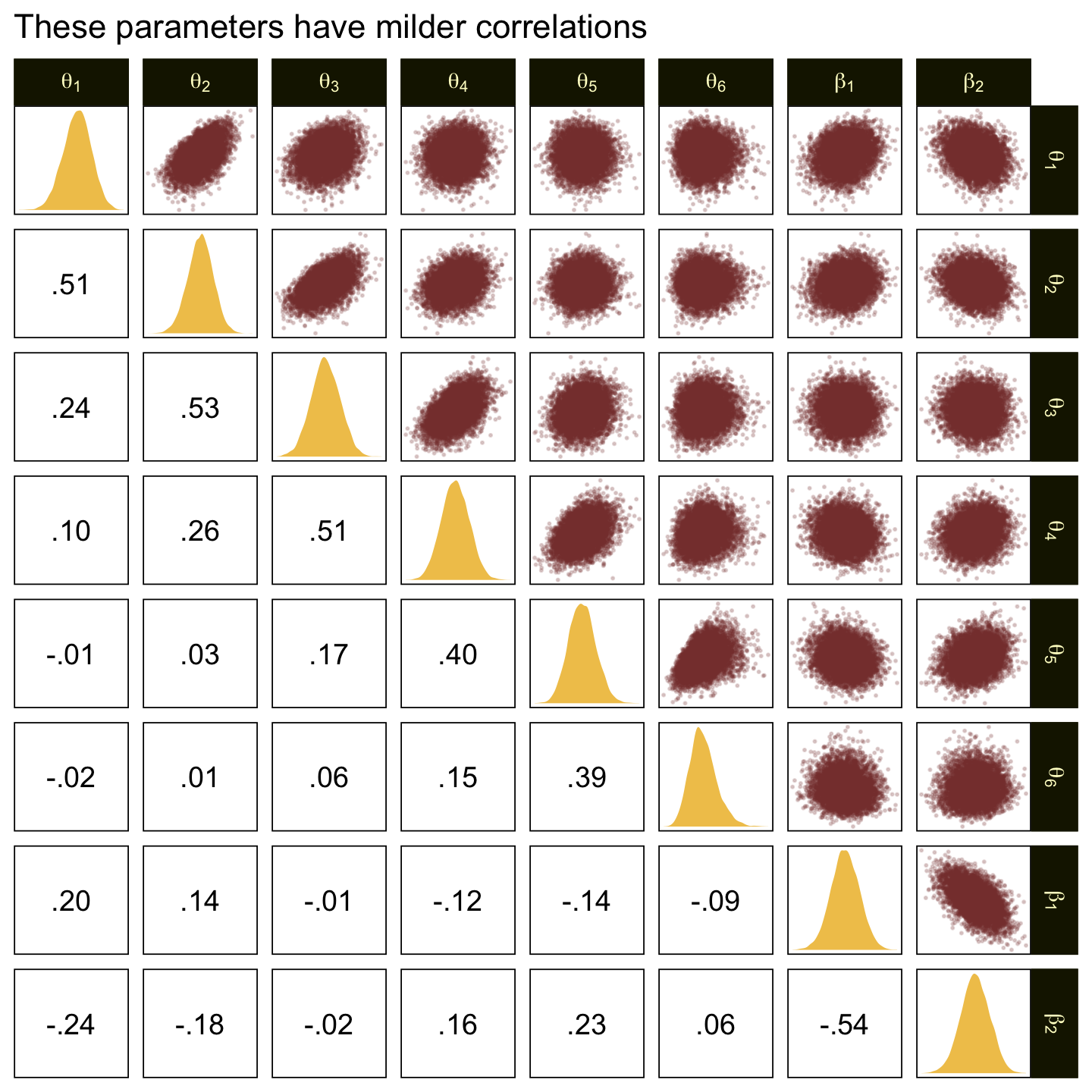

In the large paragraph on the lower part of page 679, Kruschke discussed why the thresholds tend to have nontrivial covariances. This is what he was trying to convey with the bottom subplot in Figure 23.2. Just for practice, we might also explore the correlation matrix among the thresholds with a customized GGally::ggpairs() plot.

# load

library(GGally)

# customize

my_upper <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_point(size = 1/5, alpha = 1/5, color = sl[7])

}

my_diag <- function(data, mapping, ...) {

ggplot(data = data, mapping = mapping) +

geom_density(linewidth = 0, fill = sl[3]) +

scale_x_continuous(NULL, breaks = NULL) +

scale_y_continuous(NULL, breaks = NULL)

}

my_lower <- function(data, mapping, ...) {

# get the x and y data to use the other code

x <- eval_data_col(data, mapping$x)

y <- eval_data_col(data, mapping$y)

# compute the correlations

corr <- cor(x, y, method = "p", use = "pairwise")

# plot the cor value

ggally_text(

label = formatC(corr, digits = 2, format = "f") %>% str_replace(., "0\\.", "."),

mapping = aes(),

color = "black",

size = 4) +

scale_x_continuous(NULL, breaks = NULL) +

scale_y_continuous(NULL, breaks = NULL)

}

# wrangle

as_draws_df(fit23.1) %>%

select(contains("Intercept")) %>%

set_names(str_c("theta[", 1:6, "]")) %>%

# plot!

ggpairs(upper = list(continuous = my_upper),

diag = list(continuous = my_diag),

lower = list(continuous = my_lower),

labeller = label_parsed) +

ggtitle("The thresholds are highly correlated")

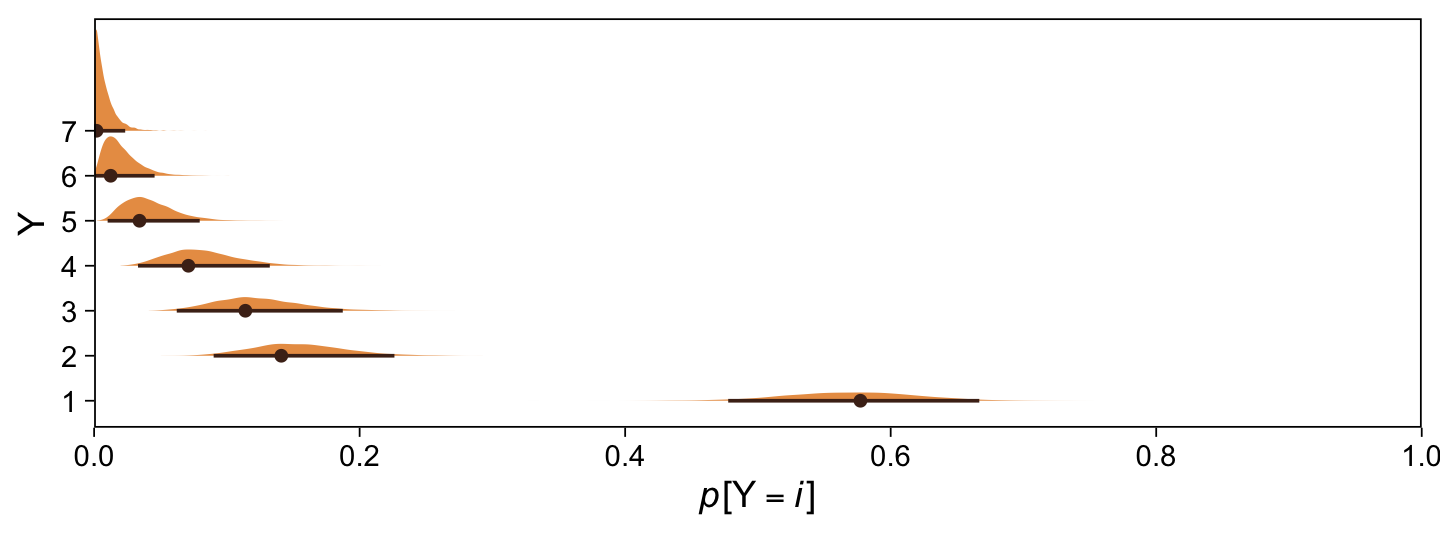

Kruschke didn’t do this in the text, but it might be informative to plot the probability distributions for the seven categories from Y, p(y = k | \mu = 0, \sigma = 1, \{ \theta_i \}).

library(tidybayes)

draws %>%

select(-.draw) %>%

mutate_all(.funs = ~pnorm(. ,0, 1)) %>%

transmute(`p[Y==1]` = `b_Intercept[1]`,

`p[Y==2]` = `b_Intercept[2]` - `b_Intercept[1]`,

`p[Y==3]` = `b_Intercept[3]` - `b_Intercept[2]`,

`p[Y==4]` = `b_Intercept[4]` - `b_Intercept[3]`,

`p[Y==5]` = `b_Intercept[5]` - `b_Intercept[4]`,

`p[Y==6]` = `b_Intercept[6]` - `b_Intercept[5]`,

`p[Y==7]` = 1 - `b_Intercept[6]`) %>%

set_names(1:7) %>%

pivot_longer(everything(), names_to = "Y") %>%

ggplot(aes(x = value, y = Y)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], size = 1/2, height = 2.5) +

scale_x_continuous(expression(italic(p)*"["*Y==italic(i)*"]"),

breaks = 0:5 / 5,

expand = c(0, 0), limits = c(0, 1))

Happily, the model produces data that look a lot like those from which it was generated.

set.seed(23)

draws %>%

mutate(z = rnorm(n(), mean = 0, sd = 1)) %>%

mutate(Y = case_when(

z < `b_Intercept[1]` ~ 1,

z < `b_Intercept[2]` ~ 2,

z < `b_Intercept[3]` ~ 3,

z < `b_Intercept[4]` ~ 4,

z < `b_Intercept[5]` ~ 5,

z < `b_Intercept[6]` ~ 6,

z >= `b_Intercept[6]` ~ 7

) %>% as.factor(.)) %>%

ggplot(aes(x = Y)) +

geom_bar(fill = sl[5]) +

scale_y_continuous(expand = expansion(mult = c(0, 0.05)))

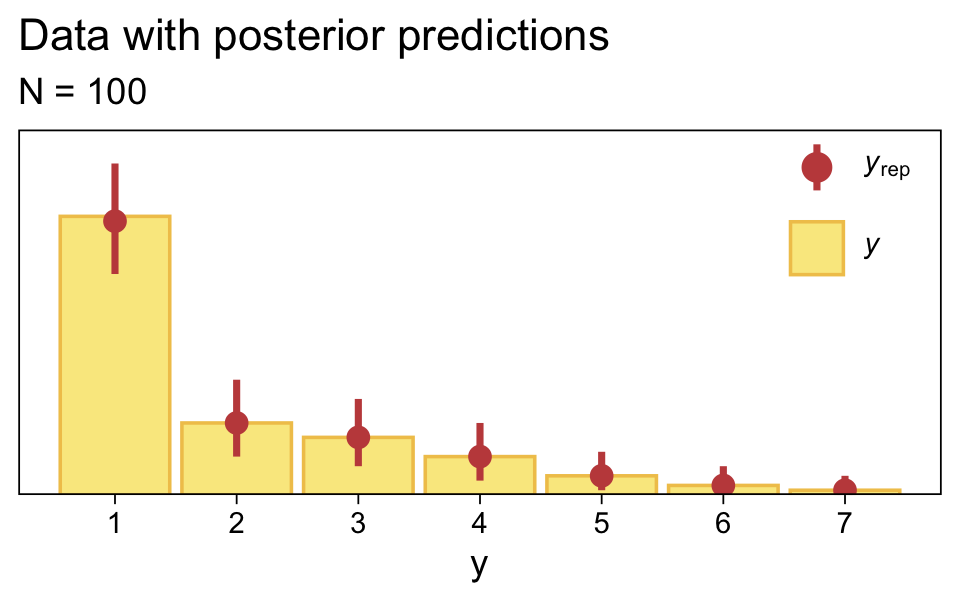

Along similar lines, we can use the pp_check() function to make a version of the upper right panel of Figure 23.2. The type = "bars" argument will allow us to summarize the posterior predictions as a dot (mean) and standard error bars superimposed on a bar plot of the original data. Note how this differs a little from Kruschke’s use of the posterior median and 95% HDIs. The ndraws = 1000 argument controls how many posterior predictions we wanted to summarize over. The rest is just formatting.

bayesplot::color_scheme_set(sl[2:7])

set.seed(23)

pp_check(fit23.1, type = "bars", ndraws = 1000, fatten = 2) +

scale_x_continuous("y", breaks = 1:7) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Data with posterior predictions",

subtitle = "N = 100") +

theme(legend.background = element_blank(),

legend.position = c(.9, .8))

Load the data for the next model.

my_data_2 <- read_csv("data.R/OrdinalProbitData-1grp-2.csv")Since we’re reusing all the specifications from the last model for this one, we can just use update().

fit23.2 <-

update(fit23.1,

newdata = my_data_2,

iter = 3000, warmup = 1000, chains = 4, cores = 4,

seed = 23,

file = "fits/fit23.02")print(fit23.2)## Family: cumulative

## Links: mu = probit; disc = identity

## Formula: Y ~ 1

## Data: my_data_2 (Number of observations: 70)

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept[1] -1.41 0.21 -1.83 -1.01 1.00 4879 4982

## Intercept[2] -0.17 0.15 -0.47 0.12 1.00 9111 6632

## Intercept[3] 0.17 0.15 -0.11 0.47 1.00 9222 7109

## Intercept[4] 0.46 0.15 0.15 0.76 1.00 8706 6590

## Intercept[5] 0.83 0.17 0.51 1.17 1.00 9277 6513

## Intercept[6] 2.00 0.31 1.46 2.67 1.00 8063 6649

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## disc 1.00 0.00 1.00 1.00 NA NA NA

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Extract and wrangle the posterior draws.

draws <-

as_draws_df(fit23.2) %>%

select(.draw, `b_Intercept[1]`:`b_Intercept[6]`)Now we might compare the brms parameterization of \theta_{[i]} with Kruschke’s parameterization in an expanded version of the bottom plot of Figure 23.3. As we’ll be making a lot of these plots throughout this chapter, it might be worthwhile to just make a custom function. We’ll call it compare_thresholds().

compare_thresholds <- function(data, lb = 1.5, ub = 6.5) {

# we have two parameters:

# lb = lower bound

# ub = upper bound

# primary data wrangling

p <-

bind_rows(

data %>%

pivot_longer(-.draw, values_to = "threshold") %>%

group_by(.draw) %>%

mutate(theta_bar = mean(threshold)),

data %>%

pivot_longer(-.draw, values_to = "threshold") %>%

group_by(.draw) %>%

mutate(threshold = scales::rescale(threshold, to = c(lb, ub))) %>%

mutate(theta_bar = mean(threshold))

) %>%

mutate(model = rep(c("brms parameterization", "Kruschke's parameterization"), each = n() / 2))

# compute the means by model and threshold for the vertical lines

means <-

p %>%

ungroup() %>%

group_by(model, name) %>%

summarise(mean = mean(threshold))

# plot!

p %>%

ggplot(aes(x = threshold, y = theta_bar)) +

geom_vline(data = means,

aes(xintercept = mean, color = name),

linetype = 2) +

geom_point(aes(color = name),

alpha = 1/10, size = 1/2) +

scale_color_scico_d(palette = "lajolla", begin = .25) +

ylab("mean threshold") +

theme(legend.position = "none") +

facet_wrap(~ model, ncol = 1, scales = "free")

}Take that puppy for a spin.

draws %>%

compare_thresholds(lb = 1.5, ub = 6.5)

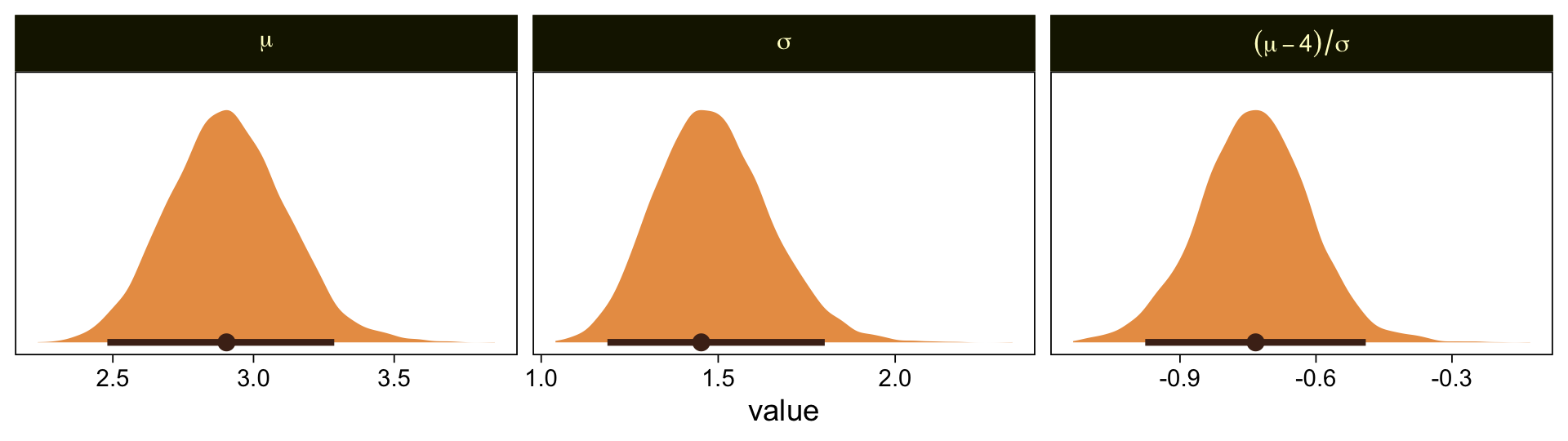

Oh man, that works sweet. Now let’s use the same parameter-transformation approach from before to get our un-standardized posteriors for \mu, \sigma, and the effect size.

draws %>%

select(.draw, `b_Intercept[1]`, `b_Intercept[6]`) %>%

mutate(`y[1]` = 1.5,

`y[6]` = 6.5) %>%

mutate(mu = `y[1]` - `b_Intercept[1]` * 1,

sigma = (`y[1]` - `y[6]`) / (`b_Intercept[1]` - `b_Intercept[6]`)) %>%

mutate(`(mu-4)/sigma` = (mu - 4) / sigma) %>%

pivot_longer(mu:`(mu-4)/sigma`) %>%

mutate(name = factor(name, levels = c("mu", "sigma", "(mu-4)/sigma"))) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8],

normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

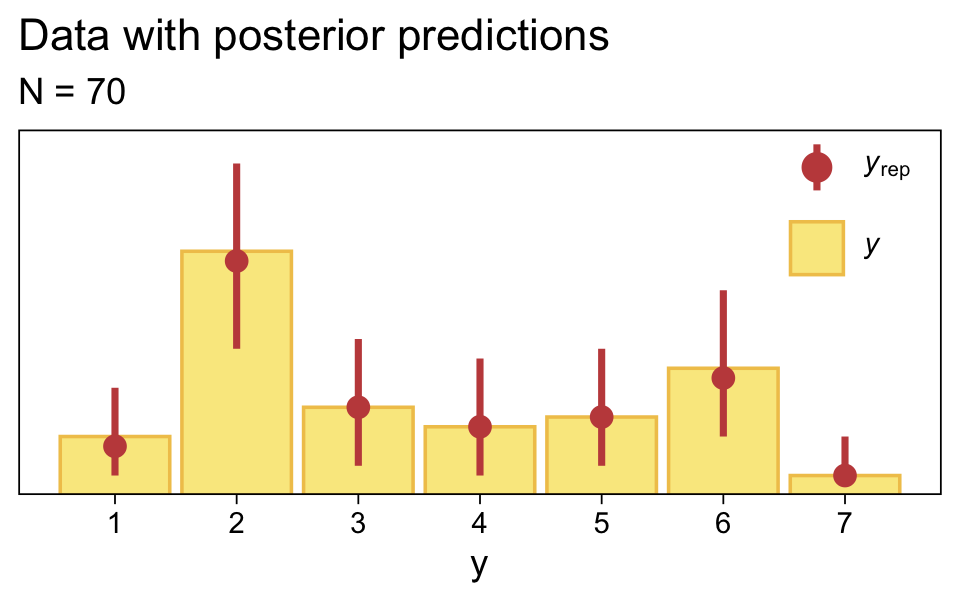

Use pp_check() to make our version of the upper-right panel of Figure 23.3.

set.seed(23)

pp_check(fit23.2, type = "bars", ndraws = 1000, fatten = 2) +

scale_x_continuous("y", breaks = 1:7) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Data with posterior predictions",

subtitle = "N = 70") +

theme(legend.background = element_blank(),

legend.position = c(.9, .8))

Just as in the text, “the posterior predictive distribution in the top-right subpanel accurately describes the bimodal distribution of the outcomes” (p. 680).

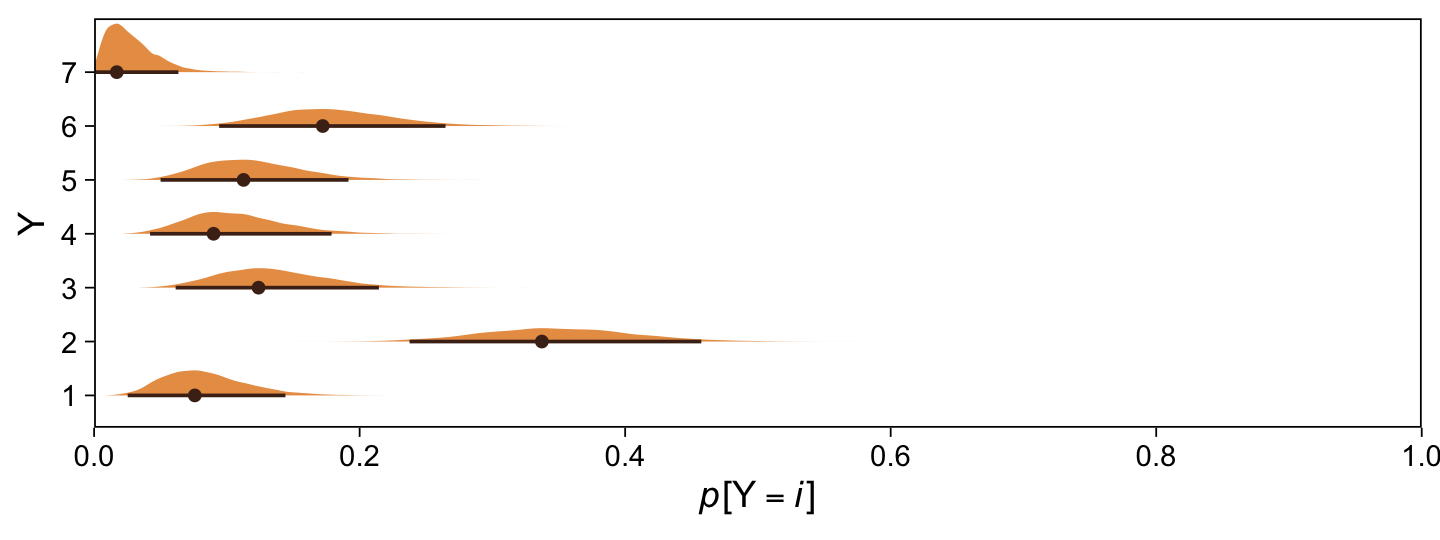

Here are the probability distributions for each of the 7 categories of Y.

draws %>%

select(-.draw) %>%

mutate_all(.funs = ~pnorm(. ,0, 1)) %>%

transmute(`p[Y==1]` = `b_Intercept[1]`,

`p[Y==2]` = `b_Intercept[2]` - `b_Intercept[1]`,

`p[Y==3]` = `b_Intercept[3]` - `b_Intercept[2]`,

`p[Y==4]` = `b_Intercept[4]` - `b_Intercept[3]`,

`p[Y==5]` = `b_Intercept[5]` - `b_Intercept[4]`,

`p[Y==6]` = `b_Intercept[6]` - `b_Intercept[5]`,

`p[Y==7]` = 1 - `b_Intercept[6]`) %>%

set_names(1:7) %>%

pivot_longer(everything(), names_to = "Y") %>%

ggplot(aes(x = value, y = Y)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], size = 1/2) +

scale_x_continuous(expression(italic(p)*"["*Y==italic(i)*"]"),

breaks = 0:5 / 5,

expand = c(0, 0), limits = c(0, 1))

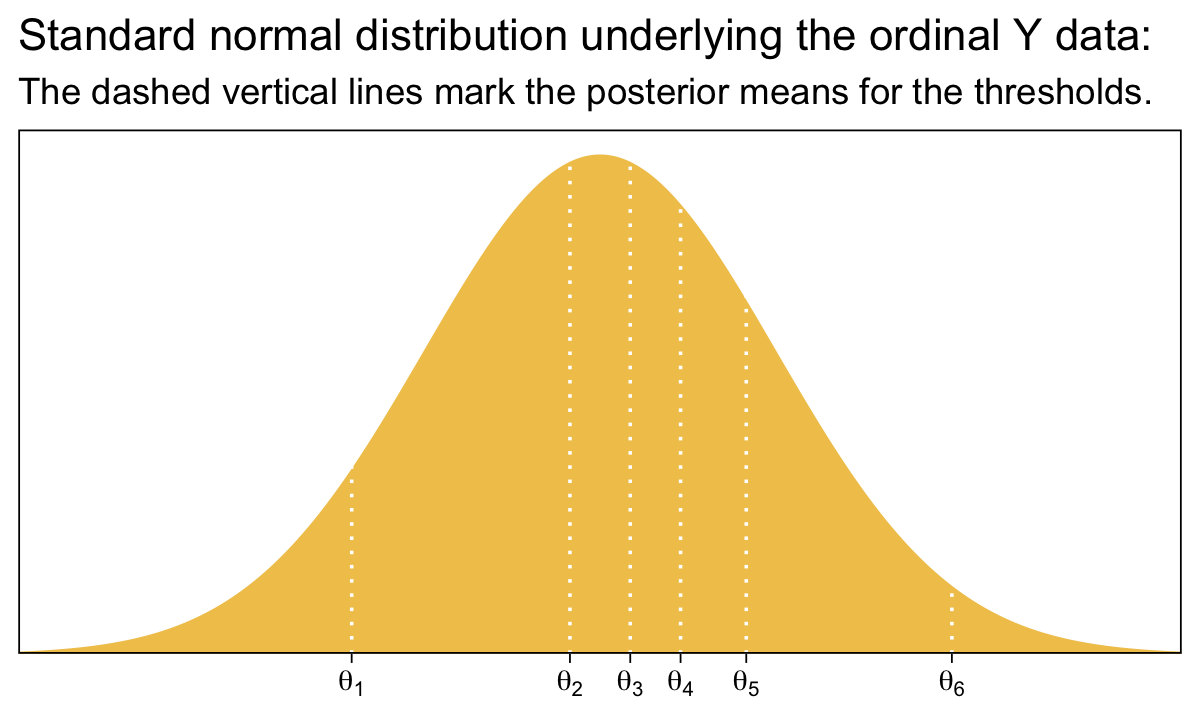

Before we move on, it might be helpful to nail down what the thresholds mean within the context of our brms parameterization. To keep things simple, we’ll focus on their posterior means.

tibble(x = seq(from = -3.5, to = 3.5, by = .01)) %>%

mutate(d = dnorm(x)) %>%

ggplot(aes(x = x, ymin = 0, ymax = d)) +

geom_ribbon(fill = sl[3]) +

geom_vline(xintercept = fixef(fit23.2)[, 1], color = "white", linetype = 3) +

scale_x_continuous(NULL, breaks = fixef(fit23.2)[, 1],

labels = parse(text = str_c("theta[", 1:6, "]"))) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Standard normal distribution underlying the ordinal Y data:",

subtitle = "The dashed vertical lines mark the posterior means for the thresholds.") +

coord_cartesian(xlim = c(-3, 3))

Compare that to Figure 23.1.

23.2.2.1 Not the same results as pretending the data are metric.

“In some conventional approaches to ordinal data, the data are treated as if they were metric and normally distributed” (p. 681). Here’s what that brms::brm() model might look like using methods from back in Chapter 16. First, we’ll define our stanvars.

mean_y <- mean(my_data_1$Y)

sd_y <- sd(my_data_1$Y)

stanvars <-

stanvar(mean_y, name = "mean_y") +

stanvar(sd_y, name = "sd_y")Fit the model.

fit23.3 <-

brm(data = my_data_1,

family = gaussian,

Y ~ 1,

prior = c(prior(normal(mean_y, sd_y * 100), class = Intercept),

prior(normal(0, sd_y), class = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

seed = 23,

file = "fits/fit23.03")Check the results.

print(fit23.3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: Y ~ 1

## Data: my_data_1 (Number of observations: 100)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 1.95 0.14 1.68 2.23 1.00 3454 2723

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.41 0.10 1.23 1.62 1.00 3535 2700

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).As Kruschke indicated in the text, it yielded a distributional mean of about 1.95 and a standard deviation of about 1.41. Here we’ll use a posterior predictive check to compare histograms of data generated from this model to that of the original data.

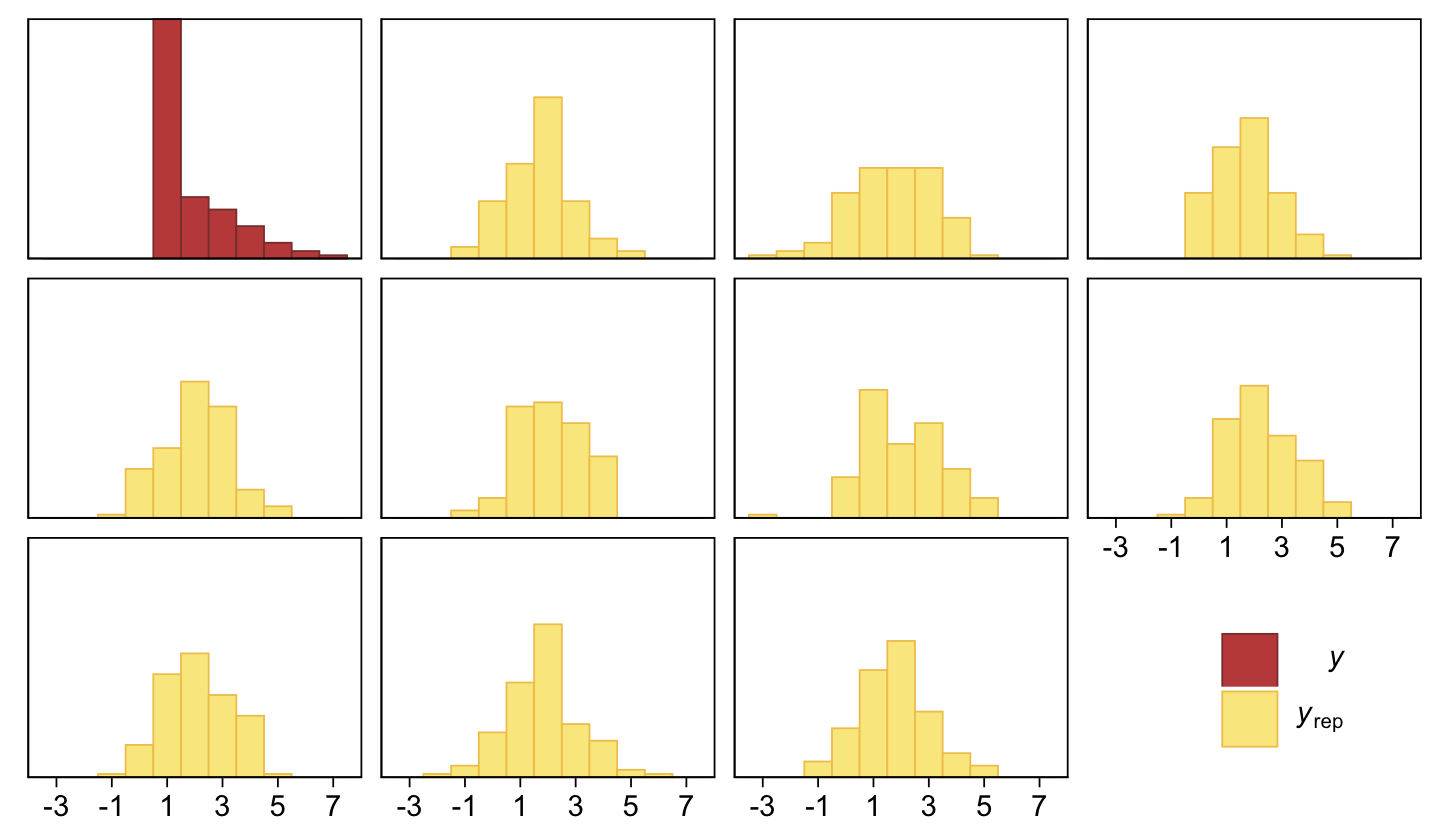

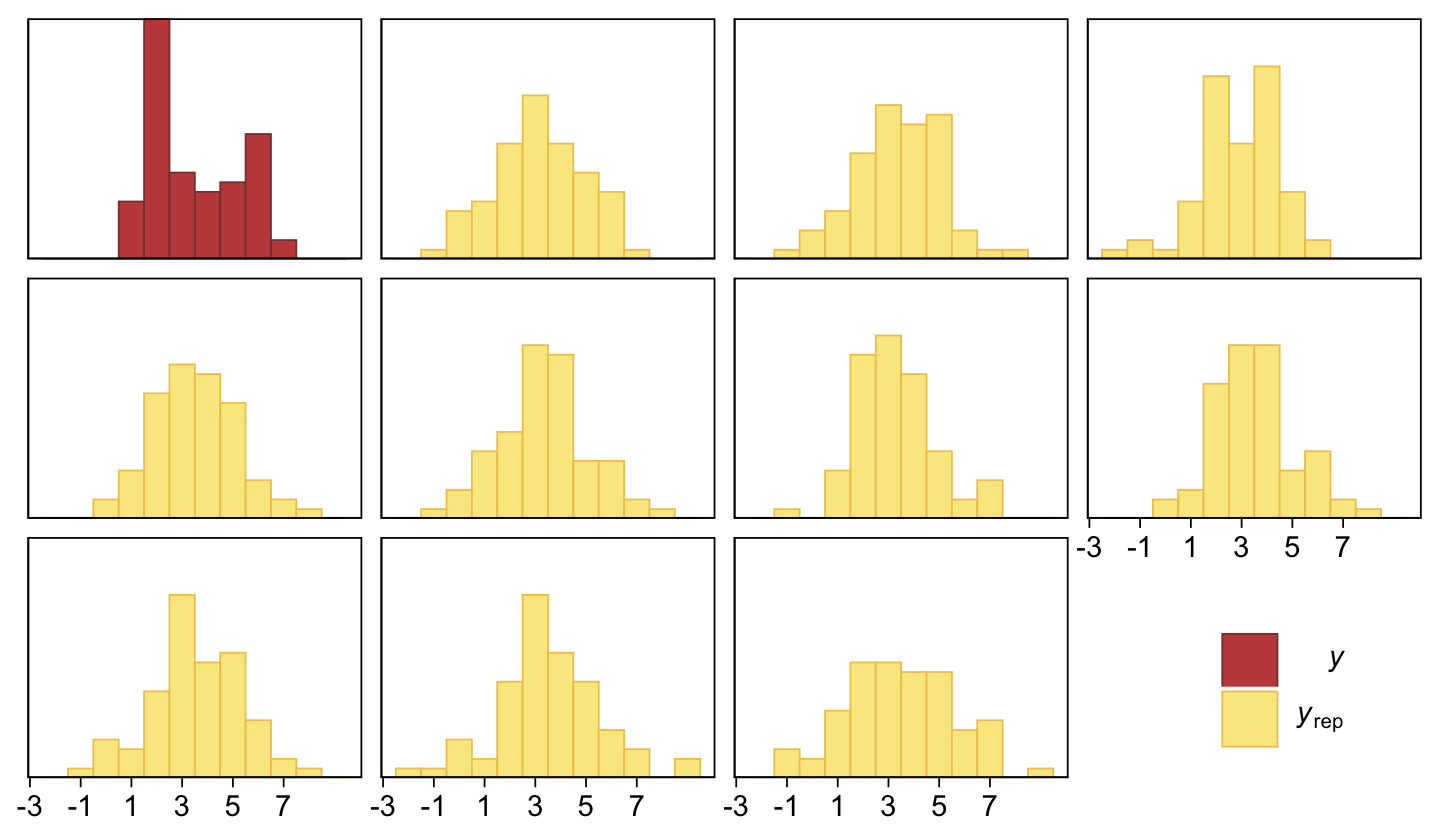

pp_check(fit23.3, type = "hist", ndraws = 10, binwidth = 1) +

scale_x_continuous(breaks = seq(from = -3, to = 7, by = 2)) +

theme(legend.position = c(.9, .15))

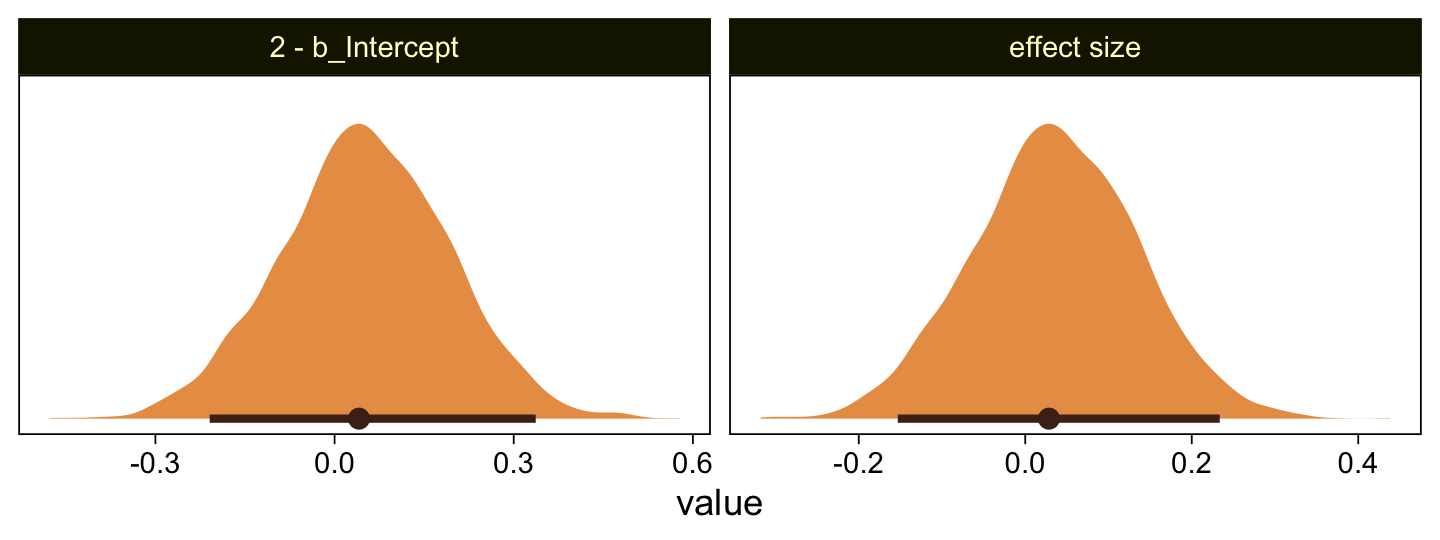

Yeah, that’s not a good fit. We won’t be conducting a t-test like Kruschke did on page 681. But we might compromise and take a look at the marginal distribution of the intercept (i.e., for \mu) and its difference from 2, the reference value.

as_draws_df(fit23.3) %>%

mutate(`2 - b_Intercept` = 2 - b_Intercept,

`effect size` = (2 - b_Intercept) / sigma) %>%

pivot_longer(`2 - b_Intercept`:`effect size`) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, scales = "free")

Yes indeed, 2 is a credible value for the intercept. And as reported in the text, we got a very small d effect size. Now we repeat the process for the second data set.

mean_y <- mean(my_data_2$Y)

sd_y <- sd(my_data_2$Y)

stanvars <-

stanvar(mean_y, name = "mean_y") +

stanvar(sd_y, name = "sd_y")

fit23.4 <-

update(fit23.3,

newdata = my_data_2,

chains = 4, cores = 4,

stanvars = stanvars,

seed = 23,

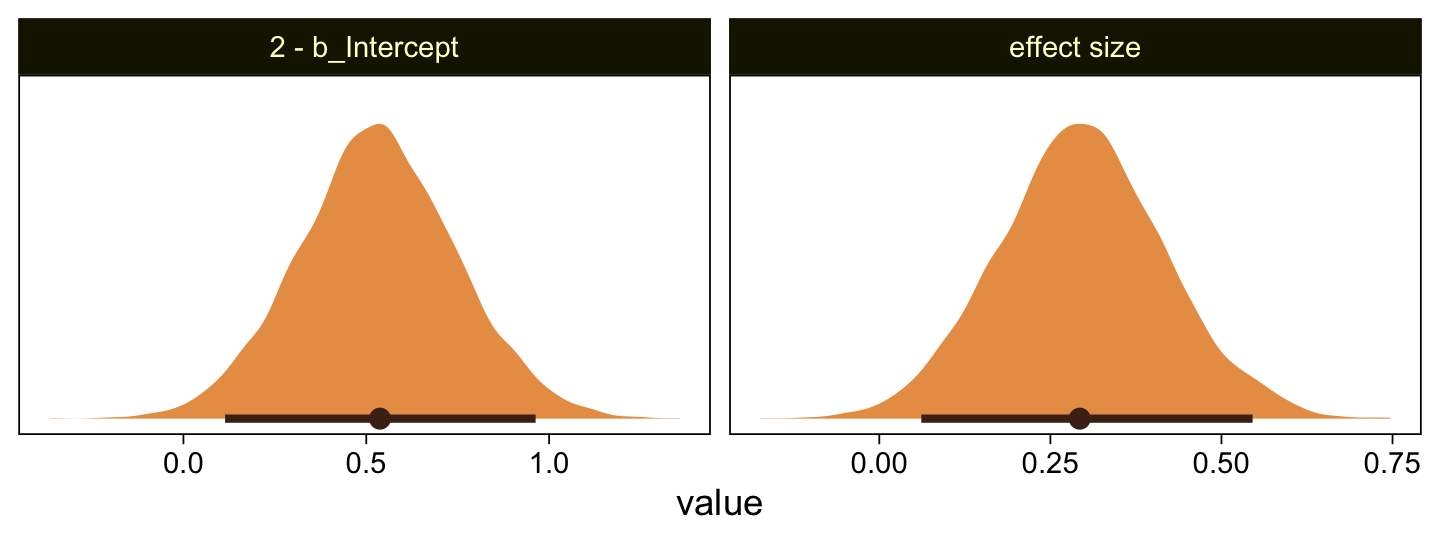

file = "fits/fit23.04")## The desired updates require recompiling the modelLet’s just jump to the plot. This time we’re comparing the b_Intercept to the value of 4.0.

as_draws_df(fit23.4) %>%

mutate(`2 - b_Intercept` = 4 - b_Intercept,

`effect size` = (4 - b_Intercept) / sigma) %>%

pivot_longer(`2 - b_Intercept`:`effect size`) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, scales = "free")

As in the text, our d is centered around 0.3. Let’s use a posterior predictive check to see how well fit23.4 summarized these data.

pp_check(fit23.4, type = "hist", ndraws = 10, binwidth = 1) +

scale_x_continuous(breaks = seq(from = -3, to = 7, by = 2)) +

theme(legend.position = c(.9, .15))

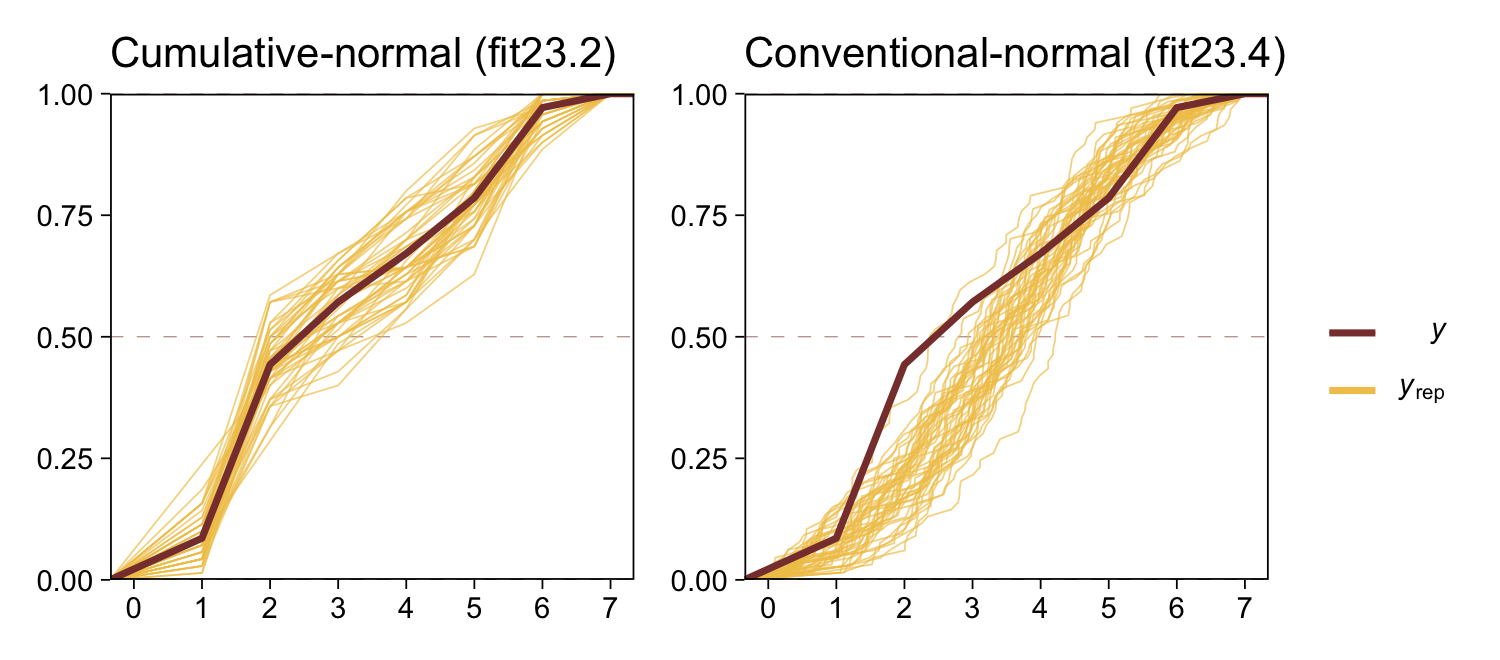

The histograms aren’t as awful as the ones from the previous model. But they’re still not great. We might further inspect the model misspecification with a cumulative distribution function overlay, this time comparing fit23.2 directly to fit23.4.

p1 <-

pp_check(fit23.2, type = "ecdf_overlay", ndraws = 50) +

ggtitle("Cumulative-normal (fit23.2)")

p2 <-

pp_check(fit23.4, type = "ecdf_overlay", ndraws = 50) +

ggtitle("Conventional-normal (fit23.4)")

(p1 + p2 &

scale_x_continuous(breaks = 0:7, limits = c(0, 7)) &

scale_y_continuous(expand = c(0, 0)) &

theme(title = element_text(size = 10.5))) +

plot_layout(guides = 'collect')

“Which of the analyses yields the more trustworthy conclusion? The one that describes the data better. In these cases, there is no doubt that the cumulative-normal model is the better description of the data” than the conventional Gaussian model (p. 682).

23.2.2.2 Ordinal outcomes versus Likert scales.

Just for fun,

rate how much you agree with the statement, “Bayesian estimation is more informative than null-hypothesis significance testing,” by selecting one option from the following: 1 = strongly disagree; 2 = disagree; 3 = undecided; 4 = agree; 5 = strongly agree. This sort of ordinal response interface is often called a Likert-type response (Likert, 1932), pronounced LICK-ert not LIKE-ert). Sometimes, it is called a Likert “scale” but the term “scale” in this context is more properly reserved for referring to an underlying metric variable that is indicated by the arithmetic mean of several meaningfully related Likert-type responses (Carifio & Perla, 2008; e.g., Carifio & Perla, 2007; Norman, 2010). (p. 681)

Kruschke then briefly introduced how one might combine several such meaningfully-related Likert-type responses with latent variable methods. He then clarified this text will not explore that approach, further. The current version of brms (i.e., 2.12.0) has very limited latent variable capacities. However, they are in the works. Interested modelers can follow Bürkner’s progress in GitHub issue #304. He also has a (2020) paper on how one might use brms to fit item response theory models, which can be viewed as a special family of latent variable models. One can also fit Bayesian latent variable models with the blavaan package.

23.3 The case of two groups

In both examples in the preceding text, the two groups of outcomes were on the same ordinal scale. In the first example, both questionnaire statements were answered on the same disagree–agree scale. In the second example, both groups responded on the same very unhappy–very happy scale. Therefore, we assume that both groups have the same underlying metric variable with the same thresholds. (p. 682)

23.3.1 Implementation in JAGS brms.

The brm() syntax for adding a single categorical predictor to an ordered-probit model is much like that for any other likelihood. We just add the variable name to the right side of the ~ in the formula argument. If you’re like me and like to use the verbose 1 syntax for your model intercepts–thresholds in these models–just use the + operator between them. For example, this is what it’d look like for an ordered-categorical criterion y and a single categorical predictor x.

fit <-

brm(data = my_data,

family = cumulative(probit),

y ~ 1 + x,

prior = c(prior(normal(0, 4), class = Intercept),

prior(normal(0, 4), class = b)))Also of note, we’ve expanded the prior section to include a line for class = b. As with the thresholds, interpret this prior through the context of the underlying standard normal cumulative distribution, \Phi(z). Note the interpretation, though. By brms defaults, the underlying Gaussian for the reference category of x will be \operatorname{Normal}(0, 1). Thus whatever parameter value you get for the other categories in x, those will be standardized mean differences, making them a kind of effect size.

Note, the above all presumes you’re only interested in comparing means between groups. Things get more complicated if you want groups to vary by \sigma, too. Hold on tight!

First, look back at the output from print(fit1) or print(fit2). The second line for both reads: Links: mu = probit; disc = identity. Hopefully the mu = probit part is no surprise. Probit regression is the primary focus of this chapter. But check out the disc = identity part and notice that nowhere in there is there any mention of sigma = identity like we get when treating the criterion as metric as in conventional Gaussian models (i.e., execute print(fit3) or print(fit4)).

Yes, there is a relationship between disc and sigma. disc is shorthand for discrimination. The term comes from the item response theory (IRT) literature and discrimination is the inverse of \sigma (see Bürkner’s Bayesian item response modelling in R with brms and Stan). In IRT, discrimination is often denoted a or \alpha. Here I’ll adopt the latter, making \sigma = 1 / \alpha. But focusing back on brms summary output, notice how both disc and sigma are modeled using the identity link. If you recall from earlier chapters, we switched to the log link to constrain the values to zero and above when we allowed \sigma to vary across groups. It’s the same thing for our discrimination parameter, \alpha. Because \alpha should always be zero or above, brms defaults to the log link when modeling it with predictors.

As with \sigma in conventional Gaussian models, we’ll be using some version of the bf() syntax when modeling the discrimination parameter in brms. For a general introduction to what Bürkner calls distributional modeling, see his (2022a) vignette, Estimating distributional models with brms. In the case of the discrimination parameter for the cumulative model, we’ll want more focused instructions. Happily, Bürkner & Vuorre (2019) have our backs. We read:

Conceptually, unequal variances are incorporated in the model by specifying an additional regression formula for the variance component of the latent variable \tilde Y. In brms, the parameter related to latent variances is called disc (short for “discrimination”), following conventions in item response theory. Note that disc is not the variance itself, but the inverse of the standard deviation, s. That is, s = 1/ \text{disc}. Further, because disc must be strictly positive, it is by default modeled on the log scale.

Predicting auxiliary parameters (parameters of the distribution other than the mean/location) in brms is accomplished by passing multiple regression formulas to the

brm()function. Each formula must first be wrapped in another function,bf()orlf()(for “linear formula”)–depending on whether it is a main or an auxiliary formula, respectively. The formulas are then combined and passed to theformulaargument ofbrm(). Because the standard deviation of the latent variable is fixed to 1 for the baseline [group, disc cannot be estimated for the baseline group]. We must therefore ensure that disc is estimated only for [non-baseline groups]. To do so, we omit the intercept from the model of disc by writing0 + ...on the right-hand side of the regression formula. By default, R applies cell-mean coding to factors in formulas without an intercept. That would lead to disc being estimated for [all groups], so we must deactivate it via thecmcargument oflf(). (pp. 11–12)

Here’s what that might look like.

fit <-

brm(data = my_data,

family = cumulative(probit),

bf(y ~ 1 + x) +

lf(disc ~ 0 + x, cmc = F),

prior = c(prior(normal(0, 4), class = Intercept),

prior(normal(0, 4), class = b),

prior(normal(0, 4), class = b, dpar = disc)))Note how when using the disc ~ 0 + ... syntax, the disc parameters are of class = b within the prior() function. If you’d like to assign them priors differing from the other b parameters, you’ll need to specify dpar = disc. Again, though the mean structure for this model is on the probit scale, the discrimination structure is on the log scale. Recalling that \sigma = 1/\alpha, which means \alpha = 1/\sigma, and also that we’re modeling \log (\alpha), the priors for the standard deviations of the non-reference category groups are on the scale of \log (1 / \sigma).

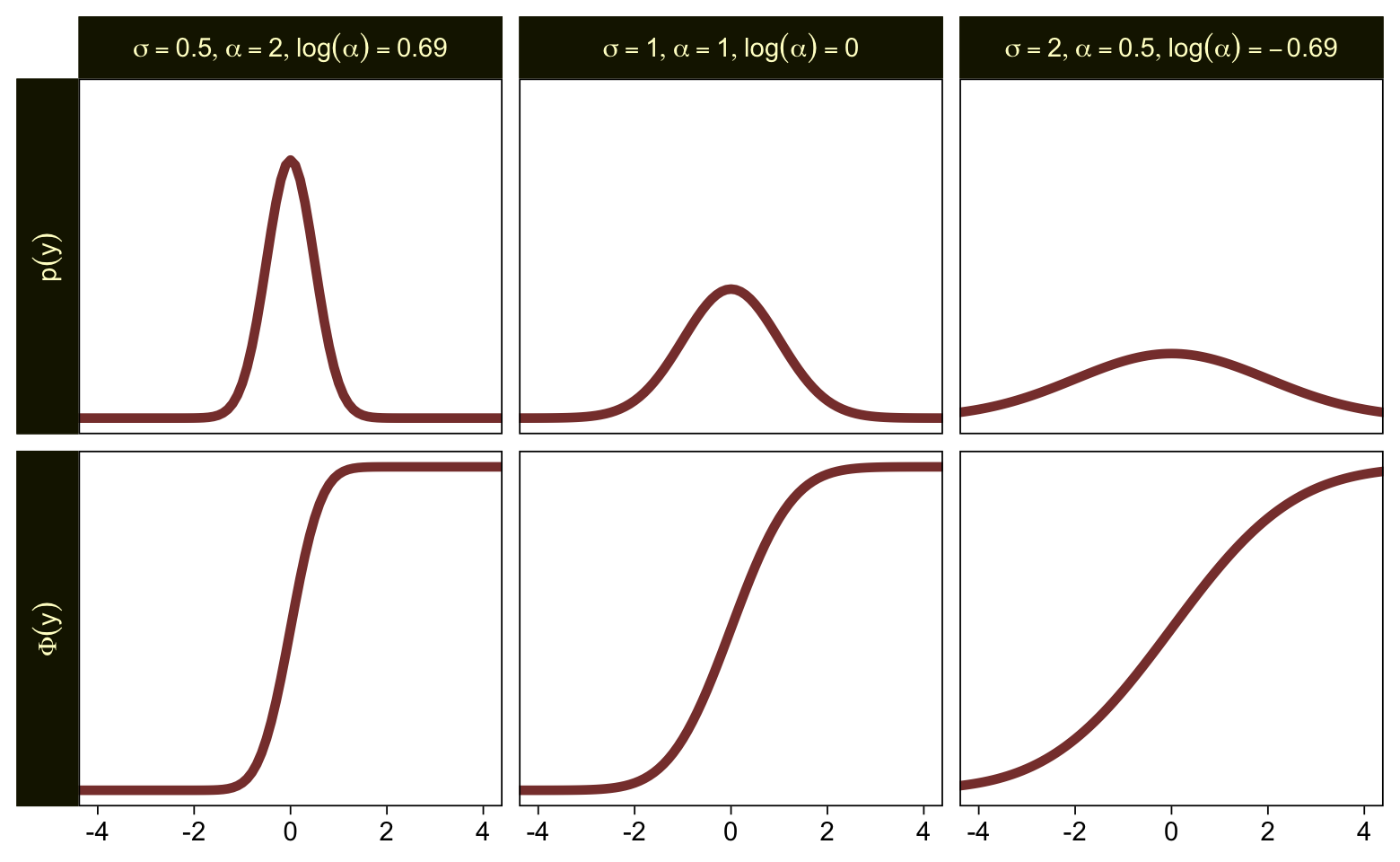

To get a better sense of how one might set a prior on such a scale, we might compare \sigma, \alpha, and \log (\alpha). Here are the density and cumulative density functions for \operatorname{Normal}(0, 0.5), \operatorname{Normal}(0, 1), and \operatorname{Normal}(0, 2).

tibble(mu = 0,

sigma = c(0.5, 1, 2)) %>%

expand_grid(y = seq(from = -5, to = 5, by = 0.1)) %>%

mutate(`p(y)` = dnorm(y, mu, sigma),

`Phi(y)` = pnorm(y, mu, sigma)) %>%

mutate(alpha = 1 / sigma,

loga = log(1 / sigma)) %>%

mutate(label = str_c("list(sigma==", sigma, ",alpha==", alpha, ",log(alpha)==", round(loga, 2), ")")) %>%

pivot_longer(`p(y)`:`Phi(y)`) %>%

ggplot(aes(x = y, y = value)) +

geom_line(linewidth = 1.5, color = sl[7]) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

coord_cartesian(xlim = c(-4, 4)) +

facet_grid(name ~ label, labeller = label_parsed, switch = "y")

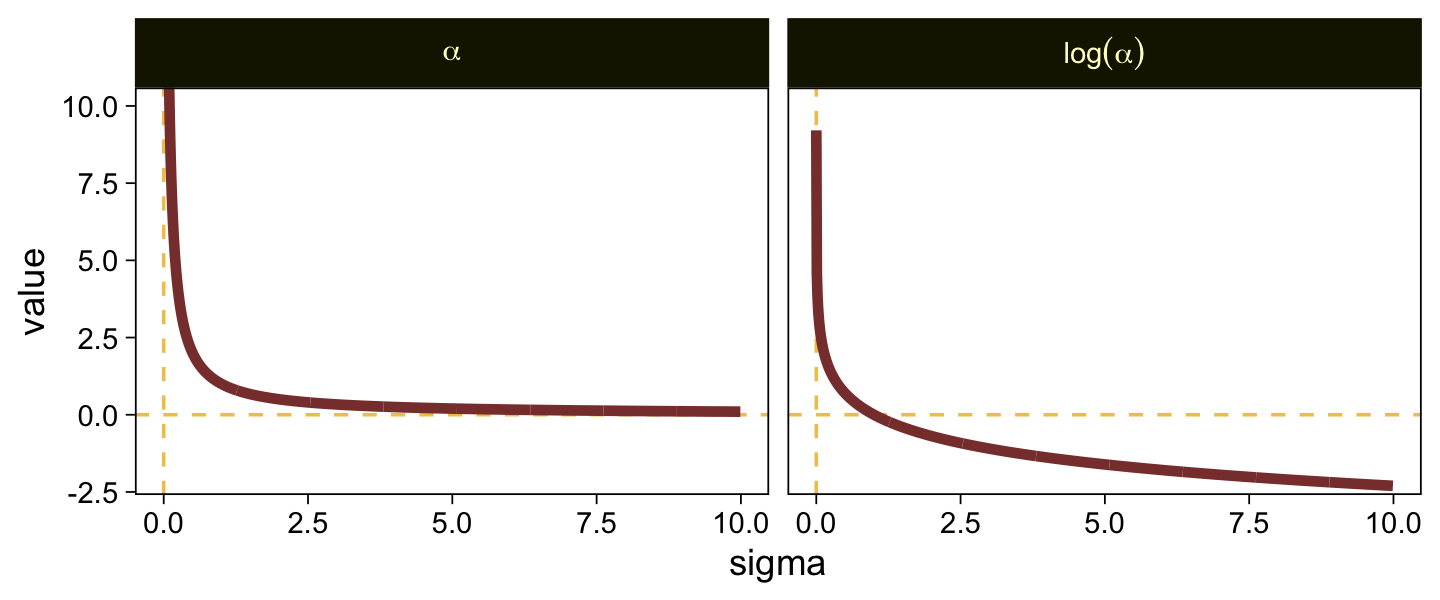

Put another way, here’s how \alpha and \log (\alpha) scale on values of \sigma ranging from 0.0001 to 10.

tibble(sigma = seq(from = 0.0001, to = 10, by = 0.01)) %>%

mutate(alpha = 1 / sigma,

`log(alpha)` = log(1 / sigma)) %>%

pivot_longer(-sigma, names_to = "labels") %>%

ggplot(aes(x = sigma, y = value)) +

geom_hline(yintercept = 0, color = sl[3], linetype = 2) +

geom_vline(xintercept = 0, color = sl[3], linetype = 2) +

geom_line(linewidth = 1.5, color = sl[7]) +

coord_cartesian(ylim = c(-2, 10)) +

facet_grid(~ labels, labeller = label_parsed)

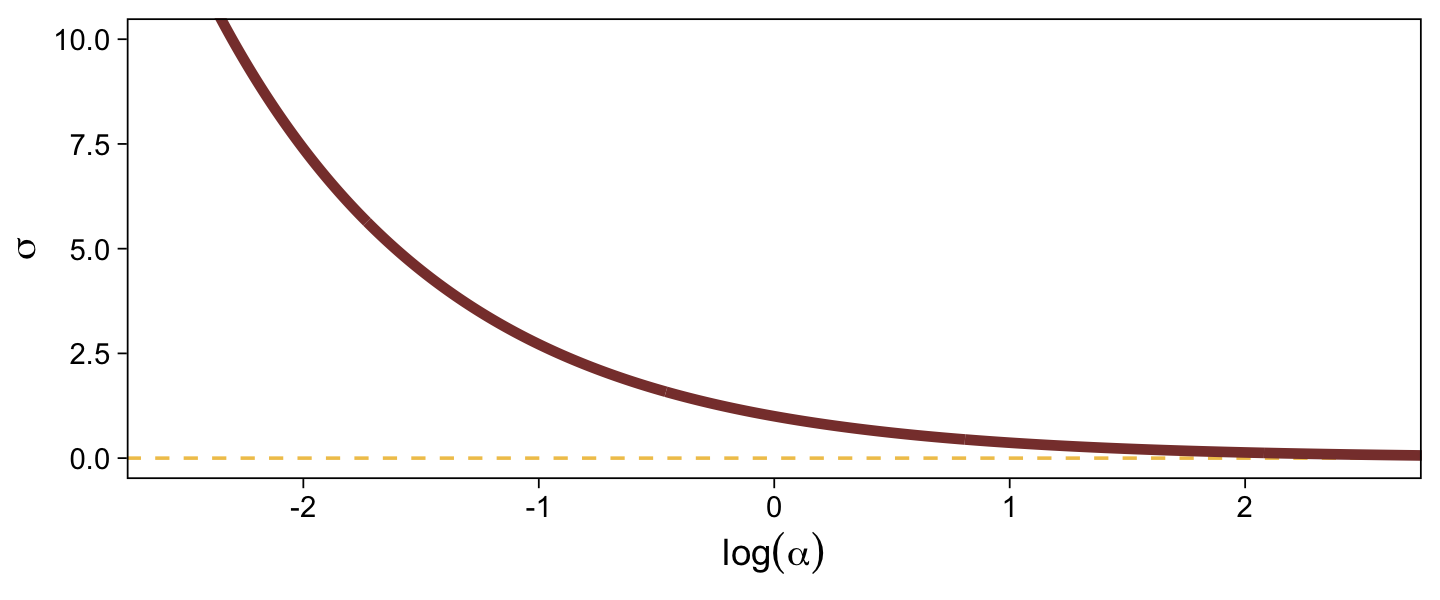

When \sigma goes below 1, both explode upward. As \sigma increases, \alpha asymptotes at zero and \log (\alpha) slowly descends below zero. Put another way, here is how \sigma scales as a function of \log (\alpha).

tibble(`log(alpha)` = seq(from = -3, to = 3, by = 0.01)) %>%

mutate(sigma = 1 / exp(`log(alpha)`)) %>%

ggplot(aes(x = `log(alpha)`, y = sigma)) +

geom_hline(yintercept = 0, color = sl[3], linetype = 2) +

geom_line(linewidth = 1.5, color = sl[7]) +

labs(x = expression(log(alpha)),

y = expression(sigma)) +

coord_cartesian(xlim = c(-2.5, 2.5),

ylim = c(0, 10))

In the context where the underlying distribution for the reference category will be the standard normal, it seems like a \operatorname{Normal}(0, 1) prior would be fairly permissive for \log (\alpha). This is what I will use going forward. Choose your priors with care.

23.3.2 Examples: Not funny.

Load the data for the next model.

my_data <- read_csv("data.R/OrdinalProbitData1.csv")

glimpse(my_data)## Rows: 88

## Columns: 2

## $ X <chr> "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A", "A"…

## $ Y <dbl> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2,…Fit the first ordinal probit model with group-specific \mu and \sigma values for the underlying normal distributions for the ordinal variable Y.

fit23.5 <-

brm(data = my_data,

family = cumulative(probit),

bf(Y ~ 1 + X) +

lf(disc ~ 0 + X, cmc = FALSE),

prior = c(prior(normal(0, 4), class = Intercept),

prior(normal(0, 4), class = b),

prior(normal(0, 1), class = b, dpar = disc)),

iter = 3000, warmup = 1000, chains = 4, cores = 4,

seed = 23,

file = "fits/fit23.05")Look over the summary.

print(fit23.5)## Family: cumulative

## Links: mu = probit; disc = log

## Formula: Y ~ 1 + X

## disc ~ 0 + X

## Data: my_data (Number of observations: 88)

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept[1] 0.49 0.20 0.11 0.88 1.00 9760 6028

## Intercept[2] 1.30 0.24 0.85 1.78 1.00 11289 5956

## Intercept[3] 2.20 0.38 1.52 3.00 1.00 5687 5511

## Intercept[4] 3.43 0.82 2.15 5.38 1.00 4453 5631

## XB 0.43 0.34 -0.31 1.04 1.00 4682 3784

## disc_XB -0.32 0.28 -0.86 0.23 1.00 3221 4543

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Because our fancy new parameter disc_XB is on the \log (\alpha) scale, we can convert it to the \sigma scale with \frac{1}{\exp (\log \alpha)}. For a quick and dirty example, here it is with the posterior mean.

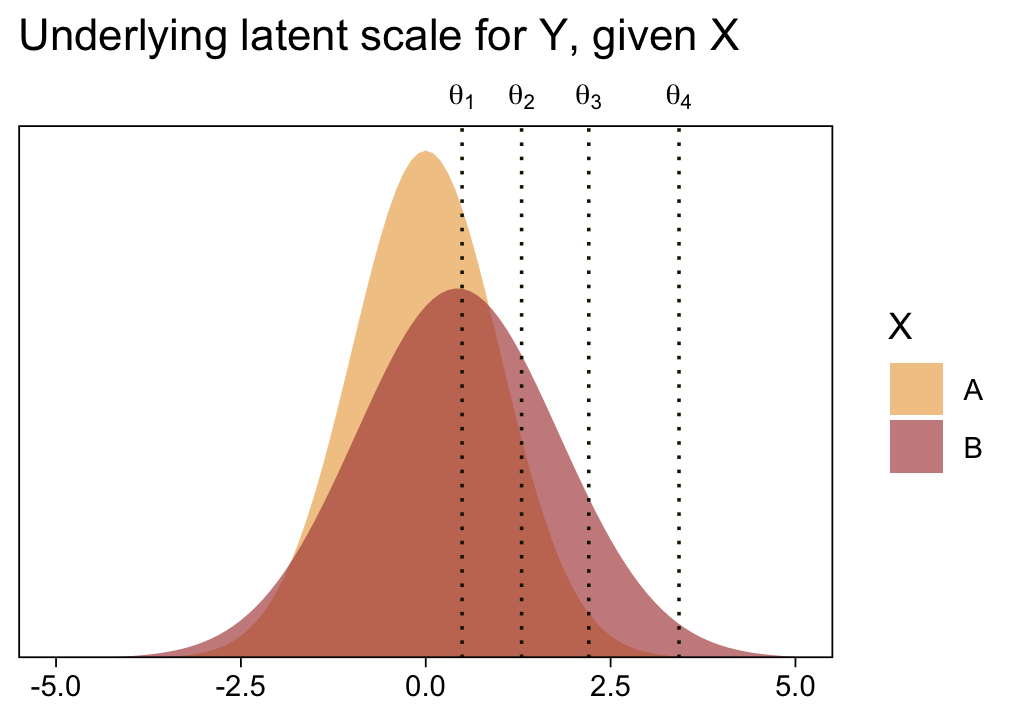

1 / (exp(fixef(fit23.5)["disc_XB", 1]))## [1] 1.372627Before we follow along with Kruschke, let’s hammer the meaning of these model parameters home. Here is a density plot of the two underlying latent distributions for Y, given X. We’ll throw in the thresholds for good measure. To keep things simple, we’ll just express the distributions in terms of the posterior means of each parameter.

tibble(X = LETTERS[1:2],

mu = c(0, fixef(fit23.5)["XB", 1]),

sigma = c(1, 1 / (exp(fixef(fit23.5)["disc_XB", 1])))) %>%

expand_grid(y = seq(from = -5, to = 5, by = 0.1)) %>%

mutate(d = dnorm(y, mu, sigma)) %>%

ggplot(aes(x = y, y = d, fill = X)) +

geom_area(alpha = 2/3, position = "identity") +

geom_vline(xintercept = fixef(fit23.5)[1:4, 1], linetype = 3, color = sl[9]) +

scale_fill_scico_d(palette = "lajolla", begin = .33, end = .67) +

scale_x_continuous(sec.axis = dup_axis(breaks = fixef(fit23.5)[1:4, 1] %>% as.double(),

labels = parse(text = str_c("theta[", 1:4, "]")))) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

labs(title = "Underlying latent scale for Y, given X",

x = NULL) +

theme(axis.ticks.x.top = element_blank())

Returning to our previous workflow, extract the posterior draws and wrangle.

draws <- as_draws_df(fit23.5)

glimpse(draws)## Rows: 8,000

## Columns: 11

## $ `b_Intercept[1]` <dbl> 0.4952251, 0.7549364, 0.7251187, 0.5801829, 0.3644839, 0.6963412, 0.2042224, 0.4628…

## $ `b_Intercept[2]` <dbl> 1.0987189, 1.2573930, 1.3004260, 1.2258207, 0.9281913, 1.2340367, 1.3980522, 1.1853…

## $ `b_Intercept[3]` <dbl> 1.701087, 1.806990, 1.651997, 2.159145, 1.776843, 1.795096, 2.845807, 2.064523, 1.9…

## $ `b_Intercept[4]` <dbl> 2.377239, 2.446180, 2.469663, 2.678498, 2.348979, 2.197770, 6.031723, 3.376425, 4.1…

## $ b_XB <dbl> 0.48385430, 0.85921784, 0.67312708, 0.38551309, 0.09678844, 0.45100318, 0.29035289,…

## $ b_disc_XB <dbl> 0.230385189, 0.288865047, 0.302123290, 0.220952201, 0.033662260, -0.001259104, -1.0…

## $ lprior <dbl> -12.71293, -12.72098, -12.73492, -12.82612, -12.73203, -12.68872, -14.37737, -12.90…

## $ lp__ <dbl> -108.1915, -109.1719, -110.3546, -112.2361, -109.8350, -110.3082, -112.2452, -107.9…

## $ .chain <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…

## $ .iteration <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, …

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22, 23, …Now, let’s use our handy compare_thresholds() function to make an expanded version of the lower-left plot of Figure 23.4.

draws %>%

select(`b_Intercept[1]`:`b_Intercept[4]`, .draw) %>%

compare_thresholds(lb = 1.5, ub = 4.5)

It will no longer be straightforward to use the formulas from 23.2.2 to convert the output from our brms parameterization to match the way Kruschke parameterized his conditional means and standard deviations. I will leave the conversion up to the interested reader. Going forward, we will focus on the output from our brms parameterization.

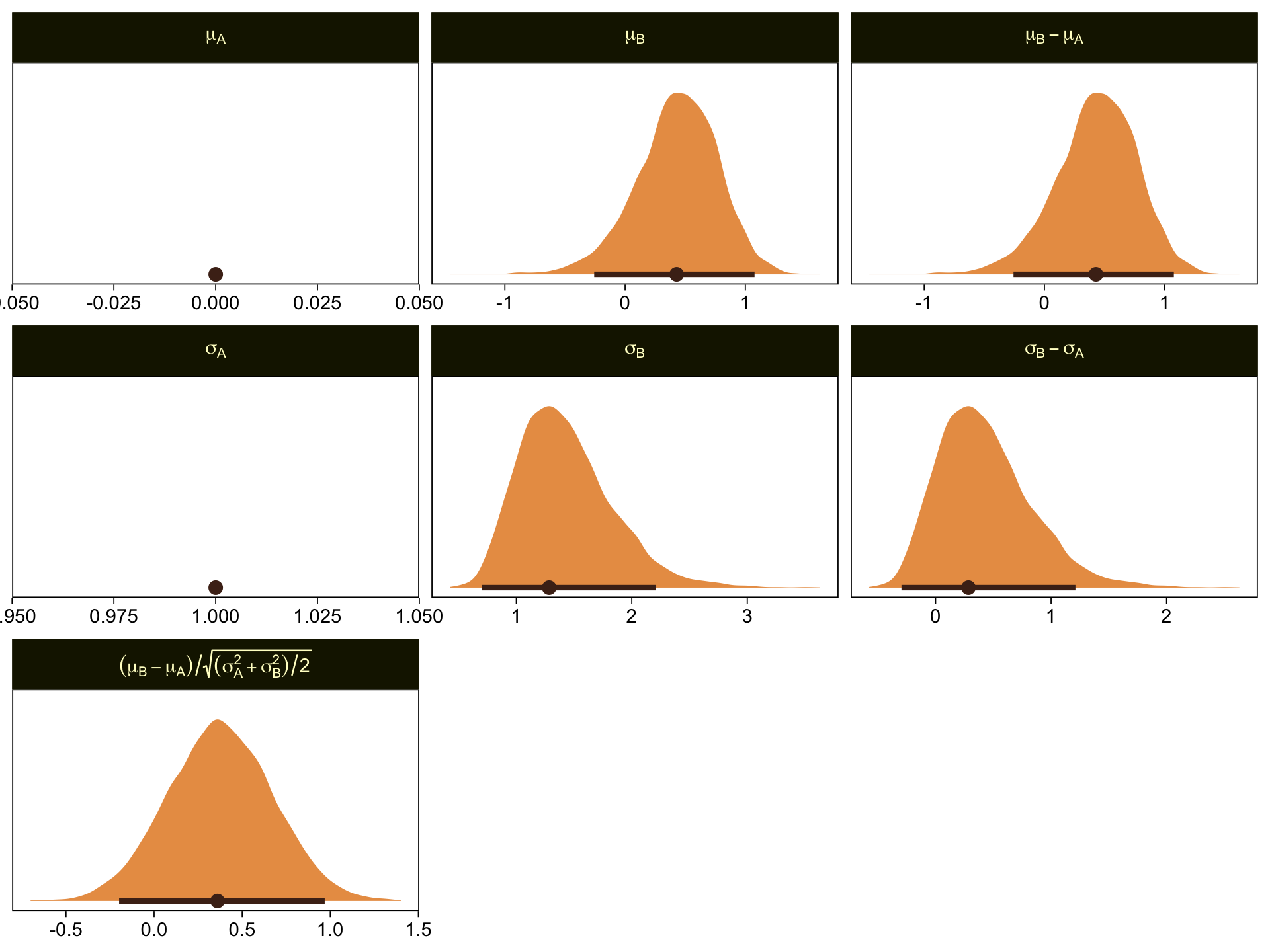

draws %>%

# simple parameters

mutate(`mu[A]` = 0,

`mu[B]` = b_XB,

`sigma[A]` = 1,

`sigma[B]` = 1 / exp(b_disc_XB)) %>%

# simple differences

mutate(`mu[B]-mu[A]` = `mu[B]` - `mu[A]`,

`sigma[B]-sigma[A]` = `sigma[B]` - `sigma[A]`) %>%

# effect size

mutate(`(mu[B]-mu[A])/sqrt((sigma[A]^2+sigma[B]^2)/2)` = (`mu[B]-mu[A]`) / sqrt((`sigma[A]`^2 + `sigma[B]`^2) / 2)) %>%

# wrangle

pivot_longer(`mu[A]`:`(mu[B]-mu[A])/sqrt((sigma[A]^2+sigma[B]^2)/2)`) %>%

mutate(name = factor(name,

levels = c("mu[A]", "mu[B]", "mu[B]-mu[A]",

"sigma[A]", "sigma[B]", "sigma[B]-sigma[A]",

"(mu[B]-mu[A])/sqrt((sigma[A]^2+sigma[B]^2)/2)"))) %>%

# plot

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

\mu_A and \sigma_A are both constants, which doesn’t show up well with our stat_halfeye() approach with the scales freed across facets. If these plots really mattered for a scientific presentation or something for industry, you could experiment using either a common scale across all facets, or making the plots individually and then combining them with patchwork syntax. Returning to interpretation, because \mu_A = 0, it turns out that \mu_B - \mu_A = \mu_B, which is on display on the top row. Because \sigma_A = 1, it turns out that \sigma_B - \sigma_A is just \sigma_B moved over one unit to the left, which is hopefully clear in the panels of the second row. Very happily, the effect size formula worked with our brms parameters the same way it did for Kruschke’s. Both yield an effect size of about 0.5, with 95% intervals extending about \pm 0.5.

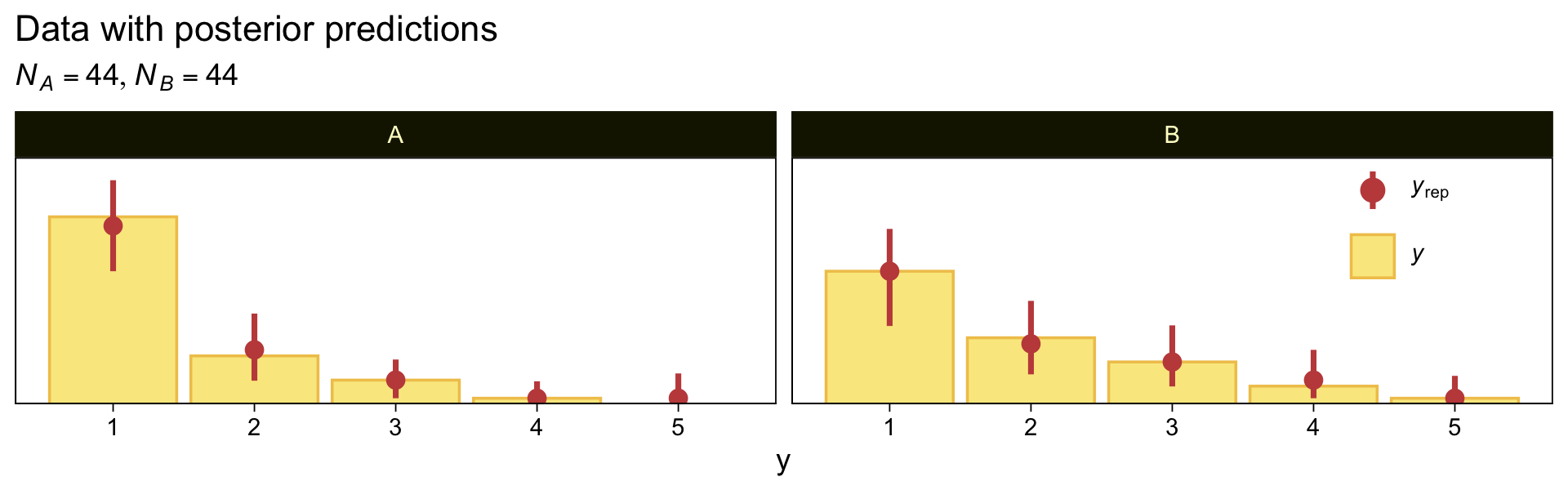

Here we make good use of the type = "bars_grouped" and group = "X" arguments to make the posterior predictive plots at the top right of Figure 23.4 with the brms::pp_check() function.

set.seed(23)

pp_check(fit23.5, type = "bars_grouped", ndraws = 100, group = "X", fatten = 2) +

scale_x_continuous("y", breaks = 1:7) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Data with posterior predictions",

subtitle = expression(list(italic(N[A])==44, italic(N[B])==44))) +

theme(legend.background = element_blank(),

legend.position = c(.9, .75))

Using more tricks from back in Chapter 16, here’s the corresponding conventional Gaussian model for metric data.

mean_y <- mean(my_data$Y)

sd_y <- sd(my_data$Y)

stanvars <-

stanvar(mean_y, name = "mean_y") +

stanvar(sd_y, name = "sd_y")

fit23.6 <-

brm(data = my_data,

family = gaussian,

bf(Y ~ 0 + X, sigma ~ 0 + X),

prior = c(prior(normal(mean_y, sd_y * 100), class = b),

prior(normal(0, 1), class = b, dpar = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

seed = 23,

file = "fits/fit23.06")Check the summary.

print(fit23.6)## Family: gaussian

## Links: mu = identity; sigma = log

## Formula: Y ~ 0 + X

## sigma ~ 0 + X

## Data: my_data (Number of observations: 88)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## XA 1.43 0.12 1.20 1.67 1.00 4176 2850

## XB 1.86 0.16 1.54 2.18 1.00 4514 2958

## sigma_XA -0.26 0.11 -0.46 -0.03 1.00 3919 2563

## sigma_XB 0.08 0.11 -0.12 0.30 1.00 4275 3139

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

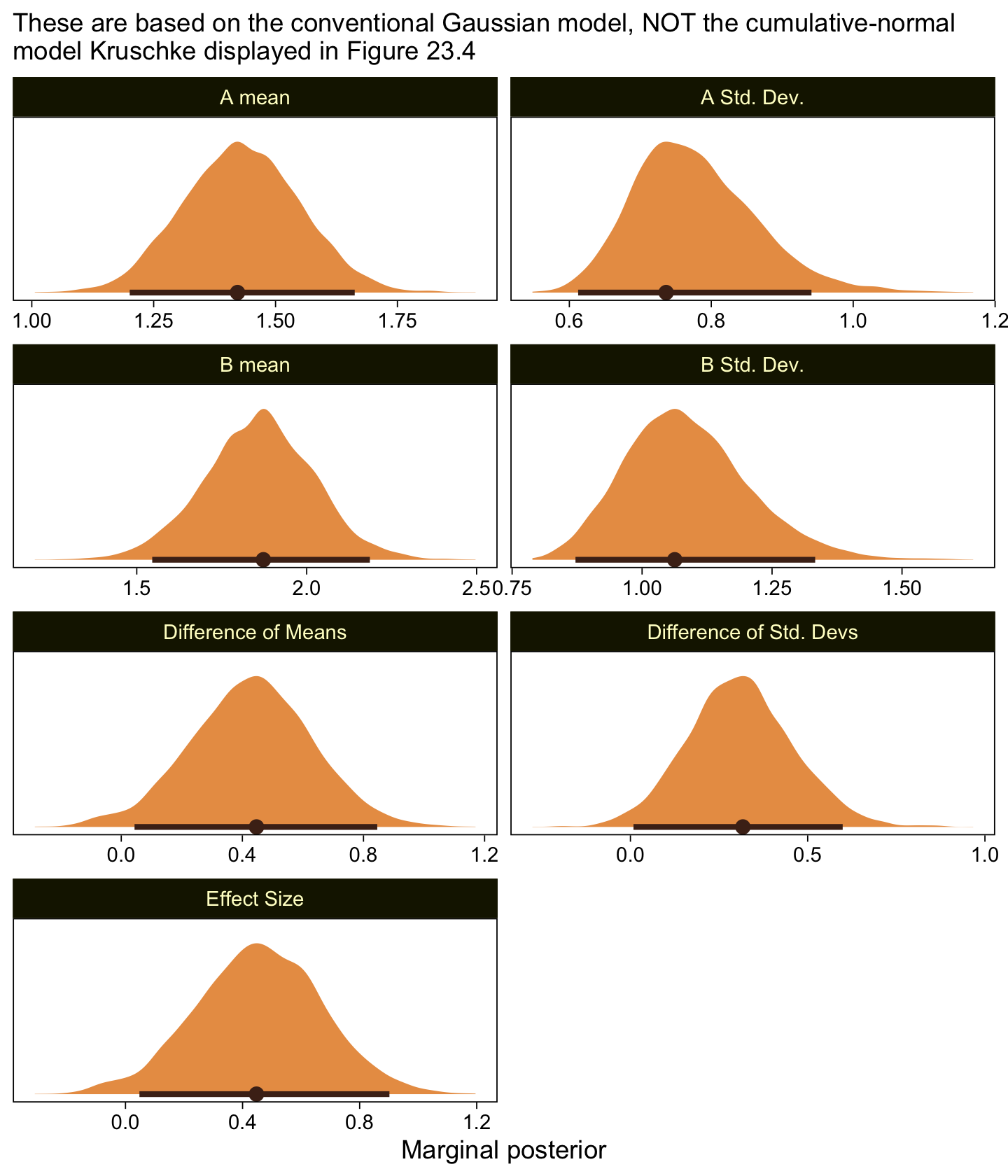

## scale reduction factor on split chains (at convergence, Rhat = 1).Here are the marginal posteriors, including the effect size (i.e., a standardized mean difference using the pooled standard deviation formula presuming equal sample sizes, (\mu_2 - \mu_1) / \sqrt{(\sigma_1^2 + \sigma_2^2) / 2}.

as_draws_df(fit23.6) %>%

mutate(`A mean` = b_XA,

`B mean` = b_XB,

`A Std. Dev.` = exp(b_sigma_XA),

`B Std. Dev.` = exp(b_sigma_XB)) %>%

mutate(`Difference of Means` = `B mean` - `A mean`,

`Difference of Std. Devs` = `B Std. Dev.` - `A Std. Dev.`) %>%

mutate(`Effect Size` = `Difference of Means` / sqrt((`A Std. Dev.`^2 + `B Std. Dev.`^2) / 2)) %>%

pivot_longer(`A mean`:`Effect Size`) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "These are based on the conventional Gaussian model, NOT the cumulative-normal\nmodel Kruschke displayed in Figure 23.4",

x = "Marginal posterior") +

facet_wrap(~ name, scales = "free", ncol = 2)

Compare those results to those Kruschke reported from an NHST analysis in the note below Figure 23.4:

M_1 = 1.43, M_2 = 1.86, t = 2.18, p = 0.032, with effect size d = 0.466 with 95\% CI of 0.036-0.895. An F test of the variances concludes that the standard deviations are significantly different: S_1 = 0.76, S_2 = 1.07, p = 0.027. Notice in this case that treating the values as metric greatly underestimates their variances, as well as erroneously concludes the variances are different. (p. 684)

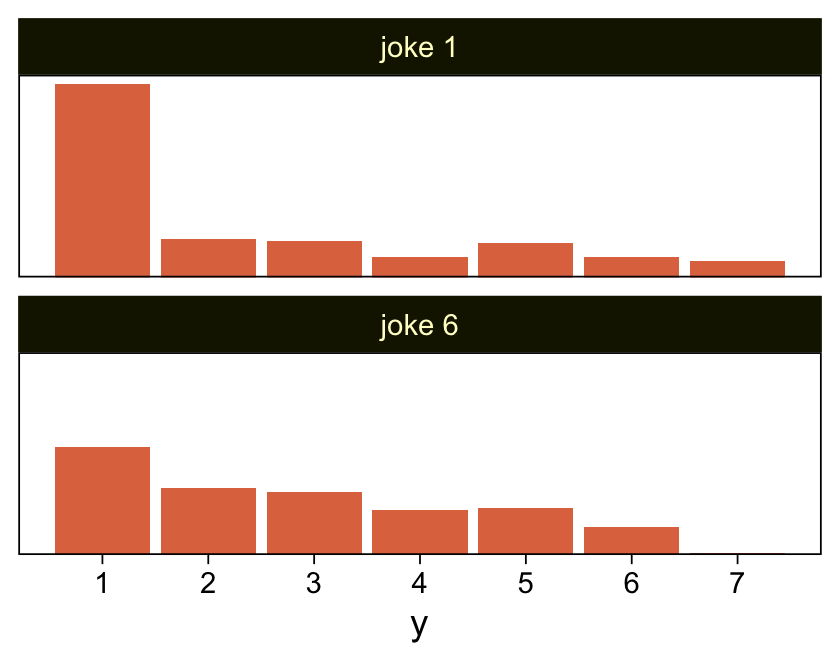

As to the data in the analyses Kruschke reported in Figure 23.5 and the prose in the second paragraph on page 685, I’m not aware that Kruschke provided them. From his footnote #2, we read: “Data in Figure 23.5 are from an as-yet unpublished study I conducted with the collaboration of Allison Vollmer as part of her undergraduate honors project.” In place of the real data, I eyeballed the values based on the upper-right panels in Figure 23.5. Here they are.

d <-

tibble(x = rep(str_c("joke ", c(1, 6)), each = 177),

y = c(rep(1:7, times = c(95, 19, 18, 10, 17, 10, 8)),

rep(1:7, times = c(53, 33, 31, 22, 23, 14, 1))))

glimpse(d)## Rows: 354

## Columns: 2

## $ x <chr> "joke 1", "joke 1", "joke 1", "joke 1", "joke 1", "joke 1", "joke 1", "joke 1", "joke 1", "joke 1"…

## $ y <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1,…My approximation to Kruschke’s data looks like this.

d %>%

ggplot(aes(x = y)) +

geom_bar(fill = sl[5]) +

scale_x_continuous(breaks = 1:7) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

facet_wrap(~ x, ncol = 1)

Here we fit the cumulative-normal model based on our version of the data, continuing to allow both \mu and \sigma (i.e., 1 / \exp(\log \alpha)) to differ across groups.

fit23.7 <-

brm(data = d,

family = cumulative(probit),

bf(y ~ 1 + x) +

lf(disc ~ 0 + x, cmc = FALSE),

prior = c(prior(normal(0, 4), class = Intercept),

prior(normal(0, 4), class = b),

prior(normal(0, 1), class = b, dpar = disc)),

iter = 3000, warmup = 1000, chains = 4, cores = 4,

seed = 23,

file = "fits/fit23.07")Check the model summary.

print(fit23.7)## Family: cumulative

## Links: mu = probit; disc = log

## Formula: y ~ 1 + x

## disc ~ 0 + x

## Data: d (Number of observations: 354)

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept[1] 0.08 0.09 -0.11 0.25 1.00 5294 5858

## Intercept[2] 0.37 0.09 0.19 0.54 1.00 7251 6247

## Intercept[3] 0.65 0.09 0.47 0.83 1.00 8814 6164

## Intercept[4] 0.87 0.10 0.68 1.07 1.00 8039 5851

## Intercept[5] 1.26 0.12 1.03 1.49 1.00 6667 5937

## Intercept[6] 1.81 0.16 1.51 2.13 1.00 7075 5922

## xjoke6 0.39 0.10 0.20 0.59 1.00 7813 6439

## disc_xjoke6 0.49 0.12 0.27 0.72 1.00 4440 5751

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Save and wrangle the posterior draws, then use our compare_thresholds() function to compare the brms parameterization of \theta_{[i]} with the parameterization in the text in an expanded version of the lower-left plot of Figure 23.5.

draws <- as_draws_df(fit23.7)

draws %>%

select(`b_Intercept[1]`:`b_Intercept[6]`, .draw) %>%

compare_thresholds(lb = 1.5, ub = 6.5)

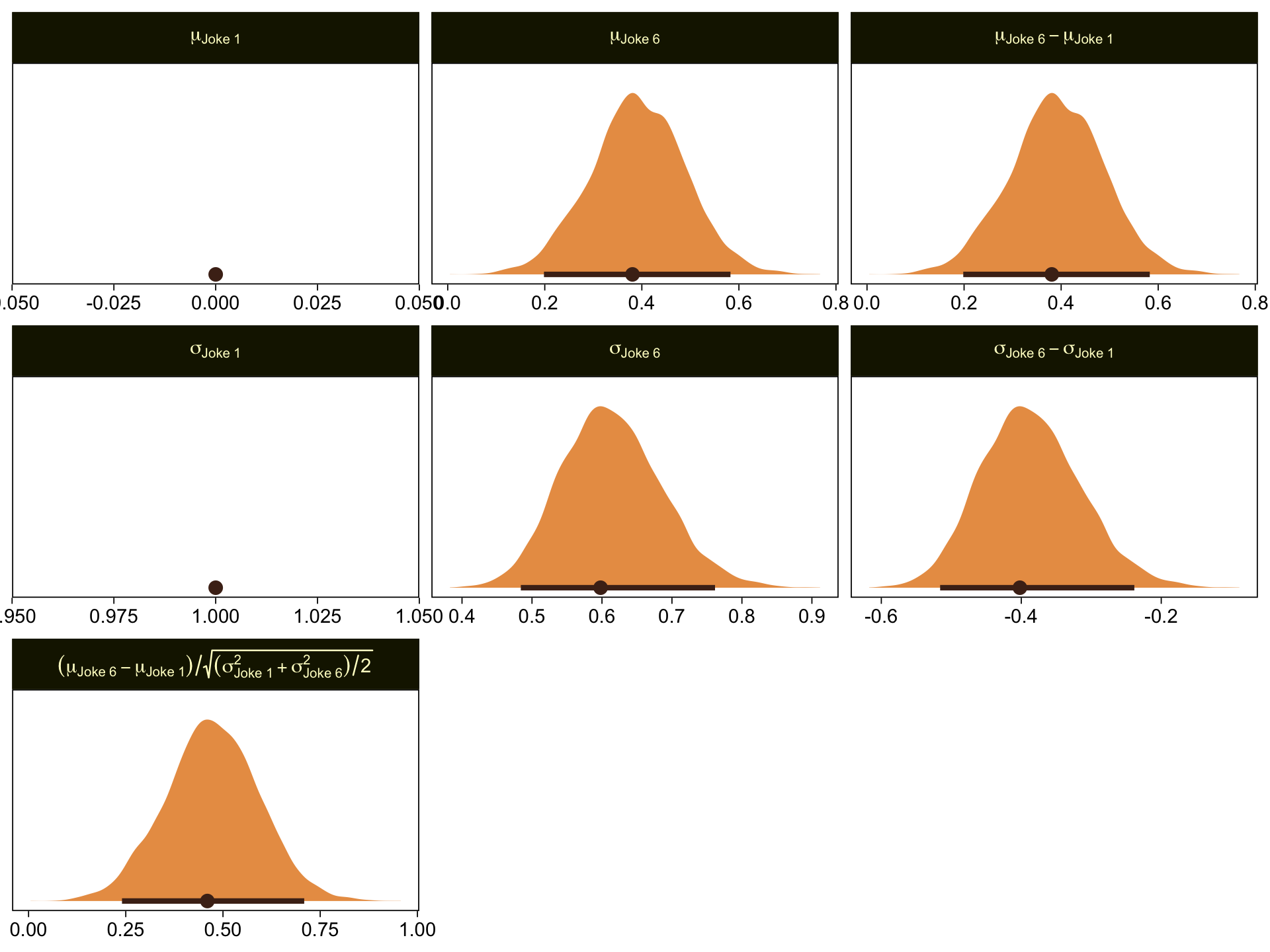

Given our data are only approximations of Kruschke’s, I think we did pretty good. Here are the histograms for our brms versions of the \mu- and \sigma-related parameters.

draws %>%

transmute(`mu[Joke~1]` = 0,

`mu[Joke~6]` = b_xjoke6,

`sigma[Joke~1]` = 1,

`sigma[Joke~6]` = 1 / exp(b_disc_xjoke6)) %>%

mutate(`mu[Joke~6]-mu[Joke~1]` = `mu[Joke~6]` - `mu[Joke~1]`,

`sigma[Joke~6]-sigma[Joke~1]` = `sigma[Joke~6]` - `sigma[Joke~1]`) %>%

mutate(`(mu[Joke~6]-mu[Joke~1])/sqrt((sigma[Joke~1]^2+sigma[Joke~6]^2)/2)` = (`mu[Joke~6]-mu[Joke~1]`) / sqrt((`sigma[Joke~1]`^2 + `sigma[Joke~6]`^2) / 2)) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name,

levels = c("mu[Joke~1]", "mu[Joke~6]", "mu[Joke~6]-mu[Joke~1]",

"sigma[Joke~1]", "sigma[Joke~6]", "sigma[Joke~6]-sigma[Joke~1]",

"(mu[Joke~6]-mu[Joke~1])/sqrt((sigma[Joke~1]^2+sigma[Joke~6]^2)/2)"))) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8],

normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

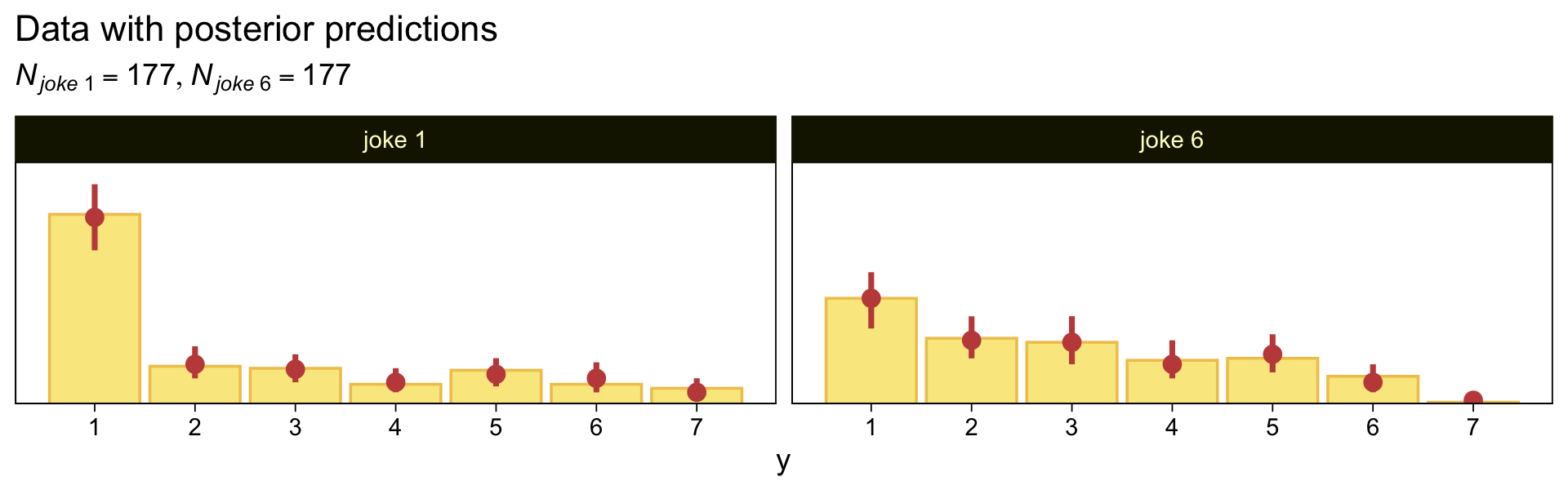

Here are our versions of the two panels in the upper right of Figure 23.5.

set.seed(23)

pp_check(fit23.7, type = "bars_grouped", ndraws = 100, group = "x", fatten = 2) +

scale_x_continuous("y", breaks = 1:7) +

scale_y_continuous(NULL, breaks = NULL, expand = expansion(mult = c(0, 0.05))) +

ggtitle("Data with posterior predictions",

subtitle = expression(list(italic(N["joke "*1])==177, italic(N["joke "*6])==177))) +

theme(legend.position = "none")

Now here’s the corresponding model where we treat the y data as metric.

mean_y <- mean(d$y)

sd_y <- sd(d$y)

stanvars <-

stanvar(mean_y, name = "mean_y") +

stanvar(sd_y, name = "sd_y")

fit23.8 <-

brm(data = d,

family = gaussian,

bf(y ~ 0 + x, sigma ~ 0 + x),

prior = c(prior(normal(mean_y, sd_y * 100), class = b),

prior(normal(0, exp(sd_y)), class = b, dpar = sigma)),

chains = 4, cores = 4,

stanvars = stanvars,

seed = 23,

file = "fits/fit23.08")Check the summary.

print(fit23.8)## Family: gaussian

## Links: mu = identity; sigma = log

## Formula: y ~ 0 + x

## sigma ~ 0 + x

## Data: d (Number of observations: 354)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## xjoke1 2.42 0.14 2.15 2.69 1.00 4201 3145

## xjoke6 2.86 0.13 2.60 3.10 1.00 4581 3030

## sigma_xjoke1 0.64 0.05 0.54 0.75 1.00 5039 3027

## sigma_xjoke6 0.52 0.05 0.42 0.63 1.00 4602 3110

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Make the marginal posteriors, including the effect size.

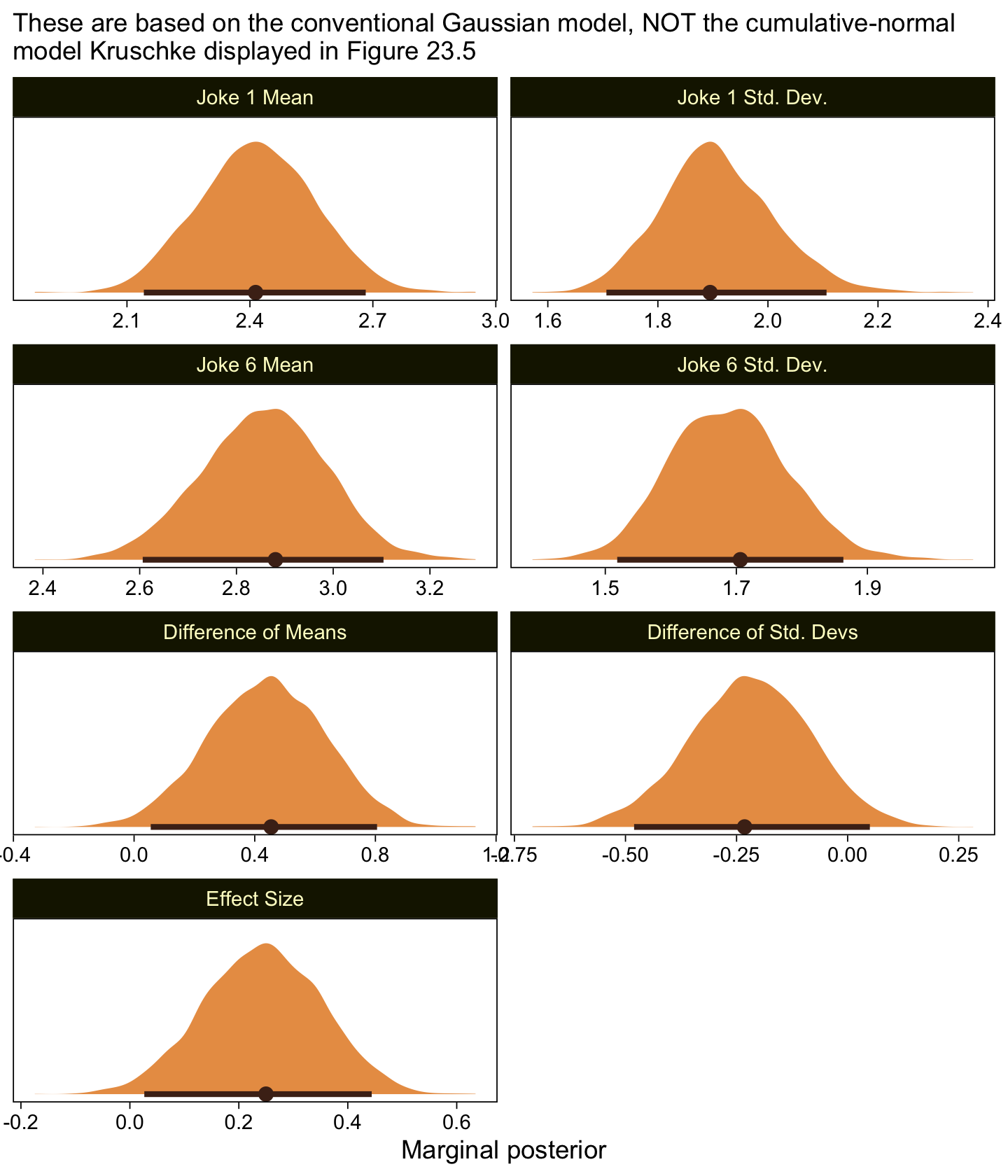

as_draws_df(fit23.8) %>%

mutate(`Joke 1 Mean` = b_xjoke1,

`Joke 6 Mean` = b_xjoke6,

`Joke 1 Std. Dev.` = exp(b_sigma_xjoke1),

`Joke 6 Std. Dev.` = exp(b_sigma_xjoke6)) %>%

mutate(`Difference of Means` = `Joke 6 Mean` - `Joke 1 Mean`,

`Difference of Std. Devs` = `Joke 6 Std. Dev.` - `Joke 1 Std. Dev.`) %>%

mutate(`Effect Size` = `Difference of Means` / sqrt((`Joke 1 Std. Dev.`^2 + `Joke 6 Std. Dev.`^2) / 2)) %>%

pivot_longer(`Joke 1 Mean`:`Effect Size`) %>%

mutate(name = factor(name,

levels = c("Joke 1 Mean", "Joke 1 Std. Dev.",

"Joke 6 Mean", "Joke 6 Std. Dev.",

"Difference of Means", "Difference of Std. Devs",

"Effect Size"))) %>%

ggplot(aes(x = value, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8], normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "These are based on the conventional Gaussian model, NOT the cumulative-normal\nmodel Kruschke displayed in Figure 23.5",

x = "Marginal posterior") +

facet_wrap(~ name, scales = "free", ncol = 2)

If you think you have a better approximation of Kruschke’s data, please share.

23.4 The Case of metric predictors

“This type of model is often referred to as ordinal probit regression or ordered probit regression because the probit function is the link function corresponding to the cumulative-normal inverse-link function” (p. 688, emphasis in the original).

23.4.1 Implementation in JAGS brms.

This model is easy to specify in brms. Just make sure to think clearly about your priors.

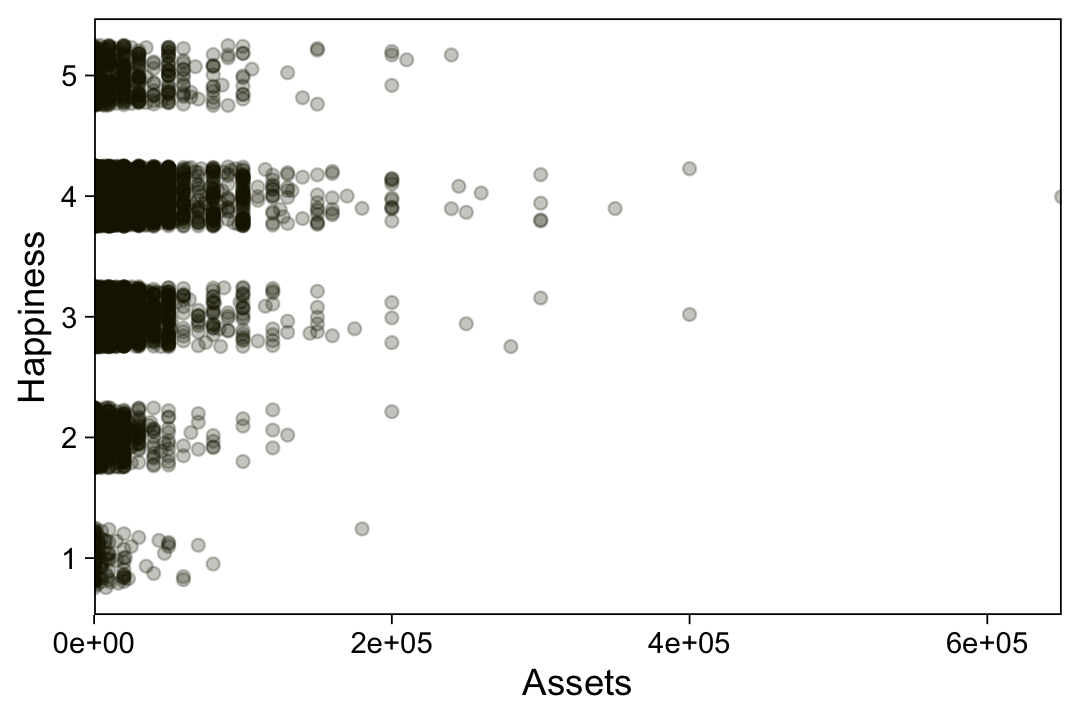

23.4.2 Example: Happiness and money.

Load the data for the next model.

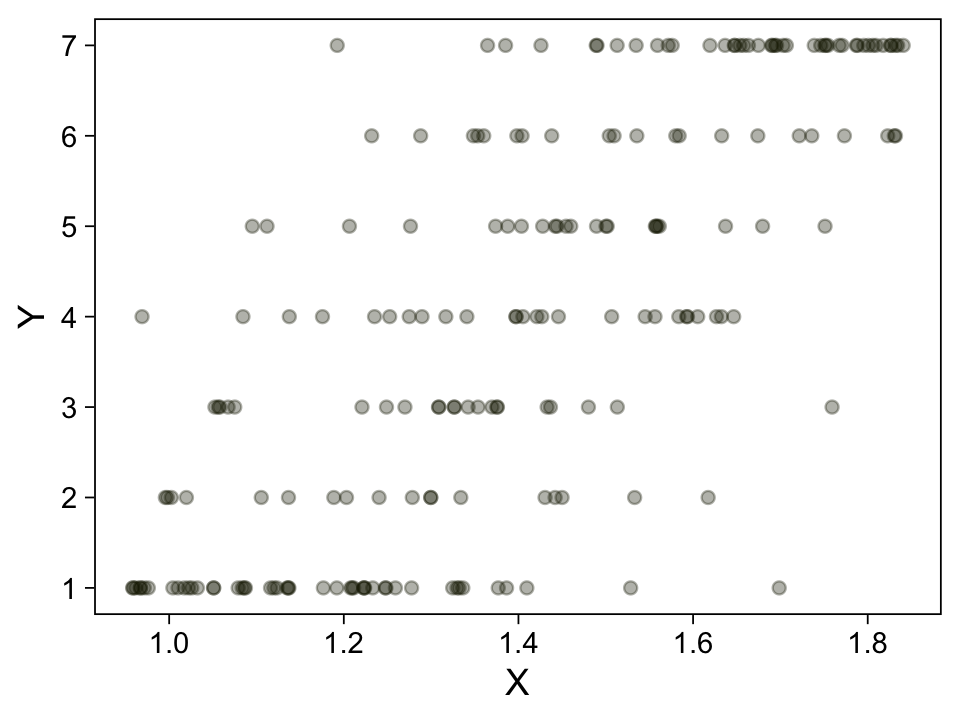

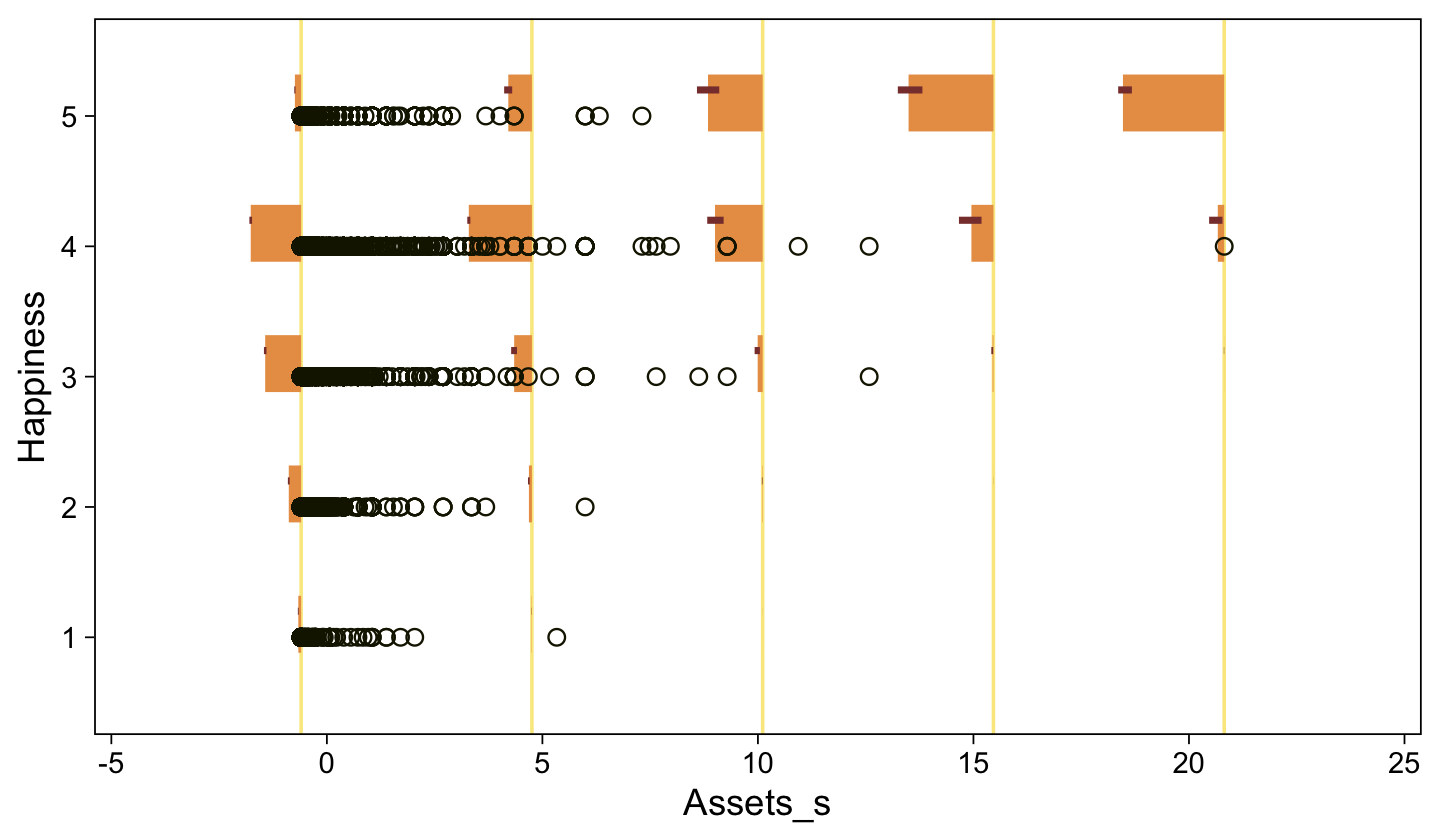

my_data <- read_csv("data.R/OrdinalProbitData-LinReg-2.csv")

glimpse(my_data)## Rows: 200

## Columns: 2

## $ X <dbl> 1.386389, 1.223879, 1.454505, 1.112068, 1.222715, 1.545099, 1.360256, 1.533071, 1.501657, 1.426755…

## $ Y <dbl> 1, 1, 5, 5, 1, 4, 6, 2, 5, 4, 1, 4, 4, 4, 4, 6, 1, 1, 6, 2, 1, 7, 1, 3, 1, 1, 7, 5, 7, 1, 4, 6, 7,…Take a quick look at the data.

my_data %>%

ggplot(aes(x = X, y = Y)) +

geom_point(alpha = 1/3, color = sl[9]) +

scale_y_continuous(breaks = 1:7)

Kruschke standardized his predictor within his model code. Here we’ll standardize X before fitting the model.

my_data <-

my_data %>%

mutate(X_s = (X - mean(X)) / sd(X))Fit the model.

fit23.9 <-

brm(data = my_data,

family = cumulative(probit),

Y ~ 1 + X_s,

prior = c(prior(normal(0, 4), class = Intercept),

prior(normal(0, 4), class = b)),

iter = 3000, warmup = 1000, chains = 4, cores = 4,

seed = 23,

file = "fits/fit23.09")Check the summary.

print(fit23.9)## Family: cumulative

## Links: mu = probit; disc = identity

## Formula: Y ~ 1 + X_s

## Data: my_data (Number of observations: 200)

## Draws: 4 chains, each with iter = 3000; warmup = 1000; thin = 1;

## total post-warmup draws = 8000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept[1] -1.18 0.12 -1.42 -0.94 1.00 5504 5515

## Intercept[2] -0.76 0.11 -0.99 -0.54 1.00 7562 6456

## Intercept[3] -0.29 0.11 -0.50 -0.08 1.00 8824 6672

## Intercept[4] 0.24 0.11 0.04 0.45 1.00 9672 6707

## Intercept[5] 0.73 0.11 0.51 0.96 1.00 9080 7114

## Intercept[6] 1.26 0.13 1.02 1.52 1.00 8399 7198

## X_s 1.16 0.10 0.97 1.35 1.00 6487 6073

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## disc 1.00 0.00 1.00 1.00 NA NA NA

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

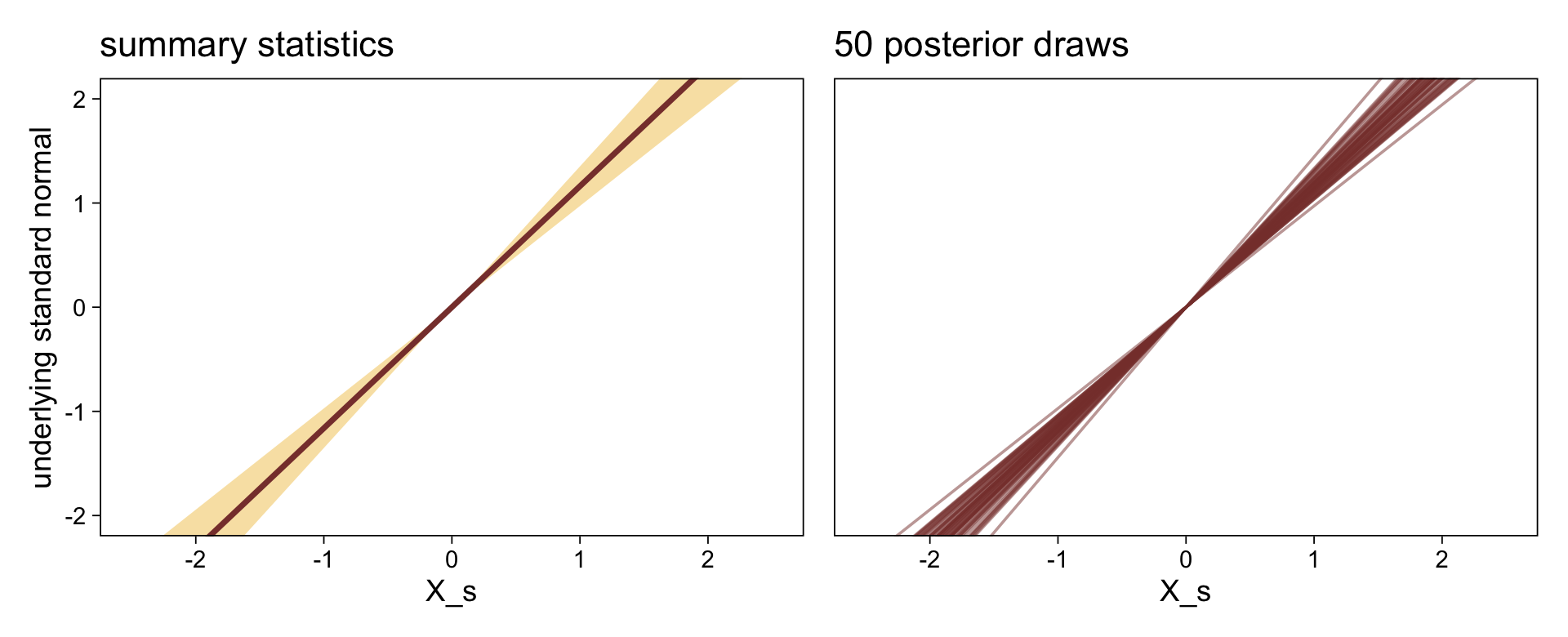

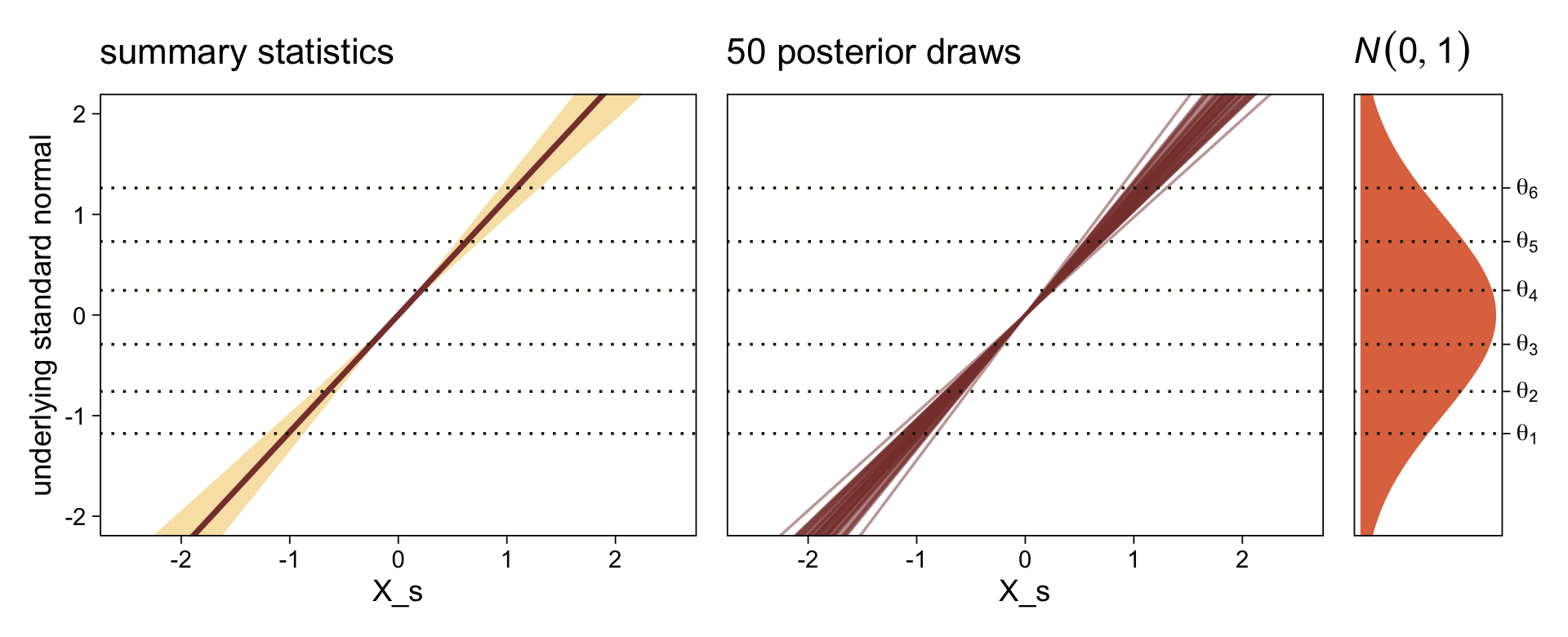

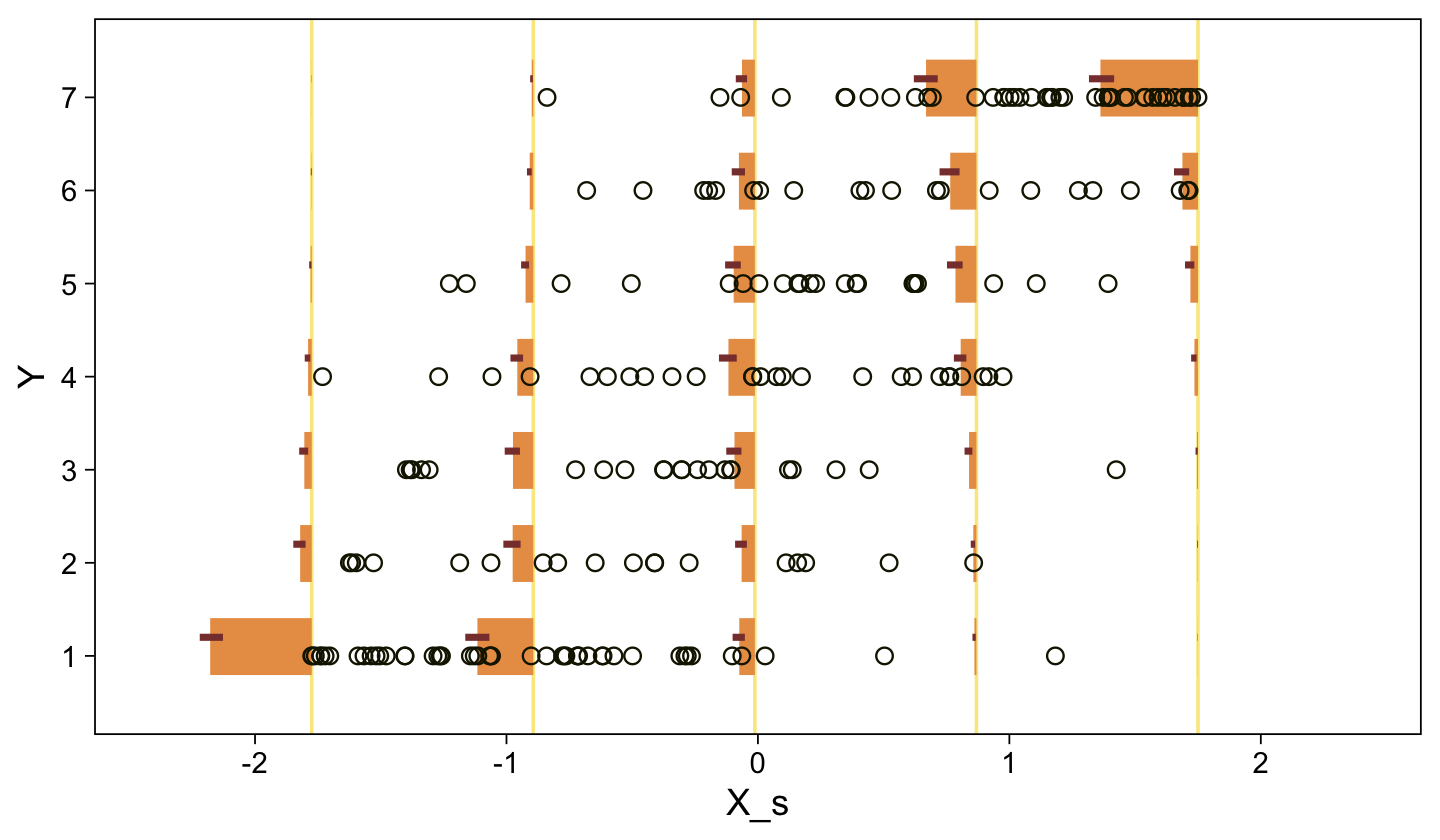

## scale reduction factor on split chains (at convergence, Rhat = 1).Extract the posterior draws and compare the brms parameterization of \theta_{[i]} with the parameterization in the text in an expanded version of the bottom panel of Figure 23.7.

draws <- as_draws_df(fit23.9)

draws %>%

select(`b_Intercept[1]`:`b_Intercept[6]`, .draw) %>%

compare_thresholds(lb = 1.5, ub = 6.5)

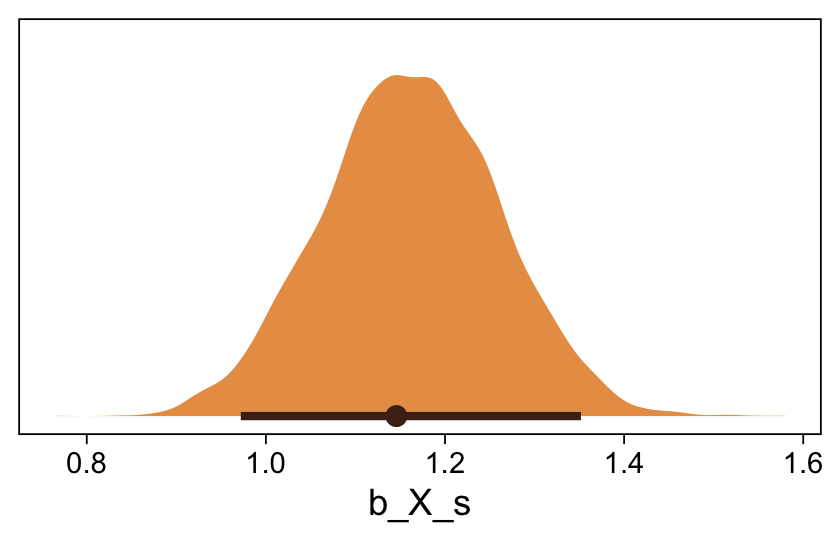

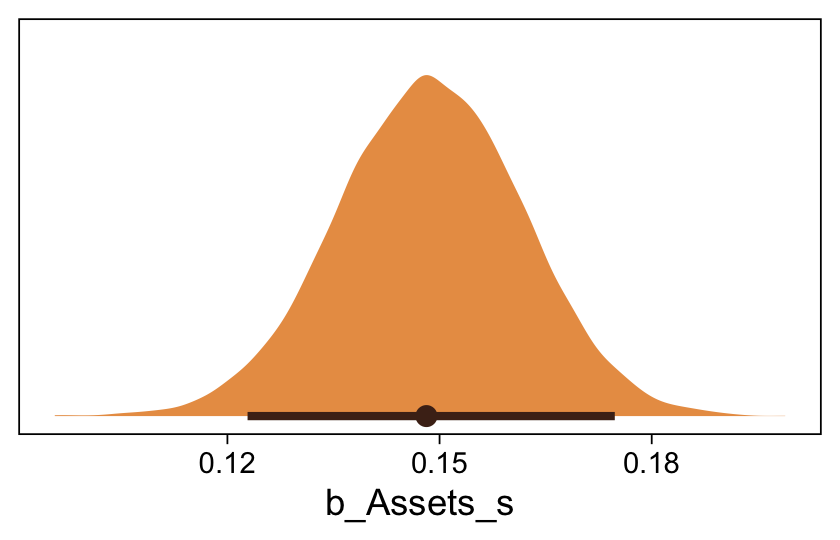

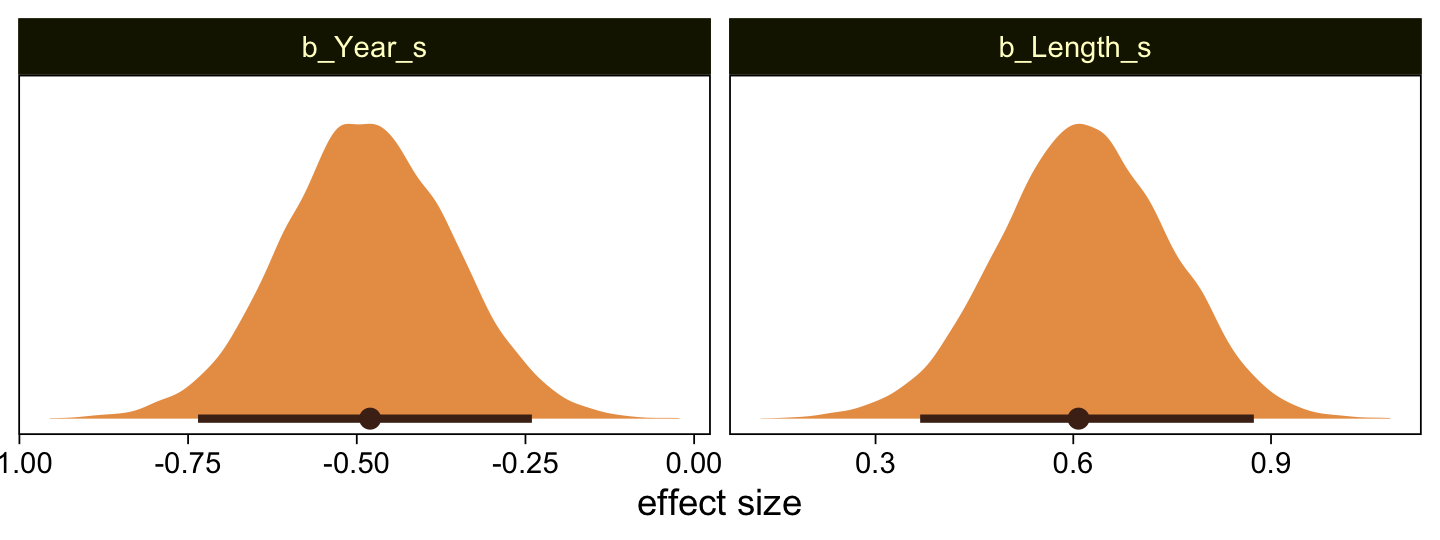

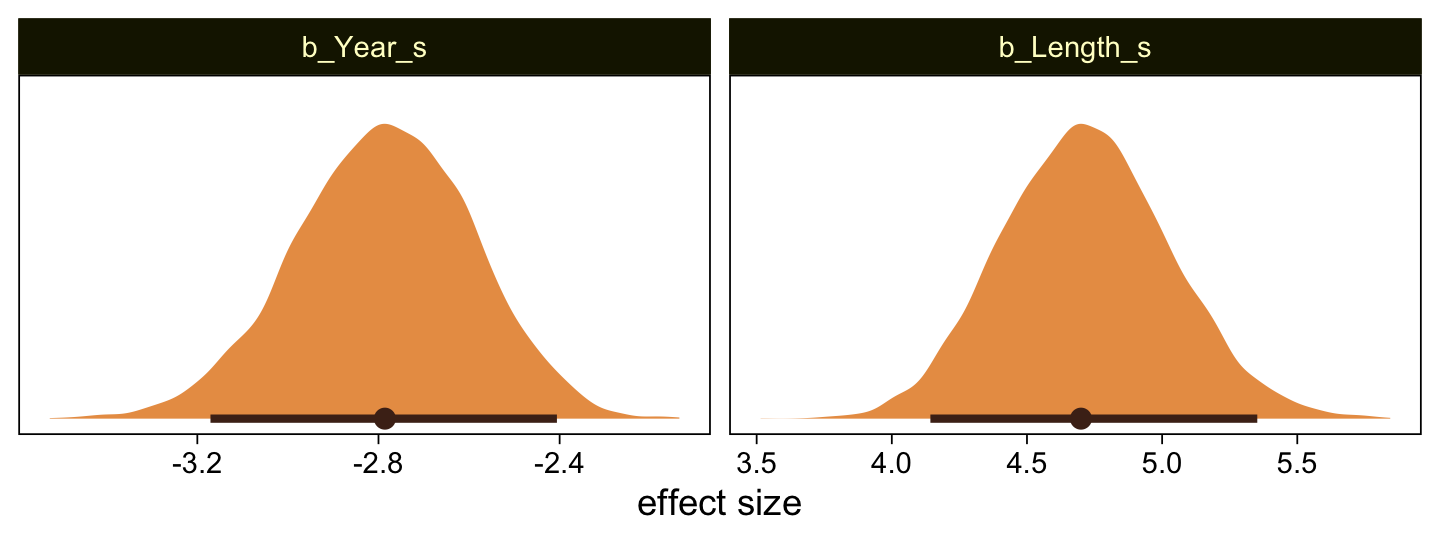

Here’s the marginal distribution of b_X_s, our effect size for the number of jokes.

draws %>%

ggplot(aes(x = b_X_s, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = sl[4], color = sl[8]) +

scale_y_continuous(NULL, breaks = NULL)

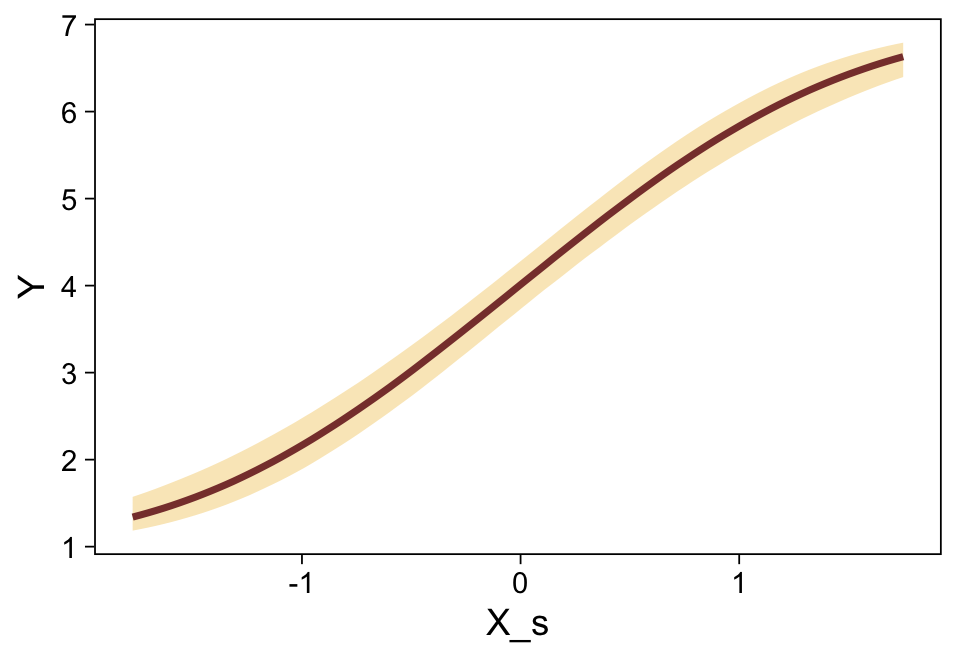

This differs from Kruschks’s \beta_1, which is in an unstandardized metric based on the parameters in his version of the model. But unlike the effect sizes from previous models, this one is not in a Cohen’s-d metric. Rather, this is a fully-standardized regression coefficient. As to the large subplot at the top of Figure 23.7, we can make something like it by nesting conditional_effects() within plot().

conditional_effects(fit23.9) %>%

plot(line_args = list(color = sl[7], fill = sl[3]))

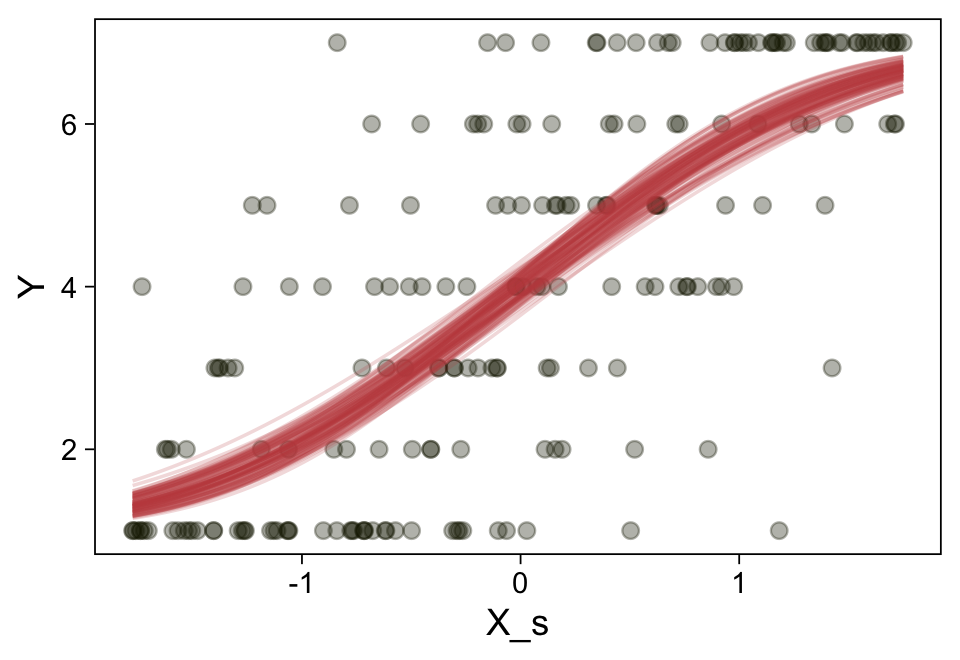

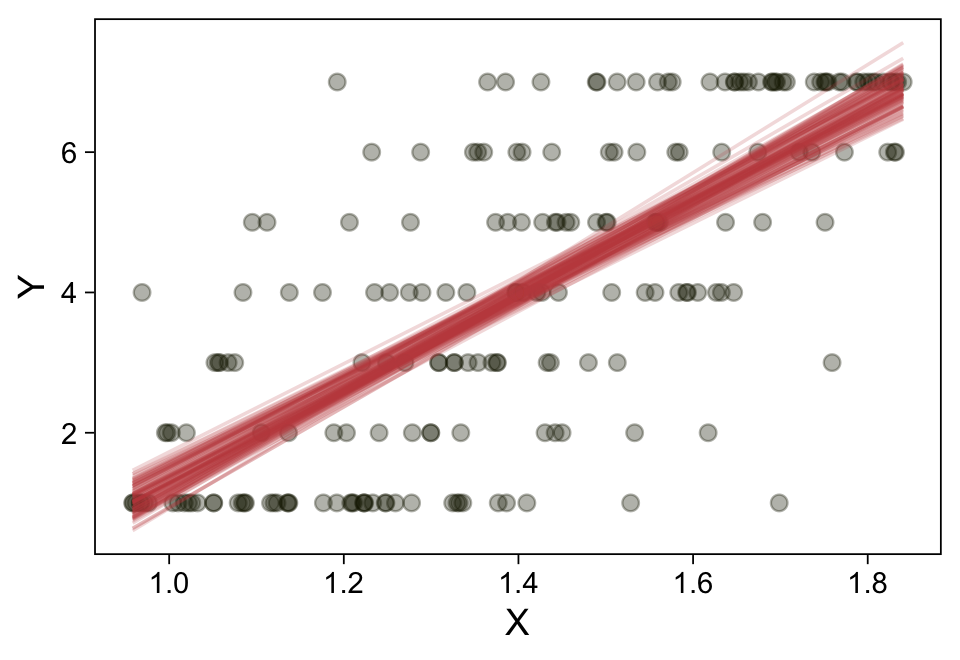

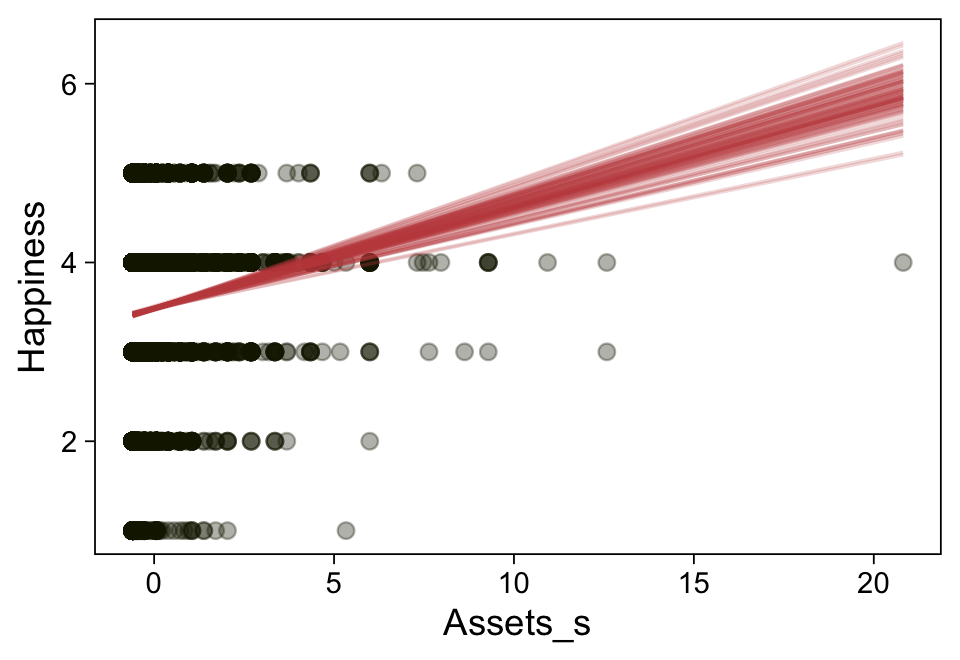

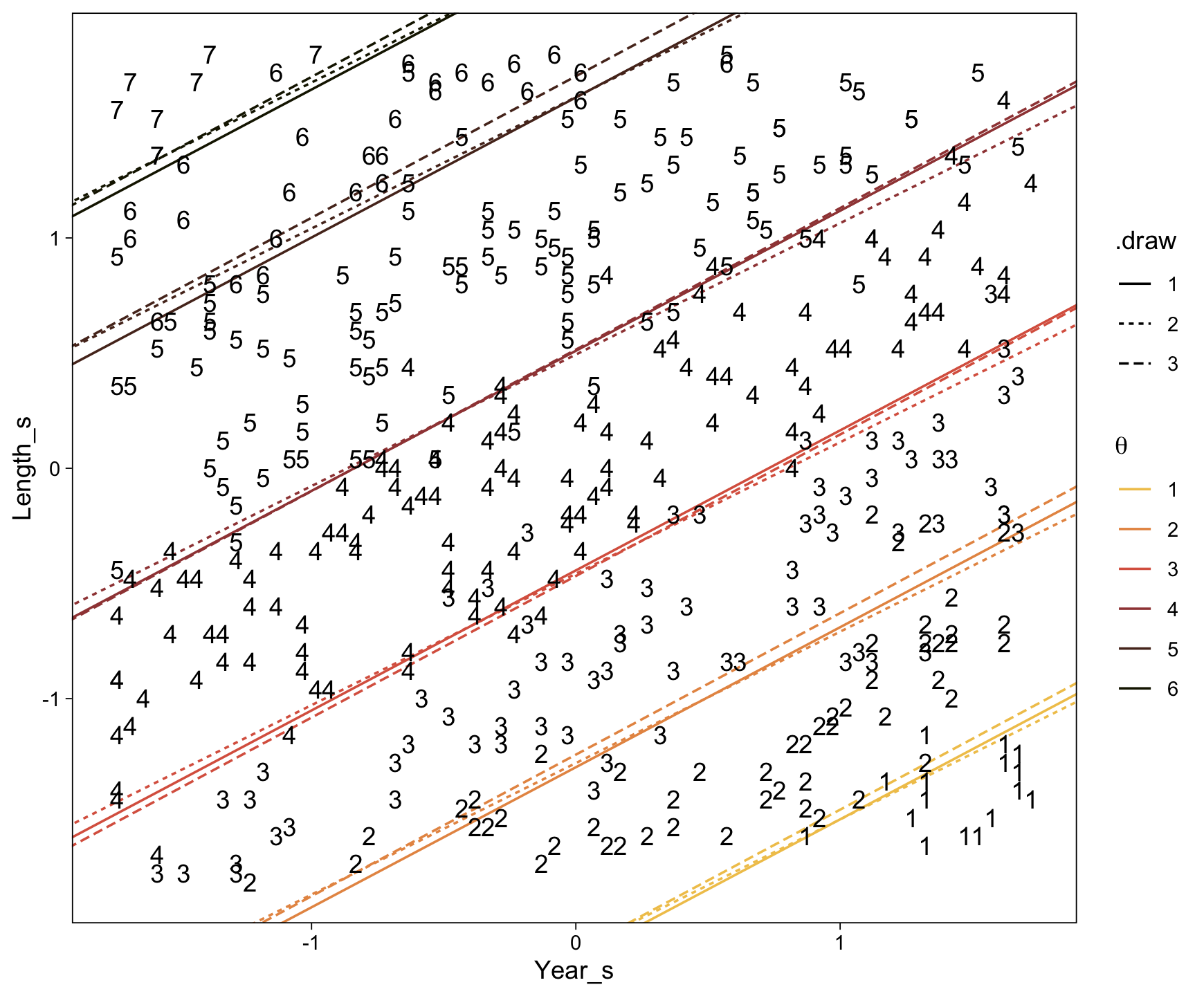

Here’s a more elaborated version of the same plot, this time depicting the model with 100 fitted lines randomly drawn from the posterior.

set.seed(23)

conditional_effects(fit23.9,

spaghetti = TRUE,

ndraws = 100) %>%

plot(points = T,

line_args = list(size = 0),

point_args = list(alpha = 1/3, color = sl[9]),

spaghetti_args = list(colour = alpha(sl[6], .2)))## Warning: Predictions are treated as continuous variables in 'conditional_effects' by default which is likely

## invalid for ordinal families. Please set 'categorical' to TRUE.

Note the warning message. There was a similar one in the first plot, which I suppressed for simplicity sake. The message suggests treating the fitted lines as “continuous variables” might lead to a deceptive plot. Here’s what happens if we follow the suggestion.

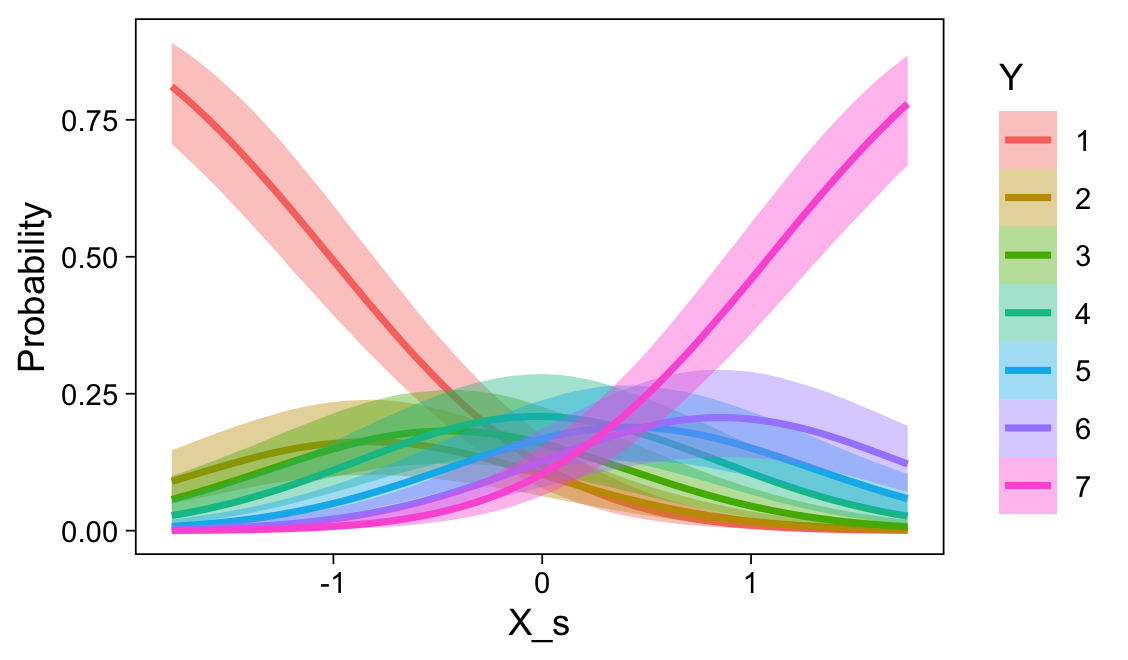

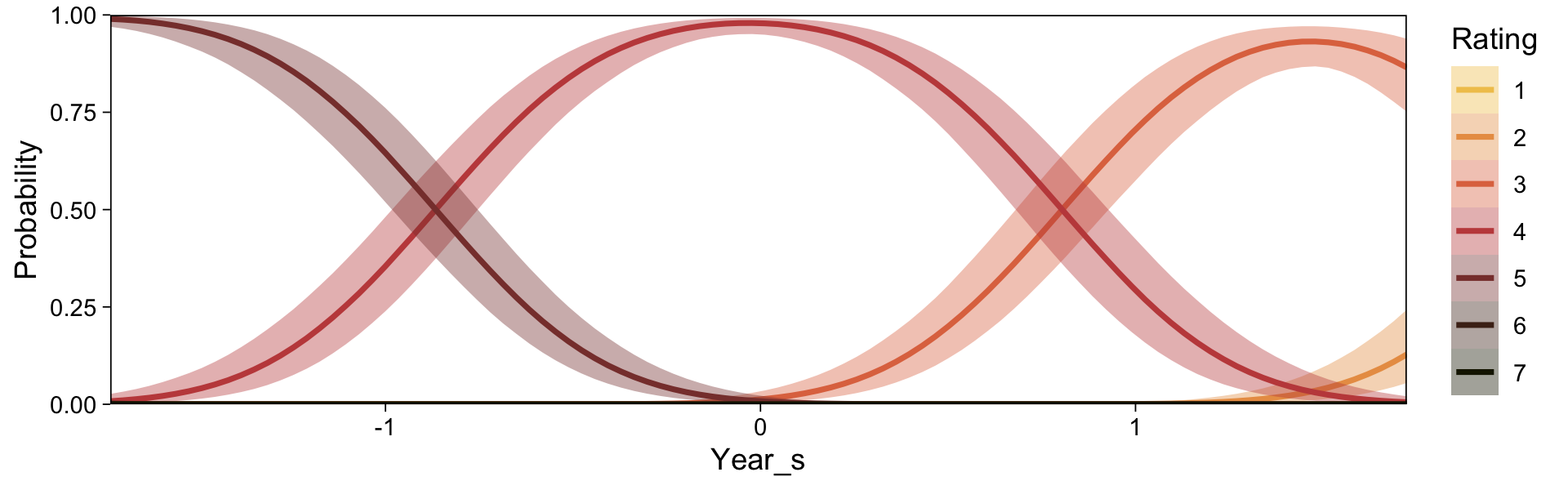

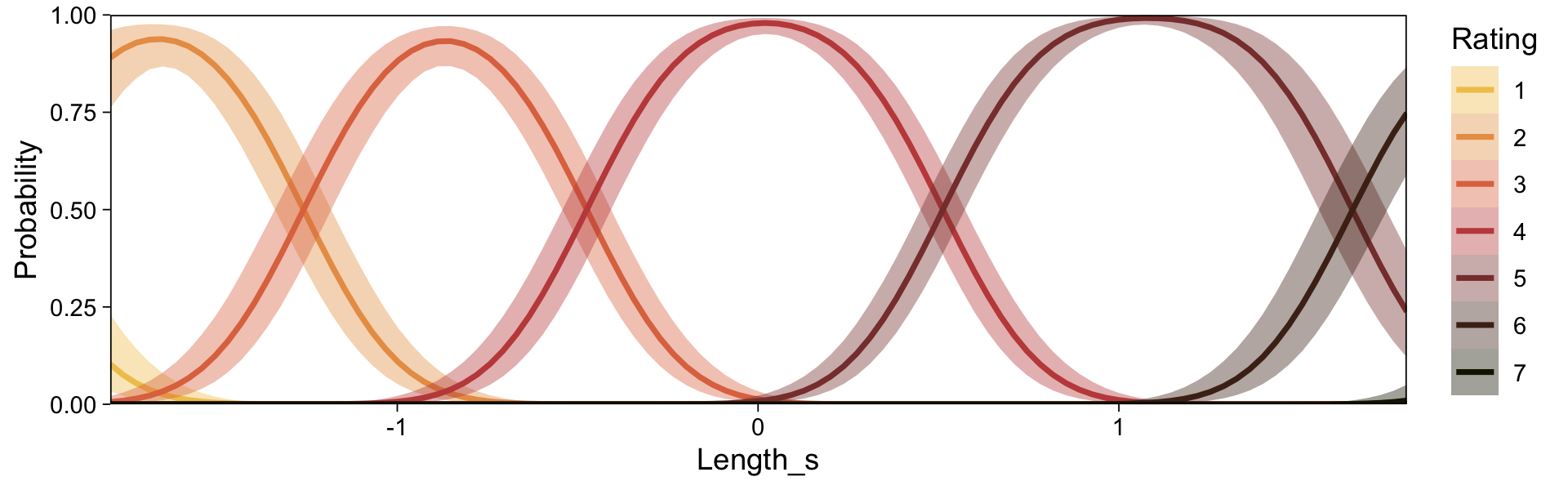

set.seed(23)

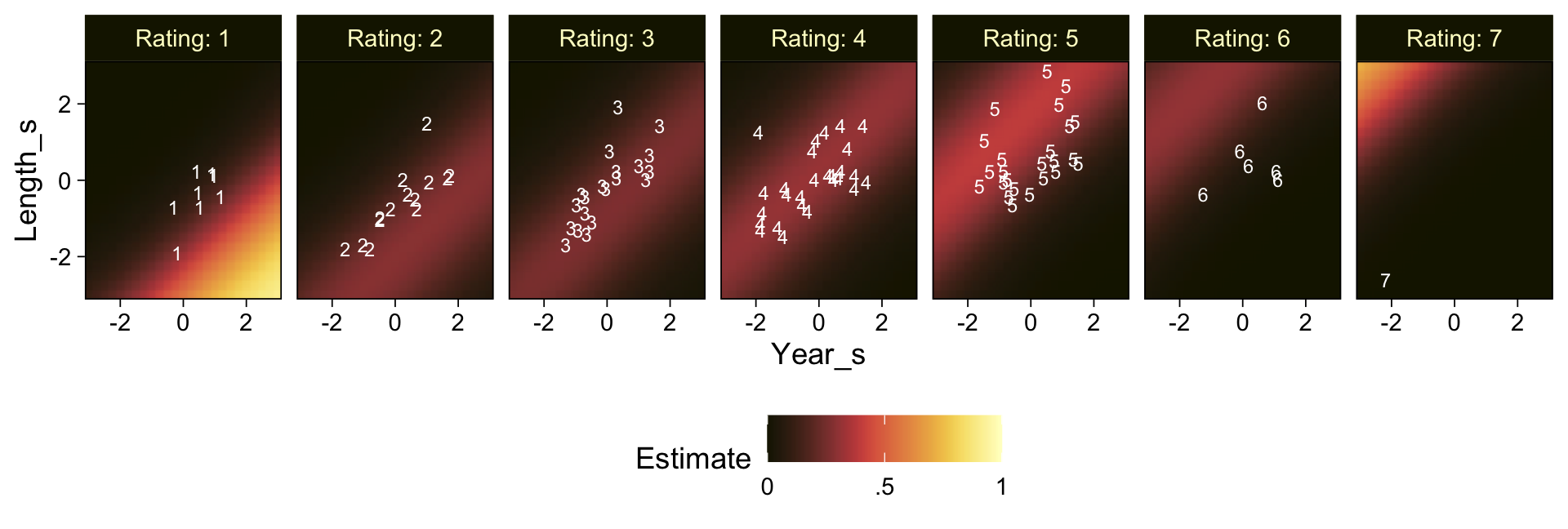

conditional_effects(fit23.9, categorical = T)

Recall that our thresholds, \theta_1,...,\theta_{K-1}, in conjunction with the standard normal density, give us the probability of a given Y value, given X_s. That is, p(y = k | \mu, \sigma, \{ \theta_j \}), where \mu is conditional on x. This plot returned the fitted lines of those conditional probabilities, each depicted by the posterior mean and percentile-based 95% intervals. At lower values of X_s, lower values of Y are more probable. At higher values of X_s, higher values of Y are more probable.

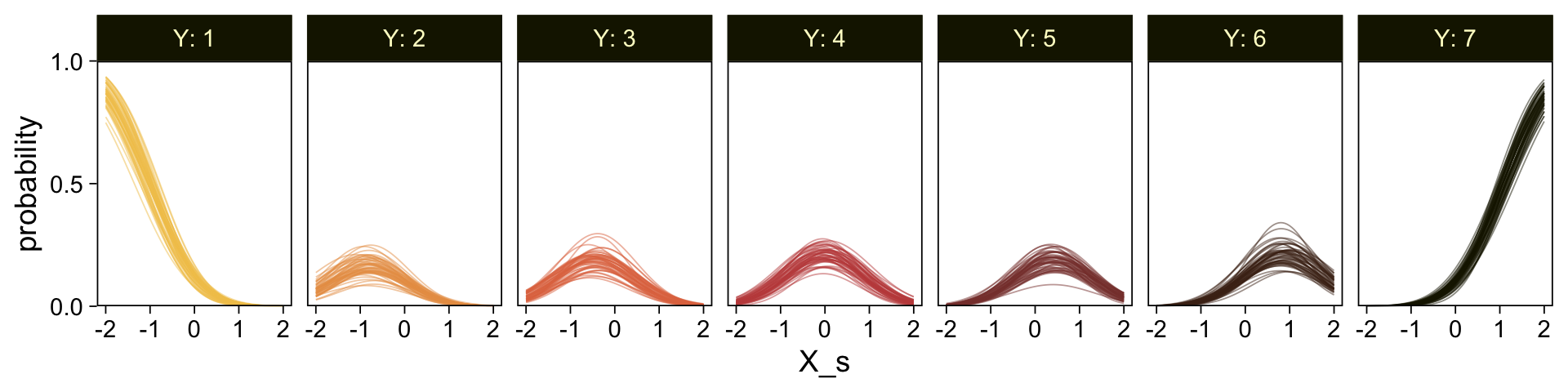

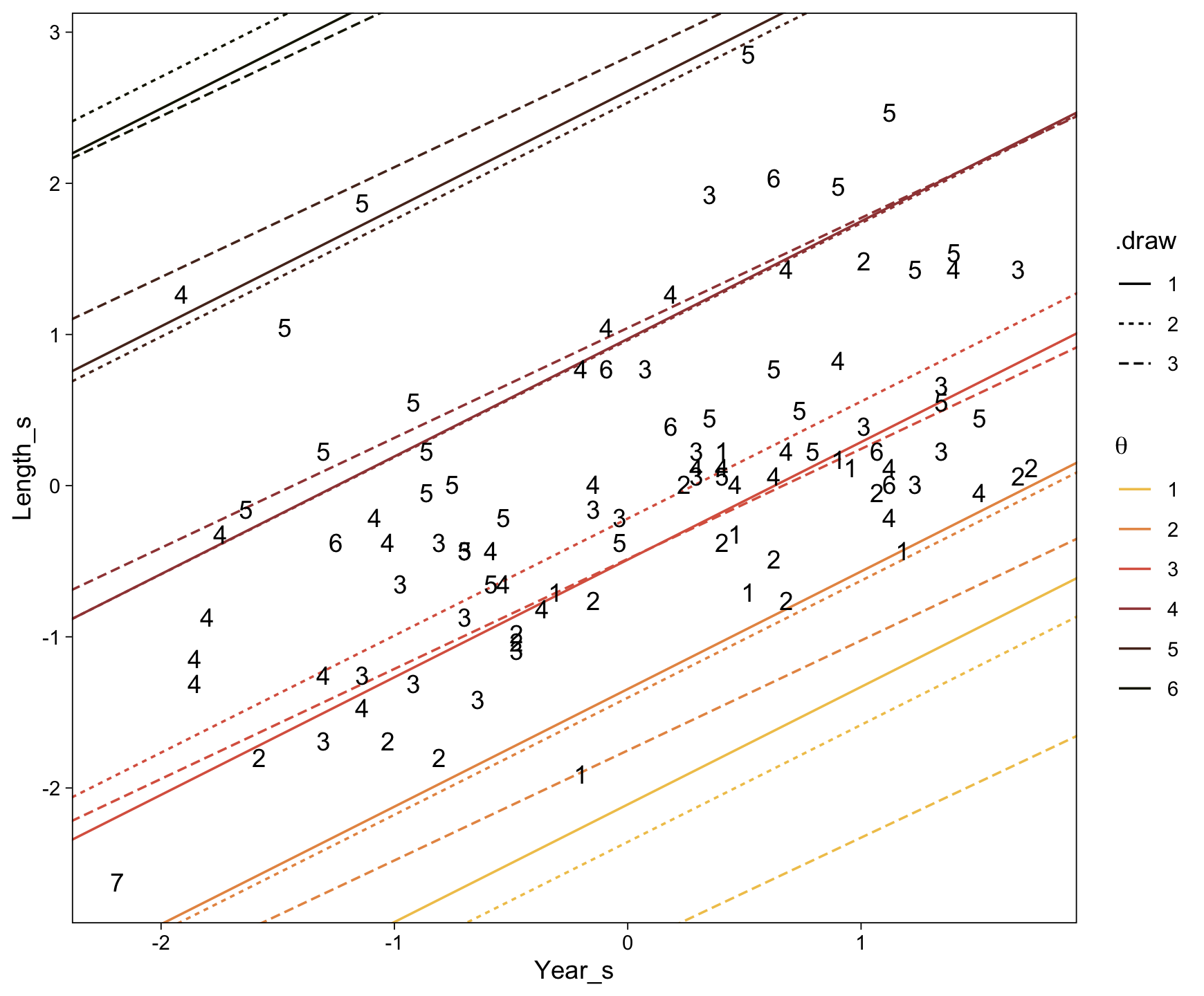

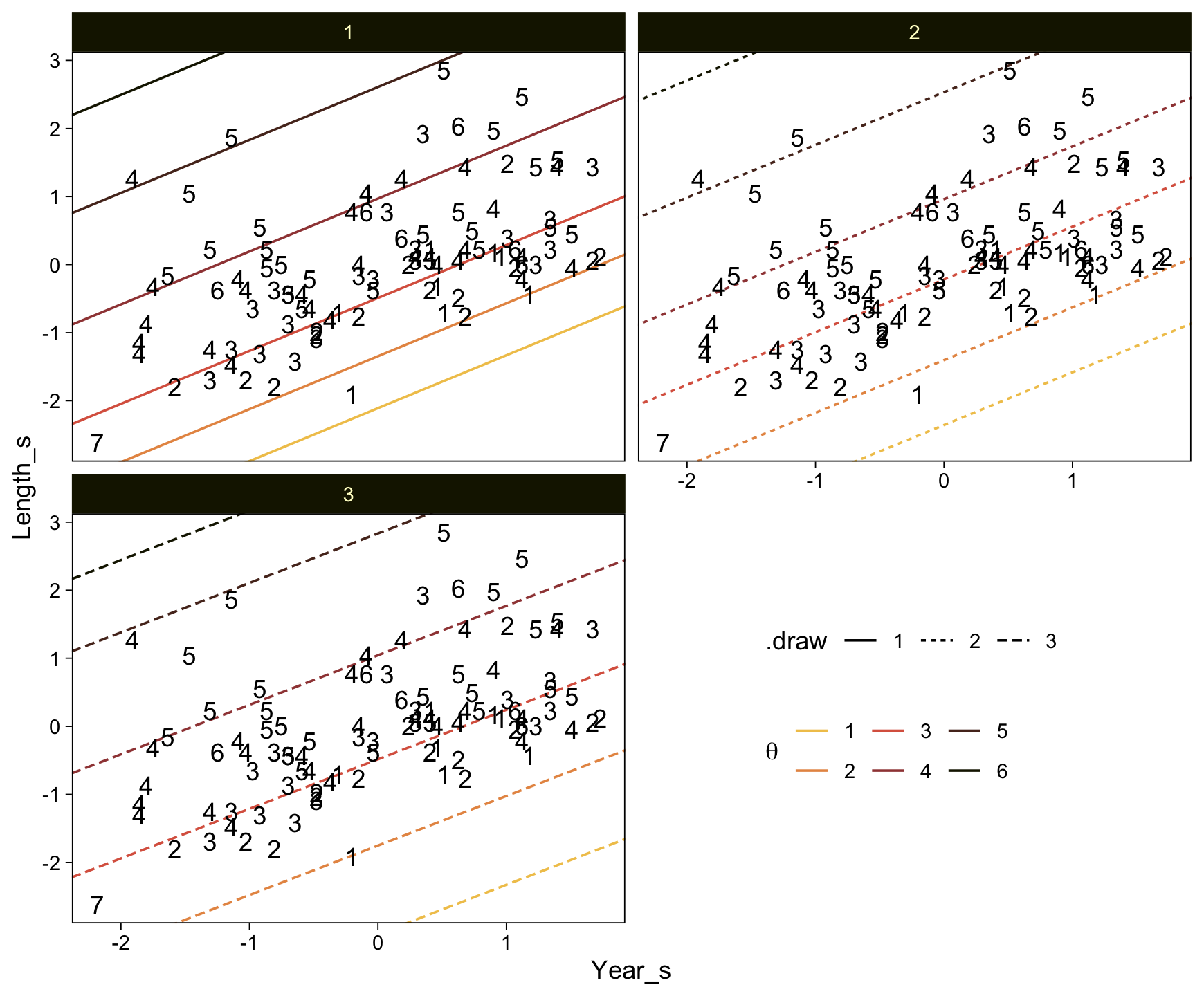

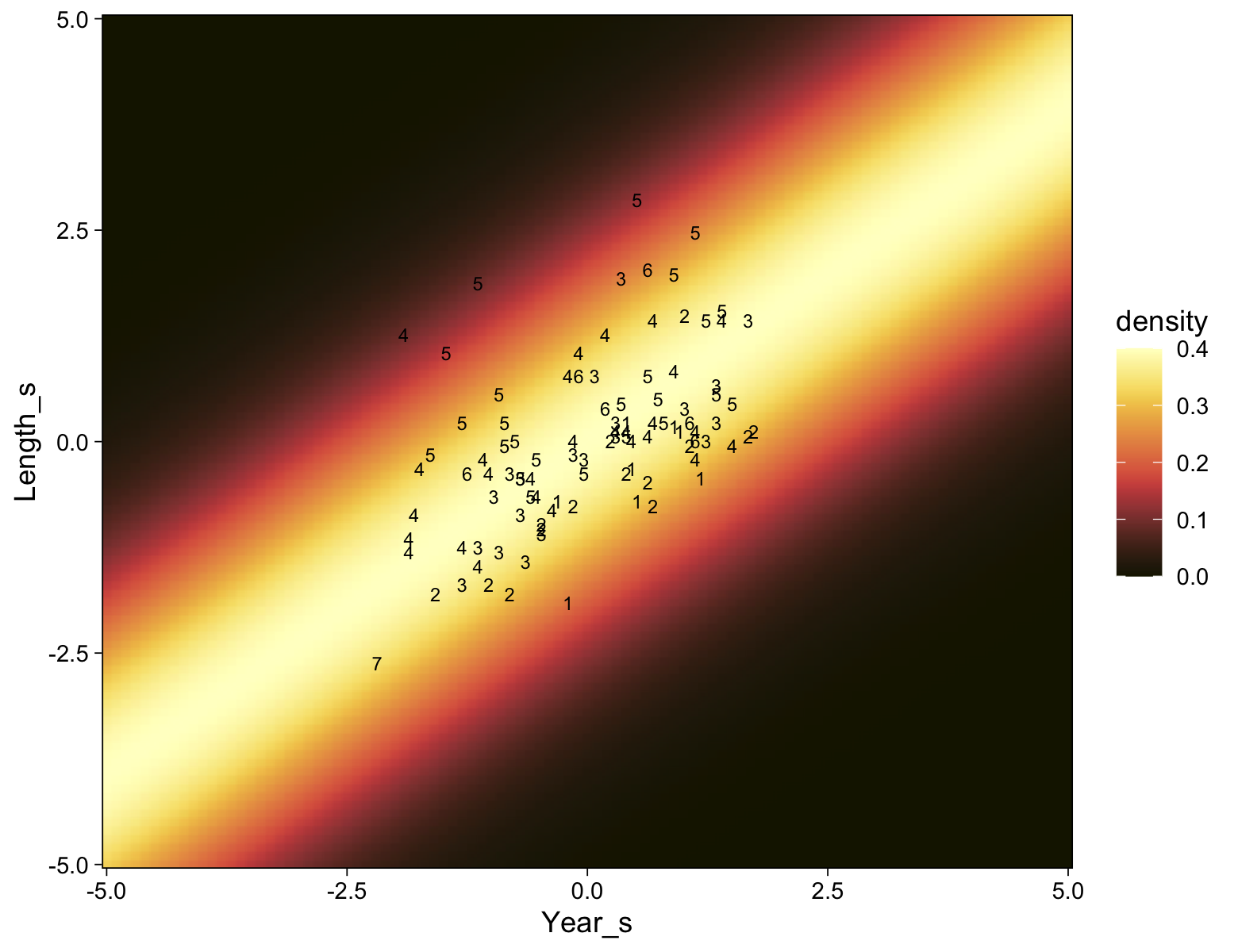

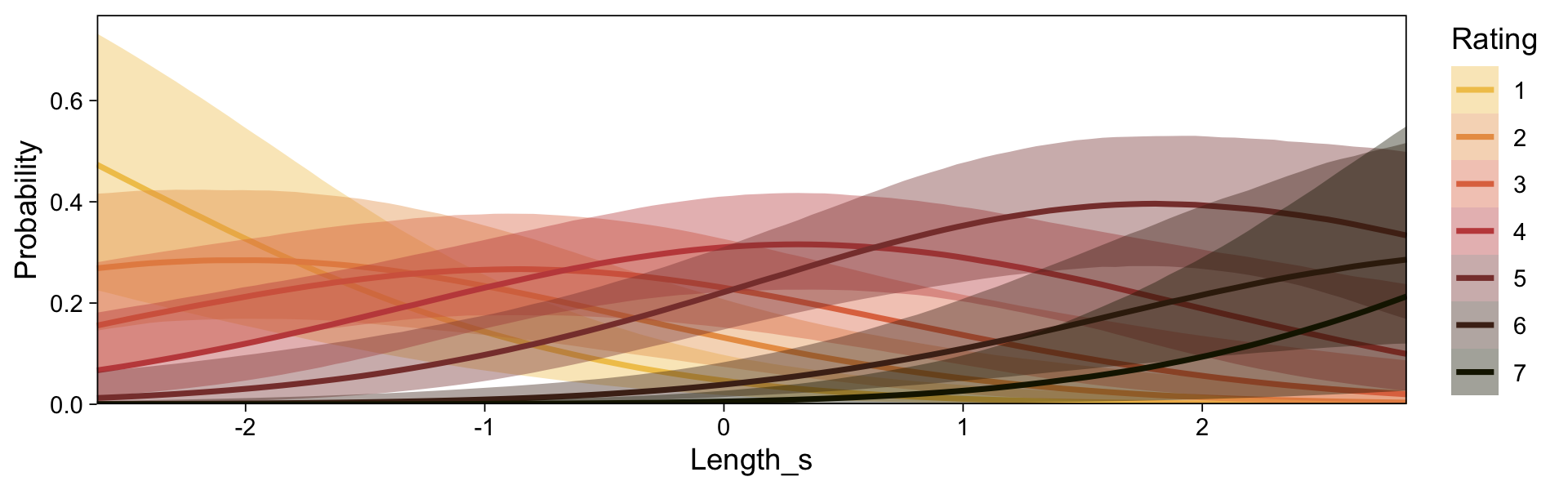

It might be useful to get more practice in with this model. Here we’ll use fitted() to make a similar plot, depicting the model with may fitted lines instead of summary statistics.

# how many fitted lines do you want?

n_draws <- 50

# define the `X_s` values you want to condition on