17 Metric Predicted Variable with One Metric Predictor

We will initially describe the relationship between the predicted variable, y and predictor, x, with a simple linear model and normally distributed residual randomness in y. This model is often referred to as ‘simple linear regression.’ We will generalize the model in three ways. First, we will give it a noise distribution that accommodates outliers, which is to say that we will replace the normal distribution with a t distribution as we did in the previous chapter. The model will be implemented in [brms]. Next, we will consider differently shaped relations between the predictor and the predicted, such as quadratic trend. Finally, we will consider hierarchical models of situations in which every individual has data that can be described by an individual trend, and we also want to estimate group-level typical trends across individuals. (Kruschke, 2015, p. 478)

17.1 Simple linear regression

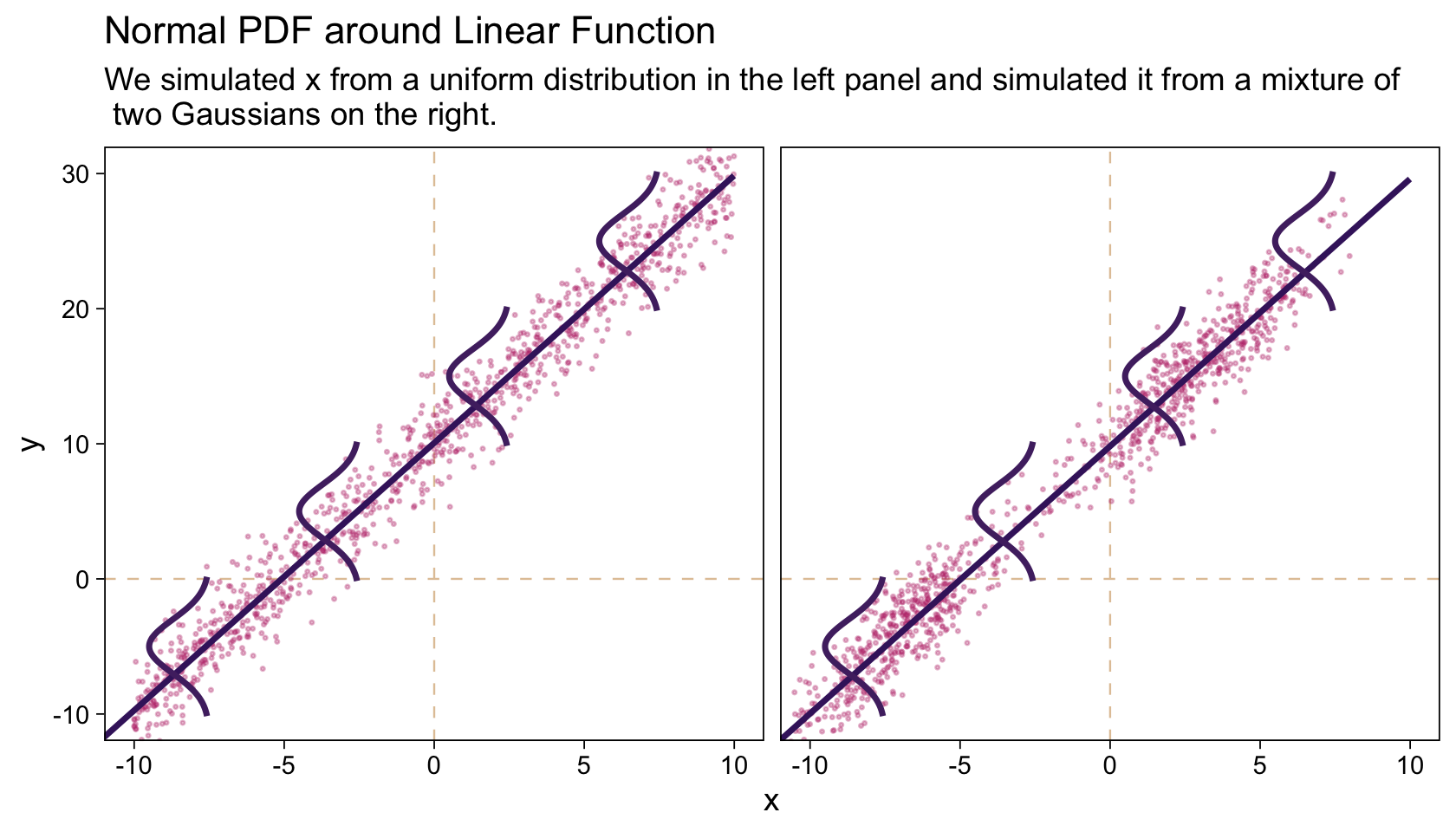

It wasn’t entirely clear how Kruschke simulated the bimodal data on the right panel of Figure 17.1. I figured an even split of two Gaussians would suffice and just sighted their μ’s and σ’s.

library(tidyverse)

# how many draws per panel would you like?

n_draw <- 1000

set.seed(17)

d <-

tibble(panel = rep(letters[1:2], each = n_draw),

x = c(runif(n = n_draw, min = -10, max = 10),

rnorm(n = n_draw / 2, mean = -7, sd = 2),

rnorm(n = n_draw / 2, mean = 3, sd = 2))) %>%

mutate(y = 10 + 2 * x + rnorm(n = n(), mean = 0, sd = 2))

head(d)## # A tibble: 6 × 3

## panel x y

## <chr> <dbl> <dbl>

## 1 a -6.90 -4.09

## 2 a 9.37 28.6

## 3 a -0.635 11.8

## 4 a 5.54 23.3

## 5 a -1.84 10.9

## 6 a 0.776 10.9In case you missed it, Kruschke defied the formula for these data in Figure 17.1. It is

yi∼Normal(μi,σ=2),whereμi=10+2xi.

“Note that the model only specifies the dependency of y on x. The model does not say anything about what generates x, and there is no probability distribution assumed for describing x” (p. 479). Let this sink into your soul. It took a long time, for me. E.g., a lot of people fret over the distributions of their x variables. Now one might should examine them to make sure nothing looks off, such as for data coding mistakes. But if they’re not perfectly or even approximately Gaussian, that isn’t necessarily an issue. The typical linear model makes no presumption about the distribution of the predictors. Often times, the largest issue is whether the x variables are categorical or continuous.

Before we make our Figure 17.1, we’ll want to make a separate tibble of the values necessary to plot those sideways Gaussians. Here are the steps.

curves <-

# define the 3 x-values we want the Gaussians to originate from

tibble(x = seq(from = -7.5, to = 7.5, length.out = 4)) %>%

# use the formula 10 + 2x to compute the expected y-value for x

mutate(y_mean = 10 + (2 * x)) %>%

# based on a Gaussian with `mean = y_mean` and `sd = 2`, compute the 99% intervals

mutate(ll = qnorm(.005, mean = y_mean, sd = 2),

ul = qnorm(.995, mean = y_mean, sd = 2)) %>%

# now use those interval bounds to make a sequence of y-values

mutate(y = map2(ll, ul, seq, length.out = 100)) %>%

# since that operation returned a nested column, we need to `unnest()`

unnest(y) %>%

# compute the density values

mutate(density = map2_dbl(y, y_mean, dnorm, sd = 2)) %>%

# now rescale the density values to be wider.

# since we want these to be our x-values, we'll

# just redefine the x column with these results

mutate(x = x - density * 2 / max(density))

str(curves)## tibble [400 × 6] (S3: tbl_df/tbl/data.frame)

## $ x : num [1:400] -7.57 -7.58 -7.59 -7.61 -7.62 ...

## $ y_mean : num [1:400] -5 -5 -5 -5 -5 -5 -5 -5 -5 -5 ...

## $ ll : num [1:400] -10.2 -10.2 -10.2 -10.2 -10.2 ...

## $ ul : num [1:400] 0.152 0.152 0.152 0.152 0.152 ...

## $ y : num [1:400] -10.15 -10.05 -9.94 -9.84 -9.74 ...

## $ density: num [1:400] 0.00723 0.00826 0.0094 0.01068 0.01209 ...Before we make Figure 17.1, let’s talk color. Like last chapter, we’ll take our color palette from the beyonce package. Our palette will be a nine-point version of #41.

library(beyonce)

bp <- beyonce_palette(41, n = 9, type = "continuous")

bp

The global theme will be ggplot2::theme_linedraw() with the grid lines removed. Make Figure 17.1.

theme_set(

theme_linedraw() +

theme(panel.grid = element_blank())

)

d %>%

ggplot(aes(x = x, y = y)) +

geom_vline(xintercept = 0, linewidth = 1/3, linetype = 2, color = bp[9]) +

geom_hline(yintercept = 0, linewidth = 1/3, linetype = 2, color = bp[9]) +

geom_point(size = 1/3, alpha = 1/3, color = bp[5]) +

stat_smooth(method = "lm", se = F, fullrange = T, color = bp[1]) +

geom_path(data = curves,

aes(group = y_mean),

color = bp[2], linewidth = 1) +

labs(title = "Normal PDF around Linear Function",

subtitle = "We simulated x from a uniform distribution in the left panel and simulated it from a mixture of\n two Gaussians on the right.") +

coord_cartesian(xlim = c(-10, 10),

ylim = c(-10, 30)) +

theme(strip.background = element_blank(),

strip.text = element_blank()) +

facet_wrap(~ panel)

Concerning causality,

the simple linear model makes no claims about causal connections between x and y. The simple linear model merely describes a tendency for y values to be linearly related to x values, hence “predictable” from the x values. When describing data with this model, we are starting with a scatter plot of points generated by an unknown process in the real world, and estimating parameter values that would produce a smattering of points that might mimic the real data. Even if the descriptive model mimics the data well (and it might not), the mathematical “process” in the model may have little if anything to do with the real-world process that created the data. Nevertheless, the parameters in the descriptive model are meaningful because they describe tendencies in the data. (p. 479, emphasis added)

I emphasized these points because I’ve heard and seen a lot of academics conflate linear regression models with causal models. For sure, it might well be preferable if your regression model was also a causal model. But good old prediction is fine, too.

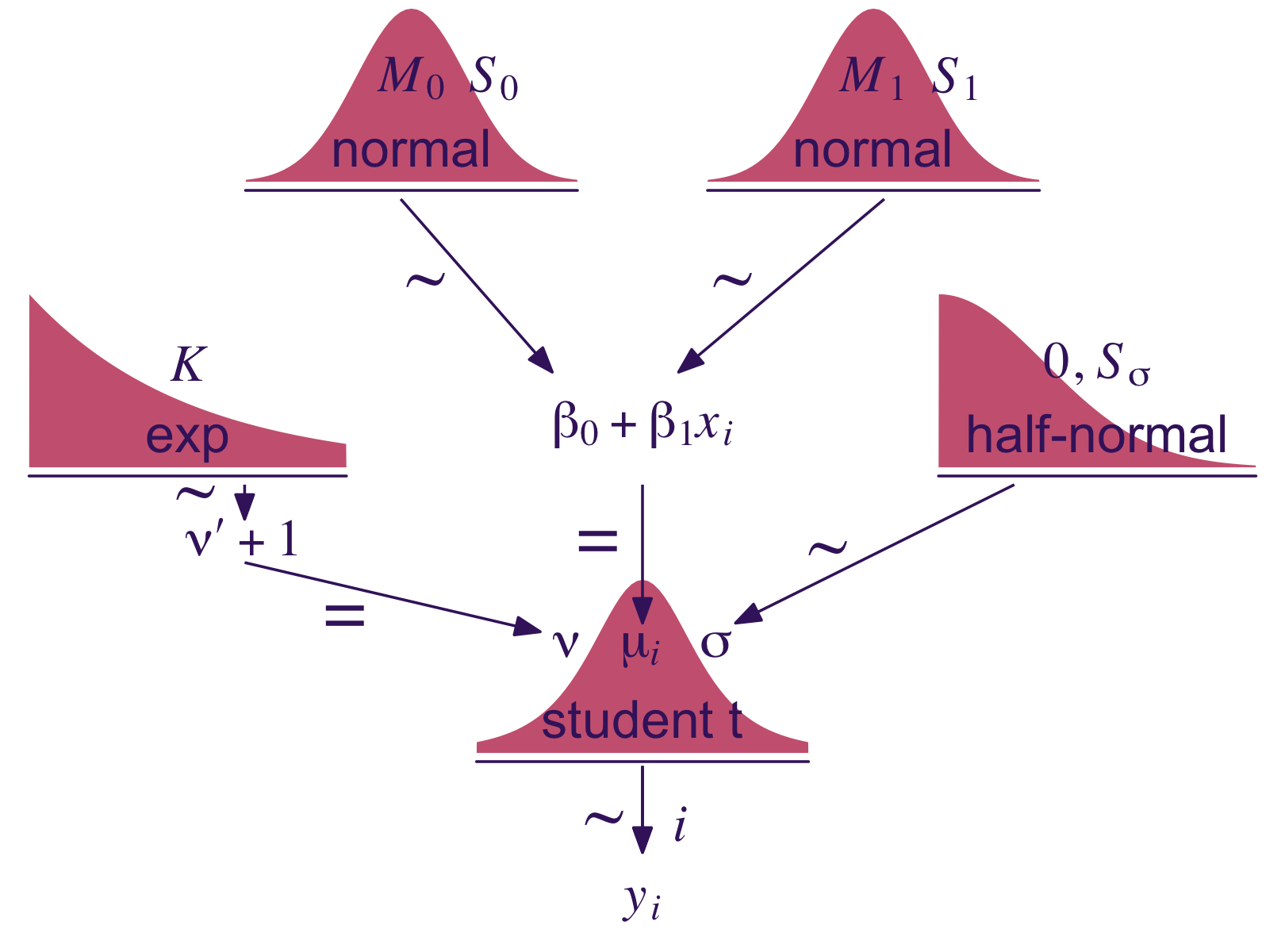

17.2 Robust linear regression

There is no requirement to use a normal distribution for the noise distribution. The normal distribution is traditional because of its relative simplicity in mathematical derivations. But real data may have outliers, and the use of (optionally) heavy-tailed noise distributions is straight forward in contemporary Bayesian software[, like brms]. (pp. 479–480)

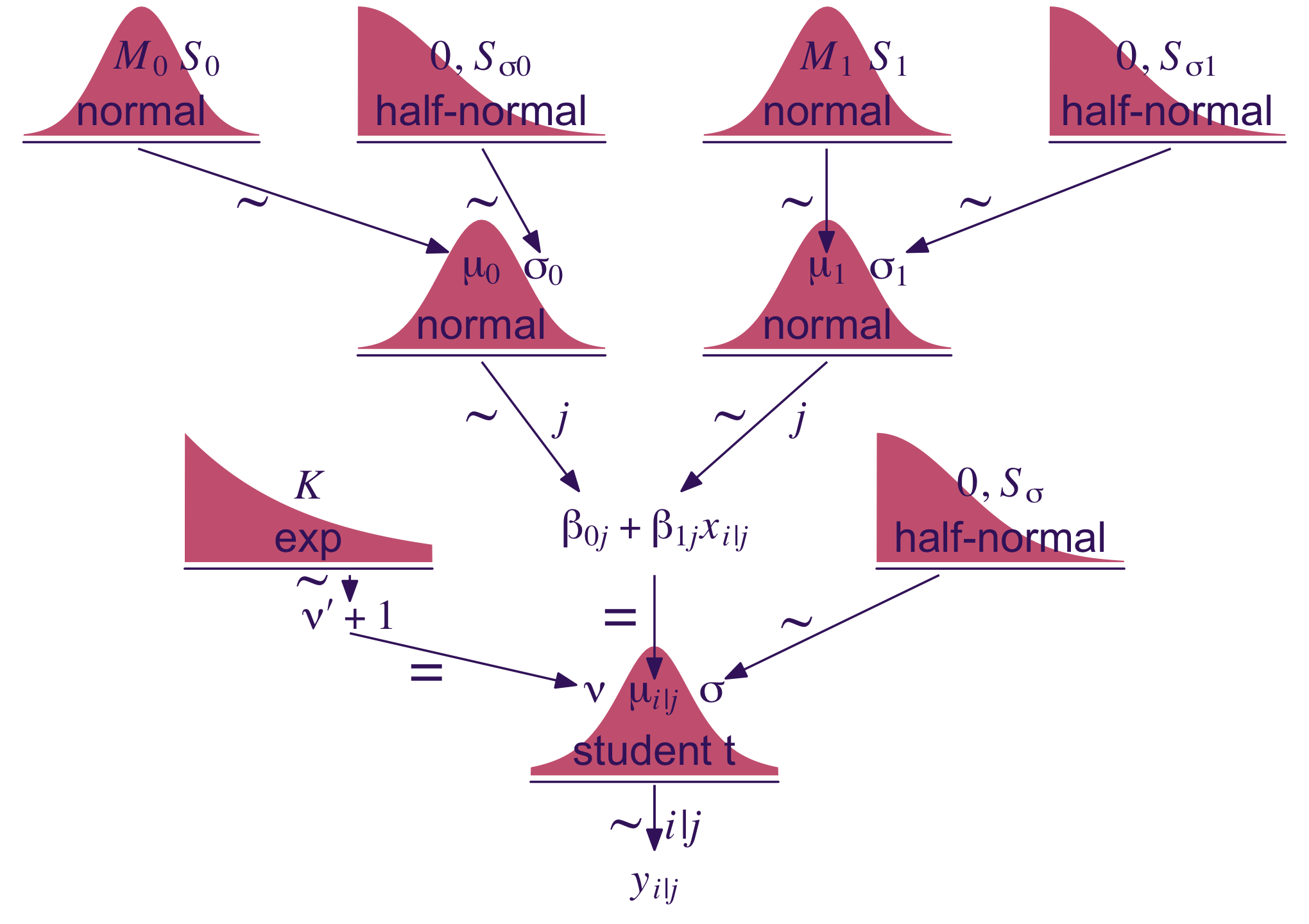

Let’s make our version of the model diagram in Figure 17.2 to get a sense of where we’re going.

library(patchwork)

# normal density

p1 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("italic(M)[0]", "italic(S)[0]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# a second normal density

p2 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("italic(M)[1]", "italic(S)[1]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

## two annotated arrows

# save our custom arrow settings

my_arrow <- arrow(angle = 20, length = unit(0.35, "cm"), type = "closed")

p3 <-

tibble(x = c(.33, 1.67),

y = c(1, 1),

xend = c(.75, 1.1),

yend = c(0, 0)) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow, color = bp[1]) +

annotate(geom = "text",

x = c(.4, 1.25), y = .5,

label = "'~'",

size = 10, color = bp[1], family = "Times", parse = T) +

xlim(0, 2) +

theme_void()

# exponential density

p4 <-

tibble(x = seq(from = 0, to = 1, by = .01)) %>%

ggplot(aes(x = x, y = (dexp(x, 2) / max(dexp(x, 2))))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = .5, y = .2,

label = "exp",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = .5, y = .6,

label = "italic(K)",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# likelihood formula

p5 <-

tibble(x = .5,

y = .25,

label = "beta[0]+beta[1]*italic(x)[italic(i)]") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, color = bp[1], parse = T, family = "Times") +

scale_x_continuous(expand = c(0, 0), limits = c(0, 1)) +

ylim(0, 1) +

theme_void()

# half-normal density

p6 <-

tibble(x = seq(from = 0, to = 3, by = .01)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 1.5, y = .2,

label = "half-normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 1.5, y = .6,

label = "0*','*~italic(S)[sigma]",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# four annotated arrows

p7 <-

tibble(x = c(.43, .43, 1.5, 2.5),

y = c(1, .55, 1, 1),

xend = c(.43, 1.225, 1.5, 1.75),

yend = c(.8, .15, .2, .2)) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow, color = bp[1]) +

annotate(geom = "text",

x = c(.3, .7, 1.38, 2), y = c(.92, .22, .65, .6),

label = c("'~'", "'='", "'='", "'~'"),

size = 10,

color = bp[1], family = "Times", parse = T) +

annotate(geom = "text",

x = .43, y = .7,

label = "nu*minute+1",

size = 7, color = bp[1], family = "Times", parse = T) +

xlim(0, 3) +

theme_void()

# student-t density

p8 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dt(x, 3) / max(dt(x, 3))))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "student t",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 0, y = .6,

label = "nu~~~mu[italic(i)]~~~sigma",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# the final annotated arrow

p9 <-

tibble(x = c(.375, .625),

y = c(1/3, 1/3),

label = c("'~'", "italic(i)")) %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = c(10, 7), color = bp[1], parse = T, family = "Times") +

geom_segment(x = .5, xend = .5,

y = 1, yend = 0,

color = bp[1], arrow = my_arrow) +

xlim(0, 1) +

theme_void()

# some text

p10 <-

tibble(x = .5,

y = .5,

label = "italic(y[i])") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, color = bp[1], parse = T, family = "Times") +

xlim(0, 1) +

theme_void()

# define the layout

layout <- c(

area(t = 1, b = 2, l = 3, r = 5),

area(t = 1, b = 2, l = 7, r = 9),

area(t = 4, b = 5, l = 1, r = 3),

area(t = 4, b = 5, l = 5, r = 7),

area(t = 4, b = 5, l = 9, r = 11),

area(t = 3, b = 4, l = 3, r = 9),

area(t = 7, b = 8, l = 5, r = 7),

area(t = 6, b = 7, l = 1, r = 11),

area(t = 9, b = 9, l = 5, r = 7),

area(t = 10, b = 10, l = 5, r = 7)

)

# combine and plot!

(p1 + p2 + p4 + p5 + p6 + p3 + p8 + p7 + p9 + p10) +

plot_layout(design = layout) &

ylim(0, 1) &

theme(plot.margin = margin(0, 5.5, 0, 5.5))

Here’s Kruschke’s HtWtDataGenerator() code.

HtWtDataGenerator <- function(nSubj, rndsd = NULL, maleProb = 0.50) {

# Random height, weight generator for males and females. Uses parameters from

# Brainard, J. & Burmaster, D. E. (1992). Bivariate distributions for height and

# weight of men and women in the United States. Risk Analysis, 12(2), 267-275.

# Kruschke, J. K. (2011). Doing Bayesian data analysis:

# A Tutorial with R and BUGS. Academic Press / Elsevier.

# Kruschke, J. K. (2014). Doing Bayesian data analysis, 2nd Edition:

# A Tutorial with R, JAGS and Stan. Academic Press / Elsevier.

# require(MASS)

# Specify parameters of multivariate normal (MVN) distributions.

# Men:

HtMmu <- 69.18

HtMsd <- 2.87

lnWtMmu <- 5.14

lnWtMsd <- 0.17

Mrho <- 0.42

Mmean <- c(HtMmu, lnWtMmu)

Msigma <- matrix(c(HtMsd^2, Mrho * HtMsd * lnWtMsd,

Mrho * HtMsd * lnWtMsd, lnWtMsd^2), nrow = 2)

# Women cluster 1:

HtFmu1 <- 63.11

HtFsd1 <- 2.76

lnWtFmu1 <- 5.06

lnWtFsd1 <- 0.24

Frho1 <- 0.41

prop1 <- 0.46

Fmean1 <- c(HtFmu1, lnWtFmu1)

Fsigma1 <- matrix(c(HtFsd1^2, Frho1 * HtFsd1 * lnWtFsd1,

Frho1 * HtFsd1 * lnWtFsd1, lnWtFsd1^2), nrow = 2)

# Women cluster 2:

HtFmu2 <- 64.36

HtFsd2 <- 2.49

lnWtFmu2 <- 4.86

lnWtFsd2 <- 0.14

Frho2 <- 0.44

prop2 <- 1 - prop1

Fmean2 <- c(HtFmu2, lnWtFmu2)

Fsigma2 <- matrix(c(HtFsd2^2, Frho2 * HtFsd2 * lnWtFsd2,

Frho2 * HtFsd2 * lnWtFsd2, lnWtFsd2^2), nrow = 2)

# Randomly generate data values from those MVN distributions.

if (!is.null(rndsd)) {set.seed(rndsd)}

datamatrix <- matrix(0, nrow = nSubj, ncol = 3)

colnames(datamatrix) <- c("male", "height", "weight")

maleval <- 1; femaleval <- 0 # arbitrary coding values

for (i in 1:nSubj) {

# Flip coin to decide sex

sex <- sample(c(maleval, femaleval), size = 1, replace = TRUE,

prob = c(maleProb, 1 - maleProb))

if (sex == maleval) {datum = MASS::mvrnorm(n = 1, mu = Mmean, Sigma = Msigma)}

if (sex == femaleval) {

Fclust = sample(c(1, 2), size = 1, replace = TRUE, prob = c(prop1, prop2))

if (Fclust == 1) {datum = MASS::mvrnorm(n = 1, mu = Fmean1, Sigma = Fsigma1)}

if (Fclust == 2) {datum = MASS::mvrnorm(n = 1, mu = Fmean2, Sigma = Fsigma2)}

}

datamatrix[i, ] = c(sex, round(c(datum[1], exp(datum[2])), 1))

}

return(datamatrix)

}Let’s take this baby for a spin to simulate our data.

d <-

HtWtDataGenerator(nSubj = 300, rndsd = 17, maleProb = .50) %>%

as_tibble() %>%

# this will allow us to subset 30 of the values into their own group

mutate(subset = rep(0:1, times = c(9, 1)) %>% rep(., 30))

head(d)## # A tibble: 6 × 4

## male height weight subset

## <dbl> <dbl> <dbl> <int>

## 1 0 63.3 167. 0

## 2 0 62.4 126. 0

## 3 1 66.4 124 0

## 4 0 62.9 148. 0

## 5 1 65.5 151. 0

## 6 1 71.4 234. 0Note how we set the seed for the the pseudorandom number generator with the rndsd argument, which makes the results in this ebook reproducible. But since we do not know what seed value Kruschke used to simulate the data in this section of the test, the results of our models in the next section will differ a little from those in the text. However, if you want to more closely reproduce Kruschke’s examples, load the HtWtData30.csv and HtWtData300.csv data files and fit the models to those, instead.

Anyway,

fortunately, we do not have to worry much about analytical derivations because we can let JAGS or Stan generate a high resolution picture of the posterior distribution. Our job, therefore, is to specify sensible priors and to make sure that the MCMC process generates a trustworthy posterior sample that is converged and well mixed. (p. 483)

17.2.1 Robust linear regression in JAGS brms.

Presuming a data set with a sole standardized predictor x_z for a sole standardized criterion y_z, the basic brms code corresponding to the JAGS code Kruschke showed on page 483 looks like this.

fit <-

brm(data = my_data,

family = student,

y_z ~ 1 + x_z,

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 1), class = sigma),

prior(exponential(one_over_twentynine), class = nu)),

stanvars = stanvar(1/29, name = "one_over_twentynine"))Like we discussed in Chapter 16, we won’t be using the uniform prior for σ. Since we’re presuming standardized data, a half-unit normal is a fine choice. But do note this is much tighter than Kruschke’s Uniform(0.001,1000) and it will have down-the-road consequences for our results versus those in the text.

Also, look at how we just pumped the definition of our sole stanvar(1/29, name = "one_over_twentynine") operation right into the stanvar argument. If we were defining multiple values this way, I’d prefer to save this as an object first and then just pump that object into stanvars. But in this case, it was simple enough to just throw directly into the brm() function.

17.2.1.1 Standardizing the data for MCMC sampling.

“Standardizing simply means re-scaling the data relative to their mean and standard deviation” (p. 485). For any variable x, that follows the formula

zi=xi−ˉxs,

where xi is the ith row in the vector of x values, ˉx is the sample mean for x, s is the sample standard deviation for x, and the product of the standardizing procedure is zi (i.e., the z-score).

Kruschke mentioned how standardizing your data before feeding it into JAGS often helps the algorithm operate smoothly. The same basic principle holds for brms and Stan. Stan can often handle unstandardized data just fine. But if you ever run into estimation difficulties, consider standardizing your data and trying again.

We’ll make a simple function to standardize the height and weight values.

standardize <- function(x) {

(x - mean(x)) / sd(x)

}

d <-

d %>%

mutate(height_z = standardize(height),

weight_z = standardize(weight))Somewhat analogous to how Kruschke standardized his data within the JAGS code, you could standardize the data within the brm() function. That would look something like this.

fit <-

brm(data = d %>% # the standardizing occurs in the next two lines

mutate(height_z = standardize(height),

weight_z = standardize(weight)),

family = student,

weight_z ~ 1 + height_z)But anyway, let’s open brms.

library(brms)We’ll fit the two models at once. fit1 will be of the total data sample. fit2 is of the n=30 subset.

fit17.1 <-

brm(data = d,

family = student,

weight_z ~ 1 + height_z,

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 1), class = sigma),

prior(exponential(one_over_twentynine), class = nu)),

chains = 4, cores = 4,

stanvars = stanvar(1/29, name = "one_over_twentynine"),

seed = 17,

file = "fits/fit17.01")

fit17.2 <-

update(fit17.1,

newdata = d %>%

filter(subset == 1),

chains = 4, cores = 4,

seed = 17,

file = "fits/fit17.02")Here are the results.

print(fit17.1)## Family: student

## Links: mu = identity; sigma = identity; nu = identity

## Formula: weight_z ~ 1 + height_z

## Data: d (Number of observations: 300)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -0.03 0.05 -0.13 0.07 1.00 3465 2645

## height_z 0.46 0.05 0.35 0.56 1.00 3893 2697

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.84 0.05 0.74 0.94 1.00 2948 2733

## nu 25.01 20.46 6.11 83.28 1.00 2741 2778

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).print(fit17.2)## Family: student

## Links: mu = identity; sigma = identity; nu = identity

## Formula: weight_z ~ 1 + height_z

## Data: d %>% filter(subset == 1) (Number of observations: 30)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -0.11 0.12 -0.35 0.12 1.00 4055 2855

## height_z 0.61 0.11 0.41 0.82 1.00 3790 2757

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.63 0.09 0.48 0.84 1.00 3610 2469

## nu 38.97 31.30 5.45 121.75 1.00 3781 2603

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Based on Kruschke’s Equation 17.2, we can convert the standardized coefficients back to their original metric using the formulas

β0=ζ0SDy+My−ζ1MxSDySDxandβ1=ζ1SDySDx,

where ζ0 and ζ1 denote the intercept and slope for the model of the standardized data, respectively, and that model follows the familiar form

zˆy=ζ0+ζ1zx.

To implement those equations, we’ll first extract the posterior draws. We begin with fit17.1, the model for which N=300.

draws <- as_draws_df(fit17.1)

head(draws)## # A draws_df: 6 iterations, 1 chains, and 6 variables

## b_Intercept b_height_z sigma nu lprior lp__

## 1 0.033 0.46 0.77 5.1 -10 -405

## 2 0.091 0.47 0.73 10.8 -11 -408

## 3 0.154 0.41 0.81 6.3 -11 -411

## 4 -0.194 0.48 0.99 51.9 -12 -410

## 5 -0.143 0.48 1.00 40.0 -12 -408

## 6 -0.168 0.49 0.93 49.4 -12 -406

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}Let’s wrap the consequences of Equation 17.2 into two functions.

make_beta_0 <- function(zeta_0, zeta_1, sd_x, sd_y, m_x, m_y) {

zeta_0 * sd_y + m_y - zeta_1 * m_x * sd_y / sd_x

}

make_beta_1 <- function(zeta_1, sd_x, sd_y) {

zeta_1 * sd_y / sd_x

}After saving a few values, we’re ready to use our custom functions to convert our posteriors for b_Intercept and b_height_z to their natural metric.

sd_x <- sd(d$height)

sd_y <- sd(d$weight)

m_x <- mean(d$height)

m_y <- mean(d$weight)

draws <-

draws %>%

mutate(b_0 = make_beta_0(zeta_0 = b_Intercept,

zeta_1 = b_height_z,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = b_height_z,

sd_x = sd_x,

sd_y = sd_y))

glimpse(draws)## Rows: 4,000

## Columns: 11

## $ b_Intercept <dbl> 0.033449878, 0.091239362, 0.154258692, -0.193823671, -0.142858016, -0.16759966…

## $ b_height_z <dbl> 0.4630762, 0.4714897, 0.4064655, 0.4757892, 0.4848149, 0.4896576, 0.5275564, 0…

## $ sigma <dbl> 0.7678310, 0.7267777, 0.8082075, 0.9882686, 1.0003481, 0.9328399, 0.9104863, 0…

## $ nu <dbl> 5.109545, 10.798497, 6.279290, 51.899674, 39.956352, 49.405875, 55.019777, 10.…

## $ lprior <dbl> -10.47370, -10.63927, -10.54572, -12.28095, -11.88108, -12.14174, -12.31494, -…

## $ lp__ <dbl> -404.6587, -407.6282, -410.7937, -409.9921, -408.3061, -406.4314, -408.0862, -…

## $ .chain <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ .iteration <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22,…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22,…

## $ b_0 <dbl> -123.86501, -127.08109, -84.63352, -139.58365, -143.41445, -147.26777, -171.38…

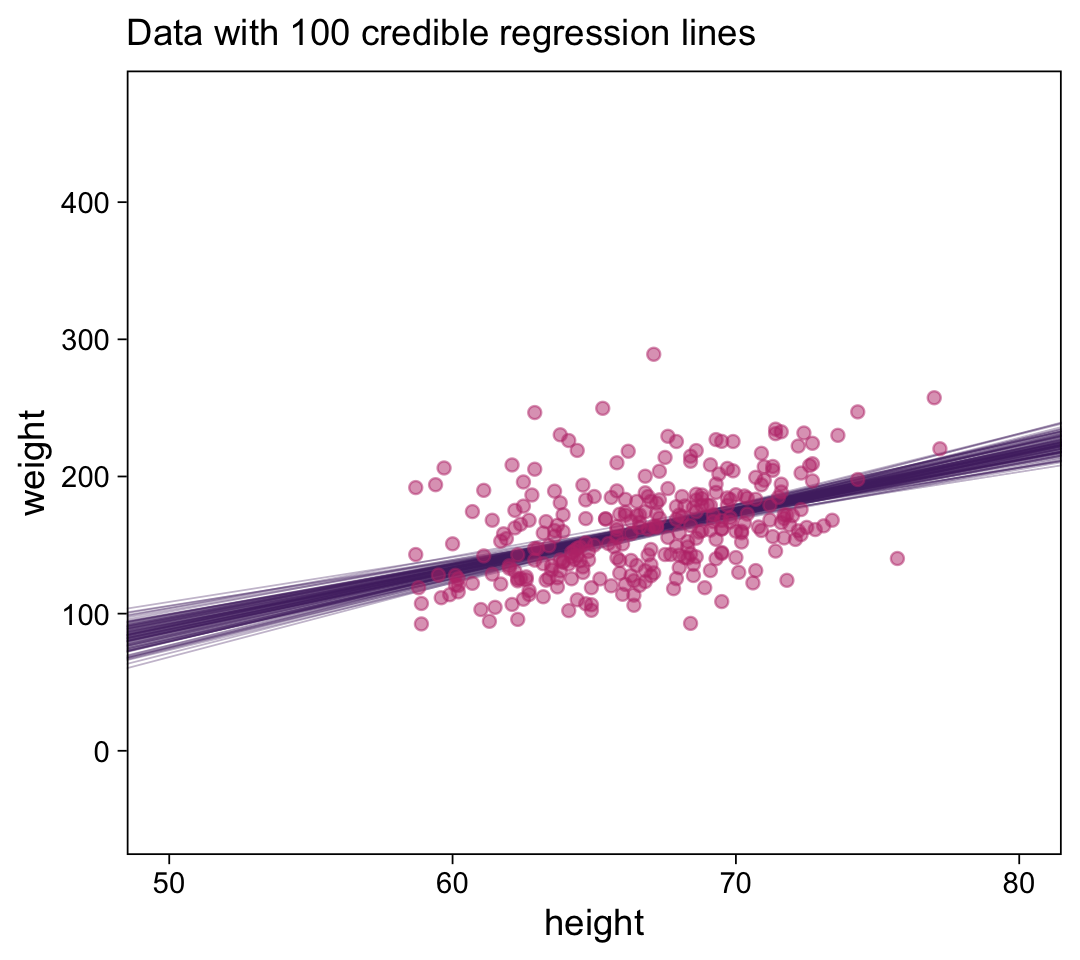

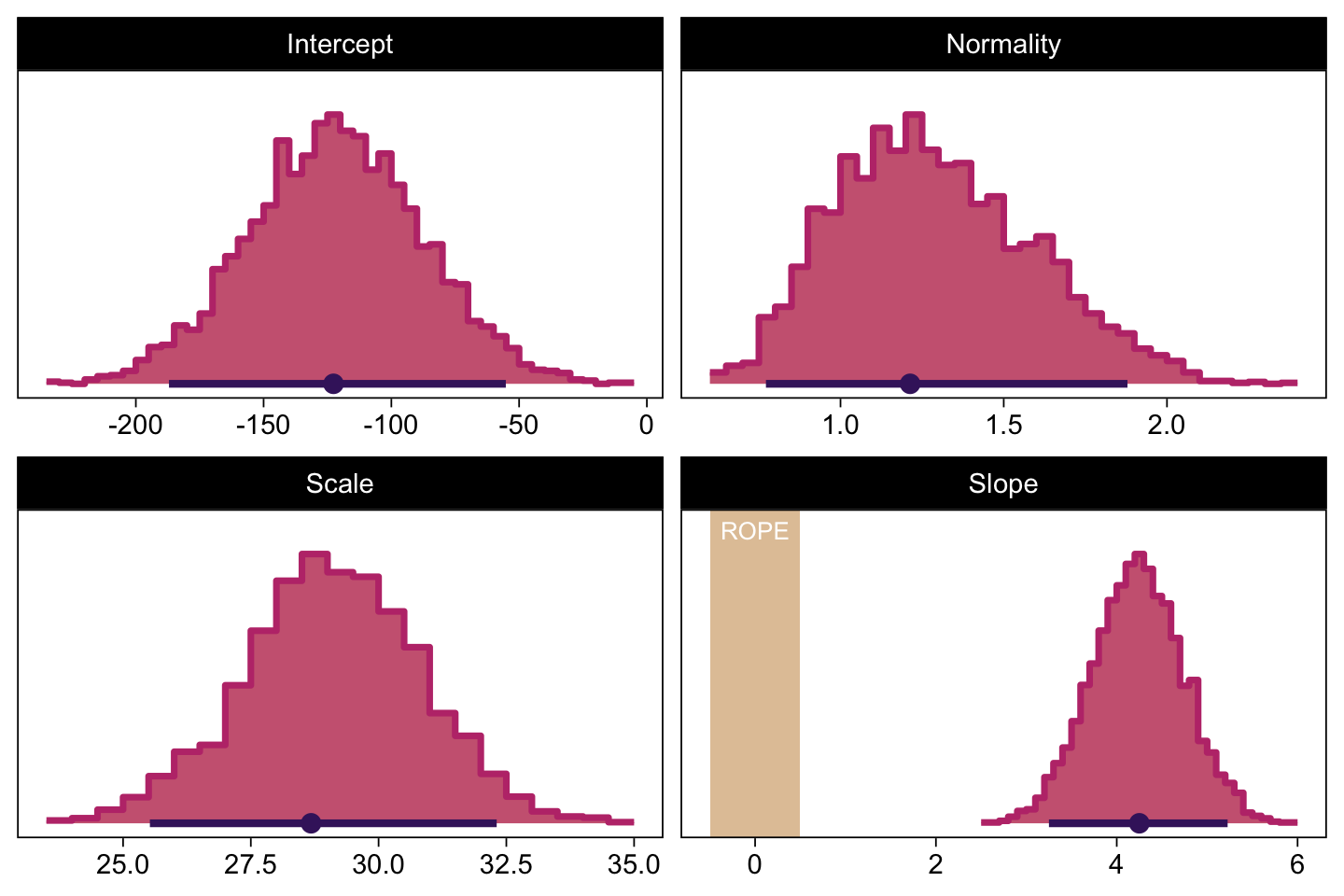

## $ b_1 <dbl> 4.297515, 4.375596, 3.772148, 4.415497, 4.499259, 4.544201, 4.895916, 3.510447…Now we’re finally ready to make the top panel of Figure 17.4.

# how many posterior lines would you like?

n_lines <- 100

d %>%

ggplot(aes(x = height, y = weight)) +

geom_abline(data = draws %>% slice(1:n_lines),

aes(intercept = b_0, slope = b_1, group = .draw),

color = bp[2], linewidth = 1/4, alpha = 1/3) +

geom_point(alpha = 1/2, color = bp[5]) +

labs(subtitle = eval(substitute(paste("Data with", n_lines, "credible regression lines"))),

x = "height",

y = "weight") +

coord_cartesian(xlim = c(50, 80),

ylim = c(-50, 470))

We’ll want to open the tidybayes package to help make the histograms.

library(tidybayes)

# we'll use this to mark off the ROPEs as white strips in the background

rope <-

tibble(name = "Slope",

xmin = -.5,

xmax = .5)

# annotate the ROPE

text <-

tibble(x = 0,

y = 0.98,

label = "ROPE",

name = "Slope")

# here are the primary data

draws %>%

transmute(Intercept = b_0,

Slope = b_1,

Scale = sigma * sd_y,

Normality = nu %>% log10()) %>%

pivot_longer(everything()) %>%

# the plot

ggplot() +

geom_rect(data = rope,

aes(xmin = xmin, xmax = xmax,

ymin = -Inf, ymax = Inf),

color = "transparent", fill = bp[9]) +

stat_histinterval(aes(x = value, y = 0),

point_interval = mode_hdi, .width = .95,

fill = bp[6], color = bp[1], slab_color = bp[5],

breaks = 40, normalize = "panels") +

geom_text(data = text,

aes(x = x, y = y, label = label),

size = 2.75, color = "white") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~ name, scales = "free", ncol = 2)

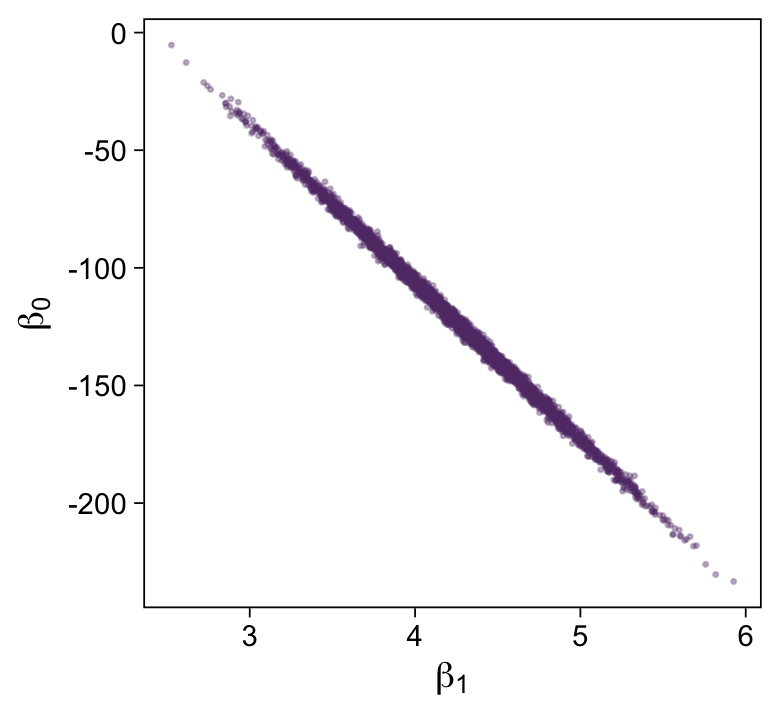

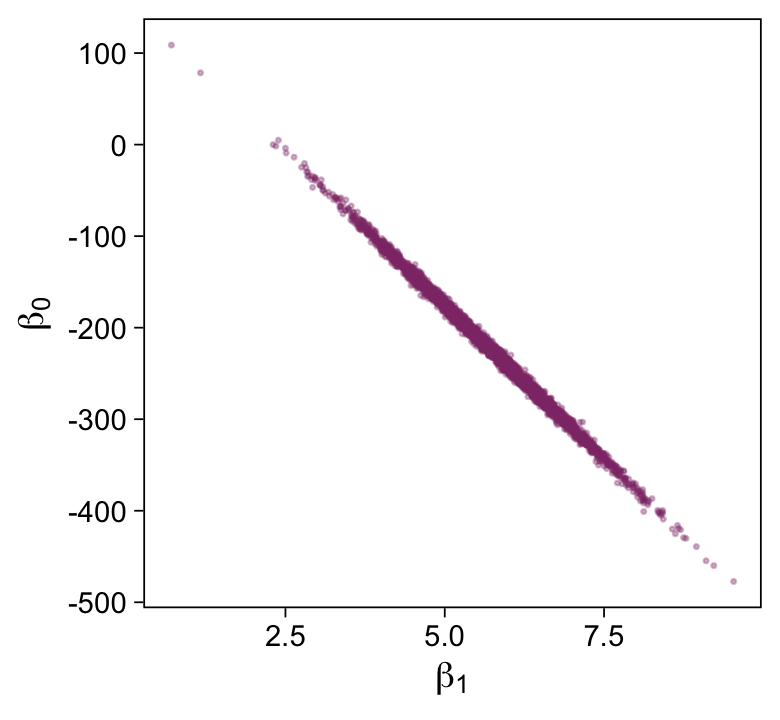

Here’s the scatter plot for the slope and intercept.

draws %>%

ggplot(aes(x = b_1, y = b_0)) +

geom_point(color = bp[3], size = 1/3, alpha = 1/3) +

labs(x = expression(beta[1]),

y = expression(beta[0]))

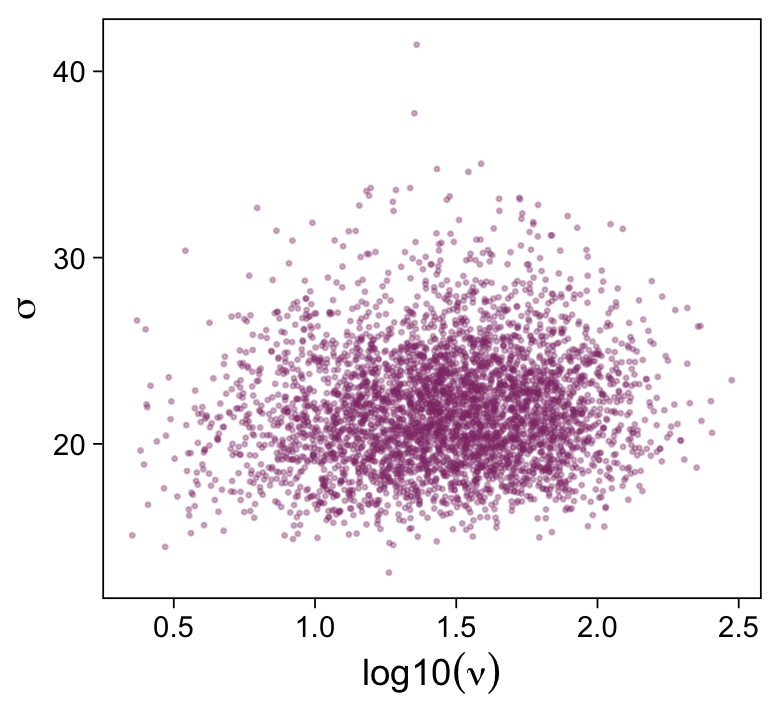

That is one strong correlation! Finally, here’s the scatter plot for log10(ν) and σtransformed back to its raw metric.

draws %>%

transmute(Scale = sigma * sd_y,

Normality = nu %>% log10()) %>%

ggplot(aes(x = Normality, y = Scale)) +

geom_point(color = bp[3], size = 1/3, alpha = 1/3) +

labs(x = expression(log10(nu)),

y = expression(sigma))

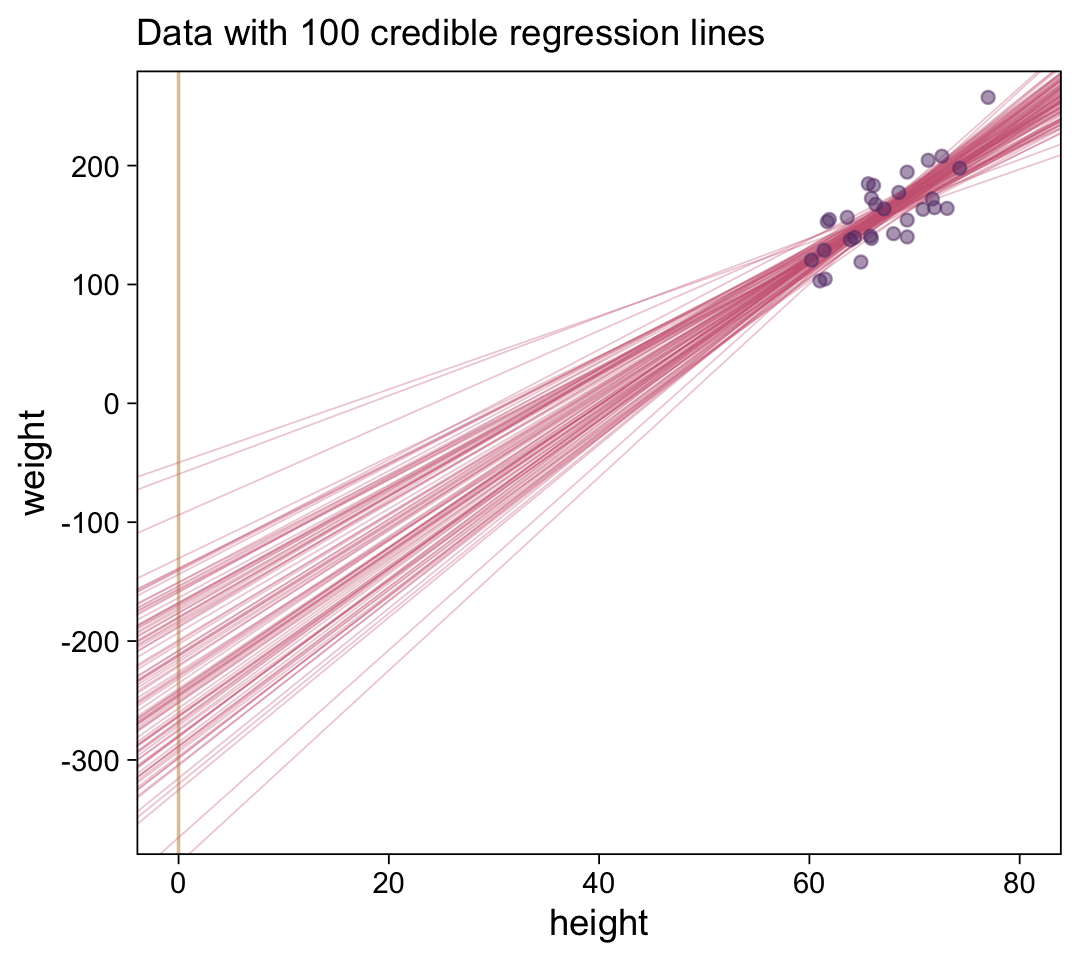

Let’s back track and make the plots for Figure 17.3 with fit17.2. We’ll need to extract the posterior draws and wrangle, as before.

draws <- as_draws_df(fit17.2)

draws <-

draws %>%

mutate(b_0 = make_beta_0(zeta_0 = b_Intercept,

zeta_1 = b_height_z,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = b_height_z,

sd_x = sd_x,

sd_y = sd_y))

glimpse(draws)## Rows: 4,000

## Columns: 11

## $ b_Intercept <dbl> -0.1019127271, -0.1602236195, -0.2453014631, -0.0767535353, -0.1667942551, -0.…

## $ b_height_z <dbl> 0.5989544, 0.6491469, 0.5190576, 0.5046634, 0.6864314, 0.7096647, 0.7160394, 0…

## $ sigma <dbl> 0.7103285, 0.5990408, 0.6441226, 0.7320464, 0.4938717, 0.8033633, 0.8161114, 0…

## $ nu <dbl> 42.477685, 45.532409, 56.704386, 27.973545, 31.975895, 29.216210, 15.016777, 7…

## $ lprior <dbl> -11.72048, -11.75331, -12.16595, -11.23548, -11.22862, -11.33463, -10.85538, -…

## $ lp__ <dbl> -36.70185, -36.15062, -37.40014, -37.47648, -37.73622, -39.23489, -40.09140, -…

## $ .chain <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ .iteration <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22,…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 18, 19, 20, 21, 22,…

## $ b_0 <dbl> -212.69271, -245.79182, -168.15852, -153.42572, -269.11056, -288.95231, -293.2…

## $ b_1 <dbl> 5.558515, 6.024319, 4.817043, 4.683460, 6.370333, 6.585946, 6.645106, 6.573859…Here’s the top panel of Figure 17.3.

# how many posterior lines would you like?

n_lines <- 100

ggplot(data = d %>%

filter(subset == 1),

aes(x = height, y = weight)) +

geom_vline(xintercept = 0, color = bp[9]) +

geom_abline(data = draws %>% slice(1:n_lines),

aes(intercept = b_0, slope = b_1, group = .draw),

color = bp[6], linewidth = 1/4, alpha = 1/3) +

geom_point(alpha = 1/2, color = bp[3]) +

scale_y_continuous(breaks = seq(from = -300, to = 200, by = 100)) +

labs(subtitle = eval(substitute(paste("Data with", n_lines, "credible regression lines"))),

x = "height",

y = "weight") +

coord_cartesian(xlim = c(0, 80),

ylim = c(-350, 250))

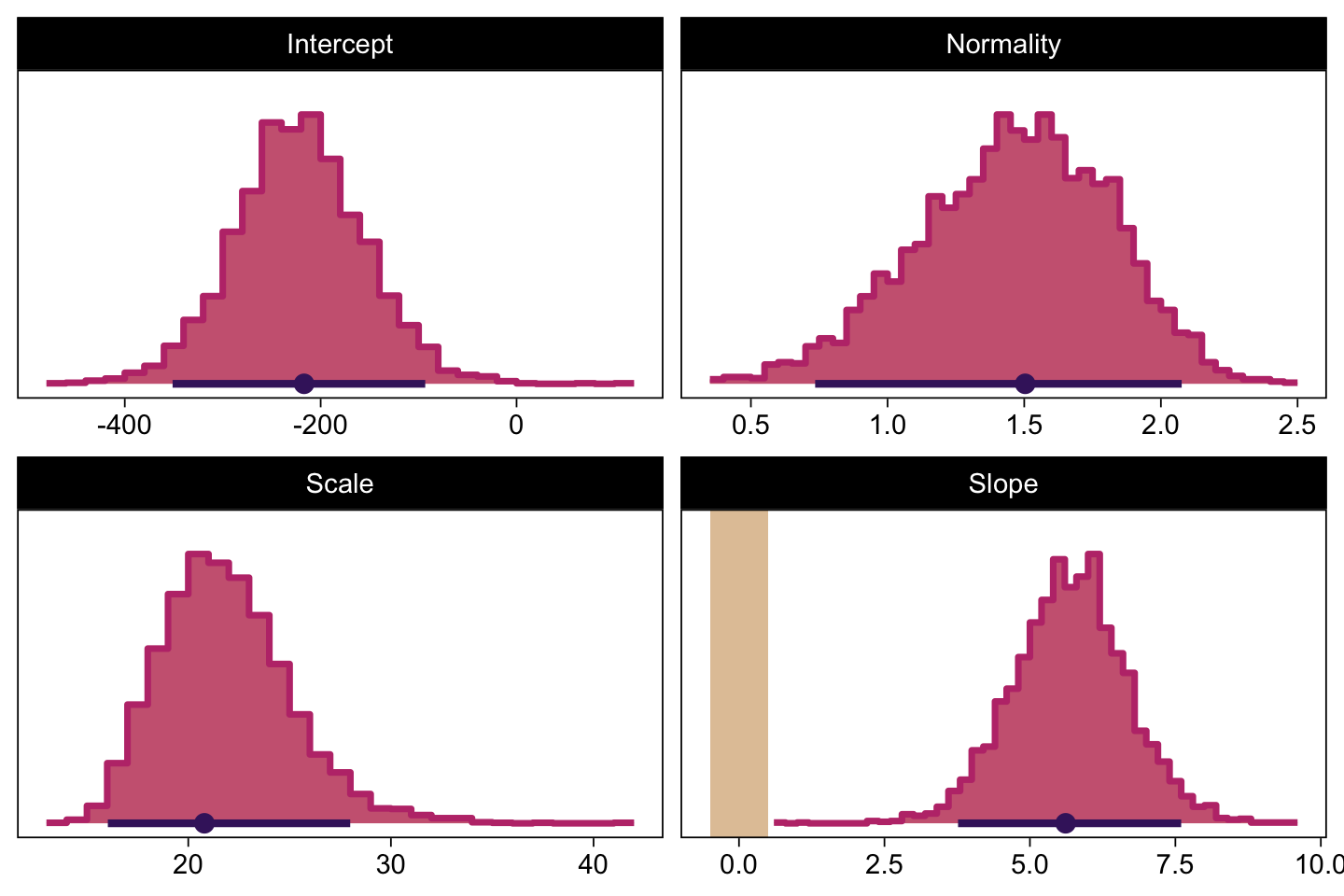

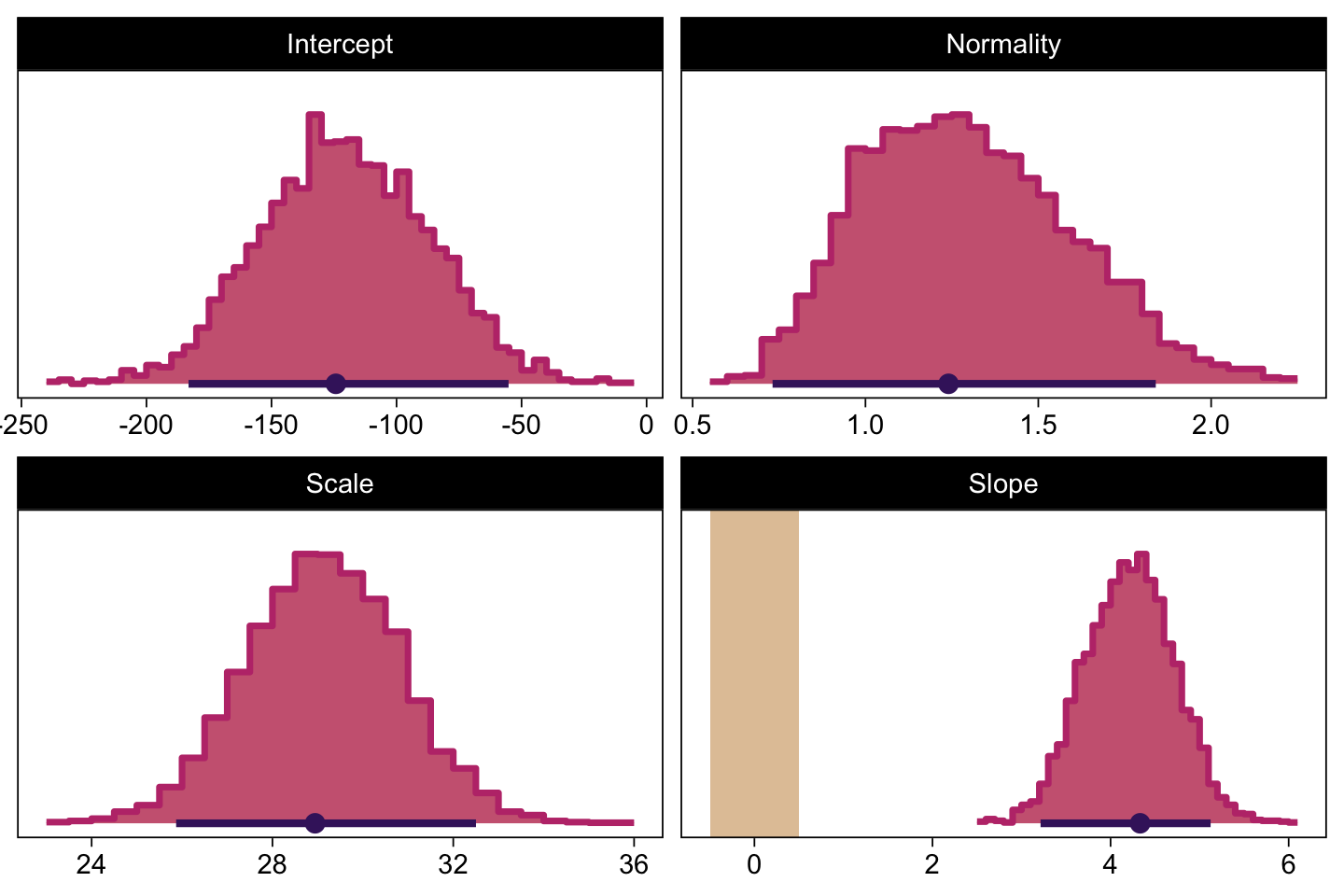

Next we’ll make the histograms.

# here are the primary data

draws %>%

transmute(Intercept = b_0,

Slope = b_1,

Scale = sigma * sd_y,

Normality = nu %>% log10()) %>%

pivot_longer(everything()) %>%

# the plot

ggplot() +

geom_rect(data = rope,

aes(xmin = xmin, xmax = xmax,

ymin = -Inf, ymax = Inf),

color = "transparent", fill = bp[9]) +

stat_histinterval(aes(x = value, y = 0),

point_interval = mode_hdi, .width = .95,

fill = bp[6], color = bp[1], slab_color = bp[5],

breaks = 40, normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~ name, scales = "free", ncol = 2)

And we’ll finish up with the scatter plots.

draws %>%

ggplot(aes(x = b_1, y = b_0)) +

geom_point(color = bp[4], size = 1/3, alpha = 1/3) +

labs(x = expression(beta[1]),

y = expression(beta[0]))

draws %>%

transmute(Scale = sigma * sd_y,

Normality = nu %>% log10()) %>%

ggplot(aes(x = Normality, y = Scale)) +

geom_point(color = bp[4], size = 1/3, alpha = 1/3) +

labs(x = expression(log10(nu)),

y = expression(sigma))

17.2.2 Robust linear regression in Stan.

Recall from Section 14.1 (p. 400) that Stan uses Hamiltonian dynamics to find proposed positions in parameter space. The trajectories use the gradient of the posterior distribution to move large distances even in narrow distributions. Thus, HMC by itself, without data standardization, should be able to efficiently generate a representative sample from the posterior distribution. (p. 487)

To be clear, we’re going to fit the models with Stan/brms twice. Above, we used the standardized data like Kruschke did with his JAGS code. Now we’re getting ready to follow along with the text and use Stan/brms to fit the models with the unstandardized data.

17.2.2.1 Constants for vague priors.

The issues is we want a system where we can readily specify vague priors on our regression models when the data are not standardized. As it turns out,

a regression slope can take on a maximum value of SDy/SDx for data that are perfectly correlated. Therefore, the prior on the slope will be given a standard deviation that is large compared to that maximum. The biggest that an intercept could be, for data that are perfectly correlated, is MxSDy/SDx. Therefore, the prior on the intercept will have a standard deviation that is large compared to that maximum. (p. 487)

With that in mind, we’ll specify our stanvars as follows.

beta_0_sigma <- 10 * abs(m_x * sd_y / sd_x)

beta_1_sigma <- 10 * abs(sd_y / sd_x)

stanvars <-

stanvar(beta_0_sigma, name = "beta_0_sigma") +

stanvar(beta_1_sigma, name = "beta_1_sigma") +

stanvar(sd_y, name = "sd_y") +

stanvar(1/29, name = "one_over_twentynine")As in Chapter 16, “set the priors to be extremely broad relative to the data” (p. 487). With our stanvars defined, we’re ready to fit fit17.3.

fit17.3 <-

brm(data = d,

family = student,

weight ~ 1 + height,

prior = c(prior(normal(0, beta_0_sigma), class = Intercept),

prior(normal(0, beta_1_sigma), class = b),

prior(normal(0, sd_y), class = sigma),

prior(exponential(one_over_twentynine), class = nu)),

chains = 4, cores = 4,

stanvars = stanvars,

seed = 17,

file = "fits/fit17.03")Here’s the model summary.

print(fit17.3)## Family: student

## Links: mu = identity; sigma = identity; nu = identity

## Formula: weight ~ 1 + height

## Data: d (Number of observations: 300)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept -120.60 33.49 -184.21 -56.13 1.00 3068 2594

## height 4.22 0.50 3.25 5.17 1.00 3119 2647

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 29.13 1.70 25.81 32.46 1.00 2484 2282

## nu 25.43 20.98 6.19 82.85 1.00 2059 2553

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Now compare the histograms for these posterior draws to those we made, above, from those fit17.1. You’ll see they’re quite similar.

# here are the primary data

as_draws_df(fit17.3) %>%

transmute(Intercept = b_Intercept,

Slope = b_height,

Scale = sigma,

Normality = nu %>% log10()) %>%

pivot_longer(everything()) %>%

# the plot

ggplot() +

geom_rect(data = rope,

aes(xmin = xmin, xmax = xmax,

ymin = -Inf, ymax = Inf),

color = "transparent", fill = bp[9]) +

stat_histinterval(aes(x = value, y = 0),

point_interval = mode_hdi, .width = .95,

fill = bp[6], color = bp[1], slab_color = bp[5],

breaks = 40, normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

facet_wrap(~ name, scales = "free", ncol = 2)

17.2.3 Stan or JAGS?

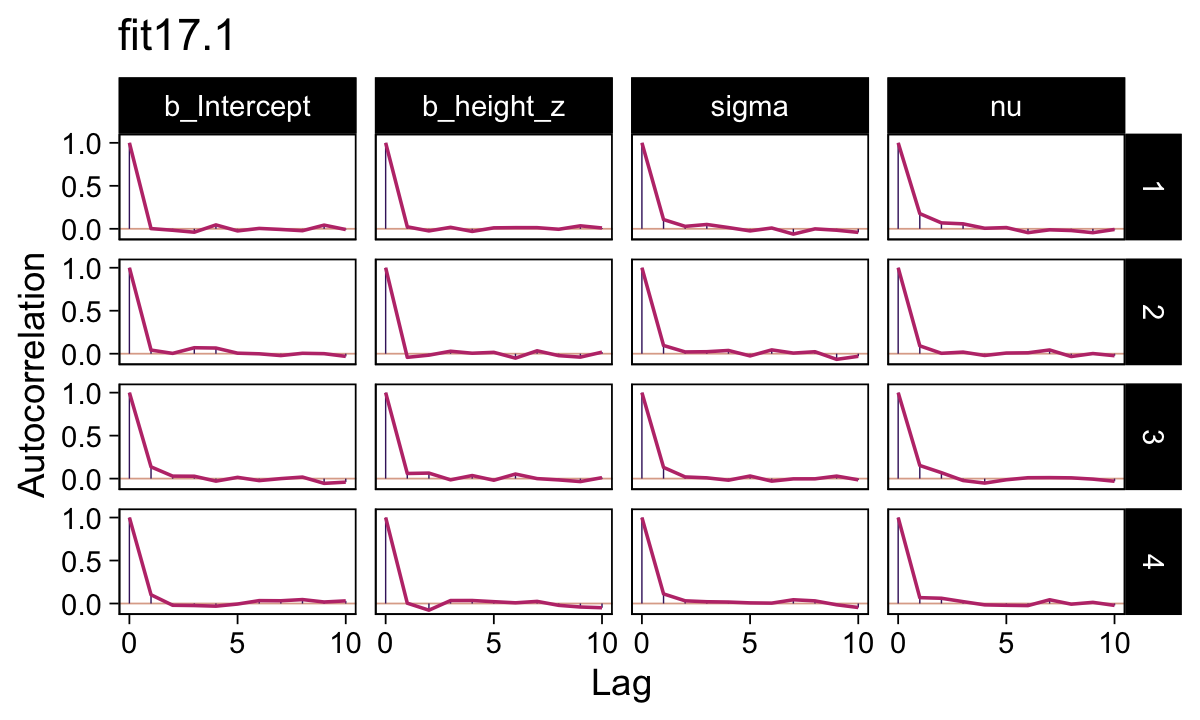

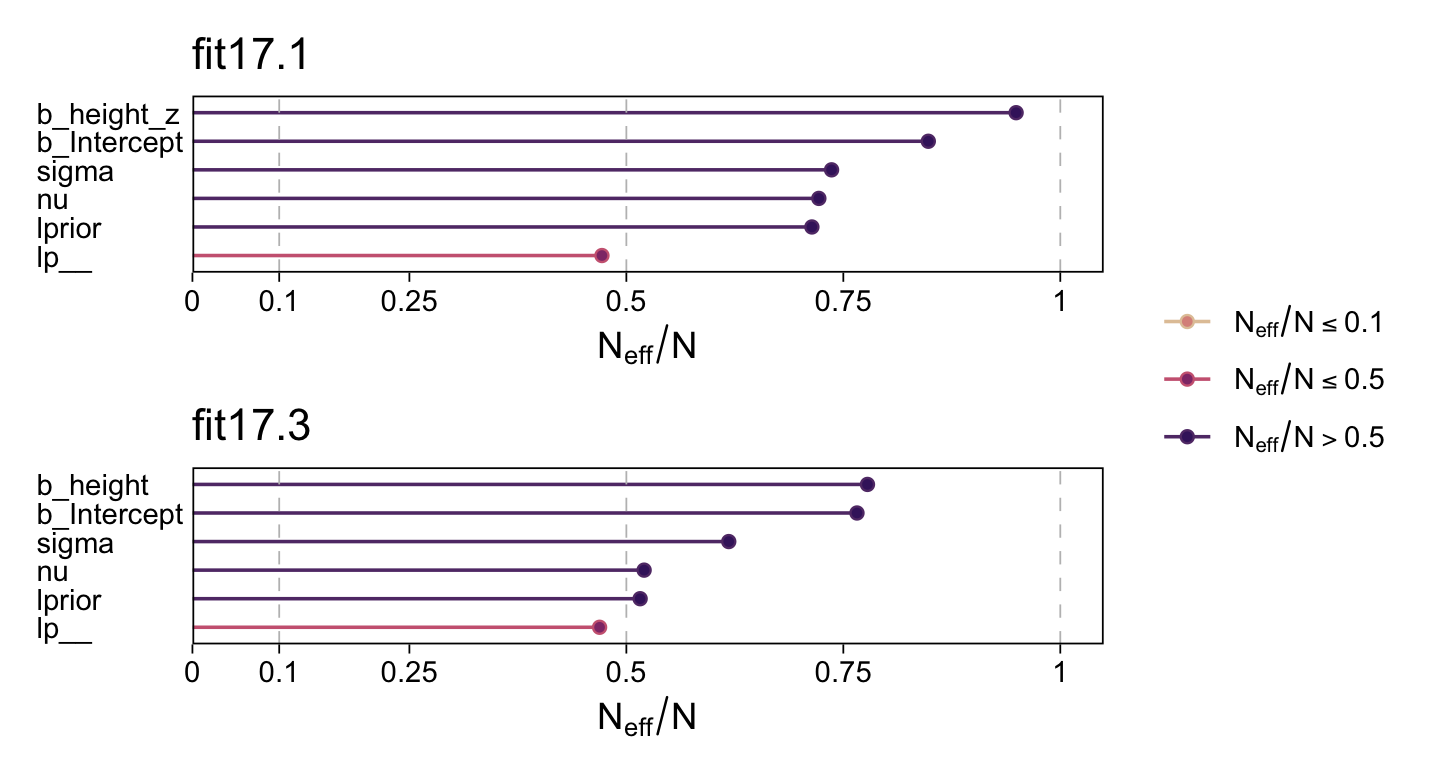

In this ebook we only fit the models with brms, which uses Stan under the hood. But since we fit the N=300 model with both standardized and unstandardized data, we can compare their performance. For that, we’ll want bayesplot.

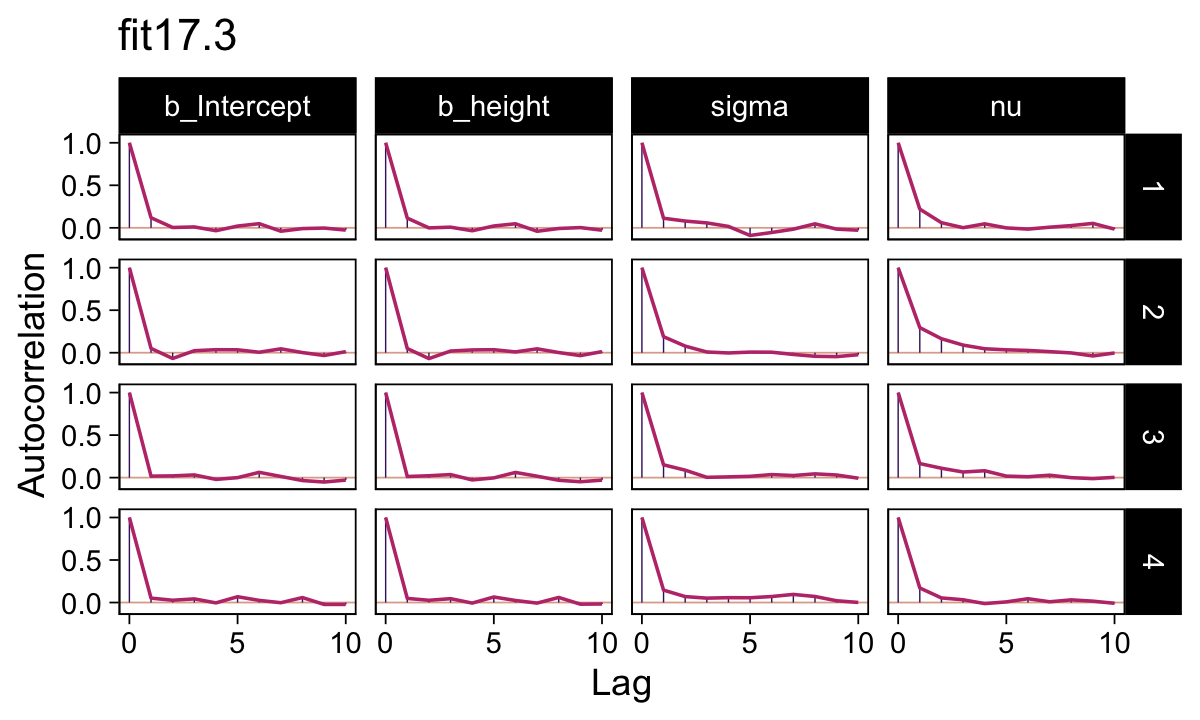

library(bayesplot)They had equally impressive autocorrelation plots.

# set the bayesplot color scheme

color_scheme_set(scheme = bp[c(1, 3, 8, 7, 5, 5)])

as_draws_df(fit17.1) %>%

mutate(chain = .chain) %>%

mcmc_acf(pars = vars(b_Intercept:nu),

lags = 10) +

ggtitle("fit17.1")

as_draws_df(fit17.3) %>%

mutate(chain = .chain) %>%

mcmc_acf(pars = vars(b_Intercept:nu),

lags = 10) +

ggtitle("fit17.3")

Their Neff/N ratios were pretty similar. Both were reasonable. You’d probably want to run a simulation to contrast them with any rigor.

# change the bayesplot color scheme

color_scheme_set(scheme = bp[c(1, 3, 4, 6, 7, 9)])

p1 <-

neff_ratio(fit17.1) %>%

mcmc_neff() +

yaxis_text(hjust = 0) +

ggtitle("fit17.1")

p2 <-

neff_ratio(fit17.3) %>%

mcmc_neff() +

yaxis_text(hjust = 0) +

ggtitle("fit17.3")

p1 / p2 + plot_layout(guide = "collect")

17.2.4 Interpreting the posterior distribution.

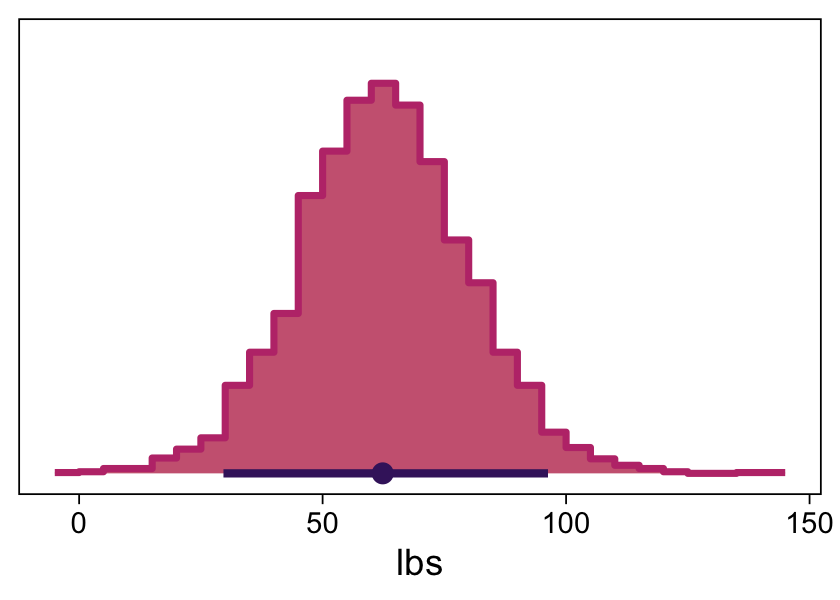

Halfway through the prose, Kruschke mentioned how the models provide entire posteriors for the weight of a 50-inch-tall person. brms offers a few ways to do so.

In some applications, there is interest in extrapolating or interpolating trends at x values sparsely represented in the current data. For instance, we might want to predict the weight of a person who is 50 inches tall. A feature of Bayesian analysis is that we get an entire distribution of credible predicted values, not only a point estimate. (p. 489)

Since this is such a simple model, one way is to work directly with the posterior draws Here we use the model formula yi=β0+β1xi by adding the transformed intercept b_0 to the product of 50 and the transformed coefficient for height, b_1.

draws %>%

mutate(weight_at_50 = b_0 + b_1 * 50) %>%

ggplot(aes(x = weight_at_50, y = 0)) +

stat_histinterval(point_interval = mode_hdi, .width = .95,

fill = bp[6], color = bp[1], slab_color = bp[5],

breaks = 40, normalize = "panels") +

scale_y_continuous(NULL, breaks = NULL) +

xlab("lbs")

Looks pretty wide, doesn’t it? Hopefully this isn’t a surprise. Recall that this draws is from fit17.2, the posterior based on the n=30 data. With so few cases, most predictions from that model are uncertain. But also, 50 inches is way out of the bounds of the data the model was based on, so we should be uncertain in this range.

Let’s practice a second method. With the brms::fitted() function, we can specify the desired height value into a tibble, which we’ll then feed into the newdata argument. Fitted will then return the model-implied criterion value for that predictor variable. To warm up, we’ll first to it with fit17.3, the model based on the untransformed data.

nd <- tibble(height = 50)

fitted(fit17.3,

newdata = nd)## Estimate Est.Error Q2.5 Q97.5

## [1,] 90.2715 8.668269 73.33173 107.1422The code returned a typical brms-style summary of the posterior mean, standard deviation, and 95% percentile-based intervals. The same basic method will work for the standardized models, fit17.1 or fit17.2. But that will take a little more wrangling. First, we’ll need to transform our desired value 50 into its standardized version.

nd <- tibble(height_z = (50 - mean(d$height)) / sd(d$height))When we feed this value into fitted(), it will return the corresponding posterior within the standardized metric. But we want unstandardized, so we’ll need to transform. That’ll be a few-step process. First, to do the transformation properly, we’ll want to work with the poster draws themselves, rather than summary values. So we’ll set summary = F. We’ll then convert the draws into a tibble format. Then we’ll use the transmute() function to do the conversion. In the final step, we’ll use mean_qi() to compute the summary values.

fitted(fit17.1,

newdata = nd,

summary = F) %>%

as_tibble() %>%

transmute(weight = V1 * sd(d$weight) + mean(d$weight)) %>%

mean_qi()## # A tibble: 1 × 6

## weight .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 90.0 73.1 107. 0.95 mean qiIf you look above, you’ll see the results are within rounding error of those from fit3.

17.3 Hierarchical regression on individuals within groups

In the previous applications, the jth individual contributed a single xj,yj pair. But suppose instead that every individual, j, contributes multiple observations of xi|j,yi|j pairs. (The subscript notation i|j means the ith observation within the jth individual.) With these data, we can estimate a regression curve for every individual. If we also assume that the individuals are mutually representative of a common group, then we can estimate group-level parameters too. (p. 490)

Load the fictitious data and take a glimpse().

my_data <- read_csv("data.R/HierLinRegressData.csv")

glimpse(my_data)## Rows: 132

## Columns: 3

## $ Subj <dbl> 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 3, 3, 3, 3, 3, 3, 3, 4, 4, 5, 5, 5, 5, 5…

## $ X <dbl> 60.2, 61.5, 61.7, 62.3, 67.6, 69.2, 53.7, 60.1, 60.5, 62.3, 63.0, 64.0, 64.1, 66.7, 6…

## $ Y <dbl> 145.6, 157.3, 165.6, 158.8, 196.1, 183.9, 165.0, 166.9, 179.0, 196.2, 192.3, 200.7, 1…Our goal is to describe each individual with a linear regression, and simultaneously to estimate the typical slope and intercept of the group overall. A key assumption for our analysis is that each individual is representative of the group. Therefore, every individual informs the estimate of the group slope and intercept, which in turn inform the estimates of all the individual slopes and intercepts. Thereby we get sharing of information across individuals, and shrinkage of individual estimates toward the overarching mode. (p. 491)

17.3.1 The model and implementation in JAGS brms.

Kruschke described the model diagram in Figure 17.6 as “a bit daunting” (p. 491). The code to make our version of the diagram is “a bit daunting,” too. Just like the code for any other diagram, it’s modular. If you’re following along with me and making these on your own, just build it up, step by step.

# normal density

p1 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("italic(M)[0]", "italic(S)[0]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# half-normal density

p2 <-

tibble(x = seq(from = 0, to = 3, by = .01)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 1.5, y = .2,

label = "half-normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 1.5, y = .6,

label = "0*','*~italic(S)[sigma][0]",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# a second normal density

p3 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("italic(M)[1]", "italic(S)[1]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# a second half-normal density

p4 <-

tibble(x = seq(from = 0, to = 3, by = .01)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 1.5, y = .2,

label = "half-normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 1.5, y = .6,

label = "0*','*~italic(S)[sigma][1]",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# four annotated arrows

p5 <-

tibble(x = c(.05, .35, .65, .95),

y = c(1, 1, 1, 1),

xend = c(.32, .4, .65, .72),

yend = c(.2, .2, .2, .2)) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow, color = bp[1]) +

annotate(geom = "text",

x = c(.15, .35, .625, .78), y = .55,

label = "'~'",

size = 10, color = bp[1], family = "Times", parse = T) +

xlim(0, 1) +

theme_void()

# third normal density

p6 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("mu[0]", "sigma[0]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# fourth normal density

p7 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = c(0, 1.5), y = .6,

label = c("mu[1]", "sigma[1]"),

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# two annotated arrows

p8 <-

tibble(x = c(.18, .82),

y = c(1, 1),

xend = c(.36, .55),

yend = c(0, 0)) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow, color = bp[1]) +

annotate(geom = "text",

x = c(.18, .33, .64, .77), y = .55,

label = c("'~'", "italic(j)", "'~'", "italic(j)"),

size = c(10, 7, 10, 7),

color = bp[1], family = "Times", parse = T) +

xlim(0, 1) +

theme_void()

# exponential density

p9 <-

tibble(x = seq(from = 0, to = 1, by = .01)) %>%

ggplot(aes(x = x, y = (dexp(x, 2) / max(dexp(x, 2))))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = .5, y = .2,

label = "exp",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = .5, y = .6,

label = "italic(K)",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# likelihood formula

p10 <-

tibble(x = .5,

y = .25,

label = "beta[0][italic(j)]+beta[1][italic(j)]*italic(x)[italic(i)*'|'*italic(j)]") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, color = bp[1], parse = T, family = "Times") +

scale_x_continuous(expand = c(0, 0), limits = c(0, 1)) +

ylim(0, 1) +

theme_void()

# half-normal density

p11 <-

tibble(x = seq(from = 0, to = 3, by = .01)) %>%

ggplot(aes(x = x, y = (dnorm(x)) / max(dnorm(x)))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 1.5, y = .2,

label = "half-normal",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 1.5, y = .6,

label = "0*','*~italic(S)[sigma]",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# four annotated arrows

p12 <-

tibble(x = c(.43, .43, 1.5, 2.5),

y = c(1, .55, 1, 1),

xend = c(.43, 1.225, 1.5, 1.75),

yend = c(.8, .15, .2, .2)) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow, color = bp[1]) +

annotate(geom = "text",

x = c(.3, .7, 1.38, 2), y = c(.92, .22, .65, .6),

label = c("'~'", "'='", "'='", "'~'"),

size = 10,

color = bp[1], family = "Times", parse = T) +

annotate(geom = "text",

x = .43, y = .7,

label = "nu*minute+1",

size = 7, color = bp[1], family = "Times", parse = T) +

xlim(0, 3) +

theme_void()

# student-t density

p13 <-

tibble(x = seq(from = -3, to = 3, by = .1)) %>%

ggplot(aes(x = x, y = (dt(x, 3) / max(dt(x, 3))))) +

geom_area(fill = bp[6]) +

annotate(geom = "text",

x = 0, y = .2,

label = "student t",

size = 7, color = bp[1]) +

annotate(geom = "text",

x = 0, y = .6,

label = "nu~~mu[italic(i)*'|'*italic(j)]~~sigma",

size = 7, color = bp[1], family = "Times", parse = T) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5, color = bp[1]))

# the final annotated arrow

p14 <-

tibble(x = c(.375, .625),

y = c(1/3, 1/3),

label = c("'~'", "italic(i)*'|'*italic(j)")) %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = c(10, 7), color = bp[1], parse = T, family = "Times") +

geom_segment(x = .5, xend = .5,

y = 1, yend = 0,

color = bp[1], arrow = my_arrow) +

xlim(0, 1) +

theme_void()

# some text

p15 <-

tibble(x = .5,

y = .5,

label = "italic(y)[italic(i)*'|'*italic(j)]") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, color = bp[1], parse = T, family = "Times") +

xlim(0, 1) +

theme_void()

# define the layout

layout <- c(

area(t = 1, b = 2, l = 1, r = 3),

area(t = 1, b = 2, l = 5, r = 7),

area(t = 1, b = 2, l = 9, r = 11),

area(t = 1, b = 2, l = 13, r = 15),

area(t = 4, b = 5, l = 5, r = 7),

area(t = 4, b = 5, l = 9, r = 11),

area(t = 3, b = 4, l = 1, r = 15),

area(t = 7, b = 8, l = 3, r = 5),

area(t = 7, b = 8, l = 7, r = 9),

area(t = 7, b = 8, l = 11, r = 13),

area(t = 6, b = 7, l = 5, r = 11),

area(t = 10, b = 11, l = 7, r = 9),

area(t = 9, b = 10, l = 3, r = 13),

area(t = 12, b = 12, l = 7, r = 9),

area(t = 13, b = 13, l = 7, r = 9)

)

# combine and plot!

(p1 + p2 + p3 + p4 + p6 + p7 + p5 + p9 + p10 + p11 + p8 + p13 + p12 + p14 + p15) +

plot_layout(design = layout) &

ylim(0, 1) &

theme(plot.margin = margin(0, 5.5, 0, 5.5))

Just look at that sweet thing! If you made you version, here; have a piece of cake. 🍰 You earned it.

Now let’s standardize the data and define our stanvars. I should note that standardizing and mean centering, more generally, becomes complicated with multilevel models. Here we’re just standardizing based on the grand mean and grand standard deviation. But there are other ways to standardize, such as within groups. Craig Enders has a good (2013) book chapter that touched on the topic, as well as an earlier (2007) paper with Tofighi.

my_data <-

my_data %>%

mutate(x_z = standardize(X),

y_z = standardize(Y))In my experience, you typically use the (|) syntax when fitting a hierarchical model with thebrm() function. The terms before the | are those varying by group and you tell brm() what the grouping variable is after the |. In the case of multiple group-level parameters–which is the case with this model (i.e., both intercept and the x_z slope)–, this syntax also estimates correlations among the group-level parameters. Kruschke’s model doesn’t appear to include such a correlation. Happily, we can use the (||) syntax instead, which omits correlations among the group-level parameters. If you’re curious about the distinction, fit the model both ways and explore the differences in the print() output. For more on the topic, see the Group-level terms subsection of the brmsformula section of the brms reference manual (Bürkner, 2022f).

fit17.4 <-

brm(data = my_data,

family = student,

y_z ~ 1 + x_z + (1 + x_z || Subj),

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 1), class = sigma),

# the next line is new

prior(normal(0, 1), class = sd),

prior(exponential(one_over_twentynine), class = nu)),

chains = 4, cores = 4,

seed = 17,

stanvars = stanvar(1/29, name = "one_over_twentynine"),

file = "fits/fit17.04")Did you catch that prior(normal(0, 1), class = sd) line in the code? That’s the prior we used for our hierarchical variance parameters, σ0 and σ1. Just like with the scale parameter, σ, we used the zero-mean half-normal distribution. By default, brms sets their left boundary to zero, which keeps the HMC algorithm from exploring negative variance values.

Anyway, here’s the model summary().

summary(fit17.4)## Family: student

## Links: mu = identity; sigma = identity; nu = identity

## Formula: y_z ~ 1 + x_z + (1 + x_z || Subj)

## Data: my_data (Number of observations: 132)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~Subj (Number of levels: 25)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 1.02 0.18 0.72 1.44 1.00 1182 2090

## sd(x_z) 0.22 0.12 0.02 0.47 1.00 969 1609

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.07 0.22 -0.36 0.49 1.00 874 1376

## x_z 0.70 0.10 0.51 0.90 1.00 2991 3100

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.59 0.05 0.49 0.70 1.00 1764 2273

## nu 39.63 30.88 6.23 125.68 1.00 3813 2203

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).17.3.2 The posterior distribution: Shrinkage and prediction.

Keeping in the same spirit of Section 17.2.4, we’ll make the plots of Figure 17.5 in two ways. First, we’ll use our make_beta_0() and make_beta_1() functions to transform the model coefficients.

draws <- as_draws_df(fit17.4)

sd_x <- sd(my_data$X)

sd_y <- sd(my_data$Y)

m_x <- mean(my_data$X)

m_y <- mean(my_data$Y)

draws <-

draws %>%

mutate(b_0 = make_beta_0(zeta_0 = b_Intercept,

zeta_1 = b_x_z,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = b_x_z,

sd_x = sd_x,

sd_y = sd_y)) %>%

select(.draw, b_0, b_1)

head(draws)## # A tibble: 6 × 3

## .draw b_0 b_1

## <int> <dbl> <dbl>

## 1 1 -90.8 3.59

## 2 2 -64.0 3.20

## 3 3 -51.2 2.94

## 4 4 -84.8 3.51

## 5 5 -21.6 2.54

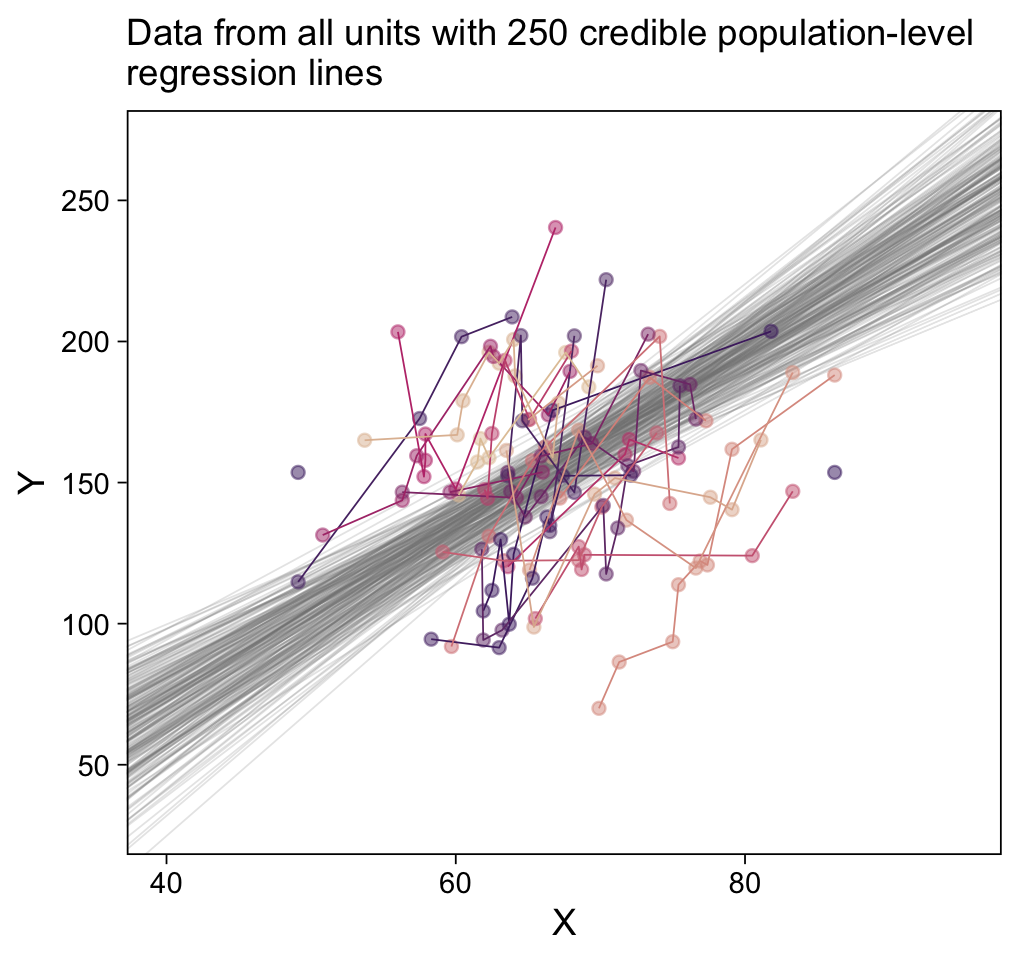

## 6 6 -82.9 3.49Here’s the top panel of Figure 17.4.

# how many posterior lines would you like?

n_lines <- 250

my_data %>%

mutate(Subj = factor(Subj, levels = 25:1)) %>%

ggplot(aes(x = X, y = Y)) +

geom_abline(data = draws %>% slice(1:n_lines),

aes(intercept = b_0, slope = b_1, group = .draw),

color = "grey50", linewidth = 1/4, alpha = 1/5) +

geom_point(aes(color = Subj),

alpha = 1/2) +

geom_line(aes(group = Subj, color = Subj),

linewidth = 1/4) +

scale_color_manual(values = beyonce_palette(41, n = 25, type = "continuous"), breaks = NULL) +

scale_y_continuous(breaks = seq(from = 50, to = 250, by = 50)) +

labs(subtitle = eval(substitute(paste("Data from all units with", n_lines, "credible population-level\nregression lines")))) +

coord_cartesian(xlim = c(40, 95),

ylim = c(30, 270))

Recall how we can use coef() to extract the Subj-specific parameters. But we’ll want posterior draws rather than summaries, which requires summary = F. It’ll take a bit of wrangling to get the output in a tidy format. Once we’re there, the plot code will be fairly simple.

c <-

# first collect and wrangle the draws for the Subj-level intercept and slopes

rbind(coef(fit17.4, summary = F)$Subj[, , "Intercept"],

coef(fit17.4, summary = F)$Subj[, , "x_z"]) %>%

data.frame() %>%

set_names(1:25) %>%

mutate(draw = rep(1:4000, times = 2),

param = rep(c("Intercept", "Slope"), each = 4000)) %>%

pivot_longer(`1`:`25`, names_to = "Subj") %>%

pivot_wider(names_from = param, values_from = value) %>%

# now we're ready to un-standardize the standardized coefficients

mutate(b_0 = make_beta_0(zeta_0 = Intercept,

zeta_1 = Slope,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = Slope,

sd_x = sd_x,

sd_y = sd_y))

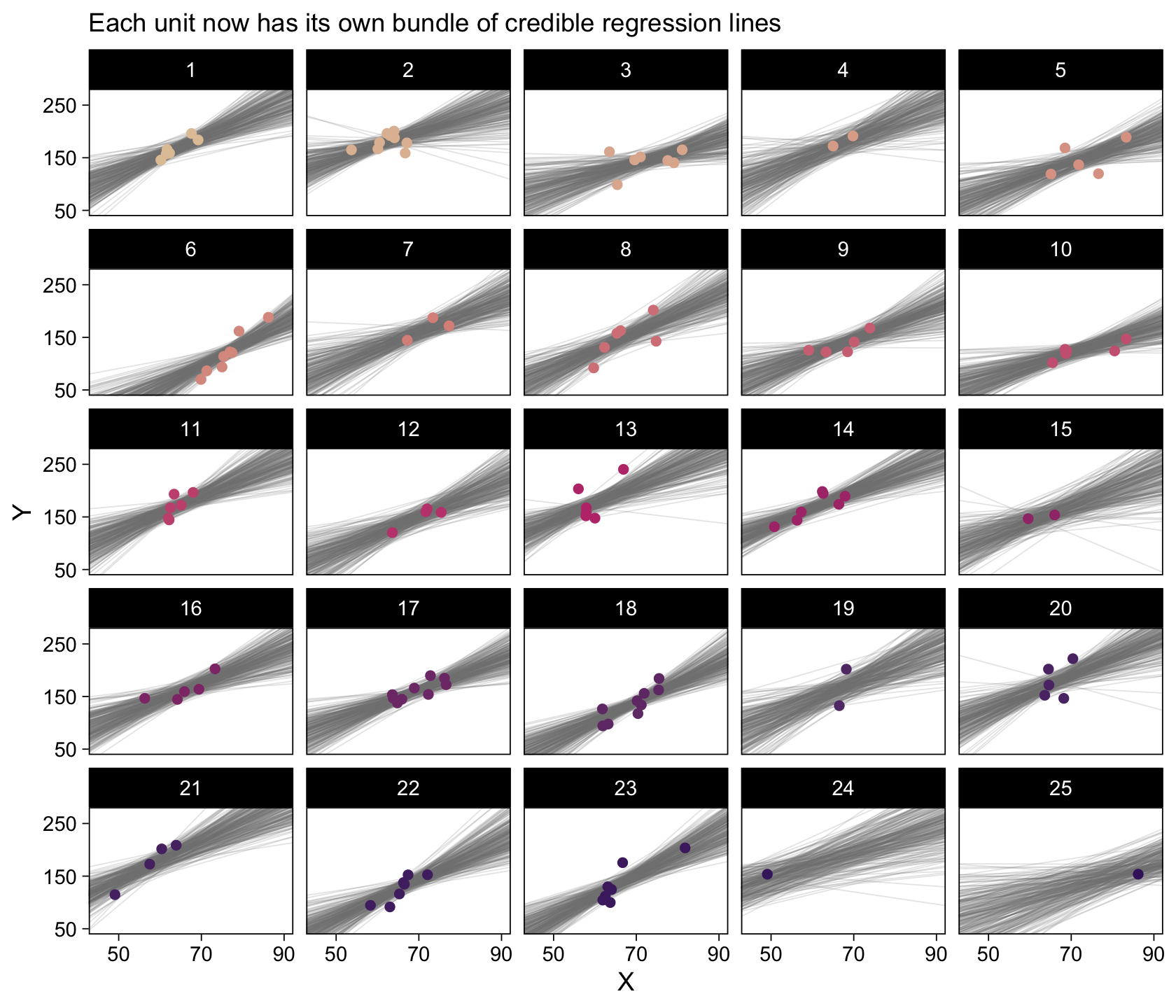

# how many lines would you like?

n_lines <- 250

# the plot:

my_data %>%

mutate(Subj = factor(Subj, levels = 25:1)) %>%

ggplot(aes(x = X, y = Y)) +

geom_abline(data = c %>% filter(draw <= n_lines),

aes(intercept = b_0, slope = b_1),

color = "grey50", linewidth = 1/4, alpha = 1/5) +

geom_point(aes(color = Subj)) +

scale_color_manual(values = beyonce_palette(41, n = 25, type = "continuous"), breaks = NULL) +

scale_x_continuous(breaks = seq(from = 50, to = 90, by = 20)) +

scale_y_continuous(breaks = seq(from = 50, to = 250, by = 100)) +

labs(subtitle = "Each unit now has its own bundle of credible regression lines") +

coord_cartesian(xlim = c(45, 90),

ylim = c(50, 270)) +

facet_wrap(~ Subj %>% factor(., levels = 1:25))

There’s some good pedagogy in that method. But I like having options and in this case fitted() affords a simpler workflow. Here’s the preparatory data wrangling step.

# how many posterior lines would you like?

n_lines <- 250

nd <-

# since we're working with straight lines, we only need two x-values

tibble(x_z = c(-5, 5)) %>%

mutate(X = x_z * sd(my_data$X) + mean(my_data$X),

name = str_c("V", 1:n()))

f <-

fitted(fit17.4,

newdata = nd,

# since we only want the fixed effects, we'll use `re_formula`

# to maginalize over the random effects

re_formula = Y_z ~ 1 + X_z,

summary = F,

# here we use `ndraws` to subset right from the get go

ndraws = n_lines) %>%

as_tibble() %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw) %>%

# transform the `y_z` values back into the `Y` metric

mutate(Y = value * sd(my_data$Y) + mean(my_data$Y)) %>%

# now attach the predictor values to the output

left_join(nd, by = "name")

head(f) ## # A tibble: 6 × 6

## draw name value Y x_z X

## <int> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 1 V1 -2.78 64.2 -5 31.4

## 2 1 V2 2.62 237. 5 103.

## 3 2 V1 -3.47 42.1 -5 31.4

## 4 2 V2 4.14 286. 5 103.

## 5 3 V1 -3.33 46.6 -5 31.4

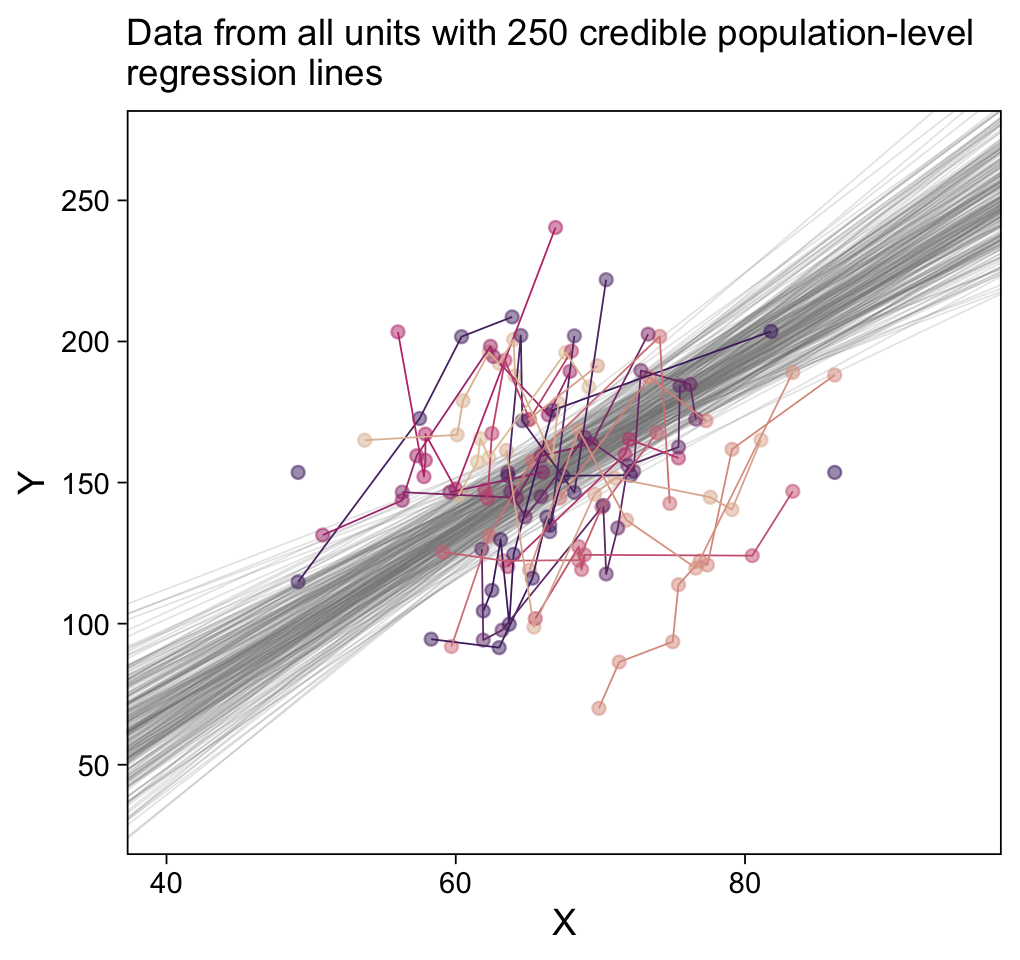

## 6 3 V2 3.99 281. 5 103.For the second time, here’s the top panel of Figure 17.4, this time based off of fitted().

p1 <-

my_data %>%

mutate(Subj = factor(Subj, levels = 25:1)) %>%

ggplot(aes(x = X, y = Y)) +

geom_line(data = f,

aes(group = draw),

color = "grey50", linewidth = 1/4, alpha = 1/5) +

geom_point(aes(color = Subj),

alpha = 1/2) +

geom_line(aes(group = Subj, color = Subj),

linewidth = 1/4) +

scale_color_manual(values = beyonce_palette(41, n = 25, type = "continuous")) +

scale_y_continuous(breaks = seq(from = 50, to = 250, by = 50)) +

labs(subtitle = eval(substitute(paste("Data from all units with", n_lines, "credible population-level\nregression lines")))) +

coord_cartesian(xlim = c(40, 95),

ylim = c(30, 270)) +

theme(legend.position = "none")

p1

The whole process is quite similar for the Subj-specific lines. There are two main differences. First, we need to specify which Subj values we’d like to get fitted() points for. That goes into our nd tibble. Second, we omit the re_formula argument. There are other subtleties, like with the contents of the bind_cols() function. But hopefully those are self-evident.

# how many posterior lines would you like?

n_lines <- 250

nd <-

tibble(x_z = c(-5, 5)) %>%

mutate(X = x_z * sd(my_data$X) + mean(my_data$X)) %>%

expand_grid(Subj = distinct(my_data, Subj) %>% pull()) %>%

mutate(name = str_c("V", 1:n()))

f <-

fitted(fit17.4,

newdata = nd,

summary = F,

ndraws = n_lines) %>%

as_tibble() %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw) %>%

mutate(Y = value * sd(my_data$Y) + mean(my_data$Y)) %>%

left_join(nd, by = "name")

head(f) ## # A tibble: 6 × 7

## draw name value Y x_z X Subj

## <int> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 V1 -2.19 83.3 -5 31.4 1

## 2 1 V2 -1.50 105. -5 31.4 2

## 3 1 V3 -2.70 66.9 -5 31.4 3

## 4 1 V4 -3.62 37.4 -5 31.4 4

## 5 1 V5 -3.51 40.8 -5 31.4 5

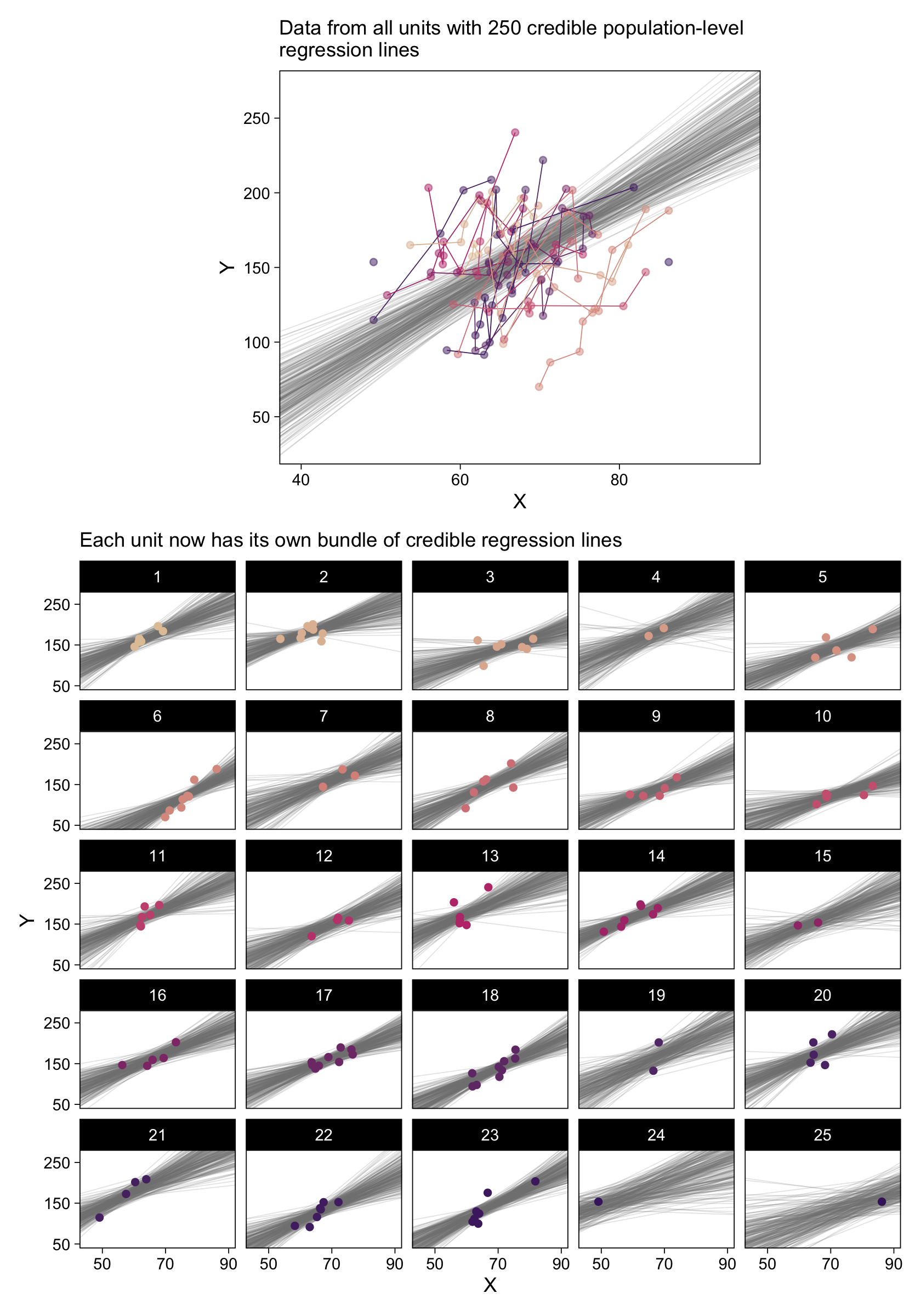

## 6 1 V6 -8.72 -126. -5 31.4 6And now for the second time, here’s the bottom panel of Figure 17.4, this time based off of fitted().

p2 <-

my_data %>%

mutate(Subj = factor(Subj, levels = 25:1)) %>%

ggplot(aes(x = X, y = Y)) +

geom_line(data = f,

aes(group = draw),

color = "grey50", linewidth = 1/4, alpha = 1/5) +

geom_point(aes(color = Subj)) +

scale_color_manual(values = beyonce_palette(41, n = 25, type = "continuous"), breaks = NULL) +

scale_x_continuous(breaks = seq(from = 50, to = 90, by = 20)) +

scale_y_continuous(breaks = seq(from = 50, to = 250, by = 100)) +

labs(subtitle = "Each unit now has its own bundle of credible regression lines") +

coord_cartesian(xlim = c(45, 90),

ylim = c(50, 270)) +

facet_wrap(~ Subj %>% factor(., levels = 1:25))

# combine with patchwork

p3 <- plot_spacer()

p4 <-

(p3 | p1 | p3) +

plot_layout(widths = c(1, 4, 1))

(p4 / p2) + plot_layout(heights = c(0.6, 1))

Especially if you’re new to these kinds of models, it’s easy to get lost in all that code. And for real–the wrangling required for those plots was no joke. The primary difficulty was that we had to convert standardized solutions to unstandardized solutions, which leads to an important distinction. When we used the first method of working with the as_draws_df() and coef() output, we focused on transforming the model parameters. In contrast, when we used the second method of working with the fitted() output, we focused instead on transforming the model predictions and predictor values. This distinction can be really confusing, at first. Stick with it! There will be times one method is more convenient or intuitive than the other. It’s good to have both methods in your repertoire.

17.4 Quadratic trend and weighted data

Quadratic models follow the general form

y=β0+β1x+β2x2,

where β2 is the quadratic term which, when 0, reduces the results to a simple linear model. That’s right; the linear model is a special case of the quadratic.

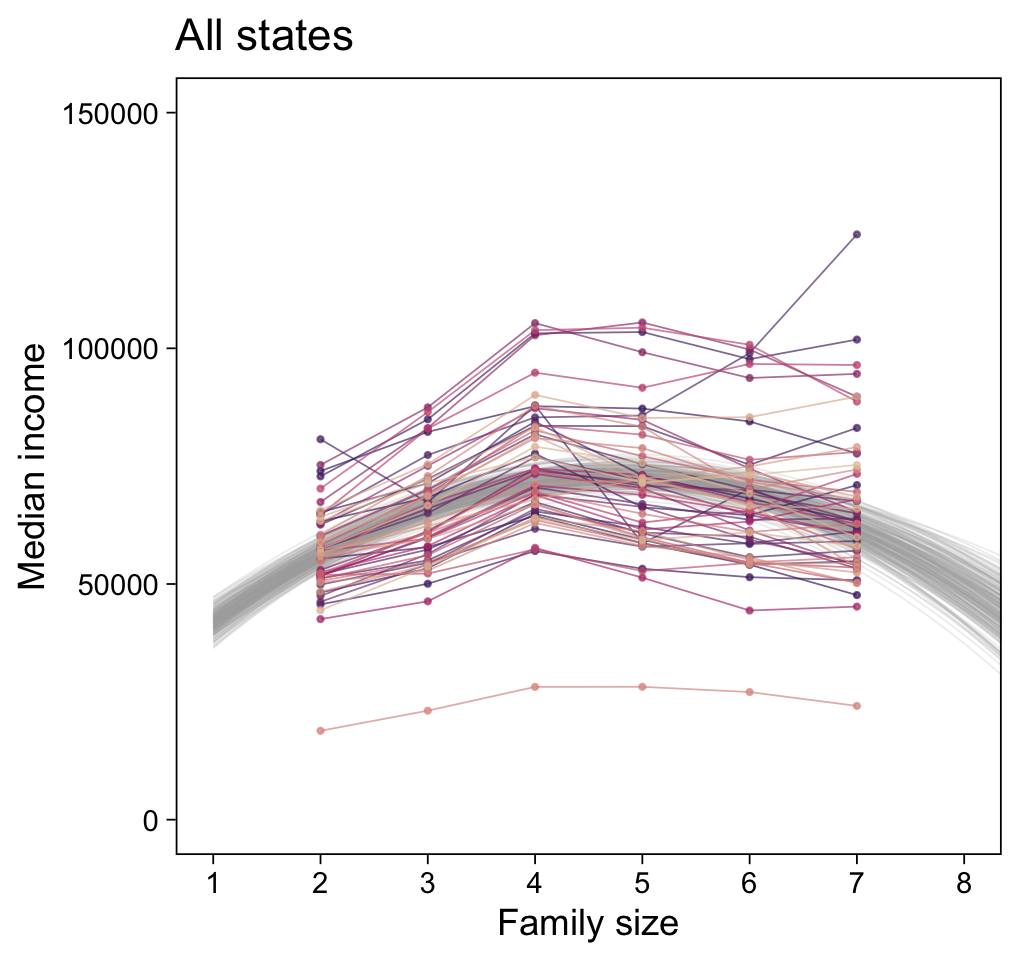

This time the data come from the American Community Survey and Puerto Rico Community Survey. In his footnote #3, Kruschke indicated “Data are from http://www.census.gov/hhes/www/income/data/Fam_Inc_SizeofFam1.xls, retrieved December 11, 2013. Median family income for years 2009-2011.” As to our read.csv() code, note the comment.char argument.

my_data <- read.csv("data.R/IncomeFamszState3yr.csv", comment.char = "#")

glimpse(my_data)## Rows: 312

## Columns: 4

## $ FamilySize <int> 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2, 3,…

## $ State <chr> "Alabama", "Alabama", "Alabama", "Alabama", "Alabama", "Alabama", "Alaska", "…

## $ MedianIncome <int> 48177, 53323, 64899, 59417, 54099, 47655, 73966, 82276, 87726, 87216, 84488, …

## $ SampErr <int> 581, 1177, 1170, 2446, 3781, 3561, 1858, 3236, 3722, 6127, 6761, 5754, 590, 1…Here we’ll standardize all variables but State, our grouping variable. It’d be silly to try to standardize that.

my_data <-

my_data %>%

mutate(family_size_z = standardize(FamilySize),

median_income_z = standardize(MedianIncome),

se_z = SampErr / (mean(SampErr)))

glimpse(my_data)## Rows: 312

## Columns: 7

## $ FamilySize <int> 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2, 3, 4, 5, 6, 7, 2,…

## $ State <chr> "Alabama", "Alabama", "Alabama", "Alabama", "Alabama", "Alabama", "Alaska"…

## $ MedianIncome <int> 48177, 53323, 64899, 59417, 54099, 47655, 73966, 82276, 87726, 87216, 8448…

## $ SampErr <int> 581, 1177, 1170, 2446, 3781, 3561, 1858, 3236, 3722, 6127, 6761, 5754, 590…

## $ family_size_z <dbl> -1.4615023, -0.8769014, -0.2923005, 0.2923005, 0.8769014, 1.4615023, -1.46…

## $ median_income_z <dbl> -1.26216763, -0.91386251, -0.13034520, -0.50139236, -0.86133924, -1.297499…

## $ se_z <dbl> 0.2242541, 0.4542979, 0.4515961, 0.9441060, 1.4593886, 1.3744731, 0.717150…With brms, there are a couple ways to handle measurement error on a variable (e.g., see Chapter 14 of my ebook, Statistical rethinking with brms, ggplot2, and the tidyverse (Kurz, 2023a). Here we’ll use the se() syntax, following the form response | se(se_response, sigma = TRUE). In this form, se stands for standard error, the loose frequentist analogue to the Bayesian posterior SD. Unless you’re fitting a meta-analysis on summary information, make sure to specify sigma = TRUE. Without that you’ll have no estimate for σ! For more information on the se() method, go to the brms reference manual and find the Additional response information subsection of the brmsformula section (Bürkner, 2022f, p. 42).

fit17.5 <-

brm(data = my_data,

family = student,

median_income_z | se(se_z, sigma = TRUE) ~ 1 + family_size_z + I(family_size_z^2) +

(1 + family_size_z + I(family_size_z^2) || State),

prior = c(prior(normal(0, 10), class = Intercept),

prior(normal(0, 10), class = b),

prior(normal(0, 1), class = sigma),

prior(normal(0, 1), class = sd),

prior(exponential(one_over_twentynine), class = nu)),

chains = 4, cores = 4,

stanvars = stanvar(1/29, name = "one_over_twentynine"),

seed = 17,

file = "fits/fit17.05")Did you notice the I(family_size_z^2) part of the formula? The brms package follows a typical convention in R statistical functions in that if you want to multiply a variable by itself as in a quadratic model, you nest the family_size_z^2 part within the I() function.

Take a look at the model summary.

print(fit17.5)## Family: student

## Links: mu = identity; sigma = identity; nu = identity

## Formula: median_income_z | se(se_z, sigma = TRUE) ~ 1 + family_size_z + I(family_size_z^2) + (1 + family_size_z + I(family_size_z^2) || State)

## Data: my_data (Number of observations: 312)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Group-Level Effects:

## ~State (Number of levels: 52)

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sd(Intercept) 0.75 0.09 0.60 0.93 1.00 732 1488

## sd(family_size_z) 0.07 0.04 0.00 0.16 1.01 778 1098

## sd(Ifamily_size_zE2) 0.05 0.03 0.00 0.13 1.01 695 1350

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.39 0.12 0.17 0.62 1.01 373 826

## family_size_z 0.12 0.05 0.02 0.22 1.00 3352 2759

## Ifamily_size_zE2 -0.44 0.04 -0.52 -0.36 1.00 3436 3041

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 0.05 0.04 0.00 0.13 1.00 2110 1921

## nu 68.97 36.04 22.04 158.27 1.00 6673 3217

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Do see that Ifamily_size_zE2 row? That’s the summary of our quadratic term.

17.4.1 Results and interpretation.

A new model type requires a different approach to un-standardizing our standardized coefficients. Based on Equation 17.3, we can convert our coefficients using the formulas

β0=ζ0SDy+My−ζ1MxSDySDx+ζ2M2xSDySD2x,β1=ζ1SDySDx−2ζ2MxSDySD2x,andβ2=ζ2SDySD2x.

We’ll make new custom functions to use them.

make_beta_0 <- function(zeta_0, zeta_1, zeta_2, sd_x, sd_y, m_x, m_y) {

zeta_0 * sd_y + m_y - zeta_1 * m_x * sd_y / sd_x + zeta_2 * m_x^2 * sd_y / sd_x^2

}

make_beta_1 <- function(zeta_1, zeta_2, sd_x, sd_y, m_x) {

zeta_1 * sd_y / sd_x - 2 * zeta_2 * m_x * sd_y / sd_x^2

}

make_beta_2 <- function(zeta_2, sd_x, sd_y) {

zeta_2 * sd_y / sd_x^2

}

# may as well respecify these, too

m_x <- mean(my_data$FamilySize)

m_y <- mean(my_data$MedianIncome)

sd_x <- sd(my_data$FamilySize)

sd_y <- sd(my_data$MedianIncome)Now we’ll extract our posterior draws and make the conversions.

draws <-

as_draws_df(fit17.5) %>%

mutate(b_0 = make_beta_0(zeta_0 = b_Intercept,

zeta_1 = b_family_size_z,

zeta_2 = b_Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = b_family_size_z,

zeta_2 = b_Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x),

b_2 = make_beta_2(zeta_2 = b_Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y)) %>%

select(.draw, b_0:b_2)Our geom_abline() approach from before won’t work with curves. We’ll have to resort to geom_line(). With the geom_line() approach, we’ll need many specific values of model-implied MedianIncome across a densely-packed range of FamilySize. We want to use a lot of FamilySize values, like 30 or 50 or so, to make sure the curves look smooth. Below, we’ll use 50 (i.e., length.out = 50). But if it’s still not clear why, try plugging in a lesser value, like 5 or so. You’ll see.

# how many posterior lines would you like?

n_lines <- 200

set.seed(17)

draws <-

draws %>%

slice_sample(n = n_lines) %>%

rownames_to_column(var = "draw") %>%

expand_grid(FamilySize = seq(from = 1, to = 9, length.out = 50)) %>%

mutate(MedianIncome = b_0 + b_1 * FamilySize + b_2 * FamilySize^2)

head(draws)## # A tibble: 6 × 7

## draw .draw b_0 b_1 b_2 FamilySize MedianIncome

## <chr> <int> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1 1970 22105. 22071. -2333. 1 41844.

## 2 1 1970 22105. 22071. -2333. 1.16 44623.

## 3 1 1970 22105. 22071. -2333. 1.33 47279.

## 4 1 1970 22105. 22071. -2333. 1.49 49809.

## 5 1 1970 22105. 22071. -2333. 1.65 52216.

## 6 1 1970 22105. 22071. -2333. 1.82 54498.Now we’re ready to make the top panel of Figure 17.7.

my_data %>%

ggplot(aes(x = FamilySize, y = MedianIncome)) +

geom_line(data = draws,

aes(group = .draw),

linewidth = 1/4, alpha = 1/5, color = "grey67") +

geom_line(aes(group = State, color = State),

alpha = 2/3, linewidth = 1/4) +

geom_point(aes(color = State),

alpha = 2/3, size = 1/2) +

scale_color_manual(values = beyonce_palette(41, n = 52, type = "continuous"), breaks = NULL) +

scale_x_continuous("Family size", breaks = 1:8) +

labs(title = "All states",

y = "Median income") +

coord_cartesian(xlim = c(1, 8),

ylim = c(0, 150000))

Like before, we’ll extract the group-level coefficients (i.e., those specific to the States) with the coef() function. And also like before, the coef() output will require a little wrangling.

c <-

# first collect and wrangle the draws for the State-level intercept and slopes

rbind(coef(fit17.5, summary = F)$State[, , "Intercept"],

coef(fit17.5, summary = F)$State[, , "family_size_z"],

coef(fit17.5, summary = F)$State[, , "Ifamily_size_zE2"]) %>%

data.frame() %>%

mutate(draw = rep(1:4000, times = 3),

param = rep(c("Intercept", "family_size_z", "Ifamily_size_zE2"), each = 4000)) %>%

pivot_longer(Alabama:Wyoming, names_to = "State") %>%

pivot_wider(names_from = param, values_from = value) %>%

# let's go ahead and make the standardized-to-unstandardized conversions, here

mutate(b_0 = make_beta_0(zeta_0 = Intercept,

zeta_1 = family_size_z,

zeta_2 = Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x,

m_y = m_y),

b_1 = make_beta_1(zeta_1 = family_size_z,

zeta_2 = Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y,

m_x = m_x),

b_2 = make_beta_2(zeta_2 = Ifamily_size_zE2,

sd_x = sd_x,

sd_y = sd_y)) %>%

# we just want the first 25 states, from Alabama through Mississippi, so we'll `filter()`

filter(State <= "Mississippi")

str(c)## tibble [100,000 × 8] (S3: tbl_df/tbl/data.frame)

## $ draw : int [1:100000] 1 1 1 1 1 1 1 1 1 1 ...

## $ State : chr [1:100000] "Alabama" "Alaska" "Arizona" "Arkansas" ...

## $ Intercept : num [1:100000] 0.0546 0.6689 0.1472 -0.0836 0.6011 ...

## $ family_size_z : num [1:100000] 0.149 0.107 0.155 0.114 0.107 ...

## $ Ifamily_size_zE2: num [1:100000] -0.513 -0.468 -0.4 -0.524 -0.415 ...

## $ b_0 : num [1:100000] 9407 24709 22033 7598 29127 ...

## $ b_1 : num [1:100000] 24594 22182 19533 24790 19777 ...

## $ b_2 : num [1:100000] -2590 -2362 -2021 -2645 -2095 ...Now we’ll subset by n_lines, expand_grid() by FamilySize, and use the model formula to compute the expected MedianIncome values.

# how many posterior lines would you like?

n_lines <- 200

set.seed(17)

c <-

c %>%

group_by(State) %>%

slice_sample(n = n_lines) %>%

ungroup() %>%

select(draw, State, b_0, b_1, b_2) %>%

expand_grid(FamilySize = seq(from = 1, to = 9, length.out = 50)) %>%

mutate(MedianIncome = b_0 + b_1 * FamilySize + b_2 * FamilySize^2)

head(c)## # A tibble: 6 × 7

## draw State b_0 b_1 b_2 FamilySize MedianIncome

## <int> <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 1970 Alabama 12417. 22126. -2260. 1 32283.

## 2 1970 Alabama 12417. 22126. -2260. 1.16 35097.

## 3 1970 Alabama 12417. 22126. -2260. 1.33 37791.

## 4 1970 Alabama 12417. 22126. -2260. 1.49 40365.

## 5 1970 Alabama 12417. 22126. -2260. 1.65 42817.

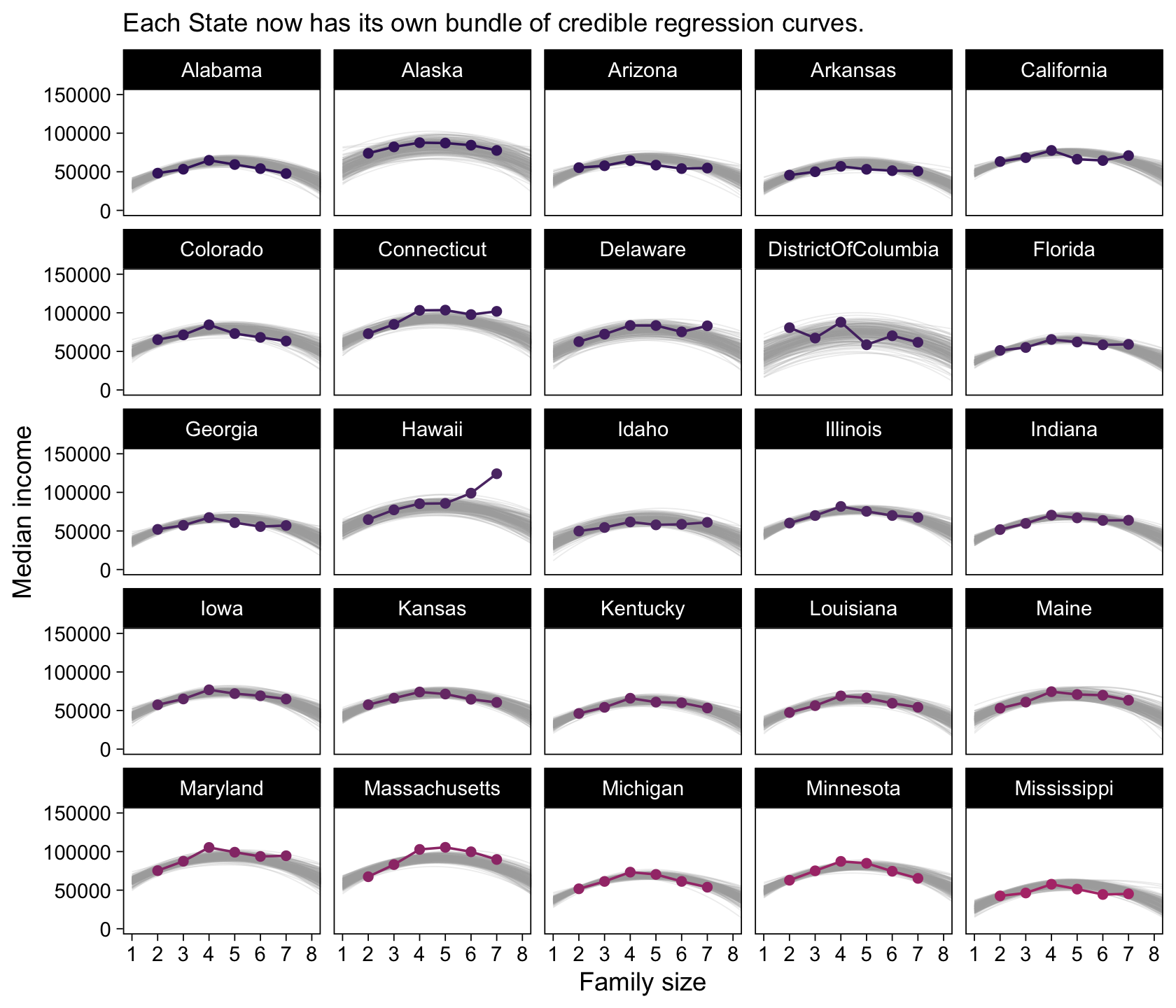

## 6 1970 Alabama 12417. 22126. -2260. 1.82 45150.Finally, we’re ready for the State-specific miniatures in Figure 17.7.

my_data %>%

filter(State <= "Mississippi") %>%

ggplot(aes(x = FamilySize, y = MedianIncome)) +

geom_line(data = c,

aes(group = draw),

linewidth = 1/4, alpha = 1/5, color = "grey67") +

geom_point(aes(color = State)) +

geom_line(aes(color = State)) +

scale_color_manual(values = beyonce_palette(41, n = 52, type = "continuous"), breaks = NULL) +

scale_x_continuous("Family size", breaks = 1:8) +

labs(subtitle = "Each State now has its own bundle of credible regression curves.",

y = "Median income") +

coord_cartesian(xlim = c(1, 8),

ylim = c(0, 150000)) +

theme(legend.position = "none") +

facet_wrap(~ State)

Magic! As our model coefficients proliferate, the fitted() approach from above starts to look more and more appetizing. Check it out for yourself.

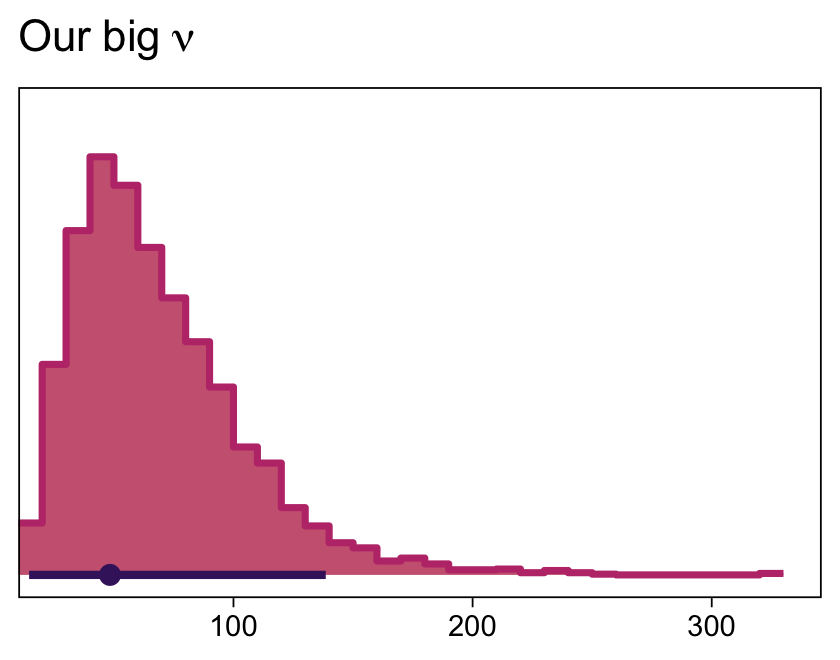

Although “almost all of the posterior distribution [was] below ν=4” in the text (p. 500), the bulk of our ν distribution spanned across much larger values.

as_draws_df(fit17.5) %>%

ggplot(aes(x = nu, y = 0)) +

stat_histinterval(point_interval = mode_hdi, .width = .95,

fill = bp[6], color = bp[1], slab_color = bp[5],

breaks = 40, normalize = "panels") +

scale_x_continuous(expand = expansion(mult = c(0, 0.05))) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(Our~big~nu),

x = NULL)

I’m guessing the distinction in our ν distribution and that in the text is our use of the se() syntax in the brm() formula. If you have a better explanation, share it.

17.4.2 Further extensions.

Kruschke discussed the ease with which users of Bayesian software might specify nonlinear models. Check out Bürkner’s (2022e) vignette, Estimating non-linear models with brms, for more on the topic. Though I haven’t used it, I believe it is also possible to use the t distribution to model group-level variation in brms (see this GitHub discussion for details).

17.5 Procedure and perils for expanding a model

Across several chapters, we’ve already dipped our toes into posterior predictive checks. For more on the PPC “double dipping” issue, check out Gelman’s Discussion with Sander Greenland on posterior predictive checks or Simpson’s Touch me, I want to feel your data, which is itself connected to Gabry et al. (2019), Visualization in Bayesian workflow.

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] bayesplot_1.10.0 tidybayes_3.0.2 brms_2.18.0 Rcpp_1.0.9 patchwork_1.1.2

## [6] beyonce_0.1 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10 purrr_1.0.1

## [11] readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4

## [5] svUnit_1.0.6 splines_4.2.2 crosstalk_1.2.0 TH.data_1.1-1

## [9] rstantools_2.2.0 inline_0.3.19 digest_0.6.31 htmltools_0.5.3

## [13] fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0 googlesheets4_1.0.1

## [17] tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5 matrixStats_0.63.0

## [21] vroom_1.5.7 xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1

## [25] colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1 haven_2.5.1