8 JAGS brms

We, of course, will be using brms in place of JAGS.

8.1 JAGS brms and its relation to R

In the opening paragraph in his GitHub repository for brms, Bürkner explained:

The brms package provides an interface to fit Bayesian generalized (non-)linear multivariate multilevel models using Stan, which is a C++ package for performing full Bayesian inference (see http://mc-stan.org/). The formula syntax is very similar to that of the package lme4 to provide a familiar and simple interface for performing regression analyses. A wide range of response distributions are supported, allowing users to fit – among others – linear, robust linear, count data, survival, response times, ordinal, zero-inflated, and even self-defined mixture models all in a multilevel context. Further modeling options include non-linear and smooth terms, auto-correlation structures, censored data, missing value imputation, and quite a few more. In addition, all parameters of the response distribution can be predicted in order to perform distributional regression. Multivariate models (i.e., models with multiple response variables) can be fit, as well. Prior specifications are flexible and explicitly encourage users to apply prior distributions that actually reflect their beliefs. Model fit can easily be assessed and compared with posterior predictive checks, cross-validation, and Bayes factors. (emphasis in the original)

Bürkner’s brms repository includes many helpful links, such as to where brms lives on CRAN, a list of blog posts highlighting brms, and a forum where users can ask questions about brms in specific or about Stan in general.

You can install the current official version of brms in the same way you would any other R package (i.e., install.packages("brms", dependencies = T)). If you want the current developmental version, you could download it from GitHub by executing the following.

if (!requireNamespace("devtools")) {

install.packages("devtools")

}

devtools::install_github("paul-buerkner/brms")8.2 A complete example

We express the likelihood for our coin toss example as

yi∼Bernoulli(θ).

Our prior will be

θ∼Beta(α,β).

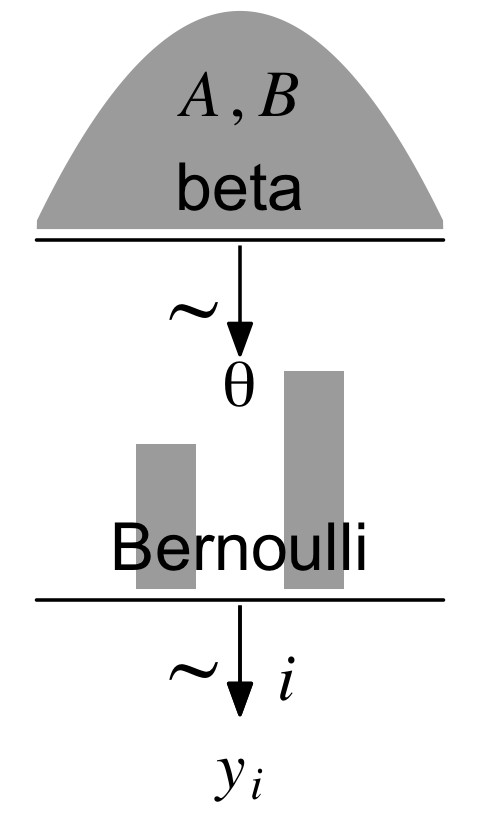

Kruschke pictured the relationships among the data, the likelihood, and the prior in the model diagram in Figure 8.2 (p. 196). If you’re tricky, you can make those in R. I’m not going to walk my method out in great detail in this ebook. If you want a step-by-step tutorial, check my blog post, Make model diagrams, Kruschke style. Here’s the code for our version of Figure 8.2.

library(tidyverse)

library(patchwork)

# plot of a beta density

p1 <-

tibble(x = seq(from = .01, to = .99, by = .01),

d = (dbeta(x, 2, 2)) / max(dbeta(x, 2, 2))) %>%

ggplot(aes(x = x, y = d)) +

geom_area(fill = "grey67") +

annotate(geom = "text",

x = .5, y = .2,

label = "beta",

size = 7) +

annotate(geom = "text",

x = .5, y = .6,

label = "italic(A)*', '*italic(B)",

size = 7, family = "Times", parse = TRUE) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5))

## an annotated arrow

# save our custom arrow settings

my_arrow <- arrow(angle = 20, length = unit(0.35, "cm"), type = "closed")

p2 <-

tibble(x = .5,

y = 1,

xend = .5,

yend = 0) %>%

ggplot(aes(x = x, xend = xend,

y = y, yend = yend)) +

geom_segment(arrow = my_arrow) +

annotate(geom = "text",

x = .375, y = 1/3,

label = "'~'",

size = 10, family = "Times", parse = T) +

xlim(0, 1) +

theme_void()

# bar plot of Bernoulli data

p3 <-

tibble(x = 0:1,

d = (dbinom(x, size = 1, prob = .6)) / max(dbinom(x, size = 1, prob = .6))) %>%

ggplot(aes(x = x, y = d)) +

geom_col(fill = "grey67", width = .4) +

annotate(geom = "text",

x = .5, y = .2,

label = "Bernoulli",

size = 7) +

annotate(geom = "text",

x = .5, y = .94,

label = "theta",

size = 7, family = "Times", parse = T) +

xlim(-.75, 1.75) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5))

# another annotated arrow

p4 <-

tibble(x = c(.375, .625),

y = c(1/3, 1/3),

label = c("'~'", "italic(i)")) %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = c(10, 7), parse = T, family = "Times") +

geom_segment(x = .5, xend = .5,

y = 1, yend = 0,

arrow = my_arrow) +

xlim(0, 1) +

theme_void()

# some text

p5 <-

tibble(x = 1,

y = .5,

label = "italic(y[i])") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, parse = T, family = "Times") +

xlim(0, 2) +

theme_void()layout <- c(

area(t = 1, b = 2, l = 1, r = 1),

area(t = 3, b = 3, l = 1, r = 1),

area(t = 4, b = 5, l = 1, r = 1),

area(t = 6, b = 6, l = 1, r = 1),

area(t = 7, b = 7, l = 1, r = 1)

)

(p1 + p2 + p3 + p4 + p5) +

plot_layout(design = layout) &

ylim(0, 1) &

theme(plot.margin = margin(0, 5.5, 0, 5.5))

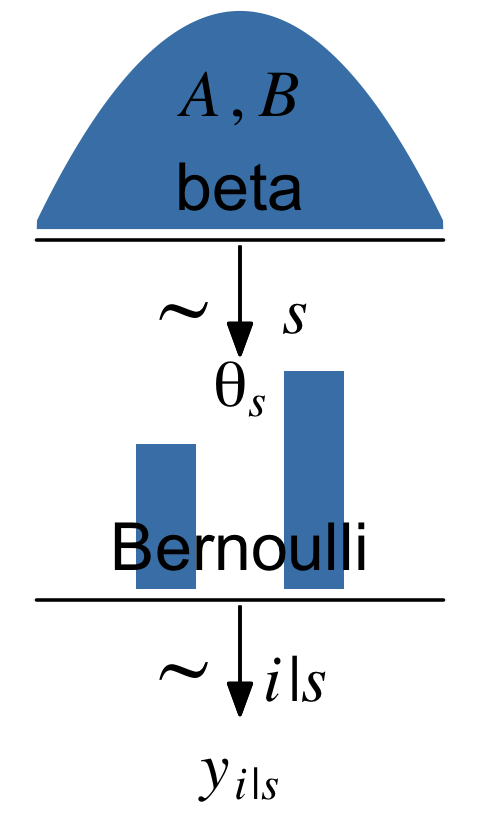

Diagrams like Figure 8.2 should be scanned from the bottom up. This is because models of data always start with the data, then conceive of a likelihood function that describes the data values in terms of meaningful parameters, and finally determine a prior distribution over the parameters. (p. 196)

8.2.1 Load data.

“Logically, models of data start with the data. We must know their basic scale and structure to conceive of a descriptive model” (p. 197).

Here we load the data with the readr::read_csv() function, the tidyverse version of base R read.csv().

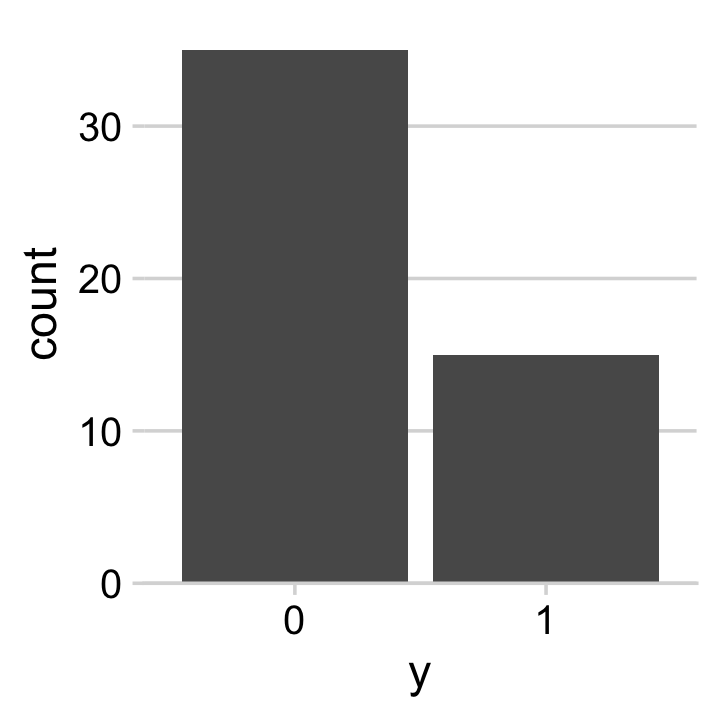

my_data <- read_csv("data.R/z15N50.csv")Unlike what Kruschke wrote about JAGS, the brms package does not require us to convert the data into a list. It can handle data in lists or data frames, of which tibbles are a special case. Here are what the data look like.

glimpse(my_data)## Rows: 50

## Columns: 1

## $ y <dbl> 0, 1, 0, 0, 0, 0, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0, 0, 1, 0, 0, 0, 0, 1, 1, 0, 0, 0, 0…We might visualize them in a bar plot.

library(cowplot)

my_data %>%

mutate(y = y %>% as.character()) %>%

ggplot(aes(x = y)) +

geom_bar() +

scale_y_continuous(expand = expansion(mult = c(0, 0.05))) +

theme_minimal_hgrid()

For the past couple chapters, we’ve been using the cowplot::theme_cowplot() theme for our plots. The cowplot package, however, includes a few more. The theme_minimal_grid() is similar, but subtracts the axis lines and adds in minimalistic grid lines. In his (2020a) Themes vignette, however, Wilke showed vertical grid lines are often unsightly in bar plots. To avoid offending Wilke with our grid lines, we used the theme_minimal_hgrid() theme, which only added horizontal grid lines.

Anyway, if you wanted to compute “Ntotal”, the number of rows in our tibble, one way is with count().

my_data %>%

count()## # A tibble: 1 × 1

## n

## <int>

## 1 50However, we’re not going to do anything with an “Ntotal” value. For brms, the data are fine in their current data frame form. No need for a dataList.

8.2.2 Specify model.

Let’s open brms.

library(brms)The brms package does not have code blocks following the JAGS format or the sequence in Kruschke’s diagrams. Rather, its syntax is modeled in part after the popular frequentist mixed-effects package, lme4. To learn more about how brms compares to lme4, see Bürkner’s (2017) overview, brms: An R package for Bayesian multilevel models using Stan.

The primary function in brms is brm(). Into this one function we will specify the data, the model, the likelihood function, the prior(s), and any technical settings such as the number of MCMC chains, iterations, and so forth. You can order the arguments in any way you like. My typical practice is to start with data, family (i.e., the likelihood function), the model formula, and my priors. If there are any technical specifications such as the number of MCMC iterations I’d like to change from their default values, I usually do that last.

Here’s how to fit the model.

fit8.1 <-

brm(data = my_data,

family = bernoulli(link = identity),

formula = y ~ 1,

prior(beta(2, 2), class = Intercept, lb = 0, ub = 1),

iter = 500 + 3334, warmup = 500, chains = 3,

seed = 8,

file = "fits/fit08.01")Also note our use of the file argument. This automatically saved the fit object as an external file. You don’t have to do that and you can avoid doing so by omitting the file argument. For a more detailed explanation of the brms::brm() function, spend some time with the brm section of the brms reference manual (Bürkner, 2022g).

8.2.3 Initialize chains.

In Stan, and in brms by extension, the initial values have default settings. In the Initialization section of the Program Execution chapter in the Stan reference manual, Version 2.29 (Stan Development Team, 2022b) we read:

If there are no user-supplied initial values, the default initialization strategy is to initialize the unconstrained parameters directly with values drawn uniformly from the interval (−2,2). The bounds of this initialization can be changed but it is always symmetric around 0. The value of 0 is special in that it represents the median of the initialization. An unconstrained value of 0 corresponds to different parameter values depending on the constraints declared on the parameters.

In general, I do not recommend setting custom initial values in brms or Stan. Under the hood, Stan will transform the parameters to the unconstrained space in models where they are bounded. In our Bernoulli model, θ is bounded at 0 and 1. A little further down in the same section, we read:

For parameters bounded above and below, the initial value of 0 on the unconstrained scale corresponds to a value at the midpoint of the constraint interval. For probability parameters, bounded below by 0 and above by 1, the transform is the inverse logit, so that an initial unconstrained value of 0 corresponds to a constrained value of 0.5, −2 corresponds to 0.12 and 2 to 0.88. Bounds other than 0 and 1 are just scaled and translated.

If you want to play around with this, have at it. In my experience, it sometimes helps to set these manually to zero, which you can do that by specifying inits = 0 within brm(). But if you really want to experiment, you might check out my blog post, Don’t forget your inits.

8.2.4 Generate chains.

By default, brms will use 4 chains of 2,000 iterations each. The type of MCMC brms uses is Hamiltonian Monte Carlo (HMC). You can learn more about HMC at the Stan website, https://mc-stan.org, which includes resources such as the Stan user’s guide (Stan Development Team, 2022c), the Stan reference manual (Stan Development Team, 2022b), and a list of tutorials. McElreath has a nice intro lecture on MCMC in general and HMC in particular. Michael Bentacourt has some good lectures on Stan and HMC, such as here and here. And, of course, we will cover HMC with Kruschke in Chapter 14.

Within each HMC chain, the first n iterations are warmups. Within the Stan-HMC paradigm, warmups are somewhat analogous to but not synonymous with burn-in iterations as done by the Gibbs sampling in JAGS. But HMC warmups are like Gibbs burn-ins in that both are discarded and not used to describe the posterior. As such, the brms default settings yield 1,000 post-warmup iterations for each of the 4 HMC chains. However, we specified iter = 500 + 3334, warmup = 500, chains = 3. Thus instead of defaults, we have 3 HMC chains. Each chain has 500 + 3,334 = 3,834 total iterations, of which 500 were discarded warmup iterations.

To learn more about the warmup stage in Stan, check out the HMC Algorithm Parameters section of the MCMC Sampling chapter of the Stan reference manual.

8.2.5 Examine chains.

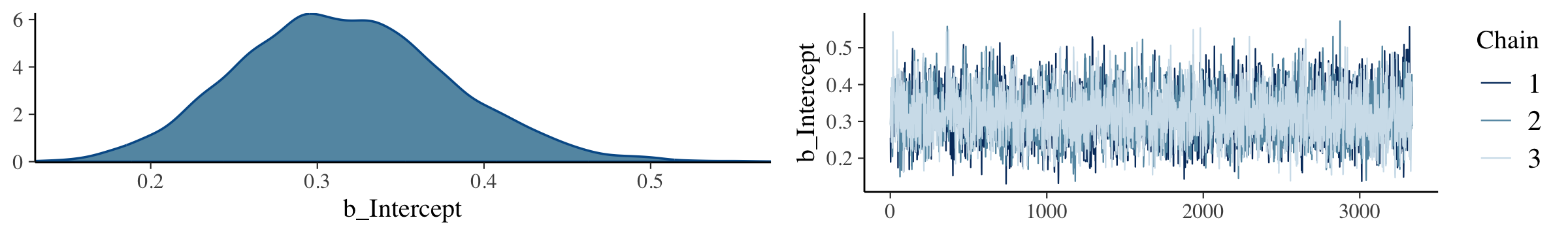

The brms::plot() function returns a density and trace plot for each model parameter.

plot(fit8.1)

Note how the brms::plot() function simply took our model fit object as input. Other post-processing functions will require us to pass the posterior draws in the form of a data frame. To get ready for them, here we’ll save them as a data frame with the as_draws_df() function.

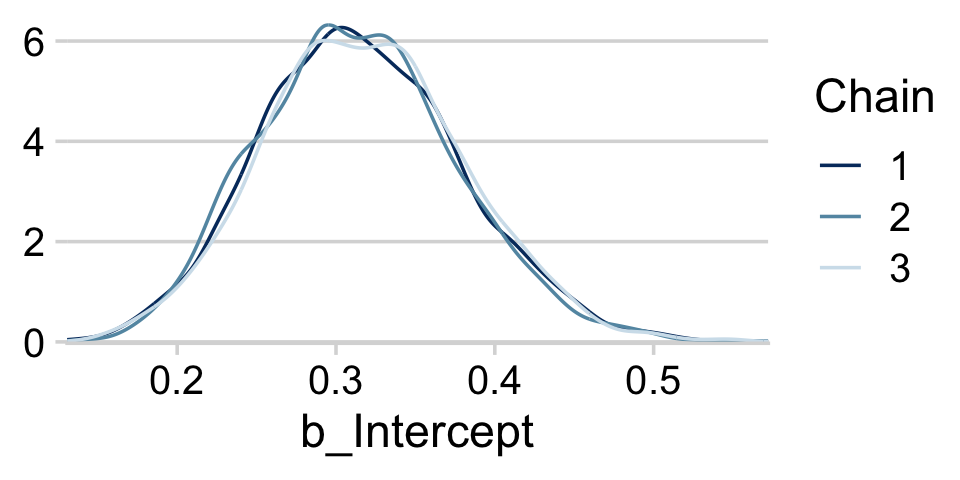

draws <- as_draws_df(fit8.1) If you want to display each chain as its own density, you can use the handy mcmc_dens_overlay() function from the bayesplot package.

library(bayesplot)Now we’re ready to use our draws object within the mcmc_dens_overlay() function to return the overlaid densities.

draws %>%

mutate(chain = .chain) %>%

mcmc_dens_overlay(pars = vars(b_Intercept)) +

theme_minimal_hgrid()

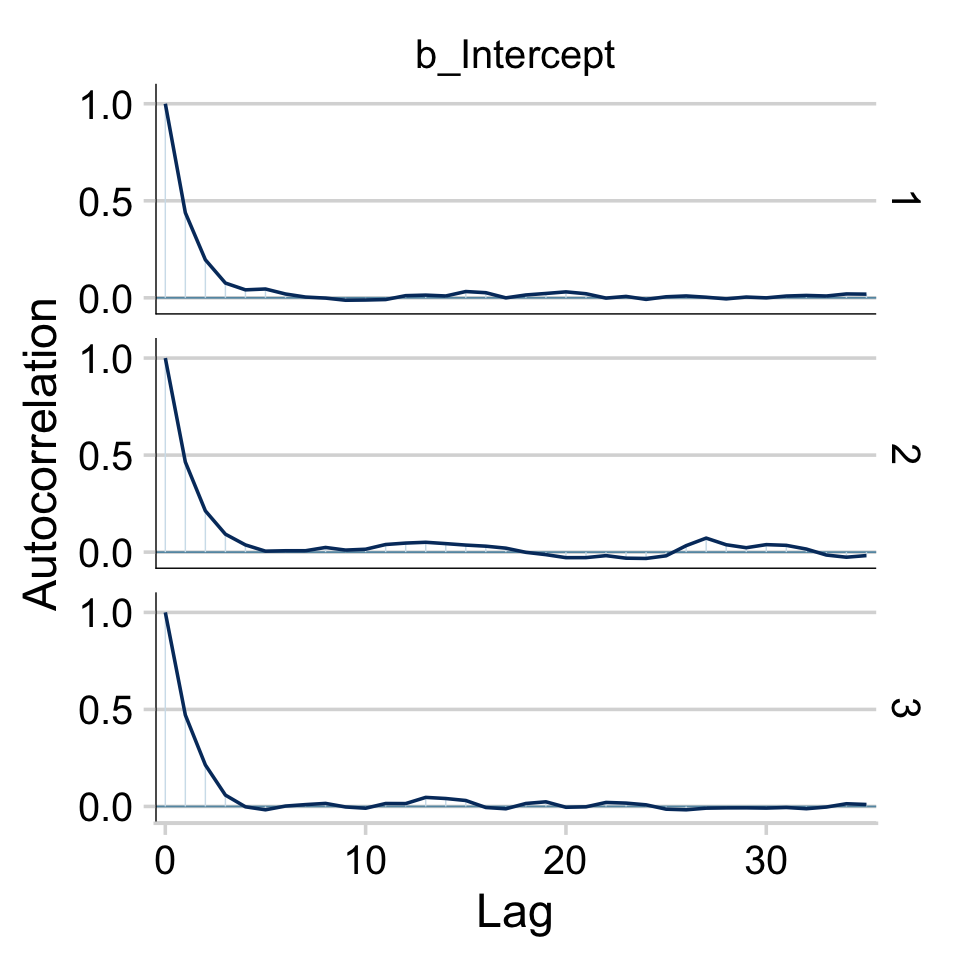

The bayesplot::mcmc_acf() function will give us the autocorrelation plots.

draws %>%

mutate(chain = .chain) %>%

mcmc_acf(pars = vars(b_Intercept), lags = 35) +

theme_minimal_hgrid()

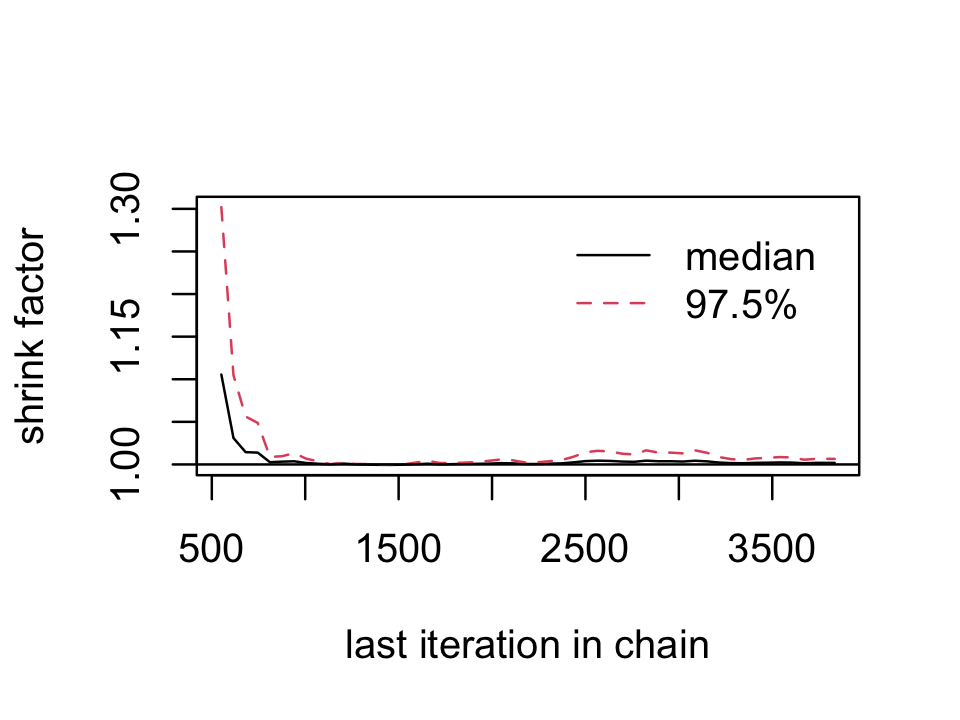

With brms functions, we get a sole ˆR value for each parameter rather than a running vector.

rhat(fit8.1)["b_Intercept"]## b_Intercept

## 1.00177We’ll have to employ brms::as.mcmc() and coda::gelman.plot() to make our running ˆR plot.

fit8.1_c <- as.mcmc(fit8.1)

coda::gelman.plot(fit8.1_c[, "b_Intercept", ])

For whatever reason, many of the package developers within the Stan/brms ecosystem don’t seem interested in shrink factor plots, like this.

8.2.5.1 The plotPost function How to plot your brms posterior distributions.

plotPost functionWe’ll get into plotting in just a moment. But before we do, here’s a summary of the model.

print(fit8.1)## Family: bernoulli

## Links: mu = identity

## Formula: y ~ 1

## Data: my_data (Number of observations: 50)

## Draws: 3 chains, each with iter = 3834; warmup = 500; thin = 1;

## total post-warmup draws = 10002

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.32 0.06 0.20 0.44 1.00 3617 5069

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).To summarize a posterior in terms of central tendency, brms defaults to the mean value (i.e., the value in the ‘Estimate’ column of the print() output). In many of the other convenience functions, you can also request the median instead. For example, we can set robust = TRUE to get the ‘Estimate’ in terms of the median.

posterior_summary(fit8.1, robust = T)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 0.3136384 0.06298898 0.19949123 0.4444603

## lprior 0.2558942 0.10535036 -0.04273333 0.3927531

## lp__ -32.0713842 0.30693948 -34.40289913 -31.8453143Across functions, the intervals default to 95%. With print() and summary() you can adjust the level with a prob argument. For example, here we’ll use 50% intervals.

print(fit8.1, prob = .5)## Family: bernoulli

## Links: mu = identity

## Formula: y ~ 1

## Data: my_data (Number of observations: 50)

## Draws: 3 chains, each with iter = 3834; warmup = 500; thin = 1;

## total post-warmup draws = 10002

##

## Population-Level Effects:

## Estimate Est.Error l-50% CI u-50% CI Rhat Bulk_ESS Tail_ESS

## Intercept 0.32 0.06 0.27 0.36 1.00 3617 5069

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).But in many other brms convenience functions, you can use the probs argument to request specific percentile summaries.

posterior_summary(fit8.1, probs = c(.025, .25, .75, .975))## Estimate Est.Error Q2.5 Q25 Q75 Q97.5

## b_Intercept 0.3160699 0.06281598 0.19949123 0.2721476 0.3571788 0.4444603

## lprior 0.2354402 0.11358623 -0.04273333 0.1726917 0.3203520 0.3927531

## lp__ -32.3504057 0.71534517 -34.40289913 -32.5122278 -31.8979067 -31.8453143Regardless of what prob or probs levels you use, brms functions always return percentile-based estimates. All this central tendency and interval talk will be important in a moment…

When plotting the posterior distribution of a parameter estimated with brms, you typically do so working with the results of an object returned by one of the as_draws_ functions. Recall we already saved those results as draws. Here’s a look at draws.

head(draws)## # A draws_df: 6 iterations, 1 chains, and 3 variables

## b_Intercept lprior lp__

## 1 0.26 0.133 -32

## 2 0.19 -0.080 -34

## 3 0.21 0.011 -33

## 4 0.27 0.165 -32

## 5 0.28 0.193 -32

## 6 0.31 0.244 -32

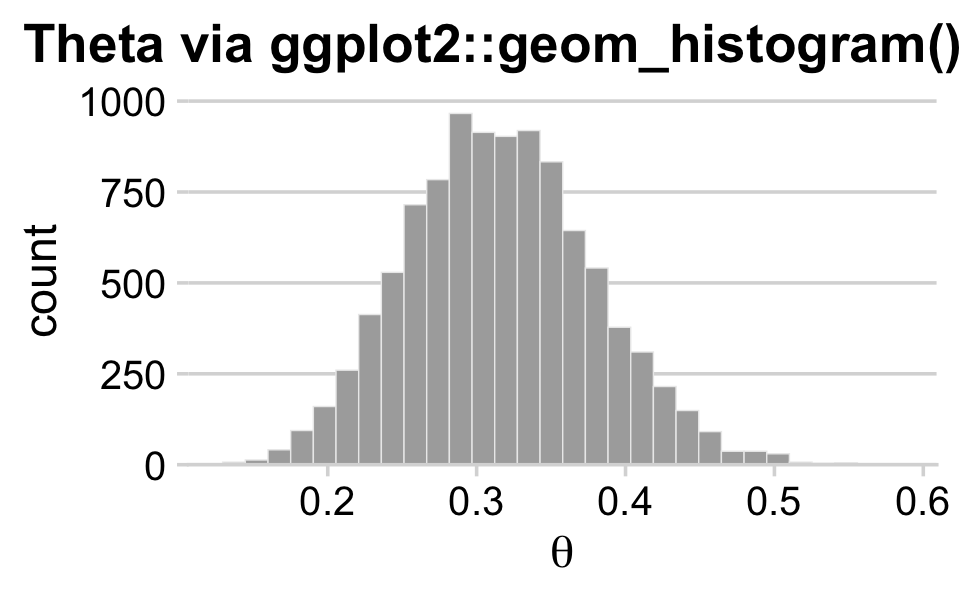

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}With draws in hand, we can use ggplot2 to do the typical distributional plots, such as with geom_histogram().

draws %>%

ggplot(aes(x = b_Intercept)) +

geom_histogram(color = "grey92", fill = "grey67",

linewidth = .2) +

scale_y_continuous(expand = expansion(mult = c(0, 0.05))) +

labs(title = "Theta via ggplot2::geom_histogram()",

x = expression(theta)) +

theme_minimal_hgrid() +

theme(plot.title.position = "plot")

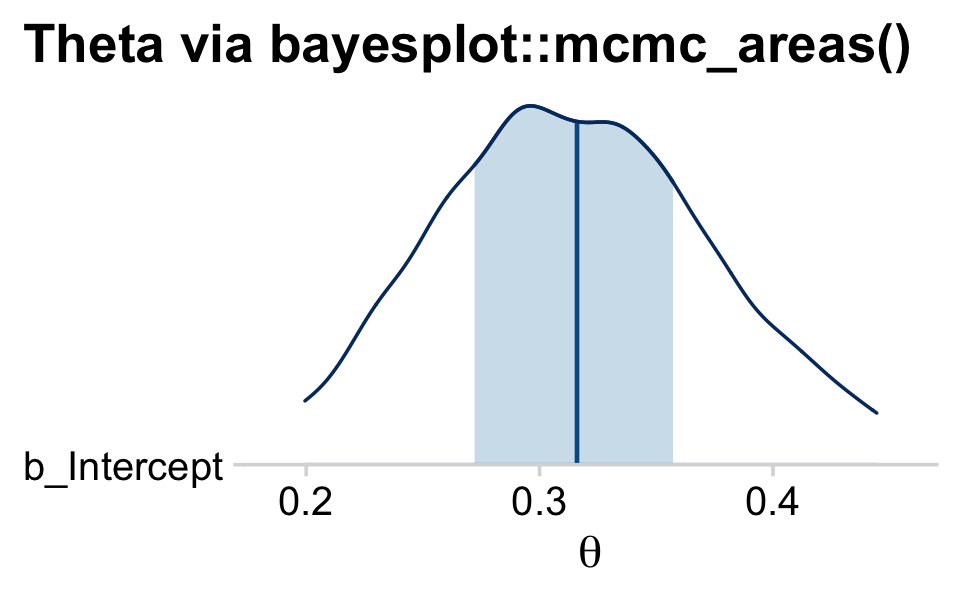

The bayesplot::mcmc_areas() function offers a nice way to depict the posterior densities, along with their percentile-based 50% and 95% ranges.

mcmc_areas(

draws,

pars = vars(b_Intercept),

prob = 0.5,

prob_outer = 0.95,

point_est = "mean"

) +

scale_y_discrete(expand = expansion(mult = c(0, 0.05))) +

labs(title = "Theta via bayesplot::mcmc_areas()",

x = expression(theta)) +

theme_minimal_hgrid() +

theme(plot.title.position = "plot")

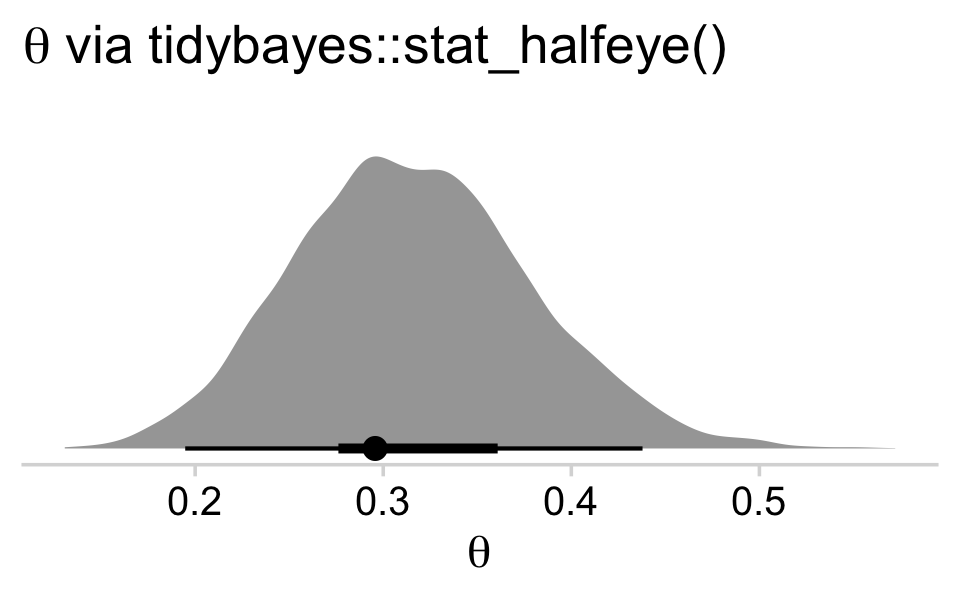

brms doesn’t have a convenient way to compute the posterior mode or HDIs. Base R is no help, either. But Matthew Kay’s tidybayes package makes it easy to compute posterior modes and HDIs with handy functions like stat_halfeye() and stat_histinterval().

library(tidybayes)

draws %>%

ggplot(aes(x = b_Intercept, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = c(.95, .5)) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(theta*" via tidybayes::stat_halfeye()"),

x = expression(theta)) +

theme_minimal_hgrid()

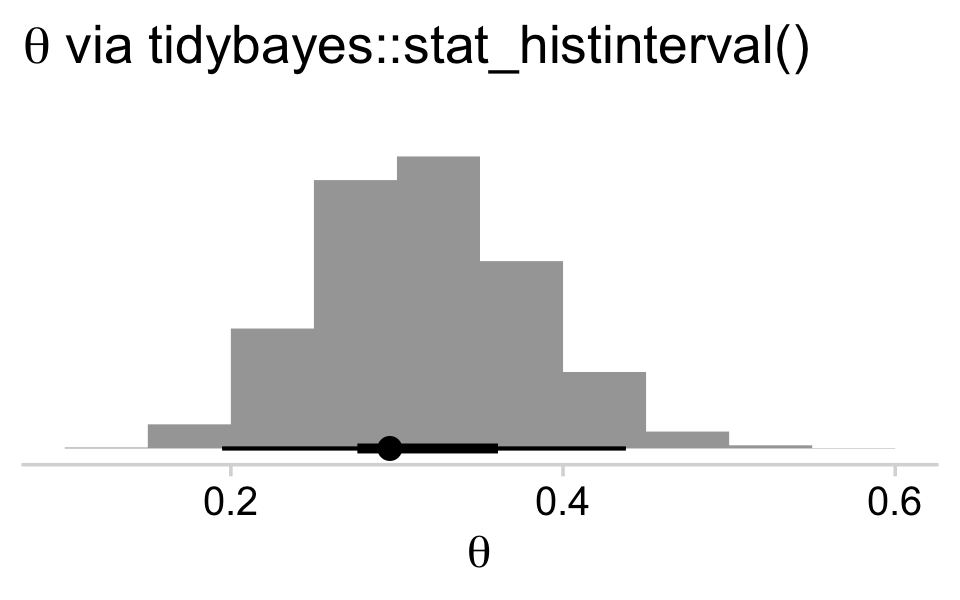

draws %>%

ggplot(aes(x = b_Intercept, y = 0)) +

stat_histinterval(point_interval = mode_hdi, .width = c(.95, .5)) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(theta*" via tidybayes::stat_histinterval()"),

x = expression(theta)) +

theme_minimal_hgrid()

The tidybayes::stat_halfeye() function returns a density with a measure of the posterior’s central tendency in a dot and one or multiple interval bands as horizontal lines at the base of the density. The stat_histinterval() function returns much the same, but replaces the density with a histogram. For both functions, the point_interval = mode_hdi argument allowed us to request the mode to be our measure of central tendency and the highest posterior density intervals to be our type intervals. With .width = c(.95, .5), we requested our HDIs be at both the 95% and 50% levels.

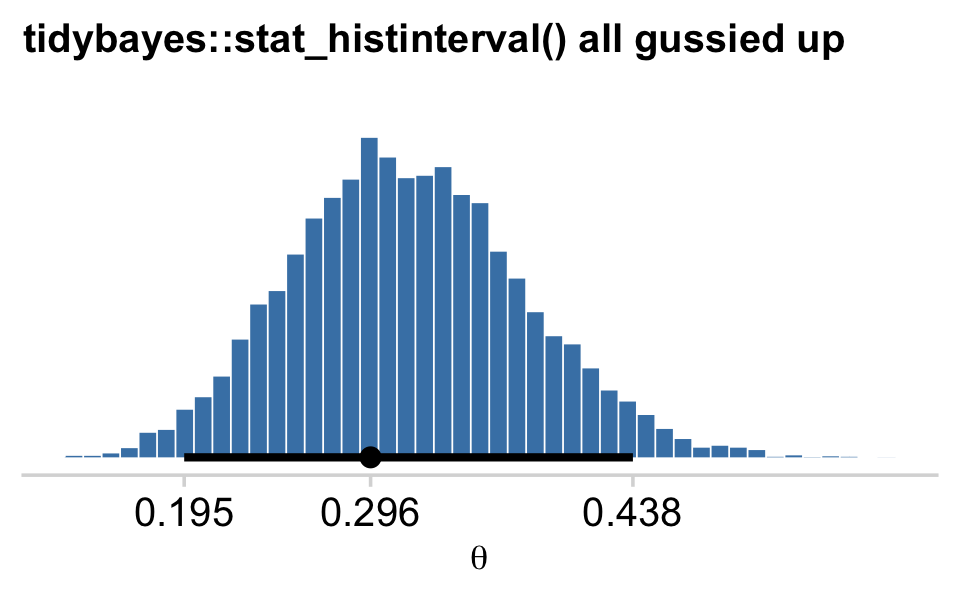

If we wanted to be more congruent with Kruschke’s plotting sensibilities, we could further modify tidybayes::stat_histinterval().

# this is unnecessary, but makes for nicer x-axis breaks

my_breaks <-

mode_hdi(draws$b_Intercept)[, 1:3] %>%

pivot_longer(everything(), values_to = "breaks") %>%

mutate(labels = breaks %>% round(digits = 3))

# here's the main plot code

draws %>%

ggplot(aes(x = b_Intercept, y = 0)) +

stat_histinterval(point_interval = mode_hdi, .width = .95,

fill = "steelblue", slab_color = "white",

breaks = 40, slab_size = .25, outline_bars = T) +

scale_x_continuous(breaks = my_breaks$breaks,

labels = my_breaks$labels) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = "tidybayes::stat_histinterval() all gussied up",

x = expression(theta)) +

theme_minimal_hgrid() +

theme(title = element_text(size = 10.5))

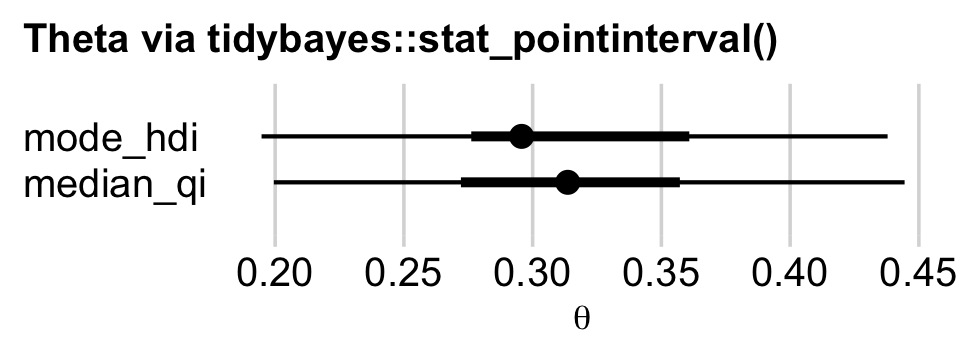

With the point_interval argument within stat_histinterval() and related functions, we can request different combinations of measures of central tendency (i.e., mean, median, mode) and interval types (i.e., percentile-based and HDIs). Although all of these are legitimate ways to summarize a posterior, they can yield somewhat different results. For example, here we’ll contrast our mode + HDI summary with a median + percentile-based interval summary using tidybayes::stat_pointinterval().

draws %>%

ggplot(aes(x = b_Intercept)) +

stat_pointinterval(aes(y = 1), point_interval = median_qi, .width = c(.95, .5)) +

stat_pointinterval(aes(y = 2), point_interval = mode_hdi, .width = c(.95, .5)) +

scale_y_continuous(NULL, breaks = 1:2,

labels = c("median_qi", "mode_hdi")) +

coord_cartesian(ylim = c(0, 3)) +

labs(title = "Theta via tidybayes::stat_pointinterval()",

x = expression(theta)) +

theme_minimal_vgrid() +

theme(axis.line.y.left = element_blank(),

axis.text.y = element_text(hjust = 0),

axis.ticks.y = element_blank(),

plot.title.position = "plot",

title = element_text(size = 10.5))

Similar, yet distinct. To get a sense of the full variety of ways tidybayes allows users to summarize and plot the results of a Bayesian model, check out Kay’s (2022a) vignette, Slab + interval stats and geoms. Also, did you notice how we switched to theme_minimal_vgrid()? That’s how we added the vertical grid lines in the absence of horizontal grid lines.

8.3 Simplified scripts for frequently used analyses

A lot has happened in R for Bayesian analysis since Kruschke wrote his (2015) text. In addition to our use of the tidyverse, the brms, bayesplot, and tidybayes packages offer an array of useful convenience functions. We can and occasionally will write our own. But really, the rich R ecosystem already has us pretty much covered.

8.4 Example: Difference of biases

Here are our new data.

my_data <- read_csv("data.R/z6N8z2N7.csv")

glimpse(my_data)## Rows: 15

## Columns: 2

## $ y <dbl> 1, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 1, 0

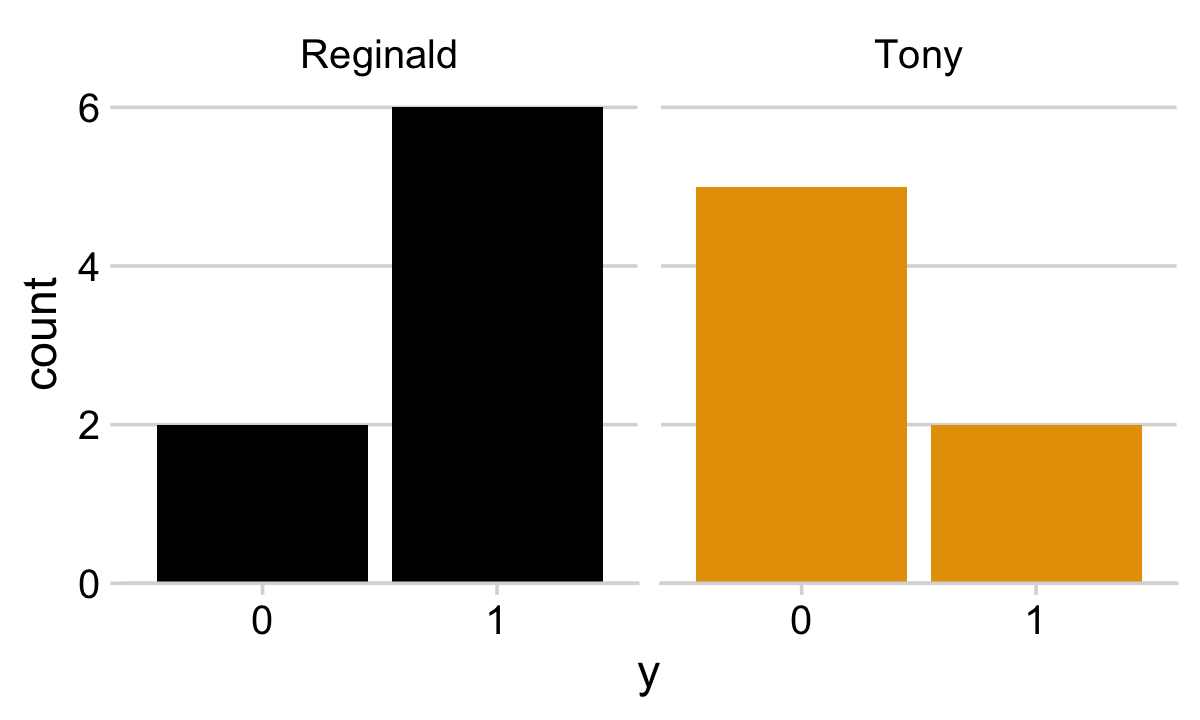

## $ s <chr> "Reginald", "Reginald", "Reginald", "Reginald", "Reginald", "Reginald", "Reginald", "Reg…They look like this.

library(ggthemes)

my_data %>%

mutate(y = y %>% as.character()) %>%

ggplot(aes(x = y, fill = s)) +

geom_bar(show.legend = F) +

scale_fill_colorblind() +

scale_y_continuous(expand = expansion(mult = c(0, 0.05))) +

theme_minimal_hgrid() +

facet_wrap(~ s)

Note our use of the scale_fill_colorblind() function from the ggthemes package (Arnold, 2021). When you want to use color to emphasize different factor levels, such as s, it’s a good idea to make sure those colors can be distinguished by folks who are colorblind. The scale_fill_colorblind() function provides a discrete palette of eight colorblind safe colors. If you’d prefer to use a different palette, but wand to make sure its accessible to folks with colorblindness, check out the colorblindr package (McWhite & Wilke, 2021).

Here’s our ggplot2 version of the model diagram in Figure 8.5.

# plot of a beta density

p1 <-

tibble(x = seq(from = .01, to = .99, by = .01),

d = (dbeta(x, 2, 2)) / max(dbeta(x, 2, 2))) %>%

ggplot(aes(x = x, y = d)) +

geom_area(fill = "steelblue") +

annotate(geom = "text",

x = .5, y = .2,

label = "beta",

size = 7) +

annotate(geom = "text",

x = .5, y = .6,

label = "italic(A)*', '*italic(B)",

size = 7, family = "Times", parse = TRUE) +

scale_x_continuous(expand = c(0, 0)) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5))

# an annotated arrow

p2 <-

tibble(x = c(.35, .65),

y = 1/3,

label = c("'~'", "italic(s)")) %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = c(10, 7), parse = T, family = "Times") +

geom_segment(x = .5, xend = .5,

y = 1, yend = 0,

arrow = my_arrow) +

xlim(0, 1) +

theme_void()

# bar plot of Bernoulli data

p3 <-

tibble(x = 0:1,

d = (dbinom(x, size = 1, prob = .6)) / max(dbinom(x, size = 1, prob = .6))) %>%

ggplot(aes(x = x, y = d)) +

geom_col(fill = "steelblue", width = .4) +

annotate(geom = "text",

x = .5, y = .2,

label = "Bernoulli",

size = 7) +

annotate(geom = "text",

x = .5, y = .92,

label = "theta[italic(s)]",

size = 7, family = "Times", parse = T) +

xlim(-.75, 1.75) +

theme_void() +

theme(axis.line.x = element_line(linewidth = 0.5))

# another annotated arrow

p4 <-

tibble(x = c(.35, .65),

y = c(1/3, 1/3),

label = c("'~'", "italic(i)*'|'*italic(s)")) %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = c(10, 7), parse = T, family = "Times") +

geom_segment(x = .5, xend = .5,

y = 1, yend = 0,

arrow = my_arrow) +

xlim(0, 1) +

theme_void()

# some text

p5 <-

tibble(x = 1,

y = .5,

label = "italic(y)[italic(i)*'|'*italic(s)]") %>%

ggplot(aes(x = x, y = y, label = label)) +

geom_text(size = 7, parse = T, family = "Times") +

xlim(0, 2) +

theme_void()

# define the layout

layout <- c(

area(t = 1, b = 2, l = 1, r = 1),

area(t = 3, b = 3, l = 1, r = 1),

area(t = 4, b = 5, l = 1, r = 1),

area(t = 6, b = 6, l = 1, r = 1),

area(t = 7, b = 7, l = 1, r = 1)

)

# combine and plot!

(p1 + p2 + p3 + p4 + p5) +

plot_layout(design = layout) &

ylim(0, 1) &

theme(plot.margin = margin(0, 5.5, 0, 5.5))

Here is how we might fit the model with brms::brm().

fit8.2 <-

brm(data = my_data,

family = bernoulli(identity),

y ~ 0 + s,

prior(beta(2, 2), class = b, lb = 0, ub = 1),

iter = 2000, warmup = 500, cores = 4, chains = 4,

seed = 8,

file = "fits/fit08.02")More typically, we’d parameterize the model as y ~ 1 + s. This form would yield an intercept and a slope. Behind the scenes, brms would treat the nominal s variable as an 0-1 coded dummy variable. One of the nominal levels would become the reverence category, depicted by the Intercept, and the difference between that and the other category would be the s slope. However, with our y ~ 0 + s syntax, we’ve suppressed the typical model intercept. The consequence is that each level of the nominal variable s gets its own intercept or [i] index, if you will. This is analogous to Kruschke’s y[i] ∼ dbern(theta[s[i]]) code.

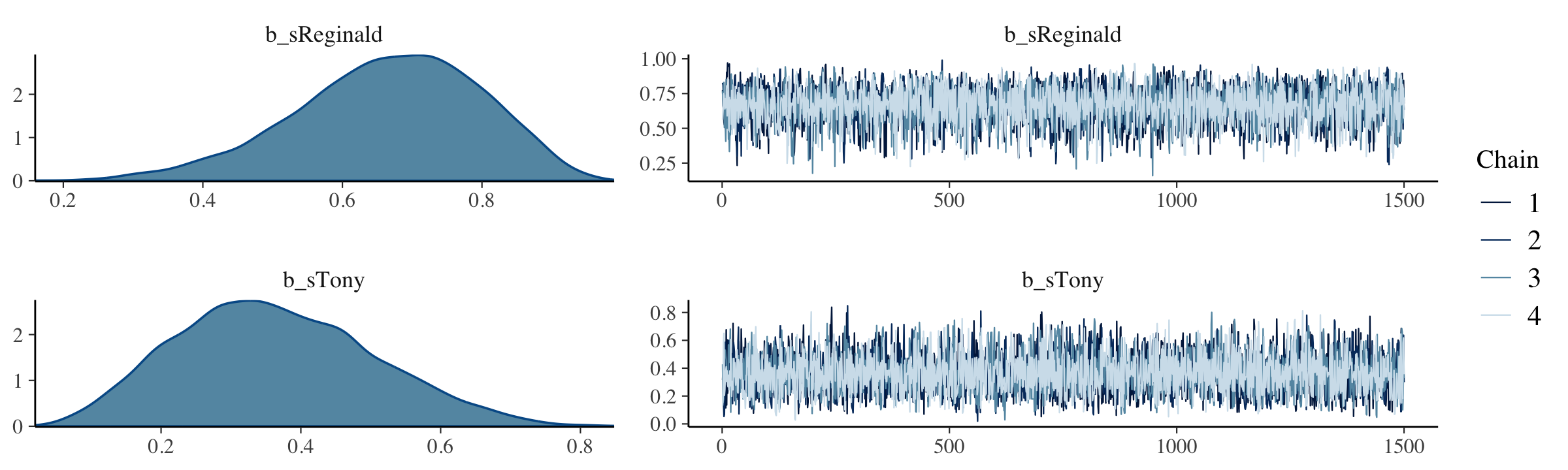

All that aside, here are the chains.

plot(fit8.2, widths = c(2, 3))

The model summary() is as follows:

summary(fit8.2)## Family: bernoulli

## Links: mu = identity

## Formula: y ~ 0 + s

## Data: my_data (Number of observations: 15)

## Draws: 4 chains, each with iter = 2000; warmup = 500; thin = 1;

## total post-warmup draws = 6000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sReginald 0.67 0.13 0.38 0.89 1.00 5152 3519

## sTony 0.36 0.14 0.12 0.66 1.00 4629 4001

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

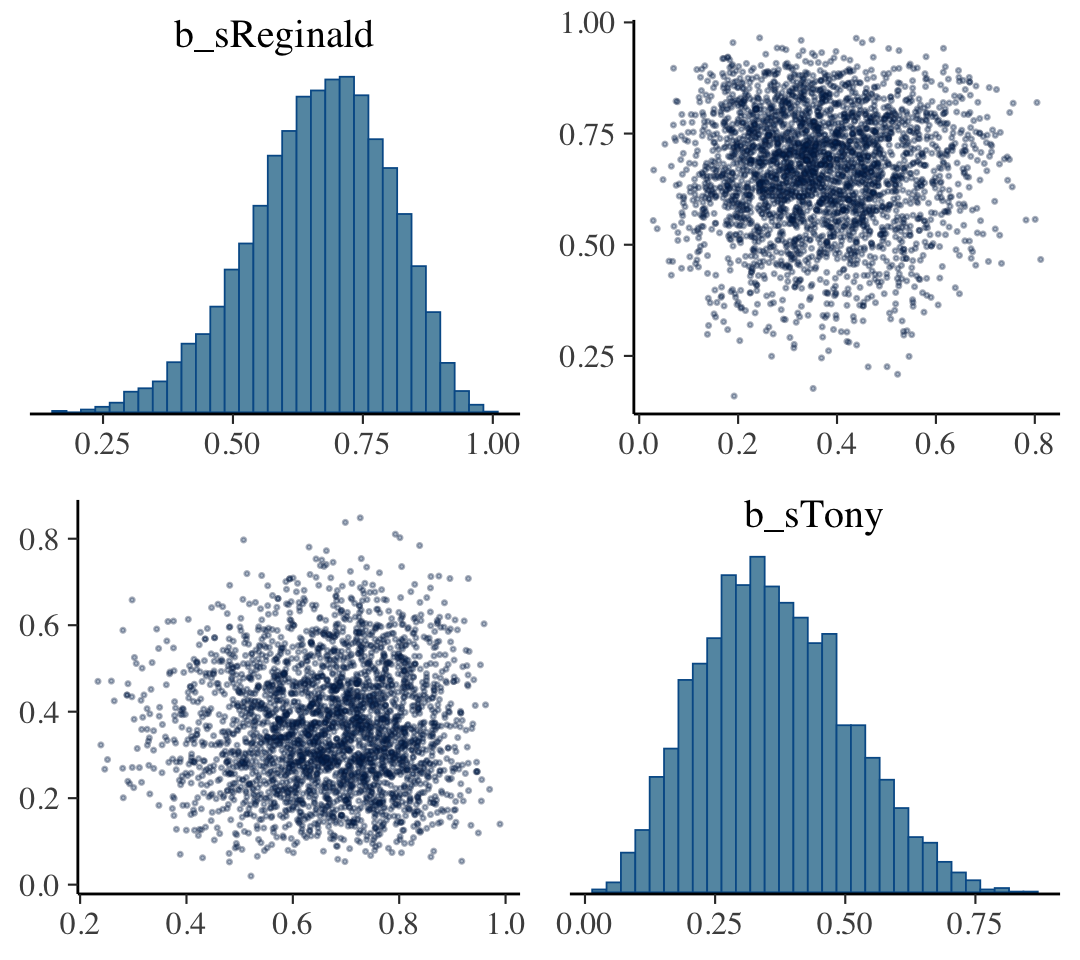

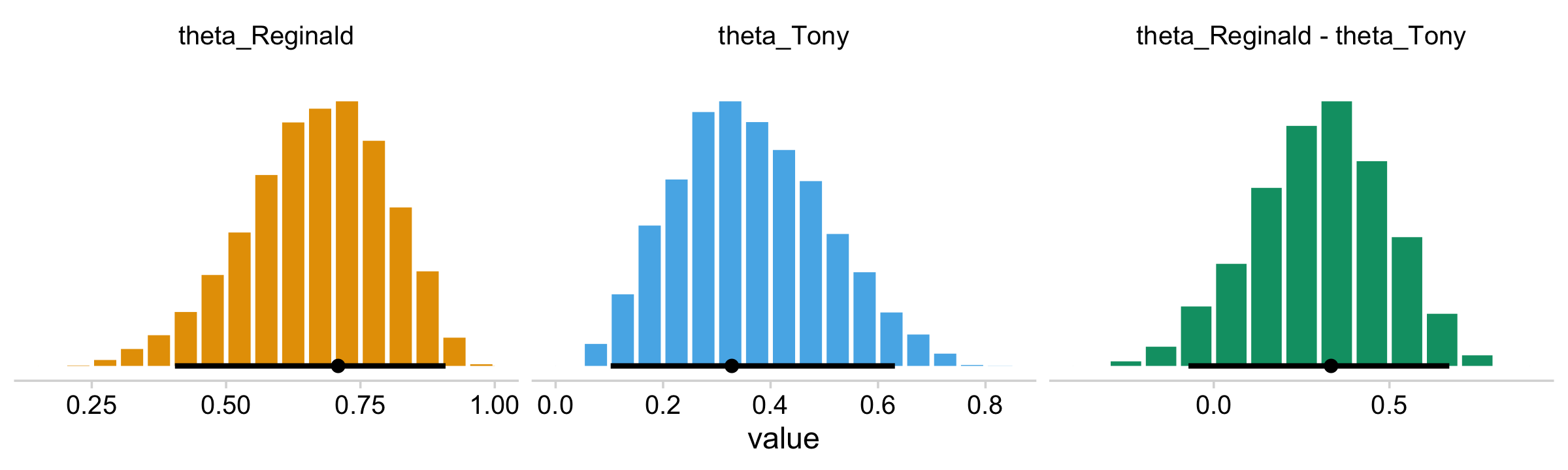

## scale reduction factor on split chains (at convergence, Rhat = 1).The brms::pairs() function gets us the bulk of Figure 8.6.

pairs(fit8.2,

off_diag_args = list(size = 1/3, alpha = 1/3))

But to get at that difference-score distribution, we’ll have extract the posterior draws with as_draws_df(), make difference score with mutate(), and manually plot with ggplot2.

draws <- as_draws_df(fit8.2)

draws <-

draws %>%

rename(theta_Reginald = b_sReginald,

theta_Tony = b_sTony) %>%

mutate(`theta_Reginald - theta_Tony` = theta_Reginald - theta_Tony)

glimpse(draws)## Rows: 6,000

## Columns: 8

## $ theta_Reginald <dbl> 0.7757244, 0.6615629, 0.6392801, 0.5430331, 0.5246347, 0.525…

## $ theta_Tony <dbl> 0.3163358, 0.4048103, 0.3239676, 0.2919574, 0.2351882, 0.209…

## $ lprior <dbl> 0.30344208, 0.66373987, 0.59782687, 0.61339128, 0.47929929, …

## $ lp__ <dbl> -11.69349, -11.30482, -11.32344, -11.77508, -12.21795, -12.4…

## $ .chain <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, …

## $ .iteration <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 1…

## $ .draw <int> 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 17, 1…

## $ `theta_Reginald - theta_Tony` <dbl> 0.45938855, 0.25675262, 0.31531244, 0.25107566, 0.28944655, …long_draws <-

draws %>%

select(starts_with("theta")) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("theta_Reginald", "theta_Tony", "theta_Reginald - theta_Tony")))

long_draws %>%

ggplot(aes(x = value, y = 0, fill = name)) +

stat_histinterval(point_interval = mode_hdi, .width = .95,

slab_color = "white", outline_bars = T,

normalize = "panels") +

scale_fill_manual(values = colorblind_pal()(8)[2:4], breaks = NULL) +

scale_y_continuous(NULL, breaks = NULL) +

theme_minimal_hgrid() +

facet_wrap(~ name, scales = "free")

Note how this time we used the colorblind_pal() function within scale_fill_manual() to manually select three of the of the scale_fill_colorblind() colors.

Anyway, here’s a way to get the numeric summaries out of post.

long_draws %>%

group_by(name) %>%

mode_hdi()## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 theta_Reginald 0.709 0.405 0.908 0.95 mode hdi

## 2 theta_Tony 0.328 0.102 0.631 0.95 mode hdi

## 3 theta_Reginald - theta_Tony 0.334 -0.0720 0.670 0.95 mode hdiIn this context, the mode_hdi() summary yields:

name(i.e., the name we used to denote the parameters)value(i.e., the value of the measure of central tendency).lower(i.e., the lower level of the 95% HDI).upper(i.e., the upper level…).width(i.e., what interval we used).point(i.e., the type of measure of central tendency).interval(i.e., the type of interval)

8.5 Sampling from the prior distribution in JAGS brms

There are a few ways to sample from the prior distribution with brms. Here we’ll practice by setting sample_prior = "only". As a consequence, brm() will ignore the likelihood and return draws based solely on the model priors.

fit8.3 <-

brm(data = my_data,

family = bernoulli(identity),

y ~ 0 + s,

prior = c(prior(beta(2, 2), class = b, coef = sReginald),

prior(beta(2, 2), class = b, coef = sTony),

# this just sets the lower and upper bounds

prior(beta(2, 2), class = b, lb = 0, ub = 1)),

iter = 2000, warmup = 500, cores = 4, chains = 4,

sample_prior = "only",

seed = 8,

file = "fits/fit08.03")Because we set sample_prior = "only", the as_draws_df() function will now return draws from the priors.

draws <- as_draws_df(fit8.3) %>%

select(starts_with("b_"))

head(draws)## # A tibble: 6 × 2

## b_sReginald b_sTony

## <dbl> <dbl>

## 1 0.693 0.942

## 2 0.156 0.448

## 3 0.242 0.176

## 4 0.452 0.445

## 5 0.275 0.818

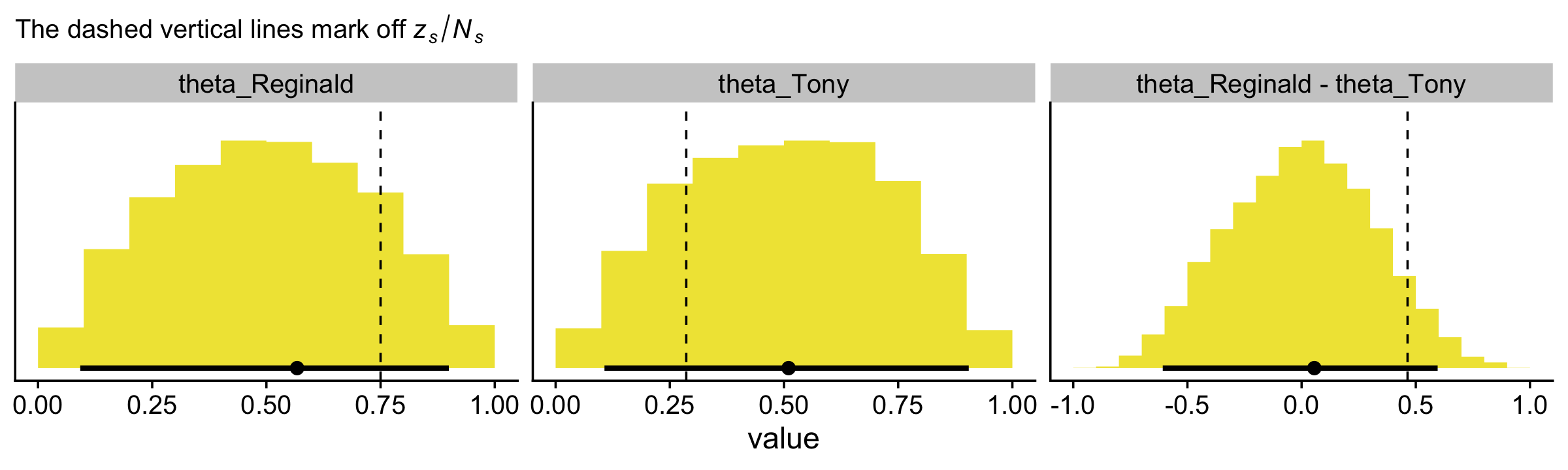

## 6 0.987 0.397With our prior draws in hand, we’re almost ready to make the prior histograms of Figure 8.7. But first we’ll want to determine the z/N values in order to mark them off in the plots. [You’ll note Kruschke did so with gray plus marks in his.]

my_data %>%

group_by(s) %>%

summarise(z = sum(y),

N = n()) %>%

mutate(`z/N` = z / N)## # A tibble: 2 × 4

## s z N `z/N`

## <chr> <dbl> <int> <dbl>

## 1 Reginald 6 8 0.75

## 2 Tony 2 7 0.286levels <- c("theta_Reginald", "theta_Tony", "theta_Reginald - theta_Tony")

d_line <-

tibble(value = c(.75, .286, .75 - .286),

name = factor(c("theta_Reginald", "theta_Tony", "theta_Reginald - theta_Tony"),

levels = levels))Behold the histograms of Figure 8.7.

draws %>%

rename(theta_Reginald = b_sReginald,

theta_Tony = b_sTony) %>%

mutate(`theta_Reginald - theta_Tony` = theta_Reginald - theta_Tony) %>%

pivot_longer(contains("theta")) %>%

mutate(name = factor(name, levels = levels)) %>%

ggplot(aes(x = value, y = 0)) +

stat_histinterval(point_interval = mode_hdi, .width = .95,

fill = colorblind_pal()(8)[5], normalize = "panels") +

geom_vline(data = d_line,

aes(xintercept = value),

linetype = 2) +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = expression("The dashed vertical lines mark off "*italic(z[s])/italic(N[s]))) +

theme_cowplot() +

facet_wrap(~ name, scales = "free")

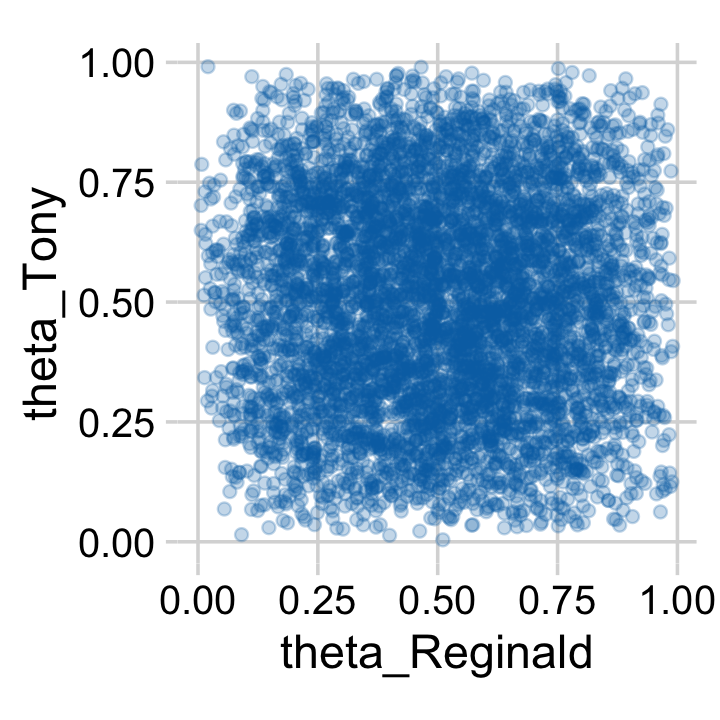

Here’s how you might make the scatter plot.

draws %>%

rename(theta_Reginald = b_sReginald,

theta_Tony = b_sTony) %>%

ggplot(aes(x = theta_Reginald, y = theta_Tony)) +

geom_point(alpha = 1/4, color = colorblind_pal()(8)[6]) +

coord_equal() +

theme_minimal_grid()

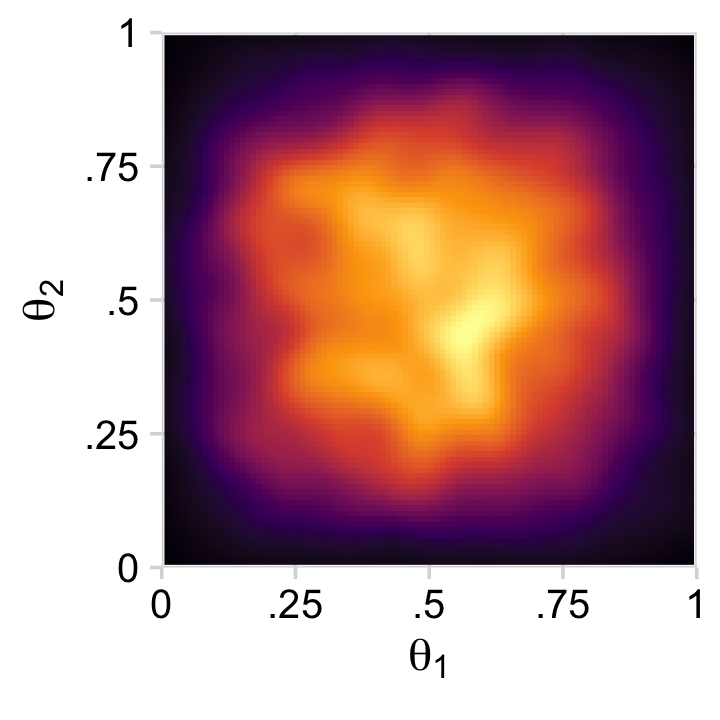

Or you could always use a two-dimensional density plot with stat_density_2d().

draws %>%

rename(theta_Reginald = b_sReginald,

theta_Tony = b_sTony) %>%

ggplot(aes(x = theta_Reginald, y = theta_Tony)) +

stat_density_2d(aes(fill = stat(density)),

geom = "raster", contour = F) +

scale_fill_viridis_c(option = "B", breaks = NULL) +

scale_x_continuous(expression(theta[1]),

expand = c(0, 0), limits = c(0, 1),

breaks = 0:4 / 4, labels = c("0", ".25", ".5", ".75", "1")) +

scale_y_continuous(expression(theta[2]),

expand = c(0, 0), limits = c(0, 1),

breaks = 0:4 / 4, labels = c("0", ".25", ".5", ".75", "1")) +

coord_equal() +

theme_minimal_grid()

The viridis color palettes in functions like scale_fill_viridis_c() and scale_fill_viridis_d() are designed to be colorblind safe, too (Garnier, 2021).

8.6 Probability distributions available in JAGS brms

[brms] has a large collection of frequently used probability distributions that are built-in. These distributions include the beta, gamma, normal, Bernoulli, and binomial along with many others. A complete list of distributions, and their [brms] names, can be found in [Bürkner’s (2022c) vignette Parameterization of response distributions in brms]. (Kruschke, 2015, pp. 213–214, emphasis added)

8.6.1 Defining new likelihood functions.

In addition to all the likelihood functions listed in above mentioned vignette, you can also make your own likelihood functions. Bürkner explained the method in his (2022d) vignette, Define custom response distributions with brms.

8.7 Faster sampling with parallel processing in runjags brms::brm()

We don’t need to open another package to sample in parallel in brms. In fact, we’ve already been doing that. Take another look at the code use used for the last model, fit8.2.

fit8.2 <-

brm(data = my_data,

family = bernoulli(identity),

y ~ 0 + s,

prior(beta(2, 2), class = b, lb = 0, ub = 1),

iter = 2000, warmup = 500, cores = 4, chains = 4,

seed = 8,

file = "fits/fit08.02")See the cores = 4, chains = 4 arguments? With that bit of code, we told brms::brm() we wanted 4 chains, which we ran in parallel across 4 cores.

8.8 Tips for expanding JAGS brms models

I’m in complete agreement with Kruschke, here:

Often, the process of programming a model is done is stages, starting with a simple model and then incrementally incorporating complexifications. At each step, the model is checked for accuracy and efficiency. This procedure of incremental building is useful for creating a desired complex model from scratch, for expanding a previously created model for a new application, and for expanding a model that has been found to be inadequate in a posterior predictive check. (p. 218)

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] ggthemes_4.2.4 tidybayes_3.0.2 bayesplot_1.10.0 brms_2.18.0 Rcpp_1.0.9

## [6] cowplot_1.1.1 patchwork_1.1.2 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10

## [11] purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0

## [16] tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4

## [5] svUnit_1.0.6 splines_4.2.2 crosstalk_1.2.0 TH.data_1.1-1

## [9] rstantools_2.2.0 inline_0.3.19 digest_0.6.31 htmltools_0.5.3

## [13] fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0 googlesheets4_1.0.1

## [17] tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5 matrixStats_0.63.0

## [21] vroom_1.5.7 xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1

## [25] colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1 haven_2.5.1

## [29] xfun_0.35 callr_3.7.3 crayon_1.5.2 jsonlite_1.8.4

## [33] lme4_1.1-31 survival_3.4-0 zoo_1.8-10 glue_1.6.2

## [37] gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0 distributional_0.3.1

## [41] pkgbuild_1.3.1 rstan_2.21.8 abind_1.4-5 scales_1.2.1

## [45] mvtnorm_1.1-3 DBI_1.1.3 miniUI_0.1.1.1 viridisLite_0.4.1

## [49] xtable_1.8-4 HDInterval_0.2.4 bit_4.0.4 stats4_4.2.2

## [53] StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4 httr_1.4.4

## [57] threejs_0.3.3 arrayhelpers_1.1-0 posterior_1.3.1 ellipsis_0.3.2

## [61] pkgconfig_2.0.3 loo_2.5.1 farver_2.1.1 sass_0.4.2

## [65] dbplyr_2.2.1 utf8_1.2.2 tidyselect_1.2.0 labeling_0.4.2

## [69] rlang_1.0.6 reshape2_1.4.4 later_1.3.0 munsell_0.5.0

## [73] cellranger_1.1.0 tools_4.2.2 cachem_1.0.6 cli_3.6.0

## [77] generics_0.1.3 ggridges_0.5.4 broom_1.0.2 evaluate_0.18

## [81] fastmap_1.1.0 processx_3.8.0 knitr_1.40 bit64_4.0.5

## [85] fs_1.5.2 nlme_3.1-160 projpred_2.2.1 mime_0.12

## [89] xml2_1.3.3 compiler_4.2.2 shinythemes_1.2.0 rstudioapi_0.13

## [93] gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0 stringi_1.7.8

## [97] highr_0.9 ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45

## [101] Matrix_1.5-1 nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0

## [105] tensorA_0.36.2 vctrs_0.5.1 pillar_1.8.1 lifecycle_1.0.3

## [109] jquerylib_0.1.4 bridgesampling_1.1-2 estimability_1.4.1 httpuv_1.6.5

## [113] R6_2.5.1 bookdown_0.28 promises_1.2.0.1 gridExtra_2.3

## [117] codetools_0.2-18 boot_1.3-28 colourpicker_1.1.1 MASS_7.3-58.1

## [121] gtools_3.9.4 assertthat_0.2.1 withr_2.5.0 shinystan_2.6.0

## [125] multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2 hms_1.1.1

## [129] grid_4.2.2 minqa_1.2.5 coda_0.19-4 rmarkdown_2.16

## [133] googledrive_2.0.0 shiny_1.7.2 lubridate_1.8.0 base64enc_0.1-3

## [137] dygraphs_1.1.1.6