8 Estimating Functions of GWN Model Parameters

Updated: May 9, 2021

Copyright © Eric Zivot 2015, 2016, 2020, 2021

In the previous chapter, we considered estimation of the parameters of the GWN model for asset returns and the construction of estimated standard errors and confidence intervals for the estimated parameters. In this chapter, we consider estimation of functions of the parameters of the GWN model, and the construction of estimated standard errors and confidence intervals for these estimates. This is an important topic as we are often more interested in a function of the GWN model parameters (e.g. \(\mathrm{VaR}_{\alpha}\)) than the GWN model parameters themselves. In order to do this, we introduce some new statistical tools including the analytic delta method and two computer intensive resampling methods called the jackknife and the bootstrap.

The R packages used in this chapter are boot, bootstrap, car, IntroCompFinR, and PerformanceAnalytics. Have these packages installed and loaded in R before replicating the chapter examples.

suppressPackageStartupMessages(library(boot))

suppressPackageStartupMessages(library(bootstrap))

suppressPackageStartupMessages(library(car))

suppressPackageStartupMessages(library(IntroCompFinR))

suppressPackageStartupMessages(library(PerformanceAnalytics))

options(digits=3)8.1 Functions of GWN Model Parameters

Consider the GWN model for returns (simple or continuously compounded):

\[\begin{align*} R_{it} & =\mu_{i}+\varepsilon_{it},\\ \{\varepsilon_{it}\}_{t=1}^{T} & \sim\mathrm{GWN}(0,\sigma^{2}), \\ \mathrm{cov}(\varepsilon_{it},\varepsilon_{js}) & =\left\{ \begin{array}{c} \sigma_{ij}\\ 0 \end{array}\right.\begin{array}{c} t=s\\ t\neq s \end{array}. \end{align*}\]

Let \(\theta\) denote a \(k \times 1\) vector of GWN model parameters from which we want to compute a function of interest (we drop the asset subscript \(i\) to simplify notation). Let \(f:R^k \rightarrow R\) be a continuous and differentiable function of \(\theta\), and define \(\eta = f(\theta)\) as the parameter of interest. To make things concrete, let \(\theta = (\mu,\sigma)^{\prime}\). Consider the following functions of \(\theta\):

\[\begin{align} f_{1}(\theta) & = \mu + \sigma \times q_{\alpha}^{Z} = q_{\alpha}^R \tag{8.1} \\ f_{2}(\theta) & = -W_{0}\times \left( \mu + \sigma \times q_{\alpha}^{Z} \right) = W_{0} \times f_{1}(\theta) = \mathrm{VaR}_{\alpha}^N \tag{8.2} \\ f_{3}(\theta) & = -W_{0}\times \left( \exp \left( \mu + \sigma \times q_{\alpha}^{Z} \right) - 1 \right) = W_{0} \times (\exp(f_{1}(\theta)) - 1) = \mathrm{VaR}_{\alpha}^{LN} \tag{8.3} \\ f_{4}(\theta) & = \frac{\mu-r_f}{\sigma} = \mathrm{SR} \tag{8.4} \end{align}\]

The first function (8.1) is the \(\alpha\)-quantile, \(q_{\alpha}^R\), of the \(N(\mu,\sigma^{2})\) distribution for simple returns. The second function (8.2) is \(\mathrm{VaR}_{\alpha}\) of an initial investment of \(W_{0}\) based on the normal distribution for simple returns. The third function (8.3) is \(\mathrm{VaR}_{\alpha}\) based on the log-normal distribution for simple returns (normal distribution for continuously compounded returns). The fourth function (8.4) is the Sharpe ratio, \(\mathrm{SR}\), of an asset, where \(r_f\) denotes the constant risk-free rate of interest. The first two functions are linear functions of the elements of \(\theta\), and second two functions are nonlinear linear functions of the elements of \(\theta\).

Given the plug-in estimate of \(\theta\), \(\hat{\theta} = (\hat{\mu},\hat{\sigma})'\), we want to estimate each of the above functions of \(\theta\), and construct estimated standard errors and confidence intervals.

For the examples in this chapter, we use data on monthly continuously compounded and simple returns for Microsoft over the period January 1998, through May 2012, from the IntroCompFinR package. The data is the same as that used in Chapter 5 and is constructed as follows:

data(msftDailyPrices)

msftPrices = to.monthly(msftDailyPrices, OHLC=FALSE)

smpl = "1998-01::2012-05"

msftPrices = msftPrices[smpl]

msftRetS = na.omit(Return.calculate(msftPrices, method="discrete"))

msftRetC = log(1 + msftRetS)The GWN model estimates for \(\mu_{msft}\) and \(\sigma_{msft}\), with estimated standard errors, are:

n.obs = nrow(msftRetC)

muhatC = mean(msftRetC)

sigmahatC = sd(msftRetC)

muhatS = mean(msftRetS)

sigmahatS = sd(msftRetS)

estimates = c(muhatC, sigmahatC, muhatS, sigmahatS)

se.muhatC = sigmahatC/sqrt(n.obs)

se.muhatS = sigmahatS/sqrt(n.obs)

se.sigmahatC = sigmahatC/sqrt(2*n.obs)

se.sigmahatS = sigmahatS/sqrt(2*n.obs)

stdErrors = c(se.muhatC, se.sigmahatC, se.muhatS, se.sigmahatS)

ans = rbind(estimates, stdErrors)

colnames(ans) = c("mu.cc", "sigma.cc", "mu.simple", "sigma.simple")

ans## mu.cc sigma.cc mu.simple sigma.simple

## estimates 0.00413 0.1002 0.00915 0.10150

## stdErrors 0.00764 0.0054 0.00774 0.00547\(\blacksquare\)

8.2 Estimation of Functions of GWN Model Parameters

In Chapter 7, we used the plug-in principle to motivate estimators of the GWN model parameters \(\mu_i\), \(\sigma_{i}^2\), \(\sigma_{i,j}\), \(\sigma_{ij}\), and \(\rho_{ij}\). We can also use the plug-in principle to estimate functions of the GWN model parameters.

Definition 8.1 (Plug-in principle for functions of GWN model parameters) Let \(\theta\) denote a \(k \times 1\) vector of GWN model parameters, and let \(\eta = f(\theta)\) where \(f:\mathbb{R}^k \rightarrow \mathbb{R}\) is a continuous and differentiable function of \(\theta\). Let \(\hat{\theta}\) denote the plug-in estimator of \(\theta\). Then the plug-in estimator of \(\eta\) is \(\hat{\eta} = f(\hat{\theta})\).

Let \(W_0 = \$100,000\), and \(r_f = 0.03/12 = 0.0025\). Using the plug-in estimates of the GWN model parameters, the plug-in estimates of the functions (8.1) - (8.4) are:

W0 = 100000

r.f = 0.03/12

f1.hat = muhatS + sigmahatS*qnorm(0.05)

f2.hat = -W0*f1.hat

f3.hat = -W0*(exp(muhatC + sigmahatC*qnorm(0.05)) - 1)

f4.hat = (muhatS-r.f)/sigmahatS

fhat.vals = cbind(f1.hat, f2.hat, f3.hat, f4.hat)

colnames(fhat.vals) = c("f1", "f2", "f3", "f4")

rownames(fhat.vals) = "Estimate"

fhat.vals## f1 f2 f3 f4

## Estimate -0.158 15780 14846 0.0655\(\blacksquare\)

Plug-in estimators of functions are random variables and are subject to estimation error. Just as we studied the statistical properties of the plug-in estimators of the GWN model parameters we can study the statistical properties of the plug-in estimators of functions of GWN model parameters.

8.2.1 Bias

As discussed in Chapter 7, a desirable finite sample property of an estimator \(\hat{\theta}\) of \(\theta\) is unbiasedness: on average over many hypothetical samples \(\hat{\theta}\) is equal to \(\theta\). When estimating \(f(\theta)\), unbiasedness of \(\hat{\theta}\) may or may not carry over to \(f(\hat{\theta})\).

Suppose \(f(\theta)\) is a linear function of the elements of \(\theta\) so that

\[ f(\theta) = a + b_1 \theta_1 + b_2 \theta_2 + \cdots + b_k \theta_k. \]

where \(a, b_1, b_2, \ldots b_k\) are constants. For example, the functions (8.1) and (8.2) are linear functions of the elements of \(\theta = (\mu, \sigma)^{\prime}.\) Let \(\hat{\theta}\) denote an estimator of \(\theta\) and let \(f(\hat{\theta})\) denote the plug-in estimator of \(f(\theta)\). If \(E[\hat{\theta}]=\theta\) for all elements of \(\theta\) (\(\hat{\theta_i}\) is unbiased for \(\theta_i\), \(i=1,\ldots, k\)), then

\[\begin{align*} E[f(\hat{\theta)}] & = a + b_1 E[\hat{\theta_1}] + b_2 E[\hat{\theta_2}] + \cdots + b_k E[\hat{\theta_k}] \\ & = a + b_1 \theta_1 + b_2 \theta_2 + \cdots + b_k \theta_k = f(\theta) \end{align*}\]

and so \(f(\hat{\theta})\) is unbiased for \(f(\theta)\).

Now suppose \(f(\theta)\) is a nonlinear function of the elements of \(\theta\). For example, the functions (8.3) and (8.4) are nonlinear functions of the elements of \(\theta = (\mu, \sigma)^{\prime}.\) Then, in general, \(f(\hat{\theta})\) is not unbiased for \(f(\theta)\) even if \(\hat{\theta}\) is unbiased for \(\theta\). The direction of the bias depends on the properties of \(f(\theta)\). If \(f(\cdot)\) is convex at \(\theta\) or concave at \(\theta\) then we can say something about the direction of the bias in \(f(\hat{\theta})\).

Definition 8.2 (Convex function) A scalar function \(f(\theta)\) is convex (in two dimensions) if all points on a straight line connecting any two points on the graph of \(f(\theta)\) is above or on that graph. More formally, we say \(f(\theta)\) is convex if for all \(\theta_1\) and \(\theta_2\) and for all \(0 \le \alpha \le1\)

\[\begin{equation*} f(\alpha \theta_1 + (1-\alpha) \theta_2)) \le \alpha f(\theta_1) + (1 - \alpha)f(\theta_2) \end{equation*}\]For example, the function \(f(\theta)=\theta^2\) is a convex function. Another common convex function is \(\exp(\theta)\).

For example, the function \(f(\theta) = - \theta^2\) is concave. Other common concave functions are \(log(\theta)\) and \(\sqrt{\theta}\).

Jensen’s inequality tells us that if \(f\) is convex (concave) and \(\hat{\theta}\) is unbiased then \(f(\hat{\theta})\) is positively (negatively) biased. For example, we know from Chapter 7 that \(\hat{\sigma}^2\) is unbiased for \(\sigma^2\). Now, \(\hat{\sigma} = f(\hat{\sigma}^2) = \sqrt{\hat{\sigma}^2}\) is a concave function of \(\hat{\sigma}\). From Jensen’s inequality, we know that \(\hat{\sigma}\) is negatively biased (i.e. too small).

\(\blacksquare\)

8.2.2 Consistency

An important property of the plug-in estimators of the GWN model parameters is that they are consistent: as the sample size gets larger and larger the estimators get closer and closer to their true values and eventually equal their true values for an infinitely large sample.

Consistency also holds for plug-in estimators of functions of GWN model parameters. The justification comes from Slutsky’s Theorem.

Proposition 8.2 (Slutsky’s Theorem) Let \(\hat{\theta}\) be consistent for \(\theta\), and let \(f:\mathbb{R}^k \rightarrow \mathbb{R}\) be continuous at \(\theta\). Then \(f(\hat{\theta})\) is consistent for \(f(\theta)\).

The key condition on \(f(\theta)\) is continuity at \(\theta\).39

All of the functions (8.1) - (8.4) are continuous at \(\theta = (\mu, \sigma)'\). By Slutsky’s Theorem, all of the plug-in estimators of the functions are also consistent.

\(\blacksquare\)

8.3 Asymptotic normality

Let \(\theta\) denote a scalar parameter of the GWN model for returns and let \(\hat{\theta}\) denote the plug-in estimator of \(\theta\). In Chapter 7 we stated that \(\hat{\theta}\) is asymptotically normally distributed

\[\begin{equation*} \hat{\theta}\sim N(\theta,\widehat{\mathrm{se}}(\hat{\theta})^{2}), \end{equation*}\]

for large enough \(T\), where \(\widehat{\mathrm{se}}(\hat{\theta})\) denotes the estimated standard error of \(\hat{\theta}.\) This result is justified by the CLT. Let \(f(\theta)\) denote a real-valued continuous and differentiable scalar function of \(\theta\). Using a technique called the delta method, we can show that the plug-in estimator \(f(\hat{\theta})\) is also asymptotically normally distributed:

\[\begin{equation*} f(\hat{\theta}) \sim N(f(\theta),\widehat{\mathrm{se}}(f(\hat{\theta}))^{2}), \end{equation*}\]

for large enough \(T\), where \(\widehat{\mathrm{se}}(f(\hat{\theta}))\) is the estimated standard error for \(f(\hat{\theta})\). The delta method shows us how to compute \(\widehat{\mathrm{se}}(f(\hat{\theta}))^2\):

\[\begin{equation} \widehat{\mathrm{se}}(f(\hat{\theta}))^{2} = f'(\hat{\theta})^2 \times \widehat{\mathrm{se}}(\hat{\theta})^{2}, \end{equation}\]

where \(f'(\hat{\theta})\) denotes the derivative of \(f(\theta)\) evaluated at \(\hat{\theta}\). Then,

\[\begin{equation} \widehat{\mathrm{se}}(f(\hat{\theta})) = \sqrt{f'(\hat{\theta})^2 \times \widehat{\mathrm{se}}(\hat{\theta})^{2}}. \end{equation}\]

In Chapter 7 we stated that, for large enough \(T\),

\[\begin{equation*} \hat{\sigma}^2 \sim N(\sigma^2, \mathrm{se}(\hat{\sigma}^2)^2), ~ \hat{\sigma} \sim N(\sigma,\mathrm{se}(\hat{\sigma})^2), \end{equation*}\]

where \(\mathrm{se}(\sigma^2)^2 = 2\sigma^4/T\) and \(\mathrm{se}(\sigma)^2 = \sigma^2/2T\). The formula for \(\mathrm{se}(\hat{\sigma})^2\) is the result of the delta method for the function \(f(\sigma^2)= \sqrt{\sigma^2}=\sigma\) applied to the asymptotic distribution of \(\hat{\sigma}^2\). To see this, first note that by the chain rule

\[\begin{equation*} f'(\sigma^2) = \frac{1}{2} (\sigma^2)^{-1/2}, \end{equation*}\]

so that \(f'(\sigma^2)^2 = \frac{1}{4}(\sigma^2)^{-1}\). Then,

\[\begin{align*} \mathrm{se}(f(\hat{\theta}))^{2} = f'(\sigma^2)^2 \times \mathrm{se}(\hat{\sigma}^2)^2 & = \frac{1}{4}(\sigma^2)^{-1} \times 2\sigma^4/T \\ & = \frac{1}{2}\sigma^2/T = \sigma^2/2T \\ & = \mathrm{se}(\hat{\sigma})^2. \end{align*}\]

The estimated squared standard error replaces \(\sigma^2\) with its estimate \(\hat{\sigma}^2\) and is given by \[\begin{equation*} \widehat{\mathrm{se}}(\hat{\sigma}^2)^2 = \hat{\sigma}^2/2T. \end{equation*}\]

\(\blacksquare\)

Now suppose \(\theta\) is a \(k \times 1\) vector of GWN model parameters, and \(f:\mathbb{R}^k \rightarrow \mathbb{R}\) is a continuous and differentiable function of \(\theta\). Define the \(k \times 1\) gradient function \(g(\theta)\) as:

\[\begin{equation} g(\theta) = \frac{\partial f(\theta)}{\partial \theta} = \left( \begin{array}{c} \frac{\partial f(\theta)}{\partial \theta_1} \\ \vdots \\ \frac{\partial f(\theta)}{\partial \theta_k} \end{array} \right). \end{equation}\]

Assume that \(\hat{\theta}\) is asymptotically normally distributed:

\[\begin{equation*} \hat{\theta} \sim N(\theta, \widehat{\mathrm{var}}(\hat{\theta})), \end{equation*}\]

where \(\widehat{\mathrm{var}}(\hat{\theta})\) is the \(k \times k\) estimated variance-covariance matrix of \(\hat{\theta}\). Then the delta method shows us that \(f(\hat{\theta})\) is asymptotically normally distributed:

\[\begin{equation*} f(\hat{\theta}) \sim N(f(\theta), \widehat{\mathrm{se}}(f(\hat{\theta}))^{2}), \end{equation*}\]

where

\[\begin{equation*} \widehat{\mathrm{se}}(f(\hat{\theta}))^{2} = g(\hat{\theta})'\widehat{\mathrm{var}}(\hat{\theta})g(\hat{\theta}). \end{equation*}\]

Let \(\theta = (\mu, \sigma)'\) and consider \(f_1(\theta) = q_{\alpha}^R = \mu + \sigma q_{\alpha}^Z\) (normal simple return quantile). The gradient function \(g_1(\theta)\) for \(f_1(\theta)\) is:

\[\begin{align*} g_1(\theta) = \frac{\partial f_1(\theta)}{\partial \theta} = \left( \begin{array}{c} \frac{\partial f_1(\theta)}{\partial \mu} \\ \frac{\partial f_1(\theta)}{\partial \sigma} \end{array} \right) = \left( \begin{array}{c} 1 \\ q_{\alpha}^Z \end{array} \right). \end{align*}\]

Regarding \(\mathrm{var}(\hat{\theta})\), recall from Chapter 7, in the GWN model: \[\begin{equation*} \left(\begin{array}{c} \hat{\mu}\\ \hat{\sigma}^2 \end{array}\right)\sim N\left(\left(\begin{array}{c} \mu\\ \sigma^2 \end{array}\right),\left(\begin{array}{cc} \mathrm{se}(\hat{\mu})^{2} & 0\\ 0 & \mathrm{se}(\hat{\sigma}^2)^{2} \end{array}\right)\right) \end{equation*}\]

for large enough \(T\). We show below, using the delta method, that

\[\begin{equation*} \left(\begin{array}{c} \hat{\mu}\\ \hat{\sigma} \end{array}\right)\sim N\left(\left(\begin{array}{c} \mu\\ \sigma \end{array}\right),\left(\begin{array}{cc} \mathrm{se}(\hat{\mu})^{2} & 0\\ 0 & \mathrm{se}(\hat{\sigma})^{2} \end{array}\right)\right) \end{equation*}\]

Hence,

\[\begin{equation*} \mathrm{var}(\hat{\theta}) = \left(\begin{array}{cc} \mathrm{se}(\hat{\mu})^{2} & 0\\ 0 & \mathrm{se}(\hat{\sigma})^{2} \end{array}\right) = \left(\begin{array}{cc} \frac{\sigma^2}{T} & 0\\ 0 & \frac{\sigma^2}{2T} \end{array}\right) \end{equation*}\]

Then,

\[\begin{align*} g_1(\theta)'\mathrm{var}(\hat{\theta})g_1(\theta) &= \left( \begin{array}{cc} 1 & q_{\alpha}^Z \end{array} \right) \left(\begin{array}{cc} \mathrm{se}(\hat{\mu})^{2} & 0\\ 0 & \mathrm{se}(\hat{\sigma})^{2} \end{array}\right) \left( \begin{array}{c} 1 \\ q_{\alpha}^Z \end{array} \right) \\ &= \mathrm{se}(\hat{\mu})^{2} + (q_{\alpha}^Z)^2 \mathrm{se}(\hat{\sigma})^{2} \\ & =\frac{\sigma^{2}}{T}+\frac{\left(q_{\alpha}^{Z}\right)^{2}\sigma^{2}}{2T}\\ & =\frac{\sigma^{2}}{T}\left[1+\frac{1}{2}\left(q_{\alpha}^{Z}\right)^{2}\right] \\ &= \mathrm{se}(\hat{q}_{\alpha}^Z)^2. \end{align*}\]

The standard error for \(\hat{q}_{\alpha}^Z\) is: \[\begin{equation*} \mathrm{se}(\hat{q}_{\alpha}^{R})=\sqrt{\mathrm{var}(\hat{q}_{\alpha}^{R})}=\frac{\sigma}{\sqrt{T}}\left[1+\frac{1}{2}\left(q_{\alpha}^{Z}\right)^{2}\right]^{1/2}.\tag{8.5} \end{equation*}\]

The formula (8.5) shows that \(\mathrm{se}(\hat{q}_{\alpha}^{R})\) increases with \(\sigma\) and \(q_{\alpha}^{Z}\), and decreases with \(T\). In addition, for fixed \(T\), \(\mathrm{se}(\hat{q}_{\alpha}^{R})\rightarrow\infty\) as \(\alpha\rightarrow0\) or as \(\alpha\rightarrow1\) because \(q_{\alpha}^{Z}\rightarrow-\infty\) as \(\alpha\rightarrow0\) and \(q_{\alpha}^{Z}\rightarrow\infty\) as \(\alpha\rightarrow1\). Hence, we have very large estimation error in \(\hat{q}_{\alpha}^{R}\) when \(\alpha\) is very close to zero or one (for fixed values of \(\sigma\) and \(T\)). This makes intuitive sense as we have very few observations in the extreme tails of the empirical distribution of the data for a fixed sample size and so we expect a larger estimation error.

Replacing \(\sigma\) with its estimate \(\hat{\sigma}\) gives the estimated standard error for \(\hat{q}_{\alpha}^{R}\):

\[\begin{equation} \widehat{\mathrm{se}}(\hat{q}_{\alpha}^{R})=\frac{\hat{\sigma}}{\sqrt{T}}\left[1+\frac{1}{2}\left(q_{\alpha}^{Z}\right)^{2}\right]^{1/2}.\tag{8.5} \end{equation}\]

Using the above results, the sampling distribution of \(\hat{q}_{\alpha}^{R}\) can be approximated by the normal distribution: \[\begin{equation} \hat{q}_{\alpha}^{R}\sim N(q_{\alpha}^{R},~\widehat{\mathrm{se}}(\hat{q}_{\alpha}^{R})^{2}),\tag{8.6} \end{equation}\] for large enough \(T\).

The estimates of \(q_{\alpha}^{R}\), \(\mathrm{se}(\hat{q}_{\alpha}^{R})\), and 95% confidence intervals, for \(\alpha=0.05,\,0.01\) and \(0.001\), from the simple monthly returns for Microsoft are:

qhat.05 = muhatS + sigmahatS*qnorm(0.05)

qhat.01 = muhatS + sigmahatS*qnorm(0.01)

qhat.001 = muhatS + sigmahatS*qnorm(0.001)

seQhat.05 = (sigmahatS/sqrt(n.obs))*sqrt(1 + 0.5*qnorm(0.05)^2)

seQhat.01 = (sigmahatS/sqrt(n.obs))*sqrt(1 + 0.5*qnorm(0.01)^2)

seQhat.001 = (sigmahatS/sqrt(n.obs))*sqrt(1 + 0.5*qnorm(0.001)^2)

lowerQhat.05 = qhat.05 - 2*seQhat.05

upperQhat.05 = qhat.05 + 2*seQhat.05

lowerQhat.01 = qhat.01 - 2*seQhat.01

upperQhat.01 = qhat.01 + 2*seQhat.01

lowerQhat.001 = qhat.001 - 2*seQhat.001

upperQhat.001 = qhat.001 + 2*seQhat.001

ans = cbind(c(qhat.05, qhat.01, qhat.001),

c(seQhat.05, seQhat.01, seQhat.001),

c(lowerQhat.05, lowerQhat.01, lowerQhat.001),

c(upperQhat.05, upperQhat.01, upperQhat.001))

colnames(ans) = c("Estimates", "Std Errors", "2.5%", "97.5%")

rownames(ans) = c("q.05", "q.01", "q.001")

ans## Estimates Std Errors 2.5% 97.5%

## q.05 -0.158 0.0119 -0.182 -0.134

## q.01 -0.227 0.0149 -0.257 -0.197

## q.001 -0.304 0.0186 -0.342 -0.267For Microsoft the values of \(\widehat{\mathrm{se}}(\hat{q}_{\alpha}^{R})\) are about 1.2%, 1.5%, and 1.9% for \(\alpha=0.05\), \(\alpha=0.01\) and \(\alpha=0.001\), respectively. The 95% confidence interval widths increase with decreases in \(\alpha\) indicating more uncertainty about the true value of \(q_{\alpha}^R\) for smaller values of \(\alpha\). For example, the 95% confidence for interval \(q_{0.01}^R\) indicates that the true loss with \(1\%\) probability (one month out of 100 months) is between \(19.7\%\) and \(25.7\%\).

\(\blacksquare\)

Let \(\theta = (\mu, \sigma)'\) and consider the functions \(f_2(\theta) = \mathrm{VaR}_{\alpha}^N = -W_0(\mu + \sigma q_{\alpha}^Z)\) (normal VaR), \(f_3(\theta) = \mathrm{VaR}_{\alpha}^{LN} = -W_0(\exp((\mu + \sigma q_{\alpha}^Z)) - 1)\) (log-normal VaR), and \(f_4(\theta) = \mathrm{SR} = \frac{\mu-r_f}{\sigma}\) (Sharpe Ratio). It is straightforward to show (see end-of-chapter exercises) that the gradient functions are:

\[\begin{align*} g_2(\theta) & = \frac{\partial f_2(\theta)}{\partial \theta} = \left( \begin{array}{c} -W_0 \\ -W_0 q_{\alpha}^Z \end{array} \right), \\ g_3(\theta) & = \frac{\partial f_3(\theta)}{\partial \theta} = \left( \begin{array}{c} -W_0 \exp(\mu + \sigma q_{\alpha}^Z) \\ -W_0 \exp(\mu + \sigma q_{\alpha}^Z)q_{\alpha}^Z \end{array} \right), \\ g_4(\theta) & = \frac{\partial f_4(\theta)}{\partial \theta} = \left( \begin{array}{c} \frac{-1}{\sigma} \\ -(\sigma)^{-2}(\mu - r_f) \end{array} \right). \end{align*}\]

The delta method variances are:

\[\begin{align*} g_2(\theta)'\mathrm{var}(\hat{\theta})g_2(\theta) &= W_0^2 \mathrm{se}(\hat{q}_{\alpha}^R)^2 = \mathrm{se} \left( \widehat{\mathrm{VaR}}_{\alpha}^{N} \right )^2 \\ g_3(\theta)'\mathrm{var}(\hat{\theta})g_3(\theta) &= W_0^2 \exp(q_{\alpha}^R)^2 \mathrm{se}(\hat{q}_{\alpha}^R)^2 = \mathrm{se} \left( \widehat{\mathrm{VaR}}_{\alpha}^{LN} \right )^2 \\ g_4(\theta)'\mathrm{var}(\hat{\theta})g_4(\theta) &= \frac{1}{T}\left( 1 + \frac{1}{2}\mathrm{SR}^2 \right ) = \mathrm{se}(\widehat{\mathrm{SR}})^2. \end{align*}\]

The corresponding standard errors are:

\[\begin{align*} \mathrm{se} \left( \widehat{\mathrm{VaR}}_{\alpha}^{N} \right ) &= W_0 \mathrm{se}(\hat{q}_{\alpha}^R) \\ \mathrm{se} \left( \widehat{\mathrm{VaR}}_{\alpha}^{LN} \right ) &= W_0 \exp(q_{\alpha}^R) \mathrm{se}(\hat{q}_{\alpha}^R) \\ \mathrm{se}(\widehat{\mathrm{SR}}) &= \sqrt{\frac{1}{T}\left( 1 + \frac{1}{2}\mathrm{SR}^2 \right ) }, \end{align*}\]

where

\[\begin{equation*} \mathrm{se}(\hat{q}_{\alpha}^Z) = \sqrt{\frac{\sigma^{2}}{T}\left[1+\frac{1}{2}\left(q_{\alpha}^{Z}\right)^{2}\right]}. \end{equation*}\]

We make the following remarks:

\(\mathrm{se}(\widehat{\mathrm{VaR}}_{\alpha}^{N})\) and \(\mathrm{se}(\widehat{\mathrm{VaR}}_{\alpha}^{LN})\) increase with \(W_{0}\), \(\sigma\), and \(q_{\alpha}^{Z}\), and decrease with \(T\).

For fixed values of \(W_{0}\), \(\sigma\), and \(T\), \(\mathrm{se}(\widehat{\mathrm{VaR}}_{\alpha}^N)\) and \(\mathrm{se}(\widehat{\mathrm{VaR}}_{\alpha}^{LN})\) become very large as \(\alpha\) approaches zero. That is, for very small loss probabilities we will have very bad estimates of \(\mathrm{VaR}_{\alpha}^N\) and \(\mathrm{VaR}_{\alpha}^{LN}\).

\(\mathrm{se}(\mathrm{SR})\) increases with \(\mathrm{SR}^2\) and decreases with \(T\).

The practically useful estimated standard errors replace the unknown values of \(q_{\alpha}^R\) and \(\mathrm{SR}\) with their estimated values \(\hat{q}_{\alpha}^R\) and \(\widehat{\mathrm{SR}}\) and are given by:

\[\begin{align} \mathrm{\widehat{se}} \left( \widehat{\mathrm{VaR}}_{\alpha}^{N} \right ) &= W_0 \mathrm{\widehat{se}}(\hat{q}_{\alpha}^R) \tag{8.7}\\ \mathrm{\widehat{se}} \left( \widehat{\mathrm{VaR}}_{\alpha}^{LN} \right ) &= W_0 \exp(\hat{q}_{\alpha}^R) \mathrm{\widehat{se}}(\hat{q}_{\alpha}^R) \tag{8.8} \\ \mathrm{\widehat{se}}(\widehat{\mathrm{SR}}) &= \sqrt{\frac{1}{T}\left( 1 + \frac{1}{2}\widehat{\mathrm{SR}}^2 \right ) } \end{align}\]

\(\blacksquare\)

Consider a \(\$100,000\) investment for one month in Microsoft. The estimates of \(\mathrm{VaR}_{\alpha}^{N}\), its standard error, and \(95\%\) confidence intervals, for \(\alpha=0.05\) and \(\alpha=0.01\) are:

W0=100000

qhat.05 = muhatS + sigmahatS*qnorm(0.05)

qhat.01 = muhatS + sigmahatS*qnorm(0.01)

VaR.N.05 = -qhat.05*W0

seVaR.N.05 = W0*seQhat.05

VaR.N.01 = -qhat.01*W0

seVaR.N.01 = W0*seQhat.01

lowerVaR.N.05 = VaR.N.05 - 2*seVaR.N.05

upperVaR.N.05 = VaR.N.05 + 2*seVaR.N.05

lowerVaR.N.01 = VaR.N.01 - 2*seVaR.N.01

upperVaR.N.01 = VaR.N.01 + 2*seVaR.N.01

ans = cbind(c(VaR.N.05, VaR.N.01),

c(seVaR.N.05, seVaR.N.01),

c(lowerVaR.N.05, lowerVaR.N.01),

c(upperVaR.N.05,upperVaR.N.01))

colnames(ans) = c("Estimate", "Std Error", "2.5%", "97.5%")

rownames(ans) = c("VaR.N.05", "VaR.N.01")

ans## Estimate Std Error 2.5% 97.5%

## VaR.N.05 15780 1187 13405 18154

## VaR.N.01 22696 1490 19717 25676The estimated values of \(\mathrm{VaR}_{.05}^N\) and \(\mathrm{VaR}_{.01}^N\) are \(\$15,780\) and \(\$22,696\), respectively, with estimated standard errors of \(\$1,172\) and \(\$1,471\). The estimated standard errors are fairly small compared with the estimated VaR values, so we can say that VaR is estimated fairly precisely here. Notice that the estimated standard error for \(\mathrm{VaR}_{.01}\) is about \(26\%\) larger than the estimated standard error for \(\mathrm{VaR}_{.05}^N\) indicating we have less precision for estimating VaR for very small values of \(\alpha\).

The estimates of \(\mathrm{VaR}_{\alpha}^{LN}\) and its standard error for \(\alpha=0.05\) and \(\alpha=0.01\) are:

qhat.05 = muhatC + sigmahatC*qnorm(0.05)

qhat.01 = muhatC + sigmahatC*qnorm(0.01)

seQhat.05 = (sigmahatC/sqrt(n.obs))*sqrt(1 + 0.5*qnorm(0.05)^2)

seQhat.01 = (sigmahatC/sqrt(n.obs))*sqrt(1 + 0.5*qnorm(0.01)^2)

VaR.LN.05 = -W0*(exp(qhat.05)-1)

seVaR.LN.05 = W0*exp(qhat.05)*seQhat.05

VaR.LN.01 = -W0*(exp(qhat.01)-1)

seVaR.LN.01 = W0*exp(qhat.01)*seQhat.01

lowerVaR.LN.05 = VaR.LN.05 - 2*seVaR.LN.05

upperVaR.LN.05 = VaR.LN.05 + 2*seVaR.LN.05

lowerVaR.LN.01 = VaR.LN.01 - 2*seVaR.LN.01

upperVaR.LN.01 = VaR.LN.01 + 2*seVaR.LN.01

ans = cbind(c(VaR.LN.05, VaR.LN.01),

c(seVaR.LN.05, seVaR.LN.01),

c(lowerVaR.LN.05, lowerVaR.LN.01),

c(upperVaR.LN.05,upperVaR.LN.01))

colnames(ans) = c("Estimate", "Std Error", "2.5%", "97.5%")

rownames(ans) = c("VaR.LN.05", "VaR.LN.01")

ans## Estimate Std Error 2.5% 97.5%

## VaR.LN.05 14846 998 12850 16842

## VaR.LN.01 20467 1170 18128 22807The estimates of \(\mathrm{VaR}_{\alpha}^{LN}\) (and its standard error) are slightly smaller than the estimates of \(\mathrm{VaR}_{\alpha}^{N}\) (and its standard error) due to the positive skewness in the log-normal distribution.

\(\blacksquare\)

The estimated monthly SR for Microsoft (using \(r_f = 0.0025\)), its standard error and 95% confidence interval are computed using:

SRhat = (muhatS - r.f)

seSRhat = sqrt((1/n.obs)*(1 + 0.5*(SRhat^2)))

lowerSR = SRhat - 2*seSRhat

upperSR = SRhat + 2*seSRhat

ans = c(SRhat, seSRhat, lowerSR, upperSR)

names(ans) = c("Estimate", "Std Error", "2.5%", "97.5%")

ans## Estimate Std Error 2.5% 97.5%

## 0.00665 0.07625 -0.14585 0.15915The estimated monthly SR for Microsoft is close to zero, with a large estimated standard error and a 95% confidence interval containing both negative and positive values. Clearly, the SR is not estimated very well.

\(\blacksquare\)

8.3.1 The numerical delta method

The delta method is an advanced statistical technique and requires a bit of calculus to implement, especially for nonlinear vector-valued functions. It can be easy to make mistakes when working out the math! Fortunately, in R there is an easy way to implement the delta method that does not require you to work out the math. The car function deltaMethod implements the delta method numerically, utilizing the stats function D() which implements symbolic derivatives. The arguments expected by deltaMethod are:

## function (object, g., vcov., func = g., constants, level = 0.95,

## rhs = NULL, ..., envir = parent.frame())

## NULLHere, object is the vector of named estimated parameters, \(\hat{\theta}\), g. is a quoted string that is the function of the parameter estimates to be evaluated, \(f(\theta)\), and vcov is the named estimated covariance matrix of the coefficient estimates, \(\widehat{\mathrm{var}}(\hat{\theta})\). The function returns an object of class “deltaMethod” for which there is a print method. The “deltaMethod” object is essentially a data.frame with columns giving the parameter estimate(s), the delta method estimated standard error(s), and lower and upper confidence limits. The following example illustrates how to use deltaMethod for the example functions.

We first create the named inputs \(\hat{\theta}\) and \(\widehat{\mathrm{var}}(\hat{\theta})\) from the GWN model for simple returns:

thetahatS = c(muhatS, sigmahatS)

names(thetahatS) = c("mu", "sigma")

var.thetahatS = matrix(c(se.muhatS^2,0,0,se.sigmahatS^2), 2, 2, byrow=TRUE)

rownames(var.thetahatS) = colnames(var.thetahatS) = names(thetahatS)

thetahatS## mu sigma

## 0.00915 0.10150## mu sigma

## mu 5.99e-05 0.00e+00

## sigma 0.00e+00 2.99e-05We do the same for the inputs from the GWN model for continuously compounded returns:

thetahatC = c(muhatC, sigmahatC)

names(thetahatC) = c("mu", "sigma")

var.thetahatC = matrix(c(se.muhatC^2,0,0,se.sigmahatC^2), 2, 2, byrow=TRUE)

rownames(var.thetahatC) = colnames(var.thetahatC) = names(thetahatC)It is important to name the elements in thetahat and var.thetahat as these names will be used in defining \(f(\theta)\). To implement the numerical delta method for the normal quantile, we need to supply a quoted string specifying the function \(f_1(\theta) = \mu + \sigma q_{\alpha}^Z\). Here we use the string "mu+sigma*q.05", where q.05 = qnorm(0.05). For, \(\alpha = 0.05\) the R code is:

## [1] "deltaMethod" "data.frame"The returned object dm1 is of class “deltaMethod”, inhereting from “data.frame”, and has named columns:

## [1] "Estimate" "SE" "2.5 %" "97.5 %"The print method shows the results of applying the delta method:

## Estimate SE 2.5 % 97.5 %

## mu + sigma * q.05 -0.1578 0.0119 -0.1811 -0.13The results match the analytic calculations performed above.40

The delta method for the normal VaR function \(f_2(\theta)\) is:

## Estimate SE 2.5 % 97.5 %

## -W0 * (mu + sigma * q.05) 15780 1187 13453 18106The delta method for the log-normal VaR function \(f_3(\theta)\) is:

## Estimate SE 2.5 % 97.5 %

## -W0 * (exp(mu + sigma * q.05) - 1) 14846 998 12890 16802The delta method for the Sharpe ratio function \(f_4(\theta)\) is

## Estimate SE 2.5 % 97.5 %

## (mu - r.f)/sigma 0.0655 0.0763 -0.0841 0.22\(\blacksquare\)

8.3.2 the delta method for vector valued functions (advanced)

Let \(\theta\) denote a \(k \times 1\) vector of GWN model parameters. For example, \(\theta = (\mu, \sigma^2)'\). Assume that \(\hat{\theta}\) is asymptotically normally distributed: \[\begin{equation} \hat{\theta} \sim N( \theta, \widehat{\mathrm{var}}(\theta)), \end{equation}\] for large enough \(T\) where \(\widehat{\mathrm{var}}(\theta)\) is the \(k \times k\) variance-covariance matrix of \(\hat{\theta}\). Sometimes we need to consider the asymptotic distribution of a vector-valued function of \(\theta\). Let \(f:\mathbb{R}^k \rightarrow \mathbb{R}^j\) where \(j \le k\) be a continuous and differentiable function, and define the \(j \times 1\) vector \(\eta=f(\theta)\). It is useful to express \(\eta\) as:

\[\begin{equation} \eta = \begin{pmatrix} \eta_1 \\ \eta_2 \\ \vdots \\ \eta_j \end{pmatrix} = \begin{pmatrix} f_1(\theta) \\ f_2(\theta) \\ \vdots \\ f_j(\theta) \end{pmatrix}. \end{equation}\]

For the first example let \(\theta=(\sigma_1^2, \sigma_2^2)'\), and define the \(2 \times 1\) vector of volatilities: \(\eta = f(\theta)=(\sigma_1, \sigma_2)'\). Then, \(\eta_1 = f_1(\theta) = \sqrt{\sigma_2^2}\) and \(\eta_2 = f_2(\theta) = \sqrt{\sigma_2^2}\).

For the second example, let \(\theta\) = \((\mu_1,\mu_2,\sigma_1,\sigma_2)'\) and define the \(2 \times 1\) vector of Sharpe ratios:

\[\begin{equation} \eta = f(\theta) = \begin{pmatrix} \frac{\mu_1 - r_f}{\sigma_1} \\ \frac{\mu_1 - r_f}{\sigma_1} \end{pmatrix} = \begin{pmatrix} \mathrm{SR}_1 \\ \mathrm{SR}_2 \end{pmatrix}, \end{equation}\]

where \(r_f\) denotes the constant risk-free rate. Then \[\begin{equation} \eta_1 = f_1(\theta) = \frac{\mu_1 - r_f}{\sigma_1} = \mathrm{SR}_1, ~ \eta_2 = f_2(\theta) = \frac{\mu_2 - r_f}{\sigma_2} = \mathrm{SR}_2. \end{equation}\]

\(\blacksquare\)

Define the \(j \times k\) Jacobian matrix: \[\begin{equation} \mathbf{G}(\theta)' = \begin{pmatrix} \frac{\partial f_1(\theta)}{\partial \theta_1} & \frac{\partial f_1(\theta)}{\partial \theta_2} & \cdots & \frac{\partial f_1(\theta)}{\partial \theta_k} \\ \frac{\partial f_2(\theta)}{\partial \theta_1} & \frac{\partial f_2(\theta)}{\partial \theta_2} & \cdots & \frac{\partial f_2(\theta)}{\partial \theta_k} \\ \vdots & \vdots & \ldots & \vdots \\ \frac{\partial f_j(\theta)}{\partial \theta_1} & \frac{\partial f_j(\theta)}{\partial \theta_2} & \cdots & \frac{\partial f_j(\theta)}{\partial \theta_k} \\ \end{pmatrix} \end{equation}\]

Then, by the delta method, the asymptotic normal distribution of \(\hat{\eta} = f(\hat{\theta})\) is: \[\begin{equation} \hat{\eta} \sim N(\eta, \widehat{\mathrm{var}}(\hat{\eta})), \end{equation}\] where \[\begin{equation} \widehat{\mathrm{var}}(\hat{\eta}) = \mathbf{G}(\hat{\theta})'\widehat{\mathrm{var}}(\hat{\theta})\mathbf{G}(\hat{\theta}).\tag{8.9} \end{equation}\]

Consider the first example function where \(\theta = (\sigma_1^2,\sigma_2^2)'\) and \(\eta = f(\theta)=(\sigma_1, \sigma_2)'\). Now, using the chain-rule:

\[\begin{align*} \frac{\partial f_1(\theta)}{\partial \sigma_1^2} & = \frac{1}{2}\sigma_1^{-1}, ~ \frac{\partial f_1(\theta)}{\partial \sigma_2^2} = 0 \\ \frac{\partial f_2(\theta)}{\partial \sigma_1^2} & = 0, ~ \frac{\partial f_2(\theta)}{\partial \sigma_2^2} = \frac{1}{2}\sigma_1^{-1} \end{align*}\]

The Jacobian matrix is

\[\begin{equation} \mathbf{G}(\theta)' = \begin{pmatrix} \frac{1}{2}\sigma_1^{-1} & 0 \\ 0 & \frac{1}{2}\sigma_2^{-1} \end{pmatrix} \end{equation}\]

From Chapter 7, Proposition 7.9,

\[\begin{equation} \widehat{\mathrm{var}}(\hat{\theta}) = \frac{1}{T}\begin{pmatrix} 2\hat{\sigma}_1^4 & 2\hat{\sigma}_{12}^2 \\ 2\hat{\sigma}_{12}^2 & 2\hat{\sigma}_2^4 \end{pmatrix} \end{equation}\]

Then

\[\begin{align*} \widehat{\mathrm{var}}(\hat{\eta}) & = \mathbf{G}(\hat{\theta})'\widehat{\mathrm{var}}(\hat{\theta})\mathbf{G}(\hat{\theta}) \\ & = \begin{pmatrix} \frac{1}{2}\hat{\sigma}_1^{-1} & 0 \\ 0 & \frac{1}{2}\hat{\sigma}_2^{-1} \end{pmatrix} \frac{1}{T}\begin{pmatrix} 2\hat{\sigma}_1^4 & 2\hat{\sigma}_{12}^2 \\ 2\hat{\sigma}_{12}^2 & 2\hat{\sigma}_2^4 \end{pmatrix} \begin{pmatrix} \frac{1}{2}\hat{\sigma}_1^{-1} & 0 \\ 0 & \frac{1}{2}\hat{\sigma}_2^{-1} \end{pmatrix} \\ &= \frac{1}{T} \begin{pmatrix} \frac{1}{2}\hat{\sigma}_1^2 & \frac{1}{2}\hat{\sigma}_{12}^2 \hat{\sigma}_{1}^{-1} \hat{\sigma}_{2}^{-1}\\ \frac{1}{2}\hat{\sigma}_{12}^2 \hat{\sigma}_{1}^{-1} \hat{\sigma}_{2}^{-1} & \frac{1}{2}\hat{\sigma}_2^2 \end{pmatrix} = \frac{1}{T} \begin{pmatrix} \frac{1}{2}\hat{\sigma}_1^2 & \frac{1}{2}\hat{\sigma}_{12} \hat{\rho}_{12} \\ \frac{1}{2}\hat{\sigma}_{12} \hat{\rho}_{12} & \frac{1}{2}\hat{\sigma}_2^2 \end{pmatrix}. \end{align*}\]

\(\blacksquare\)

Consider the second example function where \(\theta =(\mu_1,\mu_2, \sigma_1, \sigma_2)'\) and \(\eta = f(\theta)=(f_1(\theta), f_2(\theta))'\) where \[\begin{equation} f_1(\theta) = \frac{\mu_1 - r_f}{\sigma_1} = \mathrm{SR}_1, ~ f_2(\theta) = \frac{\mu_2 - r_f}{\sigma_2} = \mathrm{SR}_2. \end{equation}\]

Here, we want to use the delta method to get the joint distribution of \(\eta = (\mathrm{SR}_1, \mathrm{SR}_2)'\). Now, using the chain-rule:

\[\begin{align*} \frac{\partial f_1(\theta)}{\partial \mu_1} & = \sigma_1^{-1}, ~ \frac{\partial f_1(\theta)}{\partial \mu_2} = 0, ~ & \frac{\partial f_1(\theta)}{\partial \sigma_1} = -\sigma_1^{-2}(\mu_1 - r_f), ~ & \frac{\partial f_1(\theta)}{\partial \sigma_2} = 0, \\ \frac{\partial f_2(\theta)}{\partial \mu_1} & = 0, ~ \frac{\partial f_2(\theta)}{\partial \mu_2} = \sigma_2^{-1}, ~ & \frac{\partial f_2(\theta)}{\partial \sigma_1} = 0, ~ & \frac{\partial f_2(\theta)}{\partial \sigma_2} = -\sigma_1^{-2}(\mu_1 - r_f). \end{align*}\]

Then, using \(\mathrm{SR}_{i} = (\mu_i - r_f)/\sigma_i ~ (i=1,2)\), we have:

\[\begin{equation} \mathbf{G}(\theta)' = \begin{pmatrix} \sigma_1^{-1} & 0 & -\sigma_1^{-1} \mathrm{SR}_1 & 0 \\ 0 & \sigma_2^{-1} & 0 & -\sigma_2^{-1}\mathrm{SR}_2 & 0 \end{pmatrix} \end{equation}\]

Using Proposition 7.10 and the results from the previous example we have:

\[\begin{equation} \mathrm{var}(\hat{\theta}) = \frac{1}{T} \begin{pmatrix} \sigma_1^2 & \sigma_{12} & 0 & 0 \\ \sigma_{12} & \sigma_2^2 & 0 & 0 \\ 0 & 0 & \frac{1}{2}\sigma_1^2 & \frac{1}{2} \sigma_{12}^2 \sigma_1^{-1} \sigma_2^{-1} \\ 0 & 0 & \frac{1}{2} \sigma_{12}^2 \sigma_1^{-1} \sigma_2^{-1} & \frac{1}{2}\sigma_2^2 \end{pmatrix}. \end{equation}\]

Then, after some straightforward matrix algebra calculations (see end-of-chapter exercises) we get:

\[\begin{align} \widehat{\mathrm{var}}(\hat{\eta}) & = \mathbf{G}(\hat{\theta})'\widehat{\mathrm{var}}(\hat{\theta})\mathbf{G}(\hat{\theta}) \\ &= \begin{pmatrix} \widehat{\mathrm{var}}(\widehat{\mathrm{SR}}_1) & \widehat{\mathrm{cov}}(\widehat{\mathrm{SR}}_1, \widehat{\mathrm{SR}}_2) \\ \widehat{\mathrm{cov}}(\widehat{\mathrm{SR}}_1, \widehat{\mathrm{SR}}_2) & \widehat{\mathrm{var}}(\widehat{\mathrm{SR}}_2) \end{pmatrix} \\ & = \begin{pmatrix} \frac{1}{T}(1+\frac{1}{2}\widehat{\mathrm{SR}}_1^2) & \frac{\hat{\rho}_{12}}{T}(1 + \frac{1}{2}\hat{\rho}_{12}\widehat{\mathrm{SR}}_1 \widehat{\mathrm{SR}}_2) \\ \frac{\hat{\rho}_{12}}{T}(1 + \frac{1}{2}\hat{\rho}_{12} \widehat{\mathrm{SR}}_1\widehat{\mathrm{SR}}_2) & \frac{1}{T}(1+\frac{1}{2}\widehat{\mathrm{SR}}_2^2) \end{pmatrix}. \end{align}\]

The diagonal elements match the delta method variances for the estimated Sharpe ratio derived earlier. The off-diagonal covariance term shows that the two estimated Sharpe ratios are correlated. We explore this correlation in more depth in the end-of-chapter exercises.

\(\blacksquare\)

8.3.3 The delta method explained

The delta method deduces the asymptotic distribution of \(f(\hat{\theta})\) using a first order Taylor series approximation of \(f(\hat{\theta})\) evaluated at the true value \(\theta\). For simplicity, assume that \(\theta\) is a scalar parameter. Then, the first order Taylor series expansion gives

\[\begin{align*} f(\hat{\theta}) & = f(\theta) + f'(\theta)(\hat{\theta} - \theta) + \text{remainder} \\ & = f(\theta) + f'(\theta)\hat{\theta} + f'(\theta)\theta + \text{remainder} \\ & \approx f(\theta) + f'(\theta)\hat{\theta} + f'(\theta)\theta \end{align*}\]

The first order Taylor series expansion shows that \(f(\hat{\theta})\) is an approximate linear function of the random variable \(\hat{\theta}\). The values \(\theta\), \(f(\theta)\), and \(f'(\theta)\) are constants. Assuming that, for large enough T,

\[\begin{equation*} \hat{\theta}\sim N(\theta,\widehat{\mathrm{se}}(\hat{\theta})^{2}), \end{equation*}\]

it follows that

\[\begin{equation*} f(\hat{\theta}) \sim N(f(\theta), f'(\theta)^2 \widehat{\mathrm{se}}(\hat{\theta})^{2}) \end{equation*}\]

Since \(\hat{\theta}\) is consistent for \(\theta\) we can replace \(f'(\theta)^2\) with \(f'(\hat{\theta})^2\) giving the practically useful result

\[\begin{equation*} f(\hat{\theta}) \sim N(f(\theta), f'(\hat{\theta})^2 \widehat{\mathrm{se}}(\hat{\theta})^{2}) \end{equation*}\]

8.4 Resampling

As described in the previous sections, the delta method can be used to produce analytic expressions for standard errors of functions of estimated parameters that are asymptotically normally distributed. The formulas are specific to each function and are approximations that rely on large samples and the validity of the Central Limit Theorem to be accurate.

Alternatives to the analytic asymptotic distributions of estimators and the delta method are computer intensive resampling methods such as the jackknife and the bootstrap. These resampling methods can be used to produce numerical estimated standard errors for estimators and functions of estimators without the use of mathematical formulas. Most importantly, these jackknife and bootstrap standard errors are: (1) easy to compute, and (2) often numerically very close to the analytic standard errors based on CLT approximations. In some cases the bootstrap standard errors can even be more reliable than asymptotic approximations.

There are many advantages of the jackknife and bootstrap over analytic formulas. The most important are the following:

- Fewer assumptions. The jackknife and boostrap procedures typically requires fewer assumptions than are needed to derive analytic formulas. In particular, they do not require the data to be normally distributed or the sample size to be large enough so that the Central Limit Theorem holds.

- Greater accuracy. In small samples the bootstrap is often more reliable than analytic approximations based on the Central Limit Theorem. In large samples when the Central Limit Theorem holds the bootstrap can be even be more accurate.

- Generality. In many situations, the jackknife and bootstrap procedures are applied in the same way regardless of the estimator under consideration. That is, you don’t need a different jackknife or bootstrap procedure for each estimator.

8.5 The Jackknife

Let \(\theta\) denote a scalar parameter of interest. It could be a scalar parameter of the GWN model (e.g. \(\mu\) or \(\sigma\)) or it could be a scalar function of the parameters of the GWN model (e.g. VaR or SR). Let \(\hat{\theta}\) denote the plug-in estimator of \(\theta\). The goal is to compute a numerical estimated standard error for \(\hat{\theta}\), \(\widehat{\mathrm{se}}(\hat{\theta})\), using the jackknife resampling method.

The jackknife resampling method utilizes \(T\) resamples of the original data \(\left\{ R_t \right\}_{t=1}^{T}\). The resamples are created by leaving out a single observation. For example, the first jackknife resample leaves out the first observation and is given by \(\left\{ R_t \right\}_{t=2}^{T}\). This sample has \(T-1\) observations. In general, the \(t^{th}\) jackknife resample leaves out the \(t^{th}\) return \(R_{t}\) for \(t \le T\). Now, on each of the \(t=1,\ldots, T\) jackknife resamples you compute an estimate of \(\theta\). Denote this jackknife estimate \(\hat{\theta}_{-t}\) to indicate that \(\theta\) is estimated using the resample that omits observation \(t\). The estimator \(\hat{\theta}_{-t}\) is called the leave-one-out estimator of \(\theta\). Computing \(\hat{\theta}_{-t}\) for \(t=1,\ldots, T\) gives \(T\) leave-one-out estimators \(\left\{ \hat{\theta}_{-t} \right\}_{t=1}^{T}\).

The jackknife estimator of \(\widehat{\mathrm{se}}(\hat{\theta})\) is the scaled standard deviation of \(\left\{ \hat{\theta}_{-t} \right\}_{t=1}^{T}\):

\[\begin{equation} \widehat{\mathrm{se}}_{jack}(\hat{\theta}) = \sqrt{ \frac{T-1}{T}\sum_{t=1}^T (\hat{\theta}_{-t} - \bar{\theta})^2 }, \tag{8.10} \end{equation}\]

where \(\bar{\theta} = T^{-1}\sum_{t=1}^T \hat{\theta}_{-t}\) is the sample mean of \(\left\{ \hat{\theta}_{-t} \right\}_{t=1}^{T}\). The estimator (8.10) is easy to compute and does not require any analytic calculations from the asymptotic distribution of \(\hat{\theta}\).

The intuition for the jackknife standard error formula can be illustrated by considering the jackknife standard error estimate for the sample mean \(\hat{\theta} = \hat{\mu}\). We know that the analytic estimated squared standard error for \(\hat{\mu}\) is

\[\begin{equation*} \widehat{\mathrm{se}}(\hat{\mu})^2 = \frac{\hat{\sigma}^2}{T} = \frac{1}{T} \frac{1}{T-1} \sum_{t=1}^T (R_t - \hat{\mu})^2. \end{equation*}\]

Now consider the jackknife squared standard error for \(\hat{\mu}\):

\[\begin{equation*} \widehat{\mathrm{se}}_{jack}(\hat{\mu})^2= \frac{T-1}{T}\sum_{t=1}^T (\hat{\mu}_{-t} - \bar{\mu})^2, \end{equation*}\]

where \(\bar{\mu} = T^{-1}\sum_{t=1}^T \hat{\mu}_{-t}\). Here, we will show that \(\widehat{\mathrm{se}}(\hat{\mu})^2 = \widehat{\mathrm{se}}_{jack}(\hat{\mu})^2\).

First, note that

\[\begin{align*} \hat{\mu}_{-t} & = \frac{1}{T-1}\sum_{j \ne t} R_j = \frac{1}{T-1} \left( \sum_{j=1}^T R_j - R_t \right) \\ &= \frac{1}{T-1} \left( T \hat{\mu} - R_t \right) \\ &= \frac{T}{T-1}\hat{\mu} - \frac{1}{T-1}R_t. \end{align*}\]

Next, plugging in the above to the definition of \(\bar{\mu}\) we get

\[\begin{align*} \bar{\mu} &= \frac{1}{T} \sum_{j=1}^T \hat{\mu}_{-t} = \frac{1}{T}\sum_{t=1}^T \left( \frac{T}{T-1}\hat{\mu} - \frac{1}{T-1}R_t \right) \\ &= \frac{1}{T-1} \sum_{t=1}^T \hat{\mu} - \frac{1}{T}\frac{1}{T-1} \sum_{t=1}^T R_t \\ &= \frac{T}{T-1} \hat{\mu} - \frac{1}{T-1} \hat{\mu} \\ &= \hat{\mu} \left( \frac{T}{T-1} - \frac{1}{T-1} \right) \\ &= \hat{\mu}. \end{align*}\]

Hence, the sample mean of \(\hat{\mu}_{-t}\) is \(\hat{\mu}\). Then we can write

\[\begin{align*} \hat{\mu}_{-t} - \bar{\mu} & = \hat{\mu}_{-t} - \hat{\mu} \\ & = \frac{T}{T-1}\hat{\mu} - \frac{1}{T-1}R_t - \hat{\mu} \\ &= \frac{T}{T-1}\hat{\mu} - \frac{1}{T-1}R_t - \frac{T-1}{T-1}\hat{\mu} \\ &= \frac{1}{T-1}\hat{\mu} - \frac{1}{T-1}R_t \\ &= \frac{1}{T-1}(\hat{\mu} - R_t). \end{align*}\]

Using the above we can write

\[\begin{align*} \widehat{\mathrm{se}}_{jack}(\hat{\mu})^2 &= \frac{T-1}{T}\sum_{t=1}^T (\hat{\mu}_{-t} - \bar{\mu})^2 \\ &= \frac{T-1}{T}\left(\frac{1}{T-1}\right)^2 \sum_{t=1}^T (\hat{\mu} - R_t)^2 \\ &= \frac{1}{T}\frac{1}{T-1} \sum_{t=1}^T (\hat{\mu} - R_t)^2 \\ &= \frac{1}{T} \hat{\sigma}^2 \\ &= \widehat{\mathrm{se}}(\hat{\mu})^2. \end{align*}\]

We can compute the leave-one-out estimates \(\{\hat{\mu}_{-t}\}_{t=1}^T\) using a simple for loop as follows:

n.obs = length(msftRetS)

muhat.loo = rep(0, n.obs)

for(i in 1:n.obs) {

muhat.loo[i] = mean(msftRetS[-i])

}In the above loop, msftRetS[-i] extracts all observations except observation \(i\). The mean of the leave-one-out estimates is

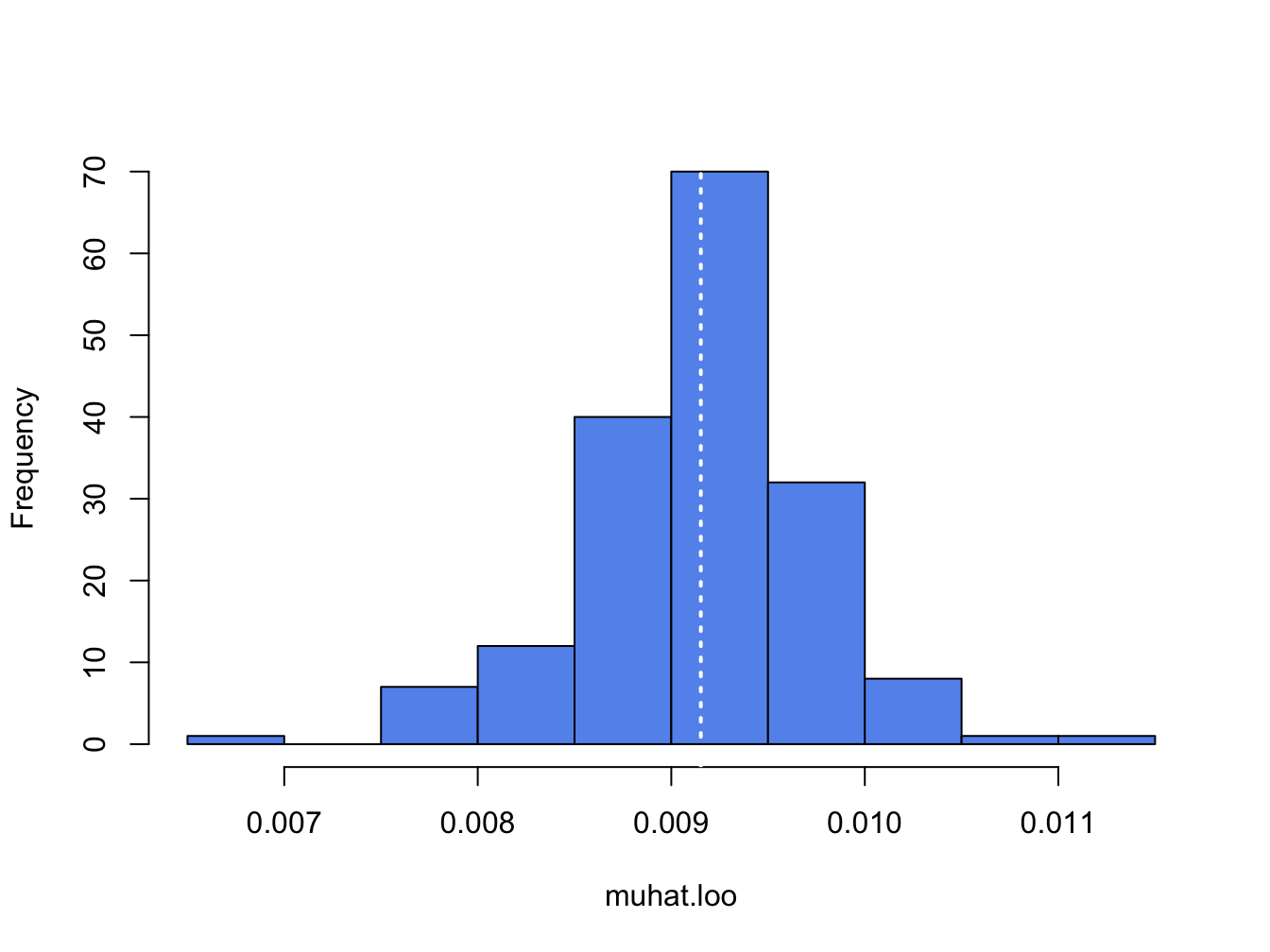

## [1] 0.00915which is the sample mean of msftRetS. It is informative to plot a histogram of the leave-one-out estimates:

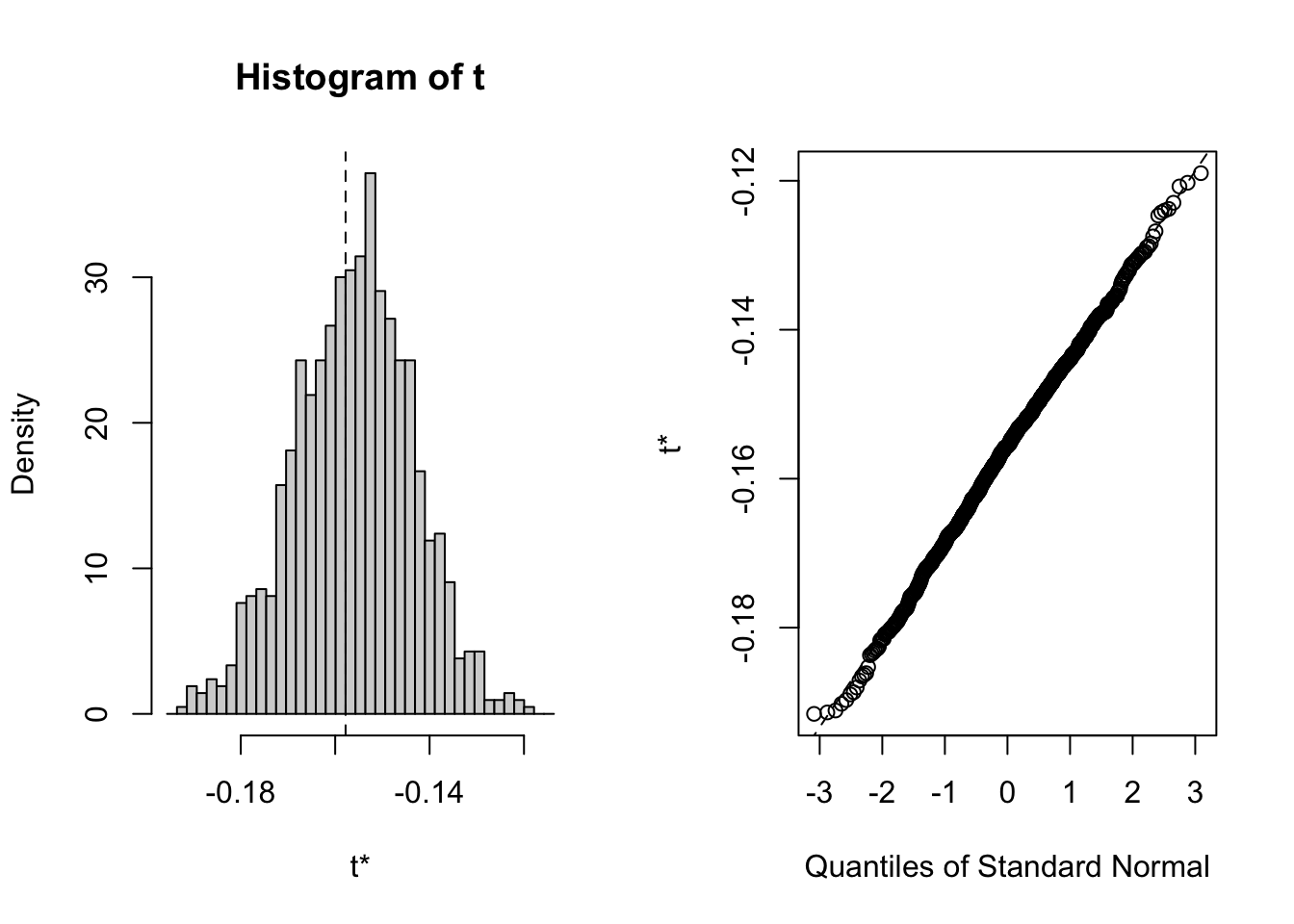

Figure 8.1: Histogram of leave-one-out estimator of \(\mu\) for Microsoft.

The white dotted line is at the point \(\hat{\mu} = 0.00915\). The jackknife estimated standard error is the scaled standard deviation of the \(T\) \(\hat{\mu}_{-t}\) values:41

## [1] 0.00774This value is exactly the same as the analytic standard error:

## [1] 0.00774You can also compute jackknife estimated standard errors using the bootstrap function jackknife:

## [1] "jack.se" "jack.bias" "jack.values" "call"By default, jackknife() takes as input a vector of data and the name of an R function and returns a list with components related to the jackknife procedure. The component jack.values contains the leave-one-out estimators, and the component jack.se gives you the jackknife estimated standard error:

## [1] 0.00774\(\blacksquare\)

The fact that the jackknife standard error estimate for \(\hat{\mu}\) equals the analytic standard error is main justification for using the jackknife to compute standard errors. For estimated parameters other than \(\hat{\mu}\) and general estimated functions the jackknife standard errors are often numerically very close to the delta method standard errors. The following example illustrates this point for the example functions (8.1) - (8.4).

Here, we use the bootstrap function jackknife() to compute jackknife estimated standard errors for the plug-in estimates of the example functions (8.1) - (8.4) and we compare the jackknife standard errors with the delta method standard errors. To use jackknife() with user-defined functions involving multiple parameters you must create the function such that the first input is an integer vector of index observations and the second input is the data. For example, a function for computing (8.1) to be passed to jackknife() is:

theta.f1 = function(x, xdata) {

mu.hat = mean(xdata[x, ])

sigma.hat = sd(xdata[x, ])

fun.hat = mu.hat + sigma.hat*qnorm(0.05)

fun.hat

}To compute the jackknife estimated standard errors for the estimate of (8.1) use:

f1.jack = jackknife(1:n.obs, theta=theta.f1, coredata(msftRetS))

ans = cbind(f1.jack$jack.se, dm1$SE)

colnames(ans) = c("Jackknife", "Delta Method")

rownames(ans) = "f1"

ans## Jackknife Delta Method

## f1 0.0133 0.0119Here, we see that the estimated jackknife standard error for \(f_1(\hat{\theta})\) is very close to the delta method standard error computed earlier.

To compute the jackknife estimated standard error for \(f_2(\hat{\theta})\) use:

theta.f2 = function(x, xdata, W0) {

mu.hat = mean(xdata[x, ])

sigma.hat = sd(xdata[x, ])

fun.hat = -W0*(mu.hat + sigma.hat*qnorm(0.05))

fun.hat

}

f2.jack = jackknife(1:n.obs, theta=theta.f2, coredata(msftRetS), W0)

ans = cbind(f2.jack$jack.se, dm2$SE)

colnames(ans) = c("Jackknife", "Delta Method")

rownames(ans) = "f2"

ans## Jackknife Delta Method

## f2 1329 1187Again, the jackknife standard error is close to the delta method standard error.

To compute the jackknife estimated standard error for \(f_3(\hat{\theta})\) use:

theta.f3 = function(x, xdata, W0) {

mu.hat = mean(xdata[x, ])

sigma.hat = sd(xdata[x, ])

fun.hat = -W0*(exp(mu.hat + sigma.hat*qnorm(0.05)) - 1)

fun.hat

}

f3.jack = jackknife(1:n.obs, theta=theta.f3, coredata(msftRetC), W0)

ans = cbind(f3.jack$jack.se, dm3$SE)

colnames(ans) = c("Jackknife", "Delta Method")

rownames(ans) = "f3"

ans## Jackknife Delta Method

## f3 1315 998For log-normal VaR, the jackknife standard error is a bit larger than the delta method standard error.

Finally, to compute the jacknife estimated standard for the Sharpe ratio use:

theta.f4 = function(x, xdata, r.f) {

mu.hat = mean(xdata[x, ])

sigma.hat = sd(xdata[x, ])

fun.hat = (mu.hat-r.f)/sigma.hat

fun.hat

}

f4.jack = jackknife(1:n.obs, theta=theta.f4, coredata(msftRetS), r.f)

ans = cbind(f4.jack$jack.se, dm4$SE)

colnames(ans) = c("Jackknife", "Delta Method")

rownames(ans) = "f4"

ans## Jackknife Delta Method

## f4 0.076 0.0763Here, the jackknife and delta method standard errors are very close.

\(\blacksquare\)

8.5.1 The jackknife for vector-valued estimates

The jackknife can also be used to compute an estimated variance matrix of a vector-valued estimate of parameters. Let \(\theta\) denote a \(k \times 1\) vector of GWN model parameters (e.g. \(\theta=(\mu,\sigma)'\)), and let \(\hat{\theta}\) denote the plug-in estimate of \(\theta\). Let \(\hat{\theta}_{-t}\) denote the leave-one-out estimate of \(\theta\), and \(\bar{\theta}=T^{-1}\sum_{t=1}^T \hat{\theta}_{-t}\). Then the jackknife estimate of the \(k \times k\) variance matrix \(\widehat{\mathrm{var}}(\hat{\theta})\) is the scaled sample covariance of \(\{\hat{\theta}_{-t}\}_{t=1}^T\):

\[\begin{equation} \widehat{\mathrm{var}}_{jack}(\hat{\theta}) = \frac{T-1}{T} \sum_{t=1}^T (\hat{\theta}_{-t} - \bar{\theta})(\hat{\theta}_{-t} - \bar{\theta})'. \tag{8.11} \end{equation}\]

The jackknife estimated standard errors for the elements of \(\hat{\theta}\) are given by the square root of the diagonal elements of \(\widehat{\mathrm{var}}_{jack}(\hat{\theta})\).

The same principle works for computing the estimated variance matrix of a vector of functions of GWN model parameters. Let \(f:R^k \rightarrow R^l\) with \(l \le k\) and define \(\eta = f(\theta)\) denote a \(l \times 1\) vector of functions of GWN model parameters. Let \(\hat{\eta}=f(\hat{\theta})\) denote the plug-in estimator of \(\eta\). Let \(\hat{\eta}_{-t}\) denote the leave-one-out estimate of \(\eta\), and \(\bar{\eta}=T^{-1}\sum_{t=1}^T \hat{\eta}_{-t}\). Then the jackknife estimate of the \(l \times l\) variance matrix \(\widehat{\mathrm{var}}(\hat{\eta})\) is the scaled sample covariance of \(\{\hat{\eta}_{-t}\}_{t=1}^T\):

\[\begin{equation} \widehat{\mathrm{var}}_{jack}(\hat{\eta}) = \frac{T-1}{T} \sum_{t=1}^T (\hat{\eta}_{-t} - \bar{\eta})(\hat{\eta}_{-t} - \bar{\eta})'. \tag{8.12} \end{equation}\]

The bootstrap function jackknife() function only works for scalar-valued parameter estimates, so we have to compute the jackknife variance estimate by hand using a for loop. The R code is:

n.obs = length(msftRetS)

muhat.loo = rep(0, n.obs)

sigmahat.loo = rep(0, n.obs)

for(i in 1:n.obs) {

muhat.loo[i] = mean(msftRetS[-i])

sigmahat.loo[i] = sd(msftRetS[-i])

}

thetahat.loo = cbind(muhat.loo, sigmahat.loo)

colnames(thetahat.loo) = c("mu", "sigma")

varhat.jack = (((n.obs-1)^2)/n.obs)*var(thetahat.loo)

varhat.jack## mu sigma

## mu 5.99e-05 1.49e-05

## sigma 1.49e-05 6.12e-05The jackknife variance estimate is somewhat close to the analytic estimate:

## mu sigma

## mu 5.99e-05 0.00e+00

## sigma 0.00e+00 2.99e-05Since the first element of \(\hat{\theta}\) is \(\hat{\mu}\), the (1,1) elements of the two variance matrix estimates match exactly. However, the varhat.jack has non-zero off diagonal elements and the (2,2) element corresponding to \(\hat{\sigma}\) is considerably bigger than (2,2) element of the analytic estimate. Converting var.thetahatS to an estimated correlation matrix gives:

## mu sigma

## mu 1.000 0.246

## sigma 0.246 1.000Here, we see that the jackknife shows a small positive correlation between \(\hat{\mu}\) and \(\hat{\sigma}\) whereas the analytic matrix shows a zero correlation.

\(\blacksquare\)

8.5.2 Pros and Cons of the Jackknife

The jackknife gets its name because it is a useful statistical device.42 The main advantages (pros) of the jacknife are:

It is a good “quick and dirty” method for computing numerical estimated standard errors of estimates that does not rely on asymptotic approximations.

The jackknife estimated standard errors are often close to the delta method standard errors.

This jackknife, however, has some limitations (cons). The main limitations are:

It can be computationally burdensome for very large samples (e.g., if used with ultra high frequency data).

It does not work well for non-smooth functions such as empirical quantiles.

It is not well suited for computing confidence intervals.

It is not as accurate as the bootstrap.

8.6 The Nonparametric Bootstrap

In this section, we describe the easiest and most common form of the bootstrap: the nonparametric bootstrap. As we shall see, the nonparametric bootstrap procedure is very similar to a Monte Carlo simulation experiment. The main difference is how the random data is generated. In a Monte Carlo experiment, the random data is created from a computer random number generator for a specific probability distribution (e.g., normal distribution). In the nonparametric bootstrap, the random data is created by resampling with replacement from the original data.

The procedure for the nonparametric bootstrap is as follows:

- Resample. Create \(B\) bootstrap samples by sampling with replacement from the original data \(\{r_{1},\ldots,r_{T}\}\).43 Each bootstrap sample has \(T\) observations (same as the original sample) \[\begin{eqnarray*} \left\{r_{11}^{*},r_{12}^{*},\ldots,r_{1T}^{*}\right\} & = & \mathrm{1st\,bootstrap\,sample}\\ & \vdots\\ \left\{r_{B1}^{*},r_{B2}^{*},\ldots,r_{BT}^{*}\right\} & = & \mathrm{Bth\,boostrap\,sample} \end{eqnarray*}\]

- Estimate \(\theta\). From each bootstrap sample estimate \(\theta\) and denote the resulting estimate \(\hat{\theta}^{*}\). There will be \(B\) values of \(\hat{\theta}^{*}\): \(\left\{\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\right\}\).

- Compute statistics. Using \(\left\{\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\right\}\) compute estimate of bias, standard error, and approximate 95% confidence interval.

This procedure is very similar to the procedure used to perform a Monte Carlo experiment described in Chapter 7. The main difference is how the hypothetical samples are created. With the nonparametric bootstrap, the hypothetical samples are created by resampling with replacement from the original data. In this regard the bootstrap treats the sample as if it were the population. This ensures that the bootstrap samples inherit the same distribution as the original data – whatever that distribution may be. If the original data is normally distributed, then the nonparametric bootstrap samples will also be normally distributed. If the data is Student’s t distributed, then the nonparametric bootstrap samples will be Student’s t distributed. With Monte Carlo simulation, the hypothetical samples are simulated under an assumed model and distribution. This requires one to specify values for the model parameters and the distribution from which to simulate.

8.6.1 Bootstrap bias estimate

The nonparametric bootstrap can be used to estimate the bias of an estimator \(\hat{\theta}\) using the bootstrap estimates {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}. The key idea is to treat the empirical distribution (i.e., histogram) of the bootstrap estimates {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)} as an approximate to the unknown distribution of \(\hat{\theta}.\)

Recall, the bias of an estimator is defined as

\[\begin{equation*} E[\hat{\theta}]-\theta. \end{equation*}\]

The bootstrap estimate of bias is given by

\[\begin{align} \widehat{\mathrm{bias}}_{boot}(\hat{\theta},\theta)= \bar{\theta}^{\ast} - \hat{\theta} = \frac{1}{B}\sum_{j=1}^{\ B}\hat{\theta}_{j}^{\ast}-\hat{\theta.}\tag{8.13}\\ \text{(bootstrap mean - estimate)}\nonumber \end{align}\]

The bootstrap bias estimate (8.13) is the difference between the mean of the bootstrap estimates of \(\theta\) and the sample estimate of \(\theta\). This is similar to the Monte Carlo estimate of bias discussed in Chapter 7. However, the Monte Carlo estimate of bias is the difference between the mean of the Monte Carlo estimates of \(\theta\) and the true value of \(\theta.\) The bootstrap estimate of bias does not require knowing the true value of \(\theta\). Effectively, the bootstrap treats the sample estimate \(\hat{\theta}\) as the population value \(\theta\) and the bootstrap mean \(\bar{\theta}^{\ast} = \frac{1}{B}\sum_{j=1}^{\ B}\hat{\theta}_{j}^{\ast}\) as an approximation to \(E[\hat{\theta}]\). Here, \(\widehat{\mathrm{bias}}_{boot}(\hat{\theta},\theta)=0\) if the center of the histogram of {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)} is at \(\hat{\theta}.\)

Given the bootstrap estimate of bias (8.13), we can compute a bias-adjusted estimate:

\[\begin{equation} \hat{\theta}_{adj} = \hat{\theta} - (\bar{\theta}^{\ast} - \hat{\theta}) = 2\hat{\theta} - \bar{\theta}^{\ast} \tag{8.14} \end{equation}\]

if the bootstrap estimate of bias is large, it may be tempting to use the bias-adjusted estimate (8.14) in place of the original estimate. This is generally not done in practice because the bias adjustment introduces extra variability into the estimator. So what use is the bootstrap bias estimate? It provides information to you that your estimate contains bias (or not) and this information can influence your decision making based on the estimate.

8.6.2 Bootstrap standard error estimate

for a scalar estimate \(\hat{\theta}\), the bootstrap estimate of \(\widehat{\mathrm{se}}(\hat{\theta}\)) is given by the sample standard deviation of the bootstrap estimates {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}:

\[\begin{equation} \widehat{\mathrm{se}}_{boot}(\hat{\theta})=\sqrt{\frac{1}{B-1}\sum_{j=1}^{B}\left(\hat{\theta}_{j}^{\ast} - \bar{\theta}^{\ast} \right)^{2}}.\tag{8.15} \end{equation}\]

where \(\bar{\theta}^{\ast} = \frac{1}{B}\sum_{j=1}^{\ B}\hat{\theta}_{j}^{\ast}\). Here, \(\widehat{\mathrm{se}}_{boot}(\hat{\theta})\) is the size of a typical deviation of a bootstrap estimate, \(\hat{\theta}^{*}\), from the mean of the bootstrap estimates (typical deviation from the middle of the histogram of the bootstrap estimates). This is very closely related to the Monte Carlo estimate of \(\widehat{\mathrm{se}}(\hat{\theta}\)) , which is the sample standard deviation of the estimates of \(\theta\) from the Monte Carlo samples.

For a \(k \times 1\) vector estimate \(\hat{\theta}\), the bootstrap estimate of \(\widehat{\mathrm{var}}(\hat{\theta})\) is

\[\begin{equation} \widehat{\mathrm{var}}(\hat{\theta}) = \frac{1}{B-1}\sum_{j=1}^B (\hat{\theta}_{j}^{\ast} - \bar{\theta}^{\ast})(\hat{\theta}_{j}^{\ast} - \bar{\theta}^{\ast})'. \end{equation}\]

8.6.3 Bootstrap confidence intervals

In addition to providing standard error estimates, the bootstrap is commonly used to compute confidence intervals for scalar parameters. There are several ways of computing confidence intervals with the bootstrap and which confidence interval to use in practice depends on the characteristics of the distribution of the bootstrap estimates {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}.

If the bootstrap distribution appears to be normal then you can compute a 95% confidence interval for \(\theta\) using two simple methods. The first method mimics the rule-of-thumb that is justified by the CLT to compute a 95% confidence interval but uses the bootstrap standard error estimate (8.15):

\[\begin{equation} \hat{\theta}\pm2\times\widehat{\mathrm{se}}_{boot}(\hat{\theta}) = [\hat{\theta} - 2\times \widehat{\mathrm{se}}_{boot}(\hat{\theta}), ~ \hat{\theta} + 2\times \widehat{\mathrm{se}}_{boot}(\hat{\theta})].\tag{8.16} \end{equation}\]

The 95% confidence interval (8.16) is called the normal approximation 95% confidence interval.

The second method directly uses the distribution of the bootstrap estimates {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)} to form a 95% confidence interval:

\[\begin{equation} [\hat{q}_{.025}^{*},\,\hat{q}_{.975}^{*}],\tag{8.17} \end{equation}\]

where \(\hat{q}_{.025}^{*}\) and \(\hat{q}_{.975}^{*}\) are the 2.5% and 97.5% empirical quantiles of {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}, respectively. By construction, 95% of the bootstrap estimates lie in the quantile-based interval (8.17). This 95% confidence interval is called the bootstrap percentile 95% confidence interval.

The main advantage of (8.17) over (8.16) is transformation invariance. This means that if (8.17) is a 95% confidence interval for \(\theta\) and \(f(\theta)\) is a monotonic function of \(\theta\) then \([f(\hat{q}_{.025}^{*}),\,f(\hat{q}_{.975}^{*})]\) is a 95% confidence interval for \(f(\theta)\). The interval (8.17) can also be asymmetric whereas (8.16) is symmetric.

The bootstrap normal approximation and percentile 95% confidence intervals (8.16) and (8.17) will perform well (i.e., will have approximately correct coverage probability) if the bootstrap distribution looks normally distributed. However, if the bootstrap distribution is asymmetric then these 95% confidence intervals may have coverage probability different from 95%, especially if the asymmetry is substantial. The basic shape of the bootstrap distribution can be visualized by the histogram of {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}, and the normality of the distribution can be visually evaluated using the normal QQ-plot of {\(\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\)}. If the histogram is asymmetric and/or not bell-shaped or if the normal QQ-plot is not linear then normality is suspect and the confidence intervals (8.17) and (8.16) may be inaccurate.

If the bootstrap distribution is asymmetric then it is recommended to use Efron’s bias and skewness adjusted bootstrap percentile confidence (BCA) interval. The details of how to compute the BCA interval are a bit complicated, and so are omitted, and the interested reader is referred to Chapter 10 of Hansen (2011) for a good explanation. Suffice it to say that the 95% BCA method adjusts the 2.5% and 97.5% quantiles of the bootstrap distribution for bias and skewness. The BCA confidence interval can be computed using the boot function boot.ci() with optional argument type="bca".44

8.6.4 Performing the Nonparametric Bootstrap in R

The nonparametric bootstrap procedure is easy to perform in R. You can implement the procedure by “brute force” in very much the same way as you perform a Monte Carlo experiment. In this approach you program all parts of the bootstrapping procedure. Alternatively, you can use the R package boot which contains functions for automating certain parts of the bootstrapping procedure.

8.6.4.1 Brute force implementation

In the brute force implementation you progam all parts of the bootstrap procedure. This typically involves three steps:

- Sample with replacement from the original data using the R function

sample()to create \(\{r_{1}^{*},r_{2}^{*},\ldots,r_{T}^{*}\}\). Do this \(B\) times. - Compute \(B\) values of the statistic of interest \(\hat{\theta}\) from each bootstrap sample giving \(\{\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\}\). \(B=1,000\) for routine applications of the bootstrap. To minimize simulation noise it may be required to have \(B=10,000\) or higher.

- Compute the bootstrap bias and SE values from \(\{\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\}\) using the R functions

mean()andsd(), respectively. - Compute the histogram and normal QQ-plot of \(\{\hat{\theta}_{1}^{*},\ldots,\hat{\theta}_{B}^{*}\}\) using the R functions

hist()andqqnorm(), respectively, to see if the bootstrap distribution looks normal.

Typically steps 1 and 2 are performed inside a for loop or within

the R function apply().

In step 1 sampling with replacement for the original data is performed

with the R function sample(). To illustrate, consider sampling

with replacement from the \(5\times1\) return vector:

## [1] 0.10 0.05 -0.02 0.03 -0.04Recall, in R we can extract elements from a vector by subsetting

based on their location, or index, in the vector. Since the vector r

has five elements, its index is the integer vector 1:5. Then

to extract the first, second and fifth elements of r use

the index vector c(1, 2, 5):

## [1] 0.10 0.05 -0.04Using this idea, you can extract a random sample (of any given size)

with replacement from r by creating a random sample with

replacement of the integers \(\{1,2,\ldots,5\}\) and using this set

of integers to extract the sample from r. The R fucntion

sample() can be used to do this process. When you pass a

positive integer value n to sample(), with the optional

argument replace=TRUE, it returns a random sample from the

set of integers from \(1\) to n. For example, to create a

random sample with replacement of size 5 from the integers\(\{1,2,\ldots,5\}\)

use:45

## [1] 3 3 2 2 3We can then get a random sample with replacement from the vector r

by subsetting using the index vector idx:

## [1] -0.02 -0.02 0.05 0.05 -0.02This two step process is automated when you pass a vector of observations

to sample():

## [1] -0.02 -0.02 0.05 0.05 -0.02Consider using the nonparametric bootstrap to compute estimates of the bias and standard error for \(\hat{\mu}\) in the GWN model for Microsoft. The R code for the brute force “for loop” to implement the nonparametric bootstrap is:

B = 1000

muhat.boot = rep(0, B)

n.obs = nrow(msftRetS)

set.seed(123)

for (i in 1:B) {

boot.data = sample(msftRetS, n.obs, replace=TRUE)

muhat.boot[i] = mean(boot.data)

}The bootstrap bias estimate is:

## [1] 0.000483which is very close to zero and confirms that \(\hat{\mu}\) is unbiased. The bootstrap standard error estimate is

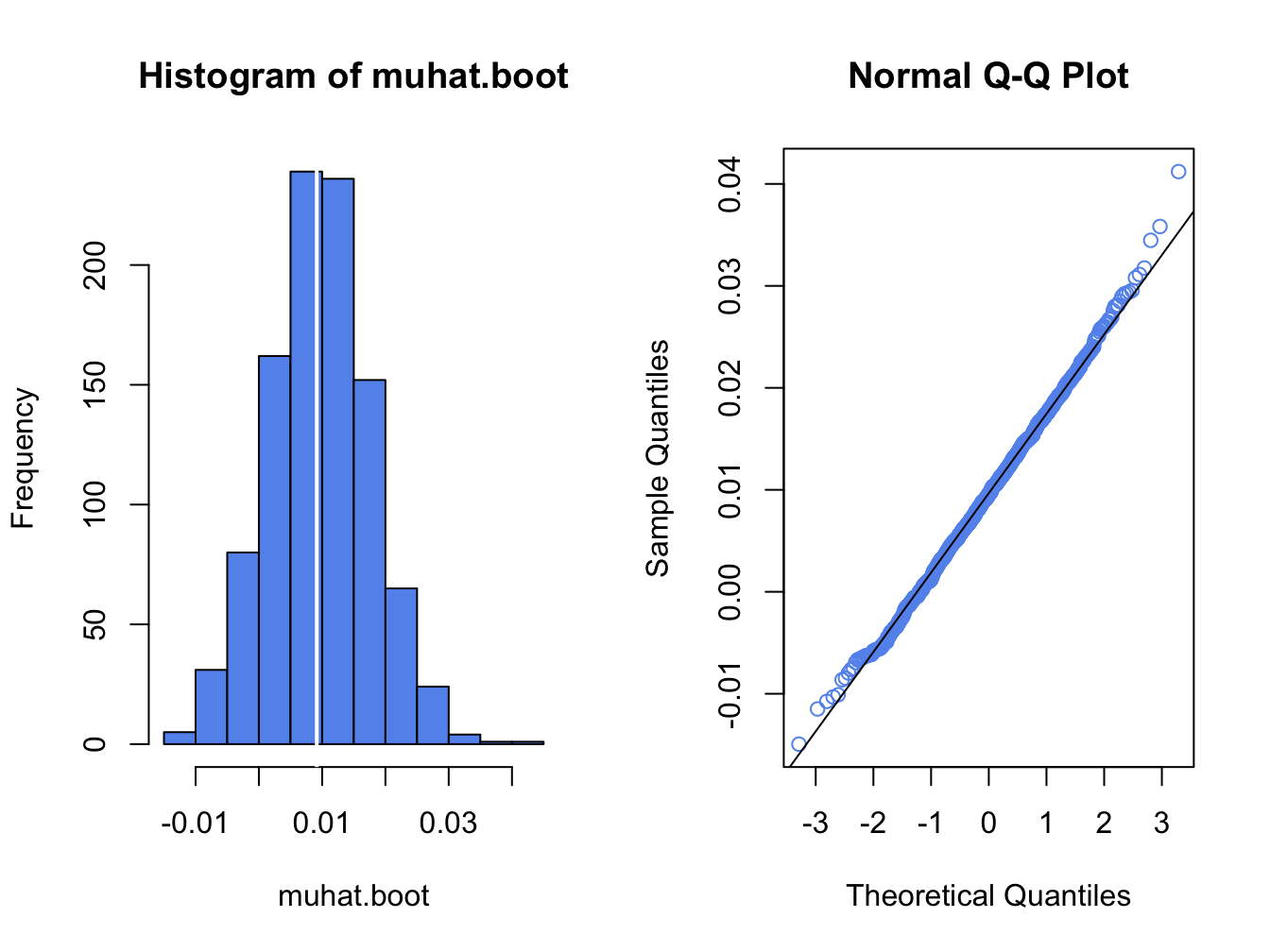

## [1] 0.00796and is equal (to three decimals) to the analytic standard error estimate computed earlier. This confirms that the nonparametric bootstrap accurately computes \(\widehat{\mathrm{se}}(\hat{\mu}).\) Figure 8.2 shows the histogram and normal QQ-plot of the bootstrap estimates created with:

par(mfrow=c(1,2))

hist(muhat.boot, col="cornflowerblue")

abline(v=muhatS, col="white", lwd=2)

qqnorm(muhat.boot, col="cornflowerblue")

qqline(muhat.boot)

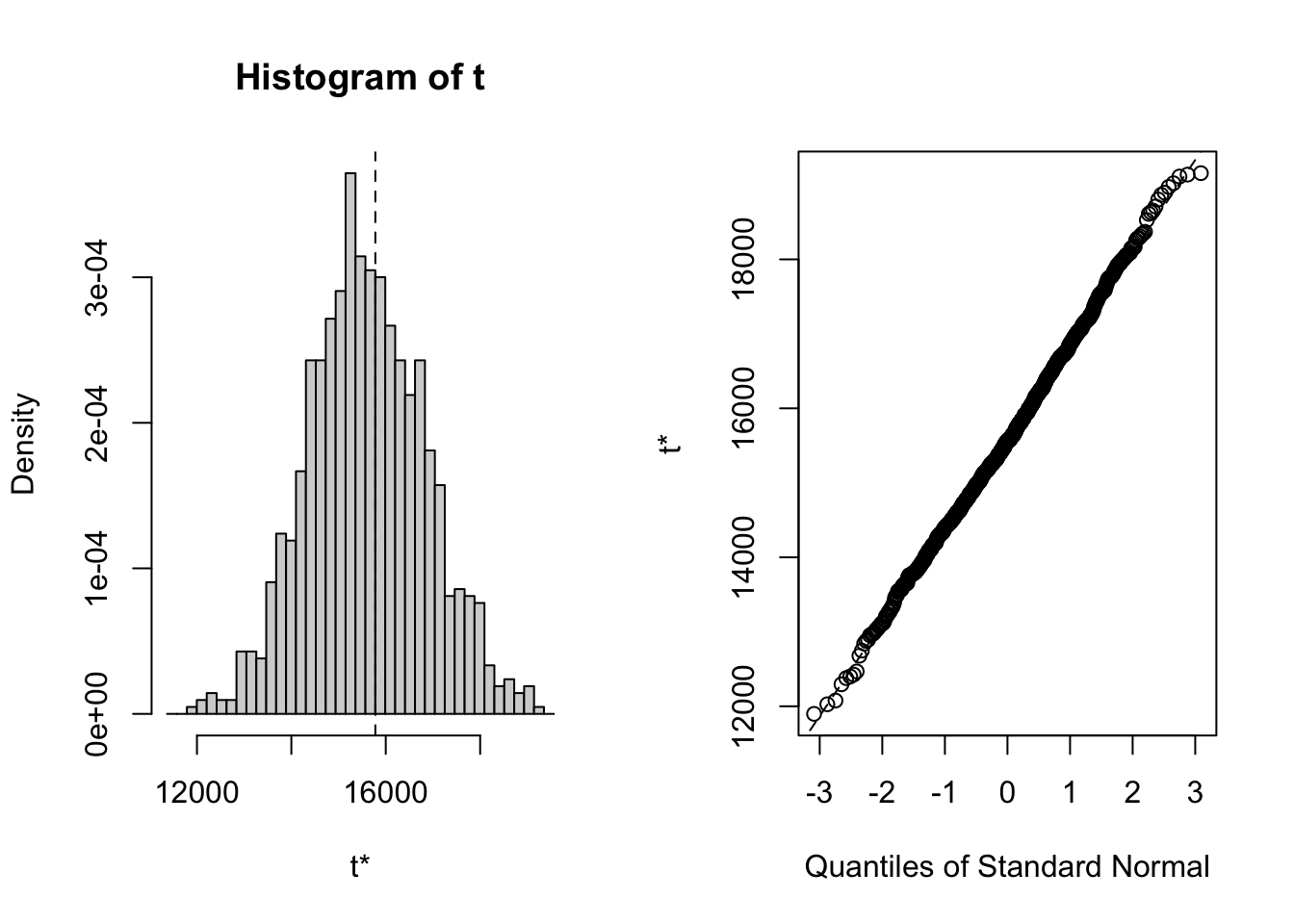

Figure 8.2: Histogram and normal QQ-plot of bootstrap estimates of \(\mu\) for Microsoft.

The distribution of the bootstrap estimates looks normal. As a result, the bootstrap 95% confidence interval for \(\mu\) has the form:

se.boot = sd(muhat.boot)

lower = muhatS + se.boot

upper = muhatS - se.boot

ans = cbind(muhatS, se.boot, lower, upper)

colnames(ans)=c("Estimate", "Std Error", "2.5%", "97.5%")

rownames(ans) = "mu"

ans## Estimate Std Error 2.5% 97.5%

## mu 0.00915 0.00796 0.0171 0.0012\(\blacksquare\)

It is important to keep in mind that the bootstrap resampling algorithm draws random samples from the observed data. In the example above, the random number generator in R was initially set with set.seed(123). If the seed is set to a different integer, say 345, then the \(B=1,000\) bootstrap samples will be different and we will get different numerical results for the bootstrap bias and estimated standard error. How different these results will be depends on number of bootstrap samples \(B\) and the statistic \(\hat{\theta}\) being bootstrapped. Generally, the smaller is \(B\) the more variable the bootstraps results will be. It is good practice to repeat the bootstrap analysis with several different random number seeds to see how variable the results are. If the results vary considerably for different random number seeds then it is advised to increase the number of bootstrap simulations \(B\). It is common to use \(B=1,000\) for routine calculations, and \(B=10,000\) for final results.

8.6.4.2 R package boot

The R package boot implements a variety of bootstrapping

techniques including the basic non-parametric bootstrap described

above. The boot package was written to accompany the textbook Bootstrap Methods and Their Application by (Davison and Hinkley 1997).

The two main functions in boot are boot() and

boot.ci(), respectively. The boot() function implements

the bootstrap for a statistic computed from a user-supplied function.

The boot.ci() function computes bootstrap confidence intervals

given the output from the boot() function.

The arguments to boot() are:

## function (data, statistic, R, sim = "ordinary", stype = c("i",

## "f", "w"), strata = rep(1, n), L = NULL, m = 0, weights = NULL,

## ran.gen = function(d, p) d, mle = NULL, simple = FALSE, ...,

## parallel = c("no", "multicore", "snow"), ncpus = getOption("boot.ncpus",

## 1L), cl = NULL)

## NULLwhere data is a data object (typically a vector or matrix

but can be a time series object too), statistic is a use-specified

function to compute the statistic of interest, and R is the

number of bootstrap replications. The remaining arguments are not

important for the basic non-parametric bootstrap. The function assigned

to the argument statistic has to be written in a specific

form, which is illustrated in the next example. The boot()

function returns an object of class boot for which

there are print and plot methods.

The arguments to boot.ci() are

## function (boot.out, conf = 0.95, type = "all", index = 1L:min(2L,

## length(boot.out$t0)), var.t0 = NULL, var.t = NULL, t0 = NULL,

## t = NULL, L = NULL, h = function(t) t, hdot = function(t) rep(1,

## length(t)), hinv = function(t) t, ...)

## NULLwhere boot.out is an object of class boot,

conf specifies the confidence level, and type is

a subset from c("norm", "basic", "stud", "perc", "bca") indicating the type of confidence interval to compute.

The choices norm, perc, and “bca” compute the

normal confidence interval (8.16), the percentile

confidence interval (8.17), and the BCA confidence interval, respectively. The remaining arguments are not important for the computation of these

bootstrap confidence intervals.

To use the boot() function to implement the bootstrap for

\(\hat{\mu},\) a function must be specified to compute \(\hat{\mu}\)

for each bootstrap sample. The function must have two arguments: x

and idx. Here, x represents the original data and

idx represents the random integer index (created internally

by boot()) to subset x for each bootstrap sample.

For example, a function to be passed to boot() for \(\hat{\mu}\) is

mean.boot = function(x, idx) {

# arguments:

# x data to be resampled

# idx vector of scrambled indices created by boot() function

# value:

# ans mean value computed using resampled data

ans = mean(x[idx])

ans

}To implement the nonparametric bootstrap for \(\hat{\mu}\) with 999 samples use

## [1] "boot"## [1] "t0" "t" "R" "data" "seed"

## [6] "statistic" "sim" "call" "stype" "strata"

## [11] "weights"The returned object muhat.boot is of class boot.

The component t0 is the sample estimate \(\hat{\mu}\), and

the component t is a \(999\times1\) matrix containing the bootstrap

estimates \(\{\hat{\mu}_{1}^{*},\ldots,\hat{\mu}_{999}^{*}\}\). The

print method shows the sample estimate, the bootstrap bias and the

bootstrap standard error:

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = msftRetS, statistic = mean.boot, R = 999)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 0.00915 0.000482 0.00758These statistics can be computed directly from the components of muhat.boot

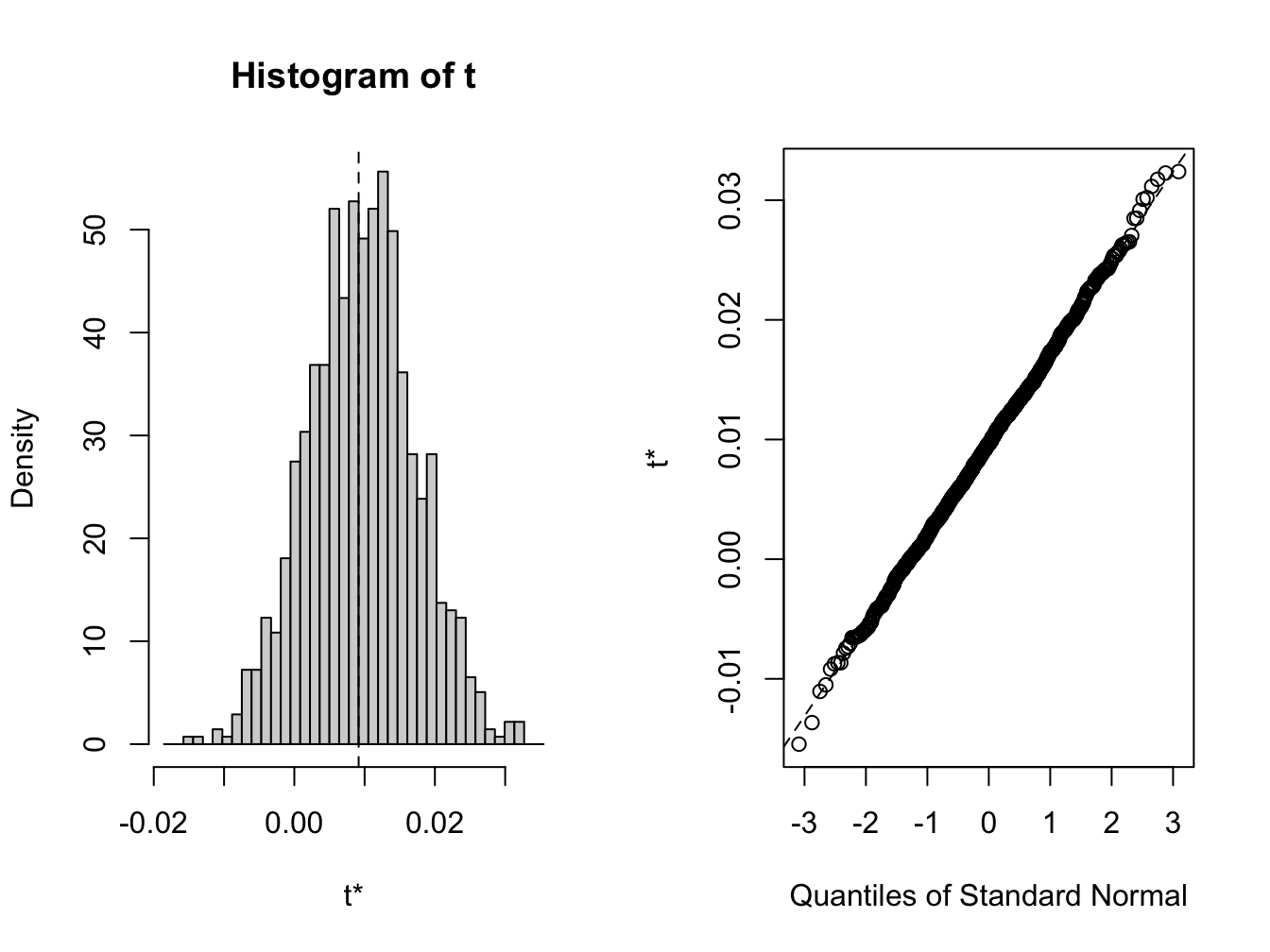

## [1] 0.009153 0.000482 0.007584A histogram and nornal QQ-plot of the bootstrap values, shown in Figure 8.3, can be created using the plot method

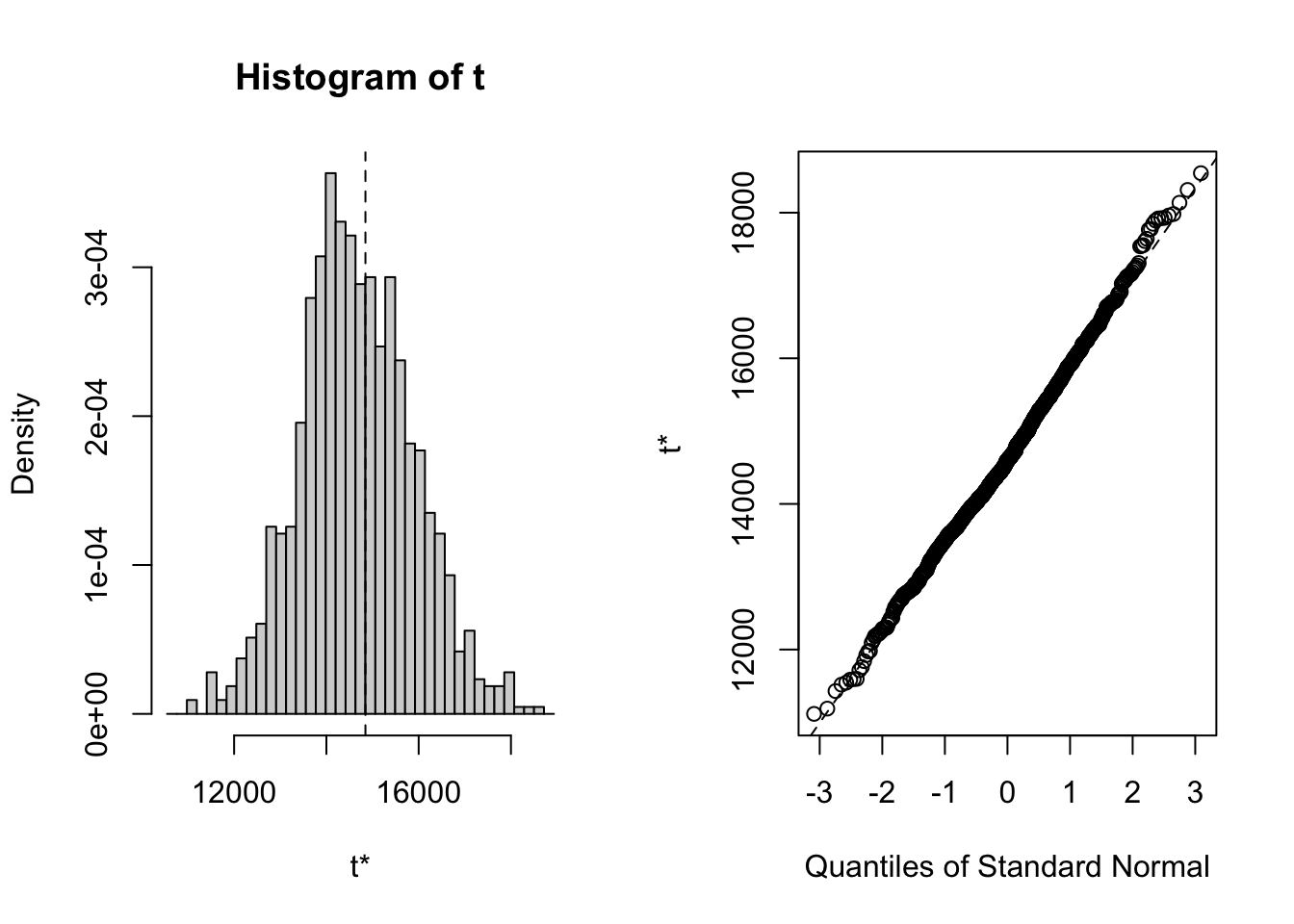

Figure 8.3: plot method for objects of class boot

Normal, percentile, and bias-adjusted (bca) percentile 95% confidence intervals can be computed using

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 999 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = muhat.boot, conf = 0.95, type = c("norm",

## "perc", "bca"))

##

## Intervals :

## Level Normal Percentile BCa

## 95% (-0.0062, 0.0235 ) (-0.0057, 0.0245 ) (-0.0062, 0.0239 )

## Calculations and Intervals on Original ScaleBecause the bootstrap distribution looks normal, the normal, percentile, and bca confidence intervals are very similar.

The GWN model estimate \(\hat{\sigma}\) can be “bootstrapped” in a similar fashion. First, we write a function to compute \(\hat{\sigma}\) for each bootstrap sample

Then we call boot() with statistic=sd.boot

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = msftRetS, statistic = sd.boot, R = 999)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* 0.101 -0.000783 0.00764

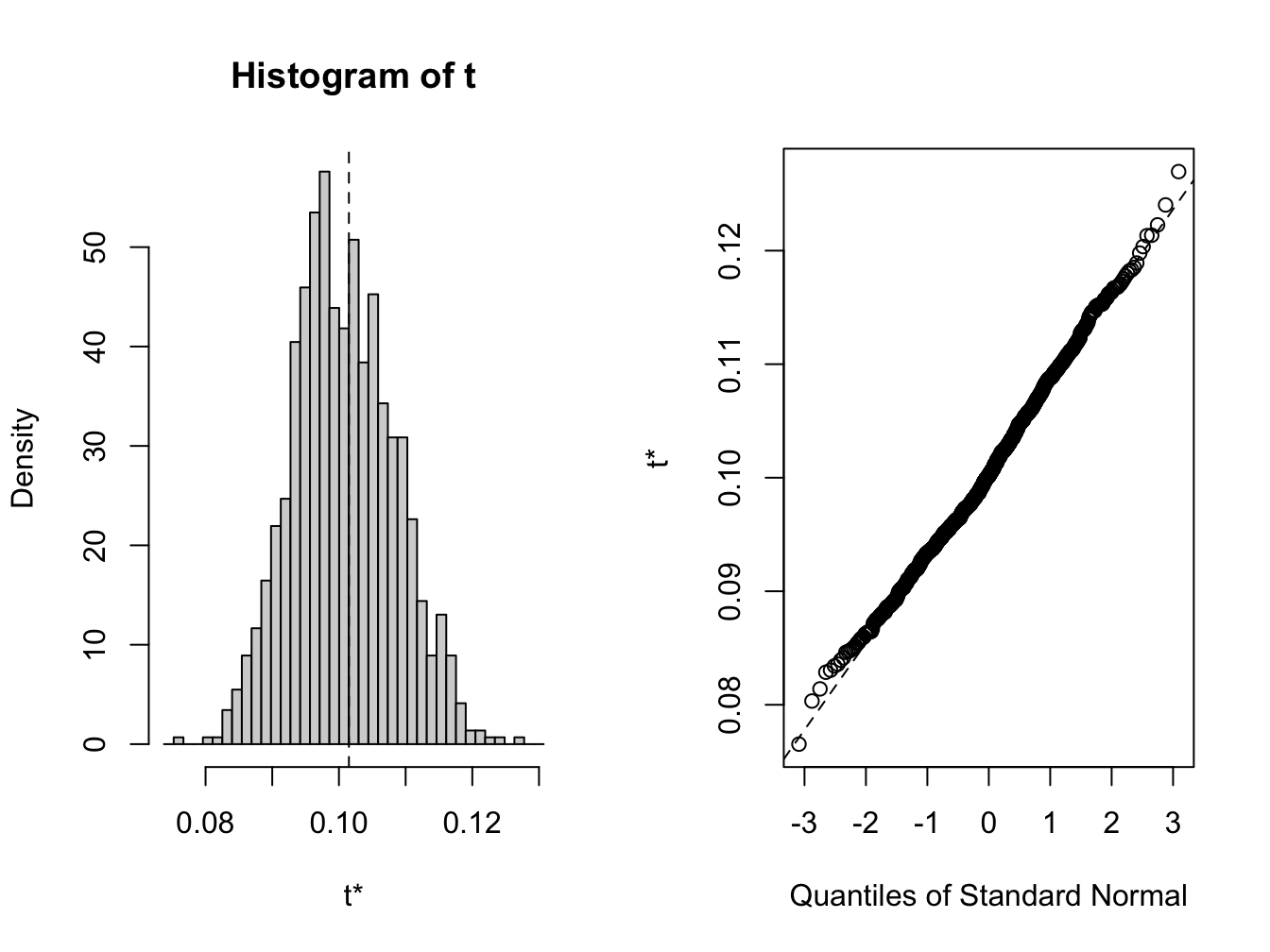

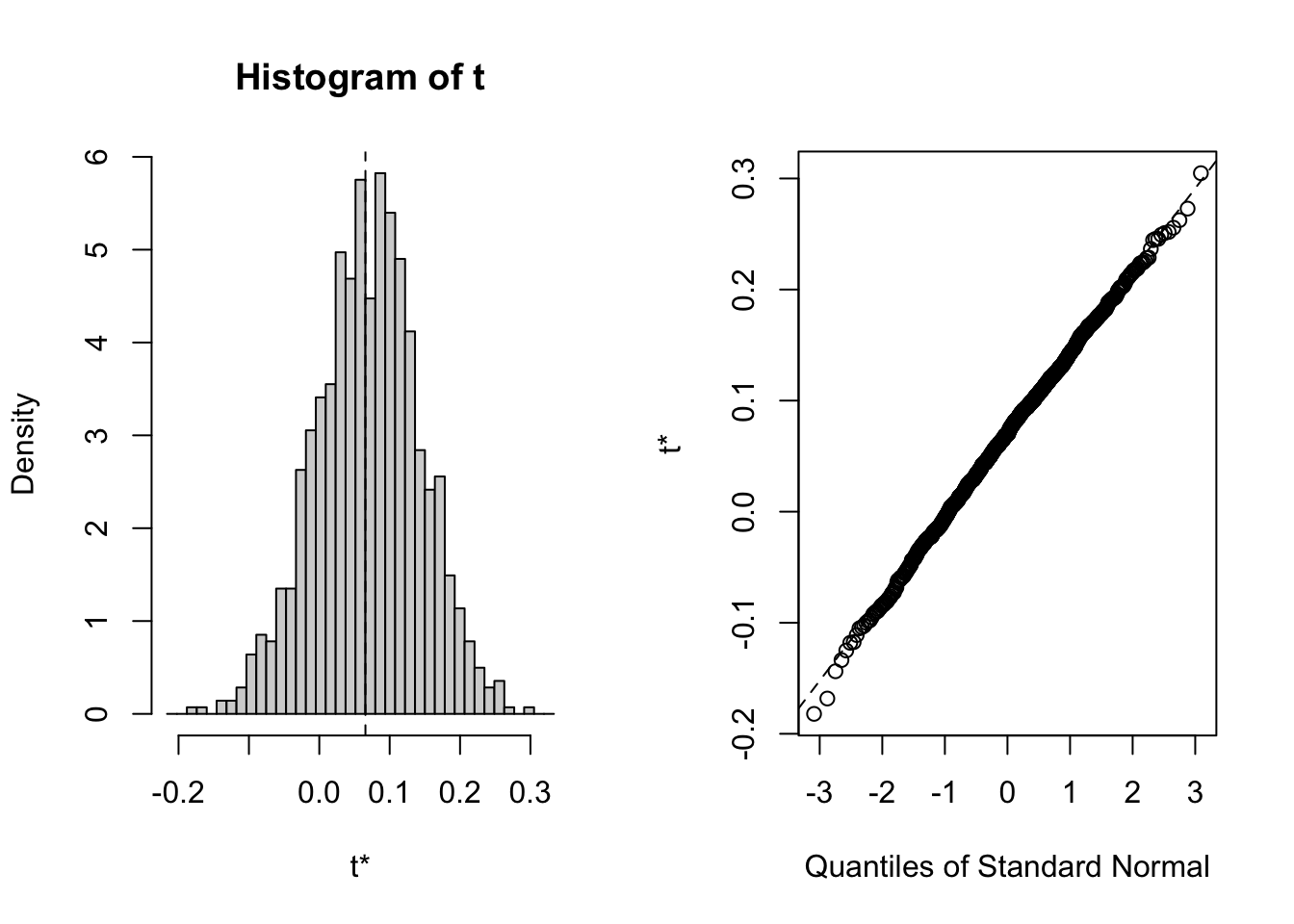

Figure 8.4: Bootstrap distribution for \(\hat{\sigma}\)

The bootstrap distribution, shown in Figure 8.4, looks a bit non-normal. The 95% confidences intervals are computed using

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 999 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = sigmahat.boot, conf = 0.95, type = c("norm",

## "perc", "bca"))

##

## Intervals :

## Level Normal Percentile BCa

## 95% ( 0.0873, 0.1173 ) ( 0.0864, 0.1162 ) ( 0.0892, 0.1200 )

## Calculations and Intervals on Original Scale

## Some BCa intervals may be unstableWe see that all of these intervals are quite similar.

\(\blacksquare\)

The real power of the bootstrap procedure comes when we apply it to

plug-in estimates of functions like (8.1)-(8.4) for which there

are no easy analytic formulas for estimating bias and standard errors. In this situation, the bootstrap procedure easily computes numerical estimates of the bias,

standard error, and 95% confidence interval. All we need is an R function for computing

the plug-in function estimate in a form suitable for boot(). The R functions for the example functions are:

f1.boot = function(x, idx, alpha=0.05) {

q = mean(x[idx]) + sd(x[idx])*qnorm(alpha)

q

}

f2.boot = function(x, idx, alpha=0.05, w0=100000) {

q = mean(x[idx]) + sd(x[idx])*qnorm(alpha)

VaR = -w0*q

VaR

}

f3.boot = function(x, idx, alpha=0.05, w0=100000) {

q = mean(x[idx]) + sd(x[idx])*qnorm(alpha)

VaR = -(exp(q) - 1)*w0

VaR

}

f4.boot = function(x, idx, r.f=0.0025) {

SR = (mean(x[idx]) - r.f)/sd(x[idx])

SR

}The functions \(f_1\), \(f_2\), and \(f_3\) have the additional argument alpha

which specifies the tail probability for the quantile, and the functions \(f_2\), and \(f_3\) have the additional argument w0 for the initial wealth invested. The function \(f_4\) has the additional argument r.f for the risk-free rate.

To compute the nonparametric bootstrap for \(f_1\) with \(\alpha=0.05\) use:

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = msftRetS, statistic = f1.boot, R = 999)

##

##

## Bootstrap Statistics :

## original bias std. error

## t1* -0.158 0.00177 0.0124Here, the bootstrap estimate of bias is small and the bootstrap estimated standard error is similar to the delta method and jackknife standard errors.

The bootstrap distribution looks normal, and the three methods for computing 95% confidence intervals are essentially the same:

## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 999 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = f1.bs, type = c("norm", "perc", "bca"))

##

## Intervals :

## Level Normal Percentile BCa

## 95% (-0.184, -0.135 ) (-0.181, -0.132 ) (-0.189, -0.138 )

## Calculations and Intervals on Original Scale

## Some BCa intervals may be unstableTo compute the nonparametric bootstrap for \(f_2\) with \(\alpha=0.05\) and \(W_0=\$100,000\) use:

##

## ORDINARY NONPARAMETRIC BOOTSTRAP

##

##

## Call:

## boot(data = msftRetS, statistic = f2.boot, R = 999)

##

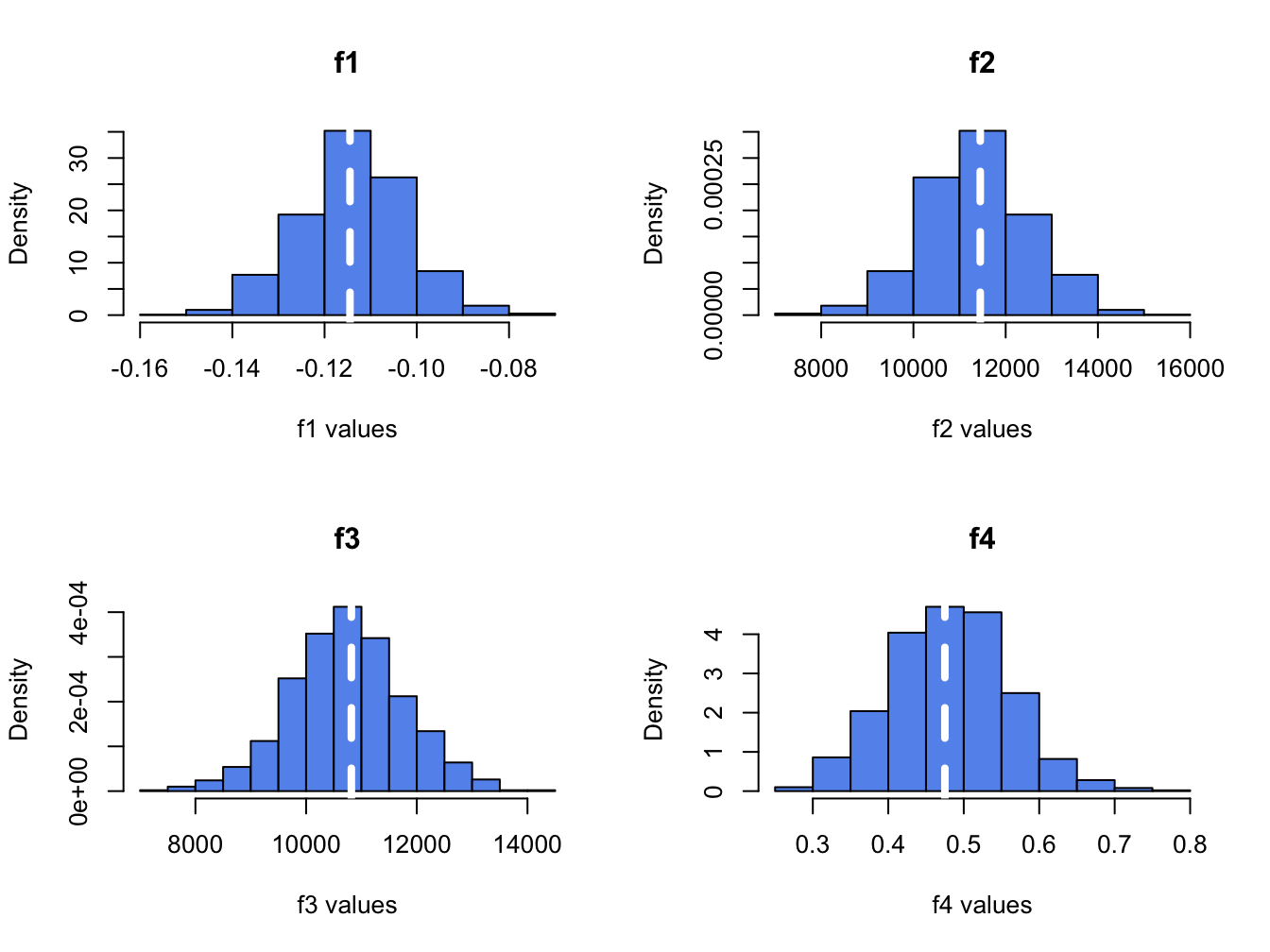

##

## Bootstrap Statistics :