Block Relaxation Methods in Statistics

Jan de Leeuw

Version 003, March 17, 2016

1 Preface

Note: This is a book in progress which will be expanded/updated frequently and unpredictably. It will never be finished, or, more precisely, it will be finished when I am. All suggestions for improvement are welcome. At gifi.stat.ucla.edu/bras there is a pdf version of the book, the Rmd file with all the code chunks, the tex, md, and html files, the figures, the R code, and whatever else is needed for perfect reproducibility.

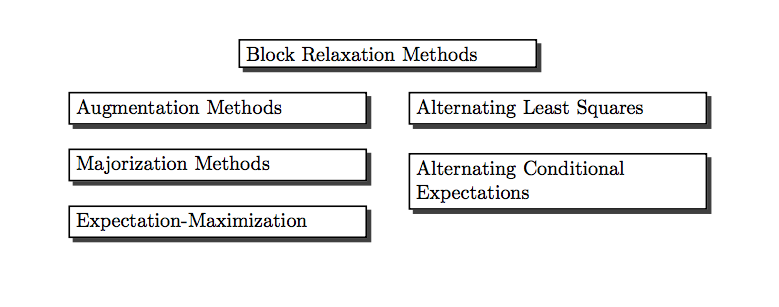

Many recent algorithms in computational statistics are variations on a common theme. In this book we discuss four such classes of algorithms. Or, more precisely, we discuss a single large class of algorithms, and we show how various well-known classes of statistical algorithms fit into this common framework. The types of algorithms we consider are, in logical order,

Figure 1.1: Algorithm Types

There is not much statistics, in the sense of data analysis or inference, in this book. It is almost exclusively about deterministic optimization problems (although we shall optimize a likelihood function or two). Some of our results have been derived in the statistical literature in the context of maximizing a multinomial or multinormal likelihood function. In most cases statisticians have developed their own results, not relying on the more comprehensive results in the optimization literature. We will try, from the start, to use existing results and apply them to our specific optimization methods.

There are many, many excellent books on optimization and mathematical programming. Without a doubt the canonical reference for statisticians is, and will be for many years to come, the book by Lange (2013). In particular his chapters 7,8, 9, and 12 have substantial overlap with this book. There is even more overlap with the book in progress MM Optimization Algorithms (Lange 2016 (in press)).

For the record, the books that have been most useful to me throughout my personal optimization career are Ortega and Rheinboldt (1970a), Ostrowski (1966), Rockafellar (1970), and, above all, Zangwill (1969). They were all published in a five year interval, at the end of the sixties. Around that time also started the ten-year period of my greatest intellectual curiosity and creativity.

Throughout the book we try to present our results in three different languages: the language of mathematics, the language of graphics, and the programming language R. We use R for all computations, tables, and figures (R Core Team 2016). In fact, all figures and tables are dynamically generated by chunks of R code using knitr (Xie 2015) and bookdown (Xie 2016) .

There are many examples throughout the book. They are usually presented in considerable and sometimes exasperating detail, with code, computations, and figures. I like to work on such examples, so please indulge me. It is nice to have an infinite number of pages available. The examples are mostly in separate subsections, so if you do not like them you can easily skip them.

In many cases the examples are simple one-dimensional optimization problems, which is maybe surprising, because the techniques we discuss are largely intended for high-dimensional problems. To further simplify the examples the functions involved are often cubic or quartic polynomials. Thus these small examples are not particularly representative for the types of applications we are interested in, but they are used to produce nice graphs, a more complete analysis, and illustrations of various general principles and properties. Also it sometimes makes sense to think of the polynomial examples as models, in the same sense in which the quadratic is a model for Newton’s method.

Many of the remaining examples are taken from multivariate statistical analysis, which we define in the broadest possible sense. It includes data analysis using linear and bilinear algebra, and in particular it includes multidimensional scaling and cluster analysis. Given my history, it is probably not surprising that many examples have their origin in psychometrics, and mre specifically in publications of researchers directly or loosely associated with the Data Theory group at Leiden University, starting in 1968. See Van der Heijden and Sijtsma (1996).

We take the point of view in this book that having global convergence of an iterative algorithm, i.e. convergence from an arbitrary starting point, is a desirable property. But it is neither necessary nor sufficient for the usefulness of the algorithm, because in addition one needs information about its speed of convergence. We try to give as much information as possible about convergence speed and complexity in our presentation of the examples.

It should be noted that this book is a corrected, updated, and expanded version of a twenty year old chapter in a conference proceedings volume (De Leeuw 1994). It will not be possible to erase all the traces of its humble beginnings. Specifically, references will often be to material from before 1994, and more recent work will probably be less completely covered.

Much of the material in this book is pasted together from published and unpublished papers and reports. This may lead to inconsistencies in the notation and to annoying duplications. Over time I will eliminate these blemishes as much as possible.

Items in the bibliography freely available on the internet are hyperlinked by title to external pdf files. This includes all published and unpublished works authored or co-authored by me. They can also be found in my bibliography of about 750 items, most of them linked to pdf files, on my server gifi.stat.ucla.edu.