Chapter 22 Pre-registration and Registered Reports

22.1 Learning objectives

By the end of this chapter, you will be able to:

Understand how pre-registration of study protocols can help counteract publication bias

Explain how a Registered Report differs from a standard pre-registered study

22.2 Study registration

The basic idea of study registration is that the researcher declares in advance key details of the study - effectively a full protocol that explains the research question and the methods that will be used to address it. Crucially, this should specify primary outcomes and the method of analysis, without leaving the researcher any wiggle room to tweak results to make them look more favourable. A study record in a trial registry should be public and time-stamped, and completed before any results have been collected. Since the Food and Drug Administration Modernization Act of 1997 first required FDA-regulated trials to be deposited in a registry, other areas of medicine have followed, with a general registry, https://clinicaltrials.gov/ being established in 2000. Registration of clinical trials has become widely adopted, and is now required if one wants to publish clinical trial results in a reputable journal (De Angelis et al., 2004).

Study registration serves several functions, but perhaps the most important one is that it makes research studies visible, regardless of whether they obtain positive results. In Chapter 21, we showed results from a study that was able to document publication bias (De Vries et al., 2018) precisely because trials in this area were registered. Without registration, we would have no way of knowing that the unpublished trials had ever existed.

A second important function of trial registration is that it allows us to see whether researchers did what they planned to do. Of course, “The best laid schemes o’ mice an’ men / Gang aft a-gley”, as Robert Burns put it. It may turn out to be impossible to recruit all the participants one hoped for. An outcome variable may turn out to be unsuitable for analysis. A new analytic method may come along which is much more appropriate for the study. The purpose of registration is not to put the study in a straitjacket, but rather to make it transparent when there are departures from the protocol. As noted in Chapter 14, it is not uncommon for researchers to (illegitimately) change their hypothesis on the basis of seeing the data. This practice can be misleading to both the researcher and the intended audience. It can happen that the researcher is initially disappointed by a null result, but then notices that their hypothesis might be supported if a covariate is used to adjust the analysis or if a subgroup of particular individuals is analyzed instead. But if we look at the data and observe interesting patterns, then form subgroups of individuals based on these observations, we are p-hacking, and raising the likelihood that we will pursue chance findings rather than a true phenomenon (Senn, 2018). As discussed in Chapter 15, it can be entirely reasonable to suppose that some people are more responsive to the intervention than others, but there is a real risk of misinterpreting chance variation as meaningful difference if we identify subgroups only after examining the results (see Chapter 14).

Does this mean we are prohibited from exploring our data to discover unexpected findings? A common criticism of pre-registration is that it kills creativity and prevents us from making new discoveries, but this is not the case. Data exploration is an important part of scientific discovery and is to be encouraged, provided that the complete analysis timeline is presented and unregistered analyses are labelled as exploratory. An interesting-looking subgroup effect can then be followed up in a new study to see if it replicates. The problem arises when such analyses are presented as if they were part of the original plan, with results that favour an intervention effect being cherry-picked. As we saw in Chapter 14, the interpretation of statistical analyses is highly dependent on whether a specific hypothesis is tested prospectively, or whether the researcher is data-dredging - running numerous analyses in search of the elusive “significant” result; registration of the study protocol means that this distinction cannot be obscured.

In psychology, a move towards registration of studies has been largely prompted by concerns about the high rates of p-hacking and HARKing in this literature (Simmons et al., 2011) (see Chapter 14), and the focus is less on clinical trials than on basic observational or experimental studies. The term pre-registration has been adopted to cover this situation. For psychologists, the Open Science Framework: https://osf.io has become the most popular repository for pre-registrations, allowing researchers to deposit a time-stamped protocol, which can be embargoed for a period of time if it is desired to keep this information private (Hardwicke & Wagenmakers, 2021). Examples of pre-registration templates are available at: https://cos.io/rr/](https://cos.io/rr/).

Does trial registration prevent outcome-switching?

A registered clinical trial protocol typically specifies a primary outcome measure, which should be used in the principal analysis of the study data. This protects against the situation where the researcher looks at numerous outcomes and picks the one that looks best - in effect p-hacking. In practice, trial registration does not always achieve its goals: Goldacre et al. (2019) identified 76 trials published in a six-week period in one of five journals: New England Journal of Medicine, The Lancet, Journal of the American Medical Association, British Medical Journal, and Annals of Internal Medicine. These are all high-impact journals that officially endorse Consolidated Standards of Reporting Trials (CONSORT), which specify that pre-specified primary outcomes should be reported. Not only did Goldacre et al find high rates of outcome-switching in these trial reports; they also found that some of the journals were reluctant to publish a letter that drew attention to the mismatch, with qualitative analysis demonstrating “extensive misunderstandings among journal editors about correct outcome reporting”.

22.3 Registered Reports

Michael Mahoney, whose book was mentioned in Chapter 21, provided an early demonstration of publication bias with his little study of journal reviewers (Mahoney, 1976). Having found that reviewers are far too readily swayed by a paper’s results, he recommended:

Manuscripts should be evaluated solely on the basis of their relevance and their methodology. Given that they ask an important question in an experimentally meaningful way, they should be published - regardless of their results. (p. 105).

37 years later, Chris Chambers independently came to the same conclusion. In his editorial role at the journal Cortex, he introduced a new publishing initiative adopting this model, which was heralded by an open letter in the Guardian newspaper: https://www.theguardian.com/science/blog/2013/jun/05/trust-in-science-study-pre-registration. The registered report is a specific type of journal article that embraces pre-registration as one element of the process; crucially, peer review occurs before data is collected.

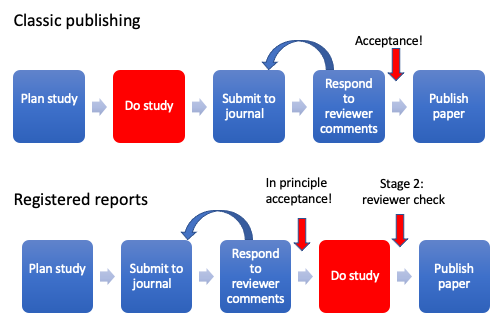

Figure 22.1: Comparison of stages in regular publishing model and Registered Reports

Figure 22.1 shows how registered reports differ from the traditional publishing model. In traditional publishing, reviewers evaluate the study after it has been completed, giving ample opportunity for them to be swayed by the results. In addition, their input comes at a stage when it is too late to remedy serious flaws in the experimental design: there is a huge amount of waste that occurs when researchers put months or years into a piece of research that is then rejected because peer reviewers find serious flaws. In contrast, with registered reports, reviewers are involved at a much earlier stage. The decision whether or not to accept the article for publication is based on the introduction, methods and analysis plan, before results are collected. At this point reviewers cannot be influenced by the results, as they have not yet been collected. The second stage review is conducted after the study has been completed, but this is much lighter touch and only checks whether the authors did as they had said they would and whether the conclusions are reasonable given the data. The “in principle” acceptance cannot be overturned by reviewers coming up with new demands at this point. This unique format turns the traditional publishing paradigm on its head. Peer review can be viewed less as “here’s what you got wrong and should have done” and more like a helpful collaborator who gives feedback at a stage in the project when things can be adapted to improve the study.

Methodological quality of registered reports tends to be high because no editor or reviewer wants to commit to publish a study that is poorly conducted, underpowered or unlikely to give a clear answer to an interesting question. Registered reports are required to specify clear hypotheses, give specification of an analysis plan to test these, justify the sample size, and document how issues such as outlier exclusion and participant selection criteria will be handled. These requirements are more rigorous than those for clinical trial registration.

The terminology in this area can be rather confusing, and it is important to distinguish between pre-registration, as described in the previous section (which in the clinical trials literature is simply referred to as “trial registration”) and registered reports, which include peer review prior to data collection, with in principle acceptance of the paper before results are known. Another point of difference is that trial registration is always made public; that is not necessarily the case for registered reports, where the initial protocol may be deposited with the journal but not placed in the public domain. Pre-registrations outside the clinical trials domain need to be deposited on a repository with a time-stamp, but there is flexibility about when, or indeed if, they are made public.

The more stringent requirements for a registered report, versus standard pre-registration, mean that this publication model can counteract four major sources of bias in scientific publications - referred to by Bishop (2019) as the four horsemen of the reproducibility apocalypse, namely:

Publication bias. By basing reviews on introduction and methods only, it is no longer possible for knowledge of results to influence publication decisions. As Mahoney (1976) put it, it allows us to : place our trust in good questions rather than cheap answers.

Low power. No journal editor wants to publish an ambiguous null result that could just be the consequence of low statistical power -see Chapter 13. However, in an adequately powered intervention study, a null result is important and informative for telling us what does not work. Registered reports require authors to justify their sample size, minimizing the likelihood of type II errors.

P-hacking. Pre-specification of the analysis plan makes transparent the distinction between pre-planned hypothesis-testing analyses, and post hoc exploration of the data. Note that exploratory analyses are not precluded in a registered report, but they are reported separately, on the grounds that statistical inferences need to be handled differently in this case - see Chapter 14.

HARKing. Because hypotheses are specified before the data are collected, it will be obvious if the researcher uses their data to generate a new hypothesis. HARKing is so common as to be normative in many fields, but it generates a high rate of false positives when a hypothesis that is only specified after seeing the data is presented as if it was the primary motivation for a study. Instead, in a registered report, authors are encouraged to present new ideas that emerge from the data in a separate section entitled “Exploratory analyses”.

Registered reports are becoming increasingly popular in psychology, and are beginning to be adopted in other fields, but many journal editors have resisted adopting this format. In part this is because any novel system requires extra work, and in part because of other concerns - e.g. that this might lead to less interesting work being published in the journal. Answers to frequently asked questions about registered reports can be found on Open Science Framework: https://www.cos.io/initiatives/registered-reports. As might be gathered from this account, we are enthusiastic advocates of this approach, and have co-authored several registered reports ourselves.

22.4 Check your understanding

- Read this preregistration of a study on Open Science Framework: https://osf.io/ecswy, and compare it with the article reporting results here: https://link.springer.com/article/10.1007/s11121-022-01455-4. Note any points where the report of the study diverges from the preregistration and consider why this might have happened. Do the changes from preregistration influence the conclusions you can draw from the study?