9 Publication Bias

L ooking back at the last chapters, we see that we already covered a vast range of meta-analytic techniques. Not only did we learn how to pool effect sizes, we also know now how to assess the robustness of our findings, inspect patterns of heterogeneity, and test hypotheses on why effects differ.

All of these approaches can help us to draw valid conclusions from our meta-analysis. This, however, rests on a tacit assumption concerning the nature of our data, which we have not challenged yet. When conducting a meta-analysis, we take it as a given that the data we collected is comprehensive, or at least representative of the research field under examination.

Back in Chapter 1.4.3, we mentioned that meta-analyses usually try to include all available evidence, in order to derive a single effect size that adequately describes the research field. From a statistical perspective, we may be able to tolerate that a few studies are missing in our analysis–but only if these studies were “left out” by chance.

Unfortunately, meta-analyses are often unable to include all existing evidence. To make things worse, there are also good reasons to assume that some studies are not missing completely “at random” from our collected data. Our world is imperfect, and so are the incentives and “rules” that govern scientific practice. This means that there are systemic biases that can determine if a study ends up in our meta-analysis or not.

A good example of this problem can be found in a not-so-recent anecdote from pharmacotherapy research. Even back in the 1990s, it was considered secured knowledge that antidepressive medication (such as selective serotonin re-uptake inhibitors, or SSRIs) are effective in treating patients suffering from depression. Most of this evidence was provided by meta-analyses of published pharmacotherapy trials, in which an antidepressant is compared to a pill placebo. The question regarding the effects of antidepressive medication is an important one, considering that the antidepressant drug market is worth billions of dollars, and growing steadily.

This may help to understand the turmoil caused by an article called The Emperor’s New Drugs, written by Irving Kirsch and colleagues (2002), which argued that things may not look so bright after all.

Drawing on the “Freedom of Information Act”, Kirsch and colleagues obtained previously unpublished antidepressant trial data which pharmaceutical companies had provided to the US Food and Drug Administration. They found that when this unpublished data was also considered, the benefits of antidepressants compared to placebos were at best minimal, and clinically negligible. Kirsch and colleagues argued that this was because companies only published studies with favorable findings, while studies with “disappointing” evidence were withheld (Kirsch 2010).

A contentious debate ensued, and Kirsch’s claims have remained controversial until today. We have chosen this example not to pick sides, but to illustrate the potential threat that missing studies can pose to the validity of meta-analytic inferences. In the meta-analysis literature, such problems are usually summarized under the term publication bias.

The problem of publication bias underlines that every finding in meta-analyses can only be as good as the data it is based on. Meta-analytic techniques can only work with the data at hand. Therefore, if the collected data is distorted, even the best statistical model will only reproduce inherent biases. Maybe you recall that we already covered this fundamental caveat at the very beginning of this book, where we discussed the “File Drawer” problem (see Chapter 1.3). Indeed, the terms “file drawer problem” and “publication bias” are often used synonymously.

The consequences of publication bias and related issues on the results of meta-analyses can be enormous. It can cause us to overestimate the effects of treatments, overlook negative side-effects, or reinforce the belief in theories that are actually invalid.

In this chapter, we will therefore discuss the various shapes and forms through which publication bias can distort our findings. We will also have a look at a few approaches that we as meta-analysts can use to examine the risk of publication bias in our data; and how publication bias can be mitigated in the first place.

9.1 What Is Publication Bias?

Publication bias exists when the probability of a study getting published is affected by its results (Rothstein, Sutton, and Borenstein 2005, chap. 2 and 5). There is widespread evidence that a study is more likely to find its way into the public if its findings are statistically significant, or confirm the initial hypothesis (Schmucker et al. 2014; Scherer et al. 2018; Chan et al. 2014; Dechartres et al. 2018).

When searching for eligible studies, we are usually constrained to evidence that has been made public in some form or the other, for example through peer-reviewed articles, preprints, books, or other kinds of accessible reports. In the presence of publication bias, this not only means that some studies are missing in our data set–it also means that the missing studies are likely the ones with unfavorable findings.

Meta-analytic techniques allow us to find an unbiased estimate of the average effect size in the population. But if our sample itself is distorted, even an effect estimate that is “true” from a statistical standpoint will not be representative of the reality. It is like trying to estimate the size of an iceberg, but only measuring its tip: our finding will inevitably be wrong, even if we are able to measure the height above the water surface with perfect accuracy.

Publication bias is actually just one of many non-reporting biases. There are several other factors that can also distort the evidence that we obtain in our meta-analysis (Page et al. 2020), including:

Citation bias: Even when published, studies with negative or inconclusive findings are less likely to be cited by related literature. This makes it harder to detect them through reference searches, for example.

Time-lag bias: Studies with positive results are often published earlier than those with unfavorable findings. This means that findings of recently conducted studies with positive findings are often already available, while those with non-significant results are not.

Multiple publication bias: Results of “successful” studies are more likely to be reported in several journal articles, which makes it easier to find at least one of them. The practice of reporting study findings across several articles is also known as “salami slicing”.

Language bias: In most disciplines, the primary language in which evidence is published is English. Publications in other languages are less likely to be detected, especially when the researchers themselves cannot understand the contents without translation. If studies in English systematically differ from the ones published in other languages, this may also introduce bias.

Outcome reporting bias: Many studies, and clinical trials in particular, measure more than one outcome of interest. Some researchers exploit this, and only report those outcomes for which positive results were attained, while the ones that did not confirm the hypothesis are dropped. This can also lead to bias: technically speaking, the study has been published, but its (unfavorable) result will still be missing in our meta-analysis because it is not reported.

Non-reporting biases can be seen as systemic factors which make it harder for us to find existing evidence. However, even if we were able to include all relevant findings, our results may still be flawed. Bias may also exist due to questionable research practices (QRPs) that researchers have applied when analyzing and reporting their findings (Simonsohn, Simmons, and Nelson 2020).

We already mentioned the concept of “researcher degrees of freedom” previously (Chapter 1.3). QRPs can be defined as practices in which researchers abuse these degrees of freedom to “bend” results into the desired direction. Unfortunately, there is no clear consensus on what constitutes a QRP. There are, however, a few commonly suggested examples.

One of the most prominent QRPs is p-hacking, in which analyses are tweaked until the conventional significance threshold of \(p<\) 0.05 is reached. This can include the way outliers are removed, analyses of subgroups, or the missing data handling.

Another QRP is HARKing (Kerr 1998), which stands for hypothesizing after the results are known. One way of HARKing is to pretend that a finding in exploratory analyses has been an a priori hypothesis of the study all along. A researcher, for example, may run various tests on a data set, and then “invent” hypotheses around all the tests that were significant. This is a seriously flawed approach, which inflates the false discovery rate of a study, and thus increases the risk of spurious findings (to name just a few problems). Another type of HARKing is to drop all hypotheses that were not supported by the data, which can ultimately lead to outcome reporting bias.

9.2 Addressing Publication Bias in Meta-Analyses

It is quite clear that publication bias, other reporting biases and QRPs can have a strong and deleterious effect on the validity of our meta-analysis. They constitute major challenges since it is usually practically impossible to know the exact magnitude of the bias–or if it exists at all.

In meta-analyses, we can apply techniques which can, to some extent, reduce the risk of distortions due to publication and reporting bias, as well as QRPs. Some of these approaches pertain to the study search, while others are statistical methods.

Study search. In Chapter 1.4.3, we discussed the process of searching for eligible studies. If publication bias exists, this step is of great import, because it means that that a search of the published literature may yield data that is not fully representative of all the evidence. We can counteract this by also searching for grey literature, which includes dissertations, preprints, government reports, or conference proceedings. Fortunately, pre-registration is also becoming more common in many disciplines. This makes it possible to search study registries such as the ICTRP or OSF Registries (see Table 1.1 in Chapter 1.4.3) for studies with unpublished data, and ask the authors if they can provide us with data that has not been made public (yet)37. Grey literature search can be tedious and frustrating, but it is worth the effort. One large study has found that the inclusion of grey and unpublished literature can help to avoid an overestimation of the true effects (McAuley et al. 2000).

Statistical methods. It is also possible to examine the presence of publication through statistical procedures. None of these methods can identify publication bias directly, but they can examine certain properties of our data that may be indicative of it. Some methods can also be used to quantify the true overall effect when correcting for publication bias.

In this chapter, we will showcase common statistical methods to evaluate and control for publication bias. We begin with methods focusing on small-study effects (Sterne, Gavaghan, and Egger 2000; Schwarzer, Carpenter, and Rücker 2015, chap. 5; Rothstein, Sutton, and Borenstein 2005, chap. 5). A common thread among these approaches is that they find indicators of publication bias by looking at the relationship between the precision and observed effect size of studies.

9.2.1 Small-Study Effect Methods

There are various small-study effect methods to assess and correct for publication bias in meta-analyses. Many of the techniques have been conventional for many years. As it says in the name, these approaches are particularly concerned with small studies. From a statistical standpoint, this translates to studies with a high standard error. Small-study effect methods assume that small studies are more likely to fall prey to publication bias.

This assumption is based on three core ideas (see Borenstein et al. 2011, chap. 30):

Because they involve a large commitment of resources and time, large studies are likely to get published, no matter whether the results are significant or not.

Moderately sized studies are at greater risk of not being published. However, even when the statistical power is only moderate, this is still often sufficient to produce significant results. This means that only some studies will not get published because they delivered “undesirable” (i.e. non-significant) results.

Small studies are at the greatest risk of generating non-significant findings, and thus of remaining in the “file drawer”. In small studies, only very large effects become significant. This means that only small studies with very high effect sizes will be published.

We see that the purported mechanism behind these assumptions is quite simple. Essentially, it says that publication bias exists because only significant effects are published. Since the probability of obtaining significant results rises with larger sample size, it follows that publication bias will disproportionately affect small studies.

9.2.1.1 The Funnel Plot

Earlier in this guide (Chapter 3.1), we learned that a study’s sample size and standard error are closely related. Larger standard errors of an effect size result in wider confidence intervals and increase the chance that the effect is not statistically significant. Therefore, it is sensible to assume that small-study effects will largely affect studies with larger standard errors.

Suppose that our collected data is burdened by publication bias. If this is the case, we can assume that the studies with large standard errors have higher effect sizes than the ones with a low standard error. This is because the smaller studies with lower effects were not significant, and thus never considered for publication. Consequently, we never included them in our meta-analysis.

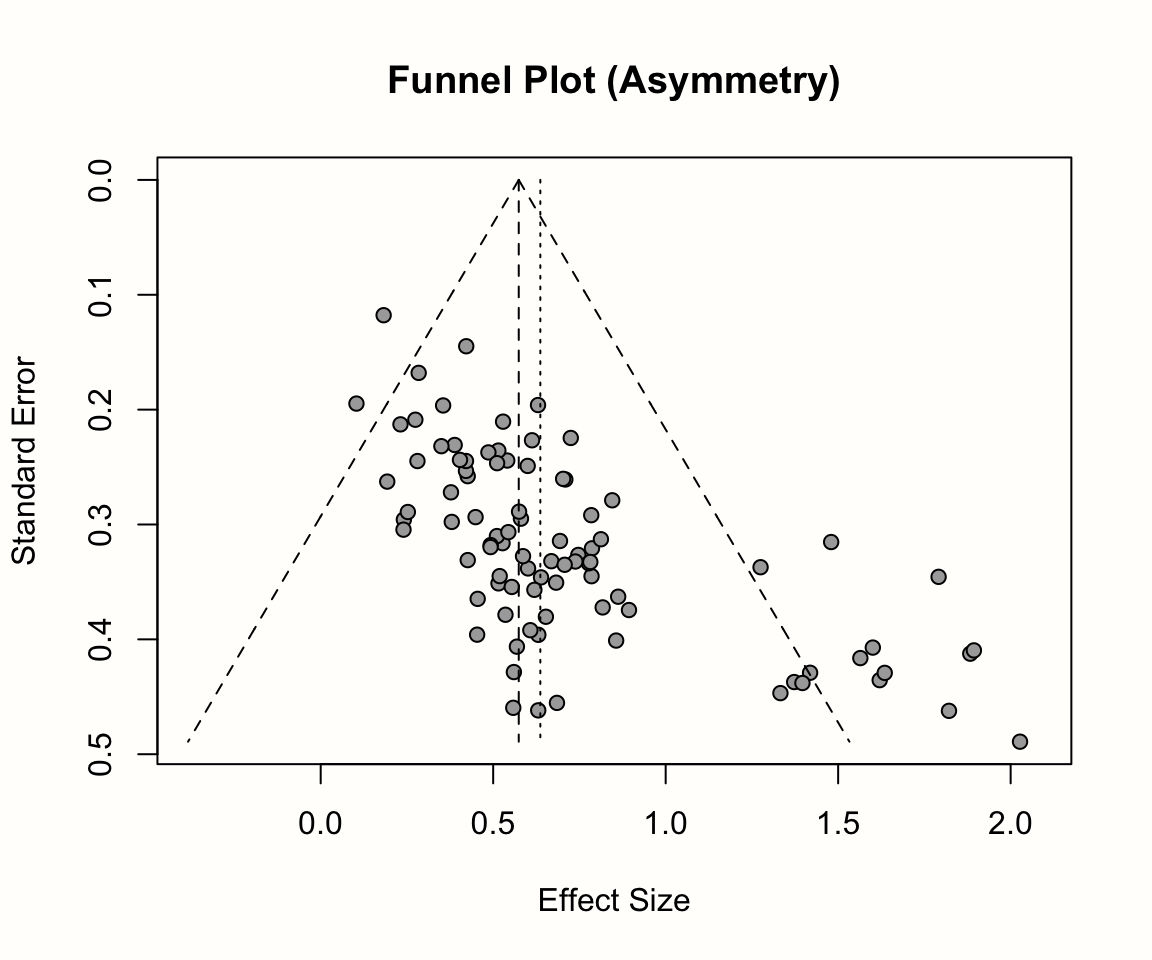

It is conventional to inspect small-study effects through funnel plots. A funnel plot is a scatter plot of the studies’ observed effect sizes on the x-axis against a measure of their standard error on the y-axis. Usually, the y-axis in funnel plots is inverted (meaning that “higher” values on the y-axis represent lower standard errors).

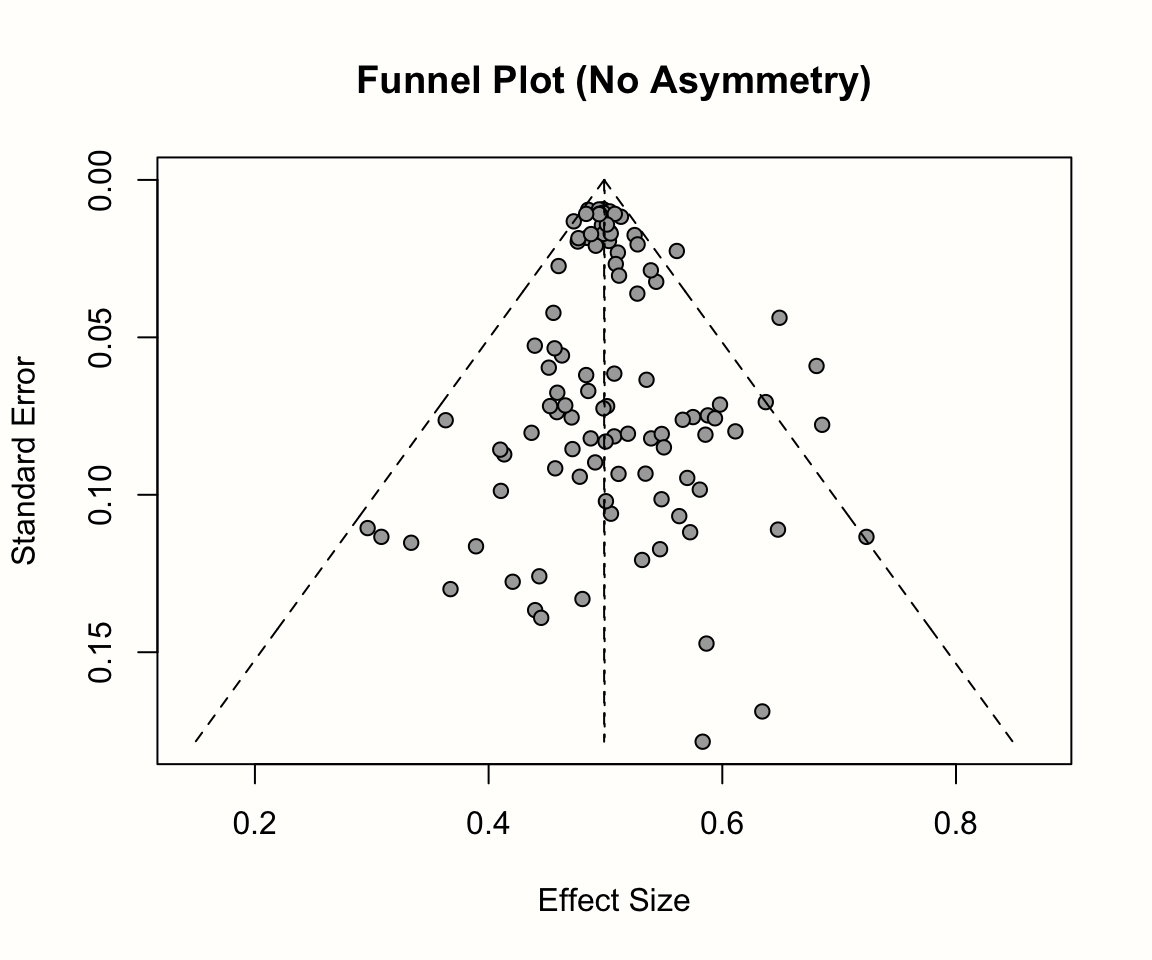

When there is no publication bias, the data points in such a plot should form a roughly symmetrical, upside-down funnel. This is why they are called funnel plots. Studies in the top part of the plot (those with low standard errors), should lie closely together, and not far away from the pooled effect size. In the lower part of the plot, with increasing standard errors, the funnel “opens up”, and effect sizes are expected to scatter more heavily to the left and right of the pooled effect.

It becomes easier to see why studies should form a funnel when we think back to what we learned about the behavior of effect sizes in Chapter 3.1, and when discussing the fixed-effect model in Chapter 4.1.1 (Figure 4.1). The standard error is indicative of a study’s precision: with decreasing standard error, we expect the observed effect size to become an increasingly good estimator of the true effect size. When the standard error is high, the effect size has a low precision and is therefore much more likely to be far off from the actual effect in the population.

We will now make this more concrete by generating a funnel plot ourselves. In the {meta} package, the meta::funnel38 function can be used to print a funnel plot for a meta-analysis object. Here, we produce a funnel plot for our m.gen meta-analysis object. We specify two further arguments, xlim and studlab. The first controls the limits of the x-axis in the plot, while the latter tells the function to include study labels. A call to the title function after running meta::funnel adds a title to the plot.

Our code looks like this:

# Load 'meta' package

library(meta)

# Produce funnel plot

meta::funnel(m.gen,

xlim = c(-0.5, 2),

studlab = TRUE)

# Add title

title("Funnel Plot (Third Wave Psychotherapies)")

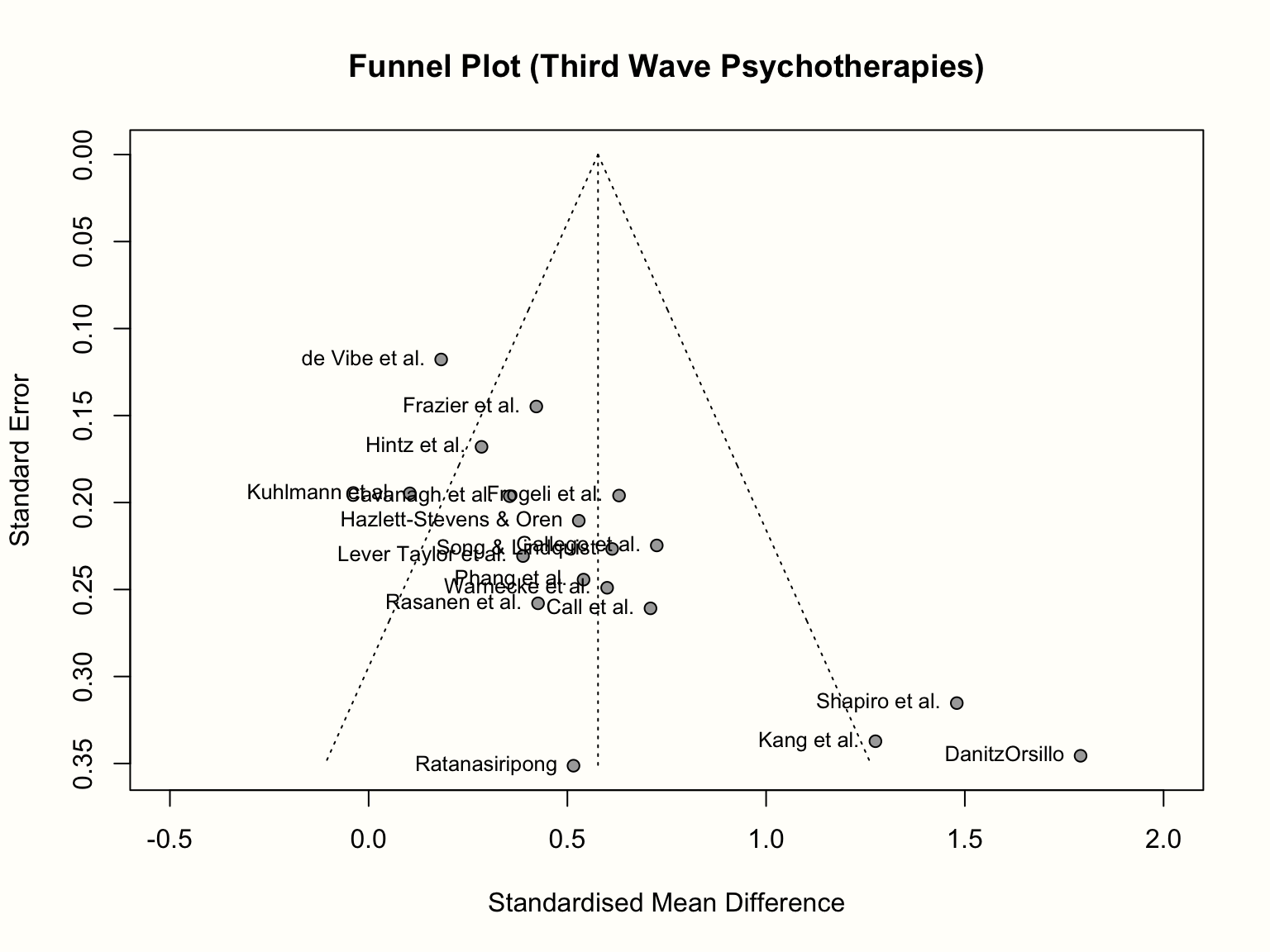

As discussed, the resulting funnel plot shows the effect size of each study (expressed as the standardized mean difference) on the x-axis, and the standard error (from large to small) on the y-axis. To facilitate the interpretation, the plot also includes the idealized funnel-shape that we expect our studies to follow. The vertical line in the middle of the funnel shows the average effect size. Because we used a random-effects model when generating m.gen, the funnel plot also uses the random-effects estimate.

In the absence of small-study effects, our studies should roughly follow the shape delineated by the funnel displayed in the plot. Is this the case in our example? Well, not really. While we see that studies with lower standard errors lie more concentrated around the estimated true effect, the pattern overall looks asymmetrical. This is because there are three small studies with very high effect sizes in the bottom-right corner of the plot (the ones by Shapiro, Kang, and Danitz-Orsillo).

These studies, however, have no equivalent in the bottom-left corner in the plot. There are no small studies with very low or negative effect sizes to “balance out” the ones with very high effects. Another worrisome detail is that the study with the greatest precision in our sample, the one by de Vibe, does not seem to follow the funnel pattern well either. Its effect size is considerably smaller than expected.

Overall, the data set shows an asymmetrical pattern in the funnel plot that might be indicative of publication bias. It could be that the three small studies are the ones that were lucky to find effects high enough to become significant, while there is an underbelly of unpublished studies with similar standard errors, but smaller and thus non-significant effects which did not make the cut.

A good way to inspect how asymmetry patterns relate to statistical significance is to generate contour-enhanced funnel plots (Peters et al. 2008). Such plots can help to distinguish publication bias from other forms of asymmetry. Contour-enhanced funnel plots include colors which signify the significance level of each study in the plot. In the meta::funnel function, contours can be added by providing the desired significance thresholds to the contour argument. Usually, these are 0.9, 0.95 and 0.99, which equals \(p\) < 0.1, 0.05 and 0.01, respectively. Using the col.contour argument, we can also specify the color that the contours should have. Lastly, the legend function can be used afterwards to add a legend to the plot, specifying what the different colors mean. We can position the legend on the plot using the x and y arguments, provide labels in legend, and add fill colors using the fill argument.

This results in the following code:

# Define fill colors for contour

col.contour = c("gray75", "gray85", "gray95")

# Generate funnel plot (we do not include study labels here)

meta::funnel(m.gen, xlim = c(-0.5, 2),

contour = c(0.9, 0.95, 0.99),

col.contour = col.contour)

# Add a legend

legend(x = 1.6, y = 0.01,

legend = c("p < 0.1", "p < 0.05", "p < 0.01"),

fill = col.contour)

# Add a title

title("Contour-Enhanced Funnel Plot (Third Wave Psychotherapies)")

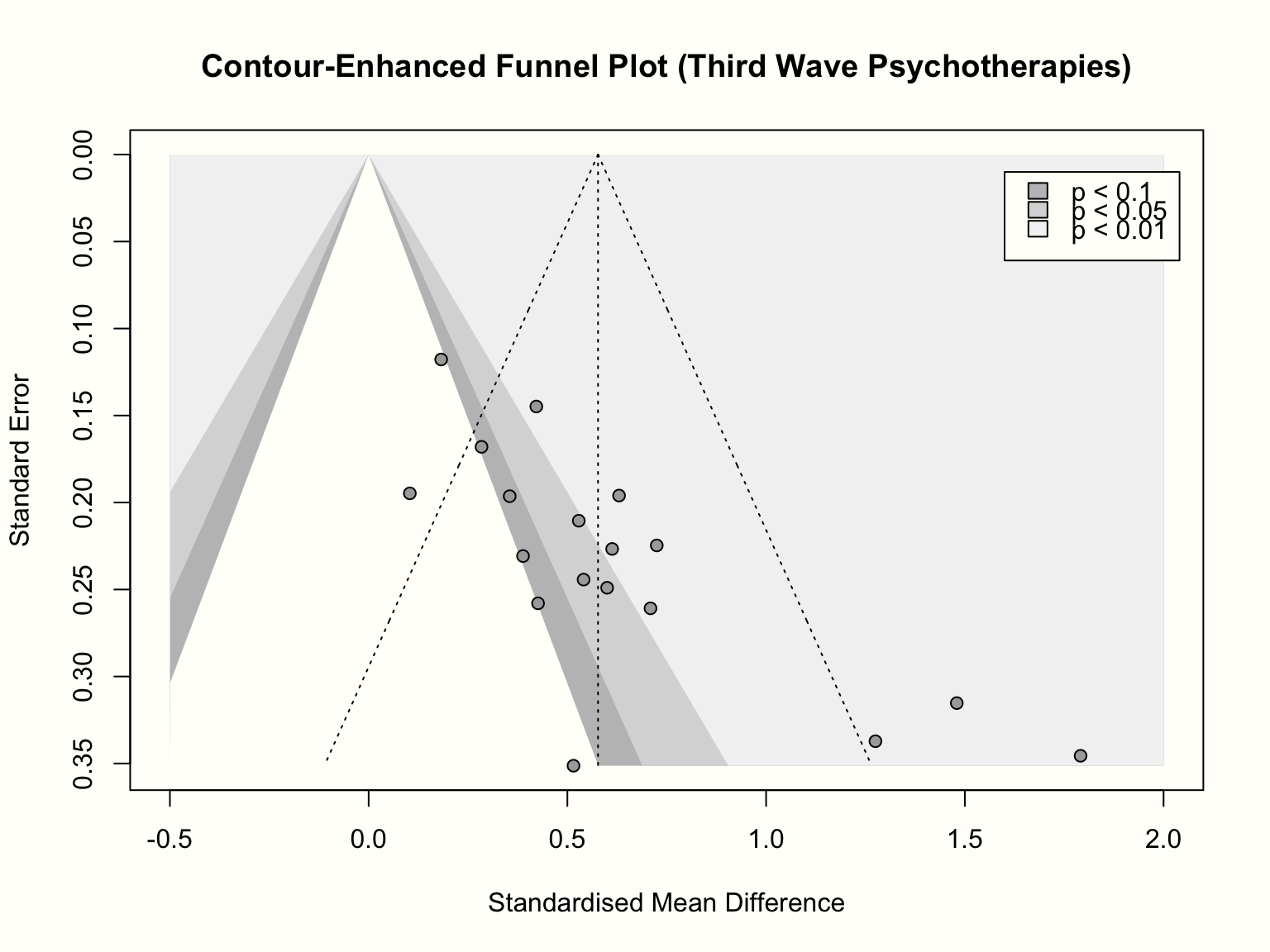

We see that the funnel plot now contains three shaded regions. We are particularly interested in the \(p<\) 0.05 and \(p<\) 0.01 regions, because effect sizes falling into this area are traditionally considered significant.

Adding the contour regions is illuminating: it shows that the three small studies all have significant effects, despite having a large standard error. There is only one study with a similar standard error that is not significant. If we would “impute” the missing studies in the lower left corner of the plot to increase the symmetry, these studies would lie in the non-significance region of the plot; or they would actually have a significant negative effect.

The pattern looks a little different for the larger studies. We see that there are several studies for which \(p>\) 0.05, and the distribution of effects is less lopsided. What could be problematic though is that, while not strictly significant, all but one study are very close to the significance threshold (i.e. they lie in the 0.1 \(> p >\) 0.05 region). It is possible that these studies simply calculated the effect size differently in the original paper, which led to a significant result. Or maybe, finding effects that are significant on a trend level was already convincing enough to get the study published.

In sum, inspection of the contour-enhanced funnel plot corroborates our initial hunch that there is asymmetry in the funnel plot and that this may be caused by publication bias. It is crucial, however, not to jump to conclusions, and interpret the funnel plot cautiously. We have to keep in mind that publication bias is just one of many possible reasons for funnel plot asymmetry.

Alternative Explanations for Funnel Plot Asymmetry

Although publication bias can lead to asymmetrical funnel plots, there are also other, rather “benign”, causes that may produce similar patterns (Page et al. 2020):

- Asymmetry can also be caused by between-study heterogeneity. Funnel plots assume that the dispersion of effect sizes is caused by the studies’ sampling error, but do not control for the fact the studies may be estimators of different true effects.

- It is possible that study procedures were different in small studies, and that this resulted in higher effects. In clinical studies, for example, it is easier to make sure that every participant receives the treatment as intended when the sample size is small. This may not be the case in large studies, resulting in a lower treatment fidelity, and thus lower effects. It can make sense to inspect the characteristics of the included studies in order to evaluate if such an alternative explanation is plausible.

- It is a common finding that low-quality studies tend to show larger effect sizes, because there is a higher risk of bias. Large studies require more investment, so it is likely that their methodology will also be more rigorous. This can also lead to funnel plot asymmetry, even when there is no publication bias.

- Lastly, it is perfectly possible that funnel plot asymmetry simply occurs by chance.

We see that visual inspection of the (contour-enhanced) funnel plot can already provide us with a few “red flags” that indicate that our results may be affected by publication bias.

However, interpreting the funnel plot just by looking at it clearly also has its limitations. There is no explicit rule when our results are “too asymmetric”, meaning that inferences from funnel plots are always somewhat subjective. Therefore, it is helpful to assess the presence of funnel plot asymmetry in a quantitative way. This is usually achieved through Egger’s regression test, which we will discuss next.

9.2.1.2 Egger’s Regression Test

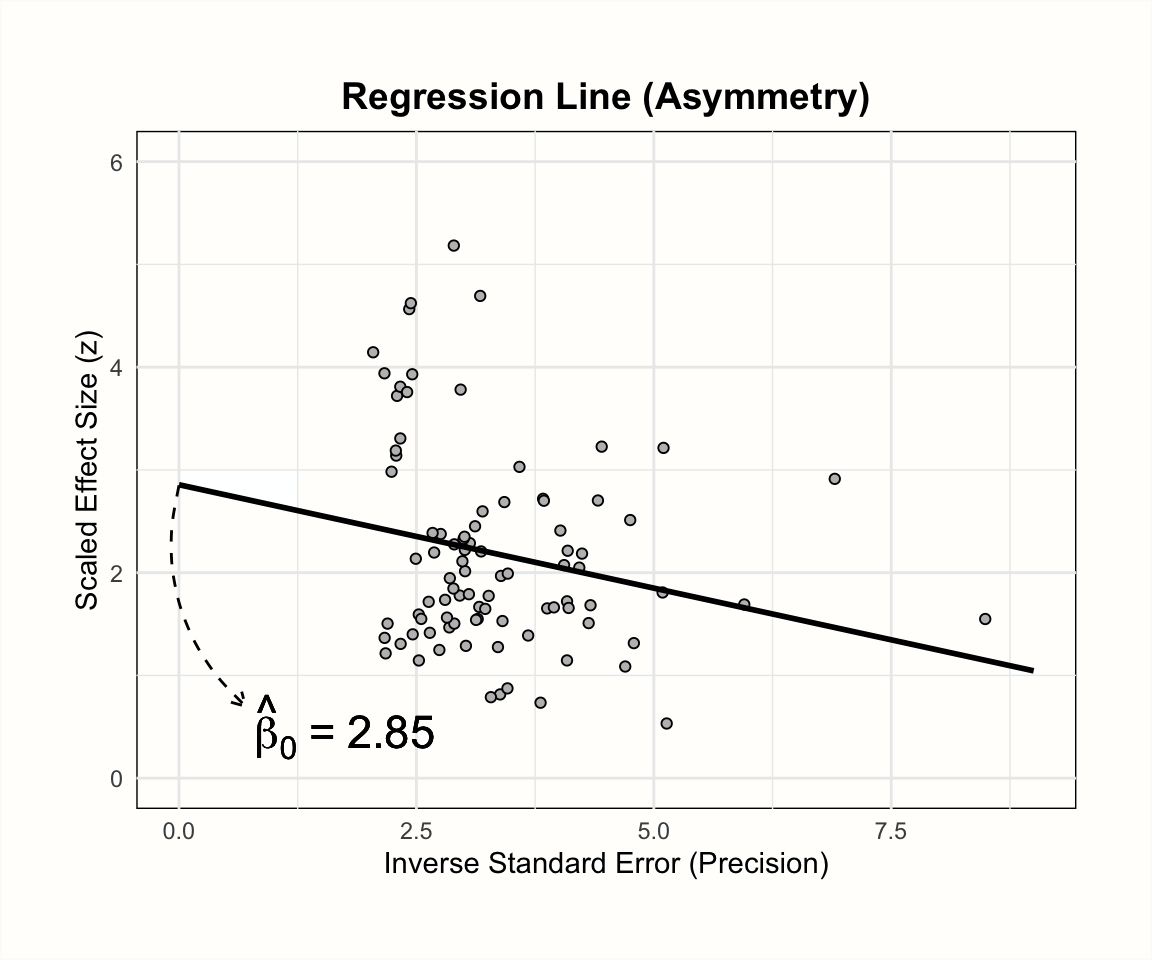

Egger’s regression test (Egger et al. 1997) is a commonly used quantitative method that tests for asymmetry in the funnel plot. Like visual inspection of the funnel plot, it can only identify small-study effects and not tell us directly if publication bias exists. The test is based on a simple linear regression model, the formula of which looks like this:

\[\begin{equation} \frac{\hat\theta_k}{SE_{\hat\theta_k}} = \beta_0 + \beta_1 \frac{1}{SE_{\hat\theta_k}} \tag{9.1} \end{equation}\]

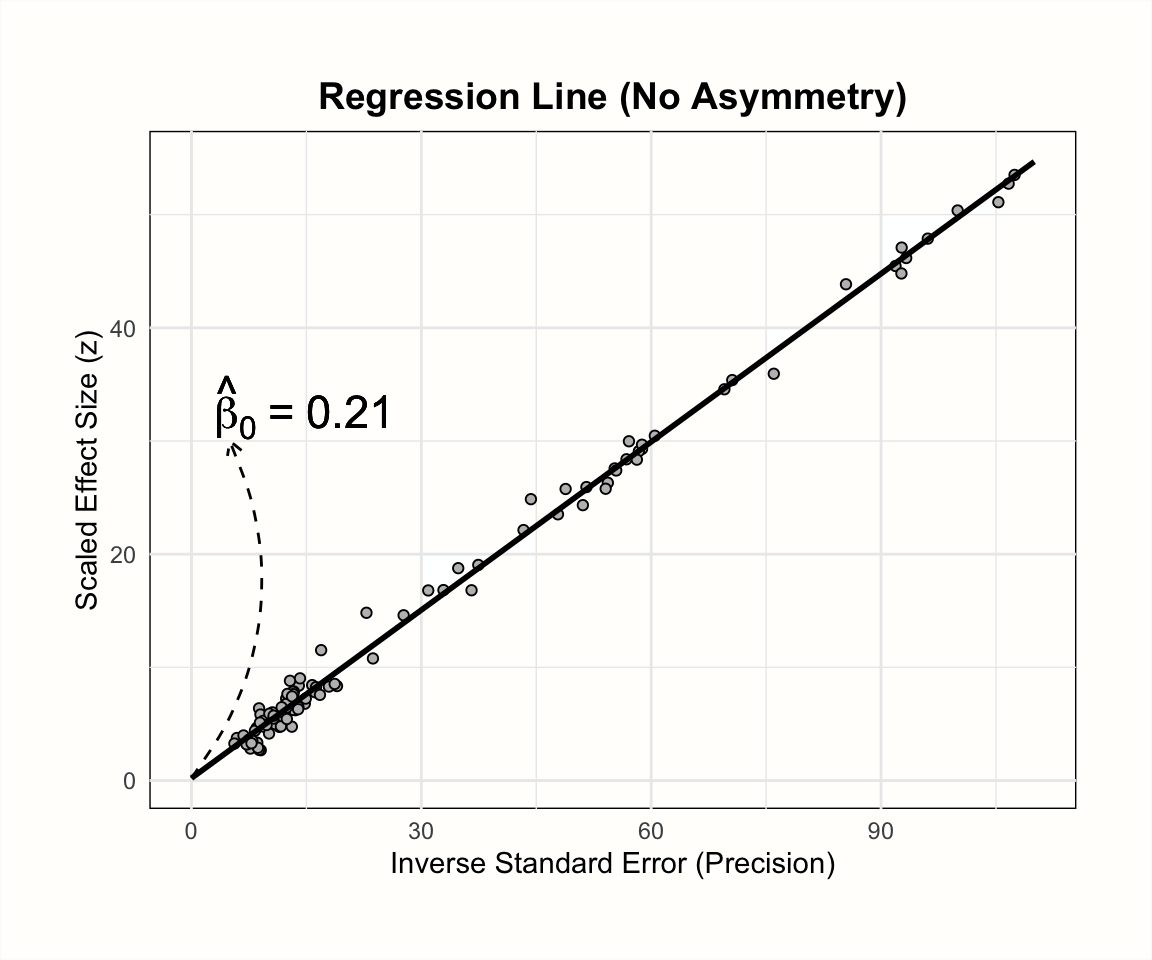

The responses \(y\) in this formula are the observed effect sizes \(\hat\theta_k\) in our meta-analysis, divided through their standard error. The resulting values are equivalent to \(z\)-scores. These scores tell us directly if an effect size is significant; when \(z \geq\) 1.96 or \(\leq\) -1.96, we know that the effect is significant (\(p<\) 0.05). This response is regressed on the inverse of the studies’ standard error, which is equivalent to their precision.

When using Egger’s test, however, we are not interested in the size and significance of the regression weight \(\beta_1\), but in the intercept \(\beta_0\). To evaluate the funnel asymmetry, we inspect the size of \(\hat\beta_0\), and if it differs significantly from zero. When this is the case, Egger’s test indicates funnel plot asymmetry.

Let us take a moment to understand why the size of the regression intercept tells us something about asymmetry in the funnel plot. In every linear regression model, the intercept represents the value of \(y\) when all other predictors are zero. The predictor in our model is the precision of a study, so the intercept shows the expected \(z\)-score when the precision is zero (i.e. when the standard error of a study is infinitely large).

When there is no publication bias, the expected \(z\)-score should be scattered around zero. This is because studies with extremely large standard errors have extremely large confidence intervals, making it nearly impossible to reach a value of \(|z| \geq\) 1.96. However, when the funnel plot is asymmetric, for example due to publication bias, we expect that small studies with very high effect sizes will be considerably over-represented in our data, leading to a surprisingly high number of low-precision studies with \(z\) values greater or equal to 1.96. Due to this distortion, the predicted value of \(y\) for zero precision will be much larger than zero, resulting in a significant intercept.

The plots below illustrate the effects of funnel plot asymmetry on the regression slope and intercept underlying Egger’s test.

Let us see what results we get when we fit such a regression model to the data in m.gen. Using R, we can extract the original data in m.gen to calculate the response y and our predictor x. In the code below, we do this using a pipe (Chapter 2.5.3) and the mutate function, which is part of the {tidyverse}. After that, we use the linear model function lm to regress the \(z\) scores y on the precision x. In the last part of the pipe, we request a summary of the results.

# Load required package

library(tidyverse)

m.gen$data %>%

mutate(y = TE/seTE, x = 1/seTE) %>%

lm(y ~ x, data = .) %>%

summary()## [...]

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 4.1111 0.8790 4.677 0.000252 ***

## x -0.3407 0.1837 -1.855 0.082140 .

## ---

## Signif. codes: 0 ‘***’ 0.001 ‘**’ 0.01 ‘*’ 0.05 ‘.’ 0.1 ‘ ’ 1

##

## [...]In the results, we see that the intercept of our regression model is \(\hat\beta_0\) = 4.11. This is significantly larger than zero (\(t\) = 4.677, \(p<\) 0.001), and indicates that the data in the funnel plot is indeed asymmetrical. Overall, this corroborates our initial findings that there are small-study effects. Yet, to reiterate, it is uncertain if this pattern has been caused by publication bias.

A more convenient way to perform Egger’s test of the intercept is to use the metabias function in {meta}. This function only needs the meta-analysis object as input, and we have to set the method.bias argument to "linreg". If we apply the function to m.gen, we get the same results as before.

metabias(m.gen, method.bias = "linreg")## Review: Third Wave Psychotherapies

##

## Linear regression test of funnel plot asymmetry

##

## Test result: t = 4.68, df = 16, p-value = 0.0003

## Bias estimate: 4.1111 (SE = 0.8790)

##

## Details:

## - multiplicative residual heterogeneity variance (tau^2 = 1.2014)

## - predictor: standard error

## - weight: inverse variance

## - reference: Egger et al. (1997), BMJReporting the Results of Egger’s Test

For Egger’s tests, it is usually sufficient to report the value of the intercept, its 95% confidence interval, as well as the \(t\) and \(p\)-value. In the {dmetar} package, we included a convenience function called eggers.test. This function is a wrapper for metabias, and provides the results of Egger’s test in a format suitable for reporting. In case you do not have {dmetar} installed, you can find the function’s source code online. Here is an example:

eggers.test(m.gen)

| \(~\) | Intercept |

ConfidenceInterval |

t |

p |

|---|---|---|---|---|

Egger's test |

4.111 |

2.347-5.875 |

4.677 |

0.00025 |

The effect size metric used in m.gen is the small sample bias-corrected SMD (Hedges’ \(g\)). It has been argued that running Egger’s test on SMDs can lead to an inflation of false positive results (J. E. Pustejovsky and Rodgers 2019). This is because a study’s standardized mean difference and standard error are not independent.

We can easily see this by looking at the formula used to calculate the standard error of between-group SMDs (equation 3.18, Chapter 3.3.1.2). This formula includes the SMD itself, which means that a study’s standard error changes for smaller or larger values of the observed effect (i.e. there is an artifactual correlation between the SMD and its standard error).

Pustejovsky and Rodgers (2019) propose to use a modified version of the standard error when testing for the funnel plot asymmetry of standardized mean differences. Only the first part of the standard error formula is used, which means that the observed effect size drops out of the equation. Thus, the formula looks like this:

\[\begin{equation} SE^*_{\text{SMD}_{\text{between}}}= \sqrt{\frac{n_1+n_2}{n_1n_2}} \tag{9.2} \end{equation}\]

Where \(SE^*_{\text{SMD}_{\text{between}}}\) is the modified version of the standard error. It might be a good idea to check if Egger’s test gives the same results when using this improvement. In the following code, we add the sample size per group of each study to our initial data set, calculate the adapted standard error, and then use it to re-run the analyses.

# Add experimental (n1) and control group (n2) sample size

n1 <- c(62, 72, 44, 135, 103, 71, 69, 68, 95,

43, 79, 61, 62, 60, 43, 42, 64, 63)

n2 <- c(51, 78, 41, 115, 100, 79, 62, 72, 80,

44, 72, 67, 59, 54, 41, 51, 66, 55)

# Calculate modified SE

ThirdWave$seTE_c <- sqrt((n1+n2)/(n1*n2))

# Re-run 'metagen' with modified SE to get meta-analysis object

m.gen.c <- metagen(TE = TE, seTE = seTE_c,

studlab = Author, data = ThirdWave, sm = "SMD",

fixed = FALSE, random = TRUE,

method.tau = "REML", hakn = TRUE,

title = "Third Wave Psychotherapies")## Warning: Use argument 'common' instead of 'fixed' (deprecated).## Warning: Use argument 'method.random.ci' instead of 'hakn' (deprecated).

# Egger's test

metabias(m.gen.c, method = "linreg")## Review: Third Wave Psychotherapies

##

## Linear regression test of funnel plot asymmetry

##

## Test result: t = 4.36, df = 16, p-value = 0.0005

## Bias estimate: 11.1903 (SE = 2.5667)

##

## Details:

## - multiplicative residual heterogeneity variance (tau^2 = 2.5334)

## - predictor: standard error

## - weight: inverse variance

## - reference: Egger et al. (1997), BMJWe see that, although the exact values differ, the interpretation of the results remains the same. This points to the robustness of our previous finding.

Using the Pustejovsky-Rodgers Approach Directly in metabias

In the latest versions of {meta}, the metabias function also contains an option to conduct Eggers’ test with the corrected standard error formula proposed by Pustejovsky and Rodgers. The option can be used by setting method.bias to "Pustejovsky".

Yet, this is only possible if the {meta} meta-analysis object already contains the sample sizes of the experimental and control groups in elements n.e and n.c, respectively. When using metagen objects (like in our example above), this is not typically the case, so these values need to be added manually. Let us use our m.gen meta-analysis object again as an example:

m.gen$n.e = n1; m.gen$n.c = n2

metabias(m.gen, method.bias = "Pustejovsky")

Please note that metabias, under these settings, uses equation (9.5) to perform Egger’s test, which is equivalent to equation (9.1) shown before. The main difference is that metabias uses the corrected standard error as the predictor in the model, and the inverse variance of included effect sizes as weights.

In our example, however, we used the corrected standard error on both sides of equation (9.1). This means that results of our approach as shown above, and results obtained via setting method.bias to "Pustejovsky", will not be completely identical.

9.2.1.3 Peters’ Regression Test

The dependence of effect size and standard error not only applies to standardized mean differences. This mathematical association also exists in effect sizes based on binary outcome data, such as (log) odds ratios (Chapter 3.3.2.2), risk ratios (Chapter 3.3.2.1) or proportions (Chapter 3.2.2).

To avoid an inflated risk of false positives when using binary effect size data, we can use another type of regression test, proposed by Peters and colleagues (Peters et al. 2006). To obtain the results of Peters’ test, the log-transformed effect size is regressed on the inverse of the sample size:

\[\begin{equation} \log\psi_k = \beta_0 + \beta_1\frac{1}{n_k} \tag{9.3} \end{equation}\]

In this formula, \(\log\psi_k\) can stand for any log-transformed effect size based on binary outcome data (e.g. the odds ratio), and \(n_k\) is the total sample size of study \(k\).

Importantly, when fitting the regression model, each study \(k\) is given a different weight \(w_k\), depending on its sample size and event counts. This results in a weighted linear regression, which is similar (but not identical) to a meta-regression model (see Chapter 8.1.3). The formula for the weights \(w_k\) looks like this:

\[\begin{equation} w_k = \frac{1}{\left(\dfrac{1}{a_k+c_k}+\dfrac{1}{b_k+d_k}\right)} \tag{9.4} \end{equation}\]

Where \(a_k\) is the number of events in the treatment group, \(c_k\) is the number of events in the control group; \(b_k\) and \(d_k\) are the number of non-events in the treatment and control group, respectively (see Chapter 3.3.2.1). In contrast to Eggers’ regression test, Peters’ test uses \(\beta_1\) instead of the intercept to test for funnel plot asymmetry. When the statistical test reveals that \(\beta_1 \neq 0\), we can assume that asymmetry exists in our data.

When we have calculated a meta-analysis based on binary outcome data using the metabin (Chapter 4.2.3.1) or metaprop (Chapter 4.2.6) function, the metabias function can be used to conduct Peters’ test. We only have to provide a fitting meta-analysis object and use "peters" as the argument in method.bias. Let us check for funnel plot asymmetry in the m.bin object we created in Chapter 4.2.3.1.

As you might remember, we used the risk ratio as the summary measure for this meta-analysis.

metabias(m.bin, method.bias = "peters")## Review: Depression and Mortality

##

## Linear regression test of funnel plot asymmetry

##

## Test result: t = -0.08, df = 16, p-value = 0.9368

## Bias estimate: -11.1728 (SE = 138.6121)

##

## Details:

## - multiplicative residual heterogeneity variance (tau^2 = 40.2747)

## - predictor: inverse of total sample size

## - weight: inverse variance of average event probability

## - reference: Peters et al. (2006), JAMAWe see that the structure of the output looks identical to the one of Eggers’ test. The output tells us that the results are the ones of a regression test based on sample size, meaning that Peters’ method has been used. The test is not significant (\(t\) = -0.08, \(p\) = 0.94), indicating no funnel plot asymmetry.

Statistical Power of Funnel Plot Asymmetry Tests

It is advisable to only test for funnel plot asymmetry when our meta-analysis includes a sufficient number of studies. When the number of studies is low, the statistical power of Eggers’ or Peters’ test may not be high enough to detect real asymmetry. It is generally recommended to only perform a test when \(K \geq 10\) (Sterne et al. 2011).

By default, metabias will throw an error when the number

of studies in our meta-analysis is smaller than that. However, it is

possible (although not advised) to prevent this by setting the

k.min argument in the function to a lower number.

9.2.1.4 Duval & Tweedie Trim and Fill Method

We have now learned several ways to examine (and test for) small-study effects in our meta-analysis. While it is good to know that publication bias may exist in our data, what we are primarily interested in is the magnitude of that bias. We want to know if publication bias has only distorted our estimate slightly, or if it is massive enough to change the interpretation of our findings.

In short, we need a method which allows us to calculate a bias-corrected estimate of the true effect size. Yet, we already learned that publication bias cannot be measured directly. We can only use small-study effects as a proxy that may point to publication bias.

We can therefore only adjust for small-study effects to attain a corrected effect estimate, not for publication bias per se. When effect size asymmetry was indeed caused by publication bias, correcting for this imbalance will yield an estimate that better represents the true effect when all evidence is considered.

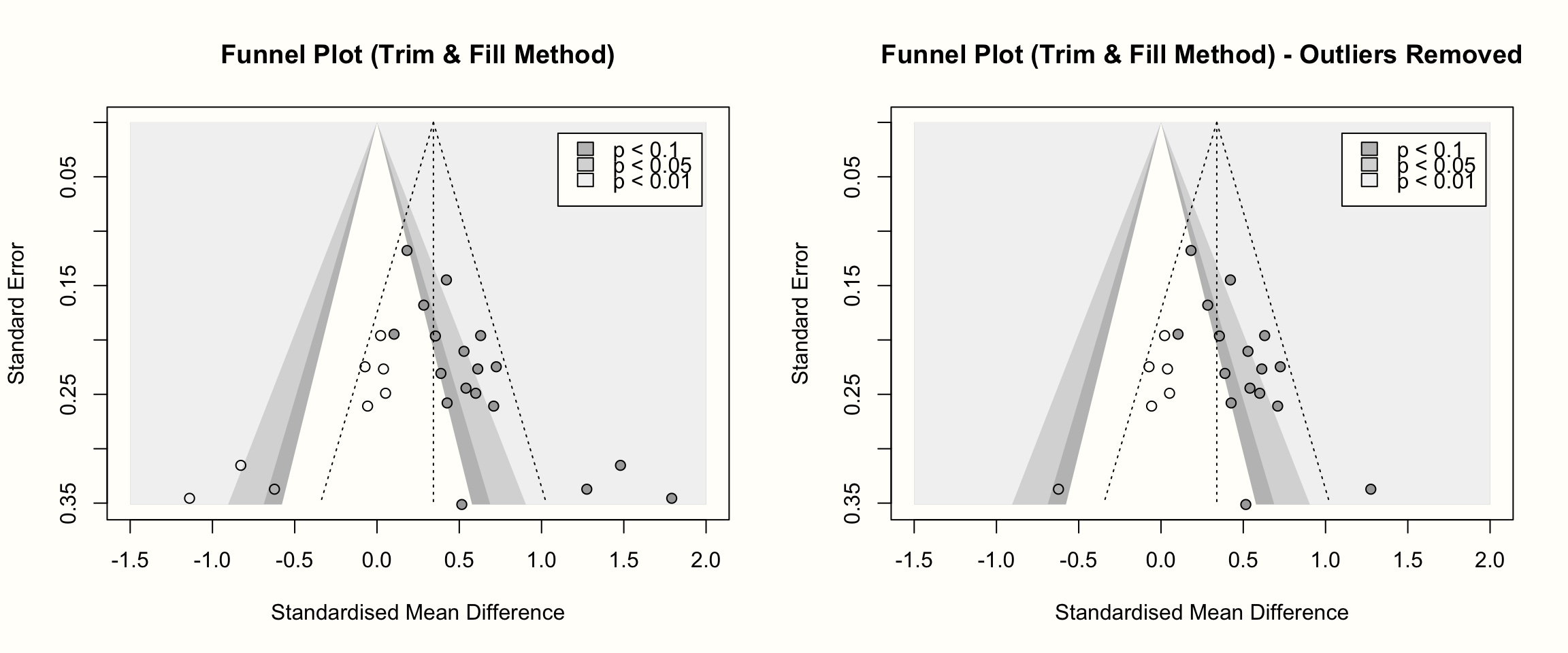

One of the most common methods to adjust for funnel plot asymmetry is the Duval & Tweedie trim and fill method (Duval and Tweedie 2000). The idea behind this method is simple: it imputes “missing” effects until the funnel plot is symmetric. The pooled effect size of the resulting “extended” data set then represents the estimate when correcting for small-study effects. This is achieved through a simple algorithm, which involves the “trimming” and “filling” of effects (Schwarzer, Carpenter, and Rücker 2015, chap. 5.3.1):

Trimming. First, the method identifies all the outlying studies in the funnel plot. In our example from before, these would be all small studies scattered around the right side of the plot. Once identified, these studies are trimmed: they are removed from the analysis, and the pooled effect is recalculated without them. This step is usually performed using a fixed-effect model.

Filling. For the next step, the recalculated pooled effect is now assumed to be the center of all effect sizes. For each trimmed study, one additional effect size is added, mirroring its results on the other side of the funnel. For example, if the recalculated mean effect is 0.5 and a trimmed study has an effect of 0.8, the mirrored study will be given an effect of 0.2. After this is done for all trimmed studies, the funnel plot will look roughly symmetric. Based on all data, including the trimmed and imputed effect sizes, the average effect is then recalculated again (typically using a random-effects model). The result is then used as the estimate of the corrected pooled effect size.

An important caveat pertaining to the trim-and-fill method is that it does not produce reliable results when the between-study heterogeneity is large (Peters et al. 2007; Terrin et al. 2003; Simonsohn, Nelson, and Simmons 2014b). When studies do not share one true effect, it is possible that even large studies deviate substantially from the average effect. This means that such studies are also trimmed and filled, even though it is unlikely that they are affected by publication bias. It is easy to see that this can lead to invalid results.

We can apply the trim and fill algorithm to our data using the trimfill function in {meta}. The function has very sensible defaults, so it is sufficient to simply provide it with our meta-analysis object. In our example, we use our m.gen object again. However, before we start, let us first check the amount of \(I^2\) heterogeneity we observed in this meta-analysis.

m.gen$I2## [1] 0.6263947We see that, with \(I^2\) = 63%, the heterogeneity in our analysis is substantial. In light of the trim and fill method’s limitations in heterogeneous data sets, this could prove problematic.

We will therefore conduct two trim and fill analyses: one with all studies, and a sensitivity analysis in which we exclude two outliers identified in chapter 5.4 (i.e. study 3 and 16). We save the results to tf and tf.no.out.

# Using all studies

tf <- trimfill(m.gen)

# Analyze with outliers removed

tf.no.out <- trimfill(update(m.gen,

subset = -c(3, 16)))First, let us have a look at the first analysis, which includes all studies.

summary(tf)## Review: Third Wave Psychotherapies

## SMD 95%-CI %W(random)

## [...]

## Filled: Warnecke et al. 0.0520 [-0.4360; 0.5401] 3.8

## Filled: Song & Lindquist 0.0395 [-0.4048; 0.4837] 4.0

## Filled: Frogeli et al. 0.0220 [-0.3621; 0.4062] 4.2

## Filled: Call et al. -0.0571 [-0.5683; 0.4541] 3.8

## Filled: Gallego et al. -0.0729 [-0.5132; 0.3675] 4.0

## Filled: Kang et al. -0.6230 [-1.2839; 0.0379] 3.3

## Filled: Shapiro et al. -0.8277 [-1.4456; -0.2098] 3.4

## Filled: DanitzOrsillo -1.1391 [-1.8164; -0.4618] 3.3

##

## Number of studies combined: k = 26 (with 8 added studies)

##

## SMD 95%-CI t p-value

## Random effects model 0.3428 [0.1015; 0.5841] 2.93 0.0072

##

## Quantifying heterogeneity:

## tau^2 = 0.2557 [0.1456; 0.6642]; tau = 0.5056 [0.3816; 0.8150];

## I^2 = 76.2% [65.4%; 83.7%]; H = 2.05 [1.70; 2.47]

##

## [...]

##

## Details on meta-analytical method:

## - Inverse variance method

## - Restricted maximum-likelihood estimator for tau^2

## - Q-profile method for confidence interval of tau^2 and tau

## - Hartung-Knapp adjustment for random effects model

## - Trim-and-fill method to adjust for funnel plot asymmetryWe see that the trim and fill procedure added a total of eight studies. Trimmed and filled studies include our detected outliers, but also a few other smaller studies with relatively high effects. We see that the imputed effect sizes are all very low, and some are even highly negative. The output also provides us with the estimate of the corrected effect, which is \(g=\) 0.34. This is still significant, but much lower than the effect of \(g=\) 0.58 we initially calculated for m.gen.

Now, let us compare this to the results of the analysis in which outliers were removed.

summary(tf.no.out)## Review: Third Wave Psychotherapies

## [...]

##

## Number of studies combined: k = 22 (with 6 added studies)

##

## SMD 95%-CI t p-value

## Random effects model 0.3391 [0.1904; 0.4878] 4.74 0.0001

##

## Quantifying heterogeneity:

## tau^2 = 0.0421 [0.0116; 0.2181]; tau = 0.2053 [0.1079; 0.4671];

## I^2 = 50.5% [19.1%; 69.7%]; H = 1.42 [1.11; 1.82]

## [...]With \(g=\) 0.34, the results are nearly identical. Overall, the trim and fill method indicates that the pooled effect of \(g=\) 0.58 in our meta-analysis is overestimated due to small-study effects. In reality, the effect may be considerably smaller. It is likely that this overestimation has been caused by publication bias, but this is not certain. Other explanations are possible too, and this could mean that the trim and fill estimate is invalid.

Lastly, it is also possible to create a funnel plot including the imputed studies. We only have to apply the meta::funnel function to the output of trimfill. In the following code, we create contour-enhanced funnel plots for both trim and fill analyses (with and without outliers). Using the par function, we can print both plots side by side.

# Define fill colors for contour

contour <- c(0.9, 0.95, 0.99)

col.contour <- c("gray75", "gray85", "gray95")

ld <- c("p < 0.1", "p < 0.05", "p < 0.01")

# Use 'par' to create two plots in one row (row, columns)

par(mfrow=c(1,2))

# Contour-enhanced funnel plot (full data)

meta::funnel(tf,

xlim = c(-1.5, 2), contour = contour,

col.contour = col.contour)

legend(x = 1.1, y = 0.01,

legend = ld, fill = col.contour)

title("Funnel Plot (Trim & Fill Method)")

# Contour-enhanced funnel plot (outliers removed)

meta::funnel(tf.no.out,

xlim = c(-1.5, 2), contour = contour,

col.contour = col.contour)

legend(x = 1.1, y = 0.01,

legend = ld, fill = col.contour)

title("Funnel Plot (Trim & Fill Method) - Outliers Removed")

In these funnel plots, the imputed studies are represented by circles that have no fill color.

9.2.1.5 PET-PEESE

Duval & Tweedie’s trim and fill method is relatively old, and arguably one of the most common methods to adjust for small-study effects. However, as we mentioned, it is an approach that is far from perfect, and not the only way to estimate a bias-corrected version of our pooled effect. In recent years, a method called PET-PEESE (T. D. Stanley and Doucouliagos 2014; T. D. Stanley 2008) has become increasingly popular; particularly in research fields where SMDs are frequently used as the outcome measure (for example psychology or educational research). Like all previous techniques, PET-PEESE is aimed at small-study effects, which are seen as a potential indicator of publication bias.

PET-PEESE is actually a combination of two methods: the precision-effect test (PET) and the precision-effect estimate with standard error (PEESE). Let us begin with the former. The PET method is based on a simple regression model, in which we regress a study’s effect size on its standard error:

\[\begin{equation} \theta_k = \beta_0 + \beta_1SE_{\theta_k} \tag{9.5} \end{equation}\]

Like in Peters’ test, we use a weighted regression. The study weight \(w_k\) is calculated as the inverse of the variance–just like in a normal (fixed-effect) meta-analysis:

\[\begin{equation} w_k = \frac{1}{s_k^2} \tag{9.6} \end{equation}\]

It is of note that the regression model used by the PET method is equivalent to the one of Eggers’ test. The main difference is that in the PET formula, the \(\beta_1\) coefficient quantifies funnel asymmetry, while in Eggers’ test, this is indicated by the intercept.

When using the PET method, however, we are not interested in the funnel asymmetry measured by \(\beta_1\), but in the intercept \(\beta_0\). This is because, in the formula above, the intercept represents the so-called limit effect. This limit effect is the expected effect size of a study with a standard error of zero. This is the equivalent of an observed effect size measured without sampling error. All things being equal, we know that an effect size measured without sampling error \(\epsilon_k\) will represent the true overall effect itself.

The idea behind the PET method is to control for the effect of small studies by including the standard error as a predictor. In theory, this should lead to an intercept \(\beta_0\) which represents the true effect in our meta-analysis after correction for all small-study effects:

\[\begin{equation} \hat\theta_{\text{PET}} = \hat\beta_{0_{\mathrm{PET}}} \tag{9.7} \end{equation}\]

The formula for the PEESE method is very similar. The only difference is that we use the squared standard error as the predictor (i.e. the effect size variance \(s_k^2\)):

\[\begin{equation} \theta_k = \beta_0 + \beta_1SE_{\theta_k}^2 \tag{9.8} \end{equation}\]

While the formula for the study weights \(w_k\) remains the same. The idea behind squaring the standard error is that small studies are particularly prone to reporting highly over-estimated effects. This problem, it is assumed, is far less pronounced for studies with high statistical power.

While the PET method works best when the true effect captured by \(\beta_0\) is zero, PEESE shows a better performance when the true effect is not zero. Stanley and Doucouliagos (2014) therefore proposed to combine both methods, in order to balance out their individual strengths. The resulting approach is the PET-PEESE method. PET-PEESE uses the intercept \(\beta_0\) of either PET or PEESE as the estimate of the corrected true effect.

Whether PET or PEESE is used depends on the size of the intercept calculated by the PET method. When \(\beta_{0_{\text{PET}}}\) is significantly larger than zero in a one-sided test with \(\alpha\) = 0.05, we use the intercept of PEESE as the true effect size estimate. If PET’s intercept is not significantly larger than zero, we remain with the PET estimate.

In most implementations of regression models in R, it is conventional to test the significance of coefficients using a two-sided test (i.e. we test if a \(\beta\) weight significantly differs from zero, no matter the direction). To assume a one-sided test with \(\alpha\) = 0.05, we already regard the intercept as significant when \(p\) < 0.1, and when the estimate of \(\beta_0\) is larger than zero39.

The rule to obtain the true effect size as estimated by PET-PEESE, therefore, looks like this:

\[\begin{equation} \hat\theta_{\text{PET-PEESE}}=\begin{cases} \mathrm{P}(\beta_{0_{\text{PET}}} = 0) <0.1~\mathrm{and}~\hat\beta_{0_{\text{PET}}} > 0: & \hat\beta_{0_{\text{PEESE}}}\\ \text{else}: & \hat\beta_{0_{\text{PET}}}. \end{cases} \tag{9.9} \end{equation}\]

It is somewhat difficult to wrap one’s head around this if-else logic, but a hands-on example may help to clarify things. Using our m.gen meta-analysis object, let us see what PET-PEESE’s estimate of the true effect size is.

There is currently no straightforward implementation of PET-PEESE in {meta}, so we write our own code using the linear model function lm. Before we can fit the PET and PEESE model, however, we first have to prepare all the variables we need in our data frame. We will call this data frame dat.petpeese. The most important variable, of course, is the standardized mean difference. No matter if we initially ran our meta-analysis using metacont or metagen, the calculated SMDs of each study will always be stored under TE in our meta-analysis object.

# Build data set, starting with the effect size

dat.petpeese <- data.frame(TE = m.gen$TE)Next, we need the standard error of the effect size. For PET-PEESE, it is also advisable to use the modified standard error proposed by Pustejovsky and Rodgers (2019, see Chapter 9.2.1.2)40.

Therefore, we use the adapted formula to calculate the corrected standard error seTE_c, so that it is not correlated with the effect size itself. We also save this variable to dat.petpeese. Furthermore, we add a variable seTE_c2, containing the squared standard error, since we need this as the predictor for PEESE.

# Experimental (n1) and control group (n2) sample size

n1 <- c(62, 72, 44, 135, 103, 71, 69, 68, 95,

43, 79, 61, 62, 60, 43, 42, 64, 63)

n2 <- c(51, 78, 41, 115, 100, 79, 62, 72, 80,

44, 72, 67, 59, 54, 41, 51, 66, 55)

# Calculate modified SE

dat.petpeese$seTE_c <- sqrt((n1+n2)/(n1*n2))

# Add squared modified SE (= variance)

dat.petpeese$seTE_c2 <- dat.petpeese$seTE_c^2Lastly, we need to calculate the inverse-variance weights w_k for each study. Here, we also use the squared modified standard error to get an estimate of the variance.

dat.petpeese$w_k <- 1/dat.petpeese$seTE_c^2Now, dat.petpeese contains all the variables we need to fit a weighted linear regression model for PET and PEESE. In the following code, we fit both models, and then directly print the estimated coefficients using the summary function. These are the results we get:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -1.353476 0.443164 -3.054119 0.0075732464

## seTE_c 11.190288 2.566719 4.359764 0.0004862409## Estimate Std. Error t value Pr(>|t|)

## (Intercept) -0.4366495 0.2229352 -1.958639 0.0678222117

## seTE_c2 33.3609862 7.1784369 4.647389 0.0002683009To determine if PET or PEESE should be used, we first need to have a look at the results of the PET method. We see that the limit estimate is \(g\) = -1.35. This effect is significant (\(p\) < 0.10), but considerably smaller than zero, indicating that the PET estimate should be used.

However, with, \(g\) = -1.35, PET’s estimate of the bias-corrected effect is not very credible. It indicates that in reality, the intervention type under study has a highly negative effect on the outcome of interest; that it is actually very harmful. That seems very unlikely. It may be possible that a “bona fide” intervention has no effect, but it is extremely uncommon to find interventions that are downright dangerous.

In fact, what we see in our results is a common limitation of PET-PEESE: it sometimes heavily overcorrects for biases in our data (Carter et al. 2019). This seems to be the case in our example: although all observed effect sizes have a positive sign, the corrected effect size is heavily negative. If we look at the second part of the output, we see that the same is also true for PEESE, even though its estimate is slightly less negative (\(g=\) -0.44).

When this happens, it is best not to interpret the intercept as a point estimate of the true effect size. We can simply say that PET-PEESE indicates, when correcting for small-sample effects, that the intervention type under study has no effect. This basically means that we set \(\hat\theta_{\mathrm{PET-PEESE}}\) to zero, instead of interpreting the negative effect size that was actually estimated.

Limitations of PET-PEESE

PET-PEESE can not only systematically over-correct the pooled effect size–it also sometimes overestimates the true effect, even when there is no publication bias at all. Overall, the PET-PEESE method has been found to perform badly when the number of included studies is small (i.e. \(K\) < 20), and the between-study heterogeneity is very high, i.e. \(I^2\) > 80% (T. D. Stanley 2017).

Unfortunately, it is common to find meta-analyses with a small number of studies and high heterogeneity. This restricts the applicability of PET-PEESE, and we do not recommend its use as the only method to adjust for small-study effects. Yet, it is good to know that this method exists and how it can be applied since it has become increasingly common in some research fields.

Using rma.uni Instead of lm for PET-PEESE

In our hands-on example, we used the lm function together with study weights to implement PET-PEESE. This approach, while used frequently, is not completely uncontroversial.

There is a minor, but crucial difference between weighted regression models implemented via lm, and meta-regression models employed by, for example, rma.uni. While lm uses a multiplicative error model, meta-analysis functions typically employ an additive error model. We will not delve into the technical minutiae of this difference here; more information can be found in an excellent vignette written by Wolfgang Viechtbauer on this topic.

The main takeaway is that lm models, by assuming a proportionality constant for the sampling error variances, are not perfectly suited for meta-analysis data. This means that implementing PET-PEESE via, say, rma.uni instead of lm is indicated, at least as a sensitivity analysis. Practically, this would mean running rma.uni with \(SE_{\theta_k}^{(2)}\) added as a moderator variable in mods; e.g. for PET:

rma.uni(TE, seTE^2, mods = ~seTE, data = dat, method = "FE").

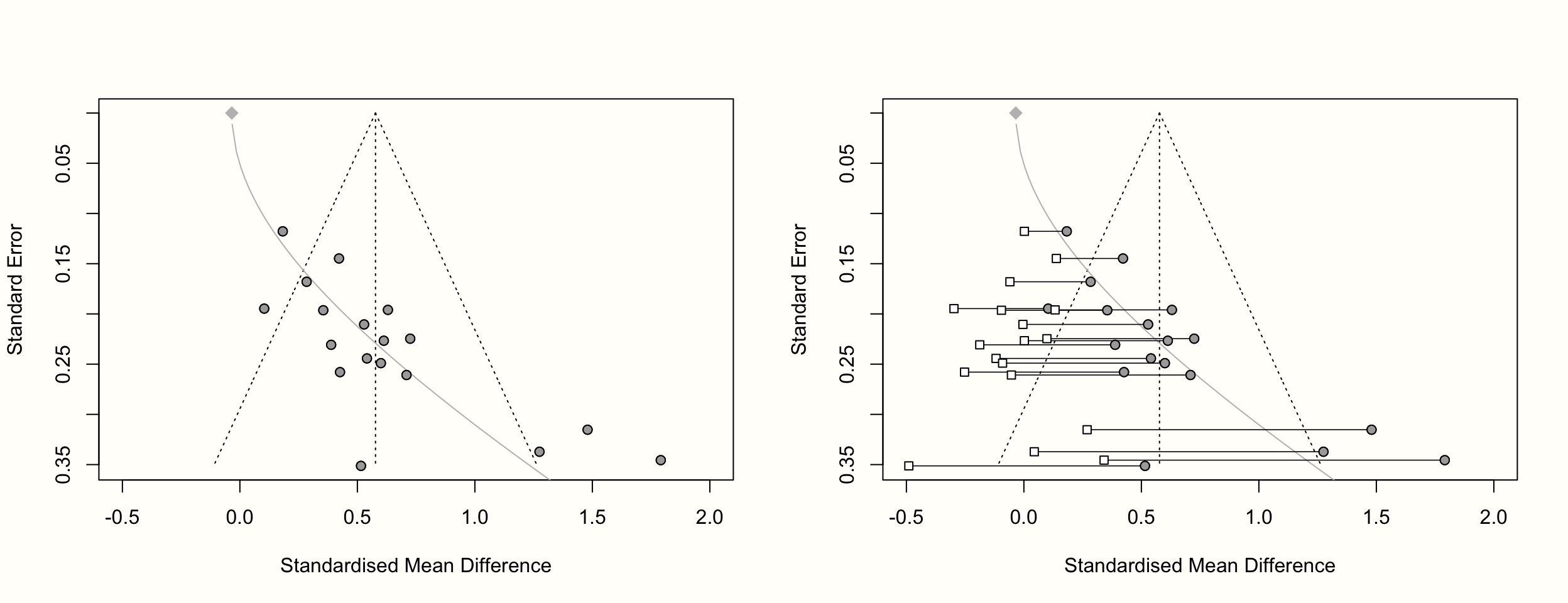

9.2.1.6 Rücker’s Limit Meta-Analysis Method

Another way to calculate an estimate of the adjusted effect size is to perform a limit meta-analysis as proposed by Rücker and colleagues (2011). This method is more sophisticated than PET-PEESE and involves more complex computations. Here, we therefore focus on understanding the general idea behind this method and let R do the heavy lifting after that.

The idea behind Rücker’s method is to build a meta-analysis model which explicitly accounts for bias due to small-study effects. As a reminder, the formula of a (random-effects) meta-analysis can be defined like this:

\[\begin{equation} \hat\theta_k = \mu + \epsilon_k+\zeta_k \tag{9.10} \end{equation}\]

Where \(\hat\theta_k\) is the observed effect size of study \(k\), \(\mu\) is the true overall effect size, \(\epsilon_k\) is the sampling error, and \(\zeta_k\) quantifies the deviation due to between-study heterogeneity.

In a limit meta-analysis, we extend this model. We account for the fact that the effect sizes and standard errors of studies are not independent when there are small-study effects. This is assumed because we know that publication bias particularly affects small studies, and that small studies will therefore have a larger effect size than big studies. In Rücker’s method, this bias is added to our model by introducing a new term \(\theta_{\text{Bias}}\). It is assumed that \(\theta_{\text{Bias}}\) interacts with \(\epsilon_k\) and \(\zeta_k\). It becomes larger as \(\epsilon_k\) increases. The adapted formula looks like this:

\[\begin{equation} \hat\theta_k = \mu_* + \theta_{\text{Bias}}(\epsilon_k+\zeta_k) \tag{9.11} \end{equation}\]

It is important to note that in this formula, \(\mu_*\) does not represent the overall true effect size anymore, but a global mean that has no direct equivalent in a “standard” random-effects meta-analysis (unless \(\theta_{\text{Bias}}=\) 0).

The next step is similar to the idea behind PET-PEESE (see previous chapter). Using the formula above, we suppose that studies’ effect size estimates become increasingly precise, meaning that their individual sampling error \(\epsilon_k\) approaches zero. This means that \(\epsilon_k\) ultimately drops out of the equation:

\[\begin{equation} \mathrm{E}(\hat\theta_k) \rightarrow \mu_{*} + \theta_{\text{Bias}}\zeta_k ~ ~ ~ ~ \text{as} ~ ~ ~ ~ \epsilon_k \rightarrow 0. \tag{9.12} \end{equation}\]

In this formula, \(\mathrm{E}(\hat\theta_k)\) stands for the expected value of \(\hat\theta_k\) as \(\epsilon_k\) approaches zero. The formula we just created is the one of a “limit meta-analysis”. It provides us with an adjusted estimate of the effect when removing the distorting influence of studies with a large standard error. Since \(\zeta_k\) is usually expressed by the between-study heterogeneity variance \(\tau^2\) (or its square root, the standard deviation \(\tau\)), we can use it to replace \(\zeta_k\) in the equation, which leaves us with this formula:

\[\begin{equation} \hat\theta_{*} = \mu_* + \theta_{\mathrm{Bias}}\tau \tag{9.13} \end{equation}\]

Where \(\hat\theta_*\) stands for the estimate of the pooled effect size after adjusting for small-study effects. Rücker’s method uses maximum likelihood to estimate the parameters in this formula, including the “shrunken” estimate of the true effect size \(\hat\theta_*\). Furthermore, it is also possible to obtain a shrunken effect size estimate \(\hat\theta_{{*}_k}\) for each individual study \(k\), using this formula:

\[\begin{equation} \hat\theta_{{*}_k} = \mu_* + \sqrt{\dfrac{\tau^2}{SE^2_k + \tau^2}}(\hat\theta_k - \mu_*) \end{equation}\]

in which \(SE^2_k\) stands for the squared standard error (i.e. the observed variance) of \(k\), and with \(\hat\theta_k\) being the originally observed effect size41.

An advantage of Rücker’s limit meta-analysis method, compared to PET-PEESE, is that the heterogeneity variance \(\tau^2\) is explicitly included in the model. Another more practical asset is that this method can be directly applied in R, using the limitmeta function. This function is included in the {metasens} package (Schwarzer, Carpenter, and Rücker 2020).

Since {metasens} and {meta} have been developed by the same group of researchers, they usually work together quite seamlessly. To conduct a limit meta-analysis of our m.gen meta-analysis, for example, we only need to provide it as the first argument in our call to limitmeta.

# Install 'metasens', then load from library

library(metasens)

# Run limit meta-analysis

limitmeta(m.gen)## Results for individual studies

## (left: original data; right: shrunken estimates)

##

## SMD 95%-CI SMD 95%-CI

## Call et al. 0.70 [ 0.19; 1.22] -0.05 [-0.56; 0.45]

## Cavanagh et al. 0.35 [-0.03; 0.73] -0.09 [-0.48; 0.28]

## DanitzOrsillo 1.79 [ 1.11; 2.46] 0.34 [-0.33; 1.01]

## de Vibe et al. 0.18 [-0.04; 0.41] 0.00 [-0.22; 0.23]

## Frazier et al. 0.42 [ 0.13; 0.70] 0.13 [-0.14; 0.42]

## Frogeli et al. 0.63 [ 0.24; 1.01] 0.13 [-0.25; 0.51]

## Gallego et al. 0.72 [ 0.28; 1.16] 0.09 [-0.34; 0.53]

## Hazlett-Stevens & Oren 0.52 [ 0.11; 0.94] -0.00 [-0.41; 0.40]

## Hintz et al. 0.28 [-0.04; 0.61] -0.05 [-0.38; 0.26]

## Kang et al. 1.27 [ 0.61; 1.93] 0.04 [-0.61; 0.70]

## Kuhlmann et al. 0.10 [-0.27; 0.48] -0.29 [-0.67; 0.08]

## Lever Taylor et al. 0.38 [-0.06; 0.84] -0.18 [-0.64; 0.26]

## Phang et al. 0.54 [ 0.06; 1.01] -0.11 [-0.59; 0.36]

## Rasanen et al. 0.42 [-0.07; 0.93] -0.25 [-0.75; 0.25]

## Ratanasiripong 0.51 [-0.17; 1.20] -0.48 [-1.17; 0.19]

## Shapiro et al. 1.47 [ 0.86; 2.09] 0.26 [-0.34; 0.88]

## Song & Lindquist 0.61 [ 0.16; 1.05] 0.00 [-0.44; 0.44]

## Warnecke et al. 0.60 [ 0.11; 1.08] -0.09 [-0.57; 0.39]

##

## Result of limit meta-analysis:

##

## Random effects model SMD 95%-CI z pval

## Adjusted estimate -0.0345 [-0.3630; 0.2940] -0.21 0.8367

## Unadjusted estimate 0.5771 [ 0.3782; 0.7760] -0.21 < 0.0001

## [...]The output first shows us the original (left) and shrunken estimates (right) of each study. We see that the adjusted effect sizes are considerably smaller than the observed ones–some are even negative now. In the second part of the output, we see the adjusted pooled effect estimate. It is \(g=\) -0.03, indicating that the overall effect is approximately zero when correcting for small-study effects.

If the small-study effects are indeed caused by publication bias, this result would be discouraging. It would mean that our initial finding has been completely spurious and that selective publication has concealed the fact that the treatment is actually ineffective. Yet again, it is hard to prove that publication bias has been the only driving force behind the small-study effects in our data.

It is also possible to create funnel plots for the limit meta-analysis: we simply have to provide the results of limitmeta to the funnel.limitmeta function. This creates a funnel plot which looks exactly like the one produced by meta::funnel. The only difference is that a gray curve is added to the plot. This curve indicates the adjusted average effect size when the standard error on the y-axis is zero, but also symbolizes the increasing bias due to small-study effects as the standard error increases.

When generating a funnel plot for limitmeta objects, it is also possible to include the shrunken study-level effect size estimates, by setting the shrunken argument to TRUE. Here is the code to produce these plots:

# Create limitmeta object

lmeta <- limitmeta(m.gen)

# Funnel with curve

funnel.limitmeta(lmeta, xlim = c(-0.5, 2))

# Funnel with curve and shrunken study estimates

funnel.limitmeta(lmeta, xlim = c(-0.5, 2), shrunken = TRUE)

Note that limitmeta can not only be applied to meta-analyses which use the standardized mean difference–any kind of {meta} meta-analysis object can be used. To exemplify this, let us check the adjusted effect size of m.bin, which used the risk ratio as the summary measure.

limitmeta(m.bin)## Result of limit meta-analysis:

##

## Random effects model RR 95%-CI z pval

## Adjusted estimate 2.2604 [1.8066; 2.8282] 7.13 < 0.0001

## Unadjusted estimate 2.0217 [1.5786; 2.5892] 7.13 < 0.0001We see that in this analysis, the original and adjusted estimate are largely identical. This is not very surprising, given that Peters’ test (Chapter 9.2.1.3) already indicated that small-study effects seem to play a minor role in this meta-analysis.

9.2.2 P-Curve

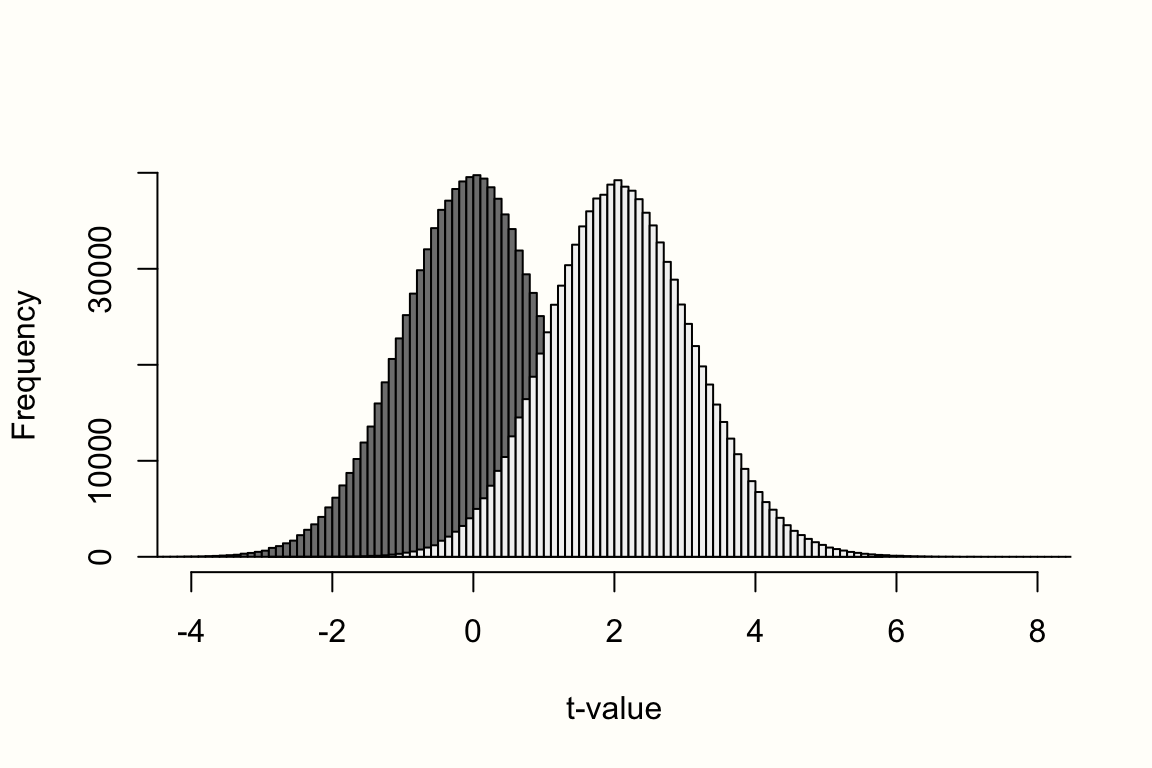

Previously, we covered various approaches that assess the risk of publication bias by looking at small-study effects. Although their implementation differs, all of these methods are based on the idea that selective reporting causes a study’s effect size to depend on its sample size. We assume that studies with a higher standard error (and thus a lower precision) have higher average effect sizes than large studies. This is because only small studies with a very high effect size are published, while others remain in the file drawer.

While this “theory” certainly sounds intuitive, one may also argue that it somewhat misses the point. Small-study methods assume that publication bias is driven by effect sizes. A more realistic stance, however, would be to say that it operates through \(p\)-values. In practice, research findings are only considered worth publishing when the results are \(p<\) 0.05.

As we mentioned before, research is conducted by humans, and thus influenced by money and prestige–just like many other parts of our lives. The infamous saying “significant \(p\), or no PhD” captures this issue very well. Researchers are often under enormous external pressure to “produce” \(p\)-values smaller than 0.05. They know that this significance threshold can determine if their work is going to get published, and if it is perceived as “successful”. These incentives may explain why negative and non-significant findings are increasingly disappearing from the published literature (Fanelli 2012).

One could say that small-study methods capture the mechanism behind publication bias indirectly. It is true that selective reporting can lead to smaller studies having higher effects. Yet, this is only correct because very high effects increase the chance of obtaining a test statistic for which \(p<\) 0.05. For small-study effect methods, there is hardly a difference between a study in which \(p=\) 0.049, and a study with a \(p\)-value of 0.051. In practice, however, this tiny distinction can mean the world to researchers.

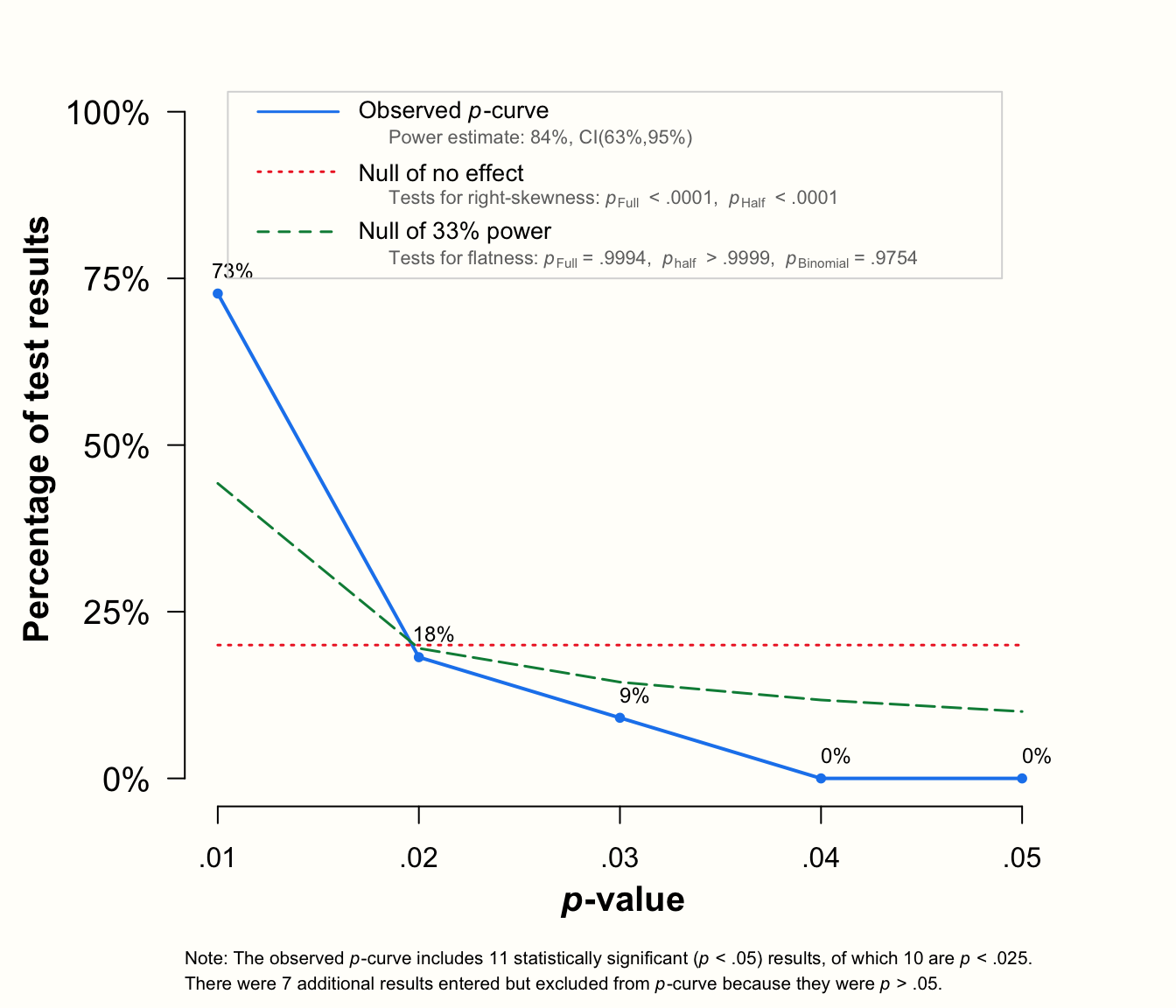

In the following, we will introduce a method called p-curve, which focuses on \(p\)-values as the main driver of publication bias (Simonsohn, Nelson, and Simmons 2014b, 2014a; Simonsohn, Simmons, and Nelson 2015). The special thing about this method is that it is restricted to significant effect sizes, and how their \(p\)-values are distributed. It allows to assess if there is a true effect behind our meta-analysis data, and can estimate how large it is. Importantly, it also explicitly controls for questionable research practices such as \(p\)-hacking, which small-study effect methods do not.

P-curve is a relatively novel method. It was developed in response to the “replication crisis” that affected the social sciences in recent years (Ioannidis 2005; Open Science Collaboration et al. 2015; McNutt 2014). This crisis was triggered by the observation that many seemingly well-established research findings are in fact spurious–they can not be systematically replicated. This has sparked renewed interest in methods to detect publication bias, since this may be a logical explanation for failed replications. Meta-analyses, by not adequately controlling for selective reporting, may have simply reproduced biases that already exist in the published literature.

P-curve was also developed in response to deficiencies of standard publication bias methods, in particular the Duval & Tweedie trim-fill-method. Simonsohn and colleagues (2014a) found that the trim-and-fill approach usually only leads to a small downward correction, and often misses the fact that there is no true effect behind the analyzed data at all.

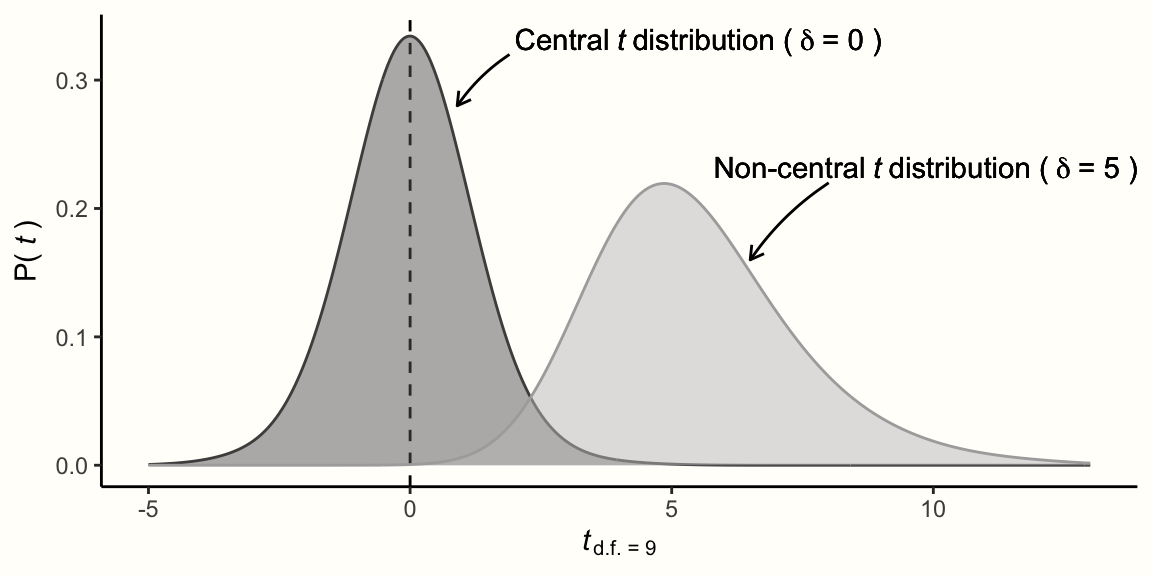

P-curve is, as it says in the name, based on a curve of \(p\)-values. A \(p\)-curve is like a histogram, showing the number of studies in a meta-analysis for which \(p<\) 0.05, \(p<\) 0.04, \(p<\) 0.03, and so forth. The p-curve method is based on the idea that the shape of this histogram of \(p\)-values depends on the sample sizes of studies, and–more importantly–on the true effect size behind our data.

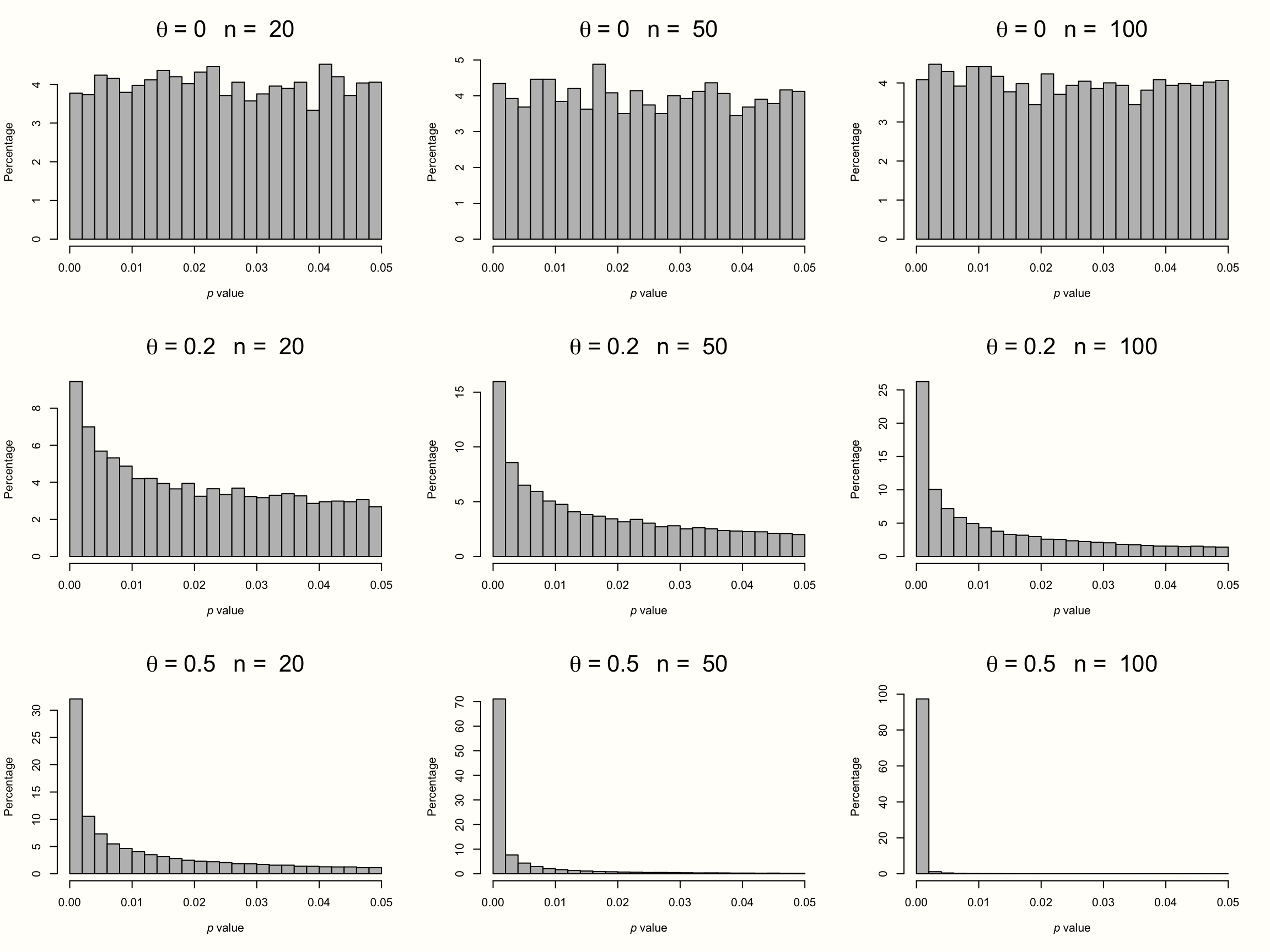

To illustrate this, we simulated the results of nine meta-analyses. To make patterns clearly visible, each of these imaginary meta-analyses contains the huge number of \(K=\) 10\(^{\text{5}}\) studies. In each of the nine simulations, we assumed different sample sizes for each individual study (ranging from \(n=\) 20 to \(n=\) 100), and a different true effect size (ranging from \(\theta=\) 0 to 0.5). We assumed that all studies in a meta-analysis share one true effect size, meaning effects follow the fixed-effect model. Then, we took the \(p\)-value of all significant effect sizes in our simulations and created a histogram. The results can be seen in the plot below.

Figure 9.1: P-curves for varying study sample size and true effect.

The first row displays the distribution of significant \(p\)-values when there is no true effect. We see that the pattern is identical in all simulations, no matter how large the sample size of the individual studies. The \(p\)-values in all three examples seem to be evenly distributed: a barely significant value of \(p=\) 0.04 seems to be just as likely as \(p=\) 0.01. Such a flat \(p\)-curve emerges when there is no underlying effect in our data, i.e. when the null hypothesis of \(\theta = 0\) is true.

When this is the case, \(p\)-values are assumed to follow a uniform distribution: every \(p\)-value is just as likely as the other. When the null hypothesis (\(\theta = 0\)) is true, it is still possible to find significant effect sizes just by chance. This results in a false positive, or \(\alpha\) error. But this is unlikely, and we know exactly how unlikely. Since they are uniformly distributed when the effect size is zero, 5% of all \(p\)-values can be expected to be smaller than 0.05. This is exactly the significance threshold of \(\alpha=\) 0.05 that we commonly use in hypothesis testing to reject the null hypothesis.

The \(p\)-curve looks completely different in the second and third row. In these examples, the null hypothesis is false, and a true effect exists in our data. This leads to a right-skewed distribution of \(p\)-values. When our data capture a true effect, highly significant (e.g. \(p=\) 0.01) effect sizes are more likely than effects that are barely significant (e.g. \(p=\) 0.049). This right-skew becomes more and more pronounced as the true effect size and study sample size increase.

Yet, we see that a right-skewed \(p\)-curve even emerges when the studies in our meta-analysis are drastically under-powered (i.e. containing only \(n=\) 20 participants while aiming to detect a small effect of \(\theta=\) 0.2). This makes it clear that \(p\)-curves are very sensitive to changes in the true underlying effect size. When a true effect size exists, we will often be able to detect it just by looking at the distribution of \(p\)-values that are significant.

Now, imagine how the \(p\)-curve would look like when researchers \(p\)-hacked their results. Usually, analysts start to use \(p\)-hacking when a result is not significant but close to that. Details of the analysis are then tweaked until a \(p\)-value smaller than 0.05 is reached. Since that is already enough to get the results published, no further \(p\)-hacking is conducted after that. It takes no imagination to see that widespread \(p\)-hacking would lead to a left-skewed \(p\)-curve: \(p\)-values slightly below 0.05 are over-represented, and highly significant results under-represented.

In sum, we see that a \(p\)-curve can be used as a diagnostic tool to assess the presence of publication bias and \(p\)-hacking. Next, we will discuss p-curve analysis, which is a collection of statistical tests based on an empirical \(p\)-curve. Importantly, none of these tests focuses on publication bias per se. The method instead tries to find out if our data contains evidential value. This is arguably what we are most interested in a meta-analysis: we want to make sure that the effect we estimated is not spurious; an artifact caused by selective reporting. P-curve addresses exactly this concern. It allows us to check if our findings are driven by an effect that exists in reality, or if they are–to put it dramatically–“a tale of sound and fury, signifying nothing”.

9.2.2.1 Testing for Evidential Value

To evaluate the presence of evidential value, p-curve uses two types of tests: a test for right-skewness, and a test for 33% power (the latter can be seen as a test for flatness of the \(p\)-curve). We begin with the test for right-skewness. As we learned, the right-skewness of the \(p\)-curve is a function of studies’ sample sizes and their true underlying effect. Therefore, a test which allows us to confirm that the \(p\)-curve of our meta-analysis is significantly right-skewed is very helpful. When we find a significant right-skew in our distribution of significant \(p\)-values, this would indicate that our results are indeed driven by a true effect.

9.2.2.1.1 Test for Right-Skewness

To test for right-skewness, the p-curve method first uses a binomial test. These tests can be used for data that follows a binomial distribution. A binomial distribution can be assumed for data that can be divided into two categories (e.g. success/failure, head/tail, yes/no), where \(p\) indicates the probability of one of the outcomes, and \(q = 1-p\) is the probability of the other outcome.

To use a binomial test, we have to split our \(p\)-curve into two sections. We do this by counting the number of \(p\)-values that are <0.025, and then the number of significant \(p\)-values that are >0.025. Since values in our \(p\)-curve can range from 0 to 0.05, we essentially use the middle of the x-axis as our cut-off. When the \(p\)-curve is indeed right-skewed, we would expect that the number of \(p\)-values in the two groups differ. This is because the probability \(p\) of obtaining a result that is smaller than 0.025 is considerably higher than the probability \(q\) of getting values that are higher than 0.025.

Imagine that our \(p\)-curve contains eight values, seven of which are below 0.025. We can use the binom.test function in R to test how likely it is to find such data under the null hypothesis that small and high \(p\)-values are equally likely42.

Since we assume that small \(p\)-values are more frequent than high \(p\)-values, we can use a one-sided test by setting the alternative argument to "greater".

k <- 7 # number of studies p<0.025

n <- 8 # total number of significant studies

p <- 0.5 # assumed probability of k (null hypothesis)

binom.test(k, n, p, alternative = "greater")$p.value## [1] 0.03515625We see that the binomial test is significant (\(p<\) 0.05). This means there are significantly more high than low \(p\)-values in our example. Overall, this indicates that the \(p\)-curve is right-skewed, and that there is a true effect.

A drawback of the binomial test is that is requires us to dichotomize our \(p\)-values, while they are in fact continuous. To avoid information loss, we need a test which does not require us to transform our data into bins.

P-curve achieves this by calculating a \(p\)-value for each \(p\)-value, which results in a so-called \(pp\)-value of each study. The \(pp\)-value tells us how likely it is to get a value at least as high as \(p\) when the \(p\)-curve is flat (i.e. when there is no true effect). It gives the probability of a \(p\)-value when only significant values are considered. Since \(p\)-values follow a uniform distribution when \(\theta = 0\), \(pp\)-values are nothing but significant \(p\)-values which we project to the \([0,1]\) range. For continuous outcomes measures, this is achieved through multiplying the \(p\)-value by 20, for example: \(p = 0.023\times20 = 0.46 \rightarrow pp\).

Using the \(pp_k\)-value of each significant study \(k\) in our meta-analysis, we can test for right-skewness using Fisher’s method. This method is an “archaic” type of meta-analysis developed by R. A. Fisher in the early 20th century (see Chapter 1.2). Fisher’s method allows to aggregate \(p\)-values from several studies, and to test if at least one of them measures a true effect (i.e. it tests if the distribution of submitted \(p\)-values is right-skewed). It entails log-transforming the \(pp\)-values, summing the result across all studies \(k\), and then multiplying by -2.

The resulting value is a test statistic which follows a \(\chi^2\) distribution (see Chapter 5.1.1) with \(2\times K\) degrees of freedom (where \(K\) is the total number of \(pp\)-values)43:

\[\begin{equation} \chi^2_{2K} = -2 \sum^K_{k=1} \log(pp_k) \tag{9.14} \end{equation}\]

Let us try out Fisher’s method in a brief example. Imagine that our \(p\)-curve contains five \(p\)-values: \(p=\) 0.001, 0.002, 0.003, 0.004 and 0.03. To test for right-skewness, we first have to transform these \(p\)-values into the \(pp\)-value:

p <- c(0.001, 0.002, 0.003, 0.004, 0.03)

pp <- p*20

# Show pp values

pp## [1] 0.02 0.04 0.06 0.08 0.60Using equation 9.15, we can calculate the value of \(\chi^2\) using this code:

## [1] 25.96173This results in \(\chi^2=\) 25.96. Since five studies were included, the degrees of freedom are \(\text{d.f.}=2\times5=10\). We can use this information to check how likely our data are under the null hypothesis of no effect/no right-skewness. This can be done in R using the pchisq function, which we have to provide with our value of \(\chi^2\) as well as the number of d.f.:

pchisq(26.96, df = 10, lower.tail = FALSE)## [1] 0.002642556This gives us a \(p\)-value of 0.0026. This means that the null hypothesis is very unlikely, and therefore rejected. The significant value of the \(\chi^2\) test tells us that, in this example, the \(p\)-values are indeed right-skewed. This can be seen as evidence for the assumption that there is evidential value behind our data.

9.2.2.1.2 Test for Flatness

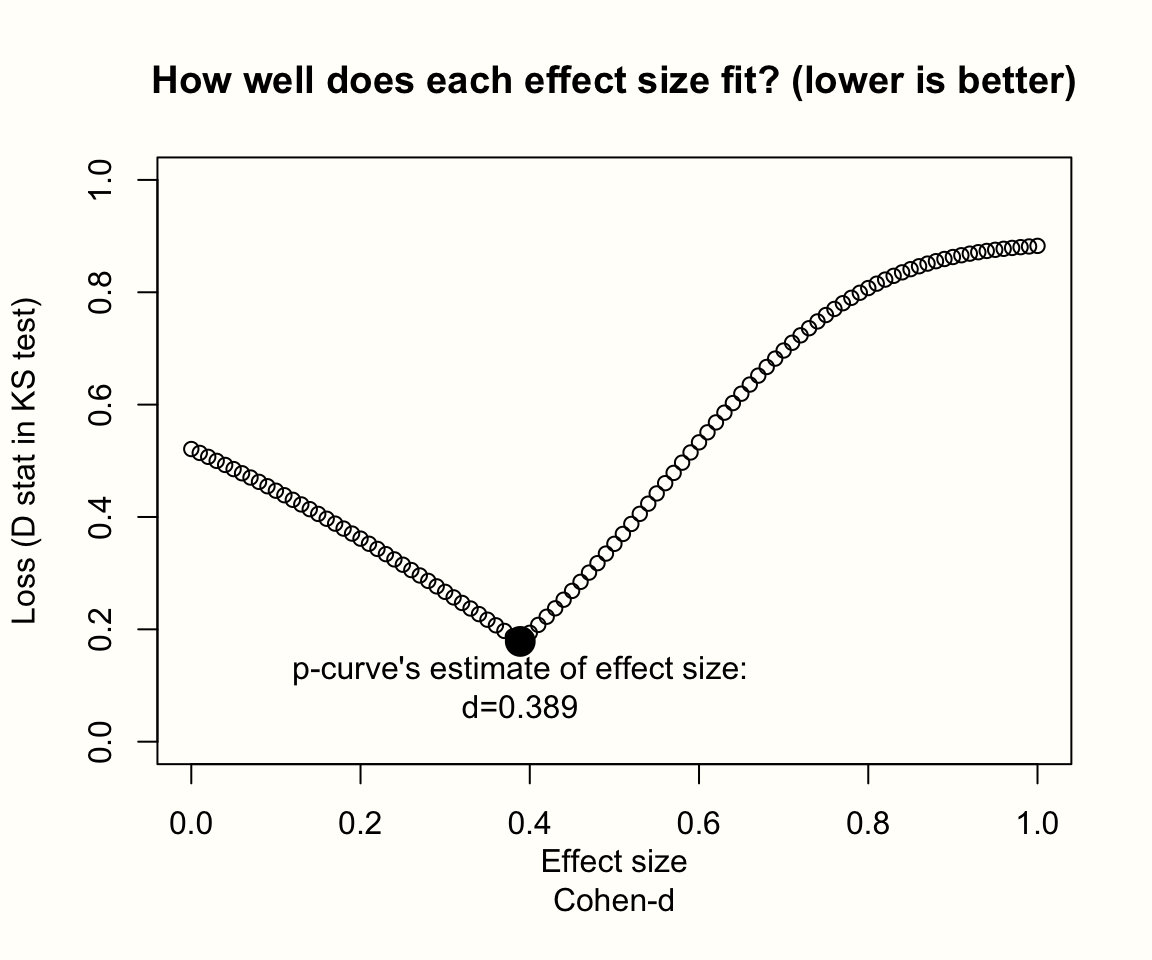

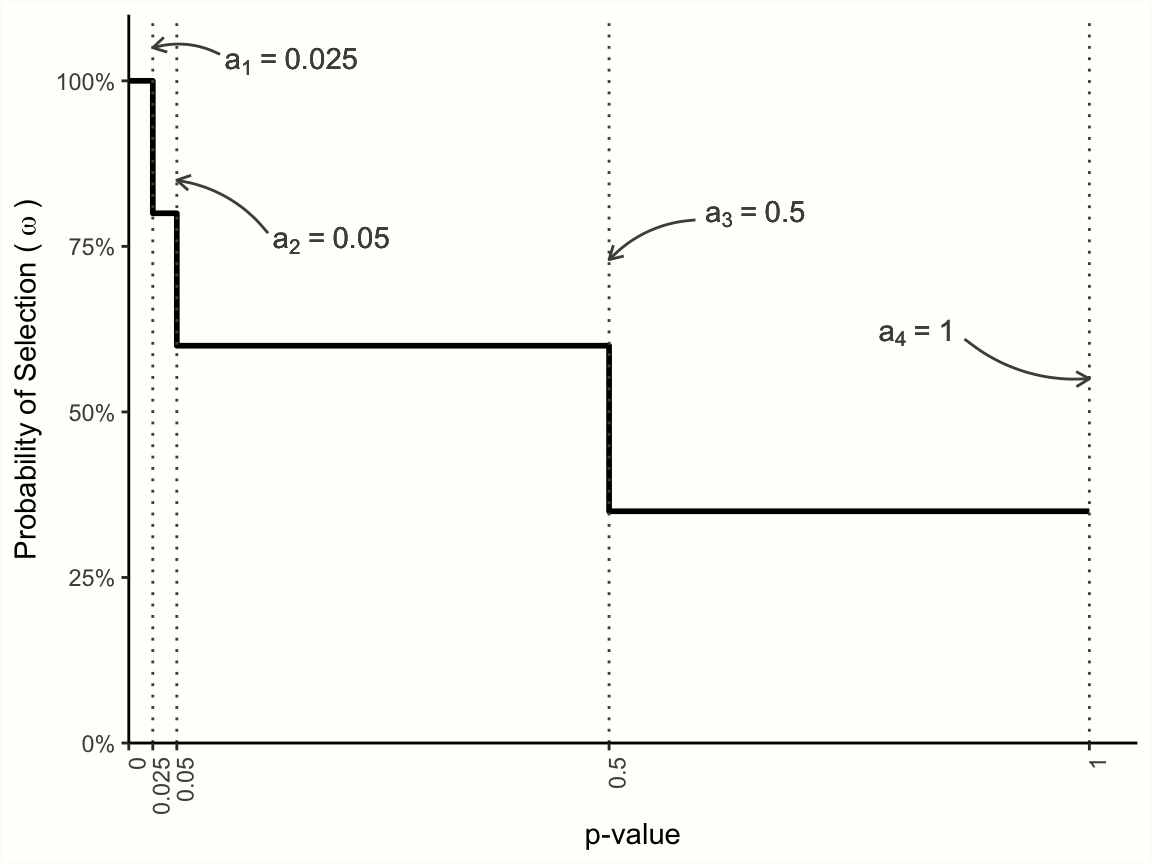

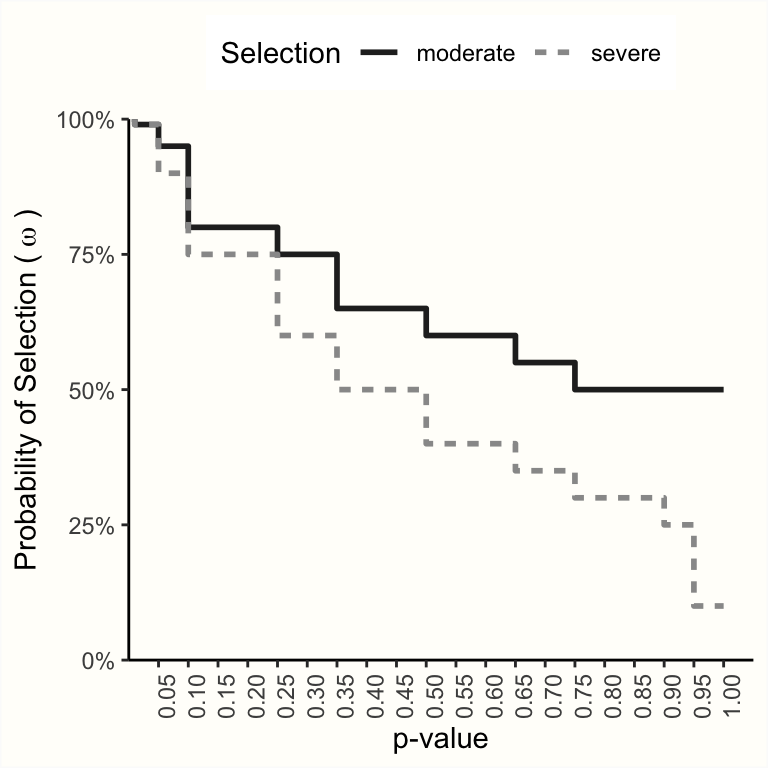

We have seen that the right-skewness test can be used to determine if the distribution of significant \(p\)-values represents a true overall effect. The problem is that this test depends on the statistical power of our data. Therefore, when the right-skewness test is not significant, this does not automatically mean that there is no evidential value. Two things are possible: either there is indeed no true effect, or the number of values in our \(p\)-curve is simply too small to render the \(\chi^2\) test significant–even if the data is in fact right-skewed.