Chapter 4 Macrofinancial Data Analysis

4.1 Imagine This

Your US-based company just landed a contract worth more than 20 percent of your company’s current revenue in Spain. Now that everyone has recovered from this coup, your management wants you to

- Retrieve and begin to analyze data about the Spanish economy

- Compare and contrast Spanish stock market and government-issued debt value versus the United States and several other countries

- Begin to generate economic scenarios based on political events that may, or may not, happen in Spain

Up to this point we had reviewed several ways to manipulate data in R. We then reviewed some basic finance and statistics concepts in R. We also got some idea of the financial analytics workflow.

- What decision(s) are we making?

- What are the key business questions we need to support this decision?

- What data do we need?

- What tools do we need to analyze the data?

- How do we communicate answers to inform the decision?

4.1.1 Working an example

Let’s use this workflow to motivate our work in this chapter.

Let’s identify a decision at work (e.g., investment in a new machine, financing a building, acquisition of customers, hiring talent, locating manufacturing).

For this decision we will list three business questions you need to inform the decision we chose.

Now we consider data we might need to answer one of those questions and choose from this set:

- Macroeconomic data: GDP, inflation, wages, population

- Financial data: stock market prices, bond prices, exchange rates, commodity prices

Here is the example using the scenario that started this chapter.

- Our decision is supply a new market segment

- Product: voltage devices with supporting software

- Geography: Spain

- Customers: major buyers at Iberdrola, Repsol, and Endesa

- We pose three business questions:

- How would the performance of these companies affect the size and timing of orders?

- How would the value of their products affect the value of our business with these companies?

- We are a US functional currency firm (see FAS 52), so how would we manage the repatriation of accounts receivable from Spain?

- Some data and analysis to inform the decision could include

- Customer stock prices: volatility and correlation

- Oil prices: volatility

- USD/EUR exchange rates: volatility

- All together: correlations among these indicators

4.1.2 How we will proceed

This chapter will develop styliZed facts of the market. These continue to be learned the hard Way: financial data is not independent, it possesses volatile volatility, and has extremes.

- Financial stock, bond, commodity…you name it…have highly interdependent relationships.

- Volatility is rarely constant and often has a structure (mean reversion) and is dependent on the past.

- Past shocks persist and may or may not dampen (rock in a pool).

- Extreme events are likely to happen with other extreme events.

- Negative returns are more likely than positive returns (left skew).

4.2 Building the Stylized Facts

Examples from the 70s, 80s, and 90s have multiple intersecting global events influencing decision makers. We will load some computational help and some data from Brent, format dates, and create a time series object (package zoo' will be needed by packagesfBasicsandevir`):

library(fBasics)

library(evir)

library(qrmdata)

library(zoo)

data(OIL_Brent)

str(OIL_Brent)## An 'xts' object on 1987-05-20/2015-12-28 containing:

## Data: num [1:7258, 1] 18.6 18.4 18.6 18.6 18.6 ...

## - attr(*, "dimnames")=List of 2

## ..$ : NULL

## ..$ : chr "OIL_Brent"

## Indexed by objects of class: [Date] TZ: UTC

## xts Attributes:

## NULLWe will compute rates of change for Brent oil prices next.

Brent.price <- as.zoo(OIL_Brent)

str(Brent.price)## 'zoo' series from 1987-05-20 to 2015-12-28

## Data: num [1:7258, 1] 18.6 18.4 18.6 18.6 18.6 ...

## - attr(*, "dimnames")=List of 2

## ..$ : NULL

## ..$ : chr "OIL_Brent"

## Index: Date[1:7258], format: "1987-05-20" "1987-05-21" "1987-05-22" "1987-05-25" "1987-05-26" ...Brent.return <- diff(log(Brent.price))[-1] *

100

colnames(Brent.return) <- "Brent.return"

head(Brent.return, n = 5)## Brent.return

## 1987-05-22 0.5405419

## 1987-05-25 0.2691792

## 1987-05-26 0.1611604

## 1987-05-27 -0.1611604

## 1987-05-28 0.0000000tail(Brent.return, n = 5)## Brent.return

## 2015-12-21 -3.9394831

## 2015-12-22 -0.2266290

## 2015-12-23 1.4919348

## 2015-12-24 3.9177726

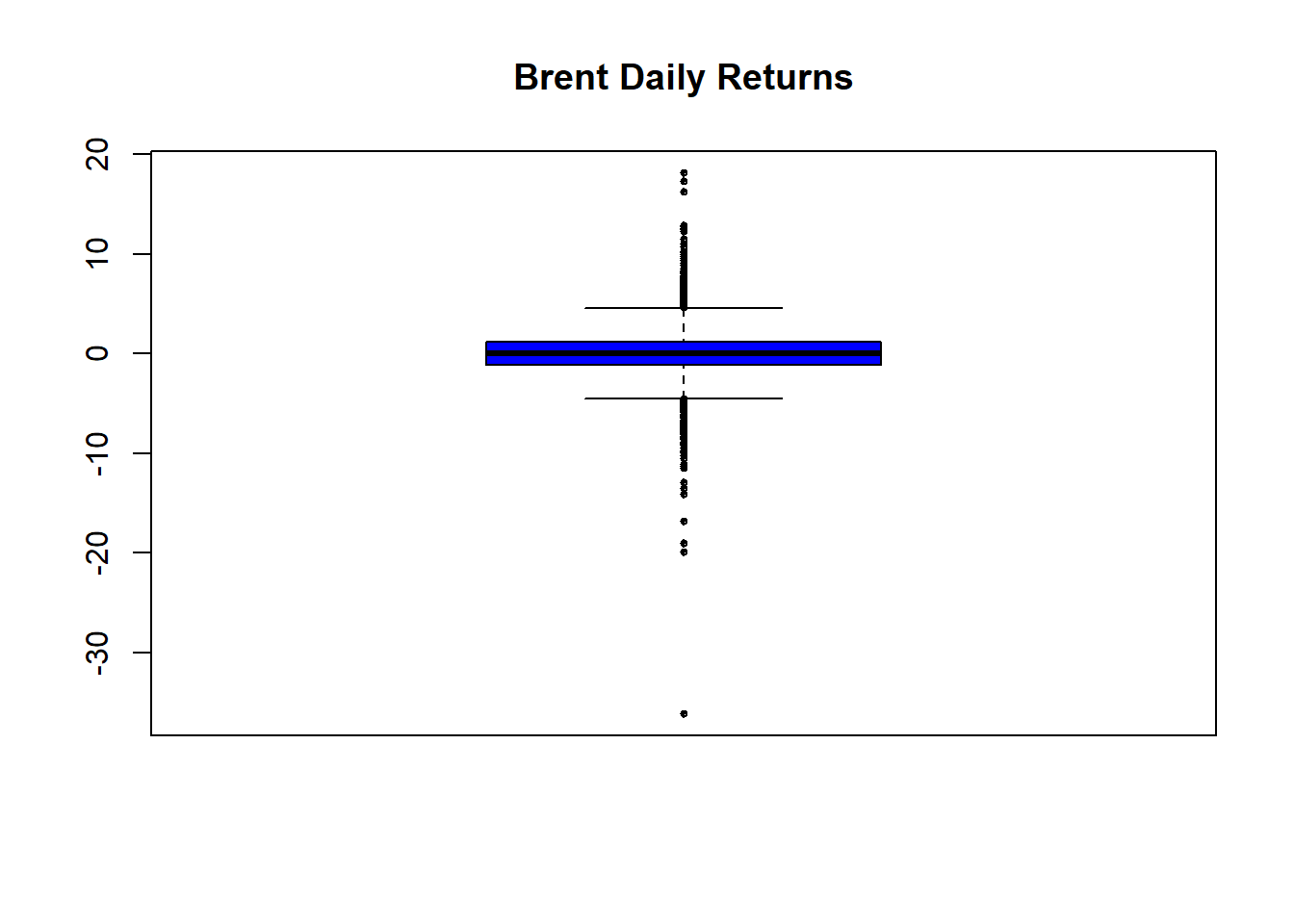

## 2015-12-28 -0.3768511Let’s look at this data with box plots and autocorrelation functions. Box plots will show minimum to maximum with the mean in the middle of the box. Autocorrelation plots will reveal how persistent the returns are over time.

We run these statements.

boxplot(as.vector(Brent.return), title = FALSE,

main = "Brent Daily % Change", col = "blue",

cex = 0.5, pch = 19)

skewness(Brent.return)

kurtosis(Brent.return)This time series plot shows lots of return clustering and spikes, especially negative ones.

Performing some “eyeball econometrics” these clusters seem to occur around - The oil embargo of the ’70s - The height of the new interest rate regime of Paul Volcker at the Fed - “Black Monday” stock market crash in 1987 - Gulf I - Barings and other derivatives business collapses in the ’90s

- Let’s look at the likelihood of positive versus negative returns. We might want to review skewness and kurtosis definitions and ranges to help us.

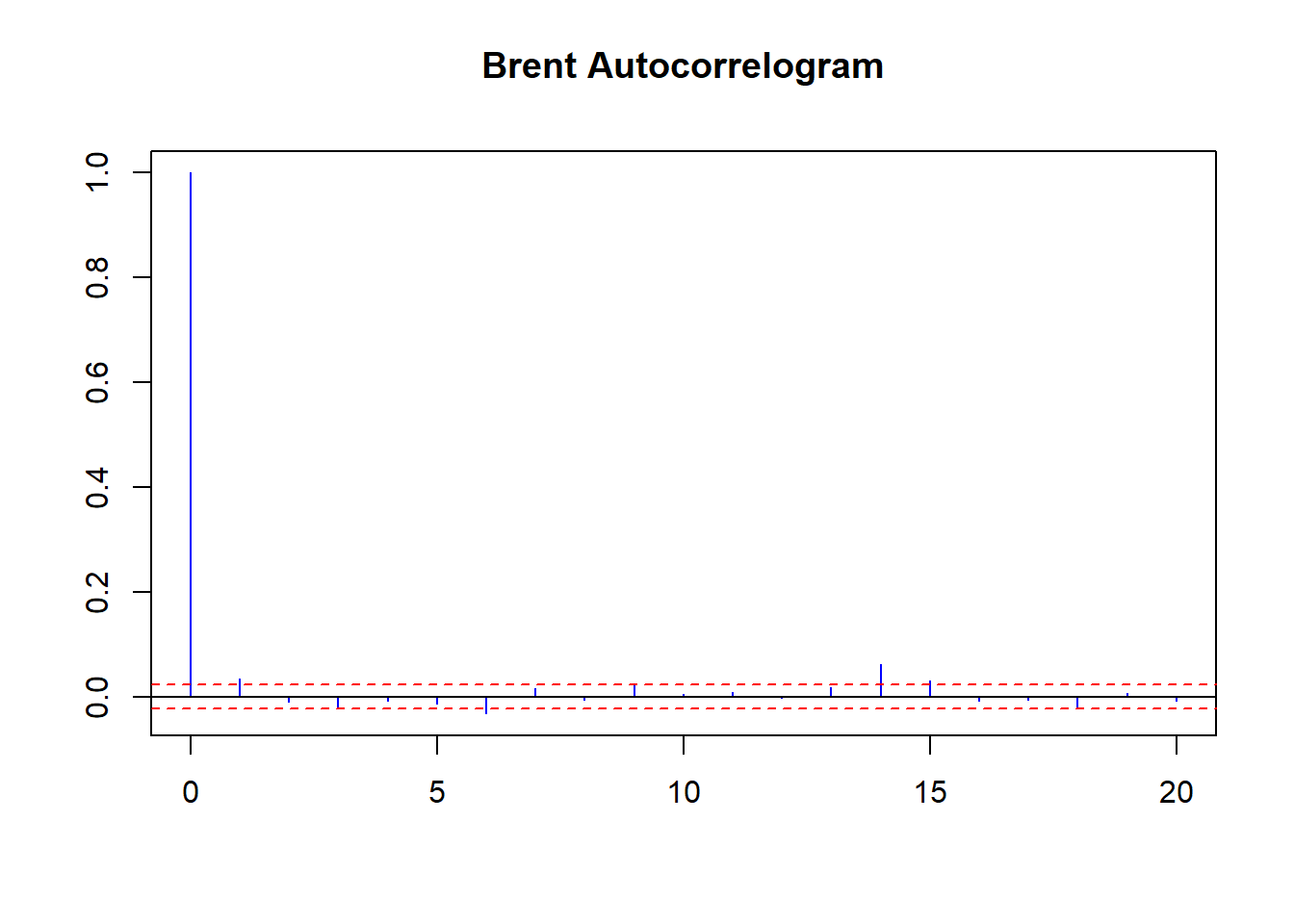

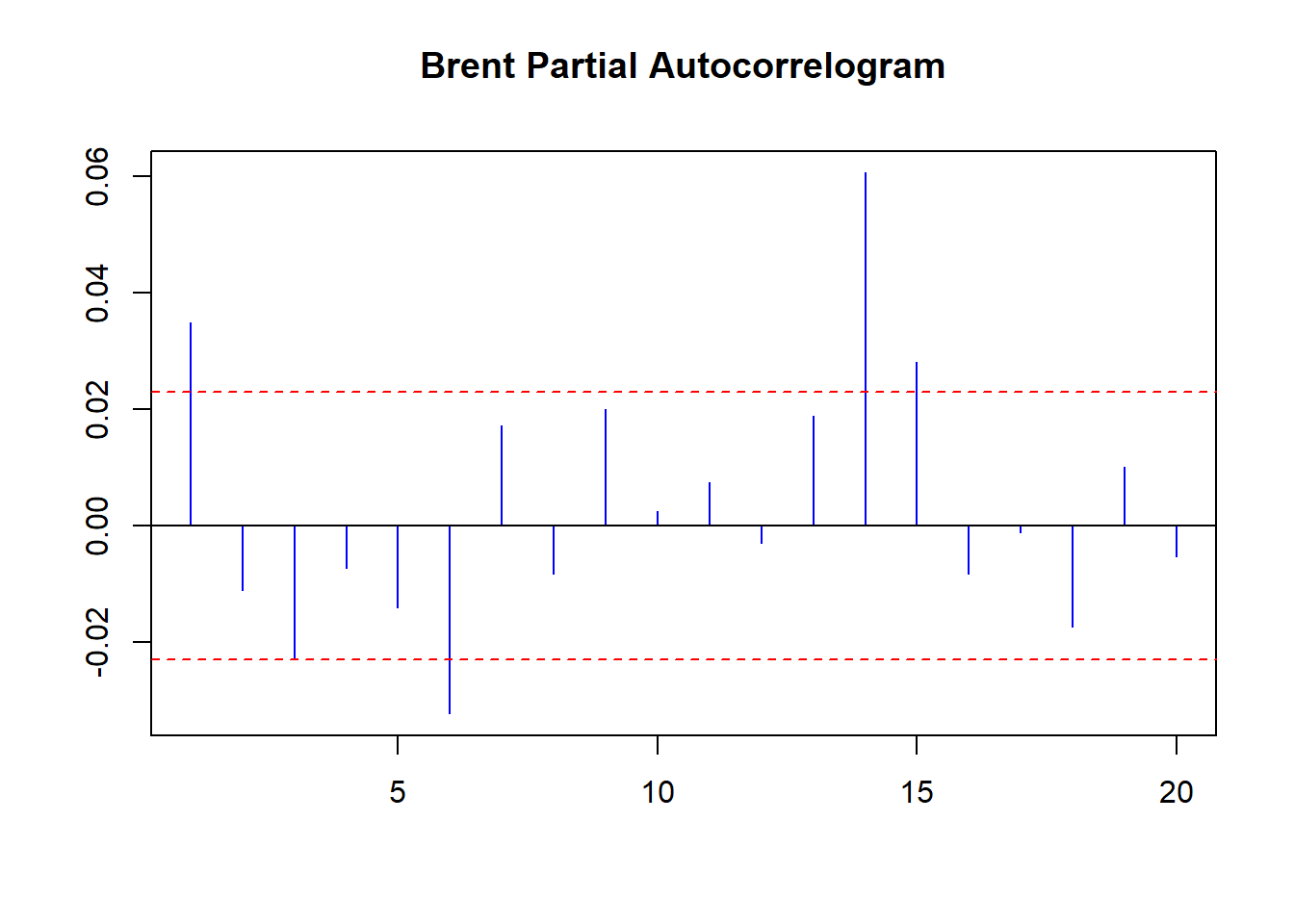

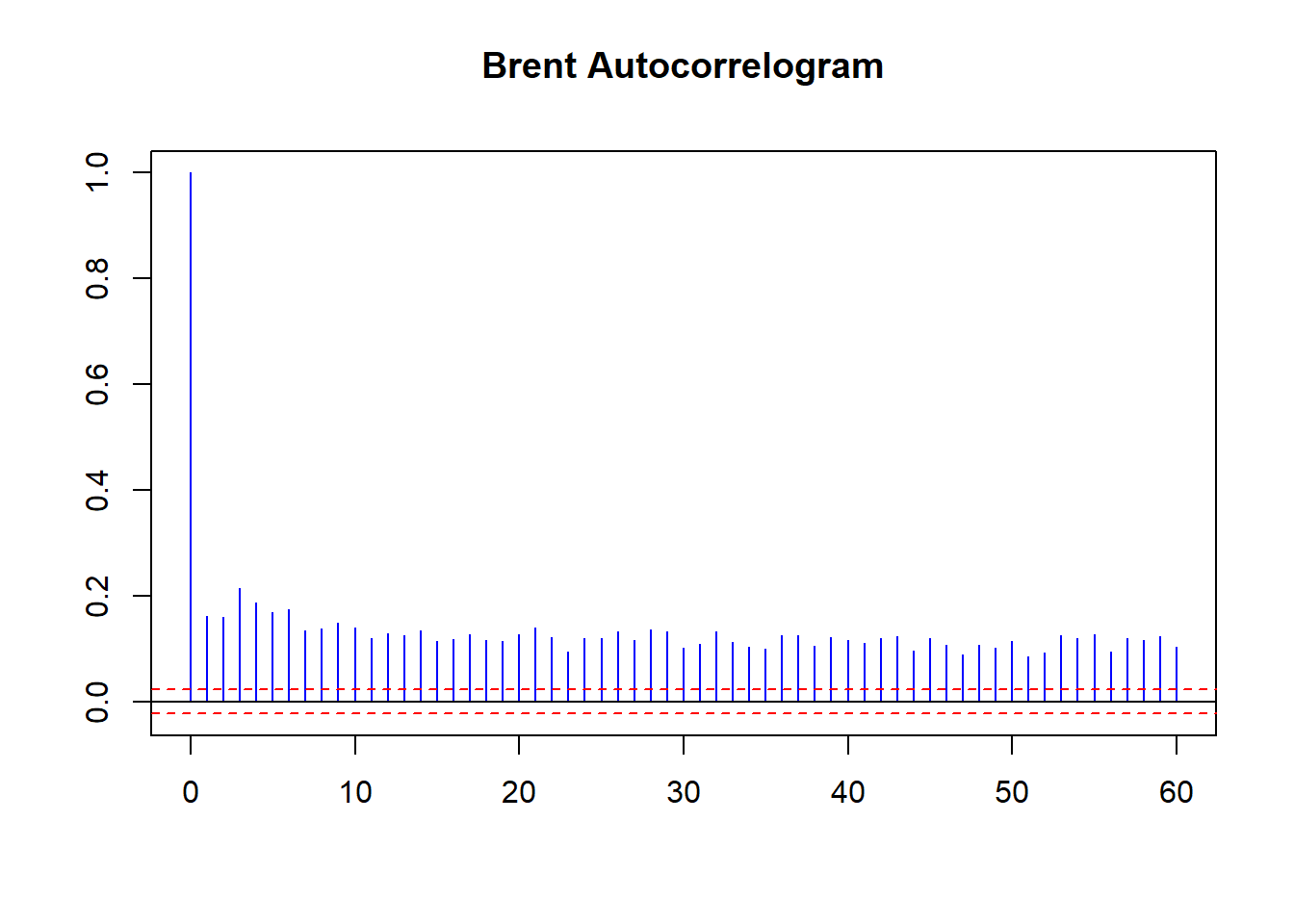

Now to look at persistence:

acf(coredata(Brent.return), main = "Brent Daily Autocorrelogram",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")

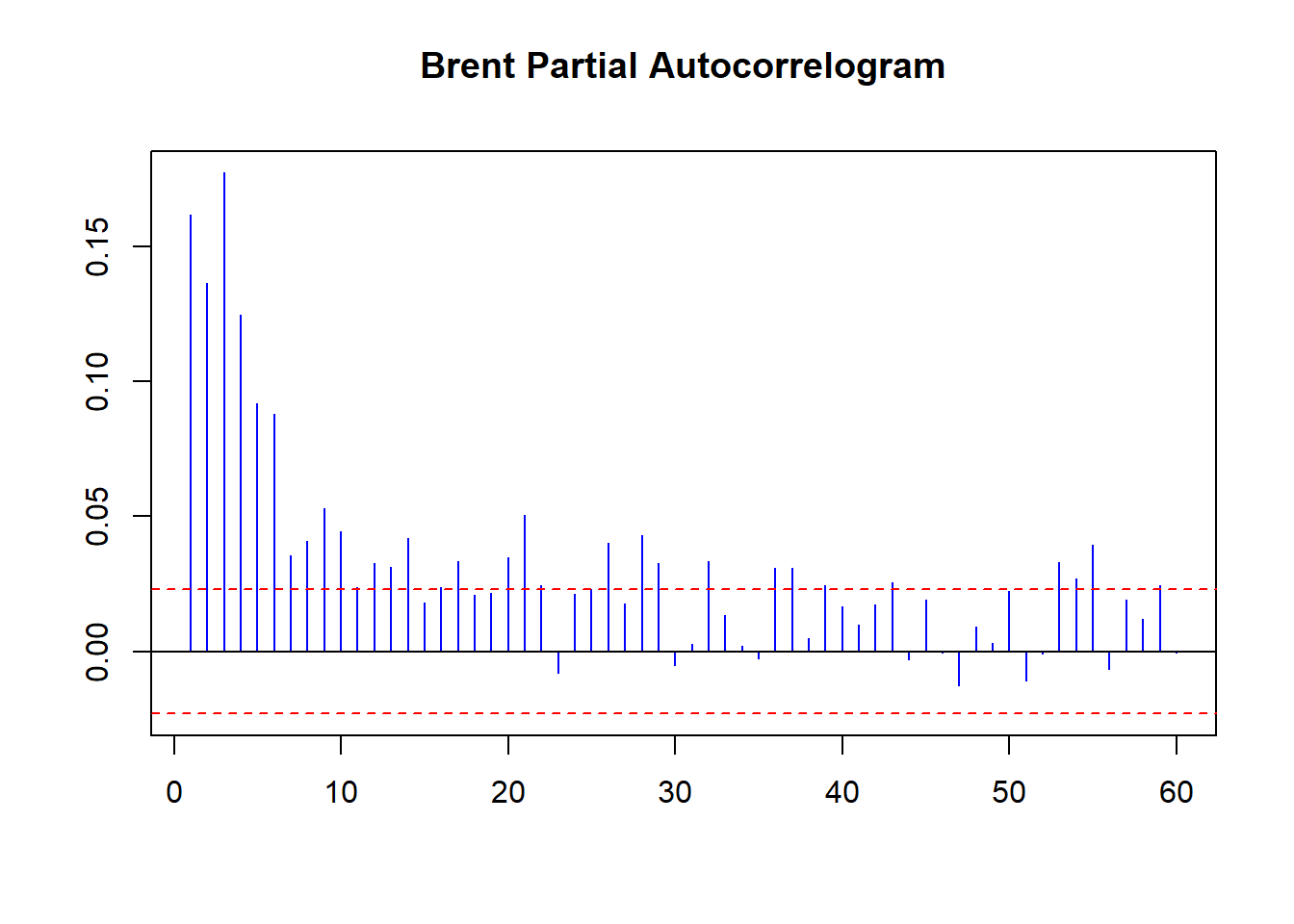

pacf(coredata(Brent.return), main = "Brent Daily Partial Autocorrelogram",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")Confidence intervals are the red dashed lines. ACF at lag 6 means the correlation of current Brent returns with returns 6 trading days ago, including any correlations from trading day 1 through 6. PACF is simpler: it is the raw correlation between day 0 and day 6. ACF starts at lag 0 (today); PACF starts at lag 1 (yesterday).

How many trading days in a typical week or in a month? Comment on the spikes (blue lines that grow over or under the red dashed lines).

How thick is that tail?

Here is a first look:

boxplot(as.vector(Brent.return), title = FALSE,

main = "Brent Daily Returns", col = "blue",

cex = 0.5, pch = 10)

… with some basic stats to back up the eyeball econometrics in the box plot:

skewness(Brent.return)## [1] -0.6210447

## attr(,"method")

## [1] "moment"kurtosis(Brent.return)## [1] 14.62226

## attr(,"method")

## [1] "excess"- A negative skew means there are more observations less than the median than greater.

- This high a kurtosis means a pretty heavy tail, especially in negative returns. That means they have happened more often than positive returns.

- A preponderance of negative returns frequently happening spells trouble for anyone owning these assets.

4.2.1 Implications

- We should recommend that management budget for the body of the distribution from the mean and out to positive levels.

- At the same time management should build a comprehensive playbook for the strong possibility that bad tail events frequently happen and might happen again (and why shouldn’t they?).

- Now for something really interesting

acf(coredata(Brent.return), main = "Brent Autocorrelogram",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")

pacf(coredata(Brent.return), main = "Brent Partial Autocorrelogram",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")

On average there are 5 days in the trading week and 20 in the trading month.

Some further thoughts:

- There seems to be positive weekly and negative monthly cycles.

- On a weekly basis negative rates (5 trading days ago) are followed by negative rates (today) and vice-versa with positive rates.

- On a monthly basis negative rates (20 days ago) are followed by positive rates (today).

- There is memory in the markets: positive correlation at least weekly up to a month ago reinforces the strong and frequently occurring negative rates (negative skew and leptokurtotic, a.k.a. heavy tails).

- Run the PACF for 60 days to see a 40-day negative correlation as well.

4.2.2 Now for somthing really interesting…again

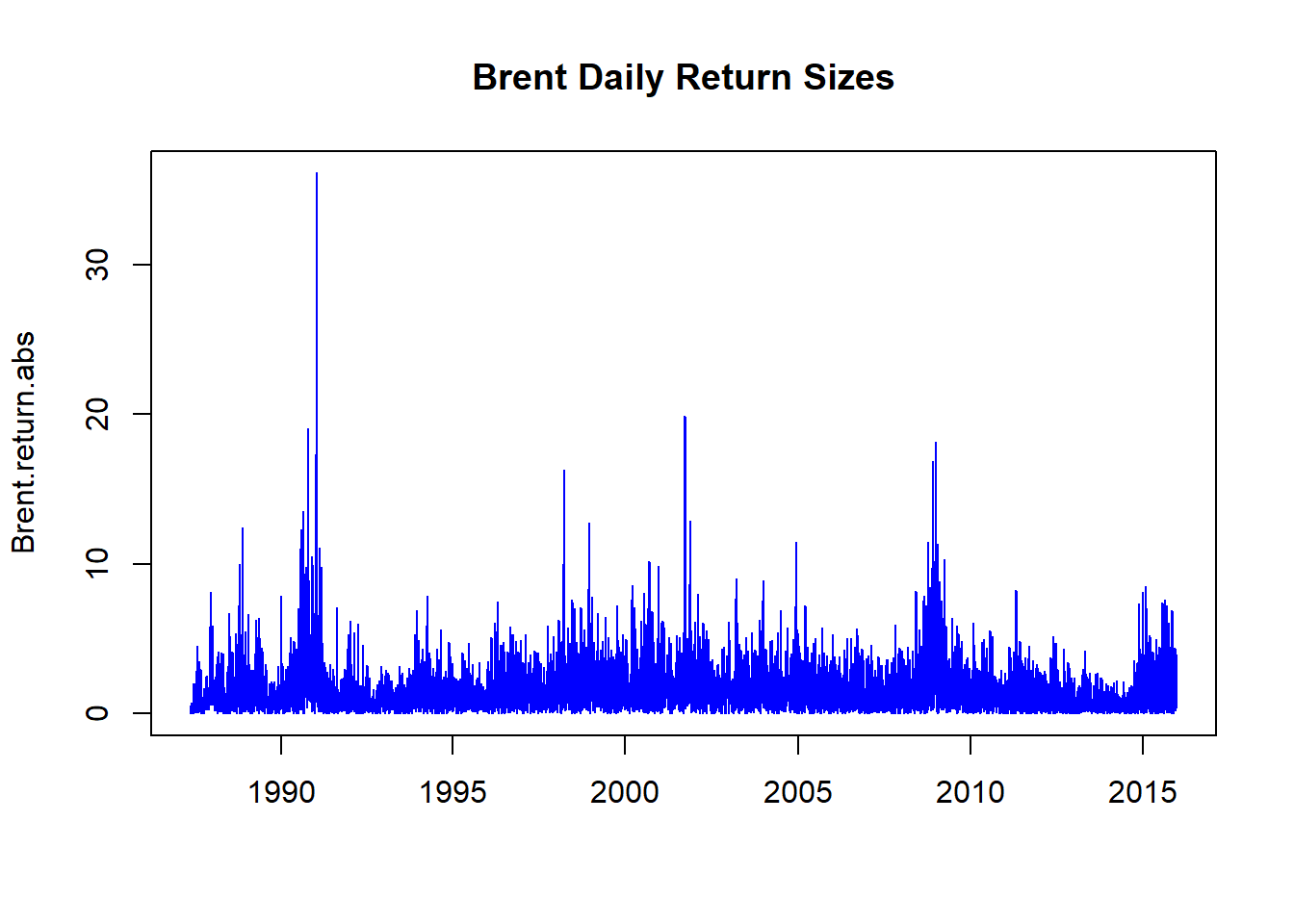

Let’s look just at the size of the Brent returns. The absolute value of the returns (think of oil and countries entering and leaving the EU!) can signal contagion, herd mentality, and simply very large margin calls (and the collateral to back it all up!). Let’s run this code:

Brent.return.abs <- abs(Brent.return)

## Trading position size matters

Brent.return.tail <- tail(Brent.return.abs[order(Brent.return.abs)],

100)[1]

## Take just the first of the 100

## observations and pick the first

index <- which(Brent.return.abs > Brent.return.tail,

arr.ind = TRUE)

## Build an index of those sizes that

## exceed the heavy tail threshold

Brent.return.abs.tail <- timeSeries(rep(0,

length(Brent.return)), charvec = time(Brent.return))

## just a lot of zeros we will fill up

## next

Brent.return.abs.tail[index, 1] <- Brent.return.abs[index]

## A Phew! is in orderWhat did we do? Let’s run some plots next.

plot(Brent.return.abs, xlab = "", main = "Brent Daily Return Sizes",

col = "blue")

We see lots of return volatility – just in the pure size along. These are correlated with financial innovations from the ’80s and ’90s, as well as Gulf 1, Gulf 2, Great Recession, and its 9/11 antecedents.

acf(coredata(Brent.return.abs), main = "Brent Autocorrelogram",

lag.max = 60, ylab = "", xlab = "",

col = "blue", ci.col = "red")

pacf(coredata(Brent.return.abs), main = "Brent Partial Autocorrelogram",

lag.max = 60, ylab = "", xlab = "",

col = "blue", ci.col = "red")

There is Volatility Clustering galore. Strong persistent lags of absolute movements in returns evidenced by the ACF plot. There is evidence of dampening with after shocks past trading 10 days 10 ago. Monthly volatility affects today’s performance.

Some of this volatility arises from the way Brent is traded. It is lifted through well-heads in the North Sea. It then is scheduled for loading onto ships and loads are then bid, along with routes to destination. It takes about five days to load crude and another five to unload. At each partial loading and unloading, the crude is re-priced. Then there is the voyage lag itself, where paper claims to wet crude create further pricing, and volatility.

Next we explore the relationships among financial variables.

4.3 Getting Caught in the Cross-Current

Now our job is to ask the really important questions around connectivity. Suppose we are banking our investments in certain sectors of an economy, with its GDP, financial capability, employment, exports and imports, and so on.

- How will we decide to contract for goods and services, segment vendors, segment customers, based on these interactions?

- How do we construct out portfolio of business opportunities?

- How do we identify insurgent and relational risks and build a playbook to manage these?

- How will changes in one sector’s factors (say, finance, political will) affect factors in another?

We will now stretch our univariate analysis a bit and look at cross-correlations to help us get the ground truth around these relationships, and begin to answer some of these business questions in a more specific context.

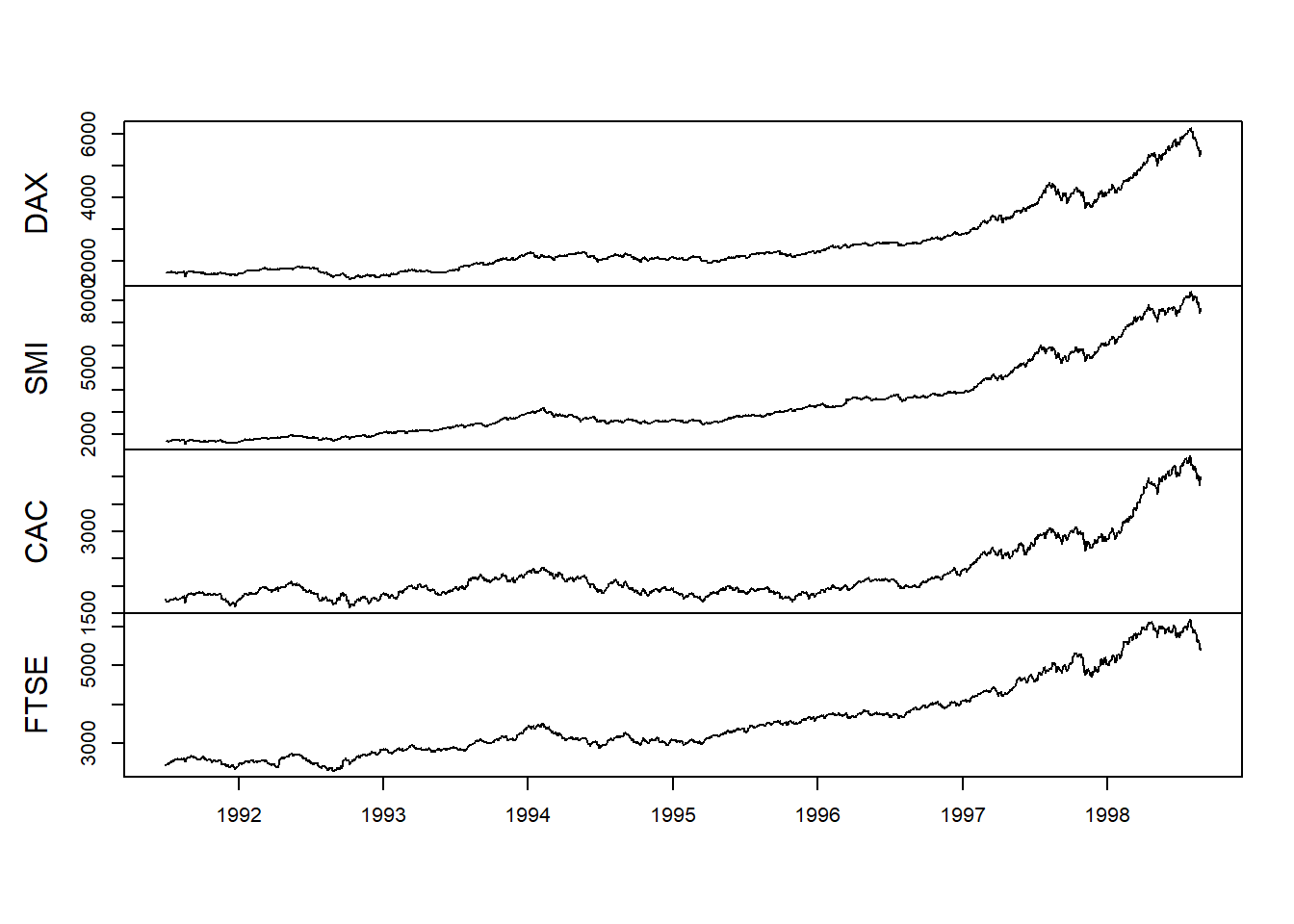

Let’s load the zoo and qrmdata libraries first and look at the EuroStoxx50 data set. Here we can imagine we are rebuilding our brand and footprint in the European Union and United Kingdom. Our customers might be the companies based in these countries as our target market.

- The data: 4 stock exchange indices across Europe (and the United Kingdom)

- This will allow us to profile the forward capabilities of these companies across their economies.

- Again we will look at returns data using the

diff(log(data))[-1]formula.

require(zoo)

require(qrmdata)

require(xts)

data("EuStockMarkets")

EuStockMarkets.price <- as.zoo(EuStockMarkets)

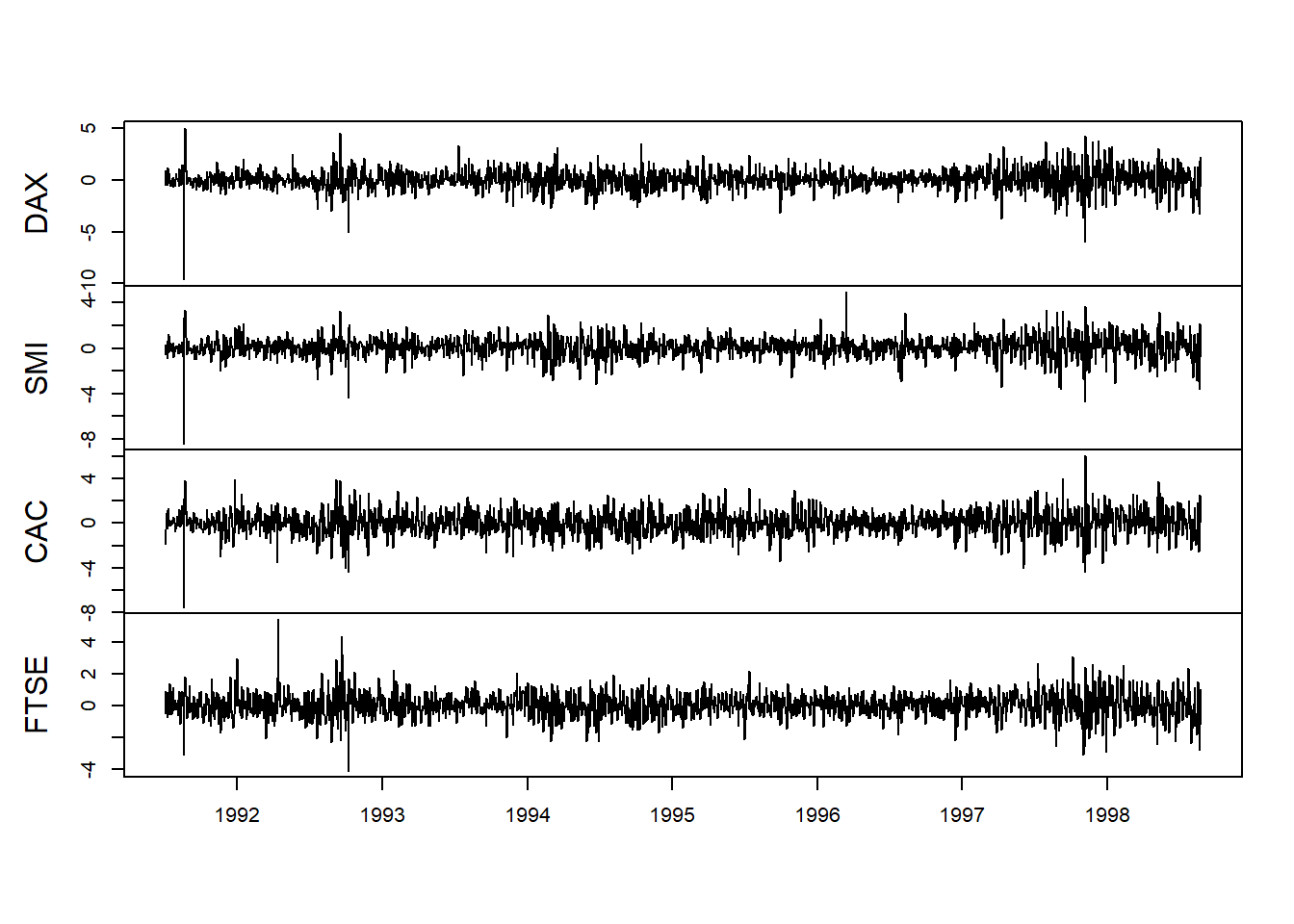

EuStockMarkets.return <- diff(log(EuStockMarkets.price))[-1] *

100We then plot price levels and returns.

plot(EuStockMarkets.price, xlab = " ",

main = " ")

plot(EuStockMarkets.return, xlab = " ",

main = " ")

We see much the same thing as Brent oil with volatility clustering and heavily weighted tails.

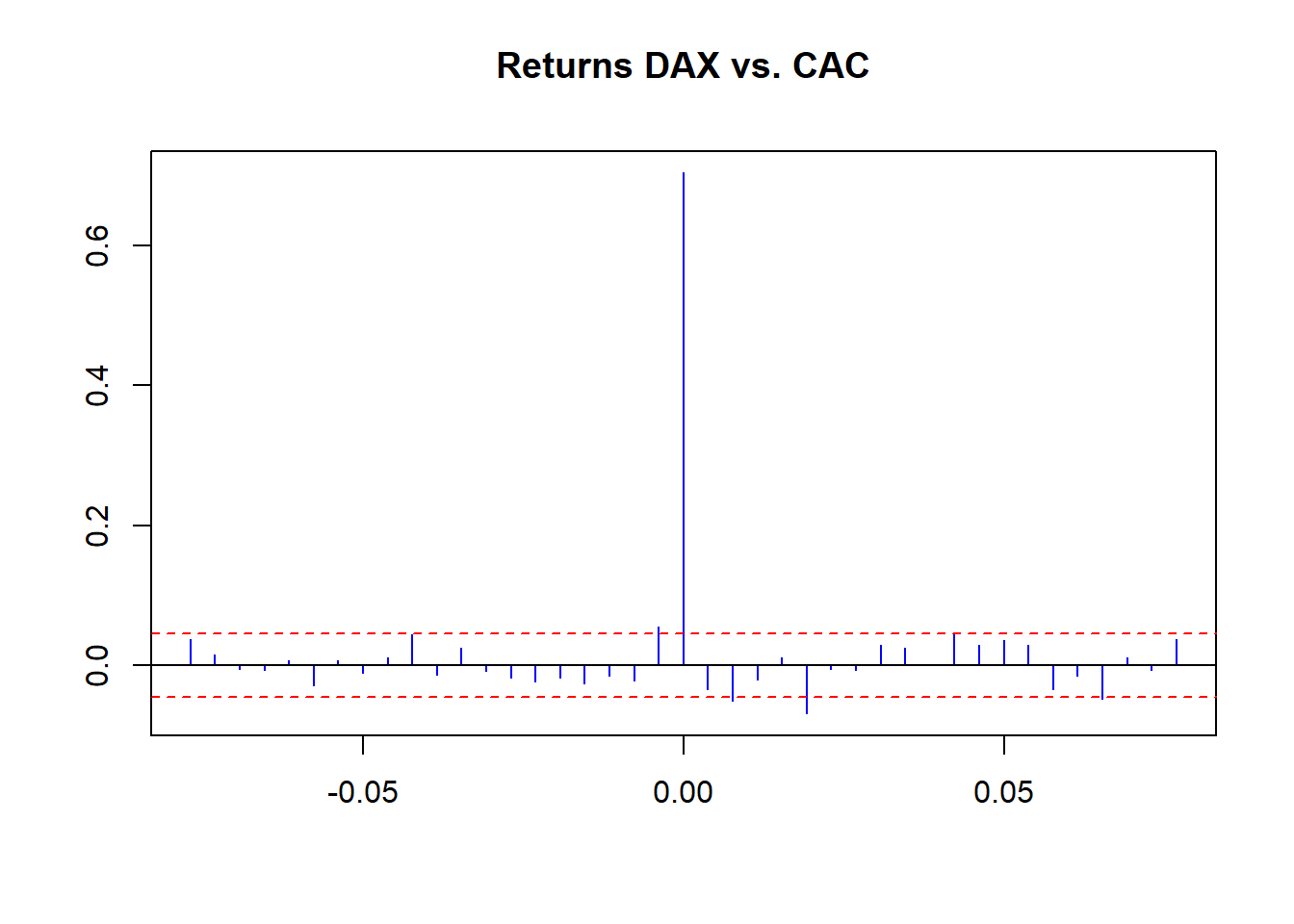

Let’s then look at cross-correlations among one pair of these indices to see how they are related across time (lags) for returns and the absolute value of returns. THe function ccf will aid us tremendously.

ccf(EuStockMarkets.return[, 1], EuStockMarkets.return[,

2], main = "Returns DAX vs. CAC",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")

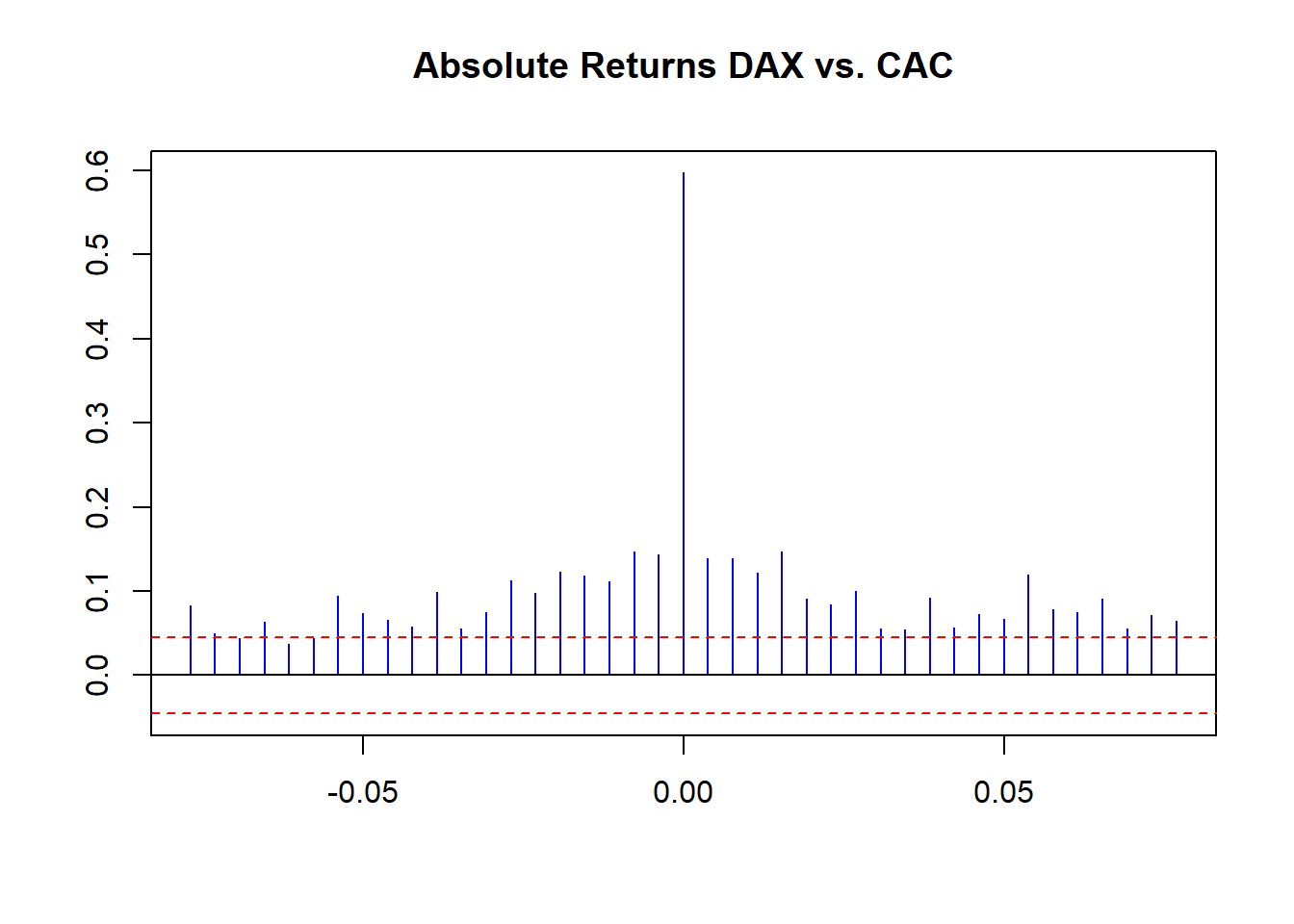

ccf(abs(EuStockMarkets.return[, 1]),

abs(EuStockMarkets.return[, 2]),

main = "Absolute Returns DAX vs. CAC",

lag.max = 20, ylab = "", xlab = "",

col = "blue", ci.col = "red")

We see some small raw correlations across time with raw returns. More revealing, we see volatility of correlation clustering using return sizes. We can conduct one more experiment: a rolling correlation using this function:

corr.rolling <- function(x) {

dim <- ncol(x)

corr.r <- cor(x)[lower.tri(diag(dim),

diag = FALSE)]

return(corr.r)

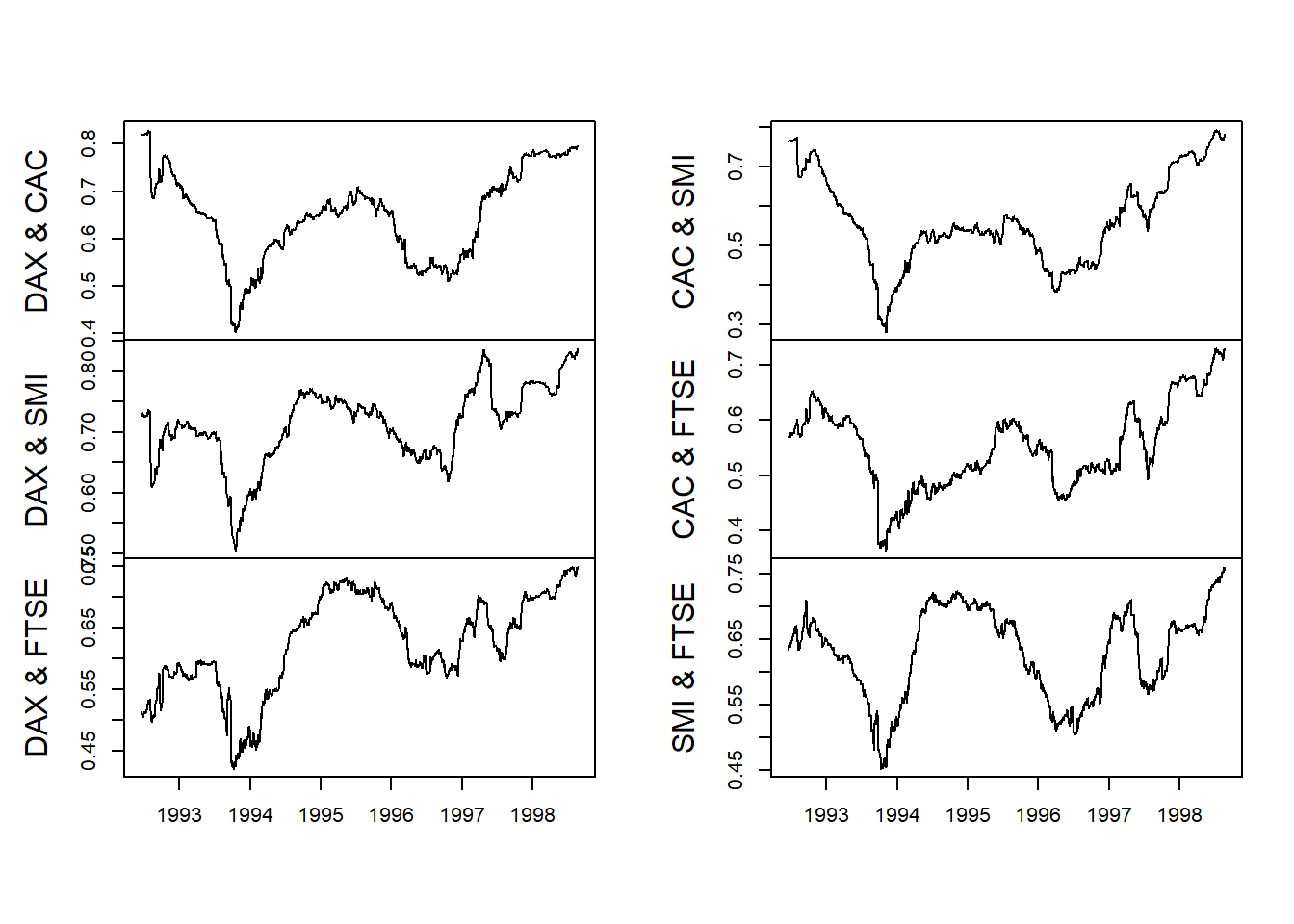

}We then embed our rolling correlation function, corr.rolling, into the function rollapply (look this one up using ??rollapply at the console). The question we need to answer is: What is the history of correlations, and from the history, the pattern of correlations in the UK and EU stock markets? If there is a “history” with a “pattern,” then we have to manage the risk that conducting business in one country will definitely affect business in another. The implication is that bad things will be followed by more bad things more often than good things. The implication compounds a similar implication across markets.

corr.returns <- rollapply(EuStockMarkets.return,

width = 250, corr.rolling, align = "right",

by.column = FALSE)

colnames(corr.returns) <- c("DAX & CAC",

"DAX & SMI", "DAX & FTSE", "CAC & SMI",

"CAC & FTSE", "SMI & FTSE")

plot(corr.returns, xlab = "", main = "")

Again we observe the volatility clustering from bunching up of the the absolute sizes of returns. Economic performance is certainly subject here to the same dynamics we saw for a single financial variable such as Brent.

Let’s redo some of the work we just did using another set of techniques. This time we are using the “Fisher” transformation. Look up Fisher in Wikipedia and in your reference texts.

- How can the Fisher Transformation possibly help us answer our business questions?

- For three Spanish companies, Iberdrola, Endesa, and Repsol, replicate the Brent and EU stock market experiments above with absolute sizes and tails. Here we already have “series” covered.

First, the Fisher transformation is a smoothing routine that helps us tabilize the volitility of a variate. It does this by pulling some of the shockiness (i.e., outliers and aberrant noise) out of the original time series. In a phrase, it helps us see the forest (or the wood) for the trees.

We now replicate the Brent and EU stock exchange experiments. We again load some packages and get some data using quantmod’s getSymbols off the Madrid stock exchange to match our initial working example of Iberian companies on account. Then compute returns and merge into a master file.

require(xts)

require(qrmdata)

require(quantreg)

require(quantmod)

require(matrixStats)

tickers <- c("ELE.MC", "IBE.MC", "REP.MC")

getSymbols(tickers)## [1] "ELE.MC" "IBE.MC" "REP.MC"REP.r <- na.omit(diff(log(REP.MC[, 4]))[-1])

IBE.r <- na.omit(diff(log(IBE.MC[, 4]))[-1])

ELE.r <- na.omit(diff(log(ELE.MC[, 4]))[-1]) # clean up missing values

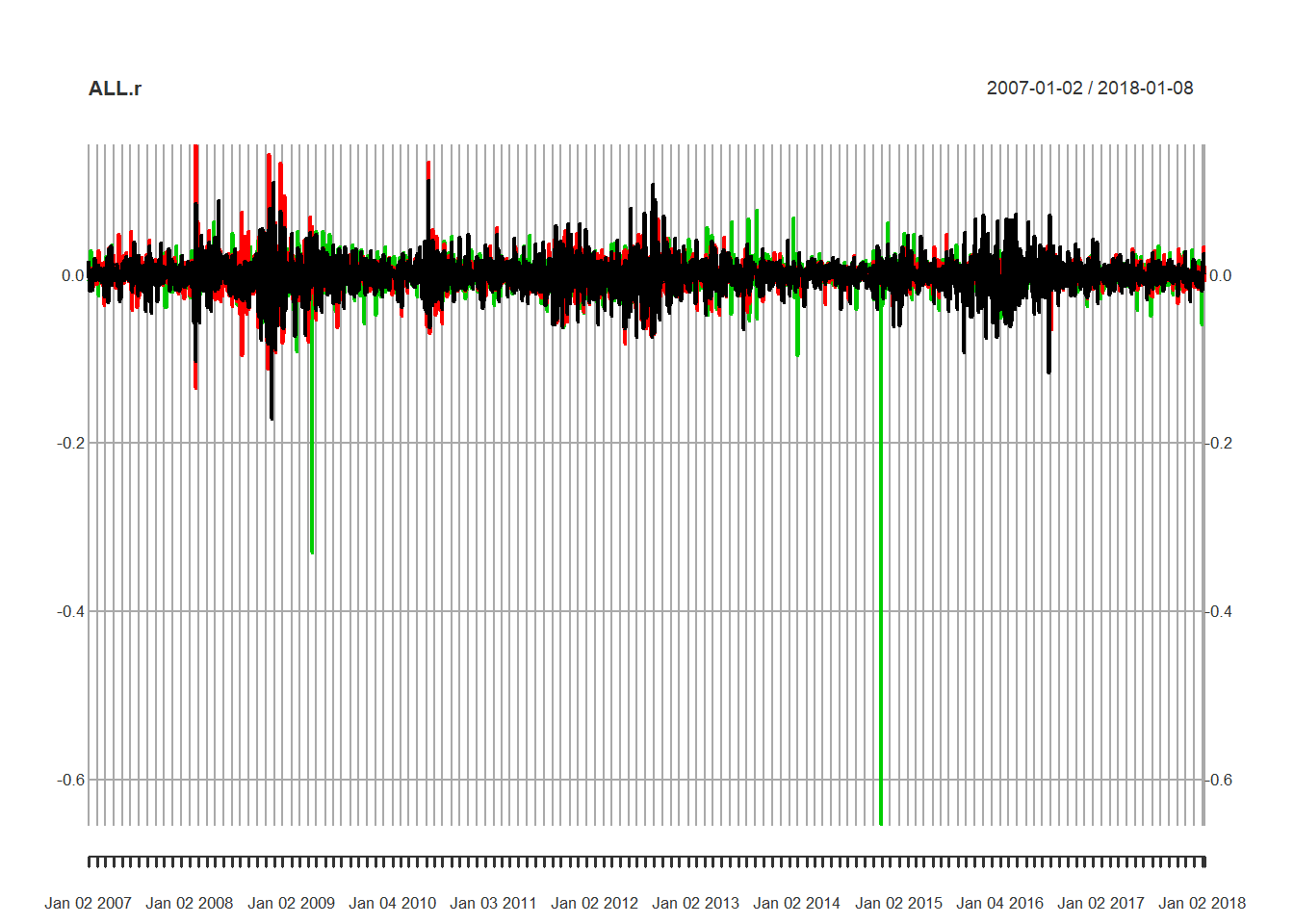

ALL.r <- na.omit(merge(REP = REP.r, IBE = IBE.r,

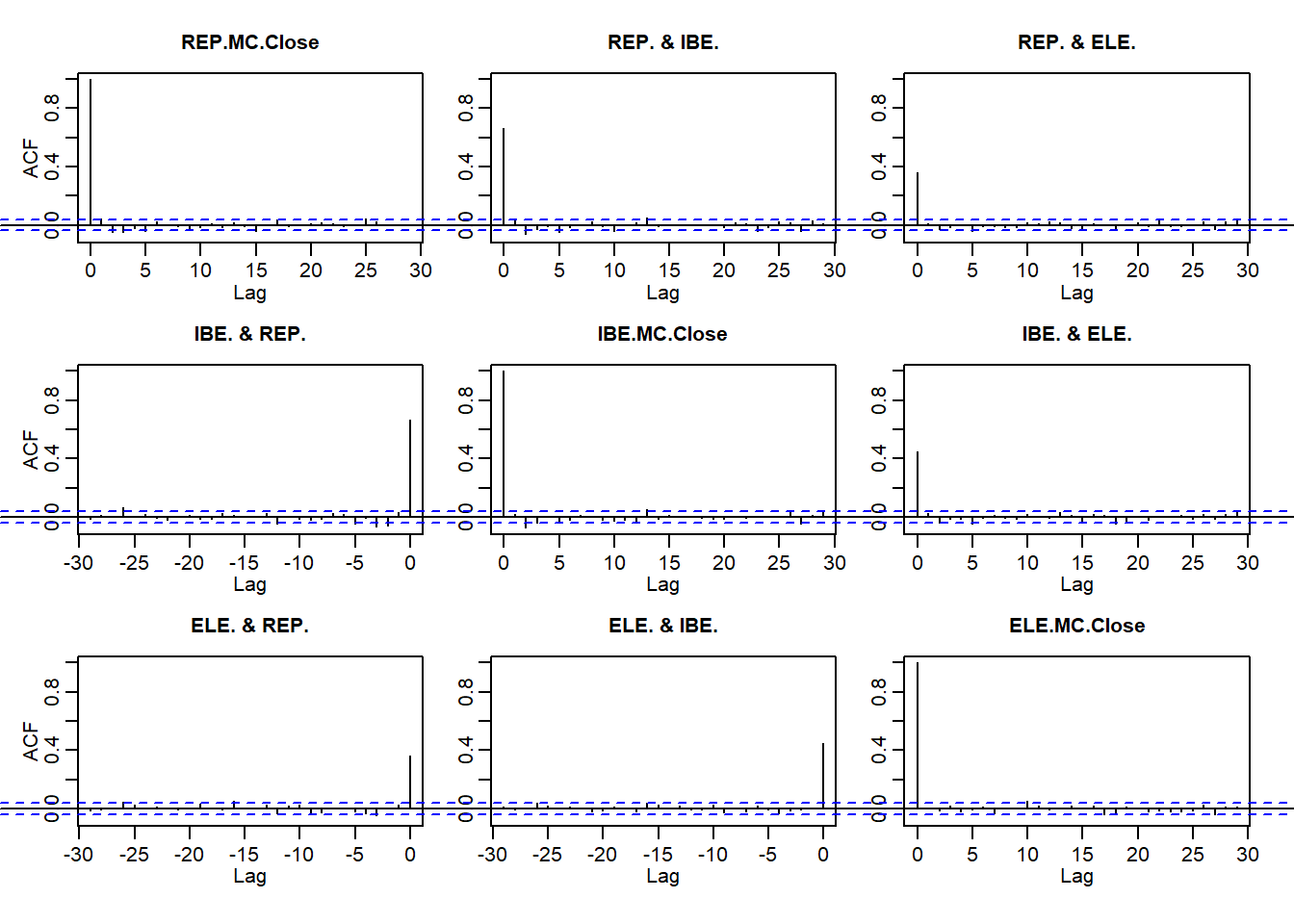

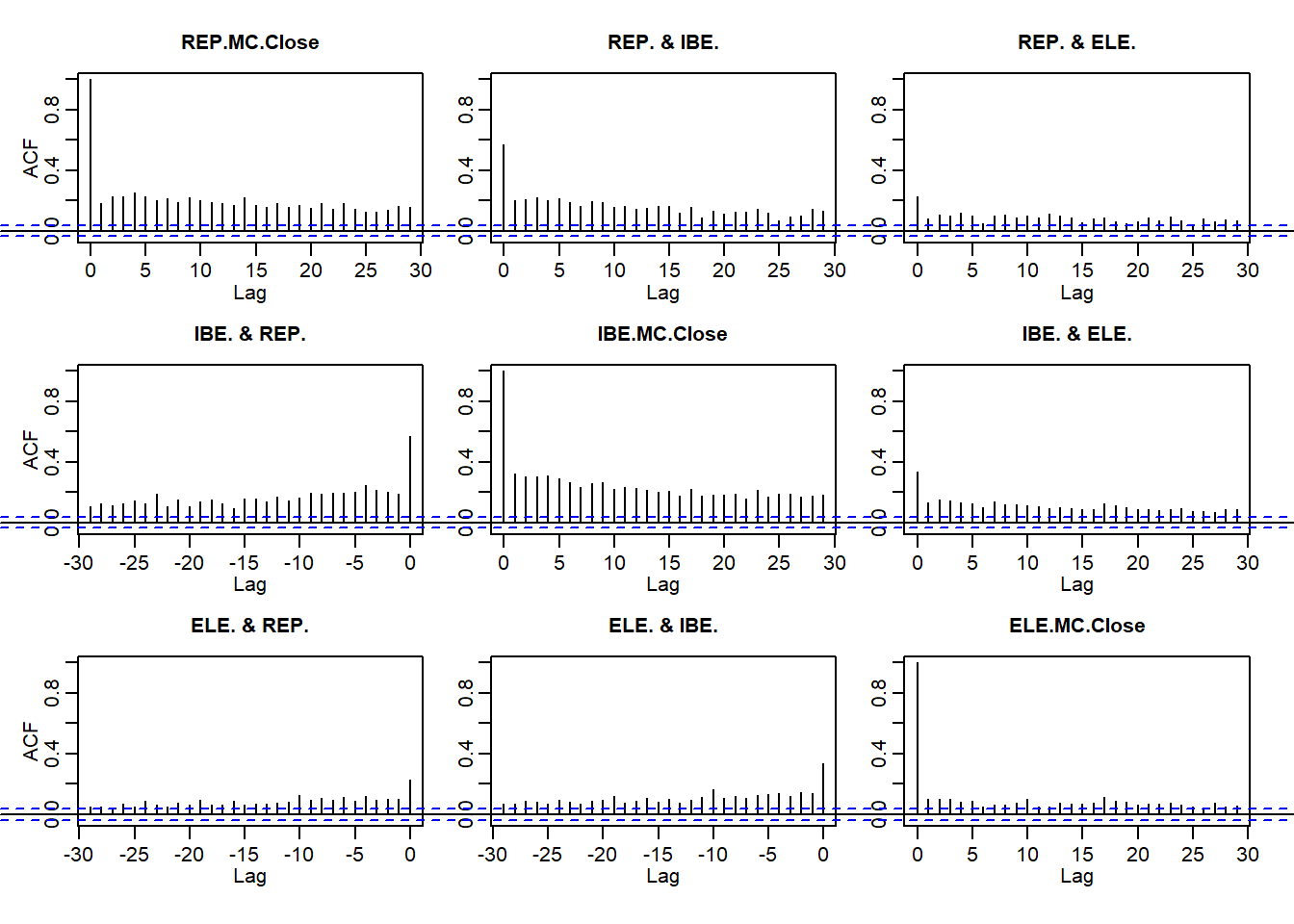

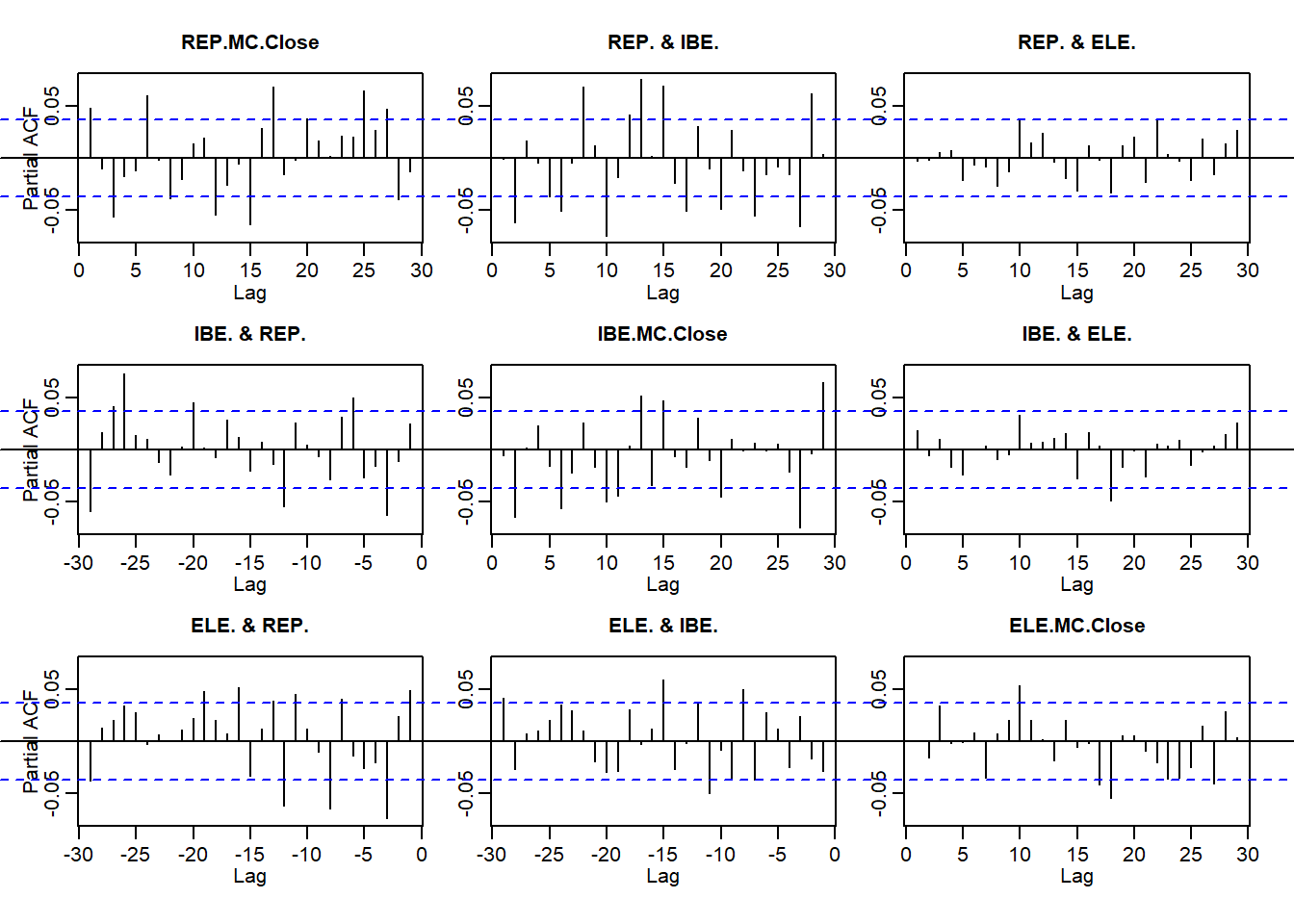

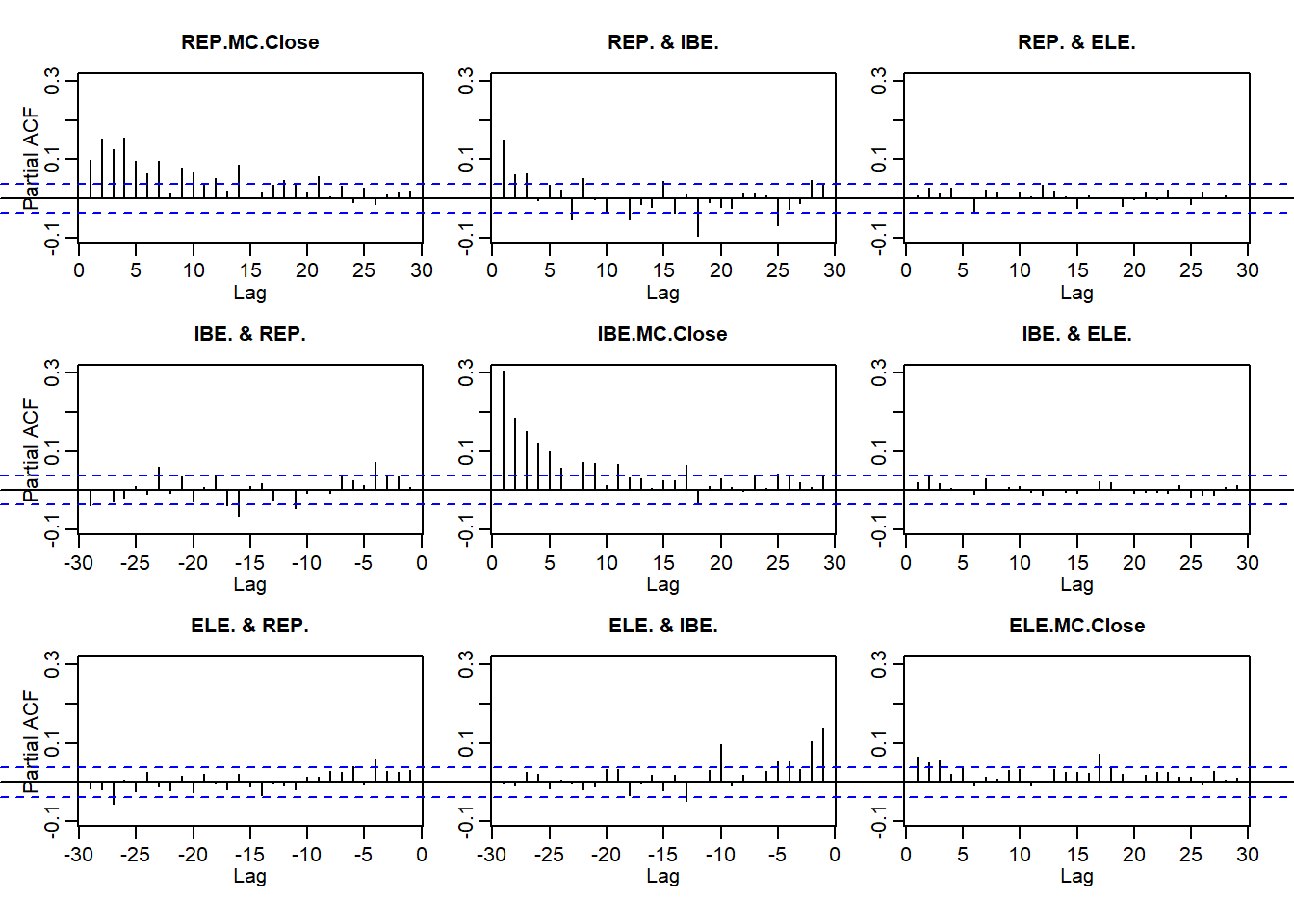

ELE = ELE.r, all = FALSE))Next we plot the returns and their absolute values, acf and pacf, all like we did in Brent. Again we see

- The persistence of returns

- The importance of return size

- Clustering of volatility

plot(ALL.r)

par(mfrow = c(2, 1))

acf(ALL.r)

par(mfrow = c(2, 1))

acf(abs(ALL.r))

par(mfrow = c(2, 1))

pacf(ALL.r)

par(mfrow = c(2, 1))

pacf(abs(ALL.r))

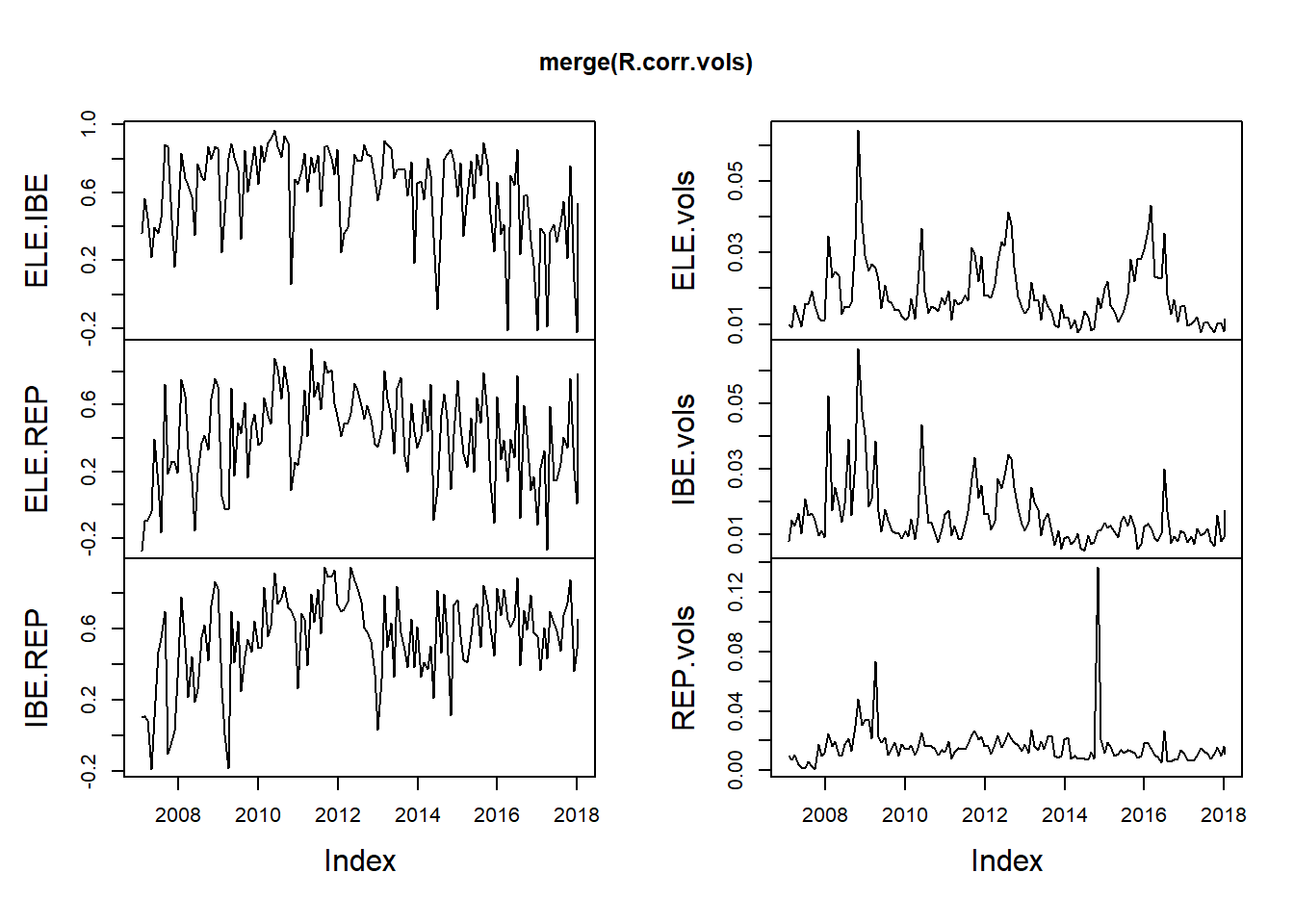

Let’s examine the correlation structure of markets where we can observe

- The relationship between correlation and volatility

- How quantile regression gets us to an understanding of high stress (high and low quantile) episodes

R.corr <- apply.monthly(ALL.r, FUN = cor)

R.vols <- apply.monthly(ALL.r, FUN = colSds) ## from MatrixStats

head(R.corr, 3)## [,1] [,2] [,3] [,4] [,5] [,6]

## 2007-01-31 1 0.3613554 -0.27540757 0.3613554 1 0.10413800

## 2007-02-28 1 0.5661814 -0.09855544 0.5661814 1 0.10760477

## 2007-03-30 1 0.4500982 -0.08874664 0.4500982 1 0.08538064

## [,7] [,8] [,9]

## 2007-01-31 -0.27540757 0.10413800 1

## 2007-02-28 -0.09855544 0.10760477 1

## 2007-03-30 -0.08874664 0.08538064 1head(R.vols, 3)## REP.MC.Close IBE.MC.Close ELE.MC.Close

## 2007-01-31 0.009787963 0.007892759 0.009777426

## 2007-02-28 0.009181099 0.014571945 0.007674848

## 2007-03-30 0.015317331 0.012719792 0.010919155R.corr.1 <- matrix(R.corr[1, ], nrow = 3,

ncol = 3, byrow = FALSE)

rownames(R.corr.1) <- tickers

colnames(R.corr.1) <- tickers

head(R.corr.1)## ELE.MC IBE.MC REP.MC

## ELE.MC 1.0000000 0.3613554 -0.2754076

## IBE.MC 0.3613554 1.0000000 0.1041380

## REP.MC -0.2754076 0.1041380 1.0000000R.corr <- R.corr[, c(2, 3, 6)]

colnames(R.corr) <- c("ELE.IBE", "ELE.REP",

"IBE.REP")

colnames(R.vols) <- c("ELE.vols", "IBE.vols",

"REP.vols")

head(R.corr, 3)## ELE.IBE ELE.REP IBE.REP

## 2007-01-31 0.3613554 -0.27540757 0.10413800

## 2007-02-28 0.5661814 -0.09855544 0.10760477

## 2007-03-30 0.4500982 -0.08874664 0.08538064head(R.vols, 3)## ELE.vols IBE.vols REP.vols

## 2007-01-31 0.009787963 0.007892759 0.009777426

## 2007-02-28 0.009181099 0.014571945 0.007674848

## 2007-03-30 0.015317331 0.012719792 0.010919155R.corr.vols <- merge(R.corr, R.vols)plot.zoo(merge(R.corr.vols))

ELE.vols <- as.numeric(R.corr.vols[,

"ELE.vols"])

IBE.vols <- as.numeric(R.vols[, "IBE.vols"])

REP.vols <- as.numeric(R.vols[, "REP.vols"])

length(ELE.vols)## [1] 133fisher <- function(r) {

0.5 * log((1 + r)/(1 - r))

}

rho.fisher <- matrix(fisher(as.numeric(R.corr.vols[,

1:3])), nrow = length(ELE.vols),

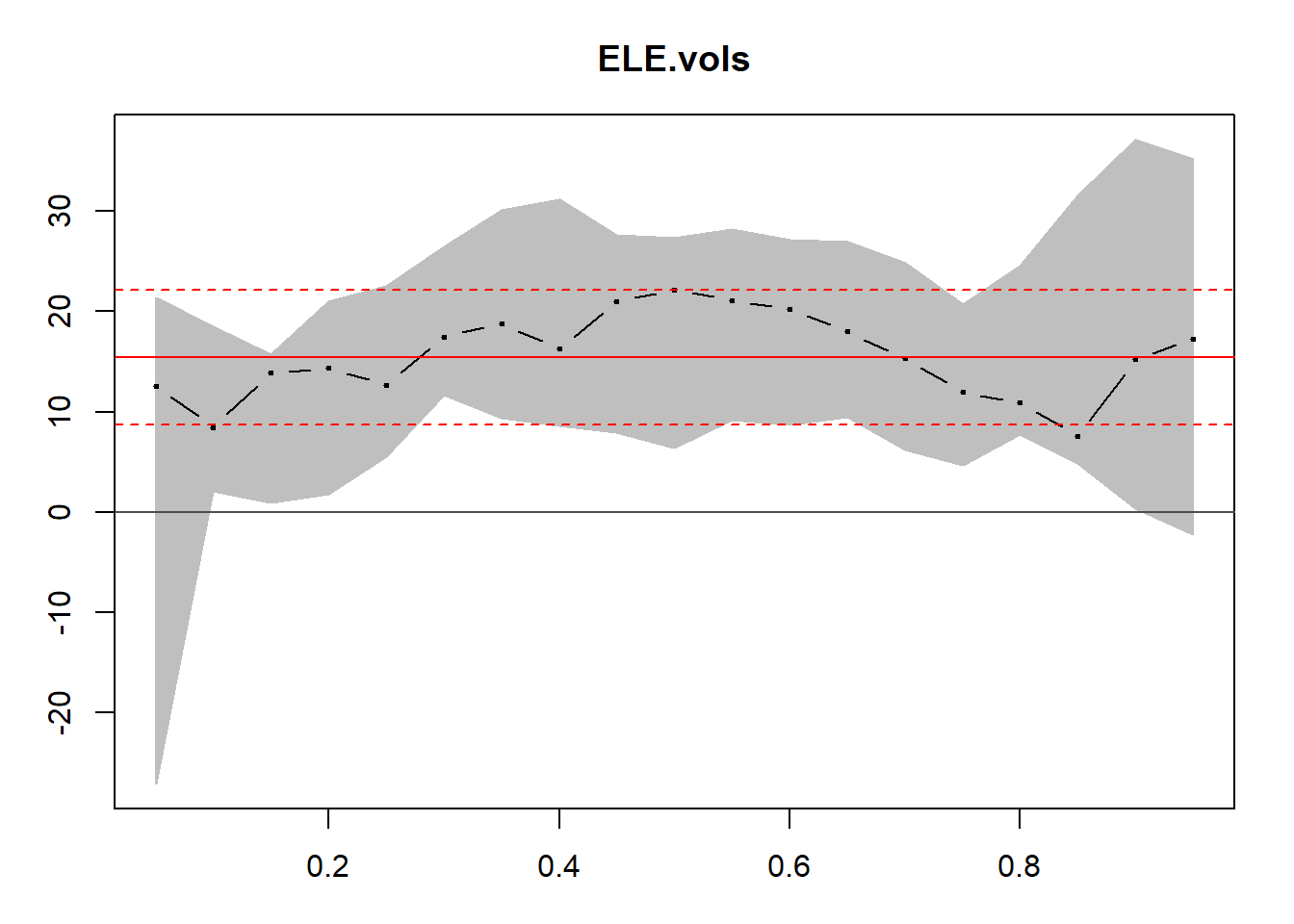

ncol = 3, byrow = FALSE)4.3.1 On to quantiles

Here is the quantile regression part of the package. Quantile regression finds the average relationship between dependent and independent variables just like ordinary least squares with one exception. Instead of centering the regression on the arithmetic mean of the dependent variable, quantile regression centers the regression on a specified quantile of the dependent variable. So instead of using the arithemetic average of the rolling correlations, we now use the 10th quantile, or the median, which is the 50th quantile as our reference. This makes great intuitive sense since we have already established that the series we deal with here are thick tailed, skewed, and certainly not normally distributed.

Here is how we use the quantreg package.

- We set

tausas the quantiles of interest. - We run the quantile regression using the

quantregpackage and a call to therqfunction. - We can overlay the quantile regression results onto the standard linear model regression.

- We can sensitize our analysis with the range of upper and lower bounds on the parameter estimates of the relationship between correlation and volatility. This sensitivity analysis is really a confidence interval based on quantile regressions.

require(quantreg)

taus <- seq(0.05, 0.95, 0.05)

fit.rq.ELE.IBE <- rq(rho.fisher[, 1] ~

ELE.vols, tau = taus)

fit.lm.ELE.IBE <- lm(rho.fisher[, 1] ~

ELE.vols)

plot(summary(fit.rq.ELE.IBE), parm = "ELE.vols")

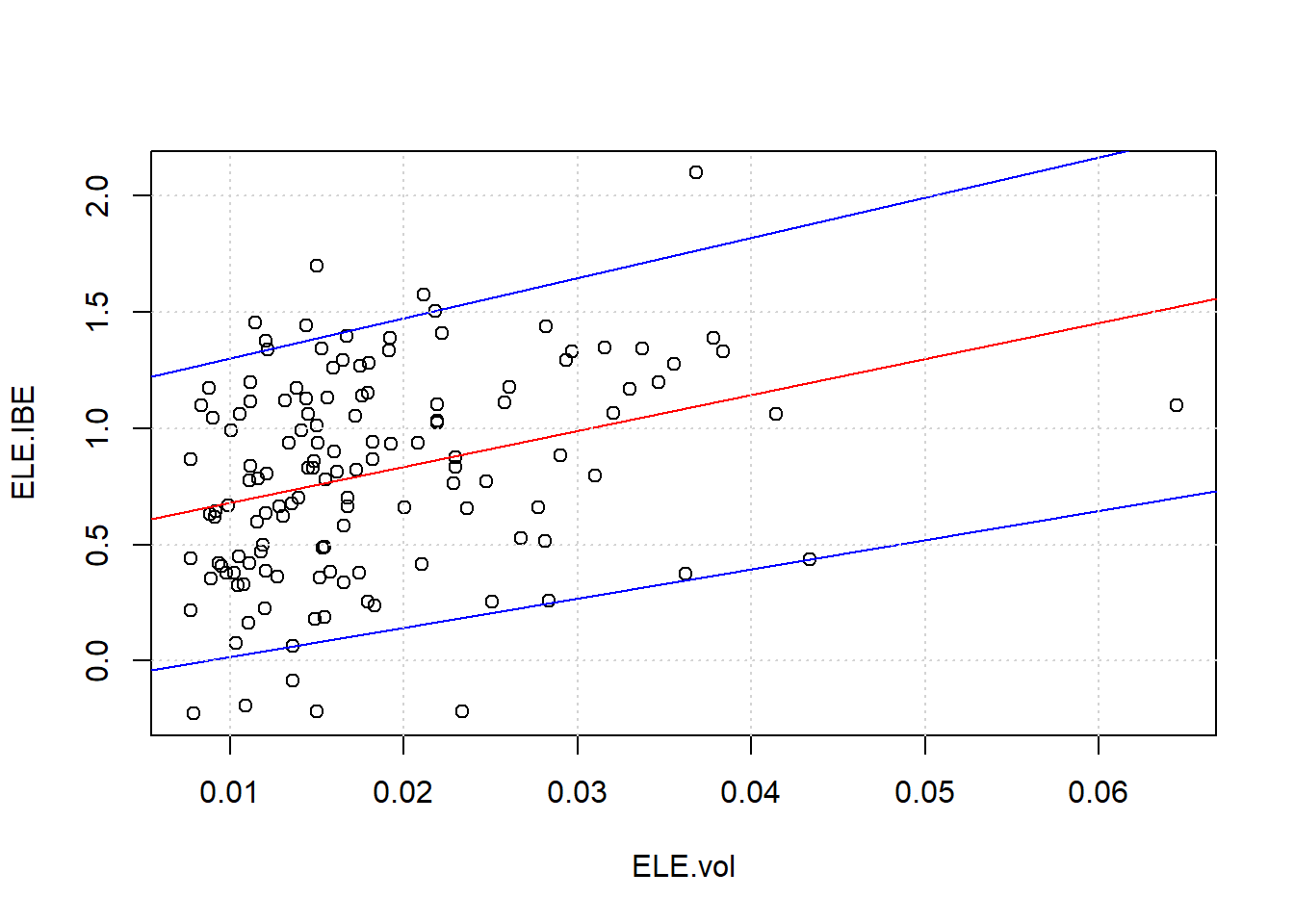

Here we build the estimations and plot the upper and lower bounds.

taus1 <- c(0.05, 0.95) ## fit the confidence interval (CI)

plot(ELE.vols, rho.fisher[, 1], xlab = "ELE.vol",

ylab = "ELE.IBE")

abline(fit.lm.ELE.IBE, col = "red")

for (i in 1:length(taus1)) {

## these lines will be the CI

abline(rq(rho.fisher[, 1] ~ ELE.vols,

tau = taus1[i]), col = "blue")

}

grid()

Quantile regression helps us to see the upper and lower bounds. Relationships between high-stress periods and correlation are abundant. These markets simply reflect normal buying behaviors across many types of exchanges: buying food at Safeway or Whole Foods, buying collateral to insure a project, selling off illiquid assets.

4.4 Time is on our Side

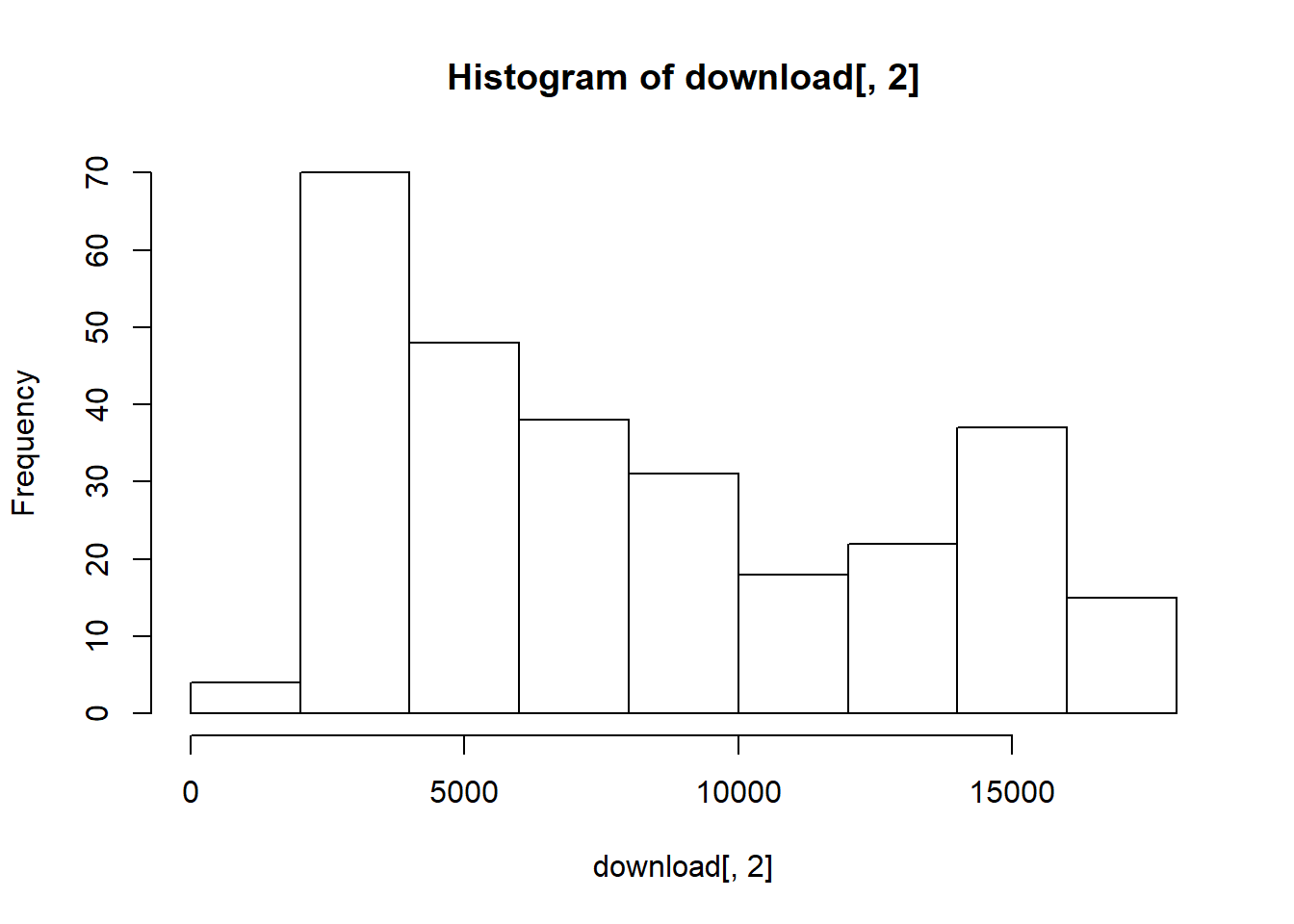

Off to another important variable, the level and growth rate of Gross National Product. Let’s start with some US Gross National Product (GNP) data from the St. Louis Fed’s open data website (“FRED”). We access https://fred.stlouisfed.org/series/GNPC96 to download real GNP in chained 1996 dollars. Saving this as a CSV file wwe then read the saved file into our R workspace.

name <- "GNP"

download <- read.csv("data/GNPC96.csv")Look at the data:

hist(download[, 2])

summary(download[, 2])## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 1943 3808 6659 8035 12385 17353We then create a raw time series object using the ts function where rownames are dates, select some data, and calculate growth rates. This will allow us and plotting functions to use the dates to index the data. Again we make use of the diff(log(data)) vector calculation.

GNP <- ts(download[1:85, 2], start = c(1995,

1), freq = 4)

GNP.rate <- 100 * diff(log(GNP)) # In percentage terms

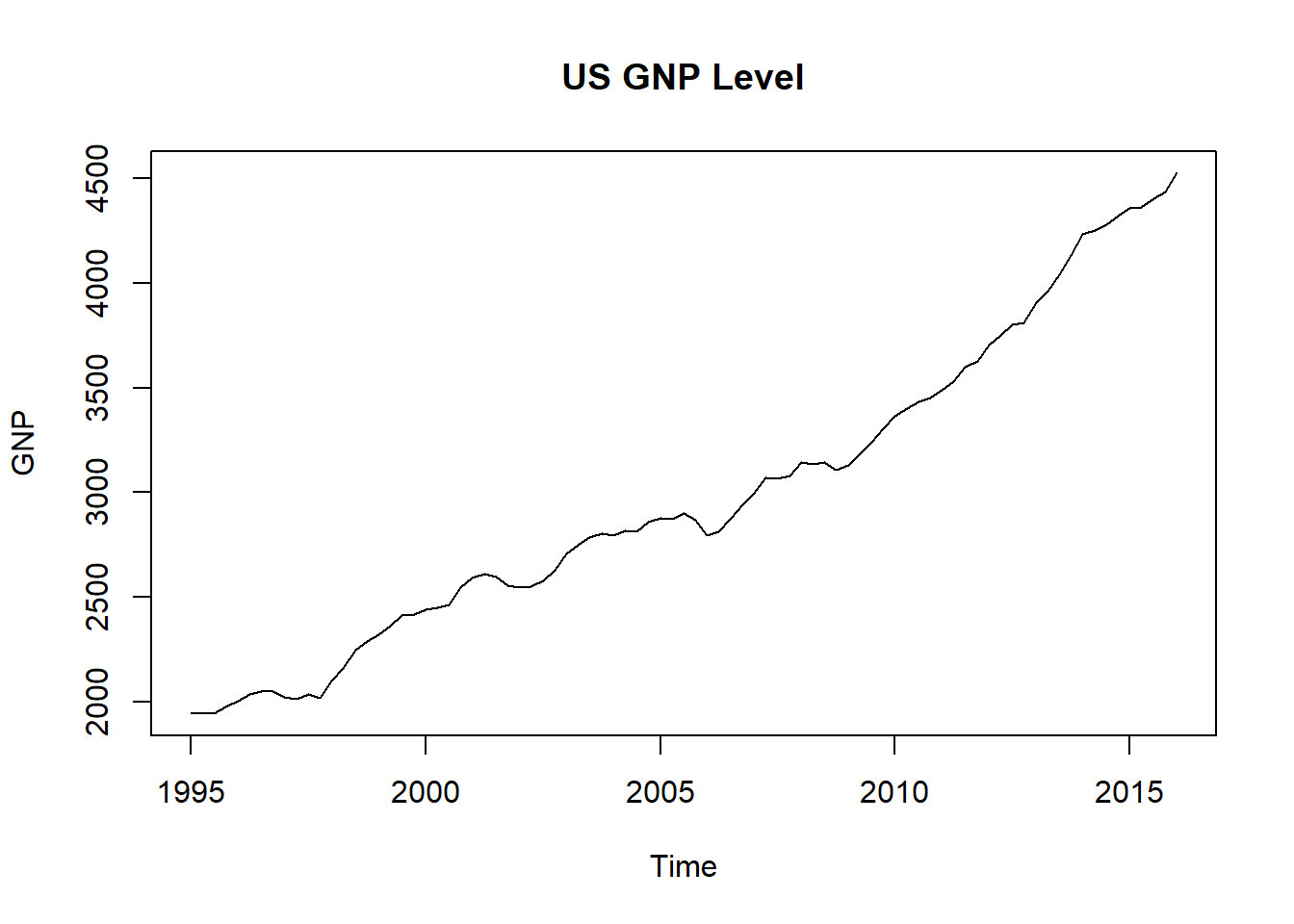

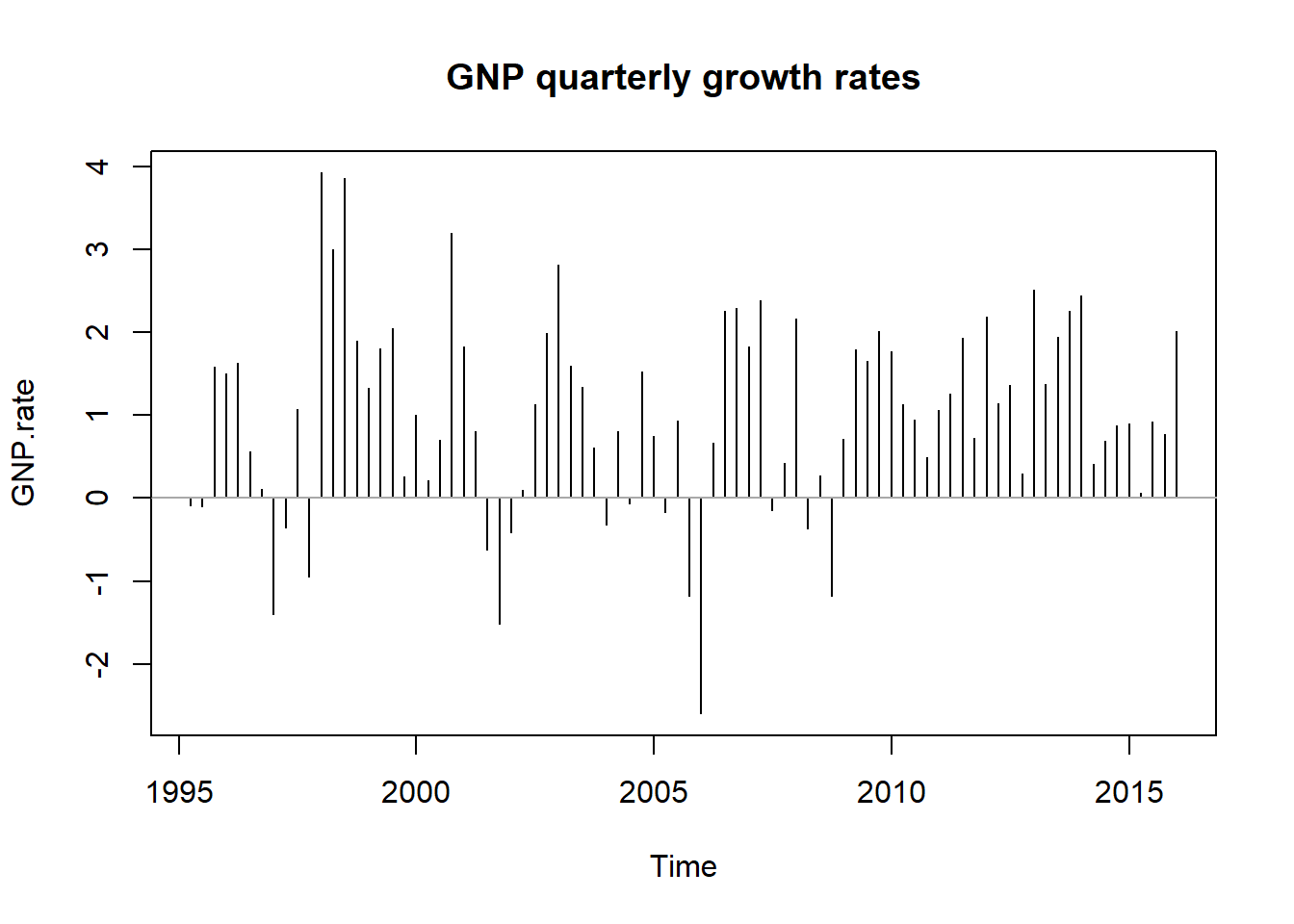

str(GNP)## Time-Series [1:85] from 1995 to 2016: 1947 1945 1943 1974 2004 ...head(GNP)## [1] 1947.003 1945.311 1943.290 1974.312 2004.218 2037.215head(GNP.rate)## [1] -0.08694058 -0.10394485 1.58375702 1.50339765 1.63297193 0.55749065Let’s plot the GNP level and rate and comment on the patterns.

plot(GNP, type = "l", main = "US GNP Level")

plot(GNP.rate, type = "h", main = "GNP quarterly growth rates")

abline(h = 0, col = "darkgray")

We see a phenomenon called “nonstationarity.” The probability distribution (think hist()) would seem to change over time (many versions of a hist()). This means that the standard deviation and mean change as well (and higher moments such as skewness and kurtosis). There is trend in the level and simply dampened sinusoidal in the rate. In a nutshell we observe several distributions mixed together in this series. This will occur again in the term structure of interest rates where we will use splines and their knots to get at parameterizing the various distributions lurking just beneath the ebb and flow of the data.

4.4.1 Forecasting GNP

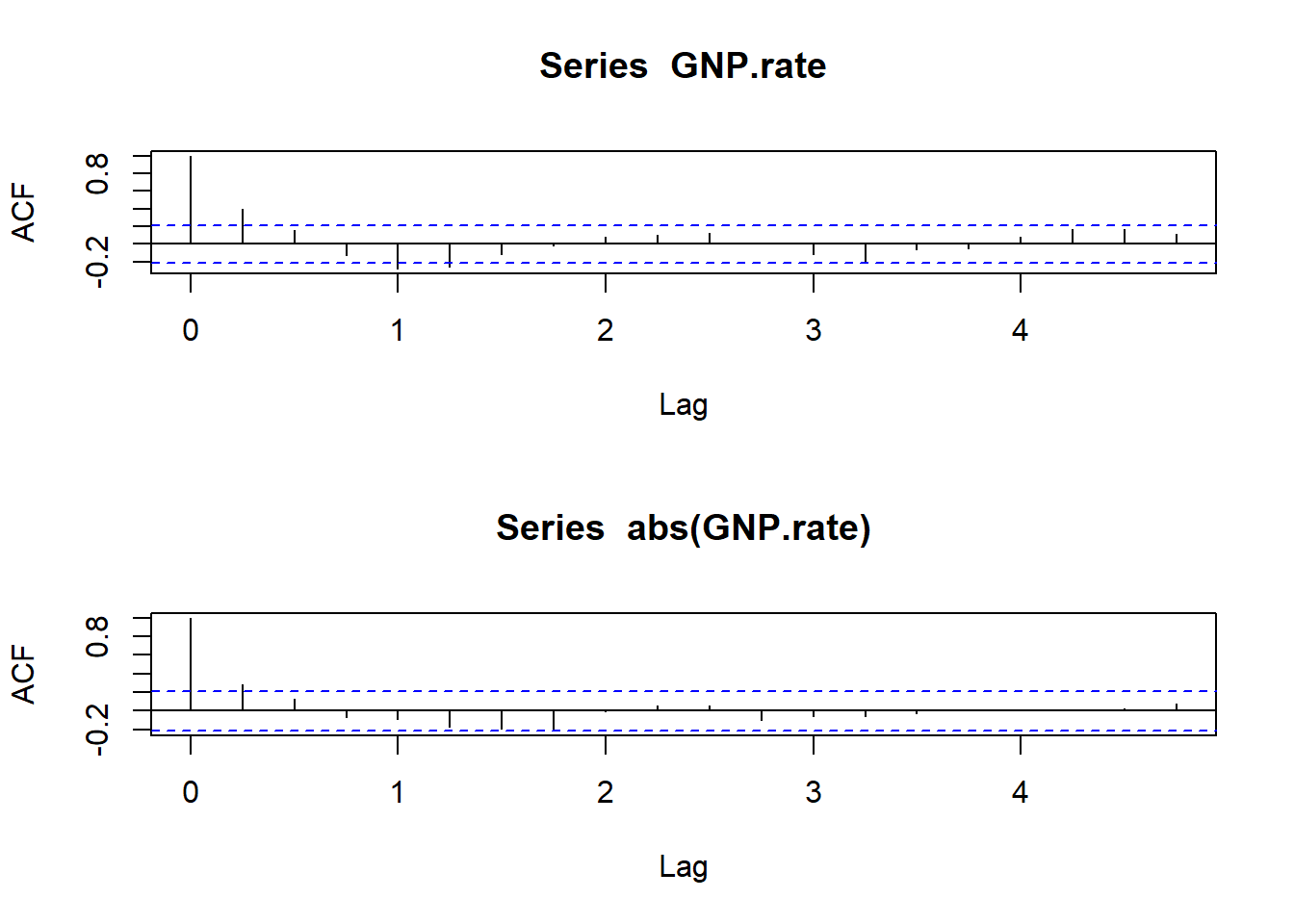

As always let’s look at ACF and PACF:

par(mfrow = c(2, 1)) ##stacked up and down

acf(GNP.rate)

acf(abs(GNP.rate))

par(mfrow = c(1, 1)) ##default settingWhat do we think is going on? There are several significant autocorrelations within the last 4 quarters. Partial autocorrelation also indicates some possible relationship 8 quarters back.

Let’s use R’s time series estimation and prediction tool arima. In this world we think there is a regression that looks like this:

\[ x_t = a_0 + a_1 x_{t-1} ... a_p x_{t-p} + b_1 \varepsilon_{t-1} + ... + b_q \varepsilon_{t-q} \]

where \(x_t\) is a first, \(d = 1\), differenced level of a variable, here GNP. There are \(p\) lags of the rate itself and \(q\) lags of residuals. We officially call this an Autoregressive Integrated Moving Average process of order \((p,d,q)\), or ARIMA(p,d,q) for short.

Estimation is quick and easy.

fit.rate <- arima(GNP.rate, order = c(2,

1, 1))The order is 2 lags of rates, 1 further difference (already differenced once when we calculated diff(log(GNP))), and 1 lag of residuals. Let’s diagnose the results with tsdiag(). What are the results?

fit.rate##

## Call:

## arima(x = GNP.rate, order = c(2, 1, 1))

##

## Coefficients:

## ar1 ar2 ma1

## 0.4062 0.0170 -1.000

## s.e. 0.1106 0.1107 0.038

##

## sigma^2 estimated as 1.182: log likelihood = -126.49, aic = 260.98Let’s take out the moving average term and compare:

fit.rate.2 <- arima(GNP.rate, order = c(2,

0, 0))

fit.rate.2##

## Call:

## arima(x = GNP.rate, order = c(2, 0, 0))

##

## Coefficients:

## ar1 ar2 intercept

## 0.3939 0.0049 1.0039

## s.e. 0.1092 0.1093 0.1946

##

## sigma^2 estimated as 1.168: log likelihood = -125.8, aic = 259.59We examine the residuals next.

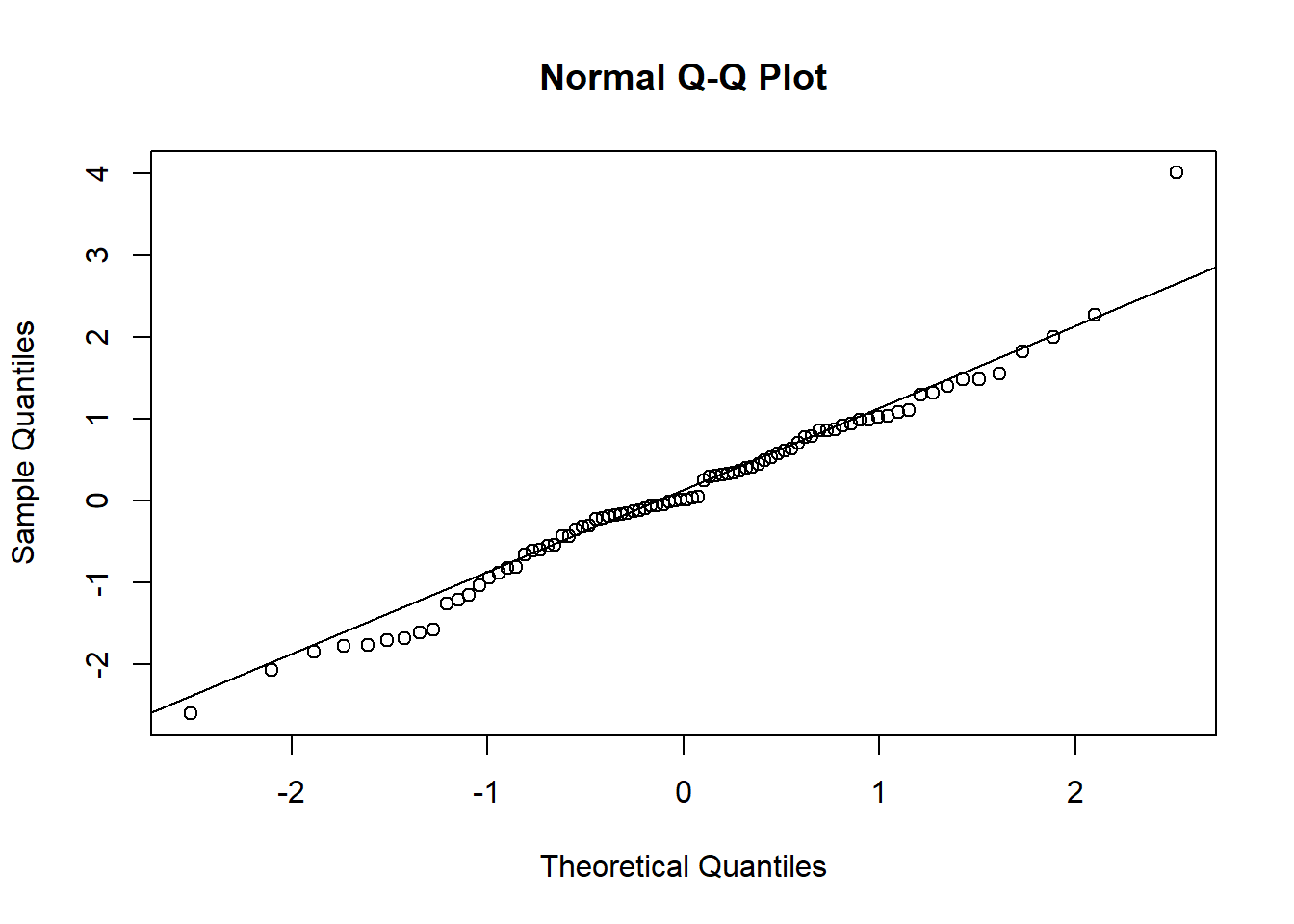

The qqnorm function plots actual quantiles against theoretical normal distributions of the quantiles. A line through the scatterplot will reveal deviations of actual quantiles from the normal ones. Those deviations are the key to understanding tail behavior, and thus the potential influence of outliers, on our understanding of the data.

qqnorm(GNP.resid)

qqline(GNP.resid)

Some ways to interpret the qq-chart include

- The diagonal line is the normal distribution quantile line.

- Deviations of actual quantiles from the normal quantile line mean nonnormal.

- Especially deviations at either (or both) end of the line spell thick tails and lots more “shape” than the normal distribution allows.

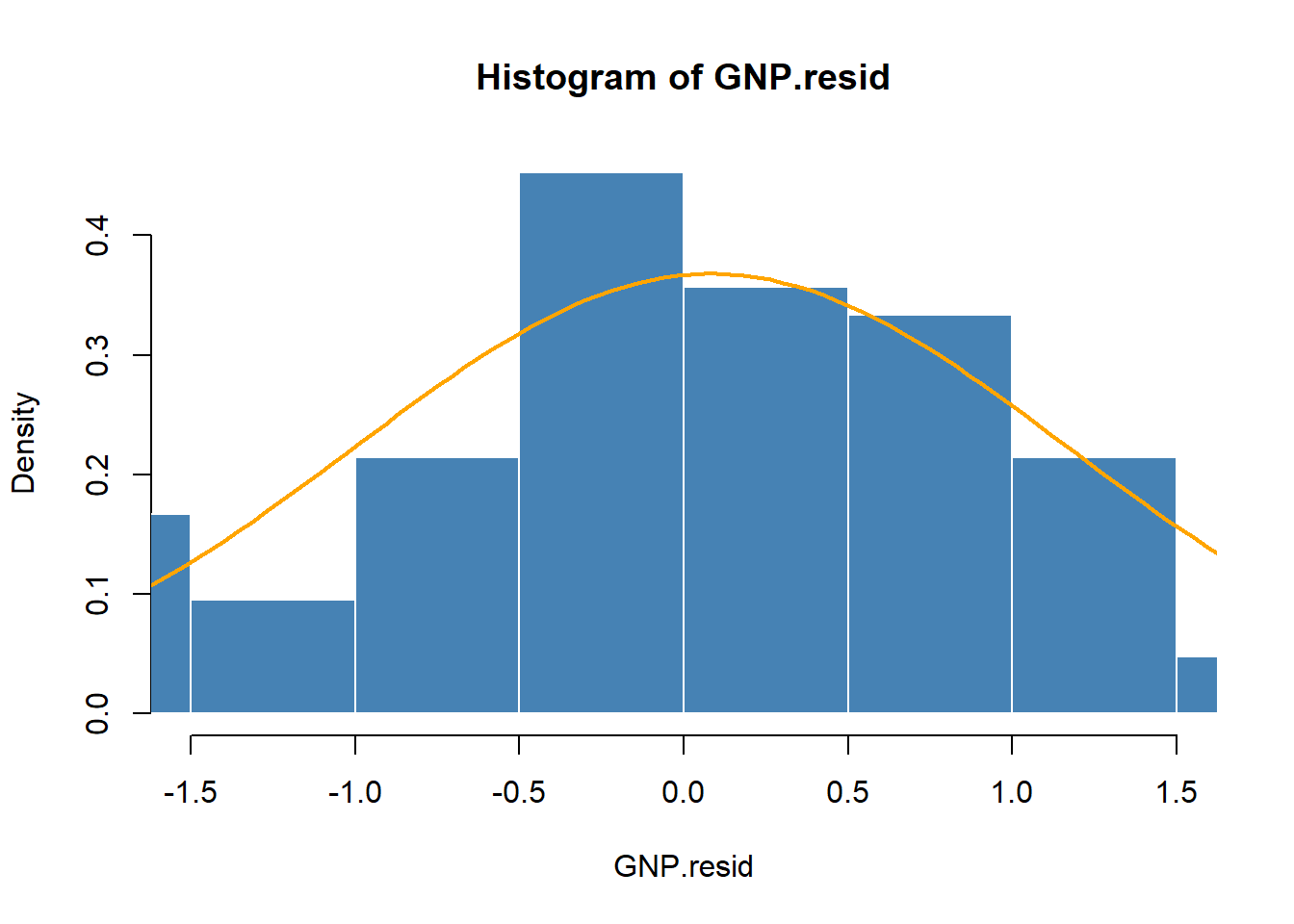

4.4.2 Residuals again

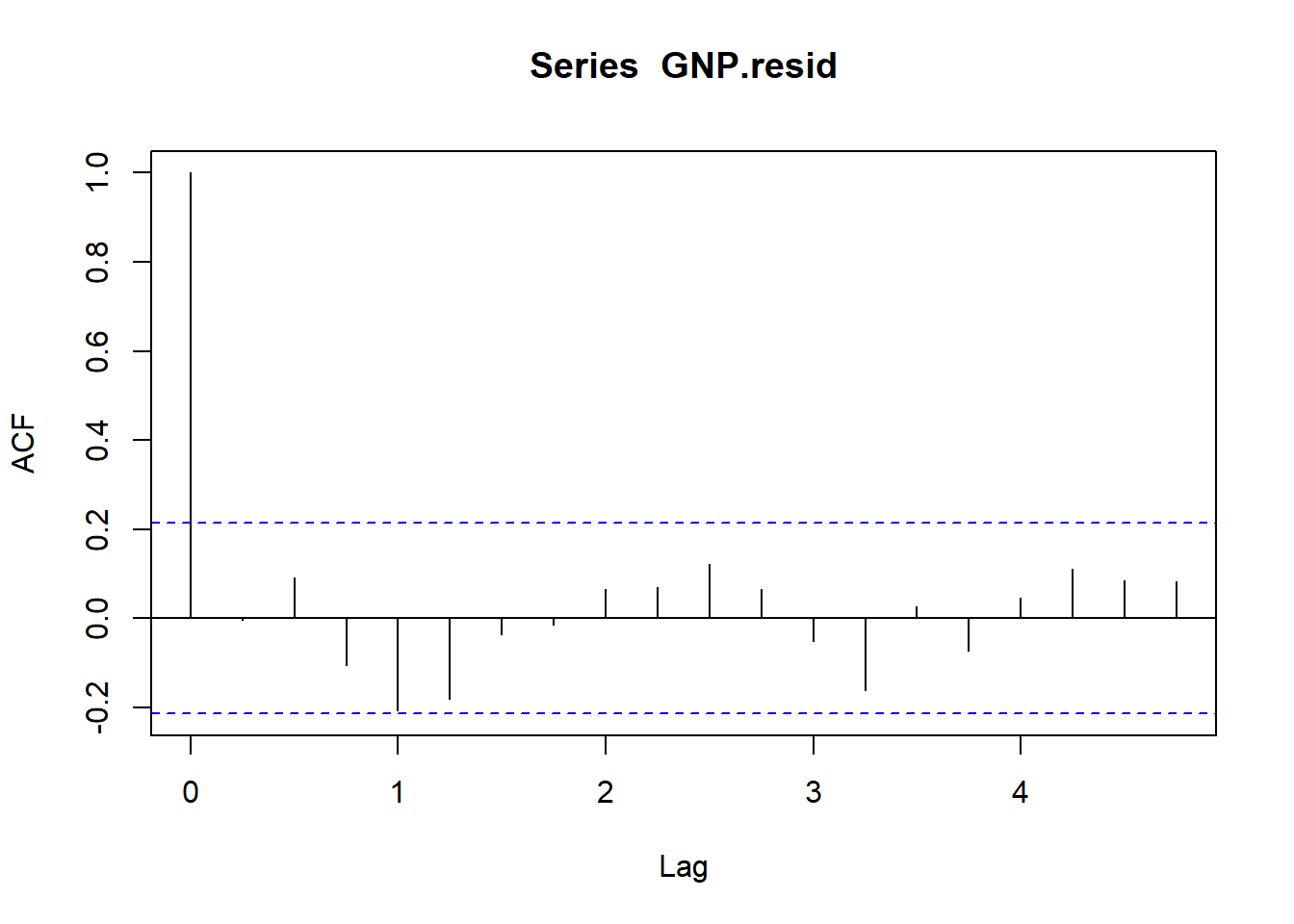

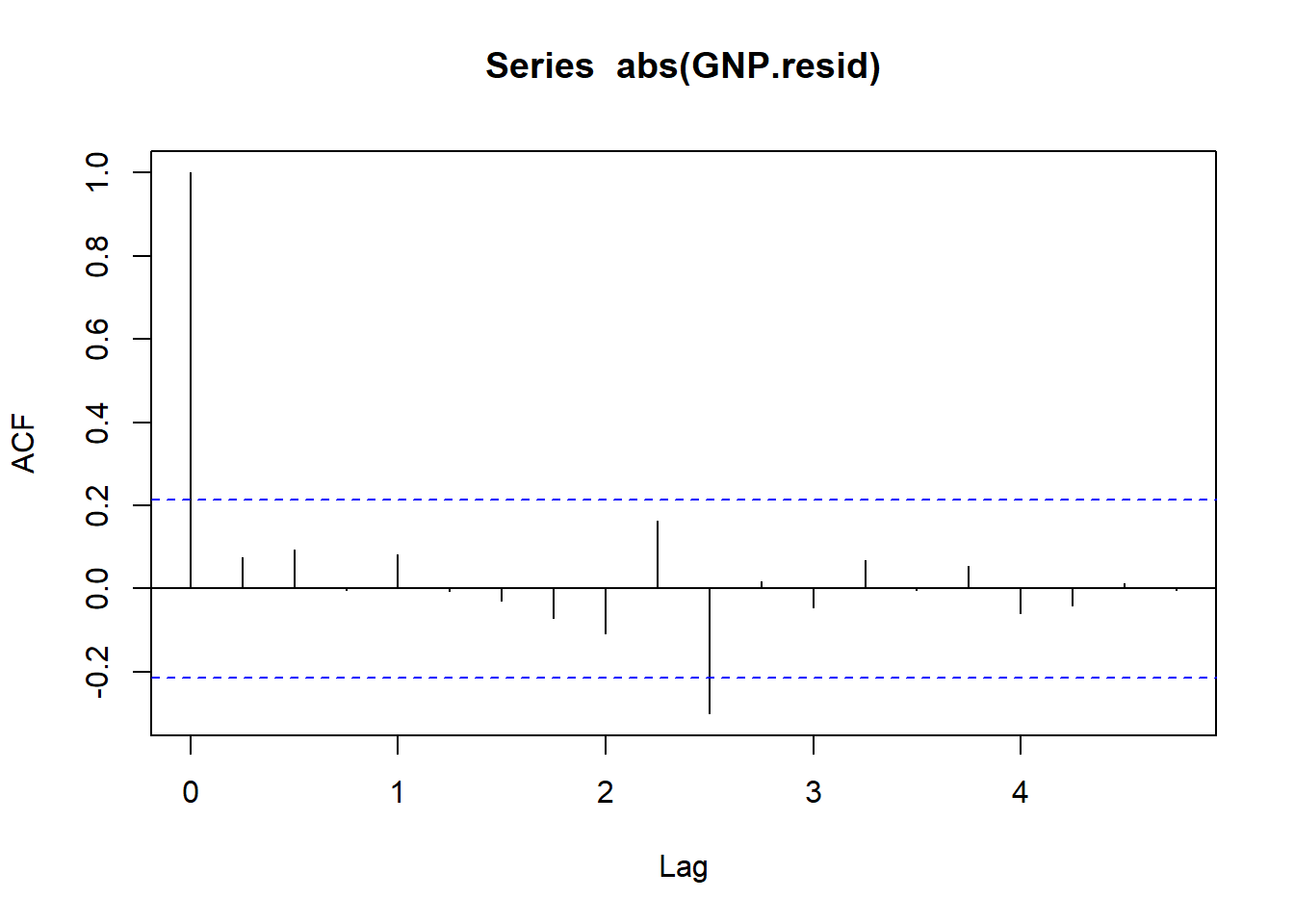

How can we begin to diagnose the GNP residuals? Let’s use the ACF and the moments package to calculate skewness and kurtosis. We find that the series is very thick tailed and serially correlated as evidenced by the usual statistical suspects. But no volatility clustering.

acf(GNP.resid)

Now let’s look at the absolute values of growth (i.e., GNP growth sizes). This will help us understand the time series aspects of the volatility of the GNP residuals.

acf(abs(GNP.resid))

…and compute tail statistics.

require(moments)

skewness(GNP.resid)## [1] 0.2283692

## attr(,"method")

## [1] "moment"kurtosis(GNP.resid)## [1] 1.037626

## attr(,"method")

## [1] "excess"The residuals are positively skewed and not so thick tailed, as the normal distribution has by definition a kurtosis equal to 3.00. By the by: Where’s the forecast?

(GNP.pred <- predict(fit.rate, n.ahead = 8))## $pred

## Qtr1 Qtr2 Qtr3 Qtr4

## 2016 1.410045 1.185815 1.084467

## 2017 1.039485 1.019489 1.010602 1.006652

## 2018 1.004896

##

## $se

## Qtr1 Qtr2 Qtr3 Qtr4

## 2016 1.093704 1.185259 1.204803

## 2017 1.209512 1.210836 1.211272 1.211437

## 2018 1.211504Now for something really interesting, yet another rendering of the notorious Efficient Markets Hypothesis.

4.5 Give it the Boot

Our goal is to infer the significance of a statistical relationship among variates. However, we do not have access to, or a “formula” does not exist, that allows us to compute the sample standard deviation of the mean estimator.

- The context is just how dependent is today’s stock return on yesterday’s?

- We want to use the distribution of real-world returns data, without needing assumptions about normality.

- The null hypothesis \(H_0\) is lack of dependence (i.e., an efficient market). The alternative hypothesis \(H_1\) is that today’s returns depend on past returns, on average.

Our strategy is to change the data repeatedly, and re-estimate a relationship. The data is sampled using the replicate function, and the sample ACF is computed. This gives us the distribution of the coefficient of the ACF under the null hypotheses, \(H0\): independence, while using the empirical distribution of the returns data.

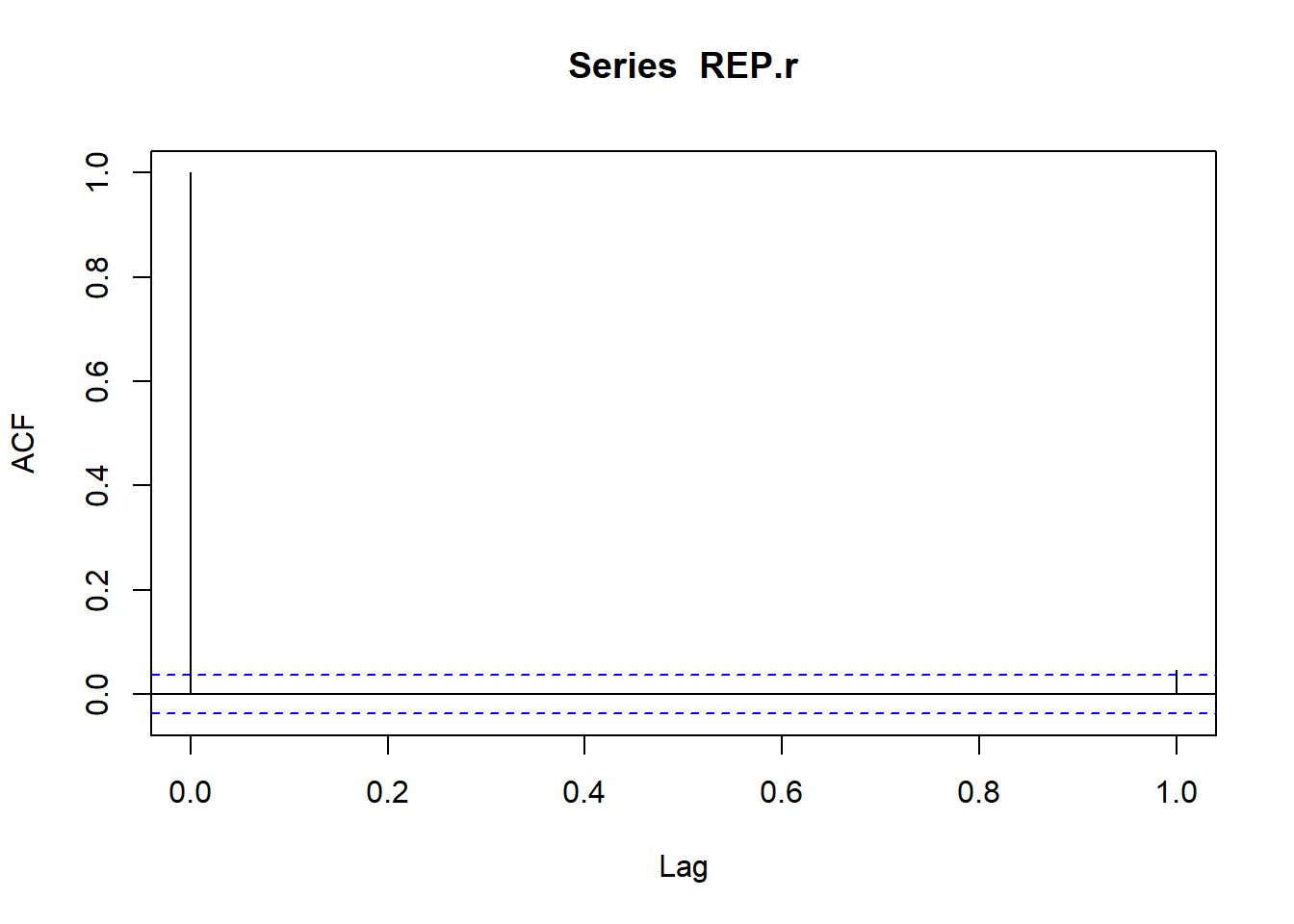

Let’s use the Repsol returns and pull the 1st autocorrelation from the sample with this simple code,

acf(REP.r, 1)

There is not much for us to see, barely a blip, but there is a correlation over the 95% line. Let’s further test this idea.

- We obtain 2500 draws from the distribution of the first autocorrelation using the

replicatefunction. - We operate under the null hypothesis of independence, assuming rational markets (i.e, rational markets is a “maintained hypothesis”).

set.seed(1016)

acf.coeff.sim <- replicate(2500, acf(sample(REP.r,

size = 2500, replace = FALSE), lag = 2,

plot = FALSE)$acf[2])

summary(acf.coeff.sim)## Min. 1st Qu. Median Mean 3rd Qu. Max.

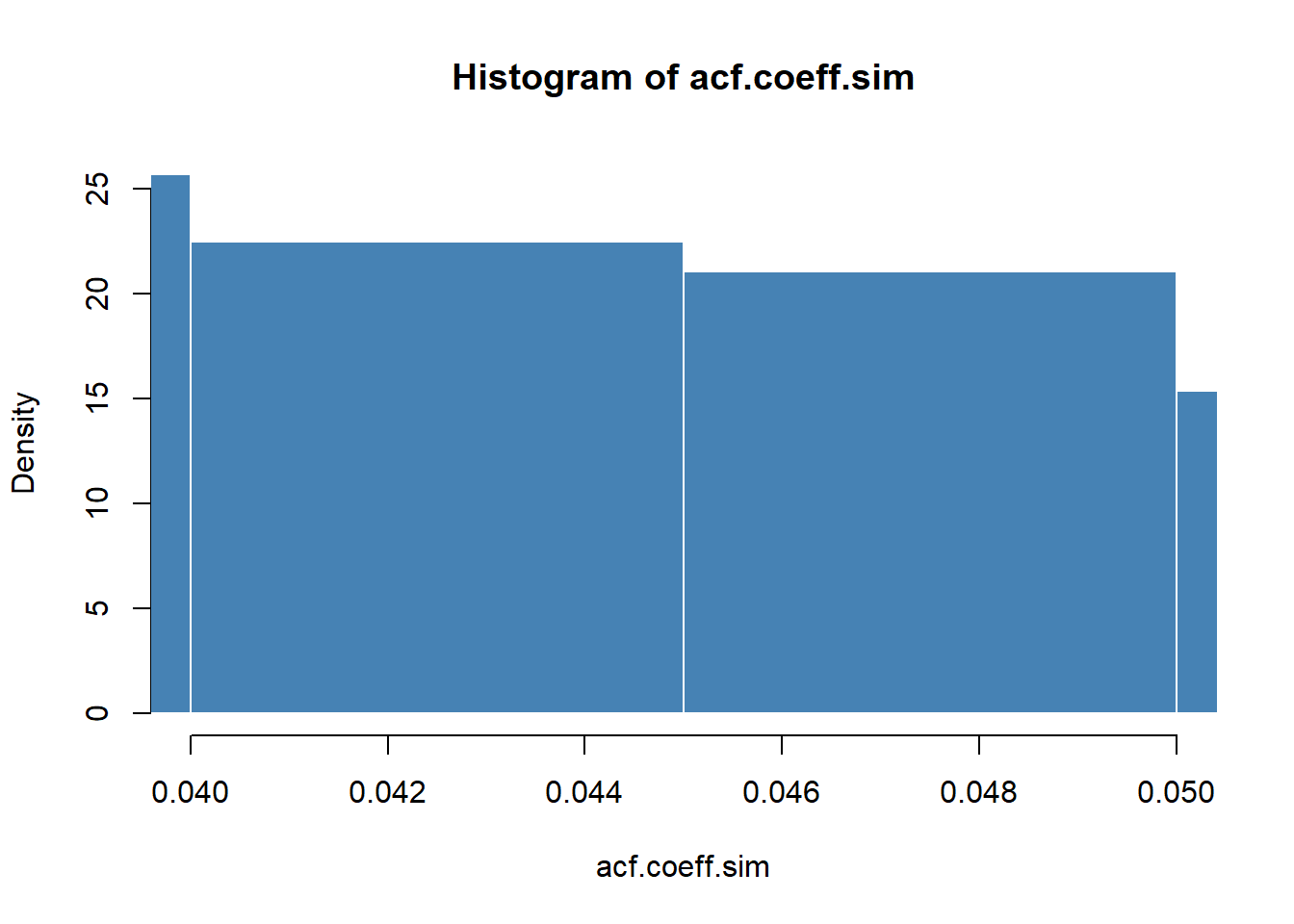

## -0.01496 0.02576 0.03610 0.03617 0.04649 0.09576Here is a plot of the distribution of the sample means of the one lag correlation between successive returns.

hist(acf.coeff.sim, probability = TRUE,

breaks = "FD", xlim = c(0.04, 0.05),

col = "steelblue", border = "white")

4.5.1 Try this exercise

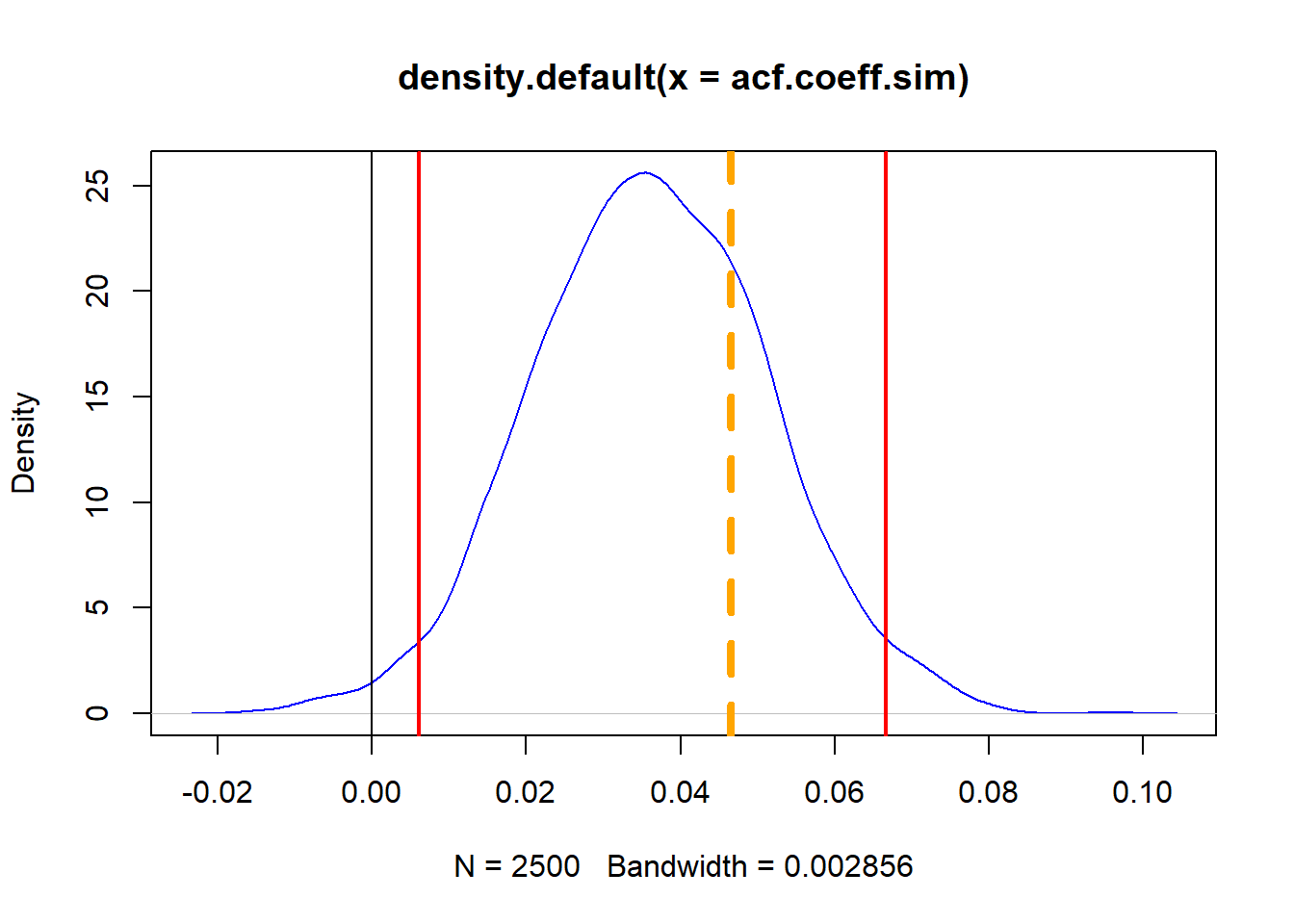

We will nvestigate tolerances of \(5\%\) and \(1\%\) from both ends of the distribution of the 1-lag acf coefficient using these statements. That was a mouthful! When we think of inference, we first identify a parameter of interest, and its estimator. That parameter is the coefficient of correlation between the current return and its 1-period lag. We estimate this parameter using the history of returns. If the parameter is significantly, and probably, not equal to zero, then we would have reason to believe there is “pattern” in the “history.”

## At 95% tolerance level

quantile(acf.coeff.sim, probs = c(0.025,

0.975))## 2.5% 97.5%

## 0.006069169 0.066687720## At 99% tolerance level

quantile(acf.coeff.sim, probs = c(0.005,

0.995))## 0.5% 99.5%

## -0.004529213 0.075026818## And the

(t.sim <- mean(acf.coeff.sim)/sd(acf.coeff.sim))## [1] 2.383801(1 - pt(t.sim, df = 2))## [1] 0.06998016Here are some highly preliminary and provisional answers to ponder.

- Quantile values are very narrow…

- How narrow (feeling like rejecting the null hypothesis)?

- Thet-stat is huge, but…

- …no buts!, the probability that we would be wrong to reject the null hypothesis is very small.

Here we plot the simulated density and lower and upper quantiles, along with the estimate of the lag-1 coefficient:

plot(density(acf.coeff.sim), col = "blue")

abline(v = 0)

abline(v = quantile(acf.coeff.sim, probs = c(0.025,

0.975)), lwd = 2, col = "red")

abline(v = acf(REP.r, 1, plot = FALSE)$acf[2],

lty = 2, lwd = 4, col = "orange")

Can we reject the null hypothesis that the coefficient = 0? Is the market “efficient”?

- Reject the null hypothesis since there is a less than 0.02% chance that the coefficient is zero.

- Read [Fama(2013, p. 365-367)]https://www.nobelprize.org/nobel_prizes/economic-sciences/laureates/2013/fama-lecture.pdf for a diagnosis.

- If the model is correct (ACF lag-1) then the previous day’s return can predict today’s return according to our analysis. Thus the market would seem to be inefficient.

- This means we might be able to create a profitable trading strategy that makes use of the little bit of correlation we found to be significant (net of the costs of trading).

4.6 Summary

We explored time series data using ACF, PACF, and CCF. We showed how to pull data from Yahoo! and FRED. We characterized several stylized facts of financial returns and inferred behavior using a rolling correlation regression on volatility. We then supplemented the ordinary least square regression confidence intervals using the entire distribution of the data with quantile regression. We also built Using bootstrapping techniques we simulated coefficient inference to check the efficient markets hypothesis. This, along with the quantile regression technique, allows us to examine risk tolerance from an inference point of view.

4.7 Further Reading

In this chapter we touch on the voluminous topic of time series analysis. Ruppert et al. in chapters 12, 13, 14, and 15 explore the basics, as in this chapter, as well as far more advanced topics such as GARCH and cointegration. We will explore GARCH in a later chapter as well. McNeil et al. in their chapter 1 surveys the perspective of risk, all of helps to yield the so-called stylized fact of financial data in chapter 5 and a more formal treatment of time series topics in chapter 4.

4.8 Practice Laboratory

4.8.1 Practice laboratory #1

4.8.1.1 Problem

4.8.1.2 Questions

4.8.2 Practice laboratory #2

4.8.2.1 Problem

4.8.2.2 Questions

4.9 Project

4.9.1 Background

4.9.2 Data

4.9.3 Workflow

4.9.4 Assessment

We will use the following rubric to assess our performance in producing analytic work product for the decision maker.

The text is laid out cleanly, with clear divisions and transitions between sections and sub-sections. The writing itself is well-organized, free of grammatical and other mechanical errors, divided into complete sentences, logically grouped into paragraphs and sections, and easy to follow from the presumed level of knowledge.

All numerical results or summaries are reported to suitable precision, and with appropriate measures of uncertainty attached when applicable.

All figures and tables shown are relevant to the argument for ultimate conclusions. Figures and tables are easy to read, with informative captions, titles, axis labels and legends, and are placed near the relevant pieces of text.

The code is formatted and organized so that it is easy for others to read and understand. It is indented, commented, and uses meaningful names. It only includes computations which are actually needed to answer the analytical questions, and avoids redundancy. Code borrowed from the notes, from books, or from resources found online is explicitly acknowledged and sourced in the comments. Functions or procedures not directly taken from the notes have accompanying tests which check whether the code does what it is supposed to. All code runs, and the

R Markdownfileknitstopdf_documentoutput, or other output agreed with the instructor.Model specifications are described clearly and in appropriate detail. There are clear explanations of how estimating the model helps to answer the analytical questions, and rationales for all modeling choices. If multiple models are compared, they are all clearly described, along with the rationale for considering multiple models, and the reasons for selecting one model over another, or for using multiple models simultaneously.

The actual estimation and simulation of model parameters or estimated functions is technically correct. All calculations based on estimates are clearly explained, and also technically correct. All estimates or derived quantities are accompanied with appropriate measures of uncertainty.

The substantive, analytical questions are all answered as precisely as the data and the model allow. The chain of reasoning from estimation results about the model, or derived quantities, to substantive conclusions is both clear and convincing. Contingent answers (for example, “if X, then Y , but if A, then B, else C”) are likewise described as warranted by the model and data. If uncertainties in the data and model mean the answers to some questions must be imprecise, this too is reflected in the conclusions.

All sources used, whether in conversation, print, online, or otherwise are listed and acknowledged where they used in code, words, pictures, and any other components of the analysis.

4.10 References

McNeill, Alexander J., Rudiger Frey, and Paul Embrechts. 2015. Quantitative Risk Management: Concepts, Techniques and Tools. Revised Edition. Princeton: Princeton University Press.

Ruppert, David and David S. Matteson. 2015. Statistics and Data Analysis for Financial Engineering with R Examples, Second Edition. New York: Springer.