Chapter 8 Introduction to Multilevel Models

8.1 Learning Objectives

After finishing this chapter, you should be able to:

- Recognize when response variables and covariates have been collected at multiple (nested) levels.

- Apply exploratory data analysis techniques to multilevel data.

- Write out a multilevel statistical model, including assumptions about variance components, in both by-level and composite forms.

- Interpret model parameters (including fixed effects and variance components) from a multilevel model, including cases in which covariates are continuous, categorical, or centered.

- Understand the taxonomy of models, including why we start with an unconditional means model.

- Select a final model, using criteria such as AIC, BIC, and deviance.

8.2 Case Study: Music Performance Anxiety

Stage fright can be a serious problem for performers, and understanding the personality underpinnings of performance anxiety is an important step in determining how to minimize its impact. Sadler and Miller (2010) studied the emotional state of musicians before performances and factors which may affect their emotional state. Data was collected by having 37 undergraduate music majors from a competitive undergraduate music program fill out diaries prior to performances over the course of an academic year. In particular, study participants completed a Positive Affect Negative Affect Schedule (PANAS) before each performance. The PANAS instrument provided two key outcome measures: negative affect (a state measure of anxiety) and positive affect (a state measure of happiness). We will focus on negative affect as our primary response measuring performance anxiety.

Factors which were examined for their potential relationships with performance anxiety included: performance type (solo, large ensemble, or small ensemble); audience (instructor, public, students, or juried); if the piece was played from memory; age; gender; instrument (voice, orchestral, or keyboard); and, years studying the instrument. In addition, the personalities of study participants were assessed at baseline through the Multidimensional Personality Questionnaire (MPQ). The MPQ provided scores for one lower-order factor (absorption) and three higher-order factors: positive emotionality (PEM—a composite of well-being, social potency, achievement, and social closeness); negative emotionality (NEM—a composite of stress reaction, alienation, and aggression); and, constraint (a composite of control, harm avoidance, and traditionalism).

Primary scientific hypotheses of the researchers included:

- Lower music performance anxiety will be associated with lower levels of a subject’s negative emotionality.

- Lower music performance anxiety will be associated with lower levels of a subject’s stress reaction.

- Lower music performance anxiety will be associated with greater number of years of study.

8.3 Initial Exploratory Analyses

8.3.1 Data Organization

Our examination of the data from Sadler and Miller (2010) in musicdata.csv will focus on the following key variables:

id= unique musician identification numberdiary= cumulative total of diaries filled out by musicianperf_type= type of performance (Solo, Large Ensemble, or Small Ensemble)audience= who attended (Instructor, Public, Students, or Juried)memory= performed from Memory, using Score, or Unspecifiedna= negative affect score from PANASgender= musician genderinstrument= Voice, Orchestral, or Pianompqab= absorption subscale from MPQmpqpem= positive emotionality (PEM) composite scale from MPQmpqnem= negative emotionality (NEM) composite scale from MPQ

Sample rows containing selected variables from our data set are illustrated in Table 8.1; note that each subject (id) has one row for each unique diary entry.

| Obs | id | diary | perf_type | memory | na | gender | instrument | mpqab | mpqpem | mpqnem |

|---|---|---|---|---|---|---|---|---|---|---|

| 1 | 1 | 1 | Solo | Unspecified | 11 | Female | voice | 16 | 52 | 16 |

| 2 | 1 | 2 | Large Ensemble | Memory | 19 | Female | voice | 16 | 52 | 16 |

| 3 | 1 | 3 | Large Ensemble | Memory | 14 | Female | voice | 16 | 52 | 16 |

| 495 | 43 | 2 | Solo | Score | 13 | Female | voice | 31 | 64 | 17 |

| 496 | 43 | 3 | Small Ensemble | Memory | 19 | Female | voice | 31 | 64 | 17 |

| 497 | 43 | 4 | Solo | Score | 11 | Female | voice | 31 | 64 | 17 |

As with any statistical analysis, our first task is to explore the data, examining distributions of individual responses and predictors using graphical and numerical summaries, and beginning to discover relationships between variables. With multilevel models, exploratory analyses must eventually account for the level at which each variable is measured. In a two-level study such as this one, Level One will refer to variables measured at the most frequently occurring observational unit, while Level Two will refer to variables measured on larger observational units. For example, in our study on music performance anxiety, many variables are measured at every performance. These “Level One” variables include:

- negative affect (our response variable)

- performance characteristics (type, audience, if music was performed from memory)

- number of previous performances with a diary entry

However, other variables measure characteristics of study participants that remain constant over all performances for a particular musician; these are considered “Level Two” variables and include:

- demographics (age and gender of musician)

- instrument used and number of previous years spent studying that instrument

- baseline personality assessment (MPQ measures of positive emotionality, negative emotionality, constraint, stress reaction, and absorption)

8.3.2 Exploratory Analyses: Univariate Summaries

Because of this data structure—the assessment of some variables on a performance-by-performance basis and others on a subject-by-subject basis—we cannot treat our data set as consisting of 497 independent observations. Although negative affect measures from different subjects can reasonably be assumed to be independent (unless, perhaps, the subjects frequently perform in the same ensemble group), negative affect measures from different performances by the same subject are not likely to be independent. For example, some subjects tend to have relatively high performance anxiety across all performances, so that knowing their score for Performance 3 was 20 makes it more likely that their score for Performance 5 is somewhere near 20 as well. Thus, we must carefully consider our exploratory data analysis, recognizing that certain plots and summary statistics may be useful but imperfect in light of the correlated observations.

First, we will examine each response variable and potential covariate individually. Continuous variables can be summarized using histograms and summaries of center and spread; categorical variables can be summarized with tables and possibly bar charts. When examining Level One covariates and responses, we will begin by considering all 497 observations, essentially treating each performance by each subject as independent even though we expect observations from the same musician to be correlated. Although these plots will contain dependent points, since each musician provides data for up to 15 performances, general patterns exhibited in these plots tend to be real. Alternatively, we can calculate mean scores across all performances for each of the 37 musicians so that we can more easily consider each plotted point to be independent. The disadvantage of this approach would be lost information which, in a study such as this with a relatively small number of musicians each being observed over many performances, could be considerable. In addition, if the sample sizes varied greatly by subject, a mean based on 1 observation would be given equal weight to a mean based on 15 observations. Nevertheless, both types of exploratory plots typically illustrate similar relationships.

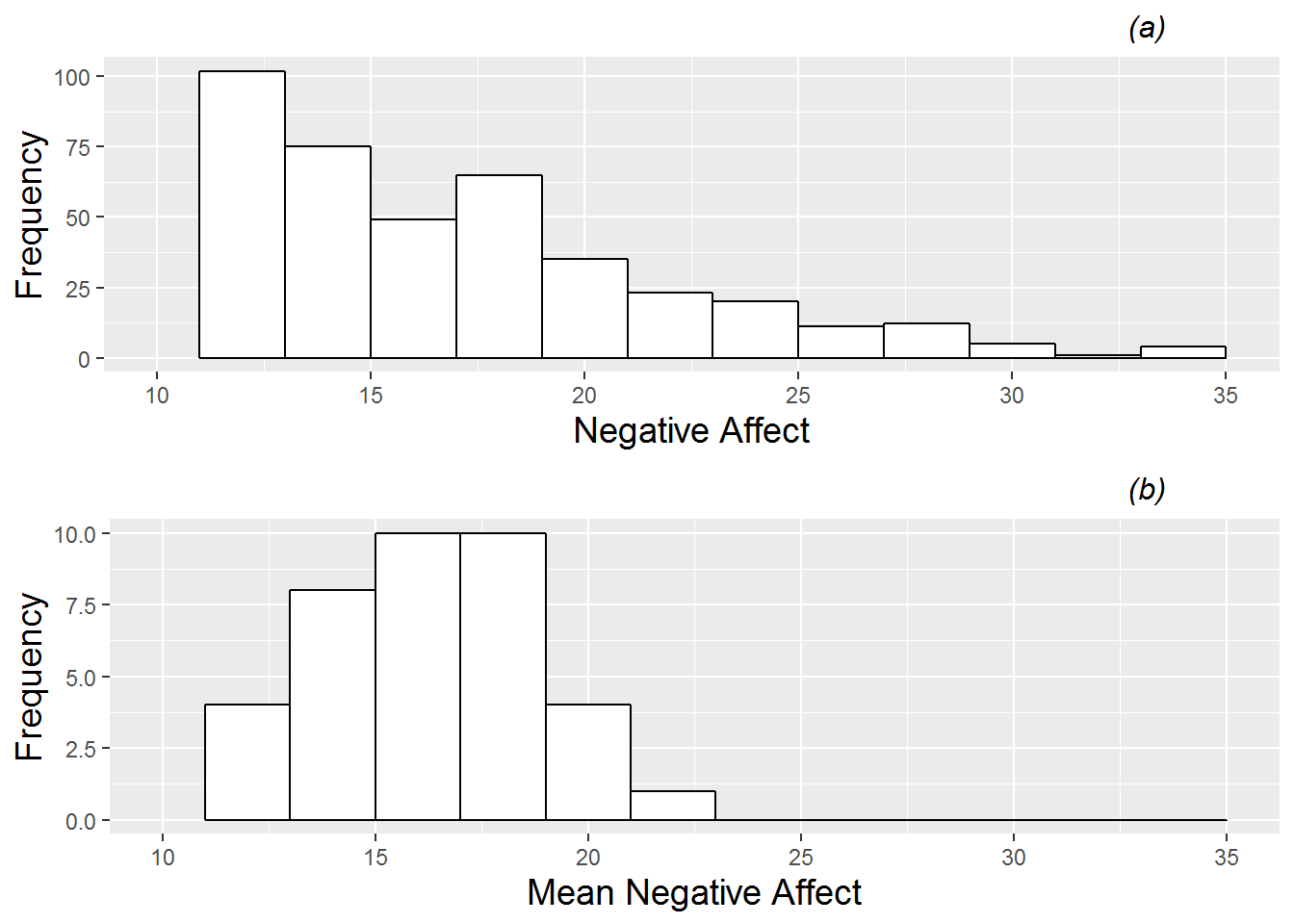

In Figure 8.1 we see histograms for the primary response (negative affect); plot (a) shows all 497 (dependent) observations, while plot (b) shows the mean negative affect for each of the 37 musicians across all their performances. Through plot (a), we see that performance anxiety (negative affect) across all performances follows a right skewed distribution with a lower bound of 10 (achieved when all 10 questions are answered with a 1). Plot (b) shows that mean negative affect is also right-skewed (although not as smoothly decreasing in frequency), with range 12 to 23.

Figure 8.1: Histogram of the continuous Level One response (negative effect). Plot (a) contains all 497 performances across the 37 musicians, while plot (b) contains one observation per musician (the mean negative affect across all performances).

We can also summarize categorical Level One covariates across all (possibly correlated) observations to get a rough relative comparison of trends. 56.1% of the 497 performances in our data set were solos, while 27.3% were large ensembles and 16.5% were small emsembles. The most common audience type was a public performance (41.0%), followed by instructors (30.0%), students (20.1%), and finally juried recitals (8.9%). In 30.0% of performances, the musician played by memory, while 55.1% used the score and 14.9% of performances were unspecified.

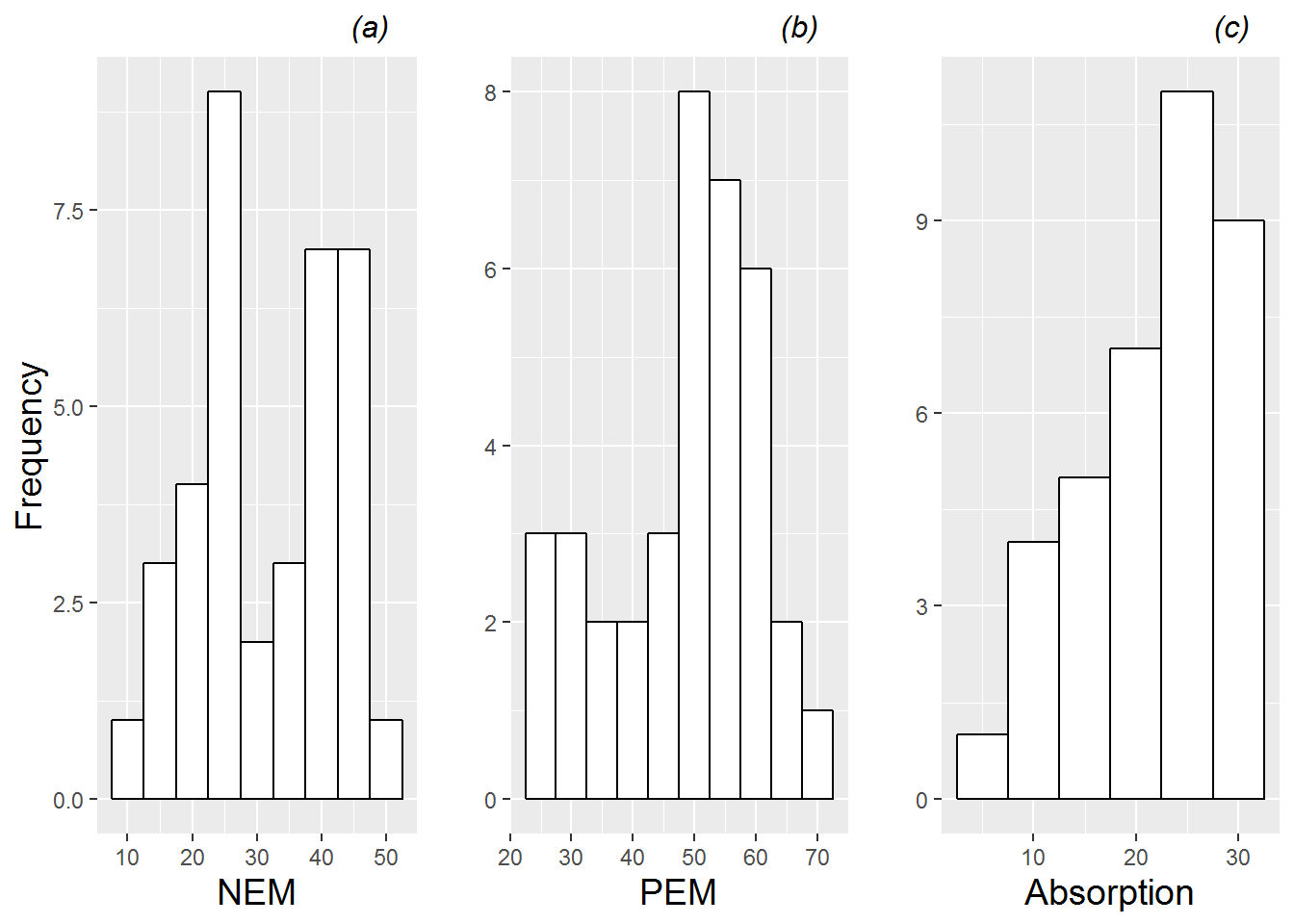

To generate an initial examination of Level Two covariates, we consider a data set with just one observation per subject, since Level Two variables are constant over all performances from the same subject. Then, we can proceed as we did with Level One covariates—using histograms to illustrate the distributions of continuous covariates (see Figure 8.2) and tables to summarize categorical covariates. For example, we learn that the majority of subjects have positive emotionality scores between 50 and 60, but that several subjects fall into a lengthy lower tail with scores between 20 and 50. A summary of categorical Level Two covariates reveals that among the 37 subjects (26 female and 11 male), 17 play an orchestral instrument, 15 are vocal performers, and 5 play a keyboard instrument.

Figure 8.2: Histograms of the 3 continuous Level Two covariates (negative emotionality (NEM), positive emotionality (PEM), and absorption). Each plot contains one observation per musician.

8.3.3 Exploratory Analyses: Bivariate Summaries

The next step in an initial exploratory analysis is the examination of numerical and graphical summaries of relationships between model covariates and responses. In examining these bivariate relationships, we hope to learn: (1) if there is a general trend suggesting that as the covariate increases the response either increases or decreases, (2) if subjects at certain levels of the covariate tend to have similar mean responses (low variability), and (3) if the variation in the response differs at different levels of the covariate (unequal variability).

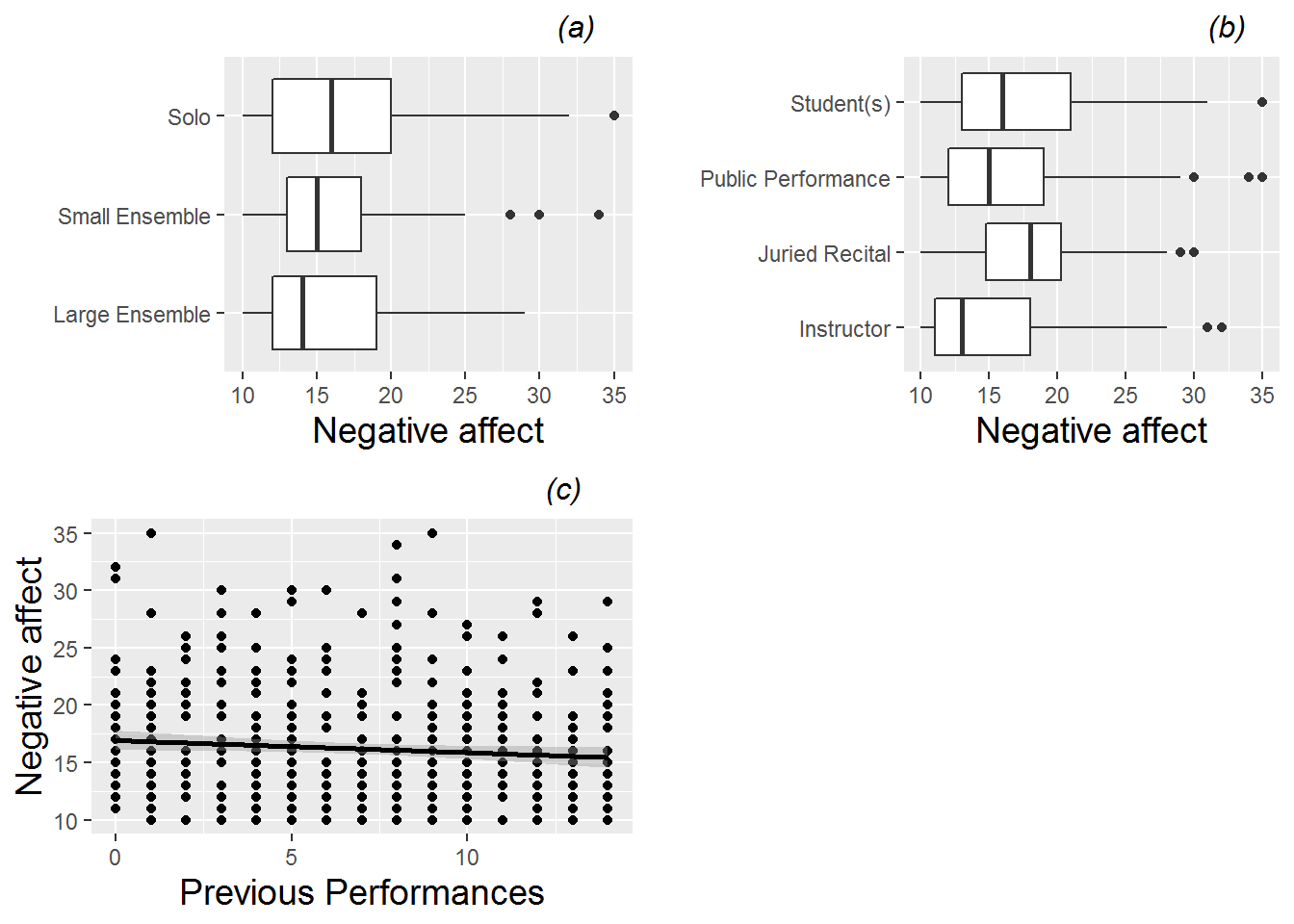

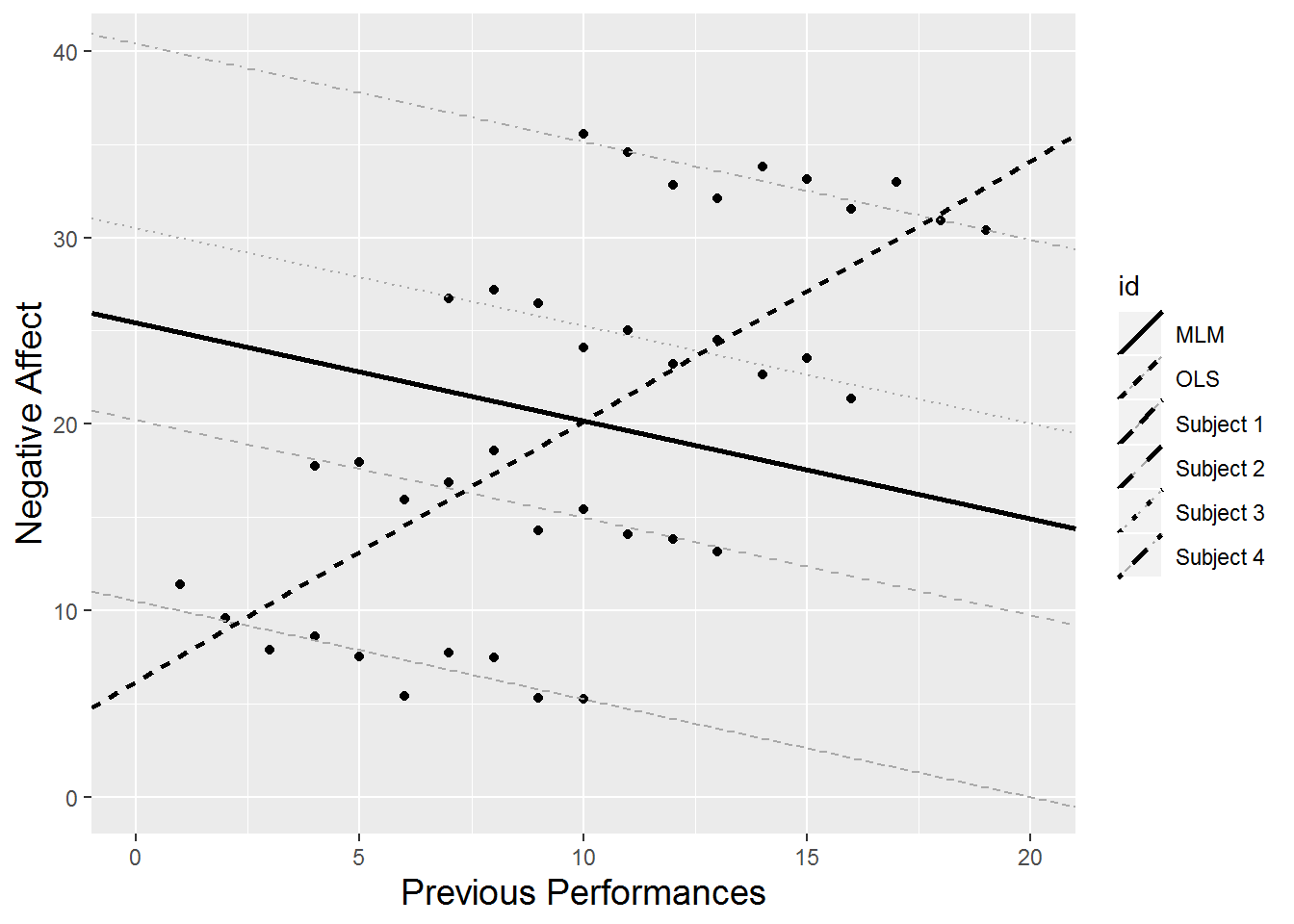

As with individual variables, we will begin by treating all 497 performances recorded as independent observations, even though blocks of 15 or so performances were performed by the same musician. For categorical Level One covariates, we can generate boxplots against negative affect as in Figure 8.3, plots (a) and (b). From these boxplots, we see that lower levels of performance anxiety seem to be associated with playing in large ensembles and playing in front of an instructor. For our lone continuous Level One covariate (number of previous performances), we can generate a scatterplot against negative affect as in plot (c) from Figure 8.3, adding a fitted line to illustrate general trends upward or downward. From this scatterplot, we see that negative affect seems to decrease slightly as a subject has more experience.

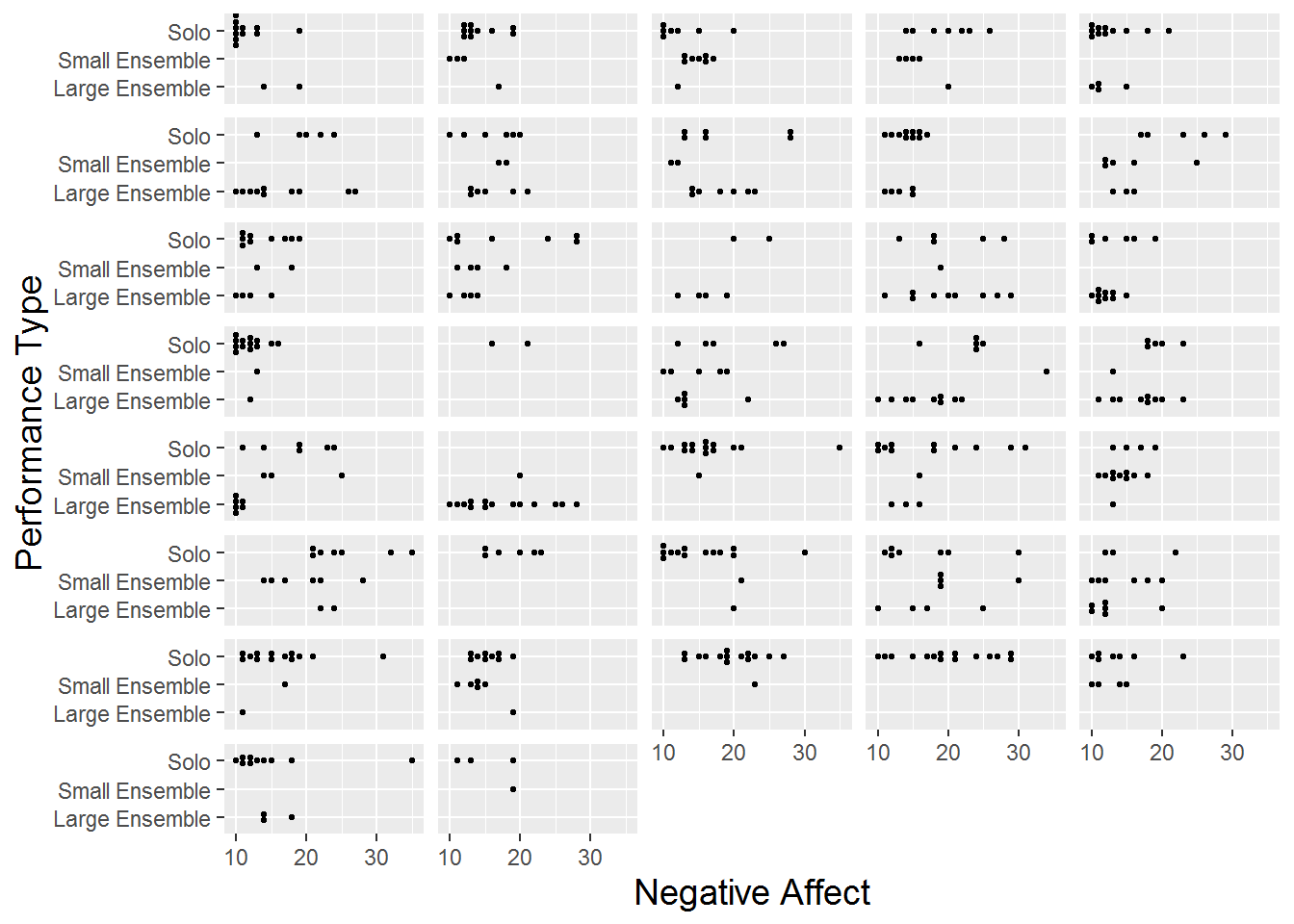

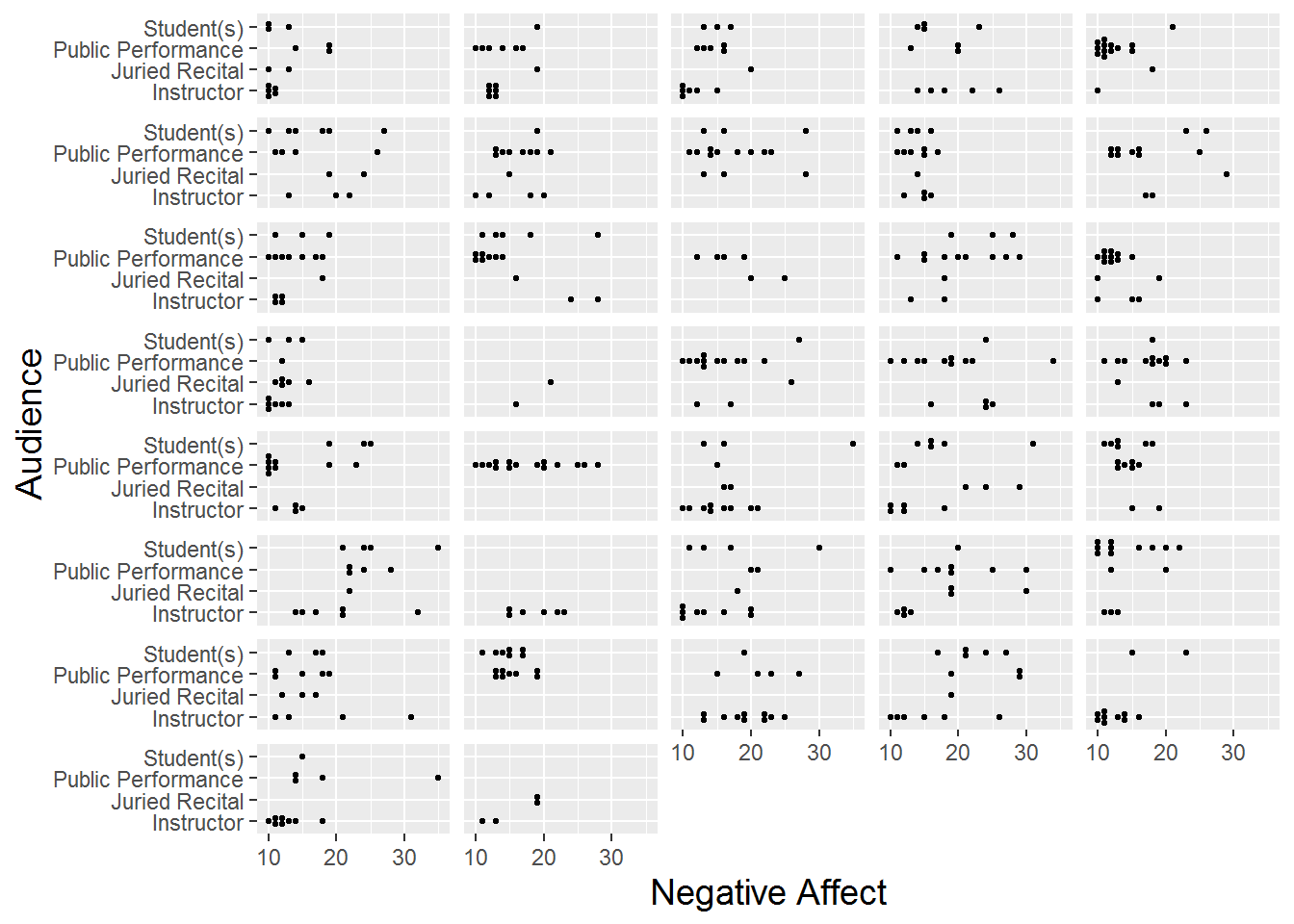

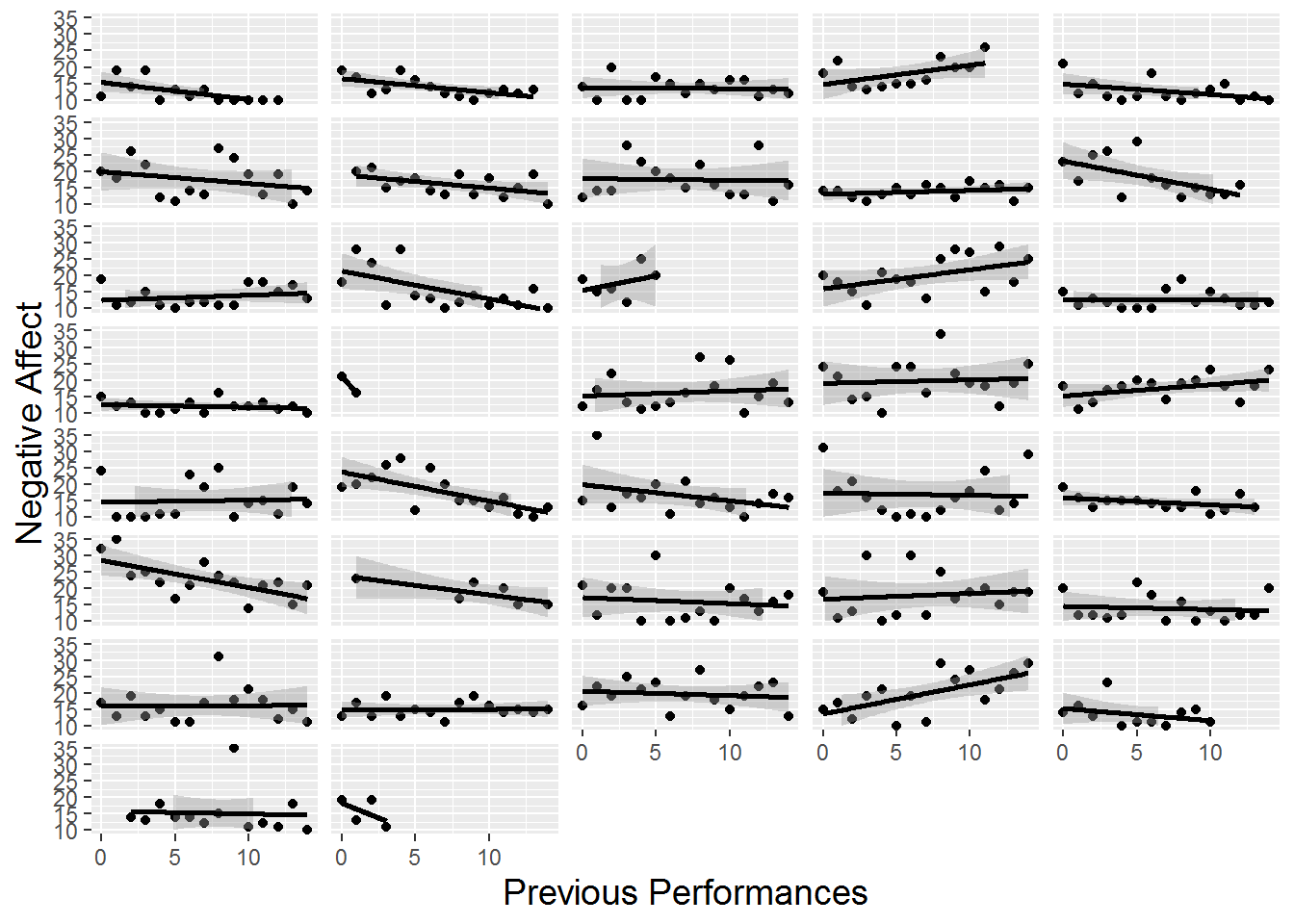

To avoid the issue of dependent observations in our three plots from Figure 8.3, we could generate separate plots for each subject and examine trends within and across subjects. These “lattice plots” are illustrated in Figures 8.4, 8.5, and 8.6; we discuss such plots more thoroughly in Chapter 9. While general trends are difficult to discern from these lattice plots, we can see the variety in subjects in sample size distributions and overall level of performance anxiety. In particular, in Figure 8.6, we notice that linear fits for many subjects illustrate the same same slight downward trend displayed in the overall scatterplot in Figure 8.3, although some subjects experience increasing anxiety and others exhibit non-linear trends. Having an idea of the range of individual trends will be important when we begin to draw overall conclusions from this study.

Figure 8.3: Boxplots of two categorical Level One covariates (performance type (a) and audience type (b)) vs. model response, and scatterplot of one continuous Level One covariate (number of previous diary entries (c)) vs. model response (negative affect). Each plot contains one observation for each of the 497 performances.

Figure 8.4: Lattice plot of performance type vs. negative affect, with separate dotplots by subject.

Figure 8.5: Lattice plot of audience type vs. negative affect, with separate dotplots by subject.

Figure 8.6: Lattice plot of previous performances vs. negative affect, with separate scatterplots with fitted lines by subject.

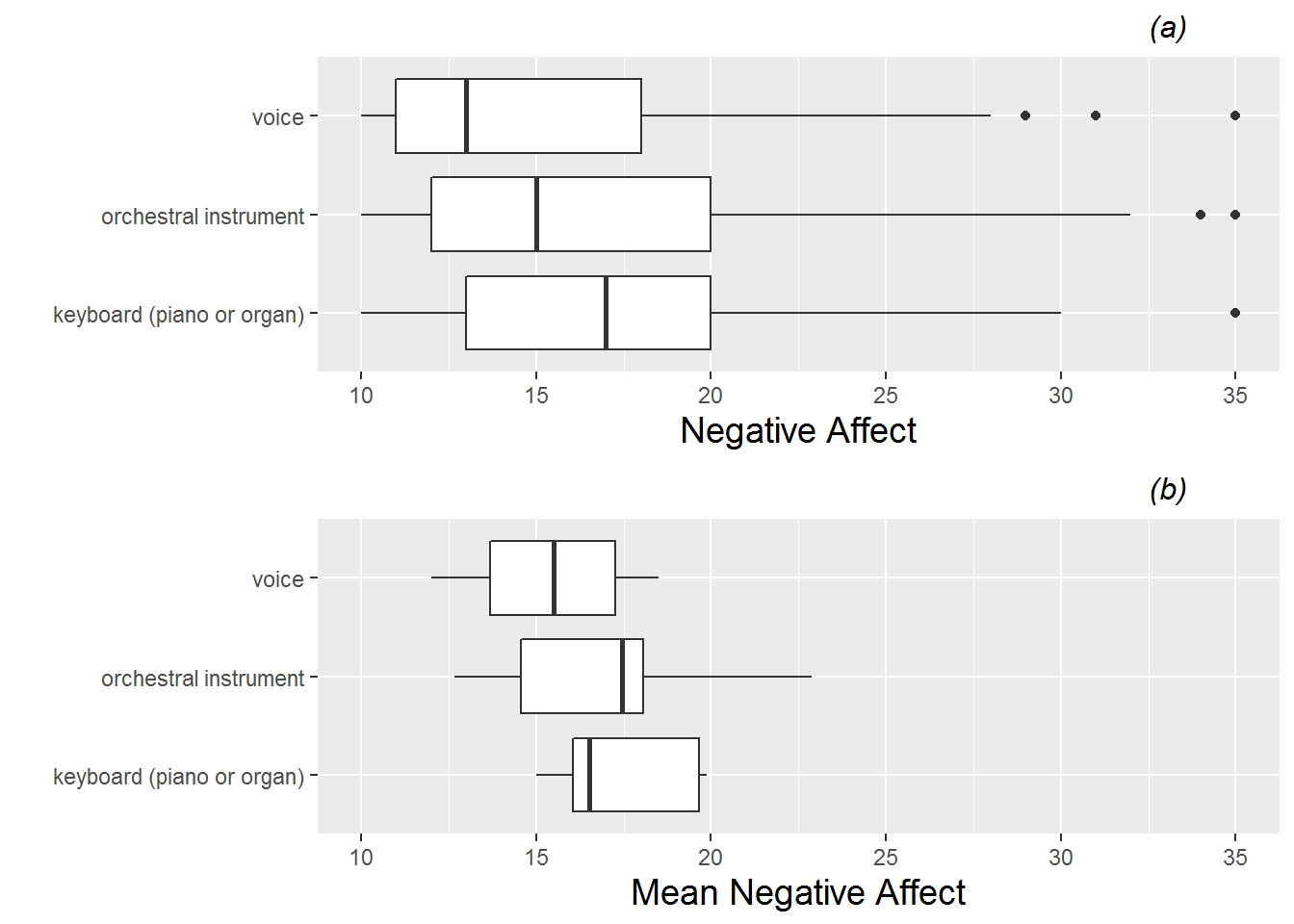

In Figure 8.7, we use boxplots to examine the relationship between our primary categorical Level Two covariate (instrument) and our continuous model response. Plot (a) uses all 497 performances, while plot (b) uses one observation per subject (the mean performance anxiety across all performances) regardless of how many performances that subject had. Naturally, plot (b) has a more condensed range of values, but both plots seem to support the notion that performance anxiety is slightly lower for vocalists and maybe a bit higher for keyboardists

Figure 8.7: Boxplots of the categorical Level Two covariate (instrument) vs. model response (negative affect). Plot (a) is based on all 497 observations from all 37 subjects, while plot (b) uses only one observation per subject.

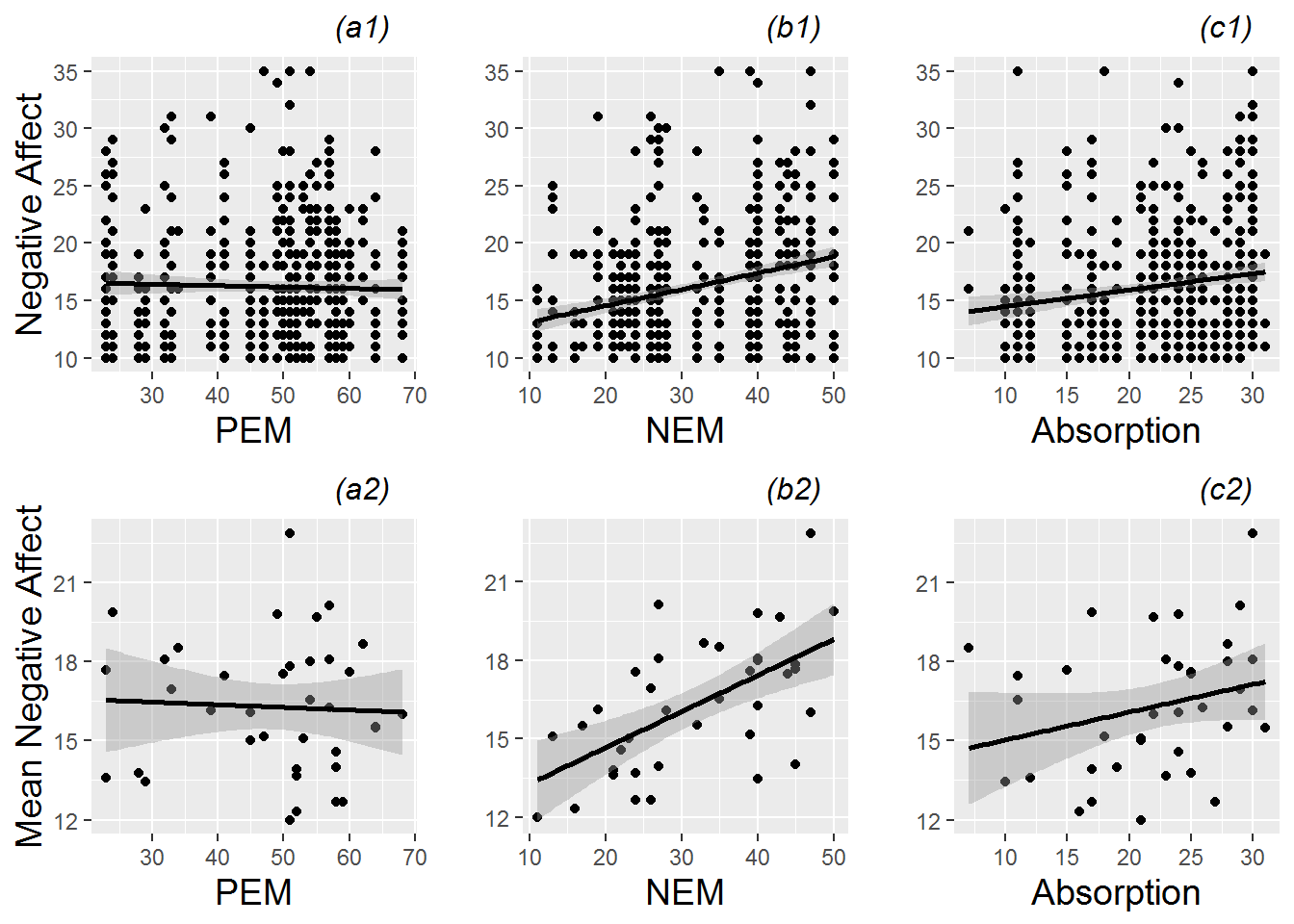

In Figure 8.8, we use scatterplots to examine the relationships between continuous Level Two covariates and our model response. Performance anxiety appears to vary little with a subject’s positive emotionality, but there is some evidence to suggest that performance anxiety increases with increasing negative emotionality and absorption level. Plots based on mean negative affect, with one observation per subject, support conclusions based on plots with all observations from all subjects; indeed the overall relationships are in the same direction and of the same magnitude.

Figure 8.8: Scatterplots of continuous Level Two covariates (positive emotionality (PEM), negative emotionality (NEM), and absorption) vs. model response (negative affect). The top plots (a1, b1, c1) are based on all 497 observations from all 37 subjects, while the bottom plots (a2, b2, c2) use only one observation per subject.

Of course, any graphical analysis is exploratory, and any notable trends at this stage should be checked through formal modeling. At this point, a statistician begins to ask familiar questions such as:

- which characteristics of individual performances are most associated with performance anxiety?

- which characteristics of study participants are most associated with performance anxiety?

- are any of these associations statistically significant?

- does the significance remain after controlling for other covariates?

- how do we account for the lack of independence in performances by the same musician?

As you might expect, answers to these questions will arise from proper consideration of variability and properly identified statistical models.

8.4 Two level modeling: preliminary considerations

8.4.1 Ignoring the two level structure (not recommended)

Armed with any statistical software package, it would be relatively simple to take our complete data set of 497 observations and run an OLS multiple linear regression model seeking to explain variability in negative affect with a number of performance-level or musician-level covariates. As an example, output from a model with two binary covariates (Does the subject play an orchestral instrument? and, Was the performance a large ensemble?) is presented below. Do you see any problems with this approach?

lm(formula = na ~ orch + large + orch:large, data = music)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 15.7212 0.3591 43.778 < 2e-16 ***

orch 1.7887 0.5516 3.243 0.00126 **

large -0.2767 0.7910 -0.350 0.72662

orch:large -1.7087 1.0621 -1.609 0.10831

---

Residual standard error: 5.179 on 493 degrees of freedom

Multiple R-squared: 0.02782, Adjusted R-squared: 0.0219

F-statistic: 4.702 on 3 and 493 DF, p-value: 0.003012Other than somewhat skewed residuals, residual plots (not shown) do not indicate any major problems with the OLS multiple regression model. However, another key assumption in these models is the independence of all observations. While we might reasonably conclude that responses from different study participants are independent (although possibly not if they are members of the same ensemble group), it is not likely that the 15 or so observations taken over multiple performances from a single subject are similarly independent. If a subject begins with a relatively high level of anxiety (compared to other subjects) before her first performance, chances are good that she will have relatively high anxiety levels before subsequent performances. Thus, OLS multiple linear regression using all 497 observations is not advisable for this study (or multilevel data sets in general).

8.4.2 A two-stage modeling approach (better but imperfect)

If we assume that the 37 study participants can reasonably be considered to be independent, we could use traditional OLS regression techniques to analyze data from this study if we could condense each subject’s set of responses to a single meaningful outcome. Candidates for this meaningful outcome include a subject’s last performance anxiety measurement, average performance anxiety, minimum anxiety level, etc. For example, in clinical trials, data is often collected over many weekly or monthly visits for each patient, except that many patients will drop out early for many reasons (e.g., lack of efficacy, side effects, personal reasons). In these cases, treatments are frequently compared using “last-value-carried-forward” methods—the final visit of each patient is used as the primary outcome measure, regardless of how long they remained in the study. However, “last-value-carried-forward” and other summary measures feel inadequate, since we end up ignoring much of the information contained in the multiple measures for each individual. A more powerful solution is to model performance anxiety at multiple levels.

We will begin by considering all performances by a single individual. For instance, consider the 15 performances for which Musician #22 recorded a diary, illustrated in Table 8.2.

| id | diary | perform_type | audience | na | instrument | |

|---|---|---|---|---|---|---|

| 240 | 22 | 1 | Solo | Instructor | 24 | orchestral instrument |

| 241 | 22 | 2 | Large Ensemble | Public Performance | 21 | orchestral instrument |

| 242 | 22 | 3 | Large Ensemble | Public Performance | 14 | orchestral instrument |

| 243 | 22 | 4 | Large Ensemble | Public Performance | 15 | orchestral instrument |

| 244 | 22 | 5 | Large Ensemble | Public Performance | 10 | orchestral instrument |

| 245 | 22 | 6 | Solo | Instructor | 24 | orchestral instrument |

| 246 | 22 | 7 | Solo | Student(s) | 24 | orchestral instrument |

| 247 | 22 | 8 | Solo | Instructor | 16 | orchestral instrument |

| 248 | 22 | 9 | Small Ensemble | Public Performance | 34 | orchestral instrument |

| 249 | 22 | 10 | Large Ensemble | Public Performance | 22 | orchestral instrument |

| 250 | 22 | 11 | Large Ensemble | Public Performance | 19 | orchestral instrument |

| 251 | 22 | 12 | Large Ensemble | Public Performance | 18 | orchestral instrument |

| 252 | 22 | 13 | Large Ensemble | Public Performance | 12 | orchestral instrument |

| 253 | 22 | 14 | Large Ensemble | Public Performance | 19 | orchestral instrument |

| 254 | 22 | 15 | Solo | Instructor | 25 | orchestral instrument |

Does this musician tend to have higher anxiety levels when he is playing in a large ensemble or playing in front of fellow students? Which factor is the biggest determinant of anxiety for a performance by Musician #22? We can address these questions through OLS multiple linear regression applied to only Musician #22’s data, using appropriate indicator variables for factors of interest.

Let \(Y_{j}\) be the performance anxiety score of Musician #22 before performance \(j\). Consider the observed performances for Musician #22 to be a random sample of all conceivable performances by that subject. If we are initially interested in examining the effect of playing in a large ensemble, we can model the performance anxiety for Musician #22 according to the model: \[\begin{equation} Y_{22j}=a_{22}+b_{22}\textstyle{LargeEns}_{22j}+\epsilon_{22j} \textrm{ where } \epsilon_{22j}\sim N(0,\sigma^2) \textrm{ and } \tag{8.1} \end{equation}\]\[ \textstyle{LargeEns}_{j} = \left\{ \begin{array}{ll} 1 & \mbox{if `perf_type` = Large Ensemble} \\ 0 & \mbox{if `perf_type` = Solo or Small Ensemble.} \end{array} \right. \] The parameters in this model (\(a_{22}\), \(b_{22}\), and \(\sigma^2\)) can be estimated through OLS methods. \(a_{22}\) represents the true intercept for Musician #22—the expected anxiety score for Musician #22 when performance type is a Solo or Small Ensemble (\(\textstyle{LargeEns}=0\)), or the true average anxiety for Musician #22 over all Solo or Small Ensemble performances he may conceivably give. \(b_{22}\) represents the true slope for Musician #22—the expected increase in performance anxiety for Musician #22 when performing as part of a Large Ensemble rather than in a Small Ensemble or as a Solo, or the true average difference in anxiety scores for Musician #22 between Large Ensemble performances and other types. Finally, the \(\epsilon_{22j}\) terms represent the deviations of Musician #22’s actual performance anxiety scores from the expected scores under this model—the part of Musician #22’s anxiety before performance \(j\) that is not explained by performance type. The variability in these deviations from the regression model is denoted by \(\sigma^2\).

For Subject 22, we estimate \(\hat{a}_{22}=24.5\), \(\hat{b}_{22}=-7.8\), and \(\hat{\sigma}=4.8\). Thus, according to our simple linear regression model, Subject 22 had an estimated anxiety score of 24.5 before Solo and Small Ensemble performances, and 16.7 (7.8 points lower) before Large Ensemble performances. With an \(R^2\) of 0.425, the regression model explains a moderate amount (42.5%) of the performance-to-performance variability in anxiety scores for Subject 22, and the trend toward lower scores for large ensemble performances is statistically significant at the 0.05 level (t(13)=-3.10, p=.009).

lm(formula = na ~ large, data = id22)

Coefficients:

Estimate Std. Error t value Pr(>|t|)

(Intercept) 24.500 1.960 12.503 1.28e-08 ***

large -7.833 2.530 -3.097 0.0085 **

---

Residual standard error: 4.8 on 13 degrees of freedom

Multiple R-squared: 0.4245, Adjusted R-squared: 0.3802

F-statistic: 9.588 on 1 and 13 DF, p-value: 0.008504We could continue trying to build a better model for Subject 22, adding indicators for audience and memory, and even adding a continuous variable representing the number of previous performance where a diary was kept. As our model R-square value increased, we would be explaining a larger proportion of Subject 22’s performance-to-performance variability in anxiety. It would not, however, improve our model to incorporate predictors for age, gender, or even negative emotionality based on the MPQ—why is that?

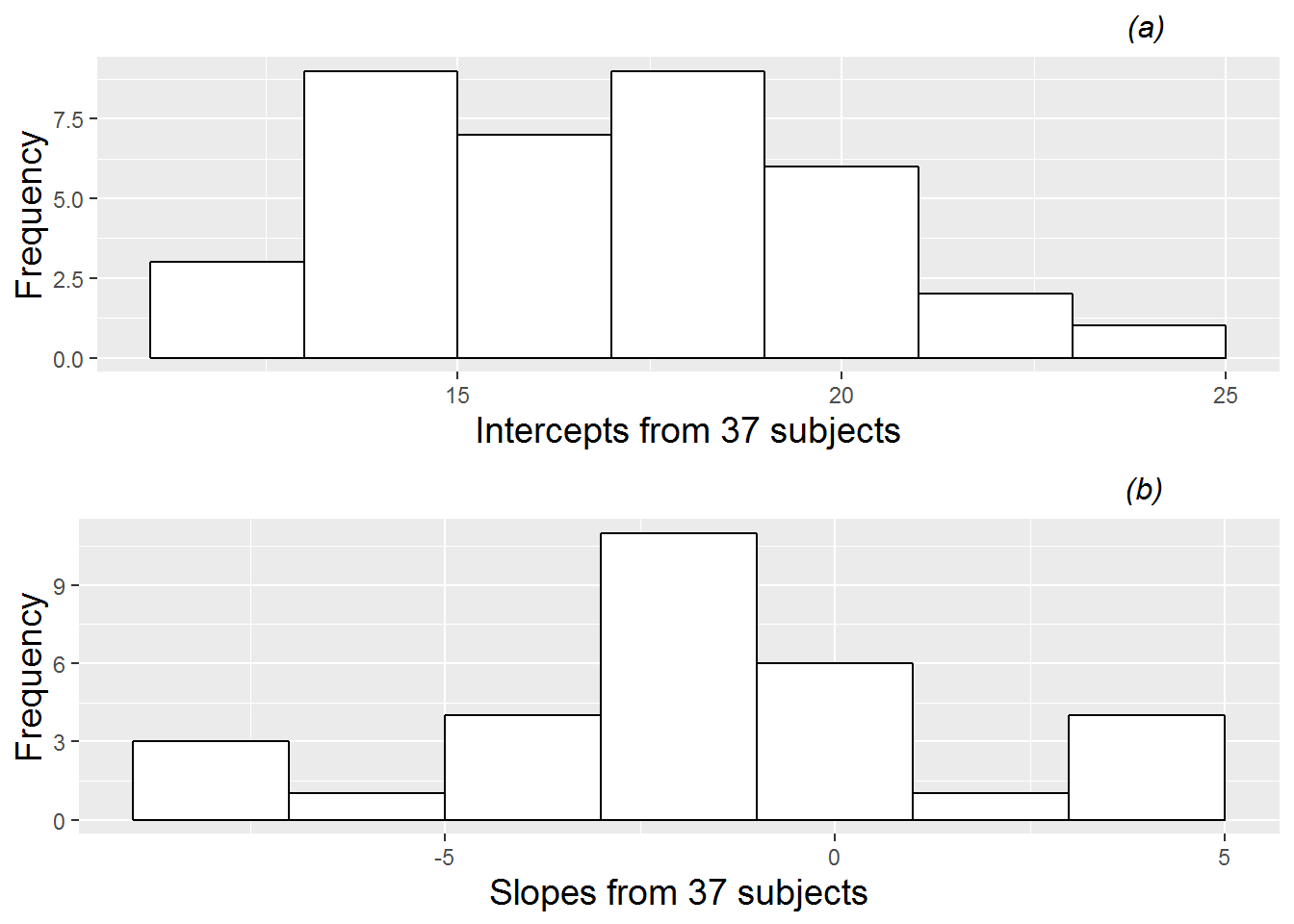

For the present time, we will model Subject 22’s anxiety scores for his 15 performances using the model given by Equation (8.1), with a lone indicator variable for performing in a Large Ensemble. We can then proceed to fit the OLS regression model in Equation (8.1) to examine the effect of performing in a Large Ensemble for each of the 37 subjects in this study. These are called Level One models. As displayed in Figure 8.9, there is considerable variability in the fitted intercepts and slopes among the 37 subjects. Mean performance anxiety scores for Solos and Small Ensembles range from 11.6 to 24.5, with a median score of 16.7, while mean differences in performance anxiety scores for Large Ensembles compared to Solos and Small Ensembles range from -7.9 to 5.0, with a median difference of -1.7. Can these differences among individual musicians be explained by (performance-invariant) characteristics associated with each individual, such as gender, age, instrument, years studied, or baseline levels of personality measures? Questions like these can be addressed through further statistical modeling.

Figure 8.9: Histograms of intercepts and slopes from fitting simple regression models by subject, where each model contained a single binary predictor indicating if a performance was part of a large ensemble.

where \(\textstyle{Orch}_{i}=1\) if Subject \(i\) plays an orchestral instrument and \(\textstyle{Orch}_{i}=0\) if Subject \(i\) plays keyboard or is a vocalist. Note that, at Level Two, our response variables are not observed measurements such as performance anxiety scores, but rather the fitted regression coefficients from the Level One models fit to each subject. (Well, in our theoretical model, the responses are actually the true intercepts and slopes from Level One models for each subject, but in reality, we have to use our estimated slopes and intercepts.)

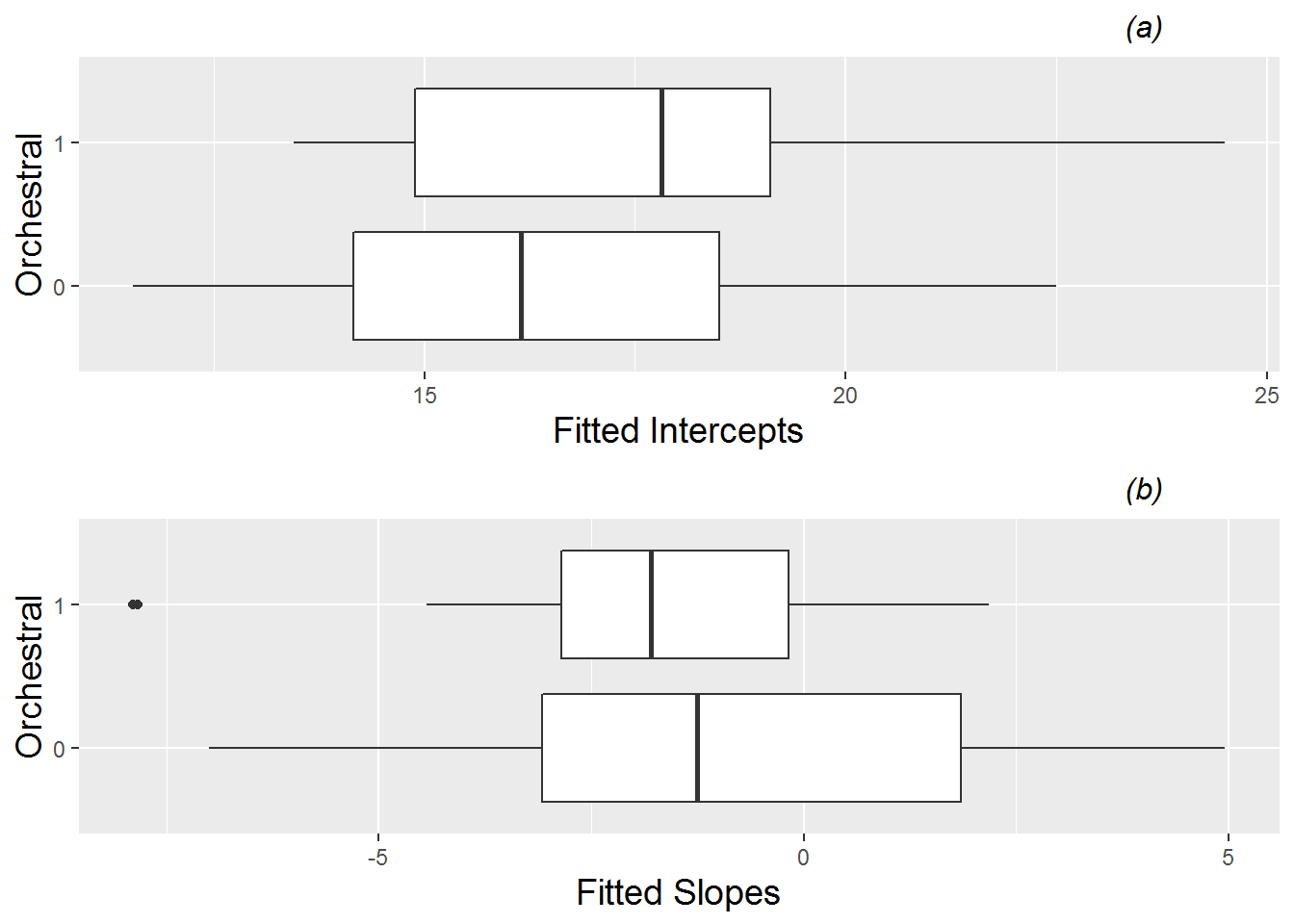

Exploratory data analysis (see boxplots by instrument in Figure 8.10 suggests that subjects playing orchestral instruments have higher intercepts than vocalists or keyboardists, and that orchestral instruments are associated with slight lower (more negative) slopes, although with less variability that the slopes of vocalists and keyboardists. These trends are borne out in regression modeling. If we fit Equations (8.2) and (8.3) using fitted intercepts and slopes as our response variables, we obtain the following estimated parameters: \(\hat{\alpha}_{0}=16.3\), \(\hat{\alpha}_{1}=1.4\), \(\hat{\beta}_{0}=-0.8\), and \(\hat{\beta}_{1}=-1.4\). Thus, the intercept (\(a_{i}\)) and slope (\(b_{i}\)) for Subject \(i\) can be modeled as: \[\begin{eqnarray} \hat{a}_{i} & = & 16.3+1.4\textstyle{Orch}_{i}+u_{i} \tag{8.4} \\ \hat{b}_{i} & = & -0.8-1.4\textstyle{Orch}_{i}+v_{i} \tag{8.5} \end{eqnarray}\]where \(a_{i}\) is the true mean negative affect when Subject \(i\) is playing solos or small ensembles, and \(b_{i}\) is the true mean difference in negative affect for Subject \(i\) between large ensembles and other performance types. Based on these models, average performance anxiety before solos and small ensembles is 16.3 for vocalists and keyboardists, but 17.7 (1.4 points higher) for orchestral instrumentalists. Before playing in large ensembles, vocalists and instrumentalists have performance anxiety (15.5) which is 0.8 points lower, on average, than before solos and small ensembles, while subjects playing orchestral instruments experience an average difference of 2.2 points, producing an average performance anxiety of 15.5 before playing in large ensembles just like subjects playing other instruments. However, the difference between orchestral instruments and others does not appear to be statistically significant for either intercepts (t=1.424, p=0.163) or slopes (t=-1.168, p=0.253).

Figure 8.10: Boxplots of fitted intercepts (plot (a)) and slopes (plot (b)) by orchestral instrument (1) vs. keyboard or vocalist (0).

This two stage modeling process does have some drawbacks. Among other things, (1) it weights every subject the same regardless of the number of diary entries we have, (2) it responds to missing individual slopes (from 7 subjects who never performed in a large ensemble) by simply dropping those subjects, and (3) it does not share strength effectively across individuals. These issues can be better handled through a unified multilevel modeling framework which we will develop over the remainder of this section.

8.5 Two level modeling: a unified approach

8.5.1 Our framework

For the unified approach, we will still envision two levels of models as in section 8.4.2, but we will use likelihood-based methods for parameter estimation rather than ordinary least squares to address the drawbacks associated with the two-stage approach. To illustrate the unified approach, we will first generalize the models presented in section 8.4.2. Let \(Y_{ij}\) be the performance anxiety score of the \(i^{th}\) subject before performance \(j\). If we are initially interested in examining the effects of playing in a large ensemble and playing an orchestral instrument, then we can model the performance anxiety for Subject \(i\) in performance \(j\) with the following system of equations:

- Level One: \[\begin{equation} Y_{ij} = a_{i}+b_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij} \tag{8.6} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{Orch}_{i}+u_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{Orch}_{i}+v_{i}, \end{eqnarray*}\]

From this point forward, when building multilevel models, we will use Greek letters (such as \(\alpha_{0}\)) to denote final fixed effects model parameters to be estimated empirically, and Roman letters (such as \(a_{0}\)) to denote preliminary fixed effects parameters at lower levels. Variance components that will be estimated empirically will be denoted with \(\sigma\) or \(\rho\), while terms such as \(\epsilon\) and \(u_{i}\) represent error terms. In our framework, we can estimate final parameters directly without first estimating preliminary parameters, which can be seen with the Composite Model formulation (although we can obtain estimates of preliminary parameters in those occasional cases when they are of interest to us). Note that when we model a slope term like \(b_{i}\) from Level One using Level Two covariates like \(\textstyle{Orch}_{i}\), the resulting Composite Model contains an interaction term, denoting that the effect of \(\textstyle{LargeEns}_{ij}\) depends on the instrument played.

Furthermore, with a binary predictor at Level Two such as instrument, we can write out what our Level Two model looks like for those who play keyboard or are vocalists (\(\textstyle{Orch}_{i}=0\)) and those who play orchestral instruments (\(\textstyle{Orch}_{i}=1\)):

- Keyboardists and Vocalists (\(\textstyle{Orch}_{i}=0\)) \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+u_{i} \\ b_{i} & = & \beta_{0}+v_{i} \end{eqnarray*}\]

- Orchestral instrumentalists (\(\textstyle{Orch}_{i}=1\)) \[\begin{eqnarray*} a_{i} & = & (\alpha_{0}+\alpha_{1})+u_{i} \\ b_{i} & = & (\beta_{0}+\beta_{1})+v_{i} \label{eq:level2byorch} \end{eqnarray*}\]

Writing the Level Two model in this manner helps us interpret the model parameters from our two-level model. In this case, even the Level One covariate is binary, so that we can write out expressions for mean performance anxiety based on our model for four different combinations of instrument played and performance type:

- Keyboardists or vocalists playing solos or small ensembles: \(\alpha_{0}\)

- Keyboardists or vocalists playing large ensembles: \(\alpha_{0}+\beta_{0}\)

- Orchestral instrumentalists playing solos or small ensembles: \(\alpha_{0}+\alpha_{1}\)

- Orchestral instrumentalists playing large ensembles: \(\alpha_{0}+\alpha_{1}+\beta_{0}+\beta_{1}\)

8.5.2 Random vs. fixed effects

Before we can use likelihood-based methods to estimate our model parameters, we still must define the distributions of our error terms. The error terms \(\epsilon_{ij}\), \(u_{i}\), and \(v_{i}\) represent random effects in our model. In multilevel models, it is important to distinguish between fixed and random effects. Typically, fixed effects describe levels of a factor that we are specifically interested in drawing inferences about, and which would not change in replications of the study. For example, in our music performance anxiety case study, the levels of performance type will most likely remain as solos, small ensembles, and large ensembles even in replications of the study, and we wish to draw specific conclusions about differences between these three types of performances. Thus, performance type would be considered a fixed effect. On the other hand, random effects describe levels of a factor which can be thought of as a sample from a larger population of factor levels; we are not typically interested in drawing conclusions about specific levels of a random effect, although we are interested in accounting for the influence of the random effect in our model. For example, in our case study the different musicians included can be thought of as a random sample from a population of performing musicians. Although our goal is not to make specific conclusions about differences between any two musicians, we do want to account for inherent differences among musicians in our model, and by doing so, we will be able to draw more precise conclusions about our fixed effects of interest. Thus, musician would be considered a random effect.

8.5.3 Distribution of errors: the multivariate normal distribution

As part of our multilevel model, we must provide probability distributions to describe the behavior of random effects. Typically, we assume that random effects follow a normal distribution with mean 0 and a variance parameter which must be estimated from the data. For example, at Level One, we will assume that the errors associated with each performance of a particular musician can be described as: \(\epsilon_{ij}\sim N(0,\sigma^2)\). At Level Two, we have one error term (\(u_{i}\)) associated with subject-to-subject differences in intercepts, and one error term (\(v_{i}\)) associated with subject-to-subject differences in slopes. That is, \(u_{i}\) represents the deviation of Subject \(i\) from the mean performance anxiety before solos and small ensembles after accounting for his or her instrument, and \(v_{i}\) represents the deviation of Subject \(i\) from the mean difference in performance anxiety between large ensembles and other performance types after accounting for his or her instrument.

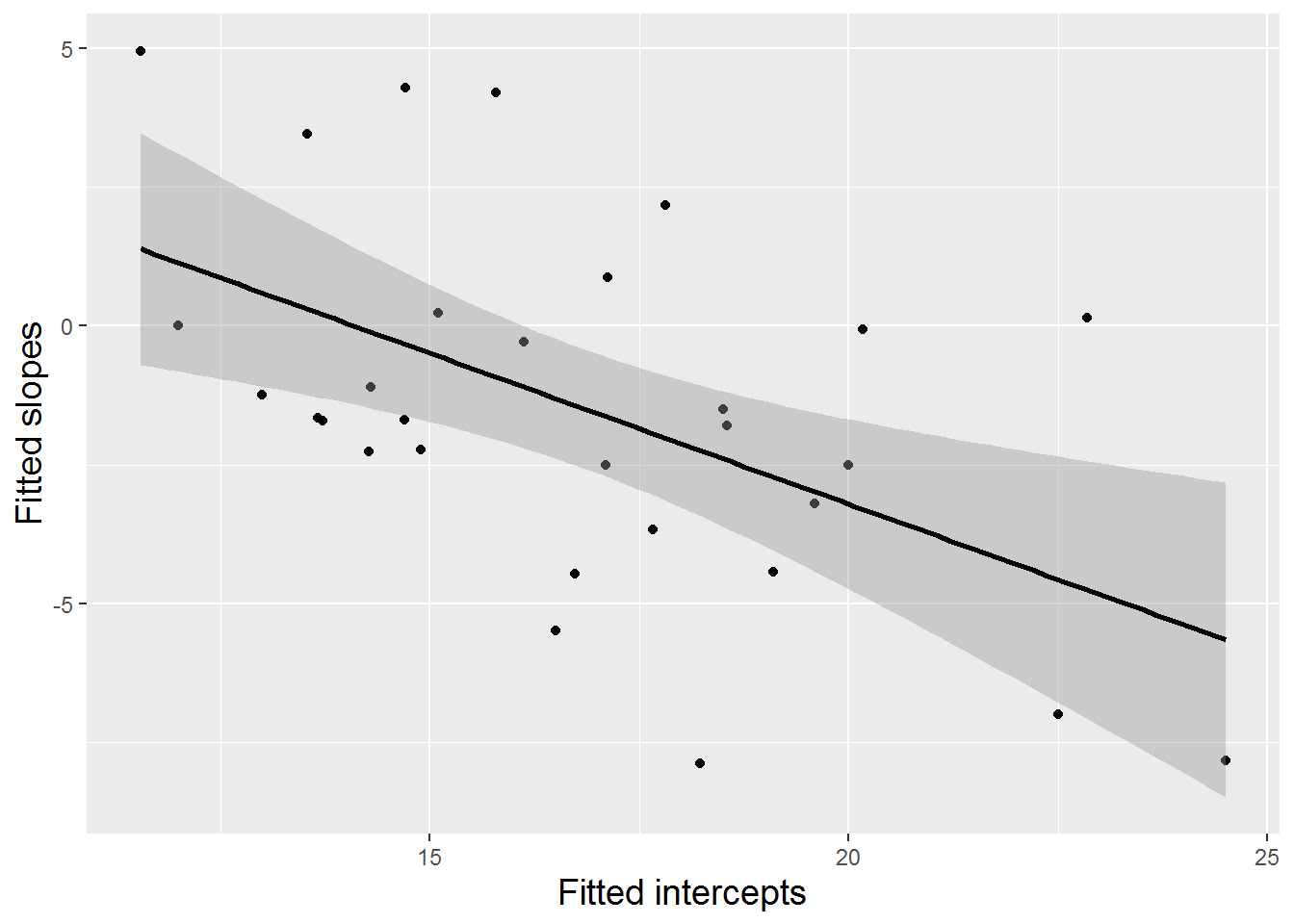

In modeling the random behavior of \(u_{i}\) and \(v_{i}\), we must also account for the possibility that random effects at the same level might be correlated. Subjects with higher baseline performance anxiety have a greater capacity for showing decreased anxiety in large ensembles as compared to solos and small ensembles, so we might expect that subjects with larger intercepts (performance anxiety before solos and small ensembles) would have smaller slopes (indicating greater decreases in anxiety before large ensembles). In fact, our fitted Level One intercepts and slopes in this example actually show evidence of a fairly strong negative correlation (\(r=-0.525\), see Figure 8.11).

Figure 8.11: Scatterplot with fitted regression line for estimated intercepts and slopes (one point per subject).

With this expression, we are allowing each error term to have its own variance (around a mean of 0) and each pair of error terms to have its own covariance (or correlation). Thus, if there are \(n\) equations at Level Two, we can have \(n\) variance terms and \(n(n-1)/2\) covariance terms for a total of \(n + n(n-1)/2\) variance components. These variance components are organized in matrix form, with variance terms along the diagonal and covariance terms in the off-diagonal. In our small example, we have \(n=2\) equations at Level Two, so we have 3 variance components to estimate—2 variance terms (\(\sigma_{u}^{2}\) and \(\sigma_{v}^{2}\)) and 1 covariance term (\(\sigma_{uv}\)).

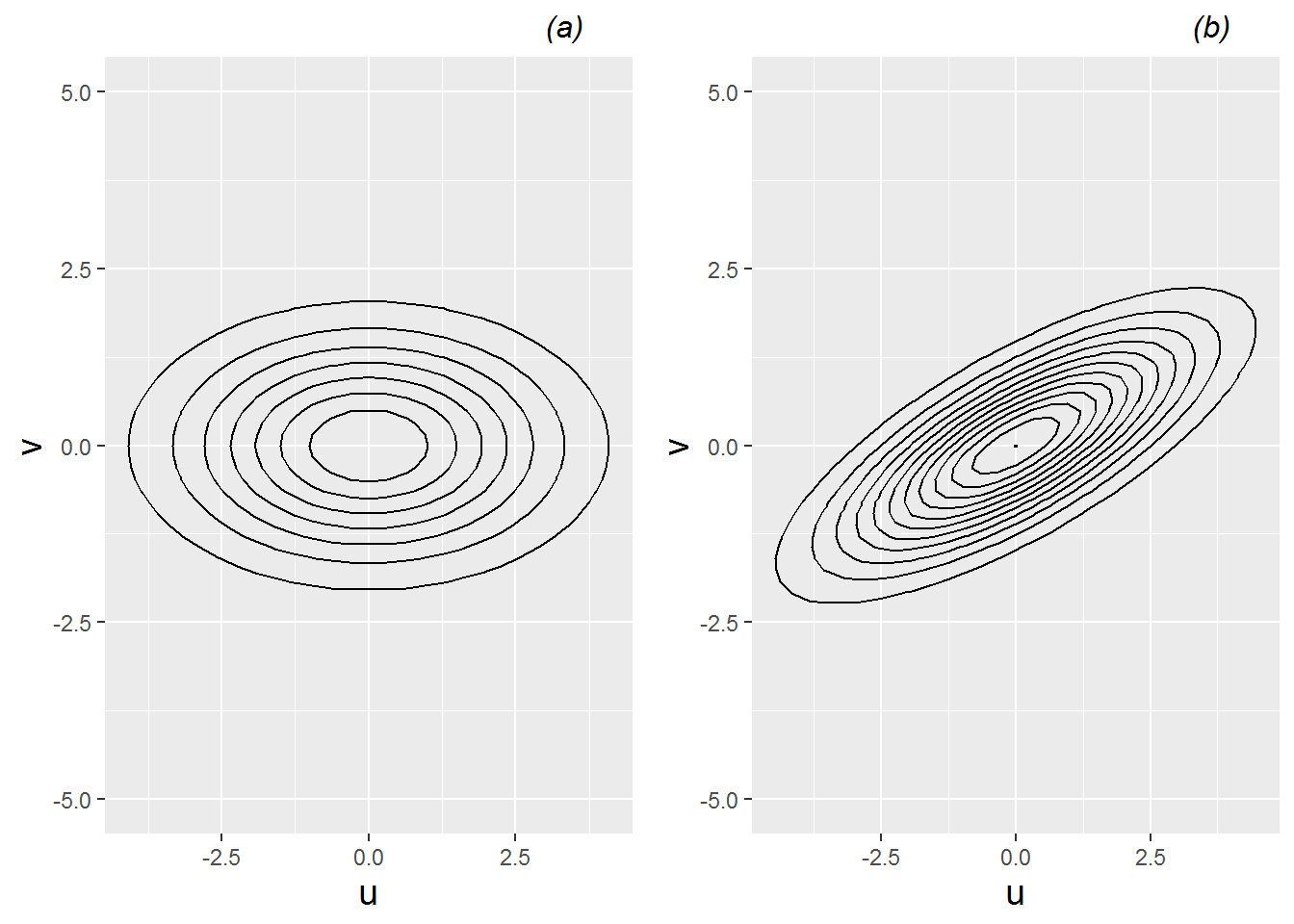

The multivariate normal distribution with \(n=2\) is illustrated in Figure 8.12 for two cases: (a) the error terms are uncorrelated (\(\sigma_{uv}=\rho_{uv}=0\)), and (b) the error terms are positively correlated (\(\sigma_{uv}>0\) and \(\rho_{uv} > 0\)). In general, if the errors in intercepts (\(u_{i}\)) are placed on the x-axis and the errors in slopes (\(v_{i}\)) are placed on the y-axis, then \(\sigma_{u}^{2}\) measures spread in the x-direction and \(\sigma_{v}^{2}\) measures spread in the y-direction, while \(\sigma_{uv}\) measures tilt. Positive tilt (\(\sigma_{uv}>0\)) indicates a tendency for errors from the same subject to both be positive or both be negative, while negative tilt (\(\sigma_{uv}<0\)) indicates a tendency for one error from a subject to be positive and the other to be negative. In Figure 8.12, \(\sigma_{u}^{2}=4\) and \(\sigma_{v}^{2}=1\), so both contour plots show a greater range of errors in the x-direction than the y-direction. Internal ellipses in the contour plot indicate pairs of \(u_{i}\) and \(v_{i}\) that are more likely. In Figure 8.12 (a) \(\sigma_{uv}=\rho_{uv}=0\), so the axes of the contour plot correspond to the x- and y-axes, but in Figure 8.12 (b) \(\sigma_{uv}=1.5\), so the contour plot tilts up, reflecting a tendency for high values of \(u_{i}\) to be associated with high values of \(v_{i}\).

Figure 8.12: Contour plots illustrating multivariate normal density with (a) no correlation between error terms, and (b) positive correlation between error terms.

8.5.4 Technical issues when estimating and testing parameters (Optional)

Now, our two-level model has 8 parameters that need to be estimated: 4 fixed effects (\(\alpha_{0}\), \(\alpha_{1}\), \(\beta_{0}\), and \(\beta_{1}\)), and 4 variance components (\(\sigma^{2}\), \(\sigma_{u}^{2}\), \(\sigma_{v}^{2}\), and \(\sigma_{uv}\)). Note that we use the term variance components to signify model parameters that describe the behavior of random effects. We can use statistical software, such as the lmer() function from the lme4 package in R, to obtain parameter estimates using our 497 observations. The most common methods for estimating model parameters—both fixed effects and variance components—are maximum likelihood (ML) and restricted maximum likelihood (REML). The method of maximum likelihood (ML) was introduced in Chapter 2, where parameter estimates are chosen to maximize the value of the likelihood function based on observed data. Restricted maximum likelihood (REML) is conditional on the fixed effects, so that the part of the data used for estimating variance components is separated from that used for estimating fixed effects. Thus REML, by accounting for the loss in degrees of freedom from estimating the fixed effects, provides an unbiased estimate of variance components, while ML estimators for variance components are biased under assumptions of normality, since they use estimated fixed effects rather than the true values. REML is preferable when the number of parameters is large or the primary interest is obtaining estimates of model parameters, either fixed effects or variance components associated with random effects. ML should be used if nested fixed effects models are being compared using a likelihood ratio test, although REML is fine for nested models of random effects (with the same fixed effects model). In this text, we will typically report REML estimates unless we are specifically comparing nested models with the same random effects. In most case studies and most models we consider, there is very little difference between ML and REML parameter estimates. Additional details are beyond the scope of this book (Singer and Willett 2003).

Note that the multilevel output shown beginning in the next section contains no p-values for performing hypothesis tests. This is primarily because the exact distribution of the test statistics under the null hypothesis (no fixed effect) is unknown, primarily because the exact degrees of freedom is not known (Bates et al. 2015). Finding good approximate distributions for test statistics (and thus good approximate p-values) in multilevel models is an area of active research. In most cases, we can simply conclude that t-values (ratios of parameter estimates to estimated standard errors) with absolute value above 2 indicate significant evidence that a particular model parameter is different than 0. Certain software packages will report p-values corresponding to hypothesis tests for parameters of fixed effects; these packages are typically using conservative assumptions, large-sample results, or approximate degrees of freedom for a t-distribution. In section 1.6.5, we introduced the bootstrap as a non-parametric, computational approach for producing confidence intervals for model parameters. In addition, in section 10.6, we will introduce a method called the parametric bootstrap which is being used more frequently by researchers to better approximate the distribution of the likelihood test statistic and produce more accurate p-values by simulating data under the null hypothesis (Efron 2012).

8.5.5 An initial model with parameter interpretations

The output below contains likelihood-based estimates of our 8 parameters from a two-level model applied to the music performance anxiety data:

Linear mixed model fit by REML ['lmerMod']

A) Formula: na ~ orch + large + orch:large + (large | id)

Data: music

B) REML criterion at convergence: 2987

B2) AIC BIC logLik deviance df.resid

3007.2 3040.9 -1495.6 2991.2 489

Random effects:

Groups Name Variance Std.Dev. Corr

C) id (Intercept) 5.655 2.3781

D) large 0.452 0.6723 -0.63

E) Residual 21.807 4.6698

F) Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

G) (Intercept) 15.9297 0.6415 24.833

H) orch 1.6926 0.9452 1.791

I) large -0.9106 0.8452 -1.077

J) orch:large -1.4239 1.0992 -1.295This output (except for the capital letters along the left column) was specifically generated by the lmer() function in R; multilevel modeling results from other packages will contain similar elements. Because we will use lmer() output to summarize analyses of case studies in this and following sections, we will spend a little time now orienting ourselves to the most important features in this output.

- A: How our multilevel model is written in R, based on the composite model formulation. For more details, see section 8.12.

- B: Measures of model performance. Since this model was fit using REML, this line only contains the REML criterion.

- B2: If the model is fit with ML instead of REML, the measures of performance will contain AIC, BIC, deviance, and the log-likelihood.

- C: Estimated variance components (\(\hat{\sigma}_{u}^2\) and \(\hat{\sigma}_{u}\)) associated with the intercept equation in Level Two.

- D: Estimated variance components (\(\hat{\sigma}_{v}^2\) and \(\hat{\sigma}_{v}\)) associated with the large ensemble effect equation in Level Two, along with the estimated correlation (\(\hat{\rho}_{uv}\)) between the two Level Two error terms.

- E: Estimated variance components (\(\hat{\sigma}^2\) and \(\hat{\sigma}\)) associated with the Level One equation.

- F: Total number of performances where data was collected (Level One observations = 497) and total number of subjects (Level Two observations = 37).

- G: Estimated fixed effect (\(\hat{\alpha}_{0}\)) for intercept term, along with its standard error and t-value (which is the ratio of the estimated coefficient to its standard error). As described in section 8.5.4, no p-value testing the significance of the coefficient is provided because the exact null distribution of the t-value is unknown.

- H: Estimated fixed effect (\(\hat{\alpha}_{1}\)) for the orchestral instrument effect, along with its standard error and t-value .

- I: Estimated fixed effect (\(\hat{\beta}_{0}\)) for the large ensemble effect, along with its standard error and t-value.

- J: Estimated fixed effect (\(\hat{\beta}_{1}\)) for the interaction between orchestral instruments and large ensembles, along with its standard error and t-value.

Assuming the 37 musicians in this study are representative of a larger population of musicians, parameter interpretations for our 8 model parameters are given below:

- Fixed effects:

- \(\hat{\alpha}_{0} = 15.9\). The estimated mean performance anxiety for solos and small ensembles (Large=0) for keyboard players and vocalists (Orch=0) is 15.9.

- \(\hat{\alpha}_{1} = 1.7\). Orchestral instrumentalists have an estimated mean performance anxiety for solos and small ensembles which is 1.7 points higher than keyboard players and vocalists.

- \(\hat{\beta}_{0} = -0.9\). Keyboard players and vocalists have an estimated mean decrease in performance anxiety of 0.9 points when playing in large ensembles instead of solos or small ensembles.

- \(\hat{\beta}_{1} = -1.4\). Orchestral instrumentalists have an estimated mean decrease in performance anxiety of 2.3 points when playing in large ensembles instead of solos or small ensembles, 1.4 points greater than the mean decrease among keyboard players and vocalists.

- Variance components

- \(\hat{\sigma}_{u} = 2.4\). The estimated standard deviation of performance anxiety levels for solos and small ensembles is 2.4 points, after controlling for instrument played.

- \(\hat{\sigma}_{v} = 0.7\). The estimated standard deviation of differences in performance anxiety levels between large ensembles and other performance types is 0.7 points, after controlling for instrument played.

- \(\hat{\rho}_{uv} = -0.64\). The estimated correlation between performance anxiety scores for solos and small ensembles and increases in performance anxiety for large ensembles is -0.64, after controlling for instrument played. Those subjects with higher performance anxiety scores for solos and small ensembles tend to have greater decreases in performance anxiety for large ensemble performances.

- \(\hat{\sigma} = 4.7.\) The estimated standard deviation in residuals for the individual regression models is 4.7 points.

Table 8.3 shows a side-by-side comparison of estimated coefficients from the approaches described to this point. Underlying assumptions, especially regarding the error and correlation structure, differ, and differences in estimated effects are potentially meaningful. Note that some standard errors are greatly underestimated under independence, and that no Level One covariates (such as performance type) can be analyzed under a method such as last-visit-carried-forward which uses one observation per subject. Moving forward, we will employ the unified multilevel approach to maximize the information being used to estimate model parameters and to remain faithful to the structure of the data.

| Variable | Independence | TwoStage | LVCF | Multilevel |

|---|---|---|---|---|

| Intercept | 15.72(0.36) | 16.28(0.67) | 15.20(1.25) | 15.93(0.64) |

| Orch | 1.79(0.55) | 1.41(0.99) | 1.45(1.84) | 1.69(0.95) |

| Large | -0.28(0.79) | -0.77(0.85) |

|

-0.91(0.85) |

| Orch*Large | -1.71(1.06) | -1.41(1.20) |

|

-1.42(1.10) |

Two level modeling as done with the music performance anxiety data usually involves fitting a number of models. Subsequent sections will describe a process of starting with the simplest two-level models and building toward a final model which addresses the research questions of interest.

8.6 Building a multilevel model

8.6.1 Model building strategy

Initially, it is advisable to first fit some simple, preliminary models, in part to establish a baseline for evaluating larger models. Then, we can build toward a final model for description and inference by attempting to add important covariates, centering certain variables, and checking model assumptions. In this study, we are particularly interested in Level Two covariates—those subject-specific variables that provide insight into why individuals react differently in anxiety-inducing situations. To get more precise estimates of the effect of Level Two covariates, we also want to control for Level One covariates that describe differences in individual performances.

Our strategy for building multilevel models will begin with extensive exploratory data analysis at each level. Then, after examining models with no predictors to assess variability at each level, we will first focus on creating a Level One model, starting simple and adding terms as necessary. Next, we will move to Level Two models, again starting simple and adding terms as necessary, beginning with the equation for the intercept term. Finally, we will examine the random effects and variance components, beginning with a full set of error terms and then removing covariance terms and variance terms where advisable (for instance, when parameter estimates are failing to converge or producing impossible or unlikely values). This strategy follows closely with that described by Raudenbush and Bryk (2002) and used by Singer and Willett (2003). Singer and Willett further find that the modeled error structure rarely matters in practical contexts. Other model building approaches are certainly possible. Diggle et al. (2002), for example, begins with a saturated fixed effects model, determines variance components based on that, and then simplifies the fixed part of the model after fixing the random part.

8.6.2 An initial model: unconditional means or random intercepts

The first model fit in almost any multilevel context should be the unconditional means model, also called a random intercepts model. In this model, there are no predictors at either level; rather, the purpose of the unconditional means model is to assess the amount of variation at each level—to compare variability within subject to variability between subjects. Expanded models will then attempt to explain sources of between and within subject variability.

The unconditional means (random intercepts) model, which we will denote as Model A, can be specified either using formulations at both levels:

- Level One: \[\begin{equation} Y_{ij} = a_{i}+\epsilon_{ij} \textrm{ where } \epsilon_{ij}\sim N(0,\sigma^2) \tag{8.8} \end{equation}\]

- Level Two: \[\begin{equation} a_{i} = \alpha_{0}+u_{i} \textrm{ where } u_{i}\sim N(0,\sigma_{u}^{2}) \tag{8.9} \end{equation}\]

In this model, the performance anxiety scores of subject \(i\) are not a function of performance type or any other Level One covariate, so that \(a_{i}\) is the true mean response of all observations for subject \(i\). On the other hand, \(\alpha_{0}\) is the grand mean – the true mean of all observations across the entire population. Our primary interest in the unconditional means model is the variance components – \(\sigma^2\) is the within-person variability, while \(\sigma_{u}^{2}\) is the between-person variability. The name random intercepts model then arises from the Level Two equation for \(a_{i}\): each subject’s intercept is assumed to be a random value from a normal distribution centered at \(\alpha_{0}\) with variance \(\sigma_{u}^{2}\).

Using the composite model specification, the unconditional means model can be fit to the music performance anxiety data using statistical software:

Formula: na ~ 1 + (1 | id)

Random effects:

Groups Name Variance Std.Dev.

id (Intercept) 4.95 2.225

Residual 22.46 4.739

Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

(Intercept) 16.2370 0.4279 37.94From this output, we obtain estimates of our three model parameters:

- \(\hat{\alpha}_{0}=16.2=\) the estimated mean performance anxiety score across all performances and all subjects.

- \(\hat{\sigma}^2=22.5=\) the estimated variance in within-person deviations.

- \(\hat{\sigma}_{u}^{2}=5.0=\) the estimated variance in between-person deviations.

Thus, 18.2% of the total variability in performance anxiety scores are attributable to differences among subjects. In this particular model, we can also say that the average correlation for any pair of responses from the same individual is a moderately low .182. As \(\rho\) approaches 0, responses from an individual are essentially independent and accounting for the multilevel structure of the data becomes less crucial. However, as \(\rho\) approaches 1, repeated observations from the same individual essentially provide no additional information and accounting for the multilevel structure becomes very important. With \(\rho\) near 0, the effective sample size (the number of independent pieces of information we have for modeling) approaches the total number of observations, while with \(\rho\) near 1, the effective sample size approaches the number of subjects in the study.

8.7 Binary covariates at Level One and Level Two

8.7.1 Random slopes and intercepts model

The next step in model fitting is to build a good model for predicting performance anxiety scores at Level One (within subject). We will add potentially meaningful Level One covariates—those that vary from performance-to-performance for each individual. In this case, mirroring our model from section 8.4, we will include a binary covariate for performance type: \[ \textstyle{LargeEns}_{ij} = \left\{ \begin{array}{ll}

1 & \mbox{if `perf_type` = Large Ensemble} \\

0 & \mbox{if `perf_type` = Solo or Small Ensemble.}

\end{array}

\right. \] and no other Level One covariates (for now). (Note that we may later also want to include an indicator variable for “Small Ensemble” to separate the effects of Solo performances and Small Ensemble performances.) The resulting model, which we will denote as Model B, can be specified either using formulations at both levels:

- Level One: \[\begin{equation} Y_{ij} = a_{i}+b_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij} \tag{8.12} \end{equation}\]

- Level Two:

where \(\epsilon_{ij}\sim N(0,\sigma^2)\) and \[ \left[ \begin{array}{c} u_{i} \\ v_{i} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \rho\sigma_{u}\sigma_{v} & \sigma_{v}^{2} \end{array} \right] \right). \] as discussed in section 8.5.3.

In this model, performance anxiety scores for subject \(i\) are assumed to differ (on average) for Large Ensemble performances as compared with Solos and Small Ensemble performances; the \(\epsilon_{ij}\) terms capture the deviation between the true performance anxiety levels for subjects (based on performance type) and their observed anxiety levels. \(\alpha_{0}\) is then the true mean performance anxiety level for Solos and Small Ensembles, and \(\beta_{0}\) is the true mean difference in performance anxiety for Large Ensembles compared to other performance types. As before, \(\sigma^2\) quantifies the within-person variability (the scatter of points around individuals’ means by performance type), while now the between-person variability is partitioned into variability in Solo and Small Ensemble scores (\(\sigma_{u}^{2}\)) and variability in differences with Large Ensembles (\(\sigma_{v}^{2}\)).

Using the composite model specification, Model B can be fit to the music performance anxiety data, producing the following output:

Formula: na ~ large + (large | id)

Random effects:

Groups Name Variance Std.Dev. Corr

id (Intercept) 6.3330 2.5165

large 0.7429 0.8619 -0.76

Residual 21.7712 4.6660

Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

(Intercept) 16.7297 0.4908 34.09

large -1.6762 0.5425 -3.09From this output, we obtain estimates of our six model parameters (2 fixed effects and 4 variance components):

- \(\hat{\alpha}_{0}=16.7=\) the mean performance anxiety level before solos and small ensemble performances.

- \(\hat{\beta}_{0}=-1.7=\) the mean decrease in performance anxiety before large ensemble performances.

- \(\hat{\sigma}^2=21.8=\) the variance in within-person deviations.

- \(\hat{\sigma}_{u}^{2}=6.3=\) the variance in between-person deviations in performance anxiety scores before solos and small ensembles.

- \(\hat{\sigma}_{v}^{2}=0.7=\) the variance in between-person deviations in increases (or decreases) in performance anxiety scores before large ensembles.

- \(\hat{\rho}_{uv}=-0.76=\) the correlation in subjects’ anxiety before solos and small ensembles and their differences in anxiety between large ensembles and other performance types.

We see that, on average, subjects had a performance anxiety level of 16.7 before solos and small ensembles, and their anxiety levels were 1.7 points lower, on average, before large ensembles, producing an average performance anxiety level before large ensembles of 15.0. According to the t-value listed in R, the difference between large ensembles and other performance types is statistically significant (t=-3.09).

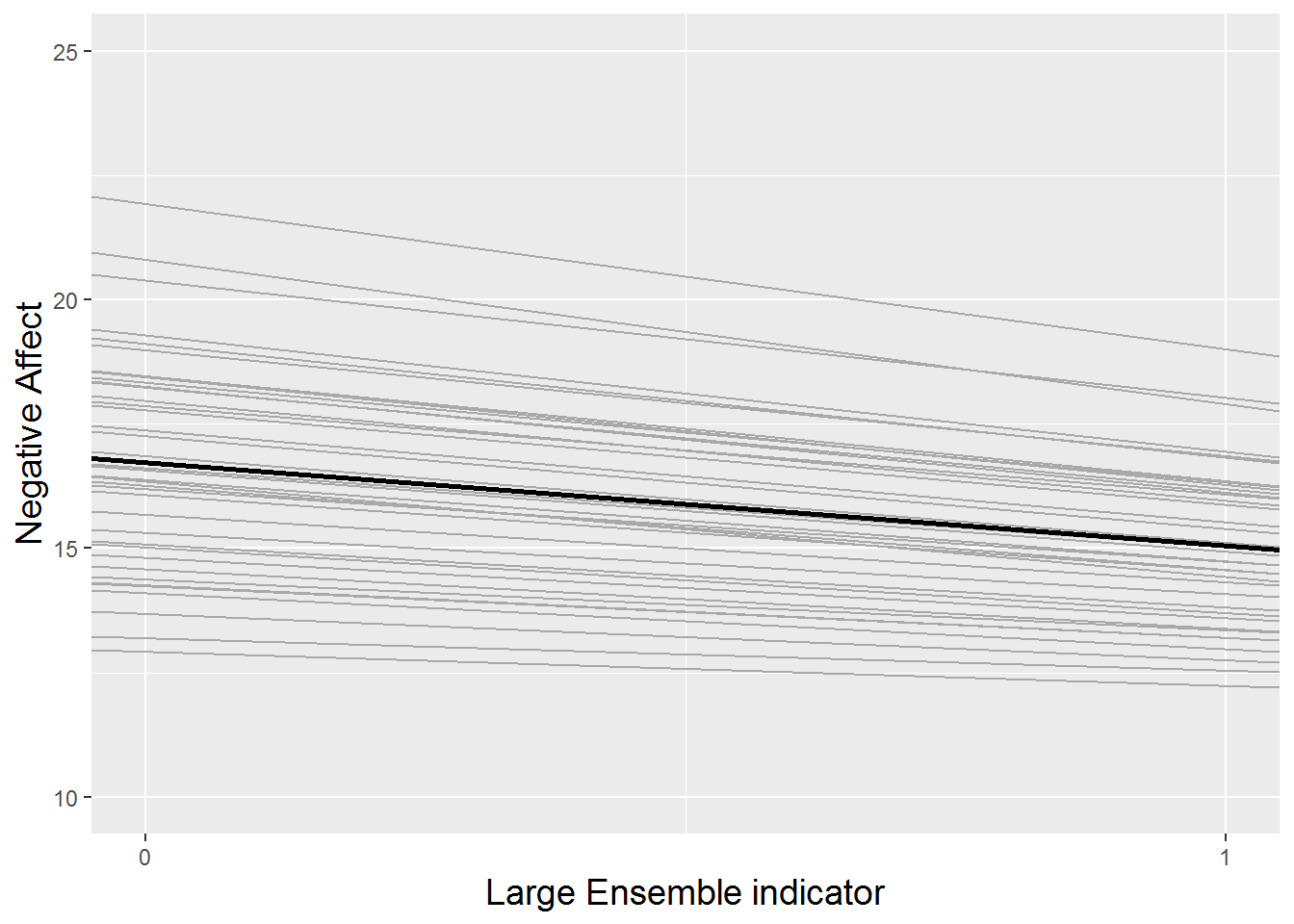

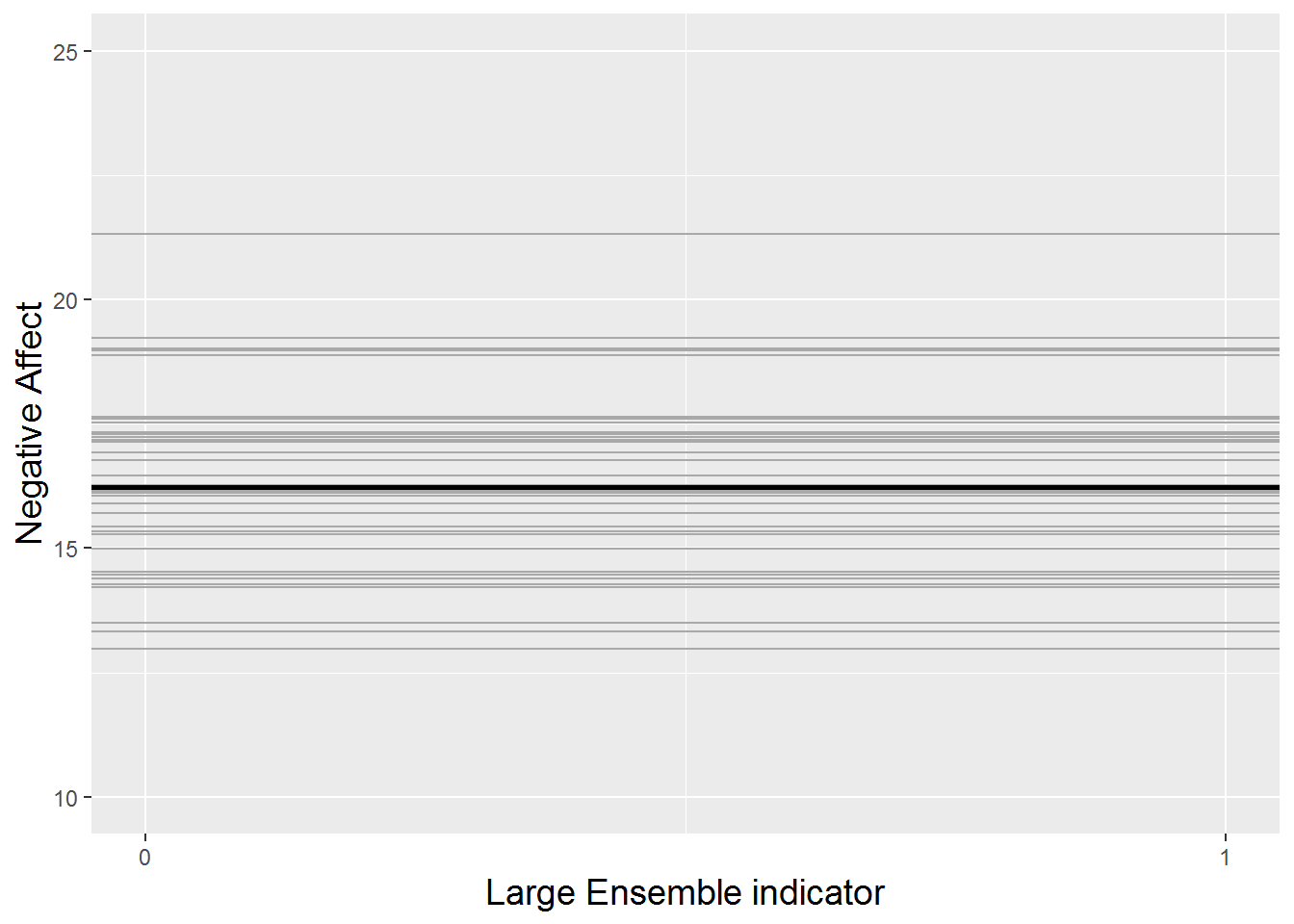

This random slopes and intercepts model is illustrated in Figure 8.13. The thicker black line shows the overall trends given by our estimated fixed effects: an intercept of 16.7 and a slope of -1.7. Then, each subject is represented by a gray line. Not only do the subjects’ intercepts differ (with variance 6.3), but their slopes differ as well (with variance 0.7). Additionally, subjects’ slopes and intercepts are negatively associated (with correlation -0.76), so that subjects with greater intercepts tend to have steeper negative slopes. We can compare this model with the random intercepts model from section 8.6.2, pictured in Figure 8.14. With no effect of large ensembles, each subject is represented by a gray line with the identical slope (0), but with varying intercepts (with variance 5.0).

Figure 8.13: The random slopes and intercepts model fitted to the music performance anxiety data. Each gray line represents one subject, and the thicker black line represents the trend across all subjects.

Figure 8.14: The random intercepts model fitted to the music performance anxiety data. Each gray line represents one subject, and the thicker black line represents the trend across all subjects.

Figures 8.13 and 8.14 use empirical Bayes estimates for the intercepts (\(a_{i}\)) and slopes (\(b_{i}\)) of individual subjects. Empirical Bayes estimates are sometimes called “shrinkage estimates” since they combine individual-specific information with information from all subjects, thus “shrinking” the individual estimates toward the group averages. Empirical Bayes estimates are often used when a term such as \(a_{i}\) involves both fixed and random components; further detail can be found in Raudenbush and Bryk (2002) and Singer and Willett (2003).

8.7.2 Pseudo \(R^2\) values

The estimated within-person variance \(\hat{\sigma}^2\) decreased by 3.1% (from 22.5 to 21.8) from the unconditional means model, implying that only 3.1% of within-person variability in performance anxiety scores can be explained by performance type. This calculation is considered a pseudo R-square value: \[\begin{equation} \textrm{Pseudo }R^2_{L1} = \frac{\hat{\sigma}^{2}(\textrm{Model A})-\hat{\sigma}^{2}(\textrm{Model B})}{\hat{\sigma}^{2}(\textrm{Model A})} = \frac{22.5-21.8}{22.5} = 0.031 \tag{8.14} \end{equation}\]Values of \(\hat{\sigma}_{u}^{2}\) and \(\hat{\sigma}_{v}^{2}\) cannot be compared to between-person variability from Model A, since the inclusion of performance type has changed the interpretation of these values, although they can provide important benchmarks for evaluating more complex Level Two predictions. Finally, \(\hat{\rho}_{uv}=-0.76\) indicates a strong negative relationship between a subject’s performance anxiety before solos and small ensembles and their (typical) decrease in performance anxiety before large ensembles. As might be expected, subjects with higher levels of performance anxiety before solos and small ensembles tend to have smaller increases (or greater decreases) in performance anxiety before large ensembles; those with higher levels of performance anxiety before solos and small ensembles have more opportunity for decreases before large ensembles.

Pseudo \(R^2\) values are not universally reliable as measures of model performance. Because of the complexity of estimating fixed effects and variance components at various levels of a multilevel model, it is not unusual to encounter situations in which covariates in a Level Two equation for, say, the intercept remain constant (while other aspects of the model change), yet the associated pseudo \(R^2\) values differ or are negative. For this reason, pseudo \(R^2\) values in multilevel models should be interpreted cautiously.

8.7.3 Adding a covariate at Level Two

The initial two-level model described in section 8.5.5 essentially expands upon the random slopes and intercepts model by adding a binary covariate for instrument played at Level Two. We will denote this as Model C:

- Level One: \[\begin{equation} Y_{ij} = a_{i}+b_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij} \tag{8.15} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{Orch}_{i}+u_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{Orch}_{i}+v_{i}, \end{eqnarray*}\] where \(\epsilon_{ij}\sim N(0,\sigma^2)\) and \[ \left[ \begin{array}{c} u_{i} \\ v_{i} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \rho\sigma_{u}\sigma_{v} & \sigma_{v}^{2} \end{array} \right] \right). \]

We found that there are no highly significant fixed effects in Model C (other than the intercept). In particular, we have no significant evidence that musicians playing orchestral instruments reported different performance anxiety scores, on average, for solos and small ensembles than keyboardists and vocalists, no evidence of a difference in performance anxiety by performance type for keyboard players and vocalists, and no evidence of an instrument effect in difference between large ensembles and other types.

Since no terms were added at Level One when expanding from the random slopes and intercepts model (Model B), no discernable changes should occur in explained within-person variability (although small changes could occur due to numerical estimation procedures used in likelihood-based parameter estimates). However, Model C expanded Model B by using the instrument which a subject plays to model both intercepts and slopes at Level Two. We can use pseudo R-square values for both intercepts and slopes to evaluate the impact on between-person variability of adding instrument to Model B. \[\begin{equation} \textrm{Pseudo }R^2_{L2_u} = \frac{\hat{\sigma}_{u}^{2}(\textrm{Model B})-\hat{\sigma}_{u}^{2}(\textrm{Model C})}{\hat{\sigma}_{u}^{2}(\textrm{Model B})} = \frac{6.33-5.66}{6.33} = 0.106 \tag{8.16} \end{equation}\] \[\begin{equation} \textrm{Pseudo }R^2_{L2_v} = \frac{\hat{\sigma}_{v}^{2}(\textrm{Model B})-\hat{\sigma}_{v}^{2}(\textrm{Model C})}{\hat{\sigma}_{v}^{2}(\textrm{Model B})} = \frac{0.74-0.45}{0.74} = 0.392 \tag{8.17} \end{equation}\]\(\textrm{Pseudo }R^2_{L2_u}\) describes the improvement in Model C over Model B in explaining subject-to-subject variability in intercepts, and \(\textrm{Pseudo }R^2_{L2_v}\) describes the improvement in Model C over Model B in explaining subject-to-subject variability in slopes. Thus, the addition of instrument at Level Two has decreased the between-person variability in mean performance anxiety before solos and small ensembles by 10.6%, and it has decreased the between-person variability in the effect of large ensembles on performance anxiety by 39.2%.

We could also run a “random intercepts” version of Model C, with no error term in the equation for the slope at Level Two (and thus no covariance between errors at Level Two as well):

- Level One: \[\begin{equation} Y_{ij} = a_{i}+b_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij} \tag{8.18} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{Orch}_{i}+u_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{Orch}_{i}, \end{eqnarray*}\] where \(\epsilon_{ij}\sim N(0,\sigma^2)\) and \(u_{i}\sim N(0,\sigma_{u}^{2})\).

The output below contains REML estimates of our 6 parameters from this simplified version of Model C:

Formula: na ~ orch + large + orch:large + (1 | id)

Random effects:

Groups Name Variance Std.Dev.

id (Intercept) 5.131 2.265

Residual 21.882 4.678

Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

(Intercept) 15.9026 0.6187 25.703

orch 1.7100 0.9131 1.873

large -0.8918 0.8415 -1.060

orch:large -1.4650 1.0880 -1.347 df AIC

model.c 8 3002.981

model.c2 6 2999.384 df BIC

model.c 8 3036.650

model.c2 6 3024.635Note that parameter estimates for the remaining 6 fixed effects and variance components closely mirror the corresponding parameter estimates from Model C. In fact, removing the error term on the slope has improved (reduced) both the AIC and BIC measures of overall model performance. Instead of assuming that the large ensemble effects, after accounting for instrument played, vary by individual, we are assuming that large ensemble effect is fixed across subjects. It is not unusual to run a two-level model like this, with an error term on the intercept equation to account for subject-to-subject differences, but with no error terms on other Level Two equations unless there is an a priori reason to allow effects to vary by subject or if the model performs better after building in those additional error terms.

8.8 Additional covariates: model comparison and interpretability

Recall that we are particularly interested in this study in Level Two covariates—those subject-specific variables that provide insight into why individuals react differently in anxiety-inducing situations. In section 1.5, we saw evidence that subjects with higher baseline levels of negative emotionality tend to have higher performance anxiety levels prior to performances. Thus, in our next step in model building, we will add negative emotionality as a Level Two predictor to Model C. With this addition, our new model can be expressed as a system of Level One and Level Two models:

- Level One: \[\begin{equation} Y_{ij} = a_{i}+b_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij} \tag{8.19} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{Orch}_{i}+\alpha_{2}\textstyle{MPQnem}_{i}+u_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{Orch}_{i}+\beta_{2}\textstyle{MPQnem}_{i}+v_{i}, \end{eqnarray*}\] or as a composite model: \[\begin{eqnarray*} Y_{ij} & = & [\alpha_{0}+\alpha_{1}\textstyle{Orch}_{i}+\alpha_{2}\textstyle{MPQnem}_{i}+\beta_{0}\textstyle{LargeEns}_{ij} \\ & & \mbox{} + \beta_{1}\textstyle{Orch}_{i}\textstyle{LargeEns}_{ij}+\beta_{2}\textstyle{MPQnem}_{i}\textstyle{LargeEns}_{ij}] \\ & & \mbox{} + [u_{i}+v_{i}\textstyle{LargeEns}_{ij}+\epsilon_{ij}] \end{eqnarray*}\] where error terms are defined as in Model C.

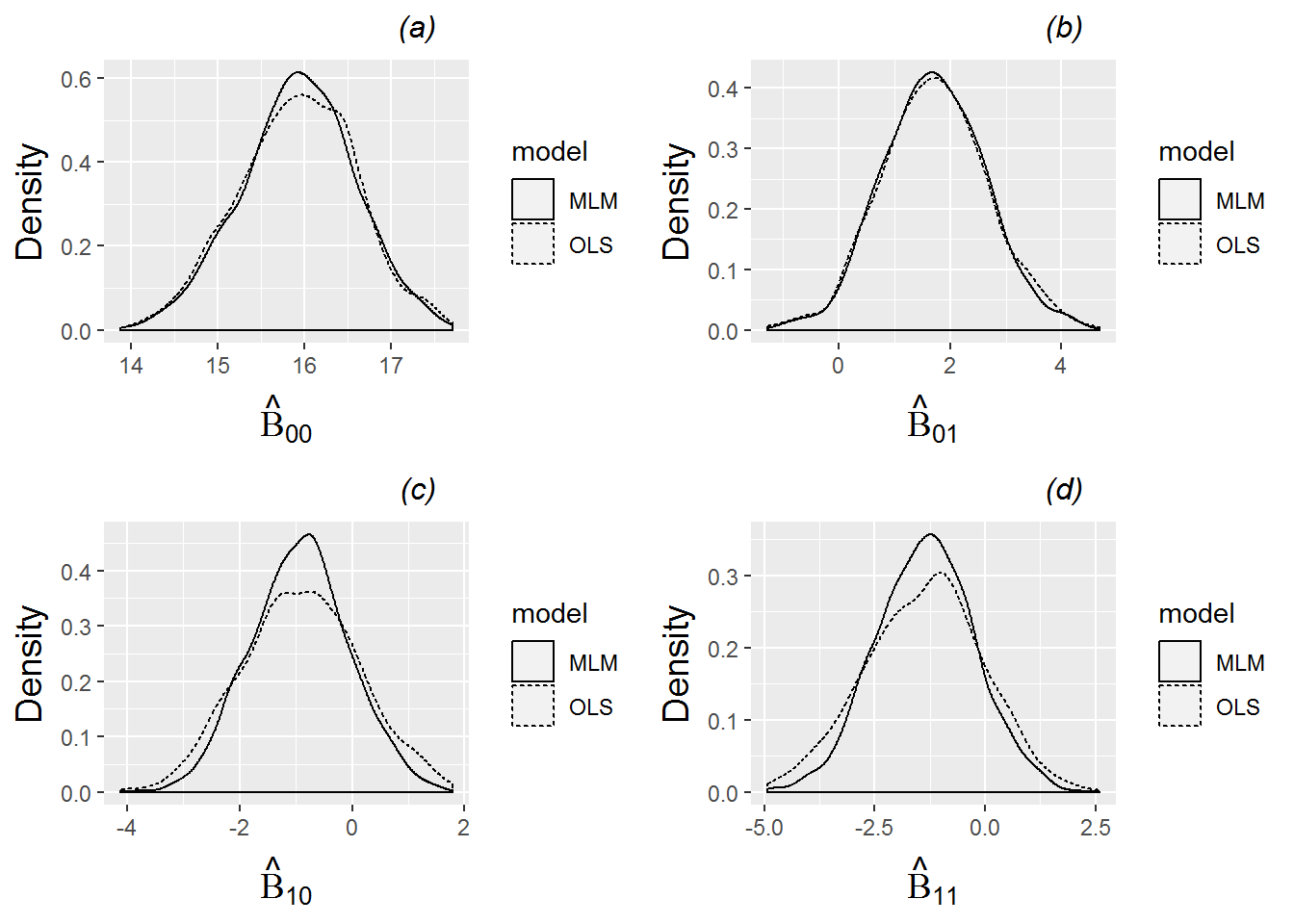

From the R output below, we see that, as our exploratory analyses suggested, subjects with higher baseline levels of stress reaction, alienation, and aggression (as measured by the MPQ negative emotionality scale) had significantly higher levels of performance anxiety before solos and small ensembles (t=3.893). They also had somewhat greater differences between large ensembles and other performance types, controlling for instrument (t=-0.575), although this interaction was not statistically significant.

Formula:

na ~ orch + mpqnem + large + orch:large + mpqnem:large + (large | id)

Random effects:

Groups Name Variance Std.Dev. Corr

id (Intercept) 3.2857 1.813

large 0.5565 0.746 -0.38

Residual 21.8114 4.670

Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

(Intercept) 11.56801 1.22058 9.477

orch 1.00069 0.81713 1.225

mpqnem 0.14823 0.03808 3.892

large -0.28019 1.83412 -0.153

orch:large -0.94927 1.10620 -0.858

mpqnem:large -0.03018 0.05246 -0.5758.8.1 Interpretation of parameter estimates

Compared to Model C, the directions of the effects of instrument and performance type are consistent, but the effect sizes and levels of significance are reduced because of the relative importance of the negative emotionality term. Interpretations will also change slightly to acknowledge that we have controlled for a covariate. In addition, interpretations of fixed effects involving negative emotionality must acknowledge that this covariate is a continuous measure and not binary like instrument and performance type:

- \(\hat{\alpha}_{0} = 11.57\). The estimated mean performance anxiety for solos and small ensembles (

large=0) is 11.57 for keyboard players and vocalists (orch=0) with negative emotionality of 0 at baseline (mpqnem=0). Since the minimum negative emotionality score in this study was 11, this interpretation, while technically correct, is not practically meaningful. - \(\hat{\alpha}_{1} = 1.00\). Orchestral instrument players have an estimated mean anxiety level before solos and small ensembles which is 1.00 point higher than keyboardists and vocalists, controlling for the effects of baseline negative emotionality.

- \(\hat{\alpha}_{2} = 0.15\). A one point increase in baseline negative emotionality is associated with an estimated 0.15 mean increase in anxiety levels before solos and small ensembles, after controlling for instrument.

- \(\hat{\beta}_{0} = -0.28\). Keyboard players and vocalists (

orch=0) with baseline negative emotionality levels of 0 (mpqnem=0) have an estimated mean decrease in anxiety level of 0.28 points before large ensemble performances compared to other performance types. - \(\hat{\beta}_{1} = -0.95\). Orchestral instrument players have an estimated mean difference in anxiety levels between large ensembles and other performance types that is 0.95 points lower than the difference for keyboard players and vocalists, controlling for the effects of baseline negative emotionality. For example, orchestral instrumentalists with

mpqnem=0have a larger estimated mean decrease in anxiety level of 1.23 points before large ensemble performances, rather than the mean decrease of 0.28 in those who don’t play orchestral instruments. - \(\hat{\beta}_{2} = -0.03\). A one point increase in baseline negative emotionality is associated with an estimated .03 mean decrease in the difference in anxiety levels for large ensembles and other performance types, after controlling for instrument.

Some of the detail in these parameter interpretations can be tricky—describing interaction terms, deciding if a covariate must be fixed at 0 or merely held constant, etc. Often it helps to write out models for special cases to isolate the effects of specific fixed effects. We will consider a few parameter estimates from above and see why the interpretations are written as they are.

- \(\hat{\alpha}_{1}\). For solos and small ensembles (

LargeEns=0), the following equations describe the fixed effects portion of the composite model for negative affect score for vocalists and keyboardsists (Orch=0) and orchestral instrumentalists (Orch=1): \[\begin{eqnarray*} \textstyle{Orch}=0: & & \\ Y_{ij} & = & \alpha_{0}+\alpha_{2}\textstyle{MPQnem}_{i} \\ \textstyle{Orch}=1: & & \\ Y_{ij} & = & (\alpha_{0}+\alpha_{1})+\alpha_{2}\textstyle{MPQnem}_{i} \end{eqnarray*}\]Regardless of the subjects’ baseline negative emotionality (

MPQnem), \(\hat{\alpha}_{1}\) represents the estimated difference in performance anxiety between those playing orchestral instruments and others. This interpretation, however, only holds for solos and small ensembles. For large ensembles, the difference between those playing orchestral instruments and others is actually given by \(\hat{\alpha}_{1}+\hat{\beta}_{1}\), holdingMPQnemconstant (Show!). - \(\hat{\beta}_{0}\). Because

LargeEnsinteracts with bothOrchandMPQnemin Model C, \(\hat{\beta}_{0}\) only describes the estimated difference between large ensembles and other performance types when bothOrch=0andMPQnem=0, thus removing the effects of the interaction terms. If, for instance,Orch=1andMPQnem=20, then the difference between large ensembles and other performance types is given by \(\hat{\beta}_{0}+\hat{\beta}_{1}+20\hat{\beta}_{2}\). - \(\hat{\beta}_{1}\). As with \(\hat{\alpha}_{1}\), we consider equations describing the fixed effects portion of the composite model for negative affect score for vocalists and keyboardsists (

Orch=0) and orchestral instrumentalists (Orch=1), except here we leave LargeEns as an unknown rather than restricting the model to solos and small ensembles: \[\begin{eqnarray*} \textstyle{Orch}=0: & & \\ Y_{ij} & = & \alpha_{0}+\alpha_{2}\textstyle{MPQnem}_{i}+\beta_{0}\textstyle{LargeEns}_{ij} \\ & & \mbox{} +\beta_{2}\textstyle{MPQnem}_{i}\textstyle{LargeEns}_{ij} \\ \textstyle{Orch}=1: & & \\ Y_{ij} & = & (\alpha_{0}+\alpha_{1})+\alpha_{2}\textstyle{MPQnem}_{i}+(\beta_{0}+\beta_{1})\textstyle{LargeEns}_{ij} \\ & & \mbox{} +\beta_{2}\textstyle{MPQnem}_{i}\textstyle{LargeEns}_{ij} \end{eqnarray*}\]As long as baseline negative emotionality is held constant (at any level, not just 0), then \(\hat{\beta}_{1}\) represents the estimated difference in the large ensemble effect between those playing orchestral instruments and others.

8.8.2 Model comparisons

At this point, we might ask: do the two extra fixed effects terms in Model D provide a significant improvement over Model C? Nested models such as these can be tested using a likelihood ratio (drop in deviance) test, as we’ve used in Sections 4.4.3 and 6.5.4 with certain generalized linear models. Since we are comparing models nested in their fixed effects, we use full maximum likelihood methods to estimate model parameters, as discussed in section 8.5.4. As expected, the likelihood is larger (and the log-likelihood is less negative) under the larger model (Model D); our test statistic (14.734) is then -2 times the difference in log-likelihood between Models C and D. Comparing the test statistic to a chi-square distribution with 2 degrees of freedom (signifying the number of additional terms in Model D), we obtain a p-value of .0006. Thus, Model D significantly outperforms Model C.

# anova() automatically uses ML for LRT tests

anova(model.d,model.c)Data: music

Models:

model.c: na ~ orch + large + orch:large + (large | id)

model.d: na ~ orch + mpqnem + large + orch:large + mpqnem:large + (large |

model.d: id)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

model.c 8 3007.2 3040.8 -1495.6 2991.2

model.d 10 2996.4 3038.5 -1488.2 2976.4 14.734 2 0.0006319 ***

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1Two models, whether they are nested or not, can be compared using AIC and BIC measures, which were first seen in Chapter 1 and later used in evaluating generalized linear models. In this case, the AIC clearly favors Model D (2996.7) over Model C (3007.3), whereas the BIC favors Model D (3038.8) only slightly over Model C (3041.0) since the BIC imposes a stiffer penalty on additional terms and additional model complexity. However, the likelihood ratio test is a more reliable method for comparing nested models.

Finally, we note that Model D could be further improved by dropping the negative emotionality by large ensemble interaction term. Not only is the t-value (-0.575) associated with this term of low magnitude, but a likelihood ratio test comparing Model D to a model without mpqnem:large produces an insignificant p-value of 0.5534.

model.d1 <- lmer(na ~ orch + mpqnem + large + orch:large +

(large|id), data=music)

anova(model.d,model.d1)Data: music

Models:

model.d1: na ~ orch + mpqnem + large + orch:large + (large | id)

model.d: na ~ orch + mpqnem + large + orch:large + mpqnem:large + (large |

model.d: id)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

model.d1 9 2994.8 3032.7 -1488.4 2976.8

model.d 10 2996.4 3038.5 -1488.2 2976.4 0.3513 1 0.55348.9 Center covariates

As we observed above, the addition of baseline negative emotionality in Model D did not always produce sensible interpretations of fixed effects. It makes no sense to draw conclusions about performance anxiety levels for subjects with MPQNEM scores of 0 at baseline (as in \(\hat{\beta}_{0}\)), since the minimum NEM composite score among subjects in this study was 11. In order to produce more meaningful interpretations of parameter estimates and often more stable parameter estimates, it is often wise to center explanatory variables. Centering involves subtracting a fixed value from each observation, where the fixed value represents a meaningful anchor value (e.g., last grade completed = 12; GPA = 3.0). Often, when there’s no pre-defined anchor value, the mean is used to represent a typical case. With this in mind, we can create a new variable \[\begin{eqnarray*} \textstyle{centeredbaselineNEM} = \textstyle{cmpqnem} = \textstyle{mpqnem - mean(mpqnem)} = \textstyle{mpqnem} - 31.63 \end{eqnarray*}\]and replace baseline NEM in Model D with its centered version:

Formula:

na ~ orch + cmpqnem + large + orch:large + cmpqnem:large + (large |

-2.0544 -0.6364 -0.1584 0.4826 4.0530

Random effects:

Groups Name Variance Std.Dev. Corr

id (Intercept) 3.2857 1.813

large 0.5565 0.746 -0.38

Residual 21.8114 4.670

Number of obs: 497, groups: id, 37

Fixed effects:

Estimate Std. Error t value

(Intercept) 16.25679 0.54756 29.689

orch 1.00069 0.81713 1.225

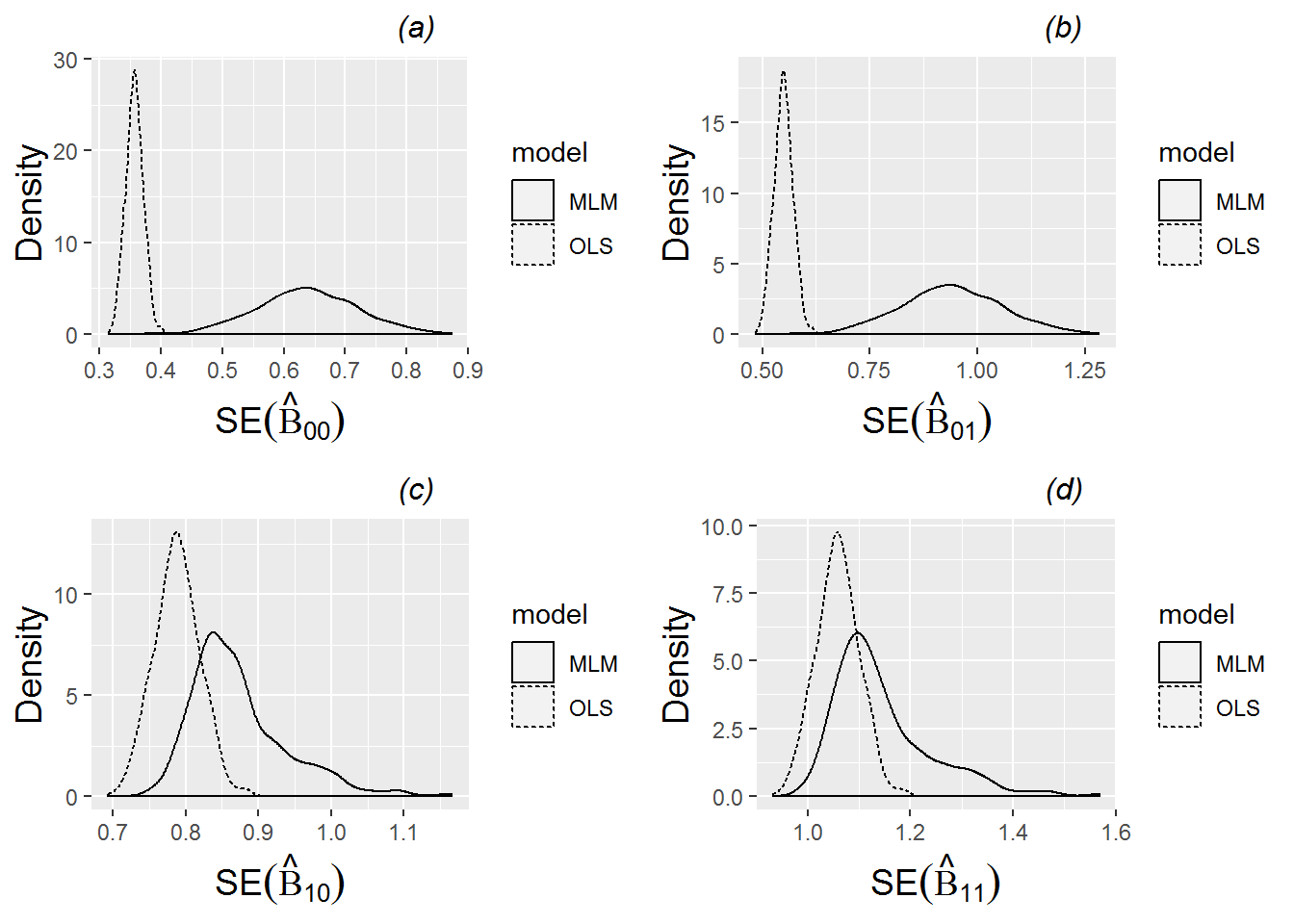

cmpqnem 0.14823 0.03808 3.892