Chapter 10 Multilevel Data With More Than Two Levels

10.1 Learning Objectives

After finishing this chapter, you should be able to:

- Extend the standard multilevel model to cases with more than two levels.

- Apply exploratory data analysis techniques specific to data from more than two levels.

- Formulate multilevel models including the variance-covariance structure.

- Build and understand a taxonomy of models for data with more than two levels.

- Interpret parameters in models with more than two levels.

- Develop strategies for handling an exploding number of parameters in multilevel models.

- Recognize when a fitted model has encountered boundary constraints and understand strategies for moving forward.

- Understand how a parametric bootstrap test of significance works and when it might be useful.

10.2 Case Studies: Seed Germination

It is estimated that 82-99% of historic tallgrass prairie ecosystems have been converted to agricultural use (Baer et al. 2002). A prime example of this large scale conversion of native prairie to agricultural purposes can be seen in Minnesota, where less than 1% of the prairies that once existed in the state still remain (Philip et al. 2004). Such large scale alteration of prairie communities has been associated with numerous problems. For example, erosion and decomposition that readily take place in cultivated soils have increased atmospheric CO2 levels and increased nitrogen inputs to adjacent waterways (Baer et al. (2002), Philip et al. (2004), Knops and Tilman (2000)). In addition, cultivation practices are known to affect rhizosphere composition as tilling can disrupt networks of soil microbes (Allison et al. 2005). The rhizosphere is the narrow region of soil that is directly influenced by root secretions and associated soil microorganisms; much of the nutrient cycling and disease suppression needed by plants occur immediately adjacent to roots. It is important to note that microbial communities in prairie soils have been implicated with plant diversity and overall ecosystem function by controlling carbon and nitrogen cycling in the soils (Zak et al. 2003).

There have been many responses to these claims, but one response in recent years is reconstruction of the native prairie community. These reconstruction projects provide new habitat for a variety of native prairie species, yet it is important to know as much as possible about the outcomes of prairie reconstruction projects in order to ensure that a functioning prairie community is established. The ecological repercussions resulting from prairie reconstruction are not well known. For example, all of the aforementioned changes associated with cultivation practices are known to affect the subsequent reconstructed prairie community (Baer et al. (2002), Philip et al. (2004)), yet there are few explanations for this phenomenon. For instance, prairies reconstructed in different years (using the same seed combinations and dispersal techniques) have yielded disparate prairie communities.

Researchers at a small Midwestern college decided to experimentally explore the underlying causes of variation in reconstruction projects in order to make future projects more effective. Introductory ecology classes were organized to collect longitudinal data on native plant species grown in a greenhouse setting, using soil samples from surrounding lands (Angell 2010). We will examine their data to compare germination and growth of two species of prairie plants—leadplants (Amorpha canescens) and coneflowers (Ratibida pinnata)—in soils taken from a remnant (natural) prairie, a cultivated (agricultural) field, and a restored (reconstructed) prairie. Additionally, half of the sampled soil was sterilized to determine if rhizosphere differences were responsible for the observed variation, so we will examine the effects of sterilization as well.

The data we’ll examine was collected through an experiment run using a 3x2x2 factorial design, with 3 levels of soil type (remnant, cultivated, and restored), 2 levels of sterilization (yes or no), and 2 levels of species (leadplant and coneflower). Each of the 12 treatments (unique combinations of factor levels) was replicated in 6 pots, for a total of 72 pots. Six seeds were planted in each pot (although a few pots had 7 or 8 seeds), and initially student researchers recorded days to germination (defined as when two leaves are visible), if germination occurred. In addition, the height of each germinated plant (in mm) was measured at 13, 18, 23, and 28 days after planting. The study design is illustrated in the diagram below.

The design of the seed germination study

10.3 Initial Exploratory Analyses

10.3.1 Data Organization

Data for Case Study 10.2 in seeds2.csv contains the following variables:

pot= Pot plant was grown in (1-72)plant= Unique plant identification numberspecies= L for leadplant and C for coneflowersoil= STP for reconstructed prairie, REM for remnant prairie, and CULT for cultivated landsterile= Y for yes and N for nogermin= Y if plant germinated, N if nothgt13= height of plant (in mm) 13 days after seeds plantedhgt18= height of plant (in mm) 18 days after seeds plantedhgt23= height of plant (in mm) 23 days after seeds plantedhgt28= height of plant (in mm) 28 days after seeds planted

This data is stored in wide format, with one row per plant (see 12 sample plants in Table 10.1). As we have done in previous multilevel analyses, we will convert to long format (one observation per plant-time combination) after examining the missing data pattern and removing any plants with no growth data. In this case, we are almost assuredly losing information by removing plants with no height data at all four time points, since these plants did not germinate, and there may well be differences between species, soil type, and sterilization with respect to germination rates. We will handle this possibility by analyzing germination rates separately (see Chapter 11); the analysis in this chapter will focus on effects of species, soil type, and sterilization on initial growth and growth rate among plants that germinate.

| pot | plant | soil | sterile | species | germin | hgt13 | hgt18 | hgt23 | hgt28 | |

|---|---|---|---|---|---|---|---|---|---|---|

| 135 | 23 | 231 | CULT | N | C | Y | 1.1 | 1.4 | 1.6 | 1.7 |

| 136 | 23 | 232 | CULT | N | C | Y | 1.3 | 2.2 | 2.5 | 2.7 |

| 137 | 23 | 233 | CULT | N | C | Y | 0.5 | 1.4 | 2.0 | 2.3 |

| 138 | 23 | 234 | CULT | N | C | Y | 0.3 | 0.4 | 1.2 | 1.7 |

| 139 | 23 | 235 | CULT | N | C | Y | 0.5 | 0.5 | 0.8 | 2.0 |

| 140 | 23 | 236 | CULT | N | C | Y | 0.1 | NA | NA | NA |

| 141 | 24 | 241 | STP | Y | L | Y | 1.8 | 2.6 | 3.9 | 4.2 |

| 142 | 24 | 242 | STP | Y | L | Y | 1.3 | 1.7 | 2.8 | 3.7 |

| 143 | 24 | 243 | STP | Y | L | Y | 1.5 | 1.6 | 3.9 | 3.9 |

| 144 | 24 | 244 | STP | Y | L | Y | NA | 1.0 | 2.3 | 3.8 |

| 145 | 24 | 245 | STP | Y | L | N | NA | NA | NA | NA |

| 146 | 24 | 246 | STP | Y | L | N | NA | NA | NA | NA |

Although the experimental design called for \(72*6=432\) plants, the wide data set has 437 plants because a few pots had more than six plants (likely because two of the microscopically small seeds stuck together when planted). Of those 437 plants, 154 had no height data (did not germinate by the 28th day) and were removed from analysis (for example, see rows 145-146 in Table 10.1). 248 plants had complete height data (e.g., rows 135-139 and 141-143), 13 germinated later than the 13th day but had complete heights once they germinated (e.g., row 144), and 22 germinated and had measurable height on the 13th day but died before the 28th day (e.g., row 140). Ultimately, the long data set contains 1132 unique observations where plants heights were recorded; representation of plants 236-242 in the long data set can be seen in Table 10.2.

| pot | plant | soil | sterile | species | germin | hgt | time13 |

|---|---|---|---|---|---|---|---|

| 23 | 236 | CULT | N | C | Y | 0.1 | 0 |

| 23 | 236 | CULT | N | C | Y | NA | 5 |

| 23 | 236 | CULT | N | C | Y | NA | 10 |

| 23 | 236 | CULT | N | C | Y | NA | 15 |

| 24 | 241 | STP | Y | L | Y | 1.8 | 0 |

| 24 | 241 | STP | Y | L | Y | 2.6 | 5 |

| 24 | 241 | STP | Y | L | Y | 3.9 | 10 |

| 24 | 241 | STP | Y | L | Y | 4.2 | 15 |

| 24 | 242 | STP | Y | L | Y | 1.3 | 0 |

| 24 | 242 | STP | Y | L | Y | 1.7 | 5 |

| 24 | 242 | STP | Y | L | Y | 2.8 | 10 |

| 24 | 242 | STP | Y | L | Y | 3.7 | 15 |

Notice the three-level structure of this data. Treatments (levels of the three experimental factors) were assigned at the pot level, then multiple plants were grown in each pot, and multiple measurements were taken over time for each plant. Our multilevel analysis must therefore account for pot-to-pot variability in height measurements (which could result from factor effects), plant-to-plant variability in height within a single pot, and variability over time in height for individual plants. In order to fit such a three-level model, we must extend the two-level model which we have used thus far.

10.3.2 Exploratory Analyses

We start by taking an initial look at the effect of Level Three covariates (factors applied at the pot level: species, soil type, and sterilization) on plant height, pooling observations across pot, across plant, and across time of measurement within plant. First, we observe that the initial balance which existed after randomization of pot to treatment no longer holds. After removing plants that did not germinate (and therefore had no height data), more height measurements exist for coneflowers (n=704, compared to 428 for leadplants), soil from restored prairies (n=524, compared to 288 for cultivated land and 320 for remnant prairies), and unsterilized soil (n=612, compared to 520 for sterilized soil). This imbalance indicates possible factor effects on germination rate; we will take up those hypotheses in Chapter 11. In this chapter, we will focus on the effects of species, soil type, and sterilization on the growth patterns of plants that germinate.

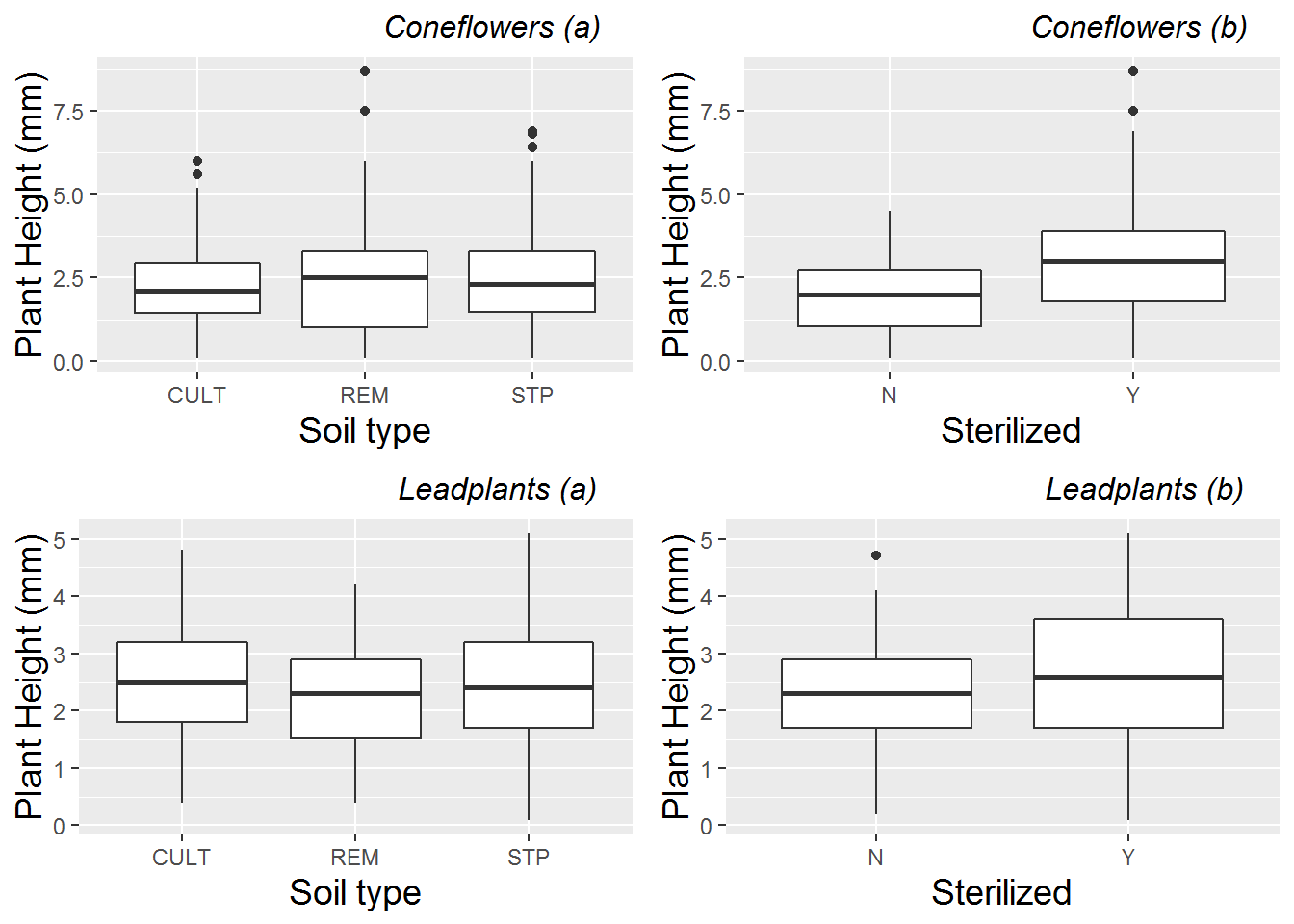

Because we suspect that height measurements over time for a single plant are highly correlated, while height measurements from different plants from the same pot are relatively uncorrelated, we calculate mean height per plant (over all available time points) before generating exploratory plots investigating Level Three factors. Figure 10.1 then examines the effects of soil type and sterilization separately by species. Sterilization seems to have a bigger benefit for coneflowers, while soil from remnant prairies seems to lead to smaller leadplants and taller coneflowers.

Figure 10.1: Plant height comparisons of (a) soil type and (b) sterilization within species. Each plant is represented by the mean height over all measurements at all time points for that plant.

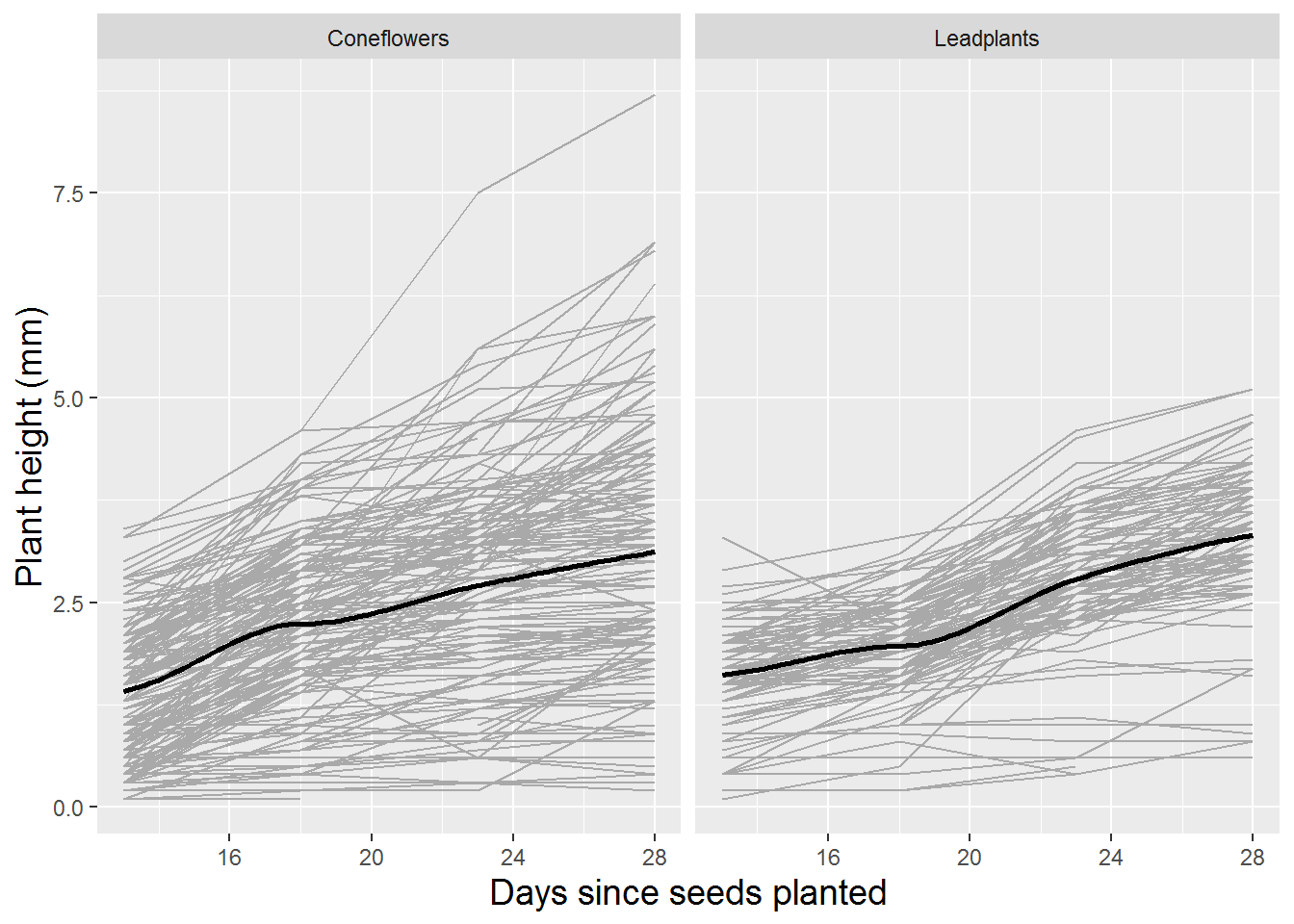

We also use spaghetti plots to examine time trends within species to see (a) if it is reasonable to assume linear growth between Day 13 and Day 28 after planting, and (b) if initial height and rate of growth is similar in the two species. Figure 10.2 illustrates differences between species. While both species have similar average heights 13 days after planting, coneflowers appear to have faster early growth which slows later, while leadplants have a more linear growth rate which culminates in greater average heights 28 days after planting. Coneflowers also appear to have greater variability in initial height and growth rate, although there are more coneflowers with height data.

Figure 10.2: Spaghetti plot by species with loess fit. Each “line” represents one plant.

Exploratory analyses such as these confirm the suspicions of biology researchers that leadplants and coneflowers should be analyzed separately. Because of biological differences, it is expected that these two species will show different growth patterns and respond differently to treatments such as fertilization. Coneflowers are members of the aster family, growing up to 4 feet tall with their distinctive gray seed heads and drooping yellow petals. Leadplants, on the other hand, are members of the bean family, with purple flowers, a height of 1 to 3 feet, and compound greyish green leaves which look to be dusted with white lead. Leadplants have deep root systems and are symbiotic N-fixers, which means they might experience stifled growth in sterilized soil compared with other species. For the remainder of this chapter, we will focus on leadplants and how their growth patterns are affected by soil type and sterilization. You will have a chance to analyze coneflower data later in the Exercises section.

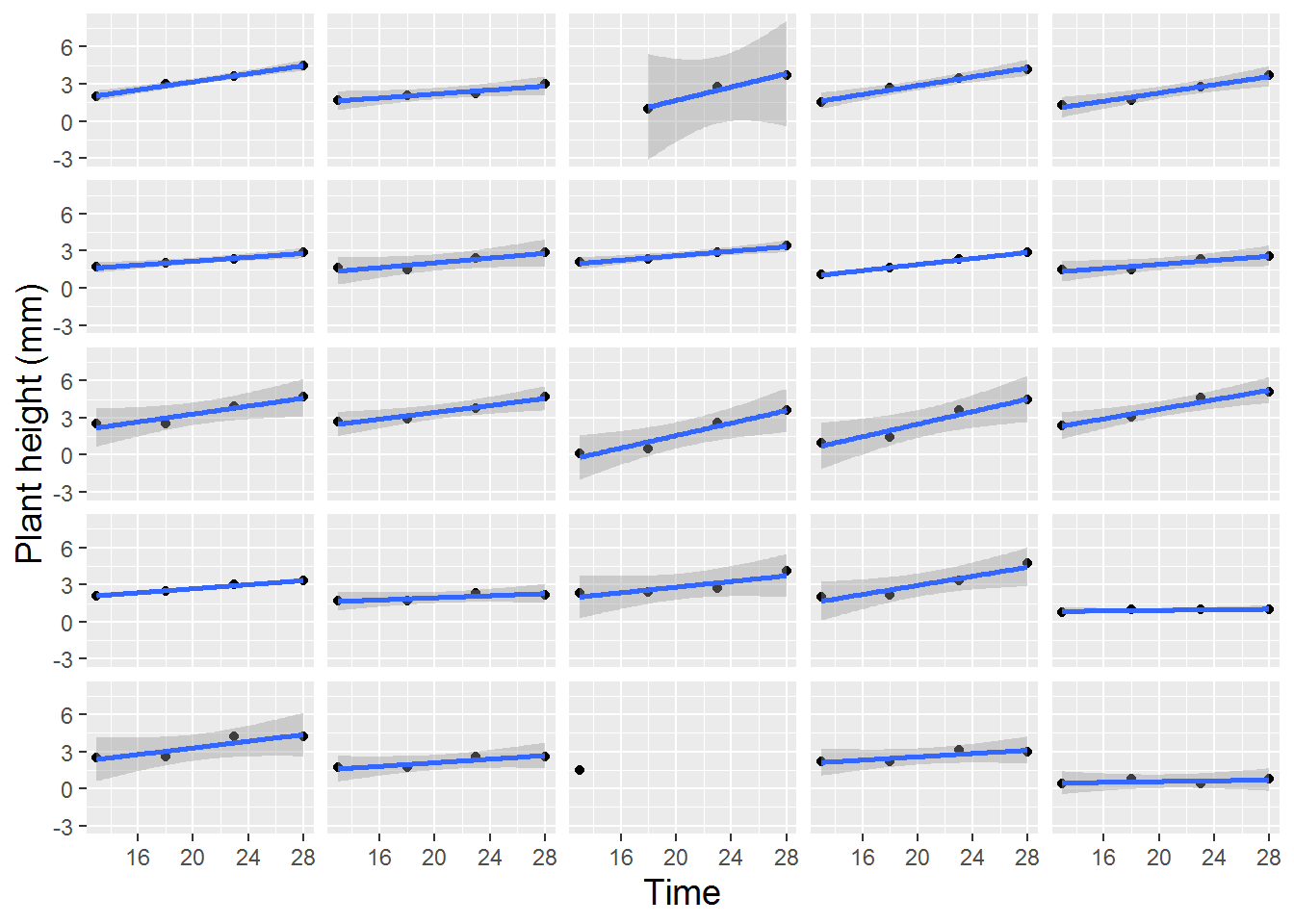

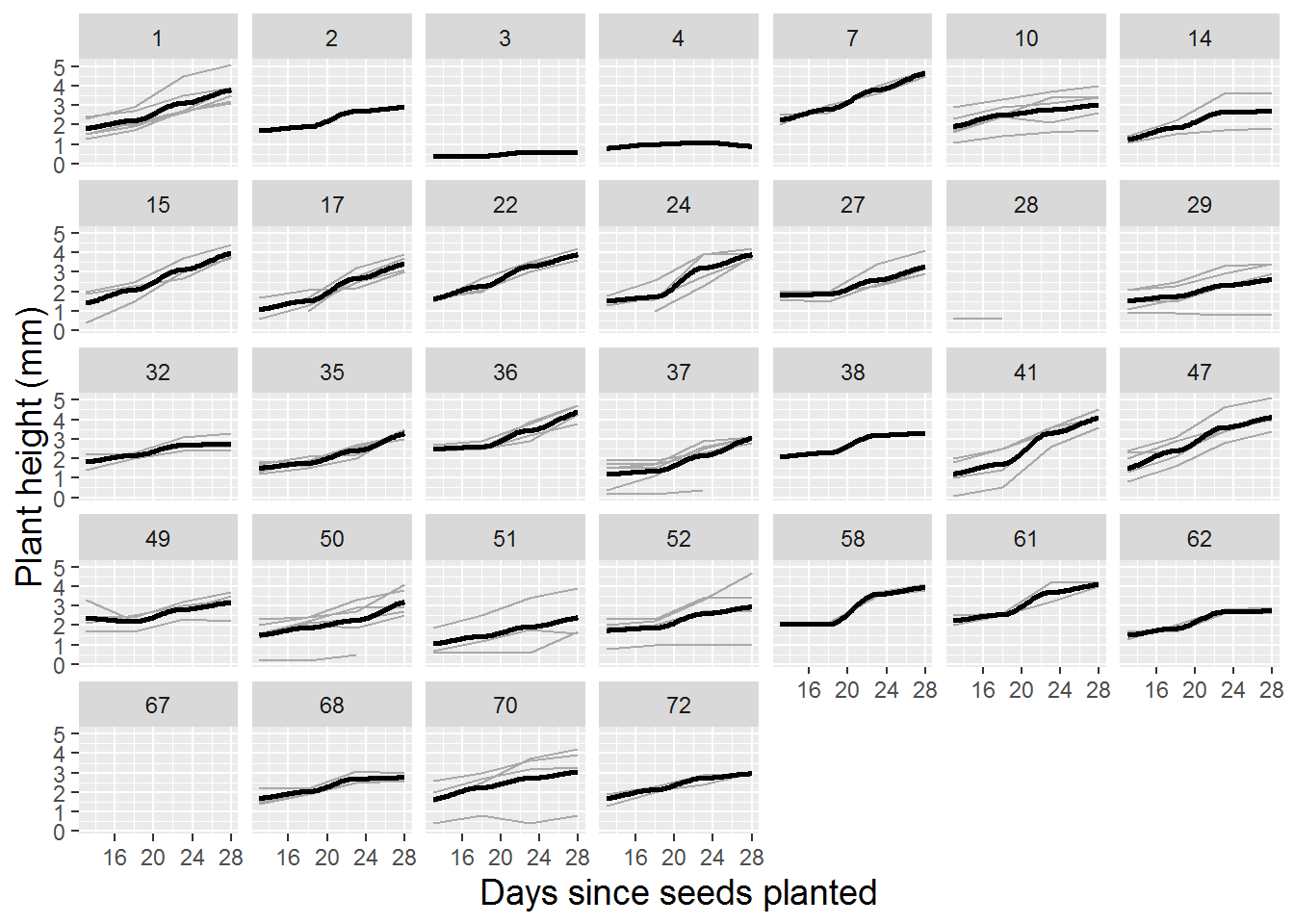

Lattice plots, illustrating several observational units simultaneously, each with fitted lines where appropriate, are also valuable to examine during the exploratory analysis phase. Figure 10.3 shows height over time for 24 randomly selected leadplants that germinated in this study, with a fitted linear regression line. Linearity appears reasonable in most cases, although there is some variability in the intercepts and a good deal of variability in the slopes of the fitted lines. These intercepts and slopes by plant, of course, will be potential parameters in a multilevel model which we will fit to this data. Given the three-level nature of this data, it is also useful to examine a spaghetti plot by pot (Figure 10.4). While linearity appears to reasonably model the average trend over time within pot, we see differences in the plant-to-plant variability within pot, but some consistency in intercept and slope from pot to pot.

Figure 10.3: Lattice plot of linear trends fit to 24 randomly selected leadplants. One plant with only a single height measurement has no associated regression line.

Figure 10.4: Spaghetti plot for leadplants by pot with loess fit.

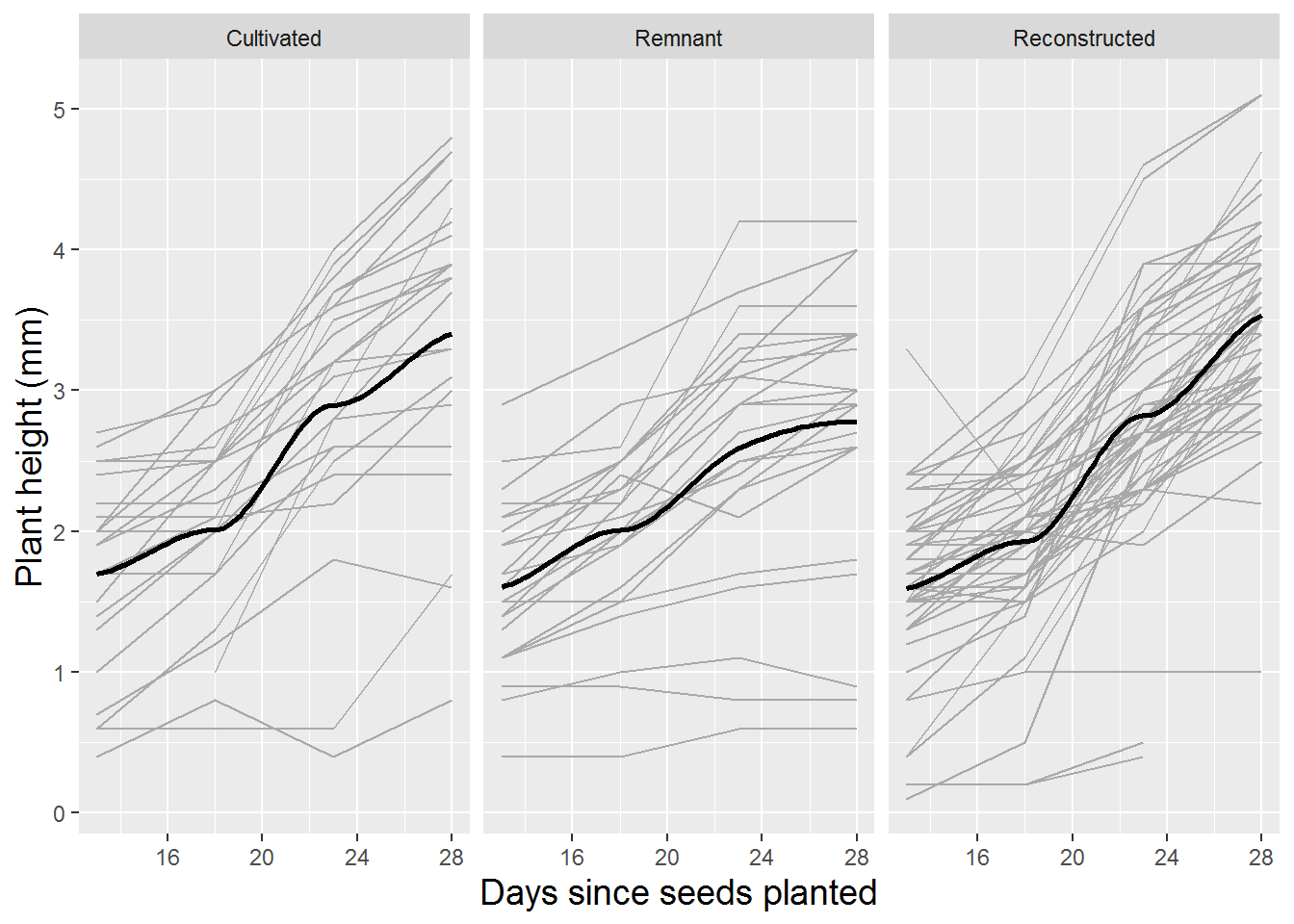

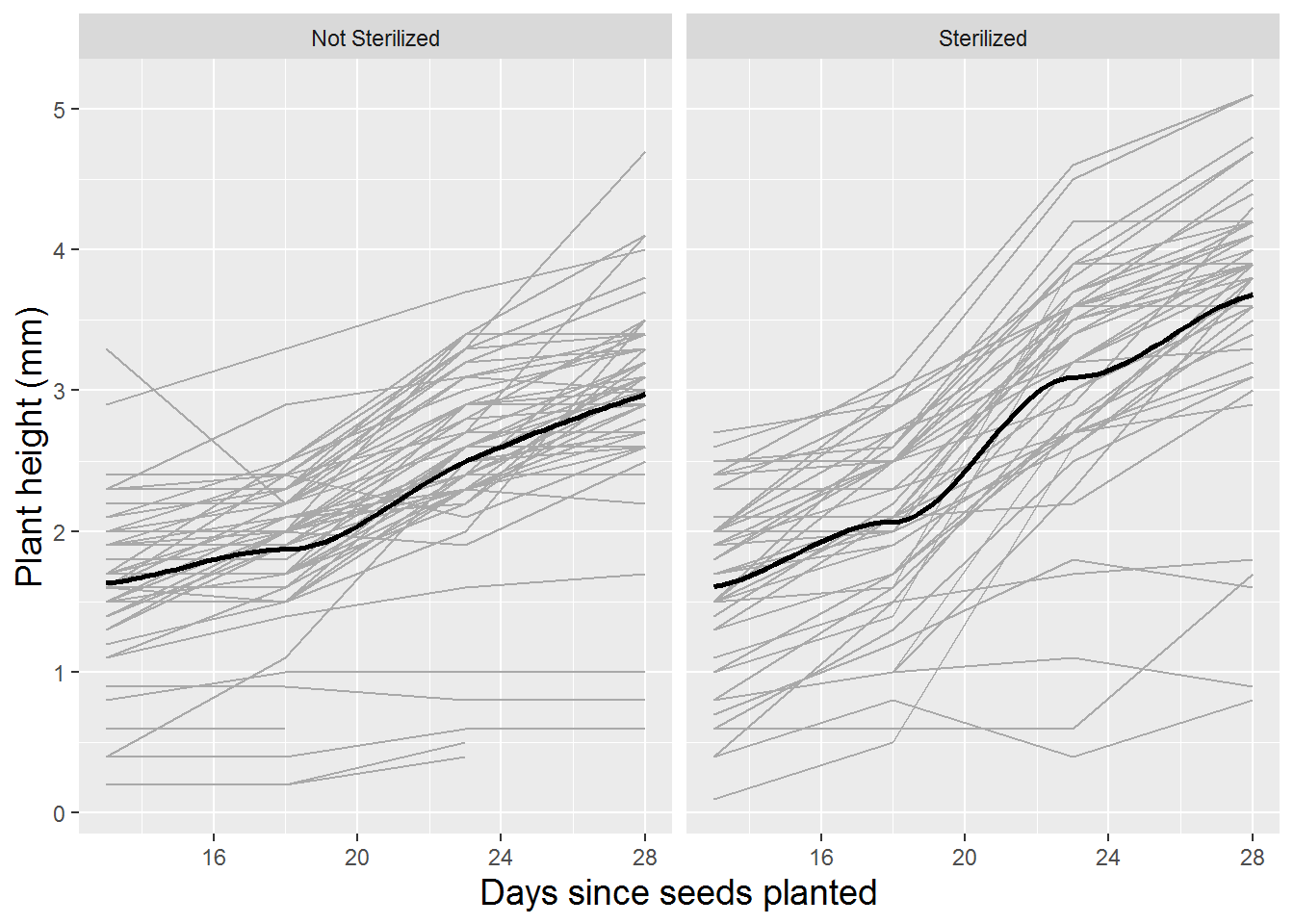

Spaghetti plots can also be an effective tool for examining the potential effects of soil type and sterilization on growth patterns of leadplants. Figure 10.5 and Figure 10.6 illustrate how the growth patterns of leadplants depend on soil type and sterilization. In general, we observe slower growth in soil from remnant prairies and soil that has not been sterilized.

Figure 10.5: Spaghetti plot for leadplants by soil type with loess fit.

Figure 10.6: Spaghetti plot for leadplants by sterilization with loess fit.

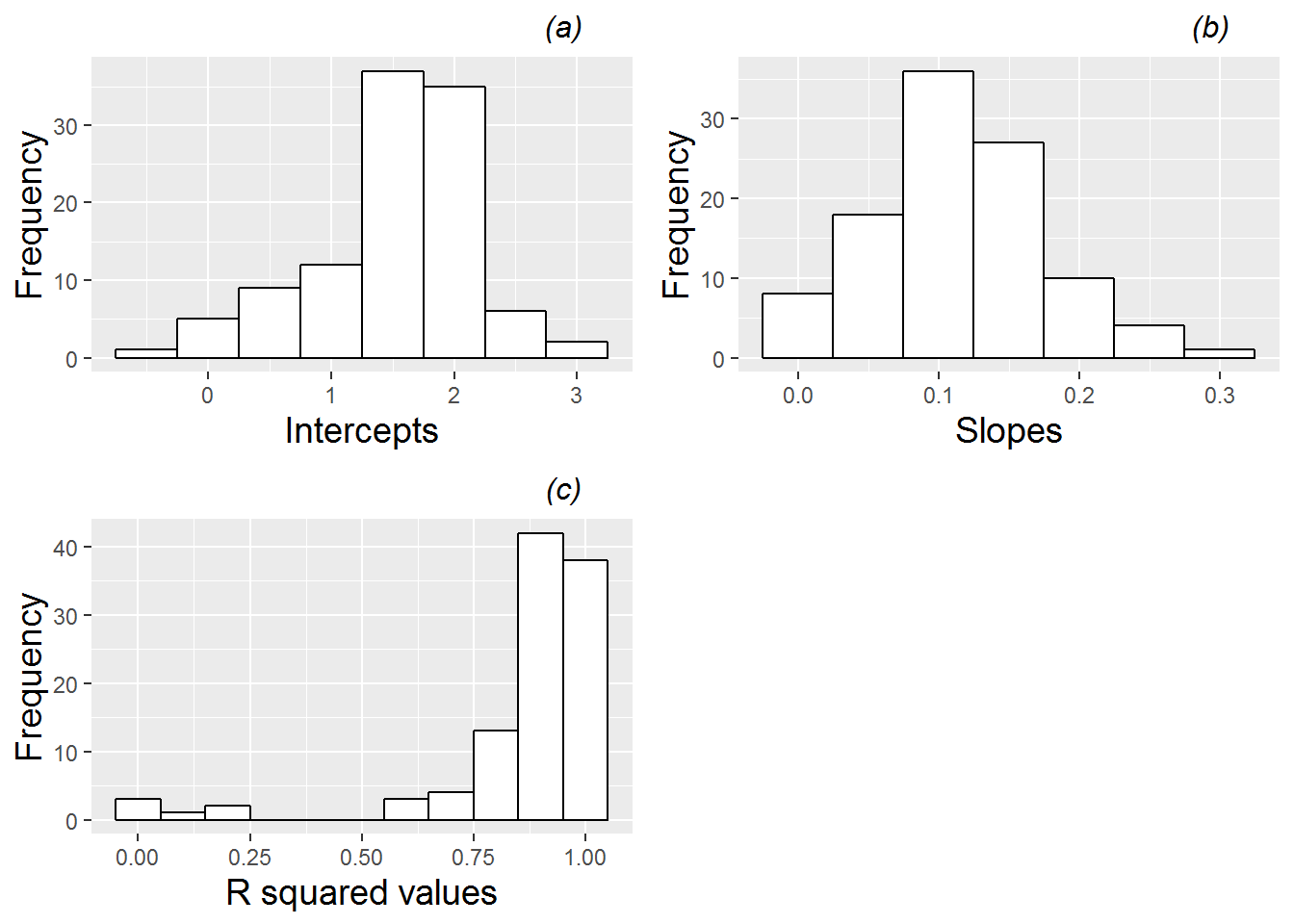

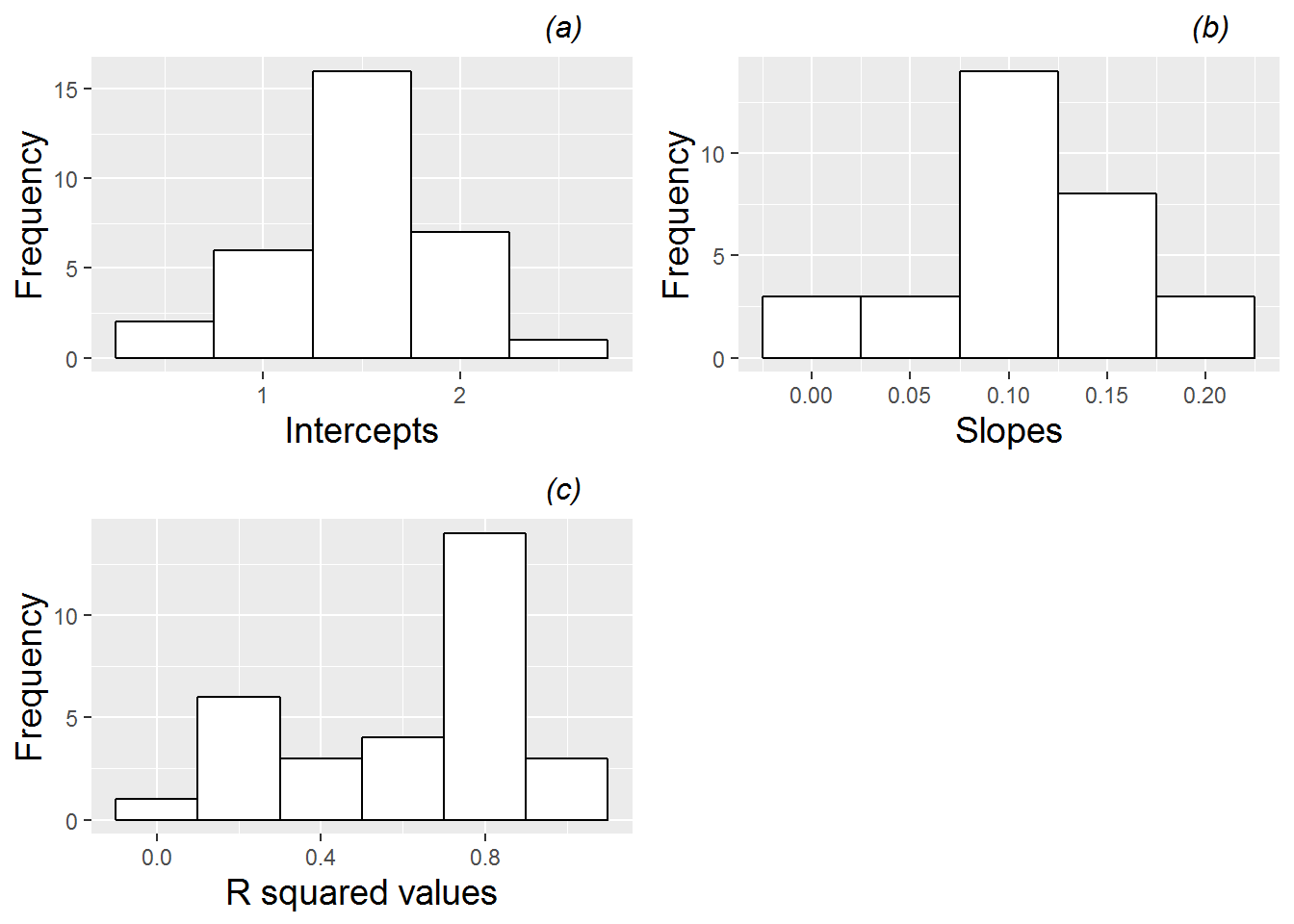

We can further explore the variability in linear growth among plants and among pots by fitting regression lines and examining the estimated intercepts and slopes, as well as the corresponding R-squared values. Figures 10.7 and 10.8 provide just such an analysis, where Figure 10.7 shows results of fitting lines by plant, and Figure 10.8 shows results of fitting lines by pot. Certain caveats accompany these summaries. In the case of fitted lines by plant, each plant is given equal weight regardless of the number of observations (2-4) for a given plant, and in the case of fitted lines by pot, a line is estimated by simply pooling all observations from a given pot, ignoring the plant from which the observations came, and equally weighting pots regardless of how many plants germinated and survived to Day 28. Nevertheless, the summaries of fitted lines provide useful information. When fitting regression lines by plant, we see a mean intercept of 1.52 (SD=0.66), indicating an estimated average height at 13 days of 1.5 mm, and a mean slope of 0.114 mm per day of growth from Days 13 to 28 (SD=0.059). Most R-squared values were strong (e.g., 84% were above 0.8). Summaries of fitted regression lines by pot show similar mean intercepts (1.50) and slopes (0.107), but somewhat less variability pot-to-pot than we observed plant-to-plant (SD=0.46 for intercepts and SD=0.050 for slopes).

Figure 10.7: Histograms of (a) intercepts, (b) slopes, and (c) R-squared values for linear fits across all leadplants.

Figure 10.8: Histograms of (a) intercepts, (b) slopes, and (c) R-squared values for linear fits across all pots with leadplants.

Another way to examine variability due to plant vs. variability due to pot is through summary statistics. Plant-to-plant variability can be estimated by averaging standard deviations from each pot (.489 for intercepts and .039 for slopes), while pot-to-pot variability can be estimated by finding the standard deviation of average intercept (.478) or slope (.051) within pot. Based on these rough measurements, variability due to plants and pots is comparable.

Fitted lines by plant and pot are modeled using a centered time variable (time13), adjusted so that the first day of height measurements (13 days after planting) corresponds to time13=0. This centering has two primary advantages. First, the estimated intercept becomes more interpretable. Rather than representing height on the day of planting (which should be 0 mm, but which represents a hefty extrapolation from our observed range of days 13 to 28), the intercept now represents height on Day 13. Second, the intercept and slope are much less correlated (r=-0.16) than when uncentered time is used, which improves the stability of future models.

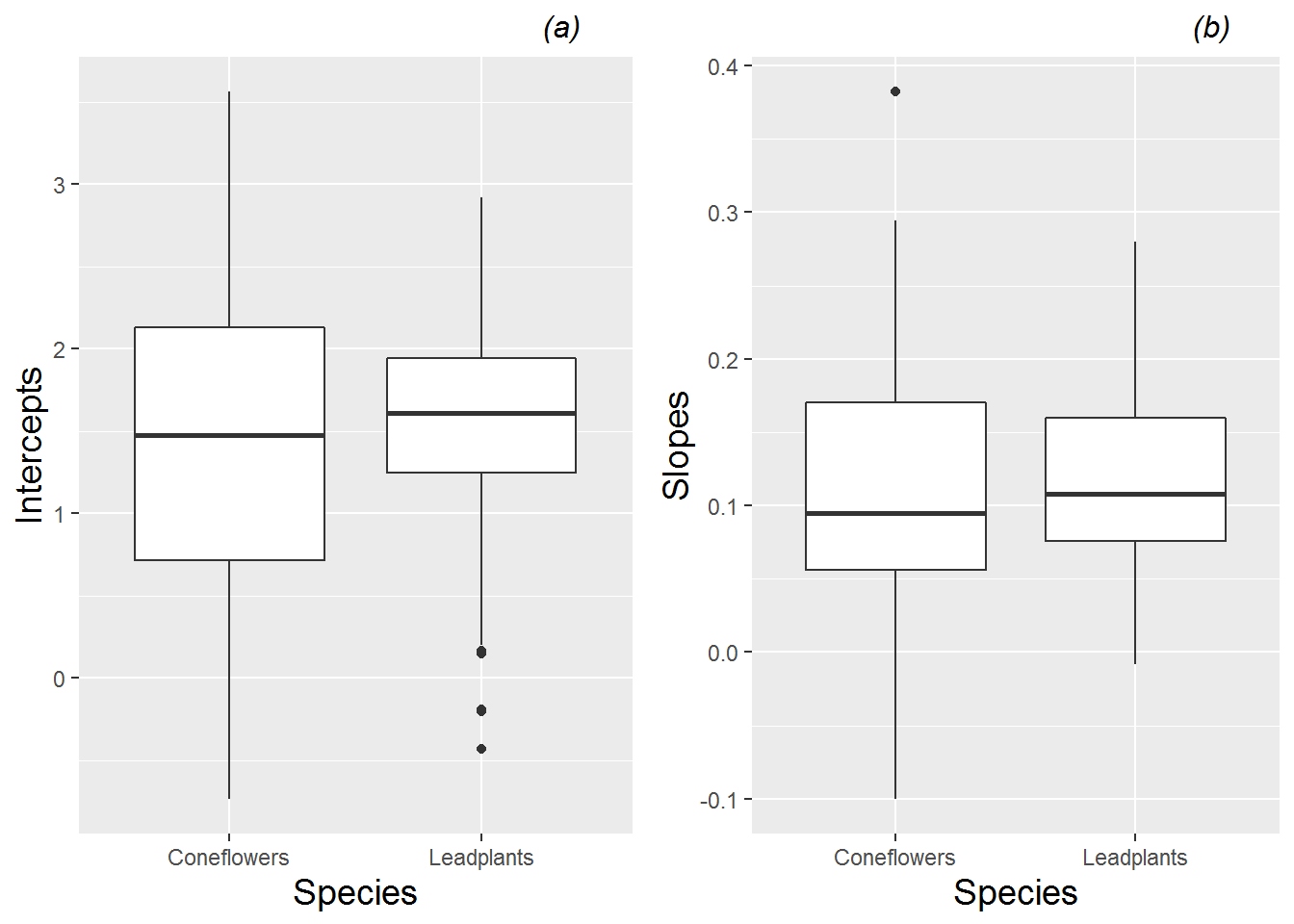

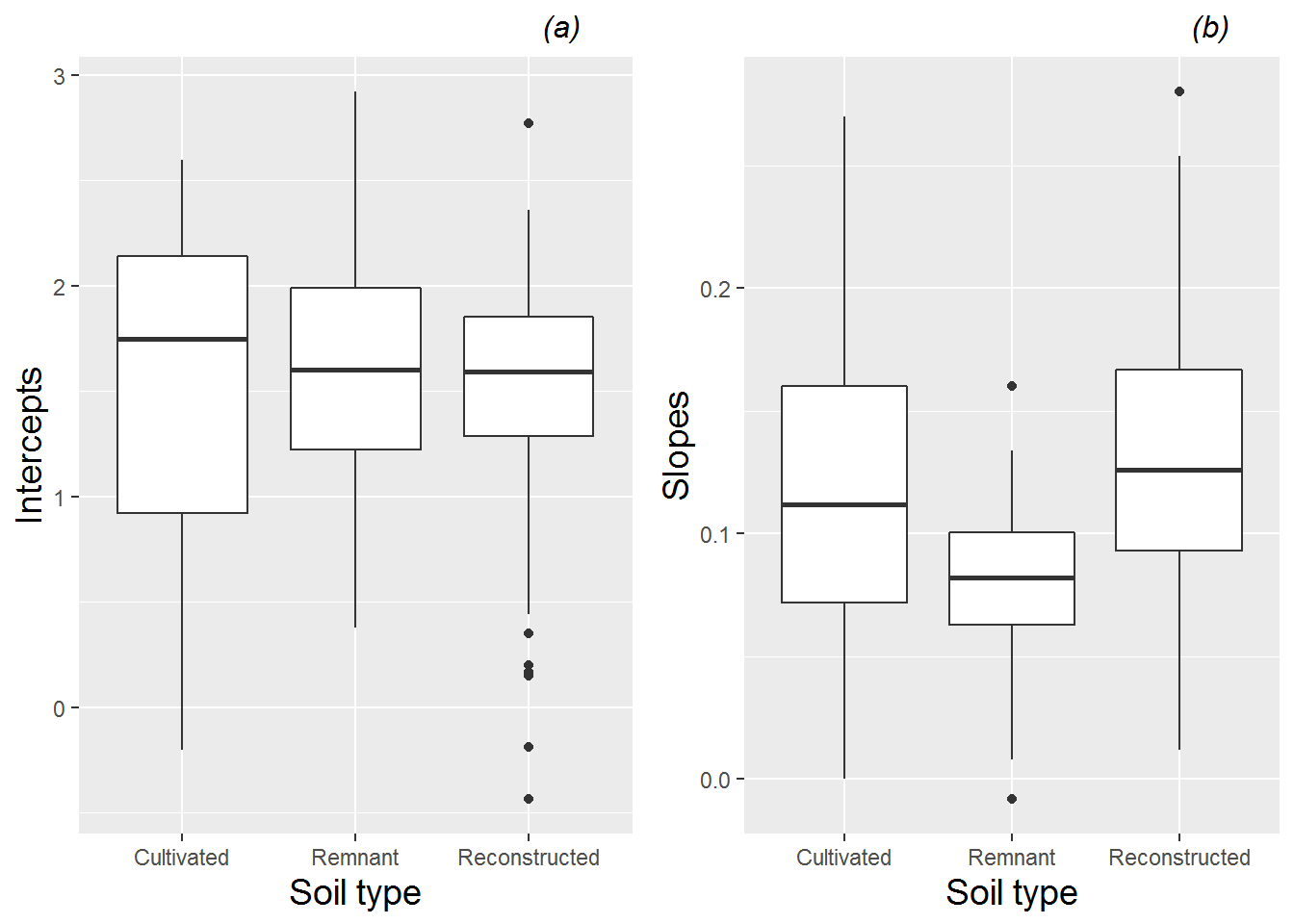

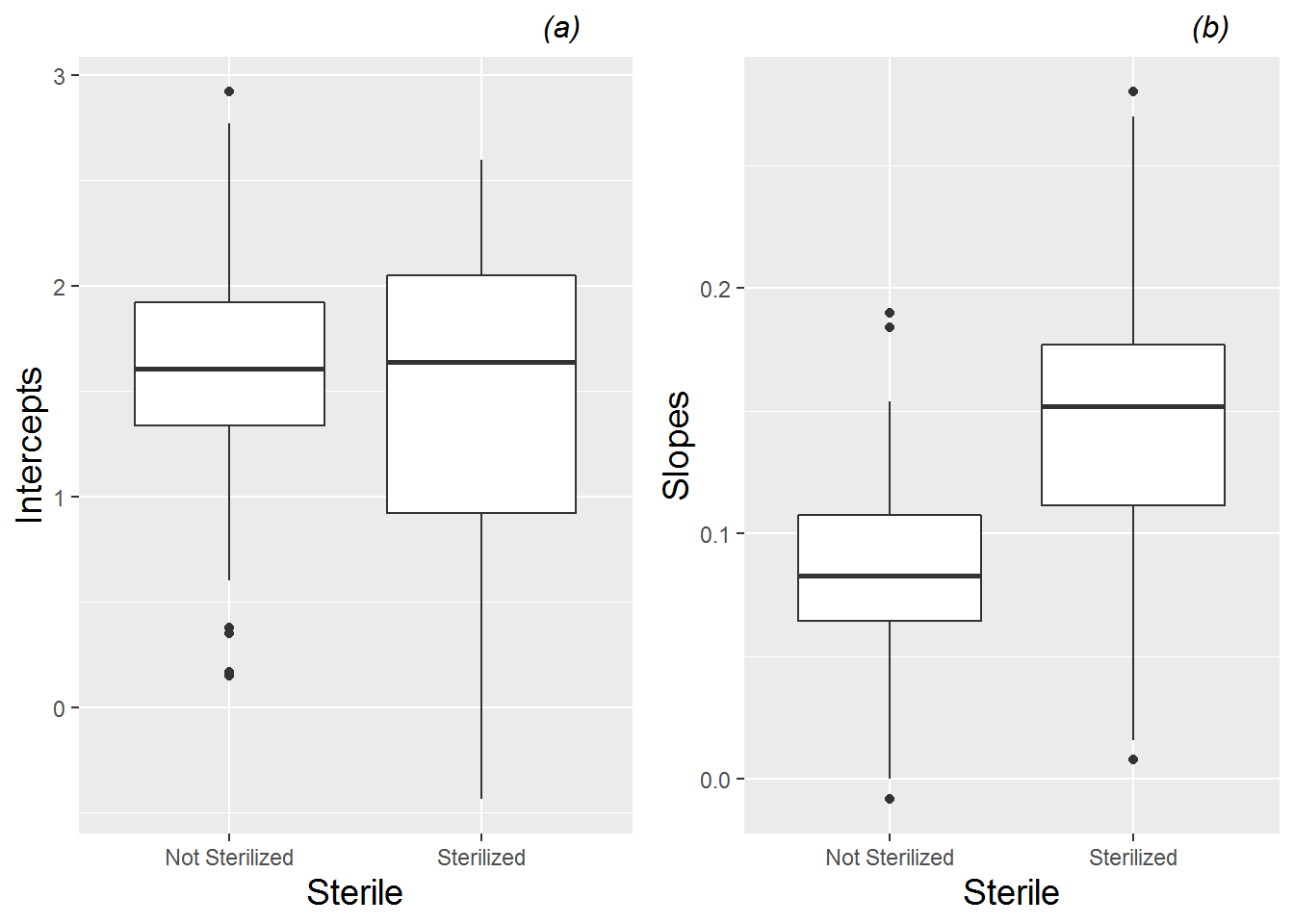

Fitted intercepts and slopes by plant can be used for an additional exploratory examination of factor effects to complement those from the earlier spaghetti plots. Figure 10.9 complements Figure 10.2, again showing differences between species—coneflowers tend to start smaller and have slower growth rates, although they have much more variability in growth patterns than leadplants. Returning to our focus on leadplants, Figure 10.10 shows that plants grown in soil from cultivated fields tends to be taller at Day 13, and plants grown in soil from remnant prairies tend to grow more slowly than plants grown in other soil types. Figure 10.11 shows the strong tendency for plants grown in sterilized soil to grow faster than plants grown in non-sterilized soil. We will soon see if our fitted multilevel models support these observed trends.

Figure 10.9: Boxplots of (a) intercepts and (b) slopes for all plants by species, based on a linear fit to height data from each plant.

Figure 10.10: Boxplots of (a) intercepts and (b) slopes for all leadplants by soil type, based on a linear fit to height data from each plant.

Figure 10.11: Boxplots of (a) intercepts and (b) slopes for all leadplants by sterilization, based on a linear fit to height data from each plant.

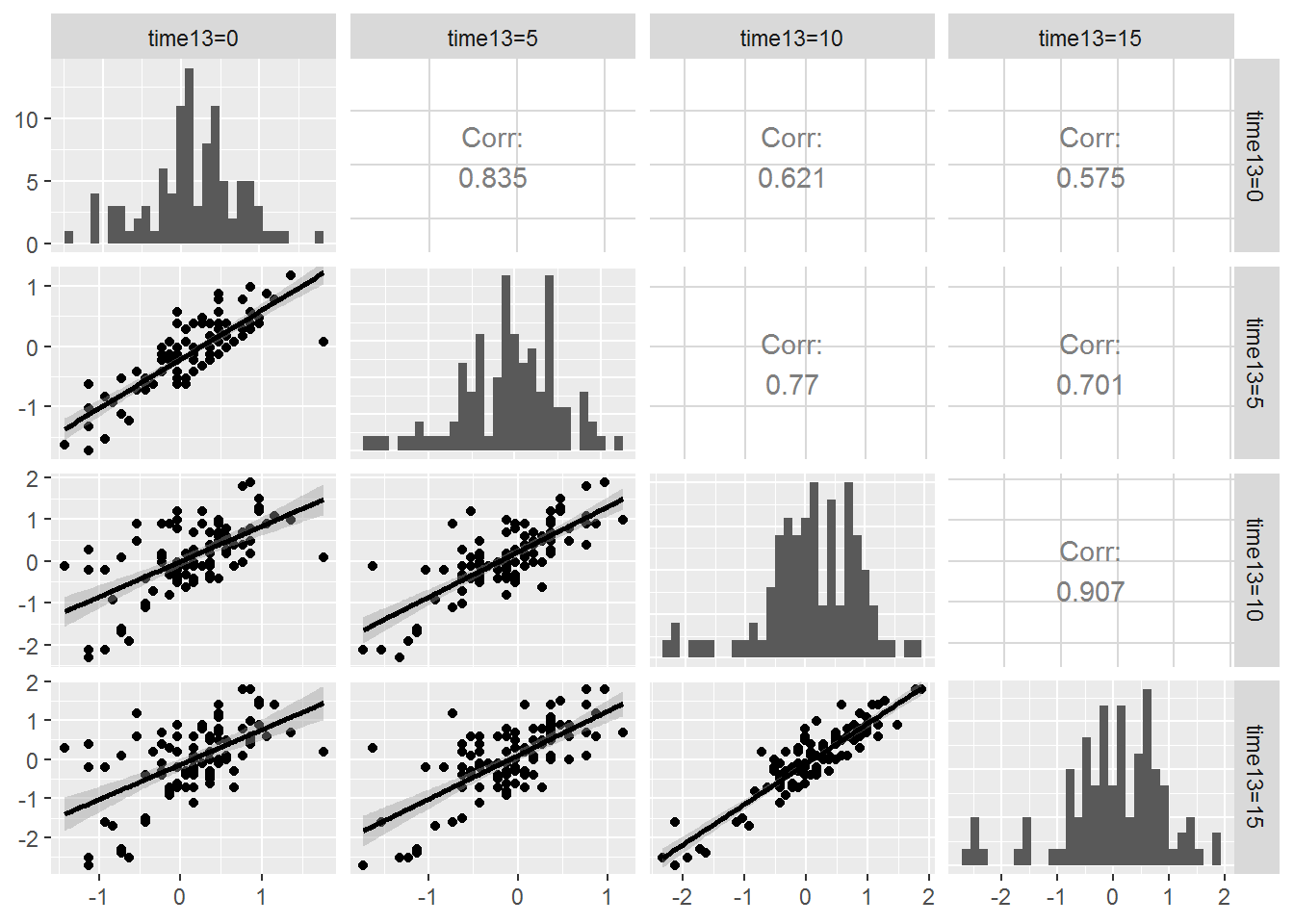

Since we have time at Level One, any exploratory analysis of Case Study 10.2 should contain an investigation of the variance-covariance structure within plant. Figure 10.12 shows the potential for an autocorrelation structure in which the correlation between observations from the same plant diminishes as the time between measurements increases. Residuals five days apart have correlations ranging from .77 to .91, while measurements ten days apart have correlations of .62 and .70, and measurements fifteen days apart have correlation of .58.

Figure 10.12: Correlation structure within plant. The upper right contains correlation coefficients between residuals at pairs of time points, the lower left contains scatterplots of the residuals at time point pairs, and the diagonal contains histograms of residuals at each of the four time points.

10.4 Initial models: unconditional means and unconditional growth

The structure and notation for three level models will closely resemble the structure and notation for two level models, just with extra subscripts. Therein lies some of the power of multilevel models—extensions are relatively easy and allow you to control for many sources of variability, obtaining more precise estimates of important parameters. However, the number of variance component parameters to estimate can quickly mushroom as covariates are added at lower levels, so implementing simplifying restrictions will often become necessary (see Section 10.7).

We once again begin with the unconditional means model, in which there are no predictors at any level, in order to assess the amount of variation at each level. Here, Level Three is pot, Level Two is plant within pot, and Level One is time within plant. Using model formulations at each of the three levels, the unconditional means three-level model can be expressed as:

- Level One (timepoint within plant): \[\begin{equation} Y_{ijk} = a_{ij}+\epsilon_{ijk} \textrm{ where } \epsilon_{ijk}\sim N(0,\sigma^2) \tag{10.1} \end{equation}\]

- Level Two (plant within pot): \[\begin{equation} a_{ij} = a_{i}+u_{ij} \textrm{ where } u_{ij}\sim N(0,\sigma_{u}^{2}) \tag{10.2} \end{equation}\]

- Level Three (pot): \[\begin{equation} a_{i} = \alpha_{0}+\tilde{u}_{i} \textrm{ where } \tilde{u}_{i} \sim N(0,\sigma_{\tilde{u}}^{2}) \tag{10.3} \end{equation}\]

where the heights of plants from different pots are considered independent, but plants from the same pot are correlated as well as measurements at different times from the same plant.

Keeping track of all the model terms, especially with three subscripts, is not a trivial task, but it’s worth spending time thinking through. Here is a quick guide to the meaning of terms found in our three-level model:

- \(Y_{ijk}\) is the height (in mm) of plant \(j\) from pot \(i\) at time \(k\)

- \(a_{ij}\) is the true mean height for plant \(j\) from pot \(i\) across all time points. This is not considered a model parameter, since we further model \(a_{ij}\) at Level Two.

- \(a_{i}\) is the true mean height for pot \(i\) across all plants from that pot and all time points. This is also not considered a model parameter, since we further model \(a_{i}\) at Level Three.

- \(\alpha_{0}\) is a fixed effects model parameter representing the true mean height across all pots, plants, and time points

- \(\epsilon_{ijk}\) describes how far an observed height \(Y_{ijk}\) is from the mean height for plant \(j\) from pot \(i\)

- \(u_{ij}\) describe how far the mean height of plant \(j\) from pot \(i\) is from the mean height of all plants from pot \(i\)

- \(\tilde{u}_{i}\) describes how far the mean height of all observations from pot \(i\) is from the overall mean height across all pots, plants, and time points. None of the error terms (\(\epsilon, u, \tilde{u}\)) are considered model parameters; they simply account for differences between the observed data and expected values under our model.

- \(\sigma^2\) is a variance component (random effects model parameter) that describes within-plant variability over time

- \(\sigma_{u}^{2}\) is the variance component describing plant-to-plant variability within pot

- \(\sigma_{\tilde{u}}^{2}\) is the variance component describing pot-to-pot variability.

and this composite model can be fit using statistical software:

Formula: hgt ~ 1 + (1 | plant) + (1 | pot)

Random effects:

Groups Name Variance Std.Dev.

plant (Intercept) 0.27817 0.5274

pot (Intercept) 0.04873 0.2207

Residual 0.72782 0.8531

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 2.38808 0.07887 30.28From this output, we obtain estimates of our four model parameters:

- \(\hat{\alpha}_{0}=2.39=\) the mean height (in mm) across all time points, plants, and pots.

- \(\hat{\sigma}^2=0.728=\) the variance over time within plants.

- \(\hat{\sigma}_{u}^{2}=0.278=\) the variance between plants from the same pot.

- \(\hat{\sigma}_{\tilde{u}}^{2}=0.049=\) the variance between pots.

From the estimates of variance components, 69.0% of total variability in height measurements is due to differences over time for each plant, 26.4% of total variability is due to differences between plants from the same pot, and only 4.6% of total variability is due to difference between pots. Accordingly, we will next explore whether the incorporation of time as a linear predictor at Level One can reduce the unexplained variability within plant.

The unconditional growth model introduces time as a predictor at Level One, but there are still no predictors at Levels Two or Three. The unconditional growth model allows us to assess how much of the within-plant variability (the variability among height measurements from the same plant at different time points) can be attributed to linear changes over time, while also determining how much variability we see in the intercept (Day 13 height) and slope (daily growth rate) from plant-to-plant and pot-to-pot. Later, we can model plant-to-plant and pot-to-pot differences in intercepts and slopes with Level Two and Three covariates.

The three-level unconditional growth model (Model B) can be specified either using formulations at each level:

- Level One (timepoint within plant): \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \tag{10.5} \end{equation}\]

- Level Two (plant within pot): \[\begin{eqnarray*} a_{ij} & = & a_{i}+u_{ij} \\ b_{ij} & = & b_{i}+v_{ij} \end{eqnarray*}\]

- Level Three (pot): \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\tilde{u}_{i} \\ b_{i} & = & \beta_{0}+\tilde{v}_{i} \end{eqnarray*}\]

where \(\epsilon_{ijk}\sim N(0,\sigma^2)\), \[ \left[ \begin{array}{c} u_{ij} \\ v_{ij} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \sigma_{uv} & \sigma_{v}^{2} \end{array} \right] \right), \] and \[ \left[ \begin{array}{c} \tilde{u}_{i} \\ \tilde{v}_{i} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{\tilde{u}}^{2} & \\ \sigma_{\tilde{u}\tilde{v}} & \sigma_{\tilde{v}}^{2} \end{array} \right] \right). \]

In this model, at Level One the trajectory for plant \(j\) from pot \(i\) is assumed to be linear, with intercept \(a_{ij}\) (height on Day 13) and slope \(b_{ij}\) (daily growth rate between Days 13 and 28); the \(\epsilon_{ijk}\) terms capture the deviation between the true growth trajectory of plant \(j\) from pot \(i\) and its observed heights. At Level Two, \(a_{i}\) represents the true mean intercept and \(b_{i}\) represents the true mean slope for all plants from pot \(i\), while \(u_{ij}\) and \(v_{ij}\) capture the deviation between plant \(j\)’s true growth trajectory and the mean intercept and slope for pot \(i\). The deviations in intercept and slope at Level Two are allowed to be correlated through the covariance parameter \(\sigma_{uv}\). Finally, \(\alpha_{0}\) is the true mean intercept and \(\beta_{0}\) is the true mean daily growth rate over the entire population of leadplants, while \(\tilde{u}_{i}\) and \(\tilde{v}_{i}\) capture the deviation between pot \(i\)’s true overall growth trajectory and the population mean intercept and slope. Note that between-plant and between-pot variability are both partitioned now into variability in initial status (\(\sigma_{u}^{2}\) and \(\sigma_{\tilde{u}}^{2}\)) and variability in rates of change (\(\sigma_{v}^{2}\) and \(\sigma_{\tilde{v}}^{2}\)).

Using the composite model specification, the unconditional growth model can be fit to the seed germination data:

Formula: hgt ~ time13 + (time13 | plant) + (time13 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.299162 0.54696

time13 0.001194 0.03456 0.28

pot (Intercept) 0.044218 0.21028

time13 0.001261 0.03551 -0.61

Residual 0.082157 0.28663

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.537696 0.070304 21.87

time13 0.112117 0.007924 14.15From this output, we obtain estimates of our nine model parameters (two fixed effects and seven variance components):

- \(\hat{\alpha}_{0}=1.538=\) the mean height of leadplants 13 days after planting.

- \(\hat{\beta}_{0}=0.112=\) the mean daily change in height of leadplants from 13 to 28 days after planting.

- \(\hat{\sigma}=.287=\) the standard deviation in within-plant residuals after accounting for time.

- \(\hat{\sigma}_{u}=.547=\) the standard deviation in Day 13 heights between plants from the same pot.

- \(\hat{\sigma}_{v}=.0346=\) the standard deviation in rates of change in height between plants from the same pot.

- \(\hat{\rho}_{uv}=.280=\) the correlation in plants’ Day 13 height and their rate of change in height.

- \(\hat{\sigma}_{\tilde{u}}=.210=\) the standard deviation in Day 13 heights between pots.

- \(\hat{\sigma}_{\tilde{v}}=.0355=\) the standard deviation in rates of change in height between pots.

- \(\hat{\rho}_{\tilde{u}\tilde{v}}=-.610=\) the correlation in pots’ Day 13 height and their rate of change in height.

We see that, on average, leadplants have a height of 1.54 mm thirteen days after planting (pooled across pots and treatment groups), and their heights tend to grow by 0.11 mm per day, producing an average height at the end of the study (Day 28) of 3.22 mm. According to the t-values listed in R, both the Day 13 height and the growth rate are statistically significant. The estimated within-plant variance \(\hat{\sigma}^2\) decreased by 88.7% from the unconditional means model (from 0.728 to 0.082), implying that 88.7% of within-plant variability in height can be explained by linear growth over time.

10.5 Encountering boundary constraints

Typically, with models consisting of three or more levels, the next step after adding covariates at Level One (such as time) is considering covariates at Level Two. In the seed germination experiment, however, there are no Level Two covariates of interest, and the treatments being studied were applied to pots (Level Three). We are primarily interested in the effects of soil type and sterilization on the growth of leadplants. Since soil type is a categorical factor with three levels, we can represent soil type in our model with indicator variables for cultivated lands (cult) and remnant prairies (rem), using reconstructed prairies as the reference level. For sterilization, we create a single indicator variable (strl) which takes on the value 1 for sterilized soil.

Our Level One and Level Two models will look identical to those from Model B; our Level Three models will contain the new covariates for soil type (cult and rem) and sterilization (strl):

where the error terms at Level Three follow the same multivariate normal distribution as in Model B. In our case, the composite model can be written as:

\[\begin{eqnarray*} Y_{ijk} & = & (\alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i}+\tilde{u}_{i}+u_{ij}) + \\ & & (\beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{cult}_{i}+\beta_{3}\textstyle{rem}_{i}+\tilde{v}_{i}+ v_{ij})\textstyle{time}_{ijk}+\epsilon_{ijk} \\ \end{eqnarray*}\]which, after combining fixed effects and random effects, can be rewritten as:

\[\begin{eqnarray*} Y_{ijk} & = & [\alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i} + \beta_{0}\textstyle{time}_{ijk} + \\ & & \beta_{1}\textstyle{strl}_{i}\textstyle{time}_{ijk}+\beta_{2}\textstyle{cult}_{i}\textstyle{time}_{ijk}+ \beta_{3}\textstyle{rem}_{i}\textstyle{time}_{ijk}] + \\ & & [\tilde{u}_{i}+u_{ij}+\epsilon_{ijk}+\tilde{v}_{i}\textstyle{time}_{ijk}+v_{ij}\textstyle{time}_{ijk}] \end{eqnarray*}\]From the output below, the addition of Level Three covariates in Model C (cult, rem, strl, and their interactions with time) appears to provide a significant improvement (likelihood ratio test statistic = 32.2 on 6 df, \(p<.001\)) to the unconditional growth model (Model B).

Formula: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

time13:rem + (time13 | plant) + (time13 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.2980206 0.54591

time13 0.0012081 0.03476 0.28

pot (Intercept) 0.0531503 0.23054

time13 0.0001317 0.01148 -1.00

Residual 0.0820769 0.28649

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.502893 0.127001 11.834

time13 0.101069 0.008292 12.189

strl -0.076546 0.151368 -0.506

cult 0.130013 0.182723 0.712

rem 0.138387 0.176196 0.785

time13:strl 0.058917 0.010282 5.730

time13:cult -0.029765 0.012263 -2.427

time13:rem -0.035860 0.011978 -2.994...

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

modelbl 9 603.76 639.97 -292.88 585.76

modelcl 15 583.55 643.90 -276.78 553.55 32.202 6 1.493e-05 ***

...However, Model C has encountered a boundary constraint with an estimated Level 3 correlation between the intercept and slope error terms of -1. “Allowable” values of correlation coefficients run from -1 to 1; by definition, it is impossible to have a correlation between two error terms below -1. Thus, our estimate of -1 is right on the boundary of the allowable values. But how did this happen, and why is it potentially problematic?

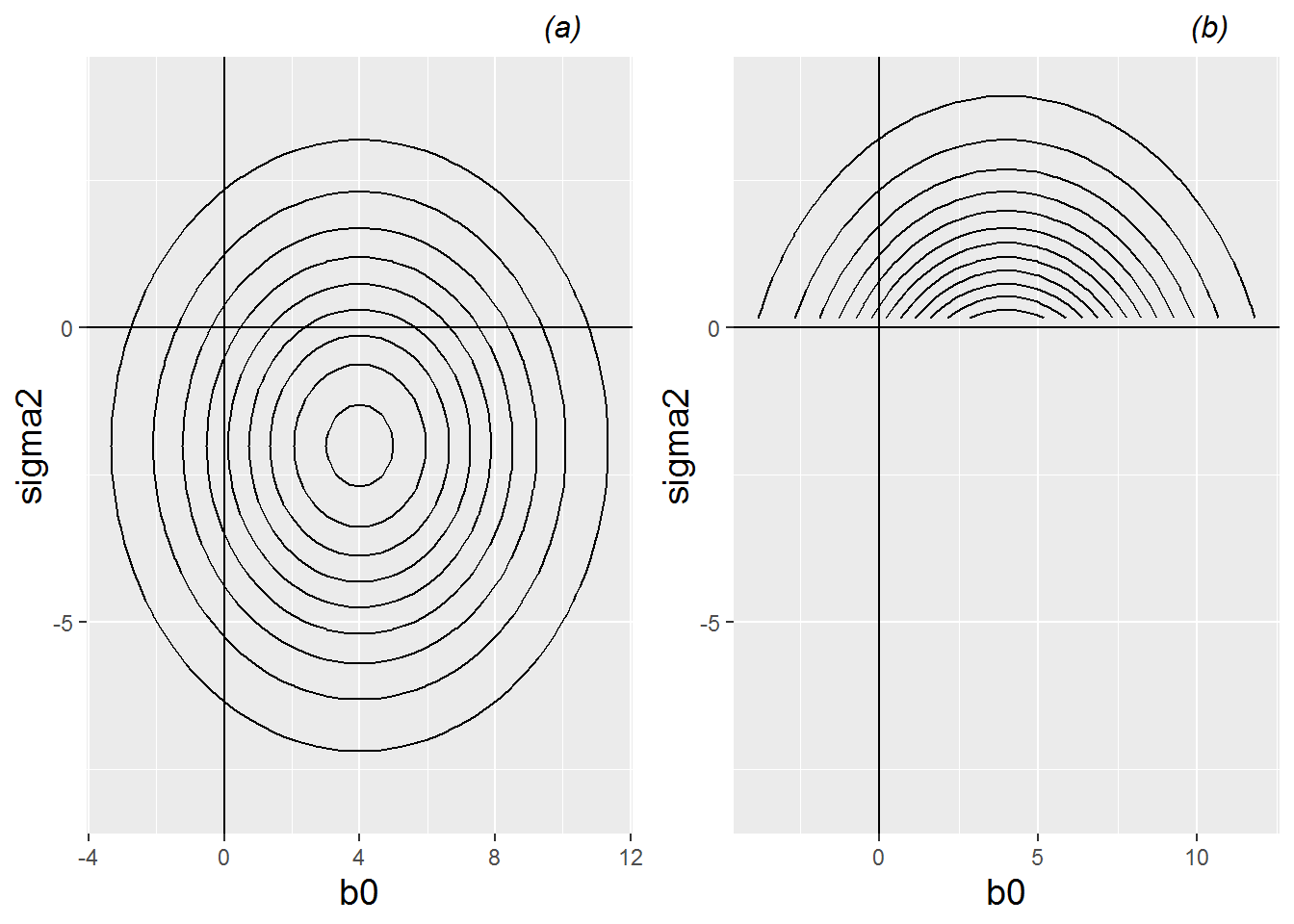

Consider a model in which we have two parameters that must be estimated: \(\beta_0\) and \(\sigma^2\). As the intercept, \(\beta_0\) can take on any value; any real number is “allowable”. But, by definition, variance terms such as \(\sigma^2\) must be non-negative; that is, \(\sigma^2 \geq 0\). Under the Principle of Maximum Likelihood, maximum likelihood estimators for \(\beta_0\) and \(\sigma^2\) will be chosen to maximize the likelihood of observing our given data. The left plot in Figure 10.13 shows hypothetical contours of the likelihood function \(L(\beta_0, \sigma^2)\); the likelihood is clearly maximized at \((\hat{\beta}_0 , \hat{\sigma}^2)=(4,-2)\). However, variance terms cannot be negative! A more sensible approach would have been to perform a constrained search for MLEs, considering any potential values for \(\beta_0\) but only non-negative values for \(\sigma^2\). This constrained search is illustrated in the right plot in Figure 10.13. In this case, the likelihood is maximized at \((\hat{\beta}_0 , \hat{\sigma}^2)=(4,0)\). Note that the estimated intercept did not change, but the estimated variance is simply set at the smallest allowable value – at the boundary constraint.

Figure 10.13: Left (a): hypothetical contours of the likelihood function \(L(\beta_0, \sigma^2)\) with no restrictions on \(\sigma^2\); the likelihood function is maximized at \((\hat{\beta}_0, \hat{\sigma}^2)=(4,-2)\). Right (b): hypothetical contours of the likelihood function \(L(\beta_0, \sigma^2)\) with the restriction that \(\sigma^2 \geq 0\); the constrained likelihood function is maximized at \((\hat{\beta}_0, \hat{\sigma}^2)=(4,0)\)

Graphically, in this simple illustration, the effect of the boundary constraint is to alter the likelihood function from a nice hill (in the left plot in Figure 10.13) with a single peak at \((4,-2)\), to a hill with a huge cliff face where \(\sigma^2=0\). The highest point overlooking this cliff is at \((4,0)\), straight down the hill from the original peak.

In general, then, boundary constraints occur when the maximum likelihood estimator of at least one model parameter occurs at the limits of allowable values (such as estimated correlation coefficients of -1 or 1, or estimated variances of 0). Maximum likelihood estimates at the boundary tend to indicate that the likelihood function would be maximized at non-allowable values of that parameter, if an unconstrained search for MLEs was conducted. Most software packages, however, will only report maximum likelihood estimates with allowable values. Therefore, boundary constraints would ideally be avoided, if possible.

What should you do if you encounter boundary constraints? Often, boundary constraints signal that your model needs to be reparameterized – i.e., you should alter your model to feature different parameters or ones that are interpreted differently. This can be accomplished in several ways:

- remove parameters, especially those variance and correlation terms which are being estimated on their boundaries

- fix the values of certain parameters; for instance, you could set two variance terms equal to each other, thereby reducing the number of unknown parameters to estimate by one

- transform covariates. Centering variables, standardizing variables, or changing units can all help stabilize a model. Numerical procedures for searching for and finding maximum likelihood estimates can encounter difficulties when variables have very high or low values, extreme ranges, outliers, or are highly correlated.

Although it is worthwhile attempting to reparameterize models to remove boundary constraints, sometimes they can be tolerated if (a) you are not interested in estimates of those parameters encountering boundary issues, and (b) removing those parameters does not affect conclusions about parameters of interest. For example, in the output below we explore the implications of simply removing the correlation between error terms at the pot level (i.e., assume \(\rho_{\tilde{u}\tilde{v}}=0\) rather than accepting the (constrained) maximum likelihood estimate of \(\hat{\rho}_{\tilde{u}\tilde{v}}=-1\) that we saw in Model C).

Formula: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

time13:rem + (time13 | plant) + (1 | pot) + (0 + time13 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.2941016 0.54231

time13 0.0012062 0.03473 0.22

pot (Intercept) 0.0591619 0.24323

pot.1 time13 0.0001375 0.01173

Residual 0.0821742 0.28666

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.51209 0.12965 11.663

time13 0.10158 0.00836 12.150

strl -0.08743 0.15407 -0.567

cult 0.13235 0.18563 0.713

rem 0.10661 0.17931 0.595

time13:strl 0.05869 0.01038 5.653

time13:cult -0.03065 0.01234 -2.484

time13:rem -0.03810 0.01209 -3.150Note that the estimated variance components are all very similar to Model C, and the estimated fixed effects and their associated t-statistics are also very similar to Model C. Therefore, in this case we could consider simply reporting the results of Model C despite the boundary constraint.

However, when removing boundary constraints through reasonable model reparameterizations is possible, that is typically the preferred route. In this case, one option we might consider is simplifying Model C by setting \(\sigma_{\tilde{v}}^{2}=\sigma_{\tilde{u}\tilde{v}}=0\). We can then write our new model (Model C.1) in level-by-level formulation:

- Level One (timepoint within plant): \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \end{equation}\]

- Level Two (plant within pot): \[\begin{eqnarray*} a_{ij} & = & a_{i}+u_{ij} \\ b_{ij} & = & b_{i}+v_{ij} \end{eqnarray*}\]

- Level Three (pot): \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i}+\tilde{u}_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{cult}_{i}+\beta_{3}\textstyle{rem}_{i} \end{eqnarray*}\]

Note that there is no longer an error term associated with the model for mean growth rate \(b_{i}\) at the pot level. The growth rate for pot \(i\) is assumed to be fixed, after accounting for soil type and sterilization; all pots with the same soil type and sterilization are assumed to have the same growth rate. As a result, our error assumption at Level Three is no longer bivariate normal, but rather univariate normal: \(\tilde{u}_{i}\sim N(0,\sigma_{\tilde{u}}^{2})\). By removing one of our two Level Three error terms (\(\tilde{v}_{i}\)), we effectively removed two parameters – the variance for \(\tilde{v}_{i}\) and the correlation between \(\tilde{u}_{i}\) and \(\tilde{v}_{i}\). Fixed effects remain similar, as can be seen in the output below:

Formula: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

time13:rem + (time13 | plant) + (1 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.294718 0.54288

time13 0.001327 0.03642 0.19

pot (Intercept) 0.057653 0.24011

Residual 0.082221 0.28674

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.512224 0.129006 11.722

time13 0.101091 0.007453 13.565

strl -0.087518 0.153440 -0.570

cult 0.132876 0.184886 0.719

rem 0.106537 0.178601 0.597

time13:strl 0.059264 0.009492 6.244

time13:cult -0.030824 0.011353 -2.715

time13:rem -0.036244 0.011102 -3.265We now have a more stable model, free of boundary constraints. In fact, we can attempt to determine whether or not removing the two variance component parameters for Model C.1 provides a significant reduction in performance. Based on a likelihood ratio test (see below), we do not have significant evidence (chi-square test statistic=2.089 on 2 df, p=0.3519) that \(\sigma_{\tilde{v}}^{2}\) or \(\sigma_{\tilde{u}\tilde{v}}\) is non-zero, so it is advisable to use the simpler Model C.1. However, Section 10.6 describes why this test may be misleading and prescribes a potentially better approach.

...

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

modelcl0 13 581.64 633.95 -277.82 555.64

modelcl 15 583.55 643.90 -276.78 553.55 2.089 2 0.3519

...10.6 Parametric bootstrap testing

When testing random effects at the boundary (such as \(\sigma_{\tilde{v}}^{2} = 0\)), using a chi-square distribution to conduct a likelihood ratio test like we did in Section 10.5 is not appropriate. In fact, this will produce a conservative test, with p-values that are too large and not rejected enough (Raudenbush and Bryk (2002), Singer and Willett (2003), J. Faraway (2005)). For example, we should suspect that the p-value (.3519) produced by the likelihood ratio test comparing Models C and C.1 in Section 10.5 is too large, that the real probability of getting a likelihood ratio test statistic of 2.089 or greater when Model C.1 is true is smaller than .3519. Researchers often use the parametric bootstrap to better approximate the distribution of the likelihood test statistic and produce more accurate p-values by simulating data under the null hypothesis.

Here are the basic steps for running a parametric bootstrap procedure to compare Model C.1 with Model C (see associated diagram below):

- Fit Model C.1 (the null model) to obtain estimated fixed and random effects (This is the “parametric” part.)

- Use the estimated fixed and random effects from the null model to regenerate a new set of plant heights with the same sample size (\(n=413\)) and associated covariates for each observation as the original data (This is the “bootstrap” part.)

- Fit both Model C.1 (the reduced model) and Model C (the full model) to the new data

- Compute a likelihood ratio statistic comparing Models C.1 and C

- Repeat the previous 3 steps many times (e.g., 1000)

- Produce a histogram of likelihood ratio statistics to illustrate its behavior when the null hypothesis is true

- Calculate a p-value by finding the proportion of times the bootstrapped test statistic is greater than our observed test statistic

The steps in conducting a parametric bootstrap test comparing Models C and C.1.

Let’s see how new plant heights are generated under the parametric bootstrap. Consider, for instance, \(i=1\) and \(j=1,2\). That is, consider Plants #11 and #12. These plants are found in Pot #1, which was randomly assigned to contain sterilized soil from a restored prairie (STP):

pot plant soil sterile species germin hgt13 hgt18 hgt23 hgt28

1 1 11 STP Y L Y 2.3 2.9 4.5 5.1

2 1 12 STP Y L Y 1.9 2.0 2.6 3.5Level Three

One way to see the data generation process under the null model (Model C.1) is to start with Level Three and work backwards to Level One. Recall that our Level Three models for \(a_{i}\) and \(b_{i}\), the true intercept and slope from Pot \(i\), in Model C.1 are:

\[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i}+\tilde{u}_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{cult}_{i}+\beta_{3}\textstyle{rem}_{i} \end{eqnarray*}\]All the \(\alpha\) and \(\beta\) terms will be fixed at their estimated values, so the one term that will change for each bootstrapped data set is \(\tilde{u}_{i}\). As we obtain a numeric value for \(\tilde{u}_{i}\) for each pot, we will fix the subscript. For example, if \(\tilde{u}_{i}\) is set to -.192 for Pot #1, then we would denote this by \(\tilde{u}_{1}=-.192\). Similarly, in the context of Model C.1, \(a_{1}\) represents the mean height at Day 13 across all plants in Pot #1, where \(\tilde{u}_{1}\) quantifies how Pot #1’s Day 13 height relates to other pots with the same sterilization and soil type.

According to Model C.1, each \(\tilde{u}_{i}\) is sampled from a normal distribution with mean 0 and standard deviation .240 (note that the standard deviation \(\sigma^2_{u}\) is also fixed at its estimated value from Model C.1, given in Section 10.5). That is, a random component to the intercept for Pot #1 (\(\tilde{u}_{1}\)) would be sampled from a normal distribution with mean 0 and SD .240; say, for instance, \(\tilde{u}_{1}=-.192\). We would sample \(\tilde{u}_{2},...,\tilde{u}_{72}\) in a similar manner. Then we can produce a model-based intercept and slope for Pot #1:

\[\begin{eqnarray*} a_{1} & = & 1.512-.088(1)+.133(0)+.107(0)-.192 = 1.232 \\ b_{1} & = & .101+.059(1)-.031(0)-.036(0) = .160 \end{eqnarray*}\]Notice a couple of features of the above derivations. First, all of the coefficients from the above equations (\(\alpha_{0}=1.512\), \(\alpha_{1}=-.088\), etc.) come from the estimated fixed effects from Model C.1 reported in Section 10.5. Second, “restored prairie” is the reference level for soil type, so that indicators for “cultivated land” and “remnant prairie” are both 0. Third, the mean intercept (Day 13 height) for observations from sterilized restored prairie soil is 1.512 - 0.088 = 1.424 mm across all pots, while the mean daily growth is .160 mm. Pot #1 therefore has mean Day 13 height that is .192 mm below the mean for all pots with sterilized restored prairie soil, but every such pot is assumed to have the same growth rate of .160 mm/day because of our assumption that there is no pot-to-pot variability in growth rate (i.e., \(\tilde{v}_{i}=0\)).

Level Two

We next proceed to Level Two, where our equations for Model C.1 are:

\[\begin{eqnarray*} a_{ij} & = & a_{i}+u_{ij} \\ b_{ij} & = & b_{i}+v_{ij} \end{eqnarray*}\]We will initially focus on Plant #11 from Pot #1. Notice that the intercept (Day 13 height = \(a_{11}\)) for Plant #11 has two components: the mean Day 13 height for Pot #1 (\(a_{1}\)) which we specified at Level Three, and an error term (\(u_{11}\)) which indicates how the Day 13 height for Plant #11 differs from the overall average for all plants from Pot #1. The slope (daily growth rate = \(b_{11}\)) for Plant #11 similarly has two components. Since both \(a_{1}\) and \(b_{1}\) were determined at Level Three, at this point we need to find the two error terms for Plant #11: \(u_{11}\) and \(v_{11}\). According to our multilevel model, we can sample \(u_{11}\) and \(v_{11}\) from a bivariate normal distribution with means both equal to 0, standard deviation for the intercept of .543, standard deviation for the slope of .036, and correlation between the intercept and slope of .194.

For instance, suppose we sample \(u_{11}=.336\) and \(v_{11}=.029\). Then we can produce a model-based intercept and slope for Plant #11: \[\begin{eqnarray*} a_{11} & = & 1.232+.336 = 1.568 \\ b_{11} & = & .160+.029 = .189 \end{eqnarray*}\]Although plants from Pot #1 have a mean Day 13 height of 1.232 mm, Plant #11’s mean Day 13 height is .336 mm above that. Similarly, although plants from Pot #1 have a mean growth rate of .160 mm/day (just like every other pot with sterilized restored prairie soil), Plant #11’s growth rate is .029 mm/day faster.

Level One

Finally we proceed to Level One, where the height of Plant #11 is modeled as a linear function of time (\(1.568 + .189\textstyle{time}_{11k}\)) with a normally distributed residual \(\epsilon_{11k}\) at each time point \(k\). Four residuals (one for each time point) are sampled independently from a normal distribution with mean 0 and standard deviation .287 – the standard deviation again coming from parameter estimates from fitting Model C.1 to the actual data as reported in Section 10.5. Suppose we obtain residuals of \(\epsilon_{111}=-.311\), \(\epsilon_{112}=.119\), \(\epsilon_{113}=.241\), and \(\epsilon_{114}=-.066\). In that case, our parametrically generated data for Plant #11 from Pot #1 would look like:

\[ \begin{array}{rcccl} Y_{111} & = & 1.568+.189(0)-.311 & = & 1.257 \\ Y_{112} & = & 1.568+.189(5)+.119 & = & 2.632 \\ Y_{113} & = & 1.568+.189(10)+.241 & = & 3.699 \\ Y_{114} & = & 1.568+.189(15)-.066 & = & 4.337 \\ \end{array} \]

We would next turn to Plant #12 from Pot #1 (\(i=1\) and \(j=2\)). Fixed effects would remain the same, as would coefficients for Pot #1, \(a_{1} = 1.232\) and \(b_{1} = .160\), at Level Three. We would, however, sample new residuals \(u_{12}\) and \(v_{12}\) at Level Two, producing a different intercept \(a_{12}\) and slope \(b_{12}\) than those observed for Plant #11. Four new independent residuals \(\epsilon_{12k}\) would also be selected at Level One, from the same normal distribution as before with mean 0 and standard deviation .287.

Once an entire set of simulated heights for every pot, plant, and time point have been generated based on Model C.1, two models are fit to this data:

- Model C.1 – the correct (null) model that was actually used to generate the responses

- Model C – the incorrect (full) model that contains two extra variance components – \(\sigma_{\tilde{v}}^{2}\) and \(\sigma_{\tilde{u}\tilde{v}}\) – that were not actually used when generating the responses

# Generate 1 set of bootstrapped data and run chi-square test

set.seed(3333)

d <- drop(simulate(modelcl0))

m2 <-refit(modelcl, newresp=d)

m1 <-refit(modelcl0, newresp=d)

anova(m2,m1)## Data: leaddata

## Models:

## m1: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

## m1: time13:rem + (time13 | plant) + (1 | pot)

## m2: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

## m2: time13:rem + (time13 | plant) + (time13 | pot)

## Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

## m1 13 588.48 640.78 -281.24 562.48

## m2 15 591.07 651.42 -280.54 561.07 1.4054 2 0.4953A likelihood ratio test statistic is calculated comparing Model C.1 to Model C. For example, after continuing as above to generate new \(Y_{ijk}\) values corresponding to all 413 leadplant height measurements, we fit the “bootstrapped” data using both models. Since the data was generated using Model C.1, we would expect the two extra terms in Model C (\(\sigma^2_{\tilde{v}}\) and \(\sigma_{\tilde{u}\tilde{v}}\)) to contribute very little to the quality of the fit; Model C will have a slightly larger likelihood and loglikelihood since it contains every parameter from Model C.1 plus two more, but the difference in the likelihoods should be due to chance. In fact, that is what the output above shows. Model C does have a larger loglikelihood than Model C.1 (-280.54 vs. -281.24), but this small difference is not statistically significant based on a chi-square test with 2 degrees of freedom (p=.4953).

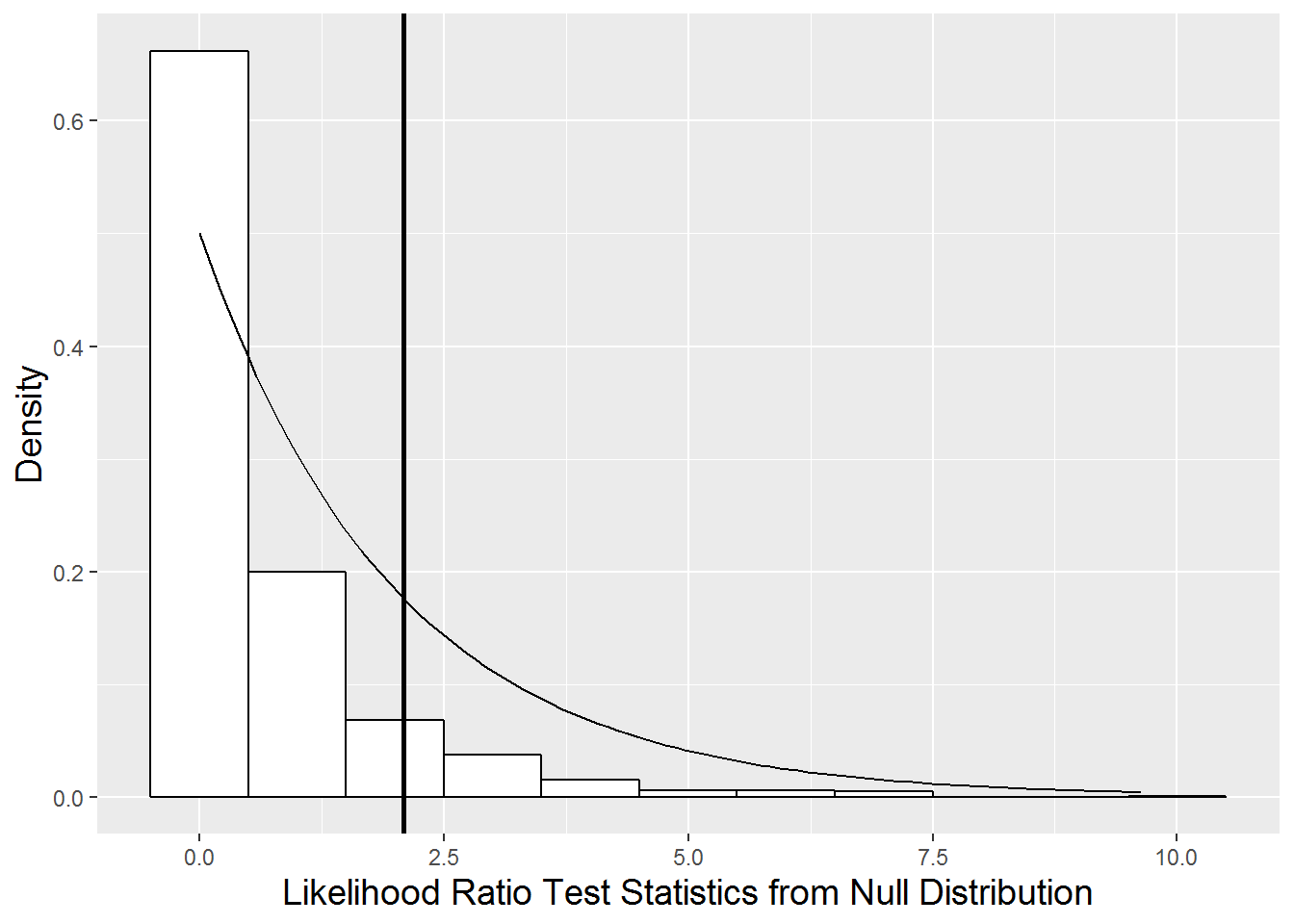

However, we are really only interested in saving the likelihood ratio test statistic from this bootstrapped sample (\(2*(-280.54 - (-281.24) = 1.40\)). By generating (“bootstrapping”) many sets of responses based on estimated parameters from Model C.1 and calculating many likelihood ratio test statistics, we can observe how this test statistic behaves under the null hypothesis of \(\sigma_{\tilde{v}}^{2} = \sigma_{\tilde{u}\tilde{v}} = 0\), rather than making the (dubious) assumption that its behavior is described by a chi-square distribution with 2 degrees of freedom. Figure 10.14 illustrates the null distribution of the likelihood ratio test statistic derived by the parametric bootstrap procedure as compared to a chi-square distribution. A p-value for comparing our full and reduced models can be approximated by finding the proportion of likelihood ratio test statistics generated under the null model which exceed our observed likelihood ratio test (2.089). The parametric bootstrap provides a more reliable p-value in this case (.088 from table below); a chi-square distribution puts too much mass in the tail and not enough near 0, leading to overestimation of the p-value. Based on this test, we would still choose our simpler Model C.1, but we nearly had enough evidence to favor the more complex model.

Data: leaddata

Parametric bootstrap with 1000 samples.

Models:

m0: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

m0: time13:rem + (time13 | plant) + (1 | pot)

mA: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

mA: time13:rem + (time13 | plant) + (time13 | pot)

Df AIC BIC logLik deviance Chisq Chi Df Pr_boot(>Chisq)

m0 13 581.64 633.95 -277.82 555.64

mA 15 583.55 643.90 -276.78 553.55 2.089 2 0.088

Figure 10.14: Null distribution of likelihood ratio test statistic derived using parametric bootstrap (histogram) compared to a chi-square distribution with 2 degrees of freedom (smooth curve). The horizontal line represents the observed likelihood ratio test statistic.

Another way of testing whether or not we should stick with the reduced model or reject it in favor of the larger model is by generating parametric bootstrap samples, and then using those samples to produce 95% confidence intervals for both \(\sigma_{\tilde{u}\tilde{v}} = 0\) and \(\sigma_{\tilde{v}}^{2} = 0\). From the output below, the confidence intervals for \(\sigma_{\tilde{u}\tilde{v}}\) (Index 10) and \(\sigma_{\tilde{v}}^{2}\) (Index 11) both contain 0, showing again that we do not have significant evidence to reject the reduced model in favor of the larger one.

# Use lmeresampler to get bootstrapped CIs for var components

## running a parametric bootstrap (30 seconds or so if B=100)

set.seed(333)

boo1 <- lmeresampler::bootstrap(model = modelcl, fn = varcomp.mer,

type = "parametric", B = 100)

## bootstrap confidence intervals are easily found using 'boot.ci'

## - there are actually 7 variance components

## - varcomp.mer lists 11, but 4 are actually 0

boot.ci(boo1, index = 10, type="perc")## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 100 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boo1, type = "perc", index = 10)

##

## Intervals :

## Level Percentile

## 95% (-0.0043, 0.0012 )

## Calculations and Intervals on Original Scale

## Some percentile intervals may be unstableboot.ci(boo1, index = 11, type="perc")## BOOTSTRAP CONFIDENCE INTERVAL CALCULATIONS

## Based on 100 bootstrap replicates

##

## CALL :

## boot.ci(boot.out = boo1, type = "perc", index = 11)

##

## Intervals :

## Level Percentile

## 95% ( 0.0000, 0.0004 )

## Calculations and Intervals on Original Scale

## Some percentile intervals may be unstable## you can also examine the bootstrap samples graphically

# plot(boo1, index = 10)

# plot(boo1, index = 11)In this section, we have offered the parametric bootstrap as a noticeable improvement over the likelihood ratio test with an approximate chi-square distribution for testing random effects near the boundary. And typically when we conduct hypothesis tests involving variance terms we are testing at the boundary, since we are asking if the variance term is really necessary (i.e., \(H_O: \sigma^2=0\) vs. \(H_A: \sigma^2 > 0\)). However, what if we are conducting a hypothesis test about a fixed effect? For the typical test of whether or not a fixed effect is significant – e.g., \(H_O: \alpha_i=0\) vs. \(H_A: \alpha_i \neq 0\) – we are not testing at the boundary, since most fixed effects have no bounds on allowable values. We have often used a likelihood ratio test with an approximate chi-square distribution in these settings – does that provide accurate p-values? Although some research (e.g., J. Faraway (2005)) shows that p-values of fixed effects from likelihood ratio tests can tend to be anti-conservative (too low), in general the approximation is not bad. We will continue to use the likelihood ratio test with a chi-square distribution for fixed effects, but you could always check your p-values using a parametric bootstrap approach.

10.7 Exploding variance components

Our modeling task in Section 10.5 was simplified by the absence of covariates at Level Two. As multilevel models grow to include three or more levels, the addition of just a few covariates at lower levels can lead to a huge increase in the number of parameters (fixed effects and variance components) that must be estimated throughout the model. In this section, we will examine when and why the number of model parameters might explode, and we will consider strategies for dealing with these potentially complex models.

For instance, consider Model C, where we must estimate a total of 15 parameters: 8 fixed effects plus 7 variance components (1 at Level One, 3 at Level Two, and 3 at Level Three). By adding just a single covariate to the equations for \(a_{ij}\) and \(b_{ij}\) at Level Two in Model C (say, for instance, the size of each seed), we would now have a total of 30 parameters to estimate! The new multilevel model (Model Cplus) could be written as follows:

- Level One (timepoint within plant): \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \tag{10.7} \end{equation}\]

- Level Two (plant within pot): \[\begin{eqnarray*} a_{ij} & = & a_{i}+c_{i}\textstyle{seedsize}_{ij}+u_{ij} \\ b_{ij} & = & b_{i}+d_{i}\textstyle{seedsize}_{ij}+v_{ij} \end{eqnarray*}\]

- Level Three (pot): \[\begin{eqnarray*} a_{i} & = & \alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i}+ \tilde{u}_{i}\\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{cult}_{i}+\beta_{3}\textstyle{rem}_{i}+ \tilde{v}_{i} \\ c_{i} & = & \gamma_{0}+\gamma_{1}\textstyle{strl}_{i}+\gamma_{2}\textstyle{cult}_{i}+\gamma_{3}\textstyle{rem}_{i}+ \tilde{w}_{i} \\ d_{i} & = & \delta_{0}+\delta_{1}\textstyle{strl}_{i}+\delta_{2}\textstyle{cult}_{i}+\delta_{3}\textstyle{rem}_{i}+ \tilde{z}_{i} \end{eqnarray*}\]

where \(\epsilon_{ijk}\sim N(0,\sigma^2)\), \[ \left[ \begin{array}{c} u_{ij} \\ v_{ij} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \sigma_{uv} & \sigma_{v}^{2} \end{array} \right] \right), \] and \[ \left[ \begin{array}{c} \tilde{u}_{i} \\ \tilde{v}_{i} \\ \tilde{w}_{i} \\ \tilde{z}_{i} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \\ 0 \\ 0 \end{array} \right], \left[ \begin{array}{cccc} \sigma_{\tilde{u}}^{2} & & & \\ \sigma_{\tilde{u}\tilde{v}} & \sigma_{\tilde{v}}^{2} & & \\ \sigma_{\tilde{u}\tilde{w}} & \sigma_{\tilde{v}\tilde{w}} & \sigma_{\tilde{w}}^{2} & \\ \sigma_{\tilde{u}\tilde{z}} & \sigma_{\tilde{v}\tilde{z}} & \sigma_{\tilde{w}\tilde{z}} & \sigma_{\tilde{z}}^{2} \end{array} \right] \right). \] We would have 16 fixed effects from the four equations at Level Three, each with 4 fixed effects to estimate if we use the same 3 indicators for soil type and sterilization for each \(a\), \(b\), \(c\), and \(d\). And, with four equations at Level Three, the error covariance matrix at the pot level would be 4x4 with 10 variance components to estimate; each error term (4) has a variance associated with it, and each pair of error terms (6) has an associated correlation. The error structure at Levels One (1 variance term) and Two (2 variance terms and 1 correlation) would remain the same, for a total of 14 variance components.

Now consider adding an extra Level One covariate to the model in the previous paragraph. How many model parameters would now need to be estimated? (Try writing out the multilevel models and counting parameters…) The correct answer is 52 total parameters! There are 24 fixed effects (from 6 Level Three equations) and 28 variance components (1 at Level One, 6 at Level Two, and 21 at Level Three).

Estimating even 30 parameters as in Model Cplus from a single set of data is an ambitious task and computationally very challenging. Essentially we (or the statistics package we are using) must determine which combination of values for the 30 parameters would maximize the likelihood associated with our model and the observed data. Even in the absolute simplest case, with only two options for each parameter, there would be over one billion possible combinations to consider. But if our primary interest is in fixed effects, we really only have 5 covariates (and their associated interactions) in our model. What can be done to make fitting a 3-level model with 1 covariate at Level One, 1 at Level Two, and 3 at Level Three more manageable? Reasonable options include:

- Reduce the number of variance components by assuming all error terms to be independent; that is, set all correlation terms to 0.

- Reduce the number of variance components by removing error terms from certain Level Two and Three equations. Often, researchers will begin with a random intercepts model, in which only the first equation at Level Two and Three has an error term. With the leadplant data, we would account for variability in Day 13 height (intercept) among plants and pots, but assume that the effects of time and seed size are constant among pots and plants.

- Reduce the number of fixed effects by removing interaction terms that are not expected to be meaningful. Interaction terms between covariates at different levels can be eliminated simply by reducing the number of terms in certain equations at Levels Two and Three. There is no requirement that all equations at a certain level contain the same set of predictors. Often, researchers will not include covariates in equations beyond the intercept at a certain level unless there’s a compelling reason.

By following the options above, our potential 30-parameter model can be simplified to this 9-parameter model:

- Level One: \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{ij} & = & a_{i}+c_{i}\textstyle{seedsize}_{ij}+u_{ij} \\ b_{ij} & = & b_{i} \end{eqnarray*}\]

- Level Three: \[\begin{eqnarray*} a_{i} & = & \alpha_{0} + \alpha_{1}\textstyle{strl}_{i} + \alpha_{2}\textstyle{cult}_{i} + \alpha_{3}\textstyle{rem}_{i} + \tilde{u}_{i} \\ b_{i} & = & \beta_{0} \\ c_{i} & = & \gamma_{0} \end{eqnarray*}\]

where \(\epsilon_{ijk}\sim N(0,\sigma^2)\), \(u_{ij}\sim N(0,\sigma_{u}^{2})\), and \(\tilde{u}_{i}\sim N(0,\sigma_{\tilde{u}}^{2})\). Or, in terms of a composite model:

\[\begin{eqnarray*} Y_{ijk} & = & [\alpha_{0}+\alpha_{1}\textstyle{strl}_{i}+\alpha_{2}\textstyle{cult}_{i}+\alpha_{3}\textstyle{rem}_{i} + \gamma_{0}\textstyle{seedsize}_{ij} + \beta_{0}\textstyle{time}_{ijk}] + \\ & & [\tilde{u}_{i}+u_{ij}+\epsilon_{ijk}] \end{eqnarray*}\]According to the second option, we have built a random intercepts model with error terms only at the first (intercept) equation at each level. Not only does this eliminate variance terms associated with the missing error terms, but it also eliminates correlation terms between errors (as suggested by Option 1) since there are no pairs of error terms that can be formed at any level. In addition, as suggested by Option 3, we have eliminated predictors (and their fixed effects coefficients) at every equation other than the intercept at each level.

The simplified 9-parameter model essentially includes a random effect for pot (\(\sigma_{\tilde{u}}^{2}\)) after controlling for sterilization and soil type, a random effect for plant within pot (\(\sigma_{u}^{2}\)) after controlling for seed size, and a random effect for error about the time trend for individual plants (\(\sigma^{2}\)). We must assume that the effect of time is the same for all plants and all pots, and it does not depend on seed size, sterilization, or soil type. Similarly, we must assume that the effect of seed size is the same for each pot and does not depend on sterilization or soil type. While somewhat proscriptive, a random intercepts model such as this can be a sensible starting point, since the simple act of accounting for variability of observational units at Level Two or Three can produce better estimates of fixed effects of interest and their standard errors.

10.8 Building to a final model

In Model C we considered the main effects of soil type and sterilization on leadplant initial height and growth rate, but we did not consider interactions—even though biology researchers expect that sterilization will aid growth in certain soil types more than others. Thus, in Model D we will build Level Three interaction terms into Model C.1:

- Level One: \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{ij} & = & a_{i}+u_{ij} \\ b_{ij} & = & b_{i}+v_{ij} \end{eqnarray*}\]

- Level Three: \[\begin{eqnarray*} a_{i} & = & \alpha_{0} + \alpha_{1}\textstyle{strl}_{i} + \alpha_{2}\textstyle{cult}_{i} + \alpha_{3}\textstyle{rem}_{i} + \alpha_{4}\textstyle{strl}_{i}\textstyle{rem}_{i} + \alpha_{5}\textstyle{strl}_{i}\textstyle{cult}_{i} + \tilde{u}_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{cult}_{i}+\beta_{3}\textstyle{rem}_{i} + \beta_{4}\textstyle{strl}_{i}\textstyle{rem}_{i} + \beta_{5}\textstyle{strl}_{i}\textstyle{cult}_{i} \end{eqnarray*}\]

where error terms are defined as in Model C.1.

From the output below, we see that the interaction terms were not especially helpful, except possibly for a differential effect of sterilization in remnant and reconstructed prairies on the growth rate of leadplants. But it’s clear that Model D can be simplified through the removal of certain fixed effects with low t-ratios.

Formula: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

time13:rem + strl:cult + strl:rem + time13:strl:cult + time13:strl:rem +

(time13 | plant) + (1 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.292722 0.54104

time13 0.001276 0.03573 0.20

pot (Intercept) 0.070544 0.26560

Residual 0.082139 0.28660

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.531250 0.153897 9.950

time13 0.094919 0.008119 11.692

strl -0.126511 0.222661 -0.568

cult -0.061835 0.329916 -0.187

rem 0.124258 0.239585 0.519

time13:strl 0.072790 0.012005 6.063

time13:cult -0.022624 0.020836 -1.086

time13:rem -0.020474 0.013165 -1.555

strl:cult 0.276162 0.407097 0.678

strl:rem -0.071397 0.378048 -0.189

time13:strl:cult -0.016082 0.024812 -0.648

time13:strl:rem -0.051990 0.023951 -2.171To form Model F, we begin by removing all covariates describing the intercept (Day 13 height), since neither sterilization nor soil type nor their interaction appear to be significantly related to initial height. However, sterilization, remnant prairie soil, and their interaction appear to have significant influences on growth rate, although the effect of cultivated soil on growth rate did not appear significantly different from that of restored prairie soil (the reference level). A likelihood ratio test shows no significant difference between Models D and F (chi-square test statistic = 11.15 on 7 df, p=.1323), supporting the use of simplified Model F.

Formula:

hgt ~ time13 + time13:strl + time13:rem + time13:strl:rem + (time13 |

plant) + (1 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.294044 0.54226

time13 0.001425 0.03775 0.16

pot (Intercept) 0.048710 0.22070

Residual 0.082045 0.28643

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.529092 0.071336 21.435

time13 0.091371 0.007745 11.798

time13:strl 0.059670 0.010375 5.751

time13:rem -0.016489 0.013238 -1.246

time13:strl:rem -0.039389 0.023847 -1.652Data: leaddata

Models:

modelfl0: hgt ~ time13 + time13:strl + time13:rem + time13:strl:rem + (time13 |

modelfl0: plant) + (1 | pot)

modeldl0: hgt ~ time13 + strl + cult + rem + time13:strl + time13:cult +

modeldl0: time13:rem + strl:cult + strl:rem + time13:strl:cult + time13:strl:rem +

modeldl0: (time13 | plant) + (1 | pot)

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

modelfl0 10 581.20 621.43 -280.60 561.20

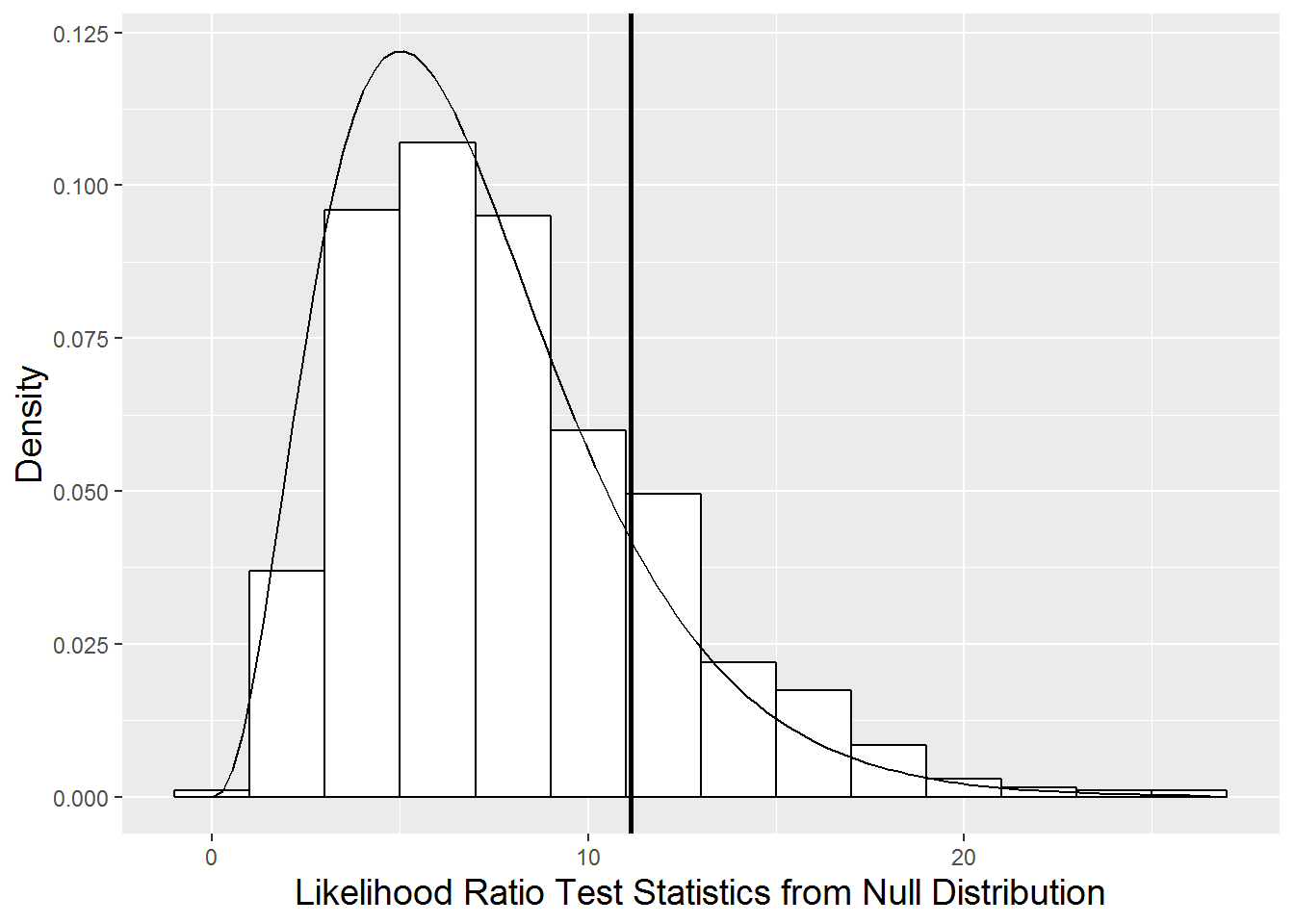

modeldl0 17 584.05 652.45 -275.02 550.05 11.148 7 0.1323We mentioned in Section 10.6 that we could compare Model F and Model D, which differ only in their fixed effects terms, using the parametric bootstrap approach. In fact, in Section 10.6 we suggested that the p-value using the chi-square approximation (.1323) may be a slight under-estimate of the true p-value, but probably in the ballpark. In fact, when we generated 1000 bootstrapped samples of plant heights under Model F, and produced 1000 simulated likelihood ratios comparing Models D and F, we produced a p-value of .201. In Figure 10.15, we see that the chi-square distribution has too much area in the peak and too little area in the tails, although in general it approximates the parametric bootstrap distribution of the likelihood ratio pretty nicely.

Figure 10.15: Null distribution of likelihood ratio test statistic derived using parametric bootstrap (histogram) compared to a chi-square distribution with 7 degrees of freedom (smooth curve). The horizontal line represents the observed likelihood ratio test statistic.

The effects of remnant prairie soil and the interaction between remnant soil and sterilization appear to have marginal benefit in Model F, so we remove those two terms to create Model E. A likelihood ratio test comparing Models E and F, however, shows that Model F significantly outperforms Model E (chi-square test statistic = 9.40 on 2 df, p=.0090). Thus, we will use Model F as our “Final Model” for generating inference.

Formula: hgt ~ time13 + time13:strl + (time13 | plant) + (1 | pot)

Random effects:

Groups Name Variance Std.Dev. Corr

plant (Intercept) 0.29441 0.54260

time13 0.00159 0.03987 0.14

pot (Intercept) 0.04712 0.21707

Residual 0.08199 0.28634

Number of obs: 413, groups: plant, 107; pot, 32

Fixed effects:

Estimate Std. Error t value

(Intercept) 1.528770 0.070965 21.543

time13 0.085682 0.006524 13.134

time13:strl 0.058645 0.009364 6.263refitting model(s) with ML (instead of REML)...

Df AIC BIC logLik deviance Chisq Chi Df Pr(>Chisq)

modelel0 8 586.6 618.78 -285.3 570.6

modelfl0 10 581.2 621.43 -280.6 561.2 9.401 2 0.009091 **

---

Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

...Our final model (Model F), with its constraints on Level Three error terms, can be expressed level-by-level as:

- Level One: \[\begin{equation} Y_{ijk} = a_{ij}+b_{ij}\textstyle{time}_{ijk}+\epsilon_{ijk} \end{equation}\]

- Level Two: \[\begin{eqnarray*} a_{ij} & = & a_{i}+u_{ij} \\ b_{ij} & = & b_{i}+v_{ij} \end{eqnarray*}\]

- Level Three: \[\begin{eqnarray*} a_{i} & = & \alpha_{0} + \tilde{u}_{i} \\ b_{i} & = & \beta_{0}+\beta_{1}\textstyle{strl}_{i}+\beta_{2}\textstyle{rem}_{i} + \beta_{3}\textstyle{strl}_{i}\textstyle{rem}_{i} \end{eqnarray*}\]

where \(\epsilon_{ijk}\sim N(0,\sigma^2)\), \[ \left[ \begin{array}{c} u_{ij} \\ v_{ij} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \sigma_{uv} & \sigma_{v}^{2} \end{array} \right] \right), \] and \(\tilde{{u}_{i}}\sim N(0,\sigma_{\tilde{u}}^{2})\).

In composite form, we have:

\[\begin{eqnarray*} Y_{ijk} & = & [\alpha_{0}+ \beta_{0}\textstyle{time}_{ijk} + \beta_{1}\textstyle{strl}_{i}\textstyle{time}_{ijk} + \beta_{2}\textstyle{rem}_{i}\textstyle{time}_{ijk} + \beta_{3}\textstyle{strl}_{i}\textstyle{rem}_{i}\textstyle{time}_{ijk}] + \\ & & [\tilde{u}_{i}+u_{ij}+\epsilon_{ijk}+v_{ij}\textstyle{time}_{ijk}] \end{eqnarray*}\]Estimates of model parameters can be interpreted in the following manner:

- \(\hat{\sigma}=.287=\) the standard deviation in within-plant residuals after accounting for time.

- \(\hat{\sigma}_{u}=.543=\) the standard deviation in Day 13 heights between plants from the same pot.

- \(\hat{\sigma}_{v}=.037=\) the standard deviation in rates of change in height between plants from the same pot.

- \(\hat{\rho}_{uv}=.157=\) the correlation in plants’ Day 13 height and their rate of change in height.

- \(\hat{\sigma}_{\tilde{u}}=.206=\) the standard deviation in Day 13 heights between pots.

- \(\hat{\alpha}_{0}=1.529=\) the mean height for leadplants 13 days after planting.

- \(\hat{\beta}_{0}=0.091=\) the mean daily change in height from 13 to 28 days after planting for leadplants from reconstructed prairies or cultivated lands (

rem=0) with no sterilization (strl=0) . - \(\hat{\beta}_{1}=0.060=\) the increase in mean daily change in height for leadplants from using sterilized soil instead of unsterilized soil in reconstructed prairies or cultivated lands. Thus, leadplants grown in sterilized soil from reconstructed prairies or cultivated lands have an estimated daily increase in height of 0.151 mm.

- \(\hat{\beta}_{2}=-0.017=\) the decrease in mean daily change in height for leadplants from using unsterilized soil from remnant prairies, rather than unsterilized soil from reconstructed prairies or cultivated lands. Thus, leadplants grown in unsterilized soil from remnant prairies have an estimated daily increase in height of 0.074 mm.

- \(\hat{\beta}_{3}=-0.039=\) the decrease in mean daily change in height for leadplants from sterilized soil from remnant prairies, compared to the expected daily change based on \(\hat{\beta}_{1}\) and \(\hat{\beta}_{2}\). Three-way interactions show that the size of an interaction between two predictors differs depending on the level of a third predictor. Whew! In this case, we might focus on how the interaction between remnant prairies and time differs for unsterilized and sterilized soil. Specifically, the negative effect of remnant prairies on growth rate (compared to reconstructed prairies or cultivated lands) is larger in sterilized soil than unsterilized; in sterilized soil, plants from remnant prairie soil grow .056 mm/day slower on average than plants from other soil types (.095 vs. .151 mm/day), while in unsterilized soil, plants from remnant prairie soil grow just .017 mm/day slower than plants from other soil types (.074 vs. .091 mm/day). Note that the difference between .056 and .017 is our three-way interaction coefficient. Through this three-way interaction term, we also see that leadplants grown in sterilized soil from remnant prairies have an estimated daily increase in height of 0.095 mm.

Based on t-values produced by Model F, sterilization has the most significant effect on leadplant growth, while there is some evidence that growth rate is somewhat slower in remnant prairies, and that the effect of sterilization is also somewhat muted in remnant prairies. Sterilization leads to an estimated 66% increase in growth rate of leadplants from Days 13 to 28 in soil from reconstructed prairies and cultivated lands, and an estimated 28% increase in soil from remnant prairies. In unsterilized soil, plants from remnant prairies grow an estimated 19% slower than plants from other soil types.

10.9 Covariance structure (Optional)

As in Chapter 9, it is important to be aware of the covariance structure implied by our chosen models (focusing initially on Model B). Our three-level model, through error terms at each level, defines a specific covariance structure at both the plant level (Level Two) and the pot level (Level Three). For example, our standard model implies a certain level of correlation among measurements from the same plant and among plants from the same pot. Although three-level models are noticeably more complex than two-level models, it is still possible to systematically determine the implication of our standard model; by doing this, we can evaluate whether our fitted model agrees with our exploratory analyses, and we can also decide if it’s worth considering alternative covariance structures.

We will first consider Model B with \(\tilde{v}_{i}\) at Level Three, and then we will evaluate the resulting covariance structure that results from removing \(\tilde{v}_{i}\), thereby restricting \(\sigma_{\tilde{v}}^{2}=\sigma_{\tilde{u}\tilde{v}}=0\). The composite version of Model B has been previously expressed as:

\[\begin{equation} Y_{ijk}=[\alpha_{0}+\beta_{0}\textstyle{time}_{ijk}]+ [\tilde{u}_{i}+u_{ij}+\epsilon_{ijk}+(\tilde{v}_{i}+v_{ij})\textstyle{time}_{ijk}] \tag{10.8} \end{equation}\]where \(\epsilon_{ijk}\sim N(0,\sigma^2)\), \[ \left[ \begin{array}{c} u_{ij} \\ v_{ij} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{u}^{2} & \\ \sigma_{uv} & \sigma_{v}^{2} \end{array} \right] \right), \] and \[ \left[ \begin{array}{c} \tilde{u}_{i} \\ \tilde{v}_{i} \end{array} \right] \sim N \left( \left[ \begin{array}{c} 0 \\ 0 \end{array} \right], \left[ \begin{array}{cc} \sigma_{\tilde{u}}^{2} & \\ \sigma_{\tilde{u}\tilde{v}} & \sigma_{\tilde{v}}^{2} \end{array} \right] \right). \]

In order to assess the implied covariance structure from our standard model, we must first derive variance and covariance terms for related observations (i.e., same timepoint and same plant, different timepoints but same plant, different plants but same pot). Each derivation will rely on the random effects portion of the composite model, since there is no variability associated with fixed effects. For ease of notation, we will let \(t_{k}=\textstyle{time}_{ijk}\), since all plants were planned to be observed on the same 4 days.