Chapter3 Single Missing data imputation

The topic of this Chapter is to explain how simple missing data methods like complete case analysis, mean and single regression imputation work. These procedures are still very often applied (Eekhout et al. 2012) but generally not recommended because they decreases statistical power or lead to an incorrect estimation of standard errors when the data is MCAR, MAR and MNAR (Eekhout et al. 2014; Van Buuren 2018; Enders 2010).

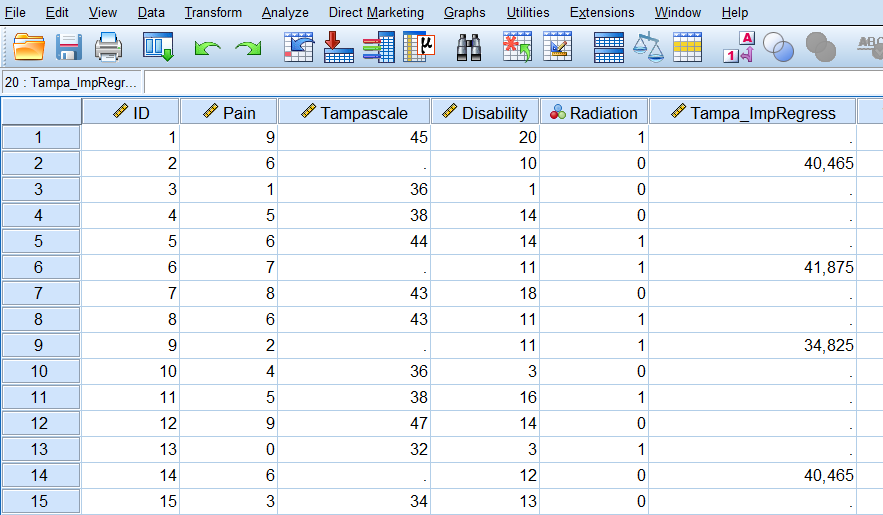

We use as an example data from a study about low back pain and we want to study if the Tampa scale variable is a predictor of low back pain. Both variables are continuous. Pain represents the intensity of the low back pain and the Tampa scale measures fear of moving the low back. The Tampa scale variable contains missing values. The number or type of missing values is not important because the main topic is to show how simple missing data methods work in SPSS and R.

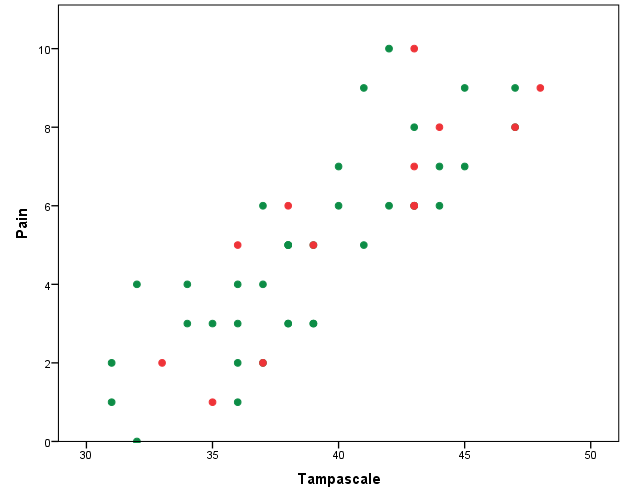

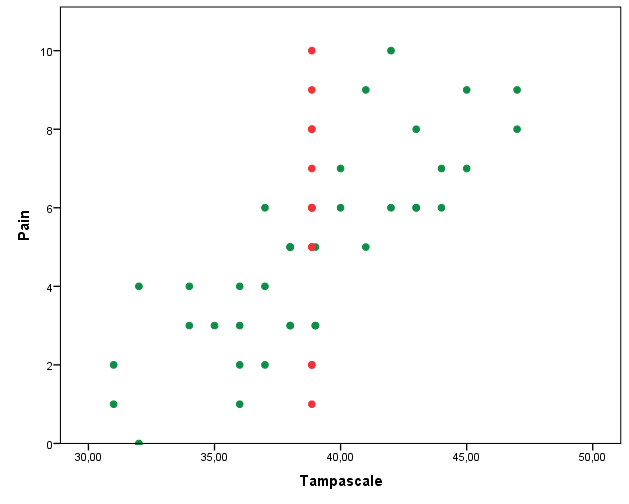

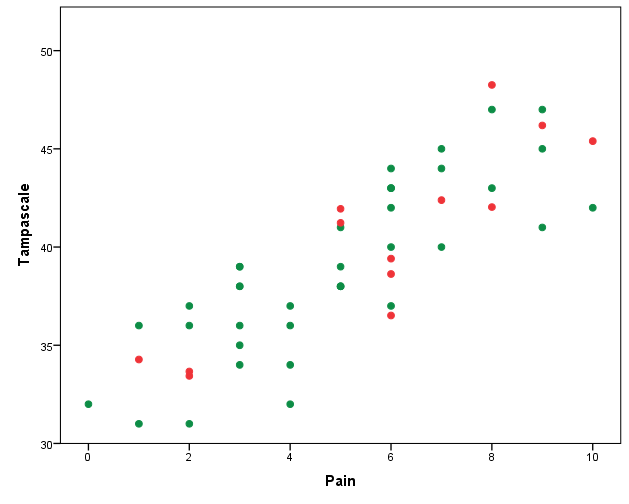

To get a first impression about the relationship between the Pain and the Tampa scale variables we make a scatterplot. The scatterplots with the complete and intended incomplete data is displayed in Figure 3.1.

Figure 3.1: Relationship between the Tampa scale and Pain variables (green dots are observed and red dots are the missing data

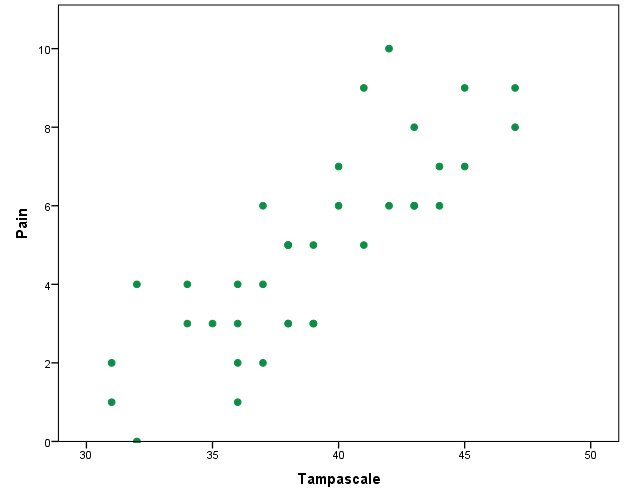

The green dots in Figure 3.1 represent the observed data and the red dots the missing data points. In practice, you work with the available points that are visualized in Figure 3.2.

Figure 3.2: Relationship between the Tampa scale and Pain variable. Missing data are excluded

3.1 Complete cases analysis

Complete case analysis (CCA) means that persons with a missing data point are excluded from the dataset before statistical analyses are performed. Nevertheless it is the default procedure in many statistical software packages such as SPSS.

3.2 Mean Imputation

With mean imputation the mean of a variable that contains missing values is calculated and used to replace all missing values in that variable.

3.2.1 Mean imputation in SPSS

Descriptive Statistics

The easiest method to do mean imputation is by calculating the mean using

Analyze -> Descriptive Statistics -> Descriptives

and than replace the missing values by the mean value by using the “Recode into Same Variables”under the Transform menu.

Other procedures for mean imputation are the Replace Missing Values procedure under Transform and by using the Linear Regression procedure.

Replace Missing Values procedure

You can find the Replace Missing Values dialog box via

Transform -> Replace Missing Values.

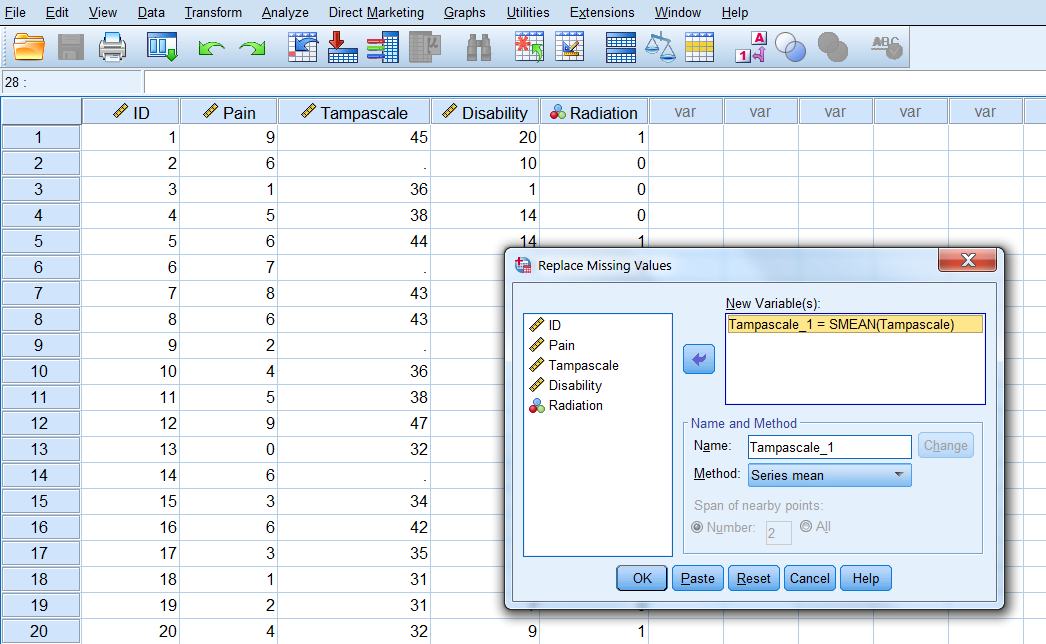

A new window opens. Transport the Tampa scale variable to the New variable(s) window (Figure 3.3). The default imputation procedure is Mean imputation or called “Series mean”.

Figure 3.3: Window for mean imputation of the Tampa scale variable.

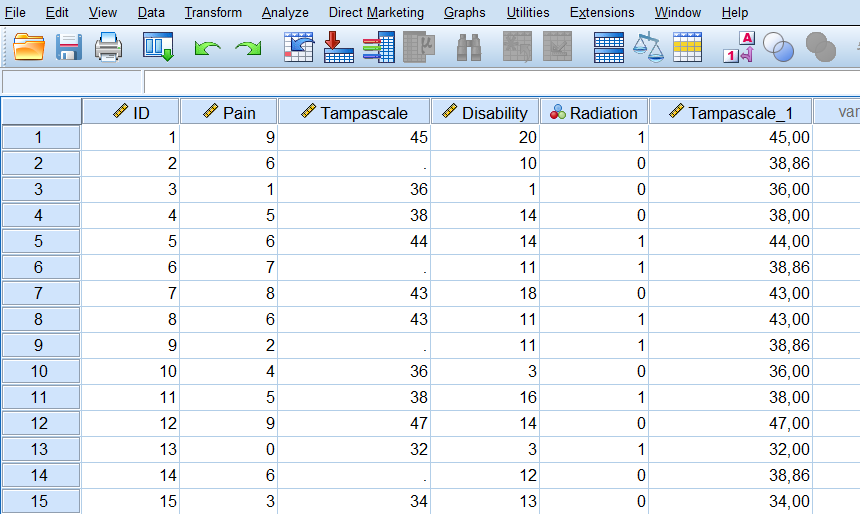

When you click on OK, a new variable is created in the dataset using the existing variable name followed by an underscore and a sequential number. The result is shown in Figure 3.4.

Figure 3.4: Mean imputation of the Tampa scale variable with the Replace Missing Values procedure.

If we now make the scatterplot between the Pain and the Tampa scale variable it clearly shows the result of the mean imputation procedure, all imputed values are located at the mean value (Figure 3.5).

Figure 3.5: Scatterplot between the Tampa scale and Pain variable, after the missing values of the Tampa scale variable have been replaced by the mean.

Linear Regression

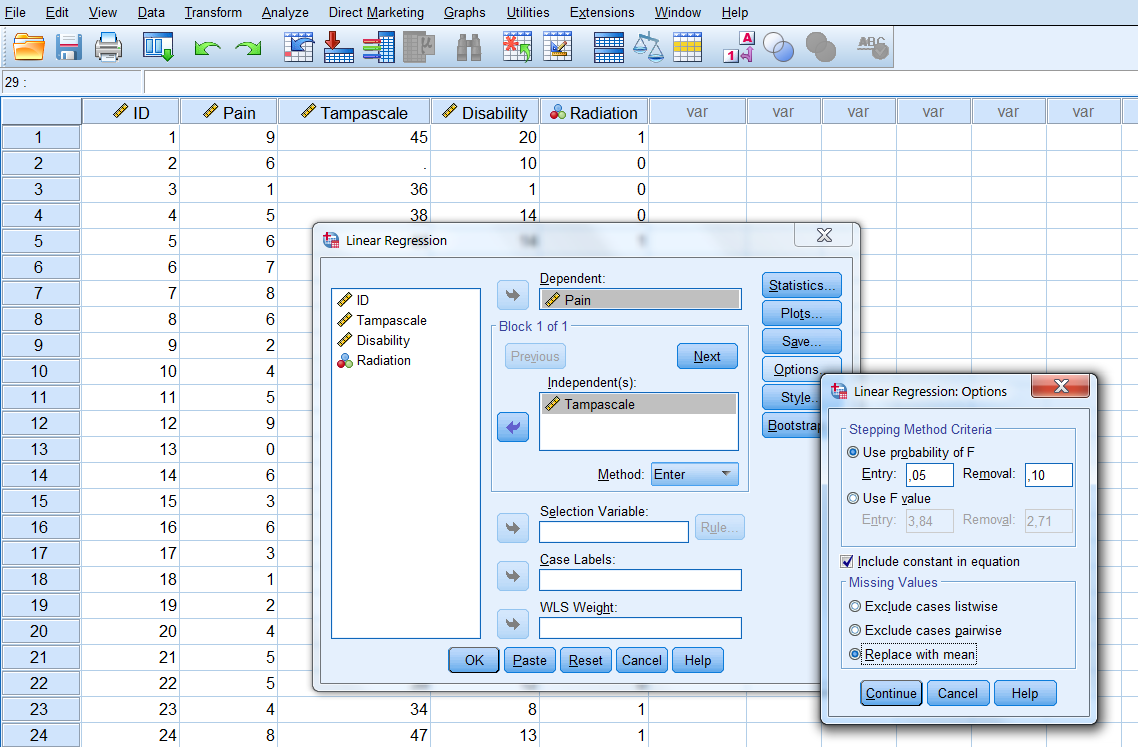

Mean imputation is also integrated in the Linear Regression menu via:

Analyze -> Regression -> Linear -> Options.

In the Missing Values group you choose for Replace with mean (Figure 3.6).

Figure 3.6: The option Replace with mean in the Linear Regression menu.

3.2.2 Mean imputation in R

You can do mean imputation by using the mice function in the mice package and choose as method “mean”.

library(foreign) # activate the foreign package to use the read.spss function

dataset <- read.spss(file="data/Backpain 50 missing.sav", to.data.frame=T)

library(mice) # Activate the mice package to use the mice function

imp_mean <- mice(dataset, method="mean", m=1, maxit=1)##

## iter imp variable

## 1 1 TampascaleYou can extract the mean imputed dataset by using the complete function as follows: complete(imp_mean)

3.3 Regression imputation

With regression imputation the information of other variables is used to predict the missing values in a variable by using a regression model. Commonly, first the regression model is estimated in the observed data and subsequently using the regression weights the missing values are predicted and replaced.

3.3.1 Regression imputation in SPSS

You can apply regression imputation in SPSS via the Missing Value Analysis menu. There are two options for regression imputation, the Regression option and the Expectation Maximization (EM) option. The Regression option in SPSS has some flaws in the estimation of the regression parameters (Hippel 2004). Therefore, we recommend the EM algorithm. This algorithm is a likelihood-based procedure. This means that the most likely values of the regression coefficients are estimated given the data and subsequently used to impute the missing value. This EM procedure gives the same results as first performing a simple regression analysis in the dataset and subsequently estimate the missing values from the regression equation. Both methods are described below.

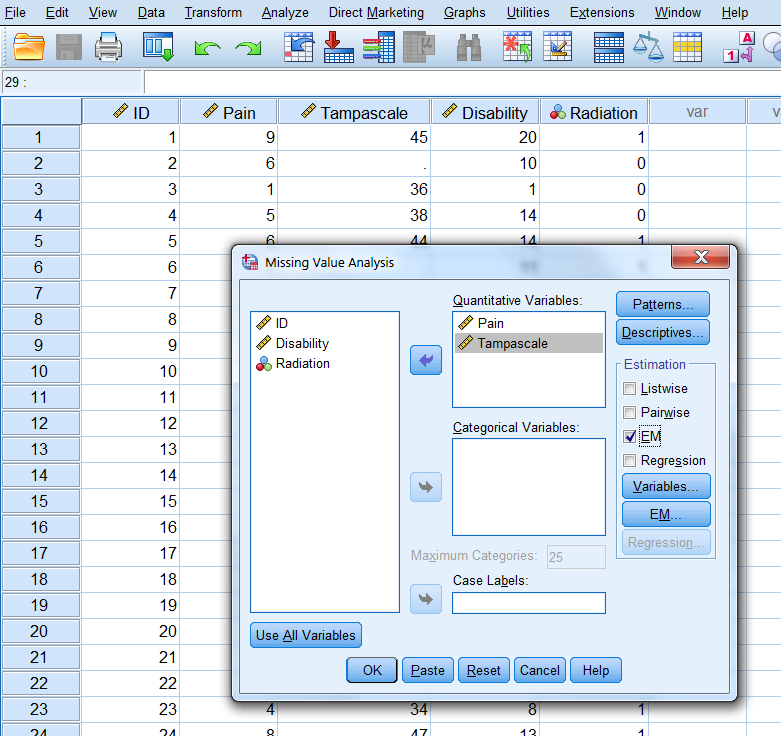

3.3.1.1 EM procedure

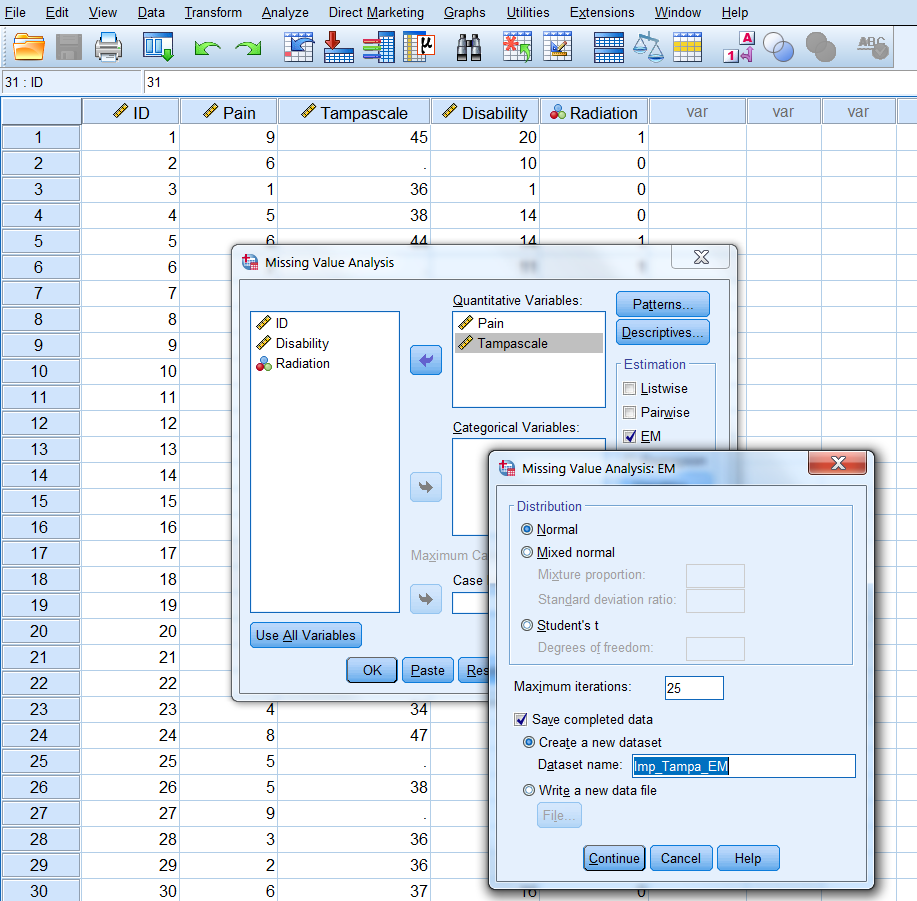

Step 1, go to:

Analyze -> Missing Value Analysis…

In the main Missing Value Analysis dialog box, select the variable(s) and select EM in the Estimation group (Figure 3.7).

Figure 3.7: EM Selection in the Missing Value Analysis window.

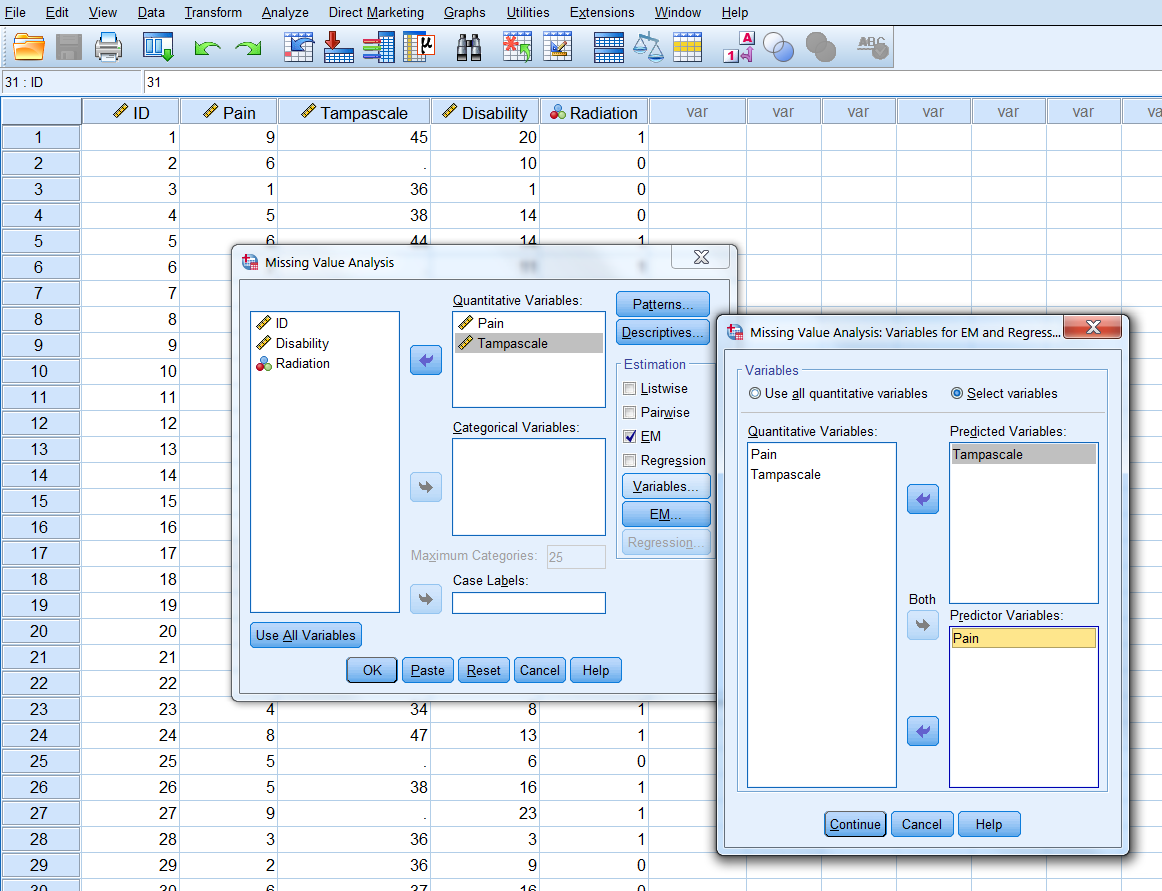

Step 2 click Variables, to specify predicted and predictor variables. Place the Tampascale variable in the Predicted variables window and the Pain variable in the Predictor Variables window (Figure 3.8).

Figure 3.8: Transfer of the Tampascale and Pain variables to the Predicted and Predictor Variables windows.

Step 3 Click on Continue -> EM and select Normal in the Distribution group. Than thick Save completed data and give the dataset a name, for example “ImpTampa_EM” (Figure 3.9).

Figure 3.9: Name of dataset to save the EM results in.

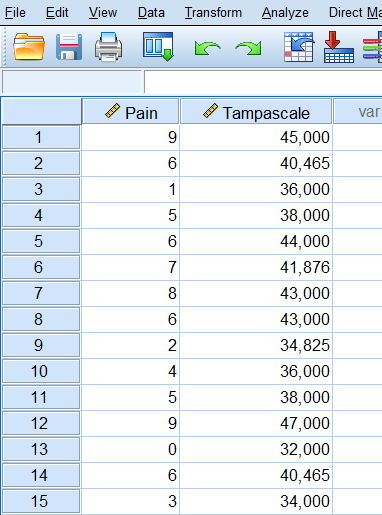

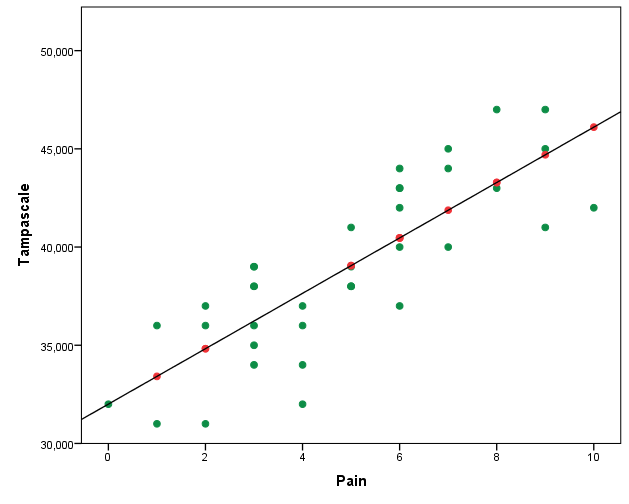

Step 4 Click Continue -> OK. The new dataset “ImpTampa_EM” will open in a new window in SPSS. In this dataset the imputed data for the Tampascale Variable together with the original data is stored (Figure 3.10, first 15 patients are shown).

Figure 3.10: Result of the EM procedure.

BNote that SPSS uses as default only quantitative variables to impute the missing values with the EM algorithm.

3.3.1.2 Normal Linear Regression imputation

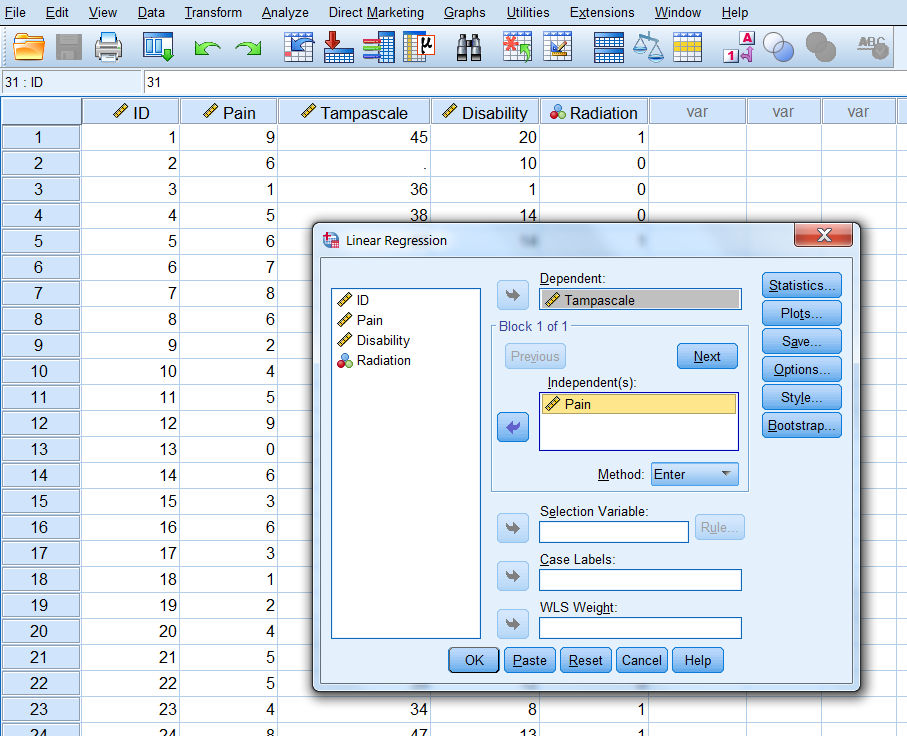

We first estimate the relationship between Pain and the Tampa scale variable in the dataset with linear regression, by default subjects with missing values are excluded. Subsequently, we use the regression coefficients from this regression model to estimate the imputed values in the Tampa scale variable.

To estimate the linear regression model, choose:

Analyze -> Regression -> Linear

Transfer the Tampa scale variable to the Dependent variable box and the Pain variable to the “Independent(s) in the Block 1 of 1 group. Then click OK.

Figure 3.11: Linear regression analysis with the Tampa scale as the outcome and Pain as the independent variable.

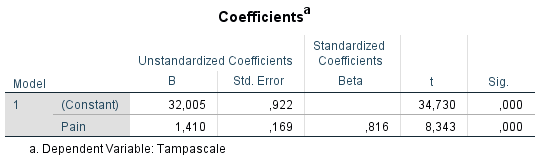

Figure 3.12: Result of the linear regression analysis.

The linear regression model can be described as:

\[Tampascale = 32.005 + 1.410 × Pain\]

Now impute the missing values in the Tampa scale variable and compare them with the EM estimates. You see that the results are the same.

Figure 3.13: Predictions of the missing Tampa scale values on basis of the regression model estimated in the dataset after the missing values were excluded.

In the scatterplot of the imputations from the regression model you see that, as expected, the imputed values are directly on the regression line (Figure 3.14).

Figure 3.14: Relationship between the Tampa scale and the Pain variable.

3.3.2 Regression imputation in R

You can aply regression imputation in R with as method setting “norm.predict” in the mice function. The Pain variable is used to predict the missing values in the Tampa scale variable.

library(foreign)

dataset <- read.spss(file="Mean imputation.sav", to.data.frame=T)

dataset <- dataset[, c("Pain", "Tampascale")]

imp.regress <- mice(dataset, method="norm.predict", m=1, maxit=1)##

## iter imp variable

## 1 1 Tampascaleimp.regress$imp$Tampascale # Extract the imputed values## 1

## 2 40.46554

## 6 41.87566

## 9 34.82506

## 14 40.46554

## 21 39.05542

## 25 39.05542

## 27 44.69590

## 31 46.10602

## 35 40.46554

## 37 43.28578

## 44 33.41494

## 49 34.82506

## 50 43.28578Expectantly, this gives comparable results as the regression imputation to SPSS above. The method “norm.predict” in the mice package fits a linear regression model in the dataset and generates the imputed values for the Tampa scale variable by using the regression coefficients of the linear regression model. The completed dataset can be extracted by using the complete function in the mice package.

3.3.3 Stochastic regression imputation

In Stochastic regression models imputation uncertainty is accounted for by adding extra error variance to the predicted values from the linear regression model. Stochastic regression can be activated in SPSS via the Missing Value Analysis and the Regression Estimation option. However, the Regression Estimation option generates incorrect regression coefficient estimates (Hippel 2004) and will therefore not further discussed.

3.3.4 Stochastic regression imputation in R

You can apply stochastic regression imputation in R with the mice function using the method “norm.nob”.

dataset <- read.spss(file="data/Backpain 50 missing.sav", to.data.frame=T)

dataset <- dataset[, c("Pain", "Tampascale")]

imp_nob <- mice(dataset, method="norm.nob", m=1, maxit=1)##

## iter imp variable

## 1 1 TampascaleThe completed dataset can be extracted by using the complete function in the mice package.

3.4 Bayesian Stochastic regression imputation

With Bayesian Stochastic regression imputation uncertainty is not only accounted for by adding error variance to the predicted values but also by taking into account the uncertainty in estimating the regression coefficients of the imputation model. The Bayesian idea is used that there is not one (true) population regression coefficient but that the regression coefficients itself also follows a distribution. For more information about the theory of Bayesian statistics we refer to the books of (Box and Tiao 2007; Enders 2010; Gelman et al. 2014).

3.4.1 Bayesian Stochastic regression imputation in SPSS

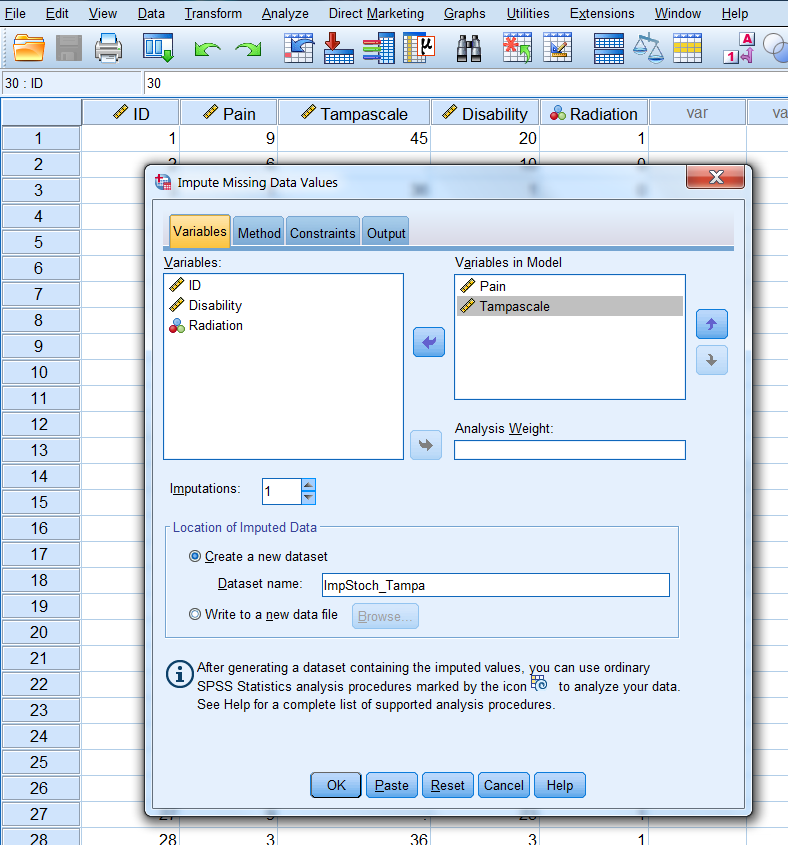

In SPSS Bayesian Stochastic regression imputation can be performed via the multiple imputation menu. To generate imputations for the Tampa scale variable, we use the Pain variable as the only predictor.

Step 1

To start the imputation procedure, Go to

Analyze -> Multiple Imputation -> Impute Missing Data Values.

In the first window you define which variables are included in the imputation model. Transfer the Tampa scale and Pain variable to the Variables in Model box. Than set the number of imputed datasets to 1 under Imputations and give the dataset where the imputed values are stored under “Create a new dataset” a name. Here we give it the name “ImpStoch_Tampa” (Figure 3.15).

Figure 3.15: The Variables window.

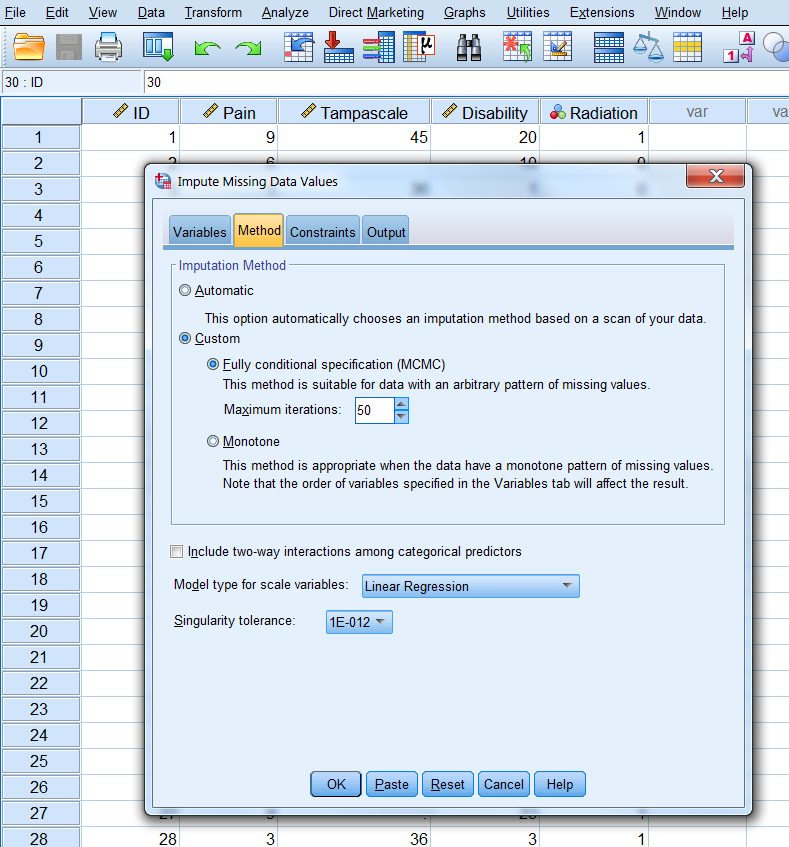

Step 2

In the Methods tab, choose under Imputation Method for custom and then Fully conditional specification (MCMC). Set the Maximum iterations number at 50. This specifies the number of iterations as part of the FCS method (Figure 3.16). We further use the default settings.

Figure 3.16: The Methods tab.

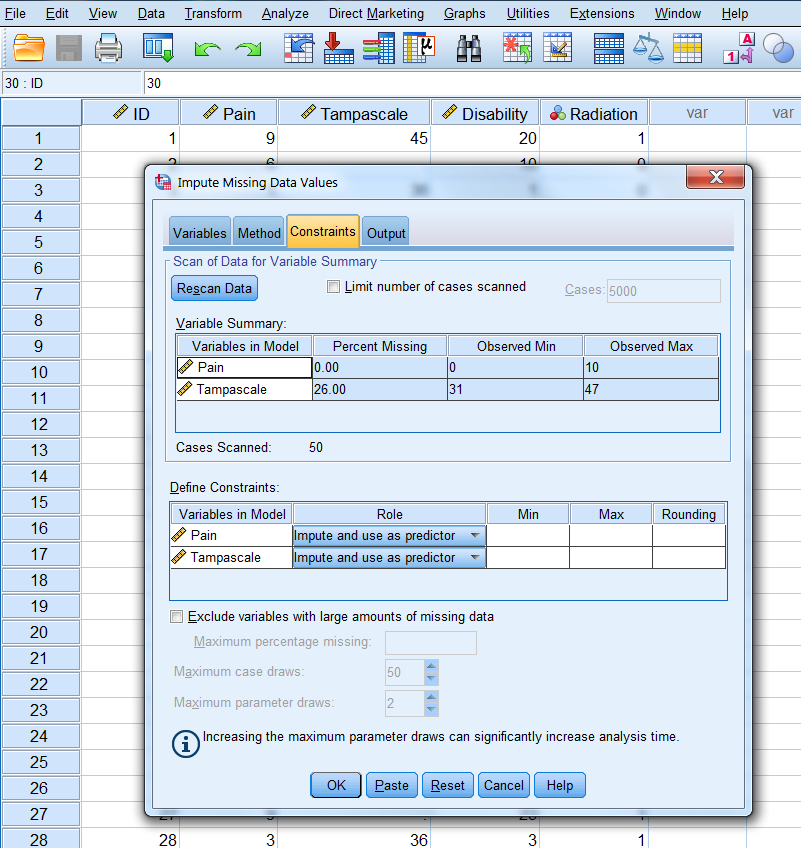

Step 3

In the constraints window (Figure 3.17) click on the Scan Data button and further use the default settings.

Figure 3.17: Bayesian Stochastic regression imputation

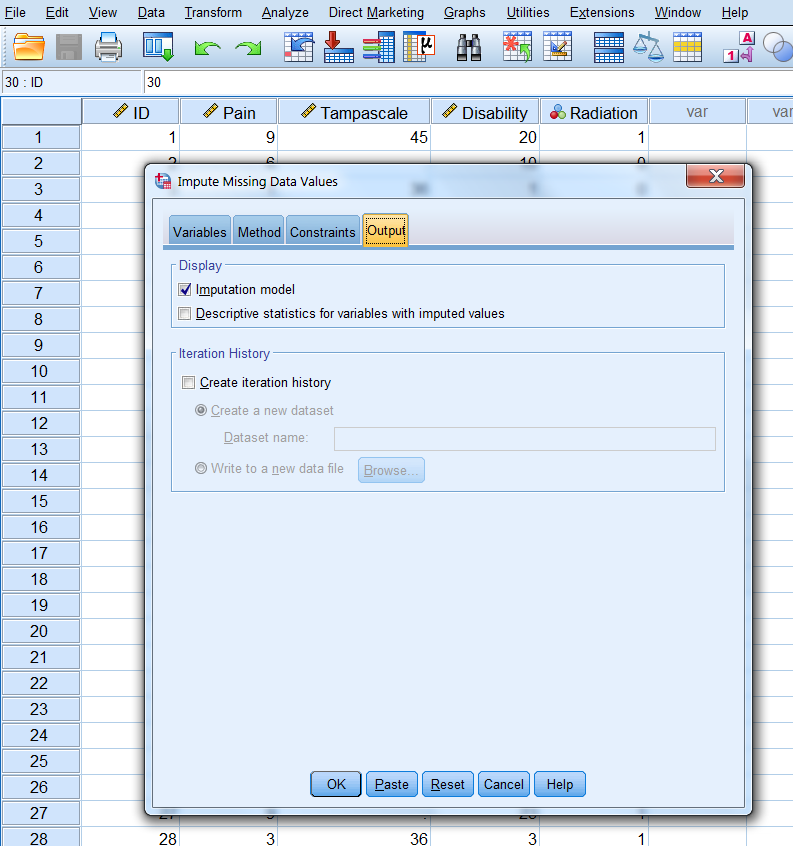

Step 4

In the Output window we only use the default settings.

Figure 3.18: The Output tab.

Step 5

Now click on OK button to start the imputation procedure

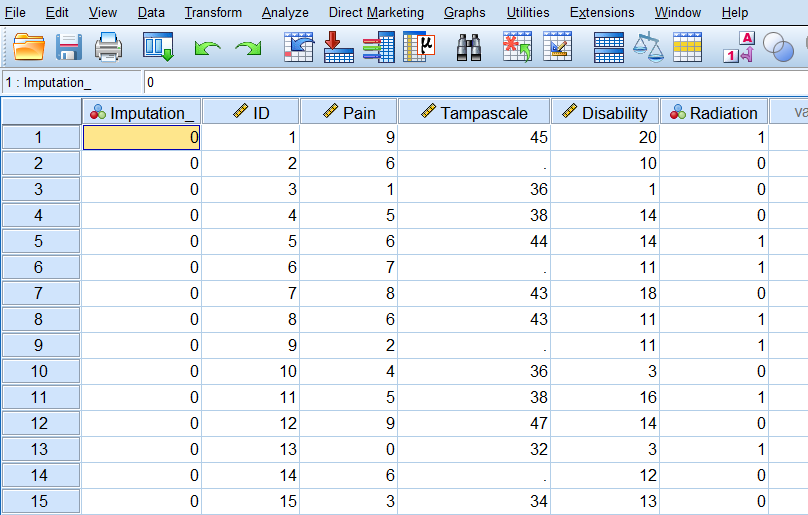

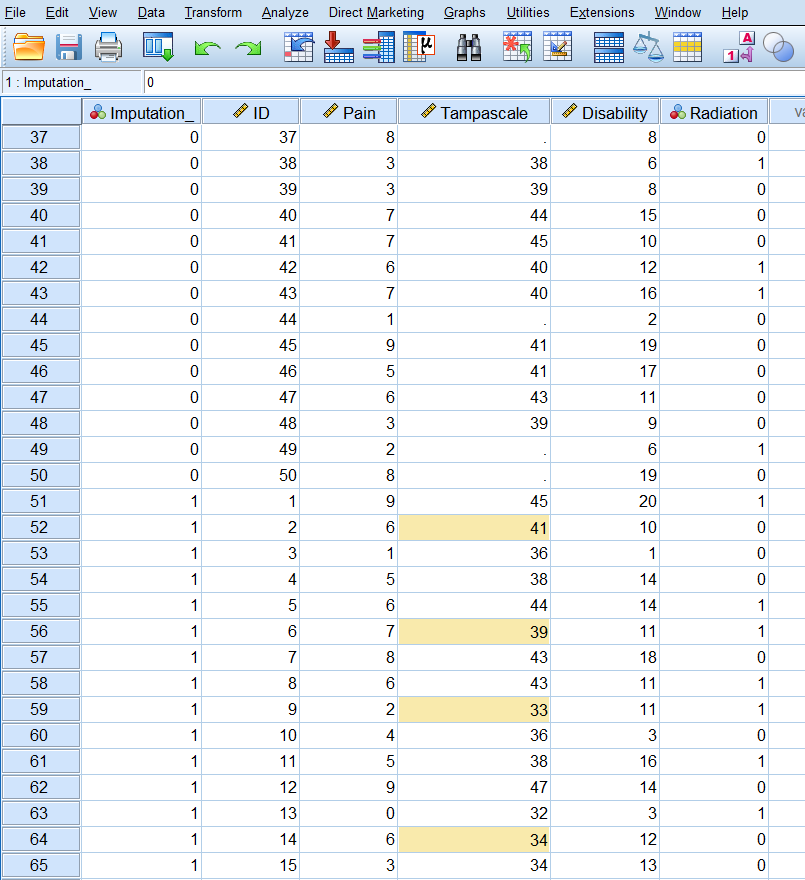

The output dataset consists of the original data with missing data plus a set of cases with imputed values for each imputation. The imputed datasets are stacked under each other. The file also contains a new variable, Imputation_, which indicates the number of the imputed dataset (0 for original data and more than 0 for the imputed datasets). The variable Imputation_ is added to the dataset and the imputed values are marked yellow.

Figure 3.19: Imputed dataset.

Figure 3.20: Imputed dataset with the imputed values marked yellow.

When we make a scatterplot of the Pain and the Tampascale variable (Figure 3.21) we see that there is more variation in the Tampascale variable, or you could say that the variation in the Tampascale variable is “repaired”.

Figure 3.21: Scatterplot of the relationship between Tampascale and the Pain variable, including the imputed values for the Tampascale variable (red dots).

The full Multiple Imputation procedure will be discussed in more detail in the next Chapter.

3.4.2 Bayesian Stochastic regression imputation in R

The package mice also include a Bayesian stochastic regression imputation procedure. You can apply this imputation procedure with the mice function and use as method “norm”. The pain variable is the only predictor variable for the missing values in the Tampa scale variable.

library(haven)

dataset <- read_sav(file="data/Backpain 50 missing.sav")

dataset <- dataset[, c("Pain", "Tampascale")]

imp_b <- mice(dataset, method="norm", m=1, maxit=1)##

## iter imp variable

## 1 1 TampascaleThe completed dataset can be extracted by using the complete function in the mice package.