10 Multicategorical Focal Antecedents and Moderators

In this chapter, [Hayes] extend[ed] the principles of moderation analysis described in Chapters 7 and 8 to testing interaction involving a multicategorical focal antecedent variable or moderator. As you will see, the principles discussed in those chapters generalize quite readily, although the model necessarily requires more than one product to capture an interaction between two variables. This makes the formulas a bit more complex, and the visualizing and probing process a bit more involved. But with comfort with the fundamentals described so far, you should not find it difficult to master this extension of multiple regression analysis. (Andrew F. Hayes, 2018, p. 350)

10.1 Moderation of the effect of a multicategorical antecedent variable

Take the case of a continuous or dichotomous moderator \(W\) and a multicategorical \(X\) “with \(g\) groups, include \(g − 1\) variables coding membership in the groups, the moderator variable \(W\), and \(g − 1\) products between the \(g − 1\) group codes and moderator \(W\) in a regression model” (p. 351) following the form

\[ Y = i_Y + \sum_{i = 1}^{g - 1} b_i D_i + b_g W + \sum_{j = g + 1}^{2g - 1} b_j D_{j - g} W + e_Y, \]

where \(D_i\) denotes the \(i\)th dummy variable. Given the case where \(g = 4\), that formula can be re-expressed as

\[\begin{align*} Y & = i_Y + b_1 D_1 + b_2 D_2 + b_3 D_3 + b_4 W + b_5 D_1 W + b_6 D_2 W + b_7 D_3 W + e_Y, \;\;\;\text{or} \\ & = i_Y + (b_1 + b_5 W) D_1 + (b_2 + b_6 W) D_2 + (b_3 + b_7 W) D_3 + b_4 W + e_Y. \end{align*}\]

10.2 An example from the sex disrimination in the workplace study

Here we load a couple necessary packages, load the data, and take a glimpse().

library(tidyverse)

protest <- read_csv("data/protest/protest.csv")

glimpse(protest)## Rows: 129

## Columns: 6

## $ subnum <dbl> 209, 44, 124, 232, 30, 140, 27, 64, 67, 182, 85, 109, 122, 69, 45, 28, 170, 66, 1…

## $ protest <dbl> 2, 0, 2, 2, 2, 1, 2, 0, 0, 0, 2, 2, 0, 1, 1, 0, 1, 2, 2, 1, 2, 1, 1, 2, 2, 0, 1, …

## $ sexism <dbl> 4.87, 4.25, 5.00, 5.50, 5.62, 5.75, 5.12, 6.62, 5.75, 4.62, 4.75, 6.12, 4.87, 5.8…

## $ angry <dbl> 2, 1, 3, 1, 1, 1, 2, 1, 6, 1, 2, 5, 2, 1, 1, 1, 2, 1, 3, 4, 1, 1, 1, 5, 1, 5, 1, …

## $ liking <dbl> 4.83, 4.50, 5.50, 5.66, 6.16, 6.00, 4.66, 6.50, 1.00, 6.83, 5.00, 5.66, 5.83, 6.5…

## $ respappr <dbl> 4.25, 5.75, 4.75, 7.00, 6.75, 5.50, 5.00, 6.25, 3.00, 5.75, 5.25, 7.00, 4.50, 6.2…With a little if_else(), computing the dummies d1 and d2 is easy enough.

protest <- protest %>%

mutate(d1 = if_else(protest == 1, 1, 0),

d2 = if_else(protest == 2, 1, 0))Load brms.

library(brms)With model10.1 and model10.2 we fit the multicategorical multivariable model and the multicategorical moderation models, respectively.

model10.1 <- brm(

data = protest,

family = gaussian,

liking ~ 1 + d1 + d2 + sexism,

cores = 4,

file = "fits/model10.01")

model10.2 <- update(

model10.1,

newdata = protest,

liking ~ 1 + d1 + d2 + sexism + d1:sexism + d2:sexism,

cores = 4,

file = "fits/model10.02")Behold the \(R^2\) summaries.

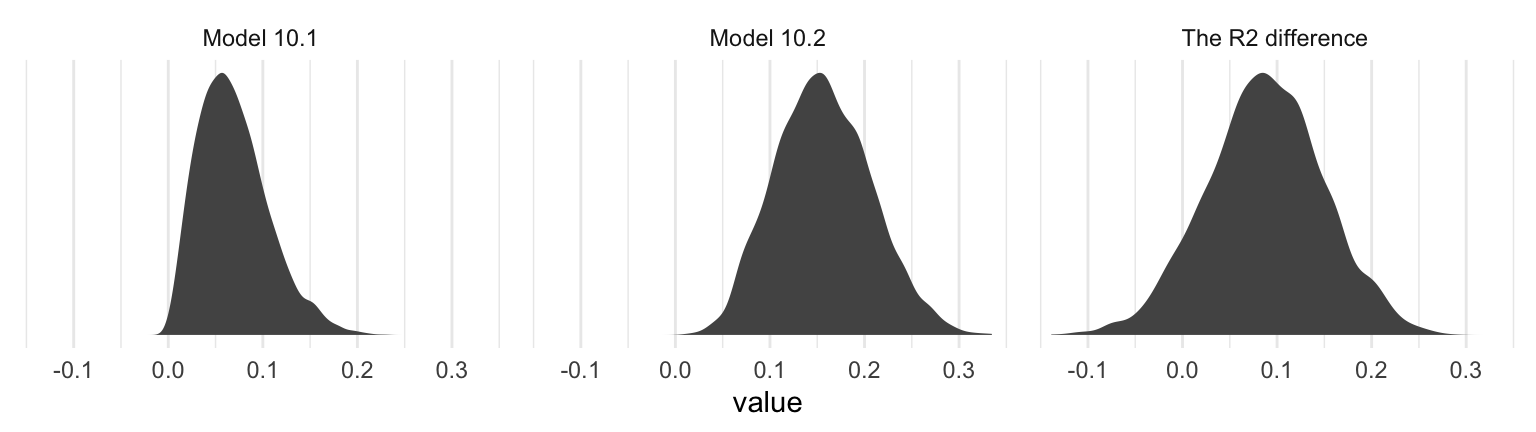

r2 <- tibble(`Model 10.1` = bayes_R2(model10.1, summary = F)[, 1],

`Model 10.2` = bayes_R2(model10.2, summary = F)[, 1]) %>%

mutate(`The R2 difference` = `Model 10.2` - `Model 10.1`)

r2 %>%

pivot_longer(everything()) %>%

# this line isn't necessary, but it sets the order the summaries appear in

mutate(name = factor(name, levels = c("Model 10.1", "Model 10.2", "The R2 difference"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

median = median(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 5

## name mean median ll ul

## <fct> <dbl> <dbl> <dbl> <dbl>

## 1 Model 10.1 0.069 0.065 0.012 0.154

## 2 Model 10.2 0.156 0.155 0.067 0.26

## 3 The R2 difference 0.087 0.087 -0.035 0.208Interestingly, even though our posterior means and medians for the model-specific \(R^2\) values differed some from the OLS estimates in the text, their difference corresponded quite nicely to the one in the text. Let’s take a look at their distributions.

r2 %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value)) +

geom_density(linewidth = 0, fill = "grey33") +

scale_y_continuous(NULL, breaks = NULL) +

facet_wrap(~ name, scales = "free_y") +

theme_minimal()

The model coefficient summaries cohere well with those in Table 10.1.

print(model10.1, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: liking ~ 1 + d1 + d2 + sexism

## Data: protest (Number of observations: 129)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 4.766 0.611 3.563 6.020 1.001 3937 2695

## d1 0.490 0.228 0.043 0.948 1.001 4257 3029

## d2 0.441 0.221 -0.002 0.878 1.000 4012 3326

## sexism 0.108 0.116 -0.128 0.333 1.001 3886 2642

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.044 0.065 0.923 1.178 1.001 4476 2841

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).print(model10.2, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: liking ~ d1 + d2 + sexism + d1:sexism + d2:sexism

## Data: protest (Number of observations: 129)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 7.721 1.092 5.602 9.903 1.001 1549 2071

## d1 -4.157 1.510 -7.177 -1.181 1.000 1632 1695

## d2 -3.511 1.454 -6.409 -0.679 1.002 1433 1941

## sexism -0.475 0.213 -0.907 -0.066 1.001 1518 2195

## d1:sexism 0.906 0.291 0.329 1.486 1.000 1620 1799

## d2:sexism 0.781 0.284 0.228 1.344 1.003 1400 1814

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.007 0.065 0.886 1.141 1.006 2730 2479

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).10.3 Visualizing the model

To get our version of the values in Table 10.2, we’ll first recreate columns for \(d_1\) through \(W\) (SEXISM) and save then as a tibble, nd.

(

nd <- tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1)) %>%

expand_grid(sexism = quantile(protest$sexism, probs = c(.16, .5, .84)))

)## # A tibble: 9 × 3

## d1 d2 sexism

## <dbl> <dbl> <dbl>

## 1 0 0 4.31

## 2 0 0 5.12

## 3 0 0 5.87

## 4 1 0 4.31

## 5 1 0 5.12

## 6 1 0 5.87

## 7 0 1 4.31

## 8 0 1 5.12

## 9 0 1 5.87With nd in hand, we’ll feed the predictor values into fitted() for the typical posterior summaries.

fitted(model10.2, newdata = nd) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## [1,] 5.676 0.227 5.237 6.115

## [2,] 5.290 0.157 4.984 5.597

## [3,] 4.934 0.231 4.479 5.378

## [4,] 5.422 0.244 4.928 5.900

## [5,] 5.772 0.155 5.470 6.083

## [6,] 6.096 0.206 5.685 6.496

## [7,] 5.529 0.206 5.131 5.937

## [8,] 5.777 0.150 5.483 6.074

## [9,] 6.007 0.214 5.600 6.438The values in our Estimate column correspond to those in the \(\hat Y\) column in the table. We, of course, add summaries of uncertainty to the point estimates.

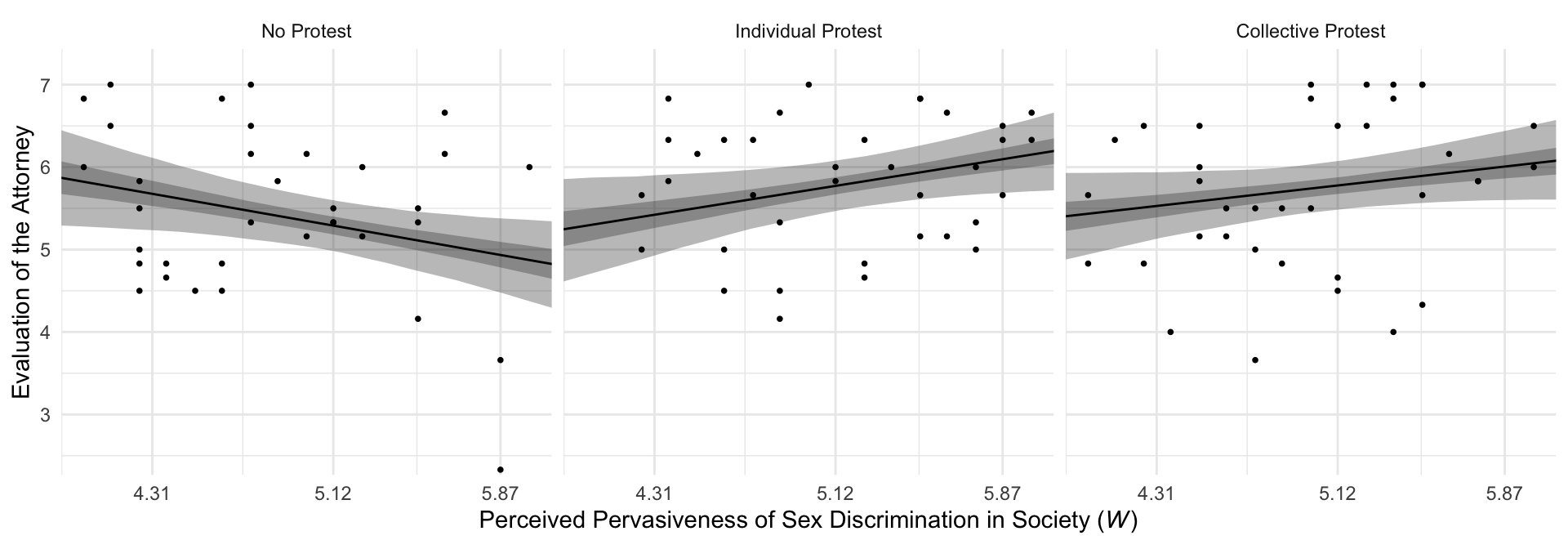

If we want to make a decent line plot for our version of Figure 10.3, we’ll need many more values for sexism, which will appear on the \(x\)-axis.

nd <- tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1)) %>%

expand_grid(sexism = seq(from = 3.5, to = 6.5, length.out = 30))This time we’ll save the results from fitted() as a tlbble and wrangle a bit to get ready for the plot.

f <- fitted(model10.2,

newdata = nd,

probs = c(.025, .25, .75, .975)) %>%

data.frame() %>%

bind_cols(nd) %>%

mutate(condition = if_else(d1 == 1, "Individual Protest",

if_else(d2 == 1, "Collective Protest", "No Protest"))) %>%

# this line is not necessary, but it will help order the facets of the plot

mutate(condition = factor(condition, levels = c("No Protest", "Individual Protest", "Collective Protest")))

glimpse(f)## Rows: 90

## Columns: 10

## $ Estimate <dbl> 6.059469, 6.010364, 5.961258, 5.912152, 5.863046, 5.813941, 5.764835, 5.715729, …

## $ Est.Error <dbl> 0.3705058, 0.3506737, 0.3311204, 0.3118986, 0.2930733, 0.2747262, 0.2569597, 0.2…

## $ Q2.5 <dbl> 5.327310, 5.316933, 5.311093, 5.296486, 5.290319, 5.276974, 5.262534, 5.246104, …

## $ Q25 <dbl> 5.811743, 5.774995, 5.741417, 5.704867, 5.668613, 5.633454, 5.598857, 5.558785, …

## $ Q75 <dbl> 6.310026, 6.247090, 6.183489, 6.122550, 6.061244, 6.000544, 5.941004, 5.880845, …

## $ Q97.5 <dbl> 6.787213, 6.698888, 6.610746, 6.521764, 6.434821, 6.347595, 6.260357, 6.174676, …

## $ d1 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ d2 <dbl> 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0, 0,…

## $ sexism <dbl> 3.500000, 3.603448, 3.706897, 3.810345, 3.913793, 4.017241, 4.120690, 4.224138, …

## $ condition <fct> No Protest, No Protest, No Protest, No Protest, No Protest, No Protest, No Prote…For Figure 10.3 and many to follow for this chapter, we’ll superimpose 50% intervals on top of 95% intervals.

# this will help us add the original data points to the plot

protest <- protest %>%

mutate(condition = ifelse(protest == 0, "No Protest",

ifelse(protest == 1, "Individual Protest",

"Collective Protest"))) %>%

mutate(condition = factor(condition, levels = c("No Protest", "Individual Protest", "Collective Protest")))

# this will help us with the x-axis

breaks <- tibble(values = quantile(protest$sexism, probs = c(.16, .5, .84))) %>%

mutate(labels = values %>% round(digits = 2) %>% as.character())

# Here we plot

f %>%

ggplot(aes(x = sexism)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/3) +

geom_ribbon(aes(ymin = Q25, ymax = Q75),

alpha = 1/3) +

geom_line(aes(y = Estimate)) +

geom_point(data = protest,

aes(y = liking),

size = 2/3) +

scale_x_continuous(breaks = breaks$values,

labels = breaks$labels) +

coord_cartesian(xlim = c(4, 6),

ylim = c(2.5, 7.2)) +

labs(x = expression(paste("Perceived Pervasiveness of Sex Discrimination in Society (", italic(W), ")")),

y = "Evaluation of the Attorney") +

theme_minimal() +

facet_wrap(~ condition)

By adding the data to the plots, they are both more informative and now serve as a posterior predictive check.

10.4 Probing the interaction

These will involve both omnibus tests and pairwise comparisons.

10.4.1 The pick-a-point approach.

“The pick-a-point approach requires you to choose values of the moderator \(W\) and then estimate the conditional effect of \(X\) on \(Y\) at those values and conduct an inferential test” [evaluate the posterior distribution] (p. 368).

10.4.1.1 Omnibus inference.

Hayes used the omnibus testing framework to assess how important coefficients \(b_1\) and \(b_2\) were to our interaction model, model1. Before fitting the models, he discussed why he preferred to fit models after centering sexism (i.e., \(W\)) to 4.25. Here we’ll call our centered variable sexism_p, where _p stands in for “prime”.

protest <- protest %>%

mutate(sexism_p = sexism - 4.25)From here on, model10.3 is the moderation model without the lower-order d1 and d2 terms; model10.4 is the full moderation model. But we’re going to be fitting both these models three different ways, based on how we centersexism. So for this first set where we centered sexism on 4.25, we’ll give them the suffix a.

# the model without d1 + d2

model10.3a <- update(

model10.2,

newdata = protest,

liking ~ 1 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.03a")

# the full model with d1 + d2

model10.4a <- update(

model10.2,

newdata = protest,

liking ~ 1 + d1 + d2 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.04a")The coefficient summaries for model10.4a correspond to the top section of Table 10.3 (p. 373).

fixef(model10.4a) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 5.697 0.235 5.247 6.156

## d1 -0.301 0.344 -0.957 0.376

## d2 -0.177 0.318 -0.803 0.467

## sexism_p -0.469 0.212 -0.883 -0.060

## d1:sexism_p 0.899 0.289 0.322 1.459

## d2:sexism_p 0.769 0.284 0.217 1.328We can compare their Bayesian \(R^2\) distributions like we usually do.

library(tidybayes)

r2 <- tibble(`Model without d1 + d2` = bayes_R2(model10.3a, summary = F)[, 1],

`Model with d1 + d2` = bayes_R2(model10.4a, summary = F)[, 1]) %>%

mutate(`The R2 difference` = `Model with d1 + d2` - `Model without d1 + d2`)

r2 %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("Model without d1 + d2", "Model with d1 + d2", "The R2 difference"))) %>%

group_by(name) %>%

median_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 Model without d1 + d2 0.139 0.051 0.238 0.95 median qi

## 2 Model with d1 + d2 0.154 0.063 0.254 0.95 median qi

## 3 The R2 difference 0.015 -0.124 0.146 0.95 median qiOur results differ a bit from those in the text (p. 370), but the substantive interpretation is the same. The d1 and d2 parameters added little predictive power to the model in terms of \(R^2\). We can also use information criteria to compare the models. Here are the results from using the LOO-CV.

model10.3a <- add_criterion(model10.3a, criterion = "loo")

model10.4a <- add_criterion(model10.4a, criterion = "loo")

loo_compare(model10.3a, model10.4a) %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## model10.3a 0.0 0.0 -186.0 11.1 6.5 2.0 371.9 22.2

## model10.4a -1.4 0.8 -187.3 10.9 7.9 2.0 374.7 21.8The LOO-CV difference between the two models was pretty small. Thus, the LOO-CV gives the same general message as the \(R^2\). The d1 and d2 parameters were sufficiently small and uncertain enough that constraining them to zero did little in terms of reducing the explanatory power of the statistical model.

Here’s the same thing all over again, but this time after centering sexism on 5.120.

protest <- protest %>%

mutate(sexism_p = sexism - 5.120)Now fit the models.

# the model without d1 + d2

model10.3b <- update(

model10.2,

newdata = protest,

liking ~ 1 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.03b")

# the full model with d1 + d2

model10.4b <- update(

model10.2,

newdata = protest,

liking ~ 1 + d1 + d2 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.04b")These coefficient summaries correspond to the middle section of Table 10.3 (p. 373).

fixef(model10.4b) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 5.290 0.155 4.985 5.589

## d1 0.483 0.218 0.060 0.916

## d2 0.489 0.216 0.068 0.906

## sexism_p -0.470 0.207 -0.876 -0.070

## d1:sexism_p 0.894 0.294 0.323 1.482

## d2:sexism_p 0.774 0.277 0.230 1.315Here are the Bayesian \(R^2\) summaries and the summary for their difference.

r2 <- tibble(`Model without d1 + d2` = bayes_R2(model10.3b, summary = F)[, 1],

`Model with d1 + d2` = bayes_R2(model10.4b, summary = F)[, 1]) %>%

mutate(`The R2 difference` = `Model with d1 + d2` - `Model without d1 + d2`)

r2 %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("Model without d1 + d2", "Model with d1 + d2", "The R2 difference"))) %>%

group_by(name) %>%

median_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 Model without d1 + d2 0.1 0.029 0.197 0.95 median qi

## 2 Model with d1 + d2 0.152 0.065 0.258 0.95 median qi

## 3 The R2 difference 0.052 -0.08 0.185 0.95 median qiThis time, our \(\Delta R^2\) distribution was more similar to the results Hayes reported in the text (p. 370, toward the bottom).

Here’s the updated LOO-CV.

model10.3b <- add_criterion(model10.3b, criterion = "loo")

model10.4b <- add_criterion(model10.4b, criterion = "loo")

loo_compare(model10.3b, model10.4b) %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## model10.4b 0.0 0.0 -187.4 10.9 7.9 2.0 374.7 21.7

## model10.3b -1.3 3.0 -188.7 11.9 6.0 1.9 377.4 23.7Here again our Bayesian \(R^2\) and loo() results cohere, both suggesting the d1 and d2 parameters were of little predictive utility. Note how this differs a little from the second \(F\)-test on page 370.

Here’s what happens when we center sexism on 5.896. First center.

protest <- protest %>%

mutate(sexism_p = sexism - 5.896)Fit the models.

# the model without d1 + d2

model10.3c <- update(

model10.2,

newdata = protest,

liking ~ 1 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.03c")

# the full model with d1 + d2

model10.4c <- update(

model10.2,

newdata = protest,

liking ~ 1 + d1 + d2 + sexism_p + d1:sexism_p + d2:sexism_p,

cores = 4,

file = "fits/model10.04c")These coefficient summaries correspond to the lower section of Table 10.3 (p. 373).

fixef(model10.4c) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 4.922 0.225 4.482 5.367

## d1 1.181 0.298 0.596 1.773

## d2 1.089 0.308 0.496 1.689

## sexism_p -0.472 0.203 -0.870 -0.068

## d1:sexism_p 0.898 0.283 0.333 1.451

## d2:sexism_p 0.775 0.274 0.223 1.315Again, compute the \(R^2\) distributions and their difference-score distribution.

r2 <- tibble(`Model without d1 + d2` = bayes_R2(model10.3c, summary = F)[, 1],

`Model with d1 + d2` = bayes_R2(model10.4c, summary = F)[, 1]) %>%

mutate(`The R2 difference` = `Model with d1 + d2` - `Model without d1 + d2`)

r2 %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("Model without d1 + d2", "Model with d1 + d2", "The R2 difference"))) %>%

group_by(name) %>%

median_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 Model without d1 + d2 0.027 0.003 0.088 0.95 median qi

## 2 Model with d1 + d2 0.152 0.067 0.252 0.95 median qi

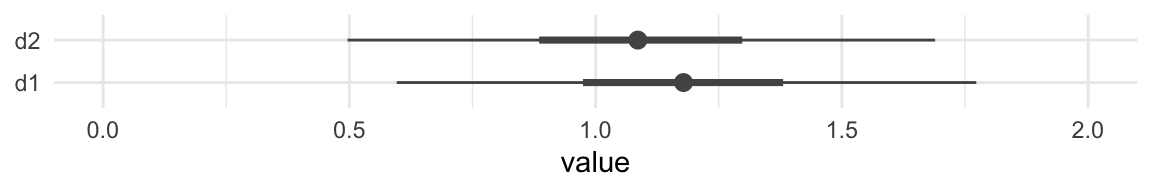

## 3 The R2 difference 0.121 0.023 0.23 0.95 median qiThat \(\Delta R^2\) distribution matches up nicely with the one Hayes reported at the bottom of page 370. Now compare the models with the LOO.

model10.3c <- add_criterion(model10.3c, "loo")

model10.4c <- add_criterion(model10.4c, "loo")

loo_compare(model10.3c, model10.4c) %>%

print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## model10.4c 0.0 0.0 -187.1 10.9 7.7 1.9 374.2 21.8

## model10.3c -6.6 5.5 -193.7 13.3 5.7 1.9 387.4 26.7Although our Bayesian \(R^2\) difference is now predominantly positive, the LOO-CV difference for the two models remains uncertain. Here’s a look at the two parameters in question using a handmade coefficient plot.

as_draws_df(model10.4c) %>%

pivot_longer(b_d1:b_d2) %>%

mutate(name = str_remove(name, "b_")) %>%

ggplot(aes(x = value, y = name)) +

stat_summary(fun = median,

fun.min = function(i) quantile(i, probs = .025),

fun.max = function(i) quantile(i, probs = .975),

color = "grey33") +

stat_summary(geom = "linerange",

fun.min = function(i) quantile(i, probs = .25),

fun.max = function(i) quantile(i, probs = .75),

color = "grey33",

linewidth = 1.25) +

ylab(NULL) +

coord_cartesian(xlim = c(0, 2)) +

theme_minimal()

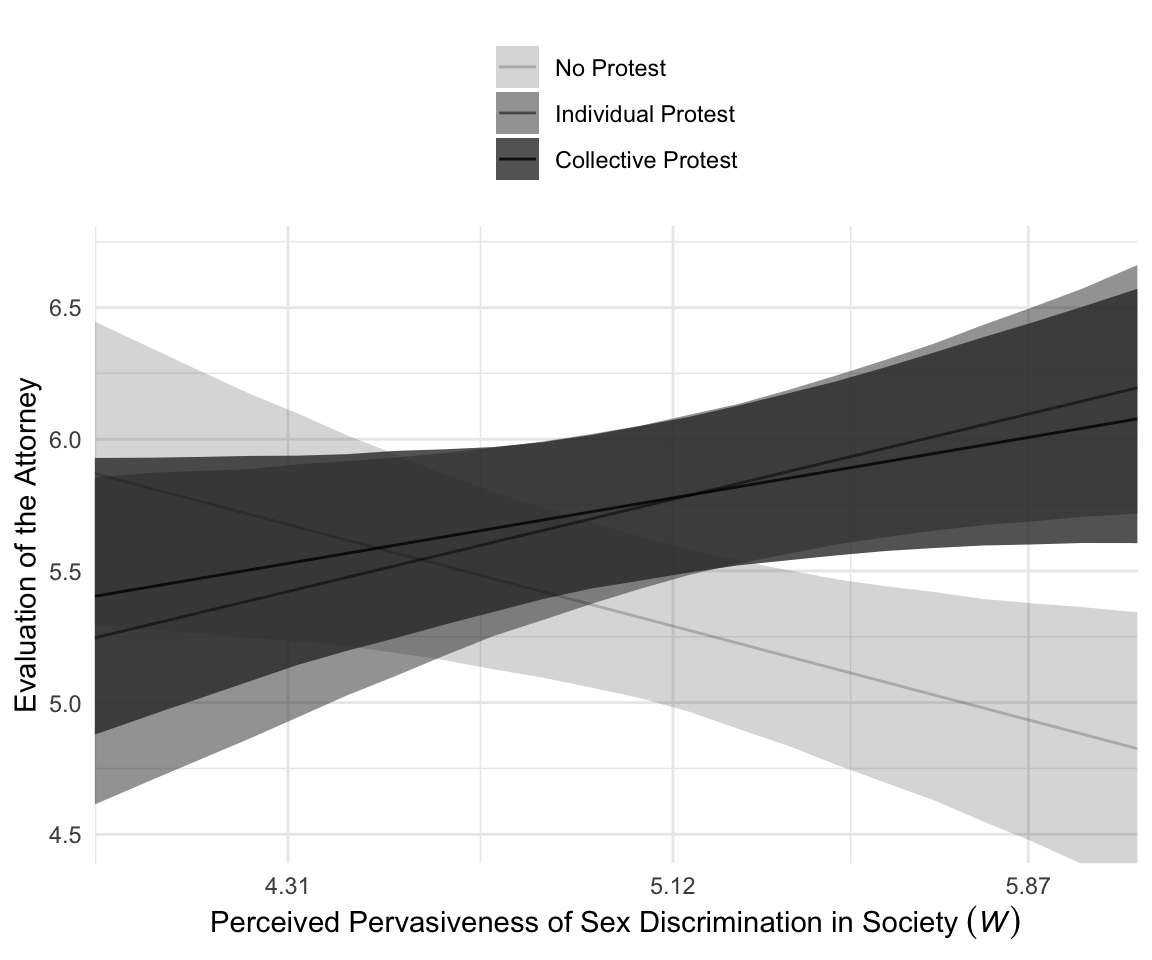

For Figure 10.4, we’ll drop our faceting approach and just make one big plot. Heads up: I’m going to drop the 50% intervals from this plot. They’d just make it too busy.

f %>%

ggplot(aes(x = sexism, y = Estimate, ymin = Q2.5, ymax = Q97.5, alpha = condition)) +

geom_ribbon() +

geom_line() +

scale_alpha_manual(values = c(.2, .5, .8)) +

scale_x_continuous(breaks = breaks$values,

labels = breaks$labels) +

coord_cartesian(xlim = c(4, 6),

ylim = c(4.5, 6.7)) +

labs(x = expression("Perceived Pervasiveness of Sex Discrimination in Society "*(italic(W))),

y = "Evaluation of the Attorney") +

theme_minimal() +

theme(legend.direction = "vertical",

legend.position = "top",

legend.title = element_blank())

10.4.1.2 Pairwise inference.

Hayes continues to reference Table 10.3. In the last subsection, we reproduced those results one model at a time. Why not practice doing it altogether? There are a lot of ways you could do this. A good first try is to extend the fixef() approach from before with a little help from bind_rows().

bind_rows(

# start with `model4a`

fixef(model10.4a) %>%

data.frame() %>%

rownames_to_column("parameter"),

# add `model4b`

fixef(model10.4b) %>%

data.frame() %>%

rownames_to_column("parameter"),

# add `model4c`

fixef(model10.4c) %>%

data.frame() %>%

rownames_to_column("parameter")

) %>%

# wrangle a bit

mutate(`w'` = str_c("w - ", c(4.25, 5.12, 5.896)) %>% rep(., each = 6)) %>%

select(`w'`, everything()) %>%

mutate_if(is.double, round, digits = 3)## w' parameter Estimate Est.Error Q2.5 Q97.5

## 1 w - 4.25 Intercept 5.697 0.235 5.247 6.156

## 2 w - 4.25 d1 -0.301 0.344 -0.957 0.376

## 3 w - 4.25 d2 -0.177 0.318 -0.803 0.467

## 4 w - 4.25 sexism_p -0.469 0.212 -0.883 -0.060

## 5 w - 4.25 d1:sexism_p 0.899 0.289 0.322 1.459

## 6 w - 4.25 d2:sexism_p 0.769 0.284 0.217 1.328

## 7 w - 5.12 Intercept 5.290 0.155 4.985 5.589

## 8 w - 5.12 d1 0.483 0.218 0.060 0.916

## 9 w - 5.12 d2 0.489 0.216 0.068 0.906

## 10 w - 5.12 sexism_p -0.470 0.207 -0.876 -0.070

## 11 w - 5.12 d1:sexism_p 0.894 0.294 0.323 1.482

## 12 w - 5.12 d2:sexism_p 0.774 0.277 0.230 1.315

## 13 w - 5.896 Intercept 4.922 0.225 4.482 5.367

## 14 w - 5.896 d1 1.181 0.298 0.596 1.773

## 15 w - 5.896 d2 1.089 0.308 0.496 1.689

## 16 w - 5.896 sexism_p -0.472 0.203 -0.870 -0.068

## 17 w - 5.896 d1:sexism_p 0.898 0.283 0.333 1.451

## 18 w - 5.896 d2:sexism_p 0.775 0.274 0.223 1.315This code works okay, but it’s redundant. Here’s a streamlined approach where we use a combination of nested tibbles and the purrr::map() function to work with our three model fits–model10.4a, model10.4b, and model10.4c–in bulk.

t <- tibble(`w'` = str_c("w - ", c(4.25, 5.12, 5.896)),

name = str_c("model10.4", letters[1:3])) %>%

mutate(fit = map(name, get)) %>%

mutate(s = map(fit, ~fixef(.) %>%

data.frame() %>%

rownames_to_column("parameter"))) %>%

unnest(s) %>%

select(`w'`, parameter:Q97.5)

t %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 18 × 6

## `w'` parameter Estimate Est.Error Q2.5 Q97.5

## <chr> <chr> <dbl> <dbl> <dbl> <dbl>

## 1 w - 4.25 Intercept 5.70 0.235 5.25 6.16

## 2 w - 4.25 d1 -0.301 0.344 -0.957 0.376

## 3 w - 4.25 d2 -0.177 0.318 -0.803 0.467

## 4 w - 4.25 sexism_p -0.469 0.212 -0.883 -0.06

## 5 w - 4.25 d1:sexism_p 0.899 0.289 0.322 1.46

## 6 w - 4.25 d2:sexism_p 0.769 0.284 0.217 1.33

## 7 w - 5.12 Intercept 5.29 0.155 4.99 5.59

## 8 w - 5.12 d1 0.483 0.218 0.06 0.916

## 9 w - 5.12 d2 0.489 0.216 0.068 0.906

## 10 w - 5.12 sexism_p -0.47 0.207 -0.876 -0.07

## 11 w - 5.12 d1:sexism_p 0.894 0.294 0.323 1.48

## 12 w - 5.12 d2:sexism_p 0.774 0.277 0.23 1.32

## 13 w - 5.896 Intercept 4.92 0.225 4.48 5.37

## 14 w - 5.896 d1 1.18 0.298 0.596 1.77

## 15 w - 5.896 d2 1.09 0.308 0.496 1.69

## 16 w - 5.896 sexism_p -0.472 0.203 -0.87 -0.068

## 17 w - 5.896 d1:sexism_p 0.898 0.283 0.333 1.45

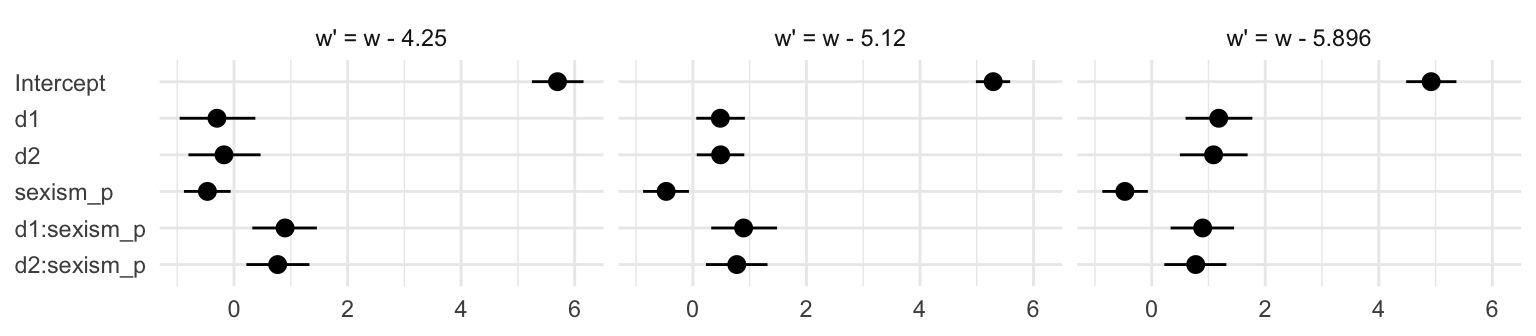

## 18 w - 5.896 d2:sexism_p 0.775 0.274 0.223 1.32Summary tables like this are precise and very common in the literature. But you can get lost in all those numbers. A coefficient plot can be better. This first version is pretty close to the Table 10.3 format.

t %>%

# this will help us order our y-axis

mutate(parameter = factor(parameter,

levels = c("d2:sexism_p", "d1:sexism_p",

"sexism_p", "d2", "d1", "Intercept")),

# this is just for aesthetics

`w'` = str_c("w' = ", `w'`)) %>%

# plot!

ggplot(aes(x = Estimate, xmin = Q2.5, xmax = Q97.5, y = parameter)) +

geom_pointrange() +

labs(x = NULL,

y = NULL) +

theme_minimal() +

theme(axis.text.y = element_text(hjust = 0)) +

facet_wrap(~ `w'`, nrow = 1)

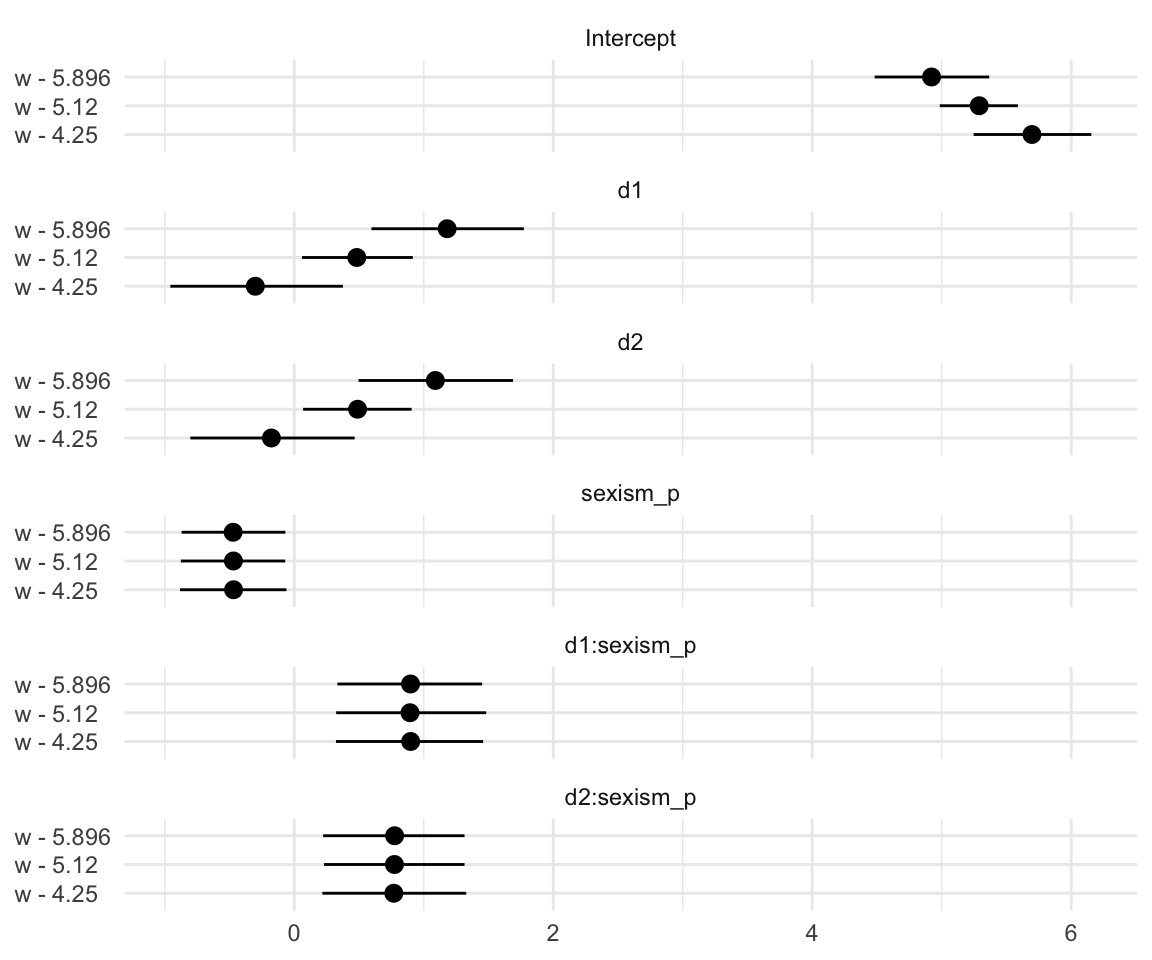

Notice how this arrangement makes it easiest to compare coefficients within models. If we wanted to make it easier to compare coefficients across models, we might arrange the plot like so.

t %>%

# this will help us order our y-axis

mutate(parameter = factor(parameter,

levels = c("Intercept", "d1", "d2", "sexism_p", "d1:sexism_p", "d2:sexism_p"))) %>%

# plot!

ggplot(aes(x = Estimate, xmin = Q2.5, xmax = Q97.5, y = `w'`)) +

geom_pointrange() +

labs(x = NULL,

y = NULL) +

theme_minimal() +

theme(axis.text.y = element_text(hjust = 0)) +

facet_wrap(~ parameter, ncol = 1)

Oh man–with sweet plots like these, who needs tables! This makes it much easier to see what happened as we changed values we centered sexism on. In the middle paragraph on page 374, Hayes pointed out “that \(b_1\) and \(b_2\) differ in these analyses, but \(b_3\), \(b_4\), and \(b_5\) are unaffected by the centering”. Our coefficient plot clarified that in a way I don’t think a table ever could. But before we move on, let’s back up a little in the text.

“To make this more concrete, consider the effect of Catherine’s behavior on how she is perceived among people who are relatively high in their perceptions of the pervasiveness of sex discrimination in society” (p. 372). For this, Hayes defined “relatively high” as \(W = 5.896\). To get those estimates for each condition, we’ll use fitted(). Since the number of unique predictor values is small for this example, we’ll just plug them directly into the newdata argument rather than first saving them as a nd object.

fitted(model10.2,

newdata = tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1),

sexism = 5.896)) %>%

round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## [1,] 4.922 0.235 4.460 5.373

## [2,] 6.107 0.210 5.692 6.512

## [3,] 6.015 0.218 5.602 6.451Those posterior summaries match up nicely with the point estimates Hayes presented at the bottom of page 372. Hayes further expounded:

So by using the regression centering strategy described earlier in the context of an omnibus test of equality of values of \(\hat Y\), the regression coefficients \(b_1\) and \(b_2\) provide pairwise inferences consistent with the coding system used to represent the three groups, conditioned on the value that \(W\) is centered around.

In the next few sentences, he focused on what happened when \(W = 4.250\) (i.e., in model4a). Recall that the two coefficients in question, \(b_1\) and \(b_2\), are named d1 and d2 when we pull their summaries with fixef().

fixef(model10.4a)[c("d1", "d2"), ] %>%

round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## d1 -0.301 0.344 -0.957 0.376

## d2 -0.177 0.318 -0.803 0.467Hayes then clarified that in this model

\[\begin{align*} b_1 & = \theta_{D_1 \rightarrow Y} | (W = 4.250) = 5.400 - 5.698 = -0.299 \;\;\; \text{ and} \\ b_2 & = \theta_{D_2 \rightarrow Y} | (W = 4.250) = 5.513 - 5.698 = -0.185. \end{align*}\]

That is, it is the same as a difference score of each of the experimental conditions minus the “No protest” condition. To further show the difference-score quality of these coefficients, we can continue using fitted() in conjunction with the original model10.2 to get the group comparisons for when \(W = 4.250\). Since these involve computing difference scores, we’ll have to use summary = F and do some wrangling.

fitted(model10.2,

newdata = tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1),

sexism = 4.25),

summary = F) %>%

data.frame() %>%

set_names("No Protest", "Individual Protest", "Collective Protest") %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(name) %>%

mean_qi(value) %>%

select(name:.upper) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 × 4

## name value .lower .upper

## <fct> <dbl> <dbl> <dbl>

## 1 No Protest 5.70 5.24 6.16

## 2 Individual Protest 5.40 4.88 5.89

## 3 Collective Protest 5.51 5.09 5.94

## 4 difference_a -0.306 -0.997 0.348

## 5 difference_b -0.192 -0.824 0.42Within simulation variance, difference_a is the same as \(b_{1 | \text{model10.4a}}\) and difference_b is the same as \(b_{2 | \text{model10.4a}}\). Here’s the same thing for when \(W = 5.120\).

fitted(model10.2,

newdata = tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1),

sexism = 5.120),

summary = F) %>%

data.frame() %>%

set_names("No Protest", "Individual Protest", "Collective Protest") %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(name) %>%

mean_qi(value) %>%

select(name:.upper) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 × 4

## name value .lower .upper

## <fct> <dbl> <dbl> <dbl>

## 1 No Protest 5.29 4.98 5.60

## 2 Individual Protest 5.77 5.47 6.08

## 3 Collective Protest 5.78 5.48 6.07

## 4 difference_a 0.482 0.057 0.915

## 5 difference_b 0.487 0.081 0.914Finally, here it is for when \(W = 5.986\).

fitted(model10.2,

newdata = tibble(d1 = c(0, 1, 0),

d2 = c(0, 0, 1),

sexism = 5.986),

summary = F) %>%

data.frame() %>%

set_names("No Protest", "Individual Protest", "Collective Protest") %>%

mutate(difference_a = `Individual Protest` - `No Protest`,

difference_b = `Collective Protest` - `No Protest`) %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("No Protest", "Individual Protest", "Collective Protest",

"difference_a", "difference_b"))) %>%

group_by(name) %>%

mean_qi(value) %>%

select(name:.upper) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 5 × 4

## name value .lower .upper

## <fct> <dbl> <dbl> <dbl>

## 1 No Protest 4.88 4.38 5.36

## 2 Individual Protest 6.15 5.70 6.57

## 3 Collective Protest 6.04 5.61 6.50

## 4 difference_a 1.27 0.634 1.93

## 5 difference_b 1.16 0.492 1.8510.4.2 The Johnson-Neyman technique.

As discussed in section 7.4, a problem with the pick-a-point approach to probing an interaction is having to choose values of the moderator. When the moderator is a continuum, you may not have any basis for choosing some values rather than others, and the choice you make will certainly influence the results of the probing exercise to some extent… Actively choosing a different system or con- vention, such as using the sample mean of \(W\), a standard deviation below the mean, and a standard deviation above the mean also does not eliminate the problem. But the Johnson–Neyman (JN) technique avoids this problem entirely. (p. 376)

10.4.2.1 Omnibus inference.

Consider the first sentence of the section:

Applied to probing an interaction between a multicategorical \(X\) and a continuous \(W\), an omnibus version of the JM technique involves finding the value or values of \(W\) where their \(F\)-ratio comparing the \(g\) estimated values of \(Y\) is just statistically significant. (p. 376)

Since we’re not using \(F\)-tests with our approach to Bayesian modeling, the closest we might have is a series of \(R^2\) difference tests, which would require refitting the model multiple times over many ways of centering the \(W\)-variable, sexism. I suppose you could do this if you wanted, but it just seems silly, to me. I’ll leave this one up to the interested reader.

10.4.2.2 Pairwise inference.

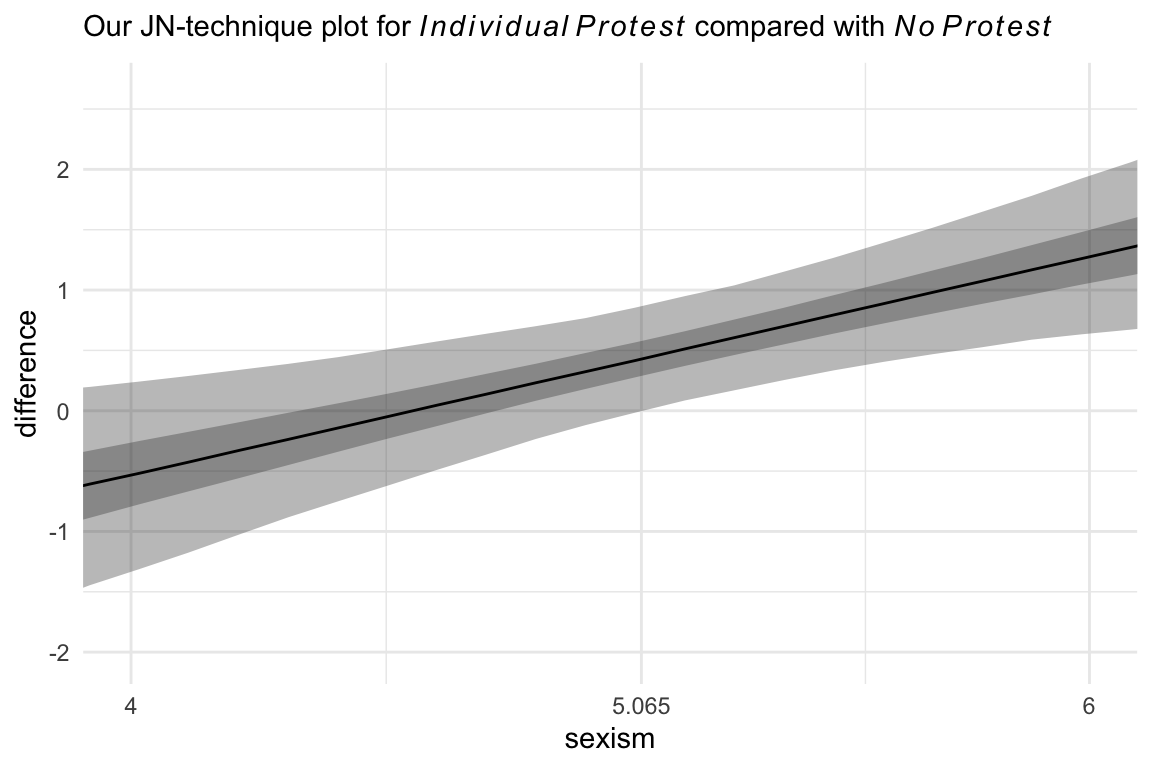

Hayes didn’t make plots for this section, but if you’re careful constructing your nd and with the subsequent wrangling, you can make the usual plots. Since we have two conditions we’d like to compare with No Protest, we’ll make two plots. Here’s the comparison using Individual Protest, first.

# the transition value Hayes identified in the text

Hayes_value <- 5.065

# we need some new data

nd <- tibble(d1 = 0:1,

d2 = 0) %>%

expand_grid(sexism = seq(from = 3.5, to = 6.5, length.out = 30)) %>%

mutate(row = 1:n())

# plug those data into `fitted()`

fitted(model10.2,

newdata = nd,

summary = F) %>%

# wrangle

data.frame() %>%

set_names(pull(nd, row)) %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw, values_to = "estimate") %>%

mutate(row = as.double(name)) %>%

left_join(nd, by = "row") %>%

mutate(condition = if_else(d1 == 0, "No Protest", "Individual Protest")) %>%

select(estimate, sexism, draw, condition) %>%

pivot_wider(names_from = condition, values_from = estimate) %>%

mutate(difference = `Individual Protest` - `No Protest`)%>%

# plot!

ggplot(aes(x = sexism, y = difference)) +

stat_summary(geom = "ribbon",

fun.min = function(i) quantile(i, probs = .025),

fun.max = function(i) quantile(i, probs = .975),

alpha = 1/3) +

stat_summary(geom = "ribbon",

fun.min = function(i) quantile(i, probs = .25),

fun.max = function(i) quantile(i, probs = .75),

alpha = 1/3) +

stat_summary(geom = "line",

fun = median) +

scale_x_continuous(breaks = c(4, Hayes_value, 6),

labels = c("4", Hayes_value, "6")) +

coord_cartesian(xlim = c(4, 6)) +

labs(subtitle = expression("Our JN-technique plot for "*italic(Individual~Protest)*" compared with "*italic(No~Protest))) +

theme_minimal()

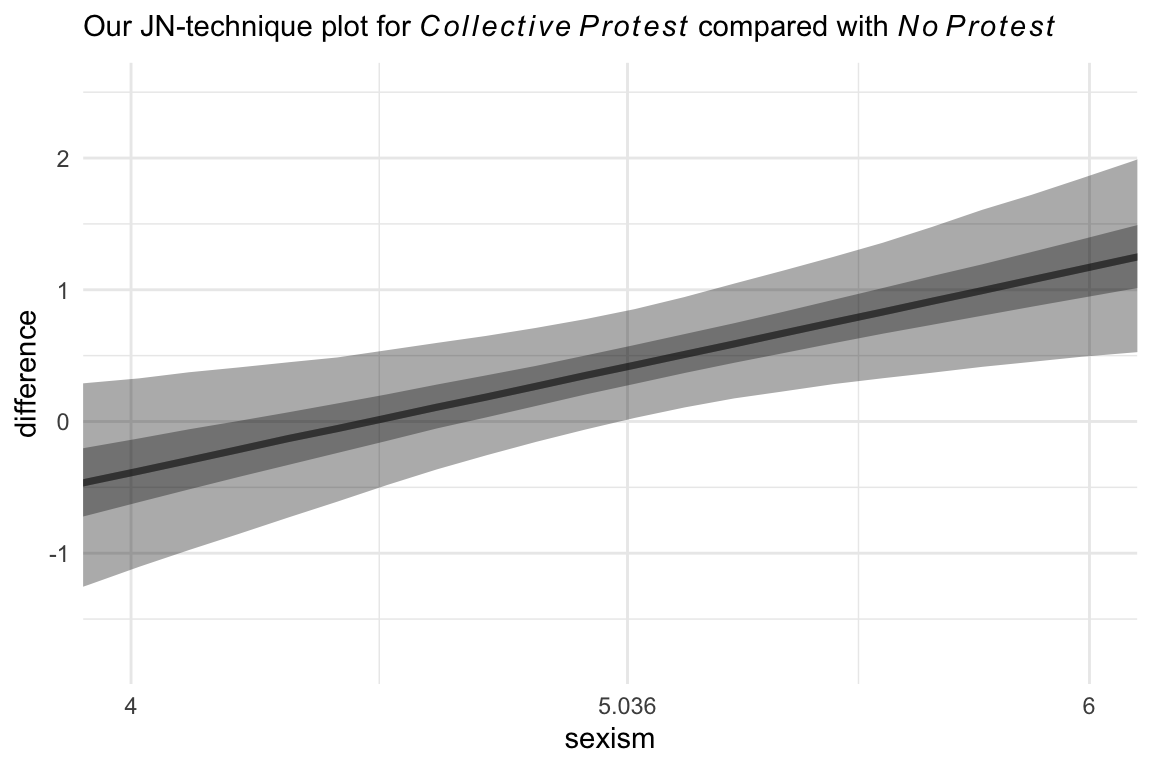

Now we’re ready to compare No Protest to Collective Protest. The main data difference is which values we assigned to the d1 and d2 columns in nd. For kicks, we should practice another way to get the median line and interval ribbons. The stat_summary() approach from above works great, but it’s verbose. The tidybayes::stat_lineribbon() function will give us the same results with fewer lines of code.

# the transition value Hayes identified in the text

Hayes_value <- 5.036

# new data

nd <- tibble(d1 = 0,

d2 = 0:1) %>%

expand_grid(sexism = seq(from = 3.5, to = 6.5, length.out = 30)) %>%

mutate(row = 1:n())

# this part is the same as before

fitted(model10.2,

newdata = nd,

summary = F) %>%

data.frame() %>%

set_names(pull(nd, row)) %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw, values_to = "estimate") %>%

mutate(row = as.double(name)) %>%

left_join(nd, by = "row") %>%

# there are some mild differences in the two mutate() lines

mutate(condition = if_else(d2 == 0, "No Protest", "Collective Protest")) %>%

select(estimate, sexism, draw, condition) %>%

pivot_wider(names_from = condition, values_from = estimate) %>%

mutate(difference = `Collective Protest` - `No Protest`) %>%

# plot!

ggplot(aes(x = sexism, y = difference)) +

# look how compact this is!

stat_lineribbon(.width = c(0.5, 0.95),

alpha = 1/3, fill = "black") +

scale_x_continuous(breaks = c(4, Hayes_value, 6),

labels = c("4", Hayes_value, "6")) +

coord_cartesian(xlim = c(4, 6)) +

labs(subtitle = expression("Our JN-technique plot for "*italic(Collective~Protest)*" compared with "*italic(No~Protest))) +

theme_minimal()

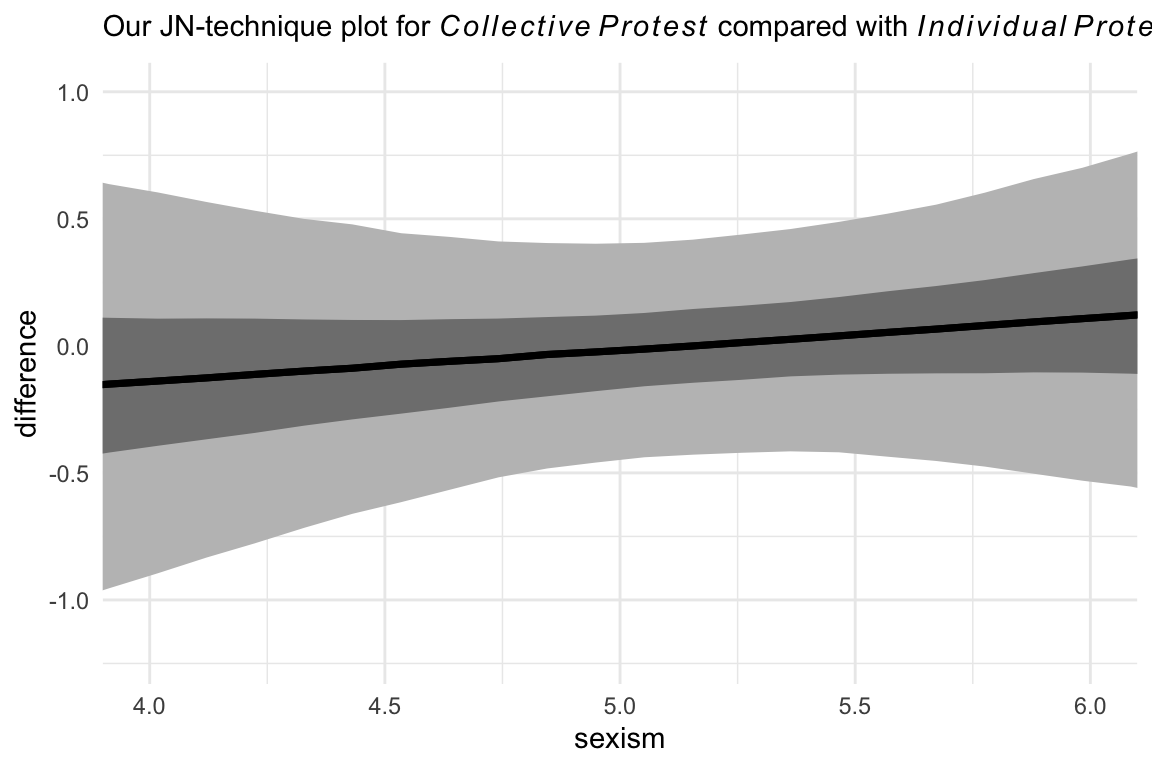

And here we do it one last time between the two active protest conditions. For good measure, we will continue experimenting with different ways of plotting the results. This time well first summarize the posterior median and intervals with tidybayes::median_qi() before plotting. We’ll then feed those results into our plot with the aid of tidybayes::geom_lineribbon() and a follow-up scale_fill_manual() line.

nd <- tibble(d1 = 1:0,

d2 = 0:1) %>%

expand_grid(sexism = seq(from = 3.5, to = 6.5, length.out = 30)) %>%

mutate(row = 1:n())

fitted(model10.2,

newdata = nd,

summary = F) %>%

data.frame() %>%

set_names(pull(nd, row)) %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw, values_to = "estimate") %>%

mutate(row = as.double(name)) %>%

left_join(nd, by = "row") %>%

# there are some mild differences in the two mutate() lines

mutate(condition = if_else(d1 == 0, "Individual Protest", "Collective Protest")) %>%

select(estimate, sexism, draw, condition) %>%

pivot_wider(names_from = condition, values_from = estimate) %>%

mutate(difference = `Collective Protest` - `Individual Protest`) %>%

# group and summarise, here

group_by(sexism) %>%

median_qi(difference, .width = c(.5, .95)) %>%

# plot!

ggplot(aes(x = sexism, y = difference, ymin = .lower, ymax = .upper)) +

# look how simple these two lines are

geom_lineribbon(show.legend = F) +

scale_fill_manual(values = c("grey75", "grey50")) +

coord_cartesian(xlim = c(4, 6)) +

labs(subtitle = expression("Our JN-technique plot for "*italic(Collective~Protest)*" compared with "*italic(Individual~Protest))) +

theme_minimal()

Little difference between those conditions.

10.5 When the moderator is multicategorical

From a substantive standpoint the combination of

- a multicategorical variable \(X\) and a dichotomous or continuous moderator \(W\) versus

- a dichotomous or continuous variable \(X\) and a multicategorical moderator \(W\)

might seem different. From a modeling perspective, the difference is trivial. As Hayes pointed out, “when we claim from a statistical test of moderation that \(X\)’s effect is moderated by \(W\), then it is also true that \(W\)’s effect is moderated by \(X\). This is the symmetry property of interactions” (p. 381). This symmetry holds when we’re not using the hypothesis-testing framework, too.

10.5.1 An example.

Just as a refresher, here’s the print() output for model2.

print(model10.2, digits = 3)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: liking ~ d1 + d2 + sexism + d1:sexism + d2:sexism

## Data: protest (Number of observations: 129)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 7.721 1.092 5.602 9.903 1.001 1549 2071

## d1 -4.157 1.510 -7.177 -1.181 1.000 1632 1695

## d2 -3.511 1.454 -6.409 -0.679 1.002 1433 1941

## sexism -0.475 0.213 -0.907 -0.066 1.001 1518 2195

## d1:sexism 0.906 0.291 0.329 1.486 1.000 1620 1799

## d2:sexism 0.781 0.284 0.228 1.344 1.003 1400 1814

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.007 0.065 0.886 1.141 1.006 2730 2479

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The Bayesian \(R^2\):

bayes_R2(model10.2) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## R2 0.156 0.05 0.067 0.26And the \(R^2\) difference between this and the model excluding the interaction terms:

tibble(`Model 10.1` = bayes_R2(model10.1, summary = F)[, 1],

`Model 10.2` = bayes_R2(model10.2, summary = F)[, 1]) %>%

transmute(difference = `Model 10.2` - `Model 10.1`) %>%

mean_qi(difference) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 1 × 6

## difference .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

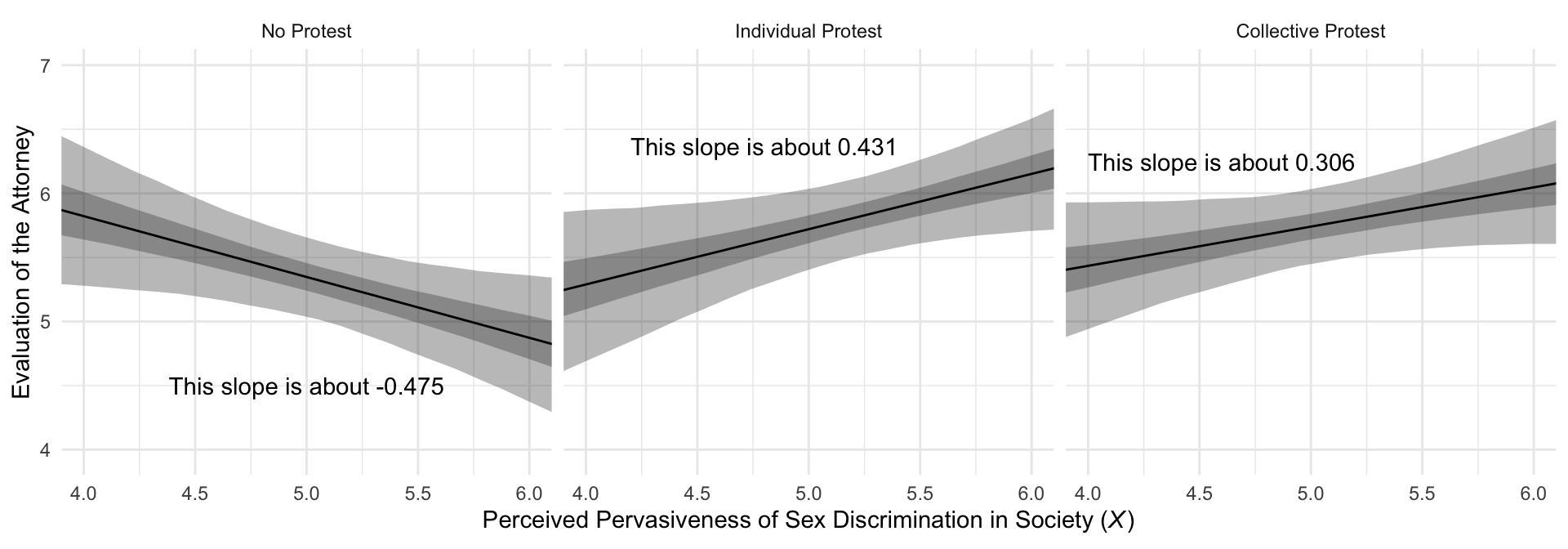

## 1 0.087 -0.035 0.208 0.95 mean qiMuch like in the text, our Figure 10.7 is just a little different from what we did with Figure 10.3.

# this will help us with the `geom_text()` annotation

slopes <- tibble(

slope = c(fixef(model10.2)["sexism", "Estimate"] + fixef(model10.2)["d1:sexism", "Estimate"],

fixef(model10.2)["sexism", "Estimate"] + fixef(model10.2)["d2:sexism", "Estimate"],

fixef(model10.2)["sexism", "Estimate"]),

x = c(4.8, 4.6, 5),

y = c(6.37, 6.25, 4.5),

condition = factor(c("Individual Protest", "Collective Protest", "No Protest"),

levels = c("No Protest", "Individual Protest", "Collective Protest"))) %>%

mutate(label = str_c("This slope is about ", slope %>% round(digits = 3)))

# now we plot

f %>%

ggplot(aes(x = sexism)) +

geom_ribbon(aes(ymin = Q2.5, ymax = Q97.5),

alpha = 1/3) +

geom_ribbon(aes(ymin = Q25, ymax = Q75),

alpha = 1/3) +

geom_line(aes(y = Estimate)) +

geom_text(data = slopes,

aes(x = x, y = y, label = label)) +

coord_cartesian(xlim = c(4, 6)) +

labs(x = expression(paste("Perceived Pervasiveness of Sex Discrimination in Society (", italic(X), ")")),

y = "Evaluation of the Attorney") +

facet_wrap(~ condition) +

theme_minimal()

10.5.2 Probing the interaction and interpreting the regression coefficients.

We computed the posterior means for the slopes when prepping for the figure, above. Here’s how we might get more complete posterior summaries. Much like in the text, our Figure 10.7 is just a little different from what we did with Figure 10.3.

draws <- as_draws_df(model10.2) %>%

transmute(`No Protest` = b_sexism + `b_d1:sexism` * 0 + `b_d2:sexism` * 0,

`Individual Protest` = b_sexism + `b_d1:sexism` * 1 + `b_d2:sexism` * 0,

`Collective Protest` = b_sexism + `b_d1:sexism` * 0 + `b_d2:sexism` * 1)

draws %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("No Protest", "Individual Protest", "Collective Protest"))) %>%

group_by(name) %>%

mean_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 No Protest -0.475 -0.907 -0.066 0.95 mean qi

## 2 Individual Protest 0.431 0.024 0.837 0.95 mean qi

## 3 Collective Protest 0.306 -0.065 0.677 0.95 mean qiHere are the differences among the three protest groups.

draws %>%

transmute(`Individual Protest - No Protest` = `Individual Protest` - `No Protest`,

`Collective Protest - No Protest` = `Collective Protest` - `No Protest`,

`Individual Protest - Collective Protest` = `Individual Protest` - `Collective Protest`) %>%

pivot_longer(everything()) %>%

# again, not necessary, but useful for reordering the summaries

mutate(name = factor(name, levels = c("Individual Protest - No Protest", "Collective Protest - No Protest", "Individual Protest - Collective Protest"))) %>%

group_by(name) %>%

mean_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 7

## name value .lower .upper .width .point .interval

## <fct> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 Individual Protest - No Protest 0.906 0.329 1.49 0.95 mean qi

## 2 Collective Protest - No Protest 0.781 0.228 1.34 0.95 mean qi

## 3 Individual Protest - Collective Protest 0.125 -0.428 0.668 0.95 mean qiSession info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] tidybayes_3.0.2 brms_2.18.0 Rcpp_1.0.9 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10

## [7] purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4

## [5] svUnit_1.0.6 sp_1.5-0 splines_4.2.2 crosstalk_1.2.0

## [9] TH.data_1.1-1 rstantools_2.2.0 inline_0.3.19 digest_0.6.31

## [13] htmltools_0.5.3 fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0

## [17] googlesheets4_1.0.1 tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5

## [21] matrixStats_0.63.0 vroom_1.5.7 xts_0.12.1 sandwich_3.0-2

## [25] prettyunits_1.1.1 colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1

## [29] haven_2.5.1 xfun_0.35 callr_3.7.3 crayon_1.5.2

## [33] jsonlite_1.8.4 lme4_1.1-31 survival_3.4-0 zoo_1.8-10

## [37] glue_1.6.2 gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0

## [41] distributional_0.3.1 pkgbuild_1.3.1 rstan_2.21.8 abind_1.4-5

## [45] scales_1.2.1 mvtnorm_1.1-3 DBI_1.1.3 miniUI_0.1.1.1

## [49] viridisLite_0.4.1 xtable_1.8-4 bit_4.0.4 stats4_4.2.2

## [53] StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4 httr_1.4.4

## [57] threejs_0.3.3 arrayhelpers_1.1-0 posterior_1.3.1 ellipsis_0.3.2

## [61] pkgconfig_2.0.3 loo_2.5.1 farver_2.1.1 sass_0.4.2

## [65] dbplyr_2.2.1 utf8_1.2.2 labeling_0.4.2 tidyselect_1.2.0

## [69] rlang_1.0.6 reshape2_1.4.4 later_1.3.0 munsell_0.5.0

## [73] cellranger_1.1.0 tools_4.2.2 cachem_1.0.6 cli_3.6.0

## [77] generics_0.1.3 broom_1.0.2 evaluate_0.18 fastmap_1.1.0

## [81] processx_3.8.0 knitr_1.40 bit64_4.0.5 fs_1.5.2

## [85] nlme_3.1-160 mime_0.12 projpred_2.2.1 xml2_1.3.3

## [89] compiler_4.2.2 bayesplot_1.10.0 shinythemes_1.2.0 rstudioapi_0.13

## [93] gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0 stringi_1.7.8

## [97] highr_0.9 ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45

## [101] Matrix_1.5-1 nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0

## [105] tensorA_0.36.2 vctrs_0.5.1 pillar_1.8.1 lifecycle_1.0.3

## [109] jquerylib_0.1.4 bridgesampling_1.1-2 estimability_1.4.1 raster_3.5-15

## [113] httpuv_1.6.5 R6_2.5.1 bookdown_0.28 promises_1.2.0.1

## [117] gridExtra_2.3 codetools_0.2-18 boot_1.3-28 colourpicker_1.1.1

## [121] MASS_7.3-58.1 gtools_3.9.4 assertthat_0.2.1 withr_2.5.0

## [125] shinystan_2.6.0 multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2

## [129] hms_1.1.1 terra_1.5-21 grid_4.2.2 minqa_1.2.5

## [133] coda_0.19-4 rmarkdown_2.16 googledrive_2.0.0 shiny_1.7.2

## [137] lubridate_1.8.0 base64enc_0.1-3 dygraphs_1.1.1.6