6 Mediation Analysis with a Multicategorical Antecedent

“Historically, investigators interested in doing a mediation analysis with a multicategorical antecedents \(X\) have resorted to some less optimal strategies than the one [Hayes] discuss[ed] in this chapter” (Andrew F. Hayes, 2018, p. 188). Happily, the approach outlined in this chapter avoids such gaffs. Hayes’s procedure “does not require discarding any data; the entire sample is analyzed simultaneously. Furthermore, the multicategorical nature of \(X\) is respected and retained (p. 189).”

6.1 Relative total, direct, and indirect effects

In review of regression analysis in Chapter 2, we saw that a multicategorical antecedent variable with \(g\) categories can be used as an antecedent variable in a regression model if it is represented by \(g - 1\) variables using some kind of group coding system (see section 2.7). [Hayes] described indicator or dummy coding as one such system, where groups are represented with \(g - 1\) variables set to either zero or one (see Table 2.1). With indicator coding, one of the \(g\) groups is chosen as the reference group. Cases in the reference group receive a zero on all \(g - 1\) variables coding \(X\). Each of the remaining \(g - 1\) groups gets its own indicator variable that is set to 1 for cases in that group, with all other cases set to zero. Using such a system, which of the \(g\) groups a case is in is represented by its pattern of zeros and ones on the \(g - 1\) indicator variables. These \(g - 1\) indicator variables are then used as antecedent variables in a regression model as a stand-in for \(X\). (pp. 189–190, emphasis in the original)

6.1.1 Relative indirect effects.

When our \(X\) is multicategorical, we end up with \(g - 1\) \(a\) coefficients. Presuming the \(M\) variable is continuous or binary, this will yield \(g - 1\) relative indirect effects, \(a_j b\).

6.2 An example: Sex discrimination in the workplace

Here we load a couple necessary packages, load the data, and take a glimpse().

library(tidyverse)

protest <- read_csv("data/protest/protest.csv")

glimpse(protest)## Rows: 129

## Columns: 6

## $ subnum <dbl> 209, 44, 124, 232, 30, 140, 27, 64, 67, 182, 85, 109, 122, 69, 45, 28, 170, 66, 168, 97, 7,…

## $ protest <dbl> 2, 0, 2, 2, 2, 1, 2, 0, 0, 0, 2, 2, 0, 1, 1, 0, 1, 2, 2, 1, 2, 1, 1, 2, 2, 0, 1, 1, 0, 1, 1…

## $ sexism <dbl> 4.87, 4.25, 5.00, 5.50, 5.62, 5.75, 5.12, 6.62, 5.75, 4.62, 4.75, 6.12, 4.87, 5.87, 4.87, 4…

## $ angry <dbl> 2, 1, 3, 1, 1, 1, 2, 1, 6, 1, 2, 5, 2, 1, 1, 1, 2, 1, 3, 4, 1, 1, 1, 5, 1, 5, 1, 1, 2, 1, 1…

## $ liking <dbl> 4.83, 4.50, 5.50, 5.66, 6.16, 6.00, 4.66, 6.50, 1.00, 6.83, 5.00, 5.66, 5.83, 6.50, 4.50, 4…

## $ respappr <dbl> 4.25, 5.75, 4.75, 7.00, 6.75, 5.50, 5.00, 6.25, 3.00, 5.75, 5.25, 7.00, 4.50, 6.25, 5.00, 5…Here are the ungrouped means and \(\textit{SD}\)’s for respappr and liking shown at the bottom of Table 6.1.

protest %>%

pivot_longer(liking:respappr) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 2 × 3

## name mean sd

## <chr> <dbl> <dbl>

## 1 liking 5.64 1.05

## 2 respappr 4.87 1.35We compute the summaries for respappr and liking, grouped by protest, like so.

protest %>%

pivot_longer(liking:respappr) %>%

group_by(protest, name) %>%

summarize(mean = mean(value),

sd = sd(value)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 6 × 4

## # Groups: protest [3]

## protest name mean sd

## <dbl> <chr> <dbl> <dbl>

## 1 0 liking 5.31 1.30

## 2 0 respappr 3.88 1.46

## 3 1 liking 5.83 0.819

## 4 1 respappr 5.14 1.08

## 5 2 liking 5.75 0.936

## 6 2 respappr 5.49 0.936It looks like Hayes has a typo in the \(\textit{SD}\) for liking when protest == 0. It seems he accidentally entered the value for when protest == 1 in that slot.

You’ll have to wait a minute to see where the adjusted \(Y\) values came from.

With a little if_else(), computing the dummies d1 and d2 is easy enough.

protest <- protest %>%

mutate(d1 = if_else(protest == 1, 1, 0),

d2 = if_else(protest == 2, 1, 0))We’re almost ready to fit the model. Let’s load brms.

library(brms)This is the first time we’ve had a simple univariate regression model in a while–no special mvbind() syntax or multiple bf() formulas, just straight up brms::brm().

model6.1 <- brm(

data = protest,

family = gaussian,

liking ~ 1 + d1 + d2,

cores = 4,

file = "fits/model06.01")Check the coefficient summaries.

fixef(model6.1)## Estimate Est.Error Q2.5 Q97.5

## Intercept 5.3124235 0.1624501 5.004147895 5.6168536

## d1 0.5141982 0.2292392 0.061921766 0.9594998

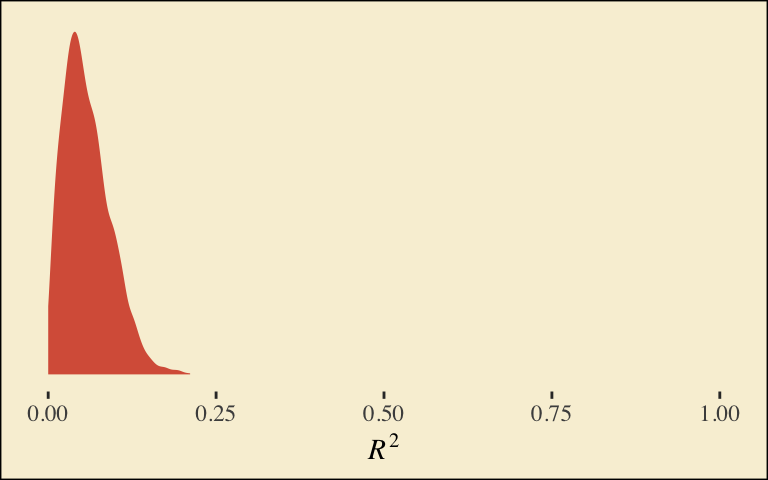

## d2 0.4380813 0.2264952 0.001383588 0.8721201Our \(R^2\) differences a bit from the OLS version in the text. This shouldn’t be surprising when it’s near the boundary.

bayes_R2(model6.1)## Estimate Est.Error Q2.5 Q97.5

## R2 0.0579167 0.03516063 0.006511632 0.1371208Here’s its shape. For the plots in this chapter, we’ll take a few formatting cues from Edward Tufte (2001), courtesy of the ggthemes package. The theme_tufte() function will change the default font and remove some chart junk. We will take our color palette from Pokemon via the palettetown package (Lucas, 2016).

library(ggthemes)

library(palettetown)

bayes_R2(model6.1, summary = F) %>%

data.frame() %>%

ggplot(aes(x = R2)) +

geom_density(linewidth = 0, fill = pokepal(pokemon = "plusle")[2]) +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = 0:1) +

xlab(expression(italic(R)^2)) +

theme_tufte() +

theme(legend.title = element_blank(),

plot.background = element_rect(fill = pokepal(pokemon = "plusle")[8]))

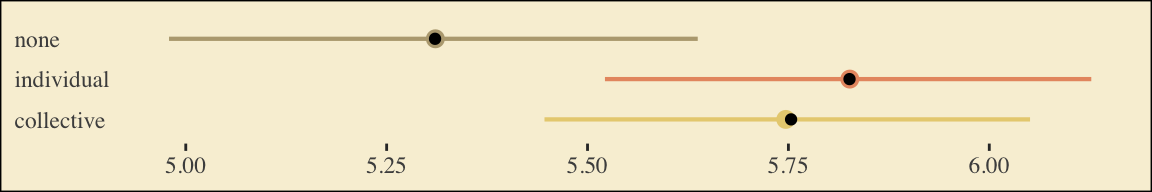

To use the model-implied equations to compute the means for each group on the criterion, we’ll extract the posterior draws.

draws <- as_draws_df(model6.1)

draws %>%

mutate(Y_np = b_Intercept + b_d1 * 0 + b_d2 * 0,

Y_ip = b_Intercept + b_d1 * 1 + b_d2 * 0,

Y_cp = b_Intercept + b_d1 * 0 + b_d2 * 1) %>%

pivot_longer(contains("Y_")) %>%

# this line will order our output the same way Hayes did in the text (p. 197)

mutate(name = factor(name, levels = c("Y_np", "Y_ip", "Y_cp"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 3 × 3

## name mean sd

## <fct> <dbl> <dbl>

## 1 Y_np 5.31 0.162

## 2 Y_ip 5.83 0.161

## 3 Y_cp 5.75 0.157What Hayes called the “relative total effects” \(c_1\) and \(c_2\) are the d1 and d2 lines in our fixef() output, above.

Here are the sub-models for the mediation model.

m_model <- bf(respappr ~ 1 + d1 + d2)

y_model <- bf(liking ~ 1 + d1 + d2 + respappr)There’s a third way to fit multivariate models in brms. It uses either the mvbrmsformula() function, or its abbreviated version, mvbf(). With these, we first define our submodels in br() statements like before. We then combine them within mvbf(), separated with a comma. If we’d like to avoid estimating a residual correlation, which we do in this project–, we then set rescore = FALSE. Here’s how it looks like for our second model.

model6.2 <- brm(

data = protest,

family = gaussian,

mvbf(m_model, y_model, rescor = FALSE),

cores = 4,

file = "fits/model06.02")print(model6.2)## Family: MV(gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: respappr ~ 1 + d1 + d2

## liking ~ 1 + d1 + d2 + respappr

## Data: protest (Number of observations: 129)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## respappr_Intercept 3.89 0.19 3.52 4.26 1.00 5405 3275

## liking_Intercept 3.71 0.31 3.10 4.32 1.00 5684 3394

## respappr_d1 1.26 0.26 0.75 1.77 1.00 5483 3326

## respappr_d2 1.61 0.26 1.09 2.13 1.00 5312 3470

## liking_d1 -0.01 0.22 -0.45 0.42 1.00 3560 3073

## liking_d2 -0.22 0.23 -0.67 0.21 1.00 3534 2828

## liking_respappr 0.41 0.07 0.28 0.56 1.00 3898 3103

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_respappr 1.18 0.08 1.04 1.33 1.00 6785 2943

## sigma_liking 0.93 0.06 0.82 1.05 1.00 5831 2738

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

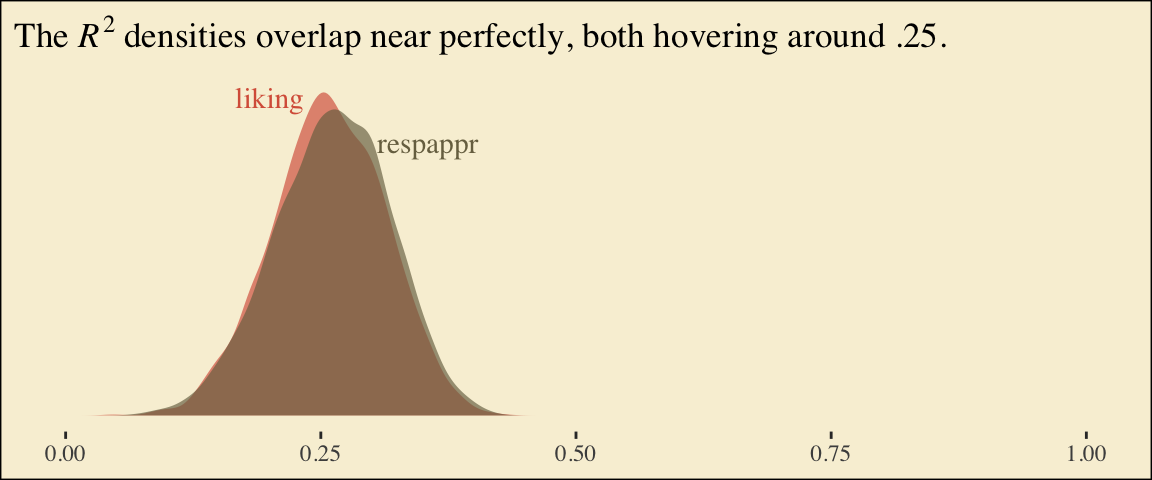

## scale reduction factor on split chains (at convergence, Rhat = 1).Behold the Bayesian \(R^2\) posteriors.

bayes_R2(model6.2, summary = F) %>%

data.frame() %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, fill = name)) +

geom_density(linewidth = 0, alpha = 2/3) +

annotate("text", x = .2, y = 7, label = "liking", color = pokepal(pokemon = "plusle")[2], family = "Times") +

annotate("text", x = .355, y = 6, label = "respappr", color = pokepal(pokemon = "plusle")[6], family = "Times") +

scale_fill_manual(values = pokepal(pokemon = "plusle")[c(2, 6)]) +

scale_x_continuous(NULL, limits = c(0:1)) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(The~italic(R)^2*" densities overlap near perfectly, both hovering around .25.")) +

theme_tufte() +

theme(legend.position = "none",

plot.background = element_rect(fill = pokepal(pokemon = "plusle")[8]))

To get the model summaries as presented in the second two columns in Table 6.2, we use as_draws_df(), rename a bit, and summarize. Like in the last chapter, here we’ll do so with a little help from tidybayes.

library(tidybayes)

draws <- as_draws_df(model6.2) %>%

mutate(a1 = b_respappr_d1,

a2 = b_respappr_d2,

b = b_liking_respappr,

c1_prime = b_liking_d1,

c2_prime = b_liking_d2,

i_m = b_respappr_Intercept,

i_y = b_liking_Intercept)

draws %>%

pivot_longer(a1:i_y) %>%

group_by(name) %>%

mean_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 7 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 a1 1.26 0.747 1.77 0.95 mean qi

## 2 a2 1.61 1.09 2.13 0.95 mean qi

## 3 b 0.413 0.278 0.557 0.95 mean qi

## 4 c1_prime -0.008 -0.448 0.424 0.95 mean qi

## 5 c2_prime -0.225 -0.666 0.206 0.95 mean qi

## 6 i_m 3.89 3.52 4.26 0.95 mean qi

## 7 i_y 3.71 3.10 4.32 0.95 mean qiWorking with the \(\overline M_{ij}\) formulas in page 199 is quite similar to what we did above.

draws %>%

mutate(M_np = b_respappr_Intercept + b_respappr_d1 * 0 + b_respappr_d2 * 0,

M_ip = b_respappr_Intercept + b_respappr_d1 * 1 + b_respappr_d2 * 0,

M_cp = b_respappr_Intercept + b_respappr_d1 * 0 + b_respappr_d2 * 1) %>%

pivot_longer(starts_with("M_")) %>%

# this line will order our output the same way Hayes did in the text (p. 199)

mutate(name = factor(name, levels = c("M_np", "M_ip", "M_cp"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 3 × 3

## name mean sd

## <fct> <dbl> <dbl>

## 1 M_np 3.89 0.190

## 2 M_ip 5.15 0.178

## 3 M_cp 5.49 0.180The \(\overline Y^*_{ij}\) formulas are more of the same.

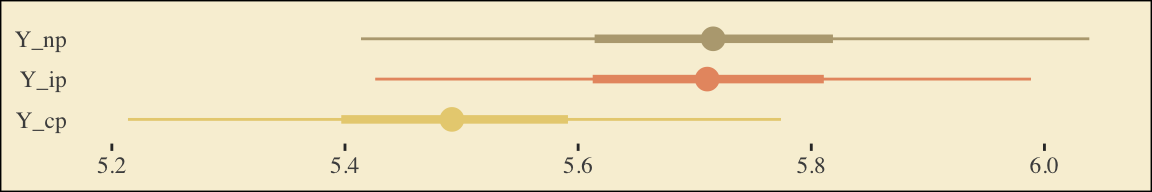

draws <- draws %>%

mutate(Y_np = b_liking_Intercept + b_liking_d1 * 0 + b_liking_d2 * 0 + b_liking_respappr * mean(protest$respappr),

Y_ip = b_liking_Intercept + b_liking_d1 * 1 + b_liking_d2 * 0 + b_liking_respappr * mean(protest$respappr),

Y_cp = b_liking_Intercept + b_liking_d1 * 0 + b_liking_d2 * 1 + b_liking_respappr * mean(protest$respappr))

draws %>%

pivot_longer(starts_with("Y_")) %>%

mutate(name = factor(name, levels = c("Y_np", "Y_ip", "Y_cp"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 3 × 3

## name mean sd

## <fct> <dbl> <dbl>

## 1 Y_np 5.72 0.158

## 2 Y_ip 5.71 0.144

## 3 Y_cp 5.49 0.143Note, these are where the adjusted \(Y\) values came from in Table 6.1.

This is as fine a spot as any to introduce coefficient plots. The brms, tidybayes, and bayesplot packages all offer convenience functions for coefficient plots. Before we get all lazy using convenience functions, it’s good to know how to make coefficient plots by hand. Here’s ours for those last three \(\overline Y^*_{ij}\)-values.

draws %>%

pivot_longer(starts_with("Y_")) %>%

ggplot(aes(x = value, y = name, color = name)) +

stat_summary(geom = "pointrange",

fun = median,

fun.min = function(x) {quantile(x, probs = .025)},

fun.max = function(x) {quantile(x, probs = .975)},

size = 0.75) +

stat_summary(geom = "linerange",

fun.min = function(x) {quantile(x, probs = .25)},

fun.max = function(x) {quantile(x, probs = .75)},

size = 1.5) +

scale_color_manual(values = pokepal(pokemon = "plusle")[c(3, 7, 9)]) +

labs(x = NULL, y = NULL) +

theme_tufte() +

theme(axis.ticks.y = element_blank(),

legend.position = "none",

plot.background = element_rect(fill = pokepal(pokemon = "plusle")[8]))

The points are the posterior medians, the thick inner lines the 50% intervals, and the thinner outer lines the 95% intervals. For kicks, we distinguished the three values by color.

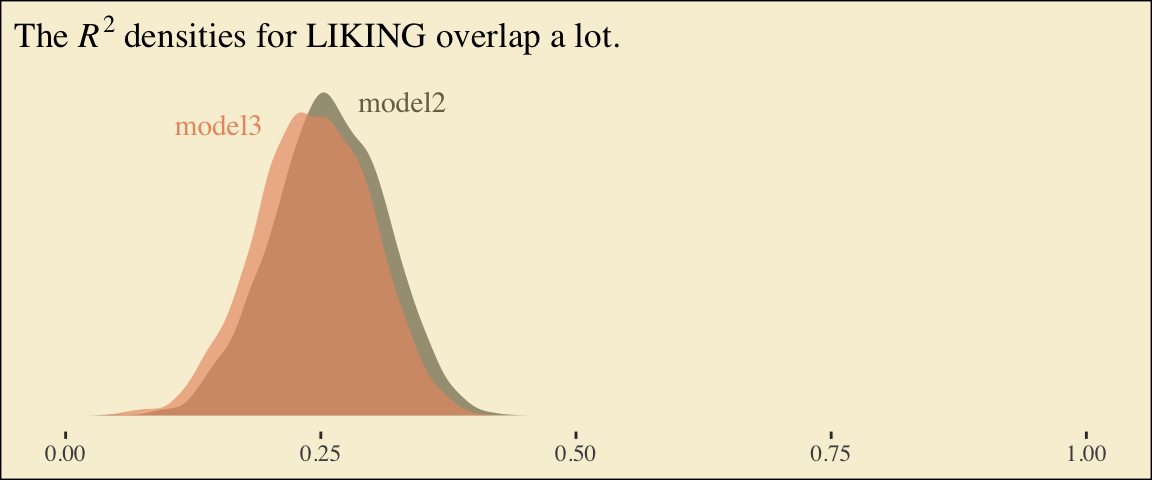

If we want to examine \(R^2\) change for dropping the dummy variables, we’ll first fit a model that omits them.

model6.3 <- brm(

data = protest,

family = gaussian,

liking ~ 1 + respappr,

cores = 4,

file = "fits/model06.03")Here are the competing \(R^2\) distributions.

# get the R2 draws and wrangle

r2 <-

rbind(bayes_R2(model6.2, resp = "liking", summary = F),

bayes_R2(model6.3, summary = F)) %>%

data.frame() %>%

set_names("R2") %>%

mutate(fit = rep(c("model6.2", "model6.3"), each = n() / 2))

# plot!

r2 %>%

ggplot(aes(x = R2, fill = fit)) +

geom_density(linewidth = 0, alpha = 2/3) +

annotate("text", x = .15, y = 6.4, label = "model3", color = pokepal(pokemon = "plusle")[7], family = "Times") +

annotate("text", x = .33, y = 6.9, label = "model2", color = pokepal(pokemon = "plusle")[6], family = "Times") +

scale_fill_manual(values = pokepal(pokemon = "plusle")[c(6, 7)]) +

scale_x_continuous(NULL, limits = 0:1) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle(expression(The~italic(R)^2*" densities for LIKING overlap a lot.")) +

theme_tufte() +

theme(legend.position = "none",

plot.background = element_rect(fill = pokepal(pokemon = "plusle")[8]))

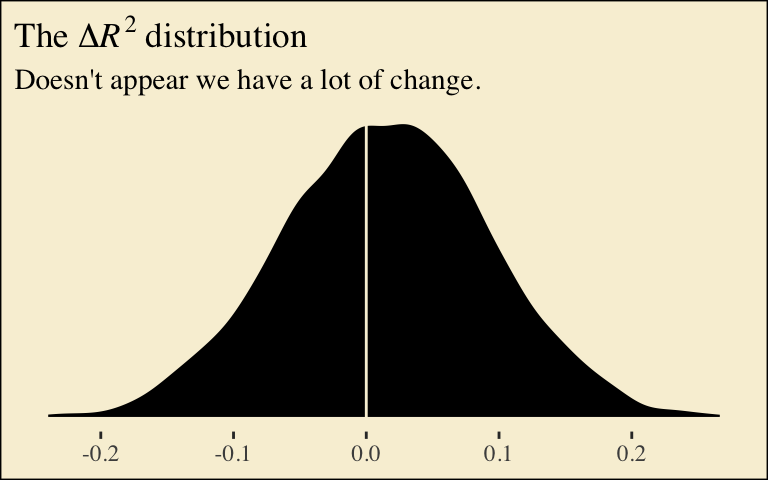

If you want to compare then with a change score, do something like this.

r2 %>%

mutate(iter = rep(1:4000, times = 2)) %>%

pivot_wider(names_from = fit, values_from = R2) %>%

ggplot(aes(x = model6.2 - model6.3)) +

geom_density(linewidth = 0, fill = pokepal(pokemon = "plusle")[4]) +

geom_vline(xintercept = 0, color = pokepal(pokemon = "plusle")[8]) +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(The~Delta*italic(R)^2~distribution),

subtitle = "Doesn't appear we have a lot of change.",

x = NULL) +

theme_tufte() +

theme(legend.title = element_blank(),

plot.background = element_rect(fill = pokepal(pokemon = "plusle")[8]))

Now compute the posterior means and 95% intervals for \(a_1 b\) and \(a_2 b\), the conditional indirect effects.

draws %>%

mutate(a1b = a1 * b,

a2b = a2 * b) %>%

pivot_longer(a1b:a2b) %>%

group_by(name) %>%

mean_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 a1b 0.52 0.277 0.816 0.95 mean qi

## 2 a2b 0.664 0.384 1.00 0.95 mean qi6.3 Using a different group coding system

Here we’ll make our alternative dummies, what we’ll call d_1 and d_2, with orthogonal contrast coding.

protest <- protest %>%

mutate(d_1 = if_else(protest == 0, -2/3, 1/3),

d_2 = if_else(protest == 0, 0,

if_else(protest == 1, -1/2, 1/2)))Here are the sub-models.

m_model <- bf(respappr ~ 1 + d_1 + d_2)

y_model <- bf(liking ~ 1 + d_1 + d_2 + respappr)Now we fit using the mvbf() approach.

model6.4 <- brm(

data = protest,

family = gaussian,

mvbf(m_model, y_model, rescor = FALSE),

cores = 4,

file = "fits/model06.04")Here are our intercepts and regression coefficient summaries.

fixef(model6.4)## Estimate Est.Error Q2.5 Q97.5

## respappr_Intercept 4.8409907 0.10372701 4.6349775 5.0469873

## liking_Intercept 3.6361860 0.35047400 2.9662744 4.3446079

## respappr_d_1 1.4406897 0.22283830 1.0013720 1.8755853

## respappr_d_2 0.3485198 0.24525265 -0.1272072 0.8297255

## liking_d_1 -0.1088995 0.19831868 -0.4897816 0.2816862

## liking_d_2 -0.2142112 0.19657459 -0.6013251 0.1662995

## liking_respappr 0.4118475 0.07058589 0.2685722 0.5495288It’s important to note that these will not correspond to the “TOTAL EFFECT MODEL” section of the PROCESS output of Figure 6.3. Hayes’s PROCESS has the mcx=3 command which tells the program to reparametrize the orthogonal contrasts. brms doesn’t have such a command.

For now, we’ll have to jump to Equation 6.8 towards the bottom of page 207. Those parameters are evident in our output. For good measure, here we’ll practice with posterior_summary().

posterior_summary(model6.4) %>%

data.frame() %>%

rownames_to_column("parameter") %>%

filter(str_detect(parameter, "b_respappr"))## parameter Estimate Est.Error Q2.5 Q97.5

## 1 b_respappr_Intercept 4.8409907 0.1037270 4.6349775 5.0469873

## 2 b_respappr_d_1 1.4406897 0.2228383 1.0013720 1.8755853

## 3 b_respappr_d_2 0.3485198 0.2452527 -0.1272072 0.8297255Thus it’s easy to get the \(\overline M_{ij}\) means with a little posterior manipulation.

draws <- as_draws_df(model6.4) %>%

mutate(M_np = b_respappr_Intercept + b_respappr_d_1 * -2/3 + b_respappr_d_2 * 0,

M_ip = b_respappr_Intercept + b_respappr_d_1 * 1/3 + b_respappr_d_2 * -1/2,

M_cp = b_respappr_Intercept + b_respappr_d_1 * 1/3 + b_respappr_d_2 * 1/2)

draws %>%

pivot_longer(starts_with("M_")) %>%

mutate(name = factor(name, levels = c("M_np", "M_ip", "M_cp"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 3 × 3

## name mean sd

## <fct> <dbl> <dbl>

## 1 M_np 3.88 0.182

## 2 M_ip 5.15 0.181

## 3 M_cp 5.50 0.172With these in hand, we can compute \(a_1\) and \(a_2\).

draws <- draws %>%

mutate(a1 = (M_ip + M_cp) / 2 - M_np,

a2 = M_cp - M_ip)

draws %>%

pivot_longer(a1:a2) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 2 × 3

## name mean sd

## <chr> <dbl> <dbl>

## 1 a1 1.44 0.223

## 2 a2 0.349 0.245Happily, our model output will allow us to work with Hayes’s \(\overline Y^*_{ij}\) equations in the middle of page 210.

draws <- draws %>%

mutate(Y_np = b_liking_Intercept + b_liking_d_1 * -2/3 + b_liking_d_2 * 0 + b_liking_respappr * mean(protest$respappr),

Y_ip = b_liking_Intercept + b_liking_d_1 * 1/3 + b_liking_d_2 * -1/2 + b_liking_respappr * mean(protest$respappr),

Y_cp = b_liking_Intercept + b_liking_d_1 * 1/3 + b_liking_d_2 * 1/2 + b_liking_respappr * mean(protest$respappr))

draws %>%

pivot_longer(starts_with("Y_")) %>%

mutate(name = factor(name, levels = c("Y_np", "Y_ip", "Y_cp"))) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 3 × 3

## name mean sd

## <fct> <dbl> <dbl>

## 1 Y_np 5.71 0.158

## 2 Y_ip 5.71 0.138

## 3 Y_cp 5.50 0.144And with these in hand, we can compute \(c'_1\) and \(c'_2\).

draws <- draws %>%

mutate(c1_prime = (Y_ip + Y_cp) / 2 - Y_np,

c2_prime = Y_cp - Y_ip)

draws %>%

pivot_longer(c1_prime:c2_prime) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 2 × 3

## name mean sd

## <chr> <dbl> <dbl>

## 1 c1_prime -0.109 0.198

## 2 c2_prime -0.214 0.197It appears Hayes has a typo in the formula for \(c'_2\) on page 211. The value he has down for \(\overline Y^*_{IP}\), 5.145, is incorrect. It’s not the one he displayed at the bottom of the previous page and it also contradicts the analyses herein. So it goes… These things happen.

We haven’t spelled it out, but the \(b\) parameter is currently labeled b_liking_respappr in our draws object. Here we’ll make a b column to make things easier. While we’re at it, we’ll compute the indirect effects, too.

draws <- draws %>%

mutate(b = b_liking_respappr) %>%

mutate(a1b = a1 * b,

a2b = a2 * b)

draws %>%

pivot_longer(a1b:a2b) %>%

group_by(name) %>%

mean_qi(value) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 a1b 0.593 0.34 0.878 0.95 mean qi

## 2 a2b 0.144 -0.049 0.371 0.95 mean qiNow we can compute and summarize() our \(c_1\) and \(c_2\).

draws <- draws %>%

mutate(c1 = c1_prime + a1b,

c2 = c2_prime + a2b)

draws %>%

pivot_longer(c1:c2) %>%

group_by(name) %>%

summarize(mean = mean(value),

sd = sd(value))## # A tibble: 2 × 3

## name mean sd

## <chr> <dbl> <dbl>

## 1 c1 0.485 0.190

## 2 c2 -0.0699 0.222Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] tidybayes_3.0.2 palettetown_0.1.1 ggthemes_4.2.4 brms_2.18.0 Rcpp_1.0.9

## [6] forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10 purrr_1.0.1 readr_2.1.2

## [11] tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4 svUnit_1.0.6

## [6] splines_4.2.2 crosstalk_1.2.0 TH.data_1.1-1 rstantools_2.2.0 inline_0.3.19

## [11] digest_0.6.31 htmltools_0.5.3 fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0

## [16] googlesheets4_1.0.1 tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5 matrixStats_0.63.0

## [21] vroom_1.5.7 xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1 colorspace_2.0-3

## [26] rvest_1.0.2 ggdist_3.2.1 haven_2.5.1 xfun_0.35 callr_3.7.3

## [31] crayon_1.5.2 jsonlite_1.8.4 lme4_1.1-31 survival_3.4-0 zoo_1.8-10

## [36] glue_1.6.2 gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0 distributional_0.3.1

## [41] pkgbuild_1.3.1 rstan_2.21.8 abind_1.4-5 scales_1.2.1 mvtnorm_1.1-3

## [46] DBI_1.1.3 miniUI_0.1.1.1 xtable_1.8-4 bit_4.0.4 stats4_4.2.2

## [51] StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4 httr_1.4.4 threejs_0.3.3

## [56] arrayhelpers_1.1-0 posterior_1.3.1 ellipsis_0.3.2 pkgconfig_2.0.3 loo_2.5.1

## [61] farver_2.1.1 sass_0.4.2 dbplyr_2.2.1 utf8_1.2.2 labeling_0.4.2

## [66] tidyselect_1.2.0 rlang_1.0.6 reshape2_1.4.4 later_1.3.0 munsell_0.5.0

## [71] cellranger_1.1.0 tools_4.2.2 cachem_1.0.6 cli_3.6.0 generics_0.1.3

## [76] broom_1.0.2 evaluate_0.18 fastmap_1.1.0 processx_3.8.0 knitr_1.40

## [81] bit64_4.0.5 fs_1.5.2 nlme_3.1-160 mime_0.12 projpred_2.2.1

## [86] xml2_1.3.3 compiler_4.2.2 bayesplot_1.10.0 shinythemes_1.2.0 rstudioapi_0.13

## [91] gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0 stringi_1.7.8 highr_0.9

## [96] ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45 Matrix_1.5-1 nloptr_2.0.3

## [101] markdown_1.1 shinyjs_2.1.0 tensorA_0.36.2 vctrs_0.5.1 pillar_1.8.1

## [106] lifecycle_1.0.3 jquerylib_0.1.4 bridgesampling_1.1-2 estimability_1.4.1 httpuv_1.6.5

## [111] R6_2.5.1 bookdown_0.28 promises_1.2.0.1 gridExtra_2.3 codetools_0.2-18

## [116] boot_1.3-28 colourpicker_1.1.1 MASS_7.3-58.1 gtools_3.9.4 assertthat_0.2.1

## [121] withr_2.5.0 shinystan_2.6.0 multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2

## [126] hms_1.1.1 grid_4.2.2 minqa_1.2.5 coda_0.19-4 rmarkdown_2.16

## [131] googledrive_2.0.0 shiny_1.7.2 lubridate_1.8.0 base64enc_0.1-3 dygraphs_1.1.1.6