11 Fundamentals of Conditional Process Analysis

Thus far in this book, mediation and moderation have been treated as distinct, separate, and independent concepts with different analytical procedures and interpretations. Yet processes modeled with mediation analysis likely are contingent and hence moderated, in that they operate differently for different people or in different contexts or circumstances. A more complete analysis, therefore, should attempt to model the mechanisms at work linking \(X\) to \(Y\) while simultaneously allowing those effects to be contingent on context, circumstance, or individual differences. (Andrew F. Hayes, 2018, p. 395)

11.1 Examples of conditional process models in the literature

You can look up the various examples in the literature on your own. The main point is

moderation can be combined with mediation in a number of different ways. But these examples [we skipped for the sake of brevity] only scratch the surface of what is possible. Think about the number of possibilities when you increase the number of mediators, distinguish between moderation of paths in a parallel versus serial multiple mediator model, or allow for multiple moderators of different paths or the same path, and so forth. The possibilities are nearly endless. But regardless of the configuration of moderated paths or complexity of the model, conditional process analysis involves the estimation and interpretation of direct and indirect effects, just as in a simple mediation analysis. However, when causal effects in a mediation model are moderated, they will be conditional on those moderators. Thus, an understanding of the concepts of the conditional direct effect and the conditional indirect effect is required before one should attempt to undertake a conditional process analysis. (p. 401, emphasis in the original)

11.2 Conditional direct and indirect effects

When a direct or indirect effect is conditional, analysis and interpretation of the results of the modeling process should be based on a formal estimate of and inference about conditional direct and/or conditional in- direct effects. In this section, [Hayes illustrated] the computation of conditional direct and indirect effects for example models that combine moderation and mediation. (p. 403)

11.3 Example: Hiding your feelings from your work team

Here we load a couple necessary packages, load the data, and take a glimpse().

library(tidyverse)

teams <- read_csv("data/teams/teams.csv")

glimpse(teams)## Rows: 60

## Columns: 4

## $ dysfunc <dbl> -0.23, -0.13, 0.00, -0.33, 0.39, 1.02, -0.35, -0.23, 0.39, -0.08, -0.23, 0.09, -0.29, -0.06,…

## $ negtone <dbl> -0.51, 0.22, -0.08, -0.11, -0.48, 0.72, -0.18, -0.13, 0.52, -0.26, 1.08, 0.53, -0.19, 0.15, …

## $ negexp <dbl> -0.49, -0.49, 0.84, 0.84, 0.17, -0.82, -0.66, -0.16, -0.16, -0.16, -0.16, 0.50, 0.84, 0.50, …

## $ perform <dbl> 0.12, 0.52, -0.08, -0.08, 0.12, 1.12, -0.28, 0.32, -1.08, -0.28, -1.08, -0.28, -0.28, -0.88,…Load the brms package.

library(brms)Recall that we fit mediation models with brms using multivariate syntax. In previous attempts, we’ve defined and saved the model components outside of the brm() function and then plugged then into brm() using their identifier. Just to shake things up a bit, we’ll just do all the steps right in brm(), this time.

model11.1 <- brm(

data = teams,

family = gaussian,

bf(negtone ~ 1 + dysfunc) +

bf(perform ~ 1 + dysfunc + negtone + negexp + negtone:negexp) +

set_rescor(FALSE),

chains = 4, cores = 4,

file = "fits/model11.01")Check the model summary.

print(model11.1, digits = 3)## Family: MV(gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: negtone ~ 1 + dysfunc

## perform ~ 1 + dysfunc + negtone + negexp + negtone:negexp

## Data: teams (Number of observations: 60)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## negtone_Intercept 0.027 0.063 -0.098 0.153 1.003 6480 3157

## perform_Intercept -0.012 0.060 -0.130 0.104 1.001 6134 3086

## negtone_dysfunc 0.619 0.168 0.285 0.951 1.000 6095 3154

## perform_dysfunc 0.366 0.182 0.007 0.722 1.003 5474 3128

## perform_negtone -0.438 0.132 -0.698 -0.180 1.001 4981 3105

## perform_negexp -0.020 0.120 -0.258 0.217 1.001 6282 3137

## perform_negtone:negexp -0.512 0.250 -0.990 -0.019 1.000 5007 3062

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_negtone 0.486 0.046 0.405 0.585 1.001 6244 2974

## sigma_perform 0.459 0.046 0.380 0.563 1.002 4892 2588

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Our model summary coheres nicely with Table 11.1 and the formulas on page 409. Here are the \(R^2\) distribution summaries.

bayes_R2(model11.1) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## R2negtone 0.192 0.078 0.045 0.344

## R2perform 0.320 0.078 0.160 0.461On page 410 Hayes reported two sample means. Compute them like so.

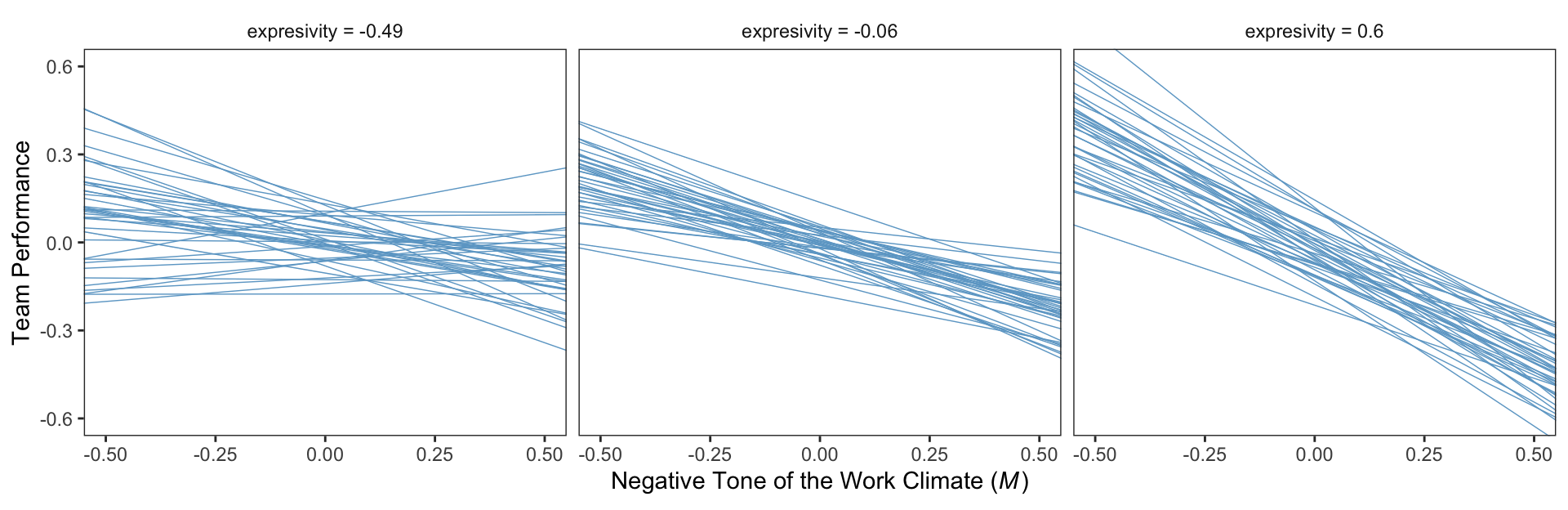

mean(teams$negexp) %>% round(digits = 3) # w_bar## [1] -0.008mean(teams$perform) %>% round(digits = 3) # m_bar## [1] -0.032For our Figure 11.4 and other similar figures in this chapter, we’ll use spaghetti plots. Recall that with a spaghetti plots for linear models, we only need two values for the variable on the \(x\)-axis, rather than the typical 30+.

nd <- crossing(negtone = c(-.8, .8),

negexp = quantile(teams$negexp, probs = c(.16, .50, .84))) %>%

mutate(dysfunc = mean(teams$dysfunc),

row = 1:n())

nd## # A tibble: 6 × 4

## negtone negexp dysfunc row

## <dbl> <dbl> <dbl> <int>

## 1 -0.8 -0.49 0.0347 1

## 2 -0.8 -0.06 0.0347 2

## 3 -0.8 0.6 0.0347 3

## 4 0.8 -0.49 0.0347 4

## 5 0.8 -0.06 0.0347 5

## 6 0.8 0.6 0.0347 6Here’s our Figure 11.4, which uses only the first 40 HMC iterations for the spaghetti-plot lines.

# compute

fitted(model11.1,

newdata = nd,

resp = "perform",

summary = F) %>%

# wrangle

data.frame() %>%

set_names(pull(nd, row)) %>%

mutate(draw = 1:n()) %>%

pivot_longer(-draw) %>%

mutate(row = as.double(name)) %>%

left_join(nd, by = "row") %>%

mutate(negexp = factor(str_c("expresivity = ", negexp),

levels = c("expresivity = -0.49", "expresivity = -0.06", "expresivity = 0.6"))) %>%

filter(draw < 41) %>%

# plot

ggplot(aes(x = negtone, y = value, group = draw)) +

geom_line(color = "skyblue3",

linewidth = 1/4) +

coord_cartesian(xlim = c(-.5, .5),

ylim = c(-.6, .6)) +

labs(x = expression(paste("Negative Tone of the Work Climate (", italic(M), ")")),

y = "Team Performance") +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(color = "transparent", fill = "transparent")) +

facet_wrap(~ negexp)

Also, the plot theme in this chapter is a nod to the style John Kruschke frequently uses in his papers and texts (e.g., Kruschke, 2015).

Using Hayes’s notation from the top of page 412, we can express \(M\)’s conditional effect on \(Y\) as

\[\theta_{M \rightarrow Y} = b_1 + b_3 W,\]

where \(M\) is negtone, \(Y\) is perform, and \(W\) is negexp. We can extract our posterior summaries for \(b_1\) and \(b_3\) like so.

fixef(model11.1)[c("perform_negtone", "perform_negtone:negexp"), ]## Estimate Est.Error Q2.5 Q97.5

## perform_negtone -0.4375692 0.1324377 -0.6980568 -0.17993006

## perform_negtone:negexp -0.5118960 0.2496913 -0.9903911 -0.0186218511.4 Estimation of a conditional process model using PROCESS

We just fit the model in the last section. No need to repeat.

11.5 Quantifying and visualizing (conditional) indirect and direct effects.

The analysis presented thus far has been piecemeal, in that [Hayes] addressed how to estimate the regression coefficients for each equation in this conditional process model and how to interpret them using standard principles of regression analysis, moderation analysis, and so forth. But a complete analysis goes further by integrating the estimates of each of the effects in the model (i.e., \(X \rightarrow M, \theta_{M \rightarrow Y}\)) to yield the direct and indirect effects of \(X\) on \(Y\). That is, the individual effects as quantified with the regression coefficients (conditional or otherwise) in equations 11.10 and 11.11 are not necessarily of immediate interest or relevance. Estimating them is a means to an end. What matters is the estimation of the direct and indirect effects, for they convey information about how \(X\) influences \(Y\) directly or through a mediator and how those effects are contingent on a moderator. (pp. 417–418)

11.5.0.1 The conditional indirect effect of \(X\).

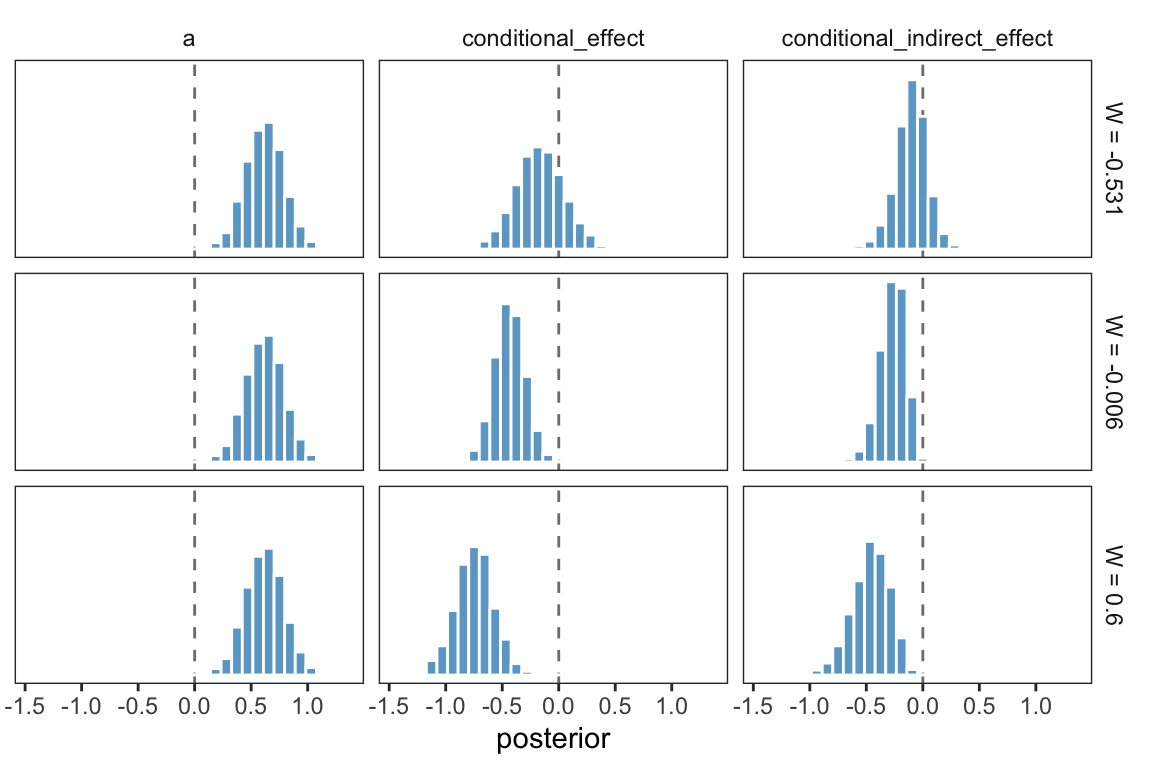

One way to make a version of Table 11.2 is to work with the as_draws_df(), simply summarizing the distributions with means.

draws <- as_draws_df(model11.1)

draws %>%

transmute(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`) %>%

expand_grid(w = c(-0.531, -0.006, 0.600)) %>%

mutate(conditional_effect = b1 + b3 * w,

conditional_indirect_effect = a * (b1 + b3 * w)) %>%

select(-(b1:b3)) %>%

pivot_longer(-w) %>%

group_by(w, name) %>%

summarise(mean = mean(value) %>% round(digits = 3)) %>%

pivot_wider(names_from = name, values_from = mean)## # A tibble: 3 × 4

## # Groups: w [3]

## w a conditional_effect conditional_indirect_effect

## <dbl> <dbl> <dbl> <dbl>

## 1 -0.531 0.619 -0.166 -0.103

## 2 -0.006 0.619 -0.434 -0.269

## 3 0.6 0.619 -0.745 -0.462That kind of summary isn’t the most Bayesian of us. Why not plot, instead?

draws %>%

transmute(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`) %>%

expand_grid(w = c(-0.531, -0.006, 0.600)) %>%

mutate(conditional_effect = b1 + b3 * w,

conditional_indirect_effect = a * (b1 + b3 * w)) %>%

select(-(b1:b3)) %>%

pivot_longer(-w) %>%

mutate(label = str_c("W = ", w),

w = fct_reorder(label, w)) %>%

ggplot(aes(x = value)) +

geom_vline(xintercept = 0, color = "grey50", linetype = 2) +

geom_histogram(color = "white", fill = "skyblue3") +

scale_y_continuous(NULL, breaks = NULL) +

xlab("posterior") +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(color = "transparent", fill = "transparent")) +

facet_grid(w ~ name)

Now the posterior distribution for each is on full display.

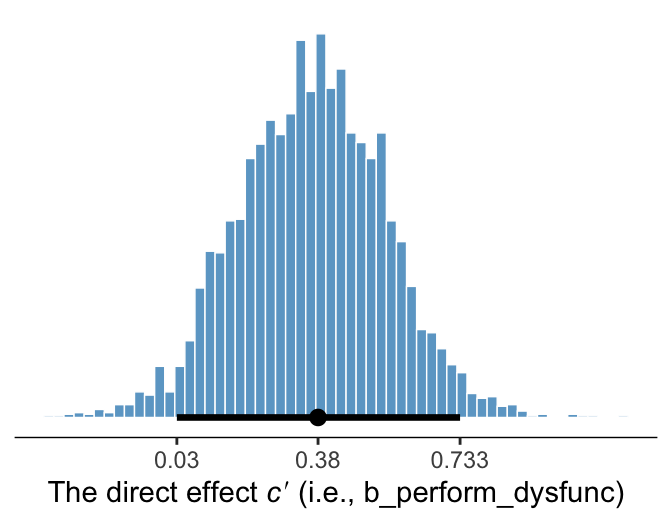

11.5.0.2 The direct effect.

The direct effect of \(X\) on \(Y\) (i.e., dysfunc on perform) for this model is b_perform_dysfunc in brms. Here’s how to get its summary values from posterior_summary().

posterior_summary(model11.1)["b_perform_dysfunc", ] %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## 0.366 0.182 0.007 0.72211.5.1 Visualizing the direct and indirect effects.

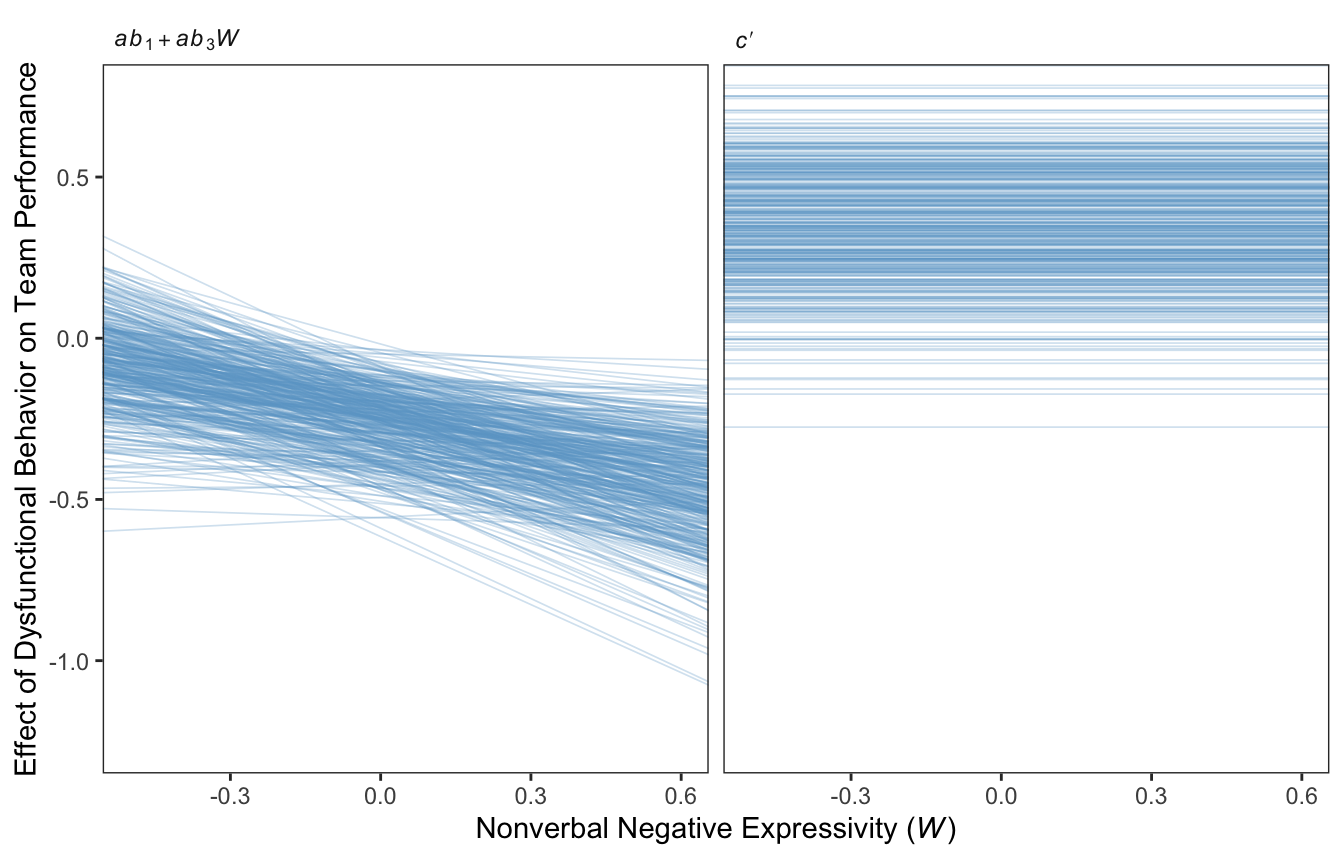

For Figure 11.7 we’ll use the first 400 HMC iterations, and a faceted approach to make the plot.

draws %>%

mutate(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`,

`italic(c)*minute` = b_perform_dysfunc) %>%

expand_grid(negexp = c(-1, 1)) %>%

mutate(`italic(ab)[1]+italic(ab)[3]*italic(W)` = a * b1 + a * b3 * negexp) %>%

pivot_longer(cols = c(`italic(c)*minute`, `italic(ab)[1]+italic(ab)[3]*italic(W)`)) %>%

filter(.draw < 401) %>%

ggplot(aes(x = negexp, y = value, group = .draw)) +

geom_line(color = "skyblue3",

linewidth = .3, alpha = .3) +

coord_cartesian(xlim = c(-.5, .6),

ylim = c(-1.25, .75)) +

labs(x = expression(paste("Nonverbal Negative Expressivity (", italic(W), ")")),

y = "Effect of Dysfunctional Behavior on Team Performance") +

theme_bw() +

theme(panel.grid = element_blank(),

strip.background = element_rect(color = "transparent", fill = "transparent"),

strip.text = element_text(hjust = 0)) +

facet_wrap(~ name, labeller = label_parsed)

Since the \(c'\) values are constant across \(W\), the individual HMC iterations end up perfectly parallel in the spaghetti plot on the right This is an example of a visualization I’d avoid making with a spaghetti plot for a professional presentation. But hopefully it has some pedagogical value, here.

11.6 Statistical inference

11.6.1 Inference about the direct effect.

We’ve already been expressing uncertainty in terms of percentile-based 95% intervals and histograms. Here’s a plot of the direct effect, b_perform_dysfunc.

library(tidybayes)

# breaks

breaks <-

mode_hdi(draws$b_perform_dysfunc, .width = .95) %>%

pivot_longer(starts_with("y")) %>%

pull(value)

draws %>%

ggplot(aes(x = b_perform_dysfunc)) +

geom_histogram(binwidth = .025, boundary = 0,

color = "white", fill = "skyblue3", size = 1/4) +

stat_pointinterval(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_x_continuous(expression("The direct effect "*italic(c)*minute*" (i.e., b_perform_dysfunc)"),

breaks = breaks, labels = round(breaks, digits = 3)) +

scale_y_continuous(NULL, breaks = NULL) +

theme_bw() +

theme(axis.line.x = element_line(size = 1/4),

panel.border = element_blank(),

panel.grid = element_blank())

Since we’re plotting in a style similar to Kruschke, we switched from emphasizing the posterior mean or median to marking off the posterior mode, which is Kruschkes’ preferred measure of central tendency. We also ditched our typical percentile-based 95% intervals for highest posterior density intervals. The stat_pointinterval() function from the Matthew Kay’s tidybayes package made it easy to compute those values with the point_interval = mode_hdi argument. Note how we also used tidybayes::mode_hdi() to compute those values and plug them into scale_x_continuous().

11.6.2 Inference about the indirect effect.

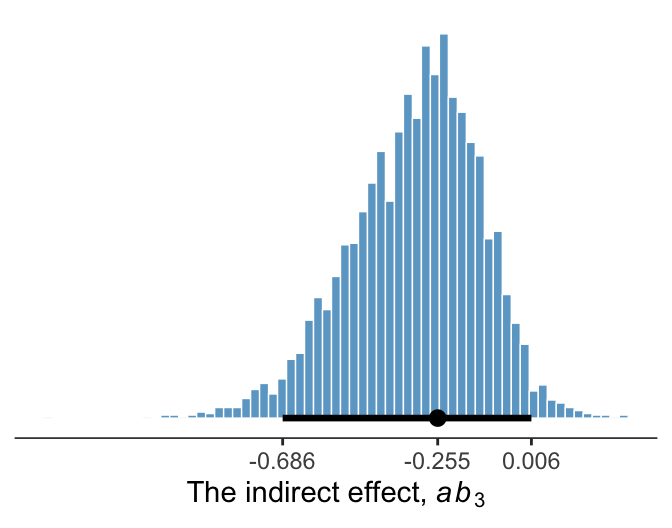

Much like above, we can make a plot of the conditional indirect effect \(ab_3\).

draws <- draws %>%

mutate(ab3 = b_negtone_dysfunc * `b_perform_negtone:negexp`)

# breaks

breaks <- mode_hdi(draws$ab3, .width = .95) %>%

pivot_longer(starts_with("y")) %>%

pull(value)

draws %>%

ggplot(aes(x = ab3)) +

geom_histogram(binwidth = .025, boundary = 0,

color = "white", fill = "skyblue3", size = 1/4) +

stat_pointinterval(aes(y = 0),

point_interval = mode_hdi, .width = .95) +

scale_x_continuous(expression(paste("The indirect effect, ", italic(ab)[3])),

breaks = breaks, labels = round(breaks, digits = 3)) +

scale_y_continuous(NULL, breaks = NULL) +

theme_bw() +

theme(axis.line.x = element_line(size = 1/4),

panel.border = element_blank(),

panel.grid = element_blank())

11.6.3 Probing moderation of mediation.

One of the contributions of Preacher et al. (2007) to the literature on moderated mediation analysis was their discussion of inference for conditional indirect effects. They suggested two approaches, one a normal theory-based approach that is an analogue of the Sobel test in unmoderated mediation analysis, and another based on bootstrapping. (p. 426)

One of the contributions of this project is moving away from NHST in favor of Bayesian modeling. Since we’ve already been referencing him with our plot themes, you might check out Kruschke’s (2015) textbook for more discussion on Bayes versus NHST.

11.6.3.1 Normal theory approach.

As we’re square within the Bayesian modeling paradigm, we have no need to appeal to normal theory for the posterior \(\textit{SD}\)’s or 95% intervals.

11.6.3.2 Bootstrap confidence intervals Two types of Bayesian credible intervals.

We produced the posterior means corresponding to those in Table 11.3 some time ago. Here they are, again, with percentile-based 95% intervals via tidybayes::mean_qi().

draws %>%

transmute(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`) %>%

expand_grid(w = c(-0.531, -0.006, 0.600)) %>%

mutate(`a(b1 + b3w)` = a * (b1 + b3 * w)) %>%

group_by(w) %>%

mean_qi(`a(b1 + b3w)`) %>%

select(w:.upper) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 4

## w `a(b1 + b3w)` .lower .upper

## <dbl> <dbl> <dbl> <dbl>

## 1 -0.531 -0.103 -0.41 0.157

## 2 -0.006 -0.269 -0.52 -0.083

## 3 0.6 -0.462 -0.82 -0.175If we wanted to summarize those same effects with posterior modes and 95% highest posterior density intervals, instead, we’d replace our mean_qi() line with mode_hdi().

draws %>%

transmute(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`) %>%

expand_grid(w = c(-0.531, -0.006, 0.600)) %>%

mutate(`a(b1 + b3w)` = a * (b1 + b3 * w)) %>%

group_by(w) %>%

# here's the change

mode_hdi(`a(b1 + b3w)`) %>%

select(w:.upper) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 3 × 4

## w `a(b1 + b3w)` .lower .upper

## <dbl> <dbl> <dbl> <dbl>

## 1 -0.531 -0.079 -0.396 0.169

## 2 -0.006 -0.245 -0.491 -0.065

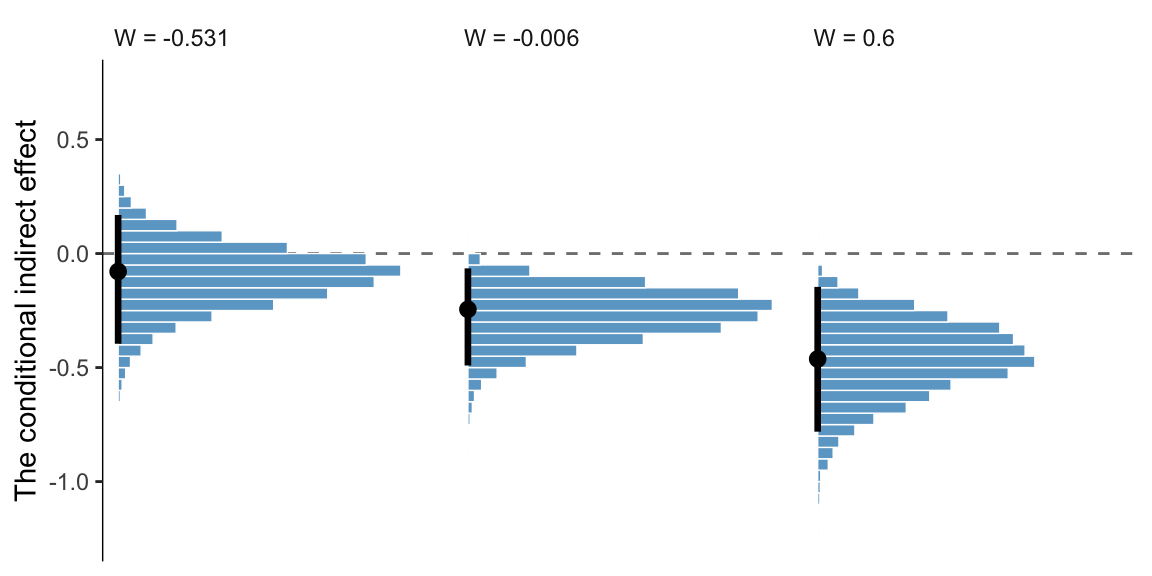

## 3 0.6 -0.465 -0.782 -0.146And we might plot these with something like this.

draws %>%

transmute(a = b_negtone_dysfunc,

b1 = b_perform_negtone,

b3 = `b_perform_negtone:negexp`) %>%

expand_grid(w = c(-0.531, -0.006, 0.600)) %>%

mutate(`a(b1 + b3w)` = a * (b1 + b3 * w)) %>%

select(w:`a(b1 + b3w)`) %>%

mutate(label = str_c("W = ", w),

w = fct_reorder(label, w)) %>%

ggplot(aes(y = `a(b1 + b3w)`)) +

geom_hline(yintercept = 0, color = "grey50", linetype = 2) +

geom_histogram(binwidth = .05, boundary = 0,

color = "white", fill = "skyblue3", linewidth = 1/4) +

stat_pointinterval(aes(x = 0),

point_interval = mode_hdi, .width = .95) +

scale_x_continuous(NULL, breaks = NULL) +

scale_y_continuous("The conditional indirect effect", limits = c(-1.25, .75)) +

theme_bw() +

theme(axis.line.y = element_line(size = 1/4),

panel.border = element_blank(),

panel.grid = element_blank(),

strip.background = element_rect(color = "transparent", fill = "transparent"),

strip.text = element_text(hjust = 0)) +

facet_wrap(~ w, nrow = 1)

This, of course, leads us right into the next section.

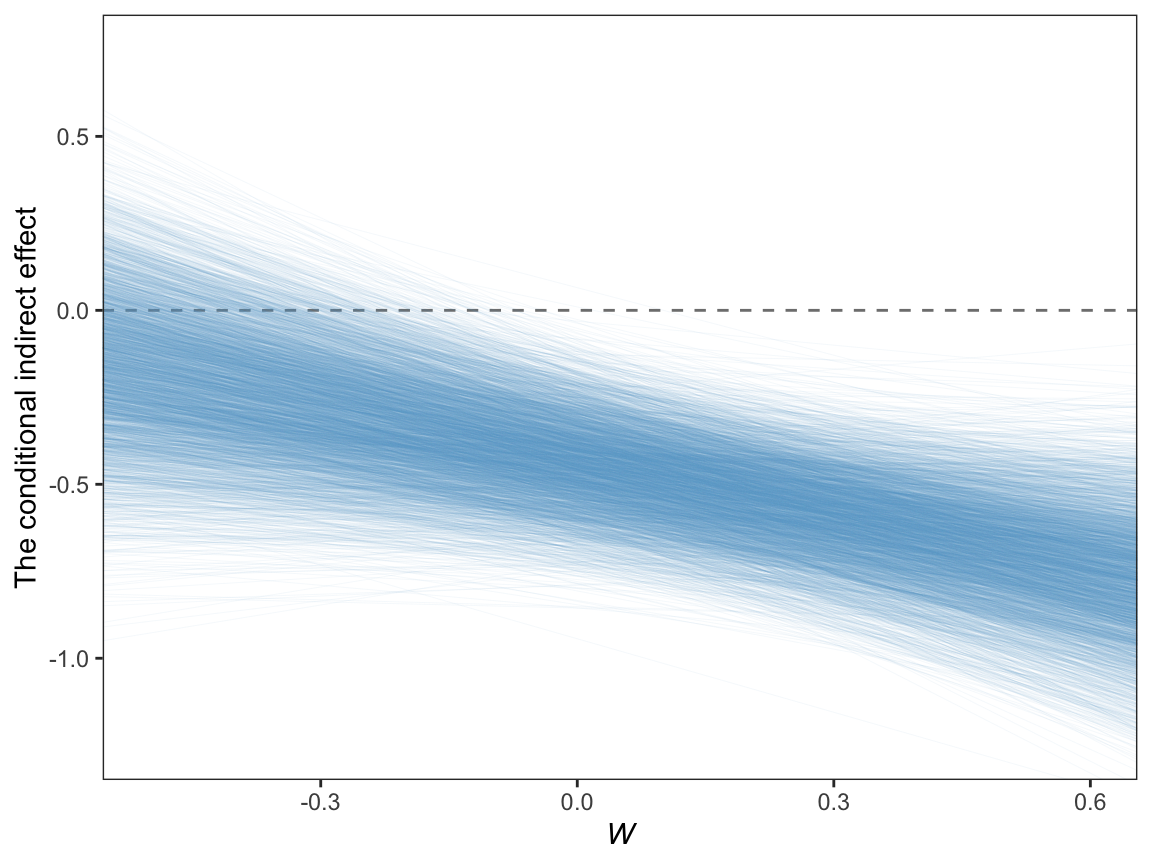

11.6.3.3 A Johnson-Neyman approach.

On page 429, Hayes discussed how Preacher et al. (2007)’s attempt to apply the JN technique in this context presumed

the sampling distribution of the conditional indirect effect is normal. Given that the sampling distribution of the conditional indirect effect is not normal, the approach they describe yields, at best, an approximate solution. To [Hayes’s] knowledge, no one has ever proposed a bootstrapping-based analogue of the Johnson-Neyman method for probing the moderation of an indirect effect.

However, our Bayesian HMC approach makes no such assumption. All we need to do is manipulate the posterior as usual. Here it is, this time using all 4,000 iterations.

draws %>%

transmute(.draw = .draw,

`-0.8` = b_perform_negtone + `b_perform_negtone:negexp` * -0.8,

`0.8` = b_perform_negtone + `b_perform_negtone:negexp` * 0.8) %>%

pivot_longer(-.draw) %>%

mutate(name = name %>% as.double()) %>%

ggplot(aes(x = name, y = value, group = .draw)) +

geom_hline(yintercept = 0, color = "grey50", linetype = 2) +

geom_line(color = "skyblue3",

linewidth = 1/6, alpha = 1/15) +

coord_cartesian(xlim = c(-.5, .6),

ylim = c(-1.25, .75)) +

labs(x = expression(italic(W)),

y = "The conditional indirect effect") +

theme_bw() +

theme(panel.grid = element_blank())

Glorious.

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] tidybayes_3.0.2 brms_2.18.0 Rcpp_1.0.9 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10

## [7] purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4 svUnit_1.0.6

## [6] sp_1.5-0 splines_4.2.2 crosstalk_1.2.0 TH.data_1.1-1 rstantools_2.2.0

## [11] inline_0.3.19 digest_0.6.31 htmltools_0.5.3 fansi_1.0.3 magrittr_2.0.3

## [16] checkmate_2.1.0 googlesheets4_1.0.1 tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5

## [21] matrixStats_0.63.0 vroom_1.5.7 xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1

## [26] colorspace_2.0-3 rvest_1.0.2 ggdist_3.2.1 haven_2.5.1 xfun_0.35

## [31] callr_3.7.3 crayon_1.5.2 jsonlite_1.8.4 lme4_1.1-31 survival_3.4-0

## [36] zoo_1.8-10 glue_1.6.2 gtable_0.3.1 gargle_1.2.0 emmeans_1.8.0

## [41] distributional_0.3.1 pkgbuild_1.3.1 rstan_2.21.8 abind_1.4-5 scales_1.2.1

## [46] mvtnorm_1.1-3 DBI_1.1.3 miniUI_0.1.1.1 xtable_1.8-4 HDInterval_0.2.4

## [51] bit_4.0.4 stats4_4.2.2 StanHeaders_2.21.0-7 DT_0.24 htmlwidgets_1.5.4

## [56] httr_1.4.4 threejs_0.3.3 arrayhelpers_1.1-0 posterior_1.3.1 ellipsis_0.3.2

## [61] pkgconfig_2.0.3 loo_2.5.1 farver_2.1.1 sass_0.4.2 dbplyr_2.2.1

## [66] utf8_1.2.2 labeling_0.4.2 tidyselect_1.2.0 rlang_1.0.6 reshape2_1.4.4

## [71] later_1.3.0 munsell_0.5.0 cellranger_1.1.0 tools_4.2.2 cachem_1.0.6

## [76] cli_3.6.0 generics_0.1.3 broom_1.0.2 evaluate_0.18 fastmap_1.1.0

## [81] processx_3.8.0 knitr_1.40 bit64_4.0.5 fs_1.5.2 nlme_3.1-160

## [86] mime_0.12 projpred_2.2.1 xml2_1.3.3 compiler_4.2.2 bayesplot_1.10.0

## [91] shinythemes_1.2.0 rstudioapi_0.13 gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0

## [96] stringi_1.7.8 highr_0.9 ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45

## [101] Matrix_1.5-1 nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0 tensorA_0.36.2

## [106] vctrs_0.5.1 pillar_1.8.1 lifecycle_1.0.3 jquerylib_0.1.4 bridgesampling_1.1-2

## [111] estimability_1.4.1 raster_3.5-15 httpuv_1.6.5 R6_2.5.1 bookdown_0.28

## [116] promises_1.2.0.1 gridExtra_2.3 codetools_0.2-18 boot_1.3-28 colourpicker_1.1.1

## [121] MASS_7.3-58.1 gtools_3.9.4 assertthat_0.2.1 withr_2.5.0 shinystan_2.6.0

## [126] multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2 hms_1.1.1 terra_1.5-21

## [131] grid_4.2.2 minqa_1.2.5 coda_0.19-4 rmarkdown_2.16 googledrive_2.0.0

## [136] shiny_1.7.2 lubridate_1.8.0 base64enc_0.1-3 dygraphs_1.1.1.6