2 Fundamentals of Linear Regression

Linear regression is the foundation of most of the methods [Hayes described in his] book, so a solid understanding of the fundamentals of linear regression is essential. I assume that most readers have been exposed to linear regression in some form before discovering this book, and so some of the material will be review. Even so, I encourage everyone to read this chapter. (Andrew F. Hayes, 2018, p. 30)

Since we’re adding Bayes and the tidyverse into the mix, walking through this chapter will be double important, for us.

2.1 Correlation and prediction

Here we load a couple necessary packages, load the data, and take a peek.

library(tidyverse)

glbwarm <- read_csv("data/glbwarm/glbwarm.csv")

glimpse(glbwarm)## Rows: 815

## Columns: 7

## $ govact <dbl> 3.6, 5.0, 6.6, 1.0, 4.0, 7.0, 6.8, 5.6, 6.0, 2.6, 1.4, 5.6, 7.0, 3.8, 3.4, 4.2, 1.0, 2.6, 5…

## $ posemot <dbl> 3.67, 2.00, 2.33, 5.00, 2.33, 1.00, 2.33, 4.00, 5.00, 5.00, 1.00, 4.00, 1.00, 5.67, 3.00, 1…

## $ negemot <dbl> 4.67, 2.33, 3.67, 5.00, 1.67, 6.00, 4.00, 5.33, 6.00, 2.00, 1.00, 4.00, 5.00, 4.67, 2.00, 1…

## $ ideology <dbl> 6, 2, 1, 1, 4, 3, 4, 5, 4, 7, 6, 4, 2, 4, 5, 2, 6, 4, 2, 4, 4, 2, 6, 4, 4, 3, 4, 5, 4, 5, 4…

## $ age <dbl> 61, 55, 85, 59, 22, 34, 47, 65, 50, 60, 71, 60, 71, 59, 32, 36, 69, 70, 41, 48, 38, 63, 71,…

## $ sex <dbl> 0, 0, 1, 0, 1, 0, 1, 1, 1, 1, 1, 0, 1, 0, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 0, 0, 1, 0…

## $ partyid <dbl> 2, 1, 1, 1, 1, 2, 1, 1, 2, 3, 2, 1, 1, 1, 1, 1, 2, 3, 1, 3, 2, 1, 3, 2, 1, 1, 1, 3, 1, 1, 1…If you are new to tidyverse-style syntax, possibly the oddest component is the pipe (i.e., %>%). I’m not going to explain the %>% in this project, but you might learn more about in this brief clip, starting around minute 21:25 in this talk by Wickham, or in Section 5.6.1 from Grolemund & Wickham (2017). Really, all of Chapter 5 of R4DS is great for new R and new tidyverse users, and Chapter 3 is a nice introduction to plotting with ggplot2.

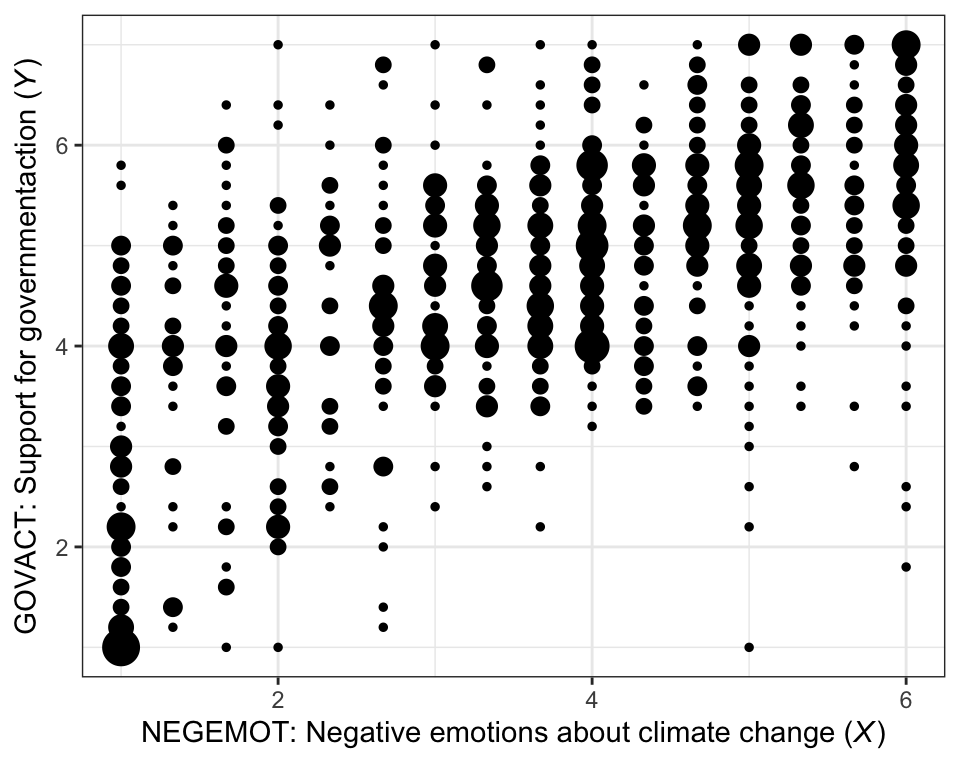

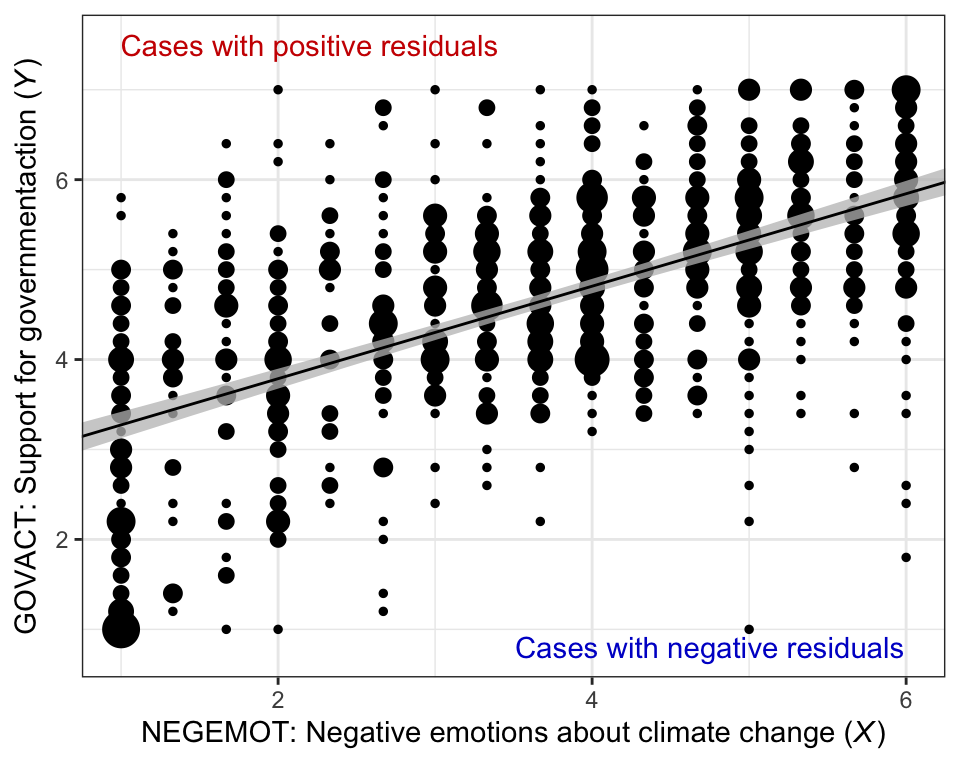

Here is our version of Figure 2.1.

glbwarm %>%

group_by(negemot, govact) %>%

count() %>%

ggplot(aes(x = negemot, y = govact, size = n)) +

geom_point(show.legend = F) +

labs(x = expression(paste("NEGEMOT: Negative emotions about climate change (", italic("X"), ")")),

y = expression(paste("GOVACT: Support for governmentaction (", italic("Y"), ")"))) +

theme_bw()

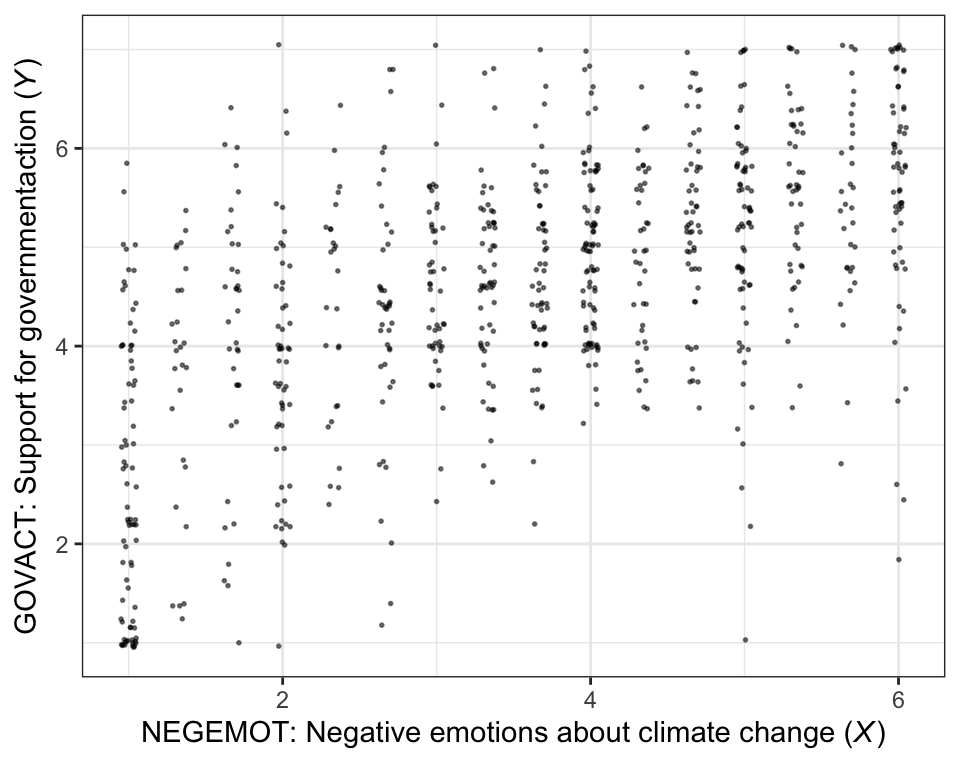

There are other ways to handle the overplotting issue, such as jittering.

glbwarm %>%

ggplot(aes(x = negemot, y = govact)) +

geom_jitter(height = .05, width = .05,

alpha = 1/2, size = 1/3) +

labs(x = expression(paste("NEGEMOT: Negative emotions about climate change (", italic("X"), ")")),

y = expression(paste("GOVACT: Support for governmentaction (", italic("Y"), ")"))) +

theme_bw()

The cor() function is a simple way to compute a Pearson’s correlation coefficient.

cor(glbwarm$negemot, glbwarm$govact)## [1] 0.5777458If you want more plentiful output, the cor.test() function returns a \(t\)-value, the degrees of freedom, the corresponding \(p\)-value and the 95% confidence intervals, in addition to the Pearson’s correlation coefficient.

cor.test(glbwarm$negemot, glbwarm$govact)##

## Pearson's product-moment correlation

##

## data: glbwarm$negemot and glbwarm$govact

## t = 20.183, df = 813, p-value < 2.2e-16

## alternative hypothesis: true correlation is not equal to 0

## 95 percent confidence interval:

## 0.5301050 0.6217505

## sample estimates:

## cor

## 0.5777458To get the Bayesian version, we’ll open our focal statistical package, Bürkner’s brms. I should briefly note that you could also do many of these analyses with other packages, such as blavaan (Merkle et al., 2021, 2022; Merkle & Rosseel, 2018) or rstanarm (Brilleman et al., 2018; Gabry & Goodrich, 2022). I just prefer brms.

library(brms)We’ll start simple and just use the default priors and settings, but with the addition of parallel sampling via cores = 4 and telling brms to save our output as an external file with the file argument.

model2.1 <- brm(

data = glbwarm,

family = gaussian,

bf(mvbind(negemot, govact) ~ 1) +

set_rescor(TRUE),

cores = 4,

file = "fits/model02.01")We can examine a summary of the output with the print() function.

print(model2.1)## Family: MV(gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: negemot ~ 1

## govact ~ 1

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## negemot_Intercept 3.56 0.05 3.45 3.66 1.00 3384 2769

## govact_Intercept 4.59 0.05 4.49 4.68 1.00 3339 2436

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_negemot 1.53 0.04 1.46 1.60 1.00 3377 3162

## sigma_govact 1.36 0.03 1.30 1.43 1.00 2925 3102

##

## Residual Correlations:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## rescor(negemot,govact) 0.58 0.02 0.53 0.62 1.00 3056 2514

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).In regression models, we typically use predictor variables to explain variation in our criterion variables. It’s pretty much never the case that our predictors explain all the variation. The variation that’s left over is often called residual variation, residual variance, residuals, error, or even \(\epsilon\). Throughout the text, Hayes typically referred to it as \(e\).

More formally, when we use likelihood-based estimators, such as maximum likelihood (popular with multilevel modeling and structural equation modeling) or Bayesian estimators, we express the models for our criterion variables in terms of likelihood functions. Probably the most common likelihood function, and the one consistent with the models in Hayes’s text, is the Gaussian likelihood. With that likelihood we say our criterion variable \(y_i\) is normally distributed with a mean \(\mu\) and standard deviation \(\sigma\). We might express that formally as

\[ y_i \sim \operatorname{Normal} (\mu, \sigma), \]

where \(\sim\) stands for “is distributed as” and \(i\) indexes the \(i\)th row in the data. When we add predictors to the model, they are typically used to model the mean \(\mu\). Thus, in the case there we have a sole predictor \(x_i\) which varies across participants, we’d expand our model formula to

\[\begin{align*} y_i & \sim \operatorname{Normal} (\mu_i, \sigma) \\ \mu_i & = \beta_0 + \beta_1 x_i, \end{align*}\]

where \(\beta_0\) is the intercept and \(\beta_1\) is the slope for predictor \(x\), which varies across cases. In this formulation, \(\sigma\) is the standard deviation after accounting for the systemic variation explained by \(x_i\). That is, it’s the residual variance (i.e., \(\epsilon\)), but in a standard-deviation metric. Why in a standard deviation metric?, you ask. There are technical reasons why brms expresses it as a standard deviation which I’m not going to go into, here. Just beware that whereas many frequentist software packages express the residual variation in a variance metric, it’s expressed in a standard-deviation metric in brms. Just go with it and move on.

Within the brms framework, \(\sigma\) of the Gaussian likelihood is considered a “family-specific” parameter. As it turns out, there are many other fine likelihood functions in addition to the Gaussian and not all of them have a \(\sigma\) parameter. For example, there is no \(\sigma\) for the Poisson 1 distribution, which is popular likelihood function for count variables. Because Bürkner made the brms package capable of a variety of likelihood functions, it behooved him to give this section of the output a generic name.

When you have a regression model with multiple Gaussian criterion variables, those variables will typically have some degree of covariation. It’s often termed residual covariance, particularly within the structural equation modeling paradigm 2. But when you have an intercept-only regression model with multiple variables, that residual covariance is just a covariance. And when you express those variation parameters in terms of standard deviations \(\sigma\), their covariance is expressed as a correlation \(\rho\). Since our multivariate model is of two variables, we have one \(\rho\) parameter for the \(\sigma\)’s, rescor(negemot,govact), which is our Bayesian analogue to the Pearson’s correlation.

To learn more about the multivariate syntax in brms, check out Bürkner’s (2022b) vignette, Estimating multivariate models with brms, or execute vignette("brms_multivariate"). Or just hold your horses until we get into mediation. All of our mediation models will use one version of the multivariate syntax or another.

But to clarify the output, above:

- ‘Estimate’ = the posterior mean, analogous to the frequentist point estimate,

- ‘Est.Error’ = the posterior \(\textit{SD}\), analogous to the frequentist standard error,

- ‘l-95% CI’ = the lower-level of the percentile-based 95% Bayesian credible interval, and

- ‘u-95% CI’ = the upper-level of the same.

2.2 The simple linear regression model

Here is how one might get the simple OLS coefficients in base R with the lm() function.

(model2.2 <- lm(data = glbwarm, govact ~ 1 + negemot))##

## Call:

## lm(formula = govact ~ 1 + negemot, data = glbwarm)

##

## Coefficients:

## (Intercept) negemot

## 2.7573 0.5142For more detailed output, put the model object model2.2 into the summary() function.

summary(model2.2)##

## Call:

## lm(formula = govact ~ 1 + negemot, data = glbwarm)

##

## Residuals:

## Min 1Q Median 3Q Max

## -4.3285 -0.6731 0.1018 0.7554 3.2142

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 2.75732 0.09866 27.95 <2e-16 ***

## negemot 0.51424 0.02548 20.18 <2e-16 ***

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 1.111 on 813 degrees of freedom

## Multiple R-squared: 0.3338, Adjusted R-squared: 0.333

## F-statistic: 407.3 on 1 and 813 DF, p-value: < 2.2e-16Here’s the Bayesian model in brms.

model2.3 <- brm(

data = glbwarm,

family = gaussian,

govact ~ 1 + negemot,

cores = 4,

file = "fits/model02.03")There are several ways to get a brms model summary. A go-to is with the summary() function, just like we did with our OLS version of the model.

summary(model2.3) ## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: govact ~ 1 + negemot

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 2.76 0.10 2.57 2.94 1.00 4183 2804

## negemot 0.51 0.02 0.47 0.56 1.00 4243 3058

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.11 0.03 1.06 1.17 1.00 3410 2874

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The print() function works very much the same way. To get a more focused look, you can use the posterior_summary() function:

posterior_summary(model2.3)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 2.7589620 0.096779459 2.5709679 2.9429637

## b_negemot 0.5140993 0.024492016 0.4678335 0.5624802

## sigma 1.1127269 0.027565258 1.0620343 1.1679305

## lprior -3.2741231 0.006381825 -3.2872712 -3.2626796

## lp__ -1245.9427589 1.226504608 -1249.1391499 -1244.5787873That also yields the log posterior, lp__, which you can learn more about in this section of the (2023) vignette by the Stan Development Team, RStan: the R interface to Stan or this brief blog post by Xulong Wang. We won’t focus on the lp__ directly in this project. But its influence will be lurking in the shadows.

The output also contains a row for lprior. This is the log of the joint prior and is a new addition to the output based on brms version 2.17.0. It is related to functionality from the up-and-coming priorsense package (Kallioinen et al., 2022), which is based on new work, such as Kallioinen et al. (2021). Though you’ll see it pop up in our output from time to time, the lprior will also be outside of our focus in this project.

But anyways, the Q2.5 and Q97.5, are the lower- and upper-levels of the 95% credible intervals. The Q prefix stands for quantile (see this thread). In this case, these are a renamed version of the l-95% CI and u-95% CI columns from our summary() output.

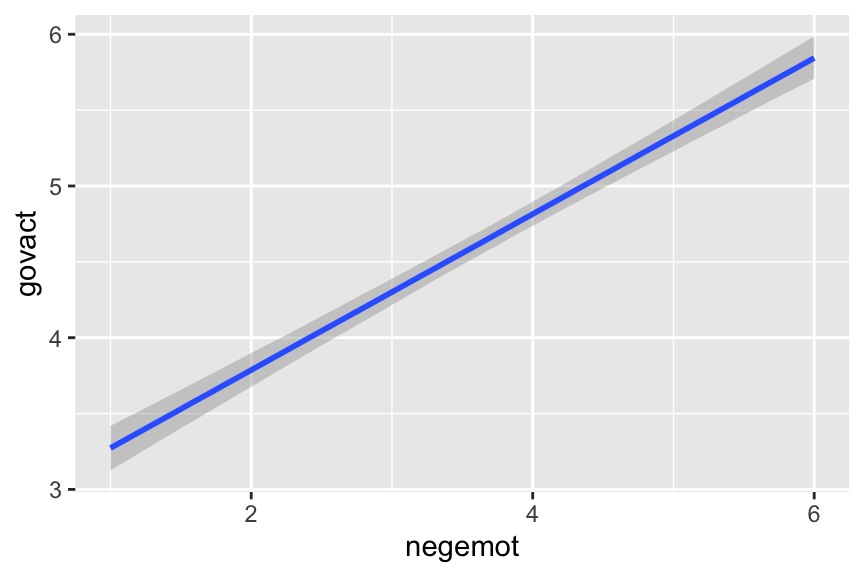

To make a quick plot of the regression line, one can use the convenient brms::conditional_effects() function.

conditional_effects(model2.3)

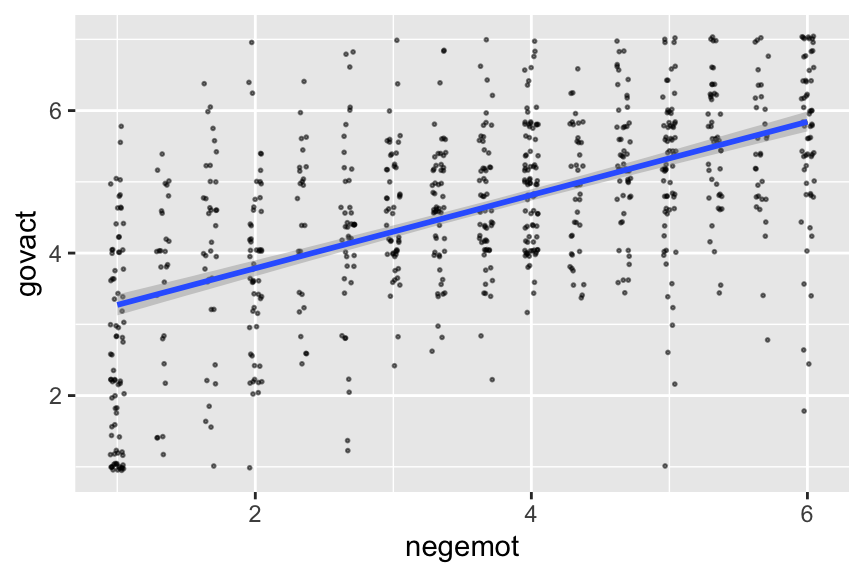

If you want to customize that output, you might nest it in plot().

plot(conditional_effects(model2.3),

points = T,

point_args = c(height = .05, width = .05,

alpha = 1/2, size = 1/3))

It’s also useful to be able to work with the output of a brms model directly. For our first step, we’ll put our posterior draws into a data frame with the as_draws_df() function.

draws <- as_draws_df(model2.3)

head(draws)## # A draws_df: 6 iterations, 1 chains, and 5 variables

## b_Intercept b_negemot sigma lprior lp__

## 1 2.8 0.51 1.1 -3.3 -1245

## 2 2.7 0.56 1.1 -3.3 -1248

## 3 2.9 0.47 1.1 -3.3 -1246

## 4 2.8 0.49 1.1 -3.3 -1246

## 5 2.8 0.50 1.1 -3.3 -1245

## 6 2.7 0.54 1.1 -3.3 -1246

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}Next, we’ll use the fitted() function to compute model-implied summaries for the expected govact value, given particular predictor values. Our first model only has negemot as a predictor, and we’ll ask for the expected govact values for negemot ranging from 0 to 7.

nd <- tibble(negemot = seq(from = 0, to = 7, length.out = 30))

f <-

fitted(model2.3,

newdata = nd) %>%

data.frame() %>%

bind_cols(nd)

head(f)## Estimate Est.Error Q2.5 Q97.5 negemot

## 1 2.758962 0.09677946 2.570968 2.942964 0.0000000

## 2 2.883055 0.09142674 2.705143 3.059423 0.2413793

## 3 3.007148 0.08614717 2.838623 3.174034 0.4827586

## 4 3.131241 0.08095506 2.972113 3.287673 0.7241379

## 5 3.255334 0.07586836 3.106983 3.402155 0.9655172

## 6 3.379427 0.07090976 3.240109 3.516169 1.2068966The first two columns should look familiar to the output from summary(model2.3), above. The next two columns, Q2.5 and Q97.5, are the lower- and upper-levels of the 95% credible intervals, like we got from posterior_summary(). The final column is the result of the bind_cols(nd) code.

Here’s our bespoke version of Figure 2.4.

glbwarm %>%

group_by(negemot, govact) %>%

count() %>%

ggplot(aes(x = negemot)) +

geom_point(aes(y = govact, size = n),

show.legend = F) +

geom_ribbon(data = f,

aes(ymin = Q2.5, ymax = Q97.5),

fill = "grey75", alpha = 3/4) +

geom_line(data = f,

aes(y = Estimate)) +

annotate("text", x = 2.2, y = 7.5, label = "Cases with positive residuals", color = "red3") +

annotate("text", x = 4.75, y = .8, label = "Cases with negative residuals", color = "blue3") +

labs(x = expression("NEGEMOT: Negative emotions about climate change ("*italic("X")*")"),

y = expression("GOVACT: Support for governmentaction ("*italic("Y")*")")) +

coord_cartesian(xlim = range(glbwarm$negemot)) +

theme_bw()

Note how that figure is a combination of the original glbwarm data and our f output.

2.2.1 Interpretation of the constant and regression coefficient.

“The regression constant is conceptually equivalent to the \(Y\)-intercept in the equation for a line. It quantifies the estimated value of \(Y\) when \(X = 0\)” (p. 39).

2.2.2 The standardized regression model.

Thus far, the interpretation of the regression coefficients in a regression model has been couched in unstandardized or raw metric form. Many regression routines will also produce a version of the model in standardized form. The standardized regression model is what results when all variables are first standardized prior to estimation of the model by expressing each measurement in units of standard deviations from the sample mean. (p. 40, emphasis in the original)

brms will not produce standardized solutions on the fly. To get them, you will have to manually standardize the variables before entering them into the model.

2.2.3 Simple linear regression with a dichotomous antecedent variable.

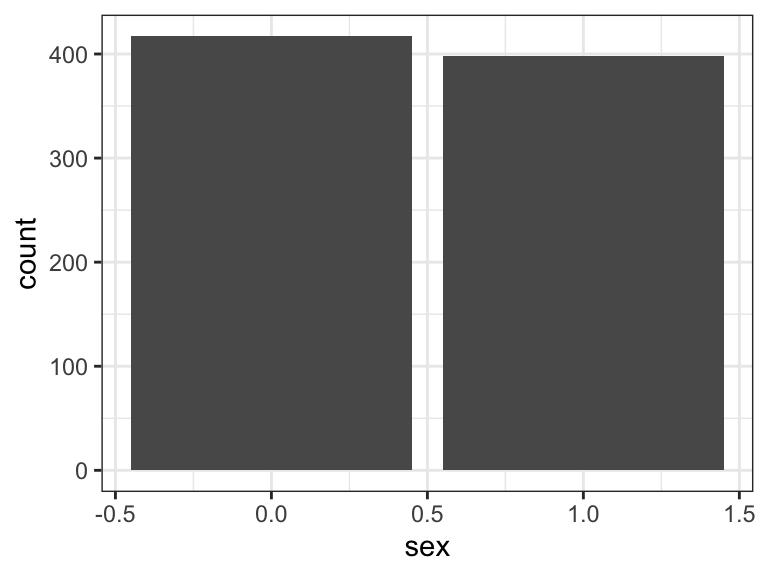

In the glbwarm data, sex is a dichotomous variable.

glbwarm %>%

ggplot(aes(x = sex)) +

geom_bar() +

theme_bw()

In these data, sex is coded females = 0, males = 1. Here we add sex to the model.

model2.4 <- brm(

data = glbwarm,

family = gaussian,

govact ~ 1 + sex,

cores = 4,

file = "fits/model02.04")Check the summary.

print(model2.4)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: govact ~ 1 + sex

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 4.72 0.07 4.58 4.85 1.00 3967 2964

## sex -0.27 0.10 -0.46 -0.08 1.00 3834 2662

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.36 0.03 1.29 1.43 1.00 4223 2883

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Our model summary is very close to that in the text. If you just wanted the coefficients, you might use the fixef() function.

fixef(model2.4) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 4.716 0.067 4.585 4.847

## sex -0.267 0.097 -0.456 -0.081Though not necessary, we used the round() function to reduce the number of significant digits in the output. You can get a little more information with the posterior_summary() function.

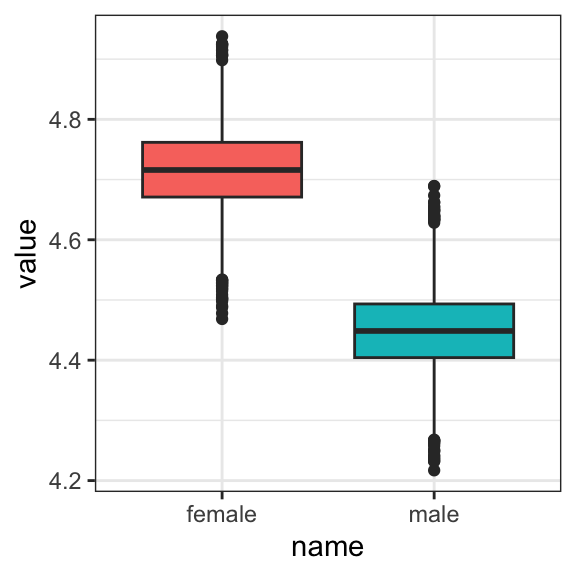

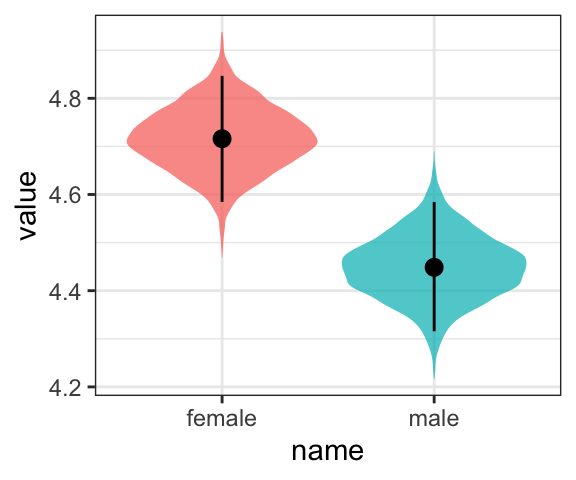

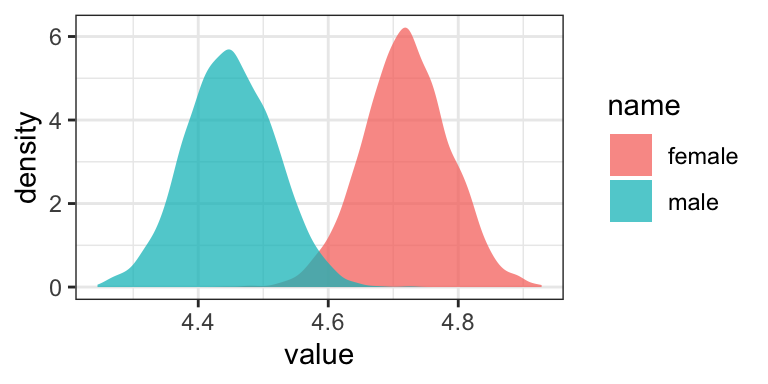

But since Bayesian estimation yields an entire posterior distribution, you can visualize that distribution in any number of ways. Because we’ll be using ggplot2, we’ll need to put the posterior draws into a data frame before plotting.

draws <- as_draws_df(model2.4)We could summarize the posterior with box plots

draws %>%

rename(female = b_Intercept) %>%

mutate(male = female + b_sex) %>%

pivot_longer(cols = c(male, female)) %>%

ggplot(aes(x = name, y = value)) +

geom_boxplot(aes(fill = name)) +

theme_bw() +

theme(legend.position = "none")

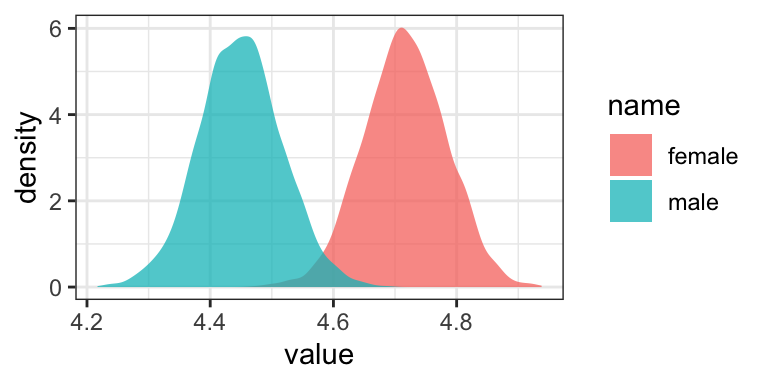

or with overlapping density plots

draws %>%

rename(female = b_Intercept) %>%

mutate(male = female + b_sex) %>%

pivot_longer(cols = c(male, female)) %>%

ggplot(aes(x = value, group = name, fill = name)) +

geom_density(color = "transparent", alpha = 3/4) +

theme_bw()

or even with violin plots with superimposed posterior medians and 95% intervals.

draws %>%

rename(female = b_Intercept) %>%

mutate(male = female + b_sex) %>%

pivot_longer(cols = c(male, female)) %>%

ggplot(aes(x = name, y = value)) +

geom_violin(aes(fill = name), color = "transparent", alpha = 3/4) +

stat_summary(fun = median,

fun.min = function(i){quantile(i, probs = .025)},

fun.max = function(i){quantile(i, probs = .975)}) +

theme_bw() +

theme(legend.position = "none")

For even more ideas, see Matthew Kay’s tidybayes and ggdist packages.

As Hayes discussed on page 42, you can also get a sense of the model estimates for women and men with a little addition. Here we continue to use the round() function to simplify the output.

# for women

round(fixef(model2.4)[1, ], digits = 2)## Estimate Est.Error Q2.5 Q97.5

## 4.72 0.07 4.58 4.85# for men (just the posterior mean)

round(fixef(model2.4)[1, 1] + fixef(model2.4)[2, 1], digits = 2)## [1] 4.45Hayes then considered that

although the model will always generate the group means, the regression coefficient and regression constant will depend on how the two groups are coded. For instance, suppose females were coded \(X = −1\) and males were coded \(X = 1\). (p. 42)

To follow along, we’ll first recode sex, saving it as sex_recode.

glbwarm <- glbwarm %>%

mutate(sex_recode = if_else(sex == 0, -1, 1))Now fit the new model.

model2.5 <- brm(

data = glbwarm,

family = gaussian,

govact ~ 1 + sex_recode,

cores = 4,

file = "fits/model02.05")Check the primary coefficients.

fixef(model2.5)## Estimate Est.Error Q2.5 Q97.5

## Intercept 4.5842056 0.04757457 4.4901617 4.67730937

## sex_recode -0.1349992 0.04797450 -0.2303585 -0.03947171They match up well with the results in the middle of page 42. Now the Intercept in the output, what Hayes called \(i_Y\) is the unweighted mean of the means, \(\big (\overline Y_\text{male} + \overline Y_\text{female} \big ) \big / 2\), and the coefficient sex_recode (i.e., what Hayes called \(b\)) is one half the difference between those means. Here’s how to work with the posterior draws from this model to reproduce the group mean estimates.

as_draws_df(model2.5) %>%

transmute(male = b_Intercept + b_sex_recode * 1,

female = b_Intercept + b_sex_recode * -1) %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, group = name, fill = name)) +

geom_density(color = "transparent", alpha = 3/4) +

theme_bw()

You’ll see it looks just like the plot from above.

2.2.3.1 A caution about the standardized regression coefficient.

The standardized regression coefficient is a function of both the mean difference and the distribution of the cases across the groups. This is an undesirable property of \(\tilde b\) when \(X\) is dichotomous. I recommend that the standardized regression coefficient for a dichotomous antecedent variable not be interpreted or reported.

If you desire an index of a mean difference in standard deviation units, I recommend standardizing \(Y\) but not the dichotomous \(X\) and then interpreting the unstandardized regression coefficient in a model estimating \(Z_Y\) from \(X\). In such a model, \(b\) is a partially standardized regression coefficient. (pp. 43–44, emphasis in the original)

Here’s how to fit the partially-standardized model, first with lm().

# standardize the Y

glbwarm <- glbwarm %>%

mutate(govact_z = (govact - mean(govact)) / sd(govact))

# fit the model

lm(data = glbwarm, govact_z ~ 1 + sex) %>%

# summarize

summary()##

## Call:

## lm(formula = govact_z ~ 1 + sex, data = glbwarm)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.7329 -0.5278 0.1104 0.6985 1.8746

##

## Coefficients:

## Estimate Std. Error t value Pr(>|t|)

## (Intercept) 0.09629 0.04876 1.975 0.04865 *

## sex -0.19717 0.06978 -2.826 0.00483 **

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.9957 on 813 degrees of freedom

## Multiple R-squared: 0.009726, Adjusted R-squared: 0.008508

## F-statistic: 7.985 on 1 and 813 DF, p-value: 0.004833Now we’ll fit the model as Bayesians with brms::brm().

model2.6 <- brm(

data = glbwarm,

family = gaussian,

govact_z ~ 1 + sex,

cores = 4,

file = "fits/model02.06")fixef(model2.6)## Estimate Est.Error Q2.5 Q97.5

## Intercept 0.09780898 0.04818554 0.0002537162 0.19502714

## sex -0.19805783 0.06898876 -0.3341843382 -0.06063056The constant \(i_Y\) is the mean standardized \(Y\) for females, and \(b\) is the mean difference between males and females in standard deviations of \(Y\). So men are estimated to differ from women by 0.197 standard deviations in their support for government action. The negative sign for \(b\) means males are lower than females, on average, in their support. (p. 44)

The parameter summaries from our Bayesian model was the same as the OLS summaries up to two decimal places. This will often be the case. One is not more correct. They are the results of using different procedures.

2.2.4 A note on symbolic representations.

This section is worthy of repeating in full.

A brief digression is in order at this point. It is important when reporting the results of an analysis to define the symbols you use unless there is a strong convention, for a failure to do so can invite confusion. Different uses of \(b\) and \(\beta\) in regression analysis are an important case in point. There is much inconsistency in the substantive and methodology literature as to how regression coefficients are symbolized in unstandardized versus standardized form. Some use \(b\) or \(B\) to refer to the unstandardized regression coefficient and \(\beta\) to refer to the standardized regression coefficient. Others, rather than using \(\beta\), spell it out by referencing “beta weights” or just talk about the “betas.” Some use \(\beta\) to refer to a population regression coefficient, to distinguish it from a sample estimate, others use \(\beta\) as the unstandardized regression weight, and there are still others who use \(\hat \beta\) to refer to a sample unstandardized regression coefficient and leave the hat off for its corresponding population or “true” value. In this book, I use \(\tilde \beta\) for the standardized regression weight.

Ultimately, the symbols we use are for the most part arbitrary. We can use any symbols we want. My point is that you should not assume others will know what symbols you use mean, for your familiar symbols to represent certain concepts may not be understood as representing those concepts by all. The same applies to terms such as “beta coefficient” or other verbalizations of symbols. Best to define your symbols in advance, or otherwise let your reader know what your symbols mean when used in text and tables. This will help others better understand and interpret your work. (p. 44)

2.3 Alternative explanations for association

That “correlation does not imply causation” is etched into the brains of all scientists. If variables \(X\) and \(Y\) are correlated, that doesn’t mean that \(X\) causes \(Y\) or that \(Y\) causes \(X\). The ability to infer cause–effect is not even a statistical matter in the end. Rather, it is the design of one’s study, the data collection procedures one employs, and theoretical plausibility that most directly influence whether a cause–effect claim can be made and with what degree of confidence, not the size or sign of a statistical index of association. (p. 45)

On page 46, Hayes reported a couple correlations. Here’s how to get them from base R.

cor(glbwarm$sex, glbwarm$negemot)## [1] -0.1173564cor(glbwarm$sex, glbwarm$govact)## [1] -0.09861854Again, if we wanted to get full Bayesian estimates, we’d fit an intercept-only multivariate model.

model2.7 <- brm(

data = glbwarm,

family = gaussian,

bf(mvbind(negemot, govact, sex) ~ 1) +

set_rescor(TRUE),

cores = 4,

file = "fits/model02.07")print(model2.7, digits = 3)## Family: MV(gaussian, gaussian, gaussian)

## Links: mu = identity; sigma = identity

## mu = identity; sigma = identity

## mu = identity; sigma = identity

## Formula: negemot ~ 1

## govact ~ 1

## sex ~ 1

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## negemot_Intercept 3.558 0.052 3.456 3.659 1.000 5210 3607

## govact_Intercept 4.587 0.046 4.495 4.680 1.001 5030 3475

## sex_Intercept 0.488 0.018 0.453 0.524 1.002 6910 2899

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma_negemot 1.532 0.039 1.457 1.610 1.001 6173 3221

## sigma_govact 1.364 0.034 1.299 1.431 1.000 5658 3362

## sigma_sex 0.501 0.013 0.477 0.527 1.002 7446 2803

##

## Residual Correlations:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## rescor(negemot,govact) 0.576 0.024 0.528 0.621 1.000 5450 3211

## rescor(negemot,sex) -0.117 0.034 -0.181 -0.050 1.001 7425 2856

## rescor(govact,sex) -0.098 0.034 -0.164 -0.031 1.000 7115 2976

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).For our purposes, the action is in the ‘rescor(\(i\), \(j\))’ portions of the ‘Family Specific Parameters’ section.

Anyway, if you wanted to get all the Pearson’s correlations among the glbwarm variables, rather than piecewise cor() approach, you could use the lowerCor() function from the psych package (Revelle, 2022).

psych::lowerCor(glbwarm[, 1:7], digits = 3)## govct posmt negmt idlgy age sex prtyd

## govact 1.000

## posemot 0.043 1.000

## negemot 0.578 0.128 1.000

## ideology -0.418 -0.029 -0.349 1.000

## age -0.097 0.042 -0.057 0.212 1.000

## sex -0.099 0.074 -0.117 0.133 0.166 1.000

## partyid -0.360 -0.036 -0.324 0.619 0.154 0.109 1.0002.4 Multiple linear regression

The simple linear regression model is easily extended to the estimation of a consequent variable using more than one antecedent variable. Including more than one antecedent in a regression model allows you to simultaneously investigate the role of multiple influences on a consequent variable. An additional and important benefit of the multiple regression model is that it provides various measures of partial association that quantify the component of the association between an antecedent and a consequent that is unique to that antecedent relative to other antecedent variables in the model. (p. 48, emphasis in the original)

Using Hayes’ notation, the linear model we’re about to fit follows the basic equation

\[\hat Y = i_Y + b_1 X_1 + b_2 X_2 + b_3 X_3 + b_4 X_4 + b_4 X_5.\]

For us, there’s technically more involved because our Bayesian paradigm includes priors, which we’re not focusing on at the moment. Anyway, there’s nothing particularly special about jumping from univariable to multivariable models with brms. You just keep tacking on predictors with the + operator.

model2.8 <- brm(

data = glbwarm,

family = gaussian,

govact ~ 1 + negemot + posemot + ideology + sex + age,

cores = 4,

file = "fits/model02.08")print(model2.8)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: govact ~ 1 + negemot + posemot + ideology + sex + age

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 4.06 0.21 3.66 4.46 1.00 5255 3316

## negemot 0.44 0.03 0.39 0.49 1.00 5468 3047

## posemot -0.03 0.03 -0.08 0.03 1.00 5267 3212

## ideology -0.22 0.03 -0.27 -0.17 1.00 5270 3107

## sex -0.01 0.08 -0.16 0.14 1.00 6013 3219

## age -0.00 0.00 -0.01 0.00 1.00 5776 3240

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.07 0.03 1.02 1.12 1.00 6157 3074

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Following Hayes on pages 50 and 51, here is the posterior mean (i.e., what you might call the Bayesian point estimate) for someone with

- negative emotions = 3,

- positive emotions = 4,

ideology= 2,- is male (i.e.,

sex= 1), and - is 30 years of

age.

fixef(model2.8)[1] +

fixef(model2.8)[2] * 3 +

fixef(model2.8)[3] * 4 +

fixef(model2.8)[4] * 2 +

fixef(model2.8)[5] * 1 +

fixef(model2.8)[6] * 30## [1] 4.792134Here’s the same deal for a man of the same profile, but with one point higher on negemot.

fixef(model2.8)[1] +

fixef(model2.8)[2] * 4 +

fixef(model2.8)[3] * 4 +

fixef(model2.8)[4] * 2 +

fixef(model2.8)[5] * 1 +

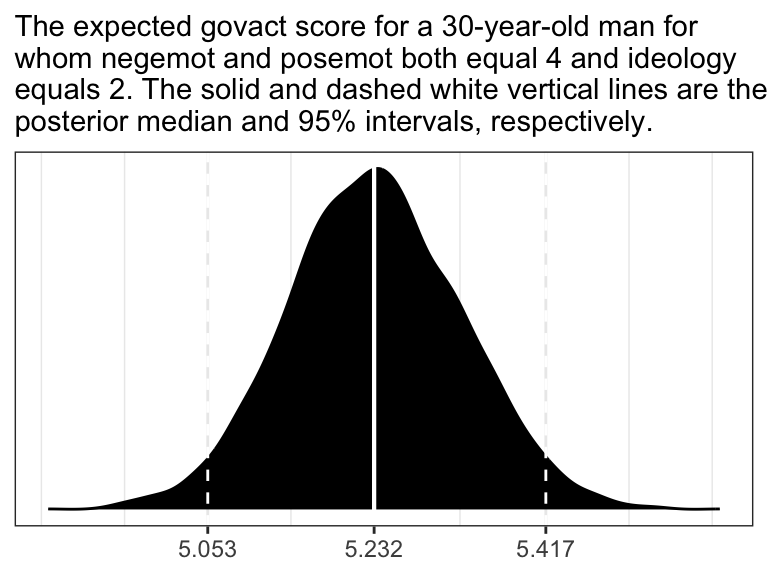

fixef(model2.8)[6] * 30## [1] 5.233116If you want a full expression of the model uncertainty in terms of the shape of the posterior distribution and the 95% intervals, you’ll might use as_draws_df() and do a little data processing.

draws <- as_draws_df(model2.8)

draws <- draws %>%

mutate(our_posterior = b_Intercept + b_negemot * 4 + b_posemot * 4 + b_ideology * 2 + b_sex * 1 + b_age * 30)

# this intermediary step will make it easier to specify the break points and their labels for the x-axis

post_summary <-

quantile(draws$our_posterior, probs = c(.025, .5, .975)) %>%

as_tibble() %>%

mutate(labels = value %>%

round(digits = 3) %>%

as.character())

# plot!

ggplot(data = draws,

aes(x = our_posterior)) +

geom_density(fill = "black") +

geom_vline(xintercept = post_summary$value,

size = c(.5, .75, .5), linetype = c(2, 1, 2), color = "white") +

scale_x_continuous(NULL,

breaks = post_summary$value,

labels = post_summary$labels) +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "The expected govact score for a 30-year-old man for\nwhom negemot and posemot both equal 4 and ideology\nequals 2. The solid and dashed white vertical lines are the\nposterior median and 95% intervals, respectively.") +

theme_bw()

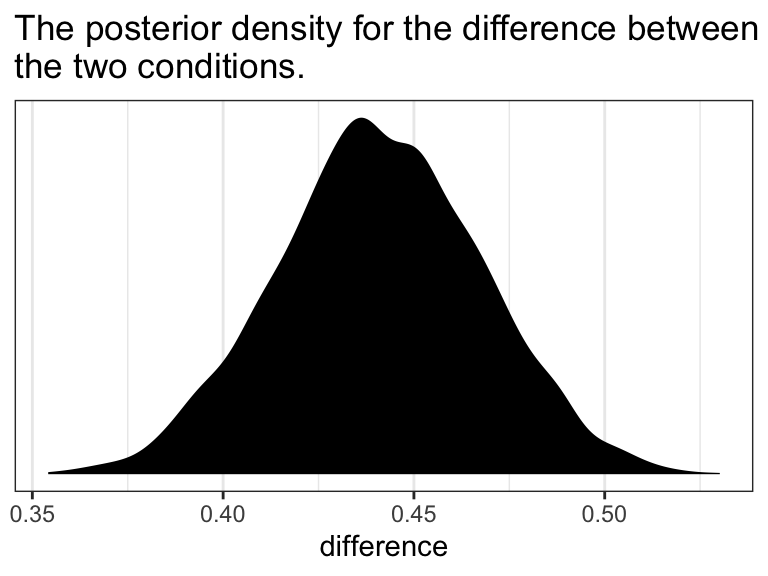

In the text, Hayes showed that individuals based on these two profiles would be expected to differ by 0.441 (i.e., \(5.244 - 4.803 = 0.441\)). That’s fine if you’re only working with OLS point estimates. But a proper Bayesian approach would express the difference in terms of an entire poster distribution, or at least a point estimate accompanied by some sort of intervals. Here we’ll just work with the posterior to create a difference distribution. You could do that with a little deft as_draws_df() wrangling. Here we’ll employ fitted().

nd <- tibble(

negemot = c(3, 4),

posemot = 4,

ideology = 2,

sex = 1,

age = 30)

fitted(model2.8,

newdata = nd,

summary = F) %>%

data.frame() %>%

set_names(str_c("condition_", letters[1:2])) %>%

mutate(difference = condition_b - condition_a) %>%

ggplot(aes(x = difference)) +

geom_density(fill = "black", color = "transparent") +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("The posterior density for the difference between\nthe two conditions.") +

theme_bw()

2.4.1 The standardized regression model.

As brms doesn’t automatically give us the standardized coefficients the way OLS output often does, we’ll have to be proactive. One solution is just to standardized the data themselves and then re-fit the model with those standardized variables. That leads us to the issue of how one standardized variables to begin with. Recall that standardizing entails subtracting the mean of a variable from that variable and then dividing that value by the standard deviation. We don’t want to do that by hand. So one handy way is to make a custom function to do that work for us.

standardize <- function(x) {

(x - mean(x)) / sd(x)

}To learn more about making custom functions in R, check our Chapter 19 of R4DS.

Here we’ll employ our custom standardize() function to make standardized versions of our variables.

glbwarm <- glbwarm %>%

mutate(posemot_z = standardize(posemot),

negemot_z = standardize(negemot),

ideology_z = standardize(ideology),

sex_z = standardize(sex),

age_z = standardize(age))Now we’ve got us our standardized variables, let’s fit a standardized model.

model2.9 <- brm(

data = glbwarm,

family = gaussian,

govact_z ~ 1 + negemot_z + posemot_z + ideology_z + sex_z + age_z,

cores = 4,

file = "fits/model02.09")Here are the newly standardized coefficient summaries, minus the Intercept.

fixef(model2.9)[-1, ] %>% round(3)## Estimate Est.Error Q2.5 Q97.5

## negemot_z 0.495 0.030 0.436 0.554

## posemot_z -0.027 0.028 -0.083 0.029

## ideology_z -0.242 0.031 -0.303 -0.182

## sex_z -0.004 0.029 -0.061 0.053

## age_z -0.016 0.029 -0.072 0.041Our coefficients match up nicely with those on page 52 in the text. Just as with Hayes’s OLS estimates, we should not attempt to interpret the standardized sex_z coefficient from our Bayesian model.

Here’s how we’d fit a partially-standardized model–a model in which all variables except for sex are standardized.

model2.10 <- update(

model2.9,

newdata = glbwarm,

formula = govact_z ~ 1 + negemot_z + posemot_z + ideology_z + sex + age_z,

cores = 4,

file = "fits/model02.10")Notice our use of the update() function. If you want to hastily fit a brms model of the same basic form of a prior model, you can just update some of the parameters of that original fit. In this case, all we did was swap out one of the predictors. To accommodate that change, we used the newdata argument so the model had access to the new variable and then we fed in the new formula.

Anyway, here are the coefficient summaries, including the Intercept, for the partially-standardized model.

fixef(model2.10) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## Intercept 0.004 0.039 -0.071 0.081

## negemot_z 0.496 0.030 0.438 0.554

## posemot_z -0.026 0.028 -0.082 0.027

## ideology_z -0.243 0.030 -0.302 -0.186

## sex -0.007 0.056 -0.117 0.103

## age_z -0.016 0.028 -0.070 0.037As Hayes wrote, now sex = -0.007 has a sensible interpretation. “We can say that men and women differ by [-0.007] standard deviations in their support for government action when all other variables in the model are held constant (p. 53).”

On page 54, Hayes gave us the equation to transform unstandardized coefficients to standardized ones:

\[ \tilde b_i = b_i \left ( \frac{\textit{SD}_{X_{i}}}{\textit{SD}_{Y}} \right ) \]

Let’s give it a whirl with negemot.

# here's the coefficient for `negemot` from the standardized model, `model2.9`

fixef(model2.9)["negemot_z", "Estimate"]## [1] 0.4954079# here's the coefficient for `negemot` from the unstandardized model, `model2.8`

fixef(model2.8)["negemot", "Estimate"]## [1] 0.4409823# and here we use Hayes' formula to standardize the unstandardized coefficient

fixef(model2.8)["negemot", "Estimate"] * (sd(glbwarm$negemot) / sd(glbwarm$govact))## [1] 0.4954375Looks like we got it within rounding error–pretty good! However, that was just the posterior mean, the Bayesian point estimate. If we want to more fully express the uncertainty around the mean–and we do–, we’ll need to work with the posterior draws.

# the posterior draws from the unstandardized model

as_draws_df(model2.8) %>%

# using Hayes' formula to standardize `b_negemot`

mutate(`hand-made b_negemot_z` = b_negemot * (sd(glbwarm$negemot) / sd(glbwarm$govact))) %>%

# tacking on the `b_negemot_z` column from the standardized `model2.9` models posterior draws

bind_cols(as_draws_df(model2.9) %>%

select(b_negemot_z)) %>%

# converting the data to the long format and grouping by `name`

pivot_longer(c(`hand-made b_negemot_z`, b_negemot_z)) %>%

group_by(name) %>%

# here we summarize the results

summarise(mean = mean(value),

sd = sd(value),

ll = quantile(value, probs = .025),

ul = quantile(value, probs = .975)) %>%

mutate_if(is.double, round, digits = 3)## # A tibble: 2 × 5

## name mean sd ll ul

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 b_negemot_z 0.495 0.03 0.436 0.554

## 2 hand-made b_negemot_z 0.495 0.03 0.437 0.553Our summary confirms that we can apply Hayes’s formula to a as_draws_df() column in order to get fuller summary statistics for a hand-converted standardized coefficient. This would be in full compliance with, say, APA recommendations to include 95% intervals with all effect sizes–the standardized regression coefficient being the effect size, here.

2.5 Measures of model fit

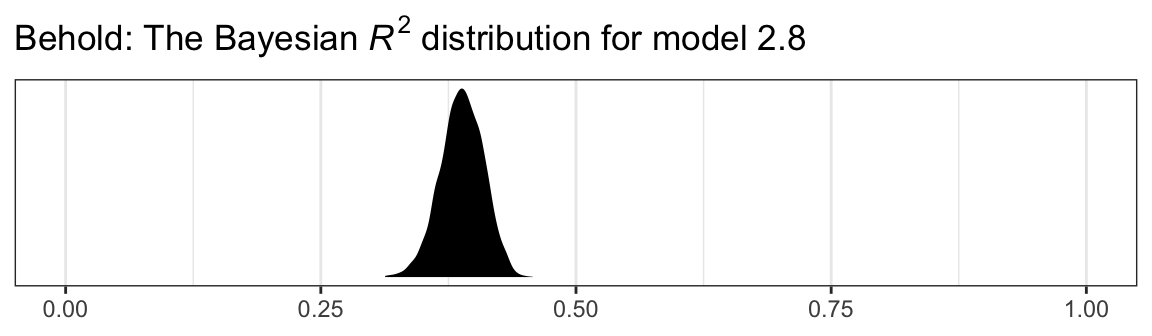

In the Bayesian world, we don’t tend to appeal to the \(\textit{SS}_{residual}\), the \(\textit{MS}_{residual}\), or the standard error of estimate. We do sometimes, however, appeal to the \(R^2\). I’m not going to go into the technical details here, but you should be aware that the Bayesian \(R^2\) is not calculated the same as the OLS \(R^2\). If you want to dive in, check out the (2019) paper by Gelman, Goodrich, Gabry, and Vehtari, R-squared for Bayesian regression models. Here’s how to get it with brms.

bayes_R2(model2.8, summary = T) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## R2 0.388 0.021 0.346 0.428It even comes with 95% intervals, which will make the editors at APA journals happy. If you want to go beyond summary statistics and take a look at the full posterior, just set summary = F and data wrangle and plot as usual.

bayes_R2(model2.8, summary = F) %>%

data.frame() %>%

ggplot(aes(x = R2)) +

geom_density(fill = "black", color = "transparent") +

scale_y_continuous(NULL, breaks = NULL) +

labs(title = expression(paste("Behold: The Bayesian ", italic("R")^{2}, " distribution for model 2.8")),

x = NULL) +

coord_cartesian(xlim = 0:1) +

theme_bw()

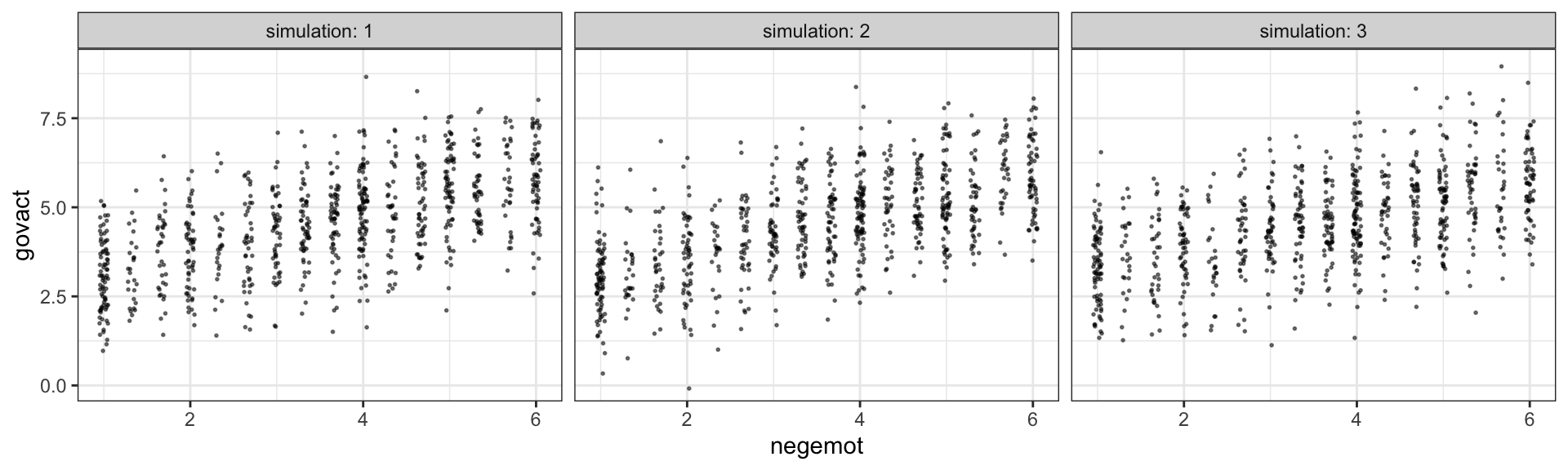

Another way we examine model fit is with graphical posterior predictive checks. Posterior predictive checking is a very general approach, which you might learn more about from Gabry et al. (2019) or with a few keyword searches in on Gelman’s blog. One basic way is to use the model in order to simulate data and then compare those data with the original data–the basic idea being that good fitting models should produce data similar to the original data.

Recall how we’ve used fitted() to make regression lines and expected values? We’ll, now we’ll use predict() to simulate data based on our models.

set.seed(2)

predict(model2.8,

summary = F,

nsamples = 3) %>%

data.frame() %>%

set_names(1:nrow(glbwarm)) %>%

mutate(simulation = str_c("simulation: ", 1:n())) %>%

pivot_longer(-simulation,

names_to = "row",

values_to = "govact") %>%

bind_cols(

bind_rows(

glbwarm %>% select(-govact),

glbwarm %>% select(-govact),

glbwarm %>% select(-govact))

) %>%

ggplot(aes(x = negemot, y = govact)) +

geom_jitter(height = .05, width = .05,

alpha = 1/2, size = 1/3) +

coord_cartesian(ylim = c(0, 9)) +

theme_bw() +

facet_wrap(~ simulation, ncol = 3)

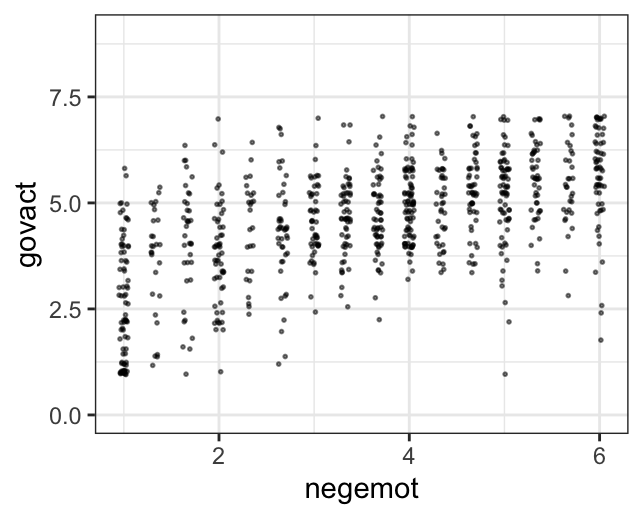

The question is, do these simulated data sets look like the original data? Let’s see.

glbwarm %>%

ggplot(aes(x = negemot, y = govact)) +

geom_jitter(height = .05, width = .05,

alpha = 1/2, size = 1/3) +

coord_cartesian(ylim = c(0, 9)) +

theme_bw()

Overall, the simulations aren’t bad. But in all three govact tends to veer above 7.5, which is where the original data appear to be bounded. But otherwise the overall shape is pretty close, at least with respect to negemot.

There’s nothing special about three simulations. Three is just more than one and gives you a sense of the variance across simulations. Also, we only examined the model fit with respect to negemot. Since there are other variables in the model, we might also assess the model based on them.

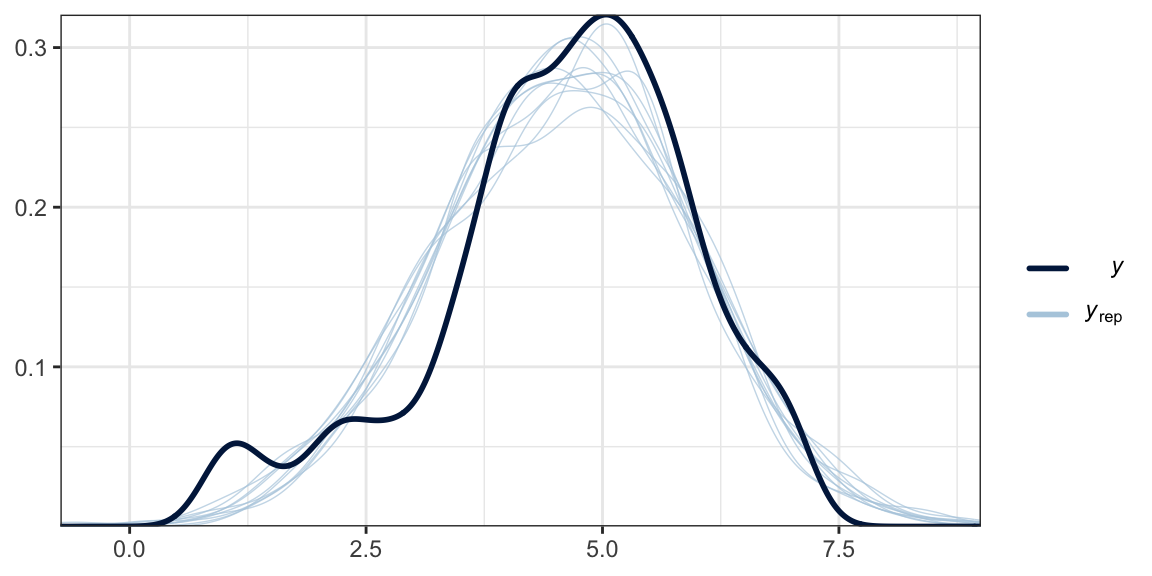

Another method is with the brms::pp_check() function, which allows users to access a variety of convenience functions from the bayesplot package (Gabry et al., 2019; Gabry & Mahr, 2022). Here we’ll use the default settings and just tack on theme_bw() for aesthetics.

set.seed(2)

pp_check(model2.8) +

theme_bw()

What we did was simulate 10 data sets worth of govact values, plot their densities (i.e., the thin blue lines) and compare them with the density of the original govact values. What we want is for the thin blue lines to largely align with the thick blue line. Though not perfect, the simulations from our model2.8 did a pretty okay job of reproducing the original govact distribution. For more ideas on this method, see the brms reference manual (Bürkner, 2022e) and Gabry’s (2019) vignette, Graphical posterior predictive checks using the bayesplot package.

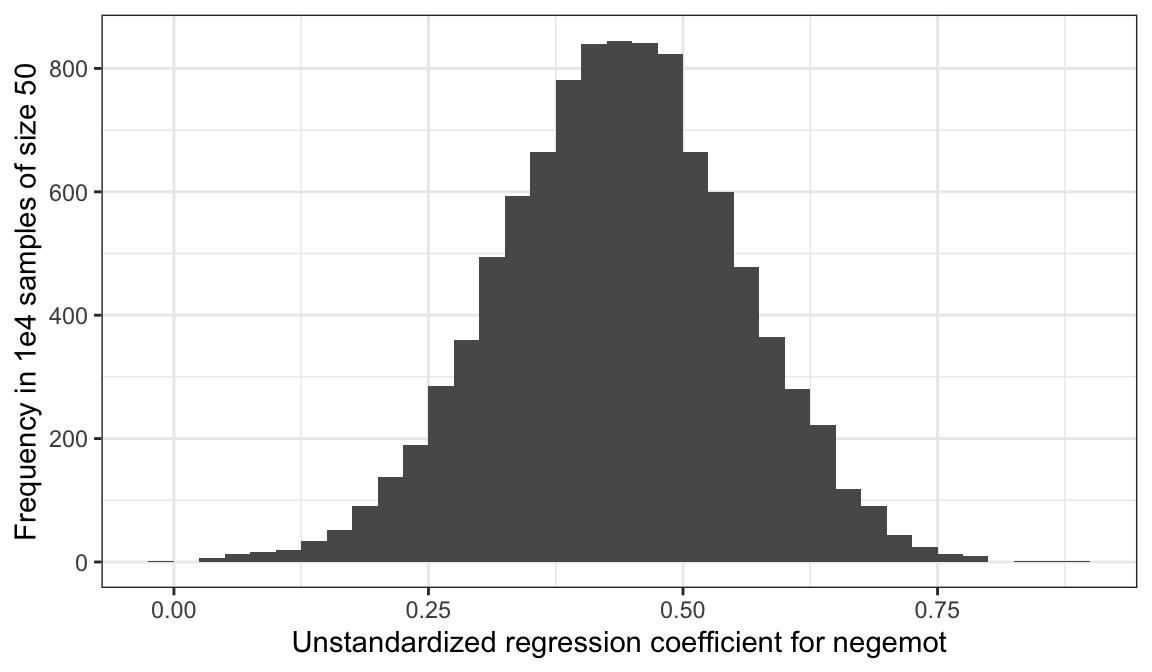

2.6 Statistical inference

Here’s a tidyverse way to do Hayes’ simulation from page 57. We’re just using OLS regression with the lm() function. You could do this with Bayesian HMC estimation, but man would it take a while. For our first step, we’ll define two custom functions.

# this first one will use the `slice_sample()` function to randomly sample from `glbwarm`

make_sample <- function(i) {

set.seed(i)

slice_sample(glbwarm, n = 50, replace = F)

}

# this second function will fit our model, the same one from `model2.8`, to each of our subsamples

glbwarm_model <- function(df) {

lm(govact ~ 1 + negemot + posemot + ideology + sex + age, data = df)

}Now we’ll run the simulation, wrangle the output, and plot in one fell swoop.

# we need an iteration index, which will double as the values we set our seed with in our `make_sample()` function

tibble(iter = 1:1e4) %>%

group_by(iter) %>%

# inserting our subsamples

mutate(sample = map(iter, make_sample)) %>%

# fitting our models

mutate(model = map(sample, glbwarm_model)) %>%

# taking those model results and tidying them with the broom package

mutate(broom = map(model, broom::tidy)) %>%

# unnesting allows us to access our model results

unnest(broom) %>%

# we're only going to focus on the estimates for `negemot`

filter(term == "negemot") %>%

# here it is, Figure 2.7

ggplot(aes(x = estimate)) +

geom_histogram(binwidth = .025, boundary = 0) +

labs(x = "Unstandardized regression coefficient for negemot",

y = "Frequency in 1e4 samples of size 50") +

theme_bw()

To learn more about this approach to simulations, see Section 25.2.1 in R4DS.

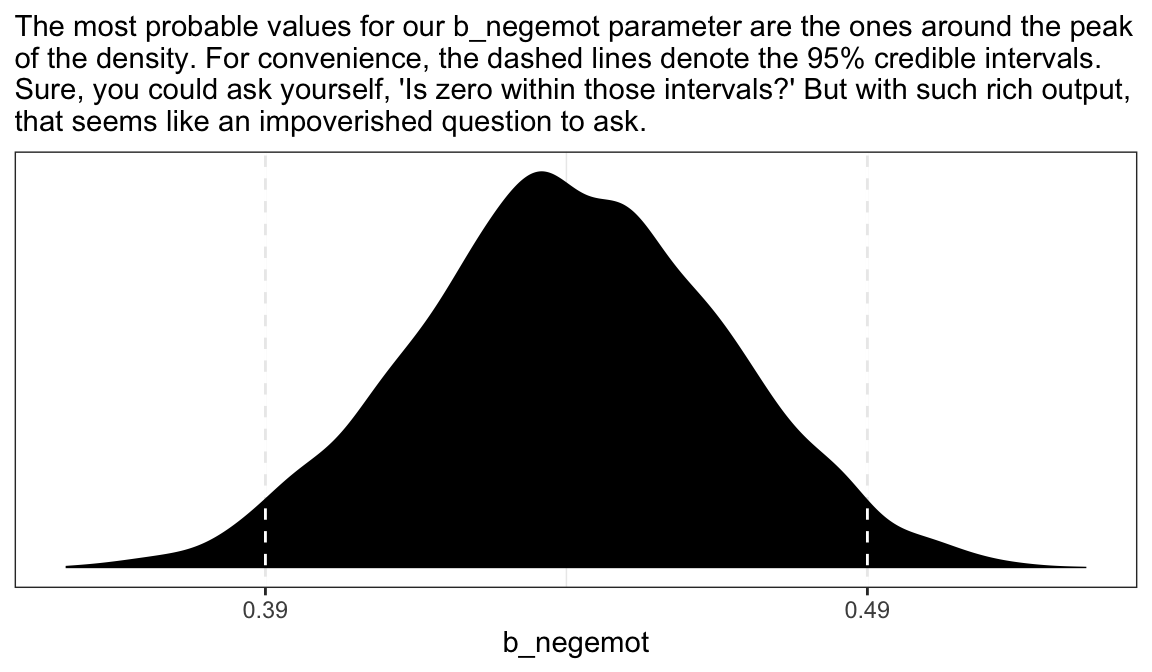

2.6.1 Testing a null hypothesis.

As Bayesians, we don’t need to wed ourselves to the null hypothesis. We’re not interested in the probability of the data given the null hypothesis. Bayes’ rule,

\[p(\theta | D) = \frac{p(D | \theta) p(\theta)}{p(D)},\]

gives us the probability of the model parameters, given the data. Though I acknowledge different researchers might set out to ask different things of their data, I propose we’re generally more interested in determining the most probable parameter values than we are the probabilities tested within the NHST paradigm.

as_draws_df(model2.8) %>%

ggplot(aes(x = b_negemot)) +

geom_density(fill = "black", color = "transparent") +

geom_vline(xintercept = posterior_interval(model2.8)["b_negemot", ],

color = "white", linetype = 2) +

scale_x_continuous(breaks = posterior_interval(model2.8)["b_negemot", ] %>% as.double(),

labels = posterior_interval(model2.8)["b_negemot", ] %>% as.double() %>% round(digits = 2) %>% as.character()) +

scale_y_continuous(NULL, breaks = NULL) +

labs(subtitle = "The most probable values for our b_negemot parameter are the ones around the peak\nof the density. For convenience, the dashed lines denote the 95% credible intervals.\nSure, you could ask yourself, 'Is zero within those intervals?' But with such rich output,\nthat seems like an impoverished question to ask.") +

theme_bw()

For more discussion the NHST paradigm and how it compares with variations of Bayesian statistics, check out Kruschke’s (2015) text, Doing Bayesian data analysis: A tutorial with R, JAGS, and Stan, particularly chapters 10 through 13. You can find my (2023a) brms + tidyverse translation of that text at https://bookdown.org/content/3686/.

2.6.2 Interval estimation.

Within the Bayesian paradigm, we don’t use 95% intervals based on the typical frequentist formula. With the brms package, we typically use percentile-based intervals. Take the 95% credible intervals for the negemot coefficient from model model2.8:

posterior_interval(model2.8)["b_negemot", ]## 2.5% 97.5%

## 0.3886046 0.4924091We can actually get those intervals with the simple use of the base R quantile() function.

as_draws_df(model2.8) %>%

summarize(the_2.5_percentile = quantile(b_negemot, probs = .025),

the_97.5_percentile = quantile(b_negemot, probs = .975))## # A tibble: 1 × 2

## the_2.5_percentile the_97.5_percentile

## <dbl> <dbl>

## 1 0.389 0.492The consequence of this is that our Bayesian credible intervals aren’t necessarily symmetric, which is fine because the posterior distribution for a given parameter isn’t always symmetric. But not all Bayesian intervals are percentile based. John Kruschke, for example, often recommends highest posterior density intervals in his work. The brms package doesn’t have a convenience function for these, but you can compute them with help from the HDInterval package (Meredith & Kruschke, 2018).

library(HDInterval)

hdi(as_draws_df(model2.8)[ , "b_negemot"], credMass = .95)## b_negemot

## lower 0.3884334

## upper 0.4921508

## attr(,"credMass")

## [1] 0.95Finally, because Bayesians aren’t bound to the NHST paradigm, we aren’t bound to 95% intervals, either. For example, in both his excellent (2020) text and as a default in its accompanying rethinking package, Richard McElreath often uses 89% intervals. Alternatively, Andrew Gelman has publicly advocated for 50% intervals. The most important thing is to express the uncertainty in the posterior in a clearly-specified way. If you’d like, say, 80% intervals in your model summary, you can insert a prob argument into either print() or summary().

print(model2.8, prob = .8)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: govact ~ 1 + negemot + posemot + ideology + sex + age

## Data: glbwarm (Number of observations: 815)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-80% CI u-80% CI Rhat Bulk_ESS Tail_ESS

## Intercept 4.06 0.21 3.79 4.32 1.00 5255 3316

## negemot 0.44 0.03 0.41 0.48 1.00 5468 3047

## posemot -0.03 0.03 -0.06 0.01 1.00 5267 3212

## ideology -0.22 0.03 -0.25 -0.18 1.00 5270 3107

## sex -0.01 0.08 -0.11 0.09 1.00 6013 3219

## age -0.00 0.00 -0.00 0.00 1.00 5776 3240

##

## Family Specific Parameters:

## Estimate Est.Error l-80% CI u-80% CI Rhat Bulk_ESS Tail_ESS

## sigma 1.07 0.03 1.03 1.10 1.00 6157 3074

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Note how the names of two of our columns changed to ‘l-80% CI’ and ‘u-80% CI’.

You can specify custom percentile levels with posterior_summary():

posterior_summary(model2.8, probs = c(.9, .8, .7, .6, .4, .3, .2, .1)) %>%

round(digits = 2)## Estimate Est.Error Q90 Q80 Q70 Q60 Q40 Q30 Q20 Q10

## b_Intercept 4.06 0.21 4.32 4.23 4.17 4.11 4.01 3.95 3.89 3.79

## b_negemot 0.44 0.03 0.48 0.46 0.46 0.45 0.43 0.43 0.42 0.41

## b_posemot -0.03 0.03 0.01 0.00 -0.01 -0.02 -0.03 -0.04 -0.05 -0.06

## b_ideology -0.22 0.03 -0.18 -0.19 -0.20 -0.21 -0.22 -0.23 -0.24 -0.25

## b_sex -0.01 0.08 0.09 0.06 0.03 0.01 -0.03 -0.05 -0.08 -0.11

## b_age 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00 0.00

## sigma 1.07 0.03 1.10 1.09 1.08 1.07 1.06 1.05 1.05 1.03

## lprior -3.26 0.01 -3.26 -3.26 -3.26 -3.26 -3.27 -3.27 -3.27 -3.27

## lp__ -1213.22 1.87 -1211.12 -1211.63 -1212.05 -1212.48 -1213.39 -1213.94 -1214.59 -1215.65And of course, you can use multiple interval summaries when you summarize() the output from as_draws_df(). E.g.,

as_draws_df(model2.8) %>%

pivot_longer(b_Intercept:b_age) %>%

group_by(name) %>%

summarize(`l-95% CI` = quantile(value, probs = .025),

`u-95% CI` = quantile(value, probs = .975),

`l-50% CI` = quantile(value, probs = .25),

`u-50% CI` = quantile(value, probs = .75)) %>%

mutate_if(is.double, round, digits = 2)## # A tibble: 6 × 5

## name `l-95% CI` `u-95% CI` `l-50% CI` `u-50% CI`

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 b_age -0.01 0 0 0

## 2 b_ideology -0.27 -0.17 -0.24 -0.2

## 3 b_Intercept 3.66 4.46 3.93 4.2

## 4 b_negemot 0.39 0.49 0.42 0.46

## 5 b_posemot -0.08 0.03 -0.05 -0.01

## 6 b_sex -0.16 0.14 -0.06 0.04Throughout this project, I’m going to be lazy and default to conventional 95% intervals, with occasional appearances of 50% intervals.

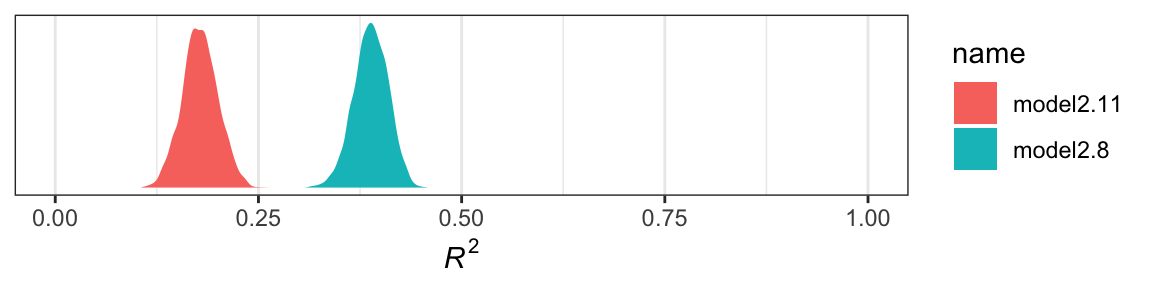

2.6.3 Testing a hypothesis about a set of antecedent variables.

Here we’ll make use of the update() function to hastily fit our reduced model, model2.11.

model2.11 <- update(

model2.8,

govact ~ 1 + ideology + sex + age,

cores = 4,

file = "fits/model02.11")We can get a look at the \(R^2\) summaries for our competing models like this.

bayes_R2(model2.8) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## R2 0.39 0.02 0.35 0.43bayes_R2(model2.11) %>% round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## R2 0.18 0.02 0.13 0.22So far it looks like our fuller model, model2.8, accounts for more variation in the data. If we wanted to look at their distributions, we’d set summary = F in the bayes_R2() function and convert the results to a data frame. Here we use bind_cols() to put the \(R^2\) results for both in the same tibble.

r2 <- cbind(bayes_R2(model2.8, summary = F),

bayes_R2(model2.11, summary = F)) %>%

data.frame() %>%

set_names(str_c("model2.", c(8, 11)))

head(r2)## model2.8 model2.11

## 1 0.3999961 0.1847054

## 2 0.3855340 0.1656601

## 3 0.4023894 0.1751479

## 4 0.3881968 0.1287015

## 5 0.4074221 0.1764577

## 6 0.4023420 0.1649124With our r2 tibble in hand, we’re ready to plot.

r2 %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value, fill = name)) +

geom_density(color = "transparent") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(paste(italic(R)^2))) +

coord_cartesian(xlim = 0:1) +

theme_bw()

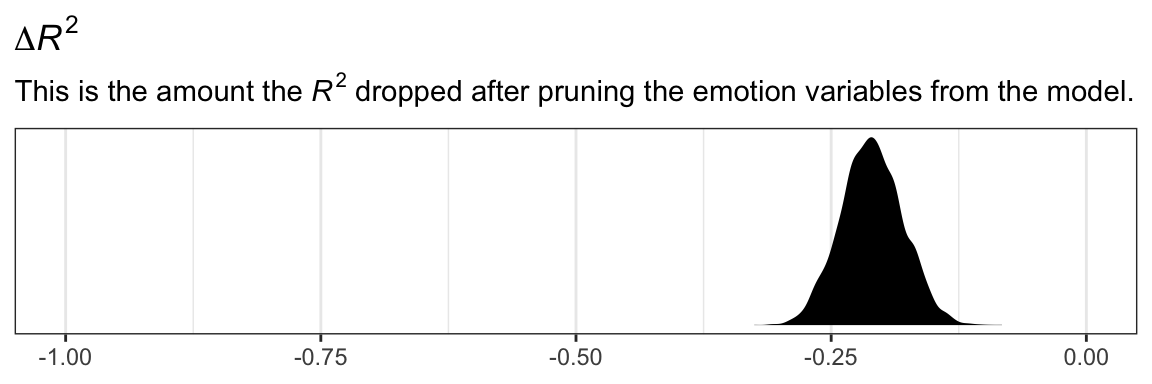

Yep, the \(R^2\) distribution for model2.8, the one including the emotion variables, is clearly larger than that for the more parsimonious model2.11. And it’d just take a little more data wrangling to get a formal \(R^2\) difference score.

r2 %>%

mutate(difference = model2.11 - model2.8) %>%

ggplot(aes(x = difference)) +

geom_density(fill = "black", color = "transparent") +

scale_y_continuous(NULL, breaks = NULL) +

coord_cartesian(xlim = -1:0) +

labs(title = expression(paste(Delta, italic(R)^{2})),

subtitle = expression(paste("This is the amount the ", italic(R)^{2}, " dropped after pruning the emotion variables from the model.")),

x = NULL) +

theme_bw()

The \(R^2\) approach is popular within the social sciences. But it has its limitations, the first of which is that it doesn’t correct for model complexity. The second is it’s not applicable to a range of models, such as those that do not use the Gaussian likelihood (e.g., logistic regression) or to multilevel models.

Happily, information criteria offer a more general framework. The AIC is the most popular information criteria among frequentists. Within the Bayesian world, we have the DIC, the WAIC, and the LOO. The DIC is quickly falling out of favor and is not immediately available with the brms package. However, we can use the WAIC and the LOO, both of which are computed in brms via the loo package (Vehtari et al., 2017; Vehtari et al., 2022; Yao et al., 2018).

With brms, you can compute the WAIC or LOO values and add them to your model fit object with the add_criterion() function.

model2.8 <- add_criterion(model2.8, criterion = c("loo", "waic"))

model2.11 <- add_criterion(model2.11, criterion = c("loo", "waic"))Here’s the main loo-summary output for model4.

model2.8$criteria$loo##

## Computed from 4000 by 815 log-likelihood matrix

##

## Estimate SE

## elpd_loo -1213.9 22.7

## p_loo 7.7 0.7

## looic 2427.9 45.5

## ------

## Monte Carlo SE of elpd_loo is 0.0.

##

## All Pareto k estimates are good (k < 0.5).

## See help('pareto-k-diagnostic') for details.You get a wealth of output, more of which can be seen with str(model2.8$loo). For now, notice the “All Pareto k estimates are good (k < 0.5).” For technical details on Pareto \(k\) values, see Vehtari & Gabry (2020). In short, each case in the data gets its own \(k\) value and we like it when those \(k\)’s are low. The makers of the loo package get worried when those \(k\)’s exceed 0.7 and as a result you will get a warning message when they do. Happily, we have no such warning messages in this example.

If you want to work with the \(k\) values directly, you can extract them and place them into a data frame like so.

model2.8$criteria$loo$diagnostics %>%

data.frame() %>%

head()## pareto_k n_eff

## 1 -0.02469817 5622.864

## 2 -0.03357609 4935.060

## 3 0.14501532 5874.881

## 4 0.16879274 2421.769

## 5 -0.19777749 6086.299

## 6 -0.02300993 5010.521The pareto_k values can be used to examine cases that are overly-influential on the model parameters, something akin to a Cook’s \(D_i\). See, for example this discussion on stackoverflow.com in which several members of the Stan team weighed in. The issue is also discussed in Vehtari et al. (2017) and in this lecture by Aki Vehtari.

But anyway, we’re getting ahead of ourselves. Back to the LOO.

Like other information criteria, the LOO values aren’t of interest in and of themselves. However, the values of one model’s LOO relative to that of another is of great interest. We generally prefer models with lower information criteria. With compare_ic(), we can compute a formal difference score between multiple loo objects.

loo_compare(model2.8, model2.11) %>% print(simplify = F)## elpd_diff se_diff elpd_loo se_elpd_loo p_loo se_p_loo looic se_looic

## model2.8 0.0 0.0 -1213.9 22.7 7.7 0.7 2427.9 45.5

## model2.11 -118.7 15.9 -1332.7 20.6 5.2 0.4 2665.3 41.1We also get a standard error. Here it looks like model2.8 was substantially better, in terms of LOO-values, than model2.11.

For more on the LOO, see the loo reference manual (Gabry, 2022), Vehtari and Gabry’s handy (2020) vignette, or the scholarly papers referenced therein. Also, McElreath discussed the LOO in the second (2020) version of his text, as well as in this lecture.

2.7 Multicategorical antecedent variables

“To include a multicategorical antecedent variable representing \(g\) groups in a regression model, it must be represented with \(g − 1\) variables using one of a variety of different group coding systems”. (p. 66) This isn’t strictly true, but we digress. Hayes went on…

One popular system for coding groups is indicator coding, also known as dummy coding. With indicator coding, \(g − 1\) indicator variables containing either a zero or one represent which of the \(g\) groups a case belongs in, and these indicator variables are used as antecedents in a regression model. To construct indicator codes, create \(g − 1\) variables \(D_i\) for each case set to 1 if the case is in group \(i\), otherwise set \(D_i\) to zero. (p. 66, emphasis in the original)

Before we get to that, we should examine our multicategorical antecedent variable, partyid. It’s coded 1 = Democrat 2 = Independent 3 = Republican. You can get a count of the cases within a give partyid like this.

glbwarm %>%

count(partyid)## # A tibble: 3 × 2

## partyid n

## <dbl> <int>

## 1 1 359

## 2 2 192

## 3 3 264We can get grouped means for govact like this.

glbwarm %>%

group_by(partyid) %>%

summarize(mean_support_for_governmental_action = mean(govact))## # A tibble: 3 × 2

## partyid mean_support_for_governmental_action

## <dbl> <dbl>

## 1 1 5.06

## 2 2 4.61

## 3 3 3.92We can make dummies with the if_else() function. Here we’ll just go ahead and do that right within the brm() function.

model2.12 <- brm(

data = glbwarm %>%

mutate(Democrat = if_else(partyid == 1, 1, 0),

Republican = if_else(partyid == 3, 1, 0)),

family = gaussian,

govact ~ 1 + Democrat + Republican,

chains = 4, cores = 4,

file = "fits/model02.12")Check the results.

fixef(model2.12)## Estimate Est.Error Q2.5 Q97.5

## Intercept 4.6039657 0.0926150 4.4240278 4.7829894

## Democrat 0.4586668 0.1141390 0.2280444 0.6818560

## Republican -0.6775160 0.1221056 -0.9201040 -0.4375259The intercept is the stand-in for Independents and the other two coefficients are difference scores.

The \(R^2\) is okay.

bayes_R2(model2.12) %>% round(digits = 3)## Estimate Est.Error Q2.5 Q97.5

## R2 0.132 0.02 0.093 0.173There’s no need to compute an \(F\)-test on our \(R^2\). The posterior mean and its 95% intervals are well away from zero. But you could use your bayes_R2(model2.12, summary = F) plotting skills from above to more fully inspect the posterior, if you’d like.

We could also use information criteria. One method would be to compare the WAIC or LOO value of model2.12 with an intercept-only model. First, we’ll need to fit that model.

model2.13 <- brm(

data = glbwarm,

family = gaussian,

govact ~ 1,

chains = 4, cores = 4,

file = "fits/model02.13")Here we’ll compute the WAIC for each, save the results to their fit objects, and them compare them with loo_compare().

model2.12 <- add_criterion(model2.12, criterion = "waic")

model2.13 <- add_criterion(model2.13, criterion = "waic")

loo_compare(model2.12, model2.13, criterion = "waic") %>%

print(simplify = F)## elpd_diff se_diff elpd_waic se_elpd_waic p_waic se_p_waic waic se_waic

## model2.12 0.0 0.0 -1353.6 20.1 4.0 0.3 2707.1 40.1

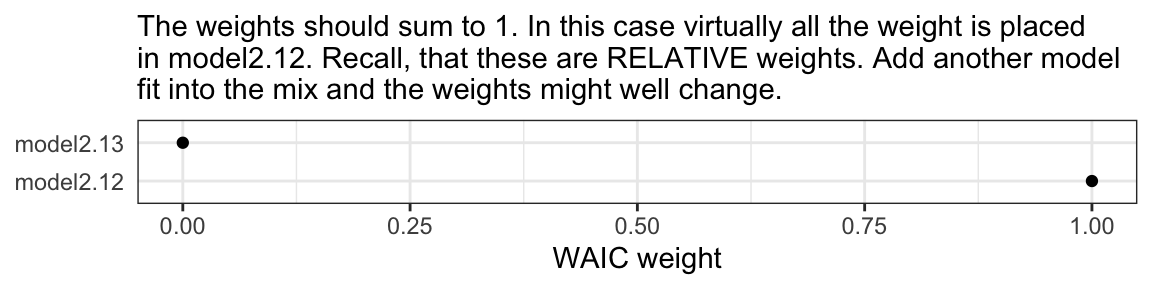

## model2.13 -55.3 10.4 -1408.8 21.4 2.1 0.2 2817.6 42.8The WAIC comparison suggests model2.12, the one with the partyid dummies, is an improvement over the simple intercept-only model. Another way to compare the information criteria is with AIC-type weighting. The brms package offers a variety of weighting methods via the model_weights() function.

mw <- model_weights(model2.12, model2.13, weights = "waic")

mw## model2.12 model2.13

## 1.000000e+00 1.011758e-24If you’re not a fan of scientific notation, you can put the results in a tibble and look at them on a plot.

mw %>%

data.frame() %>%

rownames_to_column() %>%

set_names(c("model", "WAIC weight")) %>%

ggplot(aes(x = `WAIC weight`, y = model)) +

geom_point() +

labs(subtitle = "The weights should sum to 1. In this case virtually all the weight is placed\nin model2.12. Recall, that these are RELATIVE weights. Add another model\nfit into the mix and the weights might well change.",

y = NULL) +

theme_bw() +

theme(axis.ticks.y = element_blank())

You could, of course, do all this with the LOO.

2.8 Assumptions for interpretation and statistical inference

Regression is a handy tool to have in your statistical toolbox. Its utility as a “general data analytic system” (Cohen, 1968) will be apparent throughout this book. But it is a human invention that isn’t perfect, it can lead you astray if used indiscriminately, and it is founded on some assumptions that aren’t always realistic or likely to be met in the circumstances in which the method is applied. (p. 68)

2.8.1 Linearity.

“When using OLS regression to model some consequent variable of interest \(Y\), you must be willing to assume that the relationship between the variables in the model are linear in nature, or at least approximately linear” (p. 69)

2.8.2 Normality.

The brms package is quite general and allows users to fit models from a variety of likelihoods other than the Gaussian. For example, users can accommodate outliers/extreme values with Student’s t regression. You can do count regression with the Poisson or the negative binomial… For more, see McElreath’s lecture introducing the generalized linear model or Bürkner’s (2022d) vignette, Parameterization of response distributions in brms

2.8.3 Homoscedasticity.

The brms package can also accommodate homoscedasticity with distributional modeling. In short, one simply models \(\sigma\) in addition to the mean, \(\mu\). See Bürkner’s handy (2022a) vignette, Estimating distributional models with brms on the topic.

2.8.4 Independence.

The issue of independence is where the multilevel model comes in. See any relevant text, such as Statistical rethinking or Data analysis using regression and multilevel/hierarchical models (Gelman & Hill, 2006). And yes, brms is fully capable of handling multilevel models (see Bürkner, 2017, 2018).

Session info

sessionInfo()## R version 4.2.2 (2022-10-31)

## Platform: x86_64-apple-darwin17.0 (64-bit)

## Running under: macOS Big Sur ... 10.16

##

## Matrix products: default

## BLAS: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRblas.0.dylib

## LAPACK: /Library/Frameworks/R.framework/Versions/4.2/Resources/lib/libRlapack.dylib

##

## locale:

## [1] en_US.UTF-8/en_US.UTF-8/en_US.UTF-8/C/en_US.UTF-8/en_US.UTF-8

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] HDInterval_0.2.4 brms_2.18.0 Rcpp_1.0.9 forcats_0.5.1 stringr_1.4.1 dplyr_1.0.10

## [7] purrr_1.0.1 readr_2.1.2 tidyr_1.2.1 tibble_3.1.8 ggplot2_3.4.0 tidyverse_1.3.2

##

## loaded via a namespace (and not attached):

## [1] readxl_1.4.1 backports_1.4.1 plyr_1.8.7 igraph_1.3.4 splines_4.2.2

## [6] crosstalk_1.2.0 TH.data_1.1-1 rstantools_2.2.0 inline_0.3.19 digest_0.6.31

## [11] htmltools_0.5.3 fansi_1.0.3 magrittr_2.0.3 checkmate_2.1.0 googlesheets4_1.0.1

## [16] tzdb_0.3.0 modelr_0.1.8 RcppParallel_5.1.5 matrixStats_0.63.0 vroom_1.5.7

## [21] xts_0.12.1 sandwich_3.0-2 prettyunits_1.1.1 colorspace_2.0-3 rvest_1.0.2

## [26] haven_2.5.1 xfun_0.35 callr_3.7.3 crayon_1.5.2 jsonlite_1.8.4

## [31] lme4_1.1-31 survival_3.4-0 zoo_1.8-10 glue_1.6.2 gtable_0.3.1

## [36] gargle_1.2.0 emmeans_1.8.0 distributional_0.3.1 pkgbuild_1.3.1 rstan_2.21.8

## [41] abind_1.4-5 scales_1.2.1 mvtnorm_1.1-3 DBI_1.1.3 miniUI_0.1.1.1

## [46] xtable_1.8-4 bit_4.0.4 stats4_4.2.2 StanHeaders_2.21.0-7 DT_0.24

## [51] htmlwidgets_1.5.4 httr_1.4.4 threejs_0.3.3 posterior_1.3.1 ellipsis_0.3.2

## [56] pkgconfig_2.0.3 loo_2.5.1 farver_2.1.1 sass_0.4.2 dbplyr_2.2.1

## [61] utf8_1.2.2 tidyselect_1.2.0 labeling_0.4.2 rlang_1.0.6 reshape2_1.4.4

## [66] later_1.3.0 munsell_0.5.0 cellranger_1.1.0 tools_4.2.2 cachem_1.0.6

## [71] cli_3.6.0 generics_0.1.3 broom_1.0.2 evaluate_0.18 fastmap_1.1.0

## [76] processx_3.8.0 knitr_1.40 bit64_4.0.5 fs_1.5.2 nlme_3.1-160

## [81] mime_0.12 projpred_2.2.1 xml2_1.3.3 compiler_4.2.2 bayesplot_1.10.0

## [86] shinythemes_1.2.0 rstudioapi_0.13 gamm4_0.2-6 reprex_2.0.2 bslib_0.4.0

## [91] stringi_1.7.8 highr_0.9 ps_1.7.2 Brobdingnag_1.2-8 lattice_0.20-45

## [96] Matrix_1.5-1 psych_2.2.9 nloptr_2.0.3 markdown_1.1 shinyjs_2.1.0

## [101] tensorA_0.36.2 vctrs_0.5.1 pillar_1.8.1 lifecycle_1.0.3 jquerylib_0.1.4

## [106] bridgesampling_1.1-2 estimability_1.4.1 httpuv_1.6.5 R6_2.5.1 bookdown_0.28

## [111] promises_1.2.0.1 gridExtra_2.3 codetools_0.2-18 boot_1.3-28 colourpicker_1.1.1

## [116] MASS_7.3-58.1 gtools_3.9.4 assertthat_0.2.1 withr_2.5.0 mnormt_2.1.0

## [121] shinystan_2.6.0 multcomp_1.4-20 mgcv_1.8-41 parallel_4.2.2 hms_1.1.1

## [126] grid_4.2.2 minqa_1.2.5 coda_0.19-4 rmarkdown_2.16 googledrive_2.0.0

## [131] shiny_1.7.2 lubridate_1.8.0 base64enc_0.1-3 dygraphs_1.1.1.6References

Hayes did not cover count regression with the Poisson likelihood in this textbook. If you’d like to learn more, one place to start is with McElreath’s lecture on binomial and Poisson models.↩︎

We will not be covering structural equation modeling (SEM) in this book If you’re interested, you might check out this video series by Erin M. Buchanan on SEM within R, particularly with the handy lavaan package (Merkle & Rosseel, 2018; Rosseel & Jorgensen, 2019).↩︎