Chapter 4 Exploratory Data Analysis, part 1

In the next chapters, we will be looking at parts of exploratory data analysis (EDA). Here we will cover:

Looking at data

Basic Exploratory Data Analysis

Missing Data

Imputations (how to impute missing data)

Basic Overview of Statistics

4.1 Lesson 1: Looking at data

Here, we will look primarily at the package dplyr (do have a look at the documentation), this is generally installed as part of the tidyverse package. dplyr is for data manipulation and is the newer person of plyr. We will look at some basic commands and things you’d generally want to have a look at when initially looking at data.

Lets have a look at iris:

library(tidyverse)

library(dplyr)

#you might wnt to look at the dimensions of a dataset, where we call dim()

dim(iris)## [1] 150 5#we can just look at the dataset by calling it, here we can see iris is a 'tibble'

iris## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## 7 4.6 3.4 1.4 0.3 setosa

## 8 5.0 3.4 1.5 0.2 setosa

## 9 4.4 2.9 1.4 0.2 setosa

## 10 4.9 3.1 1.5 0.1 setosa

## 11 5.4 3.7 1.5 0.2 setosa

## 12 4.8 3.4 1.6 0.2 setosa

## 13 4.8 3.0 1.4 0.1 setosa

## 14 4.3 3.0 1.1 0.1 setosa

## 15 5.8 4.0 1.2 0.2 setosa

## 16 5.7 4.4 1.5 0.4 setosa

## 17 5.4 3.9 1.3 0.4 setosa

## 18 5.1 3.5 1.4 0.3 setosa

## 19 5.7 3.8 1.7 0.3 setosa

## 20 5.1 3.8 1.5 0.3 setosa

## 21 5.4 3.4 1.7 0.2 setosa

## 22 5.1 3.7 1.5 0.4 setosa

## 23 4.6 3.6 1.0 0.2 setosa

## 24 5.1 3.3 1.7 0.5 setosa

## 25 4.8 3.4 1.9 0.2 setosa

## 26 5.0 3.0 1.6 0.2 setosa

## 27 5.0 3.4 1.6 0.4 setosa

## 28 5.2 3.5 1.5 0.2 setosa

## 29 5.2 3.4 1.4 0.2 setosa

## 30 4.7 3.2 1.6 0.2 setosa

## 31 4.8 3.1 1.6 0.2 setosa

## 32 5.4 3.4 1.5 0.4 setosa

## 33 5.2 4.1 1.5 0.1 setosa

## 34 5.5 4.2 1.4 0.2 setosa

## 35 4.9 3.1 1.5 0.2 setosa

## 36 5.0 3.2 1.2 0.2 setosa

## 37 5.5 3.5 1.3 0.2 setosa

## 38 4.9 3.6 1.4 0.1 setosa

## 39 4.4 3.0 1.3 0.2 setosa

## 40 5.1 3.4 1.5 0.2 setosa

## 41 5.0 3.5 1.3 0.3 setosa

## 42 4.5 2.3 1.3 0.3 setosa

## 43 4.4 3.2 1.3 0.2 setosa

## 44 5.0 3.5 1.6 0.6 setosa

## 45 5.1 3.8 1.9 0.4 setosa

## 46 4.8 3.0 1.4 0.3 setosa

## 47 5.1 3.8 1.6 0.2 setosa

## 48 4.6 3.2 1.4 0.2 setosa

## 49 5.3 3.7 1.5 0.2 setosa

## 50 5.0 3.3 1.4 0.2 setosa

## 51 7.0 3.2 4.7 1.4 versicolor

## 52 6.4 3.2 4.5 1.5 versicolor

## 53 6.9 3.1 4.9 1.5 versicolor

## 54 5.5 2.3 4.0 1.3 versicolor

## 55 6.5 2.8 4.6 1.5 versicolor

## 56 5.7 2.8 4.5 1.3 versicolor

## 57 6.3 3.3 4.7 1.6 versicolor

## 58 4.9 2.4 3.3 1.0 versicolor

## 59 6.6 2.9 4.6 1.3 versicolor

## 60 5.2 2.7 3.9 1.4 versicolor

## 61 5.0 2.0 3.5 1.0 versicolor

## 62 5.9 3.0 4.2 1.5 versicolor

## 63 6.0 2.2 4.0 1.0 versicolor

## 64 6.1 2.9 4.7 1.4 versicolor

## 65 5.6 2.9 3.6 1.3 versicolor

## 66 6.7 3.1 4.4 1.4 versicolor

## 67 5.6 3.0 4.5 1.5 versicolor

## 68 5.8 2.7 4.1 1.0 versicolor

## 69 6.2 2.2 4.5 1.5 versicolor

## 70 5.6 2.5 3.9 1.1 versicolor

## 71 5.9 3.2 4.8 1.8 versicolor

## 72 6.1 2.8 4.0 1.3 versicolor

## 73 6.3 2.5 4.9 1.5 versicolor

## 74 6.1 2.8 4.7 1.2 versicolor

## 75 6.4 2.9 4.3 1.3 versicolor

## 76 6.6 3.0 4.4 1.4 versicolor

## 77 6.8 2.8 4.8 1.4 versicolor

## 78 6.7 3.0 5.0 1.7 versicolor

## 79 6.0 2.9 4.5 1.5 versicolor

## 80 5.7 2.6 3.5 1.0 versicolor

## 81 5.5 2.4 3.8 1.1 versicolor

## 82 5.5 2.4 3.7 1.0 versicolor

## 83 5.8 2.7 3.9 1.2 versicolor

## 84 6.0 2.7 5.1 1.6 versicolor

## 85 5.4 3.0 4.5 1.5 versicolor

## 86 6.0 3.4 4.5 1.6 versicolor

## 87 6.7 3.1 4.7 1.5 versicolor

## 88 6.3 2.3 4.4 1.3 versicolor

## 89 5.6 3.0 4.1 1.3 versicolor

## 90 5.5 2.5 4.0 1.3 versicolor

## 91 5.5 2.6 4.4 1.2 versicolor

## 92 6.1 3.0 4.6 1.4 versicolor

## 93 5.8 2.6 4.0 1.2 versicolor

## 94 5.0 2.3 3.3 1.0 versicolor

## 95 5.6 2.7 4.2 1.3 versicolor

## 96 5.7 3.0 4.2 1.2 versicolor

## 97 5.7 2.9 4.2 1.3 versicolor

## 98 6.2 2.9 4.3 1.3 versicolor

## 99 5.1 2.5 3.0 1.1 versicolor

## 100 5.7 2.8 4.1 1.3 versicolor

## 101 6.3 3.3 6.0 2.5 virginica

## 102 5.8 2.7 5.1 1.9 virginica

## 103 7.1 3.0 5.9 2.1 virginica

## 104 6.3 2.9 5.6 1.8 virginica

## 105 6.5 3.0 5.8 2.2 virginica

## 106 7.6 3.0 6.6 2.1 virginica

## 107 4.9 2.5 4.5 1.7 virginica

## 108 7.3 2.9 6.3 1.8 virginica

## 109 6.7 2.5 5.8 1.8 virginica

## 110 7.2 3.6 6.1 2.5 virginica

## 111 6.5 3.2 5.1 2.0 virginica

## 112 6.4 2.7 5.3 1.9 virginica

## 113 6.8 3.0 5.5 2.1 virginica

## 114 5.7 2.5 5.0 2.0 virginica

## 115 5.8 2.8 5.1 2.4 virginica

## 116 6.4 3.2 5.3 2.3 virginica

## 117 6.5 3.0 5.5 1.8 virginica

## 118 7.7 3.8 6.7 2.2 virginica

## 119 7.7 2.6 6.9 2.3 virginica

## 120 6.0 2.2 5.0 1.5 virginica

## 121 6.9 3.2 5.7 2.3 virginica

## 122 5.6 2.8 4.9 2.0 virginica

## 123 7.7 2.8 6.7 2.0 virginica

## 124 6.3 2.7 4.9 1.8 virginica

## 125 6.7 3.3 5.7 2.1 virginica

## 126 7.2 3.2 6.0 1.8 virginica

## 127 6.2 2.8 4.8 1.8 virginica

## 128 6.1 3.0 4.9 1.8 virginica

## 129 6.4 2.8 5.6 2.1 virginica

## 130 7.2 3.0 5.8 1.6 virginica

## 131 7.4 2.8 6.1 1.9 virginica

## 132 7.9 3.8 6.4 2.0 virginica

## 133 6.4 2.8 5.6 2.2 virginica

## 134 6.3 2.8 5.1 1.5 virginica

## 135 6.1 2.6 5.6 1.4 virginica

## 136 7.7 3.0 6.1 2.3 virginica

## 137 6.3 3.4 5.6 2.4 virginica

## 138 6.4 3.1 5.5 1.8 virginica

## 139 6.0 3.0 4.8 1.8 virginica

## 140 6.9 3.1 5.4 2.1 virginica

## 141 6.7 3.1 5.6 2.4 virginica

## 142 6.9 3.1 5.1 2.3 virginica

## 143 5.8 2.7 5.1 1.9 virginica

## 144 6.8 3.2 5.9 2.3 virginica

## 145 6.7 3.3 5.7 2.5 virginica

## 146 6.7 3.0 5.2 2.3 virginica

## 147 6.3 2.5 5.0 1.9 virginica

## 148 6.5 3.0 5.2 2.0 virginica

## 149 6.2 3.4 5.4 2.3 virginica

## 150 5.9 3.0 5.1 1.8 virginica#then we might want to look at the first 10 rows of the dataset

head(iris, 10) #number at the end states number of rows## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## 7 4.6 3.4 1.4 0.3 setosa

## 8 5.0 3.4 1.5 0.2 setosa

## 9 4.4 2.9 1.4 0.2 setosa

## 10 4.9 3.1 1.5 0.1 setosaHere, it is important to note that this is not just a data.frame (a typical format we see in base R), but this is a dataset is in the form of a tibble, which is a data.frame in the tidyverse. You can see this in the console when you run certain code. This is a ‘modern reimagining of the data.frame’, which you can read about here (https://tibble.tidyverse.org/), however, they are useful for handling larger datasets with complex objects within it. However, this is just something to keep an eye on, as this format is not always cross-compatible with other packages that might need a data.frame.

When first looking at data, there are a number of commands we can look at using dplyr, which will help your EDA. We can focus on commands that impacts the:

- Rows

- filter() - takes rows based on column values (e.g., for numeric rows, you can filter on value using >, <, etc., or filter on categorical values using == ’’)

- arrange() - changes the order of rows (e.g., change to ascending or descending order, this can be useful when doing data viz)

- Columns

- select() - literally selects columns to be included (e.g., you are creating a new data.frame/tibble with only certain columns)

- rename() - if you want to rename columns for ease of plotting/analysis

- mutate() - creates a new column/variable and preserves the dataset

- transmute() - use with caution, this adds new variables and drops existing ones

- Groups

- summarise() - this collapses or aggregates data into a single row (e.g., by the mean, median), this can also be mixed with group_by() in order to summarise by groups (e.g., male, female or other categorical variables)

…Please note, this is not exhaustive of the functions in dplyr, please look at the documentation. These are ones I’ve pulled as perhaps the most useful and common you’ll see and likely need when doing general data cleaning, processing, and analyses.

Another key feature of dplyr that will come in useful is the ability to ‘pipe’, which is the %>% operator. I think the easiest way to think of %>% is AND THEN do a thing. So see the example below, this literally means, take the iris dataset, AND THEN filter it (whereby only pull through observations that are setosa). Note here, we are not creating a new dataframe, so this will just print in the console.

iris %>% filter(Species == 'setosa') #so you can see that the dataset printed now only consists of setosa rows## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa

## 7 4.6 3.4 1.4 0.3 setosa

## 8 5.0 3.4 1.5 0.2 setosa

## 9 4.4 2.9 1.4 0.2 setosa

## 10 4.9 3.1 1.5 0.1 setosa

## 11 5.4 3.7 1.5 0.2 setosa

## 12 4.8 3.4 1.6 0.2 setosa

## 13 4.8 3.0 1.4 0.1 setosa

## 14 4.3 3.0 1.1 0.1 setosa

## 15 5.8 4.0 1.2 0.2 setosa

## 16 5.7 4.4 1.5 0.4 setosa

## 17 5.4 3.9 1.3 0.4 setosa

## 18 5.1 3.5 1.4 0.3 setosa

## 19 5.7 3.8 1.7 0.3 setosa

## 20 5.1 3.8 1.5 0.3 setosa

## 21 5.4 3.4 1.7 0.2 setosa

## 22 5.1 3.7 1.5 0.4 setosa

## 23 4.6 3.6 1.0 0.2 setosa

## 24 5.1 3.3 1.7 0.5 setosa

## 25 4.8 3.4 1.9 0.2 setosa

## 26 5.0 3.0 1.6 0.2 setosa

## 27 5.0 3.4 1.6 0.4 setosa

## 28 5.2 3.5 1.5 0.2 setosa

## 29 5.2 3.4 1.4 0.2 setosa

## 30 4.7 3.2 1.6 0.2 setosa

## 31 4.8 3.1 1.6 0.2 setosa

## 32 5.4 3.4 1.5 0.4 setosa

## 33 5.2 4.1 1.5 0.1 setosa

## 34 5.5 4.2 1.4 0.2 setosa

## 35 4.9 3.1 1.5 0.2 setosa

## 36 5.0 3.2 1.2 0.2 setosa

## 37 5.5 3.5 1.3 0.2 setosa

## 38 4.9 3.6 1.4 0.1 setosa

## 39 4.4 3.0 1.3 0.2 setosa

## 40 5.1 3.4 1.5 0.2 setosa

## 41 5.0 3.5 1.3 0.3 setosa

## 42 4.5 2.3 1.3 0.3 setosa

## 43 4.4 3.2 1.3 0.2 setosa

## 44 5.0 3.5 1.6 0.6 setosa

## 45 5.1 3.8 1.9 0.4 setosa

## 46 4.8 3.0 1.4 0.3 setosa

## 47 5.1 3.8 1.6 0.2 setosa

## 48 4.6 3.2 1.4 0.2 setosa

## 49 5.3 3.7 1.5 0.2 setosa

## 50 5.0 3.3 1.4 0.2 setosaTo use this as a new dataset, you can create it (using assignment operators, I tend to use ‘=’ however is it common in R to use ‘<-’). Creating a new dataset to be used in the R environment, we give it a name: iris_setosa, and then assign something to it. These can be dataframes (or tibbles in this case) or they can be a list, vector, among other formats. It is useful to know about these structures for more advanced programming.

iris_setosa = iris %>%

filter(Species == 'setosa')

head(iris_setosa)## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 4.6 3.1 1.5 0.2 setosa

## 5 5.0 3.6 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa#we can check that this has filtered out any other species using unique()

unique(iris_setosa$Species)## [1] setosa

## Levels: setosa versicolor virginica#this shows only one level (aka only setosa) as this is a factor variableIf you want to select columns, lets try it using the diamonds dataset as an example and select particular columns:

head(diamonds) ## # A tibble: 6 × 10

## carat cut color clarity depth table price x y z

## <dbl> <ord> <ord> <ord> <dbl> <dbl> <int> <dbl> <dbl> <dbl>

## 1 0.23 Ideal E SI2 61.5 55 326 3.95 3.98 2.43

## 2 0.21 Premium E SI1 59.8 61 326 3.89 3.84 2.31

## 3 0.23 Good E VS1 56.9 65 327 4.05 4.07 2.31

## 4 0.29 Premium I VS2 62.4 58 334 4.2 4.23 2.63

## 5 0.31 Good J SI2 63.3 58 335 4.34 4.35 2.75

## 6 0.24 Very Good J VVS2 62.8 57 336 3.94 3.96 2.48#lets select carat, cut, color, and clarity only

#here we are creating a new dataframe called smaller_diamonds, then we call the SELECT command

#and then list the variables we want to keep

smaller_diamonds = dplyr::select(diamonds,

carat,

cut,

color,

clarity)

head(smaller_diamonds)## # A tibble: 6 × 4

## carat cut color clarity

## <dbl> <ord> <ord> <ord>

## 1 0.23 Ideal E SI2

## 2 0.21 Premium E SI1

## 3 0.23 Good E VS1

## 4 0.29 Premium I VS2

## 5 0.31 Good J SI2

## 6 0.24 Very Good J VVS2You can use additional functions with select, such as: * starts_with() - where you define a start pattern that is selected (e.g., ‘c’ in the diamonds package should pull all columns starting with ‘c’) * ends_with() - pulls anything that ends with a defined pattern * matches() - matches a specific regular expression (to be explained later) * contains() - contains a literal string (e.g., ‘ri’ will only bring through pRIce and claRIty)

(Examples to be added…)

If you ever find you want to rename variables for any reason… do it sooner rather than later. Also, sometimes it is easier to do this outside of R… however, this is how to do this:

#lets rename the price variable as price tag

#again we are creating a new dataset

#THEN %>% we are calling rename() and then renaming by defining the new name = old name

diamond_rename = diamonds %>%

dplyr::rename(price_tag = price)

head(diamond_rename) #check## # A tibble: 6 × 10

## carat cut color clarity depth table price_tag x y z

## <dbl> <ord> <ord> <ord> <dbl> <dbl> <int> <dbl> <dbl> <dbl>

## 1 0.23 Ideal E SI2 61.5 55 326 3.95 3.98 2.43

## 2 0.21 Premium E SI1 59.8 61 326 3.89 3.84 2.31

## 3 0.23 Good E VS1 56.9 65 327 4.05 4.07 2.31

## 4 0.29 Premium I VS2 62.4 58 334 4.2 4.23 2.63

## 5 0.31 Good J SI2 63.3 58 335 4.34 4.35 2.75

## 6 0.24 Very Good J VVS2 62.8 57 336 3.94 3.96 2.48So, lets create some new variables! Here, we use the mutate() function. Lets fiddle around with the diamonds dataset:

# Lets change the price by halving it...

# we will reuse the diamond_rename as we'll use the original diamonds one later

diamond_rename %>%

dplyr::mutate(half_price = price_tag/2)## # A tibble: 53,940 × 11

## carat cut color clarity depth table price_tag x y z half_price

## <dbl> <ord> <ord> <ord> <dbl> <dbl> <int> <dbl> <dbl> <dbl> <dbl>

## 1 0.23 Ideal E SI2 61.5 55 326 3.95 3.98 2.43 163

## 2 0.21 Premium E SI1 59.8 61 326 3.89 3.84 2.31 163

## 3 0.23 Good E VS1 56.9 65 327 4.05 4.07 2.31 164.

## 4 0.29 Premium I VS2 62.4 58 334 4.2 4.23 2.63 167

## 5 0.31 Good J SI2 63.3 58 335 4.34 4.35 2.75 168.

## 6 0.24 Very Good J VVS2 62.8 57 336 3.94 3.96 2.48 168

## 7 0.24 Very Good I VVS1 62.3 57 336 3.95 3.98 2.47 168

## 8 0.26 Very Good H SI1 61.9 55 337 4.07 4.11 2.53 168.

## 9 0.22 Fair E VS2 65.1 61 337 3.87 3.78 2.49 168.

## 10 0.23 Very Good H VS1 59.4 61 338 4 4.05 2.39 169

## # … with 53,930 more rows# we can create multiple new columns at once

diamond_rename %>%

dplyr::mutate(price300 = price_tag - 300, # 300 OFF from the original price

price30perc = price_tag * 0.30, # 30% of the original price

price30percoff = price_tag * 0.70, # 30% OFF from the original price

pricepercarat = price_tag / carat) # ratio of price to carat## # A tibble: 53,940 × 14

## carat cut color clarity depth table price_tag x y z price300 price30perc price30percoff pricepercarat

## <dbl> <ord> <ord> <ord> <dbl> <dbl> <int> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.23 Ideal E SI2 61.5 55 326 3.95 3.98 2.43 26 97.8 228. 1417.

## 2 0.21 Premium E SI1 59.8 61 326 3.89 3.84 2.31 26 97.8 228. 1552.

## 3 0.23 Good E VS1 56.9 65 327 4.05 4.07 2.31 27 98.1 229. 1422.

## 4 0.29 Premium I VS2 62.4 58 334 4.2 4.23 2.63 34 100. 234. 1152.

## 5 0.31 Good J SI2 63.3 58 335 4.34 4.35 2.75 35 100. 234. 1081.

## 6 0.24 Very Good J VVS2 62.8 57 336 3.94 3.96 2.48 36 101. 235. 1400

## 7 0.24 Very Good I VVS1 62.3 57 336 3.95 3.98 2.47 36 101. 235. 1400

## 8 0.26 Very Good H SI1 61.9 55 337 4.07 4.11 2.53 37 101. 236. 1296.

## 9 0.22 Fair E VS2 65.1 61 337 3.87 3.78 2.49 37 101. 236. 1532.

## 10 0.23 Very Good H VS1 59.4 61 338 4 4.05 2.39 38 101. 237. 1470.

## # … with 53,930 more rows4.2 Lesson 2: Basic EDA

EDA is an iterative cycle. What we looked at above is definitely part of EDA, but it was also to give a little overview of the dplyr package and some of the main commands that will likely be useful if you are newer to R. However, there are a number of tutorials out there that will teach you more about R programming.

Let’s look at EDA more closely. What is it and what will you be doing?

Generating questions about your data,

Search for answers by visualising, transforming, and modelling your data, and

Use what you learn to refine your questions and/or generate new questions.

We will cover all of this over the next two chapters EDA is not a formal process with a strict set of rules. More than anything, EDA is the analyst’s time to actually get to know your data and understand what you can really do with it. During the initial phases of EDA you should feel free to investigate every idea that occurs to you. This is all about understanding your data. Some of these ideas will pan out, and some will be dead ends. All of it is important and will help you figure out your questions… additionally, this is important to log and do not worry about ‘null’ findings, these are just as important!

It is important to point out here that this will get easier and better with practice and experience. The more you look at data and the more time you work with it, the easier you’ll find it to spot odd artefacts in there. For instance, I’ve looked at haul truck data recently (don’t ask…) and to give you an idea of what I am talking about: these are three-storey high trucks and weigh about 300-tonnes – now, the data said one of these moved at >1,000km/hr… Hopefully, this is absolutely not true as this is horrifuing, but also hopefully you’ve also realized this is also very unlikely. In other data coming from smartphones, in the EDA process, we found various have missing data – where it is difficult to know whether this was a logging error or something else. However, the interesting and important discussion is to (a) find out why this may have happened, (b) what does this mean for the data, (c) what do we do with this missing data, (d) is this a one-off or has this happened elsewhere too, and (e) what does this mean for the participants involved in terms of what inferences we will draw on our findings?

4.2.1 Brief ethics consideration based on point (e)

When thinking about data, often (not always) this comes from people. One thing I want to stress in this work, despite at present, this uses non-human data in the first chapters (to be updated), remember you are handling people’s data. Each observation (usually) is a person, and if you were that person, how would you feel about how you are using these data? Are you making fair judgement and assessments? How are you justifying your decision making? If there are ‘outliers’ – who are they? Was this a genuine data entry/logging error or was this real data, are you discriminating against a sub-set of the population by removing them? It is critically important to consider the consequences of every decision you make when doing data analysis. Unfortunately there is no tool or framework for this, this is down to you for the most part, and for you to be open, honest, and think about what you’re doing…

(to be expanded and have another chapter on this…)

4.2.2 Quick reminders of definitions for EDA

A variable is a quantity, quality, or property that you can measure.

A value is the state of a variable when you measure it. The value of a variable may change from measurement to measurement.

An observation is a set of measurements made under similar conditions (you usually make all of the measurements in an observation at the same time and on the same object). An observation will contain several values, each associated with a different variable. I’ll sometimes refer to an observation as a data point.

Tabular data is a set of values, each associated with a variable and an observation. Tabular data is tidy if each value is placed in its own “cell”, each variable in its own column, and each observation in its own row.

4.2.3 Variation

Variation is the tendency of the values of a variable to change from measurement to measurement. You can see variation easily in real life; if you measure any continuous variable twice, you will get two different results. This is true even if you measure quantities that are constant, like the speed of light. Each of your measurements will include a small amount of error that varies from measurement to measurement.

(This is why in STEM fields, equipment needs to be calibrated before use… this is not a thing in the social sciences, which is an interesting issue in itself – to be expanded upon in another forthcoming chapter).

Categorical variables can also vary if you measure across different subjects (e.g., the eye colors of different people), or different times (e.g. the energy levels of an electron at different moments). Every variable has its own pattern of variation, which can reveal interesting information. The best way to understand that pattern is to visualize the distribution of the variable’s values. It is important to understand the variation in your datasets, and one of the easiest ways to look at this is to visualize it (we will have a chapter purely on data visualization, too). Let’s start with loading in the following libraries (or installing them using the command install.packages() making sure to use quote around each package called): dplyr, tidyverse, magrittr, and ggplot2.

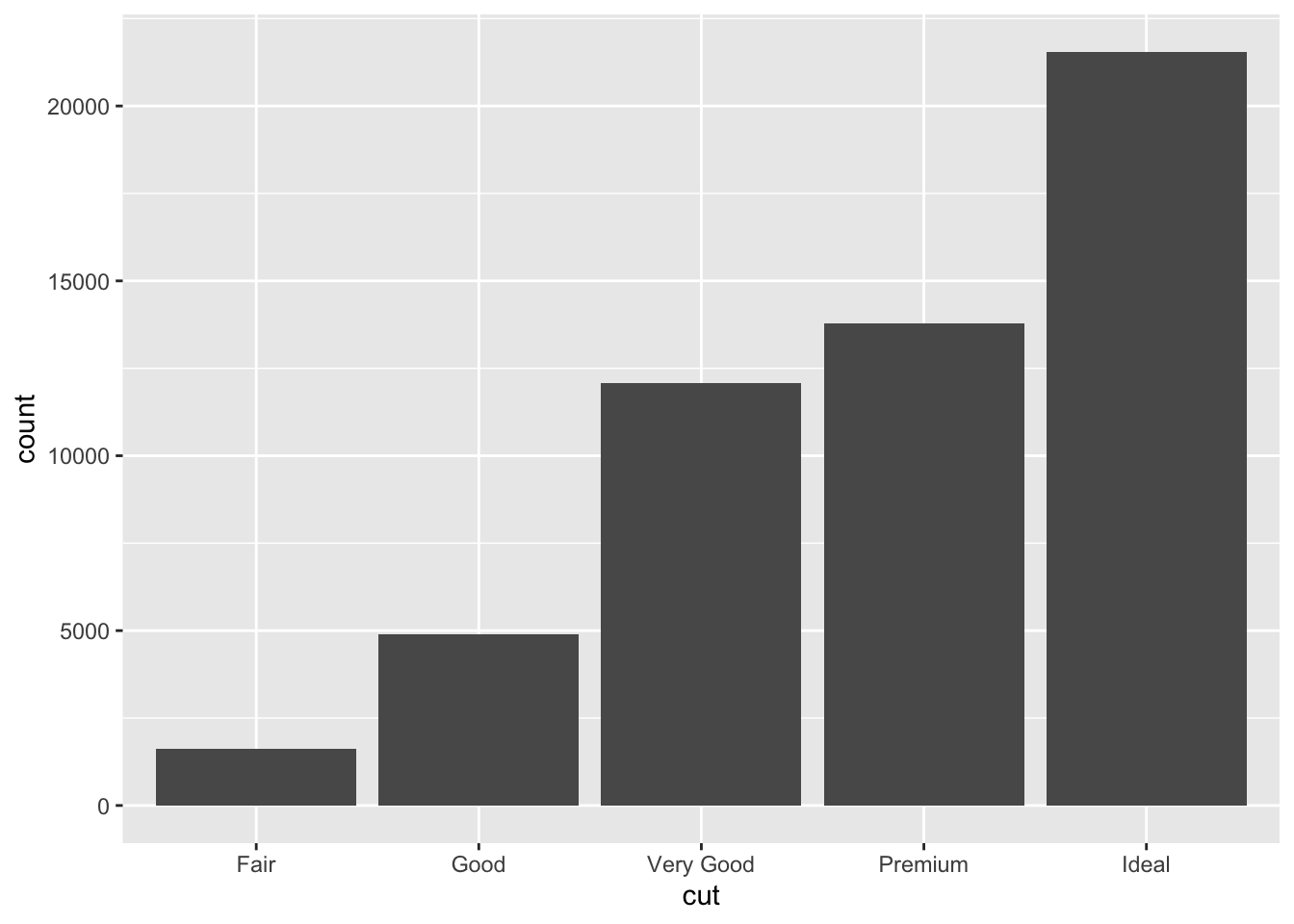

How you visualise the distribution of a variable will depend on whether the variable is categorical or continuous. A variable is categorical if it can only take one of a small set of values (e.g., color, size, level of education). These can also be ranked or ordered. In R, categorical variables are usually saved as factors or character vectors. To examine the distribution of a categorical variable, use a bar chart:

library('dplyr')

library('magrittr')

library('ggplot2')

library('tidyverse')

#Here we will look at the diamonds dataset

#lets look at the variable cut, which is a categorical variable, but how do you check?

#ypu can check using the is.factor() command and it'll return a boolean TRUE or FALSE

is.factor(diamonds$cut) #here we call the diamonds dataset and use the $ sign to specify which column## [1] TRUE#if something is not a factor when it needs to be, you can use the

# as.factor() command, but check using is.factor() after to ensure this worked as anticipated

#lets have a visualize the diamonds dataset using a bar plot

ggplot(data = diamonds) +

geom_bar(mapping = aes(x = cut))

You can also look at this without data visualization, for example using the count command in dplyr.

#this will give you a count per each category. There are a number of ways to do this, but this is the most simple:

#note we are calling dplyr:: here as this count command can be masked by other packages, this is just to ensure this code runs

diamonds %>%

dplyr::count(cut)## # A tibble: 5 × 2

## cut n

## <ord> <int>

## 1 Fair 1610

## 2 Good 4906

## 3 Very Good 12082

## 4 Premium 13791

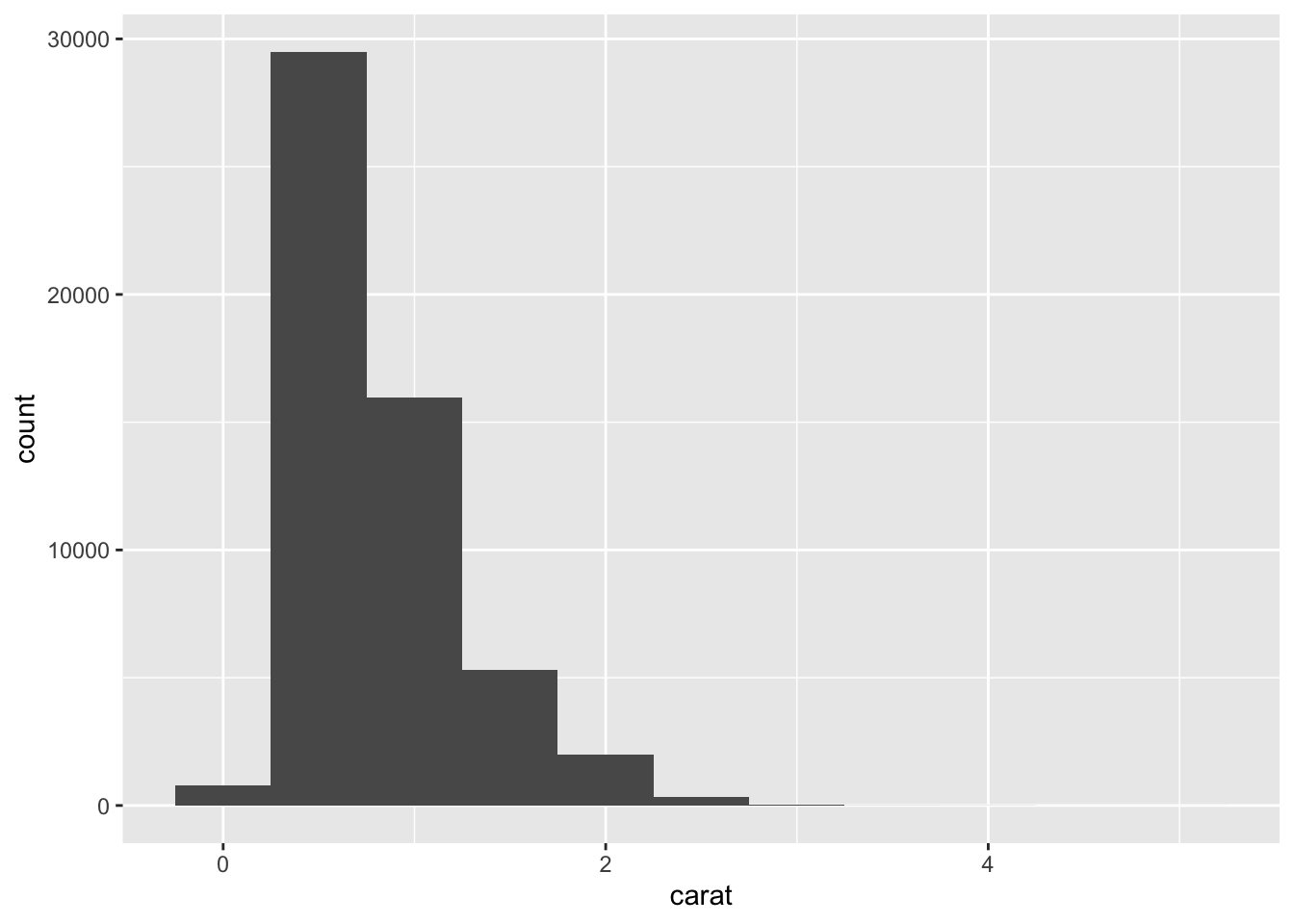

## 5 Ideal 21551If we are looking at continuous variables, a histogram is the better way to look at the distribution of each variable:

ggplot(data = diamonds) +

geom_histogram(mapping = aes(x = carat), binwidth = 0.5)

A histogram divides the x-axis into equally spaced bins and then uses the height of a bar to display the number of observations that fall in each bin. In the graph above, the tallest bar shows that almost 30,000 observations have a carat value between 0.25 and 0.75, which are the left and right edges of the bar.

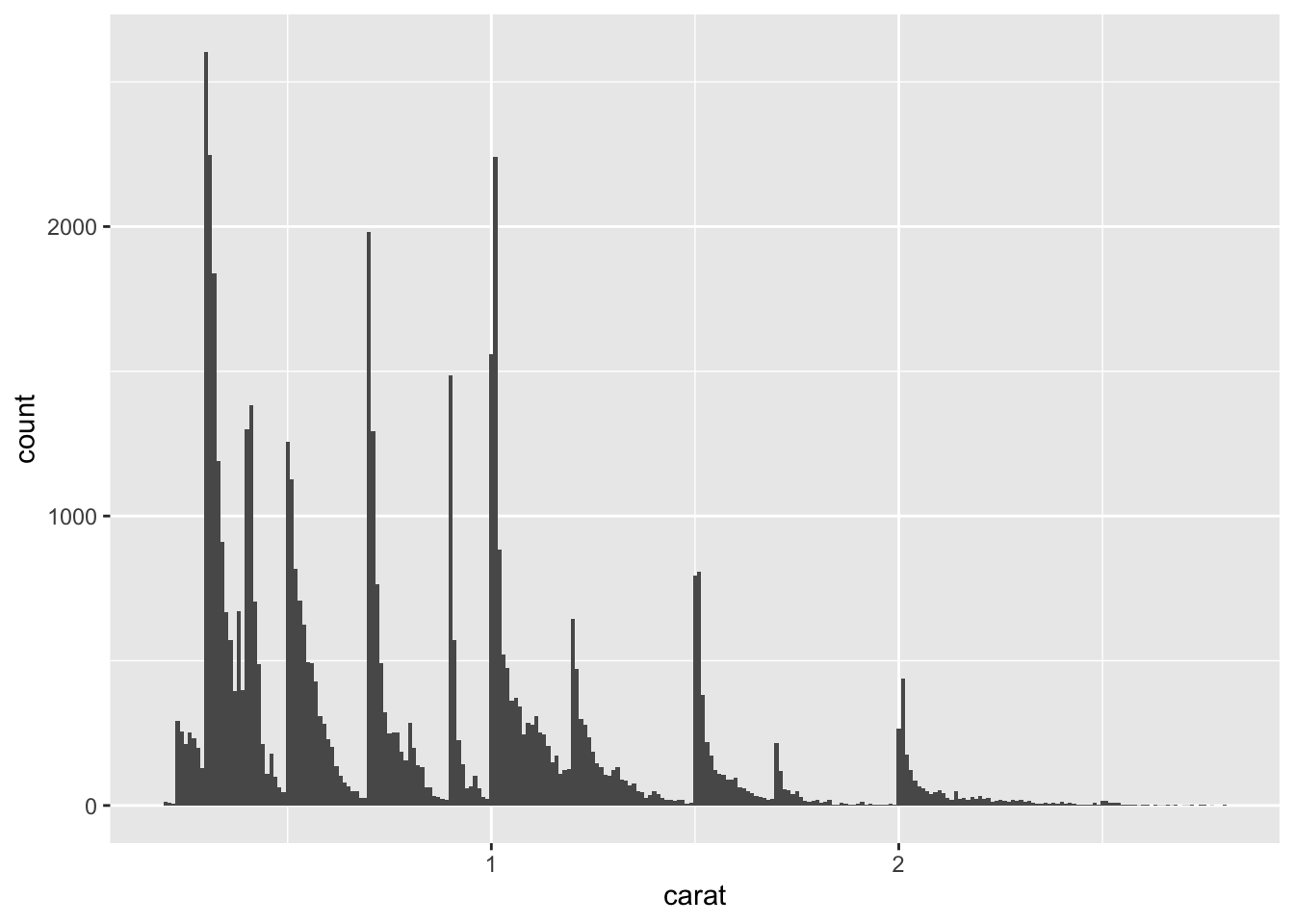

You can set the width of the intervals in a histogram with the binwidth argument, which is measured in the units of the x variable. You should always explore a variety of binwidths when working with histograms, as different binwidths can reveal different patterns. It is really important to experiemnt and get this right otherwise you’ll see too much or too little variation in the variable. Please note: you should do this for every variable you have so you can understand how much or little variation you have in your daatset and whether it is normally distributed or not (e.g., the histogram looks like a bell curve).

For example, here is how the graph above looks when we zoom into just the diamonds with a size of less than three carats and choose a smaller binwidth:

smaller <- diamonds %>%

filter(carat < 3)

ggplot(data = smaller, mapping = aes(x = carat)) +

geom_histogram(binwidth = 0.01)

4.2.3.1 Activity

Part 1: Please take the code from above and experiment with it and see how changing various arguments changes what you see.

Part 2: Use the code above to explore the ‘iris’ dataset as well, this is part of the dpylr package already.

4.2.4 Data in Context

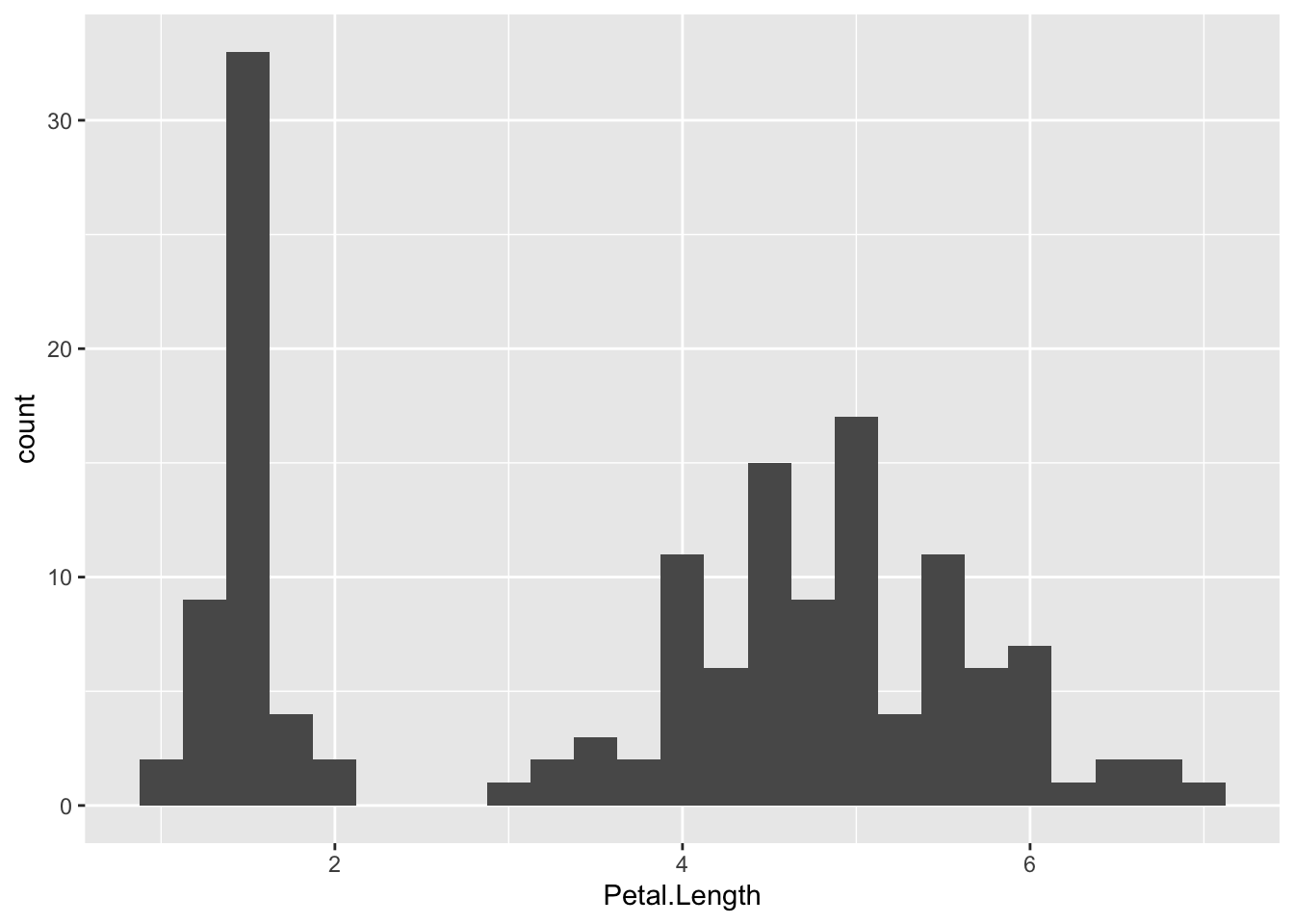

In both bar charts and histograms, tall bars show the common values of a variable, and shorter bars show less-common values. Places that do not have bars reveal values that were not seen in your data. To turn this information into useful questions, look for anything unexpected:

Which values are the most common? Why? Do we think there might be missing data here at all?

Which values are rare? Why? Does that match your expectations? Why could this be if you’re unsure?

Can you see any unusual patterns? What might explain them? (Here, if we are looking at longitudinal data, we might also look at weekday effects or even daily effects, where if we are looking at something like mood, we might see mood changing throughout the day or week consistently.)

So, if we look at the classic iris dataset, what naturally occurring groups can we see? In the code below, you can see that there are two key groups here, petals that are quite small (<2cm) and larger petals (>3cm).

ggplot(data = iris, mapping = aes(x = Petal.Length)) +

geom_histogram(binwidth = 0.25)

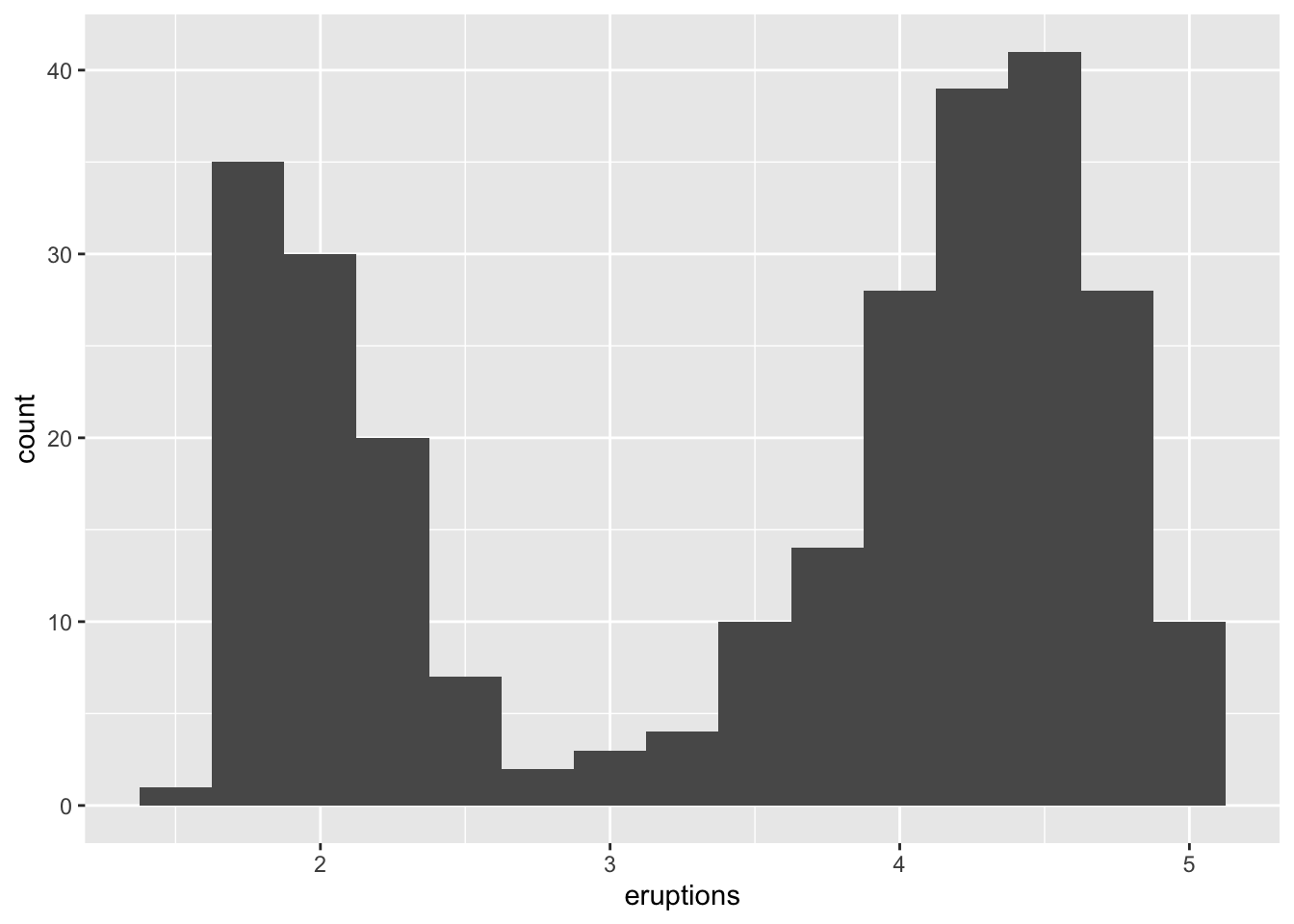

Another example is in the eruptions of the Old Faithful Geyser in Yellowstone National Park dataset. Eruption times appear to be clustered into two groups: there are short eruptions (of around 2 minutes) and long eruptions (4-5 minutes), but little in between.

ggplot(data = faithful, mapping = aes(x = eruptions)) +

geom_histogram(binwidth = 0.25)

4.2.4.1 Activity

For all datasets (iris, faithful, diamonds), play with exploring more relationships in the data itself using bar plots, histograms, and other plots you think might help you understand your data distributions.

4.2.5 Data in Context, part 2

When you look at your data, you might find some outliers, or extreme values that are unusual or unexpected. These are extremely important to address and investigate as these might be data entry issues (e.g., with technology usage time estimates, when asked how long do you use your phone each day (hours) and someone inputs 23 (hours) might be a mistake, this could be a sensor logging issue) or this may be an interesting artifact in your dataset.

It is important here to understand what are expected and unexpected values. When you’re working in a new context it is critical for you to ask data owners, or whomever collected it, or someone who knows the context for help. Do not come up with your own rules on this because you do not have enough knowledge. Be risk adverse, it is better to be a little slower and get things right than to rush and potentially cause some issues down the line, as mistakes here will only get bigger as these data are further analysed. It is common to see rules of thumb that mean anything +/- 3SDs should be removed from analyses. This is not always a good idea without knowing the context and whether this is actually appropriate.

4.2.6 Ethics interjection

As we talked about a little before – what if those ‘extreme’ values are genuine? What if these are typically underrepresented sub-groups of a population and we are now just removing them without reason? It is important to think carefully about your RQs and consider what you are doing before just removing data with a simple rule of thumb. If these are underrepresented groups being removed, this may end up continuing to solidify a bias seen in data so be careful.

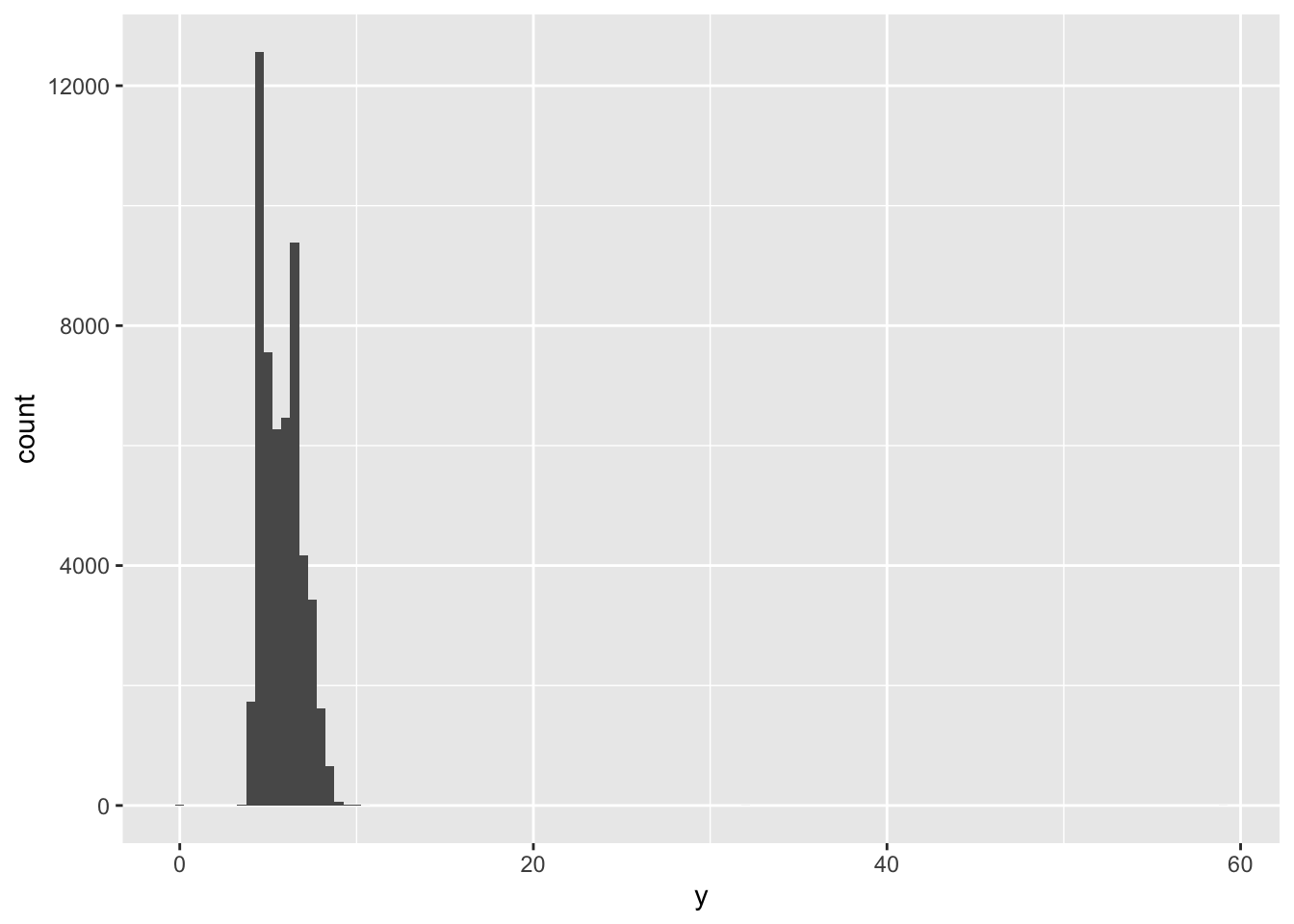

So, if we look again at the diamonds dataset, specifically looking at the distribution of the variable ‘y’, which is the ‘width in mm’ of each diamond, we get the following histogram:

ggplot(diamonds) +

geom_histogram(mapping = aes(x = y), binwidth = 0.5) This clearly shows there is something extreme happening, (also noting these data are about diamonds not people so we can tailor our thinking accordingly)… here we can see this a rare occurrence as we can hardly see the bars towards the right-hand side of the plot.

This clearly shows there is something extreme happening, (also noting these data are about diamonds not people so we can tailor our thinking accordingly)… here we can see this a rare occurrence as we can hardly see the bars towards the right-hand side of the plot.

Let’s look at this more…

# note be careful with code command duplicate names... if your code worked once and errors another time, it might be due to conflicts

#hence here, we call select using dpylr:: to ensure this does not happen

unusual <- diamonds %>%

filter(y < 3 | y > 20) %>%

dplyr::select(price, x, y, z) %>%

arrange(y)

unusual## # A tibble: 9 × 4

## price x y z

## <int> <dbl> <dbl> <dbl>

## 1 5139 0 0 0

## 2 6381 0 0 0

## 3 12800 0 0 0

## 4 15686 0 0 0

## 5 18034 0 0 0

## 6 2130 0 0 0

## 7 2130 0 0 0

## 8 2075 5.15 31.8 5.12

## 9 12210 8.09 58.9 8.06The y variable (width in mm) measures one of the three dimensions of these diamonds (all in mm)… so what do we infer here? Well, we know that diamonds can’t have a width of 0mm, so these values must be incorrect. Similarly, at the other extreme, we might also suspect that measurements of 32mm and 59mm are implausible: those diamonds are over an inch long, but don’t cost hundreds of thousands of dollars! (this is why it is important to look at distributions in context of other variables, like price!)

It’s good practice to repeat your analysis with and without the outliers. If they have minimal effect on the results, and you can’t figure out why they’re there, it’s reasonable to drop them move on (but keep note of the number of observations you remove and why). However, if they have a substantial effect on your results, you shouldn’t drop them without justification. There may be reasons, for example, completely implausible observations (e.g., someone reporting they use their smartphone for more than 24 hours in a day), but others may be worth further investigation and decision-making. You’ll need to figure out what caused them (e.g. a data entry error) and disclose that you removed them (or not) in write ups.

4.2.7 Activity

Explore the distribution of each of the x (length in mm), y (width in mm), and z (depth in mm) variables in the diamonds dataset. What do you learn? Are there any other outliers or unusual or unexpected values? If so, why are they unusual?

Explore the distribution of price. Do you discover anything unusual or surprising? (Hint: Carefully think about the binwidth and make sure you try a wide range of values.)

Do some research about the following commands: coord_cartesian() vs xlim() or ylim() when zooming in on a histogram. Try them out, what do they do? What is the difference between them? What happens if you leave binwidth unset?

4.2.8 Covariation

If variation describes the behavior within a variable, covariation describes the behavior between variables. Covariation is the tendency for the values of two or more variables to vary together in a related way. The best way to spot covariation is to visualize the relationship between two or more variables.

4.2.8.1 Categorical and Continuous Variables

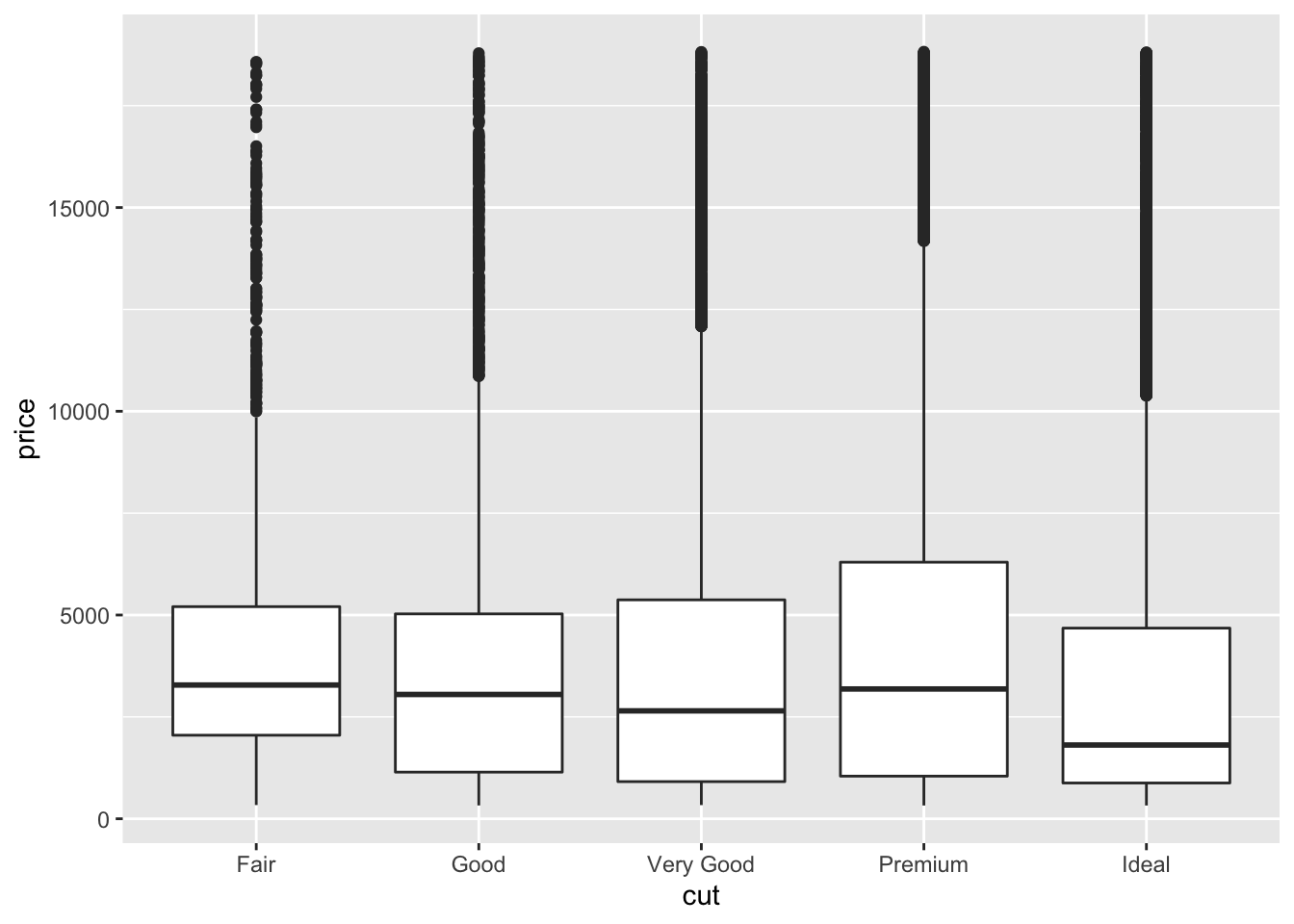

Let’s look back at the diamonds dataset. Boxplots can be really nice ways to better look at the data across several variables. So, lets have a little reminder of boxplot elements:

A box that stretches from the 25th percentile of the distribution to the 75th percentile, a distance known as the interquartile range (IQR). In the middle of the box is a line that displays the median, i.e., 50th percentile, of the distribution. These three lines give you a sense of the spread of the distribution and whether or not the distribution is symmetric about the median or skewed to one side.

Visual points that display observations that fall more than 1.5 times the IQR from either edge of the box. These outlying points are unusual so are plotted individually.

A line (or whisker) that extends from each end of the box and goes to the farthest non-outlier point in the distribution.

Let’s have a go!

ggplot(data = diamonds, mapping = aes(x = cut, y = price)) +

geom_boxplot() Please note: cut here is an ordered factor (whereby the plot automatically orders from the lowest quality = fair, to the highest = ideal). This is not always the case for categorical variables (or factors). For example:

Please note: cut here is an ordered factor (whereby the plot automatically orders from the lowest quality = fair, to the highest = ideal). This is not always the case for categorical variables (or factors). For example:

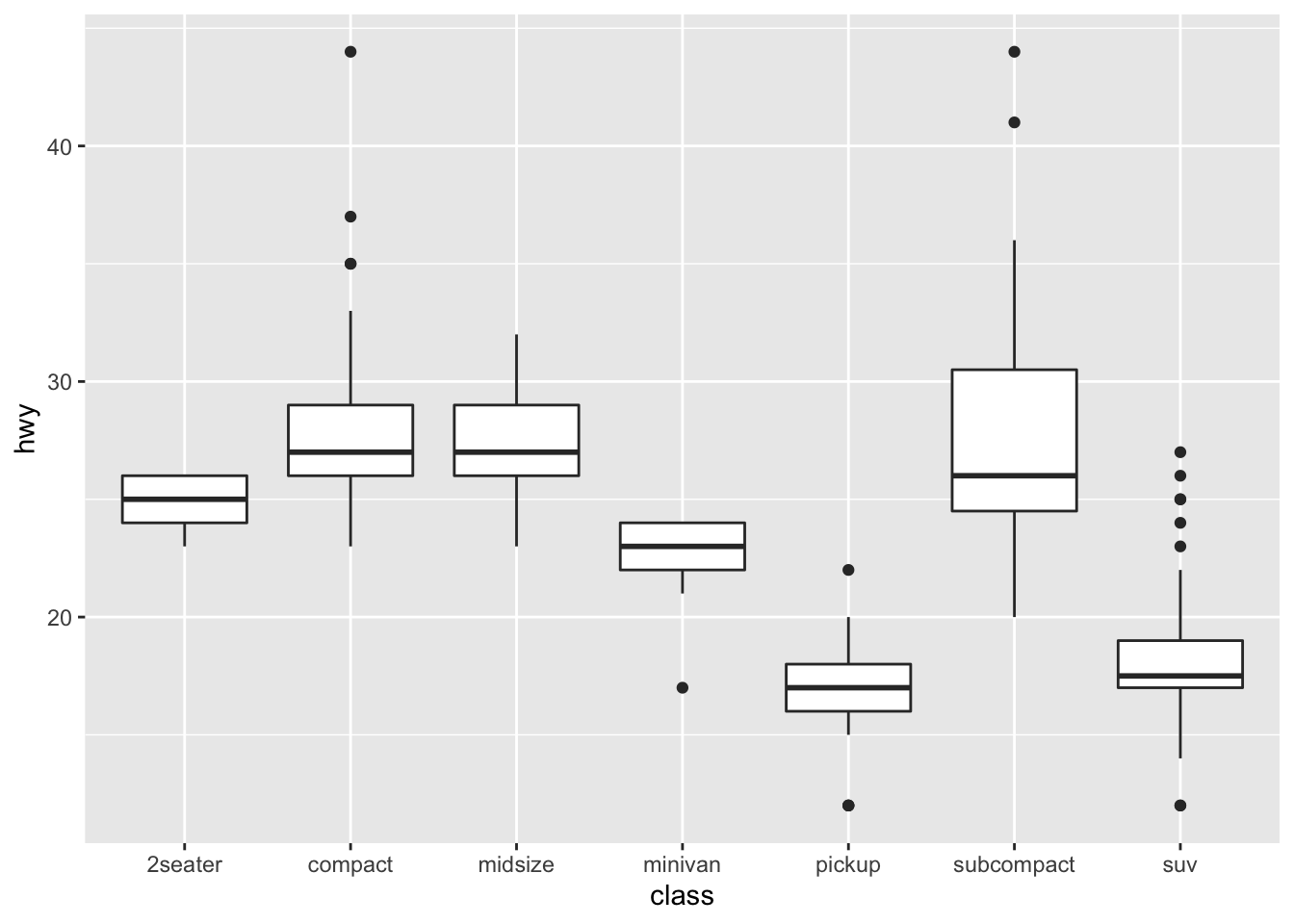

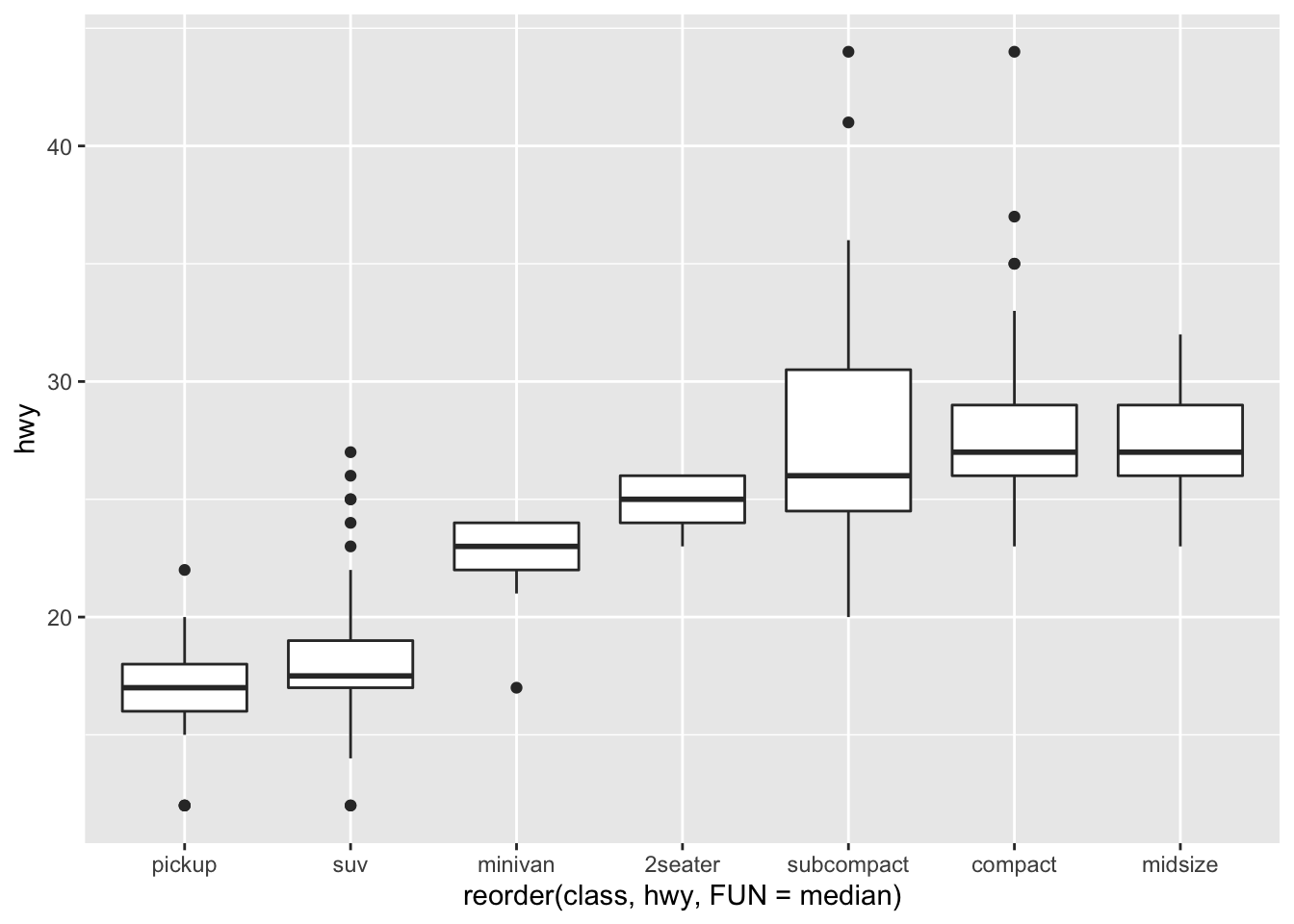

ggplot(data = mpg, mapping = aes(x = class, y = hwy)) +

geom_boxplot()

We can reorder them easily, for example by the median:

ggplot(data = mpg) +

geom_boxplot(mapping = aes(x = reorder(class, hwy, FUN = median), y = hwy)) #here we could order then on other things like the mean, too Hence, you can start to see patterns and relationships between the categorical variables with continuous ones.

Hence, you can start to see patterns and relationships between the categorical variables with continuous ones.

4.2.8.2 Two Categorial Variables

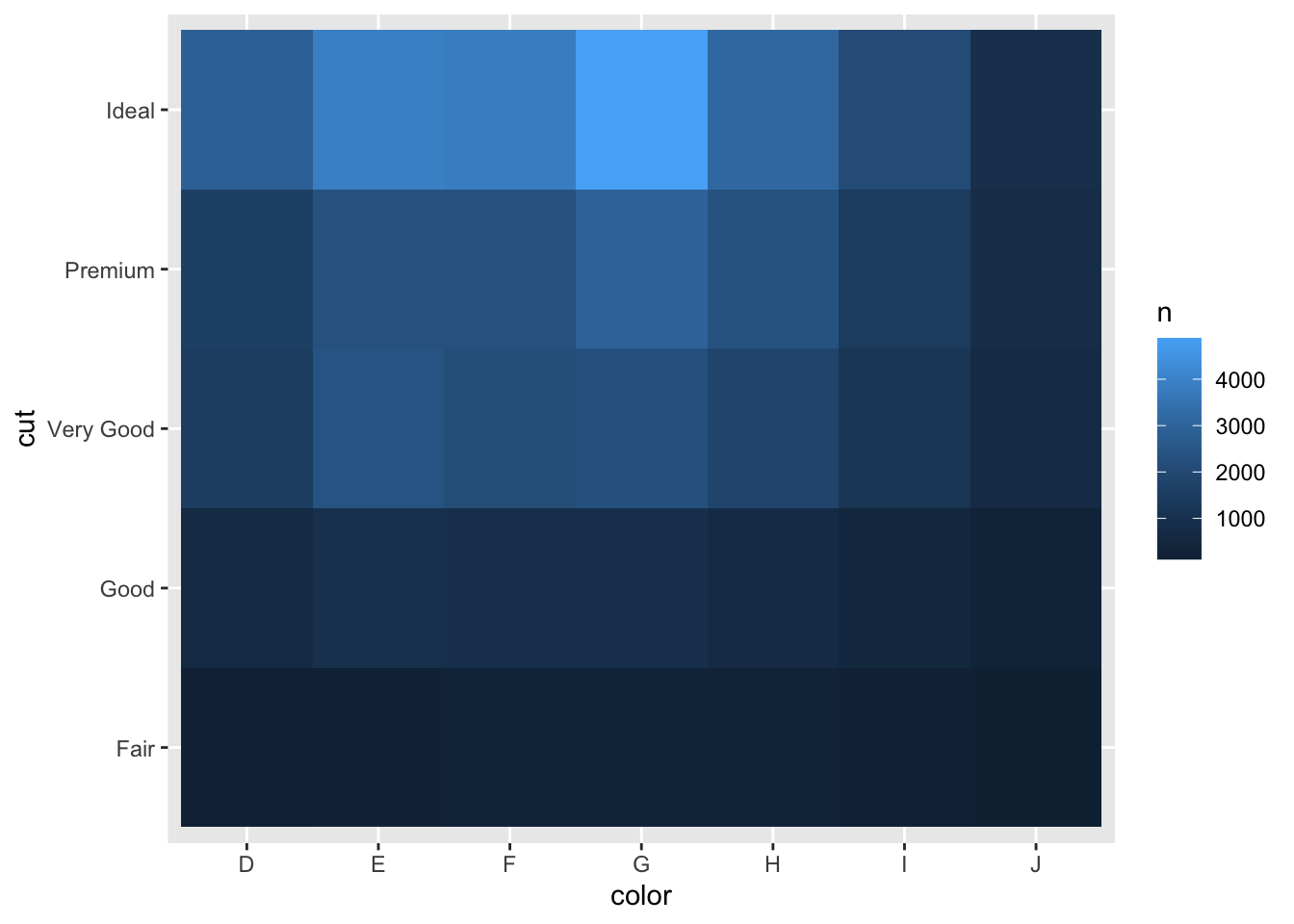

You can look at the covariance between categorical variables by counting them, as we have done earlier using the dplyr package:

diamonds %>%

dplyr::count(color, cut)## # A tibble: 35 × 3

## color cut n

## <ord> <ord> <int>

## 1 D Fair 163

## 2 D Good 662

## 3 D Very Good 1513

## 4 D Premium 1603

## 5 D Ideal 2834

## 6 E Fair 224

## 7 E Good 933

## 8 E Very Good 2400

## 9 E Premium 2337

## 10 E Ideal 3903

## # … with 25 more rowsWe could also visualize this using a heatmap (known as geom_tile), which is a really nice way to visualize this:

diamonds %>%

dplyr::count(color, cut) %>%

ggplot(mapping = aes(x = color, y = cut)) +

geom_tile(mapping = aes(fill = n))

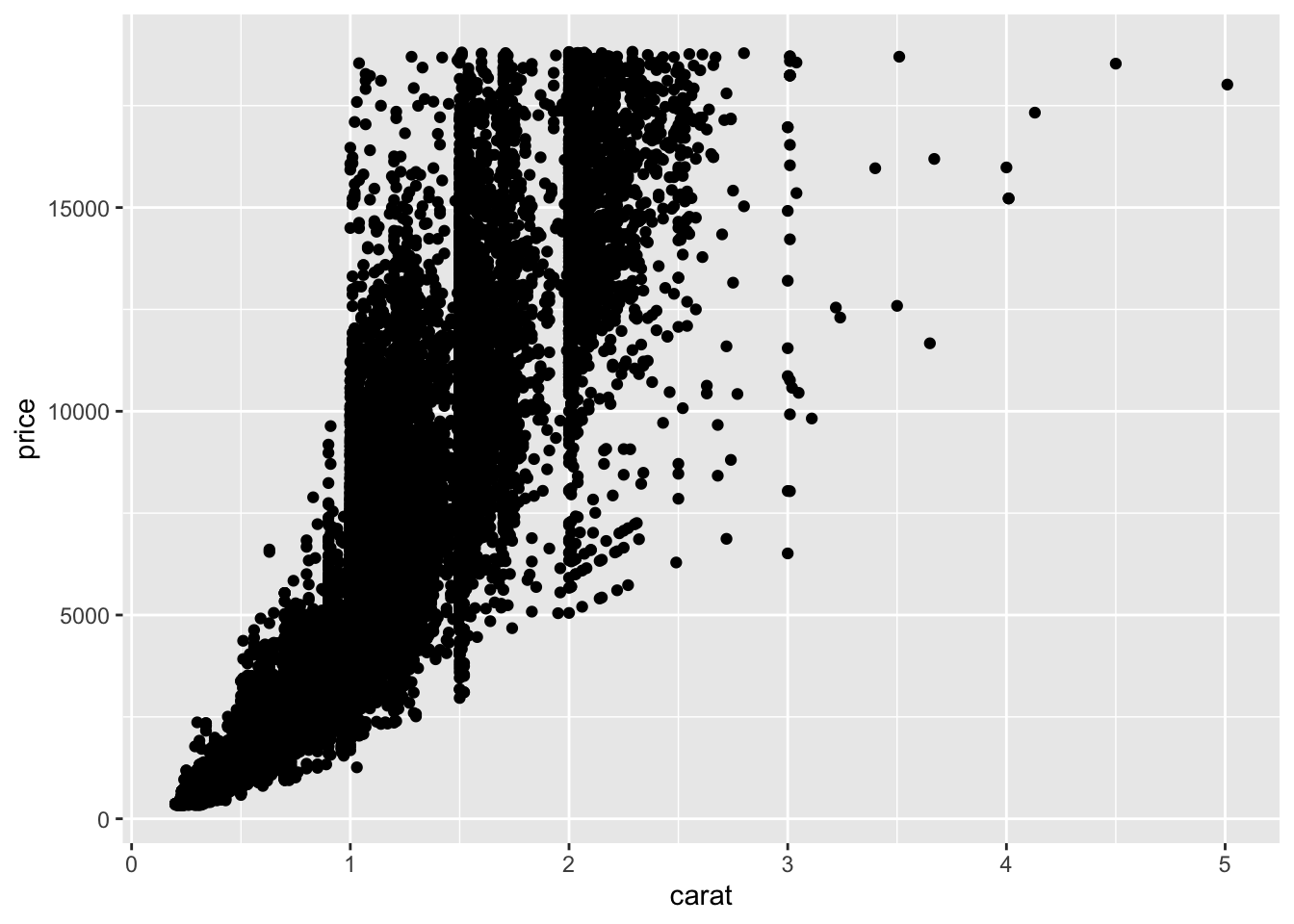

4.2.8.3 Two Continuous Variables

The classic way to look at continuous variables is to use a scatter plot, which will also give you an idea as to the correlations in the dataset, too. For example, there is an exponential relationship in the diamonds dataset when looking at carat size and price:

ggplot(data = diamonds) +

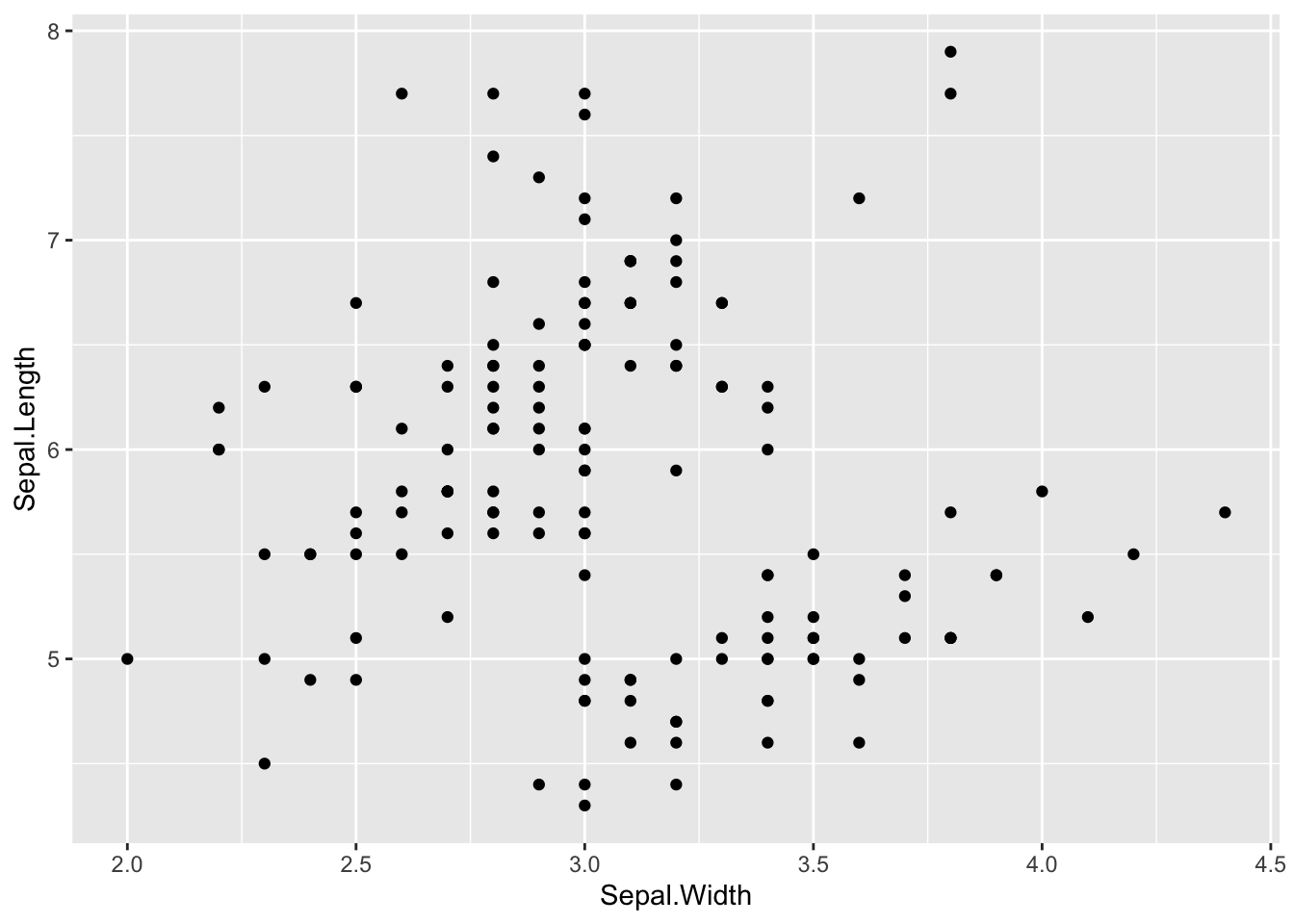

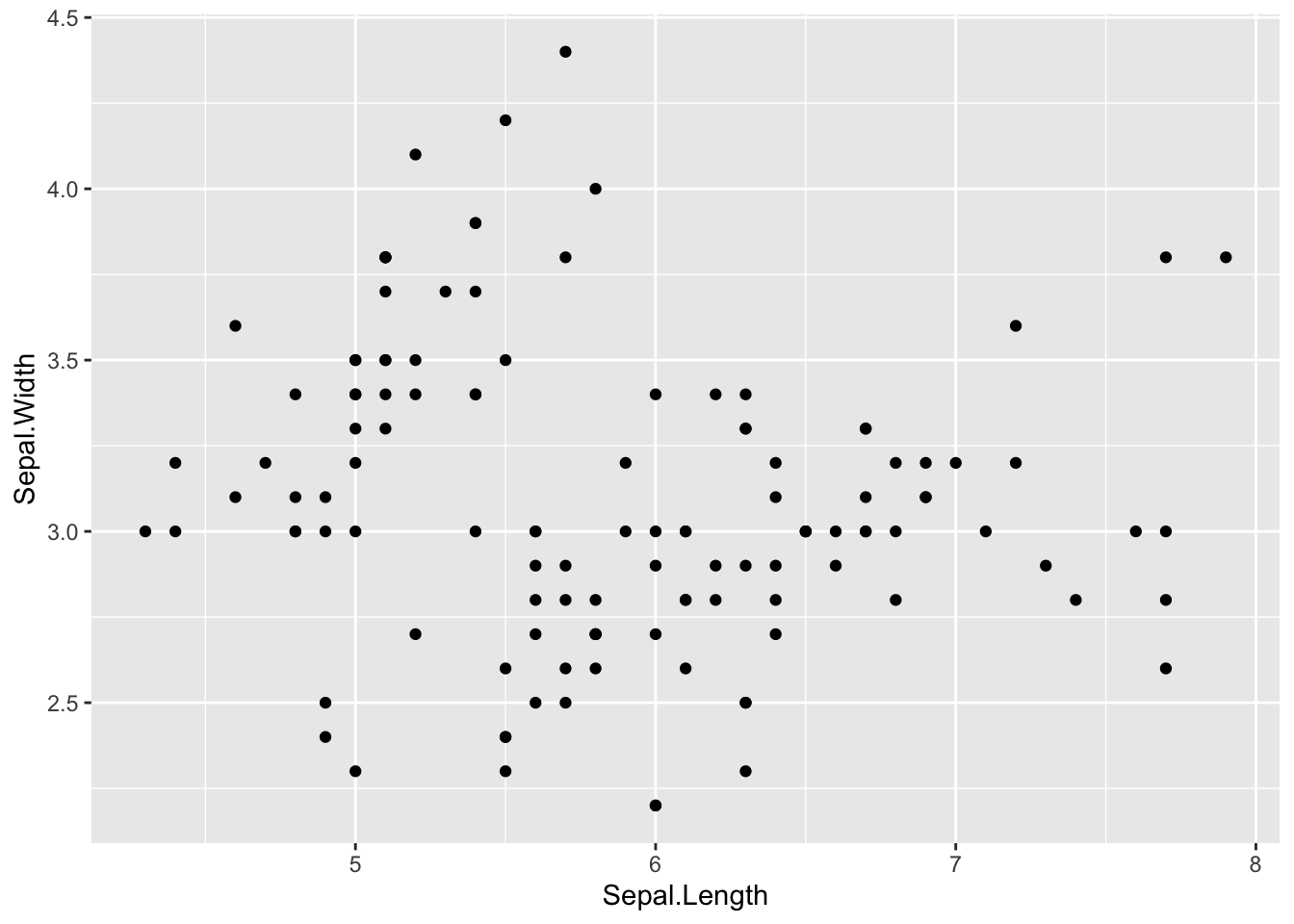

geom_point(mapping = aes(x = carat, y = price)) Similarly, there is not always an obvious relationship, where the correlation/relationship between Sepal Length and Width is less clear.

Similarly, there is not always an obvious relationship, where the correlation/relationship between Sepal Length and Width is less clear.

ggplot(iris, aes(Sepal.Width, Sepal.Length)) +

geom_point()

####Activity 1. Explore the relationships between variables in the iris, diamonds, and faithful datasets.

- Try out other visualization methods if you want, we will come back to this next week

4.3 Lesson 3: Missing Data

Sometimes when you collect data or are analyzing secondary data, there are missing values. This can appear as NULL or NA, and sometimes in other ways, it is good to check for these straight away. Missing values are considered to be the first obstacle in (predictive) modeling. Hence, it’s important to consider the variety of methods to handle them. Please note, some of the methods here jump to rather advanced statistics, while I do not go into the numbers beyond conceptually, please be aware of this before you start employing these methods. It is important to know why you’re doing this first and checking whether these methods are appropriate. These sections will be expanded more with time.

4.3.1 NA v NULL in R

Focusing on R… there are two similar but different types of missing value, NA and NULL – what is the difference? Well, the documentation for R states:

NULL represents the null object in R: it is a reserved word. NULL is often returned by expressions and functions whose values are undefined.

NA is a logical constant of length 1 which contains a missing value indicator. NA can be freely coerced to any other vector type except raw. There are also constants NA_integer_, NA_real_, NA_complex_ and NA_character_ of the other atomic vector types which support missing values: all of these are reserved words in the R language.

While there are subtle differences, R is not always consistent with how it works. Please be careful. (To be expanded…)

4.3.2 So, what do we do?

Well, thankfully there are a large choice of methods to impute missing values, largely influences the model’s predictive ability. In most statistical analysis methods, ‘listwise deletion’ is the default method used to impute missing values. By this, I mean we delete the entire record/row/observation from the dataset of any of the columns/variables are incomplete. But, it not as good since it leads to information loss… however, there are other ways we can handle missing values.

There are a couple of very simple ways you can quickly handle this without imputation:

- Remove any missing data observations (listwise deletion) – most common method (not necessarily the most sensible, either…):

#Let's look at the iris dataset and randomly add in some missing values to demonstrate (as the original dataset does not have any missing values)

library('mice') #package for imputations

library('missForest') #another package for imputations and has a command to induce missing values

iris_missing <- prodNA(iris, noNA = 0.1) #creating 10% missing values

head(iris_missing)## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.1 3.5 1.4 0.2 setosa

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 NA 3.1 1.5 0.2 setosa

## 5 5.0 NA 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosa#hopefully when you use the head() function you will see some of the missing values, if not call the dataset and check they are thereSo we have induced NA values into the iris dataset, let’s just remove them (there are many ways to do this, this is just one)

iris_remove_missing <- na.omit(iris_missing)

dim(iris_remove_missing) #get the dimnensions of the new dataframe## [1] 89 5So, you should see here that there are far fewer rows of data, as we removed a number of them to remove NA values. This is a valid method for handling missing values, but this does mean we might have far less data left over. Similarly, the issue here is that we might find that we are removing important participants, so this might be an extreme option. Think critically about whether this is the right decision for your data and research questions.

- Leave them in:

This is an important other option, where you leave the data alone and continue with missing values. If you do this, be careful with how various commands handle missing values… for example, ggplot2 does not know where to plot missing values, so ggplot2 doesn’t include them in the plot, but it does warn that they’ve been removed:

ggplot(iris_missing, aes(Sepal.Length, Sepal.Width)) +

geom_point()## Warning: Removed 29 rows containing missing values (geom_point).

# where the warning states: Removed 30 rows containing missing values (geom_point).

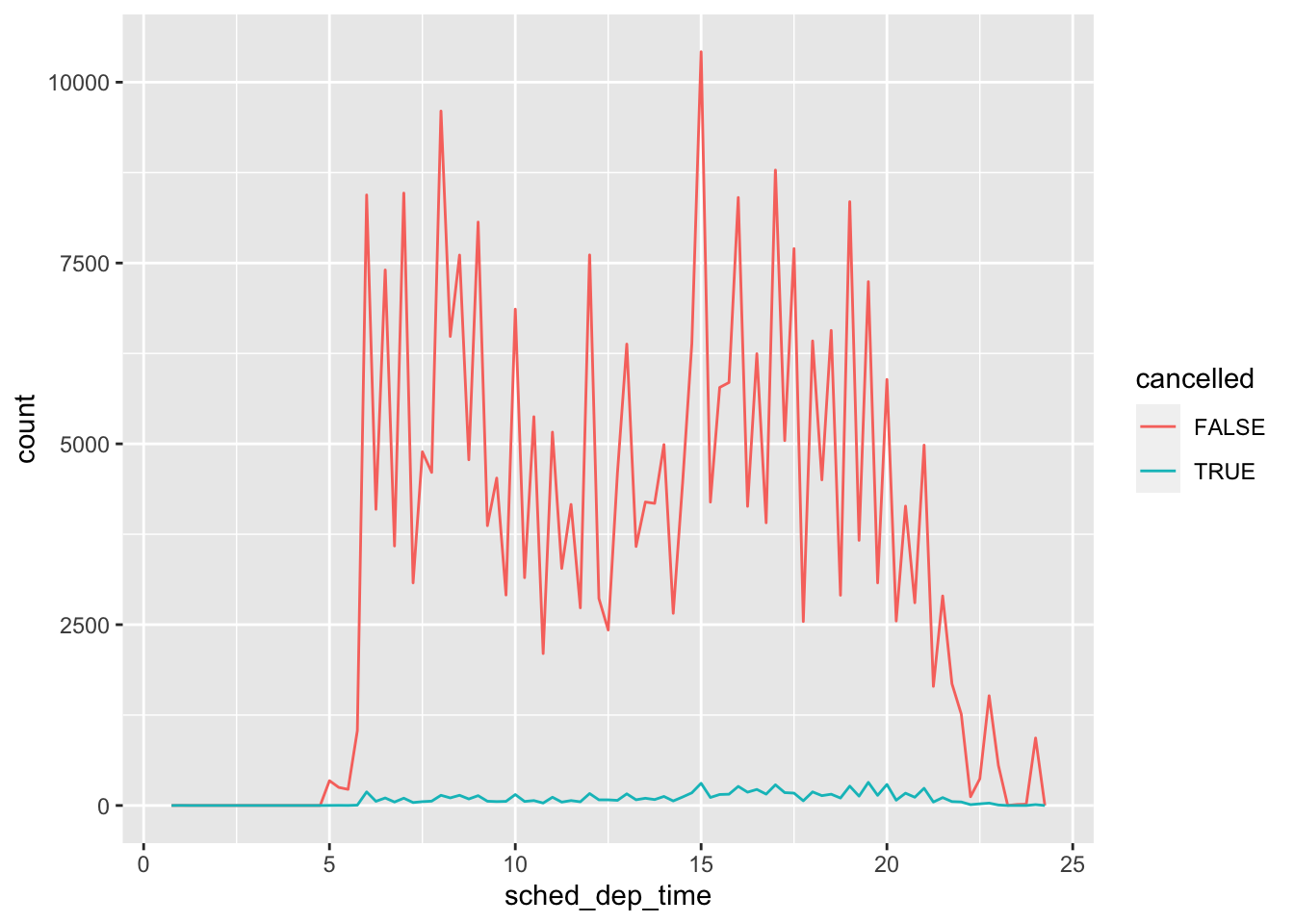

#please note this might be a different number to you because we RANDOMLY added in NA values, so do not worry!There are times where you will want to keep in missing data, for example, in the flights dataset, a missing value in departure time (dep_time) indicates a flight was cancelled. Therefore, be careful for whenever you decide to remove or leave in missing data!

library('nycflights13')

flights %>%

mutate(

cancelled = is.na(dep_time),

sched_hour = sched_dep_time %/% 100,

sched_min = sched_dep_time %% 100,

sched_dep_time = sched_hour + sched_min / 60

) %>%

ggplot(mapping = aes(sched_dep_time)) +

geom_freqpoly(mapping = aes(colour = cancelled), binwidth = 1/4)

4.3.3 Activity

Part 1: Experiment with missing values and histograms, what happens when you try and plot a variable with missing values? Try inputting your own missing values into the iris, flights, diamonds, or faithful datasets.

Part 2: Try out other simple commands on variables with missing values:

try mean()

try mean() with the argument na.rm = TRUE

try sum()

try sum() with the argument na.rm = TRUE

What happens?

4.4 Lesson 4: Imputations

We are luck that in R there are a number of packages build to handle imputations. For example: 1. Mice

Amelia

missForest

This is not an exhaustive list! There are more! We will look at a couple of these as an example to see how we can impute missing values. There are a number of considerations when doing this, rather than employing a ‘listwise deletion’. For example, are the missing values random? This is an extremely important question as this inherently means we would handle the imputations differently…and sometimes this is hard to know.

4.4.1 Definitions

Let’s dsicuss the different types of missing data briefly. Definitions are taken from ]this paper] (https://www.tandfonline.com/doi/pdf/10.1080/08839514.2019.1637138?casa_token=HlDOGucxnF4AAAAA:kMM2t7SeZ4fRedtfAsyXw0BuJB3pxzLEdE5wrrc_L1cusq75SuAtvBySQiiSDzjdgvWNn1LfLwQ):

Missing Completely at Random (MCAR): MCAR is the highest level of randomness and it implies that the pattern of missing value is totally random and does not depend on any variable which may or may not be included in the analysis. Thus, if missingness does not depend on any information in the dataset then it means that data is missing completely at random. The assumption of MCAR isthat probability of the missingness depends neither on the observed values in any variable of the dataset nor on unobserved part of dataset.

Missing at Random (MAR): In this case, probability of missing data is dependent on observed information in the dataset. It means that probability of missingness depends on observed information but does not depend on the unobserved part. Missing value of any of the variable in the dataset depends on observed values of other variables in the dataset because some correlation exists between attribute containing missing value and other attributes in the dataset. The pattern of missing data may be traceable from the observed values in the dataset.

Missing Not at Random (MNAR): In this case, missingness is dependent on unobserved data rather than observed data. Missingness depends on missing data or item itself because of response variable is too sensitive to answer. When data are MNAR, the probability of missing data is related to the value of the missing data itself. The pattern of missing data is not random and is non predictable from observed values of the other variables in the dataset.

4.4.2 Imputation Examples

4.4.2.1 [Mice package] (https://cran.r-project.org/web/packages/mice/mice.pdf)

MICE (Multivariate Imputation via Chained Equations) assumes that the missing data are Missing at Random (MAR), which means that the probability that a value is missing depends only on observed value and can be predicted using them. It imputes data on a variable by variable basis by specifying an imputation model per variable.

By default, linear regression is used to predict continuous missing values. Logistic regression is used for categorical missing values. Once this cycle is complete, multiple data sets are generated. These data sets differ only in imputed missing values. Generally, it’s considered to be a good practice to build models on these data sets separately and combining their results.

Precisely, the methods used by this package are:

PMM (Predictive Mean Matching) – For numeric variables

logreg(Logistic Regression) – For Binary Variables( with 2 levels)

polyreg(Bayesian polytomous regression) – For Factor Variables (>= 2 levels)

Proportional odds model (ordered, >= 2 levels)

We will use the missing iris data we creative above (iris_missing). First, for the sake of simplicity, we will remove the Species variable (categorial):

iris_missing <- subset(iris_missing,

select = -c(Species))

summary(iris_missing)## Sepal.Length Sepal.Width Petal.Length Petal.Width

## Min. :4.300 Min. :2.000 Min. :1.000 Min. :0.1

## 1st Qu.:5.100 1st Qu.:2.800 1st Qu.:1.550 1st Qu.:0.3

## Median :5.800 Median :3.000 Median :4.200 Median :1.3

## Mean :5.846 Mean :3.043 Mean :3.703 Mean :1.2

## 3rd Qu.:6.400 3rd Qu.:3.300 3rd Qu.:5.100 3rd Qu.:1.8

## Max. :7.900 Max. :4.200 Max. :6.900 Max. :2.5

## NA's :14 NA's :17 NA's :11 NA's :15We installed and loaded Mice above, so we do not need to do that again. We will first use the command md.pattern(), which returns a table of the missing values in each dataset.

md.pattern(iris_missing, plot = FALSE)## Petal.Length Sepal.Length Petal.Width Sepal.Width

## 101 1 1 1 1 0

## 12 1 1 1 0 1

## 9 1 1 0 1 1

## 3 1 1 0 0 2

## 11 1 0 1 1 1

## 2 1 0 1 0 2

## 1 1 0 0 1 2

## 9 0 1 1 1 1

## 2 0 1 0 1 2

## 11 14 15 17 57[Insert Week2_Fig1 here as a static image] Referring to the static figure as the numbers will change each time the code is run… Let’s read it together.

Take the first row, reading from left to right: there are 104 observations with no missing values. Take the second row, there are 12 observations with missing values in Petal.Length. Take the third row, there are 9 missing values with Sepal.Length, and so on.

Take the above code and try running this with ‘plot = TRUE’ and see how the output changes.

Let impute the values. Here are the parameters used in this example:

m – Refers to 5 imputed data sets

maxit – Refers to number of iterations taken to impute missing values

method – Refers to method used in imputation, where we used predictive mean matching.

imputed_Data <- mice(iris_missing, m=5, maxit = 50, method = 'pmm', seed = 500)##

## iter imp variable

## 1 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 1 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 1 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 1 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 1 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 2 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 2 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 2 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 2 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 2 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 3 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 3 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 3 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 3 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 3 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 4 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 4 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 4 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 4 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 4 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 5 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 5 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 5 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 5 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 5 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 6 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 6 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 6 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 6 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 6 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 7 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 7 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 7 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 7 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 7 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 8 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 8 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 8 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 8 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 8 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 9 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 9 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 9 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 9 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 9 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 10 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 10 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 10 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 10 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 10 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 11 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 11 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 11 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 11 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 11 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 12 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 12 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 12 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 12 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 12 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 13 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 13 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 13 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 13 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 13 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 14 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 14 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 14 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 14 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 14 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 15 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 15 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 15 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 15 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 15 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 16 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 16 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 16 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 16 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 16 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 17 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 17 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 17 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 17 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 17 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 18 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 18 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 18 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 18 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 18 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 19 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 19 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 19 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 19 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 19 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 20 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 20 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 20 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 20 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 20 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 21 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 21 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 21 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 21 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 21 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 22 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 22 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 22 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 22 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 22 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 23 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 23 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 23 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 23 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 23 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 24 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 24 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 24 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 24 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 24 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 25 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 25 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 25 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 25 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 25 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 26 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 26 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 26 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 26 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 26 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 27 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 27 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 27 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 27 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 27 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 28 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 28 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 28 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 28 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 28 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 29 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 29 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 29 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 29 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 29 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 30 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 30 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 30 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 30 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 30 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 31 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 31 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 31 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 31 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 31 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 32 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 32 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 32 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 32 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 32 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 33 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 33 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 33 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 33 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 33 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 34 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 34 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 34 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 34 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 34 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 35 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 35 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 35 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 35 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 35 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 36 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 36 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 36 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 36 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 36 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 37 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 37 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 37 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 37 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 37 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 38 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 38 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 38 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 38 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 38 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 39 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 39 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 39 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 39 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 39 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 40 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 40 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 40 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 40 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 40 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 41 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 41 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 41 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 41 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 41 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 42 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 42 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 42 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 42 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 42 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 43 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 43 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 43 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 43 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 43 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 44 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 44 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 44 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 44 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 44 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 45 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 45 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 45 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 45 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 45 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 46 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 46 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 46 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 46 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 46 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 47 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 47 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 47 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 47 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 47 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 48 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 48 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 48 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 48 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 48 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 49 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 49 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 49 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 49 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 49 5 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 50 1 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 50 2 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 50 3 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 50 4 Sepal.Length Sepal.Width Petal.Length Petal.Width

## 50 5 Sepal.Length Sepal.Width Petal.Length Petal.Widthsummary(imputed_Data)## Class: mids

## Number of multiple imputations: 5

## Imputation methods:

## Sepal.Length Sepal.Width Petal.Length Petal.Width

## "pmm" "pmm" "pmm" "pmm"

## PredictorMatrix:

## Sepal.Length Sepal.Width Petal.Length Petal.Width

## Sepal.Length 0 1 1 1

## Sepal.Width 1 0 1 1

## Petal.Length 1 1 0 1

## Petal.Width 1 1 1 0We can look and check our newly imputed data (note there are 5 columns as it created 5 new datasets:

imputed_Data$imp$Sepal.Length## 1 2 3 4 5

## 4 4.9 5.0 4.9 4.6 4.6

## 8 4.8 4.9 5.4 4.3 4.8

## 32 4.6 5.0 4.6 5.0 5.0

## 45 5.7 5.0 6.0 5.5 5.4

## 49 5.8 5.4 5.8 5.1 5.4

## 65 4.9 4.9 5.8 4.9 5.8

## 75 6.5 6.5 5.6 6.0 6.3

## 81 5.5 5.2 4.8 5.4 5.0

## 85 6.2 5.8 6.7 6.7 6.0

## 97 6.3 5.6 5.9 5.6 5.8

## 102 6.7 6.0 5.8 6.3 6.1

## 108 6.7 7.7 7.2 7.7 6.8

## 131 6.7 7.2 6.4 7.2 6.8

## 140 6.6 6.4 6.7 6.7 6.7Let’s take the 3rd dataset and use this…

completeData <- complete(imputed_Data, 3) #3 signifies the 3rd dataset

head(completeData) #preview of the new complete dataset, where there will be no more missing values## Sepal.Length Sepal.Width Petal.Length Petal.Width

## 1 5.1 3.5 1.4 0.2

## 2 4.9 3.0 1.4 0.2

## 3 4.7 3.2 1.3 0.2

## 4 4.9 3.1 1.5 0.2

## 5 5.0 3.4 1.4 0.2

## 6 5.4 3.9 1.7 0.44.4.2.2 [Amelia Package] (https://cran.r-project.org/web/packages/Amelia/Amelia.pdf)

This package also performs multiple imputation (generate imputed datasets) to deal with missing values. Multiple imputation helps to reduce bias and increase efficiency. It is enabled with the bootstrap based EMB algorithm, which makes it faster and robust to impute many variables including cross sectional, time series data etc. Also, it is enabled with parallel imputation feature using multicore CPUs.

This package also assumes:

All variables in a data set have Multivariate Normal Distribution (MVN). It uses means and covariances to summarize data. So please please check your data for skews/normality before you use this!

Missing data is random in nature (Missing at Random)

What’s the difference between Ameila with Mice?

Mice imputes data on variable by variable basis whereas MVN (Amelia) uses a joint modeling approach based on multivariate normal distribution.

Mice is capable of handling different types of variables whereas the variables in MVN (Ameila) need to be normally distributed or transformed to approximate normality.

Mice can manage imputation of variables defined on a subset of data whereas MVN (Amelia) cannot.

Let’s have a go (and create a new iris missing dataset that keeps the categorical species variable)

library('Amelia')

iris_missing2 <- prodNA(iris, noNA = 0.15) #taking iris dataset and adding in 15% missing values

head(iris_missing2)## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 NA 3.5 1.4 0.2 <NA>

## 2 4.9 3.0 1.4 0.2 setosa

## 3 4.7 3.2 1.3 0.2 setosa

## 4 NA 3.1 1.5 0.2 setosa

## 5 5.0 NA 1.4 0.2 setosa

## 6 5.4 3.9 1.7 0.4 setosaWhen using Ameila, we need to specify some arguments. First: idvars = keep all ID variables and others that we do not want to impute, and second: noms = we want to keep all nominal/categorical variables.

amelia_fit <- amelia(iris_missing2, m=5, parallel = 'multicore', noms = 'Species')## -- Imputation 1 --

##

## 1 2 3 4 5 6 7 8 9 10

##

## -- Imputation 2 --

##

## 1 2 3 4 5 6 7 8

##

## -- Imputation 3 --

##

## 1 2 3 4 5 6 7 8 9

##

## -- Imputation 4 --

##

## 1 2 3 4 5 6 7 8 9

##

## -- Imputation 5 --

##

## 1 2 3 4 5 6 7 8Lets look at the imputed datasets

amelia_fit$imputations[[1]]## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.122513 3.500000 1.400000 0.20000000 setosa

## 2 4.900000 3.000000 1.400000 0.20000000 setosa

## 3 4.700000 3.200000 1.300000 0.20000000 setosa

## 4 4.855997 3.100000 1.500000 0.20000000 setosa

## 5 5.000000 3.424770 1.400000 0.20000000 setosa

## 6 5.400000 3.900000 1.700000 0.40000000 setosa

## 7 4.600000 3.400000 1.400000 0.30000000 setosa

## 8 5.000000 3.400000 1.826089 0.20000000 setosa

## 9 4.256297 2.900000 1.400000 0.20000000 setosa

## 10 4.900000 3.100000 1.500000 0.32117244 setosa

## 11 5.400000 3.700000 1.500000 0.20000000 setosa

## 12 4.800000 3.400000 1.251924 0.03269126 setosa

## 13 4.878230 3.000000 1.611682 0.10000000 versicolor

## 14 4.300000 3.000000 1.100000 0.10000000 setosa

## 15 5.800000 4.000000 1.200000 0.20000000 setosa

## 16 5.700000 4.400000 1.500000 0.40000000 setosa

## 17 5.400000 3.493592 1.300000 0.40000000 setosa

## 18 4.984986 3.500000 1.400000 0.30000000 setosa

## 19 5.700000 3.800000 1.700000 0.30000000 setosa

## 20 5.100000 3.800000 1.500000 0.36340399 setosa

## 21 5.400000 3.400000 1.700000 0.20000000 setosa

## 22 5.100000 3.700000 1.500000 0.40000000 setosa

## 23 4.600000 3.600000 1.013468 0.20000000 setosa

## 24 5.183689 3.300000 1.700000 0.50000000 setosa

## 25 4.800000 3.400000 1.900000 0.20000000 setosa

## 26 5.000000 2.654395 1.600000 0.20000000 versicolor

## 27 5.000000 3.292835 1.600000 0.40000000 setosa

## 28 5.200000 3.480169 1.500000 0.20000000 setosa

## 29 4.798636 3.400000 1.400000 0.20000000 setosa

## 30 4.700000 3.200000 1.600000 0.20000000 setosa

## 31 5.093587 3.100000 1.600000 0.20000000 setosa

## 32 5.125637 3.400000 1.500000 0.40000000 setosa

## 33 5.200000 4.100000 1.500000 0.10000000 setosa

## 34 5.500000 4.200000 1.855383 0.23547964 setosa

## 35 4.900000 3.100000 1.500000 0.20000000 setosa

## 36 5.000000 3.200000 1.652803 0.20000000 setosa

## 37 5.500000 3.500000 1.952497 0.20000000 setosa

## 38 4.900000 3.600000 1.400000 0.10000000 setosa

## 39 4.400000 3.000000 1.300000 0.20000000 setosa

## 40 5.100000 3.400000 1.500000 0.20000000 setosa

## 41 5.000000 3.673719 1.300000 0.30000000 setosa

## 42 4.500000 2.300000 1.300000 0.30000000 setosa

## 43 4.400000 3.200000 1.300000 0.21746672 setosa

## 44 5.000000 3.500000 1.600000 0.60000000 setosa

## 45 5.100000 3.800000 1.900000 0.40000000 setosa

## 46 4.800000 3.000000 1.724644 0.30000000 setosa

## 47 5.100000 3.800000 1.600000 0.40213199 setosa

## 48 4.600000 3.200000 1.400000 0.20000000 setosa

## 49 5.300000 3.700000 1.879704 0.20000000 setosa

## 50 5.000000 3.300000 1.400000 0.20000000 setosa

## 51 7.000000 3.720792 4.700000 1.56616499 virginica

## 52 6.400000 3.200000 4.500000 1.71846740 versicolor

## 53 6.900000 3.100000 5.081878 1.50000000 versicolor

## 54 5.500000 2.300000 4.000000 1.30000000 versicolor

## 55 6.055398 2.800000 4.600000 1.50000000 versicolor

## 56 5.700000 2.679558 4.500000 1.30000000 versicolor

## 57 6.739645 3.300000 4.700000 1.55699836 versicolor

## 58 4.900000 2.400000 3.300000 1.00000000 versicolor

## 59 6.600000 3.286172 4.600000 1.17660780 versicolor

## 60 5.200000 2.700000 3.900000 1.40000000 versicolor

## 61 5.000000 2.000000 3.500000 0.64070637 versicolor

## 62 5.900000 3.000000 4.200000 1.50000000 versicolor

## 63 6.000000 2.200000 4.055943 1.00000000 versicolor

## 64 6.344858 2.900000 4.700000 1.40000000 versicolor

## 65 5.600000 2.900000 4.022980 1.30000000 versicolor

## 66 6.700000 3.100000 4.400000 1.40000000 versicolor

## 67 5.600000 3.000000 4.500000 1.50000000 versicolor

## 68 5.800000 2.700000 4.100000 1.00000000 versicolor

## 69 6.200000 2.200000 4.500000 1.50000000 versicolor

## 70 5.600000 2.500000 3.900000 1.10000000 versicolor

## 71 5.900000 3.200000 4.800000 1.62375847 versicolor

## 72 6.100000 2.800000 4.000000 1.30000000 versicolor

## 73 6.300000 2.500000 4.900000 1.50000000 versicolor

## 74 6.100000 2.800000 4.700000 1.20000000 versicolor

## 75 6.400000 3.327792 4.300000 1.30000000 virginica

## 76 5.374737 2.512130 4.400000 1.40000000 versicolor

## 77 6.234053 2.800000 4.800000 1.40000000 versicolor

## 78 6.892560 3.000000 5.000000 1.70000000 versicolor

## 79 6.000000 2.900000 4.500000 1.50000000 versicolor

## 80 5.700000 2.718342 3.500000 1.00000000 versicolor

## 81 5.500000 2.864249 3.800000 1.10000000 versicolor

## 82 5.500000 2.400000 3.700000 1.00000000 versicolor

## 83 5.800000 2.700000 3.900000 1.34342602 versicolor

## 84 6.000000 2.700000 5.100000 1.60000000 virginica

## 85 5.400000 3.000000 3.904452 1.50000000 versicolor

## 86 6.000000 3.400000 4.500000 1.56019808 versicolor

## 87 6.700000 3.100000 4.700000 1.43434690 versicolor

## 88 6.300000 2.801866 4.280387 1.30000000 versicolor

## 89 5.947290 3.000000 4.100000 1.30000000 versicolor

## 90 5.500000 2.500000 4.000000 1.30000000 versicolor

## 91 5.500000 2.600000 4.400000 1.18090649 versicolor

## 92 6.100000 3.000000 4.600000 1.40000000 versicolor

## 93 5.800000 2.600000 4.000000 1.20000000 versicolor

## 94 5.000000 2.300000 3.300000 1.00000000 versicolor

## 95 5.600000 2.700000 4.200000 1.30000000 versicolor

## 96 6.220751 3.000000 4.200000 1.20000000 versicolor

## 97 5.700000 2.900000 4.200000 1.30000000 versicolor

## 98 6.200000 2.900000 4.300000 1.28270798 versicolor

## 99 5.100000 2.500000 3.000000 1.10000000 versicolor

## 100 5.700000 2.800000 4.100000 1.30000000 versicolor

## 101 6.300000 3.300000 6.000000 2.50000000 virginica

## 102 5.800000 2.700000 5.100000 1.90000000 virginica

## 103 6.764507 3.000000 5.900000 2.10000000 virginica

## 104 6.300000 2.900000 5.600000 1.92648364 virginica

## 105 6.710200 3.000000 5.800000 2.20000000 virginica

## 106 7.116988 3.000000 6.600000 2.28345823 virginica

## 107 4.900000 2.500000 4.500000 1.70000000 virginica

## 108 7.300000 2.900000 6.300000 1.80000000 virginica

## 109 6.700000 2.500000 5.800000 1.82017641 virginica

## 110 7.200000 3.600000 5.671587 2.50000000 virginica

## 111 6.500000 3.004604 5.100000 1.95963729 virginica

## 112 6.400000 2.700000 5.952137 1.90000000 versicolor

## 113 6.800000 3.000000 5.500000 2.10000000 virginica

## 114 5.700000 2.500000 4.954623 2.00000000 virginica

## 115 6.362269 2.800000 5.100000 2.40000000 virginica

## 116 6.400000 3.200000 5.300000 2.30000000 virginica

## 117 6.500000 3.000000 5.676349 2.15437475 virginica

## 118 7.700000 3.800000 6.700000 2.20000000 virginica

## 119 7.700000 2.600000 6.900000 2.30000000 virginica

## 120 6.000000 3.025006 5.000000 1.50000000 virginica

## 121 6.900000 3.200000 5.700000 2.30000000 virginica

## 122 5.963757 2.800000 4.900000 2.00000000 virginica

## 123 7.700000 2.800000 6.929864 2.00000000 virginica

## 124 6.300000 3.256212 5.437117 1.80000000 virginica

## 125 6.700000 3.300000 5.700000 2.10000000 virginica

## 126 7.200000 3.200000 6.000000 1.80000000 virginica

## 127 6.200000 2.800000 4.800000 1.80000000 virginica

## 128 6.100000 3.000000 4.900000 1.80000000 virginica

## 129 6.400000 2.800000 5.600000 2.10000000 virginica

## 130 7.200000 3.000000 5.800000 1.60000000 versicolor

## 131 7.400000 3.496670 6.100000 1.90000000 virginica

## 132 7.900000 3.800000 6.400000 2.00000000 virginica

## 133 6.400000 2.800000 5.600000 2.20000000 virginica

## 134 6.300000 2.800000 5.100000 1.50000000 virginica

## 135 6.100000 2.600000 5.600000 1.40000000 virginica

## 136 7.700000 3.335620 6.100000 2.30000000 virginica

## 137 6.301903 3.288039 5.600000 2.40000000 virginica

## 138 6.400000 3.100000 5.500000 1.80000000 virginica

## 139 6.000000 3.000000 4.800000 1.80000000 virginica

## 140 6.900000 3.100000 5.400000 2.10000000 virginica

## 141 6.700000 3.100000 5.600000 2.40000000 virginica

## 142 6.900000 3.616496 5.100000 2.30000000 versicolor

## 143 5.800000 2.700000 5.100000 1.90000000 versicolor

## 144 6.800000 3.200000 5.900000 2.30000000 virginica

## 145 6.700000 3.300000 5.700000 2.50000000 virginica

## 146 7.075201 3.000000 6.538618 2.30000000 virginica

## 147 6.300000 2.500000 5.586423 1.90000000 virginica

## 148 6.500000 3.000000 5.200000 2.00000000 virginica

## 149 6.200000 3.400000 5.400000 2.28827489 virginica

## 150 5.900000 3.000000 5.100000 2.22949020 virginicaamelia_fit$imputations[[2]]## Sepal.Length Sepal.Width Petal.Length Petal.Width Species

## 1 5.070645 3.500000 1.4000000 0.20000000 setosa

## 2 4.900000 3.000000 1.4000000 0.20000000 setosa

## 3 4.700000 3.200000 1.3000000 0.20000000 setosa

## 4 5.053140 3.100000 1.5000000 0.20000000 setosa

## 5 5.000000 3.249470 1.4000000 0.20000000 setosa

## 6 5.400000 3.900000 1.7000000 0.40000000 setosa

## 7 4.600000 3.400000 1.4000000 0.30000000 setosa

## 8 5.000000 3.400000 1.0724104 0.20000000 setosa

## 9 4.749461 2.900000 1.4000000 0.20000000 setosa

## 10 4.900000 3.100000 1.5000000 0.16367355 setosa

## 11 5.400000 3.700000 1.5000000 0.20000000 setosa

## 12 4.800000 3.400000 1.0614038 0.12463265 setosa

## 13 4.758201 3.000000 1.3002041 0.10000000 versicolor

## 14 4.300000 3.000000 1.1000000 0.10000000 setosa

## 15 5.800000 4.000000 1.2000000 0.20000000 setosa

## 16 5.700000 4.400000 1.5000000 0.40000000 setosa

## 17 5.400000 3.271765 1.3000000 0.40000000 setosa

## 18 4.783556 3.500000 1.4000000 0.30000000 setosa

## 19 5.700000 3.800000 1.7000000 0.30000000 setosa

## 20 5.100000 3.800000 1.5000000 0.50358186 setosa

## 21 5.400000 3.400000 1.7000000 0.20000000 setosa

## 22 5.100000 3.700000 1.5000000 0.40000000 setosa

## 23 4.600000 3.600000 0.6024071 0.20000000 versicolor

## 24 5.057136 3.300000 1.7000000 0.50000000 setosa

## 25 4.800000 3.400000 1.9000000 0.20000000 setosa

## 26 5.000000 2.945860 1.6000000 0.20000000 setosa

## 27 5.000000 3.369704 1.6000000 0.40000000 setosa

## 28 5.200000 3.617906 1.5000000 0.20000000 setosa

## 29 5.201287 3.400000 1.4000000 0.20000000 setosa

## 30 4.700000 3.200000 1.6000000 0.20000000 setosa

## 31 5.335579 3.100000 1.6000000 0.20000000 setosa

## 32 5.187145 3.400000 1.5000000 0.40000000 setosa