Chapter 5 Multiple Hierarchical Linear Models

5.1 Objective

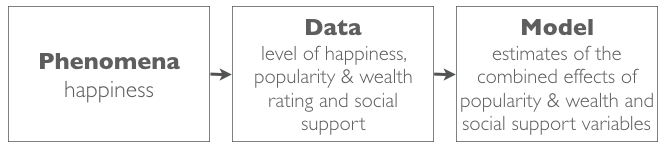

This exercise uses the Happiness Study database form research with CLSBE students. In this exercise the research question is:

- Does the combined effect of Emotional Support and Instrumental Support adds explanatory power to the combined effect of perceived Popularity and Wealth on the level of Happiness relative?

- What is the effect of social support on happiness after we take in account the effect of self-perceptions about Popularity and Wealth?

We expect positive associations between all the four variables and happiness as well for Emotional Support and Instrumental Support to add explanatory power to the combined effect of perceived Popularity and Wealth.

Schematic representation of the research question.

5.2 Load the Data Base

The data base of the Happiness Study is available here. Download the data base load it in the R environment.

Happy <- read.csv("Happy.csv")5.3 Model Estimation

5.3.1 Data Analysis Equation

In review, The fundamental equation for data analysis is data = model + error. In mathematical notation:

\[Y_i=\bar{Y}_i + e_i\]

- \(Y_i\) is the value of the phenomena in subject i;

- \(\bar{Y}_i\) an estimate of the value of the phenomena in subject i;

- \(e_i\) is the difference between the value and the estimated value of the phenomena in subject i.

5.4 The Proposed Model

In review, the proposed model is a more complete statistical model for our data. This model makes specific predictions for every observation base on one or more independent variables of interest (IV). The notation for this model is:

\[\bar{Y}_i = b_0+b_1\times X_{i1}...b_j\times X_{ij}\]

- \(b_0\) corresponds to?the best estimate for our data when the independent variables are 0;

- \(b_1\) is the parameter estimating the effect of the independent variable \(1\) for every subject \(i\);

- \(b_j\) is the \(j\) parameter estimating the effect of the IV \(j\) for every subject \(i\).

In the case of our research problem there are two proposed models. The Proposed Model 1 estimates the combined effect of Popularity and Wealth:

\[\bar{Happiness}_i = b_0+b_1*emotional_{i}+b_2*instrumental_{i}\]

- \(b_0\) correspondes to the best estimate for the level of Happiness when all the independent variables are \(0\) for the subject \(i\);

- \(b_1\) is the parameter estimating the effect of Popularity on subject \(i\);

- \(b_2\) is the parameter estimating the effect of Wealth on subject \(i\).

Proposed Model 2 estimates the combined effect of Popularity, Wealth, Emotional Support and Instrumental Support:

\[\bar{Happiness}_i = b_0+b_1*emotional_{i}+b_2*instrumental_{i}+b_3*popularity_{i}+b_4*wealth_{i}\]

- \(b_0\) correspondes to the best estimate for the level of happiness when all the independent variables are \(0\) for the subject \(i\);

- \(b_1\) and \(b_2\) have the same meaning as for Model 1;

- \(b_3\) is the parameter estimating the effect of Emotional Support on subject \(i\);

- \(b_4\) is the parameter estimating the effect of Instrumental Support on subject \(i\);

Note that we could calculate the parameters of the proposed model by had using the appropriate formulas. Here we follow a simpler approach and ask R to compute the parameters of the model for us.

Compute the parameters for the Proposed Model 1 and Proposed Model 2 using the lm.beta() function.

library(lm.beta) # lm.beta function

Model.3.1 <- lm(

Happy$HappyScale~

Happy$Popularity+

Happy$Wealth

)

Model.3.2 <- lm(

Happy$HappyScale~

Happy$Popularity+

Happy$Wealth+

Happy$EmotinalSup+

Happy$InstruSup

)

summary(lm.beta(Model.3.1))##

## Call:

## lm(formula = Happy$HappyScale ~ Happy$Popularity + Happy$Wealth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.48040 -0.48951 -0.04461 0.50625 2.24289

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 4.00748 0.00000 0.42689 9.388 0.000000000000002 ***

## Happy$Popularity 0.14790 0.19377 0.07850 1.884 0.0624 .

## Happy$Wealth 0.05591 0.07518 0.07649 0.731 0.4665

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8923 on 101 degrees of freedom

## Multiple R-squared: 0.05302, Adjusted R-squared: 0.03427

## F-statistic: 2.827 on 2 and 101 DF, p-value: 0.06386summary(lm.beta(Model.3.2))##

## Call:

## lm(formula = Happy$HappyScale ~ Happy$Popularity + Happy$Wealth +

## Happy$EmotinalSup + Happy$InstruSup)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.46898 -0.48250 -0.07665 0.59724 2.36715

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 2.325592 0.000000 0.807926 2.878 0.0049 **

## Happy$Popularity 0.135983 0.178152 0.077094 1.764 0.0808 .

## Happy$Wealth 0.008084 0.010869 0.078209 0.103 0.9179

## Happy$EmotinalSup 0.536155 0.240288 0.301178 1.780 0.0781 .

## Happy$InstruSup 0.014560 0.007677 0.257399 0.057 0.9550

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8746 on 99 degrees of freedom

## Multiple R-squared: 0.1084, Adjusted R-squared: 0.07234

## F-statistic: 3.008 on 4 and 99 DF, p-value: 0.02178Based on the output, the equations for the proposed models can be written like this using the extract_eq()function:

library(equatiomatic)

extract_eq(Model.3.1)\[ \operatorname{Happy\$HappyScale} = \alpha + \beta_{1}(\operatorname{Happy\$Popularity}) + \beta_{2}(\operatorname{Happy\$Wealth}) + \epsilon \]

extract_eq(Model.3.2)\[ \operatorname{Happy\$HappyScale} = \alpha + \beta_{1}(\operatorname{Happy\$Popularity}) + \beta_{2}(\operatorname{Happy\$Wealth}) + \beta_{3}(\operatorname{Happy\$EmotinalSup}) + \beta_{4}(\operatorname{Happy\$InstruSup}) + \epsilon \]

With these equations compute the Proposed Model 1 and 2 estimates for every subject and the corresponding error.

Happy$propmodel1 <-

4.00748 +

0.14790 * Happy$Popularity +

0.05591 * Happy$Wealth # proposed model 1

Happy$propmodelerroSQR1 <-

(Happy$HappyScale-Happy$propmodel1)^2 # squared error

sum(Happy$propmodelerroSQR1) # SSE of model 1## [1] 80.42002Happy$propmodel2 <-

2.325592 +

0.135983 * Happy$Popularity +

0.008084 * Happy$Wealth +

0.536155 * Happy$EmotinalSup +

0.014560 * Happy$InstruSup # proposed model 2

Happy$propmodelerroSQR2 <-

(Happy$HappyScale-Happy$propmodel2)^2 # squared error

sum(Happy$propmodelerroSQR2) # SSE of model 2## [1] 75.71979The total error for our proposed model 1 is 514.74553 and of proposed model 2 is 514.7500747.

5.4.1 Model Comparison

To address our research question, Emotional Support and Instrumental Support will have a combined added effect only the Proposed Model 2 has less error that the Proposed Model 1. In other words, if Model 2 has less error than Model 1 we can say that the parameters \(b_3\) and \(b_4\) estimating the added effects of Emotional Support and Instrumental Support on Happiness in Model 2 reduce the error in the data and provide a better model than Model 1 with just \(b_1\) and \(b_2\) estimating the effects of Popularity and Wealth.

To compare the proposed and null model we first need to compute the SSE of the null model:

Happy$nullmodel <- mean(Happy$HappyScale) # null model

Happy$nullmodelerroSQR <- (Happy$HappyScale-Happy$nullmodel)^2 # squared difference

sum(Happy$nullmodelerroSQR) # sum of the squared erros of the null model## [1] 84.92248To compare Model 1 and Model 2 compute the proportion of the reduction of the error (PRE) change according to equation:

\[PRE_{change}=\frac{SSE_{null}-SSE_{Model\;2}}{SSE_{null}}-\frac{SSE_{null}-SSE_{Model\;1}}{SSE_{null}}\]

PRE_Model.3.1 <- (sum(Happy$nullmodelerroSQR)-sum(Happy$propmodelerroSQR1))/sum(Happy$nullmodelerroSQR)

PRE_Model.3.2 <- (sum(Happy$nullmodelerroSQR)-sum(Happy$propmodelerroSQR2))/sum(Happy$nullmodelerroSQR)

PRE_Change <- PRE_Model.3.2 - PRE_Model.3.1

PRE_Model.3.1## [1] 0.05301839PRE_Model.3.2## [1] 0.1083657PRE_Change## [1] 0.05534731The Proportion of the Reduction of Error (PRE) for Model 1 in this case is 0.0530184 and indicates that this model reduces the error of the Null Model in \(5.3\%\). The PRE for Model is 0.1083657 and indicates that this model reduces the error of the Null Model in \(10.8\%\). Finally, the difference between Model 1 and 2 is 0.0553473 and indicates that the Model 2 reduces the error of Model 1 in \(5.5\%\).

To preform this multiple comparison of models in R you can use the aov(), anova() and summary(lm())functions. Compute these functions as indicated below.

anova(Model.3.1) # SSE and test statistics for model 1## Analysis of Variance Table

##

## Response: Happy$HappyScale

## Df Sum Sq Mean Sq F value Pr(>F)

## Happy$Popularity 1 4.077 4.0770 5.1203 0.02579 *

## Happy$Wealth 1 0.425 0.4255 0.5344 0.46647

## Residuals 101 80.420 0.7962

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1anova(Model.3.2) # SSE test statistics for model 2## Analysis of Variance Table

##

## Response: Happy$HappyScale

## Df Sum Sq Mean Sq F value Pr(>F)

## Happy$Popularity 1 4.077 4.0770 5.3304 0.02304 *

## Happy$Wealth 1 0.425 0.4255 0.5563 0.45752

## Happy$EmotinalSup 1 4.698 4.6978 6.1421 0.01489 *

## Happy$InstruSup 1 0.002 0.0024 0.0032 0.95501

## Residuals 99 75.720 0.7648

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1anova(

Model.3.2,

Model.3.1

) # test statistics for PRE change## Analysis of Variance Table

##

## Model 1: Happy$HappyScale ~ Happy$Popularity + Happy$Wealth + Happy$EmotinalSup +

## Happy$InstruSup

## Model 2: Happy$HappyScale ~ Happy$Popularity + Happy$Wealth

## Res.Df RSS Df Sum of Sq F Pr(>F)

## 1 99 75.72

## 2 101 80.42 -2 -4.7002 3.0727 0.05074 .

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1summary(

lm.beta(Model.3.1)

) # parameters and test statistics for model 1##

## Call:

## lm(formula = Happy$HappyScale ~ Happy$Popularity + Happy$Wealth)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.48040 -0.48951 -0.04461 0.50625 2.24289

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 4.00748 0.00000 0.42689 9.388 0.000000000000002 ***

## Happy$Popularity 0.14790 0.19377 0.07850 1.884 0.0624 .

## Happy$Wealth 0.05591 0.07518 0.07649 0.731 0.4665

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8923 on 101 degrees of freedom

## Multiple R-squared: 0.05302, Adjusted R-squared: 0.03427

## F-statistic: 2.827 on 2 and 101 DF, p-value: 0.06386summary(

lm.beta(Model.3.2)

) # parameters and test statistics for model 2##

## Call:

## lm(formula = Happy$HappyScale ~ Happy$Popularity + Happy$Wealth +

## Happy$EmotinalSup + Happy$InstruSup)

##

## Residuals:

## Min 1Q Median 3Q Max

## -2.46898 -0.48250 -0.07665 0.59724 2.36715

##

## Coefficients:

## Estimate Standardized Std. Error t value Pr(>|t|)

## (Intercept) 2.325592 0.000000 0.807926 2.878 0.0049 **

## Happy$Popularity 0.135983 0.178152 0.077094 1.764 0.0808 .

## Happy$Wealth 0.008084 0.010869 0.078209 0.103 0.9179

## Happy$EmotinalSup 0.536155 0.240288 0.301178 1.780 0.0781 .

## Happy$InstruSup 0.014560 0.007677 0.257399 0.057 0.9550

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1

##

## Residual standard error: 0.8746 on 99 degrees of freedom

## Multiple R-squared: 0.1084, Adjusted R-squared: 0.07234

## F-statistic: 3.008 on 4 and 99 DF, p-value: 0.021785.5 Statistical Inference

5.5.1 Model Overveiw

At this point we know that:

- \(PRE_{Model\;1}=0.05\), meaning that the Proposed Model 1 is 5% better than the Null Model.

- \(PRE_{Model\;2}=0.11\), meaning that the Proposed Model 2 is 11% better than the Null Model.

- \(PRE_{change}=0.06\), meaning that the Proposed Model 2 adding the predictors Emotional and Instrumental Support is 6% better than the Proposed Model 1.

- In Model 1:

- \(beta_1=0.19\), meaning that Popularity has week positive correlation with Happiness;

- \(beta_2=0.08\), meaning that Wealth has a even weaker positive correlation with Happiness;

- In Model 2:

- \(beta_1=0.18\), meaning that Popularity maintains a week positive correlation with Happiness;

- \(beta_2=0.08\), meaning that Wealth maintains the even weaker positive correlation with Happiness;

- \(beta_3=0.24\), meaning that Emotional Support has a week (almost moderate) positive correlation with Happiness;

- \(beta_4=0.01\), meaning that Instrumental Support as a very small (close to zero) positive correlation with Happiness.

Statistical inference is method that allows to determine if the estimates produced for our sample (i.e., all the \(b_s\) and \(PRE_s\)) can be generalized to the the population where the data was collected. In other words, it is the method to test the likelihood of an estimate for our sample to happen by chance if the value is generalized to the population. On a Multiple Hierarchical Linear Regression the \(PRE_{change}\) is the most important estimate regarding the models performance. For instance, if none of the proposed models error reduction are statistically significant, the \(PRE_{change}\) will not be significant. However, it often happens that both proposed models error reduction are statistically significant, simply because the second proposed model (or any other more complex model) adds more variables and increases, even if slightly, the explanatory power of the previous proposed model. Here it is crucial to test if the change produced by adding variables to a previous model is, on its own, statistically significant.

5.5.2 Inference for \(PRE\)

The statistical inference for \(PRE\) tests the likelihood a reduction of the error observed in the sample happening by chance if the value is generalised to the population. We have three \(PRE_s\) to test:

- \(PRE_{Model\;1}=0.05\);

- \(PRE_{Model\;2}=0.11\);

- \(PRE_{change}=0.06\).

The most important estimate regarding the models performance is the \(PRE_{change}\) and that is where we will start. The statistical hypothesis for \(PRE_{change}\) are:

\[H_0:\eta^2=0\;vs\;H_1:\eta^2\neq0\]

- \(H_0\) corresponds to the null hypothesis and states that the the reduction of the error of Model 2 relative to Model 1 observed is the sample (\(R^2_{change}\)) is likely to have happened by chance if the value is generalised to the population (\(\eta^2\)).

- \(H_1\) corresponds to the alternative hypothesis and states that the reduction of the error of Model 2 relative to Model 1 observed in the sample (\(R^2_{change}\)) is not likely to have happened by chance if the value is generalised to the population (\(\eta^2\)).

The value of the test statistic and the corresponding p-value for this inference given by the function anova(Model.3.2, Model.3.1) and is \(F=3.07\) and \(p-value=0.05\). Considering that \(p-value\leq0.05\), the test statistic has a small likelihood of happening by chance. Consequently, the decision for this test is to reject \(H_0\). This means that the reduction of the error of Model 2 relative to Model 1 observed in the sample is statistically significant.

Once the \(PRE_{change}\), the \(PRE_{Model\;2}\) will also be statistically significant because it has the same variables determining the \(PRE_{change}\) plus the the variables from Model 1. Consequently, no further hypotheses testing is required for the \(PRE_{Model\;2}\). Additionally, because out interest is to look at the added explanatory power Emotional Support and Instrumental Support and to the relative contribution of each one of the 4 independent variables, the \(PRE_{Model\;1}\) has little relevance.

5.5.3 Inference for the parameters estimates \(b_s\)

The statistical inference for \(b_s\) tests the likelihood of the assocaition between each independent variable and Happiness, in the sample (represented by \(b\) values) happening by chance if the value of this effect is generalised to the population (represented by the \(\beta\) in the hypothesis testing). Because we expect all the variables to have a positive effect on Happiness, the statistical hypothesis for is similar and is given by the following notation:

\[H_0:\beta=0\;vs\;H_1:\beta>0\]

- \(H_0\) corresponds to the null hypothesis and states that the effect of the independent variable represented in the (\(b\)) value is likely to have happened by chance if the value is generalised to the population (\(\beta\));

- \(H_1\) corresponds to the alternative hypothesis and states that the positive effect of the independent variable represented in the (\(b\)) is not likely to have happened by chance if the value is generalised to the population (\(\beta\)).

Note that the \(\frac{p-value}{2}\) because we have a unilateral hypothesis testing with a \(H_1:\beta_1>0\). Here we are interested in the likelihood of getting test statistics that are specifically equal or higher \(Pr(>t)\) and not generally equal or more extreme \(Pr(>|t|)\)!

5.5.3.1 Inference for \(b_1\)

The value of the test statistic and the corresponding p-value for the effect of Popularity is \(t=1.76\) and \(p-value=\frac{0.08}{2}\). Considering that \(p-value\leq0.05\), the test statistics has small likelihood of happening by chance. Consequently, the decision for this test is to reject \(H_0\), meaning that that the effect of Popularity observed in the sample is statistically significant.

5.5.3.2 Inference for \(b_2\)

The value of the test statistic and the corresponding p-value for the effect of Wealth is \(t=0.13\) and \(p-value=\frac{0.92}{2}\). Considering that \(p-value>0.05\), the test statistics has big likelihood of happening by chance. Consequently, the decision for this test is to not reject \(H_0\), meaning that that the effect of Wealth observed in the sample is not statistically significant.

5.5.3.3 Inference for \(b_3\)

The value of the test statistic and the corresponding p-value for the effect of Emotional Support is \(t=1.78\) and \(p-value=\frac{0.08}{2}\). Considering that \(p-value\leq0.05\), the test statistics has small likelihood of happening by chance. Consequently, the decision for this test is to reject \(H_0\), meaning that that the effect of Emotional Support observed in the sample is statistically significant.

5.5.3.4 Inference for \(b_4\)

The value of the test statistic and the corresponding p-value for the effect of Instrumental Support is \(t=0.06\) and \(p-value=\frac{0.96}{2}\). Considering that \(p-value>0.05\), the test statistics has big likelihood of happening by chance. Consequently, the decision for this test is to not reject \(H_0\), meaning that that the effect of Instrumental Support observed in the sample is not statistically significant.

5.6 Estimation and Inference Conclusion

Remember that experienced researchers report promptly the results of the model estimation, they don’t need to estimate in detail the null a proposed models nor the hypothesis testing process. These serve as a demonstration of the origin and meaning of the concepts of model, error, effect, test statistic and p-value in the context of the a simple multiple hierarchical linear model. Here is an example of how to write down the results.

With this analysis we intend to estimate if the combined effect of Emotional Support and Instrumental Support adds explanatory power to the combined effect of perceived Popularity and Wealth on the level of Happiness. Additionally, we intend to determine which of the four predictors is the most important in accounting for happiness. For this purpose, a multiple hierarchical regression with two models was used: Model 1 includes the self-perceptions of popularity and wealth; and Model 2 adds to Model 1 emotional and instrumental dimensions of social support. The results indicate that Model 2 adds 5.4% in the explanation of Happiness in relation to Model 1 and that this effect is statistically significant, F=3.07 p=0.05. All together, Model 2 accounts for 10.8% of the variance in Happiness in our sample. The results also indicate that the variables with the strongest association with Happiness are Emotional Support (beta = 0.24) and popularity (beta = 0.18), both in the expected direction and statistically significant with t=1.78, p=0.04 (unilateral) and t=1.76, p=0.04 (unilateral). Instrumental Support and Wealth have both very weak associations (both betas = 0.01) and non-statistically significant with t=0.06, p=0.48 (unilateral) and t=0.13, p=0.46 (unilateral).