2.1 Bisection Algorithm

The bisection algorithm is a simple method for finding the roots of one-dimensional functions. The goal is to find a root \(x_0\in[a, b]\) such that \(f(x_0)=0\). The algorithm starts with a large interval, known to contain \(x_0\), and then successively reduces the size of the interval until it brackets the root. The theoretical underpinning of the algorithm is the intermediate value theorem which states that if a continuous function \(f\) takes values \(f(a)\) and \(f(b)\) at the end points of the interval \([a, b]\), then \(f\) must take all values between \(f(a)\) and \(f(b)\) somewhere in the interval. So if \(f(a) < \gamma < f(b)\), then there exists a \(c\in[a, b]\) such that \(f(c)=\gamma\).

Using this information, we can present the bisection algorithm. First we must check that \(\text{sign}(f(a)) \ne \text{sign}(f(b))\). Otherwise, the interval does not contain the root and might need to be widened. Then we can proceed:

Let \(c = \frac{a + b}{2}\).

If \(f(c) = 0\), stop and return \(c\).

If \(\text{sign}(f(a))\ne\text{sign}(f(c))\), then set \(b\leftarrow c\). Else if \(\text{sign}(f(b))\ne\text{sign}(f(c))\), then set \(a\leftarrow c\).

Goto the beginning and repeat until convergence (see below).

After \(n\) iterations, the size of the interval bracketing the root will be \(2^{-n}(b-a)\).

The bisection algorithm is useful, conceptually simple, and is easy to implement. In particular, you do not need any special information about the function \(f\) except the ability to evaluate it at various points in the interval. The downsides are that it is only useful in one dimension and its convergence is linear, which is the slowest rate of convergence for algorithms we will discuss (more on that later).

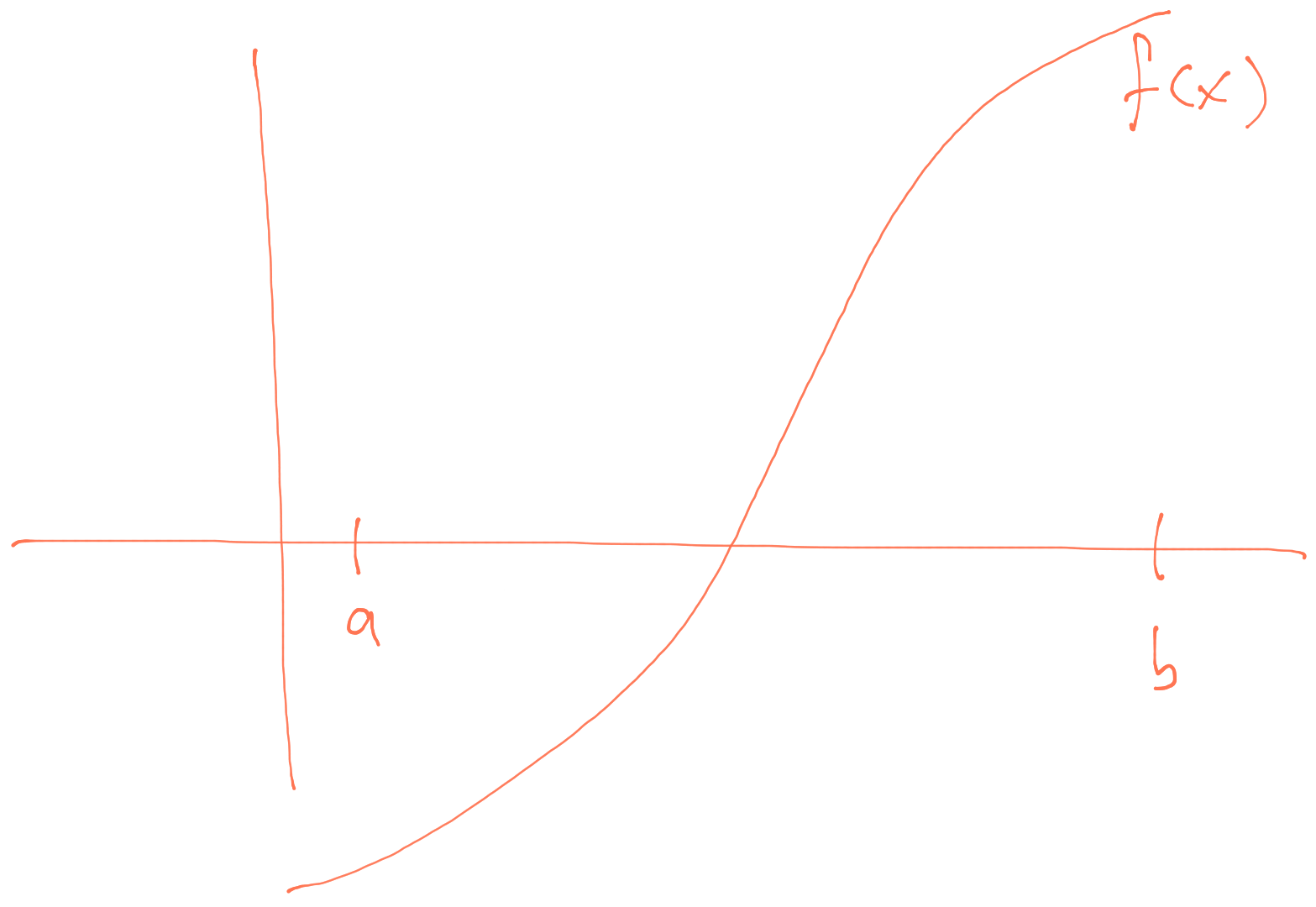

The bisection algorithm can run into problems in situations where the function \(f\) is not well behaved. The ideal situation for the bisection algorithm looks something like this.

Ideal setup for bisection algorithm.

Here, \(f(a)\) and \(f(b)\) are of opposite signs and the root is clearly in between \(a\) and \(b\).

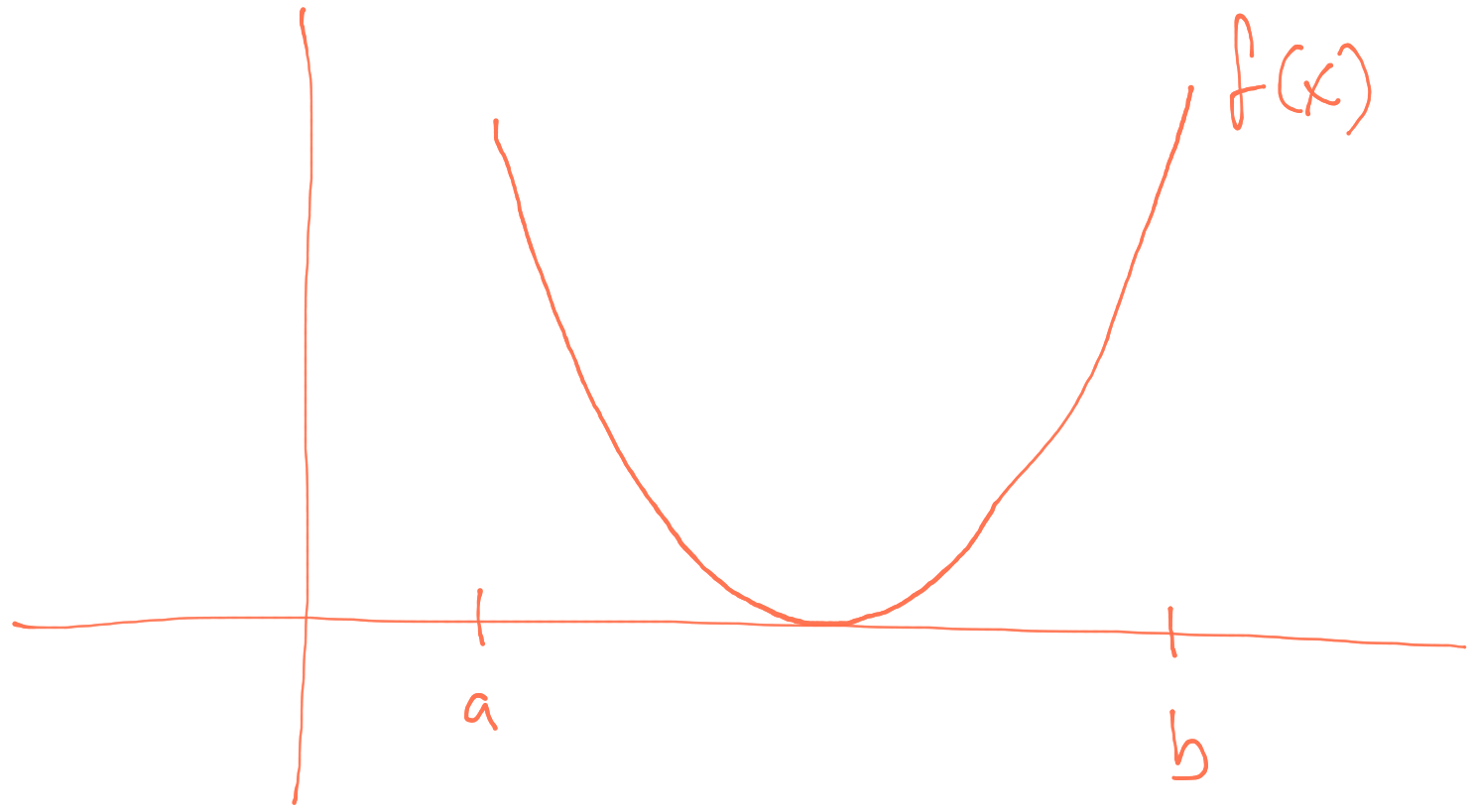

In the scenario below, the algorithm will not start because \(f(a)>0\) and \(f(b)>0\).

Derivative of \(f\) at the root is \(0\).

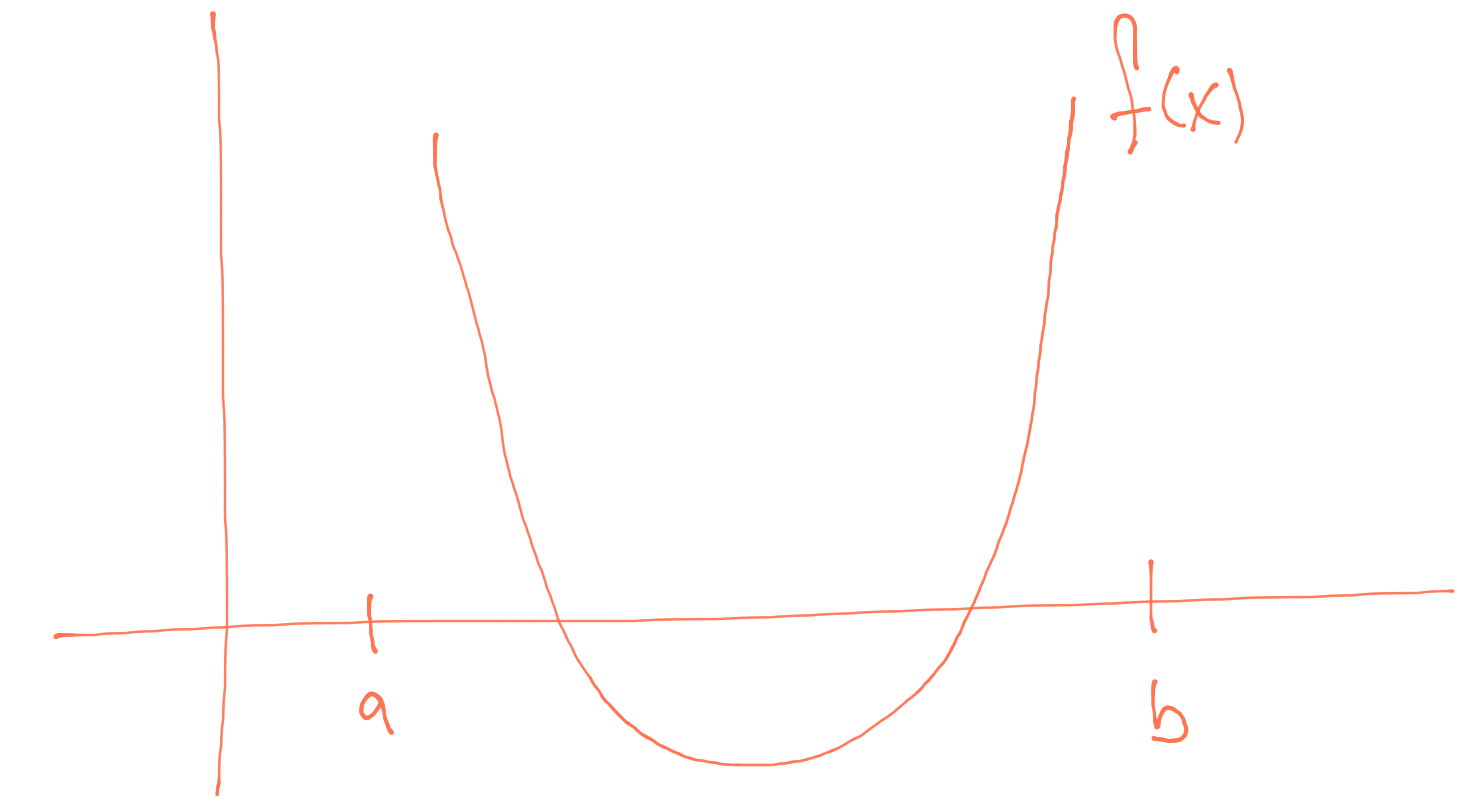

In this next scenario, there are two roots between \(a\) and \(b\), in addition to having \(f(a)>0\) and \(f(b)>0\). One would need to reduce the length of the starting interval in order to find either root.

Two roots within the interval.

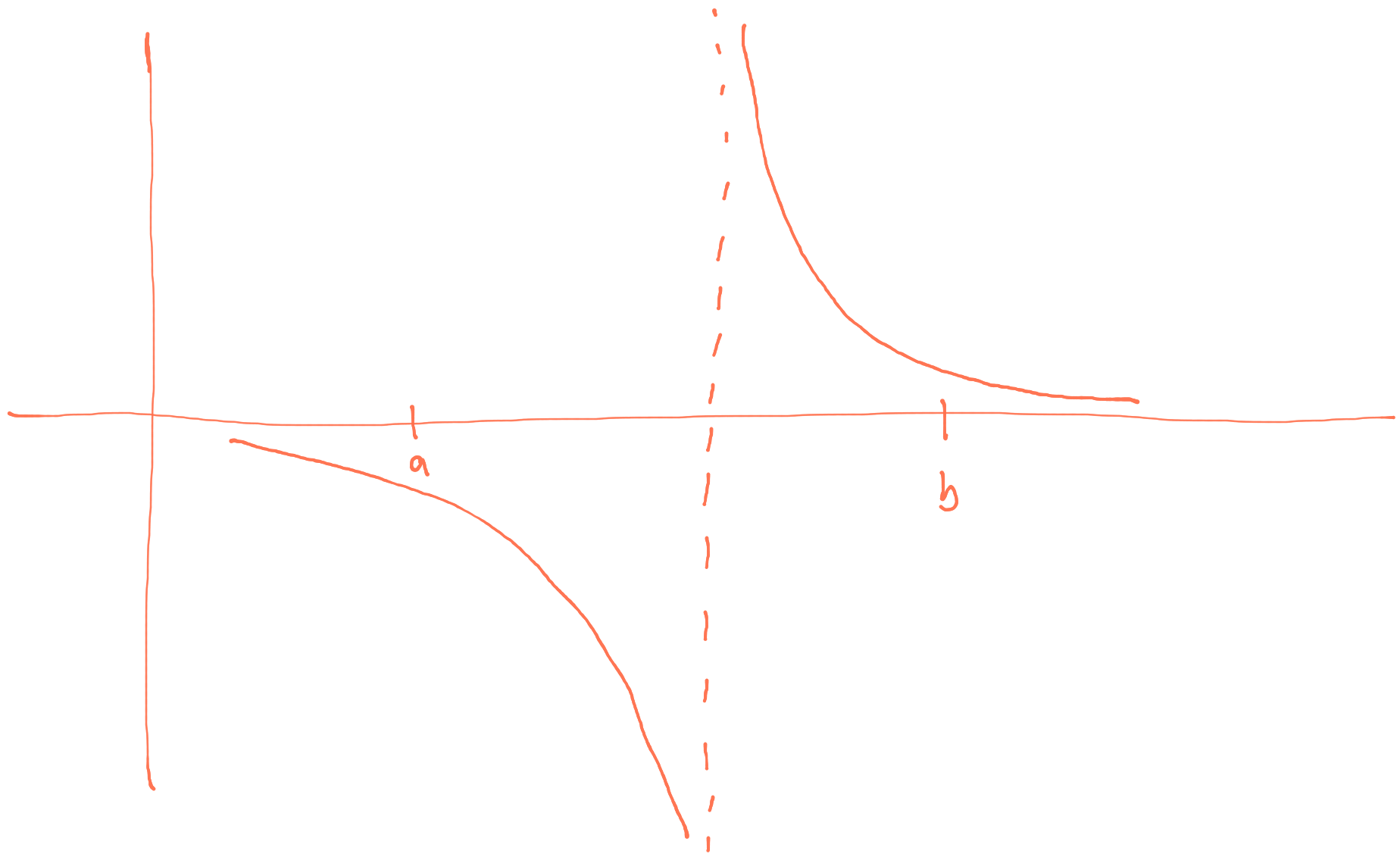

In the scenario below, the algorithm will start because \(f(a)\) and \(f(b)\) are of opposite sign, but there is no root.

Interval contains an asymptote but no root.

Convergence of the bisection algorithm can be determined by either having \(|b-a|<\varepsilon\) for some small \(\varepsilon\) or having \(|f(b)-f(a)|<\varepsilon\). Which criterion you use will depend on the specific application and on what kinds of tolerances are required.

2.1.1 Example: Quantiles

Given a cumulative distribution function \(F(x)\) and a number \(p\in (0, 1)\), a quantile of \(F\) is a number \(x\) such that \(F(x) = p\). The bisection algorithm can be used to find a quantile \(x\) for a given \(p\) by defining the function \(g(x) = F(x) - p\) and solving for the value of \(x\) that achieves \(g(x) = 0\).

Another way to put this is that we are inverting the CDF to compute \(x = F^{-1}(p)\). So the bisection algorithm can be used to invert functions in these situations.