Code

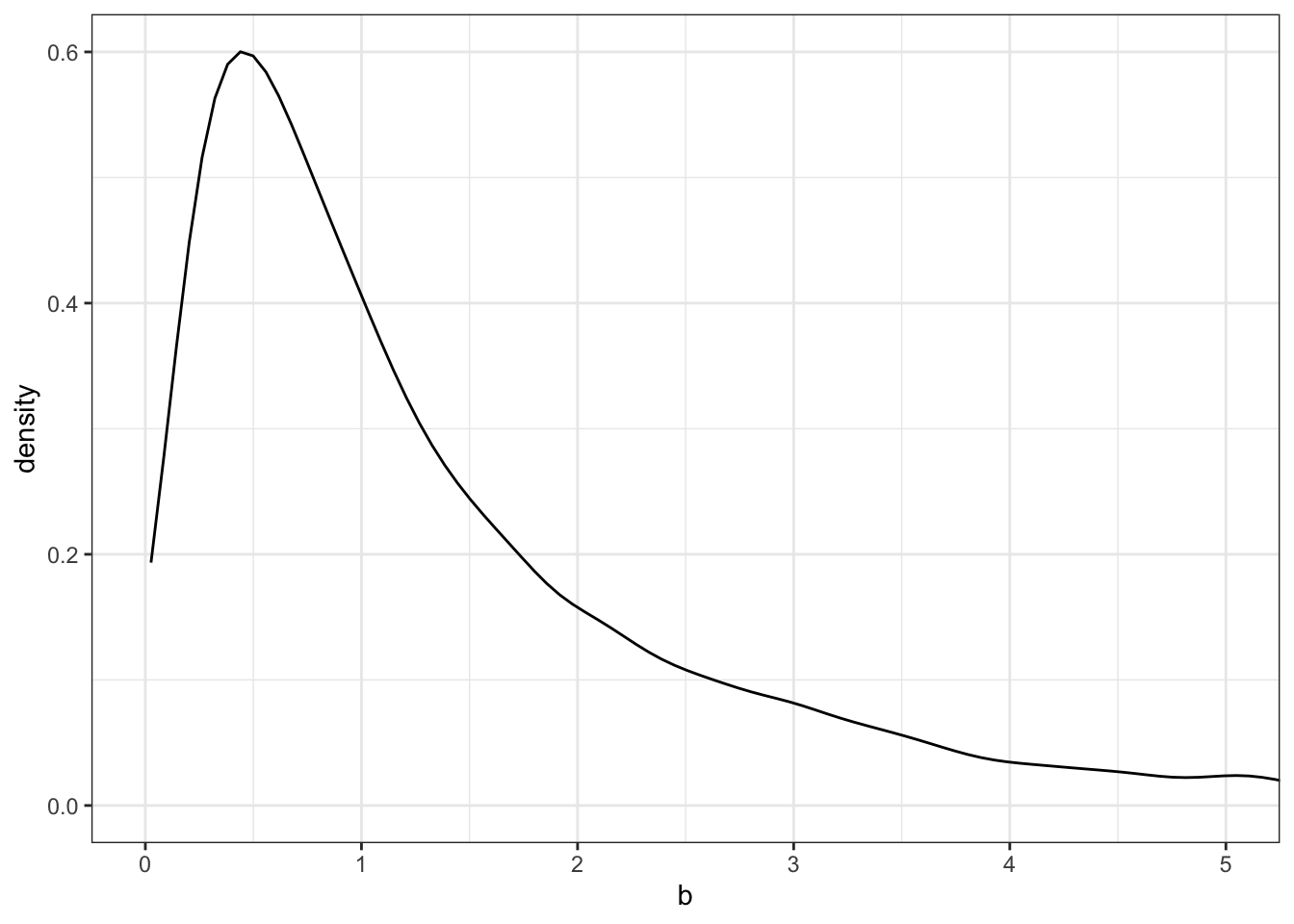

## R code 4.7 not executed! #######################

# library(rethinking)

# data(Howell1)

# d_a <- Howell1Learning Objectives:

“This chapter introduces linear regression as a Bayesian procedure. Under a probability interpretation, which is necessary for Bayesian work, linear regression uses a Gaussian (normal) distribution to describe our golem’s uncertainty about some measurement of interest.” (McElreath, 2020, p. 71) (pdf)

Why are there so many distribution approximately normal, resulting in a Gaussian curve? Because there will be more combinations of outcomes that sum up to a “central” value, rather than to some extreme value.

Why are normal distributions normal?

“Any process that adds together random values from the same distribution converges to a normal.” (McElreath, 2020, p. 73) (pdf)

“Whatever the average value of the source distribution, each sample from it can be thought of as a fluctuation from that average value. When we begin to add these fluctuations together, they also begin to cancel one another out. A large positive fluctuation will cancel a large negative one. The more terms in the sum, the more chances for each fluctuation to be canceled by another, or by a series of smaller ones in the opposite direction. So eventually the most likely sum, in the sense that there are the most ways to realize it, will be a sum in which every fluctuation is canceled by another, a sum of zero (relative to the mean).” (McElreath, 2020, p. 73) (pdf)

“It doesn’t matter what shape the underlying distribution possesses. It could be uniform, like in our example above, or it could be (nearly) anything else. Depending upon the underlying distribution, the convergence might be slow, but it will be inevitable. Often, as in this example, convergence is rapid.” (McElreath, 2020, p. 74) (pdf)

Resources: Why normal distributions?

See the excellent article Why is normal distribution so ubiquitous? which also explains the example of random walks from SR2. See also the scientific paper Why are normal distribution normal? of the The British Journal for the Philosophy of Science.

This is not only valid for addition but also for multiplication of small values: Multiplying small numbers is approximately the same as addition.

But even the multiplication of large values tend to produce Gaussian distributions on the log scale.

The justifications for using the Gaussian distribution fall into two broad categories:

WATCH OUT! Many processes have heavier tails than the Gaussian distribution

“… the Gaussian distribution has some very thin tails—there is very little probability in them. Instead most of the mass in the Gaussian lies within one standard deviation of the mean. Many natural (and unnatural) processes have much heavier tails.” (McElreath, 2020, p. 76) (pdf)

Definition 4.1 : Probability mass and probability density

Probability distributions with only discrete outcomes, like the binomial, are called probability mass functions and denoted Pr.

Continuous ones like the Gaussian are called probability density functions, denoted with \(p\) or just plain old \(f\), depending upon author and tradition.

“Probability density is the rate of change in cumulative probability. So where cumulative probability is increasing rapidly, density can easily exceed 1. But if we calculate the area under the density function, it will never exceed 1. Such areas are also called probability mass.” (McElreath, 2020, p. 76) (pdf)

For example dnorm(0,0,0.1) which is the way to make R calculate \(p(0 \mid 0, 0.1)\) results to 3.9894228.

“The Gaussian distribution is routinely seen without σ but with another parameter, \(\tau\) . The parameter \(\tau\) in this context is usually called precision and defined as \(\tau = 1/σ^2\). When \(\sigma\) is large, \(\tau\) is small.” (McElreath, 2020, p. 76) (pdf)

“This form is common in Bayesian data analysis, and Bayesian model fitting software, such as BUGS or JAGS, sometimes requires using \(\tau\) rather than \(\sigma\).” (McElreath, 2020, p. 76) (pdf)

Procedure 4.1 : General receipt for describing models

Definition 4.2 : What are statistical models?

Models are “mappings of one set of variables through a probability distribution onto another set of variables.” (McElreath, 2020, p. 77) (pdf)

Example 4.1 : Describe the globe tossing model from Chapter 3

\[ \begin{align*} W \sim \operatorname{Binomial}(N, p) \space \space (1)\\ p \sim \operatorname{Uniform}(0, 1) \space \space (2) \end{align*} \tag{4.1}\]

W: observed count of waterN: total number of tossesp: proportion of water on the globeThe first line in these kind of models always defines the likelihood function used in Bayes’ theorem. The other lines define priors.

Read the above statement as:

N and probability p.p is assumed to be uniform between zero and one.Both of the lines in the model of Equation 4.1 are stochastic, as indicated by the ~ symbol. A stochastic relationship is just a mapping of a variable or parameter onto a distribution. It is stochastic because no single instance of the variable on the left is known with certainty. Instead, the mapping is probabilistic: Some values are more plausible than others, but very many different values are plausible under any model. Later, we’ll have models with deterministic definitions in them.

In this section we want a single measurement variable to model as a Gaussian distribution. It is a preparation for the linear regression model in Section 4.4 where we will construct and add a predictor variable to the model.

“There will be two parameters describing the distribution’s shape, the mean

μand the standard deviationσ. Bayesian updating will allow us to consider every possible combination of values for μ and σ and to score each combination by its relativ // plausibility, in light of the data. These relative plausibilities are the posterior probabilities of each combination of values μ, σ.” (McElreath, 2020, p. 78/79) (pdf)

Resource: Nancy Howell data

The data contained in data(Howell1) are partial census data for the Dobe area !Kung San, compiled from interviews conducted by Nancy Howell in the late 1960s.

Much more raw data is available for download from the University of Toronto Library

“For the non-anthropologists reading along, the !Kung San are the most famous foraging population of the twentieth century, largely because of detailed quantitative studies by people like Howell.” (McElreath, 2020, p. 79) (pdf)

WATCH OUT! Loading data without attaching the package with library()

Loading data from a package with the data() function is only possible if you have already loaded the package.

R Code 4.1 : Data loading from a package – Standard procedure

## R code 4.7 not executed! #######################

# library(rethinking)

# data(Howell1)

# d_a <- Howell1The standard loading of data from packages with

`library(rethinking)`

`data(Howell1)`is in this book not executed: I want to prevent clashes with loading {rethinking} and {brms} at the same time, because of their similar functions.

Because of many function name conflicts with {brms} I do not want to load {rethinking} and will call the function of these conflicted packages with <package name>::<function name>() Therefore I have to use another, not so usual loading strategy of the data set.

R Code 4.2 a: Data loading from a package – Unusual procedure (Original)

The advantage of this unusual strategy is that I have not always to detach the {rethinking} package and to make sure {rethinking} is detached before using {brms} as it is necessary in the Kurz’s {tidyverse} / {brms} version.

Because of many function name conflicts with {brms} I do not want to load {rethinking} and will call the function of these conflicted packages with <package name>::<function name>() Therefore I have to use another, not so usual loading strategy of the data set.

R Code 4.3 b: Data loading from a package – Unusual procedure (Tidyverse)

The advantage of this unusual strategy is that I have not always to detach the {rethinking} package and to make sure {rethinking} is detached before using {brms} as it is necessary in the Kurz’s {tidyverse} / {brms} version.

Example 4.2 : Show and inspect the data

R Code 4.4 a: Compactly Display the Structure of an Arbitrary R Object (Original)

## R code 4.8 ####################

str(d_a)#> 'data.frame': 544 obs. of 4 variables:

#> $ height: num 152 140 137 157 145 ...

#> $ weight: num 47.8 36.5 31.9 53 41.3 ...

#> $ age : num 63 63 65 41 51 35 32 27 19 54 ...

#> $ male : int 1 0 0 1 0 1 0 1 0 1 ...utils::str() displays compactly the internal structure of any reasonable R object.

Our Howell1 data contains four columns. Each column has 544 entries, so there are 544 individuals in these data. Each individual has a recorded height (centimeters), weight (kilograms), age (years), and “maleness” (0 indicating female and 1 indicating male).

R Code 4.5 a: Displays concise parameter estimate information for an existing model fit (Original)

## R code 4.9 ###################

rethinking::precis(d_a)#> mean sd 5.5% 94.5% histogram

#> height 138.2635963 27.6024476 81.108550 165.73500 ▁▁▁▁▁▁▁▂▁▇▇▅▁

#> weight 35.6106176 14.7191782 9.360721 54.50289 ▁▂▃▂▂▂▂▅▇▇▃▂▁

#> age 29.3443934 20.7468882 1.000000 66.13500 ▇▅▅▃▅▂▂▁▁

#> male 0.4724265 0.4996986 0.000000 1.00000 ▇▁▁▁▁▁▁▁▁▇rethinking::precis() creates a table of estimates and standard errors, with optional confidence intervals and parameter correlations.

In this case we see the mean, the standard deviation, the width of a 89% posterior interval and a small histogram of four variables: height (centimeters), weight (kilograms), age (years), and “maleness” (0 indicating female and 1 indicating male).

Additionally there is also a console output. In our case: 'data.frame': 544 obs. of 4 variables: .

R Code 4.6 b: Get a glimpse of your data (Tidyverse)

d_b |>

dplyr::glimpse()#> Rows: 544

#> Columns: 4

#> $ height <dbl> 151.7650, 139.7000, 136.5250, 156.8450, 145.4150, 163.8300, 149…

#> $ weight <dbl> 47.82561, 36.48581, 31.86484, 53.04191, 41.27687, 62.99259, 38.…

#> $ age <dbl> 63.0, 63.0, 65.0, 41.0, 51.0, 35.0, 32.0, 27.0, 19.0, 54.0, 47.…

#> $ male <int> 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 0, …pillar::glimpse() is re-exported by {dplyr} and is the tidyverse analogue for str(). It works like a transposed version of print(): columns run down the page, and data runs across.

dplyr::glimpse() shows that the Howell1 data contains four columns. Each column has 544 entries, so there are 544 individuals in these data. Each individual has a recorded height (centimeters), weight (kilograms), age (years), and “maleness” (0 indicating female and 1 indicating male).

R Code 4.7 : Object summaries (Tidyverse)

d_b |>

base::summary()#> height weight age male

#> Min. : 53.98 Min. : 4.252 Min. : 0.00 Min. :0.0000

#> 1st Qu.:125.09 1st Qu.:22.008 1st Qu.:12.00 1st Qu.:0.0000

#> Median :148.59 Median :40.058 Median :27.00 Median :0.0000

#> Mean :138.26 Mean :35.611 Mean :29.34 Mean :0.4724

#> 3rd Qu.:157.48 3rd Qu.:47.209 3rd Qu.:43.00 3rd Qu.:1.0000

#> Max. :179.07 Max. :62.993 Max. :88.00 Max. :1.0000Kurz tells us that the {brms} package does not have a function that works like rethinking::precis() for providing numeric and graphical summaries of variables, as seen in R Code 4.5 Kurz suggests therefore to use base::summary() to get some of the information from rethinking::precis().

R Code 4.8 b: Skim a data frame, getting useful summary statistics

I think skimr::skim() is a better option as an alternative to rethinking::precis() as base::summary() because it also has a graphical summary of the variables. {skimr} has many other useful functions and is very adaptable. I propose to install and to try it out.

d_b |>

skimr::skim() | Name | d_b |

| Number of rows | 544 |

| Number of columns | 4 |

| _______________________ | |

| Column type frequency: | |

| numeric | 4 |

| ________________________ | |

| Group variables | None |

Variable type: numeric

| skim_variable | n_missing | complete_rate | mean | sd | p0 | p25 | p50 | p75 | p100 | hist |

|---|---|---|---|---|---|---|---|---|---|---|

| height | 0 | 1 | 138.26 | 27.60 | 53.98 | 125.10 | 148.59 | 157.48 | 179.07 | ▁▂▂▇▇ |

| weight | 0 | 1 | 35.61 | 14.72 | 4.25 | 22.01 | 40.06 | 47.21 | 62.99 | ▃▂▃▇▂ |

| age | 0 | 1 | 29.34 | 20.75 | 0.00 | 12.00 | 27.00 | 43.00 | 88.00 | ▇▆▅▂▁ |

| male | 0 | 1 | 0.47 | 0.50 | 0.00 | 0.00 | 0.00 | 1.00 | 1.00 | ▇▁▁▁▇ |

R Code 4.9 : Show a random number of data records

set.seed(4)

d_b |>

dplyr::slice_sample(n = 6)#> # A tibble: 6 × 4

#> height weight age male

#> <dbl> <dbl> <dbl> <int>

#> 1 124. 21.5 11 1

#> 2 130. 24.6 13 1

#> 3 83.8 9.21 1 0

#> 4 131. 25.3 15 0

#> 5 112. 17.8 8.90 1

#> 6 162. 51.6 36 1R Code 4.10 b: Randomly select a small number of observations and put it into knitr::kable()

set.seed(4)

d_b |>

bayr::as_tbl_obs() | Obs | height | weight | age | male |

|---|---|---|---|---|

| 62 | 164.4650 | 45.897841 | 50.0 | 1 |

| 71 | 129.5400 | 24.550667 | 13.0 | 1 |

| 130 | 149.2250 | 42.155707 | 27.0 | 0 |

| 307 | 130.6068 | 25.259404 | 15.0 | 0 |

| 312 | 111.7600 | 17.831836 | 8.9 | 1 |

| 371 | 83.8200 | 9.213587 | 1.0 | 0 |

| 414 | 161.9250 | 51.596090 | 36.0 | 1 |

| 504 | 123.8250 | 21.545620 | 11.0 | 1 |

I just learned another method to print variables from a data frame. In base R there is utils::head() and utils::tail() with the disadvantage that the start resp. the end of data file could be atypical for the variable values. The standard tibble printing method has the same problem. In contrast bayr::as_tbl_obs() prints a random selection of maximal 8 rows as a compact and nice output, that works on both, console and {knitr} output.

Although bayr::as_tbl_obs() does not give a data summary as discussed here in Example 4.2 but I wanted mention this printing method as I have always looked for an easy way to display a representative sample of some values of data frame.

R Code 4.11 b: Show data with the internal printing method of tibbles

print(d_b, n = 10)#> # A tibble: 544 × 4

#> height weight age male

#> <dbl> <dbl> <dbl> <int>

#> 1 152. 47.8 63 1

#> 2 140. 36.5 63 0

#> 3 137. 31.9 65 0

#> 4 157. 53.0 41 1

#> 5 145. 41.3 51 0

#> 6 164. 63.0 35 1

#> 7 149. 38.2 32 0

#> 8 169. 55.5 27 1

#> 9 148. 34.9 19 0

#> 10 165. 54.5 54 1

#> # ℹ 534 more rowsAnother possibility is to use the tbl_df internal printing method, one of the main features of tibbles. Printing can be tweaked for a one-off call by calling print() explicitly and setting arguments like \(n\) and \(width\). More persistent control is available by setting the options described in pillar::pillar_options.

Again this printing method does not give a data summary as is featured in Example 4.2. But it is an easy method – especially as you are already working with tibbles – and sometimes this method is enough to get a sense of the data.

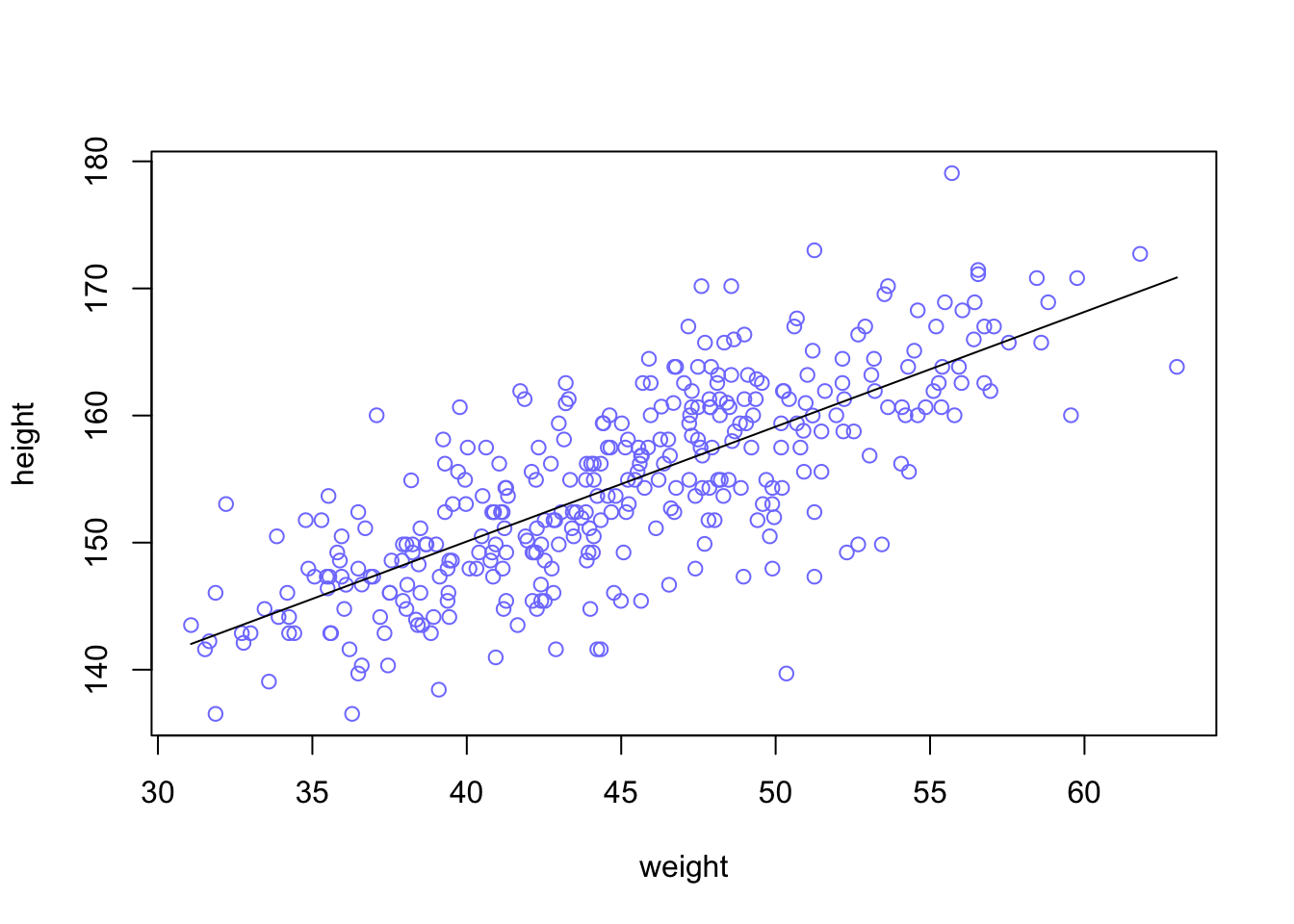

“All we want for now are heights of adults in the sample. The reason to filter out nonadults for now is that height is strongly correlated with age, before adulthood.” (McElreath, 2020, p. 80) (pdf)

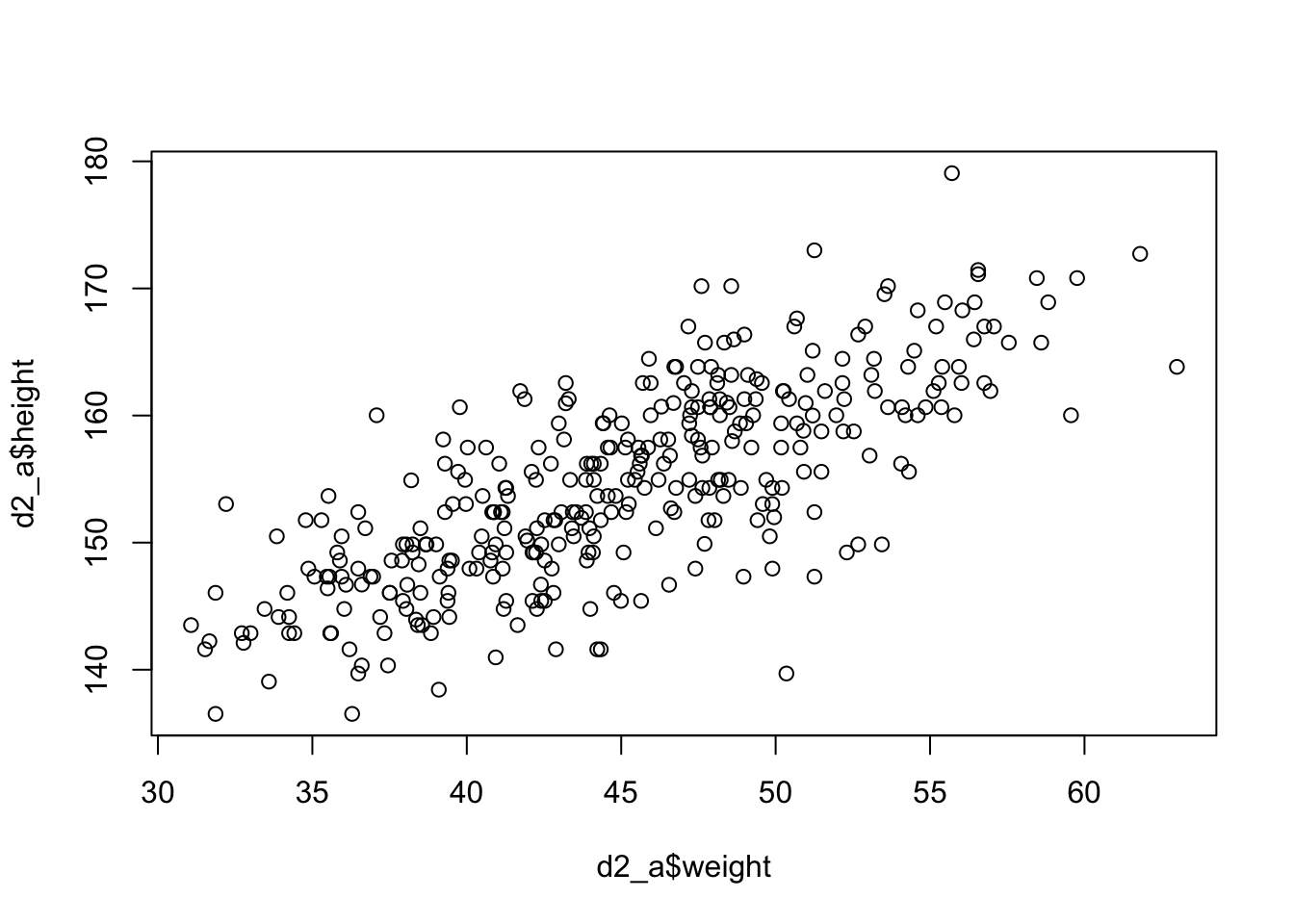

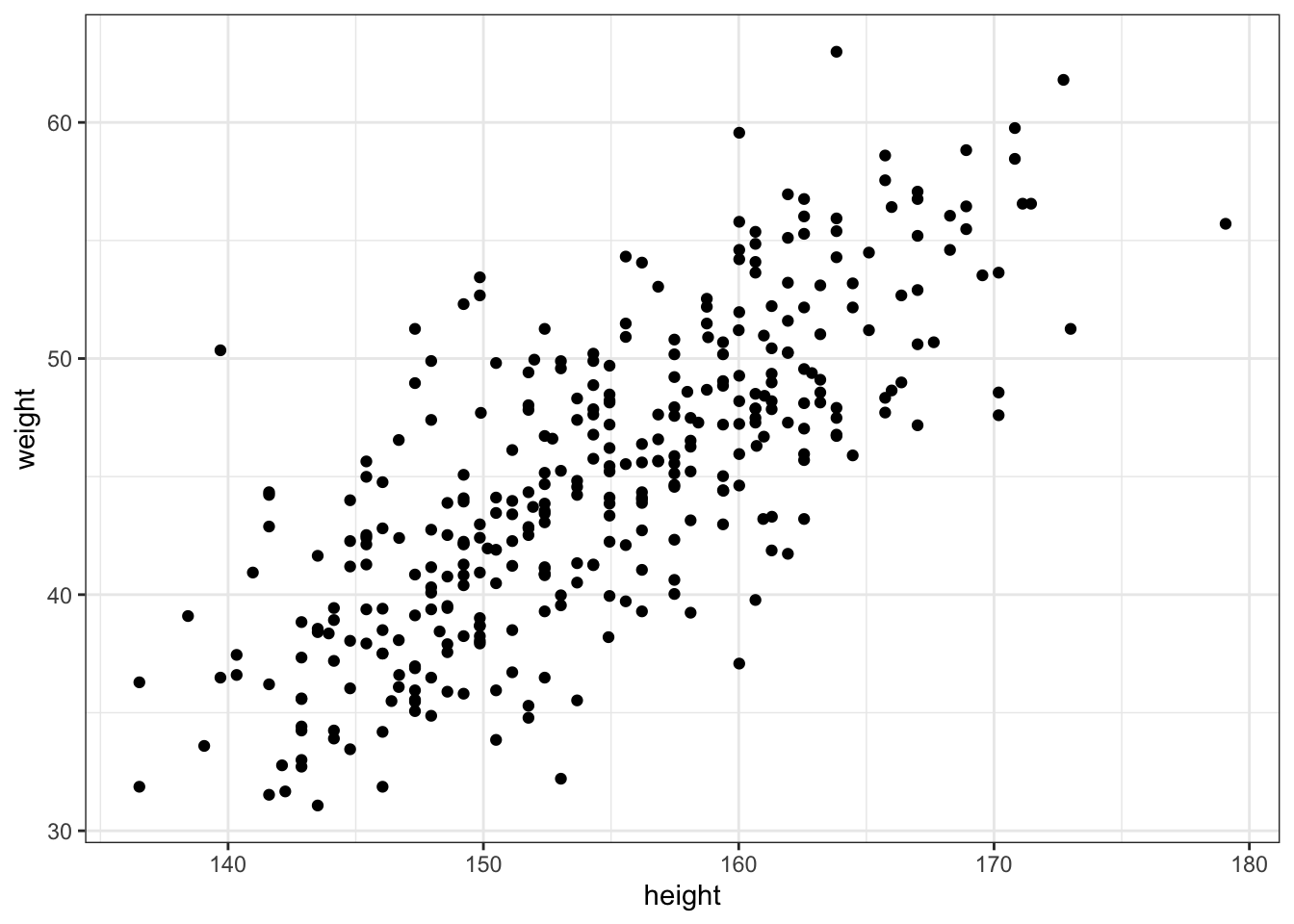

Example 4.3 : Select the height data of adults (individuals older or equal than 18 years)

R Code 4.12 a: Select individuals older or equal than 18 years (Original)

## R code 4.11a ###################

d2_a <- d_a[d_a$age >= 18, ]

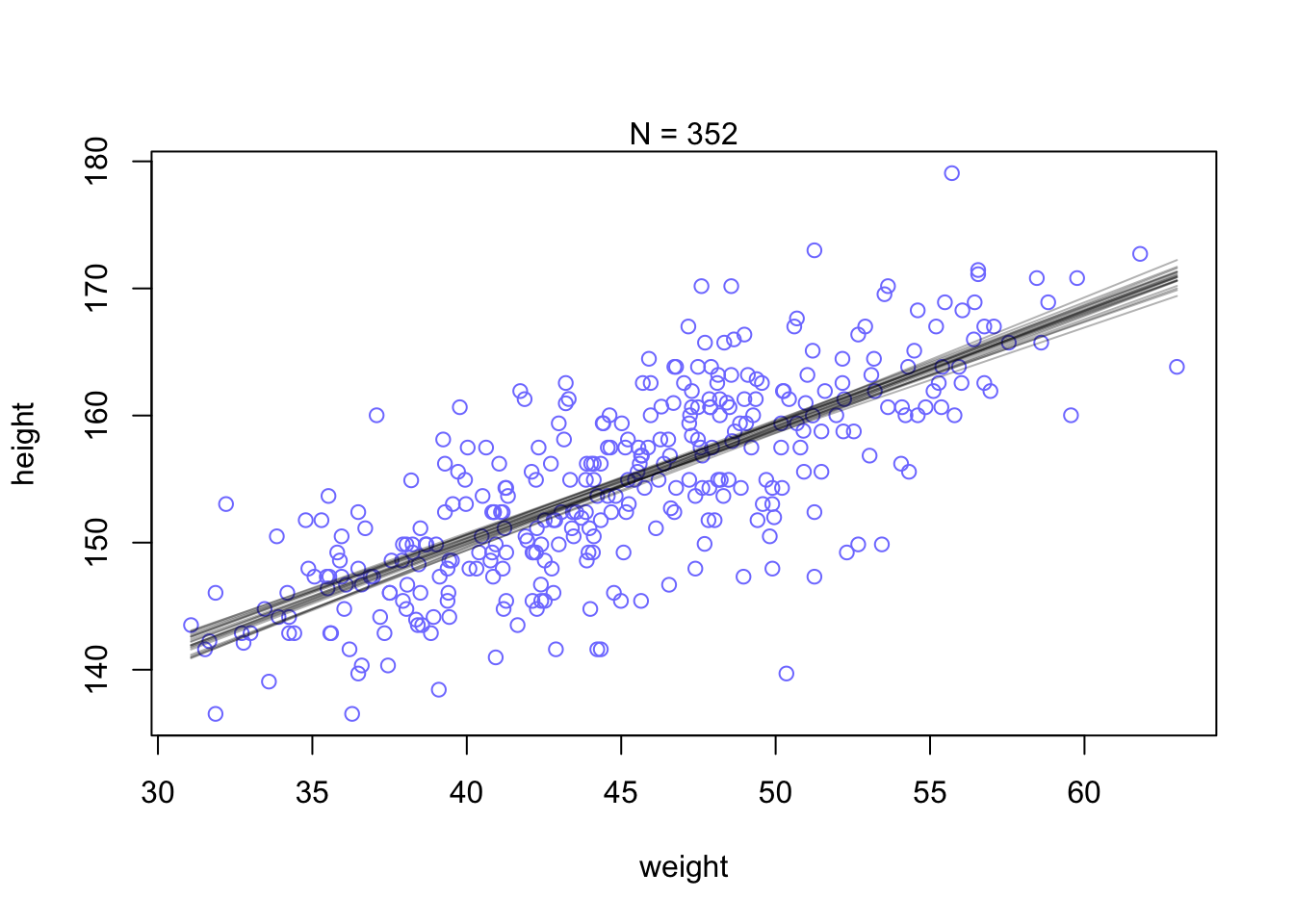

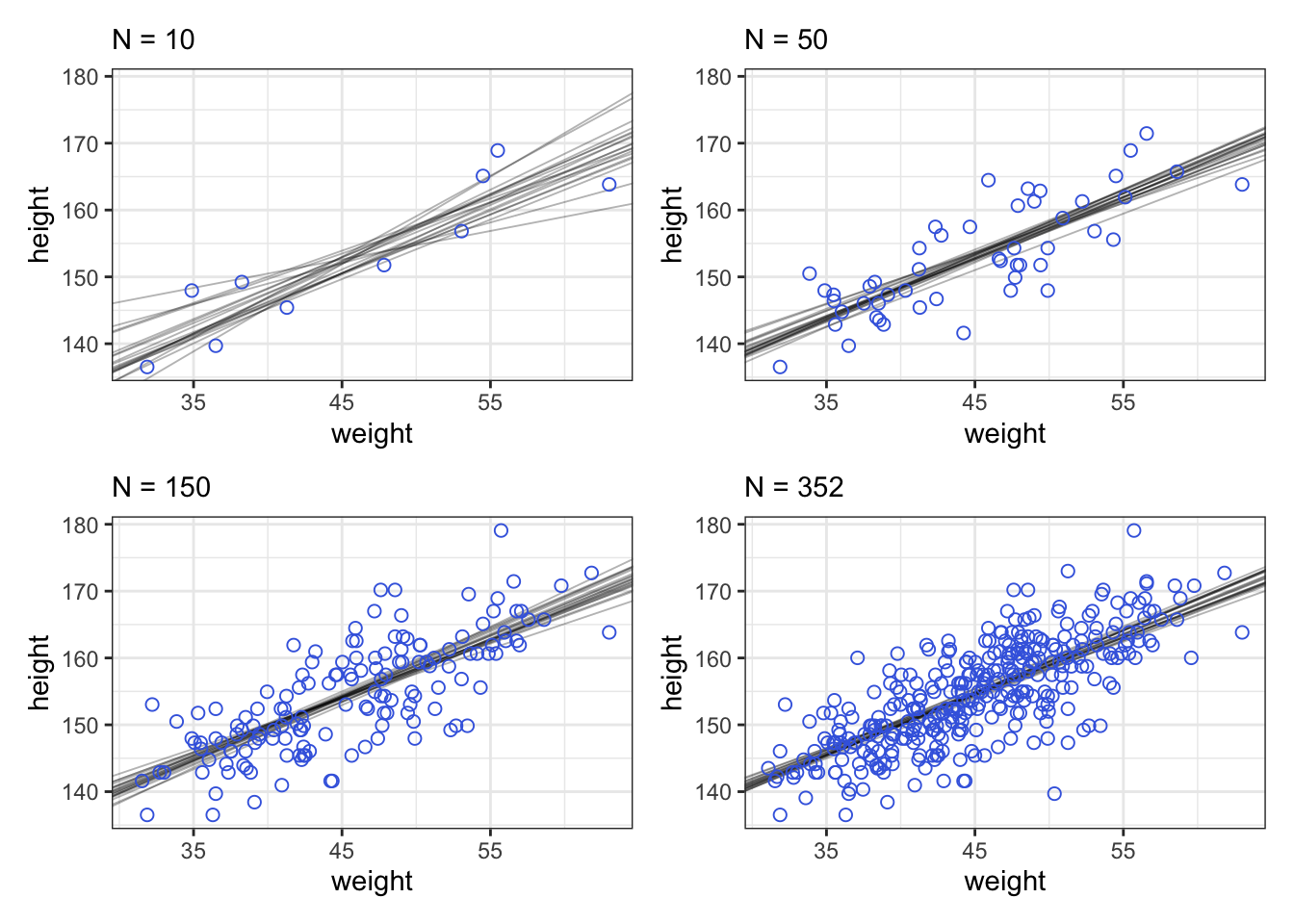

str(d2_a)#> 'data.frame': 352 obs. of 4 variables:

#> $ height: num 152 140 137 157 145 ...

#> $ weight: num 47.8 36.5 31.9 53 41.3 ...

#> $ age : num 63 63 65 41 51 35 32 27 19 54 ...

#> $ male : int 1 0 0 1 0 1 0 1 0 1 ...R Code 4.13 b: Select individuals older or equal than 18 years (Tidyverse)

#> Rows: 352

#> Columns: 4

#> $ height <dbl> 151.7650, 139.7000, 136.5250, 156.8450, 145.4150, 163.8300, 149…

#> $ weight <dbl> 47.82561, 36.48581, 31.86484, 53.04191, 41.27687, 62.99259, 38.…

#> $ age <dbl> 63.0, 63.0, 65.0, 41.0, 51.0, 35.0, 32.0, 27.0, 19.0, 54.0, 47.…

#> $ male <int> 1, 0, 0, 1, 0, 1, 0, 1, 0, 1, 0, 1, 0, 0, 0, 1, 1, 0, 1, 0, 1, …Our goal is to model the data using a Gaussian distribution.

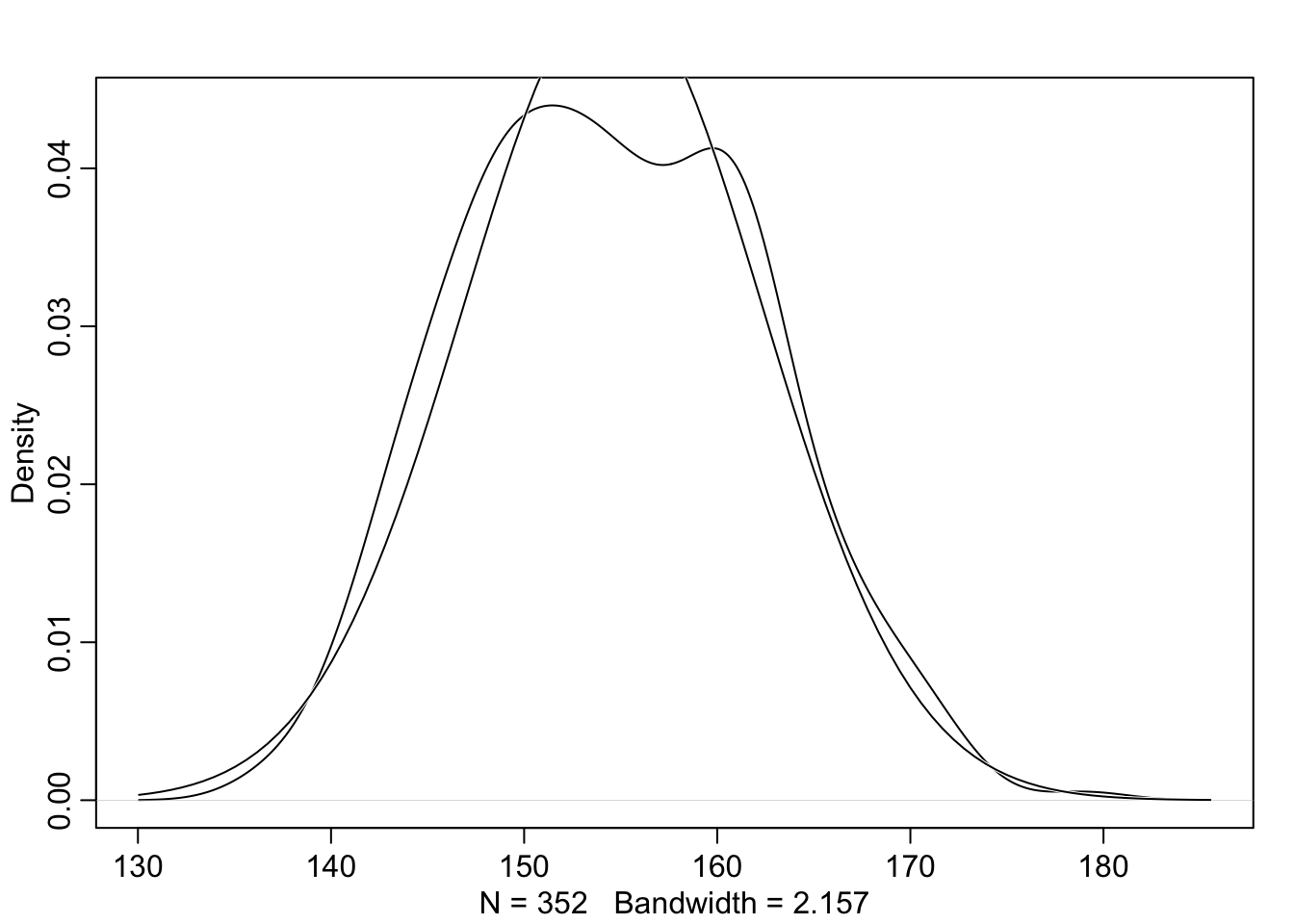

Example 4.4 : Plotting the distribution of height

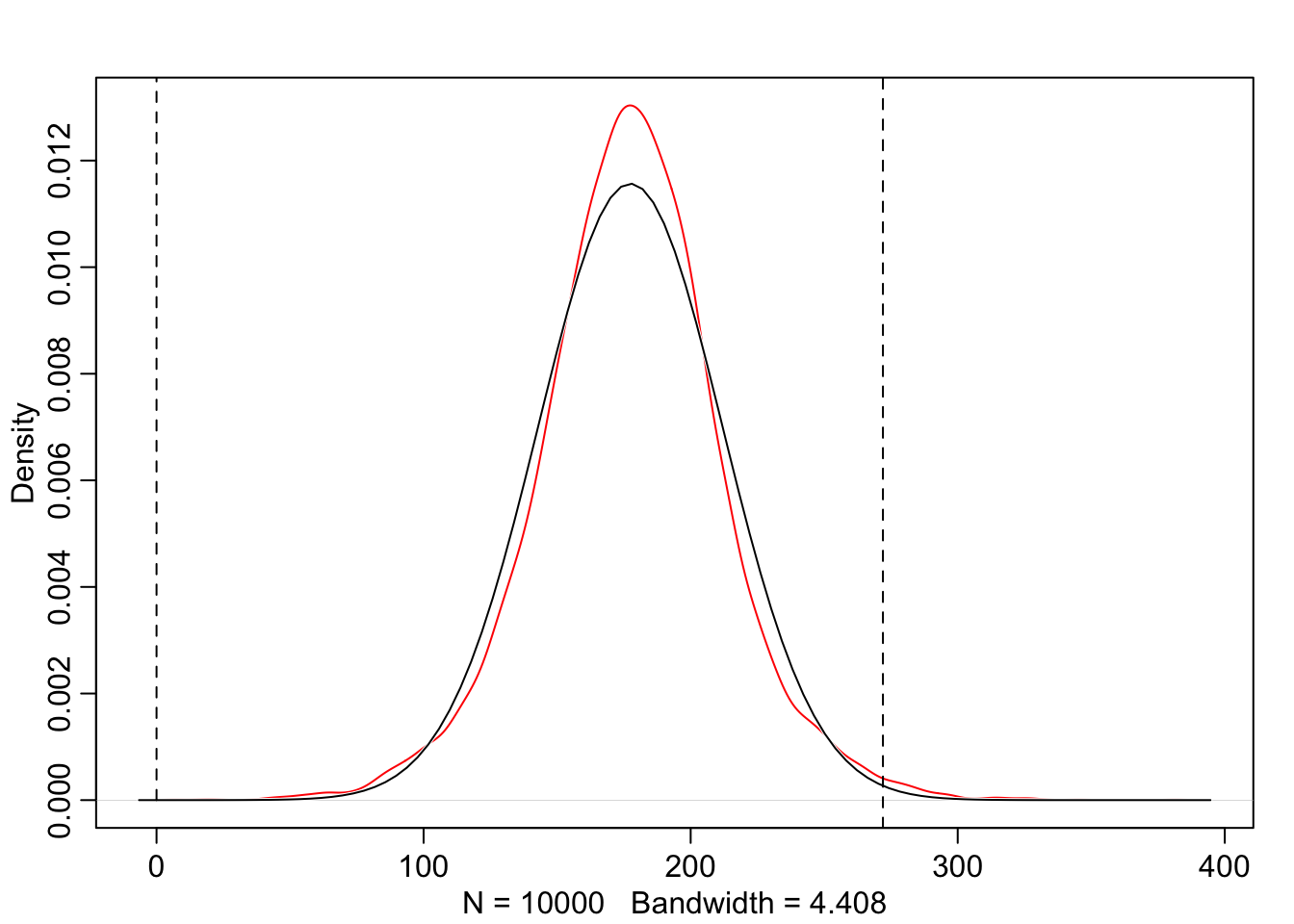

R Code 4.14 a: Plot the distribution of the heights of adults, overlaid by an ideal Gaussian distribution (Original)

rethinking::dens(d2_a$height, adj = 1, norm.comp = TRUE)

With the option norm.comp = TRUE I have overlaid a Gaussian distribution to see the differences to the actual data. There are some differences locally, especially on the peak of the distribution. But the tails looks nice and we can say that the overall impression of the curve is Gaussian.

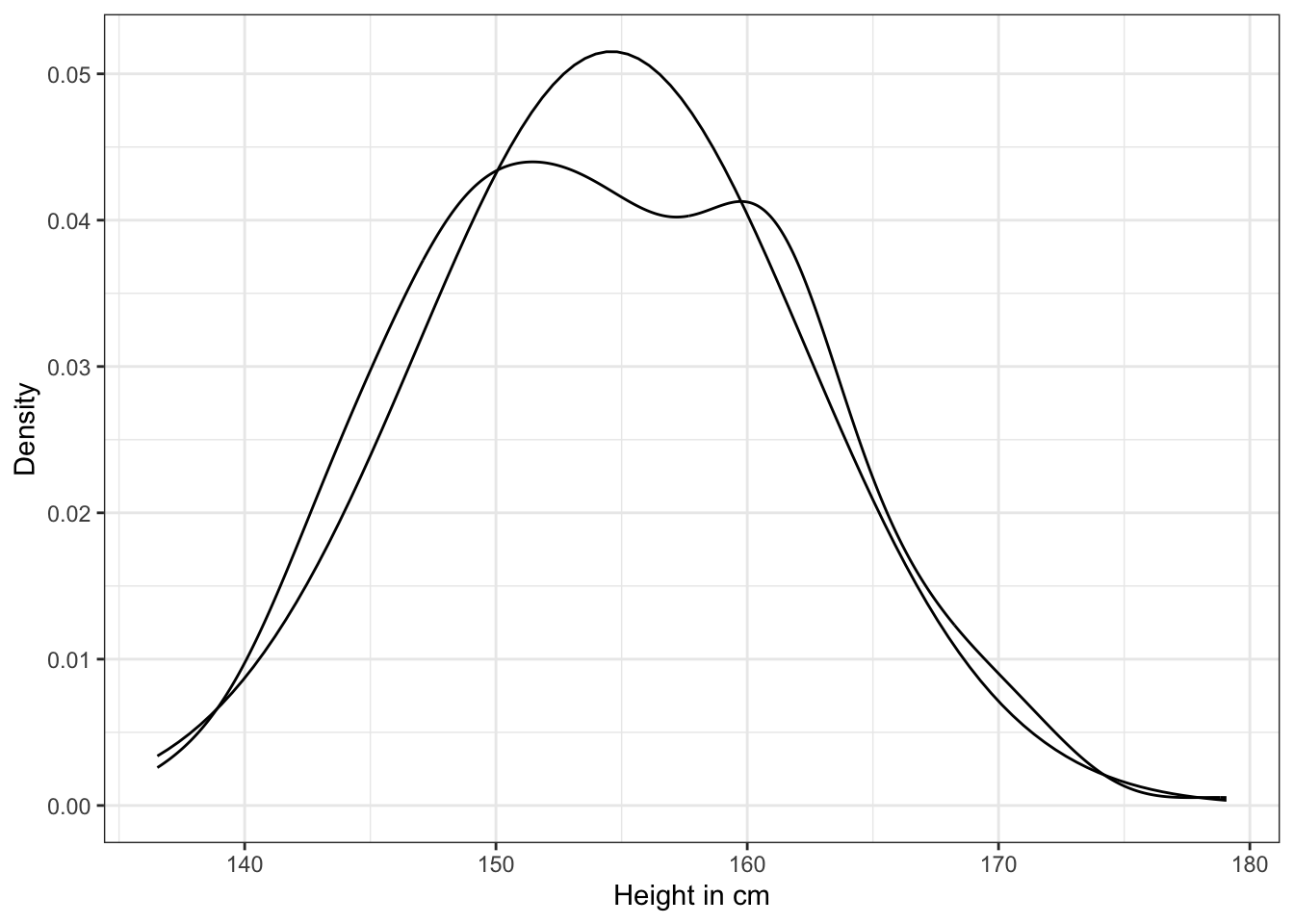

R Code 4.15 b: Plot the distribution of the heights of adults, overlaid by an ideal Gaussian distribution (Tidyverse)

d2_b |>

ggplot2::ggplot(ggplot2::aes(height)) +

ggplot2::geom_density() +

ggplot2::stat_function(

fun = dnorm,

args = with(d2_b, c(mean = mean(height), sd = sd(height)))

) +

ggplot2::labs(

x = "Height in cm",

y = "Density"

) +

ggplot2::theme_bw()

The plot of the heights distribution compared with the standard Gaussian distribution is missing in Kurz’s version. I added this plot after an internet research by using the last example of How to Plot a Normal Distribution in R. It uses the ggplot2::stat_function() to compute and draw a function as a continuous curve. This makes it easy to superimpose a function on top of an existing plot.

WATCH OUT! Looking at the raw data is not enough for a model decision

“Gawking at the raw data, to try to decide how to model them, is usually not a good idea. The data could be a mixture of different Gaussian distributions, for example, and in that case you won’t be able to detect the underlying normality just by eyeballing the outcome distribution.” (McElreath, 2020, p. 81) (pdf)

Theorem 4.1 : Define the heights as normally distributed with a mean \(\mu\) and standard deviation \(\sigma\)

\[ h_{i} \sim \operatorname{Normal}(σ, μ) \tag{4.2}\]

d2_a$height). As such, the model above is saying that all the golem knows about each height measurement is defined by the same normal distribution, with mean \(\mu\) and standard deviation \(\sigma\).Equation 4.2 assumes that the values \(h_{i}\) are i.i.d. (independent and identically distributed)

“The i.i.d. assumption doesn’t have to seem awkward, as long as you remember that probability is inside the golem, not outside in the world. The i.i.d. assumption is about how the golem represents its uncertainty. It is an epistemological assumption. It is not a physical assumption about the world, an ontological one. E. T. Jaynes (1922–1998) called this the mind projection fallacy, the mistake of confusing epistemological claims with ontological claims.” (McElreath, 2020, p. 81) (pdf)

“To complete the model, we’re going to need some priors. The parameters to be estimated are both \(\mu\) and \(\sigma\), so we need a prior \(Pr(\mu, \sigma)\), the joint prior probability for all parameters. In most cases, priors are specified independently for each parameter, which amounts to assuming \(Pr(\mu, \sigma) = Pr(\mu)Pr(\sigma)\).” (McElreath, 2020, p. 82) (pdf)

Theorem 4.2 : Define the linear heights model

\[ \begin{align*} h_{i} \sim \operatorname{Normal}(\mu, \sigma) \space \space (1) \\ \mu \sim \operatorname{Normal}(178, 20) \space \space (2) \\ \sigma \sim \operatorname{Uniform}(0, 50) \space \space (3) \end{align*} \tag{4.3}\]

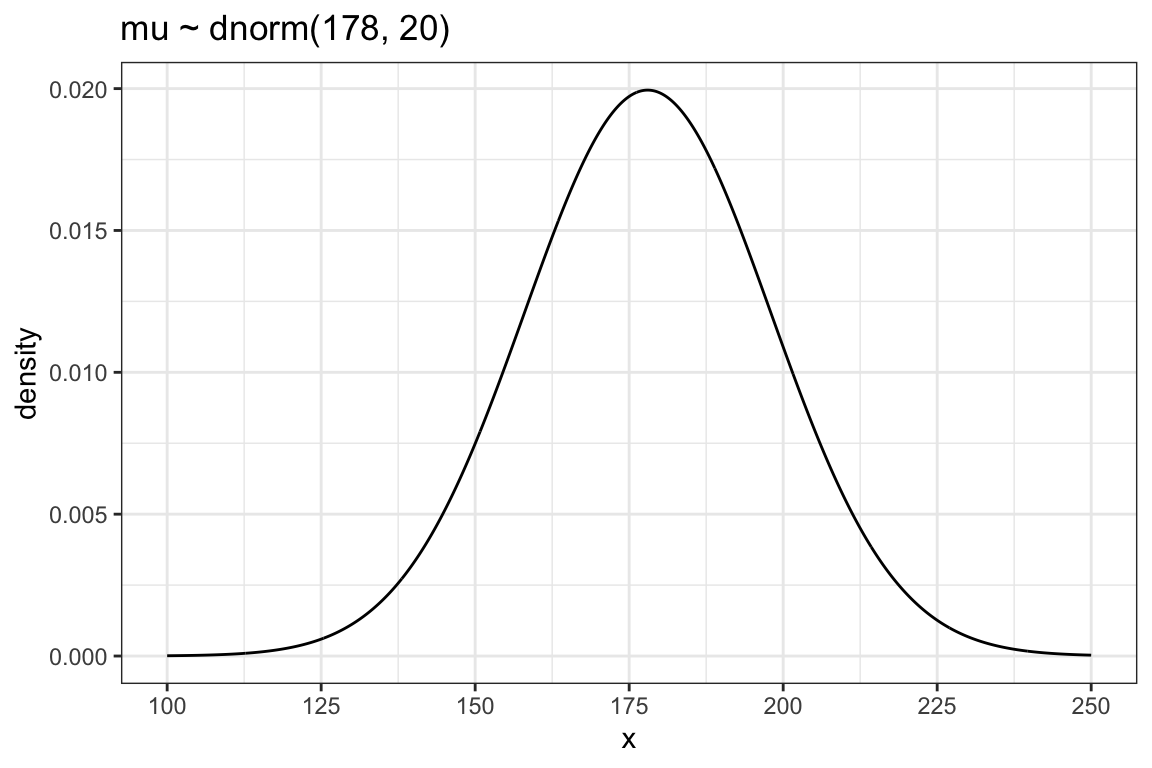

Let’s think about the chosen value for the priors more in detail:

1. Choosing the mean prior

\[ \begin{align} \Pr(\mu-1\sigma \le X \le \mu+1\sigma) & \approx 68.27\% \\ \Pr(\mu-2\sigma \le X \le \mu+2\sigma) & \approx 95.45\% \\ \Pr(\mu-3\sigma \le X \le \mu+3\sigma) & \approx 99.73\% \end{align} \]

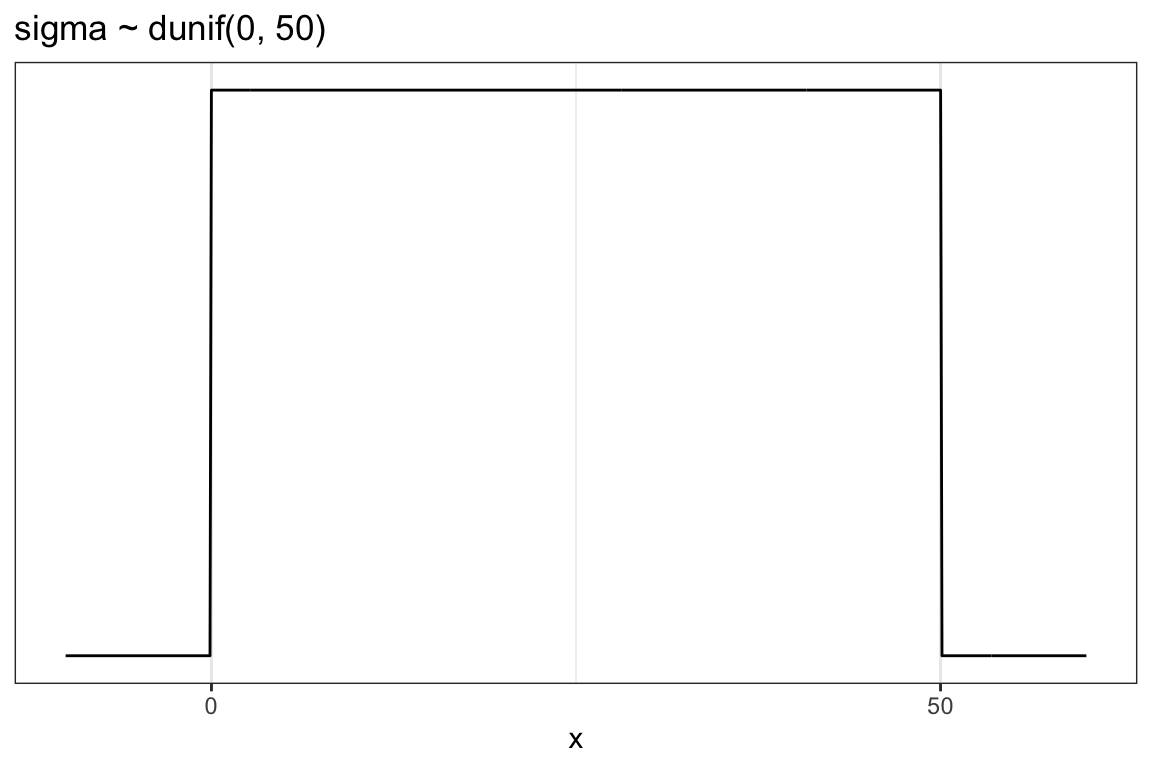

2. Choosing the sigma prior

Plot the chosen priors!

It is important to plot the priors to get an idea about the assumptions they build into your model.

Example 4.5 : Numbered Example Title

R Code 4.16 a: Plot the chosen mean prior (Original)

You can see that the golem is assuming that the average height (not each individual height) is almost certainly between 140 cm and 220 cm. So this prior carries a little information, but not a lot.

b: Plot the chosen mean prior (Tidyverse)

tibble::tibble(x = base::seq(from = 100, to = 250, by = .1)) |>

ggplot2::ggplot(ggplot2::aes(x = x, y = stats::dnorm(x, mean = 178, sd = 20))) +

ggplot2::geom_line() +

ggplot2::scale_x_continuous(breaks = base::seq(from = 100, to = 250, by = 25)) +

ggplot2::labs(title = "mu ~ dnorm(178, 20)",

y = "density") +

ggplot2::theme_bw()

As there is only one variable \(y\) (= dnorm(x, mean = 178, sd = 20)) we need to specify \(x\) as a sequence of 1501 points to provide a \(x\) and \(y\) aesthetic for the plot.

b: Plot the chosen prior for the standard deviation (Tidyverse)

tibble::tibble(x = base::seq(from = -10, to = 60, by = .1)) |>

ggplot2::ggplot(ggplot2::aes(x = x, y = stats::dunif(x, min = 0, max = 50))) +

ggplot2::geom_line() +

ggplot2::scale_x_continuous(breaks = c(0, 50)) +

ggplot2::scale_y_continuous(NULL, breaks = NULL) +

ggplot2::ggtitle("sigma ~ dunif(0, 50)") +

ggplot2::theme_bw()

We don’t really need the \(y\)-axis when looking at the shapes of a density, so we’ll just remove it with scale_y_continuous(NULL, breaks = NULL).

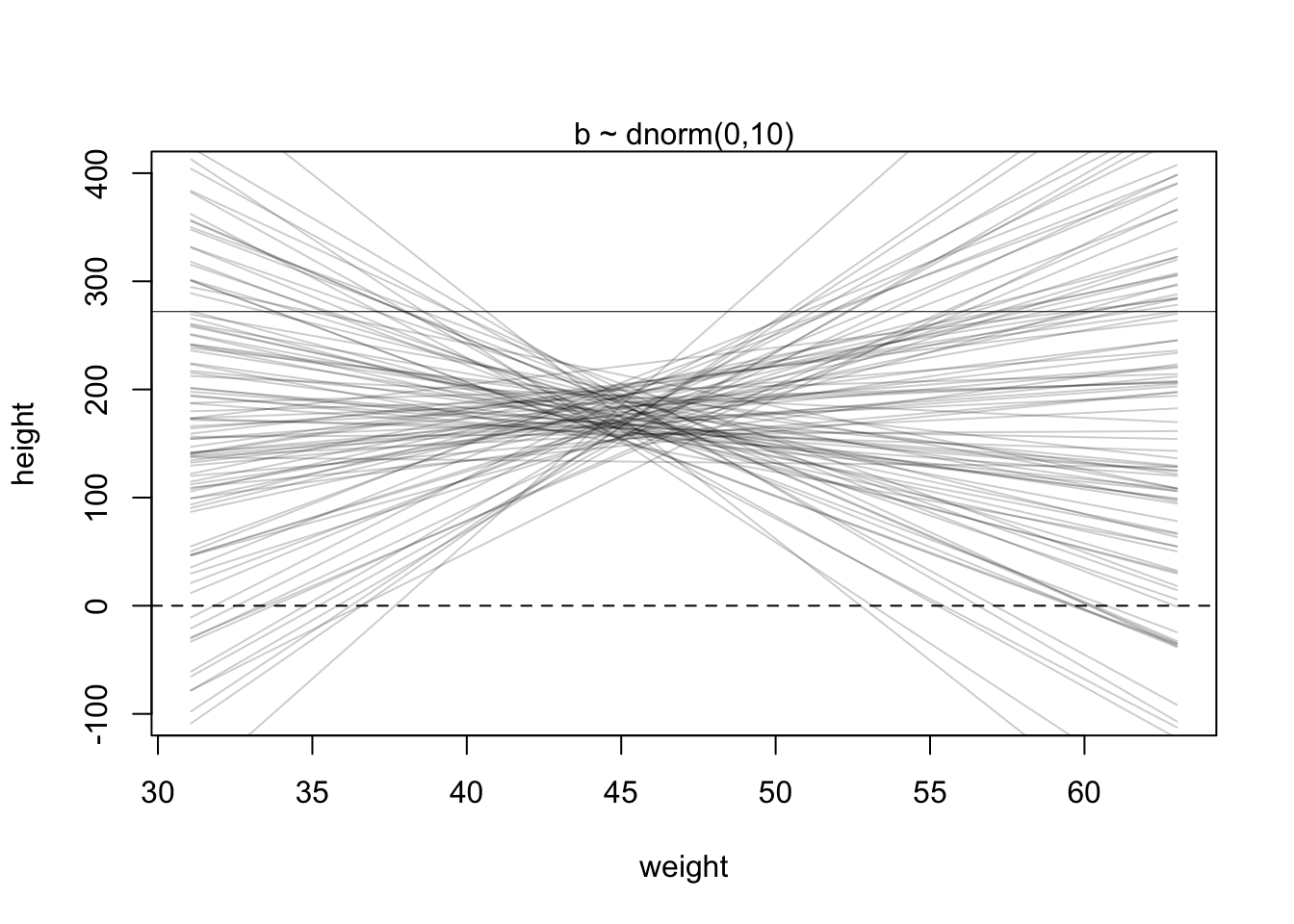

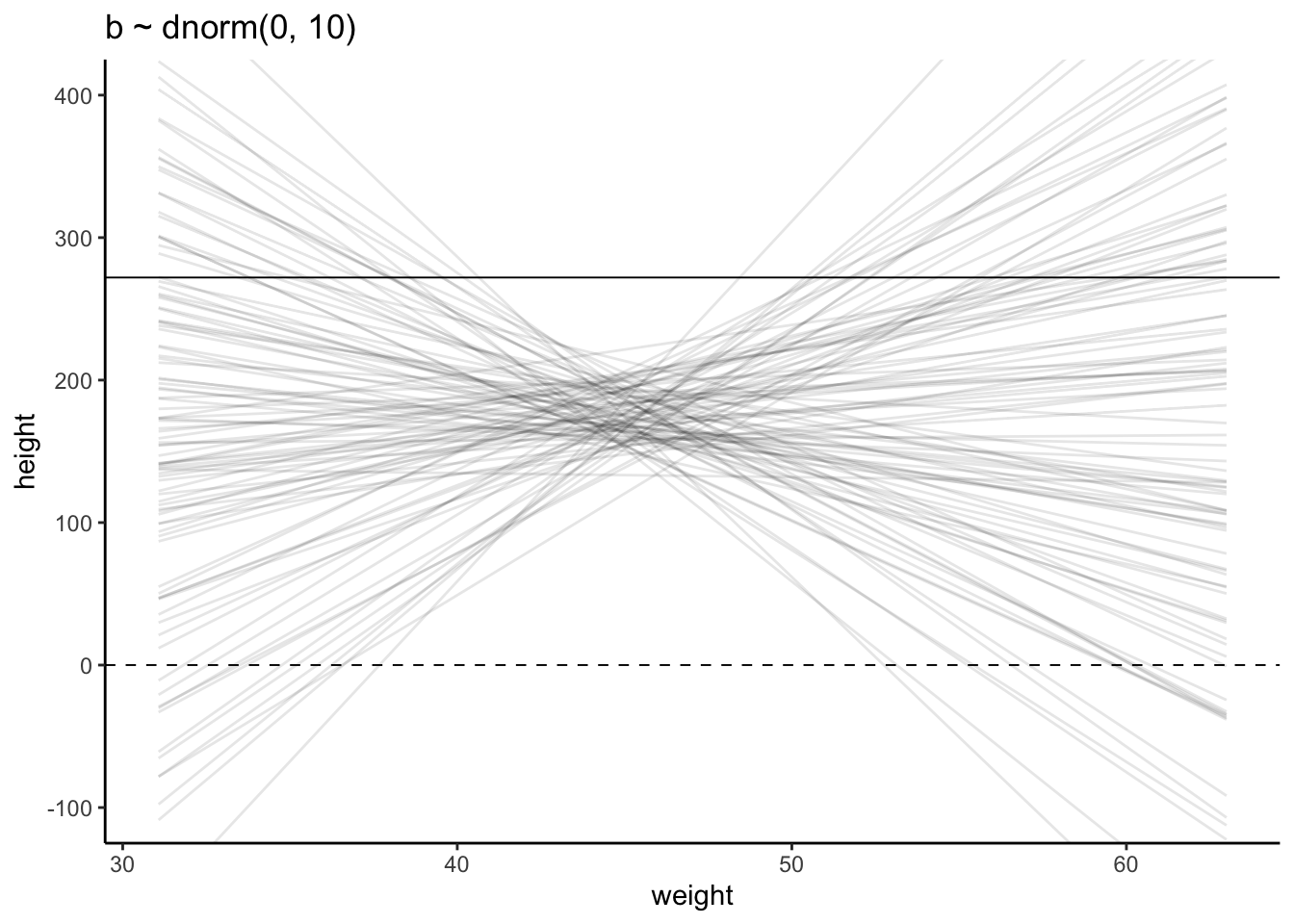

Simulate the prior predictive distribution!

“Prior predictive simulation is very useful for assigning sensible priors, because it can be quite hard to anticipate how priors influence the observable variables.” (McElreath, 2020, p. 83) (pdf)

To see the difference we will look at two prior predictive distributions:

“Okay, so how to do this? You can quickly simulate heights by sampling from the prior, like you sampled from the posterior back in Chapter 3. Remember, every posterior is also potentially a prior for a subsequent analysis, so you can process priors just like posteriors.” (McElreath, 2020, p. 82) (pdf)

Example 4.6 : Prior Predictive Simulation

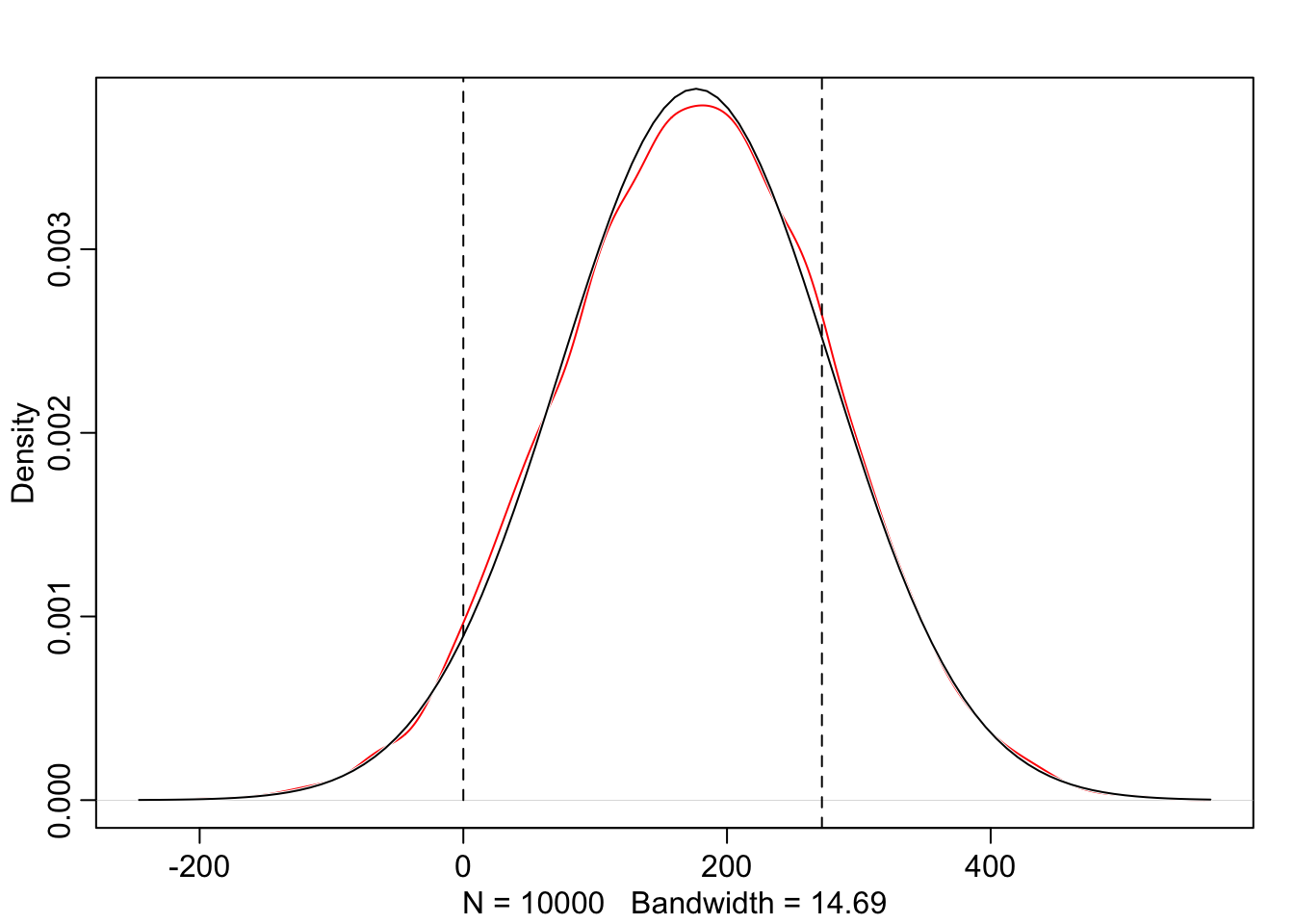

R Code 4.18 a: Simulate heights by sampling from the priors with \(\mu \sim Normal(178, 20)\) (Original)

N_sim_height_a <- 1e4

set.seed(4) # to make example reproducible

## R code 4.14a adapted #######################################

sample_mu_a <- rnorm(N_sim_height_a, 178, 20)

sample_sigma_a <- runif(N_sim_height_a, 0, 50)

priors_height_a <- rnorm(N_sim_height_a, sample_mu_a, sample_sigma_a)

rethinking::dens(priors_height_a,

adj = 1,

norm.comp = TRUE,

show.zero = TRUE,

col = "red")

graphics::abline(v = 272, lty = 2)

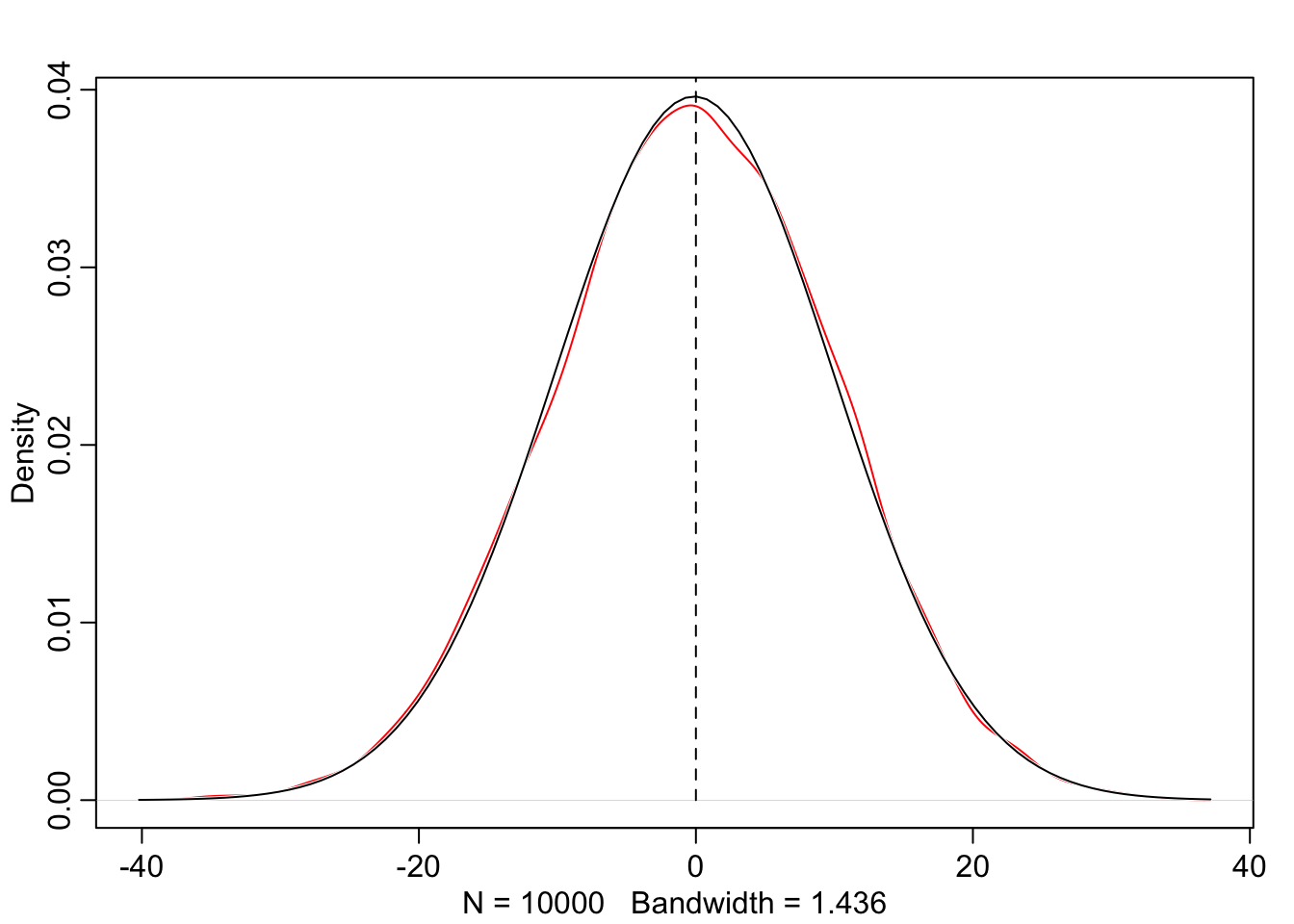

The prior predictive simulation generates a plausible distribution; There are no values negative (left dashed vertical line at 0) and one of the tallest people in recorded history, Robert Pershing Wadlow (1918–1940) with 272 cm (right dashed vertical line) has only a small probability.

The prior probability distribution of height is not itself Gaussian because it is approaching the mean too thin and to high, respectively its tails are too thick. But this is ok.

“The distribution you see is not an empirical expectation, but rather the distribution of relative plausibilities of different heights, before seeing the data.” (McElreath, 2020, p. 83) (pdf)

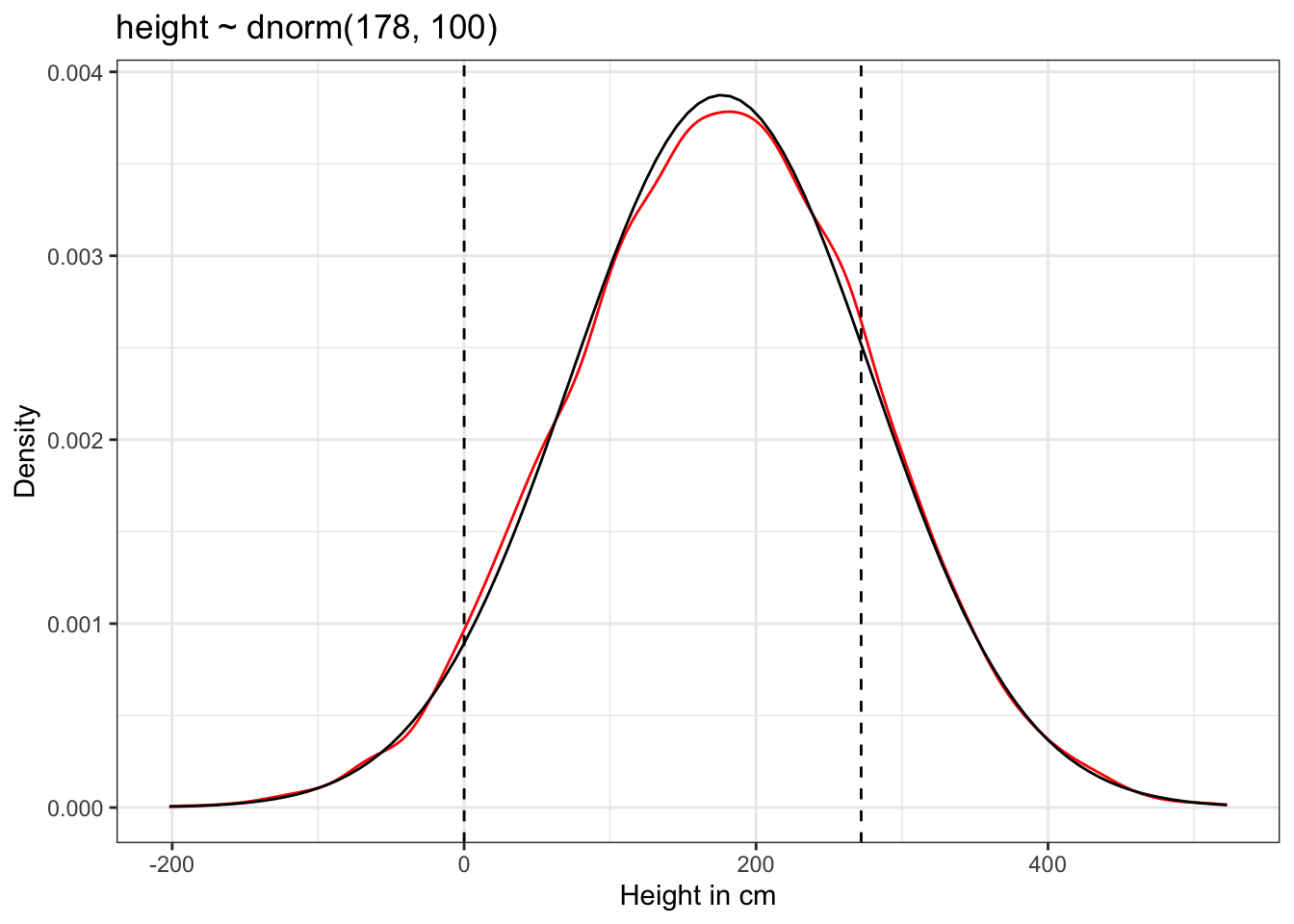

R Code 4.19 a: Simulate heights by sampling from the priors with \(\mu \sim Normal(178, 100)\) (Original)

N_sim_height_a <- 1e4

set.seed(4) # to make example reproducible

## R code 4.14a adapted #######################################

sample_mu2_a <- rnorm(N_sim_height_a, 178, 100)

sample_sigma2_a <- runif(N_sim_height_a, 0, 50)

priors_height2_a <- rnorm(N_sim_height_a, sample_mu2_a, sample_sigma2_a)

rethinking::dens(priors_height2_a,

adj = 1,

norm.comp = TRUE,

show.zero = TRUE,

col = "red")

graphics::abline(v = 272, lty = 2)

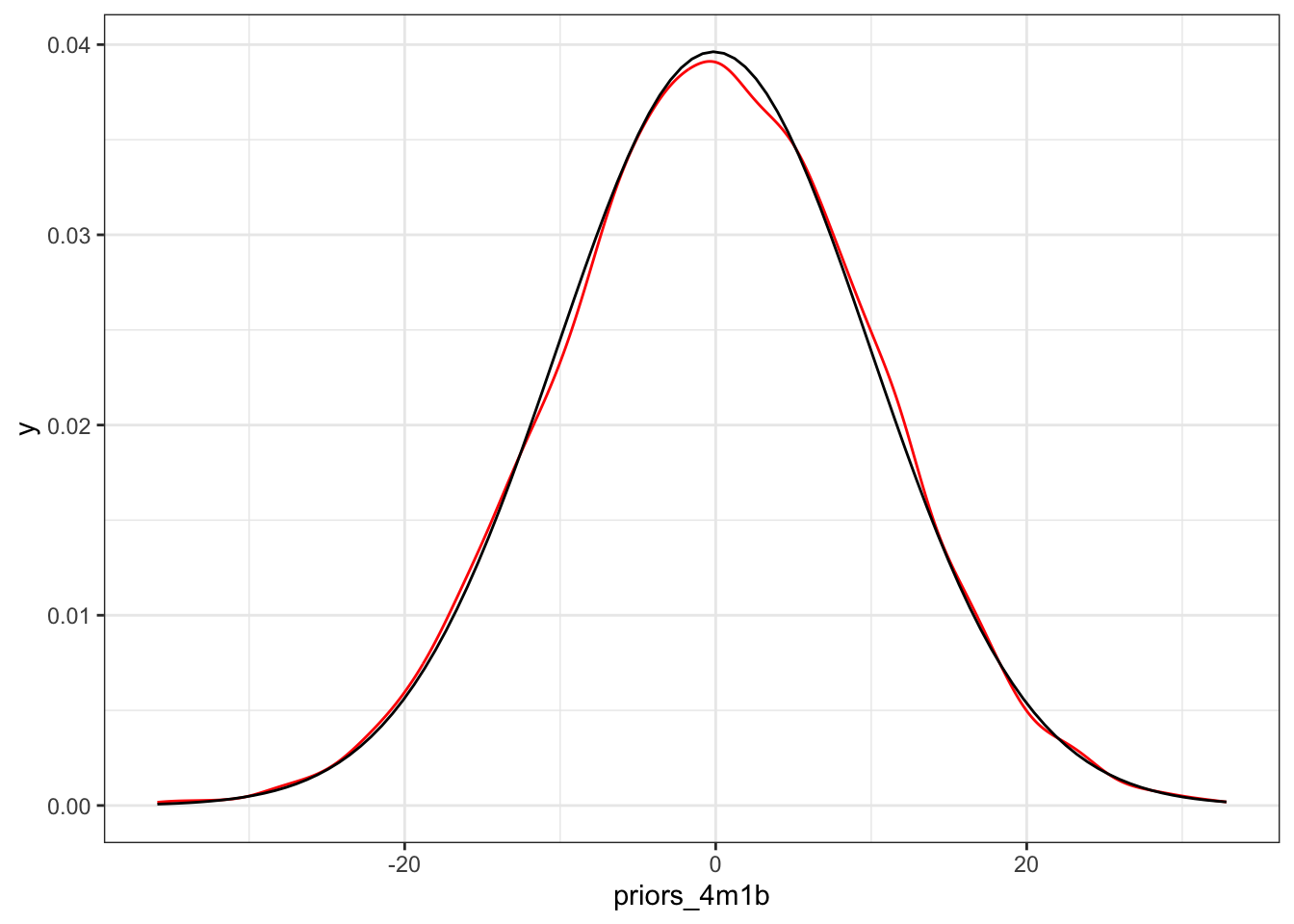

The results of Graph 4.8 contradicts our scientific knowledge — but also our common sense — about possible height values of humans. Now the model, before seeing the data, expects people to have negative height. It also expects some giants. One of the tallest people in recorded history, Robert Pershing Wadlow (1918–1940) stood 272 cm tall. In our prior predictive simulation many people are taller than this.

“Does this matter? In this case, we have so much data that the silly prior is harmless. But that won’t always be the case. There are plenty of inference problems for which the data alone are not sufficient, no matter how numerous. Bayes lets us proceed in these cases. But only if we use our scientific knowledge to construct sensible priors. Using scientific knowledge to build priors is not cheating. The important thing is that your prior not be based on the values in the data, but only on what you know about the data before you see it.” (McElreath, 2020, p. 84) (pdf)

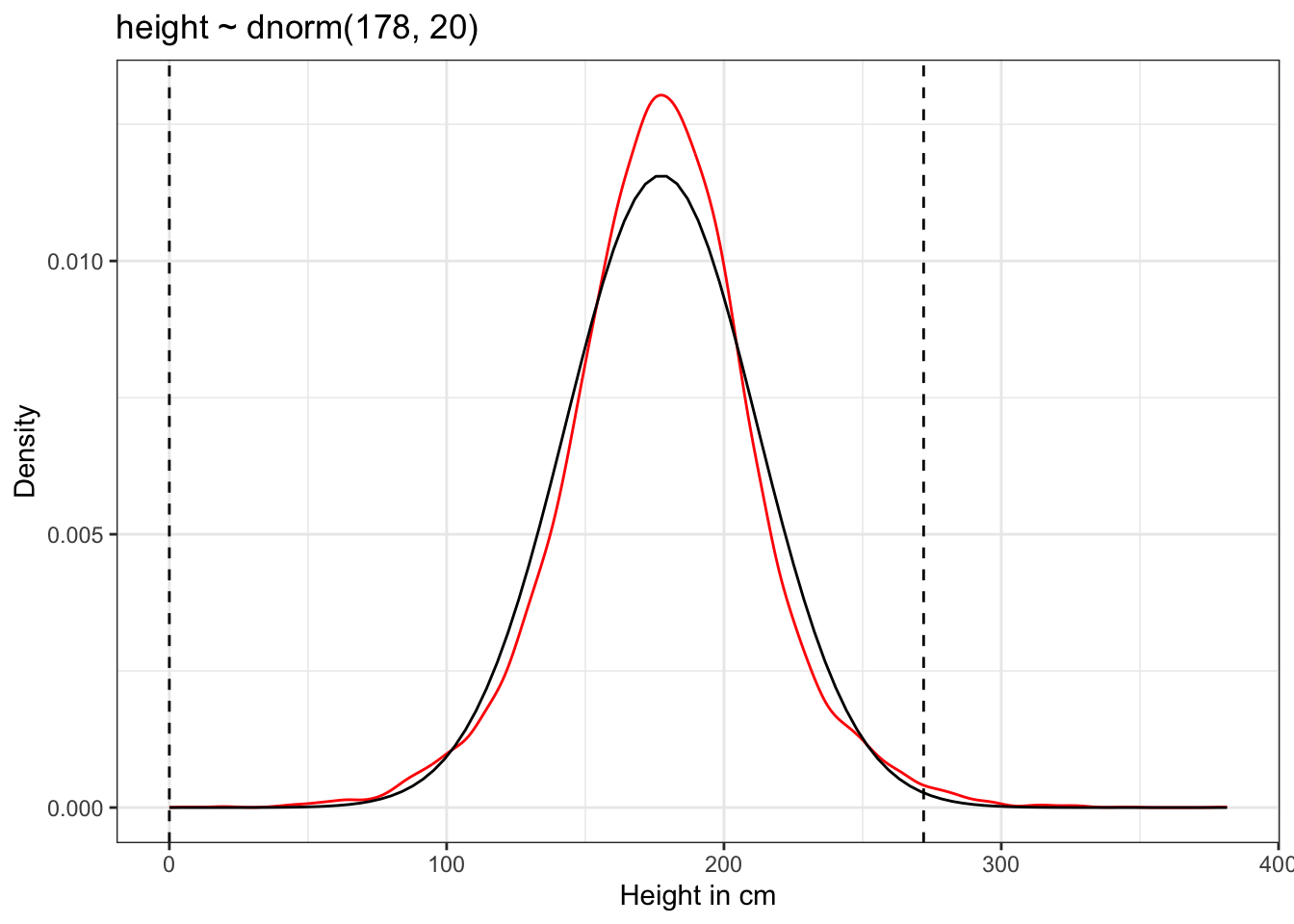

R Code 4.20 b: Simulate heights by sampling from the priors with \(\mu \sim Normal(178, 20)\) (Tidyverse)

N_sim_height_b <- 1e4

set.seed(4) # to make example reproducible

## R code 4.14b #######################################

sim_height_b <-

tibble::tibble(sample_mu_b = stats::rnorm(N_sim_height_b, mean = 178, sd = 20),

sample_sigma_b = stats::runif(N_sim_height_b, min = 0, max = 50)) |>

dplyr::mutate(priors_height_b = stats::rnorm(N_sim_height_b,

mean = sample_mu_b,

sd = sample_sigma_b))

sim_height_b |>

ggplot2::ggplot(ggplot2::aes(x = priors_height_b)) +

ggplot2::geom_density(color = "red") +

ggplot2::stat_function(

fun = dnorm,

args = with(sim_height_b, c(mean = mean(priors_height_b), sd = sd(priors_height_b)))

) +

ggplot2::geom_vline(xintercept = c(0, 272), linetype = "dashed") +

ggplot2::ggtitle("height ~ dnorm(178, 20)") +

ggplot2::labs(x = "Height in cm", y = "Density") +

ggplot2::theme_bw()

ggplot2::geom_density() computes and draws kernel density estimates, which is a smoothed version of the histogram. Note that there is no data mentioned explicitly in the call of ggplot2::geom_density(). When this is the case (data = NULL) then the data will be inherited from the plot data as specified in the call to ggplot2::ggplot(). Otherwise the function needs a data frame or a function with a single argument to override the plot data. (geom_density() help file).

The prior predictive simulation generates a plausible distribution; There are no values negative (left dashed vertical line at 0) and one of the tallest people in recorded history, Robert Pershing Wadlow (1918–1940) with 272 cm (right dashed vertical line) has only a small probability.

The prior probability distribution of height is not itself Gaussian because it is approaching the mean too thin and to high, respectively its tails are too thick. But this is ok.

“The distribution you see is not an empirical expectation, but rather the distribution of relative plausibilities of different heights, before seeing the data.” (McElreath, 2020, p. 83) (pdf)

R Code 4.21 b: Simulate heights by sampling from the priors with \(\mu \sim Normal(178, 100)\) (Tidyverse)

N_sim_height_b <- 1e4

set.seed(4) # to make example reproducible

## R code 4.14b #######################################

sim_height2_b <-

tibble::tibble(sample_mu2_b = stats::rnorm(N_sim_height_b, mean = 178, sd = 100),

sample_sigma2_b = stats::runif(N_sim_height_b, min = 0, max = 50)) |>

dplyr::mutate(priors_height2_b = stats::rnorm(N_sim_height_b,

mean = sample_mu2_b,

sd = sample_sigma2_b))

sim_height2_b |>

ggplot2::ggplot(ggplot2::aes(x = priors_height2_b)) +

ggplot2::geom_density(color = "red") +

ggplot2::stat_function(

fun = dnorm,

args = with(sim_height2_b, c(mean = mean(priors_height2_b), sd = sd(priors_height2_b)))

) +

ggplot2::geom_vline(xintercept = c(0, 272), linetype = "dashed") +

ggplot2::ggtitle("height ~ dnorm(178, 100)") +

ggplot2::labs(x = "Height in cm", y = "Density") +

ggplot2::theme_bw()

The results of Graph 4.10 contradicts our scientific knowledge — but also our common sense — about possible height values of humans. Now the model, before seeing the data, expects people to have negative height. It also expects some giants. One of the tallest people in recorded history, Robert Pershing Wadlow (1918–1940) stood 272 cm tall. In our prior predictive simulation many people are taller than this.

“Does this matter? In this case, we have so much data that the silly prior is harmless. But that won’t always be the case. There are plenty of inference problems for which the data alone are not sufficient, no matter how numerous. Bayes lets us proceed in these cases. But only if we use our scientific knowledge to construct sensible priors. Using scientific knowledge to build priors is not cheating. The important thing is that your prior not be based on the values in the data, but only on what you know about the data before you see it.” (McElreath, 2020, p. 84) (pdf)

We are going to map out the posterior distribution through brute force calculations.

This is not recommended because it is

Therefore the grid approximation technique has limited relevance. Later on we will use the quadratic approximation with rethinking::quap().

The strategy is the same grid approximation strategy as before in Section 2.4.3. But now there are two dimensions, and so there is a geometric (literally) increase in bother.

Procedure 4.2 : Grid approximation as R code (compare with Procedure 2.3)

post_a.base::sapply() passes the unique combination of \(\mu\) and \(\sigma\) on each row of post_a to a function that computes the log-likelihood of each observed height, and adds all of these log-likelihoods together with base::sum().base::exp(post_a$prod) but have to scale all log-products by the maximum log-product.Use log-probability to prevent rounding to zero!

“Remember, in large samples, all unique samples are unlikely. This is why you have to work with log-probability.” (McElreath, 2020, p. 562) (pdf)

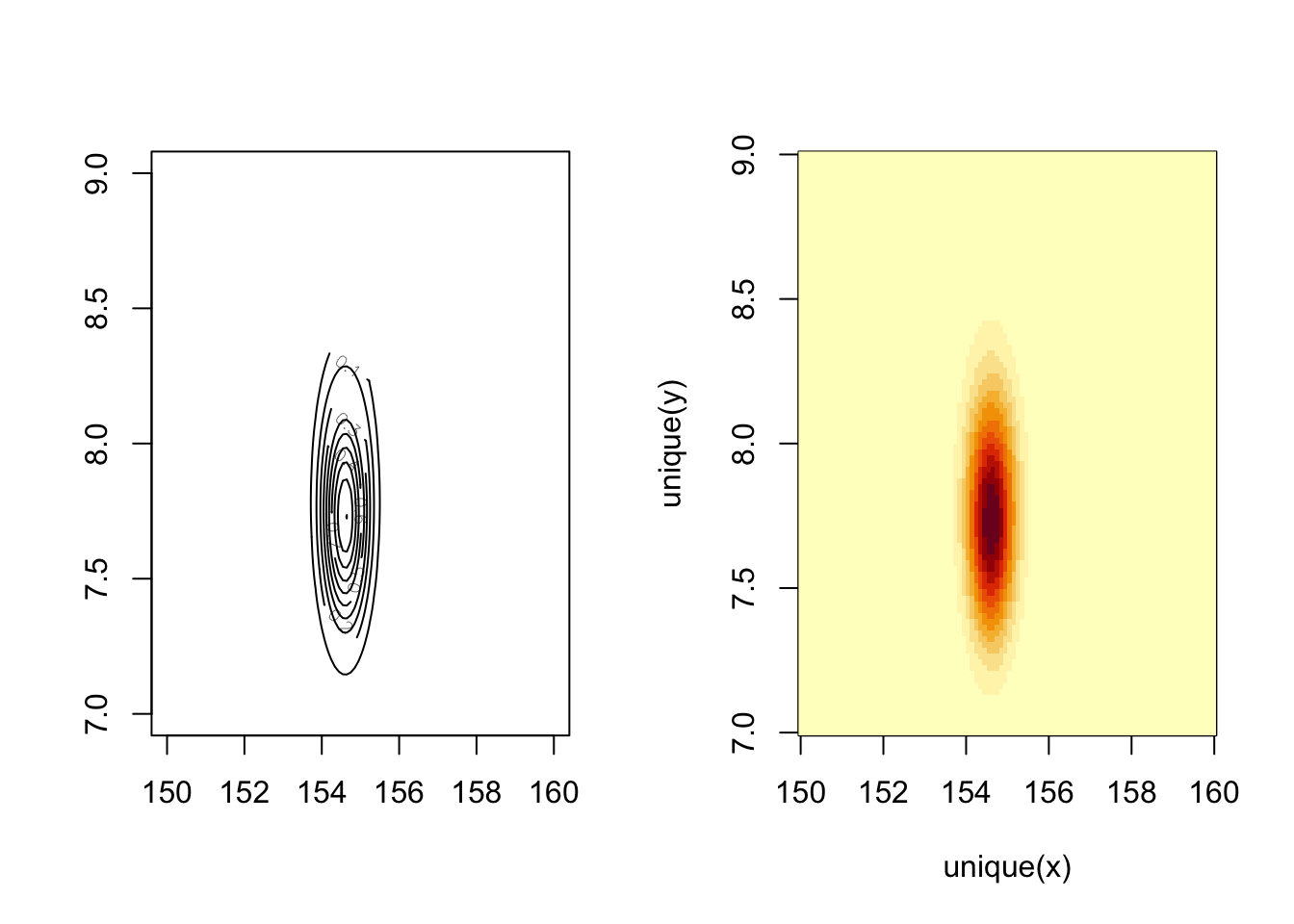

: Grid approximation of the posterior distribution

R Code 4.22 a: Grid approximation of the posterior distribution (Original)

## R code 4.16a Grid approx. ##################################

## 1. Define the grid ##########

mu.list_a <- seq(from = 150, to = 160, length.out = 100)

sigma.list_a <- seq(from = 7, to = 9, length.out = 100)

## 2. All Combinations of μ & σ ##########

post_a <- expand.grid(mu_a = mu.list_a, sigma_a = sigma.list_a)

## 3. Compute log-likelihood #######

post_a$LL <- sapply(1:nrow(post_a), function(i) {

sum(

dnorm(d2_a$height, post_a$mu[i], post_a$sigma[i], log = TRUE)

)

})

## 4. Multiply prior by likelihood ##########

## as the priors are on the log scale adding = multiplying

post_a$prod <- post_a$LL + dnorm(post_a$mu_a, 178, 20, TRUE) +

dunif(post_a$sigma_a, 0, 50, TRUE)

## 5. Back to probability scale #########

## without rounding error

post_a$prob <- exp(post_a$prod - max(post_a$prod))

## define plotting area as one row and two columns

par(mfrow = c(1, 2))

## R code 4.17a Contour plot ##################################

rethinking::contour_xyz(post_a$mu_a, post_a$sigma_a, post_a$prob)

## R code 4.18a Heat map ##################################

rethinking::image_xyz(post_a$mu_a, post_a$sigma_a, post_a$prob)

b: Numbered R Code Title (Tidyverse)

## R code 4.16b Grid approx ##################################

## 1./2. Define grid & combinations ##########

d_grid_b <-

tidyr::expand_grid(mu_b = base::seq(from = 150, to = 160, length.out = 100),

sigma_b = base::seq(from = 7, to = 9, length.out = 100))

## 3a. Compute log-likelihood #######

grid_function <- function(mu, sigma) {

stats::dnorm(d2_b$height, mean = mu, sd = sigma, log = TRUE) |>

sum()

}

d_grid2_b <-

d_grid_b |>

## 3b. Compute log-likelihood #######

dplyr::mutate(log_likelihood_b = purrr::map2(mu_b, sigma_b, grid_function)) |>

tidyr::unnest(log_likelihood_b) |>

dplyr::mutate(prior_mu_b = stats::dnorm(mu_b, mean = 178, sd = 20, log = T),

prior_sigma_b = stats::dunif(sigma_b, min = 0, max = 50, log = T)) |>

## 4. Multiply prior by likelihood ##########

## as the priors are on the log scale adding = multiplying

dplyr::mutate(product_b = log_likelihood_b + prior_mu_b + prior_sigma_b) |>

## 5. Back to probability scale #########

dplyr::mutate(probability_b = exp(product_b - max(product_b)))

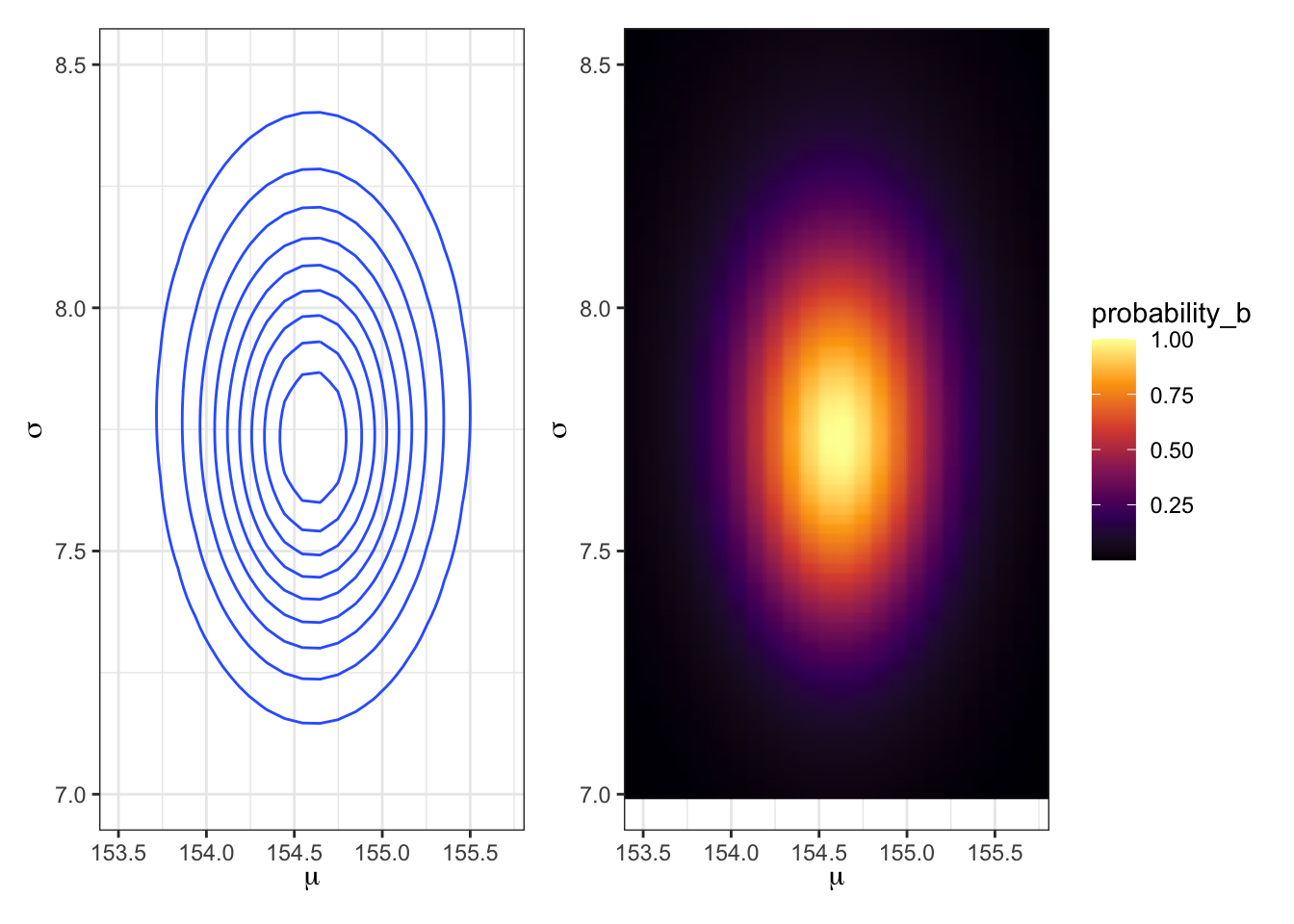

## R code 4.17b Contour plot ##################################

p1_b <-

d_grid2_b |>

ggplot2::ggplot(ggplot2::aes(x = mu_b, y = sigma_b, z = probability_b)) +

ggplot2::geom_contour() +

ggplot2::labs(x = base::expression(mu),

y = base::expression(sigma)) +

ggplot2::coord_cartesian(xlim = c(153.5, 155.7),

ylim = c(7, 8.5)) +

ggplot2::theme_bw()

## R code 4.18b Heat map ##################################

p2_b <-

d_grid2_b |>

ggplot2::ggplot(ggplot2::aes(x = mu_b, y = sigma_b, fill = probability_b)) +

ggplot2::geom_raster(interpolate = TRUE) +

ggplot2::scale_fill_viridis_c(option = "B") +

ggplot2::labs(x = base::expression(mu),

y = base::expression(sigma)) +

ggplot2::coord_cartesian(xlim = c(153.5, 155.7),

ylim = c(7, 8.5)) +

ggplot2::theme_bw()

library(patchwork)

p1_b + p2_b

tidyr::unnest() function to expand the list-column containing data frames into rows and columns.ggplot2::coord_cartesian() I zoomed into the graph to concentrate on the most important x/y ranges.base::expression().| PURPOSE | ORIGINAL | TIDYVERSE |

|---|---|---|

| All combinations | base::expand.grid() | tidyr::expand_grid() |

| Apply function to each element | base::sapply() | purrr::map2() |

| Contour plot | rethinking::contour_xyz() | ggplot2::geom_contour() |

| Heat map | rethinking::image_xyz() | ggplot2::geom_raster() |

Creating data frame resp. tibble of all combinations

There are several related function for base::expand.grid() in {tidyverse}:

tidyr::expand_grid(): Create a tibble from all combination of inputs. This is the most similar function to base::expand.grid() as its input are vectors rather than a data frame. But it different in five aspects:

tidyr::expand(): Generates all combination of variables found in a dataset. It is paired with

tidyr::crossing(): A wrapper around tidyr::expand_grid() the de-duplicates and sorts the inputs.tidyr::nesting(): Finds only combinations already present in the data.“To study this posterior distribution in more detail, again I’ll push the flexible approach of sampling parameter values from it. This works just like it did in R Code 3.6, when you sampled values of \(p\) from the posterior distribution for the globe tossing example.” (McElreath, 2020, p. 85) (pdf)

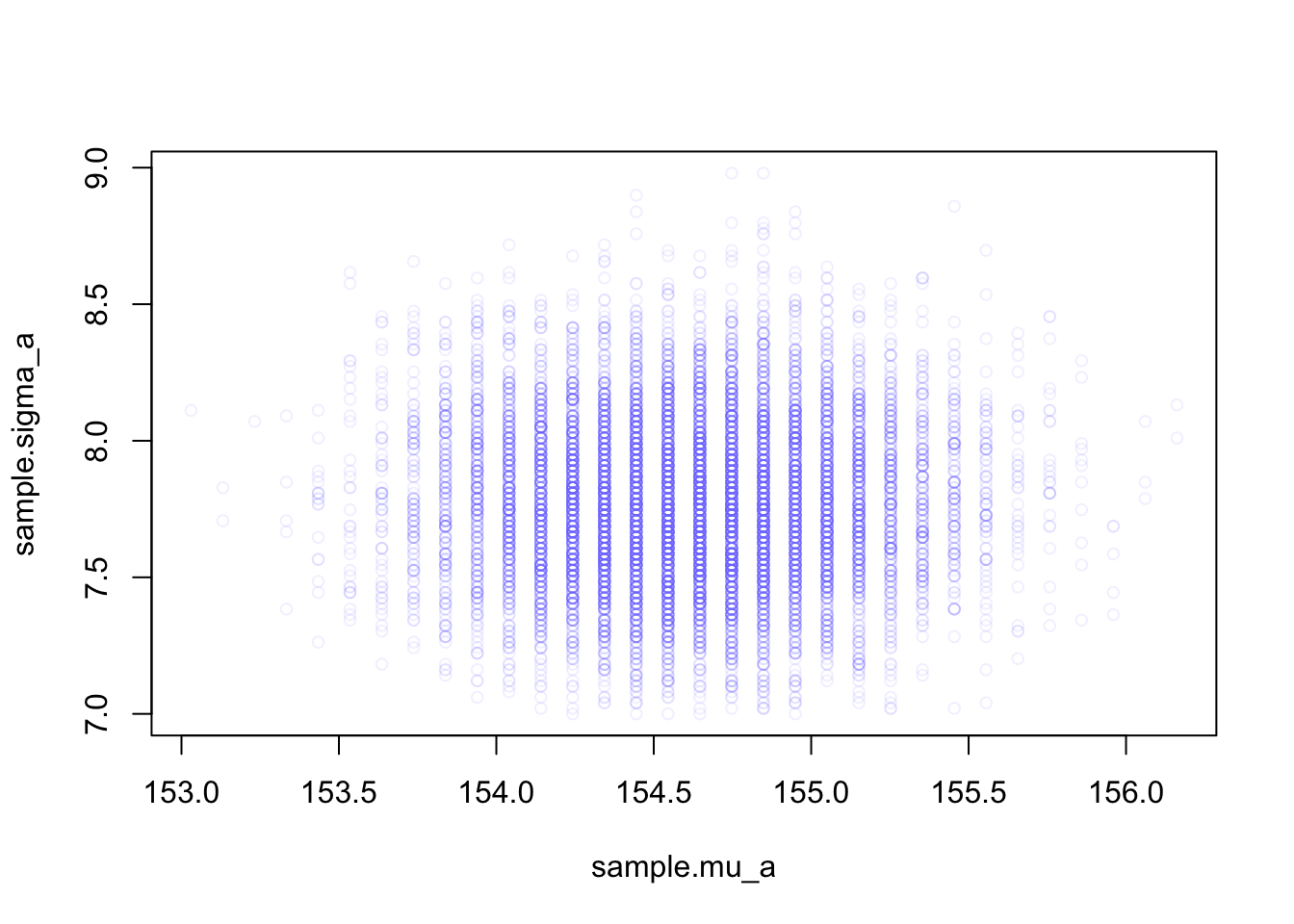

Procedure 4.3 : Sampling from the posterior

Since there are two parameters, and we want to sample combinations of them:

post_a in proportion to the values in post_a$prob.Example 4.7 : Sampling from the posterior

R Code 4.23 a: Samples from the posterior distribution for the heights data (Original)

## R code 4.19a ###########################

# 1. Sample row numbers #########

# randomly sample row numbers in post_a

# in proportion to the values in post_a$prob.

sample.rows <- sample(1:nrow(post_a),

size = 1e4, replace = TRUE,

prob = post_a$prob

)

# 2. pull out parameter values ########

sample.mu_a <- post_a$mu[sample.rows]

sample.sigma_a <- post_a$sigma[sample.rows]

## R code 4.20a ###########################

plot(sample.mu_a, sample.sigma_a,

cex = 0.8, pch = 21,

col = rethinking::col.alpha(rethinking:::rangi2, 0.1)

)

rethinking::col.alpha() is part of the {rethinking} R package. It makes colors transparent for a better inspections of values where data overlap.rethinking:::rangi2 itself is just the definition of a hex color code (“#8080FF”) specifying the shade of blue.Adjust the plot to your tastes by playing around with cex (character expansion, the size of the points), pch (plot character), and the \(0.1\) transparency value.

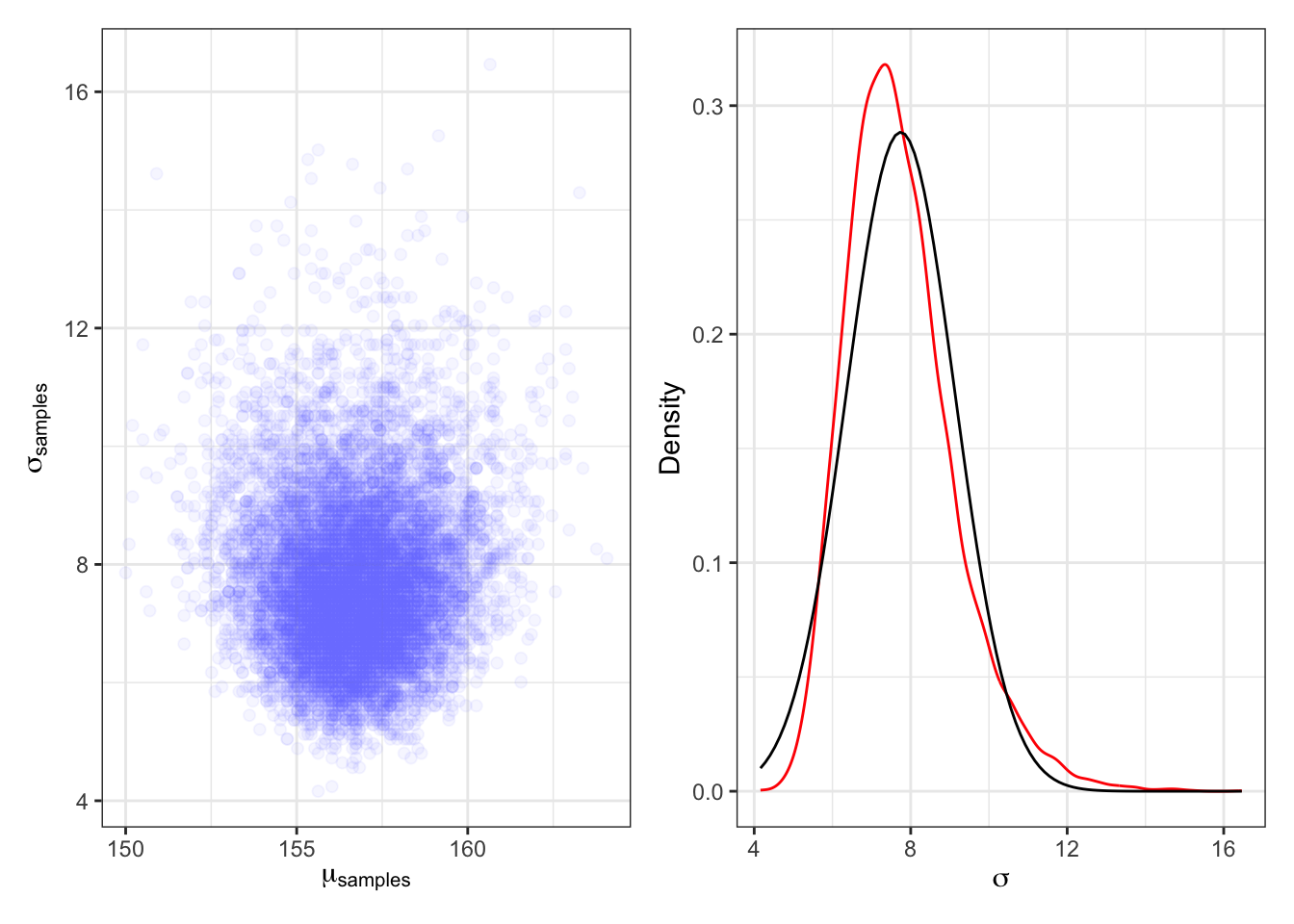

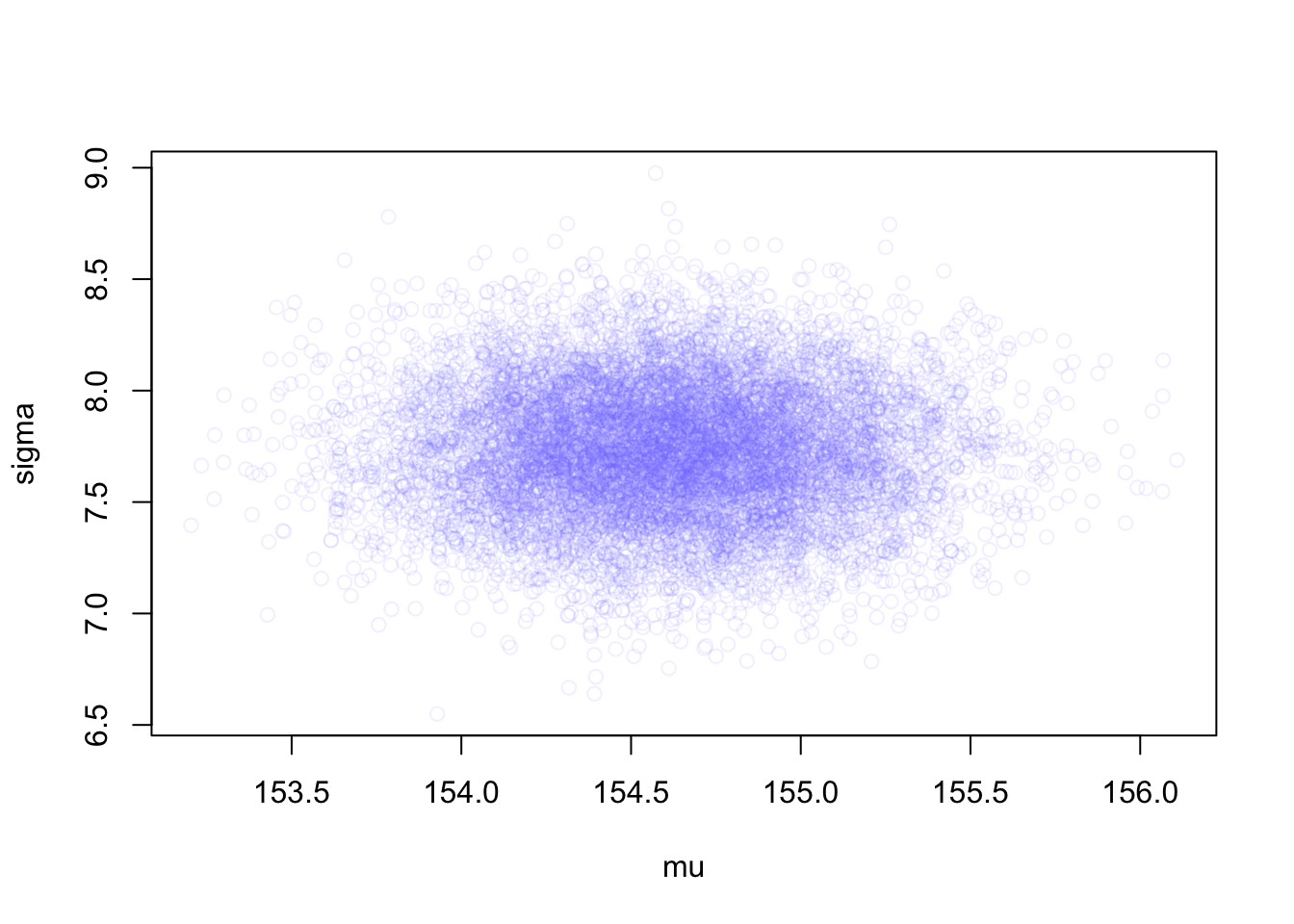

The density of points is highest in the center, reflecting the most plausible combinations of \(\mu\) and \(\sigma\). There are many more ways for these parameter values to produce the data, conditional on the model.

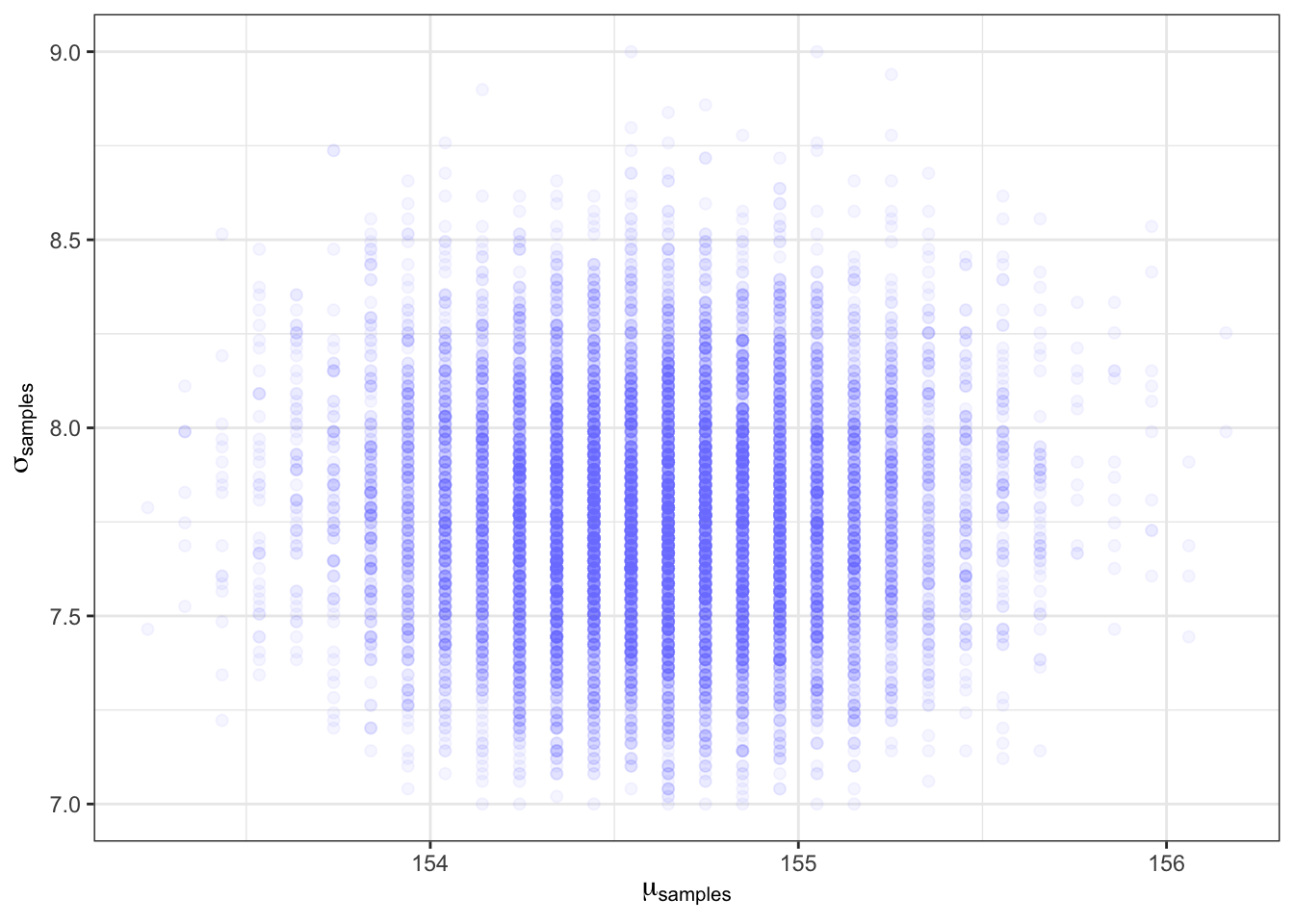

R Code 4.24 b: Samples from the posterior distribution for the heights data (Tidyverse)

set.seed(4)

d_grid_samples_b <-

d_grid2_b |>

dplyr::slice_sample(n = 1e4,

replace = TRUE,

weight_by = probability_b

)

d_grid_samples_b |>

ggplot2::ggplot(ggplot2::aes(x = mu_b, y = sigma_b)) +

ggplot2::geom_point(size = 1.8, alpha = 1/15, color = "#8080FF") +

ggplot2::scale_fill_viridis_c() +

ggplot2::labs(x = expression(mu[samples]),

y = expression(sigma[samples])) +

ggplot2::theme_bw()

Kurz used the superseded dplyr::sample_n() to sample rows with replacement from d_grid2_b. I used instead the newer dplyr::slice_sample() that should used to sample rows.

The density of points is highest in the center, reflecting the most plausible combinations of μ and σ. There are many more ways for these parameter values to produce the data, conditional on the model.

The jargon marginal here means “averaging over the other parameters.”

We described the distribution of confidence in each combination of \(\mu\) and \(\sigma\) by summarizing the samples. Think of them like data and describe them, just like in Section 3.2. For example, to characterize the shapes of the marginal posterior densities of \(\mu\) and \(\sigma\), all we need to do is to call rethinking::dens() with the appropriate vector sample.mu_a resp. sample.sigma_a.

Example 4.8 : Marginal posterior densities of \(\mu\) and \(\sigma\) for the heights data

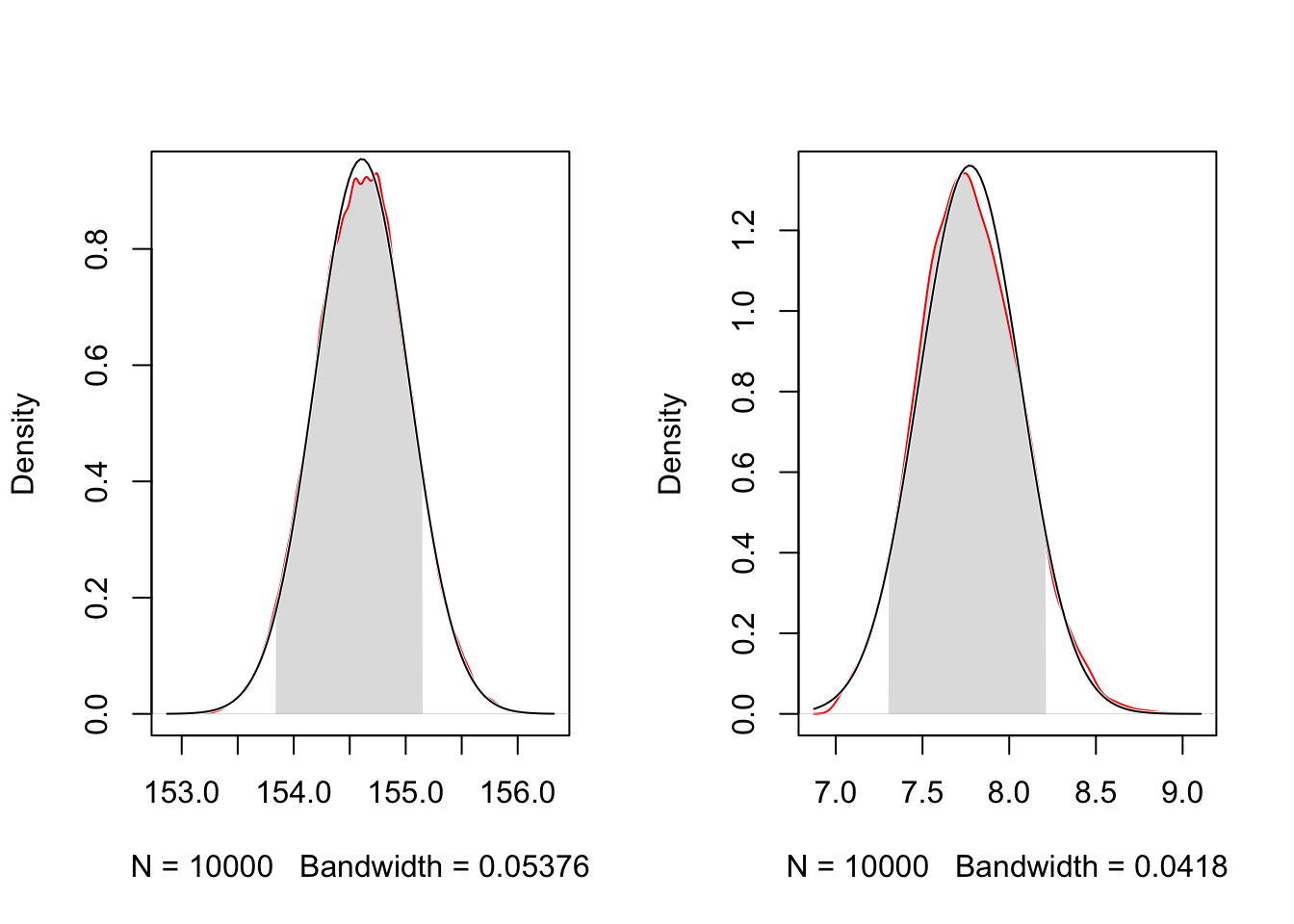

R Code 4.25 a: Marginal posterior densities of \(\mu\) and \(\sigma\) for the heights data (Original)

## define plotting area as one row and two columns

par(mfrow = c(1, 2))

## R code 4.21a adapted #########################

rethinking::dens(sample.mu_a, adj = 1, show.HPDI = 0.89,

norm.comp = TRUE, col = "red")

rethinking::dens(sample.sigma_a, adj = 1, show.HPDI = 0.89,

norm.comp = TRUE, col = "red")

For a comparison I have overlaid the normal distribution and shown the .89% HPDI. Compare the grayed area with the calculation of the values in Example 4.9.

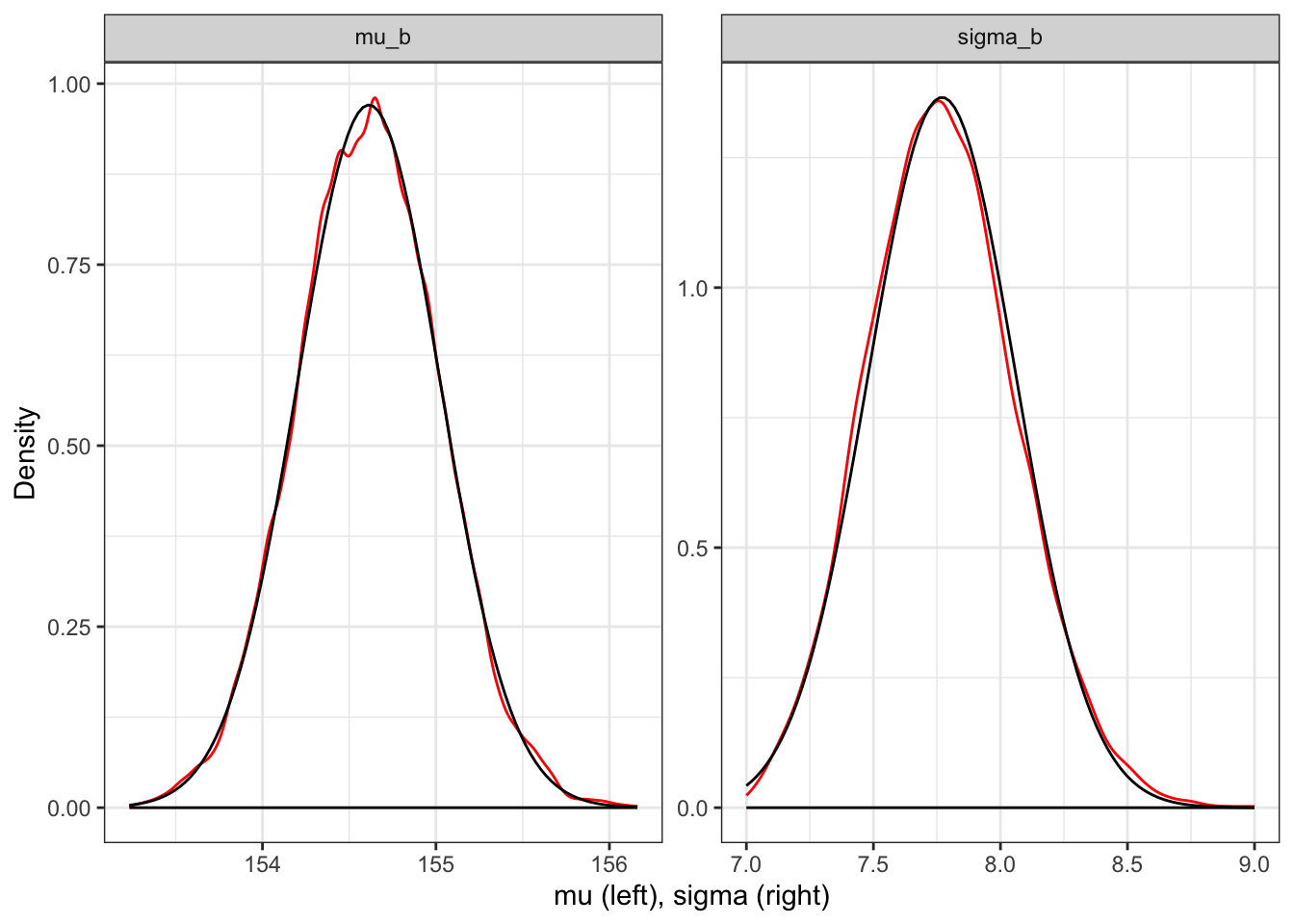

R Code 4.26 b: Marginal posterior densities of \(\mu\) and \(\sigma\) for the heights data (Tidyverse)

d_grid_samples_b |>

tidyr::pivot_longer(mu_b:sigma_b) |>

ggplot2::ggplot(ggplot2::aes(x = value)) +

ggplot2::geom_density(color = "red") +

# ggplot2::scale_y_continuous(NULL, breaks = NULL) +

# ggplot2::xlab(NULL) +

ggplot2::stat_function(

fun = dnorm,

args = with(d_grid_samples_b, c(

mean = mean(mu_b),

sd = sd(mu_b)))

) +

ggplot2::stat_function(

fun = dnorm,

args = with(d_grid_samples_b, c(

mean = mean(sigma_b),

sd = sd(sigma_b)))

) +

ggplot2::labs(x = "mu (left), sigma (right)",

y = "Density") +

ggplot2::theme_bw() +

ggplot2::facet_wrap(~ name, scales = "free",

labeller = ggplot2::label_parsed)

Kurz used tidyr::pivot_longer() and then ggplot2::facet_wrap() to plot the densities for both mu and sigma at once. For a comparison I have overlaid the normal distribution. But I do not know how to prevent the base line at density = 0. See Tidyverse 2 (Graph 4.15) where I have constructed the plots of both distribution separately.

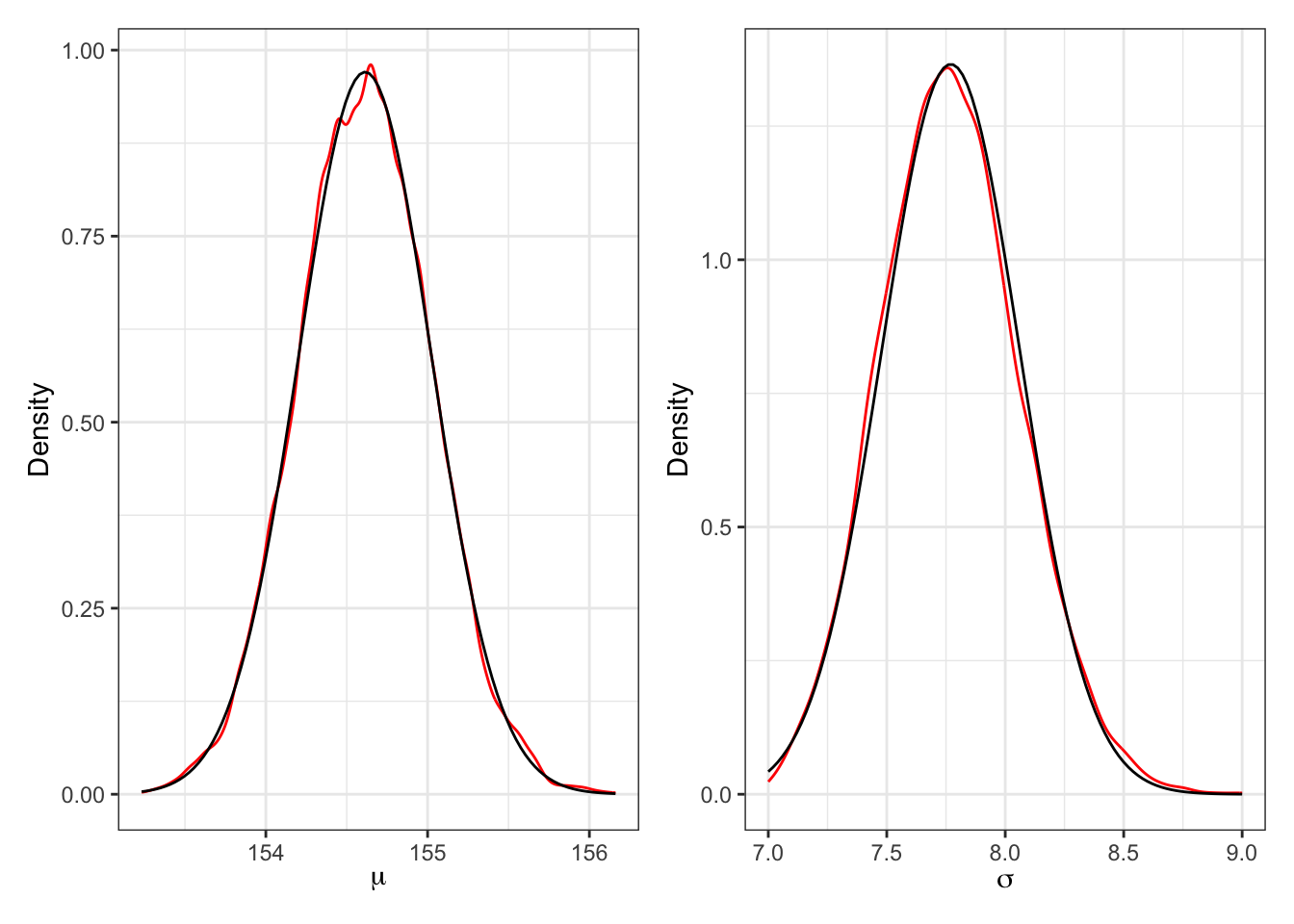

R Code 4.27 b: Marginal posterior densities of \(\mu\) and \(\sigma\) for the heights data (Tidyverse)

plot_mu_b <-

d_grid_samples_b |>

ggplot2::ggplot(ggplot2::aes(x = mu_b)) +

ggplot2::geom_density(color = "red") +

ggplot2::stat_function(

fun = dnorm,

args = with(d_grid_samples_b, c(

mean = mean(mu_b),

sd = sd(mu_b)))

) +

ggplot2::labs(x = expression(mu),

y = "Density") +

ggplot2::theme_bw()

plot_sigma_b <-

d_grid_samples_b |>

ggplot2::ggplot(ggplot2::aes(x = sigma_b)) +

ggplot2::geom_density(color = "red") +

ggplot2::stat_function(

fun = dnorm,

args = with(d_grid_samples_b, c(

mean = mean(sigma_b),

sd = sd(sigma_b)))

) +

ggplot2::labs(x = expression(sigma),

y = "Density") +

ggplot2::theme_bw()

library(patchwork)

plot_mu_b + plot_sigma_b

“These densities are very close to being normal distributions. And this is quite typical. As sample size increases, posterior densities approach the normal distribution. If you look closely, though, you’ll notice that the density for \(\sigma\) has a longer right-hand tail. I’ll exaggerate this tendency a bit later, to show you that this condition is very common for standard deviation parameters.” (McElreath, 2020, p. 86) (pdf)

Since the drawn samples of Example 4.7 are just vectors of numbers, you can compute any statistic from them that you could from ordinary data: mean, median, or quantile, for example.

As examples we will compute PI and HPDI/HDCI.

We’ll use the {tidybayes} resp. {ggdist} package to compute their posterior modes of the 89% HDIs (and not the standard 95% intervals, as recommended by McElreath).

{tidybayes} has a companion package {ggdist}

There is a companion package {ggdist} which is imported completely by {tidybayes}. Whenever you cannot find the function in {tidybayes} then look at the documentation of {ggdist}. This is also the case for the tidybayes::mode_hdi() function. In the help files of {tidybayes} you will just find notes about a deprecated tidybayes::mode_hdih() function but not the arguments of its new version without the last h (for horizontal) tidybayes::mode_hdi(). But you can look up these details in the {ggdist} documentation. This observation is valid for many families of deprecated functions.

There is a division of functionality between {tidybayes} and {ggdist}:

Example 4.9 : Summarize the widths with posterior compatibility intervals

R Code 4.28 a: Posterior compatibility interval (PI) (Original)

R Code 4.29 a: Highest Posterior Density Interval (HPDI) (Original)

R Code 4.30 b: Posterior compatibility interval (PI) (Tidyverse)

d_grid_samples_b |>

tidyr::pivot_longer(mu_b:sigma_b) |>

dplyr::group_by(name) |>

ggdist::mode_hdi(value, .width = 0.89) #> # A tibble: 2 × 7

#> name value .lower .upper .width .point .interval

#> <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

#> 1 mu_b 155. 154. 155. 0.89 mode hdi

#> 2 sigma_b 7.75 7.30 8.23 0.89 mode hdiR Code 4.31 b: Highest Density Continuous Interval (HDCI) (Tidyverse)

d_grid_samples_b |>

tidyr::pivot_longer(mu_b:sigma_b) |>

dplyr::group_by(name) |>

ggdist::mode_hdci(value, .width = 0.89) #> # A tibble: 2 × 7

#> name value .lower .upper .width .point .interval

#> <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

#> 1 mu_b 155. 154. 155. 0.89 mode hdci

#> 2 sigma_b 7.75 7.30 8.23 0.89 mode hdciIn {tidybayes} resp. {ggdist} the shortest probability interval (= Highest Posterior Density Interval (HPDI)) is called Highest Density Continuous Interval (HDCI).

{rethinking} versus {tidybayes/ggdist}

There are small differences in the results of both packages (rethinking) and {ggdist/tidybayes} that are not important.

“Before moving on to using quadratic approximation (quap) as shortcut to all of this inference, it is worth repeating the analysis of the height data above, but now with only a fraction of the original data. The reason to do this is to demonstrate that, in principle, the posterior is not always so Gaussian in shape. There’s no trouble with the mean, μ. For a Gaussian likelihood and a Gaussian prior on μ, the posterior distribution is always Gaussian as well, regardless of sample size. It is the standard deviation σ that causes problems. So if you care about σ—often people do not—you do need to be careful of abusing the quadratic approximation.” (McElreath, 2020, p. 86) (pdf)

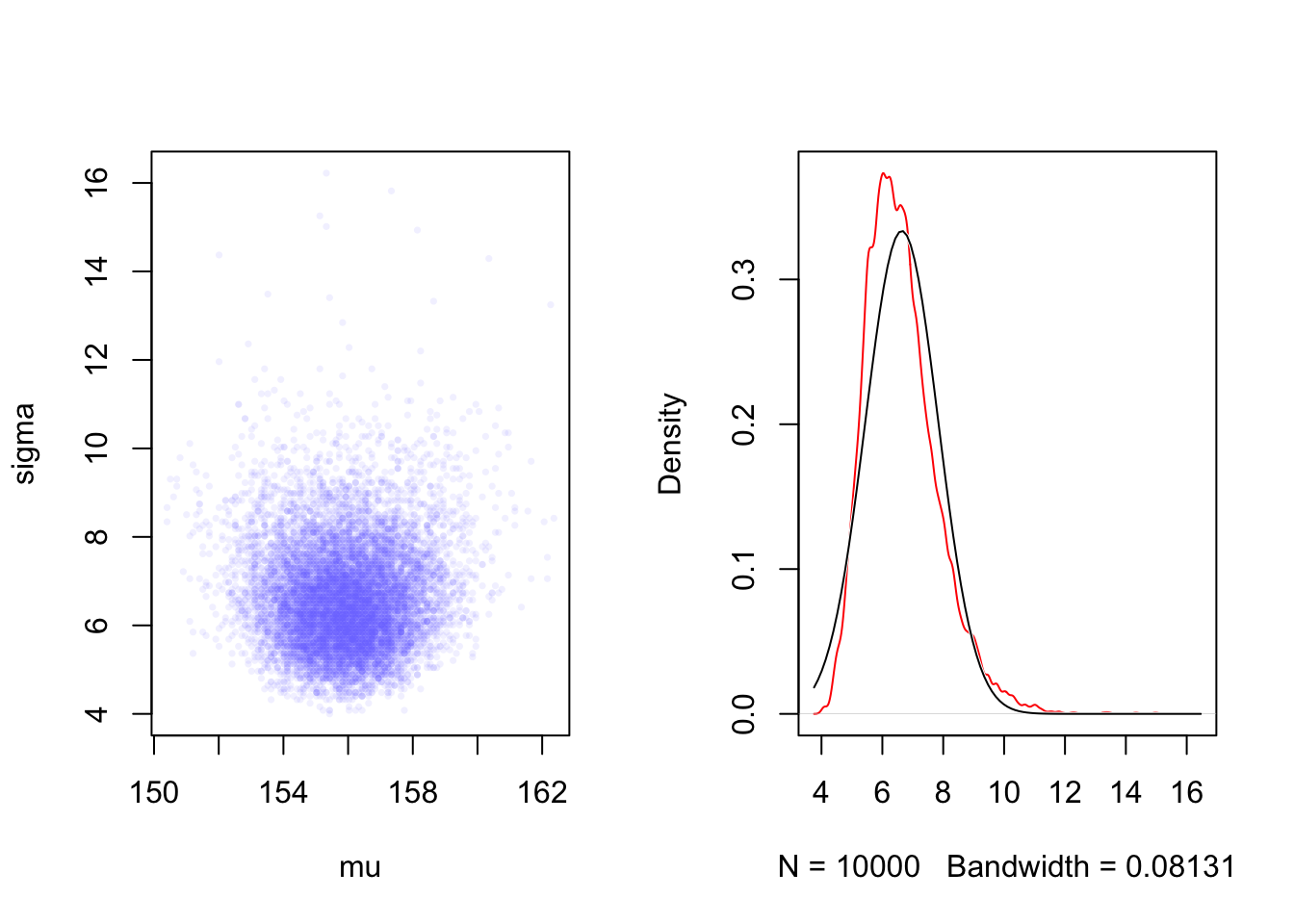

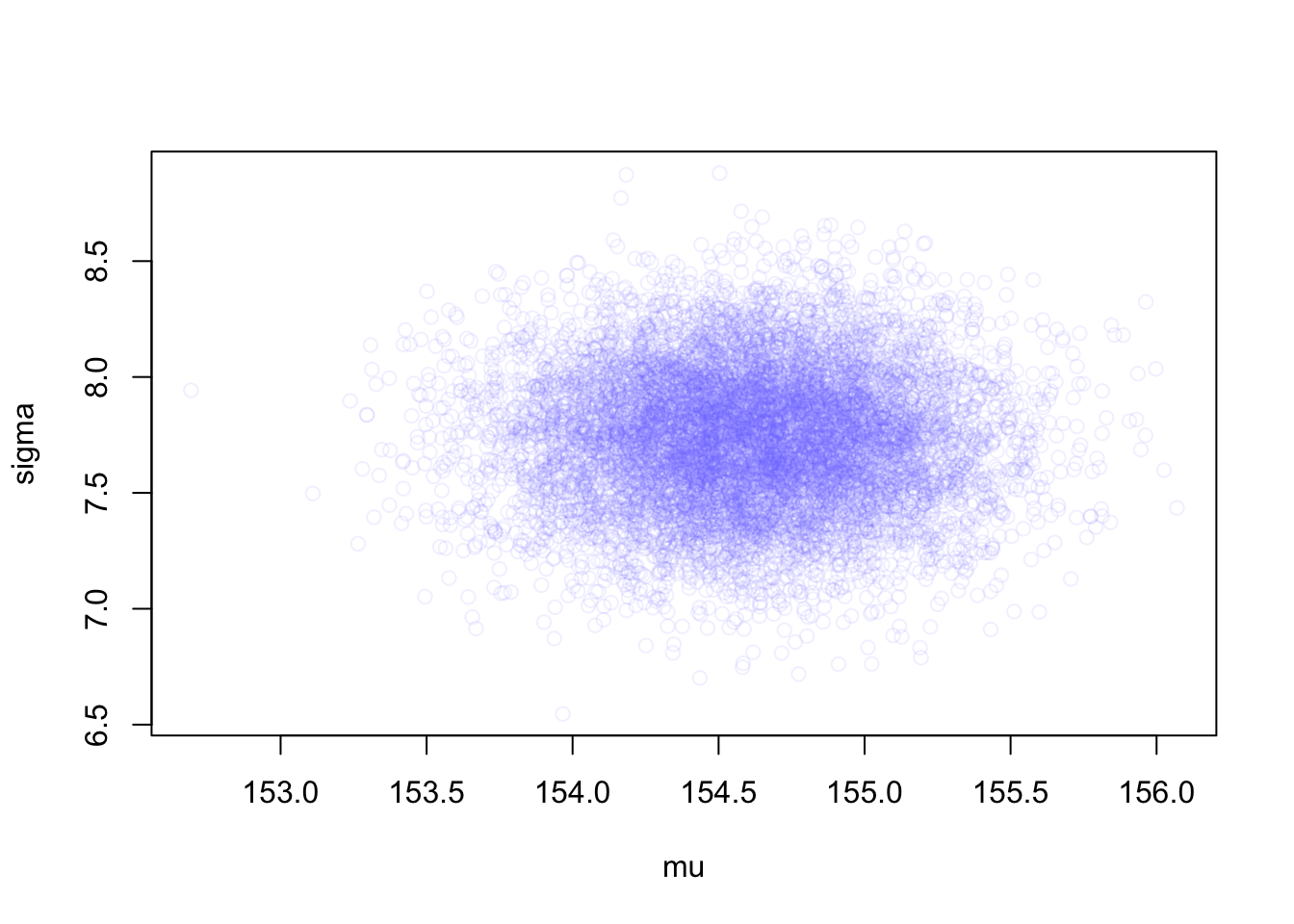

Example 4.10 : Sample size and the normality of \(\sigma\)’s posterior

R Code 4.32 a: Sample size and the normality of \(\sigma\)’s posterior (Original)

## R code 4.23a ######################################

d3_a <- sample(d2_a$height, size = 20)

## R code 4.24a ######################################

mu2_a.list <- seq(from = 150, to = 170, length.out = 200)

sigma2_a.list <- seq(from = 4, to = 20, length.out = 200)

post2_a <- expand.grid(mu = mu2_a.list, sigma = sigma2_a.list)

post2_a$LL <- sapply(1:nrow(post2_a), function(i) {

sum(dnorm(d3_a,

mean = post2_a$mu[i], sd = post2_a$sigma[i],

log = TRUE

))

})

post2_a$prod <- post2_a$LL + dnorm(post2_a$mu, 178, 20, TRUE) +

dunif(post2_a$sigma, 0, 50, TRUE)

post2_a$prob <- exp(post2_a$prod - max(post2_a$prod))

sample2_a.rows <- sample(1:nrow(post2_a),

size = 1e4, replace = TRUE,

prob = post2_a$prob

)

sample2_a.mu <- post2_a$mu[sample2_a.rows]

sample2_a.sigma <- post2_a$sigma[sample2_a.rows]

## define plotting area as one row and two columns

par(mfrow = c(1, 2))

plot(sample2_a.mu, sample2_a.sigma,

cex = 0.5,

col = rethinking::col.alpha(rethinking:::rangi2, 0.1),

xlab = "mu", ylab = "sigma", pch = 16

)

## R code 4.25a ############

rethinking::dens(sample2_a.sigma,

norm.comp = TRUE,

col = "red")

R Code 4.33 b: Sample size and the normality of \(\sigma\)’s posterior (Tidyverse)

set.seed(4)

d3_b <- sample(d2_b$height, size = 20)

n <- 200

# note we've redefined the ranges of `mu` and `sigma`

d3_grid_b <-

tidyr::crossing(mu3_b = seq(from = 150, to = 170, length.out = n),

sigma3_b = seq(from = 4, to = 20, length.out = n))

grid_function3_b <- function(mu, sigma) {

dnorm(d3_b, mean = mu, sd = sigma, log = T) |>

sum()

}

d3_grid_b <-

d3_grid_b |>

dplyr::mutate(log_likelihood3_b =

purrr::map2_dbl(mu3_b, sigma3_b, grid_function3_b)) |>

dplyr::mutate(prior3_mu_b = stats::dnorm(mu3_b, mean = 178, sd = 20, log = T),

prior3_sigma_b = stats::dunif(sigma3_b, min = 0, max = 50, log = T)) |>

dplyr::mutate(product3_b = log_likelihood3_b + prior3_mu_b + prior3_sigma_b) |>

dplyr::mutate(probability3_b = base::exp(product3_b - base::max(product3_b)))

set.seed(4)

d3_grid_samples_b <-

d3_grid_b |>

dplyr::slice_sample(n = 1e4,

replace = T,

weight_by = probability3_b)

plot3_d3_scatterplot <-

d3_grid_samples_b |>

ggplot2::ggplot(ggplot2::aes(x = mu3_b, y = sigma3_b)) +

ggplot2::geom_point(size = 1.8, alpha = 1/15, color = "#8080FF") +

ggplot2::labs(x = base::expression(mu[samples]),

y = base::expression(sigma[samples])) +

ggplot2::theme_bw()

plot3_d3_sigma3_b <-

d3_grid_samples_b |>

ggplot2::ggplot(ggplot2::aes(x = sigma3_b)) +

ggplot2::geom_density(color = "red") +

ggplot2::stat_function(

fun = dnorm,

args = with(d3_grid_samples_b, c(

mean = mean(sigma3_b),

sd = sd(sigma3_b)))

) +

ggplot2::labs(x = expression(sigma),

y = "Density") +

ggplot2::theme_bw()

library(patchwork)

plot3_d3_scatterplot + plot3_d3_sigma3_b

Compare the left panel with Graph 4.12 and the right panel with the right panel of Graph 4.15 to see that now \(\sigma\) has a long right tail and does not follow a Gaussian distribution.

“The deep reasons for the posterior of σ tending to have a long right-hand tail are complex. But a useful way to conceive of the problem is that variances must be positive. As a result, there must be more uncertainty about how big the variance (or standard deviation) is than about how small it is.” (McElreath, 2020, p. 86) (pdf)

“For example, if the variance is estimated to be near zero, then you know for sure that it can’t be much smaller. But it could be a lot bigger.” (McElreath, 2020, p. 87) (pdf)

“To build the quadratic approximation, we’ll use

quap(), a command in the {rethinking} package. Thequap()function works by using the model definition you were introduced to earlier in this chapter. Each line in the definition has a corresponding definition in the form of R code. The engine inside quap then uses these definitions to define the posterior probability at each combination of parameter values. Then it can climb the posterior distribution and find the peak, its MAP (Maximum A Posteriori estimate). Finally, it estimates the quadratic curvature at the MAP to produce an approximation of the posterior distribution. Remember: This procedure is very similar to what many non-Bayesian procedures do, just without any priors.” (McElreath, 2020, p. 87, parenthesis and emphasis are mine) (pdf)

Procedure 4.4 : Finding the posterior distribution

Howell1 data frame for adults (age >= 18) (Code 4.26).base::alist() We are going to use the Equation 4.3 (Code 4.27).Example 4.11 : Finding the posterior distribution

R Code 4.34 a: Finding the posterior distribution with rethinking::quap() (Original)

## R code 4.26a ######################

data(package = "rethinking", list = "Howell1")

d_a <- Howell1

d2_a <- d_a[d_a$age >= 18, ]

## R code 4.27a ######################

flist <- alist(

height ~ dnorm(mu, sigma),

mu ~ dnorm(178, 20),

sigma ~ dunif(0, 50)

)

## R code 4.30a #####################

start <- list(

mu = mean(d2_a$height),

sigma = sd(d2_a$height)

)

## R code 4.28a ######################

m4.1a <- rethinking::quap(flist, data = d2_a, start = start)

m4.1a#>

#> Quadratic approximate posterior distribution

#>

#> Formula:

#> height ~ dnorm(mu, sigma)

#> mu ~ dnorm(178, 20)

#> sigma ~ dunif(0, 50)

#>

#> Posterior means:

#> mu sigma

#> 154.607024 7.731333

#>

#> Log-likelihood: -1219.41The rethinking::quap() function returns a “map” object.

Sometimes I got an error message when computing this code chunk. The reason was that quap() has chosen an inconvenient value to start for its estimation of the posterior. I believe that one could visualize the problem with a metaphor: Instead of climbing up the hill quap() started with a value where it was captured in a narrow valley.

The three parts of rethinking::quap()

base::alist() of formulas that define the likelihood and priors.list(), not an alist() like the formula list is.R Code 4.35 a: Printing with rethinking::precis (Original)

## R code 4.29a ######################

(precis_m4.1a <- rethinking::precis(m4.1a))#> mean sd 5.5% 94.5%

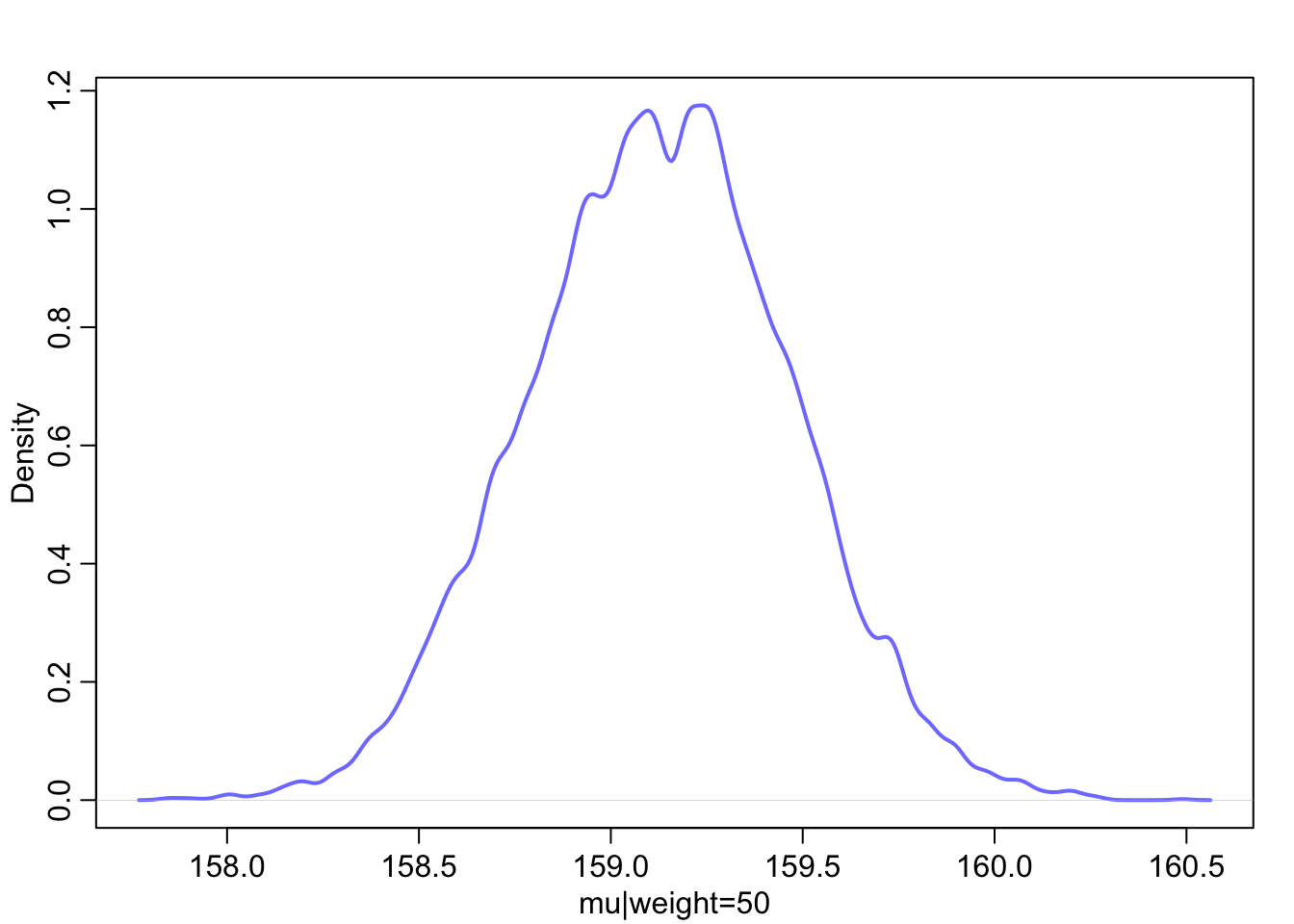

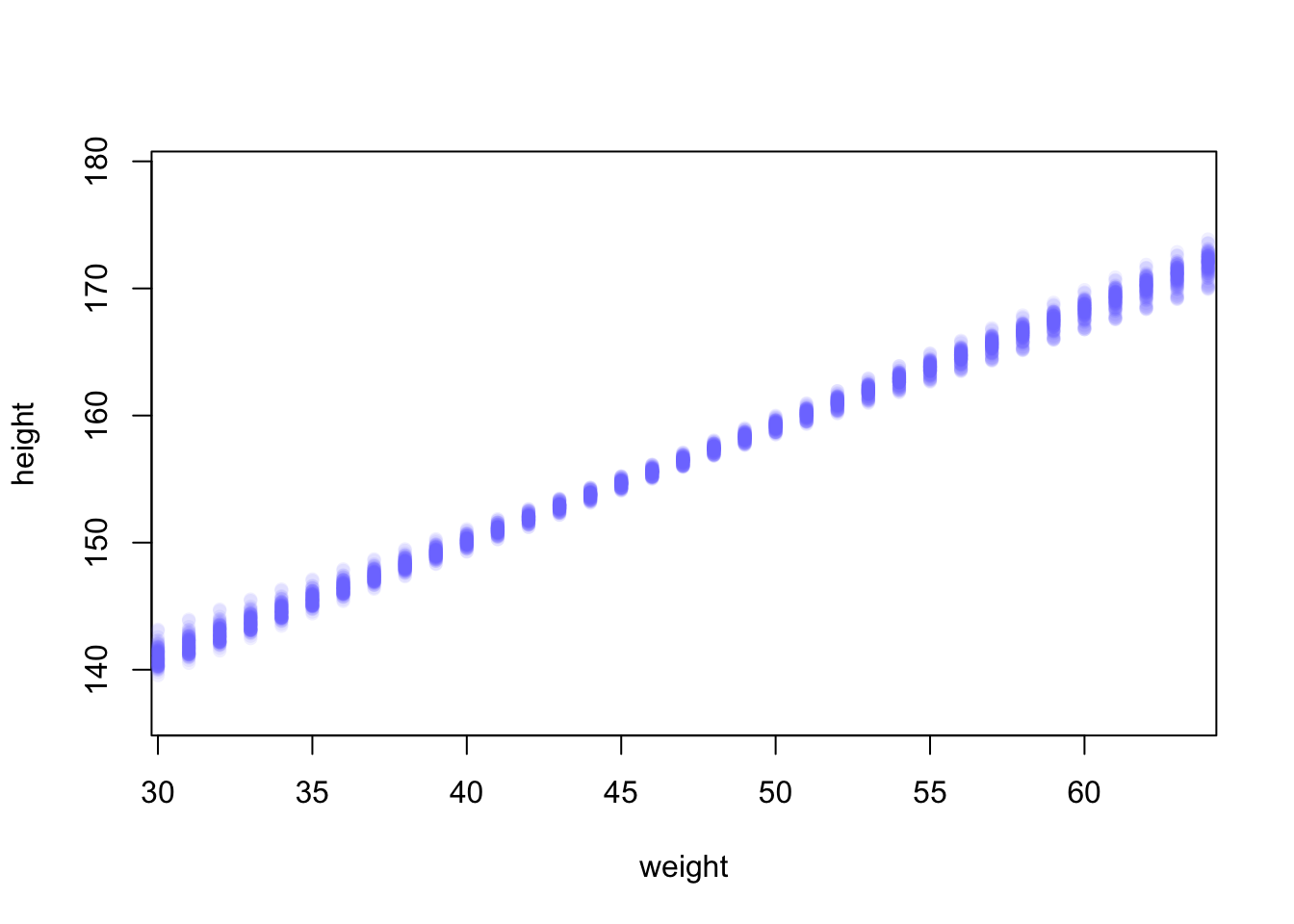

#> mu 154.607024 0.4119947 153.948576 155.265471

#> sigma 7.731333 0.2913860 7.265642 8.197024“These numbers provide Gaussian approximations for each parameter’s marginal distribution. This means the plausibility of each value of μ, after averaging over the plausibilities of each value of σ, is given by a Gaussian distribution with mean 154.6 and standard deviation 0.4. The 5.5% and 94.5% quantiles are percentile interval boundaries, corresponding to an 89% compatibility interval. Why 89%? It’s just the default. It displays a quite wide interval, so it shows a high-probability range of parameter values. If you want another interval, such as the conventional and mindless 95%, you can use precis(m4.1,prob=0.95). But I don’t recommend 95% intervals, because readers will have a hard time not viewing them as significance tests. 89 is also a prime number, so if someone asks you to justify it, you can stare at them meaningfully and incant,”Because it is prime.” That’s no worse justification than the conventional justification for 95%.” (McElreath, 2020, p. 88) (pdf)

When you compare the 89% boundaries with the result of the grid approximation in Example 4.9 you will see that they are almost identical as the posterior is approximately Gaussian.

R Code 4.36 b: Finding the posterior distribution with brms::brm()

## R code 4.26b ######################

data(package = "rethinking", list = "Howell1")

d_b <- Howell1

d2_b <-

d_b |>

dplyr::filter(age >= 18)

## R code 4.27b ######################

## R code 4.28b ######################

m4.1b <-

brms::brm(

formula = height ~ 1, # 1

data = d2_b, # 2

family = gaussian(), # 3

prior = c(brms::prior(normal(178, 20), class = Intercept), # 4

brms::prior(uniform(0, 50), class = sigma, ub = 50)), # 4

iter = 2000, # 5

warmup = 1000, # 6

chains = 4, # 7

cores = 4, # 8

seed = 4, # 9

file = "brm_fits/m04.01b") # 10

m4.1b#> Family: gaussian

#> Links: mu = identity; sigma = identity

#> Formula: height ~ 1

#> Data: d2_b (Number of observations: 352)

#> Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

#> total post-warmup draws = 4000

#>

#> Population-Level Effects:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> Intercept 154.60 0.41 153.81 155.42 1.00 2763 2635

#>

#> Family Specific Parameters:

#> Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

#> sigma 7.77 0.29 7.21 8.39 1.00 3400 2561

#>

#> Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

#> and Tail_ESS are effective sample size measures, and Rhat is the potential

#> scale reduction factor on split chains (at convergence, Rhat = 1).The brms::brm() function returns a “brmsfit” object.

If you don’t want to specify the result more in detail (for instance to change the PI) than you get the same result with brms:::print.brmsfit(m4.1b) and brms:::summary.brmsfit(m4.1b).

Description of the convergence diagnostics Rhat, Bulk_ESS, and Tail_ESS

Rhat, Bulk_ESS, and Tail_ESS are described in detail in (Vehtari et al. 2021).brms::brm() has more than 40 arguments but only three (formula, data and prior) are for our case mandatory. All the other have sensible default values. The correspondence of these three arguments to the {rethinking} version is obvious.

In the simple demonstration of brms::brm() in the toy globe example (R Code 2.25), I have just used Kurz’ code lines without any explanation. This time I will explain all 10 arguments from Kurz’ example.

~ 1, the trend component is a single value, e.g. the mean value of the data, i.e. the linear model only has an intercept. (StackOverflow) In other words, it is the value the dependent variable is expected to have when the independent variables are zero or have no influence. (StackOverflow). The formula y ~ 1 is just a model with a constant (intercept) and no regressor (StackOverflow). Or more understandable for our case are Kurz’ explication: “… the intercept of a typical regression model with no predictors is the same as its mean. In the special case of a model using the binomial likelihood, the mean is the probability of a 1 in a given trial, \(\theta\).” (Kurz) .gaussian model is applied. So this line would not have been necessary. There are standard family functions stats::family()that will work with {brms}, but there are also special family functions brms::brmsfamily() that work only for {brms} models. Additionally you can specify custom families for use in brms with the brms::custom_family() function.brms::brm() function work. — class specifies the parameter class. It defaults to “b” ((i.e. population-level – ‘fixed’ – effects)). (There is also the argument group for grouping of factors for group-level effects. Not used in this code example.) — Besides the “b” class there are other classes for the “Intercept” and the standard deviation “Sigma” on the population level: There is also a “sd” class for the standard deviation of group-level effects. Finally there is the special case of class = "cor" to set the same prior on every correlation matrix. — ub = 50 sets the upper bound to 50. There is also a lb (lower bound). Both bounds are for parameter restriction, so that population-level effects must fall within a certain interval using the lb and ub arguments. lb and ub default to NULL, i.e. there is no restriction.warmup; defaults to 2000).iter and the default is \(iter/2\).mc.cores option to be as many processors as the hardware and RAM allow (up to the number of chains).set.seed() in other code chunks the chapter number. If NA (the default), Stan will set the seed randomly.NULL or a character string. In the latter case, the fitted model object is saved via base::saveRDS() in a file named after the string supplied in file. The .rds extension is added automatically. If the file already exists, brms::brm() will load and return the saved model object instead of refitting the model. Unless you specify the file_refit argument as well, the existing files won’t be overwritten, you have to manually remove the file in order to refit and save the model under an existing file name. The file name is stored in the brmsfit object for later usage.brm() function, you use the init argument for the start values for the sampler. “If NULL (the default) or”random”, Stan will randomly generate initial values for parameters in a reasonable range. If 0, all parameters are initialized to zero on the unconstrained space. This option is sometimes useful for certain families, as it happens that default random initial values cause draws to be essentially constant. Generally, setting init = 0 is worth a try, if chains do not initialize or behave well. Alternatively, init can be a list of lists containing the initial values …” (Help file)R Code 4.37 b: Print the specified results of the brmsfit object

## R code 4.29b print ######################

brms:::print.brmsfit(m4.1b, prob = .89)

## brms:::summary.brmsfit(m4.1b, prob = .89) # (same result)#> Family: gaussian

#> Links: mu = identity; sigma = identity

#> Formula: height ~ 1

#> Data: d2_b (Number of observations: 352)

#> Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

#> total post-warmup draws = 4000

#>

#> Population-Level Effects:

#> Estimate Est.Error l-89% CI u-89% CI Rhat Bulk_ESS Tail_ESS

#> Intercept 154.60 0.41 153.94 155.25 1.00 2763 2635

#>

#> Family Specific Parameters:

#> Estimate Est.Error l-89% CI u-89% CI Rhat Bulk_ESS Tail_ESS

#> sigma 7.77 0.29 7.33 8.26 1.00 3400 2561

#>

#> Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

#> and Tail_ESS are effective sample size measures, and Rhat is the potential

#> scale reduction factor on split chains (at convergence, Rhat = 1).Description of the convergence diagnostics Rhat, Bulk_ESS, and Tail_ESS

Rhat, Bulk_ESS, and Tail_ESS are described in detail in (Vehtari et al. 2021).brms:::summary.brmsfit() results in the same output as brms:::print.brmsfit(). Both return a brmssummary object. But there are some internal differences:

There is also a \(print() method that prints the same summary stats but removes the extra formatting used for printing tibbles and returns the fitted model object itself. The \)print() method may also be faster than \(summary() because it is designed to only compute the summary statistics for the variables that will actually fit in the printed output whereas \)summary() will compute them for all of the specified variables in order to be able to return them to the user.

Using print.brmsfit() or summary.brmsfit() defaults to 95% intervals. As {rethinking} defaults to 89% intervals, I have changed the prob parameter of the print method also to 89%.

WATCH OUT! Using three colons to address generic functions of S3 classes

As I have learned shortly: print() or summary() are generic functions where one can add new printing methods with new classes. In this case class(m4.1b) = brmsfit. This means I do not need to add brms:: to secure that I will get the {brms} printing or summary method as I didn’t load the {brms} package. Quite the contrary: Adding brms:: would result into the message: “Error: ‘summary’ is not an exported object from ‘namespace:brms’”.

As I really want to specify explicitly the method these generic functions should use, I need to use the syntax with three colons, like brms:::print.brmsfit() or brms:::summary.brmsfit() respectively.

Learning more about S3 classes in R

In this respect I have to learn more about S3 classes. There are many important web resources about this subject that I have found with the search string “r what is s3 class”. Maybe I should start with the S3 chapter in Advanced R.

For the interpretation of this output I am going to use the explication in the How to use brms section of the {brms} GitHup page.

Estimate) and the standard deviation (Est.Error) of the posterior distribution as well as two-sided 95% credible intervals (l-95% CI and u-95% CI) based on quantiles. The last three values (ESS_bulk, ESS_tail, and Rhat) provide information on how well the algorithm could estimate the posterior distribution of this parameter. If Rhat is considerably greater than 1, the algorithm has not yet converged and it is necessary to run more iterations and / or set stronger priors.R Code 4.38 b: Print a Stan like summary

## R code 4.29b stan like summary ######################

m4.1b$fit#> Inference for Stan model: anon_model.

#> 4 chains, each with iter=2000; warmup=1000; thin=1;

#> post-warmup draws per chain=1000, total post-warmup draws=4000.

#>

#> mean se_mean sd 2.5% 25% 50% 75% 97.5%

#> b_Intercept 154.60 0.01 0.41 153.81 154.31 154.59 154.88 155.42

#> sigma 7.77 0.01 0.29 7.21 7.57 7.76 7.97 8.39

#> lprior -8.51 0.00 0.02 -8.56 -8.53 -8.51 -8.50 -8.46

#> lp__ -1227.04 0.02 0.97 -1229.63 -1227.44 -1226.75 -1226.33 -1226.06

#> n_eff Rhat

#> b_Intercept 2778 1

#> sigma 3404 1

#> lprior 2773 1

#> lp__ 1798 1

#>

#> Samples were drawn using NUTS(diag_e) at Mon Dec 4 22:27:38 2023.

#> For each parameter, n_eff is a crude measure of effective sample size,

#> and Rhat is the potential scale reduction factor on split chains (at

#> convergence, Rhat=1).Kurz refers to RStan: the R interface to Stan for a detailed description. I didn’t study the extensive documentation but I found out two items:

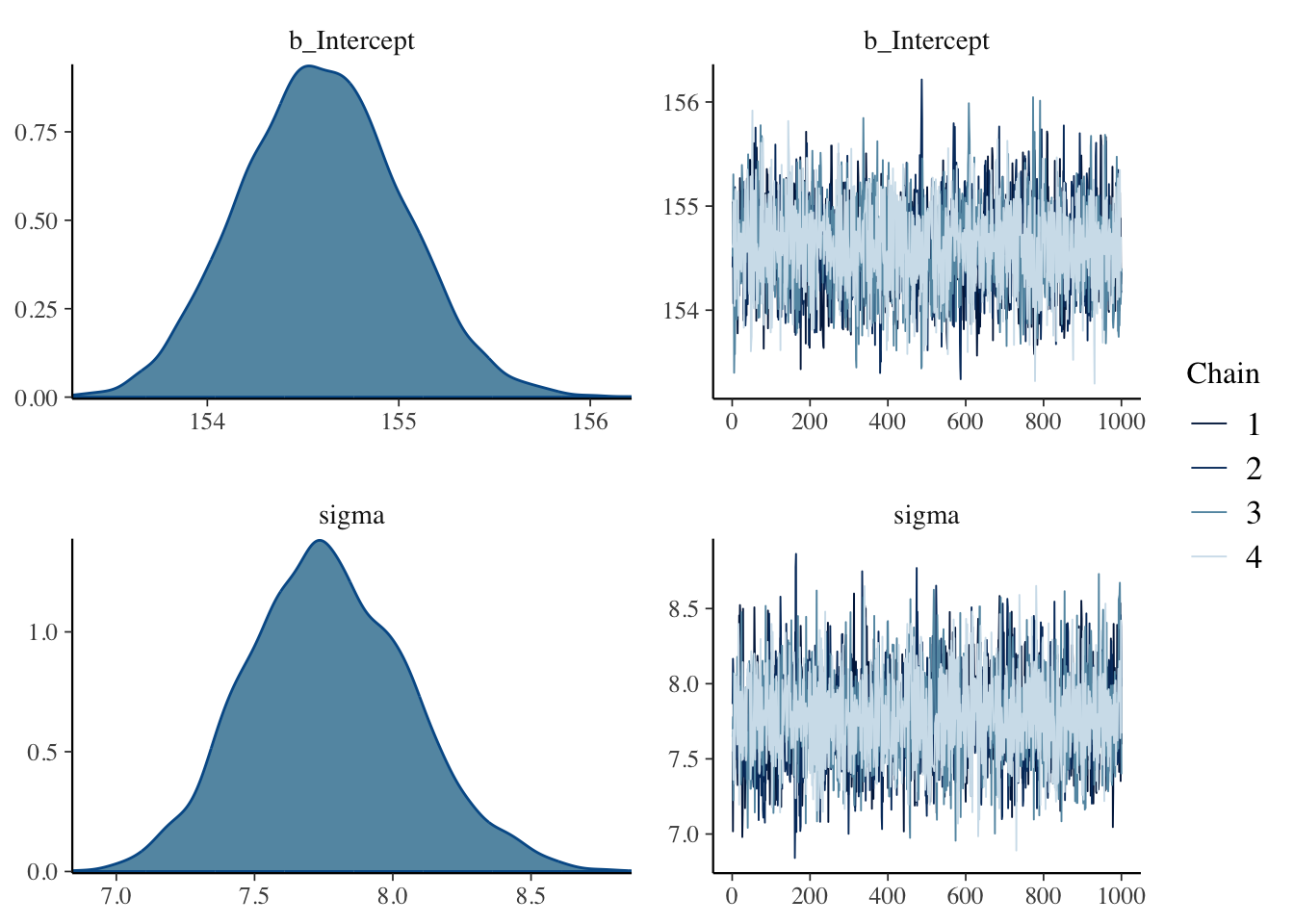

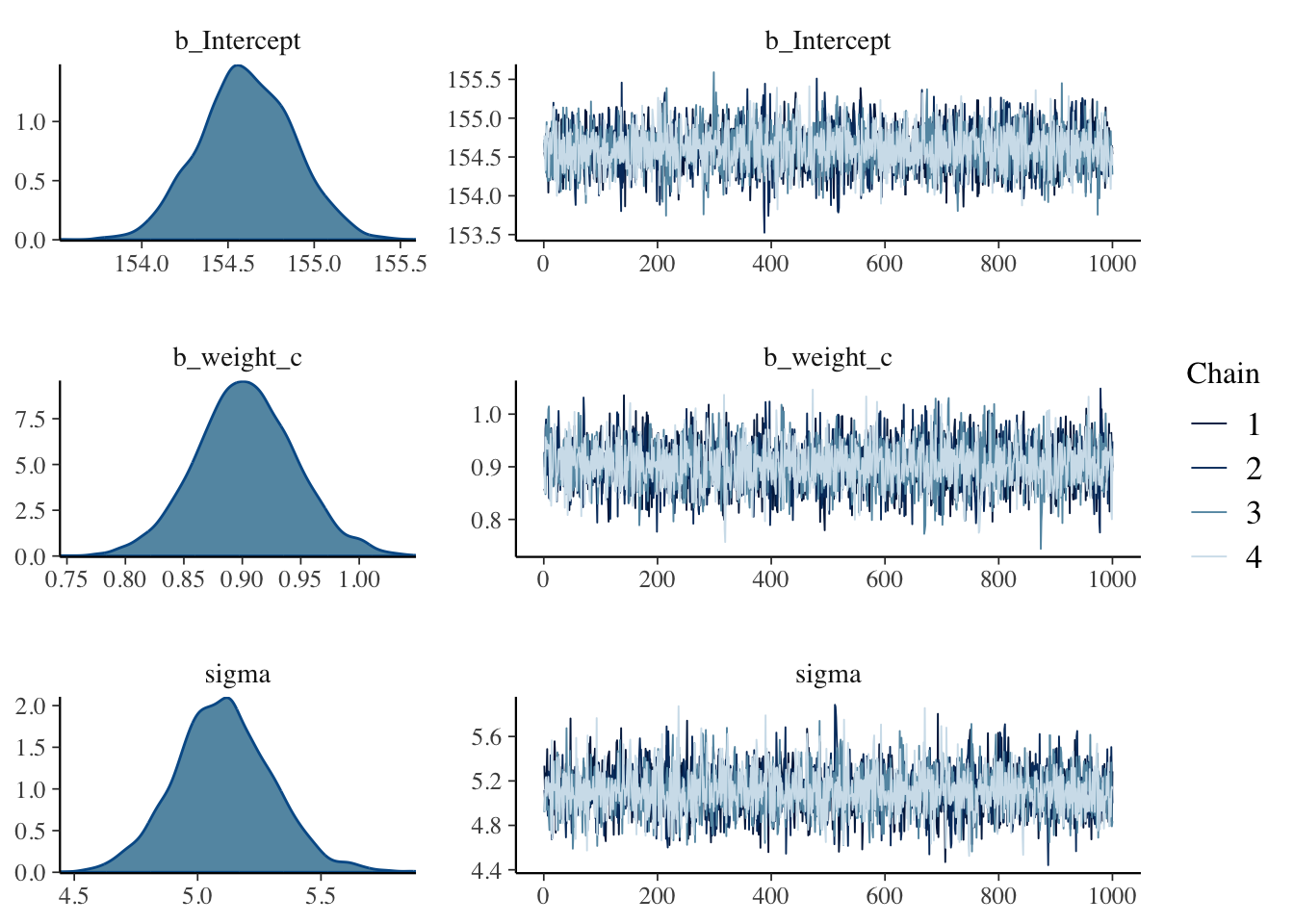

lp__ is the logarithm of the (unnormalized) posterior density as calculated by Stan. This log density can be used in various ways for model evaluation and comparison.Rhat estimates the degree of convergence of a random Markov Chain based on the stability of outcomes between and within chains of the same length. Values close to one indicate convergence to the underlying distribution. Values greater than 1.1 indicate inadequate convergence.R Code 4.39 b: Plot the visual chain diagnostics of the brmsfit object

## R code 4.29b plot ######################

brms:::plot.brmsfit(m4.1b)

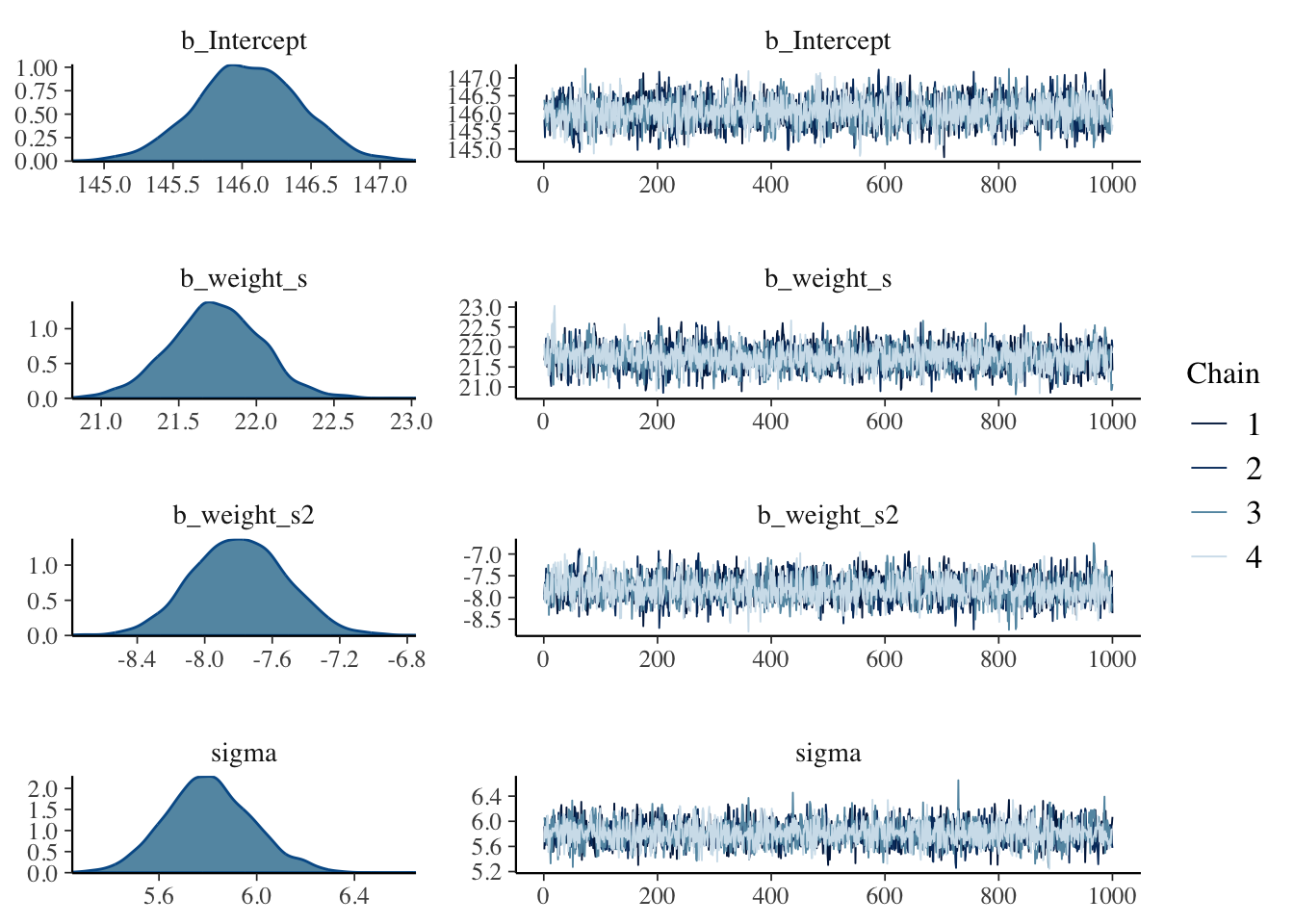

After running a model fit with HMC, it’s a good idea to inspect the chains. As we’ll see, McElreath covered visual chain diagnostics in ?sec-chap09. … If you want detailed diagnostics for the HMC chains, call

launch_shinystan(m4.1b). (Kurz)

Just a quick preview: It is important over the whole length of the samples (not counting the warmups, e.g., in our case: 1000 iteration) the width is constant and that the graph show all (four) chains at the top resp. bottom. Unfortunately is looking at the (small) graph not always conclusive. (See also tab “trace plot” in Example 4.19.)

Learn more about {shinystan}

I haven’t applied launch_shinystan(m4.1b) as it takes much time and I do not (yet) understand the detailed report anyway. To learn to work with {shinystan} see the ShinyStan website and the vignettes of the R package vignettes (Deploying to shinyapps.io, Getting Started) and documentation.

Package documentation of Stan and friends

brms::launch_shinystan)Ooops, this opens up Pandora’s box!

I do not even understand completely what the different packages do. What follows is a first try where I copied from the documentation pages:

{rstanarm} is an R package that emulates other R model-fitting functions but uses Stan (via the {rstan} package) for the back-end estimation. The primary target audience is people who would be open to Bayesian inference if using Bayesian software were easier but would use frequentist software otherwise.

RStan is the R interface to Stan. It is distributed on CRAN as the {rstan} package and its source code is hosted on GitHub.

Stan is a state-of-the-art platform for statistical modeling and high-performance statistical computation.

The next example shows the effect a very narrow \(\mu\) prior has. Instead of a sigma of 20 we provide only a standard deviation of 0.1.

Example 4.12 : The same model but with a more informative, e.g., very narrow \(\mu\) prior

R Code 4.40 a: Model with a very narrow \(\mu\) prior (Original)

## R code 4.27a ######################

flist <- alist(

height ~ dnorm(mu, sigma),

mu ~ dnorm(178, .1),

sigma ~ dunif(0, 50)

)

## R code 4.30a #####################

start <- list(

mu = mean(d2_a$height),

sigma = sd(d2_a$height)

)

## R code 4.28a ######################

m4.2a <- rethinking::quap(flist, data = d2_a, start = start)

rethinking::precis(m4.2a)#> mean sd 5.5% 94.5%

#> mu 177.86382 0.1002354 177.70363 178.0240

#> sigma 24.51934 0.9290875 23.03448 26.0042“Notice that the estimate for μ has hardly moved off the prior. The prior was very concentrated around 178. So this is not surprising. But also notice that the estimate for σ has changed quite a lot, even though we didn’t change its prior at all. Once the golem is certain that the mean is near 178—as the prior insists—then the golem has to estimate σ conditional on that fact. This results in a different posterior for σ, even though all we changed is prior information about the other parameter.” (McElreath, 2020, p. 89) (pdf)

R Code 4.41 b: Model with a very narrow \(\mu\) prior (brms)

m4.2b <-

brms::brm(

formula = height ~ 1,

data = d2_b,

family = gaussian(),

prior = c(prior(normal(178, 0.1), class = Intercept),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "brm_fits/m04.02b")

base::rbind(

brms:::summary.brmsfit(m4.1b, prob = .89)$fixed,

brms:::summary.brmsfit(m4.2b, prob = .89)$fixed

)#> Estimate Est.Error l-89% CI u-89% CI Rhat Bulk_ESS Tail_ESS

#> Intercept 154.5959 0.4144632 153.9365 155.2540 1.000708 2762.982 2635.159

#> Intercept1 177.8647 0.1052528 177.6973 178.0278 1.001224 2720.796 2586.491Subsetting the summary.brmsfit() output of the brmssummary object with $fixed provides a convenient way to compare the Intercept summaries between m4.1b and m4.2b.

rethinking::quap()

How do we get samples from the quadratic approximate posterior distribution?

“… a quadratic approximation to a posterior distribution with more than one parameter dimension—μ and σ each contribute one dimension—is just a multi-dimensional Gaussian distribution.” (McElreath, 2020, p. 90) (pdf)

“As a consequence, when R constructs a quadratic approximation, it calculates not only standard deviations for all parameters, but also the covariances among all pairs of parameters. Just like a mean and standard deviation (or its square, a variance) are sufficient to describe a one-dimensional Gaussian distribution, a list of means and a matrix of variances and covariances are sufficient to describe a multi-dimensional Gaussian distribution.” (McElreath, 2020, p. 90) (pdf)

WATCH OUT! Rethinking and Tidyverse (brms) Codes in separate examples

As there are quite big differences in the calculation of the variance-covariance matrix, I will explain the appropriate steps in different examples.

Example 4.13 a: Sampling from a quap() (Original)

R Code 4.42 a: Variance-covariance matrix (Original)

## R code 4.32a vcov #########

rethinking::vcov(m4.1a)#> mu sigma

#> mu 0.1697396109 0.0002180307

#> sigma 0.0002180307 0.0849058224“The above is a variance-covariance matrix. It is the multi-dimensional glue of a quadratic approximation, because it tells us how each parameter relates to every other parameter in the posterior distribution. A variance-covariance matrix can be factored into two elements: (1) a vector of variances for the parameters and (2) a correlation matrix that tells us how changes in any parameter lead to correlated changes in the others. This decomposition is usually easier to understand.” (McElreath, 2020, p. 90) (pdf)

vcov() returns the variance-covariance matrix of the main parameters of a fitted model object. In the above {rethinking} version is uses the class map2stan for a fitted Stan model as m4.1a is of class map.

WATCH OUT! Two different vcov() functions

I am explicitly using the package {rethinking} for the vcov() function. The same function is also available as a base R function with stats::vcov(). But this generates an error because there is no method known for an object of class map from the {rethinking} package. The help file for stats::vcov() only says that the vcov object is an S3 method for classes lm, glm, mlm and aov but not for map.

Error in UseMethod(“vcov”) : no applicable method for ‘vcov’ applied to an object of class “map”

I could have used only vcov(). But this only works when the {rethinking} package is already loaded. In that case R knows because of the class of the object which vcov() version to use. In this case: class of object = class(m4.1a) map.

R Code 4.43 a: List of variances (Original)

## R code 4.33a var adapted ##########

(var_list <- base::diag(rethinking::vcov(m4.1a)))#> mu sigma

#> 0.16973961 0.08490582“The two-element vector in the output is the list of variances. If you take the square root of this vector, you get the standard deviations that are shown in

rethinking::precis()(Example 4.11) output.” (McElreath, 2020, p. 90) (pdf)

Let’s check this out:

| Result / Parameter | mu sd, sigma sd |

|---|---|

sqrt(base::unname(var_list)) |

0.4119947, 0.291386 |

precis_m4.1a[["sd"]] |

0.4119947, 0.291386 |

R Code 4.44 a: Correlation matrix (Original)

## R code 4.33a cor #############

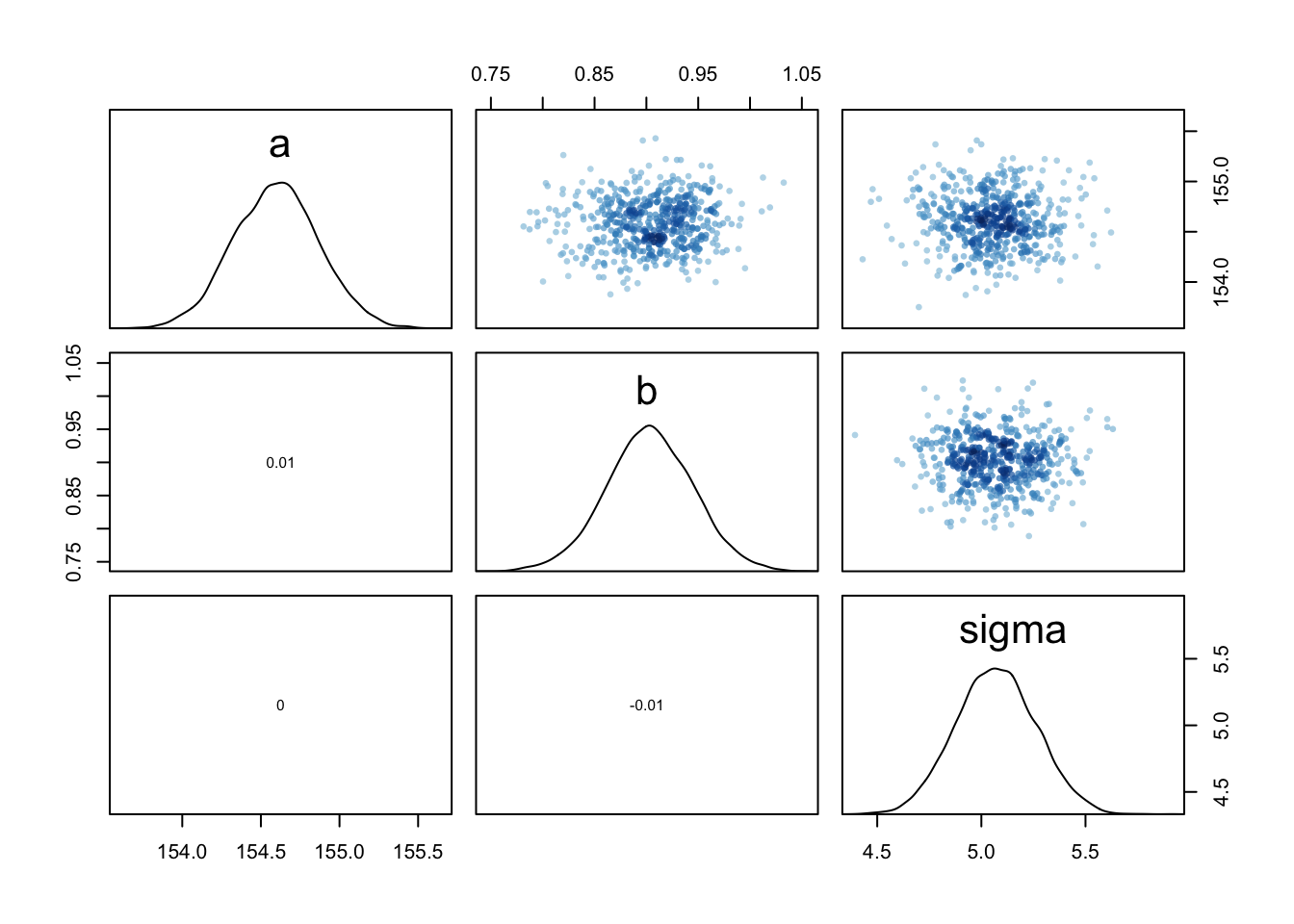

stats::cov2cor(rethinking::vcov(m4.1a))#> mu sigma

#> mu 1.000000000 0.001816174

#> sigma 0.001816174 1.000000000“The two-by-two matrix in the output is the correlation matrix. Each entry shows the correlation, bounded between −1 and +1, for each pair of parameters. The 1’s indicate a parameter’s correlation with itself. If these values were anything except 1, we would be worried. The other entries are typically closer to zero, and they are very close to zero in this example. This indicates that learning μ tells us nothing about σ and likewise that learning σ tells us nothing about μ. This is typical of simple Gaussian models of this kind. But it is quite rare more generally, as you’ll see in later chapters.” (McElreath, 2020, p. 90) (pdf)

a: Compute covariance matrix from the correlation using base::sweep() (Original)

#> mu sigma

#> mu 0.1697396109 0.0002180307

#> sigma 0.0002180307 0.0849058224I wonder how to compute the correlation matrix by hand form the covariance-variance matrix. I thought that I have to use sqrt(), but it didn’t work. After I inspected the code of the cov2cor() function I noticed that it uses the expression sqrt(1/diag(V)).

From the stats::cor() help file:

Scaling a covariance matrix into a correlation one can be achieved in many ways, mathematically most appealing by multiplication with a diagonal matrix from left and right, or more efficiently by using

base::sweep(.., FUN = "/")twice. Thestats::cov2cor()function is even a bit more efficient, and provided mostly for didactical reasons.

For computing the covariance matrix with base::sweep() see the answer in StackOverflow.

R Code 4.45 a: Samples from the multi-dimensional posterior (Original)

## R code 4.34a ########################

set.seed(4)

post3_a <- rethinking::extract.samples(m4.1a, n = 1e4)

bayr::as_tbl_obs(post3_a)| Obs | mu | sigma |

|---|---|---|

| 1349 | 153.8802 | 7.432261 |

| 2281 | 154.8268 | 7.526618 |

| 3240 | 154.1099 | 7.540981 |

| 3418 | 154.1668 | 7.634100 |

| 4467 | 155.0349 | 7.321992 |

| 6128 | 154.0472 | 7.421992 |

| 7317 | 154.7729 | 8.097497 |

| 7892 | 154.1290 | 7.631920 |

“You end up with a data frame, post, with 10,000 (1e4) rows and two columns, one column for μ and one for σ. Each value is a sample from the posterior, so the mean and standard deviation of each column will be very close to the MAP values from before.” (McElreath, 2020, p. 91) (pdf)

R Code 4.46 a: Summary from the samples of the multi-dimensional posterior (Original)

## R code 4.35a precis ##################

rethinking::precis(post3_a)#> mean sd 5.5% 94.5% histogram

#> mu 154.61196 0.4106276 153.960323 155.272265 ▁▁▅▇▂▁▁

#> sigma 7.73298 0.2936393 7.267091 8.199897 ▁▁▁▁▂▅▇▇▃▁▁▁▁Compare these values to the output from the summaries with rethinking::precis() in Example 4.11.

R Code 4.47 a: Plot the samples distribution from the multi-dimensional posterior (Original)

“see how much they resemble the samples from the grid approximation in Graph 4.11. These samples also preserve the covariance between \(\mu\) and \(\sigma\). This hardly matters right now, because \(\mu\) and \(\sigma\) don’t covary at all in this model. But once you add a predictor variable to your model, covariance will matter a lot.” (McElreath, 2020, p. 91) (pdf)

R Code 4.48 a: Extract samples from the vectors of values from a multi-dimensional Gaussian distribution with MASS::mvrnorm() and plot the result (Original)

MASS::mvrnorm()The function rethinking::extract.samples() in the “Sample1” tab is for convenience. It is just running a simple simulation of the sort you conducted near the end of Chapter 3 with R Code 3.52.

Under the hood the work of rethinking::extract.samples() is done by a multi-dimensional version of stats::rnorm(), MASS::mvrnorm(). The function stats::rnorm() simulates random Gaussian values, while MASS::mvrnorm() simulates random vectors of multivariate Gaussian values.

How to interpret covariances?

A large covariance can mean a strong relationship between variables. However, you can’t compare variances over data sets with different scales (like pounds and inches). A weak covariance in one data set may be a strong one in a different data set with different scales.

The main problem with interpretation is that the wide range of results that it takes on makes it hard to interpret. For example, your data set could return a value of 3, or 3,000. This wide range of values is caused by a simple fact: The larger the X and Y values, the larger the covariance. A value of 300 tells us that the variables are correlated, but unlike the correlation coefficient, that number doesn’t tell us exactly how strong that relationship is. The problem can be fixed by dividing the covariance by the standard deviation to get the correlation coefficient. (Statistics How To)

WATCH OUT! Confusing m4.1a with m4.3a

Frankly speaking I had troubles to understand why the correlation in m4.1a is almost 0. It turned out that I had unconsciously in mind a correlation between height and weight, an issue that is raised later in the chapter with m4.3a.

Although with m4.1bis a multi-dimensional Gaussian distribution in discussion but only with the height correlation of \(\mu\) and \(\sigma\). \(\mu\) of the height distribution does not help you in the estimation of \(\sigma\) in this distribution – and vice-versa.

brms::brm() fitIn contrast to the {rethinking} approach the {brms} doesn’t seem to have the same convenience functions and therefore we have to use different workarounds to get the same results. To get equivalent output it is the best strategy to put the Hamilton Monte Carlo (HMC) chains in a data frame and then apply the appropriate functions.

Example 4.14 b: Sampling with as_draw() functions from a brms::brm() fit

R Code 4.49 b: Variance-covariance matrix (Original)

brms:::vcov.brmsfit(m4.1b)#> Intercept

#> Intercept 0.1717797The vcov() function working with brmsfit objects only returns the first element in the matrix it did for {rethinking}. That is, it appears brms::vcov.brmsfit() only returns the variance/covariance matrix for the single-level _β_ parameters.

If we want the same information as with rethinking::vcov(), we have to put the HMC chains in a data frame with the brms::as_draws_df() function as shown in the next tab “draws”.

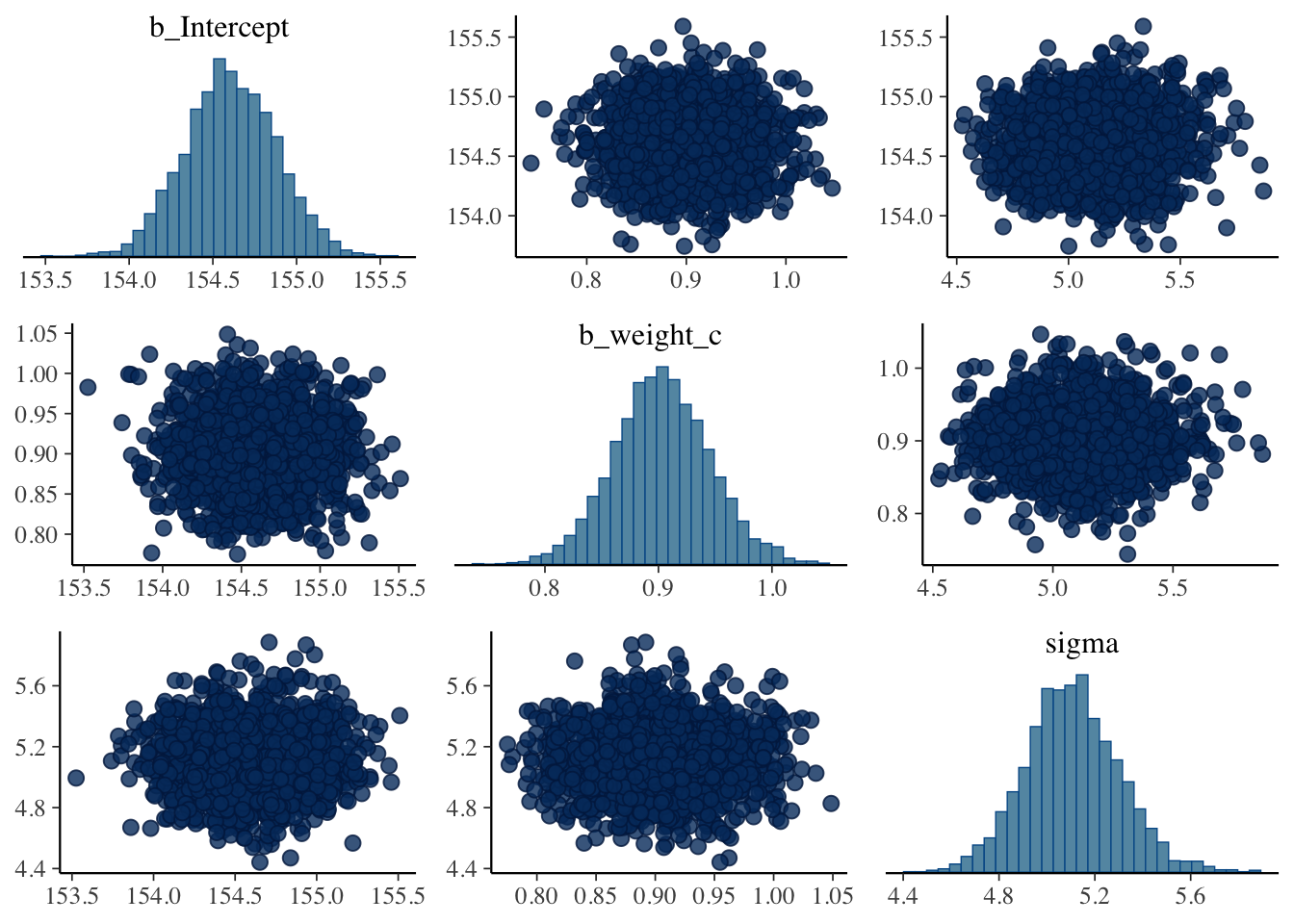

R Code 4.50 b: Extract the iteration of the Hamilton Monte Carlo (HMC) chains into a data frame (Tidyverse)

set.seed(4)

post_b <- brms::as_draws_df(m4.1b)

bayr::as_tbl_obs(post_b)| Obs | b_Intercept | sigma | lprior | lp__ | .chain | .iteration | .draw |

|---|---|---|---|---|---|---|---|

| 71 | 154.9925 | 7.868132 | -8.488375 | -1226.553 | 1 | 71 | 71 |

| 587 | 154.3334 | 7.626869 | -8.526828 | -1226.342 | 1 | 587 | 587 |

| 684 | 154.8032 | 8.290861 | -8.499311 | -1227.720 | 1 | 684 | 684 |

| 1528 | 154.4008 | 7.591280 | -8.522846 | -1226.302 | 2 | 528 | 1528 |

| 1795 | 155.7369 | 7.777169 | -8.446249 | -1229.762 | 2 | 795 | 1795 |