4 Geocentric Models

Linear regression is the geocentric model of applied statistics. By “linear regression,” we will mean a family of simple statistical golems that attempt to learn about the mean and variance of some measurement, using an additive combination of other measurements. Like geocentrism, linear regression can usefully describe a very large variety of natural phenomena. Like geocentrism, linear regression is a descriptive model that corresponds to many different process models. If we read its structure too literally, we’re likely to make mistakes. But used wisely, these little linear golems continue to be useful. (McElreath, 2020a, p. 71, emphasis, in the original)

4.1 Why normal distributions are normal

After laying out his soccer field coin toss shuffle premise, McElreath wrote:

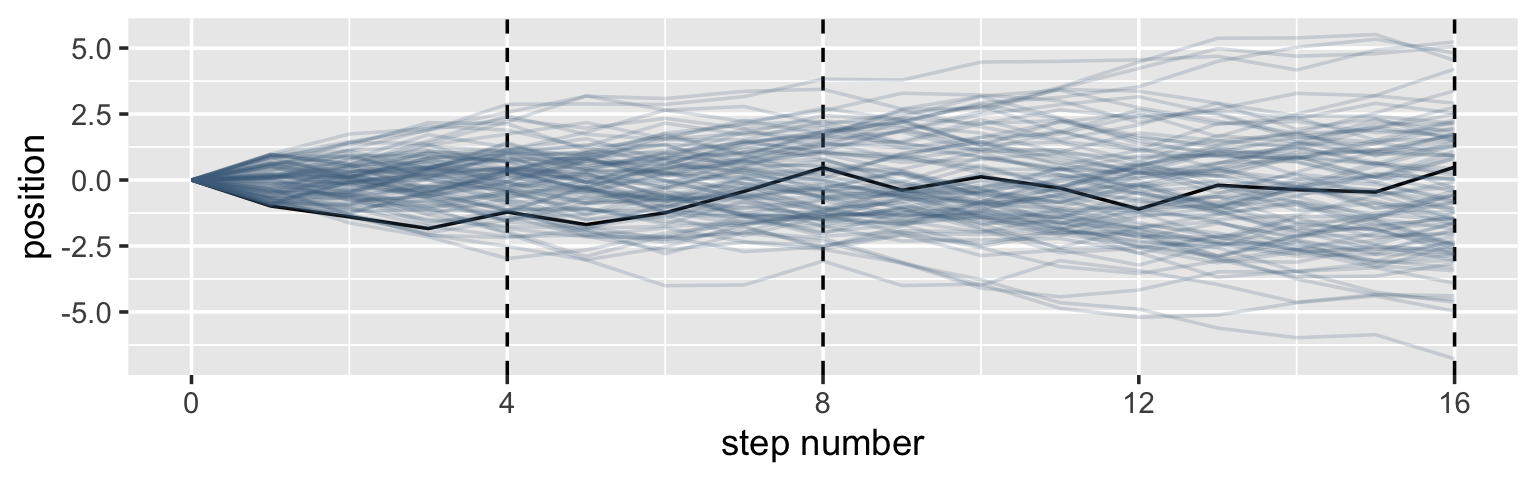

It’s hard to say where any individual person will end up, but you can say with great confidence what the collection of positions will be. The distances will be distributed in approximately normal, or Gaussian, fashion. This is true even though the underlying distribution is binomial. It does this because there are so many more possible ways to realize a sequence of left-right steps that sums to zero. There are slightly fewer ways to realize a sequence that ends up one step left or right of zero, and so on, with the number of possible sequences declining in the characteristic bell curve of the normal distribution. (p. 72)

4.1.1 Normal by addition.

Here’s a way to do the simulation necessary for the plot in the top panel of Figure 4.2.

library(tidyverse)

# we set the seed to make the results of `runif()` reproducible.

set.seed(4)

pos <-

# make data with 100 people, 16 steps each with a starting point of `step == 0` (i.e., 17 rows per person)

crossing(person = 1:100,

step = 0:16) %>%

# for all steps above `step == 0` simulate a `deviation`

mutate(deviation = map_dbl(step, ~if_else(. == 0, 0, runif(1, -1, 1)))) %>%

# after grouping by `person`, compute the cumulative sum of the deviations, then `ungroup()`

group_by(person) %>%

mutate(position = cumsum(deviation)) %>%

ungroup() That map_dbl() code within the first mutate() line might look odd. Go here to learn more about iterating with purrr::map_dbl().

We might glimpse() at the data.

glimpse(pos)## Rows: 1,700

## Columns: 4

## $ person <int> 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 1, 2, 2, 2, 2, 2, 2, 2, 2, 2, 2,…

## $ step <int> 0, 1, 2, 3, 4, 5, 6, 7, 8, 9, 10, 11, 12, 13, 14, 15, 16, 0, 1, 2, 3, 4, 5, 6, 7…

## $ deviation <dbl> 0.00000000, -0.98210841, -0.41252078, -0.44525008, 0.62714843, -0.47914446, 0.44…

## $ position <dbl> 0.0000000, -0.9821084, -1.3946292, -1.8398793, -1.2127308, -1.6918753, -1.243063…Here’s the code to make the top panel of Figure 4.2.

ggplot(data = pos,

aes(x = step, y = position, group = person)) +

geom_vline(xintercept = c(4, 8, 16), linetype = 2) +

geom_line(aes(color = person < 2, alpha = person < 2)) +

scale_color_manual(values = c("skyblue4", "black")) +

scale_alpha_manual(values = c(1/5, 1)) +

scale_x_continuous("step number", breaks = 0:4 * 4) +

theme(legend.position = "none")

Here’s the code for the bottom three plots of Figure 4.2.

# Figure 4.2.a.

p1 <-

pos %>%

filter(step == 4) %>%

ggplot(aes(x = position)) +

geom_line(stat = "density", color = "dodgerblue1") +

labs(title = "4 steps")

# Figure 4.2.b.

p2 <-

pos %>%

filter(step == 8) %>%

ggplot(aes(x = position)) +

geom_density(color = "dodgerblue2", outline.type = "full") +

labs(title = "8 steps")

# this is an intermediary step to get an SD value

sd <-

pos %>%

filter(step == 16) %>%

summarise(sd = sd(position)) %>%

pull(sd)

# Figure 4.2.c.

p3 <-

pos %>%

filter(step == 16) %>%

ggplot(aes(x = position)) +

stat_function(fun = dnorm,

args = list(mean = 0, sd = sd),

linetype = 2) +

geom_density(color = "transparent", fill = "dodgerblue3", alpha = 1/2) +

labs(title = "16 steps",

y = "density")

# combine the ggplots

library(patchwork)

(p1 | p2 | p3) & coord_cartesian(xlim = c(-6, 6))

While we were at it, we explored a few ways to express densities. The main action was with the geom_line(), geom_density(), and stat_function() functions, respectively.

Any process that adds together random values from the same distribution converges to a normal. But it’s not easy to grasp why addition should result in a bell curve of sums. Here’s a conceptual way to think of the process. Whatever the average value of the source distribution, each sample from it can be thought of as a fluctuation from that average value. When we begin to add these fluctuations together, they also begin to cancel one another out. A large positive fluctuation will cancel a large negative one. (p. 73)

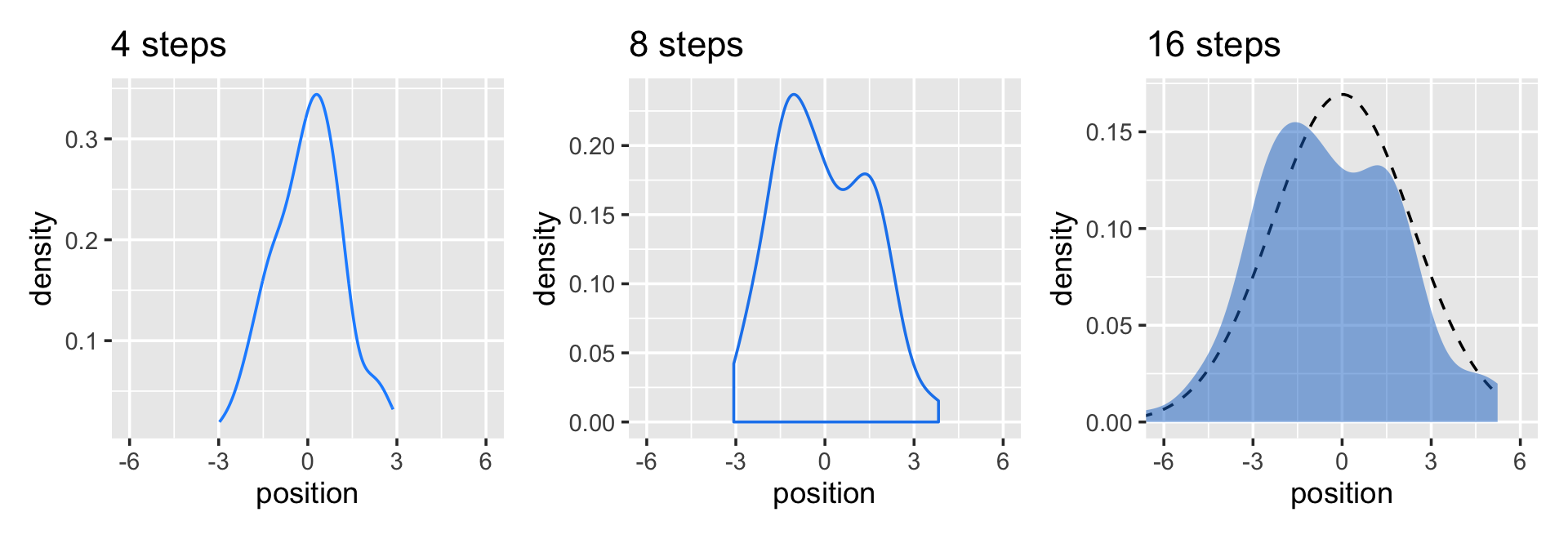

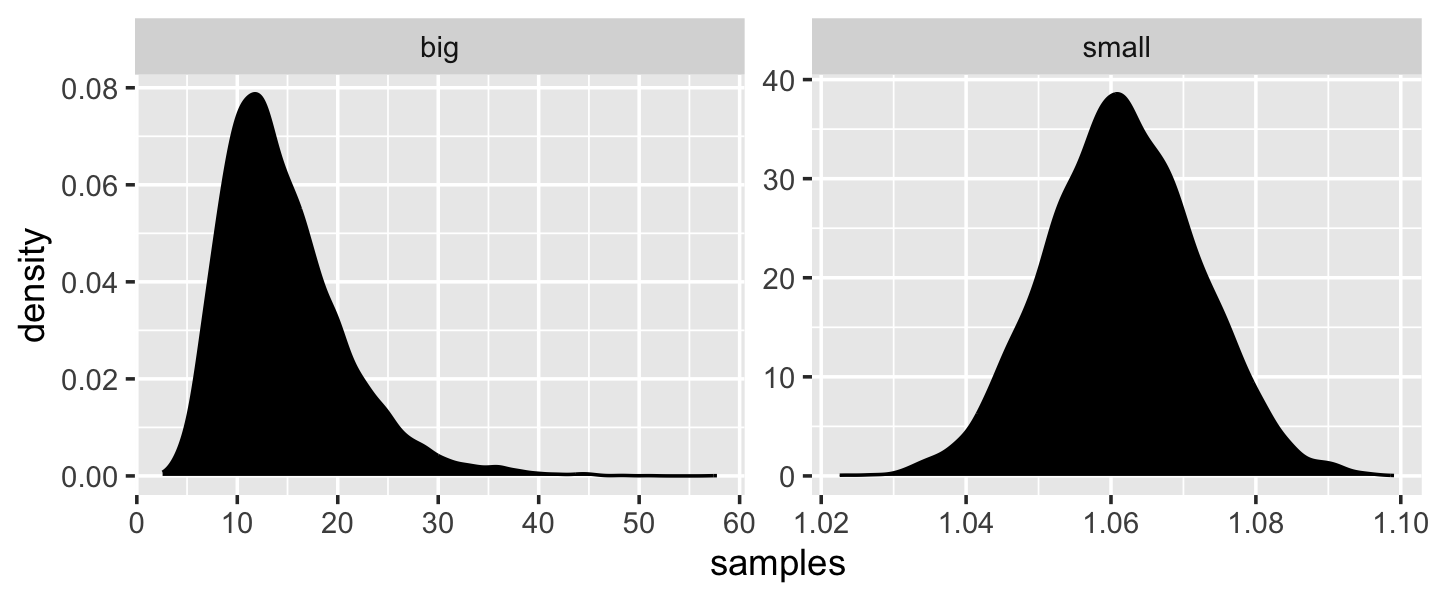

4.1.2 Normal by multiplication.

Here’s McElreath’s simple random growth rate.

set.seed(4)

prod(1 + runif(12, 0, 0.1))## [1] 1.774719In the runif() part of that code, we generated 12 random draws from the uniform distribution with bounds \([0, 0.1]\). Within the prod() function, we first added 1 to each of those values and then computed their product. Consider a more explicit variant of the code.

set.seed(4)

tibble(a = 1,

b = runif(12, 0, 0.1)) %>%

mutate(c = a + b) %>%

summarise(p = prod(c))## # A tibble: 1 × 1

## p

## <dbl>

## 1 1.77Same result. Rather than using base R replicate() to do this many times, let’s practice with purrr::map_dbl() like before.

set.seed(4)

growth <-

tibble(growth = map_dbl(1:10000, ~ prod(1 + runif(12, min = 0, max = 0.1))))

ggplot(data = growth, aes(x = growth)) +

geom_density()

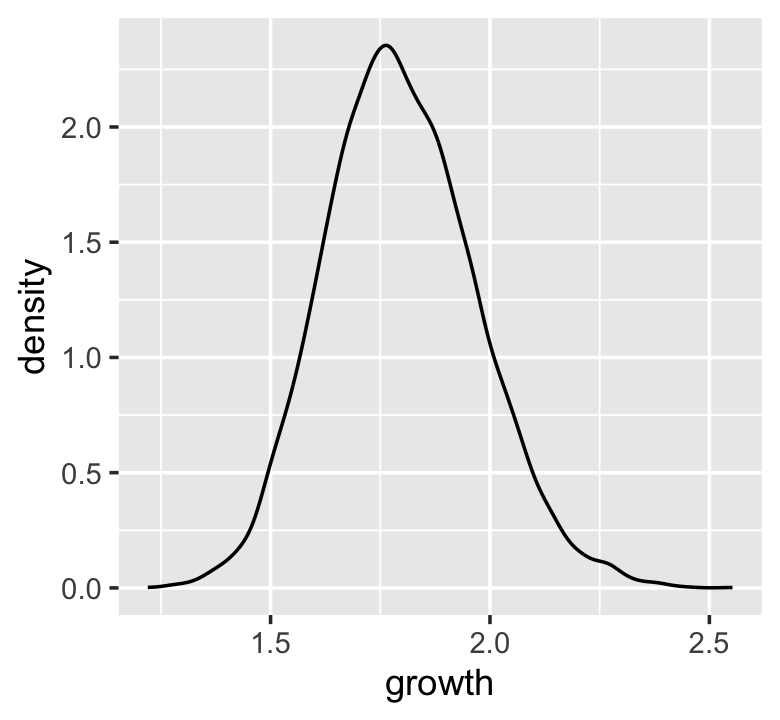

“The smaller the effect of each locus, the better this additive approximation will be” (p. 74). Let’s compare big and small.

# simulate

set.seed(4)

samples <-

tibble(big = map_dbl(1:10000, ~ prod(1 + runif(12, min = 0, max = 0.5))),

small = map_dbl(1:10000, ~ prod(1 + runif(12, min = 0, max = 0.01))))

# wrangle

samples %>%

pivot_longer(everything(), values_to = "samples") %>%

# plot

ggplot(aes(x = samples)) +

geom_density(fill = "black") +

facet_wrap(~ name, scales = "free")

Yep, the small samples were more Gaussian. “The interacting growth deviations, as long as they are sufficiently small, converge to a Gaussian distribution. In this way, the range of causal forces that tend towards Gaussian distributions extends well beyond purely additive interactions” (p. 74).

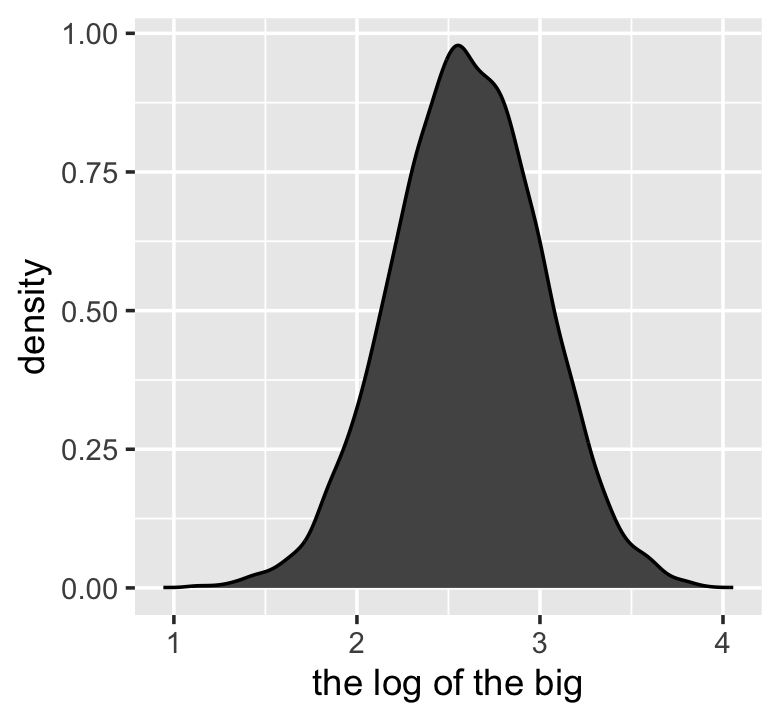

4.1.3 Normal by log-multiplication.

Instead of saving our tibble, we’ll just feed it directly into our plot.

samples %>%

mutate(log_big = log(big)) %>%

ggplot(aes(x = log_big)) +

geom_density(fill = "gray33") +

xlab("the log of the big")

Yet another Gaussian distribution. We get the Gaussian distribution back, because adding logs is equivalent to multiplying the original numbers. So even multiplicative interactions of large deviations can produce Gaussian distributions, once we measure the outcomes on the log scale. (p. 75)

4.1.4 Using Gaussian distributions.

“The justifications for using the Gaussian distribution fall into two broad categories: (1) ontological and (2) epistemological” (p. 75). I’m a fan of the justifications to follow.

4.1.4.1 Ontological justification.

The Gaussian is

a widespread pattern, appearing again and again at different scales and in different domains. Measurement errors, variations in growth, and the velocities of molecules all tend towards Gaussian distributions. These processes do this because at their heart, these processes add together fluctuations. And repeatedly adding finite fluctuations results in a distribution of sums that have shed all information about the underlying process, aside from mean and spread.

[However,] one consequence of this is that statistical models based on Gaussian distributions cannot reliably identify micro-process. (p. 75)

But like Ptolemy’s circles within circles, the Gaussian can still be useful even if it sheds information.

4.1.4.2 Epistemological justification.

By the epistemological justification, the Gaussian represents a particular state of ignorance. When all we know or are willing to say about a distribution of measures (measures are continuous values on the real number line) is their mean and variance, then the Gaussian distribution arises as the most consistent with our assumptions.

That is to say that the Gaussian distribution is the most natural expression of our state of ignorance, because if all we are willing to assume is that a measure has finite variance, the Gaussian distribution is the shape that can be realized in the largest number of ways and does not introduce any new assumptions. It is the least surprising and least informative assumption to make. In this way, the Gaussian is the distribution most consistent with our assumptions. Or rather, it is the most consistent with our golem’s assumptions. If you don’t think the distribution should be Gaussian, then that implies that you know something else that you should tell your golem about, something that would improve inference. (p. 75)

We’ll dive deeper into why the Gaussian is such a natural expression of ignorance in these contexts when we cover maximum entropy in Chapter 7.

4.1.4.3 Rethinking: Heavy tails.

The Gaussian distribution is common in nature and has some nice properties. But there are some risks in using it as a default data model. The extreme ends of a distribution are known as its tails. And the Gaussian distribution has some very thin tails–there is very little probability in them. Instead most of the mass in the Gaussian lies within one standard deviation of the mean. Many natural (and unnatural) processes have much heavier tails. (p. 76)

You have no idea how excited I am that we’ll be covering some of these heavy-tailed alternatives!

4.1.4.4 Overthinking: Gaussian distribution.

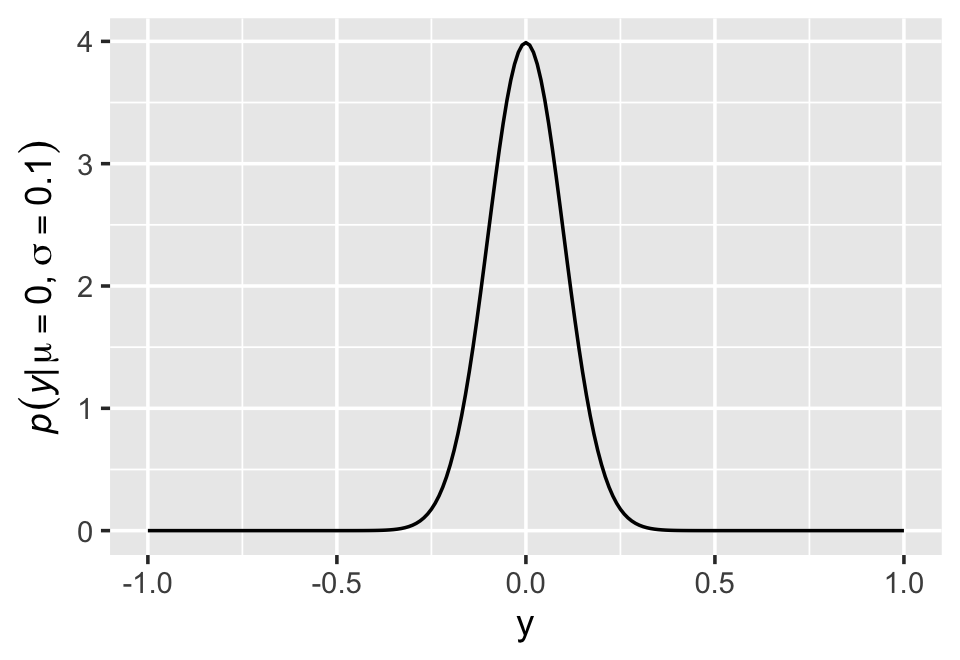

In this section McElreath gave the formula for the Gaussian probability density function. Let \(y\) be the criterion, \(\mu\) be the mean, and \(\sigma\) be the standard deviation. Then the probability density of some Gaussian value \(y\) is

\[p(y|\mu, \sigma) = \frac{1}{\sqrt{2 \pi \sigma^2}} \exp \left (- \frac{(y - \mu)^2}{2 \sigma^2} \right).\]

McElreath’s right. “This looks monstrous” (p. 76). Why not demystify that monster with a little R code? For simplicity, we’ll compute \(p(y|\mu, \sigma)\) for a series of \(y\)-values ranging from -1 to 1, holding \(\mu = 0\) and \(\sigma = 0.1\). Then we’ll plot.

tibble(y = seq(from = -1, to = 1, by = .01),

mu = 0,

sigma = 0.1) %>%

# compute p(y) with a hand-made Gaussian probability density function

mutate(p = (1 / sqrt(2 * pi * sigma^2)) * exp(-((y - mu)^2 / (2 * sigma^2)))) %>%

ggplot(aes(x = y, y = p)) +

geom_line() +

ylab(expression(italic(p)(italic("y|")*mu==0*","~sigma==0.1)))

Notice how \(p(y|\mu, \sigma)\) peaks around 4 when \(y = 0\). We can also get that value with the dnorm() function, which will return the \(p(y)\) value for a given combination of \(y\), \(\mu\), and \(\sigma\).

dnorm(0, mean = 0, sd = 0.1)## [1] 3.989423The answer, about 4, is no mistake. Probability density is the rate of change in cumulative probability. So where cumulative probability is increasing rapidly, density can easily exceed 1. But if we calculate the area under the density function, it will never exceed 1. Such areas are also called probability mass. You can usually ignore these density/mass details while doing computational work. But it’s good to be aware of the distinction. (p. 76, emphasis in the original)

4.2 A language for describing models

Our mathy ways of summarizing models will be something like

\[\begin{align*} \text{criterion}_i & \sim \operatorname{Normal}(\mu_i, \sigma) \\ \mu_i & = \beta \times \text{predictor}_i \\ \beta & \sim \operatorname{Normal}(0, 10) \\ \sigma & \sim \operatorname{Exponential}(1) \\ x_i & \sim \operatorname{Normal}(0, 1). \end{align*}\]

“If that doesn’t make much sense, good. That indicates that you are holding the right textbook” (p. 77). Welcome applied statistics! 🤓

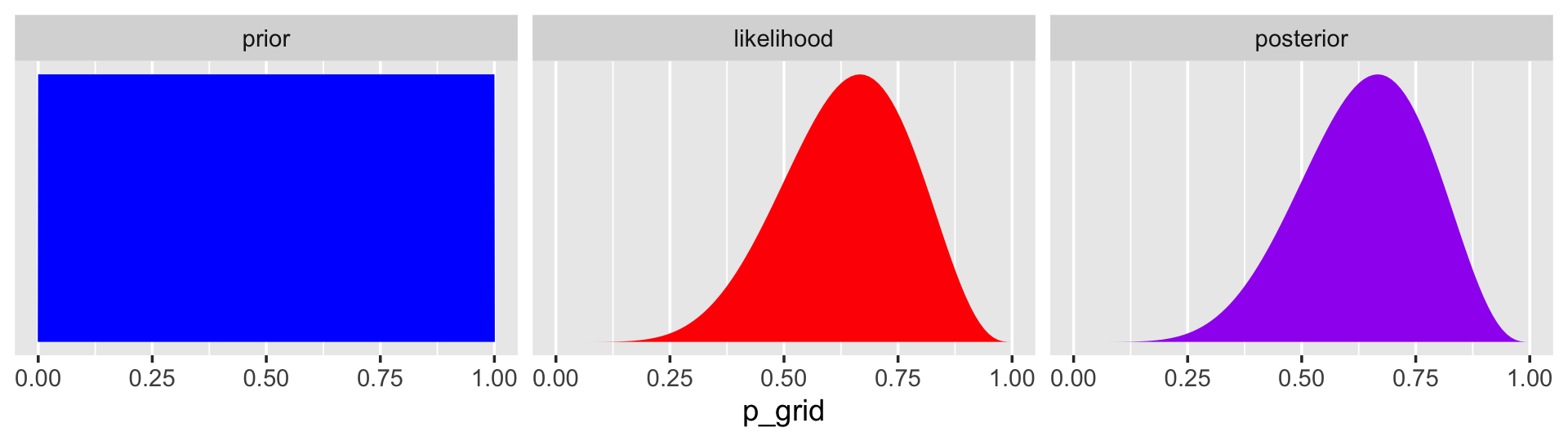

4.2.1 Re-describing the globe tossing model.

For the globe tossing model, the probability \(p\) of a count of water \(w\) based on \(n\) trials was

\[\begin{align*} w & \sim \operatorname{Binomial}(n, p) \\ p & \sim \operatorname{Uniform}(0, 1), \end{align*}\]

where the top line indicates we’re using the Binomial likelihood function to model \(w\) given unique combinations of \(n\) and \(p\). In our example, \(w\) and \(n\) were already defined in the data, so all we need to do is compute \(p\). Since \(p\) is the only parameter, it’s the only element that gets a prior, which is what that second line was.

4.2.1.1 Overthinking: From model definition to Bayes’ theorem.

We can use grid approximation to work through our globe tossing model.

# how many `p_grid` points would you like?

n_points <- 100

d <-

tibble(p_grid = seq(from = 0, to = 1, length.out = n_points),

w = 6,

n = 9) %>%

mutate(prior = dunif(p_grid, min = 0, max = 1),

likelihood = dbinom(w, size = n, prob = p_grid)) %>%

mutate(posterior = likelihood * prior / sum(likelihood * prior))

head(d)## # A tibble: 6 × 6

## p_grid w n prior likelihood posterior

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0 6 9 1 0 0

## 2 0.0101 6 9 1 8.65e-11 8.74e-12

## 3 0.0202 6 9 1 5.37e- 9 5.43e-10

## 4 0.0303 6 9 1 5.93e- 8 5.99e- 9

## 5 0.0404 6 9 1 3.23e- 7 3.26e- 8

## 6 0.0505 6 9 1 1.19e- 6 1.21e- 7In case you were curious, here’s what they look like.

d %>%

pivot_longer(prior:posterior) %>%

# this line allows us to dictate the order in which the panels will appear

mutate(name = factor(name, levels = c("prior", "likelihood", "posterior"))) %>%

ggplot(aes(x = p_grid, y = value, fill = name)) +

geom_area() +

scale_fill_manual(values = c("blue", "red", "purple")) +

scale_y_continuous(NULL, breaks = NULL) +

theme(legend.position = "none") +

facet_wrap(~ name, scales = "free")

The posterior is a combination of the prior and the likelihood. When the prior is flat across the parameter space, the posterior is just the likelihood re-expressed as a probability. As we go along, you’ll see we almost never use flat priors in practice. Be warned that eschewing flat priors is a recent development, however. You only have to look at the literature from a couple decades ago to see mounds and mounds of flat priors.

4.3 A Gaussian model of height

There are an infinite number of possible Gaussian distributions. Some have small means. Others have large means. Some are wide, with a large \(\sigma\). Others are narrow. We want our Bayesian machine to consider every possible distribution, each defined by a combination of \(\mu\) and \(\sigma\), and rank them by posterior plausibility. Posterior plausibility provides a measure of the logical compatibility of each possible distribution with the data and model. (p. 79)

4.3.1 The data.

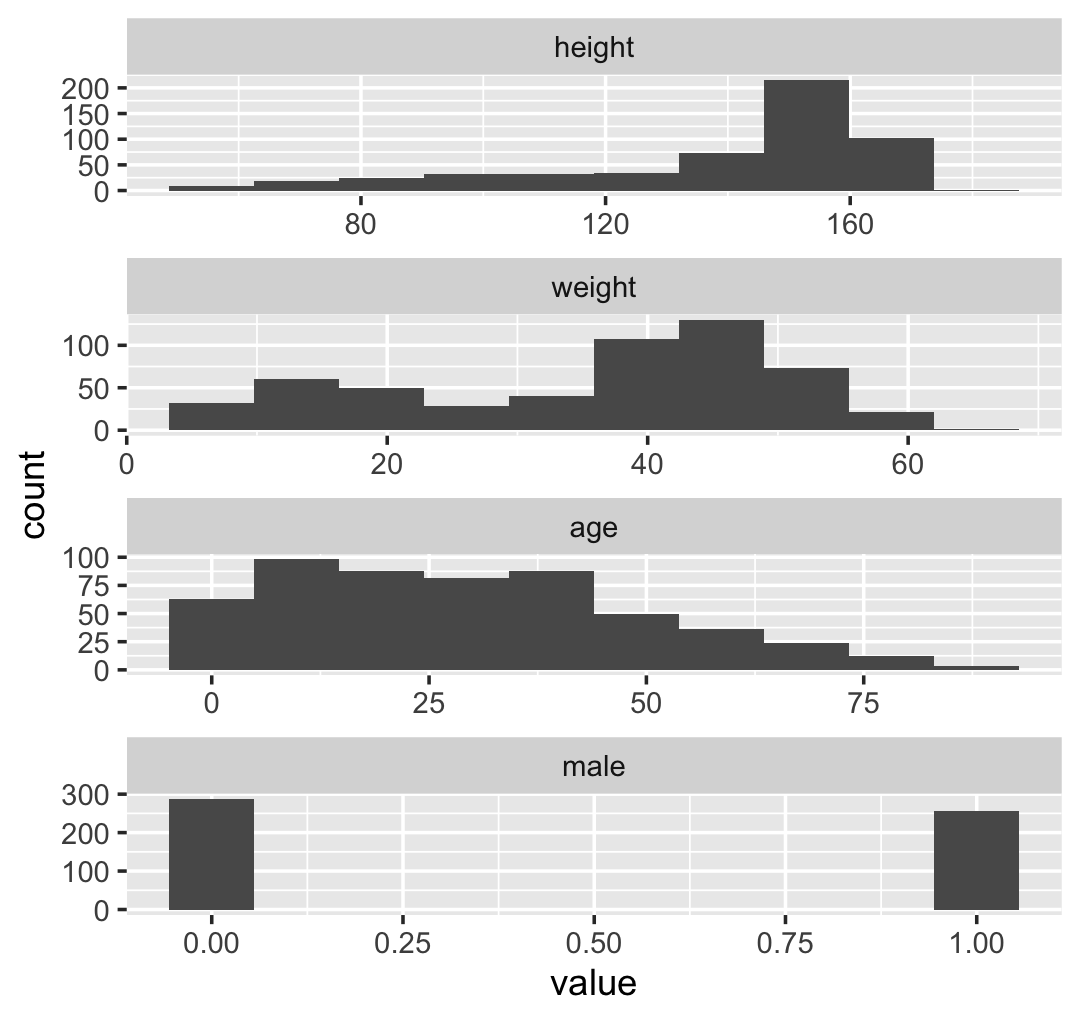

Let’s load the Howell (2001, 2010) data from McElreath’s (2020b) rethinking package.

library(rethinking)

data(Howell1)

d <- Howell1Here we open our focal statistical package, Bürkner’s brms. But before we do, we’ll want to detach the rethinking package. R will not allow us to use a function from one package that shares the same name as a different function from another package if both packages are open at the same time. The rethinking and brms packages are designed for similar purposes and, unsurprisingly, overlap in some of their function names. To prevent problems, we will always make sure rethinking is detached before using brms. To learn more on the topic, see this R-bloggers post.

rm(Howell1)

detach(package:rethinking, unload = T)

library(brms)Go ahead and investigate the data with str(), the tidyverse analogue for which is glimpse().

d %>%

str()## 'data.frame': 544 obs. of 4 variables:

## $ height: num 152 140 137 157 145 ...

## $ weight: num 47.8 36.5 31.9 53 41.3 ...

## $ age : num 63 63 65 41 51 35 32 27 19 54 ...

## $ male : int 1 0 0 1 0 1 0 1 0 1 ...The brms package does not have a function that works like rethinking::precis() for providing numeric and graphical summaries of variables, as see in McElreath’s R code 4.9. We can get some of that information with summary().

d %>%

summary()## height weight age male

## Min. : 53.98 Min. : 4.252 Min. : 0.00 Min. :0.0000

## 1st Qu.:125.09 1st Qu.:22.008 1st Qu.:12.00 1st Qu.:0.0000

## Median :148.59 Median :40.058 Median :27.00 Median :0.0000

## Mean :138.26 Mean :35.611 Mean :29.34 Mean :0.4724

## 3rd Qu.:157.48 3rd Qu.:47.209 3rd Qu.:43.00 3rd Qu.:1.0000

## Max. :179.07 Max. :62.993 Max. :88.00 Max. :1.0000We might make the histograms like this.

d %>%

pivot_longer(everything()) %>%

mutate(name = factor(name, levels = c("height", "weight", "age", "male"))) %>%

ggplot(aes(x = value)) +

geom_histogram(bins = 10) +

facet_wrap(~ name, scales = "free", ncol = 1)

If you’re curious, McElreath made those tiny histograms with help from Wickham’s histospark() function. Here’s the code.

sparks <- c("\u2581", "\u2582", "\u2583", "\u2585", "\u2587")

histospark <- function(x, width = 10) {

bins <- graphics::hist(x, breaks = width, plot = FALSE)

factor <- cut(

bins$counts / max(bins$counts),

breaks = seq(0, 1, length = length(sparks) + 1),

labels = sparks,

include.lowest = TRUE

)

paste0(factor, collapse = "")

}Here’s how it works.

histospark(d$weight)## [1] "▁▂▃▂▂▂▂▅▇▇▃▂▁"One of the neat things about the histospark() function is you can insert the output right in your R Markdown prose. For example, we can use is to casually show how left skewed the our height variable is: ▁▁▁▁▁▁▁▂▁▇▇▅▁. But it’s time to get back on track. You can isolate height values with the dplyr::select() function.

d %>%

select(height) %>%

glimpse()## Rows: 544

## Columns: 1

## $ height <dbl> 151.7650, 139.7000, 136.5250, 156.8450, 145.4150, 163.8300, 149.2250, 168.9100, 147…If you want the values in a numeric vector rather than in a data fame, try pull(d, height).

We can use the dplyr::filter() function to make an adults-only data frame.

d2 <-

d %>%

filter(age >= 18)Our reduced d2 does indeed have \(n = 352\) cases.

d2 %>%

count()## n

## 1 3524.3.1.1 Overthinking: Data frames and indexes.

For more on indexing, check out Chapter 9 of Peng’s (2022) R programming for data science, or even the Subsetting subsection in R4DS.

This probably reflects my training history, but the structure of a data frame seems natural and inherently appealing. If you’re in the other camp, do check out either of these two data wrangling talks (here and here) by the ineffable Jenny Bryan.

4.3.2 The model.

Please heed McElreath’s warnings to

be careful about choosing the Gaussian distribution only when the plotted outcome variable looks Gaussian to you. Gawking at the raw data, to try to decide how to model them, is usually not a good idea. The data could be a mixture of different Gaussian distributions, for example, and in that case you won’t be able to detect the underlying normality just by eyeballing the outcome distribution. Furthermore, as mentioned earlier in this chapter, the empirical distribution needn’t be actually Gaussian in order to justify using a Gaussian probability distribution. (p. 81)

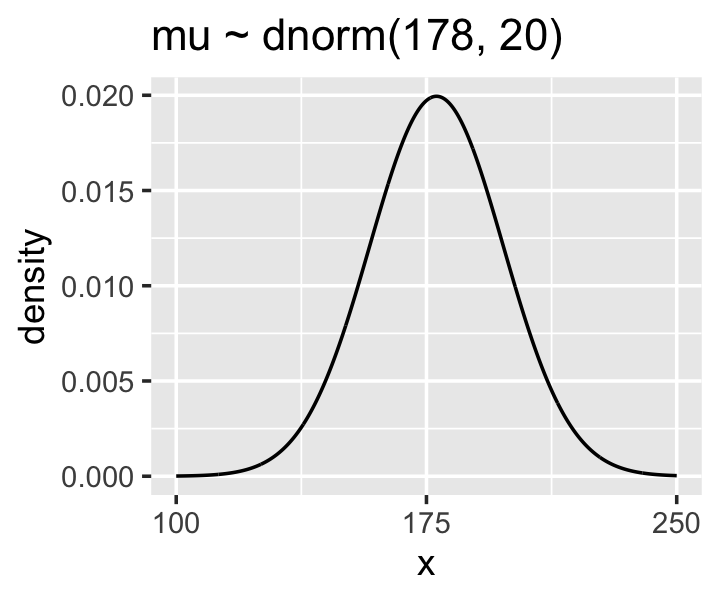

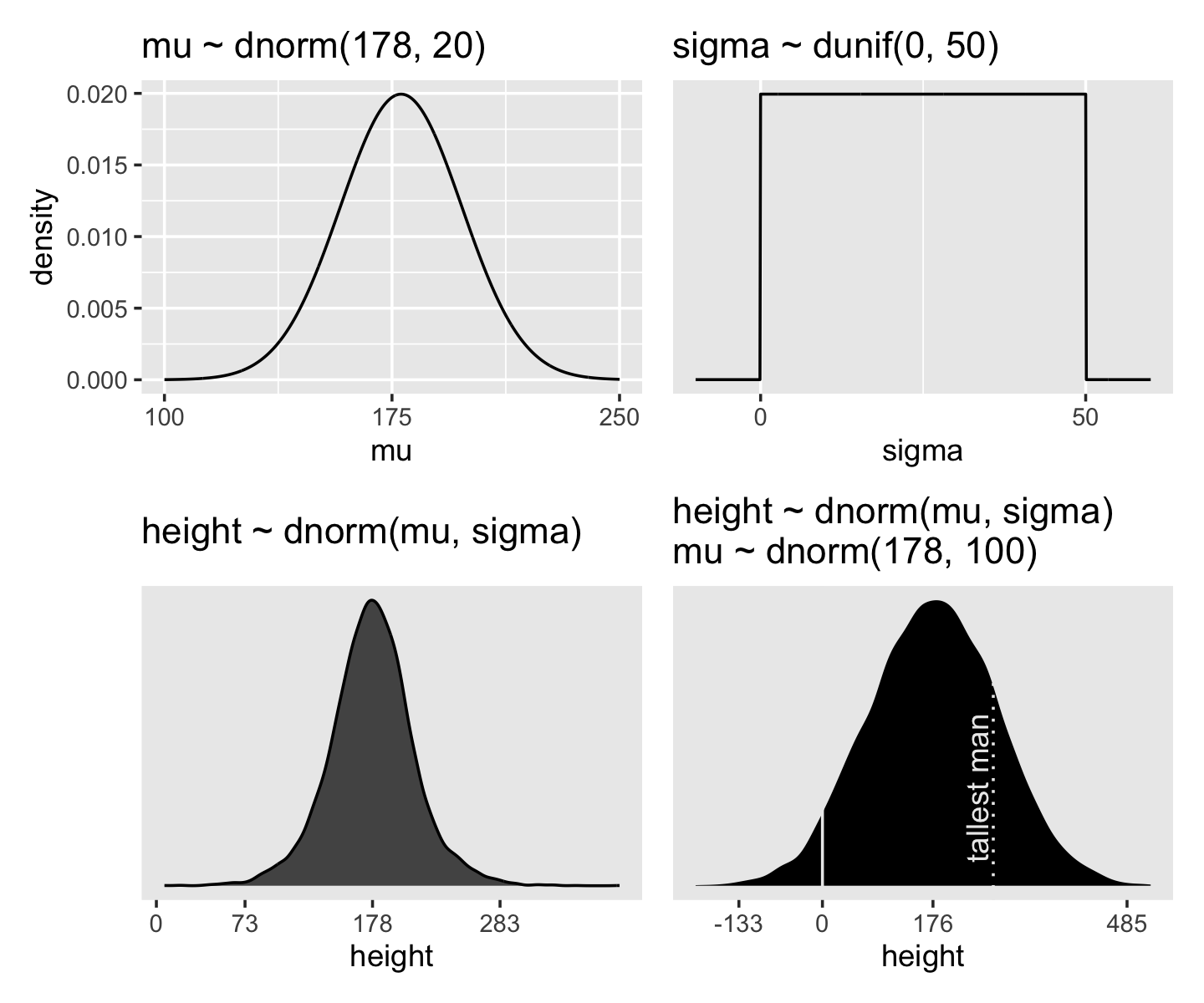

Anyway, the likelihood for our model is

\[\text{heights}_i \sim \operatorname{Normal}(\mu, \sigma),\]

where the \(i\) subscript indexes the individual cases in the data. Our two parameters are \(\mu\) and \(\sigma\), which we will estimate using Bayes’ formula. Our prior for \(\mu\) will be

\[\mu \sim \operatorname{Normal}(178, 20)\]

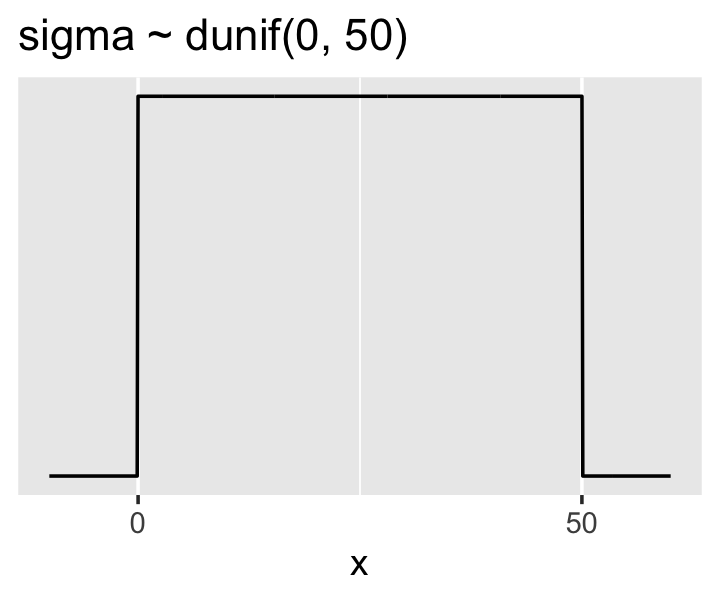

and our prior for \(\sigma\) will be

\[\sigma \sim \operatorname{Uniform}(0, 50).\]

Here’s the shape of the prior for \(\mu\), \(\mathcal N(178, 20)\).

p1 <-

tibble(x = seq(from = 100, to = 250, by = .1)) %>%

ggplot(aes(x = x, y = dnorm(x, mean = 178, sd = 20))) +

geom_line() +

scale_x_continuous(breaks = seq(from = 100, to = 250, by = 75)) +

labs(title = "mu ~ dnorm(178, 20)",

y = "density")

p1

And here’s the ggplot2 code for our prior for \(\sigma\), a uniform distribution with a minimum value of 0 and a maximum value of 50. We don’t really need the \(y\)-axis when looking at the shapes of a density, so we’ll just remove it with scale_y_continuous().

p2 <-

tibble(x = seq(from = -10, to = 60, by = .1)) %>%

ggplot(aes(x = x, y = dunif(x, min = 0, max = 50))) +

geom_line() +

scale_x_continuous(breaks = c(0, 50)) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("sigma ~ dunif(0, 50)")

p2

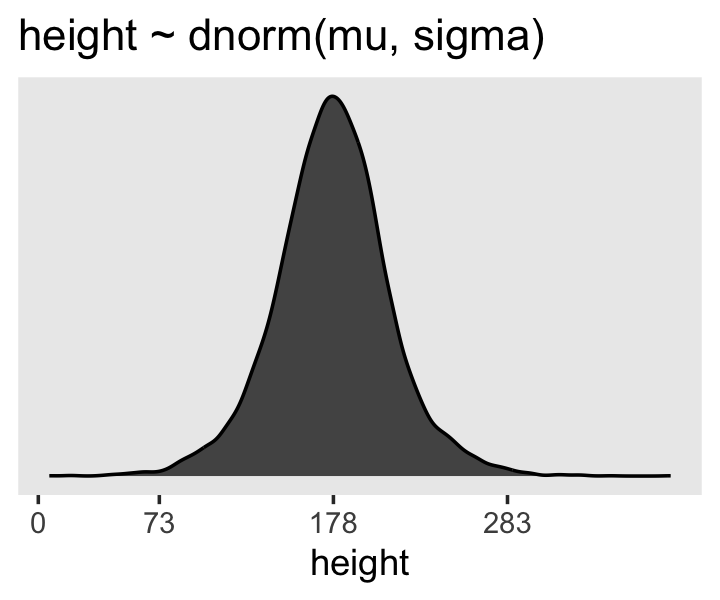

We can simulate from both priors at once to get a prior probability distribution of heights.

n <- 1e4

set.seed(4)

sim <-

tibble(sample_mu = rnorm(n, mean = 178, sd = 20),

sample_sigma = runif(n, min = 0, max = 50)) %>%

mutate(height = rnorm(n, mean = sample_mu, sd = sample_sigma))

p3 <- sim %>%

ggplot(aes(x = height)) +

geom_density(fill = "grey33") +

scale_x_continuous(breaks = c(0, 73, 178, 283)) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("height ~ dnorm(mu, sigma)") +

theme(panel.grid = element_blank())

p3

If you look at the \(x\)-axis breaks on the plot in McElreath’s lower left panel in Figure 4.3, you’ll notice they’re intentional. To compute the mean and 3 standard deviations above and below, you might do this.

sim %>%

summarise(ll = mean(height) - sd(height) * 3,

mean = mean(height),

ul = mean(height) + sd(height) * 3) %>%

mutate_all(round, digits = 1)## # A tibble: 1 × 3

## ll mean ul

## <dbl> <dbl> <dbl>

## 1 73.9 177. 281.Our values are very close to his, but are off by just a bit due to simulation variation.

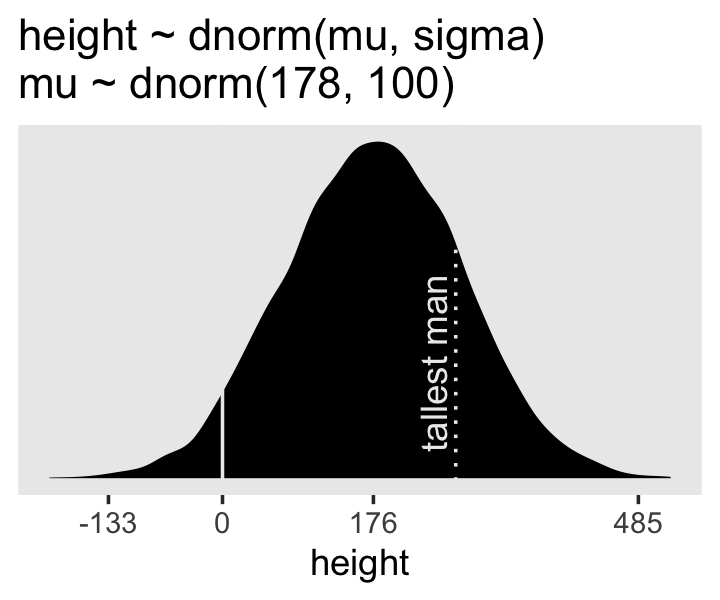

Here’s the work to make the lower right panel of Figure 4.3. Watch out; we’re starting to get fancy.

# simulate

set.seed(4)

sim <-

tibble(sample_mu = rnorm(n, mean = 178, sd = 100),

sample_sigma = runif(n, min = 0, max = 50)) %>%

mutate(height = rnorm(n, mean = sample_mu, sd = sample_sigma))

# compute the values we'll use to break on our x axis

breaks <-

c(mean(sim$height) - 3 * sd(sim$height), 0, mean(sim$height), mean(sim$height) + 3 * sd(sim$height)) %>%

round(digits = 0)

# this is just for aesthetics

text <-

tibble(height = 272 - 25,

y = .0013,

label = "tallest man",

angle = 90)

# plot

p4 <-

sim %>%

ggplot(aes(x = height)) +

geom_density(fill = "black", linewidth = 0) +

geom_vline(xintercept = 0, color = "grey92") +

geom_vline(xintercept = 272, color = "grey92", linetype = 3) +

geom_text(data = text,

aes(y = y, label = label, angle = angle),

color = "grey92") +

scale_x_continuous(breaks = breaks) +

scale_y_continuous(NULL, breaks = NULL) +

ggtitle("height ~ dnorm(mu, sigma)\nmu ~ dnorm(178, 100)") +

theme(panel.grid = element_blank())

p4

You may have noticed how we were saving each of the four last plots as p1 through p4. Let’s combine the four to make our version of McElreath’s Figure 4.3.

(p1 + xlab("mu") | p2 + xlab("sigma")) / (p3 | p4)

On page 84, McElreath said his prior simulation indicated 4% of the heights would be below zero. Here’s how we might determe that percentage for our simulation.

sim %>%

count(height < 0) %>%

mutate(percent = 100 * n / sum(n))## # A tibble: 2 × 3

## `height < 0` n percent

## <lgl> <int> <dbl>

## 1 FALSE 9571 95.7

## 2 TRUE 429 4.29Here’s the break down compared to the tallest man on record, Robert Pershing Wadlow (1918–1940).

sim %>%

count(height < 272) %>%

mutate(percent = 100 * n / sum(n))## # A tibble: 2 × 3

## `height < 272` n percent

## <lgl> <int> <dbl>

## 1 FALSE 1761 17.6

## 2 TRUE 8239 82.4Does this matter? In this case, we have so much data that the silly prior is harmless. But that won’t always be the case. There are plenty of inference problems for which the data alone are not sufficient, no matter how numerous. Bayes lets us proceed in these cases. But only if we use our scientific knowledge to construct sensible priors. Using scientific knowledge to build priors is not cheating. The important thing is that your prior not be based on the values in the data, but only on what you know about the data before you see it. (p. 84)

4.3.2.1 Rethinking: A farewell to epsilon.

Some readers will have already met an alternative notation for a Gaussian linear model:

\[\begin{align*} h_i & = \mu + \epsilon_i \\ \epsilon_i & \sim \operatorname{Normal}(0, \sigma) \end{align*}\]

This is equivalent to the \(h_i \sim \operatorname{Normal}(\mu, \sigma)\) form, with the \(\epsilon\) standing in for the Gaussian density. But this \(\epsilon\) form is poor form. The reason is that it does not usually generalize to other types of models. This means it won’t be possible to express non-Gaussian models using tricks like \(\epsilon\). Better to learn one system that does generalize. (p. 84)

Agreed.

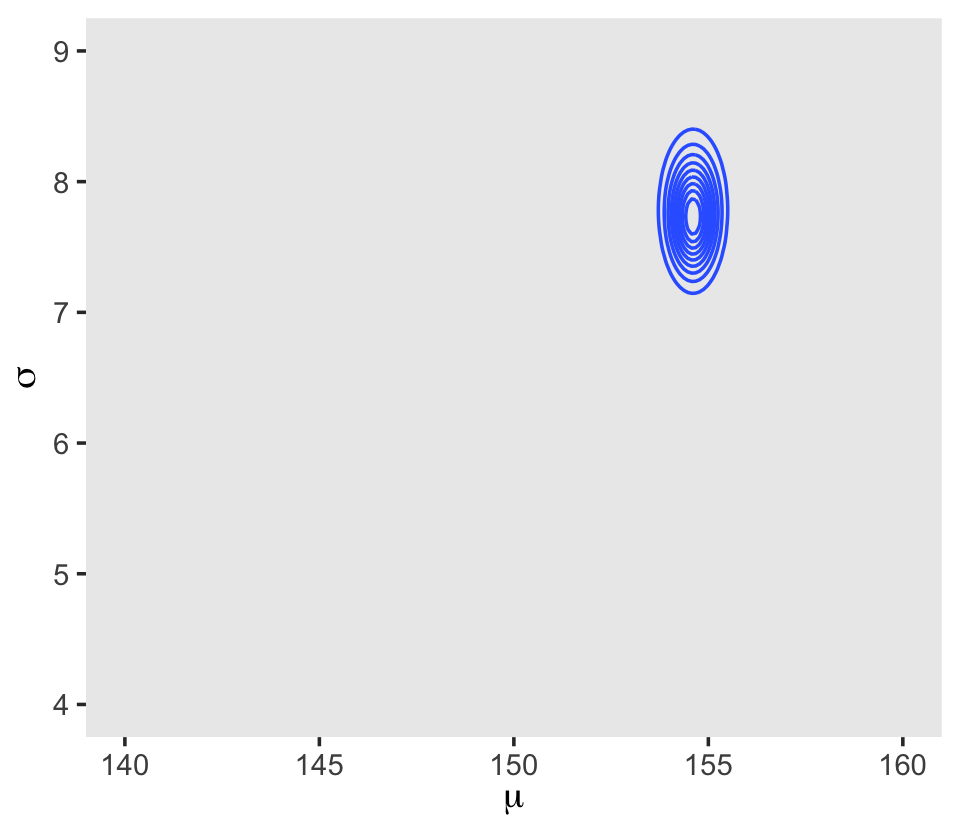

4.3.3 Grid approximation of the posterior distribution.

As McElreath explained, you’ll never use this for practical data analysis. But I found this helped me better understanding what exactly we’re doing with Bayesian estimation. So let’s play along.

n <- 200

d_grid <-

# we'll accomplish with `tidyr::crossing()` what McElreath did with base R `expand.grid()`

crossing(mu = seq(from = 140, to = 160, length.out = n),

sigma = seq(from = 4, to = 9, length.out = n))

glimpse(d_grid)## Rows: 40,000

## Columns: 2

## $ mu <dbl> 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140, 140,…

## $ sigma <dbl> 4.000000, 4.025126, 4.050251, 4.075377, 4.100503, 4.125628, 4.150754, 4.175879, 4.20…d_grid contains every combination of mu and sigma across their specified values. Instead of base R sapply(), we’ll do the computations by making a custom function which we’ll then plug into purrr::map2().

grid_function <- function(mu, sigma) {

dnorm(d2$height, mean = mu, sd = sigma, log = T) %>%

sum()

}Now we’re ready to complete the tibble.

d_grid <-

d_grid %>%

mutate(log_likelihood = map2(mu, sigma, grid_function)) %>%

unnest(log_likelihood) %>%

mutate(prior_mu = dnorm(mu, mean = 178, sd = 20, log = T),

prior_sigma = dunif(sigma, min = 0, max = 50, log = T)) %>%

mutate(product = log_likelihood + prior_mu + prior_sigma) %>%

mutate(probability = exp(product - max(product)))

head(d_grid)## # A tibble: 6 × 7

## mu sigma log_likelihood prior_mu prior_sigma product probability

## <dbl> <dbl> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 140 4 -3813. -5.72 -3.91 -3822. 0

## 2 140 4.03 -3778. -5.72 -3.91 -3787. 0

## 3 140 4.05 -3743. -5.72 -3.91 -3753. 0

## 4 140 4.08 -3709. -5.72 -3.91 -3719. 0

## 5 140 4.10 -3676. -5.72 -3.91 -3686. 0

## 6 140 4.13 -3644. -5.72 -3.91 -3653. 0In the final d_grid, the probability vector contains the posterior probabilities across values of mu and sigma. We can make a contour plot with geom_contour().

d_grid %>%

ggplot(aes(x = mu, y = sigma, z = probability)) +

geom_contour() +

labs(x = expression(mu),

y = expression(sigma)) +

coord_cartesian(xlim = range(d_grid$mu),

ylim = range(d_grid$sigma)) +

theme(panel.grid = element_blank())

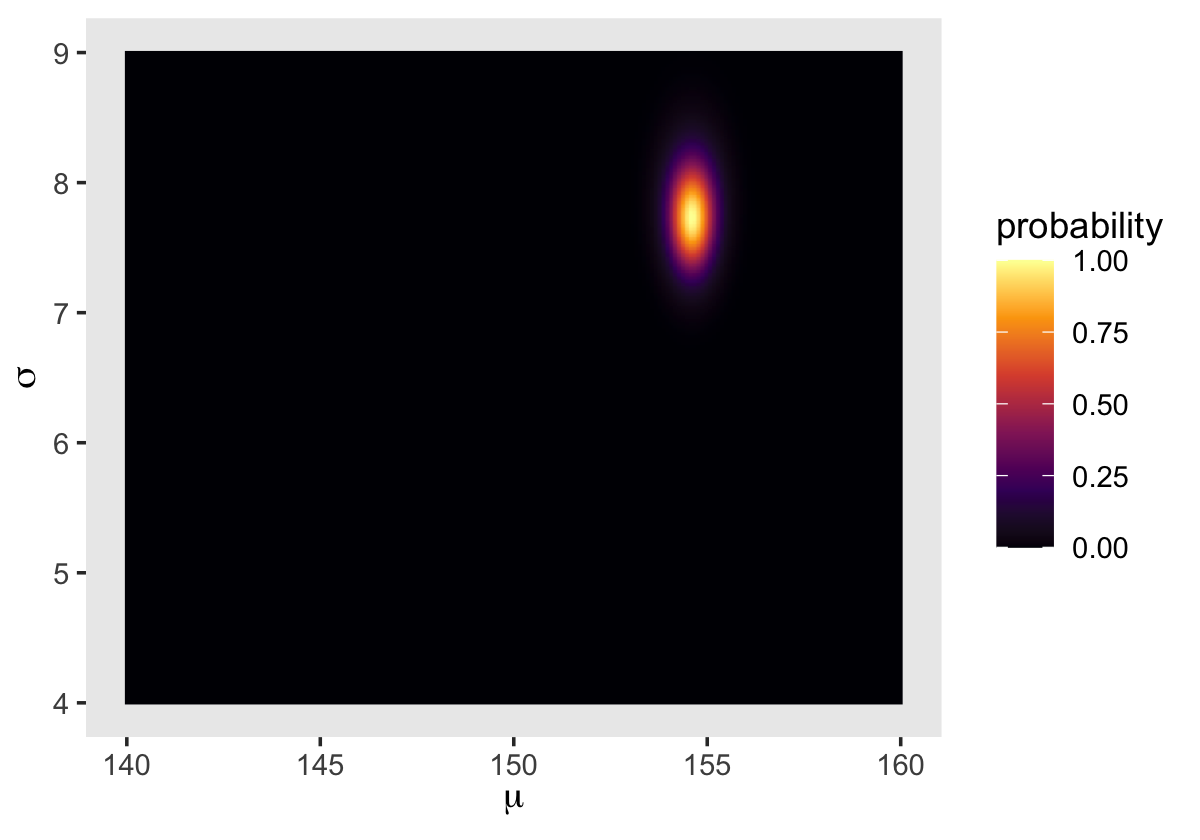

We’ll make our heat map with geom_raster().

d_grid %>%

ggplot(aes(x = mu, y = sigma, fill = probability)) +

geom_raster(interpolate = T) +

scale_fill_viridis_c(option = "B") +

labs(x = expression(mu),

y = expression(sigma)) +

theme(panel.grid = element_blank())

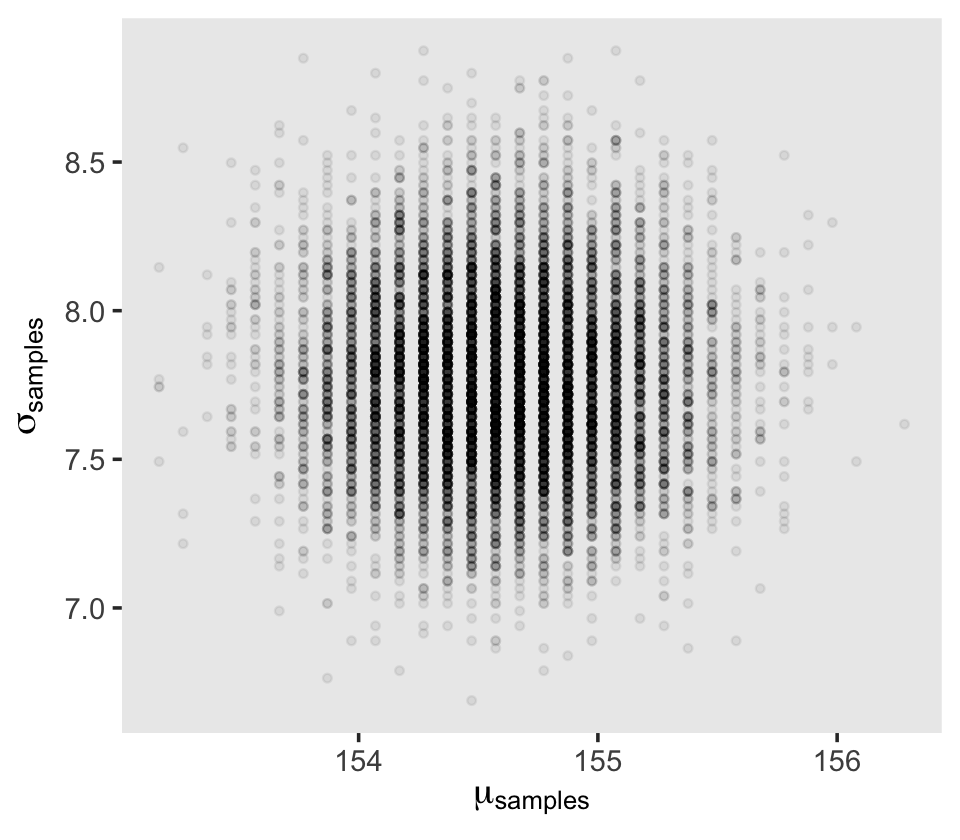

4.3.4 Sampling from the posterior.

We can use dplyr::sample_n() to sample rows, with replacement, from d_grid.

set.seed(4)

d_grid_samples <-

d_grid %>%

sample_n(size = 1e4, replace = T, weight = probability)

d_grid_samples %>%

ggplot(aes(x = mu, y = sigma)) +

geom_point(size = .9, alpha = 1/15) +

scale_fill_viridis_c() +

labs(x = expression(mu[samples]),

y = expression(sigma[samples])) +

theme(panel.grid = element_blank())

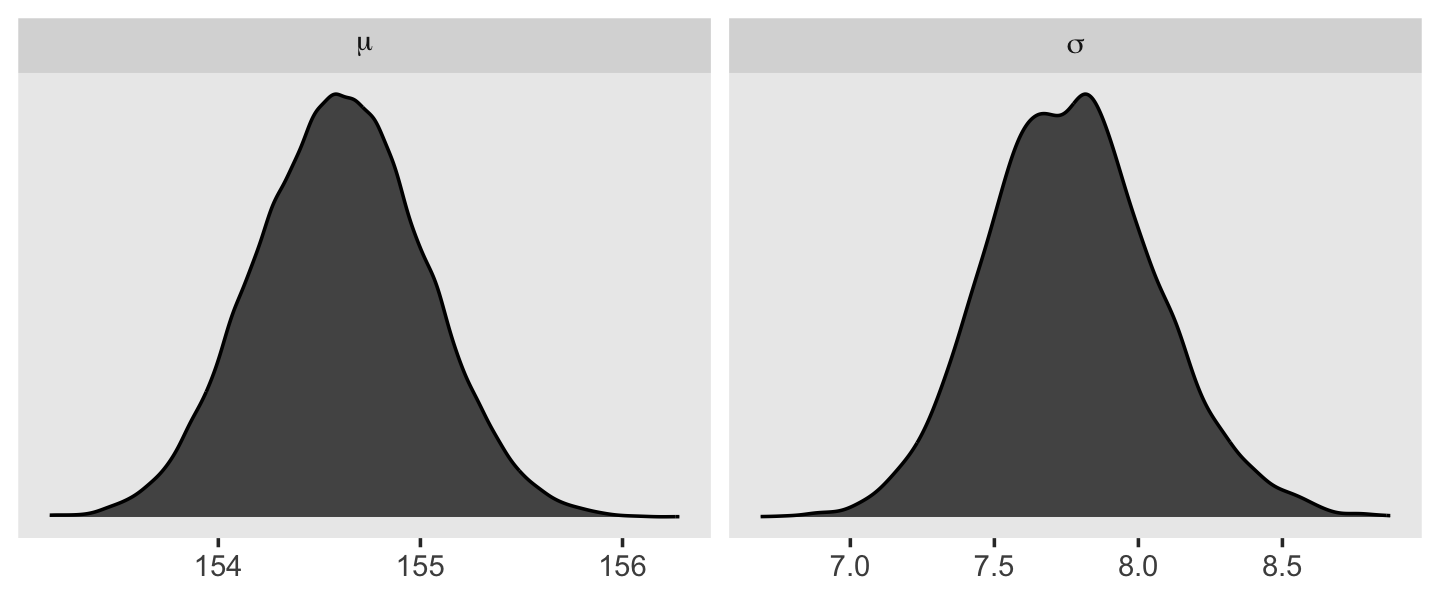

We can use pivot_longer() and then facet_wrap() to plot the densities for both mu and sigma at once.

d_grid_samples %>%

pivot_longer(mu:sigma) %>%

ggplot(aes(x = value)) +

geom_density(fill = "grey33") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

We’ll use the tidybayes package to compute their posterior modes and 95% HDIs.

library(tidybayes)

d_grid_samples %>%

pivot_longer(mu:sigma) %>%

group_by(name) %>%

mode_hdi(value)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 mu 155. 154. 155. 0.95 mode hdi

## 2 sigma 7.82 7.14 8.30 0.95 mode hdiLet’s say you wanted their posterior medians and 50% quantile-based intervals, instead. Just switch out the last line for median_qi(value, .width = .5).

4.3.4.1 Overthinking: Sample size and the normality of \(\sigma\)’s posterior.

Since we’ll be fitting models with brms almost exclusively from here on out, this section is largely mute. But we’ll do it anyway for the sake of practice. I’m going to break the steps up like before rather than compress the code together. Here’s d3.

set.seed(4)

(d3 <- sample(d2$height, size = 20))## [1] 147.3200 154.9400 168.9100 156.8450 165.7350 151.7650 165.7350 156.2100 144.7800 154.9400

## [11] 151.1300 147.9550 149.8600 162.5600 161.9250 164.4650 160.9852 151.7650 163.8300 149.8600For our first step using d3, we’ll redefine d_grid.

n <- 200

# note we've redefined the ranges of `mu` and `sigma`

d_grid <-

crossing(mu = seq(from = 150, to = 170, length.out = n),

sigma = seq(from = 4, to = 20, length.out = n))Second, we’ll redefine our custom grid_function() function to operate over the height values of d3.

grid_function <- function(mu, sigma) {

dnorm(d3, mean = mu, sd = sigma, log = T) %>%

sum()

}Now we’ll use the amended grid_function() to make the posterior.

d_grid <-

d_grid %>%

mutate(log_likelihood = map2_dbl(mu, sigma, grid_function)) %>%

mutate(prior_mu = dnorm(mu, mean = 178, sd = 20, log = T),

prior_sigma = dunif(sigma, min = 0, max = 50, log = T)) %>%

mutate(product = log_likelihood + prior_mu + prior_sigma) %>%

mutate(probability = exp(product - max(product)))Did you catch our use of purrr::map2_dbl(), there, in place of purrr::map2()? It turns out that purrr::map() and purrr::map2() always return a list (see here and here). But we can add the _dbl suffix to those functions, which will instruct the purrr package to return a double vector (i.e., a common kind of numeric vector). The advantage of that approach is we no longer need to follow our map() or map2() lines with unnest(). To learn more about the ins and outs of the map() family, check out this section from R4DS or Jenny Bryan’s purrr tutorial.

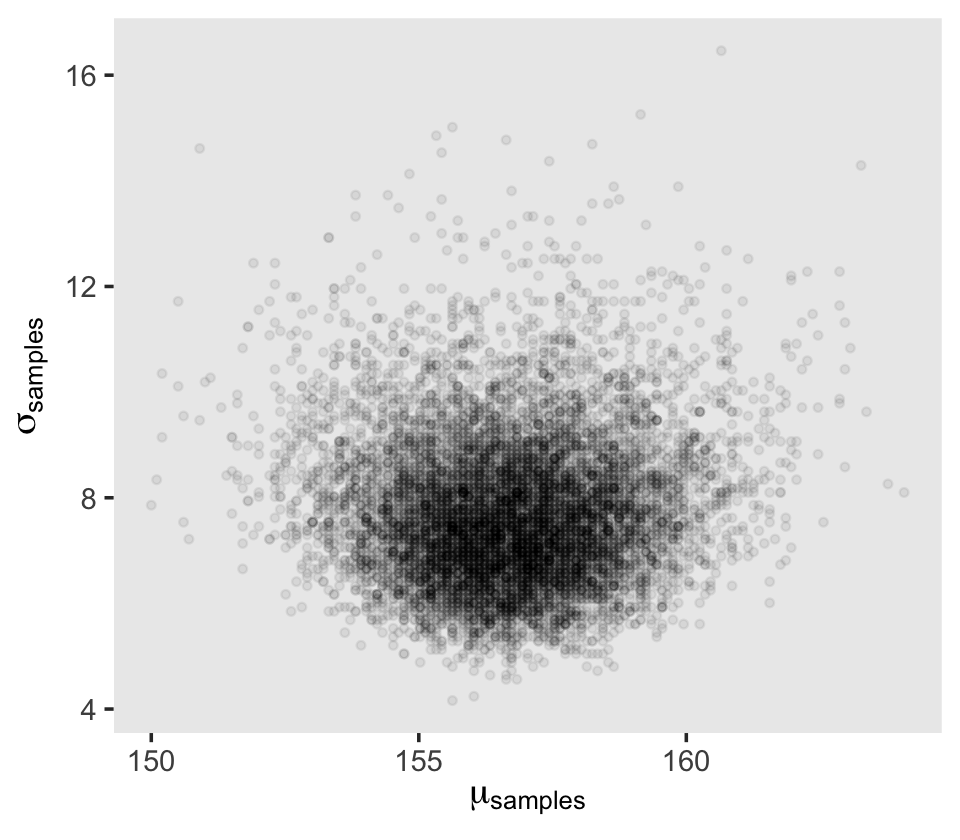

Next we’ll sample_n() and plot.

set.seed(4)

d_grid_samples <-

d_grid %>%

sample_n(size = 1e4, replace = T, weight = probability)

d_grid_samples %>%

ggplot(aes(x = mu, y = sigma)) +

geom_point(size = .9, alpha = 1/15) +

labs(x = expression(mu[samples]),

y = expression(sigma[samples])) +

theme(panel.grid = element_blank())

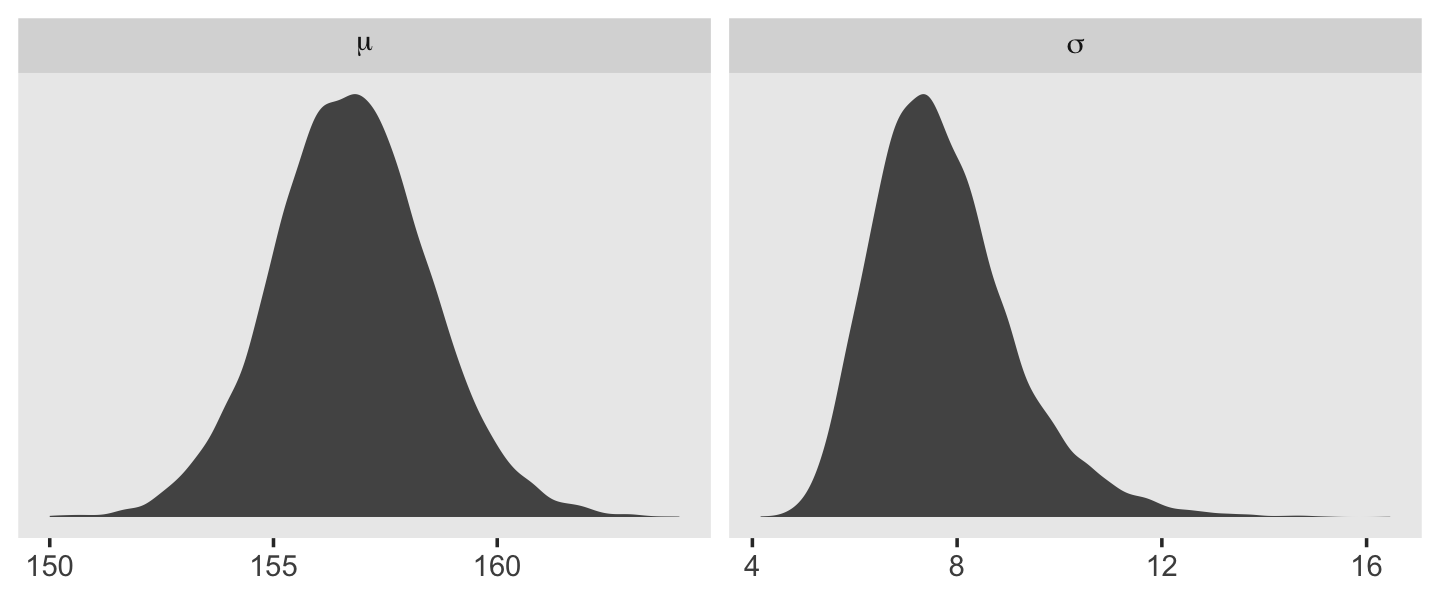

Behold the updated densities.

d_grid_samples %>%

pivot_longer(mu:sigma) %>%

ggplot(aes(x = value)) +

geom_density(fill = "grey33", linewidth = 0) +

scale_y_continuous(NULL, breaks = NULL) +

xlab(NULL) +

theme(panel.grid = element_blank()) +

facet_wrap(~ name, scales = "free", labeller = label_parsed)

That labeller = label_parsed bit in the facet_wrap() function is what converted our subplot strip labels into Greek. You can learn more about labeller here. Anyway, our posterior for \(\sigma\) isn’t so Gaussian with that small \(n\).

This is the point in the project where we hop off the grid-approximation train. On the one hand, I think this is a great idea. Most of y’all reading this will never use grid approximation in a real-world applied data analysis. On the other hand, there is some pedagogical utility in practicing with it. It can help you grasp what it is we’re doing when we apply Bayes’ theorem. If you’d like more practice, check out the first several chapters in John Kruschke’s (2015) textbook and the corresponding chapters in my (2023a) ebook translating it into brms and tidyverse.

4.3.5 Finding the posterior distribution with quapbrm().

quapHere we rewrite the statistical model, this time using font color to help differentiate the likelihood from the prior(s).

\[\begin{align*} \color{red}{\text{heights}_i} & \color{red}\sim \color{red}{\operatorname{Normal}(\mu, \sigma)} && \color{red}{\text{likelihood}} \\ \color{blue}\mu & \color{blue}\sim \color{blue}{\operatorname{Normal}(178, 20)} && \color{blue}{\text{prior}} \\ \color{blue}\sigma & \color{blue}\sim \color{blue}{\operatorname{Uniform}(0, 50)} \end{align*}\]

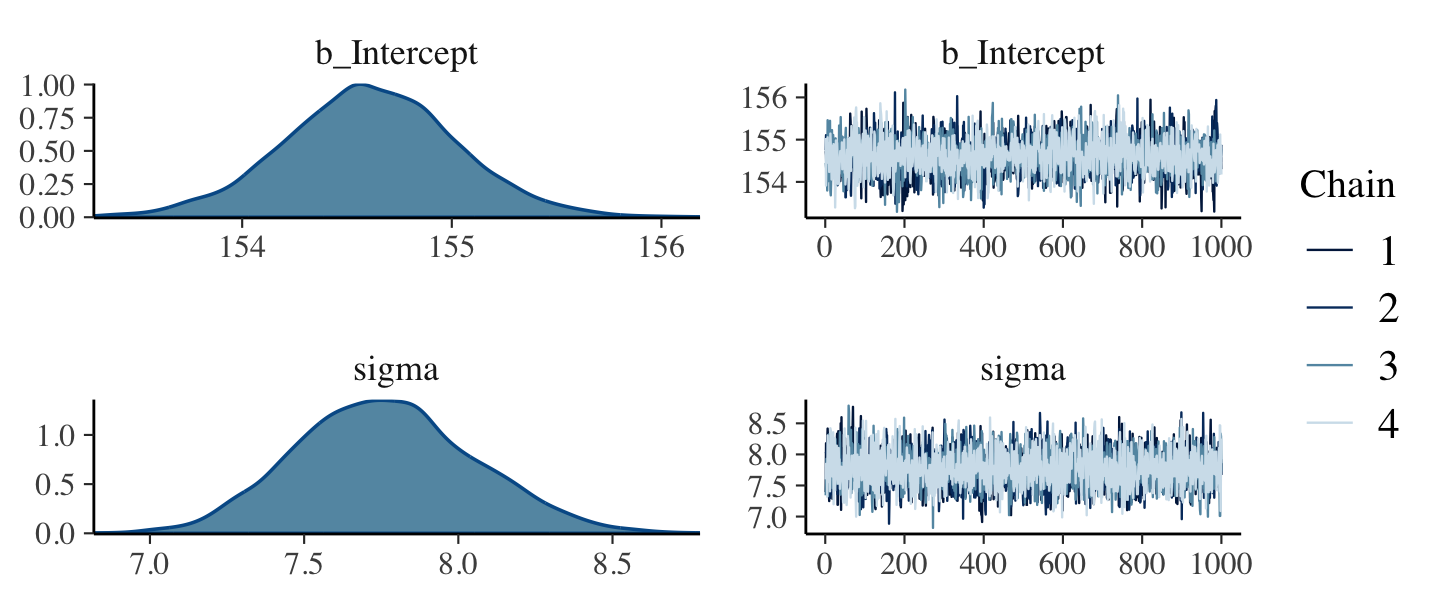

We won’t actually use rethinking::quap(). It’s time to jump straight to the primary brms modeling function, brm(). In the text, McElreath indexed his models with names like m4.1. I will largely follow that convention, but will replace the m with a b to stand for the brms package. Here’s how to fit the first model for this chapter.

b4.1 <-

brm(data = d2,

family = gaussian,

height ~ 1,

prior = c(prior(normal(178, 20), class = Intercept),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

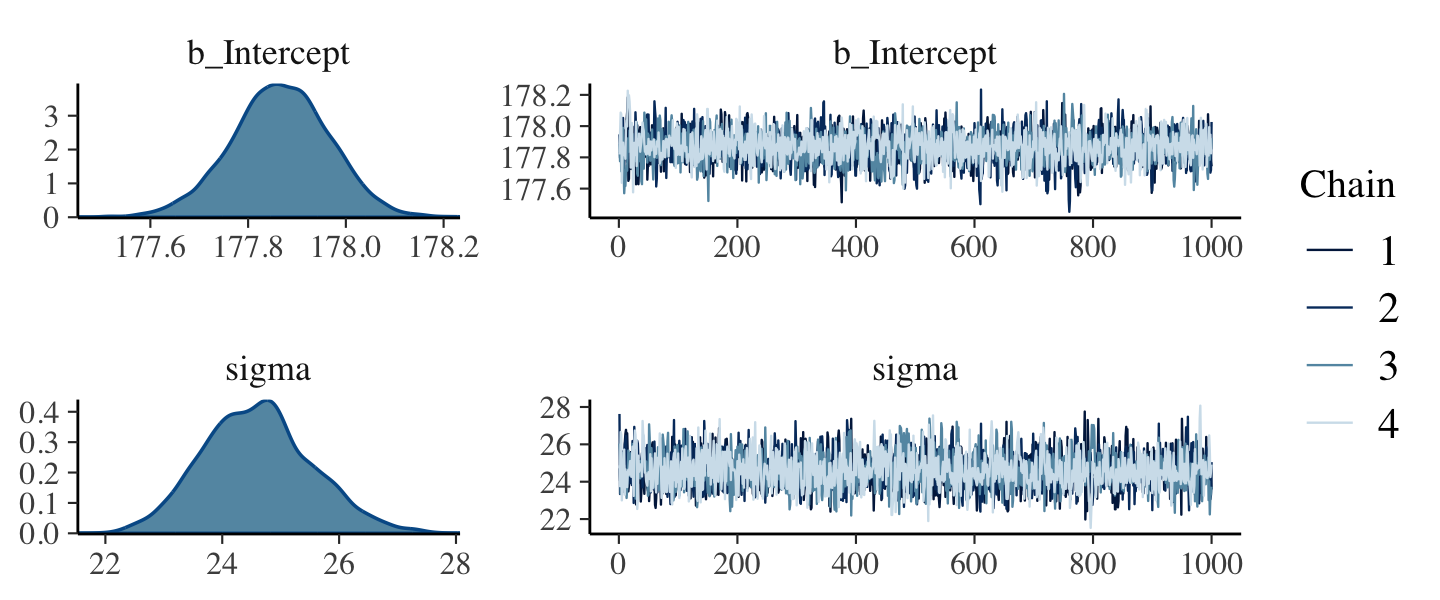

file = "fits/b04.01")Note our use of the ub parameter for the uniform prior on \(\sigma\). If you want to use an upper-bound prior on \(\sigma\) with a brm() model, the up setting will help out a lot. We’ll start to get a sense of why when we cover Hamiltonian Monte Carlo (HMC) in Chapter 9. This leads to an important point. After running a model fit with HMC, it’s a good idea to inspect the chains. As we’ll see, McElreath covered visual chain diagnostics in Chapter 9. Here’s a typical way to do so with brms.

plot(b4.1)

If you want detailed diagnostics for the HMC chains, call launch_shinystan(b4.1). That’ll keep you busy for a while. But anyway, the chains look good. We can reasonably trust the results. Here’s how to get the model summary of our brm() object.

print(b4.1)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: height ~ 1

## Data: d2 (Number of observations: 352)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 154.61 0.41 153.77 155.43 1.00 3285 2401

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 7.76 0.29 7.23 8.35 1.00 4000 2696

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).The summary() function works in a similar way. You can also get a Stan-like summary [see the RStan: the R interface to Stan vignette; Stan Development Team (2023)] with a little indexing.

b4.1$fit## Inference for Stan model: d1a1a9941e111c880584a682efcf0a73.

## 4 chains, each with iter=2000; warmup=1000; thin=1;

## post-warmup draws per chain=1000, total post-warmup draws=4000.

##

## mean se_mean sd 2.5% 25% 50% 75% 97.5% n_eff Rhat

## b_Intercept 154.61 0.01 0.41 153.77 154.34 154.61 154.88 155.43 3337 1

## sigma 7.76 0.00 0.29 7.23 7.56 7.75 7.95 8.35 3985 1

## lprior -8.51 0.00 0.02 -8.56 -8.53 -8.51 -8.49 -8.46 3332 1

## lp__ -1227.02 0.02 1.00 -1229.71 -1227.39 -1226.71 -1226.31 -1226.06 1634 1

##

## Samples were drawn using NUTS(diag_e) at Tue Sep 20 16:25:00 2022.

## For each parameter, n_eff is a crude measure of effective sample size,

## and Rhat is the potential scale reduction factor on split chains (at

## convergence, Rhat=1).Whereas rethinking defaults to 89% intervals, using print() or summary() with brms models defaults to 95% intervals. Unless otherwise specified, I will stick with 95% intervals throughout. However, if you really want those 89% intervals, an easy way is with the prob argument within brms::summary() or brms::print().

summary(b4.1, prob = .89)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: height ~ 1

## Data: d2 (Number of observations: 352)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-89% CI u-89% CI Rhat Bulk_ESS Tail_ESS

## Intercept 154.61 0.41 153.95 155.27 1.00 3285 2401

##

## Family Specific Parameters:

## Estimate Est.Error l-89% CI u-89% CI Rhat Bulk_ESS Tail_ESS

## sigma 7.76 0.29 7.31 8.24 1.00 4000 2696

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Anyways, here’s the brms::brm() code for the model with the very-narrow-\(\mu\)-prior corresponding to the rethinking::quap() code in McElreath’s R code 4.31.

b4.2 <-

brm(data = d2,

family = gaussian,

height ~ 1,

prior = c(prior(normal(178, 0.1), class = Intercept),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.02")plot(b4.2, widths = c(1, 2))

The chains look great. Here’s the model summary().

summary(b4.2)## Family: gaussian

## Links: mu = identity; sigma = identity

## Formula: height ~ 1

## Data: d2 (Number of observations: 352)

## Draws: 4 chains, each with iter = 2000; warmup = 1000; thin = 1;

## total post-warmup draws = 4000

##

## Population-Level Effects:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 177.87 0.10 177.66 178.06 1.00 2514 2190

##

## Family Specific Parameters:

## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## sigma 24.60 0.93 22.89 26.55 1.00 2613 2290

##

## Draws were sampled using sampling(NUTS). For each parameter, Bulk_ESS

## and Tail_ESS are effective sample size measures, and Rhat is the potential

## scale reduction factor on split chains (at convergence, Rhat = 1).Subsetting the summary() output with $fixed provides a convenient way to compare the Intercept summaries between b4.1_hc and b4.2.

rbind(summary(b4.1)$fixed,

summary(b4.2)$fixed)## Estimate Est.Error l-95% CI u-95% CI Rhat Bulk_ESS Tail_ESS

## Intercept 154.6111 0.4129813 153.7729 155.4321 1.000552 3284.680 2401.409

## Intercept1 177.8663 0.1005478 177.6627 178.0598 1.003814 2514.203 2190.3304.3.6 Sampling from a quap()brm() fit.

quap()brms doesn’t seem to have a convenience function that works the way vcov() does for rethinking. For example:

vcov(b4.1)## Intercept

## Intercept 0.1705535This only returns the first element in the matrix it did for rethinking. That is, it appears brms::vcov() only returns the variance/covariance matrix for the single-level \(\beta\) parameters. However, if you really wanted this information, you could get it after putting the HMC chains in a data frame. We do that with the as_draws_df() function, which we’ll be using a lot of as we go along.

post <- as_draws_df(b4.1)

head(post)## # A draws_df: 6 iterations, 1 chains, and 4 variables

## b_Intercept sigma lprior lp__

## 1 155 7.4 -8.5 -1227

## 2 155 8.2 -8.5 -1227

## 3 154 7.6 -8.5 -1227

## 4 155 7.8 -8.5 -1226

## 5 155 8.2 -8.5 -1227

## 6 154 8.4 -8.5 -1228

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}Now select() the columns containing the draws from the desired parameters and feed them into cov().

select(post, b_Intercept:sigma) %>%

cov()## b_Intercept sigma

## b_Intercept 0.1705535224 -0.0002227235

## sigma -0.0002227235 0.0833750930That was “(1) a vector of variances for the parameters and (2) a correlation matrix” for them (p. 90). Here are just the variances (i.e., the diagonal elements) and the correlation matrix.

# variances

select(post, b_Intercept:sigma) %>%

cov() %>%

diag()## b_Intercept sigma

## 0.17055352 0.08337509# correlation

post %>%

select(b_Intercept, sigma) %>%

cor()## b_Intercept sigma

## b_Intercept 1.000000000 -0.001867745

## sigma -0.001867745 1.000000000With our post <- as_draws_df(b4.1) code from a few lines above, we’ve already produced the brms version of what McElreath achieved with extract.samples() on page 90. However, what happened under the hood was different. Whereas rethinking used the mvnorm() function from the MASS package (Ripley, 2022; Venables & Ripley, 2002), with brms we just extracted the iterations of the HMC chains and put them in a data frame. It’s also noteworthy that the as_draws_df() is part of a larger class of as_draws() functions brms currently imports from the posterior package (Bürkner et al., 2022).

str(post)## draws_df [4,000 × 7] (S3: draws_df/draws/tbl_df/tbl/data.frame)

## $ b_Intercept: num [1:4000] 155 155 154 155 155 ...

## $ sigma : num [1:4000] 7.39 8.18 7.58 7.8 8.25 ...

## $ lprior : num [1:4000] -8.5 -8.51 -8.53 -8.5 -8.51 ...

## $ lp__ : num [1:4000] -1227 -1227 -1227 -1226 -1227 ...

## $ .chain : int [1:4000] 1 1 1 1 1 1 1 1 1 1 ...

## $ .iteration : int [1:4000] 1 2 3 4 5 6 7 8 9 10 ...

## $ .draw : int [1:4000] 1 2 3 4 5 6 7 8 9 10 ...Thus, our post object is not just a data frame, but also of class draws_df, which means it contains three metadata variables–.chain, .iteration, and .draw–which will are often hidden from view, but are there in the background when needed. As you’ll see, we’ll make good use of the .draw variable in the future. Notice how our post data frame also includes a vector named lp__. That’s the log posterior. For details, see the brms reference manual (Bürkner, 2022i), the “The Log-Posterior (function and gradient)” section of the Stan Development Team’s (2023) vignette, RStan: the R interface to Stan, or Stephen Martin’s nice explanation of the log posterior on the Stan Forums. The log posterior will largely be outside of our focus in this ebook.

The summary() function doesn’t work for brms posterior data frames quite the way precis() does for posterior data frames from the rethinking package. Behold the results.

summary(post[, 1:2])## b_Intercept sigma

## Min. :153.3 Min. :6.818

## 1st Qu.:154.3 1st Qu.:7.562

## Median :154.6 Median :7.755

## Mean :154.6 Mean :7.764

## 3rd Qu.:154.9 3rd Qu.:7.953

## Max. :156.2 Max. :8.784Here’s one option using the transpose of a quantile() call nested within apply(), which is a very general function you can learn more about here or here.

t(apply(post[, 1:2], 2, quantile, probs = c(.5, .025, .75)))## 50% 2.5% 75%

## b_Intercept 154.605597 153.772880 154.881059

## sigma 7.754982 7.229028 7.952742The base R code is compact, but somewhat opaque. Here’s how to do something similar with more explicit tidyverse code.

post %>%

pivot_longer(b_Intercept:sigma) %>%

group_by(name) %>%

summarise(mean = mean(value),

sd = sd(value),

`2.5%` = quantile(value, probs = .025),

`97.5%` = quantile(value, probs = .975)) %>%

mutate_if(is.numeric, round, digits = 2)## # A tibble: 2 × 5

## name mean sd `2.5%` `97.5%`

## <chr> <dbl> <dbl> <dbl> <dbl>

## 1 b_Intercept 155. 0.41 154. 155.

## 2 sigma 7.76 0.29 7.23 8.35You can always get pretty similar information by just putting the brm() fit object into posterior_summary().

posterior_summary(b4.1)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 154.611115 0.4129813 153.772880 155.432077

## sigma 7.763805 0.2887475 7.229028 8.351164

## lprior -8.510707 0.0241456 -8.560385 -8.463333

## lp__ -1227.019686 0.9983204 -1229.709193 -1226.060608And if you’re willing to drop the posterior \(\textit{SD}\)s, you can use tidybayes::mean_hdi(), too.

post %>%

pivot_longer(b_Intercept:sigma) %>%

group_by(name) %>%

mean_qi(value)## # A tibble: 2 × 7

## name value .lower .upper .width .point .interval

## <chr> <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 b_Intercept 155. 154. 155. 0.95 mean qi

## 2 sigma 7.76 7.23 8.35 0.95 mean qiThough none of these solutions get you those sweet little histograms, you can always make those for your HMC models by inserting the desired posterior draws into histospark().

rbind(histospark(post$b_Intercept),

histospark(post$sigma))## [,1]

## [1,] "▁▁▅▇▂▁▁"

## [2,] "▁▁▂▅▇▇▃▂▁▁"Hell, you can even tack those onto the output from our verbose tidyverse code from a few blocks up.

post %>%

pivot_longer(b_Intercept:sigma) %>%

group_by(name) %>%

summarise(mean = mean(value),

sd = sd(value),

`2.5%` = quantile(value, probs = .025),

`97.5%` = quantile(value, probs = .975)) %>%

mutate_if(is.numeric, round, digits = 2) %>%

mutate(histospark = c(histospark(post$b_Intercept), histospark(post$sigma)))## # A tibble: 2 × 6

## name mean sd `2.5%` `97.5%` histospark

## <chr> <dbl> <dbl> <dbl> <dbl> <chr>

## 1 b_Intercept 155. 0.41 154. 155. ▁▁▅▇▂▁▁

## 2 sigma 7.76 0.29 7.23 8.35 ▁▁▂▅▇▇▃▂▁▁4.3.6.1 Overthinking: Start values for quap()brm().

quap()We won’t be emphasizing start values in this ebook. But, yes, you can set start values for the HMC chains from brms, too. Within the brm() function, you do so with the init argument. From the brm section within the brms reference manual, we read:

Initial values for the sampler. If

NULL(the default) or"random", Stan will randomly generate initial values for parameters in a reasonable range. If0, all parameters are initialized to zero on the unconstrained space. This option is sometimes useful for certain families, as it happens that default random initial values cause draws to be essentially constant. Generally, settinginit = 0is worth a try, if chains do not initialize or behave well. Alternatively,initcan be a list of lists containing the initial values, or a function (or function name) generating initial values. The latter options are mainly implemented for internal testing but are available to users if necessary. If specifying initial values using a list or a function then currently the parameter names must correspond to the names used in the generated Stan code (not the names used inR).

4.3.6.2 Overthinking: Under the hood with multivariate sampling.

Again, brms::as_draws_df() is not the same as rethinking::extract.samples(). Rather than use the MASS::mvnorm(), brms takes the draws from the HMC chains. McElreath covered all of this in Chapter 9 and we will too. You might also look at the brms reference manual or GitHub page for details. To get documentation in a hurry, you could also just execute ?as_draws_df.

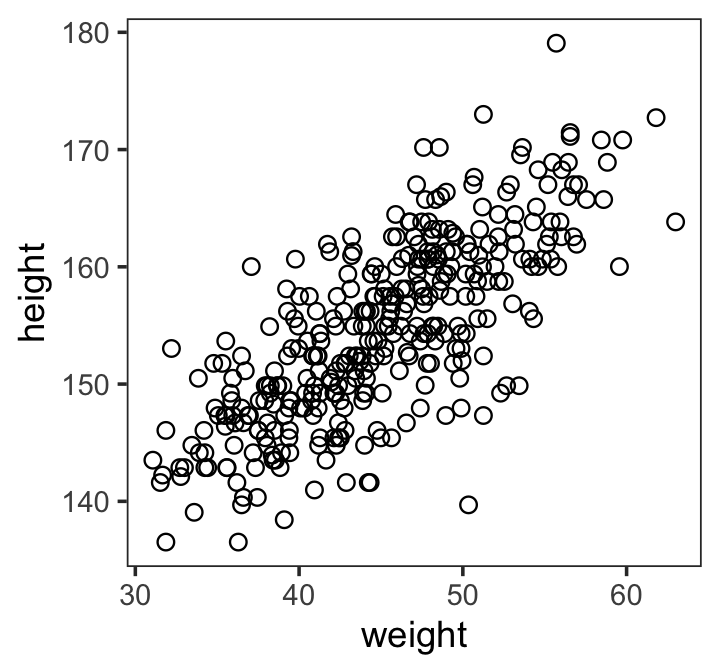

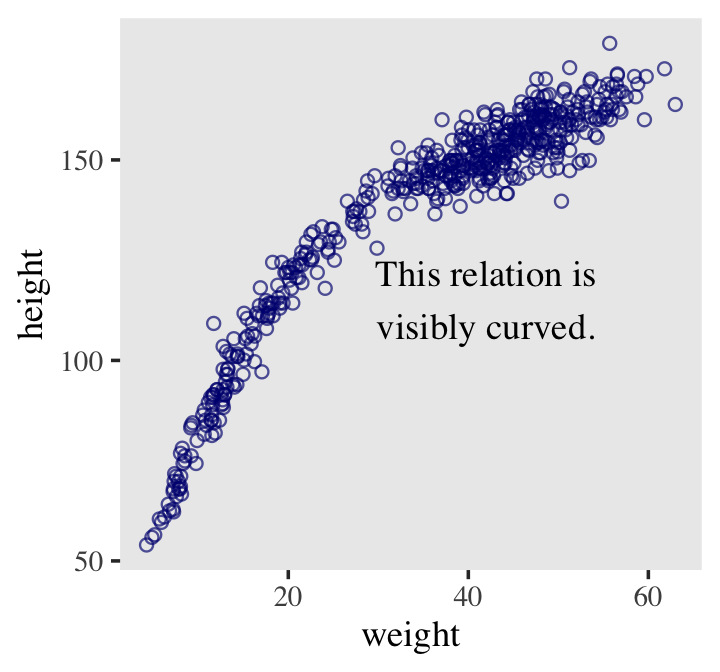

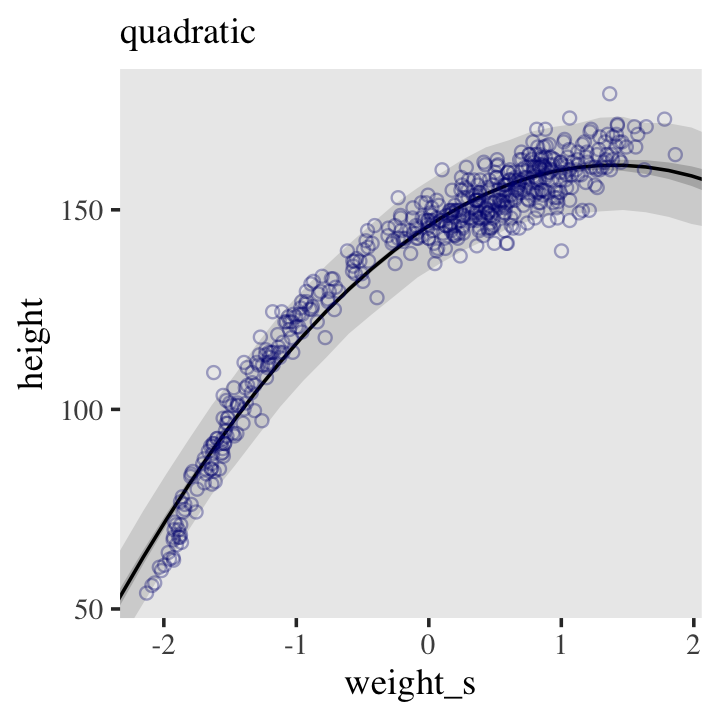

4.4 Linear prediction

Here’s our scatter plot of weight and height.

ggplot(data = d2,

aes(x = weight, y = height)) +

geom_point(shape = 1, size = 2) +

theme_bw() +

theme(panel.grid = element_blank())

There’s obviously a relationship: Knowing a person’s weight helps you predict height.

To make this vague observation into a more precise quantitative model that relates values of

weightto plausible values ofheight, we need some more technology. How do we take our Gaussian model from the previous section and incorporate predictor variables? (p. 92)

4.4.1 The linear model strategy.

The strategy is to make the parameter for the mean of a Gaussian distribution, \(\mu\), into a linear function of the predictor variable and other, new parameters that we invent. This strategy is often simply called the linear model. The linear model strategy instructs the golem to assume that the predictor variable has a constant and additive relationship to the mean of the outcome. The golem then computes the posterior distribution of this constant relationship. (p. 92, emphasis in the original)

Like we did for our first model without a predictor, we’ll use font color to help differentiate between the likelihood and prior(s) of our new univariable model,

\[\begin{align*} \color{red}{\text{height}_i} & \color{red}\sim \color{red}{\operatorname{Normal}(\mu_i, \sigma)} && \color{red}{\text{likelihood}} \\ \color{red}{\mu_i} & \color{red}= \color{red}{\alpha + \beta (\text{weight}_i - \overline{\text{weight}})} && \color{red}{\text{\{the linear model is just a special part of the likelihood\}} } \\ \color{blue}\alpha & \color{blue}\sim \color{blue}{\operatorname{Normal}(178, 20)} && \color{blue}{\text{prior(s)}} \\ \color{blue}\beta & \color{blue}\sim \color{blue}{\operatorname{Normal}(0, 10)} \\ \color{blue}\sigma & \color{blue}\sim \color{blue}{\operatorname{Uniform}(0, 50)}. \end{align*}\]

Do note that \((\text{weight}_i - \overline{\text{weight}})\) part. As we’ll see, it’s often advantageous to mean center our predictors.

4.4.1.1 Probability of the data.

Let’s begin with just the probability of the observed height, the first line of the model. This is nearly identical to before, except now there is a little index \(i\) on the \(\mu\) as well as the [\(\text{height}\)]. You can read [\(\text{height}_i\)] as “each [\(\text{height}\)]” and \(\mu_i\) as “each \(\mu\).” The mean \(\mu\) now depends upon unique values on each row \(i\). So the little \(i\) on \(\mu_i\) indicates that the mean depends upon the row. (p. 93, emphasis in the original)

4.4.1.2 Linear model.

The mean \(\mu\) is no longer a parameter to be estimated. Rather, as seen in the second line of the model, \(\mu_i\) is constructed from other parameters, \(\alpha\) and \(\beta\), and the observed variable [\(\text{weight}\)]. This line is not a stochastic relationship–there is no \(\sim\) in it, but rather an \(=\) in it–because the definition of \(\mu_i\) is deterministic. That is to say that, once we know \(\alpha\) and \(\beta\) and [\(\text{weight}_i\)], we know \(\mu_i\) with certainty.

The value [\(\text{weight}_i\)] is just the weight value on row \(i\). It refers to the same individual as the height value, [\(\text{height}_i\)], on the same row. The parameters \(\alpha\) and \(\beta\) are more mysterious. Where did they come from? We made them up….

You’ll be making up all manner of parameters as your skills improve. (p. 93)

4.4.1.2.1 Rethinking: Nothing special or natural about linear models.

Note that there’s nothing special about the linear model, really. You can choose a different relationship between \(\alpha\) and \(\beta\) and \(\mu\). For example, the following is a perfectly legitimate definition for \(\mu_i\):

\[\mu_i = \alpha \exp(- \beta x_i)\]

This does not define a linear regression, but it does define a regression model. The linear relationship we are using instead is conventional, but nothing requires that you use it. (p. 94)

4.4.1.3 Priors.

The remaining lines in the model define distributions for the unobserved variables. These variables are commonly known as parameters, and their distributions as priors. There are three parameters: \(\alpha\), \(\beta\), and \(\sigma\). You’ve seen priors for \(\alpha\) and \(\sigma\) before, although \(\alpha\) was called \(\mu\) back then.

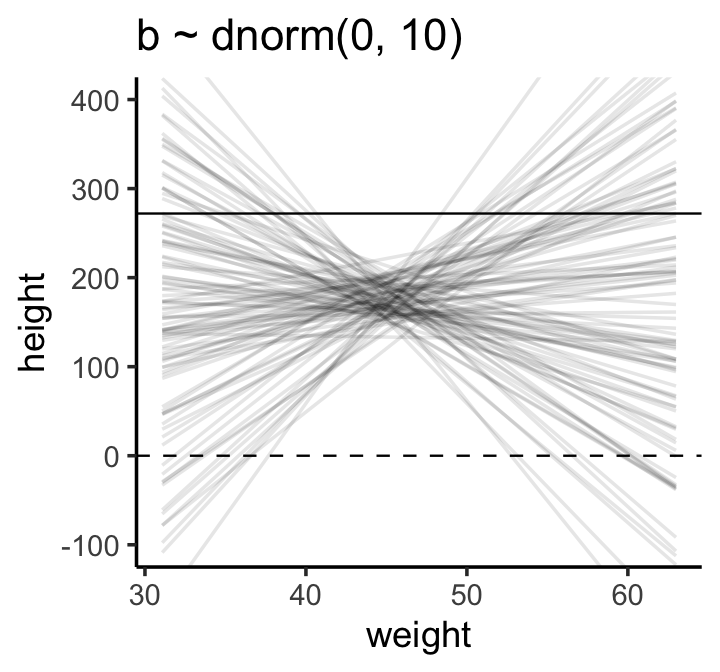

The prior for \(\beta\) deserves explanation. Why have a Gaussian prior with mean zero? (p. 94)

We’ll simulate to find out. Instead of using a loop to make our data for Figure 4.5, we’ll stay within the tidyverse.

set.seed(2971)

# how many lines would you like?

n_lines <- 100

lines <-

tibble(n = 1:n_lines,

a = rnorm(n_lines, mean = 178, sd = 20),

b = rnorm(n_lines, mean = 0, sd = 10)) %>%

expand_grid(weight = range(d2$weight)) %>%

mutate(height = a + b * (weight - mean(d2$weight)))

head(lines)## # A tibble: 6 × 5

## n a b weight height

## <int> <dbl> <dbl> <dbl> <dbl>

## 1 1 191. -7.06 31.1 289.

## 2 1 191. -7.06 63.0 63.5

## 3 2 199. 0.839 31.1 187.

## 4 2 199. 0.839 63.0 214.

## 5 3 202. 3.93 31.1 147.

## 6 3 202. 3.93 63.0 272.Now we’ll plot the left panel from Figure 4.5.

lines %>%

ggplot(aes(x = weight, y = height, group = n)) +

geom_hline(yintercept = c(0, 272), linetype = 2:1, linewidth = 1/3) +

geom_line(alpha = 1/10) +

coord_cartesian(ylim = c(-100, 400)) +

ggtitle("b ~ dnorm(0, 10)") +

theme_classic()

The pattern doesn’t look like any human population at all. It essentially says that the relationship between weight and height could be absurdly positive or negative. Before we’ve even seen the data, this is a bad model. Can we do better?

We can do better immediately. (pp. 95–96)

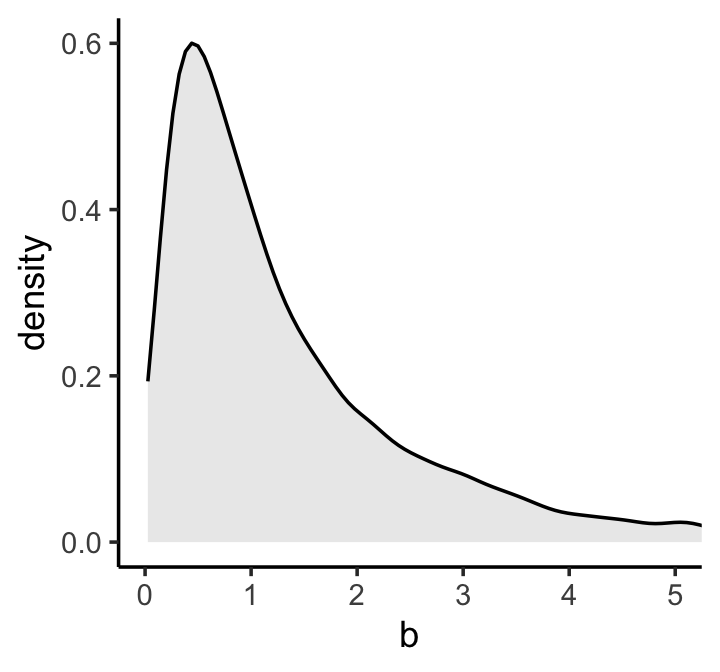

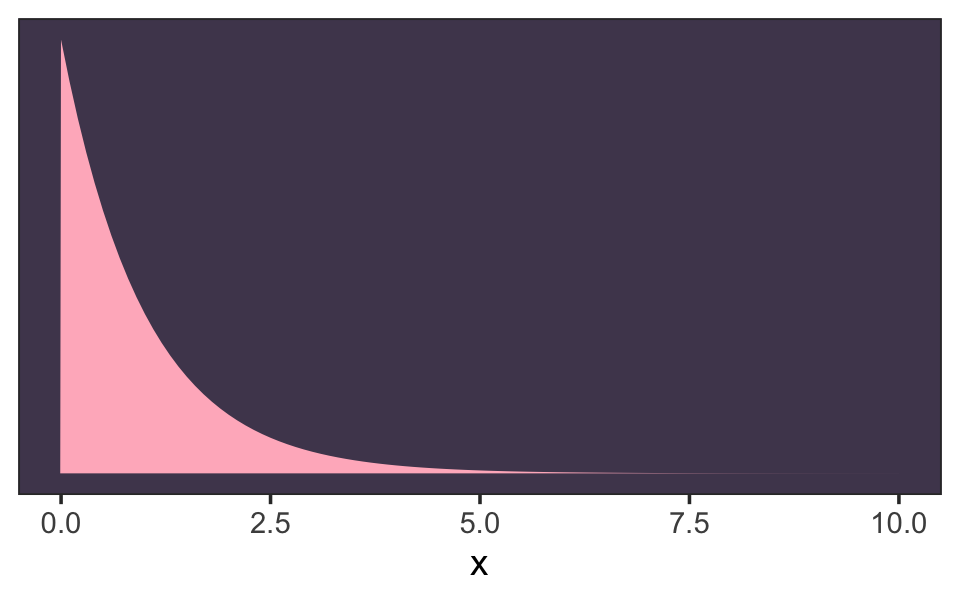

One thing we know from the outset is that the correlation between human height and weight is positive. We might not be sure of the magnitude, but it’s definitely the case that, on average, taller people are heavier people. Within the univariable regression context, this implies that the regression coefficient for weight predicting height will be positive. It might be unclear how large that coefficient will be, but it will certainly be above zero. One way we might encode this information in our data is by using the log-normal distribution for our \(\beta\) prior. Here’s what \(\operatorname{Log-Normal}(0, 1)\) looks like.

set.seed(4)

tibble(b = rlnorm(1e4, mean = 0, sd = 1)) %>%

ggplot(aes(x = b)) +

geom_density(fill = "grey92") +

coord_cartesian(xlim = c(0, 5)) +

theme_classic()

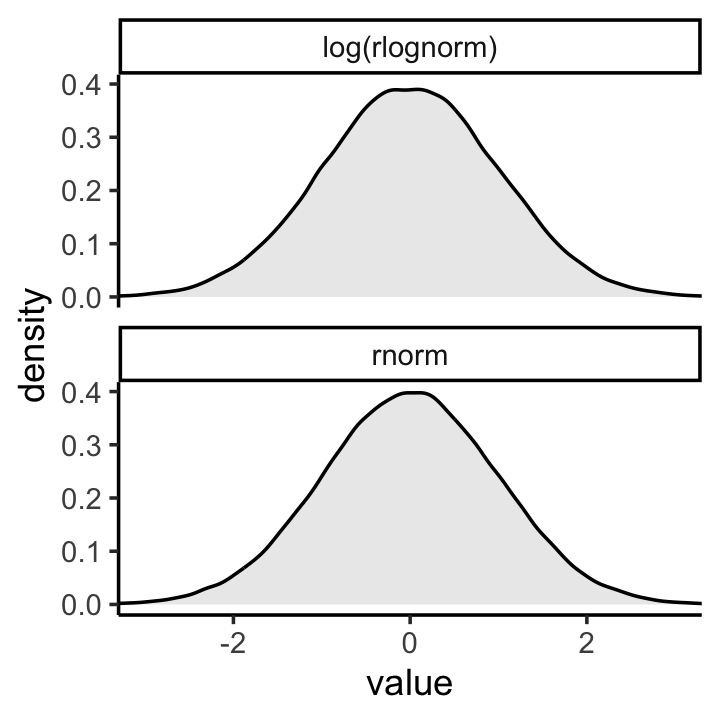

If you’re unfamiliar with the log-normal distribution, it is the distribution whose logarithm is normally distributed. For example, here’s what happens when we compare \(\operatorname{Normal}(0, 1)\) with \(\log \big ( \operatorname{Log-Normal}(0, 1) \big)\).

set.seed(4)

tibble(rnorm = rnorm(1e5, mean = 0, sd = 1),

`log(rlognorm)` = log(rlnorm(1e5, mean = 0, sd = 1))) %>%

pivot_longer(everything()) %>%

ggplot(aes(x = value)) +

geom_density(fill = "grey92") +

coord_cartesian(xlim = c(-3, 3)) +

theme_classic() +

facet_wrap(~ name, nrow = 2)

They are the same within simulation variance. Also, did you notice how we simulated those log-normal data with mean = 0, sd = 1? Those values are what the mean and standard deviation of the output from the rlnorm() function after they are log transformed. The formulas for the actual mean and standard deviation for the log-normal distribution itself are complicated (see here). They are

\[\begin{align*} \text{mean} & = \exp \left (\mu + \frac{\sigma^2}{2} \right) & \text{and} \\ \text{standard deviation} & = \sqrt{[\exp(\sigma ^{2})-1] \; \exp(2\mu +\sigma ^{2})}. \end{align*}\]

Let’s try our hand at those formulas and compute the mean and standard deviation for \(\operatorname{Log-Normal}(0, 1)\).

mu <- 0

sigma <- 1

# mean

exp(mu + (sigma^2) / 2)## [1] 1.648721# sd

sqrt((exp(sigma^2) - 1) * exp(2 * mu + sigma^2))## [1] 2.161197Let’s confirm with simulated draws from rlnorm().

set.seed(4)

tibble(x = rlnorm(1e7, mean = 0, sd = 1)) %>%

summarise(mean = mean(x),

sd = sd(x))## # A tibble: 1 × 2

## mean sd

## <dbl> <dbl>

## 1 1.65 2.17But okay, “so what [do all these complications] earn us? Do the prior predictive simulation again, now with the Log-Normal prior:” (p. 96).

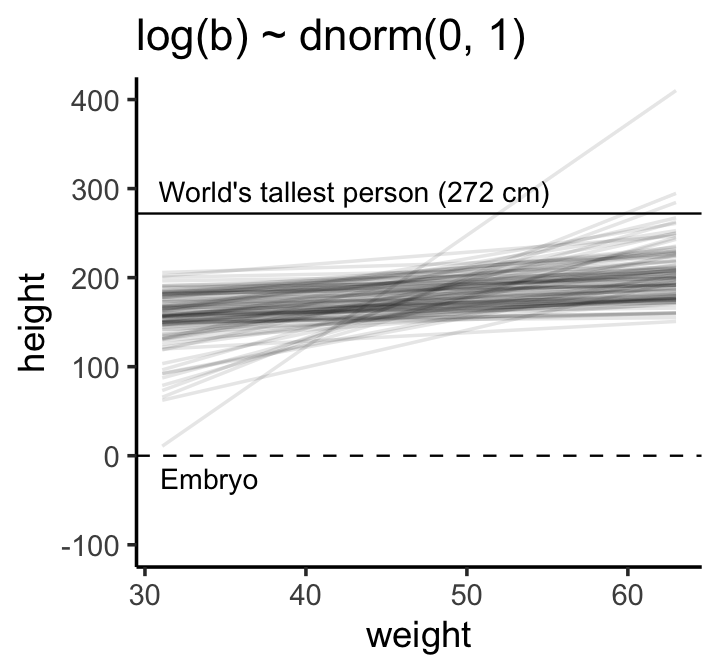

# make a tibble to annotate the plot

text <-

tibble(weight = c(34, 43),

height = c(0 - 25, 272 + 25),

label = c("Embryo", "World's tallest person (272 cm)"))

# simulate

set.seed(2971)

tibble(n = 1:n_lines,

a = rnorm(n_lines, mean = 178, sd = 20),

b = rlnorm(n_lines, mean = 0, sd = 1)) %>%

expand_grid(weight = range(d2$weight)) %>%

mutate(height = a + b * (weight - mean(d2$weight))) %>%

# plot

ggplot(aes(x = weight, y = height, group = n)) +

geom_hline(yintercept = c(0, 272), linetype = 2:1, linewidth = 1/3) +

geom_line(alpha = 1/10) +

geom_text(data = text,

aes(label = label),

size = 3) +

coord_cartesian(ylim = c(-100, 400)) +

ggtitle("log(b) ~ dnorm(0, 1)") +

theme_classic()

“This is much more sensible. There is still a rare impossible relationship. But nearly all lines in the joint prior for \(\alpha\) and \(\beta\) are now within human reason” (p. 96)

4.4.1.3.1 Rethinking: What’s the correct prior?

Good luck with that question. Hang around on academic Twitter long enough and you’ll see folks debating this.

This is a mistake. There is no more a uniquely correct prior than there is a uniquely correct likelihood. Statistical models are machines for inference. Many machines will work, but some work better than others. Priors can be wrong, but only in the same sense that a kind of hammer can be wrong for building a table. (p. 96)

4.4.1.3.2 Rethinking: Prior predictive simulation and \(p\)-hacking.

“We don’t pay any attention to \(p\)-values in this book” (p. 97). Off hand, I’m not sure of the exact origin of the term \(p\)-hacking. But the paper by Simmons, Nelson and Simonsohn (2011), False-positive psychology: Undisclosed flexibility in data collection and analysis allows presenting anything as significant, is often cited as an introduction to the problem.

4.4.2 Finding the posterior distribution.

Unlike with McElreath’s quap() formula syntax, I’m not aware that you can just specify something like weight – xbar in the formula argument in brm(). However, the alternative is easy: Just make a new variable in the data that is equivalent to weight – mean(weight). We’ll call it weight_c.

d2 <-

d2 %>%

mutate(weight_c = weight - mean(weight))Unlike with McElreath’s rethinking package, the conventional brms::brm() syntax doesn’t mirror the statistical notation. But here are the analogues to the exposition at the bottom of page 97:

- \(\text{height}_i \sim \operatorname{Normal}(\mu_i, \sigma)\):

family = gaussian, - \(\mu_i = \alpha + \beta \text{weight}_i\):

height ~ 1 + weight_c, - \(\alpha \sim \operatorname{Normal}(178, 20)\):

prior(normal(178, 20), class = Intercept, - \(\beta \sim \operatorname{Log-Normal}(0, 1)\):

prior(lognormal(0, 1), class = b), and - \(\sigma \sim \operatorname{Uniform}(0, 50)\):

prior(uniform(0, 50), class = sigma).

Thus, to add a predictor you just the + operator in the model formula.

b4.3 <-

brm(data = d2,

family = gaussian,

height ~ 1 + weight_c,

prior = c(prior(normal(178, 20), class = Intercept),

prior(lognormal(0, 1), class = b),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03")Here are the trace plots.

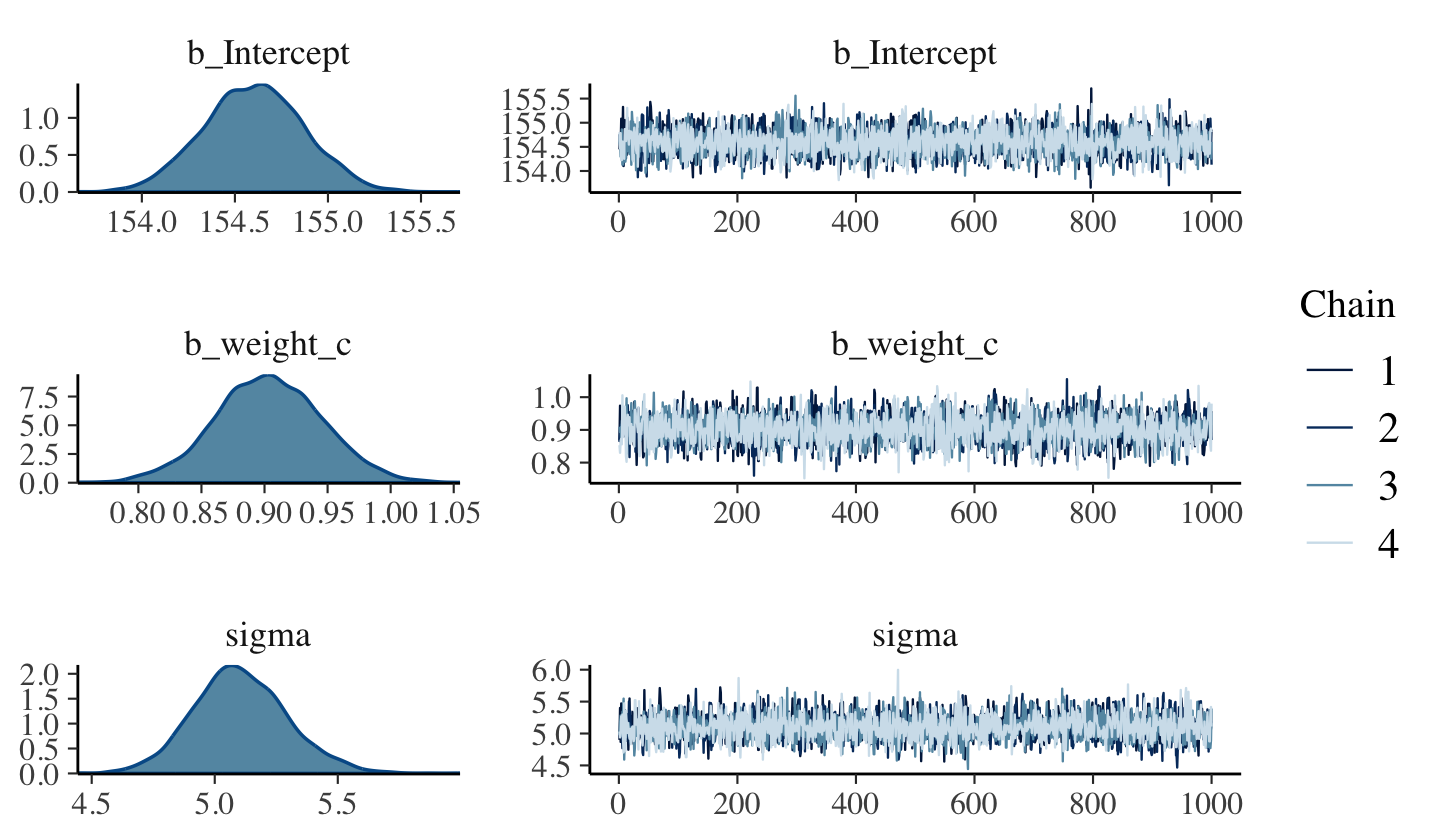

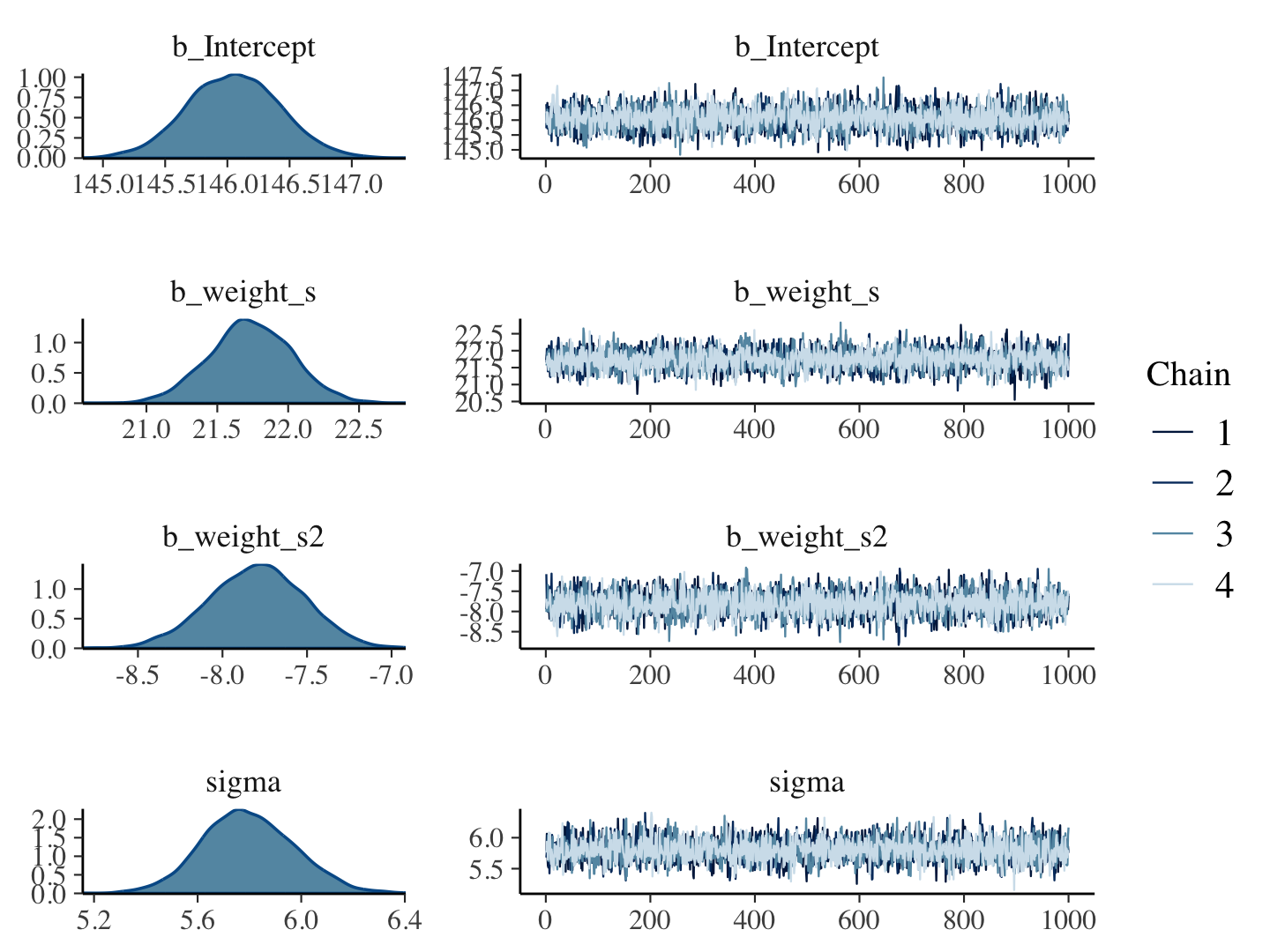

plot(b4.3, widths = c(1, 2))

4.4.2.1 Overthinking: Logs and exps, oh my.

brms does not allow users to insert coefficients into functions like \(\exp()\) within the conventional formula syntax. We can fit a brms model like McElreath’s m4.3b if we adopt what’s called the non-linear syntax (Bürkner, 2022e). The non-linear syntax is a lot like the syntax McElreath uses in rethinking in that it typically includes both predictor and variable names in the formula. Since this is so early in the book and we’re just working through a problem in an Overthinking tangent, I won’t go into a full-blown explanation, here. There will be many more opportunities to practice with the non-linear syntax in the chapters to come (e.g., Section 5.3.2, Section 6.2.1). For now, here’s how we might fit the model.

b4.3b <-

brm(data = d2,

family = gaussian,

bf(height ~ a + exp(lb) * weight_c,

a ~ 1,

lb ~ 1,

nl = TRUE),

prior = c(prior(normal(178, 20), class = b, nlpar = a),

prior(normal(0, 1), class = b, nlpar = lb),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03b")If you execute summary(b4.3b), you’ll see the intercept and \(\sigma\) summaries for this model are about the same as those for b4.3, above. The difference is for the \(\beta\) parameter, which we called lb in the b4.3b model. If we term that parameter from b4.3 as \(\beta^\text{b4.3}\) and the one from our new model \(\beta^\text{b4.3b}\), it turns out that \(\beta^\text{b4.3} = \exp \left (\beta^\text{b4.3b} \right )\).

fixef(b4.3)["weight_c", "Estimate"]## [1] 0.9033555fixef(b4.3b)["lb_Intercept", "Estimate"] %>% exp()## [1] 0.9019411They’re the same within simulation variance.

4.4.3 Interpreting the posterior distribution.

“One trouble with statistical models is that they are hard to understand” (p. 99). Welcome to the world of applied statistics, friends. 😅

4.4.3.0.1 Rethinking: What do parameters mean?

A basic issue with interpreting model-based estimates is in knowing the meaning of parameters. There is no consensus about what a parameter means, however, because different people take different philosophical stances towards models, probability, and prediction. The perspective in this book is a common Bayesian perspective: Posterior probabilities of parameter values describe the relative compatibility of different states of the world with the data, according to the model. (p. 99, emphasis in the original)

4.4.3.1 Tables of marginal distributions.

With a little [] subsetting we can exclude the log posterior from our summary for b4.3.

posterior_summary(b4.3)[1:3, ] %>%

round(digits = 2)## Estimate Est.Error Q2.5 Q97.5

## b_Intercept 154.6 0.27 154.09 155.12

## b_weight_c 0.9 0.04 0.82 0.99

## sigma 5.1 0.19 4.74 5.50If we put our brms fit into the vcov() function, we’ll get the variance/covariance matrix of the intercept and weight_c coefficient.

vcov(b4.3) %>%

round(3)## Intercept weight_c

## Intercept 0.072 0.000

## weight_c 0.000 0.002No \(\sigma\), however. To get that, we’ll have to extract the posterior draws and use the cov() function, instead.

as_draws_df(b4.3) %>%

select(b_Intercept:sigma) %>%

cov() %>%

round(digits = 3)## b_Intercept b_weight_c sigma

## b_Intercept 0.072 0.000 -0.001

## b_weight_c 0.000 0.002 0.000

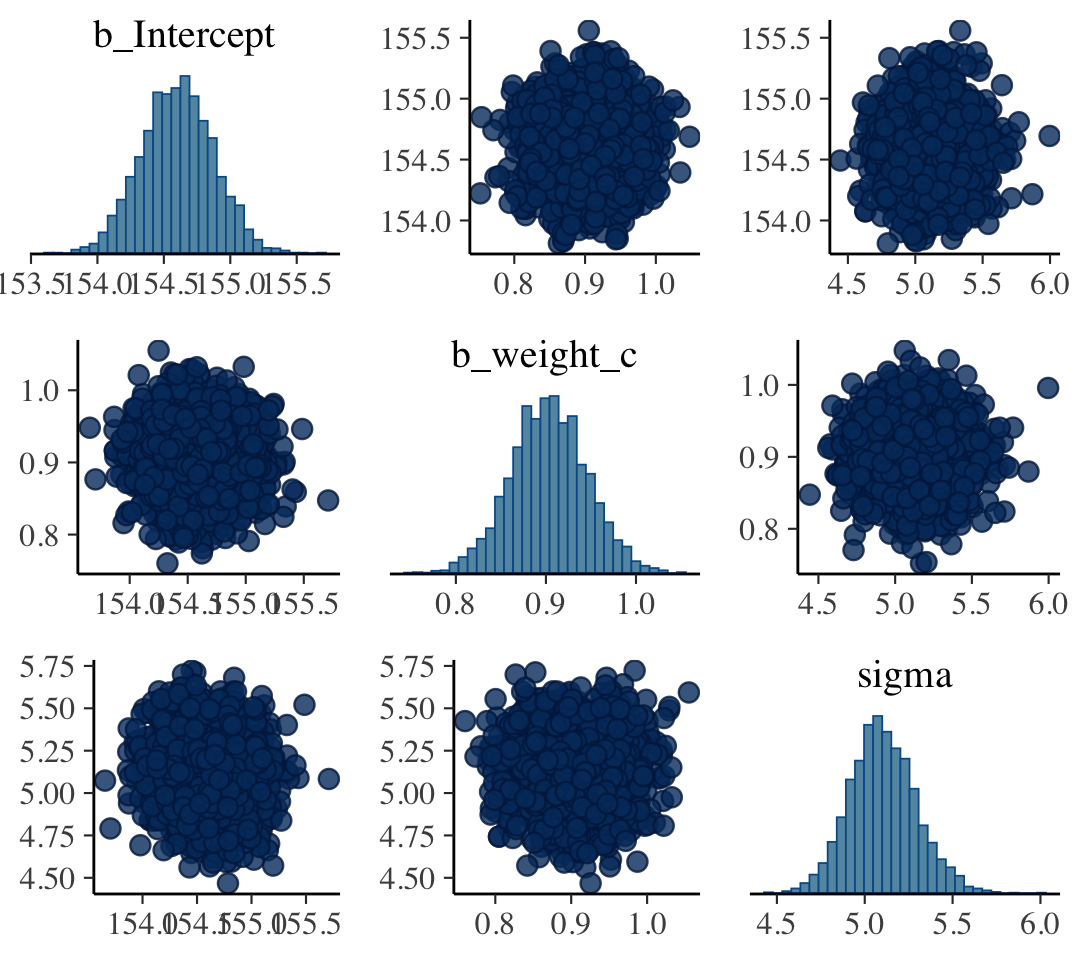

## sigma -0.001 0.000 0.036The pairs() function will work for a brms fit much like it would one from rethinking. It will show “both the marginal posteriors and the covariance” (p. 100).

pairs(b4.3)

4.4.3.2 Plotting posterior inference against the data.

“It’s almost always much more useful to plot the posterior inference against the data. Not only does plotting help in interpreting the posterior, but it also provides an informal check on model assumptions” (p. 100).

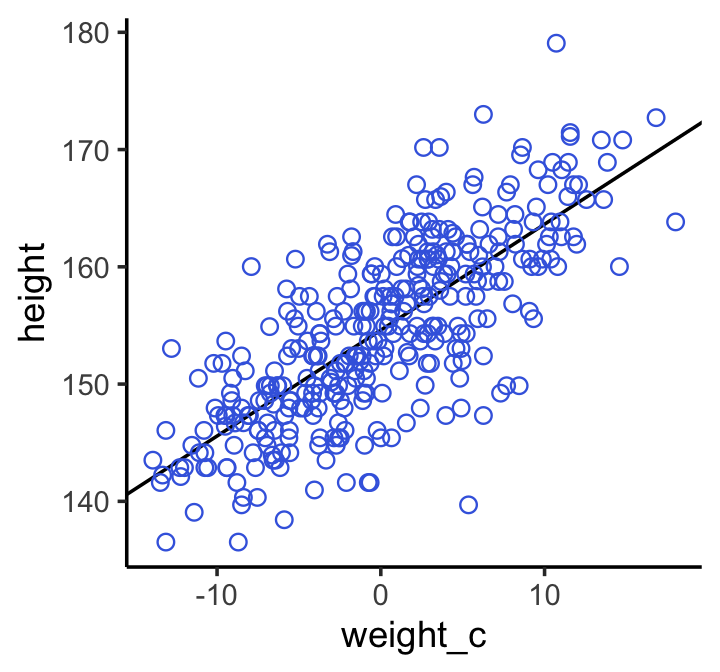

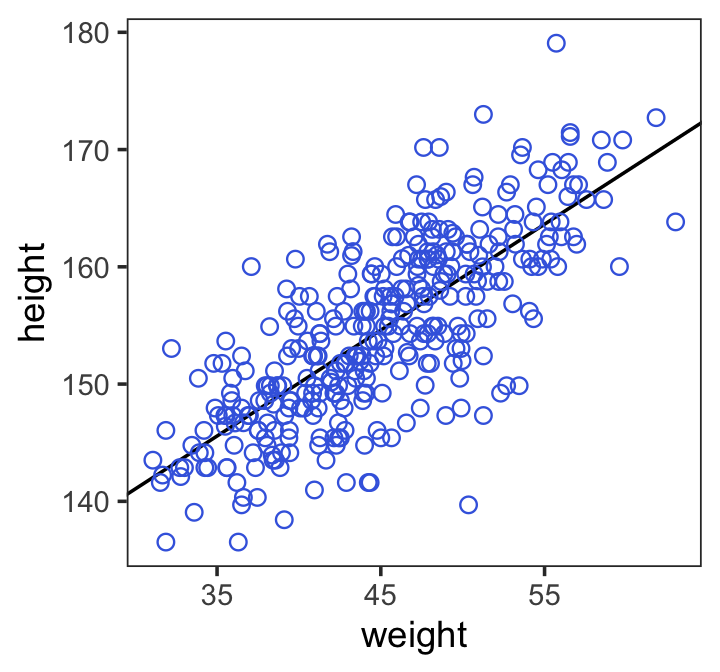

Here is the code for Figure 4.6. Note our use of the fixef() function within geom_abline().

d2 %>%

ggplot(aes(x = weight_c, y = height)) +

geom_abline(intercept = fixef(b4.3)[1],

slope = fixef(b4.3)[2]) +

geom_point(shape = 1, size = 2, color = "royalblue") +

theme_classic()

Note how the breaks on our \(x\)-axis look off. That’s because we fit the model with weight_c and we plotted the points in that metric, too. Since we computed weight_c by subtracting the mean of weight from the data, we can adjust the \(x\)-axis break point labels by simply adding that value back.

labels <-

c(-10, 0, 10) + mean(d2$weight) %>%

round(digits = 0)

d2 %>%

ggplot(aes(x = weight_c, y = height)) +

geom_abline(intercept = fixef(b4.3)[1],

slope = fixef(b4.3)[2]) +

geom_point(shape = 1, size = 2, color = "royalblue") +

scale_x_continuous("weight",

breaks = c(-10, 0, 10),

labels = labels) +

theme_bw() +

theme(panel.grid = element_blank())

4.4.3.3 Adding uncertainty around the mean.

Be default, we extract all the posterior draws with as_draws_df().

post <- as_draws_df(b4.3)

post %>%

slice(1:5) # this serves a similar function as `head()`## # A draws_df: 5 iterations, 1 chains, and 5 variables

## b_Intercept b_weight_c sigma lprior lp__

## 1 154 0.87 4.9 -9.3 -1080

## 2 155 0.97 5.4 -9.4 -1081

## 3 155 0.86 4.9 -9.3 -1080

## 4 155 0.93 5.5 -9.3 -1082

## 5 154 0.93 5.1 -9.4 -1079

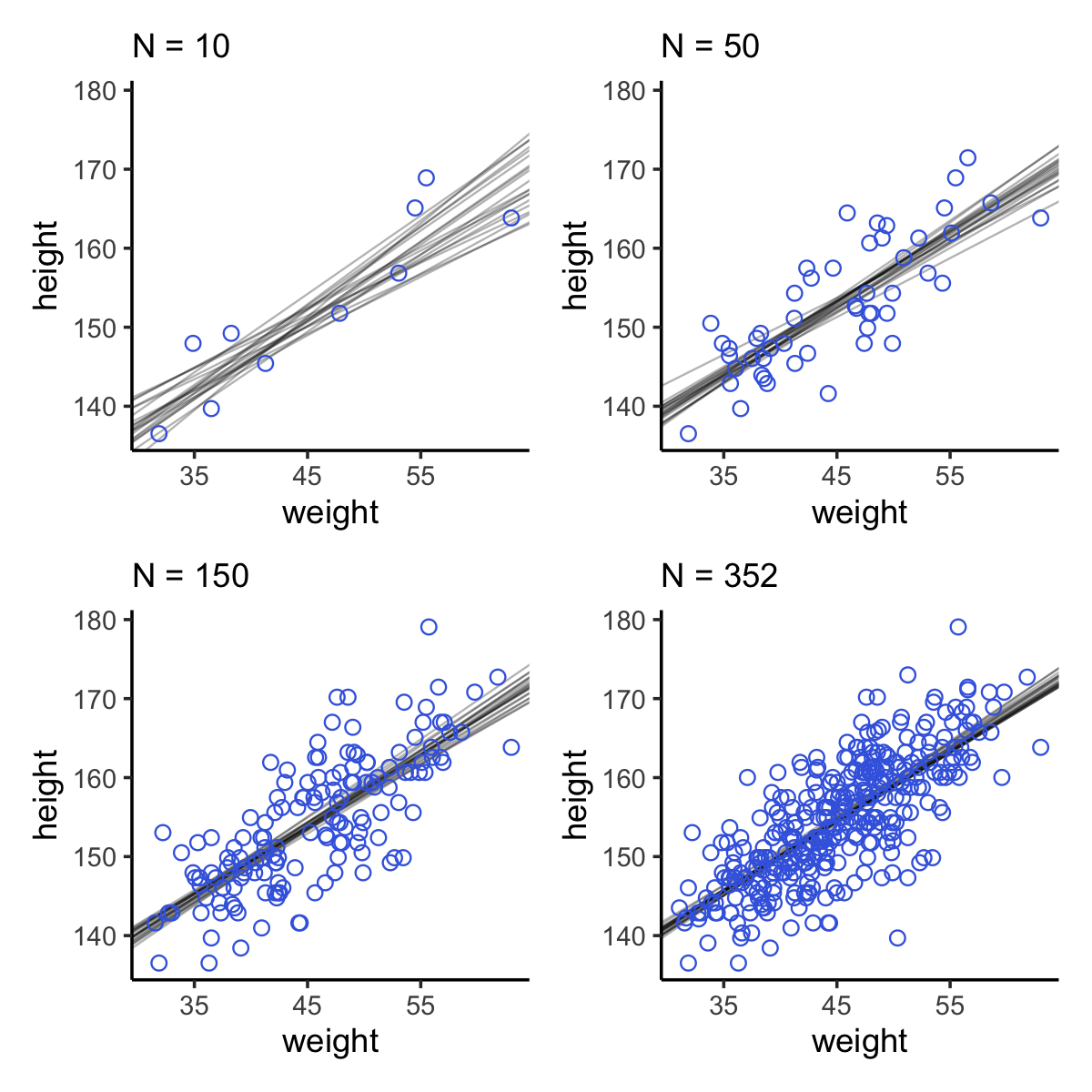

## # ... hidden reserved variables {'.chain', '.iteration', '.draw'}Here are the four models leading up to McElreath’s Figure 4.7.

N <- 10

b4.3_010 <-

brm(data = d2 %>%

slice(1:N), # note our tricky use of `N` and `slice()`

family = gaussian,

height ~ 1 + weight_c,

prior = c(prior(normal(178, 20), class = Intercept),

prior(lognormal(0, 1), class = b),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03_010")

N <- 50

b4.3_050 <-

brm(data = d2 %>%

slice(1:N),

family = gaussian,

height ~ 1 + weight_c,

prior = c(prior(normal(178, 20), class = Intercept),

prior(lognormal(0, 1), class = b),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03_050")

N <- 150

b4.3_150 <-

brm(data = d2 %>%

slice(1:N),

family = gaussian,

height ~ 1 + weight_c,

prior = c(prior(normal(178, 20), class = Intercept),

prior(lognormal(0, 1), class = b),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03_150")

N <- 352

b4.3_352 <-

brm(data = d2 %>%

slice(1:N),

family = gaussian,

height ~ 1 + weight_c,

prior = c(prior(normal(178, 20), class = Intercept),

prior(lognormal(0, 1), class = b),

prior(uniform(0, 50), class = sigma, ub = 50)),

iter = 2000, warmup = 1000, chains = 4, cores = 4,

seed = 4,

file = "fits/b04.03_352")I’m not going to clutter up the document with all the trace plots and coefficient summaries from these four models. But here’s how to get that information.

plot(b4.3_010)

print(b4.3_010)

plot(b4.3_050)

print(b4.3_050)

plot(b4.3_150)

print(b4.3_150)

plot(b4.3_352)

print(b4.3_352)We’ll need to put the chains of each model into data frames.

post010 <- as_draws_df(b4.3_010)

post050 <- as_draws_df(b4.3_050)

post150 <- as_draws_df(b4.3_150)

post352 <- as_draws_df(b4.3_352)Here is the code for the four individual plots.

p1 <-

ggplot(data = d2[1:10, ],

aes(x = weight_c, y = height)) +

geom_abline(data = post010 %>% slice(1:20),

aes(intercept = b_Intercept, slope = b_weight_c),

linewidth = 1/3, alpha = .3) +

geom_point(shape = 1, size = 2, color = "royalblue") +

coord_cartesian(xlim = range(d2$weight_c),

ylim = range(d2$height)) +

labs(subtitle = "N = 10")

p2 <-

ggplot(data = d2[1:50, ],

aes(x = weight_c, y = height)) +

geom_abline(data = post050 %>% slice(1:20),

aes(intercept = b_Intercept, slope = b_weight_c),

linewidth = 1/3, alpha = .3) +

geom_point(shape = 1, size = 2, color = "royalblue") +

coord_cartesian(xlim = range(d2$weight_c),

ylim = range(d2$height)) +

labs(subtitle = "N = 50")

p3 <-

ggplot(data = d2[1:150, ],

aes(x = weight_c, y = height)) +

geom_abline(data = post150 %>% slice(1:20),

aes(intercept = b_Intercept, slope = b_weight_c),

linewidth = 1/3, alpha = .3) +

geom_point(shape = 1, size = 2, color = "royalblue") +

coord_cartesian(xlim = range(d2$weight_c),

ylim = range(d2$height)) +

labs(subtitle = "N = 150")

p4 <-

ggplot(data = d2[1:352, ],

aes(x = weight_c, y = height)) +

geom_abline(data = post352 %>% slice(1:20),

aes(intercept = b_Intercept, slope = b_weight_c),

linewidth = 1/3, alpha = .3) +

geom_point(shape = 1, size = 2, color = "royalblue") +

coord_cartesian(xlim = range(d2$weight_c),

ylim = range(d2$height)) +

labs(subtitle = "N = 352")Now we can combine the ggplots with patchwork syntax to make the full version of Figure 4.7.

(p1 + p2 + p3 + p4) &

scale_x_continuous("weight",

breaks = c(-10, 0, 10),

labels = labels) &

theme_classic()

“Notice that the cloud of regression lines grows more compact as the sample size increases. This is a result of the model growing more confident about the location of the mean” (p. 102).

4.4.3.4 Plotting regression intervals and contours.

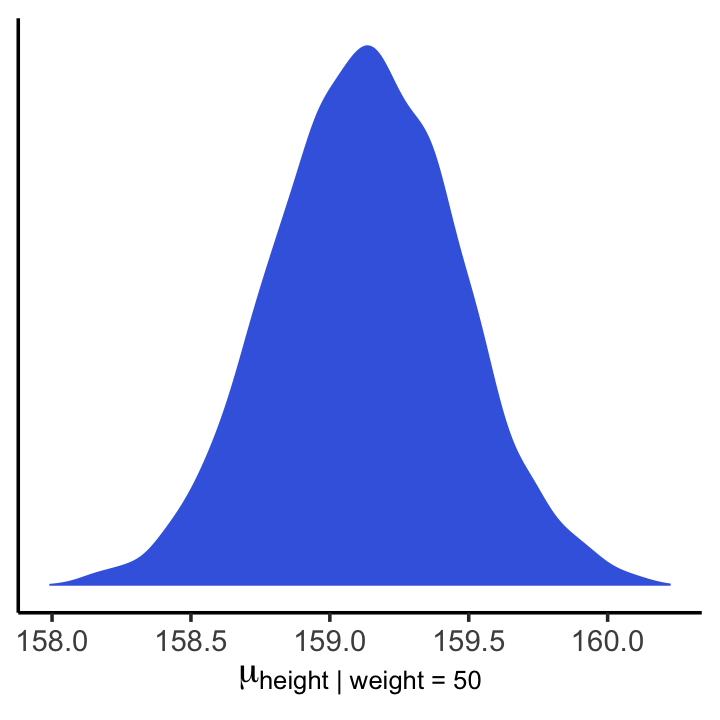

Since we used weight_c to fit our model, we might first want to understand what exactly the mean value is for weight.

mean(d2$weight)## [1] 44.99049Just a hair under 45. If we’re interested in \(\mu\) at weight = 50, that implies we’re also interested in \(\mu\) at weight_c + 5.01. Within the context of our model, we compute this with \(\alpha + \beta \cdot 5.01\). Here’s what that looks like with post.

mu_at_50 <-

post %>%

transmute(mu_at_50 = b_Intercept + b_weight_c * 5.01)

head(mu_at_50)## # A tibble: 6 × 1

## mu_at_50

## <dbl>

## 1 159.

## 2 160.

## 3 159.

## 4 160.

## 5 159.

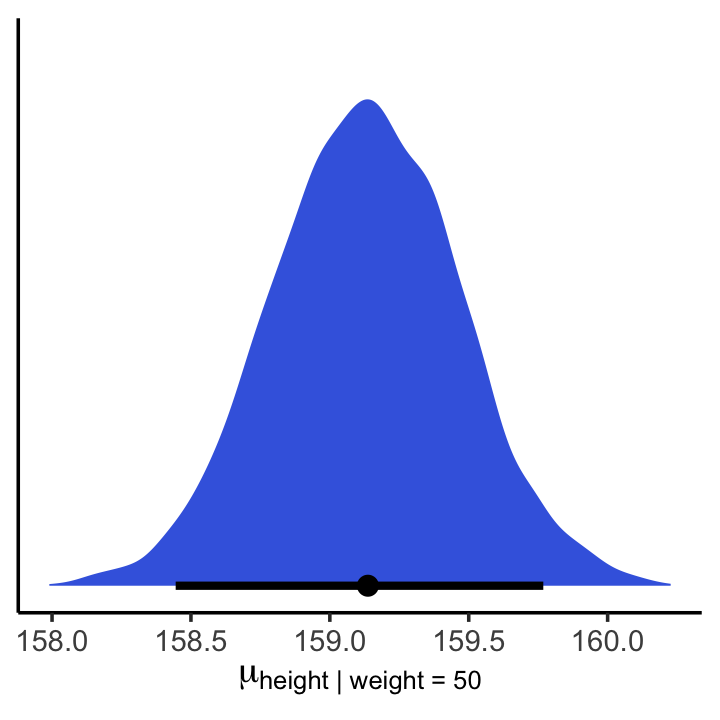

## 6 159.And here is a version McElreath’s Figure 4.8 density plot.

mu_at_50 %>%

ggplot(aes(x = mu_at_50)) +

geom_density(linewidth = 0, fill = "royalblue") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(mu["height | weight = 50"])) +

theme_classic()

We’ll use mean_hdi() to get both 89% and 95% HPDIs along with the mean.

mean_hdi(mu_at_50[, 1], .width = c(.89, .95))## # A tibble: 2 × 6

## mu_at_50 .lower .upper .width .point .interval

## <dbl> <dbl> <dbl> <dbl> <chr> <chr>

## 1 159. 159. 160. 0.89 mean hdi

## 2 159. 158. 160. 0.95 mean hdiIf you wanted to express those sweet 95% HPDIs on your density plot, you might use tidybayes::stat_halfeye(). Since stat_halfeye() also returns a point estimate, we’ll throw in the mode.

mu_at_50 %>%

ggplot(aes(x = mu_at_50, y = 0)) +

stat_halfeye(point_interval = mode_hdi, .width = .95,

fill = "royalblue") +

scale_y_continuous(NULL, breaks = NULL) +

xlab(expression(mu["height | weight = 50"])) +

theme_classic()

With brms, you would use fitted() to do what McElreath accomplished with link().

mu <- fitted(b4.3, summary = F)

str(mu)## num [1:4000, 1:352] 157 158 157 158 157 ...When you specify summary = F, fitted() returns a matrix of values with as many rows as there were post-warmup draws across your HMC chains and as many columns as there were cases in your analysis. Because we had 4,000 post-warmup draws and \(n = 352\), fitted() returned a matrix of 4,000 rows and 352 vectors. If you omitted the summary = F argument, the default is TRUE and fitted() will return summary information instead.

Much like rethinking’s link(), brms::fitted() can accommodate custom predictor values with its newdata argument.

weight_seq <-

tibble(weight = 25:70) %>%

mutate(weight_c = weight - mean(d2$weight))

mu <-

fitted(b4.3,

summary = F,

newdata = weight_seq) %>%

data.frame() %>%

# here we name the columns after the `weight` values from which they were computed

set_names(25:70) %>%

mutate(iter = 1:n())Anticipating ggplot2, we went ahead and converted the output to a data frame. But we might do a little more data processing with the aid of tidyr::pivot_longer(), which will convert the data from the wide format to the long format. If you are new to the distinction between wide and long data, you can learn more from the Pivot data from wide to long vignette from the tidyverse team (2020); Simon Ejdemyr’s blog post, Wide & long data; or Karen Grace-Martin’s blog post, The wide and long data format for repeated measures data.

mu <-

mu %>%

pivot_longer(-iter,

names_to = "weight",

values_to = "height") %>%

# we might reformat `weight` to numerals

mutate(weight = as.numeric(weight))

head(mu)## # A tibble: 6 × 3

## iter weight height

## <int> <dbl> <dbl>

## 1 1 25 137.

## 2 1 26 138.

## 3 1 27 139.

## 4 1 28 140.

## 5 1 29 141.

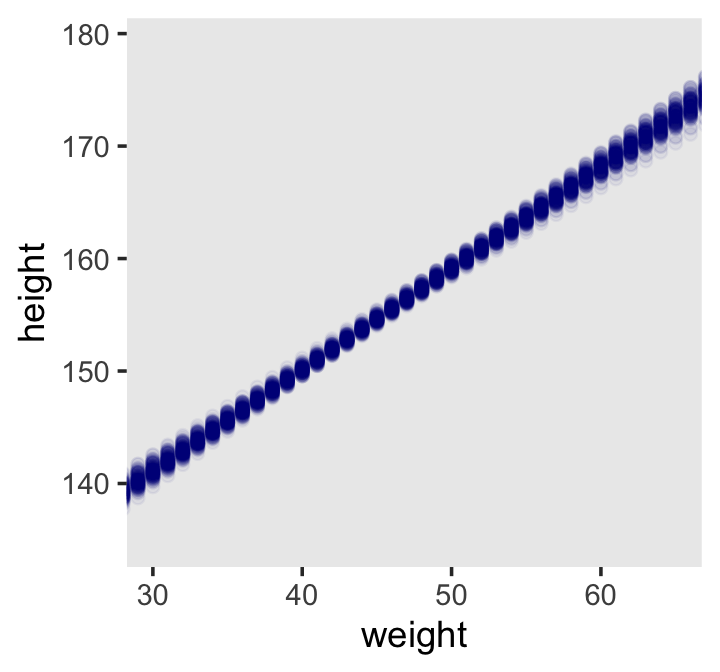

## 6 1 30 141.Now our data processing is done, here we reproduce McElreath’s Figure 4.9.a.

d2 %>%

ggplot(aes(x = weight, y = height)) +

geom_point(data = mu %>% filter(iter < 101),

color = "navyblue", alpha = .05) +

coord_cartesian(xlim = c(30, 65)) +

theme(panel.grid = element_blank())

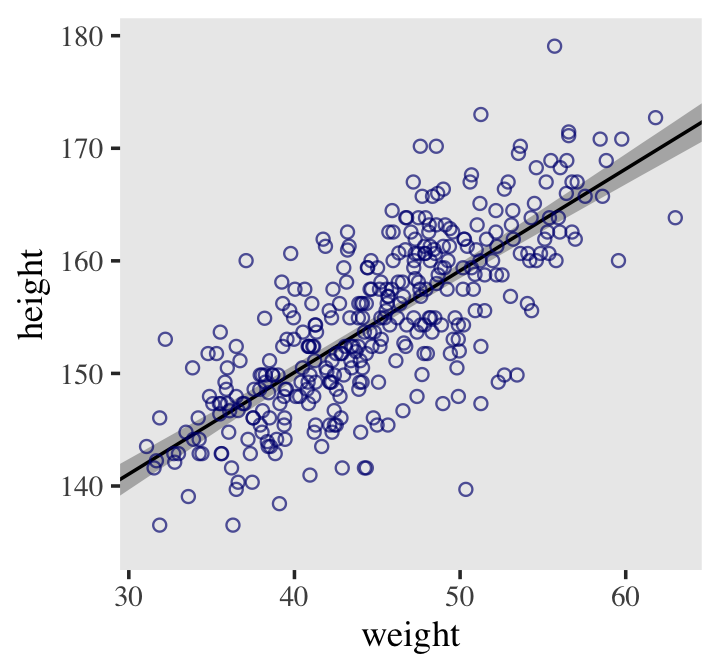

With fitted(), it’s quite easy to plot a regression line and its intervals. Just omit the summary = T argument.

mu_summary <-

fitted(b4.3,

newdata = weight_seq) %>%

data.frame() %>%

bind_cols(weight_seq)

head(mu_summary)## Estimate Est.Error Q2.5 Q97.5 weight weight_c

## 1 136.5440 0.8980997 134.7610 138.2850 25 -19.99049

## 2 137.4474 0.8577127 135.7422 139.1166 26 -18.99049

## 3 138.3507 0.8175317 136.7125 139.9426 27 -17.99049

## 4 139.2541 0.7775885 137.6966 140.7603 28 -16.99049

## 5 140.1575 0.7379219 138.6831 141.5796 29 -15.99049

## 6 141.0608 0.6985789 139.6677 142.3997 30 -14.99049Here it is, our analogue to Figure 4.9.b.

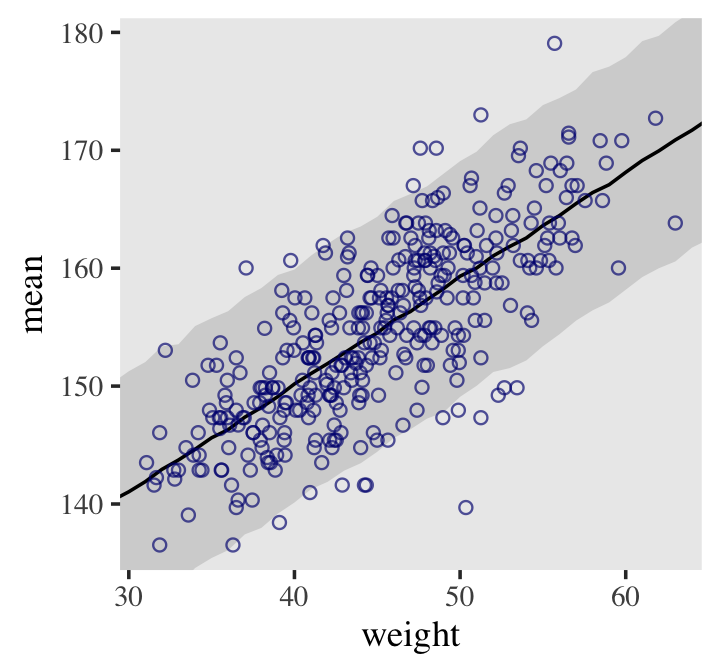

d2 %>%

ggplot(aes(x = weight, y = height)) +

geom_smooth(data = mu_summary,

aes(y = Estimate, ymin = Q2.5, ymax = Q97.5),

stat = "identity",

fill = "grey70", color = "black", alpha = 1, linewidth = 1/2) +

geom_point(color = "navyblue", shape = 1, linewidth = 1.5, alpha = 2/3) +

coord_cartesian(xlim = range(d2$weight)) +

theme(text = element_text(family = "Times"),

panel.grid = element_blank())

If you wanted to use intervals other than the default 95% ones, you’d include the probs argument like this: fitted(b4.3, newdata = weight.seq, probs = c(.25, .75)). The resulting third and fourth vectors from the fitted() object would be named Q25 and Q75 instead of the default Q2.5 and Q97.5. The Q prefix stands for quantile.

4.4.3.4.1 Rethinking: Overconfident intervals.

The compatibility interval for the regression line in Figure 4.9 clings tightly to the MAP line. Thus there is very little uncertainty about the average height as a function of average weight. But you have to keep in mind that these inferences are always conditional on the model. Even a very bad model can have very tight compatibility intervals. It may help if you think of the regression line in Figure 4.9 as saying: Conditional on the assumption that height and weight are related by a straight line, then this is the most plausible line, and these are its plausible bounds. (p. 107, emphasis in the original)

4.4.3.4.2 Overthinking: How link fitted() works.

Similar to rethinking::link(), brms::fitted() uses the formula from your model to compute the model expectations for a given set of predictor values. I use it a lot in this project. If you follow along, you’ll get a good handle on it. But to dive deeper, you can go here for the documentation. Though we won’t be using it in this project, brms users might want to know that fitted() is also an alias for the posterior_epred() function, about which you might learn more here. Users can always learn more about them and other functions in the brms reference manual.

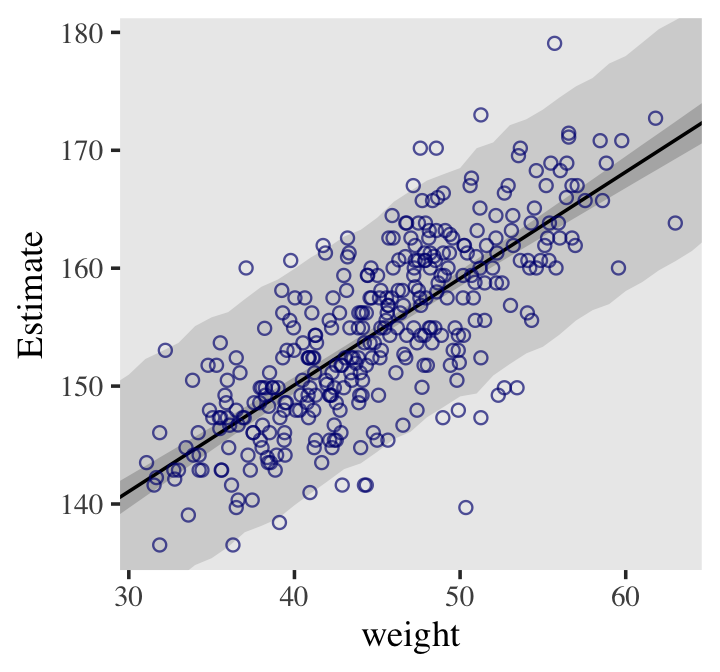

4.4.3.5 Prediction intervals.

Even though our statistical model (omitting priors for the sake of simplicity) is

\[\text{height}_i \sim \operatorname{Normal}(\mu_i = \alpha + \beta x_, \sigma),\]

we’ve only been plotting the \(\mu\) part. In order to bring in the variability expressed by \(\sigma\), we’ll have to switch to the predict() function. Much as brms::fitted() was our analogue to rethinking::link(), brms::predict() is our analogue to rethinking::sim().

We can reuse our weight_seq data from before. But in case you forgot, here’s that code again.

weight_seq <-

tibble(weight = 25:70) %>%

mutate(weight_c = weight - mean(d2$weight))The predict() code looks a lot like what we used for fitted().

pred_height <-

predict(b4.3,

newdata = weight_seq) %>%

data.frame() %>%

bind_cols(weight_seq)

pred_height %>%

slice(1:6)## Estimate Est.Error Q2.5 Q97.5 weight weight_c

## 1 136.6175 5.127009 126.4819 146.4957 25 -19.99049

## 2 137.4013 5.192623 127.2867 147.4380 26 -18.99049

## 3 138.5001 5.156907 128.3914 148.2272 27 -17.99049

## 4 139.0617 5.229955 128.8768 149.3872 28 -16.99049

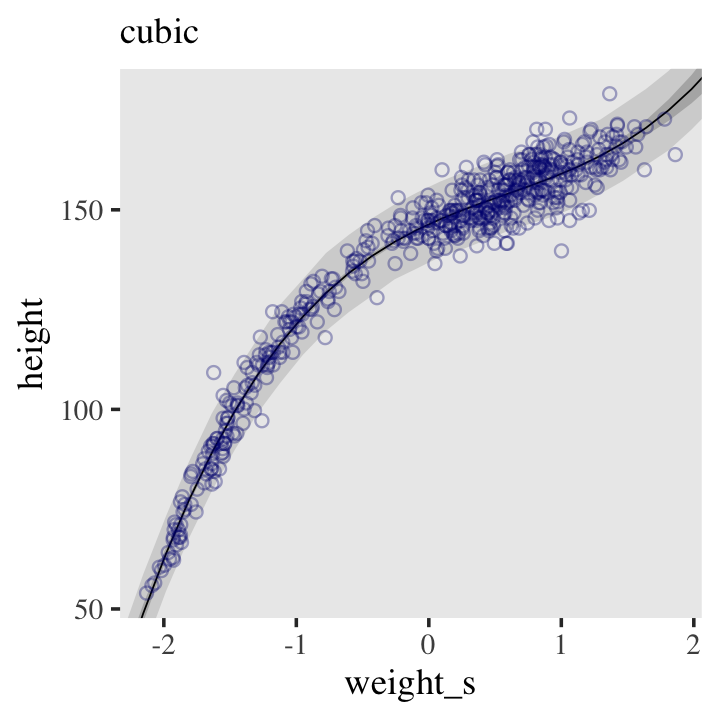

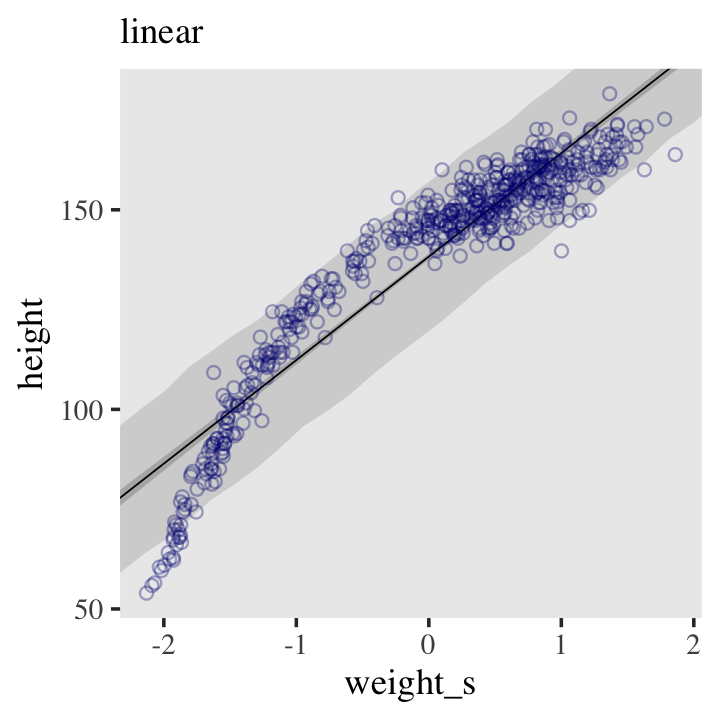

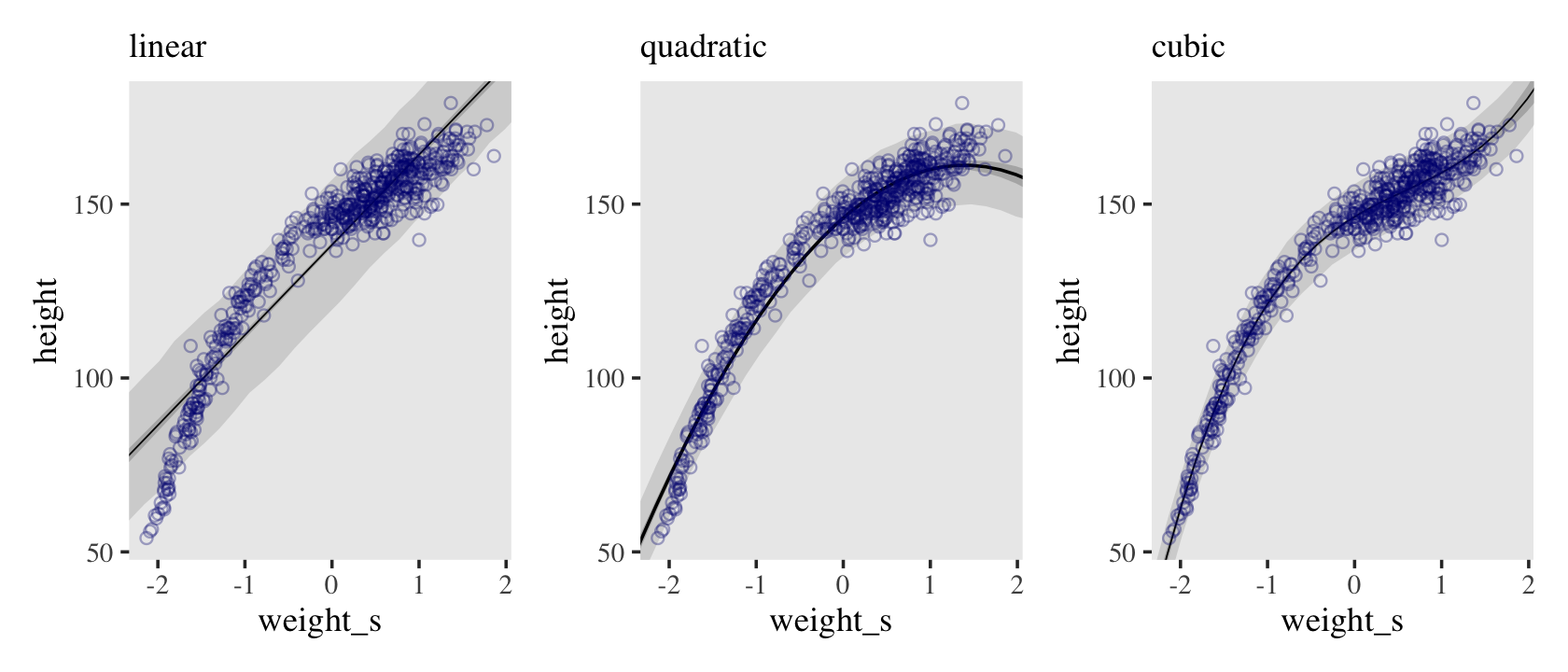

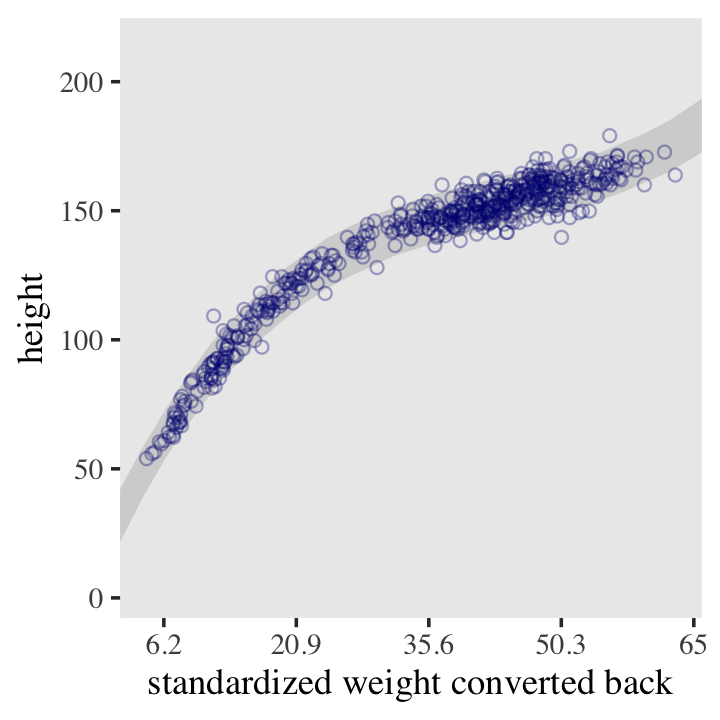

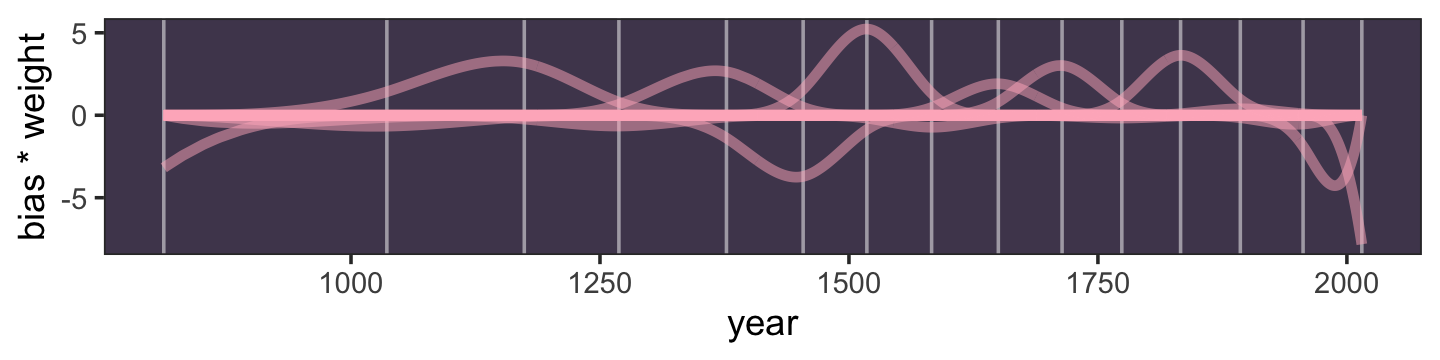

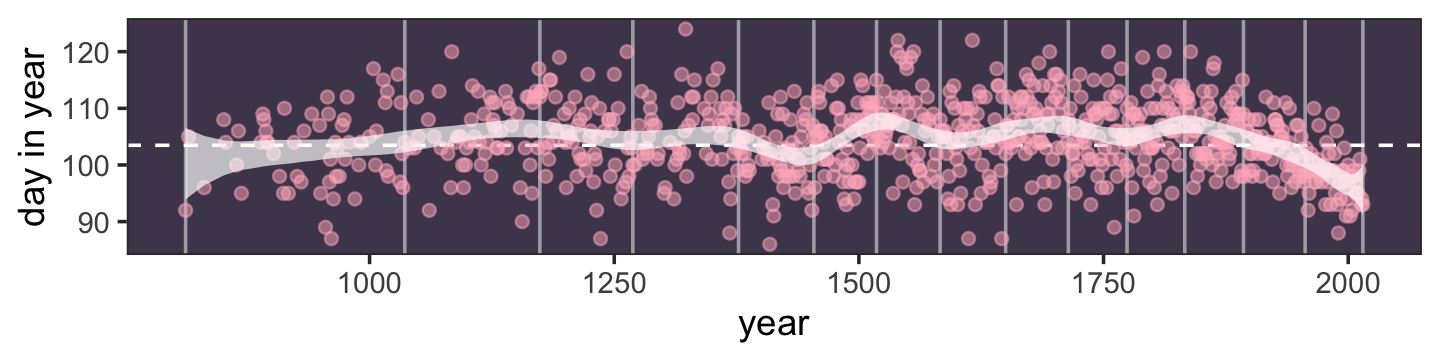

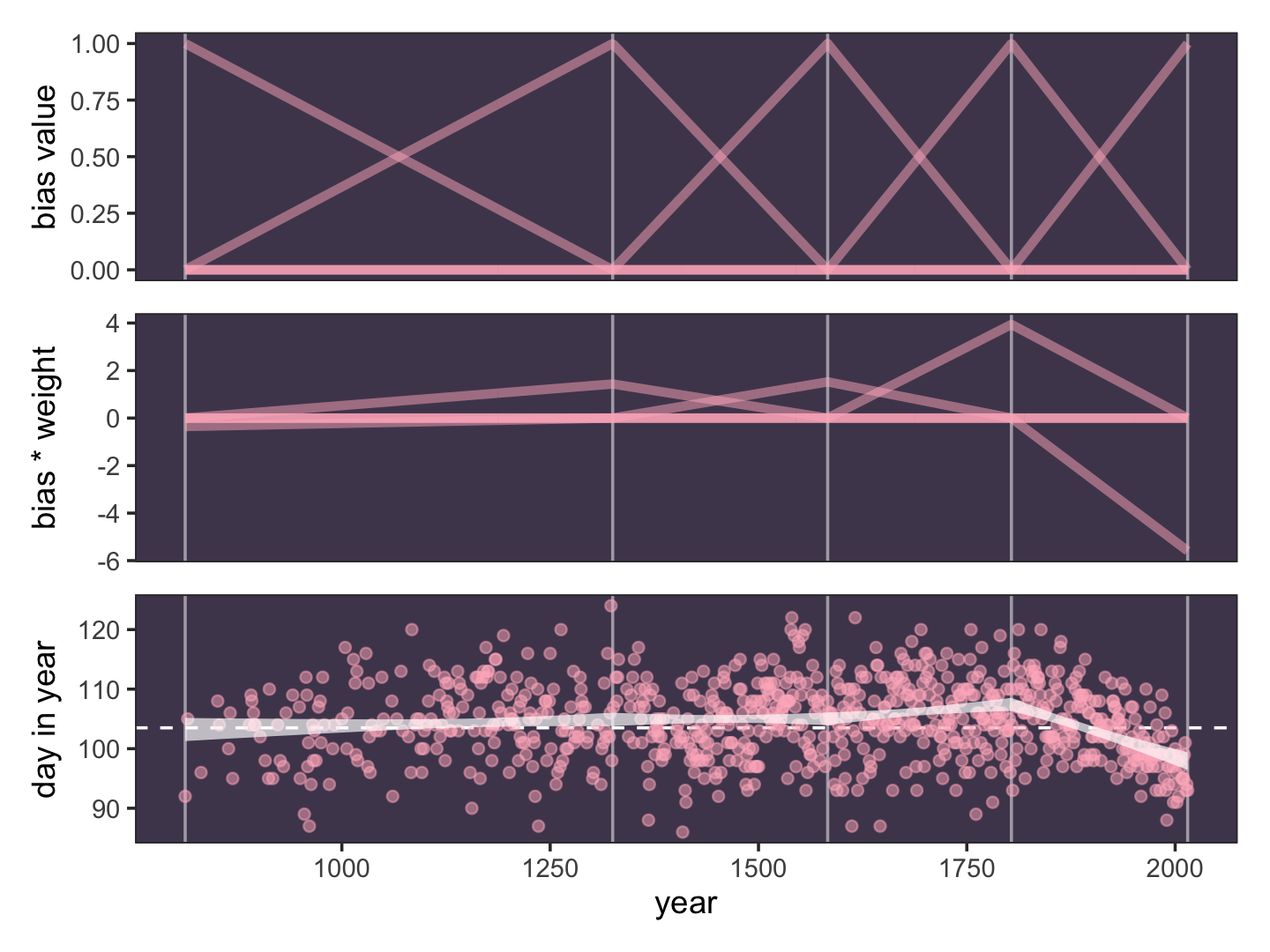

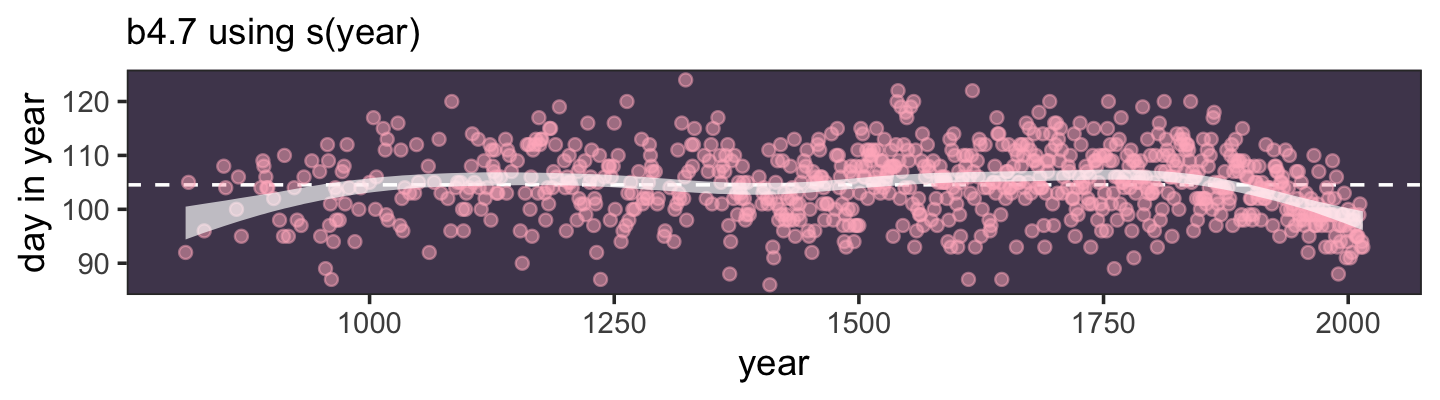

## 5 140.1661 5.115493 130.1331 150.0594 29 -15.99049